id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

13,419 | 抛弃 Autotools 向 CMake 迈进吧 | https://opensource.com/article/21/5/cmake | 2021-05-24T18:35:39 | [

"CMake",

"make",

"Autotools"

] | https://linux.cn/article-13419-1.html |

>

> CMake 是一个跨平台的编译、测试和打包软件,即使你以前从来没有使用过构建系统,也可以轻松上手。

>

>

>

在我以前的文章 [Autotools 入门](https://opensource.com/article/19/7/introduction-gnu-autotools) 一文中,我说明了如何使用 Autotools 来管理和打包代码。这是一个强大且通用的平台,可轻松集成到许多打包系统中,包括 RPM、APT、[pkgsrc](https://opensource.com/article/19/11/pkgsrc-netbsd-linux) 等等。它的语法和结构可能会令人困惑,但幸运的是,我们还有其他选择,开源的 [CMake](http://cmake.org) 就是其中一个。

CMake 是一个用于构建、测试和打包软件的跨平台套件。它使用简单而清晰的语法,因此即使你以前从未使用过构建系统,也很容易开始使用。

### 安装 CMake

CMake 可能已经安装在你的 Linux 系统上。如果没有,你可以使用发行版的程序包管理器进行安装:

```

$ sudo dnf install cmake

```

在 Debian 或者其他相似的系统上:

```

$ sudo apt install cmake

```

在 Mac 上,你可以使用 [MacPorts](https://opensource.com/article/20/11/macports) 或者 [Homebrew](https://opensource.com/article/20/6/homebrew-linux) 来安装:

```

$ sudo port install cmake

```

在 Windows 上,你可以使用 [Chocolatey](https://opensource.com/article/20/3/chocolatey) 或者直接从 [CMake 网站](https://cmake.org/download) 下载二进制来安装。

### 使用 CMake

对于想要从源代码构建软件的开发人员或用户来说,CMake 是一种快速简便的编译和安装方法。 CMake 分阶段工作:

1. 首先,在 `cmake` 步骤中,CMake 扫描计算机查看一些默认设置。默认设置包括库的位置以及在系统上安装软件的位置。

2. 接下来,使用系统上的 `make` 命令(在 Linux 上是 GUN Make,在 [NetBSD](https://opensource.com/article/19/3/netbsd-raspberry-pi) 上是 NetBSD Make)来编译程序。这个过程通常是将人类可读的源代码转换成机器语言。

3. 最后,在 `make install` 一步中,那些编译过的文件将被拷贝到(在 `cmake` 步骤中扫描出来的)计算机上合适的位置。

这看起来很简单,当你使用 CMake 时就是这样。

### CMake 的可移植性

CMake 在设计时就考虑了可移植性。虽然它不能使你的项目在所有 POSIX 平台上都能正常工作(这取决于作为开发者的你),但它可以确保将标记为要安装的文件安装到已知平台上最合适的位置。而且由于有了 CMake 之类的工具,对于高级用户而言,根据其系统需求自定义和覆盖任何不合适的选项都很容易。

使用 CMake,你只需要知道将哪些文件安装到哪个常规位置即可。它会照顾其他一切。不再需要自定义安装脚本,它们有可能在任何未经测试的操作系统上失败。

### 打包

像 Autotools 一样,CMake 也得到了很好的打包支持。无论它们是打包成 RPM 还是 DEB 或 TGZ(或其他任何东西),将带有 CMake 的项目交给打包者,他们的工作既简单又直接。打包工具支持 CMake,因此可能不需要进行任何修补或者调整。在许多情况下,可以自动将 CMake 项目整合到工作流中。

### 如何使用 CMake

要在项目中使用 CMake,只需在项目目录中创建 `CMakeLists.txt` 文件。首先,声明最低要求的 CMake 版本以及项目名称和版本。CMake 会努力在尽可能长时间内保持兼容性,但是随着你使用的时间越长,并且关注它最新的开发动态,你就会知道哪些特性是你所依赖的。

```

cmake_minimum_required(VERSION 3.10)

project(Hello VERSION 1.0)

```

如你可能已经看到的那样,CMake 的语法是一个带有括号和参数的命令。大写的 `VERSION` 字符串不是任意的,也不只是格式。它们是 `project` 命令中的有效参数。

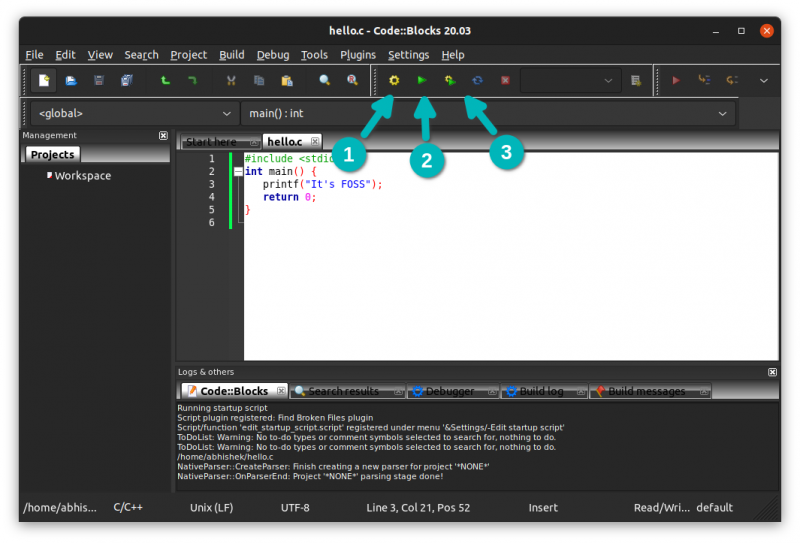

在继续之前,先写一个简单的 C 或者 C++ 的 `hello world` 程序。为了简单,我就写了六行 C 代码,并把它保存在 `hello.c` 中(为了匹配我在 `CMakeLists.txt` 中可执行文件的名字)。

```

#include <stdio.h>

int main() {

printf("Hello open source\n");

return 0;

}

```

不过,不要搞错了,CMake 不仅适用于 C 和 C++。它可以处理任意文件,并且有许多可用的命令,因此它可以帮助你维护许多不同形式的项目。

CMake 网站中记录了所有有效的内置命令及其可用参数,因此无论你要做什么,都可以轻松发现所需的功能。不过,这是一个简单的示例,因此,你需要的下一个命令是必不可少的 —— 你必须为 CMake 定义要构建的代码:

```

add_executable(Hello hello.c)

```

这个命令指定了你编译后的二进制文件的名字为 `Hello`。因此,它与你在终端中执行带有 `-o Hello` 的 `gcc` 命令是一样的。

在一些比较复杂的项目中,你可能还需要使用库文件,你可以使用 `add library` 命令来链接库文件。

在你设置了你想要构建和标记为安装的文件之后,你必须要告诉 CMake 一旦用户安装了程序,最终的应用程序应该在哪个位置。

在这个简单的例子里,你仅需要做的一件事就是在你的 `CMakeLists.txt` 文件里添加 `install` 命令。`install` 命令接受几个参数。但是在这个例子中,你仅需要使用 `TARGET` 命令来指定你要安装文件的名字。

```

install(TARGETS Hello)

```

### 向 CMake 工程添加一些文件

一个软件项目向用户交付的往往不仅仅只有代码,还有一些其他的文件数据,例如手册或者是信息页、示例项目,或者是配置文件。你可以使用与包含编译文件时类似的工作流程,将任意数据包含在 CMake 项目中:在 `CMakelists.txt` 文件中使用 `file` 命令,然后说明一下这些文件要安装在哪里。

例如,你可以在这个项目中包含一个 `assets` 目录,你可以使用 `file` 命令,后面跟上 `COPY` 和 `DESTINATION` 参数来告诉 CMake 将这些额外的文件复制到你的分发包中。

```

file(COPY assets DESTINATION "${CMAKE_CURRENT_BINARY_DIR}")

```

这个 `${CMAKE_CURRENT_BINARY_DIR}` 变量是一个特殊的 CMake 内置变量,表示 CMake 正在处理的目录。换句话说,你的任何文件都会被复制到编译目录(在你运行 `cmake` 命令后,这个过程会更加清晰,到时候回过头来看一下)。

因为这些额外的数据文件有些杂乱不堪(如果你不信的话,可以看一下 `/usr/share` 这个目录)。对于你自己的项目创建一个子文件夹对谁都有好处。最好也带上版本名字。你可以通过在 `CMAKE_CURRENT_BINARY_DIR` 中指定一个新的目录,使用你选择的项目名称,后面跟一个为你的项目命名的特殊变量和你在项目声明中为它设置的 `VERSION`。

```

file(COPY assets DESTINATION "${CMAKE_CURRENT_BINARY_DIR}/Hello-${Hello_VERSION}")

```

### 定义安装位置

你已经定义你要编译的文件,因此现在你要告诉 CMake 你的程序要安装在哪个位置。比如你的主程序,这个要程使用 `install` 命令:

```

install(DIRECTORY "${CMAKE_CURRENT_BINARY_DIR}/Hello-${Hello_VERSION}" TYPE DATA)

```

这里有一些新的参数。`DIRECTORY` 参数指定了数据文件是一个目录,而不是一个文件(`FILE`)或者脚本(`SCRIPT`)。你使用的参数和复制一些额外文件到编译目录时是一样。另外,在 `install` 命令中 `TYPE` 或者 `DESTINATION` 必须要指定其一。`TYPE` 参数指定了通用的文件类型,这些文件通常将会被放到合适的位置。在 Linux 系统上,`TYPE DATA` 一般是 `/usr/local/share` 或者 `/usr/share`,除非用户定义了其他的位置。

这是诸如 CMake 之类的良好构建系统的强大功能之一。你不必担心文件的确切位置,因为你知道用户可以更改 CMake 的首选默认设置,并且 CMake 将构建代码以使其正常工作。

### 运行 CMake

CMake 有多种方式来让你执行命令,你可以在终端或者在一个可交互的程序上执行命令,或者你也可以使用它的图形界面(GUI)。我比较偏向于使用终端命令,但是我也喜欢使用一些其他的方式(相比与在 `Makefile` 中查找那些晦涩的变量然后去修改它们更胜一筹)。

对于编译过开源 C++ 项目的任何人,都熟悉的第一步是创建一个 `build` 目录,进入到该目录,然后运行 `cmake ..` 命令。 我是一个懒惰的打字员,所以我将构建目录命名为 `b`,但是你可以使用最合适的方式:

```

$ mkdir b

$ cd b

$ cmake ..

-- The C compiler identification is GNU 11.1.1

-- The CXX compiler identification is GNU 11.1.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done

-- Generating done

-- Build files have been written to: /var/home/seth/demo-hello/b

$

```

这或多或少相当于经典的 `./configure; make; make install` 中的 `./configure`。看一下你的构建目录,CMake 已经帮你生成了几个新的文件,来让你的项目更完整。这里生成了 CMake 的数据文件、一个常规的 `Makefile` 文件(这是一个免费提供的 247 行的文件,但对于越复杂的项目,行数要多得多),还有一个包含这个示例程序的任意非编译数据的 `Hello-1.0` 目录。

```

$ ls

CMakeCache.txt

CMakeFiles

Makefile

Hello-1.0

cmake_install.cmake

```

接下来,你可以进行构建。你可以使用 CMake 的 `--build` 选项来做这件事,使用当前的构建目录作为源目录。

```

$ cmake --build .

Scanning dependencies of target Hello

[ 50%] Building C object CMakeFiles/Hello.dir/hello.c.o

[100%] Linking C executable Hello

[100%] Built target Hello

```

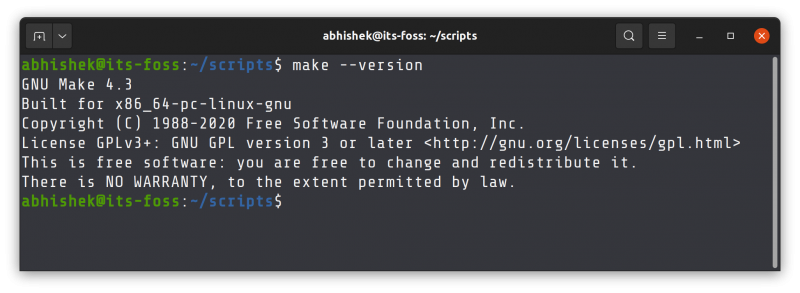

或者你可以运行 `make` 命令。这将读取由 CMake 生成的 `Makefile` 文件。在这个例子中,`make` 默认的行为就是由源程序 `hello.c` 生成目标文件。

```

$ make

Scanning dependencies of target Hello

[ 50%] Building C object CMakeFiles/Hello.dir/hello.c.o

[100%] Linking C executable Hello

[100%] Built target Hello

$

```

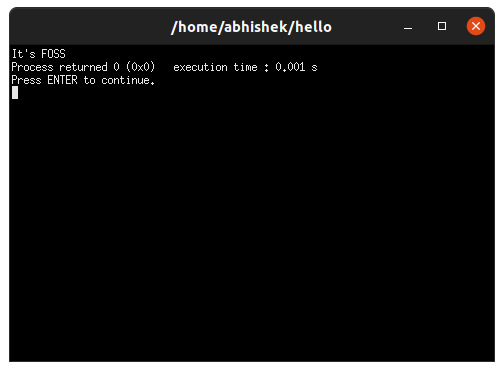

如你所料,`Hello` 二进制可执行文件现在存在于当前的构建目录中。因为它是一个简单的自包含应用程序,所以你可以运行它进行测试:

```

$ ./Hello

Hello open source

$

```

最后,你可以用 `--install` 选项进行安装。因为我不希望我的简单的 “hello world” 应用程序真的被安装到我的系统上,我设置了 `--prefix` 选项,将 CMake 的目标从根目录(`/`)重定向到 `/tmp` 的一个子目录。

```

$ cmake --install . --prefix /tmp/hello/

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/local/bin/Hello

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file1

```

另外,你也可以运行 `make install` 来调用 `Makefile` 的安装动作。同样,为了避免在我的系统上安装一个演示程序,我在这个例子中设置了 `DESTDIR` 变量,将安装目标重定向到 `/tmp` 的一个子目录:

```

$ mkdir /tmp/dist-hello

$ make install DESTDIR=/tmp/dist-hello

[100%] Built target Hello

Install the project...

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/local/bin/Hello

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file1

```

看一下输出的内容,来确定它具体的安装位置,这个程序已经安装好了。

### 快速自定义

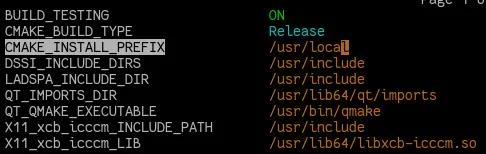

CMake 的安装前缀(由 `CMAKE_INSTALL_PREFIX` 变量指定)默认是在 `/usr/local` 这个位置,但是所有的 CMake 变量都可以在你运行 `cmake` 命令的时候,加一个 `-D` 选项来改变它。

```

$ cmake -DCMAKE_INSTALL_PREFIX=/usr ..

$ make install DESTDIR=/tmp/dist-hello

$ make install DESTDIR=/tmp/dist-hello

[100%] Built target Hello

Install the project...

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/bin/Hello

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0/assets/file1

```

所有由 CMake 使用的变量都可以通过这种方式来修改。

### 交互式的 CMake

CMake 的交互模式是一种用于配置安装环境的友好而有用的方法。要让用户知道该项目使用的所有可能的 CMake 变量是一件工作量很大的事,因此 CMake 交互式界面是他们无需查看 `Makefile` 和 `CMakeLists` 即可发现自定义选项的简便方法。

为了调用这个交互式的 CMake,使用 `ccmake` 命令,在这个简单的项目里没有太多的东西。但是对于像 [Rosegarden](https://opensource.com/article/18/3/make-sweet-music-digital-audio-workstation-rosegarden) 这样的大型项目,这将非常有用。

### CMake 的更多知识

还有很多很多的 CMake 知识需要去了解。作为一个开发者,我非常喜欢它简洁的语法、详尽的文档、可扩展性以及便捷性。作为一个用户我非常喜欢 CMake 友好且实用的错误提示信息还有它的用户界面,如果你的项目还未开始使用构建系统,请了解一下 CMake 吧。你以及以后尝试打包你应用程序的任何人都不会后悔。

---

via: <https://opensource.com/article/21/5/cmake>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[amwps290](https://github.com/amwps290) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In my [introduction to Autotools](https://opensource.com/article/19/7/introduction-gnu-autotools), I demonstrated how to manage building and packaging code with GNU Autotools. It's a robust and common platform that integrates easily into many packaging systems, including RPM, APT, [pkgsrc](https://opensource.com/article/19/11/pkgsrc-netbsd-linux), and more. Its syntax and structure can be confusing, but luckily there are alternatives, including the open source [CMake](http://cmake.org) framework.

CMake is a cross-platform suite for building, testing, and packaging software. It uses simple and clearly documented syntax, so it's easy to start using even if you've never used a build system before.

## Install CMake

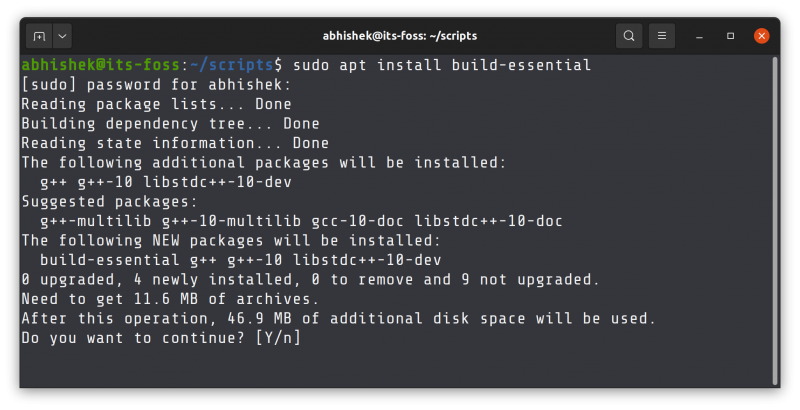

CMake may already be installed on your Linux system. If not, you can install it with your distribution's package manager:

`$ sudo dnf install cmake`

On Debian or similar:

`$ sudo apt install cmake`

For Mac, you can use [MacPorts](https://opensource.com/article/20/11/macports) or [Homebrew](https://opensource.com/article/20/6/homebrew-linux):

`$ sudo port install cmake`

On Windows, you can use [Chocolatey](https://opensource.com/article/20/3/chocolatey) or download a binary directly from the [CMake website](https://cmake.org/download).

## CMake at work

For developers or users who want to build software from source code, CMake is a quick and easy way to compile and install it. CMake works in stages:

- First, during the

`cmake`

step, CMake scans the host system (the computer it's being run on) to discover the default settings. Default settings include where support libraries are located and where new software should be placed on the system. - Next, you use your system's

`make`

command (usually GNU Make on Linux, NetBSD Make on[NetBSD](https://opensource.com/article/19/3/netbsd-raspberry-pi), and so on) to build the application, usually by converting human-readable source code into machine language. - Finally, during the

`make install`

step, the built files are copied to the appropriate locations (as detected during the`cmake`

stage) on your computer.

It seems simple, and it is when you use CMake.

## CMake portability

CMake is designed with portability in mind. While it can't make your project work across all POSIX platforms (that's up to you, as the coder), it can ensure that the files you've marked for installation get installed to the most sensible locations on a known platform. And because of tools like CMake, it's trivial for power users to customize and override any non-optimal value according to their system's needs.

With CMake, all you have to know is which files need to be installed to what general location. It takes care of everything else. No more custom install scripts that break on any untested operating system.

## Packaging

Like Autotools, CMake is well-supported. Hand a project with CMake over to a distro packager, whether they're packaging an RPM or a DEB or a TGZ (or anything else), and their job is simple and direct. Packaging tools know CMake, so it's likely there will not be any patching, hacking, or adjustments required. In many cases, incorporating a CMake project into a pipeline can be automated.

## How to use CMake

To start using CMake with your project, you need only to create a `CMakeLists.txt`

file in your project directory. First, declare the minimum required version of CMake and a project title and version. CMake strives to retain compatibility for as long as possible, but the more you use it and follow its development, the better you'll know what features you rely upon.

```

cmake_minimum_required(VERSION 3.10)

project(Hello VERSION 1.0)

```

As you may already be detecting, the syntax of CMake is a command followed by parameters in parentheses. The capitalized `VERSION`

strings aren't arbitrary or just for style; they're valid parameters for the `project`

command.

Before continuing, generate a sample `hello world`

application in C or C++. For simplicity, I wrote six lines of C code and saved it as `hello.c`

(to match the executable I list in `CMakeLists.txt`

):

```

#include <stdio.h>

int main() {

printf("Hello open source\n");

return 0;

}

```

Make no mistake, though, CMake is useful beyond just C and C++. It can handle arbitrary files and has lots of commands available to it, so it can help you maintain projects in many different forms.

The CMake website documents all valid built-in commands and their available parameters, so it's easy to uncover the functions you need, no matter what you're trying to do. This is a simple example, though, so the next command you need is essential—you must define for CMake what code you're building:

`add_executable(Hello hello.c)`

This sets the name of your compiled binary to `Hello`

, so functionally, it's the same as running `gcc`

with `-o Hello`

in your terminal.

In a complex project, you likely have libraries as well as executables. You can add libraries with the `add_library`

command.

After you've set what files you want built and marked for installation, you must tell CMake where the finished product should end up once a user installs your application.

In this simple example, only one thing is marked for installation, so you only have to add one `install`

line to your `CMakeLists`

. The `install`

command accepts a few parameters, but in this case, all that's necessary is the `TARGETS`

parameter followed by the name of the file to install:

`install(TARGETS Hello)`

### Adding files to a CMake project

A software project rarely just delivers code to its users. There's usually some additional data, such as manual or info pages, example projects, or configuration files. You can include arbitrary data in a CMake project using a similar workflow to when you include compiled files: first, add the file to `CMakeLists.txt`

and then describe how it is to be installed.

For example, to include a directory called `assets`

with your sample application, you use the `file`

command, followed by the `COPY`

and `DESTINATION`

parameters to tell CMake to copy your additional files into your distributable package:

`file(COPY assets DESTINATION "${CMAKE_CURRENT_BINARY_DIR}")`

The `${CMAKE_CURRENT_BINARY_DIR}`

is a special built-in CMake variable representing the path to the directory currently being processed by CMake. In other words, your arbitrary data gets copied to the build directory (this becomes even clearer after you run `cmake`

, so watch for this to come up again later).

Because data directories tend to be crowded places (take a look in `/usr/share`

if you don't believe me), it's to everyone's benefit for you to create a subdirectory for your own project, preferably with versioning. You can do this by specifying a new directory within `CMAKE_CURRENT_BINARY_DIR`

using your chosen project name followed by a special variable named for your project and the `VERSION`

you set for it in your project declaration:

`file(COPY assets DESTINATION "${CMAKE_CURRENT_BINARY_DIR}/Hello-${Hello_VERSION}")`

## Defining install locations

You've defined the file for the build process, so now you must tell CMake where to put it during the install process. Like your main executable, this uses the `install`

command:

`install(DIRECTORY "${CMAKE_CURRENT_BINARY_DIR}/Hello-${Hello_VERSION}" TYPE DATA)`

There are some new parameters here. The `DIRECTORY`

parameter identifies the data source as a directory (rather than `FILE`

or `SCRIPT`

, for instance). You're using the same variables as you used when copying the data files into the build location. Additionally, either a `TYPE`

or a `DESTINATION`

must be provided for `install`

(not both). The `TYPE`

argument specifies a generic file type, which is placed into a location appropriate to the target system. On Linux, a `TYPE DATA`

directory usually gets placed into `/usr/local/share`

or `/usr/share`

, unless the user or packager has defined a different data location.

That's one of the powerful things about a good build system like CMake. You don't have to worry about exactly where files end up because you know that the user can alert CMake of their preferred defaults and that CMake will build the code to make that work.

## Running CMake

CMake has several interfaces. You can use it from your terminal as a command or an interactive application, or you can use its graphical user interface (GUI) front end. I tend to use the terminal command, but I enjoy the other user experiences just as much (they definitely beat scrubbing through Makefiles in search of obscure variables to redefine).

The first step, familiar to anyone who's built their fair share of open source C++ projects, is to create a `build`

directory, change to it, and then run the `cmake ..`

command. I'm a lazy typist, so I name my build directory `b`

, but you can use whatever makes the most sense to you:

```

$ mkdir b

$ cd b

$ cmake ..

-- The C compiler identification is GNU 11.1.1

-- The CXX compiler identification is GNU 11.1.1

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done

-- Generating done

-- Build files have been written to: /var/home/seth/demo-hello/b

$

```

This is, more or less, the equivalent of `./configure`

in the classic `./configure; make; make install`

incantation. A look into your build directory reveals that CMake has generated several new files to help your project come together. There's some CMake data, a regular Makefile (that's 247 lines of code for free, but quite a lot more for complex projects), and the `Hello-1.0`

data directory containing the arbitrary non-compiled data distributed with this example application:

```

$ ls

CMakeCache.txt

CMakeFiles

Makefile

Hello-1.0

cmake_install.cmake

```

Next, you can build. You can do this with CMake using the `--build`

option, using the current build directory as the source directory:

```

$ cmake --build .

Scanning dependencies of target Hello

[ 50%] Building C object CMakeFiles/Hello.dir/hello.c.o

[100%] Linking C executable Hello

[100%] Built target Hello

```

Or you can run the `make`

command. This reads the `Makefile`

generated by CMake. In this example, the default action for Make is to compile its target, `hello.c`

:

```

$ make

Scanning dependencies of target Hello

[ 50%] Building C object CMakeFiles/Hello.dir/hello.c.o

[100%] Linking C executable Hello

[100%] Built target Hello

$

```

As you might expect, the `Hello`

binary executable now exists in your current build directory. Because it's a simple self-contained application, you can run it for testing purposes:

```

$ ./Hello

Hello open source

$

```

Finally, you can install with the `--install`

option. Because I don't want my simple "hello world" application to actually get installed on my system, I set the `--prefix`

option to redirect CMake's target from the root directory (`/`

) to a subdirectory in `/tmp`

:

```

$ cmake --install . --prefix /tmp/hello/

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/local/bin/Hello

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file1

```

Alternately, you can run `make install`

to invoke the install actions of the Makefile. Again, to avoid a demo application to be installed on my system, I set the `DESTDIR`

variable in this example to redirect the install target to a subdirectory in `/tmp`

:

```

$ mkdir /tmp/dist-hello

$ make install DESTDIR=/tmp/dist-hello

[100%] Built target Hello

Install the project...

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/local/bin/Hello

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/local/share/Hello-1.0/assets/file1

```

The output confirms its actions, and the application is installed.

## Quick customization

CMake's install prefix (the `CMAKE_INSTALL_PREFIX`

variable) defaults to `/usr/local`

, but any CMake variable can be customized when you run `cmake`

with the `-D`

option:

```

$ cmake -DCMAKE_INSTALL_PREFIX=/usr ..

$ make install DESTDIR=/tmp/dist-hello

$ make install DESTDIR=/tmp/dist-hello

[100%] Built target Hello

Install the project...

-- Install configuration: ""

-- Installing: /tmp/dist-hello/usr/bin/Hello

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0/assets/file0

-- Installing: /tmp/dist-hello/usr/share/Hello-1.0/assets/file1

```

Any variable used by CMake can be customized in this way.

## Interactive CMake

CMake's interactive mode is a friendly and useful method to configure an installation environment. It's a lot to ask your users to know all the possible CMake variables your project uses, so the CMake interactive interface is an easy way for them to discover customization options without looking at Makefiles and CMakeLists.

To invoke an interactive CMake session, use the `ccmake`

command. There's not much to see for this simple example project, but a big project like the digital audio workstation [Rosegarden](https://opensource.com/article/18/3/make-sweet-music-digital-audio-workstation-rosegarden) makes the user interface invaluable.

(Seth Kenlon, CC BY-SA 4.0)

## More CMake

There's much, much more to CMake. As a developer, I enjoy CMake for its simple syntax and extensive [documentation](https://cmake.org/cmake/help/latest/), extensibility, and expediency. As a user, I appreciate CMake for its friendly and helpful error messages and user interfaces. If you're not using a build system for your project, take a look at CMake. You, and anyone trying to package your application later, won't be sorry.

## 3 Comments |

13,420 | 4 款值得一试的 Linux 终端多路复用器 | https://opensource.com/article/21/5/linux-terminal-multiplexer | 2021-05-24T20:51:09 | [

"终端",

"多路复用器"

] | https://linux.cn/article-13420-1.html |

>

> 比较 tmux、GNU Screen、Konsole 和 Terminator,看看哪个最适合你。

>

>

>

Linux 用户通常需要大量的虚拟视觉空间。一个终端窗口是永远不够的,所以终端有了标签。一个桌面太受限制了,所以有了虚拟桌面。当然,应用程序窗口可以堆叠,但当它们堆叠起来时,又有多大的好处呢?哎呀,即使是后台文本控制台也有 `F1` 到 `F7`,可以在任务之间来回翻转。

有了这么多的多任务处理方式,有人发明了终端 *多路复用器* 的概念就不奇怪了。诚然,这是一个令人困惑的术语。在传统的电子学中,“<ruby> 多路复用器 <rt> multiplexer </rt></ruby>”是一个接收多个输入信号并将选定的信号转发到单一输出的部件。终端多路复用器的作用正好相反。它从一个输入(人类在键盘上向一个终端窗口打字)接收指令,并将该输入转发给任意数量的输出(例如,一组服务器)。

然后,“多路复用器”一词在美国也是一个流行的术语,指的是有许多屏幕的电影院(与“<ruby> 影城 <rt> cineplex </rt></ruby>”一词一个意思)。在某种程度上,这很好地描述了终端复用器的作用。它可以在一个框内提供许多屏幕。

不管这个词是什么意思,任何尝试过它的人都有自己的喜好的某一种多路复用器。因此,我决定考察一些流行的终端多路复用器,看看每一个都怎么样。就我的评估标准而言,最低限度,我需要每个多路复用器能够分割*和*堆叠终端窗口。

### tmux

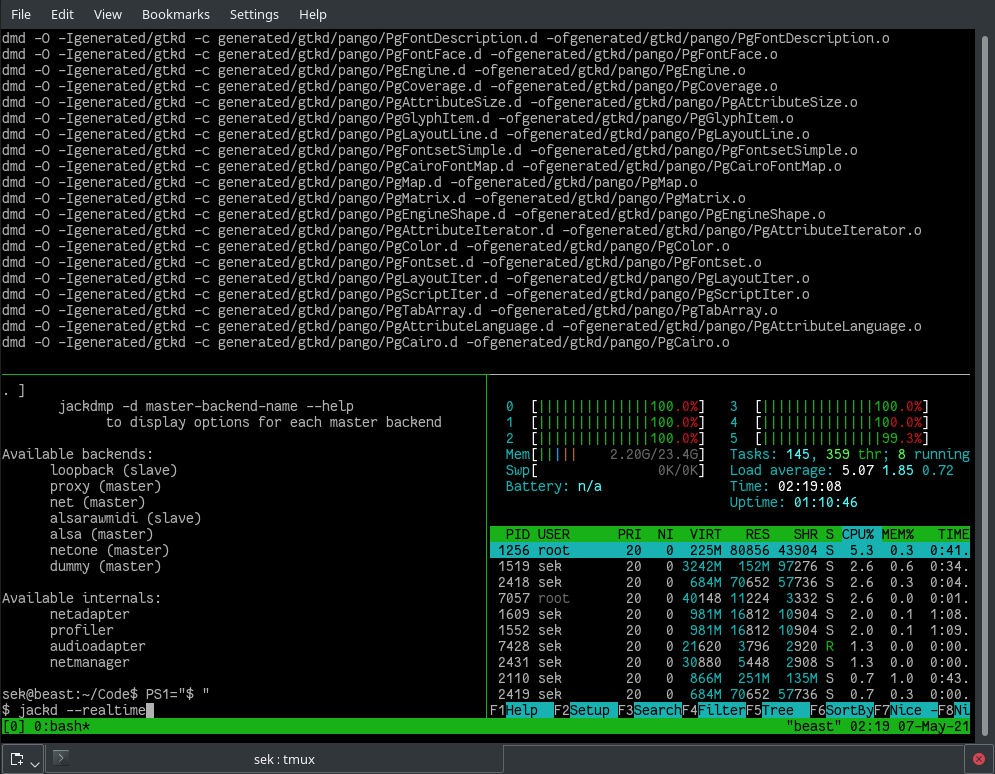

据我所知,是从 tmux 开始使用“多路复用器”这个术语的。它工作的很出色。

它作为一个守护程序运行,这样即使你关闭了正在查看的终端模拟器,你的终端会话仍然处于活动状态。它将你的终端屏幕分割成多个面板,这样你就可以在每个面板上打开独特的终端提示符。

推而广之,这意味着你也可以远程连接到任何数量的系统,并在你的终端中打开它们。利用 tmux 的能力,将输入镜像(或者以电子学术语说是“反向多路复用”)到其他打开的窗格,就能从一个中央命令窗格同时控制几台计算机。

tmux 在 GNU Screen 还只能水平分割的时候就有了垂直分割能力,这吸引了追求最大灵活性的粉丝。而灵活性正是用户在 tmux 中得到的。它可以分割、堆叠、选择和提供服务;几乎没有什么是它做不到的。

#### ? 软件包大小

从软件包中安装 tmux 大约需要 700K,这还不算它所依赖的十几个共享库。

#### ?️ 控制键

tmux 的默认触发键是 `Ctrl+B`,尽管很容易在其配置文件中重新定义。

#### ⌨️ 黑客因子

即使你只是在学习如何使用终端,你也一定会觉得使用 tmux 的人很像黑客。它看起来很复杂,但一旦你了解了正确的键绑定,就很容易使用。它为你提供了很多有用的技巧,让你玩的飞起,而且它是一种快速构建 HUD(抬头显示器)的超简单方法,可以把你需要的所有信息摆在你面前。

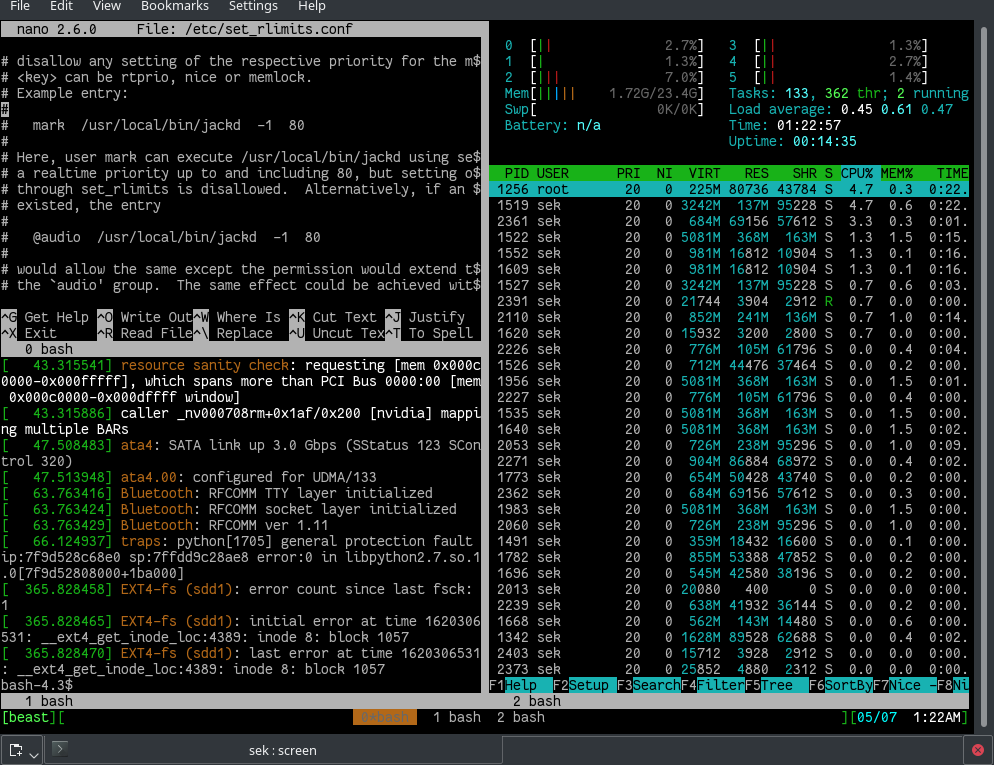

### GNU Screen

像 tmux 一样,GNU Screen 也运行一个守护程序,所以即使你关闭了用来启动它的终端,你的 shell 仍然可用。你可以从不同的计算机上连接并共享屏幕。它可以将你的终端屏幕分割成水平或垂直的窗格。

与 tmux 不同的是,GNU Screen 可以通过串行连接进行连接(`screen 9600 /dev/ttyUSB0` 就可以了),通过按键绑定可以方便地发出 `XON` 和 `XOFF` 信号。

与 SSH 会话相比,在串行连接中需要多路复用器的情况可能并不常见,所以大多数用户并不了解 Screen 这个真正特殊的功能。不过,GNU Screen 是一个很棒的多路复用器,有很多有用的选项。而如果你真的需要同时向多个服务器发送信号,还有专门的工具,比如 ClusterSSH 和 [Ansible](https://opensource.com/article/19/2/quickstart-guide-ansible)。

#### ? 软件包大小

从软件包中安装 GNU Screen 大约需要 970K,这还不算它所依赖的十几个共享库。

#### ?️ 控制键

GNU Screen 的默认触发键是 `Ctrl+A`,这对于熟悉 Bash 快捷键的人来说可能特别烦人。幸运的是,你可以在配置文件中轻松地重新定义这个触发键。

#### ⌨️ 黑客因子

当使用 Screen 通过串行连接到你的路由器或你的原型电路板时,你会成为你所有硬件黑客朋友羡慕的对象。

### Konsole

对于没有标榜自己是多路复用器的 Konsole 来说,令人惊讶的是它也是其中一个。它可以使用 Qt 窗格和标签进行必要的窗口分割和堆叠,但它也可以通过“编辑(将输入复制到)”菜单中的一个选项将输入从一个窗格传到另一个(或全部)。

然而,它所最明显缺乏的功能是作为一个守护程序运行以进行远程重新连接的能力。与 tmux 和 GNU Screen 不同,你不能远程连接到运行 Konsole 的机器并加入会话。对于一些管理员来说,这可能不是一个问题。许多管理员用 [VNC](https://en.wikipedia.org/wiki/Virtual_Network_Computing) 连接到机器的次数比用 [SSH](https://en.wikipedia.org/wiki/Secure_Shell_Protocol) 还要多,所以“重新加入”一个会话就像在 VNC 客户端上点击 Konsole 窗口一样简单。

使用 Konsole 作为多路复用器是 KDE 极客们的大招。Konsole 是我使用的第一个 Linux 终端(直到今天,我有时也会按 `Ctrl+N` 来切换新标签),所以有能力使用这个熟悉的终端作为多路复用器是一个很大的便利。这绝不是必要的,因为无论如何 tmux 和 Screen 都可以在 Konsole 里面运行,但是通过让 Konsole 处理窗格,我就不必调整肌肉记忆。这种微妙的功能包容正是 [KDE 的伟大之处](https://opensource.com/article/19/12/linux-kde-plasma)。

#### ? 软件包大小

Konsole 本身大约是 11KB,但它依赖于 105 个 KDE 和 Qt 库,所以实际上,它至少有 50MB。

#### ?️ 控制键

大多数重要的 Konsole 快捷键以 `Shift+Ctrl` 开始,分割屏幕、打开新标签、复制输入到其他窗格等都是如此。这是 KDE 里的主控台,所以如果你对 Plasma 桌面很熟悉,会感觉快捷键很熟悉。

#### ⌨️ 黑客因子

使用 Konsole 作为你的多路复用器让你有资格称自己为 KDE 高级用户。

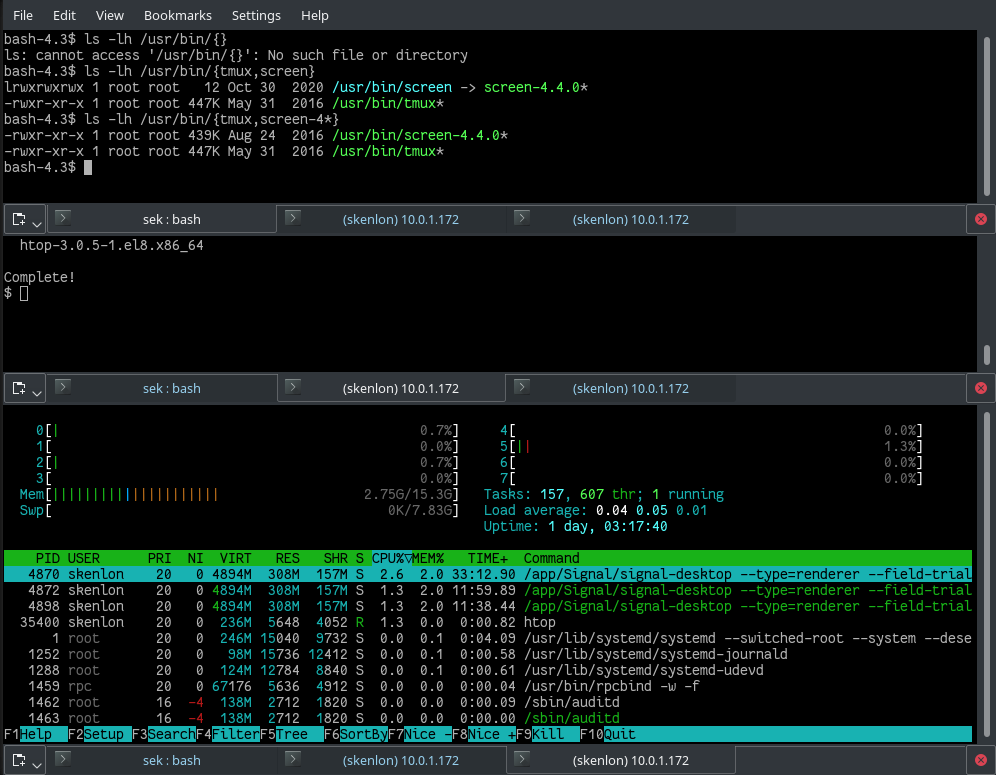

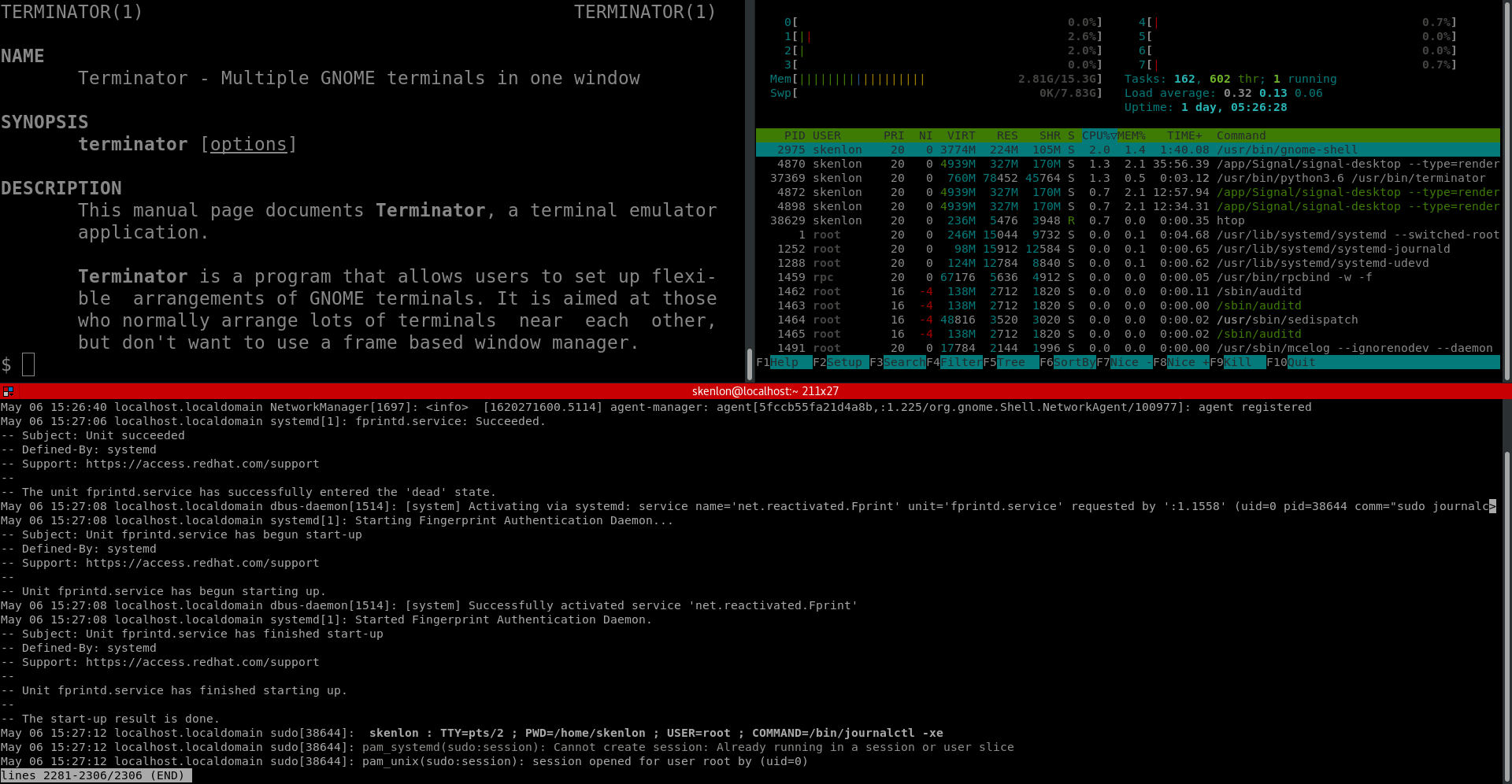

### Terminator

对于 GNOME 用户来说,Terminator 多路复用器是为他们原本极简的 GNOME 终端增加功能的一个简单方法。除了必要的多路复用功能外,Terminator 还可以向所有打开的窗格广播输入,但和 Konsole 一样,它不会在后台运行以便你可以通过 SSH 重新连接到它。话说回来,由于 GNOME 和 Wayland 让 VNC 变得如此简单,你有可能会觉得没有必要通过 SSH 来恢复终端会话。

如果你愿意,Terminator 可以完全由鼠标驱动。Konsole 通过其主菜单也有同样的能力。有了 Terminator,你可以在 Shell 的任何地方点击右键,弹出相关选项,以水平或垂直分割窗口,将窗格分组作为广播目标,广播输入,关闭窗格,等等。你还可以为所有这些动作配置键盘快捷键,所以在许多方面,你可以形成自己的体验。

我认为自己主要是一个 KDE 用户,所以当我说 Terminator 感觉像一个 KDE 应用时,我其实是一种极大的赞美。Terminator 是一个令人惊讶的可配置的和灵活的应用程序。在许多方面,它体现了开源的力量,把简陋的 GNOME 终端变成了一个强大的多路复用器。

#### ? 软件包大小

Terminator 的安装容量为 2.2MB,其中大部分是 Python 模块。但它依赖于 GTK3 和 GNOME,所以如果你没有运行完整的 GNOME 桌面,可以预料你需要一个更大的安装来拉入这些依赖。

#### ?️ 控制键

Terminator 的默认控制键没有什么一致性。你可以用 `Alt` 键来执行一些命令,用 `Ctrl` 来执行其他命令,还可以用 `Shift+Ctrl`、`Ctrl+Alt`、`Shift+Super` 等等,还有鼠标。话说回来,这是我试过的最可配置的多路复用器之一,所以只要有想法,稍加努力,你就能设计出适合你的模式。

#### ⌨️ 黑客因子

当你使用 Terminator 时,你会觉得自己是最现代、最务实的黑客。由于它的各种极客选项,它是多路复用的最佳选择,而且由于它非常灵活,无论你的手是在键盘上,还是键盘和鼠标并用,你都可以同样轻松地使用它。

### 我全要

还有更多的多路复用器和一些具有类似多路复用能力的应用。你不必非要找到*一个*完全按照你想要的方式完成你需要的所有工作的多路复用器。你可以使用不止一个。事实上,你甚至可以同时使用多个,因为 tmux 和 Screen 实际上是 shell,而 Konsole 和 Terminator 是显示 shell 的终端。对唾手可得的工具感到舒适,而且它们能帮助你管理你的工作空间,使你能有效地工作,才是最重要的。

去尝试一下多路复用器,或者在你喜欢的应用程序中发现类似多路复用器的功能。它可能会改变你看待计算的方式。

---

via: <https://opensource.com/article/21/5/linux-terminal-multiplexer>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

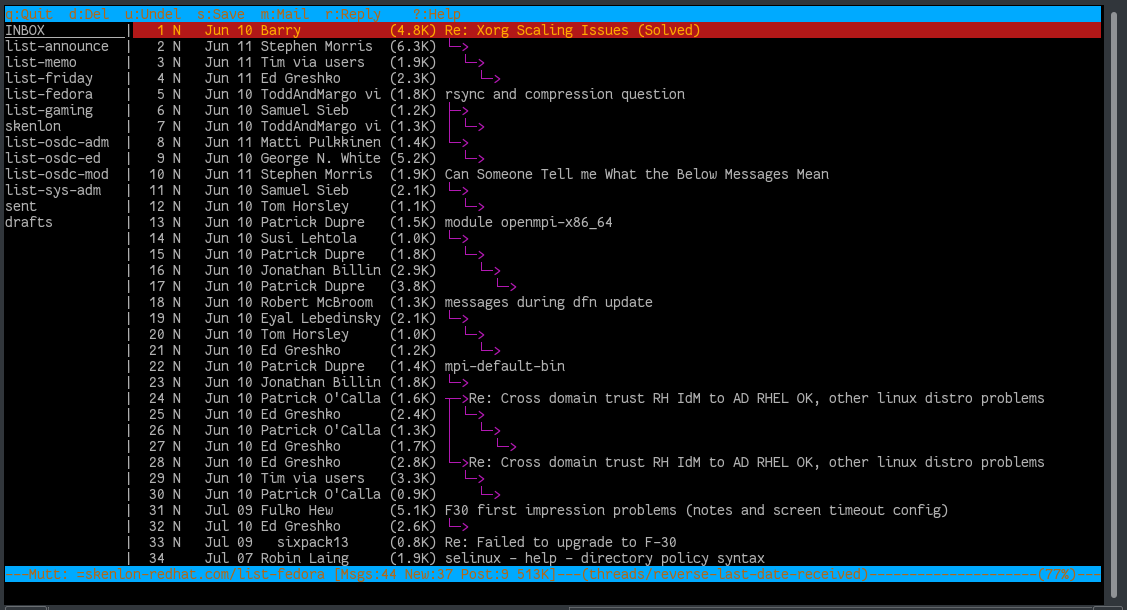

| 200 | OK | Linux users generally need a lot of virtual visual space. One terminal window is never enough, so terminals have tabs. One desktop is too constraining, so there are virtual desktops. And sure, application windows can stack, but how much better is it when they tile? Heck, even the back-end text console has F1 to F7 available for flipping back and forth between tasks.

With this much multitasking going on, it's no surprise that somebody invented the concept of a terminal *multiplexer*. This is admittedly a confusing term. In traditional electronics, a "multiplexer" is a component that receives several input signals and forwards the selected one to a single output. A terminal multiplexer does the opposite. It receives instructions from one input (the human at the keyboard typing into one terminal window) and forwards that input to any number of outputs (for example, a group of servers).

Then again, the term "multiplex" is also a term popular in the US for a cinema with many screens (sharing mindshare with the term "cineplex"). In a way, that's pretty descriptive of what a terminal multiplexer can do: It can provide lots of screens within one frame.

Whatever the term means, anybody who's tried a multiplexer has a favorite. So, I decided to take a look at a few of the popular ones to see how each one measures up. In terms of my evaluation criteria, at the bare minimum, I needed each multiplexer to split *and* stack terminal windows.

## Tmux

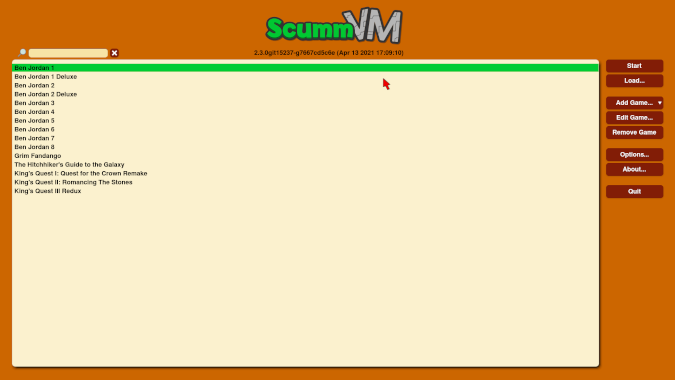

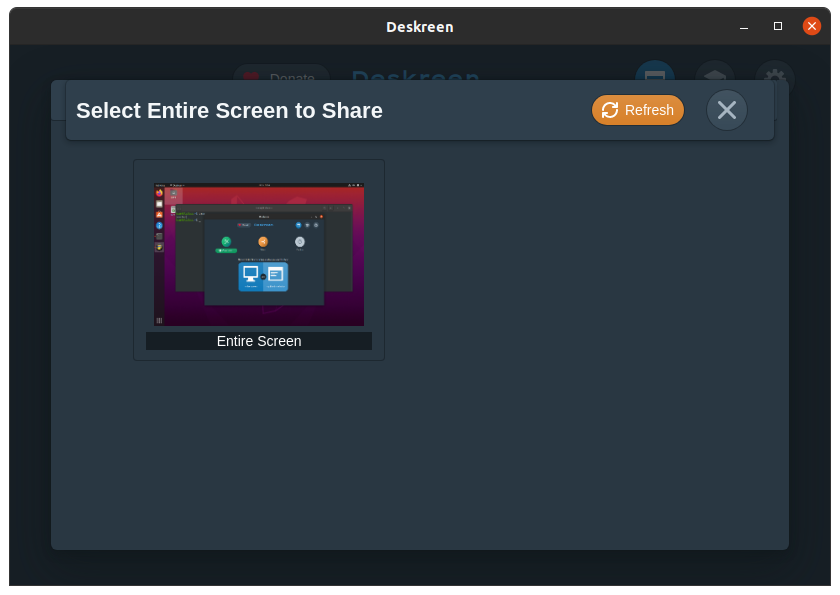

(Seth Kenlon, CC BY-SA 4.0)

As far as I know, it was tmux that started using the "multiplexer" term. It's great at what it does.

It runs as a daemon so that your terminal session remains active even after you close the terminal emulator you're viewing it in. It splits your terminal screen into panes so that you can open unique terminal prompts in each.

By extension, this means you can also connect remotely to any number of systems and have them open in your terminal, too. Using tmux's ability to mirror (or reverse multiplex, in electronics terms) input to other open panes, it's possible to control several computers at once from one central command pane.

Tmux had vertical splits back when GNU Screen only had horizontal splits, which attracted fans looking for maximum flexibility. And flexibility is what users get with tmux. It can split, stack, select, and serve; there's practically nothing it can't do.

### ? Size

Installing tmux from a package occupies roughly 700K, not counting the dozen shared libraries it depends upon.

### ?️ Control

The default trigger key for tmux is **Ctrl+B**, although it's easy to redefine this in its configuration file.

### ⌨️ Hacker factor

Even if you're just learning how to use the terminal, you're sure to feel every bit like the hacker you are by using tmux. It looks complex, but once you get to know the right key bindings it's easy to use. It provides you with lots of useful tricks to keep yourself busy, and it's a pretty easy way to construct a quick HUD with all the information you need in front of you.

## GNU Screen

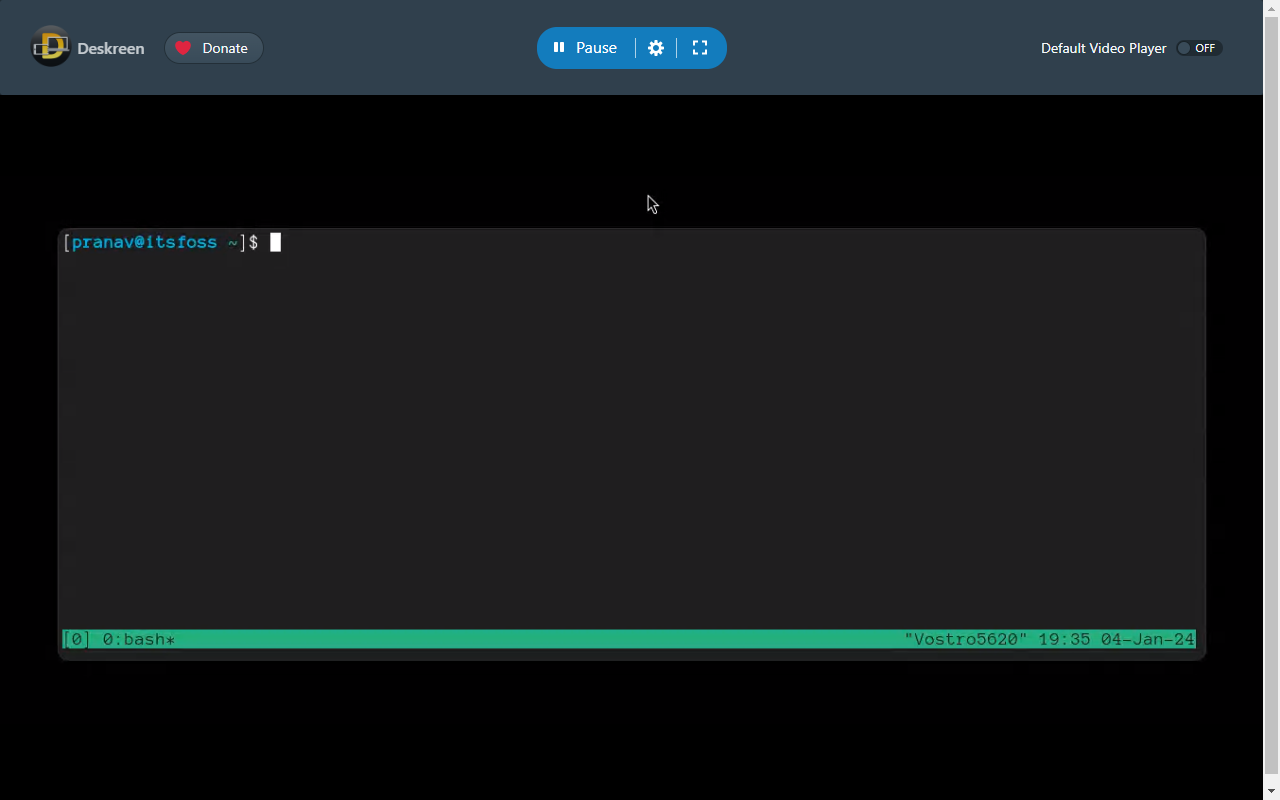

(Seth Kenlon, CC BY-SA 4.0)

Like tmux, GNU Screen runs a daemon, so your shell is available even after you close the terminal you use to launch it. You can connect from separate computers and share Screen. It splits your terminal screen into horizontal or vertical panes.

And unlike tmux, GNU Screen can connect over a serial connection (`screen 9600 /dev/ttyUSB0`

is all it takes), with key bindings for easy XON and XOFF signals.

It's probably less common to need a multiplexer over a serial connection than over an SSH session, so Screen's really special feature is lost on most users. Still, GNU Screen is a great multiplexer with many useful options, and if you really really need to send signals to multiple servers at once, there are always dedicated tools like ClusterSSH and [Ansible](https://opensource.com/article/19/2/quickstart-guide-ansible).

### ? Size

Installing GNU Screen from a package occupies roughly 970K, not counting the dozen shared libraries it depends upon.

### ?️ Control

The default trigger key for GNU Screen is **Ctrl+A**, which can be particularly annoying for anyone familiar with Bash shortcuts. Luckily, you can easily redefine this trigger in the configuration file.

### ⌨️ Hacker factor

You'll be the envy of all your hardware hacker friends when using Screen to connect over a serial connection to your router or your prototype circuit board.

## Konsole

(Seth Kenlon, CC BY-SA 4.0)

For not billing itself as a multiplexer, Konsole is a surprisingly effective one. It can do the requisite splitting and stacking of windows using Qt panes and tabs, but it can also echo input from one pane to another (or all) through an option in the **Edit (Copy input to)** menu.

The most notable feature that it lacks, however, is the ability to run as a daemon for remote reconnection. Unlike Tmux and GNU Screen, you can't connect remotely to a machine running Konsole and join the session. For some admins, this may not be an issue. Many admins [VNC](https://en.wikipedia.org/wiki/Virtual_Network_Computing) to machines more often than they [SSH](https://en.wikipedia.org/wiki/Secure_Shell_Protocol), so "rejoining" a session is as trivial as clicking on the Konsole window in a VNC client.

Using Konsole as a multiplexer is a power move for KDE geeks. Konsole was the first Linux terminal I used (to this day, I sometimes press **Ctrl+N** for a new tab), so having the ability to use this familiar terminal as a multiplexer is a great convenience. It's by no means necessary because tmux and Screen both run inside Konsole anyway, but by letting Konsole handle panes, I don't have to adjust my muscle memory. This kind of subtle feature inclusion is exactly [what makes KDE so great](https://opensource.com/article/19/12/linux-kde-plasma).

### ? Size

Konsole itself is roughly 11KB, but it relies on 105 KDE and Qt libraries, so effectively, it's more like 50MB at minimum.

### ?️ Control

Most important Konsole shortcuts start with **Shift+Ctrl**, and that's the case with splitting screens, opening new tabs, copying input to other panes, and so on. It's just Konsole, so if you're comfortable with the Plasma desktop, this feels familiar.

### ⌨️ Hacker factor

Using Konsole as your multiplexer gives you the right to call yourself a KDE power user.

## Terminator

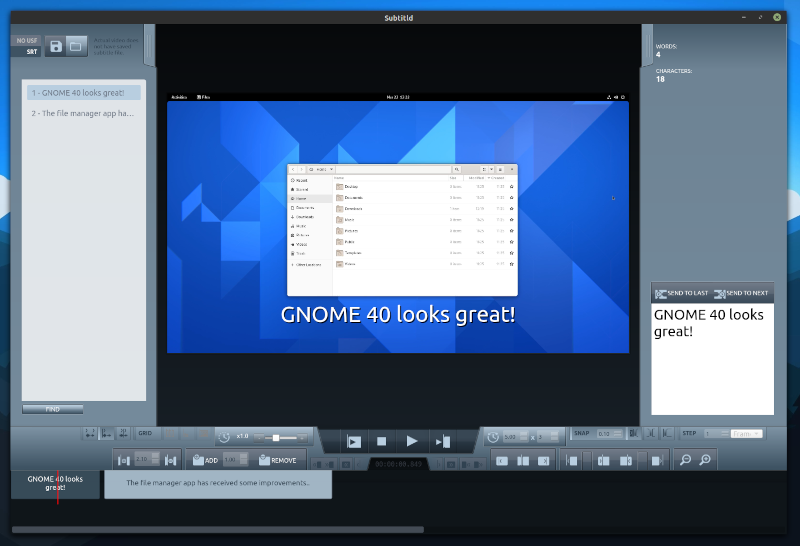

(Seth Kenlon, CC BY-SA 4.0)

For GNOME users, the Terminator multiplexer is an easy way to add power to their otherwise minimal GNOME terminal. In addition to the requisite multiplex features, Terminator can broadcast input to all open panes, but like Konsole, it can't run in the background so that you can reattach to it over SSH. Then again, with GNOME and Wayland making VNC so easy, it's possible that you won't feel the need to SSH in to continue a terminal session.

If you want it to be, Terminator can be entirely mouse-driven. Konsole has the same ability through its main menu. With Terminator, you can right-click anywhere in your shell and bring up relevant options to split the window horizontally or vertically, group panes together to target them for broadcasts, broadcast input, close panes, and so on. You can also configure keyboard shortcuts for all of these actions, so in many ways, you can build your own experience.

I consider myself mostly a KDE user, so when I say Terminator feels like a K-app, I mean that as a great compliment. Terminator is a surprisingly configurable and flexible application. In many ways, it exemplifies the power of open source by taking the humble GNOME Terminal and transforming it into a powerful multiplexer.

### ? Size

Terminator is 2.2MB to install, most of which are Python modules. It relies on GTK3 and GNOME, though, so if you're not running the full GNOME desktop, you can expect a much larger install for pulling in these dependencies.

### ?️ Control

There's not much consistency in Terminator's default controls. You use the **Alt** key for some commands, **Ctrl** for others, **Shift+Ctrl**, **Ctrl+Alt**, **Shift+Super**, and the mouse. Then again, it's one of the most configurable multiplexers I tried, so with an opinion and a little effort, you can design a schema that works for you.

### ⌨️ Hacker factor

You'll feel like the most modern and pragmatic of hackers when you use Terminator. With all of its geeky options, it's a great choice for multiplexing, and because it's so flexible you can use it just as easily whether your hands are on your keyboard or split between your keyboard and mouse.

## Choose them all

There are more multiplexers out there and several applications with multiplex-like abilities. You don't have to find the *one* multiplexer that does everything you need it to do exactly the way you want it done. You're allowed to use more than one. In fact, you can even use more than one at the same time because tmux and Screen are effectively shells, while Konsole and Terminator are terminals that display a shell. The important things are that you feel comfortable with the tools at your fingertips, and they help you manage your workspace so that you can work efficiently.

Go try a multiplexer, or discover multiplex-like features in your favorite application. It might just change the way you view computing.

## 11 Comments |

13,422 | Git 中上下文切换的 4 种方式 | https://opensource.com/article/21/4/context-switching-git | 2021-05-25T09:18:00 | [

"Git",

"分支"

] | https://linux.cn/article-13422-1.html |

>

> 比较 Git 中四种切换分支的方法的优缺点。

>

>

>

所有大量使用 Git 的人都会用到某种形式的上下文切换。有时这只会给你的工作流程增加少量的开销,但有时,这可能是一段痛苦的经历。

让我们用以下这个例子来讨论一些常见的上下文切换策略的优缺点:

>

> 假设你在一个名为 `feature-X` 的分支中工作。你刚刚发现你需要解决一个无关的问题。这不能在 `feature-X` 分支中完成。你需要在一个新的分支 `feature-Y` 中完成这项工作。

>

>

>

### 方案 1:暂存 + 分支

解决此问题最常见的工作流程可能如下所示:

1. 停止分支 `feature-X` 上的工作

2. `git stash`

3. `git checkout -b feature-Y origin/main`

4. 一顿鼓捣,解决 `feature-Y` 的问题

5. `git checkout feature-X` 或 `git switch -`

6. `git stash pop`

7. 继续在 `feature-X` 中工作

**优点:** 这种方法的优点在于,对于简单的更改,这是一个相当简单的工作流程。它可以很好地工作,特别是对于小型仓库。

**缺点:** 使用此工作流程时,一次只能有一个工作区。另外,根据你的仓库的状态,使用暂存是一个麻烦的环节。

### 方案 2:WIP 提交 + 分支

这个解决方案和前一个非常相似,但是它使用 WIP(<ruby> 正在进行的工作 <rt> Work in Progress </rt></ruby>)提交而不是暂存。当你准备好切换回来,而不是弹出暂存时,`git reset HEAD~1` 会展开 WIP 提交,你可以自由地继续,就像之前的方案一样,但不会触及暂存。

1. 停止分支 `feature-X` 上的工作

2. `git add -u`(仅仅添加修改和删除的文件)

3. `git commit -m "WIP"`

4. `git checkout -b feature-Y origin/master`

5. 一顿鼓捣,解决 `feature-Y` 的问题

6. `git checkout feature-X` 或 `git switch -`

7. `git reset HEAD~1`

**优点:** 对于简单的更改,这是一个简单的工作流,也适合于小型仓库。你不需要使用暂存。

**缺点:** 任何时候都只能有一个工作区。此外,如果你或你的代码审阅者不够谨慎,WIP 提交可能会合并到最终产品。

使用此工作流时,你**永远**不要想着将 `--hard` 添加到 `git reset`。如果你不小心这样做了,你应该能够使用 `git reflog` 恢复提交,但是你最好完全避免这种情况发生,否则你会听到心碎的声音。

### 方案 3:克隆一个新仓库

在这个解决方案中,不是创建新的分支,而是为每个新的功能分支创建存储库的新克隆。

**优点:** 你可以同时在多个工作区中工作。你不需要 `git stash` 或者是 WIP 提交。

**缺点:** 需要考虑仓库的大小,因为这可能会占用大量磁盘空间(浅层克隆可以帮助解决这种情况,但它们可能并不总是很合适。)此外,你的仓库克隆将互不可知。因为他们不能互相追踪,所以你必须手动追踪你的克隆的源仓库。如果需要 git 钩子,则需要为每个新克隆设置它们。

### 方案 4:git 工作树

要使用此解决方案,你可能需要了解 `git add worktree`。如果你不熟悉 Git 中的工作树,请不要难过。许多人多年来都对这个概念一无所知。

#### 什么是工作树?

将工作树视为仓库中属于项目的文件。本质上,这是一种工作区。你可能没有意识到你已经在使用工作树了。开始使用 Git 时,你将自动获得第一个工作树。

```

$ mkdir /tmp/foo && cd /tmp/foo

$ git init

$ git worktree list

/tmp 0000000 [master]

```

你可以在以上代码看到,甚至在第一次提交前你就有了一个工作树。接下来去尝试再添加一个工作树到你的项目中吧。

#### 添加一个工作树

想要添加一个新的工作树你需要提供:

1. 硬盘上的一个位置

2. 一个分支名

3. 添加哪些分支

```

$ git clone https://github.com/oalders/http-browserdetect.git

$ cd http-browserdetect/

$ git worktree list

/Users/olaf/http-browserdetect 90772ae [master]

$ git worktree add ~/trees/oalders/feature-X -b oalders/feature-X origin/master

$ git worktree add ~/trees/oalders/feature-Y -b oalders/feature-Y e9df3c555e96b3f1

$ git worktree list

/Users/olaf/http-browserdetect 90772ae [master]

/Users/olaf/trees/oalders/feature-X 90772ae [oalders/feature-X]

/Users/olaf/trees/oalders/feature-Y e9df3c5 [oalders/feature-Y]

```

与大多数其他 Git 命令一样,你需要在仓库路径下使用此命令。一旦创建了工作树,就有了隔离的工作环境。Git 仓库会跟踪工作树在磁盘上的位置。如果 Git 钩子已经在父仓库中设置好了,那么它们也可以在工作树中使用。

请注意到,每个工作树只使用父仓库磁盘空间的一小部分。在这种情况下,工作树需要只大约三分之一的原始磁盘空间。这这非常适合进行扩展。如果你的仓库达到了千兆字节的级别,你就会真正体会到工作树对硬盘空间的节省。

```

$ du -sh /Users/olaf/http-browserdetect

2.9M

$ du -sh /Users/olaf/trees/oalders/feature-X

1.0M

```

**优点:** 你可以同时在多个工作区中工作。你不需要使用暂存。Git 会跟踪所有的工作树。你不需要设置 Git 钩子。这也比 `git clone` 更快,并且可以节省网络流量,因为你可以在飞行模式下执行此操作。你还可以更高效地使用磁盘空间,而无需借助于浅层克隆。

**缺点:** 这是个需要你额外学习和记忆的新东西,但是如果你能养成使用这个功能的习惯,它会给你丰厚的回报。

#### 额外的小技巧

有很多方式可以清除工作树,最受欢迎的方式是使用 Git 来移除工作树:

```

git worktree remove /Users/olaf/trees/oalders/feature-X

```

如果你喜欢 RM 大法,你也可以用 `rm -rf` 来删除工作树。

```

rm -rf /Users/olaf/trees/oalders/feature-X

```

但是,如果执行此操作,则可能需要使用 `git worktree prune` 清理所有剩余的文件。或者你现在可以跳过清理,这将在将来的某个时候通过 `git gc` 自行完成。

#### 注意事项

如果你准备尝试 `git worktree`,请记住以下几点:

* 删除工作树并不会删除该分支。

* 可以在工作树中切换分支。

* 你不能在多个工作树中同时签出同一个分支。

* 像其他命令一样,`git worktree` 需要从仓库内运行。

* 你可以同时拥有许多工作树。

* 要从同一个本地仓库签出创建工作树,否则它们将互不可知。

#### git rev-parse

最后一点注意:在使用 `git worktree` 时,仓库根所在的位置可能取决于上下文。幸运的是,`git rev parse` 可以让你区分这两者。

* 要查找父仓库的根目录,请执行以下操作:

```

git rev-parse --git-common-dir

```

* 要查找你当前所在仓库的根目录,请执行:

```

git rev-parse --show-toplevel

```

### 根据你的需要选择最好的方法

就像很多事情一样,TIMTOWDI(<ruby> 条条大道通罗马 <rt> there's more than one way to do it </rt></ruby>)。重要的是你要找到一个适合你需要的工作流程。你的需求可能因手头的问题而异。也许你偶尔会发现自己将 `git worktree` 作为版本控制工具箱中的一个方便工具。

---

via: <https://opensource.com/article/21/4/context-switching-git>

作者:[Olaf Alders](https://opensource.com/users/oalders) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Chao-zhi](https://github.com/Chao-zhi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Anyone who spends a lot of time working with Git will eventually need to do some form of context switching. Sometimes this adds very little overhead to your workflow, but other times, it can be a real pain.

Let's discuss the pros and cons of some common strategies for dealing with context switching using this example problem:

Imagine you are working in a branch called

`feature-X`

. You have just discovered you need to solve an unrelated problem. This cannot be done in`feature-X`

. You will need to do this work in a new branch,`feature-Y`

.

## Solution #1: stash + branch

Probably the most common workflow to tackle this issue looks something like this:

- Halt work on the branch

`feature-X`

`git stash`

`git checkout -b feature-Y origin/main`

- Hack, hack, hack…

`git checkout feature-X`

or`git switch -`

`git stash pop`

- Resume work on

`feature-X`

**Pros:** The nice thing about this approach is that this is a fairly easy workflow for simple changes. It can work quite well, especially for small repositories.

**Cons:** When using this workflow, you can have only one workspace at a time. Also, depending on the state of your repository, working with the stash can be non-trivial.

## Solution #2: WIP commit + branch

A variation on this solution looks quite similar, but it uses a WIP (Work in Progress) commit rather than the stash. When you're ready to switch back, rather than popping the stash, `git reset HEAD~1`

unrolls your WIP commit, and you're free to continue, much as you did in the earlier scenario but without touching the stash.

- Halt work on the branch

`feature-X`

`git add -u`

(adds only modified and deleted files)`git commit -m "WIP"`

`git checkout -b feature-Y origin/master`

- Hack, hack, hack…

`git checkout feature-X`

or`git switch -`

`git reset HEAD~1`

**Pros:** This is an easy workflow for simple changes and also good for small repositories. You don't have to work with the stash.

**Cons:** You can have only one workspace at any time. Also, WIP commits can sneak into your final product if you or your code reviewer are not vigilant.

When using this workflow, you *never* want to add a `--hard`

to `git reset`

. If you do this accidentally, you should be able to restore your commit using `git reflog`

, but it's less heartstopping to avoid this scenario entirely.

## Solution #3: new repository clone

In this solution, rather than creating a new branch, you make a new clone of the repository for each new feature branch.

**Pros:** You can work in multiple workspaces simultaneously. You don't need `git stash`

or even WIP commits.

**Cons:** Depending on the size of your repository, this can use a lot of disk space. (Shallow clones can help with this scenario, but they may not always be a good fit.) Additionally, your repository clones will be agnostic about each other. Since they can't track each other, you must track where your clones live. If you need git hooks, you will need to set them up for each new clone.

## Solution #4: git worktree

To use this solution, you may need to learn about `git add worktree`

. Don't feel bad if you're not familiar with worktrees in Git. Many people get by for years in blissful ignorance of this concept.

### What is a worktree?

Think of a worktree as the files in the repository that belong to a project. Essentially, it's a kind of workspace. You may not realize that you're already using worktrees. When using Git, you get your first worktree for free.

```

$ mkdir /tmp/foo && cd /tmp/foo

$ git init

$ git worktree list

/tmp 0000000 [master]

```

As you can see, the worktree exists even before the first commit. Now, add a new worktree to an existing project.

### Add a worktree

To add a new worktree, you need to provide:

- A location on disk

- A branch name

- Something to branch from

```

$ git clone https://github.com/oalders/http-browserdetect.git

$ cd http-browserdetect/

$ git worktree list

/Users/olaf/http-browserdetect 90772ae [master]

$ git worktree add ~/trees/oalders/feature-X -b oalders/feature-X origin/master

$ git worktree add ~/trees/oalders/feature-Y -b oalders/feature-Y e9df3c555e96b3f1

$ git worktree list

/Users/olaf/http-browserdetect 90772ae [master]

/Users/olaf/trees/oalders/feature-X 90772ae [oalders/feature-X]

/Users/olaf/trees/oalders/feature-Y e9df3c5 [oalders/feature-Y]

```

Like with most other Git commands, you need to be inside a repository when issuing this command. Once the worktrees are created, you have isolated work environments. The Git repository tracks where the worktrees live on disk. If Git hooks are already set up in the parent repository, they will also be available in the worktrees.

Don't overlook that each worktree uses only a fraction of the parent repository's disk space. In this case, the worktree requires about one-third of the original's disk space. This can scale very well. Once your repositories are measured in the gigabytes, you'll really come to appreciate these savings.

```

$ du -sh /Users/olaf/http-browserdetect

2.9M

$ du -sh /Users/olaf/trees/oalders/feature-X

1.0M

```

**Pros:** You can work in multiple workspaces simultaneously. You don't need the stash. Git tracks all of your worktrees. You don't need to set up Git hooks. This is also faster than `git clone`

and can save on network traffic since you can do this in airplane mode. You also get more efficient disk space use without needing to resort to a shallow clone.

**Cons:** This is yet another thing to remember. However, if you can get into the habit of using this feature, it can reward you handsomely.

## A few more tips

When you need to clean up your worktrees, you have a couple of options. The preferable way is to let Git remove the worktree:

`git worktree remove /Users/olaf/trees/oalders/feature-X`

If you prefer a scorched-earth approach, `rm -rf`

is also your friend:

`rm -rf /Users/olaf/trees/oalders/feature-X`

However, if you do this, you may want to clean up any remaining files with `git worktree prune`

. Or you can skip the `prune`

now, and this will happen on its own at some point in the future via `git gc`

.

## Notable notes

If you're ready to get started with `git worktree`

, here are a few things to keep in mind.

- Removing a worktree does not delete the branch.

- You can switch branches within a worktree.

- You cannot simultaneously check out the same branch in multiple worktrees.

- Like many other Git commands,

`git worktree`

needs to be run from inside a repository. - You can have many worktrees at once.

- Create your worktrees from the same local checkout, or they will be agnostic about each other.

## git rev-parse

One final note: When using `git worktree`

, your concept of where the root of the repository lives may depend on context. Fortunately, `git rev-parse`

allows you to distinguish between the two.

- To find the parent repository's root:

`git rev-parse --git-common-dir`

- To find the root of the repository you're in:

`git rev-parse --show-toplevel`

## Choose the best method for your needs

As in many things, TIMTOWDI (there's more than one way to do it). What's important is that you find a workflow that suits your needs. What your needs are may vary depending on the problem at hand. Maybe you'll occasionally find yourself reaching for `git worktree`

as a handy tool in your revision-control toolbelt.

## Comments are closed. |

13,423 | 对 JavaScript 构建工具的 6 个预测 | https://opensource.com/article/20/11/javascript-build-tools | 2021-05-25T11:21:48 | [

"JavaScript"

] | /article-13423-1.html |

>

> JavaScript 前端工具的生态系统充满着变数和竞争,且只有最好的工具才会存活下来。

>

>

>

生产中使用的代码与开发中的有所不同. 在生产中,我们需要构建一些能运行得够快、能管理各种依赖关系、能自动执行任务、能加载外部模块等功能的包。而那些将开发中的代码转为生产代码的 [JavaScript](https://www.javascript.com/) 工具我们就称之为 *构建工具。*

我们可以通过各个构建步骤以及其重要性来解释前端代码需要被“构建”的原因。

### 前端代码构建步骤

前端代码的构建涉及下面的四个步骤:

#### 1、转译

通过<ruby> 转译 <rt> Transpiling </rt></ruby>,开发者可以使用到语言最新、最热门的更新和扩展,并保持浏览器的兼容性等。下面是使用 [Babel](https://babeljs.io/) 的一个例子:

```

// 数组映射中的箭头函数语法

const double = [1, 2, 3].map((num) => num * 2);

// 转译后

const double = [1, 2, 3].map(function(num) {

return num * 2;

});

```

#### 2、分包

<ruby> 分包 <rt> Bundling </rt></ruby>是处理所有 `import` 与`require` 语句的过程;找到相匹配的 JavaScript 代码片段、包和库;将它们添加到适当的域中;然后将它们打包到一个大的 JavaScript 文件中。常用的分包器包括 Browserify、Webpack 与 Parcel。

#### 3、压缩

<ruby> 压缩 <rt> Minifing </rt></ruby>是通过删除空白和代码注释来减少最终的文件大小。在压缩过程中,我们还可以更进一步添加代码混淆步骤,混淆会更改变量名和方法名,使代码变得晦涩难懂,因此一旦代码交付到客户端,它就不是那么容易能让人读懂。下面是一个使用 Grunt 的例子:

```

// 压缩前

const double = [1, 2, 3].map(function(num) {

return num * 2;

});

// 压缩后

const double=[1,2,3].map(function(num){return num*2;});

```

#### 4、打包

完成上面的所有步骤之后, 我们需要将这些具有兼容性、且经过分包、压缩/混淆过的文件放置到某个地方。<ruby> 打包 <rt> Packaging </rt></ruby>正是这样一个过程,它将上述步骤所产生的结果放置到开发者指定的某个位置上,这通常是通过打包器完成的。

### 前端构建工具

前端工具及构建工具可以分为以下几类:

* 包管理: NPM、Yarn

* 转译器: Babel 等

* 打包器: Webpack、Parcel、Browserify

* 压缩混淆: UglifyJS、Packer、Minify 等

JavaScript 生态系统中有各种各样的构建工具可以使用,包括下面的这些:

#### Grunt 和 Bower

[Grunt](https://gruntjs.com/) 是作为命令行工具引入的,它仅提供一个脚本来指定和配置相关构建任务。[Bower](https://bower.io/) 作为包管理器,提供了一种客户端包的管理方法而紧追其后。这两者,再加上 NPM,它们经常在一起使用,它们看上去似乎可以满足大多数的自动化需求,但 Grunt 的问题在于它无法提供给开发者配置更复杂任务的自由,而 Bower 使开发者管理的程序包是平常的两倍,因为它将前端包、后台包分开了(例如,Bower 组件与 Node 模块)。

**Grunt 与 Bower 的未来:** Grunt 与 Bower 正在退出 JavaScript 工具生态,但是还有一些替代品。

#### Gulp 和 Browserify

[Gulp](https://gulpjs.com/) 是在 Grunt 发布一年半之后才发布的。但 Gulp 却让大家感到很自然、舒服。用 JavaScript 来写构建脚本与用 JSON 来写相比更自由。你可以在 Gulp 的构建脚本中编写函数、即时创建变量、在任何地方使用条件语句 —— 但就这些,并不能说让我们的感觉变得特别自然和舒适,只能说这只是其中的一个可能的原因。[Browserify](http://browserify.org/) 和 Gulp 可以配合使用,Browserify 允许 NPM 包(用于后端 Node 服务器)被直接带入到前端,就这一点已经直接让 Bower 废了。而正是这种用一个包管理器来处理前后端包的方式让人感到更自然和更好。

**Gulp 的未来:** Gulp 可能会被改进,以便匹配当前流行的构建工具,但这完全取决于创造者的意愿。Gulp 仍在使用中,只是不再像以前那么流行了。

#### Webpack 和 NPM/Yarn 脚本

[Webpack](https://webpack.js.org/) 是现代前端开发工具中最热门的宠儿,它是一个开源的 JavaScript 模块打包器。Webpack 主要是为处理 JavaScript 而创造的,但如果包含相应的加载器,它也可以转换 HTML、CSS 和图片等前端资源。通过 Webpack,你也可以像 Gulp 一样编写构建脚本,并通过 [NPM/Yarn](https://github.com/yarnpkg/yarn) 来执行它们。

**Webpack 的未来:** Webpack 是目前 JavaScript 工具生态系统中最热门的工具,最近几乎所有的 JavaScript 库都在使用 React 和 Webpack。Webpack 目前处于第四个版本,不会很快消失。(LCTT 译注:Webpack 目前已经发布了第五个版本了,且还在火热更新中)

#### Parcel

[Parcel](https://parceljs.org/) 是一个 Web 应用打包器,于 2018 年推出,因其开发者体验而与众不同。Parcel 能利用处理器多核功能提供极快的打包性能,且还零配置。但 Parcel 还是一个新星,对于一些大型应用,其采用率并不高。相比之下,开发人员更喜欢使用 Webpack,因为 Webpack 有更广泛的支持和可定制性。

**Parcel 的未来:** Parcel 非常容易使用,如果你统计打包和构建时间,它会比 Webpack 更快,而且它还提供了更好的开发者体验。Parcel 没有被大量采用的原因可能是它仍然比较新。在前端构建工具的生态系统中,Parcel 的前景会非常光明,它将会存在一段时间。

#### Rollup

[Rollup](https://rollupjs.org/guide/en/) 是 JavaScript 的一个模块分包器,它可将一小段代码编译为更大更复杂的库或应用。Rollup 一般建议用来构建 JavaScript 库,特别是那种导入和依赖的第三方库较少的那种库。

**Rollup 的未来:** Rollup 很酷,且正在被迅速采用。它有很多强大的功能,将在很长一段时间内作为前端工具生态系统的一个组成部分而存在。

### 了解更多

JavaScript 前端工具的生态系统充满着变数和竞争,且只有最好的工具才能存活下来。在不久的将来,我们的构建工具将具有更少(或没有)的配置,更方便的定制化,更好的扩展性的和更好的构建速度。

该用什么样的构建工具用于你的前端项目,你需要根据具体的项目需求来做出决定。至于选择什么样的工具,才是最合适自己的,大多数时候,需要我们自己作出取舍。

更多信息, 请看:

* [JavaScript tooling: The evolution and future of JS/frontend build tools](https://qmo.io/blog/javascript-tooling-the-evolution-and-future-of-js-front-end-build-tools/)

* [Tools and modern workflow for frontend developers](https://blog.logrocket.com/tools-and-modern-workflow-for-front-end-developers-505c7227e917/)

* [Modern frontend: The tools and build process explained](https://medium.com/@trevorpoppen/modern-front-end-the-tools-and-build-process-explained-36641b5c1a53)

* [Best build tools in frontend development](https://www.developerdrive.com/best-build-tools-frontend-development/)

*这篇文章最初发表在 [Shedrack Akintayo 的博客](https://www.sheddy.xyz/posts/javascript-build-tools-past-and-beyond) 上,经许可后重新发表。*

---

via: <https://opensource.com/article/20/11/javascript-build-tools>

作者:[Shedrack Akintayo](https://opensource.com/users/shedrack-akintayo) 选题:[lujun9972](https://github.com/lujun9972) 译者:[ywxgod](https://github.com/ywxgod) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

13,425 | 使用开源工具进行 Linux 内存取证 | https://opensource.com/article/21/4/linux-memory-forensics | 2021-05-26T11:20:06 | [

"内存",

"转储",

"取证"

] | https://linux.cn/article-13425-1.html |

>

> 利用 Volatility 找出应用程序、网络连接、内核模块、文件等方面的情况。

>

>

>

计算机的操作系统和应用使用主内存(RAM)来执行不同的任务。这种易失性内存包含大量关于运行应用、网络连接、内核模块、打开的文件以及几乎所有其他的内容信息,但这些信息每次计算机重启的时候都会被清除。

<ruby> 内存取证 <rt> Memory forensics </rt></ruby>是一种从内存中找到和抽取这些有价值的信息的方式。[Volatility](https://github.com/volatilityfoundation/volatility) 是一种使用插件来处理这类信息的开源工具。但是,存在一个问题:在你处理这些信息前,必须将物理内存转储到一个文件中,而 Volatility 没有这种能力。

因此,这篇文章分为两部分:

* 第一部分是处理获取物理内存并将其转储到一个文件中。

* 第二部分使用 Volatility 从这个内存转储中读取并处理这些信息。

我在本教程中使用了以下测试系统,不过它可以在任何 Linux 发行版上工作:

```

$ cat /etc/redhat-release

Red Hat Enterprise Linux release 8.3 (Ootpa)

$

$ uname -r

4.18.0-240.el8.x86_64

$

```

>

> **注意事项:** 部分 1 涉及到编译和加载一个内核模块。不要担心:它并不像听起来那么困难。

>

>

> 一些指南:

>

>

> * 按照以下的步骤。

> * 不要在生产系统或你的主要计算机上尝试任何这些步骤。

> * 始终使用测试的虚拟机(VM)来尝试,直到你熟悉使用这些工具并理解它们的工作原理为止。

>

>

>

### 安装需要的包

在开始之前安装必要的工具。如果你经常使用基于 Debian 的发行版,可以使用 `apt-get` 命令。这些包大多数提供了需要的内核信息和工具来编译代码:

```

$ yum install kernel-headers kernel-devel gcc elfutils-libelf-devel make git libdwarf-tools python2-devel.x86_64-y

```

### 部分 1:使用 LiME 获取内存并将其转储到一个文件中

在开始分析内存之前,你需要一个内存转储供你使用。在实际的取证活动中,这可能来自一个被破坏或者被入侵的系统。这些信息通常会被收集和存储来分析入侵是如何发生的及其影响。由于你可能没有可用的内存转储,你可以获取你的测试 VM 的内存转储,并使用它来执行内存取证。

<ruby> Linux 内存提取器 <rt> Linux Memory Extractor </rt></ruby>([LiME](https://github.com/504ensicsLabs/LiME))是一个在 Linux 系统上获取内存很常用的工具。使用以下命令获得 LiME:

```

$ git clone https://github.com/504ensicsLabs/LiME.git

$

$ cd LiME/src/

$

$ ls

deflate.c disk.c hash.c lime.h main.c Makefile Makefile.sample tcp.c

$

```

#### 构建 LiME 内核模块

在 `src` 文件夹下运行 `make` 命令。这会创建一个以 .ko 为扩展名的内核模块。理想情况下,在 `make` 结束时,`lime.ko` 文件会使用格式 `lime-<your-kernel-version>.ko` 被重命名。

```

$ make

make -C /lib/modules/4.18.0-240.el8.x86_64/build M="/root/LiME/src" modules

make[1]: Entering directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

<< 删节 >>

make[1]: Leaving directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

strip --strip-unneeded lime.ko

mv lime.ko lime-4.18.0-240.el8.x86_64.ko

$

$

$ ls -l lime-4.18.0-240.el8.x86_64.ko

-rw-r--r--. 1 root root 25696 Apr 17 14:45 lime-4.18.0-240.el8.x86_64.ko

$

$ file lime-4.18.0-240.el8.x86_64.ko

lime-4.18.0-240.el8.x86_64.ko: ELF 64-bit LSB relocatable, x86-64, version 1 (SYSV), BuildID[sha1]=1d0b5cf932389000d960a7e6b57c428b8e46c9cf, not stripped

$

```

#### 加载LiME 内核模块

现在是时候加载内核模块来获取系统内存了。`insmod` 命令会帮助加载内核模块;模块一旦被加载,会在你的系统上读取主内存(RAM)并且将内存的内容转储到命令行所提供的 `path` 目录下的文件中。另一个重要的参数是 `format`;保持 `lime` 的格式,如下所示。在插入内核模块之后,使用 `lsmod` 命令验证它是否真的被加载。

```

$ lsmod | grep lime

$

$ insmod ./lime-4.18.0-240.el8.x86_64.ko "path=../RHEL8.3_64bit.mem format=lime"

$

$ lsmod | grep lime

lime 16384 0

$

```

你应该看到给 `path` 命令的文件已经创建好了,而且文件大小与你系统的物理内存(RAM)大小相同(并不奇怪)。一旦你有了内存转储,你就可以使用 `rmmod` 命令删除该内核模块:

```

$

$ ls -l ~/LiME/RHEL8.3_64bit.mem

-r--r--r--. 1 root root 4294544480 Apr 17 14:47 /root/LiME/RHEL8.3_64bit.mem

$

$ du -sh ~/LiME/RHEL8.3_64bit.mem

4.0G /root/LiME/RHEL8.3_64bit.mem

$

$ free -m

total used free shared buff/cache available

Mem: 3736 220 366 8 3149 3259

Swap: 4059 8 4051

$

$ rmmod lime

$

$ lsmod | grep lime

$

```

#### 内存转储中是什么?

这个内存转储文件只是原始数据,就像使用 `file` 命令可以看到的一样。你不可能通过手动去理解它;是的,在这里边有一些 ASCII 字符,但是你无法用编辑器打开这个文件并把它读出来。`hexdump` 的输出显示,最初的几个字节是 `EmiL`;这是因为你的请求格式在上面的命令行中是 `lime`:

```

$ file ~/LiME/RHEL8.3_64bit.mem

/root/LiME/RHEL8.3_64bit.mem: data

$

$ hexdump -C ~/LiME/RHEL8.3_64bit.mem | head

00000000 45 4d 69 4c 01 00 00 00 00 10 00 00 00 00 00 00 |EMiL............|

00000010 ff fb 09 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000020 b8 fe 4c cd 21 44 00 32 20 00 00 2a 2a 2a 2a 2a |..L.!D.2 ..*****|

00000030 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a |****************|

00000040 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 2a 20 00 20 |************* . |

00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000080 00 00 00 00 00 00 00 00 00 00 00 00 70 78 65 6c |............pxel|

00000090 69 6e 75 78 2e 30 00 00 00 00 00 00 00 00 00 00 |inux.0..........|

000000a0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

$

```

### 部分 2:获得 Volatility 并使用它来分析你的内存转储

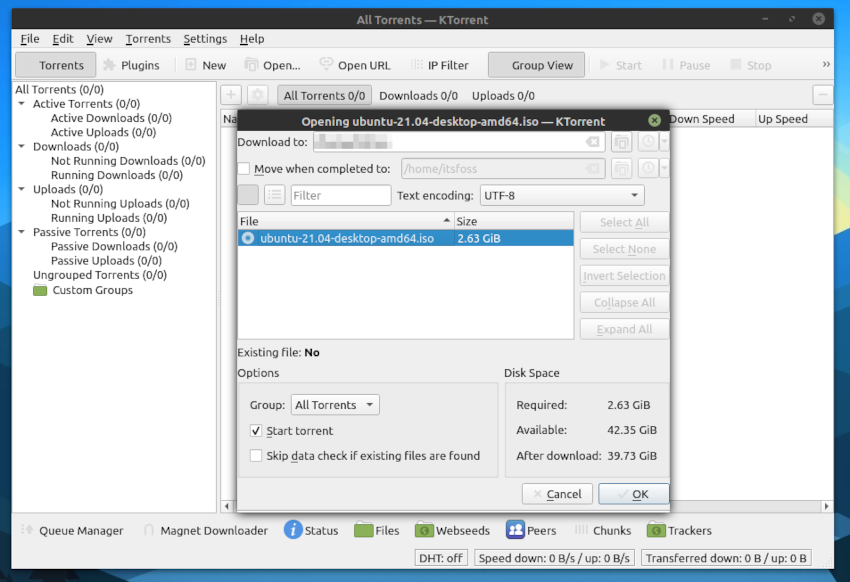

现在你有了要分析的示例内存转储,使用下面的命令获取 Volatility 软件。Volatility 已经用 Python 3 重写了,但是本教程使用的是用 Python 2 写的原始的 Volatility 包。如果你想用 Volatility 3 进行实验,可以从合适的 Git 仓库下载它,并在以下命令中使用 Python 3 而不是 Python 2:

```

$ git clone https://github.com/volatilityfoundation/volatility.git

$

$ cd volatility/

$

$ ls

AUTHORS.txt contrib LEGAL.txt Makefile PKG-INFO pyinstaller.spec resources tools vol.py

CHANGELOG.txt CREDITS.txt LICENSE.txt MANIFEST.in pyinstaller README.txt setup.py volatility

$

```

Volatility 使用两个 Python 库来实现某些功能,所以使用以下命令来安装它们。否则,在你运行 Volatility 工具时,你可能看到一些导入错误;你可以忽略它们,除非你正在运行的插件需要这些库;这种情况下,工具将会报错:

```

$ pip2 install pycrypto

$ pip2 install distorm3

```

#### 列出 Volatility 的 Linux 配置文件

你将要运行的第一个 Volatility 命令列出了可用的 Linux 配置文件,运行 Volatility 命令的主要入口点是 `vol.py` 脚本。使用 Python 2 解释器调用它并提供 `--info` 选项。为了缩小输出,查找以 Linux 开头的字符串。正如你所看到的,并没有很多 Linux 配置文件被列出:

```

$ python2 vol.py --info | grep ^Linux

Volatility Foundation Volatility Framework 2.6.1

LinuxAMD64PagedMemory - Linux-specific AMD 64-bit address space.

$

```

#### 构建你自己的 Linux 配置文件

Linux 发行版是多种多样的,并且是为不同架构而构建的。这就是为什么配置文件是必要的 —— Volatility 在提取信息前必须知道内存转储是从哪个系统和架构获得的。有一些 Volatility 命令可以找到这些信息;但是这个方法很费时。为了加快速度,可以使用以下命令构建一个自定义的 Linux 配置文件:

移动到 Volatility 仓库的 `tools/linux`目录下,运行 `make` 命令:

```

$ cd tools/linux/

$

$ pwd

/root/volatility/tools/linux

$

$ ls

kcore Makefile Makefile.enterprise module.c

$

$ make

make -C //lib/modules/4.18.0-240.el8.x86_64/build CONFIG_DEBUG_INFO=y M="/root/volatility/tools/linux" modules

make[1]: Entering directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

<< 删节 >>

make[1]: Leaving directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

$

```

你应该看到一个新的 `module.dwarf` 文件。你也需要 `/boot` 目录下的 `System.map` 文件,因为它包含了所有与当前运行的内核相关的符号:

```

$ ls

kcore Makefile Makefile.enterprise module.c module.dwarf

$

$ ls -l module.dwarf

-rw-r--r--. 1 root root 3987904 Apr 17 15:17 module.dwarf

$

$ ls -l /boot/System.map-4.18.0-240.el8.x86_64

-rw-------. 1 root root 4032815 Sep 23 2020 /boot/System.map-4.18.0-240.el8.x86_64

$

$

```

要创建一个自定义配置文件,移动回到 Volatility 目录并且运行下面的命令。第一个参数提供了一个自定义 .zip 文件,文件名是你自己命名的。我经常使用操作系统和内核版本来命名。下一个参数是前边创建的 `module.dwarf` 文件,最后一个参数是 `/boot` 目录下的 `System.map` 文件:

```

$

$ cd volatility/

$

$ zip volatility/plugins/overlays/linux/Redhat8.3_4.18.0-240.zip tools/linux/module.dwarf /boot/System.map-4.18.0-240.el8.x86_64

adding: tools/linux/module.dwarf (deflated 91%)

adding: boot/System.map-4.18.0-240.el8.x86_64 (deflated 79%)

$

```

现在自定义配置文件就准备好了,所以在前边给出的位置检查一下 .zip 文件是否被创建好。如果你想知道 Volatility 是否检测到这个自定义配置文件,再一次运行 `--info` 命令。现在,你应该可以在下边的列出的内容中看到新的配置文件:

```

$

$ ls -l volatility/plugins/overlays/linux/Redhat8.3_4.18.0-240.zip

-rw-r--r--. 1 root root 1190360 Apr 17 15:20 volatility/plugins/overlays/linux/Redhat8.3_4.18.0-240.zip

$

$

$ python2 vol.py --info | grep Redhat

Volatility Foundation Volatility Framework 2.6.1

LinuxRedhat8_3_4_18_0-240x64 - A Profile for Linux Redhat8.3_4.18.0-240 x64

$

$

```

#### 开始使用 Volatility

现在你已经准备好去做一些真正的内存取证了。记住,Volatility 是由自定义的插件组成的,你可以针对内存转储来获得信息。命令的通用格式是:

```

python2 vol.py -f <memory-dump-file-taken-by-Lime> <plugin-name> --profile=<name-of-our-custom-profile>

```

有了这些信息,运行 `linux_banner` 插件来看看你是否可从内存转储中识别正确的发行版信息:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_banner --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

Linux version 4.18.0-240.el8.x86_64 ([[email protected]][4]) (gcc version 8.3.1 20191121 (Red Hat 8.3.1-5) (GCC)) #1 SMP Wed Sep 23 05:13:10 EDT 2020

$

```

#### 找到 Linux 插件

到现在都很顺利,所以现在你可能对如何找到所有 Linux 插件的名字比较好奇。有一个简单的技巧:运行 `--info` 命令并抓取 `linux_` 字符串。有各种各样的插件可用于不同的用途。这里列出一部分:

```

$ python2 vol.py --info | grep linux_

Volatility Foundation Volatility Framework 2.6.1

linux_apihooks - Checks for userland apihooks

linux_arp - Print the ARP table

linux_aslr_shift - Automatically detect the Linux ASLR shift

<< 删节 >>

linux_banner - Prints the Linux banner information

linux_vma_cache - Gather VMAs from the vm_area_struct cache

linux_volshell - Shell in the memory image

linux_yarascan - A shell in the Linux memory image

$

```

使用 `linux_psaux` 插件检查内存转储时系统上正在运行哪些进程。注意列表中的最后一个命令:它是你在转储之前运行的 `insmod` 命令。

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_psaux --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

Pid Uid Gid Arguments

1 0 0 /usr/lib/systemd/systemd --switched-root --system --deserialize 18

2 0 0 [kthreadd]

3 0 0 [rcu_gp]

4 0 0 [rcu_par_gp]

861 0 0 /usr/libexec/platform-python -Es /usr/sbin/tuned -l -P

869 0 0 /usr/bin/rhsmcertd

875 0 0 /usr/libexec/sssd/sssd_be --domain implicit_files --uid 0 --gid 0 --logger=files

878 0 0 /usr/libexec/sssd/sssd_nss --uid 0 --gid 0 --logger=files

<< 删节 >>

11064 89 89 qmgr -l -t unix -u

227148 0 0 [kworker/0:0]

227298 0 0 -bash

227374 0 0 [kworker/u2:1]

227375 0 0 [kworker/0:2]

227884 0 0 [kworker/0:3]

228573 0 0 insmod ./lime-4.18.0-240.el8.x86_64.ko path=../RHEL8.3_64bit.mem format=lime

228576 0 0

$

```

想要知道系统的网络状态吗?运行 `linux_netstat` 插件来找到在内存转储期间网络连接的状态:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_netstat --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

UNIX 18113 systemd/1 /run/systemd/private

UNIX 11411 systemd/1 /run/systemd/notify

UNIX 11413 systemd/1 /run/systemd/cgroups-agent

UNIX 11415 systemd/1

UNIX 11416 systemd/1

<< 删节 >>

$

```

接下来,使用 `linux_mount` 插件来看在内存转储期间哪些文件系统被挂载:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_mount --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

tmpfs /sys/fs/cgroup tmpfs ro,nosuid,nodev,noexec

cgroup /sys/fs/cgroup/pids cgroup rw,relatime,nosuid,nodev,noexec

systemd-1 /proc/sys/fs/binfmt_misc autofs rw,relatime

sunrpc /var/lib/nfs/rpc_pipefs rpc_pipefs rw,relatime

/dev/mapper/rhel_kvm--03--guest11-root / xfs rw,relatime

tmpfs /dev/shm tmpfs rw,nosuid,nodev

selinuxfs /sys/fs/selinux selinuxfs rw,relatime

<< 删节 >>

cgroup /sys/fs/cgroup/net_cls,net_prio cgroup rw,relatime,nosuid,nodev,noexec

cgroup /sys/fs/cgroup/cpu,cpuacct cgroup rw,relatime,nosuid,nodev,noexec

bpf /sys/fs/bpf bpf rw,relatime,nosuid,nodev,noexec

cgroup /sys/fs/cgroup/memory cgroup ro,relatime,nosuid,nodev,noexec

cgroup /sys/fs/cgroup/cpuset cgroup rw,relatime,nosuid,nodev,noexec

mqueue /dev/mqueue mqueue rw,relatime

$

```

好奇哪些内核模块被加载了吗?Volatility 也为这个提供了一个插件 `linux_lsmod`:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_lsmod --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

ffffffffc0535040 lime 20480

ffffffffc0530540 binfmt_misc 20480

ffffffffc05e8040 sunrpc 479232

<< 删节 >>

ffffffffc04f9540 nfit 65536

ffffffffc0266280 dm_mirror 28672

ffffffffc025e040 dm_region_hash 20480

ffffffffc0258180 dm_log 20480

ffffffffc024bbc0 dm_mod 151552

$

```

想知道哪些文件被哪些进程打开了吗?使用 `linux_bash` 插件可以列出这些信息:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_bash --profile=LinuxRedhat8_3_4_18_0-240x64 -v

Volatility Foundation Volatility Framework 2.6.1

Pid Name Command Time Command

-------- -------------------- ------------------------------ -------

227221 bash 2021-04-17 18:38:24 UTC+0000 lsmod

227221 bash 2021-04-17 18:38:24 UTC+0000 rm -f .log

227221 bash 2021-04-17 18:38:24 UTC+0000 ls -l /etc/zzz

227221 bash 2021-04-17 18:38:24 UTC+0000 cat ~/.vimrc

227221 bash 2021-04-17 18:38:24 UTC+0000 ls

227221 bash 2021-04-17 18:38:24 UTC+0000 cat /proc/817/cwd

227221 bash 2021-04-17 18:38:24 UTC+0000 ls -l /proc/817/cwd

227221 bash 2021-04-17 18:38:24 UTC+0000 ls /proc/817/

<< 删节 >>

227298 bash 2021-04-17 18:40:30 UTC+0000 gcc prt.c

227298 bash 2021-04-17 18:40:30 UTC+0000 ls

227298 bash 2021-04-17 18:40:30 UTC+0000 ./a.out

227298 bash 2021-04-17 18:40:30 UTC+0000 vim prt.c

227298 bash 2021-04-17 18:40:30 UTC+0000 gcc prt.c

227298 bash 2021-04-17 18:40:30 UTC+0000 ./a.out

227298 bash 2021-04-17 18:40:30 UTC+0000 ls

$

```

想知道哪些文件被哪些进程打开了吗?使用 `linux_lsof` 插件可以列出这些信息:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_lsof --profile=LinuxRedhat8_3_4_18_0-240x64

Volatility Foundation Volatility Framework 2.6.1

Offset Name Pid FD Path

------------------ ------------------------------ -------- -------- ----

0xffff9c83fb1e9f40 rsyslogd 71194 0 /dev/null

0xffff9c83fb1e9f40 rsyslogd 71194 1 /dev/null

0xffff9c83fb1e9f40 rsyslogd 71194 2 /dev/null

0xffff9c83fb1e9f40 rsyslogd 71194 3 /dev/urandom

0xffff9c83fb1e9f40 rsyslogd 71194 4 socket:[83565]

0xffff9c83fb1e9f40 rsyslogd 71194 5 /var/log/messages

0xffff9c83fb1e9f40 rsyslogd 71194 6 anon_inode:[9063]

0xffff9c83fb1e9f40 rsyslogd 71194 7 /var/log/secure

<< 删节 >>

0xffff9c8365761f40 insmod 228573 0 /dev/pts/0

0xffff9c8365761f40 insmod 228573 1 /dev/pts/0

0xffff9c8365761f40 insmod 228573 2 /dev/pts/0

0xffff9c8365761f40 insmod 228573 3 /root/LiME/src/lime-4.18.0-240.el8.x86_64.ko

$

```

#### 访问 Linux 插件脚本位置

通过读取内存转储和处理这些信息,你可以获得更多的信息。如果你会 Python,并且好奇这些信息是如何被处理的,可以到存储所有插件的目录,选择一个你感兴趣的,并看看 Volatility 是如何获得这些信息的:

```

$ ls volatility/plugins/linux/

apihooks.py common.py kernel_opened_files.py malfind.py psaux.py

apihooks.pyc common.pyc kernel_opened_files.pyc malfind.pyc psaux.pyc

arp.py cpuinfo.py keyboard_notifiers.py mount_cache.py psenv.py

arp.pyc cpuinfo.pyc keyboard_notifiers.pyc mount_cache.pyc psenv.pyc

aslr_shift.py dentry_cache.py ld_env.py mount.py pslist_cache.py

aslr_shift.pyc dentry_cache.pyc ld_env.pyc mount.pyc pslist_cache.pyc

<< 删节 >>

check_syscall_arm.py __init__.py lsmod.py proc_maps.py tty_check.py

check_syscall_arm.pyc __init__.pyc lsmod.pyc proc_maps.pyc tty_check.pyc

check_syscall.py iomem.py lsof.py proc_maps_rb.py vma_cache.py

check_syscall.pyc iomem.pyc lsof.pyc proc_maps_rb.pyc vma_cache.pyc

$

$

```

我喜欢 Volatility 的理由是他提供了许多安全插件。这些信息很难手动获取:

```

linux_hidden_modules - Carves memory to find hidden kernel modules

linux_malfind - Looks for suspicious process mappings

linux_truecrypt_passphrase - Recovers cached Truecrypt passphrases

```

Volatility 也允许你在内存转储中打开一个 shell,所以你可以运行 shell 命令来代替上面所有命令,并获得相同的信息:

```

$ python2 vol.py -f ~/LiME/RHEL8.3_64bit.mem linux_volshell --profile=LinuxRedhat8_3_4_18_0-240x64 -v

Volatility Foundation Volatility Framework 2.6.1

Current context: process systemd, pid=1 DTB=0x1042dc000

Welcome to volshell! Current memory image is:

file:///root/LiME/RHEL8.3_64bit.mem

To get help, type 'hh()'

>>>

>>> sc()

Current context: process systemd, pid=1 DTB=0x1042dc000

>>>

```

### 接下来的步骤

内存转储是了解 Linux 内部情况的好方法。试一试 Volatility 的所有插件,并详细研究它们的输出。然后思考这些信息如何能够帮助你识别入侵或安全问题。深入了解这些插件的工作原理,甚至尝试改进它们。如果你没有找到你想做的事情的插件,那就写一个并提交给 Volatility,这样其他人也可以使用它。

---

via: <https://opensource.com/article/21/4/linux-memory-forensics>

作者:[Gaurav Kamathe](https://opensource.com/users/gkamathe) 选题:[lujun9972](https://github.com/lujun9972) 译者:[RiaXu](https://github.com/ShuyRoy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | A computer's operating system and applications use the primary memory (or RAM) to perform various tasks. This volatile memory, containing a wealth of information about running applications, network connections, kernel modules, open files, and just about everything else is wiped out each time the computer restarts.

Memory forensics is a way to find and extract this valuable information from memory. [Volatility](https://github.com/volatilityfoundation/volatility) is an open source tool that uses plugins to process this type of information. However, there's a problem: Before you can process this information, you must dump the physical memory into a file, and Volatility does not have this ability.

Therefore, this article has two parts:

- The first part deals with acquiring the physical memory and dumping it into a file.

- The second part uses Volatility to read and process information from this memory dump.

I used the following test system for this tutorial, but it will work on any Linux distribution:

```

$ cat /etc/redhat-release

Red Hat Enterprise Linux release 8.3 (Ootpa)

$

$ uname -r

4.18.0-240.el8.x86_64

$

```

A note of caution:Part 1 involves compiling and loading a kernel module. Don't worry; it isn't as difficult as it sounds. Some guidelines:

- Follow the steps.

- Do not try any of these steps on a production system or your primary machine.

- Always use a test virtual machine (VM) to try things out until you are comfortable using the tools and understand how they work.

## Install the required packages

Before you get started, install the requisite tools. If you are using a Debian-based distro, use the equivalent `apt-get`

commands. Most of these packages provide the required kernel information and tools to compile the code:

`$ yum install kernel-headers kernel-devel gcc elfutils-libelf-devel make git libdwarf-tools python2-devel.x86_64-y`

## Part 1: Use LiME to acquire memory and dump it to a file

Before you can begin to analyze memory, you need a memory dump at your disposal. In an actual forensics event, this could come from a compromised or hacked system. Such information is often collected and stored to analyze how the intrusion happened and its impact. Since you probably do not have a memory dump available, you can take a memory dump of your test VM and use that to perform memory forensics.

Linux Memory Extractor ([LiME](https://github.com/504ensicsLabs/LiME)) is a popular tool for acquiring memory on a Linux system. Get LiME with:

```

$ git clone https://github.com/504ensicsLabs/LiME.git

$

$ cd LiME/src/

$

$ ls

deflate.c disk.c hash.c lime.h main.c Makefile Makefile.sample tcp.c

$

```

### Build the LiME kernel module

Run the `make`

command inside the `src`

folder. This creates a kernel module with a .ko extension. Ideally, the `lime.ko`

file will be renamed using the format `lime-<your-kernel-version>.ko`

at the end of `make`

:

```

$ make

make -C /lib/modules/4.18.0-240.el8.x86_64/build M="/root/LiME/src" modules

make[1]: Entering directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

<< snip >>

make[1]: Leaving directory '/usr/src/kernels/4.18.0-240.el8.x86_64'

strip --strip-unneeded lime.ko

mv lime.ko lime-4.18.0-240.el8.x86_64.ko

$

$

$ ls -l lime-4.18.0-240.el8.x86_64.ko

-rw-r--r--. 1 root root 25696 Apr 17 14:45 lime-4.18.0-240.el8.x86_64.ko

$

$ file lime-4.18.0-240.el8.x86_64.ko

lime-4.18.0-240.el8.x86_64.ko: ELF 64-bit LSB relocatable, x86-64, version 1 (SYSV), BuildID[sha1]=1d0b5cf932389000d960a7e6b57c428b8e46c9cf, not stripped

$

```

### Load the LiME kernel module

Now it's time to load the kernel module to acquire the system memory. The `insmod`

command helps load the kernel module; once loaded, the module reads the primary memory (RAM) on your system and dumps the memory's contents to the file provided in the `path`

directory on the command line. Another important parameter is `format`

; keep the format `lime`

, as shown below. After inserting the kernel module, verify that it loaded using the `lsmod`

command:

```

$ lsmod | grep lime

$

$ insmod ./lime-4.18.0-240.el8.x86_64.ko "path=../RHEL8.3_64bit.mem format=lime"

$

$ lsmod | grep lime

lime 16384 0

$

```

You should see that the file given to the `path`