id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

13,746 | Debian 和 Ubuntu:有什么不同?应该选择哪一个? | https://itsfoss.com/debian-vs-ubuntu/ | 2021-09-02T23:08:31 | [

"Ubuntu",

"Debian"

] | https://linux.cn/article-13746-1.html |

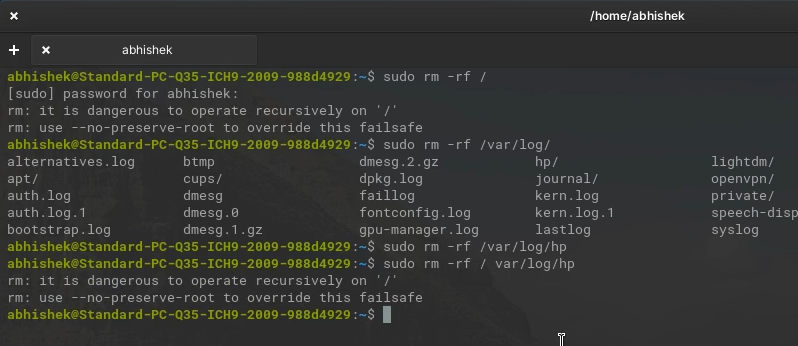

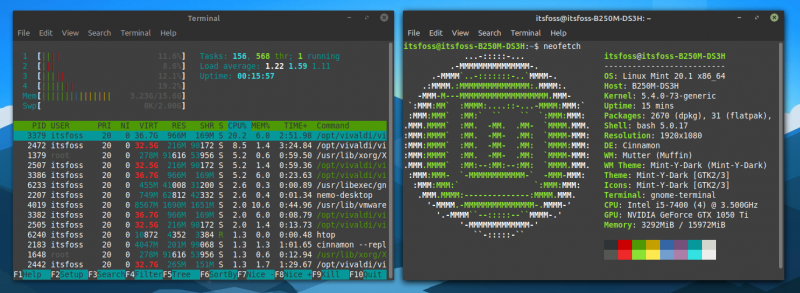

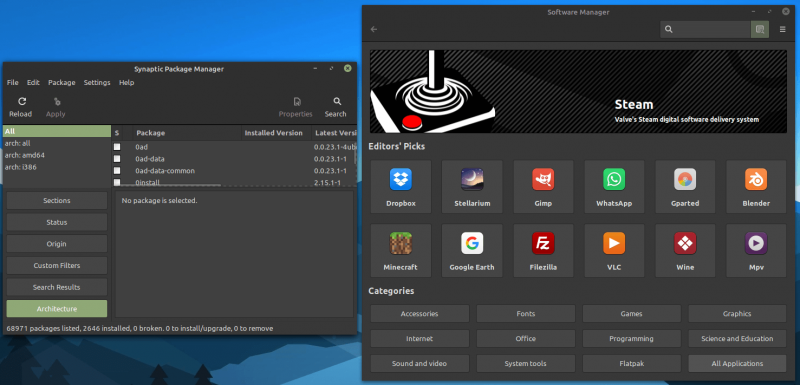

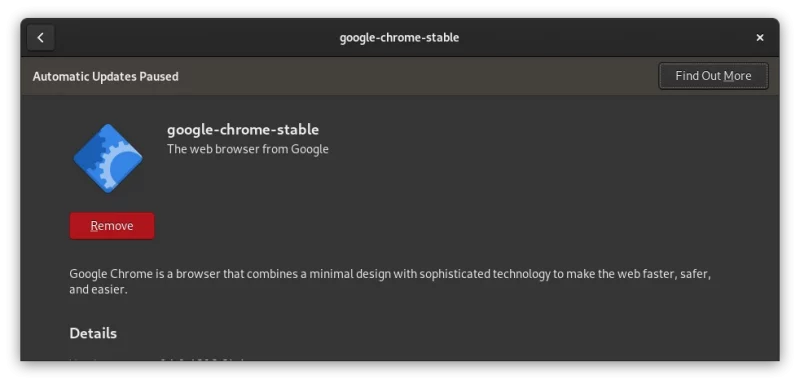

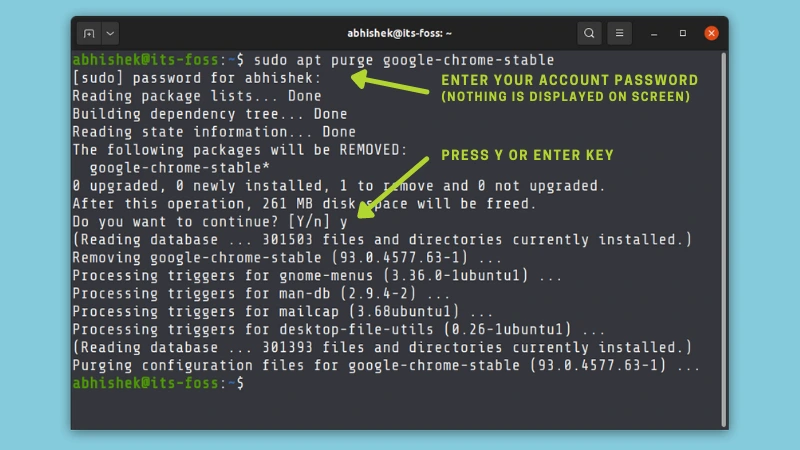

在 Debian 和 Ubuntu 系统中,你都可以 [使用 apt-get 命令](https://itsfoss.com/apt-get-linux-guide/) 来管理应用。你也可以在这两个发行版中安装 DEB 安装包。很多时候,你会在这两个发行版中发现同样的包安装命令。

它们两者是如此的相似,那么,它们两者之间有什么区别呢?

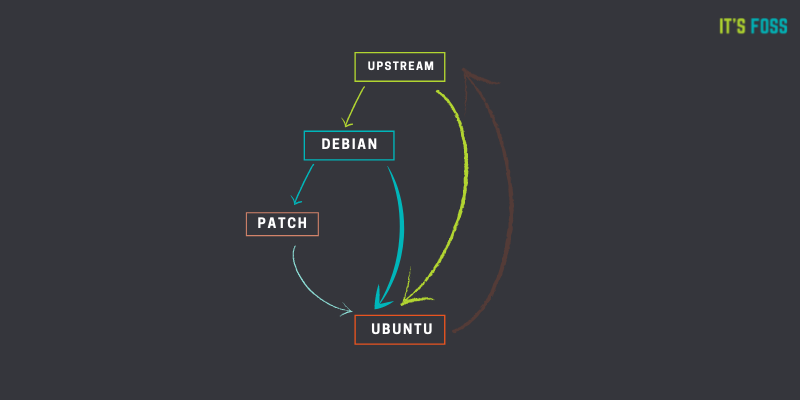

Debian 和 Ubuntu 属于同一系列的发行版。Debian 是由 Ian Murdock 在 1993 年创建的最初的发行版。Ubuntu 是 Mark Shuttleworth 在 2004 年基于 Debian 创建的发行版。

### Ubuntu 基于 Debian:这意味着什么?

Linux 发行版虽然有数百个,但其中只有少数是从零开始的独立发行版。 [Debian](https://www.debian.org/)、Arch、Red Hat 是其中几个不派生于其它发行版的使用最广的发行版。

Ubuntu 源自 Debian。这意味着 Ubuntu 使用与 Debian 相同的 APT 包管理系统,并共享来自 Debian 库中的大量包和库。它建立在 Debian 基础架构上。

这就是大多数“衍生”发行版所做的。它们使用相同的包管理器,并与基础发行版共享包。但它们也做了一些改变,添加了一些自己的包。这就是 Ubuntu 和 Debian 的不同之处,尽管它是从 Debian 衍生而来的。

### Ubuntu 和 Debian 的不同之处

因此,Ubuntu 构建在 Debian 架构和基础设施上,也与 Debian 一样是用 .DEB 格式的软件包。

这意味着使用 Ubuntu 和使用 Debian 是一样的吗?并不完全如此。有很多因素可以用来区分两个不同的发行版。

让我逐一讨论这些因素来比较 Ubuntu 和 Debian。请记住,有些比较适用于桌面版本,而有些比较适用于服务器版本。

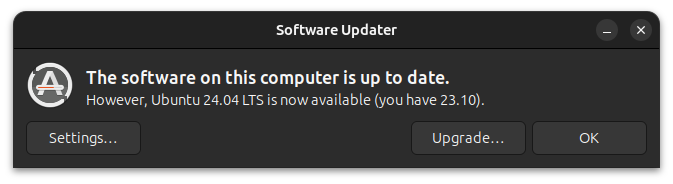

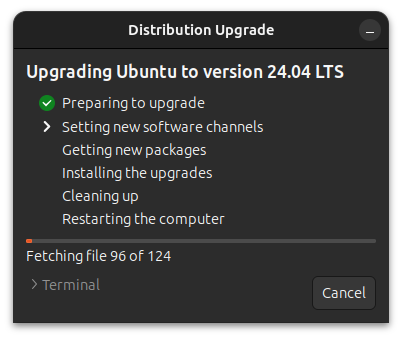

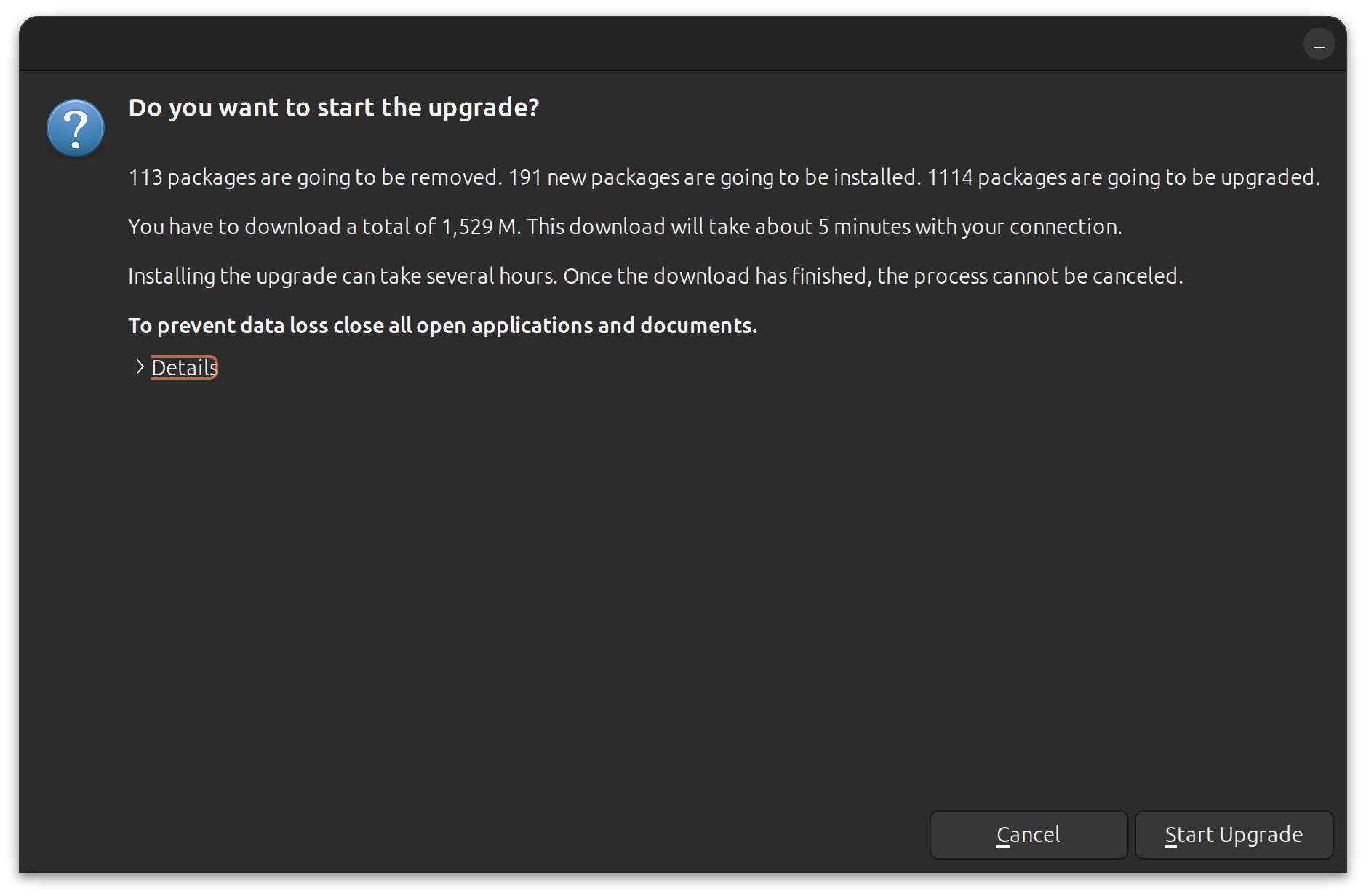

#### 1、发布周期

Ubuntu 有两种发布版本:LTS(长期支持)和常规版本。[Ubuntu LTS 版本](https://itsfoss.com/long-term-support-lts/) 每两年发布一次,并且会提供五年的支持。你可以选择升级到下一个可用的 LTS 版本。LTS 版本被认为更稳定。

还有一个非 LTS 版本,每六个月发布一次。这些版本仅仅提供九个月的支持,但是它们会有一些新的软件版本和功能。在当前的版本到达维护年限时,你应当升级到下一个 Ubuntu 版本。

所以基本上,你可以根据这些版本在稳定性和新特性之间进行选择。

另一方面,Debian 有三个不同的版本:稳定版、测试版和非稳定版。非稳定版是为了实际测试,应该避免使用。

测试版不是那么不稳定。它是用来为下一个稳定版做准备。有一些 Debian 用户更倾向于使用测试版来获取新的特性。

然后是稳定版。这是 Debian 的主要版本。Debian 稳定版可能没有最新的软件和功能,但在稳定性方面毋庸置疑。

每两年 Debian 会发布一个稳定版,并且会提供三年的支持。此后,你应当升级到下一个可用的稳定版。

#### 2、软件更新

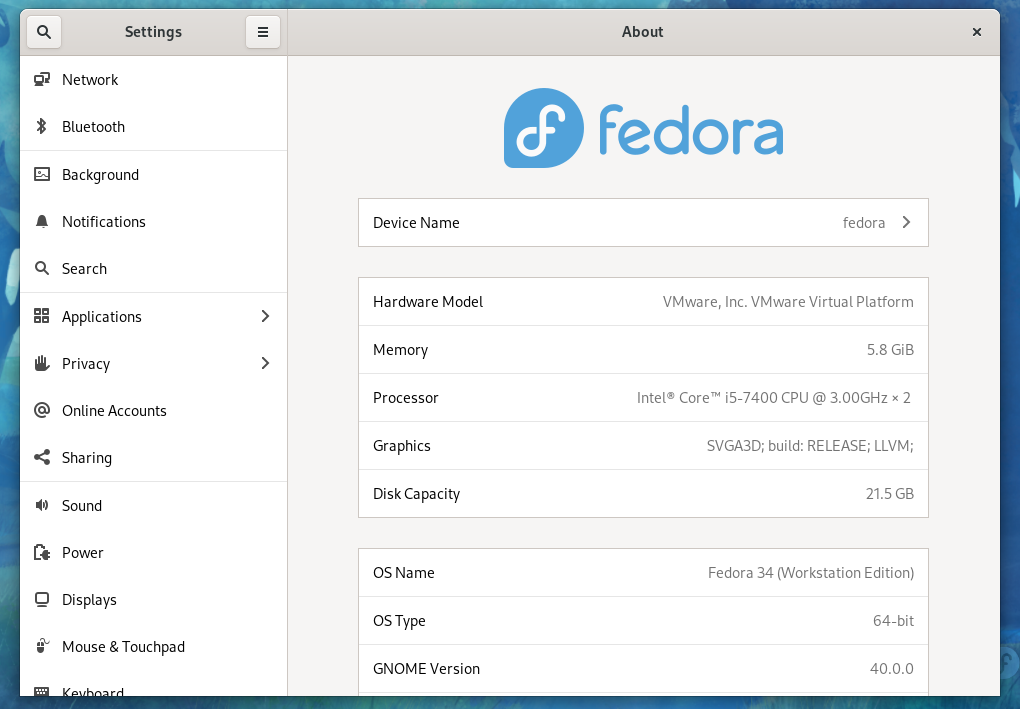

Debian 更关注稳定性,这意味着它并不总是使用最新版本的软件。例如,最新的 Debian 11 用的 GNOME 版本为 3.38,并不是最新版的 GNOME 3.40。

对于 GIMP、LibreOffice 等其它软件也是如此。这是你必须对 Debian 做出的妥协。这就是“Debian stable = Debian stale”笑话在 Linux 社区流行的原因。

Ubuntu LTS 版本也关注稳定性。但是它们通常拥有较新版本的常见软件。

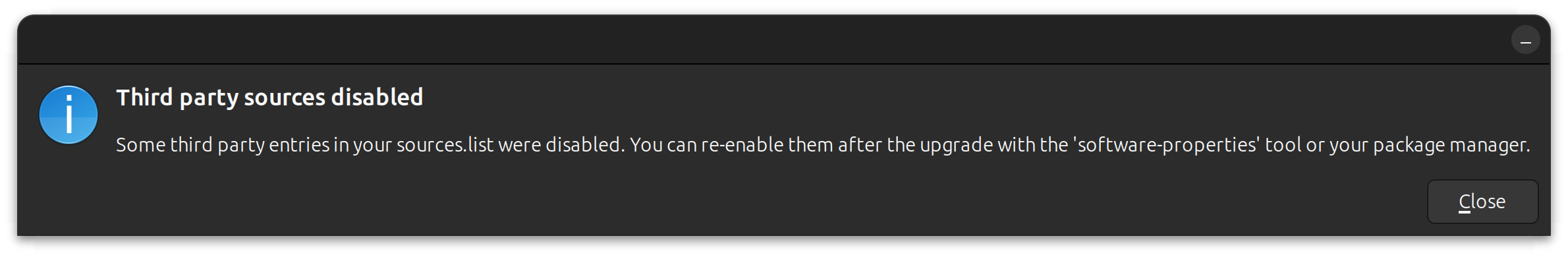

你应该注意,对于某些软件,从开发者的仓库安装也是一种选择。例如,如果你想要安装最新版的 Docker,你可以在 Debian 和 Ubuntu 中添加 Docker 仓库。

总体来说,相比较于 Ubuntu ,Debian 稳定版的软件版本会更旧。

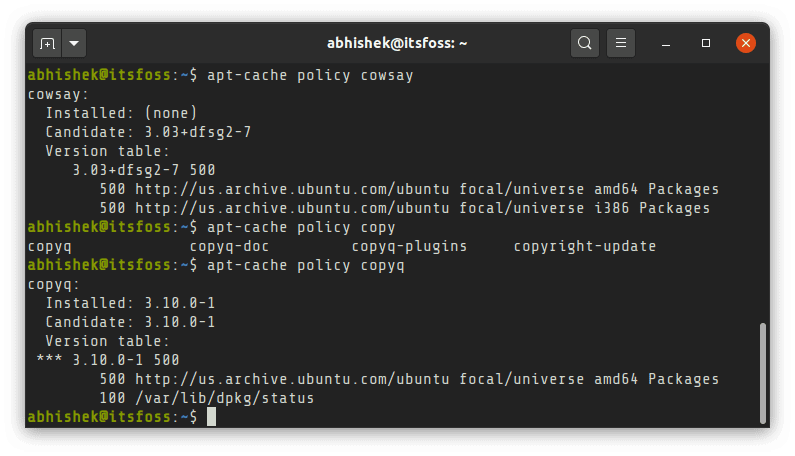

#### 3、软件可用性

Debian 和 Ubuntu 都拥有一个巨大的软件仓库。然而,[Ubuntu 还有 PPA](https://itsfoss.com/ppa-guide/)(<ruby> 个人软件包存档 <rt> Personal Package Archive </rt></ruby>)。通过 PPA,安装更新版本的软件或者获取最新版本的软件都将会变的更容易。

你可以在 Debian 中尝试使用 PPA,但是体验并不好。大多数时候你都会遇到问题。

#### 4、支持的平台

Ubuntu 可以在 64 位的 x86 和 ARM 平台上使用。它不再提供 32 位的镜像。

另一方面,Debian 支持 32 位和 64 位架构。除此之外,Debian 还支持 64 位 ARM(arm64)、ARM EABI(armel)、ARMv7(EABI hard-float ABI,armhf)、小端 MIPS(mipsel)、64 位小端 MIPS(mips64el)、64 位小端 PowerPC(ppc64el) 和 IBM System z(s390x)。

所以它也被称为 “<ruby> 通用操作系统 <rt> universal operating system </rt></ruby>”。

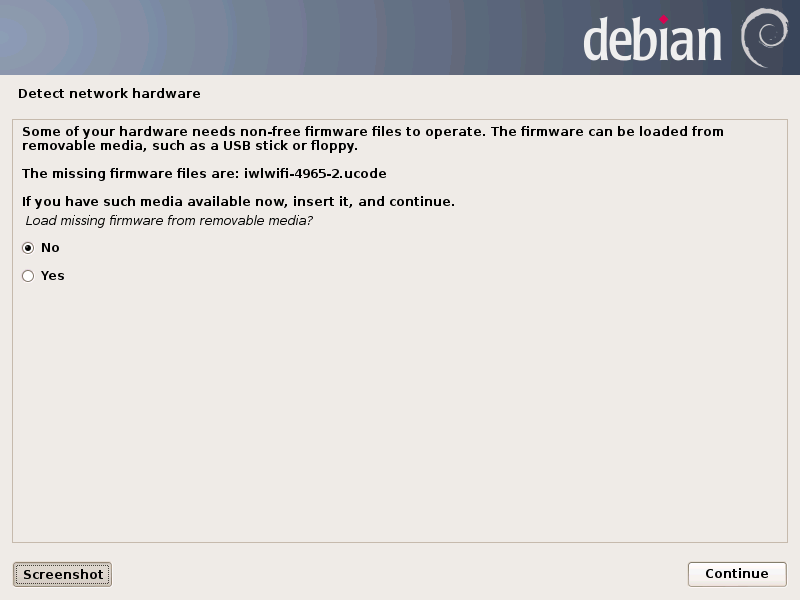

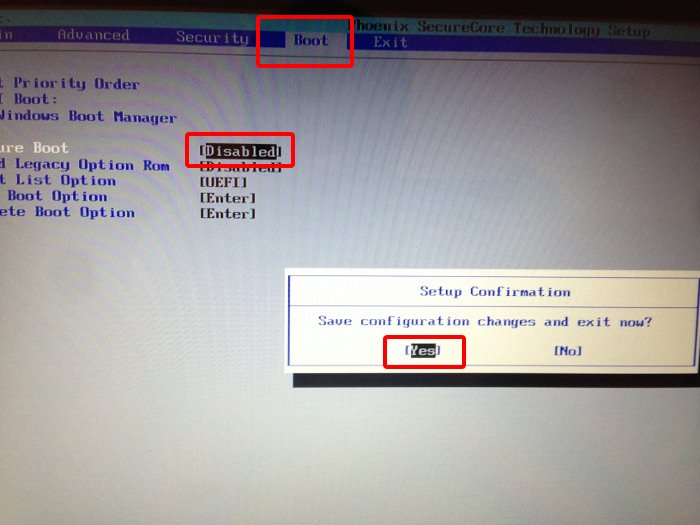

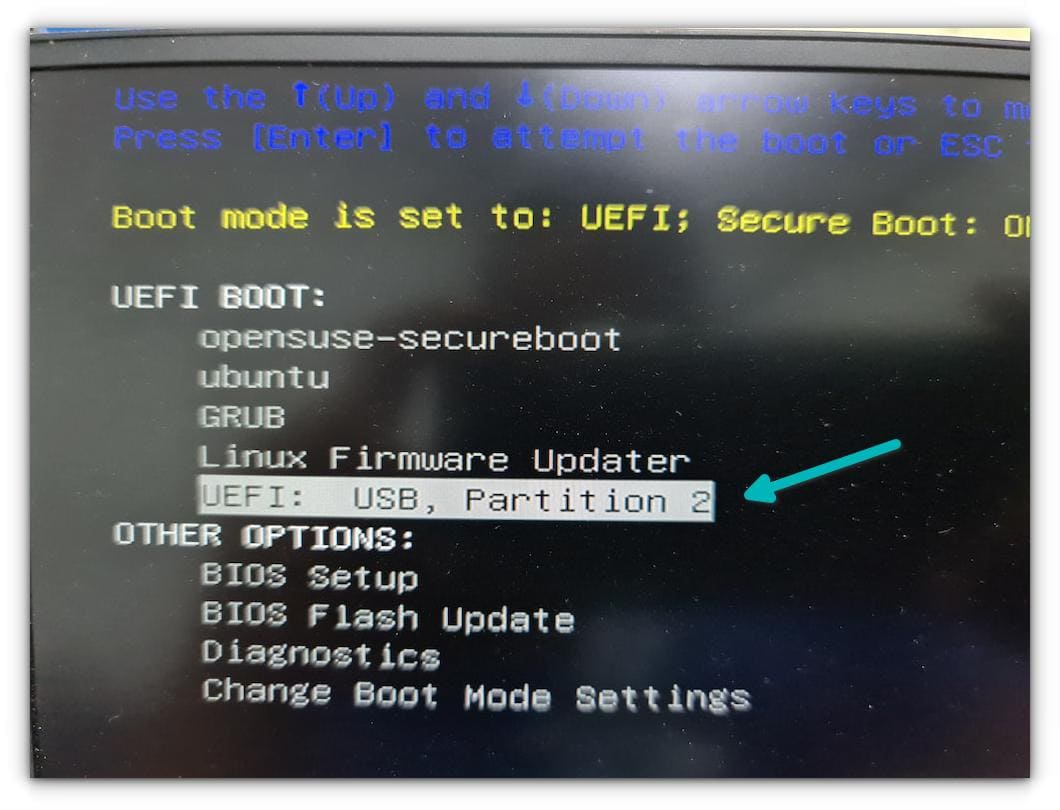

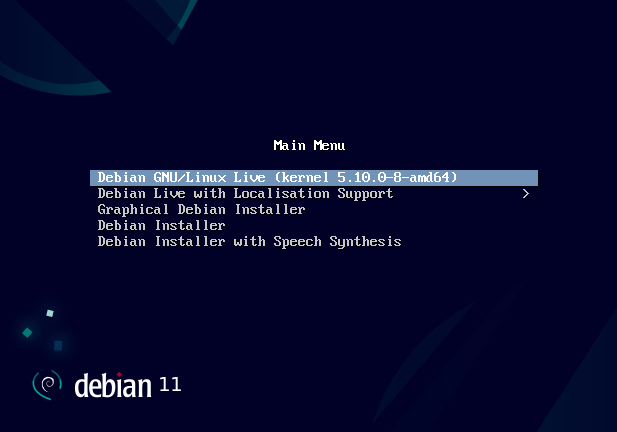

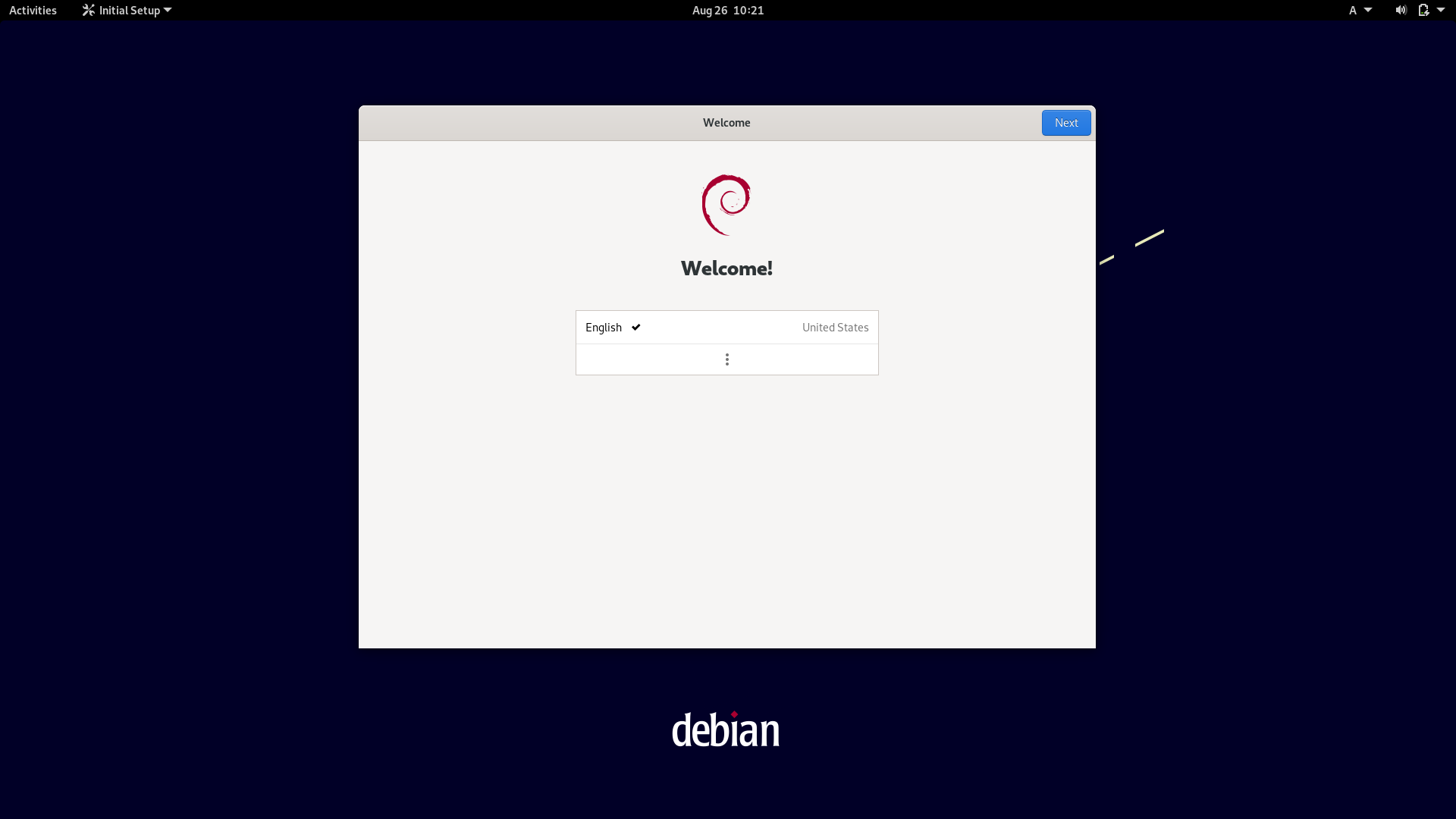

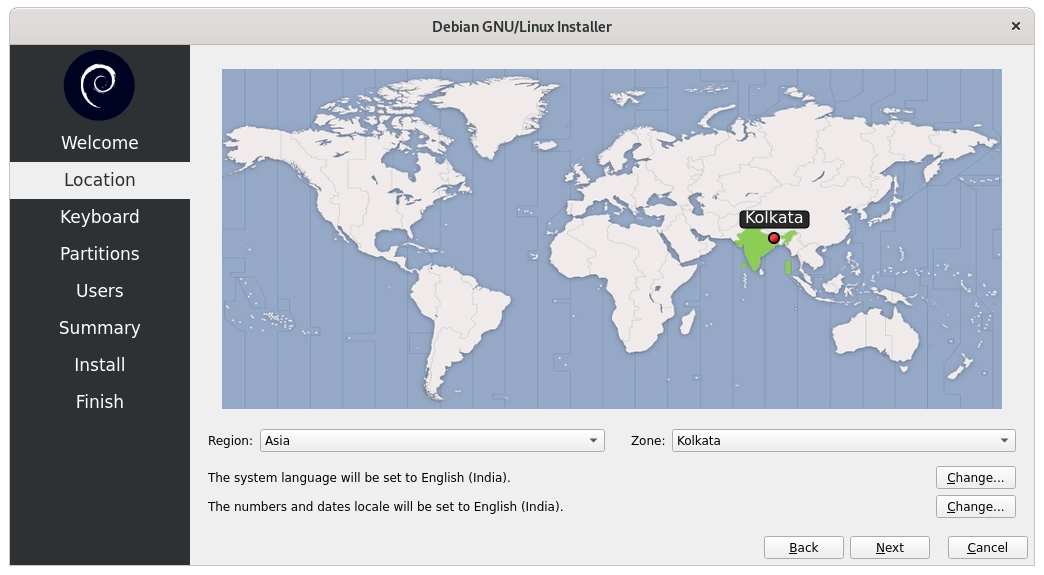

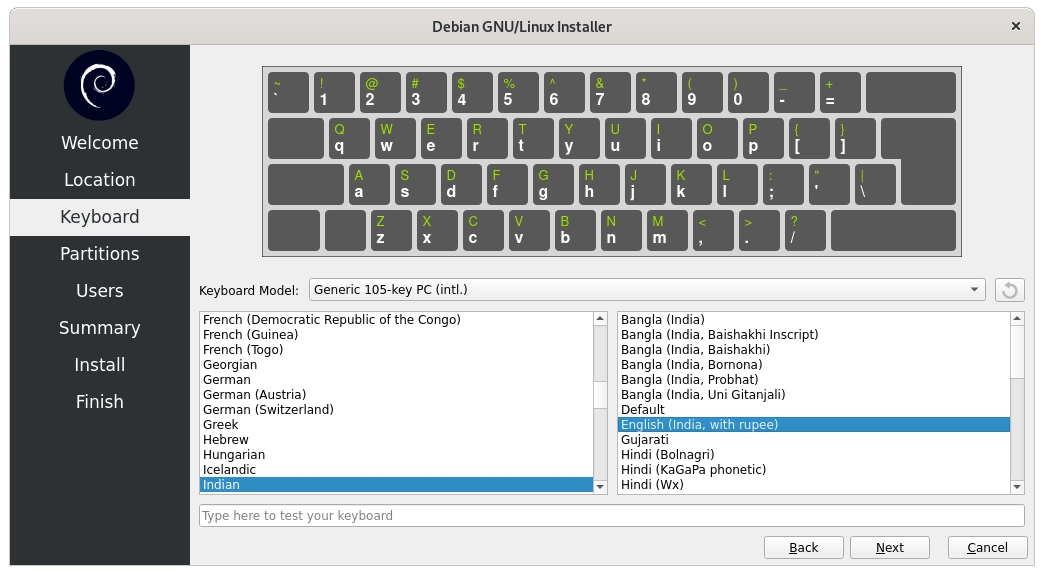

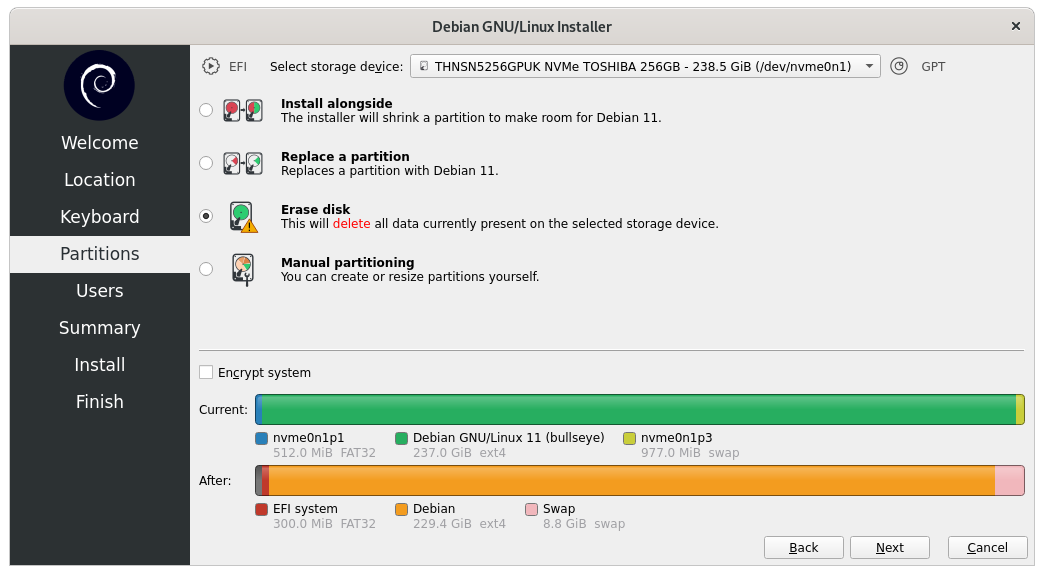

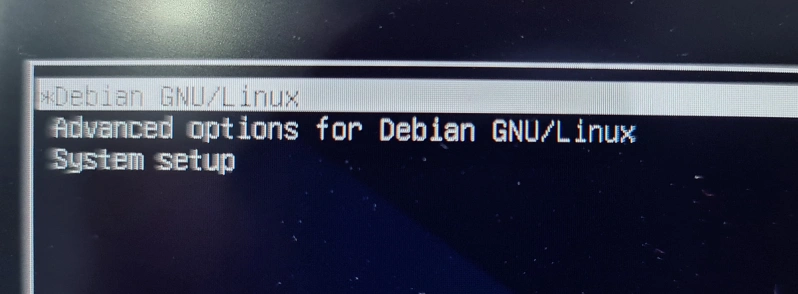

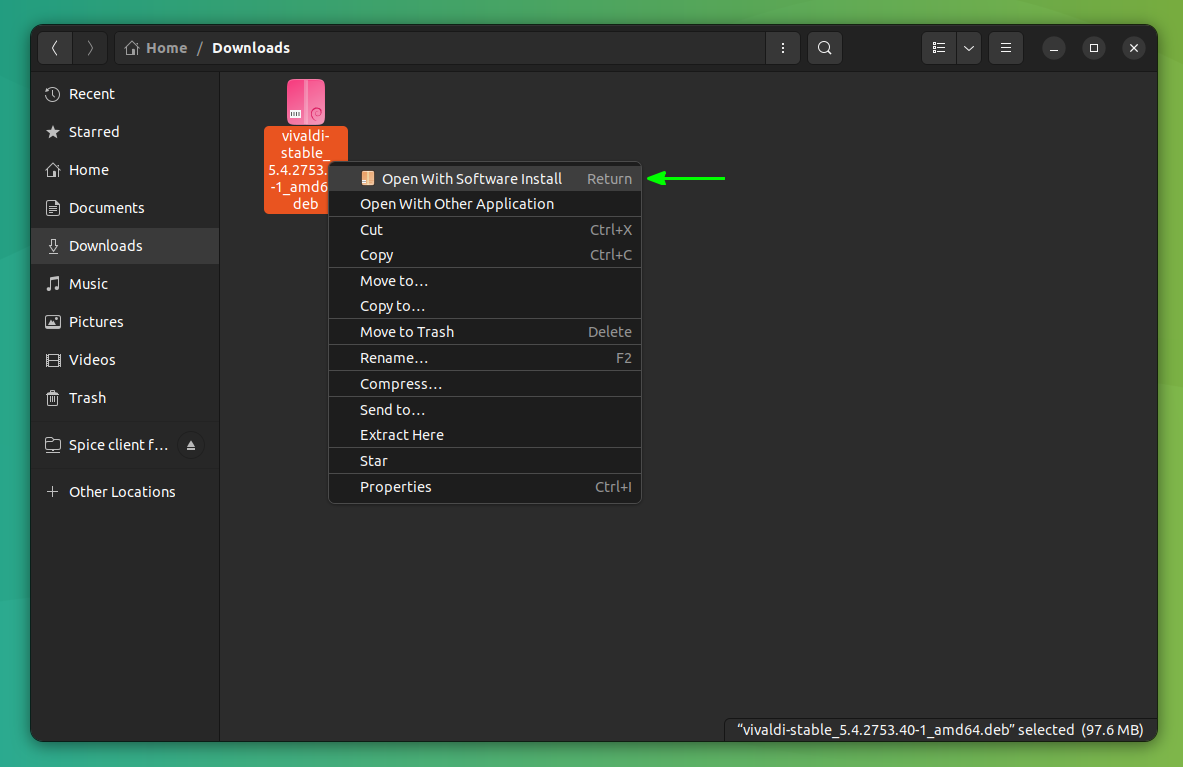

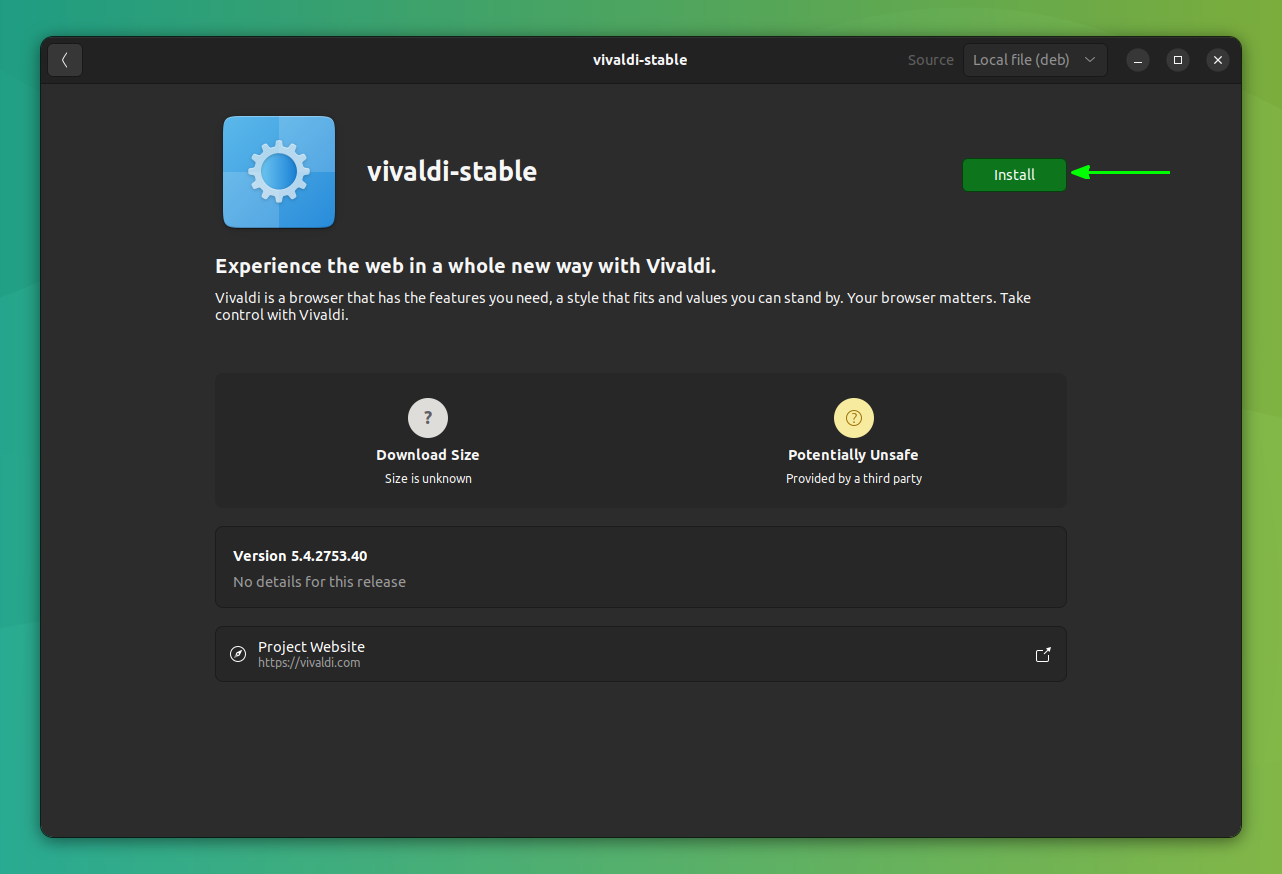

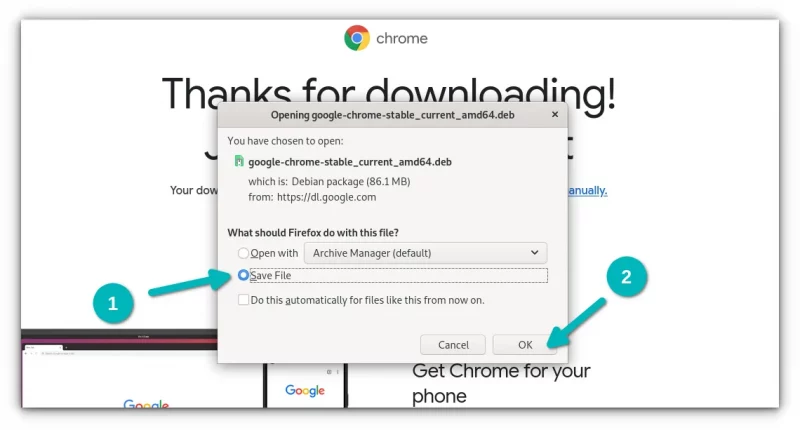

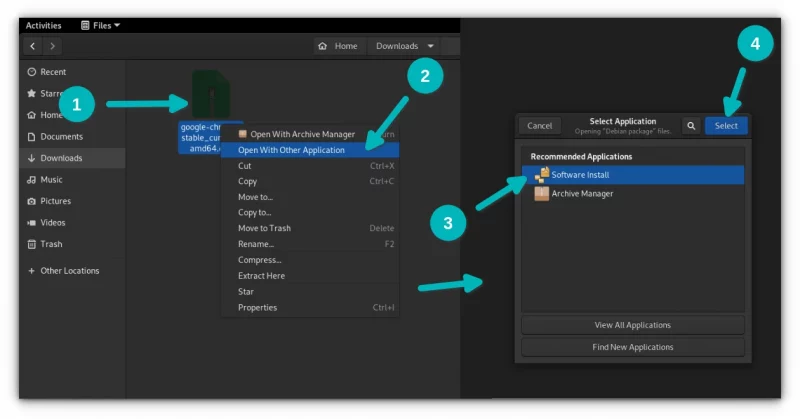

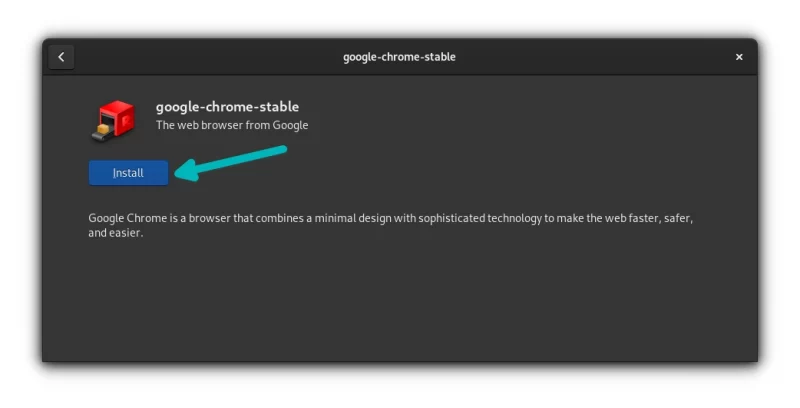

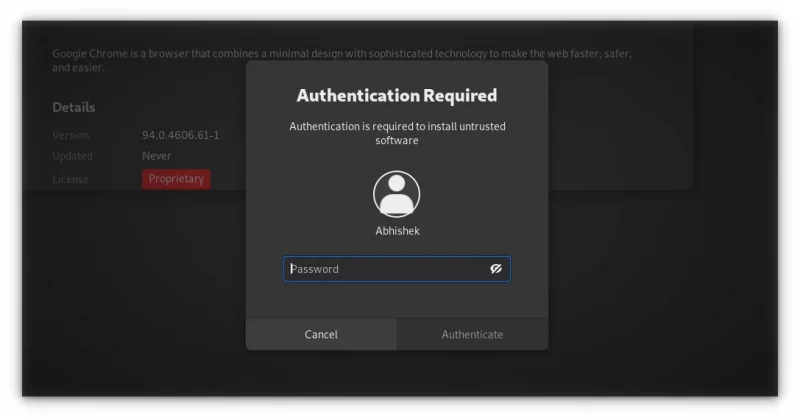

#### 5、安装

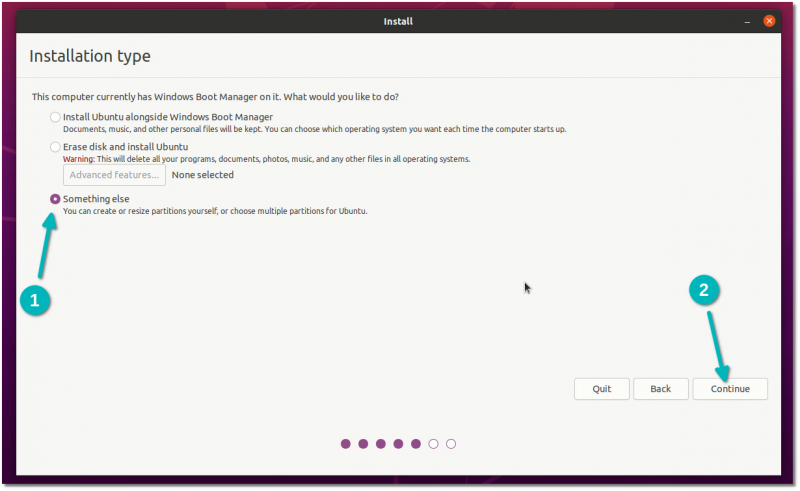

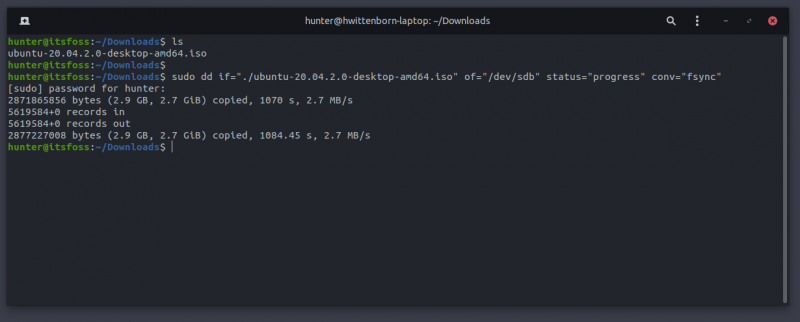

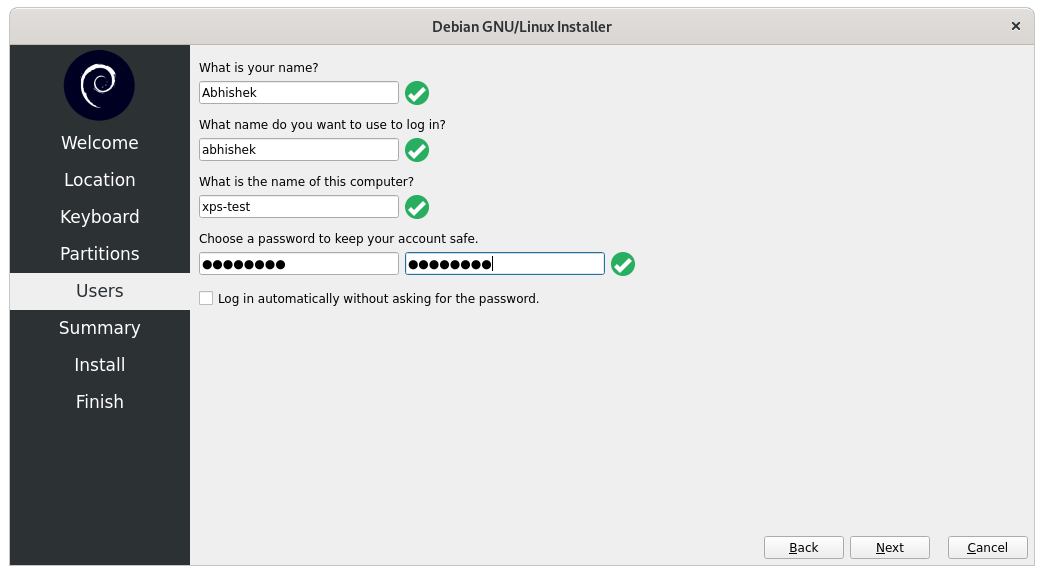

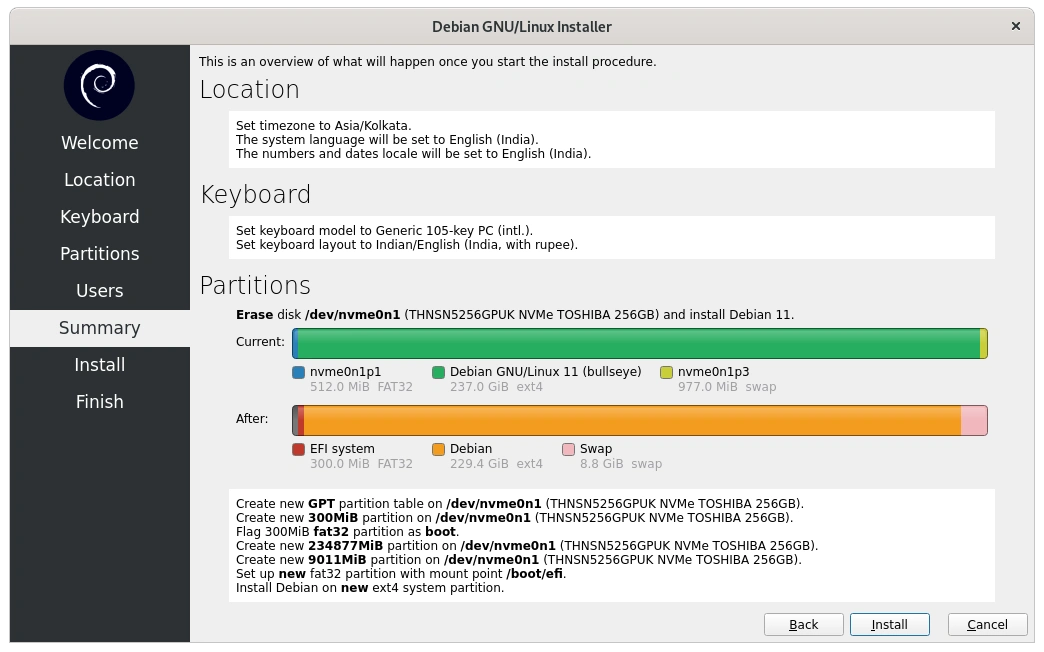

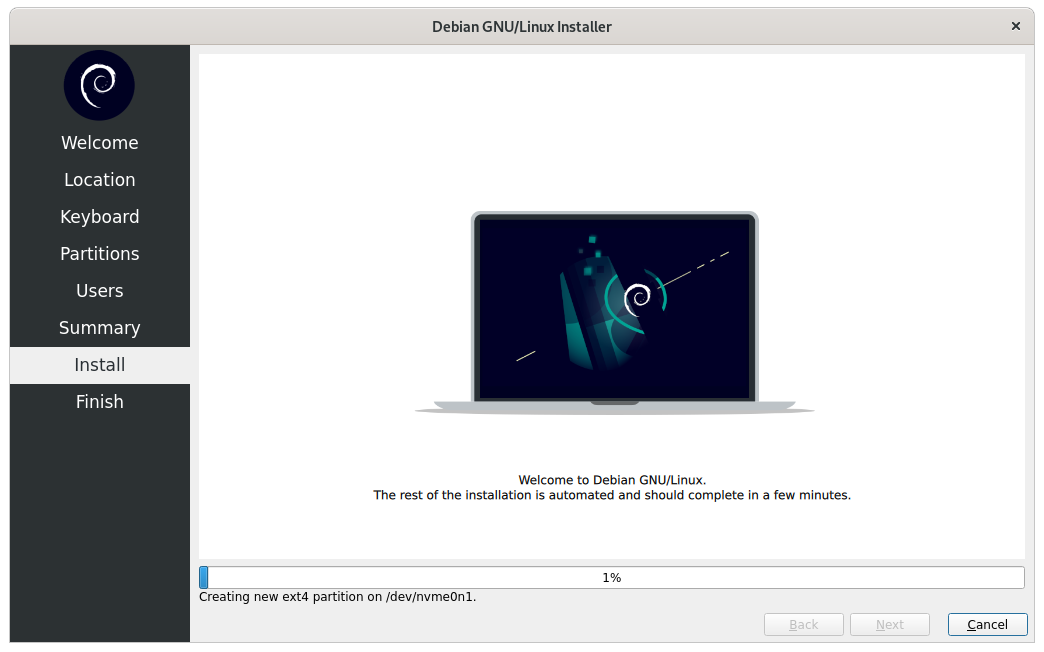

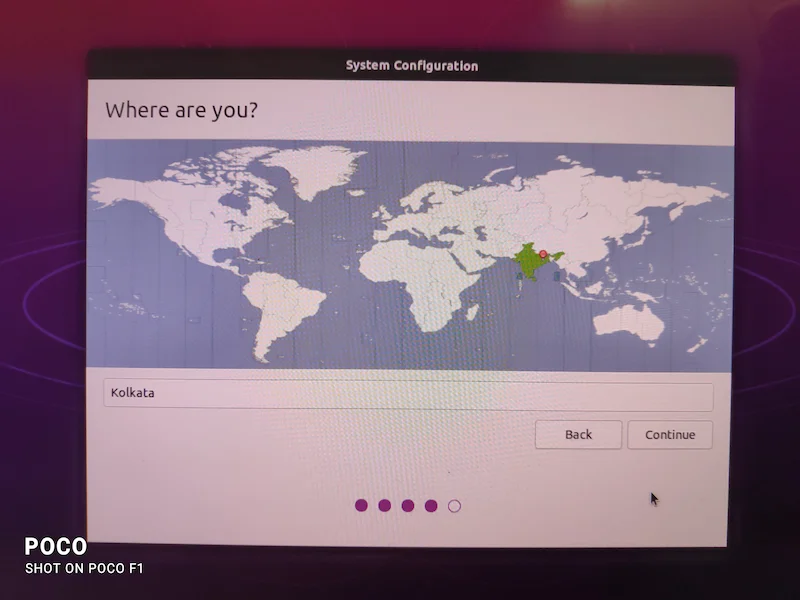

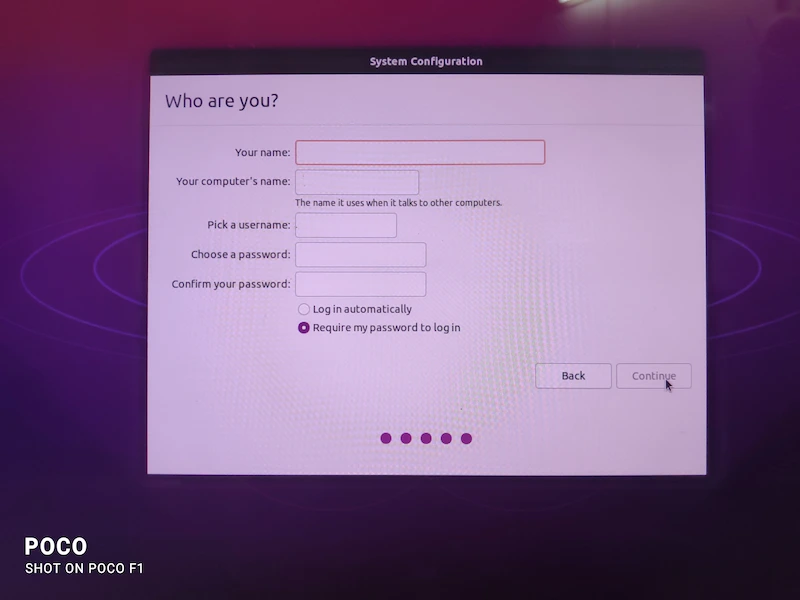

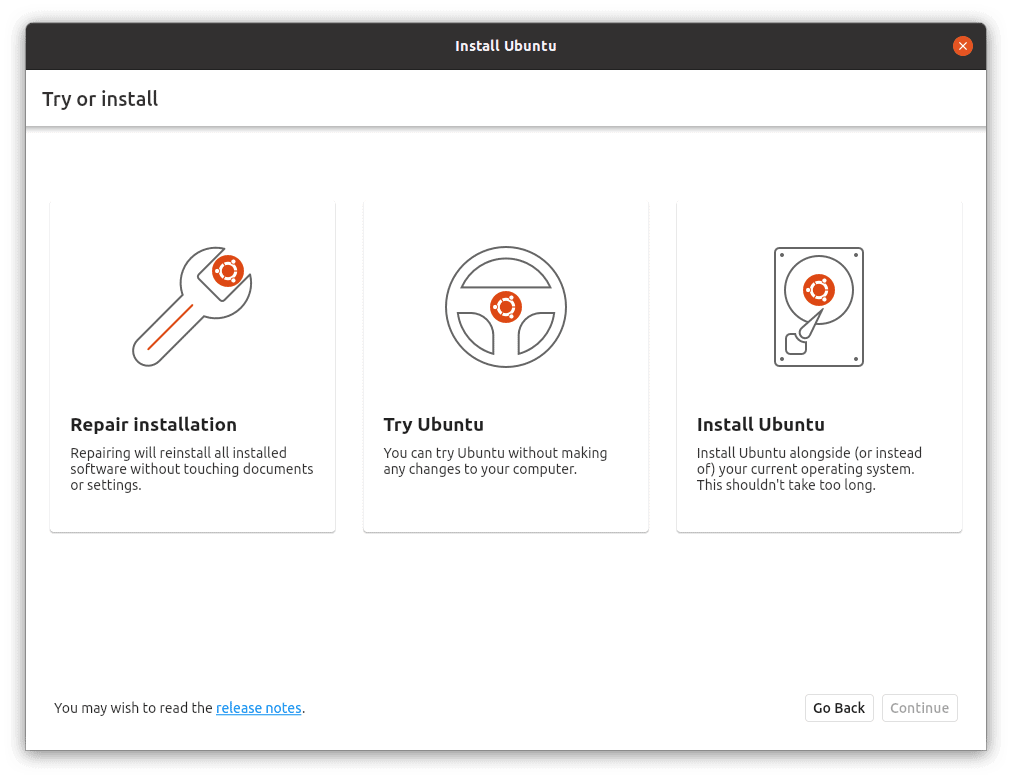

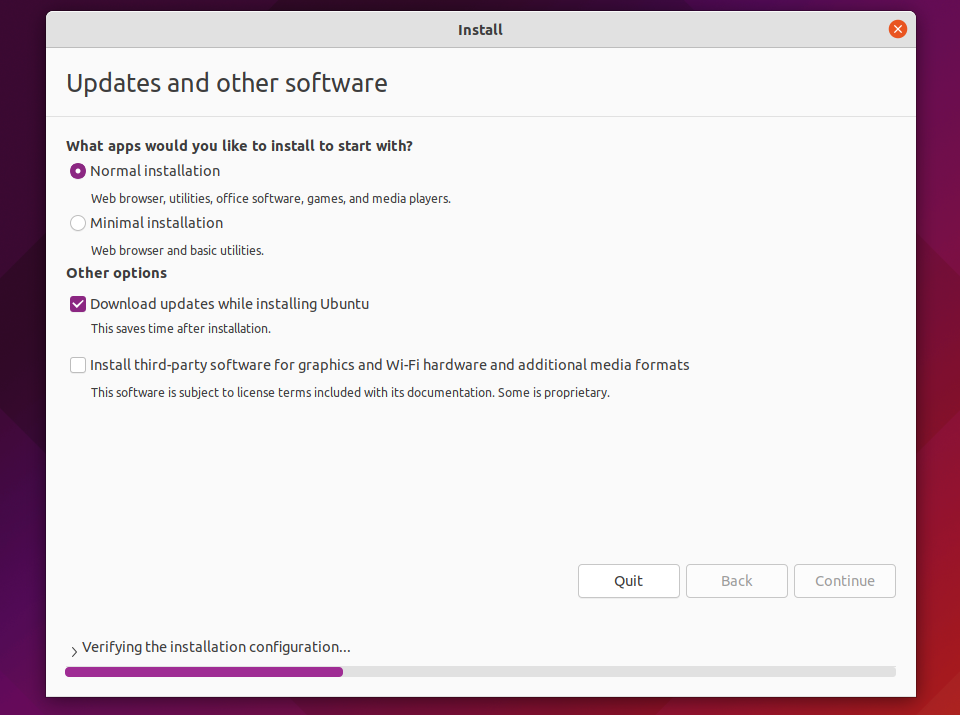

[安装 Ubuntu](https://itsfoss.com/install-ubuntu/) 比安装 Debian 容易得多。我并不是在开玩笑。即使对于有经验的 Linux 用户,Debian 也可能令人困惑。

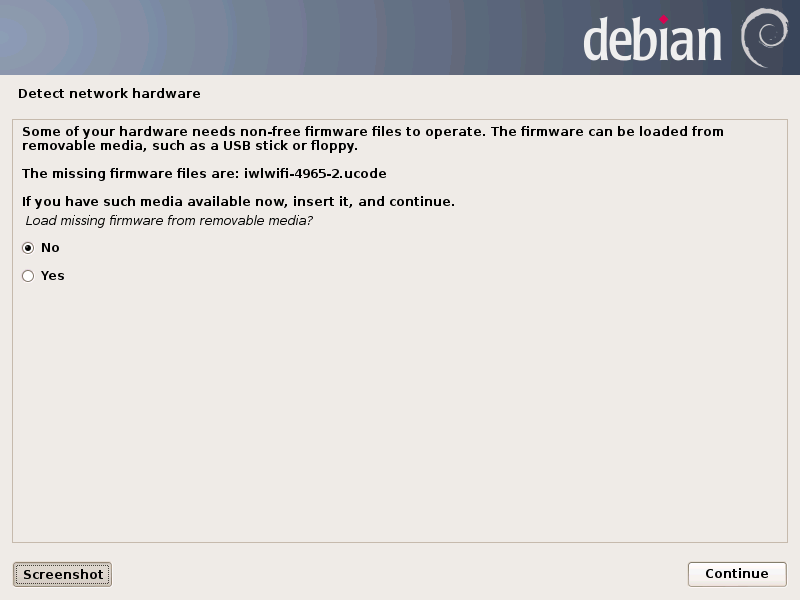

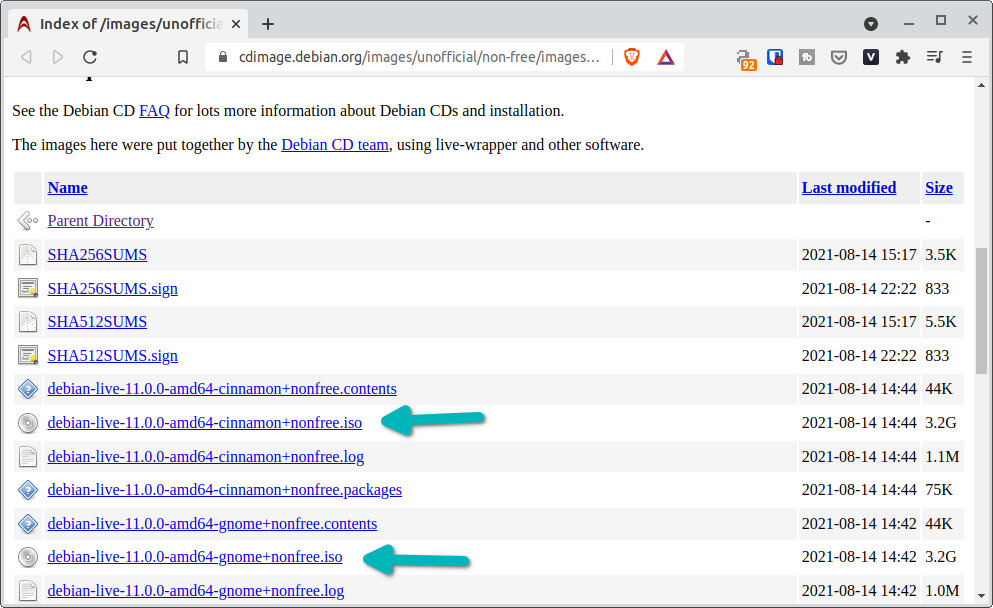

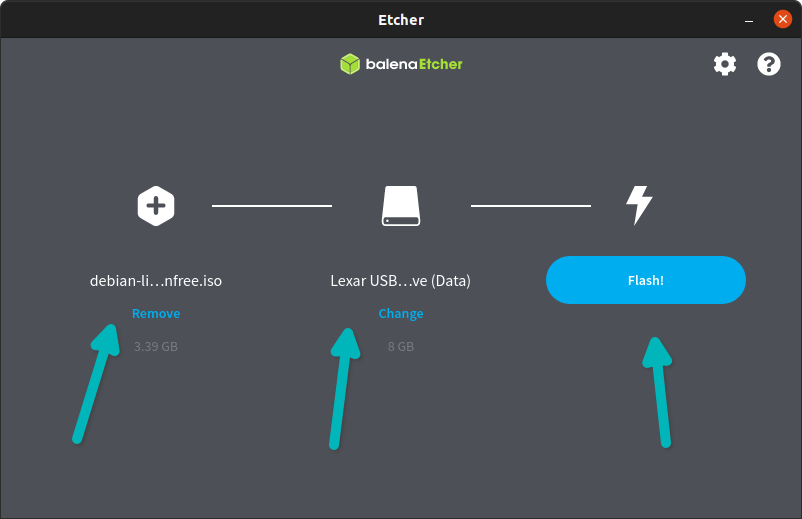

当你下载 Debian 的时候,它默认提供的是最小化镜像。此镜像没有非自由(非开源)的固件。如果你继续安装它,你就可能会发现你的网络适配器和其它硬件将无法识别。

有一个单独的包含固件的非自由镜像,但它是隐藏的,如果你不知道,你可能会大吃一惊。

Ubuntu 在默认提供的镜像中包含专有驱动程序和固件时要宽容的多。

此外,Debian 安装程序看起来很旧,而 Ubuntu 安装程序看起来就比较现代化。Ubuntu 安装程序还可以识别磁盘上其它已安装的操作系统,并为你提供将 Ubuntu 与现有操作系统一起安装的选项(双引导)。但我在测试时并没有注意到 Debian 有此选项。

#### 6、开箱即用的硬件支持

就像之前提到的,Debian 主要关注 [FOSS](https://itsfoss.com/what-is-foss/)(自由和开源软件)。这意味着 Debian 提供的内核不包括专有驱动程序和固件。

这并不是说你无法使其工作,而是你必须添加/启动额外的存储库并手动安装。这可能令人沮丧,特别是对于初学者来说。

Ubuntu 并不完美,但在提供开箱即用的驱动程序和固件方面,它比 Debian 好得多。这意味着更少的麻烦和更完整的开箱即用体验。

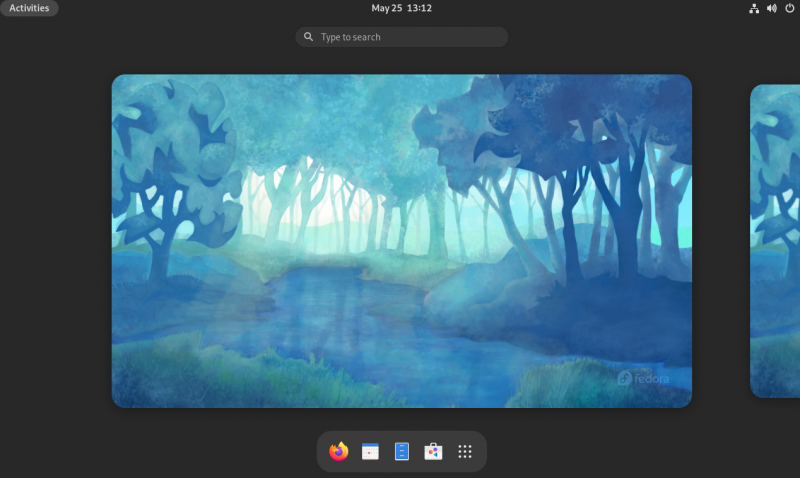

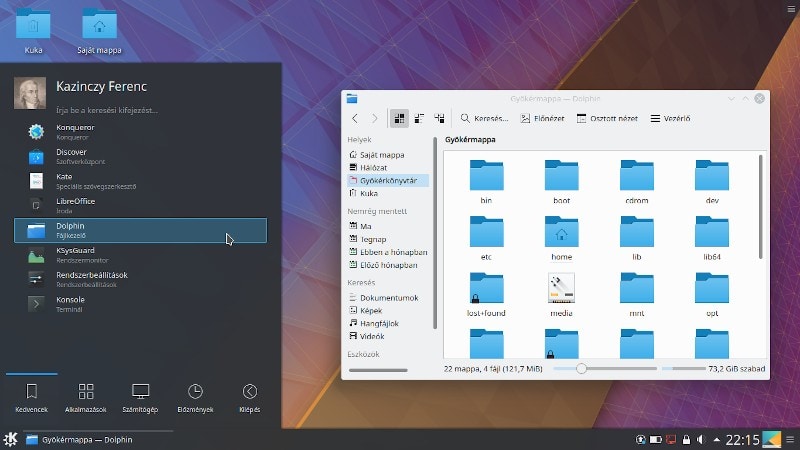

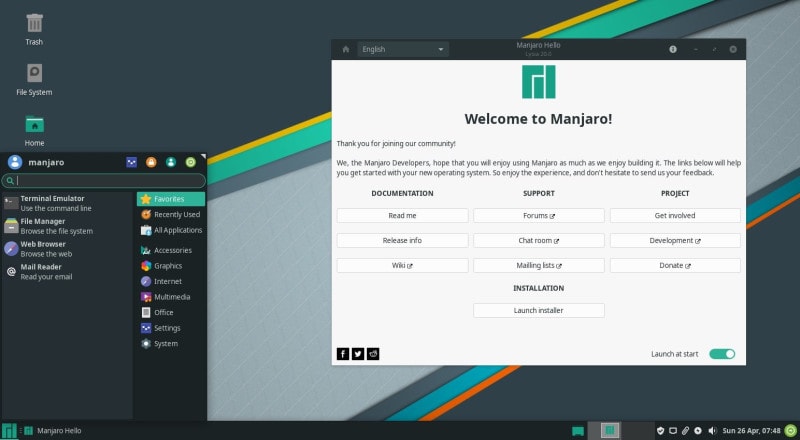

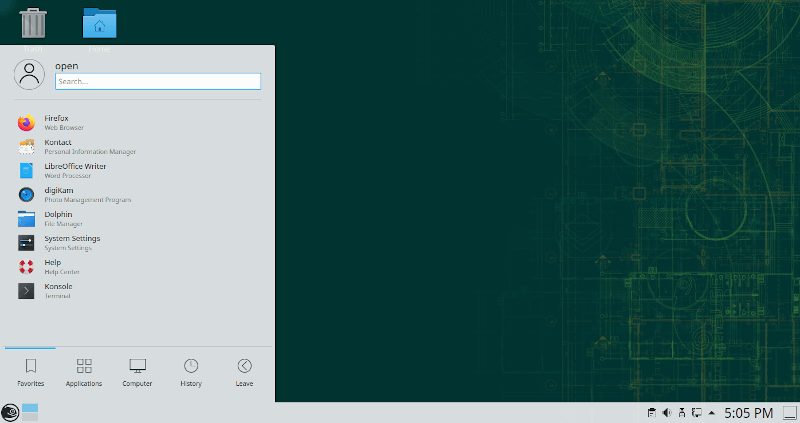

#### 7、桌面环境选择

Ubuntu 默认使用定制的 GNOME 桌面环境。你可以在其上安装 [其它桌面环境](https://itsfoss.com/best-linux-desktop-environments/),或者选择 [各种不同桌面风格的 Ubuntu](https://itsfoss.com/which-ubuntu-install/),如 Kubuntu(使用 KDE 桌面)、Xubuntu(使用 Xfce 桌面)等。

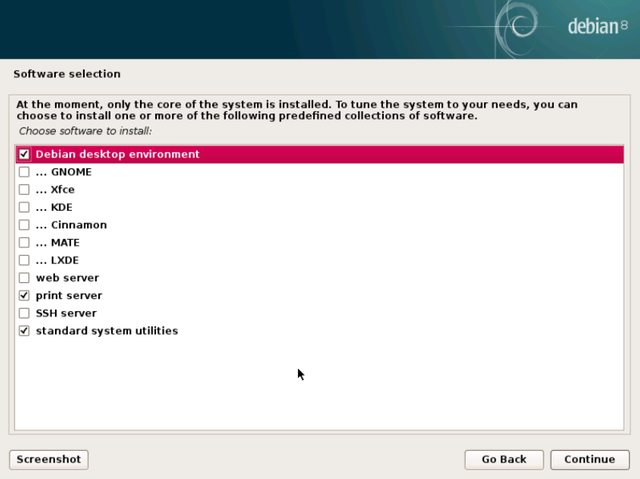

Debian 也默认安装了 GNOME 桌面。但是它会让你在安装的过程中选择你要安装的桌面环境。

你还可以从其网站获取 [特定桌面环境的 ISO 镜像](https://cdimage.debian.org/debian-cd/current-live/amd64/iso-hybrid/)。

#### 8、游戏性

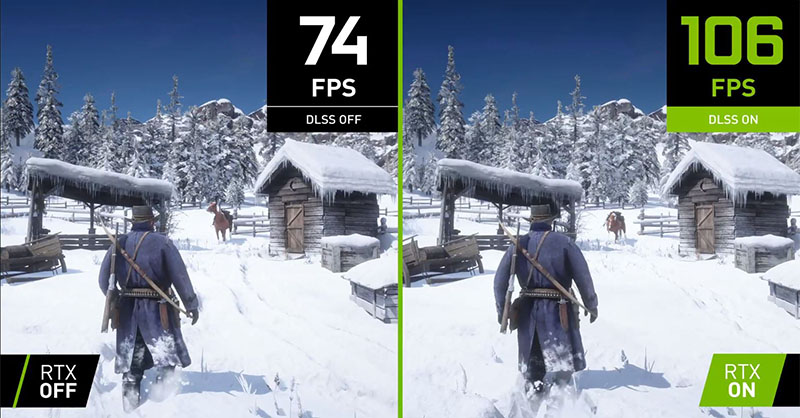

由于 Stream 及其 Proton 项目,Linux 上的游戏总体上有所改善。尽管如此,游戏在很大程度上取决于硬件。

在硬件兼容性上,Ubuntu 比 Debian 在支持专有驱动程序方面要好。

并不是说在 Debian 中不能做到这一点,而是需要一些时间和精力来实现。

#### 9、性能

性能部分没有明显的“赢家”,无论是在服务器版本还是在桌面版本。 Debian 和 Ubuntu 作为桌面和服务器操作系统都很受欢迎。

性能取决于你系统的硬件和你所使用的软件组件。你可以在你的操作系统中调整和控制你的系统。

#### 10、社区和支持

Debian 是一个真正的社区项目。此项目的一切都由其社区成员管理。

Ubuntu 由 [Canonical](https://canonical.com/) 提供支持。然而,它并不是一个真正意义上的企业项目。它确实有一个社区,但任何事情的最终决定权都掌握在 Canonical 手中。

就支持而言,Ubuntu 和 Debian 都有专门的论坛,用户可以在其中寻求帮助和提出建议。

Canonical 还为其企业客户提供收费的专业支持。Debian 则没有这样的功能。

### 结论

Debian 和 Ubuntu 都是桌面或服务器操作系统的可靠选择。 APT 包管理器和 DEB 包对两者都是通用的,因此提供了一些相似的体验。

然而,Debian 仍然需要一定程度的专业知识,特别是在桌面方面。如果你是 Linux 新手,坚持使用 Ubuntu 将是你更好的选择。在我看来,你应该积累一些经验,熟悉了一般的 Linux,然后再尝试使用 Debian。

并不是说你不能从一开始就使用 Debian,但对于 Linux 初学者来说,这并不是一种很好的体验。

欢迎你对这场 Debian 与 Ubuntu 辩论发表意见。

---

via: <https://itsfoss.com/debian-vs-ubuntu/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[perfiffer](https://github.com/perfiffer) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

You can [use apt-get commands](https://itsfoss.com/apt-get-linux-guide/) for managing applications in both Debian and Ubuntu. You can install DEB packages in both distributions as well. Many times, you’ll find common package installation instructions for both distributions.

So, what’s the difference between the two, if they are so similar?

Debian and Ubuntu belong to the same side of the distribution spectrum. Debian is the original distribution created by Ian Murdock in 1993. Ubuntu was created in 2004 by Mark Shuttleworth and it is based on Debian.

## Ubuntu is based on Debian: What does it mean?

While there are hundreds of Linux distributions, only a handful of them are independent ones, created from scratch. [Debian](https://www.debian.org/?ref=itsfoss.com), Arch, Red Hat are some of the biggest distributions that do not derive from any other distribution.

Ubuntu is derived from Debian. It means that Ubuntu uses the same APT packaging system as Debian and shares a huge number of packages and libraries from Debian repositories. It utilizes the Debian infrastructure as base.

That’s what most ‘derived’ distributions do. They use the same package management system and share packages as the base distribution. But they also add some packages and changes of their own. And that is how Ubuntu is different from Debian despite being derived from it.

## Difference between Ubuntu and Debian

So, Ubuntu is built on Debian architecture and infrastructure and uses .DEB packages same as Debian.

Does it mean using Ubuntu is the same as using Debian? Not quite so. There are many more factors involved that distinguish one distribution from the other.

Let me discuss these factors one by one to compare Ubuntu and Debian. Please keep in mind that some comparisons are applicable to desktop editions while some apply to the server editions.

### 1. Release cycle

Ubuntu has two kinds of releases: LTS and regular. [Ubuntu LTS (long term support) release](https://itsfoss.com/long-term-support-lts/) comes out every two years and they get support for five years. You have the option to upgrade to the next available LTS release. The LTS releases are considered more stable.

There are also non-LTS releases every six months. These releases are supported for nine months only, but they have newer software versions and features. You have to upgrade to the next [Ubuntu version when the current one reaches end of life](https://itsfoss.com/end-of-life-ubuntu/).

So basically, you have the option to choose between stability and new features based on these releases.

On the other hand, Debian has three different releases: Stable, Testing and Unstable. Unstable is for actual testing and should be avoided.

The testing branch is not that unstable. It is used for preparing the next stable branch. Some Debian users prefer the testing branch to get newer features.

And then comes the stable branch. This is the main Debian release. It may not have the latest software and feature but when it comes to stability, Debian Stable is rock solid.

There is a new stable release every two years and it is supported for a total of five years. The first three years are by the Debian security team and the next two years by volunteers (in the form of the Debian LTS team). After that, you have to upgrade to the next available stable release.

### 2. Software freshness

Debian’s focus on stability means that it does not always aim for the latest versions of the software. For example, the latest Debian 11 features GNOME 3.38, not the latest GNOME 3.40.

The same goes for other software like GIMP, LibreOffice, etc. This is a compromise you have to make with Debian. This is why “Debian stable = Debian stale” joke is popular in the Linux community.

Ubuntu LTS releases also focus on stability. But they usually have more recent versions of the popular software.

You should note that for * some software*, installing from the developer’s repository is also an option. For example, if you want the latest Docker version, you can add Docker repository in both Debian and Ubuntu.

Overall, software in Debian Stable often have older versions when compared to Ubuntu.

### 3. Software availability

Both Debian and Ubuntu has a huge repository of software. However, [Ubuntu also has PPA](https://itsfoss.com/ppa-guide/) (Personal Package Archive). With PPA, installing newer software or getting the latest software version becomes a bit easier.

You may try using PPA in Debian but it won’t be a smooth experience. You’ll encounter issues most of the time.

### 4. Supported platforms

Ubuntu is available on 64-bit x86 and ARM platforms. It does not provide 32-bit ISO anymore.

Debian, on the other hand, supports both 32-bit and 64-bit architecture. Apart from that Debian also supports 64-bit ARM (arm64), ARM EABI (armel), ARMv7 (EABI hard-float ABI, armhf), little-endian MIPS (mipsel), 64-bit little-endian MIPS (mips64el), 64-bit little-endian PowerPC (ppc64el) and IBM System z (s390x).

No wonder it is called the ‘universal operating system’.

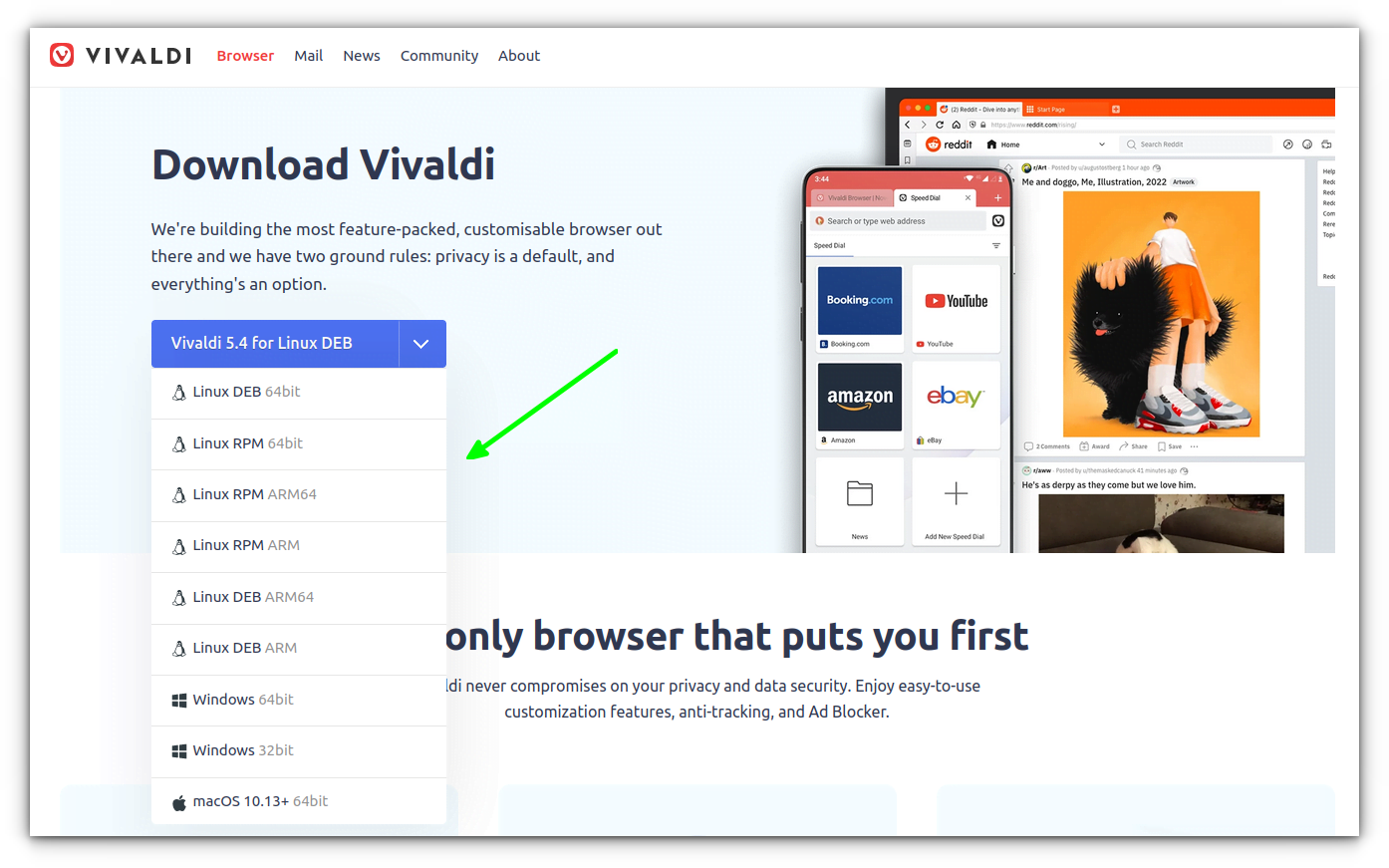

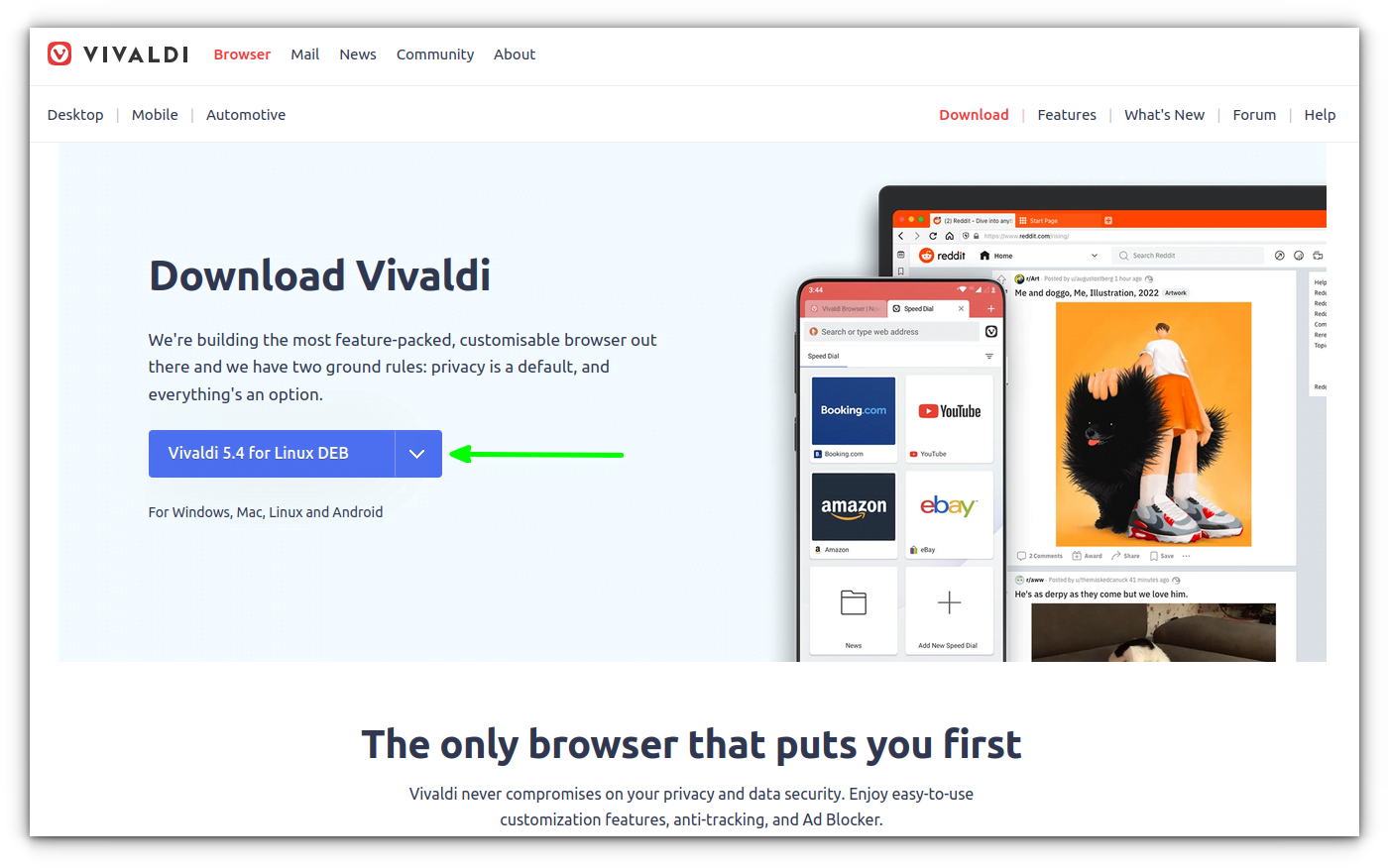

### 5. Installation

[Installing Ubuntu](https://itsfoss.com/install-ubuntu/) is a lot easier than [installing Debian](https://itsfoss.com/install-debian-easily/). I am not kidding. Debian could be confusing even for intermediate Linux user.

When you download Debian, it provides a minimal ISO by default. This ISO has no non-free (not open source) firmware. You go on to install it and realize that your network adapters and other hardware won’t be recognized.

There is a separate non-free ISO that contains firmware but it is hidden and if you do not know that, you are in for a bad surprise.

Ubuntu is a lot more forgiving when it comes to including proprietary drivers and firmware in the default ISO.

Also, the Debian installer looks old, whereas the Ubuntu installer is modern looking. Ubuntu installer also recognizes other installed operating systems on the disk and gives you the option to install Ubuntu alongside the existing ones (dual boot). I have not noticed it with Debian installer in my testing.

### 6. Out of the box hardware support

As mentioned earlier, Debian focuses primarily on [FOSS](https://itsfoss.com/what-is-foss/) (free and open source software). This means that the kernel provided by Debian does not include proprietary drivers and firmware.

It’s not that you cannot make it work but you’ll have to do add/enable additional repositories and install it manually. This could be discouraging, specially for the beginners.

Ubuntu is not perfect but it is a lot better than Debian for providing drivers and firmware out of the box. This means less hassle and a more complete out-of-the-box experience.

### 7. Desktop environment choices

Ubuntu uses a customized GNOME desktop environment by default. You may install [other desktop environments](https://itsfoss.com/best-linux-desktop-environments/) on top of it or opt for [various desktop based Ubuntu flavors](https://itsfoss.com/which-ubuntu-install/) like Kubuntu (for KDE), Xubuntu (for Xfce) etc.

Debian also installs GNOME by default. But its installer gives you choice to install desktop environment of your choice during the installation process.

You may also get [DE specific ISO images from its website](https://cdimage.debian.org/debian-cd/current-live/amd64/iso-hybrid/?ref=itsfoss.com).

### 8. Gaming

Gaming on Linux has improved in general thanks to Steam and its Proton project. Still, gaming depends a lot on hardware.

And when it comes to hardware compatibility, Ubuntu is better than Debian for supporting proprietary drivers.

Not that it cannot be done in Debian but it will require some time and effort to achieve that.

### 9. Performance

There is no clear ‘winner’ in the performance section, whether it is on the server or on the desktop. Both Debian and Ubuntu are popular as desktop as well as server operating systems.

The performance depends on your system’s hardware and the software component you use. You can tweak and control your system in both operating systems.

### 10. Community and support

Debian is a true community project. Everything about this project is governed by its community members.

Ubuntu is backed by [Canonical](https://canonical.com/?ref=itsfoss.com). However, it is not entirely a corporate project. It does have a community but the final decision on any matter is in Canonical’s hands.

As for support goes, both Ubuntu and Debian have dedicated forums where users can seek help and advice.

Canonical also offers professional support for a fee to its enterprise clients. Debian has no such features.

## Conclusion

Both Debian and Ubuntu are solid choices for desktop or server operating systems. The apt package manager and DEB packaging is common to both and thus give a somewhat similar experience.

However, Debian still needs a certain level of expertise, especially on the desktop front. If you are new to Linux, sticking with Ubuntu will be a better choice for you. In my opinion, you should gain some experience, get familiar with Linux in general and then try your hands on Debian.

It’s not that you cannot jump onto the Debian wagon from the start, but it is more likely to be an overwhelming experience for Linux beginners.

**Your opinion on this Debian vs Ubuntu debate is welcome.** |

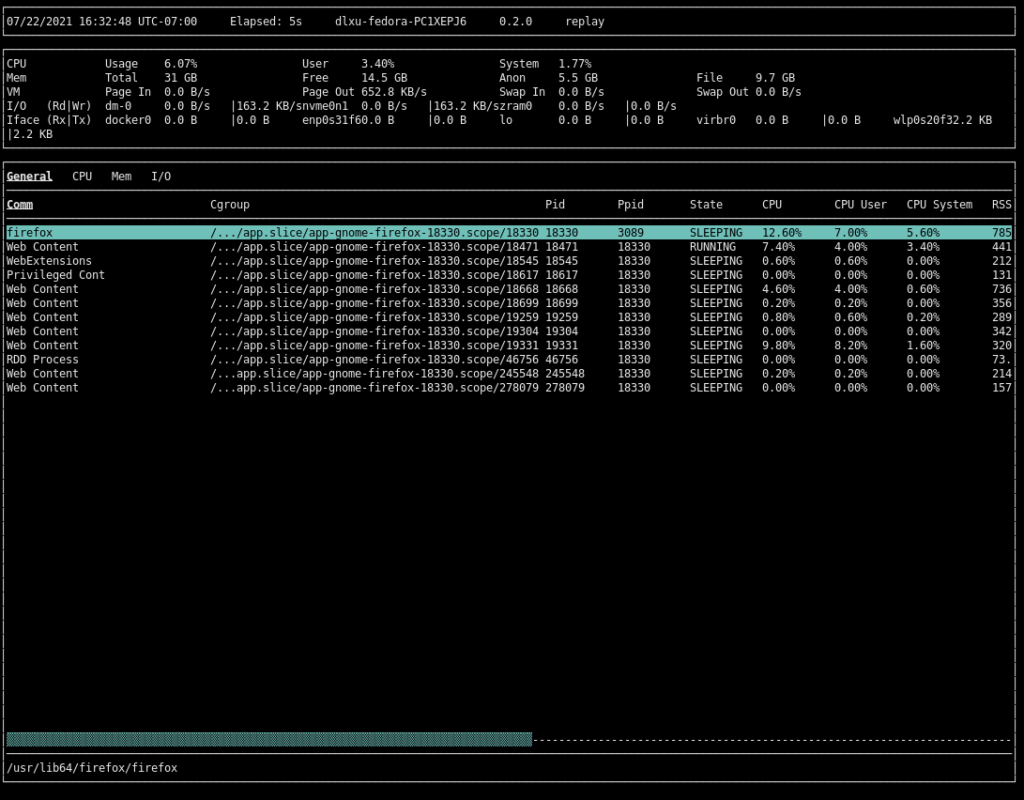

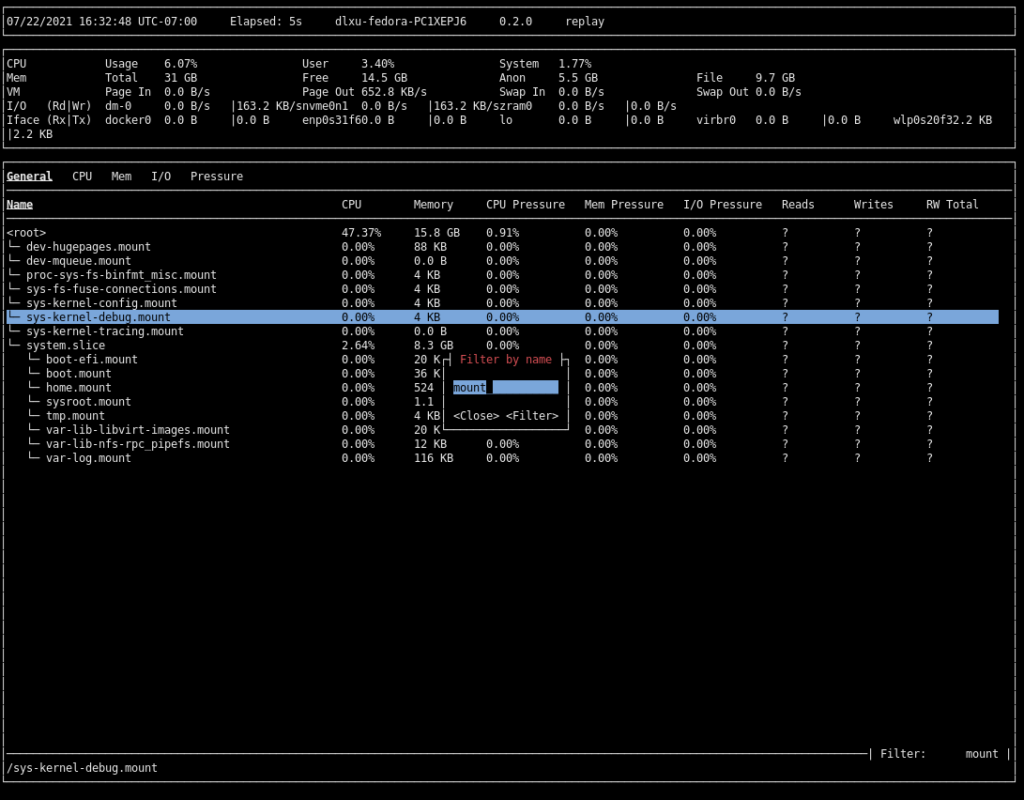

13,747 | 我们离不开的 Linux 内核模块 | https://opensource.com/article/21/8/linux-kernel-module | 2021-09-03T06:57:01 | [

"内核",

"模块",

"Linux"

] | https://linux.cn/article-13747-1.html |

>

> 开源爱好者们对他们所喜爱的 Linux 内核模块进行了评价。

>

>

>

Linux 内核今年就要满 30 岁了! 如果你像我们一样对此特别重视,那么让我们本周用几个特别的文章来庆祝 Linux。

今天,我们先来看看来自社区对“**你不能没有哪个 Linux 内核模块?为什么?**”的回答,让我们听听这 10 位爱好者是怎么说的。

### #1

我猜一些内核开发者听到我的回答后会尖叫着跑开。不过,我还是在这里列出了两个最具争议性的模块:

* 第一个是 NVIDIA,因为我的工作笔记本和个人台式机上都有 NVIDIA 显卡。

* 另一个可能产生的仇恨较少。VMware 的 VMNET 和 VMMON 模块,以便能够运行 VMware Workstation。

— [Peter Czanik](https://opensource.com/users/czanik)

### #2

我最喜欢的是 [zram](https://en.wikipedia.org/wiki/Zram) 模块。它在内存中创建了一个压缩块设备,然后它可以作为交换分区使用。在内存有限的情况下(例如,在虚拟机上),还有如果你担心频繁的 I/O 操作会磨损你的 SSD 或者甚至更糟糕的基于闪存的存储,那么使用基于 zram 的交换分区是非常理想的。

— [Stephan Avenwedde](https://opensource.com/users/hansic99)

### #3

最有用的内核模块无疑是 snd-hda-intel,因为它支持大多数集成声卡。我可以一边听音乐,一边在 Linux 桌面上编码一个音频编曲器。

— [Joël Krähemann](https://opensource.com/users/joel2001k)

### #4

如果没有我用 Broadcom 文件生成的 kmod-wl,我的笔记本就没有价值了。我有时会收到关于内核污染的信息,但没有无线网络的笔记本电脑有什么用呢?

— [Gregory Pittman](https://opensource.com/users/greg-p)

### #5

我不能没有蓝牙。没有它,我的鼠标、键盘、扬声器和耳机除了用来挡住门板还有啥用?

— [Gary Smith](https://opensource.com/users/greptile)

### #6

我要冒昧地说 *全* 都是。 说真的,我们已经到了随机拿一块硬件,插入它,它就可以工作的地步。

* USB 串行适配器能正常工作

* 显卡可以使用(尽管可能不是最好的)

* 网卡正常工作

* 声卡正常工作

所有这些模块整体带来大量可以工作的驱动程序,令人印象深刻。我记得在过去那些糟糕的日子里,我们曾经大喊 xrandr 魔法字符串才能来使投影仪工作。而现在,是的,当设备基本不能正常工作时,才真的罕见。

如果我不得不把它归结为一个,那就是 raid6。

— [John 'Warthog9' Hawley](https://opensource.com/users/warthog9)

### #7

对于这个问题,我想回到 20 世纪 90 年代末。我是一家小公司的 Unix 系统管理员(兼任 IS 经理)。我们的磁带备份系统死了,由于“小公司”预算有限,我们没有急于更换或现场维修。所以我们必须得把它送去维修。

在那两个星期里,我们没有办法进行磁带备份。没有一个系统管理员愿意处于这种境地。

但后来我想起了读过的 [如何使用软盘磁带机](https://tldp.org/HOWTO/Ftape-HOWTO.html),我们刚好有一台刚换下来的塔式电脑,它有一个软盘磁带机。

于是我用 Linux 重新安装了它,设置了 ftape 内核驱动模块,进行了一些备份/恢复测试,然后将我们最重要的备份运行到 QIC 磁带上。在这两个星期里,我们依靠 ftape 备份重要数据。

所以,对于那些让软盘磁带机在 1990 年代的 Linux 上工作的无名英雄,你真是太厉害了!

— [Jim Hall](https://opensource.com/users/jim-hall)

### #8

嗯,这很简单。是 kvm 内核模块。就个人而言,我无法想象在没有虚拟机的情况下完成日常工作。我愿意相信我们大多数人都是这样。kvm 模块在使 Linux 成为云战略的核心方面也发挥了很大作用。

— [Gaurav Kamathe](https://opensource.com/users/gkamathe)

### #9

对我来说,是 dm-crypt,它是用于 LUKS 的。参见:

* <https://www.redhat.com/sysadmin/disk-encryption-luks>

* <https://manpages.debian.org/unstable/cryptsetup-bin/cryptsetup.8.en.html>

知道别人无法看到你的磁盘上的内容是非常棒的,例如,如果你的笔记本丢失或被盗时。

— [Maximilian Kolb](https://opensource.com/users/kolb)

### #10

对于密码学基础,很难超越 crypto 模块和它的 C API,它是如此简洁明了。

在日常生活中,还有什么比蓝牙提供的即插即用更有价值的吗?

— [Marty Kalin](https://opensource.com/users/mkalindepauledu)

在评论中与我们分享。你的生活中不能没有什么 Linux 内核模块?

---

via: <https://opensource.com/article/21/8/linux-kernel-module>

作者:[Jen Wike Huger](https://opensource.com/users/jen-wike) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | The Linux kernel is turning 30 this year! If you're like us, that's a big deal and we are celebrating Linux this week with a couple of special posts.

Today we start with a roundup of responses from around the community answering "What Linux kernel module can you not live without? And, why?" Let's hear what these 10 enthusiasts have to say.

I guess some kernel developers will run away screaming when they hear my answer. Still, I list here two of the most controversial modules:

- First is NVIDIA, as I have an NVIDIA graphics card on my work laptop and my personal desktop.

- The other one probably generates less hatred—the VMNET and VMMON modules from VMware to be able to run VMware Workstation. —

[Peter Czanik](https://opensource.com/users/czanik)

My favorite is the [zram](https://en.wikipedia.org/wiki/Zram) module. It creates a compressed block device in memory, which can then be used as a swap partition. Using a zram-based swap partition is ideal when memory is limited (for example, on virtual machines) and if you are worried about wearing out your SSD or, even worse, your flash-based storage because of frequent I/O operations. —[Stephan Avenwedde](https://opensource.com/users/hansic99)

The most useful kernel module is definitively snd-hda-intel since it supports most integrated sound cards. I listen to music while coding an audio sequencer on the Linux desktop. —[Joël Krähemann](https://opensource.com/users/joel2001k)

My laptop would be worthless without the kmod-wl that I generate with the Broadcom file. I sometimes get messages about tainting the kernel, but what good is a laptop without wireless? —[Gregory Pittman](https://opensource.com/users/greg-p)

I can't live without Bluetooth. Without it, my mouse, keyboard, speakers, and headset would be doorstops. —[Gary Smith](https://opensource.com/users/greptile)

I'm going to go out on a limb and say *all of them*. Seriously, we've gotten to the point where I grab a random piece of hardware, plug it in, and it just works.

- USB serial adapter just works

- Video card just works (though maybe not at its best)

- Network card just works

- Sound card just works

It's tough not to be utterly impressed with the broad scope of the driver work that all the modules bring to the whole. I remember the bad old days when we used to yell out xrandr magic strings to make projectors work, and now—yeah, it's a genuine rarity when stuff doesn't (mostly) just work.

If I had to nail it down to one, though, it'd be raid6. —[John 'Warthog9' Hawley](https://opensource.com/users/warthog9)

I'm going to go back to the late 1990s for this one. I was a Unix systems administrator (and double duty as IS manager) for a small company. Our tape backup system died, and because of "small company" limited budgets, we didn't have a rush replacement or onsite repair on it. So we had to send it in for repair.

During those two weeks, we didn't have a way to make tape backups. No systems administrator wants to be in that position.

But then I remembered reading the [Floppy Tape How-to](https://tldp.org/HOWTO/Ftape-HOWTO.html), and we happened to have a tower PC we'd just replaced that had a floppy tape drive.

So I reinstalled it with Linux, set up the **ftape** kernel driver module, ran a few backup/recovery tests, then ran our most important backups to QIC tapes. For those two weeks, we relied on **ftape** backups of critical data.

So to the unsung hero out there who made floppy tape drives work on 1990s Linux, you are awesome! —[Jim Hall](https://opensource.com/users/jim-hall)

Well, that's easy. It's the kvm kernel modules. On a personal front, I cannot imagine doing my day-to-day work without VMs. I'd like to believe that's the case with most of us. The kvm modules also play a big part in making Linux central to the cloud strategy. —[Gaurav Kamathe](https://opensource.com/users/gkamathe)

For me, it's dm-crypt, which is used for LUKS. See:

[https://www.redhat.com/sysadmin/disk-encryption-luks](https://www.redhat.com/sysadmin/disk-encryption-luks)[https://manpages.debian.org/unstable/cryptsetup-bin/cryptsetup.8.en.html](https://manpages.debian.org/unstable/cryptsetup-bin/cryptsetup.8.en.html)

It's fantastic to know others cannot see what's on your disk, for example, if you lose your notebook or it gets stolen. —[Maximilian Kolb](https://opensource.com/users/kolb)

For cryptography basics, it's hard to beat the crypto module and its C API, which is straightforward.

For day-to-day life, is there anything more valuable than the plug-and-play that Bluetooth provides? —[Marty Kalin](https://opensource.com/users/mkalindepauledu)

Share with us in the comments: What Linux kernel module can you not live without?

## 1 Comment |

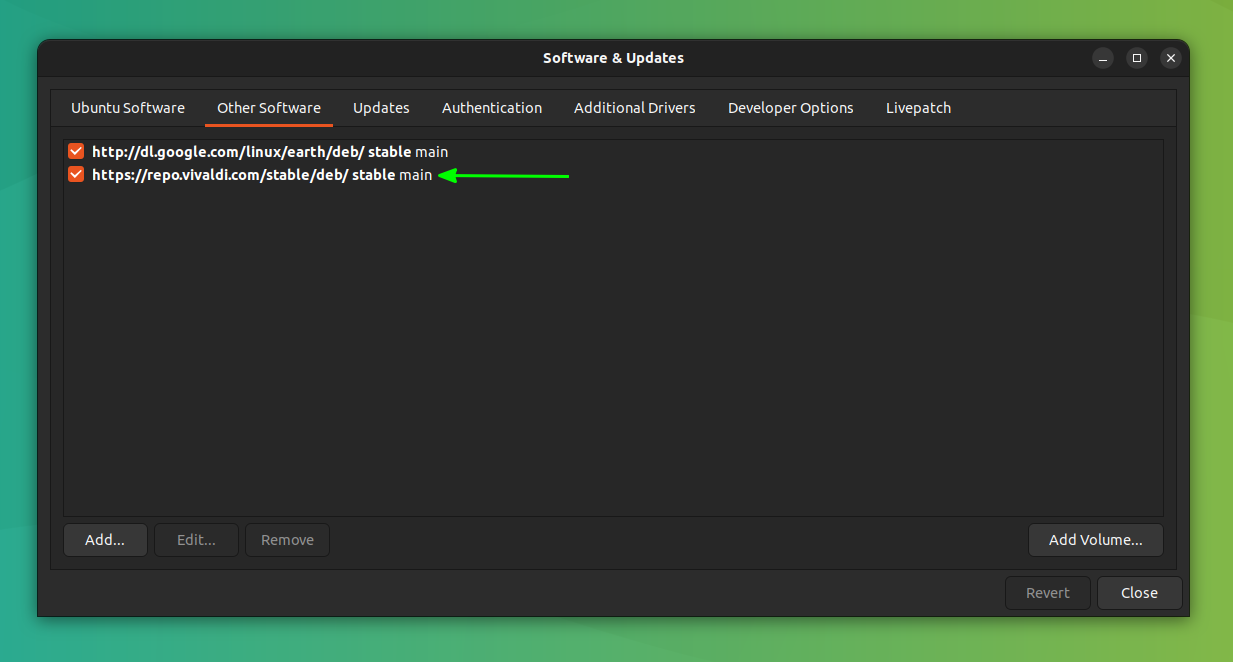

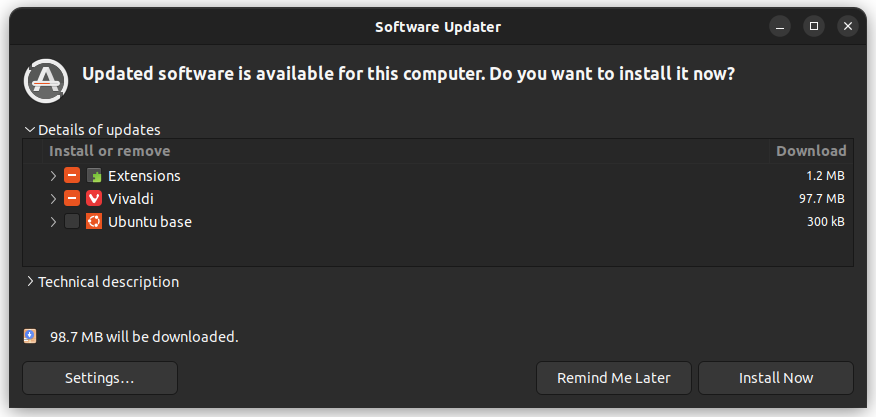

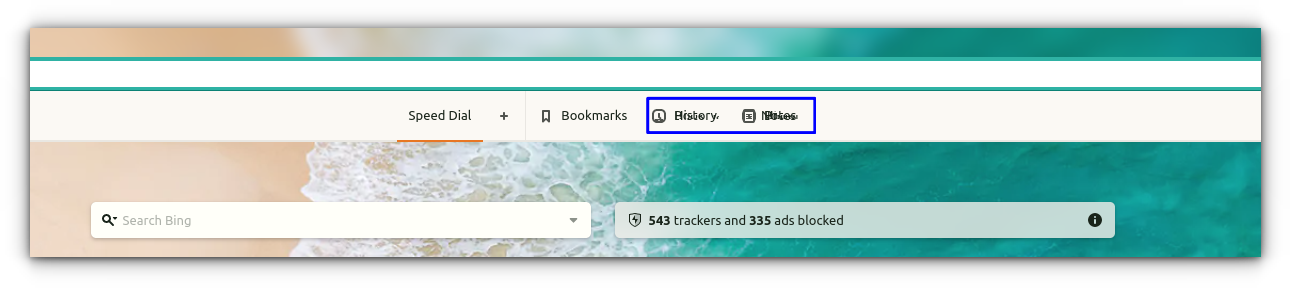

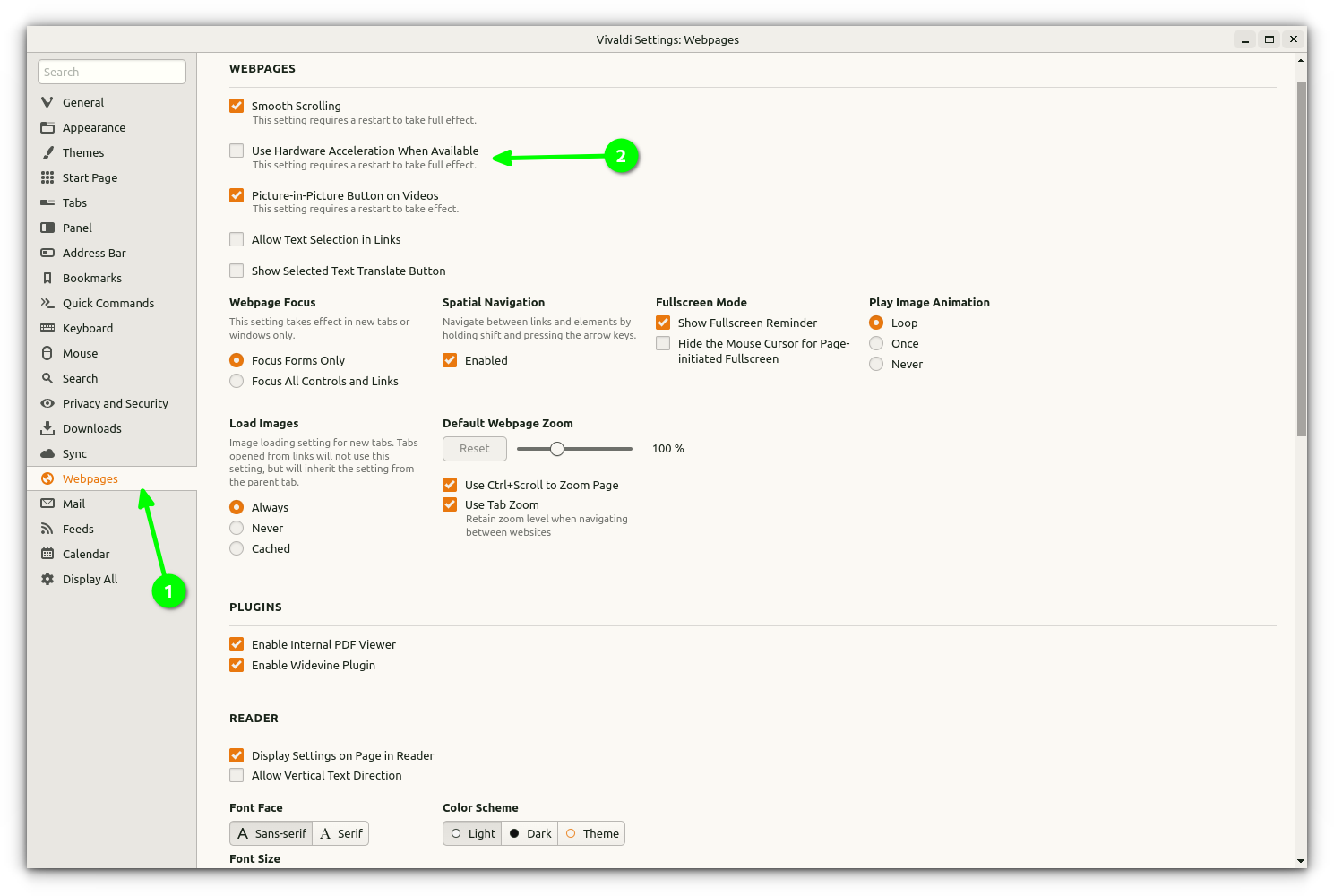

13,749 | 安装 elementary OS 6 “Odin” 后要做的 10 件事 | https://www.debugpoint.com/2021/08/10-things-to-do-after-install-elementary-os-6/ | 2021-09-04T08:14:00 | [

"elementaryOS"

] | /article-13749-1.html |

>

> 一个精心准备的在安装 elementary OS 6 “Odin” 后要做的事情的列表。

>

>

>

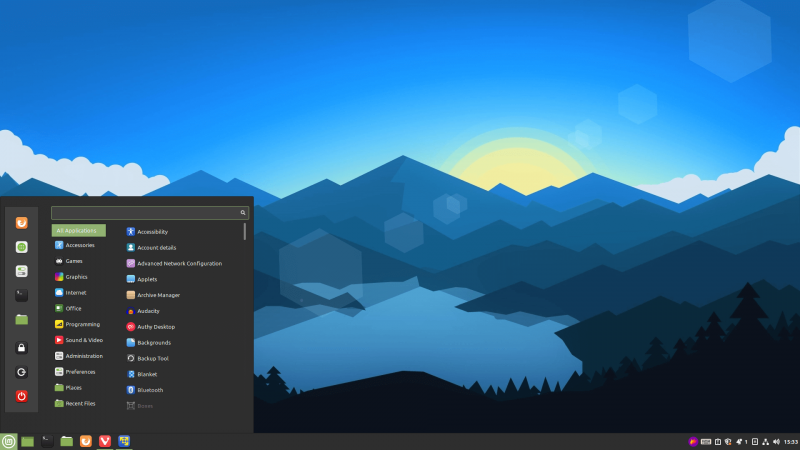

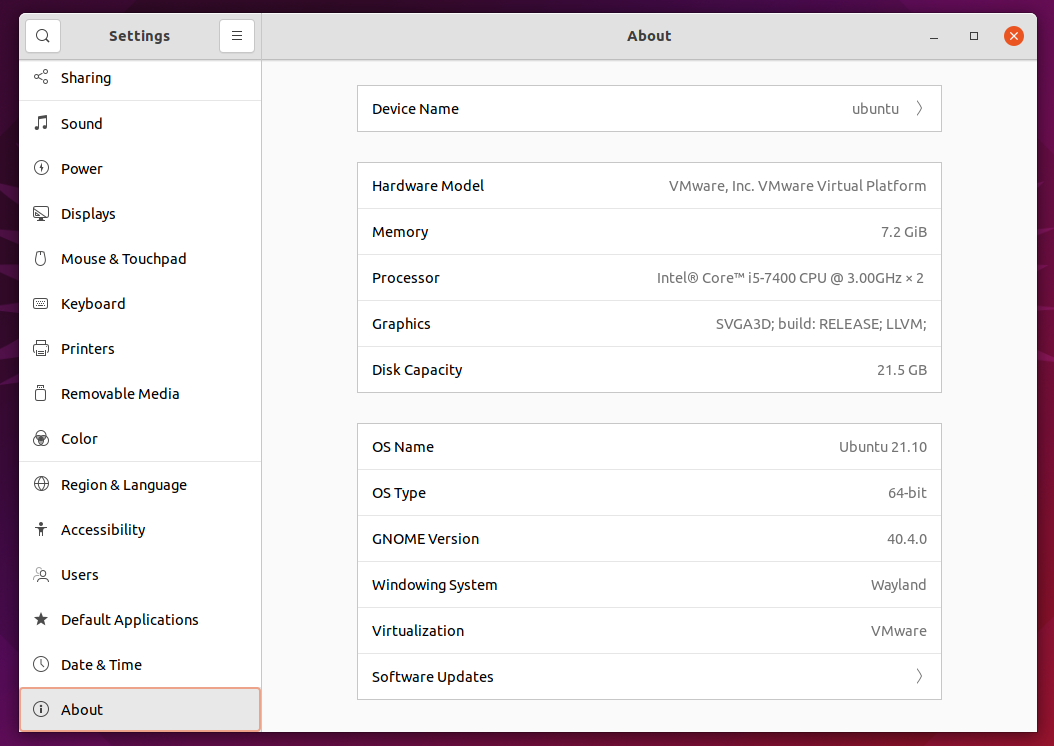

在经过两年多的开发后 [elementary OS 6 “Odin”](https://www.debugpoint.com/2021/08/elementary-os-6/) 于不久前发布,此次版本更新在核心模块、 Pantheon 桌面、原生应用方面带来了一大批新特性。elementary OS 6 “Odin” 是基于 Ubuntu 20.04 LTS 的。

如果你完成了安装,你可能想要尝试通过一些特定的设置来使你的系统更加的个性化。这里描述的选项是通用的,在某些情况下可能对你没有用,但是我们觉得有必要列出一些基本的东西,让你有合适的方式来探索这个漂亮的 elementary OS。

### 安装完 elementary OS 6 “Odin” 后要做的事情

准备步骤:

首先确保你已经连上了互联网,你可以在顶部的通知区域查看可用的网络列表

#### 1、更改主机名

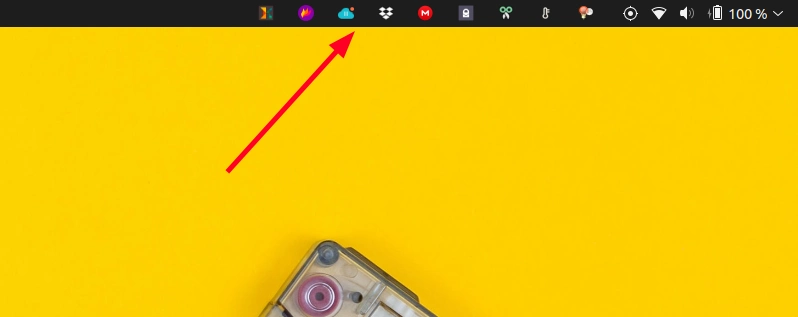

这可能不是你想做的第一件事。但是我不知道为什么在安装过程中没有给出更改主机名的选项。例如,见下图的终端提示, 这个主机名是 elementary OS 的默认硬件配置。在我看来这一点都不好。

打开终端并运行下列命令以更改主机名:

```

hostnamectl set-hostname your-new-hostname

```

示例:

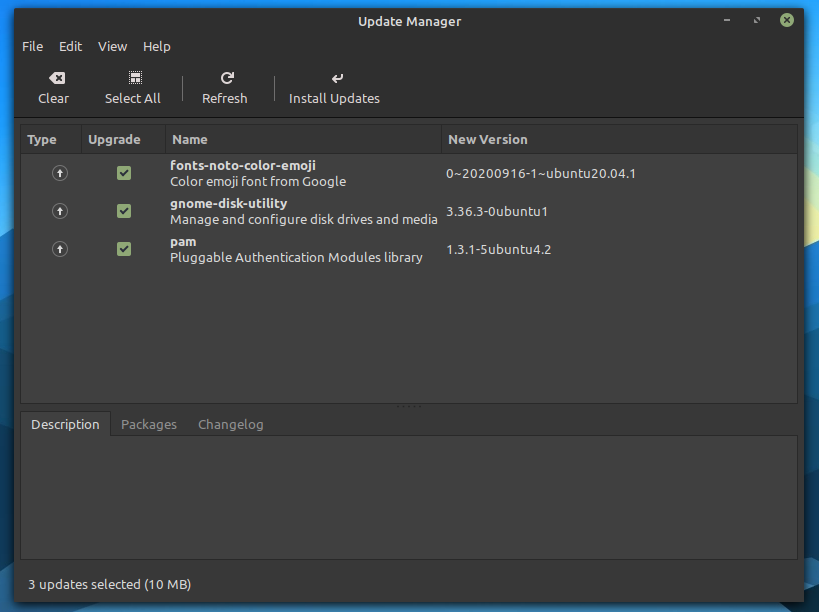

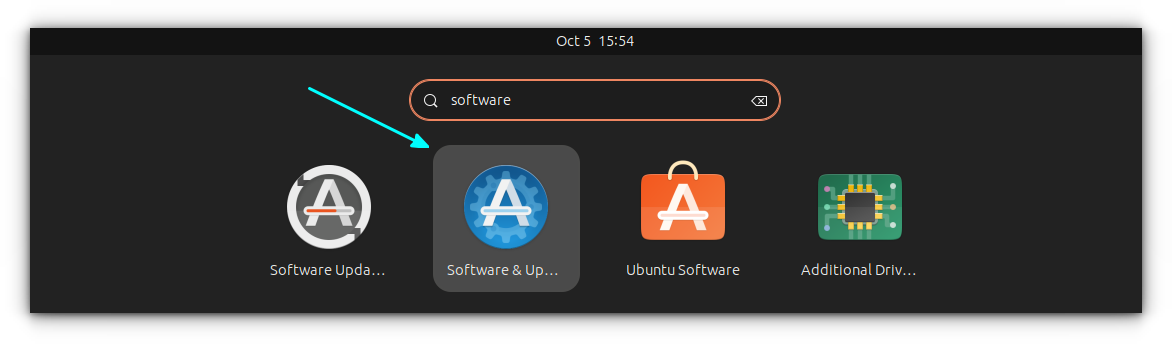

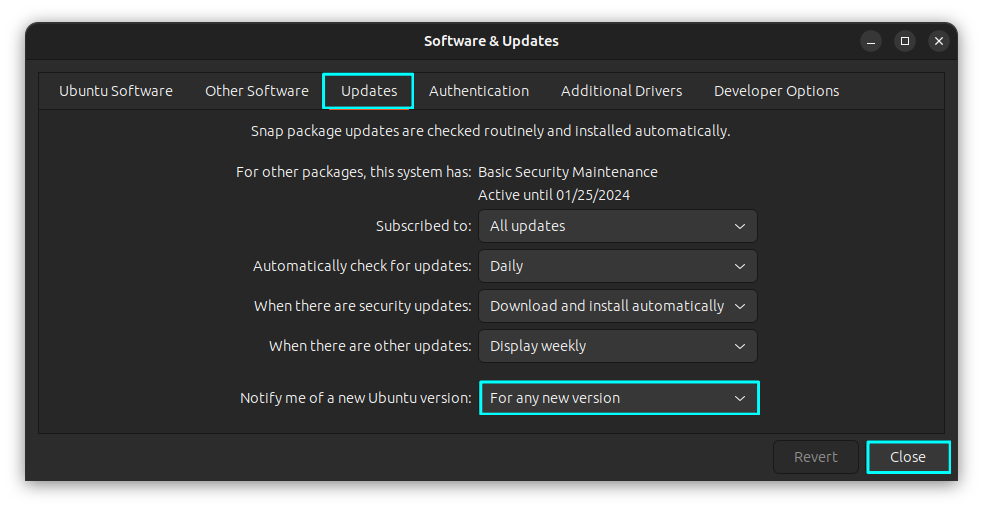

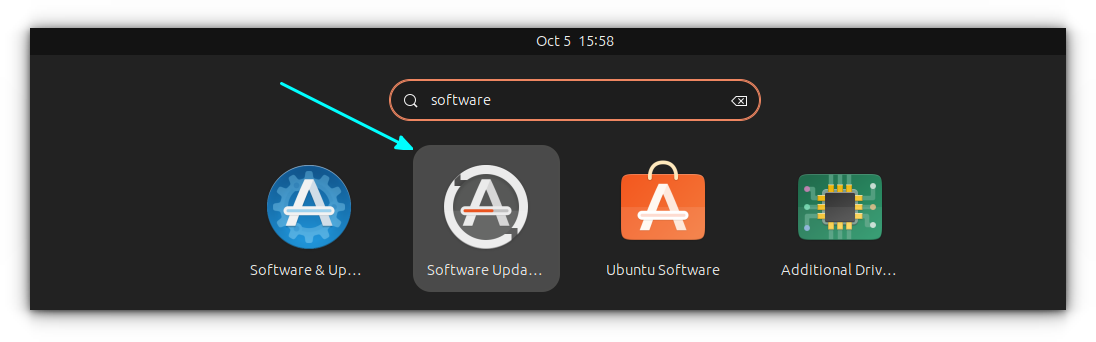

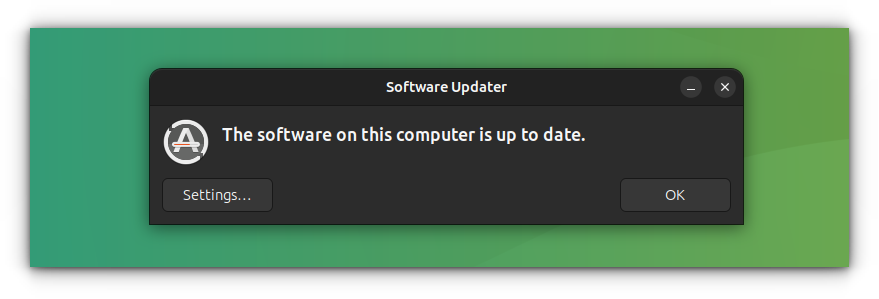

#### 2、升级你的系统

在安装任何 Linux 发行版后,你应该做的第一件事就是确保系统处于最新的软件包和安全更新状态。

你可以通过打开应用中心来检查或者安装更新。

或者打开终端运行下列命令:

```

sudo apt update

sudo apt upgrade

```

#### 3、安装 Pantheon Tweaks

Pantheon Tweaks 是 elementary OS 的必备应用。它提供了一些无法通过系统原生设置程序修改的额外的设置和配置选项,请打开终端并运行以下命令以安装 Pantheon Tweaks。注意:先前版本的 Tweak 工具叫做 elementary Tweaks,从 Odin 版本开始更名为 Pantheon Tweaks。

```

sudo apt install software-properties-common

sudo add-apt-repository -y ppa:philip.scott/pantheon-tweaks

sudo apt install -y pantheon-tweaks

```

安装后打开系统设置,你可以在那里找到 “<ruby> 调整 <rt> Tweaks </rt></ruby>” 选项。

[这里](https://www.debugpoint.com/2021/07/elementary-tweaks-install/) 提供了更详细的安装指南(如果你需要了解更多信息)。

### 4、配置 Dock

Dock 是整个桌面的中心。老实说,Dock 中默认包含的应用并不常用,因此你可以通过以下步骤配置 Dock 中的项目。

* 移除:右键单击并取消 “<ruby> 在 Dock 中驻留 <rt> Keep in Dock </rt></ruby>” 选项。

* 添加新的项目:单击顶部的应用程序。然后右键单击你想要放在 Dock 的应用图标。选择 “<ruby> 添加到 Dock <rt> Add to Dock </rt></ruby>”。

在我看来,你应该至少把文件管理、截图工具、Firefox 、计算器,以及其他的一些应用添加到 Dock。然后移除 Dock 上那些你不需要的应用。

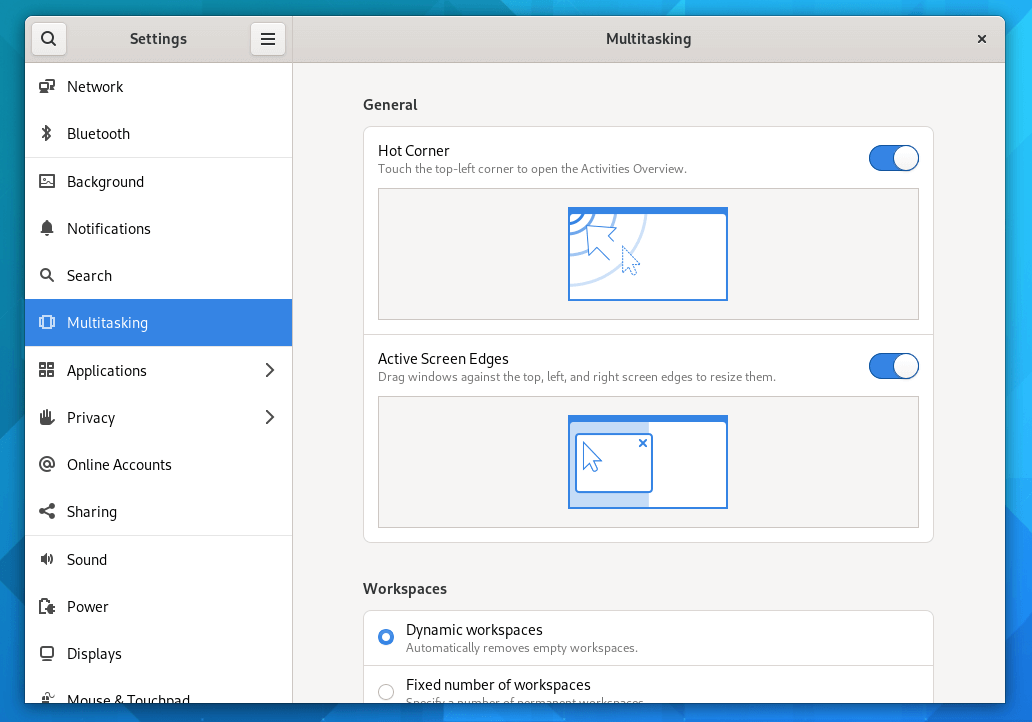

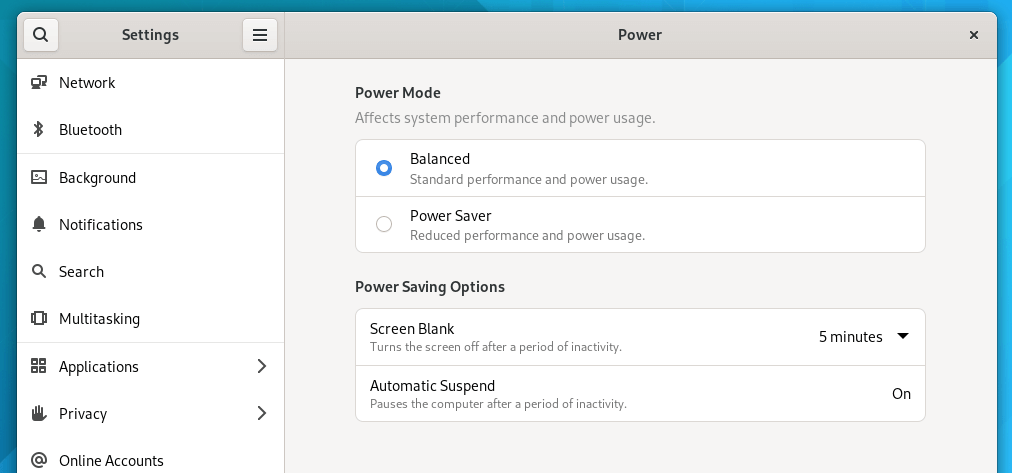

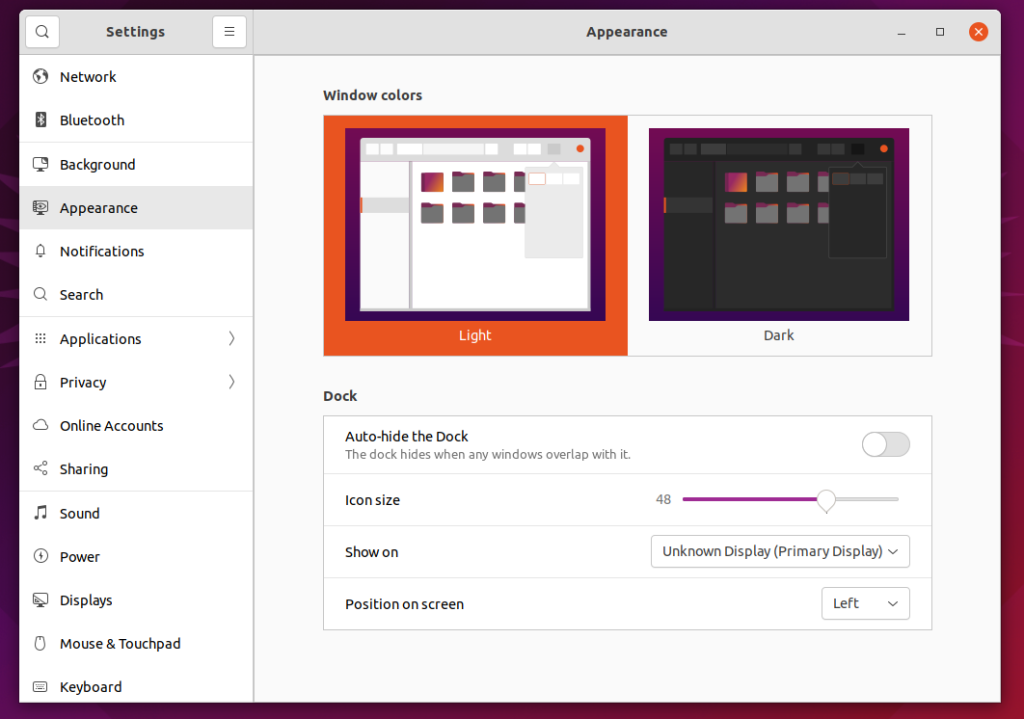

#### 5、更改外观

elementary OS 6 Odin 改进了桌面的整体外观,为整个桌面和应用程序提供了自带的强调色和原生的夜间模式,同时,系统自带了许多漂亮的壁纸。你可以通过 “应用 > 系统设置 > 桌面” 来定制壁纸、外观、面板和多任务视图。

按照你希望的样子来配置你系统的外观。

你也可以基于日出和日落的时间来设置夜间模式。

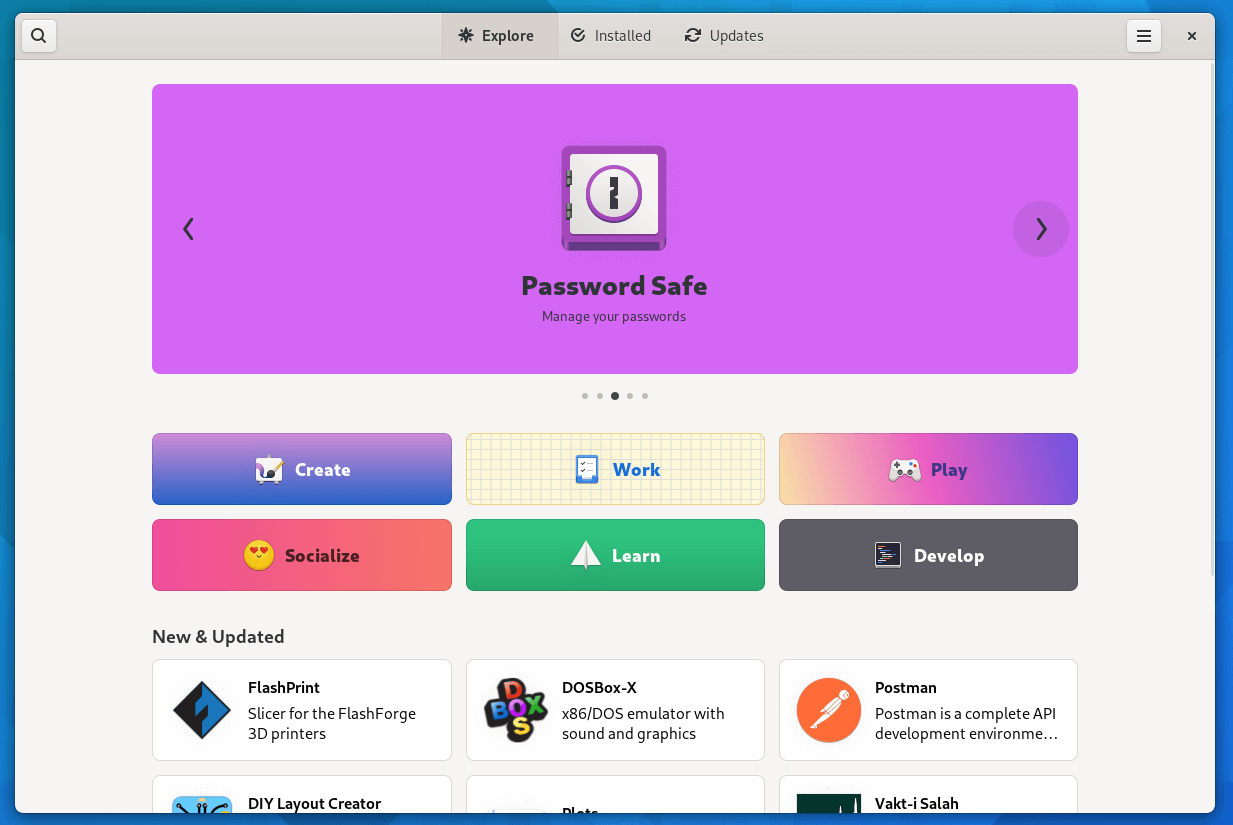

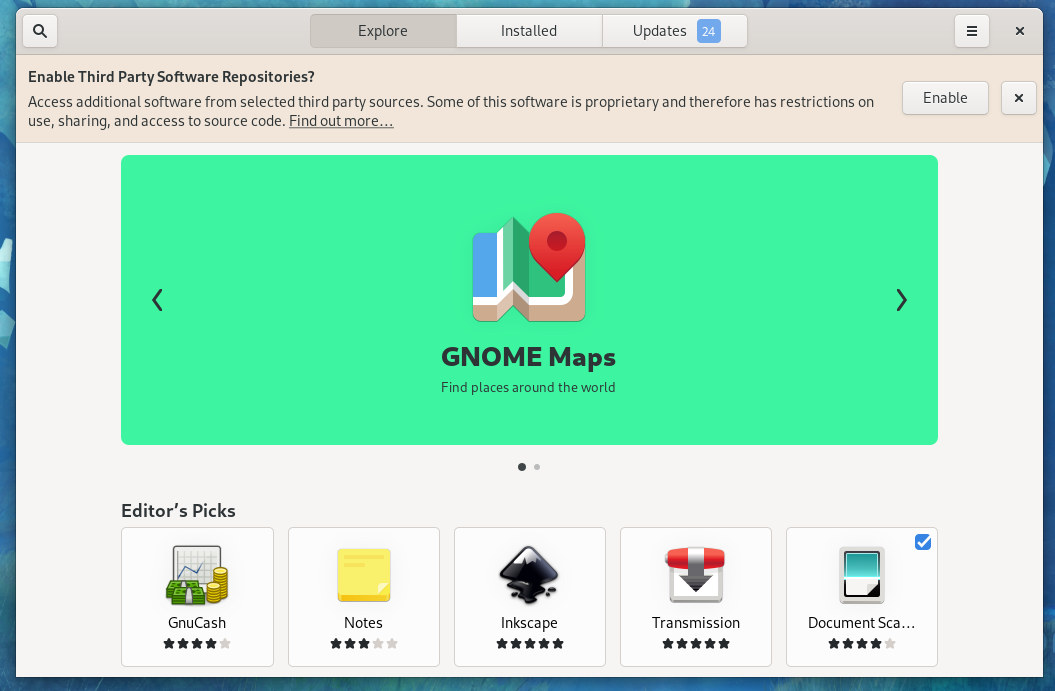

#### 6、安装其他的应用

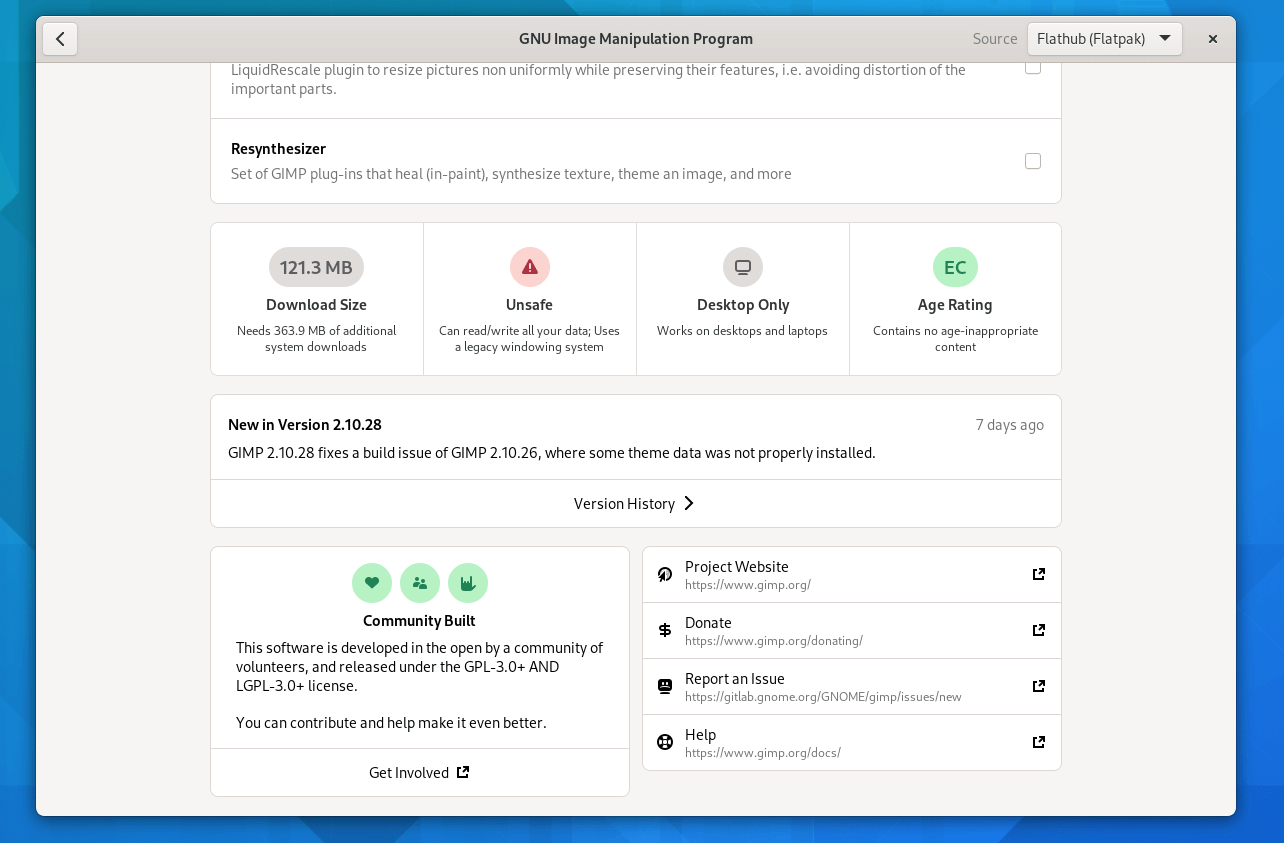

自带的应用中心非常适合这个系统,我发现它是 Linux 桌面最好的应用商店之一。然而,有时候需要安装没有预装的必要应用(大多数是知名的应用)。下面是个新系统推荐安装的软件列表。(说真的,为什么 LibreOffice 没有预装?)

* firefox

* gimp

* gedit

* inkscape

* obs-studio

* libreoffice

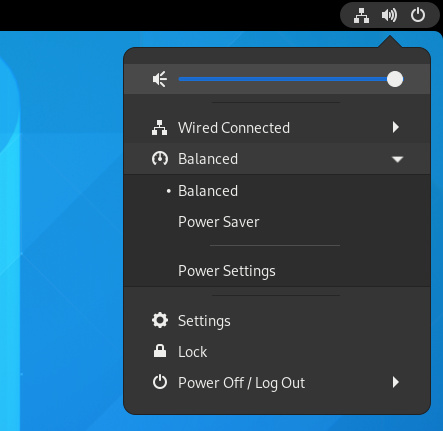

#### 7、一些针对笔记本电脑的省电贴士

有许多方法可以配置你的 elementary OS(或者一般的 Linux 桌面),以达到延长电池寿命的目的。记住,电池寿命取决于你的笔记本硬件,以及电池和笔记本的使用年限。所以,遵循下面的一些建议,最大限度的利用你的笔记本电池。

* 安装 [tlp](https://linrunner.de/tlp/)。`tlp` 是一个简单易用的命令行程序,用来帮你在 Linux 上延长电池寿命。你只需要安装它,默认情况下,它会处理好其他的设置。安装命令:

```

sudo add-apt-repository ppa:linrunner/tlp

sudo apt update

sudo apt-get install tlp

sudo tlp start

```

* 关闭蓝牙,默认情况下,蓝牙是开启状态。在需要的时候再启动它。

* 通过下面的命令安装 `thermald`。这个实用程序(实际是个守护进程)控制着你的 CPU 的 P-States 和 T-States 的温度以及 CPU 发热。

```

sudo apt install thermald

```

* 根据你的需要将亮度调到最小。

#### 8、安装磁盘实用程序

在很多情况下,你发现你需要格式化 USB 或者向 USB 中写入一些东西。默认情况下,系统没有安装任何相关的应用。你可以安装以下这些易用的应用。

* gnome-disk-utility

* gparted

#### 9、启用最大化和最小化选项

许多用户喜欢在窗口标题栏左边或者右边使用最大化、最小化的按钮,elementary OS 默认只提供关闭和恢复两个选项。这没什么问题,因为这就是它的设计理念。然而你可以通过使用 Pantheon Tweaks 来开启最大化和最小化按钮,具体的方式是:“调整 > 外观 > 窗口控制”。

#### 10、在 Odin 中学习新的多点触控手势

如果你是笔记本用户,并且使用 elementary OS “Odin”,那么你一定要看看这些超酷的新触控手势。三根手指向上滑动,就会平滑的打开多任务视图,展示打开的应用程序和工作空间。用三根手指向左或向右滑动,就能在动态工作空间之间流畅的切换,使任务之间的切换更快。

用两根手指也可以在原生应用中实现类似的功能。

### 结束语

我希望这篇安装 elementary OS 6 “Odin” 后要做的 10 件事能帮助到你,让你可以上手使用 elementary OS 6 “Odin”,尽管这些事情完全是用户的偏好,因此这些事情有可能适合你也有可能不适用于你,但总的来说,这些都是一般用户喜欢的预期调整。

如果你觉得有更多的东西应该添加到列表中,请在下面的评论中告诉我。

---

via: <https://www.debugpoint.com/2021/08/10-things-to-do-after-install-elementary-os-6/>

作者:[Arindam](https://www.debugpoint.com/author/admin1/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[anine09](https://github.com/anine09) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | ('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')) | null |

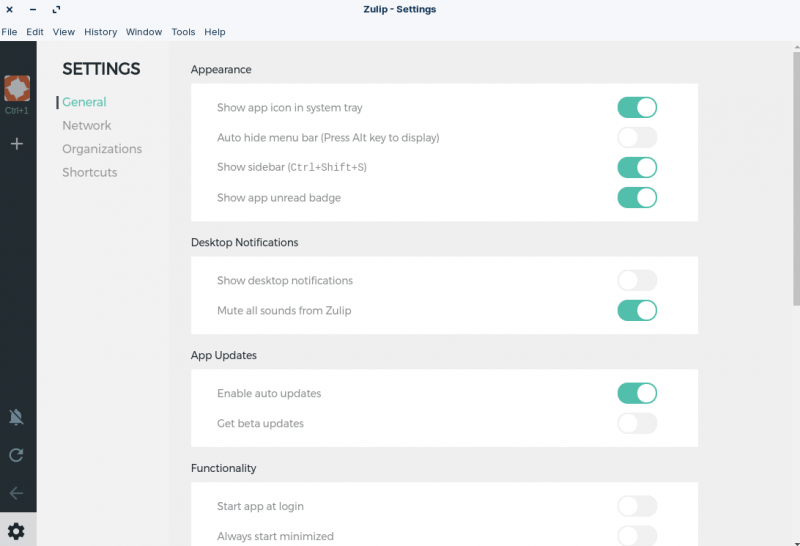

13,750 | Zulip:一个不错的开源的 Slack 替代品 | https://itsfoss.com/zulip/ | 2021-09-04T08:37:51 | [

"Zulip",

"Slack"

] | https://linux.cn/article-13750-1.html |

>

> Zulip 是一个开源的协作平台,它把自己定位为一个更好的 Slack 替代品。让我们来了解一下。

>

>

>

当涉及到你的工作时,消息和协作平台有很大的不同。

虽然有几个选择,但 Slack 是许多组织使用的一个流行选择。但是,可以自托管的 Slack 的开源替代方案如何呢?

Zulip 就是这样一个软件。

### Zulip:开源的协作消息应用

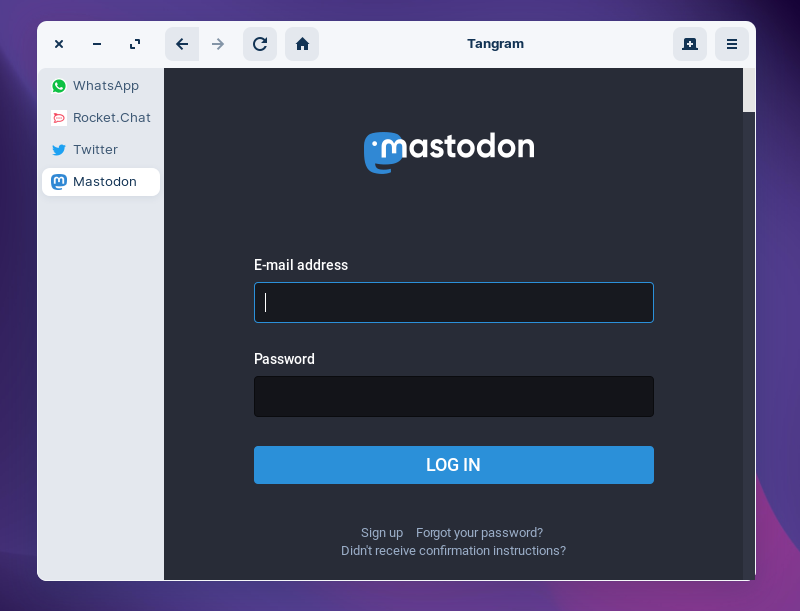

如果你想多了解,我必须提到还有更多的 [Slack 开源替代品](https://itsfoss.com/open-source-slack-alternative/)。

但在这里,我重点介绍 Zulip。

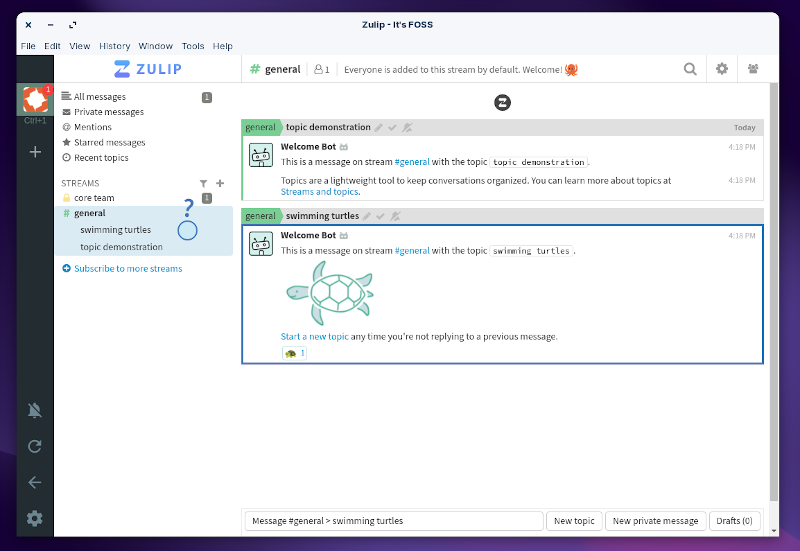

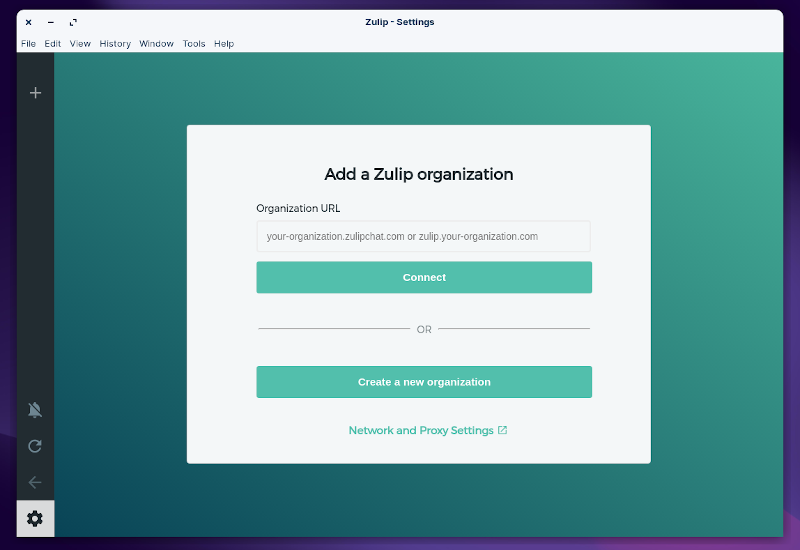

Zulip 是一个自由而开源的消息应用,有付费托管选项和自托管的能力。

它旨在提供与 Slack 类似的体验,同时努力帮助你利用话题提高对话的有效性。

与 Slack 中的频道相比,Zulip 聊天添加了话题(类似标签),以快速过滤与你有关的对话。

### Zulip 的特点

你可以通过 Zulip 获得大部分的基本功能。这里列出主要的亮点,你可以发现:

* 支持 Markdown

* 频道的主题

* 支持拖放文件

* 代码块

* 集成 GitHub 来跟踪问题

* 支持电子邮件通知

* 自托管选项

* 信息编辑

* 集成 GIPHY

* 用 Zoom、Jitsi 或 BigBlueButton 进行视频通话

除了上述功能外,你可以预期得到你通常在 Slack 和其他方面得到的基本选项。

此外,如果你愿意,你还可以将它与 Matrix 和 IRC 整合。

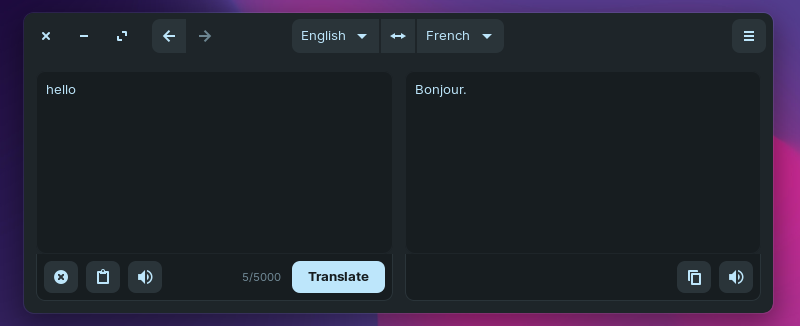

在我简短的测试使用中,其用户界面对于有效的沟通来说是足够好的。然而,我没能找到任何黑暗模式或改变主题的能力。

它看起来比 Slack 更简单直白,这样可以改善用户体验方面的问题。

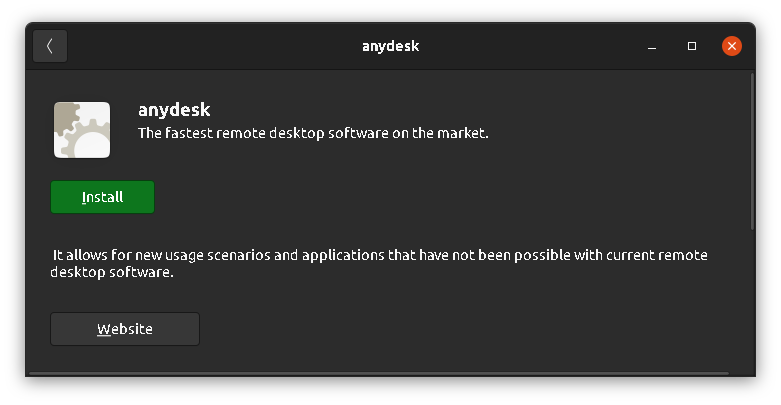

### 在 Linux 中安装 Zulip

Zulip 在其官方网站上以 AppImage 文件的形式提供。如果你需要帮助,可以参考我们关于 [在 Linux 中使用 AppImage](https://itsfoss.com/use-appimage-linux/) 的指南。

它也有一个 Snap 包。所以,你可以在任何一个 Linux 发行版上使用它们中的任何一个。

你也可以使用 APT 通过终端为基于 Ubuntu/Debian 的发行版安装它。如果你想这样做,请看它的 [官方说明](https://zulip.com/help/desktop-app-install-guide)。

Zulip 可用于 Windows、Mac 和 Linux。你也应该发现它可用于 Android 和 iOS 手机。

* [Zulip](https://zulip.com/)

你可以在网络、桌面和智能手机上使用 Zulip,所以可以把它当做 Slack 的合适替代品。

你试过了吗?你用什么消息平台来进行工作协作?欢迎在评论中分享你的想法。

---

via: <https://itsfoss.com/zulip/>

作者:[Ankush Das](https://itsfoss.com/author/ankush/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **Brief:** Zulip is an open-source collaboration platform that pitches itself as a better replacement to Slack. Let us take a closer look.

Messaging and collaboration platforms make a big difference when it comes to your work.

While there are several options available, Slack is a popular one used by many organizations. But, what about an open-source alternative to Slack that you can self-host?

Zulip is one such software.

## Zulip: Open Source Collaboration Messaging App

If you want to explore, I must mention that there are more [open-source alternatives to Slack](https://itsfoss.com/open-source-slack-alternative/) out there.

Here, I focus on Zulip.

Zulip is a free and open-source messaging application with paid hosted options and the ability to self-host.

It aims to provide a similar experience to Slack while striving to help you improve the effectiveness of conversations using topics.

In contrast to channels in Slack, Zulip chat adds topics (which are like tags) to quickly filter through the conversations that matter to you.

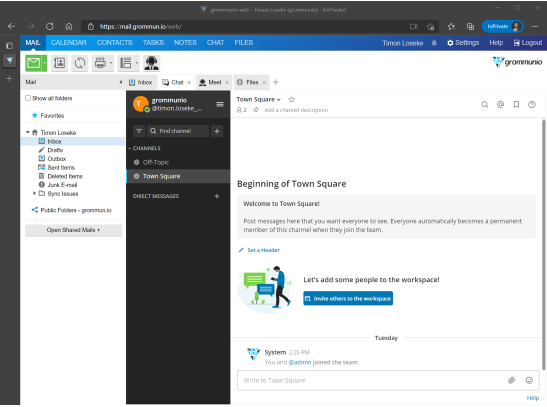

## Features of Zulip

You get most of the essential features with Zulip. To list the key highlights, you can find:

- Markdown support

- Topics for channels

- Drag and drop file support

- Code blocks

- GitHub integration to track issues

- Email notification support

- Self-host option

- Message editing

- GIPHY integration

- Video calls with Zoom, Jitsi, or BigBlueButton

In addition to the features mentioned, you should expect the basic options that you usually get with Slack and others.

Also, you can integrate it with Matrix and IRC if you want.

In my brief test usage, the user interface is good enough for effective communication. However, I failed to find any dark mode or the ability to change a theme.

It looks more straightforward than Slack so that it can improve the user experience side of things.

## Install Zulip in Linux

Zulip is available as an AppImage file from its official website. You may refer to our guide on[ using AppImage in Linux](https://itsfoss.com/use-appimage-linux/) in case you need help.

It is also available as a snap package. So, you can utilize either of them for any Linux distro.

You can also install it through the terminal for Ubuntu/Debian-based distros using APT. Take a look at its [official instructions](https://zulip.com/help/desktop-app-install-guide) if you want that.

Zulip is available for Windows, Mac, and Linux. You should also find it available for Android and iOS mobile phones.

Considering that you can use Zulip on the web, desktop, and smartphones, it is a suitable replacement for Slack.

*Have you tried it yet? What messaging platform do you use to collaborate for work? Feel free to share your thoughts in the comments.* |

13,752 | 通过 ftrace 来分析 Linux 内核 | https://opensource.com/article/21/7/linux-kernel-ftrace | 2021-09-05T15:20:00 | [

"strace",

"ftrace",

"追踪"

] | https://linux.cn/article-13752-1.html |

>

> 通过 `ftrace` 来了解 Linux 内核内部工作方式是一个好方法。

>

>

>

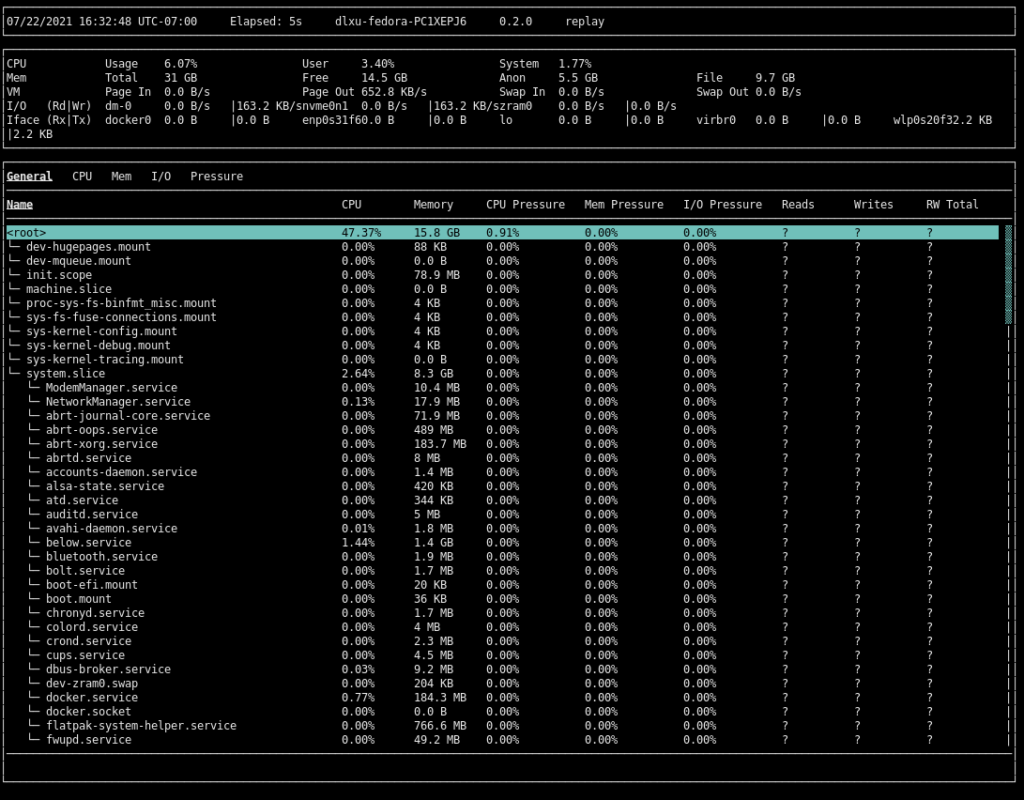

操作系统的内核是最难以理解的软件之一。自从你的系统启动后,它会一直在后台运行。尽管每个用户都不与内核直接交互,但他们在内核的帮助下完成自己的计算任务。与内核的交互发生在调用系统调用或者用户日常使用的各种库或应用间接调用了系统调用。

在之前的文章里我介绍了如何使用 [strace](/article-11545-1.html) 来追踪系统调用。然而,使用 `strace` 时你的视野是有限的。它允许你查看特定参数的系统调用。并在工作完成后,看到其返回值或状态,以表明是成功还是失败。但是你无法知道内核在这段时间内发生了什么。除了系统调用外,还有很多其他活动内核中发生,而你却视而不见。

### ftrace 介绍

本文的旨在通过使用一个名为 `ftrace` 的机制来阐明追踪内核函数的一些情况。它使得任何 Linux 用户可以轻松地追踪内核,并且了解更多关于 Linux 内核内部如何工作。

`ftrace` 默认产生的输出往往是巨大的,因为内核总是忙碌的。为了节省空间,很多情况下我会通过截断来给出尽量小的输出。

我使用 Fedora 来演示下面的例子,但是它们应该在其他最新的 Linux 发行版上同样可以运行。

### 启用 ftrace

`ftrace` 现在已经是内核中的一部分了,你不再需要事先安装它了。也就是说,如果你在使用最近的 Linux 系统,那么 `ftrace` 是已经启用了的。为了验证 `ftrace` 是否可用,运行 `mount` 命令并查找 `tracefs`。如果你看到类似下面的输出,表示 `ftrace` 已经启用,你可以轻松地尝试本文中下面的例子。下面有些命令需要在 root 用户下使用(用 `sudo` 执行是不够的)。

```

# mount | grep tracefs

none on /sys/kernel/tracing type tracefs (rw,relatime,seclabel)

```

要想使用 `ftrace`,你首先需要进入上面 `mount` 命令中找到的特定目录中,在那个目录下运行文章中的其他命令。

```

# cd /sys/kernel/tracing

```

### 一般的工作流程

首先,你需要理解捕捉踪迹和获取输出的一般流程。如果你直接运行 `ftrace`,不会运行任何特定的 `ftrace` 命令。相反的,基本操作是通过标准 Linux 命令来写入或读取一些文件。

一般的步骤如下:

1. 通过写入一些特定文件来启用/结束追踪

2. 通过写入一些特定文件来设置/取消追踪时的过滤规则

3. 从文件中读取基于第 1 和 2 步的追踪输出

4. 从文件中清除早期输出或缓冲区

5. 缩小到你的特定用例(你要追踪的内核函数),重复 1、2、3、4 步

### 可用的追踪器类型

有多种不同的追踪器可供使用。之前提到,在运行任何命令前,你需要进入一个特定的目录下,因为需要的文件在这些目录下。我在我的例子中使用了相对路径(而不是绝对路径)。

你可以查看 `available_tracers` 文件内容来查看所有可用的追踪器类型。你可以看下面列出了几个。不需要担心这些:

```

$ pwd

/sys/kernel/tracing

$ sudo cat available_tracers

hwlat blk mmiotrace function_graph wakeup_dl wakeup_rt wakeup function nop

```

在所有输出的追踪器中,我会聚焦于下面三个特殊的:启用追踪的 `function` 和 `function_graph`,以及停止追踪的 `nop`。

### 确认当前的追踪器

通常情况默认的追踪器设定为 `nop`。即在特殊文件中 `current_tracer` 中的 “无操作”,这意味着追踪目前是关闭的:

```

$ pwd

/sys/kernel/tracing

$ sudo cat current_tracer

nop

```

### 查看追踪输出

在启用任何追踪功能之前,请你看一下保存追踪输出的文件。你可以用 [cat](https://opensource.com/article/19/2/getting-started-cat-command) 命令查看名为 `trace` 的文件的内容:

```

# cat trace

# tracer: nop

#

# entries-in-buffer/entries-written: 0/0 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

```

### 启用 function 追踪器

你可以通过向 `current_tracer` 文件写入 `function` 来启用第一个追踪器 `function`(文件原本内容为 `nop`,意味着追踪是关闭的)。把这个操作看成是启用追踪的一种方式:

```

$ pwd

/sys/kernel/tracing

$ sudo cat current_tracer

nop

$ echo function > current_tracer

$

$ cat current_tracer

function

```

### 查看 function 追踪器的更新追踪输出

现在你已启用追踪,是时候查看输出了。如果你查看 `trace` 文件内容,你将会看到许多被连续写入的内容。我通过管道只展示了文件内容的前 20 行。根据左边输出的标题,你可以看到在某个 CPU 上运行的任务和进程 ID。根据右边输出的内容,你可以看到具体的内核函数和其父函数。中间显示了时间戳信息:

```

# sudo cat trace | head -20

# tracer: function

#

# entries-in-buffer/entries-written: 409936/4276216 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

<idle>-0 [000] d... 2088.841739: tsc_verify_tsc_adjust <-arch_cpu_idle_enter

<idle>-0 [000] d... 2088.841739: local_touch_nmi <-do_idle

<idle>-0 [000] d... 2088.841740: rcu_nocb_flush_deferred_wakeup <-do_idle

<idle>-0 [000] d... 2088.841740: tick_check_broadcast_expired <-do_idle

<idle>-0 [000] d... 2088.841740: cpuidle_get_cpu_driver <-do_idle

<idle>-0 [000] d... 2088.841740: cpuidle_not_available <-do_idle

<idle>-0 [000] d... 2088.841741: cpuidle_select <-do_idle

<idle>-0 [000] d... 2088.841741: menu_select <-do_idle

<idle>-0 [000] d... 2088.841741: cpuidle_governor_latency_req <-menu_select

```

请记住当追踪打开后,这意味着追踪结果会被一直连续写入直至你关闭追踪。

### 关闭追踪

关闭追踪是简单的。你只需要在 `current_tracer` 文件中用 `nop` 替换 `function` 追踪器即可:

```

$ sudo cat current_tracer

function

$ sudo echo nop > current_tracer

$ sudo cat current_tracer

nop

```

### 启用 function\_graph 追踪器

现在尝试第二个名为 `function_graph` 的追踪器。你可以使用和上面相同的步骤:在 `current_tracer` 文件中写入 `function_graph`:

```

$ sudo echo function_graph > current_tracer

$ sudo cat current_tracer

function_graph

```

### function\_tracer 追踪器的追踪输出

注意到目前 `trace` 文件的输出格式已经发生变化。现在,你可以看到 CPU ID 和内核函数的执行时长。接下来,一个花括号表示一个函数的开始,以及它内部调用了哪些其他函数:

```

# cat trace | head -20

# tracer: function_graph

#

# CPU DURATION FUNCTION CALLS

# | | | | | | |

6) | n_tty_write() {

6) | down_read() {

6) | __cond_resched() {

6) 0.341 us | rcu_all_qs();

6) 1.057 us | }

6) 1.807 us | }

6) 0.402 us | process_echoes();

6) | add_wait_queue() {

6) 0.391 us | _raw_spin_lock_irqsave();

6) 0.359 us | _raw_spin_unlock_irqrestore();

6) 1.757 us | }

6) 0.350 us | tty_hung_up_p();

6) | mutex_lock() {

6) | __cond_resched() {

6) 0.404 us | rcu_all_qs();

6) 1.067 us | }

```

### 启用追踪的设置来增加追踪的深度

你可以使用下面的步骤来调整追踪器以看到更深层次的函数调用。完成之后,你可以查看 `trace` 文件的内容并发现输出变得更加详细了。为了文章的可读性,这个例子的输出被省略了:

```

# cat max_graph_depth

0

# echo 1 > max_graph_depth ## or:

# echo 2 > max_graph_depth

# sudo cat trace

```

### 查找要追踪的函数

上面的步骤足以让你开始追踪。但是它产生的输出内容是巨大的,当你想试图找到自己感兴趣的内容时,往往会很困难。通常你更希望能够只追踪特定的函数,而忽略其他函数。但如果你不知道它们确切的名称,你怎么知道要追踪哪些进程?有一个文件可以帮助你解决这个问题 —— `available_filter_functions` 文件提供了一个可供追踪的函数列表:

```

$ sudo wc -l available_filter_functions

63165 available_filter_functions

```

### 查找一般的内核函数

现在试着搜索一个你所知道的简单内核函数。用户空间由 `malloc` 函数用来分配内存,而内核由 `kmalloc` 函数,它提供类似的功能。下面是所有与 `kmalloc` 相关的函数:

```

$ sudo grep kmalloc available_filter_functions

debug_kmalloc

mempool_kmalloc

kmalloc_slab

kmalloc_order

kmalloc_order_trace

kmalloc_fix_flags

kmalloc_large_node

__kmalloc

__kmalloc_track_caller

__kmalloc_node

__kmalloc_node_track_caller

[...]

```

### 查找内核模块或者驱动相关函数

在 `available_filter_functions` 文件的输出中,你可以看到一些以括号内文字结尾的行,例如下面的例子中的 `[kvm_intel]`。这些函数与当前加载的内核模块 `kvm_intel` 有关。你可以运行 `lsmod` 命令来验证:

```

$ sudo grep kvm available_filter_functions | tail

__pi_post_block [kvm_intel]

vmx_vcpu_pi_load [kvm_intel]

vmx_vcpu_pi_put [kvm_intel]

pi_pre_block [kvm_intel]

pi_post_block [kvm_intel]

pi_wakeup_handler [kvm_intel]

pi_has_pending_interrupt [kvm_intel]

pi_update_irte [kvm_intel]

vmx_dump_dtsel [kvm_intel]

vmx_dump_sel [kvm_intel]

$ lsmod | grep -i kvm

kvm_intel 335872 0

kvm 987136 1 kvm_intel

irqbypass 16384 1 kvm

```

### 仅追踪特定的函数

为了实现对特定函数或模式的追踪,你可以利用 `set_ftrace_filter` 文件来指定你要追踪上述输出中的哪些函数。这个文件也接受 `*` 模式,它可以扩展到包括具有给定模式的其他函数。作为一个例子,我在我的机器上使用 ext4 文件系统。我可以用下面的命令指定 ext4 的特定内核函数来追踪:

```

# mount | grep home

/dev/mapper/fedora-home on /home type ext4 (rw,relatime,seclabel)

# pwd

/sys/kernel/tracing

# cat set_ftrace_filter

#### all functions enabled ####

$

$ echo ext4_* > set_ftrace_filter

$

$ cat set_ftrace_filter

ext4_has_free_clusters

ext4_validate_block_bitmap

ext4_get_group_number

ext4_get_group_no_and_offset

ext4_get_group_desc

[...]

```

现在当你可以看到追踪输出时,你只能看到与内核函数有关的 `ext4` 函数,而你之前已经为其设置了一个过滤器。所有其他的输出都被忽略了:

```

# cat trace |head -20

## tracer: function

#

# entries-in-buffer/entries-written: 3871/3871 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

cupsd-1066 [004] .... 3308.989545: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.989547: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.989552: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.989553: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.990097: ext4_file_open <-do_dentry_open

cupsd-1066 [004] .... 3308.990111: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.990111: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.990122: ext4_llseek <-ksys_lseek

cupsd-1066 [004] .... 3308.990130: ext4_file_read_iter <-new_sync_read

```

### 排除要被追踪的函数

你并不总是知道你想追踪什么,但是,你肯定知道你不想追踪什么。因此,有一个 `set_ftrace_notrace` —— 请注意其中的 “no”。你可以在这个文件中写下你想要的模式,并启用追踪。这样除了所提到的模式外,任何其他东西都会被追踪到。这通常有助于删除那些使我们的输出变得混乱的普通功能:

```

$ sudo cat set_ftrace_notrace

#### no functions disabled ####

```

### 具有目标性的追踪

到目前为止,你一直在追踪内核中发生的一切。但是,它无法帮助你追踪与某个特定命令有关的事件。为了达到这个目的,你可以按需打开和关闭跟踪,并且在它们之间,运行我们选择的命令,这样你就不会在跟踪输出中得到额外的输出。你可以通过向 `tracing_on` 写入 `1` 来启用跟踪,写 `0` 来关闭跟踪。

```

# cat tracing_on

0

# echo 1 > tracing_on

# cat tracing_on

1

### Run some specific command that we wish to trace here ###

# echo 0 > tracing_on

# cat tracing_on

0

```

### 追踪特定的 PID

如果你想追踪与正在运行的特定进程有关的活动,你可以将该 PID 写入一个名为 `set_ftrace_pid` 的文件,然后启用追踪。这样一来,追踪就只限于这个 PID,这在某些情况下是非常有帮助的。

```

$ sudo echo $PID > set_ftrace_pid

```

### 总结

`ftrace` 是一个了解 Linux 内核内部工作的很好方式。通过一些练习,你可以学会对 `ftrace` 进行调整以缩小搜索范围。要想更详细地了解 `ftrace` 和它的高级用法,请看 `ftrace` 的核心作者 Steven Rostedt 写的这些优秀文章。

* [调试 Linux 内核,第一部分](https://lwn.net/Articles/365835/)

* [调试 Linux 内核,第二部分](https://lwn.net/Articles/366796/)

* [调试 Linux 内核,第三部分](https://lwn.net/Articles/370423/)

---

via: <https://opensource.com/article/21/7/linux-kernel-ftrace>

作者:[Gaurav Kamathe](https://opensource.com/users/gkamathe) 选题:[lujun9972](https://github.com/lujun9972) 译者:[萌新阿岩](https://github.com/mengxinayan) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | An operating system's kernel is one of the most elusive pieces of software out there. It's always there running in the background from the time your system gets turned on. Every user achieves their computing work with the help of the kernel, yet they never interact with it directly. The interaction with the kernel occurs by making system calls or having those calls made on behalf of the user by various libraries or applications that they use daily.

I've covered how to trace system calls in an earlier article using

. However, with [strace](https://opensource.com/article/19/10/strace)`strace`

, your visibility is limited. It allows you to view the system calls invoked with specific parameters and, after the work gets done, see the return value or status indicating whether they passed or failed. But you had no idea what happened inside the kernel during this time. Besides just serving system calls, there's a lot of other activity happening inside the kernel that you're oblivious to.

## Ftrace Introduction

This article aims to shed some light on tracing the kernel functions by using a mechanism called `ftrace`

. It makes kernel tracing easily accessible to any Linux user, and with its help you can learn a lot about Linux kernel internals.

The default output generated by the `ftrace`

is often massive, given that the kernel is always busy. To save space, I've kept the output to a minimum and, in many cases truncated the output entirely.

I am using Fedora for these examples, but they should work on any of the latest Linux distributions.

## Enabling ftrace

`Ftrace`

is part of the Linux kernel now, and you no longer need to install anything to use it. It is likely that, if you are using a recent Linux OS, `ftrace`

is already enabled. To verify that the `ftrace`

facility is available, run the mount command and search for `tracefs`

. If you see output similar to what is below, `ftrace`

is enabled, and you can easily follow the examples in this article. These commands must be run as the root user (`sudo`

is insufficient.)

```

# mount | grep tracefs

none on /sys/kernel/tracing type tracefs (rw,relatime,seclabel)

```

To make use of `ftrace`

, you first must navigate to the special directory as specified in the mount command above, from where you'll run the rest of the commands in the article:

`# cd /sys/kernel/tracing`

## General work flow

First of all, you must understand the general workflow of capturing a trace and obtaining the output. If you're using `ftrace`

directly, there isn't any special `ftrace-`

specific commands to run. Instead, you basically write to some files and read from some files using standard command-line Linux utilities.

The general steps:

- Write to some specific files to enable/disable tracing.

- Write to some specific files to set/unset filters to fine-tune tracing.

- Read generated trace output from files based on 1 and 2.

- Clear earlier output or buffer from files.

- Narrow down to your specific use case (kernel functions to trace) and repeat steps 1, 2, 3, 4.

## Types of available tracers

There are several different kinds of tracers available to you. As mentioned earlier, you need to be in a specific directory before running any of these commands because the files of interest are present there. I use relative paths (as opposed to absolute paths) in my examples.

You can view the contents of the `available_tracers`

file to see all the types of tracers available. You can see a few listed below. Don't worry about all of them just yet:

```

# pwd

/sys/kernel/tracing

# cat available_tracers

hwlat blk mmiotrace function_graph wakeup_dl wakeup_rt wakeup function nop

```

Out of all the given tracers, I focus on three specific ones: `function`

and `function_graph`

to enable tracing, and `nop`

to disable tracing.

## Identify current tracer

Usually, by default, the tracer is set to `nop`

. That is, "No operation" in the special file `current_tracer`

, which usually means tracing is currently off:

```

# pwd

/sys/kernel/tracing

# cat current_tracer

nop

```

## View Tracing output

Before you enable any tracing, take a look at the file where the tracing output gets stored. You can view the contents of the file named `trace`

using the [cat](https://opensource.com/article/19/2/getting-started-cat-command) command:

```

# cat trace

# tracer: nop

#

# entries-in-buffer/entries-written: 0/0 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

```

## Enable function tracer

You can enable your first tracer called `function`

by writing `function`

to the file `current_tracer`

(its earlier content was `nop`

, indicating that tracing was off.) Think of this operation as a way of enabling tracing:

```

# pwd

/sys/kernel/tracing

# cat current_tracer

nop

# echo function > current_tracer

# cat current_tracer

function

```

## View updated tracing output for function tracer

Now that you've enabled tracing, it's time to view the output. If you view the contents of the `trace`

file, you see a lot of data being written to it continuously. I've piped the output and am currently viewing only the top 20 lines to keep things manageable. If you follow the headers in the output on the left, you can see which task and Process ID are running on which CPU. Toward the right side of the output, you see the exact kernel function running, followed by its parent function. There is also time stamp information in the center:

```

# sudo cat trace | head -20

# tracer: function

#

# entries-in-buffer/entries-written: 409936/4276216 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

<idle>-0 [000] d... 2088.841739: tsc_verify_tsc_adjust <-arch_cpu_idle_enter

<idle>-0 [000] d... 2088.841739: local_touch_nmi <-do_idle

<idle>-0 [000] d... 2088.841740: rcu_nocb_flush_deferred_wakeup <-do_idle

<idle>-0 [000] d... 2088.841740: tick_check_broadcast_expired <-do_idle

<idle>-0 [000] d... 2088.841740: cpuidle_get_cpu_driver <-do_idle

<idle>-0 [000] d... 2088.841740: cpuidle_not_available <-do_idle

<idle>-0 [000] d... 2088.841741: cpuidle_select <-do_idle

<idle>-0 [000] d... 2088.841741: menu_select <-do_idle

<idle>-0 [000] d... 2088.841741: cpuidle_governor_latency_req <-menu_select

```

Remember that tracing is on, which means the output of tracing continues to get written to the trace file until you turn tracing off.

## Turn off tracing

Turning off tracing is simple. All you have to do is replace `function`

tracer with `nop`

in the `current_tracer`

file and tracing gets turned off:

```

# cat current_tracer

function

# echo nop > current_tracer

# cat current_tracer

nop

```

## Enable function_graph tracer

Now try the second tracer, called `function_graph`

. You can enable this using the same steps as before: write `function_graph`

to the `current_tracer`

file:

```

# echo function_graph > current_tracer

# cat current_tracer

function_graph

```

## Tracing output of function_graph tracer

Notice that the output format of the `trace`

file has changed. Now, you can see the CPU ID and the duration of the kernel function execution. Next, you see curly braces indicating the beginning of a function and what other functions were called from inside it:

```

# cat trace | head -20

# tracer: function_graph

#

# CPU DURATION FUNCTION CALLS

# | | | | | | |

6) | n_tty_write() {

6) | down_read() {

6) | __cond_resched() {

6) 0.341 us | rcu_all_qs();

6) 1.057 us | }

6) 1.807 us | }

6) 0.402 us | process_echoes();

6) | add_wait_queue() {

6) 0.391 us | _raw_spin_lock_irqsave();

6) 0.359 us | _raw_spin_unlock_irqrestore();

6) 1.757 us | }

6) 0.350 us | tty_hung_up_p();

6) | mutex_lock() {

6) | __cond_resched() {

6) 0.404 us | rcu_all_qs();

6) 1.067 us | }

```

## Enable trace settings to increase the depth of tracing

You can always tweak the tracer slightly to see more depth of the function calls using the steps below. After which, you can view the contents of the `trace`

file and see that the output is slightly more detailed. For readability, the output of this example is omitted:

```

# cat max_graph_depth

0

# echo 1 > max_graph_depth ## or:

# echo 2 > max_graph_depth

# sudo cat trace

```

## Finding functions to trace

The steps above are sufficient to get started with tracing. However, the amount of output generated is enormous, and you can often get lost while trying to find out items of interest. Often you want the ability to trace specific functions only and ignore the rest. But how do you know which processes to trace if you don't know their exact names? There is a file that can help you with this—`available_filter_functions`

provides you with a list of available functions for tracing:

```

# wc -l available_filter_functions

63165 available_filter_functions

```

## Search for general kernel functions

Now try searching for a simple kernel function that you are aware of. User-space has `malloc`

to allocate memory, while the kernel has its `kmalloc`

function, which provides similar functionality. Below are all the `kmalloc`

related functions:

```

# grep kmalloc available_filter_functions

debug_kmalloc

mempool_kmalloc

kmalloc_slab

kmalloc_order

kmalloc_order_trace

kmalloc_fix_flags

kmalloc_large_node

__kmalloc

__kmalloc_track_caller

__kmalloc_node

__kmalloc_node_track_caller

[...]

```

## Search for kernel module or driver related functions

From the output of `available_filter_functions`

, you can see some lines ending with text in brackets, such as `[kvm_intel]`

in the example below. These functions are related to the kernel module `kvm_intel`

, which is currently loaded. You can run the `lsmod`

command to verify:

```

# grep kvm available_filter_functions | tail

__pi_post_block [kvm_intel]

vmx_vcpu_pi_load [kvm_intel]

vmx_vcpu_pi_put [kvm_intel]

pi_pre_block [kvm_intel]

pi_post_block [kvm_intel]

pi_wakeup_handler [kvm_intel]

pi_has_pending_interrupt [kvm_intel]

pi_update_irte [kvm_intel]

vmx_dump_dtsel [kvm_intel]

vmx_dump_sel [kvm_intel]

# lsmod | grep -i kvm

kvm_intel 335872 0

kvm 987136 1 kvm_intel

irqbypass 16384 1 kvm

```

## Trace specific functions only

To enable tracing of specific functions or patterns, you can make use of the `set_ftrace_filter`

file to specify which functions from the above output you want to trace.

This file also accepts the `*`

pattern, which expands to include additional functions with the given pattern. As an example, I am using the `ext4`

filesystem on my machine. I can specify `ext4`

specific kernel functions to trace using the following commands:

```

# mount | grep home

/dev/mapper/fedora-home on /home type ext4 (rw,relatime,seclabel)

# pwd

/sys/kernel/tracing

# cat set_ftrace_filter

#### all functions enabled ####

$

$ echo ext4_* > set_ftrace_filter

$

$ cat set_ftrace_filter

ext4_has_free_clusters

ext4_validate_block_bitmap

ext4_get_group_number

ext4_get_group_no_and_offset

ext4_get_group_desc

[...]

```

Now, when you see the tracing output, you can only see functions `ext4`

related to kernel functions for which you had set a filter earlier. All the other output gets ignored:

```

# cat trace |head -20

## tracer: function

#

# entries-in-buffer/entries-written: 3871/3871 #P:8

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

cupsd-1066 [004] .... 3308.989545: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.989547: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.989552: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.989553: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.990097: ext4_file_open <-do_dentry_open

cupsd-1066 [004] .... 3308.990111: ext4_file_getattr <-vfs_fstat

cupsd-1066 [004] .... 3308.990111: ext4_getattr <-ext4_file_getattr

cupsd-1066 [004] .... 3308.990122: ext4_llseek <-ksys_lseek

cupsd-1066 [004] .... 3308.990130: ext4_file_read_iter <-new_sync_read

```

## Exclude functions from being traced

You don't always know what you want to trace but, you surely know what you don't want to trace. For that, there is this file aptly named `set_ftrace_notrace`

—notice the "no" in there. You can write your desired pattern in this file and enable tracing, upon which everything except the mentioned pattern gets traced. This is often helpful to remove common functionality that clutters our output:

```

# cat set_ftrace_notrace

#### no functions disabled ####

```

## Targetted tracing

So far, you've been tracing everything that has happened in the kernel. But that won't help us if you wish to trace events related to a specific command. To achieve this, you can turn tracing on and off on-demand and, and in between them, run our command of choice so that you do not get extra output in your trace output. You can enable tracing by writing `1`

to `tracing_on`

, and `0`

to turn it off:

```

# cat tracing_on

0

# echo 1 > tracing_on

# cat tracing_on

1

### Run some specific command that we wish to trace here ###

# echo 0 > tracing_on

# cat tracing_on

0

```

## Tracing specific PID

If you want to trace activity related to a specific process that is already running, you can write that PID to a file named `set_ftrace_pid`

and then enable tracing. That way, tracing is limited to this PID only, which is very helpful in some instances:

`# echo $PID > set_ftrace_pid`

## Conclusion

`Ftrace`

is a great way to learn more about the internal workings of the Linux kernel. With some practice, you can learn to fine-tune `ftrace`

and narrow down your searches. To understand `ftrace`

in more detail and its advanced usage, see these excellent articles written by the core author of `ftrace`

himself—Steven Rostedt.

## Comments are closed. |

13,753 | 如何使用 youtube-dl 只下载音频 | https://itsfoss.com/youtube-dl-audio-only/ | 2021-09-05T15:32:00 | [

"音频",

"youtube-dl"

] | https://linux.cn/article-13753-1.html |

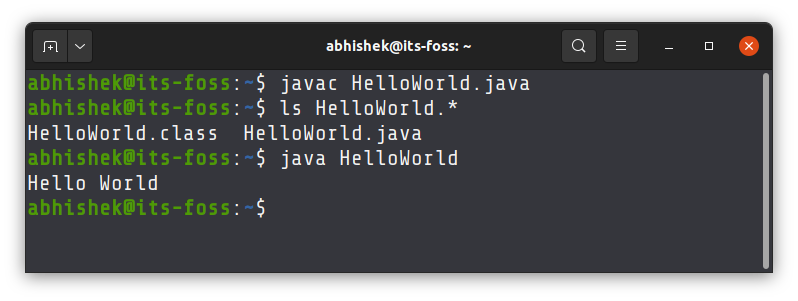

[youtube-dl](https://github.com/ytdl-org/youtube-dl) 是一个多功能的命令行工具,用于从 YouTube 和许多其他网站下载视频。我用它来做我自己的 YouTube 视频的备份。

默认情况下,你会 [使用 youtube-dl 下载视频](https://itsfoss.com/download-youtube-linux/)。用 youtube-dl 只提取音频怎么样? 其实很简单。让我告诉你步骤。

>

> **注意**

>

>

> 从网站下载视频可能违反他们的政策。这取决于你是否选择下载视频或音频。

>

>

>

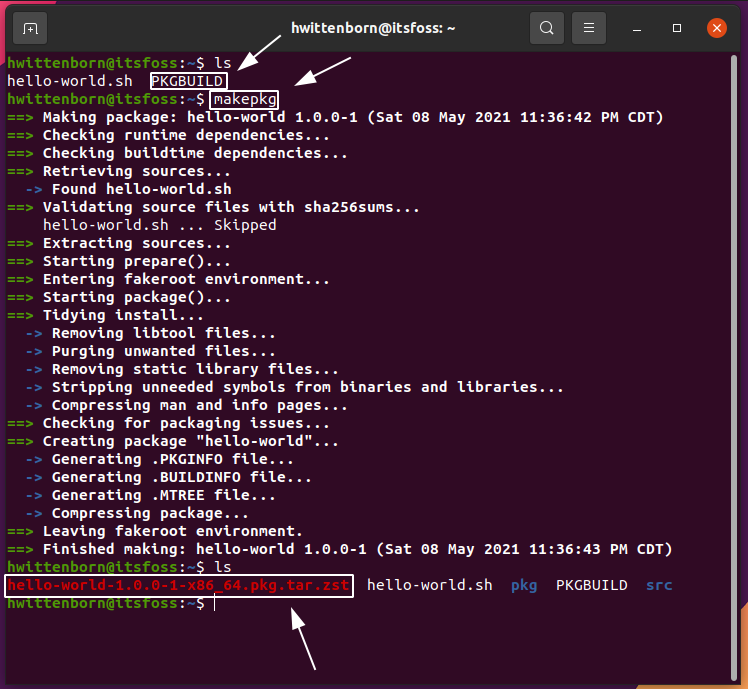

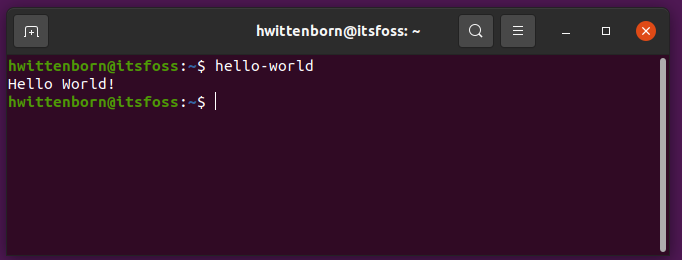

### 使用 youtube-dl 只下载音频

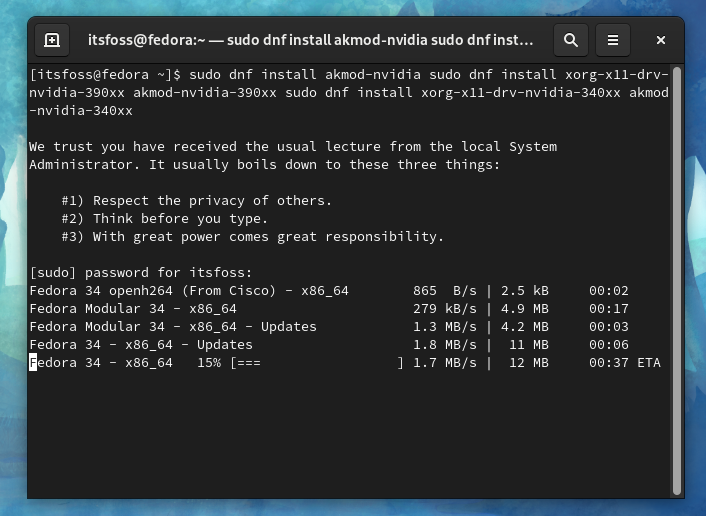

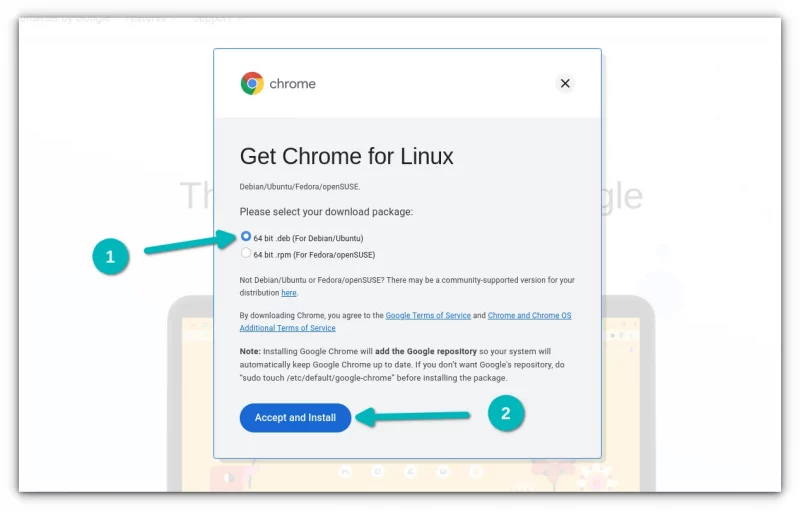

请确保你已经在你的 Linux 发行版上安装了 `youtube-dl`。

```

sudo snap install youtube-dl

```

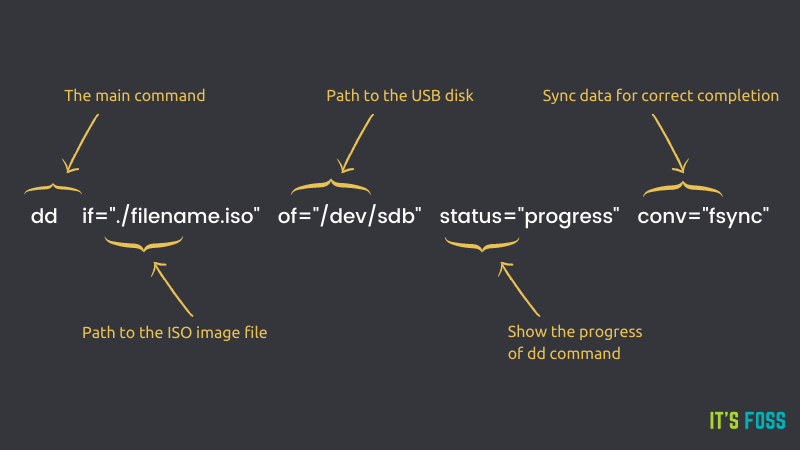

如果你只想从 YouTube 视频中下载音频,你可以使用 `youtube-dl` 的 `-x` 选项。这个提取音频的选项将视频文件转换为纯音频文件。

```

youtube-dl -x video_URL

```

该文件被保存在你运行 `youtube-dl` 命令的同一目录下。

这是我下载 Zorin OS 16 评论视频的画外音的示例:

```

youtube-dl -x https://www.youtube.com/watch?v=m_PmLG7HqbQ

[youtube] m_PmLG7HqbQ: Downloading webpage

[download] Destination: Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a

[download] 100% of 4.26MiB in 00:03

[ffmpeg] Correcting container in "Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a"

[ffmpeg] Post-process file Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a exists, skipping

```

你注意到音频格式了吗?它是 .m4a 格式。你可以把音频格式指定为你所选择的格式。

比如你想提取 MP3 格式的音频。你可以像这样使用它:

```

youtube-dl -x --audio-format mp3 video_URL

```

下面是我之前展示的同一个例子。你可以看到它 [使用 ffmpeg 转换](https://itsfoss.com/ffmpeg/) m4a 文件为 mp3:

```

youtube-dl -x --audio-format mp3 https://www.youtube.com/watch?v=m_PmLG7HqbQ

[youtube] m_PmLG7HqbQ: Downloading webpage

[download] Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a has already been downloaded

[download] 100% of 4.26MiB

[ffmpeg] Correcting container in "Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a"

[ffmpeg] Destination: Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.mp3

Deleting original file Zorin OS 16 Review - It's a Visual Masterpiece-m_PmLG7HqbQ.m4a (pass -k to keep)

```

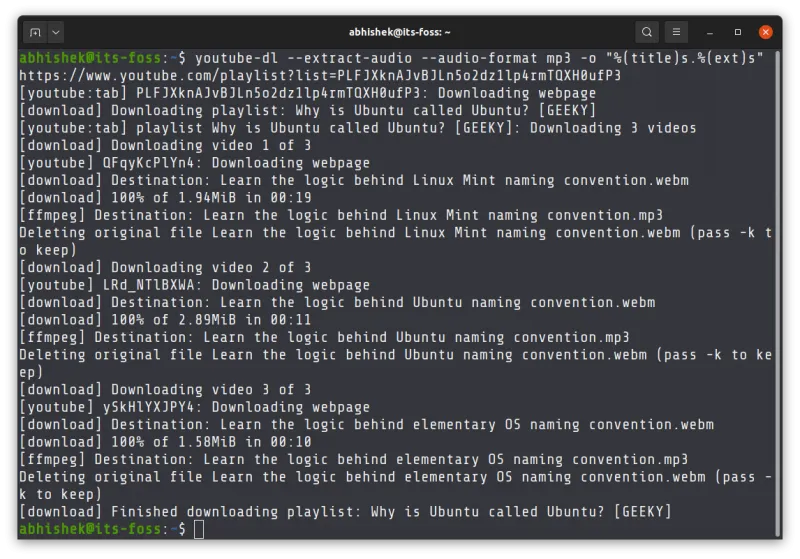

### 以 MP3 格式下载整个 YouTube 播放列表

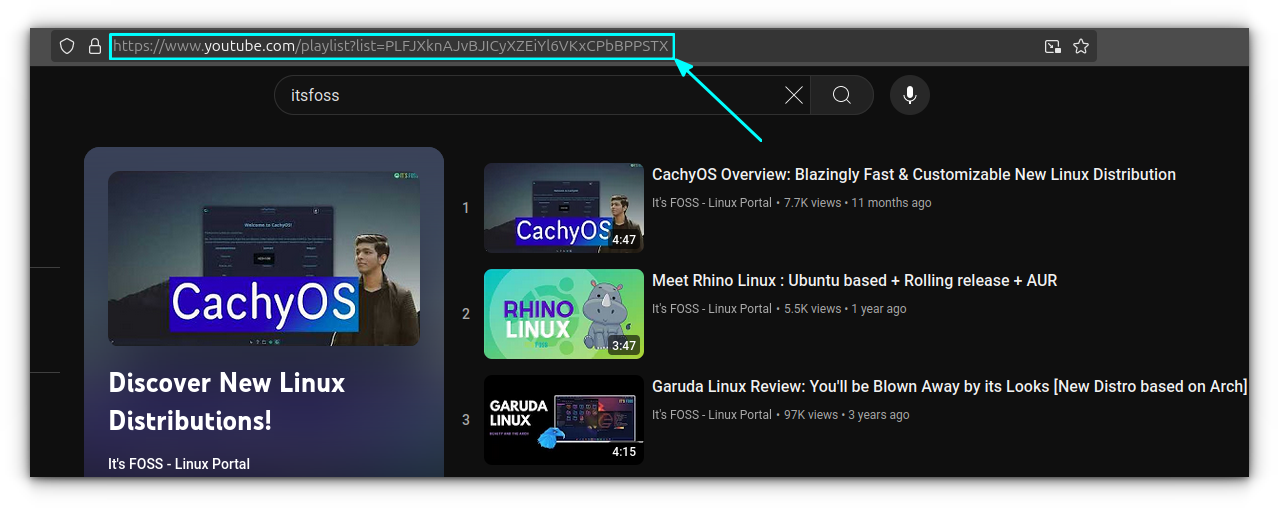

是的,你完全可以这样做。最主要的是要在这里得到播放列表的 URL。它通常是以下格式:

```

https://www.youtube.com/playlist?list=XXXXXXXXXXXXXXXXXXX

```

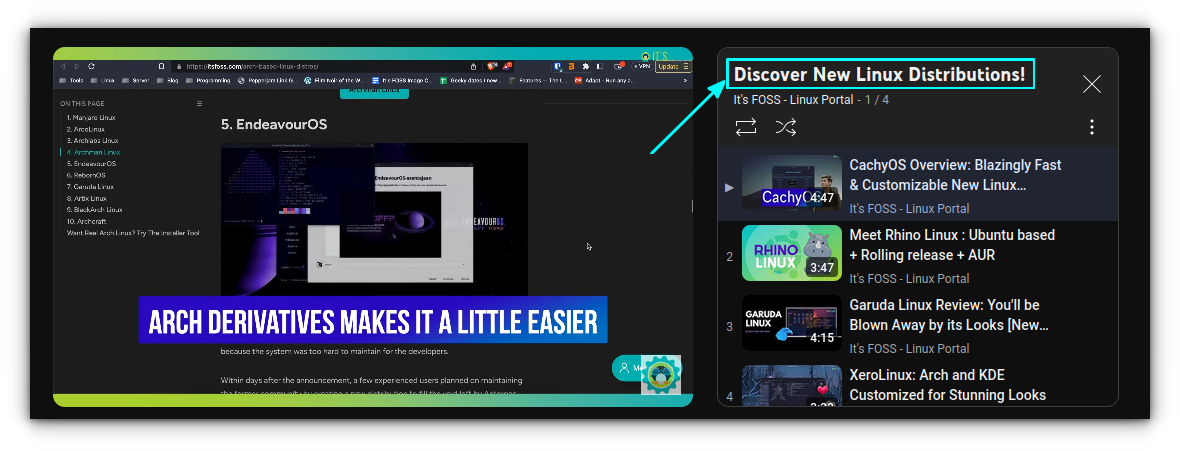

要获得一个播放列表的 URL,当播放列表显示在右边栏时,点击其名称。

它将带你到播放列表页面,你可以在这里复制 URL。

现在你有了播放列表的 URL,你可以用它来下载 MP3 格式的音频文件,方法如下:

```

youtube-dl --extract-audio --audio-format mp3 -o "%(title)s.%(ext)s" playlist_URL

```

那个看起来很可怕的 `-o "%(title)s.%(ext)s"` 指定了输出文件(选项 `-o`),并指示它使用视频的标题和扩展名(本例为 mp3)来命名音频文件。

我希望你觉得这个技巧对你有帮助。享受音频文件吧。

---

via: <https://itsfoss.com/youtube-dl-audio-only/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

[youtube-dl](https://github.com/ytdl-org/youtube-dl?ref=itsfoss.com) is a versatile command line tool for downloading videos from YouTube and many other websites. I use it for making back up of my own YouTube videos.

By default, you [use youtube-dl for downloading videos](https://itsfoss.com/download-youtube-linux/). How about extracting only the audio with youtube-dl? That’s very simple actually. Let me show you the steps.

## Install youtube-dl first

Please make sure that you have installed youtube-dl on your Linux distribution first.

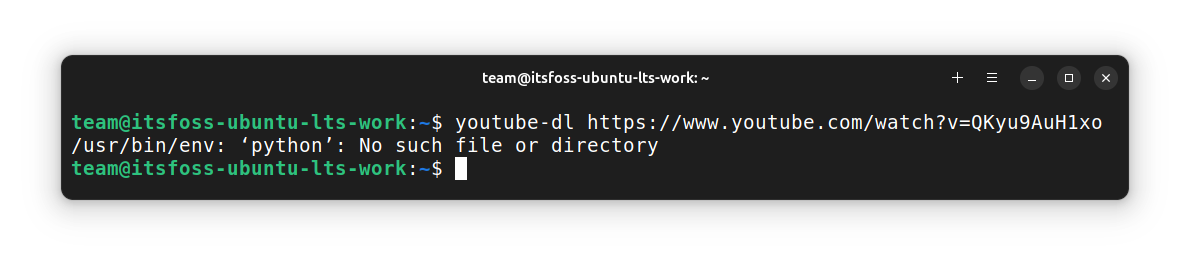

Since 2021, the usual snap and default package manager installations of youtube-dl give a broken utility. So, you need to install the Nightly version of youtube-dl to make it work.

The Nightly release contains recent changes and fixes so that you can run it without any error. I will describe the easiest way to set it up. First go to the Nightly release GitHub page.

Here, you can get a python file, named `youtube-dl`

. Just download it. Once downloaded, copy that file to `/usr/local/bin/`

. For this, open a terminal where you downloaded the file and then run:

```

sudo cp youtube-dl /usr/local/bin/

```

Now, give the file execution permission using the `chmod`

command.

```

sudo chmod a+rx /usr/local/bin/youtube-dl

```

You cannot just run `youtube-dl`

now, because of issue with `python`

naming. It uses `python`

while in Ubuntu-based systems, it is `python3`

.

So, [you can create an alias](https://linuxhandbook.com/linux-alias-command/) for youtube-dl in your `.bashrc`

.

```

alias youtube-dl='python3 /usr/local/bin/youtube-dl'

```

That’s it. You have set up youtube-dl in your system.

**Remember**In order to download audio using youtube-dl, you should have

[FFMPEG installed on your system](https://itsfoss.com/ffmpeg/).

## Download only audio with youtube-dl

If you only want to download audio from a YouTube video, you can use the `-x`

option with youtube-dl. This extract-audio option converts the video files to audio-only files.

```

youtube-dl -x video_URL

```

The file is saved in the same directory from where you ran the youtube-dl command.

Here’s an example where I downloaded the voice-over of our [Install Google Chrome on Ubuntu 24.04](https://www.youtube.com/watch?v=Cfo5BR2FGEQ) video.

Did you notice the audio format? It is in .m4a format. You may specify the audio format to something of your choice.

Say you want to extract the audio in MP3 format. You can use it like this:

```

youtube-dl -x --audio-format mp3 video_URL

```

Here’s the same example I showed previously. You can see that it [uses ffmpeg to convert](https://itsfoss.com/ffmpeg/) the m4a file into MP3.

`-k`

option to preserve the old file along with the new one.## Download entire YouTube playlist in MP3 format

Yes, you can totally do that. The main thing is to get the URL of the playlist here. It is typically in the following format:

```

https://www.youtube.com/playlist?list=XXXXXXXXXXXXXXXXXXX

```

To get the URL of a playlist, click on its name when the playlist is being displayed in the right sidebar.

It will take you to the playlist page, and you can copy the URL here.

Now that you have the playlist URL, you can use it to download the audio files in MP3 format in the following fashion:

```

youtube-dl --extract-audio --audio-format mp3 -o "%(title)s.%(ext)s" playlist_URL

```

That scary looking `-o "%(title)s.%(ext)s"`

specifies the output file (with option -o) and instructs it to use the title of the video and the extension (MP3 in this case) for naming the audio files.

I hope you find this quick tip helpful. Enjoy the audio files 😄 |

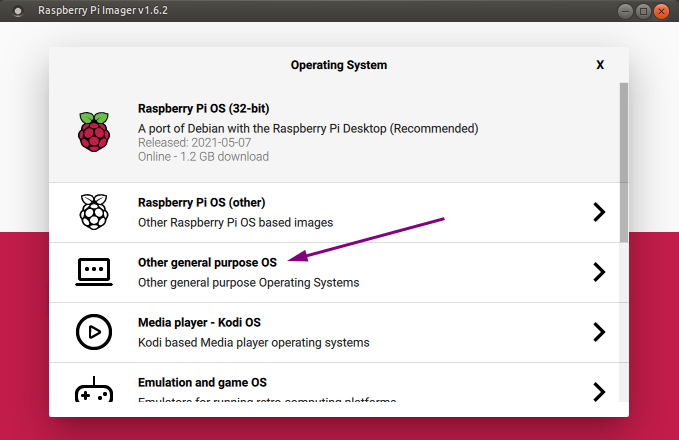

13,755 | 满足日常需求的应用(二):办公套件 | https://fedoramagazine.org/apps-for-daily-needs-part-2-office-suites/ | 2021-09-05T23:20:36 | [

"办公套件"

] | https://linux.cn/article-13755-1.html |

今天,几乎每个家庭都有一台台式电脑或笔记本电脑。这是因为计算机已经成为一个非常重要的要求。此外,不管是为了工作还是学习,许多人需要创建电子版的文档和演示文稿。因此,办公套件是几乎所有计算机上的必备应用程序。本文将介绍一些你可以在 Fedora Linux 上使用的开源办公套件。这些软件你可能需要安装。如果你不熟悉如何在 Fedora Linux 中添加软件包,请参阅我之前的文章 [安装 Fedora 34 工作站后要做的事情](https://fedoramagazine.org/things-to-do-after-installing-fedora-34-workstation/)。下面是满足日常需求的办公套件类的应用程序列表。

### LibreOffice

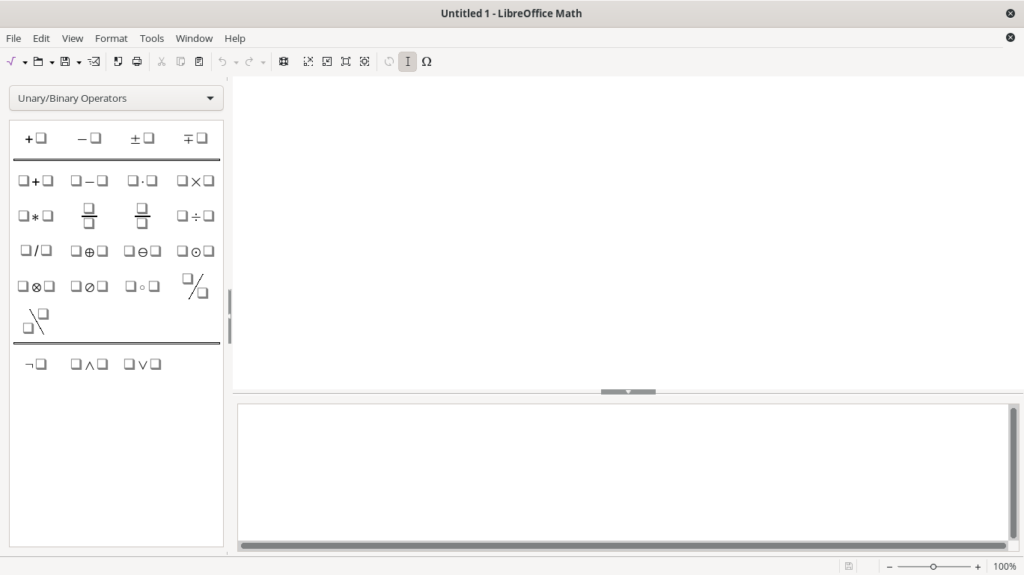

LibreOffice 是 GNU/Linux 用户中最流行的办公套件。它的用户界面和用户体验类似于微软 Office。这使得 LibreOffice 对于那些刚刚从微软 Office 迁移过来的人来说很容易学习。LibreOffice 有完整的功能,可以满足你在文档和演示方面的工作需要。它由六个应用程序组成:Writer、Calc、Impress、Draw、Math 和 Base。

第一个应用程序是 Writer,用于创建各种类型的文档,如信件、传真、议程、会议记录等。它是一个全功能的文字处理和桌面出版工具。第二个应用程序是 Calc,它是一个电子表格程序,非常适合以表格形式呈现数据和记录数据。Calc 可以创建简单的表格或进行专业的数据分析。第三个应用程序是 Impress,它是一个易于使用的演示应用程序。你可以很容易地选择你在演示幻灯片中想要的功能,如文本、图像、表格、图表等。

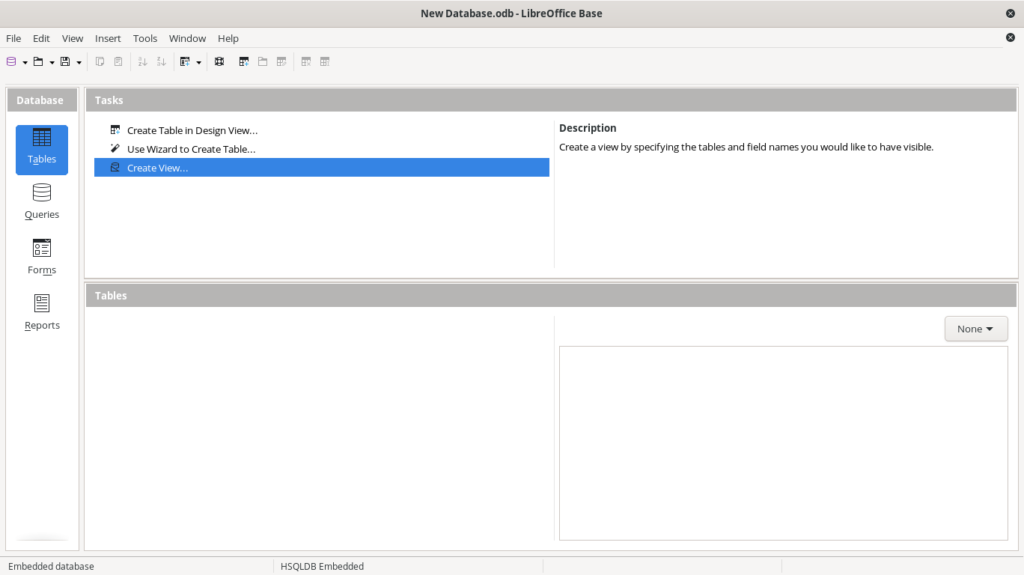

前面提到的三个 LibreOffice 应用程序是创建文档和演示文稿中最常用的应用程序。然而,LibreOffice 提供的其他三个应用程序也非常有用。第一个是 Draw,它可以用来创建从简单到复杂的图纸和图表。下一个应用程序是 Math,它可以帮助我们做出完美格式的数学和科学公式。最后一个是 Base,这是一个用于处理数据库的应用程序。

更多信息请见此链接:<https://www.libreoffice.org/>

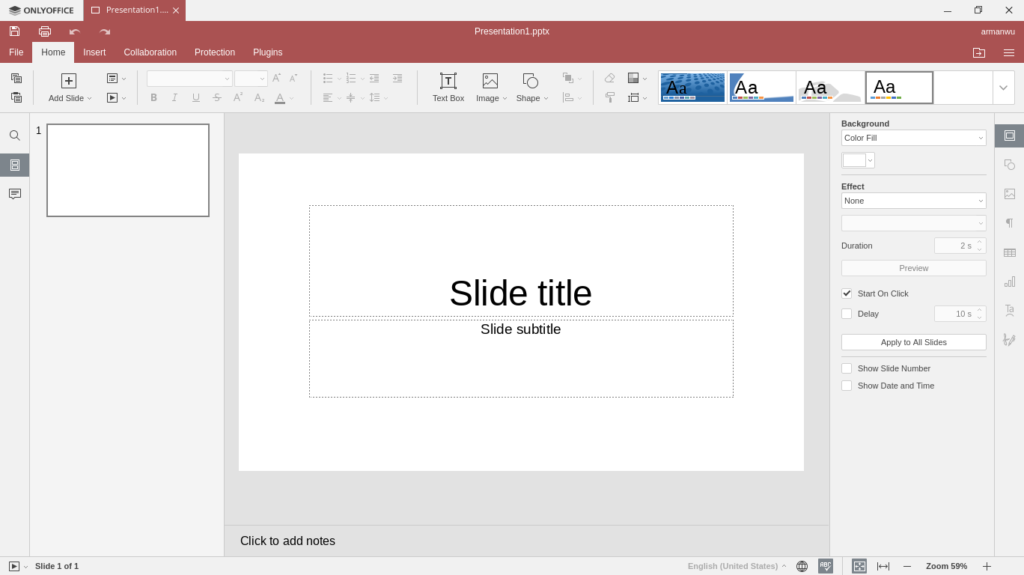

### ONLYOFFICE

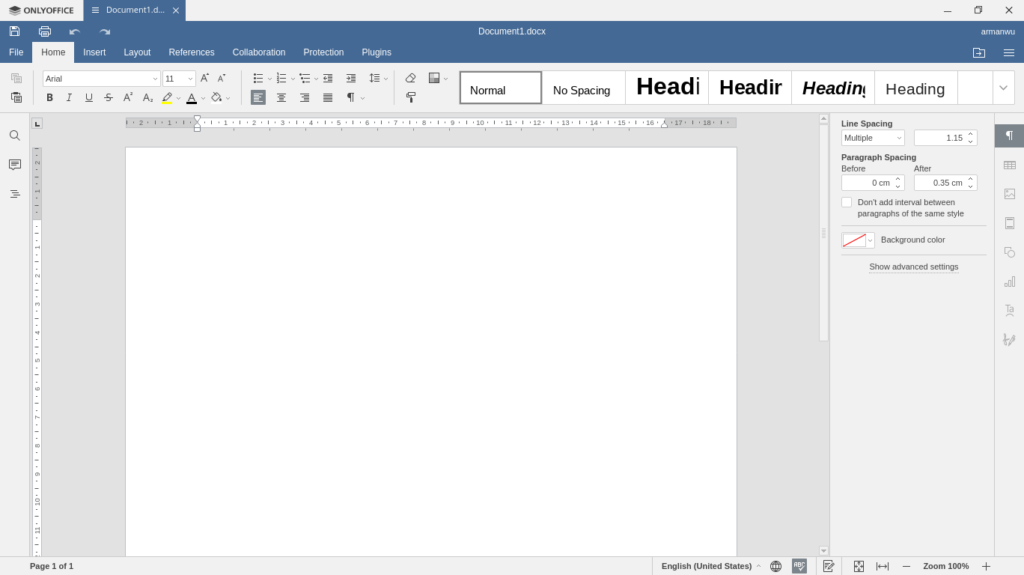

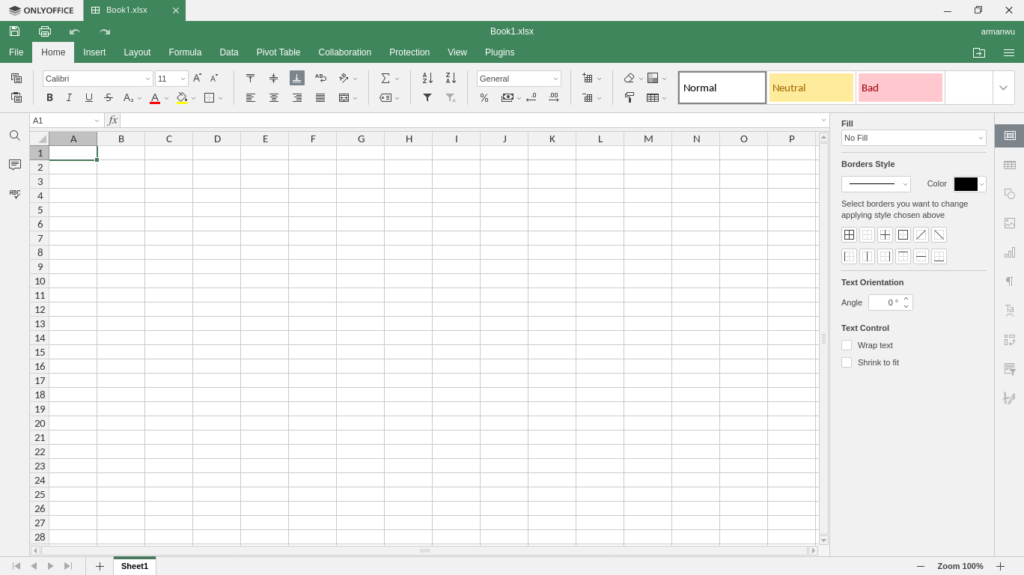

ONLYOFFICE 是一款与微软 Office 高度兼容的办公套件应用程序。因此,我们与使用微软 Office 的同事协作时就不必担心了,因为它可以读取各种文件格式,如 docx、xlsx 和 pptx。

ONLYOFFICE 提供了三种具有简洁和现代外观的应用程序。我们可以很容易地找到我们需要的功能和工具。虽然功能没有 LibreOffice 那么齐全,但也足以帮助我们创建良好的文档和演示文稿。

第一个应用程序是文档编辑器,它的功能与 LibreOffice 的 Writer 相同。它具有文字处理器所需的所有基本功能,如管理字体和样式、格式化文本、调整行距和段距、插入页眉和页脚、自定义页面布局和设置页边距。第二个应用程序是电子表格编辑器,它是一个用于处理数据并将其创建为表格格式的文件的应用程序。它是一个具有与 Calc 相同功能的应用程序。最后一个是演示文稿编辑器,它是一个演示文稿应用程序,其功能类似于 Impress。

不幸的是,ONLYOFFICE 在官方的 Fedora Linux 软件库中并没有提供。但是你仍然可以使用 Flatpak 或 Appimages 在 Fedora Linux 上安装它。

更多信息请见此链接:<https://www.onlyoffice.com/desktop.aspx>

### Calligra

Calligra 是一个由 KDE 创建的办公套件。因此,这个应用程序实际上更适合于 KDE Plasma 桌面环境的用户。但它仍然可以在其他桌面环境中良好运行,例如使用 GNOME 的 Fedora 工作站。

Calligra 提供的几个应用程序的外观与 LibreOffice 或 ONLYOFFICE 略有不同。对于那些习惯于主流办公套件应用程序的人来说,可能需要一些适应。然而,Calligra 仍然是一个可靠的办公套件,足以支持我们的日常需求。

第一个应用程序是 Words,它是一个具有桌面出版功能的直观的文字处理器。它具有帮助我们进行文档创作的全面功能。第二个应用程序是 Sheets,它具有与 Calc 和电子表格编辑器相同的功能,是一个功能齐全的电子表格应用程序。第三个应用程序是 Stage,它可以帮助我们制作演示幻灯片。

这三个 Calligra 应用程序是创建文档和演示文稿最常用的应用程序。另外还有三个应用程序也非常有用。第一个是 Karbon,它可以用来创建从简单到复杂的图纸和图表。下一个应用程序是 Plan,这是一个项目管理应用程序,可以帮助管理具有多种资源的中等规模的项目。最后一个是 KEXI,它是一个可视化数据库应用程序的创建器。

更多信息请见此链接:<https://calligra.org/>

### 总结

这篇文章介绍了 3 个可以在 Fedora Linux 上使用的办公套件,以满足你的日常需要。如果你想拥有具有一套完整功能的办公套件,那么 LibreOffice 可能是正确的选择。如果想与微软 Office 有良好的兼容性,那么你可以选择 ONLYOFFICE。然而,如果你想要一个不同的用户界面和创建文档和演示文稿的体验,你可以试试 Calligra。希望这篇文章能帮助你选择合适的办公套件。如果你有使用这些应用程序的经验,请在评论中分享你的经验。

---

via: <https://fedoramagazine.org/apps-for-daily-needs-part-2-office-suites/>

作者:[Arman Arisman](https://fedoramagazine.org/author/armanwu/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Today, almost every family has a desktop computer or laptop. That’s because the computer has become a very important requirement. Moreover, many people have to create documents and presentations in digital format for work or study. Therefore, the office suites are must-have application on almost all computers. This article will introduce some of the open source office suites that you can use on Fedora Linux. You may need to install the software mentioned. If you are unfamiliar with how to add software packages in Fedora Linux, see my earlier article [Things to do after installing Fedora 34 Workstation](https://fedoramagazine.org/things-to-do-after-installing-fedora-34-workstation/). Here is the list of apps for daily needs in the office suites category.

## LibreOffice

LibreOffice is the most popular office suite among GNU/Linux users. It has a user interface and user experience similar to Microsoft Office. This makes LibreOffice easy to learn for those who have just migrated from Microsoft Office. LibreOffice has complete features to meet your needs working on documents and presentations. It consists of six applications: Writer, Calc, Impress, Draw, Math, and Base.

The first application is Writer that is used to create various kinds of documents, such as letters, faxes, agendas, minutes, etc. It is a full-featured word processing and desktop publishing tool. The second application is Calc which is a spreadsheet program that is perfect for presenting data and documenting it in tabular format. Calc can create simple tables or do professional data analysis. The third application is Impress which is an easy-to-use presentation application. You can easily choose what you features want in your presentation slides, such as text, images, tables, diagrams, etc.

The three LibreOffice applications mentioned earlier are the most commonly used applications in creating documents and presentations. However, LibreOffice provides three other applications that are also very useful. The first is Draw which can be used to create drawings and diagrams, ranging from simple to complex. The next application is Math which can help us make perfectly formatted mathematical and scientific formulas. The last is Base which is an application for processing databases.

More information is available at this link: [https://www.libreoffice.org/](https://www.libreoffice.org/)

## ONLYOFFICE

ONLYOFFICE is an office suite application that is highly compatible with Microsoft Office. Therefore we do not have to worry about collaborating with colleagues who use Microsoft Office because it can read various file formats such as docx, xlsx, and pptx.

ONLYOFFICE provides three applications with a clean and modern look. We can easily find the features and tools that we need. Although the features are not as complete as LibreOffice, they are very sufficient to help us create good documents and presentations.

The first application is Documents Editor which has the same function as Writer from LibreOffice. It has all the basic features needed in a word processor, such as managing fonts and styles, formatting text, adjusting line and paragraph spacing, inserting headers and footers, customizing page layout, and setting margins. The second application is Spreadsheet Editor which is an application for processing data and creating it as a document in tabular format. It is an application with the same functionality as Calc. The last one is Presentations Editor which is a presentation application with functions similar to Impress.

Unfortunately ONLYOFFICE is not available in the official Fedora Linux repositories. But you can still install it on Fedora Linux using Flatpak or Appimages.

More information is available at this link: [https://www.onlyoffice.com/desktop.aspx](https://www.onlyoffice.com/desktop.aspx)

## Calligra

Calligra is an office suite created by KDE. Therefore, this application is actually more suitable for users of the KDE Plasma desktop environment. But it can still run well on other desktop environments, such as Fedora Workstation using GNOME.

Calligra provides several applications with a slightly different look from LibreOffice or ONLYOFFICE. It may take some adjustment for those who are used to mainstream office suite applications. However, Calligra is still a reliable office suite to support our daily needs.

The first application is Words which is an intuitive word processor with desktop publishing features. It has a full range of features to help us in document creation. The second application is Sheets which has the same functionality as Calc and Spreadsheet Editors as a fully-featured spreadsheet application. The third application is Stage which can help us in making presentation slides.

The three Calligra applications are the most commonly used applications for creating documents and presentations. There are three other applications that are also very useful. The first is Karbon which can be used to create drawings and diagrams, ranging from simple to complex. The next application is Plan which is project management application that can help in managing moderately large projects with multiple resources. The last is KEXI which is a visual database application creator.

More information is available at this link: [https://calligra.org/](https://calligra.org/)

## Conclusion

This article presented 3 office suites for your daily needs that you can use on Fedora Linux. If you want a complete set of features in your office suite, then LibreOffice may be the right choice. For good compatibility with Microsoft Office, then you may choose ONLYOFFICE. However, if you want a different user interface and experience in creating documents and presentations, you can try Calligra. Hopefully this article can help you to choose the right office suite. If you have experience in using these applications, please share your experience in the comments.

## dragontao

wps maybe another good choice 🙂

## Arman Arisman

Of course. But unfortunately WPS is not open source software 🙂

Thanks for adding information through your comments.

## Adam

Freeoffice – free version of SoftMaker office – https://www.freeoffice.com/en/download/applications

## Arman Arisman

Thanks for adding information for this article. I’ve never tried it. Is it open source software?

## Adam

(freeware/registerware)

## Arman Arisman

Oh I see. Thanks for your info 🙂

## HelloWorls

OpenOffice is also good.

## Arman Arisman

Of course. Thank you for completing my article 🙂

## Null_Pointer_00

Hello.

No, OpenOffice is no good at all.

https://itsfoss.com/libreoffice-letter-openoffice/

https://blog.documentfoundation.org/blog/2020/10/12/open-letter-to-apache-openoffice/

Regards.

## Arman Arisman

I think everyone has different preferences and needs. Thanks for the opinion 🙂

## PissedOff Veteran

Having tried all the above packages I/we have decided the only one that comes close to providing for our needs is LibreOffice.

Therefore we have cleaned our all others to avoid having people inadvertently using such as Calibreoffice etc and causing a ruckus.

Actually started using LibreOffice way before there was LibreOffice.

Started with it’s Great Grand Father, “Star office.”

Later used Open Office but there were problems. So tried LibreOffice which had just branched off Open Office. It had even more problems so stuck with Open Office for a while, then Libre Office cleaned up the problems that was incompatible with us and we have been with it ever since.

## Arman Arisman

Wow! You have a lot of experience related to the use of office applications in the Linux world. Thank you for sharing here. Nice info!

## Ralf Seidenschwang

I mainly need to concert documents into PDF files, and therefore LibreOffice is a very good choice.

The electronic paper file didn’t get around everywhere, so I still have to maintain a couple paper folders for mostly extremely unimportant things.

I often get the impression that a different format is chooses by intend to make the communications more difficult than needed and to provoke dispute. Our governments – that’s how they tick.

## Arman Arisman

Hi, Ralf. Yes, LibreOffice is a good choice. Agree with you. And thanks for your opinion too 🙂

## Ralf Seidenschwang

Opinions change from time to time, knowledge too.

Thanks for the nice article.

## Arman Arisman

Sure. You’re welcome, Ralf 🙂

## Bill

I’m curious to see if this series looks into other kinds of software I’ve always found very lacking on Linux. I couldn’t ever find a good todo list app, calendar app, note taking app, or email app. For that matter, I wouldn’t say that any of the apps mentioned in this series of articles so far are as good as their closed source counterparts. But I’ll keep reading. Maybe there are apps I’m not aware of out there. I’d love to use Linux as my full time OS, but as far as I can tell, the apps just aren’t there.

## Arman Arisman

Hi, Bill. Thank you for reading and giving your opinion 🙂

I think this goes back to our needs. In using office suites, I personally have had enough with open source software as I wrote in this article. But some of my friends have to use other applications because they have special needs.

To be honest there are still many fields that do not have good open source software.

## Eric

I like LibreOffice for work-related tasks, Thunderbird for email, and Chromium and Firefox for browsers on Linux. Oh, and Lollypop and Rhythmbox for managing music 🙂 But I do use Microsoft Office to do my work, as there are some things that LibreOffice doesn’t handle correctly, but I have a VirtualBox VM for that.

I just wish Wine worked more consistently with Blizzard’s launcher and games. It was doing well in Fedora 33, but now it seems to be broken in 34. Steam is consistently great, though, including at running Windows games via Proton.

I like that I can install Fedora or any other distro on a system I’ve built myself and get so much great functionality without paying money to Microshaft for an OS, or be locked into Apple’s strange and pricey hardware configurations. I even have a home-built Linux PC hooked to my TV instead of some set top box, and I wouldn’t have it any other way. On top of that, I have a Raspberry Pi with a 4-drive USB 3 enclosure hooked to it and running Open Media Vault acting as a NAS! So, bottom line, Linux has a lot of uses and flexibility, and it’s all free. It’s so nice to have available and can do a lot of things very well.

## Arman Arisman

Thanks for sharing here, Eric! 🙂

## Ralf Seidenschwang