id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

13,592 | 在 Linux 上用密码加密和解密文件 | https://opensource.com/article/21/7/linux-age | 2021-07-18T10:26:11 | [

"加密",

"解密"

] | https://linux.cn/article-13592-1.html |

>

> age 是一个简单的、易于使用的工具,允许你用一个密码来加密和解密文件。

>

>

>

文件的保护和敏感文档的安全加密是用户长期以来关心的问题。即使越来越多的数据被存放在网站和云服务上,并由具有越来越安全和高强度密码的用户账户来保护,但我们能够在自己的文件系统中存储敏感数据仍有很大的价值,特别是我们能够快速和容易地加密这些数据时。

[age](https://github.com/FiloSottile/age) 能帮你这样做。它是一个小型且易于使用的工具,允许你用一个密码加密一个文件,并根据需要解密。

### 安装 age

`age` 可以从众多 Linux 软件库中 [安装](https://github.com/FiloSottile/age#installation)。

在 Fedora 上安装它:

```

$ sudo dnf install age -y

```

在 macOS 上,使用 [MacPorts](https://opensource.com/article/20/11/macports) 或 [Homebrew](https://opensource.com/article/20/6/homebrew-mac) 来安装。在 Windows 上,使用 [Chocolatey](https://opensource.com/article/20/3/chocolatey) 来安装。

### 用 age 加密和解密文件

`age` 可以用公钥或用户自定义密码来加密和解密文件。

#### 在 age 中使用公钥

首先,生成一个公钥并写入 `key.txt` 文件:

```

$ age-keygen -o key.txt

Public key: age16frc22wz6z206hslrjzuv2tnsuw32rk80pnrku07fh7hrmxhudawase896m9

```

### 使用公钥加密

要用你的公钥加密一个文件:

```

$ touch mypasswds.txt | age -r \

ageage16frc22wz6z206hslrjzuv2tnsuw32rk80pnrku07fh7hrmxhudawase896m9 \

> mypass.tar.gz.age

```

在这个例子中,我使用生成的公钥加密文件 `mypasswds.txt`,保存在名为 `mypass.tar.gz.age` 的加密文件中。

### 用公钥解密

如需解密加密文件,使用 `age` 命令和 `--decrypt` 选项:

```

$ age --decrypt -i key.txt -o mypass.tar.gz mypass.tar.gz.age

```

在这个例子中,`age` 使用存储在 `key.text` 中的密钥,并解密了我在上一步创建的加密文件。

### 使用密码加密

不使用公钥的情况下对文件进行加密被称为对称加密。它允许用户设置密码来加密和解密一个文件。要做到这一点:

```

$ age --passphrase --output mypasswd-encrypted.txt mypasswd.txt

Enter passphrase (leave empty to autogenerate a secure one):

Confirm passphrase:

```

在这个例子中,`age` 提示你输入一个密码,它将通过这个密码对输入文件 `mypasswd.txt` 进行加密,并生成加密文件 `mypasswd-encrypted.txt`。

### 使用密码解密

如需将用密码加密的文件解密,可以使用 `age` 命令和 `--decrypt` 选项:

```

$ age --decrypt --output passwd-decrypt.txt mypasswd-encrypted.txt

```

在这个例子中,`age` 提示你输入密码,只要你提供的密码与加密时设置的密码一致,`age` 随后将 `mypasswd-encrypted.txt` 加密文件的内容解密为 `passwd-decrypt.txt`。

### 不要丢失你的密钥

无论你是使用密码加密还是公钥加密,你都\_不能\_丢失加密数据的凭证。根据设计,如果没有用于加密的密钥,通过 `age` 加密的文件是不能被解密的。所以,请备份你的公钥,并记住这些密码!

### 轻松实现加密

`age` 是一个真正强大的工具。我喜欢把我的敏感文件,特别是税务记录和其他档案数据,加密到 `.tz` 文件中,以便以后访问。`age` 是用户友好的,使其非常容易随时加密。

---

via: <https://opensource.com/article/21/7/linux-age>

作者:[Sumantro Mukherjee](https://opensource.com/users/sumantro) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[turbokernel](https://github.com/turbokernel)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Encryption and security for protecting files and sensitive documents have long been a concern for users. Even as more and more of our data is housed on websites and cloud services, protected by user accounts with ever-more secure and challenging passwords, there's still great value in being able to store sensitive data on our own filesystems, especially when we can encrypt that data quickly and easily.

[Age](https://github.com/FiloSottile/age) allows you to do this. It is a small, easy-to-use tool that allows you to encrypt a file with a single passphrase and decrypt it as required.

## Install age

Age is available to [install](https://github.com/FiloSottile/age#installation) from most Linux repositories.

To install it on Fedora:

`$ sudo dnf install age -y`

On macOS, use [MacPorts](https://opensource.com/article/20/11/macports) or [Homebrew](https://opensource.com/article/20/6/homebrew-mac). On Windows, use [Chocolatey](https://opensource.com/article/20/3/chocolatey).

## Encrypting and decrypting files with age

Age can encrypt and decrypt files with either a public key or a passphrase set by the user.

### Using age with a public key

First, generate a public key and write the output to a `key.txt`

file:

```

$ age-keygen -o key.txt

Public key: age16frc22wz6z206hslrjzuv2tnsuw32rk80pnrku07fh7hrmxhudawase896m9

```

## Encrypt with a public key

To encrypt a file with your public key:

`$ touch mypasswds.txt | age -r ageage16frc22wz6z206hslrjzuv2tnsuw32rk80pnrku07fh7hrmxhudawase896m9 > mypass.tar.gz.age`

In this example, the file `mypasswds.txt`

is encrypted with the public key I generated and put inside an encrypted file called `mypass.tar.gz.age`

.

## Decrypt with a public key

To decrypt the information you've protected, use the `age`

command and the `--decrypt`

option:

`$ age --decrypt -i key.txt -o mypass.tar.gz mypass.tar.gz.age`

In this example, age uses the key stored in `key.text`

and decrypts the file I created in the previous step.

## Encrypt with a passphrase

Encrypting a file without a public key is known as symmetrical encryption. It allows a user to set the passphrase to encrypt and decrypt a file. To do so:

```

$ age --passphrase --output mypasswd-encrypted.txt mypasswd.txt

Enter passphrase (leave empty to autogenerate a secure one):

Confirm passphrase:

```

In this example, age prompts you for a passphrase, which it uses to encrypt the input file `mypasswd.txt`

and render the file `mypasswd-encrypted.txt`

in return.

## Decrypt with a passphrase

To decrypt a file encrypted with a passphrase, use the `age`

command with the `--decrypt`

option:

`$ age --decrypt --output passwd-decrypt.txt mypasswd-encrypted.txt `

In this example, age prompts you for the passphrase, then decrypts the contents of the `mypasswd-encrypted.txt`

file into `passwd-decrypt.txt`

, as long as you provide the passphrase that matches the one set during encryption.

## Don't lose your keys

Whether you're using passphrase encryption or public-key encryption, you *must not* lose the credentials for your encrypted data. By design, a file encrypted with age cannot be decrypted without the key used to encrypt it. So back up your public key, and remember those passphrases!

## Easy encryption at last

Age is a really robust tool. I like to encrypt my sensitive files, especially tax records and other archival data, into a `.tz`

file for later access. Age is user-friendly and makes it very easy to get started with encryption on the go.

## 1 Comment |

13,594 | 用于 Web 前端开发的 9 个 JavaScript 开源框架 | https://opensource.com/article/20/5/open-source-javascript-frameworks | 2021-07-18T20:53:03 | [

"JavaScript",

"前端"

] | https://linux.cn/article-13594-1.html |

>

> 根据 JavaScript 框架的优点和主要特点对许多 JavaScript 框架进行细分。

>

>

>

大约十年前,JavaScript 社区开始见证一场 JavaScript 框架的激战。在本文中,我将介绍其中最著名的一些框架。值得注意的是,这些都是开源的 JavaScript 项目,这意味着你可以在 [开源许可证](https://opensource.com/article/17/9/open-source-licensing) 下自由地使用它们,甚至为它们的源代码和社区做出贡献。

不过,在开始之前,了解一些 JavaScript 开发者谈论框架时常用的术语,将对后续的内容大有裨益。

| 术语 | 释义 |

| --- | --- |

| [文档对象模型(DOM)](https://www.w3schools.com/js/js_htmldom.asp) | 网站的树形结构表示,每一个节点都是代表网页一部分的对象。万维网联盟(W3C),是万维网的国际标准组织,维护着 DOM 的定义。 |

| [虚拟 DOM](https://reactjs.org/docs/faq-internals.html) | 用户界面(UI)以“虚拟”或“理想”的方式保存在内存中,并通过 [ReactDOM](https://reactjs.org/docs/react-dom.html) 等一些库与“真实” DOM 同步。要进一步探索,请阅读 ReactJS 的虚拟 DOM 和内部文档。 |

| [数据绑定](https://en.wikipedia.org/wiki/Data_binding) | 一个编程概念,为访问网站上的数据提供一致的接口。Web 元素与 DOM 维护的元素的<ruby> 属性 <rt> property </rt></ruby> 或 <ruby> 特性 <rt> attribute </rt></ruby> 相关联(LCTT 译注:根据 MDN 的解释,Javascript 的<ruby> 属性 <rt> property </rt></ruby>是对象的特征,通常描述与数据结构相关的特征;<ruby> 特性 <rt> attribute </rt></ruby> 是指元素所有属性节点的一个实时集合)。例如,当需要在网页表单中填写密码时,数据绑定机制可以用密码验证逻辑检验,确保密码格式有效。 |

我们已经清楚了常用的术语,下面我们来探索一下开源的 JavaScript 框架有哪些。

| 框架 | 简介 | 许可证 | 发布日期 |

| --- | --- | --- | --- |

| [ReactJS](https://github.com/facebook/react) | 目前最流行的 JavaScript 框架,由 Facebook 创建 | MIT 许可证 | 2013-5-24 |

| [Angular](https://github.com/angular/angular) | Google 创建的流行的 JavaScript 框架 | MIT 许可证 | 2010-1-5 |

| [VueJS](https://github.com/vuejs/vue) | 快速增长的 JavaScript 框架 | MIT 许可证 | 2013-7-28 |

| [MeteorJS](https://github.com/meteor/meteor) | 超乎于 JavaScript 框架的强大框架 | MIT 许可证 | 2012-1-18 |

| [KnockoutJS](https://github.com/knockout/knockout) | 开源的 MVVM(<ruby> 模型-视图-视图模型 <rt> Model-View-ViewModel </rt></ruby>) 框架 | MIT 许可证 | 2010-7-5 |

| [EmberJS](https://github.com/emberjs/ember.js) | 另一个开源的 MVVM 框架 | MIT 许可证 | 2011-12-8 |

| [BackboneJS](https://github.com/jashkenas/backbone) | 带有 RESTful JSON 和<ruby> 模型-视图-主持人 <rt> Model-View-Presenter </rt></ruby>模式的 JavaScript 框架 | MIT 许可证 | 2010-9-30 |

| [Svelte](https://github.com/sveltejs/svelte) | 不使用虚拟 DOM 的 JavaScript 开源框架 | MIT 许可证 | 2016-11-20 |

| [AureliaJS](https://github.com/aurelia/framework) | 现代 JavaScript 模块的集合 | MIT 许可证 | 2018-2-14 |

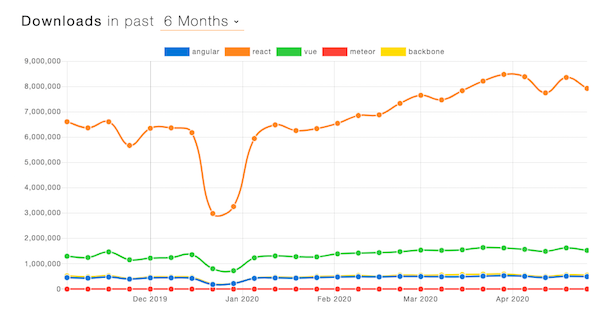

为了说明情况,下面是每个框架的 NPM 包下载量的公开数据,感谢 [npm trends](https://www.npmtrends.com/angular-vs-react-vs-vue-vs-meteor-vs-backbone)。

### ReactJS

[ReactJS](https://reactjs.org) 是由 Facebook 研发的,它虽然在 Angular 之后发布,但明显是当今 JavaScript 框架的领导者。React 引入了一个虚拟 DOM 的概念,这是一个抽象副本,开发者能在框架内仅使用他们想要的 ReactJS 功能,而无需重写整个项目。此外,React 项目活跃的开源社区无疑成为增长背后的主力军。下面是一些 React 的主要优势:

* 合理的学习曲线 —— React 开发者可以轻松地创建 React 组件,而不需要重写整个 JavaScript 的代码。在 ReactJS 的 [首页](https://reactjs.org/) 查看它的优点以及它如何使编程更容易。

* 高度优化的性能 —— React 的虚拟 DOM 的实现和其他功能提升了应用程序的渲染性能。请查看 ReactJS 的关于如何对其性能进行基准测试,并对应用性能进行衡量的相关 [描述](https://reactjs.org/docs/perf.html)。

* 优秀的支持工具 —— [Redux](https://redux.js.org/)、[Thunk](https://github.com/reduxjs/redux-thunk) 和 [Reselect](https://github.com/reduxjs/reselect) 是构建良好、可调式代码的最佳工具。

* 单向数据绑定 —— 模型使用 Reach 流,只从所有者流向子模块,这使得在代码中追踪因果关系更加简单。请在 ReactJS 的 [数据绑定页](https://reactjs.org/docs/two-way-binding-helpers.html) 阅读更多相关资料。

谁在使用 ReactJS?Facebook 自从发明它,就大量使用 React 构建公司首页,据说 [Instagram](https://instagram-engineering.com/react-native-at-instagram-dd828a9a90c7) 完全基于 ReactJS 库。你可能会惊讶地发现,其他知名公司如 [纽约时报](https://open.nytimes.com/introducing-react-tracking-declarative-tracking-for-react-apps-2c76706bb79a)、[Netflix](https://medium.com/dev-channel/a-netflix-web-performance-case-study-c0bcde26a9d9) 和 [可汗学院](https://khan.github.io/react-components/) 也在他们的技术栈中使用了 ReactJS。

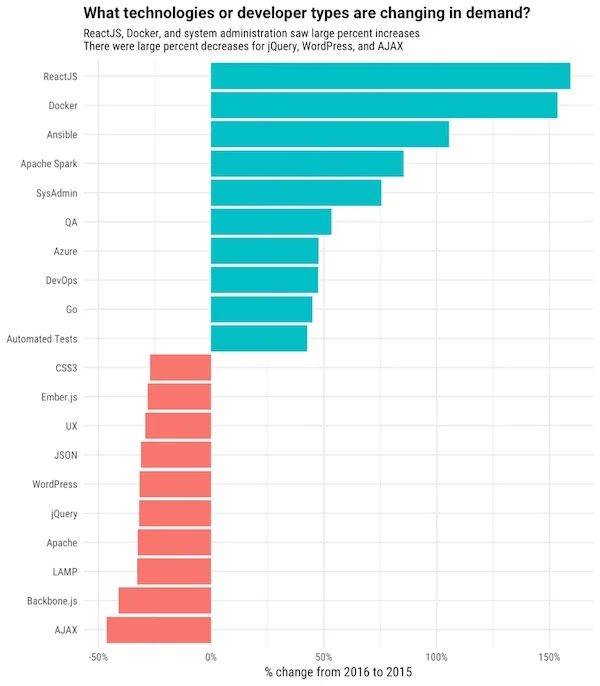

更令人惊讶的是 ReactJS 开发者的工作机会,正如在下面 Stackoverflow 所做的研究中看到的,嘿,你可以从事开源项目并获得报酬。这很酷!

*Stackoverflow 的研究显示了对 ReactJS 开发者的巨大需求——[来源:2017 年开发者招聘趋势——Stackoverflow 博客](https://stackoverflow.blog/2017/03/09/developer-hiring-trends-2017)*

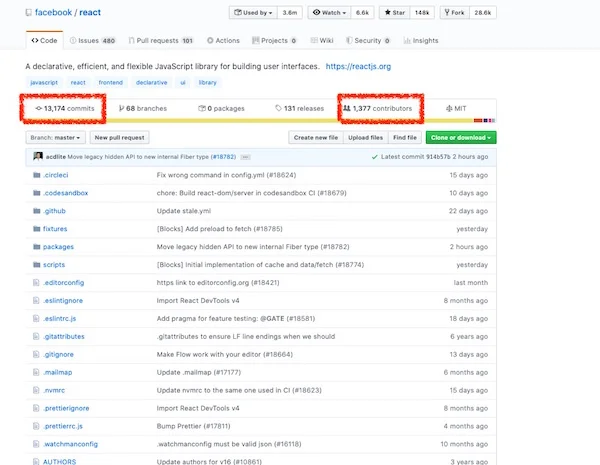

[ReactJS 的 GitHub](https://github.com/facebook/react) 目前显示超过 13,000 次提交和 1,377 位贡献者。它是一个在 MIT 许可证下的开源项目。

### Angular

就开发者数量来说,也许 React 是现在最领先的 JavaScript 框架,但是 [Angular](https://angular.io) 紧随其后。事实上,开源开发者和初创公司更乐于选择 React,而较大的公司往往更喜欢 Angular(下面列出了一些例子)。主要原因是,虽然 Angular 可能更复杂,但它的统一性和一致性适用于大型项目。例如,在我整个职业生涯中一直研究 Angular 和 React,我观察到大公司通常认为 Angular 严格的结构是一种优势。下面是 Angular 的另外一些关键优势:

* 精心设计的命令行工具 —— Angular 有一个优秀的命令行工具(CLI),可以轻松起步和使用 Angular 进行开发。ReactJS 提供命令行工具和其他工具,同时 Angular 有广泛的支持和出色的文档,你可以参见 [这个页面](https://cli.angular.io/)。

* 单向数据绑定 —— 和 React 类似,单向数据绑定模型使框架受更少的不必要的副作用的影响。

* 更好的 TypeScript 支持 —— Angular 与 [TypeScript](https://www.typescriptlang.org/) 有很好的一致性,它其实是 JavaScript 强制类型的拓展。它还可以转译为 JavaScript,强制类型是减少错误代码的绝佳选择。

像 YouTube、[Netflix](https://netflixtechblog.com/netflix-likes-react-509675426db)、[IBM](https://developer.ibm.com/technologies/javascript/tutorials/wa-react-intro/) 和 [Walmart](https://medium.com/walmartlabs/tagged/react) 等知名网站,都已在其应用程序中采用了 Angular。我通过自学使用 Angular 来开始学习前端 JavaScript 开发。我参与了许多涉及 Angular 的个人和专业项目,但那是当时被称为 AngularJS 的 Angular 1.x。当 Google 决定将版本升级到 2.0 时,他们对框架进行了彻底的改造,然后变成了 Angular。新的 Angular 是对之前的 AngularJS 的彻底改造,这一举动带来了一部分新开发者也驱逐了一部分原有的开发者。

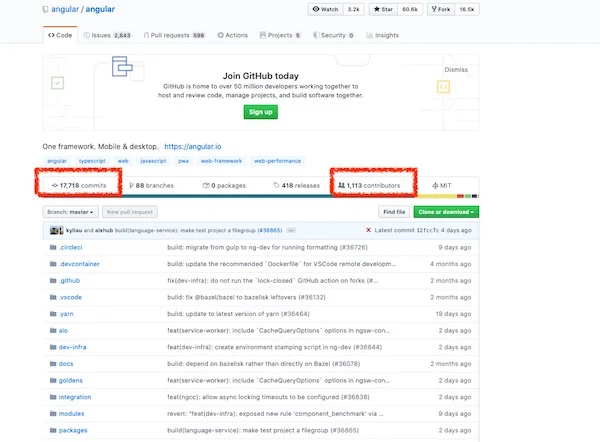

截止到撰写本文,[Angular 的 GitHub](https://github.com/angular/angular) 页面显示 17,781 次提交和 1,133 位贡献者。它也是一个遵循 MIT 许可证的开源项目,因此你可以自由地在你的项目或贡献中使用。

### VueJS

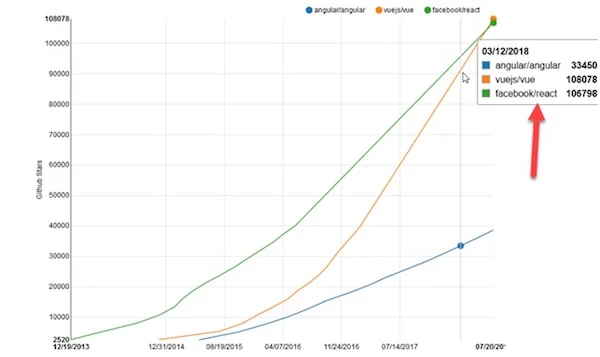

[VueJS](https://vuejs.org) 是一个非常有趣的框架。它是 JavaScript 框架领域的新来者,但是在过去几年里它的受欢迎程度显著增加。VueJS 由 [尤雨溪](https://www.freecodecamp.org/news/between-the-wires-an-interview-with-vue-js-creator-evan-you-e383cbf57cc4/) 创建,他是曾参与过 Angular 项目的谷歌工程师。该框架现在变得如此受欢迎,以至于许多前端工程师更喜欢 VueJS 而不是其他 JavaScript 框架。下图描述了该框架随着时间的推移获得关注的速度。

这里有一些 VueJS 的主要优点:

* 更容易地学习曲线 —— 与 Angular 或 React 相比,许多前端开发者都认为 VueJS 有更平滑的学习曲线。

* 小体积 —— 与 Angular 或 React 相比,VueJS 相对轻巧。在 [官方文档](https://vuejs.org/v2/guide/comparison.html#Size) 中,它的大小据说只有约 30 KB;而 Angular 生成的项目超过 65 KB。

* 简明的文档 —— VueJS 有全面清晰的文档。请自行查看它的 [官方文档](https://vuejs.org/v2/guide/)。

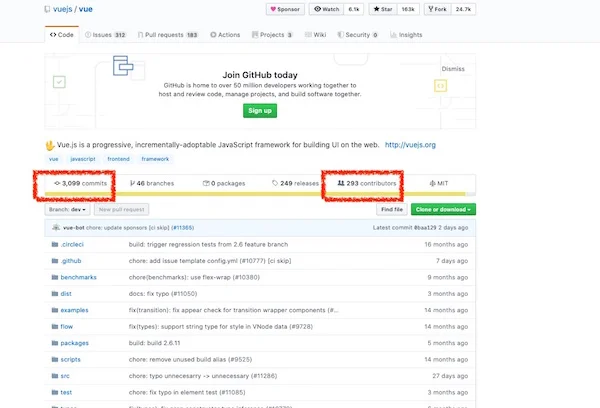

[VueJS 的 GitHub](https://github.com/vuejs/vue) 显示该项目有 3,099 次提交和 239 位贡献者。

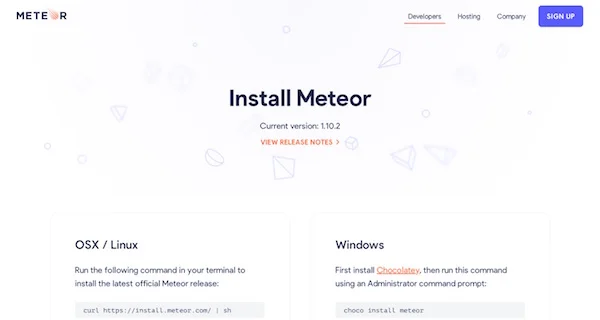

### MeteorJS

[MeteorJS](https://www.meteor.com) 是一个自由开源的 [同构框架](https://en.wikipedia.org/wiki/Isomorphic_JavaScript),这意味着它和 NodeJS 一样,同时运行客户端和服务器的 JavaScript。Meteor 能够和任何其他流行的前端框架一起使用,如 Angular、React、Vue、Svelte 等。

Meteor 被高通、马自达和宜家等多家公司以及如 Dispatch 和 Rocket.Chat 等多个应用程序使用。[您可以其在官方网站上查看更多案例](https://www.meteor.com/showcase)。

Meteor 的一些主要功能包括:

* 在线数据 —— 服务器发送数据而不是 HTML,并由客户端渲染。在线数据主要是指 Meteor 在页面加载时通过一个 WebSocket 连接服务器,然后通过该链接传输所需要的数据。

* 用 JavaScript 开发一切 —— 客户端、应用服务、网页和移动界面都可以用 JavaScript 设计。

* 支持大多数主流框架 —— Angular、React 和 Vue 都可以与 Meteor 结合。因此,你仍然可以使用最喜欢的框架如 React 或 Angular,这并不防碍 Meteor 为你提供一些优秀的功能。

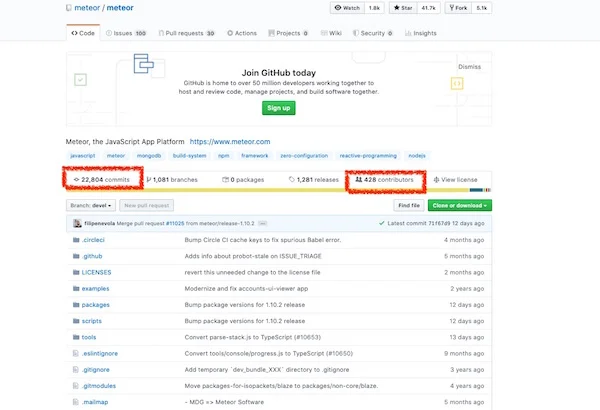

截止到目前,[Meteor 的 GitHub](https://github.com/meteor/meteor) 显示 22,804 次提交和 428 位贡献者。这对于开源项目来说相当多了。

### EmberJS

[EmberJS](https://emberjs.com) 是一个基于 [模型-视图-视图模型(MVVM)](https://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93viewmodel) 模式的开源 JavaScript 框架。如果你从来没有听说过 EmberJS,你肯定会惊讶于有多少公司在使用它。Apple Music、Square、Discourse、Groupon、LinkedIn、Twitch、Nordstorm 和 Chipotle 都将 EmberJS 作为公司的技术栈之一。你可以通过查询 [EmberJS 的官方页面](https://emberjs.com/ember-users) 来发掘所有使用 EmberJS 的应用和客户。

Ember 虽然和我们讨论过的其他框架有类似的好处,但这里有些独特的区别:

* 约定优于配置 —— Ember 将命名约定标准化并自动生成结果代码。这种方法学习曲线有些陡峭,但可以确保程序员遵循最佳实践。

* 成熟的模板机制 —— Ember 依赖于直接文本操作,直接构建 HTML 文档,而并不关心 DOM。

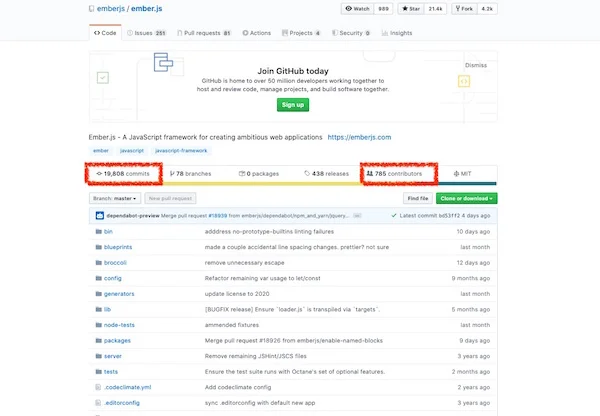

正如所期待的那样,作为一个被许多应用程序使用的框架,[Ember 的 GitHub](https://github.com/emberjs) 页面显示该项目拥有 19,808 次提交和 785 位贡献者。这是一个巨大的数字!

### KnockoutJS

[KnockoutJS](https://knockoutjs.com) 是一个独立开源的 JavaScript 框架,采用 [模板-视图-视图模型(MVVM)](https://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93viewmodel) 模式与模板。尽管与 Angular、React 或 Vue 相比,听说过这个框架的人可能比较少,这个项目在开发者社区仍然相当活跃,并且有以下功能:

* 声明式绑定 —— Knockout 的声明式绑定系统提供了一种简洁而强大的方式来将数据链接到 UI。绑定简单的数据属性或使用单向绑定很简单。请在 [KnockoutJS 的官方文档页面](https://knockoutjs.com/documentation/binding-syntax.html) 阅读更多相关信息。

* 自动 UI 刷新。

* 依赖跟踪模板。

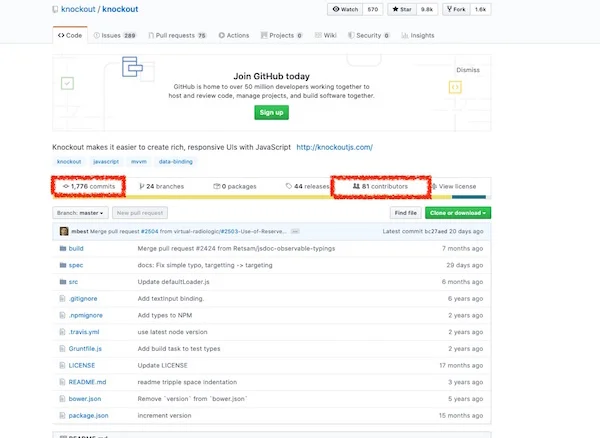

[Knockout 的 GitHub](https://github.com/knockout/knockout) 页面显示约有 1,766 次提交和 81 位贡献者。与其他框架相比,这些数据并不重要,但是该项目仍然在积极维护中。

### BackboneJS

[BackboneJS](https://backbonejs.org) 是一个具有 RESTful JSON 接口,基于<ruby> 模型-视图-主持人 <rt> Model-View-Presenter </rt></ruby>(MVP)设计范式的轻量级 JavaScript 框架。

这个框架据说已经被 [Airbnb](https://medium.com/airbnb-engineering/our-first-node-js-app-backbone-on-the-client-and-server-c659abb0e2b4)、Hulu、SoundCloud 和 Trello 使用。你可以在 [Backbone 的页面](https://sites.google.com/site/backbonejsja/examples) 找到上面所有这些案例来研究。

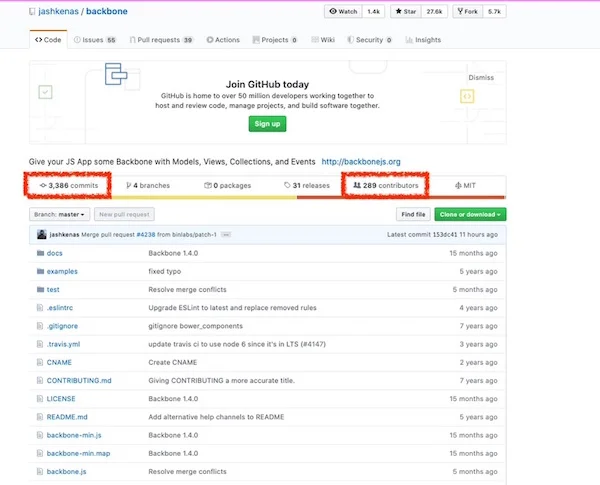

[BackboneJS 的 GitHub](https://github.com/jashkenas/backbone) 页面显示有 3,386 次提交和 289 位贡献者。

### Svelte

[Svelte](https://svelte.dev) 是一个开源的 JavaScript 框架,它生成操作 DOM 的代码,而不是包含框架引用。在构建时而非运行时将应用程序转换为 JavaScript 的过程,在某些情况下可能会带来轻微的性能提升。

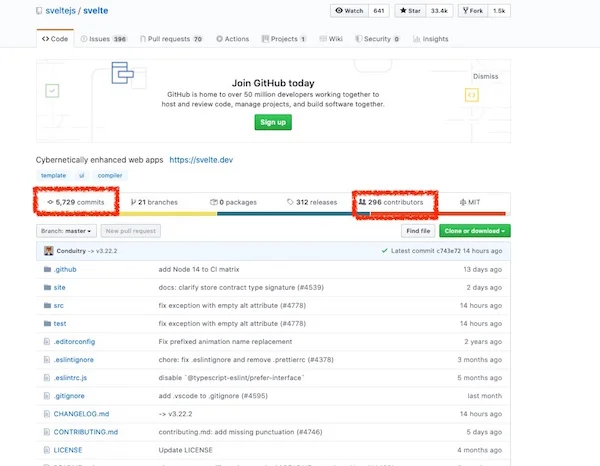

[Svelte 的 GitHub](https://github.com/sveltejs/svelte) 页面显示,截止到本文撰写为止,该项目有 5,729 次提交和 296 位贡献者。

### AureliaJS

最后我们介绍一下 [Aurelia](https://aurelia.io)。Aurelia 是一个前端 JavaScript 框架,是一个现代 JavaScript 模块的集合。Aurelia 有以下有趣的功能:

* 快速渲染 —— Aurelia 宣称比当今其他任何框架的渲染速度都快。

* 单向数据流 —— Aurelia 使用一个基于观察的绑定系统,将数据从模型推送到视图。

* 使用原生 JavaScript 架构 —— 可以用原生 JavaScript 构建网站的所有组件。

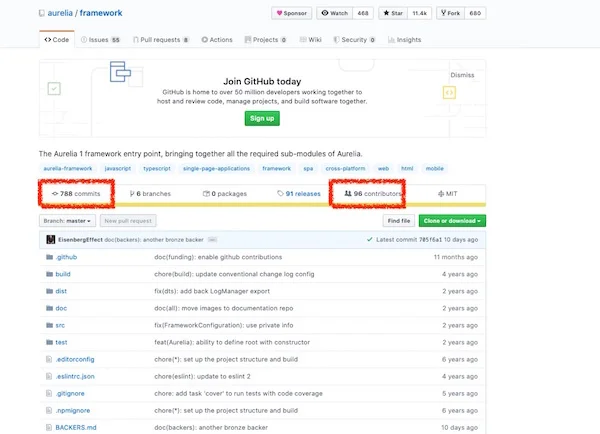

[Aurelia 的 GitHub](https://github.com/aurelia/framework) 页面显示,截止到撰写本文为止该项目有 788 次提交和 96 位贡献者。

这就是我在查看 JavaScript 框架世界时发现的新内容。我错过了其他有趣的框架吗?欢迎在评论区分享你的想法。

---

via: <https://opensource.com/article/20/5/open-source-javascript-frameworks>

作者:[Bryant Son](https://opensource.com/users/brson) 选题:[lujun9972](https://github.com/lujun9972) 译者:[stevending1st](https://github.com/stevending1st) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | About a decade ago, the JavaScript developer community began to witness fierce battles emerging among JavaScript frameworks. In this article, I will introduce some of the most well-known of these frameworks. And it's important to note that these are all open source JavaScript projects, meaning that you can freely utilize them under an [open source license](https://opensource.com/article/17/9/open-source-licensing) and even contribute to the source code and communities.

If you prefer to follow along as I explore these frameworks, you can watch my video.

Before getting started, though, it will be useful to learn some of the terminology JavaScript developers commonly use when discussing frameworks.

Term | What It Is |

---|---|

|

[Virtual DOM](https://reactjs.org/docs/faq-internals.html)[ReactDOM](https://reactjs.org/docs/react-dom.html). To explore further, please read ReactJS's virtual DOM and internals documentation.[Data Binding](https://en.wikipedia.org/wiki/Data_binding)Now that we are clear about common terms, let's explore what open source JavaScript frameworks are out there.

Framework | About | License | Release Date |

---|---|---|---|

|

[Angular](https://github.com/angular/angular)[VueJS](https://github.com/vuejs/vue)[MeteorJS](https://github.com/meteor/meteor)[KnockoutJS](https://github.com/knockout/knockout)[EmberJS](https://github.com/emberjs/ember.js)[BackboneJS](https://github.com/jashkenas/backbone)[Svelte](https://github.com/sveltejs/svelte)[AureliaJS](https://github.com/aurelia/framework)For context, here is the publicly available data on popularity based on NPM package downloads per framework, thanks to [npm trends](https://www.npmtrends.com/angular-vs-react-vs-vue-vs-meteor-vs-backbone).

## ReactJS

[ReactJS](https://reactjs.org) was invented by Facebook, and it is the clear leader among JavaScript frameworks today, though it was invented well after Angular. React introduces a concept called a virtual DOM, an abstract copy where developers can utilize only the ReactJS features that they want, without having to rewrite the entire project to work within the framework. In addition, the active open source community with the React project has definitely been the workhorse behind the growth. Here are some of React's key strengths:

- Reasonable learning curve—React developers can easily create the React components without rewriting the entire code in JavaScript. See the benefits of ReactJS and how it makes it the programming easier on ReactJS's

[front page](https://reactjs.org/). - Highly optimized for performance—React's virtual DOM implementation and other features boost app rendering performance. See ReactJS's

[description](https://reactjs.org/docs/perf.html)of how its performance can be benchmarked and measured in terms of how the app performs. - Excellent supporting tools—

[Redux](https://redux.js.org/),[Thunk](https://github.com/reduxjs/redux-thunk), and[Reselect](https://github.com/reduxjs/reselect)are some of the best tools for building well-structured, debuggable code. - One way data binding—The model used in Reach flows from owner to child only making it simpler to trace cause and effect in code. Read more about it on ReactJS's

[page on data binding](https://reactjs.org/docs/two-way-binding-helpers.html).

Who is using ReactJS? Since Facebook invented it, the company itself heavily uses React for its frontpage, and [Instagram](https://instagram-engineering.com/react-native-at-instagram-dd828a9a90c7) is said to be completely based on the ReactJS library. You might be surprised to know that other well-known companies like [New York Times](https://open.nytimes.com/introducing-react-tracking-declarative-tracking-for-react-apps-2c76706bb79a), [Netflix](https://medium.com/dev-channel/a-netflix-web-performance-case-study-c0bcde26a9d9), and [Khan Academy](https://khan.github.io/react-components/) also implement ReactJS in their technology stacks.

What may be even more surprising is the availability of jobs for ReactJS developers, as you can see below from research done by Stackoverflow. Hey, you can work on an open source project and get paid to do it. That is pretty cool!

Stackoverflow shows the huge demand for ReactJS developers—[Source: Developer Hiring Trends in 2017 - Stackoverflow Blog](https://stackoverflow.blog/2017/03/09/developer-hiring-trends-2017)

[ReactJS's GitHub](https://github.com/facebook/react) currently shows over 13K commits and 1,377 contributors to the open source project. And it is an open source project under MIT License.

## Angular

React may now be the leading JavaScript framework in terms of the number of developers, but [Angular](https://angular.io) is close behind. In fact, while React is the more popular choice among open source developers and startup companies, larger corporations tend to prefer Angular (a few are listed below). The main reason is that, while Angular might be more complicated, its uniformity and consistency works well for larger projects. For example, I have worked on both Angular and React throughout my career, and I observed that the larger companies generally consider Angular's strict structure a strength. Here are some other key strengths of Angular:

- Well-designed Command Line Interface: Angular has an excellent Command Line Interface (CLI) tool to easily bootstrap and to develop with Angular. ReactJS also offers the Command Line Interface as well as other tools, but Angular has extensive support and excellent documentation, as you can see on

[this page](https://cli.angular.io/). - One way data binding—Similarly to React, the one-way data binding model makes the framework less susceptible to unwanted side effects.

- Great support for TypeScript—Angular has excellent alignment with

[TypeScript](https://www.typescriptlang.org/), which is effectively JavaScript more type enforcement. It also transcompiling to JavaScript, making it a great option to enforce types for less buggy code.

Well-known websites like YouTube, [Netflix](https://netflixtechblog.com/netflix-likes-react-509675426db), [IBM](https://developer.ibm.com/technologies/javascript/tutorials/wa-react-intro/), and [Walmart](https://medium.com/walmartlabs/tagged/react) all have implemented Angular into their applications. I personally started front-end JavaScript development with Angular by educating myself on the subject. I have worked on quite a few personal and professional projects involving Angular, but that was Angular 1.x, which was called by AngularJS at the time. When Google decided to upgrade the version to 2.0, they completely revamped the framework, and then it became Angular. The new Angular was a complete transformation of the previous AngularJS, which drove off some existing developers while bringing new developers.

[Angular's](https://github.com/angular/angular)[ GitHub](https://github.com/angular/angular) page shows 17,781 commits and 1,133 contributors at the time of this writing. It is also an open source project with an MIT License, so you can feel free to use it for your project or to contribute.

## VueJS

[VueJS](https://vuejs.org) is a very interesting framework. It is a newcomer to the JavaScript framework scene, but its popularity has increased significantly over the course of a few years. VueJS was created by [Evan Yu](https://www.freecodecamp.org/news/between-the-wires-an-interview-with-vue-js-creator-evan-you-e383cbf57cc4/), a former Google engineer who had worked on the Angular project. The framework got so popular that many front-end engineers now prefer VueJS over other JavaScript frameworks. The chart below depicts how fast the framework gained traction over time.

Here are some of the key strengths of VueJS:

- Easier learning curve—Even compared to Angular or React, many front-end developers feel that VueJS has the lowest learning curve.

- Small size—VueJS is relatively lightweight compared to Angular or React. In its

[official documentation](https://vuejs.org/v2/guide/comparison.html#Size), its size is stated to be only about 30 KB, while the project generated by Angular, for example, is over 65 KB. - Concise documentation—Vue has thorough but concise and clear documentation. See

[its official documentation](https://vuejs.org/v2/guide/)for yourself.

[VueJS's GitHub](https://github.com/vuejs/vue) shows 3,099 commits and 239 contributors.

## MeteorJS

[MeteorJS](https://www.meteor.com) is a free and open source [isomorphic framework](https://en.wikipedia.org/wiki/Isomorphic_JavaScript), which means, just like NodeJS, it runs both client and server-side JavaScript. Meteor can be used in conjunction with any other popular front-end framework like Angular, React, Vue, Svelte, etc.

Meteor is used by several corporations such as Qualcomm, Mazda, and Ikea, and many applications like Dispatch and Rocket.Chat. [See the case studies on its official website.](https://www.meteor.com/showcase)

Some of the key features of Meteor include:

- Data on the wire—The server sends the data, not HTML, and the client renders it. Data on the wire refers mostly to the way that Meteor forms a WebSocket connection to the server on page load, and then transfers the data needed over that connection.

- Develop everything in JavaScript—Client-side, application server, webpage, and mobile interface can be all designed with JavaScript.

- Supports most major frameworks—Angular, React, and Vue can be all combined and used in conjunction with Meteor. Thus, you can still use your favorite framework, like React or Angular, but still leverage some of the great features that Meteor offers.

As of now, [Meteor's](https://github.com/meteor/meteor)[ GitHub](https://github.com/meteor/meteor) shows 22,804 commits and 428 contributors. That is quite a lot for open source activities!

## EmberJS

[EmberJS](https://emberjs.com) is an open source JavaScript framework based on the [Model-view-viewModel(MVVM)](https://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93viewmodel) pattern. If you've never heard about EmberJS, you'll definitely be surprised how many companies are using it. Apple Music, Square, Discourse, Groupon, LinkedIn, Twitch, Nordstorm, and Chipotle all leverage EmberJS as one of their technology stacks. Check [EmberJS's official page](https://emberjs.com/ember-users) to discover all applications and customers that use EmberJS.

Although Ember has similar benefits to the other frameworks we've discussed, here are some of its unique differentiators:

- Convention over configuration—Ember standardizes naming conventions and automatically generates the result code. This approach has a little more of a learning curve but ensures that programmers follow best-recommended practices.

- Fully-fledged templating mechanism—Ember relies on straight text manipulation, building the HTML document dynamically while knowing nothing about DOM.

As one might expect from a framework used by many applications, [Ember's GitHub](https://github.com/emberjs) page shows 19,808 commits and 785 contributors. That is huge!

## KnockoutJS

[KnockoutJS](https://knockoutjs.com) is a standalone open source JavaScript framework adopting a [Model-View-ViewModel (MVVM)](https://en.wikipedia.org/wiki/Model%E2%80%93view%E2%80%93viewmodel) pattern with templates. Although fewer people may have heard about this framework compared to Angular, React, or Vue, the project is still quite active among the development community and leverages features such as:

- Declarative binding—Knockout's declarative binding system provides a concise and powerful way to link data to the UI. It's generally easy to bind to simple data properties or to use a single binding. Read more about it

[here at KnockoutJS's official documentation page](https://knockoutjs.com/documentation/binding-syntax.html). - Automatic UI refresh

- Dependency tracking templating

[Knockout's GitHub](https://github.com/knockout/knockout) page shows about 1,766 commits and 81 contributors. Those numbers are not as significant compared to the other frameworks, but the project is still actively maintained.

## BackboneJS

[BackboneJS](https://backbonejs.org) is a lightweight JavaScript framework with a RESTful JSON interface and is based on Model-View-Presenter (MVP) design paradigm.

The framework is said to be used by [Airbnb](https://medium.com/airbnb-engineering/our-first-node-js-app-backbone-on-the-client-and-server-c659abb0e2b4), Hulu, SoundCloud, and Trello. You can find all of these case studies on [Backbone's page](https://sites.google.com/site/backbonejsja/examples).

The [BackboneJS GitHub](https://github.com/jashkenas/backbone) page shows 3,386 commits and 289 contributors.

## Svelte

[Svelte](https://svelte.dev) is an open source JavaScript framework that generates the code to manipulate DOM instead of including framework references. This process of converting an app into JavaScript at build time rather than run time might offer a slight boost in performance in some scenarios.

[Svelte's](https://github.com/sveltejs/svelte)[ GitHub](https://github.com/sveltejs/svelte) page shows 5,729 commits and 296 contributors as of this writing.

## AureliaJS

Last on the list is [Aurelia](https://aurelia.io). Aurelia is a front-end JavaScript framework that is a collection of modern JavaScript modules. Aurelia has the following interesting features:

- Fast rendering—Aurelia claims that its framework can render faster than any other framework today.

- Uni-directional data flow—Aurelia uses an observable-based binding system that pushes the data from the model to the view.

- Build with vanilla JavaScript—You can build all of the website's components with plain JavaScript.

[Aurelia's](https://github.com/aurelia/framework)[ GitHub](https://github.com/aurelia/framework) page shows 788 commits and 96 contributors as of this writing.

So that is what I found when looking at what is new in the JavaScript framework world. Did I miss any interesting frameworks? Feel free to share your ideas in the comment section!

## 6 Comments |

13,595 | 从实际代码开始编写好的示例 | https://jvns.ca/blog/2021/07/08/writing-great-examples/ | 2021-07-18T23:16:25 | [

"代码",

"示例"

] | https://linux.cn/article-13595-1.html |

当编写程序时,我花费了大量时间在编写好的示例上。我从未见过有人写过关于如何写出好的示例,所以我就写了一下如何写出一份好的示例。

基础思路就是从你写的真实代码开始,然后删除不相关的细节,使其成为一个独立的例子,而不是无中生有地想出一些例子。

我将会谈论两种示例:基于真实案例的示例和奇怪的示例

### 好的示例是真实的

为了说明为什么好的案例应该是真实的,我们就先讨论一个不真实的案例。假设我们在试图解释 Python 的 lambda 函数(这只是我想到的第一个概念)。你可以举一个例子,使用 `map` 和 lambda 来让一组数字变为原先的两倍。

```

numbers = [1, 2, 3, 4]

squares = map(lambda x: x * x, numbers)

```

我觉得这个示例不是真实的,有如下两方面的原因:

* 将一组数字作平方运算不是在真实的程序中完成的事,除非是欧拉项目或某种东西(更多的可能是针对列表的操作)

* `map` 在 Python 中并不常用,即便是做这个我也更愿意写 `[x*x for x in numbers]`

一个更加真实的 Python lambdas 的示例是使用 `sort` 函数,就像这样:

```

children = [{"name": "ashwin", "age": 12}, {"name": "radhika", "age": 3}]

sorted_children = sorted(children, key=lambda x: x['age'])

```

但是这个示例是被精心设计的(为什么我们需要对这些孩子按照年龄进行排序呢?)。所以我们如何来做一个真实的示例呢?

### 如何让你的示例真实起来:看你所写实际代码

我认为最简单的来生成一个例子的方法就是,不是凭空出现一个例子(就像我用那个`儿童`的例子),而只是从真正的代码开始!

举一个例子吧,如果我要用 `sort.+key` 来编写一串 Python 代码,我会发现很多我按某个标准对列表进行排序的真实例子,例如:

* `tasks.sort(key=lambda task: task['completed_time'])`

* `emails = reversed(sorted(emails, key=lambda x:x['receivedAt']))`

* `sorted_keysizes = sorted(scores.keys(), key=scores.get)`

* `shows = sorted(dates[date], key=lambda x: x['time']['performanceTime'])`

在这里很容易看到一个规律——这些基本是按时间排序的!因此,你可以明白如何将按时间排序的某些对象(电子邮件、事件等)的简单实例轻松地放在一起。

### 现实的例子有助于“布道”你试图解释的概念

当我试图去解释一个想法(就好比 Python Lambdas)的时候,我通常也会试图说服读者,说这是值得学习的想法。Python lambdas 是如此的有用!当我去试图说服某个人 lambdas 是很好用的时候,让他想象一下 lambdas 如何帮助他们完成一项他们将要去做的任务或是以及一项他们以前做过的任务,对说服他会很有帮助。

### 从真实代码中提炼出示例可能需要很长时间

我给出如何使用 `lambda` 和 `sort` 函数的解释例子是十分简单的,它并不需要花费我很长时间来想出来,但是将真实的代码提炼出为一个独立的示例则是会需要花费很长的时间!

举个例子,我想在这篇文章中融入一些奇怪的 CSS 行为的例子来说明创造一个奇怪的案例是十分有趣的。我花费了两个小时来解决我这周遇到的一个实际的问题,确保我理解 CSS 的实际情况,并将其变成一个小示例。

最后,它“仅仅”用了 [五行 HTML 和一点点的 CSS](https://codepen.io/wizardzines/pen/0eda7725a46c919dcfdd3fa80aff3d41) 来说明了这个问题,看起来并不想是我花费了好多小时写出来的。但是最初它却是几百行的 JS/CSS/JavaScript,它需要花费很长时间来将所有的代码化为核心的很少的代码。

但我认为花点时间把示例讲得非常简单明了是值得的——如果有成百上千的人在读你的示例,你就节省了他们这么多时间!

### 就这么多了!

我觉得还有更多关于示例可以去讲的——几种不同类型的有用示例,例如:

* 可以更多的改变人的思维而不是直接提供使用的惊喜读者的示例代码

* 易于复制粘贴以用作初始化的示例

也许有一天我还会再写一些呢? :smiley:

---

via: <https://jvns.ca/blog/2021/07/08/writing-great-examples/>

作者:[Julia Evans](https://jvns.ca/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[zepoch](https://github.com/zepoch) 校对:[turbokernel](https://github.com/turbokernel)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | null |

13,598 | 现实中的应用程序是如何丢失数据? | https://theartofmachinery.com/2021/06/06/how_apps_lose_data.html | 2021-07-20T06:27:45 | [

"备份"

] | https://linux.cn/article-13598-1.html |

现代应用程序开发的一大优点是,像硬件故障或如何设置 RAID 这类问题是由云提供商操心的。优秀的云供应商不太可能丢失你的应用数据,所以有时我会被询问现在为什么还要备份?下面是一些现实世界的故事。

### 故事之一

第一个故事来自一个数据科学项目:它基本上是一个从正在进行的研究中来收集数据的庞大而复杂的管道,然后用各种不同的方式处理以满足一些尖端模型的需要。这个面向用户的应用程序还没有推出,但是一个由数据科学家和开发人员组成的团队已经为建立这个模型和它的数据集工作了好几个月。

在项目中工作的人有他们自己的实验工作的开发环境。他们会在终端中做一些类似 `export ENVIRONMENT=simonsdev` 的事情,然后所有在终端上运行的软件都会在那个环境下运行,而不是在生产环境下。

该团队迫切需要推出一个面向用户的应用程序,以便那些花钱的人能够从他们几个月的投资中真正看到一些回报。在一个星期六,一位工程师试图赶工一些工作。他在晚上很晚的时候做完了一个实验,决定收拾东西回家。他启动了一个清理脚本来删除他的开发环境中的所有内容,但奇怪的是,这比平时花费了更长的时间。这时他意识到,他已经忘记了哪个终端被配置为指向哪个环境。(LCTT 译注:意即删除了生产环境。)

### 故事之二

第二个故事来自于一个商业的网页和手机应用。后端有一个由一组工程师负责的微服务体系结构。这意味着部署需要协调,但是使用正式的发布过程和自动化简化了一些。新代码在准备好后会被审查并合并到主干中,并且高层开发人员通常会为每个微服务标记版本,然后自动部署到临时环境。临时环境中的版本会被定期收集到一个元版本中,在自动部署到生产环境之前,该版本会得到各个人的签署(这是一个合规环境)。

有一天,一位开发人员正在开发一个复杂的功能,而其他开发该微服务的开发人员都同意将他们正在开发的代码提交到主干,也都知道它还不能被实际发布。长话短说,并不是团队中的每个人都收到了消息,而代码就进入了发布管道。更糟糕的是,那些实验性代码需要一种新的方式来表示用户配置文件数据,因此它有一个临时数据迁移,它在推出到生产环境时运行,损坏了所有的用户配置文件。

### 故事之三

第三个故事来自另一款网页应用。这个有一个更简单的架构:大部分代码在一个应用程序中,数据在数据库中。然而,这个应用程序也是在很大的截止日期压力下编写的。事实证明,在开发初期,当彻底更改的数据库架构很常见时,添加一项功能来检测此类更改并清理旧数据,这实际上对发布前的早期开发很有用,并且始终只是作为开发环境的临时功能。不幸的是,在匆忙构建应用的其余部分并推出时,我们忘记了这些代码。当然,直到有一天它在生产环境中被触发了。

### 事后分析

对于任何故障的事后分析,很容易忽视大局,最终将一切归咎于一些小细节。一个特例是发现某人犯了一些错误,然后责怪那个人。这些故事中的所有工程师实际上都是优秀的工程师(雇佣 SRE 顾问的公司不是那些在长期雇佣中偷工减料的公司),所以解雇他们,换掉他们并不能解决任何问题。即使你拥有 100 倍的开发人员,它仍然是有限的,所以在足够的复杂性和压力下,错误也会发生。最重要的解决方案是备份,无论你如何丢失数据(包括来自恶意软件,这是最近新闻中的一个热门话题),它都能帮助你。如果你无法容忍没有副本,就不要只有一个副本。

故事之一的结局很糟糕:没有备份。该项目的六个月的数据收集白干了。顺便说一句,有些地方只保留一个每日快照作为备份,这个故事也是一个很好的例子,说明了这也会出错:如果数据丢失发生在星期六,并且你准备在星期一尝试恢复,那么一日备份就只能得到星期日的一个空数据备份。

故事之二并不算好,但结果要好得多。备份是可用的,但数据迁移也是可逆的。不好的部分是发布是在推出前完成的,并且修复工作必须在生产站点关闭时进行编码。我讲这个故事的主要原因是为了提醒大家,备份并不仅仅是灾难性的数据丢失。部分数据损坏也会发生,而且可能会更加混乱。

故事之三还好。尽管少量数据永久丢失,但大部分数据可以从备份中恢复。团队中的每个人都对没有标记极其明显的危险代码感到非常难过。我没有参与早期的开发,但我感觉很糟糕,因为恢复数据所需的时间比正常情况要长得多。如果有一个经过良好测试的恢复过程,我认为该站点应该在总共不到 15 分钟的时间内重新上线。但是第一次恢复没有成功,我不得不调试它为什么不能成功,然后重试。当一个生产站点宕机了,需要你重新启动它,每过 10 秒钟都感觉过了一个世纪。值得庆幸的是,老板们比某些人更能理解我们。他们实际上松了一口气,因为这一场可能使公司沉没的一次性灾难只导致了几分钟的数据丢失和不到一个小时的停机时间。

在实践中,备份“成功”但恢复失败的情况极为普遍。很多时候,小型数据集上进行恢复测试是可以正常工作的,但在生产规模的大数据集上就会失败。当每个人都压力过大时,灾难最有可能发生,而生产站点的故障只会增加压力。在时间合适的时候测试和记录完整的恢复过程是一个非常好的主意。

---

via: <https://theartofmachinery.com/2021/06/06/how_apps_lose_data.html>

作者:[Simon Arneaud](https://theartofmachinery.com) 选题:[lujun9972](https://github.com/lujun9972) 译者:[PearFL](https://github.com/PearFL) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | A great thing about modern app development is that there are cloud providers to worry about things like hardware

failures or how to set up RAID. Decent cloud providers are extremely unlikely to lose your app’s data, so sometimes I

get asked what backups are really for these days. Here are some real-world stories that show exactly what.

## Story #1

This first story is from a data science project: it was basically a big, complex pipeline that took data collected from ongoing research and crunched it in various ways to feed some cutting-edge model. The user-facing application hadn’t been launched yet, but a team of data scientists and developers had been working on building the model and its dataset for several months.

The people working on the project had their own development environments for experimental work. They’d do something

like `export ENVIRONMENT=simonsdev`

in a terminal, and then all

the software running in that terminal would run against that environment instead of the production environment.

The team was under a lot of pressure to get a user-facing app launched so that stakeholders could actually see some results from their several months of investment. One Saturday, an engineer tried to catch up with some work. He finished an experiment he was doing late in the evening, and decided to tidy up and go home. He fired off a cleanup script to delete everything from his development environment, but strangely it took a lot longer than usual. That’s when he realised he’d lost track of which terminal was configured to point to which environment.

## Story #2

Story #2 is from a commercial web and mobile app. The backend had a microservice architecture worked on by a team of engineers. That meant deployments required co-ordination, but things were simplified a bit using a formal release process and automation. New code would get reviewed and merged into master when ready, and every so often a senior developer would tag a release for each microservice, which would then automatically deploy to the staging environment. The releases in the staging environment would periodically get collected together into a meta-release that got signoff from various people (it was a compliance environment) before being automatically deployed to production.

One day a developer was working on a complex feature, and the other developers working on that microservice agreed that the work-in-progress code should be committed to master with the understanding that it shouldn’t be actually released yet. To cut a long story short, not everyone in the team got the message, and the code got into the release pipeline. Worse, the experimental code required a new way to represent user profile data, so it had an ad-hoc data migration that ran on launch into production and corrupted all user profiles.

## Story #3

Story #3 is from another web app. This one had a much simpler architecture: most of the code was in one app, and the data was in a database. However, this app had also been written under a lot of deadline pressure. It turned out that early on in development, when radical database schema changes were common, a feature was added to detect such changes and clean up old data. This was actually useful for early development before launch, and was always meant to be a temporary feature for development environments only. Unfortunately, the code was forgotten about in the rush to build the rest of the app and get to launch. Until, of course, one day it got triggered in the production environment.

## Postmortem

With any outage postmortem, it’s easy to lose sight of the big picture and end up blaming everything on some little detail. A special case of that is finding some mistake someone made and then blaming that person. All of the engineers in these stories were actually good engineers (companies that hire SRE consultants aren’t the ones to cut corners with their permanent hires), so firing them and replacing them wouldn’t have solved any problem. Even if you have 100x developers, that 100x is still finite, so mistakes will happen with enough complexity and pressure. The big-picture solution is back ups, which help you however you lose the data (including from malware, which is a hot topic in the news lately). If you’re not okay with having zero copies of it, don’t have one copy.

Story #1 had a bad end: there were no backups. The project was set back by nearly six months of data collection. By the way, some places only keep a single daily snapshot as a backup, and this story is a good example of how that can go wrong, too: if the data loss happened on Saturday and recovery was attempted on Monday, the one-day backup would only have an empty database from the Sunday.

Story #2 wasn’t fun, but worked out much better. Backups were available, but the data migration was reversible, too. The unfun part was that the release was done just before lunch and the fix had to be coded up while the production site was down. The main reason I’m telling this story is as a reminder that backups aren’t just about catastrophic data loss. Partial data corruption happens, too, and can be extra messy.

Story #3 was so-so. A small amount of data was lost permanently, but most was recovered from the backup. Everyone on the team felt pretty bad about not flagging the now-extremely-obviously-dangerous code. I wasn’t involved in the early development, but I felt bad because the recovery took a lot longer than it should have. With a well-tested recovery process, I think the site should have been back online in under 15mins total. But the recovery didn’t work first time, and I had to debug why not and retry. When a production site is down and it’s on you to get it up again, every 10s feels like an eternity. Thankfully, the stakeholders were much more understanding than some. They were actually relieved that a one-off disaster that could have sunk the company only resulted in minutes of lost data and under an hour of downtime.

It’s extremely common in practice for the backup to “work” but the recovery to fail. Often the recovery works when tested on small datasets, but fails on production-sized datasets. Disaster is most likely to strike when everyone is stressed out, and having the production site down only increases the pressure. It’s a really good idea to test and document the full recovery process while times are good. |

13,599 | 不同的编程语言是如何读写数据的 | https://opensource.com/article/21/7/programming-read-write | 2021-07-20T06:54:11 | [

"编程语言",

"读写"

] | https://linux.cn/article-13599-1.html |

>

> 每种编程语言都有其独特的完成任务的方式,这也说明了为什么有这么多语言可供选择。

>

>

>

在 Jim Hall 的《[不同的编程语言如何完成相同的事情](https://opensource.com/article/21/4/compare-programming-languages)》文章中,他演示了 13 种不同的语言如何使用不同的语法来完成同一个任务。经验是,编程语言往往有很多相似之处。一旦你了解了一种编程语言,你就可以通过理解它的语法和结构来学习另一种。

本着同样的精神,Jim 的文章比较了不同编程语言如何读写数据。无论数据来自配置文件还是用户创建的文件,在存储设备上处理数据都是程序员的常见任务。以这种方式涵盖所有编程语言是不切实际的,但最近的 [Opensource.com](http://Opensource.com) 系列文章提供了对这些编程语言采用的不同方法的深入了解:

* [C](https://opensource.com/article/21/3/file-io-c)

* [C++](https://opensource.com/article/21/3/ccc-input-output)

* [Java](https://opensource.com/article/21/3/io-java)

* [Groovy](https://opensource.com/article/21/4/groovy-io)

* [Lua](https://opensource.com/article/21/3/lua-files)

* [Bash](https://opensource.com/article/21/3/input-output-bash)

* [Python](https://opensource.com/article/21/6/reading-and-writing-files-python)

### 读写数据

用计算机读写数据的过程和你在现实生活中读写数据的过程类似。要访问书中的数据,你首先要打开它,然后阅读单词或将生词写入书中,然后合上书。

当程序需要从文件中读取数据时,你向程序传入一个文件位置,然后计算机将该数据读入内存中并解析它。同样,当程序需要将数据写入文件时,计算机会将新数据放入系统的内存写入缓冲区,然后将其同步到存储设备上的文件中。

下面是这些操作的一些伪代码:

1. 在内存中加载文件。

2. 读取文件内容,或将数据写入文件。

3. 关闭文件。

### 从文件中读取数据

从 [Opensource.com](http://Opensource.com) 系列文章的语言中,你可以看到读取文件的三种趋势。

#### C

在 C 语言中,打开文件可能涉及检索单个字符(直到 `EOF` 指示符,表示文件结束)或一个数据块,具体取决于你的需求和方法。根据你的目标,它可能感觉像一个主要是手工的过程,但这正是其他语言所模仿的。

```

FILE *infile;

int ch;

infile = fopen(argv[1], "r");

do {

ch = fgetc(infile);

if (ch != EOF) {

printf("%c", ch);

}

} while (ch != EOF);

fclose(infile);

```

你还可以选择将文件的某些部分加载到系统缓冲区中,然后在缓冲区外工作。

```

FILE *infile;

char buffer[300];

infile = fopen(argv[1], "r");

while (!feof(infile)) {

size_t buffer_length;

buffer_length = fread(buffer, sizeof(char), 300, infile);

}

printf("%s", buffer);

fclose(infile);

```

#### C++

C++ 简化了一些步骤,允许你将数据解析为字符串。

```

std::string sFilename = "example.txt";

std::ifstream fileSource(sFilename);

std::string buffer;

while (fileSource >> buffer) {

std::cout << buffer << std::endl;

}

```

#### Java

Java 和 Groovy 类似于 C++。它们使用名为 `Scanner` 的类来设置数据流或对象,这样就会包含你选择的文件内容。你可以通过标记(字节、行、整数等)扫描文件。

```

File myFile = new File("example.txt");

Scanner myScanner = new Scanner(myFile);

while (myScanner.hasNextLine()) {

String line = myScanner.nextLine();

System.out.println(line);

}

myScanner.close();

```

#### Groovy

```

def myFile = new File('example.txt')

def myScanner = new Scanner(myFile)

while (myScanner.hasNextLine()) {

def line = myScanner.nextLine()

println(line)

}

myScanner.close()

```

#### Lua

Lua 和 Python 进一步抽象了整个过程。你不必有意识地创建数据流,你只需给一个变量赋值为 `open` 函数的返回值,然后解析该变量的内容。这种方式快速,最简且容易。

```

myFile = io.open('example.txt', 'r')

lines = myFile:read("*all")

print(lines)

myFile:close()

```

#### Python

```

f = open('example.tmp', 'r')

for line in f:

print(line)

f.close()

```

### 向文件中写入数据

就写代码来说,写入是读取的逆过程。因此,将数据写入文件的过程与从文件中读取数据基本相同,只是使用了不同的函数。

#### C

在 C 语言中,你可以使用 `fputc` 函数将字符写入文件:

```

fputc(ch, outfile);

```

或者,你可以使用 `fwrite` 将数据写入缓冲区。

```

fwrite(buffer, sizeof(char), buffer_length, outfile);

```

#### C++

因为 C++ 使用 `ifstream` 库为数据打开缓冲区,所以你可以像 C 语言那样将数据写入缓冲区(C++ 库除外)。

```

std::cout << buffer << std::endl;

```

#### Java

在 Java 中,你可以使用 `FileWriter` 类来创建一个可以写入数据的对象。它的工作方式与 `Scanner` 类非常相似,只是方向相反。

```

FileWriter myFileWriter = new FileWriter("example.txt", true);

myFileWriter.write("Hello world\n");

myFileWriter.close();

```

#### Groovy

类似地,Groovy 使用 `FileWriter`,但使用了稍微 “groovy” 的语法。

```

new FileWriter("example.txt", true).with {

write("Hello world\n")

flush()

}

```

#### Lua

Lua 和 Python 很相似,都使用名为 `open` 的函数来加载文件,`writer` 函数来写入数据,`close` 函数用于关闭文件。

```

myFile = io.open('example.txt', 'a')

io.output(myFile)

io.write("hello world\n")

io.close(myFile)

```

#### Python

```

myFile = open('example.txt', 'w')

myFile.write('hello world')

myFile.close()

```

### File 模式

许多语言在打开文件时会指定一个“模式”。模式有很多,但这是常见的定义:

* **w** 表示写入

* **r** 表示读取

* **r+** 表示可读可写

* **a** 表示追加

某些语言,例如 Java 和 Groovy,允许你根据用于加载文件的类来确定模式。

无论编程语言以何种方式来确定文件模式,你都需要确保你是在 *追加* 数据,除非你打算用新数据覆盖文件。编程语言不像文件选择器那样,没有内置的提示来警告你防止数据丢失。

### 新语言和旧把戏

每种编程语言都有其独特完成任务的方式,这就是为什么有这么多语言可供选择。你可以而且应该选择最合适你的语言。但是,你一旦了解了编程的基本结构,你可以随意尝试其他语言,而不必担心不知道如何完成基本任务。通常情况下,实现目标的途径是相似的,所以只要你牢记基本概念,它们就很容易学习。

---

via: <https://opensource.com/article/21/7/programming-read-write>

作者:[Alan Smithee](https://opensource.com/users/alansmithee) 选题:[lujun9972](https://github.com/lujun9972) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In his article * How different programming languages do the same thing*, Jim Hall demonstrates how 13 different languages accomplish the same exact task with different syntax. The lesson is that programming languages tend to have many similarities, and once you know one programming language, you can learn another by figuring its syntax and structure.

In the same spirit, Jim's article compares how different programming languages read and write data. Whether that data comes from a configuration file or from a file a user creates, processing data on a storage device is a common task for coders. It's not practical to cover all programming languages in this way, but a recent Opensource.com series provides insight into different approaches taken by these coding languages:

## Reading and writing data

The process of reading and writing data with a computer is similar to how you read and write data in real life. To access data in a book, you first open it, then you read words or you write new words into the book, and then you close the book.

When your code needs to read data from a file, you provide your code with a file location, and then the computer brings that data into its RAM and parses it from there. Similarly, when your code needs to write data to a file, the computer places new data into the system's in-memory write buffer and synchronizes it to the file on the storage device.

Here's some pseudo-code for these operations:

- Load a file in memory.

- Read the file's contents, or write data to the file.

- Close the file.

## Reading data from a file

You can see three trends in how the languages in the Opensource.com series read files.

### C

In C, opening a file can involve retrieving a single character (up to the `EOF`

designator, signaling the end of the file) or a block of data, depending on your requirements and approach. It can feel like a mostly manual process, depending on your goal, but the general process is exactly what the other languages mimic.

```

FILE *infile;

int ch;

infile = fopen(argv[1], "r");

do {

ch = fgetc(infile);

if (ch != EOF) {

printf("%c", ch);

}

} while (ch != EOF);

fclose(infile);

```

You can also choose to load some portion of a file into the system buffer and then work out of the buffer.

```

FILE *infile;

char buffer[300];

infile = fopen(argv[1], "r");

while (!feof(infile)) {

size_t buffer_length;

buffer_length = fread(buffer, sizeof(char), 300, infile);

}

printf("%s", buffer);

fclose(infile);

```

### C++

C++ simplifies a few steps, allowing you to parse data as strings.

```

std::string sFilename = "example.txt";

std::ifstream fileSource(sFilename);

std::string buffer;

while (fileSource >> buffer) {

std::cout << buffer << std::endl;

}

```

### Java

Java and Groovy are similar to C++. They use a class called `Scanner`

to set up a data object or stream containing the contents of the file of your choice. You can "scan" through the file by tokens (byte, line, integer, and many others).

```

File myFile = new File("example.txt");

Scanner myScanner = new Scanner(myFile);

while (myScanner.hasNextLine()) {

String line = myScanner.nextLine();

System.out.println(line);

}

myScanner.close();

```

### Groovy

```

def myFile = new File('example.txt')

def myScanner = new Scanner(myFile)

while (myScanner.hasNextLine()) {

def line = myScanner.nextLine()

println(line)

}

myScanner.close()

```

### Lua

Lua and Python abstract the process further. You don't have to consciously create a data stream; you just assign a variable to the results of an `open`

function and then parse the contents of the variable. It's quick, minimal, and easy.

```

myFile = io.open('example.txt', 'r')

lines = myFile:read("*all")

print(lines)

myFile:close()

```

### Python

```

f = open('example.tmp', 'r')

for line in f:

print(line)

f.close()

```

## Writing data to a file

In terms of code, writing is the inverse of reading. As such, the process for writing data to a file is basically the same as reading data from a file, except using different functions.

### C

In C, you can write a character to a file with the `fputc`

function.

`fputc(ch, outfile);`

Alternately, you can write data to the buffer with `fwrite`

.

`fwrite(buffer, sizeof(char), buffer_length, outfile);`

### C++

Because C++ uses the `ifstream`

library to open a buffer for data, you can write data to the buffer, as with C (except with C++ libraries).

`std::cout << buffer << std::endl;`

### Java

In Java, you can use the `FileWriter`

class to create a data object that you can write data to. It works a lot like the `Scanner`

class, except going the other way.

```

FileWriter myFileWriter = new FileWriter("example.txt", true);

myFileWriter.write("Hello world\n");

myFileWriter.close();

```

### Groovy

Similarly, Groovy uses `FileWriter`

but with a slightly "groovier" syntax.

```

new FileWriter("example.txt", true).with {

write("Hello world\n")

flush()

}

```

### Lua

Lua and Python are similar, both using functions called `open`

to load a file, `write`

to put data into it, and `close`

to close the file.

```

myFile = io.open('example.txt', 'a')

io.output(myFile)

io.write("hello world\n")

io.close(myFile)

```

### Python

```

myFile = open('example.txt', 'w')

myFile.write('hello world')

myFile.close()

```

## File modes

Many languages specify a "mode" when opening files. Modes vary, but this is common notation:

**w**to write**r**to read**r+**to read and write**a**to append only

Some languages, such as Java and Groovy, let you determine the mode based on which class you use to load the file.

Whichever way your programming language determines a file's mode, it's up to you to ensure that you're *appending* data—unless you intend to overwrite a file with new data. Programming languages don't have built-in prompts to warn you against data loss, the way file choosers do.

## New language and old tricks

Every programming language has a unique way of accomplishing a task; that's why there are so many languages to choose from. You can and should choose the language that works best for you. But once you understand the basic constructs of programming, you can also feel free to try out different languages, without fear of not knowing how to accomplish basic tasks. More often than not, the pathways to a goal are similar, so they're easy to learn as long as you keep the basic concepts in mind.

## Comments are closed. |

13,601 | FreeDOS 简史 | https://opensource.com/article/21/6/history-freedos | 2021-07-21T10:49:34 | [

"FreeDOS"

] | https://linux.cn/article-13601-1.html |

>

> 经历了近 30 年的发展, FreeDOS 已经成为了世界先进的 DOS。

>

>

>

>

> 一个大师正在给他的一个弟子讲 [编程之道](https://www.mit.edu/~xela/tao.html)。 “编程之道包含在所有的软件中 —— 不管它多么微不足道,” 大师说道。

>

>

> “编程之道在手持计算器里面吗?” 弟子问道。

>

>

> “是的,” 大师回答道。

>

>

> “编程之道在电子游戏里面吗?” 弟子继续问道。

>

>

> “即便是电子游戏中,” 大师说。

>

>

> “那编程之道在个人电脑的 DOS 里面吗?”

>

>

> 大师咳嗽了一下,稍稍改变了一下姿势,说道,“今天的课就到这里吧。”

>

>

> ——《编程之道》,Geoffrey James,InfoBooks,1987

>

>

>

过去,计算仅限于昂贵的大型机和“大铁疙瘩”计算机系统,如 PDP 11。但是微处理器的出现在 20 世纪 70 年代带来了一场计算革命。你终于可以在家里有一台电脑了——“个人电脑”时代已经到了!

我记得看到的最早的个人电脑包括 Commodore、TRS-80 和 Apple。个人电脑成了一个热门话题,所以 IBM 决定进入这个市场。在经历了一个快速开发周期之后,IBM 于 1981 年 8 月发布了 IBM 5150 个人电脑(最初的“IBM PC”)。

从零开始创建一台计算机并非易事,因此 IBM 以用“现成”的硬件来构建 PC 而闻名,并从外部开发商那里获得了其他组件的授权。其中之一是微软授权的操作系统。反过来,微软从西雅图计算机产品公司获得了 86-DOS ,进行了各种更新,并将新版本作为 IBM PC-DOS 与 IBM PC 一起首次亮相。

### 早期的 DOS

运行在最多只有 640 千字节内存中的 DOS,除了管理硬件和允许用户启动应用程序之外,真的做不了什么。因此,PC-DOS 1.0 命令行非常贫乏,只包含了一些设置日期和时间、管理文件、控制终端和格式化软盘的命令。DOS 还包括一个 BASIC 语言解释器,这是那个时代所有个人计算机的一个标准功能。

直到 PC-DOS 2.0,DOS 才变得更加有趣,为命令行添加了新的命令,并包含了其他有用的工具。但对我来说,直到 1991 年的 MS-DOS 5.0 才有了“现代感”。微软在这个版本中对 DOS 进行了大修,更新了许多命令,并用一个新的全屏编辑器取代了老旧的 Edlin 编辑器,使之更方便用户使用。DOS 5 还包括我喜欢的其他特性,比如基于微软 QuickBASIC 编译器的新 BASIC 解释器,简称 QBASIC. 如果你曾经在 DOS 上玩过 Gorillas 游戏,那可能就是在 MS-DOS 5.0 中运行的。

尽管进行了这些升级,但我对 DOS 命令行并不完全满意。DOS 从来没有偏离原来的设计,有其局限性。DOS 为用户提供了一些工具,可以从命令行执行一些事情 —— 否则,你就得使用 DOS 命令行来启动应用程序。微软认为用户大部分时间都会花在几个关键的应用程序上,比如文字处理器或电子表格。

但是开发人员想要一个功能更强的 DOS,此时一个细分行业正在萌芽,以提供小巧优雅的工具和程序。有些是全屏应用程序,但也有许多是增强 DOS 命令环境的命令行实用程序。当我学会一点 C 语言编程时,我开始编写自己的实用程序,扩展或替换 DOS 命令行。尽管 MS-DOS 的基础相当有限,但我发现第三方实用程序加上我自己的工具创建了一个功能强大的 DOS 命令行。

### FreeDOS

1994 年初,我开始在科技杂志上看到很多对微软高管的采访,他们说下一个版本的 Windows 将完全取代 DOS。我以前使用过 Windows,但如果你还记得那个时代,你就知道 Windows 3.1 并不是一个很好的平台。Windows 3.1 很笨重,有很多毛病,如果一个应用程序崩溃,它可能会使整个 Windows 系统瘫痪。我也不喜欢 Windows 的图形用户界面。我更喜欢在命令行做我的工作,而不是用鼠标。

我考虑过 Windows,并决定,“如果 Windows 3.2 或 Windows 4.0 会像 Windows 3.1 一样,我就不会去使用它。” 但我有什么选择?此时,我已经尝试过 Linux,并认为 [Linux 很棒](https://opensource.com/article/17/5/how-i-got-started-linux-jim-hall-freedos),但是 Linux 没有任何应用程序。我的文字处理器、电子表格和其他程序都在 DOS 上。我需要 DOS。

然后我有了个主意!我想,“如果开发人员能够在互联网上共同编写一个完整的 Unix 操作系统,那么我们当然可以对 DOS 做同样的事情。”毕竟,与 Unix 相比,DOS 是一个相当简单的操作系统。DOS 一次运行一个任务(单任务),并且有一个更简单的内存模型。编写我们自己的 DOS 应该不难。

因此,在 1994 年 6 月 29 日,我在一个名为 Usenet 的留言板网络上向 “comp.os.msdos.apps” [发布了一个公告](https://groups.google.com/g/comp.os.msdos.apps/c/oQmT4ETcSzU/m/O1HR8PE2u-EJ):

>

> PD-DOS 项目公告:

>

>

> 几个月前,我发表了关于启动公共领域版本的 DOS 的文章。 当时大家对此的普遍支持,许多人都同意这样的说法:“开始编写吧!”所以,我就……

>

>

> 宣布首次生产 PD-DOS 的努力。我已经写了一个“清单”,描述了这样一个项目的目标和工作大纲,以及一个“任务列表”,它准确地显示了需要编写什么。我会把这些贴在这里,供大家讨论。

>

>

>

\* 关于这个名字的说明 —— 我希望这个新的 DOS 成为每个人都可以使用的东西,我天真地认为,当每个人都可以使用它时,它就是“公共领域”。我很快就意识到了这种差别,所以我们把 “PD-DOS” 改名为 “Free-DOS”,然后去掉连字符变成 “FreeDOS”。

一些开发人员联系我,提供他们为替换或增强 DOS 命令行而创建的实用程序,类似于我自己的努力。就在项目宣布几个月后,我们汇集了我们的实用程序,并创建了一个实用的系统,我们在 1994 年 9 月发布了一个 “Alpha 1” 版本。在那些日子里,发展是相当迅速的,我们在 1994 年 12 月发布了 “Alpha 2”,1995 年 1 月发布了 “Alpha 3”,1995 年 6 月发布了“Alpha 4”。

### 一个现代的 DOS

从那以后,我们一直致力于使 FreeDOS 成为 “现代” DOS。而这种现代化大部分都集中在创建一个丰富的命令行环境上。是的,DOS 仍然需要支持应用程序,但是我们相信 FreeDOS 也需要一个强大的命令行环境。这就是为什么 FreeDOS 包含了许多有用的工具,包括浏览目录、管理文件、播放音乐、连接网络的命令,……以及类似 Unix 的实用程序集合,如 `less`、`du`、`head`、`tail`、`sed` 和 `tr`。

虽然 FreeDOS 的开发已经放缓,但它并没有停止。开发人员继续为 FreeDOS 编写新程序,并向 FreeDOS 添加新功能。我对 FreeDOS 1.3 RC4 的几个重要补充感到特别兴奋,FreeDOS 1.3 RC4 是即将发布的 FreeDOS 1.3 的最新候选版本。最近的一些更新如下:

* Mateusz Viste 创建了一个新的电子书阅读器,名为 Ancient Machine Book(AMB),我们利用它作为 FreeDOS 1.3 RC4 中的新帮助系统。

* Rask Ingemann Lambertsen、Andrew Jenner、TK Chia 和其他人正在更新 GCC 的 IA-16 版本,包括一个新的libi86 库,它提供了与 Borland TurboC++ 编译器的 C 库的某种程度的兼容性。

* Jason Hood 更新了一个可卸载的 CD-ROM 重定向器,以替代微软的 MSCDEX,最多支持 10 个驱动器。

* SuperIlu 创建了 DOjS,这是一个 Javascript 开发画布,具有集成的编辑器、图形和声音输出,以及鼠标、键盘和操纵杆输入。

* Japheth 创建了一个 DOS32PAE 扩展程序,它能够通过 PAE 分页使用大量的内存。

尽管 FreeDOS 有了新的发展,我们仍然忠于我们的 DOS 根基。在我们继续朝着 FreeDOS 1.3 “最终”版本努力时,我们带着几个核心假设,包括:

* **兼容性是关键** —— 如果 FreeDOS 不能运行经典 DOS 应用程序,它就不是真正的 “DOS”。虽然我们提供了许多优秀的开源工具、应用程序和游戏,但你也可以运行你的传统的 DOS 应用程序。

* **继续在旧 PC 上运行(XT、286、386 等)** —— FreeDOS 1.3 将保持 16 位英特尔架构,但在可能的情况下将支持扩展驱动程序支持的新硬件。为此,我们继续专注于单用户命令行环境。

* **FreeDOS 是开源软件** —— 我一直说,如果人们不能访问、学习和修改源代码,FreeDOS 就不是“自由的 DOS”。FreeDOS 1.3 将包括尽可能多地包括使用公认的开源许可证的软件。但 DOS 实际上早于 GNU 通用公共许可证(1989)和开放源码定义(1998),因此一些 DOS 软件可能会使用它自己的“免费源代码”许可证,而不是标准的“开源”许可。当我们考虑将软件包纳入 FreeDOS 时,我们将继续评估任何许可证,以确保它们是合适的“开放源码”,即使它们没有得到正式承认。

我们欢迎你的帮助,使 FreeDOS 强大!请加入我们的电子邮件列表,我们欢迎所有新来者和贡献者。我们通过电子邮件列表进行交流,不过这个列表的信件量非常小,所以不太可能撑爆你的收件箱。

访问 FreeDOS 网站 [www.freedos.org](https://www.freedos.org/)。

---

via: <https://opensource.com/article/21/6/history-freedos>

作者:[Jim Hall](https://opensource.com/users/jim-hall) 选题:[lujun9972](https://github.com/lujun9972) 译者:[zxy-wyx](https://github.com/zxy-wyx) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | A master was explaining the nature of [The Tao of Programming](https://www.mit.edu/~xela/tao.html) to one of his novices. "The Tao is embodied in all software—regardless of how insignificant," said the master.

"Is Tao in a hand-held calculator?" asked the novice.

"It is," came the reply.

"Is the Tao in a video game?" continued the novice.

"It is even in a video game," said the master.

"And is the Tao in the DOS for a personal computer?"

The master coughed and shifted his position slightly. "The lesson is over for today," he said.

The Tao of Programming, Geoffrey James, InfoBooks, 1987

Computing used to be limited only to expensive mainframes and "Big Iron" computer systems like the PDP11. But the advent of the microprocessor brought about a computing revolution in the 1970s. You could finally have a computer in your home—the "personal computer" had arrived!

The earliest personal computers I remember seeing included the Commodore, TRS-80, and Apple. The personal computer became such a hot topic that IBM decided to enter the market. After a rapid development cycle, IBM released the IBM 5150 Personal Computer (the original "IBM PC") in August 1981.

Creating a computer from scratch is no easy task, so IBM famously used "off-the-shelf" hardware to build the PC, and licensed other components from outside developers. One of those was the operating system, licensed from Microsoft. In turn, Microsoft acquired 86-DOS from Seattle Computer Products, applied various updates, and debuted the new version with the IBM PC as IBM PC-DOS.

## Early DOS

Running in memory *up to* 640 kilobytes, DOS really couldn't do much more than manage the hardware and allow the user to launch applications. As a result, the PC-DOS 1.0 command line was pretty anemic, only including a few commands to set the date and time, manage files, control the terminal, and format floppy disks. DOS also included a BASIC language interpreter, which was a standard feature in all personal computers of the era.

It wasn't until PC-DOS 2.0 that DOS became more interesting, adding new commands to the command line, and including other useful tools. But for me, it wasn't until MS-DOS 5.0 in 1991 that DOS began to feel "modern." Microsoft overhauled DOS in this release, updating many of the commands and replacing the venerable Edlin editor with a new full-screen editor that was more user-friendly. DOS 5 included other features that I liked, as well, such as a new BASIC interpreter based on Microsoft QuickBASIC Compiler, simply called QBASIC. If you've ever played the Gorillas game on DOS, it was probably in MS-DOS 5.0.

Despite these upgrades, I wasn't entirely satisfied with the DOS command line. DOS never strayed far from the original design, which proved limiting. DOS gave the user a few tools to do some things from the command line—otherwise, you were meant to use the DOS command line to launch applications. Microsoft assumed the user would spend most of their time in a few key applications, such as a word processor or spreadsheet.

But developers wanted a more functional DOS, and a sub-industry sprouted to offer neat tools and programs. Some were full-screen applications, but many were command-line utilities that enhanced the DOS command environment. When I learned a bit of C programming, I started writing my own utilities that extended or replaced the DOS command line. And despite the rather limited underpinnings of MS-DOS, I found that the third-party utilities, plus my own, created a powerful DOS command line.

## FreeDOS

In early 1994, I started seeing a lot of interviews with Microsoft executives in tech magazines saying the next version of Windows would totally do away with DOS. I'd used Windows before—but if you remember the era, you know Windows 3.1 wasn't a great platform. Windows 3.1 was clunky and buggy—if an application crashed, it might take down the entire Windows system. And I didn't like the Windows graphical user interface, either. I preferred doing my work at the command line, not with a mouse.

I considered Windows and decided, If Windows 3.2 or Windows 4.0 will be anything like Windows 3.1, I want nothing to do with it.

But what were my options? I'd already experimented with Linux at this point, and thought [Linux was great](https://opensource.com/article/17/5/how-i-got-started-linux-jim-hall-freedos)—but Linux didn't have any applications. My word processor, spreadsheet, and other programs were on DOS. I needed DOS.

Then I had an idea! I thought, If developers can come together over the internet to write a complete Unix operating system, surely we can do the same thing with DOS.

After all, DOS was a fairly straightforward operating system compared to Unix. DOS ran one task at a time (single-tasking) and had a simpler memory model. It shouldn't be *that* hard to write our own DOS.

So on June 29, 1994, I [posted an announcement](https://groups.google.com/g/comp.os.msdos.apps/c/oQmT4ETcSzU/m/O1HR8PE2u-EJ) to `comp.os.msdos.apps`

, on a message board network called Usenet:

ANNOUNCEMENT OF PD-DOS PROJECT:

A few months ago, I posted articles relating to starting a public domain version of DOS. The general support for this at the time was strong, and many people agreed with the statement, "start writing!" So, I have...

Announcing the first effort to produce a PD-DOS. I have written up a "manifest" describing the goals of such a project and an outline of the work, as well as a "task list" that shows exactly what needs to be written. I'll post those here, and let discussion follow.

** A note about the name—I wanted this new DOS to be something that everyone could use, and I naively assumed that when everyone could use it, it was "public domain." I quickly realized the difference, and we renamed "PD-DOS" to "Free-DOS"—and later dropped the hyphen to become "FreeDOS."*

A few developers reached out to me, to offer utilities they had created to replace or enhance the DOS command line, similar to my own efforts. We pooled our utilities and created a useful system that we released as "Alpha 1" in September 1994, just a few months after announcing the project. Development was pretty swift in those days, and we followed up with "Alpha 2" in December 1994, "Alpha 3" in January 1995, and "Alpha 4" in June 1995.

## A modern DOS

Since then, we've always focused on making FreeDOS a "modern" DOS. And much of that modernization is centered on creating a rich command-line environment. Yes, DOS still needs to support applications, but we believe FreeDOS needs a strong command-line environment, as well. That's why FreeDOS includes dozens of useful tools, including commands to navigate directories, manage files, play music, connect to networks, ... and a collection of Unix-like utilities such as `less`

, `du`

, `head`

, `tail`

, `sed`

, and `tr`

.

While FreeDOS development has slowed, it has not stopped. Developers continue to write new programs for FreeDOS, and add new features to FreeDOS. I'm particularly excited about several great additions to FreeDOS 1.3 RC4, the latest release candidate for the forthcoming FreeDOS 1.3. A few recent updates:

- Mateusz Viste created a new ebook reader called Ancient Machine Book (AMB) that we've leveraged as the new help system in FreeDOS 1.3 RC4

- Rask Ingemann Lambertsen, Andrew Jenner, TK Chia, and others are updating the IA-16 version of GCC, including a new

*libi86*library that provides some degree of compatibility with the Borland Turbo C++ compiler's C library - Jason Hood has updated an unloadable CD-ROM redirector substitute for Microsoft's MSCDEX, supporting up to 10 drives

- SuperIlu has created DOjS, a Javascript development canvas with an integrated editor, graphics and sound output, and mouse, keyboard, and joystick input

- Japheth has created a DOS32PAE extender that is able to use huge amounts of memory through PAE paging

Despite all of the new development on FreeDOS, we remain true to our DOS roots. As we continue working toward FreeDOS 1.3 "final," we carry several core assumptions, including:

**Compatibility is key**—FreeDOS isn't really "DOS" if it can't run classic DOS applications. While we provide many great open source tools, applications, and games, you can run your legacy DOS applications, too.**Continue to run on old PCs (XT, '286, '386, etc)**—FreeDOS 1.3 will remain 16-bit Intel but will support new hardware with expanded driver support, where possible. For this reason, we continue to focus on a single-user command-line environment.**FreeDOS is open source software**—I've always said that FreeDOS isn't a "free DOS" if people can't access, study, and modify the source code. FreeDOS 1.3 will include software that uses recognized open source licenses as much as possible. But DOS actually pre-dates the GNU General Public License (1989) and the Open Source Definition (1998) so some DOS software might use its own "free with source code" license that isn't a standard "open source" license. As we consider packages to include in FreeDOS, we continue to evaluate any licenses to ensure they are suitably "open source," even if they are not officially recognized.

We welcome your help in making FreeDOS great! Please join us on our email list—we welcome all newcomers and contributors. We communicate over an email list, but the list is fairly low volume so is unlikely to fill up your Inbox.

Visit the FreeDOS website at [www.freedos.org](https://www.freedos.org/).

## 1 Comment |

13,602 | 进阶教程:用 Python 和 NLTK 进行 NLP 分析 | https://opensource.com/article/20/8/nlp-python-nltk | 2021-07-21T11:56:00 | [

"NLP",

"自然语言处理"

] | https://linux.cn/article-13602-1.html |

>

> 进一步学习自然语言处理的基本概念

>

>

>

在 [之前的文章](https://opensource.com/article/20/8/intro-python-nltk) 里,我介绍了<ruby> 自然语言处理 <rt> natural language processing </rt></ruby>(NLP)和宾夕法尼亚大学研发的<ruby> 自然语言处理工具包 <rt> Natural Language Toolkit </rt></ruby> ([NLTK](http://www.nltk.org/))。我演示了用 Python 解析文本和定义<ruby> 停顿词 <rt> stopword </rt></ruby>的方法,并介绍了<ruby> 语料库 <rt> corpus </rt></ruby>的概念。语料库是由文本构成的数据集,通过提供现成的文本数据来辅助文本处理。在这篇文章里,我将继续用各种语料库对文本进行对比和分析。

这篇文章主要包括以下部分:

* <ruby> 词网 <rt> WordNet </rt></ruby>和<ruby> 同义词集 <rt> synset </rt></ruby>

* <ruby> 相似度比较 <rt> Similarity comparison </rt></ruby>

* <ruby> 树 <rt> Tree </rt></ruby>和<ruby> 树库 <rt> treebank </rt></ruby>

* <ruby> 命名实体识别 <rt> Named entity recognition </rt></ruby>

### 词网和同义词集

<ruby> <a href="https://en.wikipedia.org/wiki/WordNet"> 词网 </a> <rt> WordNet </rt></ruby> 是 NLTK 里的一个大型词汇数据库语料库。词网包含各单词的诸多<ruby> 认知同义词 <rt> cognitive synonyms </rt></ruby>(认知同义词常被称作“<ruby> 同义词集 <rt> synset </rt></ruby>”)。在词网里,名词、动词、形容词和副词,各自被组织成一个同义词的网络。

词网是一个很有用的文本分析工具。它有面向多种语言的版本(汉语、英语、日语、俄语和西班牙语等),也使用多种许可证(从开源许可证到商业许可证都有)。初代版本的词网由普林斯顿大学研发,面向英语,使用<ruby> 类 MIT 许可证 <rt> MIT-like license </rt></ruby>。

因为一个词可能有多个意义或多个词性,所以可能与多个同义词集相关联。每个同义词集通常提供下列属性:

| **属性** | **定义** | **例子** |

| --- | --- | --- |

| <ruby> 名称 <rt> Name </rt></ruby> | 此同义词集的名称 | 单词 `code` 有 5 个同义词集,名称分别是 `code.n.01`、 `code.n.02`、 `code.n.03`、`code.v.01` 和 `code.v.02` |

| <ruby> 词性 <rt> POS </rt></ruby> | 此同义词集的词性 | 单词 `code` 有 3 个名词词性的同义词集和 2 个动词词性的同义词集 |

| <ruby> 定义 <rt> Definition </rt></ruby> | 该词作对应词性时的定义 | 动词 `code` 的一个定义是:(计算机科学)数据或计算机程序指令的<ruby> 象征性排列 <rt> symbolic arrangement </rt></ruby> |

| <ruby> 例子 <rt> Example </rt></ruby> | 使用该词的例子 | `code` 一词的例子:We should encode the message for security reasons |

| <ruby> 词元 <rt> Lemma </rt></ruby> | 与该词相关联的其他同义词集(包括那些不一定严格地是该词的同义词,但可以大体看作同义词的);词元直接与其他词元相关联,而不是直接与<ruby> 单词 <rt> word </rt></ruby>相关联 | `code.v.02` 的词元是 `code.v.02.encipher`、`code.v.02.cipher`、`code.v.02.cypher`、`code.v.02.encrypt`、`code.v.02.inscribe` 和 `code.v.02.write_in_code` |

| <ruby> 反义词 <rt> Antonym </rt></ruby> | 意思相反的词 | 词元 `encode.v.01.encode` 的反义词是 `decode.v.01.decode` |

| <ruby> 上义词 <rt> Hypernym </rt></ruby> | 该词所属的一个范畴更大的词 | `code.v.01` 的一个上义词是 `tag.v.01` |

| <ruby> 分项词 <rt> Meronym </rt></ruby> | 属于该词组成部分的词 | `computer` 的一个分项词是 `chip` |

| <ruby> 总项词 <rt> Holonym </rt></ruby> | 该词作为组成部分所属的词 | `window` 的一个总项词是 `computer screen` |

同义词集还有一些其他属性,在 `<你的 Python 安装路径>/Lib/site-packages` 下的 `nltk/corpus/reader/wordnet.py`,你可以找到它们。

下面的代码或许可以帮助理解。

这个函数:

```

from nltk.corpus import wordnet

def synset_info(synset):

print("Name", synset.name())

print("POS:", synset.pos())

print("Definition:", synset.definition())

print("Examples:", synset.examples())

print("Lemmas:", synset.lemmas())

print("Antonyms:", [lemma.antonyms() for lemma in synset.lemmas() if len(lemma.antonyms()) > 0])

print("Hypernyms:", synset.hypernyms())

print("Instance Hypernyms:", synset.instance_hypernyms())

print("Part Holonyms:", synset.part_holonyms())

print("Part Meronyms:", synset.part_meronyms())

print()

synsets = wordnet.synsets('code')

print(len(synsets), "synsets:")

for synset in synsets:

synset_info(synset)

```

将会显示:

```

5 synsets:

Name code.n.01

POS: n

Definition: a set of rules or principles or laws (especially written ones)

Examples: []

Lemmas: [Lemma('code.n.01.code'), Lemma('code.n.01.codification')]

Antonyms: []

Hypernyms: [Synset('written_communication.n.01')]

Instance Hpernyms: []

Part Holonyms: []

Part Meronyms: []

...

Name code.n.03

POS: n

Definition: (computer science) the symbolic arrangement of data or instructions in a computer program or the set of such instructions

Examples: []

Lemmas: [Lemma('code.n.03.code'), Lemma('code.n.03.computer_code')]

Antonyms: []

Hypernyms: [Synset('coding_system.n.01')]

Instance Hpernyms: []

Part Holonyms: []

Part Meronyms: []

...

Name code.v.02

POS: v

Definition: convert ordinary language into code

Examples: ['We should encode the message for security reasons']

Lemmas: [Lemma('code.v.02.code'), Lemma('code.v.02.encipher'), Lemma('code.v.02.cipher'), Lemma('code.v.02.cypher'), Lemma('code.v.02.encrypt'), Lemma('code.v.02.inscribe'), Lemma('code.v.02.write_in_code')]

Antonyms: []

Hypernyms: [Synset('encode.v.01')]

Instance Hpernyms: []

Part Holonyms: []

Part Meronyms: []

```

<ruby> 同义词集 <rt> synset </rt></ruby>和<ruby> 词元 <rt> lemma </rt></ruby>在词网里是按照树状结构组织起来的,下面的代码会给出直观的展现:

```

def hypernyms(synset):

return synset.hypernyms()

synsets = wordnet.synsets('soccer')

for synset in synsets:

print(synset.name() + " tree:")

pprint(synset.tree(rel=hypernyms))

print()

```

```

code.n.01 tree:

[Synset('code.n.01'),

[Synset('written_communication.n.01'),

...

code.n.02 tree:

[Synset('code.n.02'),

[Synset('coding_system.n.01'),

...

code.n.03 tree:

[Synset('code.n.03'),

...

code.v.01 tree:

[Synset('code.v.01'),

[Synset('tag.v.01'),

...

code.v.02 tree:

[Synset('code.v.02'),

[Synset('encode.v.01'),

...

```

词网并没有涵盖所有的单词和其信息(现今英语有约 17,0000 个单词,最新版的 词网 涵盖了约 15,5000 个),但它开了个好头。掌握了“词网”的各个概念后,如果你觉得它词汇少,不能满足你的需要,可以转而使用其他工具。或者,你也可以打造自己的“词网”!

#### 自主尝试

使用 Python 库,下载维基百科的 “[open source](https://en.wikipedia.org/wiki/Open_source)” 页面,并列出该页面所有单词的<ruby> 同义词集 <rt> synset </rt></ruby>和<ruby> 词元 <rt> lemma </rt></ruby>。

### 相似度比较

相似度比较的目的是识别出两篇文本的相似度,在搜索引擎、聊天机器人等方面有很多应用。

比如,相似度比较可以识别 `football` 和 `soccer` 是否有相似性。

```

syn1 = wordnet.synsets('football')

syn2 = wordnet.synsets('soccer')

# 一个单词可能有多个 同义词集,需要把 word1 的每个同义词集和 word2 的每个同义词集分别比较

for s1 in syn1:

for s2 in syn2:

print("Path similarity of: ")

print(s1, '(', s1.pos(), ')', '[', s1.definition(), ']')

print(s2, '(', s2.pos(), ')', '[', s2.definition(), ']')

print(" is", s1.path_similarity(s2))

print()

```

```

Path similarity of:

Synset('football.n.01') ( n ) [ any of various games played with a ball (round or oval) in which two teams try to kick or carry or propel the ball into each other's goal ]

Synset('soccer.n.01') ( n ) [ a football game in which two teams of 11 players try to kick or head a ball into the opponents' goal ]

is 0.5

Path similarity of:

Synset('football.n.02') ( n ) [ the inflated oblong ball used in playing American football ]

Synset('soccer.n.01') ( n ) [ a football game in which two teams of 11 players try to kick or head a ball into the opponents' goal ]

is 0.05

```

两个词各个同义词集之间<ruby> 路径相似度 <rt> path similarity </rt></ruby>最大的是 0.5,表明它们关联性很大([<ruby> 路径相似度 <rt> path similarity </rt></ruby>](https://www.nltk.org/howto/wordnet.html)指两个词的意义在<ruby> 上下义关系的词汇分类结构 <rt> hypernym/hypnoym taxonomy </rt></ruby>中的最短距离)。

那么 `code` 和 `bug` 呢?这两个计算机领域的词的相似度是:

```

Path similarity of:

Synset('code.n.01') ( n ) [ a set of rules or principles or laws (especially written ones) ]

Synset('bug.n.02') ( n ) [ a fault or defect in a computer program, system, or machine ]

is 0.1111111111111111

...

Path similarity of:

Synset('code.n.02') ( n ) [ a coding system used for transmitting messages requiring brevity or secrecy ]

Synset('bug.n.02') ( n ) [ a fault or defect in a computer program, system, or machine ]

is 0.09090909090909091

...

Path similarity of:

Synset('code.n.03') ( n ) [ (computer science) the symbolic arrangement of data or instructions in a computer program or the set of such instructions ]

Synset('bug.n.02') ( n ) [ a fault or defect in a computer program, system, or machine ]

is 0.09090909090909091

```

这些是这两个词各同义词集之间<ruby> 路径相似度 <rt> path similarity </rt></ruby>的最大值,这些值表明两个词是有关联性的。

NLTK 提供多种<ruby> 相似度计分器 <rt> similarity scorers </rt></ruby>,比如:

* path\_similarity

* lch\_similarity

* wup\_similarity

* res\_similarity

* jcn\_similarity

* lin\_similarity

要进一步了解这些<ruby> 相似度计分器 <rt> similarity scorers </rt></ruby>,请查看 [WordNet Interface](https://www.nltk.org/howto/wordnet.html) 的 Similarity 部分。

#### 自主尝试