id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

13,259 | 用 Bash 读写文件 | https://opensource.com/article/21/3/input-output-bash | 2021-04-01T22:37:26 | [

"Bash",

"读写",

"文件"

] | https://linux.cn/article-13259-1.html |

>

> 学习 Bash 读取和写入数据的不同方式,以及何时使用每种方法。

>

>

>

当你使用 Bash 编写脚本时,有时你需要从一个文件中读取数据或向一个文件写入数据。有时文件可能包含配置选项,而另一些时候这个文件是你的用户用你的应用创建的数据。每种语言处理这个任务的方式都有些不同,本文将演示如何使用 Bash 和其他 [POSIX](https://opensource.com/article/19/7/what-posix-richard-stallman-explains) shell 处理数据文件。

### 安装 Bash

如果你在使用 Linux,你可能已经有了 Bash。如果没有,你可以在你的软件仓库里找到它。

在 macOS 上,你可以使用默认终端,Bash 或 [Zsh](https://opensource.com/article/19/9/getting-started-zsh),这取决于你运行的 macOS 版本。

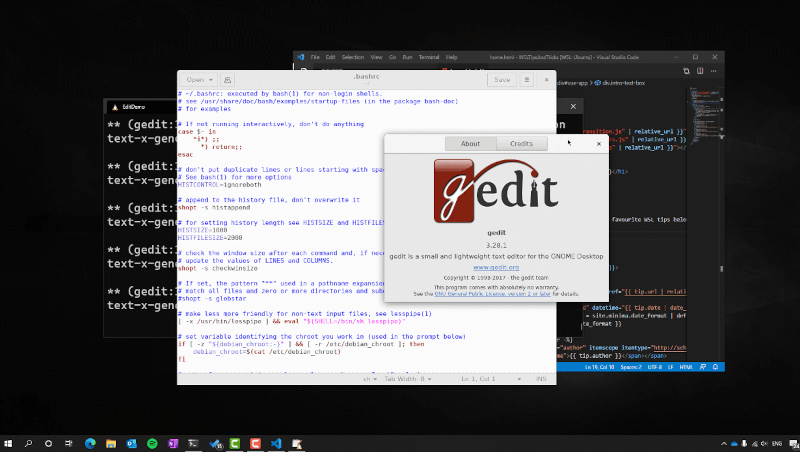

在 Windows 上,有几种方法可以体验 Bash,包括微软官方支持的 [Windows Subsystem for Linux](https://opensource.com/article/19/7/ways-get-started-linux#wsl)(WSL)。

安装 Bash 后,打开你最喜欢的文本编辑器并准备开始。

### 使用 Bash 读取文件

除了是 [shell](https://www.redhat.com/sysadmin/terminals-shells-consoles) 之外,Bash 还是一种脚本语言。有几种方法可以从 Bash 中读取数据。你可以创建一种数据流并解析输出, 或者你可以将数据加载到内存中。这两种方法都是有效的获取信息的方法,但每种方法都有相当具体的用例。

#### 在 Bash 中援引文件

当你在 Bash 中 “<ruby> 援引 <rt> source </rt></ruby>” 一个文件时,你会让 Bash 读取文件的内容,期望它包含有效的数据,Bash 可以将这些数据放入它建立的数据模型中。你不会想要从旧文件中援引数据,但你可以使用这种方法来读取配置文件和函数。

(LCTT 译注:在 Bash 中,可以通过 `source` 或 `.` 命令来将一个文件读入,这个行为称为 “sourcing”,英文原意为“一次性(试)采购”、“寻找供应商”、“获得”等,考虑到 Bash 的语境和发音,我建议可以翻译为“援引”,或有不当,供大家讨论参考 —— wxy)

例如,创建一个名为 `example.sh` 的文件,并输入以下内容:

```

#!/bin/sh

greet opensource.com

echo "The meaning of life is $var"

```

运行这段代码,看见失败了:

```

$ bash ./example.sh

./example.sh: line 3: greet: command not found

The meaning of life is

```

Bash 没有一个叫 `greet` 的命令,所以无法执行那一行,也没有一个叫 `var` 的变量记录,所以文件没有意义。为了解决这个问题,建立一个名为 `include.sh` 的文件:

```

greet() {

echo "Hello ${1}"

}

var=42

```

修改你的 `example.sh` 脚本,加入一个 `source` 命令:

```

#!/bin/sh

source include.sh

greet opensource.com

echo "The meaning of life is $var"

```

运行脚本,可以看到工作了:

```

$ bash ./example.sh

Hello opensource.com

The meaning of life is 42

```

`greet` 命令被带入你的 shell 环境,因为它被定义在 `include.sh` 文件中,它甚至可以识别参数(本例中的 `opensource.com`)。变量 `var` 也被设置和导入。

#### 在 Bash 中解析文件

另一种让数据“进入” Bash 的方法是将其解析为数据流。有很多方法可以做到这一点. 你可以使用 `grep` 或 `cat` 或任何可以获取数据并管道输出到标准输出的命令。另外,你可以使用 Bash 内置的东西:重定向。重定向本身并不是很有用,所以在这个例子中,我也使用内置的 `echo` 命令来打印重定向的结果:

```

#!/bin/sh

echo $( < include.sh )

```

将其保存为 `stream.sh` 并运行它来查看结果:

```

$ bash ./stream.sh

greet() { echo "Hello ${1}" } var=42

$

```

对于 `include.sh` 文件中的每一行,Bash 都会将该行打印(或 `echo`)到你的终端。先用管道把它传送到一个合适的解析器是用 Bash 读取数据的常用方法。例如, 假设 `include.sh` 是一个配置文件, 它的键和值对用一个等号(`=`)分开. 你可以用 `awk` 甚至 `cut` 来获取值:

```

#!/bin/sh

myVar=`grep var include.sh | cut -d'=' -f2`

echo $myVar

```

试着运行这个脚本:

```

$ bash ./stream.sh

42

```

### 用 Bash 将数据写入文件

无论你是要存储用户用你的应用创建的数据,还是仅仅是关于用户在应用中做了什么的元数据(例如,游戏保存或最近播放的歌曲),都有很多很好的理由来存储数据供以后使用。在 Bash 中,你可以使用常见的 shell 重定向将数据保存到文件中。

例如, 要创建一个包含输出的新文件, 使用一个重定向符号:

```

#!/bin/sh

TZ=UTC

date > date.txt

```

运行脚本几次:

```

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:06 UTC 2021

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:12 UTC 2021

```

要追加数据,使用两个重定向符号:

```

#!/bin/sh

TZ=UTC

date >> date.txt

```

运行脚本几次:

```

$ bash ./date.sh

$ bash ./date.sh

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:12 UTC 2021

Tue Feb 23 22:25:17 UTC 2021

Tue Feb 23 22:25:19 UTC 2021

Tue Feb 23 22:25:22 UTC 2021

```

### Bash 轻松编程

Bash 的优势在于简单易学,因为只需要一些基本的概念,你就可以构建复杂的程序。完整的文档请参考 [GNU.org](http://GNU.org) 上的 [优秀的 Bash 文档](http://gnu.org/software/bash)。

---

via: <https://opensource.com/article/21/3/input-output-bash>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | When you're scripting with Bash, sometimes you need to read data from or write data to a file. Sometimes a file may contain configuration options, and other times the file is the data your user is creating with your application. Every language handles this task a little differently, and this article demonstrates how to handle data files with Bash and other [POSIX](https://opensource.com/article/19/7/what-posix-richard-stallman-explains) shells.

## Install Bash

If you're on Linux, you probably already have Bash. If not, you can find it in your software repository.

On macOS, you can use the default terminal, either Bash or [Zsh](https://opensource.com/article/19/9/getting-started-zsh), depending on the macOS version you're running.

On Windows, there are several ways to experience Bash, including Microsoft's officially supported [Windows Subsystem for Linux](https://opensource.com/article/19/7/ways-get-started-linux#wsl) (WSL).

Once you have Bash installed, open your favorite text editor and get ready to code.

## Reading a file with Bash

In addition to being [a shell](https://www.redhat.com/sysadmin/terminals-shells-consoles), Bash is a scripting language. There are several ways to read data from Bash: You can create a sort of data stream and parse the output, or you can load data into memory. Both are valid methods of ingesting information, but each has pretty specific use cases.

### Source a file in Bash

When you "source" a file in Bash, you cause Bash to read the contents of a file with the expectation that it contains valid data that Bash can fit into its established data model. You won't source data from any old file, but you can use this method to read configuration files and functions.

For instance, create a file called `example.sh`

and enter this into it:

```

#!/bin/sh

greet opensource.com

echo "The meaning of life is $var"

```

Run the code to see it fail:

```

$ bash ./example.sh

./example.sh: line 3: greet: command not found

The meaning of life is

```

Bash doesn't have a command called `greet`

, so it could not execute that line, and it has no record of a variable called `var`

, so there is no known meaning of life. To fix this problem, create a file called `include.sh`

:

```

greet() {

echo "Hello ${1}"

}

var=42

```

Revise your `example.sh`

script to include a `source`

command:

```

#!/bin/sh

source include.sh

greet opensource.com

echo "The meaning of life is $var"

```

Run the script to see it work:

```

$ bash ./example.sh

Hello opensource.com

The meaning of life is 42

```

The `greet`

command is brought into your shell environment because it is defined in the `include.sh`

file, and it even recognizes the argument (`opensource.com`

in this example). The variable `var`

is set and imported, too.

### Parse a file in Bash

The other way to get data "into" Bash is to parse it as a data stream. There are many ways to do this. You can use `grep`

or `cat`

or any command that takes data and pipes it to stdout. Alternately, you can use what is built into Bash: the redirect. Redirection on its own isn't very useful, so in this example, I also use the built-in `echo`

command to print the results of the redirect:

```

#!/bin/sh

echo $( < include.sh )

```

Save this as `stream.sh`

and run it to see the results:

```

$ bash ./stream.sh

greet() { echo "Hello ${1}" } var=42

$

```

For each line in the `include.sh`

file, Bash prints (or echoes) the line to your terminal. Piping it first to an appropriate parser is a common way to read data with Bash. For instance, assume for a moment that `include.sh`

is a configuration file with key and value pairs separated by an equal (`=`

) sign. You could obtain values with `awk`

or even `cut`

:

```

#!/bin/sh

myVar=`grep var include.sh | cut -d'=' -f2`

echo $myVar

```

Try running the script:

```

$ bash ./stream.sh

42

```

## Writing data to a file with Bash

Whether you're storing data your user created with your application or just metadata about what the user did in an application (for instance, game saves or recent songs played), there are many good reasons to store data for later use. In Bash, you can save data to files using common shell redirection.

For instance, to create a new file containing output, use a single redirect token:

```

#!/bin/sh

TZ=UTC

date > date.txt

```

Run the script a few times:

```

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:06 UTC 2021

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:12 UTC 2021

```

To append data, use the double redirect tokens:

```

#!/bin/sh

TZ=UTC

date >> date.txt

```

Run the script a few times:

```

$ bash ./date.sh

$ bash ./date.sh

$ bash ./date.sh

$ cat date.txt

Tue Feb 23 22:25:12 UTC 2021

Tue Feb 23 22:25:17 UTC 2021

Tue Feb 23 22:25:19 UTC 2021

Tue Feb 23 22:25:22 UTC 2021

```

## Bash for easy programming

Bash excels at being easy to learn because, with just a few basic concepts, you can build complex programs. For the full documentation, refer to the [excellent Bash documentation](http://gnu.org/software/bash) on GNU.org.

## Comments are closed. |

13,261 | 为什么需要关心服务网格 | https://opensource.com/article/21/3/service-mesh | 2021-04-02T20:14:59 | [

"服务网格",

"微服务",

"云原生"

] | https://linux.cn/article-13261-1.html |

>

> 在微服务环境中,服务网格为开发和运营提供了好处。

>

>

>

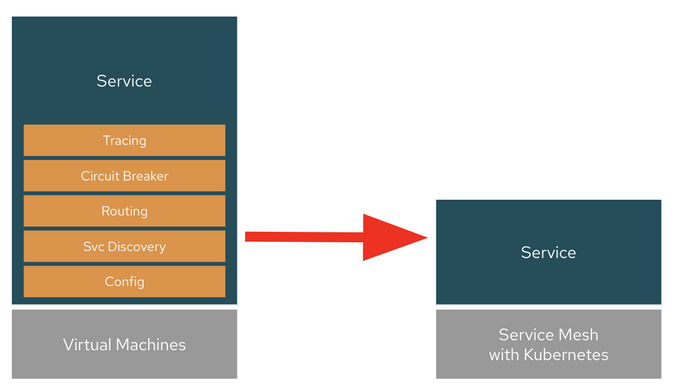

很多开发者不知道为什么要关心<ruby> <a href="https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh"> 服务网格 </a> <rt> Service Mesh </rt></ruby>。这是我在开发者见面会、会议和实践研讨会上关于云原生架构的微服务开发的演讲中经常被问到的问题。我的回答总是一样的:“只要你想简化你的微服务架构,它就应该运行在 Kubernetes 上。”

关于简化,你可能也想知道,为什么分布式微服务必须设计得如此复杂才能在 Kubernetes 集群上运行。正如本文所解释的那样,许多开发人员通过服务网格解决了微服务架构的复杂性,并通过在生产中采用服务网格获得了额外的好处。

### 什么是服务网格?

服务网格是一个专门的基础设施层,用于提供一个透明的、独立于代码的 (polyglot) 方式,以消除应用代码中的非功能性微服务能力。

### 为什么服务网格对开发者很重要

当开发人员将微服务部署到云时,无论业务功能如何,他们都必须解决非功能性微服务功能,以避免级联故障。这些功能通常可以体现在服务发现、日志、监控、<ruby> 韧性 <rt> resiliency </rt></ruby>、认证、<ruby> 弹性 <rt> elasticity </rt></ruby>和跟踪等方面。开发人员必须花费更多的时间将它们添加到每个微服务中,而不是开发实际的业务逻辑,这使得微服务变得沉重而复杂。

随着企业加速向云计算转移,服务网格 可以提高开发人员的生产力。Kubernetes 加服务网格平台不需要让服务负责处理这些复杂的问题,也不需要在每个服务中添加更多的代码来处理云原生的问题,而是负责向运行在该平台上的任何应用(现有的或新的,用任何编程语言或框架)提供这些服务。那么微服务就可以轻量级,专注于其业务逻辑,而不是云原生的复杂性。

### 为什么服务网格对运维很重要

这并没有回答为什么运维团队需要关心在 Kubernetes 上运行云原生微服务的服务网格。因为运维团队必须确保在 Kubernetes 环境上的大型混合云和多云上部署新的云原生应用的强大安全性、合规性和可观察性。

服务网格由一个用于管理代理路由流量的控制平面和一个用于注入<ruby> 边车 <rt> Sidecar </rt></ruby>的数据平面组成。边车允许运维团队做一些比如添加第三方安全工具和追踪所有服务通信中的流量,以避免安全漏洞或合规问题。服务网格还可以通过在图形面板上可视化地跟踪指标来提高观察能力。

### 如何开始使用服务网格

对于开发者和运维人员,以及从应用开发到平台运维来说,服务网格可以更有效地管理云原生功能。

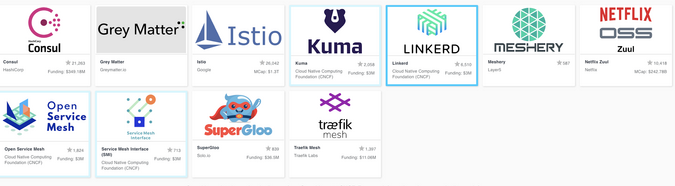

你可能想知道从哪里开始采用服务网格来配合你的微服务应用和架构。幸运的是,有许多开源的服务网格项目。许多云服务提供商也在他们的 Kubernetes 平台中提供 服务网格。

你可以在 [CNCF Service Mesh Landscape](https://landscape.cncf.io/card-mode?category=service-mesh&grouping=category) 页面中找到最受欢迎的服务网格项目和服务的链接。

---

via: <https://opensource.com/article/21/3/service-mesh>

作者:[Daniel Oh](https://opensource.com/users/daniel-oh) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Many developers wonder why they should care about [service mesh](https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh). It's a question I'm asked often in my presentations at developer meetups, conferences, and hands-on workshops about microservices development with cloud-native architecture. My answer is always the same: "As long as you want to simplify your microservices architecture, it should be running on Kubernetes."

Concerning simplification, you probably also wonder why distributed microservices must be designed so complexly for running on Kubernetes clusters. As this article explains, many developers solve the microservices architecture's complexity with service mesh and gain additional benefits by adopting service mesh in production.

## What is a service mesh?

A service mesh is a dedicated infrastructure layer for providing a transparent and code-independent (polyglot) way to eliminate nonfunctional microservices capabilities from the application code.

(Daniel Oh, CC BY-SA 4.0)

## Why service mesh matters to developers

When developers deploy microservices to the cloud, they have to address nonfunctional microservices capabilities to avoid cascading failures, regardless of business functionalities. Those capabilities typically can be represented in service discovery, logging, monitoring, resiliency, authentication, elasticity, and tracing. Developers must spend more time adding them to each microservice rather than developing actual business logic, which makes the microservices heavy and complex.

As organizations accelerate their move to the cloud, the service mesh can increase developer productivity. Instead of making the services responsible for dealing with those complexities and adding more code into each service to deal with cloud-native concerns, the Kubernetes + service mesh platform is responsible for providing those services to any application (existing or new, in any programming language or framework) running on the platform. Then the microservices can be lightweight and focus on their business logic rather than cloud-native complexities.

## Why service mesh matters to ops

This doesn't answer why ops teams need to care about the service mesh for operating cloud-native microservices on Kubernetes. It's because the ops teams have to ensure robust security, compliance, and observability for spreading new cloud-native applications across large hybrid and multi clouds on Kubernetes environments.

The service mesh is composed of a control plane for managing proxies to route traffic and a data plane for injecting sidecars. The sidecars allow the ops teams to do things like adding third-party security tools and tracing traffic in all service communications to avoid security breaches or compliance issues. The service mesh also improves observation capabilities by visualizing tracing metrics on graphical dashboards.

## How to get started with service mesh

Service mesh manages cloud-native capabilities more efficiently—for developers and operators and from application development to platform operation.

You might want to know where to get started adopting service mesh in alignment with your microservices applications and architecture. Luckily, there are many open source service mesh projects. Many cloud service providers also offer service mesh capabilities within their Kubernetes platforms.

(Daniel Oh, CC BY-SA 4.0)

You can find links to the most popular service mesh projects and services on the [CNCF Service Mesh Landscape](https://landscape.cncf.io/card-mode?category=service-mesh&grouping=category) webpage.

## Comments are closed. |

13,262 | 如何从硬件到防火墙建立一个家庭实验室 | https://opensource.com/article/19/3/home-lab | 2021-04-02T21:52:30 | [

"家庭实验室"

] | https://linux.cn/article-13262-1.html |

>

> 了解一下用于构建自己的家庭实验室的硬件和软件方案。

>

>

>

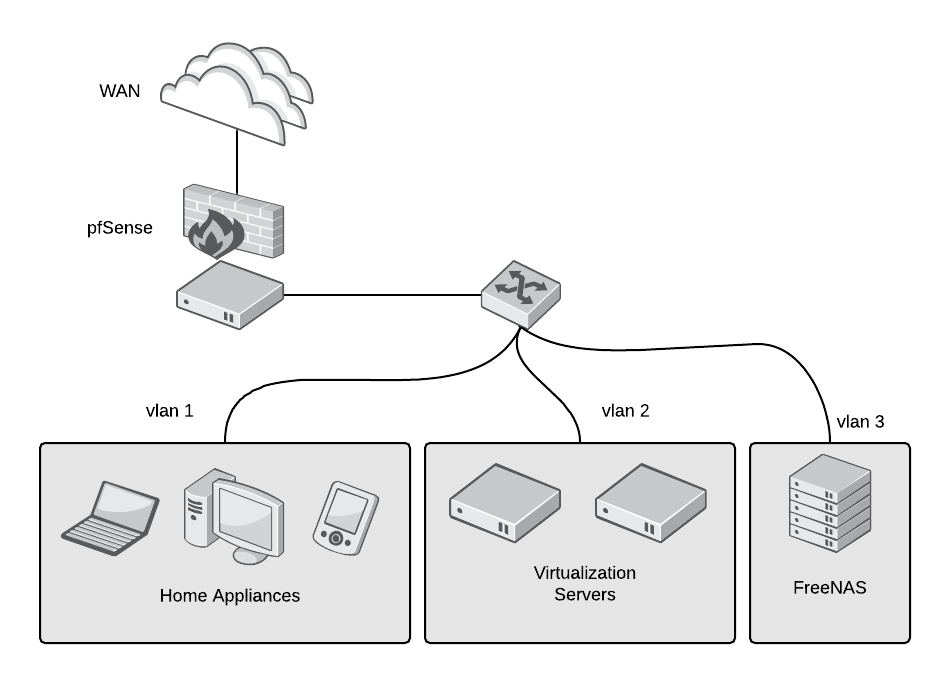

你有想过创建一个家庭实验室吗?或许你想尝试不同的技术,构建开发环境、亦或是建立自己的私有云。拥有一个家庭实验室的理由很多,本教程旨在使入门变得更容易。

规划家庭实验室时,需要考虑三方面:硬件、软件和维护。我们将在这里查看前两方面,并在以后的文章中讲述如何节省维护计算机实验室的时间。

### 硬件

在考虑硬件需求时,首先要考虑如何使用实验室以及你的预算、噪声、空间和电力使用情况。

如果购买新硬件过于昂贵,请搜索当地的大学、广告以及诸如 eBay 或 Craigslist 之类的网站,能获取二手服务器的地方。它们通常很便宜,并且服务器级的硬件可以使用很多年。你将需要三类硬件:虚拟化服务器、存储设备和路由器/防火墙。

#### 虚拟化服务器

一个虚拟化服务器允许你去运行多个共享物理机资源的虚拟机,同时最大化利用和隔离资源。如果你弄坏了一台虚拟机,无需重建整个服务器,只需虚拟一个好了。如果你想进行测试或尝试某些操作而不损坏整个系统,仅需要新建一个虚拟机来运行即可。

在虚拟服务器中,需考虑两个最重要的因素是 CPU 的核心数及其运行速度以及内存容量。如果没有足够的资源够全部虚拟机共享,那么它们将被过度分配并试着获取其他虚拟机的 CPU 的周期和内存。

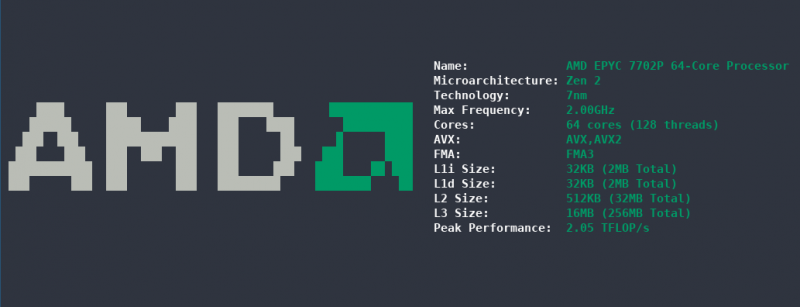

因此,考虑一个多核 CPU 的平台。你要确保 CPU 支持虚拟化指令(因特尔的 VT-x 指令集和 AMD 的 AMD-V 指令集)。能够处理虚拟化的优质的消费级处理器有因特尔的 i5 或 i7 和 AMD 的 Ryzen 处理器。如果你考虑服务器级的硬件,那么因特尔的志强系列和 AMD 的 EPYC 都是不错的选择。内存可能很昂贵,尤其是最近的 DDR4 内存。当我们估计所需多少内存时,请为主机操作系统的内存至少分配 2 GB 的空间。

如果你担心电费或噪声,则诸如因特尔 NUC 设备之类的解决方案虽然外形小巧、功耗低、噪音低,但是却以牺牲可扩展性为代价。

#### NAS

如果希望装有硬盘驱动器的计算机存储你的所有个人数据,电影,图片等,并为虚拟化服务器提供存储,则需要<ruby> 网络附加存储 <rt> Network-attached storage </rt></ruby>(NAS)。

在大多数情况下,你不太可能需要一颗强力的 CPU。实际上,许多商业 NAS 的解决方案使用低功耗的 ARM CPU。支持多个 SATA 硬盘的主板是必须的。如果你的主板没有足够的端口,请使用<ruby> 主机总线适配器 <rt> host bus adapter </rt> <ruby> (HBA)SAS 控制器添加额外的端口。 </ruby></ruby>

网络性能对于 NAS 来说是至关重要的,因此最好选择<ruby> 千兆 <rt> gigabit </rt></ruby>网络(或更快网络)。

内存需求根据你的文件系统而有所不同。ZFS 是 NAS 上最受欢迎的文件系统之一,你需要更多内存才能使用诸如缓存或重复数据删除之类的功能。<ruby> 纠错码 <rt> Error-correcting code </rt></ruby>(ECC)的内存是防止数据损坏的最佳选择(但在购买前请确保你的主板支持)。最后但同样重要的,不要忘记使用<ruby> 不间断电源 <rt> uninterruptible power supply </rt></ruby>(UPS),因为断电可能会使得数据出错。

#### 防火墙和路由器

你是否曾意识到,廉价的路由器/防火墙通常是保护你的家庭网络不受外部环境影响的主要部分?这些路由器很少及时收到安全更新(如果有的话)。现在害怕了吗?好吧,[确实](https://opensource.com/article/18/5/how-insecure-your-router)!

通常,你不需要一颗强大的 CPU 或是大量内存来构建你自己的路由器/防火墙,除非你需要高吞吐率或是执行 CPU 密集型任务,像是虚拟私有网络服务器或是流量过滤。在这种情况下,你将需要一个支持 AES-NI 的多核 CPU。

你可能想要至少 2 个千兆或更快的<ruby> 以太网卡 <rt> Ethernet network interface cards </rt></ruby>(NIC),这不是必需的,但我推荐使用一个管理型交换机来连接你自己的装配的路由器,以创建 VLAN 来进一步隔离和保护你的网络。

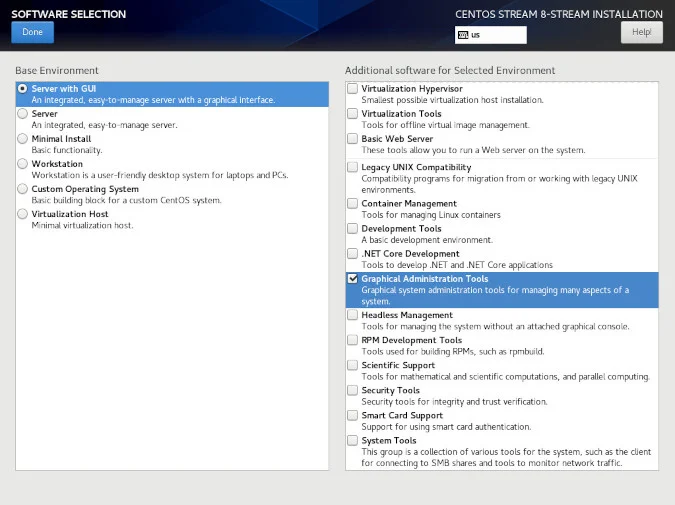

### 软件

在选择完你的虚拟化服务器、NAS 和防火墙/路由器后,下一步是探索不同的操作系统和软件,以最大程度地发挥其作用。尽管你可以使用 CentOS、Debian或 Ubuntu 之类的常规 Linux 发行版,但是与以下软件相比,它们通常花费更多的时间进行配置和管理。

#### 虚拟化软件

[KVM](https://www.linux-kvm.org/page/Main_Page)(<ruby> 基于内核的虚拟机 <rt> Kernel-based Virtual Machine </rt></ruby>)使你可以将 Linux 变成虚拟机监控程序,以便可以在同一台机器中运行多个虚拟机。最好的是,KVM 作为 Linux 的一部分,它是许多企业和家庭用户的首选。如果你愿意,可以安装 [libvirt](https://libvirt.org/) 和 [virt-manager](https://virt-manager.org/) 来管理你的虚拟化平台。

[Proxmox VE](https://www.proxmox.com/en/proxmox-ve) 是一个强大的企业级解决方案,并且是一个完全开源的虚拟化和容器平台。它基于 Debian,使用 KVM 作为其虚拟机管理程序,并使用 LXC 作为容器。Proxmox 提供了强大的网页界面、API,并且可以扩展到许多群集节点,这很有用,因为你永远不知道何时实验室容量不足。

[oVirt](https://ovirt.org/)(RHV)是另一种使用 KVM 作为虚拟机管理程序的企业级解决方案。不要因为它是企业级的,就意味着你不能在家中使用它。oVirt 提供了强大的网页界面和 API,并且可以处理数百个节点(如果你运行那么多服务器,我可不想成为你的邻居!)。oVirt 用于家庭实验室的潜在问题是它需要一套最低限度的节点:你将需要一个外部存储(例如 NAS)和至少两个其他虚拟化节点(你可以只在一个节点上运行,但你会遇到环境维护方面的问题)。

#### 网络附加存储软件

[FreeNAS](https://freenas.org/) 是最受欢迎的开源 NAS 发行版,它基于稳定的 FreeBSD 操作系统。它最强大的功能之一是支持 ZFS 文件系统,该文件系统提供了数据完整性检查、快照、复制和多个级别的冗余(镜像、条带化镜像和条带化)。最重要的是,所有功能都通过功能强大且易于使用的网页界面进行管理。在安装 FreeNAS 之前,请检查硬件是否支持,因为它不如基于 Linux 的发行版那么广泛。

另一个流行的替代方法是基于 Linux 的 [OpenMediaVault](https://www.openmediavault.org/)。它的主要功能之一是模块化,带有可扩展和添加特性的插件。它包括的功能包括基于网页管理界面,CIFS、SFTP、NFS、iSCSI 等协议,以及卷管理,包括软件 RAID、资源配额,<ruby> 访问控制列表 <rt> access control lists </rt></ruby>(ACL)和共享管理。由于它是基于 Linux 的,因此其具有广泛的硬件支持。

#### 防火墙/路由器软件

[pfSense](https://www.pfsense.org/) 是基于 FreeBSD 的开源企业级路由器和防火墙发行版。它可以直接安装在服务器上,甚至可以安装在虚拟机中(以管理虚拟或物理网络并节省空间)。它有许多功能,可以使用软件包进行扩展。尽管它也有命令行访问权限,但也可以完全使用网页界面对其进行管理。它具有你所希望路由器和防火墙提供的所有功能,例如 DHCP 和 DNS,以及更高级的功能,例如入侵检测(IDS)和入侵防御(IPS)系统。你可以侦听多个不同接口或使用 VLAN 的网络,并且只需鼠标点击几下即可创建安全的 VPN 服务器。pfSense 使用 pf,这是一种有状态的数据包筛选器,它是为 OpenBSD 操作系统开发的,使用类似 IPFilter 的语法。许多公司和组织都有使用 pfSense。

---

考虑到所有的信息,是时候动手开始建立你的实验室了。在之后的文章中,我将介绍运行家庭实验室的第三方面:自动化进行部署和维护。

---

via: <https://opensource.com/article/19/3/home-lab>

作者:[Michael Zamot (Red Hat)](https://opensource.com/users/mzamot) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wyxplus](https://github.com/wyxplus) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Do you want to create a homelab? Maybe you want to experiment with different technologies, create development environments, or have your own private cloud. There are many reasons to have a homelab, and this guide aims to make it easier to get started.

There are three categories to consider when planning a home lab: hardware, software, and maintenance. We'll look at the first two categories here and save maintaining your computer lab for a future article.

## Hardware

When thinking about your hardware needs, first consider how you plan to use your lab as well as your budget, noise, space, and power usage.

If buying new hardware is too expensive, search local universities, ads, and websites like eBay or Craigslist for recycled servers. They are usually inexpensive, and server-grade hardware is built to last many years. You'll need three types of hardware: a virtualization server, storage, and a router/firewall.

### Virtualization servers

A virtualization server allows you to run several virtual machines that share the physical box's resources while maximizing and isolating resources. If you break one virtual machine, you won't have to rebuild the entire server, just the virtual one. If you want to do a test or try something without the risk of breaking your entire system, just spin up a new virtual machine and you're ready to go.

The two most important factors to consider in a virtualization server are the number and speed of its CPU cores and its memory. If there are not enough resources to share among all the virtual machines, they'll be overallocated and try to steal each other's CPU cycles and memory.

So, consider a CPU platform with multiple cores. You want to ensure the CPU supports virtualization instructions (VT-x for Intel and AMD-V for AMD). Examples of good consumer-grade processors that can handle virtualization are Intel i5 or i7 and AMD Ryzen. If you are considering server-grade hardware, the Xeon class for Intel and EPYC for AMD are good options. Memory can be expensive, especially the latest DDR4 SDRAM. When estimating memory requirements, factor at least 2GB for the host operating system's memory consumption.

If your electricity bill or noise is a concern, solutions like Intel's NUC devices provide a small form factor, low power usage, and reduced noise, but at the expense of expandability.

### Network-attached storage (NAS)

If you want a machine loaded with hard drives to store all your personal data, movies, pictures, etc. and provide storage for the virtualization server, network-attached storage (NAS) is what you want.

In most cases, you won't need a powerful CPU; in fact, many commercial NAS solutions use low-powered ARM CPUs. A motherboard that supports multiple SATA disks is a must. If your motherboard doesn't have enough ports, use a host bus adapter (HBA) SAS controller to add extras.

Network performance is critical for a NAS, so select a gigabit network interface (or better).

Memory requirements will differ based on your filesystem. ZFS is one of the most popular filesystems for NAS, and you'll need more memory to use features such as caching or deduplication. Error-correcting code (ECC) memory is your best bet to protect data from corruption (but make sure your motherboard supports it before you buy). Last, but not least, don't forget an uninterruptible power supply (UPS), because losing power can cause data corruption.

### Firewall and router

Have you ever realized that a cheap router/firewall is usually the main thing protecting your home network from the exterior world? These routers rarely receive timely security updates, if they receive any at all. Scared now? Well, [you should be](https://opensource.com/article/18/5/how-insecure-your-router)!

You usually don't need a powerful CPU or a great deal of memory to build your own router/firewall, unless you are handling a huge throughput or want to do CPU-intensive tasks, like a VPN server or traffic filtering. In such cases, you'll need a multicore CPU with AES-NI support.

You may want to get at least two 1-gigabit or better Ethernet network interface cards (NICs), also, not needed, but recommended, a managed switch to connect your DIY-router to create VLANs to further isolate and secure your network.

## Software

After you've selected your virtualization server, NAS, and firewall/router, the next step is exploring the different operating systems and software to maximize their benefits. While you could use a regular Linux distribution like CentOS, Debian, or Ubuntu, they usually take more time to configure and administer than the following options.

### Virtualization software

** KVM** (Kernel-based Virtual Machine) lets you turn Linux into a hypervisor so you can run multiple virtual machines in the same box. The best thing is that KVM is part of Linux, and it is the go-to option for many enterprises and home users. If you are comfortable, you can install

**and**

[libvirt](https://libvirt.org/)**to manage your virtualization platform.**

[virt-manager](https://virt-manager.org/)** Proxmox VE** is a robust, enterprise-grade solution and a full open source virtualization and container platform. It is based on Debian and uses KVM as its hypervisor and LXC for containers. Proxmox offers a powerful web interface, an API, and can scale out to many clustered nodes, which is helpful because you'll never know when you'll run out of capacity in your lab.

** oVirt (RHV)** is another enterprise-grade solution that uses KVM as the hypervisor. Just because it's enterprise doesn't mean you can't use it at home. oVirt offers a powerful web interface and an API and can handle hundreds of nodes (if you are running that many servers, I don't want to be your neighbor!). The potential problem with oVirt for a home lab is that it requires a minimum set of nodes: You'll need one external storage, such as a NAS, and at least two additional virtualization nodes (you can run it just on one, but you'll run into problems in maintenance of your environment).

### NAS software

** FreeNAS** is the most popular open source NAS distribution, and it's based on the rock-solid FreeBSD operating system. One of its most robust features is its use of the ZFS filesystem, which provides data-integrity checking, snapshots, replication, and multiple levels of redundancy (mirroring, striped mirrors, and striping). On top of that, everything is managed from the powerful and easy-to-use web interface. Before installing FreeNAS, check its hardware support, as it is not as wide as Linux-based distributions.

Another popular alternative is the Linux-based ** OpenMediaVault**. One of its main features is its modularity, with plugins that extend and add features. Among its included features are a web-based administration interface; protocols like CIFS, SFTP, NFS, iSCSI; and volume management, including software RAID, quotas, access control lists (ACLs), and share management. Because it is Linux-based, it has extensive hardware support.

### Firewall/router software

** pfSense** is an open source, enterprise-grade FreeBSD-based router and firewall distribution. It can be installed directly on a server or even inside a virtual machine (to manage your virtual or physical networks and save space). It has many features and can be expanded using packages. It is managed entirely using the web interface, although it also has command-line access. It has all the features you would expect from a router and firewall, like DHCP and DNS, as well as more advanced features, such as intrusion detection (IDS) and intrusion prevention (IPS) systems. You can create multiple networks listening on different interfaces or using VLANs, and you can create a secure VPN server with a few clicks. pfSense uses pf, a stateful packet filter that was developed for the OpenBSD operating system using a syntax similar to IPFilter. Many companies and organizations use pfSense.

With all this information in mind, it's time for you to get your hands dirty and start building your lab. In a future article, I will get into the third category of running a home lab: using automation to deploy and maintain it.

## 4 Comments |

13,263 | 如何用 C++ 读写文件 | https://opensource.com/article/21/3/ccc-input-output | 2021-04-03T20:40:00 | [

"输入输出"

] | https://linux.cn/article-13263-1.html |

>

> 如果你知道如何在 C++ 中使用输入输出(I/O)流,那么(原则上)你便能够处理任何类型的输入输出设备。

>

>

>

在 C++ 中,对文件的读写可以通过使用输入输出流与流运算符 `>>` 和 `<<` 来进行。当读写文件的时候,这些运算符被应用于代表硬盘驱动器上文件类的实例上。这种基于流的方法有个巨大的优势:从 C++ 的角度,无论你要读取或写入的内容是文件、数据库、控制台,亦或是你通过网络连接的另外一台电脑,这都无关紧要。因此,知道如何使用流运算符来写入文件能够被转用到其他领域。

### 输入输出流类

C++ 标准库提供了 [ios\_base](https://en.cppreference.com/w/cpp/io/ios_base) 类。该类作为所有 I/O 流的基类,例如 [basic\_ofstream](https://en.cppreference.com/w/cpp/io/basic_ofstream) 和 [basic\_ifstream](https://en.cppreference.com/w/cpp/io/basic_ifstream)。本例将使用读/写字符的专用类型 `ifstream` 和 `ofstream`。

* `ofstream`:输出文件流,并且其能通过插入运算符 `<<` 来实现。

* `ifstream`:输入文件流,并且其能通过提取运算符 `>>` 来实现。

该两种类型都是在头文件 `<fstream>` 中所定义。

从 `ios_base` 继承的类在写入时可被视为数据接收器,在从其读取时可被视为数据源,与数据本身完全分离。这种面向对象的方法使 <ruby> <a href="https://en.wikipedia.org/wiki/Separation_of_concerns"> 关注点分离 </a> <rt> separation of concerns </rt></ruby> 和 <ruby> <a href="https://en.wikipedia.org/wiki/Dependency_injection"> 依赖注入 </a> <rt> dependency injection </rt></ruby> 等概念易于实现。

### 一个简单的例子

本例程是非常简单:实例化了一个 `ofstream` 来写入,和实例化一个 `ifstream` 来读取。

```

#include <iostream> // cout, cin, cerr etc...

#include <fstream> // ifstream, ofstream

#include <string>

int main()

{

std::string sFilename = "MyFile.txt";

/******************************************

* *

* WRITING *

* *

******************************************/

std::ofstream fileSink(sFilename); // Creates an output file stream

if (!fileSink) {

std::cerr << "Canot open " << sFilename << std::endl;

exit(-1);

}

/* std::endl will automatically append the correct EOL */

fileSink << "Hello Open Source World!" << std::endl;

/******************************************

* *

* READING *

* *

******************************************/

std::ifstream fileSource(sFilename); // Creates an input file stream

if (!fileSource) {

std::cerr << "Canot open " << sFilename << std::endl;

exit(-1);

}

else {

// Intermediate buffer

std::string buffer;

// By default, the >> operator reads word by workd (till whitespace)

while (fileSource >> buffer)

{

std::cout << buffer << std::endl;

}

}

exit(0);

}

```

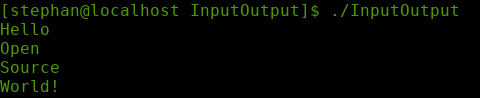

该代码可以在 [GitHub](https://github.com/hANSIc99/cpp_input_output) 上查看。当你编译并且执行它时,你应该能获得以下输出:

这是个简化的、适合初学者的例子。如果你想去使用该代码在你自己的应用中,请注意以下几点:

* 文件流在程序结束的时候自动关闭。如果你想继续执行,那么应该通过调用 `close()` 方法手动关闭。

* 这些文件流类继承自 [basic\_ios](https://en.cppreference.com/w/cpp/io/basic_ios)(在多个层次上),并且重载了 `!` 运算符。这使你可以进行简单的检查是否可以访问该流。在 [cppreference.com](https://en.cppreference.com/w/cpp/io/basic_ios/operator!) 上,你可以找到该检查何时会(或不会)成功的概述,并且可以进一步实现错误处理。

* 默认情况下,`ifstream` 停在空白处并跳过它。要逐行读取直到到达 [EOF](https://en.wikipedia.org/wiki/Newline) ,请使用 `getline(...)` 方法。

* 为了读写二进制文件,请将 `std::ios::binary` 标志传递给构造函数:这样可以防止 [EOL](https://en.wikipedia.org/wiki/Newline) 字符附加到每一行。

### 从系统角度进行写入

写入文件时,数据将写入系统的内存写入缓冲区中。当系统收到系统调用 [sync](https://en.wikipedia.org/wiki/Sync_%28Unix%29) 时,此缓冲区的内容将被写入硬盘。这也是你在不告知系统的情况下,不要卸下 U 盘的原因。通常,守护进程会定期调用 `sync`。为了安全起见,也可以手动调用 `sync()`:

```

#include <unistd.h> // needs to be included

sync();

```

### 总结

在 C++ 中读写文件并不那么复杂。更何况,如果你知道如何处理输入输出流,(原则上)那么你也知道如何处理任何类型的输入输出设备。对于各种输入输出设备的库能让你更容易地使用流运算符。这就是为什么知道输入输出流的流程会对你有所助益的原因。

---

via: <https://opensource.com/article/21/3/ccc-input-output>

作者:[Stephan Avenwedde](https://opensource.com/users/hansic99) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wyxplus](https://github.com/wyxplus) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In C++, reading and writing to files can be done by using I/O streams in conjunction with the stream operators `>>`

and `<<`

. When reading or writing to files, those operators are applied to an instance of a class representing a file on the hard drive. This stream-based approach has a huge advantage: From a C ++ perspective, it doesn't matter what you are reading or writing to, whether it's a file, a database, the console, or another PC you are connected to over the network. Therefore, knowing how to write files using stream operators can be transferred to other areas.

## I/O stream classes

The C++ standard library provides the class [ios_base](https://en.cppreference.com/w/cpp/io/ios_base). This class acts as the base class for all I/O stream-compatible classes, such as [basic_ofstream](https://en.cppreference.com/w/cpp/io/basic_ofstream) and [basic_ifstream](https://en.cppreference.com/w/cpp/io/basic_ifstream). This example will use the specialized types for reading/writing characters, `ifstream`

and `ofstream`

.

`ofstream`

means*output file stream*, and it can be accessed with the insertion operator,`<<`

.`ifstream`

means*input file stream*, and it can be accessed with the extraction operator,`>>`

.

Both types are defined inside the header `<fstream>`

.

A class that inherits from `ios_base`

can be thought of as a data sink when writing to it or as a data source when reading from it, completely detached from the data itself. This object-oriented approach makes concepts such as [separation of concerns](https://en.wikipedia.org/wiki/Separation_of_concerns) and [dependency injection](https://en.wikipedia.org/wiki/Dependency_injection) easy to implement.

## A simple example

This example program is quite simple: It creates an `ofstream`

, writes to it, creates an `ifstream`

, and reads from it:

```

#include <iostream> // cout, cin, cerr etc...

#include <fstream> // ifstream, ofstream

#include <string>

int main()

{

std::string sFilename = "MyFile.txt";

/******************************************

* *

* WRITING *

* *

******************************************/

std::ofstream fileSink(sFilename); // Creates an output file stream

if (!fileSink) {

std::cerr << "Canot open " << sFilename << std::endl;

exit(-1);

}

/* std::endl will automatically append the correct EOL */

fileSink << "Hello Open Source World!" << std::endl;

/******************************************

* *

* READING *

* *

******************************************/

std::ifstream fileSource(sFilename); // Creates an input file stream

if (!fileSource) {

std::cerr << "Canot open " << sFilename << std::endl;

exit(-1);

}

else {

// Intermediate buffer

std::string buffer;

// By default, the >> operator reads word by workd (till whitespace)

while (fileSource >> buffer)

{

std::cout << buffer << std::endl;

}

}

exit(0);

}

```

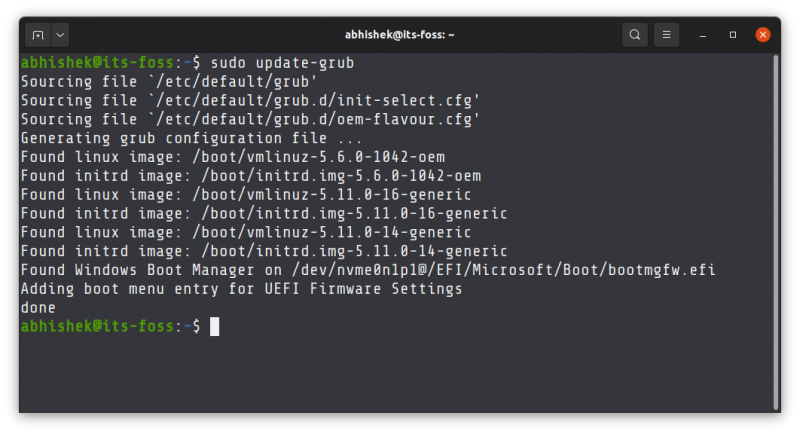

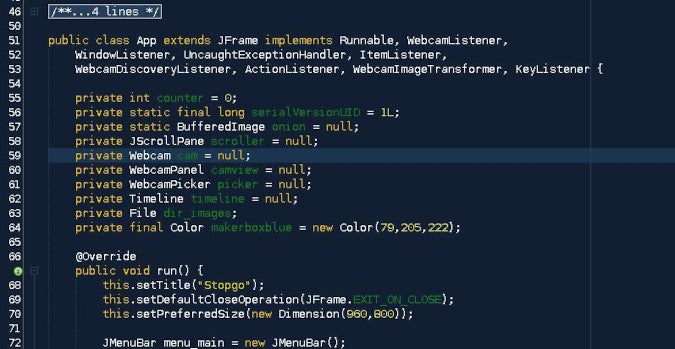

This code is available on [GitHub](https://github.com/hANSIc99/cpp_input_output). When you compile and execute it, you should get the following output:

(Stephan Avenwedde, CC BY-SA 4.0)

This is a simplified, beginner-friendly example. If you want to use this code in your own application, please note the following:

- The file streams are automatically closed at the end of the program. If you want to proceed with the execution, you should close them manually by calling the

`close()`

method. - These file stream classes inherit (over several levels) from

[basic_ios](https://en.cppreference.com/w/cpp/io/basic_ios), which overloads the`!`

operator. This lets you implement a simple check if you can access the stream. On[cppreference.com](https://en.cppreference.com/w/cpp/io/basic_ios/operator!), you can find an overview of when this check will (and won't) succeed, and you can implement further error handling. - By default,

`ifstream`

stops at white space and skips it. To read line by line until you reach[EOF](https://en.wikipedia.org/wiki/End-of-file), use the`getline(...)`

-method. - For reading and writing binary files, pass the

`std::ios::binary`

flag to the constructor: This prevents[EOL](https://en.wikipedia.org/wiki/Newline)characters from being appended to each line.

## Writing from the systems perspective

When writing files, the data is written to the system's in-memory write buffer. When the system receives the system call [sync](https://en.wikipedia.org/wiki/Sync_%28Unix%29), this buffer's contents are written to the hard drive. This mechanism is also the reason you shouldn't remove a USB stick without telling the system. Usually, *sync* is called on a regular basis by a daemon. If you really want to be on the safe side, you can also call *sync* manually:

```

#include <unistd.h> // needs to be included

sync();

```

## Summary

Reading and writing to files in C++ is not that complicated. Moreover, if you know how to deal with I/O streams, you also know (in principle) how to deal with any kind of I/O device. Libraries for various kinds of I/O devices let you use stream operators for easy access. This is why it is beneficial to know how I/O steams work.

## Comments are closed. |

13,264 | NewsFlash: 一款支持 Feedly 的现代开源 Feed 阅读器 | https://itsfoss.com/newsflash-feedreader/ | 2021-04-03T21:07:00 | [

"RSS"

] | https://linux.cn/article-13264-1.html |

有些人可能认为 RSS 阅读器已经不再,但它们仍然坚持在这里,特别是当你不想让大科技算法来决定你应该阅读什么的时候。Feed 阅读器可以帮你自助选择阅读来源。

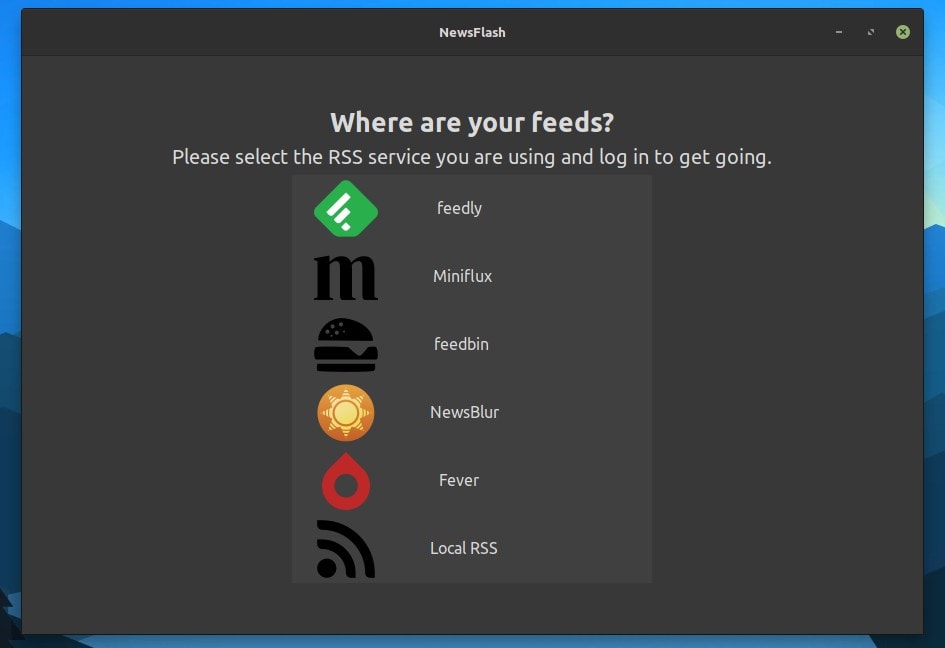

我最近遇到一个很棒的 RSS 阅读器 NewsFlash。它支持通过基于网页的 Feed 阅读器增加 feed,例如 [Feedly](https://feedly.com/) 和 NewsBlur。这是一个很大的安慰,因为如果你已经使用这种服务,就不必人工导入 feed,这节省了你的工作。

NewsFlash 恰好是 [FeedReadeer](https://jangernert.github.io/FeedReader/) 的精神继承者,原来的 FeedReader 开发人员也参与其中。

如果你正在找适用的 RSS 阅读器,我们整理了 [Linux Feed 阅读器](https://itsfoss.com/feed-reader-apps-linux/) 列表供您参考。

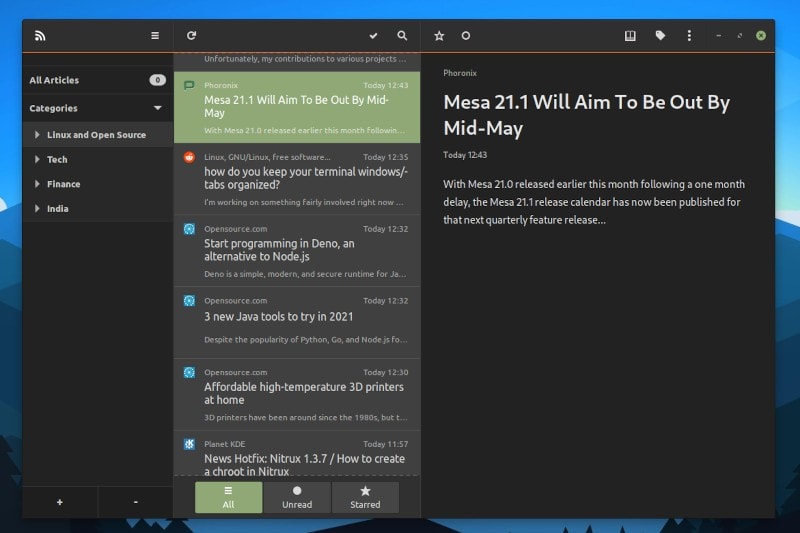

### NewsFlash: 一款补充网页 RSS 阅读器账户的 Feed 阅读器

请注意,NewsFlash 并不只是针对基于网页的 RSS feed 账户量身定做的,你也可以选择使用本地 RSS feed,而不必在多设备间同步。

不过,如果你在用是任何一款支持的基于网页的 feed 阅读器,那么 NewsFlash 特别有用。

这里,我将重点介绍 NewsFlash 提供的一些功能。

### NewsFlash 功能

* 支持桌面通知

* 快速搜索、过滤

* 支持标签

* 便捷、可重定义的键盘快捷键

* 本地 feed

* OPML 文件导入/导出

* 无需注册即可在 Feedly 库中轻松找到不同 RSS Feed

* 支持自定义字体

* 支持多主题(包括深色主题)

* 启动/禁止缩略图

* 细粒度调整定期同步间隔时间

* 支持基于网页的 Feed 账户,例如 Feedly、Fever、NewsBlur、feedbin、Miniflux

除上述功能外,当你调整窗口大小时,还可以打开阅读器视图,这是一个细腻的补充功能。

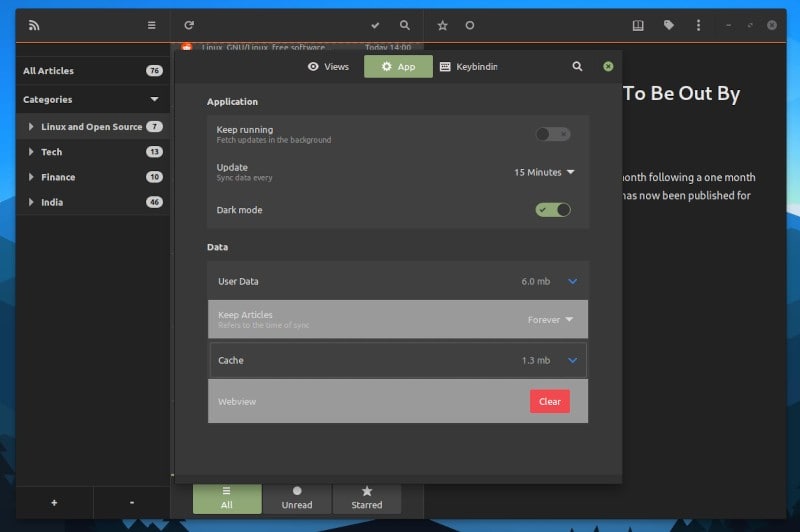

账户重新设置也很容易,这将删除所有本地数据。是的,你可以手动清除缓存并设置到期时间,并为你关注的所有 feed 设置一个用户数据存在本地的到期时间。

### 在 Linux 上安装 NewsFlash

你无法找到适用于各种 Linux 发行版的官方软件包,只有 [Flatpak](https://flathub.org/apps/details/com.gitlab.newsflash)。

对于 Arch 用户,可以从 [AUR](https://itsfoss.com/aur-arch-linux/) 下载。

幸运的是,[Flatpak](https://itsfoss.com/what-is-flatpak/) 软件包可以让你轻松在 Linux 发行版上安装 NewsFlash。具体请参阅我们的 [Flatpak 指南](https://itsfoss.com/flatpak-guide/)。

你可以参考 NewsFlash 的 [GitLab 页面](https://gitlab.com/news-flash/news_flash_gtk) 去解决大部分问题。

### 结束语

我现在用 NewsFlash 作为桌面本地解决方案,不用基于网页的服务。你可以通过直接导出 OPML 文件在移动 feed 应用上得到相同的 feed。这已经被我验证过了。

用户界面易于使用,也提供了数一数二的新版 UX。虽然这个 RSS 阅读器看似简单,但提供了你可以找到的所有重要功能。

你怎么看 NewsFlash?你喜欢用其他类似产品吗?欢迎在评论区中分享你的想法。

---

via: <https://itsfoss.com/newsflash-feedreader/>

作者:[Ankush Das](https://itsfoss.com/author/ankush/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[DCOLIVERSUN](https://github.com/DCOLIVERSUN) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Some may choose to believe that RSS readers are dead, but they’re here to stay. Especially when you don’t want the Big tech algorithm to decide what you should read. With a feed reader, you can choose your own reading sources.

I’ve recently come across a fantastic RSS reader NewsFlash. It also supports adding feeds through web-based feed readers like [Feedly](https://feedly.com/) and NewsBlur. That’s a big relief because if you are already such a service, you don’t have to import your feeds manually.

NewsFlash happens to be the spiritual successor to [FeedReader](https://jangernert.github.io/FeedReader/) with the original developer involved as well.

In case you’re wondering, we’ve already covered a list of [Feed Reader apps for Linux](https://itsfoss.com/feed-reader-apps-linux/) if you’re looking for more options.

## NewsFlash: A Feed Reader To Complement Web-based RSS Reader Account

It is important to note that NewsFlash isn’t just tailored for web-based RSS feed accounts, you can choose to use local RSS feeds as well without needing to sync them on multiple devices.

However, it is specifically helpful if you’re using any of the supported web-based feed readers.

Here, I’ll be highlighting some of the features that it offers.

## Features of NewsFlash

- Desktop Notifications support

- Fast search and filtering

- Supports tagging

- Useful keyboard shortcuts that can be later customized

- Local feeds

- Import/Export OPML files

- Easily discover various RSS Feeds using Feedly’s library without needing to sign up for the service

- Custom Font Support

- Multiple themes supported (including a dark theme)

- Ability to enable/disable the Thumbnails

- Tweak the time for regular sync intervals

- Support for web-based Feed accounts like Feedly, Fever, NewsBlur, feedbin, Miniflux

In addition to the features mentioned, it also opens the reader view when you re-size the window, so that’s a subtle addition.

If you want to reset the account, you can easily do that as well – which will delete all your local data as well. And, yes, you can manually clear the cache and set an expiry for user data to exist locally for all the feeds you follow.

**Recommended Read:**

## Installing NewsFlash in Linux

You do not get any official packages available for various Linux distributions but limited to a [Flatpak](https://flathub.org/apps/details/com.gitlab.newsflash).

For Arch users, you can find it available in [AUR](https://itsfoss.com/aur-arch-linux/).

Fortunately, the [Flatpak](https://itsfoss.com/what-is-flatpak/) package makes it easy for you to install it on any Linux distro you use. You can refer to our [Flatpak guide](https://itsfoss.com/flatpak-guide/) for help.

In either case, you can refer to its [GitLab page](https://gitlab.com/news-flash/news_flash_gtk) and compile it yourself.

## Closing Thoughts

I’m currently using it by moving away from web-based services as a local solution on my desktop. You can simply export the OPML file to get the same feeds on any of your mobile feed applications, that’s what I’ve done.

The user interface is easy to use and provides a modern UX, if not the best. You can find all the essential features available while being a simple-looking RSS reader as well.

What do you think about NewsFlash? Do you prefer using something else? Feel free to share your thoughts in the comments. |

13,267 | strace 可以解决什么问题? | https://jvns.ca/blog/2021/04/03/what-problems-do-people-solve-with-strace/ | 2021-04-05T09:48:36 | [

"strace"

] | https://linux.cn/article-13267-1.html |

昨天我 [在 Twitter 上询问大家用 strace 解决了什么问题?](https://twitter.com/b0rk/status/1378014888405168132),和往常一样,大家真的是给出了自己的答案! 我收到了大约 200 个答案,然后花了很多时间手动将它们归为 9 类。

这些解决的问题都是关于寻找程序依赖的文件、找出程序卡住或慢的原因、或者找出程序失败的原因。这些总体上与我自己使用 `strace` 的内容相吻合,但也有一些我没有想到的东西!

我不打算在这篇文章里解释什么是 `strace`,但我有一本 [关于它的免费杂志](https://wizardzines.com/zines/strace) 和 [一个讲座](https://www.youtube.com/watch?v=4pEHfGKB-OE) 以及 [很多博文](https://jvns.ca/categories/strace)。

### 问题 1:配置文件在哪里?

最受欢迎的问题是“这个程序有一个配置文件,但我不知道它在哪里”。这可能也是我最常使用 `strace` 解决的问题,因为这是个很简单的问题。

这很好,因为一个程序有一百万种方法来记录它的配置文件在哪里(在手册页、网站上、`--help`等),但只有一种方法可以让它真正打开它(用系统调用!)。

### 问题 2:这个程序还依赖什么文件?

你也可以使用 `strace` 来查找程序依赖的其他类型的文件,比如:

* 动态链接库(“为什么我的程序加载了这个错误版本的 `.so` 文件?"),比如 [我在 2014 年调试的这个 ruby 问题](https://jvns.ca/blog/2014/03/10/debugging-shared-library-problems-with-strace/)

* 它在哪里寻找它的 Ruby gem(Ruby 出现了几次这种情况!)

* SSL 根证书

* 游戏的存档文件

* 一个闭源程序的数据文件

* [哪些 node\_modules 文件没有被使用](https://indexandmain.com/post/shrink-node-modules-with-refining)

### 问题 3:为什么这个程序会挂掉?

你有一个程序,它只是坐在那里什么都不做,这是怎么回事?这个问题特别容易回答,因为很多时候你只需要运行 `strace -p PID`,看看当前运行的是什么系统调用。你甚至不需要看几百行的输出。

答案通常是“正在等待某种 I/O”。“为什么会卡住”的一些可能的答案(虽然还有很多!):

* 它一直在轮询 `select()`

* 正在 `wait()` 等待一个子进程完成

* 它在向某个没有响应的东西发出网络请求

* 正在进行 `write()`,但由于缓冲区已满而被阻止。

* 它在 stdin 上做 `read()`,等待输入。

有人还举了一个很好的例子,用 `strace` 调试一个卡住的 `df` 命令:“用 `strace df -h` 你可以找到卡住的挂载,然后卸载它”。

### 问题 4:这个程序卡住了吗?

这是上一个问题的变种:有时一个程序运行的时间比你预期的要长,你只是想知道它是否卡住了,或者它是否还在继续进行。

只要程序在运行过程中进行系统调用,用 `strace` 就可以超简单地回答这个问题:只需 `strace` 它,看看它是否在进行新的系统调用!

### 问题 5:为什么这个程序很慢?

你可以使用 `strace` 作为一种粗略的剖析工具:`strace -t` 会显示每次系统调用的时间戳,这样你就可以寻找大的漏洞,找到罪魁祸首。

以下是 Twitter 上 9 个人使用 `strace` 调试“为什么这个程序很慢?”的小故事。

* 早在 2000 年,我帮助支持的一个基于 Java 的网站在适度的负载下奄奄一息:页面加载缓慢,甚至完全加载不出来。我们对 J2EE 应用服务器进行了测试,发现它每次只读取一个类文件。开发人员没有使用 BufferedReader,这是典型的 Java 错误。

* 优化应用程序的启动时间……运行 `strace` 可以让人大开眼界,因为有大量不必要的文件系统交互在进行(例如,在同一个配置文件上反复打开/读取/关闭;在一个缓慢的 NFS 挂载上加载大量的字体文件,等等)。

* 问自己为什么在 PHP 中从会话文件中读取(通常是小于 100 字节)非常慢。结果发现一些 `flock` 系统调用花了大约 60 秒。

* 一个程序表现得异常缓慢。使用 `strace` 找出它在每次请求时,通过从 `/dev/random` 读取数据并耗尽熵来重新初始化其内部伪随机数发生器。

* 我记得最近一件事是连接到一个任务处理程序,看到它有多少网络调用(这是意想不到的)。

* `strace` 显示它打开/读取同一个配置文件数千次。

* 服务器随机使用 100% 的 CPU 时间,实际流量很低。原来是碰到打开文件数限制,接受一个套接字时,得到 EMFILE 错误而没有报告,然后一直重试。

* 一个工作流运行超慢,但是没有日志,结果它做一个 POST 请求花了 30 秒而超时,然后重试了 5 次……结果后台服务不堪重负,但是也没有可视性。

* 使用 `strace` 注意到 `gethostbyname()` 需要很长时间才能返回(你不能直接看到 `gethostbyname`,但你可以看到 `strace` 中的 DNS 数据包)

### 问题 6:隐藏的权限错误

有时候程序因为一个神秘的原因而失败,但问题只是有一些它没有权限打开的文件。在理想的世界里,程序会报告这些错误(“Error opening file /dev/whatever: permission denied”),当然这个世界并不完美,所以 `strace` 真的可以帮助解决这个问题!

这其实是我最近使用 `strace` 做的事情。我使用了一台 AxiDraw 绘图仪,当我试图启动它时,它打印出了一个难以理解的错误信息。我 `strace` 它,结果发现我的用户没有权限打开 USB 设备。

### 问题 7:正在使用什么命令行参数?

有时候,一个脚本正在运行另一个程序,你想知道它传递的是什么命令行标志!

几个来自 Twitter 的例子。

* 找出实际上是用来编译代码的编译器标志

* 由于命令行太长,命令失败了

### 问题 8:为什么这个网络连接失败?

基本上,这里的目标是找到网络连接的域名 / IP 地址。你可以通过 DNS 请求来查找域名,或者通过 `connect` 系统调用来查找 IP。

一般来说,当 `tcpdump` 因为某些原因不能使用或者只是因为比较熟悉 `strace` 时,就经常会使用 `strace` 调试网络问题。

### 问题 9:为什么这个程序以一种方式运行时成功,以另一种方式运行时失败?

例如:

* 同样的二进制程序在一台机器上可以运行,在另一台机器上却失败了

* 可以运行,但被 systemd 单元文件生成时失败

* 可以运行,但以 `su - user /some/script` 的方式运行时失败

* 可以运行,作为 cron 作业运行时失败

能够比较两种情况下的 `strace` 输出是非常有用的。虽然我在调试“以我的用户身份工作,而在同一台计算机上以不同方式运行时却失败了”时,第一步是“看看我的环境变量”。

### 我在做什么:慢慢地建立一些挑战

我之所以会想到这个问题,是因为我一直在慢慢地进行一些挑战,以帮助人们练习使用 `strace` 和其他命令行工具。我的想法是,给你一个问题,一个终端,你可以自由地以任何方式解决它。

所以我的目标是用它来建立一些你可以用 `strace` 解决的练习题,这些练习题反映了人们在现实生活中实际使用它解决的问题。

### 就是这样!

可能还有更多的问题可以用 `strace` 解决,我在这里还没有讲到,我很乐意听到我错过了什么!

我真的很喜欢看到很多相同的用法一次又一次地出现:至少有 20 个不同的人回答说他们使用 `strace` 来查找配置文件。而且和以往一样,我觉得这样一个简单的工具(“跟踪系统调用!”)可以用来解决这么多不同类型的问题,真的很令人高兴。

---

via: <https://jvns.ca/blog/2021/04/03/what-problems-do-people-solve-with-strace/>

作者:[Julia Evans](https://jvns.ca/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Yesterday I [asked on Twitter about what problems people are solving with strace](https://twitter.com/b0rk/status/1378014888405168132) and

as usual everyone really delivered! I got 200 answers and then spent a bunch of

time manually categorizing them into 9 categories of problems.

All of the problems are about either finding files a program depends on, figuring out why a program is stuck or slow, or finding out why a program is failing. These generally matched up with what I use strace for myself, but there were some things I hadn’t thought of too!

I’m not going to explain what strace is in this post but I have a [free zine about it](https://wizardzines.com/zines/strace) and [a talk](https://www.youtube.com/watch?v=4pEHfGKB-OE) and [lots of blog posts](https://jvns.ca/categories/strace).

### problem 1: where’s the config file?

The #1 most popular problem was “this program has a configuration file and I don’t know where it is”. This is probably my most common use for strace too, because it’s such a simple question.

This is great because there are a million ways for a program to document where

its config file is (in a man page, on its website, in `--help`

, etc), but

there’s only one way for it to actually open it (with a system call!)

### problem 2: what other files does this program depend on?

You can also use strace to find other types of files a program depends on, like:

- dynamically linked libraries (“why is my program loading the wrong version of this

`.so`

file?”) like[this ruby problem I debugged in 2014](https://jvns.ca/blog/2014/03/10/debugging-shared-library-problems-with-strace/) - where it’s looking for its Ruby gems (Ruby specifically came up a few times!)

- SSL root certificates

- a game’s save files

- a closed-source program’s data files

[which node_modules files aren’t being used](https://indexandmain.com/post/shrink-node-modules-with-refining)

### problem 3: why is this program hanging?

You have a program, it’s just sitting there doing nothing, what’s going

on? This one is especially easy to answer because a lot of the time you just

need to run `strace -p PID`

and look at what system call is currently running.

You don’t even have to look through hundreds of lines of output!

The answer is usually ‘waiting for some kind of I/O’. Some possible answers for “why is this stuck” (though there are a lot more!):

- it’s polling forever on a

`select()`

- it’s

`wait()`

ing for a subprocess to finish - it’s making a network request to something that isn’t responding

- it’s doing

`write()`

but it’s blocked because the buffer is full - it’s doing a

`read()`

on stdin and it’s waiting for input

Someone also gave a nice example of using strace to debug a stuck `df`

: ‘with strace df -h you can find the stuck mount and unmount it".

### problem 4: is this program stuck?

A variation on the previous one: sometimes a program has been running for longer than you expected, and you just want to know if it’s stuck or of it’s still making progress.

As long as the program makes system calls while it’s running, this is super easy to answer with strace – just strace it and see if it’s making new system calls!

### problem 5: why is this program slow?

You can use strace as a sort of coarse profiling tool – `strace -t`

will show

the timestamp of each system call, so you can look for big gaps and find the culprit.

Here are 9 short stories from Twitter of people using strace to debug “why is this program slow?”.

- Back in 2000, a Java-based web site that I helped support was dying under modest load: pages loaded slowly, if at all. We straced the J2EE application server and found that it was reading class files one. byte. at. a. time. Devs weren’t using BufferedReader, classic Java mistake.

- Optimizing app startup times… running strace can be an eye-opening experience, in terms of the amount of unnecessary file system interaction going on (e.g. open/read/close on the same config file over and over again; loading gobs of font files over a slow NFS mount, etc)

- Asked myself why reading from session files in PHP (usually <100 bytes)

was incredibly slow. Turned out some

`flock`

-syscalls took ~60s - A program was behaving abnormally slow. Used strace to figure out it was re-initializing its internal pseudo-random number generator on every request by reading from /dev/random and exhausting entropy

- Last thing I remember was attaching to a job worker and seeing just how many network calls it was making (which was unexpected).

- Why is this program so slow to start? strace shows it opening/reading the same config file thousands of times.

- Server using 100% CPU time randomly with low actual traffic. Turns out it’s hitting the number of open files limit accepting a socket, and retrying forever after getting EMFILE and not reporting it.

- A workflow was running super slow but no logs, ends up it was trying to do a post request that was taking 30s before timing out and then retrying 5 times… ends up the backend service was overwhelmed but also had no visibility

- using strace to notice that gethostbyname() is taking a long time to return (you can’t see the

`gethostbyname`

directly but you can see the DNS packets in strace)

### problem 6: hidden permissions errors

Sometimes a program is failing for a mysterious reason, but the problem is just that there’s some file that it doesn’t have permission to open. In an ideal world programs would report those errors (“Error opening file /dev/whatever: permission denied”), but of course the world is not perfect, so strace can really help with this!

This is actually the most recent thing I used strace for: I was using an

AxiDraw pen plotter and it printed out an inscrutable error message when I

tried to start it. I `strace`

d it and it turned out that my user just didn’t

have permission to open the USB device.

### problem 7: what command line arguments are being used?

Sometimes a script is running another program, and you want to know what command line flags it’s passing!

A couple of examples from Twitter:

- find what compiler flags are actually being used to build some code

- a command was failing due to having too long a command line

### problem 8: why is this network connection failing?

Basically the goal here is just to find which domain / IP address the network

connection is being made to. You can look at the DNS request to find the domain

or the `connect`

system call to find the IP.

In general there are a lot of stories about using strace to debug network

issues when `tcpdump`

isn’t available for some reason or just because it’s what

the person is more familiar with.

### problem 9: why does this program succeed when run one way and fail when run in another way?

For example:

- the same binary works on one machine, fails on another machine

- works when you run it, fails when spawned by a systemd unit file

- works when you run it, fails when you run it as “su - user /some/script”

- works when you run it, fails when run as a cron job

Being able to compare the strace output in both cases is very helpful. Though my first step when debugging “this works as my user and fails when run in a different way on the same computer” would be “look at my environment variables”.

### problem 10: how does this Linux kernel API work?

Another one quite a few people mentioned is figuring out how a Linux kernel API (for example netlink, io_uring, hdparm, I2C, etc).

Even though these APIs are usually documented, sometimes the documentation is confusing or there aren’t very many examples, so often it’s easier to just strace an existing application and see how it interacts with the Linux kernel.

### problem 11: general reverse engineering

strace is also great for just generally figuring out “how does this program

work?”. As a simple example of this, here’s a blog post on [figuring out how killall works using strace](https://jvns.ca/blog/2013/12/22/fun-with-strace/).

### what I’m doing with this: slowly building some challenges

The reason I’m thinking about this is that I’ve been slowly working on some challenges to help people practice using strace and other command line tools. The idea is that you’re given a problem to solve, a terminal, and you’re free to solve it in any way you want.

So my goal is to use this to build some practice problems that you can solve with strace that reflect the kinds of problems that people actually use it for in real life.

### that’s all!

There are probably more problems that can be solved with strace that I haven’t covered here – I’d love to hear what I’ve missed!

I really loved seeing how many of the same uses came up over and over and over again – at least 20 different people replied saying that they use strace to find config files. And as always I think it’s really delightful how such a simple tool (“trace system calls!”) can be used to solve so many different kinds of problems. |

13,268 | 用 Lua 操作文件中的数据 | https://opensource.com/article/21/3/lua-files | 2021-04-05T10:25:03 | [

"Lua",

"读写",

"文件"

] | https://linux.cn/article-13268-1.html |

>

> 了解 Lua 如何处理数据的读写。

>

>

>

有些数据是临时的,存储在 RAM 中,只有在应用运行时才有意义。但有些数据是要持久的,存储在硬盘上供以后使用。当你编程时,无论是简单的脚本还是复杂的工具套件,通常都需要读取和写入文件。有时文件可能包含配置选项,而另一些时候这个文件是你的用户用你的应用创建的数据。每种语言都会以不同的方式处理这项任务,本文将演示如何使用 Lua 处理文件数据。

### 安装 Lua

如果你使用的是 Linux,你可以从你的发行版软件库中安装 Lua。在 macOS 上,你可以从 [MacPorts](https://opensource.com/article/20/11/macports) 或 [Homebrew](https://opensource.com/article/20/6/homebrew-mac) 安装 Lua。在 Windows 上,你可以从 [Chocolatey](https://opensource.com/article/20/3/chocolatey) 安装 Lua。

安装 Lua 后,打开你最喜欢的文本编辑器并准备开始。

### 用 Lua 读取文件

Lua 使用 `io` 库进行数据输入和输出。下面的例子创建了一个名为 `ingest` 的函数来从文件中读取数据,然后用 `:read` 函数进行解析。在 Lua 中打开一个文件时,有几种模式可以启用。因为我只需要从这个文件中读取数据,所以我使用 `r`(代表“读”)模式:

```

function ingest(file)

local f = io.open(file, "r")

local lines = f:read("*all")

f:close()

return(lines)

end

myfile=ingest("example.txt")

print(myfile)

```

在这段代码中,注意到变量 `myfile` 是为了触发 `ingest` 函数而创建的,因此,它接收该函数返回的任何内容。`ingest` 函数返回文件的行数(从一个称为 `lines` 的变量中0。当最后一步打印 `myfile` 变量的内容时,文件的行数就会出现在终端中。

如果文件 `example.txt` 中包含了配置选项,那么我会写一些额外的代码来解析这些数据,可能会使用另一个 Lua 库,这取决于配置是以 INI 文件还是 YAML 文件或其他格式存储。如果数据是 SVG 图形,我会写额外的代码来解析 XML,可能会使用 Lua 的 SVG 库。换句话说,你的代码读取的数据一旦加载到内存中,就可以进行操作,但是它们都需要加载 `io` 库。

### 用 Lua 将数据写入文件

无论你是要存储用户用你的应用创建的数据,还是仅仅是关于用户在应用中做了什么的元数据(例如,游戏保存或最近播放的歌曲),都有很多很好的理由来存储数据供以后使用。在 Lua 中,这是通过 `io` 库实现的,打开一个文件,将数据写入其中,然后关闭文件:

```

function exgest(file)

local f = io.open(file, "a")

io.output(f)

io.write("hello world\n")

io.close(f)

end

exgest("example.txt")

```

为了从文件中读取数据,我以 `r` 模式打开文件,但这次我使用 `a` (用于”追加“)将数据写到文件的末尾。因为我是将纯文本写入文件,所以我添加了自己的换行符(`/n`)。通常情况下,你并不是将原始文本写入文件,你可能会使用一个额外的库来代替写入一个特定的格式。例如,你可能会使用 INI 或 YAML 库来帮助编写配置文件,使用 XML 库来编写 XML,等等。

### 文件模式

在 Lua 中打开文件时,有一些保护措施和参数来定义如何处理文件。默认值是 `r`,允许你只读数据:

* `r` 只读

* `w` 如果文件不存在,覆盖或创建一个新文件。

* `r+` 读取和覆盖。

* `a` 追加数据到文件中,或在文件不存在的情况下创建一个新文件。

* `a+` 读取数据,将数据追加到文件中,或文件不存在的话,创建一个新文件。

还有一些其他的(例如,`b` 代表二进制格式),但这些是最常见的。关于完整的文档,请参考 [Lua.org/manual](http://lua.org/manual) 上的优秀 Lua 文档。

### Lua 和文件

和其他编程语言一样,Lua 有大量的库支持来访问文件系统来读写数据。因为 Lua 有一个一致且简单语法,所以很容易对任何格式的文件数据进行复杂的处理。试着在你的下一个软件项目中使用 Lua,或者作为 C 或 C++ 项目的 API。

---

via: <https://opensource.com/article/21/3/lua-files>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Some data is ephemeral, stored in RAM, and only significant while an application is running. But some data is meant to be persistent, stored on a hard drive for later use. When you program, whether you're working on a simple script or a complex suite of tools, it's common to need to read and write files. Sometimes a file may contain configuration options, and other times the file is the data that your user is creating with your application. Every language handles this task a little differently, and this article demonstrates how to handle data files with Lua.

## Installing Lua

If you're on Linux, you can install Lua from your distribution's software repository. On macOS, you can install Lua from [MacPorts](https://opensource.com/article/20/11/macports) or [Homebrew](https://opensource.com/article/20/6/homebrew-mac). On Windows, you can install Lua from [Chocolatey](https://opensource.com/article/20/3/chocolatey).

Once you have Lua installed, open your favorite text editor and get ready to code.

## Reading a file with Lua

Lua uses the `io`

library for data input and output. The following example creates a function called `ingest`

to read data from a file and then parses it with the `:read`

function. When opening a file in Lua, there are several modes you can enable. Because I just need to read data from this file, I use the `r`

(for "read") mode:

```

function ingest(file)

local f = io.open(file, "r")

local lines = f:read("*all")

f:close()

return(lines)

end

myfile=ingest("example.txt")

print(myfile)

```

In the code, notice that the variable `myfile`

is created to trigger the `ingest`

function, and therefore, it receives whatever that function returns. The `ingest`

function returns the lines (from a variable intuitively called `lines`

) of the file. When the contents of the `myfile`

variable are printed in the final step, the lines of the file appear in the terminal.

If the file `example.txt`

contains configuration options, then I would write some additional code to parse that data, probably using another Lua library depending on whether the configuration was stored as an INI file or YAML file or some other format. If the data were an SVG graphic, I'd write extra code to parse XML, probably using an SVG library for Lua. In other words, the data your code reads can be manipulated once it's loaded into memory, but all that's required to load it is the `io`

library.

## Writing data to a file with Lua

Whether you're storing data your user is creating with your application or just metadata about what the user is doing in an application (for instance, game saves or recent songs played), there are many good reasons to store data for later use. In Lua, this is achieved through the `io`

library by opening a file, writing data into it, and closing the file:

```

function exgest(file)

local f = io.open(file, "a")

io.output(f)

io.write("hello world\n")

io.close(f)

end

exgest("example.txt")

```

To read data from the file, I open the file in `r`

mode, but this time I use `a`

(for "append") to write data to the end of the file. Because I'm writing plain text into a file, I added my own newline character (`\n`

). Often, you're not writing raw text into a file, and you'll probably use an additional library to write a specific format instead. For instance, you might use an INI or YAML library to help write configuration files, an XML library to write XML, and so on.

## File modes

When opening files in Lua, there are some safeguards and parameters to define how a file should be handled. The default is `r`

, which permits you to read data only:

**r**for read only**w**to overwrite or create a new file if it doesn't already exist**r+**to read and overwrite**a**to append data to a file or make a new file if it doesn't already exist**a+**to read data, append data to a file, or make a new file if it doesn't already exist

There are a few others (`b`

for binary formats, for instance), but those are the most common. For the full documentation, refer to the excellent Lua documentation on [Lua.org/manual](http://lua.org/manual).

## Lua and files

Like other programming languages, Lua has plenty of library support to access a filesystem to read and write data. Because Lua has a consistent and simple syntax, it's easy to perform complex processing on data in files of any format. Try using Lua for your next software project, or as an API for your C or C++ project.

## Comments are closed. |

13,270 | 如何使用 Linux anacron 命令 | https://opensource.com/article/21/2/linux-automation | 2021-04-06T08:42:00 | [

"anacron",

"cron"

] | /article-13270-1.html |

>

> 与其手动执行重复性的任务,不如让 Linux 为你做。

>

>

>

在 2021 年,人们有更多的理由喜欢 Linux。在这个系列中,我将分享使用 Linux 的 21 个不同理由。自动化是使用 Linux 的最佳理由之一。

我最喜欢 Linux 的一个原因是它愿意为我做工作。我不想执行重复性的任务,这些任务会占用我的时间,或者容易出错,或者我可能会忘记,我安排 Linux 为我做这些工作。

### 为自动化做准备

“自动化”这个词既让人望而生畏,又让人心动。我发现用模块化的方式来处理它是有帮助的。

#### 1、你想实现什么?

首先,要知道你想产生什么结果。你是要给图片加水印吗?从杂乱的目录中删除文件?执行重要数据的备份?为自己明确定义任务,这样你就知道自己的目标是什么。如果有什么任务是你发现自己每天都在做的,甚至一天一次以上,那么它可能是自动化的候选者。

#### 2、学习你需要的应用

将大的任务分解成小的组件,并学习如何手动但以可重复和可预测的方式产生每个结果。在 Linux 上可以做的很多事情都可以用脚本来完成,但重要的是要认识到你当前的局限性。学习如何自动调整几张图片的大小,以便可以方便地通过电子邮件发送,与使用机器学习为你的每周通讯生成精心制作的艺术品之间有天壤之别。有的事你可以在一个下午学会,而另一件事可能要花上几年时间。然而,我们都必须从某个地方开始,所以只要从小做起,并时刻注意改进的方法。

#### 3、自动化

在 Linux 上使用一个自动化工具来定期实现它。这就是本文介绍的步骤!

要想自动化一些东西,你需要一个脚本来自动化一个任务。在测试时,最好保持简单,所以本文自动化的任务是在 `/tmp` 目录下创建一个名为 `hello` 的文件。

```

#!/bin/sh

touch /tmp/hello

```

将这个简单的脚本复制并粘贴到一个文本文件中,并将其命名为 `example`。

### Cron

每个安装好的 Linux 系统都会有的内置自动化解决方案就是 cron 系统。Linux 用户往往把 cron 笼统地称为你用来安排任务的方法(通常称为 “cron 作业”),但有多个应用程序可以提供 cron 的功能。最通用的是 [cronie](https://github.com/cronie-crond/cronie);它的优点是,它不会像历史上为系统管理员设计的 cron 应用程序那样,假设你的计算机总是开着。

验证你的 Linux 发行版提供的是哪个 cron 系统。如果不是 cronie,你可以从发行版的软件仓库中安装 cronie。如果你的发行版没有 cronie 的软件包,你可以使用旧的 anacron 软件包来代替。`anacron` 命令是包含在 cronie 中的,所以不管你是如何获得它的,你都要确保在你的系统上有 `anacron` 命令,然后再继续。anacron 可能需要管理员 root 权限,这取决于你的设置。

```

$ which anacron

/usr/sbin/anacron

```

anacron 的工作是确保你的自动化作业定期执行。为了做到这一点,anacron 会检查找出最后一次运行作业的时间,然后检查你告诉它运行作业的频率。

假设你将 anacron 设置为每五天运行一次脚本。每次你打开电脑或从睡眠中唤醒电脑时,anacron都会扫描其日志以确定是否需要运行作业。如果一个作业在五天或更久之前运行,那么 anacron 就会运行该作业。

### Cron 作业

许多 Linux 系统都捆绑了一些维护工作,让 cron 来执行。我喜欢把我的工作与系统工作分开,所以我在我的主目录中创建了一个目录。具体来说,有一个叫做 `~/.local` 的隐藏文件夹(“local” 的意思是它是为你的用户账户定制的,而不是为你的“全局”计算机系统定制的),所以我创建了子目录 `etc/cron.daily` 来作为 cron 在我的系统上的家目录。你还必须创建一个 spool 目录来跟踪上次运行作业的时间。

```

$ mkdir -p ~/.local/etc/cron.daily ~/.var/spool/anacron

```

你可以把任何你想定期运行的脚本放到 `~/.local/etc/cron.daily` 目录中。现在把 `example` 脚本复制到目录中,然后 [用 chmod 命令使其可执行](https://opensource.com/article/19/8/linux-chmod-command)。

```

$ cp example ~/.local/etc/cron.daily

# chmod +x ~/.local/etc/cron.daily/example

```

接下来,设置 anacron 来运行位于 `~/.local/etc/cron.daily` 目录下的任何脚本。

### anacron

默认情况下,cron 系统的大部分内容都被认为是系统管理员的领域,因为它通常用于重要的底层任务,如轮换日志文件和更新证书。本文演示的配置是为普通用户设置个人自动化任务而设计的。

要配置 anacron 来运行你的 cron 作业,请在 `/.local/etc/anacrontab` 创建一个配置文件:

```

SHELL=/bin/sh

PATH=/sbin:/bin:/usr/sbin:/usr/bin

1 0 cron.mine run-parts /home/tux/.local/etc/cron.daily/

```

这个文件告诉 anacron 每到新的一天(也就是每日),延迟 0 分钟后,就运行(`run-parts`)所有在 `~/.local/etc/cron.daily` 中找到的可执行脚本。有时,会使用几分钟的延迟,这样你的计算机就不会在你登录后就被所有可能的任务冲击。不过这个设置适合测试。

`cron.mine` 值是进程的一个任意名称。我称它为 `cron.mine`,但你也可以称它为 `cron.personal` 或 `penguin` 或任何你想要的名字。

验证你的 `anacrontab` 文件的语法:

```

$ anacron -T -t ~/.local/etc/anacrontab \

-S /home/tux/.var/spool/anacron

```

沉默意味着成功。

### 在 .profile 中添加 anacron

最后,你必须确保 anacron 以你的本地配置运行。因为你是以普通用户而不是 root 用户的身份运行 anacron,所以你必须将它引导到你的本地配置:告诉 anacron 要做什么的 `anacrontab` 文件,以及帮助 anacron 跟踪每一个作业最后一次执行是多少天的 spool 目录:

```

anacron -fn -t /home/tux/.local/etc/anacrontab \

-S /home/tux/.var/spool/anacron

```

`-fn` 选项告诉 anacron *忽略* 时间戳,这意味着你强迫它无论如何都要运行你的 cron 作业。这完全是为了测试的目的。

### 测试你的 cron 作业

现在一切都设置好了,你可以测试作业了。从技术上讲,你可以在不重启的情况下进行测试,但重启是最有意义的,因为这就是设计用来处理中断和不规则的登录会话的。花点时间重启电脑、登录,然后寻找测试文件:

```

$ ls /tmp/hello

/tmp/hello

```

假设文件存在,那么你的示例脚本已经成功执行。现在你可以从 `~/.profile` 中删除测试选项,留下这个作为你的最终配置。

```

anacron -t /home/tux/.local/etc/anacrontab \

-S /home/tux/.var/spool/anacron

```

### 使用 anacron

你已经配置好了你的个人自动化基础设施,所以你可以把任何你想让你的计算机替你管理的脚本放到 `~/.local/etc/cron.daily` 目录下,它就会按计划运行。

这取决于你希望作业运行的频率。示例脚本是每天执行一次。很明显,这取决于你的计算机在任何一天是否开机和醒着。如果你在周五使用电脑,但把它设置在周末,脚本就不会在周六和周日运行。然而,在周一,脚本会执行,因为 anacron 会知道至少有一天已经过去了。你可以在 `~/.local/etc` 中添加每周、每两周、甚至每月的目录,以安排各种各样的间隔。

要添加一个新的时间间隔:

1. 在 `~/.local/etc` 中添加一个目录(例如 `cron.weekly`)。

2. 在 `~/.local/etc/anacrontab` 中添加一行,以便在新目录下运行脚本。对于每周一次的间隔,其配置如下。`7 0 cron.mine run-parts /home/tux/.local/etc/cron.weekly/`(`0` 的值可以选择一些分钟数,以适当地延迟脚本的启动)。

3. 把你的脚本放在 `cron.weekly` 目录下。

欢迎来到自动化的生活方式。它不会让人感觉到,但你将会变得更有效率。

---

via: <https://opensource.com/article/21/2/linux-automation>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

13,271 | 值得现在就去尝试的四款开源聊天应用软件 | https://opensource.com/article/20/4/open-source-chat | 2021-04-06T10:35:41 | [

"聊天"

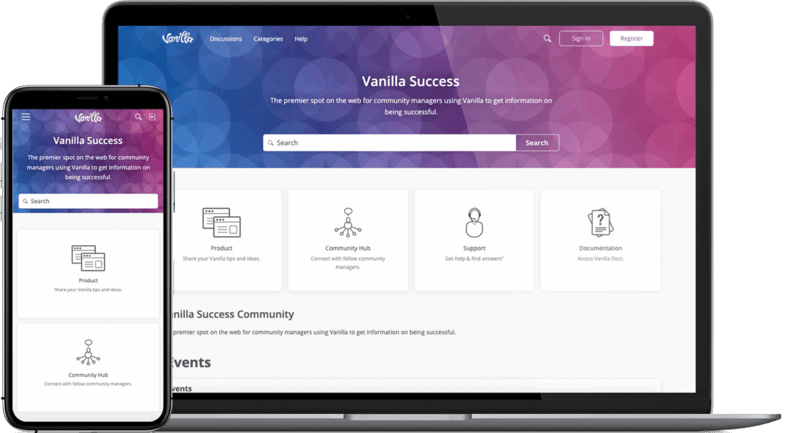

] | /article-13271-1.html |

>

> 现在,远程协作已作为一项必不可少的能力,让开源实时聊天成为你工具箱中必不可少的一部分吧。

>

>

>

清晨起床后,我们通常要做的第一件事是检查手机,看看是否有同事和朋友发来的重要信息。无论这是否是一个好习惯,但这种行为早已成为我们日常生活的一部分。

>

> 人是理性动物。他可以为任何他想相信的事情想出一个理由。 – 阿纳托尔·法朗士

>

>

>

无论理由是否合理,我们每天都在使用的一系列的通讯工具,例如电子邮件、电话、网络会议工具或社交网络。甚至在 COVID-19 之前,居家办公就已经使这些通信工具成为我们生活中的重要部分。随着疫情出现,居家办公成为新常态,我们交流方式的方方面面正面临着前所未有的改变,这让这些工具变得不可或缺。

### 为什么需要聊天?

作为全球团队的一部分进行远程工作时,我们必须要有一个相互协作的环境。聊天应用软件在帮助我们保持相互联系中起着至关重要的作用。与电子邮件相比,聊天应用软件可提供与全球各地的同事快速、实时的通信。

选择一款聊天应用软件需要考虑很多因素。为了帮助你选择最适合你的应用软件,在本文中,我将探讨四款开源聊天应用软件,和一个当你需要与同事“面对面”时的开源视频通信工具,然后概述在高效的通讯应用软件中,你应当考虑的一些功能。

### 四款开源聊天软件

#### Rocket.Chat

[Rocket.Chat](https://rocket.chat/) 是一个综合性的通讯平台,其将频道分为公开房间(任何人都可以加入)和私有房间(仅受邀请)。你还可以直接将消息发送给已登录的人员。其能共享文档、链接、照片、视频和<ruby> 动态图 <rt> GIF </rt></ruby>,以及进行视频通话,并可以在平台中发送语音信息。

Rocket.Chat 是自由开源软件,但是其独特之处在于其可自托管的聊天系统。你可以将其下载到你的服务器上,无论它是本地服务器或是在公有云上的虚拟专用服务器。

Rocket.Chat 是完全免费,其 [源码](https://github.com/RocketChat/Rocket.Chat) 可在 Github 获得。许多开源项目都使用 Rocket.Chat 作为他们官方交流平台。该软件在持续不断的发展且不断更新和改进新功能。

我最喜欢 Rocket.Chat 的地方是其能够根据用户需求来进行自定义操作,并且它使用机器学习在用户通讯间进行自动的、实时消息翻译。你也可以下载适用于你移动设备的 Rocket.Chat,以便能随时随地使用。

#### IRC

IRC(<ruby> <a href="https://en.wikipedia.org/wiki/Internet_Relay_Chat"> 互联网中继聊天 </a> <rt> Internet Relay Chat </rt></ruby>)是一款实时、基于文本格式的通信软件。尽管其是最古老的电子通讯形式之一,但在许多知名的软件项目中仍受欢迎。

IRC 频道是单独的聊天室。它可以让你在一个开放的频道中与多人进行聊天或与某人私下一对一聊天。如果频道名称以 `#` 开头,则可以假定它是官方的聊天室,而以 `##` 开头的聊天室通常是非官方的聊天室。

[上手 IRC](https://opensource.com/article/16/6/getting-started-irc) 很容易。你的 IRC 昵称可以让人们找到你,因此它必须是唯一的。但是,你可以完全自主地选择 IRC 客户端。如果你需要比标准 IRC 客户端更多功能的应用程序,则可以使用 [Riot.im](https://opensource.com/article/17/5/introducing-riot-IRC) 连接到 IRC。

考虑到它悠久的历史,你为什么还要继续使用 IRC?出于一个原因是,其仍是我们所依赖的许多自由及开源项目的家园。如果你想参于开源软件开发和社区,可以选择用 IRC。

#### Zulip

[Zulip](https://zulipchat.com/) 是十分流行的群聊应用程序,它遵循基于话题线索的模式。在 Zulip 中,你可以订阅<ruby> 流 <rt> stream </rt></ruby>,就像在 IRC 频道或 Rocket.Chat 中一样。但是,每个 Zulip 流都会拥有一个唯一的<ruby> 话题 <rt> topic </rt></ruby>,该话题可帮助你以后查找对话,因此其更有条理。

与其他平台一样,它支持表情符号、内嵌图片、视频和推特预览。它还支持 LaTeX 来分享数学公式或方程式、支持 Markdown 和语法高亮来分享代码。

Zulip 是跨平台的,并提供 API 用于编写你自己的程序。我特别喜欢 Zulip 的一点是它与 GitHub 的集成整合功能:如果我正在处理某个<ruby> 议题 <rt> issue </rt></ruby>,则可以使用 Zulip 的标记回链某个<ruby> 拉取请求 <rt> pull request </rt></ruby> ID。

Zulip 是开源的(你可以在 GitHub 上访问其 [源码](https://github.com/zulip/zulip))并且免费使用,但它有提供预置支持、[LDAP](https://en.wikipedia.org/wiki/Lightweight_Directory_Access_Protocol) 集成和更多存储类型的付费产品。

#### Let's Chat

[Let's Chat](https://sdelements.github.io/lets-chat/) 是一个面向小型团队的自托管的聊天解决方案。它使用 Node.js 和 MongoDB 编写运行,只需鼠标点击几下即可将其部署到本地服务器或云服务器。它是自由开源软件,可以在 GitHub 上查看其 [源码](https://github.com/sdelements/lets-chat)。

Let's Chat 与其他开源聊天工具的不同之处在于其企业功能:它支持 LDAP 和 [Kerberos](https://en.wikipedia.org/wiki/Kerberos_(protocol)) 身份验证。它还具有新用户想要的所有功能:你可以在历史记录中搜索过往消息,并使用 @username 之类的标签来标记人员。

我喜欢 Let's Chat 的地方是它拥有私人的受密码保护的聊天室、发送图片、支持 GIPHY 和代码粘贴。它不断更新,不断增加新功能。

### 附加:开源视频聊天软件 Jitsi

有时,文字聊天还不够,你还可能需要与某人面谈。在这种情况下,如果不能选择面对面开会交流,那么视频聊天是最好的选择。[Jitsi](https://jitsi.org/) 是一个完全开源的、支持多平台且兼容 WebRTC 的视频会议工具。

Jitsi 从 Jitsi Desktop 开始,已经发展成为许多 [项目](https://jitsi.org/projects/),包括 Jitsi Meet、Jitsi Videobridge、jibri 和 libjitsi,并且每个项目都在 GitHub 上开放了 [源码](https://github.com/jitsi)。

Jitsi 是安全且可扩展的,并支持诸如<ruby> 联播 <rt> simulcast </rt></ruby>和<ruby> 带宽预估 <rt> bandwidth estimation </rt></ruby>之类的高级视频路由的概念,还包括音频、录制、屏幕共享和拨入功能等经典功能。你可以来为你的视频聊天室设置密码以保护其不受干扰,并且它还支持通过 YouTube 进行直播。你还可以搭建自己的 Jitsi 服务器,并将其托管在本地或<ruby> 虚拟专用服务器 <rt> virtual private server </rt></ruby>(例如 Digital Ocean Droplet)上。

我最喜欢 Jitsi 的是它是免费且低门槛的。任何人都可以通过访问 [meet.jit.si](http://meet.jit.si) 来立即召开会议,并且用户无需注册或安装即可轻松参加会议。(但是,注册的话能拥有日程安排功能。)这种入门级低门槛的视频会议服务让 Jitsi 迅速普及。

### 选择一个聊天应用软件的建议

各种各样的开源聊天应用软件可能让你很难抉择。以下是一些选择一款聊天应用软件的一般准则。

* 最好具有交互式的界面和简单的导航工具。

* 最好寻找一种功能强大且能让人们以各种方式使用它的工具。

* 如果与你所使用的工具有进行集成整合的话,可以重点考虑。一些工具与 GitHub 或 GitLab 以及某些应用程序具有良好的无缝衔接,这将是一个非常有用的功能。

* 有能托管到云主机的工具将十分方便。

* 应考虑到聊天服务的安全性。在私人服务器上托管服务的能力对许多组织和个人来说是必要的。

* 最好选择那些具有丰富的隐私设置,并拥有私人聊天室和公共聊天室的通讯工具。

由于人们比以往任何时候都更加依赖在线服务,因此拥有备用的通讯平台是明智之举。例如,如果一个项目正在使用 Rocket.Chat,则必要之时,它还应具有跳转到 IRC 的能力。由于这些软件在不断更新,你可能会发现自己已经连接到多个渠道,因此集成整合其他应用将变得非常有价值。

在各种可用的开源聊天服务中,你喜欢和使用哪些?这些工具又是如何帮助你进行远程办公?请在评论中分享你的想法。

---

via: <https://opensource.com/article/20/4/open-source-chat>

作者:[Sudeshna Sur](https://opensource.com/users/sudeshna-sur) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wyxplus](https://github.com/wyxplus) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

13,273 | 《代码英雄》第四季(2):大型机 GE-225 和 BASIC 的诞生 | https://www.redhat.com/en/command-line-heroes/season-4/mainframes | 2021-04-07T09:18:25 | [

"大型机",

"代码英雄"

] | https://linux.cn/article-13273-1.html |

>

> 代码英雄讲述了开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。

>

>

>

>

> **什么是《代码英雄》**

>

>

> <ruby> 代码英雄 <rt> Command Line Heroes </rt></ruby>是世界领先的企业开源软件解决方案供应商红帽(Red Hat)精心制作的原创音频播客,讲述开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。该音频博客邀请到了谷歌、NASA 等重量级企业的众多技术大牛共同讲述开源、操作系统、容器、DevOps、混合云等发展过程中的动人故事。

>

>

>

本文是《[代码英雄](https://www.redhat.com/en/command-line-heroes)》系列播客[《代码英雄》第四季(2):大型机: GE-225 和 BASIC 的诞生](https://www.redhat.com/en/command-line-heroes/season-4/mainframes)的[音频](https://cdn.simplecast.com/audio/a88fbe/a88fbe81-5614-4834-8a78-24c287debbe6/d27b7b80-6530-4f0a-b6df-a237c6939687/clh-s4-ep2-mainframe-the-ge-225-vfinal_tc.mp3)脚本。

>

> 导语:二战后,计算机行业开始蓬勃发展。<ruby> 通用电气 <rt> General Electric </rt></ruby>(GE)的 CEO 拒绝进入这个市场。但一小队反叛的员工不顾规则,秘密进取。他们创造了 GE 225。这是工程上的一次巨大飞跃,它将计算从一个小众市场推向了主流,为今天的科技行业播下了种子。

>

>

> 在创建通用的<ruby> 大型机 <rt> mainframe </rt></ruby>之前,计算机通常是为了执行单一功能而制造的。William Ocasio 回忆了 GE 的第一台专用计算机 ERMA 是如何帮助银行每天处理成千上万的交易的。John Joseph 讲述了 GE 的几名关键员工是如何蒙骗他们的 CEO 建立一个计算机部门的。Tomas Kellner 解释了他们的工作如何产生了一台革命性的机器:GE 225。而 Joy Lisi Rankin 则介绍了<ruby> 达特茅斯学院 <rt> Dartmouth College </rt></ruby>的工程师们如何对 GE 225 用于<ruby> 分时 <rt> time-sharing </rt></ruby>计算,并利用它创建了 BASIC —— 使计算变得更加方便的重要里程碑。

>

>

>

**00:00:05 - Saron Yitbarek**:

让我们回到几十年前,回到 40 年代末、50 年代初,当时计算机行业有“白雪公主和七个小矮人”的说法。众所周知,“白雪公主”指的是 IBM,而<ruby> 通用电气公司 <rt> General Electric </rt></ruby>便是“七个小矮人”之一。这是一家偶尔生产定制机器,但从没在公开市场售卖计算机的公司。

**00:00:32**:

通用计算机是 IBM 的地盘,而 IBM 是 GE 的第二大客户(第一是美国政府)。IBM 经常采购 GE 生产的真空管、电机、变压器和开关等设备,GE 时任总裁 Ralph Cordiner 对此非常满意。所以,每当 GE 的部门主管将转向计算机业务的计划书提交到总裁办公室时,收到的答复都是封面上大写的橙色字母:RJC,Ralph Cordiner 一次又一次地拒绝他们。

**00:01:19**:

事实上,在 Cordiner 担任 GE 总裁兼首席执行官的 13 年时间里,GE 的态度从未改变。即使研究指出计算机是电子工业中增长最快的领域,但 Cordiner 总是用愤怒回应挑战他底线的员工们。然而,一小群叛逆的员工看到了制造大型机的机会,他们不想错失良机。然而他们不知道的是,这台大型机将拯救银行业,打开分时系统的大门,并孕育出新的编程语言。在上一季,我们听了 John Kemeny 和 Thomas Kurtz 在<ruby> 达特茅斯学院 <rt> Dartmouth College </rt></ruby>创造 BASIC 的故事,了解到因为 BASIC 是解释型语言,早期的计算机没有足够的资源运行它。像 BASIC 这么好的点子,正等待着像 GE-225 这样合适的设备出现,让它大放异彩。这一章,让我们一起揭开那台差点被扼杀在摇篮中的大型机,那鲜为人知的故事。这台房间大小的机器打开了新世界的大门,它鼓舞了像<ruby> 史蒂夫•沃兹尼亚克 <rt> Steve Wozniak </rt></ruby>和<ruby> 比尔•盖茨 <rt> Bill Gates </rt></ruby>这样有远见的代码英雄,鼓舞他们推动个人电脑革命,它的创造在今天仍然意义非凡。我是 Saron Yitbarek,欢迎收听<ruby> 代码英雄 <rt> Command Line Heros </rt></ruby>,Red Hat 的原创播客第四季:硬件设备。

>

> **00:03:05 - 话音 1**:

>

>

> Adams 先生觉得他的新支票账户很好用。与其他公司直接从<ruby> 埃姆维尔国家银行 <rt> Elmvale National Bank </rt></ruby>兑现不同,用这个账号可以从自家银行兑现。与其他银行一样,自家银行把支票送到<ruby> 联邦储备银行 <rt> Federal Reserve Bank </rt></ruby>去执行兑现。联邦储备银行正是为处理每天来自数百家银行的数千张支票而设立的。

>

>

>

**00:03:29 - Saron Yitbarek**:

1947 年,支票开始流行,银行工作人员的时间耗费在无穷无尽的支票当中。战后经济的蓬勃发展,更是让银行被铺天盖地的支票所淹没。为了腾出时间手工填写账簿,他们被迫下午 2 点就早早关门,但即使这样,他们仍然赶不上进度。他们迫切的希望,能有一台快速、强大的机器,让银行跟上商业发展的步伐。当时<ruby> 美国银行 <rt> Bank of America </rt></ruby>每天要处理数百万张支票。下面有请<ruby> 西北大学凯洛格管理学院 <rt> Kellogg School of Management at Northwestern University </rt></ruby>的教授 William Ocasio。

**00:04:12 - Will Ocasio**:

难以想象,银行如果没有电脑可用会是什么样子。银行本身就是繁重、文书密集型的行业,美国银行分支机构又那么多。有这么多的信息,他们想要能够快速的传递和处理这些信息。这对于这样一个大公司来说真的很重要。他们知道计算机才是未来的方向。

**00:04:39 - Saron Yitbarek**:

所以,1950 美国银行与<ruby> 斯坦福研究院 <rt> Stanford Research Institute </rt></ruby>(SRI)签约,希望找到自动处理这些支票的方法。SRI 花了五年时间制造了一台原型机,并将其命名为<ruby> 电子记录会计机 <rt> electronic recording machine accounting </rt></ruby>简称 ERMA。ERMA 有超过 100 万英尺的电线,8000 个真空管,重约 25 吨,每天可以处理 5 万笔交易。

**00:05:11**: