id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

13,099 | Arch Linux 的 pacman 命令入门 | https://itsfoss.com/pacman-command/ | 2021-02-09T11:14:00 | [

"pacman"

] | https://linux.cn/article-13099-1.html |

>

> 这本初学者指南向你展示了在 Linux 中可以使用 pacman 命令做什么,如何使用它们来查找新的软件包,安装和升级新的软件包,以及清理你的系统。

>

>

>

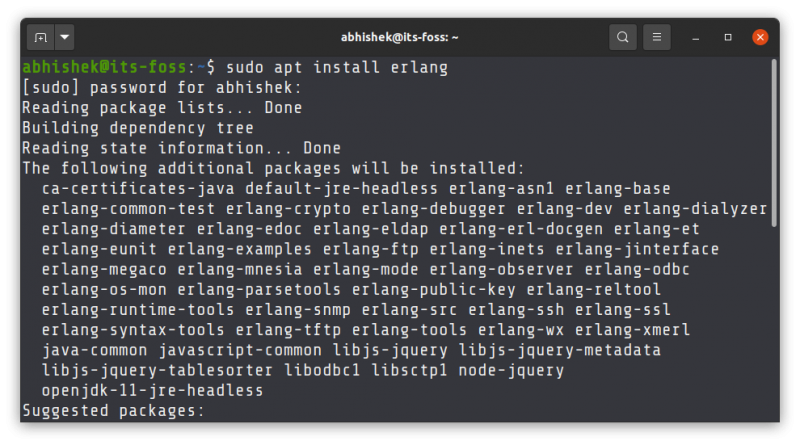

[pacman](https://www.archlinux.org/pacman/) 包管理器是 [Arch Linux](https://www.archlinux.org/) 和其他主要发行版如 Red Hat 和 Ubuntu/Debian 之间的主要区别之一。它结合了简单的二进制包格式和易于使用的 [构建系统](https://wiki.archlinux.org/index.php/Arch_Build_System)。`pacman` 的目标是方便地管理软件包,无论它是来自 [官方库](https://wiki.archlinux.org/index.php/Official_repositories) 还是用户自己构建的软件库。

如果你曾经使用过 Ubuntu 或基于 debian 的发行版,那么你可能使用过 `apt-get` 或 `apt` 命令。`pacman` 在 Arch Linux 中是同样的命令。如果你 [刚刚安装了 Arch Linux](https://itsfoss.com/install-arch-linux/),在安装 Arch Linux 后,首先要做的 [几件事](https://itsfoss.com/things-to-do-after-installing-arch-linux/) 之一就是学习使用 `pacman` 命令。

在这个初学者指南中,我将解释一些基本的 `pacman` 命令的用法,你应该知道如何用这些命令来管理你的基于 Archlinux 的系统。

### Arch Linux 用户应该知道的几个重要的 pacman 命令

与其他包管理器一样,`pacman` 可以将包列表与软件库同步,它能够自动解决所有所需的依赖项,以使得用户可以通过一个简单的命令下载和安装软件。

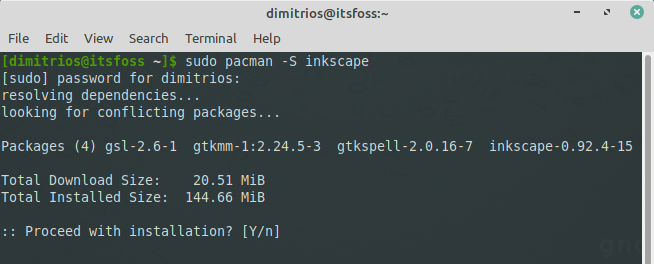

#### 通过 pacman 安装软件

你可以用以下形式的代码来安装一个或者多个软件包:

```

pacman -S 软件包名1 软件包名2 ...

```

`-S` 选项的意思是<ruby> 同步 <rt> synchronization </rt></ruby>,它的意思是 `pacman` 在安装之前先与软件库进行同步。

`pacman` 数据库根据安装的原因将安装的包分为两组:

* **显式安装**:由 `pacman -S` 或 `-U` 命令直接安装的包

* **依赖安装**:由于被其他显式安装的包所 [依赖](https://wiki.archlinux.org/index.php/Dependency),而被自动安装的包。

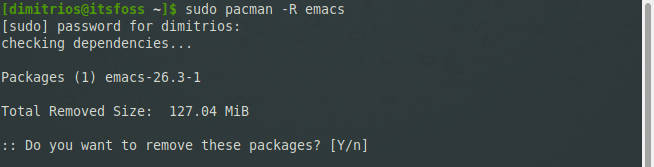

#### 卸载已安装的软件包

卸载一个包,并且删除它的所有依赖。

```

pacman -R 软件包名

```

删除一个包,以及其不被其他包所需要的依赖项:

```

pacman -Rs 软件包名

```

如果需要这个依赖的包已经被删除了,这条命令可以删除所有不再需要的依赖项:

```

pacman -Qdtq | pacman -Rs -

```

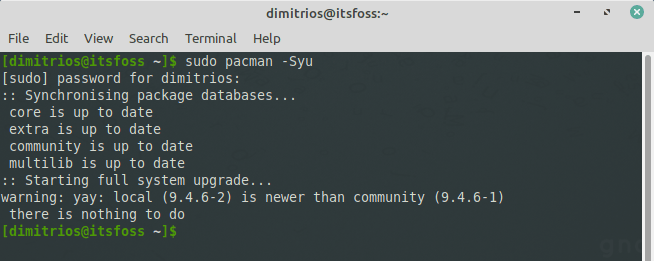

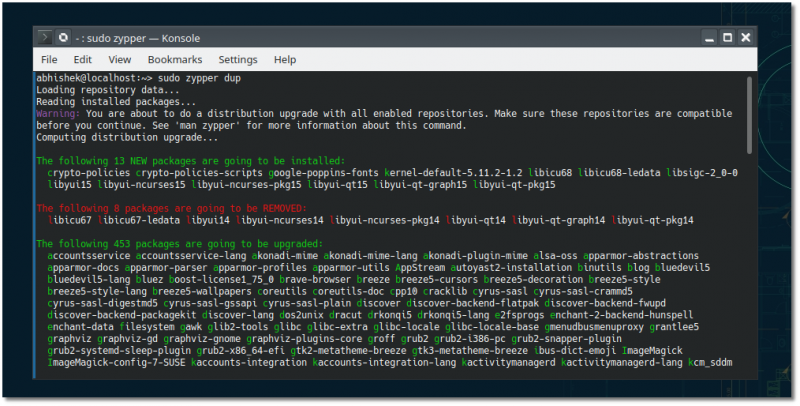

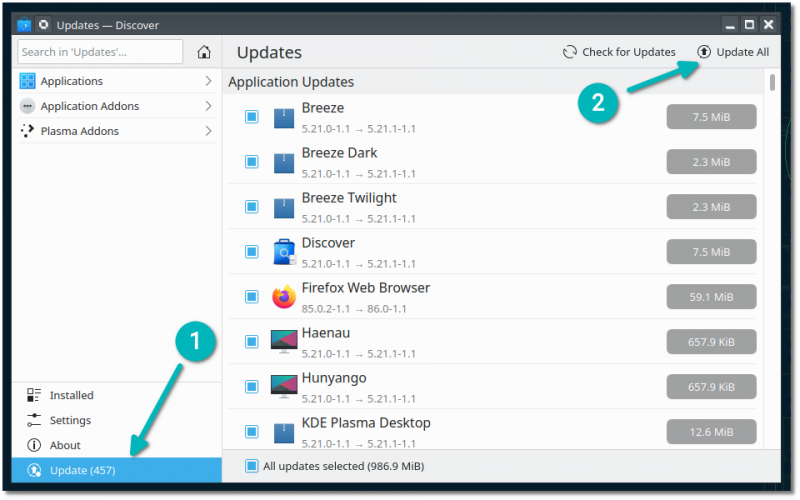

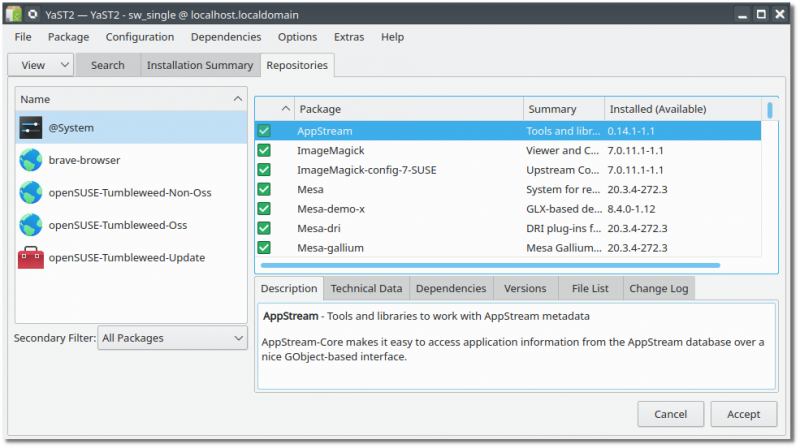

#### 升级软件包

`pacman` 提供了一个简单的办法来 [升级 Arch Linux](https://itsfoss.com/update-arch-linux/)。你只需要一条命令就可以升级所有已安装的软件包。这可能需要一段时间,这取决于系统的新旧程度。

以下命令可以同步存储库数据库,*并且* 更新系统的所有软件包,但不包括不在软件库中的“本地安装的”包:

```

pacman -Syu

```

* `S` 代表同步

* `y` 代表更新本地存储库

* `u` 代表系统更新

也就是说,同步到中央软件库(主程序包数据库),刷新主程序包数据库的本地副本,然后执行系统更新(通过更新所有有更新版本可用的程序包)。

>

> 注意!

>

>

> 对于 Arch Linux 用户,在系统升级前,建议你访问 [Arch-Linux 主页](https://www.archlinux.org/) 查看最新消息,以了解异常更新的情况。如果系统更新需要人工干预,主页上将发布相关的新闻。你也可以订阅 [RSS 源](https://www.archlinux.org/feeds/news/) 或 [Arch 的声明邮件](https://mailman.archlinux.org/mailman/listinfo/arch-announce/)。

>

>

> 在升级基础软件(如 kernel、xorg、systemd 或 glibc) 之前,请注意查看相应的 [论坛](https://bbs.archlinux.org/),以了解大家报告的各种问题。

>

>

> 在 Arch 和 Manjaro 等滚动发行版中不支持**部分升级**。这意味着,当新的库版本被推送到软件库时,软件库中的所有包都需要根据库版本进行升级。例如,如果两个包依赖于同一个库,则仅升级一个包可能会破坏依赖于该库的旧版本的另一个包。

>

>

>

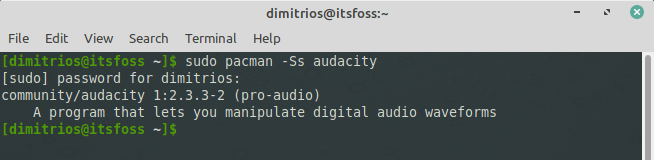

#### 用 Pacman 查找包

`pacman` 使用 `-Q` 选项查询本地包数据库,使用 `-S` 选项查询同步数据库,使用 `-F` 选项查询文件数据库。

`pacman` 可以在数据库中搜索包,包括包的名称和描述:

```

pacman -Ss 字符串1 字符串2 ...

```

查找已经被安装的包:

```

pacman -Qs 字符串1 字符串2 ...

```

根据文件名在远程软包中查找它所属的包:

```

pacman -F 字符串1 字符串2 ...

```

查看一个包的依赖树:

```

pactree 软件包名

```

#### 清除包缓存

`pacman` 将其下载的包存储在 `/var/cache/Pacman/pkg/` 中,并且不会自动删除旧版本或卸载的版本。这有一些优点:

1. 它允许 [降级](https://wiki.archlinux.org/index.php/Downgrade) 一个包,而不需要通过其他来源检索以前的版本。

2. 已卸载的软件包可以轻松地直接从缓存文件夹重新安装。

但是,有必要定期清理缓存以防止文件夹增大。

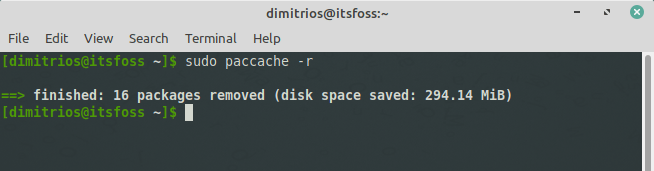

[pacman contrib](https://www.archlinux.org/packages/?name=pacman-contrib) 包中提供的 [paccache(8)](https://jlk.fjfi.cvut.cz/arch/manpages/man/paccache.8) 脚本默认情况下会删除已安装和未安装包的所有缓存版本,但最近 3 个版本除外:

```

paccache -r

```

要删除当前未安装的所有缓存包和未使用的同步数据库,请执行:

```

pacman -Sc

```

要从缓存中删除所有文件,请使用清除选项两次,这是最激进的方法,不会在缓存文件夹中留下任何内容:

```

pacman -Scc

```

#### 安装本地或者第三方的包

安装不是来自远程存储库的“本地”包:

```

pacman -U 本地软件包路径.pkg.tar.xz

```

安装官方存储库中未包含的“远程”软件包:

```

pacman -U http://www.example.com/repo/example.pkg.tar.xz

```

### 额外内容:用 pacman 排除常见错误

下面是使用 `pacman` 管理包时可能遇到的一些常见错误。

#### 提交事务失败(文件冲突)

如果你看到以下报错:

```

error: could not prepare transaction

error: failed to commit transaction (conflicting files)

package: /path/to/file exists in filesystem

Errors occurred, no packages were upgraded.

```

这是因为 `pacman` 检测到文件冲突,不会为你覆盖文件。

解决这个问题的一个安全方法是首先检查另一个包是否拥有这个文件(`pacman-Qo 文件路径`)。如果该文件属于另一个包,请提交错误报告。如果文件不属于另一个包,请重命名“存在于文件系统中”的文件,然后重新发出更新命令。如果一切顺利,文件可能会被删除。

你可以显式地运行 `pacman -S –overwrite 要覆盖的文件模式**,强制`pacman` 覆盖与 给模式匹配的文件,而不是手动重命名并在以后删除属于该包的所有文件。

#### 提交事务失败(包无效或损坏)

在 `/var/cache/pacman/pkg/` 中查找 `.part` 文件(部分下载的包),并将其删除。这通常是由在 `pacman.conf` 文件中使用自定义 `XferCommand` 引起的。

#### 初始化事务失败(无法锁定数据库)

当 `pacman` 要修改包数据库时,例如安装包时,它会在 `/var/lib/pacman/db.lck` 处创建一个锁文件。这可以防止 `pacman` 的另一个实例同时尝试更改包数据库。

如果 `pacman` 在更改数据库时被中断,这个过时的锁文件可能仍然保留。如果你确定没有 `pacman` 实例正在运行,那么请删除锁文件。

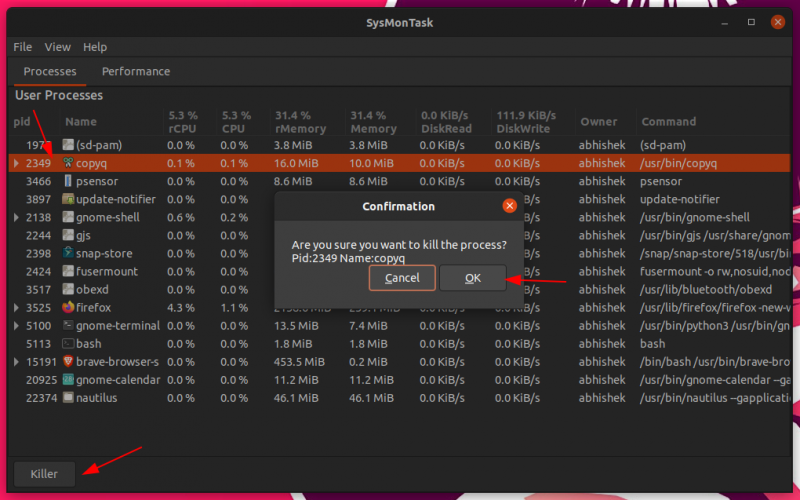

检查进程是否持有锁定文件:

```

lsof /var/lib/pacman/db.lck

```

如果上述命令未返回任何内容,则可以删除锁文件:

```

rm /var/lib/pacman/db.lck

```

如果你发现 `lsof` 命令输出了使用锁文件的进程的 PID,请先杀死这个进程,然后删除锁文件。

我希望你喜欢我对 `pacman` 基础命令的介绍。

---

via: <https://itsfoss.com/pacman-command/>

作者:[Dimitrios Savvopoulos](https://itsfoss.com/author/dimitrios/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Chao-zhi](https://github.com/Chao-zhi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

The [pacman](https://www.archlinux.org/pacman/?ref=itsfoss.com) package manager is one of the main difference between [Arch Linux](https://www.archlinux.org/?ref=itsfoss.com) and other major distributions like Red Hat and Ubuntu/Debian. It combines a simple binary package format with an easy-to-use [build system](https://wiki.archlinux.org/index.php/Arch_Build_System?ref=itsfoss.com). The aim of pacman is to easily manage packages, either from the [official repositories](https://wiki.archlinux.org/index.php/Official_repositories?ref=itsfoss.com) or the user’s own builds.

If you ever used Ubuntu or Debian-based distributions, you might have used the apt-get or apt commands. Pacman is the equivalent in Arch Linux. If you [just installed Arch Linux](https://itsfoss.com/install-arch-linux/), one of the first few [things to do after installing Arch Linux](https://itsfoss.com/things-to-do-after-installing-arch-linux/) is to learn to use pacman commands.

In this beginner’s guide, I’ll explain some of the essential usage of the pacmand command that you should know for managing your Arch-based system.

## Essential pacman commands Arch Linux users should know

Like other package managers, pacman can synchronize package lists with the software repositories to allow the user to download and install packages with a simple command by solving all required dependencies.

### Install packages with pacman

You can install a single package or multiple packages using pacman command in this fashion:

`pacman -S _package_name1_ _package_name2_ ...`

The -S stands for synchronization. It means that pacman first synchronizes

The pacman database categorises the installed packages in two groups according to the reason why they were installed:

**explicitly-installed**: the packages that were installed by a generic pacman -S or -U command**dependencies**: the packages that were implicitly installed because[required](https://wiki.archlinux.org/index.php/Dependency?ref=itsfoss.com)by another package that was explicitly installed.

### Remove an installed package

To remove a single package, leaving all of its dependencies installed:

`pacman -R package_name_`

To remove a package and its dependencies which are not required by any other installed package:

`pacman -Rs _package_name_`

To remove dependencies that are no longer needed. For example, the package which needed the dependencies was removed.

`pacman -Qdtq | pacman -Rs -`

### Upgrading packages

Pacman provides an easy way to [update Arch Linux](https://itsfoss.com/update-arch-linux/). You can update all installed packages with just one command. This could take a while depending on how up-to-date the system is.

The following command synchronizes the repository databases * and* updates the system’s packages, excluding “local” packages that are not in the configured repositories:

`pacman -Syu`

- S stands for sync

- y is for refresh (local cache)

- u is for system update

Basically it is saying that sync to central repository (master package database), refresh the local copy of the master package database and then perform the system update (by updating all packages that have a newer version available).

#### Attention! Read this before you update

If you are an Arch Linux user before upgrading, it is advised to visit the [Arch Linux home page](https://www.archlinux.org/?ref=itsfoss.com) to check the latest news for out-of-the-ordinary updates. If manual intervention is needed an appropriate news post will be made. Alternatively, you can subscribe to the [RSS feed](https://www.archlinux.org/feeds/news/?ref=itsfoss.com) or the [arch-announce mailing list](https://mailman.archlinux.org/mailman/listinfo/arch-announce/?ref=itsfoss.com).

Be also mindful to look over the appropriate [forum](https://bbs.archlinux.org/?ref=itsfoss.com) before upgrading fundamental software (such as the kernel, xorg, systemd, or glibc), for any reported problems.

**Partial upgrades are unsupported** at a rolling release distribution such as Arch and Manjaro. That means when new library versions are pushed to the repositories, all the packages in the repositories need to be rebuilt against the libraries. For example, if two packages depend on the same library, upgrading only one package, might break the other package which depends on an older version of the library.

### Use pacman to search for packages

Pacman queries the local package database with the -Q flag, the sync database with the -S flag and the files database with the -F flag.

Pacman can search for packages in the database, both in packages’ names and descriptions:

`pacman -Ss _string1_ _string2_ ...`

To search for already installed packages:

`pacman -Qs _string1_ _string2_ ...`

To search for package file names in remote packages:

`pacman -F _string1_ _string2_ ...`

To view the dependency tree of a package:

`pactree _package_naenter code hereme_`

### Cleaning the package cache

Pacman stores its downloaded packages in /var/cache/pacman/pkg/ and does not remove the old or uninstalled versions automatically. This has some advantages:

- It allows to

[downgrade](https://wiki.archlinux.org/index.php/Downgrade?ref=itsfoss.com)a package without the need to retrieve the previous version through other sources. - A package that has been uninstalled can easily be reinstalled directly from the cache folder.

However, it is necessary to clean up the cache periodically to prevent the folder to grow in size.

The [paccache(8)](https://jlk.fjfi.cvut.cz/arch/manpages/man/paccache.8?ref=itsfoss.com) script, provided within the [pacman-contrib](https://www.archlinux.org/packages/?name=pacman-contrib&ref=itsfoss.com) package, deletes all cached versions of installed and uninstalled packages, except for the most recent 3, by default:

`paccache -r`

To remove all the cached packages that are not currently installed, and the unused sync database, execute:

`pacman -Sc`

To remove all files from the cache, use the clean switch twice, this is the most aggressive approach and will leave nothing in the cache folder:

`pacman -Scc`

### Installing local or third-party packages

Install a ‘local’ package that is not from a remote repository:

`pacman -U _/path/to/package/package_name-version.pkg.tar.xz_`

Install a ‘remote’ package, not contained in an official repository:

`pacman -U http://www.example.com/repo/example.pkg.tar.xz`

## Bonus: Troubleshooting common errors with pacman

Here are some common errors you may encounter while managing packages with pacman.

### Failed to commit transaction (conflicting files)

If you see the following error:

```

error: could not prepare transaction

error: failed to commit transaction (conflicting files)

package: /path/to/file exists in filesystem

Errors occurred, no packages were upgraded.

```

This is happening because pacman has detected a file conflict and will not overwrite files for you.

A safe way to solve this is to first check if another package owns the file (pacman -Qo * /path/to/file*). If the file is owned by another package, file a bug report. If the file is not owned by another package, rename the file which ‘exists in filesystem’ and re-issue the update command. If all goes well, the file may then be removed.

Instead of manually renaming and later removing all the files that belong to the package in question, you may explicitly run **pacman -S –overwrite glob package** to force pacman to overwrite files that match

*.*

*glob*### Failed to commit transaction (invalid or corrupted package)

Look for .part files (partially downloaded packages) in /var/cache/pacman/pkg/ and remove them. It is often caused by usage of a custom XferCommand in pacman.conf.

### Failed to init transaction (unable to lock database)

When pacman is about to alter the package database, for example installing a package, it creates a lock file at /var/lib/pacman/db.lck. This prevents another instance of pacman from trying to alter the package database at the same time.

If pacman is interrupted while changing the database, this stale lock file can remain. If you are certain that no instances of pacman are running then delete the lock file.

Check if a process is holding the lock file:

`lsof /var/lib/pacman/db.lck`

If the above command doesn’t return anything, you can remove the lock file:

`rm /var/lib/pacman/db.lck`

If you find the PID of the process holding the lock file with lsof command output, kill it first and then remove the lock file.

I hope you like my humble effort in explaining the basic pacman commands. Please leave your comments below and don’t forget to subscribe on our social media. Stay safe! |

13,100 | 我为什么要用 D 语言写脚本? | https://opensource.com/article/21/1/d-scripting | 2021-02-09T13:44:46 | [

"D语言"

] | https://linux.cn/article-13100-1.html |

>

> D 语言以系统编程语言而闻名,但它也是编写脚本的一个很好的选择。

>

>

>

D 语言由于其静态类型和元编程能力,经常被宣传为系统编程语言。然而,它也是一种非常高效的脚本语言。

由于 Python 在自动化任务和快速实现原型想法方面的灵活性,它通常被选为脚本语言。这使得 Python 对系统管理员、[管理者](https://opensource.com/article/20/3/automating-community-management-python)和一般的开发人员非常有吸引力,因为它可以自动完成他们可能需要手动完成的重复性任务。

我们自然也可以期待任何其他的脚本编写语言具有 Python 的这些特性和能力。以下是我认为 D 是一个不错的选择的两个原因。

### 1、D 很容易读和写

作为一种类似于 C 的语言,D 应该是大多数程序员所熟悉的。任何使用 JavaScript、Java、PHP 或 Python 的人对 D 语言都很容易上手。

如果你还没有安装 D,请[安装 D 编译器](https://tour.dlang.org/tour/en/welcome/install-d-locally),这样你就可以[运行本文中的 D 代码](https://tour.dlang.org/tour/en/welcome/run-d-program-locally)。你也可以使用[在线 D 编辑器](https://run.dlang.io/)。

下面是一个 D 代码的例子,它从一个名为 `words.txt` 的文件中读取单词,并在命令行中打印出来:

```

open

source

is

cool

```

用 D 语言写脚本:

```

#!/usr/bin/env rdmd

// file print_words.d

// import the D standard library

import std;

void main(){

// open the file

File("./words.txt")

//iterate by line

.byLine

// print each number

.each!writeln;

}

```

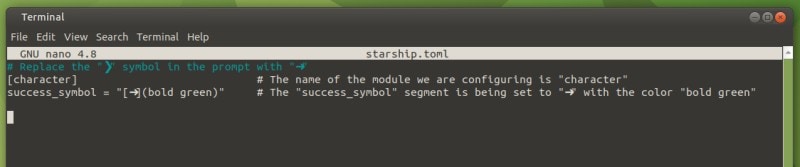

这段代码以 [释伴](https://en.wikipedia.org/wiki/Shebang_(Unix)) 开头,它将使用 [rdmd](https://dlang.org/rdmd.html) 来运行这段代码,`rdmd` 是 D 编译器自带的编译和运行代码的工具。假设你运行的是 Unix 或 Linux,在运行这个脚本之前,你必须使用`chmod` 命令使其可执行:

```

chmod u+x print_words.d

```

现在脚本是可执行的,你可以运行它:

```

./print_words.d

```

这将在你的命令行中打印以下内容:

```

open

source

is

cool

```

恭喜你,你写了第一个 D 语言脚本。你可以看到 D 是如何让你按顺序链式调用函数,这让阅读代码的感觉很自然,类似于你在头脑中思考问题的方式。这个[功能让 D 成为我最喜欢的编程语言](https://opensource.com/article/20/7/d-programming)。

试着再写一个脚本:一个非营利组织的管理员有一个捐款的文本文件,每笔金额都是单独的一行。管理员想把前 10 笔捐款相加,然后打印出金额:

```

#!/usr/bin/env rdmd

// file sum_donations.d

import std;

void main()

{

double total = 0;

// open the file

File("monies.txt")

// iterate by line

.byLine

// pick first 10 lines

.take(10)

// remove new line characters (\n)

.map!(strip)

// convert each to double

.map!(to!double)

// add element to total

.tee!((x) { total += x; })

// print each number

.each!writeln;

// print total

writeln("total: ", total);

}

```

与 `each` 一起使用的 `!` 操作符是[模板参数](http://ddili.org/ders/d.en/templates.html)的语法。

### 2、D 是快速原型设计的好帮手

D 是灵活的,它可以快速地将代码敲打在一起,并使其发挥作用。它的标准库中包含了丰富的实用函数,用于执行常见的任务,如操作数据(JSON、CSV、文本等)。它还带有一套丰富的通用算法,用于迭代、搜索、比较和 mutate 数据。这些巧妙的算法通过定义通用的 [基于范围的接口](http://ddili.org/ders/d.en/ranges.html) 而按照序列进行处理。

上面的脚本显示了 D 中的链式调用函数如何提供顺序处理和操作数据的要领。D 的另一个吸引人的地方是它不断增长的用于执行普通任务的第三方包的生态系统。一个例子是,使用 [Vibe.d](https://vibed.org) web 框架构建一个简单的 web 服务器很容易。下面是一个例子:

```

#!/usr/bin/env dub

/+ dub.sdl:

dependency "vibe-d" version="~>0.8.0"

+/

void main()

{

import vibe.d;

listenHTTP(":8080", (req, res) {

res.writeBody("Hello, World: " ~ req.path);

});

runApplication();

}

```

它使用官方的 D 软件包管理器 [Dub](https://dub.pm/getting_started),从 [D 软件包仓库](https://code.dlang.org)中获取 vibe.d Web 框架。Dub 负责下载 Vibe.d 包,然后在本地主机 8080 端口上编译并启动一个 web 服务器。

### 尝试一下 D 语言

这些只是你可能想用 D 来写脚本的几个原因。

D 是一种非常适合开发的语言。你可以很容易从 D 下载页面安装,因此下载编译器,看看例子,并亲自体验 D 语言。

---

via: <https://opensource.com/article/21/1/d-scripting>

作者:[Lawrence Aberba](https://opensource.com/users/aberba) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | The D programming language is often advertised as a system programming language due to its static typing and metaprogramming capabilities. However, it's also a very productive scripting language.

Python is commonly chosen for scripting due to its flexibility for automating tasks and quickly prototyping ideas. This makes Python very appealing to sysadmins, [managers](https://opensource.com/article/20/3/automating-community-management-python), and developers in general for automating recurring tasks that they might otherwise have to do manually.

It is reasonable to expect any other script-writing language to have these Python traits and capabilities. Here are two reasons why I believe D is a good option.

## 1. D is easy to read and write

As a C-like language, D should be familiar to most programmers. Anyone who uses JavaScript, Java, PHP, or Python will know their way around D.

If you don't already have D installed, [install a D compiler](https://tour.dlang.org/tour/en/welcome/install-d-locally) so that you can [run the D code](https://tour.dlang.org/tour/en/welcome/run-d-program-locally) in this article. You may also use the [online D editor](https://run.dlang.io/).

Here is an example of D code that reads words from a file named `words.txt`

and prints them on the command line:

```

open

source

is

cool

```

Write the script in D:

```

#!/usr/bin/env rdmd

// file print_words.d

// import the D standard library

import std;

void main(){

// open the file

File("./words.txt")

//iterate by line

.byLine

// print each number

.each!writeln;

}

```

This code is prefixed with a [shebang](https://en.wikipedia.org/wiki/Shebang_(Unix)) that will run the code using [rdmd](https://dlang.org/rdmd.html), a tool that comes with the D compiler to compile and run code. Assuming you are running Unix or Linux, before you can run this script, you must make it executable by using the `chmod`

command:

`chmod u+x print_words.d`

Now that the script is executable, you can run it:

`./print_words.d`

This should print the following on your command line:

```

open

source

is

cool

```

Congratulations! You've written your first D script. You can see how D enables you to chain functions in sequence to make reading the code feel natural, similar to how you think about problems in your mind. This [feature makes D my favorite programming language](https://opensource.com/article/20/7/d-programming).

Try writing another script: A nonprofit manager has a text file of donations with each amount on separate lines. The manager wants to sum the first 10 donations and print the amounts:

```

#!/usr/bin/env rdmd

// file sum_donations.d

import std;

void main()

{

double total = 0;

// open the file

File("monies.txt")

// iterate by line

.byLine

// pick first 10 lines

.take(10)

// remove new line characters (\n)

.map!(strip)

// convert each to double

.map!(to!double)

// add element to total

.tee!((x) { total += x; })

// print each number

.each!writeln;

// print total

writeln("total: ", total);

}

```

The `!`

operator used with `each`

is the syntax of a [template argument](http://ddili.org/ders/d.en/templates.html).

## 2. D is great for quick prototyping

D is flexible for hammering code together really quickly and making it work. Its standard library is rich with utility functions for performing common tasks, such as manipulating data (JSON, CSV, text, etc.). It also comes with a rich set of generic algorithms for iterating, searching, comparing, and mutating data. These cleverly crafted algorithms are oriented towards processing sequences by defining generic [range-based interfaces](http://ddili.org/ders/d.en/ranges.html).

The script above shows how chaining functions in D provides a gist of sequential processing and manipulating data. Another appeal of D is its growing ecosystem of third-party packages for performing common tasks. An example is how easy it is to build a simple web server using the [Vibe.d](https://vibed.org) web framework. Here's an example:

```

#!/usr/bin/env dub

/+ dub.sdl:

dependency "vibe-d" version="~>0.8.0"

+/

void main()

{

import vibe.d;

listenHTTP(":8080", (req, res) {

res.writeBody("Hello, World: " ~ req.path);

});

runApplication();

}

```

This uses the official D package manager, [Dub](https://dub.pm/getting_started), to fetch the vibe.d web framework from the [D package repository](https://code.dlang.org). Dub takes care of downloading the Vibe.d package, then compiling and spinning up a web server on localhost port 8080.

## Give D a try

These are only a couple of reasons why you might want to use D for writing scripts.

D is a great language for development. It's easy to install from the D download page, so download the compiler, take a look at the examples, and experience D for yourself.

## Comments are closed. |

13,103 | Python 之禅:时机最重要 | https://opensource.com/article/19/12/zen-python-timeliness | 2021-02-09T23:17:15 | [

"Python"

] | https://linux.cn/article-13103-1.html |

>

> 这是 Python 之禅特别系列的一部分,重点是第十五和第十六条原则:现在与将来。

>

>

>

Python 一直在不断发展。Python 社区对特性请求的渴求是无止境的,对现状也总是不满意的。随着 Python 越来越流行,这门语言的变化会影响到更多的人。

确定什么时候该进行变化往往很难,但 [Python 之禅](https://www.python.org/dev/peps/pep-0020/) 给你提供了指导。

### <ruby> 现在有总比永远没有好 <rt> Now is better than never </rt></ruby>

总有一种诱惑,就是要等到事情完美才去做,虽然,它们永远没有完美的一天。当它们看起来已经“准备”得足够好了,那就大胆采取行动吧,去做出改变吧。无论如何,变化总是发生在*某个*现在:拖延的唯一作用就是把它移到未来的“现在”。

### <ruby> 虽然将来总比现在好 <rt> Although never is often better than right now </rt></ruby>

然而,这并不意味着应该急于求成。从测试、文档、用户反馈等方面决定发布的标准。在变化就绪之前的“现在”,并不是一个好时机。

这不仅对 Python 这样的流行语言是个很好的经验,对你个人的小开源项目也是如此。

---

via: <https://opensource.com/article/19/12/zen-python-timeliness>

作者:[Moshe Zadka](https://opensource.com/users/moshez) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Python is always evolving. The Python community has an unending appetite for feature requests but also an unending bias toward the status quo. As Python gets more popular, changes to the language affect more people.

The exact timing for when a change happens is often hard, but the [Zen of Python](https://www.python.org/dev/peps/pep-0020/) offers guidance.

## Now is better than never.

There is always the temptation to delay things until they are perfect. They will never be perfect, though. When they look "ready" enough, that is when it is time to take the plunge and put them out there. Ultimately, a change always happens at *some* now: the only thing that delaying does is move it to a future person's "now."

## Although never is often better than *right now*.

This, however, does not mean things should be rushed. Decide the criteria for release in terms of testing, documentation, user feedback, and so on. "Right now," as in before the change is ready, is not a good time.

This is a good lesson not just for popular languages like Python, but also for your personal little open source project.

## Comments are closed. |

13,104 | 9 个易用的基于 Arch 的用户友好型 Linux 发行版 | https://itsfoss.com/arch-based-linux-distros/ | 2021-02-10T11:28:59 | [

"Arch"

] | https://linux.cn/article-13104-1.html | 在 Linux 社区中,[Arch Linux](https://www.archlinux.org/) 有一群狂热的追随者。这个轻量级的发行版以 DIY 的态度提供了最前沿的更新。

但是,Arch 的目标用户是那些更有经验的用户。因此,它通常被认为是那些技术不够(或耐心不够)的人所无法触及的。

事实上,只是最开始的步骤,[安装 Arch Linux 就足以把很多人吓跑](https://itsfoss.com/install-arch-linux/)。与大多数其他发行版不同,Arch Linux 没有一个易于使用的图形安装程序。安装过程中涉及到的磁盘分区,连接到互联网,挂载驱动器和创建文件系统等只用命令行工具来操作。

对于那些不想经历复杂的安装和设置的人来说,有许多用户友好的基于 Arch 的发行版。

在本文中,我将向你展示一些 Arch 替代发行版。这些发行版附带了图形安装程序、图形包管理器和其他工具,比它们的命令行版本更容易使用。

### 更容易设置和使用的基于 Arch 的 Linux 发行版

请注意,这不是一个排名列表。这些数字只是为了计数的目的。排第二的发行版不应该被认为比排第七的发行版好。

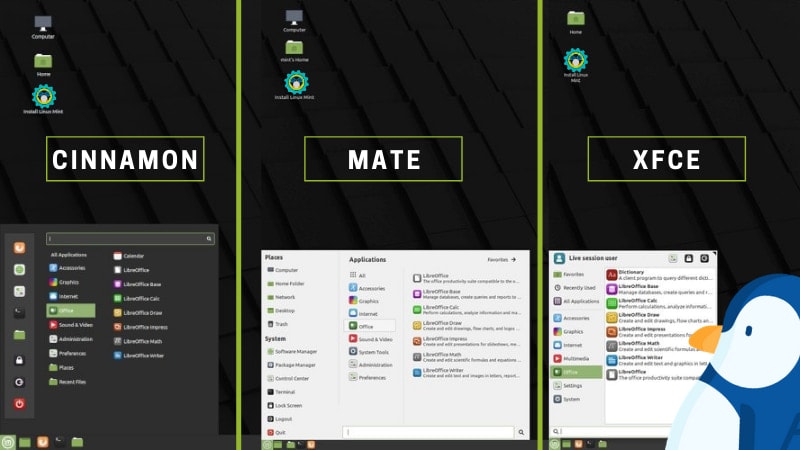

#### 1、Manjaro Linux

[Manjaro](https://manjaro.org/) 不需要任何介绍。它是几年来最流行的 Linux 发行版之一,它值得拥有。

Manjaro 提供了 Arch Linux 的所有优点,同时注重用户友好性和可访问性。Manjaro 既适合新手,也适合有经验的 Linux 用户。

**对于新手**,它提供了一个用户友好的安装程序,系统本身也设计成可以在你[最喜爱的桌面环境](https://itsfoss.com/best-linux-desktop-environments/) (DE)或窗口管理器中直接“开箱即用”。

**对于更有经验的用户**,Manjaro 还提供多种功能,以满足每个个人的口味和喜好。[Manjaro Architect](https://itsfoss.com/manjaro-architect-review/) 提供了安装各种 Manjaro 风格的选项,并为那些想要完全自由地塑造系统的人提供了各种桌面环境、文件系统([最近推出的 ZFS](https://itsfoss.com/manjaro-20-release/)) 和引导程序的选择。

Manjaro 也是一个滚动发布的前沿发行版。然而,与 Arch 不同的是,Manjaro 首先测试更新,然后将其提供给用户。稳定在这里也很重要。

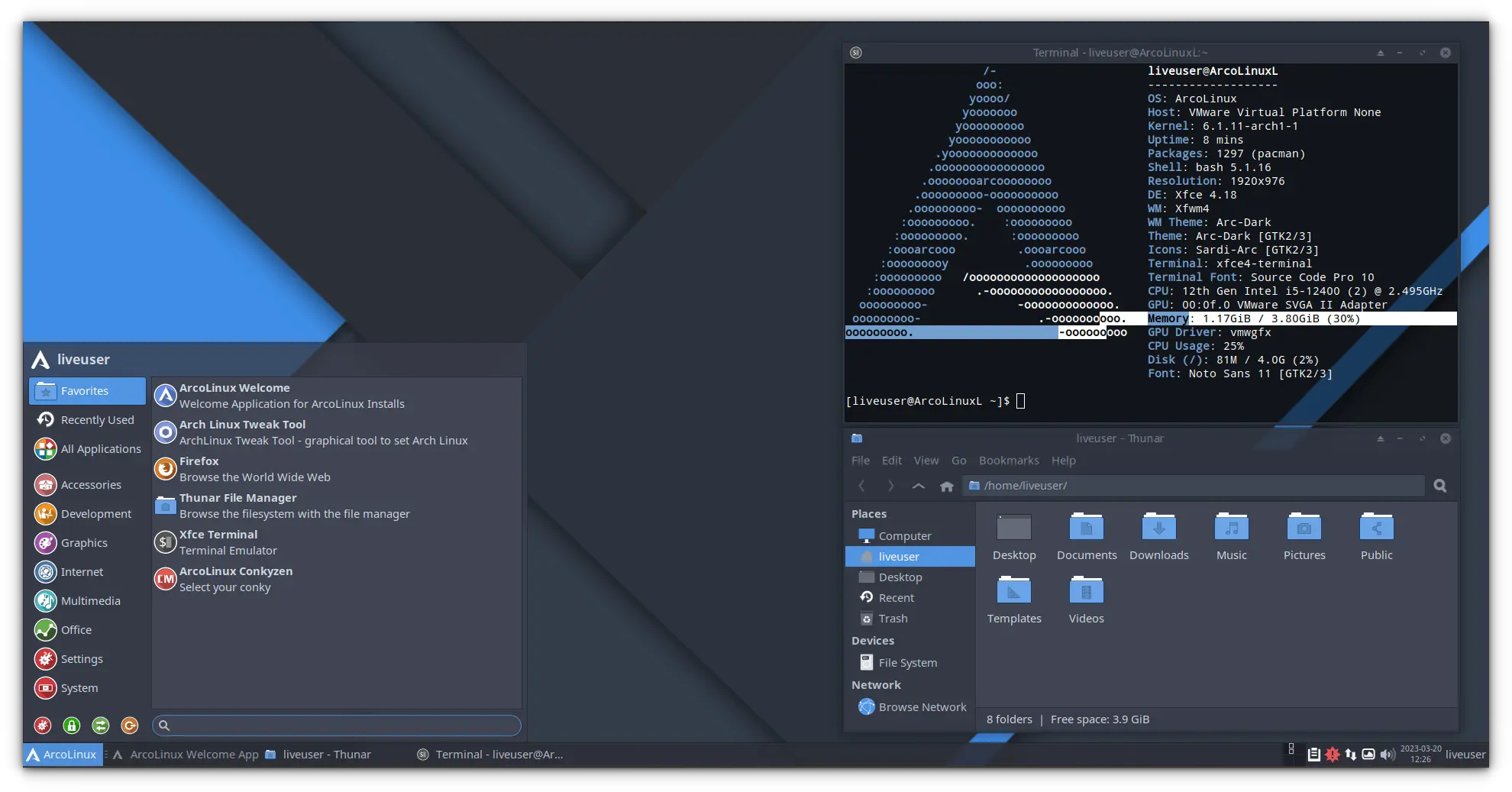

#### 2、ArcoLinux

[ArcoLinux](https://arcolinux.com/)(以前称为 ArchMerge)是一个基于 Arch Linux 的发行版。开发团队提供了三种变体。ArcoLinux、ArcoLinuxD 和 ArcoLinuxB。

ArcoLinux 是一个功能齐全的发行版,附带有 [Xfce 桌面](https://www.xfce.org/)、[Openbox](http://openbox.org/wiki/Main_Page) 和 [i3 窗口管理器](https://i3wm.org/)。

**ArcoLinuxD** 是一个精简的发行版,它包含了一些脚本,可以让高级用户安装任何桌面和应用程序。

**ArcoLinuxB** 是一个让用户能够构建自定义发行版的项目,同时还开发了几个带有预配置桌面的社区版本,如 Awesome、bspwm、Budgie、Cinnamon、Deepin、GNOME、MATE 和 KDE Plasma。

ArcoLinux 还提供了各种视频教程,因为它非常注重学习和获取 Linux 技能。

#### 3、Archlabs Linux

[ArchLabs Linux](https://itsfoss.com/archlabs-review/) 是一个轻量级的滚动版 Linux 发行版,基于最精简的 Arch Linux,带有 [Openbox](https://en.wikipedia.org/wiki/Openbox) 窗口管理器。[ArchLabs](https://archlabslinux.com/) 在观感设计中受到 [BunsenLabs](https://www.bunsenlabs.org/) 的影响和启发,主要考虑到中级到高级用户的需求。

#### 4、Archman Linux

[Archman](https://archman.org/en/) 是一个独立的项目。Arch Linux 发行版对于没有多少 Linux 经验的用户来说通常不是理想的操作系统。要想在最小的挫折感下让事情变得更有意义,必须要有相当的背景知识。Archman Linux 的开发人员正试图改变这种评价。

Archman 的开发是基于对开发的理解,包括用户反馈和体验组件。根据团队过去的经验,将用户的反馈和要求融合在一起,确定路线图并完成构建工作。

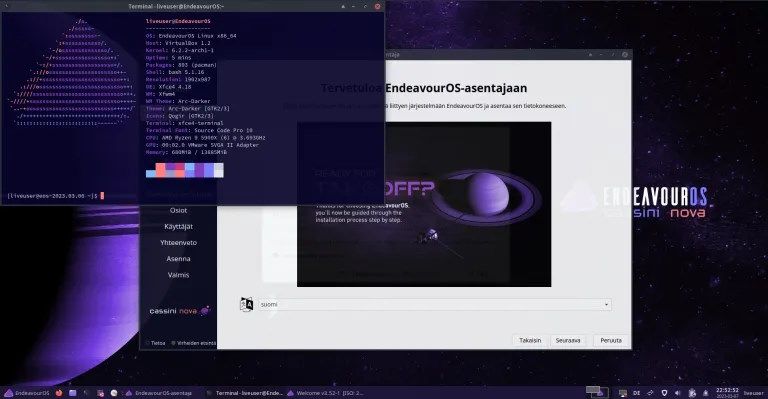

#### 5、EndeavourOS

当流行的基于 Arch 的发行版 [Antergos 在 2019 结束](https://itsfoss.com/antergos-linux-discontinued/) 时,它留下了一个友好且非常有用的社区。Antergos 项目结束的原因是因为该系统对于开发人员来说太难维护了。

在宣布结束后的几天内,一些有经验的用户通过创建一个新的发行版来填补 Antergos 留下的空白,从而维护了以前的社区。这就是 [EndeavourOS](https://itsfoss.com/endeavouros/) 的诞生。

[EndeavourOS](https://endeavouros.com/) 是轻量级的,并且附带了最少数量的预装应用程序。一块近乎空白的画布,随时可以个性化。

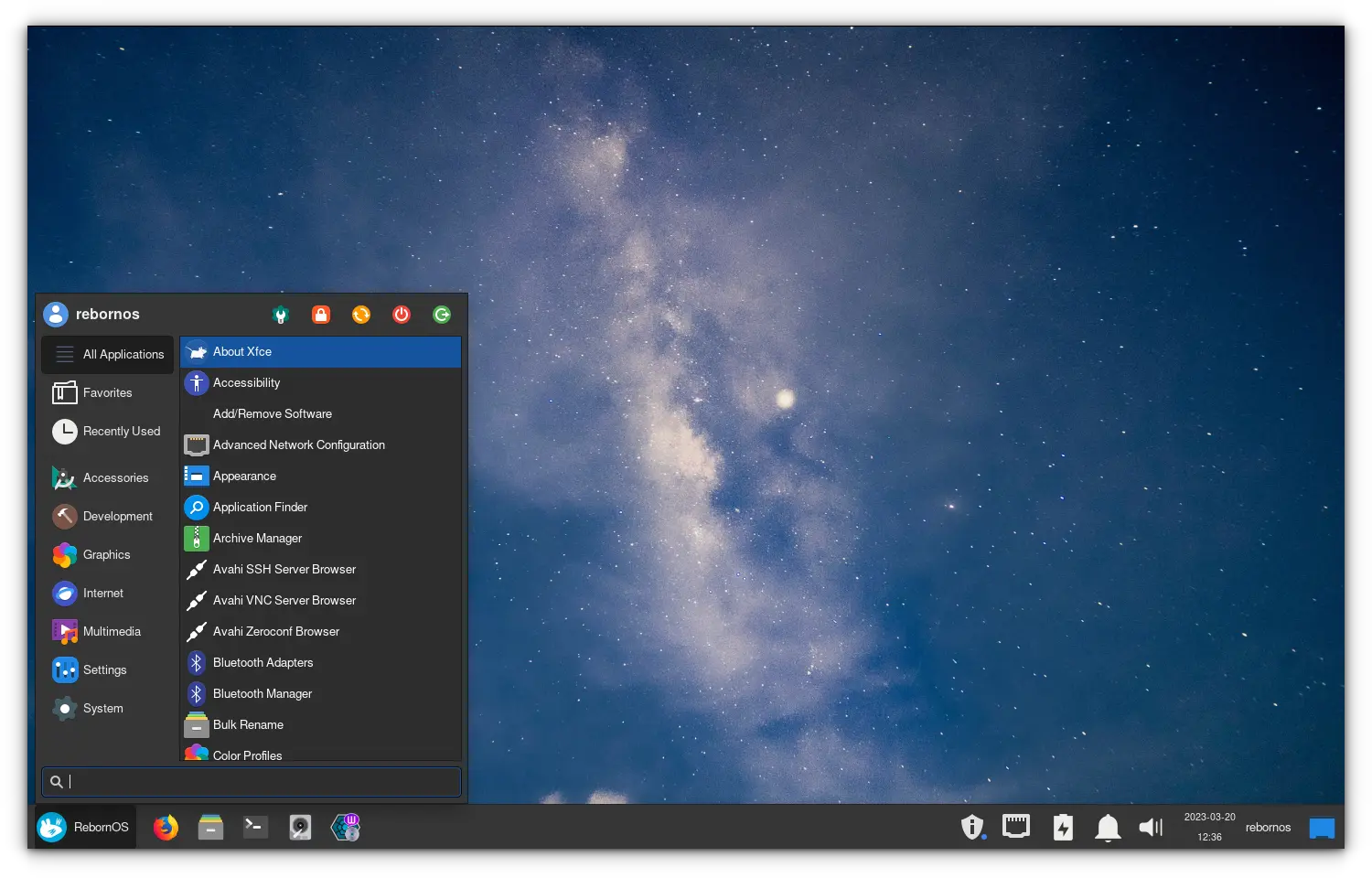

#### 6、RebornOS

[RebornOS](https://rebornos.org/) 开发人员的目标是将 Linux 的真正威力带给每个人,一个 ISO 提供了 15 个桌面环境可供选择,并提供无限的定制机会。

RebornOS 还声称支持 [Anbox](https://anbox.io/),它可以在桌面 Linux 上运行 Android 应用程序。它还提供了一个简单的内核管理器 GUI 工具。

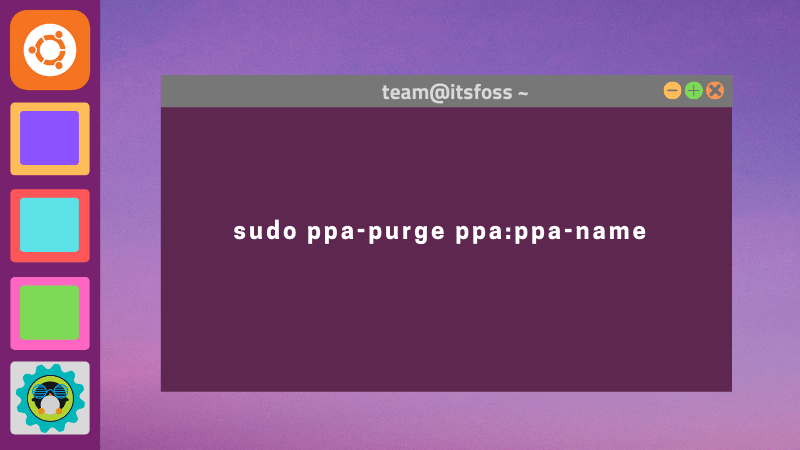

再加上 [Pacman](https://itsfoss.com/pacman-command/)、[AUR](https://itsfoss.com/aur-arch-linux/),以及定制版本的 Cnchi 图形安装程序,Arch Linux 终于可以让最没有经验的用户也能够使用了。

#### 7、Chakra Linux

一个社区开发的 GNU/Linux 发行版,它的亮点在 KDE 和 Qt 技术。[Chakra Linux](https://www.chakralinux.org/) 不在特定日期安排发布,而是使用“半滚动发布”系统。

这意味着 Chakra Linux 的核心包被冻结,只在修复安全问题时才会更新。这些软件包是在最新版本经过彻底测试后更新的,然后再转移到永久软件库(大约每六个月更新一次)。

除官方软件库外,用户还可以安装 Chakra 社区软件库 (CCR) 的软件包,该库为官方存储库中未包含的软件提供用户制作的 PKGINFOs 和 [PKGBUILD](https://wiki.archlinux.org/index.php/PKGBUILD) 脚本,其灵感来自于 Arch 用户软件库(AUR)。

#### 8、Artix Linux

[Artix Linux](https://artixlinux.org/) 也是一个基于 Arch Linux 的滚动发行版,它使用 [OpenRC](https://en.wikipedia.org/wiki/OpenRC)、[runit](https://en.wikipedia.org/wiki/Runit) 或 [s6](https://en.wikipedia.org/wiki/S6_(software)) 作为初始化工具而不是 [systemd](https://en.wikipedia.org/wiki/Systemd)。

Artix Linux 有自己的软件库,但作为一个基于 `pacman` 的发行版,它可以使用 Arch Linux 软件库或任何其他衍生发行版的软件包,甚至可以使用明确依赖于 systemd 的软件包。也可以使用 [Arch 用户软件库](https://itsfoss.com/aur-arch-linux/)(AUR)。

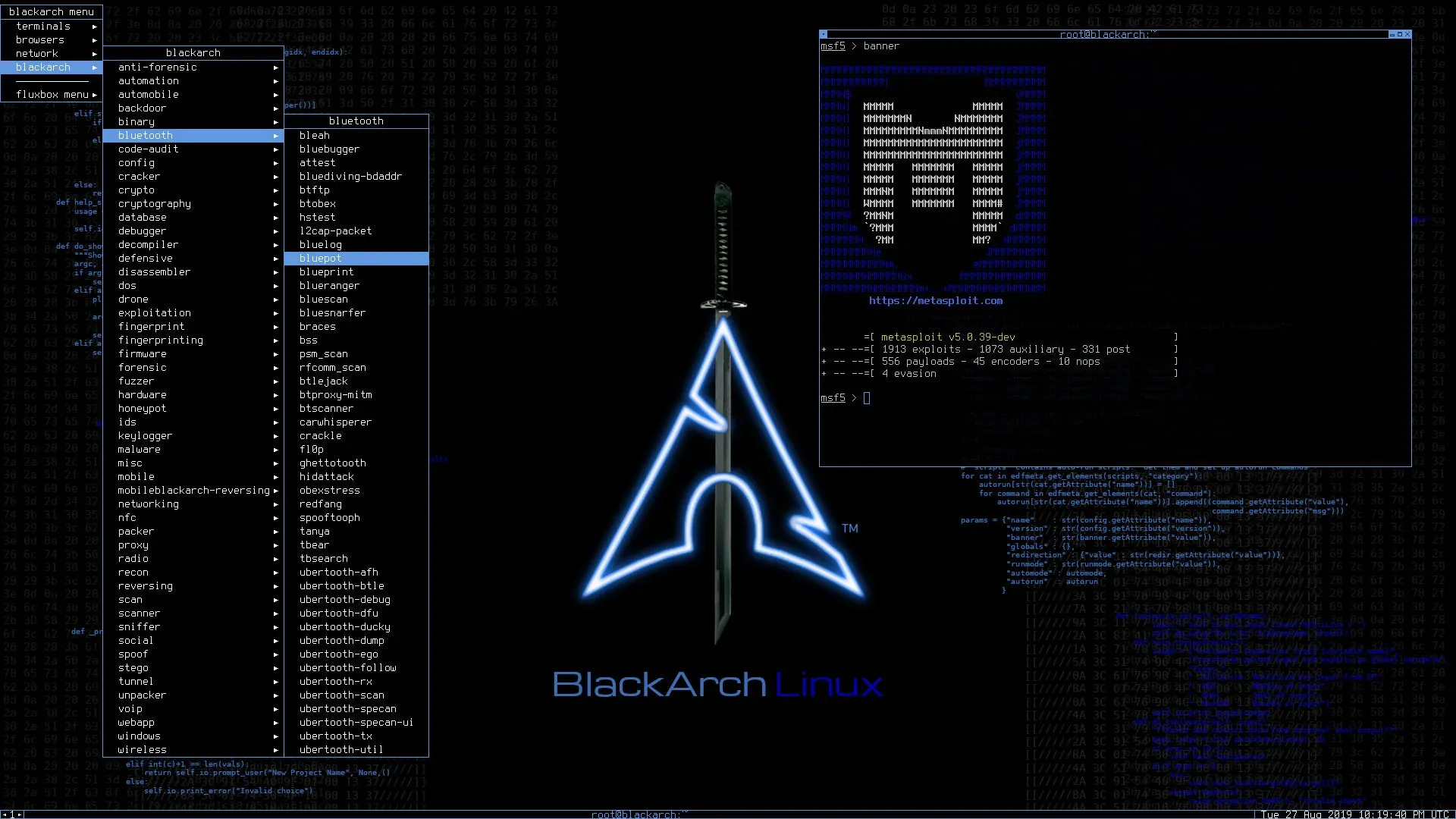

#### 9、BlackArch Linux

BlackArch 是一个基于 Arch Linux 的 [渗透测试发行版](https://itsfoss.com/linux-hacking-penetration-testing/),它提供了大量的网络安全工具。它是专门为渗透测试人员和安全研究人员创建的。该软件库包含 2400 多个[黑客和渗透测试工具](https://itsfoss.com/best-kali-linux-tools/) ,可以单独安装,也可以分组安装。BlackArch Linux 兼容现有的 Arch Linux 包。

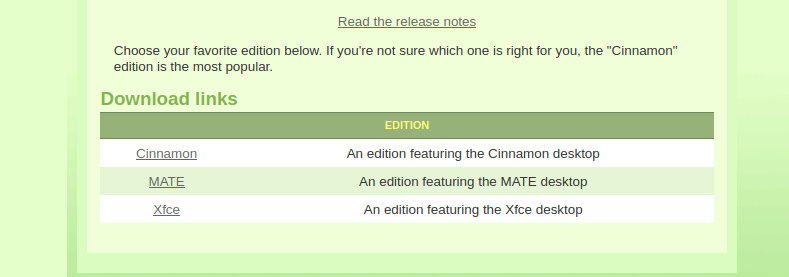

### 想要真正的原版 Arch Linux 吗?可以使用图形化 Arch 安装程序简化安装

如果你想使用原版的 Arch Linux,但又被它困难的安装所难倒。幸运的是,你可以下载一个带有图形安装程序的 Arch Linux ISO。

Arch 安装程序基本上是 Arch Linux ISO 的一个相对容易使用的基于文本的安装程序。它比裸奔的 Arch 安装容易得多。

#### Anarchy Installer

[Anarchy installer](https://anarchyinstaller.org/) 打算为新手和有经验的 Linux 用户提供一种简单而无痛苦的方式来安装 ArchLinux。在需要的时候安装,在需要的地方安装,并且以你想要的方式安装。这就是 Anarchy 的哲学。

启动安装程序后,将显示一个简单的 [TUI 菜单](https://en.wikipedia.org/wiki/Text-based_user_interface),列出所有可用的安装程序选项。

#### Zen Installer

[Zen Installer](https://sourceforge.net/projects/revenge-installer/) 为安装 Arch Linux 提供了一个完整的图形(点击式)环境。它支持安装多个桌面环境 、AUR 以及 Arch Linux 的所有功能和灵活性,并且易于图形化安装。

ISO 将引导一个临场环境,然后在你连接到互联网后下载最新稳定版本的安装程序。因此,你将始终获得最新的安装程序和更新的功能。

### 总结

对于许多用户来说,基于 Arch 的发行版会是一个很好的无忧选择,而像 Anarchy 这样的图形化安装程序至少离原版的 Arch Linux 更近了一步。

在我看来,[Arch Linux 的真正魅力在于它的安装过程](https://itsfoss.com/install-arch-linux/),对于 Linux 爱好者来说,这是一个学习的机会,而不是麻烦。Arch Linux 及其衍生产品有很多东西需要你去折腾,但是在折腾的过程中你就会进入到开源软件的世界,这里是神奇的新世界。下次再见!

---

via: <https://itsfoss.com/arch-based-linux-distros/>

作者:[Dimitrios Savvopoulos](https://itsfoss.com/author/dimitrios/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Chao-zhi](https://github.com/Chao-zhi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

In the Linux community, [Arch Linux has a cult following](https://itsfoss.com/why-arch-linux/). This lightweight distribution provides bleeding-edge updates with a DIY (do it yourself) attitude.

However, [Arch Linux](https://www.archlinux.org/?ref=its-foss) is also aimed at more experienced users. As such, it is generally considered to be beyond the reach of those who need more technical expertise (or persistence) to use it.

Things like [installing Arch Linux](https://itsfoss.com/install-arch-linux/) are paint points for new users as it does not feature an easy-to-understand graphical installer like Ubuntu. The process is getting easier at the time of updating this article.

Here, I will list some of these Arch alternative distributions that are easier to set up and use. These distributions come with a graphical installer, graphical package manager, and other tools that are easier to use than their command-line alternatives.

## 1. Manjaro Linux

[Manjaro](https://manjaro.org/?ref=itsfoss.com) doesn’t need any introduction. It is one of the most popular Linux distributions for several years and deserves it.

Manjaro provides all the benefits of Arch Linux combined with a focus on user-friendliness and accessibility. Manjaro is suitable for both newcomers and experienced Linux users alike.

For newcomers, a user-friendly installer is provided, and the system is designed to work fully ‘straight out of the box’ with your [favourite desktop environment](https://itsfoss.com/best-linux-desktop-environments/) (DE) or window manager.

Manjaro is also a rolling release cutting-edge distribution. However, unlike Arch, Manjaro tests the updates first and then provides them to its users. Stability is better there.

[Long-Term Support versions](https://itsfoss.com/long-term-support-lts/)instead of Arch-based rolling releases.

## 2. ArcoLinux

[ArcoLinux](https://arcolinux.com/?ref=itsfoss.com) (previously known as ArchMerge) is a distribution based on Arch Linux. The development team offers three variations. ArcoLinux, ArcoLinuxD and ArcoLinuxB.

ArcoLinux is a full-featured distribution that ships with the [Xfce desktop](https://www.xfce.org/?ref=itsfoss.com), [Openbox](http://openbox.org/wiki/Main_Page?ref=itsfoss.com), and [i3 window managers](https://i3wm.org/?ref=itsfoss.com) by default.

**ArcoLinuxD** is a minimal distribution that includes scripts that enable power users to install any desktop and application.

**ArcoLinuxB** is a project that gives users the power to build custom distributions, while also developing several community editions with pre-configured desktops, such as Awesome, bspwm, Budgie, Cinnamon, Deepin, GNOME, MATE and KDE Plasma.

ArcoLinux also provides various video tutorials as it places a strong focus on learning and acquiring Linux skills.

## 3. Archlabs Linux

[Distrowatch](https://distrowatch.com/table.php?distribution=archlabs)

[ArchLabs Linux](https://itsfoss.com/archlabs-review/) is a lightweight rolling release Linux distribution based on a minimal Arch Linux base with the [Openbox](https://en.wikipedia.org/wiki/Openbox?ref=itsfoss.com) window manager. [ArchLabs](https://archlabslinux.com/?ref=itsfoss.com) is influenced and inspired by the look and feel of [BunsenLabs](https://www.bunsenlabs.org/?ref=itsfoss.com) with the intermediate to advanced user in mind.

It comes with a live session of the dk window manager, based on dwm and bspwm. Other desktops like i3WM, Openbox etc are available to install through the installer.

## 4. Archman Linux

[Archman](https://archman.org/en/?ref=itsfoss.com), a name derived from a combination of **Arch** Linux and Pac**man** package management, is an independent project based on Arch Linux. Arch Linux distros, in general, are not ideal operating systems for users with little Linux experience.

Considerable background reading is necessary for things to make sense with minimal frustration. Developers of Archman Linux are trying to change that reputation.

Archman’s development is based on an understanding of development that includes user feedback and experience components. With past experience, the feedback and requests from the users are blended, and the road maps are determined, and the build works are done.

They ship Xfce, KDE Plasma, Mate, Deepin, Gnome, Lxde, and Lxqt versions, while XFCE remains the main version.

## 5. EndeavourOS

When the popular Arch-based distribution [Antergos was discontinued in 2019](https://itsfoss.com/antergos-linux-discontinued/), it left a friendly and extremely helpful community behind. The Antergos project ended because the system was too hard to maintain for the developers.

Within days after the announcement, a few experienced users planned on maintaining the former community by creating a new distribution to fill the void left by Antergos. That’s how [EndeavourOS](https://itsfoss.com/endeavouros/) was born.

[EndeavourOS](https://endeavouros.com/?ref=itsfoss.com) is lightweight and ships with a minimum amount of preinstalled apps. An almost blank canvas ready to personalize.

Later in 2022, [it added ARM installation support](https://news.itsfoss.com/endeavouros-artemis-release/) too. XFCE is their default desktop, offering several others through the online installer.

## 6. RebornOS

[RebornOS](https://rebornos.org/?ref=itsfoss.com) developers’ goal is to bring the true power of Linux to everyone, with one ISO for 15 desktop environments and full of unlimited opportunities for customization.

RebornOS also claims to have support for [Anbox](https://anbox.io/?ref=itsfoss.com) for running Android applications on desktop Linux. It also offers a simple kernel manager GUI tool.

Coupled with [Pacman](https://itsfoss.com/pacman-command/), the [AUR](https://itsfoss.com/aur-arch-linux/), and a customized version of Calamares graphical installer, Arch Linux is finally available for even the least inexperienced users.

## 7. Garuda Linux

Garuda Linux is an easy-to-install Linux distribution based on Arch Linux. It is known for its heavily customized desktops and availability of GUI tools to carry out complex operations.

It uses the BTRFS file system, with automatic system snapshots before each upgrade, accessible from the GRUB menu.

Another exciting feature is its Chaotic-AUR as a repository featuring one of the biggest precompiled software choices out of the box. Currently, there are around 2400 packages.

It offers several desktop environments, and users can download them individually.

## 8. Artix Linux

[Distrowatch](https://distrowatch.com/table.php?distribution=artix)

[Artix Linux](https://artixlinux.org/?ref=itsfoss.com) is a rolling-release distribution based on Arch Linux that uses [OpenRC](https://en.wikipedia.org/wiki/OpenRC?ref=itsfoss.com), [runit](https://en.wikipedia.org/wiki/Runit?ref=itsfoss.com) or [s6](https://en.wikipedia.org/wiki/S6_(software)?ref=itsfoss.com) init instead of [systemd](https://en.wikipedia.org/wiki/Systemd?ref=itsfoss.com).

Artix Linux has its own package repositories but as a pacman-based distribution, it can use packages from Arch Linux repositories or any other derivative distribution, even packages explicitly depending on systemd. The [Arch User Repository](https://itsfoss.com/aur-arch-linux/) (AUR) can also be used.

## 9. BlackArch Linux

[BlackArch](https://blackarch.org/) is a [penetration testing distribution](https://itsfoss.com/linux-hacking-penetration-testing/) based on Arch Linux that provides many cyber security tools. It is specially created for penetration testers and security researchers. The repository contains more than 2800+ [hacking and pen-testing tools](https://itsfoss.com/best-kali-linux-tools/) that can be installed individually or in groups. BlackArch Linux is compatible with existing Arch Linux packages.

BlackArch is a relatively new project that offers a Full ISO containing multiple window managers and a slim ISO featuring the XFCE Desktop Environment.

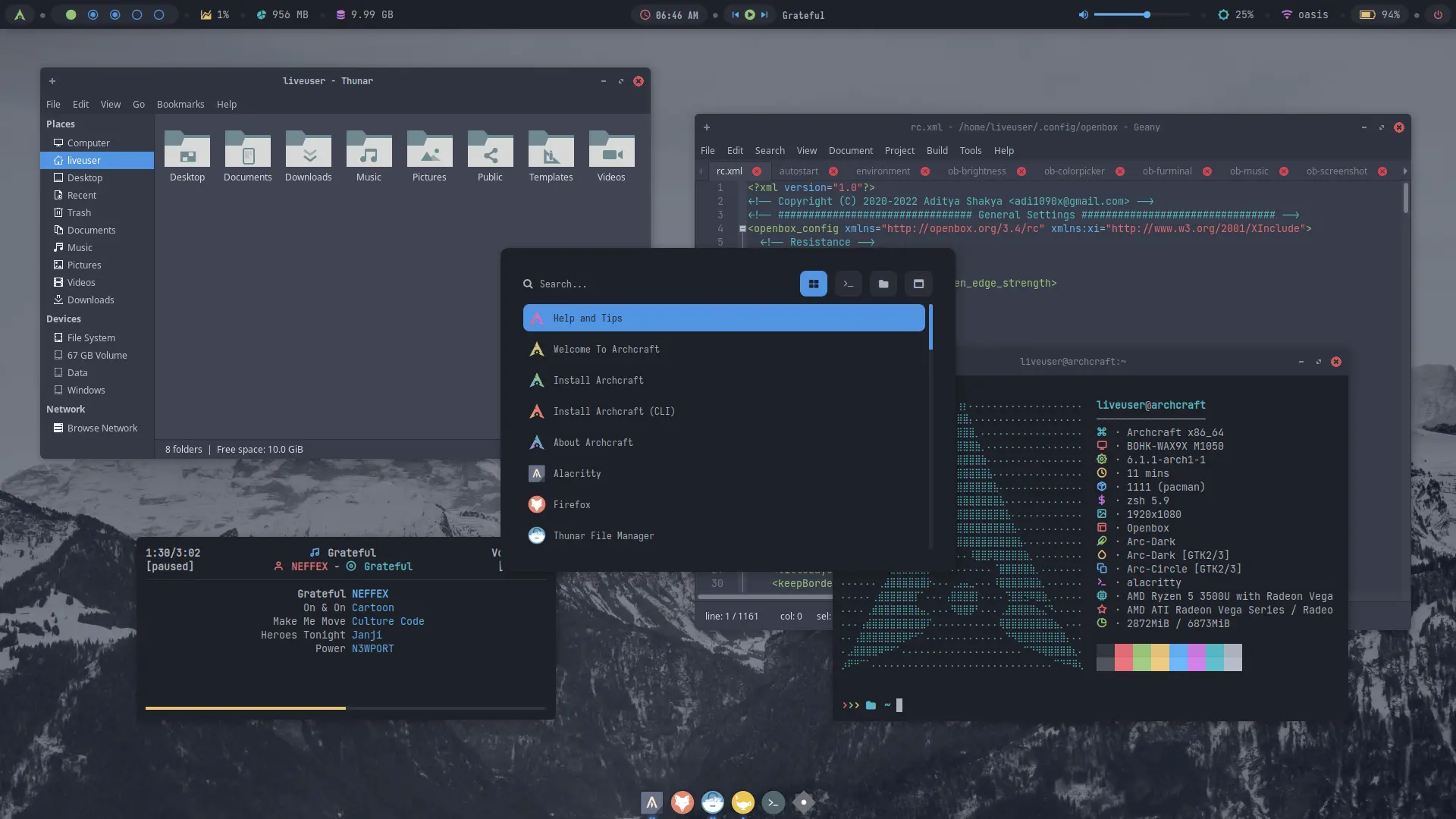

## 10. Archcraft

Archcraft is a very minimal and lightweight distribution that can run under 500Mb of memory, thanks to the lightweight applications.

It provides bspwm, LXDE, Openbox, and Xfce as desktops and also features built-in support for AUR.

## Want Real Arch Linux? Try The Installer Tool

The recent releases of Arch Linux have an installer tool called `archinstall`

, which is available along with the ISO. You can [use this tool to install Arch Linux](https://itsfoss.com/install-arch-linux-virtualbox/) easily. It is still a text-based installer but easier to understand.

In either case, you can try graphical installers like [Zen Installer](https://sourceforge.net/projects/revenge-installer/?ref=itsfoss.com) to make things easy.

These Arch-based distributions allow Linux newbies to experience Arch by eliminating some of the hassles. You can try them if you want to try something new for a change!

*💬 What is your favorite Arch-based distribution?* |

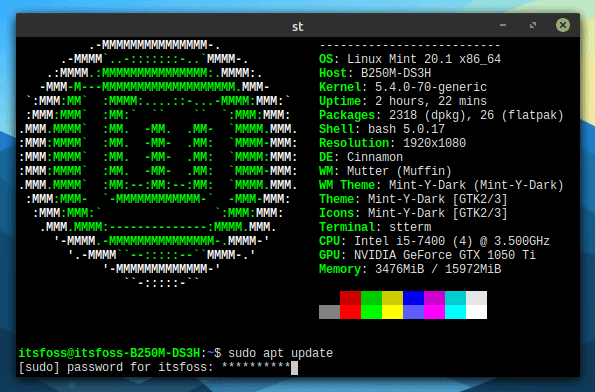

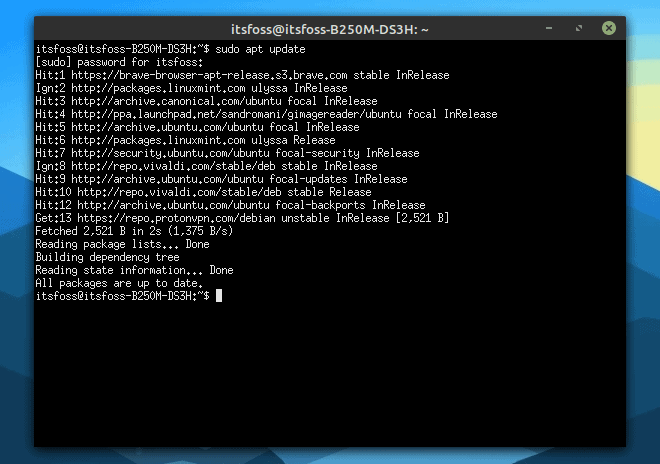

13,106 | 基础:如何在 Linux 中运行一个 Shell 脚本 | https://itsfoss.com/run-shell-script-linux/ | 2021-02-10T23:53:00 | [

"脚本",

"shell"

] | https://linux.cn/article-13106-1.html |

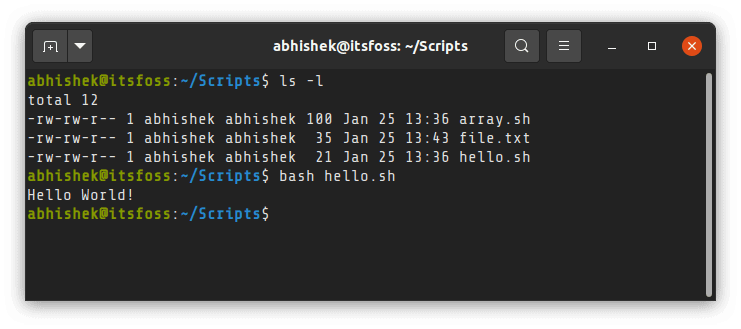

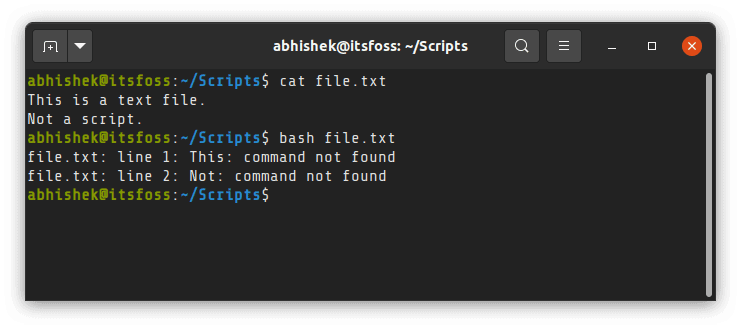

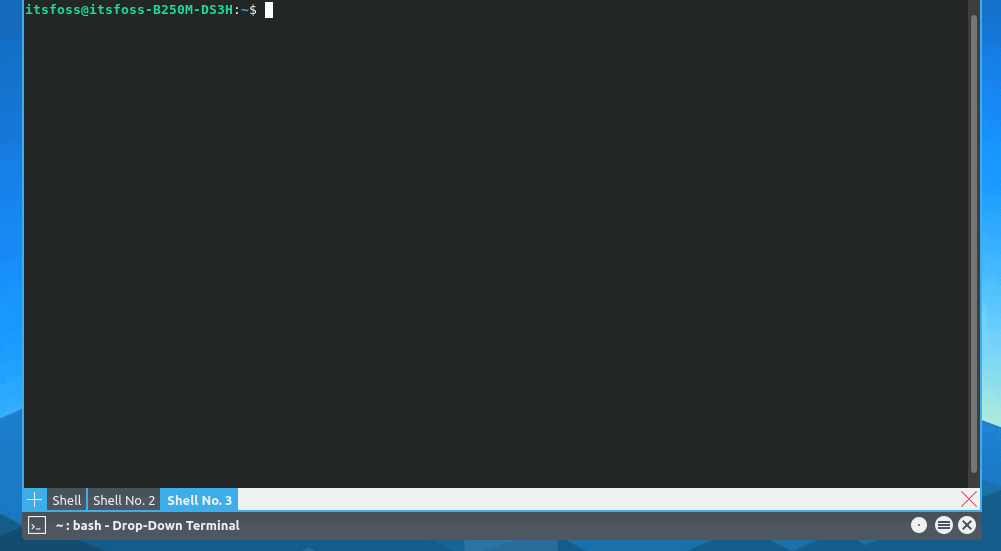

在 Linux 中有两种运行 shell 脚本的方法。你可以使用:

```

bash script.sh

```

或者,你可以像这样执行 shell 脚本:

```

./script.sh

```

这可能很简单,但没太多解释。不要担心,我将使用示例来进行必要的解释,以便你能理解为什么在运行一个 shell 脚本时要使用给定的特定语法格式。

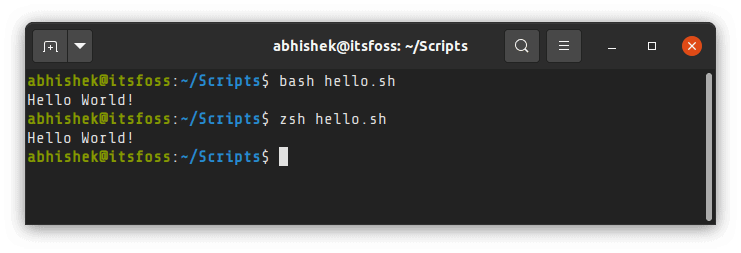

我将使用这一行 shell 脚本来使需要解释的事情变地尽可能简单:

```

abhishek@itsfoss:~/Scripts$ cat hello.sh

echo "Hello World!"

```

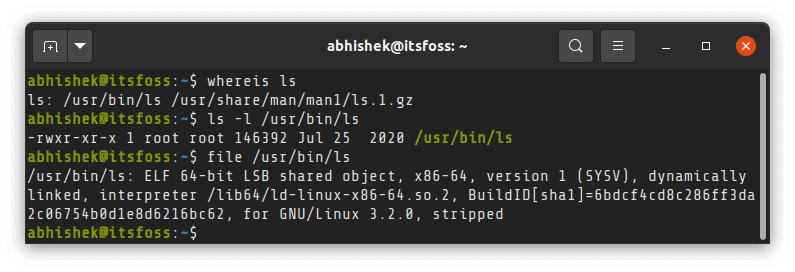

### 方法 1:通过将文件作为参数传递给 shell 以运行 shell 脚本

第一种方法涉及将脚本文件的名称作为参数传递给 shell 。

考虑到 bash 是默认 shell,你可以像这样运行一个脚本:

```

bash hello.sh

```

你知道这种方法的优点吗?**你的脚本不需要执行权限**。对于简单的任务非常方便快速。

如果你还不熟悉,我建议你 [阅读我的 Linux 文件权限详细指南](https://linuxhandbook.com/linux-file-permissions/) 。

记住,将其作为参数传递的需要是一个 shell 脚本。一个 shell 脚本是由命令组成的。如果你使用一个普通的文本文件,它将会抱怨错误的命令。

在这种方法中,**你要明确地具体指定你想使用 bash 作为脚本的解释器** 。

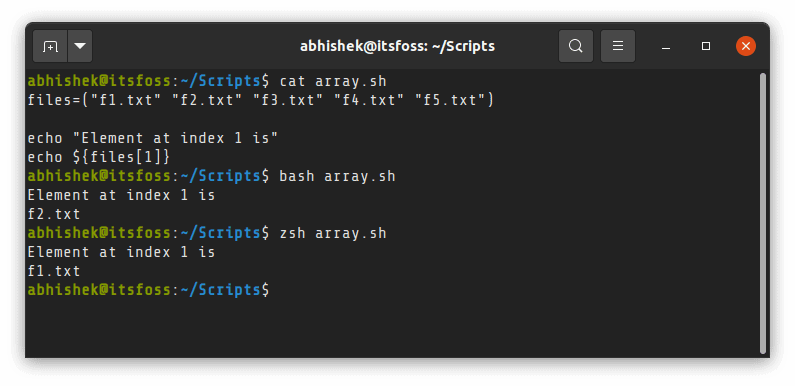

shell 只是一个程序,并且 bash 只是 Shell 的一种实现。还有其它的 shell 程序,像 ksh 、[zsh](https://www.zsh.org) 等等。如果你安装有其它的 shell ,你也可以使用它们来代替 bash 。

例如,我已安装了 zsh ,并使用它来运行相同的脚本:

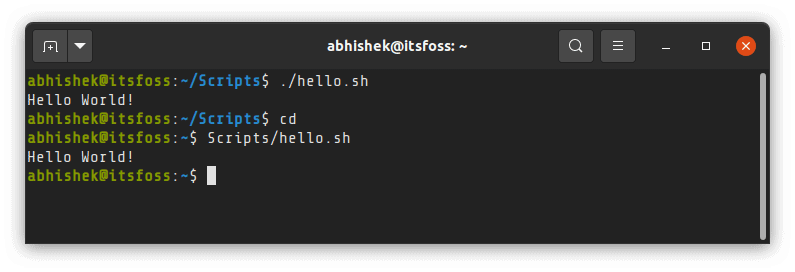

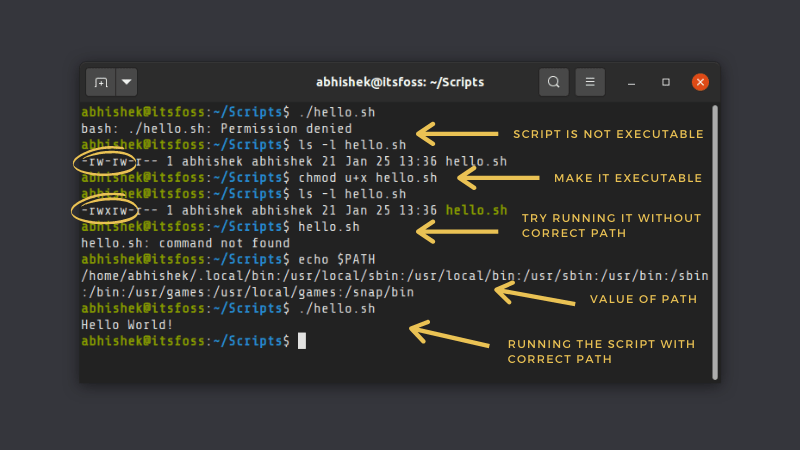

### 方法 2:通过具体指定 shell 脚本的路径来执行脚本

另外一种运行一个 shell 脚本的方法是通过提供它的路径。但是要这样做之前,你的文件必须是可执行的。否则,当你尝试执行脚本时,你将会得到 “权限被拒绝” 的错误。

因此,你首先需要确保你的脚本有可执行权限。你可以 [使用 chmod 命令](https://linuxhandbook.com/chmod-command/) 来给予你自己脚本的这种权限,像这样:

```

chmod u+x script.sh

```

使你的脚本是可执行之后,你只需输入文件的名称及其绝对路径或相对路径。大多数情况下,你都在同一个目录中,因此你可以像这样使用它:

```

./script.sh

```

如果你与你的脚本不在同一个目录中,你可以具体指定脚本的绝对路径或相对路径:

在脚本前的这个 `./` 是非常重要的(当你与脚本在同一个目录中)。

为什么当你在同一个目录下,却不能使用脚本名称?这是因为你的 Linux 系统会在 `PATH` 环境变量中指定的几个目录中查找可执行的文件来运行。

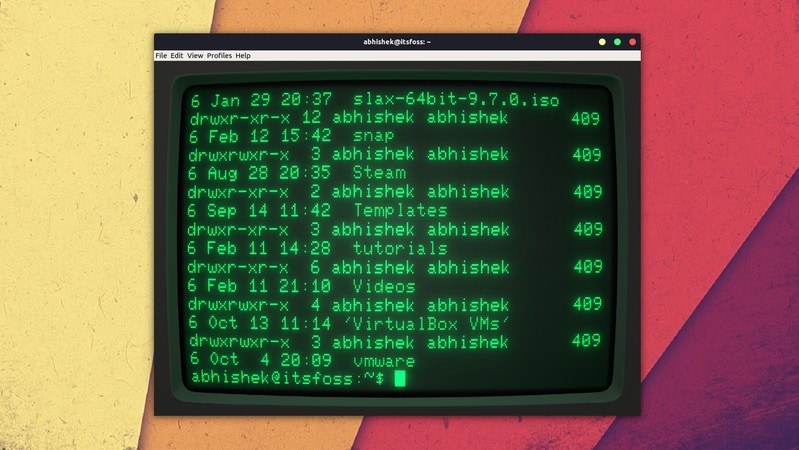

这里是我的系统的 `PATH` 环境变量的值:

```

abhishek@itsfoss:~$ echo $PATH

/home/abhishek/.local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin

```

这意味着在下面目录中具有可执行权限的任意文件都可以在系统的任何位置运行:

* `/home/abhishek/.local/bin`

* `/usr/local/sbin`

* `/usr/local/bin`

* `/usr/sbin`

* `/usr/bin`

* `/sbin`

* `/bin`

* `/usr/games`

* `/usr/local/games`

* `/snap/bin`

Linux 命令(像 `ls`、`cat` 等)的二进制文件或可执行文件都位于这些目录中的其中一个。这就是为什么你可以在你系统的任何位置通过使用命令的名称来运作这些命令的原因。看看,`ls` 命令就是位于 `/usr/bin` 目录中。

当你使用脚本而不具体指定其绝对路径或相对路径时,系统将不能在 `PATH` 环境变量中找到提及的脚本。

>

> 为什么大多数 shell 脚本在其头部包含 #! /bin/bash ?

>

>

> 记得我提过 shell 只是一个程序,并且有 shell 程序的不同实现。

>

>

> 当你使用 `#! /bin/bash` 时,你是具体指定 bash 作为解释器来运行脚本。如果你不这样做,并且以 `./script.sh` 的方式运行一个脚本,它通常会在你正在运行的 shell 中运行。

>

>

> 有问题吗?可能会有。看看,大多数的 shell 语法是大多数种类的 shell 中通用的,但是有一些语法可能会有所不同。

>

>

> 例如,在 bash 和 zsh 中数组的行为是不同的。在 zsh 中,数组索引是从 1 开始的,而不是从 0 开始。

>

>

>

>

>

> 使用 `#! /bin/bash` 来标识该脚本是 bash 脚本,并且应该使用 bash 作为脚本的解释器来运行,而不受在系统上正在使用的 shell 的影响。如果你使用 zsh 的特殊语法,你可以通过在脚本的第一行添加 `#! /bin/zsh` 的方式来标识其是 zsh 脚本。

>

>

> 在 `#!` 和 `/bin/bash` 之间的空格是没有影响的。你也可以使用 `#!/bin/bash` 。

>

>

>

### 它有帮助吗?

我希望这篇文章能够增加你的 Linux 知识。如果你还有问题或建议,请留下评论。

专家用户可能依然会挑出我遗漏的东西。但这种初级题材的问题是,要找到信息的平衡点,避免细节过多或过少,并不容易。

如果你对学习 bash 脚本感兴趣,在我们专注于系统管理的网站 [Linux Handbook](https://linuxhandbook.com) 上,我们有一个 [完整的 Bash 初学者系列](https://linuxhandbook.com/tag/bash-beginner/) 。如果你想要,你也可以 [购买带有附加练习的电子书](https://www.buymeacoffee.com/linuxhandbook) ,以支持 Linux Handbook。

---

via: <https://itsfoss.com/run-shell-script-linux/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[robsean](https://github.com/robsean) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

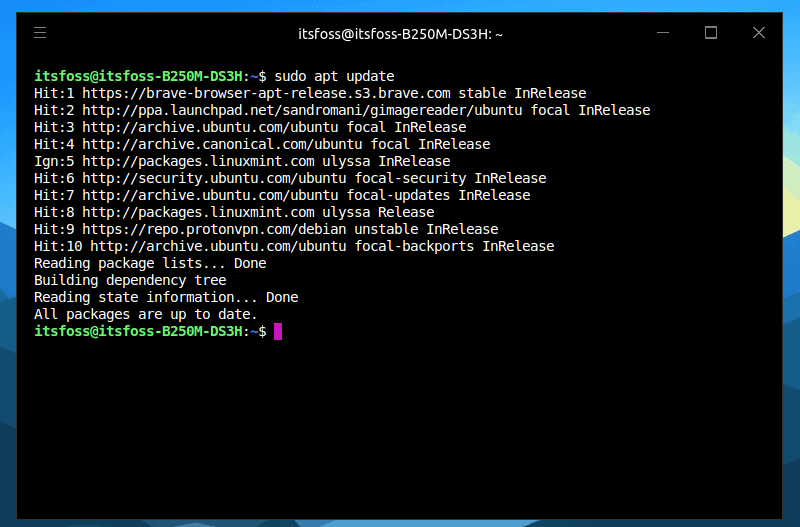

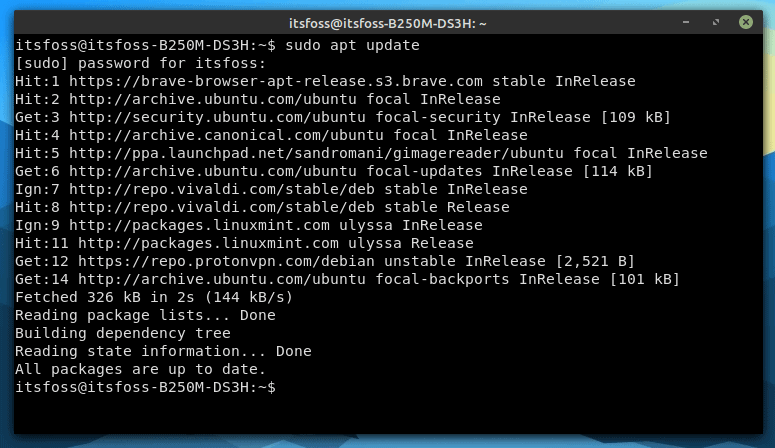

There are two ways to run a shell script in Linux. You can use:

`bash script.sh`

Or you can execute the shell script like this:

`./script.sh`

That maybe simple, but it doesn’t explain a lot. Don’t worry, I’ll do the necessary explaining with examples so that you understand why a particular syntax is used in the given format while running a shell script.

I am going to use this one line shell script to make things as uncomplicated as possible:

```

abhishek@itsfoss:~/Scripts$ cat hello.sh

echo "Hello World!"

```

## Method 1: Running a shell script by passing the file as argument to shell

The first method involves passing the script file name as an argument to the shell.

Considering that bash is the default shell, you can run a script like this:

`bash hello.sh`

Do you know the advantage of this approach? **Your script doesn’t need to have the execute permission**. Pretty handy for quick and simple tasks.

If you are not familiar already, I advise you to [read my detailed guide on file permission in Linux](https://linuxhandbook.com/linux-file-permissions/?ref=itsfoss.com).

Keep in mind that it needs to be a shell script that you pass as argument. A shell script is composed of commands. If you use a normal text file, it will complain about incorrect commands.

In this approach, **you explicitly specified that you want to use bash as the interpreter** for the script.

Shell is just a program and bash is an implementation of that. There are other such shells program like ksh, [zsh](https://www.zsh.org/?ref=itsfoss.com), etc. If you have other shells installed, you can use that as well instead of bash.

For example, I installed zsh and used it to run the same script:

## Method 2: Execute shell script by specifying its path

The other method to run a shell script is by providing its path. But for that to be possible, your file must be executable. Otherwise, you’ll have “permission denied” error when you try to execute the script.

So first you need to make sure that your script has the execute permission. You can [use the chmod command](https://linuxhandbook.com/chmod-command/?ref=itsfoss.com) to give yourself this permission like this:

`chmod u+x script.sh`

Once your script is executable, all you need to do is to type the file name along with its [absolute or relative path](https://linuxhandbook.com/absolute-vs-relative-path/?ref=itsfoss.com). Most often you are in the same directory so you just use it like this:

`./script.sh`

If you are not in the same directory as your script, you can specify it the absolute or relative path to the script:

### That ./ before the script is important (when you are in the same directory as the script)

Why can you not use the script name when you are in the same directory? That is because your Linux systems looks for the executables to run in a few selected directories that are specified in the PATH variable.

Here’s the value of PATH variable for my system:

```

abhishek@itsfoss:~$ echo $PATH

/home/abhishek/.local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin

```

This means that any file with execute permissions in one of the following directories can be executed from anywhere in the system:

- /home/abhishek/.local/bin

- /usr/local/sbin

- /usr/local/bin

- /usr/sbin

- /usr/bin

- /sbin

- /bin

- /usr/games

- /usr/local/games

- /snap/bin

The binaries or executable files for Linux commands like ls, cat etc are located in one of those directories. This is why you are able to run these commands from anywhere on your system just by using their names. See, the ls command is located in /usr/bin directory.

When you specify the script WITHOUT the absolute or relative path, it cannot find it in the directories mentioned in the PATH variable.

### Why most shell scripts contain #! /bin/bash at the beginning of the shell scripts?

Remember how I mentioned that shell is just a program and there are different implementations of shells.

When you use the #! /bin/bash, you are specifying that the script is to run with bash as interpreter. If you don’t do that and run a script in ./script.sh manner, it is usually run with whatever shell you are running.

Does it matter? It could. See, most of the shell syntax is common in all kind of shell but some might differ.

For example, the array behavior is different in bash and zsh shells. In zsh, the array index starts at 1 instead of 0.

Using #! /bin/bash indicates that the script is bash shell script and should be run with bash as interpreter irrespective of the shell which is being used on the system. If you are using zsh specific syntax, you can indicate that it is zsh script by adding #! /bin/zsh as the first line of the script.

The space between #! /bin/bash doesn’t matter. You can also use #!/bin/bash.

## Was it helpful?

I hope this article added to your Linux knowledge. If you still have questions or suggestions, please leave a comment.

Expert users can still nitpick this article about things I missed out. But the problem with such beginner topics is that it is not easy to find the right balance of information and avoid having too much or too few details.

If you are interested in learning bash script, we have an [entire Bash Beginner Series](https://linuxhandbook.com/tag/bash-beginner/?ref=itsfoss.com) on our sysadmin focused website [Linux Handbook](https://linuxhandbook.com/?ref=itsfoss.com).

[Bash Tutorial Series for Beginners: Start Learning Bash ScriptingThe 10 chapter series will teach the bash scripting essentials.](https://linuxhandbook.com/tag/bash-beginner/)

If you want, you may also purchase the ebook with additional exercises to support Linux Handbook.

[Learn Bash Quickly - Bash Beginner Book for Linux UsersThis book is available for FREE to Linux Handbook members.Learn Bash Quickly will teach you everything you need to get started with bash scripting. Each bash concept is explained with easy to understand examples. You’ll learn to:Create and run a bash scriptUse variables and pass arguments to scriptU…](https://linuxhandbook.gumroad.com/l/ejfGu) |

13,107 | 基于 Tmux 的多会话终端管理示例 | https://www.ostechnix.com/tmux-command-examples-to-manage-multiple-terminal-sessions/ | 2021-02-11T10:10:00 | [

"Tmux"

] | https://linux.cn/article-13107-1.html |

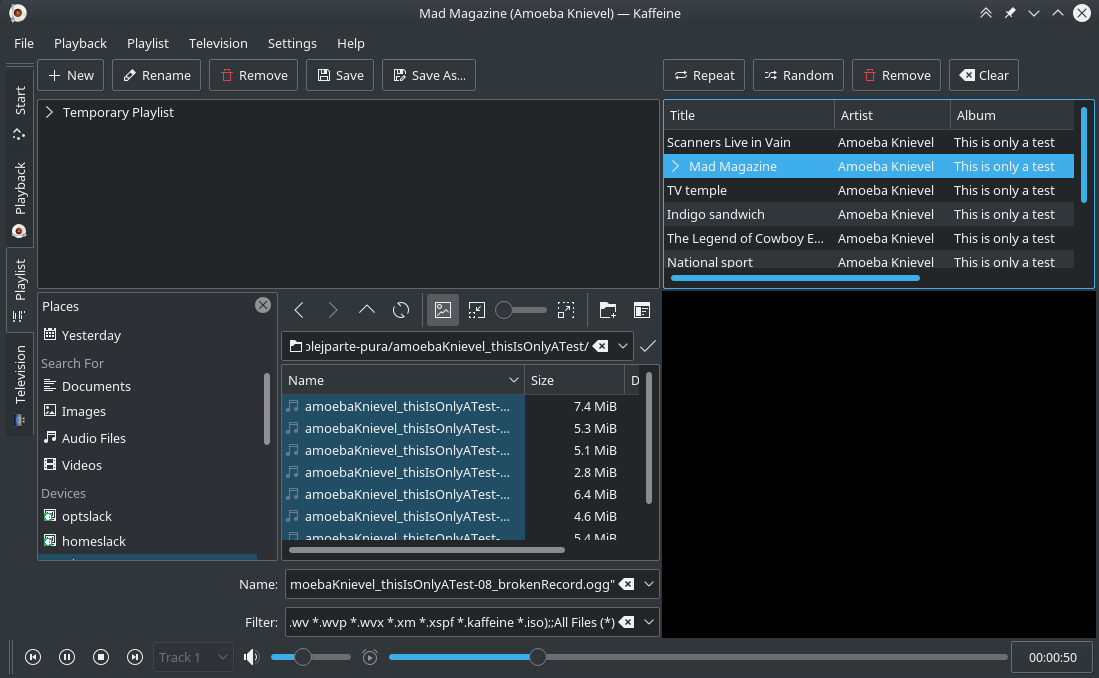

我们已经了解到如何通过 [GNU Screen](https://www.ostechnix.com/screen-command-examples-to-manage-multiple-terminal-sessions/) 进行多会话管理。今天,我们将要领略另一个著名的管理会话的命令行实用工具 **Tmux**。类似 GNU Screen,Tmux 是一个帮助我们在单一终端窗口中创建多个会话,同一时间内同时运行多个应用程序或进程的终端复用工具。Tmux 自由、开源并且跨平台,支持 Linux、OpenBSD、FreeBSD、NetBSD 以及 Mac OS X。本文将讨论 Tmux 在 Linux 系统下的高频用法。

### Linux 下安装 tmux

Tmux 可以在绝大多数的 Linux 官方仓库下获取。

在 Arch Linux 或它的变种系统下,执行下列命令来安装:

```

$ sudo pacman -S tmux

```

Debian、Ubuntu 或 Linux Mint:

```

$ sudo apt-get install tmux

```

Fedora:

```

$ sudo dnf install tmux

```

RHEL 和 CentOS:

```

$ sudo yum install tmux

```

SUSE/openSUSE:

```

$ sudo zypper install tmux

```

以上,我们已经完成 Tmux 的安装。之后我们继续看看一些 Tmux 示例。

### Tmux 命令示例: 多会话管理

Tmux 默认所有命令的前置命令都是 `Ctrl+b`,使用前牢记这个快捷键即可。

>

> **注意**:**Screen** 的前置命令都是 `Ctrl+a`.

>

>

>

#### 创建 Tmux 会话

在终端中运行如下命令创建 Tmux 会话并附着进入:

```

tmux

```

抑或,

```

tmux new

```

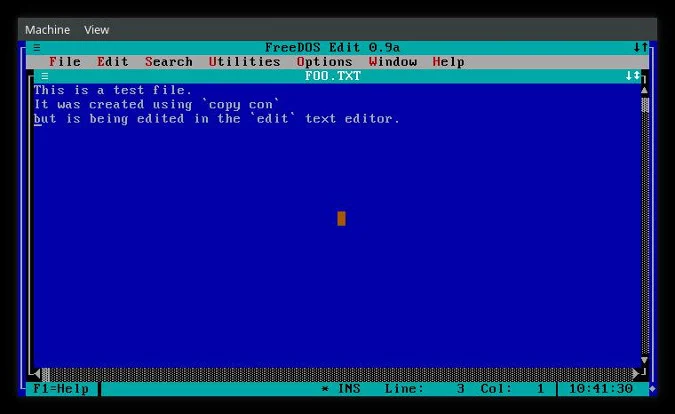

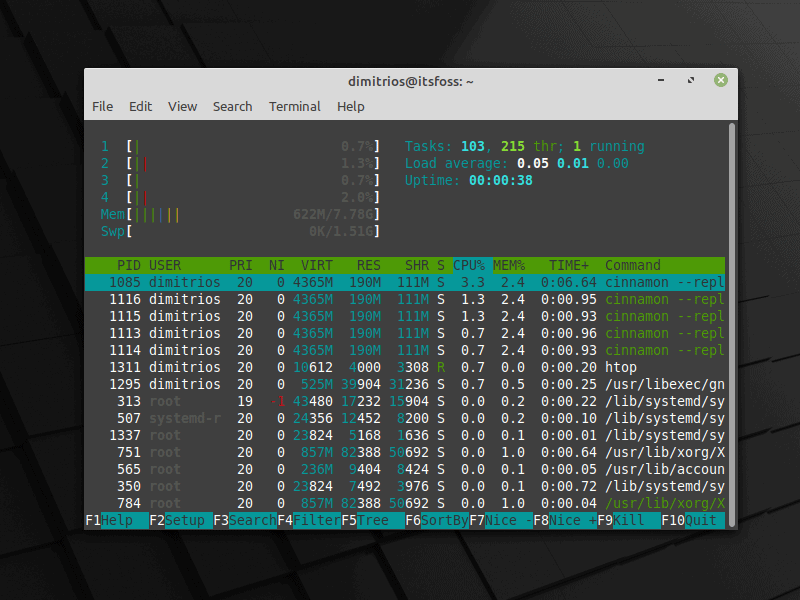

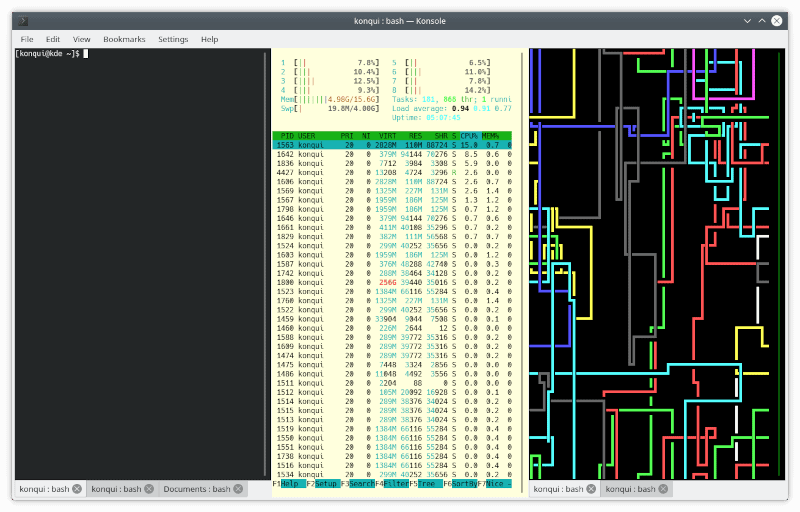

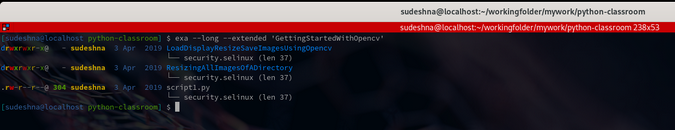

一旦进入 Tmux 会话,你将看到一个 **沉在底部的绿色的边栏**,如下图所示。

*创建 Tmux 会话*

这个绿色的边栏能很容易提示你当前是否身处 Tmux 会话当中。

#### 退出 Tmux 会话

退出当前 Tmux 会话仅需要使用 `Ctrl+b` 和 `d`。无需同时触发这两个快捷键,依次按下 `Ctrl+b` 和 `d` 即可。

退出当前会话后,你将能看到如下输出:

```

[detached (from session 0)]

```

#### 创建有名会话

如果使用多个会话,你很可能会混淆运行在多个会话中的应用程序。这种情况下,我们需要会话并赋予名称。譬如需要 web 相关服务的会话,就创建一个名称为 “webserver”(或任意一个其他名称) 的 Tmux 会话。

```

tmux new -s webserver

```

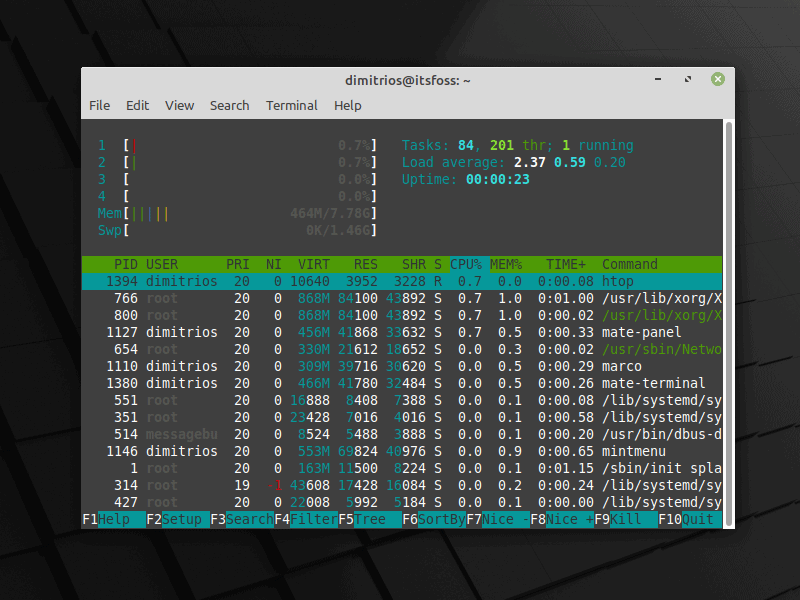

这里是新的 Tmux 有名会话:

*拥有自定义名称的 Tmux 会话*

如你所见上述截图,这个 Tmux 会话的名称已经被标注为 “webserver”。如此,你可以在多个会话中,轻易的区分应用程序的所在。

退出会话,轻按 `Ctrl+b` 和 `d`。

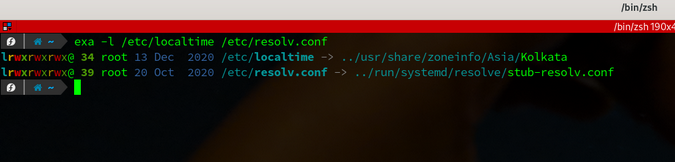

#### 查看 Tmux 会话清单

查看 Tmux 会话清单,执行:

```

tmux ls

```

示例输出:

*列出 Tmux 会话*

如你所见,我们开启了两个 Tmux 会话。

#### 创建非附着会话

有时候,你可能想要简单创建会话,但是并不想自动切入该会话。

创建一个非附着会话,并赋予名称 “ostechnix”,运行:

```

tmux new -s ostechnix -d

```

上述命令将会创建一个名为 “ostechnix” 的会话,但是并不会附着进入。

你可以通过使用 `tmux ls` 命令验证:

*创建非附着会话*

#### 附着进入 Tmux 会话

通过如下命令,你可以附着进入最后一个被创建的会话:

```

tmux attach

```

抑或,

```

tmux a

```

如果你想附着进入任意一个指定的有名会话,譬如 “ostechnix”,运行:

```

tmux attach -t ostechnix

```

或者,简写为:

```

tmux a -t ostechnix

```

#### 关闭 Tmux 会话

当你完成或者不再需要 Tmux 会话,你可以通过如下命令关闭:

```

tmux kill-session -t ostechnix

```

当身处该会话时,使用 `Ctrl+b` 以及 `x`。点击 `y` 来关闭会话。

可以通过 `tmux ls` 命令验证。

关闭所有 Tmux 服务下的所有会话,运行:

```

tmux kill-server

```

谨慎!这将终止所有 Tmux 会话,并不会产生任何警告,即便会话存在运行中的任务。

如果不存在活跃的 Tmux 会话,将看到如下输出:

```

$ tmux ls

no server running on /tmp/tmux-1000/default

```

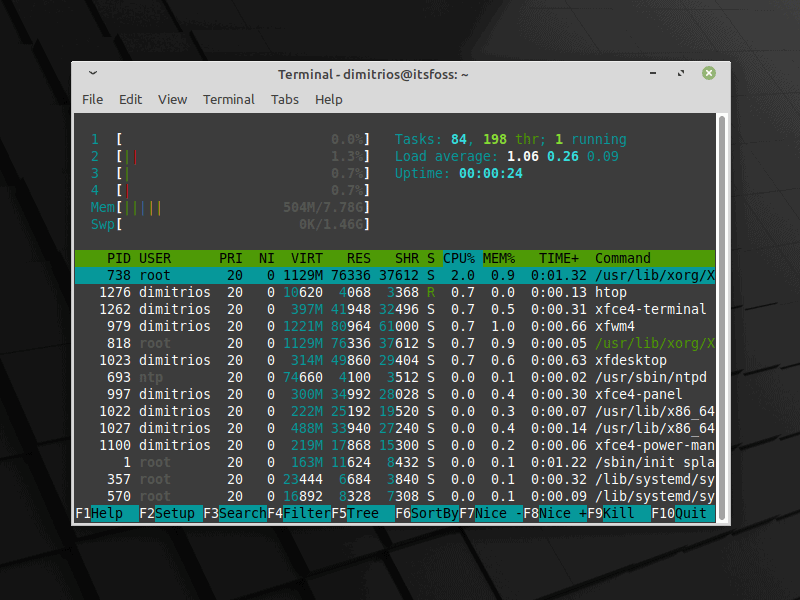

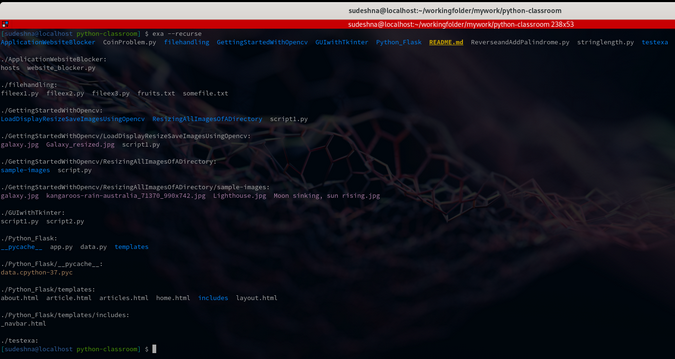

#### 切割 Tmux 窗口

切割窗口成多个小窗口,在 Tmux 中,这个叫做 “Tmux 窗格”。每个窗格中可以同时运行不同的程序,并同时与所有的窗格进行交互。每个窗格可以在不影响其他窗格的前提下可以调整大小、移动位置和控制关闭。我们可以以水平、垂直或者二者混合的方式切割屏幕。

##### 水平切割窗格

欲水平切割窗格,使用 `Ctrl+b` 和 `"`(半个双引号)。

*水平切割 Tmux 窗格*

可以使用组合键进一步切割面板。

##### 垂直切割窗格

垂直切割面板,使用 `Ctrl+b` 和 `%`。

*垂直切割 Tmux 窗格*

##### 水平、垂直混合切割窗格

我们也可以同时采用水平和垂直的方案切割窗格。看看如下截图:

*切割 Tmux 窗格*

首先,我通过 `Ctrl+b` `"` 水平切割,之后通过 `Ctrl+b` `%` 垂直切割下方的窗格。

如你所见,每个窗格下我运行了不同的程序。

##### 切换窗格

通过 `Ctrl+b` 和方向键(上下左右)切换窗格。

##### 发送命令给所有窗格

之前的案例中,我们在每个窗格中运行了三个不同命令。其实,也可以发送相同的命令给所有窗格。

为此,使用 `Ctrl+b` 然后键入如下命令,之后按下回车:

```

:setw synchronize-panes

```

现在在任意窗格中键入任何命令。你将看到相同命令影响了所有窗格。

##### 交换窗格

使用 `Ctrl+b` 和 `o` 交换窗格。

##### 展示窗格号

使用 `Ctrl+b` 和 `q` 展示窗格号。

##### 终止窗格

要关闭窗格,直接键入 `exit` 并且按下回车键。或者,按下 `Ctrl+b` 和 `x`。你会看到确认信息。按下 `y` 关闭窗格。

*关闭窗格*

##### 放大和缩小 Tmux 窗格

我们可以将 Tmux 窗格放大到当前终端窗口的全尺寸,以获得更好的文本可视性,并查看更多的内容。当你需要更多的空间或专注于某个特定的任务时,这很有用。在完成该任务后,你可以将 Tmux 窗格缩小(取消放大)到其正常位置。更多详情请看以下链接。

* [如何缩放 Tmux 窗格以提高文本可见度?](https://ostechnix.com/how-to-zoom-tmux-panes-for-better-text-visibility/)

#### 自动启动 Tmux 会话

当通过 SSH 与远程系统工作时,在 Tmux 会话中运行一个长期运行的进程总是一个好的做法。因为,它可以防止你在网络连接突然中断时失去对运行进程的控制。避免这个问题的一个方法是自动启动 Tmux 会话。更多详情,请参考以下链接。

* [通过 SSH 登录远程系统时自动启动 Tmux 会话](https://ostechnix.com/autostart-tmux-session-on-remote-system-when-logging-in-via-ssh/)

### 总结

这个阶段下,你已经获得了基本的 Tmux 技能来进行多会话管理,更多细节,参阅 man 页面。

```

$ man tmux

```

GNU Screen 和 Tmux 工具都能透过 SSH 很好的管理远程服务器。学习 Screen 和 Tmux 命令,像个行家一样,彻底通过这些工具管理远程服务器。

---

via: <https://www.ostechnix.com/tmux-command-examples-to-manage-multiple-terminal-sessions/>

作者:[sk](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[chensanle](https://github.com/chensanle) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

13,109 | 英特尔 Optane:用于数据中心内存缓存 | https://www.networkworld.com/article/3387117/intel-formally-launches-optane-for-data-center-memory-caching.html#tk.rss_all | 2021-02-12T11:17:46 | [

"内存"

] | https://linux.cn/article-13109-1.html |

>

> 英特尔推出了包含 3D Xpoint 内存技术的 Optane 持久内存产品线。英特尔的这个解决方案介乎于 DRAM 和 NAND 中间,以此来提升性能。

>

>

>

英特尔在 2019 年 4 月的[大规模数据中心活动](https://www.networkworld.com/article/3386142/intel-unveils-an-epic-response-to-amds-server-push.html)中正式推出 Optane 持久内存产品线。它已经问世了一段时间,但是目前的 Xeon 服务器处理器还不能充分利用它。而新的 Xeon8200 和 9200 系列可以充分利用 Optane 持久内存的优势。

由于 Optane 是英特尔的产品(与美光合作开发),所以意味着 AMD 和 ARM 的服务器处理器不能够支持它。

正如[我之前所说的](https://www.networkworld.com/article/3279271/intel-launches-optane-the-go-between-for-memory-and-storage.html),OptaneDC 持久内存采用与美光合作研发的 3D Xpoint 内存技术。3D Xpoint 是一种比 SSD 更快的非易失性内存,速度几乎与 DRAM 相近,而且它具有 NAND 闪存的持久性。

第一个 3D Xpoint 产品是被称为英特尔“尺子”的 SSD,因为它们被设计成细长的样子,很像尺子的形状。它们被设计这样是为了适合 1u 的服务器机架。在发布的公告中,英特尔推出了新的利用四芯或者 QLC 3D NAND 内存的英特尔 SSD D5-P4325 [尺子](https://www.theregister.co.uk/2018/02/02/ruler_and_miniruler_ssd_formats_look_to_banish_diskstyle_drives/) SSD,可以在 1U 的服务器机架上放 1PB 的存储。

OptaneDC 持久内存的可用容量最初可以通过使用 128GB 的 DIMM 达到 512GB。英特尔数据中心集团执行副总裁及总经理 Navin Shenoy 说:“OptaneDC 持久内存可达到的容量是 DRAM 的 2 到 4 倍。”

他说:“我们希望服务器系统的容量可以扩展到每个插槽 4.5TB 或者 8 个插槽 36TB,这是我们第一代 Xeon 可扩展芯片的 3 倍。”

### 英特尔Optane内存的使用和速度

Optane 有两种不同的运行模式:内存模式和应用直连模式。内存模式是将 DRAM 放在 Optane 内存之上,将 DRAM 作为 Optane 内存的缓存。应用直连模式是将 DRAM 和 OptaneDC 持久内存一起作为内存来最大化总容量。并不是每个工作负载都适合这种配置,所以应该在对延迟不敏感的应用程序中使用。正如英特尔推广的那样,Optane 的主要使用情景是内存模式。

几年前,当 3D Xpoint 最初发布时,英特尔宣称 Optane 的速度是 NAND 的 1000 倍,耐用是 NAND 的 1000 倍,密度潜力是 DRAM 的 10 倍。这虽然有点夸张,但这些因素确实很令人着迷。

在 256B 的连续 4 个缓存行中使用 Optane 内存可以达到 8.3GB/秒的读速度和 3.0GB/秒的写速度。与 SATA SSD 的 500MB/秒左右的读/写速度相比,可以看到性能有很大提升。请记住,Optane 充当内存,所以它会缓存被频繁访问的 SSD 中的内容。

这是了解 OptaneDC 的关键。它能将非常大的数据集存储在离内存非常近的位置,因此具有很低延迟的 CPU 可以最小化访问较慢的存储子系统的访问延迟,无论存储是 SSD 还是 HDD。现在,它提供了一种可能性,即把多个 TB 的数据放在非常接近 CPU 的地方,以实现更快的访问。

### Optane 内存的一个挑战

唯一真正的挑战是 Optane 插进内存所在的 DIMM 插槽。现在有些主板的每个 CPU 有多达 16 个 DIMM 插槽,但是这仍然是客户和设备制造商之间需要平衡的电路板空间:Optane 还是内存。有一些 Optane 驱动采用了 PCIe 接口进行连接,可以减轻主板上内存的拥挤。

3D Xpoint 由于它写数据的方式,提供了比传统的 NAND 闪存更高的耐用性。英特尔承诺 Optane 提供 5 年保修期,而很多 SSD 只提供 3 年保修期。

---

via: <https://www.networkworld.com/article/3387117/intel-formally-launches-optane-for-data-center-memory-caching.html>

作者:[Andy Patrizio](https://www.networkworld.com/author/Andy-Patrizio/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[RiaXu](https://github.com/ShuyRoy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

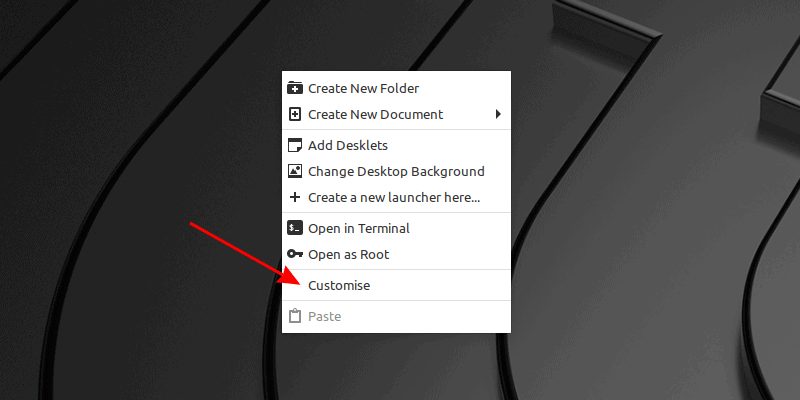

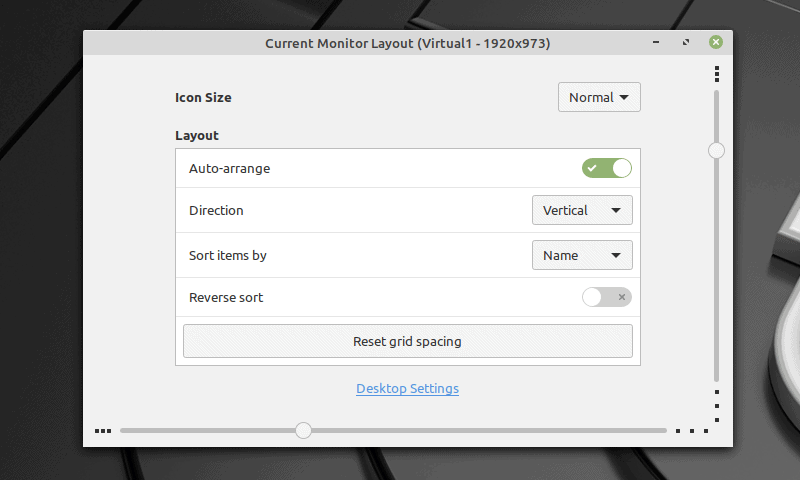

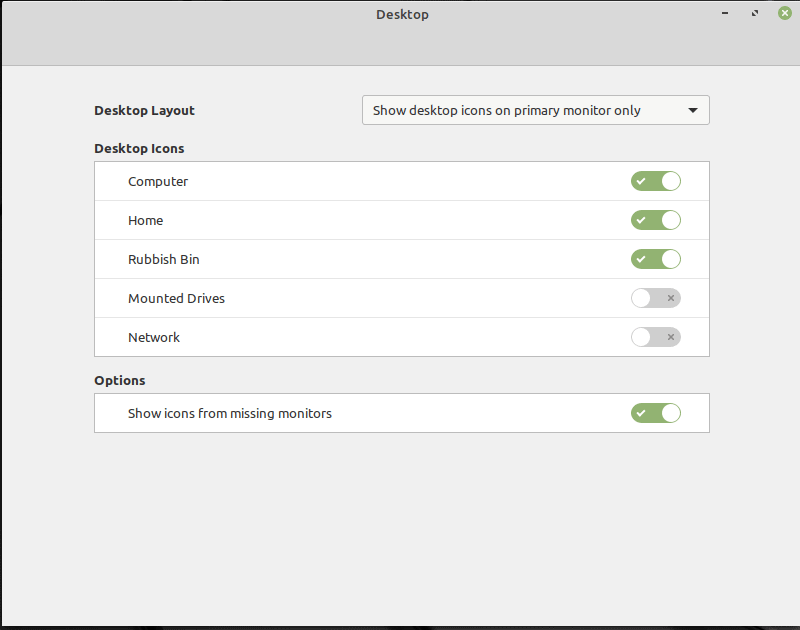

13,110 | 使用 Material Shell 扩展将你的 GNOME 桌面打造成平铺式风格 | https://itsfoss.com/material-shell/ | 2021-02-12T16:23:05 | [

"平铺"

] | https://linux.cn/article-13110-1.html | 平铺式窗口的特性吸引了很多人的追捧。也许是因为它很好看,也许是因为它能提高 [Linux 快捷键](https://itsfoss.com/ubuntu-shortcuts/) 玩家的效率。又或者是因为使用不同寻常的平铺式窗口是一种新奇的挑战。

从 i3 到 [Sway](https://itsfoss.com/sway-window-manager/),Linux 桌面拥有各种各样的平铺式窗口管理器。配置一个平铺式窗口管理器需要一个陡峭的学习曲线。

这就是为什么像 [Regolith 桌面](https://itsfoss.com/regolith-linux-desktop/) 这样的项目会存在,给你预先配置好的平铺桌面,让你可以更轻松地开始使用平铺窗口。

让我给你介绍一个类似的项目 —— Material Shell。它可以让你用上平铺式桌面,甚至比 [Regolith](https://regolith-linux.org/) 还简单。

### Material Shell 扩展:将 GNOME 桌面转变成平铺式窗口管理器

[Material Shell](https://material-shell.com) 是一个 GNOME 扩展,这就是它最好的地方。这意味着你不需要注销并登录其他桌面环境。你只需要启用或关闭这个扩展就可以自如的切换你的工作环境。

我会列出 Material Shell 的各种特性,但是也许视频更容易让你理解:

这个项目叫做 Material Shell 是因为它遵循 [Material Design](https://material.io/) 原则。因此这个应用拥有一个美观的界面。这就是它最重要的一个特性。

#### 直观的界面

Material Shell 添加了一个左侧面板,以便快速访问。在此面板上,你可以在底部找到系统托盘,在顶部找到搜索和工作区。

所有新打开的应用都会添加到当前工作区中。你也可以创建新的工作区并切换到该工作区,以将正在运行的应用分类。其实这就是工作区最初的意义。

在 Material Shell 中,每个工作区都可以显示为具有多个应用程序的行列,而不是包含多个应用程序的程序框。

#### 平铺式窗口

在工作区中,你可以一直在顶部看到所有打开的应用程序。默认情况下,应用程序会像在 GNOME 桌面中那样铺满整个屏幕。你可以使用右上角的布局改变器来改变布局,将其分成两半、多列或多个应用网格。

这段视频一目了然的显示了以上所有功能:

#### 固定布局和工作区

Material Shell 会记住你打开的工作区和窗口,这样你就不必重新组织你的布局。这是一个很好的特性,因为如果你对应用程序的位置有要求的话,它可以节省时间。

#### 热建/快捷键

像任何平铺窗口管理器一样,你可以使用键盘快捷键在应用程序和工作区之间切换。

* `Super+W` 切换到上个工作区;

* `Super+S` 切换到下个工作区;

* `Super+A` 切换到左边的窗口;

* `Super+D` 切换到右边的窗口;

* `Super+1`、`Super+2` … `Super+0` 切换到某个指定的工作区;

* `Super+Q` 关闭当前窗口;

* `Super+[鼠标拖动]` 移动窗口;

* `Super+Shift+A` 将当前窗口左移;

* `Super+Shift+D` 将当前窗口右移;

* `Super+Shift+W` 将当前窗口移到上个工作区;

* `Super+Shift+S` 将当前窗口移到下个工作区。

### 安装 Material Shell

>

> 警告!

>

>

> 对于大多数用户来说,平铺式窗口可能会导致混乱。你最好先熟悉如何使用 GNOME 扩展。如果你是 Linux 新手或者你害怕你的系统发生翻天覆地的变化,你应当避免使用这个扩展。

>

>

>

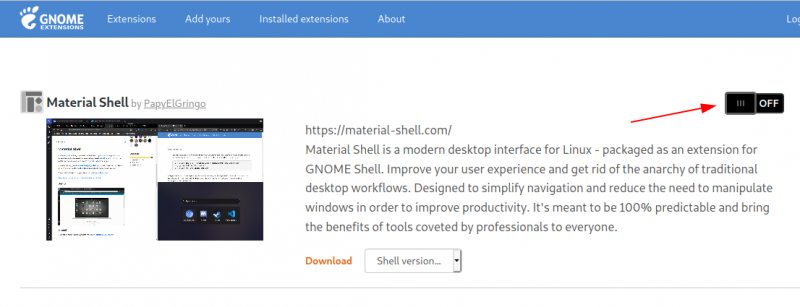

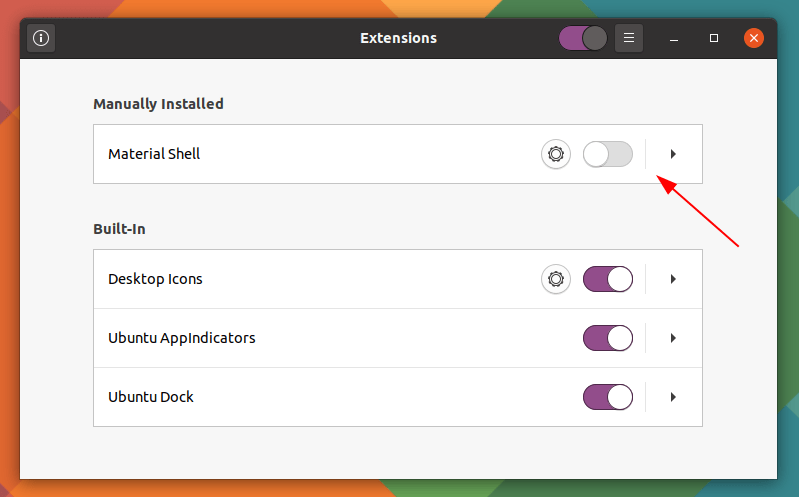

Material Shell 是一个 GNOME 扩展。所以,请 [检查你的桌面环境](https://itsfoss.com/find-desktop-environment/),确保你运行的是 GNOME 3.34 或者更高的版本。

除此之外,我注意到在禁用 Material Shell 之后,它会导致 Firefox 的顶栏和 Ubuntu 的坞站消失。你可以在 GNOME 的“扩展”应用程序中禁用/启用 Ubuntu 的坞站扩展来使其变回原来的样子。我想这些问题也应该在系统重启后消失,虽然我没试过。

我希望你知道 [如何使用 GNOME 扩展](https://itsfoss.com/gnome-shell-extensions/)。最简单的办法就是 [在浏览器中打开这个链接](https://extensions.gnome.org/extension/3357/material-shell/),安装 GNOME 扩展浏览器插件,然后启用 Material Shell 扩展即可。

如果你不喜欢这个扩展,你也可以在同样的链接中禁用它。或者在 GNOME 的“扩展”应用程序中禁用它。

### 用不用平铺式?

我使用多个电脑屏幕,我发现 Material Shell 不适用于多个屏幕的情况。这是开发者将来可以改进的地方。

除了这个毛病以外,Material Shell 是个让你开始使用平铺式窗口的好东西。如果你尝试了 Material Shell 并且喜欢它,请 [在 GitHub 上给它一个星标或赞助它](https://github.com/material-shell/material-shell) 来鼓励这个项目。

由于某些原因,平铺窗户越来越受欢迎。最近发布的 [Pop OS 20.04](https://itsfoss.com/pop-os-20-04-review/) 也增加了平铺窗口的功能。有一个类似的项目叫 PaperWM,也是这样做的。

但正如我前面提到的,平铺布局并不适合所有人,它可能会让很多人感到困惑。

你呢?你是喜欢平铺窗口还是喜欢经典的桌面布局?

---

via: <https://itsfoss.com/material-shell/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Chao-zhi](https://github.com/Chao-zhi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | There is something about tiling windows that attracts many people. Perhaps it looks good or perhaps it is time-saving if you are a fan of [keyboard shortcuts in Linux](https://itsfoss.com/ubuntu-shortcuts/). Or maybe it’s the challenge of using the uncommon tiling windows.

[Image Source](https://imgur.com/gallery/wxBZFN5)

From i3 to [Sway](https://itsfoss.com/sway-window-manager/), there are so many tiling window managers available for Linux desktop. Configuring a tiling window manager itself requires a steep learning curve.

This is why projects like [Regolith desktop](https://itsfoss.com/regolith-linux-desktop/) exist to give you preconfigured tiling desktop so that you can get started with tiling windows with less effort.

Let me introduce you to a similar project named Material Shell that makes using tiling feature even easier than [Regolith](https://regolith-linux.org/).

## Material Shell GNOME Extension: Convert GNOME desktop into a tiling window manager

[Material Shell](https://material-shell.com) is a GNOME extension and that’s the best thing about it. This means that you don’t have to log out and log in to another [desktop environment](https://itsfoss.com/what-is-desktop-environment/) or window manager. You can enable or disable it from within your current session.

I’ll list the features of Material Shell but it will be easier to see it in action:

The project is called Material Shell because it follows the [Material Design](https://material.io/) guideline and thus gives the applications an aesthetically pleasing interface. Here are its main features:

### Intuitive interface

Material Shell adds a left panel for quick access. On this panel, you can find the system tray at the bottom and the search and workspaces on the top.

All the new apps are added to the current workspace. You can create new workspace and switch to it for organizing your running apps into categories. This is the essential concept of workspace anyway.

In Material Shell, every workspace can be visualized as a row with several apps rather than a box with several apps in it.

### Tiling windows

In a workspace, you can see all your opened applications on the top all the time. By default, the applications are opened to take the entire screen like you do in GNOME desktop. You can change the layout to split it in half or multiple columns or a grid of apps using the layout changer in the top right corner.

This video shows all the above features at a glance:

### Persistent layout and workspaces

That’s not it. Material Shell also remembers the workspaces and windows you open so that you don’t have to reorganize your layout again. This is a good feature to have as it saves time if you are particular about which application goes where.

### Hotkeys/Keyboard shortcut

Like any tiling windows manager, you can use keyboard shortcuts to navigate between applications and workspaces.

`Super+W`

Navigate to the upper workspace.`Super+S`

Navigate to the lower workspace.`Super+A`

Focus the window at the left of the current window.`Super+D`

Focus the window at the right of the current window.`Super+1`

,`Super+2`

…`Super+0`

Navigate to specific workspace`Super+Q`

Kill the current window focused.`Super+[MouseDrag]`

Move window around.`Super+Shift+A`

Move the current window to the left.`Super+Shift+D`

Move the current window to the right.`Super+Shift+W`

Move the current window to the upper workspace.`Super+Shift+S`

Move the current window to the lower workspace.

## Installing Material Shell

Warning!

Tiling windows could be confusing for many users. You should be familiar with GNOME Extensions to use it. Avoid trying it if you are absolutely new to Linux or if you are easily panicked if anything changes in your system.

Material Shell is a GNOME extension. So, please [check your desktop environment](https://itsfoss.com/find-desktop-environment/) to make sure you are running ** GNOME 3.34 or higher version**.

I would also like to add that tiling windows could be confusing for many users.

Apart from that, I noticed that after disabling Material Shell it removes the top bar from Firefox and the Ubuntu dock. You can get the dock back by disabling/enabling Ubuntu dock extension from the Extensions app in GNOME. I haven’t tried but I guess these problems should also go away after a system reboot.

I hope you know [how to use GNOME extensions](https://itsfoss.com/gnome-shell-extensions/). The easiest way is to just [open this link in the browser](https://extensions.gnome.org/extension/3357/material-shell/), install GNOME extension plugin and then enable the Material Shell extension.

If you don’t like it, you can disable it from the same extension link you used earlier or use the GNOME Extensions app:

**To tile or not?**

I use multiple screens and I found that Material Shell doesn’t work well with multiple monitors. This is something the developer(s) can improve in the future.

Apart from that, it’s a really easy to get started with tiling windows with Material Shell. If you try Material Shell and like it, appreciate the project by [giving it a star or sponsoring it on GitHub](https://github.com/material-shell/material-shell).

For some reasons, tiling windows are getting popular. Recently released [Pop OS 20.04](https://itsfoss.com/pop-os-20-04-review/) also added tiling window features. There is a similar project called [PaperWM](https://itsfoss.com/paperwm/) which does the same.

But as I mentioned previously, tiling layouts are not for everyone and it could confuse many people.

How about you? Do you prefer tiling windows or you prefer the classic desktop layout? |

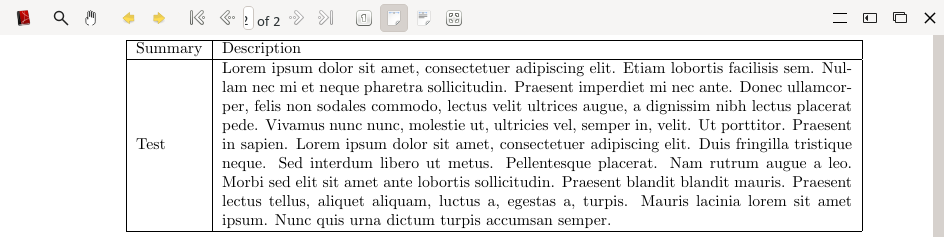

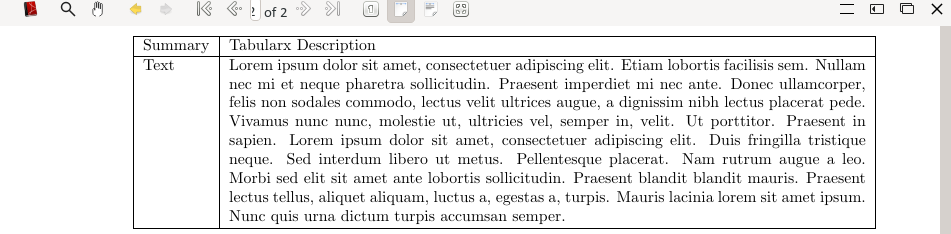

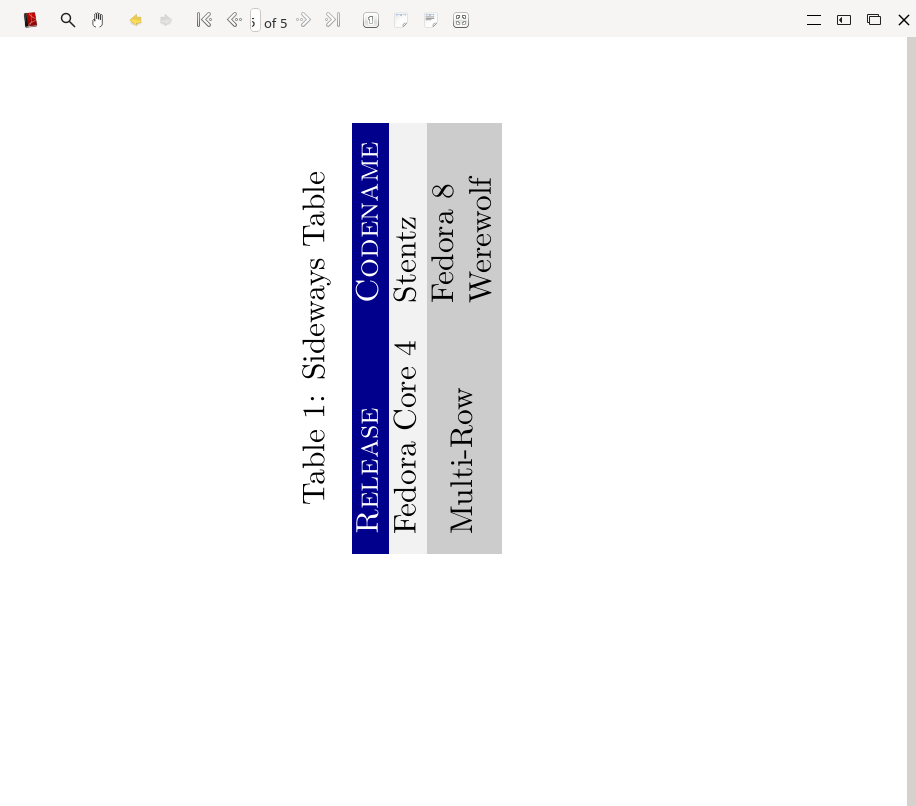

13,112 | LaTeX 排版(1):列表 | https://fedoramagazine.org/latex-typesetting-part-1/ | 2021-02-13T09:41:11 | [

"LaTex"

] | https://linux.cn/article-13112-1.html |

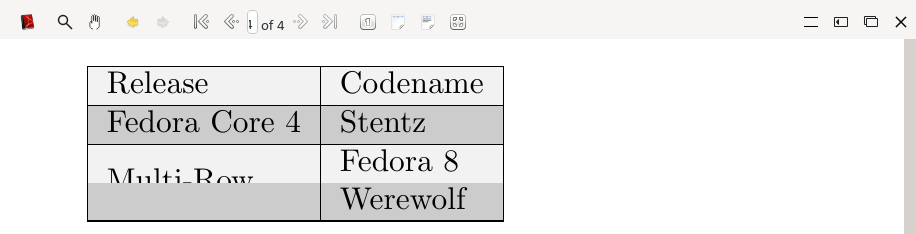

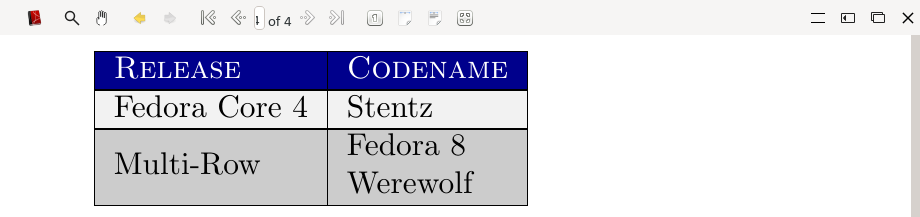

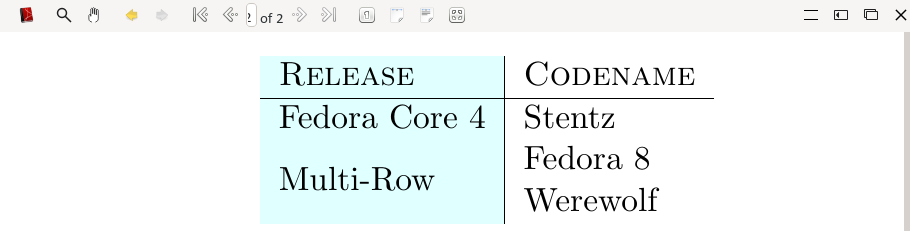

本系列基于前文《[在 Fedora 上用 LaTex 和 TeXstudio 排版你的文档](https://fedoramagazine.org/typeset-latex-texstudio-fedora)》和《[LaTeX 基础](https://fedoramagazine.org/fedora-classroom-latex-101-beginners)》,本文即系列的第一部分,是关于 LaTeX 列表的。

### 列表类型

LaTeX 中的列表是封闭的环境,列表中的每个项目可以取一行文字到一个完整的段落。在 LaTeX 中有三种列表类型:

* `itemize`:<ruby> 无序列表 <rt> unordered list </rt></ruby>/<ruby> 项目符号列表 <rt> bullet list </rt></ruby>

* `enumerate`:<ruby> 有序列表 <rt> ordered list </rt></ruby>

* `description`:<ruby> 描述列表 <rt> descriptive list </rt></ruby>

### 创建列表

要创建一个列表,需要在每个项目前加上控制序列 `\item`,并在项目清单前后分别加上控制序列 `\begin{<类型>}` 和 `\end`{<类型>}`(将其中的`<类型>` 替换为将要使用的列表类型),如下例:

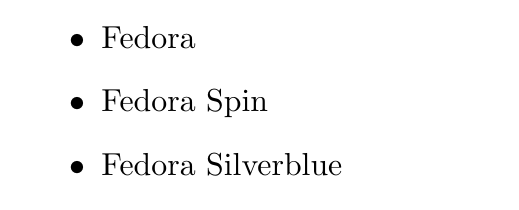

#### itemize(无序列表)

```

\begin{itemize}

\item Fedora

\item Fedora Spin

\item Fedora Silverblue

\end{itemize}

```

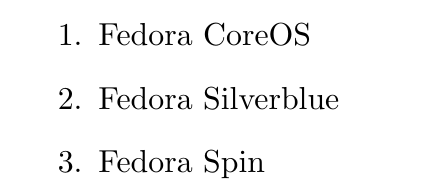

#### enumerate(有序列表)

```

\begin{enumerate}

\item Fedora CoreOS

\item Fedora Silverblue

\item Fedora Spin

\end{enumerate}

```

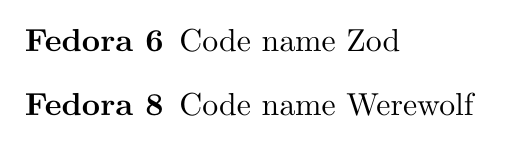

#### description(描述列表)

```

\begin{description}

\item[Fedora 6] Code name Zod

\item[Fedora 8] Code name Werewolf

\end{description}

```

### 列表项目间距

可以通过在导言区加入 `\usepackage{enumitem}` 来自定义默认的间距,宏包 `enumitem` 启用了选项 `noitemsep` 和控制序列 `\itemsep`,可以在列表中使用它们,如下例所示:

#### 使用选项 noitemsep

将选项 `noitemsep` 封闭在方括号内,并同下文所示放在控制序列 `\begin` 之后,该选项将移除默认的间距。

```

\begin{itemize}[noitemsep]

\item Fedora

\item Fedora Spin

\item Fedora Silverblue

\end{itemize}

```

#### 使用控制序列 \itemsep

控制序列 `\itemsep` 必须以一个数字作为后缀,用以表示列表项目之间应该有多少空间。

```

\begin{itemize} \itemsep0.75pt

\item Fedora Silverblue

\item Fedora CoreOS

\end{itemize}

```

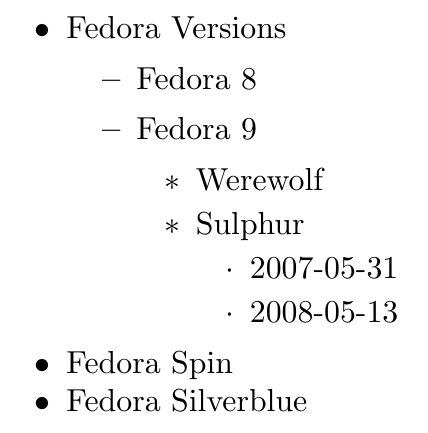

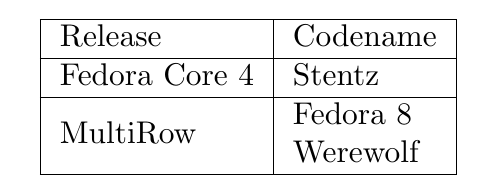

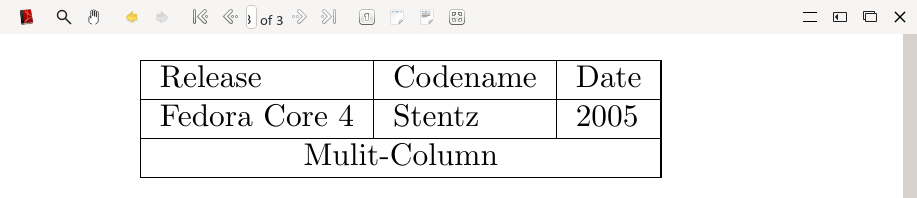

### 嵌套列表

LaTeX 最多最多支持四层嵌套列表,如下例:

#### 嵌套无序列表

```

\begin{itemize}[noitemsep]

\item Fedora Versions

\begin{itemize}

\item Fedora 8

\item Fedora 9

\begin{itemize}

\item Werewolf

\item Sulphur

\begin{itemize}

\item 2007-05-31

\item 2008-05-13

\end{itemize}

\end{itemize}

\end{itemize}

\item Fedora Spin

\item Fedora Silverblue

\end{itemize}

```

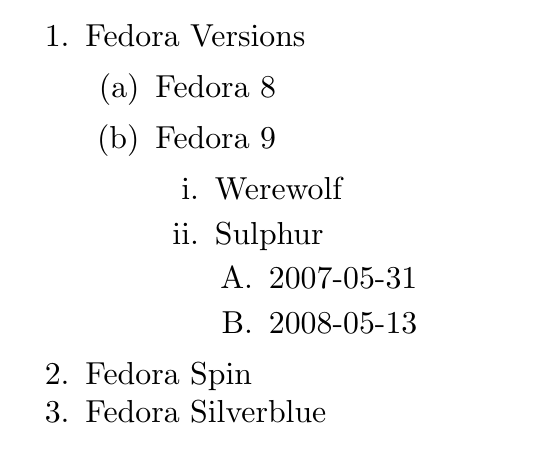

#### 嵌套有序列表

```

\begin{enumerate}[noitemsep]

\item Fedora Versions

\begin{enumerate}

\item Fedora 8

\item Fedora 9

\begin{enumerate}

\item Werewolf

\item Sulphur

\begin{enumerate}

\item 2007-05-31

\item 2008-05-13

\end{enumerate}

\end{enumerate}

\end{enumerate}

\item Fedora Spin

\item Fedora Silverblue

\end{enumerate}

```

### 每种列表类型的列表样式名称

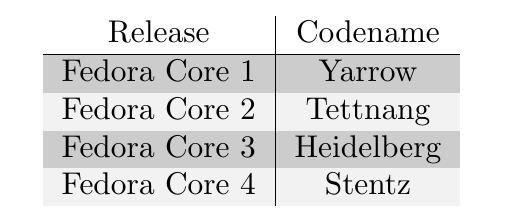

| **enumerate(有序列表)** | **itemize(无序列表)** |

| --- | --- |

| `\alph*` (小写字母) | `$\bullet$` (●) |

| `\Alph*` (大写字母) | `$\cdot$` (•) |

| `\arabic*` (阿拉伯数字) | `$\diamond$` (◇) |

| `\roman*` (小写罗马数字) | `$\ast$` (✲) |

| `\Roman*` (大写罗马数字) | `$\circ$` (○) |

| | `$-$` (-) |

### 按嵌套深度划分的默认样式

| **嵌套深度** | **enumerate(有序列表)** | **itemize(无序列表)** |

| --- | --- | --- |

| 1 | 阿拉伯数字 | (●) |

| 2 | 小写字母 | (-) |

| 3 | 小写罗马数字 | (✲) |

| 4 | 大写字母 | (•) |

### 设置列表样式

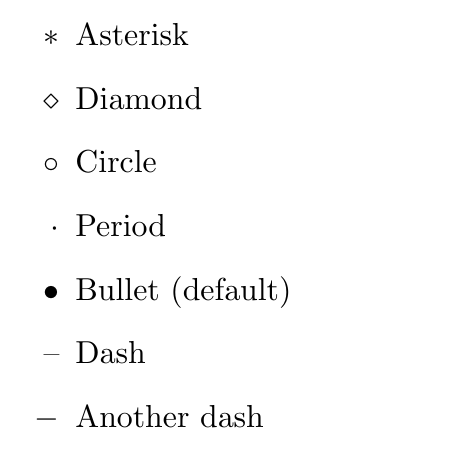

下面的例子列举了无序列表的不同样式。

```

% 无序列表样式

\begin{itemize}

\item[$\ast$] Asterisk

\item[$\diamond$] Diamond

\item[$\circ$] Circle

\item[$\cdot$] Period

\item[$\bullet$] Bullet (default)

\item[--] Dash

\item[$-$] Another dash

\end{itemize}

```

有三种设置列表样式的方式,下面将按照优先级从高到低的顺序分别举例。

#### 方式一:为各项目单独设置

将需要的样式名称封闭在方括号内,并放在控制序列 `\item` 之后,如下例:

```

% 方式一

\begin{itemize}

\item[$\ast$] Asterisk

\item[$\diamond$] Diamond

\item[$\circ$] Circle

\item[$\cdot$] period

\item[$\bullet$] Bullet (default)

\item[--] Dash

\item[$-$] Another dash

\end{itemize}

```

#### 方式二:为整个列表设置

将需要的样式名称以 `label=` 前缀并封闭在方括号内,放在控制序列 `\begin` 之后,如下例:

```

% 方式二

\begin{enumerate}[label=\Alph*.]

\item Fedora 32

\item Fedora 31

\item Fedora 30

\end{enumerate}

```

#### 方式三:为整个文档设置

该方式将改变整个文档的默认样式。使用 `\renewcommand` 来设置项目标签的值,下例分别为四个嵌套深度的项目标签设置了不同的样式。

```

% 方式三

\renewcommand{\labelitemi}{$\ast$}

\renewcommand{\labelitemii}{$\diamond$}

\renewcommand{\labelitemiii}{$\bullet$}

\renewcommand{\labelitemiv}{$-$}

```

### 总结

LaTeX 支持三种列表,而每种列表的风格和间距都是可以自定义的。在以后的文章中,我们将解释更多的 LaTeX 元素。

关于 LaTeX 列表的延伸阅读可以在这里找到:[LaTeX List Structures](https://en.wikibooks.org/wiki/LaTeX/List_Structures)

---

via: <https://fedoramagazine.org/latex-typesetting-part-1/>

作者:[Earl Ramirez](https://fedoramagazine.org/author/earlramirez/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[rakino](https://github.com/rakino) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

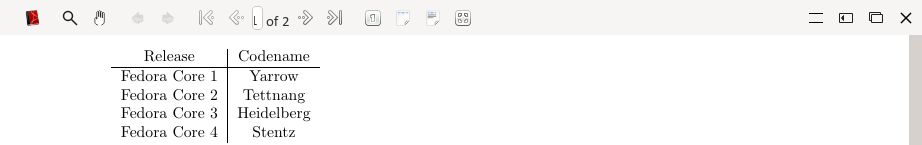

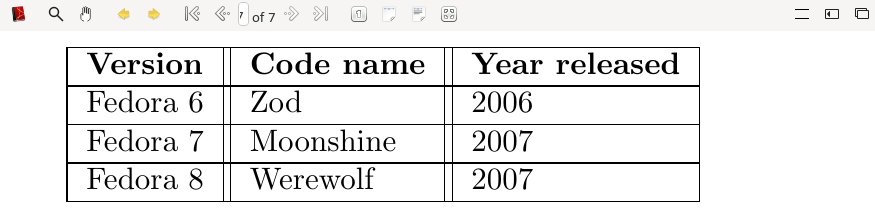

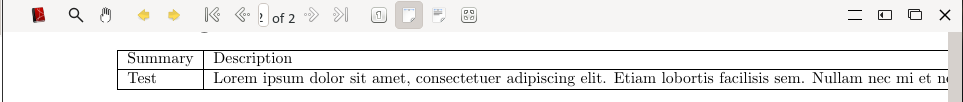

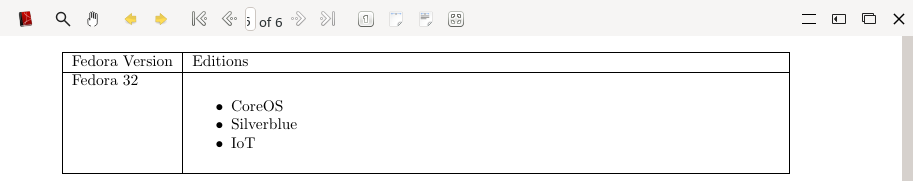

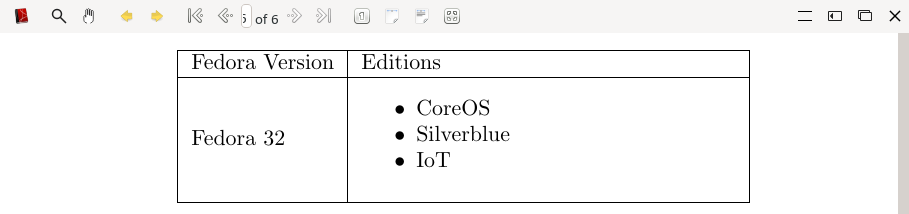

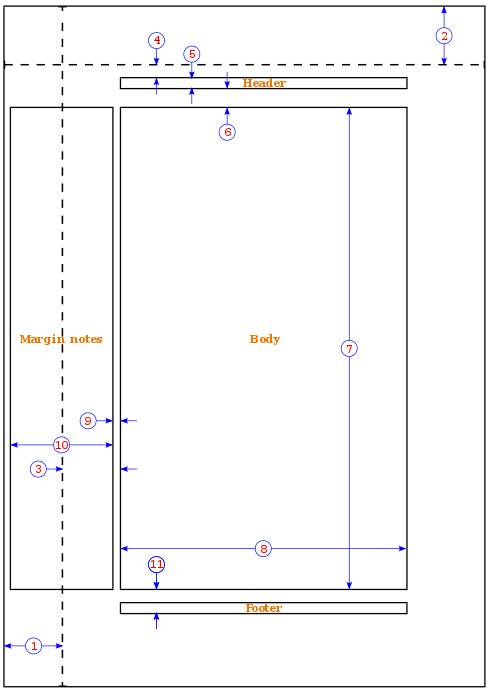

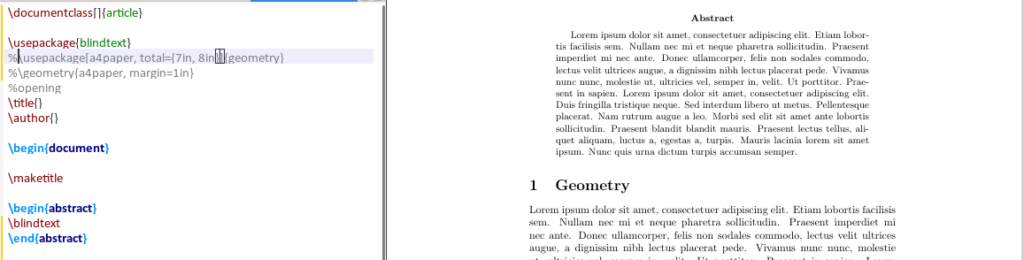

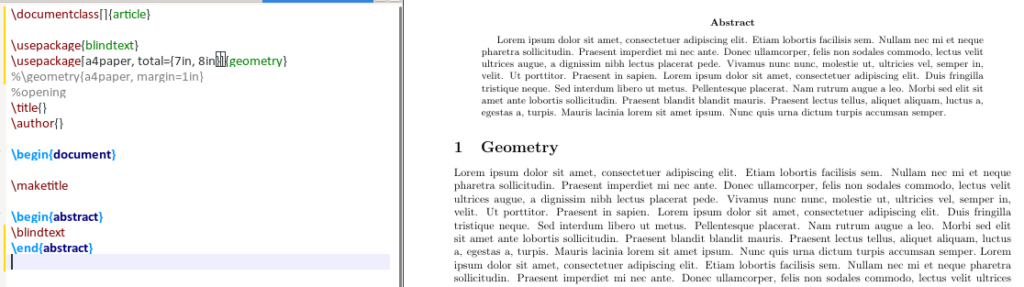

| 200 | OK | This series builds on the previous articles: [Typeset your docs with LaTex and TeXstudio on Fedora](https://fedoramagazine.org/typeset-latex-texstudio-fedora) and [LaTeX 101 for beginners](https://fedoramagazine.org/fedora-classroom-latex-101-beginners). This first part of the series is about LaTeX lists.

## Types of lists

LaTeX lists are enclosed environments, and each item in the list can take a line of text to a full paragraph. There are three types of lists available in LaTeX. They are:

**Itemized**: unordered or bullet**Enumerated**: ordered**Description**: descriptive

## Creating lists

To create a list, prefix each list item with the \*item* command. Precede and follow the list of items with the \*begin*{<type>} and \*end*{<type>} commands respectively where <type> is substituted with the type of the list as illustrated in the following examples.

### Itemized list

\begin{itemize} \item Fedora \item Fedora Spin \item Fedora Silverblue \end{itemize}

### Enumerated list

\begin{enumerate} \item Fedora CoreOS \item Fedora Silverblue \item Fedora Spin \end{enumerate}

### Descriptive list

\begin{description} \item[Fedora 6] Code name Zod \item[Fedora 8] Code name Werewolf \end{description}

## Spacing list items

The default spacing can be customized by adding \*usepackage{enumitem}* to the preamble. The *enumitem* package enables the *noitemsep* option and the \*itemsep* command which you can use on your lists as illustrated below.

### Using the noitemsep option

Enclose the *noitemsep* option in square brackets and place it on the \*begin* command as shown below. This option removes the default spacing.

\begin{itemize}[noitemsep] \item Fedora \item Fedora Spin \item Fedora Silverblue \end{itemize}

### Using the \itemsep command

The \*itemsep* command must be suffixed with a number to indicate how much space there should be between the list items.

\begin{itemize} \itemsep0.75pt \item Fedora Silverblue \item Fedora CoreOS \end{itemize}

## Nesting lists

LaTeX supports nested lists up to four levels deep as illustrated below.

### Nested itemized lists

\begin{itemize}[noitemsep] \item Fedora Versions \begin{itemize} \item Fedora 8 \item Fedora 9 \begin{itemize} \item Werewolf \item Sulphur \begin{itemize} \item 2007-05-31 \item 2008-05-13 \end{itemize} \end{itemize} \end{itemize} \item Fedora Spin \item Fedora Silverblue \end{itemize}

### Nested enumerated lists

\begin{enumerate}[noitemsep] \item Fedora Versions \begin{enumerate} \item Fedora 8 \item Fedora 9 \begin{enumerate} \item Werewolf \item Sulphur \begin{enumerate} \item 2007-05-31 \item 2008-05-13 \end{enumerate} \end{enumerate} \end{enumerate} \item Fedora Spin \item Fedora Silverblue \end{enumerate}

## List style names for each list type

Enumerated | Itemized |

\alph* | $\bullet$ |

\Alph* | $\cdot$ |

\arabic* | $\diamond$ |

\roman* | $\ast$ |

\Roman* | $\circ$ |

$-$ |

## Default style by list depth

Level | Enumerated | Itemized |

1 | Number | Bullet |

2 | Lowercase alphabet | Dash |

3 | Roman numerals | Asterisk |

4 | Uppercase alphabet | Period |

## Setting list styles

The below example illustrates each of the different itemiszed list styles.

% Itemize style \begin{itemize} \item[$\ast$] Asterisk \item[$\diamond$] Diamond \item[$\circ$] Circle \item[$\cdot$] Period \item[$\bullet$] Bullet (default) \item[--] Dash \item[$-$] Another dash \end{itemize}

There are three methods of setting list styles. They are illustrated below. These methods are listed by priority; highest priority first. A higher priority will override a lower priority if more than one is defined for a list item.

### List styling method 1 – per item

Enclose the name of the desired style in square brackets and place it on the \*item* command as demonstrated below.

% First method \begin{itemize} \item[$\ast$] Asterisk \item[$\diamond$] Diamond \item[$\circ$] Circle \item[$\cdot$] period \item[$\bullet$] Bullet (default) \item[--] Dash \item[$-$] Another dash \end{itemize}

### List styling method 2 – on the list

Prefix the name of the desired style with *label=*. Place the parameter, including the *label=* prefix, in square brackets on the \*begin* command as demonstrated below.

% Second method \begin{enumerate}[label=\Alph*.] \item Fedora 32 \item Fedora 31 \item Fedora 30 \end{enumerate}

### List styling method 3 – on the document

This method changes the default style for the entire document. Use the \*renewcommand* to set the values for the labelitems. There is a different labelitem for each of the four label depths as demonstrated below.

% Third method \renewcommand{\labelitemi}{$\ast$} \renewcommand{\labelitemii}{$\diamond$} \renewcommand{\labelitemiii}{$\bullet$} \renewcommand{\labelitemiv}{$-$}

## Summary

LaTeX supports three types of lists. The style and spacing of each of the list types can be customized. More LaTeX elements will be explained in future posts.

Additional reading about LaTeX lists can be found here: [LaTeX List Structures](https://en.wikibooks.org/wiki/LaTeX/List_Structures)

## kd

Helpful, thanks

## Earl Ramirez

Glad that is was helpful

## Jorge Dominguez

Thanks for the tips, I’m starting out with LaTeX, so this was indeed helpful

## Earl Ramirez