id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

12,764 | Fedora 33 正式发布了 | https://fedoramagazine.org/announcing-fedora-33/ | 2020-10-28T15:50:19 | [

"Fedora"

] | https://linux.cn/article-12764-1.html |

>

> 本文译自 Fedora 官方发布公告。

>

>

>

今天,我很兴奋地与大家分享数千名 Fedora 项目贡献者辛勤工作的成果:我们的最新版本 Fedora 33 来了! 这是一个有着很多变化的大版本,但我相信所有这些工作也会让您感到舒适,实现我们的目标:即为您带来最新的稳定、强大、健壮的自由和开源软件,并提供许多易于使用的产品。

如果您不想耽误时间,直接体验,现在就去 <https://getfedora.org/> 下载吧,欲了解详情请继续阅读!

### 找到适合您的 Fedora 风味!

Fedora Edition 是针对桌面、服务器和云环境中各种“体现”特定用途的目标产品,现在它也适用于物联网。

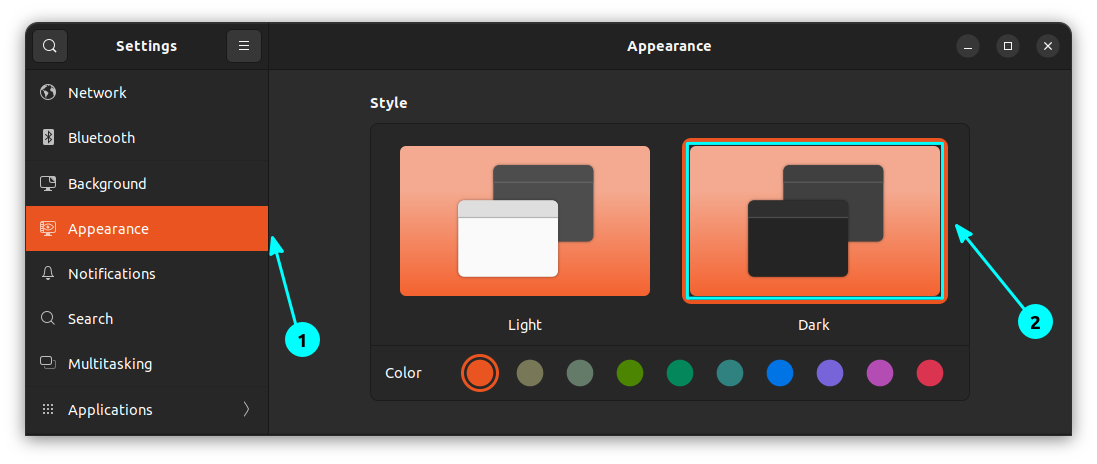

Fedora Workstation 专注于桌面,尤其是面向那些想要“只管去用”的 Linux 操作系统体验的软件开发者。这个版本的特点是 [GNOME 3.38](https://www.gnome.org/news/2020/09/gnome-3-38-released/),它一如既往地有很多很棒的改进。新增的 Tour 应用可以帮助新用户学习它们的操作方式。和我们所有其他面向桌面的变体一样,Fedora Workstation 现在使用 [BTRFS 作为默认文件系统](https://fedoramagazine.org/btrfs-coming-to-fedora-33/)。这些发布的版本中带来了很多伟大的增强功能,这个先进的文件系统为之奠定了基础。为了您的视觉享受,Fedora 33 Workstation 现在默认提供了一个动画背景(它会基于一天中的时间变化)。

Fedora CoreOS 是一个新兴的 Fedora 版本。它是一个自动更新的、最小化的操作系统,用于安全地、大规模地运行容器化工作负载。它提供了几个[更新流](https://docs.fedoraproject.org/en-US/fedora-coreos/update-streams/),可以遵循大致每两周一次的自动更新。目前 **next** 流是基于 Fedora 33 的,**testing** 和 **stable** 流后继也会跟进。您可以从[下载页面](https://getfedora.org/en/coreos/download?stream=next)中找到关于跟随 **next** 流发布的工件的信息,并在 [Fedora CoreOS 文档](https://docs.fedoraproject.org/en-US/fedora-coreos/getting-started/)中找到关于如何使用这些工件的信息。

新晋升为 Edition 状态的 [Fedora IoT](https://getfedora.org/iot),为物联网生态系统和边缘计算用例提供了坚实的基础。在许多功能之外,Fedora 33 IoT 还引入了<ruby> 平台抽象安全 <rt> Platform AbstRaction for SECurity </rt></ruby>(PARSEC),这是一个开源倡议,以平台无关的方式为硬件安全和加密服务提供了通用 API。

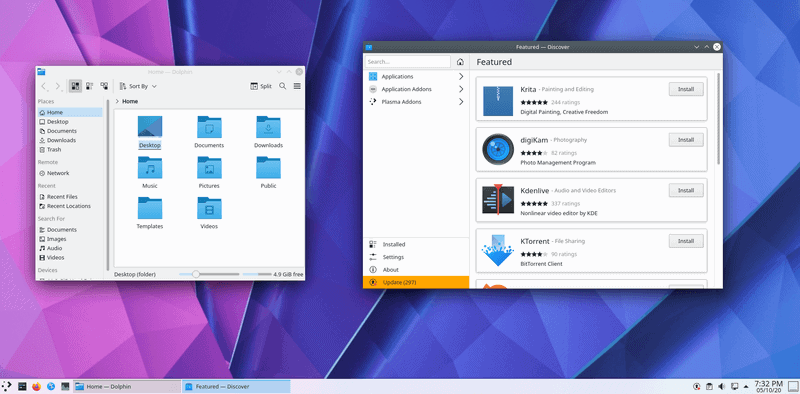

当然,我们制作的不仅仅是“官方版本”,还有 [Fedora Spin](https://spins.fedoraproject.org/)和 [Lab](https://labs.fedoraproject.org/)。[Fedora Spin](https://spins.fedoraproject.org/) 和 [Lab](https://labs.fedoraproject.org/) 针对不同的受众和用例,包括 [Fedora CompNeuro](https://labs.fedoraproject.org/en/comp-neuro/),它为神经科学带来了大量的开源计算建模工具,以及 [KDE Plasma](https://spins.fedoraproject.org/en/kde/) 和 [Xfce](https://spins.fedoraproject.org/en/xfce/)等桌面环境。

此外,别忘了我们还有备用架构:[ARM AArch64、Power 和 S390x](https://alt.fedoraproject.org/alt/)。在 Fedora 33 中提供的新功能,AArch64 用户可以使用 .NET Core 语言进行跨平台开发。我们改进了对 Pine64 设备、NVidia Jetson 64 位平台以及 Rockchip 片上系统(SoC)设备的支持,包括 Rock960、RockPro64 和 Rock64。(不过,有个最新的说明:在其中一些设备上可能会出现启动问题。从现有的 Fedora 32 升级是没问题的。更多信息将在[常见错误](https://fedoraproject.org/wiki/Common_F33_bugs)页面上公布。)

我们也很高兴地宣布,Fedora 云镜像和 Fedora CoreOS 将首次与 Fedora 33 一起在亚马逊的 [AWS 市场](https://aws.amazon.com/marketplace) 中提供。Fedora 云镜像在亚马逊云中已经存在了十多年,您可以通过 AMI ID 或[点击一下](https://getfedora.org/en/coreos/download?tab=cloud_launchable&stream=stable)来启动我们的官方镜像。该市场提供了获得同样东西的另一种方式,显著扩大了 Fedora 的知名度。这也将使我们的云镜像可以更快地在新的 AWS 区域中可用。特别感谢 David Duncan 让这一切成为现实!

### 常规改进

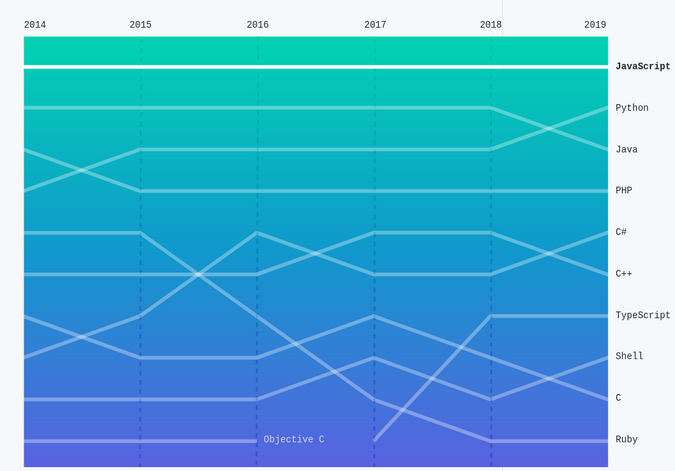

无论您使用的是哪种版本的 Fedora,您都会得到开源世界提供的最新版本。遵循我们的 [First](https://docs.fedoraproject.org/en-US/project/#_first) 原则,我们更新了关键的编程语言和系统库包,包括 Python 3.9、Ruby on Rails 6.0 和 Perl 5.32。在 Fedora KDE 中,我们沿用了 Fedora 32 Workstation 中的工作,默认启用了 EarlyOOM 服务,以改善低内存情况下的用户体验。

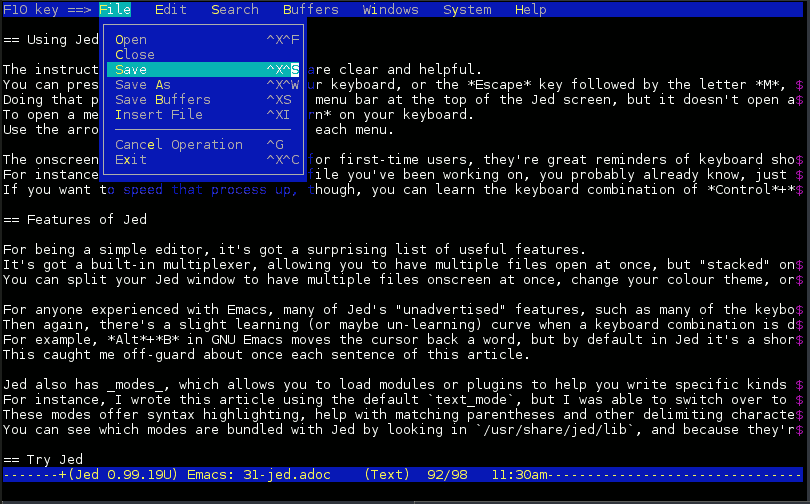

为了让 Fedora 的默认体验更好,我们将 nano 设置为默认编辑器。nano 是一个对新用户友好的编辑器。当然,那些想要像 vi 这样强大的编辑器的用户可以自己设置默认编辑器。

我们很高兴您能试用新版本! 前往 <https://getfedora.org/> 并立即下载它。或者如果您已经在运行 Fedora 操作系统,请按照这个简单的[升级说明](https://docs.fedoraproject.org/en-US/quick-docs/upgrading/)进行升级。关于 Fedora 33 新特性的更多信息,请参见[发布说明](https://docs.fedoraproject.org/en-US/fedora/f33/release-notes/)。

### 关于安全启动的说明

<ruby> 安全启动 <rt> Secure Boot </rt></ruby>是一种安全标准,它确保只有官方签署的操作系统软件才能加载到您的计算机上。这对于防止持久恶意软件非常重要,因为这些恶意软件可能会隐藏在您的计算机固件中,甚至在重新安装操作系统时也能存活。然而,在 [Boot Hole](https://access.redhat.com/security/vulnerabilities/grub2bootloader) 漏洞发生后,用于签署 Fedora <ruby> 引导加载器 <rt> Bootloader </rt></ruby>软件的加密证书将被撤销,并被新的证书取代。由于这将产生大范围的影响,撤销应该要到 2021 年第二季度或更晚才会广泛推行。

然而,一些用户可能已经从其他操作系统或固件更新中收到了这种撤销。在这种情况下,Fedora 将不能在启用了安全启动时进行安装。要说明的是,这不会影响大多数用户。如果它确实影响到了您,您可以暂时禁用安全启动。我们会在大范围的证书撤销之前发布一个用新证书签署的更新,在所有支持的版本上都可以使用,到那时,安全启动应该可以重新启用。

### 万一出现问题时……

如果您遇到问题,请查看 [Fedora 33 常见错误](https://fedoraproject.org/wiki/Common_F33_bugs)页面;如果您有疑问,请访问我们的 [Ask Fedora](http://ask.fedoraproject.org) 用户支持平台。

### 谢谢大家

感谢在这个发布周期中为 Fedora 项目做出贡献的成千上万的人,尤其是那些在疫情大流行期间为使这个版本准时发布而付出额外努力的人。Fedora 是一个社区,很高兴看到我们如此互相支持。

---

via: <https://fedoramagazine.org/announcing-fedora-33/>

作者:[Matthew Miller](https://fedoramagazine.org/author/mattdm/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Today, I’m excited to share the results of the hard work of thousands of contributors to the Fedora Project: our latest release, Fedora 33, is here! This is a big release with a lot of change, but I believe all that work will also make it a comfortable one, fulfilling our goal of bringing you the latest stable, powerful, and robust free and open source software in many easy to use offerings.

If you just want to get to the bits without delay, head over to[ https://getfedora.org/](https://getfedora.org/) right now. For details, read on!

**Find the Fedora flavor that’s right for you!**

Fedora Editions are targeted outputs geared toward specific “showcase” uses on the desktop, in server and cloud environments—and now for Internet of Things as well.

Fedora Workstation focuses on the desktop, and in particular, it’s geared toward software developers who want a “just works” Linux operating system experience. This release features [GNOME 3.38](https://www.gnome.org/news/2020/09/gnome-3-38-released/), which has plenty of great improvements as usual. The addition of the Tour application helps new users learn their way around. And like all of our other desktop-oriented variants, Fedora Workstation now uses [BTRFS as the default filesystem](https://fedoramagazine.org/btrfs-coming-to-fedora-33/). This advanced filesystem lays the foundation for bringing a lot of great enhancements in upcoming releases. For your visual enjoyment, Fedora 33 Workstation now features an animated background (based on time of day) by default.

Fedora CoreOS is an emerging Fedora Edition. It’s an automatically-updating, minimal operating system for running containerized workloads securely and at scale. It offers several [update streams](https://docs.fedoraproject.org/en-US/fedora-coreos/update-streams/) that can be followed for automatic updates that occur roughly every two weeks. Currently the **next** stream is based on Fedora 33, with the **testing** and **stable** streams to follow. You can find information about released artifacts that follow the **next** stream from [the download page](https://getfedora.org/en/coreos/download?stream=next) and information about how to use those artifacts in the [Fedora CoreOS Documentation](https://docs.fedoraproject.org/en-US/fedora-coreos/getting-started/).

[Fedora IoT](https://getfedora.org/iot), newly promoted to Edition status, provides a strong foundation for IoT ecosystems and edge computing use cases. Among many other features, Fedora 33 IoT introduces the Platform AbstRaction for SECurity (PARSEC), an open-source initiative to provide a common API to hardware security and cryptographic services in a platform-agnostic way.

Of course, we produce more than just the Editions.[ Fedora Spins](https://spins.fedoraproject.org/) and[ Labs](https://labs.fedoraproject.org/) target a variety of audiences and use cases, including [Fedora CompNeuro](https://labs.fedoraproject.org/en/comp-neuro/), which brings a plethora of open source computational modelling tools for neuroscience, and desktop environments like[ KDE Plasma](https://spins.fedoraproject.org/en/kde/) and[ Xfce](https://spins.fedoraproject.org/en/xfce/).

And, don’t forget our alternate architectures: [ARM AArch64, Power, and S390x](https://alt.fedoraproject.org/alt/). New in Fedora 33, AArch64 users can use the .NET Core language for cross-platform development. We have improved support for Pine64 devices, NVidia Jetson 64 bit platforms, and the Rockchip system-on-a-chip devices including the Rock960, RockPro64, and Rock64. (However, a late-breaking note: there may be problems booting on some of these devices. Upgrading from existing Fedora 32 will be fine. More info will be on the [Common Bugs](https://fedoraproject.org/wiki/Common_F33_bugs) page as we have it.)

We’re also excited to announce that the Fedora Cloud Base Image and Fedora CoreOS will be available in Amazon’s [AWS Marketplace](https://aws.amazon.com/marketplace) for the first time with Fedora 33. Fedora cloud images have been available in the Amazon cloud for over a decade, and you can launch our official images by AMI ID or [with a click](https://getfedora.org/en/coreos/download?tab=cloud_launchable&stream=stable). The Marketplace provides an alternate way to get the same thing, with significantly wider visibility for Fedora. This will also make our cloud images available in new AWS regions more quickly. Thank you especially to David Duncan for making this happen!

## General improvements

No matter what variant of Fedora you use, you’re getting the latest the open source world has to offer. Following our “[First](https://docs.fedoraproject.org/en-US/project/#_first)” foundation, we’ve updated key programming language and system library packages, including Python 3.9, Ruby on Rails 6.0, and Perl 5.32. In Fedora KDE, we’ve followed the work in Fedora 32 Workstation and enabled the EarlyOOM service by default to improve the user experience in low-memory situations.

To make the default Fedora experience better, we’ve set nano as the default editor. nano is a friendly editor for new users. Those of you who want the power of editors like vi can, of course, set your own default.

We’re excited for you to try out the new release! Go to[ https://getfedora.org/](https://getfedora.org/) and download it now. Or if you’re already running a Fedora operating system, follow the easy[ upgrade instructions](https://docs.fedoraproject.org/en-US/quick-docs/upgrading/). For more information on the new features in Fedora 33, see the[ release notes](https://docs.fedoraproject.org/en-US/fedora/f33/release-notes/).

**A note on Secure Boot**

Secure Boot is a security standard which ensures that only officially-signed operating system software can load on your computer. This is important for preventing persistent malware which could hide itself in your computer’s firmware and survive even an operating system reinstallation. However, in the wake of the [Boot Hole](https://access.redhat.com/security/vulnerabilities/grub2bootloader) vulnerability, the cryptographic certificate used to sign Fedora bootloader software will be revoked and replaced with a new one. Because this will have a broad impact, revocation should not happen widely until the second quarter of 2021 or later.

However, some users may have received this revocation from other operating systems or firmware updates already. In that case, Fedora installations will not boot with Secure Boot enabled. To be clear, this will not affect most users. If it does affect you, you can boot with Secure Boot disabled for the time being. We will release an update signed with the new certificate to be available on all supported releases well before broad-scale certificate revocation takes place, and at that point Secure Boot should be reenabled.

**In the unlikely event of a problem….**

If you run into a problem, check out the[ Fedora 33 Common Bugs](https://fedoraproject.org/wiki/Common_F33_bugs) page, and if you have questions, visit our[ Ask Fedora](http://ask.fedoraproject.org) user-support platform.

**Thank you everyone**

Thanks to the thousands of people who contributed to the Fedora Project in this release cycle, and especially to those of you who worked extra hard to make this another on-time release during a pandemic. Fedora is a community, and it’s great to see how much we’ve supported each other.

## Brad Smith

Outstanding! I applaud all the work accomplished by the Fedora team and open source contributors everywhere.

## Michal Konečný

Is EarlyOOM enabled automatically when doing upgrade or is this only for new installations?

## monotux

My installation is a few years old and earlyoom.service is enabled on my machine. So I think it’s default and has been default since 32?

## Abhi

i am using fedora from about 5 years it was my first linux distro. and i love it sometimes it makes me feel that i am made for it.

## Matthias

Thank you.

## Leslie Satenstein, Montreal,Que,Canada

I have been using the beta version for the past month. Never a burp, never an issue.

What a great relief to have the distribution work, “Right out of the Box”.

Congratulations to all who worked to deliver this version, and the past versions.

## Truls Gulbrandsen

The same goes for me – almost – I have experienced a couple of bugs. They have been reported and were solved promptly.

Thank you and keep up the good work-

## Tobias, Germany

Vielen Dank für das großartige Release.

## Roberto

And Fedora Silverblue?

🙁

## Eduard Lucena

Silverblue got updated too: https://silverblue.fedoraproject.org/download

## Calvin Walton

If you have an existing Silverblue install, you can rebase to 33 using the instructions here: https://docs.fedoraproject.org/en-US/fedora-silverblue/updates-upgrades-rollbacks/ (distribution release upgrades are not yet integrated into the Software gui tool in Silverblue)

## Grey the earthling

Yes they are!

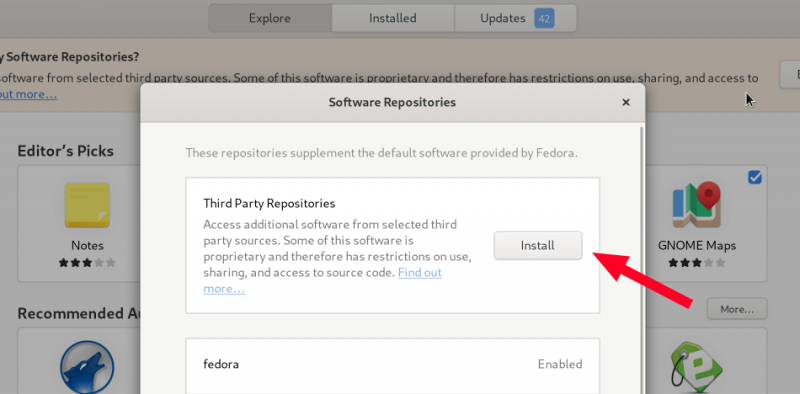

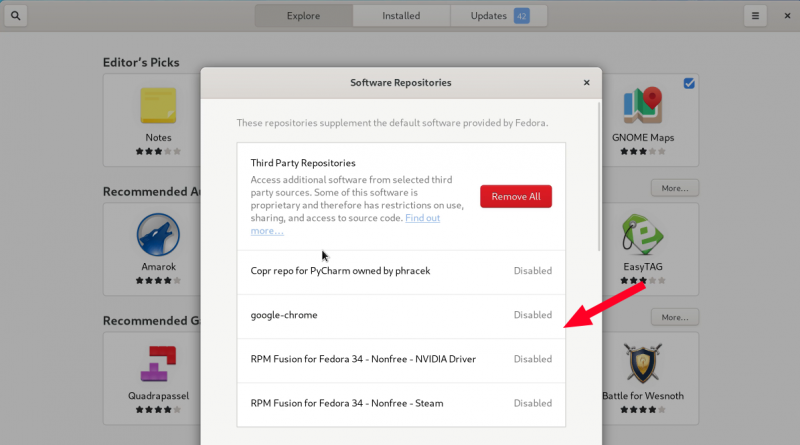

I upgraded from Silverblue 31 to 32 using GNOME Software (I disabled third-party repos using the terminal first) and those instructions now say:

## Calvin Walton

Heh, serves me right, I should have actually read the page I was linking! Very nice 🙂

## Sriniwas

Thank you fedora team, I hope someday I can contribute to this amazing project

## martin

A big thanks to everyone involved in making this great distribution!

## Lyes Saadi

The fedora background in the illustration of the article is outdated 😛 !

## flowers

Thank you

## Robert

Thanks for taking bold steps like BTRFS by default! Together with Wayland, Pipewire, fwupd, Silverblue etc. Fedora pushes for great innovation in the desktop space and I’m happily riding with you 🙂

## Rui Quaresma

Great distribution for those who like packages packaged by the distribution itself, good system for hackers.

## XHess

Ich liebe Fedora 33. btrfs rockt und Wayland made my day. 👍🏻 Alles andere natürlich auch.

Danke!

## Arek P.

The greatest gift on my birthday 🙂 Thank you !!! 🙂

## Dinu Radhakrishnan

Thank you team Fedora.

I have been using the beta version of both the Workstation and the Server editions since release, without any issues.

## 荒野无灯

I do like Fedora.

Thanks to all of you guys made this great Linux dist

## tfks

Brilliant work as always!

## Patrick

Thank you so much for your hard and complicated work Fedora team for this new exciting release!!!

## stephane keglo

Thank You ,Fedora Teams for your amazing work

## Dutchy

This is a huge release for home and office users!

– Btrfs offers lots of flexibility and safety, finally a step into the future fs-wise (option to use snapshots, no more lengthy integrity checks and corrupted files when the laptop runs out of juice)

– EarlyOOM and zram means no more systems getting swapped to death, this is a HUGE usability improvement that end users will notice (or actually, they will no longer notice anything besides their browser tabs oopsing now and then)

While many users will shrug off these features as being unnecessary, to regular users these could mean the difference between having a system that works very reliably vs one that often causes troubles, and losing data and having to watch at a stuck desktop after opening too many browser tabs isn’t part of the former.

Great work from all the devs!

## Anon Ymous

Fedora 33 is awesome! Someone should write an article here on the “Docker support” features of fedora 33 tho. I boot it up, no docker manager or anything installed by default, I take it one has to download something? Anyway, Fedora 33 is really nice, does anyone know if CENTos or RHEL is planning to go to btrfs by default? That would be interesting to know!

## Matthew Miller

## Anon Ymous

to: Matthew Miller Thank you for the reply and answering ppl’s questions, that is how articles are done right!

In the release notes, there are supposed to be new “Docker support” features – im not sure what the writer meant by that. Maybe it was a reference to podman, although that is not “new”, so i wonder why someone wrote it in the release notes. No biggie, time will tell. Again, thanks.

## Jaranguda

I’m glad didn’t upgrade before too late 🙂 I have a lot of docker images for testing purpose. Will try podman before doing the upgrade to make sure everything works perfectly

## Badtux

BTRFS is not production-ready. It has failed at every production load I’ve thrown at it. Red Hat is not going to go to BTRFS until that is consistently not true.

## monotux

I’m not a proponent for BTRFS (I like my ZFS for important stuff), but there’s a lot of FUD regarding BTRFS thrown around in places like this. Had it not been ready for production use, why would major players (hello FB) and at least two major distros (SuSE, Fedora, probably more?) use it by default?

## Dutchy

Btrfs is a poor fit for high performance cattle servers. It is great for end users’ pets systems however. Fedora is only making btrfs the default for the workstation edition so what you say doesn’t apply.

## Anon Ymous

NVIDIA with Fedora 33

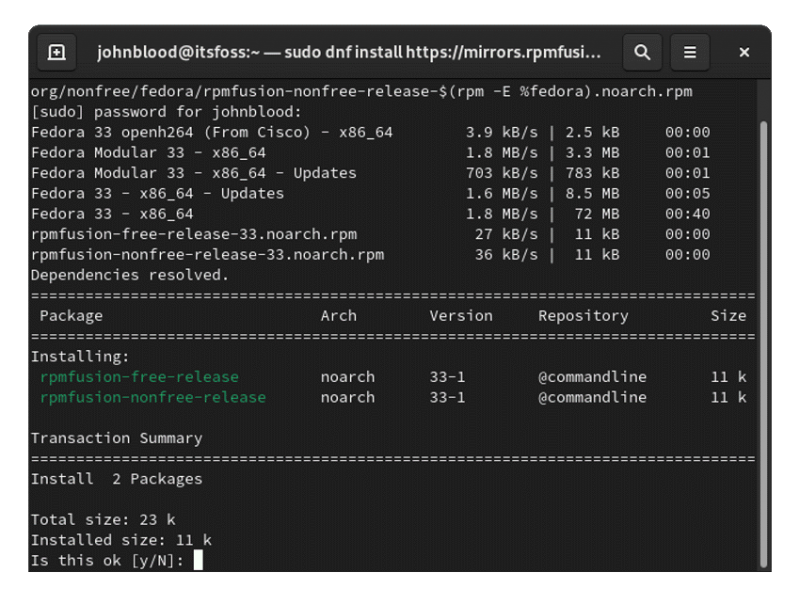

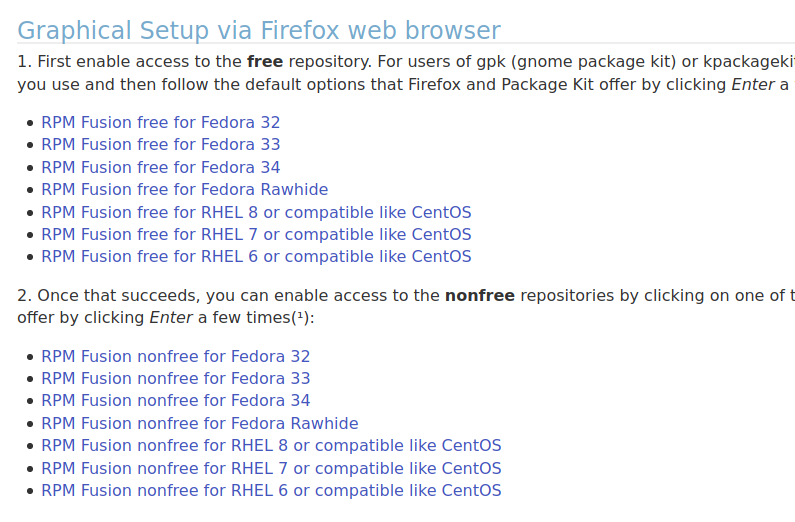

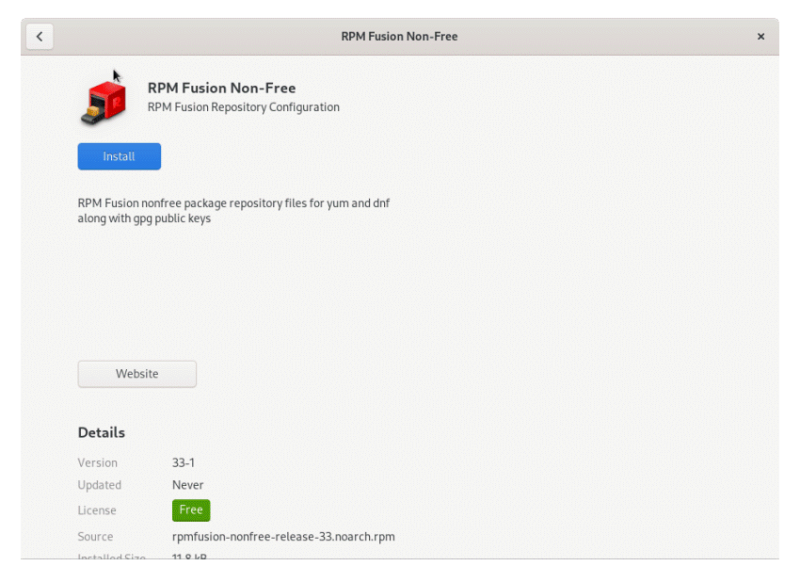

I have a dell g5 gaming rig with geforce rtx 2080 installed. after about 10 min of fedora 33 boot up the screen goes blank. Here is a VERY simple way to fix it in less than a minute!

1) sudo dnf update

2) sudo dnf install https://download1.rpmfusion.org/free/fedora/rpmfusion-free-release-$(rpm -E %fedora).noarch.rpm

3) sudo dnf install https://download1.rpmfusion.org/nonfree/fedora/rpmfusion-nonfree-release-$(rpm -E %fedora).noarch.rpm

4) sudo dnf install akmod-nvidia

sudo reboot or reboot the computer.

BAM! Problem fixed!

Notice when you go to settings/about it will show “NVIDIA Corporation TU104 [GeForce RTX 2080]”

for the graphics driver and the system will not blank the screen out. You are welcome 🙂

## Sergey

Thank you for the instruction !

What is you DE ? I read that the drivers NVIDIA you like do not work properly with wayland and therefore you need to use X11, otherwise steam and proton will not work.

Thank you.

## Stan Genchev

I have bad news for you. Fedora devs are testing the 5.9 version of the Linux kernel and it will probably appear as an update next week. The problem is that Nvidia will not support kernel 5.9 for a few more months (1 to 2 probably, but it could be more). They stated it themselves. So you have two options:

1 – Do not update your system for a few months and check every once in a while if the driver has picked up support and is in the rpmfusion repos.

2 – Get an AMD GPU (or and Intel if you don’t play games) and live a happy life 😀

Personally, I would go with the second option.

## Alexander Borro

If that’s true. There is no way kernel 5.9 should be pushed out to Fedora users. Nvidia is far too widespread in use, it would break systems without notice. I feel confident the Fedora Team know this. and wouldn’t do such an (unprofessional) thing.

Congrats Fedora 33 team. Running great here with my current RTX card. 🙂

## Vaclav

This one is related to GAMING workloads only (CUDA).

System update went surprisingly smoothly and guickly. It was super easy – One button click (upgrade) did the trick. (also have a CUDA GPU)

Great work Fedora team!

## Heliosstyx

Thanks to all contributors for their work building this high-quality and innovative Fedora product-line. It’s very impressive to see what people can do with common objectives to give their best creating Fedora. Thank you Mr. Miller for your above easy to understand article.

## polaris

I just switched to Fedora 32 yesterday…

## Filipe Guarino

Awsome to see the great work that fedora team have done. Thanks to community too.

## Francisco Reyes

Gracias a todo el equipo de Fedora. Excelente trabajo. 👍

## garcia88jose

Thank you for all effort. Just amazing.

## Chucho Linux

Fedora 33

gnome-shell[42352]: segfault at 18 ip 00007ffa91fae87f sp 00007fff957cf790 error 4 in libmutter-7.so.0.0.0[7ffa91f29000+10a000]

Any idea?

## William Whinn

I rebased to Silverblue 33 from 32 without an issue but because of the new BTrFS system, I think I’m going to nuke and pave so I can take advantage of that. Think I’ll stick to Silverblue over vanilla Workstation though, it’s a solid workhorse that got me through a data science master’s degree and is currently getting me through another in bioinformatics. Thank you all for your hard work, I celebrate every release.

## I

Just to ask about Raspberry pi 4 support. Will that be also?

## frantisekz

Fedora 33 works on Raspberry Pi 4. However:

It’s not officially supported

There is no 3D acceleration support just yet (that should come with Linux 5.10)

## Mario Soto

Fantastic!!!…Thank you all that made this possible and be safe.

## Dong Nguyen

Thank you Fedora team for your great work.

## Rey

Congratulation Fedora team, you really do an amazing work, thank you!

## max

Amazing, been on beta 33 for a while, and thoroughly impressed. Also, the advanced partioner finally fixed some long standing snags for me, which always made it a pain to do a fresh install they way I wanted it to, so no more workarounds needed, yay!

Now it only needs a kernel-lts package and it will be the perfect distro… pretty please?? 🙂

Congratulations everyone involved!

## Sam

Thank you guys, thank you fedora team, amazing work 👏🏻!

## Wang

Fedora 33 default deny ssh-rsa, through a new /etc/crypto-policies/back-ends/opensshserver.config which did not contain ssh-rsa config.

## Jonathan

Just noting as Wang pointed out that ssh-rsa signatures on public keys are off by default in Fedora 33. If at all possible, I recommend leaving the default policy in place, and upgrading your SSH server and/or client keys, rather than downgrading security as many are doing. I wrote up my experiences at https://dev.to/bowmanjd/upgrade-ssh-client-keys-and-remote-servers-after-fedora-33-s-new-crypto-policy-47ag

## Tim

Hi Jonathan, Thanks for the explaination and suggestions. What I failed to get what why they removed rsa keys from the policy list. Where would that rationale be documented?

## Bad Tux

ssh-rsa was deprecated because it uses the SHA1 hash algorithm for signature digests, and it is trivially easy to create payloads that have an equivalent SHA1 hash. This deprecation came from upstream, not from Fedora. See, e.g.,

https://lwn.net/Articles/821544/

## Ben

nice

## Christopher Augustus

I was able to get an old HP BlueStream upgraded to 33. Fairly difficult due to a 50 GB hard drive that was almost full. Ended up tinkering with the DNF configuration file to tell it to download the upgrade files to an attached flashdrive. When that did not free up enough space, I removed GIMP (sorry little critter) and some shared library files. USB 2.0 is very slow, but it is now upgraded. I have been using the old HP BlueStream running Fedora for Zoom meetings, internet browsing, and playing with Linux features for almost a year and half now. Very happy I can stay on the cutting edge of Open Source software with Fedora 33! (And yes, I reset the DNF configuration back so update will work.)

## Daniel Berto

Fedora 33 is just amazing!!! Thanks guys!!!

## Emerson Lima

Congratulations Fedora team and the whole community too! More productivity, less headache.

Fedora 33 (Thirty Three) 64-bit

Intel® Core™ i7-9750HF CPU @ 2.60GHz × 12

NVIDIA Corporation TU117M [GeForce GTX 1650 Mobile / Max-Q]

## Atul Kumar Pandey

Kudos to the Fedora team, congratulations for your efforts in this critical time.

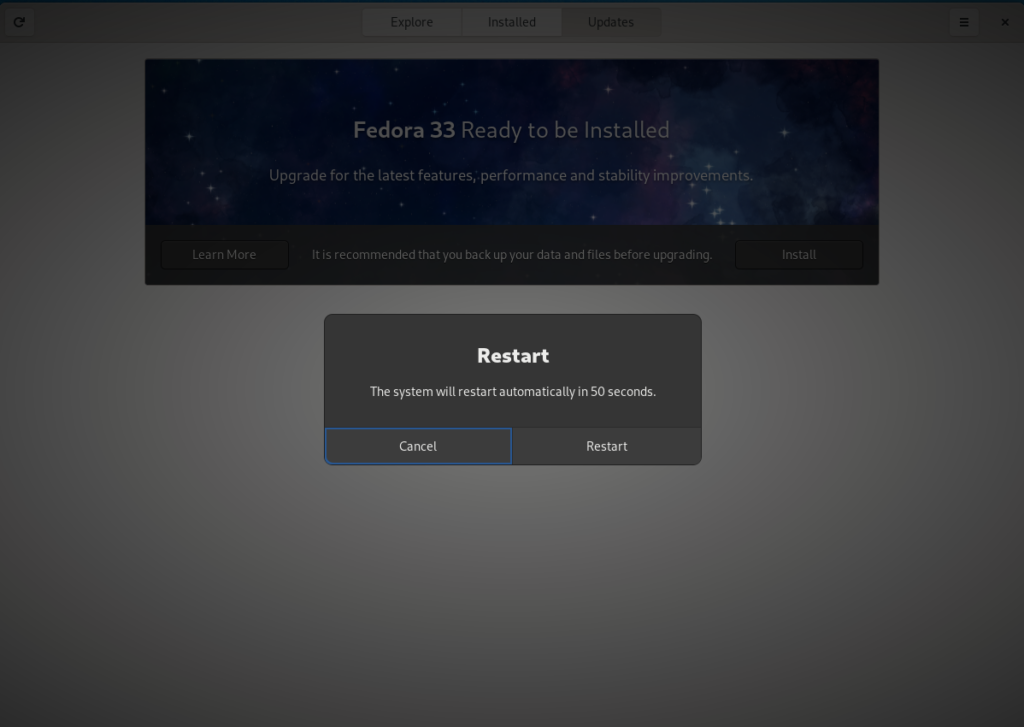

## Jens

If you click on “learn more” in the Fedora 33 announcement (in F32’s Software), it points to https://fedoramagazine.org/whats-new-fedora-33-workstation, which gives you a 404. Maybe that should be fixed 😉

## Zaro

Thanks!

Installed it on my new Thinkpad T14, and so far it’s awesome! Keep up the greak work.

## Hunter B

Can we set mount point options like ‘compress’ before installation within Anaconda?

## Jorge

Congratulations for the new release!

Matthew, Gnome Software shows a banner announcing the new Fedora 33 release. If you click on “More info” button it goes to https://fedoramagazine.org/whats-new-fedora-33-workstation, which doesn’t have any info (Page not found)

## Hyuho

Congratulations, Thanks to All~!

what is fedora 33’s kernel version?

## Marcel Hergaarden

Fedora 33 is really great. Thanks for all the hard work, much appreciated.

## rolf deenen

I am updating right now after receiving a notification that a Fedora 33 was available. I did notice that the fedora updater has a button reading: “Learn more”. This refers to a page: https://fedoramagazine.org/whats-new-fedora-33-workstation but this page doesn’t exist (Yet?).

## Zhao

Good news, thanks for your work!

## svsv sarma

Thank you Fedora 33, so far so good.

I am using Fedora-cinnamon-33 live. I am unable to install Samsung Printer ML-1670 with:

sudo dnf config-manager –add-repo=https://negativo17.org/repos/fedora-uld.repo

sudo yum -y install uld.

It worked well with F32. Perhaps I have to wait for some time.

## Wolfram Volpi

I had the same issue installing Samsung SL-M2020W printer:

# yum -y install uld

Error: Unable to find a match: uld

## Priyatam

Congratulations !! And a HUUGE thanks to the fedora team !

## Silvia

It doesn’t how to upgrade from a previous version :-/

## Matthew Miller

https://docs.fedoraproject.org/en-US/quick-docs/upgrading/

## PK

F33 the best releae yet!

## Franklin

Muchas Gracias a todo el equipo de Fedora!!

## Llaith

Superb. Sounds like the Fedora Project is going from strength to strength. I’ve been a Fedora user since Fedora 22, and I literally cant praise about the teams and their choices enough!

There is so much to get excited about with the IoT and AWS offerings, not to mention the ARM version, that I think it’s starting to be a open-and-shut case to use Fedora for modern cloud/enterprise development.

## Wyoming USA

The video software added to 33 is much better than 32. Fedora has become my favorite OS. Thanks for all the hard work everyone has contributed!

## e5r5

prosze zróbcie fedorę w moim języku.

pokażcie jak tłumaczyc i jak ustawić tak by moja angielska zaczęła gadać poprawnie

## Juergen

Is it possible to setup F33 and btrfs with luks encrypted disks?

## Chris Murphy

Yes. Simple check “Encrypt my data” when using Automatic partitioning. When using Custom (Manual) partitioning, you’ll find it in a dialog revealed by clicking the Volume:Modify button. (Figure 21 in the install guide.)

## Antonio Retali

Congratulations for your excellent work Fedora Team!

I have a small doubt about the last IoT as an ‘Official Edition’, where is the corresponding IoT subfolder at download.fedora.project.org/pub/fedora/linux/releases/33/?

I knew that this release is available at download.fedoraproject.org/pub/alt/iot/33/IoT/, but many mirrors don’t take the pub/alt branch into account because sometimes it’s considered that all the official editions are only in the releases/ subfolder.

## C Narayanan

Although I continue to update to newer versions, shutdown is usually an issue where it simply hangs many times. It’s an unresolved issue for as long as I remember with Fedora.

## Metcomm

Once again a seamless and trouble-free upgrade. I use 2 encrypted partitions on my ageing laptop, one for “/” and one for “home” and the install was perfect. I have already noticed a significant improvement in overall performance.

Many thank Fedora team!

## Malik

Thank you fedora team

## Ivan

I switched to Debian a few months ago due to performance issues with Fedora, memory running out a lot, but now that zRAM and Btrfs are implemented to improve that performance, I’m wanting to switch back 🙂

## lemc

As I have been doing for two years, I did a fresh install of Fedora Workstation 33 using the netinst server image. When there was the option for the “Base Environment”, I just changed from “Fedora Server Edition” to “Fedora Workstation”. Other than that, I used mostly default settings of the Anaconda installer. However, when checking “File Systems” in the Gnome System Monitor utility, the “Type” of the first two partitions, “/” and “/boot”, is “xfs”. Shouldn’t it be BTRFS, the new default ?

## Dave Hugh

Thanks for all the good work. Another flawless DNF based upgrade of my DIY wireless router and laptop. Very happy with Fedora, a great combination of stability, ease of use, and rapid advance in software technology

## Robert Varga

I’m surprised how much Fedora has progressed since my last adventure with it (very long ago). I’m by no means new to Linux, avid user since 2005, and have had dire bad experiences with much earlier Fedora releases in the past, but this is incredible. The ease of use and amount of polish is astounding.

Thank you for your work!

## Sebastian

Hi guys , im trying to upgrade from fredora 30 to 31 and i have a problem when i using this command at the end :

sudo dnf system-upgrade download –releasever=31

an this is the error:

Error: Transaction test error:

el archivo /usr/include/X11/extensions/XKBgeom.h de la instalación de libX11-devel-1.6.12-1.fc31.x86_64 entra en conflicto con el archivo del paquete xorg-x11-proto-devel-7.7-23.fc27.noarch

Can anyone help me ? becouse i want to update to this version , from 30 > 31 > 32 > 33

## Gregory Bartholomew

Since they are just -devel packages, I’d try adding

.

## Sebastian

Nop, keeps popping up the same message,

i did this command :

sudo dnf system-upgrade download –releasever=31 –allowerasing

and

sudo dnf –refresh –allowerasing upgrade

Maybe is another thing.

## Sebastian

Didnt work

i tried :

sudo dnf –allowerasing upgrade

sudo dnf –refresh –allowerasing upgrade

sudo dnf system-upgrade download –releasever=31 –allowerasing

## Matthew Miller

It’s unclear why a F27 package (xorg-x11-proto-devel-7.7-23.fc27.noarch) is still around — something is not right on your system. I’d suggest running

to see what it reports.

But as noted, since this is a devel package, try just removing it, and any other devel packages that are reported as problems. (sudo dnf remove xorg-x11-proto-devel). You can always add back any you need after the update.

## Sebastian

I been doing systems upgrades since fedora 27 , but recently someone told me that i must to avoid the https://www.if-not-true-then-false.com/ guide for installing the Nvidia drivers, also i have to rebuild manualy grub2 after kernel updates, maybe that guide messed something ( i been doing this since fedora 24 )

I just run dnf check i got nothing.

and i runned the sudo dnf remove …. and nothing happend :

All matches were filtered out by exclude filtering for argument: xorg-x11-proto-devel

No se han seleccionado paquetes para eliminar.

Dependencias resueltas.

Nada por hacer.

¡Listo!

Maybe is better if ill do an clean install.

## Gregory Bartholomew

It is very strange/unusual that the package is “there, but not there”. I’m sure there is a way to fix it. Maybe try

and/or

## Sebastian

Thanks for the quick response , the first command didn’t output anything.

and the second one throwed this :

error: Error de dependencias:

pkgconfig(compositeproto) >= 0.4 es necesario por (instalado) libXcomposite-devel-0.4.4-16.fc30.x86_64

pkgconfig(damageproto) >= 1.1 es necesario por (instalado) libXdamage-devel-1.1.4-16.fc30.x86_64

pkgconfig(fixesproto) >= 5.0 es necesario por (instalado) libXfixes-devel-5.0.3-9.fc30.x86_64

pkgconfig(inputproto) es necesario por (instalado) libXi-devel-1.7.10-1.fc30.x86_64

pkgconfig(kbproto) es necesario por (instalado) libX11-devel-1.6.7-1.fc30.x86_64

pkgconfig(randrproto) >= 1.5 es necesario por (instalado) libXrandr-devel-1.5.1-9.fc30.x86_64

pkgconfig(recordproto) es necesario por (instalado) libXtst-devel-1.2.3-9.fc30.x86_64

pkgconfig(renderproto) >= 0.9 es necesario por (instalado) libXrender-devel-0.9.10-9.fc30.x86_64

pkgconfig(videoproto) es necesario por (instalado) libXv-devel-1.0.11-9.fc30.x86_64

pkgconfig(xextproto) es necesario por (instalado) libXext-devel-1.3.3-11.fc30.x86_64

pkgconfig(xextproto) es necesario por (instalado) libXtst-devel-1.2.3-9.fc30.x86_64

pkgconfig(xf86vidmodeproto) es necesario por (instalado) libXxf86vm-devel-1.1.4-11.fc30.x86_64

pkgconfig(xineramaproto) es necesario por (instalado) libXinerama-devel-1.1.4-3.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXau-devel-1.0.9-1.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXv-devel-1.0.11-9.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXcursor-devel-1.1.15-5.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXdamage-devel-1.1.4-16.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXft-devel-2.3.2-12.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libICE-devel-1.0.9-15.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXfixes-devel-5.0.3-9.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libSM-devel-1.2.3-2.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXrandr-devel-1.5.1-9.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libX11-devel-1.6.7-1.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXcomposite-devel-0.4.4-16.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXt-devel-1.1.5-11.20190424gitba4ec9376.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) libXrender-devel-0.9.10-9.fc30.x86_64

pkgconfig(xproto) es necesario por (instalado) qt-devel-1:4.8.7-49.fc30.x86_64

xorg-x11-proto-devel es necesario por (instalado) libXau-devel-1.0.9-1.fc30.x86_64

xorg-x11-proto-devel es necesario por (instalado) libXi-devel-1.7.10-1.fc30.x86_64

Any thoughts ?

## Gregory Bartholomew

I think the first command may have fixed things even though it didn’t output anything. Try using “dnf remove xorg-x11-proto-devel-7.7-23.fc27.noarch” now and dnf should let you remove all those dependencies at once.

## Sebastian

Sorry , i have to reply over your first comment.

I did that and nothing, this didnt remove anything, this is the output :

All matches were filtered out by exclude filtering for argument: xorg-x11-proto-devel-7.7-23.fc27.noarch

No se han seleccionado paquetes para eliminar.

Dependencias resueltas.

Nada por hacer.

¡Listo!

## Gregory Bartholomew

Do you have any “exclude=” lines in /etc/dnf/dnf.conf? That might be causing this issue.

## Sebastian

Yes …. 🙁

exclude=xorg-x11* kernel*

i do not remember why i have excluded xorg-x11 .. but i remember that i exclude kernel* because i was so sick to every time the kernel was upgraded or updated, i have to reinstall the nvidia drivers , if not , i got no desktop so i have manage to boot in init mode 3 and make the install

this is happening for the guide that everybody told me that i do not need to follow.

I do not remember why is that there.

## Gregory Bartholomew

No problem. Glad you figured it out. It’s not an unreasonable thing to do. I exclude kernel-* from time to time as well when the latest kernels don’t work with zfs. 🙂

## Sebastian

Now works …

Sorry , everything is in spanish maybe this weekend a will try to update this system to 31 and then to 32 one after other.

Ejecutando verificación de operación

Verificación de operación exitosa.

Ejecutando prueba de operaciones

Prueba de operación exitosa.

¡Listo!

¡Descarga completa! Use ‘dnf system-upgrade reboot’ para iniciar la actualización.

Para limpiar la caché de metadatos y transacción, use ‘dnf system-upgrade clean’

Los paquetes descargados se han guardado en caché para la próxima transacción.

Puede borrar los paquetes de la caché ejecutando ‘dnf clean packages’.

Thanks for the help !!!

## nitz

When will anaconda installer will be changed? It’s intuitive.

## Eddy

after pulling off my hair using this uber shit called opensuse Fedora ROCKS!

## irlm

Mais um utilizador a usar Fedora Rawhide 34 no Dell E5570, esta distrobuição está perfeita, mesmo em fase de desenvolvimento.

## ivo magalhaes

Fedora user from Portugal, very good work…

## gombosg

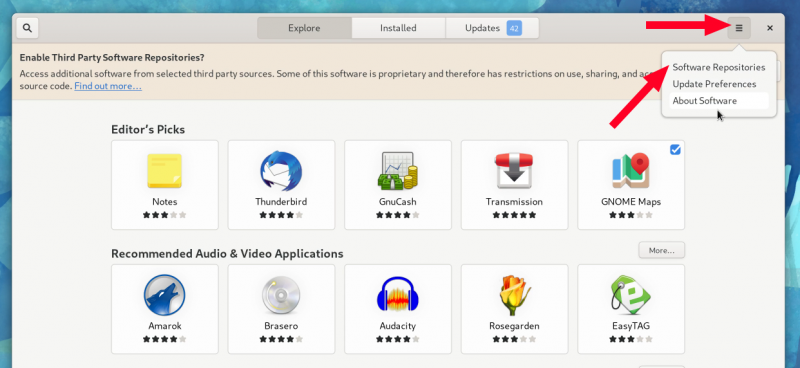

I waited the usual 1 week safety period after release, then updated via GNOME Software.

It was literally 2 clicks. Unbelievable! All external repos updated automatically. Nothing left to do.

Been using Fedora exclusively for >3 years, this was probably one of the easiest updates. Thanks guys! (Or thanks us, because I also maintain a few packages… :))

Next up: converting my main filesystem to BTRFS somehow 😛

## Jatin

Unreal Engine 4.25 (latest stable unreal) .. Projects requires CoreMinimal.h header file which needs sys/sysctl.h as an include which is not there in fedora 33 and was in fedora 32 🙁

## Casque Fou

Upgrade stalles on 83%…

Upgrade from 32 unfinished and no boot possible anymore!

Hopefully a reinstall will do.

## John M

I have used Fedora for a few years now and have updated the previous versions without any issues, but 32 to 33 did not go well. I use the Cinnamon desk, GIGABYTE X470 board, AMD Ryzen 5 3600, Radeon RX 5600.

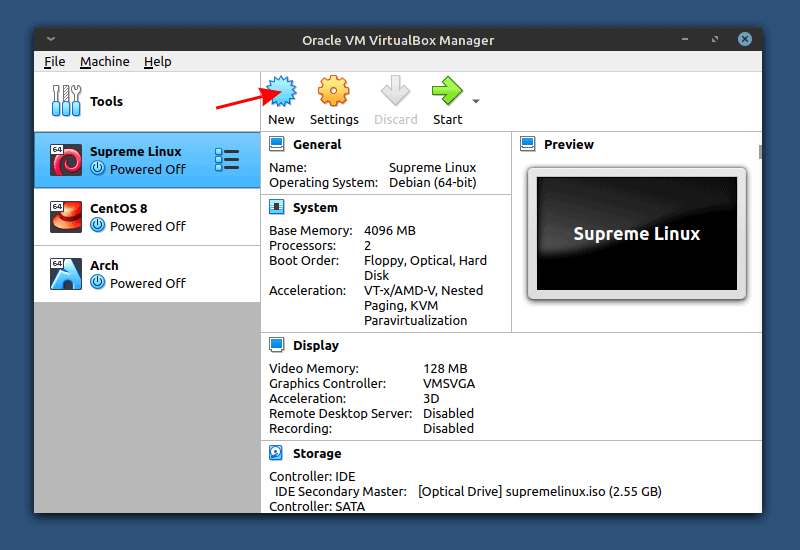

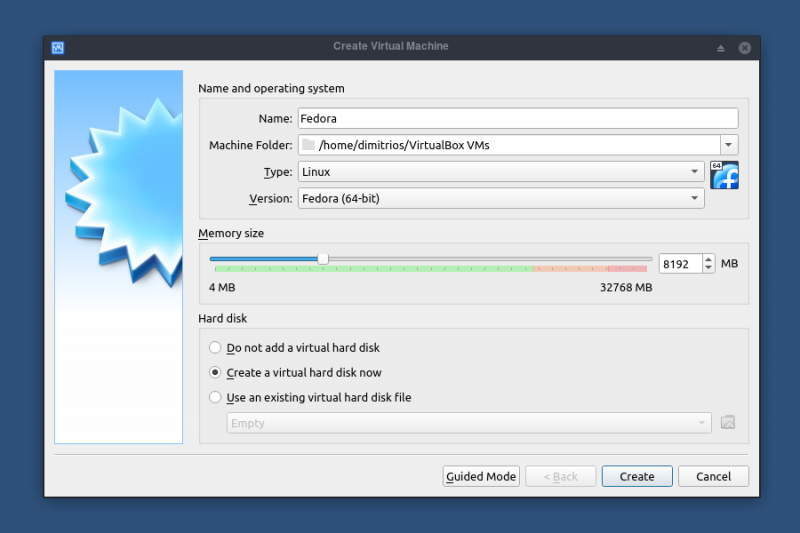

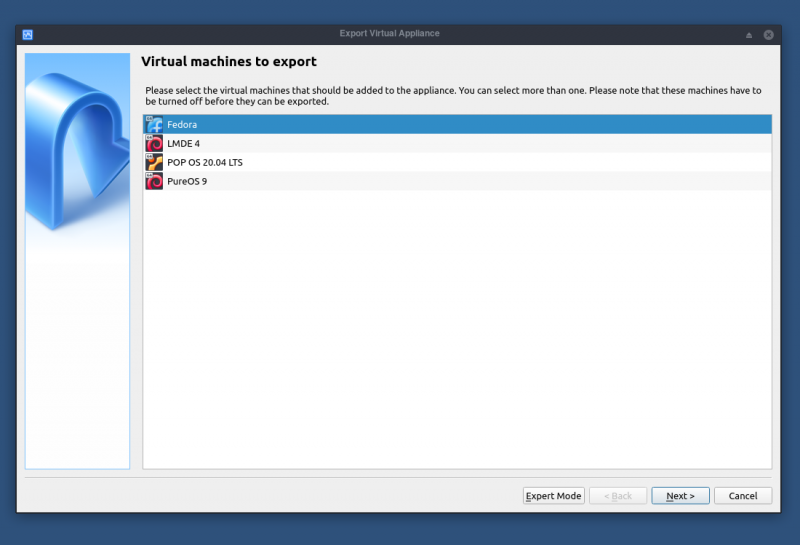

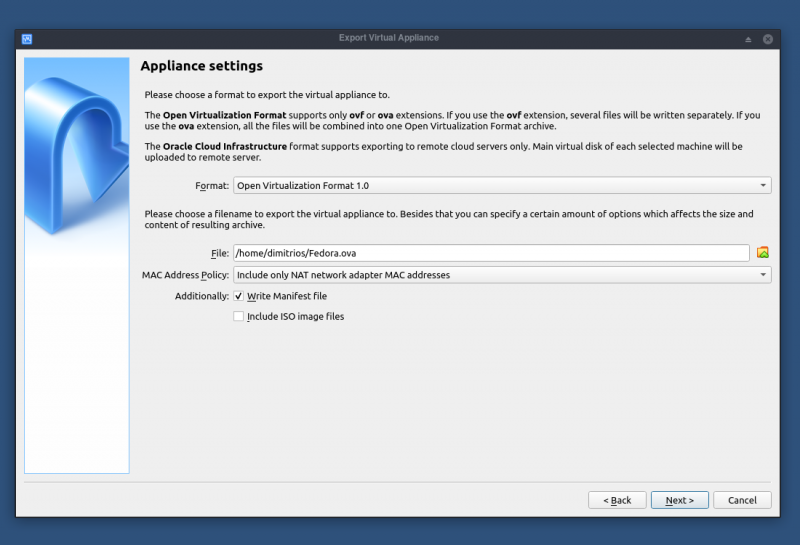

I upgraded from within Fedora 32.. The upgrade down loaded, installed and appeared to launch without a hitch. When I ran GPARTED I saw that all the partitions were ext4 other than efi, none were btrfs. I do not remember seeing an option to pick my file system. The deal breaking though was that Virtual Box 6.1 was uninstalled which contained a virtual machine that I had spent a bit of time to configure to my liking(poof gone). Fortunately I imaged the disk prior and restored Fedora 32, with no loss other than time.

So my questions are:

Did I miss an option choice to install btrfs, I thought Fedora 33 default was btrfs.

Why is Virtual Box not supported?

## Marius Qayin

As a Linux Noob, trying to rid myself of a system that want to decide how i am using my computer, trying to avoid a culture where a computer is an appliance that you have to throw away if broken or to old. Trying to be more free…. Is Fedora a good choice?

## Rafał

Czy w Fedora 33 można zmienić język na polski? / Is it possible to change the language in Polish in Fedora 33? |

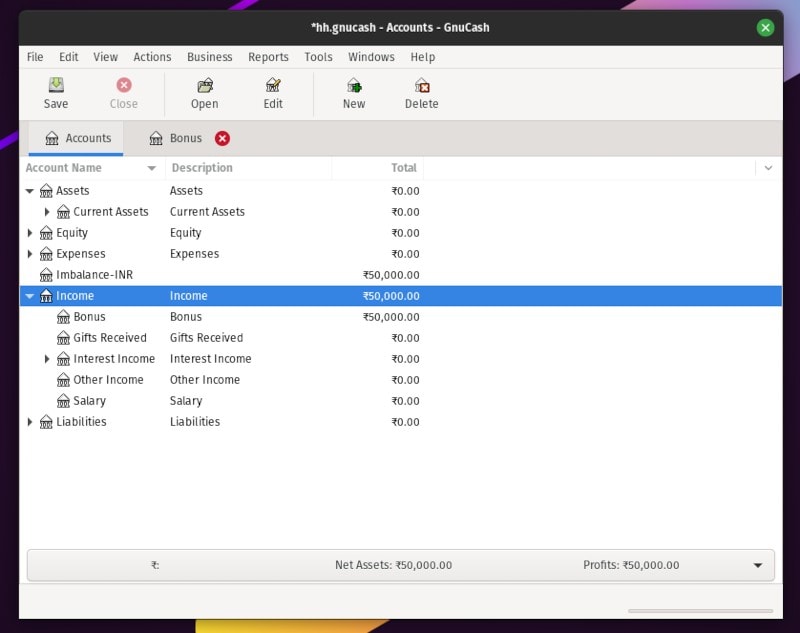

12,766 | 使用 Ansible 的 Kubernetes 模块实现容器编排自动化 | https://opensource.com/article/20/9/ansible-modules-kubernetes | 2020-10-28T21:19:13 | [

"Kubernetes",

"Ansible"

] | https://linux.cn/article-12766-1.html |

>

> 将 Kubernetes 与 Ansible 结合实现云端自动化。此外,还可以参照我们的 Ansible 的 k8s 模块速查表。

>

>

>

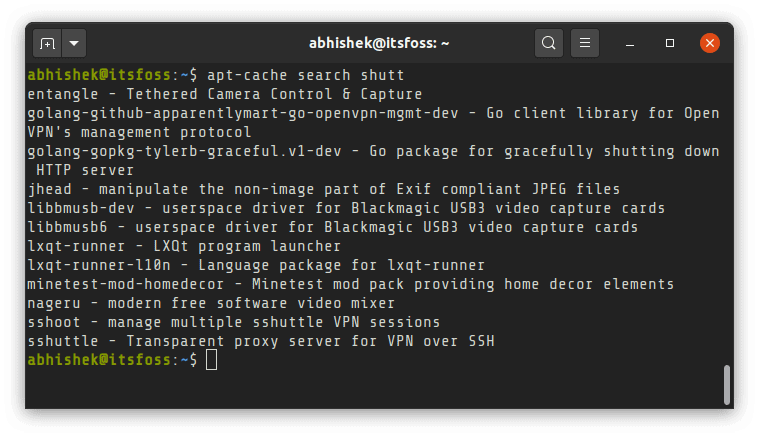

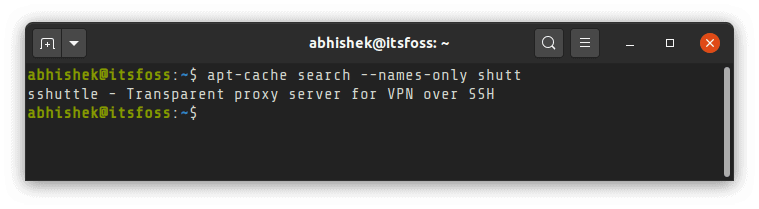

[Ansible](https://opensource.com/resources/what-ansible) 是实现自动化工作的优秀工具,而 [Kubernetes](https://opensource.com/resources/what-is-kubernetes) 则是容器编排方面的利器,要是把两者结合起来,会有怎样的效果呢?正如你所猜测的,Ansible + Kubernetes 的确可以实现容器编排自动化。

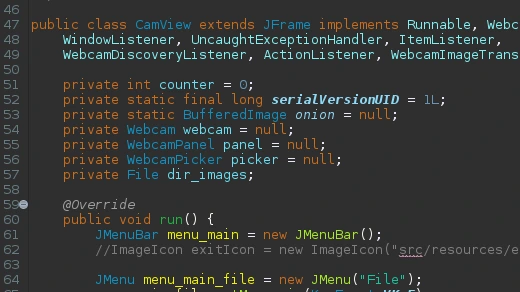

### Ansible 模块

实际上,Ansible 本身只是一个用于解释 YAML 文件的框架。它真正强大之处在于它[丰富的模块](https://docs.ansible.com/ansible/latest/modules/modules_by_category.html),所谓<ruby> 模块 <rt> module </rt></ruby>,就是在 Ansible <ruby> 剧本 <rt> playbook </rt></ruby> 中让你得以通过简单配置就能调用外部应用程序的一些工具。

Ansible 中有模块可以直接操作 Kubernetes,也有对一些相关组件(例如 [Docker](https://opensource.com/resources/what-docker) 和 [Podman](http://podman.io))实现操作的模块。学习使用一个新模块的过程和学习新的终端命令、API 一样,可以先从文档中了解这个模块在调用的时候需要接受哪些参数,以及这些参数在外部应用程序中产生的具体作用。

### 访问 Kubernetes 集群

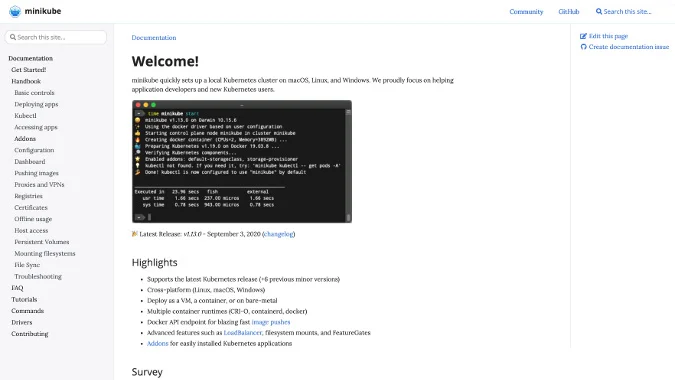

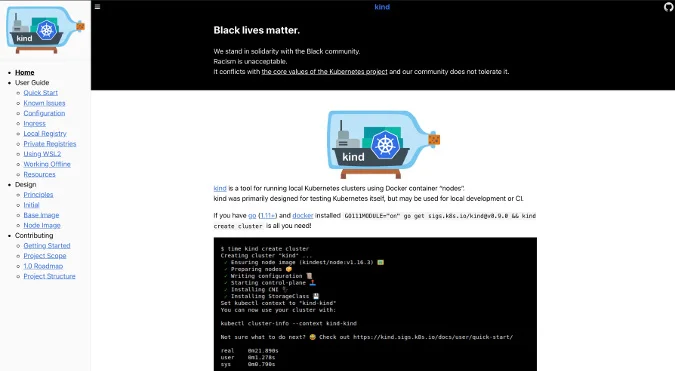

在使用 Ansible Kubernetes 模块之前,先要有能够访问 Kubernetes 集群的权限。在没有权限的情况下,可以尝试使用一个短期在线试用账号,但我们更推荐的是按照 Kubernetes 官网上的指引,或是参考 Braynt Son 《[入门 Kubernetes](https://opensource.com/article/18/10/getting-started-minikube)》的教程安装 [Minikube](https://kubernetes.io/docs/tasks/tools/install-minikube)。Minikube 提供了一个单节点 Kubernetes 实例的安装过程,你可以像使用一个完整集群一样对其进行配置和交互。

* 下载 [Ansible k8s 速记表](https://opensource.com/downloads/ansible-k8s-cheat-sheet)(需注册)

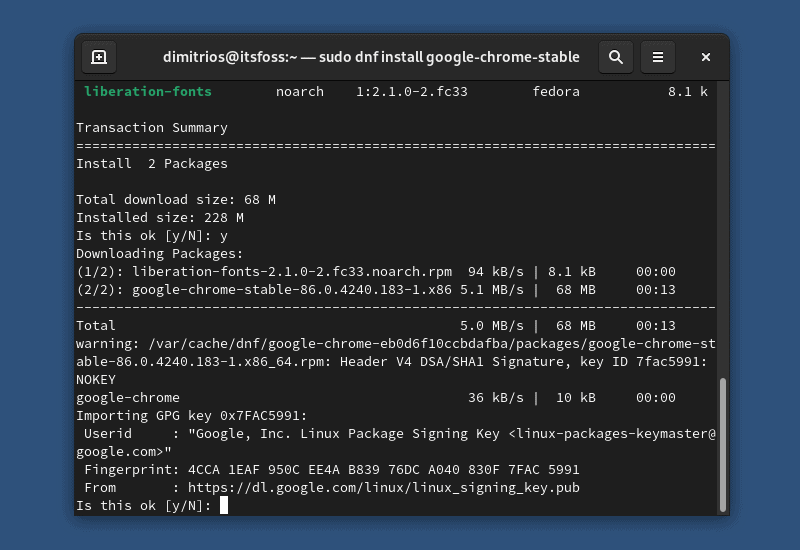

在安装 Minikube 之前,你需要确保你的环境支持虚拟化并安装 `libvirt`,然后对 `libvirt` 用户组授权:

```

$ sudo dnf install libvirt

$ sudo systemctl start libvirtd

$ sudo usermod --append --groups libvirt `whoami`

$ newgrp libvirt

```

#### 安装 Python 模块

为了能够在 Ansible 中使用 Kubernetes 相关的模块,你需要安装以下这些 Python 模块:

```

$ pip3.6 install kubernetes --user

$ pip3.6 install openshift --user

```

#### 启动 Kubernetes

如果你使用的是 Minikube 而不是完整的 Kubernetes 集群,请使用 `minikube` 命令在本地创建一个最精简化的 Kubernetes 实例:

```

$ minikube start --driver=kvm2 --kvm-network default

```

然后等待 Minikube 完成初始化,这个过程所需的时间会因实际情况而异。

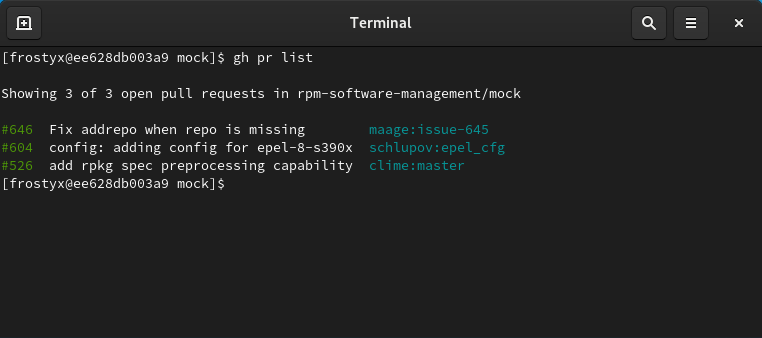

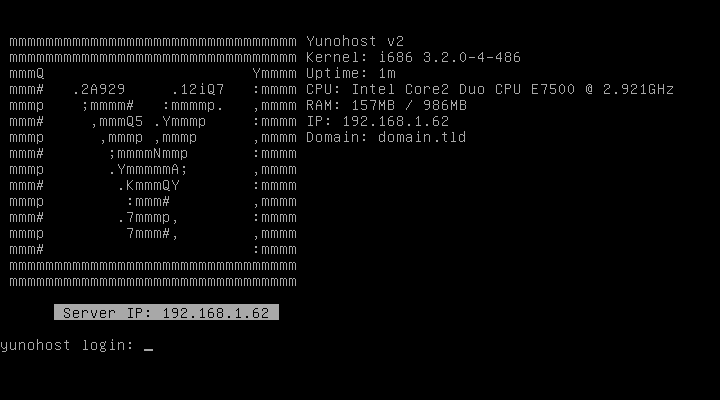

### 获取集群信息

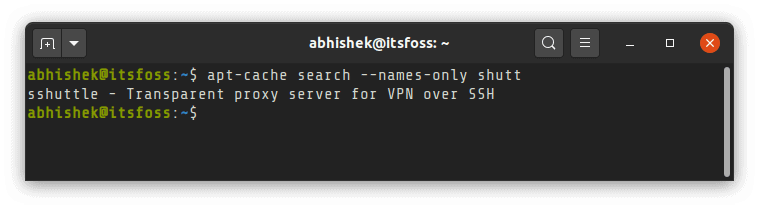

集群启动以后,通过 `cluster-info` 选项就可以获取到集群相关信息了:

```

$ kubectl cluster-info

Kubernetes master is running at https://192.168.39.190:8443

KubeDNS is running at https://192.168.39.190:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

```

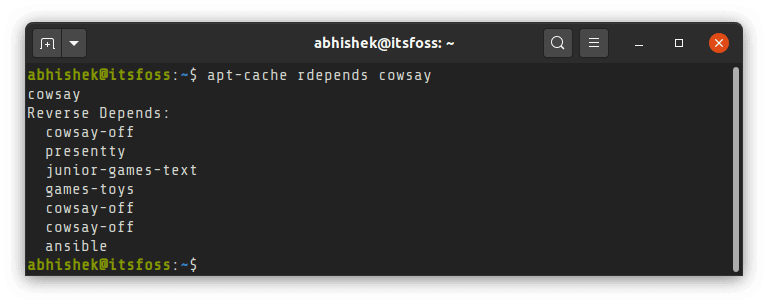

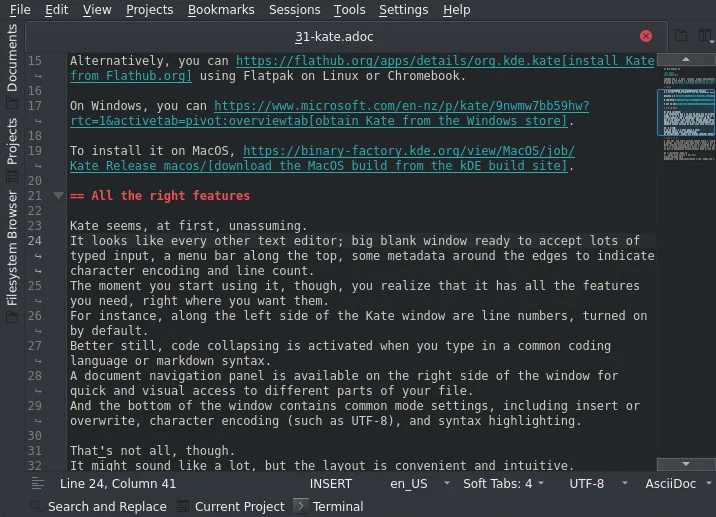

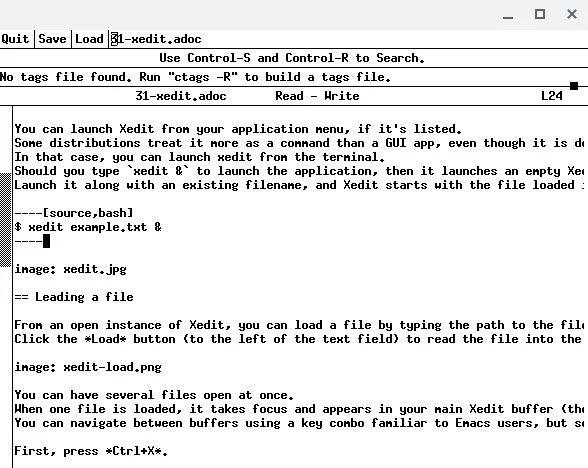

### 使用 k8s 模块

Ansible 使用 `k8s` 这个模块来实现对 Kubernetes 的操作,在剧本中使用 `k8s` 模块就可以对 Kuvernetes 对象进行管理。这个模块描述了 `kubectl` 命令的最终状态,例如对于以下这个使用 `kubectl` 创建新的[命名空间](https://opensource.com/article/19/10/namespaces-and-containers-linux)的操作:

```

$ kubectl create namespace my-namespace

```

这是一个很简单的操作,而对这个操作的最终状态用 YAML 文件来描述是这样的:

```

- hosts: localhost

tasks:

- name: create namespace

k8s:

name: my-namespace

api_version: v1

kind: Namespace

state: present

```

如果你使用的是 Minikube,那么主机名(`hosts`)应该定义为 `localhost`。需要注意的是,所使用的模块也定义了可用参数的语法(例如 `api_version` 和 `kind` 参数)。

在运行这个剧本之前,先通过 `yamllint` 命令验证是否有错误:

```

$ yamllint example.yaml

```

确保没有错误之后,运行剧本:

```

$ ansible-playbook ./example.yaml

```

可以验证新的命名空间是否已经被创建出来:

```

$ kubectl get namespaces

NAME STATUS AGE

default Active 37h

kube-node-lease Active 37h

kube-public Active 37h

kube-system Active 37h

demo Active 11h

my-namespace Active 3s

```

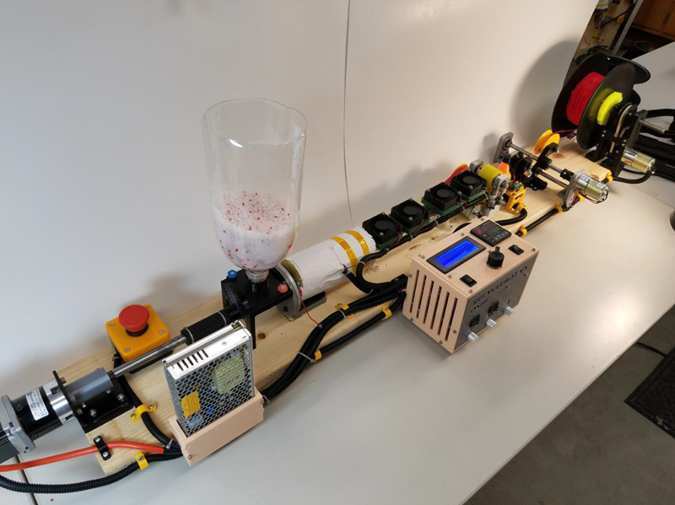

### 使用 Podman 拉取容器镜像

容器是个 Linux 系统,几乎是最小化的,可以由 Kubernetes 管理。[LXC 项目](https://www.redhat.com/sysadmin/exploring-containers-lxc)和 Docker 定义了大部分的容器规范。最近加入容器工具集的是 Podman,它不需要守护进程就可以运行,为此受到了很多用户的欢迎。

通过 Podman 可以从 Docker Hub 或者 [Quay.io](http://Quay.io) 等存储库拉取容器镜像。这一操作对应的 Ansible 语法也很简单,只需要将存储库网站提供的镜像路径写在剧本中的相应位置就可以了:

```

- name: pull an image

podman_image:

name: quay.io/jitesoft/nginx

```

使用 `yamllint` 验证:

```

$ yamllint example.yaml

```

运行剧本:

```

$ ansible-playbook ./example.yaml

[WARNING]: provided hosts list is empty, only localhost is available.

Note that the implicit localhost does not match 'all'

PLAY [localhost] ************************

TASK [Gathering Facts] ************************

ok: [localhost]

TASK [create k8s namespace] ************************

ok: [localhost]

TASK [pull an image] ************************

changed: [localhost]

PLAY RECAP ************************

localhost: ok=3 changed=1 unreachable=0 failed=0

skipped=0 rescued=0 ignored=0

```

### 使用 Ansible 实现部署

Ansible 除了可以执行小型维护任务以外,还可以通过剧本实现其它由 `kubectl` 实现的功能,因为两者的 YAML 文件之间只有少量的差异。在 Kubernetes 中使用的 YAML 文件只需要稍加改动,就可以在 Ansible 剧本中使用。例如下面这个用于使用 `kubectl` 命令部署 Web 服务器的 YAML 文件:

```

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-webserver

spec:

selector:

matchLabels:

run: my-webserver

replicas: 1

template:

metadata:

labels:

run: my-webserver

spec:

containers:

- name: my-webserver

image: nginx

ports:

- containerPort: 80

```

如果你对其中的参数比较熟悉,你只要把 YAML 文件中的大部分内容放到剧本中的 `definition` 部分,就可以在 Ansible 中使用了:

```

- name: deploy a web server

k8s:

api_version: v1

namespace: my-namespace

definition:

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deploy

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-webserver

image: quay.io/jitesoft/nginx

ports:

- containerPort: 80

protocol: TCP

```

执行完成后,使用 `kubectl` 命令可以看到预期中的的<ruby> 部署 <rt> deployment </rt></ruby>:

```

$ kubectl -n my-namespace get pods

NAME READY STATUS

nginx-deploy-7fdc9-t9wc2 1/1 Running

```

### 在云上使用模块

随着现在越来越多的开发和部署工作往云上转移的趋势,我们必须了解如何在云上实现自动化。其中 `k8s` 和 `podman_image` 这两个模块只是云开发中的其中一小部分。你可以在你的工作流程中寻找一些需要自动化的任务,并学习如何使用 Ansible 让你在这些任务上事半功倍。

---

via: <https://opensource.com/article/20/9/ansible-modules-kubernetes>

作者:[Seth Kenlon](https://opensource.com/users/seth) 选题:[lujun9972](https://github.com/lujun9972) 译者:[HankChow](https://github.com/HankChow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | [Ansible](https://opensource.com/resources/what-ansible) is one of the best tools for automating your work. [Kubernetes](https://opensource.com/resources/what-is-kubernetes) is one of the best tools for orchestrating containers. What happens when you combine the two? As you might expect, Ansible combined with Kubernetes lets you automate your container orchestration.

## Ansible modules

On its own, Ansible is basically just a framework for interpreting YAML files. Its true power comes from its [many modules](https://docs.ansible.com/ansible/latest/modules/modules_by_category.html). Modules are what enable you to invoke external applications with just a few simple configuration settings in a playbook.

There are a few modules that deal directly with Kubernetes, and a few that handle related technology like [Docker](https://opensource.com/resources/what-docker) and [Podman](http://podman.io). Learning a new module is often similar to learning a new terminal command or a new API. You get familiar with a module from its documentation, you learn what arguments it accepts, and you equate its options to how you might use the application it interfaces with.

## Access a Kubernetes cluster

To try out Kubernetes modules in Ansible, you must have access to a Kubernetes cluster. If you don't have that, then you might try to open a trial account online, but most of those are short term. Instead, you can install [Minikube](https://kubernetes.io/docs/tasks/tools/install-minikube), as described on the Kubernetes website or in Bryant Son's excellent article on [getting started with Minikube](https://opensource.com/article/18/10/getting-started-minikube). Minikube provides a local instance of a single-node Kubernetes install, allowing you to configure and interact with it as you would a full cluster.

**[Download the Ansible k8s cheat sheet]**

Before installing Minikube, you must ensure that your environment is ready to serve as a virtualization backend. You may need to install `libvirt`

and grant yourself permission to the `libvirt`

group:

```

$ sudo dnf install libvirt

$ sudo systemctl start libvirtd

$ sudo usermod --append --groups libvirt `whoami`

$ newgrp libvirt

```

### Install Python modules

To prepare for using Kubernetes-related Ansible modules, you should also install a few helper Python modules:

```

$ pip3.6 install kubernetes --user

$ pip3.6 install openshift --user

```

### Start Kubernetes

If you're using Minikube instead of a Kubernetes cluster, use the `minikube`

command to start up a local, miniaturized Kubernetes instance on your computer:

`$ minikube start --driver=kvm2 --kvm-network default`

Wait for Minikube to initialize. Depending on your internet connection, this could take several minutes.

## Get information about your cluster

Once you've started your cluster successfully, you can get information about it with the `cluster-info`

option:

```

$ kubectl cluster-info

Kubernetes master is running at https://192.168.39.190:8443

KubeDNS is running at https://192.168.39.190:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

```

## Use the k8s module

The entry point for using Kubernetes through Ansible is the `k8s`

module, which enables you to manage Kubernetes objects from your playbooks. This module describes states resulting from `kubectl`

instructions. For instance, here's how you would create a new [namespace](https://opensource.com/article/19/10/namespaces-and-containers-linux) with `kubectl`

:

`$ kubectl create namespace my-namespace`

It's a simple action, and the YAML representation of the same result is similarly terse:

```

- hosts: localhost

tasks:

- name: create namespace

k8s:

name: my-namespace

api_version: v1

kind: Namespace

state: present

```

In this case, the host is defined as `localhost`

, under the assumption that you're running this against Minikube. Notice that the module in use defines the syntax of the parameters available (such as `api_version`

and `kind`

).

Before using this playbook, verify it with `yamllint`

:

`$ yamllint example.yaml`

Correct any errors, and then run the playbook:

`$ ansible-playbook ./example.yaml`

Verify that the new namespace has been created:

```

$ kubectl get namespaces

NAME STATUS AGE

default Active 37h

kube-node-lease Active 37h

kube-public Active 37h

kube-system Active 37h

demo Active 11h

my-namespace Active 3s

```

## Pull a container image with Podman

Containers are Linux systems, almost impossibly minimal in scope, that can be managed by Kubernetes. Much of the container specifications have been defined by the [LXC project](https://www.redhat.com/sysadmin/exploring-containers-lxc) and Docker. A recent addition to the container toolset is Podman, which is popular because it runs without requiring a daemon.

With Podman, you can pull a container image from a repository, such as Docker Hub or Quay.io. The Ansible syntax for this is simple, and all you need to know is the location of the container, which is available from the repository's website:

```

- name: pull an image

podman_image:

name: quay.io/jitesoft/nginx

```

Verify it with `yamllint`

:

`$ yamllint example.yaml`

And then run the playbook:

```

$ ansible-playbook ./example.yaml

[WARNING]: provided hosts list is empty, only localhost is available.

Note that the implicit localhost does not match 'all'

PLAY [localhost] ************************

TASK [Gathering Facts] ************************

ok: [localhost]

TASK [create k8s namespace] ************************

ok: [localhost]

TASK [pull an image] ************************

changed: [localhost]

PLAY RECAP ************************

localhost: ok=3 changed=1 unreachable=0 failed=0

skipped=0 rescued=0 ignored=0

```

## Deploy with Ansible

You're not limited to small maintenance tasks with Ansible. Your playbook can interact with Ansible in much the same way a configuration file does with `kubectl`

. In fact, in many ways, the YAML you know by using Kubernetes translates to your Ansible plays. Here's a configuration you might pass directly to `kubectl`

to deploy an image (in this example, a web server):

```

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-webserver

spec:

selector:

matchLabels:

run: my-webserver

replicas: 1

template:

metadata:

labels:

run: my-webserver

spec:

containers:

- name: my-webserver

image: nginx

ports:

- containerPort: 80

```

If you know these parameters, then you mostly know the parameters required to accomplish the same with Ansible. You can, with very little modification, move that YAML into a `definition`

element in your Ansible playbook:

```

- name: deploy a web server

k8s:

api_version: v1

namespace: my-namespace

definition:

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deploy

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-webserver

image: quay.io/jitesoft/nginx

ports:

- containerPort: 80

protocol: TCP

```

After running this, you can see the deployment with `kubectl`

, as usual:

```

$ kubectl -n my-namespace get pods

NAME READY STATUS

nginx-deploy-7fdc9-t9wc2 1/1 Running

```

## Modules for the cloud

As more development and deployments move to the cloud, it's important to understand how to automate the important aspects of your cloud. The `k8s`

and `podman_image`

modules are only two examples of modules related to Kubernetes and a mere fraction of modules developed for the cloud. Take a look at your workflow, find the tasks you want to track and automate, and see how Ansible can help you do more by doing less.

## 1 Comment |

12,768 | 我的第一次开源贡献:做出决定 | https://opensource.com/article/19/11/my-first-open-source-contribution-mistake-decisions | 2020-10-30T11:24:01 | [

"开源",

"贡献"

] | /article-12768-1.html |

>

> 一位新的开源贡献者告诉你如何加入到开源项目中。

>

>

>

先前,我把我的第一次开源贡献的拖延归咎于[冒牌综合症](https://opensource.com/article/19/10/my-first-open-source-contribution-mistakes)。但还有一个我无法忽视的因素:我做出决定太艰难了。在[成千上百万](https://github.blog/2018-02-08-open-source-project-trends-for-2018/)的开源项目中选择时,选择一个要做贡献的项目是难以抉择的。如此重负,以至于我常常不得不关掉我的笔记本去思考:“或许我改天再做吧”。

错误之二是让我对做出决定的恐惧妨碍了我做出第一次贡献。在理想世界里,也许开始我的开源之旅时,心中就已经有了一个真正关心和想去做的具体项目,但我有的只是总得为开源项目做出贡献的模糊目标。对于那些处于同一处境的人来说,这儿有一些帮助我挑选出合适的项目(或者至少是一个好的项目)来做贡献的策略。

### 经常使用的工具

一开始,我不认为有必要将自己局限于已经熟悉的工具或项目。有一些项目我之前从未使用过,但由于它们的社区很活跃,或者它们解决的问题很有趣,因此看起来很有吸引力。

但是,考虑我投入到这个项目中的时间有限,我决定继续投入到我了解的工具上去。要了解工具需求,你需要熟悉它的工作方式。如果你想为自己不熟悉的项目做贡献,则需要完成一个额外的步骤来了解代码的功能和目标。这个额外的工作量可能是有趣且值得的,但也会使你的工作时间加倍。因为我的目标主要是贡献,投入到我了解的工具上是缩小范围的很好方式。回馈一个你认为有用的项目也是有意义的。

### 活跃而友好的社区

在选择项目的时候,我希望在那里有人会审查我写的代码才会觉得有信心。当然,我也希望审核我代码的人是个和善的人。毕竟,把你的作品放在那里接受公众监督是很可怕的。虽然我对建设性的反馈持开放态度,但开发者社区中的一些有毒角落是我希望避免的。

为了评估我将要加入的社区,我查看了我正在考虑加入的仓库的<ruby> 议题 <rt> issue </rt></ruby>部分。我要查看核心团队中是否有人定期回复。更重要的是,我试着确保没有人在评论中互相诋毁(这在议题讨论中是很常见的)。我还留意了那些有行为准则的项目,概述了什么是适当的和不适当的在线互动行为。

### 明确的贡献准则

因为这是我第一次为开源项目做出贡献,在此过程中我有很多问题。一些项目社区在流程的文档记录方面做的很好,可以用来指导挑选其中的议题并发起拉取请求。 [Gatsby](https://www.gatsbyjs.org/contributing/) 是这种做法的典范,尽管那时我没有选择它们,因为在此之前我从未使用过该产品。

这种清晰的文档帮助我们缓解了一些不知如何去做的不安全感。它也给了我希望:项目对新的贡献者是开放的,并且会花时间来查看我的工作。除了贡献准则外,我还查看了议题部分,看看这个项目是否使用了“<ruby> 第一个好议题 <rt> good first issue </rt></ruby>”标志。这是该项目对初学者开放的另一个迹象(并可以帮助你学会要做什么)。

### 总结

如果你还没有计划好选择一个项目,那么选择合适的领域进行你的第一个开源贡献更加可行。列出一系列标准可以帮助自己缩减选择范围,并为自己的第一个拉取请求找到一个好的项目。

---

via: <https://opensource.com/article/19/11/my-first-open-source-contribution-mistake-decisions>

作者:[Galen Corey](https://opensource.com/users/galenemco) 选题:[lujun9972](https://github.com/lujun9972) 译者:[chenmu-kk](https://github.com/chenmu-kk) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

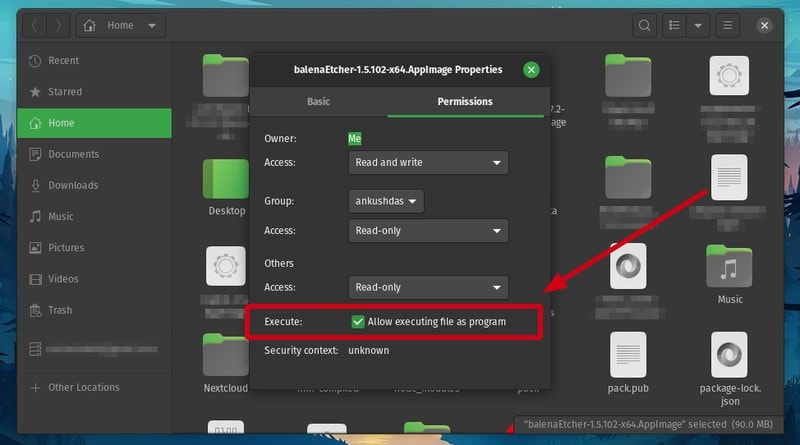

12,769 | 用这个创建可引导 USB 的工具在电脑上尝试 Linux | https://opensource.com/article/20/10/fedora-media-writer | 2020-10-30T12:21:59 | [

"USB"

] | /article-12769-1.html |

>

> Fedora Media Writer 是创建临场版 USB 以尝试 Linux 的方便方法。

>

>

>

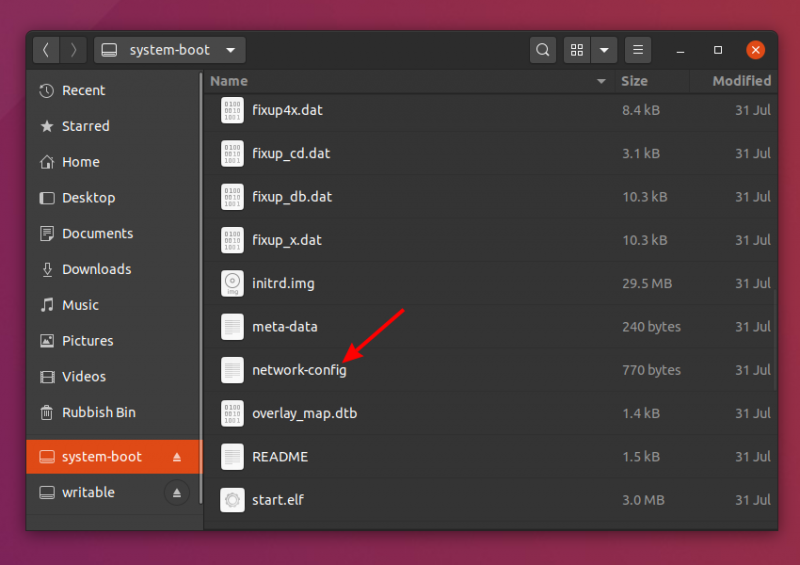

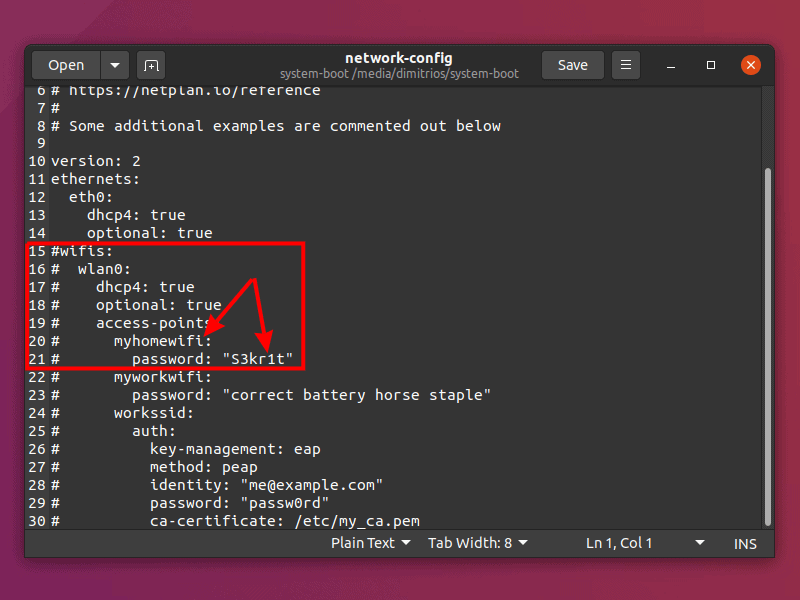

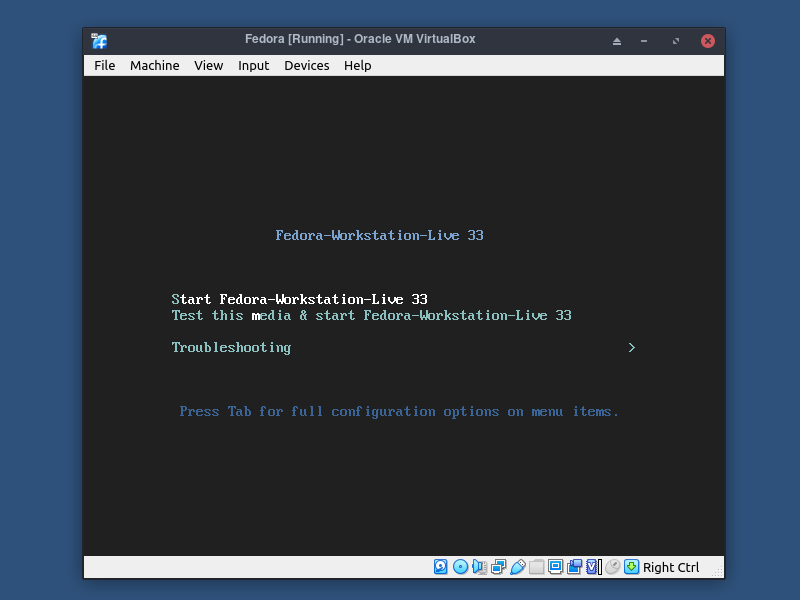

[Fedora Media Writer](https://github.com/FedoraQt/MediaWriter) 是一个小巧、轻量、全面的工具,它简化了 Linux 的入门体验。它可以下载 Fedora 的 Workstation 或 Server 版本并写入到一个可以在任何系统上引导的 USB 驱动器上,使你无需将其安装到硬盘上就可以试用 Fedora Linux。

Media Writer 工具可以用来创建一个<ruby> 临场体验的 <rt> Live </rt></ruby>、可引导的 USB 驱动器。在你的平台上安装 Fedora Media Writer 应用后,你可以下载并烧录最新的 Fedora Workstation 或 Server 稳定版,也可以选择烧录你下载的任何其他镜像。而且它并不局限于英特尔 64 位设备。它还可以用于 ARM 设备,如[树莓派](https://fedoraproject.org/wiki/Architectures/ARM/Raspberry_Pi),这些设备日益变得更加强大和有用。

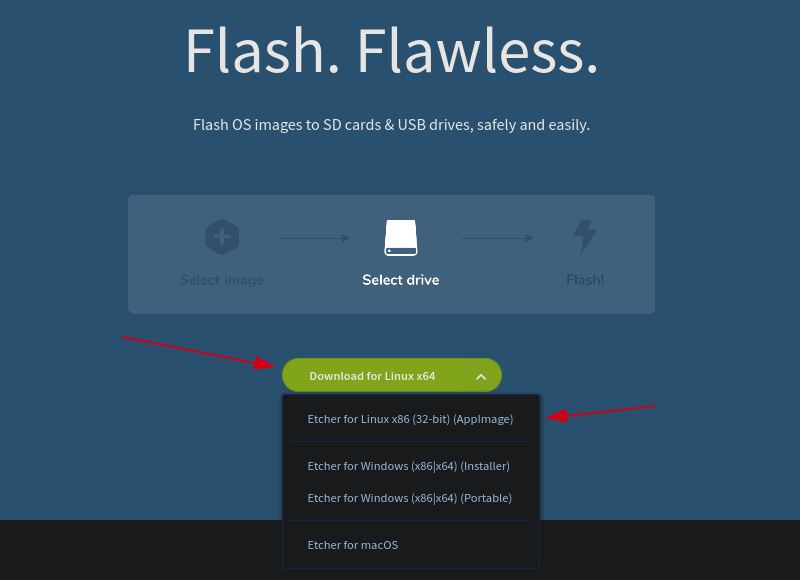

### 安装 Fedora Media Writer

[安装 Fedora Media Writer](https://docs.fedoraproject.org/en-US/fedora/f32/install-guide/install/Preparing_for_Installation/#_fedora_media_writer) 有几种方式。你可以通过 GitHub [从源码编译](https://github.com/FedoraQt/MediaWriter#building)、下载 MacOS 或 Windows 版本、使用 `dnf` 或 `yum` 安装 RPM 包,或者以 Flatpak 的形式获得。

在 Fedora 上:

```

$ sudo dnf install mediawriter

```

最新版本请参见 GitHub 仓库的[发布](https://github.com/FedoraQt/MediaWriter/releases)部分。

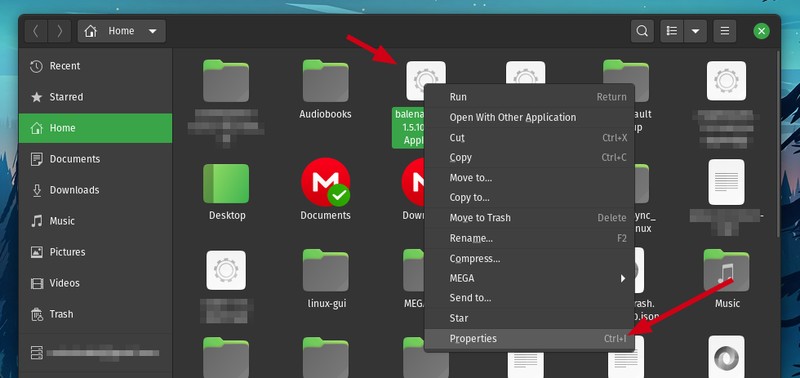

### 准备好你的媒体介质

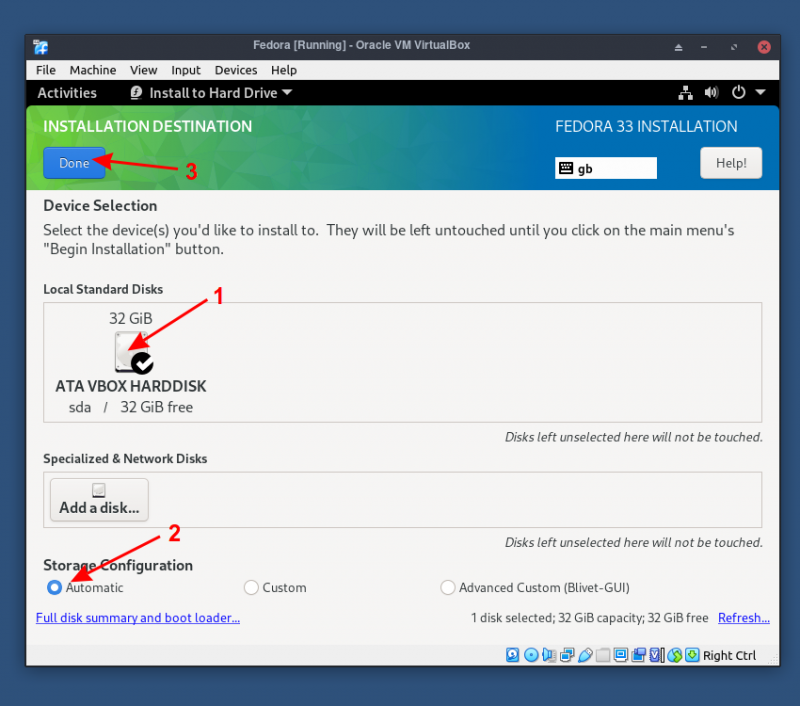

首先,你需要一个 USB 驱动器来安装你的 Linux 操作系统。这就是 Fedora Media Writer 要烧录的设备。这个驱动器必须是空白或可擦除的,因为 **该 USB 驱动器上的所有数据都会被删除**。如果有任何数据,哪怕只是一个文件,如果你不想丢失,那么你必须在继续之前备份它!

确认你的 USB 驱动器是可擦除的之后,将它插入你的电脑并启动 Fedora Media Writer。

### 使用 Fedora Media Writer

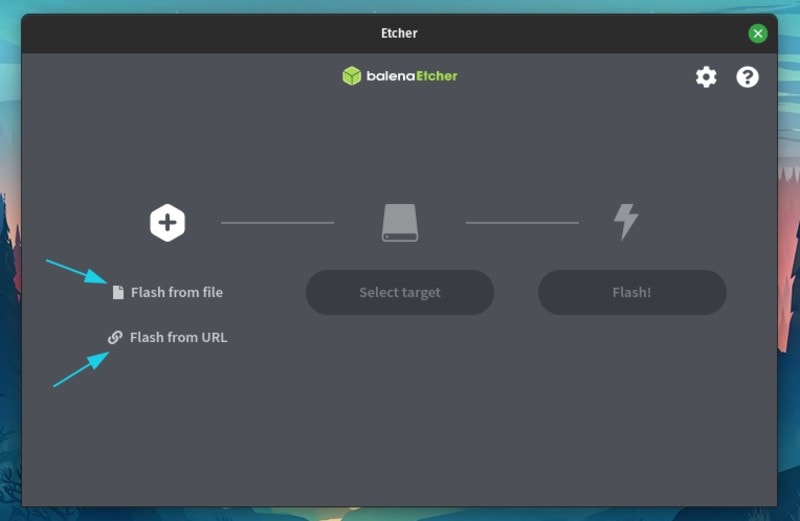

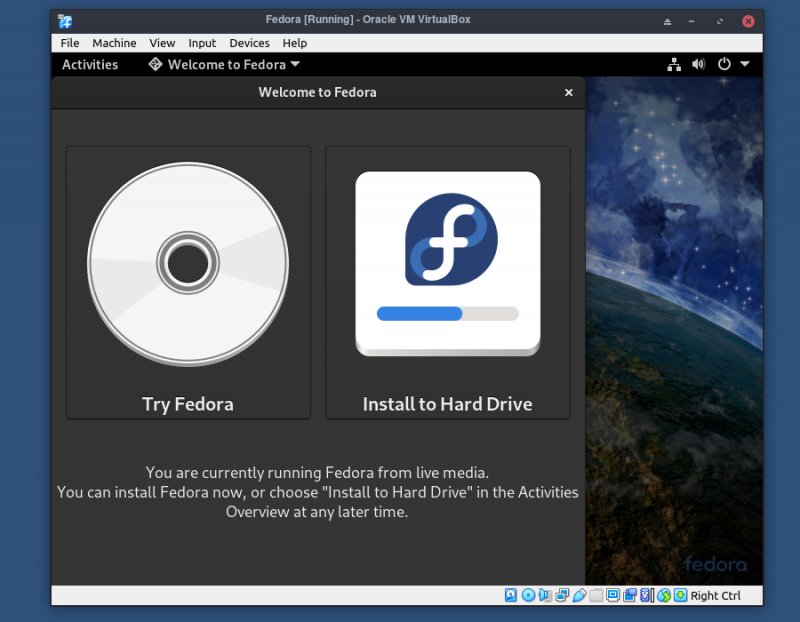

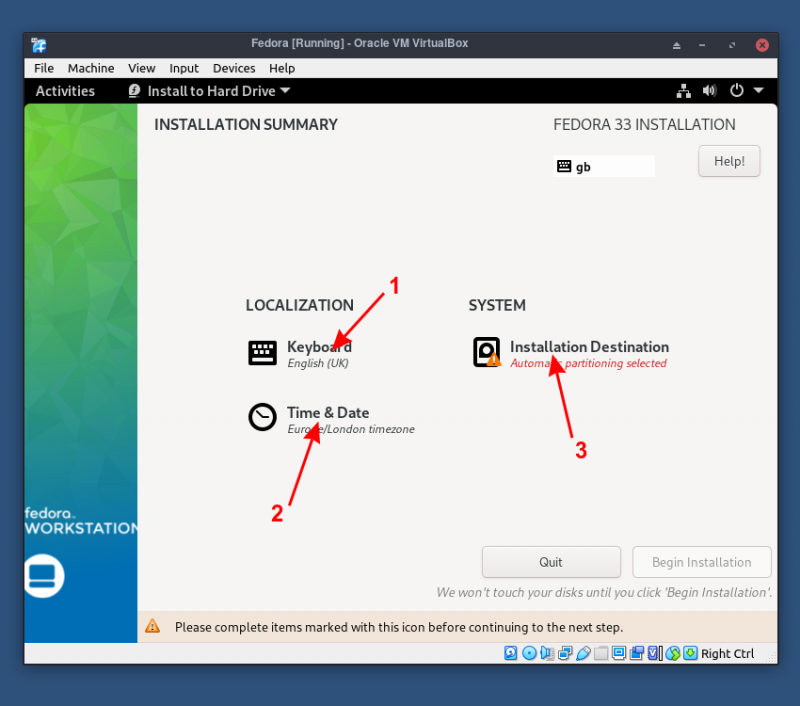

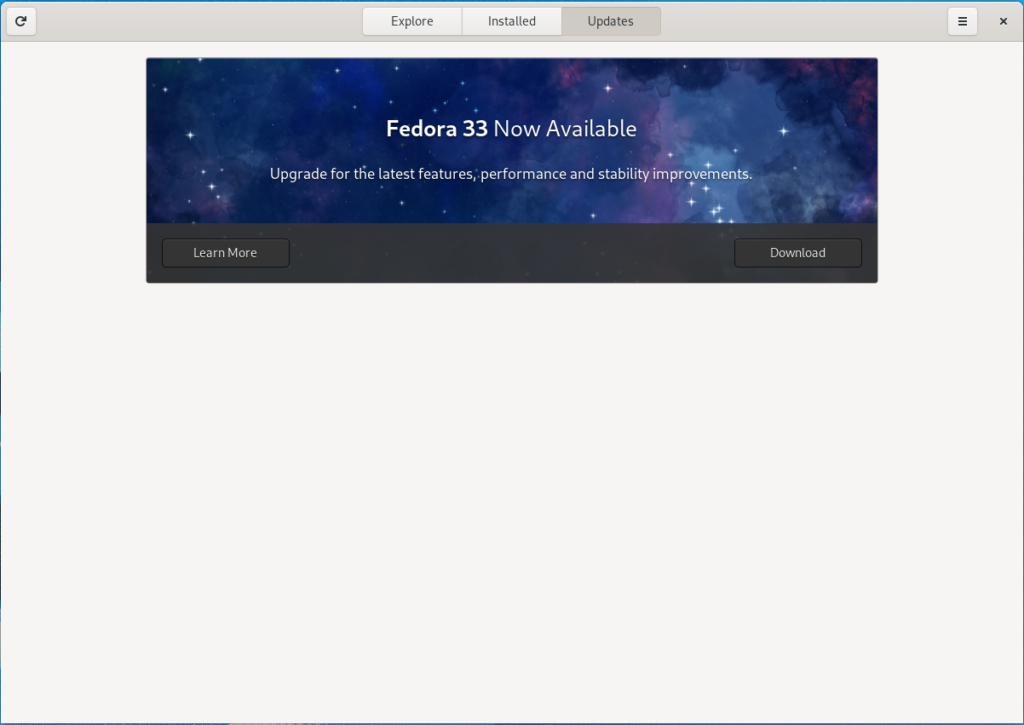

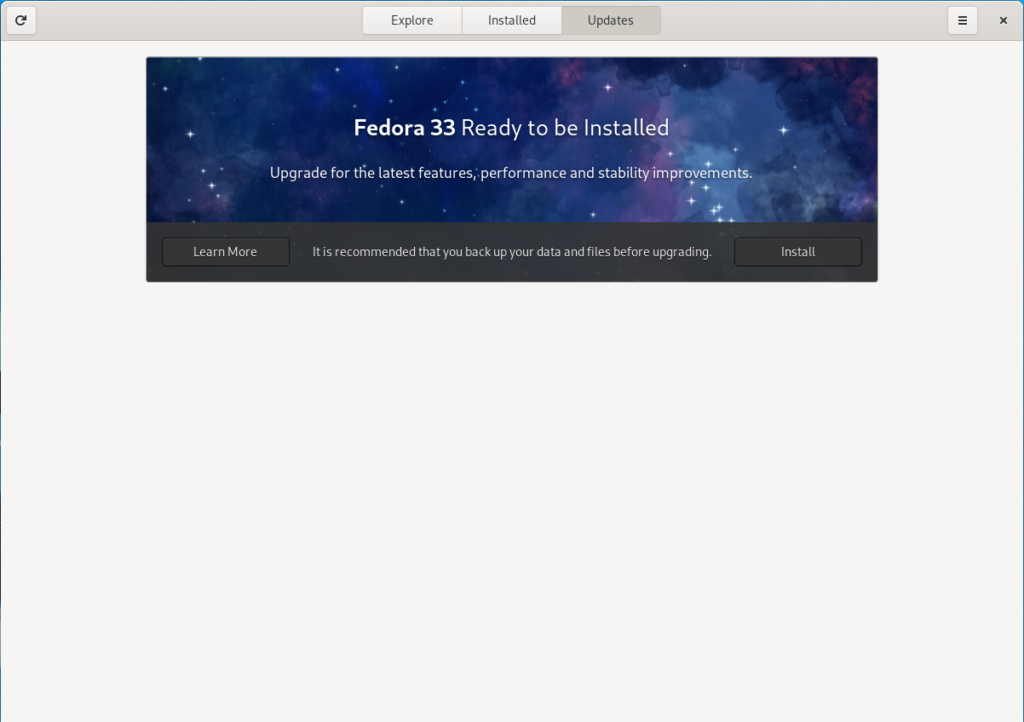

当你启动 Fedora Media Writer 时,你会看到一个页面,提示你从互联网上获取一个可引导的镜像,或者从你的硬盘上加载一个自定义镜像。第一个选择是 Fedora 的最新版本。Workstation 版本适用于台式机和笔记本电脑,Server 版本适用于虚拟化、机架服务器或任何你想作为服务器运行的情况。

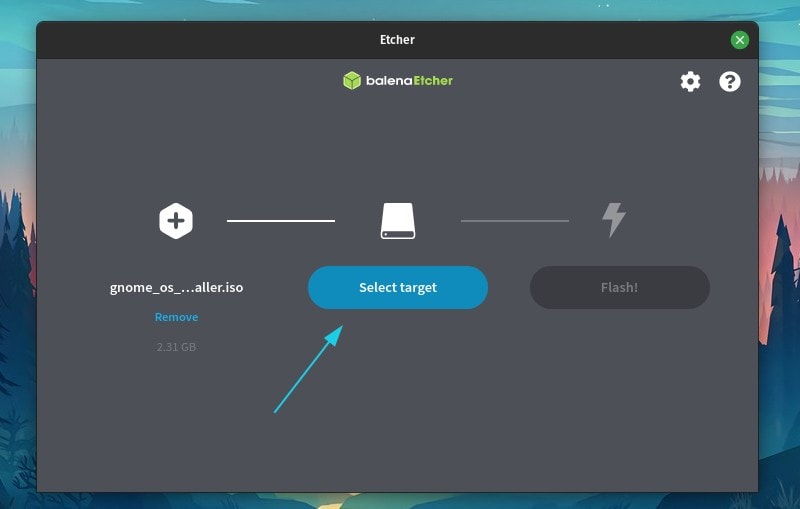

如果你选择了 Fedora 镜像,Media Writer 会下载一个光盘镜像(通常称为 “iso”,文件扩展名为 .iso),并将其保存到你的下载文件夹中,这样你就可以重复使用它来烧录另一个驱动器。

另外还有 Fedora Spins,这是来自 Fedora 社区的镜像,它旨在满足小众的兴趣。例如,如果你是 [MATE 桌面](https://opensource.com/article/19/12/mate-linux-desktop)的粉丝,那么你会很高兴地发现 Media Writer 提供了 MATE spin。它有很多,所以请滚动查看所有的选择。如果你是 Linux 的新手,不要被吓到或感到困惑:这些额外的选项是为老用户准备的,这些用户除了默认的选项外,还发展出了自己的偏好,所以对你来说,只使用 Workstation 或 Server 选项就行,这取决于你是想把 Fedora 作为一个桌面还是作为一个服务器操作系统来运行。

如果你需要一个与你当前使用的 CPU 不同架构的镜像,从窗口右上角的下拉菜单中选择 CPU 架构。

如果你已经将镜像保存在硬盘上,请选择“Custom Image”选项,并选择你要烧录到 USB 的发行版的 .iso 文件。

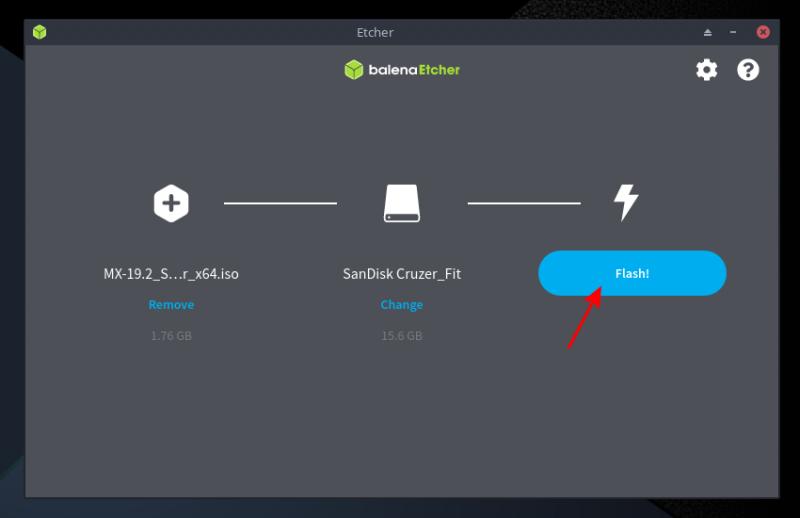

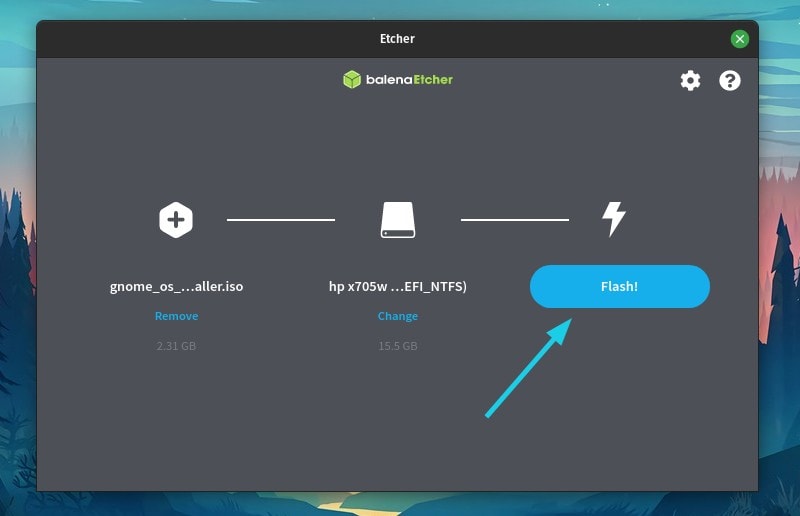

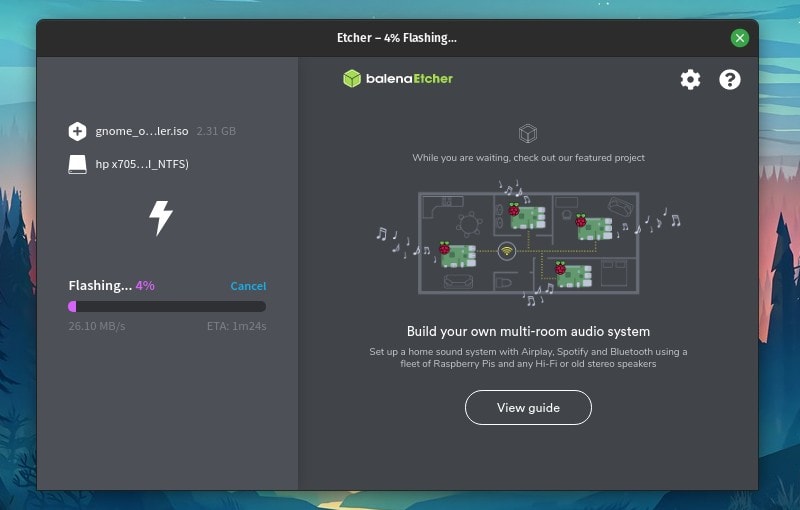

### 写入 USB 驱动器

当你下载或选择了一个镜像,你必须确认你要将该镜像写入驱动器。

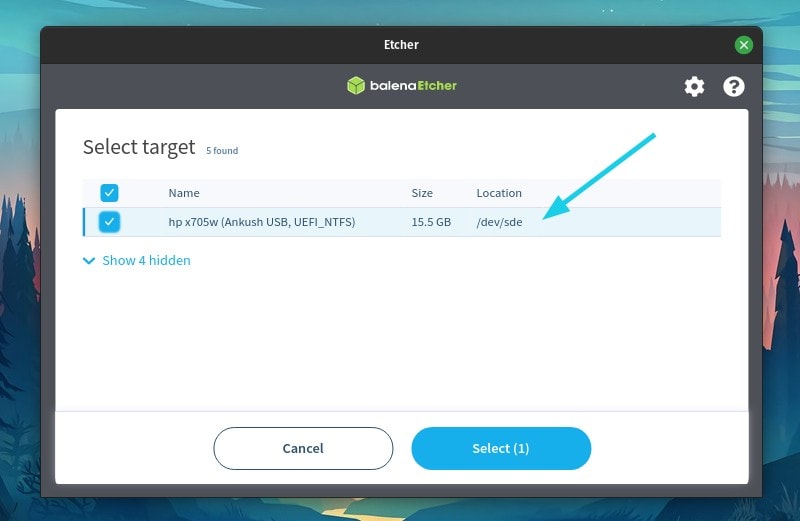

驱动器选择下拉菜单只显示了外部驱动器,所以你不会意外地覆盖自己的硬盘驱动器。这是 Fedora Media Writer 的一个重要功能,它比你在网上看到的许多手动说明要安全得多。然而,如果你的计算机上连接了多个外部驱动器,除了你想覆盖的那个,你应该将它们全部移除,以增加安全性。

选择你要安装镜像的驱动器,然后单击“Write to Disk”按钮。

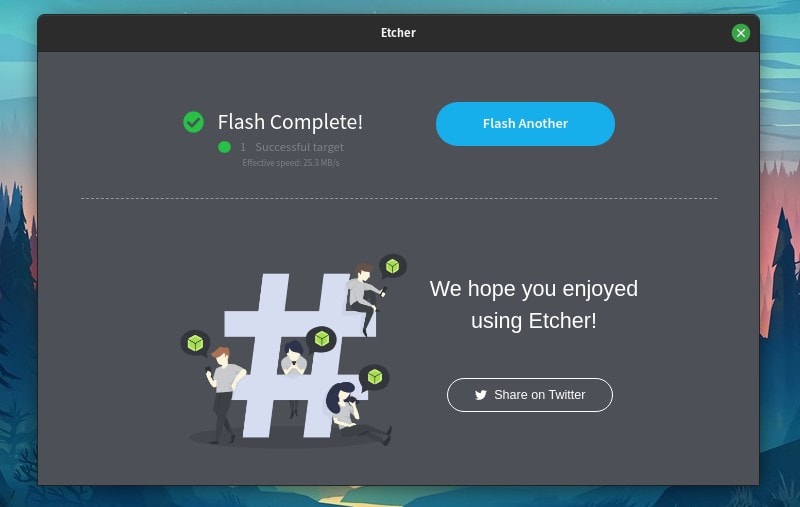

稍等一会儿,镜像就会被写入到你的驱动器,然后可以看看 Don Watkins 对[如何从 USB 驱动器启动到 Linux](https://opensource.com/article/20/4/first-linux-computer)的出色介绍。

### 开始使用 Linux

Fedora Media Writer 是一种将 Fedora Workstation 或 Server,或任何 Linux 发行版烧录到 USB 驱动器的方法,因此你可以在安装它到设备上之前试用它。试试吧,并在评论中分享你的经验和问题。

---

via: <https://opensource.com/article/20/10/fedora-media-writer>

作者:[Sumantro Mukherjee](https://opensource.com/users/sumantro) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

12,770 | 《代码英雄》第二季(9):特别篇 - 开发者推广大使圆桌会议 | https://www.redhat.com/en/command-line-heroes/season-2/developer-advocacy-roundtable | 2020-10-30T14:41:00 | [

"开发者",

"代码英雄"

] | https://linux.cn/article-12770-1.html |

>

> 代码英雄讲述了开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。

>

>

>

>

> **什么是《代码英雄》**

>

>

> <ruby> 代码英雄 <rt> Command Line Heroes </rt></ruby>是世界领先的企业开源软件解决方案供应商红帽(Red Hat)精心制作的原创音频播客,讲述开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。该音频博客邀请到了谷歌、NASA 等重量级企业的众多技术大牛共同讲述开源、操作系统、容器、DevOps、混合云等发展过程中的动人故事。

>

>

>

本文是《[代码英雄](https://www.redhat.com/en/command-line-heroes)》系列播客[第二季(9):特别篇 - 开发人员拥护者圆桌会议](https://www.redhat.com/en/command-line-heroes/season-2/developer-advocacy-roundtable)的[音频](https://dts.podtrac.com/redirect.mp3/audio.simplecast.com/7a9b329b.mp3)脚本。

>

> 导语:<ruby> 开发者推广大使 <rt> developer advocate </rt></ruby>在开源社区中起到的作用是举足轻重的。我们邀请了几位这样的推广大使来到本期节目中,以向我们揭示他们的工作方式,并阐述这份工作背后的意义。

>

>

> 来自 Mozilla 的 Sandra Persing、Twilio 的 Ricky Robinett 与 红帽的 Robyn Bergerron 将在此接受 Saron 的采访,分享他们的工作内容、他们支持社区的方式,以及他们对 2019 年的展望。

>

>

>

**00:00:06 - Saron Yitbarek**:

大家好,我是 Saron Yitbarek,这里是红帽的原创播客节目《<ruby> 代码英雄 <rt> Command Line Heroes </rt></ruby>》的特别篇。我们的节目在第 2 季去了很多地方。我们探索了[编程语言](/article-12595-1.html)、[数据大爆炸](/article-12649-1.html)、[安全危机](/article-12641-1.html)以及[无服务器](/article-12717-1.html)时代的到来,我们甚至去了[火星](/article-12744-1.html)。

**00:00:28**:

但是在结束了第 2 季之后,我们还有一个地方要去。我们要走进推广大使和思想领袖们的认识当中,他们帮着塑造了开发人员所做的全部工作。有时,他们被称为<ruby> 开发者推广大使 <rt> developer advocate </rt></ruby>,或者说他们担任开发者关系的职位,或开发者布道师。

**00:00:50**:

从开发人员的角度来看,无论他们的具体头衔是什么,他们似乎都做着许多相同的事情。你在会议上见过他们发表主题演讲。你在播客上听过他们接受采访,就像在本期节目中一样。你可能还阅读过他们的博文。但是他们是谁呢?他们到底在用自己的声音做了些什么?

**00:01:10**:

为了迎接 2019 年的到来,我们为大家召集了一个优秀人物的圆桌会议。尽管他们的头衔各不相同,但他们的目的是一致的。他们来到这里,是为了帮助开发人员,并确保其声音和需求能被大众听到。这些人都是典型的代码英雄。

**00:01:29**:

来自<ruby> 湾区 <rt> Bay Area </rt></ruby>的 Sandra Persing 是 Mozilla 的<ruby> 全球策略师 <rt> Global Strategist </rt></ruby>,也是 DevRel 峰会的创始人。

**00:01:38 - Sandra Persing**:

嗨, Saron。

**00:01:39 - Saron Yitbarek**:

你好。还有同样来自旧金山的 Ricky Robinett。他是 Twilio 的开发人员网络总监。

**00:01:47 - Ricky Robinett**:

你好。

**00:01:49 - Saron Yitbarek**:

从凤凰城外和我一起来的是 Robyn Bergeron,Red Hat 的社区架构师。

**00:01:55 - Robyn Bergeron**:

嗨!你好吗?

**00:01:56 - Saron Yitbarek**:

我很好。

**00:01:57 - Robyn Bergeron**:

看到你真高兴。

**00:01:57 - Saron Yitbarek**:

在不同的地方,这份工作的践行者被冠以不同的头衔。有<ruby> 开发者推广大使 <rt> Developer Advocate </rt></ruby>、<ruby> 开发者布道师 <rt> Developer Evangelist </rt></ruby>、<ruby> 开发者关系 <rt> Developer Relations </rt></ruby>;对于新事物来说,基本定义非常重要。因此,我认为一个好的起点是来定义这些东西是什么,尤其是定义你可以在这个领域做什么。所以,你能告诉我你的头衔以及这个头衔实质上的含义吗? Ricky,让我们从你开始吧。

**00:02:22 - Ricky Robinett**:

好的,听起来很棒。我的头衔是<ruby> 开发者网络总监 <rt> Director of the Developer Network </rt></ruby>。我有幸供职于一个开发者关系专家团队。我们有一个布道师团队、一个教育团队和一个社区团队。因此,这绝对是一个大杂烩,有你听说过的各种不同的头衔,我们将其汇集在这个团队之中。

**00:02:43 - Saron Yitbarek**:

厉害啊。Sandra,你呢?

**00:02:45 - Sandra Persing**:

我在 Mozilla 担任活动和赞助的<ruby> 全球策略师 <rt> Global Strategist </rt></ruby>,并与 Mozilla 新兴技术小组的开发者拓展团队合作。我通常会将我的日常活动解释为大量的研究、交流和探索,而这都将最终影响我们评估与决策应当如何投资我们的资源:我们的时间、金钱、纪念品、演说者等等,以回馈开发者社区,同时也从开发者社区得到反馈。这份工作既有布道的一面,也有倡导的一面。

**00:03:24 - Saron Yitbarek**:

很棒。 Robyn,该你了。

**00:03:26 - Robyn Bergeron**:

你好。好吧,我的正式头衔是<ruby> 社区架构师 <rt> Community Architect </rt></ruby>。人们就我这个头衔有过很多问题。我曾被称为<ruby> 社区经理 <rt> Community Manager </rt></ruby>,也曾被称为<ruby> 开发者推广大使 <rt> Developer Advocate </rt></ruby>,甚至还在过去的一份工作中做过<ruby> 运维推广大使 <rt> Operations Advocate </rt></ruby>。但是我想我所做的是……嗯,你知道,像是“社区经理”之类头衔所暗示的“你在管理着社区中发自内心为项目做出贡献的人”的这种想法,其实相当愚蠢,因此,我喜欢把我所做的事情看作是构建一个框架,人们可以真正顺利地参与其中,确保过程中没有障碍,并确保他们可以完成所有他们想要做的事情。

**00:04:02 - Saron Yitbarek**:

Ricky,由于你基本上是负责整个开发者网络的总监,你不仅仅是置身于推广大使团队、布道团队,也是社区团队之中,这有点儿像是你在运行着整个项目。你是怎样理解的这个的,是向开发者推广还是推广开发者?

**00:04:20 - Ricky Robinett**:

是的,这是个很棒的问题。 Phil Nash 是我们团队中的一名布道师,他对此有一个很好的认识,而我要将它剽窃过来。我们可以从很多不同的角度来解释这份工作,但说到底,我们的工作其实大体上就是帮助他人。

**00:04:33 - Saron Yitbarek**:

我喜欢这一点。是的。

**00:04:35 - Ricky Robinett**:

我们帮助开发人员,有时候这些帮助看起来就像是在 Stack Overflow 上回答问题,有时看起来像是构建一款新工具,有时看起来像组织一个活动,有时看起来又像是发起一次产品的内部改动。因此,我认为这就是我所听说过的最好的认识 —— 我们的责任是帮助。

**00:04:57 - Saron Yitbarek**:

的确。Sandra,你的职位让我感兴趣的一点是,你不光是策略师,还是全球策略师。让世界各地的开发人员组织起来,并且帮助他们,这是你在 Mozilla 的工作的一部分。在全球范围内的推广倡导会是什么样的呢?在不同的国家、不同的大洲之间,这份工作的含义会有所不同吗?

**00:05:20 - Sandra Persing**:

对,确实存在一些不同。我们今年刚刚在新加坡举办了第三届 DevRel 年度峰会。在过去的两年间,我们在西雅图主办了这项活动,而到了新加坡,我们看到了不同的视角。当我们走出湾区时,就连某些最基本的组织方式都需要做出改变。比如,如何确保一切都能在线下运转,因为连接性是一个大问题;再比如,我们该怎样使一名工作于印尼的开发人员能在峰会过程中与社区充分互动,无论他是在线上参加聚会,还是来到现场。

**00:05:59 - Sandra Persing**:

我们总会发现,所谓的“基础”,一些对我们来说如此寻常的事,比如在湾区举办一次简单的聚会——这再常见不过了,对吧?你高呼一声“我要参会”,就会发现到处都有聚会可以参加。然而对于,比如,越南的开发者来说,此事可以是具有特殊意义,且对他们而言相当重要的。这种聚会很宝贵,丰富了他们的开发者生活。

**00:06:27 - Saron Yitbarek**:

我注意到的一件事是,大家都以某种方式提到了社区。而我自己也运营着一个社区,Code Newbie,而且我经常被一些公司聘用为他们的布道师或社区经理。有一件事我一直都有点儿担心,也许有点畏惧;我在想,在过去的三四年里,我一直在尽我所能,尽心尽力地负责着这个社区。

**00:06:55**:

但如果我为一家公司工作,我就必须要牺牲这些吗?我是不是必须要将公司置于社区的需求之上?我该如何平衡这种关系呢?

**00:07:06**:

所以我在想,Robyn,或许我们可以问问你这个<ruby> 社区架构师 <rt> Community Architect </rt></ruby>,你是如何区分这两者的呢?或者说,你是如何平衡这两者的?

**00:07:16 - Robyn Bergeron**:

好吧,这当然是一个有趣的平衡。我的意思是,我以前的一个工作实际上是担任 Fedora 项目负责人。而你知道,Fedora 是 Red Enterprise Linux 的上游,在这里,你角色的一部分确实是某种找寻平衡的行为,对吧?两者之间的平衡是让社区里的人们开心,让公司对社区所做的事情高兴,同时要确保每个人都是快乐大家庭的一员。

**00:07:41**:

而且,你知道,我想当你在这个职位上做得最好的时候,你肯定会时不时地激怒公司决策层的某些人。但是你知道的,最终还是要用结果来证明,对吧?

**00:07:59**:

人们总是问我,你是如何平衡 Ansible 和红帽之间的关系的,你知道,当 Ansible 被红帽收购时,就像是 —— 哦我的天哪,红帽会接管它,然后对它做些糟糕的事情,并摆脱 Ubuntu 的支持吗?

**00:08:12**:

而这就像是,拥有整个项目的全部目的就是为了破坏它,就像是为了不要吸引 4000 名贡献者而故意变得糟糕一样。

**00:08:22**:

确保你的管理层信任你,并始终与人们保持清晰的沟通以了解实际发生的事情,并确保沟通通道的两侧都不会出现意外,这是成功与否的部分原因,也许并不总是会成功,但肯定会让人感到惊讶。

**00:08:41 - Saron Yitbarek**:

嗯嗯。确实。Ricky,你呢?当你在做这么多不同事情的时候,你是如何看待这种关系中的平衡的?

**00:08:49 - Ricky Robinett**:

我认为你必须相信公司和技术。你必须相信,你所带给开发人员的东西将会对他们的生活、职业生涯以及公司产生影响。

**00:09:03**:

另一方面,你必须让高管们相信这种方法。因此,我们非常幸运的一点是我们的 CEO 是一名开发人员,而且在很多方面,他是我们和开发者社区打交道的原动力。我们的布道师们的使命是激励并装备开发人员。因此,有时候我们会说这能激励并装备他们吗?因为如果不能的话,我们就不应该这么做,因为这超出了我们的职权限范围。

**00:09:36 - Saron Yitbarek**:

嗯嗯。Sandra,我觉得你有点儿优势,因为 Mozilla 是一个非营利性组织,对吧?所以我觉得或许 ——

**00:09:44 - Sandra Persing**:

我刚想说。

**00:09:46 - Saron Yitbarek**:

跟我讲讲这个吧。

**00:09:47 - Sandra Persing**:

Mozilla 的历史就是我们是一家叛逆的公司。我们一直在反抗企业家,对吗?出走 Netscape ,并与我们的创始人之一 Mitchell Baker 一起走过的整段历史,确保了 Web ——

**00:10:01**:

—— 乃至互联网是面向所有人的开放而自由的资源。我的意思是,我们仍然,我们每个人,每一个 Mozilla 人,都信奉这一口号,我们对此深信不疑。因此,这绝对是一家令人惊叹的、100% 拥抱了社区的公司。

**00:10:22 - Saron Yitbarek**:

确实。所以,Ricky,我还清楚地记得那件红色的运动夹克,我还记得你亲自出马做的那些很棒的演示,感觉那种联系并帮助开发人员的方式非常新颖。这个想法是怎么在 Twilio 上产生的?

**00:10:41 - Ricky Robinett**:

嗯,你这么说真是太好了。我们确实相信我们是站在巨人的肩膀之上。你会想到 Apple 公司的 Guy Kawasaki,有人在我们之前就采用了这种营销方式。我认为我们很幸运能在正确的时间把它带给开发人员。

**00:11:03**:

而且有这么多的人进来,带着我们如何能够做到这一点以及如何能够不断提高方法水平的想法。但是我实际上不知道是谁发明了红色运动夹克,因此现在我需要去探寻历史 ——

**00:11:19 - Saron Yitbarek**:

你一定得找到答案。

**00:11:19 - Ricky Robinett**:

—— 有关这是何时开始的历史。

**00:11:20 - Saron Yitbarek**:

这是件很棒的夹克。

**00:11:21 - Ricky Robinett**:

知道了,我今天下午就去找。

**00:11:25 - Saron Yitbarek**:

而我想知道的是,布道和推广的想法在 Twilio 是如何随着时间而发生变化的。你知道,曾经你们只是一家小小的初创公司,一家叛逆的初创公司,然后现如今成了一家大公司。随着公司本身的变化,布道的形式又是如何变化的呢?

**00:11:46 - Ricky Robinett**:

是的,开始时,我觉得我本可以一年 365 天都在<ruby> 黑客马拉松 <rt> hackathon </rt></ruby>上渡过,而在纽约,每个周末你都要从五到六个黑客马拉松之中抉择一个。我们在布道方面所做的许多事情都是黑客马拉松场景,而现在,场景不同了。确实,最大的变化在于公司外部而不是内部。

**00:12:11**:

因此,我谈到了布道师的激励和装备。所以令人高兴的是,这些年来这个任务并没有发生任何变化,他们激励和装备的方式一直在变化,但任务本身并没有什么改变。

**00:12:26 - Saron Yitbarek**:

那么 Robyn,随着 DevOps 和 DevSecOps 的兴起,推广大使在你和社区架构师看来如何呢?会有运维推广大使吗?

**00:12:39 - Robyn Bergeron**:

嗯,实际上那是 …… 我的意思是说,孩子们,那是我辍学后的第一份工作。我不建议这样做,不要在家做这个事情。

**00:12:47 - Saron Yitbarek**:

留在学校里继续上学。

**00:12:48 - Robyn Bergeron**:

听着。你看,小姑娘,我的第一份工作实际上是在 Motorola 担任<ruby> 系统管理员 <rt> SysAdmin </rt></ruby>多年,而当我从担任 Fedora 负责人跳槽时,我在 Elastic Search 工作了一段时间,你知道,那个头衔是开发者推广大使,因此我花了几个学期在大学里攻读 C。但是我的心一直都在运维这边。我开始对自己真的是一名开发者推广大使感到疑惑?我觉得我只是在推广运维人员而已。我开始自称是一名运维推广大使,每个人都为之注目。

**00:13:22**:

每个人都说,嗯,这真的是一个很酷的头衔。我的意思是,你知道,我基本上只是在公司内部倡导其他人在做什么。

**00:13:33 - Saron Yitbarek**:

所以,Sandra,我们谈到了推广大使和布道师在世界不同地区看起来如何不同,但是我想知道,随着我们变得越来越全球化,联系越来越紧密,更大范围和形式的布道对你来说是不是也在随着时间流逝而变化?

**00:13:52 - Sandra Persing**:

你知道什么是开发者关系吗?我们是在推销我们的产品吗?你知道,而我注意到的是,即使是大公司也正在远离这种策略。要明白的是,做一个真实的人,真正留心倾听并回应开发人员的需求是最为重要的,而不是去推销产品。

**00:14:17**:

我总是回过头来和我在 Mozilla 的团队分享一个理念,开发者们实际上是我们所合作过的最聪明、最具有创造力的客户群体之一。他们可以大老远就闻到商业销售的气味。因此,我们必须对于如何共享信息保持精明,就像它必须是一群有着才华和智慧的人聚集在一起,成为我们与开发者社区进行沟通的创新方式。

**00:14:48 - Saron Yitbarek**:

嗯,真的,我很喜欢这样的想法,需要将不同的技能,我想,还有不同的背景,集合在一起,才能很好地服务于开发者,也能帮助开发者自助。

**00:15:01**:

当我想到开发者推广大使的兴起时,在我看来,它与开源的兴起息息相关。这感觉几乎就像是开源贡献者越多,开源就变得越重要,重视它的大公司也越多,他们几乎必须与这些开源贡献者、这些开发人员建立起更好的关系,并且我感觉这二者真的是紧密相关的。

**00:15:27**:

因此我很好奇你的想法。那么 Robyn,让我们从你开始吧。是这样的吗?推广大使的这个想法与开源的兴起有所联系吗?

**00:15:37 - Robyn Bergeron**:

如果你是一家销售软件或是销售许可证和长期支持的公司,你知道,无论你的开源公司的业务模型是什么,如果你没有这种反馈回路,或者你没有真正关注人们在说什么,那你最终会犯下一个众所周知的错误。我认为,真正能在全世界范围内每天都做到这一点,就是成功和失败之间的区别,没有人愿意花时间去做错误的事情。那是个坏主意。

**00:16:10 - Saron Yitbarek**:

是的,这通常是个坏主意。很好。因此,我想知道你们每个人各自都在关注什么,都真正在思考什么。因此,Ricky,让我们从你开始吧。在你的 Twilio 角色中,你试图为开发者文化带来什么样的改进呢?

**00:16:27 - Ricky Robinett**:

如果我要说的我与开发者交谈时听到过最多的一个短语,那就是“我不是开发者,但是 …… ”,而这可能是一直萦绕在我脑海中的最大的事情之一 —— 开发者定义的扩大化。

**00:16:45**:

对我们很多人来说,“冒牌综合症”是一个非常真实的现象。令人惊讶的是,即使是你所认识的一些最好的开发者也在为此努力。而对我来说,我们所有人能在我们的文化中做的最重要的事情之一,就是让人们说,“你知道吗?我是一名开发者。我用代码解决问题。”

**00:17:08**:

因此,我最喜欢的故事之一是,我们社区有一个名为 Doug McKenziethat 的成员,他是个魔术师。他自学了 PHP,以便在魔术中使用技术。

**00:17:23 - Saron Yitbarek**:

噢,很棒。

**00:17:23 - Ricky Robinett**:

Doug 之所以如此之酷,是因为他超级谦虚,就像是:“哦,我不是一名开发者。”而突然之间你发现,他正在编写比许多人所见过的更为复杂的代码,可以完成令人震惊的事情。因此,我感觉世界上有许多 Doug McKenzies 这样的人,他们都在用代码做着伟大的事情,我们有机会让他们成为社区的一份子,并且在这项工作中拥有自己的身份。

**00:17:51 - Saron Yitbarek**:

我喜欢这个故事,因为它让我想起了我曾经为 Code Newbie 播客采访过的一个人,她是一名作家而且有很多写作任务,必须学习 Git 才能撰写有关 Git 的文章,她写了很多这方面的文章,又逐渐转向了其他的编程主题,最终,你知道,几年过去之后,她实际上已经成为了一名开发者却不自知。

**00:18:13**:

在我采访她的时候,我说:“嘿,你知道自己是一名开发者吗?”她说:“不,我是个作家。”而我说:“你可以二者兼备。它们并不互相排斥。”但是,是的,这种说法换个角度说,“嘿,我实际上是在编程和创造,因此现在我可以自称为一名码农了”,这种认识对人们来说很难,要做到这样需要一段时间。

**00:18:32 - Ricky Robinett**:

是的,当然是这样。

**00:18:33 - Saron Yitbarek**:

是的。因此,Robyn,对你来说,最近几年来你一直在努力推动的最积极的变化是什么?

**00:18:41 - Robyn Bergeron**:

只是确保在我们的成长过程中,我们不会失去对大局观的把握。我们的主要目标是使人们易于使用、易于贡献、易于实际完成生活中的事情,确保我们不会偏离这个目标,或者在实际项目的某些结构层面获得更多的工程帮助,并确保我们保持所有这些东西都井井有条。我们在这方面做得很好,我不知道是不是,但我认为这很重要。我不知道自己会不会因此而获得诺贝尔和平奖,但我知道这对许多贡献者都非常重要。

**00:19:19 - Saron Yitbarek**:

这对我来说很有用。Sandra,你呢?作为全球策略师,你近些年来所推动的最积极的变化是什么?

**00:19:26 - Sandra Persing**:

在我脑海中真正突出的两件事是扩展开发者角色这个定义,这一点非常重要。我们想要发展我们的社区,对吗?那么为什么要限制开发者的定义和描述呢?

**00:19:43**:

当我们在 Sundance 与 Reggie Watts 和 Chris Milk 等著名人物进行座谈时,我们给电影制作人、制片人、决策者一个机会,让他们说:“哦,我们也能做到。我们不需要受限于作为创意电影制作人能做些什么。”

**00:20:00**:

我们可以采纳技术,也可以成为开发者,而这相当令人耳目一新。我们在 Mozilla 经历的另一个时刻是让一位芝加哥的灯光艺术家 Ian Brill 与我们合作进行一个项目,我们把这个项目标记为 Arch,我们为今年两个重要的 JavaScript 开发者活动带来了这种巨型的塑料灯,LED 灯,带着七个可编程的树莓派结构。这是为了邀请更多的程序员(无论他们是否自称为开发者)来尝试 Mozilla 今年大力倡导的两种语言: WebAssembly 和 Rust。

**00:20:49**:

因此,我们创建了两个简单的模板,说,“尝试一下吧”,但这不是我们所想要推动的编程。这不是编程,而是“是的,有几行代码。它是编程语言,但你要做的是创建几行代码,现在可以将它们转化为艺术”,而这让许许多多的新人进入到了我们的群体之中,也就是把他们带到我们的桌子旁边,然后他们会编写他们的代码行,在 Arch 之下走过,去查看目前正在结构中循环着的灯光表现。这真是太神奇了。

**00:21:22 - Saron Yitbarek**:

哇。这听上去很美,确实美。因此,我想知道的是,当我们谈论到为开发者做推广大使时,我们谈论了很多有关社区的话题,也谈论了那个最终的想法,无论我们的职业头衔是什么,我们真的只是想要帮助人们,开发者需要什么方面的帮助? Ricky,让我们从你开始吧。开发者们说他们需要从你这里得到什么呢?

**00:21:44 - Ricky Robinett**:

哇。是的,这是个好问题。我认为,我们所发现的其中一件事是,科技变化如此之快,我们被问到的很多问题都是,“我从哪里开始?我首先要做什么?我怎么知道我走的路是正确的呢?”对我们来说,这可能是我们投入最多的领域之一,我们称之为帮助人们发现他们用代码改变世界的能力。

**00:22:16 - Saron Yitbarek**:

噢,这很美妙。我赞成。

**00:22:18 - Ricky Robinett**:

嗯,谢谢你。是的,这令人兴奋。因此我们构建了一款名为 TwilioQuest 的工具,以帮助人们发现这种力量,帮助他们知道从何处开始。但我只是感觉,你知道,人们一直在寻找自己的身份或获得允许使用该身份的方式,而对每个人而言这就已经是写代码,是用代码或软件解决问题。有许多人想这么做,然而却不知道从哪里开始。所以这是我们经常思考的一件事。

**00:22:51 - Saron Yitbarek**:

是的。因此,Robyn,对于你们红帽来说,红帽的开发者所寻求的是什么呢?

**00:23:00 - Robyn Bergeron**:

很多时候,是有人来找你,他们遇到了一些障碍,也许是“不知怎么的,我的公关人员被机器人遗漏了”,但是很多时候,也有人会说,“嘿,我有一个很酷的想法。或许它不太适合这里,但是我认为它可能会改善社区的运行方式,或者可能会是其它我们正在研究的东西的不错的辅助工具。我该怎么办呢?”这就像是,“好吧,我该如何帮你入门呢?”你知道吗?“我能怎么做呢?比如说,你只是需要有人同意吗?因为我在这儿整天都基本上是对任何事情点头,让人们知道,这行得通,你当然有权力这样做”,所以这是我所认为最好的事情是,你可以做。至少在我看来,就是确保人们在自己的前进道路上没有阻碍,或者如果阻碍他们前进的一件事是正等着某人说可以,我一直在重申,你不需要别人的许可,但是如果有人需要,那么看在上帝的份上,请给他们许可。

**00:23:58 - Saron Yitbarek**:

所以,最后一个问题,我们需要总结一下,我将向你们逐一询问。你在 2019 年将要倡导的最重要的事情是什么呢?如果你有魔杖,那么你想要改变的下一件大事是什么呢? Sandra,让我们从你开始吧。

**00:24:15 - Sandra Persing**:

嘿,我就知道你会这么做。好吧,我们将在 2019 年进行的最大挑战和最为激动人心的项目是真正兑现我们的承诺,使 Web 成为最棒、最大、最易于访问的平台之一。我们总是告诉开发者这是你应当为之构建并部署的地方,但是我们知道 Web 本身非常复杂,而且有着多个浏览器供应商,有时候这并不是一个正确的说法,这对我们而言是一个长期的挑战,尤其是在 Mozilla,在这里我们想要保持 Web 开放、自由,并且能被所有人访问。我们想要继续确保能够兑现对开发人员的承诺,确保网络确实是开放的、可访问的,对所有人都开放。

**00:25:10 - Saron Yitbarek**:

嗯,爱了。Ricky,你呢?

**00:25:13 - Ricky Robinett**:

只需要确保我们为开发人员提供服务,使得他们能够在全世界范围内进行线上或是线下的聚会。更容易专注于你所看到的内容,并且无需记得开发人员是在何处,即使你没有看到他们。因此,我会挥舞我的魔杖,并在全世界更多的地方,找出我们如何能够帮助那里的开发人员。

**00:25:36 - Sandra Persing**:

我只想说,我喜欢 Jarod 有关<ruby> 暗物质开发者 <rt> dark matter developer </rt></ruby>的演讲。实在是太棒了。

**00:25:42 - Ricky Robinett**:

当你第一次听到它的时候,感觉像是一个了不起的概念,就像是,“哇,真的是一回事儿。”

**00:25:50 - Saron Yitbarek**:

那就给我们讲讲吧,什么是暗物质开发者呢?

**00:25:52 - Sandra Persing**:

本质上,有一些开发者,他们不参与你的聚会,不参与 GitHub 和在线社区,不为 Stack Overflow 做贡献。这些开发者仍在努力工作并作出贡献,但我们不知道。我们知道他们就在那里,但我们看不到他们。我们无法认出他们,而这些人实际上是开发者社区中非常重要的一部分,我们往往会忽略他们,但不能这样。忽略那些不愿发表意见的社区将损害我们与开发者的关系,我们需要更加积极主动地寻找宇宙中那些暗物质开发者。

**00:26:34 - Saron Yitbarek**:

哦,我喜欢。这很酷。是的,Jarod 实际上在 Twilio 工作,对吗?

**00:26:38 - Sandra Persing**:

是的。是的,他负责运营亚太区的 DevRel。

**00:26:42 - Robyn Bergeron**:

我从前在红帽的同事之一 Chris Grams,现在在一家名为 Tidelift 的公司里工作,他曾经有一个名为<ruby> 暗物质很重要 <rt> Dark Matter Matters </rt></ruby>的博客,因为它有点儿像是 ——

**00:26:52 - Saron Yitbarek**:

嗯嗯。

**00:26:54 - Robyn Bergeron**:

你知道,你所看不到的东西实际上仍然很重要,所以 ——

**00:26:58 - Saron Yitbarek**:

确实是这样。 Robyn,你呢?你会用你的魔杖做什么呢?

**00:27:03 - Robyn Bergeron**:

哦,用我的魔杖,有很多事情,但是根据这次谈话,我想我会更好地管理彼此之间的依赖关系,而且也许并不令人惊讶,尤其是当我们之中有许多人在 OpenStack、 OPNFV、 Ansible 以及所有这些建立在彼此之上的东西工作时。只是确保我们项目之间的关系比你筋疲力尽时所能看到的关系更加明显。因此,我非常期待来年的事情,因为我们在事物上越来越受关注。现在这非常令人兴奋。

**00:27:39 - Saron Yitbarek**:

吸引力总是那么好。它是如此令人兴奋。好吧,我想感谢你们所有人,非常感谢你们今天加入我们并分享你们的思维、想法和故事。非常感谢大家。那么现在说再见?

**00:27:51 - Robyn Bergeron**:

再见,各位。

**00:27:54 - Sandra Persing**:

很好。非常感谢你, Saron。能上这期节目很荣幸。

**00:27:58 - Ricky Robinett**:

它已经开始了,游戏工作室对于开源的态度已经开始转变。

**00:27:59 - Saron Yitbarek**:

确实。今天的圆桌会议包括红帽的社区架构师 Robyn Bergeron、 Mozilla 的全球策略师 Sandra Persing 以及 Twilio 的开发者网络总监 Ricky Robinet。我认为自己很幸运,能有这样的平台让我分享对于我们社区将何去何从的愿景,无论是这档播客节目还是在其他地方,但我想要指出的是,你无需拥有自己的播客就能够成为推广大使。成为推广大使只是意味着睁大眼睛并代表他人大声疾呼。这确实可以是每个人的工作。因此,我希望 Robyn、 Sandra 和 Ricky 给了你一点儿有关倡导你觉得重要的事情的启发。

**00:28:50 - Saron Yitbarek**:

同时,《代码英雄》第 3 季已经在制作中了。当新剧集在今年春天推出时,你可以成为最早了解新剧集的人之一。如果你尚未注册,请在 Apple Podcasts、 Google Podcasts 或任何你获得播客节目的地方进行订阅。只需点击一下,100% 免费。我是 Saron Yitbarek。非常感谢你的收听,在第 3 季到来之前,继续编程。

>

> **什么是 LCTT SIG 和 LCTT LCRH SIG**

>

>

> LCTT SIG 是 LCTT <ruby> 特别兴趣小组 <rt> Special Interest Group </rt></ruby>,LCTT SIG 是针对特定领域、特定内容的翻译小组,翻译组成员将遵循 LCTT 流程和规范,参与翻译,并获得相应的奖励。LCRH SIG 是 LCTT 联合红帽(Red Hat)发起的 SIG,当前专注任务是《代码英雄》系列播客的脚本汉化,已有数十位贡献者加入。敬请每周三、周五期待经过我们精心翻译、校对和发布的译文。

>

>

> 欢迎[加入 LCRH SIG](/article-12436-1.html) 一同参与贡献,并领取红帽(Red Hat)和我们联合颁发的专属贡献者证书。

>

>

>

---

via: <https://www.redhat.com/en/command-line-heroes/season-2/developer-advocacy-roundtable>

作者:[Red Hat](https://www.redhat.com/en/command-line-heroes) 选题:[bestony](https://github.com/bestony) 译者:[JonnieWayy](https://github.com/JonnieWayy) 校对:[windgeek](https://github.com/windgeek), [Northurland](https://github.com/Northurland), [wxy](https://github.com/wxy)

本文由 [LCRH](https://github.com/LCTT/LCRH) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Developer advocates play important roles in open source communities. We brought a few of them together to explain how and why they do what they do.

Sandra Persing (Mozilla), Ricky Robinett (Twilio), and Robyn Bergeron (Red Hat) sit down with Saron to share what they're working on, how they support their communities, and what they're looking forward to in 2019.

**00:06** - *Saron Yitbarek*

Hi everybody, I'm Saron Yitbarek and this is a special bonus episode of Command Line Heroes, an original podcast from Red Hat. This podcast went a lot of places in Season 2. We explored programming languages, data overload, security crises, the advent of serverless. I mean, we even went to Mars.

**00:28** - *Saron Yitbarek*

But after closing out Season 2, there was still one more place we wanted to go. We wanted to go inside the minds of the advocates and thought leaders who help shape all the work that developers do. Sometimes they're called developer advocates or they're in developer relations or they're developer evangelists.

**00:50** -*Saron Yitbarek*

Whatever their exact titles, from the developer's perspective, they seem to do a lot of the same things. You've seen them give keynotes at conferences. You've heard them getting interviewed on podcasts, like this one. You've probably read their blog posts. But who are they, and what exactly are they using their voices to get done?

**01:10** - *Saron Yitbarek*