id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

12,588 | 使用 GraphQL 作为 API 网关来监控微服务 | https://opensource.com/article/20/8/microservices-graphql | 2020-09-06T20:51:36 | [

"GraphQL",

"微服务"

] | https://linux.cn/article-12588-1.html |

>

> 在问题导致关键的微服务瘫痪之前,使用 GraphQL 的监控功能帮助你及早发现问题。

>

>

>

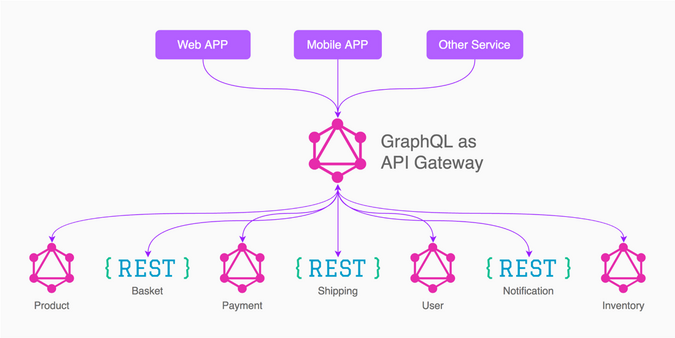

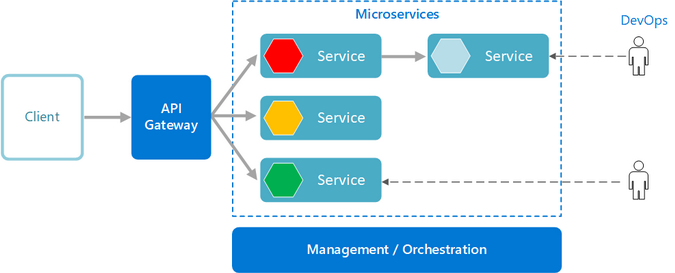

[微服务](https://opensource.com/resources/what-are-microservices)和 [GraphQL](https://opensource.com/article/19/6/what-is-graphql) 就像面包和黄油一样,是一个很好的组合。它们本身都很棒,结合起来就更棒了。了解你的微服务的健康状况是很重要的,因为它们运行着重要的服务。如果等到某个关键的服务崩溃了才诊断问题,那是很愚蠢的。让 GraphQL 帮助你及早发现问题并不需要花费太多精力。

常规的健康检查可以让你观察和测试你的服务,在问题影响到你的业务、客户或项目之前,尽早得到通知。说起来很简单,但健康检查到底要做什么呢?

以下是我在设计服务检查时考虑的因素:

服务器健康检查的要求:

1. 我需要了解我的微服务的可用性状态。

2. 我希望能够管理服务器的负载。

3. 我希望对我的微服务进行端到端(e2e)测试。

4. 我应该能够预测中断。

### 做服务器健康检查的方法

进行健康检查可能比较棘手,因为理论上,你可以检查的东西几乎是无穷无尽的。我喜欢从小处着手,运行最基本的测试:ping 测试。这只是测试运行应用的服务器是否可用。然后,我加强测试以评估特定问题,思考服务器中最重要的元素。我想到那些如果突然消失的话将是灾难性的事情。

1. \*\*Ping 检查:\*\*Ping 是最简单的监控类型。它只是检查你的应用是否在线。

2. \*\*脚本化浏览器:\*\*脚本化浏览器比较高级。像 [Selenium](https://www.selenium.dev/) 这样的浏览器自动化工具可以让你实现自定义的监控规则集。

3. \*\*API 测试:\*\*API 测试用于监控 API 端点。这是 ping 检查模型的高级版本,你可以根据 API 响应来定义监控计划。

### 使用 GraphQL 进行健康检查

在一个典型的基于 REST 的微服务中,你需要从头开始构建健康检查功能。这是一个时间密集型的过程,但使用 GraphQL 就不用担心了。

根据它的[网站](https://graphql.org/)称:

>

> “GraphQL 是一种用于 API 的查询语言,也是一种用现有数据完成这些查询的运行时环境。GraphQL 为你的 API 中的数据提供了一个完整的、可理解的描述,让客户有能力精确地仅查询他们所需要的东西,让 API 更容易随着时间的推移而进化,并实现强大的开发者工具。”

>

>

>

当你启动一个 GraphQL 微服务时,你还可以获得监控微服务的运行状况的供给。这是一个隐藏的宝贝。

正如我上面提到的,你可以用 GraphQL 端点执行 API 测试以及 ping 检查。

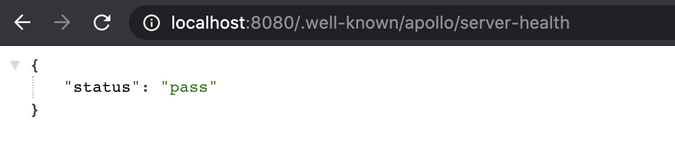

Apollo GraphQL 服务器提供了一个默认的端点,它可以返回有关你的微服务和服务器健康的信息。它不是很复杂:如果服务器正在运行,它就会返回状态码 200。

默认端点是 `<server-host>/.well-known/apollo/server-health`。

### 高级健康检查

在某些情况下,基本的健康检查可能不足以确保系统的完整性。例如,紧密耦合的系统需要更多的业务逻辑来确保系统的健康。

Apollo GraphQL 在定义服务器的同时,通过声明一个 `onHealthCheck` 函数来有效地管理这种情况。

```

* Defining the Apollo Server */

const apollo = new ApolloServer({

playground: process.env.NODE_ENV !== 'production',

typeDefs: gqlSchema,

resolvers: resolver,

onHealthCheck: () => {

return new Promise((resolve, reject) => {

// Replace the `true` in this conditional with more specific checks!

if (true) {

resolve();

} else {

reject();

}

});

}

});

```

当你定义一个 `onHealthCheck` 方法时,它返回一个 promise,如果服务器准备好了,它就会返回 `resolve`,如果有错误,它就会返回 `reject`。

GraphQL 让监控 API 变得更容易。此外,在你的服务器基础架构中使用它可以使代码变得可扩展。如果你想尝试采用 GraphQL 作为你的新基础设施定义,请参见我的 GitHub 仓库中的[示例代码和配置](https://github.com/riginoommen/example-graphql)。

---

via: <https://opensource.com/article/20/8/microservices-graphql>

作者:[Rigin Oommen](https://opensource.com/users/riginoommen) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | [Microservices](https://opensource.com/resources/what-are-microservices) and [GraphQL](https://opensource.com/article/19/6/what-is-graphql) are a great combination, like bread and butter. They're both great on their own and even better together. Knowing the health of your microservices is important because they run important services—it would be foolish to wait until something critical breaks before diagnosing a problem. It doesn't take much effort to let GraphQL help you detect issues early.

Routine health checks allow you to watch and test your services to get early notifications about problems before they affect your business, clients, or project. That's easy enough to say, but what does it really mean to do a health check?

Here are the factors I think about when designing a service checkup:

**Requirements for a server health check:**

- I need to understand the availability status of my microservice.

- I want to be able to manage the server load.

- I want end-to-end (e2e) testing of my microservices.

- I should be able to predict outages.

## Ways to do server health checks

Coming up with health checks can be tricky because, in theory, there's nearly an infinite number of things you could check for. I like to start small and run the most basic test: a ping test. This simply tests whether the server running the application is available. Then I ramp up my tests to evaluate specific concerns, thinking about the elements of my server that are most important. I think about the things that would be disastrous should they disappear suddenly.

**Ping check:**Ping is the simplest monitor type. It just checks that your application is online.**Scripted browser:**Scripted browsers are more advanced; browser automation tools like[Selenium](https://www.selenium.dev/)enable you to implement custom monitoring rule sets.**API tests:**API tests are used to monitor API endpoints. This is an advanced version of the ping check model, where you can define the monitoring plan based on the API responses.

## Health check with GraphQL

In a typical REST-based microservice, you need to build health check features from scratch. It's a time-intensive process, but it's not something you have to worry about with GraphQL.

According to its [website](https://graphql.org/):

"GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. GraphQL provides a complete and understandable description of the data in your API, gives clients the power to ask for exactly what they need and nothing more, makes it easier to evolve APIs over time, and enables powerful developer tools."

When you bootstrap a GraphQL microservice, you also get a provision to monitor the health of the microservice. This is something of a hidden gem.

As I mentioned above, you can perform API tests as well as ping checks with the GraphQL endpoint.

Apollo GraphQL Server provides a default endpoint that returns information about your microservices and server health. It's not very complex: it returns status code 200 if the server is running.

The default endpoint is `<server-host>/.well-known/apollo/server-health`

.

## Advanced health checks

In some cases, basic health checks may not be enough to ensure the integrity of a system. For example, tightly coupled systems require more business logic to ensure the health of the system.

Apollo GraphQL is efficient enough to manage this use case by declaring an `onHealthCheck`

function while defining the server:

```

* Defining the Apollo Server */

const apollo = new ApolloServer({

playground: process.env.NODE_ENV !== 'production',

typeDefs: gqlSchema,

resolvers: resolver,

onHealthCheck: () => {

return new Promise((resolve, reject) => {

// Replace the `true` in this conditional with more specific checks!

if (true) {

resolve();

} else {

reject();

}

});

}

});

```

When you define an `onHealthCheck`

method, it returns a promise that *resolves* if the server is ready and *rejects* if there is an error.

GraphQL makes monitoring APIs easier. In addition, using it for your server infrastructure makes things scalable. If you want to try adopting GraphQL as your new infrastructure definition, see my GitHub repo for [example code and configuration](https://github.com/riginoommen/example-graphql).

## Comments are closed. |

12,589 | Linux 黑话解释:什么是显示服务器,用来做什么? | https://itsfoss.com/display-server/ | 2020-09-06T21:29:00 | [

"显示服务器"

] | https://linux.cn/article-12589-1.html |

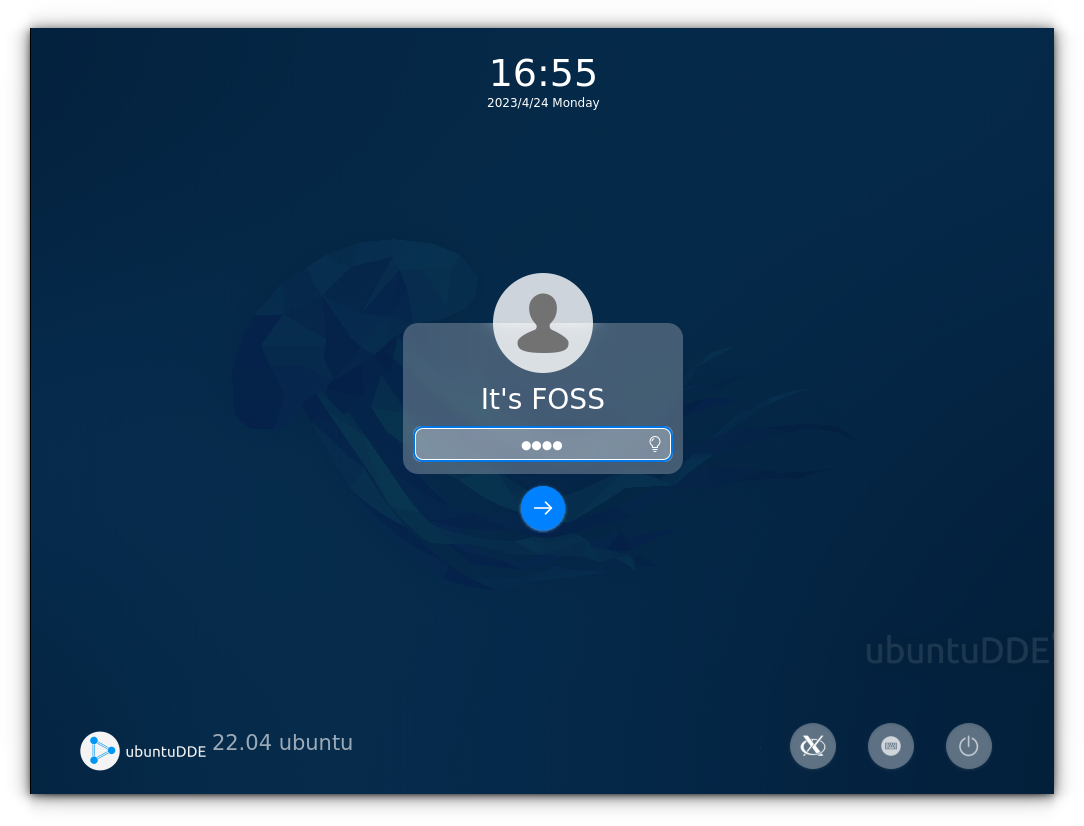

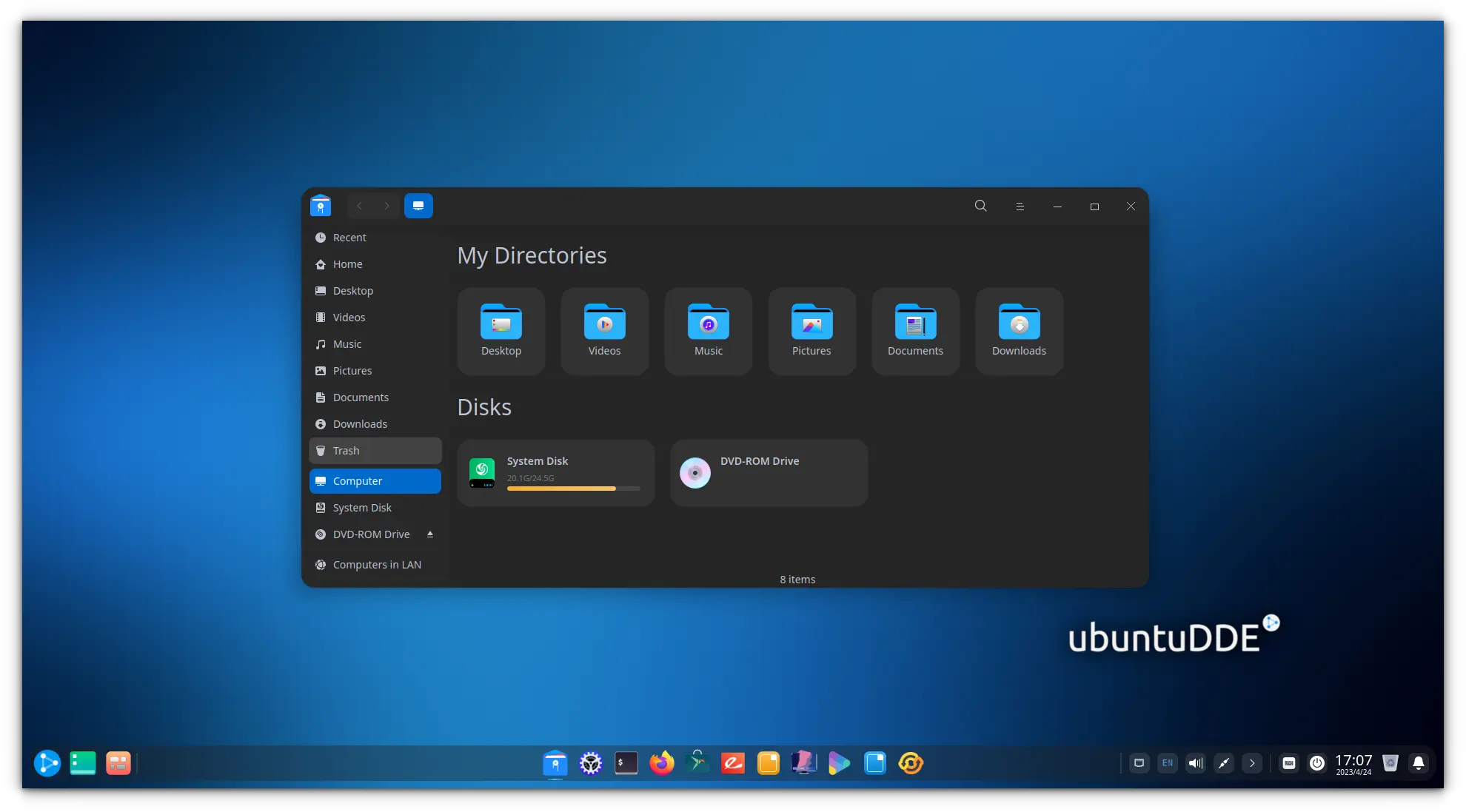

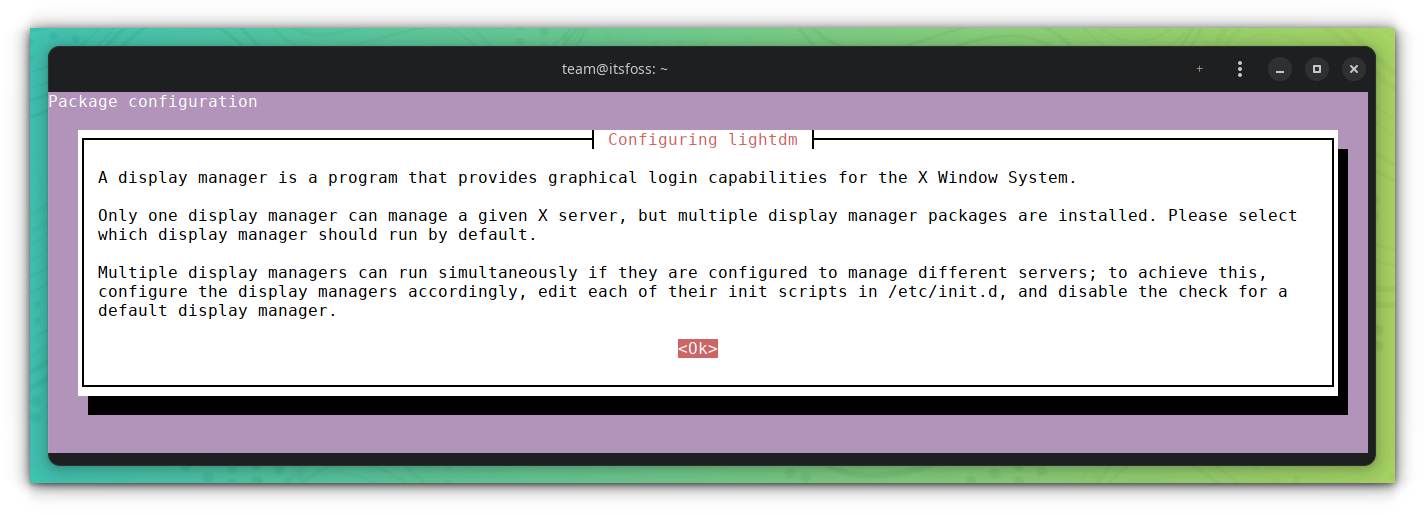

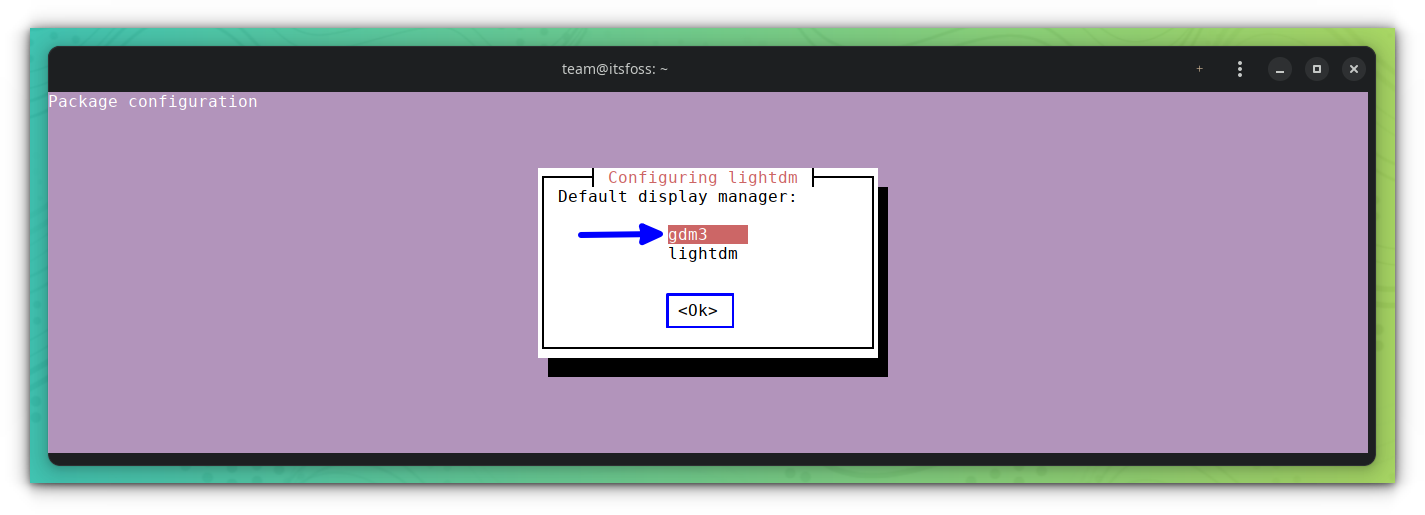

在 Linux 相关的文章、新闻和讨论中,你会经常遇到<ruby> 显示服务器 <rt> display server </rt></ruby>、Xorg、Wayland 等名词。

在这篇解释文章中,我将讨论 Linux 中的显示服务器。

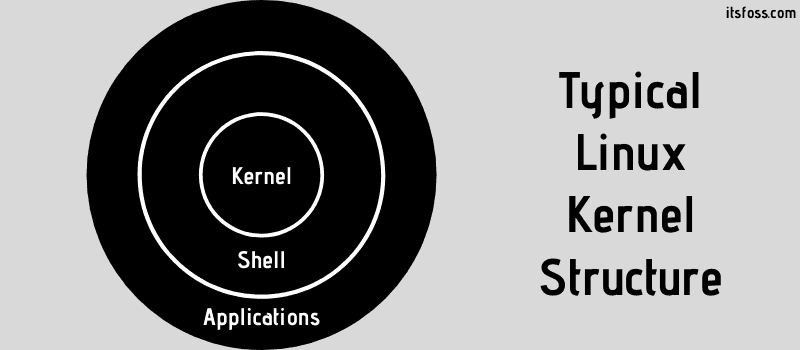

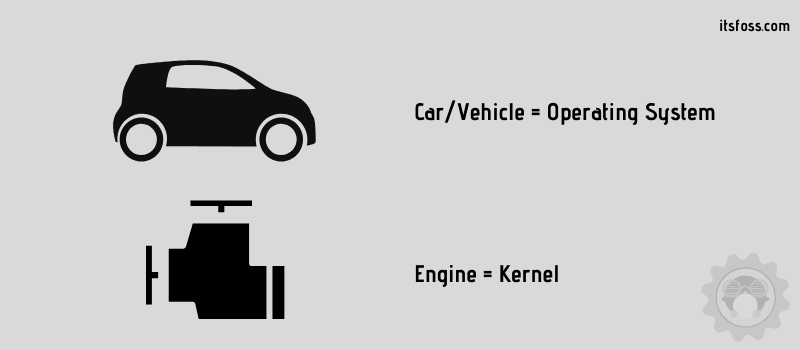

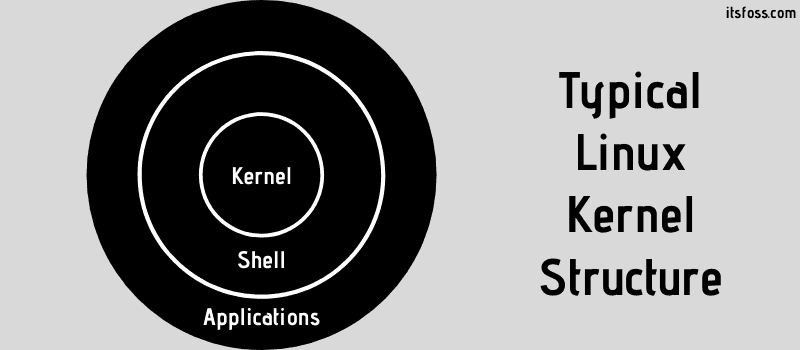

### 什么是显示服务器?

显示服务器是一个程序,它负责协调其客户端与操作系统的其他部分之间,以及硬件和操作系统之间的输入和输出。基本上,多亏了显示服务器,你才能以图形化的方式使用你的计算机(GUI)。如果没有显示服务器,你只能局限于命令行界面(TTY)。

显示服务器提供了一个图形环境的框架,使你可以使用鼠标和键盘与应用程序进行交互。

显示服务器通过[显示服务器协议](https://en.wikipedia.org/wiki/X_Window_System_core_protocol)(如 X11)与客户端进行通信。显示服务器是图形用户界面 —— 特别是窗口系统 —— 中的一个关键组件。

不要把显示服务器和[桌面环境](/article-12579-1.html)混淆。桌面环境的下层使用的是显示服务器。

听起来很熟悉,但又不完全清楚?让我来解释一下。

### Linux 上的显示服务器协议

Linux 中有三种显示服务器协议,分别是 X11、Wayland 和 Mir。下面我就给大家简单介绍一下这些显示服务器。

#### X11

X11(也称 X)是已经存在多年的传统显示服务器。它是 Linux 发行版中最常用的显示服务器。

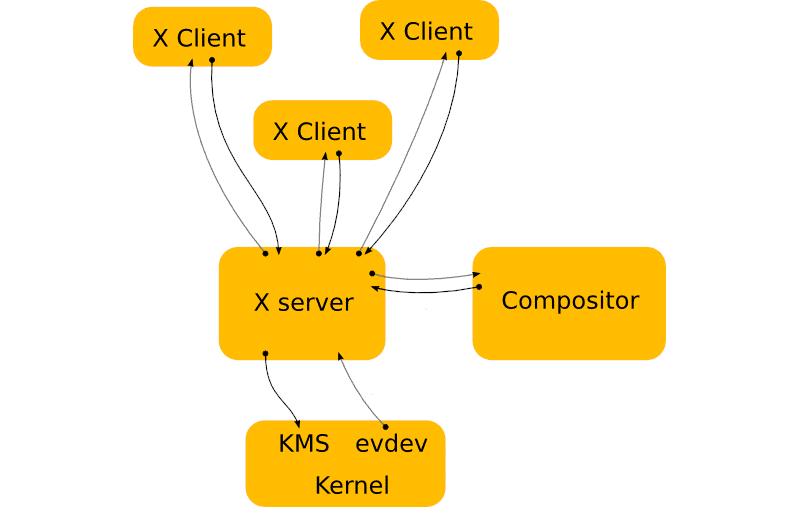

X11 通信协议,使用显示服务器 [X.org 服务器](https://en.wikipedia.org/wiki/X.Org_Server)。它接收来自[设备驱动程序](https://en.wikipedia.org/wiki/Device_driver)的输入事件,并将它们提供给它的一个客户端。

显示服务器也从客户端接收数据,它处理数据并进行合成,在 Linux 上,它将数据传递给三个内核组件之一:[DRM](https://en.wikipedia.org/wiki/Direct_Rendering_Manager)、[gem](https://en.wikipedia.org/wiki/Graphics_Execution_Manager) 或 [KMS 驱动](https://en.wikipedia.org/wiki/KMS_driver)。

[X.Org](http://X.Org) 服务器是一个显示服务器,它依靠第二个程序:[合成窗口管理器](https://en.wikipedia.org/wiki/Compositing_window_manager),来进行合成。例如 [Mutter](https://en.wikipedia.org/wiki/Mutter_(window_manager)) 或 [KWin](https://en.wikipedia.org/wiki/KWin)。GNOME 使用的是 Mutter。

#### Wayland

按照其网站的说法,Wayland “旨在作为 X 的更简单的替代品,更容易开发和维护”。

而事实上 [Wayland](https://wayland.freedesktop.org/) 就是现代的显示服务器,它应该取代传统的 X 显示服务器。

对它的采用还在普及中。Ubuntu 曾试图在 17.10 版本中改用 Wayland 作为默认的显示服务器,但这个尝试遭到了负面反馈。

很多 GUI 应用程序及其框架都依赖于 X 服务器。这些应用程序在 Wayland 上无法正常工作。

这迫使 Ubuntu 继续使用 X 作为默认显示服务器。它仍然提供了使用 Wayland 的选项,但不再是默认的了。

即使在今天,绝大多数的发行版都默认使用 X 显示服务器。

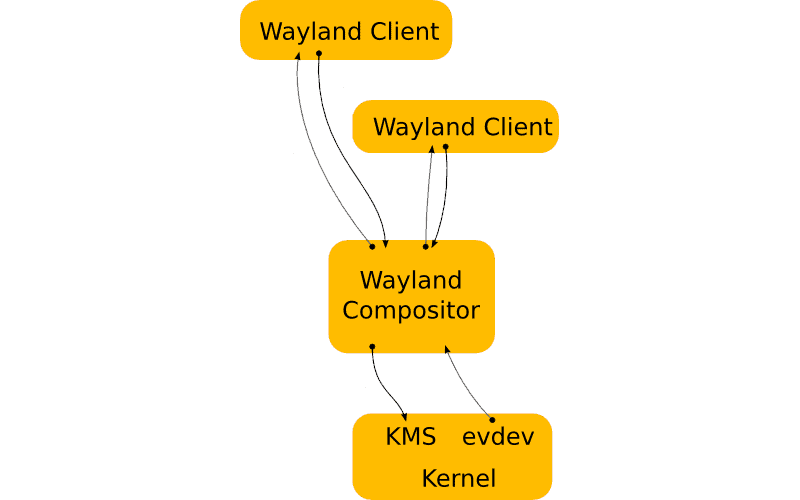

实施 Wayland 显示服务器协议的显示服务器,被称为 [Wayland 合成器](https://en.wikipedia.org/wiki/Wayland_compositor)。和 X11 上的一样,Wayland 合成器负责处理其客户端的输入和输出,但同时也进行[合成](https://en.wikipedia.org/wiki/Compositing),这与 X11 相反。

几个 Wayland 合成器是 [Weston](https://en.wikipedia.org/wiki/Weston_(software))、[Mutter](https://en.wikipedia.org/wiki/Mutter_(software))、[KWin](https://en.wikipedia.org/wiki/KWin) 或 [Enlightenment](https://en.wikipedia.org/wiki/Enlightenment_(software))。

#### Mir

[Mir 显示服务器](https://mir-server.io/)自带的 Mir 显示服务器协议,与 X11 和 Wayland 使用的协议不同。它是由 [Canonical](https://canonical.com/) 开发的,作为 Unity 开发的一部分,打算成为 [Ubuntu](https://itsfoss.com/install-ubuntu/) 的首选显示服务器。

但在 2017 年,它已经被 [Ubuntu] 桌面版的 Wayland 显示服务器所取代,不过 Mir 的开发还在继续,用于物联网(IoT)应用。

#### 为什么我们还在使用 Xorg?

Wayland 作为比较新的产品,相比 Xorg 来说,还不是很稳定。作为客户端的程序,必须知道如何与显示服务器进行通信。

因此,很多程序在使用 Wayland 时可能无法运行。Ubuntu 默认切换到 Wayland 的实验证实了这一点。

### 结束语

我希望你对 Linux 中的显示服务器概念有了更好的理解。我已经尽量不谈太多的技术细节,但我无法完全避免。

欢迎你的反馈和建议。

---

via: <https://itsfoss.com/display-server/>

作者:[Dimitrios Savvopoulos](https://itsfoss.com/author/dimitrios/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In Linux related articles, news and discussions, you’ll often come across the term display server, Xorg, Wayland etc.

In this explainer article, I’ll discuss display servers in Linux.

## What is display server in Linux?

A display server is a program which is responsible for the input and output coordination of its clients, to and from the rest of the operating system, and among the hardware and the operating system. Basically, thanks to a display server, you can use your computer graphically (GUI). Without the display server, you would only be restricted to a command line interface ([TTY](https://itsfoss.com/what-is-tty-in-linux/)).

The display server provides the framework for a graphical environment so that you can use mouse and keyboard to interact with applications.

The display server communicates with its clients over the [display server protocol](https://en.wikipedia.org/wiki/X_Window_System_core_protocol), like X11. The display server is a key component in any graphical user interface, specifically the windowing system.

Don’t confuse display server with [desktop environment](https://itsfoss.com/what-is-desktop-environment/). The desktop environment uses display server underneath it.

Sounds familiar but it is not fully clear? Let me explain.

## Display server communications protocols in Linux

There are three display protocols available in Linux, the X11, Wayland and Mir. I’ll give you a brief introduction for these display servers.

### X11

The X11 (also refer as X) is the legacy display server that has been existed for years. It is the most common display server used in Linux distributions.

The X11 communication protocol, uses the [X.org Server](https://en.wikipedia.org/wiki/X.Org_Server) display server. It receives input events from [device drivers](https://en.wikipedia.org/wiki/Device_driver) and makes them available to one of its clients.

The display server also receives data from its clients, it processes the data and does the compositing and on Linux it passes the data to one of three kernel components – the [DRM](https://en.wikipedia.org/wiki/Direct_Rendering_Manager), [gem](https://en.wikipedia.org/wiki/Graphics_Execution_Manager) or [KMS driver](https://en.wikipedia.org/wiki/KMS_driver).

The X.Org Server is a display server that relies on a second program, the [compositing window manager](https://en.wikipedia.org/wiki/Compositing_window_manager), to do the compositing. Examples are [Mutter](https://en.wikipedia.org/wiki/Mutter_(window_manager)) or [KWin](https://en.wikipedia.org/wiki/KWin). GNOME uses Mutter.

### Wayland

As per its website, Wayland is “intended as a simpler replacement for X, easier to develop and maintain”.

And indeed [Wayland](https://wayland.freedesktop.org/) is the modern display server that is supposed to replace the legacy X display server.

Its adoption is still a work in progress. Ubuntu tried to switch to Wayland as the default display server with version 17.10 but the experiment met with negative feedback.

A lot of GUI applications and their frameworks depend on the X server. These applications didn’t work as intended on Wayland.

This forced Ubuntu to stay on X as default display server. It still provides the option to use Wayland but it is not default anymore.

An overwhelming majority of distributions use X display server by default even today.

Display servers that put into effect the Wayland display server protocol, are called [Wayland compositors](https://en.wikipedia.org/wiki/Wayland_compositor). Like any X11, a Wayland compositor is responsible for handling input and output for its clients but also does the [compositing](https://en.wikipedia.org/wiki/Compositing) – in contrast to X11.

A few Wayland compositors are [Weston](https://en.wikipedia.org/wiki/Weston_(software)), [Mutter](https://en.wikipedia.org/wiki/Mutter_(software)), [KWin](https://en.wikipedia.org/wiki/KWin) or [Enlightenment](https://en.wikipedia.org/wiki/Enlightenment_(software)).

### Mir

The [Mir display server](https://mir-server.io/) comes with its own Mir display server protocol which differs to those used by X11 and Wayland. It was developed by [Canonical](https://canonical.com/), as part of the development of Unity, and was intended to be the display server of choice for [Ubuntu](https://itsfoss.com/install-ubuntu/).

As of 2017, it has been replaced with the Wayland display server for desktop editions of Ubuntu, although Mir’s development continued for Internet of Things (IoT) applications.

### Why are we still using Xorg?

Wayland as it is relatively new, is not very stable yet, compared to Xorg. The programs, which act as clients, in order to communicate with a display server, must know how to do it.

For this reason, many programs might not run when using Wayland. Ubuntu’s experiment to switch to Wayland by default confirmed this.

### Conclusion

I hope you have a bit better understanding of display server concept in Linux. I have tried to not go into too much technical details but I could not avoid them altogether.

Your feedback and suggestion is welcome. |

12,591 | 通过集中日志记录来减少安全风险 | https://opensource.com/article/19/2/reducing-security-risks-centralized-logging | 2020-09-07T23:10:42 | [

"日志",

"安全"

] | https://linux.cn/article-12591-1.html |

>

> 集中日志并结构化待处理的日志数据可缓解与缺少日志相关的风险

>

>

>

日志记录和日志分析对于保护基础设施安全来说至关重要,尤其是当我们考虑到通用漏洞的时候。这篇文章基于我在 FOSDEM'19 上的闪电秀《[Let's use centralized log collection to make incident response teams happy](https://fosdem.org/2019/schedule/event/lets_use_centralized_log_collection_to_make_incident_response_teams_happy/)》,目的是提高大家对日志匮乏这种安全问题的重视,提供一种避免风险的方法,并且倡议更多的安全实践(利益声明: 我为 NXLog 工作)。

### 为什么要收集日志?为什么要集中日志记录?

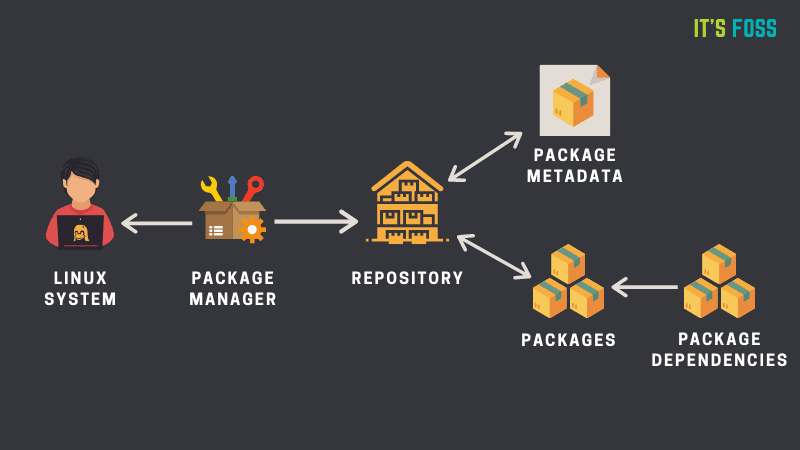

确切的说,日志是写入磁盘的仅追加的记录序列。在实际生活中,日志可以在你尝试寻找异常的根源时帮助你调查基础设施的问题。当你有多个使用自己的标准与格式的日志的异构系统,并且想用一种可靠的方法来接收和处理它们的时候,挑战就来临了。这通常以元数据为代价的。集中日志记录解决方案需要共性,这种共性常常会去除许多开源日志记录工具所提供的丰富的元数据。

### 日志记录与监控匮乏的安全风险

<ruby> 开源 Web 应用程序安全项目 <rt> Open Web Application Security Project </rt></ruby>([OWASP](https://www.owasp.org/index.php/Main_Page))是一个为业界贡献了许多杰出项目(包括许多专注于软件安全的[工具](https://github.com/OWASP))的非营利组织。OWASP 定期为应用开发人员和维护者报告最危险的安全挑战。在最新一版《[10 项最严重的 Web 应用程序安全风险](https://www.owasp.org/index.php/Top_10-2017_Top_10)》中,OWASP 将日志记录和监控匮乏加入了列表中。OWASP 警告下列情况会导致日志记录、检测、监控和主动响应的匮乏:

* 未记录重要的可审计性事件,如:登录、登录失败和高额交易。

* 告警和错误事件未能产生、产生不足或不清晰的日志信息。

* 日志信息仅在本地存储。

* 对于实时或准实时的主动攻击,应用程序无法检测、处理和告警。

可以通过集中日志记录(例如,不仅将日志本地存储)和结构化日志数据以进一步分析来缓解上述情形(例如,在告警仪表盘和安全套件中)。

举例来说, 假设一个 DNS 查询会导向名为 [hacked.badsite.net](http://hacked.badsite.net) 的恶意网站。通过 DNS 监控,管理员监控并且主动的分析 DNS 请求与响应。DNS 监控的效果依赖于充足的日志记录与收集来发现潜在问题,同样也依赖于结构化 DNS 日志的结果来进一步分析。

```

2019-01-29

Time (GMT) Source Destination Protocol-Info

12:42:42.112898 SOURCE_IP xxx.xx.xx.x DNS Standard query 0x1de7 A hacked.badsite.net

```

你可以在 [NXLog 社区版](https://nxlog.co/products/nxlog-community-edition/download) 中自己尝试一下这个例子,也可以尝试其他例子和代码片段。 (再次声明:我为 NXLog 工作)

### 重要的一点:非结构化数据与结构化数据

花费一点时间来考虑下日志数据格式是很重要的。例如,让我们来考虑以下日志消息:

```

debug1: Failed password for invalid user amy from SOURCE_IP port SOURCE_PORT ssh2

```

这段日志包含了一个预定义的结构,例如冒号前面的元数据关键词(`debug1`)然而,余下的日志字段是一个未结构化的字符串(`Failed password for invalid user amy from SOURCE_IP port SOURCE_PORT ssh2`)。因此,即便这个消息是人类可轻松阅读的格式,但它不是一个计算机容易解析的格式。

非结构化的事件数据存在局限性,包括难以解析、搜索和分析日志。重要的元数据通常以一种自由字符串的形式作为非结构化数据字段,就像上面的例子一样。日志管理员会在他们尝试标准化/归一化日志数据与集中日志源的过程中遇到这个问题。

### 接下来怎么做

除了集中和结构化日志之外,确保你收集了正确的日志数据——Sysmon、PowerShell、Windows 事件日志、DNS 调试日志、ETW、内核监控、文件完整性监控、数据库日志、外部云日志等等。同样也要选用适当的工具和流程来来收集、汇总和帮助理解数据。

希望这对你从不同日志源中集中日志收集提供了一个起点:将日志发送到仪表盘、监控软件、分析软件以及像安全性资讯与事件管理(SIEM)套件等外部源。

你的集中日志策略会是怎么样?请在评论中分享你的想法。

---

via: <https://opensource.com/article/19/2/reducing-security-risks-centralized-logging>

作者:[Hannah Suarez](https://opensource.com/users/hcs) 选题:[lujun9972](https://github.com/lujun9972) 译者:[leommxj](https://github.com/leommxj) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Logging and log analysis are essential to securing infrastructure, particularly when we consider common vulnerabilities. This article, based on my lightning talk * Let's use centralized log collection to make incident response teams happy* at FOSDEM'19, aims to raise awareness about the security concerns around insufficient logging, offer a way to avoid the risk, and advocate for more secure practices

*(disclaimer: I work for NXLog).*

## Why log collection and why centralized logging?

Logging is, to be specific, an append-only sequence of records written to disk. In practice, logs help you investigate an infrastructure issue as you try to find a cause for misbehavior. A challenge comes up when you have heterogeneous systems with their own standards and formats, and you want to be able to handle and process these in a dependable way. This often comes at the cost of metadata. Centralized logging solutions require commonality, and that commonality often removes the rich metadata many open source logging tools provide.

## The security risk of insufficient logging and monitoring

The Open Web Application Security Project ([OWASP](https://www.owasp.org/index.php/Main_Page)) is a nonprofit organization that contributes to the industry with incredible projects (including many [tools](https://github.com/OWASP) focusing on software security). The organization regularly reports on the riskiest security challenges for application developers and maintainers. In its most recent report on the [top 10 most critical web application security risks](https://www.owasp.org/index.php/Top_10-2017_Top_10), OWASP added Insufficient Logging and Monitoring to its list. OWASP warns of risks due to insufficient logging, detection, monitoring, and active response in the following types of scenarios.

- Important auditable events, such as logins, failed logins, and high-value transactions are not logged.

- Warnings and errors generate none, inadequate, or unclear log messages.

- Logs are only being stored locally.

- The application is unable to detect, escalate, or alert for active attacks in real time or near real time.

These instances can be mitigated by centralizing logs (i.e., not storing logs locally) and structuring log data for processing (i.e., in alerting dashboards and security suites).

For example, imagine a DNS query leads to a malicious site named **hacked.badsite.net**. With DNS monitoring, administrators monitor and proactively analyze DNS queries and responses. The efficiency of DNS monitoring relies on both sufficient logging and log collection in order to catch potential issues as well as structuring the resulting DNS log for further processing:

```

2019-01-29

Time (GMT) Source Destination Protocol-Info

12:42:42.112898 SOURCE_IP xxx.xx.xx.x DNS Standard query 0x1de7 A hacked.badsite.net

```

You can try this yourself and run through other examples and snippets with the [NXLog Community Edition](https://nxlog.co/products/nxlog-community-edition/download) *(disclaimer again: I work for NXLog).*

## Important aside: unstructured vs. structured data

It's important to take a moment and consider the log data format. For example, let's consider this log message:

`debug1: Failed password for invalid user amy from SOURCE_IP port SOURCE_PORT ssh2`

This log contains a predefined structure, such as a metadata keyword before the colon (**debug1**). However, the rest of the log field is an unstructured string (**Failed password for invalid user amy from SOURCE_IP port SOURCE_PORT ssh2**). So, while the message is easily available in a human-readable format, it is not a format a computer can easily parse.

Unstructured event data poses limitations including difficulty of parsing, searching, and analyzing the logs. The important metadata is too often set in an unstructured data field in the form of a freeform string like the example above. Logging administrators will come across this problem at some point as they attempt to standardize/normalize log data and centralize their log sources.

## Where to go next

Alongside centralizing and structuring your logs, make sure you're collecting the right log data—Sysmon, PowerShell, Windows EventLog, DNS debug, NetFlow, ETW, kernel monitoring, file integrity monitoring, database logs, external cloud logs, and so on. Also have the right tools and processes in place to collect, aggregate, and help make sense of the data.

Hopefully, this gives you a starting point to centralize log collection across diverse sources; send them to outside sources like dashboards, monitoring software, analytics software, specialized software like security information and event management (SEIM) suites; and more.

What does your centralized logging strategy look like? Share your thoughts in the comments.

## Comments are closed. |

12,592 | 研究人员表示,到 2245 年信息量可能占世界质量的一半 | https://www.networkworld.com/article/3570438/information-could-be-half-the-worlds-mass-by-2245-says-researcher.html | 2020-09-07T23:31:01 | [

"信息",

"质量"

] | https://linux.cn/article-12592-1.html |

>

> 根据一位理论物理学家的说法,由于创建和存储数字信息所使用的能源和资源数量,数据应该被视为物理的,而不仅仅是看不见的一和零。

>

>

>

一位大学学者建议,数字内容应该与气体、液体、等离子体和固体一样,被视为第五种物质状态。

英国朴茨茅斯大学高级讲师、发表在《AIP Advances》杂志上的《[信息灾难](https://aip.scitation.org/doi/10.1063/5.0019941)》一文的作者 Melvin Vopson 称,由于以物理和数字方式创建、存储和分发数据所使用的能量和资源,数据已经发生了演变,现在应该被视为质量。

Vopson 还声称,数字比特正在走向压倒地球的道路,最终将超过原子的数量。

给数字信息分配质量的想法建立在一些现有数据点的基础之上。Vopson 引用了 IBM 的一项估计,发现数据每天以 2.5 万亿字节的速度产生。他还将每英寸超过 1 <ruby> 太比特 <rt> terabit </rt></ruby>的数据存储密度考虑在内,将比特的大小与原子的大小进行比较。

假设数据生成量每年增长 50%,根据宣布 Vopson 研究的[媒体发布](https://publishing.aip.org/publications/latest-content/digital-content-on-track-to-equal-half-earths-mass-by-2245/),“比特的数量将在大约 150 年内等于地球上的原子数量。”

新闻稿中写道:“大约 130 年后,维持数字信息创造所需的动力将等于地球上目前产生的所有动力,到 2245 年,地球上一半的质量将转化为数字信息质量。”

Vopson 补充说,COVID-19 大流行正在提高数字数据创造的速度,并加速这一进程。

他警告说,一个饱和点即将到来:“即使假设未来的技术进步将比特大小降低到接近原子本身的大小,这个数字信息量所占的比重将超过地球的大小,从而导致我们所定义的‘信息灾难’。”Vopson 在[论文](https://aip.scitation.org/doi/full/10.1063/5.0019941)中写道。

“我们正在一点一点地改变这个星球,这是一场看不见的危机,”Vopson 说,他是希捷科技公司的前研发科学家。

Vopson 并不是一个人在探索,信息并不是简单的不可察觉的 1 和 0。根据发布的消息,Vopson 借鉴了爱因斯坦广义相对论中的质能对比;将热力学定律应用于信息的 Rolf Landauer 的工作;以及数字比特的发明者 Claude Shannon 的工作。

“当一个人将信息内容带入现有的物理理论中时,这几乎就像物理学中的一切都多了一个维度,”Vopson 说。

他的论文总结道,随着增长速度似乎不可阻挡,数字信息生产“将消耗地球上大部分的电力能源,从而导致道德和环境问题。”他的论文总结道。

有趣的是,除此以外,Vopson 还提出,如果像他所预测的那样,未来地球的质量主要由信息位组成,并且有足够的动力创造出来(不确定),那么“可以设想未来的世界主要由计算机模拟,并由数字比特和计算机代码主导,”他写道。

---

via: <https://www.networkworld.com/article/3570438/information-could-be-half-the-worlds-mass-by-2245-says-researcher.html>

作者:[Patrick Nelson](https://www.networkworld.com/author/Patrick-Nelson/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

12,594 | 为什么排序的复杂度为 O(N log N) | https://theartofmachinery.com/2019/01/05/sorting_is_nlogn.html | 2020-09-08T14:49:21 | [

"排序"

] | https://linux.cn/article-12594-1.html |

基本上所有正而八经的算法教材都会解释像<ruby> 快速排序 <rt> quicksort </rt></ruby>和<ruby> 堆排序 <rt> heapsort </rt></ruby>这样的排序算法有多快,但并不需要复杂的数学就能证明你可以逐渐趋近的速度有多快。

>

> 关于标记的一个严肃说明:

>

>

> 大多数计算机专业的科学家使用大写字母 O 标记来指代“趋近,直到到达一个常数比例因子”,这与数学专业所指代的意义是有所区别的。这里我使用的大 O 标记的含义与计算机教材所指相同,但至少不会和其他数学符号混用。

>

>

>

### 基于比较的排序

先来看个特例,即每次比较两个值大小的算法(快速排序、堆排序,及其它通用排序算法)。这种思想后续可以扩展至所有排序算法。

#### 一个简单的最差情况下的计数角度

假设有 4 个互不相等的数,且顺序随机,那么,可以通过只比较一对数字完成排序吗?显然不能,证明如下:根据定义,要对该数组排序,需要按照某种顺序重新排列数字。换句话说,你需要知道用哪种排列方式?有多少种可能的排列?第一个数字可以放在四个位置中的任意一个,第二个数字可以放在剩下三个位置中的任意一个,第三个数字可以放在剩下两个位置中的任意一个,最后一个数字只有剩下的一个位置可选。这样,共有 4×3×2×1=4!=24 种排列可供选择。通过一次比较大小,只能产生两种可能的结果。如果列出所有的排列,那么“从小到大”排序对应的可能是第 8 种排列,按“从大到小”排序对应的可能是第 24 种排列,但无法知道什么时候需要的是其它 22 种排列。

通过 2 次比较,可以得到 2×2=4 种可能的结果,这仍然不够。只要比较的次数少于 5(对应 2<sup> 5</sup> = 32 种输出),就无法完成 4 个随机次序的数字的排序。如果 `W(N)` 是最差情况下对 `N` 个不同元素进行排序所需要的比较次数,那么,

两边取以 2 为底的对数,得:

`N!` 的增长近似于 `N<sup> N</sup>` (参阅 [Stirling 公式](http://hyperphysics.phy-astr.gsu.edu/hbase/Math/stirling.html)),那么,

这就是最差情况下从输出计数的角度得出的 `O(N log N)` 上限。

#### 从信息论角度的平均状态的例子

使用一些信息论知识,就可以从上面的讨论中得到一个更有力的结论。下面,使用排序算法作为信息传输的编码器:

1. 任取一个数,比如 15

2. 从 4 个数字的排列列表中查找第 15 种排列

3. 对这种排列运行排序算法,记录所有的“大”、“小”比较结果

4. 用二进制编码发送比较结果

5. 接收端重新逐步执行发送端的排序算法,需要的话可以引用发送端的比较结果

6. 现在接收端就可以知道发送端如何重新排列数字以按照需要排序,接收端可以对排列进行逆算,得到 4 个数字的初始顺序

7. 接收端在排列表中检索发送端的原始排列,指出发送端发送的是 15

确实,这有点奇怪,但确实可以。这意味着排序算法遵循着与编码方案相同的定律,包括理论所证明的不存在通用的数据压缩算法。算法中每次比较发送 1 比特的比较结果编码数据,根据信息论,比较的次数至少是能表示所有数据的二进制位数。更技术语言点,[平均所需的最小比较次数是输入数据的香农熵,以比特为单位](https://en.wikipedia.org/wiki/Shannon%27s_source_coding_theorem)。熵是衡量信息等不可预测量的数学度量。

包含 `N` 个元素的数组,元素次序随机且无偏时的熵最大,其值为 `log<sub> 2</sub> N!` 个比特。这证明 `O(N log N)` 是一个基于比较的对任意输入排序的最优平均值。

以上都是理论说法,那么实际的排序算法如何做比较的呢?下面是一个数组排序所需比较次数均值的图。我比较的是理论值与快速排序及 [Ford-Johnson 合并插入排序](https://en.wikipedia.org/wiki/Merge-insertion_sort) 的表现。后者设计目的就是最小化比较次数(整体上没比快速排序快多少,因为生活中相对于最大限度减少比较次数,还有更重要的事情)。又因为<ruby> 合并插入排序 <rt> merge-insertion sort </rt></ruby>是在 1959 年提出的,它一直在调整,以减少了一些比较次数,但图示说明,它基本上达到了最优状态。

一点点理论导出这么实用的结论,这感觉真棒!

#### 小结

证明了:

1. 如果数组可以是任意顺序,在最坏情况下至少需要 `O(N log N)` 次比较。

2. 数组的平均比较次数最少是数组的熵,对随机输入而言,其值是 `O(N log N)` 。

注意,第 2 个结论允许基于比较的算法优于 `O(N log N)`,前提是输入是低熵的(换言之,是部分可预测的)。如果输入包含很多有序的子序列,那么合并排序的性能接近 `O(N)`。如果在确定一个位之前,其输入是有序的,插入排序性能接近 `O(N)`。在最差情况下,以上算法的性能表现都不超出 `O(N log N)`。

### 一般排序算法

基于比较的排序在实践中是个有趣的特例,但从理论上讲,计算机的 [CMP](https://c9x.me/x86/html/file_module_x86_id_35.html) 指令与其它指令相比,并没有什么特别之处。在下面两条的基础上,前面两种情形都可以扩展至任意排序算法:

1. 大多数计算机指令有多于两个的输出,但输出的数量仍然是有限的。

2. 一条指令有限的输出意味着一条指令只能处理有限的熵。

这给出了 `O(N log N)` 对应的指令下限。任何物理上可实现的计算机都只能在给定时间内执行有限数量的指令,所以算法的执行时间也有对应 `O(N log N)` 的下限。

#### 什么是更快的算法?

一般意义上的 `O(N log N)` 下限,放在实践中来看,如果听人说到任何更快的算法,你要知道,它肯定以某种方式“作弊”了,其中肯定有圈套,即它不是一个可以处理任意大数组的通用排序算法。可能它是一个有用的算法,但最好看明白它字里行间隐含的东西。

一个广为人知的例子是<ruby> 基数排序 <rt> radix sort </rt></ruby>算法,它经常被称为 `O(N)` 排序算法,但它只能处理所有数字都能放入 `k` 比特的情况,所以实际上它的性能是 `O(kN)`。

什么意思呢?假如你用的 8 位计算机,那么 8 个二进制位可以表示 2<sup> 8</sup>=256 个不同的数字,如果数组有上千个数字,那么其中必有重复。对有些应用而言这是可以的,但对有些应用就必须用 16 个二进制位来表示,16 个二进制位可以表示 2<sup> 16</sup>=65,536 个不同的数字。32 个二进制位可以表示 2<sup> 32</sup>=4,294,967,296 不同的数字。随着数组长度的增长,所需要的二进制位数也在增长。要表示 N 个不同的数字,需要 `k ≥ log<sub> 2</sub> N` 个二进制位。所以,只有允许数组中存在重复的数字时, `O(kN)` 才优于 `O(N log N)`。

一般意义上输入数据的 `O(N log N)` 的性能已经说明了全部问题。这个讨论不那么有趣因为很少需要在 32 位计算机上对几十亿整数进行排序,[如果有谁的需求超出了 64 位计算机的极限,他一定没有告诉别人](https://sortbenchmark.org/)。

---

via: <https://theartofmachinery.com/2019/01/05/sorting_is_nlogn.html>

作者:[Simon Arneaud](https://theartofmachinery.com) 选题:[lujun9972](https://github.com/lujun9972) 译者:[silentdawn-zz](https://github.com/silentdawn-zz) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Any decent algorithms textbook will explain how fast sorting algorithms like quicksort and heapsort are, but it doesn’t take crazy maths to prove that they’re as asymptotically fast as you can possibly get.

### A pedantic note about notation

Most computer scientists use big-O notation to mean “asymptotically equal, up to a constant scaling factor”, which isn’t quite what it means to other mathematicians. Sorry, I’ll use big-O like in CS textbooks, but at least I won’t mix it with other mathematical notation.

## Comparison-based sorting

Let’s look at the special case of algorithms that compare values two at a time (like quicksort and heapsort, and most other popular algorithms). The ideas can be extended to all sorting algorithms later.

### A simple counting argument for the worst case

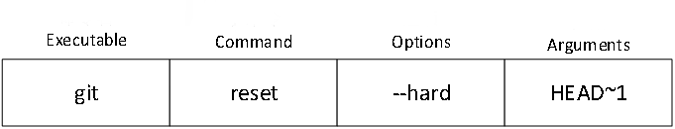

Suppose you have an array of four elements, all different, in random order. Can you sort it by comparing just one pair of elements? Obviously not, but here’s one good reason that proves you can’t: By definition, to sort the array, you need to how to rearrange the elements to put them in order. In other words, you need to know which permutation is needed. How many possible permutations are there? The first element could be moved to one of four places, the second one could go to one of the remaining three, the third element has two options, and the last element has to take the one remaining place. So there are possible permutations to choose from, but there are only two possible results from comparing two different things: “BIGGER” and “SMALLER”. If you made a list of all the possible permutations, you might decide that “BIGGER” means you need permutation #8 and “SMALLER” means you need permutation #24, but there’s no way you could know when you need the other 22 permutations.

With two comparisons, you have possible outputs, which still isn’t enough. You can’t sort every possible shuffled array unless you do at least five comparisons (). If is the worst-case number of comparisons needed to sort different elements using some algorithm, we can say

Taking a logarithm base 2,

Asymptotically, grows like (see also [Stirling’s

formula](http://hyperphysics.phy-astr.gsu.edu/hbase/Math/stirling.html)), so

And that’s an limit on the worst case just from counting outputs.

### Average case from information theory

We can get a stronger result if we extend that counting argument with a little information theory. Here’s how we could use a sorting algorithm as a code for transmitting information:

- I think of a number — say, 15

- I look up permutation #15 from the list of permutations of four elements

- I run the sorting algorithm on this permutation and record all the “BIGGER” and “SMALLER” comparison results

- I transmit the comparison results to you in binary code

- You re-enact my sorting algorithm run, step by step, referring to my list of comparison results as needed

- Now that you know how I rearranged my array to make it sorted, you can reverse the permutation to figure out my original array

- You look up my original array in the permutation list to figure out I transmitted the number 15

Okay, it’s a bit strange, but it could be done. That means that sorting algorithms are bound by the same laws as

normal encoding schemes, including the theorem proving there’s no universal data compressor. I transmitted one bit per

comparison the algorithm does, so, on average, the number of comparisons must be at least the number of bits needed to

represent my data, according to information theory. More technically, [the average number of comparisons must be at least

the Shannon entropy of my input data, measured in bits](https://en.wikipedia.org/wiki/Shannon%27s_source_coding_theorem). Entropy is a mathematical measure of the information

content, or unpredictability, of something.

If I have an array of elements that could be in any possible order without bias, then entropy is maximised and is bits. That proves that is an optimal average for a comparison-based sort with arbitrary input.

That’s the theory, but how do real sorting algorithms compare? Below is a plot of the average number of comparisons

needed to sort an array. I’ve compared the theoretical optimum against naïve quicksort and the [Ford-Johnson merge-insertion sort](https://en.wikipedia.org/wiki/Merge-insertion_sort), which was designed to

minimise comparisons (though it’s rarely faster than quicksort overall because there’s more to life than minimising

comparisons). Since it was developed in 1959, merge-insertion sort has been tweaked to squeeze a few more comparisons

out, but the plot shows it’s already almost optimal.

It’s nice when a little theory gives such a tight practical result.

### Summary so far

Here’s what’s been proven so far:

- If the array could start in any order, at least comparisons are needed in the worst case

- The average number of comparisons must be at least the entropy of the array, which is for random input

Note that #2 allows comparison-based sorting algorithms to be faster than if the input is low entropy (in other words, more predictable). Merge sort is close to if the input contains many sorted subarrays. Insertion sort is close to if the input is an array that was sorted before being perturbed a bit. None of them beat in the worst case unless some array orderings are impossible as inputs.

## General sorting algorithms

Comparison-based sorts are an interesting special case in practice, but there’s nothing theoretically special about

[ CMP](https://c9x.me/x86/html/file_module_x86_id_35.html) as opposed to any other instruction on a computer. Both arguments

above can be generalised to any sorting algorithm if you note a couple of things:

- Most computer instructions have more than two possible outputs, but still have a limited number

- The limited number of outputs means that one instruction can only process a limited amount of entropy

That gives us the same lower bound on the number of instructions. Any physically realisable computer can only process a limited number of instructions at a time, so that’s an lower bound on the time required, as well.

### But what about “faster” algorithms?

The most useful practical implication of the general bound is that if you hear about any asymptotically faster algorithm, you know it must be “cheating” somehow. There must be some catch that means it isn’t a general purpose sorting algorithm that scales to arbitrarily large arrays. It might still be a useful algorithm, but it’s a good idea to read the fine print closely.

A well-known example is radix sort. It’s often called an sorting algorithm, but the catch is that it only works if all the numbers fit into bits, and it’s really .

What does that mean in practice? Suppose you have an 8-bit machine. You can represent different numbers in 8 bits, so if you have an array of thousands of numbers, you’re going to have duplicates. That might be okay for some applications, but for others you need to upgrade to at least 16 bits, which can represent numbers distinctly. 32 bits will support different numbers. As the size of the array goes up, the number of bits needed will tend to go up, too. To represent different numbers distinctly, you’ll need . So, unless you’re okay with lots of duplicates in your array, is effectively .

The need for of input data in the general case actually proves the overall result by itself. That argument isn’t

so interesting in practice because we rarely need to sort billions of integers on a 32-bit machine, and [if anyone’s hit the limits of a 64-bit machine, they haven’t told the rest of us](https://sortbenchmark.org/). |

12,595 | 《代码英雄》第二季(2):Hello, World | https://www.redhat.com/en/command-line-heroes/season-2/hello-world | 2020-09-08T22:45:36 | [

"代码英雄",

"编程语言"

] | https://linux.cn/article-12595-1.html |

>

> 代码英雄讲述了开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。

>

>

>

>

> **什么是《代码英雄》**

>

>

> <ruby> 代码英雄 <rt> Command Line Heroes </rt></ruby>是世界领先的企业开源软件解决方案供应商红帽(Red Hat)精心制作的原创音频播客,讲述开发人员、程序员、黑客、极客和开源反叛者如何彻底改变技术前景的真实史诗。该音频博客邀请到了谷歌、NASA 等重量级企业的众多技术大牛共同讲述开源、操作系统、容器、DevOps、混合云等发展过程中的动人故事。

>

>

>

本文是《[代码英雄](https://www.redhat.com/en/command-line-heroes)》系列播客[第二季(2):Hello, World](https://www.redhat.com/en/command-line-heroes/season-2/hello-world)的[音频](https://dts.podtrac.com/redirect.mp3/audio.simplecast.com/e6ef40b1.mp3)脚本。

>

> 导语:每一种新的编程语言的诞生,都是为了做一些以前无法完成的事情。如今,有非常多编程语言可以选择,但哪些才是你真正需要了解的?

>

>

> 本集将深入探讨编程语言的历史。我们将会了解到被称为 “神奇葛丽丝” 的天才 —— 海军少将葛丽丝·哈伯。多亏了她,开发者不再需要数学博士的学历就能使用机器代码编写程序。参与录制的还有 Jupyter 项目的 Carol Willing,她是 Python 基金会的前理事;以及《<ruby> 纽约时报杂志 <rt> New York Times Magazine </rt></ruby>》和《<ruby> 连线 <rt> Wired </rt></ruby>》的撰稿人 Clive Thompson,他最近正在撰写一本关于程序员如何思考的书。

>

>

>

**00:00:07 - 各种语言**:

“你好,世界。”

**00:00:12** - Saron Yitbarek\*\*:

你好,世界。现在我们发出的是信号还是噪音?我们日日夜夜无休止地写代码,做测试工作,我们追求的事情只有一个:我们是否传递了我们的信号?

**00:00:29 - 各种语言**:

你好。

**00:00:29 - Saron Yitbarek**:

或者我们的信号是不是丢失了?难道我们一直在制造无意义的杂音吗?

**00:00:40**:

我是 Saron Yitbarek,这是代码英雄第二季,来自红帽原创播客。今天的话题将在“翻译”中挖掘。这关于编程语言的故事:它们是怎么来的,如何选择其中一个语言来学习。我们将深入研究如何与机器对话。探究这些语言是如何演化的,以及我们如何用它们来让我们的工作变得更好。

**00:01:08 - Sari**:

Daisy,daisy,来告诉我你的正确回答。

**00:01:11 - Saron Yitbarek**:

如果你像我一样是一个开发者,你可能也经受着去精通多种语言的压力。最起码你得会 Java,更好一些得懂 Python、Go、JavaScript、Node,甚至能够用 C++ 思考。也许为了增加可信度,可能你还要熟悉古典的 COBOL。甚至你还得学点新东西,比如 Dart。实在太累了。

**00:01:36**:

奇怪的是,我们正在讨论的计算机只讲一种语言:机器语言,以二进制形式飘过的 1 和 0。说到底,我们学习的每个语言都是殊途同归。不管有多抽象,它都是用来简化我们工作的道具。

**00:02:03**:

这就是我希望你们记住的,故事开始了,从那一刻,那一个经典辉煌的时刻,一个女人说:“你知道么?我是人类,我不想用二进制来讨论思考,我就想用英语去编程。”

**00:02:22**:

在今天可能看来是一个简单的概念。但曾几何时,使用人类的方式与计算机对话这个主意 —— 说轻点是一个笑话,严重点就是亵渎。

**00:02:37**:

代码英雄第二季,将讲述成就我们基础的螺丝钉展开。这一讲的英雄是一个女主人公,如果你想要进一步了解故事,你必须知道她的经历。在此给你们隆重介绍:软件界的第一夫人。

>

> **00:02:58 - 发言人**:

>

>

> 尊敬的先生们和女士们,我非常荣幸的给你们介绍<ruby> 葛丽丝·玛丽·哈伯 <rt> Grace Mary Hopper </rt></ruby>准将,谢谢。

>

>

> **00:03:02 - 葛丽丝·哈伯**:

>

>

> 我曾到加拿大的圭尔夫大学演讲,那时候我必须在多伦多的机场办理入境手续。我把护照递给移民官,他打量了护照和我说:“你是在哪里工作的?”

>

>

> **00:03:17**:

>

>

> 然后我说,“美国海军。”

>

>

> **00:03:20**:

>

>

> 她再次深深的打量我一下。然后他说:“你一定是海军里最老的。”

>

>

>

**00:03:28 - Saron Yitbarek**:

她就是 1985 年担任海军少将的葛丽丝·哈伯。在麻省理工学院发表了著名的演讲时,她 79 岁。即使在那时,她也是一个传奇人物。她是独立编程语言之母,实现通过编译器,用人类语言替代数学符号编程这一伟大成就的女性。

**00:03:51**:

她获得了国家技术奖章,她去世后(1992 年),被追授国家自由勋章。以上记录纯属真实,他们称她w为“神奇葛丽丝”。

**00:04:03 - Claire Evans**:

如果有人能够被称为天生的程序员,那么这个人一定是葛丽丝。

**00:04:06 - Saron Yitbarek**:

这是 Claire Evans,一个科技记者和《<ruby> 宽带 <rt> Broad Band </rt></ruby>》一书的作者,而这本书讲述了科技领域的女性先锋。Evans 描绘了早期的计算机世界,在 1940 年,当葛丽丝·哈伯还是一个年轻的女孩时,她加入了美军的海军预备役部队。当时的电脑有一个小房子那么大。

**00:04:25 - Claire Evans**:

早期的那些程序员,他们就像一个圣职者。他们是一群擅长做枯燥乏味事情的人,因为那会儿没有编译器,没有编程语言,人们是确实是一个比特一个比特地对机器编程。

**00:04:42**:

想要改变这种现实,你真的必须是一个特别的人,而葛丽丝真的就是那种人。

**00:04:49 - Saron Yitbarek**:

现在,她意识到让人类使用机器语言的是一种多么疯狂的限制。就像走路的时候,去告诉你身体的每个原子该怎么做。这是可能的吗?是,效率呢?不高。

**00:05:06**:

在程序员的意图和计算机的行为之间一定有一条捷径。自从有了算盘,人类一直在将数学思维机械化。一定有一种方法可以在计算机上再次实现这一点。

**00:05:19 - Claire Evans**:

在过去,数学家必须做出所有的步骤。所有这些枯燥的,一步一步的工作都是为了得到一个真实而有趣的答案。然后有了计算机后,它尽可能地承担了这些机械的运算。所以解放了数学家去进行高层面的、更智慧的、面向系统的思考。

**00:05:39**:

只不过,事实并没有如此发展。有了计算机,数学家就变成了程序员。他们又一次被这些枯燥无味的编程所束缚,做着这些一点一滴的繁琐的规则导向的小计算。

**00:05:53**:

因此,我认为,葛丽丝的期望是,她希望计算机能够辅助数学家,然后数学家就可以去更进行宏大的思考,做更伟大的事情,而不至于让他们陷入细节之中。

**00:06:12 - Saron Yitbarek**:

葛丽丝也加入了思想家的行列。我们都知道,<ruby> 帕斯卡 <rt> Pascal </rt></ruby>在 17 世纪发明了第一台计算器。<ruby> 巴比奇 <rt> Babbage </rt></ruby>在 19 世纪发明了分析机。这些伟大的杰作是发明家们解放人类的大脑的创作。这些发明让我们处理数据的能力空前绝后。这就是葛丽丝踏入的领域,发明了所有人都认为不可能的东西。

**00:06:42 - Claire Evans**:

我觉得,葛丽丝的想法是,人们应该用人类语言与计算机沟通。这个语言必须独立于机器的,因此不同计算机可以交互操作,这在当时是革命性的。

**00:06:59**:

早期她称这个为 “自动化编程”,在程序员和计算机人的群体中,被认为是像太空学员一样。

**00:07:12**:

而那些迟迟不上岸的人们被看成了尼安德特人,这在当时是计算机界的一个巨大的分歧。

**00:07:21 - Saron Yitbarek**:

葛丽丝要说服她的上级跨越这个分歧并不容易。但她认为这是她的责任,她必须尝试。

>

> **00:07:30 - 葛丽丝·哈伯**:

>

>

> 这里总是有一条线,那条线代表了你的老板在那个时候会相信什么。当然,如果你过线了是得不到预算的。所以你对那条线有双重责任:不要踩过它,但也要不断教育你的老板,让你把这条线移得更远,这样下次你就能走得更远。

>

>

>

**00:07:52 - Saron Yitbarek**:

她推动的那个未来,就是我们的现在。几乎你我依赖的每一种语言都归功于她和她的第一个编译器。因此太空学员们活了下来,尼安德特人灭亡了。

**00:08:07**:

写代码不用数字,而是用人类风格的语言是她的观点。你敲下“执行操作 8”,或“关闭 文件 C”的精简操作,才是符合人类习惯的编程。

**00:08:21 - Claire Evans**:

葛丽丝独特地意识到,计算将成为改变世界的事情。但是如果没有人知道如何接触并使用计算机,那么它就不会成为改变世界的事情。因此她想确保它对尽可能多的人开放,尽可能多的人可以使用。

**00:08:37 - Claire Evans**:

这就需要编程在可理解性和可读性上做一些妥协。因此最终创造一个编程语言的目标就是给机器提供更多切入点,让它脱离这个神职,让它的开发面向大众和未来新一代。

**00:08:59 - Saron Yitbarek**:

我想在这里打断并强调下 Claire 的说法:现在我们所已知的编程语言,都来源于科技开放的愿望。这让计算机不再是数学博士们的专属玩具,让编程开发变得更容易。

**00:09:14**:

编译器所有工作的本质,是让程序变得更好理解,更有人性。

**00:09:21**:

Claire 有一个猜测,为什么葛丽丝是做出改变的那个人,这与她在二战期间的工作有关。

**00:09:29 - Claire Evans**:

她的工作是解决扫雷问题、弹道问题和海洋学问题。她用很多不同的、交叉的学科来模拟战争里的所有的暴力、混乱和现实的灾难,并将他们转化成在 Mark I 计算机上运行的程序。

**00:09:45**:

她知道如何在语言之间去做翻译转换。我的意思不是说计算机语言,是人类语言。她知道如何倾听一个提出复杂问题的人的意见,并尝试理解问题的背景,其信息和所涉及的专业规律,然后将这些转为计算机可以理解的程序。

**00:10:06**:

从这个角度看她如同早期的编译器。就像一个人类版本的编译器,因为她知道你必须理解他们才能满足他们的需求。

**00:10:17 - Saron Yitbarek**:

编译器干的事情就是一个解释和理解。我觉得我们应该在学习新语言,或想知道为什么有一些东西根本不能编译的时候牢记这个理念。编译器的工作就是满足你使用生活中说的语言来编程。

**00:10:32**:

葛丽丝知道人类一旦可以学会讲编程语言,而编译器可以将我们的意图转换为机器语言,就像为洪水打开了大门一样。

**00:10:43 - Claire Evans**:

她知道如果计算机太孤立和太具体,就不会发展为一个产业,从而成为改变世界的力量。也就是说计算机的从业者,可以让提出问题的人跟机器直接沟通。

**00:11:00**:

因此她真的是一个善于人类语言翻译的专家,至少我是这么想的。这使她有独一无二的机会,去思考和创建编程语言。

**00:11:15 - Saron Yitbarek**:

葛丽丝对英文式数据处理语言的研究最终演变成了 COBOL,它在某种意味上很不错。因为它不浮华,很适合商务用途,葛丽丝·哈伯也是这样的人。

**00:11:28**:

从某种角度看,她给了我们一个很像她的语言。像葛丽丝一样,COBOL 语言也很长寿,它现在已经 60 多了。

**00:11:50**:

葛丽丝的编译器就像一个<ruby> 巴别鱼 <rt> babelfish </rt></ruby>,在程序员和机器之间充当翻译,不过它们翻译的都是高度概括性的语言。

**00:12:03**:

然后,几十年之后,另一个重要的思潮在语言界兴起。想象下画面:自由软件社区在 1980 年出现,但是 Unix 的替代品 GNU 被开发出来后,却没有任何自由开放的编译器随之出现。

**00:12:22**:

为了让 GNU 给我们提供一个真正的开源 UNIX 替代品,为了让编程语言在开源世界蓬勃发展,社区需要找来一个格蕾丝·哈伯 —— 我们需要一个开源编译器。

**00:12:38**:

这是 Langdon White,红帽的平台架构师,来讲讲他对这个事情的理解。

**00:12:45 - Langdon White**:

在 80 年代,你要买一个编译器很轻松就要花上上万块钱。费用是最大的问题,我没有多余的钱去给自己买编译器。再一个事实是,我必须为所有我想要的目标平台买一个对应的编译器。那个时代大部分是 Unix 平台,但是细节和风格各不相同。

**00:13:06**:

因此你就不能买一个,你需要为不同的架构,不同的供应商购买多个编译器。

**00:13:14 - Saron Yitbarek**:

Langdon 指出这不仅仅是成本问题,在一些情况下,对编码工作也带来了问题。

**00:13:21 - Langdon White**:

大家都没有意识到,你如何用很特殊的方式来组织你的代码是很重要的。做嵌套 for 循环或者做嵌套 while 循环之类的事情可能是可以的,这取决于编译器。

**00:13:38**:

因此,大家都应该明白,如果你不知道编译是如何优化你的代码的,就很难知道如何写出最优的代码。

**00:13:49 - Saron Yitbarek**:

必须要提的是,我们需要一个免费的、可获得的、可值得信赖的编译器。这就是 GNU C 语言编译器:GCC。它横空出世在 1987 年,它是格蕾丝·哈伯的编译器革命和自由软件运动的结合。

**00:14:12**:

它是使编译更标准化,从而让所有人可以编译别人写的代码。我们编程语言的丰富性要归功于它。

**00:14:22 - Carol Willing**:

GCC 是开放的,可以说将编程语言推向一个更高的层次。

**00:14:29 - Saron Yitbarek**:

这是 Jupiter 项目成员 Carol Willing,她是 Python 软件基金会的前理事。

**00:14:35 - Carol Willing**:

人们开始意识到,专有的语言会在特定时间内被服务于一个目的,但并不能得到开发者社区的认可和热爱。因为如果你是一个开发者,你希望学习最常用的,以及未来最流行的东西。

**00:14:59**:

我没必要去发展一种将我锁定在一种技术上的技能。我想 Python 成功的一个原因是因为它是开源的,它有非常简洁的语法。

**00:15:11**:

它的特点就是允许人们用常见的方法,快速高效地解决问题,也允许大家合作解决问题。我想这就是好的程序和代码库的标志:如果你可以用最小的工作量完成你的工作,并且与他人分享,这是确实很棒的事情。

**00:15:35 - Saron Yitbarek**:

这么多年过去了,GCC 逐渐的支持了 Java、C++、Ada 和 Fortran 语言,我还可以继续说说它的进步。

**00:15:43 - Carol Willing**:

通过像 GCC 这样的通用底层接口,人们可以根据自己的特殊需求来定制语言。例如,在 Python 的世界里,有大量的库,甚至具体到科学 Python 世界里,我们有 numpy,还有 scikit-image、scikit-learn 这样的东西。

**00:16:08**:

每个库都是为一个特定目的而工作。因此,我们也看到了生物信息学和自然语言处理之类的东西。而人们可以在一个共同的基础上,做出很多不同的事情。而且可以把它们放到编程语言或库里,使他们能够在他们特定的行业或领域中优化问题的解决。

**00:16:42 - Saron Yitbarek**:

因此,这就是编译器技术一头撞进开源运动的结果吧?随着时间的推移,这种不同技术的碰撞,爆炸般地创造了一个新的、被社区支持的语言,大家都可以学习和完善它。

**00:16:58**:

现在有成百上千的编程语言存活着。

**00:17:03 - Carol Willing**:

随着开源软件越来越流行和被广泛接受,我们看到了编程语言的大量激增。现在有大量围绕着手机技术的编程语言,不同的编程语言也让游戏开发更加简单快速便捷。各种用途的语言,如 Python 和 Ruby,它们算是现代网页开发和交付网页应用和网站的基础。

**00:17:34 - Saron Yitbarek**:

这个队伍还会继续壮大。是的,我们建造的这个巴别塔在未来会更加拥挤。但是你可以把它当作一个聚宝盆,一个语言的盛宴。下面我们将会帮你们梳理这个盛宴。

**00:17:55**:

现在我们已经知道编程语言泛滥的原因了。但是这些对我们有什么具体的意义?我们如何选择对我们重要的语言呢?这是个大问题,因此我们找了一些人帮忙:Clive Thompson,是最好的作家之一,他能让科技世界变得更有意义。他是《连线》的专栏记者,《纽约时报》杂志的特约撰稿人,他现在正在写一本关于计算机程序员心理学的书。

**00:18:24**:

当我们挑选我们想要学习的编程语言时,我们需要知道我们到底想要什么。

**00:18:31**:

这是我和 Clive 反复讨论得出的结论。

**00:18:35**:

当我作为一个开发新人第一次入手的时候,我就说:“让我选一个最好的编程语言,然后掌握并熟练运用它,不就完事了么。”

**00:18:44**:

不过事情不会这样简单,否则为什么有那么多的编程语言呢?

**00:18:49 - Clive Thompson**:

每个语言都有那么点它的特长。因此通常来说,有人创造一个新语言是因为现有的语言满足不了他们的需求。JavaScript 就是一个典型的例子。

**00:19:03**:

<ruby> 网景公司 <rt> Netscape </rt></ruby>曾经在 90 年代中开发了一款浏览器,所有的网站管理员想做一些更具交互性的事情。他们希望有一种方法,使其可以在网站上运行一些脚本。

**00:19:16**:

当然这些需求都提给了网景。然后他们说:“好吧,现在我们没有可以做到这一点的办法,我们需要一个可以集成到我们浏览器的脚本语言。”

**00:19:25**:

于是他们雇佣了 Brendan Eich,一个被认为很资深的家伙。他当时 32 岁,其他人 21 岁的样子。

**00:19:32 - Saron Yitbarek**:

这在开发者圈里就很资深了

**00:19:35 - Clive Thompson**:

他们给他 10 天时间开发 JavaScript。因此他真的就 10 天没有睡觉,然后疯狂地捣鼓出一个脚本语言。它看起来有点挫,有点糟,但是可以运行。它允许人们做一些简单的事情,它还有按钮,对话框,弹框和其他东西。

**00:19:54**:

这就是造一个编程语言的例子,用来做在当时没有办法完成的事情。

**00:20:01 - Saron Yitbarek**:

是不是很有意思。

**00:20:03 - Clive Thompson**:

这就是为什么存在这么多编程语言,人们不断进取,去做别人做不到的事。

**00:20:11 - Saron Yitbarek**:

这也是为什么我对开发者和编程语言之间的关系很感兴趣,我们对这些工具有很强的依赖性。为什么呢?

**00:20:22 - Clive Thompson**:

有几个原因。一个是有时你学习你的第一个编程语言完全是一场意外,有点像你的初恋。

**00:20:30**:

我觉得不同性格的人,也会匹配不同类型的编程语言。例如 Facebook 是用 PHP 设计的。PHP 是有点像毛球,它很不规则,它的成长断断续续,Facebook 也有点这种感觉。

**00:20:49**:

与此相反,来自谷歌的伙计决定:“我们想要一个超高性能的语言,因为在谷歌我们的东西都高速运行着,维护着万亿级的用户吞吐。”

**00:21:02**:

因此他们决定开发一个 Go 语言,Go 非常适合高性能计算。

**00:21:08 - Saron Yitbarek**:

让我们深入一点。作为一个开发者,我有自己的个性,我是不是对一部分编程语言有天然的喜欢呢?或者我工作用的编程语言可能会影响我的个性?

**00:21:25 - Clive Thompson**:

两者肯定都存在。但我确实认为当你遇到你喜欢的编程语言的时候,有一种强烈的共鸣感。你上计算机课程时学习了必修语言:他们都教 Java,有时会多教一门 JavaScript 或 Python。

**00:21:46**:

问题是,你被强制要求,所以你学了它。但当你有选择余地时会怎么样呢?这就是你真正看到一个人的那种情感或心理风格是如何倾向于一门语言的地方,因为我和一个人谈过,他试着学了一堆 JavaScript。

**00:22:03**:

这是个看起来有点丑的语言,它简直是花括号的噩梦。所以他试了又试,试了又试,失败了又失败。直到有一天他看到朋友在用 Python。在他看起来,这是多么的纯净和美妙的语言。因为他是个作家,而 Python 被认为是一个书写型的编程语言,缩进使一切都易于阅读。

**00:22:28 - Clive Thompson**:

它和他一拍即合,主要在于 Python 的工作方式和外观,漂亮的排版打动了他。

**00:22:39 - Saron Yitbarek**:

和 Clive 的交流让我意识到,有一些编程语言是硬塞给我们的。我说的是那些古老的编程语言,已经类似于拉丁语了。

**00:22:53**:

其实我们也有很多选择,去选择适合我们个性的编程语言。如果你想知道最新的东西有哪些,你去问问开发者们周末在用什么就知道了。

**00:23:05**:

下面是我们对话的更多内容:

**00:23:08 - Clive Thompson**:

当我问人们:“你闲暇的时候做什么?”回答肯定是些稀奇古怪的东西。我想实际上其中一个好的,最值得称颂的开发者的特点,就是他们是富有好奇心。

**00:23:22**:

我知道有人会说,“我要学习 Erlang了,就是为了好玩。”

**00:23:26 - Saron Yitbarek**:

我尝试想象人们在周末开发这些项目,项目不是最重要的,他们在学习工具,编程语言才是重要的事情。这才是他们真的想要的状态。

**00:23:41 - Clive Thompson**:

确切的说,你将看到为什么大家不停的重复迭代开发这些日历和待办事项,因为这是一个快速的学习方法。但需要指出的是,编程语言做了什么以及如何工作都与我们没关系,我只需要尽可能的构建我的东西。

**00:23:56**:

他们想知道用那样的编程语言思考是什么感觉。是不是会感觉很轻松、刺激、游刃有余?我目前的使用的语言没这个感觉,是不是入门之后一切事情都变得简单了?

**00:24:13 - Clive Thompson**:

很有可能你遇到一个新语言后会非常兴奋,想起来都很兴奋。

**00:24:20 - Saron Yitbarek**:

我是一个 Ruby 从业者,作为 Ruby on Rails 开发者我非常自豪,我想大概是两年前,另一个非常著名的 Ruby 开发者,Justin Serrals 做了一个非常好的演讲,倡导编程语言从实用角度出发,没有必要那么性感。

**00:24:41**:

他的观点是,Ruby 之所以是一个令人兴奋的编程语言的原因在于它很新,而且差不多几年前都已经得到印证了,它已经不需要更新什么了。它已经是一个稳定、成熟的编程语言了。不过因为它的成熟,开发者对它也就不那么兴奋,我们逐步把目光转移到新的花样来了。

**00:25:05**:

从某个意义上说,很有可能我们的好奇心会扼杀了一个编程语言,或者让它变得庸俗,而这与编程语言本身的好坏没有关系。

**00:25:18 - Clive Thompson**:

我认为这是完全正确的。事实上,你看到的开发者这个极度好奇心和自学欲望的特点,会让他们不断地寻找新鲜事物。并用这种语言去复制其他语言已经基本做得很好的东西。

**00:25:37 - Saron Yitbarek**:

是的。

**00:25:37 - Clive Thompson**:

就是这样,好奇心有好处,同时也是一个陷阱。

**00:25:43 - Saron Yitbarek**:

是的,说的很好。

**00:25:49**:

有时我们的好奇心可能是一个陷阱,但是它也是编程语言革命的动力。开发每个新编程语言的时候他们会说,“有没有别的可能?”它们出现的原因在于,开发人员想要做不一样的事情。

**00:26:06**:

我们想要全新的表达方式。

>

> **00:26:08 - 葛丽丝·哈伯**:

>

>

> 我向你们承诺一些事情。

>

>

>

**00:26:11 - Saron Yitbarek**:

我想葛丽丝·哈伯想在这儿最后讲两句。

>

> **00:26:15 - 葛丽丝·哈伯**:

>

>

> 在未来 12 个月里,你们谁要是说我们一直都是这么做的,我将会瞬间实质化在你的旁边,然后我 24 小时围绕着你。我倒是要看看我能不能引起你的注意。我们不能再相信那句话,这是很危险的。

>

>

>

**00:26:34 - Saron Yitbarek**:

葛丽丝的故事和第一款编译器提醒我们,只要能可以找到表达方法,我们总有更好的办法做事情。

**00:26:43**:

不管编程语言有多抽象,不管我们在机器的 1 和 0 高位还是低位浮动,我们都需要确保它是一个明智选择。我们选择一个语言,是让它帮助我们的想法更容易表达出来。

**00:27:03**:

如果你想更深一步学习编程语言和和编译器,你不会很孤独,我们收集了一些很有用的文档帮你深入学习。敬请在我们节目下面留言。请查看 [redhat.com/commandlineheroes](http://redhat.com/commandlineheroes)

**00:27:20**:

下一期节目,我们将关注开源贡献者的艰辛之路。那些维护者的真实奋斗生活是什么样的?我们如何提出第一个拉取请求?

**00:27:32**:

我们将带你们简单认识开源贡献。

**00:27:39**:

代码英雄是红帽的原创播客。你可以在苹果播客、谷歌播客以及其他可能的地方免费收听这个节目。

**00:27:48**:

我是 Saron Yitbarek,坚持编程,下期再见。

>

> **什么是 LCTT SIG 和 LCTT LCRH SIG**

>

>

> LCTT SIG 是 LCTT <ruby> 特别兴趣小组 <rt> Special Interest Group </rt></ruby>,LCTT SIG 是针对特定领域、特定内容的翻译小组,翻译组成员将遵循 LCTT 流程和规范,参与翻译,并获得相应的奖励。LCRH SIG 是 LCTT 联合红帽(Red Hat)发起的 SIG,当前专注任务是《代码英雄》系列播客的脚本汉化,已有数十位贡献者加入。敬请每周三、周五期待经过我们精心翻译、校对和发布的译文。

>

>

> 欢迎[加入 LCRH SIG](/article-12436-1.html) 一同参与贡献,并领取红帽(Red Hat)和我们联合颁发的专属贡献者证书。

>

>

>

---

via: <https://www.redhat.com/en/command-line-heroes/season-2/hello-world>

作者:[Red Hat](https://www.redhat.com/en/command-line-heroes) 选题:[bestony](https://github.com/bestony) 译者:[guevaraya](https://github.com/guevaraya) 校对:[acyanbird](https://github.com/acyanbird),[wxy](https://github.com/wxy)

本文由 [LCRH](https://github.com/LCTT/LCRH) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Every new programming language is created to do something previously impossible. Today, there are quite a few to choose from. But which ones do you really need to know?

This episode dives into the history of programming languages. We recognize the genius of “Amazing Grace,” also known as Rear Admiral Grace Hopper. It’s thanks to her that developers don’t need a PhD in mathematics to write their programs in machine code. We’re joined by Carol Willing of Project Jupyter, former Director of the Python Software Foundation, and Clive Thompson, a contributor to The New York Times Magazine and Wired who’s writing a book about how programmers think.

**00:07** - *Multiple voices*

Hello world.

**00:12** -*Saron Yitbarek*

Hello world. Is it signal, or is it noise? All the work we do as developers, all the countless hours of coding and stressing and testing, it all comes down to that one question: are we getting our signal across?

**00:29** - *Multiple voices*

Hello.

**00:29** - *Saron Yitbarek*

Or is our signal lost? Are we just making noise.

**00:40** - *Saron Yitbarek*

I'm Saron Yitbarek and this is season two of Command Line Heroes, an original podcast from Red Hat. In today's episode we're found in translation. It's all about programming languages: where they came from, and how we choose which ones to learn. We're doing a deep dive into the ways we talk to our machines. How those languages are evolving. And how we can use them to make our work sing.

**01:08** - *Siri*

Daisy, daisy, give me your answer true.

**01:11** - *Saron Yitbarek*

If you're a developer like me, you probably feel pressure to be a polyglot, to be fluent in a whole bunch of languages. You better know Java. Better know Python. Go JavaScript, Node. Gotta be able to dream in C++. Maybe know a classic like COBOL, just for cred. And you might even be worrying about a newcomer, like Dart... It's exhausting.

**01:36** - *Saron Yitbarek*

The weird thing is, the computers that we're talking to really only speak one language: machine language. All those ones and zeroes flying by, all those bits. Every single language we learn is, at the end of the day, just another pathway to the same place. It all gets boiled down, no matter how abstracted we are when we're doing the work.

**02:03** - *Saron Yitbarek*

And that's what I want you to keep in mind as our story begins, because we're starting with the moment, the completely brilliant moment, when one woman said: "You know what? I'm a human. I don't talk in bits, I don't think in bits. I want to program using English words."

**02:22** - *Saron Yitbarek*

Might seem like a simple concept today, but once upon a time, that idea, the idea you should be able to talk to a computer in your own way was a joke at best, sacrilege at worst.

**02:37** - *Saron Yitbarek*

Season Two of Command Line Heroes is all about the nuts and bolts that underpin everything we do. And this episode's hero is a woman whose journey you need to know if you want to fully understand our reality. So, I give you: the first lady of software.

**02:58** - *Speaker 5*

Ladies and gentlemen, it's with great pleasure that I present Commodore Grace Mary Hopper. Thank you.

**03:02** - *Grace Hopper*

So I went to Canada to speak at the University of Guelph, and I had to go through immigration at Toronto Airport. And I handed my passport to the immigration officer and he looked at it and looked at me and said, "What are you?"

**03:17** - *Grace Hopper*

And I said, "United States Navy."

**03:20** - *Grace Hopper*

He took a second real hard look at me. And then he said, "You must be the oldest one they've got."

**03:28** - *Saron Yitbarek*

That was Rear Admiral Grace Hopper in 1985. She was 79-years old at the time and delivering her famous lecture at M.I.T.. Even then, Grace Hopper was a legend. She was the god mother of independent programming languages. The woman who used compilers so we could use human language instead of mathematical symbols.

**03:51** - *Saron Yitbarek*

She received the National Medal of Technology, and after she died, the National Medal of Freedom. So: not a slouch. They called her " Amazing Grace."

**04:03** - *Claire Evans*

If anybody was born to be a computer programmer, it was Grace.

**04:06** - *Saron Yitbarek*

That's Claire Evans, a tech journalist and the author of a book called Broad Band, about pioneering women in tech. Evans describes that early word of computers, the one Grace Hopper stepped into in the 1940s when she was a young woman in the Navy Reserves and computers were the size of a small room.

**04:25** - *Claire Evans*

All those early programmers, they were like a priesthood. They were people that were specifically good at something that was incredibly tedious, because this is before compilers, before programming languages, when people were really programming at the machine level, bit by bit.

**04:42** - *Claire Evans*

In order to have the constitution to do that kind of thing, you really have to be a specific kind of person, and Grace really was that kind of person.

**04:49** - *Saron Yitbarek*

Right away, she saw how crazy limiting it was for humans to be using a language meant for machines. It was like trying to walk down the street by telling every atom in your body what to do. Possible? Sure. Efficient? No.

**05:06** - *Saron Yitbarek*

There had to be a shortcut between a programmer's intentions and a computer's actions. Humans had been mechanizing mathematical thinking ever since the abacus. There had to be a way to do that again with computers.

**05:19** - *Claire Evans*

In the past, mathematicians used to have to do all this arithmetic. All this tedious step-by-step work in order to get to the really interesting solutions. And then computers came along and offered the possibility of doing that arithmetic by machine, therefore freeing the mathematician to think lofty, intellectual, systems-oriented thoughts.

**05:39** - *Claire Evans*

Except for, that's not really what happened. Ultimately, the computer came along and then the mathematician had to become a programmer. And then they were stuck once again doing all these bit-by-bit tedious rule-oriented, little calculations in order to write the programs.

**05:53** - *Claire Evans*

So, I think, Grace's perspective on it was she wanted to make sure that computers could assist mathematicians to think great thoughts and do great things without bogging them down in the details.

**06:12** - *Saron Yitbarek*

Hopper was joining a long line of thinkers. You've got Pascal in the 17th century with the first calculator. You've got Babbage in the 19th century with the analytical engine. There's this big, beautiful line of inventors who want to build things to supercharge the human brain. Things that let us process more data than we could ever manage on our own. That's the lineage Grace Hopper was stepping into when she invented something that everybody thought was impossible.

**06:42** - *Claire Evans*

I mean, Grace's idea that people should be able to communicate with their computers using natural language, and that language should be machine independent, therefore interoperable between different computing machines was revolutionary in its time.

**06:59** - *Claire Evans*

People that got behind her, this notion that she called, in the early days, "automatic programming," were considered to be like space cadets in the community of programmers and computer people.

**07:12** - *Claire Evans*

And the people who weren't onboard eventually became known as the Neanderthals, it was this huge rift in the computing community.

**07:21** - *Saron Yitbarek*

Hopper did not have an easy time convincing her superiors to cross that rift. But she saw it as her duty to try.

**07:30** - *Grace Hopper*

There's always a line out here. That line represents what your boss will believe at that moment in time. Now, of course, if you step over it, you don't get the budget. So you have a double responsibility to that line: don't step over it, but also keep on educating your boss so you move the line further out, so next time you can go further into the future.

**07:52** - *Saron Yitbarek*

That future she pushed toward is our present. Pretty much every language that you and I rely on owes a debt to Hopper and her first compiler. So that's space cadets “one”, Neanderthals “zero”.

**08:07** - *Saron Yitbarek*

The idea that we could code not with numbers, but with human-style language. All of a sudden you're typing "Go to Operation 8," or, "Close file C." It was programming made human.

**08:21** - *Claire Evans*

Grace was uniquely aware of the fact that computing was going to be a world changing thing, but it wasn't going to be a world changing thing if nobody understood how to access it or use it. And she wanted to make sure that it was as open to as many people as possible and as accessible to as many people as possible.

**08:37** - *Claire Evans*

And that required a certain level of concession to comprehensibility and readability. So ultimately the desire to create programming languages came from wanting to give more points of entry to the machine and taking it away from this priesthood and opening it up to a greater public and a new generation.

**08:59** - *Saron Yitbarek*

I wanna pause here and just underline something Claire said: programming languages as we know them today come from a desire to open the tech up. To take it away from a priesthood of math PhDs and make development accessible.

**09:14** - *Saron Yitbarek*

The spirit of the compiler that does all that work, it's motivated by a sense of empathy and understanding.

**09:21** - *Saron Yitbarek*

Claire's got a theory about why Hopper was the woman to deliver that change. It has to do with Hopper's work during World War II.

**09:29** - *Claire Evans*

She was doing mine sweeping problems, ballistics problems, oceanography problems. She was applying all of these different, diverse disciplines representing all the violent, chaotic, messy realities of the war and translating them into programs to run on the Mark I computer.

**09:45** - *Claire Evans*

She knew how to do that translation between languages. And I don't mean computer languages, I mean like human languages. She understood how to listen to somebody who was presenting a complex problem, try to understand where they were coming from, what the constraints and affordances of their discipline was and then translate that into something that the computer could understand.

**10:06** - *Claire Evans*

In a way she was like the earliest compiler. Like, the embodied human compiler because she understood that you had to meet people on their level.

**10:17** - *Saron Yitbarek*

Compiling as an act of empathy and understanding. I think we can all keep that in mind when we learn new languages, or wonder why something isn't compiling at all. The compiler's job should be to meet your language where it lives.

**10:32** - *Saron Yitbarek*

Grace Hopper knew that once humans could learn to speak programming languages, and once compilers began translating our intentions into machine language, well, it was like opening the floodgates.

**10:43** - *Claire Evans*

She knew that computing could never develop as an industry, as a world changing force if it was too siloed and too specific. And people who were its practitioners couldn't communicate with the people whose problems needed to be put on the machine, so to speak.

**11:00** - *Claire Evans*

So she was really quite expert at that kind of human translation, which I think, yeah, made her uniquely qualified to be thinking about and creating programming languages.

**11:15** - *Saron Yitbarek*

Hopper's work on English-style data processing language eventually evolved into COBOL, which is sort of perfect, because COBOL is not flashy, it's very down to business, and so was Grace Hopper.

**11:28** - *Saron Yitbarek*

In a way, she gave us a language that sounded a lot like her. Also like Hopper, COBOL's got longevity. It's still around 60 years later.

**11:50** - *Saron Yitbarek*

Okay, so Grace Hopper's compilers were like babelfish, parlaying meaning between programmers and their machines. And they were translating things from higher and higher levels of abstraction.

**12:03** - *Saron Yitbarek*

Then, a few decades later, we get another crucial ingredient added to the language mix. So picture this: the free software community took off in the 1980s, but when the Unix alternative, GNU, was created, there weren't any free and open compilers to go with it.

**12:22** - *Saron Yitbarek*

In order for GNU to give us a real open-source alternative to Unix, in order for programming languages to thrive in the open-source world, the community would need to pull a Grace Hopper — we needed an open-source compiler.

**12:38** - *Saron Yitbarek*

Here's Langdon White, a platform architect at Red Hat talking about where things stood.

**12:45** - *Langdon White*

In the 80s, you can still go spend ten grand pretty easily on a compiler. The free part was a big deal. I don't have an extra ten grand lying around to go buy myself a compiler. Plus the fact that I'd have to buy myself a compiler for every platform I want to target. So, in those days it was mostly Unix, but all the different flavors.

**13:06** - *Langdon White*

So you wouldn't be able to just buy one, you would also have to buy multiple for different architectures or different vendors.

**13:14** - *Saron Yitbarek*

Langdon notes that it was more than just costs at stake. In some cases, it was the coding work itself.

**13:21** - *Langdon White*

People don't realize that it matters how you structure your code in very specific ways. So doing nested for loops or doing nested while loops or that kind of thing may be okay, depending on the compiler.

**13:38** - *Langdon White*

So, you know, if you don't know how it's optimizing your code it's very, very difficult to know how to write your code to get the most optimization.

**13:49** - *Saron Yitbarek*

Suffice to say, we needed a free option, an accessible option, a trustworthy option. What we needed was the GNU C compiler, the GCC. That was the beast, first released in 1987, that merged Grace Hopper's compiler revolution with the free software movement.

**14:12** - *Saron Yitbarek*

It standardized things and let everybody compile what anybody else had written. The richness of our languages is thanks to that moment.

**14:22** - *Carol Willing*

The fact that the GCC was open sort of moved languages to a different level.

**14:29** - *Saron Yitbarek*

Carol Willing works at Project Jupiter, and she's the former director of the Python Software Foundation.

**14:35** - *Carol Willing*

People started realizing that proprietary languages, while they served a purpose at the time, they weren't going to gain the acceptance and adoration of the developer community. Because if I'm a developer, I'm going to want to learn what is most commonly used and as well as what is new and on the forefront.

**14:59** - *Carol Willing*

I don't necessarily want to develop a skill that will lock me into one technology. I think one of the reasons that Python was so successful is it was open source, it had a very clean syntax.

**15:11** - *Carol Willing*

What it was doing is it was allowing people in a common way to solve problems quickly, efficiently. And to also allow people to collaborate. And I think those are the signs of good programs and good libraries is: if you can get your work done with a minimum amount of headache and you can share it with others. I think that's golden.

**15:35** - *Saron Yitbarek*

Over the years, the GCC evolved to support Java, C++, Ada, Fortran, I could go on.

**15:43** - *Carol Willing*

By having a common underlying interface like the GCC, it lets people take languages and then customize them to their particular needs. For example, in the Python world, there's many different libraries and even specifically in the scientific Python world, we've got things like numpy, and scikit-image, scikit-learn.

**16:08** - *Carol Willing*

And each of those had sort of a more specific task that they are known for. So, we've also seen things like bioinformatics and natural language processing. And so people are able to do many different things working off a common base. But then putting elements into their languages or libraries that allow them to optimize problem solving in their particular industry or area.

**16:42** - *Saron Yitbarek*

So, this is what happens when compiler technology runs headfirst into the open source movement, right? Over time, the collision creates a big bang of new community-supported languages that anybody can learn and build with.

**16:58** - *Saron Yitbarek*

There are hundreds and hundreds of those languages being used today.

**17:03** - *Carol Willing*

As open source became more popular and more accepted, what we've seen is a proliferation of languages. There are a number of languages around cell phone technology and mobile. Different languages that help make game development more straightforward. All purpose languages, like Python and Ruby that were sort of foundational for the web development and delivery of web applications and websites.

**17:34** - *Saron Yitbarek*

The list goes on. And, yeah, that Tower of Babel we're building is just going to get more packed in the future. But look, you can also see it as a cornucopia, a language feast. And we're going to help you sort through that feast — next.

**17:55** - *Saron Yitbarek*

Okay, so I know where the language flood came from. But how do we make sense of it all? How do we pick out languages that matter to us? It's a big question, so I brought in some help: Clive Thompson's one of the best writers out there for making sense of the tech world. He's a columnist at Wired, a contributing writer at the New York Times Magazine, and he's working on a book now about the psychology of computer programmers.

**18:24** - *Saron Yitbarek*

Which is perfect, because we need to know what's going on in our brains when we choose which ones to learn.

**18:31** - *Saron Yitbarek*

Here's me and Clive hashing it all out.

**18:35** - *Saron Yitbarek*

When I was first starting out as a new developer, I just said, "Lemme find the best one, I'm going to get really good at it. And then I'll be done."

**18:44** - *Saron Yitbarek*

But it's not quite that simple. Why are there so many programming languages?

**18:49** - *Clive Thompson*

Each language has sort of a bias of things that it's good at. So, typically, the reason that someone creates a new language is there's something they want to do that existing languages aren't good for. JavaScript is a good example of that.

**19:03** - *Clive Thompson*

Netscape had created a browser back in the mid '90s and all these web masters wanted to do something a little more interactive. They wanted there to be a way to have a bit of scripting inside their website.

**19:16** - *Clive Thompson*

And so these demands were coming to Netscape. And they were like, "Alright, we need, there's nothing out there that can do this, we need a scripting language that we build inside our browser."

**19:25** - *Clive Thompson*

And so they hired Brendan Eich, who was considered a senior guy. He was like 32, and everyone else was like 21.

**19:32** - *Saron Yitbarek*

That's senior in developer world.

**19:35** - *Clive Thompson*

And they gave him ten days to make JavaScript. So he literally just didn't sleep for ten days and he frantically cranked out a scripting language. And it was kind of ungainly and kind of a mess. But it worked. And it allowed people to do very simple things, it had buttons and dialogs and popups and whatnots.

**19:54** - *Clive Thompson*

And so that's an example of a language that came, was created to do something that wasn't really possible at that point in time.

**20:01** - *Saron Yitbarek*

That's fascinating.

**20:03** - *Clive Thompson*

So that's why so many languages exist, is that people keep on finding a reason to do something that no one else can do.

**20:11** - *Saron Yitbarek*

So what is so interesting to me about the relationship between developers and our programming languages is we have such a strong attachment to these tools, why is that?

**20:22** - *Clive Thompson*

There's a couple reasons why. One is that sometimes it's literally just an accident of what was the first language that you learned. And it's kind of like your first love.

**20:30** - *Clive Thompson*

And I think it's also like different personalities work with different types of languages. Like, you look at Facebook, and it was designed using PHP. And PHP is kind of a hairball of a language. It's very irregular. And it sort of grew in these fits and starts. And that's sort of the way Facebook feels, too.

**20:49** - *Clive Thompson*

In comparison, the guys at Google decided, "We want a super high performance language, because Google, at Google we're all about things running really fast, and things, sustaining trillions of users at once."

**21:02** - *Clive Thompson*

And so they decide to make Go, and Go is a really terrific language for that sort of high-performance computing.

**21:08** - *Saron Yitbarek*

Let's go a little bit deeper. Is it that I, as a developer, with my specific personality, am going to be naturally attracted to certain languages? Or that the language I work in might influence my personality?

**21:25** - *Clive Thompson*

Well, definitely both. But I do think that there is a really strong resonance that people find when they hit a language that they like. You go to a computer science curriculum and you sort of learn what you have to learn: they're all teaching Java. Sometimes more JavaScript or Python.

**21:46** - *Clive Thompson*

But the point is, you're forced to learn it, so you learn it. But what do people do when they have the choice? And this is where you really see how the sort of emotional or psychological style of someone gravitates towards a language, because I talked to one guy who tried learning JavaScript a bunch.

**22:03** - *Clive Thompson*

And it was just sort of, it's kind of an ugly language to look at. It's got the curly bracket nightmare going on. And so he tried and failed and tried and failed and tried and failed. And then one day he saw a friend working in Python. And it just looked so clean and beautiful to him. He was kind of a writer, and it's often regarded as a writerly type of a language, because the indentation makes everything easy to read.

**22:28** - *Clive Thompson*

And it just clicked with him, because there was just something about the way that Python worked and looked, it's beautiful sparseness, that hit him.

**22:39** - *Saron Yitbarek*

So, talking with Clive I realized there are languages that are thrust upon us. I'm talking about those ancient languages that are just baked into everything we work with — the code Latin.

**22:53** - *Saron Yitbarek*

But there're also languages we choose, the languages that fit our personalities. And if you want to know what's new and shaking things up, you want to ask a developer what they use on the weekend.

**23:05** - *Saron Yitbarek*

Here's more of our conversation:

**23:08** - *Clive Thompson*

So when I ask people: "What are you doing in your spare time?" It'll be all this weird stuff. I think it's actually one of the things that sort of, you know, nice and laudatory about developer behavior is that they tend to be very curious people.

**23:22** - *Clive Thompson*

I know people that decided, "I'm going to learn Erlang, just for the hell of it."

**23:26** - *Saron Yitbarek*

I'm trying to imagine these projects that people are working on on the weekends. And it's almost like the project is secondary. It's like the learning of the tool, the language, is more important. That's really what they're there for.

**23:41** - *Clive Thompson*

Exactly. This, is sort of why you'll see people just constantly re-iterating over and over again these calendaring and to-do list things because it's a very quick way, just to figure out, yeah, what does this language do and how does it work and it almost doesn't matter what I'm building, so long as I'm building something.

**23:56** - *Clive Thompson*

They want to know what it feels like to think in that language. Is it going to be, is it going to feel easy, and thrilling and fluent in a way that I'm not experiencing with the current languages? Is it going to open up doors to make things easier to do?

**24:13** - *Clive Thompson*

So there's a possibility space that occurs when you encounter a new language that can be really exciting. Imaginatively exciting.

**24:20** - *Saron Yitbarek*

So I'm a Ruby, a very proud Ruby on Rails developer, I think it was about two years ago that another pretty well known Ruby developer, Justin Serrals, did this really great talk advocating for this idea that a language doesn't need to be sexy to be used.

**24:41** - *Saron Yitbarek*

And his thesis, his whole point was that Ruby was really exciting because it was new. And it kind of got to a point about a couple years ago where it just didn't need any more things. It was done. It was a stable language, it was a mature language, and, as a result of it being mature, it's not as exciting for developers. It's not this new toy to play, and so we kind of slowly moved away from it on to the next shiny thing.

**25:05** - *Saron Yitbarek*