id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

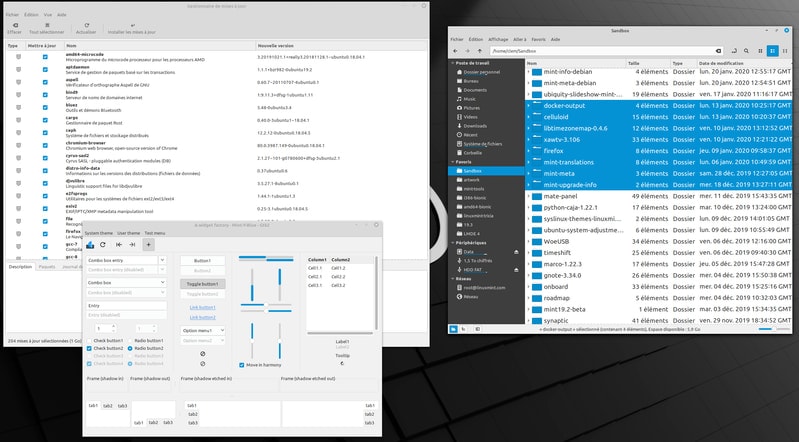

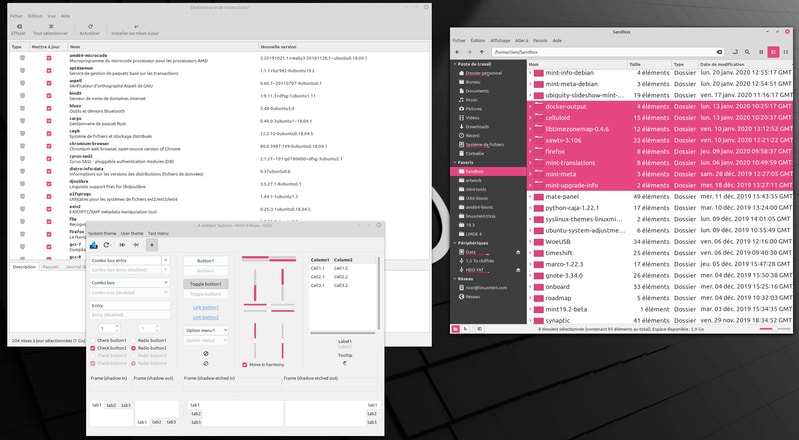

12,257 | 如何在 Ubuntu 中处理自动的无人值守升级 | https://itsfoss.com/auto-updates-ubuntu/ | 2020-05-27T23:40:53 | [

"Ubuntu",

"升级"

] | https://linux.cn/article-12257-1.html |

>

> 本教程教你如何处理无人值守的升级,即 Ubuntu Linux 的自动系统更新。

>

>

>

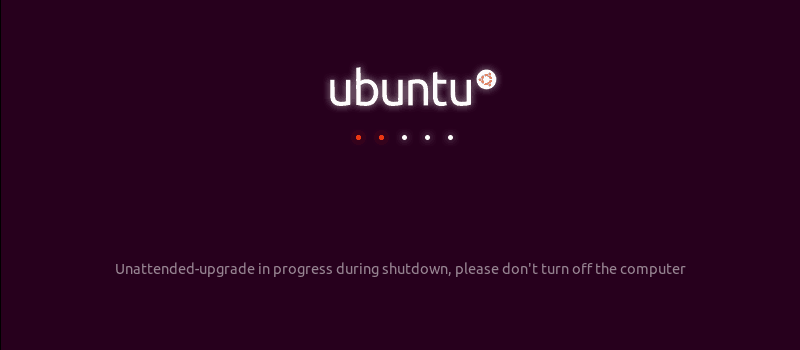

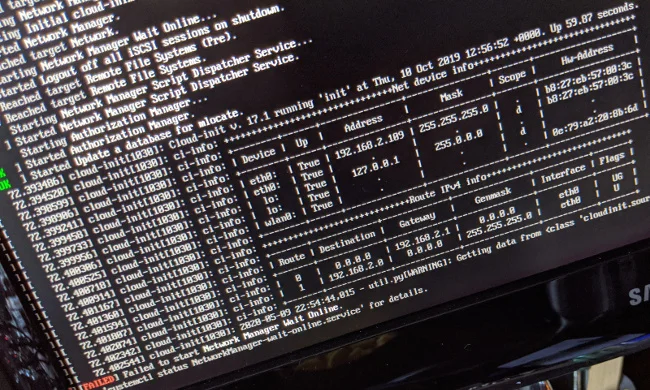

有时,当你尝试[关闭 Ubuntu 系统](https://itsfoss.com/schedule-shutdown-ubuntu/)时,可能看到这个阻止你关闭的页面:

>

> 关机过程中正在进行无人值守升级,请不要关闭计算机。

>

>

>

你可能会问这个“无人值守升级”是什么,怎么会在你不知情的情况下运行呢?

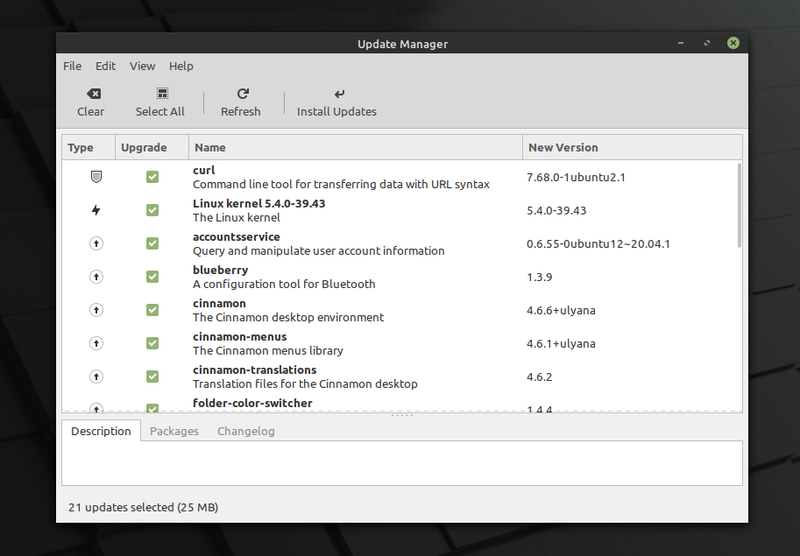

原因是 [Ubuntu](https://ubuntu.com/) 非常重视系统的安全性。默认情况下,它会每天自动检查系统更新,如果发现安全更新,它会下载这些更新并自行安装。对于正常的系统和应用更新,它会通过软件更新程序通知你。

由于所有这些都是在后台发生的,所以在你尝试关闭系统或尝试自行安装应用程序之前,你甚至不会意识到这一点。

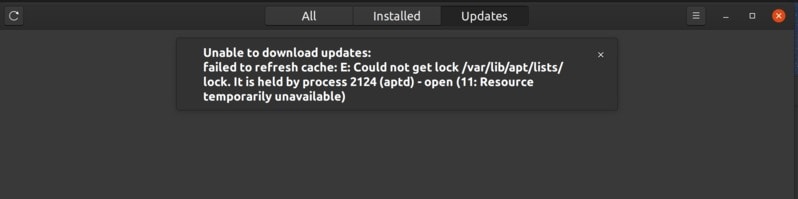

在这些无人值守的升级过程中,尝试安装新软件,会导致著名的[无法获得锁定的错误](https://itsfoss.com/could-not-get-lock-error/)。

如你所见,自动更新带来了一些小麻烦。你可以选择禁用自动更新,但这意味着你必须一直手动检查并[更新你的 Ubuntu 系统](https://itsfoss.com/update-ubuntu/)。

>

> **你真的需要禁用自动更新吗?**

>

>

> 请注意,这是一项安全功能。Linux 实际上允许你禁用系统中的所有功能,甚至禁用这些安全功能。

>

>

> 但是我认为,作为普通用户,**你不应该禁用自动更新**。毕竟,它可以确保你的系统安全。

>

>

> 为了确保系统的安全性,你可以忍受自动更新所带来的小麻烦。

>

>

>

现在,你已经收到警告,还是觉得承担手动更新系统的额外任务更好,那么让我们看看如何处理自动更新。

与往常一样,有两种方法可以做到:GUI 和命令行。 我将向你展示两种方法。

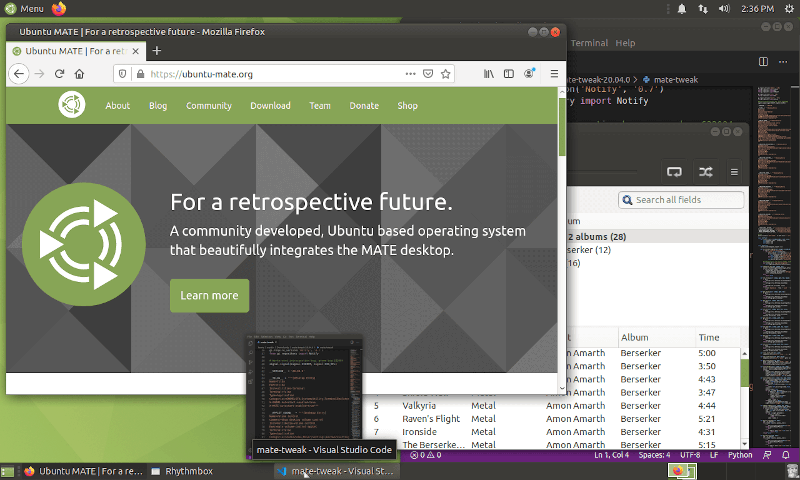

我在这里使用 Ubuntu 20.04,但是这些步骤对 Ubuntu 18.04 和任何其他 Ubuntu 版本均有效。

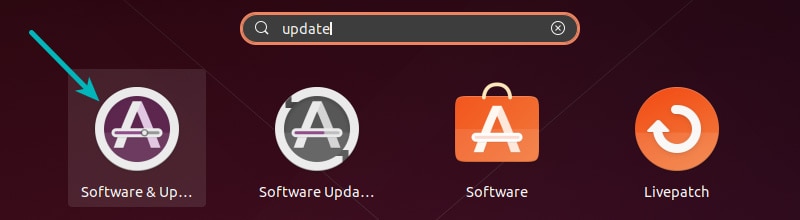

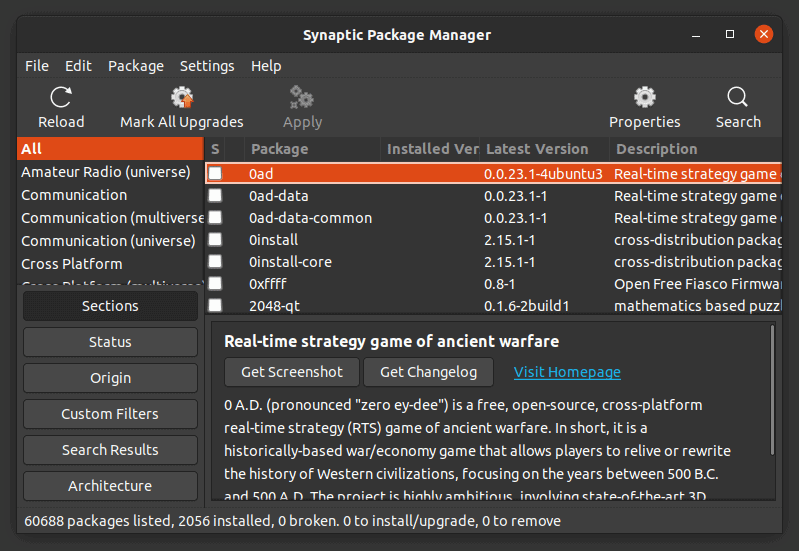

### 方法 1:以图形方式禁用 Ubuntu 中的自动更新

进入菜单并查找“软件和更新”工具。

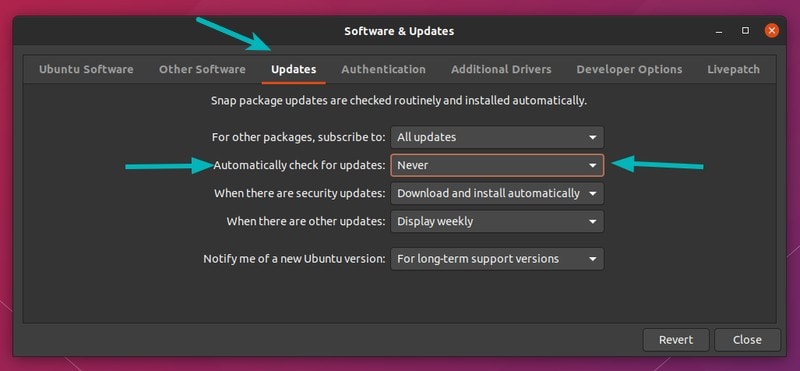

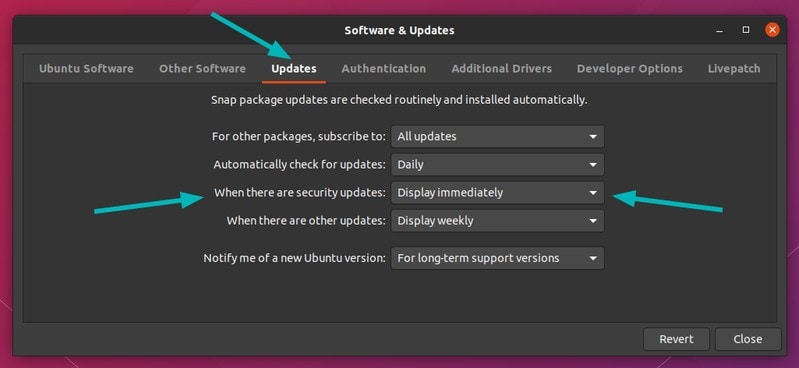

在此处,进入“更新”选项卡。查找“自动检查更新”。默认情况下,它设置为“每日”。

你可以将其更改为“从不”,你的系统将永远不会检查更新。如果不检查更新,它就不会找到要安装的新的更新。

如果这样做,那么必须不时手动更新系统。但是,这是额外的工作,你可能不会一直记得。

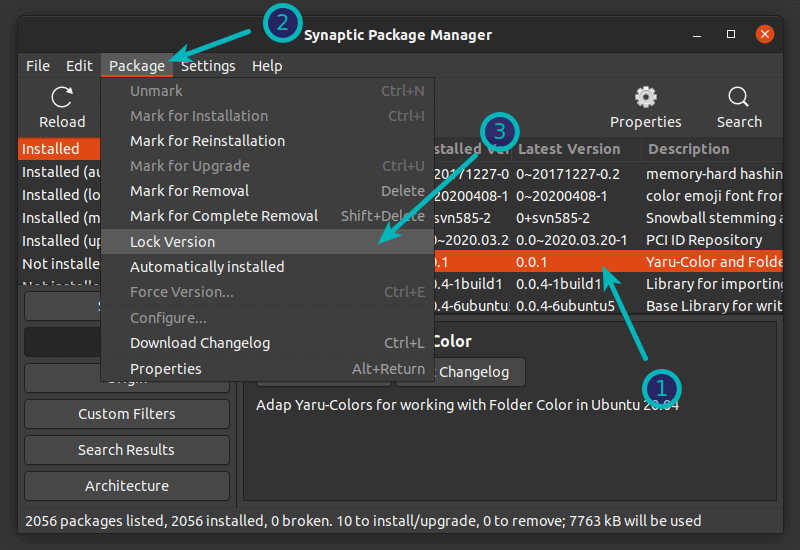

#### 在 Ubuntu 中处理自动更新的更好方法

就个人而言,我建议让它自己检查更新。如果你不希望它自动安装更新,那么可以更改该行为,以通知有关安全更新的可用性。

保持“自动检查更新”为“每日”,然后将“有安全更新时”选项更改为“立即显示”,而不是“自动下载并安装”。

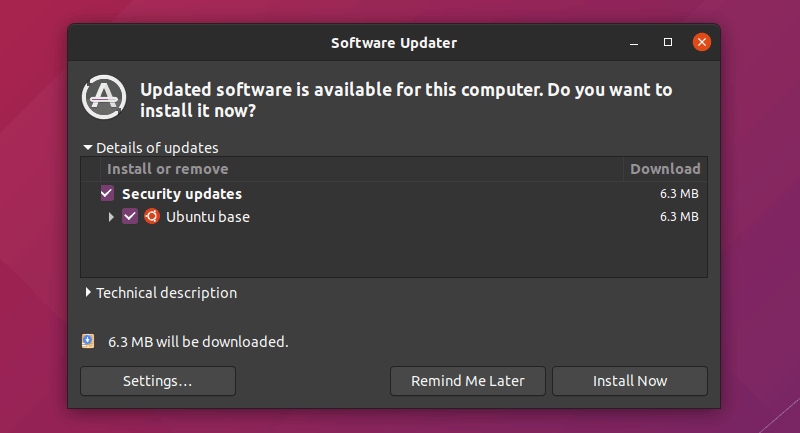

这样,它会检查是否有更新,而不是在后台自动安装更新,软件更新程序会通知你有可用于系统的更新。而你的系统已经完成正常的系统和软件更新。

使用此设置,关闭系统时将不会看到“正在进行的无人值守升级”。但是,由于两个不同的进程无法同时使用 apt 包管理器,因此你仍然可能会遇到“无法锁定”错误。

我相信这是一个更好的解决方案,你不认为是么?

如我承诺的同时有 GUI 和命令行方法一样,让我向你展示如何在终端中禁用无人值守的升级。

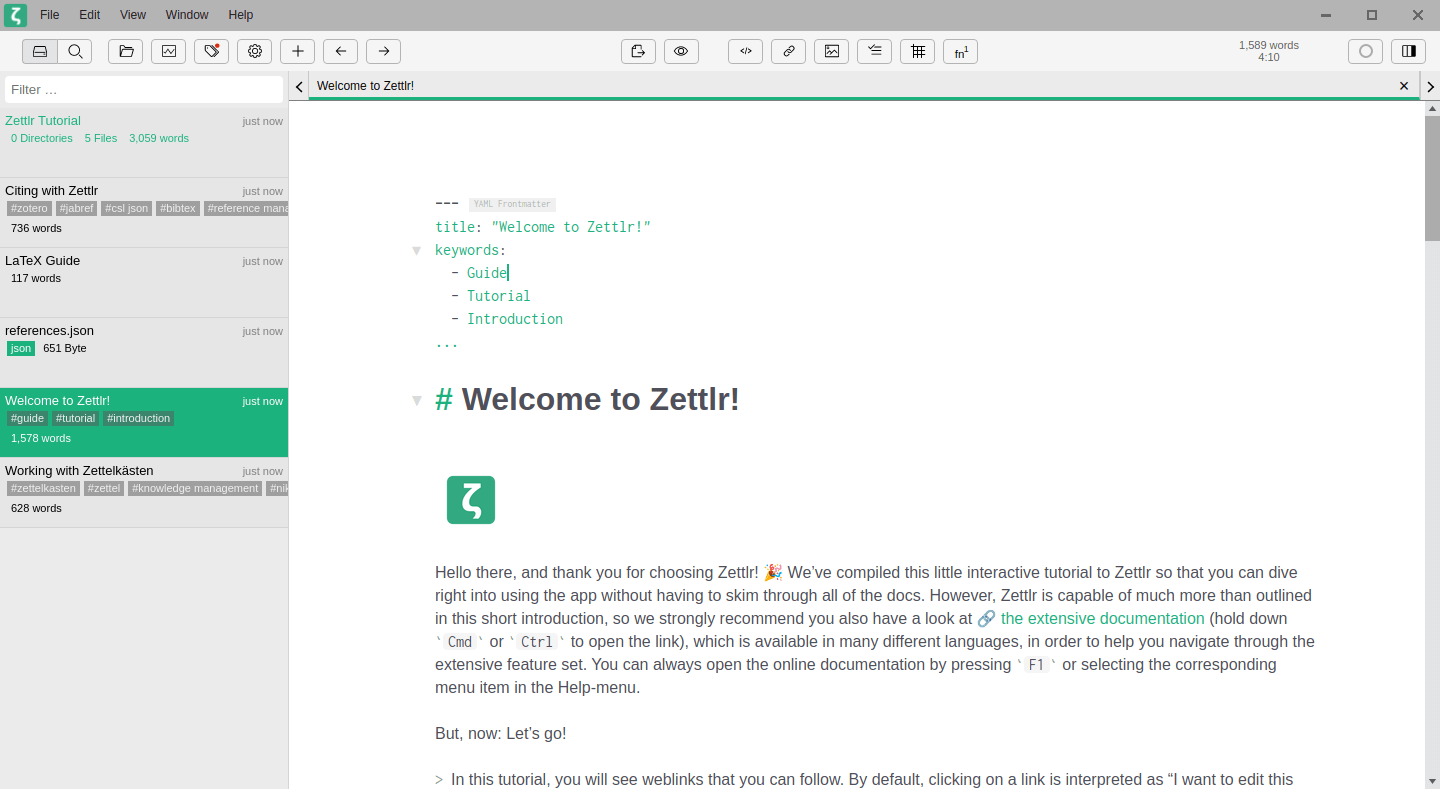

### 如何在 Ubuntu 中使用命令行禁用自动更新

你可以在 `/etc/apt/apt.conf.d/20auto-upgrades` 中找到自动升级设置。Ubuntu 终端中的默认文本编辑器是 Nano,因此你可以使用以下命令来编辑此文件:

```

sudo nano /etc/apt/apt.conf.d/20auto-upgrades

```

现在,如果你不希望系统自动检查更新,那么可以将 `APT::Periodic::Update-Package-Lists` 的值更改为 `"0"`。

```

APT::Periodic::Update-Package-Lists "0";

APT::Periodic::Unattended-Upgrade "0";

```

如果你希望它检查更新但不自动安装无人值守的升级,那么可以选择将其设置为:

```

APT::Periodic::Update-Package-Lists "1";

APT::Periodic::Unattended-Upgrade "0";

```

### 最后

由于某种原因,启用了自动安全更新,建议你保持这种状态。这个小烦恼实际上并不值得冒险损害系统安全性。你怎么看?

---

via: <https://itsfoss.com/auto-updates-ubuntu/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **Brief: This tutorial teaches you how to handle the unattended upgrade i.e. the automatic system updates in Ubuntu Linux.**

Sometimes, when you try to [shutdown your Ubuntu system](https://itsfoss.com/schedule-shutdown-ubuntu/), you may come across this screen that stops you from shutting down:

**Unattended-upgrade in progress during shutdown, please don’t turn off the computer.**

You might wonder what is this “unattended upgrade” and how come it is running without your knowledge.

The reason is that [Ubuntu](https://ubuntu.com/) takes your system’s security very seriously. By default, it automatically checks for system updates daily and if it finds any security updates, it downloads those updates and install them on its own. For normal system and application updates, it notifies you via the Software Updater tool.

Since all this happens in the background, you don’t even realize it until you try to shutdown your system or try to install applications on your own.

Trying to install a new software when these unattended upgrades are in progress leads to the famous [could not get lock error](https://itsfoss.com/could-not-get-lock-error/).

As you can see, the automatic updates present a couple of minor annoyance. You may choose to disable the auto updates but that would mean that you’ll have to check and [update your Ubuntu system](https://itsfoss.com/update-ubuntu/) manually all the time.

Do you really need to disable auto updates?

Please note that this is a security feature. Linux allows you to do practically everything in your system even disabling these security features.

But in my opinion, as a regular user, ** you should not disable the automatic updates**. It keeps your system safe after all.

For the sake of your system’s security, you may tolerate the minor annoyances that come with the automatic updates.

Now that you have been warned and you think it is better to take up the additional task of manually updating your system, let’s see how to handle the auto updates.

As always, there are two ways to do it: GUI and command line. I’ll show you both methods.

I have used Ubuntu 20.04 here but the steps are valid for Ubuntu 18.04 and any other Ubuntu version.

## Method 1: Disable automatic updates in Ubuntu graphically

Go to the menu and look for ‘software & updates’ tool.

In here, go to Updates tab. Now look for the “Automatically check for updates”. By default it is set to Daily.

You can change it to Never and your system will never check for updates on its own again. And if it won’t check for updates, it won’t find new updates to install.

If you do this, you must manually update your system from time to time. But that’s an additional chore to do and you may not remember it all the time.

### Slightly better way to handle auto updates in Ubuntu

Personally, I would suggest to let it check for updates on its own. If you don’t want it installing the updates automatically, you can change that behavior to get notified about the availability of security updates.

Keep “Automatically check for updates” to Daily and change “When there are security updates” option to “Display immediately” instead of “Download and install automatically”.

This way, it checks for updates and if there are updates, instead of installing them automatically in the background, the Software Updater tool notifies you that updates are available for your system. Your system already does that for normal system and software updates.

With this setup, you won’t see the “unattended upgrades in progress” when you shutdown your system However, you may still encounter the ‘could not get lock’ error because two separate processes cannot use apt package manager at the same time.

I believe this is a better solution, don’t you you think?

As I promised both GUI and command line methods, let me show you how to disable unattended upgrades in the terminal.

## How to disable automatic updates in Ubuntu using command line

You’ll find the auto-upgrades settings in the **/etc/apt/apt.conf.d/20auto-upgrades** file. The default text editor in Ubuntu terminal is Nano so you can use this command to edit this configuration file:

`sudo nano /etc/apt/apt.conf.d/20auto-upgrades`

Now, if you don’t want your system to check for updates automatically, you can change the value of APT::Periodic::Update-Package-Lists to 0.

```

APT::Periodic::Update-Package-Lists "0";

APT::Periodic::Unattended-Upgrade "0";

```

If you want it to check for updates but don’t install the unattended-upgrades automatically, you can choose to set it like this:

```

APT::Periodic::Update-Package-Lists "1";

APT::Periodic::Unattended-Upgrade "0";

```

**In the end…**

The automatic security updates are enabled automatically for a reason and I recommend you keep it like this. A couple of minor annoyances are not really worth risking the security of your system. What do you think? |

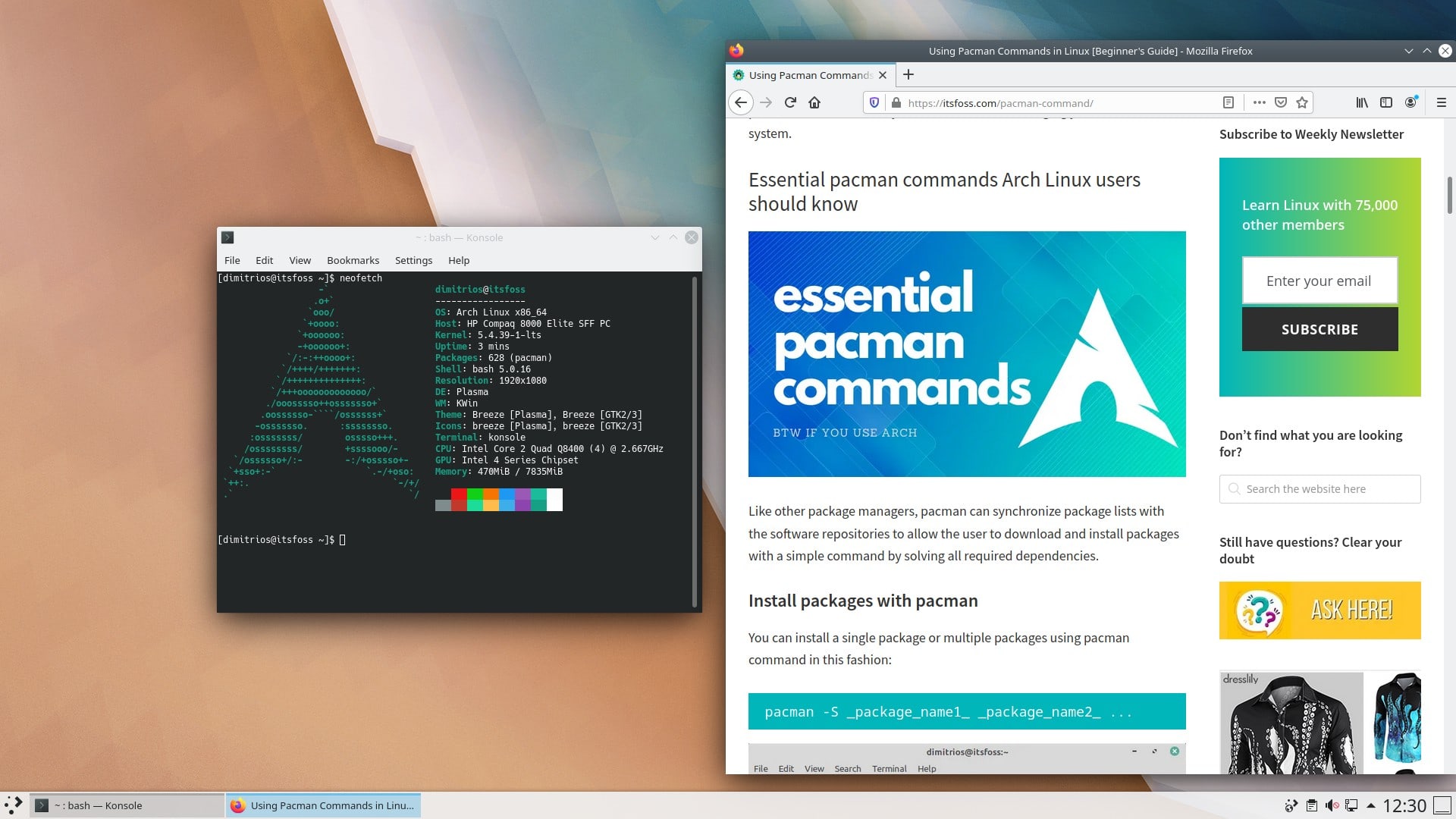

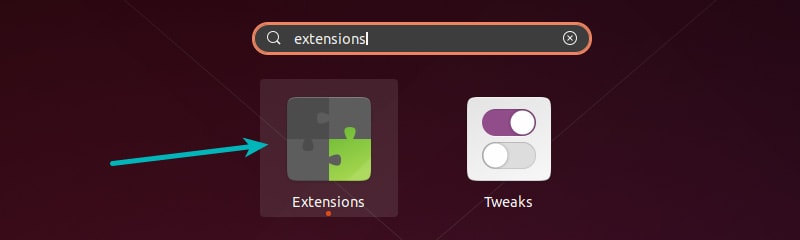

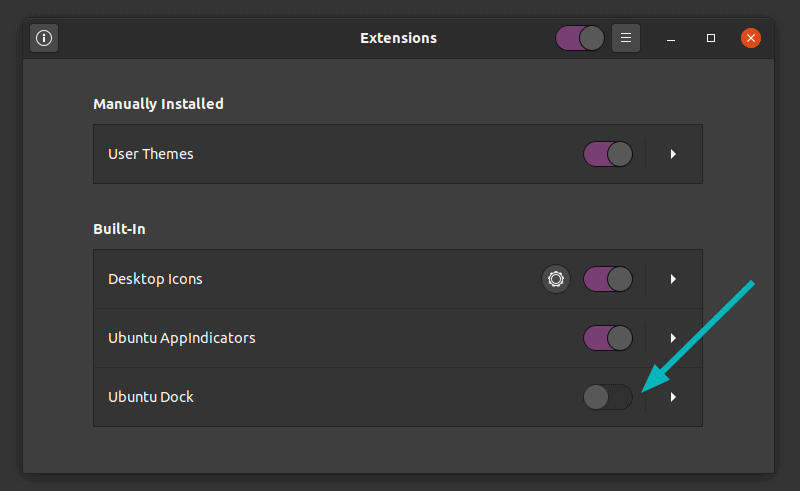

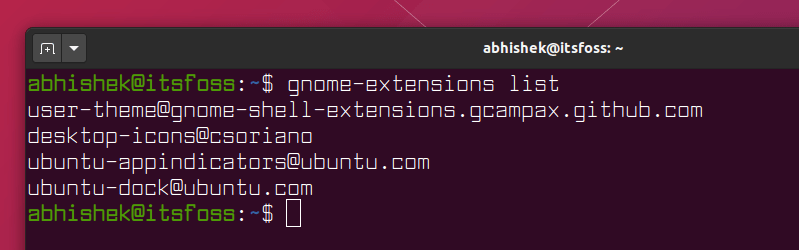

12,258 | 如何在 Arch Linux 上正确安装和设置 KDE Plasma? | https://itsfoss.com/install-kde-arch-linux/ | 2020-05-28T06:37:30 | [

"Arch",

"KDE"

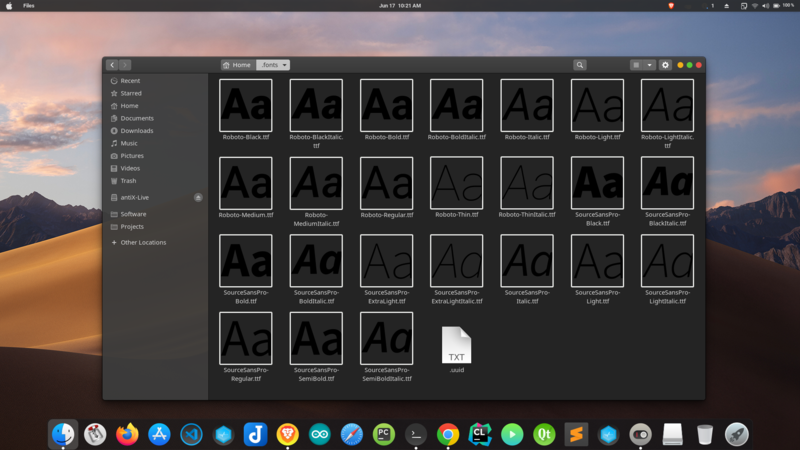

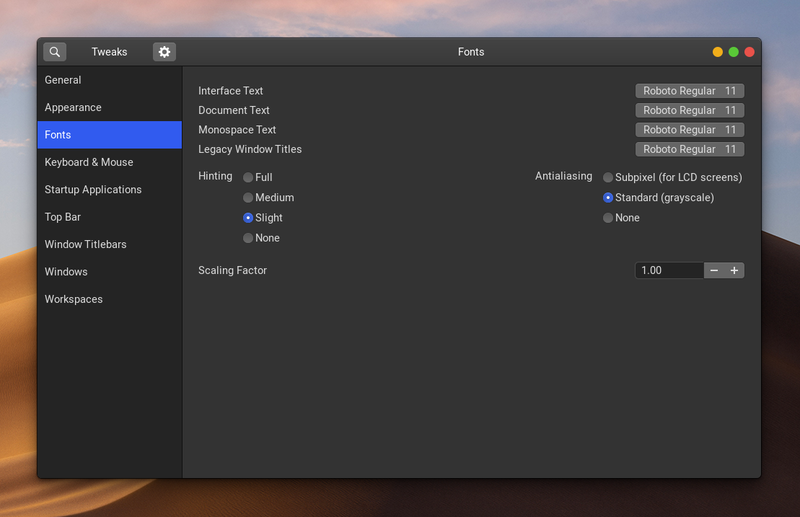

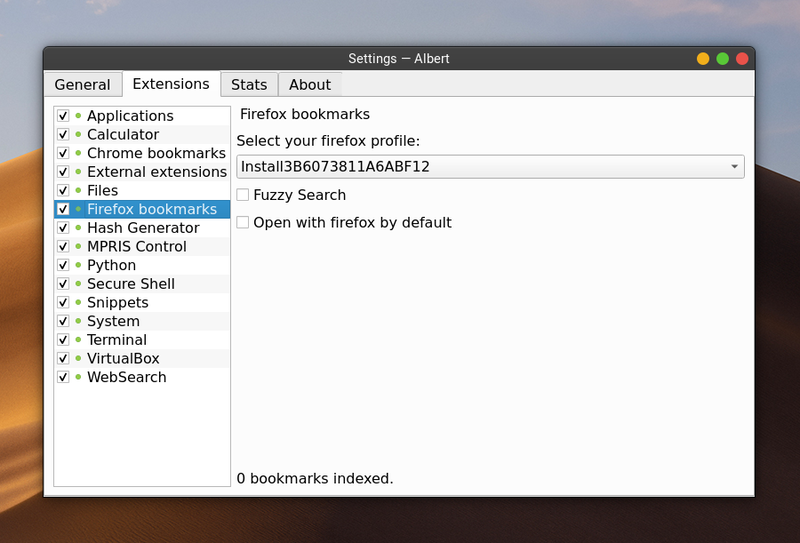

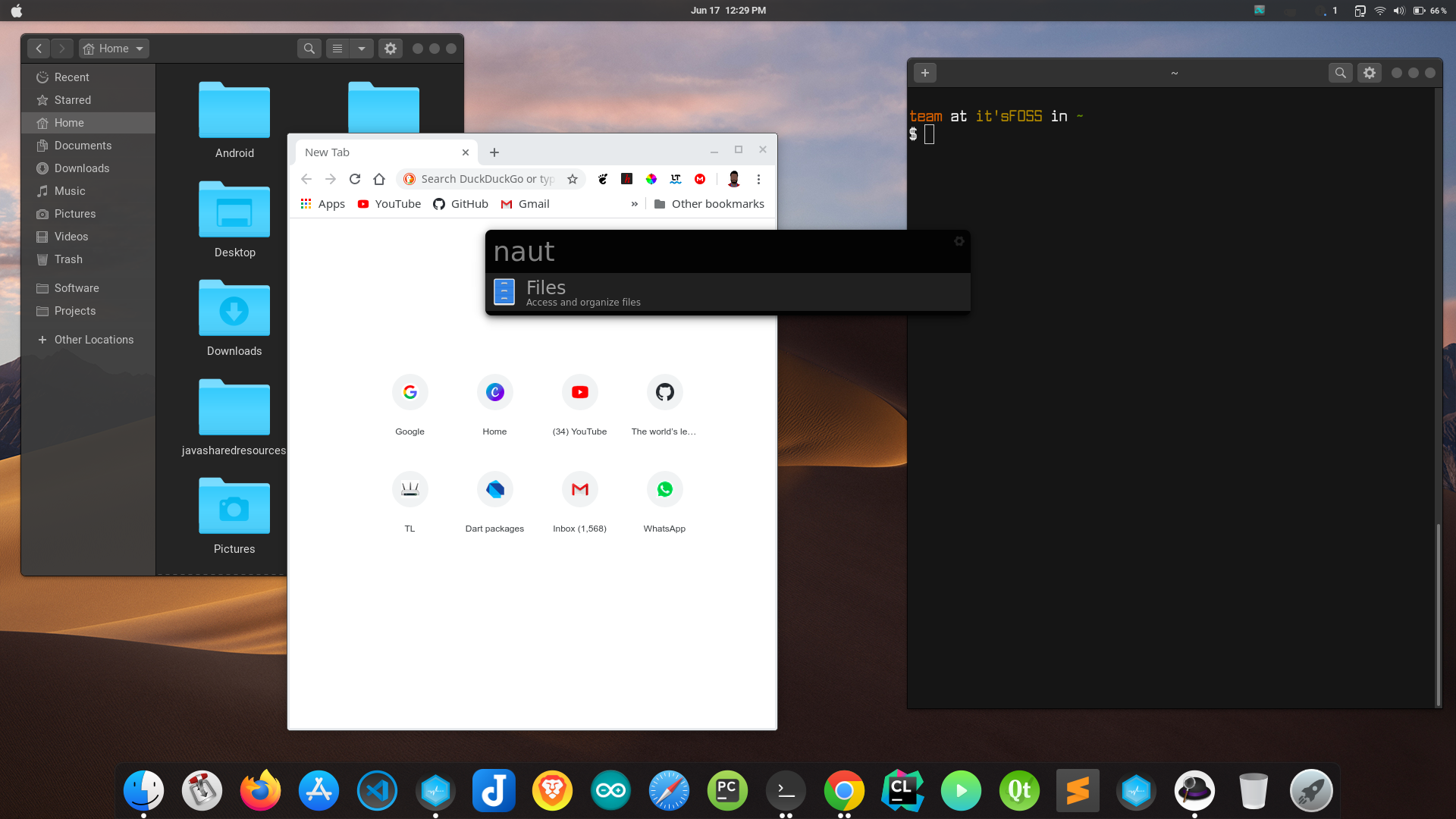

] | https://linux.cn/article-12258-1.html | 我相信你是按照[这份很棒的指南安装来 Arch Linux](/article-9170-1.html) 的,这篇指南最后提到了 [GNOME 桌面](https://www.gnome.org/)的安装步骤。

但现在,不是每个人都是 GNOME 的粉丝,有几个读者要求我们向他们展示如何在 [Arch Linux](https://www.archlinux.org/) 上配置 [KDE 桌面](https://kde.org/plasma-desktop)。

因此,我创建了这个指南来演示如何在 Arch Linux 上正确安装和配置 KDE 桌面(也被称为 KDE Plasma桌面)的步骤。

### 如何在 Arch Linux 上安装和设置 KDE 桌面环境?

请记住,KDE 不允许直接以 root 身份登录。如果你已经安装了 Arch Linux 并以 root 身份使用,你应该创建一个新的用户,并给它以 root 身份运行命令的 sudo 权限。

如果你只是最小化安装了 Arch Linux,那么你可能是以 TTY 终端方式登录的。如果你使用的是其他桌面环境,步骤也是一样的。

让我们开始吧!

#### 步骤 1:创建一个 sudo 用户(如果你只有 root 用户)

你可以使用 [useradd 命令](https://linuxhandbook.com/useradd-command/)来创建一个新用户。我创建的用户名为 `dimitrios`(这是我的名字)。你可以使用与你的名字匹配的东西。

选项 `-m` 为新创建的用户创建一个主目录。

```

useradd -m dimitrios

```

你还应该为这个用户设置一个密码。使用此命令:

```

passwd dimitrios

```

现在你已经创建了用户,给它 sudo 权限。首先,安装 sudo 和一个像 [nano](https://www.nano-editor.org/) 这样的[命令行文本编辑器](https://itsfoss.com/command-line-text-editors-linux/)。

```

pacman -S sudo nano

```

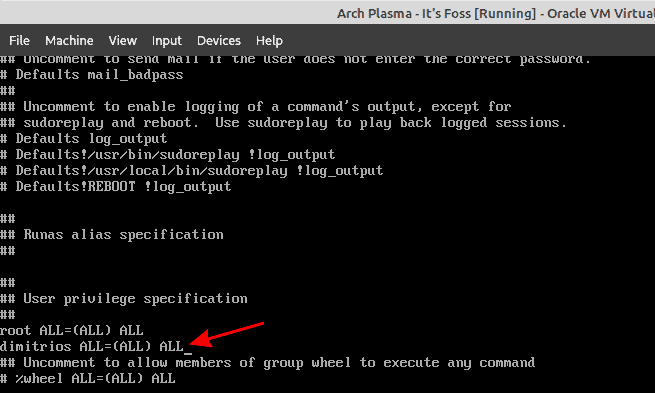

sudo 的配置文件是 `/etc/sudoers`。该文件应该使用 `visudo` 来编辑,它会锁定 `sudoers` 文件,将编辑的内容保存到一个临时文件中,并在复制到 `/etc/sudoers` 之前检查该文件的语法。

要使用 `nano` 作为 `visudo` 编辑器,请这样设置:

```

EDITOR=nano visudo

```

像我的示例那样添加下面这一行,然后保存并退出。

```

dimitrios ALL=(ALL) ALL

```

保存你的更改,然后退出编辑器。你现在在 Arch Linux 上有了一个 sudo 用户。

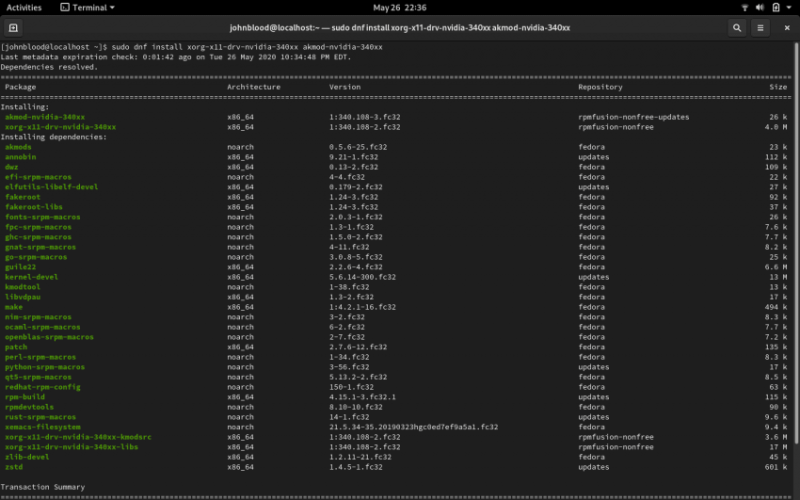

#### 步骤 2 :安装 KDE Plasma 桌面

要运行 KDE 桌面,你需要以下软件包。

* [Xorg](https://wiki.archlinux.org/index.php/Xorg) 组

* [KDE Plasma](https://kde.org/plasma-desktop) 桌面环境

* [Wayland](https://wiki.archlinux.org/index.php/Wayland) KDE Plasma 的会话

* [KDE 应用程序](https://www.archlinux.org/groups/x86_64/kde-applications/)组(包括 KDE 特有的应用程序:Dolphin 管理器和其他有用的应用程序)。

你可以用下面的命令安装上述部分。

```

pacman -S xorg plasma plasma-wayland-session kde-applications

```

安装后,启用显示管理器和网络管理器服务。

```

systemctl enable sddm.service

systemctl enable NetworkManager.service

```

快完成了。关闭你的系统。

```

shutdown now

```

重新打开系统电源,你应该会看到 KDE 的登录界面。你还记得为你的 sudo 用户设置的密码吗?用它来登录。

#### 接下来?

你可能会想探索一下[基本的 pacman 命令](https://itsfoss.com/pacman-command/),了解一下 [Arch 用户资源库](/article-12107-1.html),了解一下 [AUR 助手](/article-12019-1.html)。

希望这篇教程对你在 Arch Linux 上安装 KDE 桌面有所帮助。如果你在安装过程中遇到任何障碍或困难,请在下面的评论中告诉我们。

你最喜欢的桌面环境或窗口管理器是什么?请告诉我们,别忘了在我们的社交媒体上订阅。

---

via: <https://itsfoss.com/install-kde-arch-linux/>

作者:[Dimitrios Savvopoulos](https://itsfoss.com/author/dimitrios/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

I believe you followed the [fantastic It’s FOSS guide on installing Arch Linux](https://itsfoss.com/install-arch-linux/). The guide ends with steps mentioning the installation procedure for [GNOME desktop](https://www.gnome.org/?ref=itsfoss.com).

Now, not everyone is a GNOME fan and several readers requested that we show them how to configure the [KDE desktop](https://kde.org/plasma-desktop?ref=itsfoss.com) on [Arch Linux](https://www.archlinux.org/?ref=itsfoss.com).

And thus I created this guide to demonstrate the steps for properly installing and configuring the KDE desktop (also known as KDE Plasma desktop) on Arch Linux.

## How to install and setup KDE desktop environment on Arch Linux

Please keep in mind that KDE doesn’t allow login as root directly. If you have installed Arch Linux and using it as root, you should create a new user and give it sudo rights for running commands as root.

If you just have a bare minimum installation of Arch Linux, you probably are logging into a [TTY terminal](https://itsfoss.com/what-is-tty-in-linux/). If you are using some other desktop environment, steps remain the same.

Let’s go!

### Step 1: Create a sudo user (if you have only root user)

You can use the [useradd command](https://linuxhandbook.com/useradd-command/?ref=itsfoss.com) for creating a new user. I am creating user named dimitrios (that’s my name). You can use something that matches your name.

The option -m creates a home directory for the newly created user.

`useradd -m dimitrios`

You should also set a password for this user. Use this command:

`passwd dimitrios`

Now that you have created the user, give it sudo access. First, install sudo and a [command line text editor](https://itsfoss.com/command-line-text-editors-linux/) like [nano](https://www.nano-editor.org/?ref=itsfoss.com):

`pacman -S sudo nano`

The configuration file for sudo is /etc/sudoers. It should always be edited with the visudo command. visudo locks the sudoers file, saves edits to a temporary file, and checks that file’s grammar before copying it to /etc/sudoers.

To use nano as the visudo editor, use:

`EDITOR=nano visudo`

Add the following line like I do in the example, then save and exit.

`dimitrios ALL=(ALL) ALL`

Save your changes and exit the editor. You now have a sudo user on Arch Linux.

### Step 2: Installing KDE Plasma desktop

To run KDE desktop, you need the following packages:

[Xorg](https://wiki.archlinux.org/index.php/Xorg?ref=itsfoss.com)group[KDE Plasma](https://kde.org/plasma-desktop?ref=itsfoss.com)Desktop Environment[Wayland](https://wiki.archlinux.org/index.php/Wayland?ref=itsfoss.com)session for KDE Plasma[KDE applications](https://www.archlinux.org/groups/x86_64/kde-applications/?ref=itsfoss.com)group (consists of KDE specific applications including the Dolphin manager and other useful apps)

You can install of the above using the following command:

`pacman -S xorg plasma plasma-wayland-session kde-applications `

Once installed, enable the [Display Manager](https://itsfoss.com/display-manager/) and Network Manager services:

```

systemctl enable sddm.service

systemctl enable NetworkManager.service

```

Almost there. Shutdown your system:

`shutdown now`

Power on your system and you should see the KDE login. Do you remember the password you set up for your sudo user? Use it to login.

### What next?

You may want to explore the [essential pacman commands](https://itsfoss.com/pacman-command/), to know what’s going on with the [Arch User Repository](https://itsfoss.com/aur-arch-linux/) and learn more about [AUR helpers](https://itsfoss.com/best-aur-helpers/).

I hope you found this tutorial helpful in installing KDE desktop on Arch Linux. Please let us know in the comments below if you encounter any obstacles or difficulties during the installation.

What’s your favourite Desktop environment or Window Manager? Let us know and don’t forget to subscribe on our social media. |

12,260 | 出于安全原因,OpenSSH 决定弃用 SHA-1 验证算法 | https://www.zdnet.com/article/openssh-to-deprecate-sha-1-logins-due-to-security-risk/ | 2020-05-28T17:57:07 | [

"OpenSSH",

"SHA-1"

] | https://linux.cn/article-12260-1.html |

>

> 破坏一个 SHA-1 生成的 SSH 验证密钥,现在的成本大约为 5 万美元,使得重要的远程服务器面临着被攻击的风险。

>

>

>

最受欢迎的连接和管理远程服务器的实用工具 OpenSSH 今天宣布,计划放弃对其 SHA-1 验证方案的支持。在这个宣布当中,OpenSSH 团队列举了 SHA-1 散列算法的安全问题,该算法目前被认为是不安全的。

SHA-1 算法在 2017 年 2 月的一次实际的、真实世界的攻击中被破解,当时谷歌密码学家披露的 [SHAttered](https://shattered.it/) 技术可以使两个不同的文件拥有相同的 SHA-1 文件签名(这称之为“碰撞”)。

在当时,创建一个 SHA-1 碰撞被认为需要非常昂贵的计算成本,谷歌的专家认为 SHA-1 在实际应用中至少还能用上五年,直到成本下降。

然而,随后在 2019 年 5 月和 2020 年 1 月发布的研究报告中,详细介绍了一种更新的方法,将 SHA-1 选择-前缀碰撞攻击chosen-prefix collision attack的成本分别降低到 [11 万美元](https://www.zdnet.com/article/sha-1-collision-attacks-are-now-actually-practical-and-a-looming-danger/)和 [5 万美元](https://eprint.iacr.org/2020/014.pdf)以下。

对于高级威胁攻击者,例如国家黑客和高端网络犯罪集团,如果他们能够生成一个 SSH 认证密钥,使他们能够远程不被发现地访问关键服务器,那么 5 万美元是一个很小的代价。

### OpenSSH 关闭 ssh-rsa 模式

“由于这个原因,我们将在不久的未来版本中默认禁用 ssh-rsa 公钥签名算法。”OpenSSH 开发者今天表示。

OpenSSH 应用程序使用 ssh-rsa 模式来[生成 SSH 验证密钥](https://www.ssh.com/ssh/public-key-authentication)。这些密钥中的一个存储在用户要登录的服务器上,另一个存储在用户的本地 OpenSSH 客户端中,这样用户就可以在每次登录时不需要输入密码就可以访问服务器,而是以本地认证密钥来代替。

在默认情况下,OpenSSH ssh-rsa 模式通过使用 SHA-1 哈希函数生成这些密钥,这意味着这些密钥容易受到 SHAterred 攻击,使攻击者能够生成重复的密钥。

“遗憾的是,尽管有更好的替代方案存在,但这种算法仍然被广泛使用,它是原始的 SSH RFC 规定的唯一剩下的公钥签名算法”,OpenSSH 的开发人员今天表示。

OpenSSH 团队现在要求服务器所有者检查他们的密钥是否已经使用默认的 ssh-rsa 模式生成,并使用不同的模式生成新的密钥。

OpenSSH 团队表示,推荐的模式是 rsa-sha2-256/512(从 OpenSSH 7.2 开始支持)、ssh-ed25519(从 OpenSSH 6.5 开始支持)或 ecdsa-sha2-nistp256/384/521(从 OpenSSH 5.7 开始支持)。

>

> 这里是最近公告中的最好的消息。让我们为所有的嵌入式系统和设备永远不会看到 OpenSSH 中废弃的 ssh-rsa SHA-1 密钥的升级而默哀一分钟。

>

>

> - Julio (@juliocesarfort) 2020 年 5 月 27 日

>

>

>

OpenSSH 项目将在未来(目前还未确定)的版本中默认禁用 ssh-rsa 模式,但是在此之前,他们还计划默认启用 UpdateHostKeys 功能,让服务器所有者可以轻松自动地从旧的 ssh-rsa 模式迁移到更好的验证算法。

依赖 OpenSSH 管理远程系统的服务器管理员可以在 [OpenSSH 8.3 的变更日志](http://www.openssh.com/txt/release-8.3)中找到更多关于如何测试他们的服务器是否有基于弱 SHA-1 的密钥的详细信息。

在之前的一个版本中,在 8.2 版本中,OpenSSH 团队还增加了对[基于 FIDO/U2F 的硬件安全密钥的支持](https://www.zdnet.com/article/openssh-adds-support-for-fidou2f-security-keys/),这也可以用来更安全地登录远程服务器。

---

via: <https://www.zdnet.com/article/openssh-to-deprecate-sha-1-logins-due-to-security-risk/>

作者:[Catalin Cimpanu](https://www.zdnet.com/meet-the-team/us/catalin.cimpanu/) 选题:[wxy](https://github.com/wxy) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](/article-12257-1.html) 荣誉推出

| 200 | OK | # OpenSSH to deprecate SHA-1 logins due to security risk

OpenSSH, the most popular utility for connecting to and managing remote servers, has announced today plans to drop support for its SHA-1 authentication scheme.

The OpenSSH team cited security concerns with the SHA-1 hashing algorithm, currently considered insecure.

The algorithm was broken in a practical, real-world attack in February 2017, when Google cryptographers disclosed [SHAttered](https://shattered.it/), a technique that could make two different files appear as they had the same SHA-1 file signature.

At the time, creating an SHA-1 collision was considered computationally expensive, and Google experts thought SHA-1 could still be used in practice for at least half a decade until the cost would go down.

However, subsequent research released in May 2019 and in January 2020, detailed an updated methodology to cut down the cost of an SHA-1 chosen-prefix collision attack to [under $110,000](https://www.zdnet.com/article/sha-1-collision-attacks-are-now-actually-practical-and-a-looming-danger/) and [under $50,000](https://eprint.iacr.org/2020/014.pdf), respectively.

For advanced threat actors, such as nation-state and high-end cybercrime groups, $50,000 is a small price to pay if they could generate an SSH authentication key that grants them remote and undetected access to critical servers.

### OpenSSH to disable "ssh-rsa" mode

"For this reason, we will be disabling the 'ssh-rsa' public key signature algorithm by default in a near-future release," OpenSSH developers said today.

The OpenSSH app uses the "ssh-rsa" mode to generate [SSH authentication keys](https://www.ssh.com/ssh/public-key-authentication). One of these keys is stored on the server a user wants to log in, and the other is stored in the user's local OpenSSH client, allowing users to access servers without having to enter their passwords on every login, presenting the local authentication key instead.

By default, the OpenSSH ssh-rsa mode generates these keys by using the SHA-1 hashing function, meaning these keys are susceptible to SHAterred attacks, allowing threat actors to generate duplicate keys.

"This algorithm is unfortunately still used widely despite the existence of better alternatives, being the only remaining public key signature algorithm specified by the original SSH RFCs," OpenSSH devs said today.

The OpenSSH team is now asking server owners to check if their keys have been generated with the default ssh-rsa mode, and generate new ones using a different mode.

Recommended modes are rsa-sha2-256/512 (supported since OpenSSH 7.2), ssh-ed25519 (supported since OpenSSH 6.5) or ecdsa-sha2-nistp256/384/521 (supported since OpenSSH 5.7), the OpenSSH team said.

here's the best piece of the recent announcement. RIP & one minute silence to all embedded systems and appliances that will never see an upgrade for deprecating ssh-rsa SHA-1 based keys in OpenSSH.

— Julio (@juliocesarfort)[pic.twitter.com/zf5VewugC6][May 27, 2020]

The OpenSSH project will by disabling the ssh-rsa mode by default in a future (currently unspecified) release, however, before that, they also plan to enable the *UpdateHostKeys *feature by default, to allow server owners to easily and automatically migrate from the old ssh-rsa mode to better authentication algorithms.

Server owners who rely on OpenSSH to manage remote systems can find additional details on how to test their server for weak SHA-1-based keys in the [OpenSSH 8.3 changelog](http://www.openssh.com/txt/release-8.3).

In a previous release, in version 8.2, the OpenSSH team also added [support for FIDO/U2F-based hardware security keys](https://www.zdnet.com/article/openssh-adds-support-for-fidou2f-security-keys/), which can also be used to log into remote servers in even a safer manner.

#### What's in a name? These DevOps tools come with strange backstories

[Editorial standards](/editorial-guidelines/) |

12,261 | 攒一台“Linus Torvalds 牌”电脑 | https://www.zdnet.com/article/look-whats-inside-linus-torvalds-latest-linux-development-pc/ | 2020-05-28T22:46:00 | [

"Linus"

] | https://linux.cn/article-12261-1.html |

前两天,Linus Torvalds 说他更新了其主力电脑,[据称编译内核时快了三倍](/article-12249-1.html)。虽然 Torvalds [用来编程时喜欢用戴尔 XPS 开发者版笔记本电脑](/article-7795-1.html),但他还有一台开发工作站,最近他将它升级为基于 AMD 锐龙 Threadripper 3970x 高速处理器。这是 Linus 15 年来第一次换用 AMD 的 CPU。

作为世界上最著名的程序员之一、Linux 操作系统之父以及 Git 的缔造者,他的一举一动都会引来开源社区的兴趣,因此大家对这台新电脑的配置也相当感兴趣。

### “Linus Torvalds 牌”电脑配置清单

Torvalds 是一个喜欢自己攒电脑的人。“我通常都是自己攒电脑。我会重新使用上一台机器的机箱或 SSD 之类的东西,但这一次是全新打造的。”

在这次攒机之前,他的机器是 i9-9900k。通常情况下,Torvalds 会直接去当地的 Fry's 商店直接购买一些比较基础的配件,但由于病毒的影响,这次都是从亚马逊买的。几周后就到货了(这年头电脑配件几天就发货了),最后两件是上周五到的。

以下列出这台电脑的具体配置,大家感兴趣的可以按图索骥。

#### CPU:AMD 锐龙 Threadripper 3970X

锐龙 Threadripper 3970X 并不便宜,而且它是目前最快的 CPU。AMD Threadripper 3970x 搭载了 32 个核心。它采用 AMD 的 7 纳米 Zen 2 核心架构,拥有 88 个 PCIe 4.0。AMD 声称它比竞争对手快了 90%。Phoronix 的独立测试发现,“[Threadripper 3970X 在性能上绝对占优势](https://www.phoronix.com/scan.php?page=article&item=amd-linux-3960x-3970x&num=1),超过了 Core i9 10980XE。”

“最初,我其实是计划用 AM4 主板和 Ryzen 3950X,这是比较主流的升级过程。” Torvalds 说,“Ryzen 3950X 是英特尔 i9-9900K 的升级版,但只是不多的升级。”

“通常情况下,我都会选择普通的消费级 CPU,因为它们往往是性价比最高的,而对于英特尔 CPU 来说,我其实喜欢它们只是因为集成了显卡。我不太在意 GPU,所以集成式的就好了,这样可以避免了挑选相匹配的 GPU,以及风扇不好的 GPU 可能产生的噪音。”

Torvalds 为此“来回折腾了好一阵子”,正如他所说的:“Threadripper 的功率让我担心噪音问题。但我决定做一次大升级,因为与传统的英特尔 Xeon 高核数平台不同,AMD 的 Threadripper 系列仍然属于‘物美价廉’的范畴。所以我咬咬牙就上了,到目前为止,我很满意。”

#### 主板:技嘉 Aorus TRX40 Master

对于顶级 CPU,你当然需要最好的主板:技嘉 Aorus RX40 Master。它使用的是 16+3 相英飞凌供电,考虑到新 CPU 恐怖的规格,技嘉还给主板的供电覆盖上了加强散热模块,模块以密集的散热鳍片热管为主。

在这里,Torvalds 主要关心的是:

“要有一块看起来不错的电源传输和风扇控制的主板。在我所攒的机器中,我希望的是最基本的东西都要可靠,没有什么比电源传输更基本的东西了。很久以前,我有几个系统因为电源断电而变得不可靠,现在我对这个问题已经变得很虔诚了。所以我就找一些适合超频的东西,然后**不超频**。”

简而言之,他要的是一台能承受高负载的 PC,但他不会把机器推到极限。话虽如此,Torvalds 很讨厌“这款主板的默认风扇设置(非常烦人的小高转速风扇,用于电压调节器模块 VRM 散热),但你可以通过 BIOS 设置调整得更好。另外请大家注意:这是一款 E-ATX 主板,所以在出现错误的情况下可能会很不方便。”

#### CPU 散热器和风扇:猫头鹰(Noctua) NF-A14 PWM、NH-U14S 和 NF-A15

高性能 CPU 的发热自然很高,因此 CPU 风扇也需要一台强劲的,但是,更重要的是,需要安静,Torvalds 以前就在用猫头鹰,感觉很好,这次依然用的是它。

Torvalds 使用了两款 CPU 散热器的风扇。其中 NH-U14S 是主打的,而多出的 NF-A15 风扇则是针对该散热器的推拉式配置。

既然他这么担心噪音问题,你问为什么不选择水冷呢?“我不喜欢水冷的。可靠性让我担心,而且我不觉得 AIO 系统会比好的风冷系统好。另外,定制那些系统太费劲了,我担心水泵和潺潺的噪音。” Torvalds 说。

Torvalds 说,“额外的风扇是因为我喜欢那种推拉式的设置,140mm 大的猫头鹰风扇低速运行,我不用担心噪音问题。即使是在负载下加速运行时,我也不觉得那些风扇的噪音很烦人。它更多的是一种舒缓的‘嗖嗖’的白噪音,没有劣质风扇所带来的恼人的呜呜声或嘎嘎声。”

#### 机箱:德商必酷(Be Quiet)Dark Base 700

显然程序员在工作时都想要安静,Torvalds 也不例外,那么德商必酷(Be Quiet)Dark Base 700 PC 机箱自然是首选。

其实 Torvalds 更喜欢猫头鹰,德商必酷只是第二选择,但是猫头鹰没有做机箱产品。

#### 附加风扇:德商必酷(Be Quiet)Silent Wings 3

高性能 PC 需要大量的电能,这又意味着它们会产生大量的热量。 自然,重视安静的 Torvalds 又选择了一块德商必酷(Be Quiet) Silent Wings 3 风扇。

为什么要多一个风扇?Torvalds 解释说:“多出一个风扇(机箱已经有两个)是因为我最初订购了这个机箱,然后在看的时候,我觉得前面的进气口看起来比后面的输出口更受限制(因为前面板的缘故),反正我在等其他配件到货,所以我决定多加一个进气口风扇会更有利于空气流通,希望能造成机箱的正压,减少灰尘的担心。”

最终,为了做一台安静强大的PC,所有的努力都是值得的。

“有了 BIOS 中正确的风扇控制设置(假设你选对了风扇头。主板的纸质手册上的图片太可怕了,而且我在第一次攒机的时候把 CPU 和系统风扇头弄错了),你的机器在空载的时候基本上是静音的,而且在满载的情况下没有任何恼人的呜呜声(但不是静音)。”

#### 电源:海韵(Seasonic)Focus GX-850

快速的 CPU 也需要大量的电能,海韵(Seasonal)Focus GX-850 可以提供澎湃的电能。

GX-850 并不是 Torvald 的首选,但在新冠期间只能选到它,不过“它应该是可靠的”,Torvalds 说。他对基础的电源传输非常在意:“我基本上会考虑机器的最高功率使用量是多少?然后挑选一个额定功率为 2 倍的电源,然后寻找评论和有信誉的品牌。”

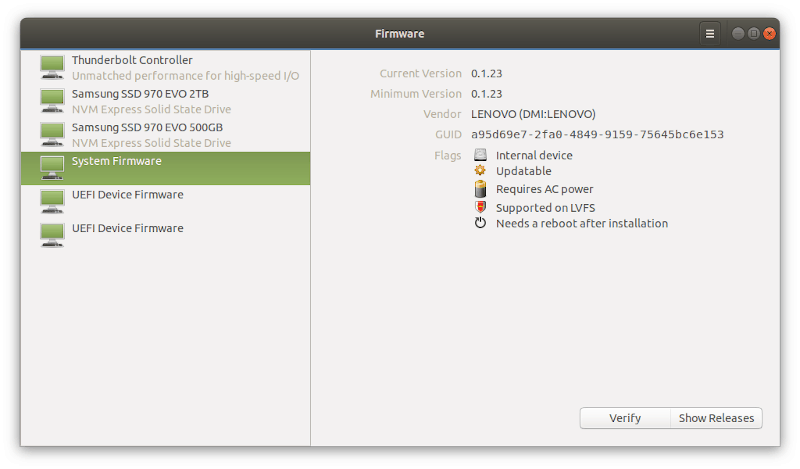

#### 存储:1TB 三星 EVO 970 SSD

说到存储,Torvalds 说现在已经是固态硬盘(SSD)的天下:“到现在为止,我已经十几年没接触过旋转介质了,在过去的几代产品中,我尽量避免布线这些麻烦,只用 m.2 外形的固态硬盘。我用过几款三星的固态硬盘,它们一直都很好。几代以前有很多坏的固态硬盘,现在已经不是什么问题了,但我还是坚持用适合自己的东西。”

#### 内存:4 条 16GB DDR4-2666

在理想的情况下,当然是 64GB 的纠错码(ECC)内存更好,不过这次他只是选择了高质量的通用 DDR RAM。

事实证明,内存是 Torvalds 的一个痛点:“这其实是我攒的机器里面最不喜欢的部分 —— 它是个好内存,但我真的很想要 ECC(错误校正代码)内存。我很难在亚马逊上找到任何(价格合理)的东西,所以这个我觉得是暂时的‘够用了’,实际用起来起来还不错。”

此外,他继续说道:“其实我甚至不需要 64GB 内存,因为我做的东西往往都不太需要内存,但我想把四个内存通道都填满,而且内存很便宜。”

虽然游戏和人工智能和机器学习的开发者们都很关心图形,但视频和图像处理并不在 Torvalds 的兴趣范围之内。他用的是“随便选的蓝宝石 RX580 显卡。对于我所做的工作(台式机使用,不玩游戏)来说,这已经足够了。”

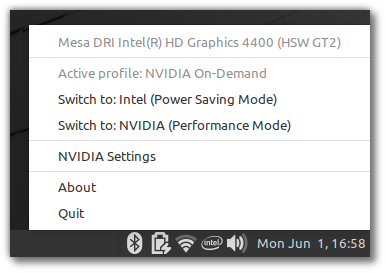

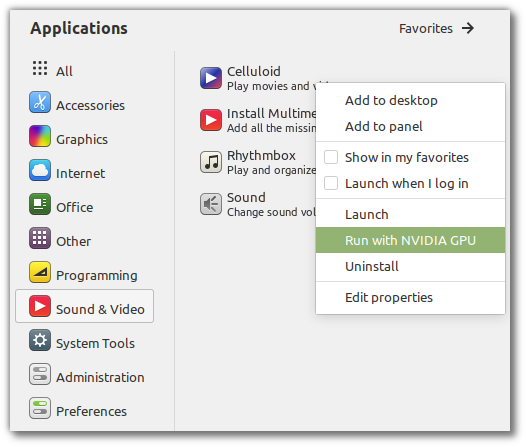

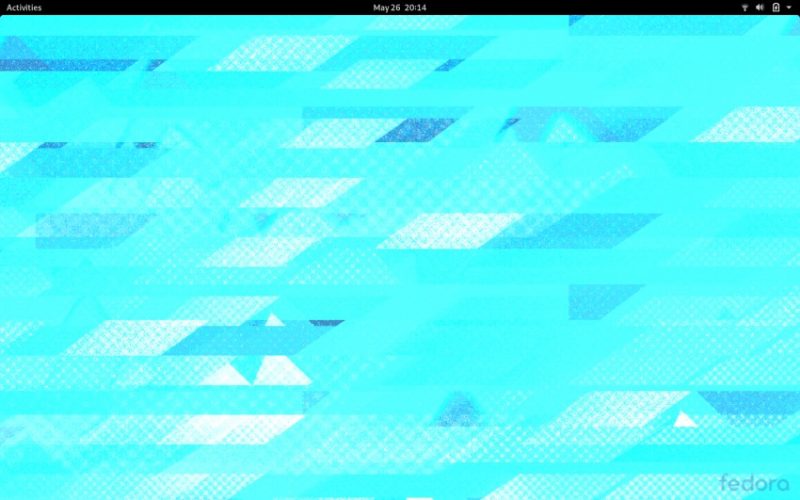

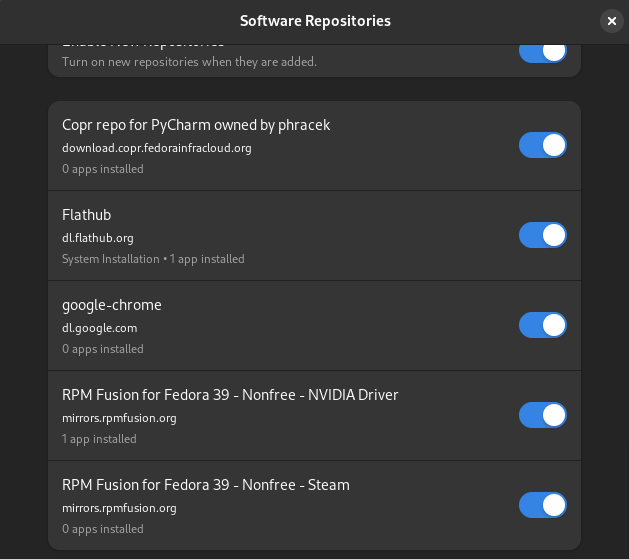

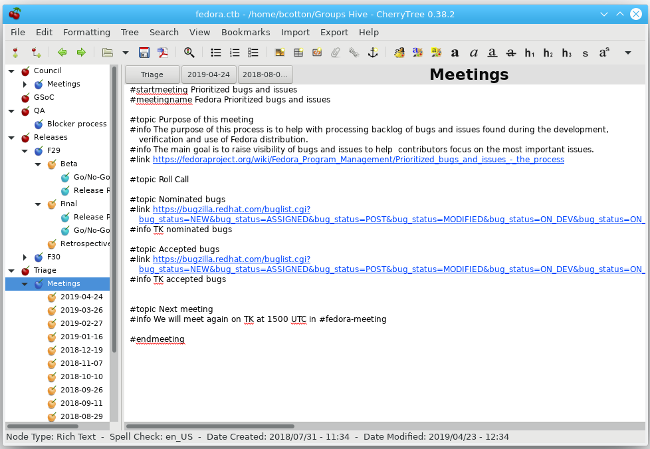

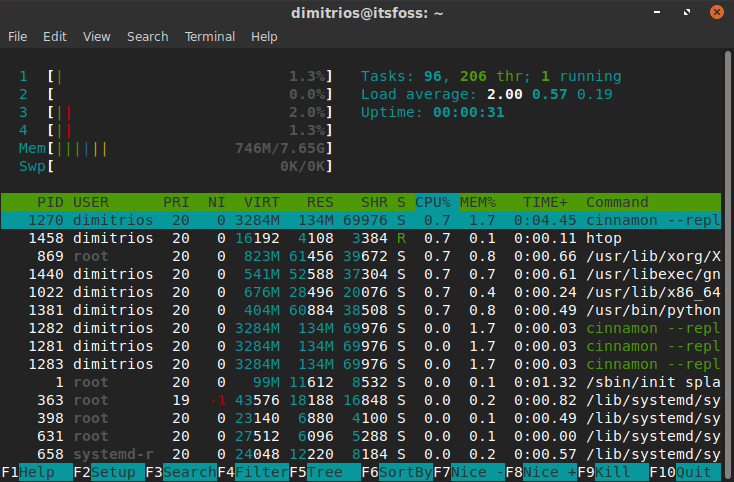

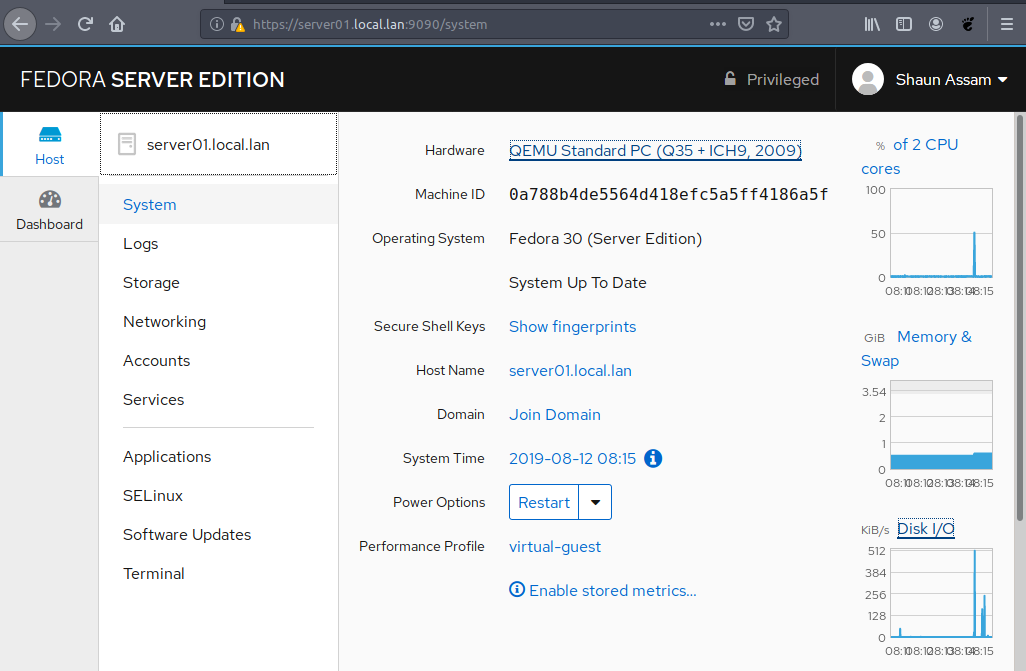

#### 操作系统:Linux Fedora 32

这没什么说的,Torvalds 肯定是用的 Linux ,他一直很喜欢用 Red Hat 的 Fedora 进行开发。当然,用的是最新版本的 Fedora 32。

“把它拼凑在一起,确保所有的风扇设置正确,然后在上面安装 Fedora 32,你就有了一个相当不错的工作站”,Torvalds 说。

除了这台工作站之外,Torvalds 日常使用笔记本电脑进行编程,他使用的是戴尔 XPS 13。

Torvalds 说,“通常情况下,我不会说出品牌,但对 XPS 13 我破例了,我实在太喜欢它了,以至于在我女儿上大学的时候,我还为她买了一台。”

### 羡慕吗?

要不要也攒一台呢,也就 3 万来块钱 :D

| 200 | OK | # Look what's inside Linus Torvalds' latest Linux development PC

In a [Linux Kernel Mailing List (LKML](https://lkml.org/)), Linus Torvalds, Linux's top developer, talked about the latest progress in the next version of Linux: [Linux 5.7-rc7](http://lkml.iu.edu/hypermail/linux/kernel/2005.3/00406.html). Along the way, he mentioned, "for the first time in about 15 years, my desktop isn't Intel-based." In his newest development box, he's "rocking an [AMD Threadripper 3970x](https://www.zdnet.com/article/amd-unveils-worlds-most-powerful-desktop-cpus/)." But a computer is more than just a processor no matter how speedy it is, so I talked with Torvalds to see exactly what's in his new box.

First, he's already impressed by its performance:

"My 'allmodconfig' test builds are now three times faster than they used to be, which doesn't matter so much right now during the calming down period, but I will most definitely notice the upgrade during the next merge window," said Torvalds.

The AMD Threadripper 3970x comes with 32 cores. It's built using AMD's 7-nanometer "Zen 2" core architecture, and it boasts 88 PCIe 4.0. AMD claims it's up to 90% faster than its competition. [Phoronix](https://www.phoronix.com/)'s independent tests found the "[Threadripper 3970X absolutely dominates in performance](https://www.phoronix.com/scan.php?page=article&item=amd-linux-3960x-3970x&num=1) and "outpaces the Core i9 10980XE."

Torvalds is a build-your-own box type of guy.

"I typically build all my own machines. Usually they are frankenboxes -- I'll re-use the case or the SSD from the previous machine or something like that. This time it was an all-new build," he said.

Why do it yourself?

"I don't like having others build my machine, partly because I have my own specs I care most about, but partly I get self-conscious about getting donations that I no longer really need," Torvalds said.

Before this latest build, his box was an [i9-9900k](https://www.zdnet.com/article/intel-9600k-9700k-and-9900k-cpu-specs/). Normally, Torvalds would just pop into the local Fry's to pick up some of the more basic stuff directly, but with the virus, this time it was all from Amazon. The pieces came in over a few weeks (no more two-day shipping of computer parts these days); the last two pieces came last Friday.

So, here's Torvald's annotated hardware list:

### CPU

**See it now:**[Ryzen Threadripper 3970X](https://www.amazon.com/AMD-Ryzen-Threadripper-3970X-64-Thread/dp/B0815JJQQ8/?tag=zdnet-deals-20)

"Initially, my plan was actually to do an AM4 board and just a Ryzen 3950X, which is the more mainstream upgrade," Torvalds said, but the "Ryzen 3950X would have been an upgrade over the Intel i9-9900K, but it would only have been an incremental one."

"Normally, I go for the regular consumer CPU's, since they tend to be the best bang for the buck, and in the case of Intel CPU's I actually like that they just have integrated graphics. I don't care about the GPU very much, so an integrated one is fine, and it avoids the worry about picking the right GPU and possible noise-levels from one with bad fans."

Torvalds went "back-and-forth about that for a while," because, as he said:

"The Threadripper power use made me worry about noise. But I decided to do a big upgrade because unlike the traditional Intel Xeon high-core-count platform, AMD's Threadripper series still falls in the 'good bang for the buck.' So I bit the bullet, and am so far quite pleased."

### Motherboard

**See it now: Gigabyte Aorus TRX40 Master**

Here, Torvald's main concern was:

"A board that had what looked like good power delivery and fan control. In the builds I do, I really want the basics to be solid, and there's little more basic than power delivery. Long long ago I had a couple of systems that were unreliable due to power brown-out, and I've gotten religious about this now. So I look for something that is good for overclocking, and then I do _not_ overclock things."

In short, he wants a PC that can handle a heavy load, but he's not going to push the machine to its limits. That said, Torvalds absolutely detests:

"The default fan settings of this motherboard (very whiny small high-RPM fan for VRM [Voltage Regulator Module] cooling), but you can tweak the BIOS settings to something much better. Also note to anybody else: This is an E-ATX board, so it can be inconvenient in the wrong case."

### Fan

**See it now:**[Noctua NF-A14 PWM, Premium Quiet Fan](https://www.amazon.com/Noctua-NF-A14-PWM-Premium-Cooling/dp/B00CP6QLY6/?tag=zdnet-deals-20)

As you can tell, noise is a big issue for Torvalds. He cares deeply about it:

"So I want good fans and coolers, and I've had good experiences with Noctua before," Torvalds said. "The extra fan is because I like that push-pull setup, and with a big 140mm Noctua fan running at low speed, I'm not worried about noise levels. Even when it ramps up under load, I don't find the noise of those fans annoying. It's more of a soothing 'whoosh" white noise sound, none of the annoying whining or rattling that you get with bad fans."

### CPU Cooler

**See it now:**[Noctua NH-U14S](https://www.amazon.com/Noctua-NH-U14S-Premium-Cooler-NF-A15/dp/B00C9FLSLY/?tag=zdnet-deals-20)and Noctua[NF-A15](https://www.amazon.com/Noctua-NF-A15-PWM-Premium-Cooling/dp/B00AED7XFI/?tag=zdnet-deals-20)

Torvalds uses two CPU cooler fans. The NH-U14S is the main one, while the extra NF-A15 fan is for the push-pull configuration of that cooler.

With all his concern about noise, why not water-cooling, you ask?

"I'm not a fan of water-cooling. Reliability worries me, and I'm not convinced the AIO systems are any better than a good air cooling system. And the custom ones are way too much effort, and I worry about the pump and gurgling noises," Torvalds said.

### Case

**See it now:**[Be Quiet Dark Base 700](https://www.amazon.com/Power-Modular-Platinum-fuente-alimentación/dp/B0773NWQNQ/?tag=zdnet-deals-20)

For the case, it's once again all about noise reduction.

"I like Noctua fans better than the Be Quiet ones for some reason. But Be Quiet would have been my second choice, and Noctua doesn't make cases," he said.

### Extra fan

**See it now:**[Silent Wings 3](https://www.amazon.com/quiet-BL066-SILENTWINGS-1450RPM-50-5CFM/dp/B01JMEDDYY/?tag=zdnet-deals-20)

Why an extra fan? Torvalds explained:

"The extra fan (the case comes with two already) is because I initially ordered the case, and then when looking at it I decided that it looks like the front intake looks more restricted than the back output (because of the front panel), and since I was waiting for other parts to be delivered anyway, I decided that an extra intake fan would be better for airflow, and hopefully cause positive case pressure and less worry about dust."

In the end, all the effort to make a quiet powerful PC was worth it.

"With the right fan control setup in the BIOS (and assuming you picked the right fan headers: The motherboard paper manual had horrible pictures and I got the CPU and system fan header the wrong way round for the first build), you have a machine that is basically silent when idling, and without any annoying whine (but not silent) under full load."

### Power Supply Unit

**See it now:**[Seasonic Focus GX-850](https://www.amazon.com/Seasonic-GX-850-Full-Modular-Application-SSR-850FX/dp/B07WVMDZMZ/?tag=zdnet-deals-20)

The GX-850 wasn't Torvald's first choice, but availability during the time of the coronavirus made it what it is, but "it should be solid," Torvalds said. He cares deeply about power delivery basics:

"I basically go 'what's the top power use of the machine?,' and then pick a power supply with a rating 2x that, and then look for reviews and reputable brands."

### Storage

**See it now:**[1TB Samsung EVO 970](https://www.amazon.com/Samsung-970-EVO-1TB-MZ-V7E1T0BW/dp/B07BN217QG/?tag=zdnet-deals-20)

When it comes to storage, Torvalds is solid-state drives (SSD) all the way:

"I've refused to touch spinning media for over a decade by now, and for the last several generations I've tried to avoid the hassle with cabling, etc., by just going with an m.2 form factor. I've had several of the Samsung SSD's, they've been fine. A few generations ago there were lots of bad SSD's, these days it's much less of an issue, but I've stuck with what works for me."

### Memory

**See it now:**[4x16GB DDR4-2666](https://www.amazon.com/s?k=4x16GB+DDR4-2666+memory&i=electronics&ref=nb_sb_noss&tag=zdnet-deals-20)

RAM proved to be a sore spot for Torvalds:

"This is actually the least favorite part of the build for me -- it's fine memory, but I really wanted ECC [Error-correcting code} memory. I had a hard time finding anything [priced sanely] on Amazon, so this I feel is a temporary 'good enough for now' thing that works fine in practice."

Besides, he continued:

"I don't actually even need 64GB of RAM, since the stuff I do doesn't tend to be all that memory-intensive, but I wanted to populate all four memory channels, and RAM is cheap."

While games and artificial intelligence and machine learning developers care deeply about graphics, video and image processing doesn't interest Torvalds much. He used:

"Some random Sapphire RX580 graphics card. It's overkill for what I do (desktop use, no games)."

### Linux

**See it now:**[Fedora 32](https://getfedora.org/)

That's it.

"Slap it all together, make sure you get all the fan settings right, and (in my case) install Fedora 32 on it, and you've got a fairly pleasant workstation," Torvalds said.

While for his main workstation, Torvalds builds his own, he also has cutting edge OEM PCs for "access to new technology that I might not otherwise have bought myself."

For his laptop, he uses a [Dell XPS 13](https://www.anrdoezrs.net/links/9041660/type/dlg/sid/zd-4db3425b61b14bbcb0560d6eee7de6e5--/https://www.dell.com/en-us/work/shop/overview/cp/linuxsystems).

"Normally, Torvalds said, "I wouldn't name names, but I'm making an exception for the XPS 13 just because I liked it so much that I also ended up buying one for my daughter when she went off to college.

#### Here's all the hardware and where to buy it

Torvalds concluded:

"If the above makes you go 'Linus has too much hardware,' you'd be right. Usually I have one main box, and usually it's something I built myself."

**Related Stories:**

[Editorial standards](/editorial-guidelines/) |

12,263 | 在 Linux 上检查 MySQL/MariaDB 数据库正常运行时间的三种方法 | https://www.2daygeek.com/check-mysql-mariadb-database-server-uptime-linux/ | 2020-05-29T21:10:38 | [

"MySQL",

"MariaDB"

] | https://linux.cn/article-12263-1.html |

我们都知道在 Linux 中使用 `uptime` 命令的目的。它用于检查 [Linux 系统的正常运行时间](https://www.2daygeek.com/linux-system-server-uptime-check/)以及系统上次启动以来运行的时间。

而 Linux 管理员的工作是保持系统正常运行。

如果要检查 Linux 上的其他服务(例如 [Apache](https://www.2daygeek.com/check-find-apache-httpd-web-server-uptime-linux/)、MySQL、MariaDB、sftp 等)运行了多长时间,该怎么做?

每个服务都有自己的命令来检查服务的正常运行时间。但是你也可以为此使用其他命令。

### 方法 1:如何使用 ps 命令在 Linux 上检查 MySQL/MariaDB 数据库的正常运行时间

[ps 命令](https://www.2daygeek.com/linux-ps-command-find-running-process-monitoring/)的意思是<ruby> 进程状态 <rt> process status </rt></ruby>。这是最基本的命令之一,它显示了系统正在运行的进程的详细信息。

为此,你首先需要使用 [pidof 命令](https://www.2daygeek.com/check-find-parent-process-id-pid-ppid-linux/)查找 [MySQL](https://www.2daygeek.com/category/mysql/)/[MariaDB](https://www.2daygeek.com/category/mariadb/) 的 PID。

```

# pidof mysqld | cut -d" " -f1

2412

```

获取 MySQL/MariaDB 的 PID 后,请在 `ps` 命令中使用 `--etime` 选项获得正常运行时间。

* `--etime`:自进程启动以来经过的时间,形式为 `[[DD-]hh:]mm:ss`。

```

# ps -p 2412 -o etime

ELAPSED

2-08:49:30

```

或者,在 `ps` 命令中使用 `--lstart` 选项来获取指定 PID 的正常运行时间。

```

# ps -p 2412 -o lstart

STARTED

Sat May 2 03:02:15 2020

```

MySQL/MariaDB 进程已经运行了 2 天 03 小时 02 分 15 秒。

### 方法 2:如何使用 systemctl 命令在 Linux 上检查 MySQL/MariaDB 数据库的正常运行时间

[systemctl 命令](https://www.2daygeek.com/sysvinit-vs-systemd-cheatsheet-systemctl-command-usage/)用于控制 systemd 系统和服务管理器。

systemd 是新的初始化系统和系统管理器,现在大多数 Linux 发行版都淘汰了传统的 SysVinit 管理器而采用了 systemd。

```

# systemctl status mariadb

或者

# systemctl status mysql

● mariadb.service - MariaDB 10.1.44 database server

Loaded: loaded (/usr/lib/systemd/system/mariadb.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/mariadb.service.d

└─migrated-from-my.cnf-settings.conf

Active: active (running) since Sat 2020-05-02 03:02:18 UTC; 2 days ago

Docs: man:mysqld(8)

https://mariadb.com/kb/en/library/systemd/

Process: 2448 ExecStartPost=/bin/sh -c systemctl unset-environment _WSREP_START_POSITION (code=exited, status=0/SUCCESS)

Process: 2388 ExecStartPre=/bin/sh -c [ ! -e /usr/bin/galera_recovery ] && VAR= || VAR=/usr/bin/galera_recovery; [ $? -eq 0 ] && systemctl set-environment _WSREP_START_POSITION=$VAR || exit 1 (code=exited, status=0/SUCCESS)

Process: 2386 ExecStartPre=/bin/sh -c systemctl unset-environment _WSREP_START_POSITION (code=exited, status=0/SUCCESS)

Main PID: 2412 (mysqld)

Status: "Taking your SQL requests now…"

CGroup: /system.slice/mariadb.service

└─2412 /usr/sbin/mysqld

May 03 21:41:26 ns2.2daygeek.com mysqld[2412]: 2020-05-03 21:41:26 140328136861440 [Warning] Host name '1.1.1.1' could not be resolved: … not known

May 04 02:00:46 ns2.2daygeek.com mysqld[2412]: 2020-05-04 2:00:46 140328436418304 [Warning] IP address '1.1.1.1' has been resolved to the host name '2…ss itself.

May 04 03:01:31 ns2.2daygeek.com mysqld[2412]: 2020-05-04 3:01:31 140328436111104 [Warning] IP address '1.1.1.1' could not be resolved: Temporary fai…resolution

May 04 04:03:06 ns2.2daygeek.com mysqld[2412]: 2020-05-04 4:03:06 140328136861440 [Warning] IP address '1.1.1.1' could not be resolved: Name or ser… not known

May 04 07:23:54 ns2.2daygeek.com mysqld[2412]: 2020-05-04 7:23:54 140328435189504 [Warning] IP address '1.1.1.1' could not be resolved: Name or service not known

May 04 08:03:31 ns2.2daygeek.com mysqld[2412]: 2020-05-04 8:03:31 140328436418304 [Warning] IP address '1.1.1.1' could not be resolved: Name or service not known

May 04 08:25:56 ns2.2daygeek.com mysqld[2412]: 2020-05-04 8:25:56 140328135325440 [Warning] IP address '1.1.1.1' could not be resolved: Name or service not known

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

Hint: Some lines were ellipsized, use -l to show in full.

```

### 方法 3:如何使用 MySQLAdmin 命令在 Linux 上检查 MySQL/MariaDB 数据库的正常运行时间

[MySQLAdmin](https://www.2daygeek.com/linux-mysqladmin-command-administrate-mysql-mariadb-server/) 是安装 MySQL 软件包时安装的 MySQL 服务器命令行程序。

MySQLAdmin 客户端允许你在 MySQL 服务器上执行一些基本的管理功能。

它用于创建数据库、删除数据库、设置 root 密码、更改 root 密码、检查 MySQL 状态、验证 MySQL 功能、监视 mysql 进程以及验证服务器的配置。

```

# mysqladmin -u root -pPassword version

mysqladmin Ver 8.42 Distrib 5.7.27, for Linux on x86_64

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Server version 5.7.27

Protocol version 10

Connection Localhost via UNIX socket

UNIX socket /var/lib/mysql/mysql.sock

Uptime: 1 day 10 hours 44 min 13 sec

```

---

via: <https://www.2daygeek.com/check-mysql-mariadb-database-server-uptime-linux/>

作者:[Magesh Maruthamuthu](https://www.2daygeek.com/author/magesh/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 404 | Not Found | null |

12,264 | 怎样在树莓派上安装 TT-RSS | https://opensource.com/article/20/2/ttrss-raspberry-pi | 2020-05-29T23:04:19 | [

"RSS"

] | https://linux.cn/article-12264-1.html |

>

> 使用 Tiny Tiny RSS 来保护你阅读新闻馈送时的隐私。

>

>

>

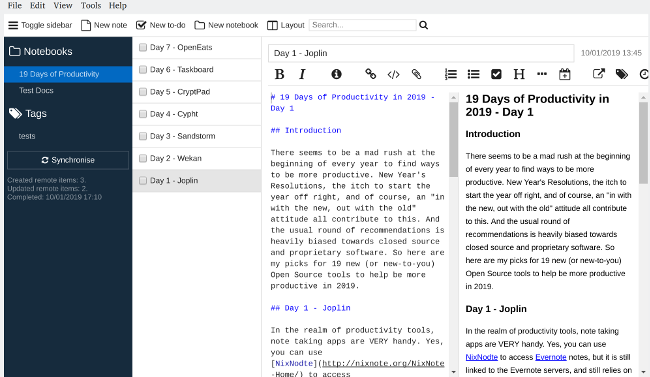

[Tiny Tiny RSS](https://tt-rss.org/)(TT-RSS)是一个自由开源的基于 Web 的新闻<ruby> 馈送 <rt> feed </rt></ruby>(RSS/Atom)阅读器和聚合工具。它非常适合那些注重隐私,并且仍然依赖 RSS 来获取日常新闻的人。TT-RSS 是自行托管的软件,因此你可以 100% 的掌控你的服务器、数据以及你的全部隐私。它还支持大量的插件、扩展和主题。你喜欢黑暗模式的界面?没问题。想基于关键词过滤发来的消息?TT-RSS 也能让你得偿所愿。

现在你知道 TT-RSS 是什么了,那么为什么你可能会想用它。我会讲述要把它安装到树莓派或 Debian 10 服务器上你需要了解的所有的东西。

### 安装和配置 TT-RSS

要把 TT-RSS 安装到树莓派上,你还需要安装和配置最新版本的 PHP(本文撰写时 PHP 最新版本是 7.3)、后端数据库 PostgreSQL、Nginx web 服务器、Git,最后才是 TT-RSS。

#### 1、安装 PHP 7

安装 PHP 7 是整个过程中最复杂的部分。幸运的是,它并不像看起来那样困难。从安装下面的支持包开始:

```

$ sudo apt install -y ca-certificates apt-transport-https

```

现在,添加存储库 PGP 密钥:

```

$ wget -q https://packages.sury.org/php/apt.gpg -O- | sudo apt-key add -

```

下一步,把 PHP 库添加到你的 apt 源:

```

$ echo "deb https://packages.sury.org/php/ buster main" | sudo tee /etc/apt/sources.list.d/php.list

```

然后更新你的存储库索引:

```

$ sudo apt update

```

最后,安装 PHP 7.3(或最新版本)和一些通用组件:

```

$ sudo apt install -y php7.3 php7.3-cli php7.3-fpm php7.3-opcache php7.3-curl php7.3-mbstring php7.3-pgsql php7.3-zip php7.3-xml php7.3-gd php7.3-intl

```

上面的命令默认你使用的后端数据库是 PostgreSQL,会安装 `php7.3-pgsql`。如果你想用 MySQL 或 MariaDB,你可以把命令参数改为 `php7.3-mysql`。

下一步,确认 PHP 已安装并在你的树莓派上运行着:

```

$ php -v

```

现在是时候安装和配置 Web 服务器了。

#### 2、安装 Nginx

可以用下面的命令安装 Nginx:

```

$ sudo apt install -y nginx

```

修改默认的 Nginx 虚拟主机配置,这样 Web 服务器才能识别 PHP 文件以及知道如何处理它们。

```

$ sudo nano /etc/nginx/sites-available/default

```

你可以安全地删除原文件中的所有内容,用下面的内容替换:

```

server {

listen 80 default_server;

listen [::]:80 default_server;

root /var/www/html;

index index.html index.htm index.php;

server_name _;

location / {

try_files $uri $uri/ =404;

}

location ~ \.php$ {

include snippets/fastcgi-php.conf;

fastcgi_pass unix:/run/php/php7.3-fpm.sock;

}

}

```

按 `Ctrl+O` 保存修改后的配置文件,然后按 `Ctrl+X` 退出 Nano。你可以用下面的命令测试你的新配置文件:

```

$ nginx -t

```

如果没有报错,重启 Nginx 服务:

```

$ systemctl restart nginx

```

#### 3、安装 PostgreSQL

接下来是安装数据库服务器。在树莓派上安装 PostgreSQL 超级简单:

```

$ sudo apt install -y postgresql postgresql-client postgis

```

输入下面的命令看一下数据库服务器安装是否成功:

```

$ psql --version

```

#### 4、创建 Tiny Tiny RSS 数据库

在做其他事之前,你需要创建一个数数据库,用来给 TT-RSS 软件保存数据。首先,登录 PostgreSQL 服务器:

```

sudo -u postgres psql

```

下一步,新建一个用户,设置密码:

```

CREATE USER username WITH PASSWORD 'your_password' VALID UNTIL 'infinity';

```

然后创建一个给 TT-RSS 用的数据库:

```

CREATE DATABASE tinyrss;

```

最后,给新建的用户赋最高权限:

```

GRANT ALL PRIVILEGES ON DATABASE tinyrss to user_name;

```

这是安装数据库的步骤。你可以输入 `\q` 来退出 `psql` 程序。

#### 5、安装 Git

安装 TT-RSS 需要用 Git,所以输入下面的命令安装 Git:

```

$ sudo apt install git -y

```

现在,进入到 Nginx 服务器的根目录:

```

$ cd /var/www/html

```

下载 TT-RSS 最新源码:

```

$ git clone https://git.tt-rss.org/fox/tt-rss.git tt-rss

```

注意,这一步会创建一个 `tt-rss` 文件夹。

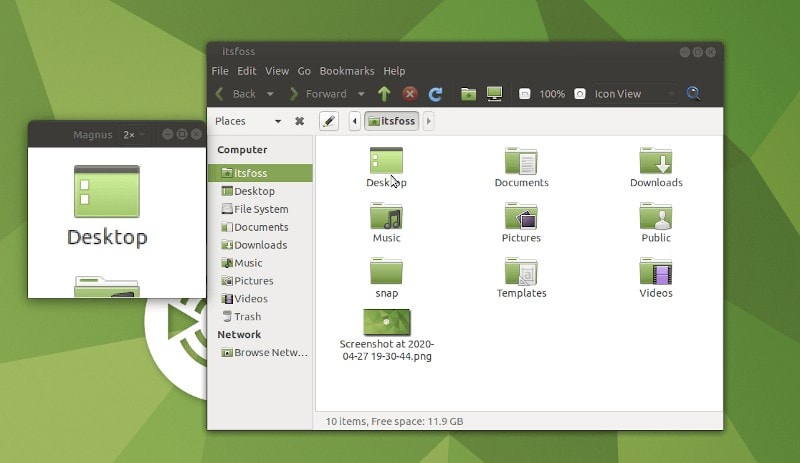

#### 6、安装和配置Tiny Tiny RSS

现在是安装和配置你的新 TT-RSS 服务器的最后时刻了。首先,确认下你在浏览器中能打开 `http://your.site/tt-rss/install/index.php`。如果浏览器显示 `403 Forbidden`,那么就证明 `/var/www/html` 文件夹的权限没有设置正确。下面的命令通常能解决这个问题:

```

$ chmod 755 /var/www/html/ -v

```

如果一切正常,你会看到 TT-RSS 安装页面,它会让你输入一些数据的信息。你只需要输入前面你创建的数据库用户名和密码;数据库名;主机名填 `localhost`;端口填 `5432`。

点击“Test Configuration”。如果一切正常,你会看到一个标记着“Initialize Database”的红色按钮。点击它来开始安装。结束后,你会看到一个配置文件,你可以把它复制到 TT-RSS 的目录,另存为 `config.php`。

安装过程结束后,浏览器输入 `http://yoursite/tt-rss/` 打开 TT-RSS,使用默认的凭证登录(用户名:`admin`,密码:`password`)。登录后系统会提示你修改密码。我强烈建议你尽快修改密码。

### 配置 TT-RSS

如果一切正常,你现在就可以开始使用 TT-RSS 了。建议你新建一个非管理员用户,使用新用户名登录,并开始导入你的馈送、订阅,按照你的意愿来配置它。

最后,并且是超级重要的事,不要忘了阅读 TT-RSS 维基上的 [Updating Feeds](https://tt-rss.org/wiki/UpdatingFeeds) 部分。它讲述了如何创建一个简单的 systemd 服务来更新馈送。如果你跳过了这一步,你的 RSS 馈送就不会自动更新。

### 总结

呵!工作量不小,但是你做完了!你现在有自己的 RSS 聚合服务器了。想了解 TT-RSS 更多的知识?我推荐你去看官方的 [FAQ](https://tt-rss.org/wiki/FAQ)、[支持](https://community.tt-rss.org/c/tiny-tiny-rss/support)论坛,和详细的[安装](https://tt-rss.org/wiki/InstallationNotes)笔记。如果你有任何问题,尽情地在下面评论吧。

---

via: <https://opensource.com/article/20/2/ttrss-raspberry-pi>

作者:[Patrick H. Mullins](https://opensource.com/users/pmullins) 选题:[lujun9972](https://github.com/lujun9972) 译者:[lxbwolf](https://github.com/lxbwolf) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | [Tiny Tiny RSS](https://tt-rss.org/) (TT-RSS) is a free and open source web-based news feed (RSS/Atom) reader and aggregator. It's ideally suited to those who are privacy-focused and still rely on RSS for their daily news. Tiny Tiny RSS is self-hosted software, so you have 100% control of the server, your data, and your overall privacy. It also supports a wide range of plugins, add-ons, and themes, Want a dark mode interface? No problem. Want to filter your incoming news based on keywords? TT-RSS has you covered there, as well.

Now that you know what TT-RSS is and why you may want to use it, I'll explain everything you need to know about installing it on a Raspberry Pi or a Debian 10 server.

## Install and configure TT-RSS

To install TT-RSS on a Raspberry Pi, you must also install and configure the latest version of PHP (7.3 as of this writing), PostgreSQL for the database backend, the Nginx web server, Git, and finally, TT-RSS.

### 1. Install PHP 7

Installing PHP 7 is, by far, the most involved part of this process. Thankfully, it's not as difficult as it might appear. Start by installing the following support packages:

`$ sudo apt install -y ca-certificates apt-transport-https`

Now, add the repository PGP key:

`$ wget -q https://packages.sury.org/php/apt.gpg -O- | sudo apt-key add -`

Next, add the PHP repository to your apt sources:

`$ echo "deb https://packages.sury.org/php/ buster main" | sudo tee /etc/apt/sources.list.d/php.list`

Then update your repository index:

`$ sudo apt update`

Finally, install PHP 7.3 (or the latest version) and some common components:

`$ sudo apt install -y php7.3 php7.3-cli php7.3-fpm php7.3-opcache php7.3-curl php7.3-mbstring php7.3-pgsql php7.3-zip php7.3-xml php7.3-gd php7.3-intl`

The command above assumes you're using PostgreSQL as your database backend and installs **php7.3-pgsql**. If you'd rather use MySQL or MariaDB, you can easily change this to **php7.3-mysql**.

Next, verify that PHP is installed and running on your Raspberry Pi:

`$ php -v`

Now it's time to install and configure the webserver.

### 2. Install Nginx

Nginx can be installed via apt with:

`$ sudo apt install -y nginx`

Modify the default Nginx virtual host configuration so that the webserver will recognize PHP files and know what to do with them:

`$ sudo nano /etc/nginx/sites-available/default`

You can safely delete everything in the original file and replace it with:

```

server {

listen 80 default_server;

listen [::]:80 default_server;

root /var/www/html;

index index.html index.htm index.php;

server_name _;

location / {

try_files $uri $uri/ =404;

}

location ~ \.php$ {

include snippets/fastcgi-php.conf;

fastcgi_pass unix:/run/php/php7.3-fpm.sock;

}

}

```

Use **Ctrl+O** to save your new configuration file and then **Ctrl+X** to exit Nano. You can test your new configuration with:

`$ nginx -t`

If there are no errors, restart the Nginx service:

`$ systemctl restart nginx`

### 3. Install PostgreSQL

Next up is installing the database server. Installing PostgreSQL on the Raspberry Pi is super easy:

`$ sudo apt install -y postgresql postgresql-client postgis`

Check to see if the database server was successfully installed by entering:

`$ psql --version`

### 4. Create the Tiny Tiny RSS database

Before you can do anything else, you need to create a database that the TT-RSS software will use to store data. First, log into the PostgreSQL server:

`sudo -u postgres psql`

Next, create a new user and assign a password:

`CREATE USER username WITH PASSWORD 'your_password' VALID UNTIL 'infinity';`

Then create the database that will be used by TT-RSS:

`CREATE DATABASE tinyrss;`

Finally, grant full permissions to the new user:

`GRANT ALL PRIVILEGES ON DATABASE tinyrss to user_name;`

That's it for the database. You can exit the **psql** app by typing **\q**.

### 5. Install Git

Installing TT-RSS requires Git, so install Git with:

`$ sudo apt install git -y`

Now, change directory to wherever Nginx serves web pages:

`$ cd /var/www/html`

Then download the latest source for TT-RSS:

`$ git clone https://git.tt-rss.org/fox/tt-rss.git tt-rss`

Note that this process creates a new **tt-rss** folder.

### 6. Install and configure Tiny Tiny RSS

It's finally time to install and configure your new TT-RSS server. First, verify that you can open ** http://your.site/tt-rss/install/index.php** in a web browser. If you get a

**403 Forbidden**error, your permissions are not set properly on the

**/var/www/html**folder. The following will usually fix this issue:

`$ chmod 755 /var/www/html/ -v`

If everything goes as planned, you'll see the TT-RSS Installer page, and it will ask you for some database information. Just tell it the database username and password that you created earlier; the database name; **localhost** for the hostname; and **5432 **for the port.

Click **Test Configuration** to continue. If all went well, you should see a red button labeled **Initialize Database.** Click on it to begin the installation. Once finished, you'll have a configuration file that you can copy and save as **config.php** in the TT-RSS directory.

After finishing with the installer, open your TT-RSS installation at ** http://yoursite/tt-rss/** and log in with the default credentials (username:

**admin**, password:

**password**). The system will recommend that you change the admin password as soon as you log in. I highly recommend that you follow that advice and change it as soon as possible.

## Set up TT-RSS

If all went well, you can start using TT-RSS right away. It's recommended that you create a new non-admin user, log in as the new user, and start importing your feeds, subscribing, and configuring it as you see fit.

Finally, and this is super important, don't forget to read the [Updating Feeds](https://tt-rss.org/wiki/UpdatingFeeds) section on TT-RSS's wiki. It describes how to create a simple systemd service that will update your feeds. If you skip this step, your RSS feeds will not update automatically.

## Conclusion

Whew! That was a lot of work, but you did it! You now have your very own RSS aggregation server. Want to learn more about TT-RSS? I recommend checking out the official [FAQ](https://tt-rss.org/wiki/FAQ), the [support](https://community.tt-rss.org/c/tiny-tiny-rss/support) forum, and the detailed [installation](https://tt-rss.org/wiki/InstallationNotes) notes. Feel free to comment below if you have any questions or issues.

## Comments are closed. |

12,267 | 如何为开源软件写作文章 | https://opensource.com/article/20/5/write-about-open-source-software | 2020-05-31T11:51:23 | [

"写作",

"作者"

] | https://linux.cn/article-12267-1.html |

>

> 通过以下提示,成为一个更好的作者。

>

>

>

开始接触开源社区的一个方法就是写关于它的文章。你可以贡献技术文档,分享你如何使用软件,或者为我们社区写一篇文章。但是开始写作说起来容易做起来难。我听到的最常见的两个不写文章的借口是:“我没有什么新东西可写”和“我不是一个好的作者”。我在这里是为了打破这两个误区。

### 你应该写什么?

>

> “寻找那些经常被遗漏的故事。” 埃里克·拉尔森

>

>

>

对于某些人来说,写作的最大障碍是找到要写的想法或话题。很容易就落入了这样的思维陷阱:“这个话题已经写过了,何必再麻烦。”

我不是第一个写文章的人,也不会是最后一个。我带来的是我独特的视角和这些年来所学到的东西。曾经有人向我请教过一些关于写作入门的建议,或者是如何让自己的写作更上一层楼。我决定把这些建议变成一篇文章。

文如其人,没有人会以你的方式来述事,你的经验和观点可能正是别人所需要的。

这里有一些提示,可以帮助你想出一个主题:

* 你最近学到了什么东西?写出你是如何学习的,你学到了什么,或使你感到惊讶的事情。

* 你经常被问到的问题是什么?把答案写出来。

* 你最近是否在搜索一篇怎么做的文章,但在搜索结果中,你对排名靠前的文章不满意?请写出你要找的文章。

* 你是否参加过会议或研讨会?写一篇会后总结来说明你所学到的东西。

* 你开始使用新工具了吗?写一份操作指南或入门指南。

### 你写的是什么类型的文章?

有不同类型的文章,包括:

* 技术文档

* 操作指南

* 博客

* 白皮书或电子书

* 回顾性的文章

内容的类型会影响你的写作风格和语气。博客更加非正式和是对话式的。而技术文档更正式,更具有指导性。

### 你是为谁而写的?

每一篇文章都应该有一个单一的受众。受众是指你为之写作的对象是什么类型的人。在你开始写作之前,写下你的读者的一些特征是有助于写作的。重要的是要考虑到你要为谁写,以及你**不是为**谁写的 —— 确定你的目标受众将决定要包括哪些内容和不包括哪些内容。

例如,我在写这篇文章的时候,我设想的目标受众是这样的:

* 有基本的写作和语法知识

* 有兴趣提高写作能力

* 在技术领域担任开发人员、销售工程师、客户经理或类似职位的工作

* 不是经验丰富或资深的作者,可能在个人或工作博客上发表过几篇文章,想写更多的文章。

* 非虚构写作

如果你有针对多个受众的内容,可以考虑针对不同的受众将其分解成不同的内容。对你的受众要考虑的一些方面:

* 专业水平:新手、中级、高级

* 作用:管理人员、个人贡献者

* 目标:他们为什么要阅读这些?

### 言语很重要

你选择的言语会对读者产生影响。晦涩难懂的词汇会使文章更难理解,不常见的词汇会让读者觉得自己很笨,某些词汇可能会不小心冒犯读者。作为一个作者,你的目标是避免所有这些。下面是怎么做的:

#### 使用日常用语

不要把写作作为炫耀你的词汇量或你从“每日一字”台历上学到的单词的方式。写作是为了让读者能够理解。每一篇文章都有相关的阅读水平。如果你写的是技术文档,那么你的目标大约是初中的阅读水平。这并不意味着你的受众只有初中的教育水平。它意味着你的写作会更容易被人理解。你想让人们对这些言语过目不忘,还是想让他们觉得自己学到了什么?虽然你可以使用长而复杂的词汇,但并不意味着你应该这样做。使用简单的语言不一定意味着你的文章会很无聊。

使用 [Hemingway 应用](http://www.hemingwayapp.com/)等工具来检查你的作品的可读性(它不是开源的,但很优秀)。比如说,在初稿之后,这篇文章被评定为五年级的阅读水平。Hemingway 还提供了如何改进写作的建议 —— 找出难以理解的句子或需要改变选词的地方。

如果你想要找出替代的词汇,可以查看 [Plain English Campaign](http://www.plainenglish.co.uk/the-a-z-of-alternative-words.html) 的建议或 [Power Thesaurus](https://www.powerthesaurus.org/) 的众包建议。

#### 知道应该规避哪些词

>

> “每次你想写‘非常’的时候,就用‘该死的’代替;你的编辑会把它删掉,而作品就会如它应有的那样。” 马克·吐温

>

>

>

在写教程或指南的时候,这里有一些要避免的词,包括“<ruby> 简单 <rt> simple </rt></ruby>”、“<ruby> 容易 <rt> easy </rt></ruby>”和“<ruby> 就这样 <rt> just </rt></ruby>”。你是你所写的主题的专家,经过多年的实践,可能会觉得事情很简单。而初学者可能会觉得事情不“简单”,也不“容易”。你的读者可能会感到沮丧,因为他们觉得过程或解释并不简单。

你是否曾经因为无法理解作者的意思而不得不反复重读一个句子或段落?你有没有因为一篇文章对你来说没有意义而放弃过?我有过。

作为一个作者,你希望你的读者感到困惑或不理解吗?我希望不会。

在你的写作中要避免的其它词语:

* <ruby> 这件事 <rt> That </rt></ruby>

* <ruby> 真的 <rt> Really </rt></ruby>

* <ruby> 非常 <rt> Very </rt></ruby>

* <ruby> 所以 <rt> So </rt></ruby>

* <ruby> 为了 <rt> In order to </rt></ruby>

一般来说,这些词可以在不改变句子意思的情况下删除。

在我写完之后,我会在文档中搜索这些词。当我在这篇文章中搜索时,我发现了以下这句话:

>

> “这并不意味着你的受众只有初中的教育水平*这件事*,而意味着你的写作会更容易被理解*这件事*。”

>

>

>

这句话中出现了两个“<ruby> 这件事 <rt> That </rt></ruby>”的例子。它们并没有给句子增加价值。它们可以被删除而不改变其含义。删除这些词汇可以缩短句子,并且更短的句子更容易理解。而说到短句,我还把它改写成了两句话。

>

> “这并不意味着你的受众只有初中的教育水平。它意味着你的写作会更容易被人理解。”

>

>

>

#### 使用包容性语言

词语和短语的历史背景可能会导致人们感到被排斥或冒犯。在写作时,你要让读者感到被包容。当你使用包容性的语言时,会让读者感到被理解、被尊重,感觉被包容。我参考了 Buffer 中的这篇[关于使用包容性语言的指南](https://open.buffer.com/inclusive-language-tech/)。

### 修订和编辑

>

> “几乎所有好的写作都是从糟糕的第一次努力开始的。你需要从某个地方开始。” 安妮·拉莫特

>

>

>

写作是一个迭代的过程。如果你认为作者们坐在办公桌前,在一个小时内就能完成一篇文章准备发表,那么请你再想一想。有些文章需要我花几个星期的时间来完成。以下是我的标准流程:

* 写一个粗略的初稿。我说的粗略,我的意思是*粗陋*。写的时候不用担心语法问题。目的是让文字从我的脑海中浮现出来,写在纸上。这一步可能需要一个小时到几周的时间。

* 将草稿放一段时间。这可能需要几个小时到几天的时间,这取决于出版时间线。

* 审阅草稿。进行调整和编辑。

* 征求反馈意见,可以是同事或朋友的反馈意见。在这个阶段的反馈中,我的重点是明确性。是否有意义?是否有什么令人困惑的地方?有什么缺失的部分?

* 纳入反馈意见。无论你的写作经验有多丰富,让其他作者审阅你的作品,都会让你的作品变得更好。

在这个阶段,我有了一个相当扎实的草稿。现在到了我最不喜欢的写作部分 —— 编辑。我之前提到的 Hemingway 应用不仅能告诉你阅读水平,还能提供改进写作的建议。我还使用 Grammarly 来帮助编辑语法。关于 Grammarly 的开源替代工具,请查看[语言工具网站](https://languagetool.org/)或这篇文章中的[开源写作工具](https://opensource.com/article/20/3/open-source-writing-tools)。

我的写作挑战之一就是适当地使用逗号。Grammarly 可以帮助我找出我缺失或滥用逗号的地方。Grammarly 发现了 43 个这篇文章的最终草稿的正确性问题。其中大部分是逗号错误。

除了语法错误之外,该应用程序还提供了一些建议,以帮助提高清晰度、参与度和表达能力(其中一些功能可能在免费版中没有)。这包括单词选择和使用主动语气与被动语气等。有些建议我接受,有些则拒绝。在审查了所有的警报和建议后,Grammarly 会在各个方面进行反馈。

不要害怕寻求写作上的帮助。每一个好的作者背后都有一个好的编辑或好的编辑应用。

### 文体指南

文体指南为改善书面交流提供了标准。它们包括标点符号、语法和用词等方面。如果是为你的公司撰写文档,请检查一下公司是否有文体指南。如果没有文体指南,或者你是为自己写的,下面是一些常用的文体指南:

* [芝加哥文体手册](https://www.chicagomanualofstyle.org/home.html)

* [谷歌开发者文档指南](https://developers.google.com/style)

* [微软写作文体指南](https://docs.microsoft.com/en-us/style-guide/welcome/)

* [美联社文体手册](https://www.apstylebook.com/)

写作是一种与社区分享自己的思想和知识的方式。开始写作的唯一方法就是开始打字。用这些建议来微调你的写作。

---

via: <https://opensource.com/article/20/5/write-about-open-source-software>

作者:[Dawn Parzych](https://opensource.com/users/dawnparzych) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | One way to get started with an open source community is to write about it. You can contribute to technical documentation, share how you use the software, or write an article for Opensource.com. But getting started writing is easier said than done. The two most common excuses I hear for not writing are: "I have nothing new to say" and "I'm not a good writer." I'm here to dispel both of those myths.

## What should you write about?

"Hunt for the stories that often get left out."

—Erik Larson

For some people, the biggest hurdle to writing is generating an idea or topic to write about. It's common to fall into the trap of thinking, "This topic has been written about, so why bother."

I'm not the first person to write an article on writing, and I won't be the last. What I bring is my unique perspective and things I've learned over the years. I've had people ask me for tips on getting started or how to get better at writing. I decided to turn those suggestions into an article.

Your writing is a reflection of you. Nobody will tell the story the way you do. Your experiences and perspective may be just what somebody else needs.

Here are some prompts to help you come up with a topic:

- What is something you've recently learned? Write about how you learned, what you learned, or what surprised you.

- What questions are you frequently asked? Write up the answer.

- Did you recently search for a how-to article and weren't satisfied with any of the top search results? Write the article you were looking for.

- Did you attend a conference or workshop? Write a post-event summary of what you learned.

- Did you start using a new tool? Write up a how-to or getting-started guide.

## What type of article are you writing?

There are different types of writing, including:

- Technical documentation

- How-to guide

- Blog

- White paper or eBook

- Retrospective

The type of content will influence your writing style and tone of voice. A blog is more informal and conversational. Technical documentation is more formal and instructional.

## Who are you writing for?

Each piece of writing should have a single audience. The audience is the type of person you are writing for. Before you begin writing, it helps to jot down a few characteristics of your reader. It's important to consider who you are writing for, as well as who you *aren't* writing for—identifying your target audience will shape what to include and what not to include.

For example, this is who I envisioned when writing this article:

- Has basic knowledge of writing and grammar

- Is interested in improving writing skills

- Works in technology as a developer, sales engineer, customer success manager, or similar role

- Is not an experienced or advanced writer, may have a couple of published articles on a personal or work blog, and wants to write more

- Is writing non-fiction

If you have content for multiple audiences, consider breaking it down into different pieces for the different audiences. Some areas to consider in your audience:

- Level of expertise: novice, intermediate, advanced

- Role: manager, individual contributor

- Goals: why are they reading?

## Words matter

The words you choose will have an impact on the reader. Difficult words can make an article harder to understand. Unfamiliar words can make the reader feel stupid. Certain words can accidentally offend a reader. Your goal as a writer is to avoid all of these. Here's how.

### Use everyday language

Don't write as a way to show off your vocabulary or the words you've learned from your "Word of the Day" calendar. Write so a reader can understand. There is a reading level associated with every piece of writing. If you're writing technical documentation, aim for about an eighth-grade reading level. This doesn't imply your audience has only an eighth-grade education. It means your writing will be more easily understood. Do you want people to get hung up on the language, or do you want them to walk away feeling like they learned something? Just because you can use big, complicated words doesn't mean you should. Using simple language does not necessarily mean your article will be boring.

Use tools like [Hemingway App](http://www.hemingwayapp.com/) to check the readability of your writing (it's not open source, but it's excellent). For example, after the first draft, this article was assessed at a fifth-grade reading level. Hemingway also provides suggestions on how to improve your writing—identifying hard-to-read sentences or places to alter word choice.

If you're struggling with coming up with alternative words, check out the suggestions at the [Plain English Campaign](http://www.plainenglish.co.uk/the-a-z-of-alternative-words.html) or the crowdsourced suggestions at [Power Thesaurus](https://www.powerthesaurus.org/).

### Know which words to avoid

"Substitute 'damn' every time you're inclined to write 'very'; your editor will delete it and the writing will be just as it should be."

—Mark Twain

When writing a tutorial or how-to guide, here are some words to avoid, including "simple," "easy," and "just." You, an expert on the topic you're writing about, may find things easy after years of practice. A beginner may not find things simple or easy. Your readers may get frustrated because they don't find the process or explanation simple.

Have you ever had to reread a sentence or paragraph multiple times because you couldn't figure out what the writer was saying? Have you ever given up on an article because it wasn't making sense to you? I have.

As a writer, do you want your readers to feel confused or unintelligent? I hope not.

Other words to avoid in your writing:

- That

- Really

- Very

- So

- In order to

Generally, these words can be deleted without changing the meaning of a sentence.

After I finish writing, I search a document for instances of those words. When I searched through this document, I found the following sentence:

"This doesn't imply that your audience only has an eighth-grade education, it means that your writing will be more easily understood."

Two instances of "that" appear in this sentence. They're not adding value to the sentence. They can be deleted without changing its meaning. Removing those instances shortens the sentence, and shorter sentences are easier to understand. And speaking of shorter sentences, I also re-wrote it as two sentences.

"This doesn't imply your audience has only an eighth-grade education. It means your writing will be more easily understood."

### Use inclusive language

The historical context of words and phrases can lead to people feeling excluded or offended. When writing, you want to make the reader feel included. When you use inclusive language, it makes your reader feel understood, respected, and like they belong. I refer to this [guide on using inclusive language](https://open.buffer.com/inclusive-language-tech/) from Buffer.

## Revising and editing

"Almost all good writing begins with terrible first efforts. You need to start somewhere."

—Anne Lamott

Writing is an iterative process. If you think writers sit down at their desk and, within an hour, have a completed article ready to be published, think again. Some articles take me weeks to complete. Here's a standard process for me:

- Write a rough first draft. By rough, I mean

*rough*. I write without worrying about grammar. The idea is to get words out of my head and onto paper. This step can take anywhere from an hour to a couple of weeks. - Put the draft away for some time. This can be anywhere from a couple of hours to a couple of days, depending on the publishing timeline.

- Review the draft. Make tweaks and edits.

- Ask for feedback, either from a coworker or a friend. During this phase of feedback, I focus on clarity. Does everything make sense? Is anything confusing, any missing sections?

- Incorporate feedback. No matter how experienced you are at writing, other writers reviewing your work will make it better.

At this stage, I have a pretty solid draft. Now comes my least favorite part of writing—editing. The Hemingway App I mentioned earlier not only tells you the reading level but also provides suggestions to improve your writing. I also use Grammarly to help with grammar edits. For open source alternatives to Grammarly, check out [Language Tool](https://languagetool.org/) or this article on [open source writing tools](https://opensource.com/article/20/3/open-source-writing-tools).

One of my writing challenges is using commas appropriately. Grammarly helps me figure out where I miss or misuse a comma. Grammarly identified 43 issues related to the correctness of this article's final draft. The majority were comma errors.

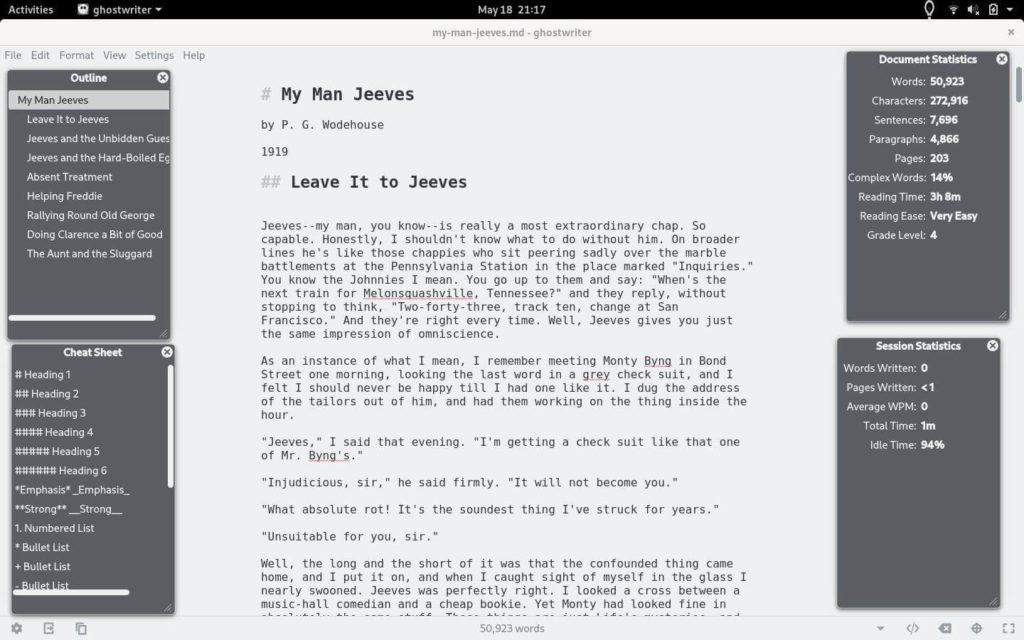

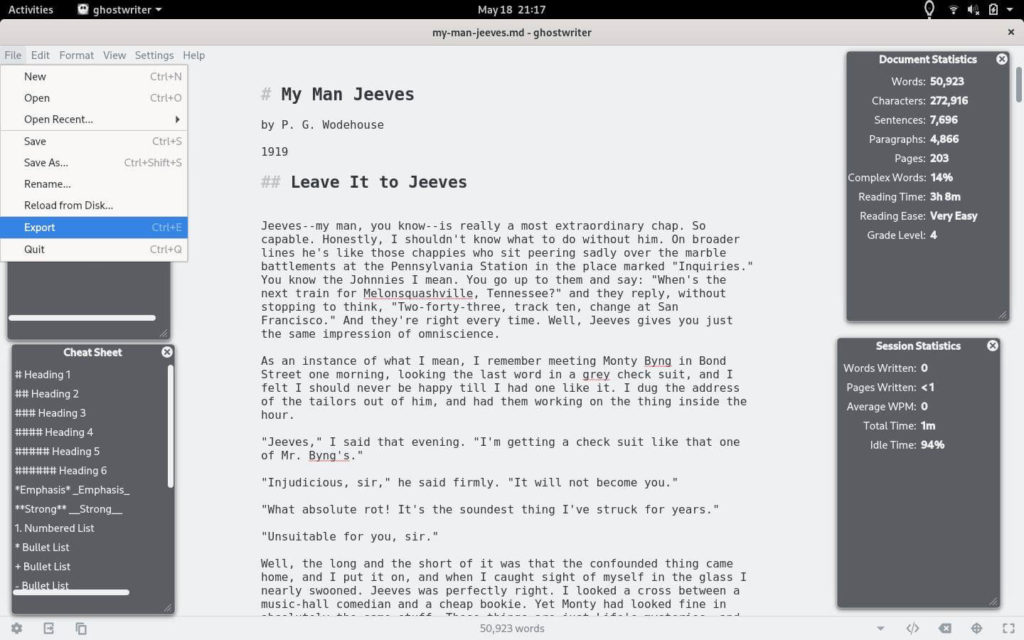

(Dawn Parzych, CC BY-SA 4.0)

In addition to grammatical errors, the app also provides suggestions to help with clarity, engagement, and delivery (some of these features may not be available in the free version). This includes things like word choice and using active vs. passive voice. Some suggestions I accept; others I reject. After reviewing all the alerts and suggestions, Grammarly reports back across all dimensions.

(Dawn Parzych, CC BY-SA 4.0)

Don't be afraid to ask for help with your writing. Behind every good writer is a good editor or a good editing app.

## Style guides

Style guides provide standards for improving communication in writing. They include aspects such as punctuation, grammar, and word usage. If writing a document for your company, check to see if it uses a style guide. If it doesn't have a style guide, or if you're writing for yourself, here are some common style guides to follow:

[Chicago Manual of Style](https://www.chicagomanualofstyle.org/home.html)[Google Developer Documentation Guide](https://developers.google.com/style)[Microsoft Writing Style Guide](https://docs.microsoft.com/en-us/style-guide/welcome/)[AP Stylebook](https://www.apstylebook.com/)

Writing is a way to share your thoughts and knowledge with the community. The only way to get started writing is to start typing. Use these suggestions to fine-tune your writing.

## 2 Comments |

12,268 | 如何使用 VTY Shell 配置路由器 | https://opensource.com/article/20/5/vty-shell | 2020-05-31T12:24:26 | [

"路由",

"FRR"

] | https://linux.cn/article-12268-1.html |

>

> FRR(free range routing)给了你实现多种协议的选择。本指南将帮助你入门。

>

>

>

最近,我写了一篇文章,解释了如何使用 [Quagga](/article-12199-1.html) 路由套件实现<ruby> 开放式最短路径优先 <rt> Open Shortest Path First </rt></ruby>(OSPF)。可以使用多个软件套件代替 Quagga 来实现不同的路由协议。其中一种是 FRR(free range routing)。

### FRR

[FRR](https://en.wikipedia.org/wiki/FRRouting) 是一个路由软件套件,它衍生自 Quagga,并在 GNU GPL2 许可下分发。与 Quagga 一样,它为类 Unix 平台提供了所有主要路由协议的实现,例如 OSPF、<ruby> 路由信息协议 <rt> Routing Information Protocol </rt></ruby>(RIP)、<ruby> 边界网关协议 <rt> Border Gateway Protocol </rt></ruby>(BGP) 和<ruby> 中间系统到中间系统 <rt> Intermediate system-to-intermediate system </rt></ruby> (IS-IS)。

开发了 Quagga 的一些公司,例如 Big Switch Networks、Cumulus、Open Source Routing 和 6wind,创建了 FRR 以在 Quagga 的良好基础上进行改善。

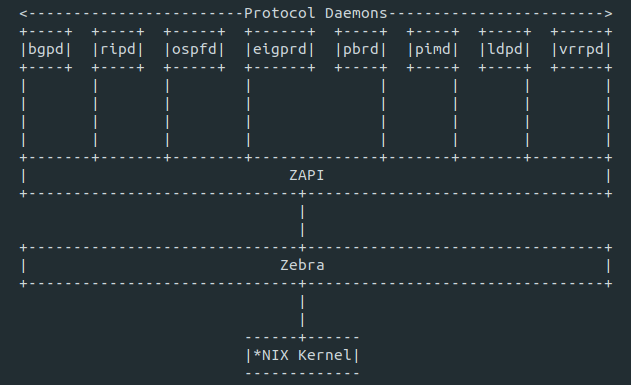

#### 体系结构

FRR 是一组守护进程,它们可以共同构建路由表。每个主协议都在其自己的守护进程中实现,并且这些守护进程与独立于协议的核心守护进程 Zebra 通信,后者提供内核路由表更新、接口查找以及不同路由协议之间路由的重新分配。每个特定协议的守护进程负责运行相关协议并根据交换的信息构建路由表。

### VTY shell

[VTYSH](http://docs.frrouting.org/projects/dev-guide/en/latest/vtysh.html) 是 FRR 路由引擎的集成 shell。它将每个守护进程中定义的所有 CLI 命令合并,并在单个 shell 中将它们呈现给用户。它提供了类似于 Cisco 的命令行模式,并且许多命令与 Cisco IOS 命令相似。CLI 有不同的模式,某些命令仅在特定模式下可用。

### 设置

在本教程中,我们将使用 FRR 配置动态路由来实现路由信息协议(RIP)。我们可以通过两种方式来做到这一点:在编辑器中编辑协议守护进程配置文件或使用 VTY Shell。在此例中,我们将使用 VTY shell。我们的设置包括两个名为 Alpha 和 Beta 的 CentOS 7.7 主机。这两台主机都有两个网络接口,并共享对 192.168.122.0/24 网络的访问。我们将广播 10.12.11.0/24 和 10.10.10.0/24 网络的路由。

对于主机 Alpha:

* eth0 IP:192.168.122.100/24

* 网关:192.168.122.1

* eth1 IP:10.10.10.12/24

对于主机 Beta:

* eth0 IP:192.168.122.50/24

* 网关:192.168.122.1

* eth1 IP:10.12.11.12/24

#### 安装软件包

首先,我们需要在两台主机上都安装 FRR 软件包。可以按照[官方 FRR 文档](http://docs.frrouting.org/projects/dev-guide/en/latest/building-frr-for-centos7.html)中的说明进行操作。

#### 启用 IP 转发

对于路由,我们需要在两台主机上都启用 IP 转发,因为这将由 Linux 内核执行:

```

sysctl -w net.ipv4.conf.all.forwarding = 1

sysctl -w net.ipv6.conf.all.forwarding = 1

sysctl -p

```

#### 启用 RIPD 守护进程

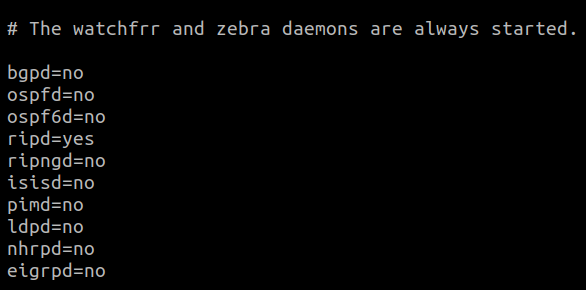

安装后,所有配置文件将保存在 `/etc/frr` 目录中。 必须通过编辑 `/etc/frr/daemons` 文件显式启用守护进程。该文件确定启动 FRR 服务时激活哪些守护进程。要启用特定的守护进程,只需将相应的 `no` 改为 `yes`。之后的服务重启将启动守护进程。

#### 防火墙配置

由于 RIP 协议使用 UDP 作为传输协议,并被分配了 520 端口,因此我们需要在 `firewalld` 配置中允许该端口。

```

firewall-cmd --add-port=520/udp –permanent

firewalld-cmd -reload

```

现在,我们可以使用以下命令启动 FRR 服务:

```

systemctl start frr

```

#### 使用 VTY 进行配置

现在,我们需要使用 VTY Shell 配置 RIP。

在主机 Alpha 上:

```

[root@alpha ~]# vtysh

Hello, this is FRRouting (version 7.2RPKI).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

alpha# configure terminal

alpha(config)# router rip

alpha(config-router)# network 192.168.122.0/24

alpha(config-router)# network 10.10.10.0/24

alpha(config-router)# route 10.10.10.5/24

alpha(config-router)# do write

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Configuration saved to /etc/frr/ripd.conf

Configuration saved to /etc/frr/staticd.conf

alpha(config-router)# do write memory

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Configuration saved to /etc/frr/ripd.conf

Configuration saved to /etc/frr/staticd.conf

alpha(config-router)# exit

```

类似地,在主机 Beta 上:

```

[root@beta ~]# vtysh

Hello, this is FRRouting (version 7.2RPKI).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

beta# configure terminal

beta(config)# router rip

beta(config-router)# network 192.168.122.0/24

beta(config-router)# network 10.12.11.0/24

beta(config-router)# do write

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Configuration saved to /etc/frr/zebra.conf

Configuration saved to /etc/frr/ripd.conf

Configuration saved to /etc/frr/staticd.conf

beta(config-router)# do write memory

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Configuration saved to /etc/frr/zebra.conf

Configuration saved to /etc/frr/ripd.conf

Configuration saved to /etc/frr/staticd.conf

beta(config-router)# exit

```

完成后,像下面这样检查两台主机路由:

```

[root@alpha ~]# ip route show

default via 192.168.122.1 dev eth0 proto static metric 100

10.10.10.0/24 dev eth1 proto kernel scope link src 10.10.10.12 metric 101

10.12.11.0/24 via 192.168.122.50 dev eth0 proto 189 metric 20

192.168.122.0/24 dev eth0 proto kernel scope link src 192.168.122.100 metric 100

```

我们可以看到 Alpha 上的路由表通过 `192.168.122.50` 包含了 `10.12.11.0/24` 的条目,它是通过 RIP 提供的。

类似地,在 Beta 上,该表通过 `192.168.122.100` 包含了 `10.10.10.0/24` 的条目。

```

[root@beta ~]# ip route show

default via 192.168.122.1 dev eth0 proto static metric 100

10.10.10.0/24 via 192.168.122.100 dev eth0 proto 189 metric 20

10.12.11.0/24 dev eth1 proto kernel scope link src 10.12.11.12 metric 101

192.168.122.0/24 dev eth0 proto kernel scope link src 192.168.122.50 metric 100

```

### 总结

如你所见,设置和配置相对简单。要增加复杂性,我们可以向路由器添加更多的网络接口,以为更多的网络提供路由。可以在编辑器中编辑配置文件来进行配置,但是使用 VTY Shell 在单个组合会话中为我们提供了所有 FRR 守护进程的前端。

---

via: <https://opensource.com/article/20/5/vty-shell>

作者:[M Umer](https://opensource.com/users/noisybotnet) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Recently, I wrote an article explaining how we can implement Open Shortest Path First (OSPF) using the [Quagga](https://opensource.com/article/20/4/quagga-linux) routing suite. There are multiple software suites that can be used instead of Quagga to implement different routing protocols. One such option is free range routing (FRR).

## FRR

[FRR](https://en.wikipedia.org/wiki/FRRouting) is a routing software suite, which has been derived from Quagga and is distributed under GNU GPL2 license. Like Quagga, it provides implementations of all major routing protocols such as OSPF, Routing Information Protocol (RIP), Border Gateway Protocol (BGP), and Intermediate system-to-intermediate system (IS-IS) for Unix-like platforms.

Several companies, such as Big Switch Networks, Cumulus, Open Source Routing, and 6wind, who were behind the development of Quagga, created FRR to improve on Quagga's well-established foundations.

### Architecture

FRR is a suite of daemons that work together to build the routing table. Each major protocol is implemented in its own daemon, and these daemons talk to the core and protocol-independent daemon Zebra, which provides kernel routing table updates, interface lookups, and redistribution of routes between different routing protocols. Each protocol-specific daemon is responsible for running the relevant protocol and building the routing table based on the information exchanged.

## VTY shell

[VTYSH](http://docs.frrouting.org/projects/dev-guide/en/latest/vtysh.html) is an integrated shell for the FRR routing engine. It amalgamates all the CLI commands defined in each of the daemons and presents them to the user in a single shell. It provides a Cisco-like modal CLI, and many of the commands are similar to Cisco IOS commands. There are different modes to the CLI, and certain commands are only available within a specific mode.

## Setup

In this tutorial, we'll be implementing the routing information protocol (RIP) to configure dynamic routing using FRR. We can do this in two ways—either by editing the protocol daemon configuration file in an editor or by using the VTY shell. We'll be using the VTY shell in this example. Our setup includes two CentOS 7.7 hosts, named Alpha and Beta. Both hosts have two network interfaces and share access to the 192.168.122.0/24 network. We'll be advertising routes for 10.12.11.0/24 and 10.10.10.0/24 networks.

**For Host Alpha:**

- eth0 IP: 192.168.122.100/24

- Gateway: 192.168.122.1

- eth1 IP: 10.10.10.12/24

**For Host Beta:**

- eth0 IP: 192.168.122.50/24

- Gateway: 192.168.122.1

- eth1 IP: 10.12.11.12/24

### Installation of package

First, we need to install the FRR package on both hosts; this can be done by following the instructions in the [official FRR documentation](http://docs.frrouting.org/projects/dev-guide/en/latest/building-frr-for-centos7.html).

### Enable IP forwarding

For routing, we need to enable IP forwarding on both hosts since that will performed by the Linux kernel.

```

sysctl -w net.ipv4.conf.all.forwarding = 1

sysctl -w net.ipv6.conf.all.forwarding = 1

sysctl -p

```

### Enabling the RIPD daemon