id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

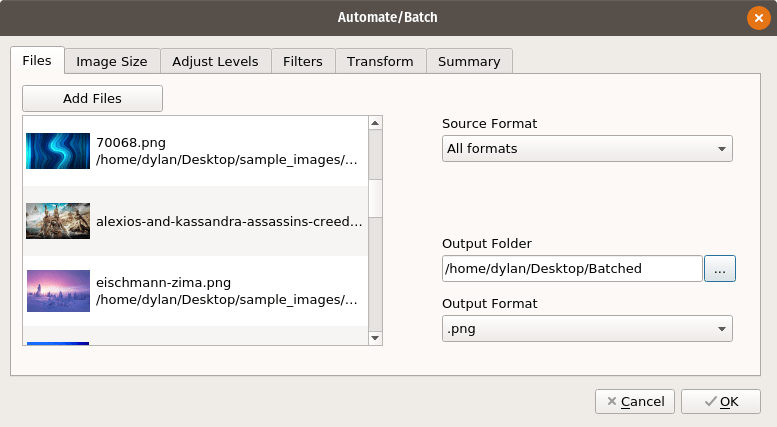

12,427 | GIMP 教程:如何使用 GIMP 裁剪图像 | https://itsfoss.com/crop-images-gimp/ | 2020-07-17T23:25:42 | [

"GIMP"

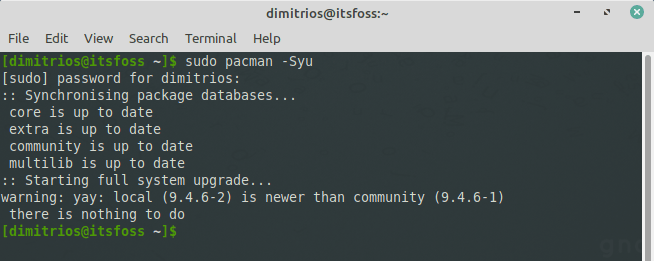

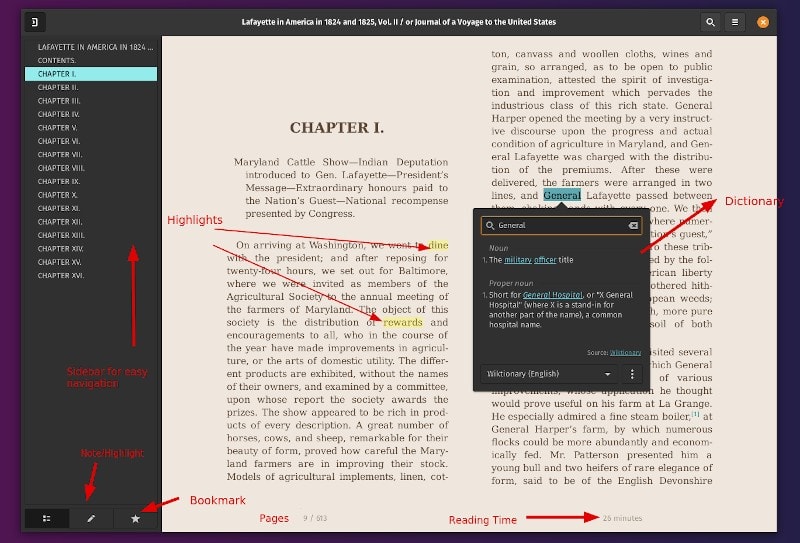

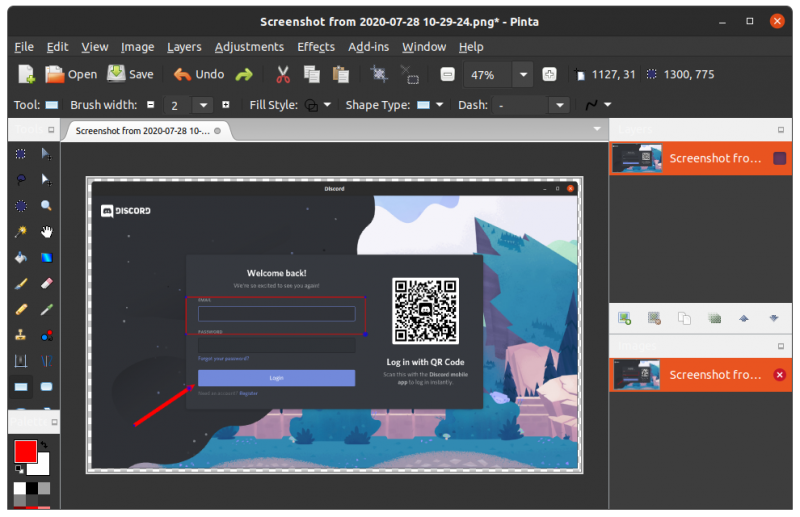

] | https://linux.cn/article-12427-1.html | 你可能想在 [GIMP](https://www.gimp.org/) 中裁剪图像的原因有很多。例如,你可能希望删除无用的边框或信息来改善图像,或者你可能希望最终图像的焦点是在一个特定细节上。

在本教程中,我将演示如何在 GIMP 中快速裁剪图像而又不影响精度。让我们一起来看看吧。

### 如何在 GIMP 中裁剪图像

#### 方法 1

裁剪只是一种将图像修整成比原始图像更小区域的操作。裁剪图像的过程很简单。

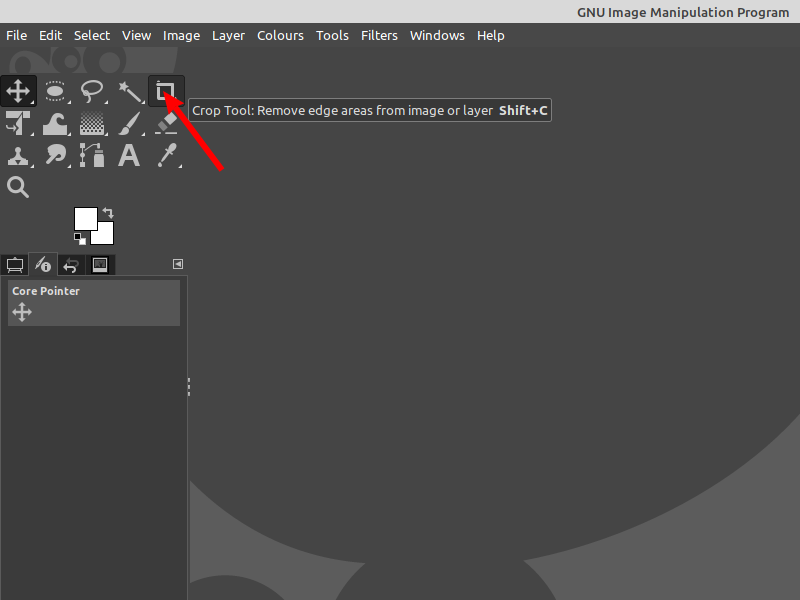

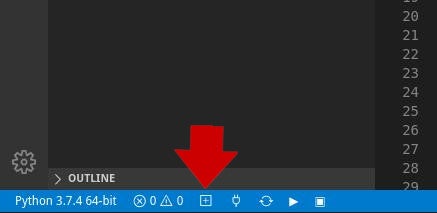

你可以通过“工具”面板访问“裁剪工具”,如下所示:

你还可以通过菜单访问裁剪工具:“<ruby> 工具 → 变形工具 → 裁剪 <rt> Tools → Transform Tools → Crop </rt></ruby>”。

激活该工具后,你会注意到画布上的鼠标光标会发生变化,以表示正在使用“裁剪工具”。

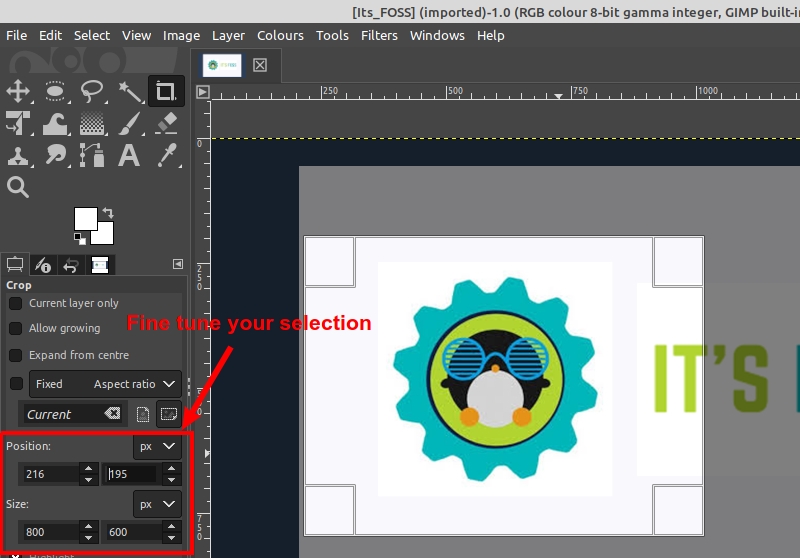

现在,你可以在图像画布上的任意位置单击鼠标左键,并将鼠标拖到某个位置以创建裁剪边界。此时你不必担心精度,因为你可以在实际裁剪之前修改最终选区。

此时,将鼠标光标悬停在所选内容的四个角上会更改鼠标光标并高亮显示该区域。现在,你可以微调裁剪的选区。你可以单击并拖动任何边或角来移动部分选区。

选定完区域后,你只需按键盘上的回车键即可进行裁剪。

如果你想重新开始或者不裁剪,你可以按键盘上的 `Esc` 键。

#### 方法 2

裁剪图像的另一种方法是使用“矩形选择工具”进行选择:“<ruby> 工具 → 选择工具 → 选择矩形 <rt> Tools → Selection Tools → Rectangle Select </rt></ruby>”。

然后,你可以使用与“裁剪工具”相同的方式高亮选区,并调整选区。选择好后,可以通过以下方式裁剪图像来适应选区:“<ruby> 图像 → 裁剪为选区 <rt> Image → Crop to Selection </rt></ruby>”。

### 总结

对于 GIMP 用户而言,精确裁剪图像可以视为一项基本功能。你可以选择哪种方法更适合你的需求并探索其潜力。

如果你对过程有任何疑问,请在下面的评论中告诉我。如果你“渴望”更多 [GIMP 教程](https://itsfoss.com/tag/gimp-tips/),请确保在你喜欢的社交媒体平台上订阅!

---

via: <https://itsfoss.com/crop-images-gimp/>

作者:[Dimitrios Savvopoulos](https://itsfoss.com/author/dimitrios/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

There are many reasons you may want to crop an image in [GIMP](https://www.gimp.org/?ref=itsfoss.com). You may want to remove useless borders or information to improve your image, or you may want the focus of the final image to be a specific detail for example.

In this tutorial, I will demonstrate how to cut out an image in GIMP quickly and without compromising the precision. Let’s see.

## Method 1: Use the crop tool in GIMP

Cropping is just an operation to trim the image down to a smaller region than the original one. The procedure to crop an image is straightforward.

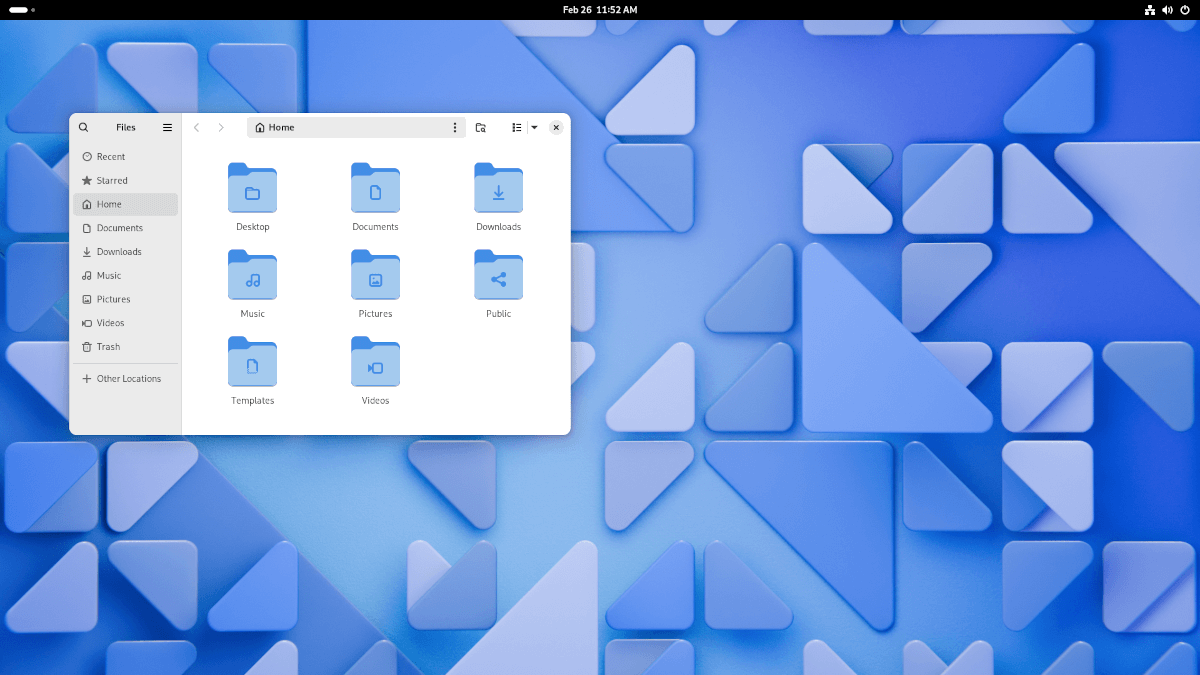

You can get to the Crop Tool through the Tools palette like this:

Use Crop Tool for cropping images in GIMP

You can also access the crop tool through the menus:

**Tools → Transform Tools → Crop**

Once the tool is activated, you’ll notice that your mouse cursor on the canvas will change to indicate the Crop Tool is being used.

Now you can Left-Click anywhere on your image canvas, and drag the mouse to a location to create the cropping boundaries. You don’t have to worry about the precision at this point, as you will be able to modify the final selection before actually cropping.

At this point hovering your mouse cursor over any of the four corners of the selection will change the mouse cursor, and highlight that region. This allows you to now fine-tune the selection for cropping. You can click and drag any side or corner to move that portion of the selection.

Once the region is good enough to be cropped, you can just press the “**Enter**” key on your keyboard to crop.

If at any time you’d like to start over or decide not to crop at all, you can press the “**Esc**” key on your keyboard.

## Method 2: Use rectangle select tool to crop images

Another way to crop an image is to make a selection first, using the **Rectangle Select Tool**.

**Tools → Selection Tools → Rectangle Select**

You can then highlight a selection the same way as the **Crop Tool**, and adjust the selection as well. Once you have a selection you like, you can crop the image to fit that selection through

**Image → Crop to Selection**

## Conclusion

Cropping precisely an image can be considered a fundamental asset for a GIMP user. You may choose which method fits better to your needs and explore its potential.

If you have any questions about the procedure, please let me know in the comments below. If you are “craving” more [GIMP tutorials](https://itsfoss.com/tag/gimp/), make sure to subscribe on your favorite social media platforms! |

12,429 | 深入了解定制 Bash | https://fedoramagazine.org/customizing-bash/ | 2020-07-19T11:04:00 | [

"Bash",

"点文件"

] | https://linux.cn/article-12429-1.html |

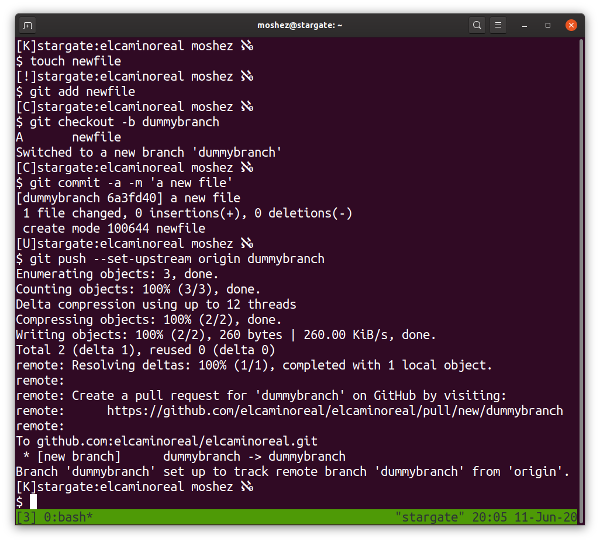

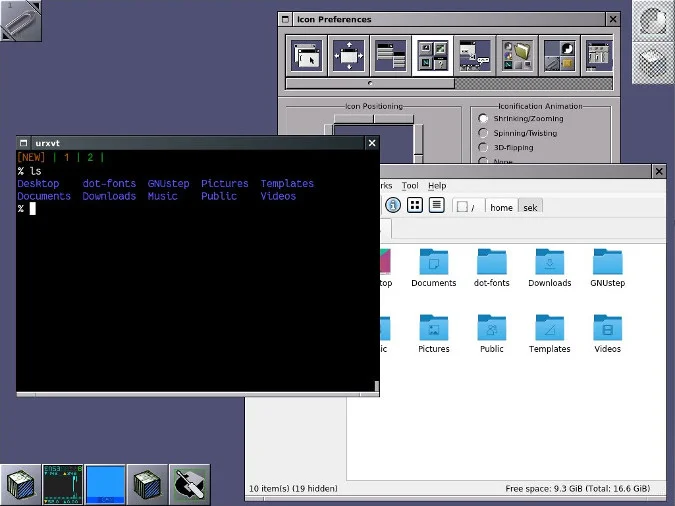

操作系统的最外层 —— 也就是跟你直接打交道的 —— 叫做 [shell](https://en.wikipedia.org/wiki/Shell_(computing))(“外壳”)。Fedora 预装了好几种不同的 shell。shell 可以是图形界面,或者字符界面。在文档中,你常常能见到两个母缩写词 GUI (<ruby> 图形用户界面 <rt> Graphical User Interface </rt></ruby>)跟 CLI(<ruby> 命令行界面 <rt> Command-Line Interface </rt></ruby>),它们用来区分图形和基于字符的 shell/界面。[GNOME](https://en.wikipedia.org/wiki/GNOME) 和 [Bash](https://en.wikipedia.org/wiki/Bash_(Unix_shell)) 分别是 Fedora 默认的图形和命令行界面,不过你也可以使用其它 [GUI](https://fedoramagazine.org/fedoras-gaggle-of-desktops/) 或者 [CLI](https://en.wikipedia.org/wiki/Comparison_of_command_shells) shell。

接下来在这篇文章中,我们会讨论一些比较推荐的 Bash 字符界面的点文件配置。

### Bash 概览

Bash 参考手册中写道:

>

> 根本上来说,shell 只是一个能执行命令的宏处理器。宏处理器这个词意味着通过扩展文本跟符号,从而构成更复杂的表达式。

>

>

> **Bash 参考手册** **第五版,Bash 5.0** **2019 年 5 月**

>

>

>

除了能使用其它程序之外,Bash shell 还含有一些内置命令和关键字。Bash 内置功能很强大,甚至能够作为一门 [高级语言](https://en.wikipedia.org/wiki/High-level_programming_language) 独当一面。Bash 的几个关键字和操作符类似于 [C 语言](https://en.wikipedia.org/wiki/C_(programming_language))。

Bash 能够以交互式或非交互式模式启动。Bash 的交互模式是一个很多人都熟悉的典型的终端/命令行界面。[GNOME 终端](https://en.wikipedia.org/wiki/GNOME_Terminal) 默认以交互模式打开 Bash。Bash 在非交互模式下运行的例子是,当命令和数据从文件或 shell 脚本通过 [管道](https://en.wikipedia.org/wiki/Pipeline_(Unix)) 传送到 Bash 时。其它 Bash 可以运行的模式包括:<ruby> 登录 <rt> login </rt></ruby>、<ruby> 非登录 <rt> non-login </rt></ruby>、<ruby> 远程 <rt> remote </rt></ruby>、POSIX、Unix sh、<ruby> 受限 <rt> restricted </rt></ruby>,以及使用与用户不同的 UID/GID 模式。各种模式是可以相互组合的。比如,交互式 + 受限 + POSIX 或者非交互式 + 非登录 + 远程。不同的启动模式,决定着 Bash 所读取的启动文件。理解这些操作模式,有助于帮助我们修改启动文件。

根据 Bash 参考手册,它会:

>

> 1. 从文件中...、从作为 `-c` 调用选项传入参数的字符...,或者从用户的终端中读取输入。

> 2. 将输入分解成单词和操作符,遵循 [它的] 引用规则。...这些标记使用元字符隔开。这一步执行别名展开。

> 3. 将标记解析成简单与复合命令。

> 4. 执行各种 shell 展开...,将展开之后的标记分解成文件名...以及命令和参数的列表。

> 5. 执行必要的重定向...并从参数列表中去除重定向操作符及其操作数。

> 6. 执行命令。

> 7. 必要时等待命令完成,并收集退出状态。

>

>

> **Bash 参考文档** **第五版,Bash 版本 5.0** **2019 年 5 月**

>

>

>

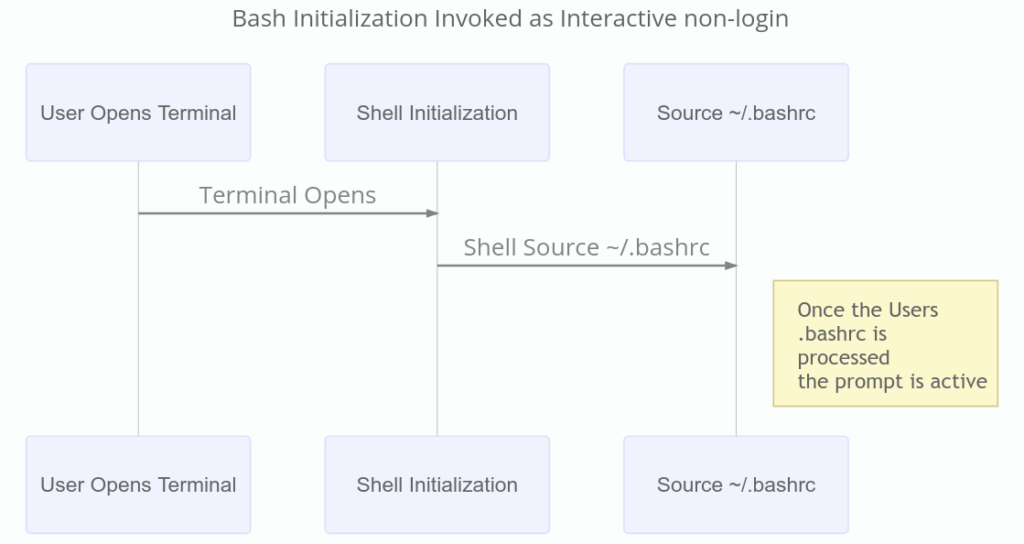

当用户开启终端模拟器进入命令行环境时,便启动了一次交互式 shell 会话。GNOME 终端默认以非登录模式为用户打开 Shell。你可以在 “<ruby> 编辑 → 首选项 → 配置文件 → 命令 <rt> Edit → Preferences → Profilles → Command </rt></ruby>” 中配置 GNOME 终端以何种模式(登录与非登录式)启动。也可以在 Bash 启动时通过向其传递 `-login` 标志来要求进入登录模式。要注意一点,Bash 的登录模式与非交互模式并不互斥。可以让 Bash 同时以登录模式和非交互模式运行。

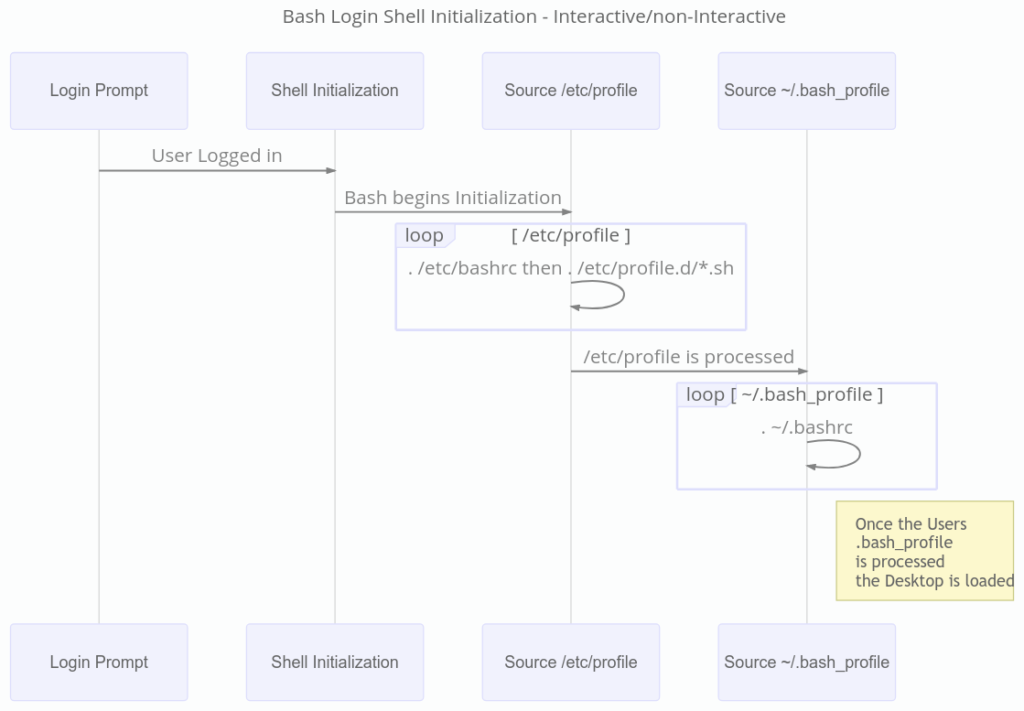

### 启动 Bash

除非传入 `-noprofile` 选项,否则登录模式的 Bash shell 会默认读取并执行某些初始化文件中命令。如果 `/etc/profile` 存在,它会是第一个被执行的文件,紧接着是按 `~/.bash_profile`、`~/.bash_login` 或 `~/.profile`顺序找到的第一个文件。当用户退出登录模式的 shell 时,或者有脚本在非交互式登录模式的 shell 中调用了内置 `exit`命令,Bash 会读取并执行 `~/.bash_logout` 中的命令,如果 `/etc/bash_logout` 存在的话,会紧接着执行它。通常来说,`/etc/profile` 会<ruby> 援引 <rt> source </rt></ruby> `/etc/bashrc` 文件,读取并执行其中的命令,然后查找并读取执行 `/etc/profile.d` 目录中以 `.sh` 结尾的文件。同样的,`~/.bash_profile` 通常也会<ruby> 援引 <rt> source </rt></ruby> `~/.bashrc` 文件。`/etc/bashrc` 和 `~/.bashrc` 都会进行检查,避免重复<ruby> 援引 <rt> source </rt></ruby>。

(LCTT 译注:在 Bash 中,脚本会通过 `source` 或 `.` 命令来将另外一个脚本引入其中,这个行为称之为 “source”、“sourcing”,但是该行为一直没有公认且常用的翻译方法。经过多番斟酌,我认为可以译做“援引”,理由如下:1、“援引”具有“引用、引入”的意思,符合该行为;2、“援”这个词的发音和“source” 常见的汉语意思“源”同音,便于记忆。以上是我们的愚见,供大家参考讨论。—— 老王,2020/7/19)

一个交互式的 shell,如果不是登录 shell,那么当它第一次被调用的时候,会执行 `~/.bashrc` 文件。这是用户在 Fedora 上打开终端时通常会进入的 shell 类型。当 Bash 以非交互模式启动 —— 就像运行脚本时那样 —— 它会寻找 `BASH_ENV`环境变量。如果找到了,就会展开它的值作为文件名,接着读取并执行该文件。效果跟执行以下命令相同:

```

if [ -n "$BASH_ENV" ]; then . "$BASH_ENV"; fi

```

值得注意的是,不会使用 `PATH` 环境变量的值来搜索该文件名(LCTT 译注:意即不会检索搜索路径)。

### 重要的用户点文件

Bash 最广为人知的用户点文件是 `~/.bashrc`。通过编辑该文件,可以设置大多数个性化选项。由于我们常常需要设置一些选项,会改动上面提及甚至没有提及的文件,所以大部分自定义选项会成为一个麻烦事。Bash 环境具有很高的可定制性,正是为了适应不同用户的不同需求。

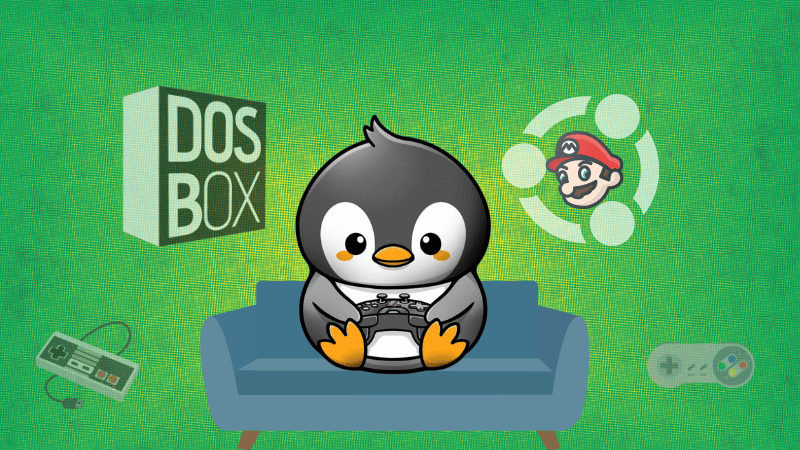

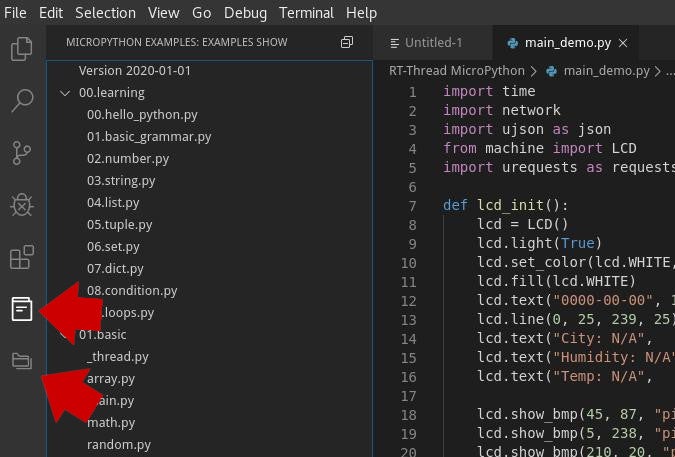

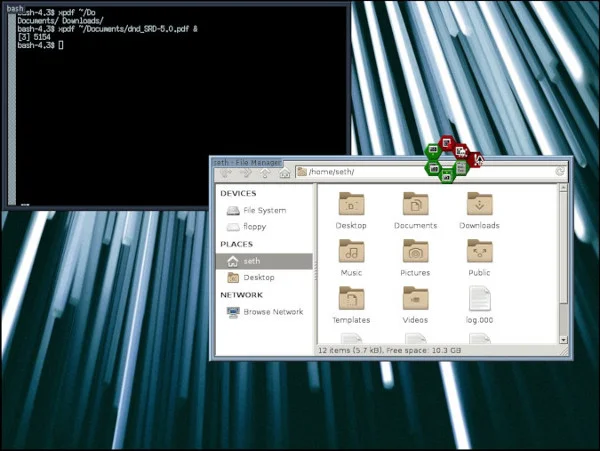

当登录 shell 正常退出时,如果 `~/.bash_logout` 和 `/etc/bash_logout` 存在,它们会被调用。下一幅图展示了 Bash 作为交互式 shell 启动时的过程。例如,当用户从桌面环境打开终端模拟器时,会按照以下顺序进行。

我们已经知道,在不同的启动模式下,Bash 会执行不同的命令,所以很明显,只有几种最需要关注的典型启动模式。分别是非交互、交互式登录 shell,和非交互式、交互式非登录 shell。如果想定义某些全局环境,那么需要将一个具有唯一名称、以 `.sh` 为后缀的文件(例如 `custom.sh`)放置在 `/etc/profile.d` 目录。

对于非交互式非登录启动方式,需要特别注意。在这种模式下,Bash 会检查 `BASH_ENV` 变量。如果定义了该变量,Bash 会援引它所指向的文件。另外,处理 `BASH_ENV` 时并不会使用 `PATH` 变量所存储的值(LCTT 译注:意即不会检索搜索路径),所以它必须包含执行文件的绝对路径。比如说,如果有人希望非交互式执行脚本时,shell 能读取 `~/.bashrc` 文件中的设置,那么他可以把类似下面这样的内容放在一个名为 `/etc/profile.d/custom.sh` 的文件中...

```

# custom.sh

.

.

.

# 如果使用 Fedora Workstation

BASH_ENV="/home/username/.bashrc"

.

.

.

# 如果使用 Fedora Silverblue Workstation

BASH_ENV="/var/home/username/.bashrc"

export BASH_ENV

```

上面这份脚本会让每个 shell 脚本在运行之前先执行该用户的 `~/.bashrc`。

用户一般都会自定义他们的系统环境,以便契合他们自己的工作习惯与偏好。举例来说,用户可以通过别名来实现这种程度的自定义。拥有相同起始参数、需要频繁使用的命令是制作别名的最佳选择。以下展示了一些来自 `~/.bashrc` 文件中定义的别名。

```

# .bashrc

# 执行全局文件

if [ -f /etc/bashrc ];

then . /etc/bashrc

fi

.

.

.

# 用户别名和函数

alias ls='ls -hF --color=auto'

alias la='ls -ahF --color=auto'

# 让 dir 命令用起来像在 Windows 那样

alias dir='ls --color=auto --format=long'

# 用颜色高亮 grep 结果

alias grep='grep --color=auto'

```

在系统中,别名是一种自定义各种命令的方法。它能减少击键次数,而且让命令用起来更方便。针对用户级别的别名通常存放在该用户的 `~/.bashrc` 文件中。

如果你发现自己经常要去历史中查找曾经执行过的某条命令,那可能需要改改历史设置了。你依然可以在 `~/.bashrc` 文件中设置针对用户级别的历史选项。比如说,如习惯同时使用多个终端,那你可能要启用 `histappend` 选项。某些 Bash 相关的 shell 选项本质上是布尔值(接收 `on` 或 `off`),通常可以用内置命令 `shopt` 启用或禁用。接收更复杂的值的 Bash 选项(如 `HISTTIMEFORMAT`),常常通过赋值给环境变量来达到配置目的。以下演示如何以 shell 选项和环境变量定制 Bash。

```

# 配置 Bash 历史

# 用制表符扩展目录环境变量,并设置 histappend

shopt -s direxpand histappend

# ignoreboth 等同于 ignorespace 和 ignoredup

HISTCONTROL='ignoreboth'

# 控制 `history` 输出中的时间格式

HISTTIMEFORMAT="[%F %T] "

# 无限历史记录

# NB:在新版 Bash 中,任何 < 0 的写法都有效,但是在 CentOS/RHEL 中,只有这样才行得通

HISTSIZE=

HISTFILESIZE=

# 或者对于使用新版 Bash 的人

HISTSIZE=-1

HISTFILESIZE=-1

```

上面例子中的 `direxpand` 选项,可以让 Bash 在文件名补全时,用单词展开结果替换目录名。它会改变 readline 编辑缓冲区的内容,所以你所输入的东西已经被补全得到的结果替换了。

`HISTCONTROL` 变量用于启用或禁用命令历史的某些过滤选项。重复行、以空白打头的行,都能通过该选项将它们从命令历史中过滤掉。引用自 Dusty Mabe,这是我从他那儿得到的技巧:

>

> `ignoredup` 可以让历史不记录重复条目(如果你反复执行同一条命令)。`ignorespace` 会忽略前面有空白的条目,当你在设置一个包含敏感信息的环境变量或者执行一条不想被记录进磁盘的命令时,这就很有用。`ignoreboth`相当于这两条选项的结合体。

>

>

> **Dusty Mabe – Redhat首席软件工程师,2020.6.19**

>

>

>

对于命令行重度用户,Bash 有一个 `CDPATH` 环境变量。如果 `CDPATH` 包含一系列供 `cd` 命令搜索的目录,且提供一个相对路径作为第一个参数,那么它会按顺序检查所有列出的目录,寻找匹配的子目录并切换到第一个匹配结果目录。

```

# .bash_profile

# 设置 CDPATH

CDPATH="/var/home/username/favdir1:/var/home/username/favdir2:/var/home/username/favdir3"

# 也可以写成这样

CDPATH="/:~:/var:~/favdir1:~/favdir2:~/favdir3"

export CDPATH

```

`CDPATH` 通常像 `PATH` 一样的方式进行更新 —— 通过在赋值右侧引用自身来保留原来的值。

```

# .bash_profile

# 设置 CDPATH

CDPATH="/var/home/username/favdir1:/var/home/username/favdir2:/var/home/username/favdir3"

# 或者写成这样

CDPATH="/:~:/var:~/favdir1:~/favdir2:~/favdir3"

CDPATH="$CDPATH:~/favdir4:~/favdir5"

export CDPATH

```

`PATH` 是另一个极其重要的变量。它是系统上的命令的搜索路径。注意,有些应用要求将它们自己的目录加入 `PATH` 变量,这样才能正常使用。跟 `CDPATH` 一样,通过在赋值右侧引用原值来追加新值到 `PATH` 变量。如果你希望将新值前置,只需要把原来的值(`$PATH`)放到列表末尾即可。还有注意的是在 Fedora,这一列值通过冒号分隔(`:`)。

```

# .bash_profile

# 添加 PATH 值到 PAHT 环境变量

PATH="$PATH:~/bin:~:/usr/bin:/bin:~/jdk-13.0.2:~/apache-maven-3.6.3"

export PATH

```

命令提示符是另一个流行的自定义选项。它有七个可定制的参数:

>

> * `PROMPT_COMMAND`:如果设置了,会在每一个主提示符(`$PS1`)出现之前执行该值。

> * `PROMPT_DIRTRIM`:如果设置成大于零的数,则该值用作展开 `\w` 和 `\W` 提示符字符串转义符时保留的尾随目录组件数量。删除的字符将替换为省略号。

> * `PS0`:这个参数的值像 `PS1` 一样展开,在交互式 shell 读取命令之后、执行命令之前展示。

> * `PS1`:主提示符字符串。默认值是 `\s-\v\$` 。

> * `PS2`:次提示符字符串。默认是 `>` 。在显示之前,`PS2` 像 `PS1` 那样展开。

> * `PS3`:这个参数的值用作 `select` 命令的提示符。如果这个变量没有设置,`select` 命令会用 `#?` 作为提示符。

> * `PS4`:这个参数的值像 `PS1` 那样展开,如果设置了 `-x` 选项,这个展开值会在命令行被回显之前作为提示符显示。展开后的值的第一个字符在必要时会复制数次,指示间接层数。默认值是 `+` 。

>

>

> **Bash 参考文档** **第五版,Bash 版本 5.0** **2019 年 5 月**

>

>

>

Bash 的这一个方面就可以用整篇文章来讨论。你可以找到许许多多信息和例子。在本文末尾链接的存储库中提供了一些点文件范例,包括提示符重新配置。你可以随意使用该存储库中的例子进行学习和体验。

### 总结

既然你已经掌握了一些 Bash 的工作原理,那就可以轻松修改你的 Bash 点文件,满足你自己的需求和习惯。美化你的提示符,制作一些别名,这样你的电脑才真的属于你。查看 `/etc/profile`、`/etc/bashrc` 和 `/etc/profile.d/` 这些文件的内容,获得一些启发。

你也可以在这里写一些关于终端模拟器的评论。有很多办法可以将你最喜欢的终端,完全配置成你想要的样子。你或许早就想到了,但是通常可以通过……嗯……用户家目录的点文件实现这个目的。终端模拟器也可以作为登录会话启动,有些人总喜欢用登录环境。你使用终端和电脑的姿势,取决于你如何修改(或不修改)你的点文件。

如果你很好奇自己的命令行处于什么会话状态,使用下面这个脚本来判断一下。

```

#!/bin/bash

case "$-" in

(*i*) echo This shell is interactive ;;

(*) echo This shell is not interactive ;;

esac

```

把这几行放到一个文件里,加上可执行权限,然后运行,就能看到你当前处于何种类型的 shell。`$-` 在 Bash 中是一个变量,如果是交互式 shell,它会包含字母 `i`。此外,你可以直接输出 `$-` 变量然后检查它的输出中是否含有 `i` 标记。

```

$ echo $-

```

### 参考信息

可以参考以下资料以获取更多信息和示例。Bash 手册也是一个很好的信息来源。请注意,确保你的本地手册页记录了你当前运行的 Bash 版本的特性,因为在网上找到的信息有时可能太老(过时了)或太新(你的系统还没有安装)。

* <https://opensource.com/tags/command-line>

* <https://opensource.com/downloads/bash-cheat-sheet>(在该网站中,你必须要输入一个有效的电子邮箱地址,或者注册,才能下载。)

* <https://opensource.com/article/19/12/bash-script-template>

对本文有各种形式(点文件示例、提示,以及脚本文件)贡献的社区成员:

* Micah Abbott – 首席质量工程师

* John Lebon – 首席软件工程师

* Dusty Mabe – 首席软件工程师

* Colin Walters – 高级首席软件工程师

示例点文件和脚本可以在这个存储库中找到:

* <https://github.com/TheOneandOnlyJakfrost/bash-article-repo>

请仔细检查上面所提供的存储库中的信息。有些可能已经过时了。里面还包含很多开发中的自定义脚本和<ruby> 宠物容器 <rt> pet container </rt></ruby>配置例子,那些不是点文件。我推荐从 John Lebon 的点文件开始学习,从头到尾都含有完善的解说,它们是我见过的最详细的,并且包含了非常好的描述。祝你学得开心!

---

via: <https://fedoramagazine.org/customizing-bash/>

作者:[Stephen Snow](https://fedoramagazine.org/author/jakfrost/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[nophDog](https://github.com/nophDog) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | The outermost layer of your operating system – the part you interact with – is called the [shell](https://en.wikipedia.org/wiki/Shell_(computing)). Fedora comes with several preinstalled shells. Shells can be either graphical or text-based. In documentation, you will often see the acronyms GUI (Graphical User Interface) and CLI (Command-Line Interface) used to distinguish between graphical and text-based shells/interfaces. Other [GUI](https://fedoramagazine.org/fedoras-gaggle-of-desktops/) and [CLI](https://en.wikipedia.org/wiki/Comparison_of_command_shells) shells can be used, but [GNOME](https://en.wikipedia.org/wiki/GNOME) is Fedora’s default GUI and [Bash](https://en.wikipedia.org/wiki/Bash_(Unix_shell)) is its default CLI.

The remainder of this article will cover recommended dotfile practices for the Bash CLI.

## Bash overview

From the Bash reference manual:

At its base, a shell is simply a macro processor that executes commands. The term macro processor means functionality where text and symbols are expanded to create larger expressions.

Reference Documentation for Bash

Edition 5.0, for Bash Version 5.0.

May 2019

In addition to helping the user start and interact with other programs, the Bash shell also includes several built-in commands and keywords. Bash’s built-in functionality is extensive enough that it is considered a [high-level programming language](https://en.wikipedia.org/wiki/High-level_programming_language) in its own right. Several of Bash’s keywords and operators resemble those of [the C programming language](https://en.wikipedia.org/wiki/C_(programming_language)).

Bash can be invoked in either interactive or non-interactive mode. Bash’s interactive mode is the typical terminal/command-line interface that most people are familiar with. [GNOME Terminal](https://en.wikipedia.org/wiki/GNOME_Terminal), by default, launches Bash in interactive mode. An example of when Bash runs in non-interactive mode is when commands and data are [piped](https://en.wikipedia.org/wiki/Pipeline_(Unix)) to it from a file or shell script. Other modes of operation that Bash can operate in include: login, non-login, remote, POSIX, unix sh, restricted, and with a different UID/GID than the user. Various combinations of these modes are possible. For example interactive+restricted+POSIX or non-interactive+non-login+remote. Which startup files Bash will process depends on the combination of modes that are requested when it is invoked. Understanding these modes of operation is necessary when modifying the startup files.

According to the Bash reference manual, Bash …

1. Reads its input from a file …, from a string supplied as an argument to the -c invocation option …, or from the user’s terminal.

2. Breaks the input into words and operators, obeying [its] quoting rules. … These tokens are separated by metacharacters. Alias expansion is performed by this step.

3. Parses the tokens into simple and compound commands.

4. Performs the various shell expansions …, breaking the expanded tokens into lists of filenames … and commands and arguments.

5. Performs any necessary redirections … and removes the redirection operators and their operands from the argument list.

6. Executes the command.

7. Optionally waits for the command to complete and collects its exit status.

Reference Documentation for Bash

Edition 5.0, for Bash Version 5.0.

May 2019

When a user starts a terminal emulator to access the command line, an interactive shell session is started. GNOME Terminal, by default, launches the user’s shell in non-login mode. Whether GNOME Terminal launches the shell in login or non-login mode can be configured under *Edit* → *Preferences* → *Profiles* → *Command*. Login mode can also be requested by passing the *–login* flag to Bash on startup. Also note that Bash’s *login* and *non-interactive* modes are not exclusive. It is possible to run Bash in both *login* and *non-interactive* mode at the same time.

## Invoking Bash

Unless it is passed the *—**noprofile* flag, a Bash login shell will read and execute the commands found in certain initialization files. The first of those files is */etc/profile* if it exists, followed by one of *~/.bash_profile*, *~/.bash_login*, or *~/.profile*; searched in that order. When the user exits the login shell, or if the script calls the *exit* built-in in the case of a non-interactive login shell, Bash will read and execute the commands found in *~/.bash_logout* followed by */etc/bash_logout* if it exists. The file */etc/profile* will normally source */etc/bashrc*, reading and executing commands found there, then search through */etc/profile.d* for any files with an *sh* extension to read and execute. As well, the file *~/.bash_profile* will normally source the file *~/.bashrc*. Both */etc/bashrc* and *~/.bashrc* have checks to prevent double sourcing.

An interactive shell that is not a login shell, will source the *~/.bashrc* file when it is first invoked. This is the usual type of shell a user will enter when opening a terminal on Fedora. When Bash is started in non-interactive mode – as it is when running a shell script – it will look for the *BASH_ENV* variable in the environment. If it is found, will expand the value, and use the expanded value as the name of a file to read and execute. Bash behaves just as if the following command were executed:

if [ -n "$BASH_ENV" ]; then . "$BASH_ENV"; fi

It is important to note that the value of the *PATH* variable is not used to search for the filename.

## Important user-specific dotfiles

Bash’s best-known user dotfile is *~/.bashrc*. Most user customization is done by editing this file. Most user customization, may be a stretch since there are reasons to modify all of the mentioned files; as well as other files that have not been mentioned. Bash’s environment is designed to be highly customizable in order to suit the needs of many different users with many different tastes.

When a Bash login shell exits cleanly, *~/.bash_logout* and then */etc/bash_logout* will be called if they exist. The next diagram is a sequence diagram showing the process Bash follows when being invoked as an interactive shell. The below sequence is followed, for example, when the user opens a terminal emulator from their desktop environment.

Armed with the knowledge of how Bash behaves under different invocation methods, it becomes apparent that there are only a few typical invocation methods to be most concerned with. These are the non-interactive and interactive login shell, and the non-interactive and interactive non-login shell. If global environment customizations are needed, then the desired settings should be placed in a uniquely-named file with a *.sh* extension (*custom.sh*, for example) and that file should be placed in the */etc/profile.d* directory.

The non-interactive, non-login invocation method needs special attention. This invocation method causes Bash to check the *BASH_ENV* variable. If this variable is defined, the file it references will be sourced. Note that the values stored in the *PATH* environment variable are not utilized when processing *BASH_ENV*. So it must contain the full path to the file to be sourced. For example, if someone wanted the settings from their *~/.bashrc* file to be available to shell scripts they run non-interactively, they could place something like the following in a file named */etc/profile.d/custom.sh* …

# custom.sh . . . #If Fedora Workstation BASH_ENV="/home/username/.bashrc" . . . #If Fedora Silverblue Workstation BASH_ENV="/var/home/username/.bashrc" export BASH_ENV

The above profile drop-in script will cause the user’s *~/.bashrc* file to be sourced just before every shell script is executed.

Users typically customizie their system environment so that it will better fit their work habits and preferences. An example of the sort of customization that a user can make is an alias. Commands frequently run with the same set of starting parameters are good candidates for aliases. Some example aliases are provided in the *~/.bashrc* file shown below.

# .bashrc # Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi . . . # User specific aliases and functions alias ls='ls -hF --color=auto' alias la='ls -ahF --color=auto' # make the dir command work kinda like in windows (long format) alias dir='ls --color=auto --format=long' # make grep highlight results using color alias grep='grep --color=auto'

Aliases are a way to customize various commands on your system. They can make commands more convenient to use and reduce your keystrokes. Per-user aliases are often configured in the user’s *~/.bashrc* file.

If you find you are looking back through your command line history a lot, you may want to configure your history settings. Per-user history options can also be configured in *~/.bashrc*. For example, if you have a habit of using multiple terminals at once, you might want to enable the *histappend* option. Bash-specific shell options that are [boolean](https://en.wikipedia.org/wiki/Boolean_data_type) in nature (take either *on* or *off* as a value) are typically enabled or disabled using the *shopt* built-in command. Bash settings that take a more complex value (for example, *HISTTIMEFORMAT*) tend to be configured by assigning the value to an environment variable. Customizing Bash with both shell options and environment variable is demonstrated below.

# Configure Bash History # Expand dir env vars on tab and set histappend shopt -s direxpand histappend # - ignoreboth = ignorespace and ignoredup HISTCONTROL='ignoreboth' # Controls the format of the time in output of `history` HISTTIMEFORMAT="[%F %T] " # Infinite history # NB: on newer bash, anything < 0 is the supported way, but on CentOS/RHEL # at least, only this works HISTSIZE= HISTFILESIZE= # or for those of us on newer Bash HISTSIZE=-1 HISTFILESIZE=-1

The *direxpand* option shown in the example above will cause Bash to replace directory names with the results of word expansion when performing filename completion. This will change the contents of the readline editing buffer, so what you typed is masked by what the completion expands it to.

The *HISTCONTROL* variable is used to enable or disable some filtering options for the command history. Duplicate lines, lines with leading blank spaces, or both can be filtered from the command history by configuring this setting. To quote Dusty Mabe, the engineer I got the tip from:

Dusty Mabe – Redhat Principle Software Engineer, June 19, 2020

ignoredupmakes history not log duplicate entries (if you are running a command over and over).ignorespaceignores entries with a space in the front, which is useful if you are setting an environment variable with a secret or running a command with a secret that you don’t want logged to disk.ignorebothdoes both.

For users who do a lot of work on the command line, Bash has the *CDPATH* environment variable. If *CDPATH* is configured with a list of directories to search, the *cd* command, when provided a relative path as its first argument, will check all the listed directories in order for a matching subdirectory and change to the first one found.

# .bash_profile # set CDPATH CDPATH="/var/home/username/favdir1:/var/home/username/favdir2:/var/home/username/favdir3" # or could look like this CDPATH="/:~:/var:~/favdir1:~/favdir2:~/favdir3"

*CDPATH* should be updated the same way *PATH* is typically updated – by referencing itself on the right hand side of the assignment to preserve the previous values.

# .bash_profile # set CDPATH CDPATH="/var/home/username/favdir1:/var/home/username/favdir2:/var/home/username/favdir3" # or could look like this CDPATH="/:~:/var:~/favdir1:~/favdir2:~/favdir3" CDPATH="$CDPATH:~/favdir4:~/favdir5"

*PATH* is another very important variable. It is the search path for commands on the system. Be aware that some applications require that their own directories be included in the *PATH* variable to function properly. As with *CDPATH*, appending new values to *PATH* can be done by referencing the old values on the right hand side of the assignment. If you want to prepend the new values instead, simply place the old values (*$PATH*) at the end of the list. Note that on Fedora, the list values are separated with the colon character (**:**).

# .bash_profile # Add PATH values to the PATH Environment Variable PATH="$PATH:~/bin:~:/usr/bin:/bin:~/jdk-13.0.2:~/apache-maven-3.6.3" export PATH

The command prompt is another popular candidate for customization. The command prompt has seven customizable parameters:

PROMPT_COMMANDIf set, the value is executed as a command prior to issuing each primary prompt ($PS1).

PROMPT_DIRTRIMIf set to a number greater than zero, the value is used as the number of trailing directory components to retain when expanding the \w and \W prompt string escapes. Characters removed are replaced with an ellipsis.

PS0The value of this parameter is expanded likePS1and displayed by interactive shells after reading a command and before the command is executed.

PS1The primary prompt string. The default value is ‘\s-\v\$‘. …

PS2The secondary prompt string. The default is ‘‘.>PS2is expanded in the same way asPS1before being displayed.

PS3The value of this parameter is used as the prompt for theselectcommand. If this variable is not set, theselectcommand prompts with ‘#?‘Reference Documentation for Bash

PS4The value of this parameter is expanded likePS1and the expanded value is the prompt printed before the command line is echoed when the-xoption is set. The first character of the expanded value is replicated multiple times, as necessary, to indicate multiple levels of indirection. The default is ‘‘.+

Edition 5.0, for Bash Version 5.0.

May 2019

An entire article could be devoted to this one aspect of Bash. There are copious quantities of information and examples available. Some example dotfiles, including prompt reconfiguration, are provided in a repository linked at the end of this article. Feel free to use and experiment with the examples provided in the repository.

## Conclusion

Now that you are armed with a little knowledge about how Bash works, feel free to modify your Bash dotfiles to suit your own needs and preferences. Pretty up your prompt. Go nuts making aliases. Or otherwise make your computer truly yours. Examine the content of */etc/profile*, */etc/bashrc*, and */etc/profile.d/* for inspiration.

Some comments about terminal emulators are fitting here. There are ways to setup your favorite terminal to behave exactly as you want. You may have already realized this, but often this modification is done with a … wait for it … dotfile in the users home directory. The terminal emulator can also be started as a login session, and some people always use login sessions. How you use your terminal, and your computer, will have a bearing on how you modify (or not) your dotfiles.

If you’re curious about what type session you are in at the command line the following script can help you determine that.

#!/bin/bash case "$-" in (*i*) echo This shell is interactive ;; (*) echo This shell is not interactive ;; esac

Place the above in a file, mark it executable, and run it to see what type of shell you are in. *$-* is a variable in Bash that contains the letter **i** when the shell is interactive. Alternatively, you could just echo the $- variable and inspect the output for the presence of the **i** flag:

$ echo $-

## Reference information

The below references can be consulted for more information and examples. The Bash man page is also a great source of information. Note that your local man page is guaranteed to document the features of the version of Bash you are running whereas information found online can sometimes be either too old (outdated) or too new (not yet available on your system).

[https://opensource.com/tags/command-line](https://opensource.com/tags/command-line)

[https://opensource.com/downloads/bash-cheat-sheet](https://opensource.com/downloads/bash-cheat-sheet)

You will have to enter a valid email address at the above site, or sign up, to download from it.

[https://opensource.com/article/19/12/bash-script-template](https://opensource.com/article/19/12/bash-script-template)

Community members who provided contributions to this article in the form of example dotfiles, tips, and other script files:

- Micah Abbott – Principal Quality Engineer

- John Lebon – Principal Software Engineer

- Dusty Mabe – Principal Software Engineer

- Colin Walters – Senior Principal Software Engineer

A repository of example dotfiles and scripts can be found here:

[https://github.com/TheOneandOnlyJakfrost/bash-article-repo](https://github.com/TheOneandOnlyJakfrost/bash-article-repo)

Please carefully review the information provided in the above repository. Some of it may be outdated. There are many examples of not only dotfiles for Bash, but also custom scripts and pet container setups for development. I recommend starting with John Lebon’s dotfiles. They are some of the most detailed I have seen and contain very good descriptions throughout. Enjoy!

## Esc

Respect, wow

## Duke

Engineering precision. Nice job!

## Vernon Van Steenkist

A couple of my favorites.

set -o vi

This command puts your shell in vi keybindings mode (normally it is in Emacs keybindings mode). Especially useful if you use a keyboard that doesn’t have arrow keys like an IPhone or IPad. Good quick tutorial and references at

https://catonmat.net/bash-vi-editing-mode-cheat-sheet

Automatically add sub-directories to your PATH

export PATH=$PATH$(find $HOME/.scripts -not ( -name CVS -prune ) -type d -printf “:%p”)

This command adds all the sub-directories in $HOME/.scripts which contain my personal scripts to my PATH while excluding the CVS version control directories.

Also, if you quickly don’t want to run the alias version of a command, just put a backslash in front of it. For example

\ls

will output a directory listing without all the color etc directives that you put in you alias version of ls.

## geirha

Never export CDPATH! You’ll get some unpleasant surprises when a script using cd accidentally hits a path from CDPATH

## Stephen Snow

Hello @geirha,

Thank you for the information. Could you possibly elaborate on the “unpleasant surprises” for everyone’s edification?

Stephen

## geirha

Sorry, I meant to link to the pitfalls page. Here:

https://mywiki.wooledge.org/BashPitfalls#pf48

In short, if cd gets a hit in CDPATH, it will output the absolute path of the directory it ends up in (to alert the user it “ended up” elsewhere), which causes breakage if a script is using cd as part of a command substitution

## Stephen Snow

Hello,

Thanks for the link. I corrected the article to reflect your advice. I could see this being a problem under certain situations.

## geirha

You should also move it to .bashrc. .bash_profile is only meant to be read during login, so it’s mainly useful for setting environment variables like PATH, not for setting variables that configure your interactive session like HISTSIZE, PS1 and CDPATH.

## Jan

Hi Stephen,

geirha possibly refers to the following:

https://mywiki.wooledge.org/BashPitfalls#export_CDPATH.3D.:.2BAH4-.2FmyProject

## Stephen Snow

Thank you @Jan,

I think exporting CDPATH is fine since the potential error would really be a problem of the script writer as opposed to a problem with CDPATH being exported. The script writer should never assume anything about the environment of the system beforehand. When I write scripts for a specific function on my system, I know the environment and write with that in mind, but not so for generic scripts I am sharing with others. Therefore I then have to account for the potential pitfalls within the script or with specific instructions on how to use it.

## Steve

Additionally, always have “.” as the first component of your CDPATH variable, else you shall see surprising behaviour (eg; you have, ~/images and CDPATH=~/Projects/. Now, if you are in ~ and do ‘cd images’ you will end up in ~/Projects/images, if it exists)

## Robin Meade

I like how the author followed the best practice of putting PATH customizations in

, which is where Fedora always put them prior to this commit: https://src.fedoraproject.org/rpms/bash/c/739b272e5f5d10cf27a847a44d09eb7f4b6ec89b?branch=master

## Gregory Bartholomew

Putting PATH in the login profile makes more sense to me as well. Especially when you understand that environment variables are always inherited by sub-processes. It is inefficient and you shouldn’t need to recreate or redefine a bunch of variables every time you spawn a shell. Doing that is also quite error-prone as can be seen from commit https://src.fedoraproject.org/rpms/bash/c/e3b3cd9cec4a3bd12a792536c0ea131f5ba5bd72?branch=master.

I also don’t like that /etc/bashrc gets sourced from /etc/profile or that everything under /etc/profile.d gets sourced by both /etc/profile and /etc/bashrc. Everything sourcing everything else, multiple times, seems like a bit of an inefficient mess. And I’ve seen plenty of cases where scripts under /etc/profile.d ended up creating massively long environment variables as well because of this problem (it’s not just a problem for the PATH environment variable). And what if I place a script under /etc/profile.d that does something like a file system mount? Would I potentially end up with infinite mounts on top of each other? Would I have to wait on the mount to complete every time a bash shell gets spawned?

What if, for efficiency’s sake, I want to spawn a bash login shell with the just the minimal login environment defined, but I don’t want to run all the start-up scripts that every installed program has dropped under the /etc directory? From the documentation, it would seem like bash –norc ought to do that, but the way everything is sourced (multiple times) from everything else, it appears that that option to the bash command is completely meaningless at this time.

I think a much cleaner design would be to simply source ~/.bash_profile once on bash login shells (falling back to /etc/profile if that doesn’t exist). And to always source ~/.bashrc (falling back to /etc/bashrc if that doesn’t exist). ~/.bash_profile should be for stuff that should only be run once and ~/.bashrc should be stuff that should be run every time.

Just my two cents.

## Stephen Snow

Hello Gregory,

Multiple sourcing is a poor practice, and there is a check in /etc/bashrc to prevent dual sourcing it but not in ~/.bashrc. I chose to present the tips as the GNU Bash manual indicates. For example, the documentation purposely shows alias’s and functions in /etc/bashrc and ~/.bashrc and this is noted in comments in the profile files /etc/profile and ~/.bash_profile as well.

From /etc/profile …

Stephen

## Gregory Bartholomew

Yeah, those comments seem inaccurate at the least. If they were true, how would ~/.bash_profile and ~/.bashrc get called? I know they aren’t called from the global scripts.

That check for double sourcing appears unreliable/bogus too. What if I run “bash –login” followed by “bash –login”? Since the only double sourcing check in done in /etc/bashrc, and since everything under /etc/profile.d is sourced from /etc/profile, all the scripts under /etc/profile.d will still get double sourced.

I suspect there should really be a /etc/bashrc.d in addition to /etc/profile.d so that there would be a cleaner divide between the two types of scripts rather than all the stuff under /etc/profile.d getting run many many many times over every time a bash script is run somewhere.

Again, just my two cents. There may well be some details of which I am unaware that make a cleaner divide between run-once and run-always scripts impractical.

## Stephen Snow

The article was more about Bash than Fedora per se. I didn’t mean to give the impression that you should follow everything I typed there verbatim. In fact I would expect those interested to review the material, read the links to get more informed, then customize their system to their own needs. What I was trying to get across specifically was the order of startup, what files are or could be accessed then, and how might you do some customization to suit your workflow and use case needs.

## George N. White III

In these days of projects with participants spread across multiple organizations and using different linux distros it is worth mentioning a common source of confusion when fedora users interact with debian/Ubuntu or macOS users. It is helpful to realize that users of other distros may have dash (default system shell on debian) or zsh (default user shell on recent macOS).

## Stephen Snow

Hello, and thank you for noting there are different default shells in use by other Linux Distro’s as well as Mac’s. I had originally opened this article stating that there is a large variety of choice of shell to use even in Fedora, but the focus would be on Bash, and it’s use within the context of a Fedora system. The broader topic of different shells, and their relative benefits/drawbacks would be a rather large (amount of) content (for a magazine article) if you wanted to attempt to do the topic justice. It would likely need to be a multi-part series. Generally, as a preference, I use zsh on my Fedora system. For this article and some time leading up to it, I have been using Bash.

## John

Please correct me if I’m wrong but in Wayland the ‘profile’ scripts are no longer sourced.

## Stephen Snow

Hello John,

You are likely referring to the discussion around this https://ask.fedoraproject.org/t/ld-library-path-is-not-set-by-bash-profile-anymore-after-upgrade-to-fedora-31/4247/13. In particular, this is relevant in how the startup scripts are processed, and in fact if they are processed as expected in the first place. The article is written from the POV of the Bash manual, so there are certain expectations on the startup behavior and the subsequent behavior of the shell. Specifically, that the distro is following the suggested sequence of login and startup for processing the environment scripts. This is not so clear cut now with Fedora as of F31, since for instance the Gnome Session is now managed by systemd(https://blogs.gnome.org/benzea/2019/10/01/gnome-3-34-is-now-managed-using-systemd/). So the ~/.bash_profile file is sourced (for now, but this may change) and for the interactive terminal sessions ~/.bashrc is still sourced.

## Gregory Bartholomew

For anyone who might be interested, I’ve found that an easy way to block a lot of the double sourcing is to add the following line to /etc/profile.d/sh.local:

The above will block /etc/bashrc from ever being run, however, so if you use it, you will need to duplicate anything in /etc/bashrc that you want in your ~/.bashrc. For me, this amounted to the following:

You want to keep ~/.bashrc very minimal because everything in it is run every time bash is executed (without the –norc parameter).

I’ve also tweaked my ~/.bash_profile and added a /etc/profile.d/ps1.sh to set the bash prompt for login shells. The end result of editing these four files is that now when I run just

bash(or when a bash script is run), there is no chance that any of the content under /etc/profile.d will be re-evaluated. This is easily verified because spawning a non-login bash shell reveils the default bash prompt (because /etc/profile.d/ps1.sh was not sourced). Below are the four files I’ve changed in their entirety. This is just an experiment at this time, so there may be problems with this setup that I have not noticed yet./etc/profile.d/sh.local

/etc/profile.d/ps1.sh

~/.bash_profile

~/.bashrc

I’ve also set gnome-terminal to always run bash as a login shell. This makes sense because the shell that gnome-terminal starts is never the child process of another bash shell — it cannot inherit an already initialized bash environment, so it needs to be run as login shell each time.

## Robin Meade

Cool! Thanks for writing that up.

I don’t find the need to set gnome-terminal to always run bash as a login shell. On my system, gnome-session is run by a bash login shell. My process table shows:

I do need to log out and back in for changes to my .bash_profile to take effect; that’s the only downside I see to not setting gnome-terminal to always run bash as a login shell.

## Gregory Bartholomew

Yeah, it looks like you have a somewhat non-standard setup there where gnome-session is a child process of a bash login shell.

I think your best option to get around having to logout and back in in that case would be to create something like ~/.gnome-terminal that ends with “exec bash”. Then set as a custom command for gnome-terminal something like:

/bin/bash -c “source $HOME/.gnome-terminal”

instead of your login shell. I haven’t tested such a setup, but it should give your a third option for where you can place configuration settings that would not require you to log out and back in (and also would not get run every time bash is run).

Just an idea,

gb

## Gregory Bartholomew

Just realized that you should probably check for the existence of the file first, so maybe something more like:

And if you want to eliminate the requirement that ~/.gnome-terminal end in “exec bash”, maybe something like:

## Robin Meade

OK, thanks Gregory. I was running Gnome using X. But with Wayland, bash is still executed as a login shell when gnome-session starts. See https://gitlab.gnome.org/GNOME/gnome-session/-/blob/master/gnome-session/gnome-session.in Anyway, I’m OK with needing to log out and back into my Gnome session to pick-up any changes to environment variables that I set in my ~/.bash_profile.

## Gregory Bartholomew

Interesting. I too now see that gnome-session is listed as being spawned from a bash login shell. However, experiments suggest that gnome-terminal is not a child of that bash login shell. I still have to set gnome-terminal to run bash as a login shell to get the content under /etc/profile.d processed (e.g. PS1) with my setup.

What I can make of the output of pstree seems to suggest that gnome-terminal is being spawned directly from systemd somehow (or perhaps from DBus as the article Steven linked earlier suggests).

## smeagol

export HISTTIMEFORMAT=”%m/%d/%y %H:%M:%S ”

^^ this is what I use. Do you like it? Can it be improved upon? |

12,430 | 将英式英语翻译成美式英语的开源工具 | https://opensource.com/article/20/6/british-american-cli-translator | 2020-07-19T11:56:51 | [

"翻译"

] | https://linux.cn/article-12430-1.html |

>

> 使用这些命令行翻译器将英式英语转换为美式英语(或反之)。

>

>

>

去年圣诞节,我和我的妻子去了家乡佛罗里达的皮尔斯堡,并且经常光顾海滩上的一家当地人场所。在那儿,我们遇到了一对来自英国中部地区的夫妇。音乐很响,喝酒也很闹,所以很难听清楚。另外,即使他们“似乎”在说英语,但有时理解他们在说什么仍然是一个挑战。我以为我在澳大利亚的时光会给我足够的语言能力,但是,可惜,很多东西都超出了我的想象。不止是一般的 “soccer 是 football” 或 “trunk是 boot” 之类的困惑。

幸运的是,有开源方案可以将“英式”翻译成“美式”。我们可能使用相同的语言,但是我们共同的言语却比多塞特郡沙夫茨伯里的之字形山要曲折得多!

如果你碰巧在酒吧有 Linux 笔记本,那么你可以使用方便的开源命令行程序,它就叫 [美英英语翻译器](https://github.com/hyperreality/American-British-English-Translator)(MIT 许可证)。

我在我的改造运行 Linux 的 MacBook 上安装这个翻译器:

```

$ npm install -g american-british-english-translator

```

这需要我首先[安装 Node.js](https://nodejs.org/en/download/)。

### 声明!

除了翻译单词和短语,美英英语翻译器还能分析(嗯,剖析)文档。我用一个叫《独立宣言》的流行文件试了一下。它分析并返回了许多结果。其中一个比较有趣的是:

```

fall:

issue: Different meanings(意思不同)

details:

American English: autumn(秋天)

British English:

"""

to become pregnant. (Either as in 'I fell pregnant' or as in 'She fell for a baby.');(怀孕 —— 无论是“我怀孕了”还是“她怀孕了”)

```

并非如《宣言》作者所指控的“在公海俘虏”(taken captive on the high seas):

>

> ”他在公海上俘虏我们的同胞,强迫他们拿起武器来反对自己的国家,成为残杀自己亲人和朋友的刽子手,或是死于自己的亲人和朋友的手下。“(He has constrained our fellow Citizens taken Captive on the high Seas to bear Arms against their Country, to become the executioners of their friends and Brethren, or to fall themselves by their Hands.)

>

>

>

### 还有一个

对于简单的单词替换,你可以尝试 [Common\_Language](https://github.com/willettk/common_language),这是一个开源(MIT 许可)Python 脚本。

当然,要与同伴一起喝几杯,你也许需要实际交流,并能理解他们。这就是需要用到 MARY 了。

[MaryTTS](https://github.com/marytts/marytts) 是一个使用 Java(GNU 许可)编写的开源多语言文本到语音合成平台。方便的[基于浏览器的界面](http://mary.dfki.de:59125/)使你可以轻松地将美国酒吧谈话内容转换为男声或女声的正确英语(或其他语言)。

现在我可以和我的英国朋友们见面了(可以安全地再来一次),喝几杯!像当地人一样讲英语!大家干杯!

---

via: <https://opensource.com/article/20/6/british-american-cli-translator>

作者:[Jeff Macharyas](https://opensource.com/users/jeffmacharyas) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Last Christmas, my wife and I traveled to my hometown of Ft. Pierce, Florida, and frequented a local establishment on the beach. There, we met a couple from The Midlands in the UK. The music was loud and so was the beer, so it was a bit hard to hear. Plus, even though it "seemed" they were speaking English, it was sometimes a challenge to understand what they were saying. I thought my time in Australia would have given me enough linguistic power, but, alas, a lot went over my head. There was more than the usual "soccer is football" or "trunk is a boot" sort of confusion.

Fortunately, there are open source solutions to translate "British" into "American." We may speak the same language, but our shared speech takes more twists and turns than Zig Zag Hill in Shaftesbury, Dorset!

If you happen to be at the bar with your Linux laptop, you can use a handy open source command-line program, cleverly called the [American British English Translator](https://github.com/hyperreality/American-British-English-Translator) (MIT license).

I installed the Translator on my repurposed MacBook running Linux with:

`$ npm install -g american-british-english-translator`

Which required me to [install Node.js](https://nodejs.org/en/download/) first.

## I do declare!

In addition to translating words and phrases, the American British English Translator will analyze, ummm, analyse, documents. I gave this a go with a popular document called *The Declaration of Independence*. (tl;dr). It analyzed the document and returned many results. One of the more interesting was:

```

fall:

issue: Different meanings

details:

American English: autumn

British English:

"""

to become pregnant. (Either as in 'I fell pregnant' or as in 'She fell for a baby.');

```

Not exactly "taken captive on the high seas" as the *Declaration's *authors accused:

"He has constrained our fellow Citizens taken Captive on the high Seas to bear Arms against their Country, to become the executioners of their friends and Brethren, or to fall themselves by their Hands."

## Along comes MARY

For simple word replacements, you can try [Common_Language](https://github.com/willettk/common_language), an open source (MIT license) Python script.

Of course, if you're sharing a pint with your mates, you may need to actually speak to them in a voice and language they will understand. This would be a good time to bring MARY along with you.

[MaryTTS](https://github.com/marytts/marytts) is an open source, multilingual text-to-speech synthesis platform written in Java (GNU license). The handy [browser-based interface](http://mary.dfki.de:59125/) makes it easy to translate your American bar talk into right good English (or other languages) in either a male or female voice.

So, now I am ready to meet my British friends (when it is safe to do so again), grab a few pints, and—Bob's your uncle—speak English like a native! Cheerio, y'all!

## 4 Comments |

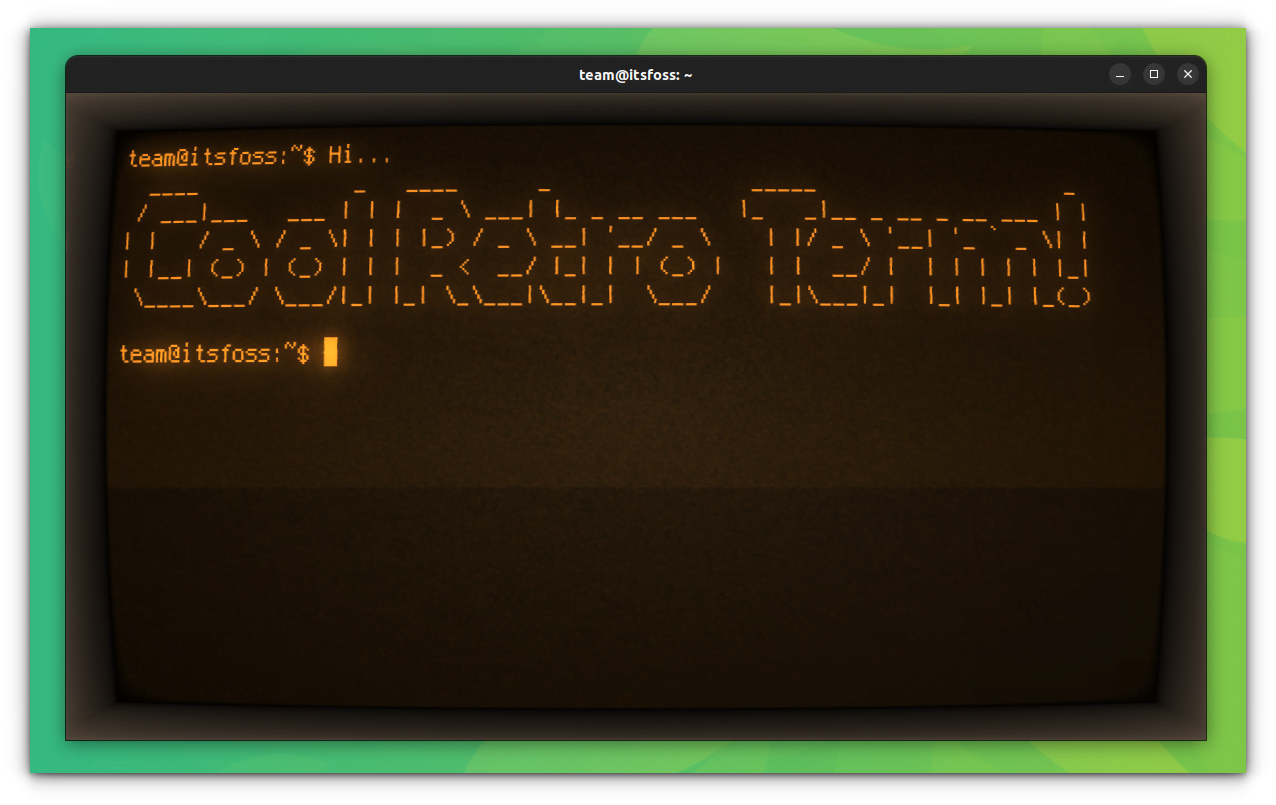

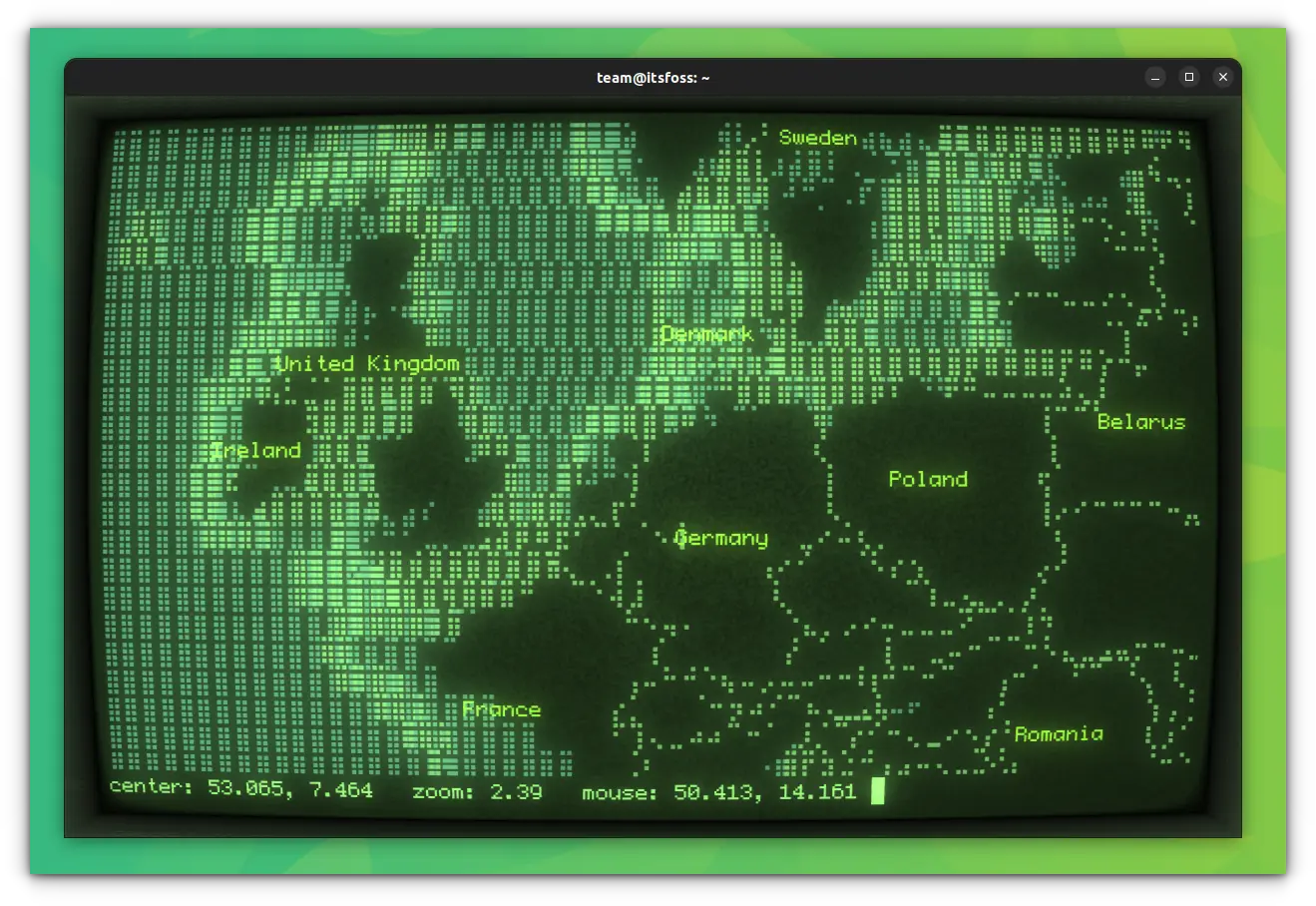

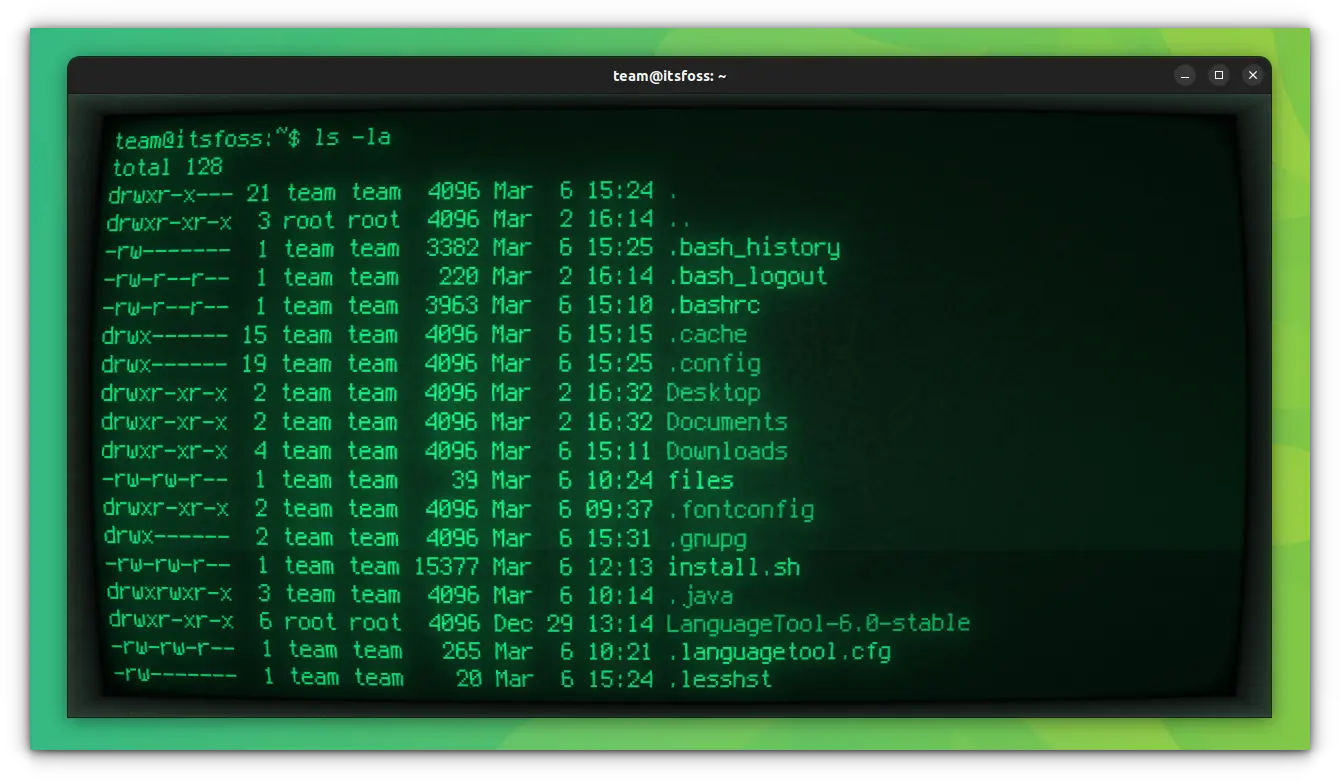

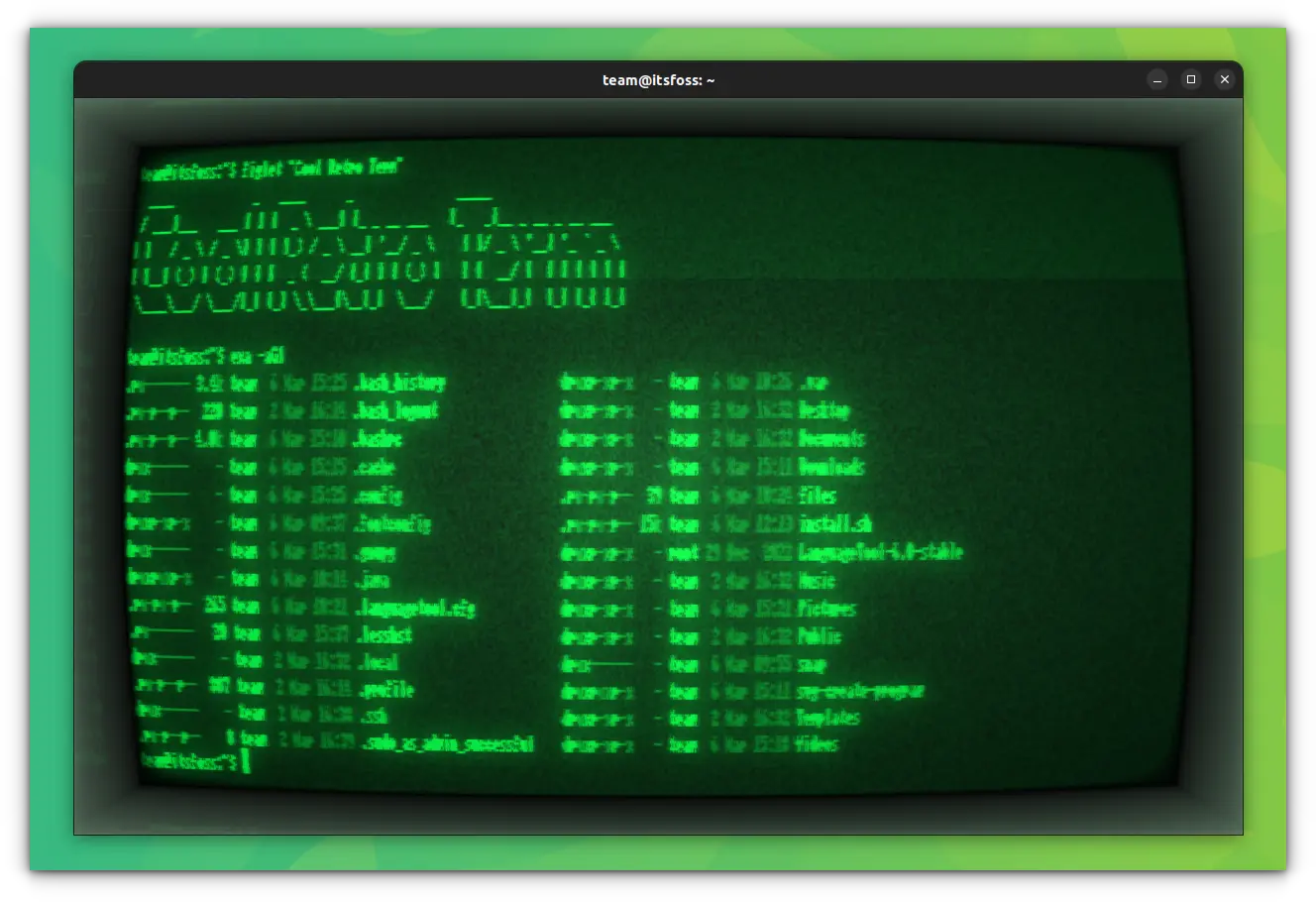

12,432 | 让你的 Linux 终端变得酷炫复古 | https://itsfoss.com/cool-retro-term/ | 2020-07-19T21:41:00 | [

"终端",

"怀旧"

] | https://linux.cn/article-12432-1.html |

>

> 怀旧?那就安装复古终端应用程序 [cool-retro-term](https://github.com/Swordfish90/cool-retro-term) 吧 —— 顾名思义,它既酷又复古。

>

>

>

你还记得那个到处都是 CRT 显示器,终端屏幕经常闪烁的年代吗?你不需要那么老,就能见证它的存在。如果你看上世纪九十年代初的电影,你会看到大量的 CRT 显示器和绿色/黑白的命令提示符。那种极客的光环,让它感觉很酷。

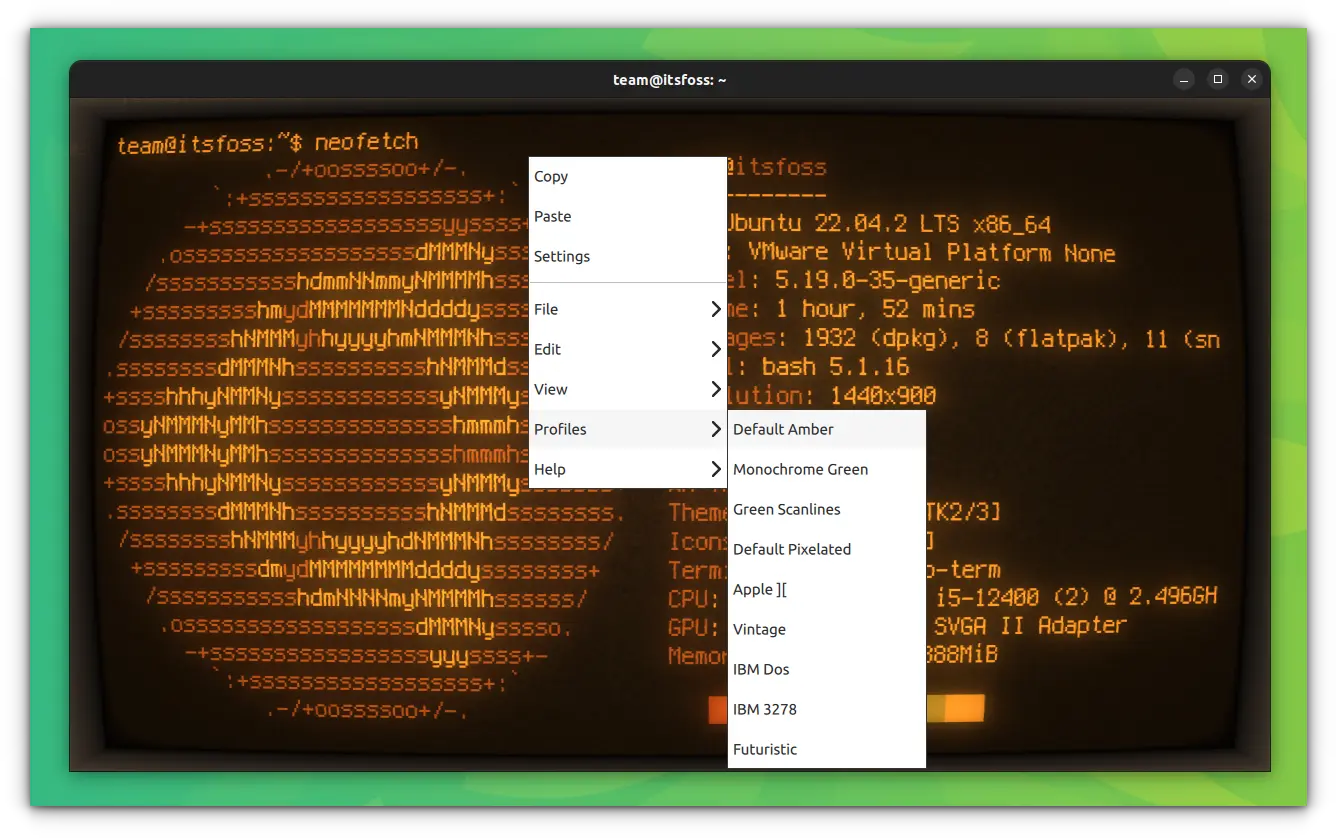

如果你厌倦了你的仿真终端的外观,你需要一些酷炫的“新”东西,[cool-retro-term](https://github.com/Swordfish90/cool-retro-term) 会给你一个复古的终端机外观,让你重温过去。你还可以改变它的颜色、动画种类,并为它添加一些效果。

### Linux 中用 Snap 包安装

在 Linux 中安装 `cool-retro-term` 的方法有很多种。一种与发行版无关的方法是使用 Snap 软件包。如果你的 Linux 发行版支持 Snap,你可以使用这个命令来安装 `cool-retro-term`:

```

sudo snap install cool-retro-term --classic

```

Ubuntu 用户也可以在软件中心找到它。

如果你不想使用 Snap 包,也不用担心。还有其他的方法来安装它。

### 在基于 Ubuntu 的 Linux 发行版中安装

有一些 PPA 可以轻松地将 `cool-retro-term` 安装到基于 Ubuntu 的发行版上,如 Linux Mint、Elementary OS、Linux Lite 等。

对于 Ubuntu 18.04、18.10、19.04、Linux Mint 19 及更高版本,请使用此 PPA:

```

sudo add-apt-repository ppa:vantuz/cool-retro-term

sudo apt update

sudo apt install cool-retro-term

```

对于 Ubuntu 16.04 和 Linux Mint 18 系列,请用此 PPA:

```

sudo add-apt-repository ppa:noobslab/apps

sudo apt update

sudo apt-get install cool-retro-term

```

### 在基于 Arch 的 Linux 发行版中安装

在基于 Arch 的 Linux 发行版(如 Antergos 和 Manjaro)中安装 `cool-retro-term`,使用以下命令:

```

sudo pacman -S cool-retro-term

```

### 从源码中安装

如果要[从源代码中安装](https://itsfoss.com/install-software-from-source-code/)这个应用程序,你需要先[安装 Git 和一些依赖关系](https://itsfoss.com/install-git-ubuntu/)。在基于 Ubuntu 的发行版中,一些已知的依赖关系是:

```

sudo apt-get install git build-essential qmlscene qt5-qmake qt5-default qtdeclarative5-dev qtdeclarative5-controls-plugin qtdeclarative5-qtquick2-plugin libqt5qml-graphicaleffects qtdeclarative5-dialogs-plugin qtdeclarative5-localstorage-plugin qtdeclarative5-window-plugin

```

其他发行版的已知依赖关系可以在 [cool-retro-term 的 GitHub 存储库](https://github.com/Swordfish90/cool-retro-term)上找到。

现在使用下面的命令来编译程序:

```

git clone https://github.com/Swordfish90/cool-retro-term.git

cd cool-retro-term

qmake && make

```

程序编译完成后,你可以用这个命令来运行它:

```

./cool-retro-term

```

如果你喜欢把这个应用放在程序菜单中,以便快速访问,而不用每次都用命令手动运行它,你可以使用下面的命令:

```

sudo cp cool-retro-term.desktop /usr/share/applications

```

你可以在这里学习更多的终端技巧。享受 Linux 中的复古终端 :)

---

via: <https://itsfoss.com/cool-retro-term/>

作者: [Abhishek Prakash](https://itsfoss.com/author/abhishek/) 译者:[wxy](https://github.com/wxy) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](/article-11810-1.html) 荣誉推出

| 200 | OK |

Nostalgic about the past? Get a slice of the past by installing the retro terminal app [cool-retro-term](https://github.com/Swordfish90/cool-retro-term?ref=its-foss), which, as the name suggests, is both cool and retro at the same time.

Do you remember the time when there were CRT monitors everywhere and the terminal screen used to flicker? You don’t need to be old to have witnessed it. If you watch movies set in the early ’90s, you’ll see plenty of CRT monitors with green/B&W command prompts. It has a geeky aura which makes it cooler.

If you are tired of terminal appearance and you need something cool and ‘new’, cool-retro-term will give you a vintage terminal appearance to relive the past. You can also change its color, and animation kind, and add some effect to it.

## Install Cool Retro Term in Linux [Recommended]

Since it is a quite popular tool, it is available on the official repositories of almost all Linux distributions. In Ubuntu, you can install it by running the following command a terminal:

`sudo apt install cool-retro-term`

Similarly, if you are running Arch Linux, use:

`sudo pacman -S cool-retro-term`

Or, in Fedora:

`sudo dnf install cool-retro-term`

To remove the package installed from repositories, you can use the respective removal command:

```

sudo apt remove cool-retro-term

sudo pacman -Rs cool-retro-term

sudo dnf remove cool-retro-term

```

Respectively for Ubuntu, Arch Linux, and Fedora.

## Install Cool Retro Term in Older Ubuntu-based Linux distributions

There are PPAs available for easily installing cool-retro-term for Ubuntu 18.04 and Linux Mint 19.

```

sudo add-apt-repository ppa:vantuz/cool-retro-term

sudo apt update

sudo apt install cool-retro-term

```

To remove, run:

```

sudo apt remove cool-retro-term

sudo add-apt-repository --remove ppa:vantuz/cool-retro-term

sudo apt update

```

## Install cool-retro-term from the source code [Not Recommended]

For [installing this application from the source code](https://itsfoss.com/install-software-from-source-code/), you need to [install Git and a number of dependencies](https://itsfoss.com/install-git-ubuntu/) first. Some of the known dependencies in Ubuntu-based distributions are:

```

sudo apt install \

git \

build-essential \

qmlscene \

qt5-qmake \

qt5-default \

qtdeclarative5-dev \

qml-module-qtquick-controls2 \

qml-module-qtgraphicaleffects \

qml-module-qtquick-dialogs \

qml-module-qtquick-localstorage \

qml-module-qtquick-window2 \

qml-module-qt-labs-settings \

qml-module-qt-labs-folderlistmodel \

qtquickcontrols2-5-dev

```

Extra packages needed for at least Ubuntu 20.04

```

sudo apt install \

qtquickcontrols2-5-dev \

qml-module-qt-labs-platform \

qml-module-qtquick-controls \

qml-module-qtquick-layouts \

qml-module-qtquick-localstorage

```

Now, run the following command to install it:

```

git clone --recursive https://github.com/Swordfish90/cool-retro-term.git

cd cool-retro-term

qmake && make

```

Once the program is compiled, you can run it with this command:

`./cool-retro-term`

If you like to have this app in the program menu for quick access so that you won’t have to run it manually each time with the commands, you can use the command below:

`sudo cp cool-retro-term.desktop /usr/share/applications`

To remove the Cool Retro Term, if you did not copy the desktop file to */usr/share/applications*, just remove the `cool-retro-term`

folder from the location, where you performed the commands. Else, delete the desktop file from */usr/share/applications* also.

Now run:

`sudo apt autoremove`

Or remove all the unnecessary dependency packages.

## Install Cool Retro Term in Linux as Snap

If [your Linux distribution has Snap support enabled](https://itsfoss.com/install-snap-linux/), you can use this command to install cool-retro-term:

`sudo snap install cool-retro-term --classic`

Ubuntu users can find it in the Software Center as well.

To remove it, use:

`sudo snap remove cool-retro-term`

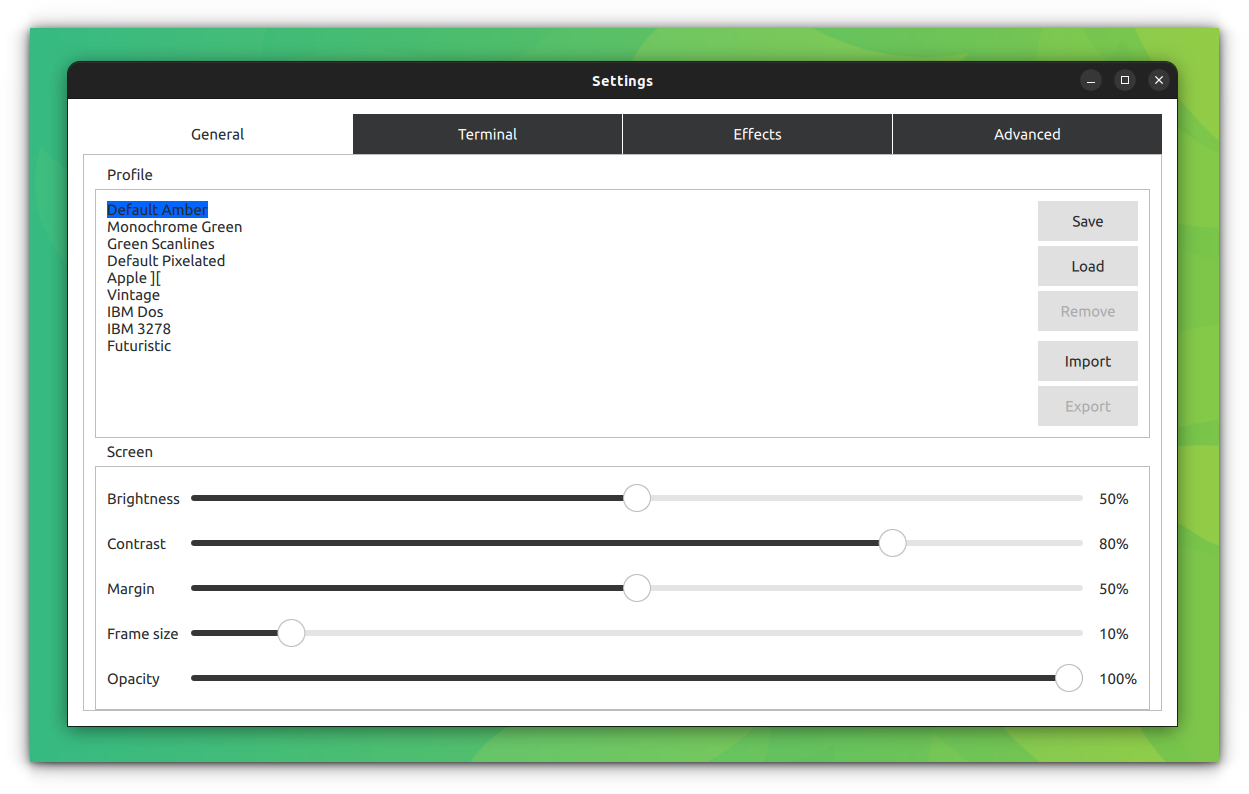

## Tweaking Cool Retro Term

Cool-retro-term offers several ways to tweak the appearance. You can access the settings by right-clicking on the interface if there is no menu panel by default.

From here, you can go to the **Settings **option for further granular tweaks.

In my opinion, it’s one of the [best terminal emulators for Linux](https://itsfoss.com/linux-terminal-emulators/). It gets all the points for bringing back memories. Enjoy the vintage terminal in Linux :)

## Want more retro stuff in Linux?

If you like retro stuff like this, there are plenty more such thing to take you back into the 80s and 90s.

For example, playing [retro games with DOSBox](https://itsfoss.com/ubuntu-dosbox/).

[Install DOSBox in Ubuntu to Play Retro GamesThe DOSbox emulator lets you use the disk operating systems of the 80s. You can also use it to play retro games on Linux.](https://itsfoss.com/ubuntu-dosbox/)

Got a spare system? Turn it into a retro gaming console with specially crafted Linux distros.

[Linux Distros That Turn Your PC into Retro Gaming ConsoleSteam Deck is making news for all the right reasons. It is a fantastic piece of tech, powered by a variant of Arch Linux (SteamOS 3.0) developed by Valve. While you can install any other operating system in it, it is best to have it as it is for](https://itsfoss.com/retro-gaming-console-linux-distros/)

Or just read about others re-exploring the software from the bygone era.

[Trying Common Desktop Environment on a Modern Linux DistroBill shares his re-experience with Common Desktop Environment (CDE), the de-facto standard windowing environment on UNIX systems in the 90s.](https://itsfoss.com/common-desktop-environment/)

|

12,433 | 在 Fedora 32 上运行 Docker | https://fedoramagazine.org/docker-and-fedora-32/ | 2020-07-20T09:16:00 | [

"Docker",

"Fedora"

] | https://linux.cn/article-12433-1.html |

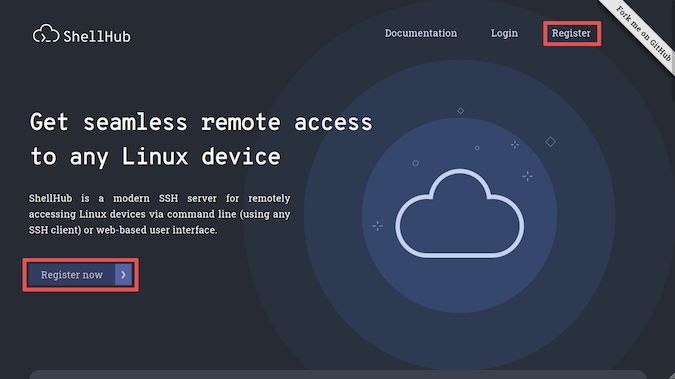

随着 Fedora 32 的发布,[Docker](https://docs.docker.com/) 的普通用户面临着一个小挑战。在编写本文时,Fedora 32 不支持 Docker。虽然还有其他选择,例如 [Podman](https://podman.io/getting-started/) 和 [Buildah](https://buildah.io/),但是对于许多现有用户而言,现在切换可能不是最佳时机。因此,本文可以帮助你在 Fedora 32 上设置 Docker 环境。

### 步骤 0:消除冲突

此步骤适用于从 Fedora 30 或 31 升级的任何用户。如果全新安装的 Fedora 32,那么可以跳到步骤 1。

删除 docker 及其所有相关组件:

```

sudo dnf remove docker-*

sudo dnf config-manager --disable docker-*

```

### 步骤 1:系统准备

在 Fedora 的最后两个版本中,操作系统已迁移到两项新技术: CGroups 和用于防火墙的 NFTables。尽管这些新技术的详细信息不在本教程的讨论范围之内,但是令人遗憾的是,Docker 还不支持这些新技术。因此,你必须进行一些更改才能在 Fedora 上使用 Docker。

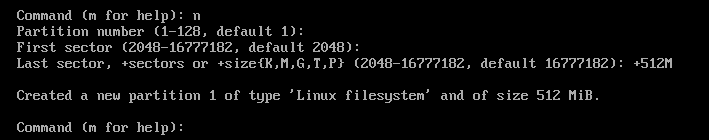

#### 启用旧的 CGroups

Fedora 仍然支持 CGroups 的先前实现,可以使用以下命令启用它:

```

sudo grubby --update-kernel=ALL --args="systemd.unified_cgroup_hierarchy=0"

```

#### 在防火墙中将 Docker 列入白名单

为了让 Docker 具有网络访问权限,需要两个命令:

```

sudo firewall-cmd --permanent --zone=trusted --add-interface=docker0

sudo firewall-cmd --permanent --zone=FedoraWorkstation --add-masquerade

```

第一个命令将 Docker 接口添加到受信任的环境,它允许 Docker 建立远程连接。第二个命令将允许 Docker 建立本地连接。当有多个 Docker 容器作为开发环境时,这特别有用。

### 步骤 2:安装 Moby

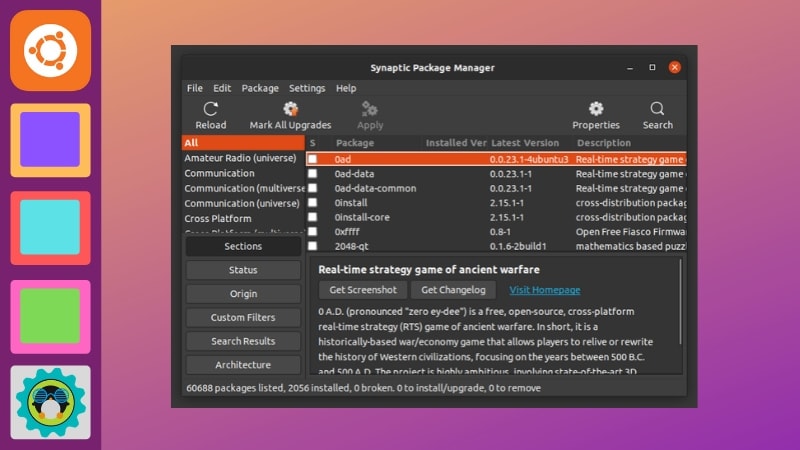

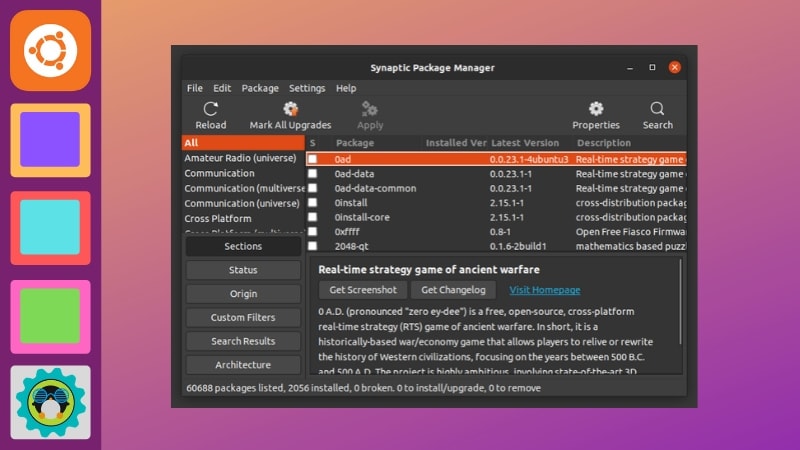

[Moby](https://mobyproject.org/) 是 Docker 的开源白牌版本。它基于相同的代码,但不带商标。它包含在 Fedora 的主仓库中,因此很容易安装:

```

sudo dnf install moby-engine docker-compose

```

这将安装 `moby-engine`、`docker-compose`、`containerd` 和其他一些相关的库。安装完成后,你必须启用系统级守护程序才能运行 Docker:

```

sudo systemctl enable docker

```

### 步骤 3:重新启动并测试

为了确保正确处理所有系统和设置,你现在必须重启计算机:

```

sudo systemctl reboot

```

之后,你可以使用 Docker hello-world 包来验证安装:

```

sudo docker run hello-world

```

除非遇到问题,不然你就会看到来自 Docker 的问候!

#### 以管理员身份运行

可选地,你现在还可以将用户添加到 Docker 的组帐户中,以便无需输入 `sudo` 即可启动 Docker 镜像:

```

sudo groupadd docker

sudo usermod -aG docker $USER

```

注销并登录以使更改生效。如果以管理员权限运行容器的想法让你有所顾虑,那么你应该研究 Podman。

### 总结

从现在起,Docker 将按照你的习惯工作,包括 `docker-compose` 和所有与 `docker` 相关的工具。不要忘记查看官方文档,这在很多情况下可以为你提供帮助。

Fedora 32 上 Docker 的当前状态并不理想。缺少官方软件包可能会困扰一些人,有一个[上游问题](https://github.com/docker/for-linux/issues/955)对此进行了讨论。对 [CGroups](https://github.com/moby/moby/issues/40360) 和 [NFTables](https://github.com/moby/moby/issues/26824) 缺少支持更多是技术问题,但是你可以查看它们在其公共问题上的进展。

这些指导应可以让你就像什么都没有发生一样继续工作。如果这不能满足你的需求,请不要忘记到 Moby 或 Docker Github 页面去寻求解决你的技术问题,或者看看 Podman,长期来看,它可能会更加强大。

---

via: <https://fedoramagazine.org/docker-and-fedora-32/>

作者:[Kevin "Eonfge" Degeling](https://fedoramagazine.org/author/eonfge/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | With the release of Fedora 32, regular users of [Docker](https://docs.docker.com/) have been confronted by a small challenge. At the time of writing, Docker is not supported on Fedora 32. There are alternatives, like [Podman](https://podman.io/getting-started/) and [Buildah](https://buildah.io/), but for many existing users, switching now might not be the best time. As such, this article can help you set up your Docker environment on Fedora 32.

*NOTE*: A more recent article covering[ Docker and Fedora 34/35](https://fedoramagazine.org/docker-and-fedora-35/) is now available.

## Step 0: Removing conflicts

This step is for any user upgrading from Fedora 30 or 31. If this is a fresh installation of Fedora 32, you can move on to step 1.

To remove docker and all its related components:

sudo dnf remove docker-* sudo dnf config-manager --disable docker-*

## Step 1: System preparation

With the last two versions of Fedora, the operating system has moved to two new technologies: CGroups and NFTables for the Firewall. While the details of these new technologies is behind the scope of this tutorial, it’s a sad fact that docker doesn’t support them yet. As such, you’ll have to make some changes to facilitate Docker on Fedora.

### Enable old CGroups

The previous implementation of CGroups is still supported and it can be enabled using the following command.

sudo grubby --update-kernel=ALL --args="systemd.unified_cgroup_hierarchy=0"

### Whitelist docker in firewall

To allow Docker to have network access, two commands are needed.

sudo firewall-cmd --permanent --zone=trusted --add-interface=docker0 sudo firewall-cmd --permanent --zone=FedoraWorkstation --add-masquerade

The first command will add the Docker-interface to the trusted environment which allows Docker to make remote connections. The second command will allow docker to make local connections. This is particularly useful when multiple Docker containers are in as a development environment.

## Step 2: installing Moby

[Moby](https://mobyproject.org/) is the open-source, white label version of Docker. It’s based on the same code but it does not carry the trademark. It’s included in the main Fedora repository, which makes it easy to install.

sudo dnf install moby-engine docker-compose

This installs *moby-engine*, *docker-compose*, *containerd* and some other related libraries. Once installed, you’ll have to enable the system-wide daemon to run docker.

sudo systemctl enable docker

## Step 3: Restart and test

To ensure that all systems and settings are properly processed, you’ll now have to reboot your machine.

sudo systemctl reboot

After that, you can validate your installation using the Docker hello-world package.

sudo docker run hello-world

You are then greeted by the Hello from Docker! unless something went wrong.

### Running as admin

Optionally, you can now also add your user to the group account of Docker, so that you can start docker images without typing *sudo*.

sudo groupadd docker sudo usermod -aG docker $USER

Logout and login for the change to take effect. If the thought of running containers with administrator privileges concerns you, then you should look into Podman.

## In summary

From this point on, Docker will work how you’re used to, including docker-compose and all docker-related tools. Don’t forget to check out the official documentation which can help you in many cases where something isn’t quite right.

The current state of Docker on Fedora 32 is not ideal. The lack of an official package might bother some, and there is an [issue upstream](https://github.com/docker/for-linux/issues/955) where this is discussed. The missing support for both [CGroups](https://github.com/moby/moby/issues/40360) and [NFTables](https://github.com/moby/moby/issues/26824) is more technical, but you can check their progress in their public issues.

These instruction should allow you to continue working like nothing has happened. If this has not satisfied your needs, don’t forget to address your technical issues at the Moby or Docker Github pages, or take a look at Podman which might prove more robust in the long-term future.

## Vincent Rubiolo

Hi there and thanks for the article, a useful sumup of the various threads lying around which will benefit a lot of people moving to Fedora32!

Just a note that w.r.t cgroups, it’s the move to cgroupsv2 which is a problem for Docker and which requires to move back to cgroupsv1.

The Redhat Enable Sysadmin site did a great article about this when Fedora 31 was released: https://www.redhat.com/sysadmin/fedora-31-control-group-v2, I can only recommend it.

## Ricky Zhang

I confirmed this is the exact problem starting form Fedora 31.

## Christian Groove

Podman is a wonderful replacement for the unsafe docker. I used it to run my accounting software, in a container that is executed as a non-root service under a technical user and a customized selinux config.

## Guus

It would be interesting to know why you find docker unsafe. Do you have a link to a document that explains this?

P.S. I am currently not a user of docker, neither of podman. But I do want to understand the technologies behind both and I would like to know why pod is safe and docker is not.

## Stephen Snow

One of the reasons Podman was created was to facilitate the use of rootless containers. or containers without root privileges on the host. Docker requires the Docker Daemon which runs as root. That is the fundamental difference.

## Mark

I prefer docker to podman from an admin and disk space point of view. With the docker daemon you have a single point to view all running containers that may impact your system plus all the images are stored in one place. Plus while ‘docker stats’ is not that accurate you do get an idea of what impact each container is having.

With podman and users building and running containers as they see fit, plus each user having their own images and container overlays in their home directory structure, on a multi-user system it is a waste of disk space an no easy overview of what containers are running.

From a system admin viewpoint Podman should be used only on single user developer workstations, not on a multi-user server.

## Stephen Snow

I would think that the added security of Podman would have been a plus from the Sys Admin POV. Podman is part of a trio of software for managing containers and images > buildah, podman, and skopeo.

## shy

There are certain things everyone show know about Docker:

https://github.com/docker/for-linux/issues/477

## Mehdi

But, podman also gives a root shell when you run a container (using run -ti) with it. How is that rootless? Would you please explain?

## Stephen Snow

Hello @Medhi,

When a user starts podman using the run command and the -ti options, they are running a container in their own namespace, which should be specified in /etc/subuid . The -ti just tells the podman run command it is a tty(-t) and interactive(-i). While you can be “root” within the container since you are the owner of the namespace used when the container is run, the container is rootless on your system.

## Olivier

To me all the unsafe is here :

and dockerd run as root. You can access host through volume and do what you want, such as change root password on the host…

## kayke

you can use this command is a little bit safer then usermod

sudo setfacl –modify user:$USER:rw /var/run/docker.sock

## shy

Podman development is progressing. There is podman-compose to replace docker-compose https://github.com/containers/podman-compose/

XWIKI runs on podman using podman-compose https://forum.xwiki.org/t/xwiki-docker-images-run-on-podman/6869

Pi-hole runs on podman using podman-compose https://discourse.pi-hole.net/t/pi-hole-runs-on-podman/34719

## Alejandro

Awesome, this is exactly what I mentioned in previous comment! Will have a look 😀

## Daniel

Thank you! I was missing an article to point this out.

## Yogesh Sharma

Following can also help

sudo dnf install podman-docker

Description : This package installs a script named docker that emulates the Docker CLI by executes podman commands, it also creates links between all Docker CLI man pages and podman.

## Alejandro

Nice article, I struggled to find an article a few months back when I moved to Fedora 32 regarding the state of Docker. Or one that actually worked for me, anyway. I decided to start investing time in Podman*, which I love, although for some reason -probably me- not all the same commands I had for docker worked.

Slightly off-topic, I wish Podman had a gender neutral name.

## Einer

Hmmmm ….. is “Alejandro” a “gender neutral name”? …….. Maybe you should change it ……..

(Podman == Pod Manager … looks pretty “gender neutral” to me ..)

## Alberto Patino

podmanwoman or podwomanman or podwo(man)^2 maybe

## John

What’s wrong with the name Podman?

## Alan

PodMan is a gender neutral name. The man stands for manager not male.

## W T Costa

There is also a question of the docker network and the firewallD. I managed to make it work by adding the docker0 network to the default group.

But I’m a user of another distribution (‘de’ …) and I’m still getting started with Fedora.

Aguém indicates a good tutorial, even simple, FirewallD?

## Sebastiaan Franken

I’ve found the firewalld man page to be a good starting point. Also, the firewalld website (firewalld [dot] org) has quite a bit of info!

## Stephane

Heads up, this worked but completely broke my libvirtd virtual machine.. Something about python-nftables failing.

I had to revert all the steps to boot the VM again… I will probably have to use podman instead of docker.

## Esteban

Check to make sure the bridges don’t overlap. It’s happened to me.

## drakkai

I’m using the docker repo for F31 on F32 without problems.

[docker-ce-stable]

name=Docker CE Stable – $basearch

baseurl=https://download.docker.com/linux/fedora/31/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://download.docker.com/linux/fedora/gpg

## Elad

How is this working ? 🙂

## Bruno

Same for me. The fedora 31 Docker repo works fine in Fedora 32.

Fortunately I also don’t use firewalld but ufw, so I didn’t have to change anything in the Firewall also 😉

Great article,

## Tomasz Gąsior

For me, reverting firewalld backend from nftables to iptables worked better than changing firewalld settings.

## Mark

Yes, as far as I am aware docker-ce runs without any issues. While I don’t use it on Fedora I run it on CentOS8 which is also cgroups2 without any issues.

## Sergey

https://www.redhat.com/en/blog/world-domination-cgroups-rhel-8-welcome-cgroups-v2

RHEL 8 ships with cgroups v1 as the default.

## Heiko Loewe

Hi,

awesome article.

But what about Nvidia integration. I found nothing on github how to install the nvidia-docker-plugin with moby.

Heiko

## vic

This is sad. I loved using older Fedora, due to RedHat heritage.

Due to Docker issues, I’m starting to look at PopOS.

## Trung LE

I am not a big fan of moby and love to switch to podman if possible. I wish one day commercial support for podman would get on par with docker.

Btw, if people start running into issue with libvirt after installing moby-engine, check this https://bugzilla.redhat.com/show_bug.cgi?id=1829090

## Anonymous

Thanks for the guide, this publication rocks! Zsh users here will need to quote “docker-*” in the first two commands to avoid expansion.

sudo dnf ‘remove docker-

‘‘sudo dnf config-manager ‘–disable docker-

## Tim Hughes

This breaks vagrant-libvirt integration with the following error:

To fix I deleted everything under

and then reinstall firewalld

sudo dnf reinstall -y firewalld

restarted firewalld and libvirt

sudo systemctl restart libvirtd

## Hubbs

This has completely broken libvirtd VM networking. The VMs can not access the Internet. The docker rules prevent libvirtd for putting the proper firewall rules in places. If VMs need to access the Internet, I need to restart firewalld and libvirtd. In turn, my docker containers will fail. Podman is useless because the work flow at my employer, where no one else is using a linux box, requires the ability to manipulate docker containers that podman doesn’t support now or doesn’t easily support. |

12,435 | OWL 项目:物联网正尝试在灾难中让一切保持联络 | https://www.networkworld.com/article/3564980/project-owl-iot-trying-to-hold-connectivity-together-in-disasters.html | 2020-07-21T13:56:28 | [

"IoT"

] | https://linux.cn/article-12435-1.html |

>

> 当自然灾害破坏了传统的通信连接时,配置在<ruby> 多跳网络 <rt> mesh network </rt></ruby>的物联网设备可以迅速部署以提供基本的连接。

>

>

>

OWL 项目负责人在最近的开源峰会上说,一个以多跳网络、物联网和 LoRa 连接为中心的开源项目可以帮助急救和受灾人员在自然灾害之后保持联系。

OWL 项目的应用场景是当在自然灾害之后频繁发生的通信中断时。无论是蜂窝网络还是有线网络,大范围的中断会频繁阻碍急救服务、供应和在暴风雨或其他重大灾难后必须解决关键问题的信息流。

该项目通过一大群“<ruby> 鸭子 <rt> duck </rt></ruby>”(便宜、易于部署且不需要现有基础设施支持的小型无线模块)实现这个目的。一些“鸭子”是太阳能的,其它一些则用的是耐用电池。每只“鸭子”配备一个 LoRa 无线电,用于在网络上和其它“鸭子”进行通信,同时还配备有 Wi-Fi,而且可能配备蓝牙和 GPS 来实现其他功能。

这个想法是这样的,当网络瘫痪时,用户可以使用他们的智能手机或者笔记本电脑与“鸭子”建立一个 Wi-Fi 连接,这个“鸭子”可以将小块的信息传递到网络的其他部分。信息向网络后端传递,直到到达“<ruby> 鸭子爸爸 <rt> papaduck </rt></ruby>”,“鸭子爸爸”装备了可以与云上的 OWL 数据管理系统连接的卫星系统(OWL 代表 ”<ruby> 组织 <rt> organization </rt></ruby>、<ruby> 位置 <rt> whereabouts </rt></ruby>和<ruby> 物流 <rt> logistics </rt></ruby>”)。信息可以通过云在智能手机或者网页上进行可视化,甚至可以通过 API 插入到现有的系统中。

秘密在于“<ruby> 鸭群 <rt> ClusterDuck </rt></ruby>” 协议,这是一个开源固件,即使在一些模块不能正常工作的网络中,它仍然能保持信息流通。它就是设计用来工作在大量便宜且容易获取的计算硬件上,类似树莓派的硬件,这样可以更容易且更快捷的建立一个“鸭群”网络。

创始人 Bryan Knouse 表示,这个项目的创建,是因为在 2017 年和 2018 年的毁灭性飓风中,要与受影响社区进行有效的通信而采取救援措施,面临着巨大的困难。

“我们的一些创始成员经历了这些灾难,然后我们会问‘我们该做些什么?’”,他说道。

在马亚圭斯,该项目有一批来自波多黎各大学的学生和教授,大多数的系统测试都在那里进行。Knouse 说,校园中目前有 17 个太阳能“鸭子”,分布在屋顶和树上,并且计划增加数量。

他说,“这种关系实际上创建了一个开源社区,这些学生和教授正在帮助我们开发这个项目。”

---

via: <https://www.networkworld.com/article/3564980/project-owl-iot-trying-to-hold-connectivity-together-in-disasters.html>

作者:[Jon Gold](https://www.networkworld.com/author/Jon-Gold/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Yufei-Yan](https://github.com/Yufei-Yan) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

12,438 | 我的 Linux 故事:从用户到贡献者 | https://opensource.com/article/20/7/linux-user-contributor | 2020-07-22T11:41:00 | [

"Linux",

"故事"

] | /article-12438-1.html |

>

> 开源社区欢迎来自不同背景和技能的贡献者。

>

>

>

我是一名 IT 专业人士,拥有超过 15 年经验,担任过不同职位 —— 包括系统管理员、高级 Linux 管理员、DevOps 工程师、自动化顾问和高级<ruby> 敏捷专家 <rt> scrum master </rt></ruby>。我开始是在 Ubuntu 上学习 Linux,但是后来作为系统管理员转到 CentOS,然后我又转到 Fedora 作为个人使用。但是我对技术的喜爱要远比我使用第一个 Linux 发行版要早的多,而且是来自于一部电影。

我最喜欢的电影是《<ruby> 黑客 <rt> Hackers </rt></ruby>》。最精彩的一幕发生在电影的开头。电影一开始,一群特工冲进一所房子抓捕臭名昭著的黑客 Zero Cool。我们马上发现 Zero Cool 其实是 11 岁的 Dade Murphy,他在一天之内成功瘫痪了 1507 台计算机系统。他被指控犯罪,他的家人被处以重罚。而且,在他 18 岁之前,他都被禁止使用电脑或按键式电话。

<ruby> 劳伦斯·梅森 <rt> Laurence Mason </rt></ruby>扮演的 Paul Cook,又名 Nikon 勋爵,是我最喜欢角色。其中一个主要原因是,我从没有看过一个黑客电影里面的人物长的像我,所以我被他的形象深深吸引了。他很神秘。这让我耳目一新,并且感到自豪,我对 IT 充满了热情,我也是一个和他很像的极客。

### 成为一个 Linux 贡献者

15 年前,我开始使用 Linux。当我成为一个 Linux 管理员的时候,Linux 就成了我的激情所在。我一直尝试找到某种方式能够为开源作出贡献,当时我还不知道该从哪开始。因为这个社区实在是太大了,我不知道自己能否真正成为一个有影响力的人,但当我发现一些人认可我的兴趣,还对我进行指导,我开始彻底打开心扉,问各种问题,并且从社区中学习。自从那以后,Fedora 社区一直是我做贡献的最主要社区。

我现在对于向开源做贡献还是有点稚嫩。当我意识到我可以用代码以外的方式来贡献时,我对开源的想法发生了改变。我更喜欢通过文档做一些贡献,因为我本质上不是一个软件开发人员,而且社区里面最迫切的需求正是文档。请记住:用户的技能和开发人员的技能同样重要。

### 我的硬件是什么?

硬件也很重要,而且现在几乎所有东西都可以运行 Linux。现在,我家里的配置包括:

* 联想 Thinksever TS140,64 GB 内存,4 x 1 TB SSD 和一个存储数据的 1 TB 机械硬盘

* 使用 RAID 5 配置的 164 TB Synology NAS