id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

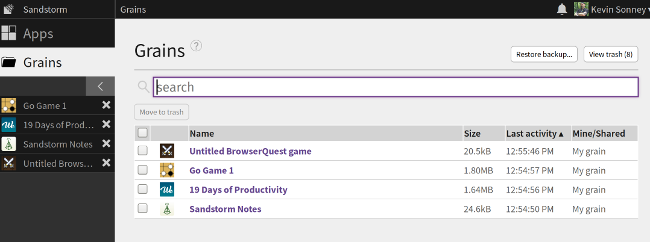

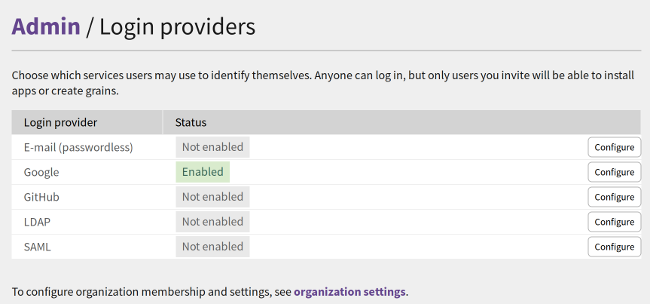

10,457 | Turtl:Evernote 的开源替代品 | https://opensource.com/article/17/12/using-turtl-open-source-alternative-evernote | 2019-01-19T13:38:50 | [

"Evernote",

"笔记"

] | https://linux.cn/article-10457-1.html |

>

> 如果你正在寻找一个 Evernote 和 Google Keep 的替代品,那么 Turtl 是一个可靠的记笔记工具。

>

>

>

我认识的每个人都会记笔记,许多人使用在线笔记应用,如 Evernote、Simplenote 或 Google Keep。这些都是很好的工具,但你不得不担忧信息的安全性和隐私性 —— 特别是考虑到 [Evernote 2016 年的隐私策略变更](https://blog.evernote.com/blog/2016/12/15/evernote-revisits-privacy-policy/)。如果你想要更好地控制笔记和数据,你需要转向开源工具。

无论你离开 Evernote 的原因是什么,都有开源替代品。让我们来看看其中一个选择:Turtl。

### 入门

[Turtl](https://turtlapp.com/) 背后的开发人员希望你将其视为“具有绝对隐私的 Evernote”。说实话,我不能保证 Turtl 提供的隐私级别,但它是一个非常好的笔记工具。

要开始使用 Turtl,[下载](https://turtlapp.com/download/)适用于 Linux、Mac OS 或 Windows 的桌面客户端,或者获取它的 [Android 应用](https://turtlapp.com/download/)。安装它,然后启动客户端或应用。系统会要求你输入用户名和密码。Turtl 使用密码来生成加密密钥,根据开发人员的说法,加密密钥会在将笔记存储在设备或服务器上之前对其进行加密。

### 使用 Turtl

你可以使用 Turtl 创建以下类型的笔记:

* 密码

* 档案

* 图片

* 书签

* 文字笔记

无论你选择何种类型的笔记,你都可以在类似的窗口中创建:

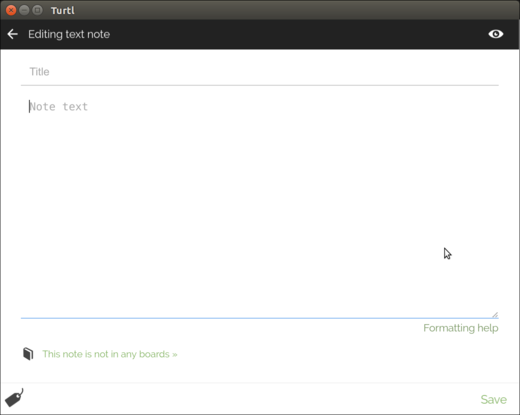

*在 Turtl 中创建新笔记*

添加笔记标题、文字并(如果你正在创建文件或图像笔记)附加文件或图像等信息。然后单击“保存”。

你可以通过 [Markdown](https://en.wikipedia.org/wiki/Markdown) 为你的笔记添加格式。因为没有工具栏快捷方式,所以你需要手动添加格式。

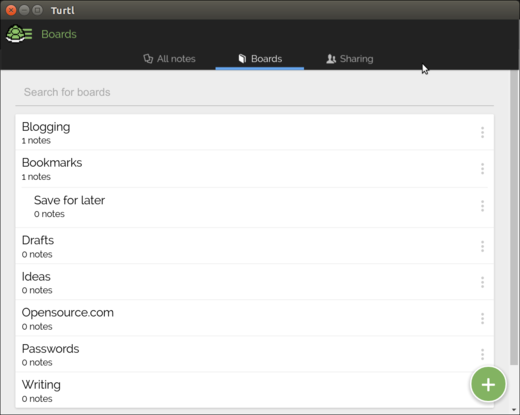

如果你需要整理笔记,可以将它们添加到“白板”中。白板就像 Evernote 中的笔记本。要创建新的白板,请单击 “Board” 选项卡,然后单击“创建”按钮。输入白板的标题,然后单击“创建”。

*在 Turtl 中创建新的白板*

要向白板中添加笔记,请创建或编辑笔记,然后单击笔记底部的“此笔记不在任何白板”的链接。选择一个或多个白板,然后单击“完成”。

要为笔记添加标记,请单击记事本底部的“标记”图标,输入一个或多个以逗号分隔的关键字,然后单击“完成”。

### 跨设备同步你的笔记

例如,如果你在多台计算机和 Android 设备上使用 Turtl,Turtl 会在你上线时同步你的笔记。但是,我在同步时遇到了一个小问题:我手机上创建的笔记经常不会同步到我的笔记本电脑上。我尝试通过单击窗口左上角的图标手动同步,然后单击立即同步,但这并不总是有效。我发现偶尔需要单击“设置”图标,然后单击“清除本地数据”。然后我需要重新登录 Turtl,但所有数据都能正确同步了。

### 一个疑问和一些问题

当我开始使用 Turtl 时,我被一个疑问所困扰:我的笔记保存在哪里?事实证明,Turtl 背后的开发人员位于美国,这也是他们服务器的所在地。虽然 Turtl 使用的加密[非常强大](https://turtlapp.com/docs/security/encryption-specifics/)并且你的笔记在服务器上加密,但我偏执地认为你不应该在 Turtl 中保存任何敏感内容(或在任何类似的在线笔记中)。

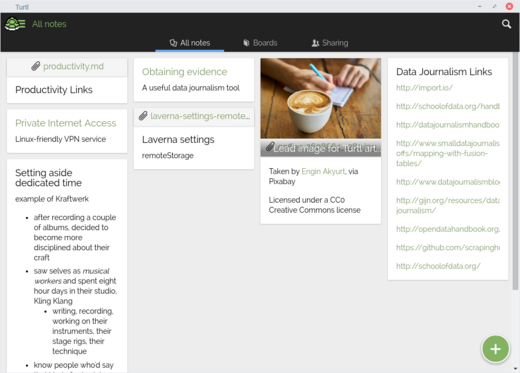

Turtl 以平铺视图显示笔记,让人想起 Google Keep:

*Turtl 中的一系列笔记*

无法在桌面或 Android 应用上将其更改为列表视图。这对我来说不是问题,但我听说有些人因为它没有列表视图而不喜欢 Turtl。

说到 Android 应用,它并不差。但是,它没有与 Android 共享菜单集成。如果你想把在其他应用中看到或阅读的内容添加到 Turtl 笔记中,则需要手动复制并粘贴。

我已经在我的 Linux 笔记本,[运行 GalliumOS 的 Chromebook](https://opensource.com/article/17/4/linux-chromebook-gallium-os),还有一台 Android 手机上使用 Turtl 几个月了。对所有这些设备来说这都是一种非常无缝的体验。虽然它不是我最喜欢的开源笔记工具,但 Turtl 做得非常好。试试看它,它可能是你正在寻找的简单的笔记工具。

---

via: <https://opensource.com/article/17/12/using-turtl-open-source-alternative-evernote>

作者:[Scott Nesbitt](https://opensource.com/users/scottnesbitt) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Just about everyone I know takes notes, and many people use an online note-taking application like Evernote, Simplenote, or Google Keep. Those are all good tools, but you have to wonder about the security and privacy of your information—especially in light of [Evernote's privacy flip-flop of 2016](https://blog.evernote.com/blog/2016/12/15/evernote-revisits-privacy-policy/). If you want more control over your notes and your data, you really need to turn to an open source tool.

Whatever your reasons for moving away from Evernote, there are open source alternatives out there. Let's look at one of those alternatives: Turtl.

## Getting started

The developers behind [Turtl](https://turtlapp.com/) want you to think of it as "Evernote with ultimate privacy." To be honest, I can't vouch for the level of privacy that Turtl offers, but it is a quite a good note-taking tool.

To get started with Turtl, [download](https://turtlapp.com/download/) a desktop client for Linux, Mac OS, or Windows, or grab the [Android app](https://turtlapp.com/download/). Install it, then fire up the client or app. You'll be asked for a username and passphrase. Turtl uses the passphrase to generate a cryptographic key that, according to the developers, encrypts your notes before storing them anywhere on your device or on their servers.

## Using Turtl

You can create the following types of notes with Turtl:

- Password

- File

- Image

- Bookmark

- Text note

No matter what type of note you choose, you create it in a window that's similar for all types of notes:

Creating a new text note with Turtl

Add information like the title of the note, some text, and (if you're creating a File or Image note) attach a file or an image. Then click **Save**.

You can add formatting to your notes via [Markdown](https://en.wikipedia.org/wiki/Markdown). You need to add the formatting by hand—there are no toolbar shortcuts.

If you need to organize your notes, you can add them to **Boards**. Boards are just like notebooks in Evernote. To create a new board, click on the **Boards** tab, then click the **Create a board** button. Type a title for the board, then click **Create**.

Create a new board in Turtl

To add a note to a board, create or edit the note, then click the **This note is not in any boards** link at the bottom of the note. Select one or more boards, then click **Done**.

To add tags to a note, click the **Tags** icon at the bottom of a note, enter one or more keywords separated by commas, and click **Done**.

## Syncing your notes across your devices

If you use Turtl across several computers and an Android device, for example, Turtl will sync your notes whenever you're online. However, I've encountered a small problem with syncing: Every so often, a note I've created on my phone doesn't sync to my laptop. I tried to sync manually by clicking the icon in the top left of the window and then clicking **Sync Now**, but that doesn't always work. I found that I occasionally need to click that icon, click **Your settings**, and then click **Clear local data**. I then need to log back into Turtl, but all the data syncs properly.

## A question, and a couple of problems

When I started using Turtl, I was dogged by one question: *Where are my notes kept online?* It turns out that the developers behind Turtl are based in the U.S., and that's also where their servers are. Although the encryption that Turtl uses is [quite strong](https://turtlapp.com/docs/security/encryption-specifics/) and your notes are encrypted on the server, the paranoid part of me says that you shouldn't save anything sensitive in Turtl (or any online note-taking tool, for that matter).

Turtl displays notes in a tiled view, reminiscent of Google Keep:

A collection of notes in Turtl

There's no way to change that to a list view, either on the desktop or on the Android app. This isn't a problem for me, but I've heard some people pan Turtl because it lacks a list view.

Speaking of the Android app, it's not bad; however, it doesn't integrate with the Android **Share** menu. If you want to add a note to Turtl based on something you've seen or read in another app, you need to copy and paste it manually.

I've been using a Turtl for several months on a Linux-powered laptop, my [Chromebook running GalliumOS](https://opensource.com/article/17/4/linux-chromebook-gallium-os), and an Android-powered phone. It's been a pretty seamless experience across all those devices. Although it's not my favorite open source note-taking tool, Turtl does a pretty good job. Give it a try; it might be the simple note-taking tool you're looking for.

## 7 Comments |

10,458 | 计算机实验室之树莓派:课程 1 OK01 | https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/ok01.html | 2019-01-19T16:36:00 | [

"树莓派"

] | https://linux.cn/article-10458-1.html |

OK01 课程讲解了树莓派如何入门,以及在树莓派上如何启用靠近 RCA 和 USB 端口的 OK 或 ACT 的 LED 指示灯。这个指示灯最初是为了指示 OK 状态的,但它在第二版的树莓派上被改名为 ACT。

### 1、入门

我们假设你已经访问了[下载](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/downloads.html)页面,并且已经获得了必需的 GNU 工具链。也下载了一个称为操作系统模板的文件。请下载这个文件并在一个新目录中解开它。

### 2、开始

现在,你已经展开了这个模板文件,在 `source` 目录中创建一个名为 `main.s` 的文件。这个文件包含了这个操作系统的代码。具体来看,这个文件夹的结构应该像下面这样:

```

build/

(empty)

source/

main.s

kernel.ld

LICENSE

Makefile

```

用文本编辑器打开 `main.s` 文件,这样我们就可以输入汇编代码了。树莓派使用了称为 ARMv6 的汇编代码变体,这就是我们即将要写的汇编代码类型。

>

> 扩展名为 `.s` 的文件一般是汇编代码,需要记住的是,在这里它是 ARMv6 的汇编代码。

>

>

>

首先,我们复制下面的这些命令。

```

.section .init

.globl _start

_start:

```

实际上,上面这些指令并没有在树莓派上做任何事情,它们是提供给汇编器的指令。汇编器是一个转换程序,它将我们能够理解的汇编代码转换成树莓派能够理解的机器代码。在汇编代码中,每个行都是一个新的命令。上面的第一行告诉汇编器 <sup id="fnref1"> <a href="#fn1" rel="footnote"> 1 </a></sup> 在哪里放我们的代码。我们提供的模板中将它放到一个名为 `.init` 的节中的原因是,它是输出的起始点。这很重要,因为我们希望确保我们能够控制哪个代码首先运行。如果不这样做,首先运行的代码将是按字母顺序排在前面的代码!`.section` 命令简单地告诉汇编器,哪个节中放置代码,从这个点开始,直到下一个 `.section` 或文件结束为止。

>

> 在汇编代码中,你可以跳行、在命令前或后放置空格去提升可读性。

>

>

>

接下来两行是停止一个警告消息,它们并不重要。<sup id="fnref2"> <a href="#fn2" rel="footnote"> 2 </a></sup>

### 3、第一行代码

现在,我们正式开始写代码。计算机执行汇编代码时,是简单地一行一行按顺序执行每个指令,除非明确告诉它不这样做。每个指令都是开始于一个新行。

复制下列指令。

```

ldr r0,=0x20200000

```

>

> `ldr reg,=val` 将数字 `val` 加载到名为 `reg` 的寄存器中。

>

>

>

那是我们的第一个命令。它告诉处理器将数字 `0x20200000` 保存到寄存器 `r0` 中。在这里我需要去回答两个问题,<ruby> 寄存器 <rt> register </rt></ruby>是什么?`0x20200000` 是一个什么样的数字?

寄存器在处理器中就是一个极小的内存块,它是处理器保存正在处理的数字的地方。处理器中有很多寄存器,很多都有专门的用途,我们在后面会一一接触到它们。最重要的有十三个(命名为 `r0`、`r1`、`r2`、…、`r9`、`r10`、`r11`、`r12`),它们被称为通用寄存器,你可以使用它们做任何计算。由于是写我们的第一行代码,我们在示例中使用了 `r0`,当然你可以使用它们中的任何一个。只要后面始终如一就没有问题。

>

> 树莓派上的一个单独的寄存器能够保存任何介于 `0` 到 `4,294,967,295`(含)之间的任意整数,它可能看起来像一个很大的内存,实际上它仅有 32 个二进制比特。

>

>

>

`0x20200000` 确实是一个数字。只不过它是以十六进制表示的。下面的内容详细解释了十六进制的相关信息:

>

> 延伸阅读:十六进制解释

>

>

> 十六进制是另一种表示数字的方式。你或许只知道十进制的数字表示方法,十进制共有十个数字:`0`、`1`、`2`、`3`、`4`、`5`、`6`、`7`、`8` 和 `9`。十六进制共有十六个数字:`0`、`1`、`2`、`3`、`4`、`5`、`6`、`7`、`8`、`9`、`a`、`b`、`c`、`d`、`e` 和 `f`。

>

>

> 你可能还记得十进制是如何用位制来表示的。即最右侧的数字是个位,紧接着的左边一位是十位,再接着的左边一位是百位,依此类推。也就是说,它的值是 100 × 百位的数字,再加上 10 × 十位的数字,再加上 1 × 个位的数字。

>

>

>

>

>

> 从数学的角度来看,我们可以发现规律,最右侧的数字是 10<sup> 0</sup> = 1s,紧接着的左边一位是 10<sup> 1</sup> = 10s,再接着是 10<sup> 2</sup> = 100s,依此类推。我们设定在系统中,0 是最低位,紧接着是 1,依此类推。但如果我们使用一个不同于 10 的数字为幂底会是什么样呢?我们在系统中使用的十六进制就是这样的一个数字。

>

>

>

>

>

>

>

>

> 上面的数学等式表明,十进制的数字 567 等于十六进制的数字 237。通常我们需要在系统中明确它们,我们使用下标 <sub> 10</sub> 表示它是十进制数字,用下标 <sub> 16</sub> 表示它是十六进制数字。由于在汇编代码中写上下标的小数字很困难,因此我们使用 0x 来表示它是一个十六进制的数字,因此 0x237 的意思就是 237<sub> 16</sub> 。

>

>

> 那么,后面的 `a`、`b`、`c`、`d`、`e` 和 `f` 又是什么呢?好问题!在十六进制中为了能够写每个数字,我们就需要额外的东西。例如 9<sub> 16</sub> = 9×16<sup> 0</sup> = 9<sub> 10</sub> ,但是 10<sub> 16</sub> = 1×16<sup> 1</sup> + 1×16<sup> 0</sup> = 16<sub> 10</sub> 。因此,如果我们只使用 0、1、2、3、4、5、6、7、8 和 9,我们就无法写出 10<sub> 10</sub> 、11<sub> 10</sub> 、12<sub> 10</sub> 、13<sub> 10</sub> 、14<sub> 10</sub> 、15<sub> 10</sub> 。因此我们引入了 6 个新的数字,这样 a<sub> 16</sub> = 10<sub> 10</sub> 、b<sub> 16</sub> = 11<sub> 10</sub> 、c<sub> 16</sub> = 12<sub> 10</sub> 、d<sub> 16</sub> = 13<sub> 10</sub> 、e<sub> 16</sub> = 14<sub> 10</sub> 、f<sub> 16</sub> = 15<sub> 10</sub> 。

>

>

> 所以,我们就有了另一种写数字的方式。但是我们为什么要这么麻烦呢?好问题!由于计算机总是工作在二进制中,事实证明,十六进制是非常有用的,因为每个十六进制数字正好是四个二进制数字的长度。这种方法还有另外一个好处,那就是许多计算机的数字都是十六进制的整数倍,而不是十进制的整数倍。比如,我在上面的汇编代码中使用的一个数字 20200000<sub> 16</sub> 。如果我们用十进制来写,它就是一个不太好记住的数字 538968064<sub> 10</sub> 。

>

>

> 我们可以用下面的简单方法将十进制转换成十六进制:

>

>

>

>

>

> 1. 我们以十进制数字 567 为例来说明。

> 2. 将十进制数字 567 除以 16 并计算其余数。例如 567 ÷ 16 = 35 余数为 7。

> 3. 在十六进制中余数就是答案中的最后一位数字,在我们的例子中它是 7。

> 4. 重复第 2 步和第 3 步,直到除法结果的整数部分为 0。例如 35 ÷ 16 = 2 余数为 3,因此 3 就是答案中的下一位。2 ÷ 16 = 0 余数为 2,因此 2 就是答案的接下来一位。

> 5. 一旦除法结果的整数部分为 0 就结束了。答案就是反序的余数,因此 567<sub> 10</sub> = 237<sub> 16</sub>。

>

>

> 转换十六进制数字为十进制,也很容易,将数字展开即可,因此 237<sub> 16</sub> = 2×16<sup> 2</sup> + 3×16<sup> 1</sup> +7 ×16<sup> 0</sup> = 2×256 + 3×16 + 7×1 = 512 + 48 + 7 = 567。

>

>

>

因此,我们所写的第一个汇编命令是将数字 20200000<sub> 16</sub> 加载到寄存器 `r0` 中。那个命令看起来似乎没有什么用,但事实并非如此。在计算机中,有大量的内存块和设备。为了能够访问它们,我们给每个内存块和设备指定了一个地址。就像邮政地址或网站地址一样,它用于标识我们想去访问的内存块或设备的位置。计算机中的地址就是一串数字,因此上面的数字 20200000<sub> 16</sub> 就是 GPIO 控制器的地址。这个地址是由制造商的设计所决定的,他们也可以使用其它地址(只要不与其它的冲突即可)。我之所以知道这个地址是 GPIO 控制器的地址是因为我看了它的手册,<sup id="fnref3"> <a href="#fn3" rel="footnote"> 3 </a></sup> 地址的使用没有专门的规范(除了它们都是以十六进制表示的大数以外)。

### 4、启用输出

阅读了手册可以得知,我们需要给 GPIO 控制器发送两个消息。我们必须用它的语言告诉它,如果我们这样做了,它将非常乐意实现我们的意图,去打开 OK 的 LED 指示灯。幸运的是,它是一个非常简单的芯片,为了让它能够理解我们要做什么,只需要给它设定几个数字即可。

```

mov r1,#1

lsl r1,#18

str r1,[r0,#4]

```

>

> `mov reg,#val` 将数字 `val` 放到名为 `reg` 的寄存器中。

>

>

> `lsl reg,#val` 将寄存器 `reg` 中的二进制操作数左移 `val` 位。

>

>

> `str reg,[dest,#val]` 将寄存器 `reg` 中的数字保存到地址 `dest + val` 上。

>

>

>

这些命令的作用是在 GPIO 的第 16 号插针上启用输出。首先我们在寄存器 `r1` 中获取一个必需的值,接着将这个值发送到 GPIO 控制器。因此,前两个命令是尝试取值到寄存器 `r1` 中,我们可以像前面一样使用另一个命令 `ldr` 来实现,但 `lsl` 命令对我们后面能够设置任何给定的 GPIO 针比较有用,因此从一个公式中推导出值要比直接写入来好一些。表示 OK 的 LED 灯是直接连线到 GPIO 的第 16 号针脚上的,因此我们需要发送一个命令去启用第 16 号针脚。

寄存器 `r1` 中的值是启用 LED 针所需要的。第一行命令将数字 1<sub> 10</sub> 放到 `r1` 中。在这个操作中 `mov` 命令要比 `ldr` 命令快很多,因为它不需要与内存交互,而 `ldr` 命令是将需要的值从内存中加载到寄存器中。尽管如此,`mov` 命令仅能用于加载某些值。<sup id="fnref4"> <a href="#fn4" rel="footnote"> 4 </a></sup> 在 ARM 汇编代码中,基本上每个指令都使用一个三字母代码表示。它们被称为助记词,用于表示操作的用途。`mov` 是 “move” 的简写,而 `ldr` 是 “load register” 的简写。`mov` 是将第二个参数 `#1` 移动到前面的 `r1` 寄存器中。一般情况下,`#` 肯定是表示一个数字,但我们已经看到了不符合这种情况的一个反例。

第二个指令是 `lsl`(逻辑左移)。它的意思是将第一个参数的二进制操作数向左移第二个参数所表示的位数。在这个案例中,将 1<sub> 10</sub> (即 1<sub> 2</sub> )向左移 18 位(将它变成 1000000000000000000<sub> 2</sub>=262144<sub> 10</sub> )。

如果你不熟悉二进制表示法,可以看下面的内容:

>

> 延伸阅读: 二进制解释

>

>

> 与十六进制一样,二进制是写数字的另一种方法。在二进制中只有两个数字,即 `0` 和 `1`。它在计算机中非常有用,因为我们可以用电路来实现它,即电流能够通过电路表示为 `1`,而电流不能通过电路表示为 `0`。这就是计算机能够完成真实工作和做数学运算的原理。尽管二进制只有两个数字,但它却能够表示任何一个数字,只是写起来有点长而已。

>

>

>

>

>

> 这个图片展示了 567<sub> 10</sub> 的二进制表示是 1000110111<sub> 2</sub> 。我们使用下标 2 来表示这个数字是用二进制写的。

>

>

> 我们在汇编代码中大量使用二进制的其中一个巧合之处是,数字可以很容易地被 `2` 的幂(即 `1`、`2`、`4`、`8`、`16`)乘或除。通常乘法和除法都是非常难的,而在某些特殊情况下却变得非常容易,所以二进制非常重要。

>

>

>

>

>

> 将一个二进制数字左移 `n` 位就相当于将这个数字乘以 2<sup> n</sup>。因此,如果我们想将一个数乘以 4,我们只需要将这个数字左移 2 位。如果我们想将它乘以 256,我们只需要将它左移 8 位。如果我们想将一个数乘以 12 这样的数字,我们可以有一个替代做法,就是先将这个数乘以 8,然后再将那个数乘以 4,最后将两次相乘的结果相加即可得到最终结果(N × 12 = N × (8 + 4) = N × 8 + N × 4)。

>

>

>

>

>

> 右移一个二进制数 `n` 位就相当于这个数除以 2<sup> n</sup> 。在右移操作中,除法的余数位将被丢弃。不幸的是,如果对一个不能被 2 的幂次方除尽的二进制数字做除法是非常难的,这将在 [课程 9 Screen04](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/screen04.html) 中讲到。

>

>

>

>

>

> 这个图展示了二进制常用的术语。一个<ruby> 比特 <rt> bit </rt></ruby>就是一个单独的二进制位。一个“<ruby> 半字节 <rt> nibble </rt></ruby>“ 是 4 个二进制位。一个<ruby> 字节 <rt> byte </rt></ruby>是 2 个半字节,也就是 8 个比特。<ruby> 半字 <rt> half </rt></ruby>是指一个字长度的一半,这里是 2 个字节。<ruby> 字 <rt> word </rt></ruby>是指处理器上寄存器的大小,因此,树莓派的字长是 4 字节。按惯例,将一个字最高有效位标识为 31,而将最低有效位标识为 0。顶部或最高位表示最高有效位,而底部或最低位表示最低有效位。一个 kilobyte(KB)就是 1000 字节,一个 megabyte 就是 1000 KB。这样表示会导致一些困惑,到底应该是 1000 还是 1024(二进制中的整数)。鉴于这种情况,新的国际标准规定,一个 KB 等于 1000 字节,而一个 Kibibyte(KiB)是 1024 字节。一个 Kb 是 1000 比特,而一个 Kib 是 1024 比特。

>

>

> 树莓派默认采用小端法,也就是说,从你刚才写的地址上加载一个字节时,是从一个字的低位字节开始加载的。

>

>

>

再强调一次,我们只有去阅读手册才能知道我们所需要的值。手册上说,GPIO 控制器中有一个 24 字节的集合,由它来决定 GPIO 针脚的设置。第一个 4 字节与前 10 个 GPIO 针脚有关,第二个 4 字节与接下来的 10 个针脚有关,依此类推。总共有 54 个 GPIO 针脚,因此,我们需要 6 个 4 字节的一个集合,总共是 24 个字节。在每个 4 字节中,每 3 个比特与一个特定的 GPIO 针脚有关。我们想去启用的是第 16 号 GPIO 针脚,因此我们需要去设置第二组 4 字节,因为第二组的 4 字节用于处理 GPIO 针脚的第 10-19 号,而我们需要第 6 组 3 比特,它在上面的代码中的编号是 18(6×3)。

最后的 `str`(“store register”)命令去保存第一个参数中的值,将寄存器 `r1` 中的值保存到后面的表达式计算出来的地址上。这个表达式可以是一个寄存器,在上面的例子中是 `r0`,我们知道 `r0` 中保存了 GPIO 控制器的地址,而另一个值是加到它上面的,在这个例子中是 `#4`。它的意思是将 GPIO 控制器地址加上 `4` 得到一个新的地址,并将寄存器 `r1` 中的值写到那个地址上。那个地址就是我们前面提到的第二组 4 字节的位置,因此,我们发送我们的第一个消息到 GPIO 控制器上,告诉它准备启用 GPIO 第 16 号针脚的输出。

### 5、生命的信号

现在,LED 已经做好了打开准备,我们还需要实际去打开它。意味着需要给 GPIO 控制器发送一个消息去关闭 16 号针脚。是的,你没有看错,就是要发送一个关闭的消息。芯片制造商认为,在 GPIO 针脚关闭时打开 LED 更有意义。<sup id="fnref5"> <a href="#fn5" rel="footnote"> 5 </a></sup> 硬件工程师经常做这种反常理的决策,似乎是为了让操作系统开发者保持警觉。可以认为是给自己的一个警告。

```

mov r1,#1

lsl r1,#16

str r1,[r0,#40]

```

希望你能够认识上面全部的命令,先不要管它的值。第一个命令和前面一样,是将值 `1` 推入到寄存器 `r1` 中。第二个命令是将二进制的 `1` 左移 16 位。由于我们是希望关闭 GPIO 的 16 号针脚,我们需要在下一个消息中将第 16 比特设置为 1(想设置其它针脚只需要改变相应的比特位即可)。最后,我们写这个值到 GPIO 控制器地址加上 40<sub> 10</sub> 的地址上,这将使那个针脚关闭(加上 28 将打开针脚)。

### 6、永远幸福快乐

似乎我们现在就可以结束了,但不幸的是,处理器并不知道我们做了什么。事实上,处理器只要通电,它就永不停止地运转。因此,我们需要给它一个任务,让它一直运转下去,否则,树莓派将进入休眠(本示例中不会,LED 灯会一直亮着)。

```

loop$:

b loop$

```

>

> `name:` 下一行的名字。

>

>

> `b label` 下一行将去标签 `label` 处运行。

>

>

>

第一行不是一个命令,而是一个标签。它给下一行命名为 `loop$`,这意味着我们能够通过名字来指向到该行。这就称为一个标签。当代码被转换成二进制后,标签将被丢弃,但这对我们通过名字而不是数字(地址)找到行比较有用。按惯例,我们使用一个 `$` 表示这个标签只对这个代码块中的代码起作用,让其它人知道,它不对整个程序起作用。`b`(“branch”)命令将去运行指定的标签中的命令,而不是去运行它后面的下一个命令。因此,下一行将再次去运行这个 `b` 命令,这将导致永远循环下去。因此处理器将进入一个无限循环中,直到它安全关闭为止。

代码块结尾的一个空行是有意这样写的。GNU 工具链要求所有的汇编代码文件都是以空行结束的,因此,这就可以你确实是要结束了,并且文件没有被截断。如果你不这样处理,在汇编器运行时,你将收到烦人的警告。

### 7、树莓派上场

由于我们已经写完了代码,现在,我们可以将它上传到树莓派中了。在你的计算机上打开一个终端,改变当前工作目录为 `source` 文件夹的父级目录。输入 `make` 然后回车。如果报错,请参考排错章节。如果没有报错,你将生成三个文件。 `kernel.img` 是你的编译后的操作系统镜像。`kernel.list` 是你写的汇编代码的一个清单,它实际上是生成的。这在将来检查程序是否正确时非常有用。`kernel.map` 文件包含所有标签结束位置的一个映射,这对于跟踪值非常有用。

为安装你的操作系统,需要先有一个已经安装了树莓派操作系统的 SD 卡。如果你浏览 SD 卡中的文件,你应该能看到一个名为 `kernel.img` 的文件。将这个文件重命名为其它名字,比如 `kernel_linux.img`。然后,复制你编译的 `kernel.img` 文件到 SD 卡中原来的位置,这将用你的操作系统镜像文件替换现在的树莓派操作系统镜像。想切换回来时,只需要简单地删除你自己的 `kernel.img` 文件,然后将前面重命名的文件改回 `kernel.img` 即可。我发现,保留一个原始的树莓派操作系统的备份是非常有用的,万一你要用到它呢。

将这个 SD 卡插入到树莓派,并打开它的电源。这个 OK 的 LED 灯将亮起来。如果不是这样,请查看故障排除页面。如果一切如愿,恭喜你,你已经写出了你的第一个操作系统。[课程 2 OK02](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/ok02.html) 将指导你让 LED 灯闪烁和关闭闪烁。

---

1. 是的,我说错了,它告诉的是链接器,它是另一个程序,用于将汇编器转换过的几个代码文件链接到一起。直接说是汇编器也没有大问题。 [↩](#fnref1)

2. 其实它们对你很重要。由于 GNU 工具链主要用于开发操作系统,它要求入口点必须是名为 `_start` 的地方。由于我们是开发一个操作系统,无论什么时候,它总是从 `_start` 开时的,而我们可以使用 `.section .init` 命令去设置它。因此,如果我们没有告诉它入口点在哪里,就会使工具链困惑而产生警告消息。所以,我们先定义一个名为 `_start` 的符号,它是所有人可见的(全局的),紧接着在下一行生成符号 `_start` 的地址。我们很快就讲到这个地址了。 [↩](#fnref2)

3. 本教程的设计减少了你阅读树莓派开发手册的难度,但是,如果你必须要阅读它,你可以在这里 [SoC-Peripherals.pdf](https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/downloads/SoC-Peripherals.pdf) 找到它。由于添加了混淆,手册中 GPIO 使用了不同的地址系统。我们的操作系统中的地址 0x20200000 对应到手册中是 0x7E200000。 [↩](#fnref3)

4. `mov` 能够加载的值只有前 8 位是 `1` 的二进制表示的值。换句话说就是一个 0 后面紧跟着 8 个 `1` 或 `0`。 [↩](#fnref4)

5. 一个很友好的硬件工程师是这样向我解释这个问题的: [↩](#fnref5)

原因是现在的芯片都是用一种称为 CMOS 的技术来制成的,它是互补金属氧化物半导体的简称。互补的意思是每个信号都连接到两个晶体管上,一个是使用 N 型半导体的材料制成,它用于将电压拉低,而另一个使用 P 型半导体材料制成,它用于将电压升高。在任何时刻,仅有一个半导体是打开的,否则将会短路。P 型材料的导电性能不如 N 型材料。这意味着三倍大的 P 型半导体材料才能提供与 N 型半导体材料相同的电流。这就是为什么 LED 总是通过降低为低电压来打开它,因为 N 型半导体拉低电压比 P 型半导体拉高电压的性能更强。

还有一个原因。早在上世纪七十年代,芯片完全是由 N 型材料制成的(NMOS),P 型材料部分使用了一个电阻来代替。这意味着当信号为低电压时,即便它什么事都没有做,芯片仍然在消耗能量(并发热)。你的电话装在口袋里什么事都不做,它仍然会发热并消耗你的电池电量,这不是好的设计。因此,信号设计成 “活动时低”,而不活动时为高电压,这样就不会消耗能源了。虽然我们现在已经不使用 NMOS 了,但由于 N 型材料的低电压信号比 P 型材料的高电压信号要快,所以仍然使用了这种设计。通常在一个 “活动时低” 信号名字上方会有一个条型标记,或者写作 `SIGNAL_n` 或 `/SIGNAL`。但是即便这样,仍然很让人困惑,那怕是硬件工程师,也不可避免这种困惑!

---

via: <https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/ok01.html>

作者:[Robert Mullins](http://www.cl.cam.ac.uk/%7Erdm34) 选题:[lujun9972](https://github.com/lujun9972) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Raspberry Pi

# Lesson 1 OK01

The OK01 lesson contains an explanation about how to get started and teaches how

to enable the 'OK' or 'ACT' **LED** on the Raspberry

Pi board near the RCA and USB ports. This light was originally labelled OK but has been renamed to ACT on the revision

2 Raspberry Pi boards.

Contents |

## 1 Getting Started

I am assuming at this point that you have already visited the [

Downloads](downloads.html) page, and got the necessary GNU Toolchain. Also on the downloads

page is a file called OS Template. Please download this and extract its contents

to a new directory.

## 2 The Beginning

The '.s' file extension is commonly used for all forms of assembly code, it is up to us to remember this is ARMv6.

Now that you have extracted the template, create a new file in the 'source' directory called 'main.s'. This file will contain the code for this operating system. To be explicit, the folder structure should look like:

build/(empty)source/ main.s kernel.ld LICENSE Makefile

Open 'main.s' in a text editor so that we can begin typing assembly code. The Raspberry Pi uses a variety of assembly code called ARMv6, so that is what we'll need to write in.

Copy in these first commands.

.section .init

.globl _start

_start:

As it happens, none of these actually do anything on the Raspberry Pi, these are

all instructions to the assembler. The assembler is the program that will translate

between assembly code that we understand, and binary machine code that the Raspberry

Pi understands. In Assembly Code, each line is a new command. The first line here

tells the Assembler[ [1]](#note1)

where to put our code. The template I provided causes the code in the section called

.init to be put at the start of the output. This

is important, as we want to make sure we can control which code runs first. If we

don't do this, the code in the alphabetically first file name will run first! The

.section command simply tells the assembler which

section to put the code in, from this point until the next

.section or the end of the file.

In assembly code, you may skip lines, and put spaces before and after commands to aid readability.

The next two lines are there to stop a warning message and aren't all that important.[2]

## 3 The First Line

Now we're actually going to code something. In assembly code, the computer simply goes through the code, doing each instruction in order, unless told otherwise. Each instruction starts on a new line.

Copy the following instruction.

ldr r0,=0x20200000

ldr reg,=val puts the number val into the register named reg.

That is our first command. It tells the processor to store the number 0x20200000 into the register r0. I shall need to answer two questions here, what is a register, and how is 0x20200000 a number?

A single register can store any integer between 0 and 4,294,967,295 inclusive on the Raspberry Pi, which might seem like a large amount of memory, but it is only 32 binary bits.

A register is a tiny piece of memory in the processor, which is where the processor stores the numbers it is working on right now. There are quite a few of these, many of which have a special meaning, which we will come to later. Importantly there are 13 (named r0,r1,r2,...,r9,r10,r11,r12) which are called General Purpose, and you can use them for whatever calculations you need to do. Since it's the first, I've used r0 in this example, but I could very well have used any of the others. As long as you're consistent, it doesn't matter.

0x20200000 is indeed a number. However it is written in Hexadecimal notation. To learn more about hexadecimal expand the box below:

So our first command is to put the number 2020000016 into r0. That doesn't

sound like it would be much use, but it is. In computers, there are an awful lot

of chunks of memory and devices. In order to access them all, we give each one an

address. Much like a postal address or a web address this is just a means of identifying

the location of the device or chunks of memory we want. Addresses in computers are

just numbers, and so the number 2020000016 happens to be the address

of the GPIO controller. This is just a design decision taken by the manufacturers,

they could have used any other address (providing it didn't conflict with anything

else). I know this address only because I looked it up in a manual[ [3]](#note3), there is no particular

system to the addresses (other than that they are all large round numbers in hexadecimal).

## 4 Enabling Output

Having read the manual, I know we're going to need to send two messages to the GPIO controller. We need to talk its language, but if we do, it will obligingly do what we want and turn on the OK LED. Fortunately, it is such a simple chip, that it only needs a few numbers in order to understand what to do.

mov r1,#1

lsl r1,#18

str r1,[r0,#4]

mov reg,#val puts the number val into the register named reg.

lsl reg,#val shifts the binary representation of the number in reg by val places to the left.

str reg,[dest,#val] stores the number in reg at the address given by dest + val.

These commands enable output to the 16th GPIO pin. First we get a necessary value in r1, then send it to the GPIO controller. Since the first two instructions are just trying to get a value into r1, we could use another ldr command as before, but it will be useful to us later to be able to set any given GPIO pin, so it is better to deduce the value from a formula than write it straight in. The OK LED is wired to the 16th GPIO pin, and so we need to send a command to enable the 16th pin.

The value in r1 is needed to enable the LED pin. The first line puts the number

110 into r1. The mov command is faster

than the ldr command, because it does not involve

a memory interaction, whereas ldr loads the value

we want to put into the register from memory. However, mov

can only be used to load certain values[ [4]](#note4). In ARM assembly code, almost every instruction

begins with a three letter code. This is called the mnemonic, and is supposed to

hint at what the operation does. mov is short

for move and ldr is short for load register.

mov moves the second argument

#1 into the first r1. In general,

# must be used to denote numbers, but we have

already seen a counterexample to this.

The second instruction is lsl or logical shift

left. This means shift the binary representation for the first argument left by

the second argument. In this case this will shift the binary representation of 110

(which is 12) left by 18 places (making it 10000000000000000002=26214410).

If you are unfamiliar with binary, expand the box below:

Once again, I only know that we need this value from reading the manual[ [3]](#note3). The manual says

that there is a set of 24 bytes in the GPIO controller, which determine the settings

of the GPIO pin. The first 4 relate to the first 10 GPIO pins, the second 4 relate

to the next 10 and so on. There are 54 GPIO pins, so we need 6 sets of 4 bytes,

which is 24 bytes in total. Within each 4 byte section, every 3 bits relates to

a particular GPIO pin. Since we want the 16th GPIO pin, we need the second set of

4 bytes because we're dealing with pins 10-19, and we need the 6th set of 3 bits,

which is where the number 18 (6×3) comes from in the code above.

Finally the str 'store register' command stores the value in the first argument, r1 into the address computed from the expression afterwards. The expression can be a register, in this case r0, which we know to be the GPIO controller address, and another value to add to it, in this case #4. This means we add 4 to the GPIO controller address and write the value in r1 to that location. This happens to be the location of the second set of 4 bytes that I mentioned before, and so we send our first message to the GPIO controller, telling it to ready the 16th GPIO pin for output.

## 5 A Sign Of Life

Now that the LED is ready to turn on, we need to actually turn it on. This means

sending a message to the GPIO controller to turn pin 16 off. Yes, *turn it off*.

The chip manufacturers decided it made more sense[ [5]](#note5) to have the LED turn on when

the GPIO pin is off. Hardware engineers often seem to take these sorts of decisions,

seemingly just to keep OS Developers on their toes. Consider yourself warned.

mov r1,#1

lsl r1,#16

str r1,[r0,#40]

Hopefully you should recognise all of the above commands, if not their values. The

first puts a 1 into r1 as before. The second

shifts the binary representation of this 1 left by 16 places. Since we want to turn

pin 16 off, we need to have a 1 in the 16th bit of this next message (other values

would work for other pins). Finally we write it out to the address which is 4010

added to the GPIO controller address, which happens to be the address to write to

turn a pin off (28 would turn the pin on).

## 6 Happily Ever After

It might be tempting to finish now, but unfortunately the processor doesn't know we're done. In actuality, the processor never will stop. As long as it has power, it continues working. Thus, we need to give it a task to do forever more, or the Raspberry Pi will crash (not much of a problem in this example, the light is already on).

loop$:

b loop$

name: labels the next line name.

b label causes the next line to be executed to be label.

The first line here is not a command, but a label. It names the next line loop$. This means we can now refer to the line by name. This is called a label. Labels get discarded when the code is turned into binary, but they're useful for our benefit for referring to lines by name, not number (address). By convention we use a $ for labels which are only important to the code in this block of code, to let others know they're not important to the overall program. The b (branch) command causes the next line to be executed to be the one at the label specified, rather than the one after it. Therefore, the next line to be executed will be this b, which will cause it to be executed again, and so on forever. Thus the processor is stuck in a nice infinite loop until it is switched off safely.

The new line at the end of the block is intentional. The GNU toolchain expects all assembly code files to end in an empty line, so that it is sure you were really finished, and the file hasn't been cut off. If you don't put one, you get an annoying warning when the assembler runs.

## 7 Pi Time

So we've written the code, now to get it onto the pi. Open a terminal on your computer and change the current working directory to the parent directory of the source directory. Type make and then press enter. If any errors occur, please refer to the troubleshooting section. If not, you will have generated three files. kernel.img is the compiled image of your operating system. kernel.list is a listing of the assembly code you wrote, as it was actually generated. This is useful to check that things were generated correctly in future. The kernel.map file contains a map of where all the labels ended up, which can be useful for chasing around values.

To install your operating system, first of all get a Raspberry PI SD card which has an operating system installed already. If you browse the files in the SD card, you should see one called kernel.img. Rename this file to something else, such as kernel_linux.img. Then, copy the file kernel.img that make generated onto the SD Card. You've just replaced the existing operating system with your own. To switch back, simply delete your kernel.img file, and rename the other one back to kernel.img. I find it is always helpful to keep a backup of you original Raspberry Pi operating system, in case you need it again.

Put the SD card into a Raspberry Pi and turn it on. The OK LED should turn on. If

not please see the troubleshooting page. If so, congratulations, you just wrote

your first operating system. See [Lesson 2: OK02](ok02.html) for a guide

to making the LED flash on and off. |

10,459 | Flatpak 新手指南 | https://www.ostechnix.com/flatpak-new-framework-desktop-applications-linux/ | 2019-01-20T11:31:08 | [

"Flatpak"

] | https://linux.cn/article-10459-1.html |

以前,我们介绍 Ubuntu 推出的 [Snaps](http://www.ostechnix.com/introduction-ubuntus-snap-packages/)。Snaps 是由 Canonical 公司为 Ubuntu 开发的,并随后移植到其他的 Linux 发行版,如 Arch、Gentoo、Fedora 等等。由于一个 snap 包中含有软件的二进制文件和其所需的所有依赖和库,所以可以在无视软件版本、在任意 Linux 发行版上安装软件。和 Snaps 类似,还有一个名为 Flatpak 的工具。也许你已经知道,为不同的 Linux 发行版打包并分发应用是一件多么费时又复杂的工作,因为不同的 Linux 发行版的库不同,库的版本也不同。现在,Flatpak 作为分发桌面应用的新框架可以让开发者完全摆脱这些负担。开发者只需构建一个 Flatpak app 就可以在多种发行版上安装使用。这真是又酷又棒!

用户也完全不用担心库和依赖的问题了,所有的东西都和 app 打包在了一起。更重要的是 Flatpak app 们都自带沙箱,而且与宿主操作系统的其他部分隔离。对了,Flatpak 还有一个很棒的特性,它允许用户在同一个系统中安装同一应用的多个版本,例如 VLC 播放器的 2.1 版、2.2 版、2.3 版。这使开发者测试同一个软件的多个版本变得更加方便。

在本文中,我们将指导你如何在 GNU/Linux 中安装 Flatpak。

### 安装 Flatpak

Flatpak 可以在大多数的主流 Linux 发行版上安装使用,如 Arch Linux、Debian、Fedora、Gentoo、Red Hat、Linux Mint、openSUSE、Solus、Mageia 还有 Ubuntu。

在 Arch Linux 上,使用这一条命令来安装 Flatpak:

```

$ sudo pacman -S flatpak

```

对于 Debian 用户,Flatpak 被收录进 Stretch 或之后版本的默认软件源中。要安装 Flatpak,直接执行:

```

$ sudo apt install flatpak

```

对于 Fedora 用户,Flatpak 是发行版默认安装的软件。你可以直接跳过这一步。

如果因为某种原因没有安装的话,可以执行:

```

$ sudo dnf install flatpak

```

对于 RHEL 7 用户,安装 Flatpak 的命令为:

```

$ sudo yum install flatpak

```

如果你在使用 Linux Mint 18.3,那么 Flatpat 也随系统默认安装,所以跳过这一步。

在 openSUSE Tumbleweed 中,使用 Zypper 包管理来安装 Flatpak:

```

$ sudo zypper install flatpak

```

而 Ubuntu 需要添加下面的软件源再安装 Flatpak,命令如下:

```

$ sudo add-apt-repository ppa:alexlarsson/flatpak

$ sudo apt update

$ sudo apt install flatpak

```

Gnome 提供了一个 Flatpak 插件,安装它就可以使用图形界面来安装 Flatpak app 了。插件的安装命令为:

```

$ sudo apt install gnome-software-plugin-flatpak

```

如果你是用发行版没有在上述的说明里,请你参考官方[安装指南](https://flatpak.org/setup/)。

### 开始使用 Flatpak

有不少流行应用都支持 Flatpak 安装,如 Gimp、Kdenlive、Steam、Spotify、Visual Sudio Code 等。

下面让我来一起学习 flatpak 的基本操作命令。

首先,我们需要添加远程仓库。

#### 添加软件仓库

**添加 Flathub 仓库:**

**Flathub** 是一个包含了几乎所有 flatpak 应用的仓库。运行这条命令来启用它:

```

$ sudo flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

```

对于流行应用来说,Flathub 已经可以满足需求。如果你想试试 GNOME 应用的话,可以添加 GNOME 的仓库。

**添加 GNOME 仓库:**

GNOME 仓库包括了所有的 GNOME 核心应用,它提供了两种版本:<ruby> 稳定版 <rt> stable </rt></ruby>和<ruby> 每日构建版 <rt> nightly </rt></ruby>。

使用下面的命令来添加 GNOME 稳定版仓库:

```

$ wget https://sdk.gnome.org/keys/gnome-sdk.gpg

$ sudo flatpak remote-add --gpg-import=gnome-sdk.gpg --if-not-exists gnome-apps https://sdk.gnome.org/repo-apps/

```

需要注意的是,GNOME 稳定版仓库中的应用需要 **3.20 版本的 org.gnome.Platform 运行时环境**。

安装稳定版运行时环境,请执行:

```

$ sudo flatpak remote-add --gpg-import=gnome-sdk.gpg gnome https://sdk.gnome.org/repo/

```

如果想使用每日构建版的 GNOME 仓库,使用如下的命令:

```

$ wget https://sdk.gnome.org/nightly/keys/nightly.gpg

$ sudo flatpak remote-add --gpg-import=nightly.gpg --if-not-exists gnome-nightly-apps https://sdk.gnome.org/nightly/repo-apps/

```

同样,每日构建版的 GNOME 仓库也需要 **org.gnome.Platform 运行时环境的每日构建版本**。

执行下面的命令安装每日构建版的运行时环境:

```

$ sudo flatpak remote-add --gpg-import=nightly.gpg gnome-nightly https://sdk.gnome.org/nightly/repo/

```

#### 查看软件仓库

要查看已经添加的软件仓库,执行下面的命令:

```

$ flatpak remotes

Name Options

flathub system

gnome system

gnome-apps system

gnome-nightly system

gnome-nightly-apps system

```

如你所见,上述命令会列出你添加到系统中的软件仓库。此外,执行结果还表明了软件仓库的配置是<ruby> 用户级 <rt> per-user </rt></ruby>还是<ruby> 系统级 <rt> system-wide </rt></ruby>。

#### 删除软件仓库

要删除软件仓库,例如 flathub,用这条命令:

```

$ sudo flatpak remote-delete flathub

```

这里的 flathub 是软件仓库的名字。

#### 安装 Flatpak 应用

这一节,我们将学习如何安装 flatpak 应用。

要安装一个应用,只要一条命令就能完成:

```

$ sudo flatpak install flathub com.spotify.Client

```

所有的稳定版 GNOME 软件仓库中的应用,都使用“stable”作为版本名。

例如,想从稳定版 GNOME 软件仓库中安装稳定版 Evince,就执行:

```

$ sudo flatpak install gnome-apps org.gnome.Evince stable

```

所有的每日构建版 GNOME 仓库中的应用,都使用“master”作为版本名。

例如,要从每日构建版 GNOME 软件仓库中安装 gedit 的每次构建版本,就执行:

```

$ sudo flatpak install gnome-nightly-apps org.gnome.gedit master

```

如果不希望应用安装在<ruby> 系统级 <rt> system-wide </rt></ruby>,而只安装在<ruby> 用户级 <rt> per-user </rt></ruby>,那么你可以这样安装软件:

```

$ flatpak install --user <name-of-app>

```

所有的应用都会被存储在 `$HOME/.var/app/` 目录下.

```

$ ls $HOME/.var/app/

com.spotify.Client

```

#### 执行 Flatpak 应用

你可以直接使用<ruby> 应用启动器 <rt> application launcher </rt></ruby>来运行已安装的 Flatpak 应用。如果你想从命令行启动的话,以 Spotify 为例,执行下面的命令:

```

$ flatpak run com.spotify.Client

```

#### 列出已安装的 Flatpak 应用

要查看已安装的应用程序和运行时环境,执行:

```

$ flatpak list

```

想只查看已安装的应用,那就用这条命令:

```

$ flatpak list --app

```

如果想查询已添加的软件仓库中的可安装程序和可安装的运行时环境,使用命令:

```

$ flatpak remote-ls

```

只列出可安装的应用程序的命令是:

```

$ flatpak remote-ls --app

```

查询指定远程仓库中的所有可安装的应用程序和运行时环境,这里以 gnome-apps 为例,执行命令:

```

$ flatpak remote-ls gnome-apps

```

只列出可安装的应用程序,这里以 flathub 为例:

```

$ flatpak remote-ls flathub --app

```

#### 更新应用程序

更新所有的 Flatpak 应用程序,执行:

```

$ flatpak update

```

更新指定的 Flatpak 应用程序,执行:

```

$ flatpak update com.spotify.Client

```

#### 获取应用详情

执行下面的命令来查看已安装应用程序的详细信息:

```

$ flatpak info io.github.mmstick.FontFinder

```

输出样例:

```

Ref: app/io.github.mmstick.FontFinder/x86_64/stable

ID: io.github.mmstick.FontFinder

Arch: x86_64

Branch: stable

Origin: flathub

Date: 2018-04-11 15:10:31 +0000

Subject: Workaround appstream issues (391ef7f5)

Commit: 07164e84148c9fc8b0a2a263c8a468a5355b89061b43e32d95008fc5dc4988f4

Parent: dbff9150fce9fdfbc53d27e82965010805f16491ec7aa1aa76bf24ec1882d683

Location: /var/lib/flatpak/app/io.github.mmstick.FontFinder/x86_64/stable/07164e84148c9fc8b0a2a263c8a468a5355b89061b43e32d95008fc5dc4988f4

Installed size: 2.5 MB

Runtime: org.gnome.Platform/x86_64/3.28

```

#### 删除应用程序

要删除一个 Flatpak 应用程序,这里以 spotify 为例,执行:

```

$ sudo flatpak uninstall com.spotify.Client

```

如果你需要更多信息,可以参考 Flatpak 的帮助。

```

$ flatpak --help

```

到此,希望你对 Flatpak 有了一些基础了解。

如果你觉得这篇指南有些帮助,请在你的社交媒体上分享它来支持我们。

稍后还有更多精彩内容,敬请期待~

---

via: <https://www.ostechnix.com/flatpak-new-framework-desktop-applications-linux/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wwhio](https://github.com/wwhio) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,460 | 理解 Linux 中的 /etc/services 文件 | https://kerneltalks.com/linux/understanding-etc-services-file-in-linux/ | 2019-01-20T12:16:00 | [

"inetd",

"services"

] | https://linux.cn/article-10460-1.html |

这篇文章将帮助你了解 Linux 中 `/etc/services` 文件,包括它的内容,格式以及重要性。

Internet 守护程序(`ineted`)是 Linux 世界中的重要服务。它借助 `/etc/services` 文件来处理所有网络服务。在本文中,我们将向你介绍这个文件的内容,格式以及它对于 Linux 系统的意义。

`/etc/services` 文件包含网络服务和它们映射端口的列表。`inetd` 或 `xinetd` 会查看这些细节,以便在数据包到达各自的端口或服务有需求时,它会调用特定的程序。

作为普通用户,你可以查看此文件,因为文件一般都是可读的。要编辑此文件,你需要有 root 权限。

```

$ ll /etc/services

-rw-r--r--. 1 root root 670293 Jun 7 2013 /etc/services

```

### /etc/services 文件格式

```

service-name port/protocol [aliases..] [#comment]

```

最后两个字段是可选的,因此用 `[` `]` 表示。

其中:

* `service-name` 是网络服务的名称。例如 [telnet](https://kerneltalks.com/config/configure-telnet-server-linux/)、[ftp](https://kerneltalks.com/config/ftp-server-configuration-steps-rhel-6/) 等。

* `port/protocol` 是网络服务使用的端口(一个数值)和服务通信使用的协议(TCP/UDP)。

* `alias` 是服务的别名。

* `comment` 是你可以添加到服务的注释或说明。以 `#` 标记开头。

### /etc/services 文件示例

```

# 每行描述一个服务,形式如下:

#

# service-name port/protocol [aliases ...] [# comment]

tcpmux 1/tcp # TCP port service multiplexer

rje 5/tcp # Remote Job Entry

echo 7/udp

discard 9/udp sink null

```

在这里,你可以看到可选的最后两个字段的用处。`discard` 服务的别名为 `sink` 或 `null`。

---

via: <https://kerneltalks.com/linux/understanding-etc-services-file-in-linux/>

作者:[kerneltalks](https://kerneltalks.com) 选题:[lujun9972](https://github.com/lujun9972) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | *The article helps you to understand /etc/services file in Linux. Learn about content, format & importance of this file.*

Internet daemon is an important service in the Linux world. It takes care of all network services with the help of `/etc/services`

file. In this article, we will walk you through the content, format of this file, and what it means to a Linux system.

`/etc/services`

file contains a list of network services and ports mapped to them. `inetd`

or `xinetd`

looks at these details so that it can call a particular program when a packet hits respective port and demand for service.

As a normal user, you can view this file since the file is world-readable. To edit this file you need to have root privileges.

```

$ ll /etc/services

-rw-r--r--. 1 root root 670293 Jun 7 2013 /etc/services

```

`/etc/services`

file format

```

service-name port/protocol [aliases..] [#comment]

```

Last two fields are optional hence denoted in `[`

`]`

where –

- service-name is the name of the network service. e.g.

[telnet](https://kerneltalks.com/config/configure-telnet-server-linux/),[FTP](https://kerneltalks.com/config/ftp-server-configuration-steps-rhel-6/), etc. - port/protocol is the port being used by that network service (numerical value) and protocol (TCP/UDP) used for communication by service.

- alias is an alternate name for service.

- a comment is a note or description you can add to service. Starts with

`#`

mark

#### Sample` /etc/services`

file

```

# Each line describes one service, and is of the form:

#

# service-name port/protocol [aliases ...] [# comment]

tcpmux 1/tcp # TCP port service multiplexer

rje 5/tcp # Remote Job Entry

echo 7/udp

discard 9/udp sink null

```

Here, you can see the use of optional last two fields as well. `discard`

service has an alternate name as `sink`

or `null`

.

## Share Your Comments & Feedback: Cancel reply |

10,461 | Caffeinated 6.828:实验 7:最终的 JOS 项目 | https://pdos.csail.mit.edu/6.828/2018/labs/lab7/ | 2019-01-20T20:57:00 | [

"6.828"

] | https://linux.cn/article-10461-1.html |

### 简介

对于最后的项目,你有两个选择:

* 继续使用你自己的 JOS 内核并做 [实验 6](https://pdos.csail.mit.edu/6.828/2018/labs/lab6/index.html),包括实验 6 中的一个挑战问题。(你可以随意地、以任何有趣的方式去扩展实验 6 或者 JOS 的任何部分,当然了,这不是课程规定的。)

* 在一个、二个或三个人组成的团队中,你选择去做一个涉及了你的 JOS 的项目。这个项目必须是涉及到与实验 6 相同或更大的领域(如果你是团队中的一员)。

目标是为了获得乐趣或探索更高级的 O/S 的话题;你不需要做最新的研究。

如果你做了你自己的项目,我们将根据你的工作量有多少、你的设计有多优雅、你的解释有多高明、以及你的解决方案多么有趣或多有创意来为你打分。我们知道时间有限,因此也不期望你能在本学期结束之前重写 Linux。要确保你的目标是合理的;合理地设定一个绝对可以实现的最小目标(即:控制你的实验 6 的规模),如果进展顺利,可以设定一个更大的目标。

如果你做了实验 6,我们将根据你是否通过了测试和挑战练习来为你打分。

### 交付期限

>

> 11 月 3 日:Piazza 讨论和 1、2、或 3 年级组选择(根据你的最终选择来定)。使用在 Piazza 上的 lab7 标记/目录。在 Piazza 上的文章评论区与其它人计论想法。使用这些文章帮你去找到有类似想法的其它学生一起组建一个小组。课程的教学人员将在 Piazza 上为你的项目想法给出反馈;如果你想得到更详细的反馈,可以与我们单独讨论。

>

>

>

.

>

> 11 月 9 日:在 [提交网站](https://6828.scripts.mit.edu/2018/handin.py/) 上提交一个提议,只需要一到两个段落就可以。提议要包括你的小组成员列表、你的计划、以及明确的设计和实现打算。(如果你做实验 6,就不用做这个了)

>

>

>

.

>

> 12 月 7 日:和你的简短报告一起提交源代码。将你的报告放在与名为 “README.pdf” 的文件相同的目录下。由于你只是这个实验任务小组中的一员,你可能需要去使用 git 在小组成员之间共享你的项目代码。因此你需要去决定哪些源代码将作为你的小组项目的共享起始点。一定要为你的最终项目去创建一个分支,并且命名为 `lab7`。(如果你做了实验 6,就按实验 6 的提交要求做即可。)

>

>

>

.

>

> 12 月 11 日这一周:简短的课堂演示。为你的 JOS 项目准备一个简短的课堂演示。为了你的项目演示,我们将提供一个投影仪。根据小组数量和每个小组选择的项目类型,我们可能会限制总的演讲数,并且有些小组可能最终没有机会上台演示。

>

>

>

.

>

> 12 月 11 日这一周:助教们验收。向助教演示你的项目,因此我们可能会提问一些问题,去了解你所做的一些细节。

>

>

>

### 项目想法

如果你不做实验 6,下面是一个启迪你的想法列表。但是,你应该大胆地去实现你自己的想法。其中一些想法只是一个开端,并且本身不在实验 6 的领域内,并且其它的可能是在更大的领域中。

* 使用 [x86 虚拟机支持](http://www.intel.com/technology/itj/2006/v10i3/1-hardware/3-software.htm) 去构建一个能够运行多个访客系统(比如,多个 JOS 实例)的虚拟机监视器。

* 使用 Intel SGX 硬件保护机制做一些有用的事情。[这是使用 Intel SGX 的最新的论文](https://www.usenix.org/system/files/conference/osdi14/osdi14-paper-baumann.pdf)。

* 让 JOS 文件系统支持写入、文件创建、为持久性使用日志、等等。或许你可以从 Linux EXT3 上找到一些启示。

* 从 [软更新](http://www.ece.cmu.edu/%7Eganger/papers/osdi94.pdf)、[WAFL](https://ng.gnunet.org/sites/default/files/10.1.1.40.3691.pdf)、ZFS、或其它较高级的文件系统上找到一些使用文件系统的想法。

* 给一个文件系统添加快照功能,以便于用户能够查看过去的多个时间点上的文件系统。为了降低空间使用量,你或许要使用一些写时复制技术。

* 使用分页去提供实时共享的内存,来构建一个 [分布式的共享内存](http://www.cdf.toronto.edu/%7Ecsc469h/fall/handouts/nitzberg91.pdf)(DSM)系统,以便于你在一个机器集群上运行多线程的共享内存的并行程序。当一个线程尝试去访问位于另外一个机器上的页时,页故障将给 DSM 系统提供一个机会,让它基于网络去从当前存储这个页的任意一台机器上获取这个页。

* 允许进程在机器之间基于网络进行迁移。你将需要做一些关于一个进程状态的多个片段方面的事情,但是由于在 JOS 中许多状态是在用户空间中,它或许从 Linux 上的进程迁移要容易一些。

* 在 JOS 中实现 [分页](http://en.wikipedia.org/wiki/Paging) 到磁盘,这样那个进程使用的内存就可以大于真实的内存。使用交换空间去扩展你的内存。

* 为 JOS 实现文件的 [mmap()](http://en.wikipedia.org/wiki/Mmap)。

* 使用 [xfi](http://static.usenix.org/event/osdi06/tech/erlingsson.html) 将一个进程的代码沙箱化。

* 支持 x86 的 [2MB 或 4MB 的页大小](http://en.wikipedia.org/wiki/Page_(computer_memory))。

* 修改 JOS 让内核支持进程内的线程。从查看 [课堂上的 uthread 任务](http://pdos.csail.mit.edu/6.828/2018/homework/xv6-uthread.html) 去开始。实现调度器触发将是实现这个项目的一种方式。

* 在 JOS 的内核中或文件系统中(实现多线程之后),使用细粒度锁或无锁并发。Linux 内核使用 [读复制更新](http://en.wikipedia.org/wiki/Read-copy-update) 去执行无需上锁的读取操作。通过在 JOS 中实现它来探索 RCU,并使用它去支持无锁读取的名称缓存。

* 实现 [外内核论文](http://pdos.csail.mit.edu/6.828/2018/readings/engler95exokernel.pdf) 中的想法。例如包过滤器。

* 使 JOS 拥有软实时行为。用它来辨识一些应用程序时非常有用。

* 使 JOS 运行在 64 位 CPU 上。这包括重设计虚拟内存让它使用 4 级页表。有关这方面的文档,请查看 [参考页](http://pdos.csail.mit.edu/6.828/2018/reference.html)。

* 移植 JOS 到一个不同的微处理器。这个 [osdev wiki](http://wiki.osdev.org/Main_Page) 或许对你有帮助。

* 为 JOS 系统增加一个“窗口”系统,包括图形驱动和鼠标。有关这方面的文档,请查看 [参考页](http://pdos.csail.mit.edu/6.828/2018/reference.html)。[sqrt(x)](http://web.mit.edu/amdragon/www/pubs/sqrtx-6.828.html) 就是一个 JOS “窗口” 系统的示例。

* 在 JOS 中实现 [dune](https://www.usenix.org/system/files/conference/osdi12/osdi12-final-117.pdf),以提供特权硬件指令给用户空间应用程序。

* 写一个用户级调试器,添加类似跟踪的功能;硬件寄存器概要(即:Oprofile);调用跟踪等等。

* 为(静态的)Linux 可运行程序做一个二进制仿真。

---

via: <https://pdos.csail.mit.edu/6.828/2018/labs/lab7/>

作者:[csail.mit](https://pdos.csail.mit.edu) 选题:[lujun9972](https://github.com/lujun9972) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # Lab 7: Final JOS project

**

Piazza Discussion Due, November 2, 2018 **

Proposals Due, November 8, 2018

Code repository Due, December 6, 2018

Check-off and in-class demos, Week of December 10, 2018

## Introduction

For the final project you have two options:

- Work on your own and do

[lab 6](../lab6/index.html), including

one challenge exercise in lab 6. (You are free, of course, to extend lab 6,

or any part of JOS, further in interesting ways, but it isn't required.)

- Work in a team of one, two or three, on a project of your choice that

involves your JOS. This project must be of the same scope as lab 6 or larger

(if you are working in a team).

The goal is to have fun and explore more advanced O/S topics; you don't have to

do novel research.

If you are doing your own project, we'll grade you on how much you got

working, how elegant your design is, how well you can explain it, and how

interesting and creative your solution is. We do realize that time is limited,

so we don't expect you to re-write Linux by the end of the semester. Try to make

sure your goals are reasonable; perhaps set a minimum goal that's definitely

achievable (e.g., something of the scale of lab 6) and a more ambitious goal if

things go well.

If you are doing lab 6, we will grade you on whether you pass the tests and

the challenge exercise.

## Deliverables

Nov 3: Piazza discussion and form groups of 1, 2, or 3 (depending on which

final project option you are choosing). Use the lab7

tag/folder on Piazza. Discuss ideas with others in comments on their Piazza

posting. Use these postings to help find other students interested in similar

ideas for forming a group. Course staff will provide feedback on project ideas

on Piazza; if you'd like more detailed feedback, come chat with us in

person.

Nov 9: Submit a proposal at [ the submission website](https://6828.scripts.mit.edu/2018/handin.py/),

just a paragraph or two. The proposal should include your group members list,

the problem you want to address, how you plan to address it, and what are you

proposing to specifically design and implement. (If you are doing lab 6, there

is nothing to do for this deliverable.)

Dec 7: submit source code along with a brief write-up.

Put the write-up under the top-level source directory with the

name "`README.pdf`". Since some of you will be working in groups for this lab assignment, you may

want to use git to share your project code between group members. You will need

to decide on whose source code you will use as a starting point for your group

project. Make sure to create a branch for your final project, and name it

**lab7**. (If you do lab 6, follow the lab 6 submission instructions.)

Week of Dec 11: short in-class demonstration. Prepare a short in-class

demo of your JOS project. We will provide a projector that you can use to

demonstrate your project. Depending on the number of groups and the kinds of

projects that each group chooses, we may decide to limit the total number of

presentations, and some groups might end up not presenting in class.

Week of Dec 11: check-off with TAs. Demo your project to the TAs so that

we can ask you some questions and find out in more detail what you did.

## Project ideas

If you are not doing lab 6, here's a list of ideas to get you started

thinking. But, you should feel free to pursue your own ideas. Some of the ideas

are starting points and by themselves not of the scope of lab 6, and others are

likely to be much of larger scope.

- Build a virtual machine monitor that can run multiple guests (for

example, multiple instances of JOS), using

[x86 VM support](http://www.intel.com/technology/itj/2006/v10i3/1-hardware/3-software.htm).

- Do something useful with the hardware protection of Intel SGX.

[Here

is a recent paper using Intel SGX](https://www.usenix.org/system/files/conference/osdi14/osdi14-paper-baumann.pdf).

- Make the JOS file system support writing, file creation, logging for

durability, etc., perhaps taking ideas from Linux EXT3.

- Use file system ideas from

[Soft

updates](http://www.ece.cmu.edu/~ganger/papers/osdi94.pdf),

[WAFL](https://ng.gnunet.org/sites/default/files/10.1.1.40.3691.pdf),

ZFS, or another advanced file system.

- Add snapshots to a file system, so that a user can look at

the file system as it appeared at various points in the past.

You'll probably want to use some kind of copy-on-write for disk

storage to keep space consumption down.

- Build a

[distributed shared memory](http://www.cdf.toronto.edu/~csc469h/fall/handouts/nitzberg91.pdf) (DSM) system, so that you can run

multi-threaded shared memory parallel programs on a cluster of

machines, using paging to give the appearance of real shared memory.

When a thread tries to access a page that's on another machine, the

page fault will give the DSM system a chance to fetch the page over

the network from whatever machine currently stores it.

- Allow processes to migrate from one machine to another over the

network. You'll need to do something about the various pieces of a process's

state, but since much state in JOS is in user-space it may be easier than

process migration on Linux.

- Implement

[paging](http://en.wikipedia.org/wiki/Paging) to disk

in JOS, so that processes can be bigger than RAM. Extend your pager

with swapping.

- Implement

[mmap()](http://en.wikipedia.org/wiki/Mmap) of files

for JOS.

- Use

[xfi](http://static.usenix.org/event/osdi06/tech/erlingsson.html) to

sandbox code within a process.

- Support x86

[2MB or 4MB pages](http://en.wikipedia.org/wiki/Page_(computer_memory)).

- Modify JOS to have kernel-supported threads inside processes. See

[in-class

uthread assignment](http://pdos.csail.mit.edu/6.828/2018/homework/xv6-uthread.html) to get started. Implementing scheduler activations

would be one way to do this project.

- Use fine-grained locking or lock-free concurrency in JOS in the kernel or

in the file server (after making it multithreaded). The linux kernel uses

[read copy

update](http://en.wikipedia.org/wiki/Read-copy-update) to be able to perform read operations without holding locks.

Explore RCU by implementing it in JOS and use it to support a name cache with

lock-free reads.

- Implement ideas from the

[Exokernel papers](http://pdos.csail.mit.edu/6.828/2018/readings/engler95exokernel.pdf), for example the packet filter.

- Make JOS have soft real-time behavior. You will have to identify some

application for which this is useful.

- Make JOS run on 64-bit CPUs. This includes redoing the virtual

memory system to use 4-level pages tables. See

[reference page](http://pdos.csail.mit.edu/6.828/2018/reference.html)

for some documentation.

- Port JOS to a different microprocessor. The

[osdev wiki](http://wiki.osdev.org/Main_Page) may be helpful.

- A window system for JOS, including graphics driver and mouse. See

[reference

page](http://pdos.csail.mit.edu/6.828/2018/reference.html) for some documentation. [sqrt(x)](http://web.mit.edu/amdragon/www/pubs/sqrtx-6.828.html) is

an example JOS window system (and writeup).

- Implement

[dune](https://www.usenix.org/system/files/conference/osdi12/osdi12-final-117.pdf)

to export privileged hardware instructions to user-space applications in JOS.

- Write a user-level debugger; add strace-like functionality;

hardware register profiling (e.g. Oprofile); call-traces

- Binary emulation for (static) Linux executables |

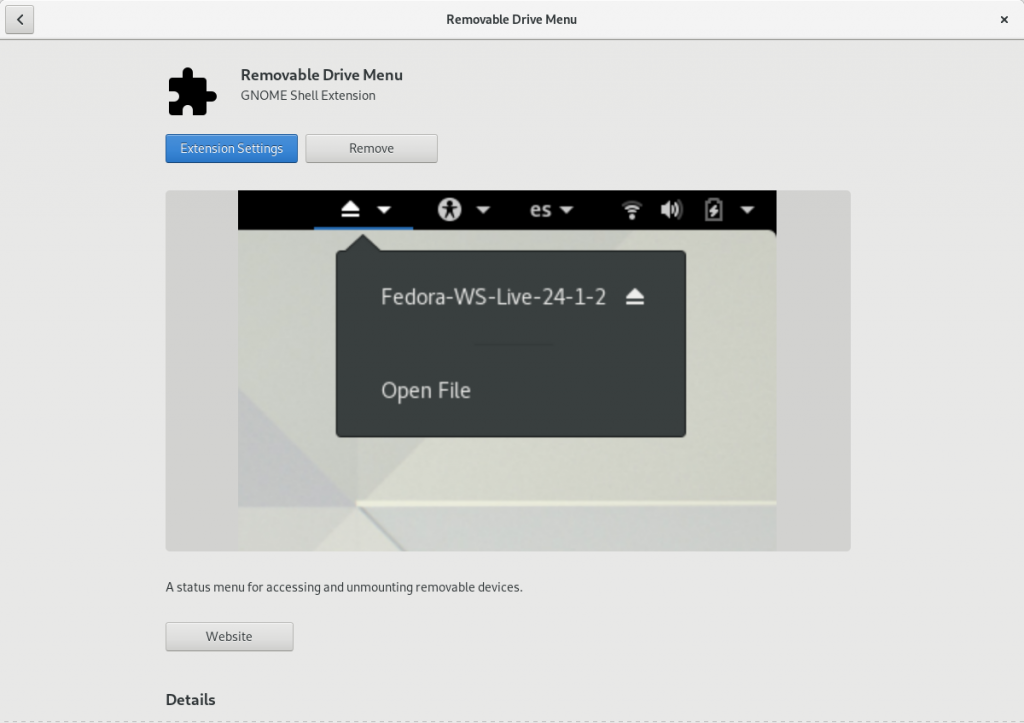

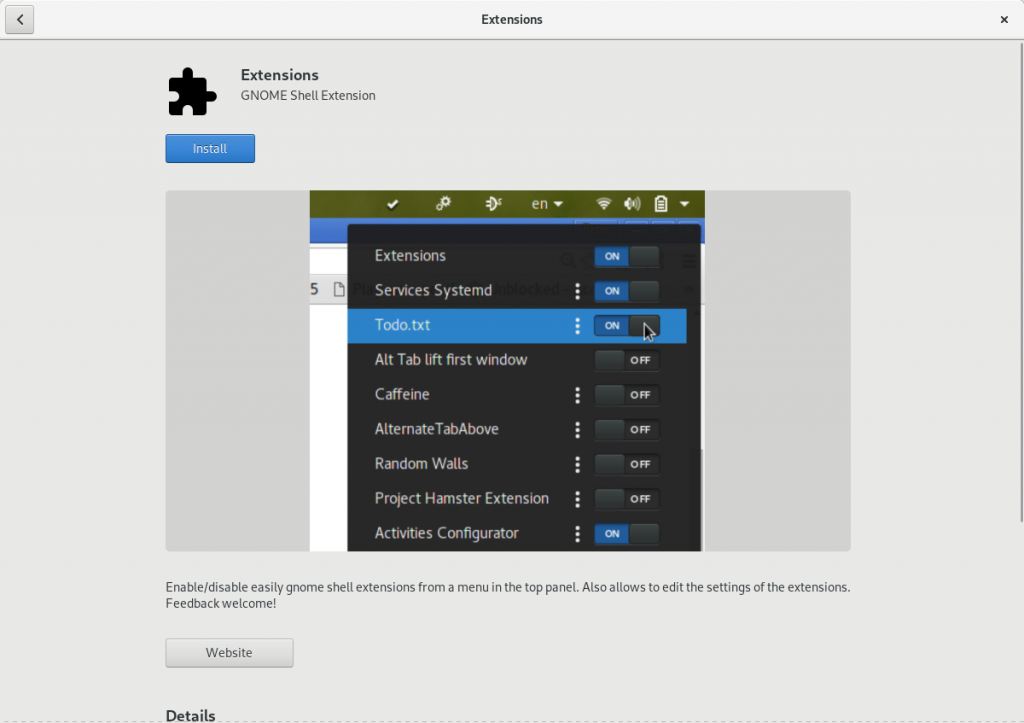

10,462 | 4 个值得一提的 Firefox 扩展插件 | https://opensource.com/article/18/6/firefox-open-source-extensions | 2019-01-21T11:09:09 | [

"Firefox",

"扩展",

"插件"

] | /article-10462-1.html |

>

> 这些扩展可以使火狐更具生产力和使用乐趣。

>

>

>

自从大约 12 年前 Firefox(火狐浏览器)v2.0 推出以来, 我一直是它的用户。它不是那时最好的网络浏览器,但是总会有一个理由让我回到它:我最喜爱的浏览器扩展插件不能工作在其它浏览器上。

如今,我喜欢现下的 Firefox,因为它快速、可定制和开源,我也很欣赏那些体现了原开发人员从未想到过的想法的扩展插件:如果你想在没有鼠标的情况下浏览网页呢?如果你不喜欢盯着晚上从显示器里发出来的强光呢?如何在 YouTube 和其他视频托管网站上使用一个更专业的播放器来获得更好的性能和更多播放控制呢?如果你需要更复杂的方法来禁用跟踪器和加快加载页面,该怎么办?

幸运的是,这些问题都有答案,我将展现给你我最喜爱的扩展 —— 所有这些都是免费软件或开源的 (即,在 [GNU GPL](https://www.gnu.org/licenses/gpl-3.0.en.html)、[MPL](https://www.mozilla.org/en-US/MPL/) 或 [Apache](https://www.apache.org/licenses/LICENSE-2.0) 许可帧下) ,它们可以使一个优秀的浏览器更优秀。

尽管术语<ruby> 加载项 <rt> add-on </rt></ruby>和<ruby> 扩展 <rt> extension </rt></ruby>的含义稍微不同,但我在本文中的使用不会区分它们。

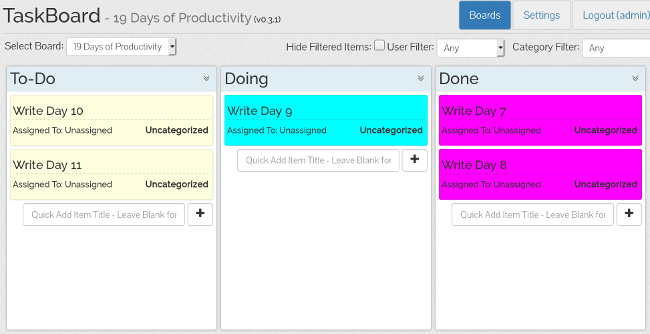

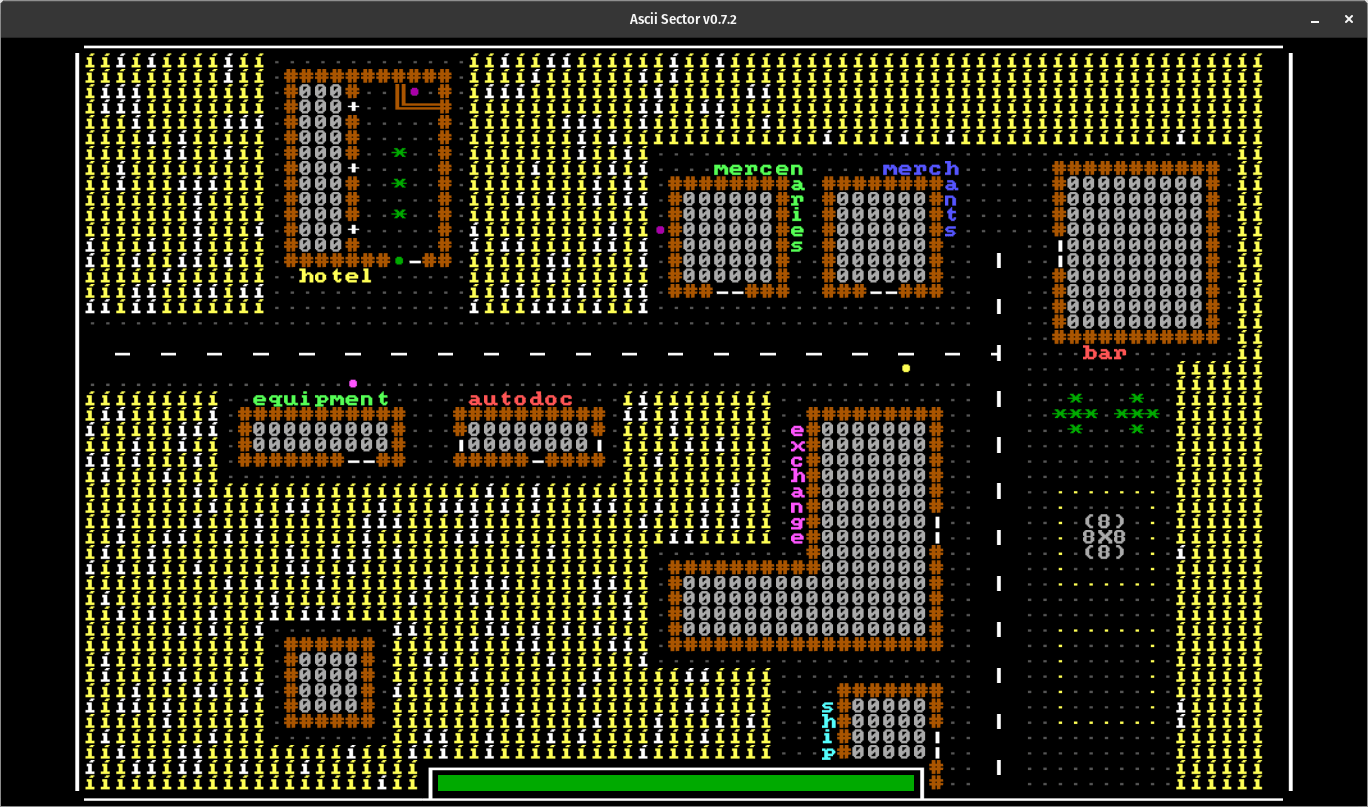

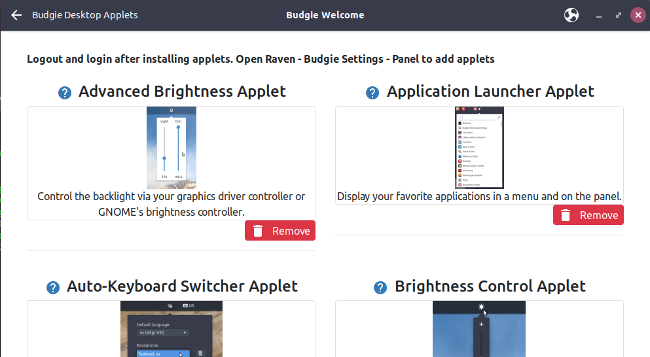

### Tridactyl

*Tridactyl 的新选项卡页面,展示了链接的指引。*

[Tridactyl](https://addons.mozilla.org/en-US/firefox/addon/tridactyl-vim/) 使你能够在大多数浏览活动中使用键盘。它的灵感来自于现已不复存在的 [Vimperator](https://github.com/vimperator/vimperator-labs) 和 [Pentadactyl](https://addons.mozilla.org/en-US/firefox/addon/pentadactyl/),而它们受到了 [Vim](https://www.vim.org/) 的默认键绑定的启发。由于我已经习惯了 Vim 和其他命令行应用程序,我发现了它的功能类似于使用键值 `h/j/k/l` 进行导航,用 `f/F` 可以与超链接进行交互,而且创建自定义的键绑定和命令非常方便。

Tridactyl 最近刚刚实现了一个可选的本地信使(目前,仅适用于 GNU/Linux 和 Mac OSX),提供了更酷的功能。例如,有了它,你可以隐藏 Firefox 用户界面上的一些元素(以 Vimperator 和 Pentadactyl 的方式)、在外部程序中打开链接或当前页(我经常用 [mpv](https://mpv.io/) 和 [youtube-dl](https://rg3.github.io/youtube-dl/index.html) 播放视频)、通过按 `Ctrl-I`(或者任意你选择的组合键)用你喜爱的编辑器来编辑文本框的内容。

话虽如此,但要记住,这是一个相对早期的项目,细节可能还是很粗糙。另一方面,它的开发非常活跃,当你回顾它早期的缺陷时,未尝不是一种乐趣。

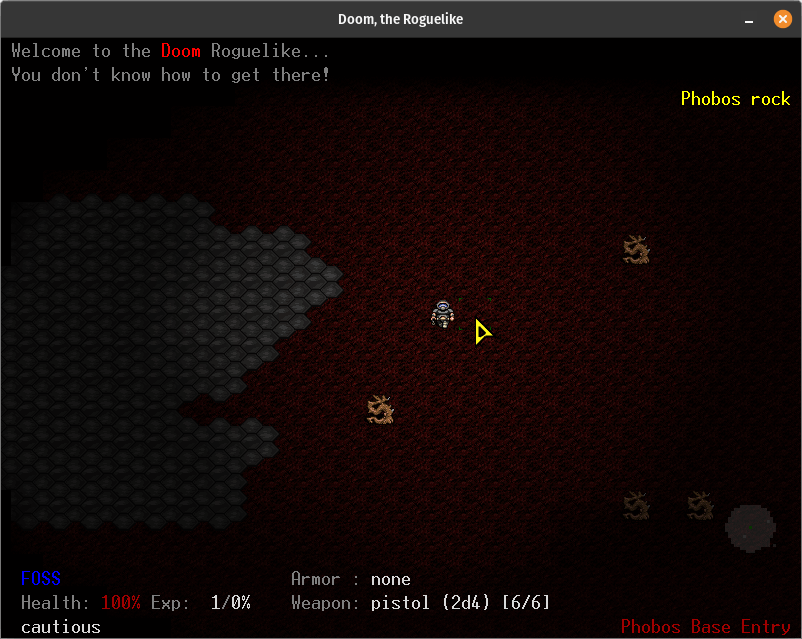

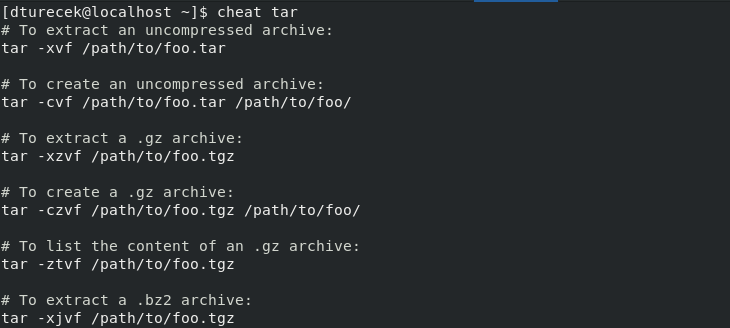

### Open With

*Open With 提供的菜单。我可以用这里列出的一个外部程序打开当前页面。*

说到与外部程序的互动,有时能够用鼠标来做到这一点还是让人很高兴的。这是 [Open With](https://addons.mozilla.org/en-US/firefox/addon/open-with/) 的用武之地。

除了添加的上下文菜单(如屏幕截图所示)之外,你还可以通过单击加载项栏上的扩展图标来找到自己定义的命令。如[它在 Mozilla Add-ons 页面上](https://addons.mozilla.org/en-US/firefox/addon/open-with/) 的图标和描述所示,它主要是为了切换到其它的 web 浏览器,但我也可以轻松地将它与 mpv 和 youtube-dl 相配合。

它也提供了键盘快捷方式,但它们受到了严重限制。可以在扩展设置的下拉列表中选择的组合不超过三种。相反,Tridactyl 允许我将命令分配给几乎任何没有被 Firefox 所阻止的东西。没错,Open With 目前为鼠标而准备的。

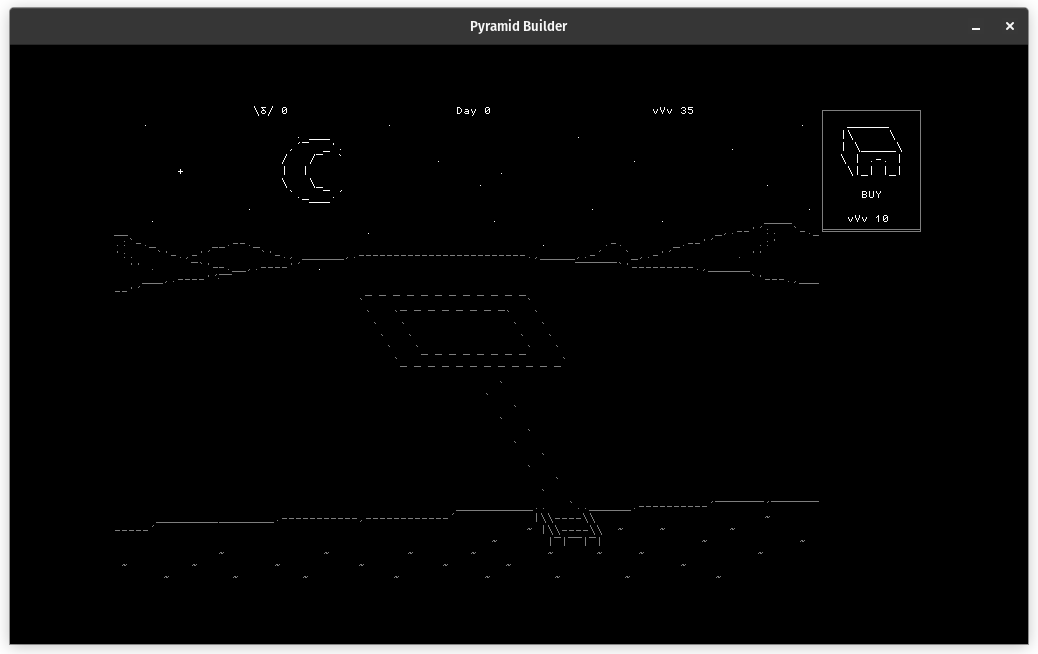

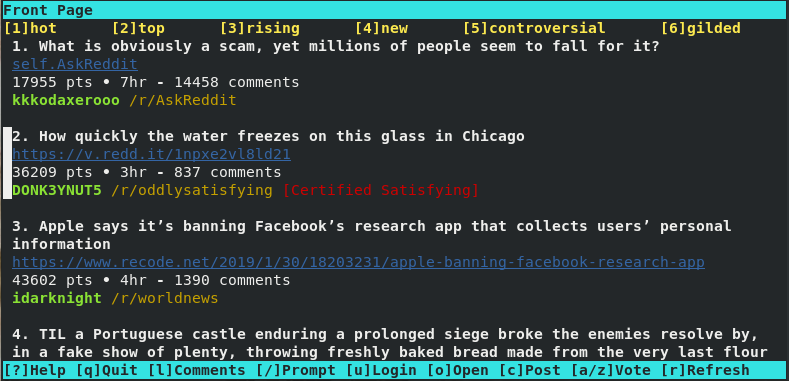

### Stylus

!")

*在这个屏幕截图中,我刚刚搜索并为当前正在浏览的 Stylus 的网站安装了一个黑暗主题。即使是弹出窗口也可以定制风格(称为 Deepdark Stylus)!*

[Stylus](https://addons.mozilla.org/en-US/firefox/addon/styl-us/) 是一个用户样式管理器,这意味着可以通过编写自定义 CSS 规则并将其加载到 Stylus 中来更改任何网页的外观。如果你不懂 CSS,在如 [userstyles.org](https://userstyles.org/) 这样网站上有大量的其他人制作的样式。

现在,你可能会问,“这不就是 [Stylish](https://addons.mozilla.org/en-US/firefox/addon/stylish/) 么?” 你是对的!Stylus 是基于 Stylish 的,并提供了更多的改进:它不包含任何远程记录、尊重你的隐私,所有开发都是公开的(尽管 Stylish 仍在积极开发,我一直未能找到最新版本的源代码),而且它还支持 [UserCSS](https://github.com/openstyles/stylus/wiki/Usercss)。

UserCSS 是一种有趣的格式,尤其是对于开发人员来说。我已经为不同的网站写了几种用户样式(主要是黑暗主题,和为了提高可读性的调整),虽然 Stylus 的内部编辑器很好,我还是喜欢用 Neovim 编辑代码。为了做到这样我所需要做的就是用 “.user.css” 作为本地加载文件的后缀名,在 Stylus 里启动 “Live Reload” 选项,只要我在 Neovim 中保存文件就会应用所有的更改。它也支持远程 UserCSS 文件,因此,每当我将更改推送到 GitHub 或任何基于 git 的开发平台时,它们将自动对用户可用。(我提供了指向该文件的原始版本的链接,以便他们可以轻松地访问它。)

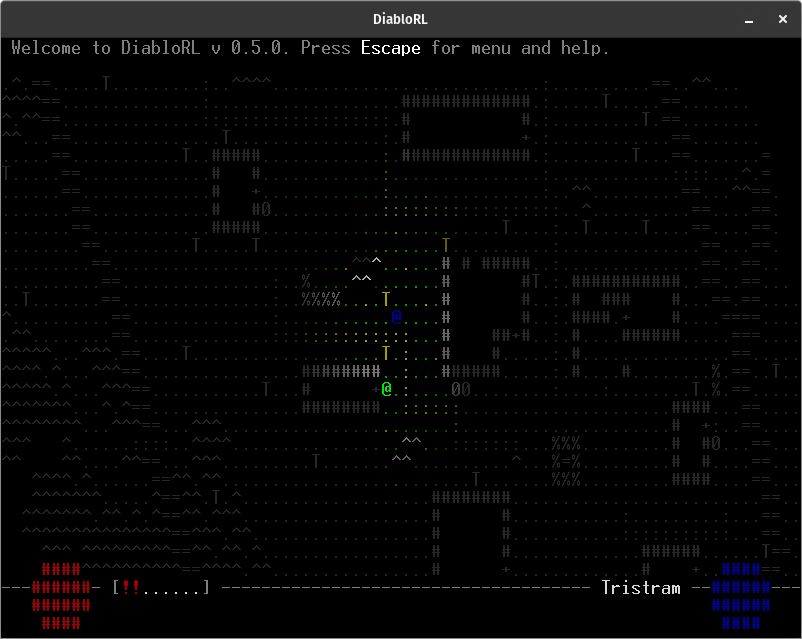

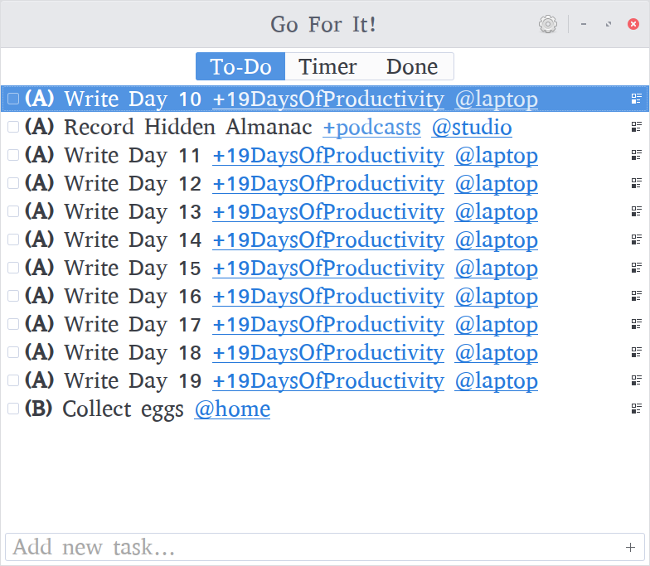

### uMatrix

*uMatrix 的用户界面,显示当前访问过的网页的当前规则。*

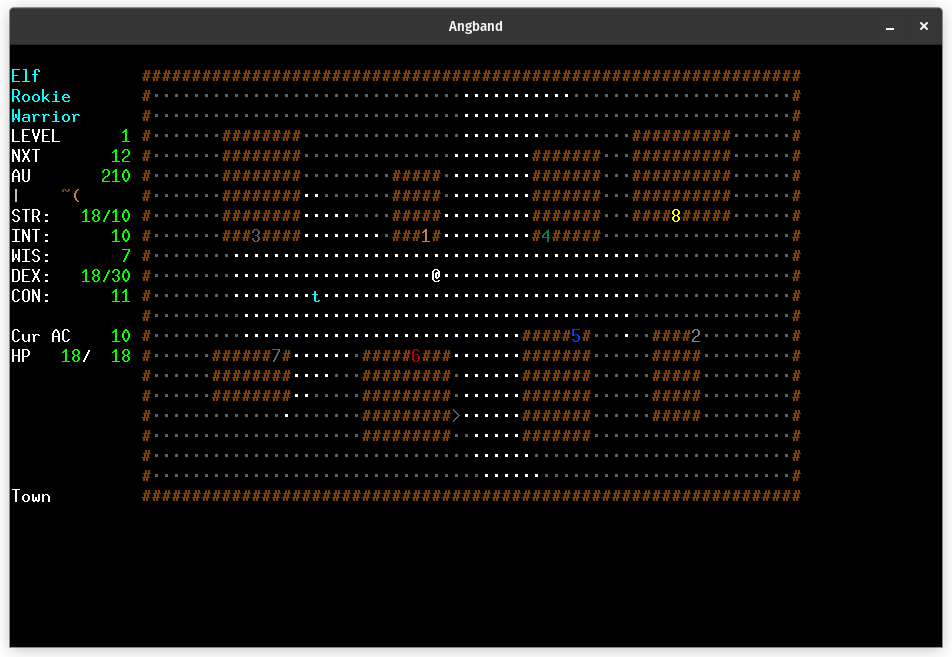

Jeremy Garcia 在他发表在 Opensource.com 的[文章](https://opensource.com/article/18/5/firefox-extensions)中提到了一个优秀的拦截器 uBlock Origin。我想提请大家关注另一个由 [gorhill](https://addons.mozilla.org/en-US/firefox/user/gorhill/) 开发的扩展插件: uMatrix 。

[uMatrix](https://addons.mozilla.org/en-US/firefox/addon/umatrix) 允许你为网页上的某些请求设置拦截规则,可以通过点击该加载项的弹出窗口来切换(在上面的屏幕截图中可以看到)。这些请求的区别在于脚本的类别、脚本发起的请求、cookies、CSS 规则、图像、媒体、帧,和被 uMatrix 标记为“other” 的其它内容。例如,你可以设置全局规则,以便在默认情况下允许所有请求,并将特定的请求添加到黑名单中(更方便的方法),或在默认情况下阻止所有内容,并手动将某些请求列入白名单(更安全的方法)。如果你一直在使用 NoScript 或 RequestPolicy,你可以从它们 [导入](https://github.com/gorhill/uMatrix/wiki/FAQ) 你的白名单规则。

另外 uMatrix 支持 [hosts 文件](https://en.wikipedia.org/wiki/Hosts_(file)),可用于阻止来自某些域的请求。不要与 uBlock Origin 所使用的筛选列表混淆,它使用的语法同 Adblock Plus 一样。默认情况下,uMatrix 会通过几个 hosts 文件阻止已知的分发广告、跟踪器和恶意软件的服务器,如果需要,你可以添加更多外部数据源。

那么你将选择哪一个:uBlock Origin 或 uMatrix ?就个人而言,我在电脑上两个都用,而只在安卓手机上用 uMatrix 。[据 gorhill 所说](https://github.com/gorhill/uMatrix/issues/32#issuecomment-61372436),两者之间存在某种重叠,但它们有不同的目标用户和目地。如果你想要的只是阻止跟踪器和广告的简单方法,uBlock Origine 是更好的选择;另一方面,如果你希望对网页在浏览器中可以执行或不能执行的操作进行精细的控制,即使需要一些时间来进行配置,并且可能会阻止某些网站如预期的工作,uMatrix 也是更好的选择。

### 结论

目前,这些是 Firefox 里我最喜欢的扩展。Tridactyl 通过依靠键盘和与外部程序交互,加快了浏览导航速度;Open With 能让我用鼠标在另外一个程序中打开页面;Stylus 是全面的用户样式管理器,对用户和开发人员都很有吸引力;uMatrix 本质上是 Firefox 的防火墙,可以用于过滤未知的请求。

尽管我基本上只是讨论了这些加载项的好处,但没有一个软件是完美的。如果你喜欢它们中的任何一个,并认为它们的某些方面可以改进,我建议你去它们的 Github 页面,并查看它们的贡献指南。通常情况下,自由开源软件的开发人员是欢迎错误报告和提交请求的。告诉你的朋友或道谢也是帮助开发者的好方法,特别是如果这些开发者是在业余时间从事他们的项目的话。

---

via: <https://opensource.com/article/18/6/firefox-open-source-extensions>

作者:[Zsolt Szakács](https://opensource.com/users/zsolt) 选题:[lujun9972](https://github.com/lujun9972) 译者:[lixinyuxx](https://github.com/lixinyuxx) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

10,463 | 红宝石(Ruby)史话 | https://twobithistory.org/2017/11/19/the-ruby-story.html | 2019-01-21T11:35:00 | [

"Ruby",

"Rails",

"RoR"

] | https://linux.cn/article-10463-1.html |

尽管我很难说清楚为什么,但 Ruby 一直是我最喜爱的一门编程语言。如果用音乐来类比的话,Python 给我的感觉像是<ruby> 朋克摇滚 <rt> punk rock </rt></ruby>,简单、直接,但略显单调,而 Ruby 则像是爵士乐,从根本上赋予了程序员表达自我的自由,虽然这可能会让代码变复杂,编写出来的程序对其他人来说不直观。

Ruby 社区一直将<ruby> 灵活表达 <rt> freedom of expression </rt></ruby>视为其核心价值。可我不认同这对于 Ruby 的开发和普及是最重要的。创建一门编程语言也许是为了更高的性能,也许是为了在抽象上节省更多的时间,可 Ruby 就有趣在它并不关心这些,从它诞生之初,它的目标就是让程序员更快乐。

### 松本·行弘

<ruby> 松本·行弘 <rt> Yukihiro Matsumoto </rt></ruby>,亦称为 “Matz”,于 1990 年毕业于筑波大学。筑波是东京东北方向上的一个小城市,是科学研究与技术开发的中心之一。筑波大学以其 STEM 计划广为流传。松本·行弘在筑波大学的信息科学专业学习过,且专攻编程语言。他也在 Ikuo Nakata 的编程语言实验室工作过。(LCTT 译注:STEM 是<ruby> 科学 <rt> Science </rt></ruby>、<ruby> 技术 <rt> Technology </rt></ruby>、<ruby> 工程 <rt> Engineering </rt></ruby>、<ruby> 数学 <rt> Mathematics </rt></ruby>四门学科英文首字母的缩写。)

松本从 1993 年开始制作 Ruby,那时他才刚毕业几年。他制作 Ruby 的起因是觉得那时的脚本语言缺乏一些特性。他在使用 Perl 的时候觉得这门语言过于“玩具”,此外 Python 也有点弱,用他自己的话说:

>

> 我那时就知道 Python 了,但我不喜欢它,因为我认为它不是一门真正的面向对象的语言。面向对象就像是 Python 的一个附件。作为一个编程语言狂热者,我在 15 年里一直是面向对象的忠实粉丝。我真的想要一门生来就面向对象而且易用的脚本语言。我为此特地寻找过,可事实并不如愿。<sup id="fnref1"> <a href="#fn1" rel="footnote"> 1 </a></sup>

>

>

>

所以一种解释松本创造 Ruby 的动机就是他想要创造一门更好,且面向对象的 Perl。

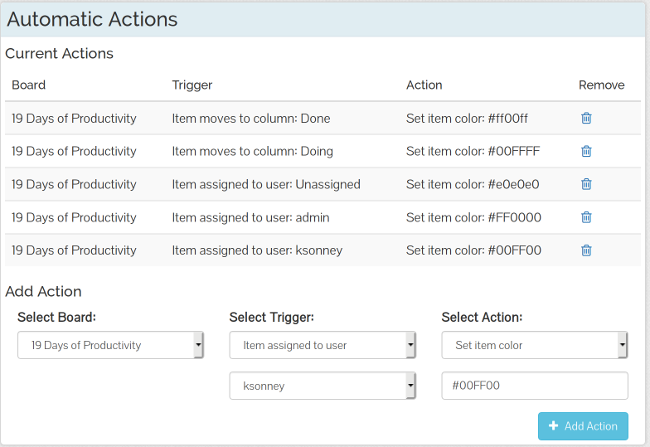

但在其他场合,松本说他创造 Ruby 主要是为了让他自己和别人更快乐。2008 年,松本在谷歌技术讲座结束时放映了这张幻灯片:

他对听众说到,

>

> 我希望 Ruby 能帮助世界上的每一个程序员更有效率地工作,享受编程并感到快乐。这也是制作 Ruby 语言的主要意图。<sup id="fnref2"> <a href="#fn2" rel="footnote"> 2 </a></sup>

>

>

>

松本开玩笑的说他制作 Ruby 的原因很自私,因为他觉得其它的语言乏味,所以需要创造一点让自己开心的东西。

这张幻灯片展现了松本谦虚的一面。其实,松本是一位摩门教践行者,因此我很好奇他传奇般的友善有多少归功于他的宗教信仰。无论如何,他的友善在 Ruby 社区广为流传,甚至有一条称为 MINASWAN 的原则,即“<ruby> 松本人很好,我们也一样 <rt> Matz Is Nice And So We Are Nice </rt></ruby>”。我想那张幻灯片一定震惊了来自 Google 的观众。我想谷歌技术讲座上的每张幻灯片都充满着代码和运行效率的指标,来说明一个方案比另一个更快更有效,可仅仅放映崇高的目标的幻灯片却寥寥无几。

Ruby 主要受到 Perl 的影响。Perl 则是由 Larry Wall 于 20 世纪 80 年代晚期创造的语言,主要用于处理和转换基于文本的数据。Perl 因其文本处理和正则表达式而闻名于世。对于 Ruby 程序员,Perl 程序中的很多语法元素都不陌生,例如符号 `$`、符号 `@`、`elsif` 等等。虽然我觉得,这些不是 Ruby 应该具有的特征。除了这些符号外,Ruby 还借鉴了 Perl 中的正则表达式的处理和标准库。

但影响了 Ruby 的不仅仅只有 Perl 。在 Ruby 之前,松本制作过一个仅用 Emacs Lisp 编写的邮件客户端。这一经历让他对 Emacs 和 Lisp 语言运行的内部原理有了更多的认识。松本说 Ruby 底层的对象模型也受其启发。在那之上,松本添加了一个 Smalltalk 风格的信息传递系统,这一系统随后成为了 Ruby 中任何依赖 `#method_missing` 的操作的基石。松本也表示过 Ada 和 Eiffel 也影响了 Ruby 的设计。

当时间来到了给这门新语言命名的时候,松本和他的同事 Keiju Ishitsuka 挑选了很多个名字。他们希望名字能够体现新语言和 Perl、shell 脚本间的联系。在[这一段非常值得一读的即时消息记录](http://blade.nagaokaut.ac.jp/cgi-bin/scat.rb/ruby/ruby-talk/88819)中,Ishitsuka 和 松本也许花了太多的时间来思考 <ruby> shell <rt> 贝壳 </rt></ruby>、<ruby> clam <rt> 蛤蛎 </rt></ruby>、<ruby> oyster <rt> 牡蛎 </rt></ruby>和<ruby> pearl <rt> 珍珠 </rt></ruby>之间的关系了,以至于差点把 Ruby 命名为“<ruby> Coral <rt> 珊瑚虫 </rt></ruby>”或“<ruby> Bisque <rt> 贝类浓汤 </rt></ruby>”。幸好,他们决定使用 Ruby,因为它就像 pearl 一样,是一种珍贵的宝石。此外,<ruby> Ruby <rt> 红宝石 </rt></ruby> 还是 7 月的生辰石,而 <ruby> Pearl <rt> 珍珠 </rt></ruby> 则是 6 月的生辰石,采用了类似 C++ 和 C# 的隐喻,暗示着她们是改进自前辈的编程语言。(LCTT 译注:Perl 和 Pearl 发音相同,所以也常以“珍珠”来借喻 Perl;shell 是操作系统提供的用户界面,这里指的是命令行界面;更多有关生辰石的[信息](https://zh.wikipedia.org/zh-hans/%E8%AA%95%E7%94%9F%E7%9F%B3)。)

### Ruby 西渐

Ruby 在日本的普及很快。1995 年 Ruby 刚刚发布后不久后,松本就被一家名为 Netlab 的日本软件咨询财团(全名 Network Applied Communication Laboratory)雇用,并全职为 Ruby 工作。到 2000 年时,在 Ruby 发布仅仅 5 年后,Ruby 在日本的流行度就超过了 Python。可这时的 Ruby 才刚刚进入英语国家。虽然从 Ruby 的诞生之初就存在讨论它的日语邮件列表,但是英语的邮件列表直到 1998 年才建立起来。起初,在英语的邮件列表中交流的大多是日本的 Ruby 狂热者,可随着 Ruby 在西方的逐渐普及而得以改变。

在 2000 年,Dave Thomas 出版了第一本涵盖 Ruby 的英文书籍《Programming Ruby》。因为它的封面上画着一把锄头,所以这本书也被称为锄头书。这是第一次向身处西方的程序员们介绍了 Ruby。就像在日本那样,Ruby 的普及很快,到 2002 年时,英语的 Ruby 邮件列表的通信量就超过了日语邮件列表。

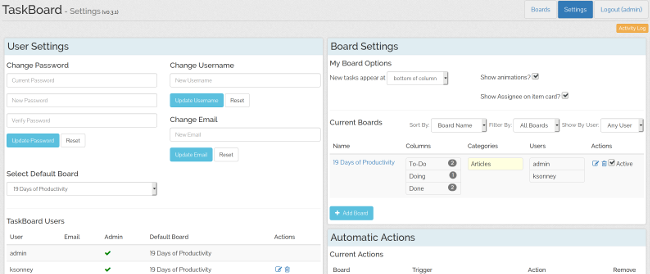

时间来到了 2005 年,Ruby 更流行了,但它仍然不是主流的编程语言。然而,Ruby on Rails 的发布让一切都不一样了。Ruby on Rails 是 Ruby 的“杀手级应用”,没有别的什么项目能比它更推动 Ruby 的普及了。在 Ruby on Rails 发布后,人们对 Ruby 的兴趣爆发式的增长,看看 TIOBE 监测的语言排行:

有时人们开玩笑的说,Ruby 程序全是基于 Ruby-on-Rails 的网站。虽然这听起来就像是 Ruby on Rails 占领了整个 Ruby 社区,但在一定程度上,这是事实。因为编写 Rails 应用时使用的语言正是 Ruby。Rails 欠 Ruby 的和 Ruby 欠 Rails 的一样多。

Ruby 的设计哲学也深深地影响了 Rails 的设计与开发。Rails 之父 David Heinemeier Hansson 常常提起他第一次与 Ruby 的接触的情形,那简直就是一次传教。他说,那种经历简直太有感召力了,让他感受到要为松本的杰作(指 Ruby)“传教”的使命。<sup id="fnref3"> <a href="#fn3" rel="footnote"> 3 </a></sup> 对于 Hansson 来说,Ruby 的灵活性简直就是对 Python 或 Java 语言中自上而下的设计哲学的反抗。他很欣赏 Ruby 这门能够信任自己的语言,Ruby 赋予了他自由选择<ruby> 程序表达方式 <rt> express his programs </rt></ruby>的权力。

就像松本那样,Hansson 声称他创造 Rails 时因为对现状的不满并想让自己能更开心。他也认同让程序员更快乐高于一切的观点,所以检验 Rails 是否需要添加一项新特性的标准是“<ruby> 更灿烂的笑容标准 <rt> The Principle of The Bigger Smile </rt></ruby>”。什么功能能让 Hansson 更开心就给 Rails 添加什么。因此,Rails 中包括了很多非正统的功能,例如 “Inflector” 类和 `Time` 扩展(“Inflector”类试图将单个类的名字映射到多个数据库表的名字;`Time` 扩展允许程序员使用 `2.days.ago` 这样的表达式)。可能会有人觉得这些功能太奇怪了,但 Rails 的成功表明它的确能让很多人的生活得更快乐。

因此,虽然 Rails 的火热带动了 Ruby 的普及看起来是一个偶然,但事实上 Rails 体现了 Ruby 的很多核心准则。此外,很难看到使用其他语言开发的 Rails,正是因为 Rails 的实现依赖于 Ruby 中<ruby> 类似于宏的类方法调用 <rt> macro-like class method calls </rt></ruby>来实现模型关联这样的功能。一些人认为这么多的 Ruby 开发需要基于 Ruby on Rails 是 Ruby 生态不健康的表现,但 Ruby 和 Ruby on Rails 结合的如此紧密并不是没有道理的。

### Ruby 之未来

人们似乎对 Ruby(及 Ruby on Rails)是否正在消亡有着异常的兴趣。早在 2011 年,Stack Overflow 和 Quora 上就充斥着程序员在咨询“如果几年后不再使用 Ruby 那么现在是否有必要学它”的话题。这些担忧对 Ruby 并非没有道理,根据 TIOBE 指数和 Stack Overflow 趋势,Ruby 和 Ruby on Rails 的人气一直在萎缩,虽然它也曾是热门新事物,但在更新更热的框架面前它已经黯然失色。

一种解释这种趋势的理论是程序员们正在舍弃动态类型的语言转而选择静态类型的。TIOBE 指数的趋势中可以看出对软件质量的需求在上升,这意味着出现在运行时的异常变得难以接受。他们引用 TypeScript 来说明这一趋势,TypeScript 是 JavaScript 的全新版本,而创造它的目的正是为了保证客户端运行的代码能受益于编译所提供的安全保障。

我认为另一个更可能的原因是比起 Ruby on Rails 推出的时候,现在存在着更多有竞争力的框架。2005 年它刚刚发布的时候,还没有那么多用于创建 Web 程序的框架,其主要的替代者还是 Java。可在今天,你可以使用为 Go、Javascript 或者 Python 开发的各种优秀的框架,而这还仅仅是主流的选择。Web 的世界似乎正走向更加分布式的结构,与其使用一块代码来完成从数据库读取到页面渲染所有事务,不如将事务拆分到多个组件,其中每个组件专注于一项事务并将其做到最好。在这种趋势下,Rails 相较于那些专攻于 JavaScript 前端通信的 JSON API 就显得过于宽泛和臃肿。

总而言之,我们有理由对 Ruby 的未来持乐观态度。因为不管是 Ruby 还是 Rails 的开发都还很活跃。松本和其他的贡献者们都在努力开发 Ruby 的第三个主要版本。新的版本将比现在的版本快上 3 倍,以减轻制约着 Ruby 发展的性能问题。虽然从 2005 年起,越来越多的 Web 框架被开发出来,但这并不意味着 Ruby on Rails 就失去了其生存空间。Rails 是一个富有大量功能的成熟的工具,对于一些特定类型的应用开发一直是非常好的选择。

但就算 Ruby 和 Rails 走上了消亡的道路,Ruby 让程序员更快乐的信条一定会存活下来。Ruby 已经深远的影响了许多新的编程语言的设计,这些语言的设计中能够看到来自 Ruby 的很多理念。而其他的新生语言则试着变成 Ruby 更现代的实现,例如 Elixir 是一个强调函数式编程范例的语言,仍在开发中的 Crystal 目标是成为使用静态类型的 Ruby 。世界上许多程序员都喜欢上了 Ruby 及其语法,因此它的影响必将会在未来持续很长一段时间。

喜欢这篇文章吗?这里每两周都会发表一篇这样的文章。请在推特上关注我们 [@TwoBitHistory](https://twitter.com/TwoBitHistory) 或者订阅我们的 [RSS](https://twobithistory.org/feed.xml),这样新文章发布的第一时间你就能得到通知。

---

1. <http://ruby-doc.org/docs/ruby-doc-bundle/FAQ/FAQ.html> [↩](#fnref1)

2. <https://www.youtube.com/watch?v=oEkJvvGEtB4?t=30m55s> [↩](#fnref2)

3. <http://rubyonrails.org/doctrine/>

---

via: <https://twobithistory.org/2017/11/19/the-ruby-story.html>

作者:[Two-Bit History](https://twobithistory.org) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wwhio](https://github.com/wwhio) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Ruby has always been one of my favorite languages, though I’ve sometimes found it hard to express why that is. The best I’ve been able to do is this musical analogy: Whereas Python feels to me like punk rock—it’s simple, predictable, but rigid—Ruby feels like jazz. Ruby gives programmers a radical freedom to express themselves, though that comes at the cost of added complexity and can lead to programmers writing programs that don’t make immediate sense to other people.

I’ve always been aware that freedom of expression is a core value of the Ruby community. But what I didn’t appreciate is how deeply important it was to the development and popularization of Ruby in the first place. One might create a programming lanugage in pursuit of better peformance, or perhaps timesaving abstractions—the Ruby story is interesting because instead the goal was, from the very beginning, nothing more or less than the happiness of the programmer.

## Yukihiro Matsumoto

Yukihiro Matsumoto, also known as “Matz,” graduated from the University of Tsukuba in 1990. Tsukuba is a small town just northeast of Tokyo, known as a center for scientific research and technological devlopment. The University of Tsukuba is particularly well-regarded for its STEM programs. Matsumoto studied Information Science, with a focus on programming languages. For a time he worked in a programming language lab run by Ikuo Nakata.

Matsumoto started working on Ruby in 1993, only a few years after graduating. He began working on Ruby because he was looking for a scripting language with features that no existing scripting language could provide. He was using Perl at the time, but felt that it was too much of a “toy language.” Python also fell short; in his own words:

I knew Python then. But I didn’t like it, because I didn’t think it was a true object-oriented language—OO features appeared to be an add-on to the language. As a language maniac and OO fan for 15 years, I really wanted a genuine object-oriented, easy-to-use scripting language. I looked for one, but couldn’t find one.

[1]

So one way of understanding Matsumoto’s motivations in creating Ruby is that he was trying to create a better, object-oriented version of Perl.

But at other times, Matsumoto has said that his primary motivation in creating Ruby was simply to make himself and others happier. Toward the end of a Google tech talk that Matsumoto gave in 2008, he showed the following slide:

He told his audience,

I hope to see Ruby help every programmer in the world to be productive, and to enjoy programming, and to be happy. That is the primary purpose of the Ruby language.

[2]

Matsumoto goes on to joke that he created Ruby for selfish reasons, because he was so underwhelmed by other languages that he just wanted to create something that would make him happy.

The slide epitomizes Matsumoto’s humble style. Matsumoto, it turns out, is a practicing Mormon, and I’ve wondered whether his religious commitments have any bearing on his legendary kindness. In any case, this kindness is so well known that the Ruby community has a principle known as MINASWAN, or “Matz Is Nice And So We Are Nice.” The slide must have struck the audience at Google as an unusual one—I imagine that any random slide drawn from a Google tech talk is dense with code samples and metrics showing how one engineering solution is faster or more efficient than another. Few, I suspect, come close to stating nobler goals more simply.

Ruby was influenced primarily by Perl. Perl was created by Larry Wall in the

late 1980s as a means of processing and transforming text-based reports. It

became well-known for its text processing and regular expression capabilities.

A Perl program contains many syntactic elements that would be familiar to a

Ruby programmer—there are `$`

signs, `@`

signs, and even `elsif`

s, which I’d

always thought were one of Ruby’s less felicitous idiosyncracies. On a deeper

level, Ruby borrows much of Perl’s regular expression handling and standard

library.

But Perl was by no means the only influence on Ruby. Prior to beginning work on

Ruby, Matsumoto worked on a mail client written entirely in Emacs Lisp. The

experience taught him a lot about the inner workings of Emacs and the Lisp

language, which Matsumoto has said influenced the underlying object model of

Ruby. On top of that he added a Smalltalk-style messsage passing system which

forms the basis for any behavior relying on Ruby’s `#method_missing`

. Matsumoto

has also claimed Ada and Eiffel as influences on Ruby.

When it came time to decide on a name for Ruby, Matsumoto and a colleague,

Keiju Ishitsuka, considered several alternatives. They were looking for

something that suggested Ruby’s relationship to Perl and also to shell

scripting. In an [instant message

exchange](http://blade.nagaokaut.ac.jp/cgi-bin/scat.rb/ruby/ruby-talk/88819)

that is well-worth reading, Ishitsuka and Matsumoto probably spend too much

time thinking about the relationship between shells, clams, oysters, and

pearls and get close to calling the Ruby language “Coral” or “Bisque” instead.

Thankfully, they decided to go with “Ruby”, the idea being that it was, like

“pearl”, the name of a valuable jewel. It also turns out that the birthstone

for June is a pearl while the birthstone for July is a ruby, meaning that the

name “Ruby” is another tongue-in-cheek “incremental improvement” name like C++

or C#.

## Ruby Goes West

Ruby grew popular in Japan very quickly. Soon after its initial release in 1995, Matz was hired by a Japanese software consulting group called Netlab (also known as Network Applied Communication Laboratory) to work on Ruby full-time. By 2000, only five years after it was initially released, Ruby was more popular in Japan than Python. But it was only just beginning to make its way to English-speaking countries. There had been a Japanese-language mailing list for Ruby discussion since almost the very beginning of Ruby’s existence, but the English-language mailing list wasn’t started until 1998. Initially, the English-language mailing list was used by Japanese Rubyists writing in English, but this gradually changed as awareness of Ruby grew.

In 2000, Dave Thomas published *Programming Ruby*, the first English-language

book to cover Ruby. The book became known as the “pickaxe” book for the pickaxe

it featured on its cover. It introduced Ruby to many programmers in the West

for the first time. Like it had in Japan, Ruby spread quickly, and by 2002 the

English-language Ruby mailing list had more traffic than the original

Japanese-language mailing list.

By 2005, Ruby had become more popular, but it was still not a mainstream programming language. That changed with the release of Ruby on Rails. Ruby on Rails was the “killer app” for Ruby, and it did more than any other project to popularize Ruby. After the release of Ruby on Rails, interest in Ruby shot up across the board, as measured by the TIOBE language index:

It’s sometimes joked that the only programs anybody writes in Ruby are Ruby-on-Rails web applications. That makes it sound as if Ruby on Rails completely took over the Ruby community, which is only partly true. While Ruby has certainly come to be known as that language people write Rails apps in, Rails owes as much to Ruby as Ruby owes to Rails.

The Ruby philosophy heavily informed the design and implementation of Rails.

David Heinemeier Hansson, who created Rails, often talks about how his first

contact with Ruby was an almost religious experience. He has said that the

encounter was so transformative that it “imbued him with a calling to do

missionary work in service of Matz’s creation.” 3 For Hansson, Ruby’s

no-shackles approach was a politically courageous rebellion against the

top-down impositions made by languages like Python and Java. He appreciated

that the language trusted him and empowered him to make his own judgements

about how best to express his programs.

Like Matsumoto, Hansson claims that he created Rails out of a frustration with

the status quo and a desire to make things better for himself. He, like

Matsumoto, prioritized programmer happiness above all else, evaluating

additions to Rails by what he calls “The Principle of The Bigger Smile.”

Whatever made Hansson smile more was what made it into the Rails codebase.

As a result, Rails would come to include unorthodox features like the

“Inflector” class (which tries to map singular class names to plural database

table names automatically) and Rails’ `Time`

extensions (allowing programmers

to write cute expressions like `2.days.ago`

). To some, these features were

truly weird, but the success of Rails is testament to the number of people who

found it made their lives much easier.

And so, while it might seem that Rails was an incidental application of Ruby that happened to become extremely popular, Rails in fact embodies many of Ruby’s core principles. Futhermore, it’s hard to see how Rails could have been built in any other language, given its dependence on Ruby’s macro-like class method calls to implement things like model associations. Some people might take the fact that so much of Ruby development revolves around Ruby on Rails as a sign of an unhealthy ecosystem, but there are good reasons that Ruby and Ruby on Rails are so intertwined.

## The Future of Ruby

People seem to have an inordinate amount of interest in whether or not Ruby (and Ruby on Rails) are dying. Since as early as 2011, it seems that Stack Overflow and Quora have been full of programmers asking whether or not they should bother learning Ruby if it will no longer be around in the next few years. These concerns are not unjustified; according to the TIOBE index and to Stack Overflow trends, Ruby and Ruby on Rails have been shrinking in popularity. Though Ruby on Rails was once the hot new thing, it has since been eclipsed by hotter and newer frameworks.

One theory for why this has happened is that programmers are abandoning dynamically typed languages for statically typed ones. Analysts at TIOBE index figure that a rise in quality requirements have made runtime exceptions increasingly unacceptable. They cite TypeScript as an example of this trend—a whole new version of JavaScript was created just to ensure that client-side code could be written with the benefit of compile-time safety guarantees.