id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

10,350 | 在 Linux 中使用 SoundConverter 轻松转换音频文件格式 | https://itsfoss.com/sound-converter-linux/ | 2018-12-15T15:05:10 | [

"音乐",

"格式"

] | https://linux.cn/article-10350-1.html |

>

> 如果你正在寻找将音频文件格式转换为 wav、mp3、ogg 或任何其他格式,SoundConverter 是你在 Linux 中需要的工具。

>

>

>

最近我购买了一些没有 DRM 的音乐。我是从 [SaReGaMa](https://en.wikipedia.org/wiki/Saregama) 那里买的,这是一家印度历史最悠久,规模最大的音乐品牌。下载的文件采用高清质量的 WAV 格式。

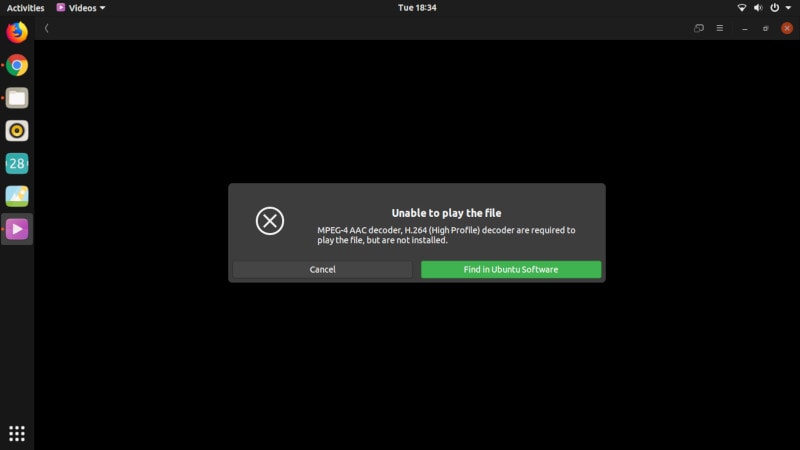

不幸的是,Rhythmbox 无法播放 WAV。最重要的是,单个文件大小约为 70MB。想象一下,将这么大的音乐传输到智能手机。它会不必要地占用大量空间。

所以我认为是时候将 WAV 文件转换为 MP3 —— 这个长青且最流行的音乐文件格式。

为此,我需要一个在 Linux 中的音频转换器。在这个教程中,我将向你展示如何使用名为 SoundCoverter 的 GUI 工具轻松地将音频文件从一种格式转换为另一种格式。

### 在 Linux 中安装 SoundConverter

[SoundConverter](http://soundconverter.org/) 是一款流行的自由开源软件。它应该可以在大多数 Linux 发行版的官方仓库中找到。

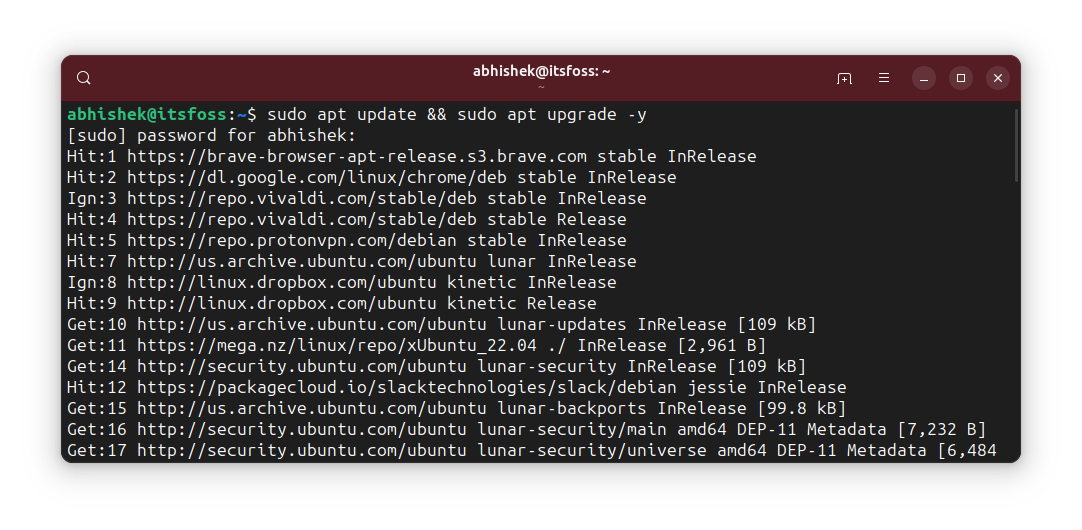

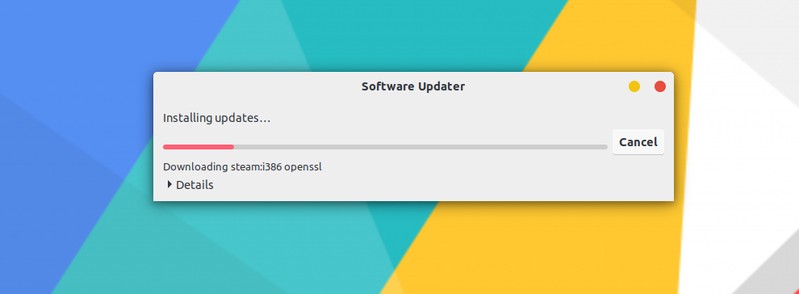

Ubuntu/Linux Mint 用户只需在软件中心搜索 SoundConverter 并从那里安装即可。

*SoundConverter 可以从软件中心安装*

或者,你可以使用命令行方式。在基于 Debian 和 Ubuntu 的系统中,你可以使用以下命令:

```

sudo apt install soundconverter

```

在 Arch、Fedora 和其他非基于 Debian 的发行版中,你可以使用你的发行版的软件中心或软件包管理器。

### 在 Linux 中使用 SoundConverter 转换音频文件格式

安装完 SoundConverter 后,在菜单中搜索并启动它。

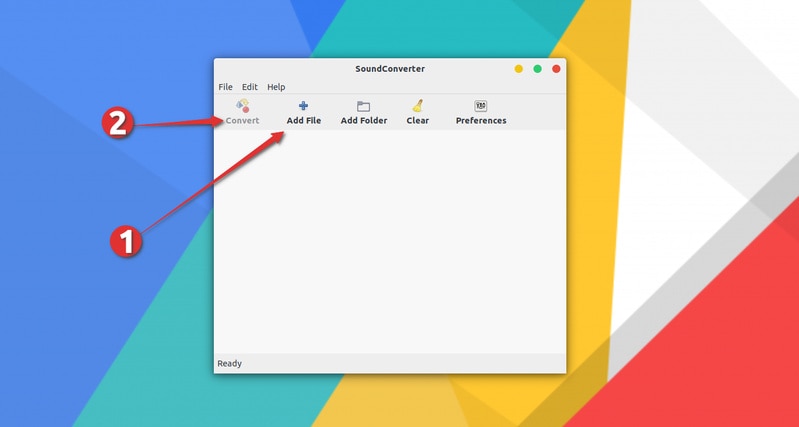

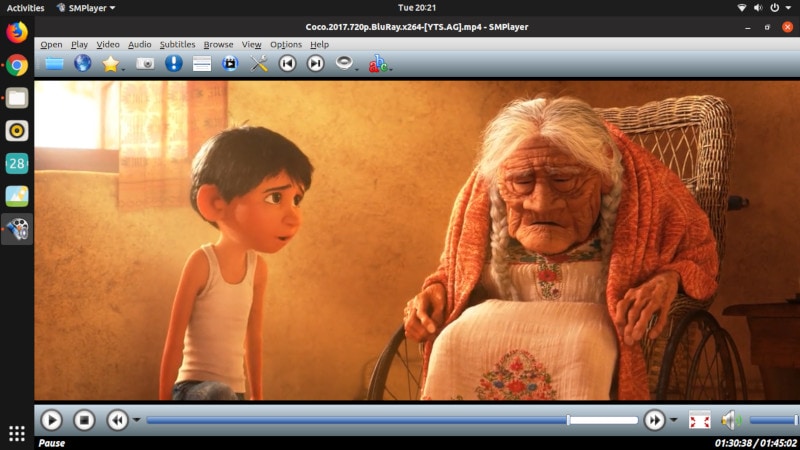

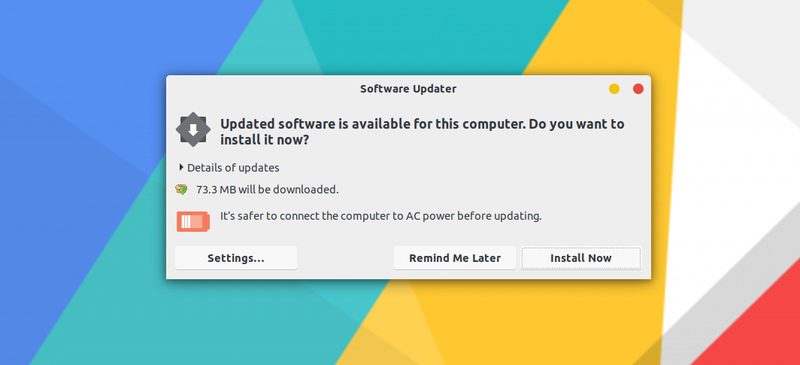

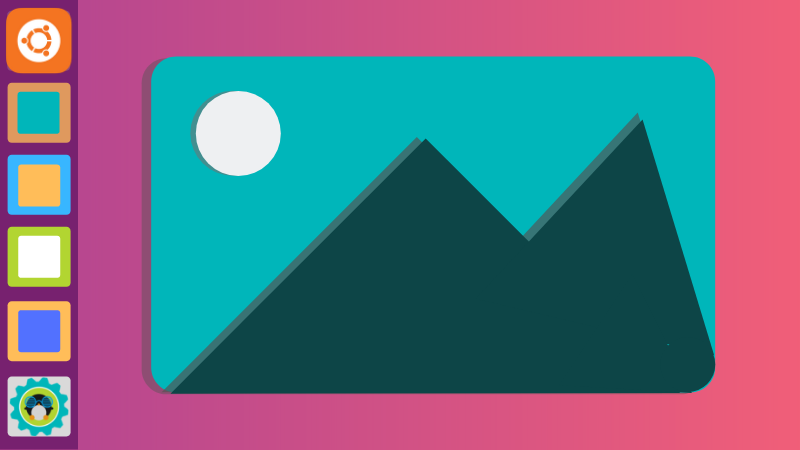

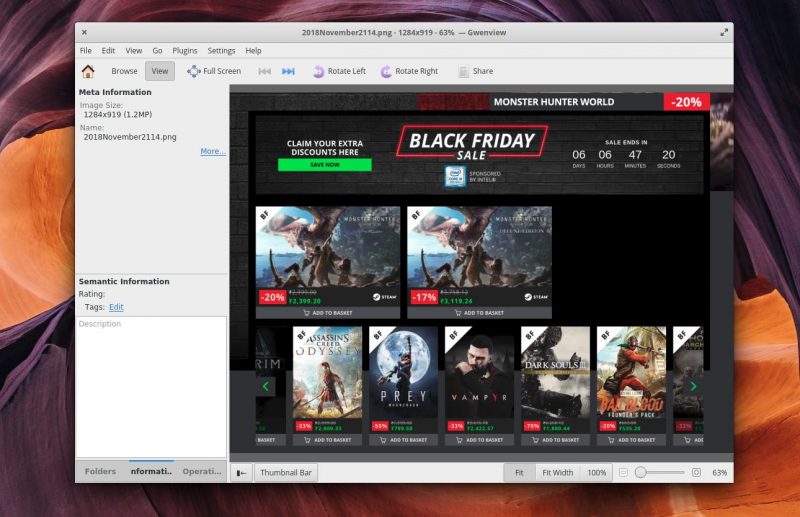

默认界面看起来像这样,它不能比这简单:

*简单的界面*

转换音频文件格式只要选择文件并单击转换。

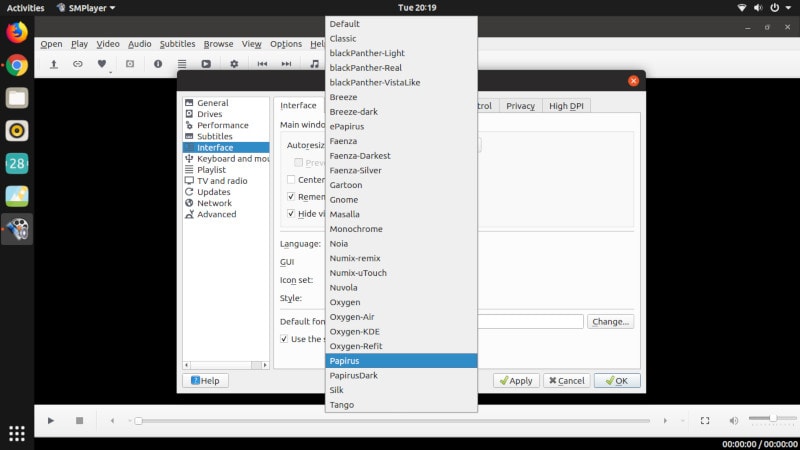

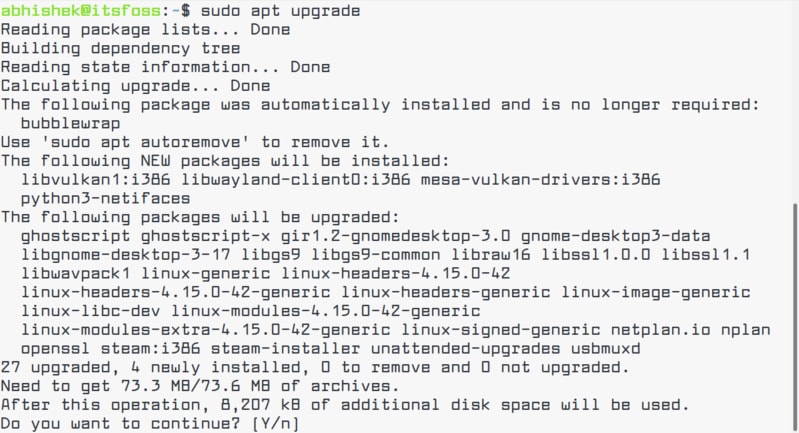

但是,我建议你至少在第一次运行时检查下默认设置。默认情况下,它会将音频文件转换为 OGG 文件格式,你可能不希望这样。

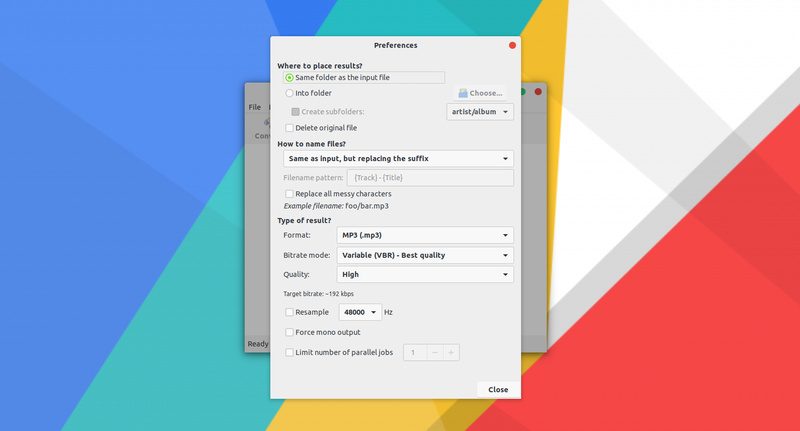

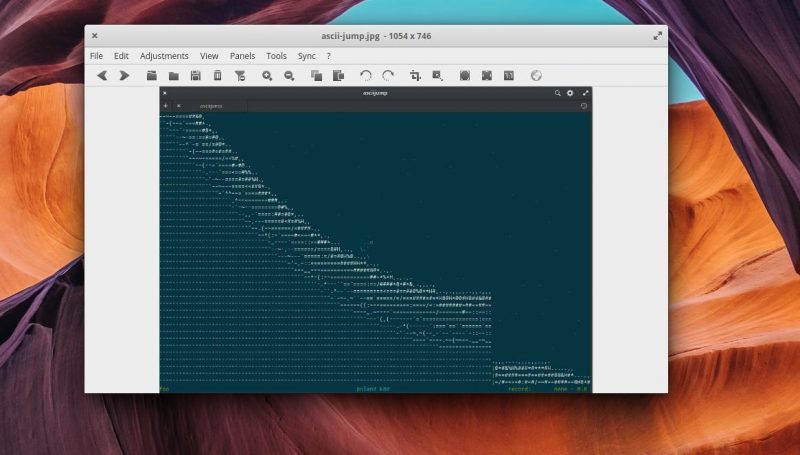

*可以在“首选项”中更改默认输出设置*

要更改默认输出设置,请单击界面上的“首选项”图标。你会在这里看到很多可更改的选择。

你可以更改默认输出格式、比特率、质量等。你还可以选择是否要将转换后的文件保存在与原始文件相同的文件夹中。

转换后还可以选择自动删除原始文件。我不认为你应该使用那个选项。

你还可以更改输出文件名。默认情况下,它只会更改后缀,但你也可以选择根据曲目编号、标题、艺术家等进行命名。为此,原始文件中应包含适当的元数据。

说到元数据,你听说过 [MusicBrainz Picard](https://itsfoss.com/musicbrainz-picard/) 吗?此工具可帮助你自动更新本地音乐文件的元数据。

### 总结

我之前用讨论过用一个小程序 [在 Linux 中录制音频](https://itsfoss.com/record-streaming-audio/)。这些很棒的工具通过专注某个特定的任务使得生活更轻松。你可以使用成熟和更好的音频编辑工具,如 [Audacity](https://www.audacityteam.org/),但对于较小的任务,如转换音频文件格式,它可能用起来很复杂。

我希望你喜欢 SoundConverter。如果你使用其他工具,请在评论中提及,我会在 FOSS 中提及。使用开心!

---

via: <https://itsfoss.com/sound-converter-linux/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

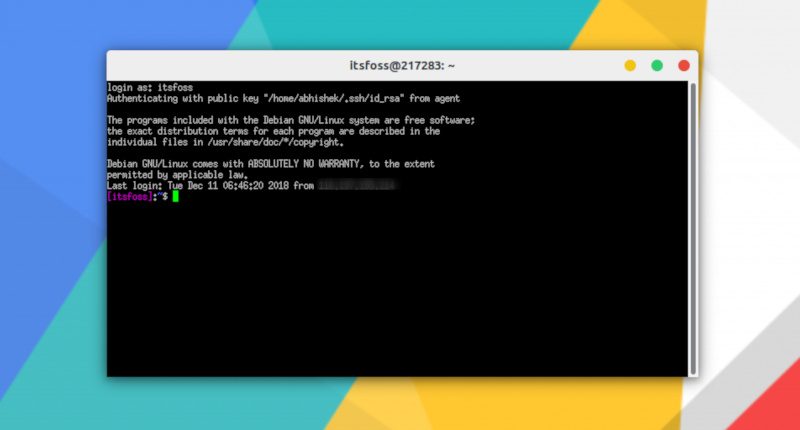

So recently I purchased some DRM-free music. I got it from [SaReGaMa](https://en.wikipedia.org/wiki/Saregama?ref=itsfoss.com), the oldest and the largest music label in India. The downloaded files were in HD quality and in WAV format.

Unfortunately, Rhythmbox doesn’t play the WAV files. On top of that, a single file was around 70 MB in size. Imagine transferring such large music files to smartphones. It would eat up a lot of space unnecessarily.

So I thought it was time to convert the WAV files to MP3, the evergreen and the most popular music file format.

And for this task, I needed an audio converter in Linux. There are plenty of [audio editors for Linux](https://itsfoss.com/best-audio-editors-linux/) but I needed a simple tool for this task.

In this quick tutorial, I’ll show you how can you convert your audio files from one format to another easily with a GUI tool called SoundConverter.

## Installing SoundConverter in Linux

If you prefer videos, you can watch this video from our YouTube channel.

[SoundConverter](http://soundconverter.org/?ref=itsfoss.com) is a popular free and open source software. It should be available in the official repository of most Linux distributions.

Ubuntu/Linux Mint users can simply search for SoundConverter in the software center and install it from there.

Alternatively, you can use the command line way. In Debian and Ubuntu-based systems, you can use the following command:

`sudo apt install soundconverter`

For Arch, Fedora and other non-Debian based distributions, you can use the software centre or the package manager of your distribution.

## Using SoundConverter to convert audio file formats in Linux

Once you have installed SoundConverter, search for it in the menu and start it.

The default interface looks like this and it cannot be more simple than this:

Converting audio file format is as easy as selecting the file and clicking on convert.

However, I would advise you to check the default settings at least on the first run. By default it converts the audio file to OGG file format and you may not want that.

To change the default output settings, click on the Preferences icon visible on the interface. You’ll see plenty of options to change here.

You can change the default output format, bitrate, quality etc. You can also choose if you want to keep the converted files in the same folder as the original or not.

There is also an option of automatically deleting the original file after conversion. I don’t think you should use that option.

You can also change the output file name. By default, it will just change the suffix but you can also choose to name it based on track number, title, artist etc. For that to happen, you should have proper metadata on the original file.

Speaking of metadata, have you heard of [MusicBrainz Picard](https://itsfoss.com/musicbrainz-picard/)? This tool helps you automatically updates the metadata of your local music files.

**Conclusion**

I have discussed [recording audio in Linux](https://itsfoss.com/record-streaming-audio/) previously with a similar tiny application. Such nifty tools actually make life easier with their focused aim of completing a certain task. You may use full-fledged and a lot better [audio editing tool like Audacity](https://itsfoss.com/audacity-recording/) but that may be complicated to use for smaller tasks like converting audio file formats.

I hope you like SoundConverter. If you use some other tool, do mention that in the comments and I may cover it here on It’s FOSS. Enjoy! |

10,351 | Caffeinated 6.828:实验 4:抢占式多任务处理 | https://pdos.csail.mit.edu/6.828/2018/labs/lab4/ | 2018-12-16T12:58:17 | [

"6.828",

"多任务"

] | https://linux.cn/article-10351-1.html |

### 简介

在本实验中,你将在多个同时活动的用户模式环境之间实现抢占式多任务处理。

在 Part A 中,你将在 JOS 中添加对多处理器的支持,以实现循环调度。并且添加基本的环境管理方面的系统调用(创建和销毁环境的系统调用、以及分配/映射内存)。

在 Part B 中,你将要实现一个类 Unix 的 `fork()`,它将允许一个用户模式中的环境去创建一个它自已的副本。

最后,在 Part C 中,你将在 JOS 中添加对进程间通讯(IPC)的支持,以允许不同用户模式环境之间进行显式通讯和同步。你也将要去添加对硬件时钟中断和优先权的支持。

#### 预备知识

使用 git 去提交你的实验 3 的源代码,并获取课程仓库的最新版本,然后创建一个名为 `lab4` 的本地分支,它跟踪我们的名为 `origin/lab4` 的远程 `lab4` 分支:

```

athena% cd ~/6.828/lab

athena% add git

athena% git pull

Already up-to-date.

athena% git checkout -b lab4 origin/lab4

Branch lab4 set up to track remote branch refs/remotes/origin/lab4.

Switched to a new branch "lab4"

athena% git merge lab3

Merge made by recursive.

...

athena%

```

实验 4 包含了一些新的源文件,在开始之前你应该去浏览一遍:

```

kern/cpu.h Kernel-private definitions for multiprocessor support

kern/mpconfig.c Code to read the multiprocessor configuration

kern/lapic.c Kernel code driving the local APIC unit in each processor

kern/mpentry.S Assembly-language entry code for non-boot CPUs

kern/spinlock.h Kernel-private definitions for spin locks, including the big kernel lock

kern/spinlock.c Kernel code implementing spin locks

kern/sched.c Code skeleton of the scheduler that you are about to implement

```

#### 实验要求

本实验分为三部分:Part A、Part B 和 Part C。我们计划为每个部分分配一周的时间。

和以前一样,你需要完成实验中出现的、所有常规练习和至少一个挑战问题。(不是每个部分做一个挑战问题,是整个实验做一个挑战问题即可。)另外,你还要写出你实现的挑战问题的详细描述。如果你实现了多个挑战问题,你只需写出其中一个即可,虽然我们的课程欢迎你完成越多的挑战越好。在动手实验之前,请将你的挑战问题的答案写在一个名为 `answers-lab4.txt` 的文件中,并把它放在你的 `lab` 目录的根下。

### Part A:多处理器支持和协调多任务处理

在本实验的第一部分,将去扩展你的 JOS 内核,以便于它能够在一个多处理器的系统上运行,并且要在 JOS 内核中实现一些新的系统调用,以便于它允许用户级环境创建附加的新环境。你也要去实现协调的循环调度,在当前的环境自愿放弃 CPU(或退出)时,允许内核将一个环境切换到另一个环境。稍后在 Part C 中,你将要实现抢占调度,它允许内核在环境占有 CPU 一段时间后,从这个环境上重新取回对 CPU 的控制,那怕是在那个环境不配合的情况下。

#### 多处理器支持

我们继续去让 JOS 支持 “对称多处理器”(SMP),在一个多处理器的模型中,所有 CPU 们都有平等访问系统资源(如内存和 I/O 总线)的权力。虽然在 SMP 中所有 CPU 们都有相同的功能,但是在引导进程的过程中,它们被分成两种类型:引导程序处理器(BSP)负责初始化系统和引导操作系统;而在操作系统启动并正常运行后,应用程序处理器(AP)将被 BSP 激活。哪个处理器做 BSP 是由硬件和 BIOS 来决定的。到目前为止,你所有的已存在的 JOS 代码都是运行在 BSP 上的。

在一个 SMP 系统上,每个 CPU 都伴有一个本地 APIC(LAPIC)单元。这个 LAPIC 单元负责传递系统中的中断。LAPIC 还为它所连接的 CPU 提供一个唯一的标识符。在本实验中,我们将使用 LAPIC 单元(它在 `kern/lapic.c` 中)中的下列基本功能:

* 读取 LAPIC 标识符(APIC ID),去告诉那个 CPU 现在我们的代码正在它上面运行(查看 `cpunum()`)。

* 从 BSP 到 AP 之间发送处理器间中断(IPI) `STARTUP`,以启动其它 CPU(查看 `lapic_startap()`)。

* 在 Part C 中,我们设置 LAPIC 的内置定时器去触发时钟中断,以便于支持抢占式多任务处理(查看 `apic_init()`)。

一个处理器使用内存映射的 I/O(MMIO)来访问它的 LAPIC。在 MMIO 中,一部分物理内存是硬编码到一些 I/O 设备的寄存器中,因此,访问内存时一般可以使用相同的 `load/store` 指令去访问设备的寄存器。正如你所看到的,在物理地址 `0xA0000` 处就是一个 IO 入口(就是我们写入 VGA 缓冲区的入口)。LAPIC 就在那里,它从物理地址 `0xFE000000` 处(4GB 减去 32MB 处)开始,这个地址对于我们在 KERNBASE 处使用直接映射访问来说太高了。JOS 虚拟内存映射在 `MMIOBASE` 处,留下一个 4MB 的空隙,以便于我们有一个地方,能像这样去映射设备。由于在后面的实验中,我们将介绍更多的 MMIO 区域,你将要写一个简单的函数,从这个区域中去分配空间,并将设备的内存映射到那里。

>

> **练习 1**、实现 `kern/pmap.c` 中的 `mmio_map_region`。去看一下它是如何使用的,从 `kern/lapic.c` 中的 `lapic_init` 开始看起。在 `mmio_map_region` 的测试运行之前,你还要做下一个练习。

>

>

>

##### 引导应用程序处理器

在引导应用程序处理器之前,引导程序处理器应该会首先去收集关于多处理器系统的信息,比如总的 CPU 数、它们的 APIC ID 以及 LAPIC 单元的 MMIO 地址。在 `kern/mpconfig.c` 中的 `mp_init()` 函数,通过读取内存中位于 BIOS 区域里的 MP 配置表来获得这些信息。

`boot_aps()` 函数(在 `kern/init.c` 中)驱动 AP 的引导过程。AP 们在实模式中开始,与 `boot/boot.S` 中启动引导加载程序非常相似。因此,`boot_aps()` 将 AP 入口代码(`kern/mpentry.S`)复制到实模式中的那个可寻址内存地址上。不像使用引导加载程序那样,我们可以控制 AP 将从哪里开始运行代码;我们复制入口代码到 `0x7000`(`MPENTRY_PADDR`)处,但是复制到任何低于 640KB 的、未使用的、页对齐的物理地址上都是可以运行的。

在那之后,通过发送 IPI `STARTUP` 到相关 AP 的 LAPIC 单元,以及一个初始的 `CS:IP` 地址(AP 将从那儿开始运行它的入口代码,在我们的案例中是 `MPENTRY_PADDR` ),`boot_aps()` 将一个接一个地激活 AP。在 `kern/mpentry.S` 中的入口代码非常类似于 `boot/boot.S`。在一些简短的设置之后,它启用分页,使 AP 进入保护模式,然后调用 C 设置程序 `mp_main()`(它也在 `kern/init.c` 中)。在继续唤醒下一个 AP 之前, `boot_aps()` 将等待这个 AP 去传递一个 `CPU_STARTED` 标志到它的 `struct CpuInfo` 中的 `cpu_status` 字段中。

>

> **练习 2**、阅读 `kern/init.c` 中的 `boot_aps()` 和 `mp_main()`,以及在 `kern/mpentry.S` 中的汇编代码。确保你理解了在 AP 引导过程中的控制流转移。然后修改在 `kern/pmap.c` 中的、你自己的 `page_init()`,实现避免在 `MPENTRY_PADDR` 处添加页到空闲列表上,以便于我们能够在物理地址上安全地复制和运行 AP 引导程序代码。你的代码应该会通过更新后的 `check_page_free_list()` 的测试(但可能会在更新后的 `check_kern_pgdir()` 上测试失败,我们在后面会修复它)。

>

>

>

.

>

> **问题 1**、比较 `kern/mpentry.S` 和 `boot/boot.S`。记住,那个 `kern/mpentry.S` 是编译和链接后的,运行在 `KERNBASE` 上面的,就像内核中的其它程序一样,宏 `MPBOOTPHYS` 的作用是什么?为什么它需要在 `kern/mpentry.S` 中,而不是在 `boot/boot.S` 中?换句话说,如果在 `kern/mpentry.S` 中删掉它,会发生什么错误? 提示:回顾链接地址和加载地址的区别,我们在实验 1 中讨论过它们。

>

>

>

##### 每个 CPU 的状态和初始化

当写一个多处理器操作系统时,区分每个 CPU 的状态是非常重要的,而每个 CPU 的状态对其它处理器是不公开的,而全局状态是整个系统共享的。`kern/cpu.h` 定义了大部分每个 CPU 的状态,包括 `struct CpuInfo`,它保存了每个 CPU 的变量。`cpunum()` 总是返回调用它的那个 CPU 的 ID,它可以被用作是数组的索引,比如 `cpus`。或者,宏 `thiscpu` 是当前 CPU 的 `struct CpuInfo` 缩略表示。

下面是你应该知道的每个 CPU 的状态:

* **每个 CPU 的内核栈**

因为内核能够同时捕获多个 CPU,因此,我们需要为每个 CPU 准备一个单独的内核栈,以防止它们运行的程序之间产生相互干扰。数组 `percpu_kstacks[NCPU][KSTKSIZE]` 为 NCPU 的内核栈资产保留了空间。

在实验 2 中,你映射的 `bootstack` 所引用的物理内存,就作为 `KSTACKTOP` 以下的 BSP 的内核栈。同样,在本实验中,你将每个 CPU 的内核栈映射到这个区域,而使用保护页做为它们之间的缓冲区。CPU 0 的栈将从 `KSTACKTOP` 处向下增长;CPU 1 的栈将从 CPU 0 的栈底部的 `KSTKGAP` 字节处开始,依次类推。在 `inc/memlayout.h` 中展示了这个映射布局。

* **每个 CPU 的 TSS 和 TSS 描述符**

为了指定每个 CPU 的内核栈在哪里,也需要有一个每个 CPU 的任务状态描述符(TSS)。CPU i 的任务状态描述符是保存在 `cpus[i].cpu_ts` 中,而对应的 TSS 描述符是定义在 GDT 条目 `gdt[(GD_TSS0 >> 3) + i]` 中。在 `kern/trap.c` 中定义的全局变量 `ts` 将不再被使用。

* **每个 CPU 当前的环境指针**

由于每个 CPU 都能同时运行不同的用户进程,所以我们重新定义了符号 `curenv`,让它指向到 `cpus[cpunum()].cpu_env`(或 `thiscpu->cpu_env`),它指向到当前 CPU(代码正在运行的那个 CPU)上当前正在运行的环境上。

* **每个 CPU 的系统寄存器**

所有的寄存器,包括系统寄存器,都是一个 CPU 私有的。所以,初始化这些寄存器的指令,比如 `lcr3()`、`ltr()`、`lgdt()`、`lidt()`、等待,必须在每个 CPU 上运行一次。函数 `env_init_percpu()` 和 `trap_init_percpu()` 就是为此目的而定义的。

>

> **练习 3**、修改 `mem_init_mp()`(在 `kern/pmap.c` 中)去映射每个 CPU 的栈从 `KSTACKTOP` 处开始,就像在 `inc/memlayout.h` 中展示的那样。每个栈的大小是 `KSTKSIZE` 字节加上未映射的保护页 `KSTKGAP` 的字节。你的代码应该会通过在 `check_kern_pgdir()` 中的新的检查。

>

>

>

.

>

> **练习 4**、在 `trap_init_percpu()`(在 `kern/trap.c` 文件中)的代码为 BSP 初始化 TSS 和 TSS 描述符。在实验 3 中它就运行过,但是当它运行在其它的 CPU 上就会出错。修改这些代码以便它能在所有 CPU 上都正常运行。(注意:你的新代码应该还不能使用全局变量 `ts`)

>

>

>

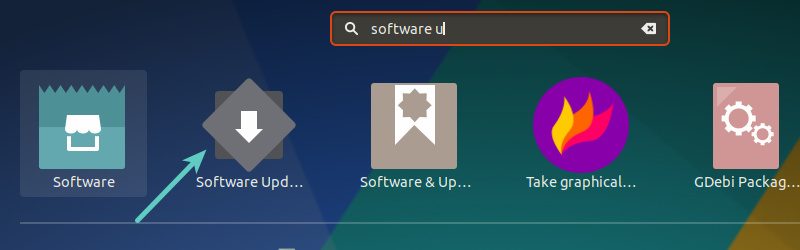

在你完成上述练习后,在 QEMU 中使用 4 个 CPU(使用 `make qemu CPUS=4` 或 `make qemu-nox CPUS=4`)来运行 JOS,你应该看到类似下面的输出:

```

...

Physical memory: 66556K available, base = 640K, extended = 65532K

check_page_alloc() succeeded!

check_page() succeeded!

check_kern_pgdir() succeeded!

check_page_installed_pgdir() succeeded!

SMP: CPU 0 found 4 CPU(s)

enabled interrupts: 1 2

SMP: CPU 1 starting

SMP: CPU 2 starting

SMP: CPU 3 starting

```

##### 锁定

在 `mp_main()` 中初始化 AP 后我们的代码快速运行起来。在你更进一步增强 AP 之前,我们需要首先去处理多个 CPU 同时运行内核代码的争用状况。达到这一目标的最简单的方法是使用大内核锁。大内核锁是一个单个的全局锁,当一个环境进入内核模式时,它将被加锁,而这个环境返回到用户模式时它将释放锁。在这种模型中,在用户模式中运行的环境可以同时运行在任何可用的 CPU 上,但是只有一个环境能够运行在内核模式中;而任何尝试进入内核模式的其它环境都被强制等待。

`kern/spinlock.h` 中声明大内核锁,即 `kernel_lock`。它也提供 `lock_kernel()` 和 `unlock_kernel()`,快捷地去获取/释放锁。你应该在以下的四个位置应用大内核锁:

* 在 `i386_init()` 时,在 BSP 唤醒其它 CPU 之前获取锁。

* 在 `mp_main()` 时,在初始化 AP 之后获取锁,然后调用 `sched_yield()` 在这个 AP 上开始运行环境。

* 在 `trap()` 时,当从用户模式中捕获一个<ruby> 陷阱 <rt> trap </rt></ruby>时获取锁。在检查 `tf_cs` 的低位比特,以确定一个陷阱是发生在用户模式还是内核模式时。

* 在 `env_run()` 中,在切换到用户模式之前释放锁。不能太早也不能太晚,否则你将可能会产生争用或死锁。

>

> **练习 5**、在上面所描述的情况中,通过在合适的位置调用 `lock_kernel()` 和 `unlock_kernel()` 应用大内核锁。

>

>

> 如果你的锁定是正确的,如何去测试它?实际上,到目前为止,还无法测试!但是在下一个练习中,你实现了调度之后,就可以测试了。

>

>

>

.

>

> **问题 2**、看上去使用一个大内核锁,可以保证在一个时间中只有一个 CPU 能够运行内核代码。为什么每个 CPU 仍然需要单独的内核栈?描述一下使用一个共享内核栈出现错误的场景,即便是在它使用了大内核锁保护的情况下。

>

>

> **小挑战!**大内核锁很简单,也易于使用。尽管如此,它消除了内核模式的所有并发。大多数现代操作系统使用不同的锁,一种称之为细粒度锁定的方法,去保护它们的共享的栈的不同部分。细粒度锁能够大幅提升性能,但是实现起来更困难并且易出错。如果你有足够的勇气,在 JOS 中删除大内核锁,去拥抱并发吧!

>

>

> 由你来决定锁的粒度(一个锁保护的数据量)。给你一个提示,你可以考虑在 JOS 内核中使用一个自旋锁去确保你独占访问这些共享的组件:

>

>

> * 页分配器

> * 控制台驱动

> * 调度器

> * 你将在 Part C 中实现的进程间通讯(IPC)的状态

>

>

>

#### 循环调度

本实验中,你的下一个任务是去修改 JOS 内核,以使它能够在多个环境之间以“循环”的方式去交替。JOS 中的循环调度工作方式如下:

* 在新的 `kern/sched.c` 中的 `sched_yield()` 函数负责去选择一个新环境来运行。它按顺序以循环的方式在数组 `envs[]` 中进行搜索,在前一个运行的环境之后开始(或如果之前没有运行的环境,就从数组起点开始),选择状态为 `ENV_RUNNABLE` 的第一个环境(查看 `inc/env.h`),并调用 `env_run()` 去跳转到那个环境。

* `sched_yield()` 必须做到,同一个时间在两个 CPU 上绝对不能运行相同的环境。它可以判断出一个环境正运行在一些 CPU(可能是当前 CPU)上,因为,那个正在运行的环境的状态将是 `ENV_RUNNING`。

* 我们已经为你实现了一个新的系统调用 `sys_yield()`,用户环境调用它去调用内核的 `sched_yield()` 函数,并因此将自愿把对 CPU 的控制禅让给另外的一个环境。

>

> **练习 6**、像上面描述的那样,在 `sched_yield()` 中实现循环调度。不要忘了去修改 `syscall()` 以派发 `sys_yield()`。

>

>

> 确保在 `mp_main` 中调用了 `sched_yield()`。

>

>

> 修改 `kern/init.c` 去创建三个(或更多个!)运行程序 `user/yield.c`的环境。

>

>

> 运行 `make qemu`。在它终止之前,你应该会看到像下面这样,在环境之间来回切换了五次。

>

>

> 也可以使用几个 CPU 来测试:`make qemu CPUS=2`。

>

>

>

> ```

> ...

> Hello, I am environment 00001000.

> Hello, I am environment 00001001.

> Hello, I am environment 00001002.

> Back in environment 00001000, iteration 0.

> Back in environment 00001001, iteration 0.

> Back in environment 00001002, iteration 0.

> Back in environment 00001000, iteration 1.

> Back in environment 00001001, iteration 1.

> Back in environment 00001002, iteration 1.

> ...

> ```

>

> 在程序 `yield` 退出之后,系统中将没有可运行的环境,调度器应该会调用 JOS 内核监视器。如果它什么也没有发生,那么你应该在继续之前修复你的代码。

>

>

> **问题 3**、在你实现的 `env_run()` 中,你应该会调用 `lcr3()`。在调用 `lcr3()` 的之前和之后,你的代码引用(至少它应该会)变量 `e`,它是 `env_run` 的参数。在加载 `%cr3` 寄存器时,MMU 使用的地址上下文将马上被改变。但一个虚拟地址(即 `e`)相对一个给定的地址上下文是有意义的 —— 地址上下文指定了物理地址到那个虚拟地址的映射。为什么指针 `e` 在地址切换之前和之后被解除引用?

>

>

>

.

>

> **问题 4**、无论何时,内核从一个环境切换到另一个环境,它必须要确保旧环境的寄存器内容已经被保存,以便于它们稍后能够正确地还原。为什么?这种事件发生在什么地方?

>

>

>

.

>

> 小挑战!给内核添加一个小小的调度策略,比如一个固定优先级的调度器,它将会给每个环境分配一个优先级,并且在执行中,较高优先级的环境总是比低优先级的环境优先被选定。如果你想去冒险一下,尝试实现一个类 Unix 的、优先级可调整的调度器,或者甚至是一个彩票调度器或跨步调度器。(可以在 Google 中查找“彩票调度”和“跨步调度”的相关资料)

>

>

> 写一个或两个测试程序,去测试你的调度算法是否工作正常(即,正确的算法能够按正确的次序运行)。如果你实现了本实验的 Part B 和 Part C 部分的 `fork()` 和 IPC,写这些测试程序可能会更容易。

>

>

>

.

>

> 小挑战!目前的 JOS 内核还不能应用到使用了 x87 协处理器、MMX 指令集、或流式 SIMD 扩展(SSE)的 x86 处理器上。扩展数据结构 `Env` 去提供一个能够保存处理器的浮点状态的地方,并且扩展上下文切换代码,当从一个环境切换到另一个环境时,能够保存和还原正确的状态。`FXSAVE` 和 `FXRSTOR` 指令或许对你有帮助,但是需要注意的是,这些指令在旧的 x86 用户手册上没有,因为它是在较新的处理器上引入的。写一个用户级的测试程序,让它使用浮点做一些很酷的事情。

>

>

>

#### 创建环境的系统调用

虽然你的内核现在已经有了在多个用户级环境之间切换的功能,但是由于内核初始化设置的原因,它在运行环境时仍然是受限的。现在,你需要去实现必需的 JOS 系统调用,以允许用户环境去创建和启动其它的新用户环境。

Unix 提供了 `fork()` 系统调用作为它的进程创建原语。Unix 的 `fork()` 通过复制调用进程(父进程)的整个地址空间去创建一个新进程(子进程)。从用户空间中能够观察到它们之间的仅有的两个差别是,它们的进程 ID 和父进程 ID(由 `getpid` 和 `getppid` 返回)。在父进程中,`fork()` 返回子进程 ID,而在子进程中,`fork()` 返回 0。默认情况下,每个进程得到它自己的私有地址空间,一个进程对内存的修改对另一个进程都是不可见的。

为创建一个用户模式下的新的环境,你将要提供一个不同的、更原始的 JOS 系统调用集。使用这些系统调用,除了其它类型的环境创建之外,你可以在用户空间中实现一个完整的类 Unix 的 `fork()`。你将要为 JOS 编写的新的系统调用如下:

* `sys_exofork`:

这个系统调用创建一个新的空白的环境:在它的地址空间的用户部分什么都没有映射,并且它也不能运行。这个新的环境与 `sys_exofork` 调用时创建它的父环境的寄存器状态完全相同。在父进程中,`sys_exofork` 将返回新创建进程的 `envid_t`(如果环境分配失败的话,返回的是一个负的错误代码)。在子进程中,它将返回 0。(因为子进程从一开始就被标记为不可运行,在子进程中,`sys_exofork` 将并不真的返回,直到它的父进程使用 …. 显式地将子进程标记为可运行之前。)

* `sys_env_set_status`:

设置指定的环境状态为 `ENV_RUNNABLE` 或 `ENV_NOT_RUNNABLE`。这个系统调用一般是在,一个新环境的地址空间和寄存器状态已经完全初始化完成之后,用于去标记一个准备去运行的新环境。

* `sys_page_alloc`:

分配一个物理内存页,并映射它到一个给定的环境地址空间中、给定的一个虚拟地址上。

* `sys_page_map`:

从一个环境的地址空间中复制一个页映射(不是页内容!)到另一个环境的地址空间中,保持一个内存共享,以便于新的和旧的映射共同指向到同一个物理内存页。

* `sys_page_unmap`:

在一个给定的环境中,取消映射一个给定的已映射的虚拟地址。

上面所有的系统调用都接受环境 ID 作为参数,JOS 内核支持一个约定,那就是用值 “0” 来表示“当前环境”。这个约定在 `kern/env.c` 中的 `envid2env()` 中实现的。

在我们的 `user/dumbfork.c` 中的测试程序里,提供了一个类 Unix 的 `fork()` 的非常原始的实现。这个测试程序使用了上面的系统调用,去创建和运行一个复制了它自己地址空间的子环境。然后,这两个环境像前面的练习那样使用 `sys_yield` 来回切换,父进程在迭代 10 次后退出,而子进程在迭代 20 次后退出。

>

> **练习 7**、在 `kern/syscall.c` 中实现上面描述的系统调用,并确保 `syscall()` 能调用它们。你将需要使用 `kern/pmap.c` 和 `kern/env.c` 中的多个函数,尤其是要用到 `envid2env()`。目前,每当你调用 `envid2env()` 时,在 `checkperm` 中传递参数 1。你务必要做检查任何无效的系统调用参数,在那个案例中,就返回了 `-E_INVAL`。使用 `user/dumbfork` 测试你的 JOS 内核,并在继续之前确保它运行正常。

>

>

>

.

>

> **小挑战!**添加另外的系统调用,必须能够读取已存在的、所有的、环境的重要状态,以及设置它们。然后实现一个能够 fork 出子环境的用户模式程序,运行它一小会(即,迭代几次 `sys_yield()`),然后取得几张屏幕截图或子环境的检查点,然后运行子环境一段时间,然后还原子环境到检查点时的状态,然后从这里继续开始。这样,你就可以有效地从一个中间状态“回放”了子环境的运行。确保子环境与用户使用 `sys_cgetc()` 或 `readline()` 执行了一些交互,这样,那个用户就能够查看和突变它的内部状态,并且你可以通过给子环境给定一个选择性遗忘的状况,来验证你的检查点/重启动的有效性,使它“遗忘”了在某些点之前发生的事情。

>

>

>

到此为止,已经完成了本实验的 Part A 部分;在你运行 `make grade` 之前确保它通过了所有的 Part A 的测试,并且和以往一样,使用 `make handin` 去提交它。如果你想尝试找出为什么一些特定的测试是失败的,可以运行 `run ./grade-lab4 -v`,它将向你展示内核构建的输出,和测试失败时的 QEMU 运行情况。当测试失败时,这个脚本将停止运行,然后你可以去检查 `jos.out` 的内容,去查看内核真实的输出内容。

### Part B:写时复制 Fork

正如在前面提到过的,Unix 提供 `fork()` 系统调用作为它主要的进程创建原语。`fork()` 系统调用通过复制调用进程(父进程)的地址空间来创建一个新进程(子进程)。

xv6 Unix 的 `fork()` 从父进程的页上复制所有数据,然后将它分配到子进程的新页上。从本质上看,它与 `dumbfork()` 所采取的方法是相同的。复制父进程的地址空间到子进程,是 `fork()` 操作中代价最高的部分。

但是,一个对 `fork()` 的调用后,经常是紧接着几乎立即在子进程中有一个到 `exec()` 的调用,它使用一个新程序来替换子进程的内存。这是 shell 默认去做的事,在这种情况下,在复制父进程地址空间上花费的时间是非常浪费的,因为在调用 `exec()` 之前,子进程使用的内存非常少。

基于这个原因,Unix 的最新版本利用了虚拟内存硬件的优势,允许父进程和子进程去共享映射到它们各自地址空间上的内存,直到其中一个进程真实地修改了它们为止。这个技术就是众所周知的“写时复制”。为实现这一点,在 `fork()` 时,内核将复制从父进程到子进程的地址空间的映射,而不是所映射的页的内容,并且同时设置正在共享中的页为只读。当两个进程中的其中一个尝试去写入到它们共享的页上时,进程将产生一个页故障。在这时,Unix 内核才意识到那个页实际上是“虚拟的”或“写时复制”的副本,然后它生成一个新的、私有的、那个发生页故障的进程可写的、页的副本。在这种方式中,个人的页的内容并不进行真实地复制,直到它们真正进行写入时才进行复制。这种优化使得一个`fork()` 后在子进程中跟随一个 `exec()` 变得代价很低了:子进程在调用 `exec()` 时或许仅需要复制一个页(它的栈的当前页)。

在本实验的下一段中,你将实现一个带有“写时复制”的“真正的”类 Unix 的 `fork()`,来作为一个常规的用户空间库。在用户空间中实现 `fork()` 和写时复制有一个好处就是,让内核始终保持简单,并且因此更不易出错。它也让个别的用户模式程序在 `fork()` 上定义了它们自己的语义。一个有略微不同实现的程序(例如,代价昂贵的、总是复制的 `dumbfork()` 版本,或父子进程真实共享内存的后面的那一个),它自己可以很容易提供。

#### 用户级页故障处理

一个用户级写时复制 `fork()` 需要知道关于在写保护页上的页故障相关的信息,因此,这是你首先需要去实现的东西。对用户级页故障处理来说,写时复制仅是众多可能的用途之一。

它通常是配置一个地址空间,因此在一些动作需要时,那个页故障将指示去处。例如,主流的 Unix 内核在一个新进程的栈区域中,初始的映射仅是单个页,并且在后面“按需”分配和映射额外的栈页,因此,进程的栈消费是逐渐增加的,并因此导致在尚未映射的栈地址上发生页故障。在每个进程空间的区域上发生一个页故障时,一个典型的 Unix 内核必须对它的动作保持跟踪。例如,在栈区域中的一个页故障,一般情况下将分配和映射新的物理内存页。一个在程序的 BSS 区域中的页故障,一般情况下将分配一个新页,然后用 0 填充它并映射它。在一个按需分页的系统上的一个可执行文件中,在文本区域中的页故障将从磁盘上读取相应的二进制页并映射它。

内核跟踪有大量的信息,与传统的 Unix 方法不同,你将决定在每个用户空间中关于每个页故障应该做的事。用户空间中的 bug 危害都较小。这种设计带来了额外的好处,那就是允许程序员在定义它们的内存区域时,会有很好的灵活性;对于映射和访问基于磁盘文件系统上的文件时,你应该使用后面的用户级页故障处理。

##### 设置页故障服务程序

为了处理它自己的页故障,一个用户环境将需要在 JOS 内核上注册一个页故障服务程序入口。用户环境通过新的 `sys_env_set_pgfault_upcall` 系统调用来注册它的页故障入口。我们给结构 `Env` 增加了一个新的成员 `env_pgfault_upcall`,让它去记录这个信息。

>

> **练习 8**、实现 `sys_env_set_pgfault_upcall` 系统调用。当查找目标环境的环境 ID 时,一定要确认启用了权限检查,因为这是一个“危险的”系统调用。 “`

>

>

>

##### 在用户环境中的正常和异常栈

在正常运行期间,JOS 中的一个用户环境运行在正常的用户栈上:它的 `ESP` 寄存器开始指向到 `USTACKTOP`,而它所推送的栈数据将驻留在 `USTACKTOP-PGSIZE` 和 `USTACKTOP-1`(含)之间的页上。但是,当在用户模式中发生页故障时,内核将在一个不同的栈上重新启动用户环境,运行一个用户级页故障指定的服务程序,即用户异常栈。其它,我们将让 JOS 内核为用户环境实现自动的“栈切换”,当从用户模式转换到内核模式时,x86 处理器就以大致相同的方式为 JOS 实现了栈切换。

JOS 用户异常栈也是一个页的大小,并且它的顶部被定义在虚拟地址 `UXSTACKTOP` 处,因此用户异常栈的有效字节数是从 `UXSTACKTOP-PGSIZE` 到 `UXSTACKTOP-1`(含)。尽管运行在异常栈上,用户页故障服务程序能够使用 JOS 的普通系统调用去映射新页或调整映射,以便于去修复最初导致页故障发生的各种问题。然后用户级页故障服务程序通过汇编语言 `stub` 返回到原始栈上的故障代码。

每个想去支持用户级页故障处理的用户环境,都需要为它自己的异常栈使用在 Part A 中介绍的 `sys_page_alloc()` 系统调用去分配内存。

##### 调用用户页故障服务程序

现在,你需要去修改 `kern/trap.c` 中的页故障处理代码,以能够处理接下来在用户模式中发生的页故障。我们将故障发生时用户环境的状态称之为捕获时状态。

如果这里没有注册页故障服务程序,JOS 内核将像前面那样,使用一个消息来销毁用户环境。否则,内核将在异常栈上设置一个陷阱帧,它看起来就像是来自 `inc/trap.h` 文件中的一个 `struct UTrapframe` 一样:

```

<-- UXSTACKTOP

trap-time esp

trap-time eflags

trap-time eip

trap-time eax start of struct PushRegs

trap-time ecx

trap-time edx

trap-time ebx

trap-time esp

trap-time ebp

trap-time esi

trap-time edi end of struct PushRegs

tf_err (error code)

fault_va <-- %esp when handler is run

```

然后,内核安排这个用户环境重新运行,使用这个栈帧在异常栈上运行页故障服务程序;你必须搞清楚为什么发生这种情况。`fault_va` 是引发页故障的虚拟地址。

如果在一个异常发生时,用户环境已经在用户异常栈上运行,那么页故障服务程序自身将会失败。在这种情况下,你应该在当前的 `tf->tf_esp` 下,而不是在 `UXSTACKTOP` 下启动一个新的栈帧。

去测试 `tf->tf_esp` 是否已经在用户异常栈上准备好,可以去检查它是否在 `UXSTACKTOP-PGSIZE` 和 `UXSTACKTOP-1`(含)的范围内。

>

> **练习 9**、实现在 `kern/trap.c` 中的 `page_fault_handler` 的代码,要求派发页故障到用户模式故障服务程序上。在写入到异常栈时,一定要采取适当的预防措施。(如果用户环境运行时溢出了异常栈,会发生什么事情?)

>

>

>

##### 用户模式页故障入口点

接下来,你需要去实现汇编程序,它将调用 C 页故障服务程序,并在原始的故障指令处恢复程序运行。这个汇编程序是一个故障服务程序,它由内核使用 `sys_env_set_pgfault_upcall()` 来注册。

>

> **练习 10**、实现在 `lib/pfentry.S` 中的 `_pgfault_upcall` 程序。最有趣的部分是返回到用户代码中产生页故障的原始位置。你将要直接返回到那里,不能通过内核返回。最难的部分是同时切换栈和重新加载 EIP。

>

>

>

最后,你需要去实现用户级页故障处理机制的 C 用户库。

>

> **练习 11**、完成 `lib/pgfault.c` 中的 `set_pgfault_handler()`。 ”`

>

>

>

##### 测试

运行 `user/faultread`(make run-faultread)你应该会看到:

```

...

[00000000] new env 00001000

[00001000] user fault va 00000000 ip 0080003a

TRAP frame ...

[00001000] free env 00001000

```

运行 `user/faultdie` 你应该会看到:

```

...

[00000000] new env 00001000

i faulted at va deadbeef, err 6

[00001000] exiting gracefully

[00001000] free env 00001000

```

运行 `user/faultalloc` 你应该会看到:

```

...

[00000000] new env 00001000

fault deadbeef

this string was faulted in at deadbeef

fault cafebffe

fault cafec000

this string was faulted in at cafebffe

[00001000] exiting gracefully

[00001000] free env 00001000

```

如果你只看到第一个 “this string” 行,意味着你没有正确地处理递归页故障。

运行 `user/faultallocbad` 你应该会看到:

```

...

[00000000] new env 00001000

[00001000] user_mem_check assertion failure for va deadbeef

[00001000] free env 00001000

```

确保你理解了为什么 `user/faultalloc` 和 `user/faultallocbad` 的行为是不一样的。

>

> **小挑战!**扩展你的内核,让它不仅是页故障,而是在用户空间中运行的代码能够产生的所有类型的处理器异常,都能够被重定向到一个用户模式中的异常服务程序上。写出用户模式测试程序,去测试各种各样的用户模式异常处理,比如除零错误、一般保护故障、以及非法操作码。

>

>

>

#### 实现写时复制 Fork

现在,你有个内核功能要去实现,那就是在用户空间中完整地实现写时复制 `fork()`。

我们在 `lib/fork.c` 中为你的 `fork()` 提供了一个框架。像 `dumbfork()`、`fork()` 应该会创建一个新环境,然后通过扫描父环境的整个地址空间,并在子环境中设置相关的页映射。重要的差别在于,`dumbfork()` 复制了页,而 `fork()` 开始只是复制了页映射。`fork()` 仅当在其中一个环境尝试去写入它时才复制每个页。

`fork()` 的基本控制流如下:

1. 父环境使用你在上面实现的 `set_pgfault_handler()` 函数,安装 `pgfault()` 作为 C 级页故障服务程序。

2. 父环境调用 `sys_exofork()` 去创建一个子环境。

3. 在它的地址空间中,低于 UTOP 位置的、每个可写入页、或写时复制页上,父环境调用 `duppage` 后,它应该会映射页写时复制到子环境的地址空间中,然后在它自己的地址空间中重新映射页写时复制。[ 注意:这里的顺序很重要(即,在父环境中标记之前,先在子环境中标记该页为 COW)!你能明白是为什么吗?尝试去想一个具体的案例,将顺序颠倒一下会发生什么样的问题。] `duppage` 把两个 PTE 都设置了,致使那个页不可写入,并且在 “avail” 字段中通过包含 `PTE_COW` 来从真正的只读页中区分写时复制页。

然而异常栈是不能通过这种方式重映射的。对于异常栈,你需要在子环境中分配一个新页。因为页故障服务程序不能做真实的复制,并且页故障服务程序是运行在异常栈上的,异常栈不能进行写时复制:那么谁来复制它呢?

`fork()` 也需要去处理存在的页,但不能写入或写时复制。

4. 父环境为子环境设置了用户页故障入口点,让它看起来像它自己的一样。

5. 现在,子环境准备去运行,所以父环境标记它为可运行。

每次其中一个环境写一个还没有写入的写时复制页时,它将产生一个页故障。下面是用户页故障服务程序的控制流:

1. 内核传递页故障到 `_pgfault_upcall`,它调用 `fork()` 的 `pgfault()` 服务程序。

2. `pgfault()` 检测到那个故障是一个写入(在错误代码中检查 `FEC_WR`),然后将那个页的 PTE 标记为 `PTE_COW`。如果不是一个写入,则崩溃。

3. `pgfault()` 在一个临时位置分配一个映射的新页,并将故障页的内容复制进去。然后,故障服务程序以读取/写入权限映射新页到合适的地址,替换旧的只读映射。

对于上面的几个操作,用户级 `lib/fork.c` 代码必须查询环境的页表(即,那个页的 PTE 是否标记为 `PET_COW`)。为此,内核在 `UVPT` 位置精确地映射环境的页表。它使用一个 [聪明的映射技巧](https://pdos.csail.mit.edu/6.828/2018/labs/lab4/uvpt.html) 去标记它,以使用户代码查找 PTE 时更容易。`lib/entry.S` 设置 `uvpt` 和 `uvpd`,以便于你能够在 `lib/fork.c` 中轻松查找页表信息。

>

> **练习 12**、在 `lib/fork.c` 中实现 `fork`、`duppage` 和 `pgfault`。

>

>

> 使用 `forktree` 程序测试你的代码。它应该会产生下列的信息,在信息中会有 ‘new env'、'free env'、和 'exiting gracefully’ 这样的字眼。信息可能不是按如下的顺序出现的,并且环境 ID 也可能不一样。

>

>

>

> ```

> 1000: I am ''

> 1001: I am '0'

> 2000: I am '00'

> 2001: I am '000'

> 1002: I am '1'

> 3000: I am '11'

> 3001: I am '10'

> 4000: I am '100'

> 1003: I am '01'

> 5000: I am '010'

> 4001: I am '011'

> 2002: I am '110'

> 1004: I am '001'

> 1005: I am '111'

> 1006: I am '101'

> ```

>

>

.

>

> **小挑战!**实现一个名为 `sfork()` 的共享内存的 `fork()`。这个版本的 `sfork()` 中,父子环境共享所有的内存页(因此,一个环境中对内存写入,就会改变另一个环境数据),除了在栈区域中的页以外,它应该使用写时复制来处理这些页。修改 `user/forktree.c` 去使用 `sfork()` 而是不常见的 `fork()`。另外,你在 Part C 中实现了 IPC 之后,使用你的 `sfork()` 去运行 `user/pingpongs`。你将找到提供全局指针 `thisenv` 功能的一个新方式。

>

>

>

.

>

> **小挑战!**你实现的 `fork` 将产生大量的系统调用。在 x86 上,使用中断切换到内核模式将产生较高的代价。增加系统调用接口,以便于它能够一次发送批量的系统调用。然后修改 `fork` 去使用这个接口。

>

>

> 你的新的 `fork` 有多快?

>

>

> 你可以用一个分析来论证,批量提交对你的 `fork` 的性能改变,以它来(粗略地)回答这个问题:使用一个 `int 0x30` 指令的代价有多高?在你的 `fork` 中运行了多少次 `int 0x30` 指令?访问 `TSS` 栈切换的代价高吗?等待 …

>

>

> 或者,你可以在真实的硬件上引导你的内核,并且真实地对你的代码做基准测试。查看 `RDTSC`(读取时间戳计数器)指令,它的定义在 IA32 手册中,它计数自上一次处理器重置以来流逝的时钟周期数。QEMU 并不能真实地模拟这个指令(它能够计数运行的虚拟指令数量,或使用主机的 TSC,但是这两种方式都不能反映真实的 CPU 周期数)。

>

>

>

到此为止,Part B 部分结束了。在你运行 `make grade` 之前,确保你通过了所有的 Part B 部分的测试。和以前一样,你可以使用 `make handin` 去提交你的实验。

### Part C:抢占式多任务处理和进程间通讯(IPC)

在实验 4 的最后部分,你将修改内核去抢占不配合的环境,并允许环境之间显式地传递消息。

#### 时钟中断和抢占

运行测试程序 `user/spin`。这个测试程序 fork 出一个子环境,它控制了 CPU 之后,就永不停歇地运转起来。无论是父环境还是内核都不能回收对 CPU 的控制。从用户模式环境中保护系统免受 bug 或恶意代码攻击的角度来看,这显然不是个理想的状态,因为任何用户模式环境都能够通过简单的无限循环,并永不归还 CPU 控制权的方式,让整个系统处于暂停状态。为了允许内核去抢占一个运行中的环境,从其中夺回对 CPU 的控制权,我们必须去扩展 JOS 内核,以支持来自硬件时钟的外部硬件中断。

##### 中断规则

外部中断(即:设备中断)被称为 IRQ。现在有 16 个可能出现的 IRQ,编号 0 到 15。从 IRQ 号到 IDT 条目的映射是不固定的。在 `picirq.c` 中的 `pic_init` 映射 IRQ 0 - 15 到 IDT 条目 `IRQ_OFFSET` 到 `IRQ_OFFSET+15`。

在 `inc/trap.h` 中,`IRQ_OFFSET` 被定义为十进制的 32。所以,IDT 条目 32 - 47 对应 IRQ 0 - 15。例如,时钟中断是 IRQ 0,所以 IDT[IRQ\_OFFSET+0](即:IDT[32])包含了内核中时钟中断服务程序的地址。这里选择 `IRQ_OFFSET` 是为了处理器异常不会覆盖设备中断,因为它会引起显而易见的混淆。(事实上,在早期运行 MS-DOS 的 PC 上, `IRQ_OFFSET` 事实上是 0,它确实导致了硬件中断服务程序和处理器异常处理之间的混淆!)

在 JOS 中,相比 xv6 Unix 我们做了一个重要的简化。当处于内核模式时,外部设备中断总是被关闭(并且,像 xv6 一样,当处于用户空间时,再打开外部设备的中断)。外部中断由 `%eflags` 寄存器的 `FL_IF` 标志位来控制(查看 `inc/mmu.h`)。当这个标志位被设置时,外部中断被打开。虽然这个标志位可以使用几种方式来修改,但是为了简化,我们只通过进程所保存和恢复的 `%eflags` 寄存器值,作为我们进入和离开用户模式的方法。

处于用户环境中时,你将要确保 `FL_IF` 标志被设置,以便于出现一个中断时,它能够通过处理器来传递,让你的中断代码来处理。否则,中断将被屏蔽或忽略,直到中断被重新打开后。我们使用引导加载程序的第一个指令去屏蔽中断,并且到目前为止,还没有去重新打开它们。

>

> **练习 13**、修改 `kern/trapentry.S` 和 `kern/trap.c` 去初始化 IDT 中的相关条目,并为 IRQ 0 到 15 提供服务程序。然后修改 `kern/env.c` 中的 `env_alloc()` 的代码,以确保在用户环境中,中断总是打开的。

>

>

> 另外,在 `sched_halt()` 中取消注释 `sti` 指令,以便于空闲的 CPU 取消屏蔽中断。

>

>

> 当调用一个硬件中断服务程序时,处理器不会推送一个错误代码。在这个时候,你可能需要重新阅读 [80386 参考手册](https://pdos.csail.mit.edu/6.828/2018/labs/readings/i386/toc.htm) 的 9.2 节,或 [IA-32 Intel 架构软件开发者手册 卷 3](https://pdos.csail.mit.edu/6.828/2018/labs/readings/ia32/IA32-3A.pdf) 的 5.8 节。

>

>

> 在完成这个练习后,如果你在你的内核上使用任意的测试程序去持续运行(即:`spin`),你应该会看到内核输出中捕获的硬件中断的捕获帧。虽然在处理器上已经打开了中断,但是 JOS 并不能处理它们,因此,你应该会看到在当前运行的用户环境中每个中断的错误属性并被销毁,最终环境会被销毁并进入到监视器中。

>

>

>

##### 处理时钟中断

在 `user/spin` 程序中,子环境首先运行之后,它只是进入一个高速循环中,并且内核再无法取得 CPU 控制权。我们需要对硬件编程,定期产生时钟中断,它将强制将 CPU 控制权返还给内核,在内核中,我们就能够将控制权切换到另外的用户环境中。

我们已经为你写好了对 `lapic_init` 和 `pic_init`(来自 `init.c` 中的 `i386_init`)的调用,它将设置时钟和中断控制器去产生中断。现在,你需要去写代码来处理这些中断。

>

> **练习 14**、修改内核的 `trap_dispatch()` 函数,以便于在时钟中断发生时,它能够调用 `sched_yield()` 去查找和运行一个另外的环境。

>

>

> 现在,你应该能够用 `user/spin` 去做测试了:父环境应该会 fork 出子环境,`sys_yield()` 到它许多次,但每次切换之后,将重新获得对 CPU 的控制权,最后杀死子环境后优雅地终止。

>

>

>

这是做回归测试的好机会。确保你没有弄坏本实验的前面部分,确保打开中断能够正常工作(即: `forktree`)。另外,尝试使用 `make CPUS=2 target` 在多个 CPU 上运行它。现在,你应该能够通过 `stresssched` 测试。可以运行 `make grade` 去确认。现在,你的得分应该是 65 分了(总分为 80)。

#### 进程间通讯(IPC)

(严格来说,在 JOS 中这是“环境间通讯” 或 “IEC”,但所有人都称它为 IPC,因此我们使用标准的术语。)

我们一直专注于操作系统的隔离部分,这就产生了一种错觉,好像每个程序都有一个机器完整地为它服务。一个操作系统的另一个重要服务是,当它们需要时,允许程序之间相互通讯。让程序与其它程序交互可以让它的功能更加强大。Unix 的管道模型就是一个权威的示例。

进程间通讯有许多模型。关于哪个模型最好的争论从来没有停止过。我们不去参与这种争论。相反,我们将要实现一个简单的 IPC 机制,然后尝试使用它。

##### JOS 中的 IPC

你将要去实现另外几个 JOS 内核的系统调用,由它们共同来提供一个简单的进程间通讯机制。你将要实现两个系统调用,`sys_ipc_recv` 和 `sys_ipc_try_send`。然后你将要实现两个库去封装 `ipc_recv` 和 `ipc_send`。

用户环境可以使用 JOS 的 IPC 机制相互之间发送 “消息” 到每个其它环境,这些消息有两部分组成:一个单个的 32 位值,和可选的一个单个页映射。允许环境在消息中传递页映射,提供了一个高效的方式,传输比一个仅适合单个的 32 位整数更多的数据,并且也允许环境去轻松地设置安排共享内存。

##### 发送和接收消息

一个环境通过调用 `sys_ipc_recv` 去接收消息。这个系统调用将取消对当前环境的调度,并且不会再次去运行它,直到消息被接收为止。当一个环境正在等待接收一个消息时,任何其它环境都能够给它发送一个消息 — 而不仅是一个特定的环境,而且不仅是与接收环境有父子关系的环境。换句话说,你在 Part A 中实现的权限检查将不会应用到 IPC 上,因为 IPC 系统调用是经过慎重设计的,因此可以认为它是“安全的”:一个环境并不能通过给它发送消息导致另一个环境发生故障(除非目标环境也存在 Bug)。

尝试去发送一个值时,一个环境使用接收者的 ID 和要发送的值去调用 `sys_ipc_try_send` 来发送。如果指定的环境正在接收(它调用了 `sys_ipc_recv`,但尚未收到值),那么这个环境将去发送消息并返回 0。否则将返回 `-E_IPC_NOT_RECV` 来表示目标环境当前不希望来接收值。

在用户空间中的一个库函数 `ipc_recv` 将去调用 `sys_ipc_recv`,然后,在当前环境的 `struct Env` 中查找关于接收到的值的相关信息。

同样,一个库函数 `ipc_send` 将去不停地调用 `sys_ipc_try_send` 来发送消息,直到发送成功为止。

##### 转移页

当一个环境使用一个有效的 `dstva` 参数(低于 `UTOP`)去调用 `sys_ipc_recv` 时,环境将声明愿意去接收一个页映射。如果发送方发送一个页,那么那个页应该会被映射到接收者地址空间的 `dstva` 处。如果接收者在 `dstva` 已经有了一个页映射,那么已存在的那个页映射将被取消映射。

当一个环境使用一个有效的 `srcva` 参数(低于 `UTOP`)去调用 `sys_ipc_try_send` 时,意味着发送方希望使用 `perm` 权限去发送当前映射在 `srcva` 处的页给接收方。在 IPC 成功之后,发送方在它的地址空间中,保留了它最初映射到 `srcva` 位置的页。而接收方也获得了最初由它指定的、在它的地址空间中的 `dstva` 处的、映射到相同物理页的映射。最后的结果是,这个页成为发送方和接收方共享的页。

如果发送方和接收方都没有表示要转移这个页,那么就不会有页被转移。在任何 IPC 之后,内核将在接收方的 `Env` 结构上设置新的 `env_ipc_perm` 字段,以允许接收页,或者将它设置为 0,表示不再接收。

##### 实现 IPC

>

> **练习 15**、实现 `kern/syscall.c` 中的 `sys_ipc_recv` 和 `sys_ipc_try_send`。在实现它们之前一起阅读它们的注释信息,因为它们要一起工作。当你在这些程序中调用 `envid2env` 时,你应该去设置 `checkperm` 的标志为 0,这意味着允许任何环境去发送 IPC 消息到另外的环境,并且内核除了验证目标 envid 是否有效外,不做特别的权限检查。

>

>

> 接着实现 `lib/ipc.c` 中的 `ipc_recv` 和 `ipc_send` 函数。

>

>

> 使用 `user/pingpong` 和 `user/primes` 函数去测试你的 IPC 机制。`user/primes` 将为每个质数生成一个新环境,直到 JOS 耗尽环境为止。你可能会发现,阅读 `user/primes.c` 非常有趣,你将看到所有的 fork 和 IPC 都是在幕后进行。

>

>

>

.

>

> **小挑战!**为什么 `ipc_send` 要循环调用?修改系统调用接口,让它不去循环。确保你能处理多个环境尝试同时发送消息到一个环境上的情况。

>

>

>

.

>

> **小挑战!**质数筛选是在大规模并发程序中传递消息的一个很巧妙的用法。阅读 C. A. R. Hoare 写的 《Communicating Sequential Processes》,Communications of the ACM\_ 21(8) (August 1978), 666-667,并去实现矩阵乘法示例。

>

>

>

.

>

> **小挑战!**控制消息传递的最令人印象深刻的一个例子是,Doug McIlroy 的幂序列计算器,它在 [M. Douglas McIlroy,《Squinting at Power Series》,Software–Practice and Experience, 20(7) (July 1990),661-683](https://swtch.com/%7Ersc/thread/squint.pdf) 中做了详细描述。实现了它的幂序列计算器,并且计算了 sin ( x + x <sup> 3)</sup> 的幂序列。

>

>

>

.

>

> **小挑战!**通过应用 Liedtke 的论文([通过内核设计改善 IPC 性能](http://dl.acm.org/citation.cfm?id=168633))中的一些技术、或你可以想到的其它技巧,来让 JOS 的 IPC 机制更高效。为此,你可以随意修改内核的系统调用 API,只要你的代码向后兼容我们的评级脚本就行。

>

>

>

**Part C 到此结束了。**确保你通过了所有的评级测试,并且不要忘了将你的小挑战的答案写入到 `answers-lab4.txt` 中。

在动手实验之前, 使用 `git status` 和 `git diff` 去检查你的更改,并且不要忘了去使用 `git add answers-lab4.txt` 添加你的小挑战的答案。在你全部完成后,使用 `git commit -am 'my solutions to lab 4’` 提交你的更改,然后 `make handin` 并关注它的动向。

---

via: <https://pdos.csail.mit.edu/6.828/2018/labs/lab4/>

作者:[csail.mit](https://pdos.csail.mit.edu) 选题:[lujun9972](https://github.com/lujun9972) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **

Part A due Thursday, October 18, 2018

Part B due Thursday, October 25, 2018

Part C due Thursday, November 1, 2018

**

In this lab you will implement preemptive multitasking among multiple simultaneously active user-mode environments.

In part A you will add multiprocessor support to JOS, implement round-robin scheduling, and add basic environment management system calls (calls that create and destroy environments, and allocate/map memory).

In part B, you will implement a Unix-like `fork()`

,

which allows a user-mode environment to create copies of

itself.

Finally, in part C you will add support for inter-process communication (IPC), allowing different user-mode environments to communicate and synchronize with each other explicitly. You will also add support for hardware clock interrupts and preemption.

Use Git to commit your Lab 3 source, fetch the latest version of the course

repository, and then create a local branch called `lab4` based on our

lab4 branch, `origin/lab4`:

athena%Lab 4 contains a number of new source files, some of which you should browse before you start:cd ~/6.828/labathena%add gitathena%git pullAlready up-to-date. athena%git checkout -b lab4 origin/lab4Branch lab4 set up to track remote branch refs/remotes/origin/lab4. Switched to a new branch "lab4" athena%git merge lab3Merge made by recursive. ... athena%

kern/cpu.h |

Kernel-private definitions for multiprocessor support |

kern/mpconfig.c |

Code to read the multiprocessor configuration |

kern/lapic.c |

Kernel code driving the local APIC unit in each processor |

kern/mpentry.S |

Assembly-language entry code for non-boot CPUs |

kern/spinlock.h |

Kernel-private definitions for spin locks, including the big kernel lock |

kern/spinlock.c |

Kernel code implementing spin locks |

kern/sched.c |

Code skeleton of the scheduler that you are about to implement |

This lab is divided into three parts, A, B, and C. We have allocated one week in the schedule for each part.

As before,

you will need to do all of the regular exercises described in the lab

and *at least one* challenge problem.

(You do not need to do one challenge problem per part,

just one for the whole lab.)

Additionally, you will need to write up a brief

description of the challenge problem that you implemented.

If you implement more than one challenge problem,

you only need to describe one of them in the write-up,

though of course you are welcome to do more.

Place the write-up in a file called `answers-lab4.txt`

in the top level of your `lab` directory

before handing in your work.

In the first part of this lab,

you will first extend JOS to run on a multiprocessor system,

and then implement some new JOS kernel system calls

to allow user-level environments to create

additional new environments.

You will also implement *cooperative* round-robin scheduling,

allowing the kernel to switch from one environment to another

when the current environment voluntarily relinquishes the CPU (or exits).

Later in part C you will implement *preemptive* scheduling,

which allows the kernel to re-take control of the CPU from an environment

after a certain time has passed even if the environment does not cooperate.

We are going to make JOS support "symmetric multiprocessing" (SMP), a multiprocessor model in which all CPUs have equivalent access to system resources such as memory and I/O buses. While all CPUs are functionally identical in SMP, during the boot process they can be classified into two types: the bootstrap processor (BSP) is responsible for initializing the system and for booting the operating system; and the application processors (APs) are activated by the BSP only after the operating system is up and running. Which processor is the BSP is determined by the hardware and the BIOS. Up to this point, all your existing JOS code has been running on the BSP.

In an SMP system, each CPU has an accompanying local APIC (LAPIC) unit.

The LAPIC units are responsible for delivering interrupts throughout

the system. The LAPIC also provides its connected CPU with a unique

identifier. In this lab, we make use of the following basic

functionality of the LAPIC unit (in `kern/lapic.c`):

`cpunum()`

). `STARTUP`

interprocessor interrupt (IPI) from

the BSP to the APs to bring up other CPUs (see

`lapic_startap()`

).`apic_init()`

).

A processor accesses its LAPIC using memory-mapped I/O (MMIO).

In MMIO, a portion of *physical* memory is hardwired to the

registers of some I/O devices, so the same load/store instructions

typically used to access memory can be used to access device

registers. You've already seen one IO hole at physical address

`0xA0000` (we use this to write to the VGA display buffer).

The LAPIC lives in a hole starting at physical address

`0xFE000000` (32MB short of 4GB), so it's too high for us to

access using our usual direct map at KERNBASE. The JOS virtual memory

map leaves a 4MB gap at `MMIOBASE` so we have a place to map

devices like this. Since later labs introduce more MMIO regions,

you'll write a simple function to allocate space from this region and

map device memory to it.

Exercise 1.

Implement `mmio_map_region`

in `kern/pmap.c`. To

see how this is used, look at the beginning of

`lapic_init`

in `kern/lapic.c`. You'll have to do

the next exercise, too, before the tests for

`mmio_map_region`

will run.

Before booting up APs, the BSP should first collect information

about the multiprocessor system, such as the total number of

CPUs, their APIC IDs and the MMIO address of the LAPIC unit.

The `mp_init()`

function in `kern/mpconfig.c`

retrieves this information by reading the MP configuration

table that resides in the BIOS's region of memory.

The `boot_aps()`

function (in `kern/init.c`) drives

the AP bootstrap process. APs start in real mode, much like how the

bootloader started in `boot/boot.S`, so `boot_aps()`

copies the AP entry code (`kern/mpentry.S`) to a memory

location that is addressable in the real mode. Unlike with the

bootloader, we have some control over where the AP will start

executing code; we copy the entry code to `0x7000`

(`MPENTRY_PADDR`

), but any unused, page-aligned

physical address below 640KB would work.

After that, `boot_aps()`

activates APs one after another, by

sending `STARTUP`

IPIs to the LAPIC unit of the corresponding

AP, along with an initial `CS:IP`

address at which the AP

should start running its entry code (`MPENTRY_PADDR`

in our

case). The entry code in `kern/mpentry.S` is quite similar to

that of `boot/boot.S`. After some brief setup, it puts the AP

into protected mode with paging enabled, and then calls the C setup

routine `mp_main()`

(also in `kern/init.c`).

`boot_aps()`

waits for the AP to signal a

`CPU_STARTED`

flag in `cpu_status`

field of

its `struct CpuInfo`

before going on to wake up the next one.

Exercise 2.

Read `boot_aps()`

and `mp_main()`

in

`kern/init.c`, and the assembly code in

`kern/mpentry.S`. Make sure you understand the control flow

transfer during the bootstrap of APs. Then modify your implementation

of `page_init()`

in `kern/pmap.c` to avoid adding

the page at `MPENTRY_PADDR`

to the free list, so that we

can safely copy and run AP bootstrap code at that physical address.

Your code should pass the updated `check_page_free_list()`

test (but might fail the updated `check_kern_pgdir()`

test, which we will fix soon).

Question

`KERNBASE`

just like

everything else in the kernel, what is the purpose of macro

`MPBOOTPHYS`

? Why is it

necessary in

When writing a multiprocessor OS, it is important to distinguish

between per-CPU state that is private to each processor, and global

state that the whole system shares. `kern/cpu.h` defines most

of the per-CPU state, including `struct CpuInfo`

, which stores

per-CPU variables. `cpunum()`

always returns the ID of the

CPU that calls it, which can be used as an index into arrays like

`cpus`

. Alternatively, the macro `thiscpu`

is

shorthand for the current CPU's `struct CpuInfo`

.

Here is the per-CPU state you should be aware of:

**Per-CPU kernel stack**.

Because multiple CPUs can trap into the kernel simultaneously,

we need a separate kernel stack for each processor to prevent them from

interfering with each other's execution. The array

`percpu_kstacks[NCPU][KSTKSIZE]`

reserves space for NCPU's

worth of kernel stacks.

In Lab 2, you mapped the physical memory that `bootstack`

refers to as the BSP's kernel stack just below

`KSTACKTOP`

.

Similarly, in this lab, you will map each CPU's kernel stack into this

region with guard pages acting as a buffer between them. CPU 0's

stack will still grow down from `KSTACKTOP`

; CPU 1's stack

will start `KSTKGAP`

bytes below the bottom of CPU 0's

stack, and so on. `inc/memlayout.h` shows the mapping layout.

**Per-CPU TSS and TSS descriptor**.

A per-CPU task state segment (TSS) is also needed in order to specify

where each CPU's kernel stack lives. The TSS for CPU *i* is stored

in `cpus[i].cpu_ts`

, and the corresponding TSS descriptor is

defined in the GDT entry `gdt[(GD_TSS0 >> 3) + i]`

. The

global `ts`

variable defined in `kern/trap.c` will

no longer be useful.

**Per-CPU current environment pointer**.

Since each CPU can run different user process simultaneously, we

redefined the symbol `curenv`

to refer to

`cpus[cpunum()].cpu_env`

(or `thiscpu->cpu_env`

), which

points to the environment *currently* executing on the

*current* CPU (the CPU on which the code is running).

**Per-CPU system registers**.

All registers, including system registers, are private to a

CPU. Therefore, instructions that

initialize these registers, such as `lcr3()`

,

`ltr()`

, `lgdt()`

, `lidt()`

, etc., must

be executed once on each CPU. Functions `env_init_percpu()`

and `trap_init_percpu()`

are defined for this purpose.

In addition to this, if you have added any extra per-CPU state or performed any additional CPU-specific initialization (by say, setting new bits in the CPU registers) in your solutions to challenge problems in earlier labs, be sure to replicate them on each CPU here!

Exercise 3.

Modify `mem_init_mp()`

(in `kern/pmap.c`) to map

per-CPU stacks starting

at `KSTACKTOP`

, as shown in

`inc/memlayout.h`. The size of each stack is

`KSTKSIZE`

bytes plus `KSTKGAP`

bytes of

unmapped guard pages. Your code should pass the new check in

`check_kern_pgdir()`

.

Exercise 4.

The code in `trap_init_percpu()`

(`kern/trap.c`)

initializes the TSS and

TSS descriptor for the BSP. It worked in Lab 3, but is incorrect

when running on other CPUs. Change the code so that it can work

on all CPUs. (Note: your new code should not use the global

`ts`

variable any more.)

When you finish the above exercises, run JOS in QEMU with 4 CPUs using

`make qemu CPUS=4` (or `make qemu-nox CPUS=4`), you

should see output like this:

... Physical memory: 66556K available, base = 640K, extended = 65532K check_page_alloc() succeeded! check_page() succeeded! check_kern_pgdir() succeeded! check_page_installed_pgdir() succeeded! SMP: CPU 0 found 4 CPU(s) enabled interrupts: 1 2 SMP: CPU 1 starting SMP: CPU 2 starting SMP: CPU 3 starting

Our current code spins after initializing the AP in

`mp_main()`

. Before letting the AP get any further, we need

to first address race conditions when multiple CPUs run kernel code

simultaneously. The simplest way to achieve this is to use a *big

kernel lock*.

The big kernel lock is a single global lock that is held whenever an

environment enters kernel mode, and is released when the environment

returns to user mode. In this model, environments in user mode can run

concurrently on any available CPUs, but no more than one environment can

run in kernel mode; any other environments that try to enter kernel mode

are forced to wait.

`kern/spinlock.h` declares the big kernel lock, namely

`kernel_lock`

. It also provides `lock_kernel()`

and `unlock_kernel()`

, shortcuts to acquire and

release the lock. You should apply the big kernel lock at four locations:

`i386_init()`

, acquire the lock before the BSP wakes up the

other CPUs.

`mp_main()`

, acquire the lock after initializing the AP,

and then call `sched_yield()`

to start running environments

on this AP.

`trap()`

, acquire the lock when trapped from user mode.

To determine whether a trap happened in user mode or in kernel mode,

check the low bits of the `tf_cs`

.

`env_run()`

, release the lock Exercise 5.

Apply the big kernel lock as described above, by calling

`lock_kernel()`

and `unlock_kernel()`

at

the proper locations.

How to test if your locking is correct? You can't at this moment! But you will be able to after you implement the scheduler in the next exercise.

Question

Challenge!

The big kernel lock is simple and easy to use. Nevertheless, it

eliminates all concurrency in kernel mode. Most

modern operating systems use different locks to protect different

parts of their shared state, an

approach called *fine-grained locking*.

Fine-grained locking can increase performance significantly, but is

more difficult to implement and error-prone. If you are brave

enough, drop the big kernel lock and embrace concurrency in JOS!

It is up to you to decide the locking granularity (the amount of data that a lock protects). As a hint, you may consider using spin locks to ensure exclusive access to these shared components in the JOS kernel:

Your next task in this lab is to change the JOS kernel so that it can alternate between multiple environments in "round-robin" fashion. Round-robin scheduling in JOS works as follows:

`sched_yield()`

in the new `envs[]`

array

in circular fashion,

starting just after the previously running environment

(or at the beginning of the array

if there was no previously running environment),

picks the first environment it finds

with a status of `ENV_RUNNABLE`

(see `env_run()`

to jump into that environment. `sched_yield()`

must never run the same environment

on two CPUs at the same time. It can tell that an environment

is currently running on some CPU (possibly the current CPU)

because that environment's status will be `ENV_RUNNING`

.`sys_yield()`

,

which user environments can call

to invoke the kernel's `sched_yield()`

function

and thereby voluntarily give up the CPU to a different environment. Exercise 6.

Implement round-robin scheduling in `sched_yield()`

as described above. Don't forget to modify

`syscall()`

to dispatch `sys_yield()`

.

Make sure to invoke `sched_yield()`

in `mp_main`

.

Modify `kern/init.c` to create three (or more!) environments

that all run the program `user/yield.c`.

Run `make qemu`.

You should see the environments

switch back and forth between each other

five times before terminating, like below.

Test also with several CPUS: `make qemu CPUS=2`.

... Hello, I am environment 00001000. Hello, I am environment 00001001. Hello, I am environment 00001002. Back in environment 00001000, iteration 0. Back in environment 00001001, iteration 0. Back in environment 00001002, iteration 0. Back in environment 00001000, iteration 1. Back in environment 00001001, iteration 1. Back in environment 00001002, iteration 1. ...

After the `yield` programs exit, there will be no runnable

environment in the system, the scheduler should

invoke the JOS kernel monitor.

If any of this does not happen,

then fix your code before proceeding.

Question

`env_run()`

you should have

called `lcr3()`

. Before and after the call to

`lcr3()`

, your code makes references (at least it should)

to the variable `e`

, the argument to `env_run`

.

Upon loading the `%cr3`

register, the addressing context

used by the MMU is instantly changed. But a virtual

address (namely `e`

) has meaning relative to a given

address context--the address context specifies the physical address to

which the virtual address maps. Why can the pointer `e`

be

dereferenced both before and after the addressing switch?

Challenge! Add a less trivial scheduling policy to the kernel, such as a fixed-priority scheduler that allows each environment to be assigned a priority and ensures that higher-priority environments are always chosen in preference to lower-priority environments. If you're feeling really adventurous, try implementing a Unix-style adjustable-priority scheduler or even a lottery or stride scheduler. (Look up "lottery scheduling" and "stride scheduling" in Google.)

Write a test program or two

that verifies that your scheduling algorithm is working correctly

(i.e., the right environments get run in the right order).

It may be easier to write these test programs

once you have implemented `fork()`

and IPC

in parts B and C of this lab.

Challenge!

The JOS kernel currently does not allow applications

to use the x86 processor's x87 floating-point unit (FPU),

MMX instructions, or Streaming SIMD Extensions (SSE).

Extend the `Env`

structure

to provide a save area for the processor's floating point state,

and extend the context switching code

to save and restore this state properly

when switching from one environment to another.

The `FXSAVE`

and `FXRSTOR`

instructions may be useful,

but note that these are not in the old i386 user's manual

because they were introduced in more recent processors.

Write a user-level test program

that does something cool with floating-point.

Although your kernel is now capable of running and switching between

multiple user-level environments,

it is still limited to running environments

that the *kernel* initially set up.

You will now implement the necessary JOS system calls

to allow *user* environments to create and start

other new user environments.

Unix provides the `fork()`

system call

as its process creation primitive.

Unix `fork()`

copies

the entire address space of calling process (the parent)

to create a new process (the child).

The only differences between the two observable from user space

are their process IDs and parent process IDs

(as returned by `getpid`

and `getppid`

).

In the parent,

`fork()`

returns the child's process ID,

while in the child, `fork()`

returns 0.

By default, each process gets its own private address space, and

neither process's modifications to memory are visible to the other.

You will provide a different, more primitive

set of JOS system calls

for creating new user-mode environments.

With these system calls you will be able to implement

a Unix-like `fork()`

entirely in user space,

in addition to other styles of environment creation.

The new system calls you will write for JOS are as follows:

`sys_exofork`

:`sys_exofork`

call.

In the parent, `sys_exofork`

will return the `envid_t`

of the newly created

environment

(or a negative error code if the environment allocation failed).

In the child, however, it will return 0.

(Since the child starts out marked as not runnable,

`sys_exofork`

will not actually return in the child

until the parent has explicitly allowed this

by marking the child runnable using....)`sys_env_set_status`

:`ENV_RUNNABLE`

or `ENV_NOT_RUNNABLE`

.

This system call is typically used

to mark a new environment ready to run,

once its address space and register state

has been fully initialized.`sys_page_alloc`

:`sys_page_map`

:`sys_page_unmap`

:

For all of the system calls above that accept environment IDs,

the JOS kernel supports the convention

that a value of 0 means "the current environment."

This convention is implemented by `envid2env()`

in `kern/env.c`.

We have provided a very primitive implementation

of a Unix-like `fork()`

in the test program `user/dumbfork.c`.

This test program uses the above system calls

to create and run a child environment

with a copy of its own address space.

The two environments

then switch back and forth using `sys_yield`

as in the previous exercise.

The parent exits after 10 iterations,

whereas the child exits after 20.

Exercise 7.

Implement the system calls described above

in `kern/syscall.c` and make sure `syscall()` calls

them.

You will need to use various functions

in `kern/pmap.c` and `kern/env.c`,

particularly `envid2env()`

.

For now, whenever you call `envid2env()`

,

pass 1 in the `checkperm`

parameter.

Be sure you check for any invalid system call arguments,

returning `-E_INVAL`

in that case.

Test your JOS kernel with `user/dumbfork`

and make sure it works before proceeding.

Challenge!

Add the additional system calls necessary

to *read* all of the vital state of an existing environment

as well as set it up.

Then implement a user mode program that forks off a child environment,

runs it for a while (e.g., a few iterations of `sys_yield()`

),

then takes a complete snapshot or *checkpoint*

of the child environment,

runs the child for a while longer,

and finally restores the child environment to the state it was in

at the checkpoint

and continues it from there.

Thus, you are effectively "replaying"

the execution of the child environment from an intermediate state.

Make the child environment perform some interaction with the user

using `sys_cgetc()`

or `readline()`

so that the user can view and mutate its internal state,

and verify that with your checkpoint/restart

you can give the child environment a case of selective amnesia,

making it "forget" everything that happened beyond a certain point.

This completes Part A of the lab;

make sure it passes all of the Part A tests when you run

`make grade`, and hand it in using `make

handin` as usual. If you are trying to figure out why a particular

test case is failing, run `./grade-lab4 -v`, which will

show you the output of the kernel builds and QEMU runs for each

test, until a test fails. When a test fails, the script will stop,

and then you can inspect `jos.out` to see what the

kernel actually printed.

As mentioned earlier,

Unix provides the `fork()`

system call

as its primary process creation primitive.

The `fork()`

system call

copies the address space of the calling process (the parent)

to create a new process (the child).

xv6 Unix implements `fork()`

by copying all data from the

parent's pages into new pages allocated for the child.

This is essentially the same approach

that `dumbfork()`

takes.

The copying of the parent's address space into the child is

the most expensive part of the `fork()`

operation.

However, a call to `fork()`

is frequently followed almost immediately

by a call to `exec()`

in the child process,

which replaces the child's memory with a new program.

This is what the the shell typically does, for example.

In this case,

the time spent copying the parent's address space is largely wasted,

because the child process will use

very little of its memory before calling `exec()`

.

For this reason,

later versions of Unix took advantage

of virtual memory hardware

to allow the parent and child to *share*

the memory mapped into their respective address spaces

until one of the processes actually modifies it.

This technique is known as *copy-on-write*.

To do this,

on `fork()`

the kernel would

copy the address space *mappings*

from the parent to the child

instead of the contents of the mapped pages,

and at the same time mark the now-shared pages read-only.

When one of the two processes tries to write to one of these shared pages,

the process takes a page fault.

At this point, the Unix kernel realizes that the page

was really a "virtual" or "copy-on-write" copy,

and so it makes a new, private, writable copy of the page for the

faulting process.

In this way, the contents of individual pages aren't actually copied

until they are actually written to.

This optimization makes a `fork()`

followed by

an `exec()`

in the child much cheaper:

the child will probably only need to copy one page

(the current page of its stack)

before it calls `exec()`

.

In the next piece of this lab, you will implement a "proper"

Unix-like `fork()`

with copy-on-write,

as a user space library routine.

Implementing `fork()`

and copy-on-write support in user space

has the benefit that the kernel remains much simpler

and thus more likely to be correct.

It also lets individual user-mode programs

define their own semantics for `fork()`

.

A program that wants a slightly different implementation

(for example, the expensive always-copy version like `dumbfork()`

,

or one in which the parent and child actually share memory afterward)

can easily provide its own.

A user-level copy-on-write `fork()`

needs to know about

page faults on write-protected pages, so that's what you'll

implement first.

Copy-on-write is only one of many possible uses

for user-level page fault handling.

It's common to set up an address space so that page faults indicate when some action needs to take place. For example, most Unix kernels initially map only a single page in a new process's stack region, and allocate and map additional stack pages later "on demand" as the process's stack consumption increases and causes page faults on stack addresses that are not yet mapped. A typical Unix kernel must keep track of what action to take when a page fault occurs in each region of a process's space. For example, a fault in the stack region will typically allocate and map new page of physical memory. A fault in the program's BSS region will typically allocate a new page, fill it with zeroes, and map it. In systems with demand-paged executables, a fault in the text region will read the corresponding page of the binary off of disk and then map it.

This is a lot of information for the kernel to keep track of. Instead of taking the traditional Unix approach, you will decide what to do about each page fault in user space, where bugs are less damaging. This design has the added benefit of allowing programs great flexibility in defining their memory regions; you'll use user-level page fault handling later for mapping and accessing files on a disk-based file system.

In order to handle its own page faults,

a user environment will need to register

a *page fault handler entrypoint* with the JOS kernel.

The user environment registers its page fault entrypoint

via the new `sys_env_set_pgfault_upcall`

system call.

We have added a new member to the `Env`

structure,

`env_pgfault_upcall`

,

to record this information.

Exercise 8.

Implement the `sys_env_set_pgfault_upcall`

system call.

Be sure to enable permission checking

when looking up the environment ID of the target environment,

since this is a "dangerous" system call.

During normal execution,

a user environment in JOS

will run on the *normal* user stack:

its `ESP` register starts out pointing at `USTACKTOP`

,

and the stack data it pushes resides on the page

between `USTACKTOP-PGSIZE`

and `USTACKTOP-1`

inclusive.

When a page fault occurs in user mode,

however,

the kernel will restart the user environment

running a designated user-level page fault handler

on a different stack,

namely the *user exception* stack.

In essence, we will make the JOS kernel

implement automatic "stack switching"

on behalf of the user environment,

in much the same way that the x86 *processor*

already implements stack switching on behalf of JOS

when transferring from user mode to kernel mode!

The JOS user exception stack is also one page in size,

and its top is defined to be at virtual address `UXSTACKTOP`

,

so the valid bytes of the user exception stack

are from `UXSTACKTOP-PGSIZE`

through `UXSTACKTOP-1`

inclusive.

While running on this exception stack,

the user-level page fault handler

can use JOS's regular system calls to map new pages or adjust mappings

so as to fix whatever problem originally caused the page fault.

Then the user-level page fault handler returns,

via an assembly language stub,

to the faulting code on the original stack.

Each user environment that wants to support user-level page fault handling

will need to allocate memory for its own exception stack,

using the `sys_page_alloc()`

system call introduced in part A.

You will now need to

change the page fault handling code in `kern/trap.c`

to handle page faults from user mode as follows.

We will call the state of the user environment at the time of the

fault the *trap-time* state.

If there is no page fault handler registered,

the JOS kernel destroys the user environment with a message as before.

Otherwise,

the kernel sets up a trap frame on the exception stack that looks like

a `struct UTrapframe`

from `inc/trap.h`:

<-- UXSTACKTOP trap-time esp trap-time eflags trap-time eip trap-time eax start of struct PushRegs trap-time ecx trap-time edx trap-time ebx trap-time esp trap-time ebp trap-time esi trap-time edi end of struct PushRegs tf_err (error code) fault_va <-- %esp when handler is run

The kernel then arranges for the user environment to resume execution

with the page fault handler

running on the exception stack with this stack frame;

you must figure out how to make this happen.

The `fault_va` is the virtual address

that caused the page fault.

If the user environment is *already* running on the user exception stack

when an exception occurs,

then the page fault handler itself has faulted.

In this case,

you should start the new stack frame just under the current

`tf->tf_esp`

rather than at `UXSTACKTOP`

.

You should first push an empty 32-bit word, then a `struct UTrapframe`

.

To test whether `tf->tf_esp`

is already on the user

exception stack, check whether it is in the range

between `UXSTACKTOP-PGSIZE`

and `UXSTACKTOP-1`

, inclusive.

Exercise 9.

Implement the code in `page_fault_handler`

in

`kern/trap.c`

required to dispatch page faults to the user-mode handler.

Be sure to take appropriate precautions

when writing into the exception stack.

(What happens if the user environment runs out of space

on the exception stack?)

Next, you need to implement the assembly routine that will

take care of calling the C page fault handler and resume

execution at the original faulting instruction.

This assembly routine is the handler that will be registered

with the kernel using `sys_env_set_pgfault_upcall()`

.

Exercise 10.

Implement the `_pgfault_upcall`

routine

in `lib/pfentry.S`.

The interesting part is returning to the original point in

the user code that caused the page fault.

You'll return directly there, without going back through

the kernel.

The hard part is simultaneously switching stacks and

re-loading the EIP.

Finally, you need to implement the C user library side of the user-level page fault handling mechanism.

Exercise 11.

Finish `set_pgfault_handler()`

in `lib/pgfault.c`.

Run `user/faultread` (`make run-faultread`). You should see:

... [00000000] new env 00001000 [00001000] user fault va 00000000 ip 0080003a TRAP frame ... [00001000] free env 00001000

Run `user/faultdie`. You should see:

... [00000000] new env 00001000 i faulted at va deadbeef, err 6 [00001000] exiting gracefully [00001000] free env 00001000

Run `user/faultalloc`. You should see:

... [00000000] new env 00001000 fault deadbeef this string was faulted in at deadbeef fault cafebffe fault cafec000 this string was faulted in at cafebffe [00001000] exiting gracefully [00001000] free env 00001000

If you see only the first "this string" line, it means you are not handling recursive page faults properly.

Run `user/faultallocbad`. You should see:

... [00000000] new env 00001000 [00001000] user_mem_check assertion failure for va deadbeef [00001000] free env 00001000

Make sure you understand why `user/faultalloc` and

`user/faultallocbad` behave differently.

Challenge!

Extend your kernel so that not only page faults,

but *all* types of processor exceptions

that code running in user space can generate,

can be redirected to a user-mode exception handler.

Write user-mode test programs

to test user-mode handling of various exceptions

such as divide-by-zero, general protection fault,

and illegal opcode.

You now have the kernel facilities

to implement copy-on-write `fork()`

entirely in user space.

We have provided a skeleton for your `fork()`

in `lib/fork.c`.

Like `dumbfork()`

,

`fork()`

should create a new environment,

then scan through the parent environment's entire address space

and set up corresponding page mappings in the child.

The key difference is that,

while `dumbfork()`

copied *pages*,

`fork()`

will initially only copy page *mappings*.

`fork()`

will

copy each page only when one of the environments tries to write it.

The basic control flow for `fork()`

is as follows:

`pgfault()`

as the C-level page fault handler,

using the `set_pgfault_handler()`

function

you implemented above.`sys_exofork()`

to create

a child environment.`duppage`

, which should

map the page copy-on-write into the address

space of the child and then `duppage`

sets both PTEs so that

the page is not writeable, and to contain `PTE_COW`

in the

"avail" field to distinguish copy-on-write pages from genuine

read-only pages.

The exception stack is *not* remapped this way, however.

Instead you need to allocate a fresh page in the child for

the exception stack. Since the page fault handler will be

doing the actual copying and the page fault handler runs

on the exception stack, the exception stack cannot be made

copy-on-write: who would copy it?

`fork()`

also needs to handle pages that are

present, but not writable or copy-on-write.

Each time one of the environments writes a copy-on-write page that it hasn't yet written, it will take a page fault. Here's the control flow for the user page fault handler:

`_pgfault_upcall`

,

which calls `fork()`

's `pgfault()`

handler.`pgfault()`

checks that the fault is a write

(check for `FEC_WR`

in the error code) and that the

PTE for the page is marked `PTE_COW`

.

If not, panic.`pgfault()`

allocates a new page mapped

at a temporary location and copies

the contents of the faulting page into it.

Then the fault handler maps the new page at the

appropriate address with read/write permissions,

in place of the old read-only mapping.The user-level `lib/fork.c` code must consult the environment's page

tables for several of the operations above (e.g., that the PTE for a page is

marked `PTE_COW`

). The kernel maps the environment's page tables at

`UVPT`

exactly for this purpose. It uses a [clever mapping trick](uvpt.html) to make it to make it easy to lookup

PTEs for user code. `lib/entry.S` sets up `uvpt`

and

`uvpd`

so that you can easily lookup page-table information in

`lib/fork.c`.

Exercise 12.

Implement `fork`

, `duppage`

and

`pgfault`

in `lib/fork.c`.

Test your code with the `forktree` program.

It should produce the following messages,

with interspersed 'new env', 'free env',

and 'exiting gracefully' messages.

The messages may not appear in this order, and the

environment IDs may be different.

1000: I am '' 1001: I am '0' 2000: I am '00' 2001: I am '000' 1002: I am '1' 3000: I am '11' 3001: I am '10' 4000: I am '100' 1003: I am '01' 5000: I am '010' 4001: I am '011' 2002: I am '110' 1004: I am '001' 1005: I am '111' 1006: I am '101'

Challenge!

Implement a shared-memory `fork()`

called `sfork()`

. This version should have the parent

and child *share* all their memory pages

(so writes in one environment appear in the other)

except for pages in the stack area,

which should be treated in the usual copy-on-write manner.

Modify `user/forktree.c`

to use `sfork()`

instead of regular `fork()`

.

Also, once you have finished implementing IPC in part C,

use your `sfork()`

to run `user/pingpongs`.

You will have to find a new way to provide the functionality

of the global `thisenv`

pointer.

Challenge!

Your implementation of `fork`

makes a huge number of system calls. On the x86, switching into

the kernel using interrupts has non-trivial cost. Augment the

system call interface

so that it is possible to send a batch of system calls at once.

Then change `fork`

to use this interface.

How much faster is your new `fork`

?

You can answer this (roughly) by using analytical

arguments to estimate how much of an improvement batching

system calls will make to the performance of your

`fork`

: How expensive is an `int 0x30`

instruction? How many times do you execute `int 0x30`

in your `fork`

? Is accessing the `TSS` stack

switch also expensive? And so on...

Alternatively, you can boot your kernel on real hardware

and *really* benchmark your code. See the `RDTSC`

(read time-stamp counter) instruction, defined in the IA32

manual, which counts the number of clock cycles that have

elapsed since the last processor reset. QEMU doesn't emulate

this instruction faithfully (it can either count the number of

virtual instructions executed or use the host TSC, neither of

which reflects the number of cycles a real CPU would

require).

This ends part B. Make sure you pass all of the Part B tests when you run

`make grade`.

As usual, you can hand in your submission

with `make handin`.

In the final part of lab 4 you will modify the kernel to preempt uncooperative environments and to allow environments to pass messages to each other explicitly.

Run the `user/spin` test program.

This test program forks off a child environment,

which simply spins forever in a tight loop

once it receives control of the CPU.

Neither the parent environment nor the kernel ever regains the CPU.

This is obviously not an ideal situation

in terms of protecting the system from bugs or malicious code

in user-mode environments,

because any user-mode environment can bring the whole system to a halt

simply by getting into an infinite loop and never giving back the CPU.

In order to allow the kernel to *preempt* a running environment,

forcefully retaking control of the CPU from it,

we must extend the JOS kernel to support external hardware interrupts

from the clock hardware.

External interrupts (i.e., device interrupts) are referred to as IRQs.

There are 16 possible IRQs, numbered 0 through 15.

The mapping from IRQ number to IDT entry is not fixed.

`pic_init`

in `picirq.c` maps IRQs 0-15

to IDT entries `IRQ_OFFSET`

through `IRQ_OFFSET+15`

.

In `inc/trap.h`,

`IRQ_OFFSET`

is defined to be decimal 32.

Thus the IDT entries 32-47 correspond to the IRQs 0-15.

For example, the clock interrupt is IRQ 0.

Thus, IDT[IRQ_OFFSET+0] (i.e., IDT[32]) contains the address of

the clock's interrupt handler routine in the kernel.

This `IRQ_OFFSET`

is chosen so that the device interrupts

do not overlap with the processor exceptions,

which could obviously cause confusion.

(In fact, in the early days of PCs running MS-DOS,

the `IRQ_OFFSET`

effectively *was* zero,

which indeed caused massive confusion between handling hardware interrupts

and handling processor exceptions!)

In JOS, we make a key simplification compared to xv6 Unix.

External device interrupts are *always* disabled

when in the kernel (and, like xv6, enabled when in user space).

External interrupts are controlled by the `FL_IF`

flag bit

of the `%eflags`

register

(see `inc/mmu.h`).

When this bit is set, external interrupts are enabled.

While the bit can be modified in several ways,

because of our simplification, we will handle it solely

through the process of saving and restoring `%eflags`

register

as we enter and leave user mode.

You will have to ensure that the `FL_IF`

flag is set in

user environments when they run so that when an interrupt arrives, it

gets passed through to the processor and handled by your interrupt code.

Otherwise, interrupts are *masked*,

or ignored until interrupts are re-enabled.

We masked interrupts with the very first instruction of the bootloader,

and so far we have never gotten around to re-enabling them.

Exercise 13.

Modify `kern/trapentry.S` and `kern/trap.c` to

initialize the appropriate entries in the IDT and provide

handlers for IRQs 0 through 15. Then modify the code

in `env_alloc()`

in `kern/env.c` to ensure

that user environments are always run with interrupts enabled.

Also uncomment the `sti` instruction in `sched_halt()` so

that idle CPUs unmask interrupts.

The processor never pushes an error code

when invoking a hardware interrupt handler.

You might want to re-read section 9.2 of the

[

80386 Reference Manual](../../readings/i386/toc.htm),

or section 5.8 of the

[