id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

10,132 | 在 Linux 手册页中查看整个 Arch Linux Wiki | https://www.ostechnix.com/how-to-browse-and-read-entire-arch-wiki-as-linux-man-pages/ | 2018-10-19T23:23:02 | [

"Wiki",

"man"

] | https://linux.cn/article-10132-1.html |

不久之前,我写了篇关于一个名叫 [arch-wiki-cli](https://www.ostechnix.com/search-arch-wiki-website-commandline/) 的命令行脚本的文章,使用它可以在终端命令行中查看 Arch Linux Wiki。使用这个脚本,你可以很轻松的用你喜欢的文本浏览器查看整个 Arch Wiki 网站。显然,使用这个脚本需要你有网络连接。我今天偶然发现了一个名为 Arch-wiki-man 的程序,与其有着相同的功能。就跟名字说的一样,它可以让你在命令行查看 Arch Wiki,但是无需联网。它可以以手册页的形式为你显示来自 Arch Wiki 的任何文章。它会下载整个 Arch Wiki 到本地,并每两天自动推送一次。因此,你的系统上总能有一份 Arch Wiki 最新的副本。

### 安装 Arch-wiki-man

Arch-wiki-man 在 [AUR](https://aur.archlinux.org/packages/arch-wiki-man/) 中可用,所以你可以通过类似[Yay](https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/) 的 AUR 帮助程序安装它。

```

$ yay -S arch-wiki-man

```

另外,它也可以使用 NPM 安装。首先确保你已经[安装了 NoodJS](https://www.ostechnix.com/install-node-js-linux/),然后使用以下命令安装它。

```

$ npm install -g arch-wiki-man

```

### 以手册页的形式查看整个 Arch Wiki

Arch-wiki-man 的典型语法如下:

```

$ awman <search-query>

```

下面看一些具体的例子:

#### 搜索一个或多个匹配项

只需要下面的命令,就可以搜索 [Arch Linux 安装指南](https://www.ostechnix.com/install-arch-linux-latest-version/)。

```

$ awman Installation guide

```

上面的命令将会从 Arch Wiki 中搜索所有包含 “Installation guide” 的条目。如果对于给出的搜索条目有很多的匹配项,将会展示为一个选择菜单。使用上下方向键或是 Vim 风格的方向键(`j`/`k`),移动到你想查看的指南上,点击回车打开。然后就会像下面这样,以手册页的形式展示指南的内容。

awman 指的是 arch wiki man 的首字母组合。

它支持手册页的所有操作,所以你可以像使用手册页一样使用它。按 `h` 查看帮助选项。

要退出选择菜单而不显示手册页,只需要按 `Ctrl+c`。

输入 `q` 返回或者/并且退出手册页。

#### 在标题或者概述中搜索匹配项

awman 默认只会在标题中搜索匹配项。但是你也可以指定它同时在标题和概述中搜索匹配项。

```

$ awman -d vim

```

或者,

```

$ awman --desc-search vim

```

#### 在目录中搜索匹配项

不同于在标题和概述中搜索匹配项,它也能够扫描整个内容以匹配。不过请注意,这样将会使搜索进程明显变慢。

```

$ awman -k emacs

```

或者,

```

$ awman --apropos emacs

```

#### 在 web 浏览器中打开搜索结果

如果你不想以手册页的形式查看 Arch Wiki 指南,你也可以像下面这样在 web 浏览器中打开它。

```

$ awman -w pacman

```

或者,

```

$ awman --web pacman

```

这条命令将会在 web 浏览器中打开匹配结果。请注意,使用这个选项需要网络连接。

#### 在其他语言中搜索

awman 默认打开的是英文的 Arch Wiki 页面。如果你想用其他的语言查看搜索结果,例如西班牙语,只需要像这样做:

```

$ awman -l spanish codecs

```

使用以下命令查看可用的语言:

```

$ awman --list-languages

```

#### 升级本地的 Arch Wiki 副本

就像我已经说过的,更新会每两天自动推送一次。或者你也可以使用以下命令手动更新。

```

$ awman-update

[email protected] /usr/lib/node_modules/arch-wiki-man

└── [email protected]

arch-wiki-md-repo has been successfully updated or reinstalled.

```

:)

---

via: <https://www.ostechnix.com/how-to-browse-and-read-entire-arch-wiki-as-linux-man-pages/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[dianbanjiu](https://github.com/dianbanjiu) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,133 | 一个简单而美观的跨平台播客应用程序 | https://www.ostechnix.com/cpod-a-simple-beautiful-and-cross-platform-podcast-app/ | 2018-10-19T23:40:08 | [

"pod"

] | https://linux.cn/article-10133-1.html |

播客在过去几年中变得非常流行。 播客就是所谓的“<ruby> 信息娱乐 <rt> infotainment </rt></ruby>”,它们通常是轻松的,但也会为你提供有价值的信息。 播客在过去几年中已经非常火爆了,如果你喜欢某些东西,就很可能有个相关的播客。 Linux 桌面版上有很多播客播放器,但是如果你想要一些视觉上美观、有顺滑的动画并且可以在每个平台上运行的东西,那就并没有很多替代品可以替代 CPod 了。 CPod(以前称为 Cumulonimbus)是一个开源而成熟的播客应用程序,适用于 Linux、MacOS 和 Windows。

CPod 运行在一个名为 Electron 的东西上 —— 这个工具允许开发人员构建跨平台(例如 Windows、MacOS 和 Linux)的桌面图形化应用程序。 在本简要指南中,我们将讨论如何在 Linux 中安装和使用 CPod 播客应用程序。

### 安装 CPod

转到 CPod 的[发布页面](https://github.com/z-------------/CPod/releases)。 下载并安装所选平台的二进制文件。 如果你使用 Ubuntu / Debian,你只需从发布页面下载并安装 .deb 文件,如下所示。

```

$ wget https://github.com/z-------------/CPod/releases/download/v1.25.7/CPod_1.25.7_amd64.deb

$ sudo apt update

$ sudo apt install gdebi

$ sudo gdebi CPod_1.25.7_amd64.deb

```

如果你使用其他发行版,你可能需要使用发布页面中的 AppImage。

从发布页面下载 AppImage 文件。

打开终端,然后转到存储 AppImage 文件的目录。 更改权限以允许执行:

```

$ chmod +x CPod-1.25.7-x86_64.AppImage

```

执行 AppImage 文件:

```

$ ./CPod-1.25.7-x86_64.AppImage

```

你将看到一个对话框询问是否将应用程序与系统集成。 如果要执行此操作,请单击“yes”。

### 特征

#### 探索标签页

CPod 使用 Apple iTunes 数据库查找播客。 这很好,因为 iTunes 数据库是最大的这类数据库。 如果某个播客存在,那么很可能就在 iTunes 上。 要查找播客,只需使用探索部分中的顶部搜索栏即可。 探索部分还展示了一些受欢迎的播客。

#### 主标签页

主标签页在打开应用程序时是默认打开的。 主标签页显示你已订阅的所有播客的所有剧集的时间顺序列表。

在主页选项卡中,你可以:

1. 标记剧集阅读。

2. 下载它们进行离线播放

3. 将它们添加到播放队列中。

#### 订阅标签页

你当然可以订阅你喜欢的播客。 你可以在订阅标签页中执行的其他一些操作是:

1. 刷新播客艺术作品

2. 导出订阅到 .OPML 文件中,从 .OPML 文件中导入订阅。

#### 播放器

播放器可能是 CPod 最美观的部分。 该应用程序根据播客的横幅更改整体外观。 底部有一个声音可视化器。 在右侧,你可以查看和搜索此播客的其他剧集。

#### 缺点/缺失功能

虽然我喜欢这个应用程序,但 CPod 确实有一些特性和缺点:

1. 糟糕的 MPRIS 集成 —— 你可以从桌面环境的媒体播放器对话框中播放或者暂停播客,但这是不够的。 播客的名称未显示,你可以转到下一个或者上一个剧集。

2. 不支持章节。

3. 没有自动下载 —— 你必须手动下载剧集。

4. 使用过程中的 CPU 使用率非常高(即使对于 Electron 应用程序而言)。

### 总结

虽然它确实有它的缺点,但 CPod 显然是最美观的播客播放器应用程序,并且它具有最基本的功能。 如果你喜欢使用视觉上美观的应用程序,并且不需要高级功能,那么这就是你的完美应用。我知道我肯定会使用它。

你喜欢 CPod 吗? 请将你的意见发表在下面的评论中。

**资源**

* [CPod GitHub 仓库](https://github.com/z-------------/CPod)

---

via: <https://www.ostechnix.com/cpod-a-simple-beautiful-and-cross-platform-podcast-app/>

作者:[EDITOR](https://www.ostechnix.com/author/editor/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Flowsnow](https://github.com/Flowsnow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,134 | 介绍 Fedora 上的 Swift | https://fedoramagazine.org/introducing-swift-fedora/ | 2018-10-20T09:35:21 | [

"Swift"

] | https://linux.cn/article-10134-1.html |

Swift 是一种使用现代方法构建安全性、性能和软件设计模式的通用编程语言。它旨在成为各种编程项目的最佳语言,从系统编程到桌面应用程序,以及扩展到云服务。继续阅读了解它以及如何在 Fedora 中尝试它。

### 安全、快速、富有表现力

与许多现代编程语言一样,Swift 被设计为比基于 C 的语言更安全。例如,变量总是在使用之前初始化。检查数组和整数是否溢出。内存自动管理。

Swift 将意图放在语法中。要声明变量,请使用 `var` 关键字。要声明常量,请使用 `let`。

Swift 还保证对象永远不会是 `nil`。实际上,尝试使用已知为 `nil` 的对象将导致编译时错误。当使用 `nil` 值时,它支持一种称为 **optional** 的机制。optional 可能包含 `nil`,但使用 `?` 运算符可以安全地解包。

更多的功能包括:

* 与函数指针统一的闭包

* 元组和多个返回值

* 泛型

* 对范围或集合进行快速而简洁的迭代

* 支持方法、扩展和协议的结构体

* 函数式编程模式,例如 `map` 和 `filter`

* 内置强大的错误处理

* 拥有 `do`、`guard`、`defer` 和 `repeat` 关键字的高级控制流

### 尝试 Swift

Swift 在 Fedora 28 中可用,包名为 **swift-lang**。安装完成后,运行 `swift` 并启动 REPL 控制台。

```

$ swift

Welcome to Swift version 4.2 (swift-4.2-RELEASE). Type :help for assistance.

1> let greeting="Hello world!"

greeting: String = "Hello world!"

2> print(greeting)

Hello world!

3> greeting = "Hello universe!"

error: repl.swift:3:10: error: cannot assign to value: 'greeting' is a 'let' constant

greeting = "Hello universe!"

~~~~~~~~ ^

3>

```

Swift 有一个不断发展的社区,特别的,有一个[工作组](https://swift.org/server/)致力于使其成为一种高效且有力的服务器端编程语言。请访问其[主页](http://swift.org)了解更多参与方式。

图片由 [Uillian Vargas](https://unsplash.com/photos/7oJpVR1inGk?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) 发布在 [Unsplash](https://unsplash.com/search/photos/fast?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) 上。

---

via: <https://fedoramagazine.org/introducing-swift-fedora/>

作者:[Link Dupont](https://fedoramagazine.org/author/linkdupont/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Swift is a general-purpose programming language built using a modern approach to safety, performance, and software design patterns. It aims to be the best language for a variety of programming projects, ranging from systems programming to desktop applications and scaling up to cloud services. Read more about it and how to try it out in Fedora.

## Safe, Fast, Expressive

Like many modern programming languages, Swift was designed to be safer than C-based languages. For example, variables are always initialized before they can be used. Arrays and integers are checked for overflow. Memory is automatically managed.

Swift puts intent right in the syntax. To declare a variable, use the *var* keyword. To declare a constant, use *let*.

Swift also guarantees that objects can never be *nil*; in fact, trying to use an object known to be *nil* will cause a compile-time error. When using a *nil* value is appropriate, it supports a mechanism called **optionals**. An optional may contain *nil*, but is safely unwrapped using the **?** operator.

Some additional features include:

- Closures unified with function pointers

- Tuples and multiple return values

- Generics

- Fast and concise iteration over a range or collection

- Structs that support methods, extensions, and protocols

- Functional programming patterns, e.g., map and filter

- Powerful error handling built-in

- Advanced control flow with do, guard, defer, and repeat keywords

## Try Swift out

Swift is available in Fedora 28 under then package name **swift-lang**. Once installed, run *swift* and the REPL console starts up.

$ swift Welcome to Swift version 4.2 (swift-4.2-RELEASE). Type :help for assistance. 1> let greeting="Hello world!" greeting: String = "Hello world!" 2> print(greeting) Hello world! 3> greeting = "Hello universe!" error: repl.swift:3:10: error: cannot assign to value: 'greeting' is a 'let' constant greeting = "Hello universe!" ~~~~~~~~ ^ 3>

Swift has a growing community, and in particular, a [work group](https://swift.org/server/) dedicated to making it an efficient and effective server-side programming language. Be sure to visit [its home page](http://swift.org) for more ways to get involved.

Photo by [Uillian Vargas](https://unsplash.com/photos/7oJpVR1inGk?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) on [Unsplash](https://unsplash.com/search/photos/fast?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText).

## Lan

Swift is almost useless on linux-based operating system without a way to create iOS and macOS applications. 🙁

## Paul W. Frields

@Lan: Swift is used in scientific applications as well, independently of iOS and MacOS.

## Cris

What are the benefits of Swift over Julia? It was a rhetorical question. I understand that it’s good to have alternatives but I don’t see this expanding beyond the Apple ecosystem. Just another FireWire®

## Tpk

Swift is a first class language for Tensorflow for example

## Ray Scott

I don’t think Apple have ever had the intention of porting their frameworks to Linux. I believe Swift support on Linux is intended to be used for writing backend services, but even that’s not possible I imagine without Apple’s Foundation module.

## Link Dupont

The Swift binary distribution from swift.org and the source code distribution available on GitHub include an implementation of the Foundation module. From the README:

Fedora’s swift-lang package includes this module.

## Ray Scott

Thanks for informing me. I might actually give it a try! 🙂

## Robb

Do you know if SourceKittenDaemon is included, or might be in the future?

## Ron Olson

It isn’t included as it’s not officially part of Apple’s toolchain. I’d never heard of it until now; I’ll take a look to see how difficult it would be to package.

## Robb

Thanks! It’s the equivalent of Rust Racer – the editor plugin that really brings home the benefits of working with an inferencing, statically typed language.

## Ron Olson

The Linux version of Swift includes the Foundation framework, along with Dispatch (GCD on MacOS) and, specifically for Linux, Glibc.

## Ron Olson

My use of Swift on Linux has been to test out code, algorithms, etc.; I can log into a powerful 16-core Linux box and use multithreaded Swift code without dealing with my much older, and much slower, Mac.

## M Leslie Satenstein

As far as I know from what I read about recent C compilers, uninitialized pointers and arrays are Nulled. Numbers (int, a char, float, are set to zero at function prologue.

My gcc C compiler tells me about a variable’s first use before being set.

If not C, I would still use C. The human brain knows better how to manage memory allocations.

## Andi

So, Fedora opens up the possibility to use Swift in order to develop Apps on Fedora? Is it right? Normally, I have to do it with my iMac.

## Robb

Really happy to see support for Fedora!

IMO there’s one piece of the puzzle remaining, to develop Swift on Linux: autocomplete and other code intelligence support. And

thatwill only arrive if “SourceKittenDaemon” is ported to Linux:https://github.com/terhechte/SourceKittenDaemon/issues/74

Without this part of the picture, we can’t get a lot of the benefits from working with Swift: the smart development environment that helps out with documentation and more as we go.

So personally, I switched to Rust as my compiled, modern, friendly Linux development language. But I’d like to see Swift give it some competition.

## Robb

Really happy to see this support for Swift.

I gave Swift dev on Fedora a try, but the lack of an intelligent code plugin held me back:

https://github.com/terhechte/SourceKittenDaemon/issues/74

No, I don’t NEED autocompletion and in-place documentation to code, but it makes my life so much more pleasant. I gave Rust a try, and it’s pretty much fulfilling the Swift promise, on Linux. Rust’s “Racer” code intelligence app compiles fine.

I’d love to give Swift a real shot on Fedora, but without IDE/editor support, we don’t get the full benefits.

## Paul W. Frields

I believe one can get Swift plugins for VS Code, which is available on Fedora, if you want to use that.

## Robb

Thanks, yep – that’s the path I followed, which led me to the SourceKittenDaemon dependency. If I’m right, that’s the piece which actually does the heavy lifting for the Swift plugins in VSC, Emacs, etc.

## Ray

I tried that this afternoon, but the plugin complained about a missing SourceKit dependency. I think the VSCode plugins are only expected to work on macOS, and won’t work on Linux until the SourceKit related issues are resolved.

## ANDRE GOMPEL

For assertive programming, from C, to Erlang, Julia, Go, Rust, Swift (and more), so many “me too languages” who do almost the same thing as C (C99) !

For system low level, the differences are so minor, that rather than a yet another language (YAL), I would much prefer as “a pragma statement” at the beginning of the source file, a choice for “YAFC” (yet another flavor of C).

So in most case (I know it is not always easy) theses file could be linked together.

In most cases it is doable.

Noe that many years ago, DEC (Digital Equipment Corporation) almost achieved the same goal on VAX/VMS.

The main issues are :

* “multi-core” processing (Like in Erlang).

Memory management (Automatic garbage collection is rarely suitable for system low level, i.e self contained , without O/S resources).

Object Oriented (when available) name mangling.

In line assembly code (Often necessary for system low level).

I am not sure thought, that a language adequate for system low level, which include device drivers, scheduler, semaphores, access to memory, and I/O mapped, embedded system (without an OS)…) will be the same for “a great application level” programming language, which in most cases run on top of an O/S and all its resources.

Microsoft Visual Studio:

I know, many people love IDE’s (I still prefer the UNIX philosophy of separate solutions: compiler, linker, debugger, Makefiles or similar).

I see a danger for the Open Source, to leave the control wide open to a large corporation (Microsoft) for the much appreciated “Visual Studio”, for which there seem to be no great “pure open source” equivalent.

GTK+ and Qt provide decent GUI frameworks. (Not KDE, bloated, never finished). Wondering if it ever will be a great FOSS, IDE build with either one?

Please comment.

Andre Gompel

## Frederik

For C++, the combination of Gnome builder and glade works pretty well. You’re buying into the entire glib ecosystem which is a no-go for some — personally that was the case, but I’ve realize that you simply can’t get anything real done without buying into one ecosystem or another. I personally like how glib does things, more than I like how Qt does things (lot of legacy cruft that hasn’t been dumped). I use the C-API of Glib, not the C++ API, which loses a lot of values over the C API on it’s insistence on using classes.

Gnome builder lacks a few integrations out of the box. I’d love to see a glade plugin that will at least allow me to launch glade from the IDE; at the moment I have to open my UI files from somewhere outside of Gnome builder. The git plugin seems to be hidden somewhere on the internet, that I can’t find (please provide a link if you know where to find it).

Given it’s dependency on meson (which is awesome), I would love to see a better integration with the Gnome builder.

## Michael J. Ryan

FYI: Visual Studio Code is completely Open-Source with an MIT license [1]. Not to be confused with the Visual Studio IDE. It’s quickly become one of the best developer environments on the planet imho. I use it on Windows, Linux and Mac regularly, as I tend to prefer the same tools everywhere as much as possible.

[1] https://github.com/Microsoft/vscode

## Ron Olson

I can also confirm that VS Code has syntax highlighting for Swift under Linux; I’m using it right now. 🙂

## Elmmur

Actually, the VS Code application that Microsoft provides is closed source.

License, see 5. :

http://code.visualstudio.com/license

If you would like to use actually FOSS VS code, you have to build it yourself:

https://github.com/Microsoft/vscode/wiki/How-to-Contribute

Or use flatpak OSS version with MS marketplace integrated:

https://flathub.org/apps/details/com.visualstudio.code.oss

More details:

https://github.com/flathub/com.visualstudio.code.oss/issues/6#issuecomment-380152999

## Elmmur

Give KDevelop a chance.

GomeBuilder is deeply tied to gnome projects. They even pin gnome projects at the front page, which will be wholey dowloaded if you click on them. That actually holds me back from it. But yeah maybe that’s why it’s called Gnome Builder. Anyway, KDevelop looks more like a general purpouse IDE to me.

## Thomas An

Can we use the kind of libraries with swift in Fedora? For examples, GLib, Gtk, DBus and so on. If so, it would be nice 🙂

## Ron Olson

You can interact with native libraries/headers, etc. Take a look at this (now old) project I wrote to learn how to do this very thing: https://github.com/tachoknight/Crazy8sBot. You could just as well work with any other library via the ‘unsafe’ bindings.

## Van

We can do server side on linux with Vapor (https://github.com/vapor/vapor) or Kitura (https://github.com/IBM-Swift/Kitura), Perfect. Apple also developed SwiftNIO for supporting server side frameworks (https://github.com/apple/swift-nio)

## steve

I downloaded and tried it. sorry to be so selective but there is no gui? modern programming languages have good editors, gui and graphical design of the screens. this looks like it runs in vi and is terminal based only. useless in today’s world. Unless i am doing something wrong in which case please tell me what it is.

## Ron Olson

Turning it around, good editors know about languages; gcc does not come with its own editor, but most (all?) Linux-based editors know how to highlight C/C++ code (though sometimes there’s a disconnect between the editor highlighting and the language features

coughC++20 experimental features).Visual Studio Code provides syntax highlighting for Swift; there is no Linux-based XCode (yet!)

## Ron Olson

I mentioned this elsewhere, but in case you didn’t see it, you can use Visual Studio Code, Microsoft’s free editor, on Linux with Swift syntax highlighting. Because it has a built-in terminal, you can use the Swift REPL in the editor whilst writing Swift code; I do this all the time.

## Paul Dufresne

hum… tried:

import Glibc

at line 1 of swift, and it seems to generate a few errors.

## Ron Olson

This happens when the REPL can’t find its Linux headers; this isn’t unique to Fedora (I’ve seen it happen with the official Ubuntu versions as well) and the solution for me is to add:

alias swift=”swift -I /usr/lib/swift-lldb/swift/clang/include/”

in my shell configuration of choice (I use both bash and fish so in .bashrc or config.fish, respectively)

## Paul Dufresne

Works fine. Thanks! |

10,136 | 命令行小技巧:读取文件的不同方式 | https://fedoramagazine.org/commandline-quick-tips-reading-files-different-ways/ | 2018-10-21T00:05:18 | [

"文件"

] | https://linux.cn/article-10136-1.html |

作为图形操作系统,Fedora 的使用是令人愉快的。你可以轻松地点击完成任何任务。但你可能已经看到了,在底层还有一个强大的命令行。想要在 shell 下体验,只需要在 Fedora 系统中打开你的终端应用。这篇文章是向你展示常见的命令行使用方法的系列文章之一。

在这部分,你将学习如何以不同的方式读取文件,如果你在系统中打开一个终端完成一些工作,你就有可能需要读取一两个文件。

### 一应俱全的大餐

对命令行终端的用户来说, `cat` 命令众所周知。 当你 `cat` 一个文件,你很容易的把整个文件内容展示在你的屏幕上。而真正发生在底层的是文件一次读取一行,然后一行一行写入屏幕。

假设你有一个文件,叫做 `myfile`, 这个文件每行只有一个单词。为了简单起见,每行的单词就是这行的行号,就像这样:

```

one

two

three

four

five

```

所以如果你 `cat` 这个文件,你就会看到如下输出:

```

$ cat myfile

one

two

three

four

five

```

并没有太惊喜,不是吗? 但是有个有趣的转折,只要使用 `tac` 命令,你可以从后往前 `cat` 这个文件。(请注意, Fedora 对这种有争议的幽默不承担任何责任!)

```

$ tac myfile

five

four

three

two

one

```

`cat` 命令允许你以不同的方式装饰输出,比如,你可以输出行号:

```

$ cat -n myfile

1 one

2 two

3 three

4 four

5 five

```

还有其他选项可以显示特殊字符和其他功能。要了解更多, 请运行 `man cat` 命令, 看完之后,按 `q` 即可退出回到 shell。

### 挑选你的食物

通常,文件太长会无法全部显示在屏幕上,您可能希望能够像文档一样查看它。 这种情况下,可以试试 `less` 命令:

```

$ less myfile

```

你可以用方向键,也可以用 `PgUp`/`PgDn` 来查看文件, 按 `q` 就可以退回到 shell。

实际上,还有一个 `more` 命令,其基于老式的 UNIX 系统命令。如果在退回 shell 后仍想看到该文件的内容,则可能需要使用它。而 `less` 命令则让你回到你离开 shell 之前的样子,并且清除屏幕上你看到的所有的文件内容。

### 一点披萨或甜点

有时,你所需的输出只是文件的开头。 比如,有一个非常长的文件,当你使用 `cat` 命令时,会显示这个文件所有内容,前几行的内容很容易滚动过去,导致你看不到。`head` 命令会帮你获取文件的前几行:

```

$ head -n 2 myfile

one

two

```

同样,你会用 `tail` 命令来查看文件的末尾几行:

```

$ tail -n 3 myfile

three

four

five

```

当然,这些只是在这个领域的几个简单的命令。但它们可以让你在阅读文件时容易入手。

---

via: <https://fedoramagazine.org/commandline-quick-tips-reading-files-different-ways/>

作者:[Paul W. Frields](https://fedoramagazine.org/author/pfrields/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[distant1219](https://github.com/distant1219) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Fedora is delightful to use as a graphical operating system. You can point and click your way through just about any task easily. But you’ve probably seen there is a powerful command line under the hood. To try it out in a shell, just open the Terminal application in your Fedora system. This article is one in a series that will show you some common command line utilities.

In this installment you’ll learn how to read files in different ways. If you open a Terminal to do some work on your system, chances are good that you’ll need to read a file or two.

## The whole enchilada

The **cat** command is well known to terminal users. When you **cat** a file, you’re simply displaying the whole file to the screen. Really what’s happening under the hood is the file is read one line at a time, then each line is written to the screen.

Imagine you have a file with one word per line, called *myfile*. To make this clear, the file will contain the word equivalent for a number on each line, like this:

one two three four five

So if you **cat** that file, you’ll see this output:

$cat myfileone two three four five

Nothing too surprising there, right? But here’s an interesting twist. You can also **cat** that file backward. For this, use the **tac** command. (Note that Fedora takes no blame for this debatable humor!)

$tac myfilefive four three two one

The **cat** file also lets you ornament the file in different ways, in case that’s helpful. For instance, you can number lines:

$cat -n myfile1 one 2 two 3 three 4 four 5 five

There are additional options that will show special characters and other features. To learn more, run the command **man cat**, and when done just hit **q** to exit back to the shell.

## Picking over your food

Often a file is too long to fit on a screen, and you may want to be able to go through it like a document. In that case, try the **less** command:

$less myfile

You can use your arrow keys as well as **PgUp/PgDn** to move around the file. Again, you can use the **q** key to quit back to the shell.

There’s actually a **more** command too, based on an older UNIX command. If it’s important to you to still see the file when you’re done, you might want to use it. The **less** command brings you back to the shell the way you left it, and clears the display of any sign of the file you looked at.

## Just the appetizer (or dessert)

Sometimes the output you want is just the beginning of a file. For instance, the file might be so long that when you **cat** the whole thing, the first few lines scroll past before you can see them. The **head** command will help you grab just those lines:

$head -n 2 myfileone two

In the same way, you can use **tail** to just grab the end of a file:

$tail -n 3 myfilethree four five

Of course these are only a few simple commands in this area. But they’ll get you started when it comes to reading files.

## jun.zhou

thankyou.The article is very simple,but userfull.

## João Rodrigues

The name cat comes from the word concatenate (and not from the animal).

Another useful uses of head and tail (that I always mix-up) are the signed “-n” arguments.

tail -n +NUM makes tail start outputting at line NUM

So, if you would want to print all the lines except the first one, you’d use

$ tail -n +2 myfile

two

three

four

five

head -n -NUM outputs every line except the last NUM lines.

So, if you want to output everything but the last line:

$ head -n -1 myfile

one

two

three

four

## Paul W. Frields

Great additional tips, João! We were hoping these short articles would invite helpful comments like this.

## svsv sarma

Very educative and informative indeed. Different moods of the same command is interesting. With GUI, Terminal has lost its importance. But in Linux, particularly in Fedora, Terminal has its own significance, for use with DNF, APT and Dracut etc. I am beginning and trying hard to understand its power now.

However, with the command $ cat or $ tac, the Terminal stuns and no output is shown!

## Paul W. Frields

The reason no output is shown is that, if you don’t include a file name, both

catandtacexpect to be fed data from the standard input (usually provided by a pipe). We’ll definitely cover using the pipe in a future article.## ryuuy

better way is using Ruby

https://github.com/tombenner/ru

https://www.sitepoint.com/ru-ruby-shell/

$ru ‘map(:to_i).sum’ myfile

sum of numbers in file

## Rich

Launching an entire interpreted language to apply line numbers to text files is not a great use of the Unix commandline; especially when atomic Unix commands provide the functionality that’s sought.

That becomes particularly important when using shell built-in commands, like “printf” or “read”. One of the goals of scripting should be to do as much as possible in one process, without loading up external commands, or deferring to other interpreters. This is why you’ll often see forum applause when someone solves a tricky regex using only bash built-in string manipulation and matching; or using a minimum of external atomic commands like “tr”, “cat” and “tac”, etc.

## peter p

i also like using sed for printing lines of a file.

sed 25q

will print the first 25 lines.

while

sed -n 7,10p

prints lines 7 thru 10.

## Declan McNulty

My favourite is “tail -f “. The f stands for “follow”. As the file changes on disk, any additional lines appended to the end are immediately displayed.

Very useful for following a log file in real time for troubleshooting.

For example you could run:

tail -f /var/log/messages

…while asking a user to repeat some action that did not complete as expected last time.

## Rich

…or uppercase, “tail -F /path/to/file” which is useful for following a logfile that either doesn’t exist yet, or you expect to be rotated.

## Daniel

a very useful flag for tail is:

tail -f myfile

to output appended data as the file grows.

## John G

I had not noticed that

will keep the file output in the terminal after you exit, where

will not.

Thanks for this interesting fact!

## Mehdi

Nice article!

Also, one useful of tail that I learned from our network guy is the following:

tail -f filename

This shows the end content of the file real-time; very useful for observing log files for example on a development system.

## Ralph

You mentioned that less does clear the screen on quit, while more leaves the last viewed screen visible, and recommended to use the more pager if one wanted the last screen still visible on return to the shell prompt.

Although this is true for less’es default behaviour there is no need to use more any more (no pun intended) and one should ditch it, unless one is forced to view text files on legacy Unices like e.g. AIX, HP-UX or Solaris, where less per se isn’t available.

For the folks who are only out and about on Linux less should almost always be preferred.

If you want less to not clear the screen on quit simply use the -X option.

e.g.

less -X /etc/fstab

Now when you press q you will be returned to the shell prompt, but still see above it the last viewed screen page of the file you viewed.

If you require this behaviour in general and you are tired of always typing extra options that you need (also others besides -X), you could either set an alias (e.g. in your ~/.bashrc and export BASH_ENV=$HOME/.bashrc),

or export the environment variable LESS and assign it any numer of options that you could read about in man less (e.g. also in .bashrc or .bash_profile).

Or simply pass LESS=”-X” as environment to the immediately to the issued command.

e.g.

LESS=-X man less

## João Rodrigues

A less known fact about man is that you can render man pages in postscript format.

$ man -t bash > bash.ps

$ xdg-open bash.ps

It’s great if you need a printed version of a man page.

You can also read man pages in html

$ man –html=firefox bash

or

$ export BROWSER=$(which firefox)

$ man -H bash

## fmatejic

while read line; do printf “$line\n”; done < /etc/fstab

Without calling extend command 😉

## Barry

It’s interesting to me as someone who has used UNIX (since BSD 4.2) and Linux since the kernel version was less than 1! That newcomers see Linux as a GUI OS with an add-on command line! The history of UNIX/Linux is that it is a command line OS with a bolt-on GUI!

The power of the command line is incredible and no effort spent learning it is a waste. You can achieve lots, quickly and easily.

If you use Linux, learn something about the command line, don’t fear it! Its not difficult and could make you more productive.

As an example imagine you have 1000 photos in a directory with names like IMG0001.PNG and you wanted to rename them to img-.png where was replaced with the creation date… Could you do this from the GUI? I could (given a hot cup of coffee and a few mins) craft a command or script to do this.

## Ivan

Why not using awk? 🙂

1) Print the line from 3 to end:

awk ‘{if (NR>=3 ) {print $0}}’ filename

2) Print the line between 2 and 30:

awk ‘{if (NR>=2 && NR <=30 ) {print $0}}’ filename

3) print the lines from 1 to 10:

awk ‘{if (NR<=10) {print $0}}’ filename

## Rahul Karkhanis

Wonderfully written article …

My favourite is “nl”

The “nl ” also lets you ornament the file in different ways, in case that’s helpful. For instance, you can number lines:

$ nl myfile

1 one

2 two

3 three

4 four

5 five

do refer man page for much more interesting options |

10,137 | 如何在双系统引导下替换 Linux 发行版 | https://itsfoss.com/replace-linux-from-dual-boot/ | 2018-10-21T00:22:39 | [

"双引导"

] | https://linux.cn/article-10137-1.html |

>

> 在双系统引导的状态下,你可以将已安装的 Linux 发行版替换为另一个发行版,同时还可以保留原本的个人数据。

>

>

>

假设你的电脑上已经[以双系统的形式安装了 Ubuntu 和 Windows](https://itsfoss.com/install-ubuntu-1404-dual-boot-mode-windows-8-81-uefi/),但经过[将 Linux Mint 与 Ubuntu 比较](https://itsfoss.com/linux-mint-vs-ubuntu/)之后,你又觉得 [Linux Mint](https://www.linuxmint.com/) 会更适合自己的时候,你会怎样做?又该如何在[删除 Ubuntu](https://itsfoss.com/uninstall-ubuntu-linux-windows-dual-boot/) 的同时[在双系统中安装 Mint](https://itsfoss.com/guide-install-linux-mint-16-dual-boot-windows/) 呢?

你或许觉得应该首先从在双系统中卸载 [Ubuntu](https://www.ubuntu.com/),然后使用 Linux Mint 重新安装成双系统。但实际上并不需要这么麻烦。

如果你已经在双系统引导中安装了一种 Linux 发行版,就可以轻松替换成另一个发行版了,而且也不必卸载已有的 Linux 发行版,只需要删除其所在的分区,然后在腾出的磁盘空间上安装另一个 Linux 发行版就可以了。

与此同时,更换 Linux 发行版后,仍然会保留原本 home 目录中包含所有文件。

下面就来详细介绍一下。

### 在双系统引导中替换 Linux 发行版

<https://youtu.be/ptF2RUehbKs>

这是我的演示范例。我使用双系统引导同时安装了 Windows 10 和 Linux Mint 19,然后我会把 Linux Mint 19 替换成 Elementary OS 5,同时在替换后保留我的个人文件(包括音乐、图片、视频和 home 目录中的文件)。

你需要做好以下这些准备:

* 使用 Linux 和 Windows 双系统引导

* 需要安装的 Linux 发行版的 USB live 版

* 在外部磁盘备份 Windows 和 Linux 中的重要文件(并非必要,但建议备份一下)

#### 在替换 Linux 发行版时要记住保留你的 home 目录

如果想让个人文件在安装新 Linux 系统的过程中不受影响,原有的 Linux 系统必须具有单独的 root 目录和 home 目录。你可能会发现我的[双系统引导教程](https://itsfoss.com/guide-install-elementary-os-luna/)在安装过程中不选择“与 Windows 共存”选项,而选择“其它”选项,然后手动创建 root 和 home 分区。所以,手动创建单独的 home 分区也算是一个磨刀不误砍柴工的操作。因为如果要在不丢失文件的情况下,将现有的 Linux 发行版替换为另一个发行版,需要将 home 目录存放在一个单独的分区上。

不过,你必须记住现有 Linux 系统的用户名和密码才能使用与新系统中相同的 home 目录。

如果你没有单独的 home 分区,也可以后续再进行创建。但这并不是推荐做法,因为这个过程会比较复杂,有可能会把你的系统搞乱。

下面来看看如何替换到另一个 Linux 发行版。

#### 步骤 1:为新的 Linux 发行版创建一个 USB live 版

尽管上文中已经提到了它,但我还是要重复一次以免忽略。

你可以使用 Windows 或 Linux 中的启动盘创建器(例如 [Etcher](https://etcher.io/))来创建 USB live 版,这个过程比较简单,这里不再详细叙述。

#### 步骤 2:启动 USB live 版并安装 Linux

你应该已经使用过双系统启动,对这个过程不会陌生。使用 USB live 版重新启动系统,在启动时反复按 F10 或 F12 进入 BIOS 设置。选择从 USB 启动,就可以看到进入 live 环境或立即安装的选项。

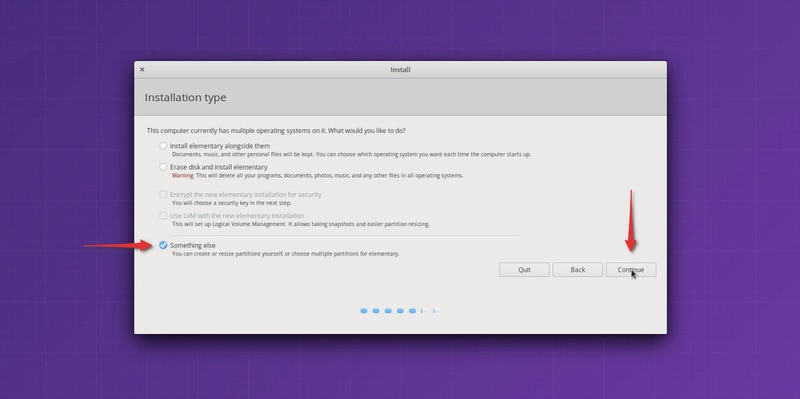

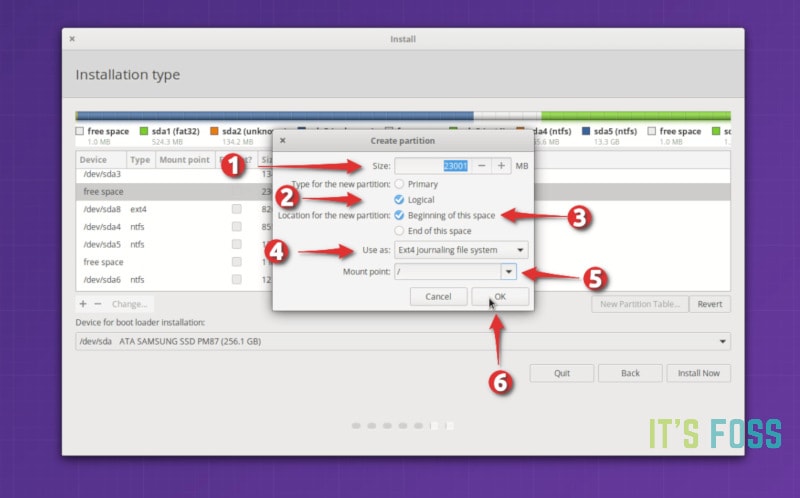

在安装过程中,进入“安装类型”界面时,选择“其它”选项。

*在这里选择“其它”选项*

#### 步骤 3:准备分区操作

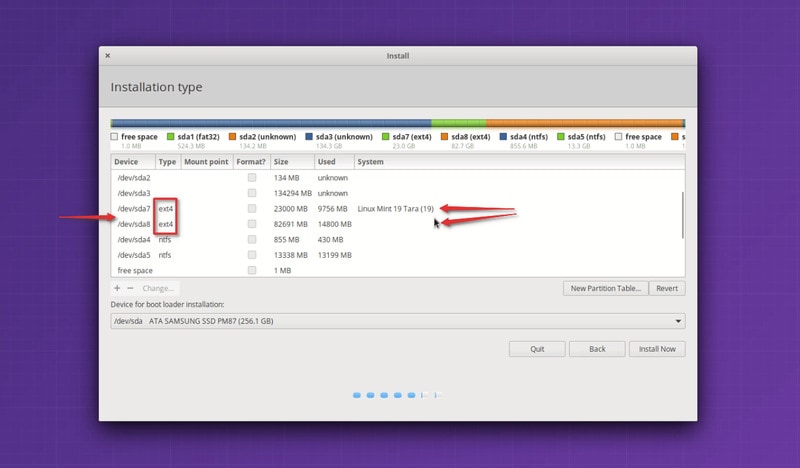

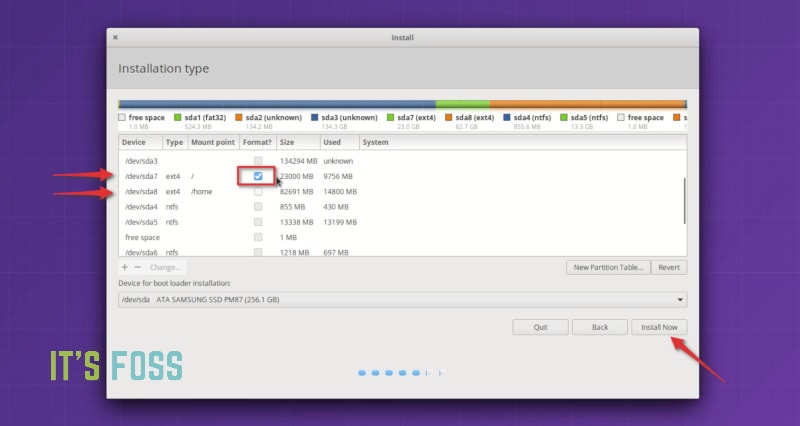

下图是分区界面。你会看到使用 Ext4 文件系统类型来安装 Linux。

*确定 Linux 的安装位置*

在上图中,标记为 Linux Mint 19 的 Ext4 分区是 root 分区,大小为 82691 MB 的第二个 Ext4 分区是 home 分区。在这里我这里没有使用[交换空间](https://itsfoss.com/swap-size/)。

如果你只有一个 Ext4 分区,就意味着你的 home 目录与 root 目录位于同一分区。在这种情况下,你就无法保留 home 目录中的文件了,这个时候我建议将重要文件复制到外部磁盘,否则这些文件将不会保留。

然后是删除 root 分区。选择 root 分区,然后点击 `-` 号,这个操作释放了一些磁盘空间。

*删除 root 分区*

磁盘空间释放出来后,点击 `+` 号。

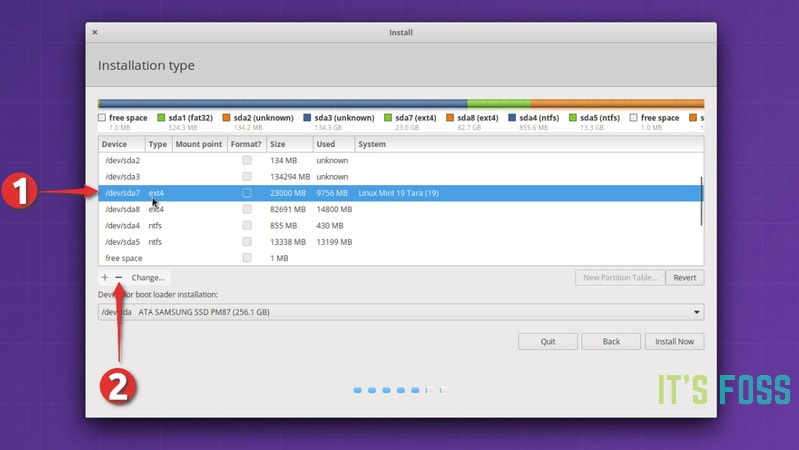

*创建新的 root 分区*

现在已经在可用空间中创建一个新分区。如果你之前的 Linux 系统中只有一个 root 分区,就应该在这里创建 root 分区和 home 分区。如果需要,还可以创建交换分区。

如果你之前已经有 root 分区和 home 分区,那么只需要从已删除的 root 分区创建 root 分区就可以了。

*创建 root 分区*

你可能有疑问,为什么要经过“删除”和“添加”两个过程,而不使用“更改”选项。这是因为以前使用“更改”选项好像没有效果,所以我更喜欢用 `-` 和 `+`。这是迷信吗?也许是吧。

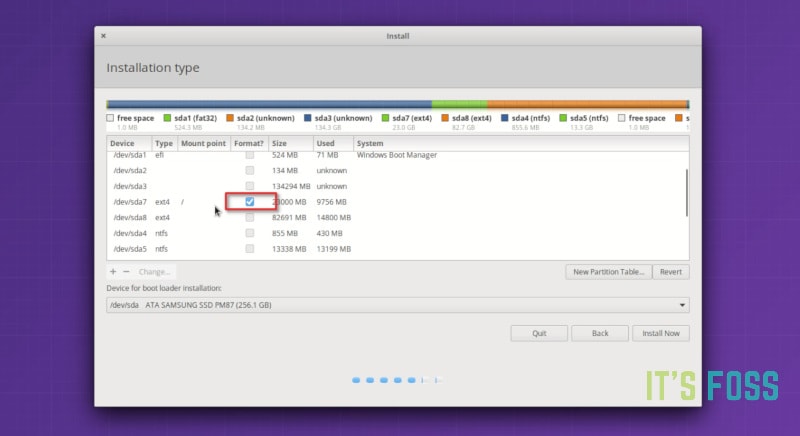

这里有一个重要的步骤,对新创建的 root 分区进行格式化。在没有更改分区大小的情况下,默认是不会对分区进行格式化的。如果分区没有被格式化,之后可能会出现问题。

*格式化 root 分区很重要*

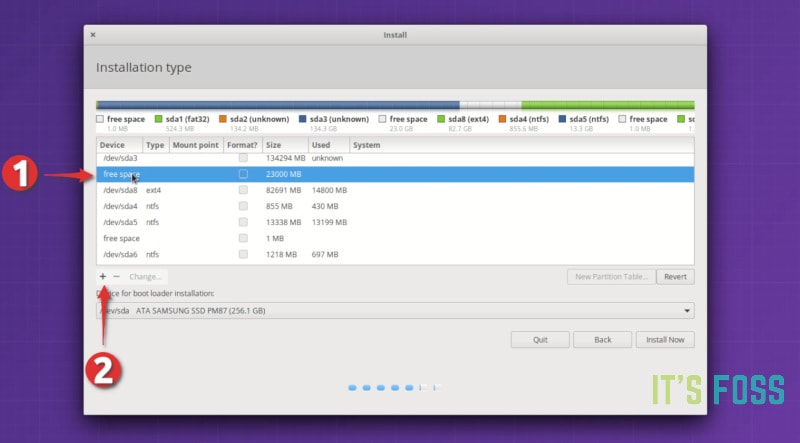

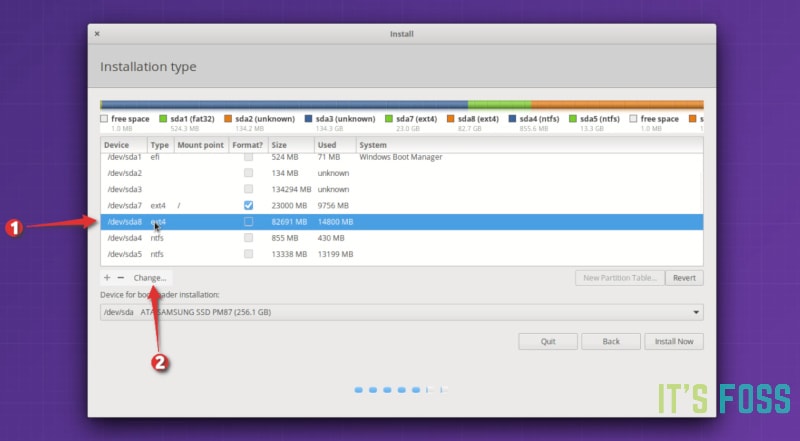

如果你在新的 Linux 系统上已经划分了单独的 home 分区,选中它并点击更改。

*修改已有的 home 分区*

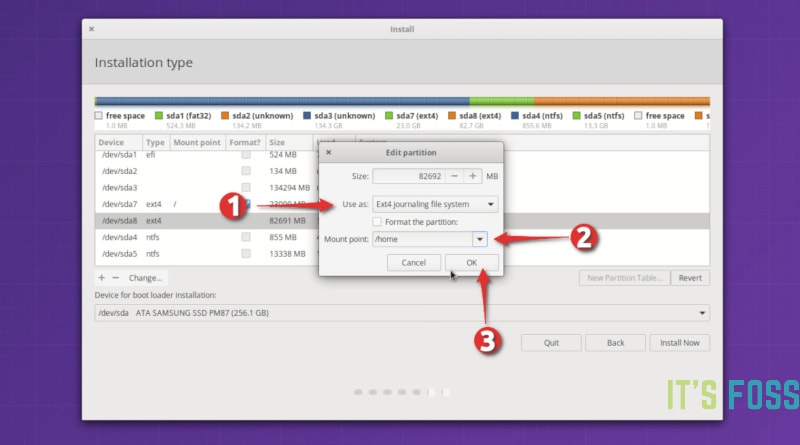

然后指定将其作为 home 分区挂载即可。

*指定 home 分区的挂载点*

如果你还有交换分区,可以重复与 home 分区相同的步骤,唯一不同的是要指定将空间用作交换空间。

现在的状态应该是有一个 root 分区(将被格式化)和一个 home 分区(如果需要,还可以使用交换分区)。点击“立即安装”可以开始安装。

*检查分区情况*

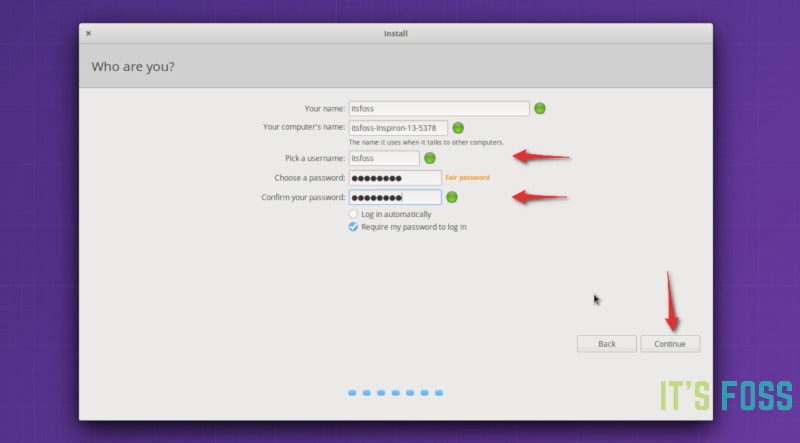

接下来的几个界面就很熟悉了,要重点注意的是创建用户和密码的步骤。如果你之前有一个单独的 home 分区,并且还想使用相同的 home 目录,那你必须使用和之前相同的用户名和密码,至于设备名称则可以任意指定。

*要保持 home 分区不变,请使用之前的用户名和密码*

接下来只要静待安装完成,不需执行任何操作。

*等待安装完成*

安装完成后重新启动系统,你就能使用新的 Linux 发行版。

在以上的例子中,我可以在新的 Linux Mint 19 中使用原有的 Elementary OS 中的整个 home 目录,并且其中所有视频和图片都原封不动。岂不美哉?

---

via: <https://itsfoss.com/replace-linux-from-dual-boot/>

作者:[Abhishek Prakash](https://itsfoss.com/author/abhishek/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[HankChow](https://github.com/HankChow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

Suppose you managed to [successfully dual boot Ubuntu and Windows](https://itsfoss.com/install-ubuntu-1404-dual-boot-mode-windows-8-81-uefi/). But after reading the [Linux Mint versus Ubuntu discussion](https://itsfoss.com/linux-mint-vs-ubuntu/), you realized that [Linux Mint](https://www.linuxmint.com/?ref=itsfoss.com) is more suited for your needs. What would you do now? How would you [remove Ubuntu](https://itsfoss.com/uninstall-ubuntu-linux-windows-dual-boot/) and [install Mint in dual boot](https://itsfoss.com/guide-install-linux-mint-16-dual-boot-windows/)?

You might think that you need to uninstall [Ubuntu](https://www.ubuntu.com/?ref=itsfoss.com) from dual boot first and then repeat the dual booting steps with Linux Mint. Let me tell you something. You don’t need to do all of that.

If you already have a Linux distribution installed in dual boot, you can easily replace it with another. You simply delete its partition and install the new distribution on the disk space vacated by the previous distribution.

Another good news is that you *may* be able to keep your Home directory with all your documents and pictures while switching the Linux distributions.

Let me show you how to switch Linux distributions.

## Replace one Linux with another from dual boot

Let me describe the scenario I am going to use here. I have Linux Mint 19 installed on my system in dual boot mode with Windows 10. I am going to replace it with elementary OS 5. I’ll also keep my personal files (music, pictures, videos, documents from my home directory) while switching distributions.

Let’s first take a look at the requirements:

- A system with Linux and Windows dual boot

- Live USB of Linux you want to install

- Backup of your important files in Windows and in Linux on an external disk (optional yet recommended)

### Things to keep in mind for keeping your home directory while changing Linux distribution

If you want to keep your files from existing Linux install as it is, you must have a separate root and home directory. You might have noticed that in my [dual boot tutorials](https://itsfoss.com/guide-install-elementary-os-luna/), I always go for ‘Something Else’ option and then manually create root and home partitions instead of choosing ‘Install alongside Windows’ option. This is where all the troubles in manually creating separate home partition pay off.

Keeping Home on a separate partition is helpful in situations when you want to replace your existing Linux install with another without losing your files.

Note: You must remember the exact username and password of your existing Linux install in order to use the same home directory as it is in the new distribution.

If you don’t have a separate Home partition, you may create it later as well BUT I won’t recommend that. That process is slightly complicated and I don’t want you to mess up your system.

With that much background information, it’s time to see how to replace a Linux distribution with another.

### Step 1: Create a live USB of the new Linux distribution

Alright! I already mentioned it in the requirements but I still included it in the main steps to avoid confusion.

You can create a live USB using a start up disk creator like [Etcher](https://etcher.io/?ref=itsfoss.com) in Windows or Linux. The process is simple so I am not going to list the steps here.

### Step 2: Boot into live USB and proceed to installing Linux

Since you have already dual booted before, you probably know the drill. Plugin the live USB, restart your system and at the boot time, press F10 or F12 repeatedly to enter BIOS settings.

In here, choose to boot from the USB. And then you’ll see the option to try the live environment or installing it immediately.

You should start the installation procedure. When you reach the ‘Installation type’ screen, choose the ‘Something else’ option.

### Step 3: Prepare the partition

You’ll see the partitioning screen now. Look closely and you’ll see your Linux installation with Ext4 file system type.

In the above picture, the Ext4 partition labeled as Linux Mint 19 is the root partition. The second Ext4 partition of 82691 MB is the Home partition. I [haven’t used any swap space](https://itsfoss.com/swap-size/) here.

Now, if you have just one Ext4 partition, that means that your home directory is on the same partition as root. In this case, you won’t be able to keep your Home directory. I suggest that you copy the important files to an external disk else you’ll lose them forever.

It’s time to delete the root partition. Select the root partition and click the – sign. This will create some free space.

When you have the free space, click on + sign.

Now you should create a new partition out of this free space. If you had just one root partition in your previous Linux install, you should create root and home partitions here. You can also create the swap partition if you want to.

If you had root and home partition separately, just create a root partition from the deleted root partition.

You may ask why did I use delete and add instead of using the ‘change’ option. It’s because a few years ago, using change didn’t work for me. So I prefer to do a – and +. Is it superstition? Maybe.

One important thing to do here is to mark the newly created partition for format. f you don’t change the size of the partition, it won’t be formatted unless you explicitly ask it to format. And if the partition is not formatted, you’ll have issues.

Now if you already had a separate Home partition on your existing Linux install, you should select it and click on change.

You just have to specify that you are mounting it as home partition.

If you had a swap partition, you can repeat the same steps as the home partition. This time specify that you want to use the space as swap.

At this stage, you should have a root partition (with format option selected) and a home partition (and a swap if you want to). Hit the install now button to start the installation.

The next few screens would be familiar to you. What matters is the screen where you are asked to create user and password.

If you had a separate home partition previously and you want to use the same home directory, you MUST use the same username and password that you had before. Computer name doesn’t matter.

Your struggle is almost over. You don’t have to do anything else other than waiting for the installation to finish.

Once the installation is over, restart your system. You’ll have a new Linux distribution or version.

In my case, I had the entire home directory of Linux Mint 19 as it is in the elementary OS. All the videos, pictures I had remained as it is. Isn’t that nice? |

10,139 | 在 Linux 命令行中使用 ls 列出文件的技巧 | https://opensource.com/article/18/10/ls-command | 2018-10-22T08:27:00 | [

"ls"

] | https://linux.cn/article-10139-1.html |

>

> 学习一些 Linux `ls` 命令最有用的变化。

>

>

>

我在 Linux 中最先学到的命令之一就是 `ls`。了解系统中文件所在目录中的内容非常重要。能够查看和修改不仅仅是一些文件还有所有文件也很重要。

我的第一个 Linux 备忘录是[单页 Linux 手册](http://hackerspace.cs.rutgers.edu/library/General/One_Page_Linux_Manual.pdf),它于 1999 年发布,成了我的首选参考资料。当我开始探索 Linux 时,我把它贴在桌子上并经常参考它。它在第一页第一列的底部介绍了 `ls -l` 列出文件的命令。

之后,我将学习这个最基本命令的其它迭代。通过 `ls` 命令,我开始了解 Linux 文件权限的复杂性,以及哪些是我的文件,哪些需要 root 或者 sudo 权限来修改。随着时间的推移,我习惯了使用命令行,虽然我仍然使用 `ls -l` 来查找目录中的文件,但我经常使用 `ls -al`,这样我就可以看到可能需要更改的隐藏文件,比如那些配置文件。

根据 Eric Fischer 在 [Linux 文档项目](http://www.tldp.org/LDP/LG/issue48/fischer.html)中关于 `ls` 命令的文章,该命令的起源可以追溯到 1961 年 MIT 的<ruby> 相容分时系统 <rt> Compatible Time-Sharing System </rt></ruby>(CTSS)上的 `listf` 命令。当 CTSS 被 [Multics](https://en.wikipedia.org/wiki/Multics) 代替时,命令变为 `list`,并有像 `list -all` 的开关。根据[维基百科](https://en.wikipedia.org/wiki/Ls),`ls` 出现在 AT&T Unix 的原始版本中。我们今天在 Linux 系统上使用的 `ls` 命令来自 [GNU Core Utilities](http://www.gnu.org/s/coreutils/)。

大多数时候,我只使用几个迭代的命令。我通常用 `ls` 或 `ls -al` 查看目录内容,但是你还应该熟悉许多其它选项。

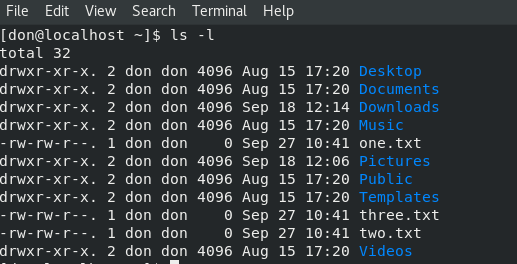

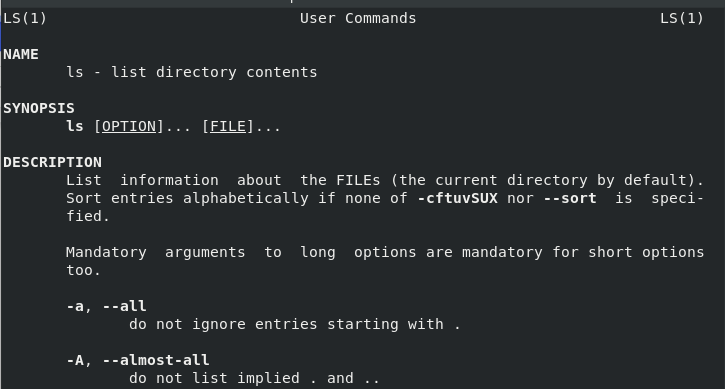

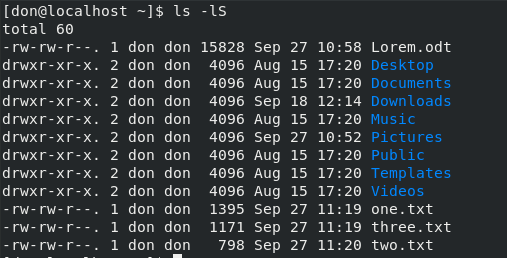

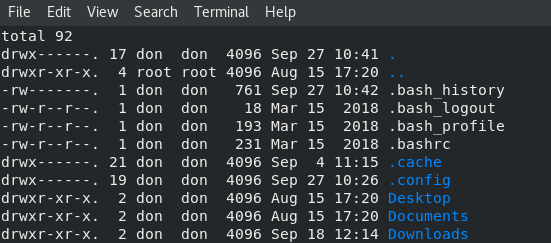

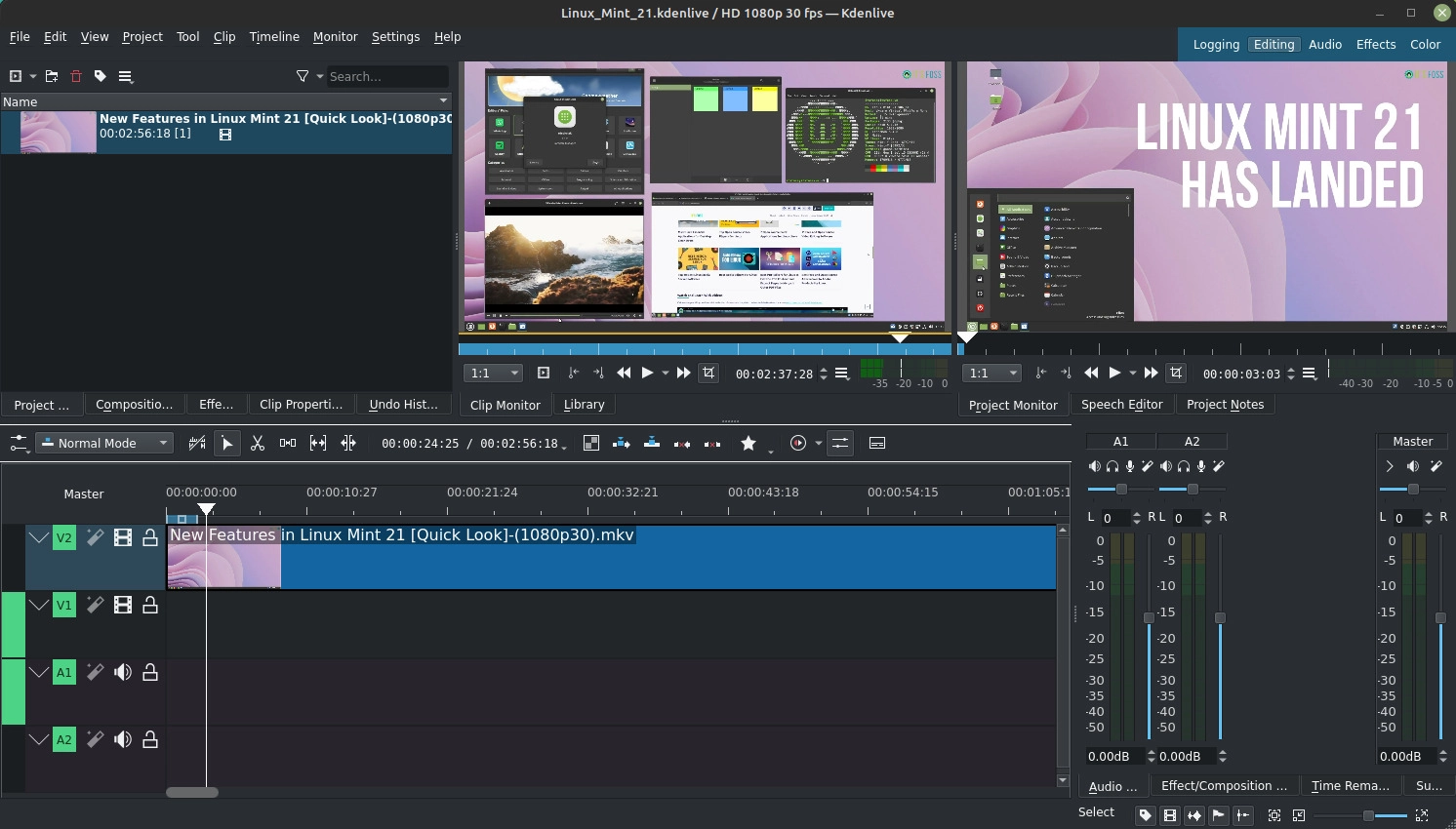

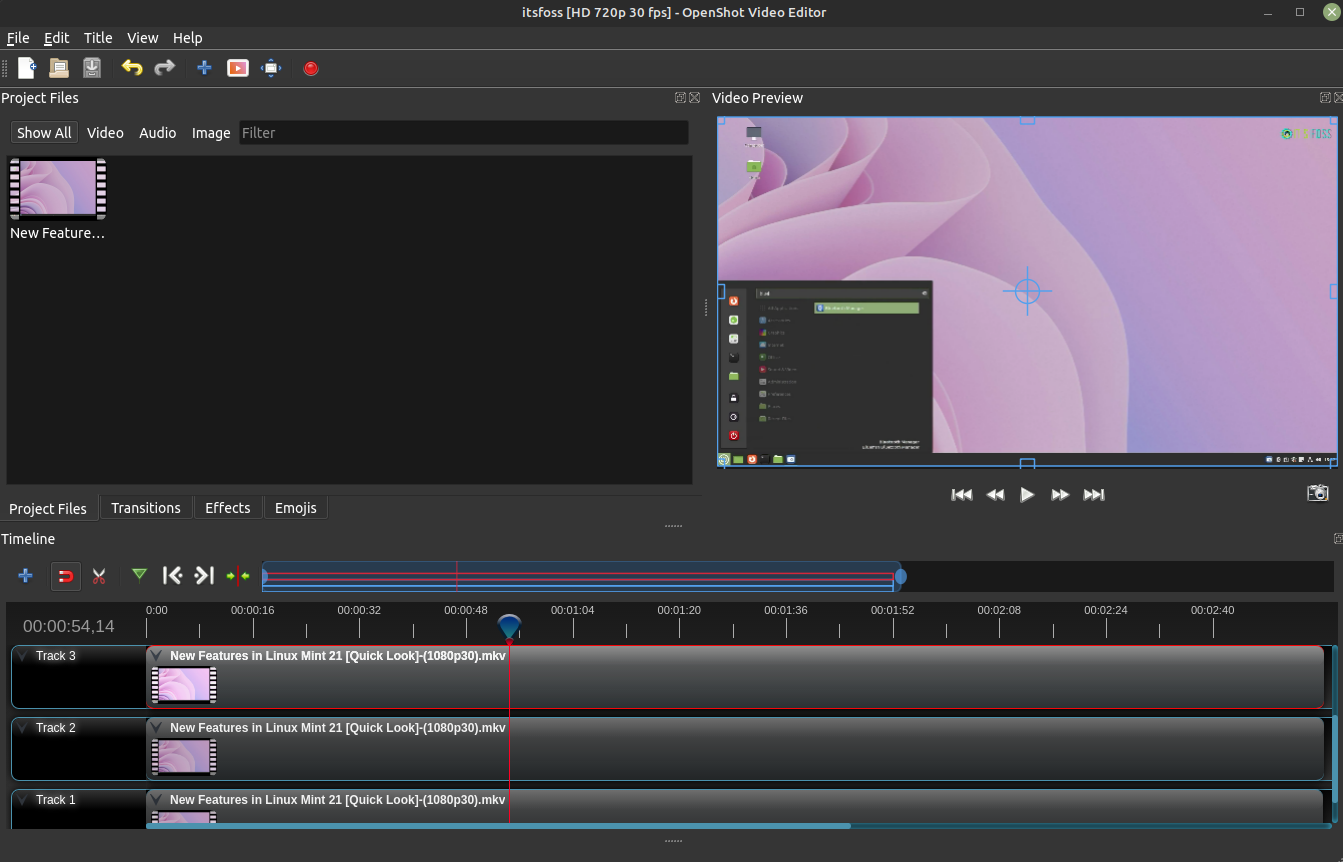

`ls -l` 提供了一个简单的目录列表:

在我的 Fedora 28 系统的手册页中,我发现 `ls` 还有许多其它选项,所有这些选项都提供了有关 Linux 文件系统的有趣且有用的信息。通过在命令提示符下输入 `man ls`,我们可以开始探索其它一些选项:

要按文件大小对目录进行排序,请使用 `ls -lS`:

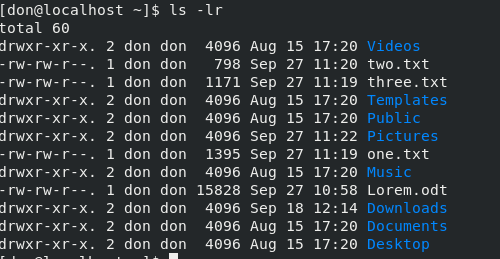

要以相反的顺序列出内容,请使用 `ls -lr`:

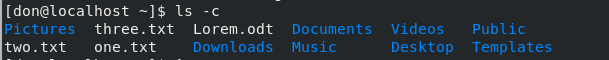

要按列列出内容,请使用 `ls -c`:

`ls -al` 提供了同一目录中所有文件的列表:

以下是我认为有用且有趣的一些其它选项:

* 仅列出目录中的 .txt 文件:`ls *.txt`

* 按文件大小列出:`ls -s`

* 按时间和日期排序:`ls -t`

* 按扩展名排序:`ls -X`

* 按文件大小排序:`ls -S`

* 带有文件大小的长格式:`ls -ls`

要生成指定格式的目录列表并将其定向到文件供以后查看,请输入 `ls -al > mydirectorylist`。最后,我找到的一个更奇特的命令是 `ls -R`,它提供了计算机上所有目录及其内容的递归列表。

有关 `ls` 命令的所有迭代的完整列表,请参阅 [GNU Core Utilities](https://www.gnu.org/software/coreutils/manual/html_node/ls-invocation.html#ls-invocation)。

---

via: <https://opensource.com/article/18/10/ls-command>

作者:[Don Watkins](https://opensource.com/users/don-watkins) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[pityonline](https://github.com/pityonline)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | One of the first commands I learned in Linux was `ls`

. Knowing what’s in a directory where a file on your system resides is important. Being able to see and modify not just some but *all* of the files is also important.

My first LInux cheat sheet was the [One Page Linux Manual](http://hackerspace.cs.rutgers.edu/library/General/One_Page_Linux_Manual.pdf), which was released in1999 and became my go-to reference. I taped it over my desk and referred to it often as I began to explore Linux. Listing files with `ls -l`

is introduced on the first page, at the bottom of the first column.

Later, I would learn other iterations of this most basic command. Through the `ls`

command, I began to learn about the complexity of the Linux file permissions and what was mine and what required root or sudo permission to change. I became very comfortable on the command line over time, and while I still use `ls -l`

to find files in the directory, I frequently use `ls -al`

so I can see hidden files that might need to be changed, like configuration files.

According to an article by Eric Fischer about the `ls`

command in the [Linux Documentation Project](http://www.tldp.org/LDP/LG/issue48/fischer.html), the command's roots go back to the `listf`

command on MIT’s Compatible Time Sharing System in 1961. When CTSS was replaced by [Multics](https://en.wikipedia.org/wiki/Multics), the command became `list`

, with switches like `list -all`

. According to [Wikipedia](https://en.wikipedia.org/wiki/Ls), `ls`

appeared in the original version of AT&T Unix. The `ls`

command we use today on Linux systems comes from the [GNU Core Utilities](http://www.gnu.org/s/coreutils/).

Most of the time, I use only a couple of iterations of the command. Looking inside a directory with `ls`

or `ls -al`

is how I generally use the command, but there are many other options that you should be familiar with.

`$ ls -l`

provides a simple list of the directory:

Using the man pages of my Fedora 28 system, I find that there are many other options to `ls`

, all of which provide interesting and useful information about the Linux file system. By entering `man ls`

at the command prompt, we can begin to explore some of the other options:

To sort the directory by file sizes, use `ls -lS`

:

To list the contents in reverse order, use `ls -lr`

:

To list contents by columns, use `ls -c`

:

`ls -al`

provides a list of all the files in the same directory:

Here are some additional options that I find useful and interesting:

- List only the .txt files in the directory:

`ls *.txt`

- List by file size:

`ls -s`

- Sort by time and date:

`ls -d`

- Sort by extension:

`ls -X`

- Sort by file size:

`ls -S`

- Long format with file size:

`ls -ls`

- List only the .txt files in a directory:

`ls *.txt`

To generate a directory list in the specified format and send it to a file for later viewing, enter `ls -al > mydirectorylist`

. Finally, one of the more exotic commands I found is `ls -R`

, which provides a recursive list of all the directories on your computer and their contents.

For a complete list of the all the iterations of the `ls`

command, refer to the [GNU Core Utilities](https://www.gnu.org/software/coreutils/manual/html_node/ls-invocation.html#ls-invocation).

## 4 Comments |

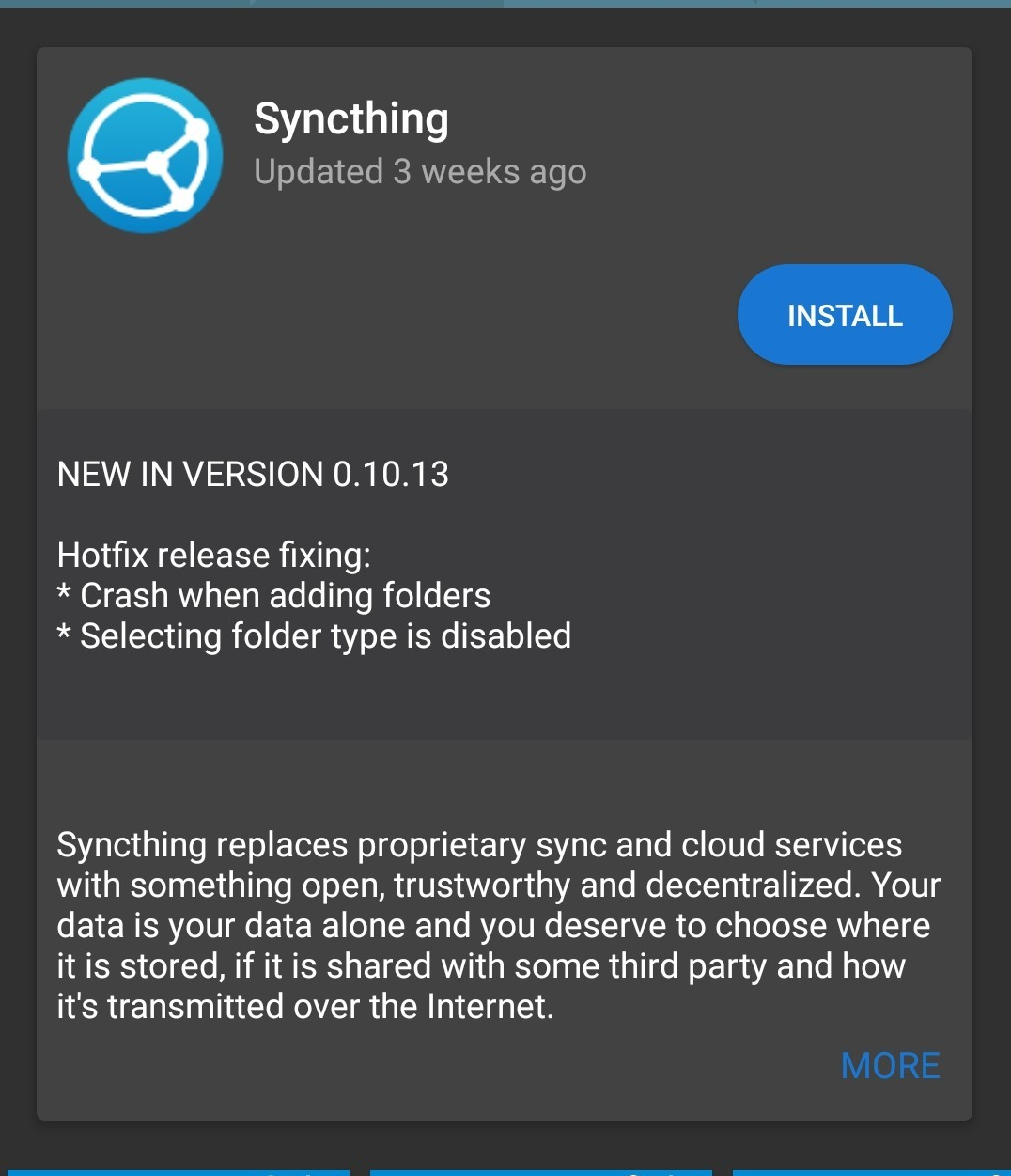

10,140 | PyTorch 1.0 预览版发布:Facebook 最新 AI 开源框架 | https://itsfoss.com/pytorch-open-source-ai-framework/ | 2018-10-22T08:44:30 | [] | https://linux.cn/article-10140-1.html | Facebook 在人工智能项目中广泛使用自己的开源 AI 框架 PyTorch,最近,他们已经发布了 PyTorch 1.0 的预览版本。

如果你尚不了解,[PyTorch](https://pytorch.org/) 是一个基于 Python 的科学计算库。

PyTorch 利用 [GPU 超强的运算能力](https://en.wikipedia.org/wiki/General-purpose_computing_on_graphics_processing_units) 来实现复杂的 [张量](https://en.wikipedia.org/wiki/Tensor) 计算 和 [深度神经网络](https://www.techopedia.com/definition/32902/deep-neural-network)。 因此, 它被世界各地的研究人员和开发人员广泛使用。

这一新的可以投入使用的 [预览版](https://code.fb.com/ai-research/facebook-accelerates-ai-development-with-new-partners-and-production-capabilities-for-pytorch-1-0) 已于 2018 年 10 月 2 日周二在旧金山 [The Midway](https://www.themidwaysf.com/) 举办的 [PyTorch 开发人员大会](https://pytorch.fbreg.com/) 宣布。

### PyTorch 1.0 候选版本的亮点

候选版本中的一些主要新功能包括:

#### 1、 JIT

JIT 是一个编译工具集,使研究和生产更加接近。 它包含一个基于 Python 语言的叫做 Torch Script 的脚本语言,也有能使现有代码与它自己兼容的方法。

#### 2、 全新的 torch.distributed 库: “C10D”

“C10D” 能够在不同的后端上启用异步操作, 并在较慢的网络上提高性能。

#### 3、 C++ 前端 (实验性功能)

虽然它被特别提到是一个不稳定的 API (估计是在预发行版中), 这是一个 PyTorch 后端的纯 C++ 接口, 遵循 API 和建立的 Python 前端的体系结构,以实现高性能、低延迟的研究和开发直接安装在硬件上的 C++ 应用程序。

想要了解更多,可以在 GitHub 上查看完整的 [更新说明](https://github.com/pytorch/pytorch/releases/tag/v1.0rc0)。

第一个 PyTorch 1.0 的稳定版本将在夏季发布。(LCTT 译注:此信息可能有误)

### 在 Linux 上安装 PyTorch

为了安装 PyTorch v1.0rc0, 开发人员建议使用 [conda](https://conda.io/), 同时也可以按照[本地安装页面](https://pytorch.org/get-started/locally/)所示,使用其他方法可以安装,所有必要的细节详见文档。

#### 前提

* Linux

* Pip

* Python

* [CUDA](https://www.pugetsystems.com/labs/hpc/How-to-install-CUDA-9-2-on-Ubuntu-18-04-1184/) (对于使用 Nvidia GPU 的用户)

我们已经知道[如何安装和使用 Pip](https://itsfoss.com/install-pip-ubuntu/),那就让我们来了解如何使用 Pip 安装 PyTorch。

请注意,PyTorch 具有 GPU 和仅限 CPU 的不同安装包。你应该安装一个适合你硬件的安装包。

#### 安装 PyTorch 的旧版本和稳定版

如果你想在 GPU 机器上安装稳定版(0.4 版本),使用:

```

pip install torch torchvision

```

使用以下两个命令,来安装仅用于 CPU 的稳定版:

```

pip install http://download.pytorch.org/whl/cpu/torch-0.4.1-cp27-cp27mu-linux_x86_64.whl

pip install torchvision

```

#### 安装 PyTorch 1.0 候选版本

使用如下命令安装 PyTorch 1.0 RC GPU 版本:

```

pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cu92/torch_nightly.html

```

如果没有GPU,并且更喜欢使用 仅限 CPU 版本,使用如下命令:

```

pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cpu/torch_nightly.html

```

#### 验证 PyTorch 安装

使用如下简单的命令,启动终端上的 python 控制台:

```

python

```

现在,按行输入下面的示例代码以验证您的安装:

```

from __future__ import print_function

import torch

x = torch.rand(5, 3)

print(x)

```

你应该得到如下输出:

```

tensor([[0.3380, 0.3845, 0.3217],

[0.8337, 0.9050, 0.2650],

[0.2979, 0.7141, 0.9069],

[0.1449, 0.1132, 0.1375],

[0.4675, 0.3947, 0.1426]])

```

若要检查是否可以使用 PyTorch 的 GPU 功能, 可以使用以下示例代码:

```

import torch

torch.cuda.is_available()

```

输出结果应该是:

```

True

```

支持 PyTorch 的 AMD GPU 仍在开发中, 因此, 尚未按[报告](https://github.com/pytorch/pytorch/issues/10657#issuecomment-415067478)提供完整的测试覆盖,如果您有 AMD GPU ,请在[这里](https://rocm.github.io/install.html#installing-from-amd-rocm-repositories)提出建议。

现在让我们来看看一些广泛使用 PyTorch 的研究项目:

### 基于 PyTorch 的持续研究项目

* [Detectron](https://github.com/facebookresearch/Detectron): Facebook AI 研究院的软件系统, 可以智能地进行对象检测和分类。它之前是基于 Caffe2 的。今年早些时候,Caffe2 和 PyTorch [合力](https://caffe2.ai/blog/2018/05/02/Caffe2_PyTorch_1_0.html)创建了一个研究 + 生产的 PyTorch 1.0

* [Unsupervised Sentiment Discovery](https://github.com/NVIDIA/sentiment-discovery): 广泛应用于社交媒体的一些算法

* [vid2vid](https://github.com/NVIDIA/vid2vid): 逼真的视频到视频的转换

* [DeepRecommender](https://github.com/NVIDIA/DeepRecommender/) 我们在过去的[网飞的 AI 文章](https://itsfoss.com/netflix-open-source-ai/)中介绍了这些系统是如何工作的

领先的 GPU 制造商英伟达在[更新](https://news.developer.nvidia.com/pytorch-1-0-accelerated-on-nvidia-gpus/)这方面最近的发展,你也可以阅读正在进行的合作的研究。

### 我们应该如何应对这种 PyTorch 的能力?

想到 Facebook 在社交媒体算法中应用如此令人惊叹的创新项目, 我们是否应该感激这一切或是感到惊恐?这几乎是[天网](https://en.wikipedia.org/wiki/Skynet_(Terminator))! 这一新改进的发布的 PyTorch 肯定会推动事情进一步向前! 在下方评论,随时与我们分享您的想法!

---

via: <https://itsfoss.com/pytorch-open-source-ai-framework/>

作者:[Avimanyu Bandyopadhyay](https://itsfoss.com/author/avimanyu/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[distant1219](https://github.com/distant1219) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Facebook already uses its own Open Source AI,

For those who are not familiar, [PyTorch](https://pytorch.org/) is a Python-based library for Scientific Computing.

PyTorch harnesses the [superior computational power of Graphical Processing Units (GPUs)](https://en.wikipedia.org/wiki/General-purpose_computing_on_graphics_processing_units) for carrying out complex [Tensor](https://en.wikipedia.org/wiki/Tensor) computations and implementing [deep neural networks](https://www.techopedia.com/definition/32902/deep-neural-network). So, it is used widely across the world by numerous researchers and developers.

This new ready-to-use [Preview Release](https://code.fb.com/ai-research/facebook-accelerates-ai-development-with-new-partners-and-production-capabilities-for-pytorch-1-0) was announced at the [PyTorch Developer Conference](https://pytorch.fbreg.com/) at [The Midway](https://www.themidwaysf.com/), San Francisco, CA on Tuesday, October 2, 2018.

## Highlights of PyTorch 1.0 Release Candidate

Some of the main new features in the release candidate are:

### 1. JIT

JIT is a set of compiler tools to bring research close to production. It includes a Python-based language called Torch Script and also ways to make existing code compatible with itself.

### 2. New torch.distributed library: “C10D”

“C10D” enables asynchronous operation on different backends with performance improvements on slower networks and more.

### 3. C++ frontend (experimental)

Though it has been specifically mentioned as an unstable API (expected in a pre-release), this is a pure C++ interface to the PyTorch backend that follows the API and architecture of the established Python frontend to enable research in high performance, low latency and C++ applications installed directly on hardware.

To know more, you can take a look at the complete [update notes](https://github.com/pytorch/pytorch/releases/tag/v1.0rc0) on GitHub.

The first stable version PyTorch 1.0 will be released in summer.

## Installing PyTorch on Linux

To install PyTorch v1.0rc0, the developers recommend [using Conda](https://learnubuntu.com/install-conda/) while there also other ways to do that as shown on their [local installation page](https://pytorch.org/get-started/locally/) where they have documented everything necessary in detail.

### Prerequisites

- Linux

- Pip

- Python

[CUDA](https://www.pugetsystems.com/labs/hpc/How-to-install-CUDA-9-2-on-Ubuntu-18-04-1184/)(For Nvidia GPU owners)

As we recently showed you [how to install and use Pip](https://itsfoss.com/install-pip-ubuntu/), let’s get to know how we can install PyTorch with it.

Note that PyTorch has GPU and CPU-only variants. You should install the one that suits your hardware.

### Installing old and stable version of PyTorch

If you want the stable release (version 0.4) for your GPU, use:

`pip install torch torchvision`

Use these two commands in succession for a CPU-only stable release:

```

pip install http://download.pytorch.org/whl/cpu/torch-0.4.1-cp27-cp27mu-linux_x86_64.whl

pip install torchvision

```

### Installing PyTorch 1.0 Release Candidate

You install PyTorch 1.0 RC GPU version with this command:

`pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cu92/torch_nightly.html`

If you do not have a GPU and would prefer a CPU-only version, use:

`pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cpu/torch_nightly.html`

### Verifying your PyTorch installation

Startup the python console on a terminal with the following simple command:

`python`

Now enter the following sample code line by line to verify your installation:

```

from __future__ import print_function

import torch

x = torch.rand(5, 3)

print(x)

```

You should get an output like:

```

tensor([[0.3380, 0.3845, 0.3217],

[0.8337, 0.9050, 0.2650],

[0.2979, 0.7141, 0.9069],

[0.1449, 0.1132, 0.1375],

[0.4675, 0.3947, 0.1426]])

```

To check whether you can use PyTorch’s GPU capabilities, use the following sample code:

```

import torch

torch.cuda.is_available()

```

The resulting output should be:

`True`

Support for AMD GPUs for PyTorch is still under development, so complete test coverage is not yet provided as reported [here](https://github.com/pytorch/pytorch/issues/10657#issuecomment-415067478), suggesting this [resource](https://rocm.github.io/install.html#installing-from-amd-rocm-repositories) in case you have an AMD GPU.

Lets now look into some research projects that extensively use PyTorch:

## Ongoing Research Projects based on PyTorch

[Detectron](https://github.com/facebookresearch/Detectron): Facebook AI Research’s software system to intelligently detect and classify objects. It is based on Caffe2. Earlier this year, Caffe2 and PyTorch[joined forces](https://caffe2.ai/blog/2018/05/02/Caffe2_PyTorch_1_0.html)to create a Research + Production enabled PyTorch 1.0 we talk about.[Unsupervised Sentiment Discovery](https://github.com/NVIDIA/sentiment-discovery): Such methods are extensively used with social media algorithms.[vid2vid](https://github.com/NVIDIA/vid2vid): Photorealistic video-to-video translation[DeepRecommender](https://github.com/NVIDIA/DeepRecommender/)(We covered how such systems work on our past[Netflix AI article](https://itsfoss.com/netflix-open-source-ai/))

Nvidia, leading GPU manufacturer covered more on this with their own [update](https://news.developer.nvidia.com/pytorch-1-0-accelerated-on-nvidia-gpus/) on this recent development where you can also read about ongoing collaborative research endeavours.

## How should we react to such PyTorch capabilities?

To think Facebook applies such amazingly innovative projects and more in its social media algorithms, should we appreciate all this or get alarmed? This is almost [Skynet](https://en.wikipedia.org/wiki/Skynet_(Terminator))! This newly improved production-ready pre-release of PyTorch will certainly push things further ahead! Feel free to share your thoughts with us in the comments below! |

10,143 | 如何在 Arch Linux(UEFI)上安装 GRUB | http://fasterland.net/how-to-install-grub-on-arch-linux-uefi.html | 2018-10-22T22:15:28 | [

"UEFI",

"GRUB"

] | https://linux.cn/article-10143-1.html |

前段时间,我写了一篇在安装 Windows 后在 Arch Linux 上[如何重新安装 Grub](http://fasterland.net/reinstall-grub-arch-linux.html)的教程。

几周前,我不得不在我的笔记本上从头开始重新安装 Arch Linux,同时我发现安装 Grub 并不像我想的那么简单。

出于这个原因,由于在新安装 Arch Linux 时在 UEFI bios 中安装 Grub 并不容易,所以我要写这篇教程。

### 定位 EFI 分区

在 Arch Linux 上安装 Grub 的第一件重要事情是定位 EFI 分区。让我们运行以下命令以找到此分区:

```

# fdisk -l

```

我们需要检查标记为 EFI System 的分区,我这里是 `/dev/sda2`。

之后,我们需要在例如 `/boot/efi` 上挂载这个分区:

```

# mkdir /boot/efi

# mount /dev/sdb2 /boot/efi

```

另一件重要的事情是将此分区添加到 `/etc/fstab` 中。

#### 安装 Grub

现在我们可以在我们的系统中安装 Grub:

```

# grub-mkconfig -o /boot/grub/grub.cfg

# grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=GRUB

```

#### 自动将 Windows 添加到 Grub 菜单中

为了自动将 Windows 条目添加到 Grub 菜单,我们需要安装 os-prober:

```

# pacman -Sy os-prober

```

要添加它,让我们运行以下命令:

```

# os-prober

# grub-mkconfig -o /boot/grub/grub.cfg

# grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=GRUB

```

你可以在[这里](https://wiki.archlinux.org/index.php/GRUB)找到更多关于在 Arch Linux 上 Grub 的信息。

---

via: <http://fasterland.net/how-to-install-grub-on-arch-linux-uefi.html>

作者:[Francesco Mondello](http://fasterland.net/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

10,144 | 系统管理员需知的 16 个 iptables 使用技巧 | https://opensource.com/article/18/10/iptables-tips-and-tricks | 2018-10-22T22:48:44 | [

"iptables"

] | /article-10144-1.html |

>

> iptables 是一款控制系统进出流量的强大配置工具。

>

>

>

现代 Linux 内核带有一个叫 [Netfilter](https://en.wikipedia.org/wiki/Netfilter) 的数据包过滤框架。Netfilter 提供了允许、丢弃以及修改等操作来控制进出系统的流量数据包。基于 Netfilter 框架的用户层命令行工具 `iptables` 提供了强大的防火墙配置功能,允许你添加规则来构建防火墙策略。[iptables](https://en.wikipedia.org/wiki/Iptables) 丰富复杂的功能以及其巴洛克式命令语法可能让人难以驾驭。我们就来探讨一下其中的一些功能,提供一些系统管理员解决某些问题需要的使用技巧。

### 避免封锁自己

应用场景:假设你将对公司服务器上的防火墙规则进行修改,你需要避免封锁你自己以及其他同事的情况(这将会带来一定时间和金钱的损失,也许一旦发生马上就有部门打电话找你了)

#### 技巧 #1: 开始之前先备份一下 iptables 配置文件。

用如下命令备份配置文件:

```

/sbin/iptables-save > /root/iptables-works

```

#### 技巧 #2: 更妥当的做法,给文件加上时间戳。

用如下命令加时间戳:

```

/sbin/iptables-save > /root/iptables-works-`date +%F`

```

然后你就可以生成如下名字的文件:

```

/root/iptables-works-2018-09-11

```

这样万一使得系统不工作了,你也可以很快的利用备份文件恢复原状:

```

/sbin/iptables-restore < /root/iptables-works-2018-09-11

```

#### 技巧 #3: 每次创建 iptables 配置文件副本时,都创建一个指向最新的文件的链接。

```

ln –s /root/iptables-works-`date +%F` /root/iptables-works-latest

```

#### 技巧 #4: 将特定规则放在策略顶部,底部放置通用规则。

避免在策略顶部使用如下的一些通用规则:

```

iptables -A INPUT -p tcp --dport 22 -j DROP

```

你在规则中指定的条件越多,封锁自己的可能性就越小。不要使用上面非常通用的规则,而是使用如下的规则:

```

iptables -A INPUT -p tcp --dport 22 –s 10.0.0.0/8 –d 192.168.100.101 -j DROP

```

此规则表示在 `INPUT` 链尾追加一条新规则,将源地址为 `10.0.0.0/8`、 目的地址是 `192.168.100.101`、目的端口号是 `22` (`--dport 22` ) 的 TCP(`-p tcp` )数据包通通丢弃掉。

还有很多方法可以设置更具体的规则。例如,使用 `-i eth0` 将会限制这条规则作用于 `eth0` 网卡,对 `eth1` 网卡则不生效。

#### 技巧 #5: 在策略规则顶部将你的 IP 列入白名单。

这是一个有效地避免封锁自己的设置:

```

iptables -I INPUT -s <your IP> -j ACCEPT

```

你需要将该规则添加到策略首位置。`-I` 表示则策略首部插入规则,`-A` 表示在策略尾部追加规则。

#### 技巧 #6: 理解现有策略中的所有规则。

不犯错就已经成功了一半。如果你了解 iptables 策略背后的工作原理,使用起来更为得心应手。如果有必要,可以绘制流程图来理清数据包的走向。还要记住:策略的预期效果和实际效果可能完全是两回事。

### 设置防火墙策略

应用场景:你希望给工作站配置具有限制性策略的防火墙。

#### 技巧 #1: 设置默认规则为丢弃

```

# Set a default policy of DROP

*filter

:INPUT DROP [0:0]

:FORWARD DROP [0:0]

:OUTPUT DROP [0:0]

```

#### 技巧 #2: 将用户完成工作所需的最少量服务设置为允许

该策略需要允许工作站能通过 DHCP(`-p udp --dport 67:68 -sport 67:68`)来获取 IP 地址、子网掩码以及其他一些信息。对于远程操作,需要允许 SSH 服务(`-dport 22`),邮件服务(`--dport 25`),DNS 服务(`--dport 53`),ping 功能(`-p icmp`),NTP 服务(`--dport 123 --sport 123`)以及 HTTP 服务(`-dport 80`)和 HTTPS 服务(`--dport 443`)。

```

# Set a default policy of DROP

*filter

:INPUT DROP [0:0]

:FORWARD DROP [0:0]

:OUTPUT DROP [0:0]

# Accept any related or established connections

-I INPUT 1 -m state --state RELATED,ESTABLISHED -j ACCEPT

-I OUTPUT 1 -m state --state RELATED,ESTABLISHED -j ACCEPT

# Allow all traffic on the loopback interface

-A INPUT -i lo -j ACCEPT

-A OUTPUT -o lo -j ACCEPT

# Allow outbound DHCP request

-A OUTPUT –o eth0 -p udp --dport 67:68 --sport 67:68 -j ACCEPT

# Allow inbound SSH

-A INPUT -i eth0 -p tcp -m tcp --dport 22 -m state --state NEW -j ACCEPT

# Allow outbound email

-A OUTPUT -i eth0 -p tcp -m tcp --dport 25 -m state --state NEW -j ACCEPT

# Outbound DNS lookups

-A OUTPUT -o eth0 -p udp -m udp --dport 53 -j ACCEPT

# Outbound PING requests

-A OUTPUT –o eth0 -p icmp -j ACCEPT

# Outbound Network Time Protocol (NTP) requests

-A OUTPUT –o eth0 -p udp --dport 123 --sport 123 -j ACCEPT

# Outbound HTTP

-A OUTPUT -o eth0 -p tcp -m tcp --dport 80 -m state --state NEW -j ACCEPT

-A OUTPUT -o eth0 -p tcp -m tcp --dport 443 -m state --state NEW -j ACCEPT

COMMIT

```

### 限制 IP 地址范围

应用场景:贵公司的 CEO 认为员工在 Facebook 上花费过多的时间,需要采取一些限制措施。CEO 命令下达给 CIO,CIO 命令 CISO,最终任务由你来执行。你决定阻止一切到 Facebook 的访问连接。首先你使用 `host` 或者 `whois` 命令来获取 Facebook 的 IP 地址。

```

host -t a www.facebook.com

www.facebook.com is an alias for star.c10r.facebook.com.

star.c10r.facebook.com has address 31.13.65.17

whois 31.13.65.17 | grep inetnum

inetnum: 31.13.64.0 - 31.13.127.255

```

然后使用 [CIDR 到 IPv4 转换](http://www.ipaddressguide.com/cidr) 页面来将其转换为 CIDR 表示法。然后你得到 `31.13.64.0/18` 的地址。输入以下命令来阻止对 Facebook 的访问:

```

iptables -A OUTPUT -p tcp -i eth0 –o eth1 –d 31.13.64.0/18 -j DROP

```

### 按时间规定做限制 - 场景1

应用场景:公司员工强烈反对限制一切对 Facebook 的访问,这导致了 CEO 放宽了要求(考虑到员工的反对以及他的助理提醒说她负责更新他的 Facebook 页面)。然后 CEO 决定允许在午餐时间访问 Facebook(中午 12 点到下午 1 点之间)。假设默认规则是丢弃,使用 iptables 的时间功能便可以实现。

```

iptables –A OUTPUT -p tcp -m multiport --dport http,https -i eth0 -o eth1 -m time --timestart 12:00 –timestop 13:00 –d 31.13.64.0/18 -j ACCEPT

```

该命令中指定在中午12点(`--timestart 12:00`)到下午 1 点(`--timestop 13:00`)之间允许(`-j ACCEPT`)到 Facebook.com (`-d [31.13.64.0/18][5]`)的 http 以及 https (`-m multiport --dport http,https`)的访问。

### 按时间规定做限制 - 场景2

应用场景:在计划系统维护期间,你需要设置凌晨 2 点到 3 点之间拒绝所有的 TCP 和 UDP 访问,这样维护任务就不会受到干扰。使用两个 iptables 规则可实现:

```

iptables -A INPUT -p tcp -m time --timestart 02:00 --timestop 03:00 -j DROP

iptables -A INPUT -p udp -m time --timestart 02:00 --timestop 03:00 -j DROP

```

该规则禁止(`-j DROP`)在凌晨2点(`--timestart 02:00`)到凌晨3点(`--timestop 03:00`)之间的 TCP 和 UDP (`-p tcp and -p udp`)的数据进入(`-A INPUT`)访问。

### 限制连接数量

应用场景:你的 web 服务器有可能受到来自世界各地的 DoS 攻击,为了避免这些攻击,你可以限制单个 IP 地址到你的 web 服务器创建连接的数量:

```

iptables –A INPUT –p tcp –syn -m multiport -–dport http,https –m connlimit -–connlimit-above 20 –j REJECT -–reject-with-tcp-reset

```

分析一下上面的命令。如果单个主机在一分钟之内新建立(`-p tcp -syn`)超过 20 个(`-connlimit-above 20`)到你的 web 服务器(`--dport http,https`)的连接,服务器将拒绝(`-j REJECT`)建立新的连接,然后通知对方新建连接被拒绝(`--reject-with-tcp-reset`)。

### 监控 iptables 规则

应用场景:由于数据包会遍历链中的规则,iptables 遵循 “首次匹配获胜” 的原则,因此经常匹配的规则应该靠近策略的顶部,而不太频繁匹配的规则应该接近底部。 你怎么知道哪些规则使用最多或最少,可以在顶部或底部附近监控?

#### 技巧 #1: 查看规则被访问了多少次

使用命令:

```

iptables -L -v -n –line-numbers

```

用 `-L` 选项列出链中的所有规则。因为没有指定具体哪条链,所有链规则都会被输出,使用 `-v` 选项显示详细信息,`-n` 选项则显示数字格式的数据包和字节计数器,每个规则开头的数值表示该规则在链中的位置。

根据数据包和字节计数的结果,你可以将访问频率最高的规则放到顶部,将访问频率最低的规则放到底部。

#### 技巧 #2: 删除不必要的规则

哪条规则从来没有被访问过?这些可以被清除掉。用如下命令查看:

```

iptables -nvL | grep -v "0 0"

```

注意:两个数字 0 之间不是 Tab 键,而是 **5** 个空格。

#### 技巧 #3: 监控正在发生什么

可能你也想像使用 `top` 命令一样来实时监控 iptables 的情况。使用如下命令来动态监视 iptables 中的活动,并仅显示正在遍历的规则:

```

watch --interval=5 'iptables -nvL | grep -v "0 0"'

```

`watch` 命令通过参数 `iptables -nvL | grep -v “0 0“` 每隔 5 秒输出 iptables 的动态。这条命令允许你查看数据包和字节计数的变化。

### 输出日志

应用场景:经理觉得你这个防火墙员工的工作质量杠杠的,但如果能有网络流量活动日志最好了。有时候这比写一份有关工作的报告更有效。

使用工具 [FWLogwatch](http://fwlogwatch.inside-security.de/) 基于 iptables 防火墙记录来生成日志报告。FWLogwatch 工具支持很多形式的报告并且也提供了很多分析功能。它生成的日志以及月报告使得管理员可以节省大量时间并且还更好地管理网络,甚至减少未被注意的潜在攻击。

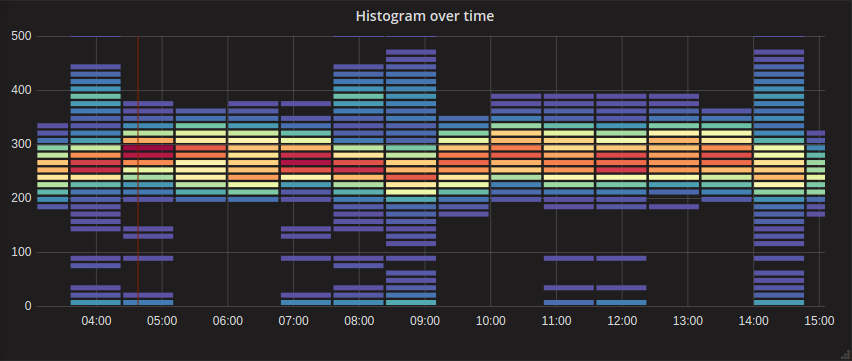

这里是一个 FWLogwatch 生成的报告示例:

### 不要满足于允许和丢弃规则

本文中已经涵盖了 iptables 的很多方面,从避免封锁自己、配置 iptables 防火墙以及监控 iptables 中的活动等等方面介绍了 iptables。你可以从这里开始探索 iptables 甚至获取更多的使用技巧。

---

via: <https://opensource.com/article/18/10/iptables-tips-and-tricks>

作者:[Gary Smith](https://opensource.com/users/greptile) 选题:[lujun9972](https://github.com/lujun9972) 译者:[jrg](https://github.com/jrglinux) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

10,145 | 5 个很酷的平铺窗口管理器 | https://fedoramagazine.org/5-cool-tiling-window-managers/ | 2018-10-23T22:13:31 | [

"窗口管理器"

] | https://linux.cn/article-10145-1.html |

Linux 桌面生态中有多种窗口管理器(WM)。有些是作为桌面环境的一部分开发的。有的则被用作独立程序。平铺窗口管理器就是这种情况,它提供了一个更轻量级的自定义环境。本文介绍了五种这样的平铺窗口管理器供你试用。

### i3

[i3](https://i3wm.org/) 是最受欢迎的平铺窗口管理器之一。与大多数其他此类 WM 一样,i3 专注于低资源消耗和用户可定制性。

您可以参考 [Magazine 上的这篇文章](https://fedoramagazine.org/getting-started-i3-window-manager/)了解 i3 安装细节以及如何配置它。

### sway

[sway](https://swaywm.org/) 是一个平铺 Wayland 合成器。它有与现有 i3 配置兼容的优点,因此你可以使用它来替换 i3 并使用 Wayland 作为显示协议。

您可以使用 `dnf` 从 Fedora 仓库安装 sway:

```

$ sudo dnf install sway

```

如果你想从 i3 迁移到 sway,这里有一个[迁移指南](https://github.com/swaywm/sway/wiki/i3-Migration-Guide)。

### Qtile

[Qtile](http://www.qtile.org/) 是另一个平铺管理器,也恰好是用 Python 编写的。默认情况下,你在位于 `~/.config/qtile/config.py` 下的 Python 脚本中配置 Qtile。当此脚本不存在时,Qtile 会使用默认[配置](https://github.com/qtile/qtile/blob/develop/libqtile/resources/default_config.py)。

Qtile 使用 Python 的一个好处是你可以编写脚本来控制 WM。例如,以下脚本打印屏幕详细信息:

```

> from libqtile.command import Client

> c = Client()

> print(c.screen.info)

{'index': 0, 'width': 1920, 'height': 1006, 'x': 0, 'y': 0}

```

要在 Fedora 上安装 Qlite,请使用以下命令:

```

$ sudo dnf install qtile

```

### dwm

[dwm](https://dwm.suckless.org/) 窗口管理器更侧重于轻量级。该项目的一个目标是保持 dwm 最小。例如,整个代码库从未超过 2000 行代码。另一方面,dwm 不容易定制和配置。实际上,改变 dwm 默认配置的唯一方法是[编辑源代码并重新编译程序](https://dwm.suckless.org/customisation/)。

如果你想尝试默认配置,你可以使用 `dnf` 在 Fedora 中安装 dwm:

```

$ sudo dnf install dwm

```

对于那些想要改变 dwm 配置的人,Fedora 中有一个 dwm-user 包。该软件包使用用户主目录中 `~/.dwm/config.h` 的配置自动重新编译 dwm。

### awesome

[awesome](https://awesomewm.org/) 最初是作为 dwm 的一个分支开发,使用外部配置文件提供 WM 的配置。配置通过 Lua 脚本完成,这些脚本允许你编写脚本以自动执行任务或创建 widget。

你可以使用这个命令在 Fedora 上安装 awesome:

```

$ sudo dnf install awesome

```

---

via: <https://fedoramagazine.org/5-cool-tiling-window-managers/>

作者:[Clément Verna](https://fedoramagazine.org) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | The Linux desktop ecosystem offers multiple window managers (WMs). Some are developed as part of a desktop environment. Others are meant to be used as standalone application. This is the case of tiling WMs, which offer a more lightweight, customized environment. This article presents five such tiling WMs for you to try out.

### i3

[i3](https://i3wm.org/) is one of the most popular tiling window managers. Like most other such WMs, i3 focuses on low resource consumption and customizability by the user.

You can refer to [this previous article in the Magazine](https://fedoramagazine.org/getting-started-i3-window-manager/) to get started with i3 installation details and how to configure it.

### sway

[sway](https://swaywm.org/) is a tiling Wayland compositor. It has the advantage of compatibility with an existing i3 configuration, so you can use it to replace i3 and use Wayland as the display protocol.

You can use *dnf* to install *sway* from Fedora repository:

$sudo dnf install sway

If you want to migrate from i3 to sway, there’s a small [migration guide](https://github.com/swaywm/sway/wiki/i3-Migration-Guide) available.

### Qtile

[Qtile](http://www.qtile.org/) is another tiling manager that also happens to be written in Python. By default, you configure Qtile in a Python script located under *~/.config/qtile/config.py.* When this script is not available, Qtile uses a default [configuration](https://github.com/qtile/qtile/blob/develop/libqtile/resources/default_config.py).

One of the benefits of Qtile being in Python is you can write scripts to control the WM. For example, the following script prints the screen details:

> from libqtile.command import Client > c = Client() > print(c.screen.info) {'index': 0, 'width': 1920, 'height': 1006, 'x': 0, 'y': 0}

To install Qlite on Fedora, use the following command:

$sudo dnf install qtile

### dwm

The [dwm](https://dwm.suckless.org/) window manager focuses more on being lightweight. One goal of the project is to keep dwm minimal and small. For example, the entire code base never exceeded 2000 lines of code. On the other hand, dwm isn’t as easy to customize and configure. Indeed, the only way to change dwm default configuration is to [edit the source code and recompile the application](https://dwm.suckless.org/customisation/).

If you want to try the default configuration, you can install dwm in Fedora using *dnf:*

$sudo dnf install dwm

For those who wand to change their dwm configuration, the *dwm-user *package is available in Fedora. This package automatically recompiles dwm using the configuration stored in the user home directory at *~/.dwm/config.h.*

### awesome

[awesome](https://awesomewm.org/) originally started as a fork of dwm, to provide configuration of the WM using an external configuration file. The configuration is done via Lua scripts, which allow you to write scripts to automate tasks or create widgets.

You can check out awesome on Fedora by installing it like this:

$sudo dnf install awesome

## Mark McIntyre

you have

for Qtile. it should be

.

## Clément Verna

Thanks updated

## Emiliano

Great article, thanks! (current awesome user :-P). I’ll try Sway, thanks!

## Hendrik

Note sway will soon release 1.0 version (https://github.com/swaywm/sway/issues/1735) backing up its own Wayland compositor library.

If you are a Awesome user you might want to check out Way Cooler which is a Wayland compositor implementing the Awesome api.

## John

Great article, but some screenshots would be nice, I think.

## freegoat

Why didn’t gTile get mentioned?

## Dann Griggs

i3 looks interesting, but I can reliably break it on my Fedora 28 install. Just install the basic i3 packages, log out of Gnome, and try it. Works fine, neat. Now exit i3 and do: ‘cp /etc/i3/config ~/.i3/config’. Try to start i3 again, it hangs during start up, no keystrokes work, and you have to switch to a VT to kill it. This makes customizing/configuring i3 a problem. Basically, if ~/.i3/config exists, you can’t start i3.

Anyone else run into this?

## Marian Šámal

Not sure, but I think the path should he ~/.config/i3/config

## Jens Petersen

There is also good ol’ xmonad, which is still well alive and kicking 🙂

waymonad is also under development.

## Sharlatan

How about StumpWM (CommonLisp base) so any configuration could load withing current session without lo-gout.

## svsv sarma

In the beginning, while learning, I used to tinker with WMs. But now I prefer the default GNOME and trust it to be memory friendly. So, forget about WMs and get on with the work.

## Nick

Pro-tip:

i3wm works very well when combined with the XFCE desktop environment.

Due to the modularity of XFCE you can easily replace the default window manager with i3 while retaining all the other niceties of a DE.

## Bill O

how about Xmonad?

## Bill O

I’ve tried a few times, moving from xmonad to i3, but never successfully.

## dg

I didn’t like i3 that much. I would love some detailed qtile and sway tutorial. Which is the most modern tiling manager? |

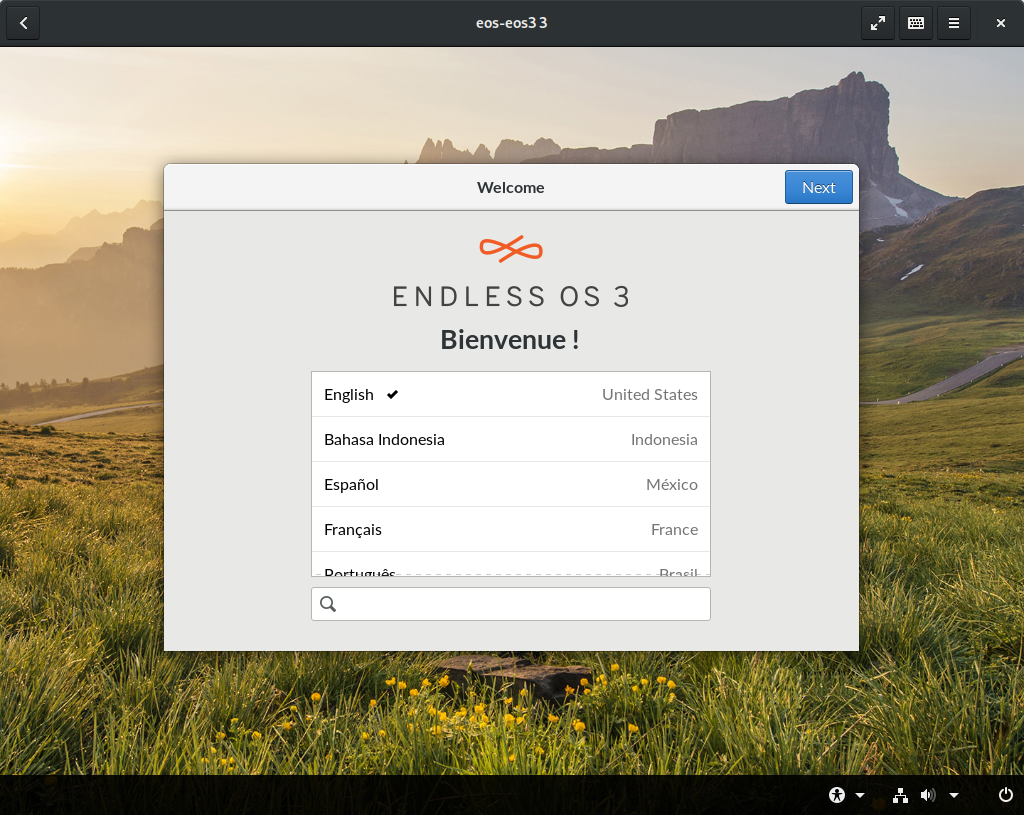

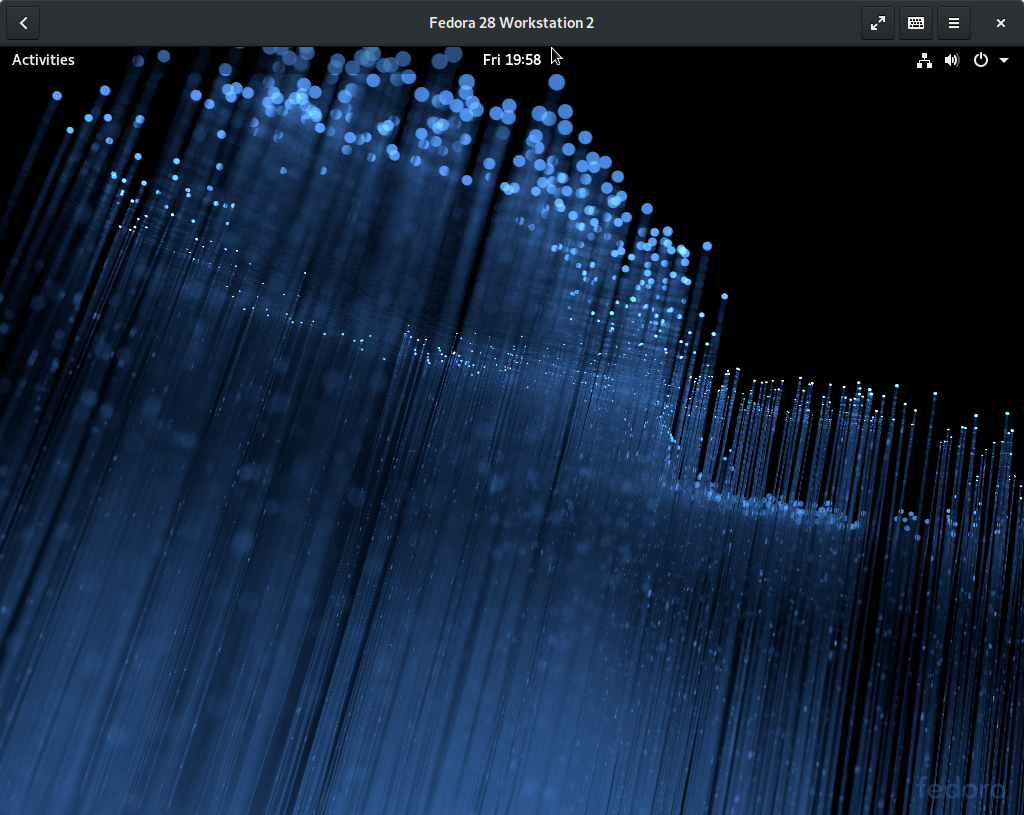

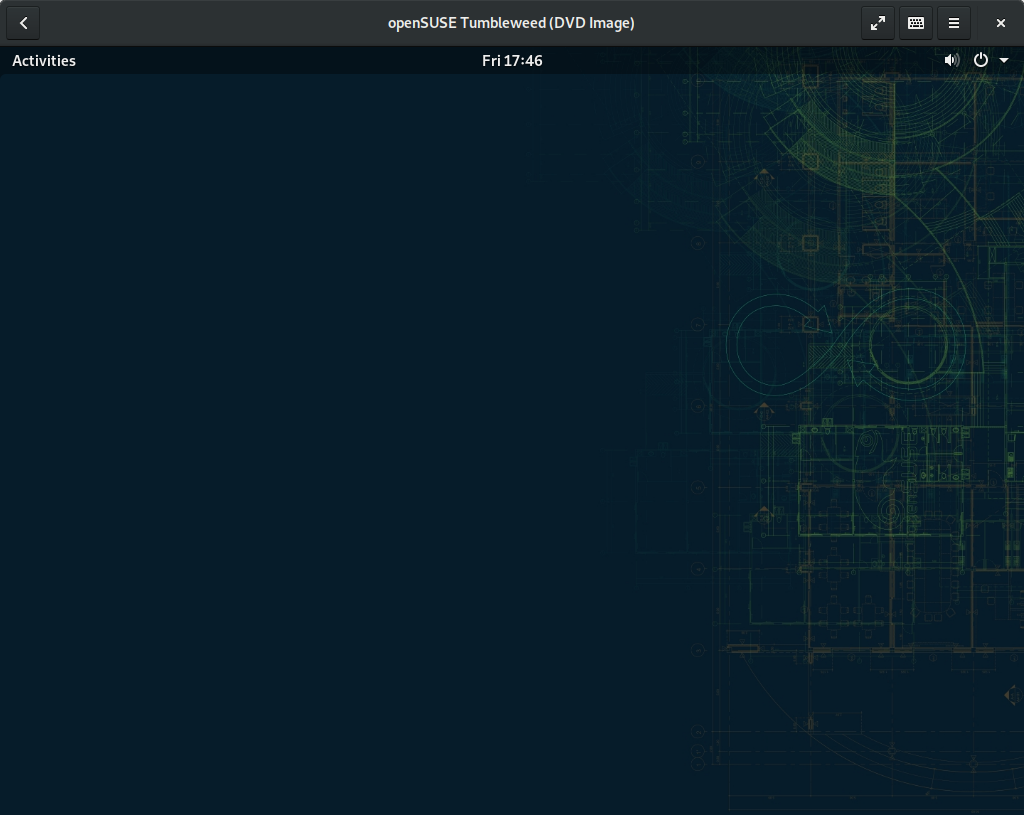

10,146 | 2018 年最好的 Linux 发行版 | https://www.linux.com/blog/learn/intro-to-linux/2018/1/best-linux-distributions-2018 | 2018-10-24T09:43:14 | [

"发行版",

"Linux"

] | https://linux.cn/article-10146-1.html |

>