id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

10,246 | 8 个很棒的 pytest 插件 | https://opensource.com/article/18/6/pytest-plugins | 2018-11-19T11:41:53 | [

"pytest",

"测试",

"Python"

] | https://linux.cn/article-10246-1.html |

>

> Python 测试工具最好的一方面是其强大的生态系统。这里列出了八个最好的插件。

>

>

>

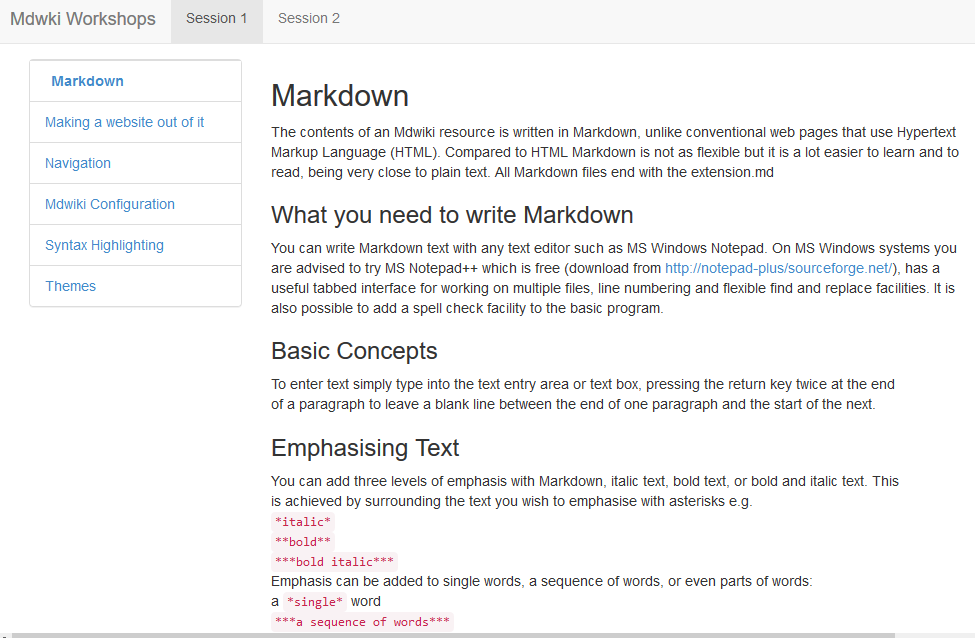

我们是 [pytest](https://docs.pytest.org/en/latest/) 的忠实粉丝,并将其作为工作和开源项目的默认 Python 测试工具。在本月的 Python 专栏中,我们分享了为什么我们喜欢 `pytest` 以及一些让 `pytest` 测试工作更有趣的插件。

### 什么是 pytest?

正如该工具的网站所说,“pytest 框架可以轻松地编写小型测试,也能进行扩展以支持应用和库的复杂功能测试。”

`pytest` 允许你在任何名为 `test_*.py` 的文件中定义测试,并将其定义为以 `test_*` 开头的函数。然后,pytest 将在整个项目中查找所有测试,并在控制台中运行 `pytest` 时自动运行这些测试。pytest 接受[标志和参数](https://docs.pytest.org/en/latest/usage.html),它们可以在测试运行器停止时更改,这些包含如何输出结果,运行哪些测试以及输出中包含哪些信息。它还包括一个 `set_trace()` 函数,它可以进入到你的测试中。它会暂停您的测试, 并允许你与变量进行交互,不然你只能在终端中“四处翻弄”来调试你的项目。

`pytest` 最好的一方面是其强大的插件生态系统。因为 `pytest` 是一个非常流行的测试库,所以多年来创建了许多插件来扩展、定制和增强其功能。这八个插件是我们的最爱。

### 8 个很棒的插件

#### 1、pytest-sugar

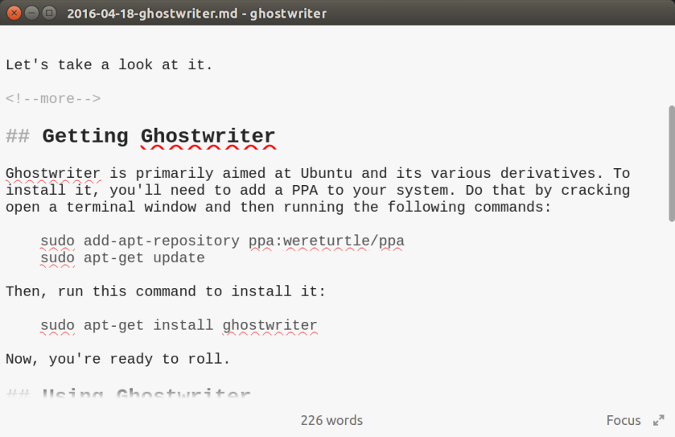

[pytest-sugar](https://github.com/Frozenball/pytest-sugar) 改变了 `pytest` 的默认外观,添加了一个进度条,并立即显示失败的测试。它不需要配置,只需 `pip install pytest-sugar`,用 `pytest` 运行测试,来享受更漂亮、更有用的输出。

#### 2、pytest-cov

[pytest-cov](https://github.com/pytest-dev/pytest-cov) 在 `pytest` 中增加了覆盖率支持,来显示哪些代码行已经测试过,哪些还没有。它还将包括项目的测试覆盖率。

#### 3、pytest-picked

[pytest-picked](https://github.com/anapaulagomes/pytest-picked) 对你已经修改但尚未提交 `git` 的代码运行测试。安装库并运行 `pytest --picked` 来仅测试自上次提交后已更改的文件。

#### 4、pytest-instafail

[pytest-instafail](https://github.com/pytest-dev/pytest-instafail) 修改 `pytest` 的默认行为来立即显示失败和错误,而不是等到 `pytest` 完成所有测试。

#### 5、pytest-tldr

一个全新的 `pytest` 插件,可以将输出限制为你需要的东西。`pytest-tldr`(`tldr` 代表 “too long, didn’t read” —— 太长,不想读),就像 pytest-sugar 一样,除基本安装外不需要配置。不像 pytest 的默认输出那么详细,[pytest-tldr](https://github.com/freakboy3742/pytest-tldr) 将默认输出限制为失败测试的回溯信息,并忽略了一些令人讨厌的颜色编码。添加 `-v` 标志会为喜欢它的人返回更详细的输出。

#### 6、pytest-xdist

[pytest-xdist](https://github.com/pytest-dev/pytest-xdist) 允许你通过 `-n` 标志并行运行多个测试:例如,`pytest -n 2` 将在两个 CPU 上运行你的测试。这可以显著加快你的测试速度。它还包括 `--looponfail` 标志,它将自动重新运行你的失败测试。

#### 7、pytest-django

[pytest-django](https://pytest-django.readthedocs.io/en/latest/) 为 Django 应用和项目添加了 `pytest` 支持。具体来说,`pytest-django` 引入了使用 pytest fixture 测试 Django 项目的能力,而省略了导入 `unittest` 和复制/粘贴其他样板测试代码的需要,并且比标准的 Django 测试套件运行得更快。

#### 8、django-test-plus

[django-test-plus](https://django-test-plus.readthedocs.io/en/latest/) 并不是专门为 `pytest` 开发,但它现在支持 `pytest`。它包含自己的 `TestCase` 类,你的测试可以继承该类,并使你能够使用较少的按键来输出频繁的测试案例,例如检查特定的 HTTP 错误代码。

我们上面提到的库绝不是你扩展 `pytest` 的唯一选择。有用的 pytest 插件的前景是广阔的。查看 [pytest 插件兼容性](https://plugincompat.herokuapp.com/)页面来自行探索。你最喜欢哪些插件?

---

via: <https://opensource.com/article/18/6/pytest-plugins>

作者:[Jeff Triplett](https://opensource.com/users/jefftriplett), [Lacery Williams Henschel](https://opensource.com/users/laceynwilliams) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | We are big fans of [pytest](https://docs.pytest.org/en/latest/) and use it as our default Python testing tool for work and open source projects. For this month's Python column, we're sharing why we love pytest and some of the plugins that make testing with pytest so much fun.

## What is pytest?

As the tool's website says, "The pytest framework makes it easy to write small tests, yet scales to support complex functional testing for applications and libraries."

Pytest allows you to define your tests in any file called `test_*.py`

and as functions that begin with `test_*`

. Pytest will then find all your tests, across your whole project, and run them automatically when you run `pytest`

in your console. Pytest accepts [flags and arguments](https://docs.pytest.org/en/latest/usage.html) that can change when the testrunner stops, how it outputs results, which tests are run, and what information is included in the output. It also includes a `set_trace()`

function that can be entered into your test; this will pause your tests and allow you to interact with your variables and otherwise "poke around" in the console to debug your project.

One of the best aspects of pytest is its robust plugin ecosystem. Because pytest is such a popular testing library, over the years many plugins have been created to extend, customize, and enhance its capabilities. These eight plugins are among our favorites.

## Great 8

**1. pytest-sugar**

`pytest-sugar`

changes the default look and feel of pytest, adds a progress bar, and shows failing tests instantly. It requires no configuration; just `pip install pytest-sugar`

, run your tests with `pytest`

, and enjoy the prettier, more useful output.**2. pytest-cov**

`pytest-cov`

adds coverage support for pytest to show which lines of code have been tested and which have not. It will also include the percentage of test coverage for your project.**3. pytest-picked**

`pytest-picked`

runs tests based on code that you have modified but not committed to `git`

yet. Install the library and run your tests with `pytest --picked`

to test only files that have been changed since your last commit.**4. pytest-instafail**

`pytest-instafail`

modifies pytest's default behavior to show failures and errors immediately instead of waiting until pytest has finished running every test.**5. pytest-tldr**

A brand-new pytest plugin that limits the output to just the things you need.

`pytest-tldr`

(the `tldr`

stands for "too long, didn't read"), like `pytest-sugar`

, requires no configuration other than basic installation. Instead of pytest's default output, which is pretty verbose, `pytest-tldr`

's default limits the output to only tracebacks for failing tests and omits the color-coding that some find annoying. Adding a `-v`

flag returns the more verbose output for those who prefer it.**6. pytest-xdist**

`pytest-xdist`

allows you to run multiple tests in parallel via the `-n`

flag: `pytest -n 2`

, for example, would run your tests on two CPUs. This can significantly speed up your tests. It also includes the `--looponfail`

flag, which will automatically re-run your failing tests.**7. pytest-django**

`pytest-django`

adds pytest support to Django applications and projects. Specifically, `pytest-django`

introduces the ability to test Django projects using pytest fixtures, omits the need to import `unittest`

and copy/paste other boilerplate testing code, and runs faster than the standard Django test suite.**8. django-test-plus **

`django-test-plus`

isn't specific to pytest, but it now supports pytest. It includes its own `TestCase`

class that your tests can inherit from and enables you to use fewer keystrokes to type out frequent test cases, like checking for specific HTTP error codes.The libraries we mentioned above are by no means your only options for extending your pytest usage. The landscape for useful pytest plugins is vast. Check out the [Pytest Plugins Compatibility](https://plugincompat.herokuapp.com/) page to explore on your own. Which ones are your favorites?

## Comments are closed. |

10,247 | COPR 仓库中 4 个很酷的新软件(2018.10) | https://fedoramagazine.org/4-cool-new-projects-try-copr-october-2018/ | 2018-11-19T12:01:00 | [

"COPR"

] | https://linux.cn/article-10247-1.html |

COPR 是软件的个人存储库的[集合](https://copr.fedorainfracloud.org/),它包含那些不在标准的 Fedora 仓库中的软件。某些软件不符合允许轻松打包的标准。或者它可能不符合其他 Fedora 标准,尽管它是自由开源的。COPR 可以在标准的 Fedora 包之外提供这些项目。COPR 中的软件不受 Fedora 基础设施的支持,或者是由项目自己背书的。但是,它是尝试新的或实验性软件的一种很好的方法。

这是 COPR 中一组新的有趣项目。

[编者按:这些项目里面有一个并不适合通过 COPR 分发,所以从本文中 也删除了。相关的评论也删除了,以免误导读者。对此带来的不便,我们深表歉意。]

(LCTT 译注:本文后来移除了对“GitKraken”项目的介绍。)

### Music On Console

[Music On Console](http://moc.daper.net/) 播放器(简称 mocp)是一个简单的控制台音频播放器。它有一个类似于 “Midnight Commander” 的界面,并且很容易使用。你只需进入包含音乐的目录,然后选择要播放的文件或目录。此外,mocp 提供了一组命令,允许直接从命令行进行控制。

#### 安装说明

该仓库目前为 Fedora 28 和 29 提供 Music On Console 播放器。要安装 mocp,请使用以下命令:

```

sudo dnf copr enable Krzystof/Moc

sudo dnf install moc

```

### cnping

[Cnping](https://github.com/cnlohr/cnping) 是小型的图形化 ping IPv4 工具,可用于可视化显示 RTT 的变化。它提供了一个选项来控制每个数据包之间的间隔以及发送的数据大小。除了显示的图表外,cnping 还提供 RTT 和丢包的基本统计数据。

#### 安装说明

该仓库目前为 Fedora 27、28、29 和 Rawhide 提供 cnping。要安装 cnping,请使用以下命令:

```

sudo dnf copr enable dreua/cnping

sudo dnf install cnping

```

### Pdfsandwich

[Pdfsandwich](http://www.tobias-elze.de/pdfsandwich/) 是将文本添加到图像形式的文本 PDF 文件 (如扫描书籍) 的工具。它使用光学字符识别 (OCR) 创建一个额外的图层, 包含了原始页面已识别的文本。这对于复制和处理文本很有用。

#### 安装说明

该仓库目前为 Fedora 27、28、29、Rawhide 以及 EPEL 7 提供 pdfsandwich。要安装 pdfsandwich,请使用以下命令:

```

sudo dnf copr enable merlinm/pdfsandwich

sudo dnf install pdfsandwich

```

---

via: <https://fedoramagazine.org/4-cool-new-projects-try-copr-october-2018/>

作者:[Dominik Turecek](https://fedoramagazine.org) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | COPR is a [collection](https://copr.fedorainfracloud.org/) of personal repositories for software that isn’t carried in the standard Fedora repositories. Some software doesn’t conform to standards that allow easy packaging. Or it may not meet other Fedora standards, despite being free and open source. COPR can offer these projects outside the standard set of Fedora Fedora packages. Software in COPR isn’t supported by Fedora infrastructure or signed by the project. However, it can be a neat way to try new or experimental software.

Here’s a set of new and interesting projects in COPR.

**[Editors’ Note:** One of these projects was unsuitable for distribution through COPR and has been removed from this article. Pursuant comments have also been removed to prevent confusion for additional readers. We apologize for any inconvenience.]

### Music On Console

[Music On Console](http://moc.daper.net/) player, or mocp, is a simple console audio player. It has an interface similar to the Midnight Commander and is easy use. You simply navigate to a directory with music files and select a file or directory to play. In addition, mocp provides a set of commands, allowing it to be controlled directly from command line.

#### Installation instructions

The repo currently provides Music On Console player for Fedora 28 and 29. To install mocp, use these commands:

sudo dnf copr enable krzysztof/Moc sudo dnf install moc

### cnping

[Cnping](https://github.com/cnlohr/cnping) is a small graphical ping tool for IPv4, useful for visualization of changes in round-trip time. It offers an option to control the time period between each packet as well as the size of data sent. In addition to the graph shown, cnping provides basic statistics on round-trip times and packet loss.

#### Installation instructions

The repo currently provides cnping for Fedora 27, 28, 29 and Rawhide. To install cnping, use these commands:

sudo dnf copr enable dreua/cnping sudo dnf install cnping

### Pdfsandwich

[Pdfsandwich](http://www.tobias-elze.de/pdfsandwich/) is a tool for adding text to PDF files which contain text in an image form — such as scanned books. It uses optical character recognition (OCR) to create an additional layer with the recognized text behind the original page. This can be useful for copying and working with the text.

#### Installation instructions

The repo currently provides pdfsandwich for Fedora 27, 28, 29 and Rawhide, and for EPEL 7. To install pdfsandwich, use these commands:

sudo dnf copr enable merlinm/pdfsandwich sudo dnf install pdfsandwich

## wk

The last one didn’t work:

dnf copr enable merlinm/pdfsandwich

(blah blah blah)

Do you want to continue? [y/N]: y

Repository successfully enabled.

[root@localhost bot]# dnf install pdfsandwich

Failed to synchronize cache for repo ‘merlinm-pdfsandwich’, disabling.

Last metadata expiration check: 0:21:56 ago on Wed 24 Oct 2018 11:52:22 AM PDT.

No match for argument: pdfsandwich

Error: Unable to find a match

#

## Paul W. Frields

Whatever problem you were encountering, it doesn’t seem to still be there. Works OK here.

## wk

I tried it again. Still gives the same error.

## Chris Moller

[1] ~/Downloads >sudo dnf copr enable Krzystof/Moc

You are about to enable a Copr repository. Please note that this

repository is not part of the main distribution, and quality may vary.

The Fedora Project does not exercise any power over the contents of

this repository beyond the rules outlined in the Copr FAQ at

https://docs.pagure.org/copr.copr/user_documentation.html#what-i-can-build-in-copr,

and packages are not held to any quality or security level.

Please do not file bug reports about these packages in Fedora

Bugzilla. In case of problems, contact the owner of this repository.

Do you want to continue? [y/N]: y

Error: Such repository does not exist.

[1] ~/Downloads >

## Guus

I have the same problem with the Krzystof/Moc repo. It does not seem to exist. Maybe the machine is offline now and again?

## Fred Weigel

The copr for moc should be:

krzysztof/Moc

## Guus

I can confirm this modification is correct. krzysztof/Moc is the right name. Remark the lowercase for krzysztof. This appears to be significant.

## Fred Weigel

: fred@tara ~ $; mocp

FATAL_ERROR: TiMidity-Plugin: Error processing TiMidity-Configuration!

Configuration file is:

## Dan Čermák

You can work around that issue by creating an empty timidity.conf file in your home directory (just touch it, it needn’t have any contents).

## Fred Weigel

Thanks! Using strace, the file name should be timidity.cfg.

## Nazar

Seems like I’m unable to count up to 4…

## Clément Verna

@Nazar please have a look at the Editor’s Note.

## Vaibhav Singh

[student@localhost-localdomain ~]$ sudo dnf copr enable Krzystof/Moc

[sudo] password for student:

You are about to enable a Copr repository. Please note that this

repository is not part of the main distribution, and quality may vary.

The Fedora Project does not exercise any power over the contents of

this repository beyond the rules outlined in the Copr FAQ at

https://docs.pagure.org/copr.copr/user_documentation.html#what-i-can-build-in-copr,

and packages are not held to any quality or security level.

Please do not file bug reports about these packages in Fedora

Bugzilla. In case of problems, contact the owner of this repository.

Do you want to continue? [y/N]: y

Error: Such repository does not exist.

[student@localhost-localdomain ~]$ sudo dnf install moc

Last metadata expiration check: 4:32:04 ago on Thu 25 Oct 2018 07:10:44 PM IST.

No match for argument: moc

Error: Unable to find a match

Please Help

Give a solution

## ddrfr

moc is ideal

## Vaibhav Singh

These two does’t work

sudo dnf copr enable Krzystof/Moc

sudo dnf install mocg

[work@localhost-localdomain ~]$ sudo dnf copr enable Krzystof/Moc

[sudo] password for work :

You are about to enable a Copr repository. Please note that this

repository is not part of the main distribution, and quality may vary.

The Fedora Project does not exercise any power over the contents of

this repository beyond the rules outlined in the Copr FAQ at

https://docs.pagure.org/copr.copr/user_documentation.html#what-i-can-build-in-copr,

and packages are not held to any quality or security level.

Please do not file bug reports about these packages in Fedora

Bugzilla. In case of problems, contact the owner of this repository.

Do you want to continue? [y/N]: y

Error: Such repository does not exist.

[work@localhost-localdomain ~]$ sudo dnf install moc

Last metadata expiration check: 0:33:37 ago on Fri 26 Oct 2018 10:47:51 AM IST.

No match for argument: moc

Error: Unable to find a match

## Larry

Let me get this straight, third party software that doesn’t conform to Fedora standards……Isn’t that one of the issues with Windoze, is “downloading software from other places,” or a far as that goes apps on Android not from the “play store.” Better yet when I used Ubuntu installing PPA’s from outside Ubuntu’s repositories, very unwise move. I don’t see a problem with installing software from outside repositories.

## Nick

For those that get an error which says “Error: Such repository does not exist.” on the first one, it is because the command is “sudo dnf copr enable krzysztof/Moc” not “sudo dnf copr enable krzystof/Moc”. Hope that helps.

## Clément Verna

Thanks I have edited the article to reflect this change

## Brenton Horne

I’ve created my own Copr for the application menu, window switch and dmenu replacement, Rofi (https://copr.fedorainfracloud.org/coprs/fusion809/Rofi/). Why am I mentioning this? Partly because I was surprised I had to package it, I would have thought it’d be in Fedora’s rather large and comprehensive repos. Also because it goes exceptionally well with i3 as a replacement for dmenu, but with a larger feature set. Might be worthwhile covering in a future article. |

10,249 | gitbase:用 SQL 查询 Git 仓库 | https://opensource.com/article/18/11/gitbase | 2018-11-19T13:14:50 | [

"Git",

"SQL"

] | https://linux.cn/article-10249-1.html |

>

> gitbase 是一个使用 go 开发的的开源项目,它实现了在 Git 仓库上执行 SQL 查询。

>

>

>

Git 已经成为了代码版本控制的事实标准,但尽管 Git 相当普及,对代码仓库的深入分析的工作难度却没有因此而下降;而 SQL 在大型代码库的查询方面则已经是一种久经考验的语言,因此诸如 Spark 和 BigQuery 这样的项目都采用了它。

所以,source{d} 很顺理成章地将这两种技术结合起来,就产生了 gitbase(LCTT 译注:source{d} 是一家开源公司,本文作者是该公司开发者关系副总裁)。gitbase 是一个<ruby> 代码即数据 <rt> code-as-data </rt></ruby>的解决方案,可以使用 SQL 对 git 仓库进行大规模分析。

[gitbase](https://github.com/src-d/gitbase) 是一个完全开源的项目。它站在了很多巨人的肩上,因此得到了足够的发展竞争力。下面就来介绍一下其中的一些“巨人”。

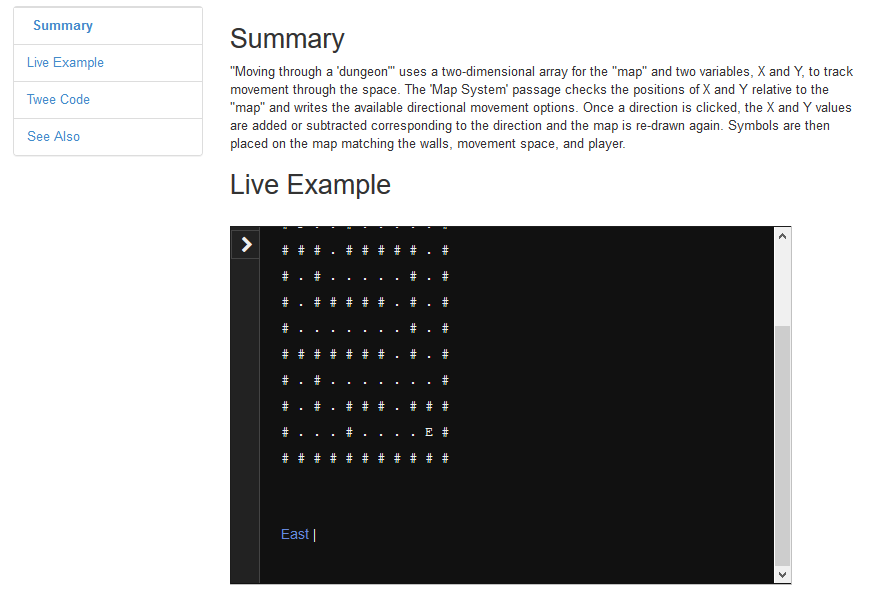

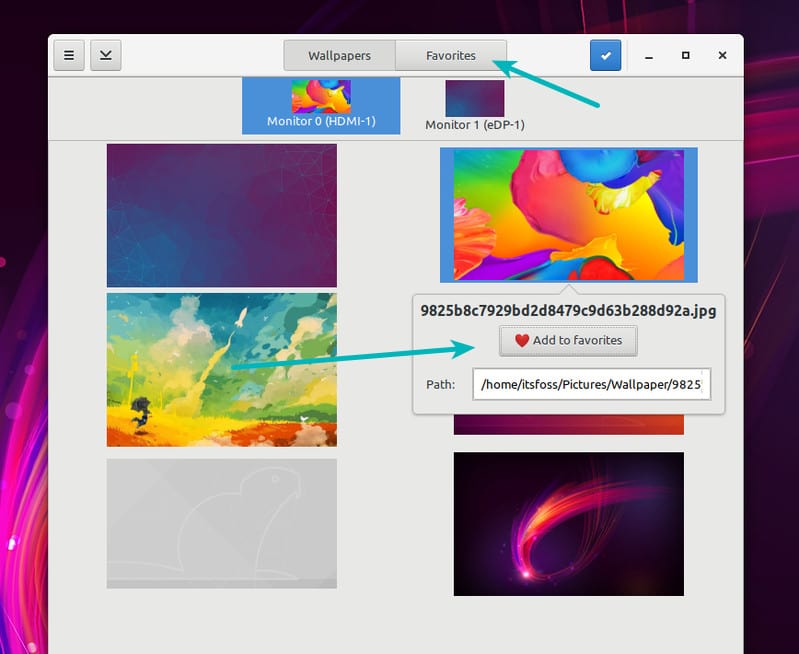

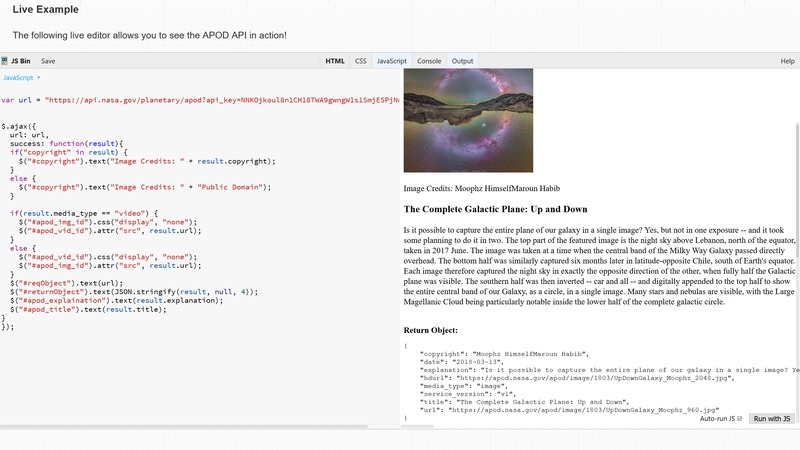

*[gitbase playground](https://github.com/src-d/gitbase-web) 为 gitbase 提供了一个可视化的操作环境。*

### 用 Vitess 解析 SQL

gitbase 通过 SQL 与用户进行交互,因此需要能够遵循 MySQL 协议来对通过网络传入的 SQL 请求作出解析和理解,万幸由 YouTube 建立的 [Vitess](https://github.com/vitessio/vitess) 项目已经在这一方面给出了解决方案。Vitess 是一个横向扩展的 MySQL 数据库集群系统。

我们只是使用了这个项目中的部分重要代码,并将其转化为一个可以让任何人在数分钟以内编写出一个 MySQL 服务器的[开源程序](https://github.com/src-d/go-mysql-server),就像我在 [justforfunc](http://justforfunc.com/) 视频系列中展示的 [CSVQL](https://youtu.be/bcRDXAraprk) 一样,它可以使用 SQL 操作 CSV 文件。

### 用 go-git 读取 git 仓库

在成功解析 SQL 请求之后,还需要对数据集中的 git 仓库进行查询才能返回结果。因此,我们还结合使用了 source{d} 最成功的 [go-git](https://github.com/src-d/go-git) 仓库。go-git 是使用纯 go 语言编写的具有高度可扩展性的 git 实现。

借此我们就可以很方便地将存储在磁盘上的代码仓库保存为 [siva](https://github.com/src-d/siva) 文件格式(这同样是 source{d} 的一个开源项目),也可以通过 `git clone` 来对代码仓库进行复制。

### 使用 enry 检测语言、使用 babelfish 解析文件

gitbase 集成了我们开源的语言检测项目 [enry](https://github.com/src-d/enry) 以及代码解析项目 [babelfish](https://github.com/bblfsh/bblfshd),因此在分析 git 仓库历史代码的能力也相当强大。babelfish 是一个自托管服务,普适于各种源代码解析,并将代码文件转换为<ruby> 通用抽象语法树 <rt> Universal Abstract Syntax Tree </rt></ruby>(UAST)。

这两个功能在 gitbase 中可以被用户以函数 `LANGUAGE` 和 `UAST` 调用,诸如“查找上个月最常被修改的函数的名称”这样的请求就需要通过这两个功能实现。

### 提高性能

gitbase 可以对非常大的数据集进行分析,例如来自 GitHub 高达 3 TB 源代码的 Public Git Archive([公告](https://blog.sourced.tech/post/announcing-pga/))。面临的工作量如此巨大,因此每一点性能都必须运用到极致。于是,我们也使用到了 Rubex 和 Pilosa 这两个项目。

#### 使用 Rubex 和 Oniguruma 优化正则表达式速度

[Rubex](https://github.com/moovweb/rubex) 是 go 的正则表达式标准库包的一个准替代品。之所以说它是准替代品,是因为它没有在 `regexp.Regexp` 类中实现 `LiteralPrefix` 方法,直到现在都还没有。

Rubex 的高性能是由于使用 [cgo](https://golang.org/cmd/cgo/) 调用了 [Oniguruma](https://github.com/kkos/oniguruma),它是一个高度优化的 C 代码库。

#### 使用 Pilosa 索引优化查询速度

索引几乎是每个关系型数据库都拥有的特性,但 Vitess 由于不需要用到索引,因此并没有进行实现。

于是我们引入了 [Pilosa](https://github.com/pilosa/pilosa) 这个开源项目。Pilosa 是一个使用 go 实现的分布式位图索引,可以显著提升跨多个大型数据集的查询的速度。通过 Pilosa,gitbase 才得以在巨大的数据集中进行查询。

### 总结

我想用这一篇文章来对开源社区表达我衷心的感谢,让我们能够不负众望的在短时间内完成 gitbase 的开发。我们 source{d} 的每一位成员都是开源的拥护者,github.com/src-d 下的每一行代码都是见证。

你想使用 gitbase 吗?最简单快捷的方式是从 sourced.tech/engine 下载 source{d} 引擎,就可以通过单个命令运行 gitbase 了。

想要了解更多,可以听听我在 [Go SF 大会](https://www.meetup.com/golangsf/events/251690574/)上的演讲录音。

本文在 [Medium](https://medium.com/sourcedtech/gitbase-exploring-git-repos-with-sql-95ec0986386c) 首发,并经许可在此发布。

---

via: <https://opensource.com/article/18/11/gitbase>

作者:[Francesc Campoy](https://opensource.com/users/francesc) 选题:[lujun9972](https://github.com/lujun9972) 译者:[HankChow](https://github.com/HankChow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Git has become the de-facto standard for code versioning, but its popularity didn't remove the complexity of performing deep analyses of the history and contents of source code repositories.

SQL, on the other hand, is a battle-tested language to query large codebases as its adoption by projects like Spark and BigQuery shows.

So it is just logical that at source{d} we chose these two technologies to create gitbase: the code-as-data solution for large-scale analysis of git repositories with SQL.

[Gitbase](https://github.com/src-d/gitbase) is a fully open source project that stands on the shoulders of a series of giants which made its development possible, this article aims to point out the main ones.

*The gitbase playground provides a visual way to use gitbase.*

## Parsing SQL with Vitess

Gitbase's user interface is SQL. This means we need to be able to parse and understand the SQL requests that arrive through the network following the MySQL protocol. Fortunately for us, this was already implemented by our friends at YouTube and their [Vitess](https://github.com/vitessio/vitess) project. Vitess is a database clustering system for horizontal scaling of MySQL.

We simply grabbed the pieces of code that mattered to us and made it into an [open source project ](https://github.com/src-d/go-mysql-server)that allows anyone to write a MySQL server in minutes (as I showed in my [justforfunc](http://justforfunc.com/) episode [CSVQL—serving CSV with SQL](https://youtu.be/bcRDXAraprk)).

## Reading git repositories with go-git

Once we've parsed a request we still need to find how to answer it by reading the git repositories in our dataset. For this, we integrated source{d}'s most successful repository [go-git](https://github.com/src-d/go-git). Go-git is a* *highly extensible Git implementation in pure Go.

This allowed us to easily analyze repositories stored on disk as [siva](https://github.com/src-d/siva) files (again an open source project by source{d}) or simply cloned with *git clone*.

## Detecting languages with enry and parsing files with babelfish

Gitbase does not stop its analytic power at the git history. By integrating language detection with our (obviously) open source project [enry](https://github.com/src-d/enry) and program parsing with [babelfish](https://github.com/bblfsh/bblfshd). Babelfish is a self-hosted server for universal source code parsing, turning code files into Universal Abstract Syntax Trees (UASTs)

These two features are exposed in gitbase as the user functions *LANGUAGE *and *UAST*. Together they make requests like *"find the name of the function that was most often modified during the last month" *possible.

## Making it go fast

Gitbase analyzes really large datasets—e.g. Public Git Archive, with 3TB of source code from GitHub ([announcement](https://blog.sourced.tech/post/announcing-pga/)) and in order to do so every CPU cycle counts.

This is why we integrated two more projects into the mix: Rubex and Pilosa.

### Speeding up regular expressions with Rubex and Oniguruma

[Rubex](https://github.com/moovweb/rubex) is a quasi-drop-in replacement for Go's *regexp *standard library package. I say quasi because they do not implement the *LiteralPrefix* method on the *regexp.Regexp* type, but I also had never heard about that method until right now.

Rubex gets its performance from the highly optimized C library [Oniguruma](https://github.com/kkos/oniguruma) which it calls using [cgo](https://golang.org/cmd/cgo/).

### Speeding up queries with Pilosa indexes

Indexes are a well-known feature of basically every relational database, but Vitess does not implement them since it doesn't really need to.

But again open source came to the rescue with [Pilosa](https://github.com/pilosa/pilosa), a distributed bitmap index implemented in Go which made gitbase usable on massive datasets. Pilosa is an open source, distributed bitmap index that dramatically accelerates queries across multiple, massive datasets.

## Conclusion

I'd like to use this blog post to personally thank the open source community that made it possible for us to create gitbase in such a shorter period that anyone would have expected. At source{d} we are firm believers in open source and every single line of code under github.com/src-d (including our OKRs and investor board) is a testament to that.

Would you like to give gitbase a try? The fastest and easiest way is with source{d} Engine. Download it from sourced.tech/engine and get gitbase running with a single command!

Want to know more? Check out the recording of my talk at the [Go SF meetup](https://www.meetup.com/golangsf/events/251690574/).

*The article was originally published on Medium and is republished here with permission.*

## Comments are closed. |

10,250 | Chrony:一个类 Unix 系统上 NTP 客户端和服务器替代品 | https://www.ostechnix.com/chrony-an-alternative-ntp-client-and-server-for-unix-like-systems/ | 2018-11-19T14:34:07 | [

"NTP",

"时间"

] | https://linux.cn/article-10250-1.html |

在这个教程中,我们会讨论如何安装和配置 **Chrony**,一个类 Unix 系统上 NTP 客户端和服务器的替代品。Chrony 可以更快的同步系统时钟,具有更好的时钟准确度,并且它对于那些不是一直在线的系统很有帮助。Chrony 是自由开源的,并且支持 GNU/Linux 和 BSD 衍生版(比如 FreeBSD、NetBSD)、macOS 和 Solaris 等。

### 安装 Chrony

Chrony 可以从大多数 Linux 发行版的默认软件库中获得。如果你使用的是 Arch Linux,运行下面的命令来安装它:

```

$ sudo pacman -S chrony

```

在 Debian、Ubuntu、Linux Mint 上:

```

$ sudo apt-get install chrony

```

在 Fedora 上:

```

$ sudo dnf install chrony

```

当安装完成后,如果之前没有启动过的话需启动 `chronyd.service` 守护进程:

```

$ sudo systemctl start chronyd.service

```

使用下面的命令让它每次重启系统后自动运行:

```

$ sudo systemctl enable chronyd.service

```

为了确认 `chronyd.service` 已经启动,运行:

```

$ sudo systemctl status chronyd.service

```

如果一切正常,你将看到类似下面的输出:

```

● chrony.service - chrony, an NTP client/server

Loaded: loaded (/lib/systemd/system/chrony.service; enabled; vendor preset: ena

Active: active (running) since Wed 2018-10-17 10:34:53 UTC; 3min 15s ago

Docs: man:chronyd(8)

man:chronyc(1)

man:chrony.conf(5)

Main PID: 2482 (chronyd)

Tasks: 1 (limit: 2320)

CGroup: /system.slice/chrony.service

└─2482 /usr/sbin/chronyd

Oct 17 10:34:53 ubuntuserver systemd[1]: Starting chrony, an NTP client/server...

Oct 17 10:34:53 ubuntuserver chronyd[2482]: chronyd version 3.2 starting (+CMDMON

Oct 17 10:34:53 ubuntuserver chronyd[2482]: Initial frequency -268.088 ppm

Oct 17 10:34:53 ubuntuserver systemd[1]: Started chrony, an NTP client/server.

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Selected source 85.25.84.166

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Source 85.25.84.166 replaced with 2403

Oct 17 10:35:03 ubuntuserver chronyd[2482]: Selected source 91.189.89.199

Oct 17 10:35:06 ubuntuserver chronyd[2482]: Selected source 106.10.186.200

```

可以看到,Chrony 服务已经启动并且正在工作!

### 配置 Chrony

NTP 客户端需要知道它要连接到哪个 NTP 服务器来获取当前时间。我们可以直接在该 NTP 配置文件中的 `server` 或者 `pool` 项指定 NTP 服务器。通常,默认的配置文件位于 `/etc/chrony/chrony.conf` 或者 `/etc/chrony.conf`,取决于 Linux 发行版版本。为了更可靠的同步时间,建议指定至少三个服务器。

下面几行是我的 Ubuntu 18.04 LTS 服务器上的一个示例。

```

[...]

# About using servers from the NTP Pool Project in general see (LP: #104525).

# Approved by Ubuntu Technical Board on 2011-02-08.

# See http://www.pool.ntp.org/join.html for more information.

pool ntp.ubuntu.com iburst maxsources 4

pool 0.ubuntu.pool.ntp.org iburst maxsources 1

pool 1.ubuntu.pool.ntp.org iburst maxsources 1

pool 2.ubuntu.pool.ntp.org iburst maxsources 2

[...]

```

从上面的输出中你可以看到,[NTP 服务器池项目](https://www.ntppool.org/en/) 已经被设置成为了默认的时间服务器。对于那些好奇的人,NTP 服务器池项目是一个时间服务器集群,用来为全世界千万个客户端提供 NTP 服务。它是 Ubuntu 以及其他主流 Linux 发行版的默认时间服务器。

在这里, \* `iburst` 选项用来加速初始的同步过程 \* `maxsources` 代表 NTP 源的最大数量

请确保你选择的 NTP 服务器是同步的、稳定的、离你的位置较近的,以便使用这些 NTP 源来提升时间准确度。

### 在命令行中管理 Chronyd

chrony 有一个命令行工具叫做 `chronyc` 用来控制和监控 chrony 守护进程(`chronyd`)。

为了检查是否 chrony 已经同步,我们可以使用下面展示的 `tracking` 命令。

```

$ chronyc tracking

Reference ID : 6A0ABAC8 (t1.time.sg3.yahoo.com)

Stratum : 3

Ref time (UTC) : Wed Oct 17 11:48:51 2018

System time : 0.000984587 seconds slow of NTP time

Last offset : -0.000912981 seconds

RMS offset : 0.007983995 seconds

Frequency : 23.704 ppm slow

Residual freq : +0.006 ppm

Skew : 1.734 ppm

Root delay : 0.089718960 seconds

Root dispersion : 0.008760406 seconds

Update interval : 515.1 seconds

Leap status : Normal

```

我们可以使用命令确认现在 chrony 使用的时间源:

```

$ chronyc sources

210 Number of sources = 8

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^- chilipepper.canonical.com 2 10 377 296 +102ms[ +104ms] +/- 279ms

^- golem.canonical.com 2 10 377 302 +105ms[ +107ms] +/- 290ms

^+ pugot.canonical.com 2 10 377 297 +36ms[ +38ms] +/- 238ms

^- alphyn.canonical.com 2 10 377 279 -43ms[ -42ms] +/- 238ms

^- dadns.cdnetworks.co.kr 2 10 377 1070 +40ms[ +42ms] +/- 314ms

^* t1.time.sg3.yahoo.com 2 10 377 169 -13ms[ -11ms] +/- 80ms

^+ sin1.m-d.net 2 10 275 567 -9633us[-7826us] +/- 115ms

^- ns2.pulsation.fr 2 10 377 311 -75ms[ -73ms] +/- 250ms

```

`chronyc` 工具可以对每个源进行统计,比如使用 `sourcestats` 命令获得漂移速率和进行偏移估计。

```

$ chronyc sourcestats

210 Number of sources = 8

Name/IP Address NP NR Span Frequency Freq Skew Offset Std Dev

==============================================================================

chilipepper.canonical.com 32 16 89m +6.293 14.345 +30ms 24ms

golem.canonical.com 32 17 89m +0.312 18.887 +20ms 33ms

pugot.canonical.com 32 18 89m +0.281 11.237 +3307us 23ms

alphyn.canonical.com 31 20 88m -4.087 8.910 -58ms 17ms

dadns.cdnetworks.co.kr 29 16 76m -1.094 9.895 -83ms 14ms

t1.time.sg3.yahoo.com 32 16 91m +0.153 1.952 +2835us 4044us

sin1.m-d.net 29 13 83m +0.049 6.060 -8466us 9940us

ns2.pulsation.fr 32 17 88m +0.784 9.834 -62ms 22ms

```

如果你的系统没有连接到互联网,你需要告知 Chrony 系统没有连接到 互联网。为了这样做,运行:

```

$ sudo chronyc offline

[sudo] password for sk:

200 OK

```

为了确认你的 NTP 源的状态,只需要运行:

```

$ chronyc activity

200 OK

0 sources online

8 sources offline

0 sources doing burst (return to online)

0 sources doing burst (return to offline)

0 sources with unknown address

```

可以看到,我的所有源此时都是离线状态。

一旦你连接到互联网,只需要使用命令告知 Chrony 你的系统已经回到在线状态:

```

$ sudo chronyc online

200 OK

```

为了查看 NTP 源的状态,运行:

```

$ chronyc activity

200 OK

8 sources online

0 sources offline

0 sources doing burst (return to online)

0 sources doing burst (return to offline)

0 sources with unknown address

```

所有选项和参数的详细解释,请参考其帮助手册。

```

$ man chronyc

$ man chronyd

```

这就是文章的所有内容。希望对你有所帮助。在随后的教程中,我们会看到如何使用 Chrony 启动一个本地的 NTP 服务器并且配置客户端来使用这个服务器同步时间。

保持关注!

---

via: <https://www.ostechnix.com/chrony-an-alternative-ntp-client-and-server-for-unix-like-systems/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[zianglei](https://github.com/zianglei) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,252 | 设计更快的网页(三):字体和 CSS 调整 | https://fedoramagazine.org/design-faster-web-pages-part-3-font-css-tweaks/ | 2018-11-19T22:54:41 | [

"CSS"

] | https://linux.cn/article-10252-1.html |

欢迎回到我们为了构建更快网页所写的系列文章。本系列的[第一部分](/article-10166-1.html)和[第二部分](/article-10217-1.html)讲述了如何通过优化和替换图片来减少浏览器脂肪。本部分会着眼于在 CSS([层叠式样式表](https://en.wikipedia.org/wiki/Cascading_Style_Sheets))和字体中减掉更多的脂肪。

### 调整 CSS

首先,我们先来看看问题的源头。CSS 的出现曾是技术的一大进步。你可以用一个集中式的样式表来装饰多个网页。如今很多 Web 开发者都会使用 Bootstrap 这样的框架。

这些框架当然方便,可是很多人都会将整个框架直接复制粘贴走。Bootstrap 非常大:目前 Bootstrap 4.0 的“最小”版本也有 144.9 KB. 在这个以 TB 来计数据的时代,它可能不算多。但就像所说的那样,一头小牛也能搞出大麻烦。

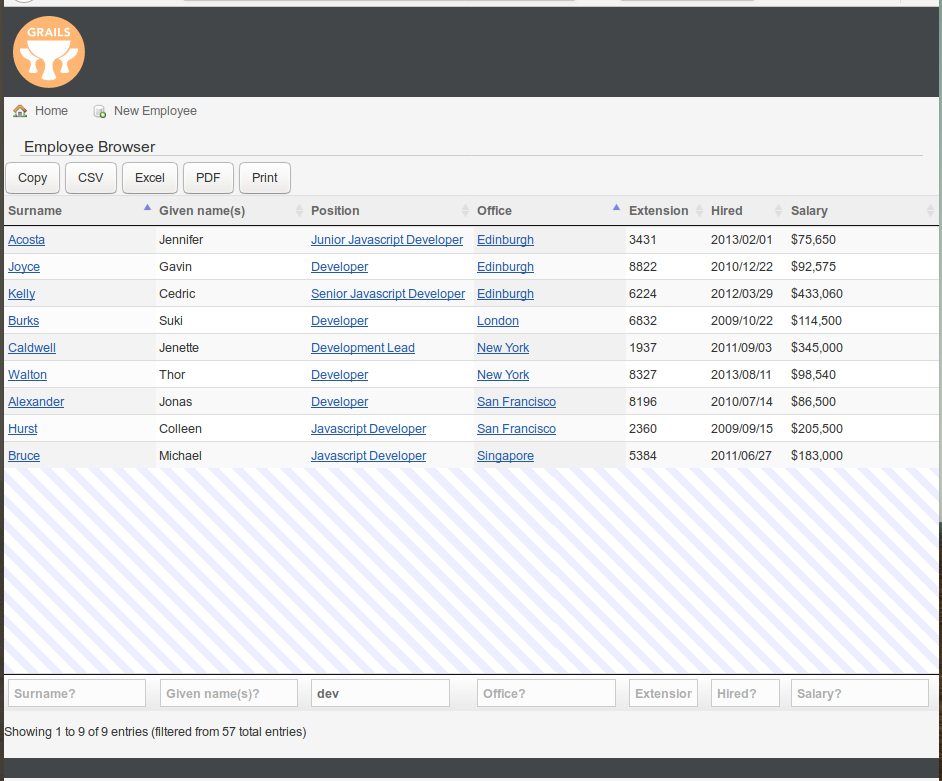

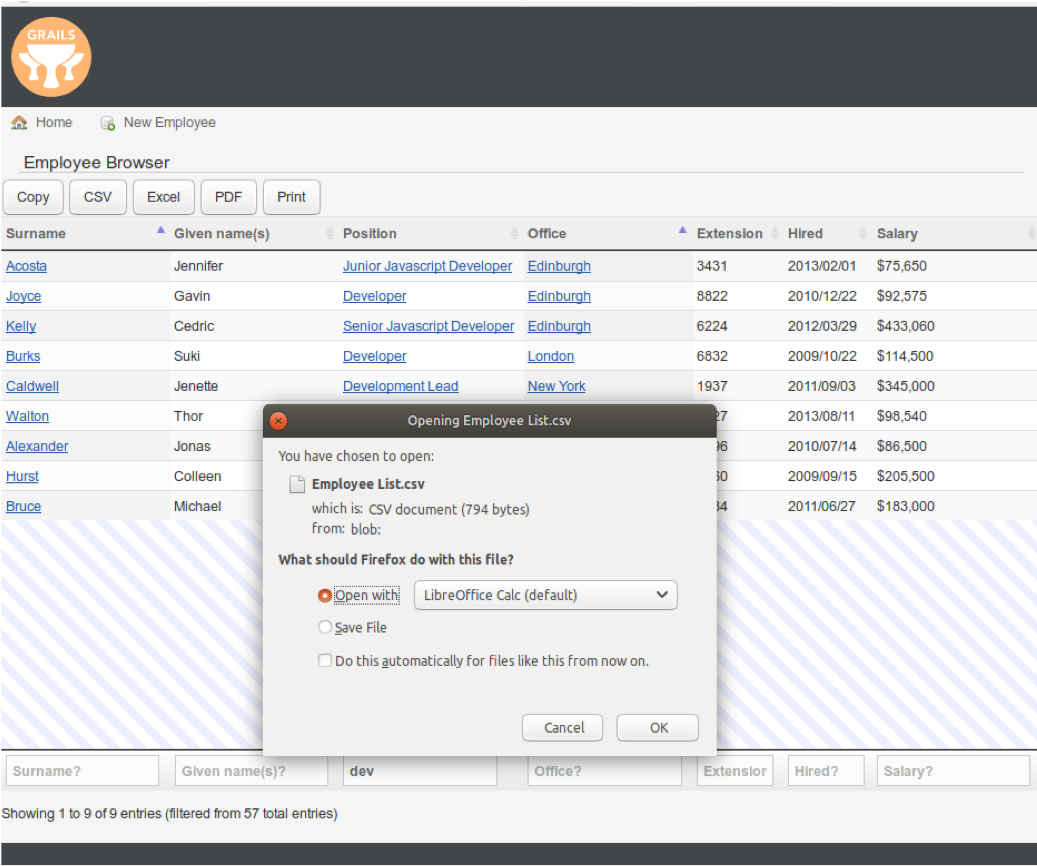

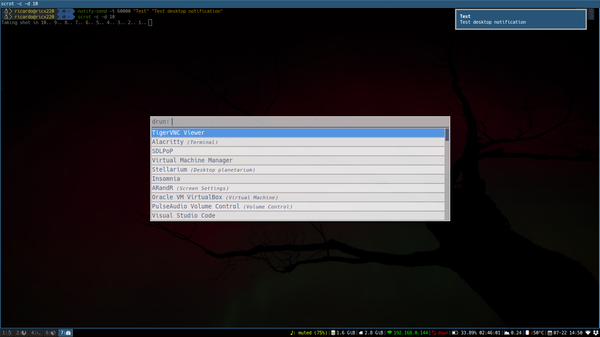

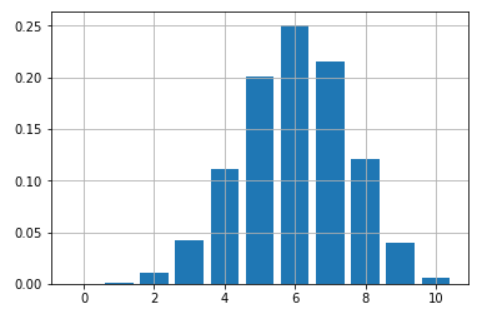

我们回头来看 [getfedora.org](https://getfedora.org) 的例子。我们在[第一部分](/article-10166-1.html)中提过,第一个分析结果显示 CSS 文件占用的空间几乎比 HTML 本身还要大十倍。这里显示了所有用到的样式表:

那是九个不同的样式表。其中的很多样式在这个页面中并没有用上。

#### 移除、合并、以及压缩/缩小化

Font-awesome CSS 代表了包含未使用样式的极端。这个页面中只用到了这个字体的三个字形。如果以 KB 为单位,getfedora.org 用到的 font-awesome CSS 最初有 25.2 KB. 在清理掉所有未使用的样式后,它只有 1.3 KB 了。这只有原来体积的 4% 左右!对于 Bootstrap CSS,原来它有 118.3 KB,清理掉无用的样式后只有 13.2 KB,这就是差异。

下一个问题是,我们必须要这样一个 `bootstrap.css` 和 `font-awesome.css` 吗?或者,它们能不能合起来呢?没错,它们可以。这样虽然不会节省更多的文件空间,但浏览器成功渲染页面所需要发起的请求更少了。

最后,在合并 CSS 文件后,尝试去除无用样式并缩小它们。这样,它们只有 4.3 KB 大小,而你省掉了 10.1 KB.

不幸的是,在 Fedora 软件仓库中,还没有打包好的缩小工具。不过,有几百种在线服务可以帮到你。或者,你也可以使用 [CSS-HTML-JS Minify](https://github.com/juancarlospaco/css-html-js-minify),它用 Python 编写,所以容易安装。现在没有一个可用的工具来净化 CSS,不过我们有 [UnCSS](https://uncss-online.com/) 这样的 Web 服务。

### 字体改进

[CSS3](https://developer.mozilla.org/en-US/docs/Web/CSS/CSS3) 带来了很多开发人员喜欢的东西。它可以定义一些渲染页面所用的字体,并让浏览器在后台下载。此后,很多 Web 设计师都很开心,尤其是在他们发现了 Web 设计中图标字体的用法之后。像 [Font Awesome](https://fontawesome.com/) 这样的字体集现在非常流行,也被广泛使用。这是这个字体集的大小:

```

current free version 912 glyphs/icons, smallest set ttf 30.9KB, woff 14.7KB, woff2 12.2KB, svg 107.2KB, eot 31.2

```

所以问题是,你需要所有的字形吗?很可能不需要。你可以通过 [FontForge](https://fontforge.github.io/en-US/) 来去除这些无用字形,但这需要很大的工作量。你还可以用 [Fontello](http://fontello.com/). 你可以使用公共实例,也可以配置你自己的版本,因为它是自由软件,可以在 [Github](https://github.com/fontello/fontello) 上找到。

这种自定义字体集的缺点在于,你必须自己来托管字体文件。你也没法使用其它在线服务来提供更新。但与更快的性能相比,这可能算不上一个缺点。

### 总结

现在,你已经做完了所有对内容本身的操作,来最大限度地减少浏览器加载和解释的内容。从现在开始,只有服务器的管理技巧才才能帮到你了。

有一个很简单,但很多人都做错了的事情,就是使用一些智能缓存。比如,CSS 或者图片文件可以缓存一周。但无论如何,如果你用了 Cloudflare 这样的代理服务或者自己构建了代理,首先要做的都应该是缩小页面。用户喜欢可以快速加载的页面。他们会(默默地)感谢你,服务器的负载也会更小。

---

via: <https://fedoramagazine.org/design-faster-web-pages-part-3-font-css-tweaks/>

作者:[Sirko Kemter](https://fedoramagazine.org/author/gnokii/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[StdioA](https://github.com/StdioA) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Welcome back to this series of articles on designing faster web pages. [Part 1](https://fedoramagazine.org/design-faster-web-pages-part-1-image-compression/) and [part 2](https://fedoramagazine.org/design-faster-web-pages-part-2-image-replacement/) of this series covered how to lose browser fat through optimizing and replacing images. This part looks at how to lose additional fat in CSS ([Cascading Style Sheets](https://en.wikipedia.org/wiki/Cascading_Style_Sheets)) and fonts.

## Tweaking CSS

First things first: let’s look at where the problem originates. CSS was once a huge step forward. You can use it to style several pages from a central style sheet. Nowadays, many web developers use frameworks like Bootstrap.

While these frameworks are certainly helpful, many people simply copy and paste the whole framework. Bootstrap is huge; the “minimal” version of 4.0 is currently 144.9 KB. Perhaps in the era of terabytes of data, this isn’t much. But as they say, even small cattle makes a mess.

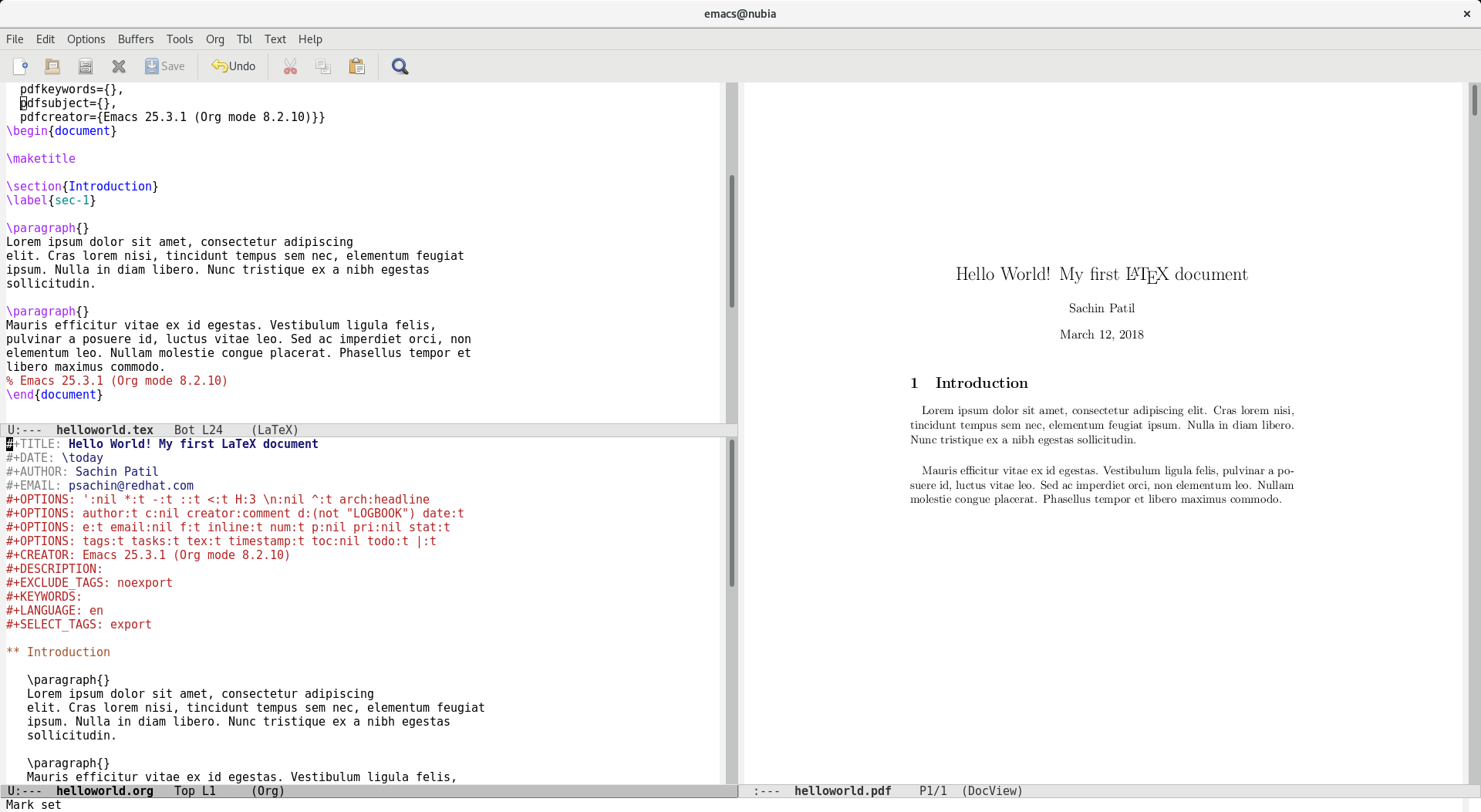

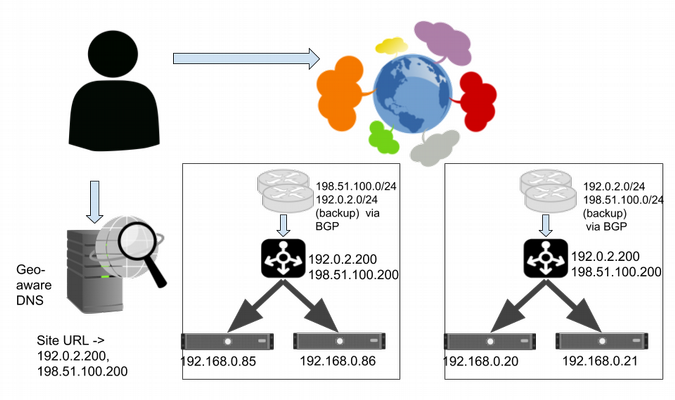

Look back at the [getfedora.org](https://getfedora.org) example. Recall in [part 1](https://fedoramagazine.org/design-faster-web-pages-part-1-image-compression/), the first analysis showed the CSS files used nearly ten times more space than the HTML itself. Here’s a display of the stylesheets used:

That’s nine different stylesheets. Many styles in them that are also unused on the page.

### Remove, merge, and compress/minify

The font-awesome CSS inhabits the extreme end of included, unused styles. There are only three glyphs of the font used on the page. To make that up in KB, the font-awesome CSS used at getfedora.org is originally 25.2 KB. After cleaning out all unused styles, it’s only 1.3 KB. This is only about 4% of its original size! For Bootstrap CSS, the difference is 118.3 KB original, and 13.2 KB after removing unused styles.

The next question is, must there be a bootstrap.css and a font-awesome.css? Or can they be combined? Yes, they can. That doesn’t save much file space, but the browser now requests fewer files to succesfully render the page.

Finally, after merging the CSS files, try to remove unused styles and minify them. In this way, you save 10.1 KB for a final size of 4.3 KB.

Unfortunately, there’s no packaged “minifier” tool in Fedoras repositories yet. However, there are hundreds of online services to do that for you. Or you can use [CSS-HTML-JS Minify](https://github.com/juancarlospaco/css-html-js-minify), which is Python, and therefore easy to isntall. There’s not an available tool to purify CSS, but there are web services like [UnCSS](https://uncss-online.com/).

## Font improvement

[CSS3](https://developer.mozilla.org/en-US/docs/Web/CSS/CSS3) came with something a lot of web developer like. They could define fonts the browser downloads in the background to render the page. Since then, a lot of web designers are very happy, especially after they discovered the usage of icon fonts for web design. Font sets like [Font Awesome](https://fontawesome.com/) are quiet popular today and widely used. Here’s the size of that content:

current free version 912 glyphs/icons, smallest set ttf 30.9KB, woff 14.7KB, woff2 12.2KB, svg 107.2KB, eot 31.2

So the question is, do you need all the glyphs? In all probability, no. You can get rid of them with [FontForge](https://fontforge.github.io/en-US/), but that’s a lot of work. You could also use [Fontello](http://fontello.com/). Use the public instance, or set up your own, as it’s free software and available on [Github](https://github.com/fontello/fontello).

The downside of such customized font sets is you must host the font by yourself. You can’t use other online font services to provide updates. But this may not really be a downside, compared to faster performance.

## Conclusion

Now you’ve done everything you can to the content itself, to minimize what the browser loads and interprets. From now on, only tricks with the administration of the server can help.

One easy to do, but which many people do wrong, is decide on some intelligent caching. For instance, a CSS or picture file can be cached for a week. Whatever you do, if you use a proxy service like Cloudflare or build your own proxy, minimze the pages first. Users like fast loading pages. They’ll (silently) thank you for it, and the server will have a smaller load, too.

## W

Critical Path CSS And render blocking should be mentioned.

## Sirko Kemter

could not should

## Maimela

this was an interesting topic. i will put it to good use (still a noob when it comes to web development) i don’t even have my own website running

## Afan Haqul Fadillah

Really Helpful,, keep it up????

## John

Most of the time I am not interested in stories in front-end development, but this whole series by Sirko blow my mind. I happened to open the link the today, and spent half a hour to read all three posts. Everything about this post, the story telling, open source tools used … is just so awesome. Good work! I hope to see more of your stories here on Fedora magazine.

## Sirko Kemter

thx for the feedback. More posts lets see

## Dave Cooper

I’ve really enjoyed this series. Tackling the amazing bloat in online content is massively overdue – even it involve the current generation of web developers in writing actual code 😉 ! |

10,253 | more、less 和 most 的区别 | https://www.ostechnix.com/the-difference-between-more-less-and-most-commands/ | 2018-11-19T23:21:23 | [

"more",

"less",

"most",

"分页"

] | https://linux.cn/article-10253-1.html |

如果你是一个 Linux 方面的新手,你可能会在 `more`、`less`、`most` 这三个命令行工具之间产生疑惑。在本文当中,我会对这三个命令行工具进行对比,以及展示它们各自在 Linux 中的一些使用例子。总的来说,这几个命令行工具之间都有相通和差异,而且它们在大部分 Linux 发行版上都有自带。

我们首先来看看 `more` 命令。

### more 命令

`more` 是一个老式的、基础的终端分页阅读器,它可以用于打开指定的文件并进行交互式阅读。如果文件的内容太长,在一屏以内无法完整显示,就会逐页显示文件内容。使用回车键或者空格键可以滚动浏览文件的内容,但有一个限制,就是只能够单向滚动。也就是说只能按顺序往下翻页,而不能进行回看。

**更正**

有的 Linux 用户向我指出,在 `more` 当中是可以向上翻页的。不过,最原始版本的 `more` 确实只允许向下翻页,在后续出现的较新的版本中也允许了有限次数的向上翻页,只需要在浏览过程中按 `b` 键即可向上翻页。唯一的限制是 `more` 不能搭配管道使用(如 `ls | more`)。(LCTT 译注:此处原作者疑似有误,译者使用 `more` 是可以搭配管道使用的,或许与不同 `more` 版本有关)

按 `q` 即可退出 `more`。

**更多示例**

打开 `ostechnix.txt` 文件进行交互式阅读,可以执行以下命令:

```

$ more ostechnix.txt

```

在阅读过程中,如果需要查找某个字符串,只需要像下面这样输入斜杠(`/`)之后接着输入需要查找的内容:

```

/linux

```

按 `n` 键可以跳转到下一个匹配的字符串。

如果需要在文件的第 `10` 行开始阅读,只需要执行:

```

$ more +10 file

```

就可以从文件的第 `10` 行开始显示文件的内容了。

如果你需要让 `more` 提示你按空格键来翻页,可以加上 `-d` 参数:

```

$ more -d ostechnix.txt

```

如上图所示,`more` 会提示你可以按空格键翻页。

如果需要查看所有选项以及对应的按键,可以按 `h` 键。

要查看 `more` 的更多详细信息,可以参考手册:

```

$ man more

```

### less 命令

`less` 命令也是用于打开指定的文件并进行交互式阅读,它也支持翻页和搜索。如果文件的内容太长,也会对输出进行分页,因此也可以翻页阅读。比 `more` 命令更好的一点是,`less` 支持向上翻页和向下翻页,也就是可以在整个文件中任意阅读。

在使用功能方面,`less` 比 `more` 命令具有更多优点,以下列出其中几个:

* 支持向上翻页和向下翻页

* 支持向上搜索和向下搜索

* 可以跳转到文件的末尾并立即从文件的开头开始阅读

* 在编辑器中打开指定的文件

**更多示例**

打开文件:

```

$ less ostechnix.txt

```

按空格键或回车键可以向下翻页,按 `b` 键可以向上翻页。

如果需要向下搜索,在输入斜杠(`/`)之后接着输入需要搜索的内容:

```

/linux

```

按 `n` 键可以跳转到下一个匹配的字符串,如果需要跳转到上一个匹配的字符串,可以按 `N` 键。

如果需要向上搜索,在输入问号(`?`)之后接着输入需要搜索的内容:

```

?linux

```

同样是按 `n` 键或 `N` 键跳转到下一个或上一个匹配的字符串。

只需要按 `v` 键,就会将正在阅读的文件在默认编辑器中打开,然后就可以对文件进行各种编辑操作了。

按 `h` 键可以查看 `less` 工具的选项和对应的按键。

按 `q` 键可以退出阅读。

要查看 `less` 的更多详细信息,可以参考手册:

```

$ man less

```

### most 命令

`most` 同样是一个终端阅读工具,而且比 `more` 和 `less` 的功能更为丰富。`most` 支持同时打开多个文件。你可以在打开的文件之间切换、编辑当前打开的文件、迅速跳转到文件中的某一行、分屏阅读、同时锁定或滚动多个屏幕等等功能。在默认情况下,对于较长的行,`most` 不会将其截断成多行显示,而是提供了左右滚动功能以在同一行内显示。

**更多示例**

打开文件:

```

$ most ostechnix1.txt

```

按 `e` 键可以编辑当前文件。

如果需要向下搜索,在斜杠(`/`)或 `S` 或 `f` 之后输入需要搜索的内容,按 `n` 键就可以跳转到下一个匹配的字符串。

如果需要向上搜索,在问号(`?`)之后输入需要搜索的内容,也是通过按 `n` 键跳转到下一个匹配的字符串。

同时打开多个文件:

```

$ most ostechnix1.txt ostechnix2.txt ostechnix3.txt

```

在打开了多个文件的状态下,可以输入 `:n` 切换到下一个文件,使用 `↑` 或 `↓` 键选择需要切换到的文件,按回车键就可以查看对应的文件。

要打开文件并跳转到某个字符串首次出现的位置(例如 linux),可以执行以下命令:

```

$ most file +/linux

```

按 `h` 键可以查看帮助。

**按键操作列表**

移动:

* 空格键或 `D` 键 – 向下滚动一屏

* `DELETE` 键或 `U` 键 – 向上滚动一屏

* `↓` 键 – 向下移动一行

* `↑` 键 – 向上移动一行

* `T` 键 – 移动到文件开头

* `B` 键 – 移动到文件末尾

* `>` 键或 `TAB` 键 – 向右滚动屏幕

* `<` 键 – 向左滚动屏幕

* `→` 键 – 向右移动一列

* `←` 键 – 向左移动一列

* `J` 键或 `G` 键 – 移动到某一行,例如 `10j` 可以移动到第 10 行

* `%` 键 – 移动到文件长度某个百分比的位置

窗口命令:

* `Ctrl-X 2`、`Ctrl-W 2` – 分屏

* `Ctrl-X 1`、`Ctrl-W 1` – 只显示一个窗口

* `O` 键、`Ctrl-X O` – 切换到另一个窗口

* `Ctrl-X 0` – 删除窗口

文件内搜索:

* `S` 键或 `f` 键或 `/` 键 – 向下搜索

* `?` 键 – 向上搜索

* `n` 键 – 跳转到下一个匹配的字符串

退出:

* `q` 键 – 退出 `most` ,且所有打开的文件都会被关闭

* `:N`、`:n` – 退出当前文件并查看下一个文件(使用 `↑` 键、`↓` 键选择下一个文件)

要查看 `most` 的更多详细信息,可以参考手册:

```

$ man most

```

### 总结

`more` – 传统且基础的分页阅读工具,仅支持向下翻页和有限次数的向上翻页。

`less` – 比 `more` 功能丰富,支持向下翻页和向上翻页,也支持文本搜索。在打开大文件的时候,比 `vi` 这类文本编辑器启动得更快。

`most` – 在上述两个工具功能的基础上,还加入了同时打开多个文件、同时锁定或滚动多个屏幕、分屏等等大量功能。

以上就是我的介绍,希望能让你通过我的文章对这三个工具有一定的认识。如果想了解这篇文章以外的关于这几个工具的详细功能,请参阅它们的 `man` 手册。

---

via: <https://www.ostechnix.com/the-difference-between-more-less-and-most-commands/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[HankChow](https://github.com/HankChow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

10,254 | 写直白的代码 | http://matthewrocklin.com/blog/work/2018/01/27/write-dumb-code | 2018-11-20T00:08:38 | [

"编程"

] | https://linux.cn/article-10254-1.html |

为开源项目作贡献最好的方式是为它减少代码,我们应致力于写出让新手程序员无需注释就容易理解的代码,让维护者也无需花费太多精力就能着手维护。

在学生时代,我们会更多地用复杂巧妙的技术去挑战新的难题。首先我们会学习循环,然后是函数啊,类啊,等等。当我们到达一定高的程度,能用更高级的技术写更长的程序,我们会因此受到称赞。此刻我们发现老司机们用 monads 而新手们用 loop 作循环。

之后我们毕业找了工作,或者和他人合作开源项目。我们用在学校里学到的各种炫技寻求并骄傲地给出解决方案的代码实现。

*哈哈,我能扩展这个项目,并实现某牛 X 功能啦,我这里能用继承啦,我太聪明啦!*

我们实现了某个小的功能,并以充分的理由觉得自己做到了。现实项目中的编程却不是针对某某部分的功能而言。以我个人的经验而言,以前我很开心的去写代码,并骄傲地向世界展示我所知道的事情。有例为证,作为对某种编程技术的偏爱,这是用另一种元编程语言构建的一个 [线性代数语言](https://github.com/mrocklin/matrix-algebra),注意,这么多年以来一直没人愿意碰它。

在维护了更多的代码后,我的观点发生了变化。

1. 我们不应去刻意探求如何构建软件。软件是我们为解决问题所付出的代价,那才是我们真实的目的。我们应努力为了解决问题而构建较小的软件。

2. 我们应使用尽可能简单的技术,那么更多的人就越可能会使用,并且无需理解我们所知的高级技术就能扩展软件的功能。当然,在我们不知道如何使用简单技术去实现时,我们也可以使用高级技术。

所有的这些例子都不是听来的故事。我遇到的大部分人会认同某些部分,但不知为什么,当我们向一个新项目贡献代码时又会忘掉这个初衷。直觉里用复杂技术去构建的念头往往会占据上风。

### 软件是种投入

你写的每行代码都要花费人力。写代码当然是需要时间的,也许你会认为只是你个人在奉献,然而这些代码在被审阅的时候也需要花时间理解,对于未来维护和开发人员来说,他们在维护和修改代码时同样要花费时间。否则他们完全可以用这时间出去晒晒太阳,或者陪伴家人。

所以,当你向某个项目贡献代码时,请心怀谦恭。就像是,你正和你的家人进餐时,餐桌上却没有足够的食物,你只索取你所需的部分,别人对你的自我约束将肃然起敬。以更少的代码去解决问题是很难的,你肩负重任的同时自然减轻了别人的重负。

### 技术越复杂越难维护

作为学生,逐渐使用高端技术证明了自己的价值。这体现在,首先我们有能力在开源项目中使用函数,接着是类,然后是高阶函数,monads 等等。我们向同行显示自己的解决方案时,常因自己所用技术高低而感到自豪或卑微。

而在现实中,和团队去解决问题时,情况发生了逆转。现在,我们致力于尽可能使用简单的代码去解决问题。简单方式解决问题使新手程序员能够以此扩展并解决其他问题。简单的代码让别人容易上手,效果立竿见影。我们藉以只用简单的技术去解决难题,从而展示自己的价值。

*看,我用循环替代了递归函数并且一样达到了我们的需求。当然我明白这是不够聪明的做法,不过我注意到新手同事在这里会遇上麻烦,我觉得这种改变将有所帮助吧。*

如果你是个好的程序员,你不需要证明你知道很多炫技。相应的,你可以通过用一个简单的方法解决一个问题来显示你的价值,并激发你的团队在未来的时间里去完善它。

### 当然,也请保持节制

话虽如此,过于遵循 “用简单的工具去构建” 的教条也会降低生产力。通常用递归会比用循环解决问题更简单,用类或 monad 才是正确的途径。还有两种情况另当别论,一是只是只为满足自我而创建的系统,或者是别人毫无构建经验的系统。

---

via: <http://matthewrocklin.com/blog/work/2018/01/27/write-dumb-code>

作者:[Matthew Rocklin](http://matthewrocklin.com) 译者:[plutoid](https://github.com/plutoid) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

10,255 | Lisp 是怎么成为上帝的编程语言的 | https://twobithistory.org/2018/10/14/lisp.html | 2018-11-20T17:15:00 | [

"Lisp"

] | https://linux.cn/article-10255-1.html |

当程序员们谈论各类编程语言的相对优势时,他们通常会采用相当平淡的措词,就好像这些语言是一条工具带上的各种工具似的 —— 有适合写操作系统的,也有适合把其它程序黏在一起来完成特殊工作的。这种讨论方式非常合理;不同语言的能力不同。不声明特定用途就声称某门语言比其他语言更优秀只能导致侮辱性的无用争论。

但有一门语言似乎受到和用途无关的特殊尊敬:那就是 Lisp。即使是恨不得给每个说出形如“某某语言比其他所有语言都好”这类话的人都来一拳的键盘远征军们,也会承认 Lisp 处于另一个层次。 Lisp 超越了用于评判其他语言的实用主义标准,因为普通程序员并不使用 Lisp 编写实用的程序 —— 而且,多半他们永远也不会这么做。然而,人们对 Lisp 的敬意是如此深厚,甚至于到了这门语言会时而被加上神话属性的程度。

大家都喜欢的网络漫画合集 xkcd 就至少在两组漫画中如此描绘过 Lisp:[其中一组漫画](https://xkcd.com/224/)中,某人得到了某种 Lisp 启示,而这好像使他理解了宇宙的基本构架。

在[另一组漫画](https://xkcd.com/297/)中,一个穿着长袍的老程序员给他的徒弟递了一沓圆括号,说这是“文明时代的优雅武器”,暗示着 Lisp 就像原力那样拥有各式各样的神秘力量。

另一个绝佳例子是 Bob Kanefsky 的滑稽剧插曲,《上帝就在人间》。这部剧叫做《永恒之火》,撰写于 1990 年代中期;剧中描述了上帝必然是使用 Lisp 创造世界的种种原因。完整的歌词可以在 [GNU 幽默合集](https://www.gnu.org/fun/jokes/eternal-flame.en.html)中找到,如下是一段摘抄:

>

> 因为上帝用祂的 Lisp 代码

>

>

> 让树叶充满绿意。

>

>

> 分形的花儿和递归的根:

>

>

> 我见过的奇技淫巧之中没什么比这更可爱。

>

>

> 当我对着雪花深思时,

>

>

> 从未见过两片相同的,

>

>

> 我知道,上帝偏爱那一门

>

>

> 名字是四个字母的语言。

>

>

>

(LCTT 译注:“四个字母”,参见:[四字神名](https://zh.wikipedia.org/wiki/%E5%9B%9B%E5%AD%97%E7%A5%9E%E5%90%8D),致谢 [no1xsyzy](https://github.com/LCTT/TranslateProject/issues/11320))

以下这句话我实在不好在人前说;不过,我还是觉得,这样一种 “Lisp 是奥术魔法”的文化模因实在是有史以来最奇异、最迷人的东西。Lisp 是象牙塔的产物,是人工智能研究的工具;因此,它对于编程界的俗人而言总是陌生的,甚至是带有神秘色彩的。然而,当今的程序员们[开始怂恿彼此,“在你死掉之前至少试一试 Lisp”](https://www.reddit.com/r/ProgrammerHumor/comments/5c14o6/xkcd_lisp/d9szjnc/),就像这是一种令人恍惚入迷的致幻剂似的。尽管 Lisp 是广泛使用的编程语言中第二古老的(只比 Fortran 年轻一岁)<sup id="fnref1"> <a href="#fn1" rel="footnote"> 1 </a></sup> ,程序员们也仍旧在互相怂恿。想象一下,如果你的工作是为某种组织或者团队推广一门新的编程语言的话,忽悠大家让他们相信你的新语言拥有神力难道不是绝佳的策略吗?—— 但你如何能够做到这一点呢?或者,换句话说,一门编程语言究竟是如何变成人们口中“隐晦知识的载体”的呢?

Lisp 究竟是怎么成为这样的?

*Byte 杂志封面,1979年八月。*

### 理论 A :公理般的语言

Lisp 的创造者<ruby> 约翰·麦卡锡 <rt> John McCarthy </rt></ruby>最初并没有想过把 Lisp 做成优雅、精炼的计算法则结晶。然而,在一两次运气使然的深谋远虑和一系列优化之后,Lisp 的确变成了那样的东西。 <ruby> 保罗·格雷厄姆 <rt> Paul Graham </rt></ruby>(我们一会儿之后才会聊到他)曾经这么写道, 麦卡锡通过 Lisp “为编程作出的贡献就像是欧几里得对几何学所做的贡献一般” <sup id="fnref2"> <a href="#fn2" rel="footnote"> 2 </a></sup>。人们可能会在 Lisp 中看出更加隐晦的含义 —— 因为麦卡锡创造 Lisp 时使用的要素实在是过于基础,基础到连弄明白他到底是创造了这门语言、还是发现了这门语言,都是一件难事。

最初, 麦卡锡产生要造一门语言的想法,是在 1956 年的<ruby> 达特茅斯人工智能夏季研究项目 <rt> Darthmouth Summer Research Project on Artificial Intelligence </rt></ruby>上。夏季研究项目是个持续数周的学术会议,直到现在也仍旧在举行;它是此类会议之中最早开始举办的会议之一。 麦卡锡当初还是个达特茅斯的数学助教,而“<ruby> 人工智能 <rt> artificial intelligence </rt></ruby>(AI)”这个词事实上就是他建议举办该会议时发明的 <sup id="fnref3"> <a href="#fn3" rel="footnote"> 3 </a></sup>。在整个会议期间大概有十人参加 <sup id="fnref4"> <a href="#fn4" rel="footnote"> 4 </a></sup>。他们之中包括了<ruby> 艾伦·纽厄尔 <rt> Allen Newell </rt></ruby>和<ruby> 赫伯特·西蒙 <rt> Herbert Simon </rt></ruby>,两名隶属于<ruby> 兰德公司 <rt> RAND Corporation </rt></ruby>和<ruby> 卡内基梅隆大学 <rt> Carnegie Mellon </rt></ruby>的学者。这两人不久之前设计了一门语言,叫做 IPL。

当时,纽厄尔和西蒙正试图制作一套能够在命题演算中生成证明的系统。两人意识到,用电脑的原生指令集编写这套系统会非常困难;于是他们决定创造一门语言——他们的原话是“<ruby> 伪代码 <rt> pseudo-code </rt></ruby>”,这样,他们就能更加轻松自然地表达这台“<ruby> 逻辑理论机器 <rt> Logic Theory Machine </rt></ruby>”的底层逻辑了 <sup id="fnref5"> <a href="#fn5" rel="footnote"> 5 </a></sup>。这门语言叫做 IPL,即“<ruby> 信息处理语言 <rt> Information Processing Language </rt></ruby>”;比起我们现在认知中的编程语言,它更像是一种高层次的汇编语言方言。 纽厄尔和西蒙提到,当时人们开发的其它“伪代码”都抓着标准数学符号不放 —— 也许他们指的是 Fortran <sup id="fnref6"> <a href="#fn6" rel="footnote"> 6 </a></sup>;与此不同的是,他们的语言使用成组的符号方程来表示命题演算中的语句。通常,用 IPL 写出来的程序会调用一系列的汇编语言宏,以此在这些符号方程列表中对表达式进行变换和求值。

麦卡锡认为,一门实用的编程语言应该像 Fortran 那样使用代数表达式;因此,他并不怎么喜欢 IPL <sup id="fnref7"> <a href="#fn7" rel="footnote"> 7 </a></sup>。然而,他也认为,在给人工智能领域的一些问题建模时,符号列表会是非常好用的工具 —— 而且在那些涉及演绎的问题上尤其有用。麦卡锡的渴望最终被诉诸行动;他要创造一门代数的列表处理语言 —— 这门语言会像 Fortran 一样使用代数表达式,但拥有和 IPL 一样的符号列表处理能力。

当然,今日的 Lisp 可不像 Fortran。在会议之后的几年中,麦卡锡关于“理想的列表处理语言”的见解似乎在逐渐演化。到 1957 年,他的想法发生了改变。他那时候正在用 Fortran 编写一个能下国际象棋的程序;越是长时间地使用 Fortran ,麦卡锡就越确信其设计中存在不当之处,而最大的问题就是尴尬的 `IF` 声明 <sup id="fnref8"> <a href="#fn8" rel="footnote"> 8 </a></sup>。为此,他发明了一个替代品,即条件表达式 `true`;这个表达式会在给定的测试通过时返回子表达式 `A` ,而在测试未通过时返回子表达式 `B` ,*而且*,它只会对返回的子表达式进行求值。在 1958 年夏天,当麦卡锡设计一个能够求导的程序时,他意识到,他发明的 `true` 条件表达式让编写递归函数这件事变得更加简单自然了 <sup id="fnref9"> <a href="#fn9" rel="footnote"> 9 </a></sup>。也是这个求导问题让麦卡锡创造了 `maplist` 函数;这个函数会将其它函数作为参数并将之作用于指定列表的所有元素 <sup id="fnref10"> <a href="#fn10" rel="footnote"> 10 </a></sup>。在给项数多得叫人抓狂的多项式求导时,它尤其有用。

然而,以上的所有这些,在 Fortran 中都是没有的;因此,在 1958 年的秋天,麦卡锡请来了一群学生来实现 Lisp。因为他那时已经成了一名麻省理工助教,所以,这些学生可都是麻省理工的学生。当麦卡锡和学生们最终将他的主意变为能运行的代码时,这门语言得到了进一步的简化。这之中最大的改变涉及了 Lisp 的语法本身。最初,麦卡锡在设计语言时,曾经试图加入所谓的 “M 表达式”;这是一层语法糖,能让 Lisp 的语法变得类似于 Fortran。虽然 M 表达式可以被翻译为 S 表达式 —— 基础的、“用圆括号括起来的列表”,也就是 Lisp 最著名的特征 —— 但 S 表达式事实上是一种给机器看的低阶表达方法。唯一的问题是,麦卡锡用方括号标记 M 表达式,但他的团队在麻省理工使用的 IBM 026 键盘打孔机的键盘上根本没有方括号 <sup id="fnref11"> <a href="#fn11" rel="footnote"> 11 </a></sup>。于是 Lisp 团队坚定不移地使用着 S 表达式,不仅用它们表示数据列表,也拿它们来表达函数的应用。麦卡锡和他的学生们还作了另外几样改进,包括将数学符号前置;他们也修改了内存模型,这样 Lisp 实质上就只有一种数据类型了 <sup id="fnref12"> <a href="#fn12" rel="footnote"> 12 </a></sup>。

到 1960 年,麦卡锡发表了他关于 Lisp 的著名论文,《用符号方程表示的递归函数及它们的机器计算》。那时候,Lisp 已经被极大地精简,而这让麦卡锡意识到,他的作品其实是“一套优雅的数学系统”,而非普通的编程语言 <sup id="fnref13"> <a href="#fn13" rel="footnote"> 13 </a></sup>。他后来这么写道,对 Lisp 的许多简化使其“成了一种描述可计算函数的方式,而且它比图灵机或者一般情况下用于递归函数理论的递归定义更加简洁” <sup id="fnref14"> <a href="#fn14" rel="footnote"> 14 </a></sup>。在他的论文中,他不仅使用 Lisp 作为编程语言,也将它当作一套用于研究递归函数行为方式的表达方法。

通过“从一小撮规则中逐步实现出 Lisp”的方式,麦卡锡将这门语言介绍给了他的读者。后来,保罗·格雷厄姆在短文《<ruby> <a href="http://languagelog.ldc.upenn.edu/myl/llog/jmc.pdf"> Lisp 之根 </a> <rt> The Roots of Lisp </rt></ruby>》中用更易读的语言回顾了麦卡锡的步骤。格雷厄姆只用了七种原始运算符、两种函数写法,以及使用原始运算符定义的六个稍微高级一点的函数来解释 Lisp。毫无疑问,Lisp 的这种只需使用极少量的基本规则就能完整说明的特点加深了其神秘色彩。格雷厄姆称麦卡锡的论文为“使计算公理化”的一种尝试 <sup id="fnref15"> <a href="#fn15" rel="footnote"> 15 </a></sup>。我认为,在思考 Lisp 的魅力从何而来时,这是一个极好的切入点。其它编程语言都有明显的人工构造痕迹,表现为 `While`,`typedef`,`public static void` 这样的关键词;而 Lisp 的设计却简直像是纯粹计算逻辑的鬼斧神工。Lisp 的这一性质,以及它和晦涩难懂的“递归函数理论”的密切关系,使它具备了获得如今声望的充分理由。

### 理论 B:属于未来的机器

Lisp 诞生二十年后,它成了著名的《<ruby> <a href="https://en.wikipedia.org/wiki/Jargon_File"> 黑客词典 </a> <rt> Hacker’s Dictionary </rt></ruby>》中所说的,人工智能研究的“母语”。Lisp 在此之前传播迅速,多半是托了语法规律的福 —— 不管在怎么样的电脑上,实现 Lisp 都是一件相对简单直白的事。而学者们之后坚持使用它乃是因为 Lisp 在处理符号表达式这方面有巨大的优势;在那个时代,人工智能很大程度上就意味着符号,于是这一点就显得十分重要。在许多重要的人工智能项目中都能见到 Lisp 的身影。这些项目包括了 [SHRDLU 自然语言程序](https://hci.stanford.edu/winograd/shrdlu/)、[Macsyma 代数系统](https://en.wikipedia.org/wiki/Macsyma) 和 [ACL2 逻辑系统](https://en.wikipedia.org/wiki/ACL2)。

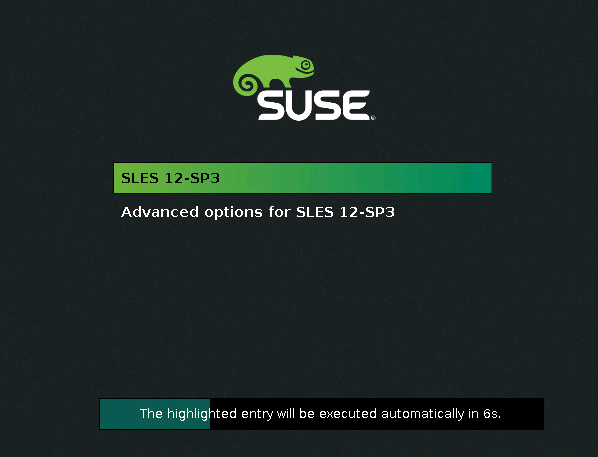

然而,在 1970 年代中期,人工智能研究者们的电脑算力开始不够用了。PDP-10 就是一个典型。这个型号在人工智能学界曾经极受欢迎;但面对这些用 Lisp 写的 AI 程序,它的 18 位地址空间一天比一天显得吃紧 <sup id="fnref16"> <a href="#fn16" rel="footnote"> 16 </a></sup>。许多的 AI 程序在设计上可以与人互动。要让这些既极度要求硬件性能、又有互动功能的程序在分时系统上优秀发挥,是很有挑战性的。麻省理工的<ruby> 彼得·杜奇 <rt> Peter Deutsch </rt></ruby>给出了解决方案:那就是针对 Lisp 程序来特别设计电脑。就像是我那[关于 Chaosnet 的上一篇文章](https://twobithistory.org/2018/09/30/chaosnet.html)所说的那样,这些<ruby> Lisp 计算机 <rt> Lisp machines </rt></ruby>会给每个用户都专门分配一个为 Lisp 特别优化的处理器。到后来,考虑到硬核 Lisp 程序员的需求,这些计算机甚至还配备上了完全由 Lisp 编写的开发环境。在当时那样一个小型机时代已至尾声而微型机的繁盛尚未完全到来的尴尬时期,Lisp 计算机就是编程精英们的“高性能个人电脑”。

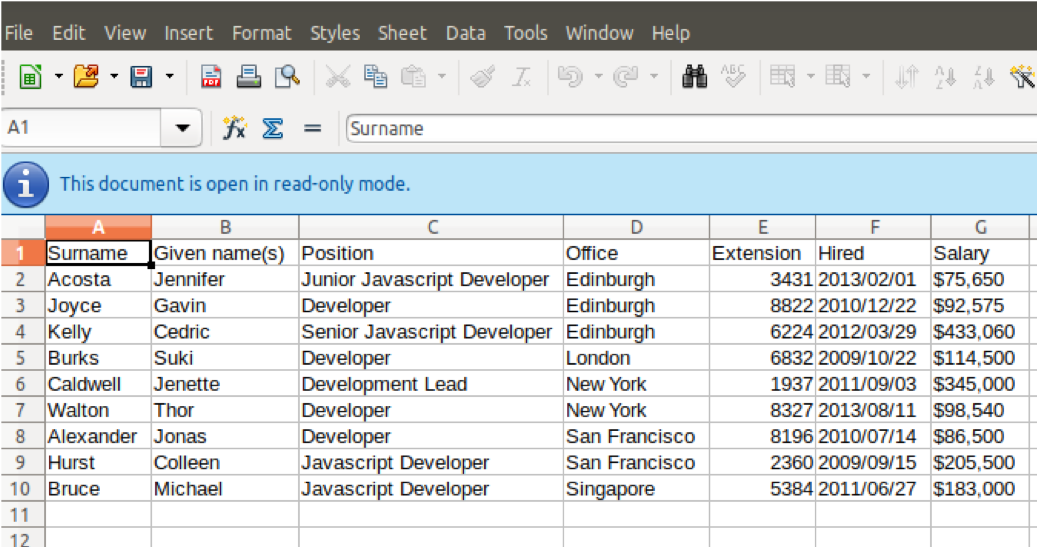

有那么一会儿,Lisp 计算机被当成是未来趋势。好几家公司雨后春笋般出现,追着赶着要把这项技术商业化。其中最成功的一家叫做 Symbolics,由麻省理工 AI 实验室的前成员创立。上世纪八十年代,这家公司生产了所谓的 3600 系列计算机,它们当时在 AI 领域和需要高性能计算的产业中应用极广。3600 系列配备了大屏幕、位图显示、鼠标接口,以及[强大的图形与动画软件](https://youtu.be/gV5obrYaogU?t=201)。它们都是惊人的机器,能让惊人的程序运行起来。例如,之前在推特上跟我聊过的机器人研究者 Bob Culley,就能用一台 1985 年生产的 Symbolics 3650 写出带有图形演示的寻路算法。他向我解释说,在 1980 年代,位图显示和面向对象编程(能够通过 [Flavors 扩展](https://en.wikipedia.org/wiki/Flavors_(programming_language))在 Lisp 计算机上使用)都刚刚出现。Symbolics 站在时代的最前沿。

*Bob Culley 的寻路程序。*

而以上这一切导致 Symbolics 的计算机奇贵无比。在 1983 年,一台 Symbolics 3600 能卖 111,000 美金 <sup id="fnref16:1"> <a href="#fn16" rel="footnote"> 16 </a></sup>。所以,绝大部分人只可能远远地赞叹 Lisp 计算机的威力和操作员们用 Lisp 编写程序的奇妙技术。不止他们赞叹,从 1979 年到 1980 年代末,Byte 杂志曾经多次提到过 Lisp 和 Lisp 计算机。在 1979 年八月发行的、关于 Lisp 的一期特别杂志中,杂志编辑激情洋溢地写道,麻省理工正在开发的计算机配备了“大坨大坨的内存”和“先进的操作系统” <sup id="fnref17"> <a href="#fn17" rel="footnote"> 17 </a></sup>;他觉得,这些 Lisp 计算机的前途是如此光明,以至于它们的面世会让 1978 和 1977 年 —— 诞生了 Apple II、Commodore PET 和 TRS-80 的两年 —— 显得黯淡无光。五年之后,在 1985 年,一名 Byte 杂志撰稿人描述了为“复杂精巧、性能强悍的 Symbolics 3670”编写 Lisp 程序的体验,并力劝读者学习 Lisp,称其为“绝大数人工智能工作者的语言选择”,和将来的通用编程语言 <sup id="fnref18"> <a href="#fn18" rel="footnote"> 18 </a></sup>。

我问过<ruby> 保罗·麦克琼斯 <rt> Paul McJones </rt></ruby>(他在<ruby> 山景城 <rt> Mountain View </rt> <rt> </rt></ruby>的<ruby> 计算机历史博物馆 <rt> Computer History Museum </rt></ruby>做了许多 Lisp 的[保护工作](http://www.softwarepreservation.org/projects/LISP/)),人们是什么时候开始将 Lisp 当作高维生物的赠礼一样谈论的呢?他说,这门语言自有的性质毋庸置疑地促进了这种现象的产生;然而,他也说,Lisp 上世纪六七十年代在人工智能领域得到的广泛应用,很有可能也起到了作用。当 1980 年代到来、Lisp 计算机进入市场时,象牙塔外的某些人由此接触到了 Lisp 的能力,于是传说开始滋生。时至今日,很少有人还记得 Lisp 计算机和 Symbolics 公司;但 Lisp 得以在八十年代一直保持神秘,很大程度上要归功于它们。

### 理论 C:学习编程

1985 年,两位麻省理工的教授,<ruby> 哈尔·阿伯尔森 <rt> Harold <q> Hal </q> Abelson </rt></ruby>和<ruby> 杰拉尔德·瑟斯曼 <rt> Gerald Sussman </rt></ruby>,外加瑟斯曼的妻子<ruby> 朱莉·瑟斯曼 <rt> Julie Sussman </rt></ruby>,出版了一本叫做《<ruby> 计算机程序的构造和解释 <rt> Structure and Interpretation of Computer Programs </rt></ruby>》的教科书。这本书用 Scheme(一种 Lisp 方言)向读者们示范了如何编程。它被用于教授麻省理工入门编程课程长达二十年之久。出于直觉,我认为 SICP(这本书的名字通常缩写为 SICP)倍增了 Lisp 的“神秘要素”。SICP 使用 Lisp 描绘了深邃得几乎可以称之为哲学的编程理念。这些理念非常普适,可以用任意一种编程语言展现;但 SICP 的作者们选择了 Lisp。结果,这本阴阳怪气、卓越不凡、吸引了好几代程序员(还成了一种[奇特的模因](https://knowyourmeme.com/forums/meme-research/topics/47038-structure-and-interpretation-of-computer-programs-hugeass-image-dump-for-evidence))的著作臭名远扬之后,Lisp 的声望也顺带被提升了。Lisp 已不仅仅是一如既往的“麦卡锡的优雅表达方式”;它现在还成了“向你传授编程的不传之秘的语言”。

SICP 究竟有多奇怪这一点值得好好说;因为我认为,时至今日,这本书的古怪之处和 Lisp 的古怪之处是相辅相成的。书的封面就透着一股古怪。那上面画着一位朝着桌子走去,准备要施法的巫师或者炼金术士。他的一只手里抓着一副测径仪 —— 或者圆规,另一只手上拿着个球,上书“eval”和“apply”。他对面的女人指着桌子;在背景中,希腊字母 λ (lambda)漂浮在半空,释放出光芒。

*SICP 封面上的画作。*

说真的,这上面画的究竟是怎么一回事?为什么桌子会长着动物的腿?为什么这个女人指着桌子?墨水瓶又是干什么用的?我们是不是该说,这位巫师已经破译了宇宙的隐藏奥秘,而所有这些奥秘就蕴含在 eval/apply 循环和 Lambda 演算之中?看似就是如此。单单是这张图片,就一定对人们如今谈论 Lisp 的方式产生了难以计量的影响。

然而,这本书的内容通常并不比封面正常多少。SICP 跟你读过的所有计算机科学教科书都不同。在引言中,作者们表示,这本书不只教你怎么用 Lisp 编程 —— 它是关于“现象的三个焦点:人的心智、复数的计算机程序,和计算机”的作品 <sup id="fnref19"> <a href="#fn19" rel="footnote"> 19 </a></sup>。在之后,他们对此进行了解释,描述了他们对如下观点的坚信:编程不该被当作是一种计算机科学的训练,而应该是“<ruby> 程序性认识论 <rt> procedural epistemology </rt></ruby>”的一种新表达方式 <sup id="fnref20"> <a href="#fn20" rel="footnote"> 20 </a></sup>。程序是将那些偶然被送入计算机的思想组织起来的全新方法。这本书的第一章简明地介绍了 Lisp,但是之后的绝大部分都在讲述更加抽象的概念。其中包括了对不同编程范式的讨论,对于面向对象系统中“时间”和“一致性”的讨论;在书中的某一处,还有关于通信的基本限制可能会如何带来同步问题的讨论 —— 而这些基本限制在通信中就像是光速不变在相对论中一样关键 <sup id="fnref21"> <a href="#fn21" rel="footnote"> 21 </a></sup>。都是些高深难懂的东西。

以上这些并不是说这是本糟糕的书;这本书其实棒极了。在我读过的所有作品中,这本书对于重要的编程理念的讨论是最为深刻的;那些理念我琢磨了很久,却一直无力用文字去表达。一本入门编程教科书能如此迅速地开始描述面向对象编程的根本缺陷,和函数式语言“将可变状态降到最少”的优点,实在是一件让人印象深刻的事。而这种描述之后变为了另一种震撼人心的讨论:某种(可能类似于今日的 [RxJS](https://rxjs-dev.firebaseapp.com/) 的)流范式能如何同时具备两者的优秀特性。SICP 用和当初麦卡锡的 Lisp 论文相似的方式提纯出了高级程序设计的精华。你读完这本书之后,会立即想要将它推荐给你的程序员朋友们;如果他们找到这本书,看到了封面,但最终没有阅读的话,他们就只会记住长着动物腿的桌子上方那神秘的、根本的、给予魔法师特殊能力的、写着 eval/apply 的东西。话说回来,书上这两人的鞋子也让我印象颇深。

然而,SICP 最重要的影响恐怕是,它将 Lisp 由一门怪语言提升成了必要的教学工具。在 SICP 面世之前,人们互相推荐 Lisp,以学习这门语言为提升编程技巧的途径。1979 年的 Byte 杂志 Lisp 特刊印证了这一事实。之前提到的那位编辑不仅就麻省理工的新 Lisp 计算机大书特书,还说,Lisp 这门语言值得一学,因为它“代表了分析问题的另一种视角” <sup id="fnref22"> <a href="#fn22" rel="footnote"> 22 </a></sup>。但 SICP 并未只把 Lisp 作为其它语言的陪衬来使用;SICP 将其作为*入门*语言。这就暗含了一种论点,那就是,Lisp 是最能把握计算机编程基础的语言。可以认为,如今的程序员们彼此怂恿“在死掉之前至少试试 Lisp”的时候,他们很大程度上是因为 SICP 才这么说的。毕竟,编程语言 [Brainfuck](https://en.wikipedia.org/wiki/Brainfuck) 想必同样也提供了“分析问题的另一种视角”;但人们学习 Lisp 而非学习 Brainfuck,那是因为他们知道,前者的那种 Lisp 视角在二十年中都被看作是极其有用的,有用到麻省理工在给他们的本科生教其它语言之前,必然会先教 Lisp。

### Lisp 的回归

在 SICP 出版的同一年,<ruby> 本贾尼·斯特劳斯特卢普 <rt> Bjarne Stroustrup </rt></ruby>发布了 C++ 语言的首个版本,它将面向对象编程带到了大众面前。几年之后,Lisp 计算机的市场崩盘,AI 寒冬开始了。在下一个十年的变革中, C++ 和后来的 Java 成了前途无量的语言,而 Lisp 被冷落,无人问津。

理所当然地,确定人们对 Lisp 重新燃起热情的具体时间并不可能;但这多半是保罗·格雷厄姆发表他那几篇声称 Lisp 是首选入门语言的短文之后的事了。保罗·格雷厄姆是 Y-Combinator 的联合创始人和《Hacker News》的创始者,他这几篇短文有很大的影响力。例如,在短文《<ruby> <a href="http://www.paulgraham.com/avg.html"> 胜于平庸 </a> <rt> Beating the Averages </rt></ruby>》中,他声称 Lisp 宏使 Lisp 比其它语言更强。他说,因为他在自己创办的公司 Viaweb 中使用 Lisp,他得以比竞争对手更快地推出新功能。至少,[一部分程序员](https://web.archive.org/web/20061004035628/http://wiki.alu.org/Chris-Perkins)被说服了。然而,庞大的主流程序员群体并未换用 Lisp。

实际上出现的情况是,Lisp 并未流行,但越来越多 Lisp 式的特性被加入到广受欢迎的语言中。Python 有了列表推导式。C# 有了 Linq。Ruby……嗯,[Ruby 是 Lisp 的一种](http://www.randomhacks.net/2005/12/03/why-ruby-is-an-acceptable-lisp/)。就如格雷厄姆之前在 2001 年提到的那样,“在一系列常用语言中所体现出的‘默认语言’正越发朝着 Lisp 的方向演化” <sup id="fnref23"> <a href="#fn23" rel="footnote"> 23 </a></sup>。尽管其它语言变得越来越像 Lisp,Lisp 本身仍然保留了其作为“很少人了解但是大家都该学的神秘语言”的特殊声望。在 1980 年,Lisp 的诞生二十周年纪念日上,麦卡锡写道,Lisp 之所以能够存活这么久,是因为它具备“编程语言领域中的某种近似局部最优” <sup id="fnref24"> <a href="#fn24" rel="footnote"> 24 </a></sup>。这句话并未充分地表明 Lisp 的真正影响力。Lisp 能够存活超过半个世纪之久,并非因为程序员们一年年地勉强承认它就是最好的编程工具;事实上,即使绝大多数程序员根本不用它,它还是存活了下来。多亏了它的起源和它的人工智能研究用途,说不定还要多亏 SICP 的遗产,Lisp 一直都那么让人着迷。在我们能够想象上帝用其它新的编程语言创造世界之前,Lisp 都不会走下神坛。

---

via: <https://twobithistory.org/2018/10/14/lisp.html>

作者:[Two-Bit History](https://twobithistory.org) 选题:[lujun9972](https://github.com/lujun9972) 译者:[Northurland](https://github.com/Northurland) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

---

1. John McCarthy, “History of Lisp”, 14, Stanford University, February 12, 1979, accessed October 14, 2018, <http://jmc.stanford.edu/articles/lisp/lisp.pdf> [↩](#fnref1)

2. Paul Graham, “The Roots of Lisp”, 1, January 18, 2002, accessed October 14, 2018, <http://languagelog.ldc.upenn.edu/myl/llog/jmc.pdf>. [↩](#fnref2)

3. Martin Childs, “John McCarthy: Computer scientist known as the father of AI”, The Independent, November 1, 2011, accessed on October 14, 2018, <https://www.independent.co.uk/news/obituaries/john-mccarthy-computer-scientist-known-as-the-father-of-ai-6255307.html>. [↩](#fnref3)

4. Lisp Bulletin History. <http://www.artinfo-musinfo.org/scans/lb/lb3f.pdf> [↩](#fnref4)

5. Allen Newell and Herbert Simon, “Current Developments in Complex Information Processing,” 19, May 1, 1956, accessed on October 14, 2018, <http://bitsavers.org/pdf/rand/ipl/P-850_Current_Developments_In_Complex_Information_Processing_May56.pdf>. [↩](#fnref5)

6. ibid. [↩](#fnref6)

7. Herbert Stoyan, “Lisp History”, 43, Lisp Bulletin #3, December 1979, accessed on October 14, 2018, <http://www.artinfo-musinfo.org/scans/lb/lb3f.pdf> [↩](#fnref7)

8. McCarthy, “History of Lisp”, 5. [↩](#fnref8)

9. ibid. [↩](#fnref9)

10. McCarthy “History of Lisp”, 6. [↩](#fnref10)

11. Stoyan, “Lisp History”, 45 [↩](#fnref11)

12. McCarthy, “History of Lisp”, 8. [↩](#fnref12)

13. McCarthy, “History of Lisp”, 2. [↩](#fnref13)

14. McCarthy, “History of Lisp”, 8. [↩](#fnref14)

15. Graham, “The Roots of Lisp”, 11. [↩](#fnref15)

16. Guy Steele and Richard Gabriel, “The Evolution of Lisp”, 22, History of Programming Languages 2, 1993, accessed on October 14, 2018, <http://www.dreamsongs.com/Files/HOPL2-Uncut.pdf>. [↩](#fnref16) [↩<sup> 1</sup>](#fnref16:1)

17. Carl Helmers, “Editorial”, Byte Magazine, 154, August 1979, accessed on October 14, 2018, <https://archive.org/details/byte-magazine-1979-08/page/n153>. [↩](#fnref17)

18. Patrick Winston, “The Lisp Revolution”, 209, April 1985, accessed on October 14, 2018, <https://archive.org/details/byte-magazine-1985-04/page/n207>. [↩](#fnref18)

19. Harold Abelson, Gerald Jay. Sussman, and Julie Sussman, Structure and Interpretation of Computer Programs (Cambridge, Mass: MIT Press, 2010), xiii. [↩](#fnref19)

20. Abelson, xxiii. [↩](#fnref20)

21. Abelson, 428. [↩](#fnref21)

22. Helmers, 7. [↩](#fnref22)

23. Paul Graham, “What Made Lisp Different”, December 2001, accessed on October 14, 2018, <http://www.paulgraham.com/diff.html>. [↩](#fnref23)

24. John McCarthy, “Lisp—Notes on its past and future”, 3, Stanford University, 1980, accessed on October 14, 2018, <http://jmc.stanford.edu/articles/lisp20th/lisp20th.pdf>. [↩](#fnref24)

| 200 | OK | When programmers discuss the relative merits of different programming languages, they often talk about them in prosaic terms as if they were so many tools in a tool belt—one might be more appropriate for systems programming, another might be more appropriate for gluing together other programs to accomplish some ad hoc task. This is as it should be. Languages have different strengths and claiming that a language is better than other languages without reference to a specific use case only invites an unproductive and vitriolic debate.

But there is one language that seems to inspire a peculiar universal reverence:

Lisp. Keyboard crusaders that would otherwise pounce on anyone daring to

suggest that some language is better than any other will concede that Lisp is

on another level. Lisp transcends the utilitarian criteria used to judge other

languages, because the median programmer has never used Lisp to build anything

practical and probably never will, yet the reverence for Lisp runs so deep that

Lisp is often ascribed mystical properties. Everyone’s favorite webcomic,

*xkcd*, has depicted Lisp this way at least twice: In [one

comic](https://xkcd.com/224/), a character reaches some sort of Lisp

enlightenment, which appears to allow him to comprehend the fundamental

structure of the universe. In [another comic](https://xkcd.com/297/), a robed,

senescent programmer hands a stack of parentheses to his padawan, saying that

the parentheses are “elegant weapons for a more civilized age,” suggesting that

Lisp has all the occult power of the Force.

Another great example is Bob Kanefsky’s parody of a

song called “God Lives on Terra.” His parody, written in the mid-1990s and

called “Eternal Flame”, describes how God must have created the world using

Lisp. The following is an excerpt, but the full set of lyrics can be found in

the [GNU Humor

Collection](https://www.gnu.org/fun/jokes/eternal-flame.en.html):

For God wrote in Lisp code

When he filled the leaves with green.

The fractal flowers and recursive roots:

The most lovely hack I’ve seen.

And when I ponder snowflakes,

never finding two the same,

I know God likes a language

with its own four-letter name.

I can only speak for myself, I suppose, but I think this “Lisp Is Arcane Magic”

cultural meme is the most bizarre and fascinating thing ever. Lisp was

concocted in the ivory tower as a tool for artificial intelligence research, so

it was always going to be unfamiliar and maybe even a bit mysterious to the

programming laity. But programmers now [urge each other to “try Lisp before you

die”](https://www.reddit.com/r/ProgrammerHumor/comments/5c14o6/xkcd_lisp/d9szjnc/)

as if it were some kind of mind-expanding psychedelic. They do this even though

Lisp is now the second-oldest programming language in widespread use, younger

only than Fortran, and even then by just one year. 1 Imagine if your job were

to promote some new programming language on behalf of the organization or team

that created it. Wouldn’t it be great if you could convince everyone that your

new language had divine powers? But how would you even do that? How does a

programming language come to be known as a font of hidden knowledge?

How did Lisp get to be this way?

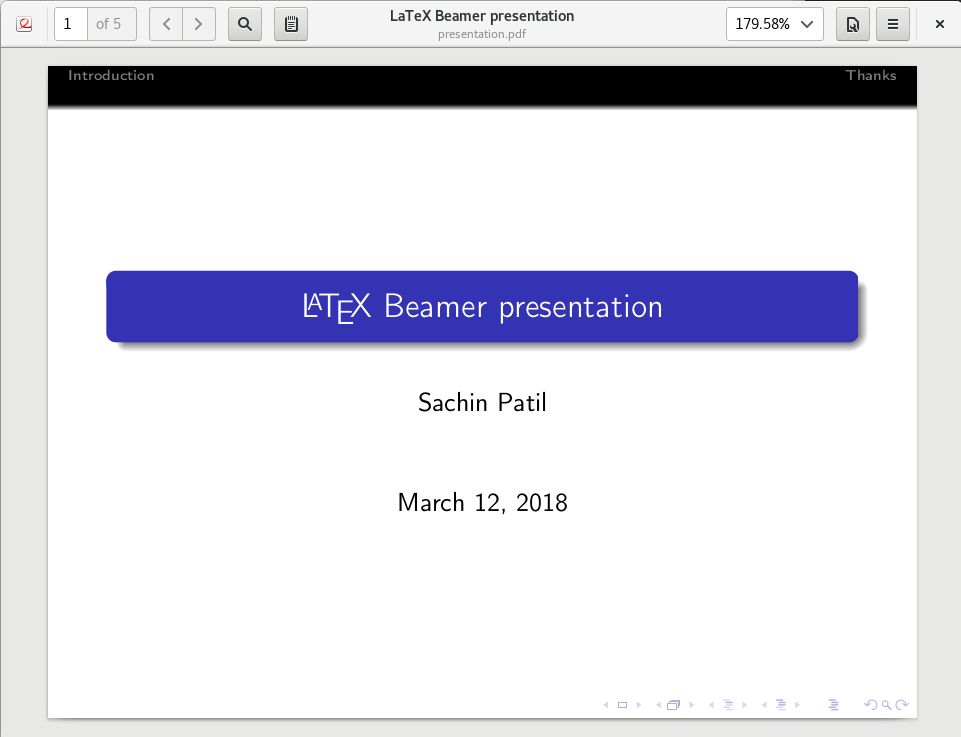

*The cover of Byte Magazine, August, 1979.*

## Theory A: The Axiomatic Language

John McCarthy, Lisp’s creator, did not originally intend for Lisp to be an

elegant distillation of the principles of computation. But, after one or two

fortunate insights and a series of refinements, that’s what Lisp became. Paul

Graham—we will talk about him some more later—has written that, with Lisp,

McCarthy “did for programming something like what Euclid did for geometry.” 2

People might see a deeper meaning in Lisp because McCarthy built Lisp out of

parts so fundamental that it is hard to say whether he invented it or

discovered it.

McCarthy began thinking about creating a language during the 1956 Darthmouth

Summer Research Project on Artificial Intelligence. The Summer Research Project

was in effect an ongoing, multi-week academic conference, the very first in the

field of artificial intelligence. McCarthy, then an assistant professor of

Mathematics at Dartmouth, had actually coined the term “artificial

intelligence” when he proposed the event. 3 About ten or so people attended

the conference for its entire duration.

Among them were Allen Newell and Herbert Simon, two researchers affiliated with the RAND Corporation and Carnegie Mellon that had just designed a language called IPL.

[4](#fn:4)Newell and Simon had been trying to build a system capable of generating proofs

in propositional calculus. They realized that it would be hard to do this while

working at the level of the computer’s native instruction set, so they decided

to create a language—or, as they called it, a “pseudo-code”—that would help

them more naturally express the workings of their “Logic Theory Machine.” 5

Their language, called IPL for “Information Processing Language”, was more of a

high-level assembly dialect then a programming language in the sense we mean

today. Newell and Simon, perhaps referring to Fortran, noted that other

“pseudo-codes” then in development were “preoccupied” with representing

equations in standard mathematical notation.

Their language focused instead on representing sentences in propositional calculus as lists of symbolic expressions. Programs in IPL would basically leverage a series of assembly-language macros to manipulate and evaluate expressions within one or more of these lists.

[6](#fn:6)McCarthy thought that having algebraic expressions in a language,

Fortran-style, would be useful. So he didn’t like IPL very much. 7 But he

thought that symbolic lists were a good way to model problems in artificial

intelligence, particularly problems involving deduction. This was the germ of

McCarthy’s desire to create an algebraic list processing language, a language

that would resemble Fortran but also be able to process symbolic lists like

IPL.

Of course, Lisp today does not resemble Fortran. Over the next few years,

McCarthy’s ideas about what an ideal list processing language should look like

evolved. His ideas began to change in 1957, when he started writing routines

for a chess-playing program in Fortran. The prolonged exposure to Fortran

convinced McCarthy that there were several infelicities in its design, chief

among them the awkward `IF`

statement. 8 McCarthy invented an alternative,

the “true” conditional expression, which returns sub-expression A if the

supplied test succeeds and sub-expression B if the supplied test fails and

which

*also*only evaluates the sub-expression that actually gets returned. During the summer of 1958, when McCarthy worked to design a program that could perform differentiation, he realized that his “true” conditional expression made writing recursive functions easier and more natural.

The differentiation problem also prompted McCarthy to devise the

[9](#fn:9)*maplist*function, which takes another function as an argument and applies it to all the elements in a list.

This was useful for differentiating sums of arbitrarily many terms.

[10](#fn:10)None of these things could be expressed in Fortran, so, in the fall of 1958,

McCarthy set some students to work implementing Lisp. Since McCarthy was now an

assistant professor at MIT, these were all MIT students. As McCarthy and his

students translated his ideas into running code, they made changes that further

simplified the language. The biggest change involved Lisp’s syntax. McCarthy

had originally intended for the language to include something called

“M-expressions,” which would be a layer of syntactic sugar that made Lisp’s

syntax resemble Fortran’s. Though M-expressions could be translated to

S-expressions—the basic lists enclosed by parentheses that Lisp is known for—

S-expressions were really a low-level representation meant for the machine. The

only problem was that McCarthy had been denoting M-expressions using square

brackets, and the IBM 026 keypunch that McCarthy’s team used at MIT did not

have any square bracket keys on its keyboard. 11 So the Lisp team stuck with

S-expressions, using them to represent not just lists of data but function

applications too. McCarthy and his students also made a few other

simplifications, including a switch to prefix notation and a memory model

change that meant the language only had one real type.

[12](#fn:12)In 1960, McCarthy published his famous paper on Lisp called “Recursive

Functions of Symbolic Expressions and Their Computation by Machine.” By that

time, the language had been pared down to such a degree that McCarthy realized

he had the makings of “an elegant mathematical system” and not just another

programming language. 13 He later wrote that the many simplifications that

had been made to Lisp turned it “into a way of describing computable functions

much neater than the Turing machines or the general recursive definitions used

in recursive function theory.”

In his paper, he therefore presented Lisp both as a working programming language and as a formalism for studying the behavior of recursive functions.

[14](#fn:14)McCarthy explained Lisp to his readers by building it up out of only a very

small collection of rules. Paul Graham later retraced McCarthy’s steps, using

more readable language, in his essay [“The Roots of

Lisp”](http://languagelog.ldc.upenn.edu/myl/llog/jmc.pdf). Graham is able to

explain Lisp using only seven primitive operators, two different notations for

functions, and a half-dozen higher-level functions defined in terms of the

primitive operators. That Lisp can be specified by such a small sequence of

basic rules no doubt contributes to its mystique. Graham has called McCarthy’s

paper an attempt to “axiomatize computation.” 15 I think that is a great way

to think about Lisp’s appeal. Whereas other languages have clearly artificial

constructs denoted by reserved words like

`while`

or `typedef`

or ```

public

static void

```

, Lisp’s design almost seems entailed by the very logic of

computing. This quality and Lisp’s original connection to a field as esoteric

as “recursive function theory” should make it no surprise that Lisp has so much

prestige today.## Theory B: Machine of the Future

Two decades after its creation, Lisp had become, according to the famous

[ Hacker’s Dictionary](https://en.wikipedia.org/wiki/Jargon_File), the “mother

tongue” of artificial intelligence research. Early on, Lisp spread quickly,

probably because its regular syntax made implementing it on new machines

relatively straightforward. Later, researchers would keep using it because of

how well it handled symbolic expressions, important in an era when so much of

artificial intelligence was symbolic. Lisp was used in seminal artificial

intelligence projects like the

[SHRDLU natural language program](https://hci.stanford.edu/winograd/shrdlu/), the

[Macsyma algebra system](https://en.wikipedia.org/wiki/Macsyma), and the

[ACL2 logic system](https://en.wikipedia.org/wiki/ACL2).

By the mid-1970s, though, artificial intelligence researchers were running out

of computer power. The PDP-10, in particular—everyone’s favorite machine for

artificial intelligence work—had an 18-bit address space that increasingly was

insufficient for Lisp AI programs. 16 Many AI programs were also supposed to

be interactive, and making a demanding interactive program perform well on a

time-sharing system was challenging. The solution, originally proposed by Peter

Deutsch at MIT, was to engineer a computer specifically designed to run Lisp

programs. These Lisp machines, as I described in

[my last post on Chaosnet](/2018/09/30/chaosnet.html), would give each user a dedicated processor optimized for Lisp. They would also eventually come with development environments written entirely in Lisp for hardcore Lisp programmers. Lisp machines, devised in an awkward moment at the tail of the minicomputer era but before the full flowering of the microcomputer revolution, were high-performance personal computers for the programming elite.

For a while, it seemed as if Lisp machines would be the wave of the future.

Several companies sprang into existence and raced to commercialize the

technology. The most successful of these companies was called Symbolics,

founded by veterans of the MIT AI Lab. Throughout the 1980s, Symbolics produced

a line of computers known as the 3600 series, which were popular in the AI

field and in industries requiring high-powered computing. The 3600 series

computers featured large screens, bit-mapped graphics, a mouse interface, and

[powerful graphics and animation software](https://youtu.be/gV5obrYaogU?t=201).

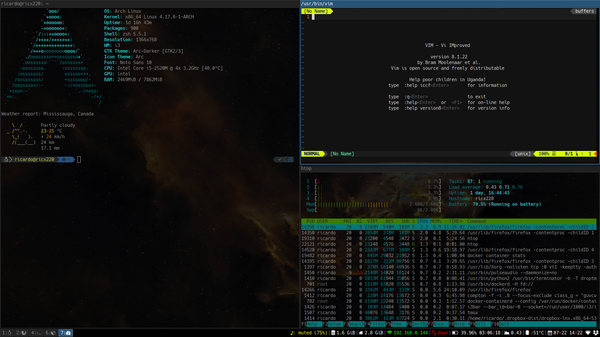

These were impressive machines that enabled impressive programs. For example,

Bob Culley, who worked in robotics research and contacted me via Twitter, was

able to implement and visualize a path-finding algorithm on a Symbolics 3650

in 1985. He explained to me that bit-mapped graphics and object-oriented

programming (available on Lisp machines via [the Flavors

extension](https://en.wikipedia.org/wiki/Flavors_(programming_language))) were

very new in the 1980s. Symbolics was the cutting edge.

*Bob Culley’s path-finding program.*

As a result, Symbolics machines were outrageously expensive. The Symbolics 3600

cost $110,000 in 1983. 16 So most people could only marvel at the power of

Lisp machines and the wizardry of their Lisp-writing operators from afar. But

marvel they did.

*Byte Magazine*featured Lisp and Lisp machines several times from 1979 through to the end of the 1980s. In the August, 1979 issue, a special on Lisp, the magazine’s editor raved about the new machines being developed at MIT with “gobs of memory” and “an advanced operating system.”

He thought they sounded so promising that they would make the two prior years—which saw the launch of the Apple II, the Commodore PET, and the TRS-80—look boring by comparison. A half decade later, in 1985, a