id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

9,597 | 在 Linux 下 9 个有用的 touch 命令示例 | https://www.linuxtechi.com/9-useful-touch-command-examples-linux/ | 2018-05-02T12:43:20 | [

"touch"

] | https://linux.cn/article-9597-1.html | `touch` 命令用于创建空文件,也可以更改 Unix 和 Linux 系统上现有文件时间戳。这里所说的更改时间戳意味着更新文件和目录的访问以及修改时间。

让我们来看看 `touch` 命令的语法和选项:

**语法**:

```

# touch {选项} {文件}

```

`touch` 命令中使用的选项:

在这篇文章中,我们将介绍 Linux 中 9 个有用的 `touch` 命令示例。

### 示例:1 使用 touch 创建一个空文件

要在 Linux 系统上使用 `touch` 命令创建空文件,键入 `touch`,然后输入文件名。如下所示:

```

[root@linuxtechi ~]# touch devops.txt

[root@linuxtechi ~]# ls -l devops.txt

-rw-r--r--. 1 root root 0 Mar 29 22:39 devops.txt

```

### 示例:2 使用 touch 创建批量空文件

可能会出现一些情况,我们必须为某些测试创建大量空文件,这可以使用 `touch` 命令轻松实现:

```

[root@linuxtechi ~]# touch sysadm-{1..20}.txt

```

在上面的例子中,我们创建了 20 个名为 `sysadm-1.txt` 到 `sysadm-20.txt` 的空文件,你可以根据需要更改名称和数字。

### 示例:3 改变/更新文件和目录的访问时间

假设我们想要改变名为 `devops.txt` 文件的访问时间,在 `touch` 命令中使用 `-a` 选项,然后输入文件名。如下所示:

```

[root@linuxtechi ~]# touch -a devops.txt

```

现在使用 `stat` 命令验证文件的访问时间是否已更新:

```

[root@linuxtechi ~]# stat devops.txt

File: 'devops.txt'

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324178 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2018-03-29 23:03:10.902000000 -0400

Modify: 2018-03-29 22:39:29.365000000 -0400

Change: 2018-03-29 23:03:10.902000000 -0400

Birth: -

```

**改变目录的访问时间:**

假设我们在 `/mnt` 目录下有一个 `nfsshare` 文件夹,让我们用下面的命令改变这个文件夹的访问时间:

```

[root@linuxtechi ~]# touch -m /mnt/nfsshare/

[root@linuxtechi ~]# stat /mnt/nfsshare/

File: '/mnt/nfsshare/'

Size: 6 Blocks: 0 IO Block: 4096 directory

Device: fd00h/64768d Inode: 2258 Links: 2

Access: (0755/drwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:mnt_t:s0

Access: 2018-03-29 23:34:38.095000000 -0400

Modify: 2018-03-03 10:42:45.194000000 -0500

Change: 2018-03-29 23:34:38.095000000 -0400

Birth: -

```

### 示例:4 更改访问时间而不用创建新文件

在某些情况下,如果文件存在,我们希望更改文件的访问时间,并避免创建文件。在 touch 命令中使用 `-c` 选项即可,如果文件存在,那么我们可以改变文件的访问时间,如果不存在,我们也可不会创建它。

```

[root@linuxtechi ~]# touch -c sysadm-20.txt

[root@linuxtechi ~]# touch -c winadm-20.txt

[root@linuxtechi ~]# ls -l winadm-20.txt

ls: cannot access winadm-20.txt: No such file or directory

```

### 示例:5 更改文件和目录的修改时间

在 `touch` 命令中使用 `-m` 选项,我们可以更改文件和目录的修改时间。

让我们更改名为 `devops.txt` 文件的更改时间:

```

[root@linuxtechi ~]# touch -m devops.txt

```

现在使用 `stat` 命令来验证修改时间是否改变:

```

[root@linuxtechi ~]# stat devops.txt

File: 'devops.txt'

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324178 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2018-03-29 23:03:10.902000000 -0400

Modify: 2018-03-29 23:59:49.106000000 -0400

Change: 2018-03-29 23:59:49.106000000 -0400

Birth: -

```

同样的,我们可以改变一个目录的修改时间:

```

[root@linuxtechi ~]# touch -m /mnt/nfsshare/

```

使用 `stat` 交叉验证访问和修改时间:

```

[root@linuxtechi ~]# stat devops.txt

File: 'devops.txt'

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324178 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2018-03-30 00:06:20.145000000 -0400

Modify: 2018-03-30 00:06:20.145000000 -0400

Change: 2018-03-30 00:06:20.145000000 -0400

Birth: -

```

### 示例:7 将访问和修改时间设置为特定的日期和时间

每当我们使用 `touch` 命令更改文件和目录的访问和修改时间时,它将当前时间设置为该文件或目录的访问和修改时间。

假设我们想要将特定的日期和时间设置为文件的访问和修改时间,这可以使用 `touch` 命令中的 `-c` 和 `-t` 选项来实现。

日期和时间可以使用以下格式指定:

```

{CCYY}MMDDhhmm.ss

```

其中:

* `CC` – 年份的前两位数字

* `YY` – 年份的后两位数字

* `MM` – 月份 (01-12)

* `DD` – 天 (01-31)

* `hh` – 小时 (00-23)

* `mm` – 分钟 (00-59)

让我们将 `devops.txt` 文件的访问和修改时间设置为未来的一个时间(2025 年 10 月 19 日 18 时 20 分)。

```

[root@linuxtechi ~]# touch -c -t 202510191820 devops.txt

```

使用 `stat` 命令查看更新访问和修改时间:

根据日期字符串设置访问和修改时间,在 `touch` 命令中使用 `-d` 选项,然后指定日期字符串,后面跟文件名。如下所示:

```

[root@linuxtechi ~]# touch -c -d "2010-02-07 20:15:12.000000000 +0530" sysadm-29.txt

```

使用 `stat` 命令验证文件的状态:

```

[root@linuxtechi ~]# stat sysadm-20.txt

File: ‘sysadm-20.txt’

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324189 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2010-02-07 20:15:12.000000000 +0530

Modify: 2010-02-07 20:15:12.000000000 +0530

Change: 2018-03-30 10:23:31.584000000 +0530

Birth: -

```

**注意:**在上述命令中,如果我们不指定 `-c`,如果系统中不存在该文件那么 `touch` 命令将创建一个新文件,并将时间戳设置为命令中给出的。

### 示例:8 使用参考文件设置时间戳(-r)

在 `touch` 命令中,我们可以使用参考文件来设置文件或目录的时间戳。假设我想在 `devops.txt` 文件上设置与文件 `sysadm-20.txt` 文件相同的时间戳,`touch` 命令中使用 `-r` 选项可以轻松实现。

**语法:**

```

# touch -r {参考文件} 真正文件

```

```

[root@linuxtechi ~]# touch -r sysadm-20.txt devops.txt

```

### 示例:9 在符号链接文件上更改访问和修改时间

默认情况下,每当我们尝试使用 `touch` 命令更改符号链接文件的时间戳时,它只会更改原始文件的时间戳。如果你想更改符号链接文件的时间戳,则可以使用 `touch` 命令中的 `-h` 选项来实现。

**语法:**

```

# touch -h {符号链接文件}

```

```

[root@linuxtechi opt]# ls -l /root/linuxgeeks.txt

lrwxrwxrwx. 1 root root 15 Mar 30 10:56 /root/linuxgeeks.txt -> linuxadmins.txt

[root@linuxtechi ~]# touch -t 203010191820 -h linuxgeeks.txt

[root@linuxtechi ~]# ls -l linuxgeeks.txt

lrwxrwxrwx. 1 root root 15 Oct 19 2030 linuxgeeks.txt -> linuxadmins.txt

```

这就是本教程的全部了。我希望这些例子能帮助你理解 `touch` 命令。请分享你的宝贵意见和评论。

---

via: <https://www.linuxtechi.com/9-useful-touch-command-examples-linux/>

作者:[Pradeep Kumar](https://www.linuxtechi.com/author/pradeep/) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In this blog post, we will cover touch command in Linux with 9 useful practical examples.

Touch command is used to change file timestamps and also used to create empty or blank files in Linux.

#### Syntax

# touch {options} {file}

Options:

Let’s delve into some practical examples to showcase its utility.

## 1) Create an empty file

One of the main function of touch command is to create empty or blank file, example is shown below:

[root@linuxtechi ~]# touch devops.txt [root@linuxtechi ~]# ls -l devops.txt -rw-r--r--. 1 root root 0 Mar 29 22:39 devops.txt [root@linuxtechi ~]#

## 2) Create empty files in bulk

There can be some scenarios where we have to create lots of empty files for some testing, this can be easily achieved using touch command with regular expression as shown,

[root@linuxtechi ~]# touch sysadm-{1..20}.txt

In the above example, we have created 20 empty files with name sysadm-1.txt to sysadm-20.txt, you can change the name and numbers based on your requirements.

## 3) Update access time of a file and directory

Let’s assume we want to change access time of a file called “**devops.txt**“, to do this use ‘**-a**‘ option in touch command followed by file name, example is shown below,

[root@linuxtechi ~]# touch -a devops.txt [root@linuxtechi ~]#

Now verify whether access time of a file has been updated or not using ‘stat’ command

```

[root@linuxtechi ~]# stat devops.txt

File: ‘devops.txt’

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324178 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2018-03-29 23:03:10.902000000 -0400

Modify: 2018-03-29 22:39:29.365000000 -0400

Change: 2018-03-29 23:03:10.902000000 -0400

Birth: -

[root@linuxtechi ~]#

```

**Change access time of a directory**,

Let’s assume we have a ‘nfsshare’ folder under /mnt, Let’s change the access time of this folder using the below command,

[root@linuxtechi ~]# touch -a /mnt/nfsshare/ [root@linuxtechi ~]#

```

[root@linuxtechi ~]# stat /mnt/nfsshare/

File: ‘/mnt/nfsshare/’

Size: 6 Blocks: 0 IO Block: 4096 directory

Device: fd00h/64768d Inode: 2258 Links: 2

Access: (0755/drwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:mnt_t:s0

Access: 2018-03-29 23:34:38.095000000 -0400

Modify: 2018-03-03 10:42:45.194000000 -0500

Change: 2018-03-29 23:34:38.095000000 -0400

Birth: -

[root@linuxtechi ~]#

```

## 4) Change Access time without creating new file

There can be some situations where we want to change access time of a file if it exists and avoid creating the file. Using ‘**-c**‘ option in touch command, we can change access time of a file if it exists and will not a create a file, if it doesn’t exist.

[root@linuxtechi ~]# touch -c sysadm-20.txt [root@linuxtechi ~]# touch -c winadm-20.txt [root@linuxtechi ~]# ls -l winadm-20.txt ls: cannot access winadm-20.txt: No such file or directory [root@linuxtechi ~]#

Note: winadm-20.txt does not exist the file, that’s why we are getting an error while listing file.

## 5) Change Modification time of a file and directory

Using ‘**-m**‘ option in touch command, we can change the modification time of a file and directory,

Let’s change the modification time of a file called “devops.txt”,

[root@linuxtechi ~]# touch -m devops.txt [root@linuxtechi ~]#

Now verify whether modification time has been changed or not using stat command,

```

[root@linuxtechi ~]# stat devops.txt

File: ‘devops.txt’

Size: 0 Blocks: 0 IO Block: 4096 regular empty file

Device: fd00h/64768d Inode: 67324178 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Context: unconfined_u:object_r:admin_home_t:s0

Access: 2018-03-29 23:03:10.902000000 -0400

Modify: 2018-03-29 23:59:49.106000000 -0400

Change: 2018-03-29 23:59:49.106000000 -0400

Birth: -

[root@linuxtechi ~]#

```

Similarly, we can change modification time of a directory,

[root@linuxtechi ~]# touch -m /mnt/nfsshare/ [root@linuxtechi ~]#

## 6) Changing access and modification time in one go

Use “**-am**” option in touch command to change the access and modification together or in one go, example is shown below,

[root@linuxtechi ~]# touch -am devops.txt [root@linuxtechi ~]#

Cross verify the access and modification time using stat,

[root@linuxtechi ~]# stat devops.txt File: ‘devops.txt’ Size: 0 Blocks: 0 IO Block: 4096 regular empty file Device: fd00h/64768d Inode: 67324178 Links: 1 Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root) Context: unconfined_u:object_r:admin_home_t:s0 Access: 2018-03-30 00:06:20.145000000 -0400 Modify: 2018-03-30 00:06:20.145000000 -0400 Change: 2018-03-30 00:06:20.145000000 -0400 Birth: - [root@linuxtechi ~]#

## 7) Set the Access & modification time to a specific date and time

Whenever we do change access and modification time of a file & directory using touch command, then it set the current time as access & modification time of that file or directory,

Let’s assume we want to set specific date and time as access & modification time of a file, this is can be achieved using ‘-c’ & ‘-t’ option in touch command,

Date and Time can be specified in the format: {CCYY}MMDDhhmm.ss

Where:

- CC – First two digits of a year

- YY – Second two digits of a year

- MM – Month of the Year (01-12)

- DD – Day of the Month (01-31)

- hh – Hour of the day (00-23)

- mm – Minutes of the hour (00-59)

Let’s set the access & modification time of devops.txt file for future date and time( 2025 year, 10th Month, 19th day of month, 18th hours and 20th minute)

[root@linuxtechi ~]# touch -c -t 202510191820 devops.txt

Use stat command to view the update access & modification time,

Set the Access and Modification time based on date string, Use ‘-d’ option in touch command and then specify the date string followed by the file name, example is shown below,

[root@linuxtechi ~]# touch -c -d "2010-02-07 20:15:12.000000000 +0530" sysadm-29.txt [root@linuxtechi ~]#

Verify the status using stat command,

[root@linuxtechi ~]# stat sysadm-20.txt File: ‘sysadm-20.txt’ Size: 0 Blocks: 0 IO Block: 4096 regular empty file Device: fd00h/64768d Inode: 67324189 Links: 1 Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root) Context: unconfined_u:object_r:admin_home_t:s0 Access: 2010-02-07 20:15:12.000000000 +0530 Modify: 2010-02-07 20:15:12.000000000 +0530 Change: 2018-03-30 10:23:31.584000000 +0530 Birth: - [root@linuxtechi ~]#

**Note:** In above commands, if we don’t specify ‘-c’ then touch command will create a new file in case it doesn’t exist on the system and will set the timestamps whatever is mentioned in the command.

## 8) Set timestamps to a file using a reference file

In touch command we can use a reference file for setting the timestamps of file or directory. Let’s assume I want to set the same timestamps of file “sysadm-20.txt” on “devops.txt” file. This can be easily achieved using ‘-r’ option in touch.

Syntax:

# touch -r {reference-file} actual-file

[root@linuxtechi ~]# touch -r sysadm-20.txt devops.txt [root@linuxtechi ~]#

## 9) Change Access & Modification time on symbolic link file

By default, whenever we try to change timestamps of a symbolic link file using touch command then it will change the timestamps of original file only, In case you want to change timestamps of a symbolic link file then this can be achieved using ‘-h’ option in touch command,

Syntax:

# touch -h {symbolic link file}

[root@linuxtechi opt]# ls -l /root/linuxgeeks.txt lrwxrwxrwx. 1 root root 15 Mar 30 10:56 /root/linuxgeeks.txt -> linuxadmins.txt [root@linuxtechi ~]# touch -t 203010191820 -h linuxgeeks.txt [root@linuxtechi ~]# ls -l linuxgeeks.txt lrwxrwxrwx. 1 root root 15 Oct 19 2030 linuxgeeks.txt -> linuxadmins.txt [root@linuxtechi ~]#

That’s all from this blog post, I hope you have found these examples useful and informative. Please do share your queries and feedback in below comments section.

Read Also: [17 useful rsync (remote sync) Command Examples in Linux](https://www.linuxtechi.com/rsync-command-examples-linux/)

Jason PerryYou’ve got a slight typo… your example for “Change access time of a directory” using -m instead of -a… which would change the Modification Time, not the Access Time.

Pradeep KumarHi Jason, Thank You very much for pointing out the typo, i have corrected it now. |

9,599 | 如何使用 rsync 通过 SSH 恢复部分传输的文件 | https://www.ostechnix.com/how-to-resume-partially-downloaded-or-transferred-files-using-rsync/ | 2018-05-02T21:36:09 | [

"scp",

"rsync"

] | https://linux.cn/article-9599-1.html |

由于诸如电源故障、网络故障或用户干预等各种原因,使用 `scp` 命令通过 SSH 复制的大型文件可能会中断、取消或损坏。有一天,我将 Ubuntu 16.04 ISO 文件复制到我的远程系统。不幸的是断电了,网络连接立即断了。结果么?复制过程终止!这只是一个简单的例子。Ubuntu ISO 并不是那么大,一旦电源恢复,我就可以重新启动复制过程。但在生产环境中,当你在传输大型文件时,你可能并不希望这样做。

而且,你不能继续使用 `scp` 命令恢复被中止的进度。因为,如果你这样做,它只会覆盖现有的文件。这时你会怎么做?别担心!这是 `rsync` 派上用场的地方!`rsync` 可以帮助你恢复中断的复制或下载过程。对于那些好奇的人,`rsync` 是一个快速、多功能的文件复制程序,可用于复制和传输远程和本地系统中的文件或文件夹。

它提供了大量控制其各种行为的选项,并允许非常灵活地指定要复制的一组文件。它以增量传输算法而闻名,它通过仅发送源文件和目标中现有文件之间的差异来减少通过网络发送的数据量。 `rsync` 广泛用于备份和镜像,以及日常使用中改进的复制命令。

就像 `scp` 一样,`rsync` 也会通过 SSH 复制文件。如果你想通过 SSH 下载或传输大文件和文件夹,我建议您使用 `rsync`。请注意,应该在两边(远程和本地系统)都安装 `rsync` 来恢复部分传输的文件。

### 使用 rsync 恢复部分传输的文件

好吧,让我给你看一个例子。我将使用命令将 Ubuntu 16.04 ISO 从本地系统复制到远程系统:

```

$ scp Soft_Backup/OS\ Images/Linux/ubuntu-16.04-desktop-amd64.iso [email protected]:/home/sk/

```

这里,

* `sk`是我的远程系统的用户名

* `192.168.43.2` 是远程机器的 IP 地址。

现在,我按下 `CTRL+C` 结束它。

示例输出:

```

[email protected]'s password:

ubuntu-16.04-desktop-amd64.iso 26% 372MB 26.2MB/s 00:39 ETA^c

```

正如你在上面的输出中看到的,当它达到 26% 时,我终止了复制过程。

如果我重新运行上面的命令,它只会覆盖现有的文件。换句话说,复制过程不会在我断开的地方恢复。

为了恢复复制过程,我们可以使用 `rsync` 命令,如下所示。

```

$ rsync -P -rsh=ssh Soft_Backup/OS\ Images/Linux/ubuntu-16.04-desktop-amd64.iso [email protected]:/home/sk/

```

示例输出:

```

[email protected]'s password:

sending incremental file list

ubuntu-16.04-desktop-amd64.iso

380.56M 26% 41.05MB/s 0:00:25

```

看见了吗?现在,复制过程在我们之前断开的地方恢复了。你也可以像下面那样使用 `-partial` 而不是 `-P` 参数。

```

$ rsync --partial -rsh=ssh Soft_Backup/OS\ Images/Linux/ubuntu-16.04-desktop-amd64.iso [email protected]:/home/sk/

```

这里,参数 `-partial` 或 `-P` 告诉 `rsync` 命令保留部分下载的文件并恢复进度。

或者,我们也可以使用以下命令通过 SSH 恢复部分传输的文件。

```

$ rsync -avP Soft_Backup/OS\ Images/Linux/ubuntu-16.04-desktop-amd64.iso [email protected]:/home/sk/

```

或者,

```

rsync -av --partial Soft_Backup/OS\ Images/Linux/ubuntu-16.04-desktop-amd64.iso [email protected]:/home/sk/

```

就是这样了。你现在知道如何使用 `rsync` 命令恢复取消、中断和部分下载的文件。正如你所看到的,它也不是那么难。如果两个系统都安装了 `rsync`,我们可以轻松地通过上面描述的那样恢复复制的进度。

如果你觉得本教程有帮助,请在你的社交、专业网络上分享,并支持我们。还有更多的好东西。敬请关注!

干杯!

---

via: <https://www.ostechnix.com/how-to-resume-partially-downloaded-or-transferred-files-using-rsync/>

作者:[SK](https://www.ostechnix.com/author/sk/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,600 | Pet:一个简单的命令行片段管理器 | https://www.ostechnix.com/pet-simple-command-line-snippet-manager/ | 2018-05-02T21:53:06 | [

"命令行"

] | https://linux.cn/article-9600-1.html |

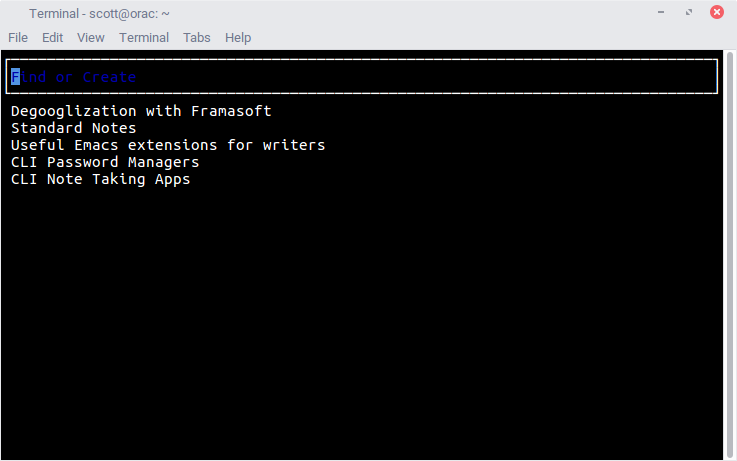

我们不可能记住所有的命令,对吧?是的。除了经常使用的命令之外,我们几乎不可能记住一些很少使用的长命令。这就是为什么需要一些外部工具来帮助我们在需要时找到命令。在过去,我们已经点评了两个有用的工具,名为 “Bashpast” 和 “Keep”。使用 Bashpast,我们可以轻松地为 Linux 命令添加书签,以便更轻松地重复调用。而 Keep 实用程序可以用来在终端中保留一些重要且冗长的命令,以便你可以随时使用它们。今天,我们将看到该系列中的另一个工具,以帮助你记住命令。现在让我们认识一下 “Pet”,这是一个用 Go 语言编写的简单的命令行代码管理器。

使用 Pet,你可以:

* 注册/添加你重要的、冗长和复杂的命令片段。

* 以交互方式来搜索保存的命令片段。

* 直接运行代码片段而无须一遍又一遍地输入。

* 轻松编辑保存的代码片段。

* 通过 Gist 同步片段。

* 在片段中使用变量

* 还有很多特性即将来临。

### 安装 Pet 命令行接口代码管理器

由于它是用 Go 语言编写的,所以确保你在系统中已经安装了 Go。

安装 Go 后,从 [**Pet 发布页面**](https://github.com/knqyf263/pet/releases) 获取最新的二进制文件。

```

wget https://github.com/knqyf263/pet/releases/download/v0.2.4/pet_0.2.4_linux_amd64.zip

```

对于 32 位计算机:

```

wget https://github.com/knqyf263/pet/releases/download/v0.2.4/pet_0.2.4_linux_386.zip

```

解压下载的文件:

```

unzip pet_0.2.4_linux_amd64.zip

```

对于 32 位:

```

unzip pet_0.2.4_linux_386.zip

```

将 `pet` 二进制文件复制到 PATH(即 `/usr/local/bin` 之类的)。

```

sudo cp pet /usr/local/bin/

```

最后,让它可以执行:

```

sudo chmod +x /usr/local/bin/pet

```

如果你使用的是基于 Arch 的系统,那么你可以使用任何 AUR 帮助工具从 AUR 安装它。

使用 [Pacaur](https://www.ostechnix.com/install-pacaur-arch-linux/):

```

pacaur -S pet-git

```

使用 [Packer](https://www.ostechnix.com/install-packer-arch-linux-2/):

```

packer -S pet-git

```

使用 [Yaourt](https://www.ostechnix.com/install-yaourt-arch-linux/):

```

yaourt -S pet-git

```

使用 [Yay](https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/):

```

yay -S pet-git

```

此外,你需要安装 [fzf](https://github.com/junegunn/fzf) 或 [peco](https://github.com/peco/peco) 工具以启用交互式搜索。请参阅官方 GitHub 链接了解如何安装这些工具。

### 用法

运行没有任何参数的 `pet` 来查看可用命令和常规选项的列表。

```

$ pet

pet - Simple command-line snippet manager.

Usage:

pet [command]

Available Commands:

configure Edit config file

edit Edit snippet file

exec Run the selected commands

help Help about any command

list Show all snippets

new Create a new snippet

search Search snippets

sync Sync snippets

version Print the version number

Flags:

--config string config file (default is $HOME/.config/pet/config.toml)

--debug debug mode

-h, --help help for pet

Use "pet [command] --help" for more information about a command.

```

要查看特定命令的帮助部分,运行:

```

$ pet [command] --help

```

#### 配置 Pet

默认配置其实工作的挺好。但是,你可以更改保存片段的默认目录,选择要使用的选择器(fzf 或 peco),编辑片段的默认文本编辑器,添加 GIST id 详细信息等。

要配置 Pet,运行:

```

$ pet configure

```

该命令将在默认的文本编辑器中打开默认配置(例如我是 vim),根据你的要求更改或编辑特定值。

```

[General]

snippetfile = "/home/sk/.config/pet/snippet.toml"

editor = "vim"

column = 40

selectcmd = "fzf"

[Gist]

file_name = "pet-snippet.toml"

access_token = ""

gist_id = ""

public = false

~

```

#### 创建片段

为了创建一个新的片段,运行:

```

$ pet new

```

添加命令和描述,然后按下回车键保存它。

```

Command> echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9'

Description> Remove numbers from output.

```

这是一个简单的命令,用于从 `echo` 命令输出中删除所有数字。你可以很轻松地记住它。但是,如果你很少使用它,几天后你可能会完全忘记它。当然,我们可以使用 `CTRL+R` 搜索历史记录,但 Pet 会更容易。另外,Pet 可以帮助你添加任意数量的条目。

另一个很酷的功能是我们可以轻松添加以前的命令。为此,在你的 `.bashrc` 或 `.zshrc` 文件中添加以下行。

```

function prev() {

PREV=$(fc -lrn | head -n 1)

sh -c "pet new `printf %q "$PREV"`"

}

```

执行以下命令来使保存的更改生效。

```

source .bashrc

```

或者:

```

source .zshrc

```

现在,运行任何命令,例如:

```

$ cat Documents/ostechnix.txt | tr '|' '\n' | sort | tr '\n' '|' | sed "s/.$/\\n/g"

```

要添加上述命令,你不必使用 `pet new` 命令。只需要:

```

$ prev

```

将说明添加到该命令代码片段中,然后按下回车键保存。

#### 片段列表

要查看保存的片段,运行:

```

$ pet list

```

#### 编辑片段

如果你想编辑代码片段的描述或命令,运行:

```

$ pet edit

```

这将在你的默认文本编辑器中打开所有保存的代码片段,你可以根据需要编辑或更改片段。

```

[[snippets]]

description = "Remove numbers from output."

command = "echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9'"

output = ""

[[snippets]]

description = "Alphabetically sort one line of text"

command = "\t prev"

output = ""

```

#### 在片段中使用标签

要将标签用于判断,使用下面的 `-t` 标志。

```

$ pet new -t

Command> echo 'Hell1o, Welcome1 2to OSTechNix4' | tr -d '1-9

Description> Remove numbers from output.

Tag> tr command examples

```

#### 执行片段

要执行一个保存的片段,运行:

```

$ pet exec

```

从列表中选择你要运行的代码段,然后按回车键来运行它:

记住你需要安装 fzf 或 peco 才能使用此功能。

#### 寻找片段

如果你有很多要保存的片段,你可以使用字符串或关键词如 below.qjz 轻松搜索它们。

```

$ pet search

```

输入搜索字词或关键字以缩小搜索结果范围。

#### 同步片段

首先,你需要获取访问令牌。转到此链接 <https://github.com/settings/tokens/new> 并创建访问令牌(只需要 “gist” 范围)。

使用以下命令来配置 Pet:

```

$ pet configure

```

将令牌设置到 `[Gist]` 字段中的 `access_token`。

设置完成后,你可以像下面一样将片段上传到 Gist。

```

$ pet sync -u

Gist ID: 2dfeeeg5f17e1170bf0c5612fb31a869

Upload success

```

你也可以在其他 PC 上下载片段。为此,编辑配置文件并在 `[Gist]` 中将 `gist_id` 设置为 GIST id。

之后,使用以下命令下载片段:

```

$ pet sync

Download success

```

获取更多细节,参阅帮助选项:

```

pet -h

```

或者:

```

pet [command] -h

```

这就是全部了。希望这可以帮助到你。正如你所看到的,Pet 使用相当简单易用!如果你很难记住冗长的命令,Pet 实用程序肯定会有用。

干杯!

---

via: <https://www.ostechnix.com/pet-simple-command-line-snippet-manager/>

作者:[SK](https://www.ostechnix.com/author/sk/) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,601 | 每个 Linux 新手都应该知道的 10 个命令 | https://opensource.com/article/18/4/10-commands-new-linux-users | 2018-05-02T22:17:37 | [

"命令行"

] | https://linux.cn/article-9601-1.html |

>

> 通过这 10 个基础命令开始掌握 Linux 命令行。

>

>

>

你可能认为你是 Linux 新手,但实际上并不是。全球互联网用户有 [3.74 亿](https://hostingcanada.org/state-of-the-internet/),他们都以某种方式使用 Linux,因为 Linux 服务器占据了互联网的 90%。大多数现代路由器运行 Linux 或 Unix,[TOP500 超级计算机](https://www.top500.org/statistics/details/osfam/1) 也依赖于 Linux。如果你拥有一台 Android 智能手机,那么你的操作系统就是由 Linux 内核构建的。

换句话说,Linux 无处不在。

但是使用基于 Linux 的技术和使用 Linux 本身是有区别的。如果你对 Linux 感兴趣,但是一直在使用 PC 或者 Mac 桌面,你可能想知道你需要知道什么才能使用 Linux 命令行接口(CLI),那么你来到了正确的地方。

下面是你需要知道的基本的 Linux 命令。每一个都很简单,也很容易记住。换句话说,你不必成为比尔盖茨就能理解它们。

### 1、 ls

你可能会想:“这是(is)什么东西?”不,那不是一个印刷错误 —— 我真的打算输入一个小写的 l。`ls`,或者说 “list”, 是你需要知道的使用 Linux CLI 的第一个命令。这个 list 命令在 Linux 终端中运行,以显示在存放在相应文件系统下的所有主要目录。例如,这个命令:

```

ls /applications

```

显示存储在 `applications` 文件夹下的每个文件夹,你将使用它来查看文件、文件夹和目录。

显示所有隐藏的文件都可以使用命令 `ls -a`。

### 2、 cd

这个命令是你用来跳转(或“更改”)到一个目录的。它指导你如何从一个文件夹导航到另一个文件夹。假设你位于 `Downloads` 文件夹中,但你想到名为 `Gym Playlist` 的文件夹中,简单地输入 `cd Gym Playlist` 将不起作用,因为 shell 不会识别它,并会报告你正在查找的文件夹不存在(LCTT 译注:这是因为目录名中有空格)。要跳转到那个文件夹,你需要包含一个反斜杠。改命令如下所示:

```

cd Gym\ Playlist

```

要从当前文件夹返回到上一个文件夹,你可以在该文件夹输入 `cd ..`。把这两个点想象成一个后退按钮。

### 3、 mv

该命令将文件从一个文件夹转移到另一个文件夹;`mv` 代表“移动”。你可以使用这个简单的命令,就像你把一个文件拖到 PC 上的一个文件夹一样。

例如,如果我想创建一个名为 `testfile` 的文件来演示所有基本的 Linux 命令,并且我想将它移动到我的 `Documents` 文件夹中,我将输入这个命令:

```

mv /home/sam/testfile /home/sam/Documents/

```

命令的第一部分(`mv`)说我想移动一个文件,第二部分(`home/sam/testfile`)表示我想移动的文件,第三部分(`/home/sam/Documents/`)表示我希望传输文件的位置。

### 4、 快捷键

好吧,这不止一个命令,但我忍不住把它们都包括进来。为什么?因为它们能节省时间并避免经历头痛。

* `CTRL+K` 从光标处剪切文本直至本行结束

* `CTRL+Y` 粘贴文本

* `CTRL+E` 将光标移到本行的末尾

* `CTRL+A` 将光标移动到本行的开头

* `ALT+F` 跳转到下一个空格处

* `ALT+B` 回到前一个空格处

* `ALT+Backspace` 删除前一个词

* `CTRL+W` 剪切光标前一个词

* `Shift+Insert` 将文本粘贴到终端中

* `Ctrl+D` 注销

这些命令在许多方面都能派上用场。例如,假设你在命令行文本中拼错了一个单词:

```

sudo apt-get intall programname

```

你可能注意到 `install` 拼写错了,因此该命令无法工作。但是快捷键可以让你很容易回去修复它。如果我的光标在这一行的末尾,我可以按下两次 `ALT+B` 来将光标移动到下面用 `^` 符号标记的地方:

```

sudo apt-get^intall programname

```

现在,我们可以快速地添加字母 `s` 来修复 `install`,十分简单!

### 5、 mkdir

这是你用来在 Linux 环境下创建目录或文件夹的命令。例如,如果你像我一样喜欢 DIY,你可以输入 `mkdir DIY` 为你的 DIY 项目创建一个目录。

### 6、 at

如果你想在特定时间运行 Linux 命令,你可以将 `at` 添加到语句中。语法是 `at` 后面跟着你希望命令运行的日期和时间,然后命令提示符变为 `at>`,这样你就可以输入在上面指定的时间运行的命令。

例如:

```

at 4:08 PM Sat

at> cowsay 'hello'

at> CTRL+D

```

这将会在周六下午 4:08 运行 `cowsay` 程序。

### 7、 rmdir

这个命令允许你通过 Linux CLI 删除一个目录。例如:

```

rmdir testdirectory

```

请记住,这个命令不会删除里面有文件的目录。这只在删除空目录时才起作用。

### 8、 rm

如果你想删除文件,`rm` 命令就是你想要的。它可以删除文件和目录。要删除一个文件,键入 `rm testfile`,或者删除一个目录和里面的文件,键入 `rm -r`。

### 9、 touch

`touch` 命令,也就是所谓的 “make file 的命令”,允许你使用 Linux CLI 创建新的、空的文件。很像 `mkdir` 创建目录,`touch` 会创建文件。例如,`touch testfile` 将会创建一个名为 testfile 的空文件。

### 10、 locate

这个命令是你在 Linux 系统中用来查找文件的命令。就像在 Windows 中搜索一样,如果你忘了存储文件的位置或它的名字,这是非常有用的。

例如,如果你有一个关于区块链用例的文档,但是你忘了标题,你可以输入 `locate -blockchain` 或者通过用星号分隔单词来查找 "blockchain use cases",或者星号(`*`)。例如:

```

locate -i*blockchain*use*cases*

```

还有很多其他有用的 Linux CLI 命令,比如 `pkill` 命令,如果你开始关机但是你意识到你并不想这么做,那么这条命令很棒。但是这里描述的 10 个简单而有用的命令是你开始使用 Linux 命令行所需的基本知识。

---

via: <https://opensource.com/article/18/4/10-commands-new-linux-users>

作者:[Sam Bocetta](https://opensource.com/users/sambocetta) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | You may think you're new to Linux, but you're really not. There are [3.74 billion](https://hostingcanada.org/state-of-the-internet/) global internet users, and all of them use Linux in some way since Linux servers power 90% of the internet. Most modern routers run Linux or Unix, and the [TOP500 supercomputers](https://www.top500.org/statistics/details/osfam/1) also rely on Linux. If you own an Android smartphone, your operating system is constructed from the Linux kernel.

In other words, Linux is everywhere.

But there's a difference between using Linux-based technologies and using Linux itself. If you're interested in Linux, but have been using a PC or Mac desktop, you may be wondering what you need to know to use the Linux command line interface (CLI). You've come to the right place.

The following are the fundamental Linux commands you need to know. Each is simple and easy to commit to memory. In other words, you don't have to be Bill Gates to understand them.

## 1. ls

You're probably thinking, "Is what?" No, that wasn't a typographical error – I really intended to type a lower-case L. `ls`

, or "list," is the number one command you need to know to use the Linux CLI. This list command functions within the Linux terminal to reveal all the major directories filed under a respective filesystem. For example, this command:

`ls /applications`

shows every folder stored in the applications folder. You'll use it to view files, folders, and directories.

All hidden files are viewable by using the command `ls -a`

.

## 2. cd

This command is what you use to go (or "change") to a directory. It is how you navigate from one folder to another. Say you're in your Downloads folder, but you want to go to a folder called Gym Playlist. Simply typing `cd Gym Playlist`

won't work, as the shell won't recognize it and will report the folder you're looking for doesn't exist. To bring up that folder, you'll need to include a backslash. The command should look like this:

`cd Gym\ Playlist`

To go back from the current folder to the previous one, you can type in the folder name followed by `cd ..`

. Think of the two dots like a back button.

## 3. mv

This command transfers a file from one folder to another; `mv`

stands for "move." You can use this short command like you would drag a file to a folder on a PC.

For example, if I create a file called `testfile`

to demonstrate all the basic Linux commands, and I want to move it to my Documents folder, I would issue this command:

`mv /home/sam/testfile /home/sam/Documents/`

The first piece of the command (`mv`

) says I want to move a file, the second part (`home/sam/testfile`

) names the file I want to move, and the third part (`/home/sam/Documents/`

) indicates the location where I want the file transferred.

## 4. Keyboard shortcuts

Okay, this is more than one command, but I couldn't resist including them all here. Why? Because they save time and take the headache out of your experience.

`CTRL+K`

Cuts text from the cursor until the end of the line

`CTRL+Y`

Pastes text

`CTRL+E`

Moves the cursor to the end of the line

`CTRL+A`

Moves the cursor to the beginning of the line

`ALT+F `

Jumps forward to the next space

`ALT+B`

Skips back to the previous space

`ALT+Backspace`

Deletes the previous word

`CTRL+W`

Cuts the word behind the cursor

`Shift+Insert`

Pastes text into the terminal

`Ctrl+D`

Logs you out

These commands come in handy in many ways. For example, imagine you misspell a word in your command text:

`sudo apt-get intall programname`

You probably noticed "install" is misspelled, so the command won't work. But keyboard shortcuts make it easy to go back and fix it. If my cursor is at the end of the line, I can click `ALT+B`

twice to move the cursor to the place noted below with the `^`

symbol:

`sudo apt-get^intall programname`

Now, we can quickly add the letter `s`

to fix `install`

. Easy peasy!

## 5. mkdir

This is the command you use to make a directory or a folder in the Linux environment. For example, if you're big into DIY hacks like I am, you could enter `mkdir DIY`

to make a directory for your DIY projects.

## 6. at

If you want to run a Linux command at a certain time, you can add `at`

to the equation. The syntax is `at`

followed by the date and time you want the command to run. Then the command prompt changes to `at>`

so you can enter the command(s) you want to run at the time you specified above

For example:

`at 4:08 PM Sat`

`at> cowsay 'hello'`

`at> CTRL+D`

This will run the program cowsay at 4:08 p.m. on Saturday night.

## 7. rmdir

This command allows you to remove a directory through the Linux CLI. For example:

`rmdir testdirectory`

Bear in mind that this command will *not* remove a directory that has files inside. This only works when removing empty directories.

## 8. rm

If you want to remove files, the `rm`

command is what you want. It can delete files and directories. To delete a single file, type `rm testfile`

, or to delete a directory and the files inside it, type `rm -r`

.

## 9. touch

The `touch`

command, otherwise known as the "make file command," allows you to create new, empty files using the Linux CLI. Much like `mkdir`

creates directories, `touch`

creates files. For example, `touch testfile`

will make an empty file named testfile.

## 10. locate

This command is what you use to find a file in a Linux system. Think of it like search in Windows. It's very useful if you forget where you stored a file or what you named it.

For example, if you have a document about blockchain use cases, but you can't think of the title, you can punch in `locate -blockchain `

or you can look for "blockchain use cases" by separating the words with an asterisk or asterisks (`*`

). For example:

`locate -i*blockchain*use*cases*`

.

There are tons of other helpful Linux CLI commands, like the `pkill`

command, which is great if you start a shutdown and realize you didn't mean to. But the 10 simple and useful commands described here are the essentials you need to get started using the Linux command line.

## 14 Comments |

9,602 | 如何在 Linux 中快速监控多个主机 | https://www.ostechnix.com/how-to-quickly-monitor-multiple-hosts-in-linux/ | 2018-05-03T21:27:00 | [

"who",

"rwho"

] | https://linux.cn/article-9602-1.html |

有很多监控工具可用来监控本地和远程 Linux 系统,一个很好的例子是 [Cockpit](https://www.ostechnix.com/cockpit-monitor-administer-linux-servers-via-web-browser/)。但是,这些工具的安装和使用比较复杂,至少对于新手管理员来说是这样。新手管理员可能需要花一些时间来弄清楚如何配置这些工具来监视系统。如果你想要以快速且粗略地在局域网中一次监控多台主机,你可能需要了解一下 “rwho” 工具。只要安装了 rwho 实用程序,它将立即快速地监控本地和远程系统。你什么都不用配置!你所要做的就是在要监视的系统上安装 “rwho” 工具。

请不要将 rwho 视为功能丰富且完整的监控工具。这只是一个简单的工具,它只监视远程系统的“正常运行时间”(`uptime`),“负载”(`load`)和**登录的用户**。使用 “rwho” 使用程序,我们可以发现谁在哪台计算机上登录;一个被监视的计算机的列表,列出了正常运行时间(自上次重新启动以来的时间);有多少用户登录了;以及在过去的 1、5、15 分钟的平均负载。不多不少!而且,它只监视同一子网中的系统。因此,它非常适合小型和家庭办公网络。

### 在 Linux 中监控多台主机

让我来解释一下 `rwho` 是如何工作的。每个在网络上使用 `rwho` 的系统都将广播关于它自己的信息,其他计算机可以使用 `rwhod` 守护进程来访问这些信息。因此,网络上的每台计算机都必须安装 `rwho`。此外,为了分发或访问其他主机的信息,必须允许 `rwho` 端口(例如端口 `513/UDP`)通过防火墙/路由器。

好的,让我们来安装它。

我在 Ubuntu 16.04 LTS 服务器上进行了测试,`rwho` 在默认仓库中可用,所以,我们可以使用像下面这样的 APT 软件包管理器来安装它。

```

$ sudo apt-get install rwho

```

在基于 RPM 的系统如 CentOS、 Fedora、 RHEL 上,使用以下命令来安装它:

```

$ sudo yum install rwho

```

如果你在防火墙/路由器之后,确保你已经允许使用 rwhod 513 端口。另外,使用命令验证 `rwhod` 守护进程是否正在运行:

```

$ sudo systemctl status rwhod

```

如果它尚未启动,运行以下命令启用并启动 `rwhod` 服务:

```

$ sudo systemctl enable rwhod

$ sudo systemctl start rwhod

```

现在是时候来监视系统了。运行以下命令以发现谁在哪台计算机上登录:

```

$ rwho

ostechni ostechnix:pts/5 Mar 12 17:41

root server:pts/0 Mar 12 17:42

```

正如你所看到的,目前我的局域网中有两个系统。本地系统用户是 `ostechnix` (Ubuntu 16.04 LTS),远程系统的用户是 `root` (CentOS 7)。可能你已经猜到了,`rwho` 与 `who` 命令相似,但它会监视远程系统。

而且,我们可以使用以下命令找到网络上所有正在运行的系统的正常运行时间:

```

$ ruptime

ostechnix up 2:17, 1 user, load 0.09, 0.03, 0.01

server up 1:54, 1 user, load 0.00, 0.01, 0.05

```

这里,`ruptime`(类似于 `uptime` 命令)显示了我的 Ubuntu(本地) 和 CentOS(远程)系统的总运行时间。明白了吗?棒极了!以下是我的 Ubuntu 16.04 LTS 系统的示例屏幕截图:

你可以在以下位置找到有关局域网中所有其他机器的信息:

```

$ ls /var/spool/rwho/

whod.ostechnix whod.server

```

它很小,但却非常有用,可以发现谁在哪台计算机上登录,以及正常运行时间和系统负载详情。

**建议阅读:**

请注意,这种方法有一个严重的漏洞。由于有关每台计算机的信息都通过网络进行广播,因此该子网中的每个人都可能获得此信息。通常情况下可以,但另一方面,当有关网络的信息分发给非授权用户时,这可能是不必要的副作用。因此,强烈建议在受信任和受保护的局域网中使用它。

更多的信息,查找 man 手册页。

```

$ man rwho

```

好了,这就是全部了。更多好东西要来了,敬请期待!

干杯!

---

via: <https://www.ostechnix.com/how-to-quickly-monitor-multiple-hosts-in-linux/>

作者:[SK](https://www.ostechnix.com/author/sk/) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,603 | 如何使用 Linux 防火墙隔离本地欺骗地址 | https://opensource.com/article/18/2/block-local-spoofed-addresses-using-linux-firewall | 2018-05-04T07:33:12 | [

"iptables",

"防火墙"

] | https://linux.cn/article-9603-1.html |

>

> 如何使用 iptables 防火墙保护你的网络免遭黑客攻击。

>

>

>

即便是被入侵检测和隔离系统所保护的远程网络,黑客们也在寻找各种精巧的方法入侵。IDS/IPS 不能停止或者减少那些想要接管你的网络控制权的黑客攻击。不恰当的配置允许攻击者绕过所有部署的安全措施。

在这篇文章中,我将会解释安全工程师或者系统管理员该怎样避免这些攻击。

几乎所有的 Linux 发行版都带着一个内建的防火墙来保护运行在 Linux 主机上的进程和应用程序。大多数防火墙都按照 IDS/IPS 解决方案设计,这样的设计的主要目的是检测和避免恶意包获取网络的进入权。

Linux 防火墙通常有两种接口:iptables 和 ipchains 程序(LCTT 译注:在支持 systemd 的系统上,采用的是更新的接口 firewalld)。大多数人将这些接口称作 iptables 防火墙或者 ipchains 防火墙。这两个接口都被设计成包过滤器。iptables 是有状态防火墙,其基于先前的包做出决定。ipchains 不会基于先前的包做出决定,它被设计为无状态防火墙。

在这篇文章中,我们将会专注于内核 2.4 之后出现的 iptables 防火墙。

有了 iptables 防火墙,你可以创建策略或者有序的规则集,规则集可以告诉内核该如何对待特定的数据包。在内核中的是Netfilter 框架。Netfilter 既是框架也是 iptables 防火墙的项目名称。作为一个框架,Netfilter 允许 iptables 勾连被设计来操作数据包的功能。概括地说,iptables 依靠 Netfilter 框架构筑诸如过滤数据包数据的功能。

每个 iptables 规则都被应用到一个表中的链上。一个 iptables 链就是一个比较包中相似特征的规则集合。而表(例如 `nat` 或者 `mangle`)则描述不同的功能目录。例如, `mangle` 表用于修改包数据。因此,特定的修改包数据的规则被应用到这里;而过滤规则被应用到 `filter` 表,因为 `filter` 表过滤包数据。

iptables 规则有一个匹配集,以及一个诸如 `Drop` 或者 `Deny` 的目标,这可以告诉 iptables 对一个包做什么以符合规则。因此,没有目标和匹配集,iptables 就不能有效地处理包。如果一个包匹配了一条规则,目标会指向一个将要采取的特定措施。另一方面,为了让 iptables 处理,每个数据包必须匹配才能被处理。

现在我们已经知道 iptables 防火墙如何工作,让我们着眼于如何使用 iptables 防火墙检测并拒绝或丢弃欺骗地址吧。

### 打开源地址验证

作为一个安全工程师,在处理远程的欺骗地址的时候,我采取的第一步是在内核打开源地址验证。

源地址验证是一种内核层级的特性,这种特性丢弃那些伪装成来自你的网络的包。这种特性使用反向路径过滤器方法来检查收到的包的源地址是否可以通过包到达的接口可以到达。(LCTT 译注:到达的包的源地址应该可以从它到达的网络接口反向到达,只需反转源地址和目的地址就可以达到这样的效果)

利用下面简单的脚本可以打开源地址验证而不用手工操作:

```

#!/bin/sh

#作者: Michael K Aboagye

#程序目标: 打开反向路径过滤

#日期: 7/02/18

#在屏幕上显示 “enabling source address verification”

echo -n "Enabling source address verification…"

#将值0覆盖为1来打开源地址验证

echo 1 > /proc/sys/net/ipv4/conf/default/rp_filter

echo "completed"

```

上面的脚本在执行的时候只显示了 `Enabling source address verification` 这条信息而不会换行。默认的反向路径过滤的值是 `0`,`0` 表示没有源验证。因此,第二行简单地将默认值 `0` 覆盖为 `1`。`1` 表示内核将会通过确认反向路径来验证源地址。

最后,你可以使用下面的命令通过选择 `DROP` 或者 `REJECT` 目标之一来丢弃或者拒绝来自远端主机的欺骗地址。但是,处于安全原因的考虑,我建议使用 `DROP` 目标。

像下面这样,用你自己的 IP 地址代替 `IP-address` 占位符。另外,你必须选择使用 `REJECT` 或者 `DROP` 中的一个,这两个目标不能同时使用。

```

iptables -A INPUT -i internal_interface -s IP_address -j REJECT / DROP

iptables -A INPUT -i internal_interface -s 192.168.0.0/16 -j REJECT / DROP

```

这篇文章只提供了如何使用 iptables 防火墙来避免远端欺骗攻击的基础知识。

---

via: <https://opensource.com/article/18/2/block-local-spoofed-addresses-using-linux-firewall>

作者:[Michael Kwaku Aboagye](https://opensource.com/users/revoks) 译者:[leemeans](https://github.com/leemeans) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Attackers are finding sophisticated ways to penetrate even remote networks that are protected by intrusion detection and prevention systems. No IDS/IPS can halt or minimize attacks by hackers who are determined to take over your network. Improper configuration allows attackers to bypass all implemented network security measures.

In this article, I will explain how security engineers or system administrators can prevent these attacks.

Almost all Linux distributions come with a built-in firewall to secure processes and applications running on the Linux host. Most firewalls are designed as IDS/IPS solutions, whose primary purpose is to detect and prevent malicious packets from gaining access to a network.

A Linux firewall usually comes with two interfaces: iptables and ipchains. Most people refer to these interfaces as the "iptables firewall" or the "ipchains firewall." Both interfaces are designed as packet filters. Iptables acts as a stateful firewall, making decisions based on previous packets. Ipchains does not make decisions based on previous packets; hence, it is designed as a stateless firewall.

In this article, we will focus on the iptables firewall, which comes with kernel version 2.4 and beyond.

With the iptables firewall, you can create policies, or ordered sets of rules, which communicate to the kernel how it should treat specific classes of packets. Inside the kernel is the Netfilter framework. Netfilter is both a framework and the project name for the iptables firewall. As a framework, Netfilter allows iptables to hook functions designed to perform operations on packets. In a nutshell, iptables relies on the Netfilter framework to build firewall functionality such as filtering packet data.

Each iptables rule is applied to a chain within a table. An *iptables chain* is a collection of rules that are compared against packets with similar characteristics, while a table (such as nat or mangle) describes diverse categories of functionality. For instance, a mangle table alters packet data. Thus, specialized rules that alter packet data are applied to it, and filtering rules are applied to the filter table because the filter table filters packet data.

Iptables rules have a set of matches, along with a target, such as `Drop`

or `Deny`

, that instructs iptables what to do with a packet that conforms to the rule. Thus, without a target and a set of matches, iptables can’t effectively process packets. A target simply refers to a specific action to be taken if a packet matches a rule. Matches, on the other hand, must be met by every packet in order for iptables to process them.

Now that we understand how the iptables firewall operates, let's look at how to use iptables firewall to detect and reject or drop spoofed addresses.

## Turning on source address verification

The first step I, as a security engineer, take when I deal with spoofed addresses from remote hosts is to turn on source address verification in the kernel.

Source address verification is a kernel-level feature that drops packets pretending to come from your network. It uses the reverse path filter method to check whether the source of the received packet is reachable through the interface it came in.

To turn source address verification, utilize the simple shell script below instead of doing it manually:

```

``````

#!/bin/sh

#author’s name: Michael K Aboagye

#purpose of program: to enable reverse path filtering

#date: 7/02/18

#displays “enabling source address verification” on the screen

echo -n "Enabling source address verification…"

#Overwrites the value 0 to 1 to enable source address verification

echo 1 > /proc/sys/net/ipv4/conf/default/rp_filter

echo "completed"

```

The preceding script, when executed, displays the message `Enabling source address verification`

without appending a new line. The default value of the reverse path filter is 0.0, which means no source validation. Thus, the second line simply overwrites the default value 0 to 1. 1 means that the kernel will validate the source by confirming the reverse path.

Finally, you can use the following command to drop or reject spoofed addresses from remote hosts by choosing either one of these targets: `DROP`

or `REJECT`

. However, I recommend using `DROP`

for security reasons.

Replace the “IP-address” placeholder with your own IP address, as shown below. Also, you must choose to use either `REJECT`

or `DROP`

; the two targets don’t work together.

```

``````

iptables -A INPUT -i internal_interface -s IP_address -j REJECT / DROP

iptables -A INPUT -i internal_interface -s 192.168.0.0/16 -j REJECT/ DROP

```

This article provides only the basics of how to prevent spoofing attacks from remote hosts using the iptables firewall.

## 7 Comments |

9,605 | 为什么MIT的专利许可不讨人喜欢? | https://opensource.com/article/18/3/patent-grant-mit-license | 2018-05-04T19:53:56 | [

"MIT",

"许可证",

"专利"

] | https://linux.cn/article-9605-1.html |

>

> 提要:传统观点认为,Apache 许可证拥有“真正”的专利许可,那 MIT 许可证呢?

>

>

>

我经常听到说,[MIT 许可证](https://opensource.org/licenses/MIT)中没有专利许可,或者它只有一些“默示”专利许可的可能性。如果 MIT 许可证很敏感的话,那么它可能会因为大家对其较为年轻的同伴 [Apache 许可证](https://www.apache.org/licenses/LICENSE-2.0)的不断称赞而产生自卑感,传统观点认为,Apache 许可证拥有“真正”的专利许可。

这种区分经常重复出现,以至于人们认为,在许可证文本中是否出现“专利”一词具有很大的法律意义。不过,对“专利”一词的强调是错误的。1927 年,[美国最高法院表示](https://scholar.google.com/scholar_case?case=6603693344416712533):

>

> “专利所有人使用的任何语言,或者专利所有人向其他人展示的任何行为,使得其他人可以从中合理地推断出专利所有人同意他依据专利来制造、使用或销售,便构成了许可行为,并可以作为侵权行为的辩护理由。”

>

>

>

MIT 许可证无疑拥有明示许可。该许可证不限于授予任何特定类型的知识产权。但其许可证声明里不使用“专利”或“版权”一词。您上次听到有人表示担心 MIT 许可证仅包含默示版权许可是什么时候了?

既然授予权利的文本中没有“版权”和“专利”,让我们来研究一下 MIT 许可证中的字眼,看看我们能否了解到哪些权利被授予。

| | |

| --- | --- |

| **特此授予以下权限,** | 这是授予权限的直接开始。 |

| *免费,* | 为了获得权限,不需要任何费用。 |

| *任何人获得本软件的副本和相关文档文件(本“软件”),* | 我们定义了一些基本知识:许可的主体和受益人。 |

| **无限制地处理本软件,** | 不错,这很好。现在我们正在深究此事。我们并没有因为细微差别而乱搞,“无限制”非常明确。 |

| *包括但不限于* | 对示例列表的介绍指出,该列表不是一种转弯抹角的限制,它只是一个示例列表。 |

| *使用、复制、修改、合并、发布、分发、再许可和/或销售本软件副本的权利,并允许获得本软件的人员享受同等权利,* | 我们可以对软件采取各种各样的行动。虽然有一些建议涉及[专利所有人的专有权](http://uscode.house.gov/view.xhtml?req=granuleid:USC-prelim-title35-section271&num=0&edition=prelim)和[版权所有者的专有权](https://www.copyright.gov/title17/92chap1.html#106),但这些建议并不真正关注特定知识产权法提供的专有权的具体清单;重点是软件。 |

| *受以下条件限制:* | 权限受条件限制。 |

| *上述版权声明和权限声明应包含在本软件的所有副本或主要部分中。* | 这种情况属于所谓的<ruby> 不设限许可 <rp> ( </rp> <rt> permissive license </rt> <rp> ) </rp></ruby>。 |

| *本软件按“原样”提供,不附有任何形式的明示或暗示保证,包括但不限于对适销性、特定用途适用性和非侵权性的保证。在任何情况下,作者或版权所有者都不承担任何索赔、损害或其他责任。无论它是以合同形式、侵权或是其他方式,如由它引起,在其作用范围内、与该软件有联系、该软件的使用或者由这个软件引起的其他行为。* | 为了完整起见,我们添加免责声明。 |

没有任何信息会导致人们认为,许可人会保留对使用专利所有人创造的软件的行为起诉专利侵权的权利,并允许其他人“无限制地处理本软件”。

为什么说这是默示专利许可呢?没有充足的理由这么做。我们来看一个默示专利许可的案例。[Met-Coil Systems Corp. 诉Korners Unlimited](https://scholar.google.com/scholar_case?case=4152769754469052201) 的专利纠纷涉及专利的默示许可([美国专利 4,466,641](https://patents.google.com/patent/US4466641),很久以前已过期),该专利涉及用于连接供暖和空调系统中使用的金属管道段。处理该专利纠纷上诉的美国法院认定,专利权人(Met-Coil)出售其成型机(一种不属于专利保护主体的机器,但用于弯曲金属管道端部的法兰,使其作为以专利方式连接管道的一部分)授予其客户默示专利许可;因此,所谓的专利侵权者(Korners Unlimited)向这些客户出售某些与专利有关的部件(与 Met-Coil 机器弯曲产生的法兰一起使用的特殊角件)并不促成专利的侵权,因为客户被授予了许可(默示许可)。

通过销售其目的是在使用受专利保护的发明中发挥作用的金属弯曲机,专利权人向机器的购买者授予了专利许可。在 Met-Coil 案例中,可以看到需要谈论“默示”许可,因为根本不存在书面许可;法院也试图寻找由行为默示的许可。

现在,让我们回到 MIT 许可证。这是一个明示许可证。这个明示许可证授予了专利许可吗?事实上,在授予“无限制地处理软件”权限的情况下,MIT 许可证的确如此。没有比通过直接阅读授予许可的文字来得出结论更有效的办法了。

“明示专利许可”一词可以用于两种含义之一:

* 包括授予专利权利的明示许可证,或

* 明确提及专利权利的许可证。

其中第一项是与默示专利许可的对比。如果没有授予专利权利的明示许可,人们可以在分析中继续查看是否默示了专利许可。

人们经常使用第二个含义“明示专利许可”。不幸的是,这导致一些人错误地认为缺乏这样的“明示专利许可”会让人寻找默示许可。但是,第二种含义没有特别的法律意义。没有明确提及专利权利并不意味着没有授予专利权利的明示许可。因此,没有明确提及专利权利并不意味着仅受限于专利权利的默示许可。

说完这一切之后,那它究竟有多重要呢?

并没有多重要。当个人和企业根据 MIT 协议贡献软件时,他们并不希望稍后对那些使用专利所有人为之做出贡献的软件的人们主张专利权利。这是一个强有力的声明,当然,我没有直接看到贡献者的期望。但是根据 20 多年来我对依据 MIT 许可证贡献代码的观察,我没有看到任何迹象表明贡献者认为他们应该保留后续对使用其贡献的代码的行为征收专利许可费用的权利。恰恰相反,我观察到了与许可证中“无限制”这个短语一致的行为。

本讨论基于美国法律。其他司法管辖区的法律专家可以针对在其他国家的结果是否有所不同提出意见。

---

作者简介:Scott Peterson 是红帽公司(Red Hat)法律团队成员。很久以前,一位工程师就一个叫做 GPL 的奇怪文件向 Scott 征询法律建议,这个决定性的问题让 Scott 走上了探索包括技术标准和开源软件在内的协同开发法律问题的纠结之路。

译者简介:薛亮,集慧智佳知识产权咨询公司高级咨询师,擅长专利检索、专利分析、竞争对手跟踪、FTO 分析、开源软件知识产权风险分析,致力于为互联网企业、高科技公司提供知识产权咨询服务。

| 200 | OK | Too often, I hear it said that the [MIT License](https://opensource.org/licenses/MIT) has no patent license, or that it has merely some possibility of an "implied" patent license. If the MIT License was sensitive, it might develop an inferiority complex in light of the constant praise heaped on its younger sibling, the [Apache License](https://www.apache.org/licenses/LICENSE-2.0), which conventional wisdom says has a "real" patent license.

This distinction is repeated so often that one might be tempted to think there is some great legal significance in whether or not the word "patent" appears in the text of a license. That emphasis on the word "patent" is misguided. In 1927, the [U.S. Supreme Court said](https://scholar.google.com/scholar_case?case=6603693344416712533):

"Any language used by the owner of the patent, or any conduct on his part exhibited to another from which that other may properly infer that the owner consents to his use of the patent in making or using it, or selling it, upon which the other acts, constitutes a license and a defense to an action for a tort."

The MIT License unquestionably has an express license. That license is not limited to the granting of any particular flavor of intellectual property rights. The statement of license does not use the word "patent" or the word "copyright." When was the last time you heard someone expressing concern that the MIT License merely had an implied copyright license?

Since neither "copyright" nor "patent" appear in the text of the grant of rights, let us look to the words in the MIT License to see what we might learn about what rights are being granted.

|

That's a straightforward start to the granting of permissions. |

|

No fee is needed in order to benefit from the permission. |

|

We have some basics defined: subject of the license and who is to benefit. |

|

Well, that's pretty good. Now we are getting to the heart of the matter. We are not messing around with nuance: "without restriction" is pretty clear. |

|

This introduction to a list of examples points out that the list is not a backhanded limitation: It is a list of examples. |

|

We have a mixed assortment of actions one might undertake with respect to software. While there is some suggestion of |

|

The permissions are subject to a condition. |

|

The condition is of the type that has become typical of so-called permissive licenses. |

|

For completeness, let's include the disclaimer. |

There is nothing that would lead one to believe that the licensor wanted to preserve their right to pursue patent infringement claims against the use of software that the patent owner created and permitted others "to deal in the Software without restriction."

Why call this an implied patent license? There is no good reason to do so. Let's look at an example of an implied patent license. The patent dispute in [Met-Coil Systems Corp. v. Korners Unlimited](https://scholar.google.com/scholar_case?case=4152769754469052201) concerns an implied license for a patent ([US Patent 4,466,641](https://patents.google.com/patent/US4466641), long ago expired) on a way of connecting sections of metal ducts of the kind used in heating and air conditioning systems. The U.S. court that handles appeals in patent disputes concluded that the patent owner's (Met-Coil) sale of its roll-forming machine (a machine that is not the subject of the patent, but that is used to bend flanges at the ends of metal ducts as a part of making duct connections in a patented way) granted an implied license under the patent to its customers; thus, sale by the alleged patent infringer (Korners Unlimited) of certain patent-related components (special corner pieces for use with the flanges that resulted from the Met-Coil machine's bending) to those customers was not a contributory patent infringement because the customers were licensed (with an implied license).

By selling the metal-bending machine whose purpose was to play a role in using the patented invention, the patent owner granted a patent license to purchasers of the machine. In the Met-Coil case, one can see a need to talk about an "implied" license because there was no written license at all; the court was finding a license implied by behavior.

Now, let's return to the MIT License. There is an express license. Does that express license grant patent rights? Indeed, with permission granted "to deal in the Software without restriction," it does. And there is no need to arrive at that conclusion via anything more than a direct reading of the words that grant the license.

The phrase "express patent license" could be used with either of two intended meanings:

- an express license that includes a grant of patent rights, or

- a license that expressly refers to patent rights.

The first of those is the contrast to an implied patent license. If there is no express license that grants patent rights, one might move on in the analysis to see if a patent license might be implied.

People have often used the phrase "express patent license" with the second meaning. Unfortunately, that has led some to incorrectly assume that lack of such an "express patent license" leaves one looking for an implied license. However, the second meaning has no particular legal significance. The lack of expressly referring to patent rights does not mean that there is no express license that grants patent rights, and thus the lack of expressly referring to patent rights does not mean that one is limited to an implied grant of patent rights.

Having said all this, how much does it matter?

Not much. When people and companies contribute software under the MIT License, they do so without expecting to be able to later assert patent rights against those who use the software that the patent owner has contributed. That's a strong statement, and of course, I cannot see directly the expectations of contributors. But over the 20+ years that I have observed contribution of code under the MIT License, I see no indication that contributors think that they are reserving the right to later charge patent license fees for use of their contributed code. Quite the contrary: I observe behavior consistent with the "without restriction" phrase in the license.

This discussion is based on the law in the United States. I invite experts on the law in other jurisdictions to offer their views on whether the result would be different in other countries.

## 1 Comment |

9,606 | vrms 助你在 Debian 中查找非自由软件 | https://www.ostechnix.com/the-vrms-program-helps-you-to-find-non-free-software-in-debian/ | 2018-05-04T20:06:26 | [

"自由软件",

"vrms",

"rms"

] | https://linux.cn/article-9606-1.html |

有一天,我在 Digital ocean 上读到一篇有趣的指南,它解释了[自由和开源软件之间的区别](https://www.digitalocean.com/community/tutorials/Free-vs-Open-Source-Software)。在此之前,我认为两者都差不多。但是,我错了。它们之间有一些显著差异。在阅读那篇文章时,我想知道如何在 Linux 中找到非自由软件,因此有了这篇文章。

### 向 “Virtual Richard M. Stallman” 问好,这是一个在 Debian 中查找非自由软件的 Perl 脚本

**Virtual Richard M. Stallman** ,简称 **vrms**,是一个用 Perl 编写的程序,它在你基于 Debian 的系统上分析已安装软件的列表,并报告所有来自非自由和 contrib 树的已安装软件包。对于那些不太清楚区别的人,自由软件应该符合以下[**四项基本自由**](https://www.gnu.org/philosophy/free-sw.html)。

* **自由 0** – 不管任何目的,随意运行程序的自由。

* **自由 1** – 研究程序如何工作的自由,并根据你的需求进行调整。访问源代码是一个先决条件。

* **自由 2** – 重新分发副本的自由,这样你可以帮助别人。

* **自由 3** – 改进程序,并向公众发布改进的自由,以便整个社区获益。访问源代码是一个先决条件。

任何不满足上述四个条件的软件都不被视为自由软件。简而言之,**自由软件意味着用户有运行、复制、分发、研究、修改和改进软件的自由。**

现在让我们来看看安装的软件是自由的还是非自由的,好么?

vrms 包存在于 Debian 及其衍生版(如 Ubuntu)的默认仓库中。因此,你可以使用 `apt` 包管理器安装它,使用下面的命令。

```

$ sudo apt-get install vrms

```

安装完成后,运行以下命令,在基于 debian 的系统中查找非自由软件。

```

$ vrms

```

在我的 Ubuntu 16.04 LTS 桌面版上输出的示例。

```

Non-free packages installed on ostechnix

unrar Unarchiver for .rar files (non-free version)

1 non-free packages, 0.0% of 2103 installed packages.

```

如你在上面的截图中看到的那样,我的 Ubuntu 中安装了一个非自由软件包。

如果你的系统中没有任何非自由软件包,则应该看到以下输出。

```

No non-free or contrib packages installed on ostechnix! rms would be proud.

```

vrms 不仅可以在 Debian 上找到非自由软件包,还可以在 Ubuntu、Linux Mint 和其他基于 deb 的系统中找到非自由软件包。

**限制**

vrms 虽然有一些限制。就像我已经提到的那样,它列出了安装的非自由和 contrib 部分的软件包。但是,某些发行版并未遵循确保专有软件仅在 vrms 识别为“非自由”的仓库中存在,并且它们不努力维护这种分离。在这种情况下,vrms 将不能识别非自由软件,并且始终会报告你的系统上安装了非自由软件。如果你使用的是像 Debian 和 Ubuntu 这样的发行版,遵循将专有软件保留在非自由仓库的策略,vrms 一定会帮助你找到非自由软件包。

就是这些。希望它是有用的。还有更好的东西。敬请关注!

干杯!

---

via: <https://www.ostechnix.com/the-vrms-program-helps-you-to-find-non-free-software-in-debian/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,607 | 用 PGP 保护代码完整性(三):生成 PGP 子密钥 | https://www.linux.com/blog/learn/pgp/2018/2/protecting-code-integrity-pgp-part-3-generating-pgp-subkeys | 2018-05-04T20:29:00 | [

"PGP"

] | https://linux.cn/article-9607-1.html |

>

> 在第三篇文章中,我们将解释如何生成用于日常工作的 PGP 子密钥。

>

>

>

在本系列教程中,我们提供了使用 PGP 的实用指南。在此之前,我们介绍了[基本工具和概念](/article-9524-1.html),并介绍了如何[生成并保护您的主 PGP 密钥](/article-9529-1.html)。在第三篇文章中,我们将解释如何生成用于日常工作的 PGP 子密钥。

#### 清单

1. 生成 2048 位加密子密钥(必要)

2. 生成 2048 位签名子密钥(必要)

3. 生成一个 2048 位验证子密钥(推荐)

4. 将你的公钥上传到 PGP 密钥服务器(必要)

5. 设置一个刷新的定时任务(必要)

#### 注意事项

现在我们已经创建了主密钥,让我们创建用于日常工作的密钥。我们创建 2048 位的密钥是因为很多专用硬件(我们稍后会讨论这个)不能处理更长的密钥,但同样也是出于实用的原因。如果我们发现自己处于一个 2048 位 RSA 密钥也不够好的世界,那将是由于计算或数学有了基本突破,因此更长的 4096 位密钥不会产生太大的差别。

#### 创建子密钥

要创建子密钥,请运行:

```

$ gpg --quick-add-key [fpr] rsa2048 encr

$ gpg --quick-add-key [fpr] rsa2048 sign

```

用你密钥的完整指纹替换 `[fpr]`。

你也可以创建验证密钥,这能让你将你的 PGP 密钥用于 ssh:

```

$ gpg --quick-add-key [fpr] rsa2048 auth

```

你可以使用 `gpg --list-key [fpr]` 来查看你的密钥信息:

```

pub rsa4096 2017-12-06 [C] [expires: 2019-12-06]

111122223333444455556666AAAABBBBCCCCDDDD

uid [ultimate] Alice Engineer <[email protected]>

uid [ultimate] Alice Engineer <[email protected]>

sub rsa2048 2017-12-06 [E]

sub rsa2048 2017-12-06 [S]

```

#### 上传你的公钥到密钥服务器

你的密钥创建已完成,因此现在需要你将其上传到一个公共密钥服务器,使其他人能更容易找到密钥。 (如果你不打算实际使用你创建的密钥,请跳过这一步,因为这只会在密钥服务器上留下垃圾数据。)

```

$ gpg --send-key [fpr]

```

如果此命令不成功,你可以尝试指定一台密钥服务器以及端口,这很有可能成功:

```

$ gpg --keyserver hkp://pgp.mit.edu:80 --send-key [fpr]

```

大多数密钥服务器彼此进行通信,因此你的密钥信息最终将与所有其他密钥信息同步。

**关于隐私的注意事项:**密钥服务器是完全公开的,因此在设计上会泄露有关你的潜在敏感信息,例如你的全名、昵称以及个人或工作邮箱地址。如果你签名了其他人的钥匙或某人签名了你的钥匙,那么密钥服务器还会成为你的社交网络的泄密者。一旦这些个人信息发送给密钥服务器,就不可能被编辑或删除。即使你撤销签名或身份,它也不会将你的密钥记录删除,它只会将其标记为已撤消 —— 这甚至会显得更显眼。

也就是说,如果你参与公共项目的软件开发,以上所有信息都是公开记录,因此通过密钥服务器另外让这些信息可见,不会导致隐私的净损失。

#### 上传你的公钥到 GitHub

如果你在开发中使用 GitHub(谁不是呢?),则应按照他们提供的说明上传密钥:

* [添加 PGP 密钥到你的 GitHub 账户](https://help.github.com/articles/adding-a-new-gpg-key-to-your-github-account/)

要生成适合粘贴的公钥输出,只需运行:

```

$ gpg --export --armor [fpr]

```

#### 设置一个刷新定时任务

你需要定期刷新你的钥匙环,以获取其他人公钥的最新更改。你可以设置一个定时任务来做到这一点:

```

$ crontab -e

```

在新行中添加以下内容:

```

@daily /usr/bin/gpg2 --refresh >/dev/null 2>&1

```

**注意:**检查你的 `gpg` 或 `gpg2` 命令的完整路径,如果你的 `gpg` 是旧式的 GnuPG v.1,请使用 gpg2。

通过 Linux 基金会和 edX 的免费“[Introduction to Linux](https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux)” 课程了解关于 Linux 的更多信息。

---

via: <https://www.linux.com/blog/learn/pgp/2018/2/protecting-code-integrity-pgp-part-3-generating-pgp-subkeys>

作者:[Konstantin Ryabitsev](https://www.linux.com/users/mricon) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,608 | Caffeinated 6.828:练习 shell | https://sipb.mit.edu/iap/6.828/lab/shell/ | 2018-05-06T10:31:57 | [

"MIT"

] | https://linux.cn/article-9608-1.html |

通过在 shell 中实现多项功能,该作业将使你更加熟悉 Unix 系统调用接口和 shell。你可以在支持 Unix API 的任何操作系统(一台 Linux Athena 机器、装有 Linux 或 Mac OS 的笔记本电脑等)上完成此作业。请在第一次上课前将你的 shell 提交到[网站](https://exokernel.scripts.mit.edu/submit/)。

如果你在练习中遇到困难或不理解某些内容时,你不要羞于给[员工邮件列表](mailto:[email protected])发送邮件,但我们确实希望全班的人能够自行处理这级别的 C 编程。如果你对 C 不是很熟悉,可以认为这个是你对 C 熟悉程度的检查。再说一次,如果你有任何问题,鼓励你向我们寻求帮助。

下载 xv6 shell 的[框架](https://sipb.mit.edu/iap/6.828/files/sh.c),然后查看它。框架 shell 包含两个主要部分:解析 shell 命令并实现它们。解析器只能识别简单的 shell 命令,如下所示:

```

ls > y

cat < y | sort | uniq | wc > y1

cat y1

rm y1

ls | sort | uniq | wc

rm y

```

将这些命令剪切并粘贴到 `t.sh` 中。

你可以按如下方式编译框架 shell 的代码:

```

$ gcc sh.c

```

它会生成一个名为 `a.out` 的文件,你可以运行它:

```

$ ./a.out < t.sh

```

执行会崩溃,因为你还没有实现其中的几个功能。在本作业的其余部分中,你将实现这些功能。

### 执行简单的命令

实现简单的命令,例如:

```

$ ls

```

解析器已经为你构建了一个 `execcmd`,所以你唯一需要编写的代码是 `runcmd` 中的 case ' '。要测试你可以运行 “ls”。你可能会发现查看 `exec` 的手册页是很有用的。输入 `man 3 exec`。

你不必实现引用(即将双引号之间的文本视为单个参数)。

### I/O 重定向

实现 I/O 重定向命令,这样你可以运行:

```

echo "6.828 is cool" > x.txt

cat < x.txt

```

解析器已经识别出 '>' 和 '<',并且为你构建了一个 `redircmd`,所以你的工作就是在 `runcmd` 中为这些符号填写缺少的代码。确保你的实现在上面的测试输入中正确运行。你可能会发现 `open`(`man 2 open`) 和 `close` 的 man 手册页很有用。

请注意,此 shell 不会像 `bash`、`tcsh`、`zsh` 或其他 UNIX shell 那样处理引号,并且你的示例文件 `x.txt` 预计包含引号。

### 实现管道

实现管道,这样你可以运行命令管道,例如:

```

$ ls | sort | uniq | wc

```

解析器已经识别出 “|”,并且为你构建了一个 `pipecmd`,所以你必须编写的唯一代码是 `runcmd` 中的 case '|'。测试你可以运行上面的管道。你可能会发现 `pipe`、`fork`、`close` 和 `dup` 的 man 手册页很有用。

现在你应该可以正确地使用以下命令:

```

$ ./a.out < t.sh

```

无论是否完成挑战任务,不要忘记将你的答案提交给[网站](https://exokernel.scripts.mit.edu/submit/)。

### 挑战练习

如果你想进一步尝试,可以将所选的任何功能添加到你的 shell。你可以尝试以下建议之一:

* 实现由 `;` 分隔的命令列表

* 通过实现 `(` 和 `)` 来实现子 shell

* 通过支持 `&` 和 `wait` 在后台执行命令

* 实现参数引用

所有这些都需要改变解析器和 `runcmd` 函数。

---

via: <https://sipb.mit.edu/iap/6.828/lab/shell/>

作者:[mit](https://sipb.mit.edu) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | null |

9,609 | 递归:梦中梦 | https://manybutfinite.com/post/recursion/ | 2018-05-06T11:15:00 | [

"递归"

] | https://linux.cn/article-9609-1.html |

>

> “方其梦也,不知其梦也。梦之中又占其梦焉,觉而后知其梦也。”

>

>

> —— 《庄子·齐物论》

>

>

>

**递归**是很神奇的,但是在大多数的编程类书藉中对递归讲解的并不好。它们只是给你展示一个递归阶乘的实现,然后警告你递归运行的很慢,并且还有可能因为栈缓冲区溢出而崩溃。“你可以将头伸进微波炉中去烘干你的头发,但是需要警惕颅内高压并让你的头发生爆炸,或者你可以使用毛巾来擦干头发。”难怪人们不愿意使用递归。但这种建议是很糟糕的,因为在算法中,递归是一个非常强大的思想。

我们来看一下这个经典的递归阶乘:

```

#include <stdio.h>

int factorial(int n)

{

int previous = 0xdeadbeef;

if (n == 0 || n == 1) {

return 1;

}

previous = factorial(n-1);

return n * previous;

}

int main(int argc)

{

int answer = factorial(5);

printf("%d\n", answer);

}

```

*递归阶乘 - factorial.c*

函数调用自身的这个观点在一开始是让人很难理解的。为了让这个过程更形象具体,下图展示的是当调用 `factorial(5)` 并且达到 `n == 1`这行代码 时,[栈上](https://github.com/gduarte/blog/blob/master/code/x86-stack/factorial-gdb-output.txt) 端点的情况:

每次调用 `factorial` 都生成一个新的 [栈帧](https://manybutfinite.com/post/journey-to-the-stack)。这些栈帧的创建和 [销毁](https://manybutfinite.com/post/epilogues-canaries-buffer-overflows/) 是使得递归版本的阶乘慢于其相应的迭代版本的原因。在调用返回之前,累积的这些栈帧可能会耗尽栈空间,进而使你的程序崩溃。

而这些担心经常是存在于理论上的。例如,对于每个 `factorial` 的栈帧占用 16 字节(这可能取决于栈排列以及其它因素)。如果在你的电脑上运行着现代的 x86 的 Linux 内核,一般情况下你拥有 8 GB 的栈空间,因此,`factorial` 程序中的 `n` 最多可以达到 512,000 左右。这是一个 [巨大无比的结果](https://gist.github.com/gduarte/9944878),它将花费 8,971,833 比特来表示这个结果,因此,栈空间根本就不是什么问题:一个极小的整数 —— 甚至是一个 64 位的整数 —— 在我们的栈空间被耗尽之前就早已经溢出了成千上万次了。

过一会儿我们再去看 CPU 的使用,现在,我们先从比特和字节回退一步,把递归看作一种通用技术。我们的阶乘算法可归结为:将整数 N、N-1、 … 1 推入到一个栈,然后将它们按相反的顺序相乘。实际上我们使用了程序调用栈来实现这一点,这是它的细节:我们在堆上分配一个栈并使用它。虽然调用栈具有特殊的特性,但是它也只是又一种数据结构而已,你可以随意使用。我希望这个示意图可以让你明白这一点。

当你将栈调用视为一种数据结构,有些事情将变得更加清晰明了:将那些整数堆积起来,然后再将它们相乘,这并不是一个好的想法。那是一种有缺陷的实现:就像你拿螺丝刀去钉钉子一样。相对更合理的是使用一个迭代过程去计算阶乘。

但是,螺丝钉太多了,我们只能挑一个。有一个经典的面试题,在迷宫里有一只老鼠,你必须帮助这只老鼠找到一个奶酪。假设老鼠能够在迷宫中向左或者向右转弯。你该怎么去建模来解决这个问题?

就像现实生活中的很多问题一样,你可以将这个老鼠找奶酪的问题简化为一个图,一个二叉树的每个结点代表在迷宫中的一个位置。然后你可以让老鼠在任何可能的地方都左转,而当它进入一个死胡同时,再回溯回去,再右转。这是一个老鼠行走的 [迷宫示例](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze.h):

每到边缘(线)都让老鼠左转或者右转来到达一个新的位置。如果向哪边转都被拦住,说明相关的边缘不存在。现在,我们来讨论一下!这个过程无论你是调用栈还是其它数据结构,它都离不开一个递归的过程。而使用调用栈是非常容易的:

```

#include <stdio.h>

#include "maze.h"

int explore(maze_t *node)

{

int found = 0;

if (node == NULL)

{

return 0;

}

if (node->hasCheese){

return 1;// found cheese

}

found = explore(node->left) || explore(node->right);

return found;

}

int main(int argc)

{

int found = explore(&maze);

}

```

*递归迷宫求解 [下载](https://manybutfinite.com/code/x86-stack/maze.c)*

当我们在 `maze.c:13` 中找到奶酪时,栈的情况如下图所示。你也可以在 [GDB 输出](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze-gdb-output.txt) 中看到更详细的数据,它是使用 [命令](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze-gdb-commands.txt) 采集的数据。

它展示了递归的良好表现,因为这是一个适合使用递归的问题。而且这并不奇怪:当涉及到算法时,*递归是规则,而不是例外*。它出现在如下情景中——进行搜索时、进行遍历树和其它数据结构时、进行解析时、需要排序时——它无处不在。正如众所周知的 pi 或者 e,它们在数学中像“神”一样的存在,因为它们是宇宙万物的基础,而递归也和它们一样:只是它存在于计算结构中。

Steven Skienna 的优秀著作 [算法设计指南](http://www.amazon.com/Algorithm-Design-Manual-Steven-Skiena/dp/1848000693/) 的精彩之处在于,他通过 “战争故事” 作为手段来诠释工作,以此来展示解决现实世界中的问题背后的算法。这是我所知道的拓展你的算法知识的最佳资源。另一个读物是 McCarthy 的 [关于 LISP 实现的的原创论文](https://github.com/papers-we-love/papers-we-love/blob/master/comp_sci_fundamentals_and_history/recursive-functions-of-symbolic-expressions-and-their-computation-by-machine-parti.pdf)。递归在语言中既是它的名字也是它的基本原理。这篇论文既可读又有趣,在工作中能看到大师的作品是件让人兴奋的事情。

回到迷宫问题上。虽然它在这里很难离开递归,但是并不意味着必须通过调用栈的方式来实现。你可以使用像 `RRLL` 这样的字符串去跟踪转向,然后,依据这个字符串去决定老鼠下一步的动作。或者你可以分配一些其它的东西来记录追寻奶酪的整个状态。你仍然是实现了一个递归的过程,只是需要你实现一个自己的数据结构。

那样似乎更复杂一些,因为栈调用更合适。每个栈帧记录的不仅是当前节点,也记录那个节点上的计算状态(在这个案例中,我们是否只让它走左边,或者已经尝试向右)。因此,代码已经变得不重要了。然而,有时候我们因为害怕溢出和期望中的性能而放弃这种优秀的算法。那是很愚蠢的!

正如我们所见,栈空间是非常大的,在耗尽栈空间之前往往会遇到其它的限制。一方面可以通过检查问题大小来确保它能够被安全地处理。而对 CPU 的担心是由两个广为流传的有问题的示例所导致的:<ruby> 哑阶乘 <rt> dumb factorial </rt></ruby>和可怕的无记忆的 O( 2<sup> n</sup> ) [Fibonacci 递归](http://stackoverflow.com/questions/360748/computational-complexity-of-fibonacci-sequence)。它们并不是栈递归算法的正确代表。

事实上栈操作是非常快的。通常,栈对数据的偏移是非常准确的,它在 [缓存](https://manybutfinite.com/post/intel-cpu-caches/) 中是热数据,并且是由专门的指令来操作它的。同时,使用你自己定义的在堆上分配的数据结构的相关开销是很大的。经常能看到人们写的一些比栈调用递归更复杂、性能更差的实现方法。最后,现代的 CPU 的性能都是 [非常好的](https://manybutfinite.com/post/what-your-computer-does-while-you-wait/) ,并且一般 CPU 不会是性能瓶颈所在。在考虑牺牲程序的简单性时要特别注意,就像经常考虑程序的性能及性能的[测量](https://manybutfinite.com/post/performance-is-a-science)那样。

下一篇文章将是探秘栈系列的最后一篇了,我们将了解尾调用、闭包、以及其它相关概念。然后,我们就该深入我们的老朋友—— Linux 内核了。感谢你的阅读!

---

via:<https://manybutfinite.com/post/recursion/>

作者:[Gustavo Duarte](http://duartes.org/gustavo/blog/about/) 译者:[qhwdw](https://github.com/qhwdw) 校对:[FSSlc](https://github.com/FSSlc)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **Recursion** is magic, but it suffers from the most awkward introduction in

programming books. They’ll show you a recursive factorial implementation, then

warn you that while it sort of works it’s terribly slow and might crash due to

stack overflows. “You could always dry your hair by sticking your head

into the microwave, but watch out for intracranial pressure and head explosions.

Or you can use a towel.” No wonder people are suspicious of it. Which is too

bad, because **recursion is the single most powerful idea in algorithms**.

Let’s take a look at the classic recursive factorial:

1 |

|

The idea of a function calling itself is mystifying at first. To make it

concrete, here is *exactly* what is [on the stack](https://github.com/gduarte/blog/blob/master/code/x86-stack/factorial-gdb-output.txt) when

`factorial(5)`

is called and reaches `n == 1`

:

Each call to `factorial`

generates a new [stack frame](/post/journey-to-the-stack). The creation and

[destruction](/post/epilogues-canaries-buffer-overflows/) of these stack frames is what makes the recursive

factorial slower than its iterative counterpart. The accumulation of these

frames before the calls start returning is what can potentially exhaust stack

space and crash your program.

These concerns are often theoretical. For example, the stack frames for

`factorial`

take 16 bytes each (this can vary depending on stack alignment and

other factors). If you are running a modern x86 Linux kernel on a computer, you

normally have 8 megabytes of stack space, so factorial could handle `n`

up to

~512,000. This is a [monstrously large result](https://gist.github.com/gduarte/9944878) that takes

8,971,833 bits to represent, so stack space is the least of our problems: a puny

integer - even a 64-bit one - will overflow tens of thousands of times over

before we run out of stack space.

We’ll look at CPU usage in a moment, but for now let’s take a step back from the bits and bytes and look at recursion as a general technique. Our factorial algorithm boils down to pushing integers N, N-1, … 1 onto a stack, then multiplying them in reverse order. The fact we’re using the program’s call stack to do this is an implementation detail: we could allocate a stack on the heap and use that instead. While the call stack does have special properties, it’s just another data structure at your disposal. I hope the diagram makes that clear.

Once you see the call stack as a data structure, something else becomes clear:

piling up all those integers to multiply them afterwards is *one dumbass idea*.

*That* is the real lameness of this implementation: it’s using a screwdriver to

hammer a nail. It’s far more sensible to use an iterative process to calculate

factorials.

But there are *plenty* of screws out there, so let’s pick one. There is

a traditional interview question where you’re given a mouse in a maze, and you

must help the mouse search for cheese. Suppose the mouse can turn either left

or right in the maze. How would you model and solve this problem?

Like most problems in life, you can reduce this rodent quest to a graph, in

particular a binary tree where the nodes represent positions in the maze.

You could then have the mouse attempt left turns whenever possible, and

backtrack to turn right when it reaches a dead end. Here’s the mouse walk in an

[example maze](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze.h):

Each edge (line) is a left or right turn taking our mouse to a new position. If

either turn is blocked, the corresponding edge does not exist. Now we’re

talking! This process is *inherently* recursive whether you use the call stack

or another data structure. But using the call stack is just *so easy*:

[view raw](/code/x86-stack/maze.c)

1 |

|

Below is the stack when we find the cheese in maze.c:13. You can also

see the detailed [GDB output](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze-gdb-output.txt) and [commands](https://github.com/gduarte/blog/blob/master/code/x86-stack/maze-gdb-commands.txt)

used to gather data.

This shows recursion in a much better light because it’s a suitable problem. And

that’s no oddity: when it comes to algorithms, *recursion is the rule, not the

exception*. It comes up when we search, when we traverse trees and other data

structures, when we parse, when we sort: it’s *everywhere*. You know how **pi**

or **e** come up in math all the time because they’re in the foundations of the

universe? Recursion is like that: it’s in the fabric of computation.

Steven Skienna’s excellent [Algorithm Design Manual](http://www.amazon.com/Algorithm-Design-Manual-Steven-Skiena/dp/1848000693/) is a great place to

see that in action as he works through his “war stories” and shows the reasoning

behind algorithmic solutions to real-world problems. It’s the best resource

I know of to develop your intuition for algorithms. Another good read is

McCarthy’s [original paper on LISP](https://github.com/papers-we-love/papers-we-love/blob/master/comp_sci_fundamentals_and_history/recursive-functions-of-symbolic-expressions-and-their-computation-by-machine-parti.pdf). Recursion is both in its title

and in the foundations of the language. The paper is readable and fun, it’s

always a pleasure to see a master at work.

Back to the maze. While it’s hard to get away from recursion here, it doesn’t

mean it must be done via the call stack. You could for example use a string like

`RRLL`

to keep track of the turns, and rely on the string to decide on the

mouse’s next move. Or you can allocate something else to record the state of the

cheese hunt. You’d still be implementing a recursive process, but rolling your

own data structure.

That’s likely to be more complex because the call stack fits like a glove. Each stack frame records not only the current node, but also the state of computation in that node (in this case, whether we’ve taken only the left, or are already attempting the right). Hence the code becomes trivial. Yet we sometimes give up this sweetness for fear of overflows and hopes of performance. That can be foolish.

As we’ve seen, the stack is large and frequently other constraints kick

in before stack space does. One can also check the problem size and ensure it

can be handled safely. The CPU worry is instilled chiefly by two widespread

pathological examples: the dumb factorial and the hideous O(2n)

[recursive Fibonacci](http://stackoverflow.com/questions/360748/computational-complexity-of-fibonacci-sequence) without memoization. These are **not** indicative of

sane stack-recursive algorithms.

The reality is that stack operations are *fast*. Often the offsets to data are

known exactly, the stack is hot in the [caches](/post/intel-cpu-caches/), and there are dedicated

instructions to get things done. Meanwhile, there is substantial overhead

involved in using your own heap-allocated data structures. It’s not uncommon to

see people write something that ends up *more complex and less performant* than

call-stack recursion. Finally, modern CPUs are [pretty good](/post/what-your-computer-does-while-you-wait/)

and often not the bottleneck. Be careful about sacrificing simplicity and as

always with performance, [measure](/post/performance-is-a-science).

The next post is the last in this stack series, and we’ll look at Tail Calls, Closures, and Other Fauna. Then it’ll be time to visit our old friend, the Linux kernel. Thanks for reading!

## Comments |

9,610 | 在 Linux 上使用 groff -me 格式化你的学术论文 | https://opensource.com/article/18/2/how-format-academic-papers-linux-groff-me | 2018-05-06T13:49:28 | [

"groff"

] | https://linux.cn/article-9610-1.html |

>

> 学习用简单的宏为你的课程论文添加脚注、引用、子标题及其它格式。

>

>

>

当我在 1993 年发现 Linux 时,我还是一名本科生。我很兴奋在我的宿舍里拥有 Unix 系统的强大功能,但是尽管它有很多功能,但 Linux 却缺乏应用程序。像 LibreOffice 和 OpenOffice 这样的文字处理程序还需要几年的时间才出现。如果你想使用文字处理器,你可能会将你的系统引导到 MS-DOS 中,并使用 WordPerfect、共享软件 GalaxyWrite 或类似的程序。

这就是我的方法,因为我需要为我的课程写论文,但我更喜欢呆在 Linux 中。我从我们的 “大 Unix” 校园计算机实验室得知,Unix 系统提供了一组文本格式化的程序 `nroff` 和 `troff` ,它们是同一系统的不同接口:`nroff` 生成纯文本输出,适用于屏幕或行式打印机,而 `troff` 产生非常优美的输出,通常用于在激光打印机上打印。

在 Linux 上,`nroff` 和 `troff` 被合并为 GNU troff,通常被称为 [groff](https://www.gnu.org/software/groff/)。 我很高兴看到早期的 Linux 发行版中包含了某个版本的 groff,因此我着手学习如何使用它来编写课程论文。 我学到的第一个宏集是 `-me` 宏包,一个简单易学的宏集。

关于 `groff` ,首先要了解的是它根据一组宏来处理和格式化文本。宏通常是个两个字符的命令,它自己设置在一行上,并带有一个引导点。宏可能包含一个或多个选项。当 `groff` 在处理文档时遇到这些宏中的一个时,它会自动对文本进行格式化。

下面,我将分享使用 `groff -me` 编写课程论文等简单文档的基础知识。 我不会深入细节进行讨论,比如如何创建嵌套列表,保存和显示,以及使用表格和数字。

### 段落

让我们从一个简单的例子开始,在几乎所有类型的文档中都可以看到:段落。段落可以格式化为首行缩进或不缩进(即,与左边齐平)。 包括学术论文,杂志,期刊和书籍在内的许多印刷文档都使用了这两种类型的组合,其中文档或章节中的第一个(主要)段落左侧对齐,而所有其他(常规)的段落缩进。 在 `groff -me`中,您可以使用两种段落类型:前导段落(`.lp`)和常规段落(`.pp`)。

```

.lp

This is the first paragraph.

.pp

This is a standard paragraph.

```

### 文本格式

用粗体格式化文本的宏是 `.b`,斜体格式是 `.i` 。 如果您将 `.b` 或 `.i` 放在一行上,则后面的所有文本将以粗体或斜体显示。 但更有可能你只是想用粗体或斜体来表示一个或几个词。 要将一个词加粗或斜体,将该单词放在与 `.b` 或 `.i` 相同的行上作为选项。 要用粗体或斜体格式化多个单词,请将文字用引号引起来。

```

.pp

You can do basic formatting such as

.i italics

or

.b "bold text."

```

在上面的例子中,粗体文本结尾的句点也是粗体。 在大多数情况下,这不是你想要的。 只要文字是粗体字,而不是后面的句点也是粗体字。 要获得您想要的效果,您可以向 `.b` 或 `.i` 添加第二个参数,以指示以粗体或斜体显示的文本后面跟着的任意文本以正常类型显示。 您可以这样做,以确保尾随句点不会以粗体显示。

```

.pp

You can do basic formatting such as

.i italics

or

.b "bold text" .

```

### 列表

使用 `groff -me`,您可以创建两种类型的列表:无序列表(`.bu`)和有序列表(`.np`)。

```