id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

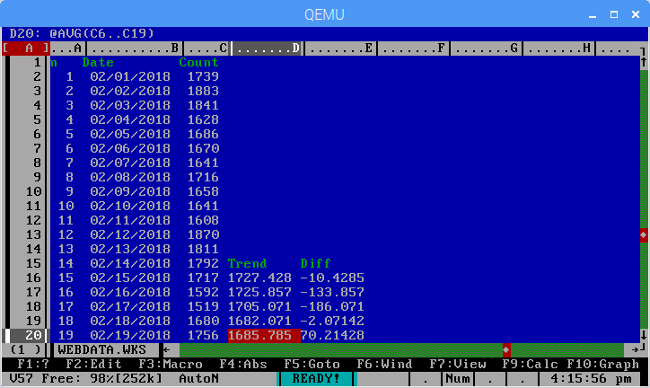

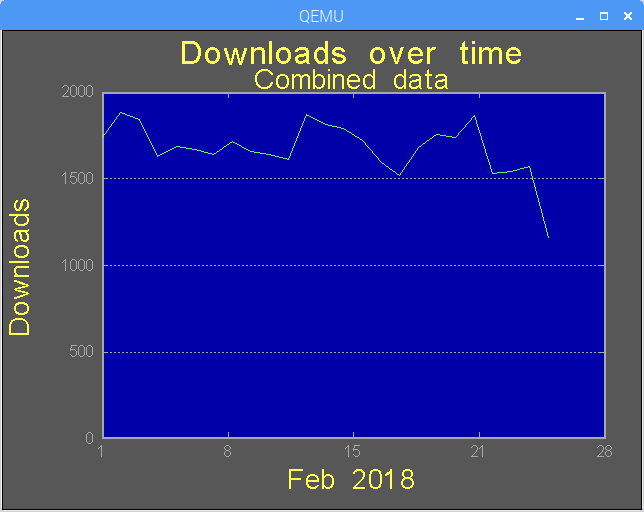

9,493 | 快捷教程:如何在命令行上编辑文件 | https://www.howtoforge.com/faq/how-to-edit-files-on-the-command-line | 2018-03-29T12:59:54 | [

"编辑器"

] | https://linux.cn/article-9493-1.html |

此次教程中,我们将向您展示三种命令行编辑文件的方式。本文一共覆盖了三种命令行编辑器,vi(或 vim)、nano 和 emacs。

### 在命令行上使用 Vi 或 Vim 编辑文件

您可以使用 `vi` 编辑文件。运行如下命令,打开文件:

```

vi /path/to/file

```

现在,您可以看见文件中的内容了(如果文件存在。请注意,如果此文件不存在,该命令会创建文件)。

`vi` 最重要的命令莫过于此:

键入 `i` 进入<ruby> 编辑 <rt> Insert </rt></ruby>模式。如此,您可以编辑文本。

退出编辑模式请键入 `ESC`。

正处于光标之下的字符,使用 `x` 键删除(您千万不要在编辑模式这样做,如果您这样做了,将不会删除光标下的字符,而是会在光标下插入 `x` 字符)。因此,当您仅仅使用 `vi` 打开文本(LCTT 译注:此时默认进入指令模式),此时您可以使用 `x` 键立即删除字符。在编辑模式下,您需要键入 `ESC` 退出编辑模式。

如果您做了修改,想要保存文件,请键入 `:x`(同样,您不能在编辑模式执行此操作。请按 `ESC` 退出编辑模式,完成此操作)。

如果您并没有做修改,键入 `:q` 退出文本(您同样不能在编辑模式执行此操作)。

如果您做了修改,但却想不保存文本而之际退出,请键入 `:q!` (同样不能在编辑模式执行此作)。

请注意在上述所有操作中,您都可以使用方向键操控光标在文本中的位置。

以上所有都是 `vi` 编辑器的内容。请注意,`vim` 编辑器或多或少也会支持这些操作,如果您想深层次了解 `vim`,请看 [这里](https://www.howtoforge.com/vim-basics)。

### 使用 Nano 命令行编辑器编辑文件

接下来是 Nano 编辑器。您可以执行 `nano` 命令调用它:

```

nano

```

这里是 `nano` 的用户界面:

您同样可以使用它启动文件。

```

nano [filename]

```

例如:

```

nano test.txt

```

如您所见的用户界面,大致被分成四个部分。编辑器顶部显示编辑器版本、正在编辑的文件和编辑状态。然后是实际编辑区域,在这里,您能看见文件的内容。编辑器下方高亮区展示着重要的信息,最后两行显示能执行基础任务地快捷键,切实地帮助初学者。

这里是您前期应当了解的快捷键快表。

使用方向键浏览文本,退格键删除文本,`Ctrl+O` 保存文件修改。当您尝试保存时,`nano` 会征询您的确认(请参阅截图中主编辑器下方区域):

注意,在这个阶段,您有一个选项,可以保存不同的系统格式。键入 `Alt+D` 选择 DOS 格式,`Atl+M` 选择 Mac 格式。

敲回车保存更改。

继续,文本剪切使用 `Ctrl+K`,文本复制使用 `Ctrl+U`。这些快捷键同样可以用来粘贴剪切单个单词,但您需要先选择好单词,通常,您可以通过键入 `Alt+A`(光标在第一个单词下) 然后使用方向键选择完整的单词。

现在来进行搜索操作。使用 `Ctrl+W` 可以执行一个简单的搜索,同时搜索和替换您可以使用 `Ctrl+\\`。

这些就是 `nano` 的一些基础功,它能给您带来一些不错的开始,如果您是初次使用 `nano` 编辑器。更多内容,请阅读我们的完整内容,点击 [这里](https://www.howtoforge.com/linux-nano-command/)。

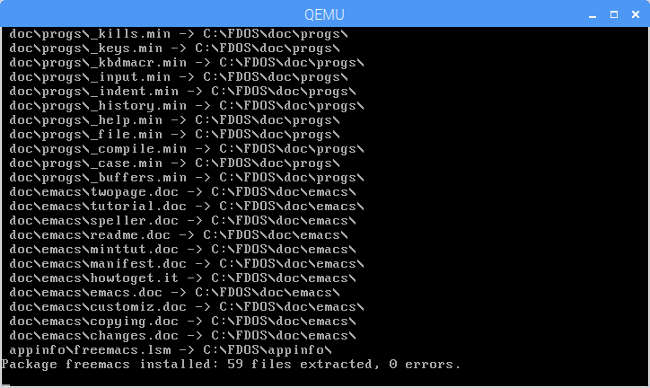

### 使用 Emacs 命令行编辑器编辑文件

接下来登场的是 Emacs。如果系统未安装此软件,您可以使用下面的命令在您的系统中安装它:

```

sudo apt-get install emacs

```

和 `nano` 一致,您可以使用下面的方式在 `emacs` 中直接打开文件:

```

emacs -nw [filename]

```

注意:`-nw` 选项确保 `emacs` 在本窗口启动,而不是打开一个新窗口,默认情况下,它会打开一个新窗口。

一个实例:

```

emacs -nw test.txt

```

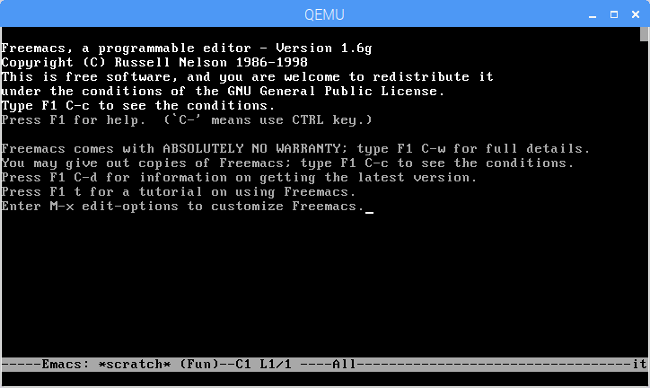

下面是编辑器的用户界面:

和 `nano` 一样,`emacs` 的界面同样被分割成了几个部分。第一部分是最上方的菜单区域,和您在图形界面下的应用程序一致。接下来是显示文本(您打开的文件文本)内容的主编辑区域。

编辑区域下方坐落着另一个高亮菜单条,显示了文件名,编辑模式(如截图内的 ‘Text’)和状态(`**` 为已修改,`-` 为未修改,`%%` 为只读)。最后是提供输入指令的区域,同时也能查看输出。

现在开始基础操作,当您做了修改、想要保存时,在 `Ctrl+x` 之后键入 `Ctrl+s`。最后,在面板最后一行会向您显示一些信息:‘Wrote ........’。这里有一个例子:

现在,如果您放弃修改并且退出时,在 `Ctrl+x` 之后键入`Ctrl+c`。编辑器将会立即询问,如下图:

输入 `n` 之后键入 `yes`,之后编辑器将会不保存而直接退出。

请注意,Emacs 中 `C` 代表 `Ctrl`,`M` 代表 `Alt`。比如,当你看见 `C-x`,这意味着按下 `Ctrl+x`。

至于其他基本编辑器操作,以删除为例,大多数人都会,使用 `Backspace`/`Delete` 键。然而,这里的一些删除快捷键能够提高用户体验。比如,使用 `Ctrl+k` 删除一整行,`Alt+d` 删除一个单词,`Alt+k` 删除一个整句。

在键入 `Ctrl+k` 之后键入 `u` 将撤销操作,输入 `Ctrl+g` 之后输入 `Ctrl+_` 恢复撤销的操作。使用 `Ctrl+s` 向前搜索,`Ctrl+r` 反向搜索。

继续,使用 `Alt+Shift+%` 执行替换操作。您将被询问要替换单词。回复并回车。之后编辑器将会询问您是否替换。例如,下方截图展示了 `emacs` 询问使用者关于单词 ‘This’ 的替换操作。

输入替换文本并回车。每一个替换操作 `emacs` 都会等待询问,下面是首次询问:

键入 `y` 之后,单词将会被替换。

这些就是几乎所有的基础操作,您在开始使用 `emacs` 时需要了解掌握的。对了,我们忘记讨论如何访问顶部菜单,其实这些可以通过使用 `F10` 访问它们。

按 `Esc` 键三次,退出这些菜单。

---

via: <https://www.howtoforge.com/faq/how-to-edit-files-on-the-command-line>

作者:[Falko Timme, Himanshu Arora](https://www.howtoforge.com) 译者:[CYLeft](https://github.com/CYLeft) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # How do I edit files on the command line?

**Authors:** Falko Timme, Himanshu Arora, Till Brehm

In this tutorial, we will show you how to edit files on the command line. This article covers three command-line editors, vi (or vim), nano, and emacs. These editors are available on all Linux distributions like Arch Linux, CentOS, Debian, Fedora, and Ubuntu.

## Editing Files with Vi or Vim Command Line Editor

To edit files on the command line, you can use an editor such as vi. To open the file, run

vi /path/to/file

Now you see the contents of the file (if there is any. Please note that the file is created if it does not exist yet.).

As an example. I opened the /etc/passwd file with vi:

The most important commands in vi are these:

Press i to enter the Insert mode. Now you can type in your text.

To leave the Insert mode press ESC.

To delete the character that is currently under the cursor you must press x (and you must not be in Insert mode because if you are you will insert the character x instead of deleting the character under the cursor). So if you have just opened the file with vi, you can immediately use x to delete characters. If you are in Insert mode you have to leave it first with ESC.

If you have made changes and want to save the file, press :x (again you must not be in Insert mode. If you are, press ESC to leave it).

If you haven't made any changes, press :q to leave the file (but you must not be in Insert mode).

If you have made changes, but want to leave the file without saving the changes, press :q! (but you must not be in Insert mode).

Please note that during all these operations you can use your keyboard's arrow keys to navigate the cursor through the text.

So that was all about the vi editor. Please note that the vim editor also works more or less in the same way, although if you'd like to know vim in depth, head [here](https://www.howtoforge.com/vim-basics).

## Editing Files with Nano Command Line Editor

Next up is the Nano editor. You can invoke it simply by running the 'nano' command:

nano

Here's how the nano UI looks like:

You can also launch the editor directly with a file.

nano [filename]

For example:

nano test.txt

The UI, as you can see, is broadly divided into four parts. The line at the top shows editor version, the file being edited, and the editing status. Then comes the actual edit area where you'll see the contents of the file. The highlighted line below the edit area shows important messages, and the last two lines are really helpful for beginners as they show keyboard shortcuts that you use to perform basic tasks in nano.

So here's a quick list of some of the shortcuts that you should know upfront.

Use arrow keys to navigate the text, the Backspace key to delete text, and **Ctrl+o** to save the changes you make. When you try saving the changes, nano will ask you for confirmation (see the line below the main editor area in screenshot below):

Note that at this stage, you also have an option to save in different OS formats. Pressing **Altd+d** enables the DOS format, while **Atl+m** enables the Mac format.

Press enter and your changes will be saved.

Moving on, to cut and paste lines of text use **Ctrl+k** and **Ctrl+u**. These keyboard shortcuts can also be used to cut and paste individual words, but you'll have to select the words first, something you can do by pressing **Alt+A** (with the cursor under the first character of the word) and then using the arrow to key select the complete word.

Now comes search operations. A simple search can be initiated using **Ctrl+w**, while a search and replace operation can be done using **Ctrl+\**.

So those were some of the basic features of nano that should give you a head start if you're new to the editor. For more details, read our comprehensive coverage [here](https://www.howtoforge.com/linux-nano-command/).

## Editing Files with Emacs Command Line Editor

Next comes **Emacs**. If not already, you can install the editor on your system using the following command:

sudo apt-get install emacs

Like nano, you can directly open a file to edit in emacs in the following way:

emacs -nw [filename]

**Note**: The **-nw** flag makes sure emacs launches in bash itself, instead of a separate window which is the default behavior.

For example:

`emacs -nw test.txt`

Here's the editor's UI:

Like nano, the emacs UI is also divided into several parts. The first part is the top menu area, which is similar to the one you'd see in graphical applications. Then comes the main edit area, where the text (of the file you've opened) is displayed.

Below the edit area sits another highlighted bar that shows things like name of the file, editing mode ('Text' in screenshot above), and status (** for modified, - for non-modified, and %% for read only). Then comes the final area where you provide input instructions, see output as well.

Now coming to basic operations, after making changes, if you want to save them, use **Ctrl+x** followed by **Ctrl+s**. The last section will show you a message saying something on the lines of '**Wrote ........' . **Here's an example:

Now, if you want to discard changes and quit the editor, use **Ctrl+x** followed by **Ctrl+c**. The editor will confirm this through a prompt - see screenshot below:

Type 'n' followed by a 'yes' and the editor will quit without saving the changes.

*Please note that Emacs represents 'Ctrl' as 'C' and 'Alt' as 'M'. So, for example, whenever you see something like C-x, it means Ctrl+x.*

As for other basic editing operations, deleting is simple, as it works through the Backspace/Delete keys that most of us are already used to. However, there are shortcuts that make your deleting experience smooth. For example, use **Ctrl+k** for deleting complete line, **Alt+d** for deleting a word, and **Alt+k** for a sentence.

Undoing is achieved through '**Ctrl+x**' followed by '**u**', and to re-do, press **Ctrl+g** followed by **Ctrl+_**. Use **Ctrl+s** for forward search and **Ctrl+r** for reverse search.

Moving on, to launch a replace operation, use the Alt+Shift+% keyboard shortcut. You'll be asked for the word you want to replace. Enter it. Then the editor will ask you for the replacement. For example, the following screenshot shows emacs asking user about the replacement for the word 'This'.

Input the replacement text and press Enter. For each replacement operation emacs will carry, it'll seek your permission first:

Press 'y' and the word will be replaced.

So that's pretty much all the basic editing operations that you should know to start using emacs. Oh, and yes, those menus at the top - we haven't discussed how to access them. Well, those can be accessed using the F10 key.

To come out of these menus, press the Esc key three times. |

9,494 | 发现 GPL 违规怎么办?多种法律救济可用 | https://opensource.com/article/18/3/gpl-and-license-contract-debate | 2018-03-29T13:30:51 | [

"GPL"

] | https://linux.cn/article-9494-1.html |

>

> 提要:最近一系列的法律案件为解决 GPL 违规问题提供了一些启示。

>

>

>

2017 年 4 月份,位于加州的一家美国联邦法院在 [Artifex Software, Inc. 诉 Hancom, Inc. 案](/article-8522-1.html)(2017 WL 1477373)中做出了一项[裁决](https://www.fsf.org/blogs/licensing/update-on-artifex-v-hancom-gnu-gpl-compliance-case-1),为针对 GPL 违规的救济方式提供了新的视角。令人遗憾的是,这起案件由于对法院裁定 GPL 是合同的一些错误解释而重新引发了已持续数十年之久的 GPL 究竟是许可还是合同的[辩论](/article-8971-1.html)。在研究救济措施的新进展之前,值得我们去重新审视为什么这种争辩依然存在。

当您考虑针对 GPL 违规的救济措施时,您可能会想到针对版权侵权主张权利,这种法律原则似乎适用于强制执行 GPL,因为版权侵权最有力的救济措施之一就是<ruby> 禁令救济 <rp> ( </rp> <rt> injunctive relief </rt> <rp> ) </rp></ruby>。对于 GPL 违规,这通常意味着防止侵权者分发违规软件。版权法还规定了实际和法定损害赔偿。相反,合同违约的救济措施相当有限,尽管也存在其他形式的救济,但通常只用于使一方完全避免造成损失。正如 Hancom 公司在其简易判决动议(虽然[被法院驳回](/article-8971-1.html))中所指出的,对于 GPL 软件来说,可能很难进行损失计算。

关于为什么 GPL 应该被视为许可而不是合同,已经有很多想法提出。例如,自由软件基金会(FSF)[一直认为](https://www.gnu.org/philosophy/enforcing-gpl.en.html) [GPL 不是合同](https://www.gnu.org/philosophy/enforcing-gpl.en.html)。合同和开源许可证之间的这种区别可以在协议的性质中找到:合同是契约或承诺的交换,而开源许可证则给出了使用许可证的条件。在 Jacobsen 诉 Katzer 案(535 F.3d 1373)中,法院支持这种看法,认为<ruby> 艺术许可协议 <rp> ( </rp> <rt> Artistic License </rt> <rp> ) </rp></ruby>列举了条件而非契约。有鉴于此,违反许可证将导致强有力救济措施的观点让许可/合同争辩陷入平息。

我们再来看 Artifex,该公司针对许可违规(根据上述分析)以及合同违约均提出了权利主张。有很多文章讨论了法院对 GPL 构成合同的分析,其中也包括 FSF [发表的文章](https://www.fsf.org/blogs/licensing/motion-to-dismiss-denied-in-recent-gnu-gpl-case),所以本文不会详细讨论这个看法。总结其分析结果,法院认为创建合同的要素(要约、接受和对价)得到了充分的陈述,其中大部分聚焦在对 GPL 的接受上(如果 GPL 被视为合同)。法院试图寻找 GPL 之外的接受证据,在 Hancom 制作的 Ghostscript 在线描述资料以及该产品的双重许可性质中已经找到。因此,法院认定可能存在合同。

在这里,我们关注的是法院合同分析之外的两个问题。首先,注意上面使用的“可能”这个词的重要性。Artifex 的判令来自于一个驳回动议,只评估 Artifex 主张的合理性而非优劣。法院对此事没有进一步的法律分析。所以如果这一点已经被提起诉讼,它可能会或可能没有找到合法的合同。既然这一点在第二个动议中已经得到了承认,并且[各方私下达成了和解](https://www.artifex.com/news/artifex-and-hancom-reach-settlement-over-ghostscript-open-source-dispute/),所以我们不知道这个争议会如何结束。

其次,尽管可能的合同权利主要很重要,但还有更有趣的第二个问题。在 Artifex 案之前,版权和合同的讨论也被搁置,其中一部分原因是由于<ruby> 优先适用 <rp> ( </rp> <rt> preemption </rt> <rp> ) </rp></ruby>问题。当美国国会颁布<ruby> 版权法 <rp> ( </rp> <rt> Copyright Act </rt> <rp> ) </rp></ruby>时,它取代了任何与其不一致的州法的权利主张,例如有的州法对等同权提供版权保护。如果州法的权利主张(例如违约)涉及与“(联邦)版权法本质上不同的权利”(引自 Artifex),则可以避免优先适用的问题。在确定是否存在优先适用问题时,法院会询问州法的权利主张是否有超出联邦版权法的“额外要素”。

在争论一个“额外要素”来证实其合同违约的权利主张时,Artifex 引用了 [Versata Software, Inc. 诉 Ameriprise Fin., Inc. 案](https://opensource.com/law/14/12/gplv2-court-decisions-versata)(2014 WL 950065)中版权法自身没有强加任何开源义务的主张。因此,任何“额外要素”(例如开源责任)都不在联邦版权法的范围之内,从而使得违反了州法中的合同权利主张变得可能。因此,Artifex 提出了这一概念以及与域外侵权有关的另一个概念(不在本文讨论范围),法院认定合同违约权利主张可以继续进行,同时允许进行合同法和版权法意义下的可能的救济,且不能对其中任意一个权利主张构成减损。

这一案件的最终效应仍有待观察,但结果是为针对 GPL 违规行为通过版权侵权和合同违约来实施多种救济措施铺平了道路。

---

作者简介:Chris Gillespie 就职于红帽公司(Redhat)。

译者简介:薛亮,集慧智佳知识产权咨询公司高级咨询师,擅长专利检索、专利分析、竞争对手跟踪、FTO 分析、开源软件知识产权风险分析,致力于为互联网企业、高科技公司提供知识产权咨询服务。

| 200 | OK | Last April, a federal court in California [handed down a decision](https://www.fsf.org/blogs/licensing/update-on-artifex-v-hancom-gnu-gpl-compliance-case-1) in *Artifex Software, Inc. v. Hancom, Inc.,* 2017 WL 1477373 (N.D. Cal. 2017), adding a new perspective to the forms of remedies available for breach of the General Public License (GPL). Sadly, this case reignited the decades-old license/contract debate due to some misinterpretations under which the court ruled the GPL to be a contract. Before looking at the remedy developments, it’s worth reviewing why the license debate even exists.

When you think about remedies for a GPL breach, you probably imagine a claim for copyright infringement. This doctrine of law seems fit to enforce the GPL, as one of the most powerful remedies available for copyright infringement is injunctive relief. For GPL violations, this usually means preventing the infringer from distributing noncompliant software. Copyright law also provides for actual and statutory damages. In contrast, remedies for breach of contract are rather limited, typically only used to make a party whole from resulting losses, although other forms of recovery may be possible. For GPL software, loss calculations may be difficult, as Hancom pointed out in their motion for summary judgment (though this was rejected by the court).

There have been a number of ideas advanced as to why the GPL should be viewed as a license as opposed to a contract. For example, the Free Software Foundation [has long held that](https://www.gnu.org/philosophy/enforcing-gpl.en.html) the [GPL is not a contract](https://www.gnu.org/philosophy/enforcing-gpl.en.html). This distinction between a contract and an open source license can be found in the nature of the agreement: a contract is an exchange of *promises* or *covenants*, whereas an open source license gives *conditions* upon which the license may be used. Courts have supported this idea, with *Jacobsen v. Katzer*, 535 F.3d 1373 (Fed. Cir. 2008), holding that the Artistic License enumerated conditions and not covenants. In light of this, the license/contract debate has quieted with the idea that a breach of license is a viable cause of action with powerful remedies.

This leads us to *Artifex*, where a claim was brought for *both* breach of license (to be expected based on the above), *as well as* breach of contract. There have been a number of articles discussing the court’s contract analysis, including by the [FSF](https://www.fsf.org/blogs/licensing/motion-to-dismiss-denied-in-recent-gnu-gpl-case), so this article will not discuss this idea at length. To summarize the analysis, the court found that the elements creating a contract (offer, acceptance, and consideration) were sufficiently pleaded, with most of its focus on acceptance of the GPL if viewed as a contract. The court looked for indications of acceptance outside the GPL, finding it in online representations Hancom made about Ghostscript and the dual-licensing nature of the product. Accordingly, the court found that a contract *might* exist.

Here, we are focused on two issues beyond the court’s contract analysis. First, it’s important to note the significance of the word “might” as used above. *Artifex’s* order came from a motion to dismiss, assessing only the *plausibility* of Artifex’s claim, not the *merits* of it. The court conducted no further legal analysis on the matter, so if the point had actually been litigated, it may or may not have found a legal contract. Since the point was conceded in the second motion and [the parties settled privately](https://www.artifex.com/news/artifex-and-hancom-reach-settlement-over-ghostscript-open-source-dispute/), we won’t know how the argument would have played out.

Next, though a possible contract claim is important, there is a more interesting second issue. Pre-*Artifex*, the copyright versus contract discussion was also put in abeyance in part due to preemption. When Congress enacted the Copyright Act, it superseded any inconsistent state law claims, such as state-law copyright protections for equivalent rights. Preemption could be avoided if the state law claim (e.g., breach of contract) involved rights "qualitatively different from the [federal] copyright rights" (quoting Artifex). In determining whether preemption exists, a court will ask whether the state law claim has an “extra element” that reaches outside the scope of federal copyright law.

In arguing for an “extra element” to substantiate their breach of contract claim, Artifex relied on *Versata Software, Inc. v. Ameriprise Fin., Inc.*[, 2014 WL 950065 ](https://opensource.com/law/14/12/gplv2-court-decisions-versata)[(W.D. Tex. 2014](https://opensource.com/law/14/12/gplv2-court-decisions-versata)), which concluded that the copyright laws themselves do not impose any open source obligations. Thus any “extra elements” (such as an open source obligation) would be outside the scope of federal copyright law, thereby making a state-law breach of contract claim viable. Accordingly, *Artifex* advanced this concept along with another concept relating to extraterritorial infringement (which is outside the scope of this article), with the court finding that the breach of contract claim could proceed, allowing possible recovery under *both* contract and copyright law, without detracting from either claim.

The ultimate effect of this case remains to be seen, but the result paints a path of multiple remedies via copyright infringement and breach of contract for violations of the GPL.

## Comments are closed. |

9,495 | 如何在 Linux 中查找最大的 10 个文件 | https://www.2daygeek.com/how-to-find-search-check-print-top-10-largest-biggest-files-in-linux/ | 2018-03-30T16:08:00 | [

"find",

"du"

] | https://linux.cn/article-9495-1.html |

当系统的磁盘空间不足时,您可能会使用 `df`、`du` 或 `ncdu` 命令进行检查,但这些命令只会显示当前目录的文件,并不会显示整个系统范围的文件。

您得花费大量的时间才能用上述命令获取系统中最大的文件,因为要进入到每个目录重复运行上述命令。

这种方法比较麻烦,也并不恰当。

如果是这样,那么该如何在 Linux 中找到最大的 10 个文件呢?

我在谷歌上搜索了很久,却没发现类似的文章,我反而看到了很多关于列出当前目录中最大的 10 个文件的文章。所以,我希望这篇文章对那些有类似需求的人有所帮助。

本教程中,我们将教您如何使用以下四种方法在 Linux 系统中查找最大的前 10 个文件。

### 方法 1

在 Linux 中没有特定的命令可以直接执行此操作,因此我们需要将多个命令结合使用。

```

# find / -type f -print0 | xargs -0 du -h | sort -rh | head -n 10

1.4G /swapfile

1.1G /home/magi/ubuntu-17.04-desktop-amd64.iso

564M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqTFU0XzkzUlJUZzA

378M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqeldzUmhPeC03Zm8

377M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqRGd4V0VrOXM4YVU

100M /usr/lib/x86_64-linux-gnu/libOxideQtCore.so.0

93M /usr/lib/firefox/libxul.so

84M /var/lib/snapd/snaps/core_3604.snap

84M /var/lib/snapd/snaps/core_3440.snap

84M /var/lib/snapd/snaps/core_3247.snap

```

详解:

* `find`:在目录结构中搜索文件的命令

* `/`:在整个系统(从根目录开始)中查找

* `-type`:指定文件类型

+ `f`:普通文件

* `-print0`:在标准输出显示完整的文件名,其后跟一个空字符(null)

* `|`:控制操作符,将一条命令的输出传递给下一个命令以供进一步处理

* `xargs`:将标准输入转换成命令行参数的命令

* `-0`:以空字符(null)而不是空白字符(LCTT 译者注:即空格、制表符和换行)来分割记录

* `du -h`:以可读格式计算磁盘空间使用情况的命令

* `sort`:对文本文件进行排序的命令

* `-r`:反转结果

* `-h`:用可读格式打印输出

* `head`:输出文件开头部分的命令

* `n -10`:打印前 10 个文件

### 方法 2

这是查找 Linux 系统中最大的前 10 个文件的另一种方法。我们依然使用多个命令共同完成这个任务。

```

# find / -type f -exec du -Sh {} + | sort -rh | head -n 10

1.4G /swapfile

1.1G /home/magi/ubuntu-17.04-desktop-amd64.iso

564M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqTFU0XzkzUlJUZzA

378M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqeldzUmhPeC03Zm8

377M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqRGd4V0VrOXM4YVU

100M /usr/lib/x86_64-linux-gnu/libOxideQtCore.so.0

93M /usr/lib/firefox/libxul.so

84M /var/lib/snapd/snaps/core_3604.snap

84M /var/lib/snapd/snaps/core_3440.snap

84M /var/lib/snapd/snaps/core_3247.snap

```

详解:

* `find`:在目录结构中搜索文件的命令

* `/`:在整个系统(从根目录开始)中查找

* `-type`:指定文件类型

+ `f`:普通文件

* `-exec`:在所选文件上运行指定命令

* `du`:计算文件占用的磁盘空间的命令

* `-S`:不包含子目录的大小

* `-h`:以可读格式打印

* `{}`:递归地查找目录,统计每个文件占用的磁盘空间

* `|`:控制操作符,将一条命令的输出传递给下一个命令以供进一步处理

* `sort`:对文本文件进行按行排序的命令

* `-r`:反转结果

* `-h`:用可读格式打印输出

* `head`:输出文件开头部分的命令

* `n -10`:打印前 10 个文件

### 方法 3

这里介绍另一种在 Linux 系统中搜索最大的前 10 个文件的方法。

```

# find / -type f -print0 | xargs -0 du | sort -n | tail -10 | cut -f2 | xargs -I{} du -sh {}

84M /var/lib/snapd/snaps/core_3247.snap

84M /var/lib/snapd/snaps/core_3440.snap

84M /var/lib/snapd/snaps/core_3604.snap

93M /usr/lib/firefox/libxul.so

100M /usr/lib/x86_64-linux-gnu/libOxideQtCore.so.0

377M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqRGd4V0VrOXM4YVU

378M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqeldzUmhPeC03Zm8

564M /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqTFU0XzkzUlJUZzA

1.1G /home/magi/ubuntu-17.04-desktop-amd64.iso

1.4G /swapfile

```

详解:

* `find`:在目录结构中搜索文件的命令

* `/`:在整个系统(从根目录开始)中查找

* `-type`:指定文件类型

+ `f`:普通文件

* `-print0`:输出完整的文件名,其后跟一个空字符(null)

* `|`:控制操作符,将一条命令的输出传递给下一个命令以供进一步处理

* `xargs`:将标准输入转换成命令行参数的命令

* `-0`:以空字符(null)而不是空白字符来分割记录

* `du`:计算文件占用的磁盘空间的命令

* `sort`:对文本文件进行按行排序的命令

* `-n`:根据数字大小进行比较

* `tail -10`:输出文件结尾部分的命令(最后 10 个文件)

* `cut`:从每行删除特定部分的命令

* `-f2`:只选择特定字段值

* `-I{}`:将初始参数中出现的每个替换字符串都替换为从标准输入读取的名称

* `-s`:仅显示每个参数的总和

* `-h`:用可读格式打印输出

* `{}`:递归地查找目录,统计每个文件占用的磁盘空间

### 方法 4

还有一种在 Linux 系统中查找最大的前 10 个文件的方法。

```

# find / -type f -ls | sort -k 7 -r -n | head -10 | column -t | awk '{print $7,$11}'

1494845440 /swapfile

1085984380 /home/magi/ubuntu-17.04-desktop-amd64.iso

591003648 /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqTFU0XzkzUlJUZzA

395770383 /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqeldzUmhPeC03Zm8

394891761 /home/magi/.gdfuse/magi/cache/0B5nso_FPaZFqRGd4V0VrOXM4YVU

103999072 /usr/lib/x86_64-linux-gnu/libOxideQtCore.so.0

97356256 /usr/lib/firefox/libxul.so

87896064 /var/lib/snapd/snaps/core_3604.snap

87793664 /var/lib/snapd/snaps/core_3440.snap

87089152 /var/lib/snapd/snaps/core_3247.snap

```

详解:

* `find`:在目录结构中搜索文件的命令

* `/`:在整个系统(从根目录开始)中查找

* `-type`:指定文件类型

+ `f`:普通文件

* `-ls`:在标准输出中以 `ls -dils` 的格式列出当前文件

* `|`:控制操作符,将一条命令的输出传递给下一个命令以供进一步处理

* `sort`:对文本文件进行按行排序的命令

* `-k`:按指定列进行排序

* `-r`:反转结果

* `-n`:根据数字大小进行比较

* `head`:输出文件开头部分的命令

* `-10`:打印前 10 个文件

* `column`:将其输入格式化为多列的命令

* `-t`:确定输入包含的列数并创建一个表

* `awk`:模式扫描和处理语言

* `'{print $7,$11}'`:只打印指定的列

---

via: <https://www.2daygeek.com/how-to-find-search-check-print-top-10-largest-biggest-files-in-linux/>

作者:[Magesh Maruthamuthu](https://www.2daygeek.com/author/magesh/) 译者:[jessie-pang](https://github.com/jessie-pang) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 404 | Not Found | null |

9,496 | 我是如何创造“开源”这个词的 | https://opensource.com/article/18/2/coining-term-open-source-software | 2018-03-30T09:47:00 | [

"开源"

] | https://linux.cn/article-9496-1.html |

>

> Christine Peterson 最终公开讲述了二十年前那决定命运的一天。

>

>

>

2 月 3 日是术语“<ruby> <a href="https://opensource.com/resources/what-open-source"> 开源软件 </a> <rt> open source software </rt></ruby>”创立 20 周年的纪念日。由于开源软件渐受欢迎,并且为这个时代强有力的重要变革提供了动力,我们仔细反思了它的初生到崛起。

我是 “开源软件” 这个词的始作俑者,它是我在<ruby> 前瞻协会 <rt> Foresight Institute </rt></ruby>担任执行董事时提出的。我不像其它人是个软件开发者,所以感谢 Linux 程序员 Todd Anderson 对这个术语的支持并将它提交小组讨论。

这是我对于它如何想到的,如何提出的,以及后续影响的记叙。当然,还有一些有关该术语的其它记叙,例如 Eric Raymond 和 Richard Stallman 写的,而我的,则写于 2006 年 1 月 2 日。

但直到今天,我才公诸于世。

---

推行术语“开源软件”是特别为了让新手和商业人士更加理解这个领域,对它的推广被认为对于更广泛的用户社区很有必要。早期的称呼“<ruby> 自由软件 <rt> free software </rt></ruby>”不适用并非是因为含有政治意义,而是对于新手来说会误导关注于价格。所以需要一个关注于关键的源代码,而且不会让新用户混淆概念的术语。第一个在正确时间出现并且满足这些要求的术语被迅速接受了:<ruby> 开源 <rt> open source </rt></ruby>。

这个术语很长一段时间被用在“情报”(即间谍活动)活动中,但据我所知,确实在 1998 年以前软件领域从未使用过该术语。下面这个就是讲述了术语“开源软件”如何流行起来,并且变成了一项产业和一场运动名称的故事。

### 计算机安全会议

在 1997 年的晚些时候,<ruby> 前瞻协会 <rt> Foresight Institute </rt></ruby>开始举办周会讨论计算机安全问题。这个协会是一个非盈利性智库,它专注于纳米技术和人工智能,而二者的安全性及可靠性取决于软件安全。我们确定了自由软件是一个改进软件安全可靠性且具有发展前景的方法,并将寻找推动它的方式。 对自由软件的兴趣开始在编程社区外开始增长,而且越来越清晰,一个改变世界的机会正在来临。然而,该怎么做我们并不清楚,因为我们当时正在摸索中。

在这些会议中,由于“容易混淆”的因素,我们讨论了采用一个新术语的必要性。观点主要如下:对于那些新接触“自由软件”的人会把 “free” 当成了价格上的 “免费” 。老资格的成员们开始解释,通常像下面所说的:“我们指的是 ‘freedom’ 中的自由,而不是‘免费啤酒’的免费。”在这一点上,关于软件方面的讨论就会变成了关于酒精饮料价格的讨论。问题不在于解释不了它的含义 —— 问题在于重要概念的术语不应该使新手们感到困惑。所以需要一个更清晰的术语。自由软件一词并没有政治上的问题;问题在于这个术语不能对新人清晰表明其概念。

### 开放的网景

1998 年 2 月 2 日,Eric Raymond 访问网景公司,并与它一起计划采用自由软件风格的许可证发布其浏览器的源代码。我们那晚在前瞻协会位于<ruby> 罗斯阿尔托斯 <rt> Los Altos </rt></ruby>的办公室开会,商讨并完善了我们的计划。除了 Eric 和我,积极参与者还有 Brian Behlendorf、Michael Tiemann、Todd Anderson、Mark S. Miller 和 Ka-Ping Yee。但在那次会议上,这一领域仍然被描述成“自由软件”,或者用 Brian 的话说, 叫“可获得源代码的” 软件。

在这个镇上,Eric 把前瞻协会作为行动的大本营。他访问行程期间,他接到了网景的法律和市场部门人员的电话。当他聊完后,我要求和他们(一男一女,可能是 Mitchell Baker)通电话,以便我告诉他们一个新的术语的必要性。他们原则上立即同意了,但我们在具体术语上并未达成一致。

在那周的会议中,我始终专注于起一个更好的名字并提出了 “开源软件”一词。 虽然不太理想,但我觉得足够好了。我找到至少四个人征求意见:Eric Drexler、Mark Miller 以及 Todd Anderson 都喜欢它,而一个从事市场公关的朋友觉得术语 “open” 被滥用了,并且觉得我们能找到一个更好。理论上他是对的,可我想不出更好的了,所以我想试着先推广它。事后想起来,我应该直接向 Eric Raymond 提议,但在那时我并不是很了解他,所以我采取了间接的策略。

Todd 强烈同意需要一个新的术语,并提供协助推广它。这很有帮助,因为作为一个非编程人员,我在自由软件社区的影响力很弱。我从事的纳米技术教育是一个加分项,但不足以让我在自由软件问题上非常得到重视。而作为一个 Linux 程序员,Todd 的话更容易被倾听。

### 关键性会议

那周稍晚时候,1998 年的 2 月 5 日,一伙人在 VA Research 进行头脑风暴商量对策。与会者除了 Eric Raymond、Todd 和我之外,还有 Larry Augustin、Sam Ockman,和 Jon Hall (“maddog”)通过电话参与。

会议的主要议题是推广策略,特别是要联系的公司。 我几乎没说什么,但是一直在寻找机会介绍提议的术语。我觉得我直接说“你们这些技术人员应当开始使用我的新术语了。”没有什么用。大多数与会者不认识我,而且据我所知,他们可能甚至不同意现在就迫切需要一个新术语。

幸运的是,Todd 一直留心着。他没有主张社区应该用哪个特定的术语,而是面对社区这些固执的人间接地做了一些事。他仅仅是在其它话题中使用了那个术语 —— 把它放进对话里看看会发生什么。我很紧张,期待得到回应,但是起初什么也没有。讨论继续进行原来的话题。似乎只有他和我注意了这个术语的使用。

不仅如此——模因演化(LCTT 译注:人类学术语)在起作用。几分钟后,另一个人使用了这个术语,显然没有注意到,而在继续进行话题讨论。Todd 和我用眼角互觑了一下:是的,我们都注意到发生了什么。我很激动——它或许有用!但我保持了安静:我在小组中仍然地位不高。可能有些人都奇怪为什么 Eric 会邀请我。

临近会议尾声,可能是 Todd 或 Eric,明确提出了[术语问题](https://wiki2.org/en/Alternative_terms_for_free_software)。Maddog 提及了一个早期的术语“可自由分发的”,和一个新的术语“合作开发的”。Eric 列出了“自由软件”、“开源软件”和“软件源”作为主要选项。Todd 提议使用“开源”,然后 Eric 支持了他。我没说太多,就让 Todd 和 Eric(轻松、非正式地)就“开源”这个名字达成了共识。显然对于大多数与会者,改名并不是在这讨论的最重要议题;那只是一个次要的相关议题。从我的会议记录中看只有大约 10% 的内容是术语的。

但是我很高兴。在那有许多社区的关键领导人,并且他们喜欢这新名字,或者至少没反对。这是一个好的信号。可能我帮不上什么忙; Eric Raymond 更适合宣传新的名称,而且他也这么做了。Bruce Perens 立即表示支持,帮助建立了 [Opensource.org](https://opensource.org/) 并在新术语的宣传中发挥了重要作用。

为了让这个名字获得认同,Tim O'Reilly 同意在代表社区的多个项目中积极使用它,这是很必要,甚至是非常值得的。并且在官方即将发布的 Netscape Navigator(网景浏览器)代码中也使用了此术语。 到二月底, O'Reilly & Associates 还有网景公司(Netscape) 已经开始使用新术语。

### 名字的宣传

在那之后的一段时间,这条术语由 Eric Raymond 向媒体推广,由 Tim O'Reilly 向商业推广,并由二人向编程社区推广,它似乎传播的相当快。

1998 年 4 月 17 日,Tim O'Reilly 召集了该领域的一些重要领袖的峰会,宣布为第一次 “[自由软件峰会](http://www.oreilly.com/pub/pr/636)” ,在 4 月14 日之后,它又被称作首届 “[开源峰会](http://www.oreilly.com/pub/pr/796)”。

这几个月对于开源来说是相当激动人心的。似乎每周都有一个新公司宣布加入计划。读 Slashdot(LCTT 译注:科技资讯网站)已经成了一个必需操作,甚至对于那些像我一样只能外围地参与者亦是如此。我坚信新术语能对快速传播到商业很有帮助,能被公众广泛使用。

尽管在谷歌搜索一下表明“开源”比“自由软件”出现的更多,但后者仍然有大量的使用,在和偏爱它的人们沟通的时候我们应该包容。

### 快乐的感觉

当 Eric Raymond 写的有关术语更改的[早期声明](https://ipfs.io/ipfs/QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco/wiki/Alternative_terms_for_free_software.html)被发布在了<ruby> 开源促进会 <rt> Open Source Initiative </rt></ruby>的网站上时,我被列在 VA 头脑风暴会议的名单上,但并不是作为术语的创始人。这是我自己的失误,我没告诉 Eric 细节。我的想法就是让它过去吧,我呆在幕后就好,但是 Todd 不这样认为。他认为我总有一天会为被称作“开源软件”这个名词的创造者而高兴。他向 Eric 解释了这个情况,Eric 及时更新了网站。

想出这个短语只是一个小贡献,但是我很感激那些把它归功于我的人。每次我听到它(现在经常听到了),它都给我些许的感动。

说服社区的巨大功劳要归功于 Eric Raymond 和 Tim O'Reilly,是他们让这一切成为可能。感谢他们对我的归功,并感谢 Todd Anderson 所做的一切。以上内容并非完整的开源一词的历史,让我对很多没有提及的关键人士表示歉意。那些寻求更完整讲述的人应该参考本文和网上其他地方的链接。

### 关于作者

Christine Peterson 撰写、举办讲座,并向媒体介绍未来强大的技术,特别是在纳米技术,人工智能和长寿方面。她是纳米科技公益组织前瞻协会的共同创始人和前任主席。前瞻协会向公众、技术团体和政策制定者提供未来强大的技术的教育以及告诉它是如何引导他们的长期影响。她服务于[机器智能](http://intelligence.org/)咨询委员会……[更多关于 Christine Peterson](https://opensource.com/users/christine-peterson)

---

via: <https://opensource.com/article/18/2/coining-term-open-source-software>

作者:[Christine Peterson](https://opensource.com/users/christine-peterson) 译者:[fuzheng1998](https://github.com/fuzheng1998) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | In a few days, on February 3, the 20th anniversary of the introduction of the term "[open source software](https://opensource.com/resources/what-open-source)" is upon us. As open source software grows in popularity and powers some of the most robust and important innovations of our time, we reflect on its rise to prominence.

I am the originator of the term "open source software" and came up with it while executive director at Foresight Institute. Not a software developer like the rest, I thank Linux programmer Todd Anderson for supporting the term and proposing it to the group.

This is my account of how I came up with it, how it was proposed, and the subsequent reactions. Of course, there are a number of accounts of the coining of the term, for example by Eric Raymond and Richard Stallman, yet this is mine, written on January 2, 2006.

It has never been published, until today.

The introduction of the term "open source software" was a deliberate effort to make this field of endeavor more understandable to newcomers and to business, which was viewed as necessary to its spread to a broader community of users. The problem with the main earlier label, "free software," was not its political connotations, but that—to newcomers—its seeming focus on price is distracting. A term was needed that focuses on the key issue of source code and that does not immediately confuse those new to the concept. The first term that came along at the right time and fulfilled these requirements was rapidly adopted: open source.

This term had long been used in an "intelligence" (i.e., spying) context, but to my knowledge, use of the term with respect to software prior to 1998 has not been confirmed. The account below describes how the term [open source software](https://opensource.org/osd) caught on and became the name of both an industry and a movement.

## Meetings on computer security

In late 1997, weekly meetings were being held at Foresight Institute to discuss computer security. Foresight is a nonprofit think tank focused on nanotechnology and artificial intelligence, and software security is regarded as central to the reliability and security of both. We had identified free software as a promising approach to improving software security and reliability and were looking for ways to promote it. Interest in free software was starting to grow outside the programming community, and it was increasingly clear that an opportunity was coming to change the world. However, just how to do this was unclear, and we were groping for strategies.

At these meetings, we discussed the need for a new term due to the confusion factor. The argument was as follows: those new to the term "free software" assume it is referring to the price. Oldtimers must then launch into an explanation, usually given as follows: "We mean free as in freedom, not free as in beer." At this point, a discussion on software has turned into one about the price of an alcoholic beverage. The problem was not that explaining the meaning is impossible—the problem was that the name for an important idea should not be so confusing to newcomers. A clearer term was needed. No political issues were raised regarding the free software term; the issue was its lack of clarity to those new to the concept.

## Releasing Netscape

On February 2, 1998, Eric Raymond arrived on a visit to work with Netscape on the plan to release the browser code under a free-software-style license. We held a meeting that night at Foresight's office in Los Altos to strategize and refine our message. In addition to Eric and me, active participants included Brian Behlendorf, Michael Tiemann, Todd Anderson, Mark S. Miller, and Ka-Ping Yee. But at that meeting, the field was still described as free software or, by Brian, "source code available" software.

While in town, Eric used Foresight as a base of operations. At one point during his visit, he was called to the phone to talk with a couple of Netscape legal and/or marketing staff. When he was finished, I asked to be put on the phone with them—one man and one woman, perhaps Mitchell Baker—so I could bring up the need for a new term. They agreed in principle immediately, but no specific term was agreed upon.

Between meetings that week, I was still focused on the need for a better name and came up with the term "open source software." While not ideal, it struck me as good enough. I ran it by at least four others: Eric Drexler, Mark Miller, and Todd Anderson liked it, while a friend in marketing and public relations felt the term "open" had been overused and abused and believed we could do better. He was right in theory; however, I didn't have a better idea, so I thought I would try to go ahead and introduce it. In hindsight, I should have simply proposed it to Eric Raymond, but I didn't know him well at the time, so I took an indirect strategy instead.

Todd had agreed strongly about the need for a new term and offered to assist in getting the term introduced. This was helpful because, as a non-programmer, my influence within the free software community was weak. My work in nanotechnology education at Foresight was a plus, but not enough for me to be taken very seriously on free software questions. As a Linux programmer, Todd would be listened to more closely.

## The key meeting

Later that week, on February 5, 1998, a group was assembled at VA Research to brainstorm on strategy. Attending—in addition to Eric Raymond, Todd, and me—were Larry Augustin, Sam Ockman, and attending by phone, Jon "maddog" Hall.

The primary topic was promotion strategy, especially which companies to approach. I said little, but was looking for an opportunity to introduce the proposed term. I felt that it wouldn't work for me to just blurt out, "All you technical people should start using my new term." Most of those attending didn't know me, and for all I knew, they might not even agree that a new term was greatly needed, or even somewhat desirable.

Fortunately, Todd was on the ball. Instead of making an assertion that the community should use this specific new term, he did something less directive—a smart thing to do with this community of strong-willed individuals. He simply used the term in a sentence on another topic—just dropped it into the conversation to see what happened. I went on alert, hoping for a response, but there was none at first. The discussion continued on the original topic. It seemed only he and I had noticed the usage.

Not so—memetic evolution was in action. A few minutes later, one of the others used the term, evidently without noticing, still discussing a topic other than terminology. Todd and I looked at each other out of the corners of our eyes to check: yes, we had both noticed what happened. I was excited—it might work! But I kept quiet: I still had low status in this group. Probably some were wondering why Eric had invited me at all.

Toward the end of the meeting, the [question of terminology](https://wiki2.org/en/Alternative_terms_for_free_software) was brought up explicitly, probably by Todd or Eric. Maddog mentioned "freely distributable" as an earlier term, and "cooperatively developed" as a newer term. Eric listed "free software," "open source," and "sourceware" as the main options. Todd advocated the "open source" model, and Eric endorsed this. I didn't say much, letting Todd and Eric pull the (loose, informal) consensus together around the open source name. It was clear that to most of those at the meeting, the name change was not the most important thing discussed there; a relatively minor issue. Only about 10% of my notes from this meeting are on the terminology question.

But I was elated. These were some key leaders in the community, and they liked the new name, or at least didn't object. This was a very good sign. There was probably not much more I could do to help; Eric Raymond was far better positioned to spread the new meme, and he did. Bruce Perens signed on to the effort immediately, helping set up [Opensource.org](https://opensource.org/) and playing a key role in spreading the new term.

For the name to succeed, it was necessary, or at least highly desirable, that Tim O'Reilly agree and actively use it in his many projects on behalf of the community. Also helpful would be use of the term in the upcoming official release of the Netscape Navigator code. By late February, both O'Reilly & Associates and Netscape had started to use the term.

## Getting the name out

After this, there was a period during which the term was promoted by Eric Raymond to the media, by Tim O'Reilly to business, and by both to the programming community. It seemed to spread very quickly.

On April 7, 1998, Tim O'Reilly held a meeting of key leaders in the field. Announced in advance as the first "[Freeware Summit](http://www.oreilly.com/pub/pr/636)," by April 14 it was referred to as the first "[Open Source Summit](http://www.oreilly.com/pub/pr/796)."

These months were extremely exciting for open source. Every week, it seemed, a new company announced plans to participate. Reading Slashdot became a necessity, even for those like me who were only peripherally involved. I strongly believe that the new term was helpful in enabling this rapid spread into business, which then enabled wider use by the public.

A quick Google search indicates that "open source" appears more often than "free software," but there still is substantial use of the free software term, which remains useful and should be included when communicating with audiences who prefer it.

## A happy twinge

When an [early account](https://ipfs.io/ipfs/QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco/wiki/Alternative_terms_for_free_software.html) of the terminology change written by Eric Raymond was posted on the Open Source Initiative website, I was listed as being at the VA brainstorming meeting, but not as the originator of the term. This was my own fault; I had neglected to tell Eric the details. My impulse was to let it pass and stay in the background, but Todd felt otherwise. He suggested to me that one day I would be glad to be known as the person who coined the name "open source software." He explained the situation to Eric, who promptly updated his site.

Coming up with a phrase is a small contribution, but I admit to being grateful to those who remember to credit me with it. Every time I hear it, which is very often now, it gives me a little happy twinge.

The big credit for persuading the community goes to Eric Raymond and Tim O'Reilly, who made it happen. Thanks to them for crediting me, and to Todd Anderson for his role throughout. The above is not a complete account of open source history; apologies to the many key players whose names do not appear. Those seeking a more complete account should refer to the links in this article and elsewhere on the net.

## 20 Comments |

9,497 | 命令行乐趣:嘲讽输错 Bash 命令的用户 | https://www.cyberciti.biz/howto/insult-linux-unix-bash-user-when-typing-wrong-command/ | 2018-03-30T10:26:59 | [

"sudo",

"嘲讽"

] | https://linux.cn/article-9497-1.html |

你可以通过配置 `sudo` 命令去嘲讽输入错误密码的用户。但是现在,当用户在 shell 输错命令时,就能嘲讽他了(滥用?)。

### 你好 bash-insulter

来自 Github 页面:

>

> 当用户键入错误命令,随机嘲讽。它使用了一个 bash4.x. 版本的全新内置错误处理函数,叫 `command_not_found_handle`。

>

>

>

### 安装

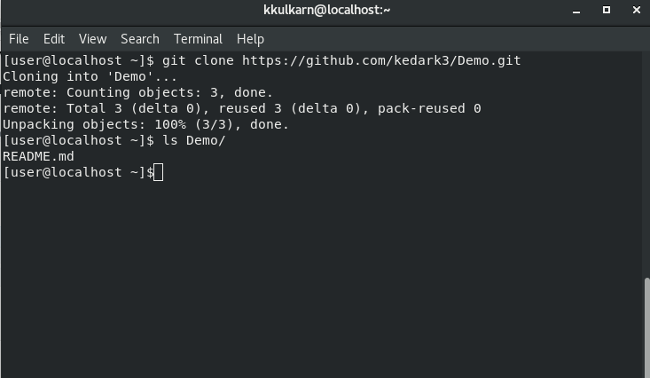

键入下列 git 命令克隆一个仓库:

```

git clone https://github.com/hkbakke/bash-insulter.git bash-insulter

```

示例输出:

```

Cloning into 'bash-insulter'...

remote: Counting objects: 52, done.

remote: Compressing objects: 100% (49/49), done.

remote: Total 52 (delta 12), reused 12 (delta 2), pack-reused 0

Unpacking objects: 100% (52/52), done.

```

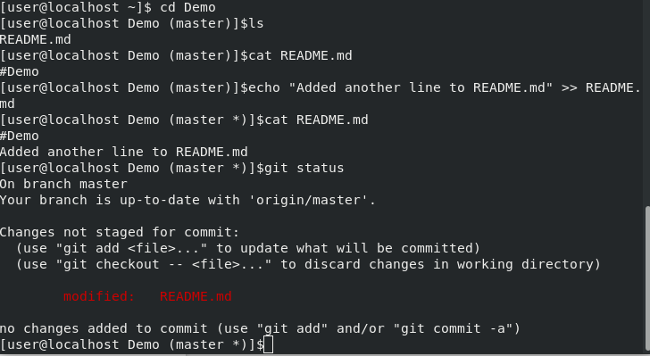

用文本编辑器,比如说使用 `vi`,编辑你的 `~/.bashrc` 或者 `/etc/bash.bashrc` 文件:

```

$ vi ~/.bashrc

```

在其后追加这一行(具体了解请查看 [if..else..fi 声明](https://bash.cyberciti.biz/guide/If..else..fi) 和 [source 命令](https://bash.cyberciti.biz/guide/Source_command)):

```

if [ -f $HOME/bash-insulter/src/bash.command-not-found ]; then

source $HOME/bash-insulter/src/bash.command-not-found

fi

```

保存并关闭文件。重新登录,如果不想退出账号也可以手动运行它:

```

$ . $HOME/bash-insulter/src/bash.command-not-found

```

### 如何使用它?

尝试键入一些无效命令:

```

$ ifconfigs

$ dates

```

示例输出:

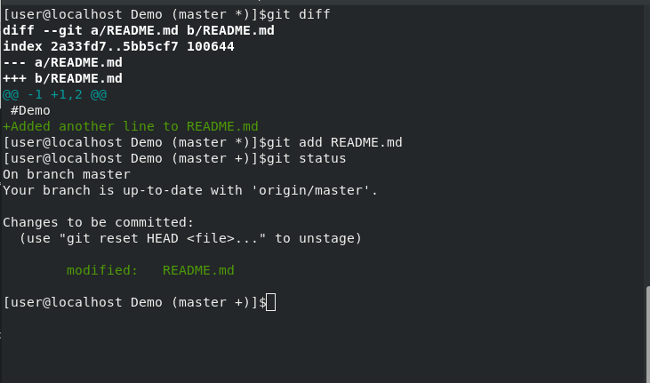

[](https://www.cyberciti.biz/media/new/cms/2017/11/bash-insulter-Insults-the-user-when-typing-wrong-command.jpg)

### 自定义

你需要编辑 `$HOME/bash-insulter/src/bash.command-not-found`:

```

$ vi $HOME/bash-insulter/src/bash.command-not-found

```

示例代码:

```

command_not_found_handle () {

local INSULTS=(

"Boooo!"

"Don't you know anything?"

"RTFM!"

"Hahaha, n00b!"

"Wow! That was impressively wrong!"

"What are you doing??"

"Pathetic"

"...and this is the best you can do??"

"The worst one today!"

"n00b alert!"

"Your application for reduced salary has been sent!"

"lol"

"u suk"

"lol... plz"

"plz uninstall"

"And the Darwin Award goes to.... ${USER}!"

"ERROR_INCOMPETENT_USER"

"Incompetence is also competence"

"Bad."

"Fake it till you make it!"

"What is this...? Amateur hour!?"

"Come on! You can do it!"

"Nice try."

"What if... you type an actual command the next time!"

"What if I told you... it is possible to type valid commands."

"Y u no speak computer???"

"This is not Windows"

"Perhaps you should leave the command line alone..."

"Please step away from the keyboard!"

"error code: 1D10T"

"ACHTUNG! ALLES TURISTEN UND NONTEKNISCHEN LOOKENPEEPERS! DAS KOMPUTERMASCHINE IST NICHT FÜR DER GEFINGERPOKEN UND MITTENGRABEN! ODERWISE IST EASY TO SCHNAPPEN DER SPRINGENWERK, BLOWENFUSEN UND POPPENCORKEN MIT SPITZENSPARKEN. IST NICHT FÜR GEWERKEN BEI DUMMKOPFEN. DER RUBBERNECKEN SIGHTSEEREN KEEPEN DAS COTTONPICKEN HÄNDER IN DAS POCKETS MUSS. ZO RELAXEN UND WATSCHEN DER BLINKENLICHTEN."

"Pro tip: type a valid command!"

)

# 设置“随机”种子发生器

RANDOM=$(date +%s%N)

VALUE=$((${RANDOM}%2))

if [[ ${VALUE} -lt 1 ]]; then

printf "\n $(tput bold)$(tput setaf 1)$(shuf -n 1 -e "${INSULTS[@]}")$(tput sgr0)\n\n"

fi

echo "-bash: $1: command not found"

# 无效命令,常规返回已存在的代码

return 127

}

```

### 赠品:sudo 嘲讽

编辑 `sudoers` 文件:

```

$ sudo visudo

```

追加下面这一行:

```

Defaults insults

```

或者像下面尾行增加一句嘲讽语:

```

Defaults !lecture,tty_tickets,!fqdn,insults

```

这是我的文件:

```

Defaults env_reset

Defaults mail_badpass

Defaults secure_path = "/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin"

## If set, sudo will insult users when they enter an incorrect password. ##

Defaults insults

# Host alias specification

# User alias specification

# Cmnd alias specification

# User privilege specification

root ALL = (ALL:ALL) ALL

# Members of the admin group may gain root privileges

% admin ALL = (ALL) ALL

# Allow members of group sudo to execute any command

% sudo ALL = (ALL:ALL) ALL

# See sudoers(5) for more information on "#include" directives:

#includedir /etc/sudoers.d

```

试一试:

```

$ sudo -k # 清除缓存,从头开始

$ sudo ls /root/

$ sudo -i

```

样例对话:

[](https://www.cyberciti.biz/media/new/cms/2017/11/sudo-insults.jpg)

### 赠品:你好 sl

[sl 或是 UNIX 经典捣蛋软件](https://www.cyberciti.biz/tips/displays-animations-when-accidentally-you-type-sl-instead-of-ls.html) 游戏。当你错误的把 `ls` 输入成 `sl`,将会有一辆蒸汽机车穿过你的屏幕。

```

$ sl

```

[](https://www.cyberciti.biz/tips/displays-animations-when-accidentally-you-type-sl-instead-of-ls.html)

---

via: <https://www.cyberciti.biz/howto/insult-linux-unix-bash-user-when-typing-wrong-command/>

作者:[Vivek Gite](https://www.cyberciti.biz) 译者:[CYLeft](https://github.com/CYLeft) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,498 | 你没听过的 10 个免费的 Linux 生产力应用程序 | https://www.maketecheasier.com/free-linux-productivity-apps-you-havent-heard-of/ | 2018-03-30T22:24:00 | [

"生产力"

] | https://linux.cn/article-9498-1.html |

高效率的应用程序确实可以让你工作变得更轻松。如果你是一位 Linux 用户,这 10 个不太知名的 Linux 桌面应用程序可以帮助到你。事实上,Linux 用户可能已经听说过这个列表上的所有应用,但对于那些只用过主流应用的人来说,应该是不知道这些应用的。

### 1. Tomboy/Gnote

[Tomboy](https://wiki.gnome.org/Apps/Tomboy) 是一个简单的便签应用。它不仅仅适用于 Linux,你也可以在 Unix、Windows 和 macOS 上获得它。Tomboy 很容易使用——你写一个便条,选择是否让它粘贴在你的桌面上,当你完成它时删除它。

### 2. MyNotex

如果你想要一个更多功能的便签,但是仍喜欢一个小而简单的应用程序,而不是一个巨大的套件,请看看 [MyNotex](https://sites.google.com/site/mynotex/)。除了简单的笔记和检索之外,它还带有一些不错的功能,例如格式化、键盘快捷键和附件等等。你也可以将其用作图片管理器。

### 3. Trojitá

尽管你可以没有桌面电子邮件客户端,但如果你想要一个的话,在几十个的桌面电子邮件客户端里,请尝试下 [Trojita](http://trojita.flaska.net/)。这有利于生产力,因为它是一个快速而轻量级的电子邮件客户端,但它提供了一个好的电子邮件客户端所必须具备的所有功能(以及更多)。

### 4. Kontact

个人信息管理器(PIM)是一款出色的生产力工具。我的个人喜好是 [Kontact](https://userbase.kde.org/Kontact)。尽管它已经有几年没有更新,但它仍然是一个非常有用的 PIM 工具,用于管理电子邮件、地址簿、日历、任务、新闻源等。Kontact 是一个 KDE 原生程序,但你也可以在其他桌面上使用它。

### 5. Osmo

[Osmo](http://clayo.org/osmo/) 是一款更先进的应用,包括日历、任务、联系人和便签功能。它还附带一些额外的功能,比如加密私有数据备份和地图上的地理位置,以及对便签、任务、联系人等的强大搜索功能。

### 6. Catfish

没有好的搜索工具就没有高生产力。[Catfish](http://www.twotoasts.de/index.php/catfish/) 是一个必须尝试的搜索工具。它是一个 GTK+ 工具,非常快速,轻量级。Catfish 会利用 Zeitgeist 的自动完成功能,你还可以按日期和类型过滤搜索结果。

### 7. KOrganizer

[KOrganizer](https://userbase.kde.org/KOrganizer) 是我上面提到的 Kontact 应用程序的日历和计划组件。如果你不需要完整的 PIM 应用程序,只需要日历和日程安排,则可以使用 KOrganizer。KOrganizer 提供快速的待办事项和快速事件条目,以及事件和待办事项的附件。

### 8. Evolution

如果你不是 KDE 应用程序的粉丝,但你仍然需要一个好的 PIM,那么试试 GNOME 的 [Evolution](https://help.gnome.org/users/evolution/3.22/intro-main-window.html.en)。Evolution 并不是一个你从没听过的少见的应用程序,但因为它有用,所以它出现在这个列表中。也许你已经听说过 Evolution 是一个电子邮件客户端,但它远不止于此——你可以用它来管理日历、邮件、地址簿和任务。

### 9. Freeplane

我不知道你们中的大多数是否每天都使用思维导图软件,但是如果你使用,请选择 [Freeplane](https://www.freeplane.org/wiki/index.php/Home)。这是一款免费的思维导图和知识管理软件,可用于商业或娱乐。你可以创建笔记,将其排列在云图或图表中,使用日历和提醒设置任务等。

### 10. Calligra Flow

最后,如果你需要流程图和图表工具,请尝试 [Calligra Flow](https://www.calligra.org/flow/)。你可以将其视为开放源代码的 [Microsoft Visio](https://www.maketecheasier.com/5-best-free-alternatives-to-microsoft-visio/) 替代品,但 Calligra Flow 不提供 Viso 提供的所有特性。不过,你可以使用它来创建网络图、组织结构图、流程图等等。

生产力工具不仅可以加快工作速度,还可以让你更有条理。我敢打赌,几乎没有人不使用某种形式的生产力工具。尝试这里列出的应用程序可以使你的工作效率更高,还能让你的生活至少轻松一些。

---

via: <https://www.maketecheasier.com/free-linux-productivity-apps-you-havent-heard-of/>

作者:[Ada Ivanova](https://www.maketecheasier.com/author/adaivanoff/) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK |

Productivity apps can really make your work easier. If you are a Linux user, these 10 lesser-known free productivity apps for the Linux desktop can help you.. As a matter of fact, it’s possible keen Linux users have heard of all the apps on the list, but for somebody who hasn’t gone beyond the main apps, these should be unknown.

## 1. Tomboy/Gnote

[Tomboy](https://wiki.gnome.org/Apps/Tomboy) is a simple note-taking app. It’s not for Linux only – you can get it for Unix, Windows, and macOS, too. Tomboy is pretty straightforward to use – you write a note, choose whether to make it sticky on your desktop, and delete it when you are done with it.

## 2. MyNotex

If you want a note-taker with more features but still prefer a small and simple app rather than a huge suite, check [MyNotex](https://sites.google.com/site/mynotex/). In addition to simple note taking and retrieval, it comes with some nice perks, such as formatting abilities, keyboard shortcuts, and attachments, to name a few. You can also use it as a picture manager.

## 3. Trojitá

Though you can live without a desktop email client, if you are used to having one, out of the dozens that are available, try [Trojitá](https://trojita.flaska.net/). It’s good for productivity because it is a fast and lightweight email client, yet it offers all the basics (and more) a good email client must have.

## 4. Kontact

A Personal Information Manager (PIM) is a great productivity tool. My personal preferences go to [Kontact](https://userbase.kde.org/Kontact). Even though it hasn’t been updated in years, it’s still a very useful PIM tool to manage emails, address books, calendars, tasks, news feeds, etc. Kontact is a KDE native, but you can use it with other desktops as well.

## 5. Osmo

[Osmo](https://clayo.org/osmo/) is a much more up-to-date app with calendar, tasks, contacts, and notes functionality. It comes with some perks, such as encrypted private data backup and address locations on the map, as well as great search capabilities for notes, tasks, contacts, etc.

## 6. Catfish

You can’t be productive without a good searching tool. Catfish is one of the must-try search tools. It’s a GTK+ tool and is very fast and lightweight. Catfish uses autocompletion from Zeitgeist, and you can also filter results by date and type.

## 7. KOrganizer

[KOrganizer](https://userbase.kde.org/KOrganizer) is the calendar and scheduling component of the Kontact app I mentioned above. If you don’t need a full-fledged PIM app but only calendar and scheduling, you can go with KOrganizer instead. KOrganizer offers quick ToDo and quick event entry, as well as attachments for events and todos.

## 8. Evolution

If you are not a fan of KDE apps but still you need a good PIM, try GNOME’s [Evolution](https://help.gnome.org/users/evolution/3.22/intro-main-window.html.en). Evolution is not exactly a less popular app you haven’t heard of, but since it’s useful, it made the list. Maybe you’ve heard about Evolution as an email client ,but it’s much more than this – you can use it to manage calendars, mail, address books and tasks.

## 9. Freeplane

I don’t know if many of you use mind-mapping software on a daily basis, but if you do, check [Freeplane](https://www.freeplane.org/wiki/index.php/Home). This is a free mind mapping and knowledge management software you can use for business or fun. You create notes, arrange them in clouds or charts, set tasks with calendars and reminders, etc.

## 10. Calligra Flow

Finally, if you need a flowchart and diagramming tool, try [Calligra Flow](https://www.calligra.org/flow/). Think of it as the open source [alternative of Microsoft Visio](https://www.maketecheasier.com/5-best-free-alternatives-to-microsoft-visio/), though Calligra Flow doesn’t offer all the perks Visio offers. Still, you can use it to create network diagrams, organization charts, flowcharts and more.

Productivity tools not only speed up work, but they also make you more organized. I bet there is hardly a person who doesn’t use productivity tools in some form. Trying the apps listed here could make you more productive and could make your life at least a bit easier

Our latest tutorials delivered straight to your inbox |

9,499 | chkservice:在 Linux 终端管理 systemd 单元的工具 | https://www.2daygeek.com/chkservice-a-tool-for-managing-systemd-units-from-linux-terminal/ | 2018-03-31T00:33:56 | [

"systemd"

] | https://linux.cn/article-9499-1.html |

systemd 意即<ruby> 系统守护进程 <rt> system daemon </rt></ruby>,是一个新的初始化系统和系统管理工具,它现在非常流行,大部分的 Linux 发行版开始使用这种新的初始化系统。

`systemctl` 是一个 systemd 的工具,它可以帮助我们管理 systemd 守护进程。 它控制系统的启动程序和服务,使用并行化方式,为启动的服务激活套接字和 D-Bus,提供守护进程的按需启动,使用 Linux 控制组跟踪进程,维护挂载和自动挂载点。

此外,它还提供了日志守护进程、用于控制基本系统配置的功能,如主机名、日期、地区、维护已登录用户列表和运行容器和虚拟机、系统帐户、运行时目录和设置,以及管理简单网络配置、网络时间同步、日志转发和名称解析的守护进程。

### 什么是 chkservice

[chkservice](https://github.com/linuxenko/chkservice) 是一个基于 ncurses 的在终端中管理 systemd 单元的工具。它提供了一个非常全面的 systemd 服务的视图,使得它们非常容易修改。

只有拥有超级管理权限才能够改变 systemd 单元的状态和 sysv 系统启动脚本。

### 在 Linux 安装 chkservice

我们可以通过两种方式安装 `chkservice`,通过包安装或者手动安装。

对于 Debian/Ubuntu,使用 [APT-GET 命令](https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/) 或 [APT 命令](https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/) 安装 `chkservice`。

```

$ sudo add-apt-repository ppa:linuxenko/chkservice

$ sudo apt-get update

$ sudo apt-get install chkservice

```

对于 Arch Linux 系的系统,使用 [Yaourt 命令](https://www.2daygeek.com/install-yaourt-aur-helper-on-arch-linux/) 或 [Packer 命令](https://www.2daygeek.com/install-packer-aur-helper-on-arch-linux/) 从 AUR 库安装 `chkservice`。

```

$ yaourt -S chkservice

或

$ packer -S chkservice

```

对于 Fedora,使用 [DNF 命令](https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/) 安装 `chkservice`。

```

$ sudo dnf copr enable srakitnican/default

$ sudo dnf install chkservice

```

对于 Debian 系系统,使用 [DPKG 命令](https://www.2daygeek.com/dpkg-command-to-manage-packages-on-debian-ubuntu-linux-mint-systems/) 安装 `chkservice`。

```

$ wget https://github.com/linuxenko/chkservice/releases/download/0.1/chkservice_0.1.0-amd64.deb

$ sudo dpkg -i chkservice_0.1.0-amd64.deb

```

对于 RPM 系的系统,使用 [DNF 命令](https://www.2daygeek.com/rpm-command-examples/) 安装 `chkservice`。

```

$ sudo yum install https://github.com/linuxenko/chkservice/releases/download/0.1/chkservice_0.1.0-amd64.rpm

```

### 如何使用 chkservice

只需输入以下命令即可启动 `chkservice` 工具。 输出分为四部分。

* **第一部分:** 这一部分显示了守护进程的状态,比如可用的 `[X]` 或者不可用的 `[ ]` 或者静态的 `[s]` 或者被掩藏的 `-m-`

* **第二部分:** 这一部分显示守护进程的状态例如开始 `>` 或者停止 `=`

* **第三部分:** 这一部分显示单元的名称

* **第四部分:** 这一部分简短地显示了守护进程的一些信息

```

$ sudo chkservice

```

要查看帮助页面,按下 `?`。 这将向您显示管理 systemd 服务的可用选项。

选择要启用或禁用的守护进程,然后点击空格键。

选择你想开始或停止的守护进程,然后按下 `s`。

选择要重新启动的守护进程,然后按下 `r`,之后,您可以在顶部看到更新的提示。

按下 `q` 退出。

---

via: <https://www.2daygeek.com/chkservice-a-tool-for-managing-systemd-units-from-linux-terminal/>

作者:[Ramya Nuvvula](https://www.2daygeek.com/author/ramya/) 译者:[amwps290](https://github.com/amwps290) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 404 | Not Found | null |

9,500 | 计算机系统进化论 | http://karl-voit.at/2017/02/10/evolution-of-systems/ | 2018-03-31T23:13:31 | [

"进化",

"计算机"

] | https://linux.cn/article-9500-1.html |

纵观现代计算机的历史,从与系统的交互方式方面,可以划分为数个进化阶段。而我更倾向于将之归类为以下几个阶段:

1. 数字系统

2. 专用应用系统

3. 应用中心系统

4. 信息中心系统

5. 无应用系统

下面我们详细聊聊这几种分类。

### 数字系统

在我看来,[早期计算机](https://en.wikipedia.org/wiki/History_of_computing_hardware),只被设计用来处理数字。它们能够加、减、乘、除。在它们中有一些能够运行像是微分和积分之类的更复杂的数学操作。

当然,如果你把字符映射成数字,它们也可以计算字符串。但这多少有点“数字的创造性使用”的意思,而不是直接处理各种信息。

### 专用应用系统

对于更高层级的问题,纯粹的数字系统是不够的。专用应用系统被开发用来处理单一任务。它们和数字系统十分相似,但是,它们拥有足够的复杂数字计算能力。这些系统能够完成十分明确的高层级任务,像调度问题的相关计算或者其他优化问题。

这类系统为单一目的而搭建,它们解决的是单一明确的问题。

### 应用中心系统

应用中心系统是第一个真正的通用系统。它们的主要使用风格很像专用应用系统,但是它们拥有以时间片模式(一个接一个)或以多任务模式(多应用同时)运行的多个应用程序。

上世纪 70 年代的 [早期的个人电脑](https://en.wikipedia.org/wiki/Xerox_Alto)是第一种受人们欢迎的应用中心系统。

如今的现在操作系统 —— Windows 、macOS 、大多数 GNU/Linux 桌面环境 —— 一直遵循相同的法则。

当然,应用中心系统还可以再细分为两种子类:

1. 紧密型应用中心系统

2. 松散型应用中心系统

紧密型应用中心系统像是 [Windows 3.1](https://en.wikipedia.org/wiki/Windows_3.1x) (拥有程序管理器和文件管理器)或者甚至 [Windows 95](https://en.wikipedia.org/wiki/Windows_95) 的最初版本都没有预定义的文件夹层次。用户启动文本处理程序(像 [WinWord](https://en.wikipedia.org/wiki/Microsoft_Word) )并且把文件保存在 WinWord 的程序文件夹中。在使用表格处理程序的时候,又把文件保存在表格处理工具的程序文件夹中。诸如此类。用户几乎不创建自己的文件层次结构,可能由于此举的不方便、用户单方面的懒惰,或者他们认为根本没有必要。那时,每个用户拥有几十个至多几百个文件。

为了访问文件中的信息,用户常常先打开一个应用程序,然后通过程序中的“文件/打开”功能来获取处理过的数据文件。

在 Windows 平台的 [Windows 95](https://en.wikipedia.org/wiki/Windows_95) SP2 中,“[我的文档](https://en.wikipedia.org/wiki/My_Documents)”首次被使用。有了这样一个文件层次结构的样板,应用设计者开始把 “[我的文档](https://en.wikipedia.org/wiki/My_Documents)” 作为程序的默认的保存 / 打开目录,抛弃了原来将软件产品安装目录作为默认目录的做法。这样一来,用户渐渐适应了这种模式,并且开始自己维护文件夹层次。

松散型应用中心系统(通过文件管理器来提取文件)应运而生。在这种系统下,当打开一个文件的时候,操作系统会自动启动与之相关的应用程序。这是一次小而精妙的用法转变。这种应用中心系统的用法模式一直是个人电脑的主要用法模式。

然而,这种模式有很多的缺点。例如,为了防止数据提取出现问题,需要维护一个包含给定项目的所有相关文件的严格文件夹层次结构。不幸的是,人们并不总能这样做。更进一步说,[这种模式不能很好的扩展](http://karl-voit.at/tagstore/downloads/Voit2012b.pdf)。 桌面搜索引擎和高级数据组织工具(像 [tagstore](http://karl-voit.at/tagstore/))可以起到一点改善作用。正如研究显示的那样,只有一少部分人正在使用那些高级文件提取工具。大多数的用户不使用替代提取工具或者辅助提取技术在文件系统中寻找文件。

### 信息中心系统

解决上述需要将所有文件都放到一个文件夹的问题的可行办法之一就是从应用中心系统转换到信息中心系统。

信息中心系统将项目的所有信息联合起来,放在一个地方,放在同一个应用程序里。因此,我们再也不需要计算项目预算时,打开表格处理程序;写工程报告时,打开文本处理程序;处理图片文件时,又打开另一个工具。

上个月的预算情况在客户会议笔记的右下方,客户会议笔记又在画板的右下方,而画板又在另一些要去完成的任务的右下方。在各个层之间没有文件或者应用程序来回切换的麻烦。

早期,IBM [OS/2](https://en.wikipedia.org/wiki/OS/2)、 Microsoft [OLE](https://en.wikipedia.org/wiki/Object_Linking_and_Embedding) 和 [NeXT](https://en.wikipedia.org/wiki/NeXT) 都做过类似的尝试。但都由于种种原因没有取得重大成功。从 [Plan 9](https://en.wikipedia.org/wiki/Plan_9_from_Bell_Labs) 发展而来的 [ACme](https://en.wikipedia.org/wiki/Acme_%2528text_editor%2529) 是一个非常有趣的信息中心环境。它在一个应用程序中包含了[多种应用程序](https://en.wikipedia.org/wiki/List_of_Plan_9_applications)。但是即时是它移植到了 Windows 和 GNU/Linux,也从来没有成为一个引起关注的软件。

信息中心系统的现代形式是高级 [个人维基](https://en.wikipedia.org/wiki/Personal_wiki)(像 [TheBrain](https://en.wikipedia.org/wiki/TheBrain) 和 [Microsoft OneNote](https://en.wikipedia.org/wiki/Microsoft_OneNote))。

我选择的个人工具是带 [Org 模式](../../../../tags/emacs) 扩展的 [GNU/Emacs](https://github.com/novoid/Memacs) 平台。在用电脑的时候,我几乎不能没有 Org 模式 。为了访问外部数据资源,我创建了一个可以将多种数据导入 Org 模式的插件 —— [Memacs](https://github.com/novoid/Memacs) 。我喜欢将表格数据计算放到日程任务的右下方,然后是行内图片,内部和外部链接,等等。它是一个真正的用户不用必须操心程序或者严格的层次文件系统文件夹的信息中心系统。同时,用简单的或高级的标签也可以进行多分类。一个命令可以派生多种视图。比如,一个视图有日历,待办事项。另一个视图是租借事宜列表。等等。它对 Org 模式的用户没有限制。只有你想不到,没有它做不到。

进化结束了吗? 当然没有。

### 无应用系统

我能想到这样一类操作系统,我称之为无应用系统。在下一步的发展中,系统将不需要单一领域的应用程序,即使它们能和 Org 模式一样出色。计算机直接提供一个处理信息和使用功能的友好用户接口,而不通过文件和程序。甚至连传统的操作系统也不需要。

无应用系统也可能和 [人工智能](https://en.wikipedia.org/wiki/Artificial_intelligence) 联系起来。把它想象成 [2001 太空漫游](https://en.wikipedia.org/wiki/2001:_A_Space_Odyssey) 中的 [HAL 9000](https://en.wikipedia.org/wiki/HAL_9000) 和星际迷航中的 [LCARS](https://en.wikipedia.org/wiki/LCARS) 一类的东西就可以了。

从基于应用的、基于供应商的软件文化到无应用系统的转化让人很难相信。 或许,缓慢但却不断发展的开源环境,可以使一个由各种各样组织和人们贡献的真正无应用环境成型。

信息和提取、操作信息的功能,这是系统应该具有的,同时也是我们所需要的。其他的东西仅仅是为了使我们不至于分散注意力。

---

via: <http://karl-voit.at/2017/02/10/evolution-of-systems/>

作者:[Karl Voit](http://karl-voit.at) 译者:[lontow](https://github.com/lontow) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,501 | 为初学者准备的 ln 命令教程(5 个示例) | https://www.howtoforge.com/linux-ln-command/ | 2018-03-31T23:31:08 | [

"ln",

"链接",

"符号链接"

] | https://linux.cn/article-9501-1.html |

当我们在命令行上工作时,您可能需要在文件之间创建链接。这时,您可以可以借助一个专用命令,`ln`。本教程中,我们将通过一些简单易理解的例子来讨论此工具的基础知识。在此之前,值得一提的是,本教程所有例子都已在 Ubuntu 16.04 上测试通过。

### Linux ln 命令

正如你现在所了解的,`ln` 命令能够让您在文件之间创建链接。下面就是 `ln` 工具的语法(或者使用其他一些可行的语法)。

```

ln [OPTION]... [-T] TARGET LINK_NAME (第一种形式)

ln [OPTION]... TARGET (第二种形式)

ln [OPTION]... TARGET... DIRECTORY (第三种形式)

ln [OPTION]... -t DIRECTORY TARGET... (第四种形式)

```

下面是 `ln` 工具 man 文档描述的内容:

>

> 在第一种形式下,为目标位置(TARGET)创建一个叫 LINK\_NAME 的链接。在第二种形式下,为目标位置(TARGET)在当前目录下创建一个链接(LCTT 译注:创建的为同名链接)。在第三和第四种形式中,在 DIRECTORY 目录下为每一个目标位置(TARGET)创建链接。默认创建硬链接,符号链接需要 `--symbolic` 选项。默认创建的每一个创建的链接(新链接的名字)都不能已经存在。当创建硬链接时,目标位置(TARGET)文件必须存在;符号链接可以保存任意文本,如果之后解析,相对链接的解析与其父目录有关。

>

>

>

通过下面问答风格的例子,可能会给你更好的理解。但是在此之前,建议您先了解 [硬链接和软链接的区别](https://medium.com/meatandmachines/explaining-the-difference-between-hard-links-symbolic-links-using-bruce-lee-32828832e8d3).

### Q1. 如何使用 ln 命令创建硬链接?

这很简单,你只需要像下面使用 `ln` 命令:

```

ln [file] [hard-link-to-file]

```

例如:

```

ln test.txt test_hard_link.txt

```

[](https://www.howtoforge.com/images/command-tutorial/big/ln-hard-link.png)

如此,您便可以看见一个已经创建好的,名为 `test_hard_link.txt` 的硬链接。

### Q2. 如何使用 ln 命令创建软/符号链接?

使用 `-s` 命令行选项:

```

ln -s [file] [soft-link-to-file]

```

例如:

```

ln -s test.txt test_soft_link.txt

```

[](https://www.howtoforge.com/images/command-tutorial/big/ln-soft-link.png)

`test_soft_link.txt` 文件就是一个软/符号链接,以天蓝色文本 [标识](https://askubuntu.com/questions/17299/what-do-the-different-colors-mean-in-ls)。

### Q3. 如何使用 ln 命令删除既存的同名目标文件?

默认情况下,`ln` 不允许您在目标目录下创建已存在的链接。

[](https://www.howtoforge.com/images/command-tutorial/big/ln-file-exists.png)

然而,如果一定要这么做,您可以使用 `-f` 命令行选项覆盖此行为。

[](https://www.howtoforge.com/images/command-tutorial/big/ln-f-option.png)

提示:如果您想在此删除过程中有所交互,您可以使用 `-i` 选项。

### Q4. 如何使用 ln 命令创建现有文件的同名备份?

如果您不想 `ln` 删除同名的现有文件,您可以为这些文件创建备份。使用 `-b` 即可实现此效果,以这种方式创建的备份文件,会在其文件名结尾处包含一个波浪号(`~`)。

[](https://www.howtoforge.com/images/command-tutorial/big/ln-b-option.png)

### Q5. 如何在当前目录以外的其它目录创建链接?

使用 `-t` 选项指定一个文件目录(除了当前目录)。比如:

```

ls test* | xargs ln -s -t /home/himanshu/Desktop/

```

上述命令会为(当前目录下的)所有 `test*` 文件创建链接,并放到桌面目录下。

### 总结

当然,尤其对于新手来说,`ln` 并不是日常必备命令。但是,这是一个有用的命令,因为你永远不知道它什么时候能够节省你一天的时间。对于这个命令,我们已经讨论了一些实用的选项,如果你已经完成了这些,可以查询 [man 文档](https://linux.die.net/man/1/ln) 来了解更多详情。

---

via: <https://www.howtoforge.com/linux-ln-command/>

作者:[Himanshu Arora](https://www.howtoforge.com) 译者:[CYLeft](https://github.com/CYLeft) 校对:[Locez](https://github.com/locez)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # Linux ln Command Tutorial for Beginners (5 Examples)

### On this page

[Linux ln command](#linux-ln-command)[Q1. How to create a hard link using ln?](#q-how-to-create-a-hard-link-using-ln)[Q2. How to create soft/symbolic link using ln?](#q-how-to-create-softsymbolic-link-using-ln)[Q3. How to make ln remove existing destination files of same name?](#q-how-to-make-ln-remove-existing-destination-files-of-same-name)[Q4. How to make ln create a backup of existing files with the same name?](#q-how-to-make-ln-create-a-backup-of-existing-files-with-the-same-name)[Q5. How to create links in a directory other than the current directory?](#q-how-to-create-links-in-a-directory-other-than-the-current-directory)[Conclusion](#conclusion)

Sometimes, while working on the command line, you need to create links between files. This can be achieved using a dedicated command, dubbed **ln**. In this tutorial, we will discuss the basics of this tool using some easy-to-understand examples. But before we do that, it's worth mentioning that all examples here have been tested on an Ubuntu 22.04 machine.

## Linux ln command

As you have understood by now, the ln command lets you make links between files. Following is the syntax (or rather a different syntax available) for this tool:

ln [OPTION]... [-T] TARGET LINK_NAME (1st form)

ln [OPTION]... TARGET (2nd form)

ln [OPTION]... TARGET... DIRECTORY (3rd form)

ln [OPTION]... -t DIRECTORY TARGET... (4th form)

And here's how the tool's man page explains it:

In the 1st form, create a link to TARGET with the name LINK_NAME. In the 2nd form, create a link

to TARGET in the current directory. In the 3rd and 4th forms, create links to each TARGET in

DIRECTORY. Create hard links by default, symbolic links with --symbolic. By default, each

destination (name of new link) should not already exist. When creating hard links, each TARGET

must exist. Symbolic links can hold arbitrary text; if later resolved, a relative link is

interpreted in relation to its parent directory.

The following Q&A-styled examples will give you a better idea of how the ln command works. But before that, it's good for you to get an understanding of what's the [difference between hard links and soft links](https://medium.com/meatandmachines/explaining-the-difference-between-hard-links-symbolic-links-using-bruce-lee-32828832e8d3).

## Q1. How to create a hard link using ln?

That's pretty straightforward - all you have to do is to use the *ln* command in the following way:

ln [file] [hard-link-to-file]

For example:

ln test.txt test_hard_link.txt

So you can see a hard link was created with the name *test_hard_link.txt*.

## Q2. How to create soft/symbolic link using ln?

For this, use the -s command line option.

ln -s [file] [soft-link-to-file]

For example:

ln -s test.txt test_soft_link.txt

The test_soft_link.txt file is a soft/symbolic link, as [confirmed](https://askubuntu.com/questions/17299/what-do-the-different-colors-mean-in-ls) by its sky-blue text color.

## Q3. How to make ln remove existing destination files of same name?

By default, ln won't let you create a link if a file of the same name already exists in the destination directory.

However, if you want, you can make ln override this behavior by using the **-f** command line option.

**Note**: You can use the **-i** command line option if you want to make all this deletion process interactive.

## Q4. How to make ln create a backup of existing files with the same name?

If you don't want ln to delete existing files of the same name, you can make it create a backup of these files. This can be achieved using the **-b** command line option. Backup files created this way will contain a tilde (~) toward the end of their name.

## Q5. How to create links in a directory other than the current directory?

A particular destination directory (other than the current one) can be specified using the **-t** command line option. For example:

ls test* | xargs ln -s -t /home/himanshu/Desktop/

The aforementioned command will create links to all test* files (present in the current directory) and put them in the Desktop directory.

## Conclusion

Agreed, **ln** isn't something that you'll require on daily basis, especially if you're a newbie. But it's a helpful command to know about, as you never know when it'd save your day. We've discussed some useful command line options the tool offers. Once you're done with these, you can learn more about ln by heading to its [man page](https://linux.die.net/man/1/ln). Another interesting command you might want to check out is the [tee](https://www.howtoforge.com/linux-tee-command/) command. |

9,502 | 使用 Vagrant 测试 Ansible 剧本 | https://carlchenet.com/testing-ansible-playbooks-with-vagrant/ | 2018-04-01T09:24:00 | [

"Ansible",

"Vagrant"

] | /article-9502-1.html |

我使用 Ansible 来自动部署站点 ([LinuxJobs.fr](https://www.linuxjobs.fr)、[Journal du hacker](https://www.journalduhacker.net)) 与应用 ([Feed2toot](https://gitlab.com/chaica/feed2toot)、[Feed2tweet](https://gitlab.com/chaica/feed2tweet))。在本文中将会讲述我是如何配置以实现在本地测试 Ansbile <ruby> 剧本 <rt> playbook </rt></ruby>的。

### 为何要测试 Ansible 剧本

我需要一种简单而迅速的方法来在我的本地笔记本上测试 Ansible 剧本的部署情况,尤其在刚开始写一个新剧本的时候,因为直接部署到生产服务器上不仅特别慢而且风险还很大。

我使用 [Vagrant](https://www.vagrantup.com/) 来将剧本部署到 [VirtualBox](https://www.virtualbox.org/) 虚拟机上而不是部署到远程服务器。这使得修改的结果很快就能看到,以实现快速迭代和修正。

责任声明:我并不是专业程序员。我只是描述一种我觉得适合我的,即简单又有效的用来测试 Ansible 剧本的解决方案,但可能还有其他更好的方法。

### 我的流程

1. 开始写新的 Ansible 剧本

2. 启动一台新的虚拟机(VM)并使用 Vagrantt 将剧本部署到这台虚拟机中

3. 修复剧本或应用中的错误

4. 重新在虚拟机上部署

5. 如果还有问题,回到第三步。否则销毁这台虚拟机,重新创建新虚拟机然后测试一次全新部署

6. 若没有问题出现,则标记你的 Ansible 剧本版本,可以在生产环境上发布产品了

### 你需要哪些东西

首先,你需要 Virtualbox。若你使用的是 [Debian](https://www.debian.org) 发行版,[这个链接](https://wiki.debian.org/VirtualBox) 描述了安装的方法,可以从 Debian 仓库中安装,也可以通过官网来安装。

[](https://www.virtualbox.org/)

其次,你需要 Vagrant。为什么要 Vagrant?因为它是介于开发环境和虚拟机之间的中间件,它允许通过编程的方式重复操作,而且可以很方便地将你的部署环境与虚拟机连接起来。通过下面命令可以安装 Vagrant:

```

# apt install vagrant

```

[](https://www.vagrantup.com/)

### 设置 Vagrant

Vagrant 的一切信息都存放在 `Vagrantfile` 文件中。这是我的内容:

```

Vagrant.require_version ">= 2.0.0"

Vagrant.configure(1) do |config|

config.vm.box = "debian/stretch64"

config.vm.provision "shell", inline: "apt install --yes git python3-pip"

config.vm.provision "ansible" do |ansible|

ansible.verbose = "v"

ansible.playbook = "site.yml"

ansible.vault_password_file = "vault_password_file"

end

end

```

1. 第一行指明了需要用哪个版本的 Vagrant 来执行 `Vagrantfile`。

2. 文件中的第一个循环,你要定义为多少台虚拟机执行下面的操作(这里为 `1`)。

3. 第三行指定了用来创建虚拟机的官方 Vagrant 镜像。

4. 第四行非常重要:有一些需要的应用没有安装到虚拟机中。这里我们用 `apt` 安装 `git` 和 `python3-pip`。

5. 下一行指明了 Ansible 配置开始的地方

6. 第六行说明我们想要 Ansible 输出详细信息。

7. 第七行,我们定义了 Ansible 剧本的入口。

8. 第八行,若你使用 Ansible Vault 加密了一些文件,在这里指定这些文件。

当 Vagrant 启动 Ansible 时,类似于执行这样的操作:

```

$ ansible-playbook --inventory-file=/home/me/ansible/test-ansible-playbook/.vagrant/provisioners/ansible/inventory -v --vault-password-file=vault_password_file site.yml

```

### 执行 Vagrant

写好 `Vagrantfile` 后,就可以启动虚拟机了。只需要简单地运行下面命令:

```

$ vagrant up

```

这个操作会很慢,因为它会启动虚拟机,安装 `Vagrantfile` 中定义的附加软件,最终应用你的剧本。你不要太频繁地使用这条命令。

Ok,现在你可以快速迭代了。在做出修改后,可以通过下面命令来快速测试你的部署:

```

$ vagrant provision

```

Ansible 剧本搞定后,通常要经过多次迭代(至少我是这样的),你应该一个全新安装的虚拟机上再测试一次,因为你在迭代的过程中可能会对虚拟机造成修改从而引发意料之外的结果。

使用下面命令进行全新测试:

```

$ vagrant destroy && vagrant up

```

这又是一个很慢的操作。你应该在 Ansible 剧本差不多完成了的情况下才这样做。在全新虚拟机上测试部署之后,就可以发布到生产上去了。至少准备要充分不少了吧 :p

### 有什么改进意见?请告诉我

本文中描述的配置对我自己来说很有用。我可以做到快速迭代(尤其在编写新的剧本的时候),除了剧本外,对我的最新应用,尚未准备好部署到生产环境上的应用也很有帮助。直接部署到远程服务器上对我的生产服务来说不仅缓慢而且很危险。

我本也可以使用持续集成(CI)服务器,但这不是本文的主题。如前所述,本文的目的是在编写新的 Ansible 剧本之初尽可能的快速迭代。

在编写 Ansible 剧本之初就提交,推送到你的 Git 仓库然后等待 CI 测试的执行结果,这有点太过了,因为这个时期的错误总是很多,你需要一一个地去调试。我觉得 CI 在编写 Ansible 剧本的后期会有用的多,尤其当多个人同时对它进行修改而且你有一整套代码质量规范要遵守的时候。不过,这只是我自己的观念,还有待讨论,再重申一遍,我不是个专业的程序员。

如果你有更好的测试 Ansible 剧本的方案或者能对这里描述的方法做出一些改进,请告诉我。你可以把它写到留言框中或者通过社交网络联系我,我会很高兴的。

---

via: <https://carlchenet.com/testing-ansible-playbooks-with-vagrant/>

作者:[Carl Chenet](https://carlchenet.com) 译者:[lujun9972](https://github.com/lujun9972) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='carlchenet.com', port=443): Max retries exceeded with url: /testing-ansible-playbooks-with-vagrant/ (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x7b83275c6ef0>, 'Connection to carlchenet.com timed out. (connect timeout=10)')) | null |

9,503 | 计算机语言的巨变 | http://esr.ibiblio.org/?p=7724 | 2018-04-02T08:16:00 | [

"C语言",

"Go"

] | https://linux.cn/article-9503-1.html |

我的上一篇博文《[与 C 语言长别离](/article-9268-1.html)》引来了我的老朋友,一位 C++ 专家的评论。在评论里,他推荐把 C++ 作为 C 的替代品。这是不可能发生的,如果 C++ 代替 C 是趋势的话,那么 Go 和 Rust 也就不会出现了。

但是我不能只给我的读者一个光秃秃的看法(LCTT 译注:此处是双关语)。所以,在这篇文章中,我来讲述一下为什么我不再碰 C++ 的故事。这是关于计算机语言设计经济学专题文章的起始点。这篇文章会讨论为什么一些真心不好的决策会被做出来,然后进入语言的基础设计之中,以及我们该如何修正这些问题。

在这篇文章中,我会一点一点的指出人们(当然也包括我)自从 20 世纪 80 年代以来就存在的关于未来的编程语言的预见失误。直到最近,我们才找到了证明我们错了的证据。

我记得我第一次学习 C++ 是因为我需要使用 GNU eqn 输出 MathXML,而 eqn 是使用 C++ 写的。那个项目不错。在那之后,21 世纪初,我在<ruby> 韦诺之战 <rt> Battle For Wesnoth </rt></ruby>那边当了多年的资深开发人生,并且与 C++ 相处甚欢。

在那之后啊,有一天我们发现一个不小心被我们授予提交权限的人已经把游戏的 AI 核心搞崩掉了。显然,在团队中只有我是不那么害怕查看代码的。最终,我把一切都恢复正常了 —— 我折腾了整整两周。再那之后,我就发誓我再也不靠近 C++ 了。

在那次经历过后,我发现这个语言的问题就是它在尝试使得本来就复杂的东西更加复杂,来粗陋补上因为基础概念的缺失造成的漏洞。对于裸指针这样东西,它说“别这样做”,这没有问题。对于小规模的个人项目(比如我的魔改版 eqn),遵守这些规定没有问题。

但是对于大型项目,或者开发者水平参差不齐的多人项目(这是我经常要处理的情况)就不能这样。随着时间的推移以及代码行数的增加,有的人就会捅篓子。当别人指出有 BUG 时,因为诸如 STL 之类的东西给你增加了一层复杂度,你处理这种问题所需要的精力就比处理同等规模的 C 语言的问题就要难上很多。我在韦诺之战时,我就知道了,处理这种问题真的相当棘手。

我给 Stell Heller(我的老朋友,C++ 的支持者)写代码时不会发生的问题在我与非 Heller 们合作时就被放大了,我和他们合作的结局可能就是我得给他们擦屁股。所以我就不用 C++ ,我觉得不值得为了其花时间。 C 是有缺陷的,但是 C 有 C++ 没有的优点 —— 如果你能在脑内模拟出硬件,那么你就能很简单的看出程序是怎么运行的。如果 C++ 真的能解决 C 的问题(也就是说,C++ 是类型安全以及内存安全的),那么失去其透明性也是值得的。但是,C++ 并没有这样。

我们判断 C++ 做的还不够的方法之一是想象一个 C++ 已经搞得不错的世界。在那个世界里,老旧的 C 语言项目会被迁移到 C++ 上来。主流的操作系统内核会是 C++ 写就,而现存的内核实现,比如 Linux 会渐渐升级成那样。在现实世界,这些都没有发生。C++ 不仅没有打消语言设计者设想像 D、Go 以及 Rust 那样的新语言的想法,它甚至都没有取代它的前辈。不改变 C++ 的核心思想,它就没有未来,也因此,C++ 的<ruby> 抽象泄露 <rt> leaky abstraction </rt></ruby>也不会消失。

既然我刚刚提到了 D 语言,那我就说说为什么我不把 D 视为一个够格的 C 语言竞争者的原因吧。尽管它比 Rust 早出现了八年(和 Rust 相比是九年)Walter Bright 早在那时就有了构建那样一个语言的想法。但是在 2001 年,以 Python 和 Perl 为首的语言的出现已经确定了,专有语言能和开源语言抗衡的时代已经过去。官方 D 语言库/运行时和 Tangle 的无谓纷争也打击了其发展。它从未修正这些错误。

然后就是 Go 语言(我本来想说“以及 Rust”。但是如前文所述,我认为 Rust 还需要几年时间才能有竞争力)。它*的确是*类型安全以及内存安全的(好吧,是在大多数时候是这样,但是如果你要使用接口的话就不是如此了,但是自找麻烦可不是正常人的做法)。我的一位好友,Mark Atwood,曾指出过 Go 语言是脾气暴躁的老头子因为愤怒而创造出的语言,主要是 *C 语言的作者之一*(Ken Thompson) 因为 C++ 的混乱臃肿造成的愤怒,我深以为然。

我能理解 Ken 恼火的原因。这几十年来我就一直认为 C++ 搞错了需要解决的问题。C 语言的后继者有两条路可走。其一就是 C++ 那样,接受 C 的抽象泄漏、裸指针等等,以保证兼容性。然后以此为基础,构建一个最先进的语言。还有一条道路,就是从根源上解决问题 —— *修正* C语言的抽象泄露。这一来就会破环其兼容性,但是也会杜绝 C/C++ 现有的问题。

对于第二条道路,第一次严谨的尝试就是 1995 年出现的 Java。Java 搞得不错,但是在语言解释器上构建这门语言使其不适合系统编程。这就在系统编程那留下一个巨大的洞,在 Go 以及 Rust 出现之前的 15 年里,都没有语言来填补这个空白。这也就是我的 GPSD 和 NTPsec 等软件在 2017 年仍然主要用 C 写成的原因,尽管 C 的问题也很多。

在许多方面这都是很糟糕的情况。尽管由于缺少足够多样化的选择,我们很难认识到 C/C++ 做的不够好的地方。我们都认为在软件里面出现缺陷以及基于安全方面考虑的妥协是理所当然的,而不是想想这其中多少是真的由于语言的设计问题导致的,就像缓存区溢出漏洞一样。

所以,为什么我们花了这么长时间才开始解决这个问题?从 C 1972 年面世到 Go 2009 年出现,这其中隔了 37 年;Rust 也是在其仅仅一年之前出现。我想根本原因还是经济。

从最早的计算机语言开始,人们就已经知道,每种语言的设计都体现了程序员时间与机器资源的相对价值的权衡。在机器这端,就是汇编语言,以及之后的 C 语言,这些语言以牺牲开发人员的时间为代价来提高性能。 另一方面,像 Lisp 和(之后的)Python 这样的语言则试图自动处理尽可能多的细节,但这是以牺牲机器性能为代价的。

广义地说,这两端的语言的最重要的区别就是有没有自动内存管理。这与经验一致,内存管理缺陷是以机器为中心的语言中最常见的一类缺陷,程序员需要手动管理资源。

当相对价值断言与软件开发在某个特定领域的实际成本动因相匹配时,这个语言就是在经济上可行的。语言设计者通过设计一个适合处理现在或者不远的将来出现的情况的语言,而不是使用现有的语言来解决他们遇到的问题。

随着时间的推移,时兴的编程语言已经渐渐从需要手动管理内存的语言变为带有自动内存管理以及垃圾回收(GC)机制的语言。这种变化对应了摩尔定律导致的计算机硬件成本的降低,使得程序员的时间与之前相比更加的宝贵。但是,除了程序员的时间以及机器效率的变化之外,至少还有两个维度与这种变化相关。

其一就是距离底层硬件的距离。底层软件(内核与服务代码)的低效率会被成倍地扩大。因此我们可以发现,以机器为中心的语言向底层推进,而以程序员为中心的语言向着高级发展。因为大多数情况下面向用户的语言仅仅需要以人类的反应速度(0.1 秒)做出回应即可。

另一个维度就是项目的规模。由于程序员抽象发生的问题的漏洞以及自身的疏忽,任何语言都会有可预期的每千行代码的出错率。这个比率在以机器为中心的语言上很高,而在程序员为中心的带有 GC 的语言里就大大降低。随着项目规模的增大,带有 GC 的语言作为一个防止出错率不堪入目的策略就显得愈发重要起来。

当我们使用这三种维度来看当今的编程语言的形势 —— C 语言在底层,蓬勃发展的带有 GC 的语言在上层,我们会发现这基本上很合理。但是还有一些看似不合理的是 —— C 语言的应用不合理地广泛。

我为什么这么说?想想那些经典的 Unix 命令行工具吧。那些小程序通常都可以使用带有完整的 POSIX 支持的脚本语言快速实现出来。重新编码那些程序将使得它们调试、维护和拓展起来都会更加简单。

但是为什么还是使用 C (或者某些像 eqn 的项目,使用 C++)?因为有转换成本。就算是把相当小、相当简单的程序使用新的语言重写并且确认你已经忠实地保留了所有非错误行为都是相当困难的。笼统地说,在任何一个领域的应用编程或者系统编程在一种语言的权衡过时之后,仍然坚持使用它。

这就是我和其他预测者犯的大错。 我们认为,降低机器资源成本(增加程序员时间的相对成本)本身就足以取代 C 语言(以及没有 GC 的语言)。 在这个过程中,我们有一部分或者甚至一大部分都是错误的 —— 自 20 世纪 90 年代初以来,脚本语言、Java 以及像 Node.js 这样的东西的兴起显然都是这样兴起的。

但是,竞争系统编程语言的新浪潮并非如此。 Rust 和 Go 都明确地回应了*增加项目规模* 这一需求。 脚本语言是先是作为编写小程序的有效途径,并逐渐扩大规模,而 Rust 和 Go 从一开始就定位为减少*大型项目*中的缺陷率。 比如 Google 的搜索服务和 Facebook 的实时聊天复用。

我认为这就是对 “为什么不再早点儿” 这个问题的回答。Rust 和 Go 实际上并不算晚,它们相对迅速地回应了一个直到最近才被发现低估的成本动因问题。

好,说了这么多理论上的问题。按照这些理论我们能预言什么?它告诉我们在 C 之后会出现什么?

推动 GC 语言发展的趋势还没有扭转,也不要期待其扭转。这是大势所趋。因此:最终我们*将*拥有具有足够低延迟的 GC 技术,可用于内核和底层固件,这些技术将以语言实现方式被提供。 这些才是真正结束 C 长期统治的语言应有的特性。

我们能从 Go 语言开发团队的工作文件中发现端倪,他们正朝着这个方向前进 —— 可参见关于并发 GC 的学术研究 —— 从未停止研究。 如果 Go 语言自己没有选择这么做,其他的语言设计师也会这样。 但我认为他们会这么做 —— 谷歌推动他们的项目的能力是显而易见的(我们从 “Android 的发展”就能看出来)。

在我们拥有那么理想的 GC 之前,我把能替换 C 语言的赌注押在 Go 语言上。因为其 GC 的开销是可以接受的 —— 也就是说不只是应用,甚至是大部分内核外的服务都可以使用。原因很简单: C 的出错率无药可医,转化成本还很高。

上周我尝试将 C 语言项目转化到 Go 语言上,我发现了两件事。其一就是这活很简单, C 的语言和 Go 对应的很好。还有就是写出的代码相当简单。由于 GC 的存在以及把集合视为首要的数据结构,人们会预期代码减少,但是我意识到我写的代码比我最初期望的减少的更多,比例约为 2:1 —— 和 C 转 Python 类似。

抱歉呐,Rust 粉们。你们在内核以及底层固件上有着美好的未来,但是你们在别的 C 领域被 Go 压的很惨。没有 GC ,再加上难以从 C 语言转化过来,还有就是 API 的标准部分还是不够完善。(我的 `select(2)` 又哪去了啊?)。

对你们来说,唯一的安慰就是,C++ 粉比你们更糟糕 —— 如果这算是安慰的话。至少 Rust 还可以在 Go 顾及不到的 C 领域内大展宏图。C++ 可不能。

>

> 本站按:本文由著名开源领袖 ESR 撰写,了解 ESR 事迹的同学知道他拒绝去大公司荣养,而仍然主要负责一些互联网基础性项目的开发维护(如 NTPsec),所以,他在创造者赞助网站 [Patreon](https://www.patreon.com/) 上有一份[生活赞助计划](https://www.patreon.com/esr/overview),大家可以考虑献出一些微薄之力支持他,每个月 $20 也不过你一餐饭而已。

>

>

>

---

via: <http://esr.ibiblio.org/?p=7724>

作者:[Eric Raymond](http://esr.ibiblio.org/?author=2) 译者:[name1e5s](https://github.com/name1e5s) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出