id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

9,386 | 五个值得现在安装的火狐插件 | https://opensource.com/article/18/1/top-5-firefox-extensions | 2018-02-26T23:29:21 | [

"FireFox",

"插件"

] | /article-9386-1.html |

>

> 合适的插件能大大增强你浏览器的功能,但仔细挑选插件很重要。本文有五个值得一看的插件。

>

>

>

对于很多用户来说,网页浏览器已经成为电脑使用体验的重要环节。现代浏览器已经发展成强大、可拓展的平台。作为平台的一部分,*插件*能添加或修改浏览器的功能。火狐插件的构建使用了 WebExtensions API ,这是一个跨浏览器的开发系统。

你应该安装哪一个插件?一般而言,这个问题的答案取决于你如何使用你的浏览器、你对于隐私的看法、你信任插件开发者多少以及其他个人喜好。

首先,我想指出浏览器插件通常需要读取和(或者)修改你浏览的网页上的每项内容。你应该*非常*仔细地考虑这件事的后果。如果一个插件有修改所有你访问过的网页的权限,那么它可能记录你的按键、拦截信用卡信息、在线跟踪你、插入广告,以及其他各种各样邪恶的行为。

并不是每个插件都偷偷摸摸地做这些事,但是在你安装任何插件之前,你要慎重考虑下插件安装来源、涉及的权限、你的风险数据和其他因素。记住,你可以从个人数据的角度来管理一个插件如何影响你的攻击面( LCTT 译注:攻击面是指入侵者能尝试获取或提取数据的途径总和)——例如使用特定的配置、不使用插件来完成例如网上银行的操作。

考虑到这一点,这里有你或许想要考虑的五个火狐插件

### uBlock Origin

*ublock Origin 可以拦截广告和恶意网页,还允许用户定义自己的内容过滤器。*

[uBlock Origin](https://addons.mozilla.org/en-US/firefox/addon/ublock-origin/) 是一款快速、内存占用低、适用范围广的拦截器,它不仅能屏蔽广告,还能让你执行你自己定制的内容过滤。uBlock Origin 默认使用多份预定义好的过滤名单来拦截广告、跟踪器和恶意网页。它允许你任意地添加列表和规则,或者锁定在一个默认拒绝的模式。除了强大之外,这个插件已被证明是效率高、性能好。

### Privacy Badger

*Privacy Badger 运用了算法来无缝地屏蔽侵犯用户准则的广告和跟踪器。*

正如它名字所表明,[Privacy Badger](https://www.eff.org/privacybadger) 是一款专注于隐私的插件,它屏蔽广告和第三方跟踪器。EFF (LCTT 译注:EFF 全称是<ruby> 电子前哨基金会 <rt> Electronic Frontier Foundation </rt></ruby>,旨在宣传互联网版权和监督执法机构)说:“我们想要推荐一款能自动分析并屏蔽任何侵犯用户准则的跟踪器和广告,而 Privacy Badger 诞生于此目的;它不用任何设置、知识或者用户的配置,就能运行得很好;它是由一个明显为用户服务而不是为广告主服务的组织出品;它使用算法来确定正在跟踪什么,而没有跟踪什么。”

为什么 Privacy Badger 出现在这列表上的原因跟 uBlock Origin 如此相似?其中一个原因是 Privacy Badger 从根本上跟 uBlock Origin 的工作不同。另一个原因是纵深防御的做法是个可以遵循的合理策略。

### LastPass

*LastPass 是一款用户友好的密码管理插件,支持双因子认证。*

这个插件对于很多人来说是个有争议的补充。你是否应该使用密码管理器——如果你用了,你是否应该选择一个浏览器插件——这都是个热议的话题,而答案取决于你的风险资料。我想说大部分不关心的电脑用户应该用一个,因为这比起常见的选择:每一处使用相同的弱密码,都好太多了。

[LastPass](https://addons.mozilla.org/en-US/firefox/addon/lastpass-password-manager/) 对于用户很友好,支持双因子认证,相当安全。这家公司过去出过点安全事故,但是都处理得当,而且资金充足。记住使用密码管理器不是非此即彼的命题。很多用户选择使用密码管理器管理绝大部分密码,但是保持了一点复杂性,为例如银行这样重要的网页采用了精心设计的密码和多因子认证。

### Xmarks Sync

[Xmarks Sync](https://addons.mozilla.org/en-US/firefox/addon/xmarks-sync/) 是一款方便的插件,能跨实例同步你的书签、打开的标签页、配置项和浏览器历史。如果你有多台机器,想要在桌面设备和移动设备之间同步、或者在同一台设备使用不同的浏览器,那来看看 Xmarks Sync 。(注意这款插件最近被 LastPass 收购)

### Awesome Screenshot Plus

[Awesome Screenshot Plus](https://addons.mozilla.org/en-US/firefox/addon/screenshot-capture-annotate/) 允许你很容易捕获任意网页的全部或部分区域,也能添加注释、评论、使敏感信息模糊等。你还能用一个可选的在线服务来分享图片。我发现这工具在网页调试时截图、讨论设计和分享信息上很棒。这是一款比你预期中发现自己使用得多的工具。

*Awesome Screenshot Plus 允许你容易地截下任何网页的部分或全部内容。*

我发现这五款插件有用,我把它们推荐给其他人。这就是说,还有很多浏览器插件。我很感兴趣社区用户们正在使用哪些插件,请在评论中让我知道。

---

via: <https://opensource.com/article/18/1/top-5-firefox-extensions>

作者:[Jeremy Garcia](https://opensource.com/users/jeremy-garcia) 译者:[ypingcn](https://github.com/ypingcn) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

9,387 | Intel 设计缺陷背后的原因是什么? | https://arstechnica.com/gadgets/2018/01/whats-behind-the-intel-design-flaw-forcing-numerous-patches/ | 2018-02-26T23:53:21 | [

"KPTI",

"Intel",

"CPU"

] | https://linux.cn/article-9387-1.html |

>

> 我们知道有问题,但是并不知道问题的详细情况。

>

>

>

(本文发表于 1 月份)最近 Windows 和 Linux 都发送了重大安全更新,为防范这个尚未完全公开的问题,在最坏的情况下,它可能会导致性能下降多达一半。

在过去的几周,Linux 内核陆续打了几个补丁。Microsoft [自 11 月份开始也内部测试了 Windows 更新](https://twitter.com/aionescu/status/930412525111296000),并且它预计在下周二的例行补丁中将这个改进推送到主流 Windows 构建版中。Microsoft 的 Azure 也在下周的维护窗口中做好了安排,而 Amazon 的 AWS 也安排在周五对相关的设施进行维护。

自从 Linux 第一个补丁 (参见 [KPTI:内核页表隔离的当前的发展](/article-9201-1.html)) 明确描绘了出现的错误以后。虽然 Linux 和 Windows 基于不同的考虑,对此持有不同的看法,但是这两个操作系统 —— 当然还有其它的 x86 操作系统,比如 FreeBSD 和 [macOS](https://twitter.com/aionescu/status/948609809540046849) — 对系统内存的处理采用了相同的方式,因为对于操作系统在这一部分特性是与底层的处理器高度耦合的。

### 保持地址跟踪

在一个系统中的每个内存字节都是隐性编码的,这些编码数字是每个字节的地址。早期的操作系统使用物理内存地址,但是,物理内存地址由于各种原因,它并不很合适。例如,在地址中经常会有空隙,并且(尤其是 32 位的系统上)物理地址很难操作,需要 36 位数字,甚至更多。

因此,现在操作系统完全依赖一个叫虚拟内存的概念。虚拟内存系统允许程序和内核一起在一个简单、清晰、统一的环境中各自去操作。而不是使用空隙和其它奇怪的东西的物理内存,每个程序和内核自身都使用虚拟地址去访问内存。这些虚拟地址是连续的 —— 不用担心有空隙 —— 并且合适的大小也更便于操作。32 位的程序仅可以看到 32 位的地址,而不用管物理地址是 36 位还是更多位。

虽然虚拟地址对每个软件几乎是透明的,但是,处理器最终还是需要知道虚拟地址引用的物理地址是哪个。因此,有一个虚拟地址到物理地址的映射,它保存在一个被称为页面表的数据结构中。操作系统构建页面表,使用一个由处理器决定的布局,并且处理器和操作系统在虚拟地址和物理地址之间进行转换时就需要用到页面表。

这个映射过程是非常重要的,它也是现代操作系统和处理器的重要基础,处理器有专用的缓存 — Translation Lookaside Buffer(简称 TLB)—— 它保存了一定数量的虚拟地址到物理地址的映射,这样就不需要每次都使用全部页面。

虚拟内存的使用为我们提供了很多除了简单寻址之外的有用的特性。其中最主要的是,每个程序都有了自己独立的一组虚拟地址,有了它自己的一组虚拟地址到物理地址的映射。这就是用于提供“内存保护”的关键技术,一个程序不能破坏或者篡改其它程序使用的内存,因为其它程序的内存并不在它的地址映射范围之内。

由于每个进程使用一个单独的映射,因此每个程序也就有了一个额外的页面表,这就使得 TLB 缓存很拥挤。TLB 并不大 —— 一般情况下总共可以容纳几百个映射 —— 而系统使用的页面表越多,TLB 能够包含的任何特定的虚拟地址到物理地址的映射就越少。

### 一半一半

为了更好地使用 TLB,每个主流的操作系统都将虚拟地址范围一分为二。一半用于程序;另一半用于内核。当进程切换时,仅有一半的页面表条目发生变化 —— 仅属于程序的那一半。内核的那一半是每个程序公用的(因为只有一个内核)并且因此它可以为每个进程使用相同的页面表映射。这对 TLB 的帮助非常大;虽然它仍然会丢弃属于进程的那一半内存地址映射;但是它还保持着另一半属于内核的映射。

这种设计并不是一成不变的。在 Linux 上做了一项工作,使它可以为一个 32 位的进程提供整个地址范围,而不用在内核页面表和每个进程之间共享。虽然这样为程序提供了更多的地址空间,但这是以牺牲性能为代价的,因为每次内核代码需要运行时,TLB 重新加载内核的页面表条目。因此,这种方法并没有广泛应用到 x86 的系统上。

在内核和每个程序之间分割虚拟地址的这种做法的一个负面影响是,内存保护被削弱了。如果内核有它自己的一组页面表和虚拟地址,它将在不同的程序之间提供相同的保护;内核内存将是简单的不可见。但是使用地址分割之后,用户程序和内核使用了相同的地址范围,并且从原理上来说,一个用户程序有可能去读写内核内存。

为避免这种明显不好的情况,处理器和虚拟地址系统有一个 “Ring” 或者 “模式”的概念。x86 处理器有许多 Ring,但是对于这个问题,仅有两个是相关的:“user” (Ring 3)和 “supervisor”(ring 0)。当运行普通的用户程序时,处理器将置为用户模式 (Ring 3)。当运行内核代码时,处理器将处于 Ring 0 —— supervisor 模式,也称为内核模式。

这些 Ring 也用于从用户程序中保护内核内存。页面表并不仅仅有虚拟地址到物理地址的映射;它也包含关于这些地址的元数据,包含哪个 Ring 可能访问哪个地址的信息。内核页面表条目被标记为仅有 Ring 0 可以访问;程序的条目被标记为任何 Ring 都可以访问。如果一个处于 Ring 3 中的进程去尝试访问标记为 Ring 0 的内存,处理器将阻止这个访问并生成一个意外错误信息。运行在 Ring 3 中的用户程序不能得到内核以及运行在 Ring 0 内存中的任何东西。

至少理论上是这样的。大量的补丁和更新表明,这个地方已经被突破了。这就是最大的谜团所在。

### Ring 间迁移

这就是我们所知道的。每个现代处理器都执行一定数量的推测运行。例如,给一些指令,让两个数加起来,然后将结果保存在内存中,在查明内存中的目标是否可访问和可写入之前,一个处理器可能已经推测性地做了加法。在一些常见案例中,在地址可写入的地方,处理器节省了一些时间,因为它以并行方式计算出内存中的目标是什么。如果它发现目标位置不可写入 —— 例如,一个程序尝试去写入到一个没有映射的地址或压根就不存在的物理位置 —— 然后它将产生一个意外错误,而推测运行就白做了。

Intel 处理器,尤其是([虽然不是 AMD 的](https://lkml.org/lkml/2017/12/27/2))允许对 Ring 3 代码进行推测运行并写入到 Ring 0 内存中的处理器上。处理器并不完全阻止这种写入,但是推测运行轻微扰乱了处理器状态,因为,为了查明目标位置是否可写入,某些数据已经被加载到缓存和 TLB 中。这又意味着一些操作可能快几个周期,或者慢几个周期,这取决于它们所需要的数据是否仍然在缓存中。除此之外,Intel 的处理器还有一些特殊的功能,比如,在 Skylake 处理器上引入的软件保护扩展(SGX)指令,它改变了一点点访问内存的方式。同样的,处理器仍然是保护 Ring 0 的内存不被来自 Ring 3 的程序所访问,但是同样的,它的缓存和其它内部状态已经发生了变化,产生了可测量的差异。

我们至今仍然并不知道具体的情况,到底有多少内核的内存信息泄露给了用户程序,或者信息泄露的情况有多容易发生。以及有哪些 Intel 处理器会受到影响?也或者并不完全清楚,但是,有迹象表明每个 Intel 芯片都使用了推测运行(是自 1995 年 Pentium Pro 以来的所有主流处理器吗?),它们都可能会因此而泄露信息。

这个问题第一次被披露是由来自 [奥地利的 Graz Technical University](https://gruss.cc/files/kaiser.pdf) 的研究者。他们披露的信息表明这个问题已经足够破坏内核模式地址空间布局随机化(内核 ASLR,或称 KASLR)。ASLR 是防范 [缓冲区溢出](https://arstechnica.com/information-technology/2015/08/how-security-flaws-work-the-buffer-overflow/) 漏洞利用的最后一道防线。启用 ASLR 之后,程序和它们的数据被置于随机的内存地址中,它将使一些安全漏洞利用更加困难。KASLR 将这种随机化应用到内核中,这样就使内核的数据(包括页面表)和代码也随机化分布。

Graz 的研究者开发了 [KAISER](https://github.com/IAIK/KAISER),一组防范这个问题的 Linux 内核补丁。

如果这个问题正好使 ASLR 的随机化被破坏了,这或许将成为一个巨大的灾难。ASLR 是一个非常强大的保护措施,但是它并不是完美的,这意味着对于黑客来说将是一个很大的障碍,一个无法逾越的障碍。整个行业对此的反应是 —— Windows 和 Linux 都有一个非常重要的变化,秘密开发 —— 这表明不仅是 ASLR 被破坏了,而且从内核泄露出信息的更普遍的技术被开发出来了。确实是这样的,研究者已经 [在 Twitter 上发布信息](https://twitter.com/brainsmoke/status/948561799875502080),他们已经可以随意泄露和读取内核数据了。另一种可能是,漏洞可能被用于从虚拟机中“越狱”,并可能会危及 hypervisor。

Windows 和 Linux 选择的解决方案是非常相似的,将 KAISER 分为两个区域:内核页面表的条目不再是由每个进程共享。在 Linux 中,这被称为内核页面表隔离(KPTI)。

应用补丁后,内存地址仍然被一分为二:这样使内核的那一半几乎是空的。当然它并不是非常的空,因为一些内核片断需要永久映射,不论进程是运行在 Ring 3 还是 Ring 0 中,它都几乎是空的。这意味着如果恶意用户程序尝试去探测内核内存以及泄露信息,它将会失败 —— 因为那里几乎没有信息。而真正的内核页面中只有当内核自身运行的时刻它才能被用到。

这样做就破坏了最初将地址空间分割的理由。现在,每次切换到用户程序时,TLB 需要实时去清除与内核页面表相关的所有条目,这样就失去了启用分割带来的性能提升。

影响的具体大小取决于工作负载。每当一个程序被调入到内核 —— 从磁盘读入、发送数据到网络、打开一个文件等等 —— 这种调用的成本可能会增加一点点,因为它强制 TLB 清除了缓存并实时加载内核页面表。不使用内核的程序可能会观测到 2 - 3 个百分点的性能影响 —— 这里仍然有一些开销,因为内核仍然是偶尔会运行去处理一些事情,比如多任务等等。

但是大量调用进入到内核的工作负载将观测到很大的性能损失。在一个基准测试中,一个除了调入到内核之外什么都不做的程序,观察到 [它的性能下降大约为 50%](https://twitter.com/grsecurity/status/947257569906757638);换句话说就是,打补丁后每次对内核的调用的时间要比不打补丁调用内核的时间增加一倍。基准测试使用的 Linux 的网络回环(loopback)也观测到一个很大的影响,比如,在 Postgres 的基准测试中大约是 [17%](https://www.postgresql.org/message-id/[email protected])。真实的数据库负载使用了实时网络可能观测到的影响要低一些,因为使用实时网络时,内核调用的开销基本是使用真实网络的开销。

虽然对 Intel 系统的影响是众所周知的,但是它们可能并不是唯一受影响的。其它的一些平台,比如 SPARC 和 IBM 的 S390,是不受这个问题影响的,因为它们的处理器的内存管理并不需要分割地址空间和共享内核页面表;在这些平台上的操作系统一直就是将它们的内核页面表从用户模式中隔离出来的。但是其它的,比如 ARM,可能就没有这么幸运了;[适用于 ARM Linux 的类似补丁](https://lwn.net/Articles/740393/) 正在开发中。

---

[PETER BRIGHT](https://arstechnica.com/author/peter-bright) 是 Ars 的一位技术编辑。他涉及微软、编程及软件开发、Web 技术和浏览器、以及安全方面。它居住在纽约的布鲁克林。

---

via: <https://arstechnica.com/gadgets/2018/01/whats-behind-the-intel-design-flaw-forcing-numerous-patches/>

作者:[PETER BRIGHT](https://arstechnica.com/author/peter-bright/) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Both Windows and Linux are receiving significant security updates that can, in the worst case, cause performance to drop by half, to defend against a problem that as yet hasn't been fully disclosed.

Patches to the Linux kernel have been trickling in over the past few weeks. Microsoft has been [testing the Windows updates in the Insider program since November](https://twitter.com/aionescu/status/930412525111296000), and it is expected to put the alterations into mainstream Windows builds on Patch Tuesday next week. Microsoft's Azure has scheduled maintenance next week, and Amazon's AWS is scheduled for maintenance on Friday—presumably related.

Since the Linux patches [first came to light](https://lwn.net/SubscriberLink/741878/eb6c9d3913d7cb2b/), a clearer picture of what seems to be wrong has emerged. While Linux and Windows differ in many regards, the basic elements of how these two operating systems—and indeed, every other x86 operating system such as FreeBSD and [macOS](https://twitter.com/aionescu/status/948609809540046849)—handle system memory is the same, because these parts of the operating system are so tightly coupled to the capabilities of the processor.

## Keeping track of addresses

Every byte of memory in a system is implicitly numbered, those numbers being each byte's address. The very earliest operating systems operated using physical memory addresses, but physical memory addresses are inconvenient for lots of reasons. For example, there are often gaps in the addresses, and (particularly on 32-bit systems), physical addresses can be awkward to manipulate, requiring 36-bit numbers, or even larger ones.

Accordingly, modern operating systems all depend on a broad concept called virtual memory. Virtual memory systems allow both programs and the kernels themselves to operate in a simple, clean, uniform environment. Instead of the physical addresses with their gaps and other oddities, every program, and the kernel itself, uses virtual addresses to access memory. These virtual addresses are contiguous—no need to worry about gaps—and sized conveniently to make them easy to manipulate. 32-bit programs see only 32-bit addresses, even if the physical address requires 36-bit or more numbering. |

9,388 | Torrents(种子):你需要知道的一切事情 | http://www.linuxandubuntu.com/home/torrents-everything-you-need-to-know | 2018-03-01T09:00:00 | [

"Torrent",

"种子"

] | https://linux.cn/article-9388-1.html | Torrents(种子):你需要知道的一切事情

=======================

**Torrents(种子)** — 每次听到这个词时,在我的脑海里想到的唯一的事情就是免费的电影、游戏、和被破解的软件。但是我们并不知道它们是如何工作的,在“种子”中涉及到各种概念。因此,通过这篇文章我们从技术的角度来了解**种子下载**是什么。

### “种子”是什么?

“种子”是一个到因特网上文件位置的链接。它们不是一个文件,它们仅仅是动态指向到你想去下载的原始文件上。

例如:如果你点击 [Google Chrome](https://www.google.com/chrome/),你可以从谷歌的服务器上下载 Google Chrome 浏览器。

如果你明天、或者下周、或者下个月再去点击那个链接,这个文件仍然可以从谷歌服务器上去下载。

但是当我们使用“种子”下载时,它并没有固定的服务器。文件是从以前使用“种子”下载的其它人的个人电脑上下载的。

### Torrents 是如何工作的?

[](http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/torrent_orig.png)

假设 ‘A’ 上有一些视频,它希望以“种子”方式去下载。因此,他创建了一个“种子”,并将这个链接发送给 ‘B’,这个链接包含了那个视频在因特网上的准确 IP 地址的信息。因此,当 ‘B’ 开始下载那个文件的时候,‘B’ 连接到 ‘A’ 的计算机。在 ‘B’ 下载完成这个视频之后,‘B’ 将开始做为种子,也就是 ‘B’ 将允许其它的 ‘C’ 或者 ‘D’ 从 ‘B’ 的计算机上下载它。

因此每个人先下载文件然后会上传,下载的人越多,下载的速度也越快。并且在任何情况下,如果想停止上传,也没有问题,随时可以。这样做并不会成为什么问题,除非很多的人下载而上传的人很少。

### 播种者和索取者

[](http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/seeders_orig.png)

下载完成特定文件的用户将会即刻做为上传者,因此,可以被新用户下载的已下载者称为播种者。

而一些还没有完成特定文件的下载者,并且还正在下载的用户称为索取者。

### 块

[](http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/peers_orig.png)

所有的“种子”文件都独立分割成固定大小的数据包,因此,它们可以非线性顺序和随机顺序下载。每个块都有唯一的标识,因此,一旦所有的块下载完成之后,它们会被拼接出原始文件。

正是因为这种机制,如果你正在从某人处下载一个文件,假如这个时候因某些原因他停止了上传,你可以继续从其它的播种者处继续下载,而不需要从头开始重新下载。

### 对端

对端是指当前连接到你的索取者。一个正在上传的索取者,不论它下载了多少块,它就是一个对端。

**例如:**

一个已经下载了文件的前 50 个块的用户就是一个索取者,但是,他又同时上传这些文件,而你只有前 10 个块,因此,你可以从他那里下载最多 50 个块。这时候他就成了你的对端。

### 最佳实践

当你下载一个“种子”时,总是选择最大的播种者。这就是最佳经验。

这里并没有最小的标准,但是只要确保你选择的是最大的那一个播种者就可以了。

### “种子”相关的法律

[](http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/torrent-laws_orig.png)

“种子”相关的法律和其它的法律并没有什么区别,对受版权保护的其它任何东西一样,侵权行为会受到法律的制裁。大多数的政府都拦截“种子”站点和协议,但是“种子”下载本身并不是有害的东西。

“种子”对快速分享文件是非常有用的,并且它们被用来共享开源社区的软件,因为它们能节约大量的服务器资源。但是,许多人却因为盗版而使用它们。

### 结束语

Torrenting 是降低服务器上负载的一个非常完美的技术。“种子”下载可以使我们将下载速度提升到网卡的极限,这是非常好的。但是,在这种非中心化的服务器上,盗版成为一种必然发生的事。限制我们分享的内容,从不去下载盗版的东西,这是我们的道德责任。

请在下面的评论中分享你使用“种子”的心得,分享你喜欢的、法律许可下载的“种子”网站。

---

via: <http://www.linuxandubuntu.com/home/torrents-everything-you-need-to-know>

作者:[LINUXANDUBUNTU](http://www.linuxandubuntu.com) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,389 | 关于处理器你所需要知道的一切 | http://www.theitstuff.com/processors-everything-need-know | 2018-02-27T06:46:55 | [

"CPU",

"处理器"

] | https://linux.cn/article-9389-1.html | [](http://www.theitstuff.com/wp-content/uploads/2017/10/processors-all-you-need-to-know.jpg)

我们的手机、主机以及笔记本电脑这样的数字设备已经变得如此成熟,以至于它们进化成为我们的一部分,而不只是一种设备。

在应用和软件的帮助下,处理器执行许多任务。我们是否曾经想过是什么给了这些软件这样的能力?它们是如何执行它们的逻辑的?它们的大脑在哪?

我们知道 CPU (或称处理器)是那些需要处理数据和执行逻辑任务的设备的大脑。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/download.jpg)

在处理器的深处有那些不一样的概念呢?它们是如何演化的?一些处理器是如何做到比其它处理器更快的?让我们来看看关于处理器的主要术语,以及它们是如何影响处速度的。

### 架构

处理器有不同的架构,你一定遇到过不同类型的程序说它们是 64 位或 32 位的,这其中的意思就是程序支持特定的处理器架构。

如果一颗处理器是 32 位的架构,这意味着这颗处理器能够在一个处理周期内处理一个 32 位的数据。

同理可得,64 位的处理器能够在一个周期内处理一个 64 位的数据。

同时,你可以使用的内存大小决定于处理器的架构,你可以使用的内存总量为 2 的处理器架构的幂次方(如:`2^64`)。

16 位架构的处理器,仅仅有 64 kb 的内存使用。32 位架构的处理器,最大可使用的 RAM 是 4 GB,64 位架构的处理器的可用内存是 16 EB。

### 核心

在电脑上,核心是基本的处理单元。核心接收指令并且执行它。越多的核心带来越快的速度。把核心比作工厂里的工人,越多的工人使工作能够越快的完成。另一方面,工人越多,你所付出的薪水也就越多,工厂也会越拥挤;相对于核心来说,越多的核心消耗更多的能量,比核心少的 CPU 更容易发热。

### 时钟速度

[](http://www.theitstuff.com/wp-content/uploads/2017/10/download-1.jpg)

GHz 是 GigaHertz 的简写,Giga 意思是 10 亿次,Hertz (赫兹)意思是一秒有几个周期,2 GHz 的处理器意味着处理器一秒能够执行 20 亿个周期 。

它也以“频率”或者“时钟速度”而熟知。这项数值越高,CPU 的性能越好。

### CPU 缓存

CPU 缓存是处理器内部的一块小的存储单元,用来存储一些内存。不管如何,我们需要执行一些任务时,数据需要从内存传递到 CPU,CPU 的工作速度远快于内存,CPU 在大多数时间是在等待从内存传递过来的数据,而此时 CPU 是处于空闲状态的。为了解决这个问题,内存持续的向 CPU 缓存发送数据。

一般的处理器会有 2 ~ 3 Mb 的 CPU 缓存。高端的处理器会有 6 Mb 的 CPU 缓存,越大的缓存,意味着处理器更好。

### 印刷工艺

晶体管的大小就是处理器平板印刷的大小,尺寸通常是纳米,更小的尺寸意味者更紧凑。这可以让你有更多的核心,更小的面积,更小的能量消耗。

最新的 Intel 处理器有 14 nm 的印刷工艺。

### 热功耗设计(TDP)

代表着平均功耗,单位是瓦特,是在全核心激活以基础频率来处理 Intel 定义的高复杂度的负载时,处理器所散失的功耗。

所以,越低的热功耗设计对你越好。一个低的热功耗设计不仅可以更好的利用能量,而且产生更少的热量。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/download-2.jpg)

桌面版的处理器通常消耗更多的能量,热功耗消耗的能量能在 40% 以上,相对应的移动版本只有不到桌面版本的 1/3。

### 内存支持

我们已经提到了处理器的架构是如何影响到我们能够使用的内存总量,但这只是理论上而已。在实际的应用中,我们所能够使用的内存的总量对于处理器的规格来说是足够的,它通常是由处理器规格详细规定的。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/images.jpg)

它也指出了内存所支持的 DDR 的版本号。

### 超频

前面我们讲过时钟频率,超频是程序强迫 CPU 执行更多的周期。游戏玩家经常会使他们的处理器超频,以此来获得更好的性能。这样确实会增加速度,但也会增加消耗的能量,产生更多的热量。

一些高端的处理器允许超频,如果我们想让一个不支持超频的处理器超频,我们需要在主板上安装一个新的 BIOS 。 这样通常会成功,但这种情况是不安全的,也是不建议的。

### 超线程(HT)

如果不能添加核心以满足特定的处理需要,那么超线程是建立一个虚拟核心的方式。

如果一个双核处理器有超线程,那么这个双核处理器就有两个物理核心和两个虚拟核心,在技术上讲,一个双核处理器拥有四个核心。

### 结论

处理器有许多相关的数据,这些对数字设备来说是最重要的部分。我们在选择设备时,我们应该在脑海中仔细的检查处理器在上面提到的数据。

时钟速度、核心数、CPU 缓存,以及架构是最重要的数据。印刷尺寸以及热功耗设计重要性差一些 。

仍然有疑惑? 欢迎评论,我会尽快回复的。

---

via: <http://www.theitstuff.com/processors-everything-need-know>

作者:[Rishabh Kandari](http://www.theitstuff.com/author/reevkandari) 译者:[singledo](https://github.com/singledo) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 520 | null |

|

9,390 | 学习用工具来驾驭 Git 历史 | https://ieftimov.com/learn-your-tools-navigating-git-history | 2018-02-27T17:03:11 | [

"git",

"历史"

] | https://linux.cn/article-9390-1.html |

在你的日常工作中,不可能每天都从头开始去开发一个新的应用程序。而真实的情况是,在日常工作中,我们大多数时候所面对的都是遗留下来的一个代码库,去修改一些特性的内容或者现存的一些代码行,这是我们在日常工作中很重要的一部分。而这也就是分布式版本控制系统 `git` 的价值所在。现在,我们来深入了解怎么去使用 `git` 的历史以及如何很轻松地去浏览它的历史。

### Git 历史

首先和最重要的事是,什么是 `git` 历史?正如其名字一样,它是一个 `git` 仓库的提交历史。它包含一堆提交信息,其中有它们的作者的名字、该提交的哈希值以及提交日期。查看一个 `git` 仓库历史的方法很简单,就是一个 `git log` 命令。

>

> *旁注:为便于本文的演示,我们使用 Ruby on Rails 的仓库的 `master` 分支。之所以选择它的理由是因为,Rails 有良好的 `git` 历史,漂亮的提交信息、引用以及对每个变更的解释。如果考虑到代码库的大小、维护者的年龄和数量,Rails 肯定是我见过的最好的仓库。当然了,我并不是说其它的 `git` 仓库做的不好,它只是我见过的比较好的一个仓库。*

>

>

>

那么,回到 Rails 仓库。如果你在 Ralis 仓库上运行 `git log`。你将看到如下所示的输出:

```

commit 66ebbc4952f6cfb37d719f63036441ef98149418

Author: Arthur Neves <[email protected]>

Date: Fri Jun 3 17:17:38 2016 -0400

Dont re-define class SQLite3Adapter on test

We were declaring in a few tests, which depending of the order load will cause an error, as the super class could change.

see https://github.com/rails/rails/commit/ac1c4e141b20c1067af2c2703db6e1b463b985da#commitcomment-17731383

commit 755f6bf3d3d568bc0af2c636be2f6df16c651eb1

Merge: 4e85538 f7b850e

Author: Eileen M. Uchitelle <[email protected]>

Date: Fri Jun 3 10:21:49 2016 -0400

Merge pull request #25263 from abhishekjain16/doc_accessor_thread

[skip ci] Fix grammar

commit f7b850ec9f6036802339e965c8ce74494f731b4a

Author: Abhishek Jain <[email protected]>

Date: Fri Jun 3 16:49:21 2016 +0530

[skip ci] Fix grammar

commit 4e85538dddf47877cacc65cea6c050e349af0405

Merge: 082a515 cf2158c

Author: Vijay Dev <[email protected]>

Date: Fri Jun 3 14:00:47 2016 +0000

Merge branch 'master' of github.com:rails/docrails

Conflicts:

guides/source/action_cable_overview.md

commit 082a5158251c6578714132e5c4f71bd39f462d71

Merge: 4bd11d4 3bd30d9

Author: Yves Senn <[email protected]>

Date: Fri Jun 3 11:30:19 2016 +0200

Merge pull request #25243 from sukesan1984/add_i18n_validation_test

Add i18n_validation_test

commit 4bd11d46de892676830bca51d3040f29200abbfa

Merge: 99d8d45 e98caf8

Author: Arthur Nogueira Neves <[email protected]>

Date: Thu Jun 2 22:55:52 2016 -0400

Merge pull request #25258 from alexcameron89/master

[skip ci] Make header bullets consistent in engines.md

commit e98caf81fef54746126d31076c6d346c48ae8e1b

Author: Alex Kitchens <[email protected]>

Date: Thu Jun 2 21:26:53 2016 -0500

[skip ci] Make header bullets consistent in engines.md

```

正如你所见,`git log` 展示了提交的哈希、作者及其 email 以及该提交创建的日期。当然,`git` 输出的可定制性很强大,它允许你去定制 `git log` 命令的输出格式。比如说,我们只想看提交信息的第一行,我们可以运行 `git log --oneline`,它将输出一个更紧凑的日志:

```

66ebbc4 Dont re-define class SQLite3Adapter on test

755f6bf Merge pull request #25263 from abhishekjain16/doc_accessor_thread

f7b850e [skip ci] Fix grammar4e85538 Merge branch 'master' of github.com:rails/docrails

082a515 Merge pull request #25243 from sukesan1984/add_i18n_validation_test

4bd11d4 Merge pull request #25258 from alexcameron89/master

e98caf8 [skip ci] Make header bullets consistent in engines.md

99d8d45 Merge pull request #25254 from kamipo/fix_debug_helper_test

818397c Merge pull request #25240 from matthewd/reloadable-channels

2c5a8ba Don't blank pad day of the month when formatting dates

14ff8e7 Fix debug helper test

```

如果你想看 `git log` 的全部选项,我建议你去查阅 `git log` 的 man 页面,你可以在一个终端中输入 `man git-log` 或者 `git help log` 来获得。

>

> *小提示:如果你觉得 `git log` 看起来太恐怖或者过于复杂,或者你觉得看它太无聊了,我建议你去寻找一些 `git` 的 GUI 或命令行工具。在之前,我使用过 [GitX](http://gitx.frim.nl/) ,我觉得它很不错,但是,由于我看命令行更“亲切”一些,在我尝试了 [tig](https://github.com/jonas/tig) 之后,就再也没有去用过它。*

>

>

>

### 寻找尼莫

现在,我们已经知道了关于 `git log` 命令的一些很基础的知识之后,我们来看一下,在我们的日常工作中如何使用它更加高效地浏览历史。

假如,我们怀疑在 `String#classify` 方法中有一个预期之外的行为,我们希望能够找出原因,并且定位出实现它的代码行。

为达到上述目的,你可以使用的第一个命令是 `git grep`,通过它可以找到这个方法定义在什么地方。简单来说,这个命令输出了匹配特定模式的那些行。现在,我们来找出定义它的方法,它非常简单 —— 我们对 `def classify` 运行 grep,然后看到的输出如下:

```

➜ git grep 'def classify'

activesupport/lib/active_support/core_ext/string/inflections.rb: def classifyactivesupport/lib/active_support/inflector/methods.rb: def classify(table_name)tools/profile: def classify

```

现在,虽然我们已经看到这个方法是在哪里创建的,但是,并不能够确定它是哪一行。如果,我们在 `git grep` 命令上增加 `-n` 标志,`git` 将提供匹配的行号:

```

➜ git grep -n 'def classify'

activesupport/lib/active_support/core_ext/string/inflections.rb:205: def classifyactivesupport/lib/active_support/inflector/methods.rb:186: def classify(table_name)tools/profile:112: def classify

```

更好看了,是吧?考虑到上下文,我们可以很轻松地找到,这个方法在 `activesupport/lib/active_support/core_ext/string/inflections.rb` 的第 205 行的 `classify` 方法,它看起来像这样,是不是很容易?

```

# Creates a class name from a plural table name like Rails does for table names to models.

# Note that this returns a string and not a class. (To convert to an actual class

# follow +classify+ with +constantize+.)

#

# 'ham_and_eggs'.classify # => "HamAndEgg"

# 'posts'.classify # => "Post"

def classify

ActiveSupport::Inflector.classify(self)

end

```

尽管我们找到的这个方法是在 `String` 上的一个常见的调用,它调用了 `ActiveSupport::Inflector` 上的另一个同名的方法。根据之前的 `git grep` 的结果,我们可以很轻松地发现结果的第二行, `activesupport/lib/active_support/inflector/methods.rb` 在 186 行上。我们正在寻找的方法是这样的:

```

# Creates a class name from a plural table name like Rails does for table

# names to models. Note that this returns a string and not a Class (To

# convert to an actual class follow +classify+ with constantize).

#

# classify('ham_and_eggs') # => "HamAndEgg"

# classify('posts') # => "Post"

#

# Singular names are not handled correctly:

#

# classify('calculus') # => "Calculus"

def classify(table_name)

# strip out any leading schema name

camelize(singularize(table_name.to_s.sub(/.*\./, ''.freeze)))

end

```

酷!考虑到 Rails 仓库的大小,我们借助 `git grep` 找到它,用时都没有超越 30 秒。

### 那么,最后的变更是什么?

现在,我们已经找到了所要找的方法,现在,我们需要搞清楚这个文件所经历的变更。由于我们已经知道了正确的文件名和行数,我们可以使用 `git blame`。这个命令展示了一个文件中每一行的最后修订者和修订的内容。我们来看一下这个文件最后的修订都做了什么:

```

git blame activesupport/lib/active_support/inflector/methods.rb

```

虽然我们得到了这个文件每一行的最后的变更,但是,我们更感兴趣的是对特定方法(176 到 189 行)的最后变更。让我们在 `git blame` 命令上增加一个选项,让它只显示那些行的变化。此外,我们将在命令上增加一个 `-s` (忽略)选项,去跳过那一行变更时的作者名字和修订(提交)的时间戳:

```

git blame -L 176,189 -s activesupport/lib/active_support/inflector/methods.rb

9fe8e19a 176) #Creates a class name from a plural table name like Rails does for table

5ea3f284 177) # names to models. Note that this returns a string and not a Class (To

9fe8e19a 178) # convert to an actual class follow +classify+ with #constantize).

51cd6bb8 179) #

6d077205 180) # classify('ham_and_eggs') # => "HamAndEgg"

9fe8e19a 181) # classify('posts') # => "Post"

51cd6bb8 182) #

51cd6bb8 183) # Singular names are not handled correctly:

5ea3f284 184) #

66d6e7be 185) # classify('calculus') # => "Calculus"

51cd6bb8 186) def classify(table_name)

51cd6bb8 187) # strip out any leading schema name

5bb1d4d2 188) camelize(singularize(table_name.to_s.sub(/.*\./, ''.freeze)))

51cd6bb8 189) end

```

现在,`git blame` 命令的输出展示了指定行的全部内容以及它们各自的修订。让我们来看一下指定的修订,换句话说就是,每个变更都修订了什么,我们可以使用 `git show` 命令。当指定一个修订哈希(像 `66d6e7be`)作为一个参数时,它将展示这个修订的全部内容。包括作者名字、时间戳以及完整的修订内容。我们来看一下 188 行最后的修订都做了什么?

```

git show 5bb1d4d2

```

你亲自做实验了吗?如果没有做,我直接告诉你结果,这个令人惊叹的 [提交](https://github.com/rails/rails/commit/5bb1d4d288d019e276335465d0389fd2f5246bfd) 是由 [Schneems](https://twitter.com/schneems) 完成的,他通过使用 frozen 字符串做了一个非常有趣的性能优化,这在我们当前的场景中是非常有意义的。但是,由于我们在这个假设的调试会话中,这样做并不能告诉我们当前问题所在。因此,我们怎么样才能够通过研究来发现,我们选定的方法经过了哪些变更?

### 搜索日志

现在,我们回到 `git` 日志,现在的问题是,怎么能够看到 `classify` 方法经历了哪些修订?

`git log` 命令非常强大,因此它提供了非常多的列表选项。我们尝试使用 `-p` 选项去看一下保存了这个文件的 `git` 日志内容,这个选项的意思是在 `git` 日志中显示这个文件的完整补丁:

```

git log -p activesupport/lib/active_support/inflector/methods.rb

```

这将给我们展示一个很长的修订列表,显示了对这个文件的每个修订。但是,正如下面所显示的,我们感兴趣的是对指定行的修订。对命令做一个小的修改,只显示我们希望的内容:

```

git log -L 176,189:activesupport/lib/active_support/inflector/methods.rb

```

`git log` 命令接受 `-L` 选项,它用一个行的范围和文件名做为参数。它的格式可能有点奇怪,格式解释如下:

```

git log -L <start-line>,<end-line>:<path-to-file>

```

当我们运行这个命令之后,我们可以看到对这些行的一个修订列表,它将带我们找到创建这个方法的第一个修订:

```

commit 51xd6bb829c418c5fbf75de1dfbb177233b1b154

Author: Foo Bar <[email protected]>

Date: Tue Jun 7 19:05:09 2011 -0700

Refactor

diff--git a/activesupport/lib/active_support/inflector/methods.rb b/activesupport/lib/active_support/inflector/methods.rb

--- a/activesupport/lib/active_support/inflector/methods.rb

+++ b/activesupport/lib/active_support/inflector/methods.rb

@@ -58,0 +135,14 @@

+ # Create a class name from a plural table name like Rails does for table names to models.

+ # Note that this returns a string and not a Class. (To convert to an actual class

+ # follow +classify+ with +constantize+.)

+ #

+ # Examples:

+ # "egg_and_hams".classify # => "EggAndHam"

+ # "posts".classify # => "Post"

+ #

+ # Singular names are not handled correctly:

+ # "business".classify # => "Busines"

+ def classify(table_name)

+ # strip out any leading schema name

+ camelize(singularize(table_name.to_s.sub(/.*\./, '')))

+ end

```

现在,我们再来看一下 —— 它是在 2011 年提交的。`git` 可以让我们重回到这个时间。这是一个很好的例子,它充分说明了足够的提交信息对于重新了解当时的上下文环境是多么的重要,因为从这个提交信息中,我们并不能获得足够的信息来重新理解当时的创建这个方法的上下文环境,但是,话说回来,你**不应该**对此感到恼怒,因为,你看到的这些项目,它们的作者都是无偿提供他们的工作时间和精力来做开源工作的。(向开源项目贡献者致敬!)

回到我们的正题,我们并不能确认 `classify` 方法最初实现是怎么回事,考虑到这个第一次的提交只是一个重构。现在,如果你认为,“或许、有可能、这个方法不在 176 行到 189 行的范围之内,那么就你应该在这个文件中扩大搜索范围”,这样想是对的。我们看到在它的修订提交的信息中提到了“重构”这个词,它意味着这个方法可能在那个文件中是真实存在的,而且是在重构之后它才存在于那个行的范围内。

但是,我们如何去确认这一点呢?不管你信不信,`git` 可以再次帮助你。`git log` 命令有一个 `-S` 选项,它可以传递一个特定的字符串作为参数,然后去查找代码变更(添加或者删除)。也就是说,如果我们执行 `git log -S classify` 这样的命令,我们可以看到所有包含 `classify` 字符串的变更行的提交。

如果你在 Ralis 仓库上运行上述命令,首先你会发现这个命令运行有点慢。但是,你应该会发现 `git` 实际上解析了在那个仓库中的所有修订来匹配这个字符串,其实它的运行速度是非常快的。在你的指尖下 `git` 再次展示了它的强大之处。因此,如果去找关于 `classify` 方法的第一个修订,我们可以运行如下的命令:

```

git log -S 'def classify'

```

它将返回所有这个方法的引用和修改的地方。如果你一直往下看,你将看到日志中它的最后的提交:

```

commit db045dbbf60b53dbe013ef25554fd013baf88134

Author: David Heinemeier Hansson <[email protected]>

Date: Wed Nov 24 01:04:44 2004 +0000

Initial

git-svn-id: http://svn-commit.rubyonrails.org/rails/trunk@4 5ecf4fe2-1ee6-0310-87b1-e25e094e27de

```

很酷!是吧?它初次被提交到 Rails,是由 DHH 在一个 `svn` 仓库上做的!这意味着 `classify` 大概在一开始就被提交到了 Rails 仓库。现在,我们去看一下这个提交的所有变更信息,我们运行如下的命令:

```

git show db045dbbf60b53dbe013ef25554fd013baf88134

```

非常好!我们终于找到它的根源了。现在,我们使用 `git log -S 'def classify'` 的输出,结合 `git log -L` 命令来跟踪这个方法都发生了哪些变更。

### 下次见

当然,我们并没有真的去修改任何 bug,因为我们只是去尝试使用一些 `git` 命令,来演示如何查看 `classify` 方法的演变历史。但是不管怎样,`git` 是一个非常强大的工具,我们必须学好它、用好它。我希望这篇文章可以帮助你掌握更多的关于如何使用 `git` 的知识。

你喜欢这些内容吗?

---

作者简介:

后端工程师,对 Ruby、Go、微服务、构建弹性架构来解决大规模部署带来的挑战很感兴趣。我在阿姆斯特丹的 Rails Girls 担任顾问,维护着一个小而精的列表,并且经常为开源做贡献。

那个列表是我写的关于软件开发、编程语言以及任何我感兴趣的东西。

---

via: <https://ieftimov.com/learn-your-tools-navigating-git-history>

作者:[Ilija Eftimov](https://ieftimov.com/) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,391 | 如何在 Ubuntu 安装 Go 语言编写的 Git 服务器 Gogs | https://www.howtoforge.com/tutorial/how-to-install-gogs-go-git-service-on-ubuntu-1604/ | 2018-02-27T22:31:57 | [

"Gogs",

"Go",

"Git"

] | https://linux.cn/article-9391-1.html |

Gogs 是由 Go 语言编写的,自由开源的 Git 服务。Gogs 是一款无痛式自托管的 Git 服务器,能在尽可能小的硬件资源开销上搭建并运行您的私有 Git 服务器。Gogs 的网页界面和 GitHub 十分相近,且提供 MySQL、PostgreSQL 和 SQLite 数据库支持。

在本教程中,我们将使用 Gogs 在 Ununtu 16.04 上按步骤指导您安装和配置您的私有 Git 服务器。这篇教程中涵盖了如何在 Ubuntu 上安装 Go 语言、PostgreSQL 和安装并且配置 Nginx 网页服务器作为 Go 应用的反向代理的细节内容。

### 搭建环境

* Ubuntu 16.04

* Root 权限

### 我们将会接触到的事物

1. 更新和升级系统

2. 安装和配置 PostgreSQL

3. 安装 Go 和 Git

4. 安装 Gogs

5. 配置 Gogs

6. 运行 Gogs 服务器

7. 安装和配置 Nginx 反向代理

8. 测试

### 步骤 1 - 更新和升级系统

继续之前,更新 Ubuntu 所有的库,升级所有包。

运行下面的 `apt` 命令:

```

sudo apt update

sudo apt upgrade

```

### 步骤 2 - 安装和配置 PostgreSQL

Gogs 提供 MySQL、PostgreSQL、SQLite 和 TiDB 数据库系统支持。

此步骤中,我们将使用 PostgreSQL 作为 Gogs 程序的数据库。

使用下面的 `apt` 命令安装 PostgreSQL。

```

sudo apt install -y postgresql postgresql-client libpq-dev

```

安装完成之后,启动 PostgreSQL 服务并设置为开机启动。

```

systemctl start postgresql

systemctl enable postgresql

```

此时 PostgreSQL 数据库在 Ubuntu 系统上完成安装了。

之后,我们需要为 Gogs 创建数据库和用户。

使用 `postgres` 用户登录并运行 `psql` 命令以访问 PostgreSQL 操作界面。

```

su - postgres

psql

```

创建一个名为 `git` 的新用户,给予此用户 `CREATEDB` 权限。

```

CREATE USER git CREATEDB;

\password git

```

创建名为 `gogs_production` 的数据库,设置 `git` 用户作为其所有者。

```

CREATE DATABASE gogs_production OWNER git;

```

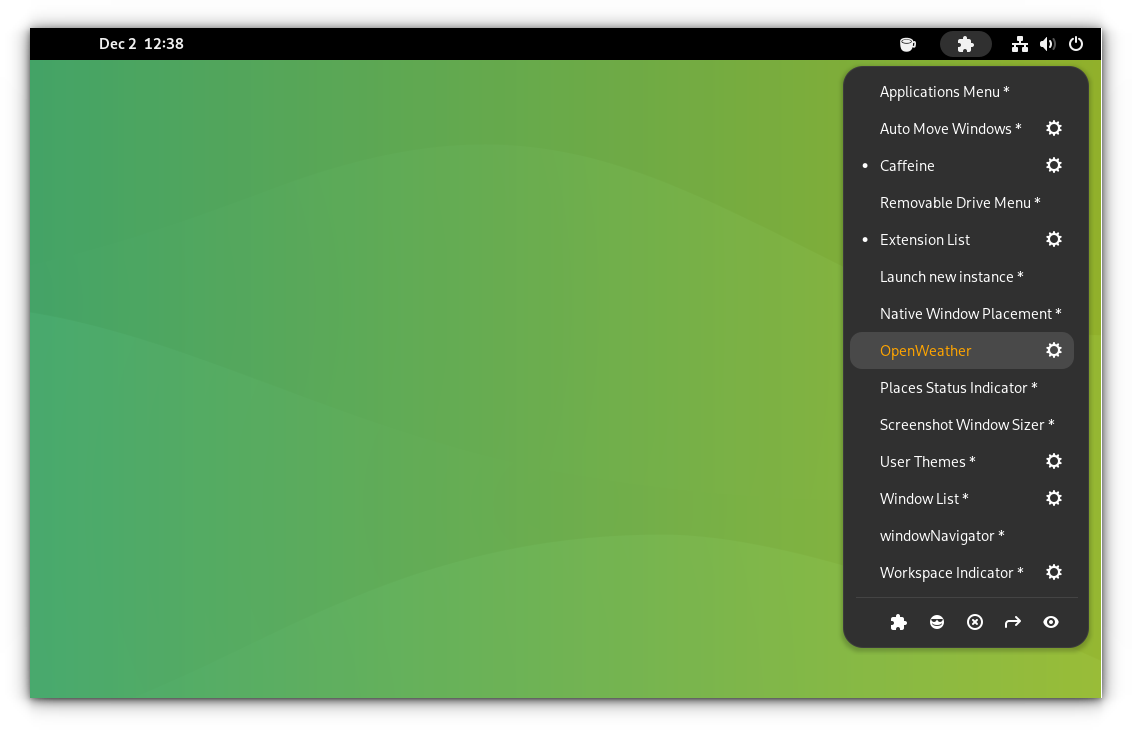

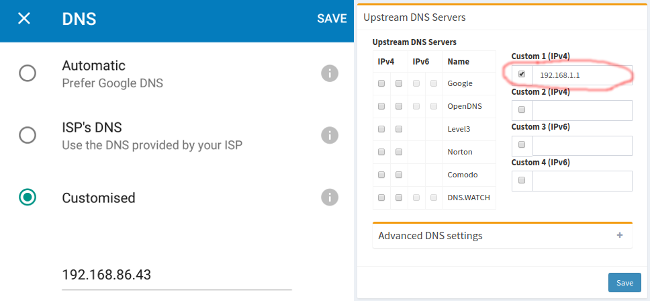

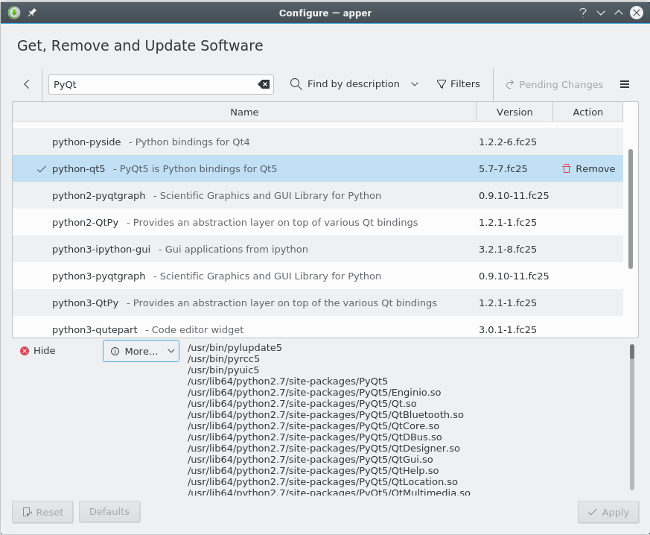

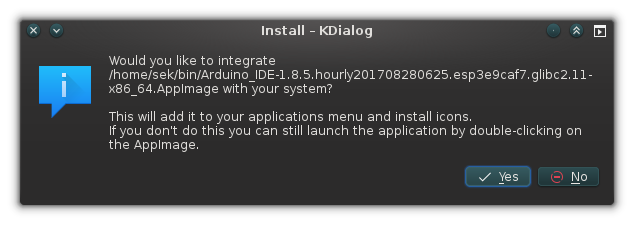

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/1.png)

用于 Gogs 的 `gogs_production` PostgreSQL 数据库和 `git` 用户已经创建完毕。

### 步骤 3 - 安装 Go 和 Git

使用下面的 `apt` 命令从库中安装 Git。

```

sudo apt install git

```

此时,为系统创建名为 `git` 的新用户。

```

sudo adduser --disabled-login --gecos 'Gogs' git

```

登录 `git` 账户并且创建名为 `local` 的目录。

```

su - git

mkdir -p /home/git/local

```

切换到 `local` 目录,依照下方所展示的内容,使用 `wget` 命令下载 Go(最新版)。

```

cd ~/local

wget https://dl.google.com/go/go1.9.2.linux-amd64.tar.gz

```

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/2.png)

解压并且删除 go 的压缩文件。

```

tar -xf go1.9.2.linux-amd64.tar.gz

rm -f go1.9.2.linux-amd64.tar.gz

```

Go 二进制文件已经被下载到 `~/local/go` 目录。此时我们需要设置环境变量 - 设置 `GOROOT` 和 `GOPATH` 目录到系统环境,这样,我们就可以在 `git` 用户下执行 `go` 命令。

执行下方的命令。

```

cd ~/

echo 'export GOROOT=$HOME/local/go' >> $HOME/.bashrc

echo 'export GOPATH=$HOME/go' >> $HOME/.bashrc

echo 'export PATH=$PATH:$GOROOT/bin:$GOPATH/bin' >> $HOME/.bashrc

```

之后通过运行 `source ~/.bashrc` 重载 Bash,如下:

```

source ~/.bashrc

```

确定您使用的 Bash 是默认的 shell。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/3.png)

现在运行 `go` 的版本查看命令。

```

go version

```

之后确保您得到下图所示的结果。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/4.png)

现在,Go 已经安装在系统的 `git` 用户下了。

### 步骤 4 - 使用 Gogs 安装 Git 服务

使用 `git` 用户登录并且使用 `go` 命令从 GitHub 下载 Gogs。

```

su - git

go get -u github.com/gogits/gogs

```

此命令将在 `GOPATH/src` 目录下载 Gogs 的所有源代码。

切换至 `$GOPATH/src/github.com/gogits/gogs` 目录,并且使用下列命令搭建 Gogs。

```

cd $GOPATH/src/github.com/gogits/gogs

go build

```

确保您没有遇到错误。

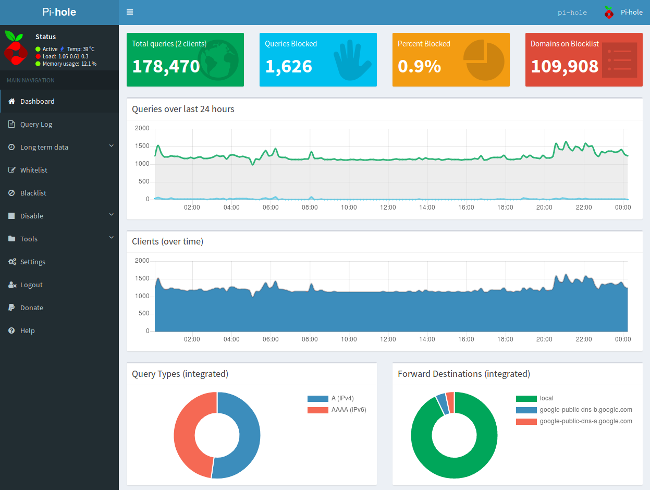

现在使用下面的命令运行 Gogs Go Git 服务器。

```

./gogs web

```

此命令将会默认运行 Gogs 在 3000 端口上。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/5.png)

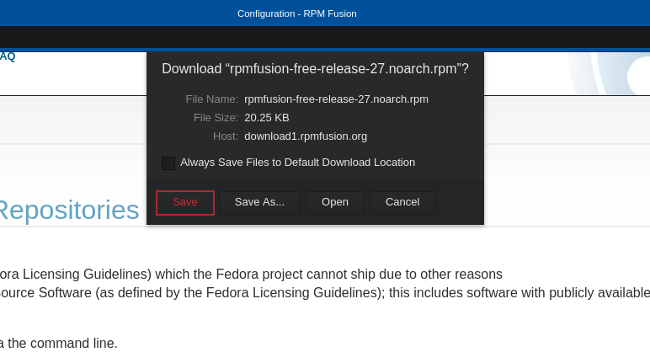

打开网页浏览器,键入您的 IP 地址和端口号,我的是 <http://192.168.33.10:3000/> 。

您应该会得到与下方一致的反馈。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/6.png)

Gogs 已经在您的 Ubuntu 系统上安装完毕。现在返回到您的终端,并且键入 `Ctrl + C` 中止服务。

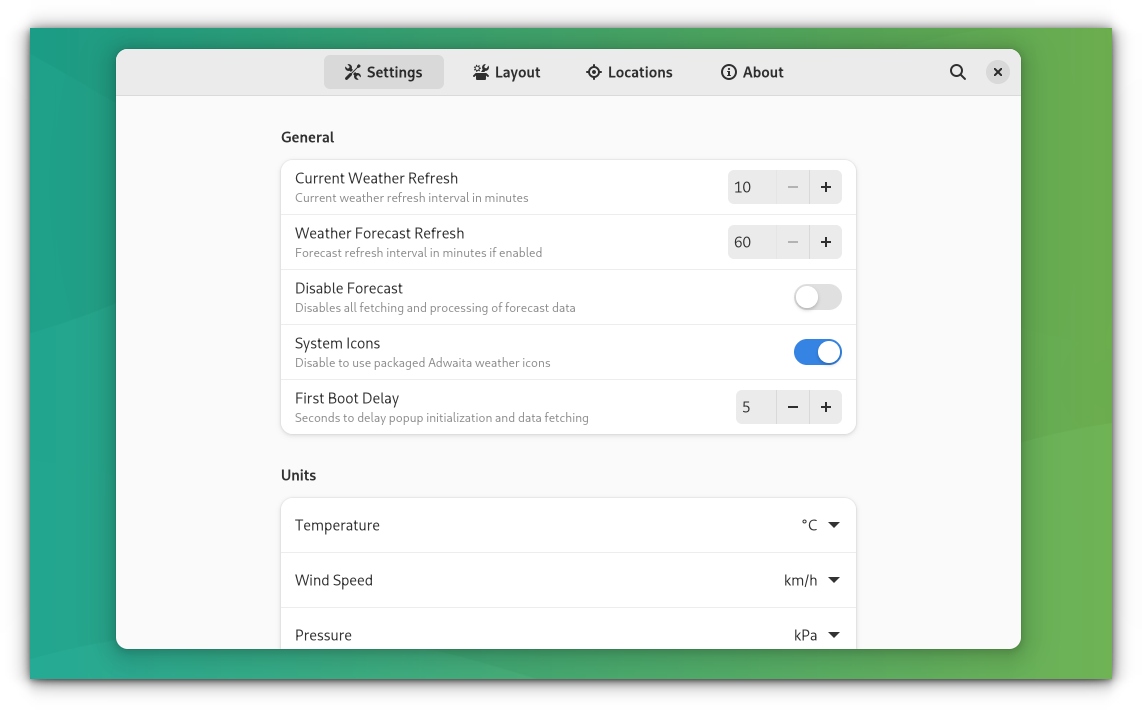

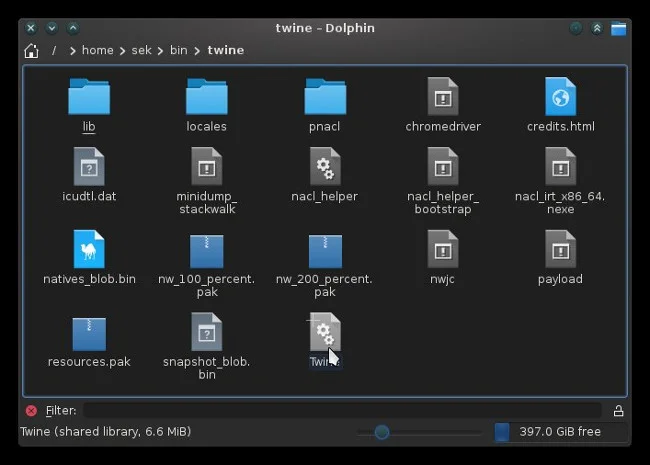

### 步骤 5 - 配置 Gogs Go Git 服务器

本步骤中,我们将为 Gogs 创建惯例配置。

进入 Gogs 安装目录并新建 `custom/conf` 目录。

```

cd $GOPATH/src/github.com/gogits/gogs

mkdir -p custom/conf/

```

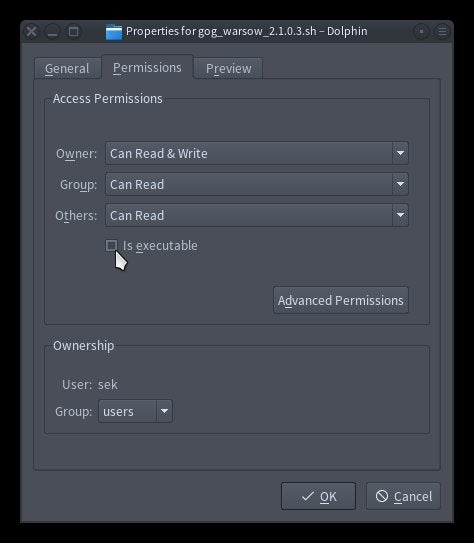

复制默认的配置文件到 `custom` 目录,并使用 [vim](https://www.howtoforge.com/vim-basics) 修改。

```

cp conf/app.ini custom/conf/app.ini

vim custom/conf/app.ini

```

在 `[server]` 小节中,修改 `HOST_ADDR` 为 `127.0.0.1`。

```

[server]

PROTOCOL = http

DOMAIN = localhost

ROOT_URL = %(PROTOCOL)s://%(DOMAIN)s:%(HTTP_PORT)s/

HTTP_ADDR = 127.0.0.1

HTTP_PORT = 3000

```

在 `[database]` 选项中,按照您的数据库信息修改。

```

[database]

DB_TYPE = postgres

HOST = 127.0.0.1:5432

NAME = gogs_production

USER = git

PASSWD = aqwe123@#

```

保存并退出。

运行下面的命令验证配置项。

```

./gogs web

```

并且确保您得到如下的结果。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/7.png)

Gogs 现在已经按照自定义配置下运行在 `localhost` 的 3000 端口上了。

### 步骤 6 - 运行 Gogs 服务器

这一步,我们将在 Ubuntu 系统上配置 Gogs 服务器。我们会在 `/etc/systemd/system` 目录下创建一个新的服务器配置文件 `gogs.service`。

切换到 `/etc/systemd/system` 目录,使用 [vim](https://www.howtoforge.com/vim-basics) 创建服务器配置文件 `gogs.service`。

```

cd /etc/systemd/system

vim gogs.service

```

粘贴下面的代码到 Gogs 服务器配置文件中。

```

[Unit]

Description=Gogs

After=syslog.target

After=network.target

After=mariadb.service mysqld.service postgresql.service memcached.service redis.service

[Service]

# Modify these two values and uncomment them if you have

# repos with lots of files and get an HTTP error 500 because

# of that

###

#LimitMEMLOCK=infinity

#LimitNOFILE=65535

Type=simple

User=git

Group=git

WorkingDirectory=/home/git/go/src/github.com/gogits/gogs

ExecStart=/home/git/go/src/github.com/gogits/gogs/gogs web

Restart=always

Environment=USER=git HOME=/home/git

[Install]

WantedBy=multi-user.target

```

之后保存并且退出。

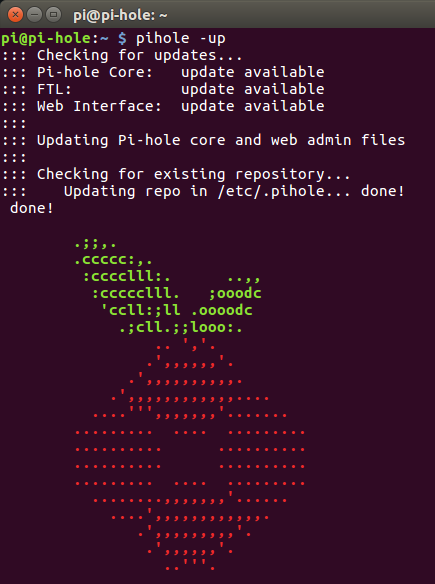

现在可以重载系统服务器。

```

systemctl daemon-reload

```

使用下面的命令开启 Gogs 服务器并设置为开机启动。

```

systemctl start gogs

systemctl enable gogs

```

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/8.png)

Gogs 服务器现在已经运行在 Ubuntu 系统上了。

使用下面的命令检测:

```

netstat -plntu

systemctl status gogs

```

您应该会得到下图所示的结果。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/9.png)

### 步骤 7 - 为 Gogs 安装和配置 Nginx 反向代理

在本步中,我们将为 Gogs 安装和配置 Nginx 反向代理。我们会在自己的库中调用 Nginx 包。

使用下面的命令添加 Nginx 库。

```

sudo add-apt-repository -y ppa:nginx/stable

```

此时更新所有的库并且使用下面的命令安装 Nginx。

```

sudo apt update

sudo apt install nginx -y

```

之后,进入 `/etc/nginx/sites-available` 目录并且创建虚拟主机文件 `gogs`。

```

cd /etc/nginx/sites-available

vim gogs

```

粘贴下面的代码到配置文件。

```

server {

listen 80;

server_name git.hakase-labs.co;

location / {

proxy_pass http://localhost:3000;

}

}

```

保存退出。

**注意:** 请使用您的域名修改 `server_name` 项。

现在激活虚拟主机并且测试 nginx 配置。

```

ln -s /etc/nginx/sites-available/gogs /etc/nginx/sites-enabled/

nginx -t

```

确保没有遇到错误,重启 Nginx 服务器。

```

systemctl restart nginx

```

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/10.png)

### 步骤 8 - 测试

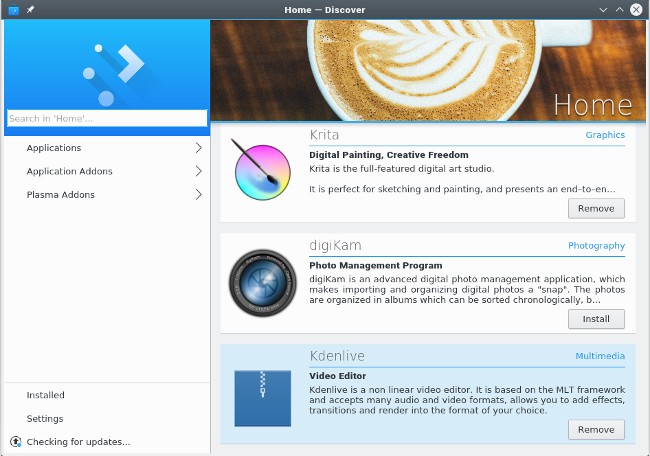

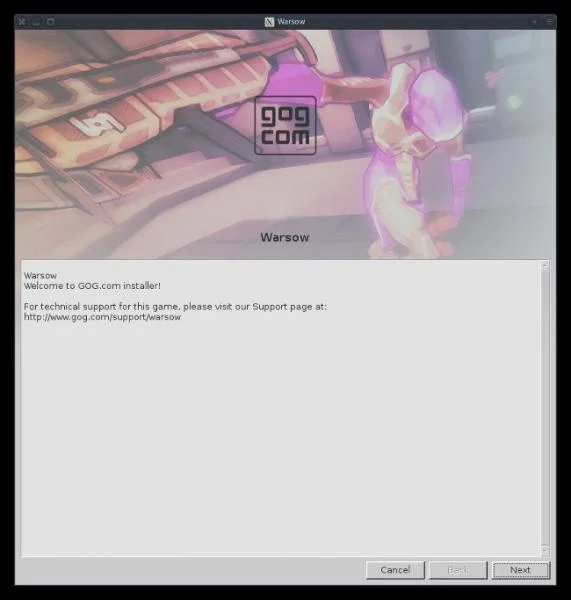

打开您的网页浏览器并且输入您的 Gogs URL,我的是 <http://git.hakase-labs.co>

现在您将进入安装界面。在页面的顶部,输入您所有的 PostgreSQL 数据库信息。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/11.png)

之后,滚动到底部,点击 “Admin account settings” 下拉选项。

输入您的管理者用户名和邮箱。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/12.png)

之后点击 “Install Gogs” 按钮。

然后您将会被重定向到下图显示的 Gogs 用户面板。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/13.png)

下面是 Gogs 的 “Admin Dashboard(管理员面板)”。

[](https://www.howtoforge.com/images/how_to_install_gogs_go_git_service_on_ubuntu_1604/big/14.png)

现在,Gogs 已经通过 PostgreSQL 数据库和 Nginx 网页服务器在您的 Ubuntu 16.04 上完成安装。

---

via: <https://www.howtoforge.com/tutorial/how-to-install-gogs-go-git-service-on-ubuntu-1604/>

作者:[Muhammad Arul](https://www.howtoforge.com/tutorial/server-monitoring-with-shinken-on-ubuntu-16-04/) 译者:[CYLeft](https://github.com/CYLeft) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # How to Install Gogs Go Git Service on Ubuntu 16.04

### On this page

[Prerequisites](#prerequisites)[What we will do](#what-we-will-do)[Step 1 - Update and Upgrade System](#step-update-and-upgrade-system)[Step 2 - Install and Configure PostgreSQL](#step-install-and-configure-postgresql)[Step 3 - Install Go and Git](#step-install-go-and-git)[Step 4 - Install Gogs Go Git Service](#step-install-gogs-go-git-service)[Step 5 - Configure Gogs Go Git Service](#step-configure-gogs-go-git-service)[Step 6 - Running Gogs as a Service](#step-running-gogs-as-a-service)[Step 7 - Configure Nginx as a Reverse Proxy for Gogs](#step-configure-nginx-as-a-reverse-proxy-for-gogs)[Step 8 - Testing](#step-testing)[Reference](#reference)

Gogs is free and open source Git service written in Go language. Gogs is a painless self-hosted git service that allows you to create and run your own Git server on a minimal hardware server. Gogs web-UI is very similar to GitHub and offers support for MySQL, PostgreSQL, and SQLite database.

In this tutorial, we will show you step-by-step how to install and configure your own Git service using Gogs on Ubuntu 16.04. This tutorial will cover details including, how to install Go on Ubuntu system, install PostgreSQL, and install and configure Nginx web server as a reverse proxy for Go application.

## Prerequisites

- Ubuntu 16.04

- Root privileges

## What we will do

- Update and Upgrade System

- Install and Configure PostgreSQL

- Install Go and Git

- Install Gogs

- Configure Gogs

- Running Gogs as a Service

- Install and Configure Nginx as a Reverse Proxy

- Testing

## Step 1 - Update and Upgrade System

Before going any further, update all Ubuntu repositories and upgrade all packages.

Run the apt commands below.

sudo apt update

sudo apt upgrade

## Step 2 - Install and Configure PostgreSQL

Gogs offers support for MySQL, PostgreSQL, SQLite3, MSSQL, and TiDB database systems.

In this guide, we will be using PostgreSQL as a database for our Gogs installations.

Install PostgreSQL using the apt command below.

sudo apt install -y postgresql postgresql-client libpq-dev

After the installation is complete, start the PostgreSQL service and enable it to launch everytime at system boot.

systemctl start postgresql

systemctl enable postgresql

PostgreSQL database has been installed on an Ubuntu system.

Next, we need to create a new database and user for Gogs.

Login as the 'postgres' user and run the 'psql' command to get the PostgreSQL shell.

su - postgres

psql

Create a new user named 'git', and give the user privileges for 'CREATEDB'.

CREATE USER git CREATEDB;

\password git

Create a database named 'gogs_production', and set the 'git' user as the owner of the database.

CREATE DATABASE gogs_production OWNER git;

New PostgreSQL database 'gogs_production' and user 'git' for Gogs installation has been created.

## Step 3 - Install Go and Git

Install Git from the repository using the apt command below.

sudo apt install git

Now add new user 'git' to the system.

sudo adduser --disabled-login --gecos 'Gogs' git

Login as the 'git' user and create a new 'local' directory.

su - git

mkdir -p /home/git/local

Go to the 'local' directory and download 'Go' (the latest version) using the wget command as shown below.

cd ~/local

wget https://dl.google.com/go/go1.9.2.linux-amd64.tar.gz

Extract the go compressed file, then remove it.

tar -xf go1.9.2.linux-amd64.tar.gz

rm -f go1.9.2.linux-amd64.tar.gz

'Go' binary file has been downloaded in the '~/local/go' directory. Now we need to setup the environment - we need to define the 'GOROOT' and 'GOPATH directories so we can run a 'go' command on the system under 'git' user.

Run all of the following commands.

cd ~/

echo 'export GOROOT=$HOME/local/go' >> $HOME/.bashrc

echo 'export GOPATH=$HOME/go' >> $HOME/.bashrc

echo 'export PATH=$PATH:$GOROOT/bin:$GOPATH/bin' >> $HOME/.bashrc

And reload Bash by running the 'source ~/.bashrc' command as shown below.

source ~/.bashrc

Make sure you're using Bash as your default shell.

Now run the 'go' command for checking the version.

go version

And make sure you get the result as shown in the following screenshot.

Go is now installed on the system under 'git' user.

## Step 4 - Install Gogs Go Git Service

Login as the 'git' user and download 'Gogs' from GitHub using the 'go' command.

su - git

go get -u github.com/gogits/gogs

The command will download all Gogs source code in the 'GOPATH/src' directory.

Go to the '$GOPATH/src/github.com/gogits/gogs' directory and build gogs using commands below.

cd $GOPATH/src/github.com/gogits/gogs

go build

And make sure you get no error.

Now run Gogs Go Git Service using the command below.

./gogs web

The command will run Gogs on the default port 3000.

Open your web browser and type your server IP address with port 3000, mine is http://192.168.33.10:3000/

And you should get the result as shown below.

Gogs is installed on the Ubuntu system. Now back to your terminal and press 'Ctrl + c' to exit.

## Step 5 - Configure Gogs Go Git Service

In this step, we will create a custom configuration for Gogs.

Goto the Gogs installation directory and create a new 'custom/conf' directory.

cd $GOPATH/src/github.com/gogits/gogs

mkdir -p custom/conf/

Copy default configuration to the custom directory and edit it using [vim](https://www.howtoforge.com/vim-basics).

cp conf/app.ini custom/conf/app.ini

vim custom/conf/app.ini

In the '**[server]**' section, change the server 'HOST_ADDR' with '127.0.0.1'.

[server]

PROTOCOL = http

DOMAIN = localhost

ROOT_URL = %(PROTOCOL)s://%(DOMAIN)s:%(HTTP_PORT)s/

HTTP_ADDR = 127.0.0.1

HTTP_PORT = 3000

In the '**[database]**' section, change everything with your own database info.

[database]

DB_TYPE = postgres

HOST = 127.0.0.1:5432

NAME = gogs_production

USER = git

PASSWD = aqwe123@#

Save and exit.

Now verify the configuration by running the command as shown below.

./gogs web

And make sure you get the result as following.

Gogs is now running with our custom configuration, under 'localhost' with port 3000.

## Step 6 - Running Gogs as a Service

In this step, we will configure Gogs as a service on Ubuntu system. We will create a new service file configuration 'gogs.service' under the '/etc/systemd/system' directory.

Go to the '/etc/systemd/system' directory and create a new service file 'gogs.service' using the [vim](https://www.howtoforge.com/vim-basics) editor.

cd /etc/systemd/system

vim gogs.service

Paste the following gogs service configuration there.

[Unit]

Description=Gogs

After=syslog.target

After=network.target

After=mariadb.service mysqld.service postgresql.service memcached.service redis.service

[Service]

# Modify these two values and uncomment them if you have

# repos with lots of files and get an HTTP error 500 because

# of that

###

#LimitMEMLOCK=infinity

#LimitNOFILE=65535

Type=simple

User=git

Group=git

WorkingDirectory=/home/git/go/src/github.com/gogits/gogs

ExecStart=/home/git/go/src/github.com/gogits/gogs/gogs web

Restart=always

Environment=USER=git HOME=/home/git

[Install]

WantedBy=multi-user.target

Save and exit.

Now reload the systemd services.

systemctl daemon-reload

Start gogs service and enable it to launch everytime at system boot using the systemctl command.

systemctl start gogs

systemctl enable gogs

Gogs is now running as a service on Ubuntu system.

Check it using the commands below.

netstat -plntu

systemctl status gogs

And you should get the result as shown below.

## Step 7 - Configure Nginx as a Reverse Proxy for Gogs

In this step, we will configure Nginx as a reverse proxy for Gogs. We will be using Nginx packages from its own repository.

Add Nginx repository using the add-apt command.

sudo add-apt-repository -y ppa:nginx/stable

Now update all Ubuntu repositories and install Nginx using the apt command below.

sudo apt update

sudo apt install nginx -y

Next, goto the '/etc/nginx/sites-available' directory and create new virtual host file 'gogs'.

cd /etc/nginx/sites-available

vim gogs

Paste the following configuration there.

server {

listen 80;

server_name git.hakase-labs.co;

location / {

proxy_pass http://localhost:3000;

}

}

Save and exit.

**Note:**

Change the 'server_name' line with your own domain name.

Now activate a new virtual host and test the nginx configuration.

ln -s /etc/nginx/sites-available/gogs /etc/nginx/sites-enabled/

nginx -t

Make sure there is no error, then restart the Nginx service.

systemctl restart nginx

## Step 8 - Testing

Open your web browser and type your gogs URL, mine is http://git.hakase-labs.co

Now you will get the installation page. On top of the page, type all of your PostgreSQL database info.

Now scroll to the bottom, and click the 'Admin account settings' dropdown.

Type your admin user, password, and email.

Then click the 'Install Gogs' button.

And you will be redirected to the Gogs user Dashboard as shown below.

Below is Gogs 'Admin Dashboard'.

Gogs is now installed with PostgreSQL database and Nginx web server on Ubuntu 16.04 server |

9,392 | 如何在 Linux/Unix 中不重启 Vim 而重新加载 .vimrc 文件 | https://www.cyberciti.biz/faq/how-to-reload-vimrc-file-without-restarting-vim-on-linux-unix/ | 2018-02-27T23:15:26 | [

"Vim"

] | https://linux.cn/article-9392-1.html |

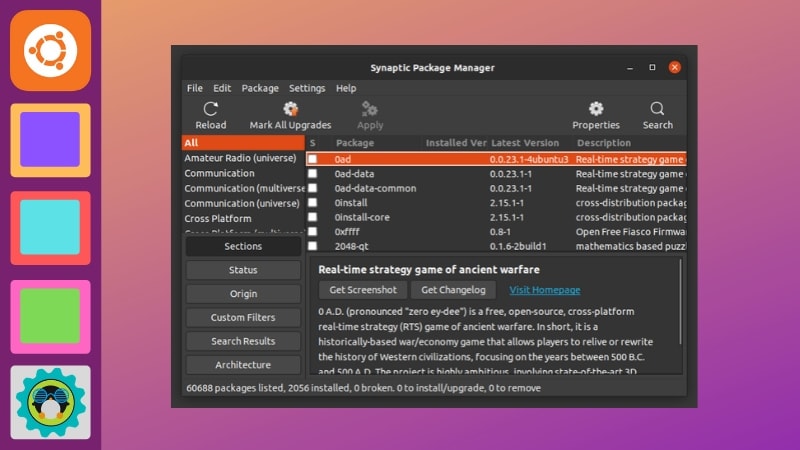

我是一位新的 Vim 编辑器用户。我通常使用 `:vs ~/.vimrc` 来加载 `~/.vimrc` 配置。而当编辑 `.vimrc` 时,我需要不重启 Vim 会话而重新加载它。在 Linux 或者类 Unix 系统中,如何在编辑 `.vimrc` 后,重新加载它而不用重启 Vim 呢?

Vim 是自由开源并且向上兼容 Vi 的编辑器。它可以用来编辑各种文本。它在编辑用 C/Perl/Python 编写的程序时特别有用。可以用它来编辑 Linux/Unix 配置文件。`~/.vimrc` 是你个人的 Vim 初始化和自定义文件。

### 如何在不重启 Vim 会话的情况下重新加载 .vimrc

在 Vim 中重新加载 `.vimrc` 而不重新启动的流程:

1. 输入 `vim filename` 启动 vim

2. 按下 `Esc` 接着输入 `:vs ~/.vimrc` 来加载 vim 配置

3. 像这样添加自定义配置:

```

filetype indent plugin on

set number

syntax on

```

4. 使用 `:wq` 保存文件,并从 `~/.vimrc` 窗口退出

5. 输入下面任一命令重载 `~/.vimrc`:`:so $MYVIMRC` 或者 `:source ~/.vimrc`。

[](https://www.cyberciti.biz/media/new/faq/2018/02/How-to-reload-.vimrc-file-without-restarting-vim.jpg)

*图1:编辑 ~/.vimrc 并在需要时重载它而不用退出 vim,这样你就可以继续编辑程序了*

`:so[urce]! {file}` 这个 vim 命令会从给定的文件比如 `~/.vimrc` 读取配置。就像你输入的一样,这些命令是在普通模式下执行的。当你在 `:global`、:`argdo`、 `:windo`、`:bufdo` 之后、循环中或者跟着另一个命令时,显示不会再在执行命令时更新。

### 如何设置按键来编辑并重载 ~/.vimrc

在你的 `~/.vimrc` 后面跟上这些:

```

" Edit vimr configuration file

nnoremap confe :e $MYVIMRC<CR>

" Reload vims configuration file

nnoremap confr :source $MYVIMRC<CR>

```

现在只要按下 `Esc` 接着输入 `confe` 就可以编辑 `~/.vimrc`。按下 `Esc` ,接着输入 `confr` 以重新加载。一些人喜欢在 `.vimrc` 中使用 `<Leader>` 键。因此上面的映射变成:

```

" Edit vimr configuration file

nnoremap <Leader>ve :e $MYVIMRC<CR>

" Reload vimr configuration file

nnoremap <Leader>vr :source $MYVIMRC<CR>

```

`<Leader>` 键默认映射成 `\` 键。因此只要输入 `\` 接着 `ve` 就能编辑文件。按下 `\` 接着 `vr` 就能重载 `~/vimrc`。

这就完成了,你可以不用再重启 Vim 就能重新加载 `.vimrc` 了。

### 关于作者

作者是 nixCraft 的创建者,经验丰富的系统管理员,也是 Linux / Unix shell 脚本的培训师。他曾与全球客户以及IT、教育、国防和太空研究以及非营利部门等多个行业合作。在 [Twitter][9]、[Facebook][10]、[Google +][11] 上关注他。通过[RSS/XML 订阅](https://www.cyberciti.biz/atom/atom.xml)获取最新的系统管理、Linux/Unix 以及开源主题教程。

---

via: <https://www.cyberciti.biz/faq/how-to-reload-vimrc-file-without-restarting-vim-on-linux-unix/>

作者:[Vivek Gite](https://www.cyberciti.biz/) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,393 | 内核如何管理内存 | http://duartes.org/gustavo/blog/post/how-the-kernel-manages-your-memory/ | 2018-03-01T09:16:00 | [

"内存"

] | https://linux.cn/article-9393-1.html |

在学习了进程的 [虚拟地址布局](/article-9255-1.html) 之后,让我们回到内核,来学习它管理用户内存的机制。这里再次使用 Gonzo:

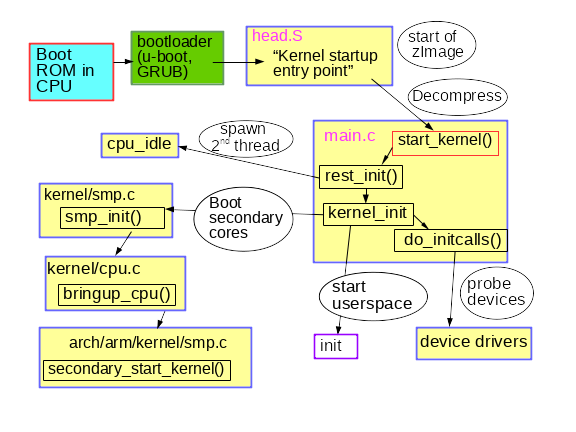

Linux 进程在内核中是作为进程描述符 [task\_struct](http://lxr.linux.no/linux+v2.6.28.1/include/linux/sched.h#L1075) (LCTT 译注:它是在 Linux 中描述进程完整信息的一种数据结构)的实例来实现的。在 task\_struct 中的 [mm](http://lxr.linux.no/linux+v2.6.28.1/include/linux/sched.h#L1129) 域指向到**内存描述符**,[mm\_struct](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L173) 是一个程序在内存中的执行摘要。如上图所示,它保存了起始和结束内存段,进程使用的物理内存页面的 [数量](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L197)(RSS <ruby> 常驻内存大小 <rt> Resident Set Size </rt></ruby> )、虚拟地址空间使用的 [总数量](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L206)、以及其它片断。 在内存描述符中,我们可以获悉它有两种管理内存的方式:**虚拟内存区域**集和**页面表**。Gonzo 的内存区域如下所示:

每个虚拟内存区域(VMA)是一个连续的虚拟地址范围;这些区域绝对不会重叠。一个 [vm\_area\_struct](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L99) 的实例完整地描述了一个内存区域,包括它的起始和结束地址,[flags](http://lxr.linux.no/linux+v2.6.28/include/linux/mm.h#L76) 决定了访问权限和行为,并且 [vm\_file](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L150) 域指定了映射到这个区域的文件(如果有的话)。(除了内存映射段的例外情况之外,)一个 VMA 是不能**匿名**映射文件的。上面的每个内存段(比如,堆、栈)都对应一个单个的 VMA。虽然它通常都使用在 x86 的机器上,但它并不是必需的。VMA 也不关心它们在哪个段中。

一个程序的 VMA 在内存描述符中是作为 [mmap](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L174) 域的一个链接列表保存的,以起始虚拟地址为序进行排列,并且在 [mm\_rb](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L175) 域中作为一个 [红黑树](http://en.wikipedia.org/wiki/Red_black_tree) 的根。红黑树允许内核通过给定的虚拟地址去快速搜索内存区域。在你读取文件 `/proc/pid_of_process/maps` 时,内核只是简单地读取每个进程的 VMA 的链接列表并[显示它们](http://lxr.linux.no/linux+v2.6.28.1/fs/proc/task_mmu.c#L201)。

在 Windows 中,[EPROCESS](http://www.nirsoft.net/kernel_struct/vista/EPROCESS.html) 块大致类似于一个 task\_struct 和 mm\_struct 的结合。在 Windows 中模拟一个 VMA 的是虚拟地址描述符,或称为 [VAD](http://www.nirsoft.net/kernel_struct/vista/MMVAD.html);它保存在一个 [AVL 树](http://en.wikipedia.org/wiki/AVL_tree) 中。你知道关于 Windows 和 Linux 之间最有趣的事情是什么吗?其实它们只有一点小差别。

4GB 虚拟地址空间被分配到**页面**中。在 32 位模式中的 x86 处理器中支持 4KB、2MB、以及 4MB 大小的页面。Linux 和 Windows 都使用大小为 4KB 的页面去映射用户的一部分虚拟地址空间。字节 0-4095 在页面 0 中,字节 4096-8191 在页面 1 中,依次类推。VMA 的大小 *必须是页面大小的倍数* 。下图是使用 4KB 大小页面的总数量为 3GB 的用户空间:

处理器通过查看**页面表**去转换一个虚拟内存地址到一个真实的物理内存地址。每个进程都有它自己的一组页面表;每当发生进程切换时,用户空间的页面表也同时切换。Linux 在内存描述符的 [pgd](http://lxr.linux.no/linux+v2.6.28.1/include/linux/mm_types.h#L185) 域中保存了一个指向进程的页面表的指针。对于每个虚拟页面,页面表中都有一个相应的**页面表条目**(PTE),在常规的 x86 页面表中,它是一个简单的如下所示的大小为 4 字节的记录:

Linux 通过函数去 [读取](http://lxr.linux.no/linux+v2.6.28.1/arch/x86/include/asm/pgtable.h#L173) 和 [设置](http://lxr.linux.no/linux+v2.6.28.1/arch/x86/include/asm/pgtable.h#L230) PTE 条目中的每个标志位。标志位 P 告诉处理器这个虚拟页面是否**在**物理内存中。如果该位被清除(设置为 0),访问这个页面将触发一个页面故障。请记住,当这个标志位为 0 时,内核可以在剩余的域上**做任何想做的事**。R/W 标志位是读/写标志;如果被清除,这个页面将变成只读的。U/S 标志位表示用户/超级用户;如果被清除,这个页面将仅被内核访问。这些标志都是用于实现我们在前面看到的只读内存和内核空间保护。

标志位 D 和 A 用于标识页面是否是“**脏的**”或者是已**被访问过**。一个脏页面表示已经被写入,而一个被访问过的页面则表示有一个写入或者读取发生过。这两个标志位都是粘滞位:处理器只能设置它们,而清除则是由内核来完成的。最终,PTE 保存了这个页面相应的起始物理地址,它们按 4KB 进行整齐排列。这个看起来不起眼的域是一些痛苦的根源,因为它限制了物理内存最大为 [4 GB](http://www.google.com/search?hl=en&amp;amp;amp;amp;q=2%5E20+*+2%5E12+bytes+in+GB)。其它的 PTE 域留到下次再讲,因为它是涉及了物理地址扩展的知识。

由于在一个虚拟页面上的所有字节都共享一个 U/S 和 R/W 标志位,所以内存保护的最小单元是一个虚拟页面。但是,同一个物理内存可能被映射到不同的虚拟页面,这样就有可能会出现相同的物理内存出现不同的保护标志位的情况。请注意,在 PTE 中是看不到运行权限的。这就是为什么经典的 x86 页面上允许代码在栈上被执行的原因,这样会很容易导致挖掘出栈缓冲溢出漏洞(可能会通过使用 [return-to-libc](http://en.wikipedia.org/wiki/Return-to-libc_attack) 和其它技术来找出非可执行栈)。由于 PTE 缺少禁止运行标志位说明了一个更广泛的事实:在 VMA 中的权限标志位有可能或可能不完全转换为硬件保护。内核只能做它能做到的,但是,最终的架构限制了它能做的事情。

虚拟内存不保存任何东西,它只是简单地 *映射* 一个程序的地址空间到底层的物理内存上。物理内存被当作一个称之为**物理地址空间**的巨大块而由处理器访问。虽然内存的操作[涉及到某些](http://duartes.org/gustavo/blog/post/getting-physical-with-memory)总线,我们在这里先忽略它,并假设物理地址范围从 0 到可用的最大值按字节递增。物理地址空间被内核进一步分解为**页面帧**。处理器并不会关心帧的具体情况,这一点对内核也是至关重要的,因为,**页面帧是物理内存管理的最小单元**。Linux 和 Windows 在 32 位模式下都使用 4KB 大小的页面帧;下图是一个有 2 GB 内存的机器的例子:

在 Linux 上每个页面帧是被一个 [描述符](http://lxr.linux.no/linux+v2.6.28/include/linux/mm_types.h#L32) 和 [几个标志](http://lxr.linux.no/linux+v2.6.28/include/linux/page-flags.h#L14) 来跟踪的。通过这些描述符和标志,实现了对机器上整个物理内存的跟踪;每个页面帧的具体状态是公开的。物理内存是通过使用 [Buddy 内存分配](http://en.wikipedia.org/wiki/Buddy_memory_allocation) (LCTT 译注:一种内存分配算法)技术来管理的,因此,如果一个页面帧可以通过 Buddy 系统分配,那么它是**未分配的**(free)。一个被分配的页面帧可以是**匿名的**、持有程序数据的、或者它可能处于页面缓存中、持有数据保存在一个文件或者块设备中。还有其它的异形页面帧,但是这些异形页面帧现在已经不怎么使用了。Windows 有一个类似的页面帧号(Page Frame Number (PFN))数据库去跟踪物理内存。

我们把虚拟内存区域(VMA)、页面表条目(PTE),以及页面帧放在一起来理解它们是如何工作的。下面是一个用户堆的示例:

蓝色的矩形框表示在 VMA 范围内的页面,而箭头表示页面表条目映射页面到页面帧。一些缺少箭头的虚拟页面,表示它们对应的 PTE 的当前标志位被清除(置为 0)。这可能是因为这个页面从来没有被使用过,或者是它的内容已经被交换出去了。在这两种情况下,即便这些页面在 VMA 中,访问它们也将导致产生一个页面故障。对于这种 VMA 和页面表的不一致的情况,看上去似乎很奇怪,但是这种情况却经常发生。

一个 VMA 像一个在你的程序和内核之间的合约。你请求它做一些事情(分配内存、文件映射、等等),内核会回应“收到”,然后去创建或者更新相应的 VMA。 但是,它 *并不立刻* 去“兑现”对你的承诺,而是它会等待到发生一个页面故障时才去 *真正* 做这个工作。内核是个“懒惰的家伙”、“不诚实的人渣”;这就是虚拟内存的基本原理。它适用于大多数的情况,有一些类似情况和有一些意外的情况,但是,它是规则是,VMA 记录 *约定的* 内容,而 PTE 才反映这个“懒惰的内核” *真正做了什么*。通过这两种数据结构共同来管理程序的内存;它们共同来完成解决页面故障、释放内存、从内存中交换出数据、等等。下图是内存分配的一个简单案例:

当程序通过 [brk()](http://www.kernel.org/doc/man-pages/online/pages/man2/brk.2.html) 系统调用来请求一些内存时,内核只是简单地 [更新](http://lxr.linux.no/linux+v2.6.28.1/mm/mmap.c#L2050) 堆的 VMA 并给程序回复“已搞定”。而在这个时候并没有真正地分配页面帧,并且新的页面也没有映射到物理内存上。一旦程序尝试去访问这个页面时,处理器将发生页面故障,然后调用 [do\_page\_fault()](http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L583)。这个函数将使用 [find\_vma()](http://lxr.linux.no/linux+v2.6.28/mm/mmap.c#L1466) 去 [搜索](http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L692) 发生页面故障的 VMA。如果找到了,然后在 VMA 上进行权限检查以防范恶意访问(读取或者写入)。如果没有合适的 VMA,也没有所尝试访问的内存的“合约”,将会给进程返回段故障。

当[找到](http://lxr.linux.no/linux+v2.6.28/arch/x86/mm/fault.c#L711)了一个合适的 VMA,内核必须通过查找 PTE 的内容和 VMA 的类型去[处理](http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2653)故障。在我们的案例中,PTE 显示这个页面是 [不存在的](http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2674)。事实上,我们的 PTE 是全部空白的(全部都是 0),在 Linux 中这表示虚拟内存还没有被映射。由于这是匿名 VMA,我们有一个完全的 RAM 事务,它必须被 [do\_anonymous\_page()](http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2681) 来处理,它分配页面帧,并且用一个 PTE 去映射故障虚拟页面到一个新分配的帧。

有时候,事情可能会有所不同。例如,对于被交换出内存的页面的 PTE,在当前(Present)标志位上是 0,但它并不是空白的。而是在交换位置仍有页面内容,它必须从磁盘上读取并且通过 [do\_swap\_page()](http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2280) 来加载到一个被称为 [major fault](http://lxr.linux.no/linux+v2.6.28/mm/memory.c#L2316) 的页面帧上。

这是我们通过探查内核的用户内存管理得出的前半部分的结论。在下一篇文章中,我们通过将文件加载到内存中,来构建一个完整的内存框架图,以及对性能的影响。

---

via: <http://duartes.org/gustavo/blog/post/how-the-kernel-manages-your-memory/>

作者:[Gustavo Duarte](http://duartes.org/gustavo/blog/about/) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,394 | 20 个 OpenSSH 最佳安全实践 | https://www.cyberciti.biz/tips/linux-unix-bsd-openssh-server-best-practices.html | 2018-02-28T15:45:00 | [

"SSH",

"OpenSSH"

] | https://linux.cn/article-9394-1.html |

OpenSSH 是 SSH 协议的一个实现。一般通过 `scp` 或 `sftp` 用于远程登录、备份、远程文件传输等功能。SSH能够完美保障两个网络或系统间数据传输的保密性和完整性。尽管如此,它最大的优势是使用公匙加密来进行服务器验证。时不时会出现关于 OpenSSH 零日漏洞的[传言](https://isc.sans.edu/diary/OpenSSH+Rumors/6742)。本文将描述如何设置你的 Linux 或类 Unix 系统以提高 sshd 的安全性。

### OpenSSH 默认设置

* TCP 端口 - 22

* OpenSSH 服务配置文件 - `sshd_config` (位于 `/etc/ssh/`)

### 1、 基于公匙的登录

OpenSSH 服务支持各种验证方式。推荐使用公匙加密验证。首先,使用以下 `ssh-keygen` 命令在本地电脑上创建密匙对:

>

> 1024 位或低于它的 DSA 和 RSA 加密是很弱的,请不要使用。当考虑 ssh 客户端向后兼容性的时候,请使用 RSA密匙代替 ECDSA 密匙。所有的 ssh 密钥要么使用 ED25519 ,要么使用 RSA,不要使用其它类型。

>

>

>

```

$ ssh-keygen -t key_type -b bits -C "comment"

```

示例:

```

$ ssh-keygen -t ed25519 -C "Login to production cluster at xyz corp"

或

$ ssh-keygen -t rsa -b 4096 -f ~/.ssh/id_rsa_aws_$(date +%Y-%m-%d) -C "AWS key for abc corp clients"

```

下一步,使用 `ssh-copy-id` 命令安装公匙:

```

$ ssh-copy-id -i /path/to/public-key-file user@host

或

$ ssh-copy-id user@remote-server-ip-or-dns-name

```

示例:

```

$ ssh-copy-id vivek@rhel7-aws-server

```

提示输入用户名和密码的时候,确认基于 ssh 公匙的登录是否工作:

```

$ ssh vivek@rhel7-aws-server

```

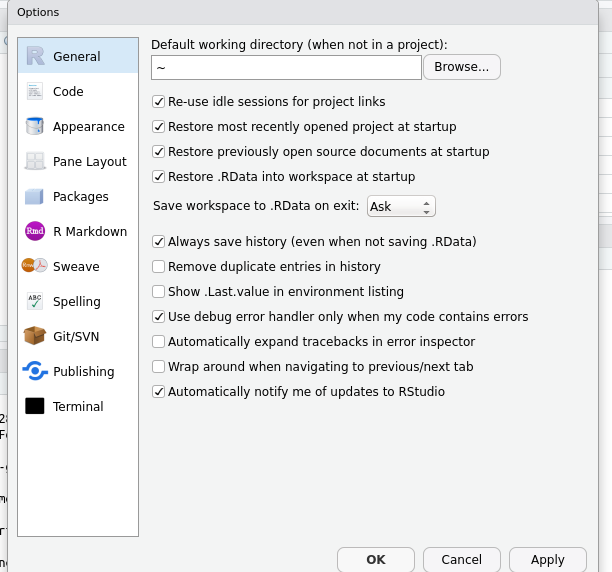

[](https://www.cyberciti.biz/tips/wp-content/uploads/2009/07/OpenSSH-server-security-best-practices.png)

更多有关 ssh 公匙的信息,参照以下文章:

* [为备份脚本设置无密码安全登录](https://www.cyberciti.biz/faq/ssh-passwordless-login-with-keychain-for-scripts/)

* [sshpass:使用脚本密码登录 SSH 服务器](/article-8086-1.html)

* [如何为一个 Linux/类 Unix 系统设置 SSH 登录密匙](https://www.cyberciti.biz/faq/how-to-set-up-ssh-keys-on-linux-unix/)

* [如何使用 Ansible 工具上传 ssh 登录授权公匙](https://www.cyberciti.biz/faq/how-to-upload-ssh-public-key-to-as-authorized_key-using-ansible/)

### 2、 禁用 root 用户登录

禁用 root 用户登录前,确认普通用户可以以 root 身份登录。例如,允许用户 vivek 使用 `sudo` 命令以 root 身份登录。

#### 在 Debian/Ubuntu 系统中如何将用户 vivek 添加到 sudo 组中

允许 sudo 组中的用户执行任何命令。 [将用户 vivek 添加到 sudo 组中](https://www.cyberciti.biz/faq/how-to-create-a-sudo-user-on-ubuntu-linux-server/):

```

$ sudo adduser vivek sudo

```

使用 [id 命令](https://www.cyberciti.biz/faq/unix-linux-id-command-examples-usage-syntax/ "See Linux/Unix id command examples for more info") 验证用户组。

```

$ id vivek

```

#### 在 CentOS/RHEL 系统中如何将用户 vivek 添加到 sudo 组中

在 CentOS/RHEL 和 Fedora 系统中允许 wheel 组中的用户执行所有的命令。使用 `usermod` 命令将用户 vivek 添加到 wheel 组中:

```

$ sudo usermod -aG wheel vivek

$ id vivek

```

#### 测试 sudo 权限并禁用 ssh root 登录

测试并确保用户 vivek 可以以 root 身份登录执行以下命令:

```

$ sudo -i

$ sudo /etc/init.d/sshd status

$ sudo systemctl status httpd

```

添加以下内容到 `sshd_config` 文件中来禁用 root 登录:

```

PermitRootLogin no

ChallengeResponseAuthentication no

PasswordAuthentication no

UsePAM no

```

更多信息参见“[如何通过禁用 Linux 的 ssh 密码登录来增强系统安全](https://www.cyberciti.biz/faq/how-to-disable-ssh-password-login-on-linux/)” 。

### 3、 禁用密码登录

所有的密码登录都应该禁用,仅留下公匙登录。添加以下内容到 `sshd_config` 文件中:

```

AuthenticationMethods publickey

PubkeyAuthentication yes

```

CentOS 6.x/RHEL 6.x 系统中老版本的 sshd 用户可以使用以下设置:

```

PubkeyAuthentication yes

```

### 4、 限制用户的 ssh 访问

默认状态下,所有的系统用户都可以使用密码或公匙登录。但是有些时候需要为 FTP 或者 email 服务创建 UNIX/Linux 用户。然而,这些用户也可以使用 ssh 登录系统。他们将获得访问系统工具的完整权限,包括编译器和诸如 Perl、Python(可以打开网络端口干很多疯狂的事情)等的脚本语言。通过添加以下内容到 `sshd_config` 文件中来仅允许用户 root、vivek 和 jerry 通过 SSH 登录系统:

```

AllowUsers vivek jerry

```

当然,你也可以添加以下内容到 `sshd_config` 文件中来达到仅拒绝一部分用户通过 SSH 登录系统的效果。

```

DenyUsers root saroj anjali foo

```

你也可以通过[配置 Linux PAM](https://www.cyberciti.biz/tips/linux-pam-configuration-that-allows-or-deny-login-via-the-sshd-server.html) 来禁用或允许用户通过 sshd 登录。也可以允许或禁止一个[用户组列表](https://www.cyberciti.biz/tips/openssh-deny-or-restrict-access-to-users-and-groups.html)通过 ssh 登录系统。

### 5、 禁用空密码

你需要明确禁止空密码账户远程登录系统,更新 `sshd_config` 文件的以下内容:

```

PermitEmptyPasswords no

```

### 6、 为 ssh 用户或者密匙使用强密码

为密匙使用强密码和短语的重要性再怎么强调都不过分。暴力破解可以起作用就是因为用户使用了基于字典的密码。你可以强制用户避开[字典密码](https://www.cyberciti.biz/tips/linux-check-passwords-against-a-dictionary-attack.html)并使用[约翰的开膛手工具](https://www.cyberciti.biz/faq/unix-linux-password-cracking-john-the-ripper/)来检测弱密码。以下是一个随机密码生成器(放到你的 `~/.bashrc` 下):

```

genpasswd() {

local l=$1

[ "$l" == "" ] && l=20

tr -dc A-Za-z0-9_ < /dev/urandom | head -c ${l} | xargs

}

```

运行:

```

genpasswd 16

```

输出:

```

uw8CnDVMwC6vOKgW

```

* [使用 mkpasswd / makepasswd / pwgen 生成随机密码](https://www.cyberciti.biz/faq/generating-random-password/)

* [Linux / UNIX: 生成密码](https://www.cyberciti.biz/faq/linux-unix-generating-passwords-command/)

* [Linux 随机密码生成命令](https://www.cyberciti.biz/faq/linux-random-password-generator/)

### 7、 为 SSH 的 22端口配置防火墙

你需要更新 `iptables`/`ufw`/`firewall-cmd` 或 pf 防火墙配置来为 ssh 的 TCP 端口 22 配置防火墙。一般来说,OpenSSH 服务应该仅允许本地或者其他的远端地址访问。

#### Netfilter(Iptables) 配置

更新 [/etc/sysconfig/iptables (Redhat 和其派生系统特有文件)](https://www.cyberciti.biz/faq/rhel-fedorta-linux-iptables-firewall-configuration-tutorial/) 实现仅接受来自于 192.168.1.0/24 和 202.54.1.5/29 的连接,输入:

```

-A RH-Firewall-1-INPUT -s 192.168.1.0/24 -m state --state NEW -p tcp --dport 22 -j ACCEPT

-A RH-Firewall-1-INPUT -s 202.54.1.5/29 -m state --state NEW -p tcp --dport 22 -j ACCEPT

```

如果同时使用 IPv6 的话,可以编辑 `/etc/sysconfig/ip6tables` (Redhat 和其派生系统特有文件),输入:

```

-A RH-Firewall-1-INPUT -s ipv6network::/ipv6mask -m tcp -p tcp --dport 22 -j ACCEPT

```

将 `ipv6network::/ipv6mask` 替换为实际的 IPv6 网段。

#### Debian/Ubuntu Linux 下的 UFW

[UFW 是 Uncomplicated FireWall 的首字母缩写,主要用来管理 Linux 防火墙](https://www.cyberciti.biz/faq/howto-configure-setup-firewall-with-ufw-on-ubuntu-linux/),目的是提供一种用户友好的界面。输入[以下命令使得系统仅允许网段 202.54.1.5/29 接入端口 22](https://www.cyberciti.biz/faq/ufw-allow-incoming-ssh-connections-from-a-specific-ip-address-subnet-on-ubuntu-debian/):

```

$ sudo ufw allow from 202.54.1.5/29 to any port 22

```

更多信息请参见 “[Linux:菜鸟管理员的 25 个 Iptables Netfilter 命令](https://www.cyberciti.biz/tips/linux-iptables-examples.html)”。

#### \*BSD PF 防火墙配置

如果使用 PF 防火墙 [/etc/pf.conf](https://bash.cyberciti.biz/firewall/pf-firewall-script/) 配置如下:

```

pass in on $ext_if inet proto tcp from {192.168.1.0/24, 202.54.1.5/29} to $ssh_server_ip port ssh flags S/SA synproxy state

```

### 8、 修改 SSH 端口和绑定 IP

ssh 默认监听系统中所有可用的网卡。修改并绑定 ssh 端口有助于避免暴力脚本的连接(许多暴力脚本只尝试端口 22)。更新文件 `sshd_config` 的以下内容来绑定端口 300 到 IP 192.168.1.5 和 202.54.1.5:

```

Port 300

ListenAddress 192.168.1.5

ListenAddress 202.54.1.5

```

当需要接受动态广域网地址的连接时,使用主动脚本是个不错的选择,比如 fail2ban 或 denyhosts。

### 9、 使用 TCP wrappers (可选的)

TCP wrapper 是一个基于主机的访问控制系统,用来过滤来自互联网的网络访问。OpenSSH 支持 TCP wrappers。只需要更新文件 `/etc/hosts.allow` 中的以下内容就可以使得 SSH 只接受来自于 192.168.1.2 和 172.16.23.12 的连接:

```

sshd : 192.168.1.2 172.16.23.12

```

在 Linux/Mac OS X 和类 UNIX 系统中参见 [TCP wrappers 设置和使用的常见问题](https://www.cyberciti.biz/faq/tcp-wrappers-hosts-allow-deny-tutorial/)。

### 10、 阻止 SSH 破解或暴力攻击

暴力破解是一种在单一或者分布式网络中使用大量(用户名和密码的)组合来尝试连接一个加密系统的方法。可以使用以下软件来应对暴力攻击:

* [DenyHosts](https://www.cyberciti.biz/faq/block-ssh-attacks-with-denyhosts/) 是一个基于 Python SSH 安全工具。该工具通过监控授权日志中的非法登录日志并封禁原始 IP 的方式来应对暴力攻击。

+ RHEL / Fedora 和 CentOS Linux 下如何设置 [DenyHosts](https://www.cyberciti.biz/faq/rhel-linux-block-ssh-dictionary-brute-force-attacks/)。

* [Fail2ban](https://www.fail2ban.org) 是另一个类似的用来预防针对 SSH 攻击的工具。

* [sshguard](https://sshguard.sourceforge.net/) 是一个使用 pf 来预防针对 SSH 和其他服务攻击的工具。

* [security/sshblock](http://www.bsdconsulting.no/tools/) 阻止滥用 SSH 尝试登录。

* [IPQ BDB filter](https://savannah.nongnu.org/projects/ipqbdb/) 可以看做是 fail2ban 的一个简化版。

### 11、 限制 TCP 端口 22 的传入速率(可选的)

netfilter 和 pf 都提供速率限制选项可以对端口 22 的传入速率进行简单的限制。

#### Iptables 示例

以下脚本将会阻止 60 秒内尝试登录 5 次以上的客户端的连入。

```

#!/bin/bash

inet_if=eth1

ssh_port=22

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --set

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --update --seconds 60 --hitcount 5

```

在你的 iptables 脚本中调用以上脚本。其他配置选项:

```

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state NEW -m limit --limit 3/min --limit-burst 3 -j ACCEPT

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state ESTABLISHED -j ACCEPT

$IPT -A OUTPUT -o ${inet_if} -p tcp --sport ${ssh_port} -m state --state ESTABLISHED -j ACCEPT

# another one line example

# $IPT -A INPUT -i ${inet_if} -m state --state NEW,ESTABLISHED,RELATED -p tcp --dport 22 -m limit --limit 5/minute --limit-burst 5-j ACCEPT

```

其他细节参见 iptables 用户手册。

#### \*BSD PF 示例

以下脚本将限制每个客户端的连入数量为 20,并且 5 秒内的连接不超过 15 个。如果客户端触发此规则,则将其加入 abusive\_ips 表并限制该客户端连入。最后 flush 关键词杀死所有触发规则的客户端的连接。

```

sshd_server_ip = "202.54.1.5"

table <abusive_ips> persist

block in quick from <abusive_ips>

pass in on $ext_if proto tcp to $sshd_server_ip port ssh flags S/SA keep state (max-src-conn 20, max-src-conn-rate 15/5, overload <abusive_ips> flush)

```

### 12、 使用端口敲门(可选的)

[端口敲门](https://en.wikipedia.org/wiki/Port_knocking)是通过在一组预先指定的封闭端口上生成连接尝试,以便从外部打开防火墙上的端口的方法。一旦指定的端口连接顺序被触发,防火墙规则就被动态修改以允许发送连接的主机连入指定的端口。以下是一个使用 iptables 实现的端口敲门的示例:

```

$IPT -N stage1

$IPT -A stage1 -m recent --remove --name knock

$IPT -A stage1 -p tcp --dport 3456 -m recent --set --name knock2

$IPT -N stage2

$IPT -A stage2 -m recent --remove --name knock2

$IPT -A stage2 -p tcp --dport 2345 -m recent --set --name heaven

$IPT -N door

$IPT -A door -m recent --rcheck --seconds 5 --name knock2 -j stage2

$IPT -A door -m recent --rcheck --seconds 5 --name knock -j stage1

$IPT -A door -p tcp --dport 1234 -m recent --set --name knock

$IPT -A INPUT -m --state ESTABLISHED,RELATED -j ACCEPT

$IPT -A INPUT -p tcp --dport 22 -m recent --rcheck --seconds 5 --name heaven -j ACCEPT

$IPT -A INPUT -p tcp --syn -j door

```

更多信息请参见:

[Debian / Ubuntu: 使用 Knockd and Iptables 设置端口敲门](https://www.cyberciti.biz/faq/debian-ubuntu-linux-iptables-knockd-port-knocking-tutorial/)

### 13、 配置空闲超时注销时长

用户可以通过 ssh 连入服务器,可以配置一个超时时间间隔来避免无人值守的 ssh 会话。 打开 `sshd_config` 并确保配置以下值:

```

ClientAliveInterval 300

ClientAliveCountMax 0

```

以秒为单位设置一个空闲超时时间(300秒 = 5分钟)。一旦空闲时间超过这个值,空闲用户就会被踢出会话。更多细节参见[如何自动注销空闲超时的 BASH / TCSH / SSH 用户](https://www.cyberciti.biz/faq/linux-unix-login-bash-shell-force-time-outs/)。

### 14、 为 ssh 用户启用警示标语

更新 `sshd_config` 文件如下行来设置用户的警示标语:

```

Banner /etc/issue

```

`/etc/issue 示例文件:

```

----------------------------------------------------------------------------------------------

You are accessing a XYZ Government (XYZG) Information System (IS) that is provided for authorized use only.

By using this IS (which includes any device attached to this IS), you consent to the following conditions:

+ The XYZG routinely intercepts and monitors communications on this IS for purposes including, but not limited to,

penetration testing, COMSEC monitoring, network operations and defense, personnel misconduct (PM),

law enforcement (LE), and counterintelligence (CI) investigations.

+ At any time, the XYZG may inspect and seize data stored on this IS.

+ Communications using, or data stored on, this IS are not private, are subject to routine monitoring,

interception, and search, and may be disclosed or used for any XYZG authorized purpose.

+ This IS includes security measures (e.g., authentication and access controls) to protect XYZG interests--not

for your personal benefit or privacy.

+ Notwithstanding the above, using this IS does not constitute consent to PM, LE or CI investigative searching

or monitoring of the content of privileged communications, or work product, related to personal representation

or services by attorneys, psychotherapists, or clergy, and their assistants. Such communications and work

product are private and confidential. See User Agreement for details.

----------------------------------------------------------------------------------------------

```

以上是一个标准的示例,更多的用户协议和法律细节请咨询你的律师团队。

### 15、 禁用 .rhosts 文件(需核实)

禁止读取用户的 `~/.rhosts` 和 `~/.shosts` 文件。更新 `sshd_config` 文件中的以下内容:

```

IgnoreRhosts yes

```

SSH 可以模拟过时的 rsh 命令,所以应该禁用不安全的 RSH 连接。

### 16、 禁用基于主机的授权(需核实)

禁用基于主机的授权,更新 `sshd_config` 文件的以下选项:

```

HostbasedAuthentication no

```

### 17、 为 OpenSSH 和操作系统打补丁

推荐你使用类似 [yum](https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/)、[apt-get](https://www.cyberciti.biz/tips/linux-debian-package-management-cheat-sheet.html) 和 [freebsd-update](https://www.cyberciti.biz/tips/howto-keep-freebsd-system-upto-date.html) 等工具保持系统安装了最新的安全补丁。

### 18、 Chroot OpenSSH (将用户锁定在主目录)

默认设置下用户可以浏览诸如 `/etc`、`/bin` 等目录。可以使用 chroot 或者其他专有工具如 [rssh](https://www.cyberciti.biz/tips/rhel-centos-linux-install-configure-rssh-shell.html) 来保护 ssh 连接。从版本 4.8p1 或 4.9p1 起,OpenSSH 不再需要依赖诸如 rssh 或复杂的 chroot(1) 等第三方工具来将用户锁定在主目录中。可以使用新的 `ChrootDirectory` 指令将用户锁定在其主目录,参见[这篇博文](https://www.debian-administration.org/articles/590)。

### 19. 禁用客户端的 OpenSSH 服务

工作站和笔记本不需要 OpenSSH 服务。如果不需要提供 ssh 远程登录和文件传输功能的话,可以禁用 sshd 服务。CentOS / RHEL 用户可以使用 [yum 命令](https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/ "See Linux/Unix yum command examples for more info") 禁用或删除 openssh-server:

```

$ sudo yum erase openssh-server

```

Debian / Ubuntu 用户可以使用 [apt 命令](https://www.cyberciti.biz/faq/ubuntu-lts-debian-linux-apt-command-examples/ "See Linux/Unix apt command examples for more info")/[apt-get 命令](https://www.cyberciti.biz/tips/linux-debian-package-management-cheat-sheet.html "See Linux/Unix apt-get command examples for more info") 删除 openssh-server:

```

$ sudo apt-get remove openssh-server

```

有可能需要更新 iptables 脚本来移除 ssh 的例外规则。CentOS / RHEL / Fedora 系统可以编辑文件 `/etc/sysconfig/iptables` 和 `/etc/sysconfig/ip6tables`。最后[重启 iptables](https://www.cyberciti.biz/faq/howto-rhel-linux-open-port-using-iptables/) 服务:

```

# service iptables restart

# service ip6tables restart

```

### 20. 来自 Mozilla 的额外提示

如果使用 6.7+ 版本的 OpenSSH,可以尝试下[以下设置](https://wiki.mozilla.org/Security/Guidelines/OpenSSH):

```

#################[ WARNING ]########################

# Do not use any setting blindly. Read sshd_config #

# man page. You must understand cryptography to #

# tweak following settings. Otherwise use defaults #

####################################################

# Supported HostKey algorithms by order of preference.

HostKey /etc/ssh/ssh_host_ed25519_key

HostKey /etc/ssh/ssh_host_rsa_key

HostKey /etc/ssh/ssh_host_ecdsa_key

# Specifies the available KEX (Key Exchange) algorithms.

KexAlgorithms [email protected],ecdh-sha2-nistp521,ecdh-sha2-nistp384,ecdh-sha2-nistp256,diffie-hellman-group-exchange-sha256

# Specifies the ciphers allowed

Ciphers [email protected],[email protected],[email protected],aes256-ctr,aes192-ctr,aes128-ctr

#Specifies the available MAC (message authentication code) algorithms

MACs [email protected],[email protected],[email protected],hmac-sha2-512,hmac-sha2-256,[email protected]

# LogLevel VERBOSE logs user's key fingerprint on login. Needed to have a clear audit track of which key was using to log in.

LogLevel VERBOSE

# Log sftp level file access (read/write/etc.) that would not be easily logged otherwise.

Subsystem sftp /usr/lib/ssh/sftp-server -f AUTHPRIV -l INFO

```

使用以下命令获取 OpenSSH 支持的加密方法:

```

$ ssh -Q cipher

$ ssh -Q cipher-auth

$ ssh -Q mac

$ ssh -Q kex

$ ssh -Q key

```

[](https://www.cyberciti.biz/tips/wp-content/uploads/2009/07/OpenSSH-Security-Tutorial-Query-Ciphers-and-algorithms-choice.jpg)

### 如何测试 sshd\_config 文件并重启/重新加载 SSH 服务?

在重启 sshd 前检查配置文件的有效性和密匙的完整性,运行:

```

$ sudo sshd -t

```

扩展测试模式:

```

$ sudo sshd -T

```

最后,根据系统的的版本[重启 Linux 或类 Unix 系统中的 sshd 服务](https://www.cyberciti.biz/faq/howto-restart-ssh/):

```

$ [sudo systemctl start ssh][38] ## Debian/Ubunt Linux##

$ [sudo systemctl restart sshd.service][39] ## CentOS/RHEL/Fedora Linux##

$ doas /etc/rc.d/sshd restart ## OpenBSD##

$ sudo service sshd restart ## FreeBSD##

```

### 其他建议

1. [使用 2FA 加强 SSH 的安全性](https://www.cyberciti.biz/open-source/howto-protect-linux-ssh-login-with-google-authenticator/) - 可以使用 [OATH Toolkit](http://www.nongnu.org/oath-toolkit/) 或 [DuoSecurity](https://duo.com) 启用多重身份验证。

2. [基于密匙链的身份验证](https://www.cyberciti.biz/faq/ssh-passwordless-login-with-keychain-for-scripts/) - 密匙链是一个 bash 脚本,可以使得基于密匙的验证非常的灵活方便。相对于无密码密匙,它提供更好的安全性。

### 更多信息

* [OpenSSH 官方](https://www.openssh.com/) 项目。

* 用户手册: sshd(8)、ssh(1)、ssh-add(1)、ssh-agent(1)。

如果知道这里没用提及的方便的软件或者技术,请在下面的评论中分享,以帮助读者保持 OpenSSH 的安全。

### 关于作者

作者是 nixCraft 的创始人,一个经验丰富的系统管理员和 Linux/Unix 脚本培训师。他曾与全球客户合作,领域涉及 IT,教育,国防和空间研究以及非营利部门等多个行业。请在 [Twitter](https://twitter.com/nixcraft)、[Facebook](https://facebook.com/nixcraft)、[Google+](https://plus.google.com/+CybercitiBiz) 上关注他。

---

via: <https://www.cyberciti.biz/tips/linux-unix-bsd-openssh-server-best-practices.html>

作者:[Vivek Gite](https://www.cyberciti.biz) 译者:[shipsw](https://github.com/shipsw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,395 | 在 Linux 上安装必应桌面墙纸更换器 | https://www.2daygeek.com/bing-desktop-wallpaper-changer-linux-bing-photo-of-the-day/ | 2018-02-28T23:16:00 | [

"墙纸",

"bing"

] | https://linux.cn/article-9395-1.html | 你是否厌倦了 Linux 桌面背景,想要设置好看的壁纸,但是不知道在哪里可以找到?别担心,我们在这里会帮助你。

我们都知道必应搜索引擎,但是由于一些原因很少有人使用它,每个人都喜欢必应网站的背景壁纸,它是非常漂亮和惊人的高分辨率图像。

如果你想使用这些图片作为你的桌面壁纸,你可以手动下载它,但是很难去每天下载一个新的图片,然后把它设置为壁纸。这就是自动壁纸改变的地方。

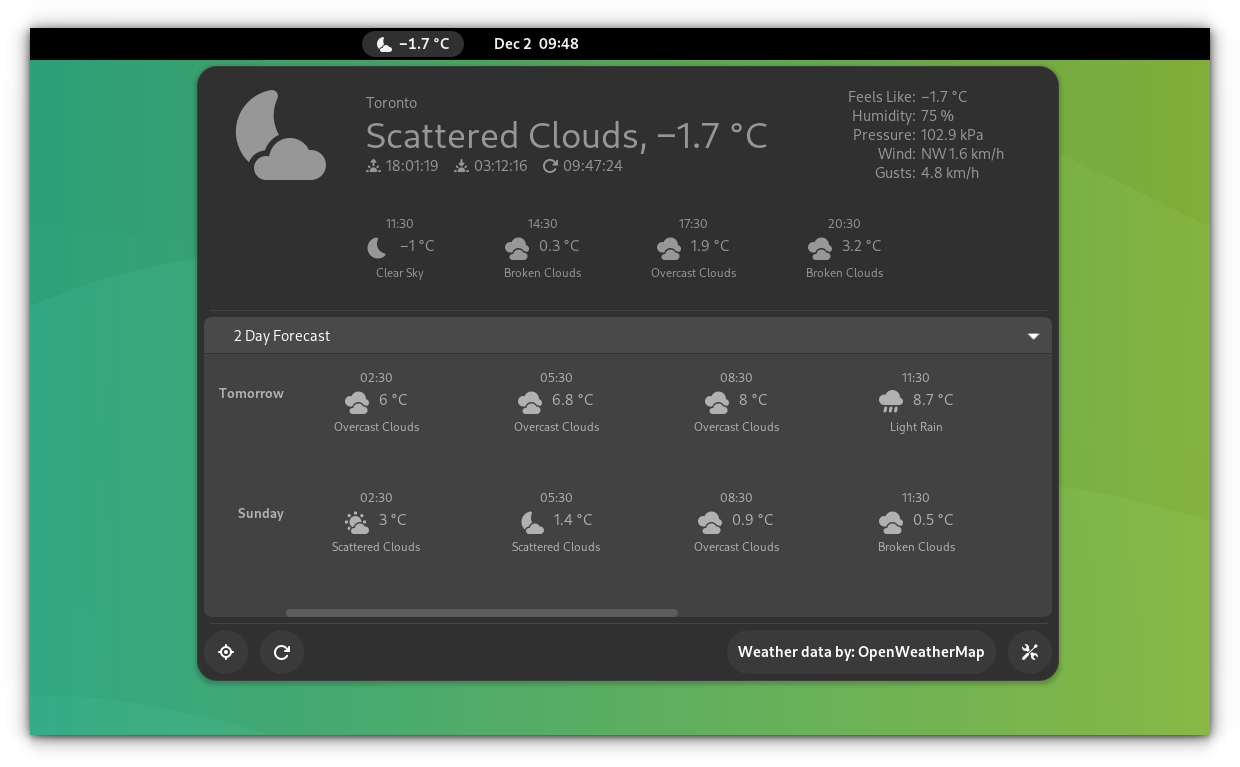

[必应桌面墙纸更换器](https://github.com/UtkarshGpta/bing-desktop-wallpaper-changer)会自动下载并将桌面壁纸更改为当天的必应照片。所有的壁纸都储存在 `/home/[user]/Pictures/BingWallpapers/`。

### 方法 1: 使用 Utkarsh Gupta Shell 脚本

这个小型 Python 脚本会自动下载并将桌面壁纸更改为当天的必应照片。该脚本在机器启动时自动运行,并工作于 GNU/Linux 上的 Gnome 或 Cinnamon 环境。它不需要手动工作,安装程序会为你做所有事情。

从 2.0+ 版本开始,该脚本的安装程序就可以像普通的 Linux 二进制命令一样工作,它会为某些任务请求 sudo 权限。

只需克隆仓库并切换到项目目录,然后运行 shell 脚本即可安装必应桌面墙纸更换器。

```

$ curl https://github.com/UtkarshGpta/bing-desktop-wallpaper-changer/archive/master.zip

$ unzip master

$ cd bing-desktop-wallpaper-changer-master

```

运行 `installer.sh` 使用 `--install` 选项来安装必应桌面墙纸更换器。它会下载并设置必应照片为你的 Linux 桌面。

```

$ ./installer.sh --install

Bing-Desktop-Wallpaper-Changer

BDWC Installer v3_beta2

GitHub:

Contributors:

.

.

[sudo] password for daygeek: ******

.

Where do you want to install Bing-Desktop-Wallpaper-Changer?

Entering 'opt' or leaving input blank will install in /opt/bing-desktop-wallpaper-changer

Entering 'home' will install in /home/daygeek/bing-desktop-wallpaper-changer

Install Bing-Desktop-Wallpaper-Changer in (opt/home)? :Press Enter

Should we create bing-desktop-wallpaper-changer symlink to /usr/bin/bingwallpaper so you could easily execute it?

Create symlink for easy execution, e.g. in Terminal (y/n)? : y

Should bing-desktop-wallpaper-changer needs to autostart when you log in? (Add in Startup Application)

Add in Startup Application (y/n)? : y

.

.

Executing bing-desktop-wallpaper-changer...

Finished!!

```

要卸载该脚本:

```

$ ./installer.sh --uninstall

```

使用帮助页面了解更多关于此脚本的选项。

```

$ ./installer.sh --help

```

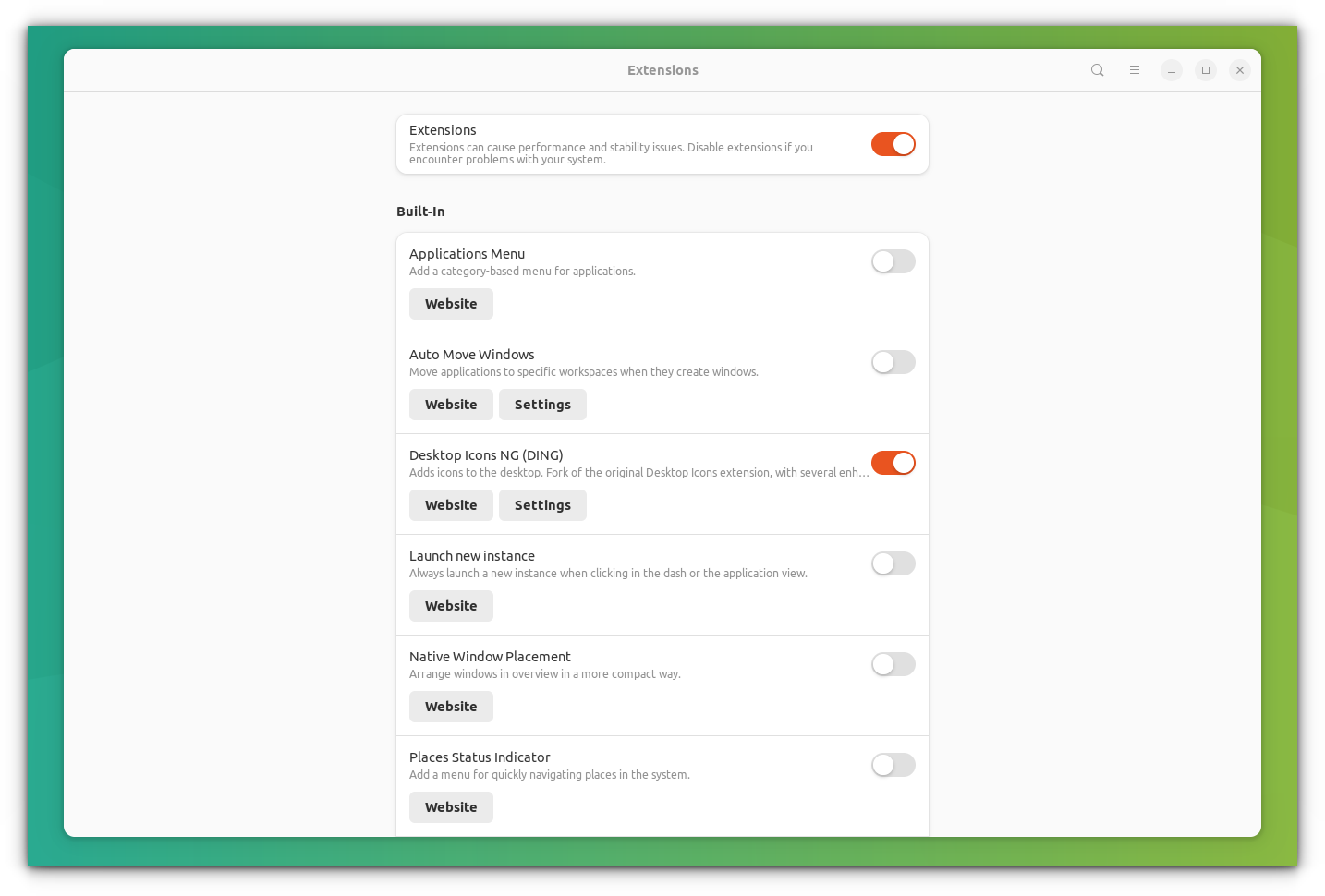

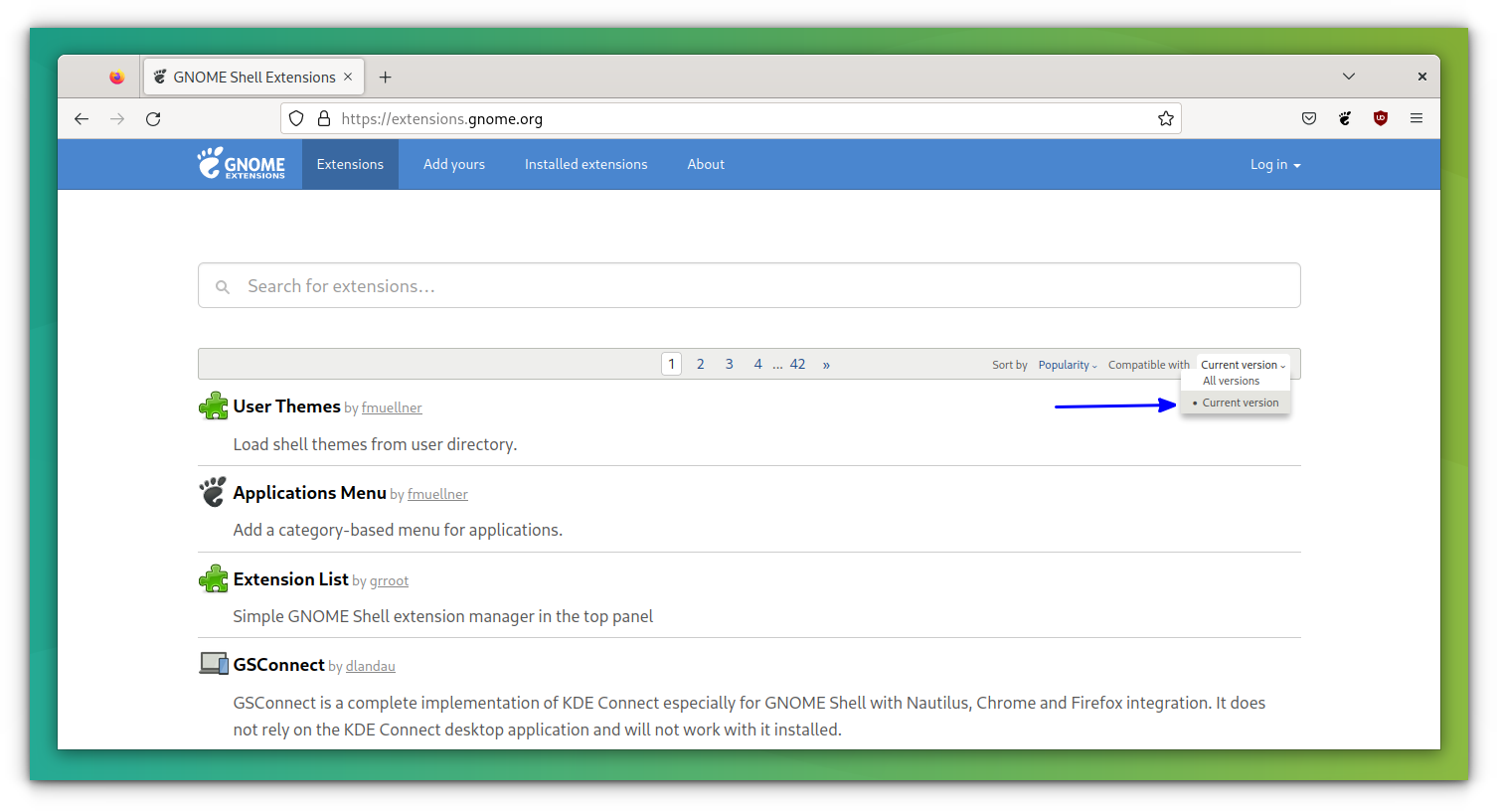

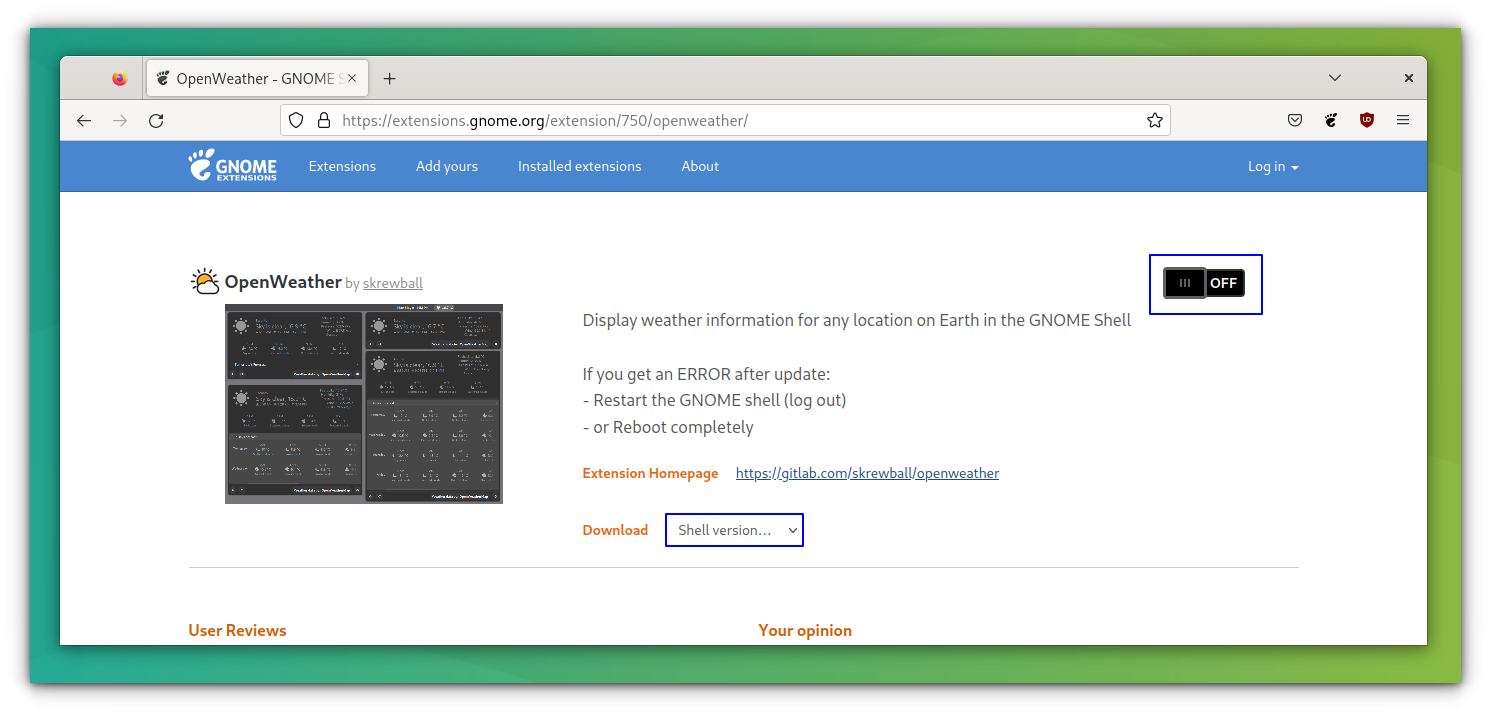

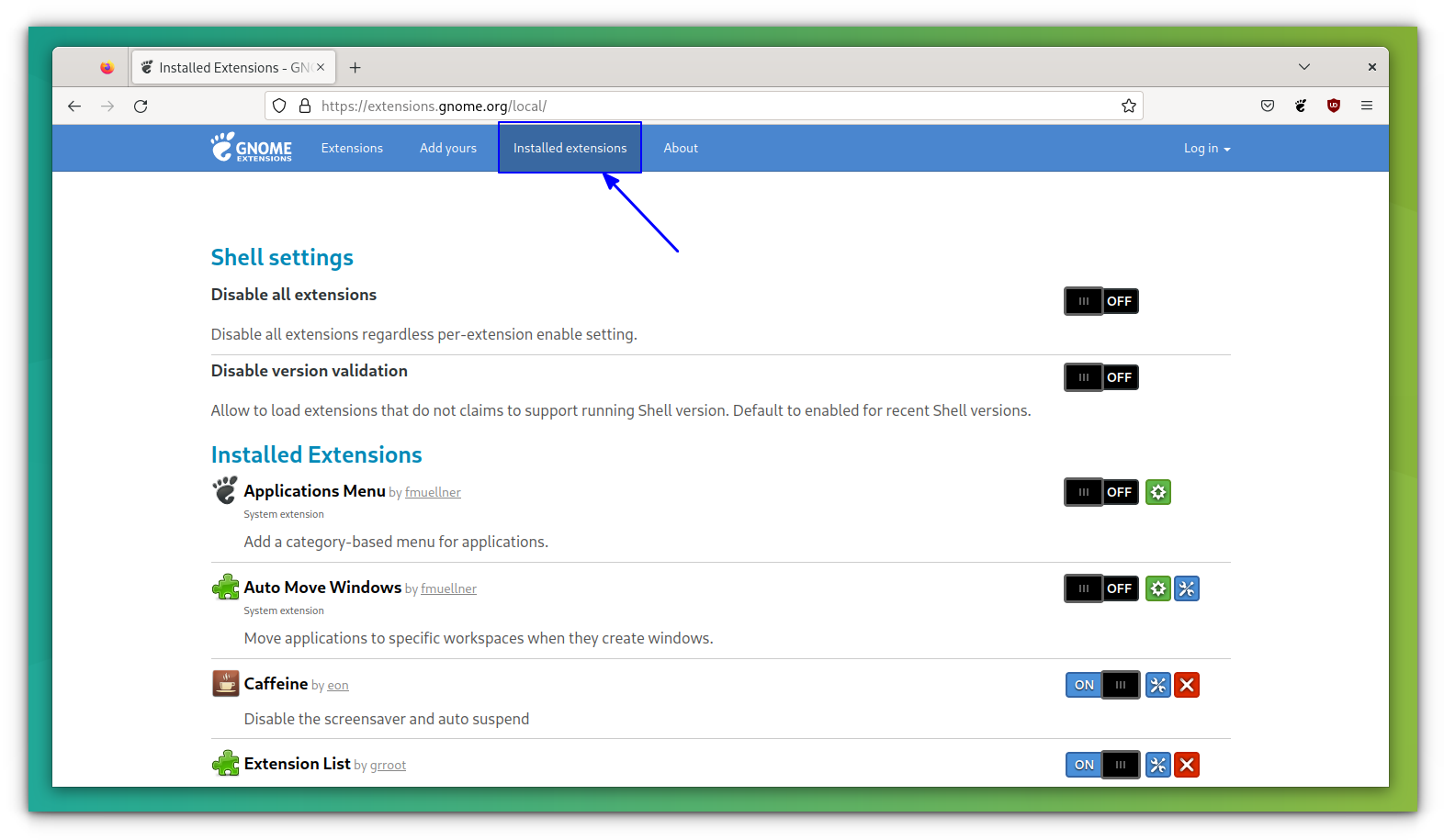

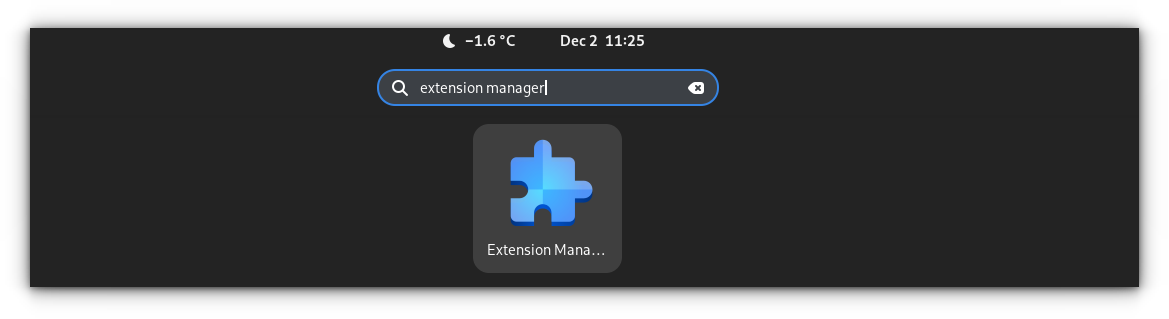

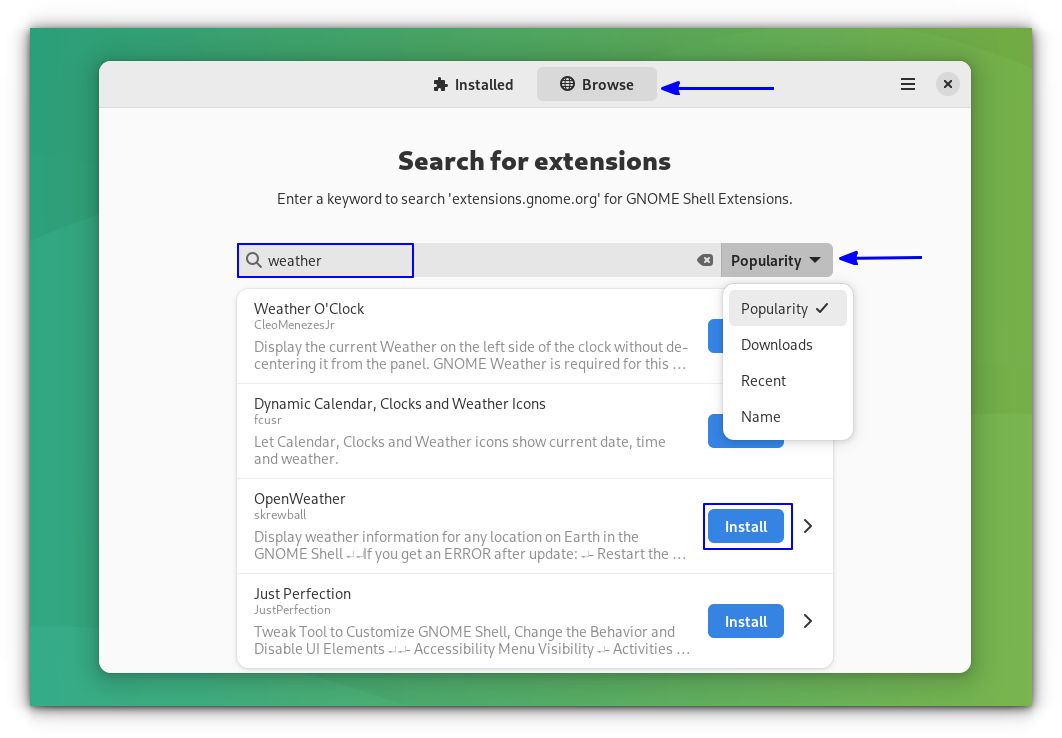

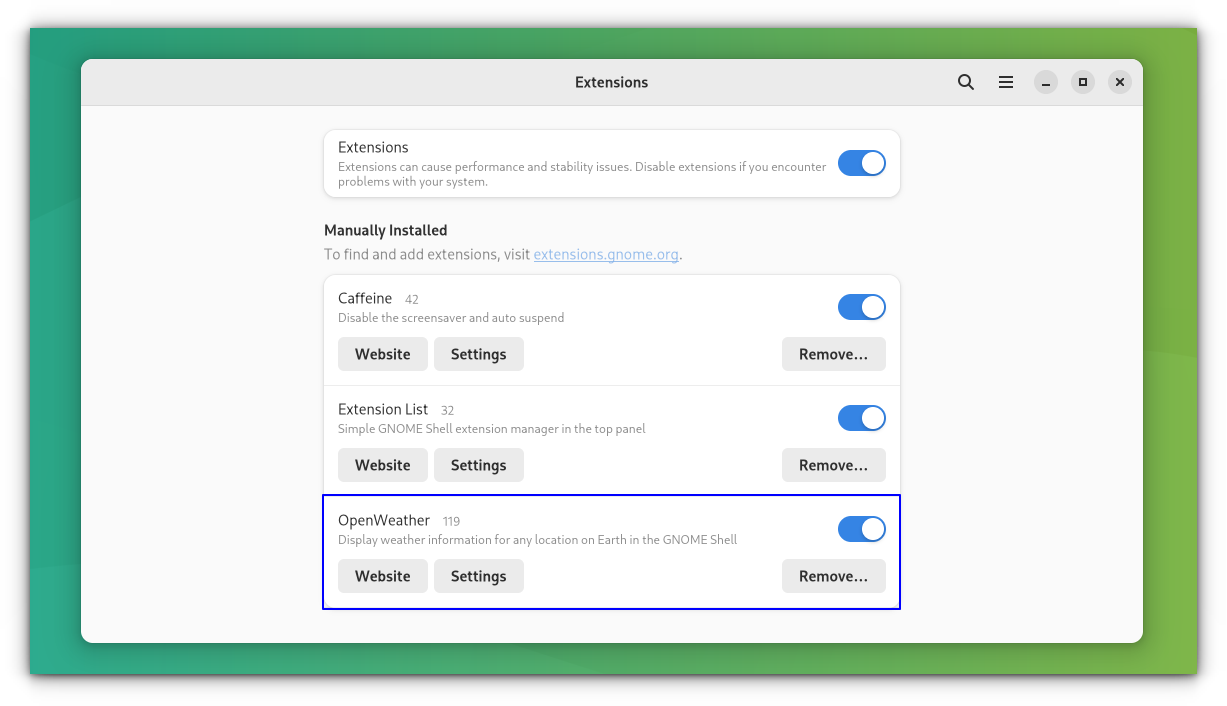

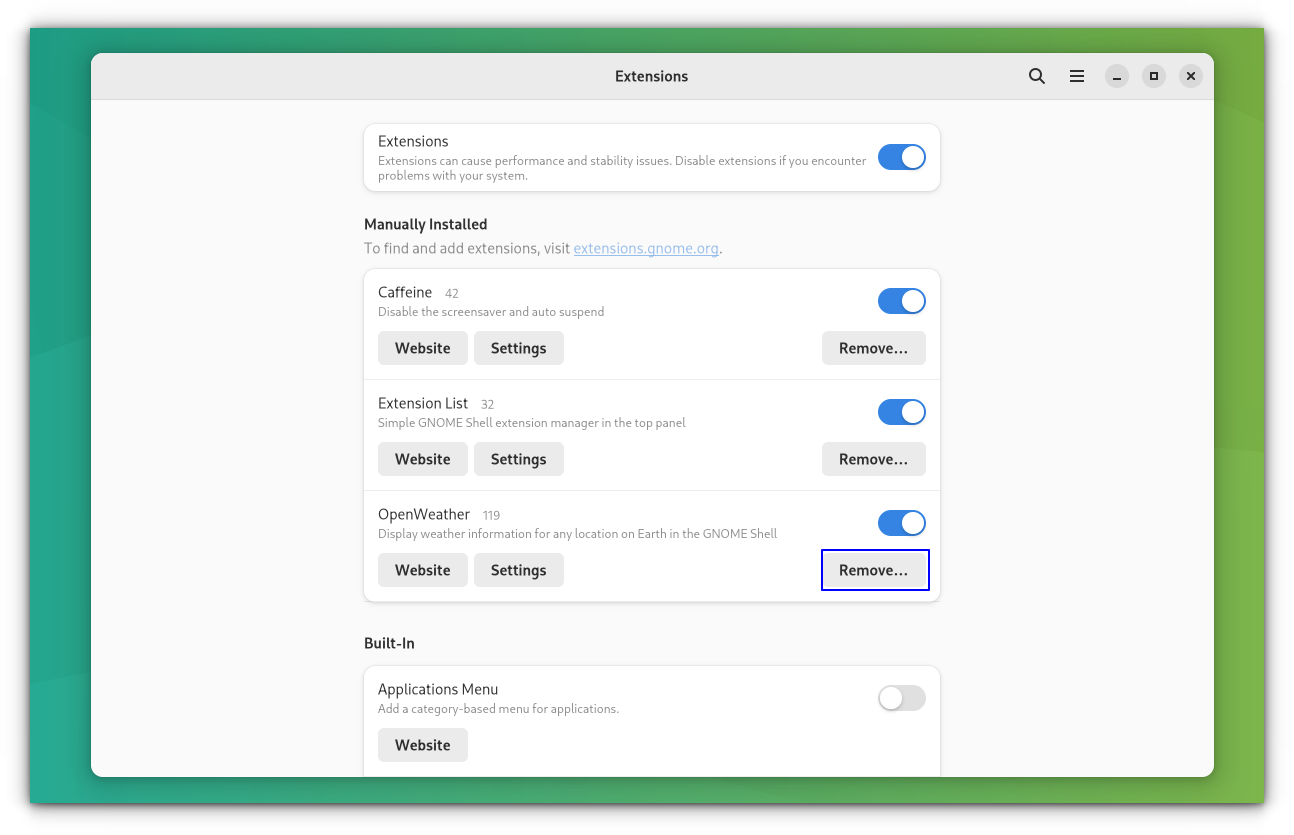

### 方法 2: 使用 GNOME Shell 扩展

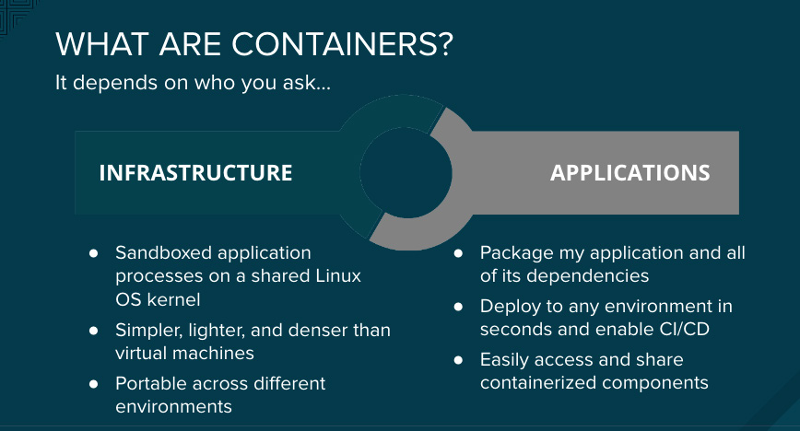

这个轻量级 [GNOME shell 扩展](https://github.com/neffo/bing-wallpaper-gnome-extension),可将你的壁纸每天更改为微软必应的壁纸。它还会显示一个包含图像标题和解释的通知。

该扩展大部分基于 Elinvention 的 NASA APOD 扩展,受到了 Utkarsh Gupta 的 Bing Desktop WallpaperChanger 启发。

#### 特点

* 获取当天的必应壁纸并设置为锁屏和桌面墙纸(这两者都是用户可选的)

* 可强制选择某个特定区域(即地区)

* 为多个显示器自动选择最高分辨率(和最合适的墙纸)

* 可以选择在 1 到 7 天之后清理墙纸目录(删除最旧的)

* 只有当它们被更新时,才会尝试下载壁纸

* 不会持续进行更新 - 每天只进行一次,启动时也要进行一次(更新是在必应更新时进行的)

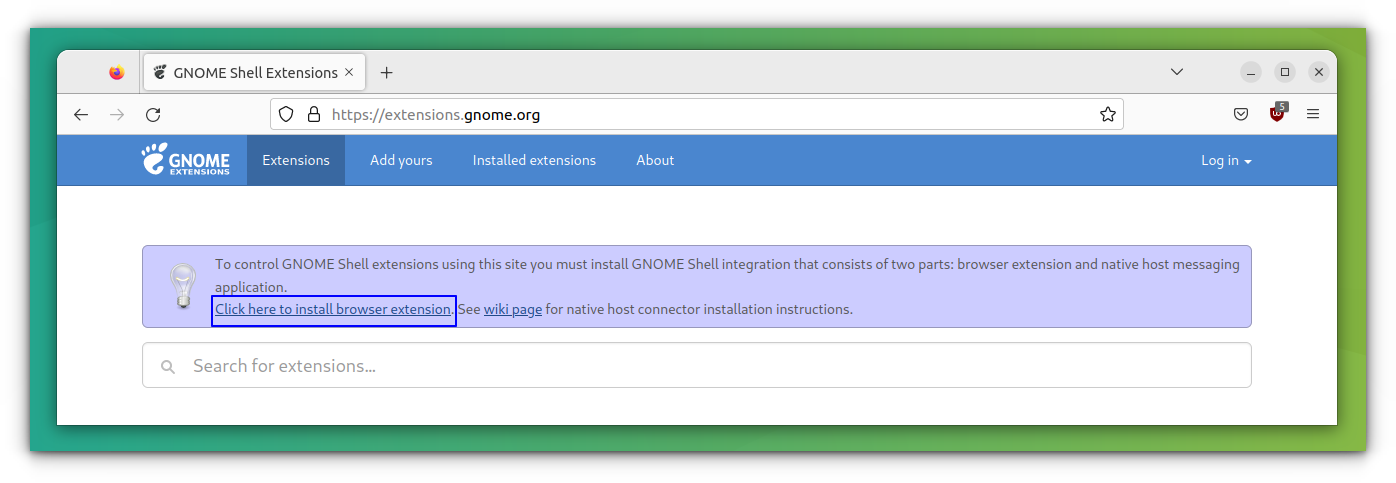

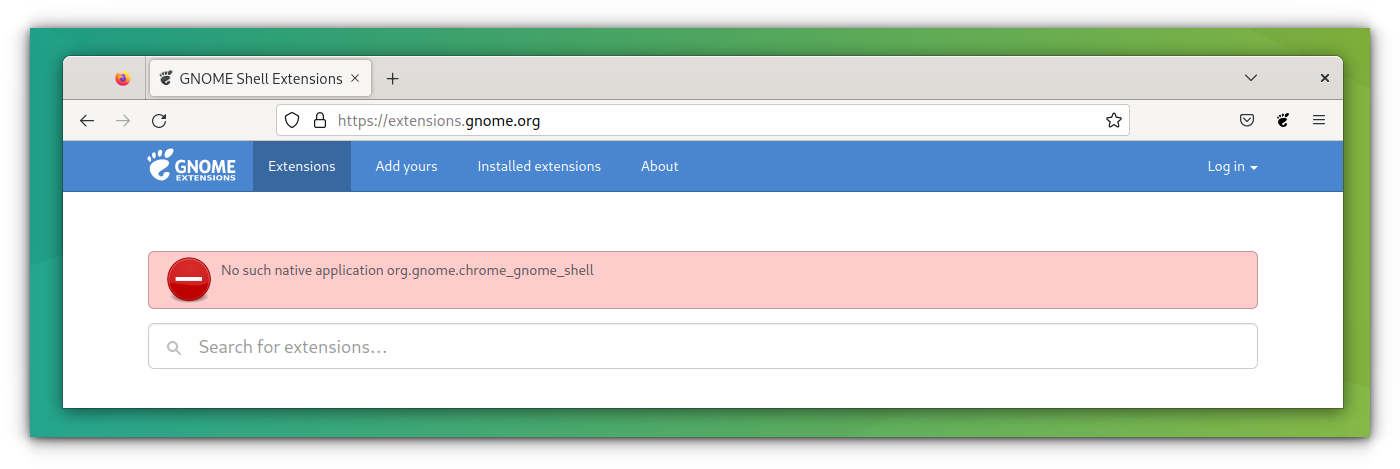

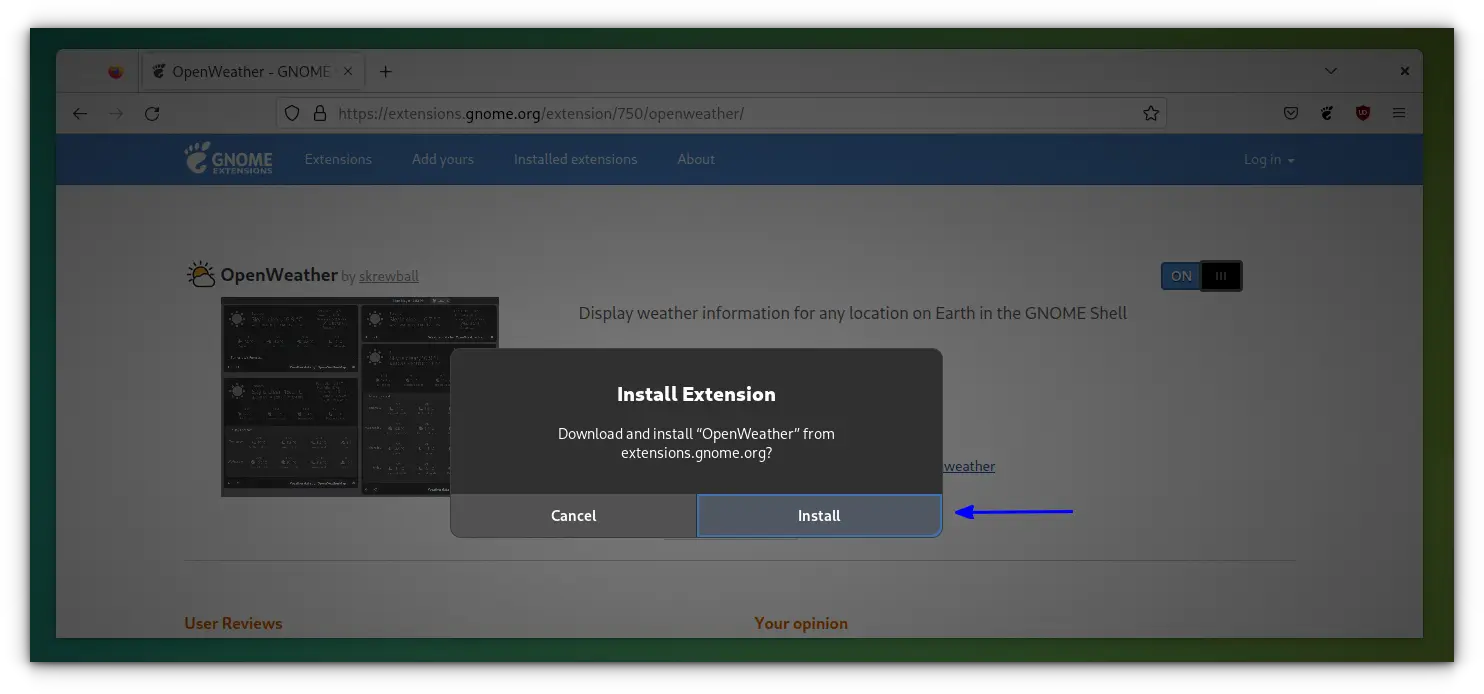

#### 如何安装

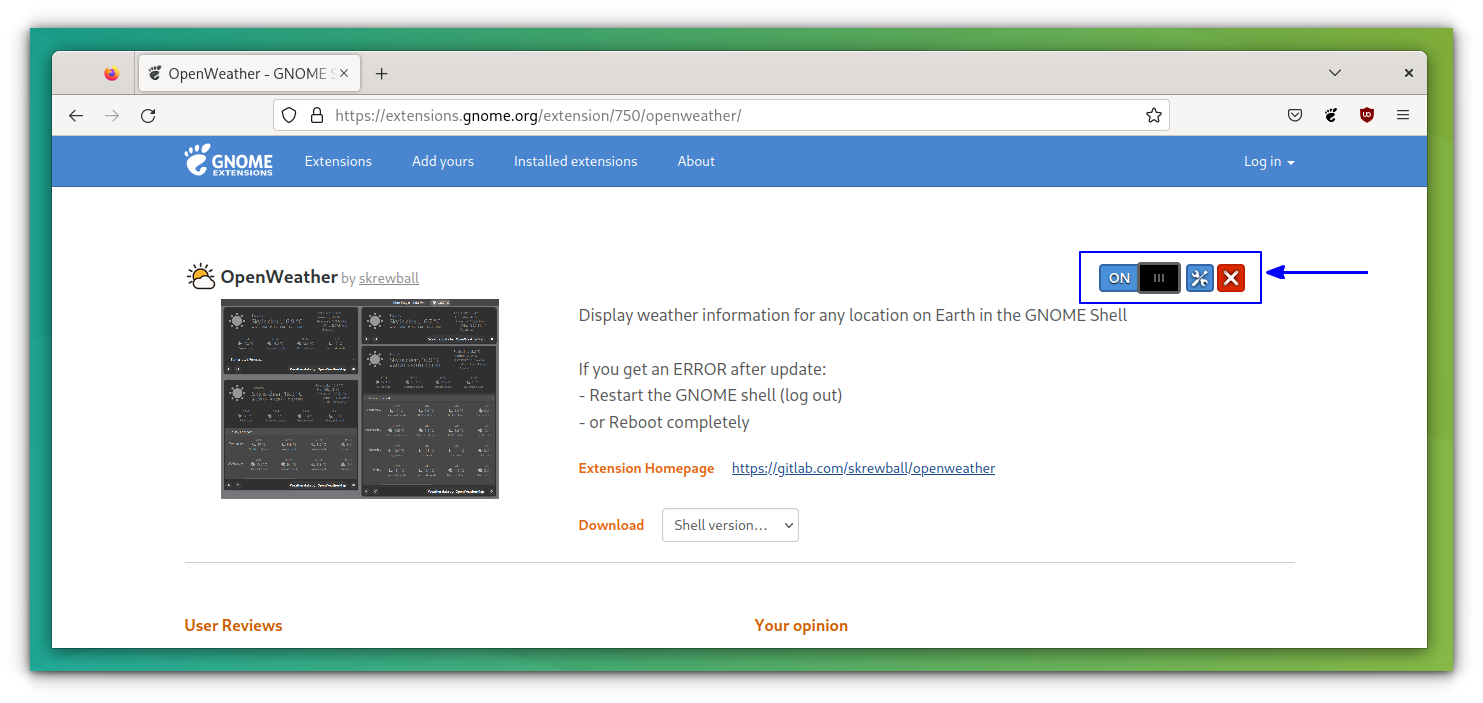

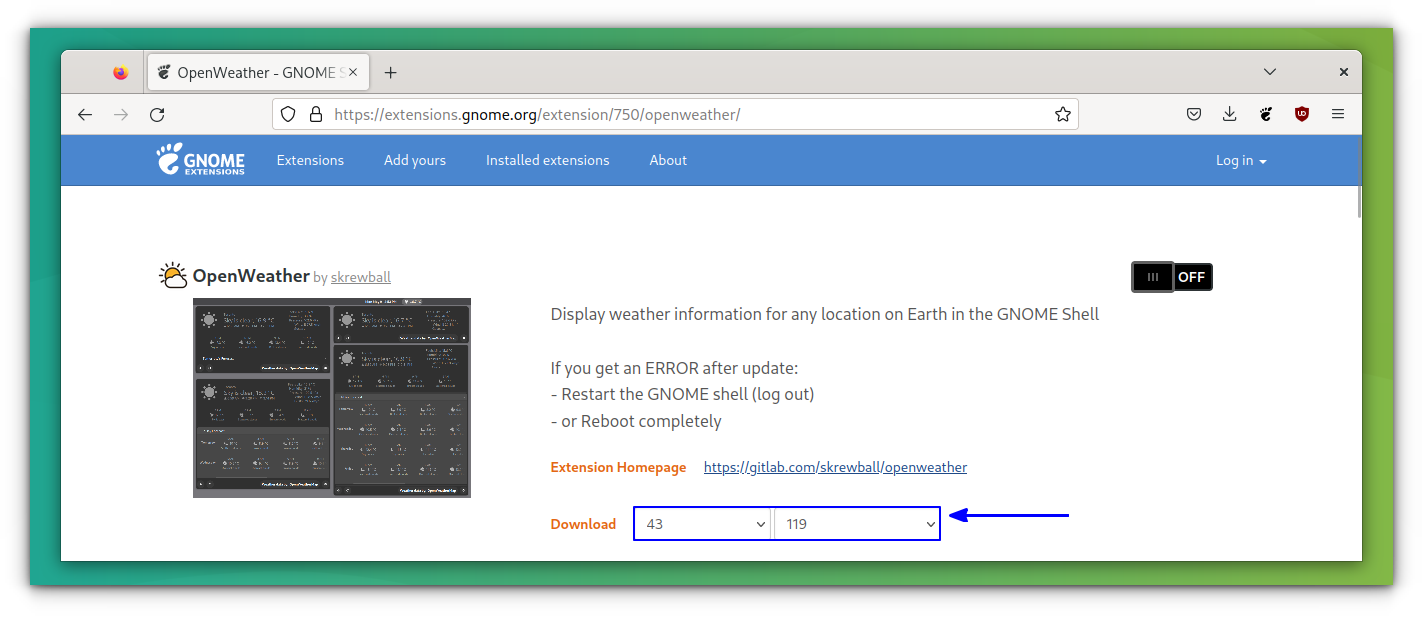

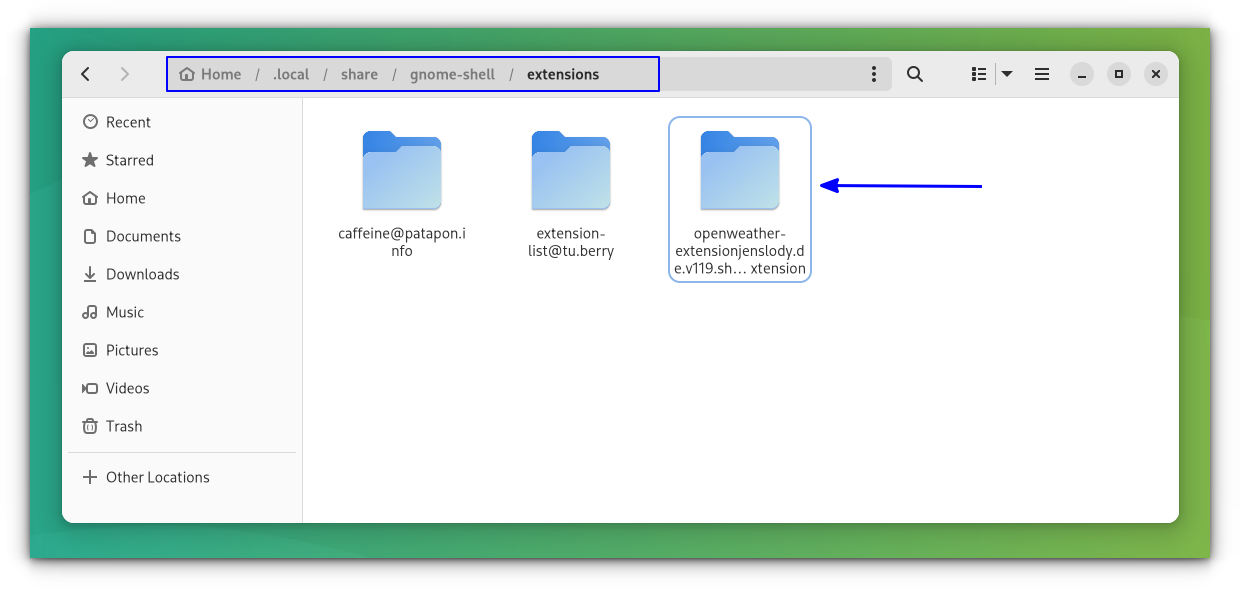

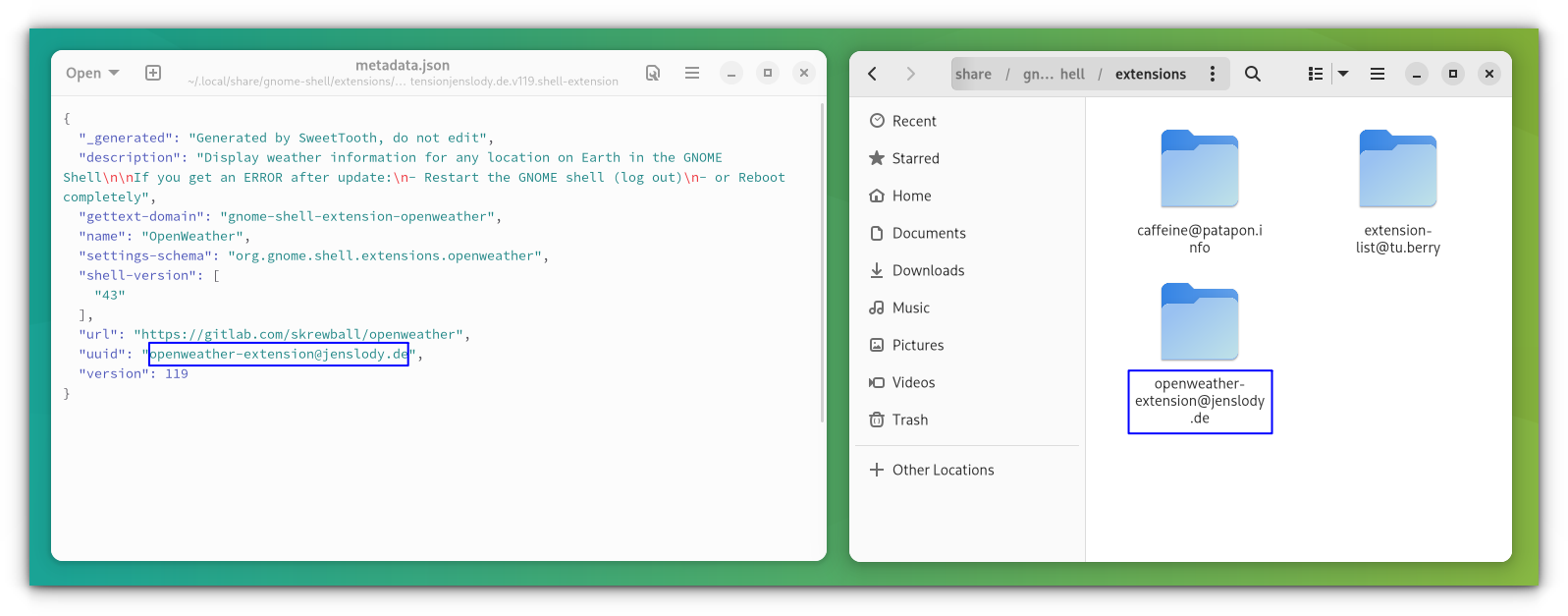

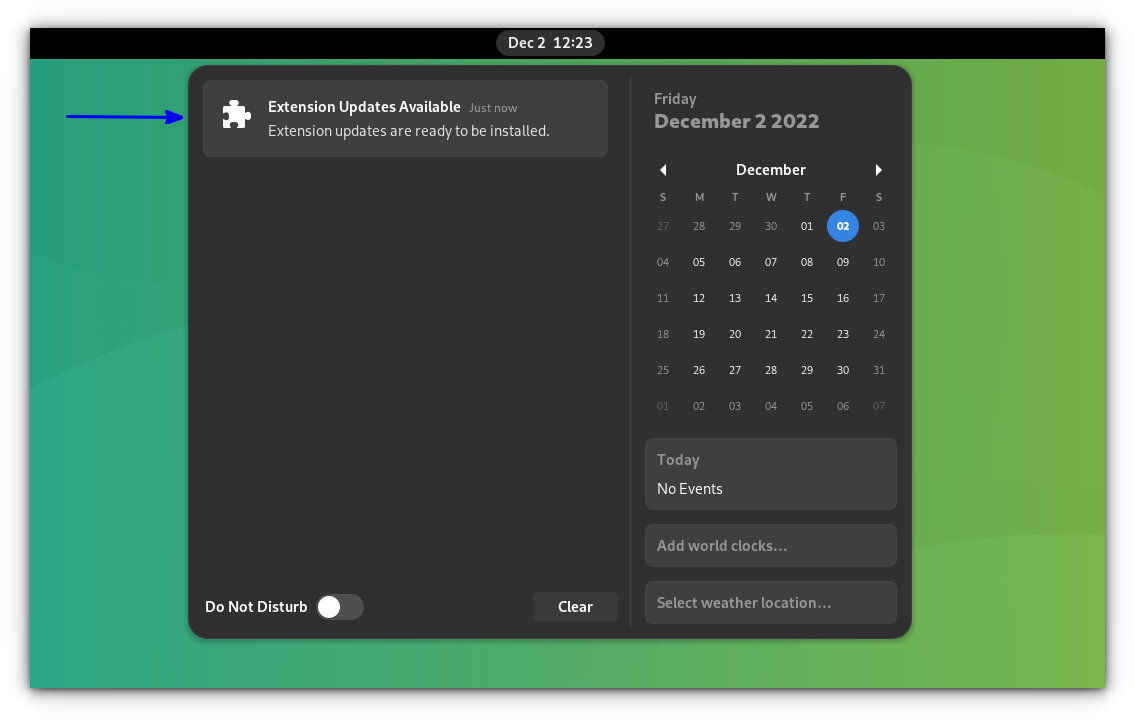

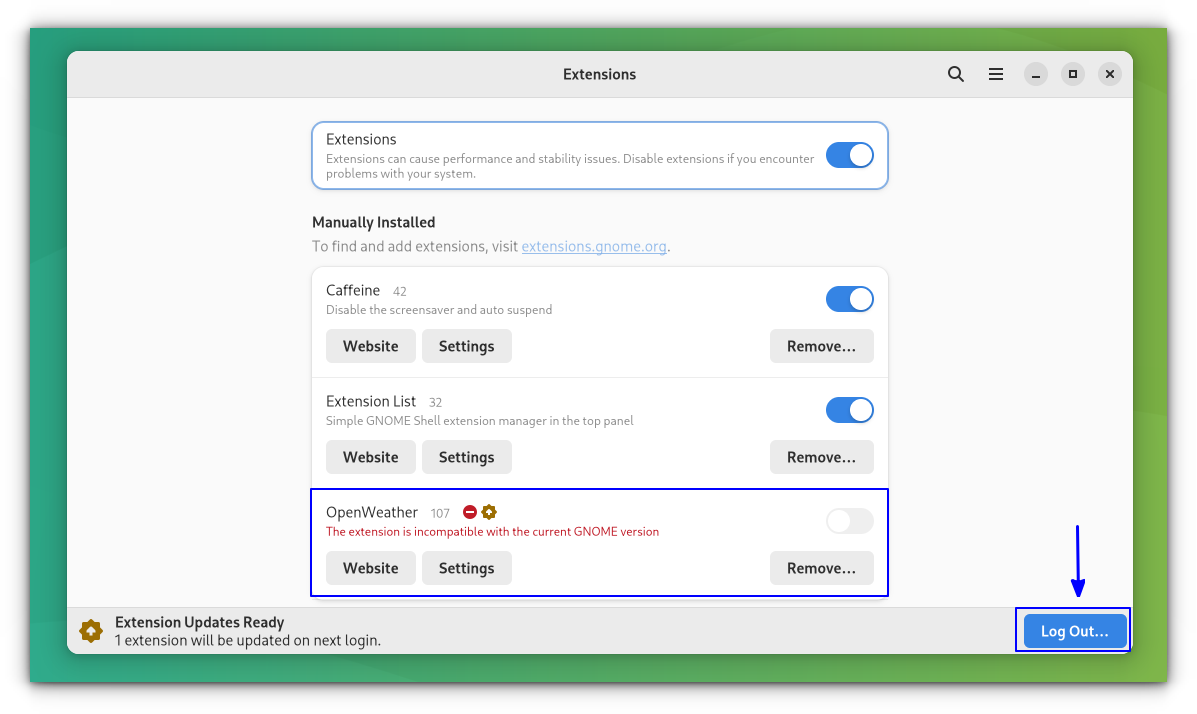

访问 [extenisons.gnome.org](https://extensions.gnome.org/extension/1262/bing-wallpaper-changer/) 网站并将切换按钮拖到 “ON”,然后点击 “Install” 按钮安装必应壁纸 GNOME 扩展。(LCTT 译注:页面上并没有发现 ON 按钮,但是有 Download 按钮)

安装必应壁纸 GNOME 扩展后,它会自动下载并为你的 Linux 桌面设置当天的必应照片,并显示关于壁纸的通知。

托盘指示器将帮助你执行少量操作,也可以打开设置。

根据你的要求自定义设置。

---