id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

9,178 | 2018 年开源技术 10 大发展趋势 | https://opensource.com/article/17/11/10-open-source-technology-trends-2018 | 2017-12-27T08:59:24 | [

"趋势",

"技术",

"开源"

] | https://linux.cn/article-9178-1.html |

>

> 你是否关注过开源技术的发展趋势? 这里是 10 个预测。

>

>

>

技术一直在变革,诸如 OpenStack、<ruby> 增强型网页应用 <rt> Progressive Web App </rt></ruby>(PWA)、Rust、R、<ruby> 认知云 <rt> the cognitive cloud </rt></ruby>、人工智能(AI),物联网等一些新技术正在颠覆我们对世界的固有认知。以下概述了 2018 年最可能成为主流的开源技术。

### 1、 OpenStack 认可度持续高涨

[OpenStack](https://www.openstack.org/) 本质上是一个云操作平台(系统),它为管理员提供直观友好的控制面板,以便对大量的计算、存储和网络资源进行配置和监管。

目前,很多企业运用 OpenStack 平台搭建和管理云计算系统。得益于其灵活的生态系统、透明度和运行速度,OpenStack 越来越流行。相比其他替代方案,OpenStack 只需更少的花费便能轻松支持任务关键型应用程序。 但是,其复杂的结构以及其对虚拟化、服务器和大量网络资源的严重依赖使得不少企业对使用 OpenStack 心存顾虑。另外,想要用好 OpenStack,好的硬件支持和高水平的员工二者缺一不可。

OpenStack 基金会一直在致力于完善他们的产品。一些功能创新,无论是已经发布的还是尚处于打造阶段,都将解决许多 OpenStack 潜在的问题。随着其结构复杂性降低,OpenStack 将获取更大认可。加之众多大型的软件开发及托管公司以及成千上万会员的支持, OpenStack 在云计算时代前途光明。

### 2、 PWA 或将大热

PWA,即 <ruby> <a href="https://developers.google.com/web/progressive-web-apps/"> 增强型网页应用 </a> <rt> Progressive Web App </rt></ruby>,是对技术、设计和<ruby> 网络应用程序接口 <rt> Web API </rt></ruby>的整合,它能够在移动浏览器上提供类似应用的体验。

传统的网站有许多与生俱来的缺点。虽然应用(app)提供了比网站更加个性化、用户参与度更高的体验,但是却要占用大量的系统资源;并且要想使用应用,你还必须提前下载安装。PWA 则扬长避短,它可用浏览器访问、可被引擎搜索检索,并可响应式适应外在环境,为用户提供应用级体验。PWA 也能像应用一样自我更新,总是显示最新的实时信息,并且像网站一样,以极其安全的 HTTPS 模式递交信息。PWA 运行于标准容器中,无须安装,任何人只要输入 URL 即可访问。

现在的移动用户看重便利性和参与度,PWAs 的特性完美契合这一需求,所以 PWA 成为主流是必然趋势。

### 3、 Rust 成开发者新宠

大多数的编程语言都需在安全和控制二者之间折衷,但 [Rust](https://www.rust-lang.org/) 是一个例外。Rust 使用广泛的编译时检查进行 100% 的控制而不影响程序安全性。上一次 [Pwn2Own](https://en.wikipedia.org/wiki/Pwn2Own) 竞赛找出了 Firefox C++ 底层实现的许多严重漏洞。如果 Firefox 是用 Rust 编写的,这些漏洞在产品发布之前的编译阶段就会被发现并解决。

Rust 独特的内建单元测试方式使开发者们考虑将其作为首选的开源语言。它是 C 和 Python 等其他编程语言有效的替代方案,Rust 可以在不损失程序可读性的情况下写出安全的代码。总之,Rust 前途光明。

### 4、 R 用户群在壮大

[R](https://en.wikipedia.org/wiki/R_(programming_language)) 编程语言,是一个与统计计算和图像呈现相关的 [GUN 项目](https://en.wikipedia.org/wiki/GNU_Project)。它提供了大量的统计和图形技术,并且可扩展增强。它是 [S](https://en.wikipedia.org/wiki/S_(programming_language)) 语言的延续。S 语言早已成为统计方法学的首选工具,R 为数据操作、计算和图形显示提供了开源选择。R 语言的另一个优势是对细节的把控和对细微差别的关注。

和 Rust 一样,R 语言也处于上升期。

### 5、 广义的 XaaS

XaaS 是 “<ruby> 一切皆服务 <rt> anything as a service </rt></ruby>” 的缩写,是通过网络提供的各种线上服务的总称。XaaS 的外延正在扩大,软件即服务(SaaS)、基础设施即服务(IaaS) 和平台即服务(PaaS)等观念已深入人心,新兴的基于云的服务如网络即服务(NaaS)、存储即服务(SaaS 或 StaaS)、监控即服务(MaaS)以及通信即服务(CaaS)等概念也正在普及。我们正在迈向一个万事万物 “皆为服务” 的世界。

现在,XaaS 的概念已经延伸到实体企业。著名的例子有 Uber 、Lyft 和 Airbnb,前二者利用新科技提供交通服务,后者提供住宿服务。

高速网络和服务器虚拟化使得强大的计算能力成为可能,这加速了 XaaS 的发展,2018 年可能是 “XaaS 年”。XaaS 无与伦比的灵活性、可扩展性将推动 XaaS 进一步发展。

### 6、 容器技术越来越受欢迎

[容器技术](https://www.techopedia.com/2/31967/trends/open-source/container-technology-the-next-big-thing),是用标准化方法打包代码的技术,它使得代码能够在任意环境中快速地 “接入并运行”。容器技术让企业可以削减经费、降低实施周期。尽管容器技术在 IT 基础结构改革方面的已经初显潜力,但事实上,运用好容器技术仍然比较复杂。

容器技术仍在发展中,技术复杂性随着各方面的进步在下降。最新的技术让容器使用起来像使用智能手机一样简单、直观,更不用说现在的企业需求:速度和灵活性往往能决定业务成败。

### 7、 机器学习和人工智能的更广泛应用

[机器学习和人工智能](https://opensource.com/tags/artificial-intelligence) 指在没有程序员给出明确的编码指令的情况下,机器具备自主学习并且积累经验自我改进的能力。

随着一些开源技术利用机器学习和人工智能实现尖端服务和应用,这两项技术已经深入人心。

[Gartner](https://sdtimes.com/gartners-top-10-technology-trends-2018/) 预测,2018 年机器学习和人工智能的应用会更广。其他一些领域诸如数据准备、集成、算法选择、学习方法选择、模块制造等随着机器学习的加入将会取得很大进步。

全新的智能开源解决方案将改变人们和系统交互的方式,转变由来已久的工作观念。

* 机器交互,像[聊天机器人](https://en.wikipedia.org/wiki/Chatbot)这样的对话平台,提供“问与答”的体验——用户提出问题,对话平台作出回应,成为人机之间默认的交互界面。

* 无人驾驶和无人机现在已经家喻户晓了,2018 年将会更司空见惯。

* 沉浸式体验的应用不再仅仅局限于视频游戏,在真实的生活场景比如设计、培训和可视化过程中都能看到沉浸式体验的身影。

### 8、 区块链将成为主流

自比特币应用区块链技术以来,其已经取得了重大进展,并且已广泛应用在金融系统、保密选举、学历验证等领域中。未来几年,区块链会在医疗、制造业、供应链物流、政府服务等领域中大展拳脚。

区块链分布式存储数据信息,这些数据信息依赖于数百万个共享数据库的节点。区块链不被任意单一所有者控制,并且单个损坏的节点不影响其正常运行,区块链的这两个特性让它异常健壮、透明、不可破坏。同时也规避了有人从中篡改数据的风险。区块链强大的先天优势足够支撑其成为将来主流技术。

### 9、 认知云粉墨登场

认识技术,比如前面所述的机器学习和人工智能,用于为多行业提供简单化和个性化服务。一个典型例子是金融行业的游戏化应用,其为投资者提供了严谨的投资建议,降低投资模块的复杂程度。数字信托平台使得金融机构的身份认证过程较以前精简 80%,提升了合规性,降低了诈骗比率。

认知云技术现在正向云端迁移,借助云,它将更加强大。[IBM Watson](https://en.wikipedia.org/wiki/Watson_(computer)) 是认知云应用最知名的例子。IBM 的 UIMA 架构是开源的,由 Apache 基金会负责维护。DARPA(美国国防高级研究计划局)的 DeepDive 项目借鉴了 Watson 的机器学习能力,通过不断学习人类行为来增强决策能力。另一个开源平台 [OpenCog](https://en.wikipedia.org/wiki/OpenCog) ,为开发者和数据科学家开发人工智能应用程序提供支撑。

考虑到实现先进的、个性化的用户体验风险较高,这些认知云平台来年时机成熟时才会粉墨登场。

### 10、 物联网智联万物

物联网(IoT)的核心在于建立小到嵌入式传感器、大至计算机设备的相互连接,让其(“物”)相互之间可以收发数据。毫无疑问,物联网将会是科技界的下一个 “搅局者”,但物联网本身处于一个不断变化的状态。

物联网最广为人知的产品就是 IBM 和三星合力打造的去中心化 P2P 自动遥测系统([ADEPT](https://insights.samsung.com/2016/03/17/block-chain-mobile-and-the-internet-of-things/))。它运用和区块链类似的技术来构建一个去中心化的物联网。没有中央控制设备,“物” 之间通过自主交流来进行升级软件、处理 bug、管理电源等等一系列操作。

### 开源推动技术创新

[数字化颠覆](https://cio-wiki.org/home/loc/home?page=digital-disruption)是当今以科技为中心的时代的常态。在技术领域,开放源代码正在逐渐普及,其在 2018 将年成为大多数技术创新的驱动力。

此榜单对开源技术趋势的预测有遗漏?在评论区告诉我们吧!

(题图:[Mitch Bennett](https://www.flickr.com/photos/mitchell3417/9206373620). [Opensource.com](https://opensource.com/) 修改)

### 关于作者

[**Sreejith Omanakuttan**](https://opensource.com/users/sreejith) - 自 2000 年开始编程,2007年开始从事专业工作。目前在 [Fingent](https://www.fingent.com/) 领导开源团队,工作内容涵盖不同的技术层面,从“无聊的工作”(?)到前沿科技。有一套 “构建—修复—推倒重来” 工作哲学。在领英上关注我: <https://www.linkedin.com/in/futuregeek/>

---

via: <https://opensource.com/article/17/11/10-open-source-technology-trends-2018>

作者:[Sreejith Omanakuttan](https://opensource.com/users/sreejith) 译者:[wangy325](https://github.com/wangy25) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Technology is always evolving. New developments, such as OpenStack, Progressive Web Apps, Rust, R, the cognitive cloud, artificial intelligence (AI), the Internet of Things, and more are putting our usual paradigms on the back burner. Here is a rundown of the top open source trends expected to soar in popularity in 2018.

## 1. OpenStack gains increasing acceptance

[OpenStack](https://www.openstack.org/) is essentially a cloud operating system that offers admins the ability to provision and control huge compute, storage, and networking resources through an intuitive and user-friendly dashboard.

Many enterprises are using the OpenStack platform to build and manage cloud computing systems. Its popularity rests on its flexible ecosystem, transparency, and speed. It supports mission-critical applications with ease and lower costs compared to alternatives. But, OpenStack's complex structure and its dependency on virtualization, servers, and extensive networking resources has inhibited its adoption by a wider range of enterprises. Using OpenStack also requires a well-oiled machinery of skilled staff and resources.

The OpenStack Foundation is working overtime to fill the voids. Several innovations, either released or on the anvil, would resolve many of its underlying challenges. As complexities decrease, OpenStack will surge in acceptance. The fact that OpenStack is already backed by many big software development and hosting companies, in addition to thousands of individual members, makes it the future of cloud computing.

## 2. Progressive Web Apps become popular

[Progressive Web Apps](https://developers.google.com/web/progressive-web-apps/) (PWA), an aggregation of technologies, design concepts, and web APIs, offer an app-like experience in the mobile browser.

Traditional websites suffer from many inherent shortcomings. Apps, although offering a more personal and focused engagement than websites, place a huge demand on resources, including needing to be downloaded upfront. PWA delivers the best of both worlds. It delivers an app-like experience to users while being accessible on browsers, indexable on search engines, and responsive to fit any form factor. Like an app, a PWA updates itself to always display the latest real-time information, and, like a website, it is delivered in an ultra-safe HTTPS model. It runs in a standard container and is accessible to anyone who types in the URL, without having to install anything.

PWAs perfectly suit the needs of today's mobile users, who value convenience and personal engagement over everything else. That this technology is set to soar in popularity is a no-brainer.

## 3. Rust to rule the roost

Most programming languages come with safety vs. control tradeoffs. [Rust](https://www.rust-lang.org/) is an exception. The language co-opts extensive compile-time checking to offer 100% control without compromising safety. The last [Pwn2Own](https://en.wikipedia.org/wiki/Pwn2Own) competition threw up many serious vulnerabilities in Firefox on account of its underlying C++ language. If Firefox had been written in Rust, many of those errors would have manifested as compile-time bugs and resolved before the product rollout stage.

Rust's unique approach of built-in unit testing has led developers to consider it a viable first-choice open source language. It offers an effective alternative to languages such as C and Python to write secure code without sacrificing expressiveness. Rust has bright days ahead in 2018.

## 4. R user community grows

The [R](https://en.wikipedia.org/wiki/R_(programming_language)) programming language, a GNU project, is associated with statistical computing and graphics. It offers a wide array of statistical and graphical techniques and is extensible to boot. It starts where [S](https://en.wikipedia.org/wiki/S_(programming_language)) ends. With the S language already the vehicle of choice for research in statistical methodology, R offers a viable open source route for data manipulation, calculation, and graphical display. An added benefit is R's attention to detail and care for the finer nuances.

Like Rust, R's fortunes are on the rise.

## 5. XaaS expands in scope

XaaS, an acronym for "anything as a service," stands for the increasing number of services delivered over the internet, rather than on premises. Although software as a service (SaaS), infrastructure as a service (IaaS), and platform as a service (PaaS) are well-entrenched, new cloud-based models, such as network as a service (NaaS), storage as a service (SaaS or StaaS), monitoring as a service (MaaS), and communications as a service (CaaS), are soaring in popularity. A world where anything and everything is available "as a service" is not far away.

The scope of XaaS now extends to bricks-and-mortar businesses, as well. Good examples are companies such as Uber and Lyft leveraging digital technology to offer transportation as a service and Airbnb offering accommodations as a service.

High-speed networks and server virtualization that make powerful computing affordable have accelerated the popularity of XaaS, to the point that 2018 may become the "year of XaaS." The unmatched flexibility, agility, and scalability will propel the popularity of XaaS even further.

## 6. Containers gain even more acceptance

Container technology is the approach of packaging pieces of code in a standardized way so they can be "plugged and run" quickly in any environment. Container technology allows enterprises to cut costs and implementation times. While the potential of containers to revolutionize IT infrastructure has been evident for a while, actual container use has remained complex.Container technology is still evolving, and the complexities associated with the technology decrease with every advancement. The latest developments make containers quite intuitive and as easy as using a smartphone, not to mention tuned for today's needs, where speed and agility can make or break a business.

## 7. Machine learning and artificial intelligence expand in scope

[Machine learning and AI](https://opensource.com/tags/artificial-intelligence) give machines the ability to learn and improve from experience without a programmer explicitly coding the instruction.

These technologies are already well entrenched, with several open source technologies leveraging them for cutting-edge services and applications.

[Gartner predicts](https://sdtimes.com/gartners-top-10-technology-trends-2018/) the scope of machine learning and artificial intelligence will expand in 2018. Several greenfield areas, such as data preparation, integration, algorithm selection, training methodology selection, and model creation are all set for big-time enhancements through the infusion of machine learning.

New open source intelligent solutions are set to change the way people interact with systems and transform the very nature of work.

- Conversational platforms, such as chatbots, make the question-and-command experience, where a user asks a question and the platform responds, the default medium of interacting with machines.

- Autonomous vehicles and drones, fancy fads today, are expected to become commonplace by 2018.

- The scope of immersive experience will expand beyond video games and apply to real-life scenarios such as design, training, and visualization processes.

## 8. Blockchain becomes mainstream

Blockchain has come a long way from Bitcoin. The technology is already in widespread use in finance, secure voting, authenticating academic credentials, and more. In the coming year, healthcare, manufacturing, supply chain logistics, and government services are among the sectors most likely to embrace blockchain technology.

Blockchain distributes digital information. The information resides on millions of nodes, in shared and reconciled databases. The fact that it's not controlled by any single authority and has no single point of failure makes it very robust, transparent, and incorruptible. It also solves the threat of a middleman manipulating the data. Such inherent strengths account for blockchain's soaring popularity and explain why it is likely to emerge as a mainstream technology in the immediate future.

## 9. Cognitive cloud moves to center stage

Cognitive technologies, such as machine learning and artificial intelligence, are increasingly used to reduce complexity and personalize experiences across multiple sectors. One case in point is gamification apps in the financial sector, which offer investors critical investment insights and reduce the complexities of investment models. Digital trust platforms reduce the identity-verification process for financial institutions by about 80%, improving compliance and reducing chances of fraud.

Such cognitive cloud technologies are now moving to the cloud, making it even more potent and powerful. IBM Watson is the most well-known example of the cognitive cloud in action. IBM's UIMA architecture was made open source and is maintained by the Apache Foundation. DARPA's DeepDive project mirrors Watson's machine learning abilities to enhance decision-making capabilities over time by learning from human interactions. OpenCog, another open source platform, allows developers and data scientists to develop artificial intelligence apps and programs.

Considering the high stakes of delivering powerful and customized experiences, these cognitive cloud platforms are set to take center stage over the coming year.

## 10. The Internet of Things connects more things

At its core, the Internet of Things (IoT) is the interconnection of devices through embedded sensors or other computing devices that enable the devices (the "things") to send and receive data. IoT is already predicted to be the next big major disruptor of the tech space, but IoT itself is in a continuous state of flux.

One innovation likely to gain widespread acceptance within the IoT space is Autonomous Decentralized Peer-to-Peer Telemetry ([ADEPT](https://insights.samsung.com/2016/03/17/block-chain-mobile-and-the-internet-of-things/)), which is propelled by IBM and Samsung. It uses a blockchain-type technology to deliver a decentralized network of IoT devices. Freedom from a central control system facilitates autonomous communications between "things" in order to manage software updates, resolve bugs, manage energy, and more.

## Open source drives innovation

Digital disruption is the norm in today's tech-centric era. Within the technology space, open source is now pervasive, and in 2018, it will be the driving force behind most of the technology innovations.

Which open source trends and technologies would you add to this list? Let us know in the comments.

## 6 Comments |

9,179 | 补丁管理:不要以持续运行时间为自豪 | https://www.linuxjournal.com/content/sysadmin-101-patch-management | 2017-12-27T10:46:54 | [

"补丁",

"安全",

"升级"

] | https://linux.cn/article-9179-1.html |

就在之前几篇文章,我开始了“系统管理 101”系列文章,用来记录现今许多初级系统管理员、DevOps 工程师或者“全栈”开发者可能不曾接触过的一些系统管理方面的基本知识。按照我原本的设想,该系列文章已经是完结了的。然而后来 WannaCry 恶意软件出现,并在补丁管理不善的 Windows 主机网络间爆发。我能想象到那些仍然深陷 2000 年代 Linux 与 Windows 争论的读者听到这个消息可能已经面露优越的微笑。

我之所以这么快就决定再次继续“系统管理 101”文章系列,是因为我意识到在补丁管理方面一些 Linux 系统管理员和 Windows 系统管理员没有差别。实话说,在一些方面甚至做的更差(特别是以持续运行时间为自豪)。所以,这篇文章会涉及 Linux 下补丁管理的基础概念,包括良好的补丁管理该是怎样的,你可能会用到的一些相关工具,以及整个补丁安装过程是如何进行的。

### 什么是补丁管理?

我所说的补丁管理,是指你部署用于升级服务器上软件的系统,不仅仅是把软件更新到最新最好的前沿版本。即使是像 Debian 这样为了“稳定性”持续保持某一特定版本软件的保守派发行版,也会时常发布升级补丁用于修补错误和安全漏洞。

当然,如果你的组织决定自己维护特定软件的版本,要么是因为开发者有最新最好版本的需求,需要派生软件源码并做出修改,要么是因为你喜欢给自己额外的工作量,这时你就会遇到问题。理想情况下,你应该已经配置好你的系统,让它在自动构建和打包定制版本软件时使用其它软件所用的同一套持续集成系统。然而,许多系统管理员仍旧在自己的本地主机上按照维基上的文档(但愿是最新的文档)使用过时的方法打包软件。不论使用哪种方法,你都需要明确你所使用的版本有没有安全缺陷,如果有,那必须确保新补丁安装到你定制版本的软件上了。

### 良好的补丁管理是怎样的

补丁管理首先要做的是检查软件的升级。首先,对于核心软件,你应该订阅相应 Linux 发行版的安全邮件列表,这样才能第一时间得知软件的安全升级情况。如果你使用的软件有些不是来自发行版的仓库,那么你也必须设法跟踪它们的安全更新。一旦接收到新的安全通知,你必须查阅通知细节,以此明确安全漏洞的严重程度,确定你的系统是否受影响,以及安全补丁的紧急性。

一些组织仍在使用手动方式管理补丁。在这种方式下,当出现一个安全补丁,系统管理员就要凭借记忆,登录到各个服务器上进行检查。在确定了哪些服务器需要升级后,再使用服务器内建的包管理工具从发行版仓库升级这些软件。最后以相同的方式升级剩余的所有服务器。

手动管理补丁的方式存在很多问题。首先,这么做会使补丁安装成为一个苦力活,安装补丁越多就需要越多人力成本,系统管理员就越可能推迟甚至完全忽略它。其次,手动管理方式依赖系统管理员凭借记忆去跟踪他或她所负责的服务器的升级情况。这非常容易导致有些服务器被遗漏而未能及时升级。

补丁管理越快速简便,你就越可能把它做好。你应该构建一个系统,用来快速查询哪些服务器运行着特定的软件,以及这些软件的版本号,而且它最好还能够推送各种升级补丁。就个人而言,我倾向于使用 MCollective 这样的编排工具来完成这个任务,但是红帽提供的 Satellite 以及 Canonical 提供的 Landscape 也可以让你在统一的管理界面上查看服务器的软件版本信息,并且安装补丁。

补丁安装还应该具有容错能力。你应该具备在不下线的情况下为服务安装补丁的能力。这同样适用于需要重启系统的内核补丁。我采用的方法是把我的服务器划分为不同的高可用组,lb1、app1、rabbitmq1 和 db1 在一个组,而lb2、app2、rabbitmq2 和 db2 在另一个组。这样,我就能一次升级一个组,而无须下线服务。

所以,多快才能算快呢?对于少数没有附带服务的软件,你的系统最快应该能够在几分钟到一小时内安装好补丁(例如 bash 的 ShellShock 漏洞)。对于像 OpenSSL 这样需要重启服务的软件,以容错的方式安装补丁并重启服务的过程可能会花费稍多的时间,但这就是编排工具派上用场的时候。我在最近的关于 MCollective 的文章中(查看 2016 年 12 月和 2017 年 1 月的工单)给了几个使用 MCollective 实现补丁管理的例子。你最好能够部署一个系统,以具备容错性的自动化方式简化补丁安装和服务重启的过程。

如果补丁要求重启系统,像内核补丁,那它会花费更多的时间。再次强调,自动化和编排工具能够让这个过程比你想象的还要快。我能够在一到两个小时内在生产环境中以容错方式升级并重启服务器,如果重启之间无须等待集群同步备份,这个过程还能更快。

不幸的是,许多系统管理员仍坚信过时的观点,把持续运行时间(uptime)作为一种骄傲的象征——鉴于紧急内核补丁大约每年一次。对于我来说,这只能说明你没有认真对待系统的安全性!

很多组织仍然使用无法暂时下线的单点故障的服务器,也因为这个原因,它无法升级或者重启。如果你想让系统更加安全,你需要去除过时的包袱,搭建一个至少能在深夜维护时段重启的系统。

基本上,快速便捷的补丁管理也是一个成熟专业的系统管理团队所具备的标志。升级软件是所有系统管理员的必要工作之一,花费时间去让这个过程简洁快速,带来的好处远远不止是系统安全性。例如,它能帮助我们找到架构设计中的单点故障。另外,它还帮助鉴定出环境中过时的系统,给我们替换这些部分提供了动机。最后,当补丁管理做得足够好,它会节省系统管理员的时间,让他们把精力放在真正需要专业知识的地方。

---

Kyle Rankin 是高级安全与基础设施架构师,其著作包括: Linux Hardening in Hostile Networks,DevOps Troubleshooting 以及 The Official Ubuntu Server Book。同时,他还是 Linux Journal 的专栏作家。

---

via: <https://www.linuxjournal.com/content/sysadmin-101-patch-management>

作者:[Kyle Rankin](https://www.linuxjournal.com/users/kyle-rankin) 译者:[haoqixu](https://github.com/haoqixu) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # Sysadmin 101: Patch Management

A few articles ago, I started a Sysadmin 101 series to pass down some fundamental knowledge about systems administration that the current generation of junior sysadmins, DevOps engineers or "full stack" developers might not learn otherwise. I had thought that I was done with the series, but then the WannaCry malware came out and exposed some of the poor patch management practices still in place in Windows networks. I imagine some readers that are still stuck in the Linux versus Windows wars of the 2000s might have even smiled with a sense of superiority when they heard about this outbreak.

The reason I decided to revive my Sysadmin 101 series so soon is I realized that most Linux system administrators are no different from Windows sysadmins when it comes to patch management. Honestly, in some areas (in particular, uptime pride), some Linux sysadmins are even worse than Windows sysadmins regarding patch management. So in this article, I cover some of the fundamentals of patch management under Linux, including what a good patch management system looks like, the tools you will want to put in place and how the overall patching process should work.

What Is Patch Management?When I say patch management, I'm referring to the systems you have in place to update software already on a server. I'm not just talking about keeping up with the latest-and-greatest bleeding-edge version of a piece of software. Even more conservative distributions like Debian that stick with a particular version of software for its "stable" release still release frequent updates that patch bugs or security holes.

Of course, if your organization decided to roll its own version of a particular piece of software, either because developers demanded the latest and greatest, you needed to fork the software to apply a custom change, or you just like giving yourself extra work, you now have a problem. Ideally you have put in a system that automatically packages up the custom version of the software for you in the same continuous integration system you use to build and package any other software, but many sysadmins still rely on the outdated method of packaging the software on their local machine based on (hopefully up to date) documentation on their wiki. In either case, you will need to confirm that your particular version has the security flaw, and if so, make sure that the new patch applies cleanly to your custom version.

What Good Patch Management Looks LikePatch management starts with knowing that there is a software update to begin with. First, for your core software, you should be subscribed to your Linux distribution's security mailing list, so you're notified immediately when there are security patches. If there you use any software that doesn't come from your distribution, you must find out how to be kept up to date on security patches for that software as well. When new security notifications come in, you should review the details so you understand how severe the security flaw is, whether you are affected and gauge a sense of how urgent the patch is.

Some organizations have a purely manual patch management system. With such a system, when a security patch comes along, the sysadmin figures out which servers are running the software, generally by relying on memory and by logging in to servers and checking. Then the sysadmin uses the server's built-in package management tool to update the software with the latest from the distribution. Then the sysadmin moves on to the next server, and the next, until all of the servers are patched.

There are many problems with manual patch management. First is the fact that it makes patching a laborious chore. The more work patching is, the more likely a sysadmin will put it off or skip doing it entirely. The second problem is that manual patch management relies too much on the sysadmin's ability to remember and recall all of the servers he or she is responsible for and keep track of which are patched and which aren't. This makes it easy for servers to be forgotten and sit unpatched.

The faster and easier patch management is, the more likely you are to do it. You should have a system in place that quickly can tell you which servers are running a particular piece of software at which version. Ideally, that system also can push out updates. Personally, I prefer orchestration tools like MCollective for this task, but Red Hat provides Satellite, and Canonical provides Landscape as central tools that let you view software versions across your fleet of servers and apply patches all from a central place.

Patching should be fault-tolerant as well. You should be able to patch a service and restart it without any overall down time. The same idea goes for kernel patches that require a reboot. My approach is to divide my servers into different high availability groups so that lb1, app1, rabbitmq1 and db1 would all be in one group, and lb2, app2, rabbitmq2 and db2 are in another. Then, I know I can patch one group at a time without it causing downtime anywhere else.

So, how fast is fast? Your system should be able to roll out a patch to a minor piece of software that doesn't have an accompanying service (such as bash in the case of the ShellShock vulnerability) within a few minutes to an hour at most. For something like OpenSSL that requires you to restart services, the careful process of patching and restarting services in a fault-tolerant way probably will take more time, but this is where orchestration tools come in handy. I gave examples of how to use MCollective to accomplish this in my recent MCollective articles (see the December 2016 and January 2017 issues), but ideally, you should put a system in place that makes it easy to patch and restart services in a fault-tolerant and automated way.

When patching requires a reboot, such as in the case of kernel patches, it might take a bit more time, but again, automation and orchestration tools can make this go much faster than you might imagine. I can patch and reboot the servers in an environment in a fault-tolerant way within an hour or two, and it would be much faster than that if I didn't need to wait for clusters to sync back up in between reboots.

Unfortunately, many sysadmins still hold on to the outdated notion that uptime is a badge of pride—given that serious kernel patches tend to come out at least once a year if not more often, to me, it's proof you don't take security seriously.

Many organizations also still have that single point of failure server that can never go down, and as a result, it never gets patched or rebooted. If you want to be secure, you need to remove these outdated liabilities and create systems that at least can be rebooted during a late-night maintenance window.

Ultimately, fast and easy patch management is a sign of a mature and professional sysadmin team. Updating software is something all sysadmins have to do as part of their jobs, and investing time into systems that make that process easy and fast pays dividends far beyond security. For one, it helps identify bad architecture decisions that cause single points of failure. For another, it helps identify stagnant, out-of-date legacy systems in an environment and provides you with an incentive to replace them. Finally, when patching is managed well, it frees up sysadmins' time and turns their attention to the things that truly require their expertise.

[Load Disqus comments](https://www.linuxjournal.com/content/sysadmin-101-patch-management#disqus_thread) |

9,180 | UC 浏览器最大的问题 | https://www.theitstuff.com/biggest-problems-uc-browser | 2017-12-29T09:52:00 | [

"UC",

"浏览器"

] | https://linux.cn/article-9180-1.html |

在我们开始谈论缺点之前,我要确定的事实是过去 3 年来,我一直是一个忠实的 UC 浏览器用户。我真的很喜欢它的下载速度,超时尚的用户界面和工具上引人注目的图标。我一开始是 Android 上的 Chrome 用户,但我在朋友的推荐下开始使用 UC。但在过去的一年左右,我看到了一些东西让我重新思考我的选择,现在我感觉我要重新回到 Chrome。

### 不需要的**通知**

我相信我不是唯一一个每几个小时内就收到这些不需要的通知的人。这些欺骗点击的文章真的很糟糕,最糟糕的部分是你每隔几个小时就会收到一次。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/Untitled-design-6.png)

我试图从通知设置里关闭他们,但它们仍然以一个更低频率出现。

### **新闻主页**

另一个不需要的部分是完全无用的。我们完全理解 UC 浏览器是免费下载,可能需要资金,但并不应该这么做。这个主页上的新闻文章是非常让人分心且不需要的。有时当你在一个专业或家庭环境中的一些诱骗点击甚至可能会导致尴尬。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/Untitled-design-1-1.png)

而且他们甚至有这样的设置。将 **UC** **新闻显示打开/关闭**。我也试过,猜猜看发生了什么。在下图中,左侧你可以看到我的尝试,右侧可以看到结果。

[](http://www.theitstuff.com/wp-content/uploads/2017/12/uceffort.png)

而且不止诱骗点击新闻,他们已经开始添加一些不必要的功能。所以我也列出它们。

### UC **音乐**

UC 浏览器在浏览器中集成了一个**音乐播放器**来播放音乐。它只是能用,没什么特别的东西。那为什么还要呢?有什么原因呢?谁需要浏览器中的音乐播放器?

[](http://www.theitstuff.com/wp-content/uploads/2017/10/Untitled-design-3-1.png)

它甚至不是在后台直接播放来自网络的音频。相反,它是一个播放离线音乐的音乐播放器。所以为什么要它?我的意思是,它甚至没有好到可以作为主要音乐播放器。即使它足够好,它也不能独立于 UC 浏览器运行。所以为什么会有人运行将他/她的浏览器只是为了使用你的音乐播放器?

### **快速**访问栏

我已经看到平均有 90% 的用户在通知区域挂着这栏,因为它默认安装,并且它们不知道如何摆脱它。右侧的设置可以摆脱它。

[](http://www.theitstuff.com/wp-content/uploads/2017/10/Untitled-design-4-1.png)

但是我还是想问一下,“为什么它是默认的?”。这让大多数用户很头痛。如果我们需要它,就会去启用它。为什么要强迫用户。

### 总结

UC 浏览器仍然是最大的玩家之一。它提供了一个最好的体验,但是,我不知道 UC 通过在浏览中打包进将越来越多的功能并强迫用户使用它们是要证明什么。

我喜欢 UC 的速度和设计。但最近的体验导致我再次考虑我的主要浏览器。

---

via: <https://www.theitstuff.com/biggest-problems-uc-browser>

作者:[Rishabh Kandari](https://www.theitstuff.com/author/reevkandari) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 526 | null |

|

9,181 | 修复 Linux / Unix / OS X / BSD 系统控制台上的显示乱码 | https://www.cyberciti.biz/tips/bash-fix-the-display.html | 2017-12-30T08:21:00 | [

"clear",

"reset",

"终端"

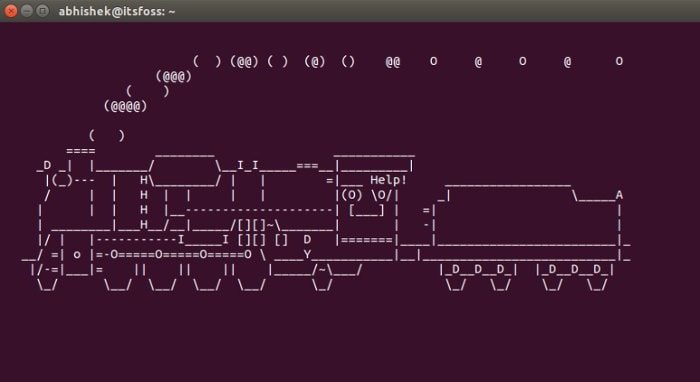

] | https://linux.cn/article-9181-1.html | 有时我的探索会在屏幕上输出一些奇怪的东西。比如,有一次我不小心用 `cat` 命令查看了一下二进制文件的内容 —— `cat /sbin/*`。这种情况下你将无法再访问终端里的 bash/ksh/zsh 了。大量的奇怪字符充斥了你的终端。这些字符会隐藏你输入的内容和要显示的字符,取而代之的是一些奇怪的符号。要清理掉这些屏幕上的垃圾可以使用以下方法。本文就将向你描述在 Linux/ 类 Unix 系统中如何真正清理终端屏幕或者重置终端。

### clear 命令

`clear` 命令会清理掉屏幕内容,连带它的回滚缓存区一起也会被清理掉。(LCTT 译注:这种情况下你输入的字符回显也是乱码,不必担心,正确输入后回车即可生效。)

```

$ clear

```

你也可以按下 `CTRL+L` 来清理屏幕。然而,`clear` 命令并不会清理掉终端屏幕(LCTT 译注:这句话比较难理解,应该是指的运行 `clear` 命令并不是真正的把以前显示的内容删掉,你还是可以通过向上翻页看到之前显示的内容)。使用下面的方法才可以真正地清空终端,使你的终端恢复正常。

### 使用 reset 命令修复显示

要修复正常显示,只需要输入 `reset` 命令。它会为你再初始化一次终端:

```

$ reset

```

或者:

```

$ tput reset

```

如果 `reset` 命令还不行,那么输入下面命令来让绘画回复到正常状态:

```

$ stty sane

```

按下 `CTRL + L` 来清理屏幕(或者输入 `clear` 命令):

```

$ clear

```

### 使用 ANSI 转义序列来真正地清空 bash 终端

另一种选择是输入下面的 ANSI 转义序列:

```

clear

echo -e "\033c"

```

下面是这两个命令的输出示例:

[](https://www.cyberciti.biz/tips/bash-fix-the-display.html/unix-linux-console-gibberish)

更多信息请阅读 `stty` 和 `reset` 的 man 页: stty(1),reset(1),bash(1)。

---

via: <https://www.cyberciti.biz/tips/bash-fix-the-display.html>

作者:[Vivek Gite](https://www.cyberciti.biz) 译者:[lujun9972](https://github.com/lujun9972) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,182 | Bash 脚本:正则表达式基础篇 | http://linuxtechlab.com/bash-scripting-learn-use-regex-basics/ | 2017-12-27T15:11:35 | [

"正则表达式",

"脚本"

] | https://linux.cn/article-9182-1.html | <ruby> 正则表达式 <rt> Regular expressions </rt></ruby>(简写为 regex 或者 regexp)基本上是定义一种搜索模式的字符串,可以被用来执行“搜索”或者“搜索并替换”操作,也可以被用来验证像密码策略等条件。

正则表达式是一个我们可利用的非常强大的工具,并且使用正则表达式的优点是它能在几乎所有计算机语言中被使用。所以如果你使用 Bash 脚本或者创建一个 python 程序时,我们可以使用正则表达式,或者也可以写一个单行搜索查询。

在这篇教程中,我们将会学习一些正则表达式的基本概念,并且学习如何在 Bash 中通过 `grep` 使用它们,但是如果你希望在其他语言如 python 或者 C 中使用它们,你只能使用正则表达式部分。那么让我们通过正则表达式的一个例子开始吧,

正则表达式看起来像 `/t[aeiou]l/` 这个样子。

但这是什么意思呢?它意味着所提到的正则表达式将寻找一个词,它以 `t` 开始,在中间包含字母 `a e i o u` 中任意一个,并且字母 `l` 最为最后一个字符。它可以是 `tel`,`tal` 或者 `til`,可以匹配一个单独的词或者其它单词像 `tilt`,`brutal` 或者 `telephone` 的一部分。

grep 使用正则表达式的语法是 `$ grep "regex_search_term" file_location`

如果不理解,不要担心,这只是一个例子,来展示可以利用正则表达式获取什么,相信我,这是最简单的例子。我们可以从正则表达式中获取更多。现在我们将从正则表达式基础的开始。

* 推荐阅读: [你应该知道的有用的 linux 命令](http://linuxtechlab.com/useful-linux-commands-you-should-know/)

### 基础的正则表示式

现在我们开始学习一些被称为<ruby> 元字符 <rt> MetaCharacters </rt></ruby>的特殊字符。它们可以帮助我们创建更复杂的正则表达式搜索项。下面提到的是基本元字符的列表,

* `.` 点将匹配任意字符

* `[ ]` 将匹配一个字符范围

* `[^ ]` 将匹配除了括号中提到的那个之外的所有字符

* `*` 将匹配零个或多个前面的项

* `+` 将匹配一个或多个前面的项

* `?` 将匹配零个或一个前面的项

* `{n}` 将匹配 n 次前面的项

* `{n,}` 将匹配 n 次或更多前面的项

* `{n,m}` 将匹配在 n 和 m 次之间的项

* `{,m}` 将匹配少于或等于 m 次的项

* `\` 是一个转义字符,当我们需要在我们的搜索中包含一个元字符时使用

现在我们将用例子讨论所有这些元字符。

#### `.` (点)

它用于匹配出现在我们搜索项中的任意字符。举个例子,我们可以使用点如:

```

$ grep "d.g" file1

```

这个正则表达式意味着我们在名为 ‘file1’ 的文件中查找的词以 `d` 开始,以 `g`结尾,中间可以有 1 个字符的字符串。同样,我们可以使用任意数量的点作为我们的搜索模式,如 `T......h`,这个查询项将查找一个词,以 `T` 开始,以 `h` 结尾,并且中间可以有任意 6 个字符。

#### `[ ]`

方括号用于定义字符范围。例如,我们需要搜索一些特别的单词而不是匹配任何字符,

```

$ grep "N[oen]n" file2

```

这里,我们正寻找一个单词,以 `N`开头,以 `n` 结尾,并且中间只能有 `o`、`e` 或者 `n` 中的一个。 在方括号中我们可以提到单个到任意数量的字符。

我们在方括号中也可以定义像 `a-e`或者 `1-18` 作为匹配字符的列表。

#### `[^ ]`

这就像正则表达式的 not 操作。当使用 `[^ ]` 时,它意味着我们的搜索将包括除了方括号内提到的所有字符。例如,

```

$ grep "St[^1-9]d" file3

```

这意味着我们可以拥有所有这样的单词,它们以 `St` 开始,以字母 `d` 结尾,并且不得包含从 `1` 到 `9` 的任何数字。

到现在为止,我们只使用了仅需要在中间查找单个字符的正则表达式的例子,但是如果我们需要更多字符该怎么办呢。假设我们需要找到以一个字符开头和结尾的所有单词,并且在中间可以有任意数量的字符。这就是我们使用乘数元字符如 `+` `*` 与 `?` 的地方。

`{n}`、`{n,m}`、`{n,}` 或者 `{,m}` 也是可以在我们的正则表达式项中使用的其他乘数元字符。

#### `*` (星号)

以下示例匹配字母 `k` 的任意出现次数,包括一次没有:

```

$ grep "lak*" file4

```

它意味着我们可以匹配到 `lake`、`la` 或者 `lakkkk`。

#### `+`

以下模式要求字符串中的字母 `k` 至少被匹配到一次:

```

$ grep "lak+" file5

```

这里 `k` 在我们的搜索中至少需要发生一次,所以我们的结果可以为 `lake` 或者 `lakkkk`,但不能是 `la`。

#### `?`

在以下模式匹配中

```

$ grep "ba?b" file6

```

匹配字符串 `bb` 或 `bab`,使用 `?` 乘数,我们可以有一个或零个字符的出现。

#### 非常重要的提示

当使用乘数时这是非常重要的,假设我们有一个正则表达式

```

$ grep "S.*l" file7

```

我们得到的结果是 `small`、`silly`,并且我们也得到了 `Shane is a little to play ball`。但是为什么我们得到了 `Shane is a little to play ball`?我们只是在搜索中寻找单词,为什么我们得到了整个句子作为我们的输出。

这是因为它满足我们的搜索标准,它以字母 `s` 开头,中间有任意数量的字符并以字母 `l` 结尾。那么,我们可以做些什么来纠正我们的正则表达式来只是得到单词而不是整个句子作为我们的输出。

我们在正则表达式中需要增加 `?` 元字符,

```

$ grep "S.*?l" file7

```

这将会纠正我们正则表达式的行为。

#### `\`

`\` 是当我们需要包含一个元字符或者对正则表达式有特殊含义的字符的时候来使用。例如,我们需要找到所有以点结尾的单词,所以我们可以使用:

```

$ grep "S.*\\." file8

```

这将会查找和匹配所有以一个点字符结尾的词。

通过这篇基本正则表达式教程,我们现在有一些关于正则表达式如何工作的基本概念。在我们的下一篇教程中,我们将学习一些高级的正则表达式的概念。同时尽可能多地练习,创建正则表达式并试着尽可能多的在你的工作中加入它们。如果有任何疑问或问题,您可以在下面的评论区留言。

---

via: <http://linuxtechlab.com/bash-scripting-learn-use-regex-basics/>

作者:[SHUSAIN](http://linuxtechlab.com/author/shsuain/) 译者:[kimii](https://github.com/kimii) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,183 | Ubuntu 18.04 新功能、发行日期和更多信息 | https://thishosting.rocks/ubuntu-18-04-new-features-release-date/ | 2017-12-30T08:34:00 | [

"Ubuntu"

] | https://linux.cn/article-9183-1.html |

我们一直都在翘首以盼 —— 新的 Ubuntu 的 LTS 版本 —— 18.04。了解有关新功能,发行日期以及更多信息。

### 关于 Ubuntu 18.04 的基本信息

让我们以一些基本信息开始。

* 这是一个新的 LTS(长期支持)版本。所以对桌面版和服务器版有 5 年的支持。

* 被命名为 “Bionic Beaver”(仿生河狸)。Canonical 的创始人 Mark Shuttleworth 解释了这个名字背后的含义。吉祥物是一个河狸,因为它充满活力,勤劳,并且是一个很棒工程师 —— 这完美地描述了一个典型的 Ubuntu 用户,以及新的 Ubuntu 发行版本身。使用 “Bionic”(仿生)这个形容词是由于在 Ubuntu Core 上运行的机器人数量的增加。

### Ubuntu 18.04 发行日期和日程

如果你是 Ubuntu 的新手,你可能并不熟悉实际的版本号意味着什么。它指的是官方发行的年份和月份。所以 Ubuntu 18.04 正式发布将在 2018 年的第 4 个月。Ubuntu 17.10 于 2017 年发布,也就是今年的第 10 个月。

对进一步的细节,这里是有关 Ubuntu 18.04 LTS 的重要日期和需要知道的:

* 2017 年 11 月 30 日 - 功能定义冻结。

* 2018 年 1 月 4 日 - 第一个 Alpha 版本。所以,如果您选择接收新的 Alpha 版本,那么您将在这天获得 Alpha 1 更新。

* 2018 年 2 月 1 日 - 第二个 Alpha 版本。

* 2018 年 3 月 1 日 - 功能冻结。将不会引入或发布新功能。所以开发团队只会在改进现有功能和修复错误上努力。当然也有例外。如果您不是开发人员或有经验的用户,但仍想尝试新的 Ubuntu ASAP,那么我个人建议从此版本开始。

* 2018 年 3 月 8 日 - 第一个 Bata 版本。如果您选择接收 Bata 版更新,则会在当天得到更新。

* 2018 年 3 月 22 日 - 用户界面冻结。这意味着不会对实际的用户界面做进一步的更改或更新,因此,如果您编写文档,[教程](https://thishosting.rocks/category/knowledgebase/),并使用屏幕截图,那时开始是可靠的。

* 2018 年 3 月 29 日 - 文档字符串冻结。将不会有任何编辑或新的东西(字符串)添加到文档中,所以翻译者可以开始翻译文档。

* 2018 年 4 月 5 日 - 最终 Beta 版本。这也是开始使用新版本的好日子。

* 2018 年 4 月 19 日 - 最终冻结。现在一切都已经完成了。版本的图像被创建和分发,并且可能不会有任何更改。

* 2018 年 4 月 26 日 - 官方最终版本的 Ubuntu 18.04。每个人都可以从这一天开始使用它,即使在生产服务器上。我们建议从 [Vultr](https://thishosting.rocks/go/vultr/) 获得 Ubuntu 18.04 服务器并测试新功能。[Vultr](https://thishosting.rocks/go/vultr/) 的服务器每月起价为 2.5 美元。(LCTT 译注:这是原文广告!)

### Ubuntu 18.04 的新功能

在 Ubuntu 18.04 LTS 上的所有新功能:

#### 现已支持彩色表情符号

在以前的版本中,Ubuntu 只支持单色(黑和白)表情符号,坦白地说,它看起来不是太好。Ubuntu 18.04 将使用[Noto Color Emoji font](https://www.google.com/get/noto/help/emoji/) 来支持彩色表情符号。随着 18.04,你可以在任何地方轻松查看和添加颜色表情符号。它们是原生支持的 —— 所以你可以使用它们,而不用使用第三方应用程序或安装/配置任何额外的东西。你可以随时通过删除该字体来禁用彩色表情符号。

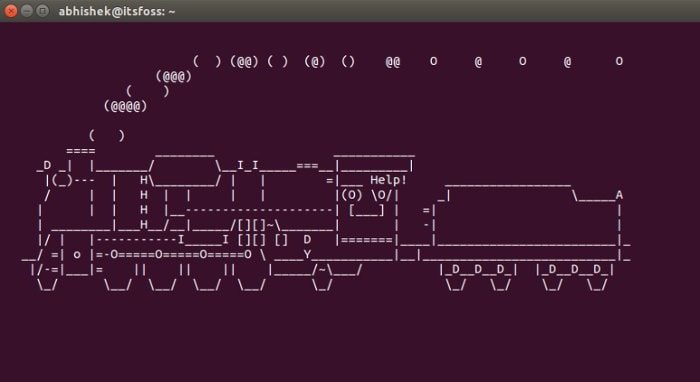

#### GNOME 桌面环境

[](https://thishosting.rocks/wp-content/uploads/2017/12/ubuntu-17-10-gnome.jpg)

Ubuntu 从 Ubuntu 17.10 开始使用 GNOME 桌面环境,而不是默认的 Unity 环境。Ubuntu 18.04 将继续使用 GNOME。这是 Ubuntu 的一个重要的变化。

#### Ubuntu 18.04 桌面将有一个新的默认主题

Ubuntu 18.04 正在用新的 GTK 主题以告别旧的默认主题 “Ambience”。如果你想帮助新的主题,看看一些截图甚至更多,去[这里](https://community.ubuntu.com/t/call-for-participation-an-ubuntu-default-theme-lead-by-the-community/1545)。

到目前为止,有人猜测 Suru 将成 为 Ubuntu 18.04 的[新默认图标主题](http://www.omgubuntu.co.uk/2017/11/suru-default-icon-theme-ubuntu-18-04-lts)。这里有一个截图:

[](https://thishosting.rocks/wp-content/uploads/2017/12/suru-icon-theme-ubuntu-18-04.jpg)

>

> 值得注意的是:Ubuntu 16.10,17.04 和 17.10 中的所有新功能都将滚动到 Ubuntu 18.04 中,这些更新,如右边的窗口按钮、更好的登录屏幕,改进的蓝牙支持等将推送到 Ubuntu 18.04。对此我们不会特别说明,因为它对 Ubuntu 18.04 本身并不新鲜。如果您想了解更多关于从 16.04 到 18.04 的所有变化,请谷歌搜索它们之间的每个版本。

>

>

>

### 下载 Ubuntu 18.04

首先,如果你已经使用 Ubuntu,你可以升级到 Ubuntu 18.04。

如果你需要下载 Ubuntu 18.04:

在最终发布之后,请进入[官方 Ubuntu 下载页面](https://www.ubuntu.com/download)。

对于每日构建(alpha,beta 和 non-final 版本),请转到[这里](http://cdimage.ubuntu.com/daily-live/current/)。

### 常见问题解答

现在是一些经常被问到的问题(附带答案),这应该能给你关于这一切的更多信息。

#### 什么时候切换到 Ubuntu 18.04 是安全的?

当然是在正式的最终发布日期。但是,如果您等不及,请开始使用 2018 年 3 月 1 日的桌面版本,并在 2018 年 4 月 5 日开始测试服务器版本。但是为了确保安全,您需要等待最终发布,甚至更长时间,使得您正在使用的第三方服务和应用程序经过测试,并在新版本上进行良好运行。

#### 如何将我的服务器升级到 Ubuntu 18.04?

这个过程相当简单,但潜在风险很大。我们可能会在不久的将来发布一个教程,但你基本上需要使用 `do-release-upgrade`。同样,升级你的服务器也有潜在的风险,并且如果在生产服务器上,我会在升级之前再三考虑。特别是如果你在 16.04 上还剩有几年的支持。

#### 我怎样才能帮助 Ubuntu 18.04?

即使您不是一个经验丰富的开发人员和 Ubuntu 用户,您仍然可以通过以下方式提供帮助:

* 宣传它。让人们了解 Ubuntu 18.04。在社交媒体上的一个简单的分享也有点帮助。

* 使用和测试版本。开始使用该版本并进行测试。同样,您不必是一个开发人员。您仍然可以查找和报告错误,或发送反馈。

* 翻译。加入翻译团队,开始翻译文档或应用程序。

* 帮助别人。加入一些在线 Ubuntu 社区,并帮助其他人解决他们对 Ubuntu 18.04 的问题。有时候人们需要帮助,一些简单的事如“我在哪里可以下载 Ubuntu?”

#### Ubuntu 18.04 对其他发行版如 Lubuntu 意味着什么?

所有基于 Ubuntu 的发行版都将具有相似的新功能和类似的发行计划。你需要检查你的发行版的官方网站来获取更多信息。

#### Ubuntu 18.04 是一个 LTS 版本吗?

是的,Ubuntu 18.04 是一个 LTS(长期支持)版本,所以你将得到 5 年的支持。

#### 我能从 Windows/OS X 切换到 Ubuntu 18.04 吗?

当然可以!你很可能也会体验到性能的提升。从不同的操作系统切换到 Ubuntu 相当简单,有相当多的相关教程。你甚至可以设置一个双引导,来使用多个操作系统,所以 Windows 和 Ubuntu 18.04 你都可以使用。

#### 我可以尝试 Ubuntu 18.04 而不安装它吗?

当然。你可以使用像 [VirtualBox](https://www.virtualbox.org/) 这样的东西来创建一个“虚拟桌面” —— 你可以在你的本地机器上安装它,并且使用 Ubuntu 18.04 而不需要真正地安装 Ubuntu。

或者你可以在 [Vultr](https://thishosting.rocks/go/vultr/) 上以每月 2.5 美元的价格尝试 Ubuntu 18.04 服务器。如果你使用一些[免费账户(free credits)](https://thishosting.rocks/vultr-coupons-for-2017-free-credits-and-more/),那么它本质上是免费的。(LCTT 译注:广告!)

#### 为什么我找不到 Ubuntu 18.04 的 32 位版本?

因为没有 32 位版本。Ubuntu 的 17.10 版本便放弃了 32 位版本。如果你使用的是旧硬件,那么最好使用不同的[轻量级 Linux 发行版](https://thishosting.rocks/best-lightweight-linux-distros/)而不是 Ubuntu 18.04。

#### 还有其他问题吗?

在下面留言!分享您的想法,我们会非常激动,并且一旦有新信息发布,我们就会更新这篇文章。敬请期待,耐心等待!

---

via: <https://thishosting.rocks/ubuntu-18-04-new-features-release-date/>

作者:<thishosting.rocks> 译者:[kimii](https://github.com/kimii) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | We’ve all been waiting for it – the new LTS release of Ubuntu – 18.04. Learn more about new features, the release dates, and more.

Note: we’ll frequently update this article with new information, so bookmark this page and check back soon.

**Basic information about Ubuntu 18.04**

Let’s start with some basic information.

- It’s a new LTS (Long Term Support) release. So you get

**5 years of support**for both the desktop and server version. - Named

**“Bionic Beaver”**. The founder of Canonical, Mark Shuttleworth, explained the meaning behind the name. The mascot is a Beaver because it’s energetic, industrious, and an awesome engineer – which perfectly describes a typical Ubuntu user, and the new Ubuntu release itself. The “Bionic” adjective is due to the increased number of robots that run on the Ubuntu Core.

**Ubuntu 18.04 Release Dates & Schedule**

If you’re new to Ubuntu, you may not be familiar the actual version numbers mean. It’s the year and month of the official release. So Ubuntu’s 18.04 official release will be in the **4**th month of the year 20**18**. Ubuntu 17.10 was released in 20**17**, in the **10**th month of the year.

To go into further details, here are the important dates and need to know about Ubuntu 18.04 LTS:

- November 30th, 2017 – Feature Definition Freeze.

- January 4th, 2018 – First Alpha release. So if you opted-in to receive new Alpha releases, you’ll get the Alpha 1 update on this date.

- February 1st, 2018 – Second Alpha release.

- March 1st, 2018 – Feature Freeze. No new features will be introduced or released. So the development team will only work on improving existing features and fixing bugs. With exceptions, of course. If you’re not a developer or an experienced user, but would still like to try the new Ubuntu ASAP, then I’d personally recommend starting with this release.

- March 8th, 2018 – First Beta release. If you opted-in for receiving Beta updates, you’ll get your update on this day.

- March 22nd, 2018 – User Interface Freeze. It means that no further changes or updates will be done to the actual user interface, so if you write documentation,

[tutorials](https://thishosting.rocks/category/knowledgebase/), and use screenshots, it’s safe to start then. - March 29th, 2018 – Documentation String Freeze. There won’t be any edits or new stuff (strings) added to the documentation, so translators can start translating the documentation.

- April 5th, 2018 – Final Beta release. This is also a good day to start using the new release.

- April 19th, 2018 – Final Freeze. Everything’s pretty much done now. Images for the release are created and distributed, and will likely not have any changes.

**April 26th, 2018 – Official, Final release**of Ubuntu 18.04. Everyone should start using it starting this day, even on production servers. We recommend getting an Ubuntu 18.04 server from[Vultr](https://thishosting.rocks/go/vultr/)and testing out the new features. Servers at[Vultr](https://thishosting.rocks/go/vultr/)start at $2.5 per month.

**What’s New in Ubuntu 18.04**

All the new features in Ubuntu 18.04 LTS:

### Color emojis are now supported 👏👏👏

With previous versions, Ubuntu only supported monochrome (black and white) emojis, which quite frankly, didn’t look so good. Ubuntu 18.04 will support colored emojis by using the [Noto Color Emoji font](https://www.google.com/get/noto/help/emoji/). With 18.04, you can view and add color emojis with ease everywhere. They are supported natively – so you can use them without using 3-rd party apps or installing/configuring anything extra. You can always disable the color emojis by removing the font.

### GNOME desktop environment

Ubuntu started using the GNOME desktop environment with Ubuntu 17.10 instead of the default Unity environment. Ubuntu 18.04 will continue using GNOME. This is a major change to Ubuntu.

This article will mainly focus on Ubuntu’s changes, but GNOME has also done a lot of changes to their desktop environment, as well as new features. An improved dock, an on-screen keyboard, and more. So check out GNOME’s website for more info.

### Ubuntu 18.04 Desktop will have a new theme

Ubuntu 18.04 is saying Goodbye to the old ‘Ambience’ theme with a new GTK theme. If you want to help with the new theme, check out some screenshots and more, go [here](https://community.ubuntu.com/t/mockups-new-design-discussions/1898/180). It may not be default, but it most probably will.

As of now, there is speculation that Suru will be the [new default icon theme](http://www.omgubuntu.co.uk/2017/11/suru-default-icon-theme-ubuntu-18-04-lts) for Ubuntu 18.04. Here’s a screenshot:

**UPDATE: **Ubuntu 18.04 will ship with Ambience and **it won’t use a new theme by default**. The new Communitheme won’t even be installed. The Desktop team has decided to do this for various reasons, including bugs and lack of testing.

Luckily, you can still use the Communitheme, but you’ll have to install it yourself. The Communitheme can be installed easily via a snap, but you can always install it manually by following the instructions below.

You can actually try the new theme (Communitheme) right now if you’re using Ubuntu 17.10 or Ubuntu 18.04. You can do that by:

Add a repository:

sudo add-apt-repository ppa:communitheme/ppa

Update your package list:

sudo apt update

And install the new theme:

sudo apt install ubuntu-communitheme-session

To start using it, you need to log out, and when logging back in, select the new theme. For more information, visit the [Communitheme GitHub repo](https://github.com/Ubuntu/gnome-shell-communitheme).

### Ubuntu 18.04 with a Faster Boot Speed

The Ubuntu desktop team has been working on improving the boot time on the new Ubuntu 18.04 release. We expect a big improvement

### Xorg will be used by default instead of Wayland

Ubuntu 17.10 used the Wayland graphics server by default. With Ubuntu 18.04, the default graphics server will change to Xorg. Wayland will still be available as an option, but Xorg will be the default, out of the box one. The Ubuntu Desktop team decided to go with Xorg for its compatibility with services like Skype, Google Hangouts, WebRTC services, VNC and RDP, and more.

### Lots of improvements and bug fixes

The most notable improvement will be in CPU usage. The Ubuntu Desktop team has greatly improved and reduced the CPU usage caused by Ubuntu 18.04.

They’ve also fixed hundreds of bugs and made hundreds of other small improvements.

### Ubuntu 18.04 Desktop will have a new app pre-installed

The new LTS desktop release will ship with a new app pre-installed by default. The app is [GNOME To Do](https://wiki.gnome.org/Apps/Todo) and it’s a very useful app for organizing lists, tasks, and more. You can prioritize them. color them, set due dates, and a number of other features.

### Ubuntu 18.04 minimal install option

Ubuntu 18.04 will use [Ubiquity](https://wiki.ubuntu.com/Ubiquity), the Ubuntu installer you’re probably already familiar with. Though the developers plan on implementing Subiquity, 18.04 will use Ubiquity, which will have a new “[minimal install](https://thishosting.rocks/wp-content/uploads/2017/12/ubuntu-18-04-minimal-installation.jpg)” option that you can choose during setup. Minimal install basically means the same Ubuntu, but without most of the pre-installed software. The minimal install option does not make Ubuntu 18.04 lightweight. It only saves about 500 MB. The minimal Ubuntu 18.04 version is only 28MB in size (when compressed). If you need a [lightweight alternative](https://thishosting.rocks/best-lightweight-linux-distros/), use something like Lubuntu.

### Ubuntu 18.04 will collect data about your system and make it public

Ubuntu 18.04 will collect data like the Ubuntu flavor you’re using, hardware stats, your country etc. Anyone can opt-out of this, but it’s enabled by default. What’s interesting about this is that the data they collect will be public, and no sensitive data will be collected. so most of the Ubuntu community supports this decision.

### Applications will be installed as snaps by default

They been planning on using [snaps](https://www.ubuntu.com/desktop/snappy) for a while, and they finally shipped GNOME Calculator as a snap instead of a deb. This is a test to help the Desktop team find and fix any bugs. They’ll later on move more applications to snap in the final release. Using snaps will make the process of installing and updating apps much easier. You can even install snaps on any distro and device.

### Ubuntu 18.04 will ship with Linux Kernel 4.15

Ubuntu’s daily builds started to ship with Linux Kernel 4.15 by default – the latest stable release of the Linux kernel. 4.15 has fixed the Spectre and Meltdown issues, among other things.

### New default background in Ubuntu 18.04

And of course, the new Ubuntu release will have a new default background (wallpaper) with a Beaver:

You can download the full (8K) .png version [here](https://launchpadlibrarian.net/361641455/18.04_beaver_wp_8192x4608_AW-60.png). There’s also a black and white version available [here](https://thishosting.rocks/wp-content/uploads/2017/12/ubuntu-18-04-background-bw.jpg).

There are also various other new wallpapers available from the Free Culture Showcase:

### Further Reading

You can read this [ovierview on Ubuntu.com](https://insights.ubuntu.com/2018/04/27/breeze-through-ubuntu-desktop-18-04-lts-bionic-beaver) for 18.04 which includes screenshots.

Worth noting: all new features in Ubuntu 16.10, 17.04, and 17.10 will roll through to Ubuntu 18.04. So updates like Window buttons to the right, a better login screen, improved Bluetooth support etc. will roll out to Ubuntu 18.04. We won’t include a special section since it’s not really new to Ubuntu 18.04 itself. If you want to learn more about all the changes from 16.04 to 18.04, google it for each version in between.

**Download Ubuntu 18.04**

First off, if you’re already using Ubuntu, you can just upgrade to Ubuntu 18.04.

If you need to download Ubuntu 18.04:

Go to the [official Ubuntu 18.04 download page](http://releases.ubuntu.com/18.04/)

**What’s New in Ubuntu 18.04 – Video**

Check this video out – detailing what’s new in Ubuntu 18.04

**FAQs**

Now for some of the frequently asked questions (with answers) that should give you more information about all of this.

### Will I be able to add icons to my desktop on Ubuntu 18.04?

Since version 3.28, GNOME has removed the option to put icons on your desktop, as well as some other features of managing your desktop. So naturally, people are wondering if they’ll be able to put icons on their Ubuntu 18.04 desktop since Ubuntu 18.04 will be using the latest GNOME.

The answer is yes. You’ll still be able to put icons on your desktop because the Ubuntu desktop team has decided to stick to the older version of GNOME’s file manager, which still has the desktop features. It will only stick back to the older version of Nautilus (Nautilus 3.26), but will keep all the latest releases of everything else in GNOME. So no worries. Desktop in Ubuntu 18.04 is fine.

### When is it safe to switch to Ubuntu 18.04?

On the official final release date, of course. But if you can’t wait, start using the desktop version on March 1st, 2018, and start testing out the server version on April 5th, 2018. But for you to truly be “safe”, you’ll need to wait for the final release, maybe even more so the 3-rd party services and apps you are using are tested and working well on the new release.

### How do I upgrade my server to Ubuntu 18.04?

It’s a fairly simple process but has huge potential risks. We may publish a tutorial sometime in the near future, but you’ll basically need to use ‘do-release-upgrade’. Again, upgrading your server has potential risks, and if you’re on a production server, I’d think twice before upgrading. Especially if you’re on 16.04 which has a few years of support left.

### How can I help with Ubuntu 18.04?

Even if you’re not an experienced developer and Ubuntu user, you can still help by:

- Spreading the word. Let people know about Ubuntu 18.04. A simple share on social media helps a bit too.

- Using and testing the release. Start using the release and test it. Again, you don’t have to be a developer. You can still find and report bugs, or send feedback.

- Translating. Join the translating teams and start translating documentation and/or applications.

- Helping other people. Join some online Ubuntu communities and help others with issues they’re having with Ubuntu 18.04. Sometimes people need help with simple stuff like “where can I download Ubuntu?”

### What does Ubuntu 18.04 mean for other distros like Lubuntu?

All distros that are based on Ubuntu will have similar new features and a similar release schedule. You’ll need to check your distro’s official website for more information.

### Is Ubuntu 18.04 an LTS release?

Yes, Ubuntu 18.04 is an LTS (Long Term Support) release, so you’ll get support for 5 years.

### Can I switch from Windows/OS X to Ubuntu 18.04?

Of course! You’ll most likely experience a performance boost too. Switching from a different OS to Ubuntu is fairly easy, there are quite a lot of tutorials for doing that. You can even set up a dual-boot where you’ll be using multiple OSes, so you can use both Windows and Ubuntu 18.04.

### Can I try Ubuntu 18.04 without installing it?

Sure. You can use something like [VirtualBox](https://www.virtualbox.org/) to create a “virtual desktop” – you can install it on your local machine and use Ubuntu 18.04 without actually installing Ubuntu.

Or you can try an Ubuntu 18.04 server at [Vultr](https://thishosting.rocks/go/vultr/) for $2.5 per month. It’s essentially free if you use some [free credits](https://thishosting.rocks/vultr-coupons-for-2017-free-credits-and-more/).

### Why can’t I find a 32-bit version of Ubuntu 18.04?

Because there is no 32bit version. Ubuntu dropped 32bit versions with its 17.10 release. If you’re using old hardware, you’re better off using a different [lightweight Linux distro](https://thishosting.rocks/best-lightweight-linux-distros/) instead of Ubuntu 18.04 anyway.

### Why Ubuntu 18.04 Should Use KDE Plasma Instead of GNOME

This was an interesting video and point of view we’d like to share.

### Any other question?

Leave a comment below! Share your thoughts, we’re super excited and we’re gonna update this article as soon as new information comes in. Stay tuned and be patient!

## 35 thoughts on “Ubuntu 18.04 – New Features, Release Date & More”

Since everyone knows that Gnome is cancer (right?), what about KDE in 18.04 LTS?

No

Ubuntu is a popular distro for the masses. It is advertised as linux for humans.

KDE can’t be the default. Too many confusing settings, a normal user can break everything by tweaking them. They can’t afford to have a confusing for normal people desktop. KDE is for geeks.

I presume the ‘spins’ that are usually available will continue to be available… Kubuntu for KDE, Lubuntu for LXDE, Xubuntu for XFCE, etc.

If I am not mistaken, Gnome was the desktop earlier. I did not find any problem in using it or Unity now. Neither my laptop, nor am I suffering from cancer.

I am a normal user without any understanding of the working of the innards of OS or the hardware. With due respect, the ‘cancer’ has helped spread the use of Linux among normal users.

hello, guys don’t fight together. MR (yeah pal) its mean that KDE is really lighter than gnome.

but a gnome desktop is user-friendly for such user like you.

but for programmers (i dont know maybe you are) kde is better.

and another reason is battery life.kde is really better in it.

another reason is the gnome will stop some tasks on the server. I’ll put a link for you to look at it.

https://www.reddit.com/r/linux/comments/5m4chp/gnome_vs_kde_in_2017/

Is 14.15 still the target Linux version?

Either 4.15 or 4.14 (since it’s LTS). We’ll update the article once we know for sure

16.04 has been rock solid. Only reason for me to go for this is USBGuard!

I have no idea who selected the default wallpaper for the new GTK theme, but I doubt that the person spend much time staring at the screen. Those brilliant colors would be blinding. While I’m a Linux guy, when it comes to ergonomics, Apple sets the industry standard.

I am running 14.04 and 16.04 both 32 bit, two computers, both dual core. When I upgraded from Vista 32 bit on a dual core computer to Windows 7 64 bit I had to do a clean install. I had to transfer the files using a USB stick.

Question:

When I upgrade to 18.04 which only comes in 64 bit will I have to to do a clean install?

You’ll have to do a clean install. It is technically possible to upgrade from 32 to 64, but it’s too complicated and not worth the hassle. Check this for more info: https://askubuntu.com/questions/81824/how-can-i-switch-a-32-bit-installation-to-a-64-bit-one

You can reinstall all packages you’ve previously had installed though, which is easier.

https://ubuntuforums.org/showthread.php?t=1057608

Do a clean install, reinstall all your previous packages and that’s it.

KDE plasma make your pc heavier.. and slow

Some applications may be installed using a snap by default in Ubuntu 18.04. Still no concrete info though. Read more here: https://community.ubuntu.com/t/snappy-vs-apt-packages-on-ubuntu-18-04/2472/6

We’ll update our article once we have some news on Snappy on Ubuntu 18.04

My Ubuntu 18.04 is working perfectly, but only with Cinnamon Desktop. Without, it bugs when I slide a file from a USB key to the desktop. The mouse is then inefficient. Same issue with 17.10.

Mon Ubuntu 18.04 fonctionne à merveille avec le bureau Cinnamon. Il beugue si je n’installe pas Cinnamon. La souris devient inopérante sur le bureau après avoir manipulé des fichiers entre une clé USB et le bureau. Même problème avec la version 17.10.

“Why Ubuntu 18.04 Should Use KDE Plasma Instead of GNOME”, what about XFCE ou Cinnamon ? They use less RAM than KDE or GNOME, no ?

Make a DUAL PANEL (F3) available in File Manager !

We NEED This !

use nemo

How can I get gksudo nautilus working in ubuntu 18.04 ?

wird 18.04 lts mit amd raven ridge, z.b. ryzen 3 2200g zusammen arbeiten? meine nächste hardware ist ein solches system. wenn nicht, werde ich bis auf weiteres bei win bleiben.

es wäre eine günstige gelegenheit: neues ubuntu und neue amd apu mit vega grafik

Please fix the text “The Ubuntu Desktop team has greately imrpoved and”.

Thank you

Done. Thank you!

Ubuntu themselves don’t update production systems until the first point release. That would be 18.04.1 before switching production machines. If you’re on the LTS version then it won’t offer updating the release until that time. This usually happens way after the final release.

Hello from Helsinki

18.04 in april … its like X-mas all over again … can’t wait !!!

I got driver problem issue(wifi) on my lenovo ideapad 310 after installing ubuntu 16 LTS and i know ubuntu 17 breaks some lenovo PC BIOS will there be any update on this issues

thanks

I like Linux Mint MATE user interface. Linux is so much better than Windows 10 in most aspects. The best thing about Linux is they let you pick which interface and software you want unlike Microsoft and their forced software updates, one size fits all, we do not care what you think. I like MATE interface but the Cinnamon and Gnome user interfaces are tolerable to me as well. I do not care for KDE Plasma.

What a boring video about why Canonical should use KDE (Qt) instead of Gnome (GTK). They made their choice. I’m very happy with the decision because Gnome is so fast and easy to use. It’s just the best desktop in the world. KDE is a Windows 7 like offering and also massively bloated in code base terms. We don’t need that people. We need ease of use and smaller, nimbler code base. Go Gnome all the way.

Does this ubuntu support UEFI system and GPT type Hardisk? because I did not find the option to change the settings to legacy. thanks

If somebody want to give me about the information you text me to [email protected]

Uhm saying that there is no 32 bit version is not true, there is but not in ISO form. But if you have running Ubuntu on 32 bit and you want 18.04 you can just upgrade to it, but not from scratch like a iso.

What is the alternate for Likewiseopen software that is being used to get an Active Directory user logged on on Ubuntu? Can I use SSSD on Desktop as well? Since it’s mentioned in server package list.

It should be pointed out that it’s officially recommended to wait before upgrading in-place (e.g., `do-release-upgrade`) 16.04 to 18.04 until a few days after 18.04.1 comes out in July.

See https://fridge.ubuntu.com/2018/04/27/ubuntu-18-04-lts-bionic-beaver-released/

upgraded in may & find the version is unusable due to configuration problems. 2 keyboards appear onscreen with no apparent way of removing them, & the @ sign has disappeared.

For universal usage, it needs to be more user-friendly, particularly for the nontechnical user!

Back to antiquity? Just attempted to upgrade from 14.04 to 18.04, yes attempted. Then I went out to buy cables to install a disk laying around (perhaps just for this moment) and attempted to install 18.04 with the 14.04 disk still attached. Finally, I removed the 14.04 disk and the installation went well. Now I must deal with installing the software packages I use and dealing with gnome. Now to KDE plasma. Thanks for your article. Before doing anything else I am going to try KDE Plasma before continuing with gnome. Where is the tutorial? for 14.04 or 18.04?

Ubuntu 18.04 is awesome. Have been using it for a month now.Sorry new to Linux but already loving it.

I recently had Ubuntu 18.04 installed in January, 2020 by a service tech. It looks like I only have about 3 years of free service if it expires on April 2023, is this correct? When this date arrives can you tell me what the maintenance service will cost me, if not known at this time, please give me your best estimate and is it paid monthly or yearly? Waiting to here from you. Thanks |

9,184 | 如何改善遗留的代码库 | https://jacquesmattheij.com/improving-a-legacy-codebase | 2017-12-28T09:33:45 | [

"代码",

"重构"

] | /article-9184-1.html |

在每一个程序员、项目管理员、团队领导的一生中,这都会至少发生一次。原来的程序员早已离职去度假了,给你留下了一坨几百万行屎一样的、勉强支撑公司运行的代码和(如果有的话)跟代码驴头不对马嘴的文档。

你的任务:带领团队摆脱这个混乱的局面。

当你的第一反应(逃命)过去之后,你开始去熟悉这个项目。公司的管理层都在关注着你,所以项目只能成功;然而,看了一遍代码之后却发现失败几乎是不可避免。那么该怎么办呢?

幸运(不幸)的是我已经遇到好几次这种情况了,我和我的小伙伴发现将这坨热气腾腾的屎变成一个健康可维护的项目是一个有丰厚利润的业务。下面这些是我们的一些经验:

### 备份

在开始做任何事情之前备份与之可能相关的所有文件。这样可以确保不会丢失任何可能会在另外一些地方很重要的信息。一旦修改了其中一些文件,你可能花费一天或者更多天都解决不了这个愚蠢的问题。配置数据通常不受版本控制,所以特别容易受到这方面影响,如果定期备份数据时连带着它一起备份了,还是比较幸运的。所以谨慎总比后悔好,复制所有东西到一个绝对安全的地方并不要轻易碰它,除非这些文件是只读模式。

### 重要的先决条件:必须确保代码能够在生产环境下构建运行并产出

之前我假设环境已经存在,所以完全丢了这一步,但 Hacker News 的众多网友指出了这一点,并且事实证明他们是对的:第一步是确认你知道在生产环境下运行着什么东西,也意味着你需要在你的设备上构建一个跟生产环境上运行的版本每一个字节都一模一样的版本。如果你找不到实现它的办法,一旦你将它投入生产环境,你很可能会遭遇一些预料之外的糟糕事情。确保每一部分都尽力测试,之后在你足够确信它能够很好的运行的时候将它部署生产环境下。无论它运行的怎么样都要做好能够马上切换回旧版本的准备,确保日志记录下了所有情况,以便于接下来不可避免的 “验尸” 。

### 冻结数据库

直到你修改代码结束之前尽可能冻结你的数据库,在你已经非常熟悉代码库和遗留代码之后再去修改数据库。在这之前过早的修改数据库的话,你可能会碰到大问题,你会失去让新旧代码和数据库一起构建稳固的基础的能力。保持数据库完全不变,就能比较新的逻辑代码和旧的逻辑代码运行的结果,比较的结果应该跟预期的没有差别。

### 写测试

在你做任何改变之前,尽可能多的写一些端到端测试和集成测试。确保这些测试能够正确的输出,并测试你对旧的代码运行的各种假设(准备好应对一些意外状况)。这些测试有两个重要的作用:其一,它们能够在早期帮助你抛弃一些错误观念,其二,这些测试在你写新代码替换旧代码的时候也有一定防护作用。

要自动化测试,如果你有 CI 的使用经验可以用它,并确保在你提交代码之后 CI 能够快速的完成所有测试。

### 日志监控

如果旧设备依然可用,那么添加上监控功能。在一个全新的数据库,为每一个你能想到的事件都添加一个简单的计数器,并且根据这些事件的名字添加一个函数增加这些计数器。用一些额外的代码实现一个带有时间戳的事件日志,你就能大概知道发生多少事件会导致另外一些种类的事件。例如:用户打开 APP 、用户关闭 APP 。如果这两个事件导致后端调用的数量维持长时间的不同,这个数量差就是当前打开的 APP 的数量。如果你发现打开 APP 比关闭 APP 多的时候,你就必须要知道是什么原因导致 APP 关闭了(例如崩溃)。你会发现每一个事件都跟其它的一些事件有许多不同种类的联系,通常情况下你应该尽量维持这些固定的联系,除非在系统上有一个明显的错误。你的目标是减少那些错误的事件,尽可能多的在开始的时候通过使用计数器在调用链中降低到指定的级别。(例如:用户支付应该得到相同数量的支付回调)。

这个简单的技巧可以将每一个后端应用变成一个像真实的簿记系统一样,而像一个真正的簿记系统,所有数字必须匹配,如果它们在某个地方对不上就有问题。

随着时间的推移,这个系统在监控健康方面变得非常宝贵,而且它也是使用源码控制修改系统日志的一个好伙伴,你可以使用它确认 BUG 引入到生产环境的时间,以及对多种计数器造成的影响。

我通常保持每 5 分钟(一小时 12 次)记录一次计数器,但如果你的应用生成了更多或者更少的事件,你应该修改这个时间间隔。所有的计数器公用一个数据表,每一个记录都只是简单的一行。

### 一次只修改一处

不要陷入在提高代码或者平台可用性的同时添加新特性或者是修复 BUG 的陷阱。这会让你头大,因为你现在必须在每一步操作想好要出什么样的结果,而且会让你之前建立的一些测试失效。

### 修改平台

如果你决定转移你的应用到另外一个平台,最主要的是跟之前保持一模一样。如果你觉得需要,你可以添加更多的文档和测试,但是不要忘记这一点,所有的业务逻辑和相互依赖要跟从前一样保持不变。

### 修改架构

接下来处理的是改变应用的结构(如果需要)。这一点上,你可以自由的修改高层的代码,通常是降低模块间的横向联系,这样可以降低代码活动期间对终端用户造成的影响范围。如果旧代码很庞杂,那么现在正是让它模块化的时候,将大段代码分解成众多小的部分,不过不要改变量和数据结构的名字。

Hacker News 的 [mannykannot](https://news.ycombinator.com/item?id=14445661) 网友指出,修改高层代码并不总是可行,如果你特别不幸的话,你可能为了改变一些架构必须付出沉重的代价。我赞同这一点也应该在这里加上提示,因此这里有一些补充。我想额外补充的是如果你修改高层代码的时候修改了一点点底层代码,那么试着只修改一个文件或者最坏的情况是只修改一个子系统,尽可能限制修改的范围。否则你可能很难调试刚才所做的更改。

### 底层代码的重构

现在,你应该非常理解每一个模块的作用了,准备做一些真正的工作吧:重构代码以提高其可维护性并且使代码做好添加新功能的准备。这很可能是项目中最消耗时间的部分,记录你所做的任何操作,在你彻底的记录并且理解模块之前不要对它做任何修改。之后你可以自由的修改变量名、函数名以及数据结构以提高代码的清晰度和统一性,然后请做测试(情况允许的话,包括单元测试)。

### 修复 bug

现在准备做一些用户可见的修改,战斗的第一步是修复很多积累了几年的 bug。像往常一样,首先证实 bug 仍然存在,然后编写测试并修复这个 bug,你的 CI 和端对端测试应该能避免一些由于不太熟悉或者一些额外的事情而犯的错误。

### 升级数据库

如果你在一个坚实且可维护的代码库上完成所有工作,你就可以选择更改数据库模式的计划,或者使用不同的完全替换数据库。之前完成的步骤能够帮助你更可靠的修改数据库而不会碰到问题,你可以完全的测试新数据库和新代码,而之前写的所有测试可以确保你顺利的迁移。

### 按着路线图执行

祝贺你脱离的困境并且可以准备添加新功能了。

### 任何时候都不要尝试彻底重写

彻底重写是那种注定会失败的项目。一方面,你在一个未知的领域开始,所以你甚至不知道构建什么,另一方面,你会把所有的问题都推到新系统马上就要上线的前一天。非常不幸的是,这也是你失败的时候。假设业务逻辑被发现存在问题,你会得到异样的眼光,那时您会突然明白为什么旧系统会用某种奇怪的方式来工作,最终也会意识到能将旧系统放在一起工作的人也不都是白痴。在那之后。如果你真的想破坏公司(和你自己的声誉),那就重写吧,但如果你是聪明人,你会知道彻底重写系统根本不是一个可选的选择。

### 所以,替代方法:增量迭代工作

要解开这些线团最快方法是,使用你熟悉的代码中任何的元素(它可能是外部的,也可能是内核模块),试着使用旧的上下文去增量改进。如果旧的构建工具已经不能用了,你将必须使用一些技巧(看下面),但至少当你开始做修改的时候,试着尽力保留已知的工作。那样随着代码库的提升你也对代码的作用更加理解。一个典型的代码提交应该最多两三行。

### 发布!

每一次的修改都发布到生产环境,即使一些修改不是用户可见的。使用最少的步骤也是很重要的,因为当你缺乏对系统的了解时,有时候只有生产环境能够告诉你问题在哪里。如果你只做了一个很小的修改之后出了问题,会有一些好处:

* 很容易弄清楚出了什么问题

* 这是一个改进流程的好位置

* 你应该马上更新文档展示你的新见解

### 使用代理的好处

如果你做 web 开发那就谢天谢地吧,可以在旧系统和用户之间加一个代理。这样你能很容易的控制每一个网址哪些请求定向到旧系统,哪些请求定向到新系统,从而更轻松更精确的控制运行的内容以及谁能够看到运行系统。如果你的代理足够的聪明,你可以使用它针对个别 URL 把一定比例的流量发送到新系统,直到你满意为止。如果你的集成测试也能连接到这个接口那就更好了。

### 是的,但这会花费很多时间!

这就取决于你怎样看待它了。的确,在按照以上步骤优化代码时会有一些重复的工作步骤。但是它确实有效,而这里介绍的任何一个步骤都是假设你对系统的了解比现实要多。我需要保持声誉,也真的不喜欢在工作期间有负面的意外。如果运气好的话,公司系统已经出现问题,或者有可能会严重影响到客户。在这样的情况下,我比较喜欢完全控制整个流程得到好的结果,而不是节省两天或者一星期。如果你更多地是牛仔的做事方式,并且你的老板同意可以接受冒更大的风险,那可能试着冒险一下没有错,但是大多数公司宁愿采取稍微慢一点但更确定的胜利之路。

---

via: <https://jacquesmattheij.com/improving-a-legacy-codebase>

作者:[Jacques Mattheij](https://jacquesmattheij.com/) 译者:[aiwhj](https://github.com/aiwhj) 校对:[JianqinWang](https://github.com/JianqinWang), [wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='jacquesmattheij.com', port=443): Max retries exceeded with url: /improving-a-legacy-codebase (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: certificate has expired (_ssl.c:1007)'))) | null |

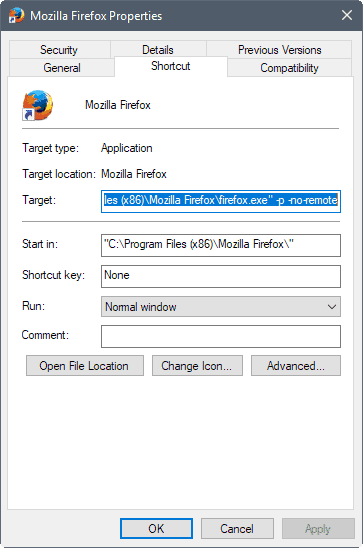

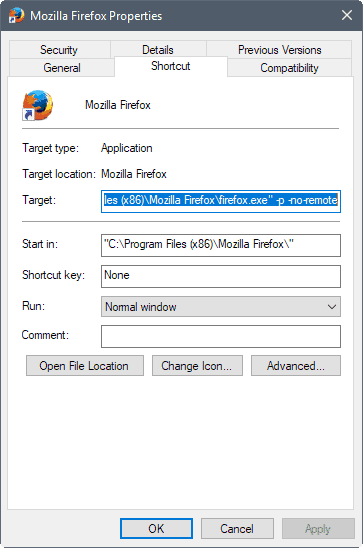

9,185 | 一行命令轻松升级 Ubuntu | https://itsfoss.com/zzupdate-upgrade-ubuntu/ | 2017-12-30T09:54:00 | [

"Ubuntu",

"升级"

] | https://linux.cn/article-9185-1.html |

[zzupdate](https://github.com/TurboLabIt/zzupdate) 是一个开源的命令行程序,通过将几个更新命令组合到一个命令中,使得将 Ubuntu 桌面和服务器版本升级到更新版本的任务变得容易一些。

将 Ubuntu 系统升级到更新的版本并不是一项艰巨的任务。无论是使用 GUI 还是使用几个命令,都可以轻松地将系统升级到最新版本。

另一方面,Gianluigi 'Zane' Zanettini 写的 `zzupdate` 只需一个命令就可以在 Ubuntu 中清理、更新、自动删除、版本升级、该工具的自我更新。

它会清理本地缓存,更新可用的软件包信息,然后执行发行版升级。接着,它会更新该工具并删除未使用的软件包。

该脚本必须以 root 用户身份运行。

### 安装 zzupdate 将 Ubuntu 升级到更新的版本

要安装 `zzupdate`,请在终端中执行以下命令。

```

curl -s https://raw.githubusercontent.com/TurboLabIt/zzupdate/master/setup.sh | sudo sh

```

然后将提供的示例配置文件复制到 `zzupdate.conf` 并设置你的首选项。

```

sudo cp /usr/local/turbolab.it/zzupdate/zzupdate.default.conf /etc/turbolab.it/zzupdate.conf

```

完成后,只要使用下面的命令,它就会开始升级你的 Ubuntu 系统到一个更新的版本(如果有的话)。

```

sudo zzupdate

```

请注意,在普通版本(非 LTS 版本)下,zzupdate 会将系统升级到下一个可用的版本。但是,当你运行 Ubuntu 16.04 LTS 时,它将尝试仅搜索下一个长期支持版本,而不是可用的最新版本。

如果你想退出 LTS 版本并升级到最新版本,你将需要更改一些选项。

对于 Ubuntu 桌面,打开 **软件和更新** 和下面 **更新** 选项卡,并更改通知我新的 Ubuntu 版本选项为 “**对于任何新版本**”。

对于 Ubuntu 服务版,编辑 `release-upgrades` 文件。

```

vi /etc/update-manager/release-upgrades

Prompt=normal

```

### 配置 zzupdate [可选]

`zzupdate` 要配置的选项:

```

REBOOT=1

```

如果值为 1,升级后系统将重启。

```

REBOOT_TIMEOUT=15

```

将重启超时设置为 900 秒,因为某些硬件比其他硬件重启需要更长的时间。

```

VERSION_UPGRADE=1

```

如果升级可用,则执行版本升级。

```

VERSION_UPGRADE_SILENT=0

```

自动显示版本进度。

```

COMPOSER_UPGRADE=1

```

值为 “1” 会自动升级该工具。

```

SWITCH_PROMPT_TO_NORMAL=0

```

此功能将 Ubuntu 版本更新为普通版本,即如果你运行着 LTS 发行版,`zzupdate` 将不会将其升级到 Ubuntu 17.10(如果其设置为 0)。它将仅搜索 LTS 版本。相比之下,无论你运行着 LTS 或者普通版,“1” 都将搜索最新版本。

完成后,你要做的就是在控制台中运行一个完整的 Ubuntu 系统更新。

```

sudo zzupdate

```

### 最后的话

尽管 Ubuntu 的升级过程本身就很简单,但是 zzupdate 将它简化为一个命令。不需要编码知识,这个过程完全是配置文件驱动。我个人发现这是一个很好的更新几个 Ubuntu 系统的工具,而无需单独关心不同的事情。

你愿意试试吗?

---

via: <https://itsfoss.com/zzupdate-upgrade-ubuntu/>

作者:[Ambarish Kumar](https://itsfoss.com) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,186 | 用 Ansible Container 去管理 Linux 容器 | https://opensource.com/article/17/10/dockerfiles-ansible-container | 2017-12-29T09:14:00 | [

"Dockerfile",

"Ansible",

"Docker"

] | https://linux.cn/article-9186-1.html |

>

> Ansible Container 解决了 Dockerfile 的不足,并对容器化项目提供了完整的管理。

>

>

>

Image by : opensource.com

我喜欢容器,并且每天都使用这个技术。即便如此,容器并不完美。不过,在过去几个月里,一系列项目已经解决了我遇到的一些问题。

我刚开始时,用 [Docker](https://opensource.com/tags/docker) 使用容器,这个项目使得这种技术非常流行。除了使用这个容器引擎之外,我学到了怎么去使用 [docker-compose](https://github.com/docker/compose) 以及怎么去用它管理我的项目。使用它使得我的生产力猛增!一个命令就可以运行我的项目,而不管它有多复杂。因此,我太高兴了。

使用一段时间之后,我发现了一些问题。最明显的问题是创建容器镜像的过程。Docker 工具使用一个定制的文件格式作为生成容器镜像的依据:Dockerfile。这个格式易于学习,并且很短的一段时间之后,你就可以自己制作容器镜像了。但是,一旦你希望进一步掌握它或者考虑到复杂场景,问题就会出现。

让我们打断一下,先去了解一个不同的东西:[Ansible](https://opensource.com/tags/ansible) 的世界。你知道它吗?它棒极了,是吗?你不这么认为?好吧,是时候去学习一些新事物了。Ansible 是一个允许你通过写一些任务去管理你的基础设施,并在你选择的环境中运行它们的项目。不需要去安装和设置任何的服务;你可以从你的笔记本电脑中很容易地做任何事情。许多人已经接受 Ansible 了。

想像一下这样的场景:你在 Ansible 中,你写了很多的 Ansible <ruby> 角色 <rt> role </rt></ruby>和<ruby> 剧本 <rt> playbook </rt></ruby>,你可以用它们去管理你的基础设施,并且你想把它们运用到容器中。你应该怎么做?通过 shell 脚本和 Dockerfile 去写容器镜像定义?听起来好像不对。

来自 Ansible 开发团队的一些人问到这个问题,并且他们意识到,人们每天编写和使用的那些同样的 Ansible 角色和剧本也可以用来制作容器镜像。但是 Ansible 能做到的不止这些 —— 它可以被用于去管理容器化项目的完整生命周期。从这些想法中,[Ansible Container](https://www.ansible.com/ansible-container) 项目诞生了。它利用已有的 Ansible 角色转变成容器镜像,甚至还可以被用于生产环境中从构建到部署的完整生命周期。

现在让我们讨论一下,我之前提到过的在 Dockerfile 环境中的最佳实践问题。这里有一个警告:这将是非常具体且技术性的。出现最多的三个问题有:

### 1、 在 Dockerfile 中内嵌的 Shell 脚本

当写 Dockerfile 时,你可以指定会由 `/bin/sh -c` 解释执行的脚本。它类似如下:

```

RUN dnf install -y nginx

```

这里 `RUN` 是一个 Dockerfile 指令,其它的都是参数(它们传递给 shell)。但是,想像一个更复杂的场景:

```

RUN set -eux; \

\

# this "case" statement is generated via "update.sh"

%%ARCH-CASE%%; \

\

url="https://golang.org/dl/go${GOLANG_VERSION}.${goRelArch}.tar.gz"; \

wget -O go.tgz "$url"; \

echo "${goRelSha256} *go.tgz" | sha256sum -c -; \

```

这仅是从 [golang 官方镜像](https://github.com/docker-library/golang/blob/master/Dockerfile-debian.template#L14) 中拿来的一段。它看起来并不好看,是不是?

### 2、 解析 Dockerfile 并不容易

Dockerfile 是一个没有正式规范的新格式。如果你需要在你的基础设施(比如,让构建过程自动化一点)中去处理 Dockerfile 将会很复杂。仅有的规范是 [这个代码](https://github.com/moby/moby/tree/master/builder/dockerfile),它是 **dockerd** 的一部分。问题是你不能使用它作为一个<ruby> 库 <rt> library </rt></ruby>来使用。最容易的解决方案是你自己写一个解析器,然后祈祷它运行的很好。使用一些众所周知的标记语言不是更好吗?比如,YAML 或者 JSON。

### 3、 管理困难

如果你熟悉容器镜像的内部结构,你可能知道每个镜像是由<ruby> 层 <rt> layer </rt></ruby>构成的。一旦容器被创建,这些层就使用联合文件系统技术堆叠在一起(像煎饼一样)。问题是,你并不能显式地管理这些层 — 你不能说,“这儿开始一个新层”,你被迫使用一种可读性不好的方法去改变你的 Dockerfile。最大的问题是,必须遵循一套最佳实践以去达到最优结果 — 新来的人在这个地方可能很困难。

### Ansible 语言和 Dockerfile 比较

相比 Ansible,Dockerfile 的最大缺点,也是 Ansible 的优点,作为一个语言,Ansible 更强大。例如,Dockerfile 没有直接的变量概念,而 Ansible 有一个完整的模板系统(变量只是它其中的一个特性)。Ansible 包含了很多更易于使用的模块,比如,[wait\_for](http://docs.ansible.com/wait_for_module.html),它可以被用于服务就绪检查,比如,在处理之前等待服务准备就绪。在 Dockerfile 中,做任何事情都通过一个 shell 脚本。因此,如果你想去找出已准备好的服务,它必须使用 shell(或者独立安装)去做。使用 shell 脚本的其它问题是,它会变得很复杂,维护成为一种负担。很多人已经发现了这个问题,并将这些 shell 脚本转到 Ansible。

### 关于作者

Tomas Tomecek - 工程师、Hacker、演讲者、Tinker、Red Hatter。喜欢容器、linux、开源软件、python 3、rust、zsh、tmux。[More about me](https://opensource.com/users/tomastomecek)

---

via: <https://opensource.com/article/17/10/dockerfiles-ansible-container>

作者:[Tomas Tomecek](https://opensource.com/users/tomastomecek) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | I love containers and use the technology every day. Even so, containers aren't perfect. Over the past couple of months, however, a set of projects has emerged that addresses some of the problems I've experienced.

I started using containers with [Docker](https://opensource.com/tags/docker), since this project made the technology so popular. Aside from using the container engine, I learned how to use ** docker-compose** and started managing my projects with it. My productivity skyrocketed! One command to run my project, no matter how complex it was. I was so happy.

After some time, I started noticing issues. The most apparent were related to the process of creating container images. The Docker tool uses a custom file format as a recipe to produce container images—Dockerfiles. This format is easy to learn, and after a short time you are ready to produce container images on your own. The problems arise once you want to master best practices or have complex scenarios in mind.

Let's take a break and travel to a different land: the world of [Ansible](https://opensource.com/tags/ansible). You know it? It's awesome, right? You don't? Well, it's time to learn something new. Ansible is a project that allows you to manage your infrastructure by writing tasks and executing them inside environments of your choice. No need to install and set up any services; everything can easily run from your laptop. Many people already embrace Ansible.

Imagine this scenario: You invested in Ansible, you wrote plenty of Ansible roles and playbooks that you use to manage your infrastructure, and you are thinking about investing in containers. What should you do? Start writing container image definitions via shell scripts and Dockerfiles? That doesn't sound right.

Some people from the Ansible development team asked this question and realized that those same Ansible roles and playbooks that people wrote and use daily can also be used to produce container images. But not just that—they can be used to manage the complete lifecycle of containerized projects. From these ideas, the [Ansible Container](https://www.ansible.com/ansible-container) project was born. It utilizes existing Ansible roles that can be turned into container images and can even be used for the complete application lifecycle, from build to deploy in production.

Let's talk about the problems I mentioned regarding best practices in context of Dockerfiles. A word of warning: This is going to be very specific and technical. Here are the top three issues I have:

## 1. Shell scripts embedded in Dockerfiles.

When writing Dockerfiles, you can specify a script that will be interpreted via **/bin/sh -c**. It can be something like:

```

````RUN dnf install -y nginx`

where RUN is a Dockerfile instruction and the rest are its arguments (which are passed to shell). But imagine a more complex scenario:

```

``````

RUN set -eux; \

\

# this "case" statement is generated via "update.sh"

%%ARCH-CASE%%; \

\

url="https://golang.org/dl/go${GOLANG_VERSION}.${goRelArch}.tar.gz"; \

wget -O go.tgz "$url"; \

echo "${goRelSha256} *go.tgz" | sha256sum -c -; \

```

This one is taken from [the official golang image](https://github.com/docker-library/golang/blob/master/Dockerfile-debian.template#L14). It doesn't look pretty, right?

## 2. You can't parse Dockerfiles easily.

Dockerfiles are a new format without a formal specification. This is tricky if you need to process Dockerfiles in your infrastructure (e.g., automate the build process a bit). The only specification is [the code](https://github.com/moby/moby/tree/master/builder/dockerfile) that is part of **dockerd**. The problem is that you can't use it as a library. The easiest solution is to write a parser on your own and hope for the best. Wouldn't it be better to use some well-known markup language, such as YAML or JSON?

## 3. It's hard to control.

If you are familiar with the internals of container images, you may know that every image is composed of layers. Once the container is created, the layers are stacked onto each other (like pancakes) using union filesystem technology. The problem is, that you cannot explicitly control this layering—you can't say, "here starts a new layer." You are forced to change your Dockerfile in a way that may hurt readability. The bigger problem is that a set of best practices has to be followed to achieve optimal results—newcomers have a really hard time here.

## Comparing Ansible language and Dockerfiles