id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

9,706 | 保护你的 Fedora 系统免受这个 DHCP 漏洞 | https://fedoramagazine.org/protect-fedora-system-dhcp-flaw/ | 2018-06-02T06:23:34 | [

"dhcp",

"安全"

] | https://linux.cn/article-9706-1.html |

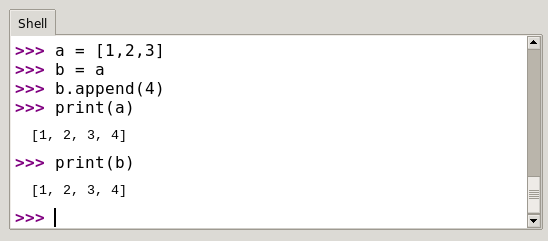

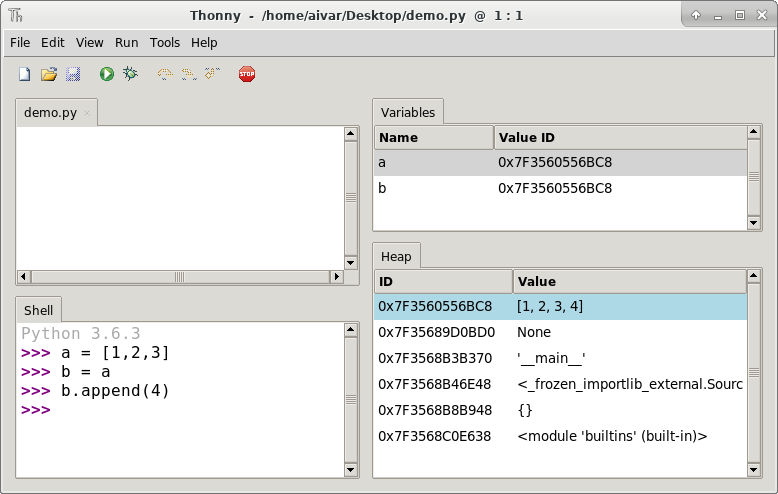

前几天在 `dhcp-client` 中发现并披露了一个严重的安全漏洞。此 DHCP 漏洞会对你的系统和数据造成高风险,尤其是在使用不受信任的网络,如非你拥有的 WiFi 接入点时。

动态主机控制协议(DHCP)能让你的系统从其加入的网络中获取配置。你的系统将请求 DHCP 数据,并且通常是由路由器等服务器应答。服务器为你的系统提供必要的数据以进行自我配置。例如,你的系统如何在加入无线网络时正确进行网络配置。

但是,本地网络上的攻击者可能会利用此漏洞。使用在 NetworkManager 下运行的 `dhcp-client` 脚本中的漏洞,攻击者可能能够在系统上以 root 权限运行任意命令。这个 DHCP 漏洞使你的系统和数据处于高风险状态。该漏洞已分配 CVE-2018-1111,并且有 [Bugzilla 来跟踪 bug](https://bugzilla.redhat.com/show_bug.cgi?id=1567974)。

### 防范这个 DHCP 漏洞

新的 dhcp 软件包包含了 Fedora 26、27 和 28 以及 Rawhide 的修复程序。维护人员已将这些更新提交到 updates-testing 仓库。对于大多数用户而言,它们应该在这篇文章的大约一天左右的时间内在稳定仓库出现。所需的软件包是:

* Fedora 26: dhcp-4.3.5-11.fc26

* Fedora 27: dhcp-4.3.6-10.fc27

* Fedora 28: dhcp-4.3.6-20.fc28

* Rawhide: dhcp-4.3.6-21.fc29

#### 更新稳定的 Fedora 系统

要在稳定的 Fedora 版本上立即更新,请[使用 sudo](https://fedoramagazine.org/howto-use-sudo/) 运行此命令。如有必要,请在提示时输入你的密码:

```

sudo dnf --refresh --enablerepo=updates-testing update dhcp-client

```

之后,使用标准稳定仓库进行更新。要从稳定的仓库更新 Fedora 系统,请使用以下命令:

```

sudo dnf --refresh update dhcp-client

```

#### 更新 Rawhide 系统

如果你的系统是 Rawhide,请使用以下命令立即下载和更新软件包:

```

mkdir dhcp && cd dhcp

koji download-build --arch={x86_64,noarch} dhcp-4.3.6-21.fc29

sudo dnf update ./dhcp-*.rpm

```

在每日的 Rawhide compose 后,只需运行 `sudo dnf update` 即可获取更新。

### Fedora Atomic Host

针对 Fedora Atomic Host 的修复程序版本为 28.20180515.1。要获得更新,请运行以下命令:

```

atomic host upgrade -r

```

此命令将重启系统以应用升级。

---

via: <https://fedoramagazine.org/protect-fedora-system-dhcp-flaw/>

作者:[Paul W. Frields](https://fedoramagazine.org/author/pfrields/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | A critical security vulnerability was discovered and disclosed earlier today in *dhcp-client*. This DHCP flaw carries a high risk to your system and data, especially if you use untrusted networks such as a WiFi access point you don’t own. Read more here for how to protect your Fedora system.

Dynamic Host Control Protocol (DHCP) allows your system to get configuration from a network it joins. Your system will make a request for DHCP data, and typically a server such as a router answers. The server provides the necessary data for your system to configure itself. This is how, for instance, your system configures itself properly for networking when it joins a wireless network.

However, an attacker on the local network may be able to exploit this vulnerability. Using a flaw in a dhcp-client script that runs under NetworkManager, the attacker may be able to run arbitrary commands with root privileges on your system. This DHCP flaw puts your system and your data at high risk. The flaw has been assigned CVE-2018-1111 and has a [Bugzilla tracking bug](https://bugzilla.redhat.com/show_bug.cgi?id=1567974).

## Guarding against this DHCP flaw

New *dhcp* packages contain fixes for Fedora 26, 27, and 28, as well as Rawhide. The maintainers have submitted these updates to the *updates-testing* repositories. They should show up in stable repos within a day or so of this post for most users. The desired packages are:

- Fedora 26:

*dhcp-4.3.5-11.fc26* - Fedora 27:

*dhcp-4.3.6-10.fc27* - Fedora 28:

*dhcp-4.3.6-20.fc28* - Rawhide:

*dhcp-4.3.6-21.fc29*

### Updating a stable Fedora system

To update immediately on a stable Fedora release, use this command [with sudo](https://fedoramagazine.org/howto-use-sudo/). Type your password at the prompt, if necessary:

sudo dnf --refresh --enablerepo=updates-testing update dhcp-client

Later, use the standard stable repos to update. To update your Fedora system from the stable repos, use this command:

sudo dnf --refresh update dhcp-client

### Updating a Rawhide system

If your system is on Rawhide, use these commands to download and update the packages immediately:

mkdir dhcp && cd dhcp koji download-build --arch={x86_64,noarch} dhcp-4.3.6-21.fc29 sudo dnf update ./dhcp-*.rpm

After the nightly Rawhide compose, simply run *sudo dnf update* to get the update.

## Fedora Atomic Host

The fixes for Fedora Atomic Host are in ostree version 28.20180515.1. To get the update, run this command:

atomic host upgrade -r

This command reboots your system to apply the upgrade.

Photo by [Markus Spiske](https://unsplash.com/photos/FXFz-sW0uwo?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText) on [Unsplash](https://unsplash.com/search/photos/protect?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText).

## alick

It seems to me we will need to reboot after updating. Can anyone confirm?

## Georg Sauthoff

You have to make sure that any running dhclient process is terminated. Thus, a

is sufficient. The network-manager then automatically restarts the dhclient, after a short timeout.

A reboot is an alternative way to make sure that the update is effective.

## Flo

https://apps.fedoraproject.org/packages/dhcp is confusing. The fixed version has been pushed to

already. However, if right now people add

to their dnf command they actually get an older version that still contains the flaw.

## Paul W. Frields

@Flo: This could happen if your mirror is out of sync. You can try waiting a short while for the sync to catch up, or pull packages from another mirror.

## Jared Busch

Already in

for Fedora 28

## murph

The rawhide instructions didn’t quite work. Got some great help on IRC, they directed me to https://koji.fedoraproject.org/koji/buildinfo?buildID=1081949 where I downloaded the common and libs packages, then got everything to install fine.

A few rough edges when installing brand new patches on Rawhide should be expected, so no problem.

## Costa A.

A “dnf upgrade -y” today was enough to get the updated dhcp-client version.

## Eddie G. O'Connor Jr.

Just wanna say “Thanks” to all the folks who help keep my Fedora systems up and running smoothly. Will be applying this update to my systems just as soon as we get power restored. (High winds knocked down trees which took out the power lines in my neighborhood!) SO by then?…(Fri. evening!) everything should be in place for a smooth update for me!

Fedora? ROCKS!!!

## Eddie G. O'Connor Jr.

Also…..dows anyone know if this will affect CEntOS servers as well? Wondering if I have to pull them offline and perform updates on ’em too?

## Eduard Lucena

Yes, it affects RHEL, CentOS and Fedora.

## Hans

I’d be surprised if your server would use dhcp-client to receive an IP address.

## Michael J Gruber

The rpm package name is dhcp-client, not dhcp.

(dhcp is the source package from which various dhcp related rpm packages are built – including dhcp-client, but not dhcp.)

## Paul W. Frields

@Michael: The dhcp-client package comes from the dhcp source package. It also requires dhcp-common and dhcp-libs. That is why the overall dhcp source package version is used in the article.

## Michael J Gruber

@Paul Yes, that is what I wrote. We are in complete agreement 🙂

It’s just that the natural “rpm -q dhcp” does not work as a check whether you are affected.

## Michael A Hawkins

Some of us are forced to run older versions of Fedora. Any chance this thread could include the offending text from the script and some ways to edit it away or improve it?

## Mike

Is this working for Fedora 25? |

9,707 | 如何在 Ubuntu 18.04 服务器上安装和配置 KVM | https://www.linuxtechi.com/install-configure-kvm-ubuntu-18-04-server/ | 2018-06-03T08:51:00 | [

"虚拟化",

"kvm"

] | https://linux.cn/article-9707-1.html |

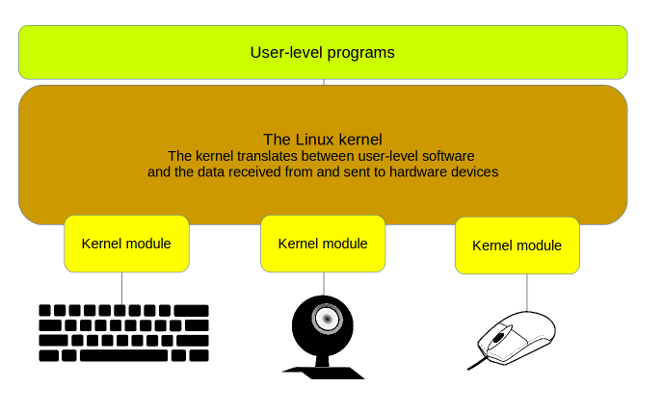

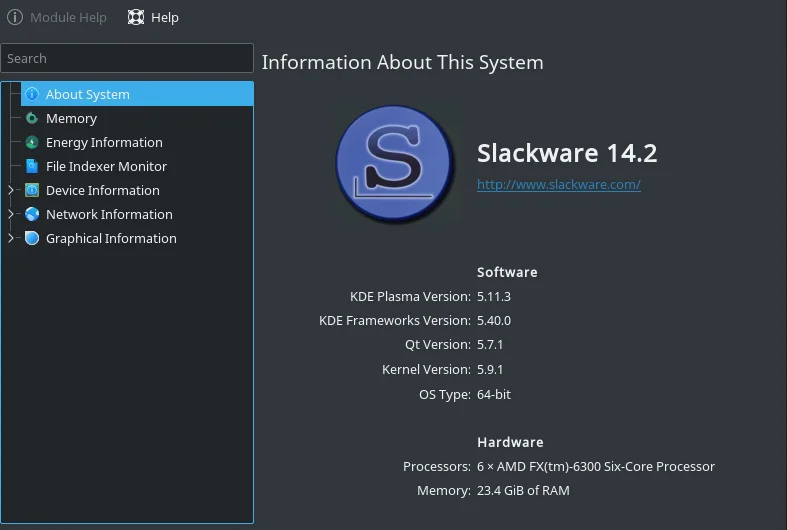

**KVM**(基于内核的虚拟机)是一款为类 Linux 系统提供的开源的全虚拟化解决方案,KVM 使用虚拟化扩展(如 **Intel VT** 或 **AMD-V**)提供虚拟化功能。无论何时我们在任何 Linux 机器上安装 KVM,都会通过加载诸如 `kvm-intel.ko`(基于 Intel 的机器)和 `kvm-amd.ko`(基于 amd 的机器)的内核模块,使其成为<ruby> 管理程序 <rt> hyervisor </rt></ruby>(LCTT 译注:一种监控和管理虚拟机运行的核心软件层)。

KVM 允许我们安装和运行多个虚拟机(Windows 和 Linux)。我们可以通过 `virt-manager` 的图形用户界面或使用 `virt-install` 和 `virsh` 命令在命令行界面来创建和管理基于 KVM 的虚拟机。

在本文中,我们将讨论如何在 Ubuntu 18.04 LTS 服务器上安装和配置 **KVM 管理程序**。我假设你已经在你的服务器上安装了 Ubuntu 18.04 LTS 。接下来登录到您的服务器执行以下步骤。

### 第一步:确认您的硬件是否支持虚拟化

执行 `egrep` 命令以验证您的服务器的硬件是否支持虚拟化,

```

linuxtechi@kvm-ubuntu18-04:~$ egrep -c '(vmx|svm)' /proc/cpuinfo

1

```

如果输出结果大于 0,就意味着您的硬件支持虚拟化。重启,进入 BIOS 设置中启用 VT 技术。

现在使用下面的命令安装 `kvm-ok` 实用程序,该程序用于确定您的服务器是否能够运行硬件加速的 KVM 虚拟机。

```

linuxtechi@kvm-ubuntu18-04:~$ sudo apt install cpu-checker

```

运行 kvm-ok 命令确认输出结果,

```

linuxtechi@kvm-ubuntu18-04:~$ sudo kvm-ok

INFO: /dev/kvm exists

KVM acceleration can be used

```

### 第二步:安装 KVM 及其依赖包

运行下面的 apt 命令安装 KVM 及其依赖项:

```

linuxtechi@kvm-ubuntu18-04:~$ sudo apt update

linuxtechi@kvm-ubuntu18-04:~$ sudo apt install qemu qemu-kvm libvirt-bin bridge-utils virt-manager

```

只要上图相应的软件包安装成功,那么你的本地用户(对于我来说是 `linuxtechi`)将被自动添加到 `libvirtd` 群组。

### 第三步:启动并启用 libvirtd 服务

我们在 Ubuntu 18.04 服务器上安装 qemu 和 libvirtd 软件包之后,它就会自动启动并启用 `libvirtd` 服务,如果 `libvirtd` 服务没有开启,则运行以下命令开启,

```

linuxtechi@kvm-ubuntu18-04:~$ sudo service libvirtd start

linuxtechi@kvm-ubuntu18-04:~$ sudo update-rc.d libvirtd enable

```

现在使用下面的命令确认 libvirtd 服务的状态,

```

linuxtechi@kvm-ubuntu18-04:~$ service libvirtd status

```

输出结果如下所示:

### 第四步:为 KVM 虚拟机配置桥接网络

只有通过桥接网络,KVM 虚拟机才能访问外部的 KVM 管理程序或主机。在Ubuntu 18.04中,网络由 `netplan` 实用程序管理,每当我们新安装一个 Ubuntu 18.04 系统时,会自动创建一个名称为 `/etc/netplan/50-cloud-init.yaml` 文件,其配置了静态 IP 和桥接网络,`netplan` 实用工具将引用这个文件。

截至目前,我已经在此文件配置了静态 IP,文件的具体内容如下:

```

network:

ethernets:

ens33:

addresses: [192.168.0.51/24]

gateway4: 192.168.0.1

nameservers:

addresses: [192.168.0.1]

dhcp4: no

optional: true

version: 2

```

我们在这个文件中添加桥接网络的配置信息,

```

linuxtechi@kvm-ubuntu18-04:~$ sudo vi /etc/netplan/50-cloud-init.yaml

network:

version: 2

ethernets:

ens33:

dhcp4: no

dhcp6: no

bridges:

br0:

interfaces: [ens33]

dhcp4: no

addresses: [192.168.0.51/24]

gateway4: 192.168.0.1

nameservers:

addresses: [192.168.0.1]

```

正如你所看到的,我们已经从接口(`ens33`)中删除了 IP 地址,并将该 IP 添加到 `br0` 中,并且还将接口(`ens33`)添加到 `br0`。使用下面的 `netplan` 命令使更改生效,

```

linuxtechi@kvm-ubuntu18-04:~$ sudo netplan apply

```

如果您想查看 debug 日志请使用以下命令,

```

linuxtechi@kvm-ubuntu18-04:~$ sudo netplan --debug apply

```

现在使用以下方法确认网络桥接状态:

```

linuxtechi@kvm-ubuntu18-04:~$ sudo networkctl status -a

```

```

linuxtechi@kvm-ubuntu18-04:~$ ifconfig

```

### 第五步:创建虚拟机(使用 virt-manager 或 virt-install 命令)

有两种方式创建虚拟机:

* `virt-manager`(图形化工具)

* `virt-install`(命令行工具)

#### 使用 virt-manager 创建虚拟机

通过执行下面的命令启动 `virt-manager`:

```

linuxtechi@kvm-ubuntu18-04:~$ sudo virt-manager

```

创建一个新的虚拟机:

点击“下一步”然后选择 ISO 镜像文件,我使用的是 RHEL 7.3 iso 镜像。

点击“下一步”。

在接下来的几个窗口中,系统会提示要求您为 VM 分配内存,处理器数量和磁盘空间。

并指定虚拟机名字和桥接网络名:

点击“结束”。

接下来只需要按照屏幕指示安装系统。

#### 使用virt-install命令从命令行界面创建虚拟机

使用下面的 `virt-install` 命令从终端创建一个虚拟机,它将在命令行界面中开始安装,并根据您对虚拟机的名字,说明,ISO 文件位置和桥接配置的设置创建虚拟机。

```

linuxtechi@kvm-ubuntu18-04:~$ sudo virt-install -n DB-Server --description "Test VM for Database" --os-type=Linux --os-variant=rhel7 --ram=1096 --vcpus=1 --disk path=/var/lib/libvirt/images/dbserver.img,bus=virtio,size=10 --network bridge:br0 --graphics none --location /home/linuxtechi/rhel-server-7.3-x86_64-dvd.iso --extra-args console=ttyS0

```

本文到此为止,我希望这篇文章能帮助你能够在 Ubuntu 18.04 服务器上成功安装 KVM。 除此之外,KVM 也是 Openstack 默认的管理程序。

阅读更多:“[如何使用 virsh 命令创建,还原和删除 KVM 虚拟机快照](https://www.linuxtechi.com/create-revert-delete-kvm-virtual-machine-snapshot-virsh-command/)”。

---

via: <https://www.linuxtechi.com/install-configure-kvm-ubuntu-18-04-server/>

作者:[Pradeep Kumar](http://www.linuxtechi.com/author/pradeep/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[wyxplus](https://github.com/wyxplus) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | **KVM** (Kernel-based Virtual Machine) is an open source full virtualization solution for Linux like systems, KVM provides virtualization functionality using the virtualization extensions like **Intel VT** or** AMD-V**. Whenever we install KVM on any linux box then it turns it into the hyervisor by loading the kernel modules like **kvm-intel.ko**( for intel based machines) and **kvm-amd.ko** ( for amd based machines).

KVM allows us to install and run multiple virtual machines (Windows & Linux). We can create and manage KVM based virtual machines either via **virt-manager** graphical user interface or **virt-install** & **virsh** cli commands.

In this article we will discuss how to install and configure **KVM hypervisor** on Ubuntu 18.04 LTS server. I am assuming you have already installed Ubuntu 18.04 LTS server on your system. Login to your server and perform the following steps.

#### Step:1 Verify Whether your system support hardware virtualization

Execute below egrep command to verify whether your system supports hardware virtualization or not,

linuxtechi@kvm-ubuntu18-04:~$ egrep -c '(vmx|svm)' /proc/cpuinfo 1 linuxtechi@kvm-ubuntu18-04:~$

If the output is greater than 0 then it means your system supports Virtualization else reboot your system, then go to BIOS settings and enable VT technology.

Now Install “**kvm-ok**” utility using below command, it is used to determine if your server is capable of running hardware accelerated KVM virtual machines

linuxtechi@kvm-ubuntu18-04:~$ sudo apt install cpu-checker

Run kvm-ok command and verify the output,

linuxtechi@kvm-ubuntu18-04:~$ sudo kvm-ok INFO: /dev/kvm exists KVM acceleration can be used linuxtechi@kvm-ubuntu18-04:~$

#### Step:2 Install KVM and its required packages

Run the below apt commands to install KVM and its dependencies

linuxtechi@kvm-ubuntu18-04:~$ sudo apt update linuxtechi@kvm-ubuntu18-04:~$ sudo apt install qemu qemu-kvm libvirt-bin bridge-utils virt-manager

Once the above packages are installed successfully, then your local user (In my case linuxtechi) will be added to the group libvirtd automatically.

#### Step:3 Start & enable libvirtd service

Whenever we install qemu & libvirtd packages in Ubuntu 18.04 Server then it will automatically start and enable libvirtd service, In case libvirtd service is not started and enabled then run beneath commands,

linuxtechi@kvm-ubuntu18-04:~$ sudo service libvirtd start linuxtechi@kvm-ubuntu18-04:~$ sudo update-rc.d libvirtd enable

Now verify the status of libvirtd service using below command,

linuxtechi@kvm-ubuntu18-04:~$ service libvirtd status

Output would be something like below:

#### Step:4 Configure Network Bridge for KVM virtual Machines

Network bridge is required to access the KVM based virtual machines outside the KVM hypervisor or host. In Ubuntu 18.04, network is managed by netplan utility, whenever we freshly installed Ubuntu 18.04 server then netplan file is created under **/etc/netplan/.** In most of the hardware and virtualized environment, netplan file name would be “**50-cloud-init.yaml**” or “**01-netcfg.yaml”, **to configure static IP and bridge, netplan utility will refer this file.

As of now I have already configured the static IP via this file and content of this file is below:

network: ethernets: ens33: addresses: [192.168.0.51/24] gateway4: 192.168.0.1 nameservers: addresses: [192.168.0.1] dhcp4: no optional: true version: 2

Let’s add the network bridge definition in this file,

linuxtechi@kvm-ubuntu18-04:~$ sudo vi /etc/netplan/50-cloud-init.yaml

network: version: 2 ethernets: ens33: dhcp4: no dhcp6: no bridges: br0: interfaces: [ens33] dhcp4: no addresses: [192.168.0.51/24] gateway4: 192.168.0.1 nameservers: addresses: [192.168.0.1]

As you can see we have removed the IP address from interface(ens33) and add the same IP to the bridge ‘**br0**‘ and also added interface (ens33) to the bridge br0. Apply these changes using below netplan command,

linuxtechi@kvm-ubuntu18-04:~$ sudo netplan apply linuxtechi@kvm-ubuntu18-04:~$

If you want to see the debug logs then use the below command,

linuxtechi@kvm-ubuntu18-04:~$ sudo netplan --debug apply

Now Verify the bridge status using following methods:

linuxtechi@kvm-ubuntu18-04:~$ sudo networkctl status -a

linuxtechi@kvm-ubuntu18-04:~$ ifconfig

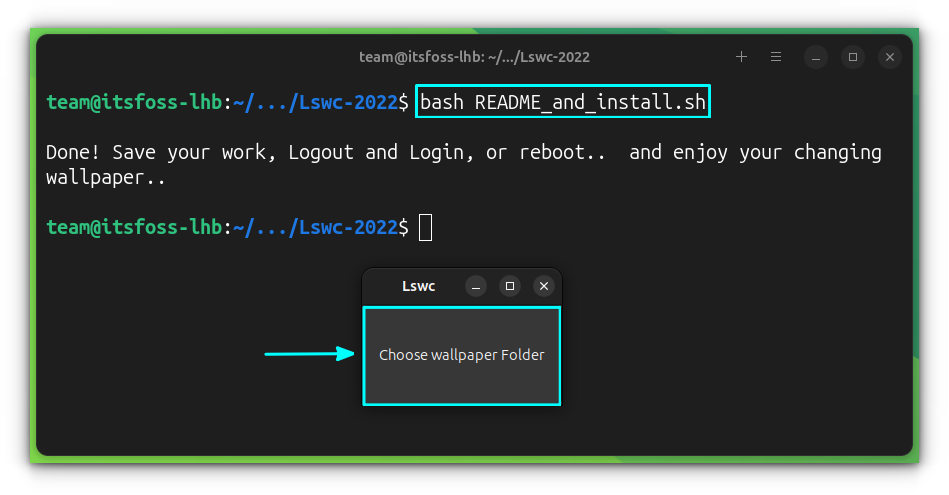

#### Start:5 Creating Virtual machine (virt-manager or virt-install command )

There are two ways to create virtual machine:

- virt-manager (GUI utility)

- virt-install command (cli utility)

**Creating Virtual machine using virt-manager:**

Start the virt-manager by executing the beneath command,

linuxtechi@kvm-ubuntu18-04:~$ sudo virt-manager

Create a new virtual machine

Click on forward and select the ISO file, in my case I am using RHEL 7.3 iso file.

Click on Forward

In the next couple of windows, you will be prompted to specify the RAM, CPU and disk for the VM.

Now Specify the Name of the Virtual Machine and network,

Click on Finish

Now follow the screen instruction and complete the installation,

Read More On : “[ How to Create, Revert and Delete KVM Virtual machine (domain) snapshot with virsh command](https://www.linuxtechi.com/create-revert-delete-kvm-virtual-machine-snapshot-virsh-command/)”

**Creating Virtual machine from CLI using virt-install command,**

Use the below virt-install command to create a VM from terminal, it will start the installation in CLI, replace the name of the VM, description, location of ISO file and network bridge as per your setup.

linuxtechi@kvm-ubuntu18-04:~$ sudo virt-install -n DB-Server --description "Test VM for Database" --os-type=Linux --os-variant=rhel7 --ram=1096 --vcpus=1 --disk path=/var/lib/libvirt/images/dbserver.img,bus=virtio,size=10 --network bridge:br0 --graphics none --location /home/linuxtechi/rhel-server-7.3-x86_64-dvd.iso --extra-args console=ttyS0

That’s conclude the article, I hope this article help you to install KVM on your Ubuntu 18.04 Server. Apart from this, KVM is the default hypervisor for Openstack.

Read More on : **How to Install and Configure KVM on OpenSUSE Leap 15**

AskarTo check virtualization support the command should be …

egrep -c ‘(vmx|svm)’ /proc/cpuinfo

Cristian ArriazaYou are the best thank you very much, better than on the manufacturer’s website 🙂

EricDo I understand correctly from Step 5 that one can be logged into a headless U 18.04 server via ssh and run the graphical virt-manager utility? Even in the absence of a local graphical environment?

If so, how must ssh be configured? Is it just a question of setting X11Forwarding=yes in the respective ssh_config and sshd_config files?

TonyYou’ll also need to install a X server on your host machine (for Windows, I recommend vcxsrv, open source and updated).

EddieDoc states:

whenever we freshly installed Ubuntu 18.04 server then a file with name “/etc/netplan/50-cloud-init.yaml” is created automatically, to configure static IP and bridge, netplan utility will refer this file.

NOT TRUE, this file is missing.

Would be helpful if based on ifconfig output how this file could be created

Pradeep KumarHi Eddie,

In case netplan is not present in your server then i would recommend create a file with name “01-netcfg.yaml” under /etc/netplan directory and copy paste the contents from article to this file.

KamiGreat article, step by step very helpful^_^ Thanks |

9,708 | 使用 Quagga 实现 Linux 动态路由 | https://www.linux.com/learn/intro-to-linux/2018/3/dynamic-linux-routing-quagga | 2018-06-03T10:56:00 | [

"Quagga",

"路由"

] | https://linux.cn/article-9708-1.html |

>

> 学习如何使用 Quagga 套件的路由协议去管理动态路由。

>

>

>

迄今为止,本系列文章中,我们已经在 [Linux 局域网路由新手指南:第 1 部分](/article-9657-1.html) 中学习了复杂的 IPv4 地址,在 [Linux 局域网路由新手指南:第 2 部分](/article-9675-1.html) 中学习了如何去手工创建静态路由。

今天,我们继续使用 [Quagga](https://www.quagga.net/) 去管理动态路由,这是一个安装完后就不用理它的的软件。Quagga 是一个支持 OSPFv2、OSPFv3、RIP v1 和 v2、RIPng、以及 BGP-4 的路由协议套件,并全部由 zebra 守护程序管理。

OSPF 的意思是<ruby> 最短路径优先 <rt> Open Shortest Path First </rt></ruby>。OSPF 是一个内部网关协议(IGP);它可以用在局域网和跨因特网的局域网互联中。在你的网络中的每个 OSPF 路由器都包含整个网络的拓扑,并计算通过网络的最短路径。OSPF 会通过多播的方式自动对外传播它检测到的网络变化。你可以将你的网络分割为区域,以保持路由表的可管理性;每个区域的路由器只需要知道离开它的区域的下一跳接口地址,而不用记录你的网络的整个路由表。

RIP,即路由信息协议,是一个很老的协议,RIP 路由器向网络中周期性多播它的整个路由表,而不是像 OSPF 那样只多播网络的变化。RIP 通过跳数来测量路由,任何超过 15 跳的路由它均视为不可到达。RIP 设置很简单,但是 OSPF 在速度、效率以及弹性方面更佳。

BGP-4 是边界网关协议版本 4。这是用于因特网流量路由的外部网关协议(EGP)。你不会用到 BGP 协议的,除非你是因特网服务提供商。

### 准备使用 OSPF

在我们的小型 KVM 测试实验室中,用两台虚拟机表示两个不同的网络,然后将另一台虚拟机配置为路由器。创建两个网络:net1 是 192.168.110.0/24 ,而 net2 是 192.168.120.0/24。启用 DHCP 是明智的,否则你要分别进入这三个虚拟机,去为它们设置静态地址。Host 1 在 net1 中,Host 2 在 net2 中,而路由器同时与这两个网络连接。设置 Host 1 的网关地址为 192.168.110.126,Host 2 的网关地址为 192.168.120.136。

* Host 1: 192.168.110.125

* Host 2:192.168.120.135

* Router:192.168.110.126 和 192.168.120.136

在路由器上安装 Quagga。在大多数 Linux 中它是 quagga 软件包。在 Debian 上还有一个单独的文档包 quagga-doc。取消 `/etc/sysctl.conf` 配置文件中如下这一行的注释去启用包转发功能:

```

net.ipv4.ip_forward=1

```

然后,运行 `sysctl -p` 命令让变化生效。

### 配置 Quagga

查看你的 Quagga 包中的示例配置文件,比如,`/usr/share/doc/quagga/examples/ospfd.conf.sample`。除非你的 Linux 版本按你的喜好做了创新,否则,一般情况下配置文件应该在 `/etc/quagga` 目录中。大多数 Linux 版本在这个目录下有两个文件,`vtysh.conf` 和 `zebra.conf`。它们提供了守护程序运行所需要的最小配置。除非你的发行版做了一些特殊的配置,否则,zebra 总是首先运行,当你启动 ospfd 的时候,它将自动启动。Debian/Ubuntu 是一个特例,稍后我们将会说到它。

每个路由器守护程序将读取它自己的配置文件,因此,我们必须创建 `/etc/quagga/ospfd.conf`,并输入如下内容:

```

!/etc/quagga/ospfd.conf

hostname router1

log file /var/log/quagga/ospfd.log

router ospf

ospf router-id 192.168.110.15

network 192.168.110.0/0 area 0.0.0.0

network 192.168.120.0/0 area 0.0.0.0

access-list localhost permit 127.0.0.1/32

access-list localhost deny any

line vty

access-class localhost

```

你可以使用感叹号(`!`)或者井号(`#`)去注释掉这些行。我们来快速浏览一下这些选项。

* `hostname` 可以是你希望的任何内容。这里不是一般意义上的 Linux 主机名,但是,当你使用 `vtysh` 或者 `telnet` 登入时,你将看到它们。

* `log file` 是你希望用于保存日志的任意文件。

* `router` 指定路由协议。

* `ospf router-id` 是任意的 32 位数字。使用路由器的一个 IP 地址就是很好的选择。

* `network` 定义你的路由器要通告的网络。

* `access-list` 限制 `vtysh` 登入,它是 Quagga 命令行 shell,它允许本地机器登入,并拒绝任何远程管理。

### Debian/Ubuntu

在你启动守护程序之前,Debian/Ubuntu 相对其它的 Debian 衍生版可能多需要一步到多步。编辑 `/etc/quagga/daemons` ,除了 `zebra=yes` 和 `ospfd=yes` 外,使其它所有的行的值为 `no`。

然后,在 Debian 上运行 `ospfd` 去启动 Quagga:

```

# systemctl start quagga

```

在大多数的其它 Linux 上,包括 Fedora 和 openSUSE,用如下命令启动 `ospfd`:

```

# systemctl start ospfd

```

现在,Host 1 和 Host 2 将可以互相 ping 通对方和路由器。

这里用了许多篇幅去描述非常简单的设置。在现实中,路由器将连接两个交换机,然后为连接到这个交换机上的所有电脑提供一个网关。你也可以在你的路由器上添加更多的网络接口,这样你的路由器就可以为更多的网络提供路由服务,或者也可以直接连接到其它路由器上,或者连接到连接其它路由器的骨干网络上。

你或许不愿意如此麻烦地手工配置网络接口。最简单的方法是使用你的 DHCP 服务器去宣告你的路由器。如果你使用了 Dnsmasq,那么你就有了一个 DHCP 和 DNS 的一体化解决方案。

还有更多的配置选项,比如,加密的密码保护。更多内容请查看 [Quagga 路由套件](https://www.quagga.net/) 的官方文档。

---

via: <https://www.linux.com/learn/intro-to-linux/2018/3/dynamic-linux-routing-quagga>

作者:[CARLA SCHRODER](https://www.linux.com/users/cschroder) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,709 | Audacity 快速指南:快速消除背景噪音 | https://fedoramagazine.org/audacity-quick-tip-quickly-remove-background-noise/ | 2018-06-03T11:01:00 | [

"Audacity",

"噪音"

] | https://linux.cn/article-9709-1.html |

当在笔记本电脑上录制声音时 —— 比如首次简单地录屏 —— 许多用户通常使用内置麦克风。但是,这些小型麦克风也会捕获很多背景噪音。在这个快速指南中,我们会学习如何使用 Fedora 中的 [Audacity](https://www.audacityteam.org/) 快速移除音频文件中的背景噪音。

### 安装 Audacity

Audacity 是 Fedora 中用于混合、剪切和编辑音频文件的程序。在 Fedora 上它支持各种开箱即用的格式 - 包括 MP3 和 OGG。从软件中心安装 Audacity。

如果你更喜欢终端,请使用以下命令:

```

sudo dnf install audacity

```

### 导入您的音频、样本背景噪音

安装 Audacity 后,打开程序,使用 “File > Import” 菜单项导入你的声音。这个例子使用了一个[来自 freesound.org 添加了噪音的声音](https://freesound.org/people/levinj/sounds/8323/):

* <https://ryanlerch.fedorapeople.org/noise.ogg?_=1>

接下来,采样要滤除的背景噪音。导入音轨后,选择仅包含背景噪音的音轨区域。然后从菜单中选择 “Effect > Noise Reduction”,然后按下 “Get Noise Profile” 按钮。

### 过滤噪音

接下来,选择你要过滤噪音的音轨区域。通过使用鼠标进行选择,或者按 `Ctrl + a` 来选择整个音轨。最后,再次打开 “Effect > Noise Reduction” 对话框,然后单击确定以应用滤镜。

此外,调整设置,直到你的音轨听起来更好。这里是原始文件,接下来是用于比较的降噪音轨(使用默认设置):

* <https://ryanlerch.fedorapeople.org/sidebyside.ogg?_=2>

---

via: <https://fedoramagazine.org/audacity-quick-tip-quickly-remove-background-noise/>

作者:[Ryan Lerch](https://fedoramagazine.org/introducing-flatpak/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | When recording sounds on a laptop — say for a simple first screencast — many users typically use the built-in microphone. However, these small microphones also capture a lot of background noise. In this quick tip, learn how to use [Audacity](https://www.audacityteam.org/) in Fedora to quickly remove the background noise from audio files.

### Installing Audacity

Audacity is an application in Fedora for mixing, cutting, and editing audio files. It supports a wide range of formats out of the box on Fedora — including MP3 and OGG. Install Audacity from the Software application.

If the terminal is more your speed, use the command:

sudo dnf install audacity

### Import your Audio, sample background noise

After installing Audacity, open the application, and import your sound using the **File > Import** menu item. This example uses a [sound bite from freesound.org](https://freesound.org/people/levinj/sounds/8323/) to which noise was added:

Next, take a sample of the background noise to be filtered out. With the tracks imported, select an area of the track that contains only the background noise. Then choose **Effect > Noise Reduction** from the menu, and press the **Get Noise Profile** button.

### Filter the Noise

Next, select the area of the track you want to filter the noise from. Do this either by selecting with the mouse, or **Ctrl + a** to select the entire track. Finally, open the **Effect > Noise Reduction** dialog again, and click OK to apply the filter.

Additionally, play around with the settings until your tracks sound better. Here is the original file again, followed by the noise reduced track for comparison (using the default settings):

## Cătălin George Feștilă

I don’t know very well audacity .

Can be done noise in real time ?

## Jeff Hachtel

I believe any “real time” noise reduction will need to be done by you as you record. This means being in a quiet room with no electronic interference, microphones set up as optimally as possible. Even so, even with good mics, there is usually noise that needs to be reduced using the software.

## Klaatu

Audacity doesn’t really do realtime effects. If you want to run sound through a filter (like a noise gate or a high-pass or low-pass filter or an EQ) then you can do that in applications like Ardour or Qtractor.

Otherwise, Audacity does process noise removal pretty quickly even on an old “slow” computer, so while it’s not realtime processing, it’s still pretty painless.

## Creak

Thanks for this tips, I didn’t know how to do this kind of stuff. Testing on one of my files, it works pretty well!

## JaspEr

You read in minds or what?

I was just looking around for: how the heck do I remove all this noise?

THANKS! |

9,710 | diff 与 patch 的使用 | http://locez.com/linux/diff-and-patch/ | 2018-06-03T11:21:06 | [

"diff",

"patch",

"补丁"

] | https://linux.cn/article-9710-1.html |

### 摘要

在 Linux 的日常使用中,我们经常需要修改一些配置文件,然而在软件升级以后,经常会面临配置更新后与原配置部分不兼容的问题(当然我们更多的可能是来制作软件升级的补丁)。在这种情况下我们通常有两种选择:

* 对比现有配置,手动在新配置文件中改动

* 利用 `sed`、`awk` 等工具配合改动

* 采用 `diff` 与 `patch` 制作增量补丁的方式改动

本文主要通过一个升级`awesome` 配置的例子,对第三种方法进行介绍和讲解。

### diff 介绍

`diff` 是一个文件比较工具,可以逐行比较两个文件的不同,其中它有三种输出方式,分别是 `normal`, `context` 以及 `unified`。区别如下:

* `normal` 方式为默认输出方式,不需要加任何参数

* `context` 相较于 `normal` 模式的简单输出,`contetx` 模式会输出修改过部分的上下文,默认是前后 **3** 行。使用参数 `-c`

* `unified` 合并上下文模式则为新的上下文输出模式,同样为前后 **3** 行,只不过把上下文合并了显示了。使用参数 `-u`

**注**:本文主要介绍 `unified` 模式

其他常用参数:

* `-r` 递归处理目录

* `-N` 将缺失的文件当作空白文件处理

#### diff 语法与文件格式

```

diff [options] old new

```

先来看一个简单的例子:

```

$ cat test1

linux

linux

linux

linux

```

```

$ cat test2

locez

linux

locez

linux

```

此时输入 `diff -urN test1 test2` 会输出以下信息:

```

--- test1 2018-05-12 18:39:41.508375114 +0800

+++ test2 2018-05-12 18:41:00.124031736 +0800

@@ -1,4 +1,4 @@

+locez

linux

-linux

-linux

+locez

linux

```

先看前面 2 行,这两行为文件的基本信息,`---` 开头为改变前的文件,`+++` 开头为更新后的文件。

```

--- test1 2018-05-12 18:39:41.508375114 +0800

+++ test2 2018-05-12 18:41:00.124031736 +0800

```

第三行为上下文描述块,其中 `-1,4` 为旧文件的 4 行上下文,`+1,4` 为新文件的:

```

@@ -1,4 +1,4 @@

```

而具体到块里面的内容,前面有 `-` 号的则为删除,有 `+` 号为新增,不带符号则未做改变,仅仅是上下文输出。

### patch 介绍

`patch` 是一个可以将 `diff` 生成的**补丁**应用到源文件,生成一个打过补丁版本的文件。语法:

```

patch [oiption] [originalfile [patchfile]]

```

常用参数:

* `-i` 指定补丁文件

* `-pNum` 在 `diff` 生成的补丁中,第一二行是文件信息,其中文件名是可以包含路径的,例如 `--- /tmp/test1 2018-05-12 18:39:41.508375114 +0800` 其中 `-p0` 代表完整的路径 `/tmp/test1`,而 `-p1` 则指 `tmp/test1`,`-pN` 依此类推

* `-E` 删除应用补丁后为空文件的文件

* `-o` 输出到一个文件而不是直接覆盖文件

### 应用

awesome 桌面 3.5 与 4.0 之间的升级是不兼容的,所以在升级完 4.0 以后,awesome 桌面部分功能无法使用,因此需要迁移到新配置,接下来则应用 `diff` 与 `patch` 实现迁移,当然你也可以单纯使用 `diff` 找出不同,然后手动修改新配置。

现在有以下几个文件:

* `rc.lua.3.5` 3.5 版本的默认配置文件,未修改

* `rc.lua.myconfig` 基于 3.5 版本的个人配置文件

* `rc.lua.4.2` 4.2 新默认配置,未修改

思路为利用 `diff` 提取出个人配置与 3.5 默认配置文件的增量补丁,然后把补丁应用在 4.2 的文件上实现迁移。

#### 制作补丁

```

$ diff -urN rc.lua.3.5 rc.lua.myconfig > mypatch.patch

```

#### 应用补丁

```

$ patch rc.lua.4.2 -i mypatch.patch -o rc.lua

patching file rc.lua (read from rc.lua.4.2)

Hunk #1 FAILED at 38.

Hunk #2 FAILED at 55.

Hunk #3 succeeded at 101 with fuzz 1 (offset 5 lines).

Hunk #4 succeeded at 276 with fuzz 2 (offset 29 lines).

2 out of 4 hunks FAILED -- saving rejects to file rc.lua.rej

```

显然应用没有完全成功,其中在 38 行以及 55 行应用失败,并记录在 `rc.lua.rej` 里。

```

$ cat rc.lua.rej

--- rc.lua.3.5 2018-05-12 19:15:54.922286085 +0800

+++ rc.lua.myconfig 2018-05-12 19:13:35.057911463 +0800

@@ -38,10 +38,10 @@

-- {{{ Variable definitions

-- Themes define colours, icons, font and wallpapers.

-beautiful.init("@AWESOME_THEMES_PATH@/default/theme.lua")

+beautiful.init("~/.config/awesome/default/theme.lua")

-- This is used later as the default terminal and editor to run.

-terminal = "xterm"

+terminal = "xfce4-terminal"

editor = os.getenv("EDITOR") or "nano"

editor_cmd = terminal .. " -e " .. editor

@@ -55,18 +55,18 @@

-- Table of layouts to cover with awful.layout.inc, order matters.

local layouts =

{

- awful.layout.suit.floating,

- awful.layout.suit.tile,

- awful.layout.suit.tile.left,

- awful.layout.suit.tile.bottom,

- awful.layout.suit.tile.top,

+-- awful.layout.suit.floating,

+-- awful.layout.suit.tile,

+-- awful.layout.suit.tile.left,

+-- awful.layout.suit.tile.bottom,

+-- awful.layout.suit.tile.top,

awful.layout.suit.fair,

awful.layout.suit.fair.horizontal,

awful.layout.suit.spiral,

awful.layout.suit.spiral.dwindle,

awful.layout.suit.max,

awful.layout.suit.max.fullscreen,

- awful.layout.suit.magnifier

+-- awful.layout.suit.magnifier

}

-- }}}

```

这里是主题,终端,以及常用布局的个人设置。

#### 修正补丁

再次通过对比补丁文件与 4.2 文件,发现 38 行区块是要删除的东西不匹配,而 55 行区块则是上下文与要删除的内容均不匹配,导致不能应用补丁,于是手动修改补丁

```

$ vim mypatch.patch

```

```

--- rc.lua.3.5 2018-05-12 19:15:54.922286085 +0800

+++ rc.lua.myconfig 2018-05-12 19:13:35.057911463 +0800

@@ -38,10 +38,10 @@

-- {{{ Variable definitions

-- Themes define colours, icons, font and wallpapers.

-beautiful.init(gears.filesystem.get_themes_dir() .. "default/theme.lua")

+beautiful.init("~/.config/awesome/default/theme.lua")

-- This is used later as the default terminal and editor to run.

-terminal = "xterm"

+terminal = "xfce4-terminal"

editor = os.getenv("EDITOR") or "nano"

editor_cmd = terminal .. " -e " .. editor

@@ -55,18 +55,18 @@

-- Table of layouts to cover with awful.layout.inc, order matters.

awful.layout.layouts = {

- awful.layout.suit.floating,

- awful.layout.suit.tile,

- awful.layout.suit.tile.left,

- awful.layout.suit.tile.bottom,

- awful.layout.suit.tile.top,

+-- awful.layout.suit.floating,

+-- awful.layout.suit.tile,

+-- awful.layout.suit.tile.left,

+-- awful.layout.suit.tile.bottom,

+-- awful.layout.suit.tile.top,

awful.layout.suit.fair,

awful.layout.suit.fair.horizontal,

awful.layout.suit.spiral,

awful.layout.suit.spiral.dwindle,

awful.layout.suit.max,

awful.layout.suit.max.fullscreen,

- awful.layout.suit.magnifier,

+-- awful.layout.suit.magnifier,

awful.layout.suit.corner.nw,

-- awful.layout.suit.corner.ne,

-- awful.layout.suit.corner.sw,

....

....

```

输出省略显示,有兴趣的读者可以仔细与`rc.lua.rej` 文件对比,看看笔者是怎样改的。

#### 再次应用补丁

```

$ patch rc.lua.4.2 -i mypatch.patch -o rc.lua

patching file rc.lua (read from rc.lua.4.2)

Hunk #1 succeeded at 41 (offset 3 lines).

Hunk #2 succeeded at 57 with fuzz 2 (offset 2 lines).

Hunk #3 succeeded at 101 with fuzz 1 (offset 5 lines).

Hunk #4 succeeded at 276 with fuzz 2 (offset 29 lines).

$ cp rc.lua ~/.config/awesome/rc.lua ### 打完补丁直接使用

```

### 总结

`diff` 与 `patch` 配合使用,能当增量备份,而且还可以将补丁分发给他人使用,而且在日常的软件包打补丁也具有重要的意义,特别是内核补丁或者一些驱动补丁,打补丁遇到错误时候可以尝试自己修改,已满足自身特殊要求,修改的时候一定要抓住 2 个非常重要的要素:

1. 要修改的内容是否匹配?特别是要删除的

2. 上下文是否满足,特别是距离要修改的地方前后一行,以及上下文的行数是否满足,默认是 3 行上下文

| 301 | Moved Permanently | null |

9,711 | 异步决策:帮助远程团队走向成功 | https://opensource.com/article/17/12/asynchronous-decision-making | 2018-06-03T17:12:07 | [

"决策"

] | https://linux.cn/article-9711-1.html |

>

> 更好的沟通和少量的会议并不是白日梦。这里告诉您异步决策是如何实现这一切的。

>

>

>

异步决策能够让地理和文化上分散的软件团队更有效率地做出决策。本文就将讨论一下实现异步决策所需要的一些原则和工具。

同步决策,要求参与者实时地进行互动,而这对那些需要<ruby> <a href="http://www.paulgraham.com/makersschedule.html"> 大块完整时间工作 </a> <rt> Maker's Schedule </rt></ruby>的人来说代价非常大,而且对于远程团队来说这也不现实。我们会发现这种会议最后浪费的时间让人难以置信。

相比之下,异步决策常应用于大型开源项目中(比如我常参与的 Apache 软件基金会 ASF)。它为团队提供了一种尽可能少开会的有效方法。很多开源项目每年只开很少的几次会议(有的甚至完全没开过会),然而开发团队却始终如一地在生产高质量的软件。

怎样才能异步决策呢?

### 所需工具

#### 中心化的异步沟通渠道

异步决策的第一步就是构建一个中心化的异步沟通渠道。你所使用的技术必须能让所有的团队成员都获得同样的信息,并能进行<ruby> 线索讨论 <rt> threaded discussions </rt></ruby>,也就是说你要既能对一个主题进行发散也要能封禁其他主题的讨论。想一想航海专用无线电台,其中广播渠道的作用只是为了引起特定人员的注意,这些人然后再创建一个子渠道来进行详细的讨论。

很多开源项目依旧使用邮件列表作为中心渠道,不过很多新一代的软件开发者可能会觉得这个方法又古老又笨拙。邮件列表需要遵循大量的准则才能有效的管理热门项目,比如你需要进行有意义的引用,每个线索只讨论一个主题,保证 [标题与内容相吻合](https://grep.codeconsult.ch/2017/11/10/large-mailing-lists-survival-guide/)。虽然这么麻烦,但使用得当的话,再加上一个经过索引的归档系统,邮件列表依然在创建中心渠道的工具中占据绝对主导的地位。

公司团队可以从一个更加现代化的协作工具中收益,这类工具更易使用并提供了更加强大的多媒体功能。不管你用的是哪个工具,关键在于要创建一个能让大量的人员有效沟通并异步地讨论各种主题的渠道。要创建一个一致而活跃的社区,使用一个 [繁忙的渠道要好过建立多个渠道](https://grep.codeconsult.ch/2011/12/06/stefanos-mazzocchis-busy-list-pattern/)。

#### 构建共识的机制

第二个工具是一套构建共识的机制,这样你才不会陷入死循环从而确保能做出决策。做决策最理想的情况就是一致同意,而次佳的就是达成共识,也就是 “有决策权的人之间广泛形成了一致的看法”。强求完全一致的赞同或者允许一票否决都会阻碍决策的制定,因此 ASF 中只在非常有限的决策类型中允许否决权。[ASF 投票制度](http://www.apache.org/foundation/voting.html) 为类似 ASF 这样没有大老板的松散组织构建了一个久经考验的,用于达成共识的好方法。当共识无法自然产生时也可以使用该套制度。

#### 案例管理系统

如上所述,我们通常在项目的中心渠道中构建共识。但是在讨论一些复杂的话题时,使用案例管理系统这一第三方的工具很有意义。小组可以使用中心渠道专注于非正式的讨论和头脑风暴上,当讨论要转变成一个决策时将其转到一个更加结构化的案例管理系统中去。

案例管理系统能够更精确地组织决策。小型团队不用做太多决策可以不需要它,但很多团队会发现能有一个相对独立的地方讨论决策的细节并保存相关信息会方便很多。

案例管理系统不一定就是个很复杂的软件; 在 ASF 中我们所使用的只是简单的问题跟踪软件而已,这些基于网页的系统原本是创建来进行软件支持和 bug 管理的。每个案例列在一个单独的网页上,还有一些历史的注释和动作信息。该途径可以很好的追踪决策是怎么制定出来的。比如,某些非紧急的决策或者复杂的决策可能会花很长时间才会制定出来,这时有一个地方能够了解这些决策的历史就很有用了。新来的团队成员也能很快地了解到最近做出了哪些决策,哪些决策还在讨论,每个决策都有那些人参与其中,每个决策的背景是什么。

### 成功的案例

ASF 董事会中的九名董事在每个月的电话会议上只做很少的一些决策,耗时不超过 2 个小时。在准备这些会议之前,大多数的决策都预先通过异步的方式决定好了。这使得我们可以在会议上集中讨论复杂和难以确定的问题,而不是讨论那些已经达成普遍或部分共识的问题上。

软件世界外的一个有趣的案例是 [瑞士联邦委员会的周会](https://www.admin.ch/gov/en/start/federal-council/tasks/decision-making/federal-council-meeting.html),它的运作方式跟 ASF 很类似。团队以异步决策构建共识的方式来准备会议。会议议程由一组不同颜色编码的列表组成,这些颜色标识了那些事项可以很快通过批准,那些事项需要进一步的讨论,哪些事项特别的复杂。这使得只要 7 个人就能每年忙完超过 2500 项决策,共 50 个周会,每个周会只需要几个小时时间。我觉得这个效率已经很高了。

就我的经验来看,异步决策带来的好处完全值得上为此投入的时间和工具。而且它也能让团队成员更快乐,这也是成功的关键因素之一。

---

via: <https://opensource.com/article/17/12/asynchronous-decision-making>

作者:[Bertrand Delacretaz](https://opensource.com) 译者:[lujun9972](https://github.com/lujun9972) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | *Asynchronous decision-making* is a strategy that enables geographically and culturally distributed software teams to make decisions more efficiently. In this article, I'll discuss some of the principles and tools that make this approach possible.

*Synchronous decision-making*, in which participants interact with each other in real time, can be expensive for people who work on a [Maker's Schedule](http://www.paulgraham.com/makersschedule.html), and they are often impractical for remote teams. We've all seen how such meetings can devolve into inefficient time wasters that we all dread and avoid.

In contrast, asynchronous decision-making, which is often used in large open source projects—for example, at the Apache Software Foundation (ASF), where I'm most active—provides an efficient way for teams to move forward with minimal meetings. Many open source projects involve only a few meetings each year (and some none at all), yet development teams consistently produce high-quality software.

How does asynchronous decision-making work?

## Required tools

### Central asynchronous communications channel

The first thing you need to enable asynchronous decision-making is a central asynchronous communications channel. The technology you use must enable all team members to get the same information and hold threaded discussions, where you can branch off on a topic while ignoring other topics being discussed on the same channel. Think of marine radio, in which a broadcast channel is used sparingly to get the attention of specific people, who then branch off to a sub-channel to have more detailed discussions.

Many open source projects still use mailing lists as this central channel, although younger software developers might consider this approach old and clunky. Mailing lists require a lot of discipline to efficiently manage the high traffic of a busy project, particularly when it comes to meaningful quoting, sticking to one topic per thread, and making sure [subject lines are relevant](https://grep.codeconsult.ch/2017/11/10/large-mailing-lists-survival-guide/). Still, when used properly and coupled with an indexed archive, mailing lists remain the most ubiquitous tool to create a central channel.

Corporate teams might benefit from more modern collaboration tools, which can be easier to use and provide stronger multimedia features. Regardless of which tools you use, the key is to create a channel in which large groups of people can communicate efficiently and asynchronously on a wide variety of topics. A [busy channel is often preferable to multiple channels](https://grep.codeconsult.ch/2011/12/06/stefanos-mazzocchis-busy-list-pattern/) to create a consistent and engaged community.

### Consensus-building mechanism

The second tool is a mechanism for building consensus so you can avoid deadlocks and ensure that decisions go forward. Unanimity in decision-making is ideal, but *consensus*, defined as "widespread agreement among people who have decision power," is second-best. Requiring unanimity or allowing vetoes in decision-making can block progress, so at the ASF vetoes apply only to a very limited set of decision types. The [ASF's voting rules](http://www.apache.org/foundation/voting.html) constitute a well-established and often-emulated approach to building consensus in loosely coupled teams which, like the ASF, may have no single boss. They can also be used when consensus doesn't emerge naturally.

### Case management system

Building consensus usually happens on the project's central channel, as described above. But for complex topics, it often makes sense to use a third tool: a case management system. Groups can then focus the central channel on informal discussions and brainstorming, then move to a more structured case management system when a discussion evolves into a decision.

The case management system organizes decisions more precisely. Smaller teams with fewer decisions to make could work without one, but many find it convenient to be able to discuss the details of a given decision and keep associated information in a single, relatively isolated place.

A case management system does not require complex software; at the ASF we use simple *issue trackers*, web-based systems originally created for software support and bug management. Each case is handled on a single web page, with a history of comments and actions. This approach works well for keeping track of decisions and the paths that lead to them. Some non-urgent or complex decisions can take a long time to reach closure, for example, and it's useful to have their history in a single place. New team members can also get up to speed quickly by learning which decisions were made most recently, which remain outstanding, who's involved in each one, and the background behind each decision.

## Success stories

The nine members of ASF's board of directors make a few dozen decisions at each monthly phone meeting, which last less than two hours. We carefully prepare for these meetings by making most of our decisions asynchronously in advance. This allows us to focus the meeting on the complex or uncertain issues rather than the ones that already have full or partial consensus.

An interesting example outside of the software world is the [Swiss Federal Council's weekly meeting](https://www.admin.ch/gov/en/start/federal-council/tasks/decision-making/federal-council-meeting.html), which runs in a way similar to ASF. Teams prepare for the meeting by using asynchronous decision-making to build consensus. The meeting agenda consists of a set of color-coded lists that indicate which items can be approved quickly, which need more discussion, and which are expected to be most complex. This allows seven busy people to make more than 2,500 decisions each year, in about 50 weekly sessions of a few hours each. Sounds pretty efficient to me.

In my experience, the benefits of asynchronous decision-making are well worth the investment in time and tools. It also leads to happier team members, which is a key component of success.

## 2 Comments |

9,712 | 最小权限的容器编排 | https://blog.docker.com/2017/10/least-privilege-container-orchestration/ | 2018-06-03T21:57:35 | [

"容器",

"编排",

"Docker",

"Swarm"

] | https://linux.cn/article-9712-1.html |

Docker 平台和容器已经成为打包、部署、和管理应用程序的标准。为了在一个集群内协调跨节点的容器,必须有一个关键的能力:一个容器编排器。

对于关键的集群化以及计划的任务,编排器是起重大作用的,比如:

* 管理容器计划和资源分配。

* 支持服务发现和无缝的应用程序部署。

* 分配应用程序运行必需的资源。

不幸的是,在这种环境下,编排器的分布式特性和资源的短暂性使得确保编排器的安全是一个极具挑战性的任务。在这篇文章中,我们将讨论容器编排器安全模型中没有考虑到的、但是很重要的这方面的详细情况,以及 Docker 企业版中如何使用内置的编排性能、Swarm 模式,去克服这些问题。

### 诱因和威胁模型

使用 swarm 模式的 Docker 企业版的其中一个主要目标是提供一个内置安全特性的编排器。为达到这个目标,我们部署了第一个在我们心目中认为的以最小权限原则设计的容器编排器。

在计算机科学中,一个分布式系统所要求的最小权限原则是,系统中的每个参与者仅仅能访问它正当目的所需要的信息和资源。不是更多,也不是更少。

>

> “一个进程必须仅仅能去访问它的正当目的所需要的信息和资源”

>

>

>

#### 最小权限原则

在一个 Docker 企业版集群中的每个节点分配的角色:既不是管理者(manager),也不是工人(worker)。这些角色为节点定义了一个很粗粒度的权限级别:分别进行管理和任务执行。尽管如此,不用理会它的角色,通过使用加密的方式,来保证一个节点仅仅有执行它的任务所需要的信息和资源。结果是,确保集群安全变得更容易了,甚至可以防止大多数的有经验的攻击者模式:攻击者控制了底层通讯网络,或者甚至攻陷了集群节点。

### 内核缺省安全

这是一个很老的安全最大化状态:如果它不是缺省的,就没人用它。Docker Swarm 模式将缺省安全这一概念融入了核心,并且使用这一机制去解决编排器生命周期中三个很难并且很重要的部分:

1. 可信引导和节点引入。

2. 节点身份发布和管理。

3. 认证、授权和加密的信息存储和传播。

我们来分别看一下这三个部分:

#### 可信引导和节点引入

确保集群安全的第一步,没有别的,就是严格控制成员和身份。管理员不能依赖它们节点的身份,并且在节点之间强制实行绝对的负载隔离。这意味着,未授权的节点不能允许加入到集群中,并且,已经是集群一部分的节点不能改变身份,突然去伪装成另一个节点。

为解决这种情况,通过 Docker 企业版 Swarm 模式管理的节点,维护了健壮的、不可改变的身份。期望的特性是,通过使用两种关键的构建块去保证加密:

1. 为集群成员使用<ruby> 安全加入令牌 <rt> Secure join token </rt></ruby>。

2. 从一个集中化的认证机构发行的内嵌唯一身份的证书。

##### 加入 Swarm

要加入 Swarm,节点需要一份<ruby> 安全加入令牌 <rt> Secure join token </rt></ruby>的副本。在集群内的每个操作角色的令牌都是独一无二的 —— 现在有两种类型的节点:工人(workers)和管理者(managers)。由于这种区分,拥有一个工人令牌的节点将不允许以管理者角色加入到集群。唯一得到这个特殊令牌的方式是通过 swarm 的管理 API 去向集群管理者请求一个。

令牌是安全的并且是随机生成的,它还有一个使得令牌泄露更容易被检测到的特殊语法:一个可以在你的日志和仓库中很容易监视的特殊前缀。幸运的是,即便发现一个泄露,令牌也可以很容易去更新,并且,推荐你经常去更新它们 —— 特别是,在一段时间中你的集群不进行扩大的情况下。

##### 引导信任

作为它的身份标识创建的一部分,一个新的节点将向任意一个网络管理者请求发布一个新的身份。但是,在我们下面的威胁模型中,所有的通讯可以被一个第三方拦截。这种请求存在的问题是:一个节点怎么知道与它进行对话的对方是合法的管理者?

幸运的是,Docker 有一个内置机制可以避免这种情况。这个加入令牌被主机用于加入 Swarm,包含了一个根 CA 证书的哈希串。所以,主机可以使用单向 TLS,并且使用这个哈希串去验证它加入的 Swarm 是否正确:如果管理者持有的证书没有被正确的 CA 哈希串签名,节点就知道它不可信任。

#### 节点身份发布和管理

在一个 Swarm 中,身份标识是内嵌在每个节点都单独持有的一个 x509 证书中。在一个最小权限原则的表现形式中,证书的私钥被绝对限制在主机的原始位置。尤其是,管理者不能访问除了它自己的私钥以外的任何一个私钥。

##### 身份发布

要接收它们的证书而无需共享它们的私钥,新的主机通过发布一个证书签名请求(CSR)来开始,管理者收到证书签名请求之后,转换成一个证书。这个证书成为这个新的主机的身份标识,使这个节点成为 Swarm 的一个完全合格成员!

当和安全引导机制一起使用时,发行身份标识的这个机制来加入节点是缺省安全的:所有的通讯部分都是经过认证的、授权的,并且非敏感信息从来都不会以明文方式进行交换。

##### 身份标识延期

尽管如此,给一个 Swarm 中安全地加入节点,仅仅是 “故事” 的一部分。为降低证书的泄露或者失窃造成的影响,并且移除管理 CRL 列表的复杂性,Swarm 模式为身份标识使用了较短存活周期的证书。这些证书缺省情况下三个月后将过期,但是,也可以配置为一个小时后即刻过期!

较短的证书过期时间意味着不能手动去处理证书更新,所以,通常会使用一个 PKI 系统。对于 Swarm,所有的证书是以一种不中断的方式进行自动更新的。这个过程很简单:使用一个相互认证的 TLS 连接去证明一个特定身份标识的所有者,一个 Swarm 节点定期生成一个新的公钥/私钥密钥对,并且用相关的 CSR 去签名发送,创建一个维持相同身份标识的完整的新证书。

#### 经过认证、授权、和加密的信息存储和传播。

在一个正常的 Swarm 的操作中,关于任务的信息被发送给去运行的工人(worker)节点。这里不仅仅包含将被一个节点运行的容器的信息;也包含那个容器运行所必需的资源的所有信息,包括敏感的机密信息,比如,私钥、密码和 API 令牌。

##### 传输安全

事实上,参与 Swarm 的每个节点都拥有一个独一无二的 X509 格式的证书,因此,节点之间的通讯安全是没有问题的:节点使用它们各自的证书,与另一个连接方进行相互认证、继承机密、真实性、和 TLS 的完整性。

关于 Swarm 模式的一个有趣的细节是,本质上它是使用了一个推送模式:仅管理者被允许去发送信息到工人们(workers)—— 显著降低了暴露在低权限的工人节点面前的管理者节点的攻击面。

##### 将负载严格隔离进安全区域

管理者节点的其中一个责任是,去决定发送到每个工人(worker)节点的任务是什么。管理者节点使用多种策略去做这个决定;根据每个节点和每个负载的特性,去跨 Swarm 去安排负载。

在使用 Swarm 模式的 Docker 企业版中,管理者节点通过使用附加到每个单个节点标识上的安全标签,去影响这些安排决定。这些标签允许管理者将节点组与不同的安全区域连接到一起,以限制敏感负载暴露,以及使相关机密信息更安全。

##### 安全分发机密

除了加快身份标识发布过程之外,管理者节点还有存储和分发工人节点所需要的任何资源的任务。机密信息像任何其它类型的资源一样处理,并且基于安全的 mTLS 连接,从管理者推送到工人节点。

在主机上,Docker 企业版能确保机密仅提供给它们指定的容器。在同一个主机上的其它容器并不能访问它们。Docker 以一个临时文件系统的方式显露机密给一个容器,确保机密总是保存在内存中,并且从不写入到磁盘。这种方式比其它竞争的替代者更加安全,比如,[在环境变量中存储它们](https://diogomonica.com/2017/03/27/why-you-shouldnt-use-env-variables-for-secret-data/)。一旦这个任务完成,这个机密将永远消失。

##### 存储机密

在管理者主机上的机密总是保持加密的。缺省情况下,加密这些机密的密钥(被称为数据加密密钥,DEK)是以明文的方式存储在硬盘上的。这使得那些对安全性要求较低的人可以轻松地去使用 Docker Swarm 模式。

但是,如果你运行一个生产集群,我们推荐你启用自动锁定模式。当自动锁定模式启用后,一个重新更新过的 DEK 被一个独立的加密密钥的密钥(KEK)所加密。这个密钥从不被存储在集群中;管理者有责任将它存储在一个安全可靠的地方,并且当集群启动的时候可以提供它。这就是所谓的 “解锁” Swarm。

根据 Raft 故障容错一致性算法,Swarm 模式支持多个管理者。在这个场景中,无缝扩展了机密存储的安全性。每个管理者主机除了共享密钥之外,还有一个唯一的磁盘加密密钥。幸运的是,Raft 日志在磁盘上也是加密的,并且,在自动锁定模式下,没有 KEK 同样是不可访问的。

#### 当一个节点被攻陷后发生了什么?

在传统的编排器中,挽回一台被攻陷的主机是一个缓慢而复杂的过程。使用 Swarm 模式,恢复它就像运行一个 Docker 节点的 `rm` 命令一样容易。这是从集群中删除一个受影响的节点,而 Docker 将去处理剩下的事情,即,重新均衡负载,并且确保其它的主机已经知道,而不会去与受影响的节点通讯。

正如我们看到的那样,感谢最小权限的编排器,甚至是,如果攻击者在主机上持续活动,它们将被从剩余的网络上切断。主机的证书 —— 它的身份标识 —— 被列入黑名单,因此,管理者也不能有效访问它。

### 结论

使用 Swarm 模式的 Docker 企业版,在缺省情况下确保了编排器的所有关键区域的安全:

* 加入集群。阻止恶意节点加入到集群。

* 把主机分组为安全区域。阻止攻击者的横向移动。

* 安排任务。任务将仅被委派到允许的节点。

* 分配资源。恶意节点不能 “盗取” 其它的负载或者资源。

* 存储机密。从不明文保存并且从不写入到工人节点的磁盘上。

* 与工人节点的通讯。使用相互认证的 TLS 加密。

因为 Swarm 模式的持续改进,Docker 团队正在努力将最小权限原则进一步推进。我们正在处理的一个任务是:如果一个管理者被攻陷了,怎么去保证剩下的节点的安全?路线图已经有了,其中一些功能已经可以使用,比如,白名单功能,仅允许特定的 Docker 镜像,阻止管理者随意运行负载。这是通过 Docker 可信内容来实现的。

---

via: <https://blog.docker.com/2017/10/least-privilege-container-orchestration/>

作者:[Diogo Mónica](https://blog.docker.com/author/diogo/) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,713 | Go 程序的持续分析 | https://medium.com/google-cloud/continuous-profiling-of-go-programs-96d4416af77b | 2018-06-04T11:00:21 | [

"优化",

"Go"

] | https://linux.cn/article-9713-1.html |

Google 最有趣的部分之一就是我们规模庞大的持续分析服务。我们可以看到谁在使用 CPU 和内存,我们可以持续地监控我们的生产服务以争用和阻止配置文件,并且我们可以生成分析和报告,并轻松地告诉我们可以进行哪些有重要影响的优化。

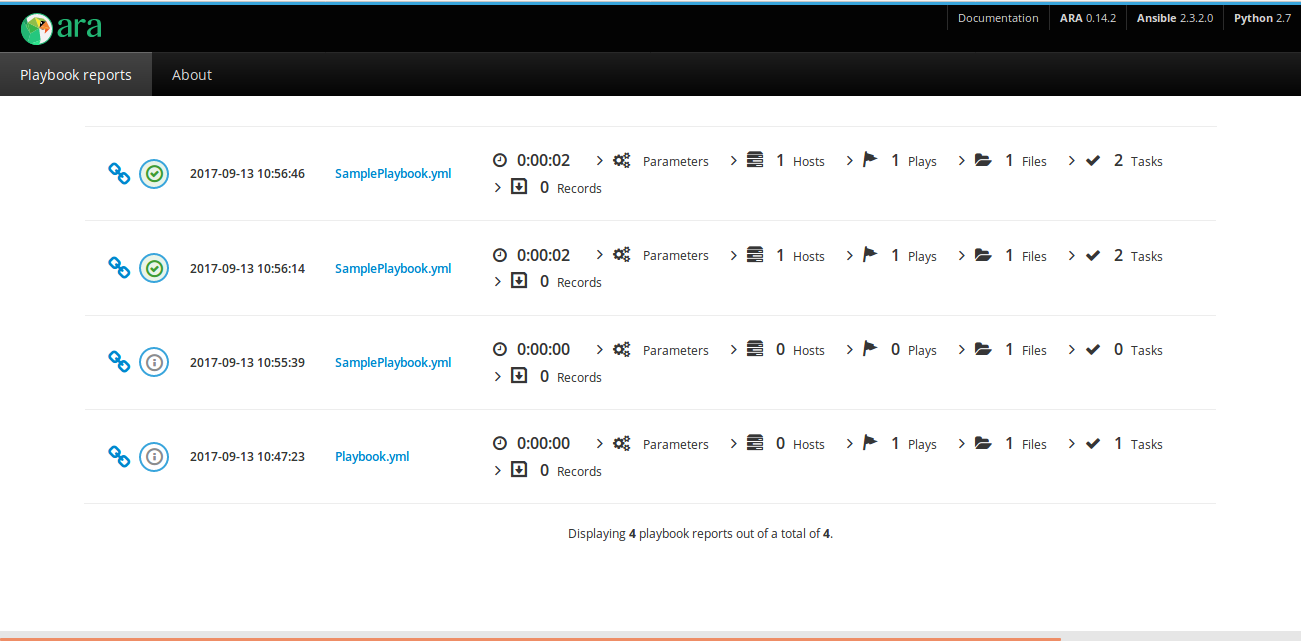

我简单研究了 [Stackdriver Profiler](https://cloud.google.com/profiler/),这是我们的新产品,它填补了针对云端用户在云服务范围内分析服务的空白。请注意,你无需在 Google 云平台上运行你的代码即可使用它。实际上,我现在每天都在开发时使用它。它也支持 Java 和 Node.js。

### 在生产中分析

pprof 可安全地用于生产。我们针对 CPU 和堆分配分析的额外会增加 5% 的开销。一个实例中每分钟收集 10 秒。如果你有一个 Kubernetes Pod 的多个副本,我们确保进行分摊收集。例如,如果你拥有一个 pod 的 10 个副本,模式,那么开销将变为 0.5%。这使用户可以一直进行分析。

我们目前支持 Go 程序的 CPU、堆、互斥和线程分析。

### 为什么?

在解释如何在生产中使用分析器之前,先解释为什么你想要在生产中进行分析将有所帮助。一些非常常见的情况是:

* 调试仅在生产中可见的性能问题。

* 了解 CPU 使用率以减少费用。

* 了解争用的累积和优化的地方。

* 了解新版本的影响,例如看到 canary 和产品级之间的区别。

* 通过[关联](https://rakyll.org/profiler-labels/)分析样本以了解延迟的根本原因来丰富你的分布式经验。

### 启用

Stackdriver Profiler 不能与 `net/http/pprof` 处理程序一起使用,并要求你在程序中安装和配置一个一行的代理。

```

go get cloud.google.com/go/profiler

```

在你的主函数中,启动分析器:

```

if err := profiler.Start(profiler.Config{

Service: "indexing-service",

ServiceVersion: "1.0",

ProjectID: "bamboo-project-606", // optional on GCP

}); err != nil {

log.Fatalf("Cannot start the profiler: %v", err)

}

```

当你运行你的程序后,profiler 包将每分钟报告给分析器 10 秒钟。

### 可视化

当分析被报告给后端后,你将在 <https://console.cloud.google.com/profiler> 上看到火焰图。你可以按标签过滤并更改时间范围,也可以按服务名称和版本进行细分。数据将会长达 30 天。

你可以选择其中一个分析,按服务,区域和版本分解。你可以在火焰中移动并通过标签进行过滤。

### 阅读火焰图

[Brendan Gregg](http://www.brendangregg.com/flamegraphs.html) 非常全面地解释了火焰图可视化。Stackdriver Profiler 增加了一点它自己的特点。

我们将查看一个 CPU 分析,但这也适用于其他分析。

1. 最上面的 x 轴表示整个程序。火焰上的每个框表示调用路径上的一帧。框的宽度与执行该函数花费的 CPU 时间成正比。

2. 框从左到右排序,左边是花费最多的调用路径。

3. 来自同一包的帧具有相同的颜色。这里所有运行时功能均以绿色表示。

4. 你可以单击任何框进一步展开执行树。

你可以将鼠标悬停在任何框上查看任何帧的详细信息。

### 过滤

你可以显示、隐藏和高亮符号名称。如果你特别想了解某个特定调用或包的消耗,这些信息非常有用。

1. 选择你的过滤器。你可以组合多个过滤器。在这里,我们将高亮显示 `runtime.memmove`。

2. 火焰将使用过滤器过滤帧并可视化过滤后的框。在这种情况下,它高亮显示所有 `runtime.memmove` 框。

---

via: <https://medium.com/google-cloud/continuous-profiling-of-go-programs-96d4416af77b>

作者:[JBD](https://medium.com/@rakyll?source=post_header_lockup) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | # Continuous Profiling of Go programs

One of the most interesting parts of Google is our fleet-wide continuous profiling service. We can see who is accountable for CPU and memory usage, we can continuously monitor our production services for contention and blocking profiles, and we can generate analysis and reports and easily can tell what are some highly impactful optimization projects we can work on.

I briefly worked on [Google Cloud Profiler](https://cloud.google.com/profiler/), our new product that is filling the cloud-wide profiling gap for Cloud users. Note that you DON’T need to run your code on Google Cloud Platform in order to use it. Actually, I use it at development time on a daily basis now. It also supports Java and Node.js.

## Profiling in production

pprof is safe to use in production. We target an additional 5% overhead for CPU and heap allocation profiling. The collection is happening for 10 seconds for every minute from a single instance. If you have multiple replicas of a Kubernetes pod, we make sure we do amortized collection. For example, if you have 10 replicas of a pod, the overhead will be 0.5%. This makes it possible for users to keep the profiling always on.

We currently support CPU, heap, mutex and thread profiles for Go programs.

## Why?

Before explaining how you can use the profiler in production, it would be helpful to explain why you would ever want to profile in production. Some very common cases are:

- Debug performance problems only visible in production.

- Understand the CPU usage to reduce billing.

- Understand where the contention cumulates and optimize.

- Understand the impact of new releases, e.g. seeing the difference between canary and production.

- Enrich your distributed traces by

[correlating](https://rakyll.org/profiler-labels/)them with profiling samples to understand the root cause of latency.

## Enabling

Stackdriver Profiler doesn’t work with the *net/http/pprof* handlers and require you to install and configure a one-line agent in your program.

`go get `[cloud.google.com/go/profiler](http://cloud.google.com/go/profiler)

And in your main function, start the profiler:

`if err := profiler.Start(profiler.Config{`

Service: "indexing-service",

ServiceVersion: "1.0",

ProjectID: "bamboo-project-606", // optional on GCP

}); err != nil {

log.Fatalf("Cannot start the profiler: %v", err)

}

Once you start running your program, the profiler package will report the profilers for 10 seconds for every minute.

## Visualization

As soon as profiles are reported to the backend, you will start seeing a flamegraph at [https://console.cloud.google.com/profiler](https://console.cloud.google.com/profiler). You can filter by tags and change the time span, as well as break down by service name and version. The data will be around up to 30 days.

You can choose one of the available profiles; break down by service, zone and version. You can move in the flame and filter by tags.

## Reading the flame

Flame graph visualization is explained by [Brendan Gregg](http://www.brendangregg.com/flamegraphs.html) very comprehensively. Stackdriver Profiler adds a little bit of its own flavor.

We will examine a CPU profile but all also applies to the other profiles.

- The top-most x-axis represents the entire program. Each box on the flame represents a frame on the call path. The width of the box is proportional to the CPU time spent to execute that function.

- Boxes are sorted from left to right, left being the most expensive call path.

- Frames from the same package have the same color. All runtime functions are represented with green in this case.

- You can click on any box to expand the execution tree further.

You can hover on any box to see detailed information for any frame.

## Filtering

You can show, hide and highlight by symbol name. These are extremely useful if you specifically want to understand the cost of a particular call or package.

- Choose your filter. You can combine multiple filters. In this case, we are highlighting runtime.memmove.

- The flame is going to filter the frames with the filter and visualize the filtered boxes. In this case, it is highlighting all runtime.memmove boxes. |

9,714 | 如何使用 Arduino 制作一个绘图仪 | https://opensource.com/article/18/3/diy-plotter-arduino | 2018-06-04T20:11:26 | [

"Arduino",

"绘图仪"

] | https://linux.cn/article-9714-1.html |

>

> 使用开源硬件和软件的 DIY 绘图仪可以自动地绘制、雕刻。

>

>

>

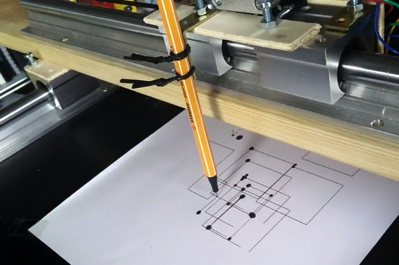

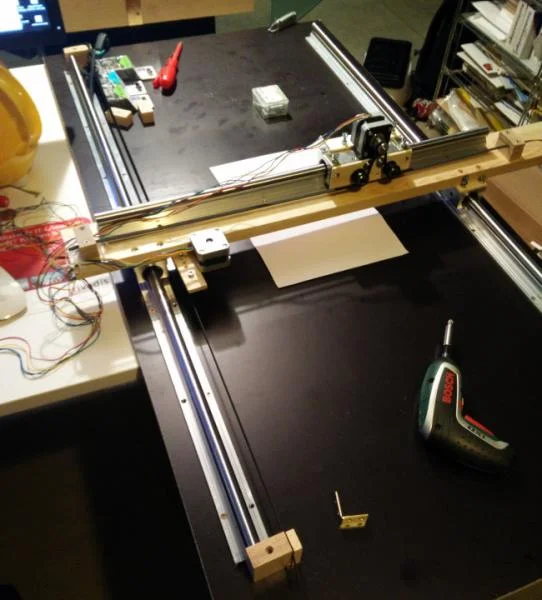

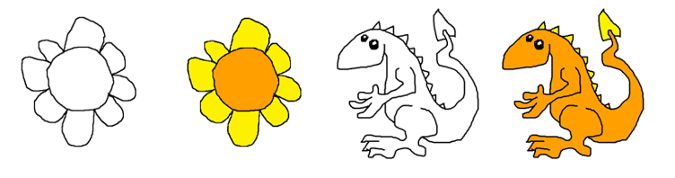

在上学时,科学系的壁橱里藏着一台惠普绘图仪。虽然我在上学的期间可以经常使用它,但我还是想拥有一台属于自己的绘图仪。许多年之后,步进电机已经很容易获得了,我又在从事电子产品和微控制器方面的工作,最近,我看到有人用丙烯酸塑料(acrylic)制作了一个显示器。这件事启发了我,并最终制作了我自己的绘图仪。

*我 DIY 的绘图仪;在这里看它工作的[视频](https://twitter.com/pilhuhn/status/948205323726344193)。*

由于我是一个很怀旧的人,我真的很喜欢最初的 [Arduino Uno](https://en.wikipedia.org/wiki/Arduino#Official_boards)。下面是我用到的其它东西的一个清单(仅供参考,其中一些我也不是很满意):

* [FabScan shield](http://www.watterott.com/de/Arduino-FabScan-Shield):承载步进电机驱动器。

* [SilentStepSticks](http://www.watterott.com/de/SilentStepStick-TMC2130):步进电机驱动器,因为 Arduino 自身不能处理步进电机所需的电压和电流。因此我使用了一个 Trinamic TMC2130 芯片,但它是工作在单独模式。这些替换为 Pololu 4988,但是它们运转更安静。

* [SilentStepStick 保护装置](http://www.watterott.com/de/SilentStepStick-Protector):一个防止你的电机驱动器转动过快的二极管(相信我,你肯定会需要它的)。

* 步进电机:我选择的是使用 12 V 电压的 NEMA 17 电机(如,来自 [Watterott](http://www.watterott.com/de/Schrittmotor-incl-Anschlusskabel) 和 [SparkFun](https://www.sparkfun.com/products/9238) 的型号)。

* [直线导杆](https://www.ebay.de/itm/CNC-Set-12x-600mm-Linearfuhrung-Linear-Guide-Rail-Stage-3D-/322917927292?hash=item4b2f68897c)

* 木制的基板

* 木螺丝

* GT2 皮带

* [GT2 同步滑轮](http://www.watterott.com/de/OpenBuilds-GT2-2mm-Aluminium-Timing-Pulley)

这是我作为个人项目而设计的。如果你想找到一个现成的工具套件,你可以从 German Make 杂志上找到 [MaXYposi](https://www.heise.de/make/artikel/MaXYposi-Projektseite-zum-universellen-XY-Portalroboter-von-Make-3676050.html)。

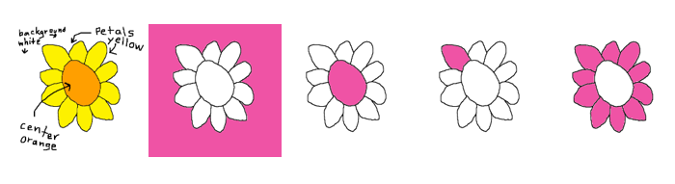

### 硬件安装

正如你所看到的,我刚开始做的太大了。这个绘图仪并不合适放在我的桌子上。但是,没有关系,我只是为了学习它(并且,我也将一些东西进行重新制作,下次我将使用一个更小的横梁)。

*带 X 轴和 Y 轴轨道的绘图仪基板*

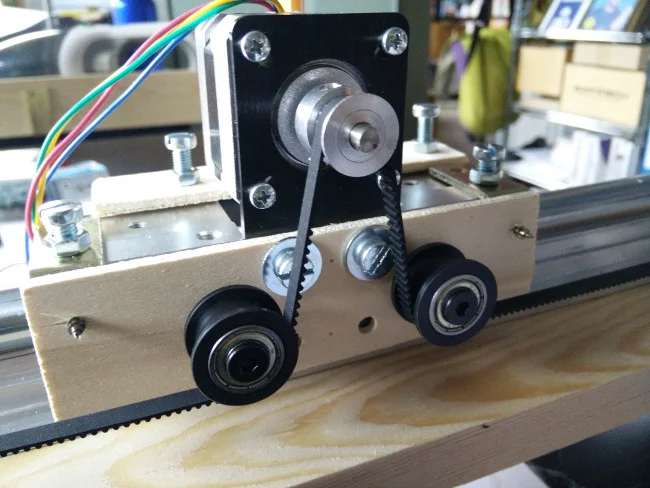

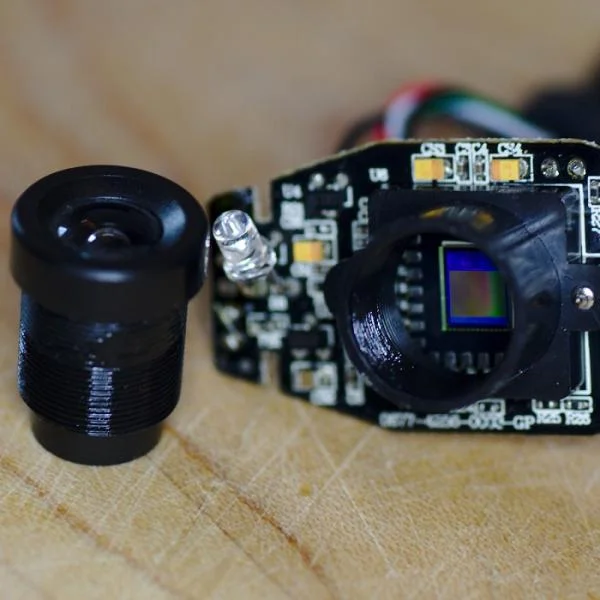

皮带安装在轨道的侧面,并且用它将一些辅助轮和电机挂在一起:

*电机上的皮带路由*

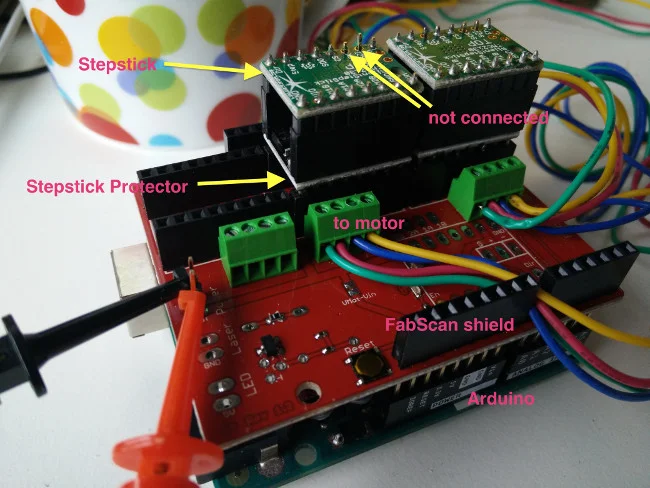

我在 Arduino 上堆叠了几个组件。Arduino 在最下面,它之上是 FabScan shield,接着是一个安装在 1 和 2 号电机槽上的 StepStick 保护装置,SilentStepStick 在最上面。注意,SCK 和 SDI 针脚没有连接。

*Arduino 堆叠配置([高清大图](https://www.dropbox.com/s/7bp3bo5g2ujser8/IMG_20180103_110111.jpg?dl=0))*

注意将电机的连接线接到正确的针脚上。如果有疑问,就去查看它的数据表,或者使用欧姆表去找出哪一对线是正确的。

### 软件配置

#### 基础部分

虽然像 [grbl](https://github.com/gnea/grbl) 这样的软件可以解释诸如像装置移动和其它一些动作的 G-codes,并且,我也可以将它刷进 Arduino 中,但是我很好奇,想更好地理解它是如何工作的。(我的 X-Y 绘图仪软件可以在 [GitHub](https://github.com/pilhuhn/xy-plotter) 上找到,不过我不提供任何保修。)

使用 StepStick(或者其它兼容的)驱动器去驱动步进电机,基本上只需要发送一个高电平信号或者低电平信号到各自的针脚即可。或者使用 Arduino 的术语:

```

digitalWrite(stepPin, HIGH);

delayMicroseconds(30);

digitalWrite(stepPin, LOW);

```

在 `stepPin` 的位置上是步进电机的针脚编号:3 是 1 号电机,而 6 是 2 号电机。

在步进电机能够工作之前,它必须先被启用。

```

digitalWrite(enPin, LOW);

```

实际上,StepStick 能够理解针脚的三个状态:

* Low:电机已启用

* High:电机已禁用

* Pin 未连接:电机已启用,但在一段时间后进入节能模式

电机启用后,它的线圈已经有了力量并用来保持位置。这时候几乎不可能用手来转动它的轴。这样可以保证很好的精度,但是也意味着电机和驱动器芯片都“充满着”力量,并且也因此会发热。

最后,也是很重要的,我们需要一个决定绘图仪方向的方法:

```

digitalWrite(dirPin, direction);

```

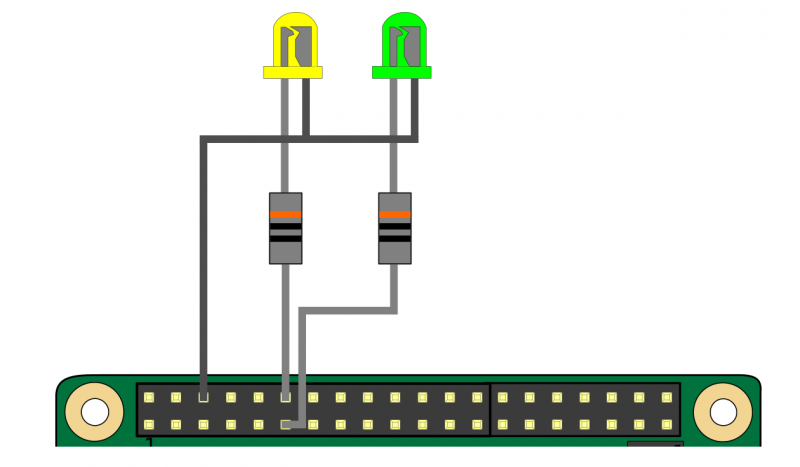

下面的表列出了功能和针脚:

| 功能 | 1 号电机 | 2 号电机 |

| --- | --- | --- |

| 启用 | 2 | 5 |

| 方向 | 4 | 7 |

| 步进 | 3 | 6 |

在我们使用这些针脚之前,我们需要在代码的 `setup()` 节中设置它的 `OUTPUT` 模式。

```

pinMode(enPin1, OUTPUT);

pinMode(stepPin1, OUTPUT);

pinMode(dirPin1, OUTPUT);

digitalWrite(enPin1, LOW);

```

了解这些知识后,我们可以很容易地让步进电机四处移动:

```

totalRounds = ...

for (int rounds =0 ; rounds < 2*totalRounds; rounds++) {

if (dir==0){ // set direction

digitalWrite(dirPin2, LOW);

} else {

digitalWrite(dirPin2, HIGH);

}

delay(1); // give motors some breathing time

dir = 1-dir; // reverse direction

for (int i=0; i < 6400; i++) {

int t = abs(3200-i) / 200;

digitalWrite(stepPin2, HIGH);

delayMicroseconds(70 + t);

digitalWrite(stepPin2, LOW);

delayMicroseconds(70 + t);

}

}

```

这将使滑块向左和向右移动。这些代码只操纵一个步进电机,但是,对于一个 X-Y 绘图仪,我们要考虑两个轴。

#### 命令解释器

我开始做一个简单的命令解释器去使用规范的路径,比如:

```

"X30|Y30|X-30 Y-30|X-20|Y-20|X20|Y20|X-40|Y-25|X40 Y25

```

用毫米来描述相对移动(1 毫米等于 80 步)。

绘图仪软件实现了一个 *持续模式* ,这可以允许一台 PC 给它提供一个很大的路径(很多的路径)去绘制。(在这个[视频](https://twitter.com/pilhuhn/status/949737734654124032)中展示了如何绘制 Hilbert 曲线)

### 设计一个好用的握笔器

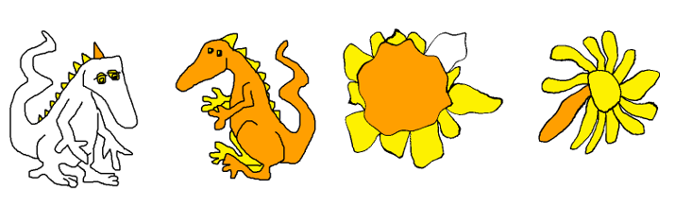

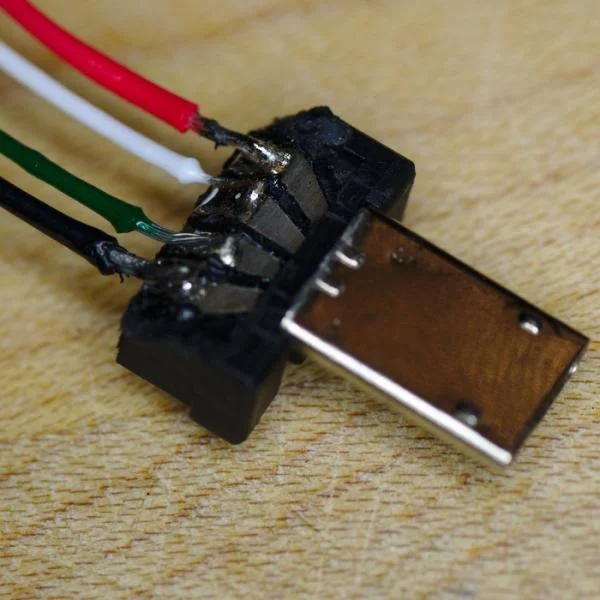

在上面的第一张图中,绘图笔是细绳子绑到 Y 轴上的。这样绘图也不精确,并且也无法在软件中实现提笔和下笔(如示例中的大黑点)。

因此,我设计了一个更好用的、更精确的握笔器,它使用一个伺服器去提笔和下笔。可以在下面的这张图中看到这个新的、改进后的握笔器,上面视频链接中的 Hilbert 曲线就是使用它绘制的。

*图中的特写镜头就是伺服器臂提起笔的图像*

笔是用一个小夹具固定住的(图上展示的是一个大小为 8 的夹具,它一般用于将线缆固定在墙上)。伺服器臂能够提起笔;当伺服器臂放下来的时候,笔就会被放下来。

#### 驱动伺服器

驱动伺服器是非常简单的:只需要提供位置,伺服器就可以完成所有的工作。

```

#include <Servo.h>

// Servo pin

#define servoData PIN_A1

// Positions

#define PEN_UP 10

#define PEN_DOWN 50

Servo penServo;

void setup() {

// Attach to servo and raise pen

penServo.attach(servoData);

penServo.write(PEN_UP);

}

```

我把伺服器接头连接在 FabScan shield 的 4 号电机上,因此,我将用 1 号模拟针脚。

放下笔也很容易:

```

penServo.write(PEN_DOWN);

```

### 进一步扩展

我的进一步扩展的其中一项就是添加一些终止检测器,但是,我也可以不用它们,进而使用 TMC2130 的 StallGuard 模式来代替。这些检测器也可以用于去实现一个 `home` 命令。

以后,我或许还将添加一个真实的 Z 轴,这样它就可以对一个木头进行铣削雕刻,或者钻一个 PCB 板,或者雕刻一块丙烯酸塑料,或者 … (我还想到了用激光)。

这篇文章最初发布在 [Some Things to Remember](http://pilhuhn.blogspot.com/2018/01/homegrown-xy-plotter-with-arduino.html) 博客中并授权重分发。

---

via: <https://opensource.com/article/18/3/diy-plotter-arduino>

作者:[Heiko W.Rupp](https://opensource.com/users/pilhuhn) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | Back in school, there was an HP plotter well hidden in a closet in the science department. I got to play with it for a while and always wanted to have one of my own. Fast forward many, many years. Stepper motors are easily available, I am back into doing stuff with electronics and micro-controllers, and I recently saw someone creating displays with engraved acrylic. This triggered me to finally build my own plotter.

opensource.com

As an old-school 5V guy, I really like the original [Arduino Uno](https://en.wikipedia.org/wiki/Arduino#Official_boards). Here's a list of the other components I used (fyi, I am not affiliated with any of these companies):

[FabScan shield](http://www.watterott.com/de/Arduino-FabScan-Shield): Physically hosts the stepper motor drivers.[SilentStepSticks](http://www.watterott.com/de/SilentStepStick-TMC2130): Motor drivers, as the Arduino on its own can't handle the voltage and current that a stepper motor needs. I am using ones with a Trinamic TMC2130 chip, but in standalone mode for now. Those are replacements for the Pololu 4988, but allow for much quieter operation.[SilentStepStick protectors](http://www.watterott.com/de/SilentStepStick-Protector): Diodes that prevent the turning motor from frying your motor drivers (you want them, believe me).- Stepper motors: I selected NEMA 17 motors with 12V (e.g., models from

[Watterott](http://www.watterott.com/de/Schrittmotor-incl-Anschlusskabel)and[SparkFun](https://www.sparkfun.com/products/9238)). [Linear guide rails](https://www.ebay.de/itm/CNC-Set-12x-600mm-Linearfuhrung-Linear-Guide-Rail-Stage-3D-/322917927292?hash=item4b2f68897c)- Wooden base plate

- Wood screws

- GT2 belt

[GT2 timing pulley](http://www.watterott.com/de/OpenBuilds-GT2-2mm-Aluminium-Timing-Pulley)

This is a work in progress that I created as a personal project. If you are looking for a ready-made kit, then check out the [MaXYposi](https://www.heise.de/make/artikel/MaXYposi-Projektseite-zum-universellen-XY-Portalroboter-von-Make-3676050.html) from German *Make* magazine.

## Hardware setup

As you can see here, I started out much too large. This plotter can't comfortably sit on my desk, but it's okay, as I did it for learning purposes (and, as I have to re-do some things, next time I'll use smaller beams).

opensource.com

The belt is mounted on both sides of the rail and then slung around the motor with some helper wheels:

opensource.com

I've stacked several components on top of the Arduino. The Arduino is on the bottom, above that is the FabScan shield, next is a StepStick protector on motor slots 1+2, and the SilentStepStick is on top. Note that the SCK and SDI pins are not connected.

opensource.com

Be careful to correctly attach the wires to the motor. When in doubt, look at the data sheet or an ohmmeter to figure out which wires belong together.

## Software setup

While software like [grbl](https://github.com/gnea/grbl) can interpret so-called G-codes for tool movement and other things, and I could have just flashed it to the Arduino, I am curious and wanted to better understand things. (My X-Y plotter software is available at [GitHub ](https://github.com/pilhuhn/xy-plotter) and comes without any warranty.)

### The basics

To drive a stepper motor with the StepStick (or compatible) driver, you basically need to send a high and then a low signal to the respective pin. Or in Arduino terms:

```

``````

digitalWrite(stepPin, HIGH);

delayMicroseconds(30);

digitalWrite(stepPin, LOW);

```

Where `stepPin`

is the pin number for the stepper: 3 for motor 1 and 6 for motor 2.

Before the stepper does any work, it must be enabled.

```

````digitalWrite(enPin, LOW);`

Actually, the StepStick knows three states for the pin:

- Low: Motor is enabled

- High: Motor is disabled

- Pin not connected: Motor is enabled but goes into an energy-saving mode after a while

When a motor is enabled, its coils are powered and it keeps its position. It is almost impossible to manually turn its axis. This is good for precision purposes, but it also means that both motors and driver chips are "flooded" with power and will warm up.

And last, but not least, we need a way to determine the plotter's direction:

```

````digitalWrite(dirPin, direction);`

The following table lists the functions and the pins

Function | Motor1 | Motor2 |

---|---|---|

Enable | 2 | 5 |

Direction | 4 | 7 |

Step | 3 | 6 |

Before we can use the pins, we need to set them to `OUTPUT`

mode in the `setup()`

section of the code

```

``````

pinMode(enPin1, OUTPUT);

pinMode(stepPin1, OUTPUT);

pinMode(dirPin1, OUTPUT);

digitalWrite(enPin1, LOW);

```

With this knowledge, we can easily get the stepper to move around:

```

``````

totalRounds = ...

for (int rounds =0 ; rounds < 2*totalRounds; rounds++) {

if (dir==0){ // set direction

digitalWrite(dirPin2, LOW);

} else {

digitalWrite(dirPin2, HIGH);

}

delay(1); // give motors some breathing time

dir = 1-dir; // reverse direction

for (int i=0; i < 6400; i++) {

int t = abs(3200-i) / 200;

digitalWrite(stepPin2, HIGH);

delayMicroseconds(70 + t);

digitalWrite(stepPin2, LOW);

delayMicroseconds(70 + t);

}

}

```

This will make the slider move left and right. This code deals with one stepper, but for an X-Y plotter, we have two axes to consider.

### Command interpreter

I started to implement a simple command interpreter to use path specifications, such as:

```

````"X30|Y30|X-30 Y-30|X-20|Y-20|X20|Y20|X-40|Y-25|X40 Y25`

to describe relative movements in millimeters (1mm equals 80 steps).

The plotter software implements a *continuous mode*, which allows a PC to feed large paths (in chunks) to the plotter. (This how I plotted the Hilbert curve in this [video](https://twitter.com/pilhuhn/status/949737734654124032).)

## Building a better pen holder

In the first image above, the pen was tied to the Y-axis with some metal string. This was not precise and also did not enable the software to raise and lower the hand (this explains the big black dots).

I have since created a better, more precise pen holder that uses a servo to raise and lower the pen. This new, improved holder can be seen in this picture and in the Hilbert curve video linked above.

opensource.com

The pen is attached with a little clamp (the one shown is a size 8 clamp typically used to attach cables to walls). The servo arm can raise the pen; when the arm goes down, gravity will lower the pen.

### Driving the servo

Driving the servo is relatively straightforward: Just provide the position and the servo does all the work.

```

``````

#include <Servo.h>

// Servo pin

#define servoData PIN_A1

// Positions

#define PEN_UP 10

#define PEN_DOWN 50

Servo penServo;

void setup() {

// Attach to servo and raise pen

penServo.attach(servoData);

penServo.write(PEN_UP);

}

```

I am using the servo headers on the Motor 4 place of the FabScan shield, so I've used analog pin 1.

Lowering the pen is as easy as:

```

```` penServo.write(PEN_DOWN);`

## Next steps

One of my next steps will be to add some end detectors, but I may skip them and use the StallGuard mode of the TMC2130 instead. Those detectors can also be used to implement a `home`

command.

And perhaps in the future I'll add a real Z-axis that can hold an engraver to do wood milling, or PCB drilling, or acrylic engraving, or ... (a laser comes to mind as well).

*This was originally published on the Some Things to Remember blog and is reprinted with permission.*

## 1 Comment |

9,715 | 如何在 Linux 系统中结束进程或是中止程序 | https://opensource.com/article/18/5/how-kill-process-stop-program-linux | 2018-06-04T22:14:00 | [

"kill",

"killall"

] | https://linux.cn/article-9715-1.html |

>

> 在 Linux 中有几种使用命令行或图形界面终止一个程序的方式。

>

>

>

进程出错的时候,您可能会想要中止或是杀掉这个进程。在本文中,我们将探索在命令行和图形界面中终止进程或是应用程序,这里我们使用 [gedit](https://wiki.gnome.org/Apps/Gedit) 作为样例程序。

### 使用命令行或字符终端界面

#### Ctrl + C

在命令行中调用 `gedit` (如果您没有使用 `gedit &` 命令)程序的一个问题是 shell 会话被阻塞,没法释放命令行提示符。在这种情况下,`Ctrl + C` (`Ctrl` 和 `C` 的组合键) 会很管用。这会终止 `gedit` ,并且所有的工作都将丢失(除非文件已经被保存)。`Ctrl + C` 会给 `gedit` 发送了 `SIGINT` 信号。这是一个默认终止进程的停止信号,它将指示 shell 停止 `gedit` 的运行,并返回到主函数的循环中,您将返回到提示符。

```

$ gedit

^C

```

#### Ctrl + Z

它被称为挂起字符。它会发送 `SIGTSTP` 信号给进程。它也是一个停止信号,但是默认行为不是杀死进程,而是挂起进程。

下面的命令将会停止(杀死/中断) `gedit` 的运行,并返回到 shell 提示符。

```

$ gedit

^Z

[1]+ Stopped gedit

$

```

一旦进程被挂起(以 `gedit` 为例),将不能在 `gedit` 中写入或做任何事情。而在后台,该进程变成了一个作业,可以使用 `jobs` 命令验证。

```

$ jobs

[1]+ Stopped gedit

```

`jobs` 允许您在单个 shell 会话中控制多个进程。您可以终止,恢复作业,或是根据需要将作业移动到前台或是后台。

让我们在后台恢复 `gedit`,释放提示符以运行其它命令。您可以通过 `bg` 命令来做到,后跟作业 ID(注意上面的 `jobs` 命令显示出来的 `[1]`,这就是作业 ID)。

```

$ bg 1

[1]+ gedit &

```

这和直接使用 `gedit &` 启动程序效果差不多:

```

$ gedit &

```

### 使用 kill

`kill` 命令提供信号的精确控制,允许您通过指定信号名或是信号数字为进程发送信号,后跟进程 ID 或是 PID。

我喜欢 `kill` 命令的一点是它也能够根据作业 ID 控制进程。让我们使用 `gedit &` 命令在后台开启 `gedit` 服务。假设通过 `jobs` 命令我得到了一个 `gedit` 的作业 ID,让我们为 `gedit` 发送 `SIGINT` 信号:

```

$ kill -s SIGINT %1

```

作业 ID 需要使用 `%` 前缀,不然 `kill` 会将其视作 PID。

不明确指定信号,`kill` 仍然可以工作。此时,默认会发送能中断进程的 `SIGTERM` 信号。执行 `kill -l` 可以查看信号名列表,使用 `man kill` 命令阅读手册。

### 使用 killall

如果您不想使用特定的工作 ID 或者 PID,`killall` 允许您使用特定的进程名。中断 `gedit` 最简单的 `killall` 使用方式是:

```

$ killall gedit

```

它将终止所有名为 `gedit` 的进程。和 `kill` 相似,默认发送的信号是 `SIGTERM`。使用 `-I` 选项忽略进程名的大小写。

```

$ gedit &

[1] 14852

$ killall -I GEDIT

[1]+ Terminated gedit

```

查看手册学习更多 `killall` 命令选项(如 `-u`)。

### 使用 xkill

您是否遇见过播放器崩溃,比如 [VLC](https://www.videolan.org/vlc/index.html) 灰屏或挂起?现在你可以像上面一样获得进程的 PID 来杀掉它,或者使用 `xkill` 命令终止应用程序。

`xkill` 允许您使用鼠标关闭窗口。仅需在终端执行 `xkill` 命令,它将会改变鼠标光标为一个 **X** 或是一个小骷髅图标。在你想关闭的进程窗口上点击 **x**。小心使用 `xkill`,如手册描述的一致,它很危险。我已经提醒过您了!

参阅手册,了解上述命令更多信息。您还可以接续探索 `pkill` 和 `pgrep` 命令。

---

via: <https://opensource.com/article/18/5/how-kill-process-stop-program-linux>

作者:[Sachin Patil](https://opensource.com/users/psachin) 选题:[lujun9972](https://github.com/lujun9972) 译者:[CYLeft](https://github.com/CYLeft) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | When a process misbehaves, you might sometimes want to terminate or kill it. In this post, we'll explore a few ways to terminate a process or an application from the command line as well as from a graphical interface, using [gedit](https://wiki.gnome.org/Apps/Gedit) as a sample application.

## Using the command line/termination characters

### Ctrl + C

One problem invoking `gedit`

from the command line (if you are not using `gedit &`

) is that it will not free up the prompt, so that shell session is blocked. In such cases, Ctrl+C (the Control key in combination with 'C') comes in handy. That will terminate `gedit`

and all work will be lost (unless the file was saved). Ctrl+C sends the

signal to [SIGINT](http://man7.org/linux/man-pages/man7/signal.7.html)`gedit`

. This is a stop signal whose default action is to terminate the process. It instructs the shell to stop `gedit`

and return to the main loop, and you'll get the prompt back.

```

``````

$ gedit

^C

```

### Ctrl + Z

This is called a *suspend character*. It sends a

signal to process. This is also a stop signal, but the default action is not to kill but to suspend the process.[SIGTSTP](http://man7.org/linux/man-pages/man7/signal.7.html)

It will stop (kill/terminate) `gedit`

and return the shell prompt.

```

``````

$ gedit

^Z

[1]+ Stopped gedit

$

```

Once the process is suspended (in this case, `gedit`

), it is not possible to write or do anything in `gedit`

. In the background, the process becomes a *job*. This can be verified by the `jobs`

command.

```

``````

$ jobs

[1]+ Stopped gedit

```

`jobs`

allows you to control multiple processes within a single shell session. You can stop, resume, and move jobs to the background or foreground as needed.

Let's resume `gedit`

in the background and free up a prompt to run other commands. You can do this using the `bg`

command, followed by job ID (notice `[1]`

from the output of `jobs`

above. `[1]`

is the job ID).

```

``````

$ bg 1

[1]+ gedit &

```

This is similar to starting `gedit`

with `&,`

:

```

```` $ gedit &`

## Using *kill*

`kill`

allows fine control over signals, enabling you to signal a process by specifying either a signal name or a signal number, followed by a process ID, or PID.

What I like about `kill`

is that it can also work with job IDs. Let's start `gedit`

in the background using `gedit &`

. Assuming I have a job ID of `gedit`

from the `jobs`

command, let's send `SIGINT`

to `gedit`

:

```

```` $ kill -s SIGINT %1`

Note that the job ID should be prefixed with `%`

, or `kill`

will consider it a PID.

`kill`

can work without specifying a signal explicitly. In that case, the default action is to send `SIGTERM`

, which will terminate the process. Execute `kill -l`

to list all signal names, and use the `man kill`

command to read the man page.

## Using *killall*

If you don't want to specify a job ID or PID, `killall`

lets you specify a process by name. The simplest way to terminate `gedit`

using `killall`

is:

```

```` $ killall gedit`

This will kill all the processes with the name `gedit`

. Like `kill`

, the default signal is `SIGTERM`

. It has the option to ignore case using `-I`

:

```

``````

$ gedit &

[1] 14852

$ killall -I GEDIT

[1]+ Terminated gedit

```

To learn more about various flags provided by `killall`

(such as `-u`

, which allows you to kill user-owned processes) check the man page (`man killall`

)

## Using *xkill*

Have you ever encountered an issue where a media player, such as [VLC](https://www.videolan.org/vlc/index.html), grayed out or hung? Now you can find the PID and kill the application using one of the commands listed above or use `xkill`

.

`xkill`

allows you to kill a window using a mouse. Simply execute `xkill`

in a terminal, which should change the mouse cursor to an **x** or a tiny skull icon. Click **x** on the window you want to close. Be careful using `xkill`

, though—as its man page explains, it can be dangerous. You have been warned!

Refer to the man page of each command for more information. You can also explore commands like `pkill`

and `pgrep`

.

## 5 Comments |

9,716 | 3 个 Python 模板库比较 | https://opensource.com/resources/python/template-libraries | 2018-06-05T08:38:00 | [

"Python",

"模板"

] | /article-9716-1.html |

>

> 你的下一个 Python 项目需要一个模板引擎来自动生成 HTML 吗?这有几种选择。

>

>

>