id

int64 2.05k

16.6k

| title

stringlengths 5

75

| fromurl

stringlengths 19

185

| date

timestamp[s] | tags

sequencelengths 0

11

| permalink

stringlengths 20

37

| content

stringlengths 342

82.2k

| fromurl_status

int64 200

526

⌀ | status_msg

stringclasses 339

values | from_content

stringlengths 0

229k

⌀ |

|---|---|---|---|---|---|---|---|---|---|

9,816 | Python 字节码介绍 | https://opensource.com/article/18/4/introduction-python-bytecode | 2018-07-08T07:24:00 | [

"Python",

"字节码"

] | https://linux.cn/article-9816-1.html |

>

> 了解 Python 字节码是什么,Python 如何使用它来执行你的代码,以及知道它是如何帮到你的。

>

>

>

如果你曾经编写过 Python,或者只是使用过 Python,你或许经常会看到 Python 源代码文件——它们的名字以 `.py` 结尾。你可能还看到过其它类型的文件,比如以 `.pyc` 结尾的文件,或许你可能听说过它们就是 Python 的 “<ruby> 字节码 <rt> bytecode </rt></ruby>” 文件。(在 Python 3 上这些可能不容易看到 —— 因为它们与你的 `.py` 文件不在同一个目录下,它们在一个叫 `__pycache__` 的子目录中)或者你也听说过,这是节省时间的一种方法,它可以避免每次运行 Python 时去重新解析源代码。

但是,除了 “噢,原来这就是 Python 字节码” 之外,你还知道这些文件能做什么吗?以及 Python 是如何使用它们的?

如果你不知道,那你走运了!今天我将带你了解 Python 的字节码是什么,Python 如何使用它去运行你的代码,以及知道它是如何帮助你的。

### Python 如何工作

Python 经常被介绍为它是一个解释型语言 —— 其中一个原因是在程序运行时,你的源代码被转换成 CPU 的原生指令 —— 但这样的看法只是部分正确。Python 与大多数解释型语言一样,确实是将源代码编译为一组虚拟机指令,并且 Python 解释器是针对相应的虚拟机实现的。这种中间格式被称为 “字节码”。

因此,这些 `.pyc` 文件是 Python 悄悄留下的,是为了让它们运行的 “更快”,或者是针对你的源代码的 “优化” 版本;它们是你的程序在 Python 虚拟机上运行的字节码指令。

我们来看一个示例。这里是用 Python 写的经典程序 “Hello, World!”:

```

def hello()

print("Hello, World!")

```

下面是转换后的字节码(转换为人类可读的格式):

```

2 0 LOAD_GLOBAL 0 (print)

2 LOAD_CONST 1 ('Hello, World!')

4 CALL_FUNCTION 1

```

如果你输入那个 `hello()` 函数,然后使用 [CPython](https://github.com/python/cpython) 解释器去运行它,那么上述列出的内容就是 Python 所运行的。它看起来可能有点奇怪,因此,我们来深入了解一下它都做了些什么。

### Python 虚拟机内幕

CPython 使用一个基于栈的虚拟机。也就是说,它完全面向栈数据结构的(你可以 “推入” 一个东西到栈 “顶”,或者,从栈 “顶” 上 “弹出” 一个东西来)。

CPython 使用三种类型的栈:

1. <ruby> 调用栈 <rt> call stack </rt></ruby>。这是运行 Python 程序的主要结构。它为每个当前活动的函数调用使用了一个东西 —— “<ruby> 帧 <rt> frame </rt></ruby>”,栈底是程序的入口点。每个函数调用推送一个新的帧到调用栈,每当函数调用返回后,这个帧被销毁。

2. 在每个帧中,有一个<ruby> 计算栈 <rt> evaluation stack </rt></ruby> (也称为<ruby> 数据栈 <rt> data stack </rt></ruby>)。这个栈就是 Python 函数运行的地方,运行的 Python 代码大多数是由推入到这个栈中的东西组成的,操作它们,然后在返回后销毁它们。

3. 在每个帧中,还有一个<ruby> 块栈 <rt> block stack </rt></ruby>。它被 Python 用于去跟踪某些类型的控制结构:循环、`try` / `except` 块、以及 `with` 块,全部推入到块栈中,当你退出这些控制结构时,块栈被销毁。这将帮助 Python 了解任意给定时刻哪个块是活动的,比如,一个 `continue` 或者 `break` 语句可能影响正确的块。

大多数 Python 字节码指令操作的是当前调用栈帧的计算栈,虽然,还有一些指令可以做其它的事情(比如跳转到指定指令,或者操作块栈)。

为了更好地理解,假设我们有一些调用函数的代码,比如这个:`my_function(my_variable, 2)`。Python 将转换为一系列字节码指令:

1. 一个 `LOAD_NAME` 指令去查找函数对象 `my_function`,然后将它推入到计算栈的顶部

2. 另一个 `LOAD_NAME` 指令去查找变量 `my_variable`,然后将它推入到计算栈的顶部

3. 一个 `LOAD_CONST` 指令去推入一个实整数值 `2` 到计算栈的顶部

4. 一个 `CALL_FUNCTION` 指令

这个 `CALL_FUNCTION` 指令将有 2 个参数,它表示那个 Python 需要从栈顶弹出两个位置参数;然后函数将在它上面进行调用,并且它也同时被弹出(对于函数涉及的关键字参数,它使用另一个不同的指令 —— `CALL_FUNCTION_KW`,但使用的操作原则类似,以及第三个指令 —— `CALL_FUNCTION_EX`,它适用于函数调用涉及到参数使用 `*` 或 `**` 操作符的情况)。一旦 Python 拥有了这些之后,它将在调用栈上分配一个新帧,填充到函数调用的本地变量上,然后,运行那个帧内的 `my_function` 字节码。运行完成后,这个帧将被调用栈销毁,而在最初的帧内,`my_function` 的返回值将被推入到计算栈的顶部。

### 访问和理解 Python 字节码

如果你想玩转字节码,那么,Python 标准库中的 `dis` 模块将对你有非常大的帮助;`dis` 模块为 Python 字节码提供了一个 “反汇编”,它可以让你更容易地得到一个人类可读的版本,以及查找各种字节码指令。[`dis` 模块的文档](https://docs.python.org/3/library/dis.html) 可以让你遍历它的内容,并且提供一个字节码指令能够做什么和有什么样的参数的完整清单。

例如,获取上面的 `hello()` 函数的列表,可以在一个 Python 解析器中输入如下内容,然后运行它:

```

import dis

dis.dis(hello)

```

函数 `dis.dis()` 将反汇编一个函数、方法、类、模块、编译过的 Python 代码对象、或者字符串包含的源代码,以及显示出一个人类可读的版本。`dis` 模块中另一个方便的功能是 `distb()`。你可以给它传递一个 Python 追溯对象,或者在发生预期外情况时调用它,然后它将在发生预期外情况时反汇编调用栈上最顶端的函数,并显示它的字节码,以及插入一个指向到引发意外情况的指令的指针。

它也可以用于查看 Python 为每个函数构建的编译后的代码对象,因为运行一个函数将会用到这些代码对象的属性。这里有一个查看 `hello()` 函数的示例:

```

>>> hello.__code__

<code object hello at 0x104e46930, file "<stdin>", line 1>

>>> hello.__code__.co_consts

(None, 'Hello, World!')

>>> hello.__code__.co_varnames

()

>>> hello.__code__.co_names

('print',)

```

代码对象在函数中可以以属性 `__code__` 来访问,并且携带了一些重要的属性:

* `co_consts` 是存在于函数体内的任意实数的元组

* `co_varnames` 是函数体内使用的包含任意本地变量名字的元组

* `co_names` 是在函数体内引用的任意非本地名字的元组

许多字节码指令 —— 尤其是那些推入到栈中的加载值,或者在变量和属性中的存储值 —— 在这些元组中的索引作为它们参数。

因此,现在我们能够理解 `hello()` 函数中所列出的字节码:

1. `LOAD_GLOBAL 0`:告诉 Python 通过 `co_names` (它是 `print` 函数)的索引 0 上的名字去查找它指向的全局对象,然后将它推入到计算栈

2. `LOAD_CONST 1`:带入 `co_consts` 在索引 1 上的字面值,并将它推入(索引 0 上的字面值是 `None`,它表示在 `co_consts` 中,因为 Python 函数调用有一个隐式的返回值 `None`,如果没有显式的返回表达式,就返回这个隐式的值 )。

3. `CALL_FUNCTION 1`:告诉 Python 去调用一个函数;它需要从栈中弹出一个位置参数,然后,新的栈顶将被函数调用。

“原始的” 字节码 —— 是非人类可读格式的字节 —— 也可以在代码对象上作为 `co_code` 属性可用。如果你有兴趣尝试手工反汇编一个函数时,你可以从它们的十进制字节值中,使用列出 `dis.opname` 的方式去查看字节码指令的名字。

### 字节码的用处

现在,你已经了解的足够多了,你可能会想 “OK,我认为它很酷,但是知道这些有什么实际价值呢?”由于对它很好奇,我们去了解它,但是除了好奇之外,Python 字节码在几个方面还是非常有用的。

首先,理解 Python 的运行模型可以帮你更好地理解你的代码。人们都开玩笑说,C 是一种 “可移植汇编器”,你可以很好地猜测出一段 C 代码转换成什么样的机器指令。理解 Python 字节码之后,你在使用 Python 时也具备同样的能力 —— 如果你能预料到你的 Python 源代码将被转换成什么样的字节码,那么你可以知道如何更好地写和优化 Python 源代码。

第二,理解字节码可以帮你更好地回答有关 Python 的问题。比如,我经常看到一些 Python 新手困惑为什么某些结构比其它结构运行的更快(比如,为什么 `{}` 比 `dict()` 快)。知道如何去访问和阅读 Python 字节码将让你很容易回答这样的问题(尝试对比一下: `dis.dis("{}")` 与 `dis.dis("dict()")` 就会明白)。

最后,理解字节码和 Python 如何运行它,为 Python 程序员不经常使用的一种特定的编程方式提供了有用的视角:面向栈的编程。如果你以前从来没有使用过像 FORTH 或 Fator 这样的面向栈的编程语言,它们可能有些古老,但是,如果你不熟悉这种方法,学习有关 Python 字节码的知识,以及理解面向栈的编程模型是如何工作的,将有助你开拓你的编程视野。

### 延伸阅读

如果你想进一步了解有关 Python 字节码、Python 虚拟机、以及它们是如何工作的更多知识,我推荐如下的这些资源:

* [Python 虚拟机内幕](https://leanpub.com/insidethepythonvirtualmachine),它是 Obi Ike-Nwosu 写的一本免费在线电子书,它深入 Python 解析器,解释了 Python 如何工作的细节。

* [一个用 Python 编写的 Python 解析器](http://www.aosabook.org/en/500L/a-python-interpreter-written-in-python.html),它是由 Allison Kaptur 写的一个教程,它是用 Python 构建的 Python 字节码解析器,并且它实现了运行 Python 字节码的全部构件。

* 最后,CPython 解析器是一个开源软件,你可以在 [GitHub](https://github.com/python/cpython) 上阅读它。它在文件 `Python/ceval.c` 中实现了字节码解析器。[这是 Python 3.6.4 发行版中那个文件的链接](https://github.com/python/cpython/blob/d48ecebad5ac78a1783e09b0d32c211d9754edf4/Python/ceval.c);字节码指令是由第 1266 行开始的 `switch` 语句来处理的。

学习更多内容,参与到 James Bennett 的演讲,[有关字节的知识:理解 Python 字节码](https://us.pycon.org/2018/schedule/presentation/127/),将在 [PyCon Cleveland 2018](https://us.pycon.org/2018/) 召开。

---

via: <https://opensource.com/article/18/4/introduction-python-bytecode>

作者:[James Bennett](https://opensource.com/users/ubernostrum) 选题:[lujun9972](https://github.com/lujun9972) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 200 | OK | If you've ever written, or even just used, Python, you're probably used to seeing Python source code files; they have names ending in `.py`

. And you may also have seen another type of file, with a name ending in `.pyc`

, and you may have heard that they're Python "bytecode" files. (These are a bit harder to see on Python 3—instead of ending up in the same directory as your `.py`

files, they go into a subdirectory called `__pycache__`

.) And maybe you've heard that this is some kind of time-saver that prevents Python from having to re-parse your source code every time it runs.

But beyond "oh, that's Python bytecode," do you really know what's in those files and how Python uses them?

If not, today's your lucky day! I'll take you through what Python bytecode is, how Python uses it to execute your code, and how knowing about it can help you.

## How Python works

Python is often described as an interpreted language—one in which your source code is translated into native CPU instructions as the program runs—but this is only partially correct. Python, like many interpreted languages, actually compiles source code to a set of instructions for a virtual machine, and the Python interpreter is an implementation of that virtual machine. This intermediate format is called "bytecode."

So those `.pyc`

files Python leaves lying around aren't just some "faster" or "optimized" version of your source code; they're the bytecode instructions that will be executed by Python's virtual machine as your program runs.

Let's look at an example. Here's a classic "Hello, World!" written in Python:

```

``````

def hello()

print("Hello, World!")

```

And here's the bytecode it turns into (translated into a human-readable form):

```

``````

2 0 LOAD_GLOBAL 0 (print)

2 LOAD_CONST 1 ('Hello, World!')

4 CALL_FUNCTION 1

```

If you type up that `hello()`

function and use the [CPython](https://github.com/python/cpython) interpreter to run it, the above listing is what Python will execute. It might look a little weird, though, so let's take a deeper look at what's going on.

## Inside the Python virtual machine

CPython uses a stack-based virtual machine. That is, it's oriented entirely around stack data structures (where you can "push" an item onto the "top" of the structure, or "pop" an item off the "top").

CPython uses three types of stacks:

- The

**call stack**. This is the main structure of a running Python program. It has one item—a "frame"—for each currently active function call, with the bottom of the stack being the entry point of the program. Every function call pushes a new frame onto the call stack, and every time a function call returns, its frame is popped off. - In each frame, there's an

**evaluation stack**(also called the**data stack**). This stack is where execution of a Python function occurs, and executing Python code consists mostly of pushing things onto this stack, manipulating them, and popping them back off. - Also in each frame, there's a

**block stack**. This is used by Python to keep track of certain types of control structures: loops,`try`

/`except`

blocks, and`with`

blocks all cause entries to be pushed onto the block stack, and the block stack gets popped whenever you exit one of those structures. This helps Python know which blocks are active at any given moment so that, for example, a`continue`

or`break`

statement can affect the correct block.

Most of Python's bytecode instructions manipulate the evaluation stack of the current call-stack frame, although there are some instructions that do other things (like jump to specific instructions or manipulate the block stack).

To get a feel for this, suppose we have some code that calls a function, like this: `my_function(my_variable, 2)`

. Python will translate this into a sequence of four bytecode instructions:

- A

`LOAD_NAME`

instruction that looks up the function object`my_function`

and pushes it onto the top of the evaluation stack - Another

`LOAD_NAME`

instruction to look up the variable`my_variable`

and push it on top of the evaluation stack - A

`LOAD_CONST`

instruction to push the literal integer value`2`

on top of the evaluation stack - A

`CALL_FUNCTION`

instruction

The `CALL_FUNCTION`

instruction will have an argument of 2, which indicates that Python needs to pop two positional arguments off the top of the stack; then the function to call will be on top, and it can be popped as well (for functions involving keyword arguments, a different instruction—`CALL_FUNCTION_KW`

—is used, but with a similar principle of operation, and a third instruction, `CALL_FUNCTION_EX`

, is used for function calls that involve argument unpacking with the `*`

or `**`

operators). Once Python has all that, it will allocate a new frame on the call stack, populate the local variables for the function call, and execute the bytecode of `my_function`

inside that frame. Once that's done, the frame will be popped off the call stack, and in the original frame the return value of `my_function`

will be pushed on top of the evaluation stack.

## Accessing and understanding Python bytecode

If you want to play around with this, the `dis`

module in the Python standard library is a huge help; the `dis`

module provides a "disassembler" for Python bytecode, making it easy to get a human-readable version and look up the various bytecode instructions. [The documentation for the dis module](https://docs.python.org/3/library/dis.html) goes over its contents and provides a full list of bytecode instructions along with what they do and what arguments they take.

For example, to get the bytecode listing for the `hello()`

function above, I typed it into a Python interpreter, then ran:

```

``````

import dis

dis.dis(hello)

```

The function `dis.dis()`

will disassemble a function, method, class, module, compiled Python code object, or string literal containing source code and print a human-readable version. Another handy function in the `dis`

module is `distb()`

. You can pass it a Python traceback object or call it after an exception has been raised, and it will disassemble the topmost function on the call stack at the time of the exception, print its bytecode, and insert a pointer to the instruction that raised the exception.

It's also useful to look at the compiled code objects Python builds for every function since executing a function makes use of attributes of those code objects. Here's an example looking at the `hello()`

function:

```

``````

>>> hello.__code__

<code object hello at 0x104e46930, file "<stdin>", line 1>

>>> hello.__code__.co_consts

(None, 'Hello, World!')

>>> hello.__code__.co_varnames

()

>>> hello.__code__.co_names

('print',)

```

The code object is accessible as the attribute `__code__`

on the function and carries a few important attributes:

`co_consts`

is a tuple of any literals that occur in the function body`co_varnames`

is a tuple containing the names of any local variables used in the function body`co_names`

is a tuple of any non-local names referenced in the function body

Many bytecode instructions—particularly those that load values to be pushed onto the stack or store values in variables and attributes—use indices in these tuples as their arguments.

So now we can understand the bytecode listing of the `hello()`

function:

`LOAD_GLOBAL 0`

: tells Python to look up the global object referenced by the name at index 0 of`co_names`

(which is the`print`

function) and push it onto the evaluation stack`LOAD_CONST 1`

: takes the literal value at index 1 of`co_consts`

and pushes it (the value at index 0 is the literal`None`

, which is present in`co_consts`

because Python function calls have an implicit return value of`None`

if no explicit`return`

statement is reached)`CALL_FUNCTION 1`

: tells Python to call a function; it will need to pop one positional argument off the stack, then the new top-of-stack will be the function to call.

The "raw" bytecode—as non-human-readable bytes—is also available on the code object as the attribute `co_code`

. You can use the list `dis.opname`

to look up the names of bytecode instructions from their decimal byte values if you'd like to try to manually disassemble a function.

## Putting bytecode to use

Now that you've read this far, you might be thinking "OK, I guess that's cool, but what's the practical value of knowing this?" Setting aside curiosity for curiosity's sake, understanding Python bytecode is useful in a few ways.

First, understanding Python's execution model helps you reason about your code. People like to joke about C being a kind of "portable assembler," where you can make good guesses about what machine instructions a particular chunk of C source code will turn into. Understanding bytecode will give you the same ability with Python—if you can anticipate what bytecode your Python source code turns into, you can make better decisions about how to write and optimize it.

Second, understanding bytecode is a useful way to answer questions about Python. For example, I often see newer Python programmers wondering why certain constructs are faster than others (like why `{}`

is faster than `dict()`

). Knowing how to access and read Python bytecode lets you work out the answers (try it: `dis.dis("{}")`

versus `dis.dis("dict()")`

).

Finally, understanding bytecode and how Python executes it gives a useful perspective on a particular kind of programming that Python programmers don't often engage in: stack-oriented programming. If you've ever used a stack-oriented language like FORTH or Factor, this may be old news, but if you're not familiar with this approach, learning about Python bytecode and understanding how its stack-oriented programming model works is a neat way to broaden your programming knowledge.

## Further reading

If you'd like to learn more about Python bytecode, the Python virtual machine, and how they work, I recommend these resources:

[Inside the Python Virtual Machine](https://leanpub.com/insidethepythonvirtualmachine)by Obi Ike-Nwosu is a free online book that does a deep dive into the Python interpreter, explaining in detail how Python actually works.[A Python Interpreter Written in Python](http://www.aosabook.org/en/500L/a-python-interpreter-written-in-python.html)by Allison Kaptur is a tutorial for building a Python bytecode interpreter in—what else—Python itself, and it implements all the machinery to run Python bytecode.- Finally, the CPython interpreter is open source and you can

[read through it on GitHub](https://github.com/python/cpython). The implementation of the bytecode interpreter is in the file`Python/ceval.c`

.[Here's that file for the Python 3.6.4 release](https://github.com/python/cpython/blob/d48ecebad5ac78a1783e09b0d32c211d9754edf4/Python/ceval.c); the bytecode instructions are handled by the`switch`

statement beginning on line 1266.

To learn more, attend James Bennett's talk, [A Bit about Bytes: Understanding Python Bytecode](https://us.pycon.org/2018/schedule/presentation/127/), at [PyCon Cleveland 2018](https://us.pycon.org/2018/).

## 3 Comments |

9,817 | Sosreport:收集系统日志和诊断信息的工具 | https://www.ostechnix.com/sosreport-a-tool-to-collect-system-logs-and-diagnostic-information/ | 2018-07-08T09:37:25 | [

"Sosreport"

] | https://linux.cn/article-9817-1.html |

如果你是 RHEL 管理员,你可能肯定听说过 **Sosreport** :一个可扩展、可移植的支持数据收集工具。它是一个从类 Unix 操作系统中收集系统配置详细信息和诊断信息的工具。当用户提出支持服务单时,他/她必须运行此工具并将由 Sosreport 工具生成的结果报告发送给 Red Hat 支持人员。然后,执行人员将根据报告进行初步分析,并尝试找出系统中的问题。不仅在 RHEL 系统上,你可以在任何类 Unix 操作系统上使用它来收集系统日志和其他调试信息。

### 安装 Sosreport

Sosreport 在 Red Hat 官方系统仓库中,因此你可以使用 Yum 或 DNF 包管理器安装它,如下所示。

```

$ sudo yum install sos

```

要么,

```

$ sudo dnf install sos

```

在 Debian、Ubuntu 和 Linux Mint 上运行:

```

$ sudo apt install sosreport

```

### 用法

安装后,运行以下命令以收集系统配置详细信息和其他诊断信息。

```

$ sudo sosreport

```

系统将要求你输入系统的一些详细信息,例如系统名称、案例 ID 等。相应地输入详细信息,然后按回车键生成报告。如果你不想更改任何内容并使用默认值,只需按回车键即可。

我的 CentOS 7 服务器的示例输出:

```

sosreport (version 3.5)

This command will collect diagnostic and configuration information from

this CentOS Linux system and installed applications.

An archive containing the collected information will be generated in

/var/tmp/sos.DiJXi7 and may be provided to a CentOS support

representative.

Any information provided to CentOS will be treated in accordance with

the published support policies at:

https://wiki.centos.org/

The generated archive may contain data considered sensitive and its

content should be reviewed by the originating organization before being

passed to any third party.

No changes will be made to system configuration.

Press ENTER to continue, or CTRL-C to quit.

Please enter your first initial and last name [server.ostechnix.local]:

Please enter the case id that you are generating this report for []:

Setting up archive ...

Setting up plugins ...

Running plugins. Please wait ...

Running 73/73: yum...

Creating compressed archive...

Your sosreport has been generated and saved in:

/var/tmp/sosreport-server.ostechnix.local-20180628171844.tar.xz

The checksum is: 8f08f99a1702184ec13a497eff5ce334

Please send this file to your support representative.

```

如果你不希望系统提示你输入此类详细信息,请如下使用批处理模式。

```

$ sudo sosreport --batch

```

正如你在上面的输出中所看到的,生成了一个归档报告并保存在 `/var/tmp/sos.DiJXi7` 中。在 RHEL 6/CentOS 6 中,报告将在 `/tmp` 中生成。你现在可以将此报告发送给你的支持人员,以便他可以进行初步分析并找出问题所在。

你可能会担心或想知道报告中的内容。如果是这样,你可以通过运行以下命令来查看它:

```

$ sudo tar -tf /var/tmp/sosreport-server.ostechnix.local-20180628171844.tar.xz

```

要么,

```

$ sudo vim /var/tmp/sosreport-server.ostechnix.local-20180628171844.tar.xz

```

请注意,上述命令不会解压存档,而只显示存档中的文件和文件夹列表。如果要查看存档中文件的实际内容,请首先使用以下命令解压存档:

```

$ sudo tar -xf /var/tmp/sosreport-server.ostechnix.local-20180628171844.tar.xz

```

存档的所有内容都将解压当前工作目录中 `ssosreport-server.ostechnix.local-20180628171844/` 目录中。进入目录并使用 `cat` 命令或任何其他文本浏览器查看文件内容:

```

$ cd sosreport-server.ostechnix.local-20180628171844/

$ cat uptime

17:19:02 up 1:03, 2 users, load average: 0.50, 0.17, 0.10

```

有关 Sosreport 的更多详细信息,请参阅手册页。

```

$ man sosreport

```

就是这些了。希望这些有用。还有更多好东西。敬请关注!

干杯!

---

via: <https://www.ostechnix.com/sosreport-a-tool-to-collect-system-logs-and-diagnostic-information/>

作者:[SK](https://www.ostechnix.com/author/sk/) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

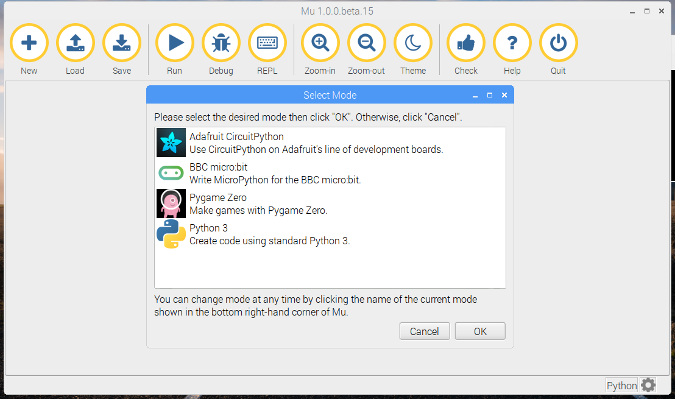

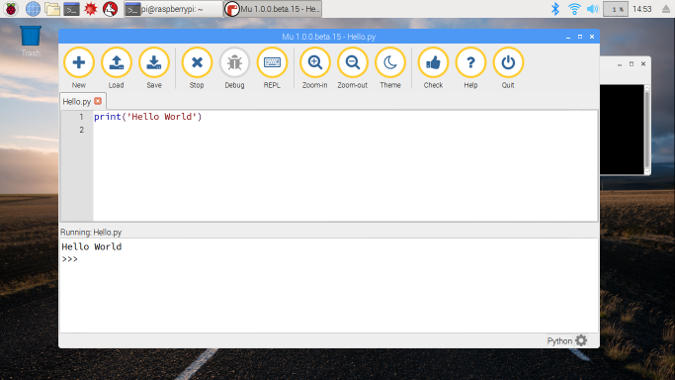

9,818 | 将你的树莓派打造成一个 Tor 中继节点 | https://www.linux.com/blog/intro-to-linux/2018/6/turn-your-raspberry-pi-tor-relay-node | 2018-07-08T09:50:37 | [

"Tor",

"树莓派"

] | https://linux.cn/article-9818-1.html |

>

> 在此教程中学习如何将你的旧树莓派打造成一个完美的 Tor 中继节点。

>

>

>

你是否和我一样,在第一代或者第二代树莓派发布时买了一个,玩了一段时间就把它搁置“吃灰”了。毕竟,除非你是机器人爱好者,否则一般不太可能去长时间使用一个处理器很慢的、并且内存只有 256 MB 的计算机。这并不是说你不能用它去做一件很酷的东西,但是在工作和其它任务之间,我还没有看到用一些旧的物件发挥新作用的机会。

然而,如果你想去好好利用它并且不想花费你太多的时间和资源的话,可以将你的旧树莓派打造成一个完美的 Tor 中继节点。

### Tor 中继节点是什么

在此之前你或许听说过 [Tor 项目](https://www.torproject.org/),如果恰好你没有听说过,我简单给你介绍一下,“Tor” 是 “The Onion Router(洋葱路由器)” 的缩写,它是用来对付在线追踪和其它违反隐私行为的技术。

不论你在互联网上做什么事情,都会在你的 IP 包通过的设备上留下一些数字“脚印”:所有的交换机、路由器、负载均衡,以及目标网络记录的来自你的原始会话的 IP 地址,以及你访问的互联网资源(通常是它的主机名,[即使是在使用 HTTPS 时](https://en.wikipedia.org/wiki/Server_Name_Indication#Security_implications))的 IP 地址。如过你是在家中上互联网,那么你的 IP 地址可以直接映射到你的家庭所在地。如果你使用了 VPN 服务([你应该使用](https://www.linux.com/blog/2017/10/tips-secure-your-network-wake-krack)),那么你的 IP 地址映射到你的 VPN 提供商那里,而 VPN 提供商是可以映射到你的家庭所在地的。无论如何,有可能在某个地方的某个人正在根据你访问的网络和在网站上呆了多长时间来为你建立一个个人的在线资料。然后将这个资料进行出售,并与从其它服务上收集的资料进行聚合,然后利用广告网络进行赚钱。至少,这是乐观主义者对如何利用这些数据的一些看法 —— 我相信你还可以找到更多的更恶意地使用这些数据的例子。

Tor 项目尝试去提供一个解决这种问题的方案,使它们不可能(或者至少是更加困难)追踪到你的终端 IP 地址。Tor 是通过让你的连接在一个由匿名的入口节点、中继节点和出口节点组成的匿名中继链上反复跳转的方式来实现防止追踪的目的:

1. **入口节点** 只知道你的 IP 地址和中继节点的 IP 地址,但是不知道你最终要访问的目标 IP 地址

2. **中继节点** 只知道入口节点和出口节点的 IP 地址,以及既不是源也不是最终目标的 IP 地址

3. **出口节点** 仅知道中继节点和最终目标地址,它是在到达最终目标地址之前解密流量的节点

中继节点在这个交换过程中扮演一个关键的角色,因为它在源请求和目标地址之间创建了一个加密的障碍。甚至在意图偷窥你数据的对手控制了出口节点的情况下,在他们没有完全控制整个 Tor 中继链的情况下仍然无法知道请求源在哪里。

只要存在大量的中继节点,你的隐私被会得到保护 —— 这就是我为什么真诚地建议你,如果你的家庭宽带有空闲的时候去配置和运行一个中继节点。

#### 考虑去做 Tor 中继时要记住的一些事情

一个 Tor 中继节点仅发送和接收加密流量 —— 它从不访问任何其它站点或者在线资源,因此你不用担心有人会利用你的家庭 IP 地址去直接浏览一些令人担心的站点。话虽如此,但是如果你居住在一个提供<ruby> 匿名增强服务 <rt> anonymity-enhancing services </rt></ruby>是违法行为的司法管辖区的话,那么你还是不要运营你的 Tor 中继节点了。你还需要去查看你的互联网服务提供商的服务条款是否允许你去运营一个 Tor 中继。

### 需要哪些东西

* 一个带完整外围附件的树莓派(任何型号/代次都行)

* 一张有 [Raspbian Stretch Lite](https://www.raspberrypi.org/downloads/raspbian/) 的 SD 卡

* 一根以太网线缆

* 一根用于供电的 micro-USB 线缆

* 一个键盘和带 HDMI 接口的显示器(在配置期间使用)

本指南假设你已经配置好了你的家庭网络连接的线缆或者 ADSL 路由器,它用于运行 NAT 转换(它几乎是必需的)。大多数型号的树莓派都有一个可用于为树莓派供电的 USB 端口,如果你只是使用路由器的 WiFi 功能,那么路由器应该有空闲的以太网口。但是在我们将树莓派设置为一个“配置完不管”的 Tor 中继之前,我们还需要一个键盘和显示器。

### 引导脚本

我改编了一个很流行的 Tor 中继节点引导脚本以适配树莓派上使用 —— 你可以在我的 GitHub 仓库 <https://github.com/mricon/tor-relay-bootstrap-rpi> 上找到它。你用它引导树莓派并使用缺省的用户 `pi` 登入之后,做如下的工作:

```

sudo apt-get install -y git

git clone https://github.com/mricon/tor-relay-bootstrap-rpi

cd tor-relay-bootstrap-rpi

sudo ./bootstrap.sh

```

这个脚本将做如下的工作:

1. 安装最新版本的操作系统更新以确保树莓派打了所有的补丁

2. 将系统配置为无人值守自动更新,以确保有可用更新时会自动接收并安装

3. 安装 Tor 软件

4. 告诉你的 NAT 路由器去转发所需要的端口(端口一般是 443 和 8080,因为这两个端口最不可能被互联网提供商过滤掉)上的数据包到你的中继节点

脚本运行完成后,你需要去配置 `torrc` 文件 —— 但是首先,你需要决定打算贡献给 Tor 流量多大带宽。首先,在 Google 中输入 “[Speed Test](https://www.google.com/search?q=speed+test)”,然后点击 “Run Speed Test” 按钮。你可以不用管 “Download speed” 的结果,因为你的 Tor 中继能处理的速度不会超过最大的上行带宽。

所以,将 “Mbps upload” 的数字除以 8,然后再乘以 1024,结果就是每秒多少 KB 的宽带速度。比如,如果你得到的上行带宽是 21.5 Mbps,那么这个数字应该是:

```

21.5 Mbps / 8 * 1024 = 2752 KBytes per second

```

你可以限制你的中继带宽为那个数字的一半,并允许突发带宽为那个数字的四分之三。确定好之后,使用喜欢的文本编辑器打开 `/etc/tor/torrc` 文件,调整好带宽设置。

```

RelayBandwidthRate 1300 KBytes

RelayBandwidthBurst 2400 KBytes

```

当然,如果你想更慷慨,你可以将那几个设置的数字调的更大,但是尽量不要设置为最大的出口带宽 —— 如果设置的太高,它会影响你的日常使用。

你打开那个文件之后,你应该去设置更多的东西。首先是昵称 —— 只是为了你自己保存记录,第二个是联系信息,只需要一个电子邮件地址。由于你的中继是运行在无人值守模式下的,你应该使用一个定期检查的电子邮件地址 —— 如果你的中继节点离线超过 48 个小时,你将收到 “Tor Weather” 服务的告警信息。

```

Nickname myrpirelay

ContactInfo [email protected]

```

保存文件并重引导系统去启动 Tor 中继。

### 测试它确认有 Tor 流量通过

如果你想去确认中继节点的功能,你可以运行 `arm` 工具:

```

sudo -u debian-tor arm

```

它需要一点时间才显示,尤其是在老板子上。它通常会给你显示一个表示入站和出站流量(或者是错误信息,它将有助于你去排错)的柱状图。

一旦你确信它运行正常,就可以将键盘和显示器拔掉了,然后将树莓派放到地下室,它就可以在那里悄悄地呆着并到处转发加密的比特了。恭喜你,你已经帮助去改善隐私和防范在线的恶意跟踪了!

通过来自 Linux 基金会和 edX 的免费课程 ["Linux 入门"](https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux) 来学习更多的 Linux 知识。

---

via: <https://www.linux.com/blog/intro-to-linux/2018/6/turn-your-raspberry-pi-tor-relay-node>

作者:[Konstantin Ryabitsev](https://www.linux.com/users/mricon) 选题:[lujun9972](https://github.com/lujun9972) 译者:[qhwdw](https://github.com/qhwdw) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,819 | 迁移到 Linux:使用 sudo | https://www.linux.com/blog/learn/2018/3/migrating-linux-using-sudo | 2018-07-09T18:04:00 | [

"迁移",

"sudo"

] | https://linux.cn/article-9819-1.html |

>

> sudo 机制可以让你轻松以普通用户偶尔执行管理任务。让我们来学习一下。

>

>

>

本文是我们关于迁移到 Linux 的系列文章的第五篇。如果你错过了之前的那些,你可以在这里赶上:

* [第1部分 - 入门介绍](/article-9212-1.html)

* [第2部分 - 磁盘、文件和文件系统](/article-9213-1.html)

* [第3部分 - 图形操作环境](/article-9293-1.html)

* [第4部分 - 命令行](/article-9565-1.html)

你可能一直想了解 Linux。也许它在你的工作场所使用,如果你每天使用它,你的工作效率会更高。或者,也许你想在家里的某些计算机上安装 Linux。无论是什么原因,这一系列文章都是为了让过渡更容易。

与许多其他操作系统一样,Linux 支持多用户。它甚至支持多个用户同时登录。

用户帐户通常会被分配一个可以存储文件的家目录。通常这个家目录位于:

```

/home/<login name>

```

这样,每个用户都有存储自己的文档和其他文件的独立位置。

### 管理任务

在传统的 Linux 安装中,常规用户帐户无权在系统上执行管理任务。典型的安装 Linux 的系统会要求用户以管理员身份登录以执行某些任务,而不是为每个用户分配权限以执行各种任务。

Linux 上的管理员帐户称为 root。

### Sudo 解释

从历史上看,要执行管理任务,必须以 root 身份登录,执行任务,然后登出。这个过程有点乏味,所以很多人以 root 登录并且整天都以管理员身份工作。这种做法可能会导致灾难性的后果,例如,意外删除系统中的所有文件。当然,root 用户可以做任何事情,因此没有任何保护措施可以防止有人意外地执行影响很大的操作。

创建 `sudo` 工具是为了使你更容易以常规用户帐户登录,偶尔以 root 身份执行管理任务,而无需登录、执行任务然后登出。具体来说,`sudo` 允许你以不同的用户身份运行命令。如果你未指定特定用户,则假定你指的是 root 用户。

`sudo` 可以有复杂的设置,允许用户有权限使用 `sudo` 运行某些命令,而其他的不行。通常,安装的桌面系统会使创建的第一个帐户在 `sudo` 中有完全的权限,因此你作为主要用户可以完全管理 Linux 安装。

### 使用 Sudo

某些安装 Linux 的系统设置了 `sudo`,因此你仍需要知道 root 帐户的密码才能执行管理任务。其他人,设置 `sudo` 输入自己的密码。这里有不同的哲学。

当你尝试在图形环境中执行管理任务时,通常会打开一个要求输入密码的对话框。输入你自己的密码(例如,在 Ubuntu 上)或 root 帐户的密码(例如,Red Hat)。

当你尝试在命令行中执行管理任务时,它通常只会给你一个 “permission denied” 错误。然后你在前面用 `sudo` 重新运行命令。例如:

```

systemctl start vsftpd

Failed to start vsftpd.service: Access denied

sudo systemctl start vsftpd

[sudo] password for user1:

```

### 何时使用 Sudo

以 root 身份运行命令(在 `sudo` 或其他情况下)并不总是解决权限错误的最佳解决方案。虽然将以 root 身份运行会消除 “permission denied” 错误,但有时最好寻找根本原因而不是仅仅解决症状。有时文件拥有错误的所有者和权限。

当你在尝试一个需要 root 权限来执行操作的任务或者程序时使用 `sudo`。如果文件恰好由另一个用户(包括 root 用户)拥有,请不要使用 `sudo`。在第二种情况下,最好正确设置文件的权限。

通过 Linux 基金会和 edX 的免费[“Linux 介绍”](https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux)课程了解有关 Linux 的更多信息。

---

via: <https://www.linux.com/blog/learn/2018/3/migrating-linux-using-sudo>

作者:[John Bonesio](https://www.linux.com/users/johnbonesio) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,820 | 五个最热门的开源机器学习 JavaScript 框架 | https://opensource.com/article/18/5/machine-learning-javascript-frameworks | 2018-07-09T18:22:24 | [

"机器学习",

"JavaScript"

] | /article-9820-1.html |

>

> 如果你是一位想要深入机器学习的 JavaScript 程序员或想成为一位使用 JavaScript 的机器学习专家,那么这些开源框架也许会吸引你。

>

>

>

开源工具的涌现使得开发者能够更加轻松地开发应用,这一点使机器学习领域本身获得了极大增长。(例如,AndreyBu,他来自德国,在机器学习领域拥有五年以上的经验,他一直在使用各种各样的开源框架来创造富有魅力的机器学习项目。)

虽然 Python 是绝大多数的机器学习框架所采用的语言,但是 JavaScript 也并没有被抛下。JavaScript 开发者可以在浏览器中使用各种框架来训练和部署机器学习模型。

下面是 JavaScript 中最热门五个机器学习框架

### 1、 TensorFlow.js

[TensorFlow.js](https://js.tensorflow.org/) 是一个开源库,它使你能在浏览器中完整地运行机器学习程序,它是 Deeplearn.js 的继承者,Deeplearn.js 不再更新了。TensorFlow.js 在 Deeplearn.js 功能的基础上进行了改善,使你能够充分利用浏览器,得到更加深入的机器学习经验。

通过这个开源库,你可以在浏览器中使用有各种功能的、直观的 API 来定义、训练和部署模型。除此之外,它自动提供 WebGL 和 Node.js 的支持。

如果您有了一个已经训练过的模型,你想要导入到浏览器中。TensorFlow.js 可以让你做到这一点,你也可以在不离开浏览器的情况下重新训练已有的模型。

### 2、 机器学习工具库

现在有很多在浏览器中提供广泛的机器学习功能的资源型开源工具,这个[机器学习工具库](https://github.com/mljs/ml)就是这些开源工具的集合。这个工具库为好几种机器学习算法提供支持,包括非监督式学习、监督式学习、数据处理、人工神经网络(ANN)、数学和回归。

如果你以前使用 Python,现在想找类似于 Scikit-learn 的,能在浏览器中使用 JavaScript 进行机器学习的工具,这套工具会满足你的要求。

### 3、 Keras.js

[Keras.js](https://transcranial.github.io/keras-js/#/) 是另外一个热门的开源框架,它使你能够在浏览器中运行机器学习模型,它使用 WebGL 来提供 GPU 模式的支持。如果你有使用 Node.js 的模型,你就只能在 GPU 模式下运行它。Keras.js 还为使用任意后端框架的模型训练提供支持,例如 Microsoft Cognitive Toolkit (CNTK) 。

一些 Keras 模型可以部署在客户端浏览器上,包括 Inception v3 (训练在 ImageNet 上),50 层冗余网络(训练在 ImageNet 上),和卷积变化自动编码器(训练在 MNIST 上)。

### 4、 Brain.js

机器学习里的概念非常重要,它可能会使刚开始进入这个领域的人们气馁,这个领域里的学术用语和专业词汇可能会使初学者感到崩溃,而解决以上问题的能力就是 [Brain.js](https://github.com/BrainJS/brain.js) 的优势所在。它是开源的,基于 JavaScript 的框架,简化了定义、训练和运行神经网络的流程。

如果你是一个 JavaScript 开发者,并且在机器学习领域是完全的新手,Brain.js 能减低你学习的难度曲线。它可以和 Node.js 一起使用,或者运行在客户端浏览器里来训练机器学习模型。Brain.js 支持部分类型的神经网络,包括前馈式网络、Ellman 网络,和门循环单元网络。

### 5、 STDLib

[STDLib](https://stdlib.io/) 是一个基于 JavaScript 和 Node.js 应用的开源库,如果您正在寻找一种在浏览器中运行,支持科学和数字化的基于 web 的机器学习应用,STDLib 能满足你的需要。

这个库能提供全面而先进的数学和统计学上的功能,来帮助你构建高性能的机器学习模型。你同样也可以使用它丰富的功能来构建应用程序和其他的库。除此之外,如果你想要一个数据可视化和探索性数据分析的框架 —— STDLib,你,值得拥有。

### 总结

如果你是一个 JavaScript 开发者,并且打算深入研究令人兴奋的机器学习世界,或者说,你是一个机器学习方面的专家,打算开始尝试使用 JavaScript ,那么上述的开源框架会激起您的兴趣。

你有知道其他的,提供在浏览器里运行机器学习功能的开源库吗?请在下面的评论区里告诉我们。

---

via: <https://opensource.com/article/18/5/machine-learning-javascript-frameworks>

作者:[Dr.Michael J.Garbade](https://opensource.com/users/drmjg) 选题:[lujun9972](https://github.com/lujun9972) 译者:[hopefully2333](https://github.com/hopefully2333) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

9,821 | 3 款 Linux 桌面的日记程序 | https://opensource.com/article/18/6/linux-journaling-applications | 2018-07-09T18:36:08 | [

"日记"

] | /article-9821-1.html |

>

> 用轻量、灵活的数字日记工具来记录你的活动。

>

>

>

保持记日记的习惯,即使是不定期地记,也可以带来很多好处。这不仅是治疗和宣泄,而且还可以很好地记录你所在的位置以及你去过的地方。它可以帮助你展示你在生活中的进步,并提醒你自己做对了什么,做错了什么。

无论你记日记的原因是什么,都有多种方法可以做到这一点。你可以使用传统的笔和纸,也可以使用基于 Web 的程序,或者你可以使用[简单的文本文件](https://plaintextproject.online/2017/07/19/journal.html)。

另一种选择是使用专门的日记程序。Linux 桌面有几种非常灵活且非常有用的日记工具。我们来看看其中的三个。

### RedNotebook

在这里描述的三个日记程序中,[RedNotebook](http://rednotebook.sourceforge.net) 是最灵活的。大部分灵活性来自其模板。这些模板可让你记录个人想法或会议记录、计划旅程或记录电话。你还可以编辑现有模板或创建自己的模板。

你可以使用与 Markdown 非常相似的标记语言来记录日记。你还可以在日记中添加标签,以便于查找。只需在程序的左窗格中单击或输入标记,右窗格中将显示相应日记的列表。

最重要的是,你可以将全部、部分或仅一个日记导出为纯文本、HTML、LaTeX 或 PDF。在执行此操作之前,你可以通过单击工具栏上的“预览”按钮了解日志在 PDF 或 HTML 中的显示情况。

总的来说,RedNotebook 是一款易于使用且灵活的程序。它需要习惯,但一旦你这样做,它是一个有用的工具。

### Lifeograph

[Lifeograph](http://lifeograph.sourceforge.net/wiki/Main_Page) 与 RedNotebook 有相似的外观和感觉。它没有那么多功能,但 Lifeograph 也够了。

该程序通过保持简单和整洁性来简化记日记这件事。你有一个很大的区域可以记录,你可以为日记添加一些基本格式。这包括通常的粗体和斜体,以及箭头和高亮显示。你可以在日记中添加标签,以便更好地组织和查找它们。

Lifeograph 有一个我觉得特别有用的功能。首先,你可以创建多个日记 - 例如,工作日记和个人日记。其次是密码保护你的日记的能力。虽然该网站声称 Lifeograph 使用“真正的加密”,但没有关于它的详细信息。尽管如此,设置密码仍然会阻止大多数窥探者。

### Almanah Diary

[Almanah Diary](https://wiki.gnome.org/Apps/Almanah_Diary) 是另一种非常简单的日记工具。但不要因为它缺乏功能就丢掉它。虽简单,但足够。

有多简单?它差不多只是一个包含了日记输入和日历的区域而已。你可以做更多的事情 —— 比如添加一些基本格式(粗体、斜体和下划线)并将文本转换为超链接。Almanah 还允许你加密日记。

虽然有一个可以将纯文本文件导入该程序的功能,但我无法使其正常工作。尽管如此,如果你喜欢一个简单,能够快速记日记的软件,那么 Almanah 日记值得一看。

### 命令行怎么样?

如果你不想用 GUI 则可以不必用。命令行是保存日记的绝佳选择。

我尝试过并且喜欢的是 [jrnl](http://maebert.github.com/jrnl/)。或者你可以使用[此方案](http://tamilinux.wordpress.com/2007/07/27/writing-short-notes-and-diaries-from-the-cli/),它使用命令行别名格式化并将日记保存到文本文件中。

你有喜欢的日记程序吗?请留下评论,随意分享。

---

via: <https://opensource.com/article/18/6/linux-journaling-applications>

作者:[Scott Nesbitt](https://opensource.com/users/scottnesbitt) 选题:[lujun9972](https://github.com/lujun9972) 译者:[geekpi](https://github.com/geekpi) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| null | HTTPSConnectionPool(host='opensource.com', port=443): Read timed out. (read timeout=10) | null |

9,823 | 迁移到 Linux:安装软件 | https://www.linux.com/blog/learn/2018/3/migrating-linux-installing-software | 2018-07-10T07:43:00 | [

"安装",

"迁移"

] | https://linux.cn/article-9823-1.html |

>

> 所有的 Linux 打包系统和工具都会让人迷惑,但是这篇面向初学者的教程可以帮助你搞明白。

>

>

>

如你所见,众所瞩目的 Linux 已经用在互联网,以及 Arduino、Beagle 和树莓派主板等设备上,或许你正在考虑是时候尝试一下 Linux 了。本系列将帮助你成功过渡到 Linux。如果你错过了本系列的早期文章,可以在这里找到它们:

* [第1部分 - 入门介绍](/article-9212-1.html)

* [第2部分 - 磁盘、文件和文件系统](/article-9213-1.html)

* [第3部分 - 图形操作环境](/article-9293-1.html)

* [第4部分 - 命令行](/article-9565-1.html)

* [第5部分 - 使用 sudo](/article-9819-1.html)

### 安装软件

要在你的计算机上获得新软件,通常的方法是从供应商处获得软件产品,然后运行一个安装程序。过去,软件产品会出现在像 CD-ROM 或 DVD 一样的物理媒介上,而现在我们经常从互联网上下载软件产品。

使用 Linux,安装软件就像在你的智能手机上安装一样。如同你的手机应用商店一样,在 Linux 上有个提供开源软件工具和程序的<ruby> 中央仓库 <rt> central repository </rt></ruby>,几乎任何你想要的程序都会出现在可用软件包列表中以供你安装。

每个程序并不需要运行单独的安装程序,而是你可以使用 Linux 发行版附带的软件包管理工具。(这里说的 Linux 发行版就是你安装的 Linux,例如 Ubuntu、Fedora、Debian 等)每个发行版在互联网上都有它自己的集中存储库(称为仓库),它们存储了数千个预先构建好的应用程序。

你可能会注意到,在 Linux 上安装软件有几种例外情况。有时候,你仍然需要去供应商那里获取他们的软件,因为该程序不存在于你的发行版的中央仓库中。当软件不是开源和/或自由软件的时候,通常就是这种情况。

另外请记住,如果你想要安装一个不在发行版仓库中的程序时,事情就不是那么简单了,即使你正在安装自由及开源程序。这篇文章没有涉及到这些更复杂的情况,请遵循在线的指引。

有了所有的 Linux 包管理系统和工具,接下来干什么可能仍然令人困惑。本文应该有助于澄清一些事情。

### 包管理

目前在 Linux 发行版中有几个相互竞争的用于管理、安装和删除软件的包管理系统。每个发行版都选择使用了一个<ruby> 包管理工具 <rt> package management tools </rt> <rt> </rt></ruby>。Red Hat、Fedora、CentOS、Scientific Linux、SUSE 等使用 Red Hat 包管理(RPM)。Debian、Ubuntu、Linux Mint 等等都使用 Debian 包管理系统,简称 DPKG。还有一些其它包管理系统,但 RPM 和 DPKG 是最常见的。

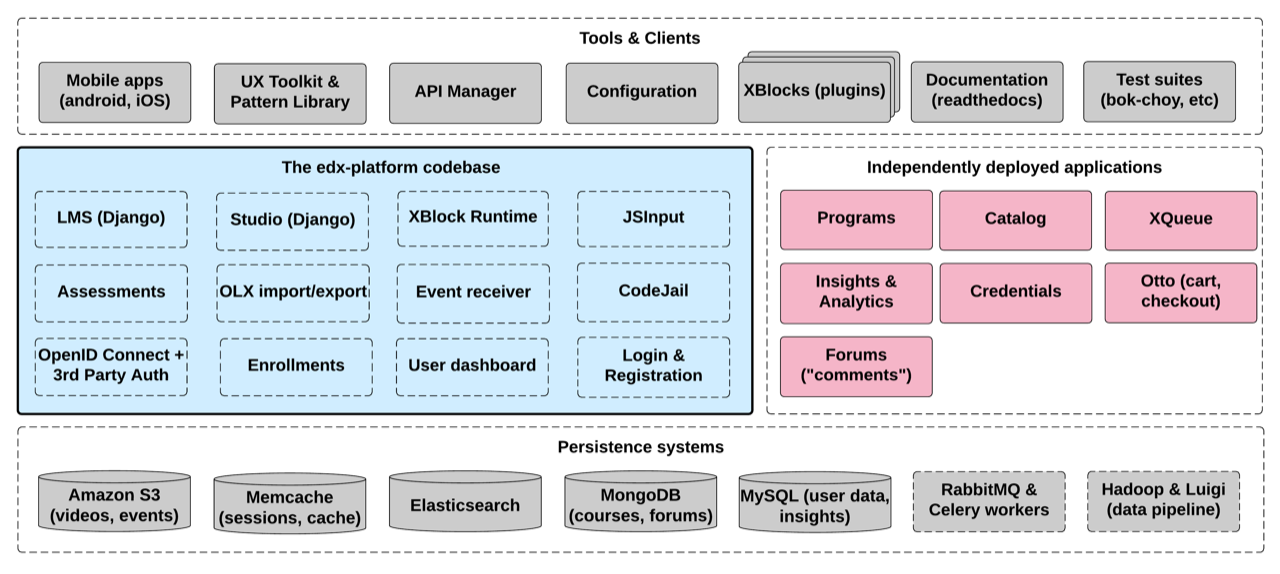

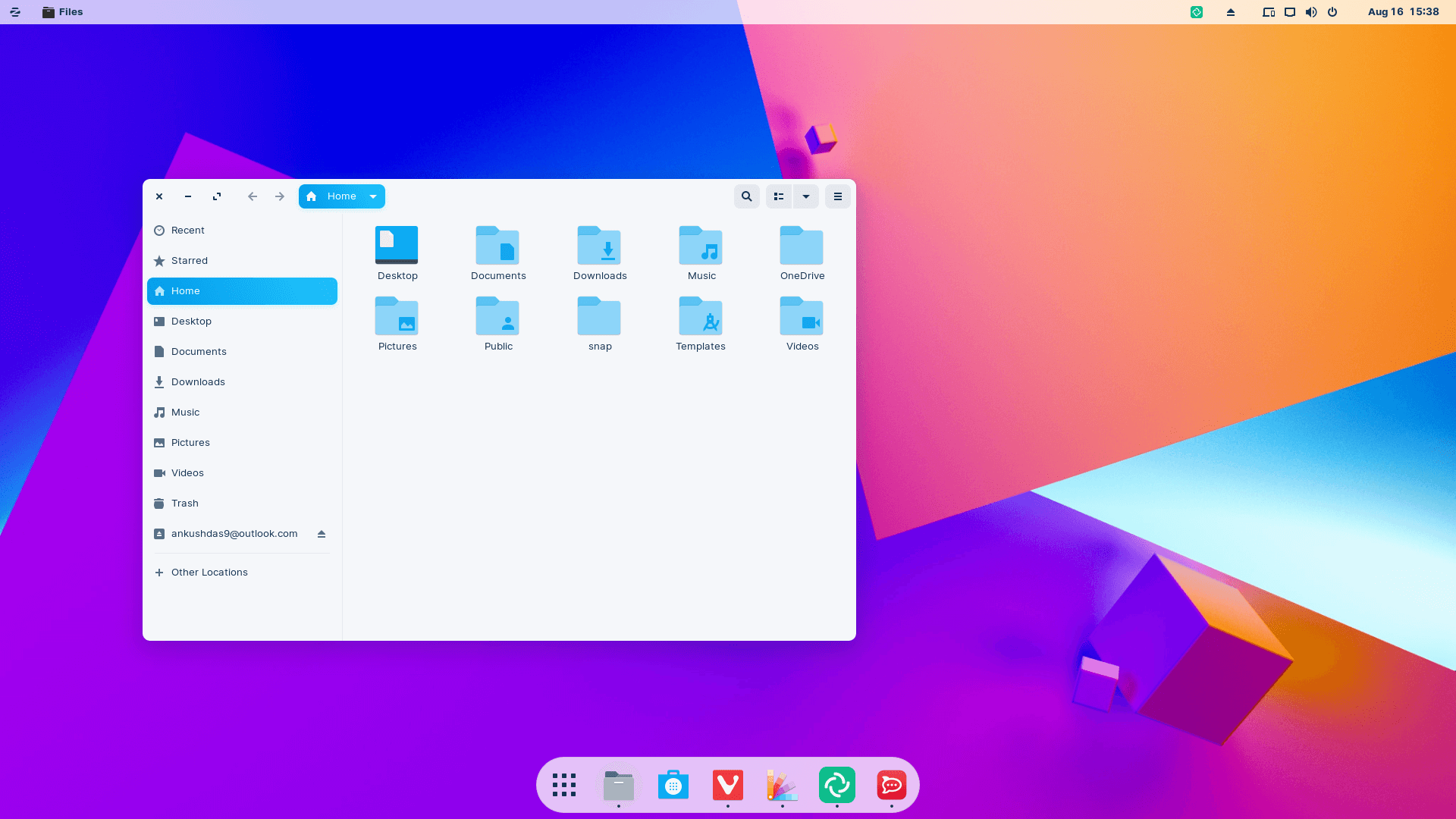

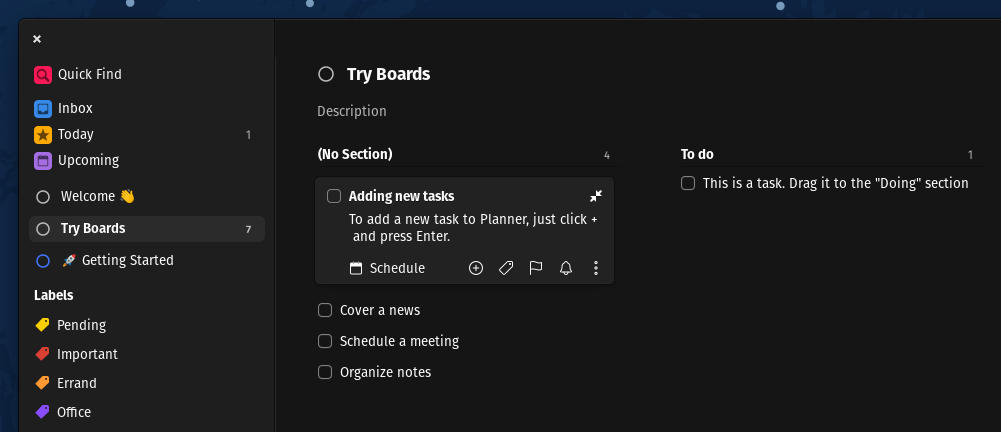

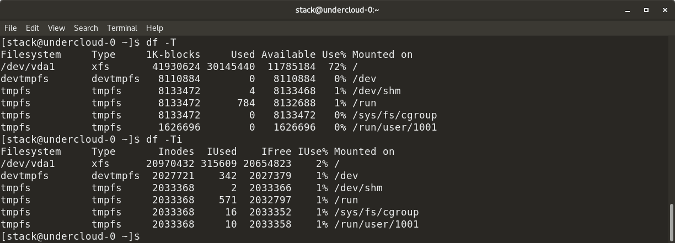

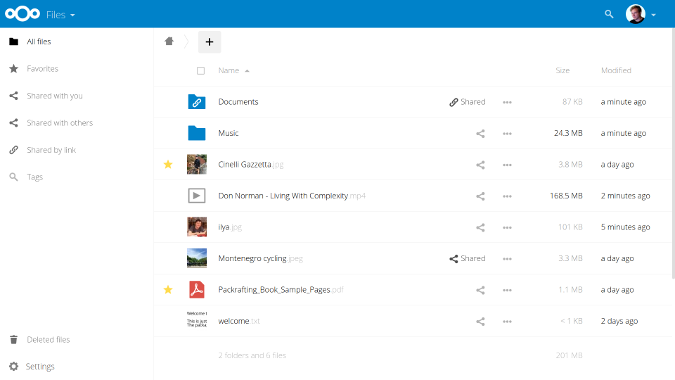

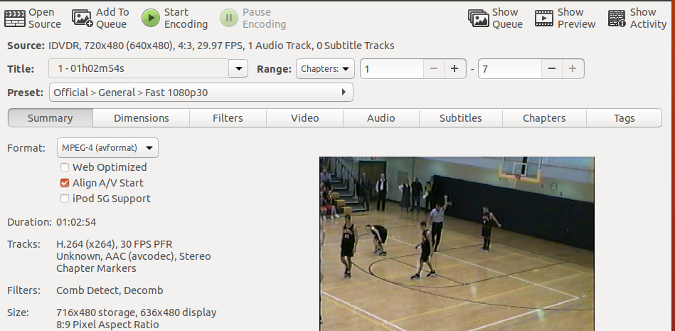

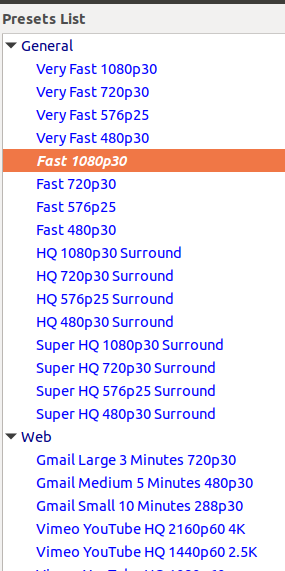

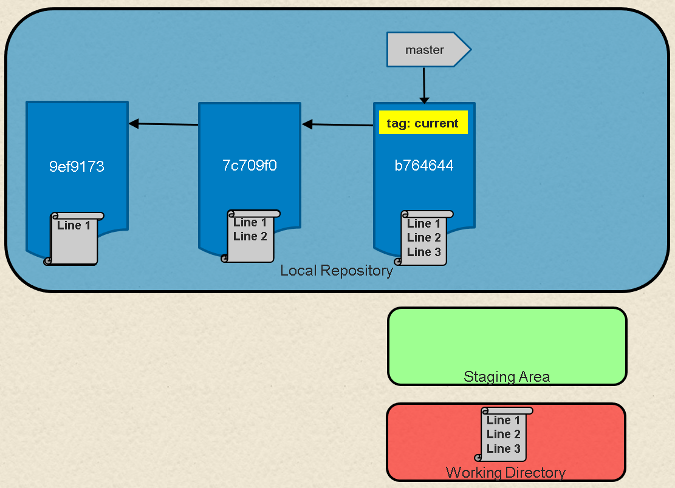

*图 1: Package installers*

无论你使用的软件包管理是什么,它们通常都是一组构建于另外一种工具之上的工具(图 1)。最底层是一个命令行工具,它可以让你做任何与安装软件相关的一切工作。你可以列出已安装的程序、删除程序、安装软件包文件等等。

这个底层工具并不总是最方便使用的,所以通常会有一个命令行工具,它可以使用单个命令在发行版的中央仓库中找到软件包,并下载和安装它以及任何依赖项。最后,通常会有一个<ruby> 图形应用程序 <rt> graphical application </rt> <rt> </rt></ruby>,可以让你使用鼠标选择任何想要的内容,然后单击 “install” 按钮即可。

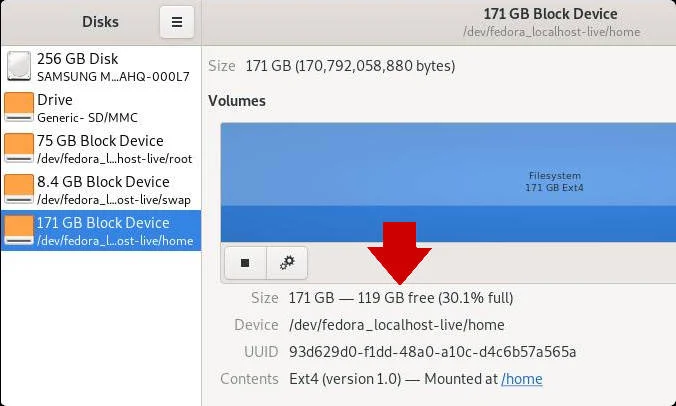

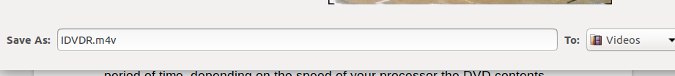

*图 2: PackageKit*

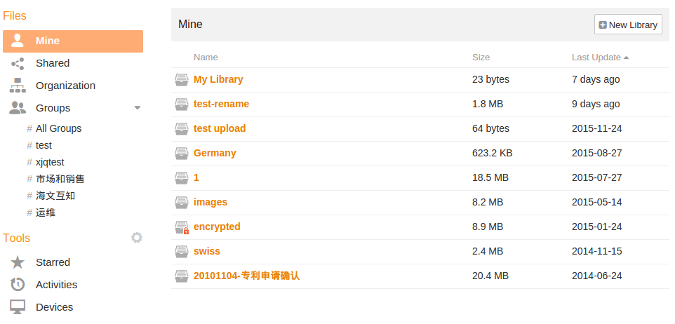

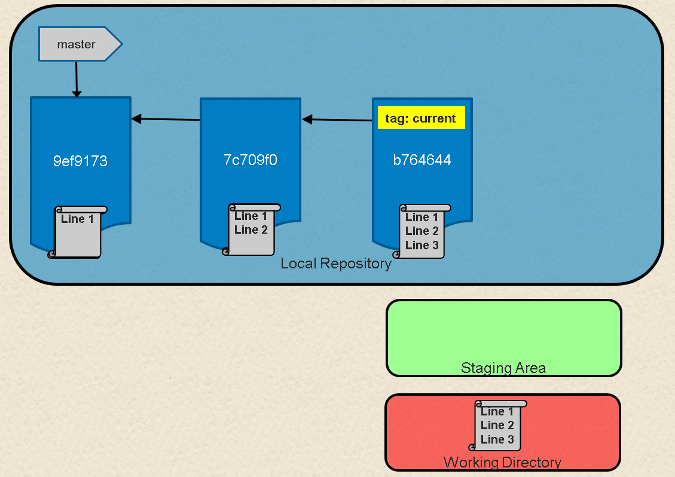

对于基于 Red Hat 的发行版,包括 Fedora、CentOS、Scientific Linux 等,它们的底层工具是 rpm,高级工具叫做 dnf(在旧系统上是 yum)。图形安装程序称为 PackageKit(图 2),它可能在系统管理菜单下显示名字为 “Add/Remove Software(添加/删除软件)”。

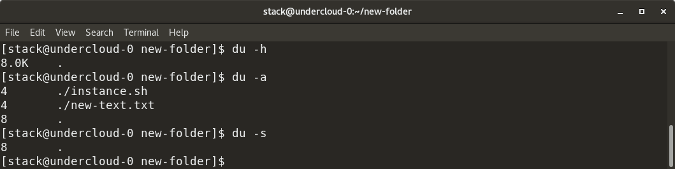

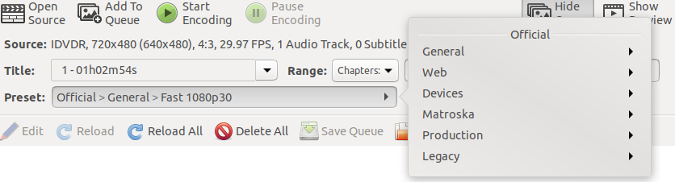

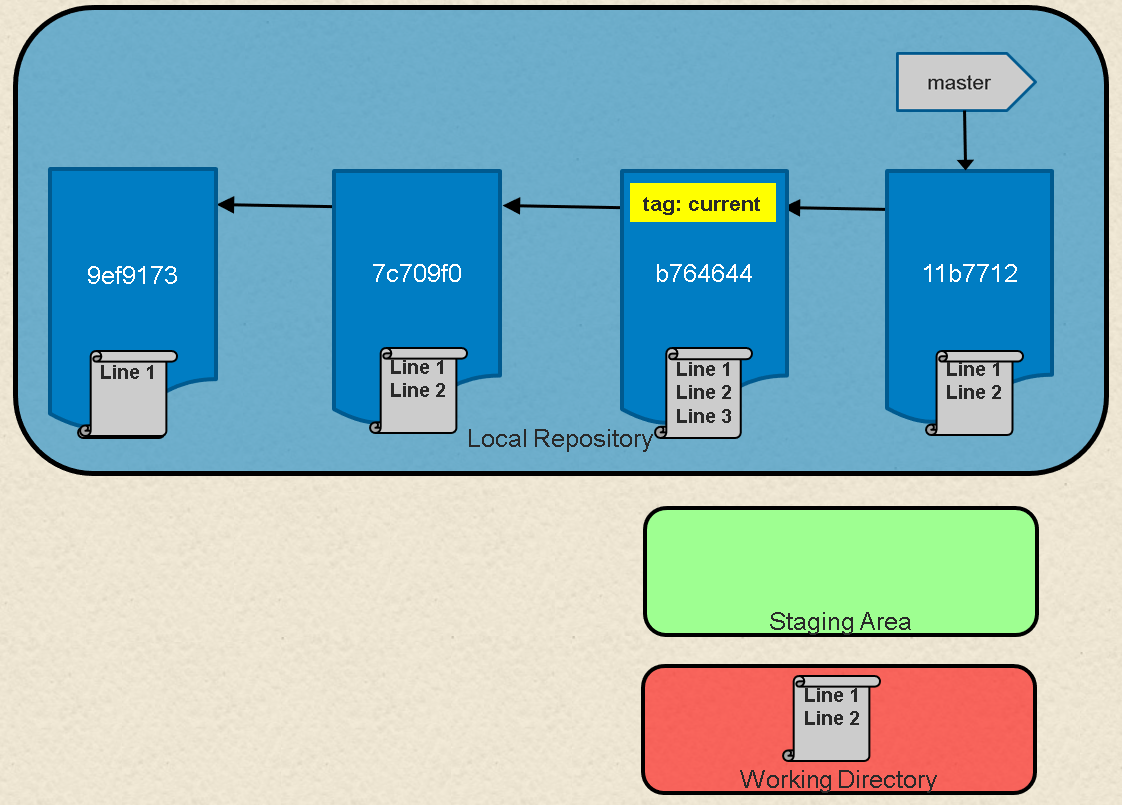

*图 3: Ubuntu Software*

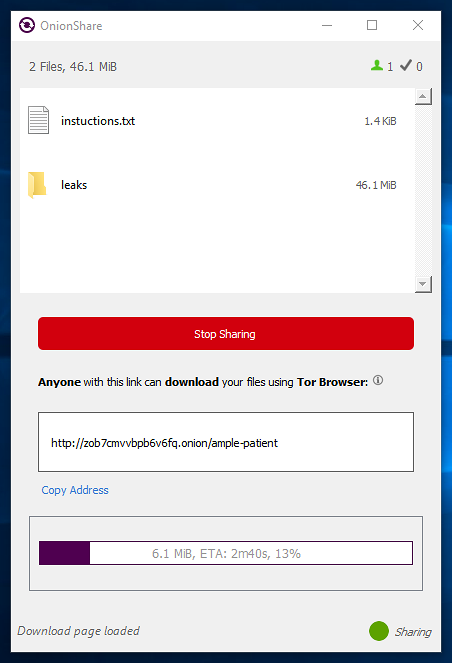

对于基于 Debian 的发行版,包括 Debian、Ubuntu、Linux Mint、Elementary OS 等,它们的底层命令行工具是 dpkg,高级工具称为 apt。在 Ubuntu 上管理已安装软件的图形工具是 Ubuntu Software(图 3)。对于 Debian 和 Linux Mint,图形工具称为<ruby> 新立得 <rt> Synaptic </rt></ruby>,它也可以安装在 Ubuntu 上。

你也可以在 Debian 相关发行版上安装一个基于文本的图形化工具 aptitude。它比 <ruby> 新立得 <rt> synaptic </rt></ruby>更强大,并且即使你只能访问命令行也能工作。如果你想通过各种选项进行各种“骚”操作,你可以试试这个,但它使用起来比新立得更复杂。其它发行版也可能有自己独特的工具。

### 命令行工具

在 Linux 上安装软件的在线说明通常描述了在命令行中键入的命令。这些说明通常更容易理解,并且将命令复制粘贴到命令行窗口中,可以在不出错的情况下一步步进行。这与下面的说明相反:“打开这个菜单,选择这个程序,输入这个搜索模式,点击这个标签,选择这个程序,然后点击这个按钮”,这经常让你在各种操作中迷失。

有时你正在使用的 Linux 没有图形环境,因此熟悉从命令行安装软件包是件好事。表 1 和表 2 列出了基于 RPM 和 DPKG 系统的一下常见操作及其相关命令。

请注意 SUSE,它像 RedHat 和 Fedora 一样使用 RPM,却没有 dnf 或 yum。相反,它使用一个名为 zypper 的程序作为高级命令行工具。其他发行版也可能有不同的工具,例如 Arch Linux 上的 pacman 或 Gentoo 上的 emerge。有很多包管理工具,所以你可能需要查找哪个适用于你的发行版。

这些技巧应该能让你更好地了解如何在新的 Linux 中安装程序,以及更好地了解 Linux 中各种软件包管理方式如何相互关联。

通过 Linux 基金会和 edX 的免费 [“Linux 入门”](https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux)课程了解有关 Linux 的更多信息。

---

via: <https://www.linux.com/blog/learn/2018/3/migrating-linux-installing-software>

作者:[JOHN BONESIO](https://www.linux.com/users/johnbonesio) 译者:[MjSeven](https://github.com/MjSeven) 校对:[pityonline](https://github.com/pityonline), [wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 301 | Moved Permanently | null |

9,824 | 如何在 Arch Linux 中查找已安装的专有软件包? | https://www.ostechnix.com/find-installed-proprietary-packages-arch-linux/ | 2018-07-10T08:08:13 | [

"专有软件"

] | https://linux.cn/article-9824-1.html |

你是狂热的自由软件支持者吗?你目前在使用任何基于 Arch 的 Linux 发行版吗?我有一个小小的提示送给你!现在,你可以轻松地在 Arch Linux 及其变体(如 Antergos、Manjaro Linux 等)中找到已安装的专有软件包。你无需在已安装软件包的网站中参考其许可细节,也无需使用任何其它外部工具来查明软件包是自由的还是专有的。(LCTT 译注:其实下面还是借助了一个外部程序)

### 在 Arch Linux 中查找已安装的专有软件包

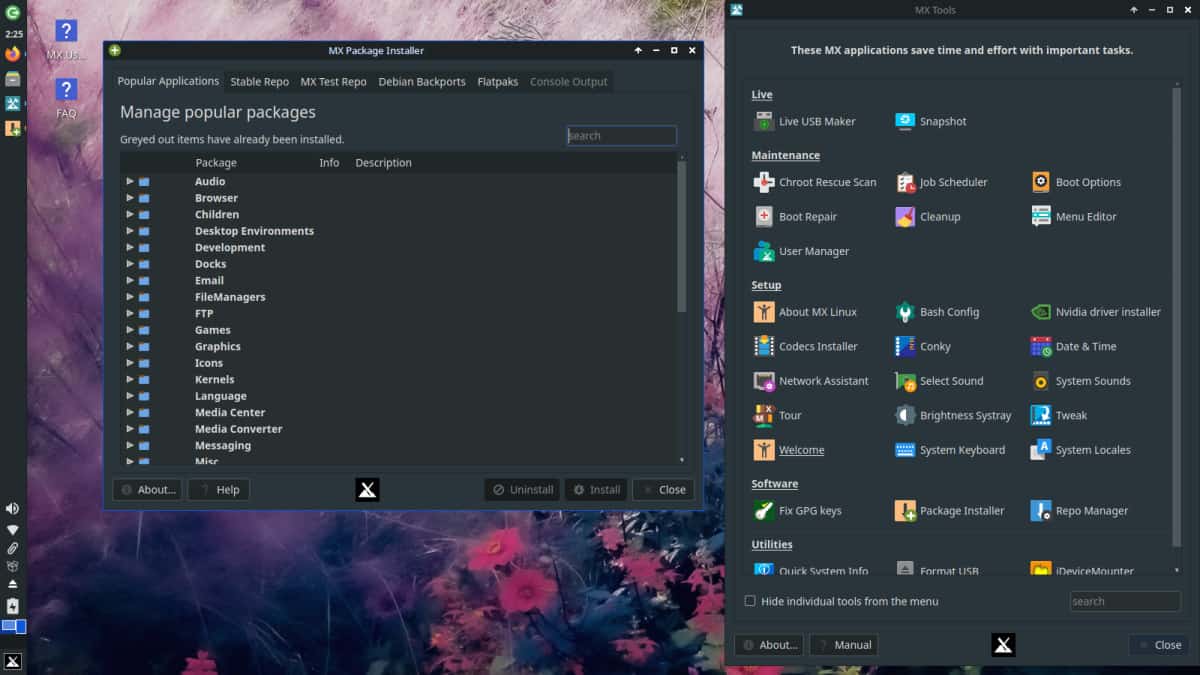

一位开发人员开发了一个名为 “[Absolutely Proprietary](https://github.com/vmavromatis/absolutely-proprietary)” 的实用程序,它是一种用于基于 Arch 发行版的专有软件包检测器。它将基于 Arch 系统中的所有安装包与 Parabola 的软件包 [blacklist](https://git.parabola.nu/blacklist.git/plain/blacklist.txt) 和 [aur-blacklist](https://git.parabola.nu/blacklist.git/plain/aur-blacklist.txt) 进行比较,然后显示出你的<ruby> 斯托曼自由指数 <rt> Stallman Freedom Index </rt></ruby>(“自由/总计”比分)。此外,你可以将该列表保存到文件中,并与其他系统/用户共享或比较。

在安装之前,确保你安装了 Python 和 Git。

然后,`git clone` 仓库:

```

git clone https://github.com/vmavromatis/absolutely-proprietary.git

```

这条命令将会下载所有内容到你当前工作目录中的 `absolutely-proprietary` 目录。

进入此目录:

```

cd absolutely-proprietary

```

接着,使用以下命令查找已安装的专有软件:

```

python main.py

```

这条命令将会下载 `blacklist.txt`、`aur-blacklist.txt`,并将本地已安装的软件包与远程软件包进行比较并显示其指数。

以下是在我的 Arch Linux 桌面的示例输出:

```

Retrieving local packages (including AUR)...

Downloading https://git.parabola.nu/blacklist.git/plain/blacklist.txt

Downloading https://git.parabola.nu/blacklist.git/plain/aur-blacklist.txt

Comparing local packages to remote...

=============================================

47 ABSOLUTELY PROPRIETARY PACKAGES INSTALLED

=============================================

Your GNU/Linux is infected with 47 proprietary packages out of 1370 total installed.

Your Stallman Freedom Index is 96.57

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| Name | Status | Libre Alternatives | Description |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| chromium-pepper-flash | nonfree | | proprietary Google Chrome EULA, missing sources |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| faac | nonfree | | [FIXME:description] is a GPL'ed package, but has non free code that can't be distributed und|

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| libunrar | nonfree | | part of nonfree unrar, Issue442 |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| opera | nonfree | | nonfree, nondistributable, built from binary installers, etc |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| shutter | nonfree | | need registered user to download (and access website) the source code and depends perl-net-d|

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| ttf-ms-fonts | nonfree | | |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| ttf-ubuntu-font-family | nonfree | | Ubuntu font license considered non-free by DFSG and Fedora |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| unace | nonfree | | license forbids making competing ACE archivers from unace |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| unrar | nonfree | unar | |

| | | fsf | |

| | | unrar | |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| virtualbox | nonfree | | contains BIOS which needs a nonfree compiler to build from source (OpenWatcom compiler), doe|

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

| wordnet | nonfree | | |

+------------------------|---------|--------------------|---------------------------------------------------------------------------------------------+

Save list to file? (Y/n)

```

如你所见,我的系统中有 47 个专有软件包。就像我说的那样,我们可以将它保存到文件中稍后查看。为此,当提示你将列表保存在文件时,请按 `y`。然后按 `y` 接受默认值,或按 `n` 以你喜欢的格式和位置来保存它。

```

Save list to file? (Y/n) y

Save as markdown table? (Y/n) y

Save it to (/tmp/tmpkuky_082.md): y

The list is saved at /home/sk/absolutely-proprietary/y.md

You can review it from the command line

using the "less -S /home/sk/absolutely-proprietary/y.md"

or, if installed, the "most /home/sk/absolutely-proprietary/y.md" commands

```

你可能已经注意到,我只有 **nonfree** 包。它还会显示另外两种类型的软件包,例如 semifree、 uses-nonfree。

* **nonfree**:这个软件包是公然的非自由软件。

* **semifree**:这个软件包大部分是自由的,但包含一些非自由软件。

* **uses-nonfree**:这个软件包依赖、推荐或不恰当地与其他自由软件或服务集成。

该使用程序的另一个显著特点是它不仅显示了专有软件包,而且还显示这些包的替代品。

希望这有些帮助。我很快就会在这里提供另一份有用的指南。敬请关注!

干杯!

资源:

* [Absolutely Proprietary](https://github.com/vmavromatis/absolutely-proprietary)

---

via: <https://www.ostechnix.com/find-installed-proprietary-packages-arch-linux/>

作者:[SK](https://www.ostechnix.com/author/sk/) 译者:[MjSeven](https://github.com/MjSeven) 校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

| 403 | Forbidden | null |

9,825 | 如何在 20 分钟内发布一个正式的 ERC20 通证 | http://www.masonforest.com/blockchain/ethereum/2017/11/13/how-to-deploy-an-erc20-token-in-20-minutes.html | 2018-07-10T22:21:00 | [

"以太坊",

"ERC20"

] | https://linux.cn/article-9825-1.html |

这段时间以来,以太坊通证很流行。这些通证可以用来代表现实世界的各种价值单位:[黄金](https://digix.global/)、 [谎言](https://www.cnbc.com/2017/08/28/burger-king-russia-cryptocurrency-whoppercoin.html)、 [猫咪](https://www.cryptokitties.co/) 甚至是类似 [公司股票](https://www.investopedia.com/terms/i/initial-coin-offering-ico.asp) 一样的东西。迄今为止,人们已经募集了 [20 亿美元的通证](https://www.forbes.com/sites/chancebarnett/2017/09/23/inside-the-meteoric-rise-of-icos/#57ac46d95670)。那些通证是以 [ERC20](https://theethereum.wiki/w/index.php/ERC20_Token_Standard) 为标准的,人们可以轻松地在钱包之间进行交易。在这篇教程中,我准备指导你部署你自己的 ERC20 通证到真实的以太坊网络上去。

事先准备:

* 一个文本编辑器([Atom](https://atom.io/) 不错,不过我喜欢 [Vim](http://www.vim.org/))

* 对命令行和终端(模拟器)有起码的了解。Mac 内置的应用“终端”就很好,不过我喜欢 [iTerm2](https://www.iterm2.com/)

* Chrome 浏览器

* [Node.js 8](https://nodejs.org/)(或更高版本)

* 你的通证的名字。我的准备叫做 HamburgerCoin(汉堡币)

你需要做的第一件事是安装 [MetaMask](https://metamask.io/)。访问 [Metamask 网站](https://metamask.io/) 并点击“Get Chrome Extention”。

Metamask 可以让你通过 Chrome 在以太坊上进行交易。它依靠运行着公开以太坊节点的 [Infura](https://infura.io/) ,所以你不用自己运行以太坊全节点。如果你颇具探索精神,你也可以下载和安装 [Mist](https://github.com/ethereum/mist/releases) 以替代它。运行 Mist 就可以让你运行你自己的以太坊节点。运行自己的节点你就需要将你的计算机与该网络进行同步,这需要不短的时间。从技术上讲这更安全,因为这样你不必信任 Infura 来处理你的交易。Infura 可以忽略你的交易而干预你,但是它并不能偷走你的钱。因为安装 Metamask 比 Mist 更快也更简单,所以我假设你在下面的教程中使用 Metamask。

接着你需要安装 [truffle](http://truffleframework.com/):

```

$ npm install -g truffle

```

现在为你自己的新通证创建一个新目录,cd 到其中并初始化你的 truffle 项目。

```

$ mkdir hamburger-coin

$ cd hamburger-coin

$ truffle init

```

很好,你的 truffle 项目已经设置好了!

现在来创建我们的通证。首先我们需要安装 [OpenZepplin](https://github.com/OpenZeppelin) 框架。OpenZepplin 框架包括了大量预先构建好的合约,包括我们要部署的 ERC20 通证合约。

(只需要按下回车接受默认值即可)

```

$ npm init

package name: (hamburger-coin)

version: (1.0.0)

description:

entry point: (truffle.js)

test command:

git repository:

keywords:

author:

license: (ISC)

About to write to /Users/masonf/src/hamburger-coin/package.json:

{

"name": "hamburger-coin",

"version": "1.0.0",

"description": "",

"main": "truffle.js",

"directories": {

"test": "test"

},

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"author": "",

"license": "ISC"

}

Is this ok? (yes) yes

$ npm install zeppelin-solidity

```

现在我们可以创建我们的通证合约了。创建一个 `contracts/HamburgerCoin.sol` 文件并加入如下内容:

```

pragma solidity ^0.4.18;

import "zeppelin-solidity/contracts/token/StandardToken.sol";

contract HamburgerCoin is StandardToken {

string public name = "HamburgerCoin";

string public symbol = "HBC";

uint public decimals = 2;

uint public INITIAL_SUPPLY = 10000 * (10 ** decimals);

function HamburgerCoin() public {

totalSupply_ = INITIAL_SUPPLY;

balances[msg.sender] = INITIAL_SUPPLY;

}

}

```

(LCTT 译注:上述合约内容中指定了合约的名称、符号和供应量。在 ERC20 当中,通证的供应量其实是整数,上述合约中通证的实际供应量是 10000 \* 100 个,出于显示 2 位小数的需求,你在合约浏览器、钱包软件中看到和操作的 1 个通证,实际上在交易中是以 100 个进行的。)

OpenZepplin 的 `StandardToken` 是一个标准的 ERC20 通证。如果你感兴趣,你可以看看它的 [源代码](https://github.com/OpenZeppelin/zeppelin-solidity/tree/master/contracts/token) 以了解是如何工作的。

实际上并不太复杂。该合约有一个 [地址到余额](https://github.com/OpenZeppelin/zeppelin-solidity/blob/master/contracts/token/BasicToken.sol#L15) 的映射(LCTT 译注:你可以理解为哈希、关联数组),它也有一个 [允许转账](https://github.com/OpenZeppelin/zeppelin-solidity/blob/master/contracts/token/StandardToken.sol#L17) 的列表。你可以看做是支票。你可以写张支票,但是直到它被兑付前,钱并不会被转账。

如果有人要转走一些资金,你可以在合约上调用 [approve](https://github.com/OpenZeppelin/zeppelin-solidity/blob/master/contracts/token/StandardToken.sol#L48) 方法,设置你要发送的通证数量。这就像是写支票一样。

然后调用 [transferFrom](https://github.com/OpenZeppelin/zeppelin-solidity/blob/master/contracts/token/StandardToken.sol#L26) 会实际进行转账。

我们可以从头写这些合约,但是最好采用经过完备的社区测试的合约。从头写一个 ERC20 通证那就是另外一篇文章了。

试着运行 `compile` 来编译我们的合约:

```

$ truffle compile

Compiling ./contracts/HamburgerCoin.sol...

Compiling zeppelin-solidity/contracts/math/SafeMath.sol...

Compiling zeppelin-solidity/contracts/ownership/Ownable.sol...

Compiling zeppelin-solidity/contracts/token/BasicToken.sol...

Compiling zeppelin-solidity/contracts/token/ERC20.sol...

Compiling zeppelin-solidity/contracts/token/ERC20Basic.sol...

Compiling zeppelin-solidity/contracts/token/MintableToken.sol...

Compiling zeppelin-solidity/contracts/token/StandardToken.sol...

Writing artifacts to ./build/contracts Next you'll need to add a migration file which will tell truffle how to deploy your contract.

```

接下来我们需要增加一个 truffle [迁移](http://truffleframework.com/docs/getting_started/migrations)。

创建 `migrations/2_deploy_hamburgercoin.js` 文件并添加如下内容:

```

var HamburgerCoin = artifacts.require("./HamburgerCoin.sol");

module.exports = function(deployer) {

deployer.deploy(HamburgerCoin);

};

```

现在让我们配置 truffle 以能够使用 Infura 公共节点。如果我们要部署到公共节点,那就需要钱包的私钥。我们可以将该私钥包含在我们的源代码当中,但是如果任何人可以访问你的源代码(和版本库),他就能够偷走我们所有的汉堡币!要避免这种情况,我们会使用 [dotenv](https://github.com/motdotla/dotenv) node.js 模块。(LCTT 译注:dotenv 用于存储机密信息的文件 .env 是以 “.” 开头的,默认不会进入版本库,当然,如果有人能查看你全部的项目文件,你的私钥还是会被泄露。)

让我们安装部署到 Infura 所需的所有模块。

```

npm install --save-dev dotenv truffle-wallet-provider ethereumjs-wallet

```

(LCTT 译注:可能安装过程中会有很多警告,大多应该是属于定义了未使用的变量和方法的编译警告,可以忽略。)

现在编辑 `truffle.js` 并(原样)加入如下内容:

```

require('dotenv').config();

const Web3 = require("web3");

const web3 = new Web3();

const WalletProvider = require("truffle-wallet-provider");

const Wallet = require('ethereumjs-wallet');

var mainNetPrivateKey = Buffer.from(process.env["MAINNET_PRIVATE_KEY"], "hex")

var mainNetWallet = Wallet.fromPrivateKey(mainNetPrivateKey);

var mainNetProvider = new WalletProvider(mainNetWallet, "https://mainnet.infura.io/");

var ropstenPrivateKey = Buffer.from(process.env["ROPSTEN_PRIVATE_KEY"], "hex")

var ropstenWallet = Wallet.fromPrivateKey(ropstenPrivateKey);

var ropstenProvider = new WalletProvider(ropstenWallet, "https://ropsten.infura.io/");

module.exports = {

networks: {

development: {

host: "localhost",

port: 8545,

network_id: "*" // Match any network id

},

ropsten: {

provider: ropstenProvider,

// You can get the current gasLimit by running

// truffle deploy --network rinkeby

// truffle(rinkeby)> web3.eth.getBlock("pending", (error, result) =>

// console.log(result.gasLimit))

gas: 4600000,

gasPrice: web3.toWei("20", "gwei"),

network_id: "3",

},

mainnet: {

provider: mainNetProvider,

gas: 4600000,

gasPrice: web3.toWei("20", "gwei"),

network_id: "1",

}

}

};

```

(LCTT 译注:原文采用 `new Buffer` 来获取私钥设置,但 node.js 升级后,废弃了 `new Buffer` 这种用法,运行时会发出警告,所以上面我修改为使用 `Buffer.from` 。)

接下来我们从 Metamask 中得到我们的私钥:

1. 点击你的 Chrome 窗口右上角的狐狸图标。

2. 点击 “Account 1” 右侧的省略号。

3. 点击 “Export Private Key”。

4. 输入你的密码。

5. 点击该文字以复制私钥到剪贴板。

然后打开 `.env`文件,并像下面这样贴入你的私钥(对于 Ropsten 测试网和 Mainnet 主网,你的私钥是一样的):

```

ROPSTEN_PRIVATE_KEY="123YourPrivateKeyHere"

MAINNET_PRIVATE_KEY="123YourPrivateKeyHere"

```

接下来,让我们部署到 Ropsten 以太坊测试网。

以太坊测试网是一个你可以测试合约的地方。此外还有 [Kovan](https://kovan-testnet.github.io/website/) 和 [Rinkeby](https://www.rinkeby.io/) 测试网。我在这个教程中选择 Ropsten 是因为现在很容易得到 Ropsten 的测试 ETH。这些测试网都类似,你可以使用任何一个你喜欢的,但是在此教程当中我假设你在使用 Ropsten。访问 <https://faucet.metamask.io/> 以得到一些测试 ETH。从 faucet 得到一些 ETH 后,你就可以部署了。

```

$ truffle deploy --network ropsten

Compiling ./contracts/HamburgerCoin.sol...

Compiling ./contracts/Migrations.sol...

Compiling zeppelin-solidity/contracts/math/SafeMath.sol...

Compiling zeppelin-solidity/contracts/token/BasicToken.sol...

Compiling zeppelin-solidity/contracts/token/ERC20.sol...

Compiling zeppelin-solidity/contracts/token/ERC20Basic.sol...

Compiling zeppelin-solidity/contracts/token/StandardToken.sol...

Writing artifacts to ./build/contracts

Using network 'ropsten'.

Running migration: 1_initial_migration.js

Deploying Migrations...

... 0xc2bbe6bf5a7c7c7312c43d65de4c18c51c4d620d5bf51481ea530411dcebc499

Migrations: 0xd827b6f93fcb50631edc4cf8e293159f0c056538

Saving successful migration to network...

... 0xe6f92402e6ca0b1d615a310751568219f66b9d78b80a37c6d92ca59af26cf475

Saving artifacts...

Running migration: 2_deploy_contracts.js

Deploying HamburgerCoin...

... 0x02c4d47526772dc524851fc2180b338a6b037500ab298fa2f405f01abdee21c4

HamburgerCoin: 0x973b1a5c753a2d5d3924dfb66028b975e7ccca51

Saving artifacts...

```

在 “Saving aritfacts” 上面的这行即是你的合约的新地址。

复制并黏贴该地址到 [Ropsten Etherscan 搜索框](https://ropsten.etherscan.io/),你就能看到你新部署的合约。

现在你可以在任何 ERC20 兼容的钱包,如 [Mist](https://github.com/ethereum/mist) 、[MyEtherWallet](https://www.myetherwallet.com/)(LCTT 译注:或 ImToken 这样的手机应用)里面使用你的通证了。

为了这篇教程,我构建了一个名为 [Etherface](http://etherface.io/) 的钱包来做演示。

首先你需要添加你的通证到 Etherface:

1. 访问 <http://etherface.io/> 。

2. 确认你在 Metamask 中选择了 “Ropsten” 网络。

3. 点击 “Tokens”。

4. 点击右上角的加号按钮。

5. 输入上面的合约地址。

如果你有朋友想要一些汉堡币,你现在就可以发送给他们了。如果没有,你也可以在你的两个账号间测试转账:

1. 在 Metamask 中点击 “切换账号”按钮(在右上角),并改变你的账号为 “Account 2”。

2. 点击 “Account 2” 右边的省略号,然后选择 “Copy Address to clipboard”。

3. 切换回 “Account 1”,这很重要!否则交易会失败。

4. 在 Etherface 中你的余额下点击 “Send”。

5. 黏贴 “Account 2” 的地址。

6. 输入你要发送的数量。

7. Metamask 会弹出确认窗口,点击 “Submit”。

8. 等大约 15-30 秒。

9. 你的 “Account 1” 的余额应该会减少,“Account 2” 现在会有了一些汉堡币!

最后,让我们来部署到主网(LCTT 译注:这会花费你真实的 ETH,你可以通过查看前面部署到 Ropsten 的合约信息中了解花费了多少 gas,以相应估计实际要花费多少 ETH):

```

$ truffle deploy --network mainnet

```

你可以如前面一样加你的通证到 Etherface ,并发送你新打造的通证给你的朋友们了!

| 301 | Moved Permanently | null |

9,826 | GNU GPL 许可证常见问题解答(六) | https://www.gnu.org/licenses/gpl-faq.html | 2018-07-10T23:53:59 | [

"GPL"

] | https://linux.cn/article-9826-1.html |

本文由高级咨询师薛亮据自由软件基金会(FSF)的[英文原文](https://www.gnu.org/licenses/gpl-faq.html)翻译而成,这篇常见问题解答澄清了在使用 GNU 许可证中遇到许多问题,对于企业和软件开发者在实际应用许可证和解决许可证问题时具有很强的实践指导意义。

1. [关于 GNU 项目、自由软件基金会(FSF)及其许可证的基本问题](/article-9062-1.html)

2. [对于 GNU 许可证的一般了解](/article-8834-1.html)

3. [在您的程序中使用 GNU 许可证](/article-8761-1.html)

4. [依据GNU许可证分发程序](/article-9222-1.html)

5. [在编写其他程序时采用依据 GNU 许可证发布的程序](/article-9448-1.html)

6. 将作品与依据 GNU 许可证发布的代码相结合

7. 关于违反 GNU 许可证的问题

### 6 将作品与依据 GNU 许可证发布的代码相结合

#### 6.1 GPL v3 是否与 GPL v2 兼容?

不兼容。许多要求已经从 GPL v2 变为 GPL v3,这意味 GPL v2 中的精确要求并不体现在 GPL v3 中,反之亦然。例如,GPL v3 的终止条件比 GPL v2 的终止条件更为宽泛,因此与 GPL v2 的终止条件不同。

由于这些差异,两个许可证不兼容:如果您试图将依据 GPL v2 发布的代码与依据 GPL v3 发布的代码组合,则将违反 GPL v2 的第 6 部分。

但是,如果代码依据 GPL “v2 或更高版本”发布,则与 GPL v3 兼容,因为 GPL v3 是其允许的选项之一。

#### 6.2 GPL v2 是否有提供安装信息的要求?

GPL v3 明确要求再分发中包含完整的必要的“安装信息”。GPL v2 不使用该术语,但它需要再分发中包含用于控制可编译和安装可执行文件的脚本以及完整和相应的源代码。这涵盖了 GPL v3 中称为“安装信息”的部分内容,但不包括所有内容。因此,GPL v3 对安装信息的要求较强。

#### 6.3 各种 GNU 许可证之间如何相互兼容?

各种 GNU 许可证彼此之间具有广泛的兼容性。下面是唯一的一种您不能将遵循两种 GNU 许可证的代码结合起来的情况:将遵循旧版本许可证的代码与遵循该许可证新版本的代码进行结合。

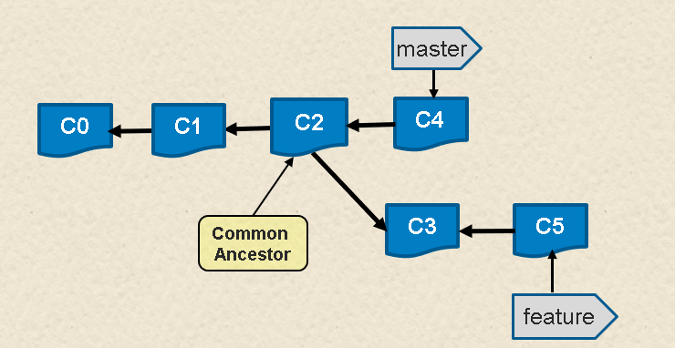

以下是 GNU 许可证的各种结合的详细兼容性矩阵,以便为特定情况提供易于使用的参考。它假设有人依据其中一个许可证编写了一些软件,而您希望以某种方式将该软件的代码结合到您要发布的项目(您自己的原始作品或其他人的软件的修改版本)中。在表顶部的列中找到项目的许可证,并在左侧的一行中找到其他代码的许可证。它们交叉的单元格会告诉您这种结合是否被允许。

当我们说“复制代码”时,我们的意思就是:您正在从一个源代码中获取一段代码(无论是否修改),并将其插入到自己的程序中,从而基于第一部分代码形成一个作品。当您编译或运行代码时,“使用库”意味着您不直接复制任何源代码,而是通过链接、导入或其他典型机制将源代码绑定在一起。

矩阵中每个标明 GPL v3 的地方,其关于兼容性的声明也同样适用于 AGPL v3。

**兼容性矩阵**

| | |

| --- | --- |

| | 我希望依据以下许可证许可我的代码 |

| 仅 GPL v2 | GPL v2 或更高版本 | GPL v3 或更高版本 | 仅 LGPL v2.1 | LGPL v2.1 或更高版本 | LGPL v3 或更高版本 |

| 我希望复制遵循右侧许可证的代码: | 仅 GPL v2 | 可以 | 可以<sup> 【2】</sup> | 不可以 | 可以,结合作品只能遵循GPL v2<sup> 【7】</sup> | 可以,结合作品只能遵循GPL v2<sup> 【7】【2】</sup> | 不可以 |

| GPL v2 或更高版本 | 可以<sup> 【1】</sup> | 可以 | 可以 | 可以,结合作品需遵循GPL v2或更高版本<sup> 【7】</sup> | 可以,结合作品需遵循GPL v2或更高版本<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【8】</sup> |

| GPL v3 | 不可以 | 可以,结合作品需遵循GPL v3<sup> 【3】</sup> | 可以 | 可以,结合作品需遵循GPL v3<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【8】</sup> |

| 仅 LGPL v2.1 | 可以,需依据GPL v2传递复制后代码<sup> 【7】</sup> | 可以,需依据GPL v2或更高版本传递复制后代码<sup> 【7】</sup> | 可以,需依据GPL v3传递复制后代码<sup> 【7】</sup> | 可以 | 可以<sup> 【6】</sup> | 可以,需依据GPL v3传递复制后代码<sup> 【7】【8】</sup> |

| LGPL v2.1 或更高版本 | 可以,需依据GPL v2传递复制后代码<sup> 【7】【1】</sup> | 可以,需依据GPL v2或更高版本传递复制后代码<sup> 【7】</sup> | 可以,需依据GPL v3传递复制后代码<sup> 【7】</sup> | 可以<sup> 【5】</sup> | 可以 | 可以 |

| LGPL v3 | 不可以 | 可以,结合作品需遵循GPL v3<sup> 【8】【3】</sup> | 可以,结合作品需遵循GPL v3<sup> 【8】</sup> | 可以,结合作品需遵循GPL v3<sup> 【7】【8】</sup> | 可以,结合作品需遵循LGPL v3<sup> 【4】</sup> | 可以 |

| 我希望使用遵循右侧许可证的库: | 仅 GPL v2 | 可以 | 可以<sup> 【2】</sup> | 不可以 | 可以,结合作品只能遵循GPL v2<sup> 【7】</sup> | 可以,结合作品只能遵循GPL v2<sup> 【7】【2】</sup> | 不可以 |

| GPL v2 或更高版本 | 可以<sup> 【1】</sup> | 可以 | 可以 | 可以,结合作品需遵循GPL v2或更高版本<sup> 【7】</sup> | 可以,结合作品需遵循GPL v2或更高版本<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【8】</sup> |

| GPL v3 | 不可以 | 可以,结合作品需遵循GPL v3<sup> 【3】</sup> | 可以 | 可以,结合作品需遵循GPL v3<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【7】</sup> | 可以,结合作品需遵循GPL v3<sup> 【8】</sup> |

| 仅LGPL v2.1 | 可以 | 可以 | 可以 | 可以 | 可以 | 可以 |

| LGPL v2.1 或更高版本 | 可以 | 可以 | 可以 | 可以 | 可以 | 可以 |

| LGPL v3 | 不可以 | 可以,结合作品需遵循GPL v3<sup> 【9】</sup> | 可以 | 可以 | 可以 | 可以 |

**角注:**

1. 在这种情况下,当结合代码时,您必须遵守 GPL v2 的条款。您不能适用更高版本的条款。

2. 在这种情况下,您可以依据 GPL v2 或更高版本发布您的项目(您的原始作品和/或您收到并修改的作品),请注意,您使用的其他代码仍然只能遵循 GPL v2。只要您的项目依赖于该代码,您将无法将项目的许可证升级到 GPL v3 或更高版本,整个作品(您的项目和其他代码的任意结合)只能依据 GPL v2 的条款传递。

3. 如果您有能力依据 GPL v2 或任何更高版本发布项目,您可以选择依据 GPL v3 或更高版本发布该项目,一旦您执行此操作,您就可以结合依据 GPL v3 发布的代码。

4. 如果您有能力依据 LGPL v2.1 或任何更高版本发布项目,您可以选择依据 LGPL v3 或更高版本发布该项目,一旦您这样做,您就可以结合依据 LGPL v3 发布的代码。

5. 在这种情况下结合代码时,您必须遵守 LGPL v2.1 的条款。您不能适用更高版本 LGPL 中的条款。

6. 如果这样做,只要项目包含仅依据 LGPL v2.1 发布的代码,您将无法将项目的许可证升级到 LGPL v3 或更高版本。

7. LGPL v2.1 允许您将遵循自 GPL v2 之后任何版本 GPL 的代码进行重新许可。如果在这种情况下可以将遵循 LGPL 的代码切换为使用适当版本的 GPL(如表所示),则可以进行此种结合。

8. LGPL v3 是 GPL v3 加上在这种情况下可以忽略的额外权限。

9. 由于 GPL v2 不允许与 LGPL v3 结合,因此在这种情况下,您必须依据 GPL v3 的条款传递项目,因为它允许此种结合。

#### 6.4 <ruby> “聚合” <rp> ( </rp> <rt> aggregate </rt> <rp> ) </rp></ruby>与其他类型的“修改版本”有什么区别?(同 2.25)

“聚合”由多个单独的程序组成,分布在同一个 CD-ROM 或其他媒介中。GPL 允许您创建和分发聚合,即使其他软件的许可证不是自由许可证或与 GPL 不兼容。唯一的条件是,发布“聚合”所使用的许可证不能禁止用户去行使“聚合”中每个程序对应的许可证所赋予用户的权利。

两个单独的程序还是一个程序有两个部分,区分的界限在哪里?这是一个法律问题,最终由法官决定。我们认为,适当的判断标准取决于通信机制(exec、管道、rpc、共享地址空间内的函数调用等)和通信的语义(哪些信息被互换)。

如果模块们被包含在相同的可执行文件中,则它们肯定是被组合在一个程序中。如果模块们被设计为在共享地址空间中链接在一起运行,那么几乎肯定意味着它们组合成为一个程序。

相比之下,管道、套接字和命令行参数是通常在两个独立程序之间使用的通信机制。所以当它们用于通信时,模块们通常是单独的程序。但是,如果通信的语义足够亲密,交换复杂的内部数据结构,那么也可以视为这两个部分合并成了一个更大的程序。

#### 6.5 我在使用 GPL 程序的源代码时是否具有<ruby> “合理使用” <rp> ( </rp> <rt> fair use </rt> <rp> ) </rp></ruby>权限?(同 4.17)

是的,您有。“合理使用”是在没有任何特别许可的情况下允许的使用。由于您不需要开发人员的许可来进行这种使用,无论开发人员在许可证或其他地方对此怎么说,您都可以执行此操作,无论该许可证是 GNU GPL 还是其他自由软件许可证。

但是,请注意,没有全世界范围普适的合理使用原则;什么样的用途被认为“合理”因国而异。

#### 6.6 美国政府可否对遵循 GPL 的程序进行改进并发布?(同 3.14)

可以。如果这些改进是由美国政府雇员在雇佣期间编写的,那么这些改进属于公有领域。不过,GNU GPL 仍然涵盖了整体的改进版本。在这种情况下没有问题。

如果美国政府使用承包商来完成这项工作,那么改进本身可以被 GPL 覆盖。

#### 6.7 GPL 对于与其所覆盖的作品进行静态或动态链接的模块有不同的要求吗?

没有。将 GPL 覆盖的作品静态或动态地链接到其他模块是基于 GPL 覆盖的作品构建结合作品。因此,GNU GPL 的条款和条件将覆盖整个结合作品。另请参阅:6.24 如果我在 GPL 软件中使用了与 GPL 不兼容的库,会出现什么法律问题?

#### 6.8 LGPL 对于与其所覆盖的作品进行静态或动态链接的模块有不同的要求吗?

为了遵守 LGPL(任何现有版本:v2、v2.1 或 v3):

(1)如果您静态链接到 LGPL 库,您还必须以对象(不一定是源代码)格式提供应用程序,以便用户有机会修改库并重新链接应用程序。

(2)如果您动态链接*已经存在于用户计算机上*的 LGPL 库,则不需要传递库的源代码。另一方面,如果您自己将可执行的 LGPL 库与您的应用程序一起传递,无论是静态还是动态链接,还必须以 LGPL 所提供的方式之一来传递库的源代码。

#### 6.9 如果库依据 GPL(而不是 LGPL)发布,这是否意味着使用它的任何软件必须遵循 GPL 或与 GPL 兼容的许可证?

是的,因为程序实际上与库进行了链接。因此,GPL 的条款适用于整个结合作品。与库链接的软件模块可能遵循与GPL兼容的不同许可证,但整体作品必须遵循 GPL。另见:“2.23 许可证与 GPL 兼容是什么意思?”

#### 6.10 您有一个遵循 GPL 的程序,我想将它与我的代码进行链接,来构建一个专有程序。那么事实上,我链接到您的程序意味着我必须让我的程序遵循 GPL 许可证?

不完全是。这意味着您必须依据与 GPL 兼容的许可证(更准确地说,与您链接的结合作品中所有其他代码所适用的一个或多个 GPL 版本相兼容)发布您的程序。然后,结合作品本身就可以遵循这些 GPL 版本。

#### 6.11 如果是这样的话,有没有机会依据 LGPL 获得您的程序许可?

您可以这么要求,但绝大多数的作者都会坚定不移地说不。GPL 的想法是,如果要将我们的代码包含在程序中,您的程序也必须是自由软件。GPL 的意图是给您施加压力,让您以能够使其成为我们社区一部分的方式来发布您的程序。

您始终拥有不使用我们代码的合法选择。

#### 6.12 我们构建专有软件的项目不能使用遵循 GPL 的某个 GNU 程序。您会为我们提供例外吗? 这将意味着该程序拥有更多用户。

对不起,我们没有这样的例外。这样做是不对的。

最大化用户数量不是我们的目标。相反,我们正在努力为尽可能多的用户提供至关重要的自由。一般来说,专有软件项目是阻碍而不是实现软件自由的原因。

我们偶尔提供许可证例外来协助一个依据 GPL 以外的许可证生产自由软件的项目。不过,我们必须看到一个很好的理由,即这个项目为什么会推动自由软件的发展。

我们有时也会改变软件包的分发条款,这显然是为自由软件事业服务的正确方法;但是我们对此非常谨慎,所以您必须向我们展示非常有说服力的理由。

#### 6.13 如果一个编程语言解释器是依据 GPL 发布的,这是否意味着由它解释的程序必须遵循与 GPL 兼容的许可证?

当解释器只是解释一种语言时,答案是否定的。被解释程序对于解释器来说只是数据;根据版权法,像GPL这样的自由软件许可证不能限制您使用解释器的数据。您可以使用任何数据(被解释程序),以任何您喜欢的方式运行它,并且没有任何要求规定您必须将数据授权给任何人。

然而,当解释器被扩展以向<ruby> 其他程序 <rp> ( </rp> <rt> facilities </rt> <rp> ) </rp></ruby>(通常但不一定是库)提供<ruby> “绑定” <rp> ( </rp> <rt> bindings </rt> <rp> ) </rp></ruby>时,被解释程序通过这些绑定有效地与其使用的程序相关联。因此,如果这些程序是依据 GPL 发布的,则使用它们的被解释程序必须以与 GPL 兼容的方式发布。JNI(Java Native Interface)是这种绑定机制的一个例子;以这种方式访问的库与调用它们的 Java 程序动态链接。这些库也与解释器联系在一起。如果解释器与这些库静态链接,或者如果它被设计为[与这些特定库动态链接](https://www.gnu.org/licenses/gpl-faq.html#GPLPluginsInNF),那么也需要以与 GPL 兼容的方式发布。

另一个类似且非常常见的情况是为库提供解释器,它们能够自我解释。例如,Perl 带有许多 Perl 模块,Java 实现带有许多 Java 类。这些库和调用它们的程序总是动态链接在一起。

结果是,如果您选择在程序中使用遵循 GPL 的 Perl 模块或 Java 类,则必须以与 GPL 兼容的方式发布该程序,无论结合后的 Perl 或 Java 程序所依之运行的 Perl 或 Java 解释器中使用什么样的许可证。

#### 6.14 如果编程语言解释器遵循与 GPL 不兼容的许可证,我可以在其上运行遵循 GPL 的程序吗?

当解释器解释一种语言时,答案是肯定的。被解释程序对于解释器来说只是数据;GPL 不会限制您处理程序时所使用的工具。

然而,当解释器被扩展以向<ruby> 其他程序 <rp> ( </rp> <rt> facilities </rt> <rp> ) </rp></ruby>(通常但不一定是库)提供“绑定”时,被解释程序通过这些绑定有效地与其使用的程序相关联。JNI(Java Native Interface)是此种程序的一个例子;以这种方式访问的库与调用它们的 Java 程序动态链接。

因此,如果这些程序是依据与 GPL 不兼容的许可证发布的,则情况就像以任何其他方式跟与 GPL 不兼容的库链接。这意味着:

1. 如果您正在编写代码并将其依据 GPL 发布,您可以声明一个<ruby> 明确例外 <rp> ( </rp> <rt> explicit exception </rt> <rp> ) </rp></ruby>,允许将其链接到与 GPL 不兼容的程序。

2. 如果您依据 GPL 编写并发布程序,并且专门设计了与这些程序配合使用的功能,人们可以将其作为<ruby> 隐性例外 <rp> ( </rp> <rt> implicit exception </rt> <rp> ) </rp></ruby>,允许它们与这些程序进行链接。但是,如果这只是你的打算的话,最好明确地这么说。

您不能把别人遵循 GPL 的代码用于这种方式,或者添加这样的例外。只有该代码的版权所有者才能添加例外。

#### 6.15 如果我将一个模块添加到遵循 GPL 的程序中,我必须使用 GPL 作为我的模块的许可证吗?

GPL 规定,整个结合后的程序必须依据 GPL 发布。所以你的模块必须可以依据 GPL 进行使用。

但是,您可以提供使用您代码的额外授权。如果您愿意,您可以依据比 GPL 更为宽松但与 GPL 兼容的许可证发布模块。许可证列表页面提供了与 [GPL 兼容许可证](https://www.gnu.org/licenses/license-list.html)的部分列表。

#### 6.16 什么时候程序和插件会被认为是单一的结合程序?

这取决于主程序如何调用其插件。如果主程序使用 `fork` 和 `exec` 来调用插件,并通过共享复杂的数据结构或来回传送复杂的数据结构来建立<ruby> 密切通信 <rp> ( </rp> <rt> intimate communication </rt> <rp> ) </rp></ruby>,可以使它们成为一个单一的结合程序。如果主程序使用简单的 `fork` 和 `exec` 来调用插件并且不建立它们之间的密切通信,插件被认为是一个单独的程序。

如果主程序动态地链接插件,并且它们彼此进行函数调用并共享数据结构,我们相信它们形成了一个单一的结合程序,它必须被视为主程序和插件的扩展。如果主程序动态地链接插件,但是它们之间的通信仅限于使用某些选项调用插件的“main”功能,并等待它返回,这是一种<ruby> 临界案例 <rp> ( </rp> <rt> borderline case </rt> <rp> ) </rp></ruby>。

使用共享内存与复杂数据结构进行通信几乎等同于动态链接。

#### 6.17 如果我写了一个用于遵循 GPL 程序的插件,那么对可用于分发我的插件的许可证有什么要求?

请参阅 “6.16 什么时候程序和插件会被认为是单一的结合程序 ?”以确定插件和主程序是否被视为单个结合程序,以及何时将其视为单独的作品。

如果主程序和插件是单个结合程序,则这意味着您必须依据 GPL 或与 GPL 兼容的自由软件许可证授权插件,并以符合 GPL 的方式将源代码进行分发。与其插件分开的主程序对插件没有要求。

#### 6.18 在为非自由程序编写插件时,可以应用 GPL 许可证吗?

请参阅 “6.16 什么时候程序和插件会被认为是单一的结合程序?”以确定插件和主程序是否被视为单个结合程序,以及何时被视为单独的程序。

如果它们组成单一的结合程序,这意味着遵循 GPL 的插件与非自由主程序的结合将违反 GPL。但是,您可以通过向插件的许可证添加例外声明来解决该法律问题,并允许将其与非自由主程序链接。

另请参阅正在编写的[使用非自由库的自由软件的问题](https://www.gnu.org/licenses/gpl-faq.html#FSWithNFLibs)。

#### 6.19 我可以发布一个旨在加载遵循 GPL 的插件的非自由程序吗?

请参阅 “6.16 什么时候程序和插件会被认为是单一的结合程序?”以确定插件和主程序是否被视为单个结合程序,以及何时被视为单独的程序。

如果它们组成单一的结合程序,则主程序必须依据 GPL 或与 GPL 兼容的自由软件许可证发布,并且当主程序为了与这些插件一起使用而被分发时,必须遵循 GPL 的条款。

然而,如果它们是单独的作品,则插件的许可证对主程序没有要求。

另请参阅正在编写的[使用非自由库的自由软件的问题](https://www.gnu.org/licenses/gpl-faq.html#FSWithNFLibs)。

#### 6.20 我想将遵循 GPL 的软件纳入我的专有系统。我只依据 GPL 给予我的权限来使用该软件。我可以这样做吗?(同 5.6)

您不能将遵循 GPL 的软件纳入专有系统。GPL 的目标是授予每个人复制、再分发、理解和修改程序的自由。如果您可以将遵循 GPL 的软件整合到非自由系统中,则可能会使遵循 GPL 的软件不再是自由软件。

包含遵循 GPL 程序的系统是该 GPL 程序的扩展版本。GPL 规定,如果它最终发布的话,任何扩展版本的程序必须依据 GPL 发布。这有两个原因:确保获得软件的用户获得自己应该拥有的自由,并鼓励人们回馈他们所做的改进。

但是,在许多情况下,您可以将遵循 GPL 的软件与专有系统一起分发。要有效地做到这一点,您必须确保自由和非自由程序之间的通信<ruby> 保持一定距离 <rp> ( </rp> <rt> arms length </rt> <rp> ) </rp></ruby>,而不是将它们有效地结合成一个程序。

这种情况与“纳入”遵循 GPL 的软件之间的区别,是一部分实质和一部分形式的问题。实质上是这样的:如果两个程序结合起来,使它们成为一个程序的两个部分,那么您不能将它们视为两个单独的程序。所以整个作品必须遵循 GPL。

如果这两个程序保持良好的分离,就像编译器和内核,或者像编辑器和shell一样,那么您可以将它们视为两个单独的程序,但是您必须恰当执行。这个问题只是一个形式问题:您如何描述您在做什么。为什么我们关心这个?因为我们想确保用户清楚地了解软件集合中遵循 GPL 的软件的自由状态。

如果人们分发遵循 GPL 的软件,将其称为系统(用户已知其中一部分为专有软件)的“一部分”,用户可能不确定其对遵循GPL的软件所拥有的权利。但是如果他们知道他们收到的是一个自由程序加上另外一个程序,那么他们的权利就会很清楚。

#### 6.21 我想将遵循 GPL 的软件纳入我的专有系统。我是否可以通过在 GPL 覆盖的部分和专有部分之间,放置一个遵循与 GPL 兼容的宽松许可证(如 X11 许可证)的<ruby> “封装” <rp> ( </rp> <rt> wrapper </rt> <rp> ) </rp></ruby>模块来实现?

不可以,X11 许可证与 GPL 兼容,因此您可以向遵循 GPL 的程序添加一个模块,并让其遵循 X11 许可证。但是,如果要将它们整合到一个更大的程序中,那么这个整体将包含 GPL 覆盖的部分,所以它必须在 GNU GPL 下作为一个整体获得许可。

专有模块 A 仅通过遵循 X11 许可证的模块 B 与遵循 GPL 的模块 C 通信,该事实在法律上是无关紧要的;重要的是模块 C 包含在整体作品中。

#### 6.22 我可以编写使用非自由库的自由软件吗?

如果您这样做,您的程序将无法在一个自由的环境中完全使用。如果您的程序依赖于一个非自由库来做某件工作,那么在自由软件世界里就不能做这个工作。如果这依赖于一个非自由库来运行,它不能是自由操作系统(例如 GNU)的一部分;这完全成为了自由软件世界里的禁区。

所以请考虑:你可以找到一种方法来完成这项工作,而不使用这个库吗?你可以为该库编写一个自由软件替代选择吗?

如果程序已经使用非自由库编写,那么改变决定也许已经太晚了。您也可以按照目前状态来发布程序,而不是不发布。但是请在 README 文件中提到,对非自由库的需求是一个缺点,并建议更改程序以便在没有非自由库的情况下执行相同的工作。请建议任何想要在程序上进行大量进一步工作的人首先将其从依赖非自由库中解脱出来。

请注意,将某些非自由库与遵循 GPL 的自由软件相结合也可能存在法律问题。有关更多信息,请参阅有关 [GPL 软件与和其不兼容库的问题](https://www.gnu.org/licenses/gpl-faq.html#GPLIncompatibleLibs)。

#### 6.23 我可以将遵循 GPL 的程序与专有系统库链接吗?

每个版本的 GPL 相对于其<ruby> 左版 <rp> ( </rp> <rt> copyleft </rt> <rp> ) </rp></ruby>都有一个例外,通常称为系统库例外。如果您要使用的与 GPL 不兼容的库符合系统库的标准,则使用它们不需要做特别的工作;分发整个程序的源代码的要求不包括那些库,即使您分发包含它们的链接可执行文件。

作为<ruby> “系统库” <rp> ( </rp> <rt> system library </rt> <rp> ) </rp></ruby>的标准在不同版本的 GPL 之间有所不同。GPL v3 在第 1 节中明确定义“系统库”,将其从<ruby> “相应源代码” <rp> ( </rp> <rt> Corresponding Source </rt> <rp> ) </rp></ruby>的定义中排除。GPL v2 在第 3 部分的末尾进行,处理这个问题略有不同。

#### 6.24 如果我在遵循 GPL 的软件中使用了与 GPL 不兼容的库,会出现什么法律问题?

如果您希望程序与未被系统库例外所涵盖的库链接,则需要提供许可来执行此操作。以下是您可以使用的两个许可证通知示例;一个用于 GPL v3,另一个用于 GPL v2。在这两种情况下,您应该将此文本放在您授予此权限的每个文件中。

只有该程序的版权持有人才能合法地按照这些条款发布其软件。如果您自己编写了整个程序,假设您的雇主或学校没有声明版权,您就是版权所有者,因此您可以授权该例外。但是,如果您想在代码中使用其他作者的其他遵循GPL的程序的一部分,那么您无法将例外授权给他们。您必须获得这些程序的版权所有者的批准。

当其他人修改程序时,他们不需要为他们的代码设置同样的例外——是否这样做是他们自己的选择。

如果您打算链接的库不是自由软件,请参阅[使用非自由库编写自由软件部分](https://www.gnu.org/licenses/gpl-faq.html#FSWithNFLibs)。

如果您使用 GPL v3,您可以通过在第 7 节下授予额外权限来实现此目标。以下许可证通知将会执行此操作。您必须使用适合您程序的文本替换括号中的所有文本。如果不是每个人都可以为您打算链接的库分发源代码,则应该删除大括号中的文本;否则,只需删除大括号。

>

> Copyright (C) [年份] [著作权人名称]

>

>

> 本程序为自由软件;您可以根据自由软件基金会发布的 GNU GPL 许可证的条款再分发和/或修改它;无论是依据本许可证的版本3,或(根据您的选择)任何更高版本。

>

>

> 本程序基于希望其有用的目标而分发,但**不提供任何担保**;甚至也**没有适销性或适用于特定用途的默示担保**。有关详细信息,请参阅 GNU GPL 许可证。

>

>

> 您应该已经收到本程序以及 GNU GPL 许可证的副本;如果没有,请参阅 <http://www.gnu.org/licenses>。

>

>

> 依据 GNU GPL v3 第7节的额外许可

>

>

> 如果您通过将[与库的名称](或库的修改版本)链接或结合来修改本程序,或任何被覆盖的作品,其中包含被[库许可证的名称]的条款所覆盖的部分,则该程序的许可人授予您额外许可来传递所产出的作品。{这种结合的非源代码形式的相应源代码应包括所使用的[库名称]部分的源代码以及被覆盖的作品的源代码。}

>

>

>

如果您使用 GPL v2,您可以为许可证条款提供自己的例外。以下许可证通知将这样做。同样,您必须使用适合您程序的文本替换括号中的所有文本。如果不是每个人都可以为您打算链接的库分发源代码,则应该删除大括号中的文本;否则,只需删除大括号。

>

> Copyright (C) [年份] [著作权人名称]

>

>

> 本程序为自由软件;您可以根据自由软件基金会发布的 GNU GPL 许可证的条款再分发和/或修改它;无论是依据许可证的 v2,或(根据您的选择)任何更高版本。

>

>

> 本程序基于希望其有用的目标而分发,但**不提供任何担保**;甚至也**没有适销性或适用于特定用途的默示担保**。有关详细信息,请参阅 GNU GPL 许可证。

>

>

> 您应该已经收到本程序以及 GNU GPL 许可证的副本;如果没有,请参阅 <http://www.gnu.org/licenses>。

>

>

> 将[您的程序名称]与其他模块静态或动态链接是以[您的程序名称]为基础构建结合作品。因此,GNU GPL 许可证的条款和条件将覆盖整个结合作品。

>

>

> 另外,作为一个特殊例外,[您的程序名称]的版权持有人可以让您将[您的程序名称]与依据 GNU LGPL 发布的自由程序或库以及依据[库的许可证名称]标准发布的[库名称]中包含的代码相结合(或具有相同许可证的此类代码的修改版本)。您可以按照[您的程序名称]所依据的 GNU GPL 的条款和其他有关代码的许可证复制和分发此系统{前提是当 GNU GPL 要求分发源代码时将其他代码的源代码包含在内}。

>

>

> 注意,对[您的程序名称]做出修改版本的人没有义务为其修改版本授予此特殊例外;是否这样做是他们自己的选择。GNU GPL 许可证允许发布一个没有此例外的修改版本;该例外也使得发布一个带有该例外的修改版本成为可能。

>

>

>

#### 6.25 我正在使用 Microsoft Visual C ++(或 Visual Basic)编写 Windows 应用程序,我将依据 GPL 发布它。依据GPL,是否允许将我的程序与 Visual C ++(或 Visual Basic)运行时库动态链接?

您可以将您的程序链接到这些库,并将编译后的程序分发给其他程序。执行此操作时,运行时库是 GPL v3 所定义的“系统库”。这意味着您不需要担心将库的源代码包含在程序的相应源代码中。GPL v2 在第 3 节中提供了类似的例外。

您可能不会随同您的程序以编译后的 DLL 形式分发这些库。为了防止不道德的分发者试图将系统库例外作为漏洞进行利用,GPL 表示,只有库不与程序本身一起分发,库才能被认定为系统库。如果您随同您的程序分发 DLL,则它们将不再符合此例外的资格;那么遵守 GPL 的唯一方法就是提供它们的源代码,而您无法做到。

可以编写只在 Windows 上运行的自由程序,但这不是一个好主意。这些程序将被 Windows <ruby> <a href="https://www.gnu.org/philosophy/java-trap.html"> “围困” </a> <rp> ( </rp> <rt> trapped </rt> <rp> ) </rp></ruby>,因此对自由软件世界的贡献为零。

#### 6.26 我想修改遵循 GPL 的程序,并将它们与 Money Guzzler Inc. 的可移植性库链接。我无法分发这些库的源代码,因此,任何想要更改这些版本的用户都必须单独获取这些库。为什么 GPL 不允许这样做?

有两个原因。第一、一般性的原因。如果我们允许 A 公司制作一个专有文件,B 公司分发与该文件相关的遵循 GPL 的软件,其效果等同于将 GPL 撕开一个大洞。对于保持 GPL 软件各种修改和扩展的源代码来说,这如同一张署名空白纸。

让所有用户能够访问源代码是我们的主要目标之一,所以这个结果绝对是我们想要避免的。

更具体地说,根据我们对条款的理解,与 Money Guzzler 库链接的程序版本不会是真正的自由软件——它们不会附带完整的让用户能够更改和重新编译程序的源代码。

#### 6.27 如果模块 Q 的许可证具有与 GPL 不兼容的要求,但是只有当 Q 自身分发时,而不是在较大程序中包含 Q 时,该要求才适用,是否可以使得该许可证与 GPL 兼容?可以将 Q 与遵循 GPL 的程序结合使用吗?

如果程序 P 依据 GPL 被发布,这意味着“任何和所有部分”都可以依据 GPL 进行使用。如果您集成了模块 Q,并依据 GPL 发布结合程序 P + Q,则表示可以依据 GPL 使用 P + Q 的任何部分。P + Q 的一部分是 Q,所以依据 GPL 发布 P + Q 意味着,Q 的任何部分可以依据 GPL 进行使用。换句话说,依据 GPL 获得 P + Q 的用户可以删除 P,所以 Q 仍然遵循 GPL。

如果模块 Q 的许可证允许您授予该许可,则其与 GPL 兼容。否则,它不与 GPL 兼容。

如果 Q 的许可证在不明确的条件下表示,您必须在自己再分发 Q 时做某些事情(与 GPL 不兼容),那么不允许您依据 GPL 分发Q。因此,您也不能依据 GPL 发布 P + Q。所以您不能将 P 与 Q 进行链接或结合。

#### 6.28 在面向对象的语言(如 Java)中,如果我在不修改的情况下使用遵循 GPL 的类,并对其进行子类化,GPL 会以什么方式影响较大的程序?

子类化将会创建衍生作品。因此,当您创建遵循 GPL 的类的子类时,GPL 的条款会影响整个程序。

#### 6.29 分发一个意图链接到 Linux 内核的非自由驱动程序会违反 GPL 吗?

Linux(GNU / Linux 操作系统中的内核)依据 GNU GPL v2 进行分发。分发一个意图链接 Linux 的非自由驱动程序违反 GPL 吗?

是的,这是一种违规行为,因为这样做形成了更大的结合作品。用户期望把这些片段放在一起的事实并不会改变任何事情。

在代码实体部分拥有版权的 Linux 的每个贡献者都可以执行 GPL,我们鼓励他们对那些分发非自由 Linux 驱动程序的人采取行动。

#### 6.30 如何允许在受控接口下将专有模块与我的 GPL 库链接起来?

在声明该文件依据 GNU GPL 进行分发的文本末尾,将以下文本添加到软件包中每个文件的许可证通知中:

>

> 将 ABC 与其他模块静态或动态链接是基于 ABC 创建结合作品。因此,GNU GPL 许可证的条款和条件将覆盖整个结合作品。

>

>

> 作为一个特殊的例外,ABC 的版权所有者可以将 ABC 程序与自由软件程序或依据 GNU LGPL 发布的库以及通过 ABCDEF 界面与 ABC 通信的独立模块相结合。您可以根据 ABC 的 GNU GPL 条款和其他代码的许可证复制和分发此系统,前提是您在 GNU GPL 需要分发源代码时提供该代码的源代码,并且您没有修改 ABCDEF 界面。

>

>

> 请注意,制作 ABC 修改版本的人没有义务为其修改版本授予此特殊例外;是否这样做是他们自己的选择。GNU GPL 许可证允许发布不含此例外的修改版本;此例外也使得发布一个带有该例外的修改版本成为可能。如果您修改了 ABCDEF 界面,此例外不适用于您修改的 ABC 版本,并且您必须在分发修改后的版本时删除此例外。

>

>

> 此例外是依据 GNU GPL 许可证第3版(“GPL v3”)第7节的额外权限。

>

>

>

此例外允许通过指定接口(“ABCDEF”)与遵循不同许可证的模块进行链接,同时确保用户仍然会按照 GPL 通常的方式接收源代码。

只有该程序的版权持有者才能合法授权此例外。如果您自己编写了整个程序,假设您的雇主或学校没有声明版权,您就是版权所有者,因此您可以授权该例外。但是,如果您想在代码中使用其他作者的其他遵循 GPL 程序的一部分,那么您无法对他们的例外进行授权。您必须获得这些程序的版权所有者的批准。

#### 6.31 考虑这种情况:1)X 发布遵循 GPL 的项目的 V1 版本。2)基于对 V1 的修改和新代码开发,Y 对 V2 的改进做出贡献。3)X 想将 V2 转换为非 GPL 许可证。X 需要 Y 的许可吗?

需要。Y 需要依据 GNU GPL 发布其版本,因为它基于 X 的版本 V1。没有任何要求规定 Y 为其代码适用任何其他许可。因此,X 必须获得 Y 的许可才能依据另一个许可证发布该代码。

#### 6.32 我已经编写了一个与许多不同组件链接的应用程序,它们具有不同的许可证。我对我的程序有什么许可要求感到很困惑。您能告诉我可以使用哪些许可证吗?

为了回答这个问题,我们需要看一下你的程序使用的每个组件的列表,该组件的许可证和一个简短的(几句话应该足够)说明你的库如何使用该组件的描述。两个例子是:

* 为了让我的软件工作,它必须链接到遵循 LGPL 的 FOO 库。

* 我的软件进行系统调用(使用我建立的命令行)来运行 BAR 程序,该程序遵循 GPL,“具有允许与 QUUX 链接的特殊例外”。

#### 6.33 可以在依据与 GPL 不兼容的许可证进行许可的文档中使用遵循 GPL 的源代码片段吗?

如果片段足够小,依据“合理使用”或类似的法律,您可以将它们纳入其中,那么可以。否则,不可以。

---

译者介绍:薛亮,集慧智佳知识产权咨询公司高级咨询师,擅长专利检索、专利分析、竞争对手跟踪、FTO 分析、开源软件知识产权风险分析,致力于为互联网企业、高科技公司提供知识产权咨询服务。

| 200 | OK | ## Frequently Asked Questions about the GNU Licenses

### Table of Contents

**Basic questions about the GNU Project, the Free Software Foundation, and its licenses****General understanding of the GNU licenses****Using GNU licenses for your programs****Distribution of programs released under the GNU licenses****Using programs released under the GNU licenses when writing other programs****Combining work with code released under the GNU licenses****Questions about violations of the GNU licenses**

#### Basic questions about the GNU Project, the Free Software Foundation, and its licenses

[What does “GPL” stand for?](#WhatDoesGPLStandFor)[Does free software mean using the GPL?](#DoesFreeSoftwareMeanUsingTheGPL)[Why should I use the GNU GPL rather than other free software licenses?](#WhyUseGPL)[Does all GNU software use the GNU GPL as its license?](#DoesAllGNUSoftwareUseTheGNUGPLAsItsLicense)[Does using the GPL for a program make it GNU software?](#DoesUsingTheGPLForAProgramMakeItGNUSoftware)[Can I use the GPL for something other than software?](#GPLOtherThanSoftware)[Why don't you use the GPL for manuals?](#WhyNotGPLForManuals)[Are there translations of the GPL into other languages?](#GPLTranslations)[Why are some GNU libraries released under the ordinary GPL rather than the Lesser GPL?](#WhySomeGPLAndNotLGPL)[Who has the power to enforce the GPL?](#WhoHasThePower)[Why does the FSF require that contributors to FSF-copyrighted programs assign copyright to the FSF? If I hold copyright on a GPLed program, should I do this, too? If so, how?](#AssignCopyright)[Can I modify the GPL and make a modified license?](#ModifyGPL)[Why did you decide to write the GNU Affero GPLv3 as a separate license?](#SeparateAffero)

#### General understanding of the GNU licenses