id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

578,045,699 |

go

|

cmd/go: 'go mod why' should return module results even without '-m'

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14 darwin/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes.

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/Users/meling/Library/Caches/go-build"

GOENV="/Users/meling/Library/Application Support/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="darwin"

GOPATH="/Users/meling/go"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/usr/local/Cellar/go/1.14/libexec"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/usr/local/Cellar/go/1.14/libexec/pkg/tool/darwin_amd64"

GCCGO="gccgo"

AR="ar"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

GOMOD="/Users/meling/work/gorums/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/xd/1g4dygzx1_g3thyggq8qllh40000gn/T/go-build624263632=/tmp/go-build -gno-record-gcc-switches -fno-common"

</pre></details>

### What did you do?

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

Ran `go mod why` without -m flag when the argument was a module instead of a package, leading to the message:

```

% go mod why github.com/labstack/echo-contrib

# github.com/labstack/echo-contrib

(main module does not need package github.com/labstack/echo-contrib)

```

This is unhelpful and has bitten me many times now. How do I know whether or not something in my go.mod file is a package or module? This is confusing to me and probably others.

### What did you expect to see?

I expected to see which package or module was using the relevant module (or package):

```

% go mod why -m github.com/labstack/echo-contrib

# github.com/labstack/echo-contrib

github.com/autograde/aguis/web

github.com/labstack/echo-contrib/session

```

### Proposal

I propose that the `go mod why` command should return a result either way. If the tool finds that the main module does not depend on the supplied package it should check if it depends on a corresponding module instead, obviating the need for the -m flag.

|

NeedsFix,modules

|

low

|

Critical

|

578,109,290 |

nvm

|

Error EACCES -13 when using npm globally

|

#### Operating system and version:

Ubuntu 18.04.4 (WSL)

#### `nvm debug` output:

<details>

<!-- do not delete the following blank line -->

```sh

$ nvm debug

nvm --version: v0.35.3

$SHELL: /usr/bin/zsh

$SHLVL: 1

${HOME}: /home/mithic

${NVM_DIR}: '${HOME}/.nvm'

${PATH}: ${NVM_DIR}/versions/node/v12.16.1/bin:${HOME}/.local/texlive/2019/bin/x86_64-linux:${HOME}/.local/bin:${HOME}/bin:/usr/local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/mnt/c/Program Files/Python38/Scripts/:/mnt/c/Program Files/Python38/:/mnt/c/Program Files (x86)/Common Files/Oracle/Java/javapath:/mnt/c/WINDOWS/system32:/mnt/c/WINDOWS:/mnt/c/WINDOWS/System32/Wbem:/mnt/c/WINDOWS/System32/WindowsPowerShell/v1.0:/mnt/c/WINDOWS/System32/OpenSSH:/mnt/c/Program Files/nodejs:/mnt/c/Program Files/AMD/StoreMI/ECmd:/mnt/c/Program Files (x86)/LilyPond/usr/bin:/mnt/c/Users/rpc01/AppData/Local/Programs/Python/Python37-32:/mnt/c/Program Files (x86)/texlive/2019/bin/win32:/mnt/c/ProgramData/chocolatey/bin:/mnt/c/Hunspell/src/tools:/mnt/c/WINDOWS/System32/WindowsPowerShell/v1.0/:/mnt/c/WINDOWS/System32/OpenSSH/:/mnt/c/Users/rpc01/.windows-build-tools/python27/:/mnt/c/Users/rpc01/AppData/Local/Microsoft/WindowsApps:/mnt/c/Users/rpc01/AppData/Local/Microsoft/WindowsApps:/mnt/c/Users/rpc01/AppData/Local/Programs/Microsoft VS Code/bin

$PREFIX: ''

${NPM_CONFIG_PREFIX}: ''

$NVM_NODEJS_ORG_MIRROR: ''

$NVM_IOJS_ORG_MIRROR: ''

shell version: 'zsh 5.4.2 (x86_64-ubuntu-linux-gnu)'

uname -a: 'Linux 4.4.0-19041-Microsoft #1-Microsoft Fri Dec 06 14:06:00 PST 2019 x86_64 x86_64 x86_64 GNU/Linux'

OS version: Ubuntu 18.04.4 LTS

curl: /usr/bin/curl, curl 7.58.0 (x86_64-pc-linux-gnu) libcurl/7.58.0 OpenSSL/1.1.1 zlib/1.2.11 libidn2/2.0.4 libpsl/0.19.1 (+libidn2/2.0.4) nghttp2/1.30.0 librtmp/2.3

wget: /usr/bin/wget, GNU Wget 1.19.4 built on linux-gnu.

git: /usr/bin/git, git version 2.17.1

ls: cannot access 'grep:': No such file or directory

grep: grep: aliased to grep --color (grep --color), grep (GNU grep) 3.1

awk: /usr/bin/awk, GNU Awk 4.1.4, API: 1.1 (GNU MPFR 4.0.1, GNU MP 6.1.2)

sed: /bin/sed, sed (GNU sed) 4.4

cut: /usr/bin/cut, cut (GNU coreutils) 8.28

basename: /usr/bin/basename, basename (GNU coreutils) 8.28

ls: cannot access 'rm:': No such file or directory

rm: rm: aliased to rm -i (rm -i), rm (GNU coreutils) 8.28

ls: cannot access 'mkdir:': No such file or directory

mkdir: mkdir: aliased to nocorrect mkdir (nocorrect mkdir), mkdir (GNU coreutils) 8.28

xargs: /usr/bin/xargs, xargs (GNU findutils) 4.7.0-git

nvm current: v12.16.1

which node: ${NVM_DIR}/versions/node/v12.16.1/bin/node

which iojs: iojs not found

which npm: ${NVM_DIR}/versions/node/v12.16.1/bin/npm

npm config get prefix: ${NVM_DIR}/versions/node/v12.16.1

npm root -g: ${NVM_DIR}/versions/node/v12.16.1/lib/node_modules

```

</details>

#### `nvm ls` output:

<details>

<!-- do not delete the following blank line -->

```sh

$ nvm ls

-> v12.16.1

default -> lts/* (-> v12.16.1)

node -> stable (-> v12.16.1) (default)

stable -> 12.16 (-> v12.16.1) (default)

iojs -> N/A (default)

unstable -> N/A (default)

lts/* -> lts/erbium (-> v12.16.1)

lts/argon -> v4.9.1 (-> N/A)

lts/boron -> v6.17.1 (-> N/A)

lts/carbon -> v8.17.0 (-> N/A)

lts/dubnium -> v10.19.0 (-> N/A)

lts/erbium -> v12.16.1

```

</details>

#### How did you install `nvm`?

<!-- (e.g. install script in readme, Homebrew) -->

Install and update script from readme:

`wget -qO- https://raw.githubusercontent.com/nvm-sh/nvm/v0.35.3/install.sh | bash`

#### What steps did you perform?

I only ran `npm -g upgrade`

#### What happened?

I got the EACCES -13 error:

<details>

```sh

$ npm -g upgrade

npm ERR! code EACCES

npm ERR! syscall rename

npm ERR! path /home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs

npm ERR! dest /home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51

npm ERR! errno -13

npm ERR! Error: EACCES: permission denied, rename '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs' -> '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'

npm ERR! [OperationalError: EACCES: permission denied, rename '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs' -> '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'] {

npm ERR! cause: [Error: EACCES: permission denied, rename '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs' -> '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'] {

npm ERR! errno: -13,

npm ERR! code: 'EACCES',

npm ERR! syscall: 'rename',

npm ERR! path: '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs',

npm ERR! dest: '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'

npm ERR! },

npm ERR! stack: "Error: EACCES: permission denied, rename '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs' -> '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'",

npm ERR! errno: -13,

npm ERR! code: 'EACCES',

npm ERR! syscall: 'rename',

npm ERR! path: '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/npm-d9b9c5ef/node_modules/yargs',

npm ERR! dest: '/home/mithic/.nvm/versions/node/v12.16.1/lib/node_modules/.staging/yargs-b6502a51'

npm ERR! }

npm ERR!

npm ERR! The operation was rejected by your operating system.

npm ERR! It is likely you do not have the permissions to access this file as the current user

npm ERR!

npm ERR! If you believe this might be a permissions issue, please double-check the

npm ERR! permissions of the file and its containing directories, or try running

npm ERR! the command again as root/Administrator.

npm ERR! A complete log of this run can be found in:

npm ERR! /home/mithic/.npm/_logs/2020-03-09T18_06_31_294Z-debug.log

```

</details>

#### What did you expect to happen?

I expected it to upgrade any packages that might be out of date, and not throw any errors, especially given that the reason I began using nvm was to solve this very issue (as npm docs explain [here](https://docs.npmjs.com/resolving-eacces-permissions-errors-when-installing-packages-globally)).

#### Is there anything in any of your profile files that modifies the `PATH`?

<!-- (e.g. `.bashrc`, `.bash_profile`, `.zshrc`, etc) -->

Yes:

`export PATH="/home/mithic/.local/texlive/2019/bin/x86_64-linux:$HOME/.local/bin:$HOME/bin:/usr/local/bin:$PATH"`

Additionally, since I am using WSL it also uses the PATH from windows (the full path can be seen in the `nvm debug` output above). I do not believe this to be part of the issue as all of the directories seem to be the correct one.

|

OS: windows,needs followup

|

low

|

Critical

|

578,145,666 |

TypeScript

|

Incorrect codegen and error detection for static property used as computed key in instance property of the same class

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 3.8.3

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** static class properties, computed property access

TypeScript is inconsistent in it's handling of static properties used as keys.

**Code**

```ts

class Some {

static readonly prop = Symbol();

[Some.prop] = 22; // compile time error

}

const s = class Some1 {

static readonly prop = Symbol();

[Some1.prop] = 22; // runtime error

};

```

**Expected behavior:**

Both examples work like

```js

// javascript

const s = Symbol();

class Some {

static get prop() { return s; };

constructor() {

this[Some.prop] = 22;

}

}

```

**Actual behavior:**

First example yields compile time error `TS2449: Class 'Some' used before its declaration`.

Second example results in emit that will throw at runtime

```js

"use strict";

var _a, _b;

const s = (_b = class Some {

constructor() {

this[_a] = 22;

}

},

_a = Some.prop,

_b.prop = Symbol(),

_b);

```

**Playground Links:** <!-- A link to a TypeScript Playground "Share" link which demonstrates this behavior -->

[Playground Link 1](https://www.typescriptlang.org/play/?ssl=1&ssc=11&pln=1&pc=1#code/MYGwhgzhAEDKD2BbAptA3gKGt6EAuYeAlsNAE7JgAm8AdiAJ7QAOZ8z0AvHA4gEbwQACgCUAbgxYcAbQQoAdK3YBdLtABM6iQF8MQA)

[Playground Link 2](https://www.typescriptlang.org/play/#code/MYewdgzgLgBBMF4bADYEMLwMogLYFMYBvAKBnLijSgEtgYAnfNAE3BQE8YAHBkbxDCwdcAIxAoAFAEoA3CTIUA2jgIA6XvwC6ggEy75AXxJA)

**Relevant**

[proposal for class fields](https://github.com/tc39/proposal-class-fields)

|

Bug

|

low

|

Critical

|

578,160,659 |

flutter

|

Consider adding Performance overlay to platform-web

|

**Note:** I'm not sure how this would work or how much work this entails. But it came up as a topic from conversations with @liyuqian, and we noticed there is no bug tracking it.

## Use case

* Performance optimization: Running a Flutter app on the web and seeing some skipped frames, I'd like to reuse my knowledge of Flutter performance tools to start addressing the issue.

* Education: Writing docs about performance, I'd like to give the users two version of an app in [DartPad](https://dartpad.dartlang.org/). Users are able to bring up the performance overlay to see how one version of the app is performing much faster than the other one.

## Proposal

* Enable the performance overlay for Flutter web apps.

For clarity, this is what I mean by performance overlay.

It is [an engine layer](https://github.com/flutter/engine/blob/master/flow/layers/performance_overlay_layer.h) added to a `--profile` (or `--debug`) version of an app by the engine. It shows the build times for the UI thread (a.k.a. main thread, app thread, etc.) and the GPU thread (a.k.a. raster thread -- i.e. not actually running on the GPU).

|

c: new feature,framework,platform-web,c: proposal,P2,team-web,triaged-web

|

low

|

Critical

|

578,187,259 |

angular

|

Using slot API with Angular Elements throws an error

|

# 🐞 bug report

### Affected Package

angular/elements

### Description

I am making a container component in Angular 9 to be turned into a web component.

The container component is first turned into a Web Component once that is done ANY children can be passed into it and manipulate it. I have code that wraps the `<ng-content>` but once the turned into a web component the children are not wrapped.

## 🔬 Minimal Reproduction

First clone https://github.com/alevyKorio/SmartContainer.git

then run `npm install`

then `npm run build:ngelement`

go into `elements` folder and the container has become a web component `SmartContainer.js`

copy that file

clone https://github.com/alevyKorio/SmartContainerShell.git

paste file into root directory of SmartContainerShell folder

run `npm install`

run `npm run serve`

## 🔥 Exception or Error

As you can see in this image

The `div`s are siblings to the `app-smart-container` instead of children

## 🌍 Your Environment

**Angular Version:**

<pre><code>

<!-- run `ng version` and paste output below -->

<!-- ✍️-->

Angular CLI: 9.0.5

Node: 10.16.2

OS: win32 x64

Angular: 9.0.5

... animations, cli, common, compiler, compiler-cli, core, forms

... language-service, platform-browser, platform-browser-dynamic

... router

Ivy Workspace: Yes

Package Version

-----------------------------------------------------------

@angular-devkit/architect 0.900.5

@angular-devkit/build-angular 0.900.5

@angular-devkit/build-optimizer 0.900.5

@angular-devkit/build-webpack 0.900.5

@angular-devkit/core 9.0.5

@angular-devkit/schematics 9.0.5

@ngtools/webpack 9.0.5

@schematics/angular 9.0.5

@schematics/update 0.900.5

rxjs 6.5.4

typescript 3.7.5

webpack 4.41.2

</code></pre>

**Anything else relevant?**

Once changes are made to SmartContainer run then `npm run build:ngelement`

go into the `elements` folder and copy the new `SmartContainer.js` to the shell and restart the shell

|

type: bug/fix,area: elements,state: confirmed,state: needs more investigation,P4

|

low

|

Critical

|

578,189,795 |

flutter

|

Add check for existence of system requirement command line tools

|

Check for the presence of all required executables as soon as possible (before artifacts are downloaded) in the tool and give a good error message. `flutter doctor` makes sense but the validators may be run too late to catch `git` or `zip` exceptions.

https://flutter.dev/docs/get-started/install/windows#system-requirements

- Windows PowerShell 5.0 or newer (this is pre-installed with Windows 10)

- Git for Windows 2.x, with the Use Git from the Windows Command Prompt option.

https://flutter.dev/docs/get-started/install/macos#system-requirements

- bash

- curl

- git 2.x

- mkdir

- rm

- unzip

- which

- zip

https://flutter.dev/docs/get-started/install/linux#system-requirements

(above list + xz-utils)

|

tool,t: flutter doctor,P3,team-tool,triaged-tool

|

low

|

Critical

|

578,190,383 |

storybook

|

storySort isn't find all stories in project.

|

**Describe the bug**

To control the order of my stories I have the following storySort function:

```

storySort: (a, b) => {

// Control root level sort order.

const sort = [

'Library/Base Components',

'Library/Abstractions',

'Library/Modules',

'Library/Transient',

'Library/Page Specific',

'Library/Deprecated',

'Library/Uncategorized',

'Pages',

];

const sortObj = {};

sort.forEach(function(a, i) {

sortObj[a] = i + 1;

});

const aSplit = a[1].kind.split('/');

const bSplit = b[1].kind.split('/');

if (aSplit && bSplit) {

return (

sortObj[`${aSplit[0]}/${aSplit[1]}`] -

sortObj[`${bSplit[0]}/${bSplit[1]}`]

);

}

return a - b;

},

```

I have story files in two locations e.g. `src/components/SomeComponet/SomeComponent.stories.js` and `src/pages/SomePage/SomePage.stories.js`.

My `main.js` includes stories from these locations with `stories: ['../src/**/*.stories.([tj]s|mdx)'],`, all components appear Storybook however the stores in `src/pages` don't get ordered, and aren't available in the `storySort`. If I `console.log(a[1].kind === 'Pages/SomePage')` I never get true. If I do the same for any of the stories that live in `src/components` e.g. `console.log(a[1].kind === 'Library/SomeComponent')` I always get true.

**To Reproduce**

Repeat what I've outlined above.

**Expected behavior**

Using my sort function above I'd expect to see stories with a little starting with `Pages` to appear after `Library`.

**Screenshots**

**System:**

```

Environment Info:

System:

OS: macOS Mojave 10.14.1

CPU: (8) x64 Intel(R) Core(TM) i7-8559U CPU @ 2.70GHz

Binaries:

Node: 12.14.1 - ~/.nvm/versions/node/v12.14.1/bin/node

Yarn: 1.15.2 - /usr/local/bin/yarn

npm: 6.13.4 - ~/.nvm/versions/node/v12.14.1/bin/npm

Browsers:

Chrome: 80.0.3987.132

Firefox: 72.0.2

Safari: 12.0.1

npmPackages:

@storybook/addon-backgrounds: ^5.3.13 => 5.3.13

@storybook/addon-docs: ^6.0.0-alpha.9 => 6.0.0-alpha.12

@storybook/addon-knobs: ^5.3.12 => 5.3.13

@storybook/addon-storysource: ^5.3.13 => 5.3.13

@storybook/preset-typescript: ^1.2.0 => 1.2.0

@storybook/source-loader: ^5.3.13 => 5.3.13

@storybook/vue: ^5.3.12 => 5.3.13

```

|

question / support,core

|

low

|

Critical

|

578,201,800 |

rust

|

Detect introduction of deadlock by using `if lock.read()`/`else lock.write()` in the same expression

|

A coworker came across the following:

```rust

use std::sync::RwLock;

use std::collections::HashMap;

fn foo() {

let lock: RwLock<HashMap<u32, String>> = RwLock::new(HashMap::new());

let test = if let Some(item) = lock.read().unwrap().get(&5) {

println!("its in there");

item.clone()

} else { lock.write().unwrap().entry(5).or_insert(" eggs".to_string());

println!("ok we put it there");

" eggs".to_string()

};

println!("There were {}", test);

}

fn main() {

foo();

}

```

This code compiles, but it will deadlock because of the `lock.read().unwrap()` in the `if let` being kept alive until the end of the `foo` function. MIRI actually catches this, but it would be nice to have a lint against this kind of usage, given that it is a latent foot-gun.

|

C-enhancement,A-lints,T-lang,C-feature-request

|

low

|

Minor

|

578,202,524 |

create-react-app

|

When Ejecting - should not use scripts folder

|

I have a scripts folder already in my project - when I ran `npm run eject` it complains about a clash with existing scripts folder. I assume it might be a good idea to move react scripts to a folder with a slightly less common name?

|

issue: proposal,needs triage

|

low

|

Minor

|

578,203,856 |

flutter

|

flutter clean times out on Runner.xcworkspace clean

|

<!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.dev/

* https://api.flutter.dev/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill our the template below. Please read

our guide to filing a bug first: https://flutter.dev/docs/resources/bug-reports

-->

## Steps to Reproduce

<!-- You must include full steps to reproduce so that we can reproduce the problem. -->

0. find a mac os machine

1. install flutter

2. `flutter test`

3. `flutter clean`

4. see it times out (after running for 5 hours)

Please see this run for more details:

https://github.com/tianhaoz95/photochat/runs/494113600?check_suite_focus=true#step:8:9

**Expected results:** <!-- what did you want to see? -->

It should cleans up the build in less than 5 minutes

**Actual results:** <!-- what did you see? -->

It gets stuck and never returns.

|

tool,platform-mac,P2,team-tool,triaged-tool

|

low

|

Critical

|

578,207,346 |

rust

|

Compiler selects invalid `--lldb-python` path

|

<!--

Thank you for finding an Internal Compiler Error! 🧊 If possible, try to provide

a minimal verifiable example. You can read "Rust Bug Minimization Patterns" for

how to create smaller examples.

http://blog.pnkfx.org/blog/2019/11/18/rust-bug-minimization-patterns/

-->

For my mac (macOS Mojave), the `--lldb-python` path is being set to `/usr/bin/python3`, which doesn't exist.

Furthermore, `/usr/bin` is immutable, so I cannot simply symlink to `/usr/local/bin/python3`, the result of `which python3`.

This is causing the `debuginfo` test suite to fail.

### Code

```

./x.py -i test src/test/debuginfo

```

### Meta

<!--

If you're using the stable version of the compiler, you should also check if the

bug also exists in the beta or nightly versions.

-->

rust version:

Top of `master`, specifically:

https://github.com/rust-lang/rust/commit/3dbade652ed8ebac70f903e01f51cd92c4e4302c

### Error output

```

running 115 tests

iFFFFFFFiFFFFFiFFFFFFFFFFFiFFFFiFFiiFFiFFiFFiiFiFFFFFFFFFFFFFiFiFiiFFFFFFFFiFFFFFiFiiFFFF.iFFFFiFFiF 100/115

FFFFiiFiFFFFFFF

failures:

---- [debuginfo-lldb] debuginfo/basic-types-globals-metadata.rs stdout ----

NOTE: compiletest thinks it is using LLDB version 1100

NOTE: compiletest thinks it is using LLDB without native rust support

error: Failed to setup Python process for LLDB script: No such file or directory (os error 2)

[ERROR compiletest::runtest] fatal error, panic: "Failed to setup Python process for LLDB script: No such file or directory (os error 2)"

thread 'main' panicked at 'fatal error', src/tools/compiletest/src/runtest.rs:2133:9

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

// …

```

<!--

Include a backtrace in the code block by setting `RUST_BACKTRACE=1` in your

environment. E.g. `RUST_BACKTRACE=1 cargo build`.

-->

<details><summary><strong>Backtrace</strong></summary>

<p>

```

running 115 tests

iFFFFFFFiFFFFFiFFFFFFFFFFFiFFFFiFFiiFFiFFiFFiiFiFFFFFFFFFFFFFiFiFiiFFFFFFFFiFFFFFiFiiFFFF.iFFFFiFFiF 100/115

FFFFiiFiFFFFFFF

failures:

---- [debuginfo-lldb] debuginfo/basic-types-globals-metadata.rs stdout ----

NOTE: compiletest thinks it is using LLDB version 1100

NOTE: compiletest thinks it is using LLDB without native rust support

error: Failed to setup Python process for LLDB script: No such file or directory (os error 2)

[ERROR compiletest::runtest] fatal error, panic: "Failed to setup Python process for LLDB script: No such file or directory (os error 2)"

thread 'main' panicked at 'fatal error', src/tools/compiletest/src/runtest.rs:2133:9

stack backtrace:

0: <std::sys_common::backtrace::_print::DisplayBacktrace as core::fmt::Display>::fmt

1: core::fmt::write

2: std::io::Write::write_fmt

3: std::io::impls::<impl std::io::Write for alloc::boxed::Box<W>>::write_fmt

4: std::sys_common::backtrace::print

5: std::panicking::default_hook::{{closure}}

6: std::panicking::default_hook

7: std::panicking::rust_panic_with_hook

8: std::panicking::begin_panic

9: compiletest::runtest::TestCx::fatal

10: compiletest::runtest::TestCx::cmd2procres

11: compiletest::runtest::TestCx::run_revision

12: compiletest::runtest::run

13: core::ops::function::FnOnce::call_once{{vtable.shim}}

14: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once

15: <alloc::boxed::Box<F> as core::ops::function::FnOnce<A>>::call_once

16: __rust_maybe_catch_panic

17: std::panicking::try

18: test::run_test_in_process

19: test::run_test::run_test_inner

20: test::run_test

21: test::run_tests

22: test::console::run_tests_console

23: compiletest::main

24: std::rt::lang_start::{{closure}}

25: std::panicking::try::do_call

26: __rust_maybe_catch_panic

27: std::panicking::try

28: std::rt::lang_start_internal

29: main

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

```

</p>

</details>

|

A-testsuite,A-debuginfo,T-compiler,T-bootstrap,C-bug,A-compiletest

|

low

|

Critical

|

578,252,732 |

pytorch

|

Expose chunk_sizes for DataParallel

|

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

Allow users to add custom chunk_sizes when running DataParallel.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

I am using the SparseConvNet package (https://github.com/facebookresearch/SparseConvNet), where tensors cannot be split evenly because each sample may have different sizes.

## Pitch

<!-- A clear and concise description of what you want to happen. -->

Add a new if statement to allow a custom scatter function to handle chunk_sizes if desired in `torch.nn.parallel.scatter_gather.scatter.scatter_map`:

```python

def scatter_map(obj):

if isinstance(obj, torch.Tensor):

return Scatter.apply(target_gpus, None, dim, obj)

if hasattr(obj, 'scatter'):

return obj.scatter(target_gpus, dim=dim)

if isinstance(obj, tuple) and len(obj) > 0:

return list(zip(*map(scatter_map, obj)))

if isinstance(obj, list) and len(obj) > 0:

return list(map(list, zip(*map(scatter_map, obj))))

if isinstance(obj, dict) and len(obj) > 0:

return list(map(type(obj), zip(*map(scatter_map, obj.items()))))

return [obj for targets in target_gpus]

```

https://github.com/pytorch/pytorch/blob/f62a0060972d594cc1c4ab99d44267373eee4ec6/torch/nn/parallel/scatter_gather.py#L11

And the same for gather/gather_map.

## Alternatives

<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->

## Additional context

<!-- Add any other context or screenshots about the feature request here. -->

|

module: nn,triaged,enhancement,module: data parallel

|

low

|

Minor

|

578,265,139 |

flutter

|

Add an example project to the new package template

|

All Flutter packages should include an example project that demonstrates how to use the packages.

Typically developers create a Flutter project under `/example` for this purpose.

Flutter should automatically create a new Flutter project under `/example` when creating a new Flutter package using the Flutter tool.

By adding this subproject, more developers are likely to implement an example project, and it alleviates the need for every package developer to manually create this subproject for every new package.

|

tool,P3,team-tool,triaged-tool

|

low

|

Major

|

578,342,473 |

vscode

|

Welcome -> Interface Overview Screen - Title Text for the first two features (Search & File explorer) Needs to change/swap

|

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Also please test using the latest insiders build to make sure your issue has not already been fixed: https://code.visualstudio.com/insiders/ -->

Interface Overview Screen - Title Text for the first two features (Search & File explorer) Needs to change/swap

<!-- Use Help > Report Issue to prefill these. -->

- VSCode Version: Visual Studio Code - Version: 1.41.1

- OS Version: Mac OS

Steps to Reproduce:

1. Initial Welcome screen (Help Menu -> Welcome)

2. Click on Interface Overview from Right Side Learn Section

<!-- Launch with `code --disable-extensions` to check. -->

Does this issue occur when all extensions are disabled?: Yes/No: Its common issue comes every time

<img width="1440" alt="vscode-interface-overview-screen" src="https://user-images.githubusercontent.com/41334766/76281734-f78f6e00-62bb-11ea-9ae6-0e64aab10aa7.png">

|

bug,workbench-welcome

|

low

|

Minor

|

578,436,730 |

TypeScript

|

Type definitions overshadowing

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

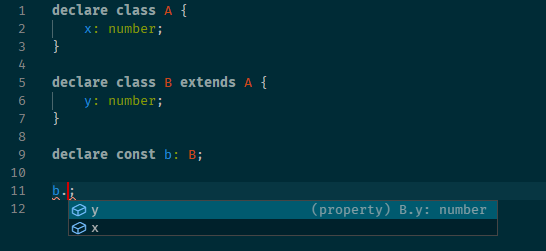

**TypeScript Version:** 3.8.3

(also tried 3.9.0-dev.20200310)

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:**

"Module '"immutable"' has no exported member"

**Code**

_(not self contained)_

```ts

import { hash } from "immutable";

export function test(): number {

return hash(1);

}

```

**Actual behavior:**

```

src/index.ts:1:10 - error TS2305: Module '"immutable"' has no exported member 'hash'.

1 import { hash } from "immutable";

~~~~

```

even though

`grep "export function hash" node_modules/immutable/dist/immutable.d.ts`

reveals

`export function hash(value: any): number;`

Because it uses type definitions in

`node_modules/@types/draft-js/node_modules/immutable/dist/immutable.d.ts`

instead of

`node_modules/immutable/dist/immutable.d.ts`

**Demo:**

sadly I did not find any way to put it in a playground, in any case:

https://gitlab.com/rawieo/issue_demo_1

**Related Issues:**

- https://github.com/facebook/create-react-app/issues/8578

- https://github.com/immutable-js/immutable-js/issues/1502 (probably)

|

Bug

|

low

|

Critical

|

578,440,025 |

rust

|

todo! and unimplemented! does not work with impl Trait return types

|

This code

```

trait SomeTrait {

fn some_func();

}

fn todo_impl_trait() -> impl SomeTrait { todo!() }

```

does not compile because

`the trait `SomeTrait` is not implemented for ()`

But such code

```

trait SomeTrait {

fn some_func();

}

fn todo_impl_trait<T: SomeTrait>() -> T { todo!() }

```

compiles correctly. Can this problem be resolved to use both todo!() and impl Trait?

|

C-enhancement,A-diagnostics,T-compiler,A-impl-trait

|

medium

|

Critical

|

578,459,689 |

vscode

|

SCM - Provide multiple ScmResourceGroup in menu commands

|

Hi,

When implementing a menu on a ResourceGroup in SourceControl view. There is always only one group in command args even if the user select multiple ResourceGroup and perform a common menu action there.

```ts

// groups length is always 1 even when multiple group selected

commands.registerCommand("commandId", (...groups: SourceControlResourceGroup[]) => {

```

The expected behavior is to have the list of SourceControlResourceGroup in command args like when selecting multiple SourceControlResource and execute a common menu action.

```ts

// resources length is greater than 1 when there is multiple resources selected in SourceControl View

commands.registerCommand("commandId", (...resources: SourceControlResourceState[]) => {

```

Regards.

- VSCode Version: 1.42.1

- OS Version: win10

|

help wanted,feature-request,scm

|

low

|

Minor

|

578,496,930 |

angular

|

Dynamic FormControl binding is broken

|

<!--🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅

Oh hi there! 😄

To expedite issue processing please search open and closed issues before submitting a new one.

Existing issues often contain information about workarounds, resolution, or progress updates.

🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅-->

# 🐞 bug report

### Affected Package

<!-- Can you pin-point one or more @angular/* packages as the source of the bug? -->

<!-- ✍️edit: --> The issue is caused by package @angular/forms

### Is this a regression?

<!-- Did this behavior use to work in the previous version? -->

<!-- ✍️--> No, none that I'm aware of.

### Description

<!-- ✍️--> We have a setup in our project where we bind different form-controls to a single input. Additionally we handle blur events to set formatted value to a form-control. The binding is done via function call to determine which form-control to bind input to. The problem is that I change the value on which the function is dependent, form-control binding is kept as if it's cached or memoized?

## 🔬 Minimal Reproduction

<!--

Please create and share minimal reproduction of the issue starting with this template: https://stackblitz.com/fork/angular-issue-repro2

-->

<!-- ✍️-->

[Link](https://stackblitz.com/edit/angular-issue-repro2-uakgvb)

<!--

If StackBlitz is not suitable for reproduction of your issue, please create a minimal GitHub repository with the reproduction of the issue.

A good way to make a minimal reproduction is to create a new app via `ng new repro-app` and add the minimum possible code to show the problem.

Share the link to the repo below along with step-by-step instructions to reproduce the problem, as well as expected and actual behavior.

Issues that don't have enough info and can't be reproduced will be closed.

You can read more about issue submission guidelines here: https://github.com/angular/angular/blob/master/CONTRIBUTING.md#-submitting-an-issue

-->

|

type: bug/fix,area: forms,state: confirmed,forms: Controls API,P4

|

low

|

Critical

|

578,517,755 |

TypeScript

|

A way to ignore prefix/suffix in module ID, then resolve the remaining ID as usual

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker.

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ, especially the "Common Feature Requests" section: https://github.com/Microsoft/TypeScript/wiki/FAQ

-->

## Search Terms

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

wildcard module declarations ignore prefix suffix relative path

## Suggestion

<!-- A summary of what you'd like to see added or changed -->

```ts

import url, { other, exported, values } from 'css:./whatever.css';

```

**TL;DR: I'm looking for a way to say "for all modules starting `css:`, ignore the `css:` bit, and resolve the remaining identifier as normal**.

As identified by [wildcard module declarations](https://www.typescriptlang.org/docs/handbook/modules.html#wildcard-module-declarations), some build tools use prefixes/suffixes to influence how a file is imported.

You can provide types for these:

```ts

declare module "css:*" {

const value: string;

export default value;

export const other: string;

export const exported: string;

export const values: string;

}

```

The above allows developers to say "all modules starting `css:` look like this…".

However, what if the exports from each CSS files differs? This is true for CSS modules, where the class names in a CSS file are 'exported'.

In these cases it's typical to generate a `.css.d.ts` file alongside your CSS that defines the types. However, if you're using a prefix like `css:`, TypeScript can't find the location of the `.css.d.ts` file.

**I'm looking for a way to say "for all modules starting `css:`, ignore the `css:` bit, and resolve the remaining identifier as normal**.

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

- Build tools that use prefixes/suffixes to indicate how to process files.

- Files that may export different things per file (like CSS modules)

## Examples

<!-- Show how this would be used and what the behavior would be -->

It isn't clear to me if this should be done in tsconfig or in a type definition file.

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,In Discussion

|

low

|

Critical

|

578,598,402 |

vscode

|

Settings Sync : Allow for custom backend service end points

|

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Describe the feature you'd like. -->

According to the Settings Sync Plan #90129 and to the Settings Sync documentation ( https://code.visualstudio.com/docs/editor/settings-sync ) it seems that only Microsoft and public Github account are supported.

What if we wanted to use a Github Enterprise backend, or any other git remote ( Gitlab, Gogs, whatever... ) ?

|

feature-request,settings-sync

|

high

|

Critical

|

578,604,488 |

youtube-dl

|

https://nation.foxnews.com support request

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2020.03.08. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.

- Make sure that site you are requesting is not dedicated to copyright infringement, see https://yt-dl.org/copyright-infringement. youtube-dl does not support such sites. In order for site support request to be accepted all provided example URLs should not violate any copyrights.

- Search the bugtracker for similar site support requests: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [ X] I'm reporting a new site support request

- [ X] I've verified that I'm running youtube-dl version **2020.03.08**

- [ X] I've checked that all provided URLs are alive and playable in a browser

- [ X] I've checked that none of provided URLs violate any copyrights

- [ X] I've searched the bugtracker for similar site support requests including closed ones

## Example URLs

<!--

Provide all kinds of example URLs support for which should be included. Replace following example URLs by yours.

-->

Single Video: https://nation.foxnews.com/watch/0667a730448e69c75ab7eab7cb3225a2/

## Description

<!--

Provide any additional information.

If work on your issue requires account credentials please provide them or explain how one can obtain them.

-->

WRITE DESCRIPTION HERE

Request https://nation.foxnews.com/watch/0667a730448e69c75ab7eab7cb3225a2/

|

site-support-request

|

low

|

Critical

|

578,652,575 |

TypeScript

|

Recognize JS namespace pattern in TS

|

I short, I propose two things:

1. In TS files recognize JavaScript namespace patterns that are recognized in JS files with `--allowJs`. Like `var my = my || {}; my.app = my.app || {};` (#7632)

2. Recognize methods that create global JavaScript namespaces from provided string. For example calling [`Ext.na("Company.data")`](https://stackoverflow.com/a/18152489/350384) should have the same effect as writing it "by hand" with `var Company = Company || {}; Company.data = Company.data || {};`

## Why 1.

It's now possible to have project with `--allowJs` flag when you can define such code in JS file and TS file recognizes it:

_JS file_:

```js

var app = app || {};

app.pages = app.pages || {};

app.pages.admin = app.pages.admin || {};

app.pages.admin.mailing = (function () {

return {

/**

* @param {string} email

*/

sendMail: function (email) { }

}

})()

```

_TS file_:

```ts

(function(){

app.pages.admin.mailing.sendMail("[email protected]")

})();

```

See what I get when I hover over `mailing` property in my TS file.

The problem is that I want to incrementally migrate old JavaScript project to Typescript and convert each JS file to TS. It's not so easy or obvious how to migrate JS file that use this namespaces pattern without adding a lot of type definitions that can be inferred in JS file with `allowJs` compiler flag. Why not recognize this pattern in Typescript files?

## Why 2. and example

This is the actual pattern that my current project uses in JS files

```js

namespace('app.pages.admin');

app.pages.admin.mailing = (function () {

return {

/**

* @param {string} email

*/

sendMail: function (email) { }

}

})()

```

`namespace` function is global function that basically creates global namespace objects. It's logically equivalent to writing `var app = app || {}; app.pages = app.pages || {}; app.pages.admin = app.pages.admin || {};` from previous example.

I want to be able to change JS file to TS file and be able to use this code in new TS files (but with static safety that TS provides).

I propose that we recognize special type alias definition for which compiler will recognize this pattern and act as if this global "javascript namespace" (or ["expando"](https://github.com/microsoft/TypeScript/issues/10566) object) was created. For example this type alias will be added to standard lib:

```ts

type JavaScriptNamespace = string;

```

Then I could declare global `namespace` function like this

```ts

declare function namespace(n : JavaScriptNamespace): void;

```

and this TS code would be valid:

```ts

namespace('app.pages.admin');

app.pages.admin.mailing = (function () {

return {

sendMail: function (email: string): void { }

}

})()

```

It's a bit similar in spirit to `ThisType`. I mean compiler have special handling to some type. But if old compiler sees this type then nothing happens (it's just a string type).

## Use cases and current "workarounds"

It's all about making migration of current JS project to TS easier. It's hard to convince my team (and even myself) that we should use TS when you need to write code like this to get the same behavior you had in JS but with type safety:

```ts

//file: global.d.ts

declare var app: NamespaceApp

declare function namespace(namespace: string): void;

interface NamespaceApp {

pages: NamespacePages;

}

interface NamespacePages {

admin: NamespaceAdmin;

}

interface NamespaceAdmin {

}

//file: module1.js

interface NamespaceAdmin {

module1: {

foo: (arg: string) => void;

bar: (arg: string[]) => void;

}

}

namespace("app.pages.admin")

app.pages.admin.module1 = (function () {

return {

foo: function (arg: string) {

},

bar: function (arg: string[]) {

}

}

})()

//file: module2.js

interface NamespaceAdmin {

module2: {

foo2: (arg: string) => void;

bar2: (arg: string[]) => void;

}

}

namespace("app.pages.admin")

app.pages.admin.module2 = (function () {

return {

foo2: function (arg: string) {

},

bar2: function (arg: string[]) {

}

}

})()

```

you need to write type definitions for you methods twice and split you type definitions into interfaces to merge them 🤮

My current workaround is using Typescript namespaces like this (I have two approaches, don't like either of them):

```ts

//first approach

namespace app1.pages.admin.mailing {

function privateFunc(){}

export function sendMail(email: string): void{

privateFunc();

}

}

//second approach

namespace app2.pages.admin {

export const mailing = (function(){

function privateFunc(){}

function sendMail(email: string){}

return {

sendMail

}

})()

}

```

There are many problems with this use of Typescript's namespace:

* the generated code is bigger, because this is how TS's namespaces work

* The first approach would almost look similar to original JS code if you could use "export" syntax like in ES modules. Then at leas it would visually look similar to previous JS code that is using `return` in IIFE. Something like that:

```ts

//second approach

namespace app5.pages.admin {

function privateFunc(){}

function sendMail(email: string): void{

privateFunc();

}

export { sendMail } //Error: "Export declarations are not permitted in a namespace.",

}

```

But it's not supported. I guess it's not worth to change it now since namespaces are not used that often nowadays.

* You basically can't represent namespaces that have same name as part of namespace. For example in JS I had `app.pages.app' namespace and I cannot have it in TS:

```ts

namespace app.pages.app {

export const mailing = (function(){

function privateFunc(){}

function sendMail(email: string){

app.pages.someOtherModule.foo(); //compiler error Property 'pages' does not exist on type 'typeof app'.

}

return {

sendMail

}

})()

}

```

In the end I had to use different name that I would use in TS files and use workaround to make old name work in other JS files:

```ts

(app.pages as any).app = app.pages.otherName;

```

## Summary

As I mentioned it's all about easing migration of old JavaScript projects that use old namespace pattern (for example because they use ExtJS library) to TypeScript. I know that nowadays this pattern is not that popular because you should use ES modules. But I believe there are a lot of people that would love to move their projects to TS but it's hard because it would require to move to ES modules first. And it's a huge task itself. Actually my plan is to migrate current code to TS with old namespace and then try to migrate it to ES modules. It should be much easier to migrate when most of you code is typed. You have more confidence when compiler helps you.

If TS team thinks that it's only worth doing 1. proposal (just recognize what's recognized in `allowJS` now, without implementing `JavaScriptNamespace` alias proposal) it would be "good enough" for me because it will be much better than my current namespace workarounds.

But if I had this `JavaScriptNamespace` alias feature then it would be possible to include all my JS files to TS compilation with `allowJS` and this JS code will be available in TS! (of course function arguments will be `any`, but still). I would also get better IntellSense in current JS files because this `namespace` function will be recognized as creator of a namespace (when using Salsa).

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,In Discussion

|

low

|

Critical

|

578,655,245 |

node

|

No stack trace with missing async keyword and dynamic imports

|

<!--

Thank you for reporting an issue.

This issue tracker is for bugs and issues found within Node.js core.

If you require more general support please file an issue on our help

repo. https://github.com/nodejs/help

Please fill in as much of the template below as you're able.

Version: output of `node -v`

Platform: output of `uname -a` (UNIX), or version and 32 or 64-bit (Windows)

Subsystem: if known, please specify affected core module name

-->

* **Version**: v13.9.0

* **Platform**: Linux PC_NAME 5.3.0-40-generic nodejs/modules#32~18.04.1-Ubuntu SMP Mon Feb 3 14:05:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

* **Subsystem**:

### What steps will reproduce the bug?

Consider the following code:

#### a.mjs

```js

(async () => {

"use strict";

let b = await import("./b.mjs");

await b.default();

})();

```

#### b.mjs

```js

import fs from 'fs';

function bug() {

// The something doesn't have to exist

console.log(await fs.promises.readFile("/proc/cpuinfo", "utf-8"));

}

export default async function () {

await bug();

}

```

#### Explanation

There is obviously a bug in `b.mjs` above. The `bug()` function is missing the `async` keyword`. However, when I attempt to execute the above, I get the following error:

```

(node:30870) UnhandledPromiseRejectionWarning: SyntaxError: Unexpected reserved word

at Loader.moduleStrategy (internal/modules/esm/translators.js:81:18)

at async link (internal/modules/esm/module_job.js:37:21)

(node:30870) UnhandledPromiseRejectionWarning: Unhandled promise rejection. This error originated either by throwing inside of an async function without a catch block, or by rejecting a promise which was not handled with .catch(). To terminate the node process on unhandled promise rejection, use the CLI flag `--unhandled-rejections=strict` (see https://nodejs.org/api/cli.html#cli_unhandled_rejections_mode). (rejection id: 1)

(node:30870) [DEP0018] DeprecationWarning: Unhandled promise rejections are deprecated. In the future, promise rejections that are not handled will terminate the Node.js process with a non-zero exit code.

```

This isn't particularly helpful. Now, consider the following snippet:

**c.mjs:**

```js

import fs from 'fs';

function bug() {

// The something doesn't have to exist

console.log(await fs.promises.readFile("/proc/cpuinfo", "utf-8"));

}

(async () => {

"use strict";

await bug();

})();

```

There's bug in this one too, but executing it yields a very different and much more helpful error:

```

file:///tmp/c.mjs:5

console.log(await fs.promises.readFile("/proc/cpuinfo", "utf-8"));

^^^^^

SyntaxError: Unexpected reserved word

at Loader.moduleStrategy (internal/modules/esm/translators.js:81:18)

at async link (internal/modules/esm/module_job.js:37:21)

```

Much better. Node gives us a helping hand by telling us where the error occurred.

### How often does it reproduce? Is there a required condition?

This bug in the error message only appears to occur when a module is dynamically imported with `import("./path/to/file.mjs")`. Specifically, the first error message is missing this bit:

```

file:///tmp/c.mjs:5

console.log(await fs.promises.readFile("/proc/cpuinfo", "utf-8"));

^^^^^

```

...obviously the filepath and line number would be different for b.mjs is this bit was added.

### What is the expected behavior?

The first error message should look like the second.

<!--

If possible please provide textual output instead of screenshots.

-->

When dynamically importing a module that is missing the async keyword on a method, the error message should tell me where the error occurred.

### What do you see instead?

The bit there it tells me where the "unexpected reserved word" was found is missing. See above for examples of what's gone wrong.

<!--

If possible please provide textual output instead of screenshots.

-->

### Additional information

If possible, when it's the `await` keyword that was detected as unexpected, the error message should reflect this more closely. For example, it might be helpful to say `SyntaxError: Unexpected reserved word "await" (did you forget the "async" keyword?)" or something like that.

<!--

Tell us anything else you think we should know.

-->

|

esm

|

low

|

Critical

|

578,682,986 |

flutter

|

DropdownButton does not grows based on content when isDense is true.

|

https://b.corp.google.com/issues/151121131.

|

framework,f: material design,d: api docs,customer: money (g3),has reproducible steps,found in release: 3.0,found in release: 3.1,team-design,triaged-design

|

low

|

Major

|

578,731,505 |

godot

|

Tilesets behave incredibly buggy when multiple Tiles share the same texture

|

**Godot version:** 3.1.2 stable

**OS/device including version:** Win64

**Issue description:**

Because the same texture can't be added more than once

and because clicking into a texture acts as selecting the Tile, and because clicking at those arrows will advance between tiles, and subtiles and shape

Tilesets start to behave unpredictably crazy when multiple Tiles try to share the same texture.

**Steps to reproduce:**

1. Have a simple spritesheet,

2. create an Autotile Tile and select all the spritesheet as region

3. create another Autotile Tile and select all the spritesheet as region, change modulate/bitmask/collision/priority whatever

4. create another Atlas Tile and select all the spritesheet as region

5. paint some tiles

6. try to go back into Tilesets to select a specifc tile and change settings

7. witness the chaos unfold

**Minimal reproduction project:**

[Tileset_single_texture_issue.zip](https://github.com/godotengine/godot/files/4313749/Tileset_single_texture_issue.zip)

|

bug,topic:core,topic:editor

|

low

|

Critical

|

578,777,312 |

pytorch

|

Strange behaviour of F.interpolate with bicubic mode.

|

## 🐛 Bug

Input:

X with shape (2,3,256,256)

RUN:

Y = F.interpolate(X, [256,256], mode='bicubic', align_corners=True)

Z = F.interpolate(Y, [256,256], mode='bicubic', align_corners=True)

ISSUE:

Y[1,:,:,:] = zeros and Z[1,:,:,:] = zeros.

Following is my code:

```

def interpolate_torch(x, s=256, mode='bicubic', align_corners=True):

return F.interpolate(x, [s, s], mode=mode, align_corners=True)

def get_transform(resize_crop, colorJitter, horizen_flip, inputsize=1024):

transform_list =[]

if resize_crop:

transform_list.append(transforms.RandomResizedCrop(inputsize, scale=(0.8, 1.0), interpolation=PIL.Image.BICUBIC))

else:

if inputsize != 1024:

transform_list.append(transforms.Resize(inputsize, interpolation=PIL.Image.BICUBIC))

if colorJitter:

transform_list.append(transforms.ColorJitter())

if horizen_flip:

transform_list.append(transforms.RandomHorizontalFlip())

transform_list += [transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5),(0.5, 0.5, 0.5))]

return transforms.Compose(transform_list)

def img2tensor(name):

transf = get_transform(resize_crop=False, colorJitter=False,

horizen_flip=False, inputsize=256)

img = Image.open(name)

img_torch = transf(img)

return img_torch

y2 = img2tensor('content.png').unsqueeze(0)

y1 = img2tensor('content.png').unsqueeze(0)

y = torch.cat([y2, y1],0)

print(y[1,:,:,:])

y_bicubic = interpolate_torch(y, s=256, mode='bicubic')

print(y_bicubic[1,:,:,:])

y_bicubic_bicubic = interpolate_torch(y_bicubic, s=128, mode='bicubic')

```

Environment:

pytorch 1.1.0

torchvision 0.2.2.post3

scikit-image 0.15.0

python3

|

module: nn,triaged

|

low

|

Critical

|

578,802,347 |

flutter

|

Changing MaterialApp to CupertinoApp exceptions on routes.

|

Hi, I have changed my flutter app from MaterialApp to CupertinoApp. It has error on routes.

Tested on Android Device and Android Emulator.

Following codes works with MaterialApp.

```

import 'package:flutter/material.dart';

import 'package:wasd/onboarding.dart';

import 'strings.dart';

void main() => runApp(WASD());

class WASD extends StatelessWidget {

// This widget is the root of your application.

@override

Widget build(BuildContext context) {

return MaterialApp(

title: Strings.appTitle,

initialRoute: Strings.navOnboarding,

routes: {

Strings.navOnboarding: (context) => Onboarding()

},

);

}

}

```

However, when I changed to CupertinoApp like following. It shows error.

```

import 'package:flutter/cupertino.dart';

import 'package:wasd/onboarding.dart';

import 'strings.dart';

void main() => runApp(WASD());

class WASD extends StatelessWidget {

// This widget is the root of your application.

@override

Widget build(BuildContext context) {

return CupertinoApp(

title: Strings.appTitle,

initialRoute: Strings.navOnboarding,

routes: {

Strings.navOnboarding: (context) => Onboarding()

},

);

}

}

```

<details>

<summary>Logs</summary>

```

Performing hot reload...

Syncing files to device Android SDK built for x86...

════════ Exception caught by widgets library ═══════════════════════════════════════════════════════

The following assertion was thrown building Builder(dirty, dependencies: [CupertinoUserInterfaceLevel, _InheritedCupertinoTheme]):

Either the home property must be specified, or the routes table must include an entry for "/", or there must be on onGenerateRoute callback specified, or there must be an onUnknownRoute callback specified, or the builder property must be specified, because otherwise there is nothing to fall back on if the app is started with an intent that specifies an unknown route.

'package:flutter/src/widgets/app.dart':

Failed assertion: line 178 pos 10: 'builder != null ||

home != null ||

routes.containsKey(Navigator.defaultRouteName) ||

onGenerateRoute != null ||

onUnknownRoute != null'

Either the assertion indicates an error in the framework itself, or we should provide substantially more information in this error message to help you determine and fix the underlying cause.

In either case, please report this assertion by filing a bug on GitHub:

https://github.com/flutter/flutter/issues/new?template=BUG.md

The relevant error-causing widget was:

CupertinoApp file:///Users/abdullahbalta/StudioProjects/wasd-flutter/lib/main.dart:11:12

When the exception was thrown, this was the stack:

#2 new WidgetsApp (package:flutter/src/widgets/app.dart:178:10)

#3 _CupertinoAppState.build.<anonymous closure> (package:flutter/src/cupertino/app.dart:278:22)

#4 Builder.build (package:flutter/src/widgets/basic.dart:6757:41)

#5 StatelessElement.build (package:flutter/src/widgets/framework.dart:4291:28)

#6 ComponentElement.performRebuild (package:flutter/src/widgets/framework.dart:4223:15)

...

════════════════════════════════════════════════════════════════════════════════════════════════════

Reloaded 1 of 639 libraries in 418ms.

```

</details>

<details>

<summary>strings.dart</summary>

```

class Strings {

static String appTitle = "WASD";

//routes

static String navOnboarding = '/onboarding';

}

```

</details>

So, assertion error message is very clear, but why this is not working?

|

framework,f: material design,d: api docs,has reproducible steps,found in release: 3.0,found in release: 3.1,team-design,triaged-design

|

low

|

Critical

|

578,842,855 |

kubernetes

|

Issues in published repos are not very visible

|

E.g. https://github.com/kubernetes/apimachinery, https://github.com/kubernetes/client-go, etc

People file issues there because it's logical, but we (api machinery) don't include them in our triage meeting.

We should either adjust our query, change our process in some other way (like tag issues when we've looked at them), or adjust the readmes and issue templates in the published repos.

/sig api-machinery

|

kind/bug,sig/api-machinery,lifecycle/frozen

|

low

|

Major

|

578,871,592 |

godot

|

Tileset: Neither Autotile nor Atlas Tile Priority allow "per Tile" priority

|

**Godot version:** 3.2.1.stable

**OS/device including version:** Win64

**Issue description:**

My goal is to have a Tile with 8 Subtiles and every time I paint a tile, a random one out of those 8 is picked. This is what the "Priority" tab in the Tileset is for.

Both Atlas texture as well as a Autotile have Priority tab. So I assumed a Tile with 8 identical bitmasks, should provide me with 8 randomly chosen Tiles, if every tile is set to the default 1/8 priority. [At least according to GDQuest](https://youtu.be/F6VerW98gEc?t=333), 1/8 should mean that out of 8 painted tiles, the probability for each subtile to appear is 1.

I would expect this to work, however what seems to be the case is that priority only takes effect, if there are multiple tiles for the same bitmask, **and only if the bitmask _rules_ return true.**

As a consequence, despite **Atlas Tiles** having a "Priority" tab, it seems to have absolutely no purpose or effect:

**Autotiles** _without_ a bitmask set will also show no effect of priority, just like with Atlas tiles:

Note the 1/0 there.

If you set the 3x3 bitmask to _fill the entire tile_, all tiles surrounded by other tiles, will be affected by "priority", but not those that are not surrounded at all sides:

If you set the 3x3 bitmask _only in the center_ of each tile, the opposite is the case, only tiles not surrounded by any other tiles will be affected by the priority setting:

The desired result however, would be to allow the user control over which tiles are set according to priority and which are not. The way bitmaps are currently set up, seem to make the priority feature either not working or useless.

See it in action:

**Minimal reproduction project:**

[Tileset_Priority_issue.zip](https://github.com/godotengine/godot/files/4314839/Tileset_Priority_issue.zip)

PS: If you wonder why Atlas tiles don't show up in the right color: https://github.com/godotengine/godot/issues/36964

|

bug,enhancement,topic:core,topic:editor,topic:2d

|

medium

|

Major

|

578,877,320 |

go

|

x/pkgsite: search algorithm doesn't find similarly named packages

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions, please email [email protected].

-->

### What is the URL of the page with the issue?

https://pkg.go.dev/search?q=blake2

### What did you do?

Searched for `blake2` at pkg.go.dev

### What did you expect to see?

Search results which included both https://pkg.go.dev/golang.org/x/crypto/blake2b and https://pkg.go.dev/golang.org/x/crypto/blake2s

### What did you see instead?

Search results that did not include those packages

It looks like the search isn't finding similarly named packages. In this case, I knew there were two blake2-related packages in x/crypto, but when I searched for "blake2", neither of the similarly named `blake2s` or `blake2b` packages were in the results

|

NeedsInvestigation,pkgsite,pkgsite/search

|

low

|

Minor

|

578,886,341 |

flutter

|

Standardize FPS computation

|

Investigating what’s the best way of measuring FPS in Flutter with sporadic user inputs and animations. On top of that, there are also multiple threads and pipelining.

This seems to also be a common issue for Chrome-based OS and Fuchsia.

We can either come up with an API, or at least document some standardized algorithm or specifications on how to compute the FPS in such scenarios.

|

c: new feature,engine,c: performance,perf: speed,P3,team-engine,triaged-engine

|

low

|

Major

|

578,886,968 |

terminal

|

Disable (or Customize) key to suppress mouse events

|

# Description of the new feature/enhancement

Work item introduced by #4856. Shift is used to suppress mouse events. A user may want to disable this feature.

If we want to be able to customize it (which I don't think anybody is asking for but I'll include the idea in here anyways), this may be a part of #1553.

|

Issue-Feature,Area-Settings,Product-Terminal

|

low

|

Major

|

578,924,054 |

terminal

|

shift+left at the end of a line in the console selects a non existent character

|

# Environment

19582 rs_prerelease

# Steps to reproduce

open cmd

type: type f:\mx\public\x86fre.nocil\onecore\external\sdk\inc\crt\direct.h

# Expected behavior

shift+left should select the h, and ctrl+shift+left should select the whole path

# Actual behavior

* shift+left selects the empty character after the h

* ctrl+shift+left selects the empty character after the h. A 2nd ctrl+shift+left selects the path

|

Product-Conhost,Area-Input,Issue-Bug,Priority-3

|

low

|

Minor

|

578,950,867 |

TypeScript

|

Disable or remove specific code action/fixes/refactoring

|

## Search Terms

## Suggestion

https://github.com/microsoft/vscode/issues/92305

Because when some code action is available, there will be a icon shows on the code editing area.

If some fixes I always not want, it's annoying that the clickable icon will show every time my cursor move on the code or select some code or some other actions. Especially when the current line left is not white spaces, the icon will show at previous line and cover the privious line code.

For example, I always want use require in js file not import.

And maybe some visual effect like the dash underline, the show fixes button in the popup tip, the dimmed color text should be removed, when the relate code action is disabled. Because these hint there are problems in my code but actually not.

## Use Cases

## Examples

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,In Discussion

|

low

|

Major

|

578,974,017 |

flutter

|

Prevent status and system bar appearance on touch or on keyboard in flutter

|

I have asking my problem in [stackoverflow](https://stackoverflow.com/questions/60611854/prevent-status-and-system-bar-appearance-on-touch-or-on-keyboard-in-flutter) but no answer or comment as for now.

My problem is a below.

I have flutter app that will be put within public in tablet or in android box. My app should be run on full screen. I have user `SystemChrome.setEnabledSystemUIOverlays([]);` on `initState()`.

But, the problem is whenever user slide top or bottom, the status and system bar will be appear like the answer in [stackoverflow](https://stackoverflow.com/a/57126488/3436326). Public can close or exit the app in back button. Same thing goes with keyboard input appearance.

What is the way to prevent or hide status and system navigation bar until I close the app? The app can be closing on administrator access page to exit the app. Is there something that can be done on MainActivity.java? I cannot find any way currently to prevent status and system bar from user access while app is running.

In Xamarin Forms that I use before, I have below code that I apply from online:

```

//Remove title bar

RequestWindowFeature(WindowFeatures.NoTitle);

//Remove notification bar

Window.SetFlags(WindowManagerFlags.Fullscreen, WindowManagerFlags.Fullscreen);

//Wake screen when app is added to task

Window.SetFlags(WindowManagerFlags.TurnScreenOn, WindowManagerFlags.TurnScreenOn);

//Keep screen on without timeout

Window.SetFlags(WindowManagerFlags.KeepScreenOn, WindowManagerFlags.KeepScreenOn);

if (Build.VERSION.SdkInt >= BuildVersionCodes.Lollipop)

{

var stBarHeight = typeof(Xamarin.Forms.Platform.Android.FormsAppCompatActivity).GetField("statusBarHeight", System.Reflection.BindingFlags.Instance | System.Reflection.BindingFlags.NonPublic);

if (stBarHeight == null)

{

stBarHeight = typeof(Xamarin.Forms.Platform.Android.FormsAppCompatActivity).GetField("_statusBarHeight", System.Reflection.BindingFlags.Instance | System.Reflection.BindingFlags.NonPublic);

}

stBarHeight?.SetValue(this, 0);

}

```

|

platform-android,framework,c: proposal,a: layout,P3,team-android,triaged-android

|

low

|

Major

|

578,974,275 |

pytorch

|

Restructure `multi_head_attention_forward`

|

## 🚀 Feature

Restructure the function `multi_head_attention_forward` in [nn.functional](https://github.com/pytorch/pytorch/blob/23b2fba79a6d2baadbb528b58ce6adb0ea929976/torch/nn/functional.py#L3573) into several functions to improve the ability to experiment. In particular, decompose the function so that the following are available:

* The input embedding functions.

* The computation of attention weights.

* The output embedding function.

This will allow users to try different embeddings or attention mechanisms without having to recode the rest.

## Motivation

Addresses the issue of decomposing the function as mentioned in #32590. It also moves forward on including more support for attention mechanisms.

## Pitch

Currently, the `mutli_head_attention_forward` function encapsulates the projection of the `query`, `key`, and `value`, computing attention for these projections, and computing the output projection after applying attention. Furthermore, the input embedding utilizes several code paths that are different embeddings. By decomposing the function into several parts, we can make it more readable and open to experimentation.

The following plan is based on the above: