id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

468,558,864 | opencv | OpenCV.js binding issue for perspectiveTransform using Point2fVector as input | I am trying to call `findHomography` and `perspectiveTransform` from OpenCV.js. The issue I came across was that the binding of these two functions is only specified for `Mat` inputs and not `std::vector<cv::Point2f>` which I want to use. So I went ahead an created new bindings for these functions with these structs as inputs. However, `findHomography` seems to work while `perspectiveTransform` results in a runtime error. I am not really sure why since I essentially did the same thing for both. Any help or suggestions would be much appreciated.

**Error Message** [LIVE EXAMPLE][1]

[![enter image description here][2]][2]

**Original Bindings**

Functions

```.cpp

Mat findHomography_wrapper(const cv::Mat& arg1, const cv::Mat& arg2, int arg3, double arg4, cv::Mat& arg5, const int arg6, const double arg7) {

return cv::findHomography(arg1, arg2, arg3, arg4, arg5, arg6, arg7);

}

void perspectiveTransform_wrapper(const cv::Mat& arg1, cv::Mat& arg2, const cv::Mat& arg3) {

return cv::perspectiveTransform(arg1, arg2, arg3);

}

```

Bindings

```.cpp

function("findHomography", select_overload<Mat(const cv::Mat&, const cv::Mat&, int, double, cv::Mat&, const int, const double)>(&Wrappers::findHomography_wrapper));

function("perspectiveTransform", select_overload<void(const cv::Mat&, cv::Mat&, const cv::Mat&)>(&Wrappers::perspectiveTransform_wrapper));

```

**Custom Bindings**

Functions

```.cpp

Mat findHomographyEasy(const std::vector<cv::Point2f>& arg1, const std::vector<cv::Point2f>& arg2, int arg3) {

return cv::findHomography(arg1,arg2,arg3);

}

void perspectiveTransformEasy(const std::vector<cv::Point2f>& arg1, std::vector<cv::Point2f>& arg2, const cv::Mat& arg3) {

cv::perspectiveTransform(arg1, arg2, arg3); // also tried with return here

}

```

Binding

```.cpp

function("findHomographyEasy", &binding_utils::findHomographyEasy); // WORKS PERFECTLY

function("perspectiveTransformEasy", &binding_utils::perspectiveTransformEasy); // CAUSES RUNTIME ERROR

```

**HTML / Javascript Usage**

```.html

<script src="./opencv.js" type="text/javascript"></script>

<script type="text/javascript">

cv['onRuntimeInitialized']=()=>{

match();

}

function match() {

// SOME STUFF...

var H = new cv.Mat();

H = cv.findHomographyEasy(obj, scene, cv.FM_RANSAC);

var obj_corners = new cv.Point2fVector();

obj_corners[0] = new cv.Point(0,0);

obj_corners[1] = new cv.Point(img1Raw.cols,0);

obj_corners[2] = new cv.Point(img1Raw.cols, img1Raw.rows);

obj_corners[3] = new cv.Point(0, img1Raw.rows);

console.log(img1Raw.cols); // 500

console.log(img1Raw.rows); // 363

var scene_corners = new cv.Point2fVector();

cv.perspectiveTransformEasy(obj_corners, scene_corners, H); // I know issue is here because I have surrounded this line with console.log

}

</script>

```

[1]: https://strmwr-cb94e.firebaseapp.com/

[2]: https://i.stack.imgur.com/R3O1o.png | category: javascript (js) | low | Critical |

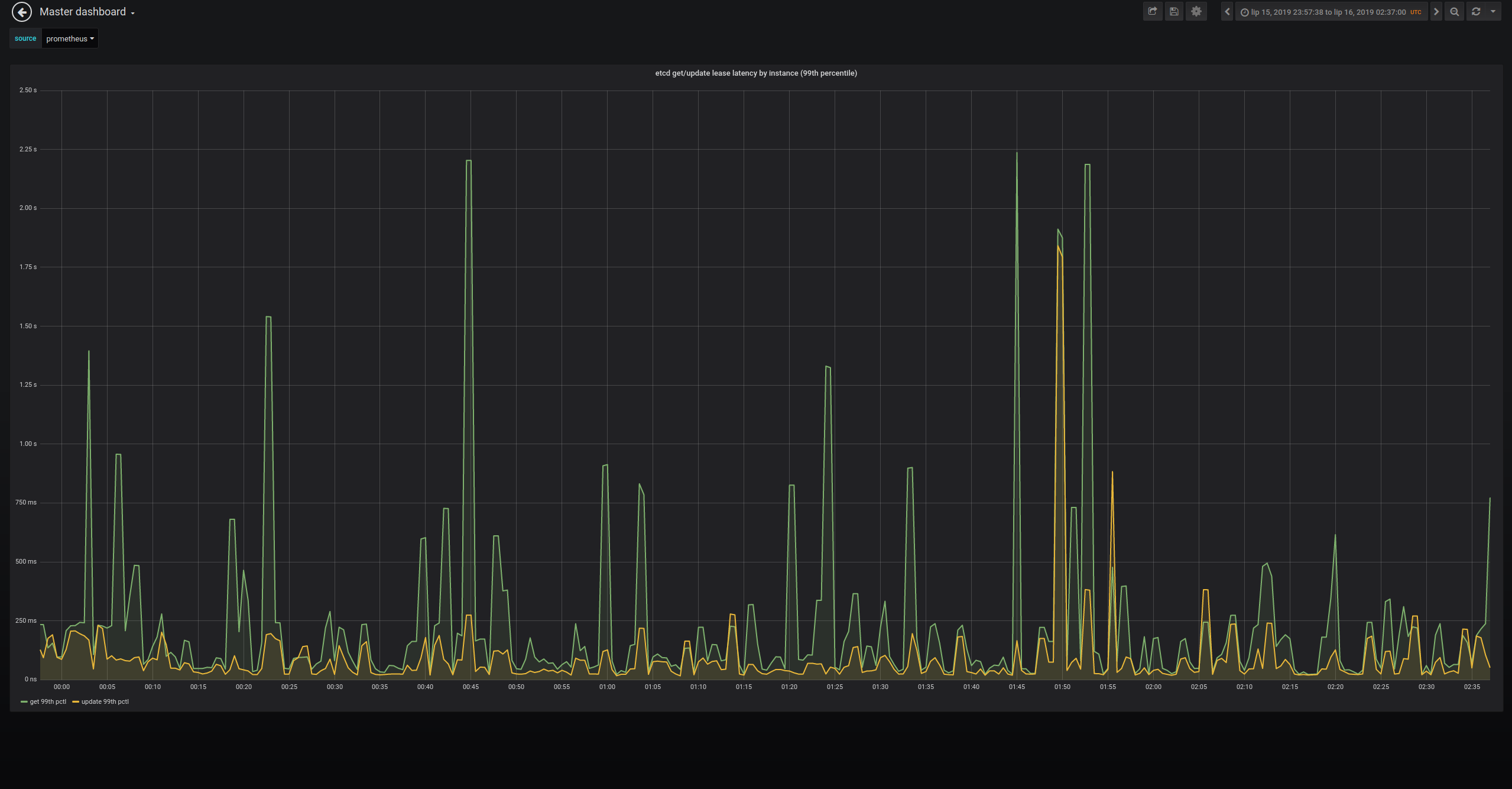

468,570,427 | kubernetes | [Performance] Etcd get node-lease latency is higher than put latency and not within SLO limits | **What happened**:

When running GCE 5K scale tests (either kubemark or regular) we discovered that the get Lease latency is significantly higher than put Lease latency. This is counter-intuitive and what's even worse the 99th pctl of get lease latency is often not withing our SLOs. Some graphs

ApiServer Latency:

ETCD latency:

**What you expected to happen**:

The get latency should be lower than put latency on the etcd level, the e2e get lease latency should be within scalability SLOs

**How to reproduce it (as minimally and precisely as possible)**:

Run ci-kubernetes-e2e-gce-scale-performance

**Anything else we need to know?**:

SIG scalability has already reached out to the etcd team and asked about this.

/sig scalability

/assign

| kind/bug,sig/scalability,sig/api-machinery,lifecycle/frozen | medium | Major |

468,707,204 | TypeScript | Allow configuration of ts.server.maxFileSize | ## Search Terms

```

configure maxFileSize

largeFileReferenced

```

## Suggestion

Allow the maxFileSize property to be configurable.

## Use Cases

Currently, when trying to import large JSON files, the Typescript Language Service fails to provide intellisense due to the maxFileSize limit being exceeded.

## Examples

```

[Trace - 10:29:07 AM] <semantic> Event received: largeFileReferenced (0).

Data: {

"file": "/home/user/project/src/data.json",

"fileSize": 6534662,

"maxFileSize": 4194304

}

```

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

| Suggestion,Awaiting More Feedback | low | Minor |

468,717,301 | opencv | OpenCV.js assigned Mat object memory isnt collected by garbage collection | <!--

If you have a question rather than reporting a bug please go to http://answers.opencv.org where you get much faster responses.

If you need further assistance please read [How To Contribute](https://github.com/opencv/opencv/wiki/How_to_contribute).

Please:

* Read the documentation to test with the latest developer build.

* Check if other person has already created the same issue to avoid duplicates. You can comment on it if there already is an issue.

* Try to be as detailed as possible in your report.

* Report only one problem per created issue.

This is a template helping you to create an issue which can be processed as quickly as possible. This is the bug reporting section for the OpenCV library.

-->

##### System information (version)

<!-- Example

- OpenCV => 3.1

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2015

-->

- OpenCV => 4.1

- Operating System / Platform => MacOS

- Compiler => using opencv.js from the source of your website

##### Detailed description

<!-- your description -->

If I push the Mat objects to arrays, the objects hang in the array and aren't possible to clean with V8 garbage collection. This is unfortunate and causes memory overflow when using with larger objects. I've filled the issue concerning this to google team, which you can read about with examples to reproduce in here: https://bugs.chromium.org/p/chromium/issues/detail?id=982797

##### Steps to reproduce

https://bugs.chromium.org/p/chromium/issues/detail?id=982797

<!-- to add code example fence it with triple backticks and optional file extension

```.cpp

// C++ code example

```

or attach as .txt or .zip file

-->

| RFC,category: javascript (js) | low | Critical |

468,733,743 | terminal | TitlebarControl should be a Template | The `TitlebarControl` introduced in #1948 should be a XAML Template, so we can style it easier. Right not the control is just straight up defined in XAML, but it should do the thing most controls do, where they're a ResourceDictionary with a Template.

| Product-Terminal,Issue-Task,Area-CodeHealth | low | Minor |

468,741,233 | terminal | Stop rendering when the terminal has been minimized/control has been hidden | Ported from MSFT:21315817

We shouldn't render the XAML island when we're minimized.

I don't remember any more of the context on this one. It _was_ assigned to @DHowett-MSFT, so he might remember. | Help Wanted,Area-UserInterface,Product-Terminal,Issue-Task | low | Minor |

468,743,436 | rust | Type inference breaks down in recursive call | This is as simple as I could make it. There is an error when I try to call `do_stuff` recursively, without explicitly giving the type of `T`:

```rust

fn do_stuff<T>(t: T)

where

u8: From<T>,

{

// This is an error:

do_stuff(1u8);

// This is ok:

do_stuff::<u8>(1u8);

}

fn main() {

// This is ok:

do_stuff(1u8);

}

```

```

error[E0308]: mismatched types

--> src/main.rs:7:14

|

7 | do_stuff(1u8);

| ^^^ expected type parameter, found u8

|

= note: expected type `T`

found type `u8`

```

Interestingly, when I switch around the `From` relationship, it works:

```rust

fn do_stuff<T>(t: T)

where

T: Into<u8>,

{

// This is ok now!:

do_stuff(1u8);

// This is ok:

do_stuff::<u8>(1u8);

}

fn main() {

// This is ok:

do_stuff(1u8);

}

```

----

```

$ rustc --version

rustc 1.36.0 (a53f9df32 2019-07-03)

``` | A-type-system,T-compiler,A-inference,C-bug,T-types | low | Critical |

468,748,416 | pytorch | JIT trace parameter sharing error if Module attributes happen to be the same | ## 🐛 Bug

You'll get an error if two attributes of a Module have the same values when using `torch.jit.trace`:

```

~/miniconda3/lib/python3.6/site-packages/torch/jit/__init__.py in check_unique(param)

1459 def check_unique(param):

1460 if param in id_set:

-> 1461 raise ValueError("TracedModules don't support parameter sharing between modules")

1462 id_set.add(param)

1463

ValueError: TracedModules don't support parameter sharing between modules

```

## To Reproduce

```

class SimpleModule(nn.Module):

def __init__(self, size=(784, 10)):

super().__init__()

self.weight = nn.Parameter(torch.Tensor(*size))

self.logits = self.weight if size[0] == 784 else self.weight + 0.01

nn.init.xavier_normal_(self.weight)

def forward(self, x):

x = self.weight @ x

return x

```

```

# Works

mod = SimpleModule(size=(785,10))

torch.jit.trace(mod, torch.randn((10,10)))

# Doesn't work

mod = SimpleModule(size=(784,10))

torch.jit.trace(mod, torch.randn((10,10)))

```

## Expected behavior

I would expect that it would be allowed to have complicated logic in a Module, in which a single case would be that two attributes happen to be the same.

## Environment

- PyTorch Version (e.g., 1.0): 1.1.0

- OS (e.g., Linux): Linux

- How you installed PyTorch (`conda`, `pip`, source): conda

- Python version: 3.6.8

## Additional context

The issue is that the values of the parameters are checked to be equal in order to indicate parameter sharing, however, that is not always the case.

See also the related issue to improve the error message: https://github.com/pytorch/pytorch/issues/22677 | oncall: jit,triaged | low | Critical |

468,751,772 | TypeScript | Wildcard ambient modules declaration override rules | ## Search Terms

wildcard ambient module override

## Suggestion

I could not find written anywhere how wildcard ambient module declaration precedence work in case of overlaps.

In the [original pull request](https://github.com/microsoft/TypeScript/pull/8939/files#diff-08a3cc4f1f9a51dbb468c2810f5229d3R575) "prefix length" was used as the best fit criteria, but I could not locate where that function is located into current code.

Also, prefix length won't help in case of post-fixed `*`, but maybe that was just naming and it actually meant "longer match".

This feature should be better documented into [its handbook page](https://www.typescriptlang.org/docs/handbook/modules.html#wildcard-module-declarations) or, if it has been dropped after first implementation, it would be useful to know why so.

The most [closely related question](https://stackoverflow.com/questions/52373658/angular-typescript-wildcard-module-declaration) I found on StackOverflow doesn't have an answer.

## Use Cases

I have a setup with Vue (Quasar actually) + TypeScript + Jest, using SFC.

I have to mount Vue components into Jest tests (which are written into `.ts` files), but importing SFC won't work (they are not TS files).

Using a shim for all Vue files (the official solution) partially solves this problem, because at least you get typings for the general Vue instance, but you won't get typings for *that* particular SFC (data, props, computed, etc).

I currently separated the TS script from the SFC to be able to get the typings by importing from the two different files. Now I'm trying to define shims for the component `.vue` to work by binding its name with a wildcard to it's TS counterpart.

Unluckily, when I import `./demo/QBtn-demo.vue`, I still get `*.vue` shim instead of the specific component one, and nowhere seems to be found how can I force the override.

If I remove Vue shim, it works, but I'm forced to make a personal shim for *every* component.

I know it's possible by using triple slash references, but that's not the point of this issue.

Current workaround is to import both the `.vue` SFC and the TS script and then explicitly cast the SFC to the type of the specific instance.

## Examples

shims-vue.d.ts

```ts

declare module '*.vue' {

import Vue from 'vue';

export default Vue;

}

```

component.d.ts

```ts

declare module '*/QBtn-demo.vue' { // <= works when general Vue shim isn't present

import QBtnDemo from 'test/jest/__tests__/demo/QBtn-demo'; // <= this is the TS file

export default QBtnDemo;

}

```

QBtn-demo.vue

```vue

<script lang="ts" src="./QBtn-demo.ts"></script>

<template>

<div>

<p class="textContent">{{ input }}</p>

<span>{{ counter }}</span>

<q-btn id="mybutton" @click="increment()"></q-btn>

</div>

</template>

```

QBtn-demo.ts

```ts

import Vue from 'vue';

export default Vue.extend({

name: 'QBUTTON',

data: function(): { counter: number; input: string } {

return {

counter: 0,

input: 'rocket muffin',

};

},

methods: {

increment(): void {

this.counter++;

},

},

});

```

app,spec.ts

```ts

import { createLocalVue, mount } from '@vue/test-utils';

import { Quasar } from 'quasar';

import { VueConstructor } from 'vue';

import QBtnDemo from './demo/QBtn-demo.vue'; // <= Gets types as 'Vue' instead of 'QBtnDemo'

describe('Mount Quasar', () => {

const localVue = createLocalVue();

localVue.use(Quasar);

const wrapper = mount(QBtnDemo, { localVue });

const vm = wrapper.vm;

it('has a created hook', () => {

expect(typeof vm.increment).toBe('function'); // <= TS error: could not find 'increment'

});

it('sets the correct default data', () => {

expect(typeof vm.counter).toBe('number'); // <= TS error: could not find 'counter'

const defaultData = vm.$data;

expect(defaultData.counter).toBe(0);

});

it('correctly updates data when button is pressed', () => {

const button = wrapper.find('button');

button.trigger('click');

expect(vm.counter).toBe(1); // <= TS error: could not find 'counter'

});

});

```

app.spec.ts with casting workaround

```ts

import { createLocalVue, mount } from '@vue/test-utils';

import { Quasar } from 'quasar';

import { VueConstructor } from 'vue';

import QBtnDemoComponent from './demo/QBtn-demo.vue';

import QBtnDemo from './demo/QBtn-demo';

describe('Mount Quasar', () => {

const localVue = createLocalVue();

localVue.use(Quasar);

const wrapper = mount(QBtnDemoComponent as typeof QBtnDemo, { localVue });

const vm = wrapper.vm;

it('has a created hook', () => {

expect(typeof vm.increment).toBe('function'); // <= Infered correctly

});

it('sets the correct default data', () => {

expect(typeof vm.counter).toBe('number'); // <= Infered correctly

const defaultData = vm.$data;

expect(defaultData.counter).toBe(0);

});

it('correctly updates data when button is pressed', () => {

const button = wrapper.find('button');

button.trigger('click');

expect(vm.counter).toBe(1); // <= Infered correctly

});

});

```

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

| Needs Investigation | low | Critical |

468,785,039 | flutter | Can't swipe to dismiss scrollable Bottom Sheet | <!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.dev/

* https://api.flutter.dev/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill our the template below. Please read

our guide to filing a bug first: https://flutter.dev/docs/resources/bug-reports

-->

Per @dnfield https://github.com/flutter/flutter/issues/31739#issuecomment-511459403

## Use case

<!--

Please tell us the problem you are running into that led to you wanting

a new feature.

Is your feature request related to a problem? Please give a clear and

concise description of what the problem is.

Describe alternative solutions you've considered. Is there a package

on pub.dev/flutter that already solves this?

-->

See the [Crane](https://material.io/design/material-studies/crane.html#layout) Material sample and [Google I/O 2019](https://play.google.com/store/apps/details?id=com.google.samples.apps.iosched&hl=en_US)

These both feature a bottom sheet that slides up (in the case of I/O, you select "Events" and the hit the "Filter" FAB) and scrolls.

With the I/O app, if you continue to swipe down when scrolled to the top, it will start to drag and then dismiss when a threshold is reached.

If the threshold is not reached (or you swipe upwards) then the bottom sheet will not be dismissed and it'll rebound to the top of the screen.

## Proposal

<!--

Briefly but precisely describe what you would like Flutter to be able to do.

Consider attaching images showing what you are imagining.

Does this have to be provided by Flutter directly, or can it be provided

by a package on pub.dev/flutter? If so, maybe consider implementing and

publishing such a package rather than filing a bug.

-->

I'd think this should be a part of `DraggableScrollableSheet` as mentioned in #31739, but maybe it makes sense to bake the logic into the Bottom Sheet itself?

I've tried both modal and non-modal bottom sheets with and without the `DraggableScrollableSheet`, and can't seem to make this work as is.

The current issue with the `DraggableScrollableSheet` is that you can't have the sheet be maximized all the time. (Since, as I mentioned in https://github.com/flutter/flutter/issues/31739#issuecomment-511241746, any swipe will immediately dismiss the `BottomSheet` rather than scroll the `CustomScrollView`)

| c: new feature,framework,f: material design,f: scrolling,f: gestures,customer: crowd,c: proposal,P2,team-design,triaged-design | low | Critical |

468,789,503 | go | x/oauth2/clientcredentials: context values are not passed to oauth2 requests that retrieve tokens | <!-- Please answer these questions before submitting your issue. Thanks! -->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.13beta1 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

It does reproduce with the latest version of golang.org/x/oauth2. I guess this is an issue about this subrepository.

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/yann/.cache/go-build"

GOENV="/home/yann/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GONOPROXY="github.com/ulule/*"

GONOSUMDB="github.com/ulule/*"

GOOS="linux"

GOPATH="/home/yann/go"

GOPRIVATE="github.com/ulule/*"

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/home/yann/sdk/go1.13beta1"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/home/yann/sdk/go1.13beta1/pkg/tool/linux_amd64"

GCCGO="gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD="/home/yann/z/facebook/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build659375516=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

https://play.golang.org/p/iOdgycJZA4f

This example requires facebook credentials, but the bug does reproduce with any credential source.

### What did you expect to see?

There are two requests, one for retrieving an oauth2 token, and the other to get the actual URL.

I expected the two requests to see the same context values, which would generate an output like

```

context value

context value

```

### What did you see instead?

```

<nil>

context value

```

The context value is not passed to the first request. | help wanted,NeedsFix | low | Critical |

468,790,746 | TypeScript | Feature Request: Make ES module exports conform to an interface using triple slash directive | ## Search Terms

ESM, ES module, EcmaScript Module, Interface, Exports, triple slash directive, module

## Suggestion

I want to be able to enforce and ESM's exports to conform to an interface.

<details>

<summary>Here's an ESM that exports some values:</summary>

</details>

This ESM is going to be consumed by some system; __the ESM acts as configuration__ for that system.

<details>

<summary>The system could expose interfaces/types for all possible exports, and users apply them manually:</summary>

</details>

But this is manual work, and thus error-prone.

**I propose to be able to do:**

*The exact syntax TBD.*

Adding

```

/// <exports name="ConfigInterface" from="system-that-needs-configuration" />

```

will tell typescript about which interface the ESM exports should adhere to and where to find it.

If this is implemented in a triple slash directive this can also work for non-ts files.

## Use Cases

I want users of [storybook](https://github.com/storybookjs/storybook) to be able to configure it with ease using modern code.

CommonJS isn't tree-shake-able, which is important to us. There's a pretty detailed RFC for this feature for storybook here: https://docs.google.com/document/d/15aAALZBl0GTBEKgJN219ebzJ8LUJf2TVJ3hQdkNdLvQ/edit#

Tools like babel, eslint & webpack currently are or can be configured using CommonJS modules; as the ecosystem for ESM is improving, being able to add an interface to an ESM becomes really useful.

Tools could start using ESM for configuration more, which has clear benefits over CommoNJS.

## Examples

Here's a config file that is annotated with the triple slash directive:

Here's the interface that's being referred to:

This should warn the user that `logLevel = 'any'` is not a valid value.

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

| Suggestion,Awaiting More Feedback | low | Critical |

468,807,845 | go | cmd/doc: show documentation for explicitly-requested identifiers regardless of the `-u` flag | ### What version of Go are you using (`go version`)?

```

~/go/src$ go version

go version devel +87bf0b5c51 Tue Jul 16 13:17:46 2019 -0400 linux/amd64

```

### What did you do?

```

~/go/src$ go doc go/build.getToolDir

```

### What did you expect to see?

```

~/go/src$ go doc go/build.getToolDir

package build // import "go/build"

func getToolDir() string

getToolDir returns the default value of ToolDir.

```

### What did you see instead?

```

~/go/src$ go doc go/build.getToolDir

package build // import "go/build"

doc: no symbol getToolDir in package go/build

exit status 1

~/go/src$ go doc -u go/build.getToolDir

package build // import "go/build"

func getToolDir() string

getToolDir returns the default value of ToolDir.

```

----

The `doc` command by default hides all unexported identifiers, _even those explicitly requested by the user._ To coax it to display the requested result, you have to pass the `-u` flag, which has the secondary (and often unwanted) effect of displaying unexported fields and methods on the requested identifier.

Moreover, that behavior is inconsistent with the behavior for `internal` packages, for which `go doc` will happily display documentation even without the `-u` flag.

Instead, the `-u` flag should control *only* the behavior for nested declarations — variables, constants, types, functions, fields, and/or methods _associated with_ the requested identifier — not the requested identifier itself.

CC @robpike @mvdan @ianthehat | help wanted,NeedsFix | low | Minor |

468,809,691 | go | cmd/doc: show types for constants and variables that have initializers | Currently, if you ask `go doc` for an exported identifier, and it happens to be declared as a `var` or `const` with the type inferred from the initializer, the type of the variable does not appear in the output.

It is doubly frustrating if the initializer happens to refer to unexported identifiers, since `go doc` requires an extra flag before it will document those (#33133).

### What version of Go are you using (`go version`)?

```

~/go/src$ go version

go version devel +87bf0b5c51 Tue Jul 16 13:17:46 2019 -0400 linux/amd64

```

### What did you do?

```

~/go/src$ go doc go/build.ToolDir

```

### What did you expect to see?

The documentation for *and type of* the `go/build.ToolDir` variable.

### What did you see instead?

```

~/go/src$ go doc go/build.ToolDir

package build // import "go/build"

var ToolDir = getToolDir()

ToolDir is the directory containing build tools.

```

No indication of whether ToolDir is a `string`, a `[]byte`, or something else entirely. It isn't mentioned in the doc comment, and it shouldn't _need_ to be mentioned in the doc comment because the compiler already knows what it is. | NeedsInvestigation,FeatureRequest | low | Minor |

468,826,595 | pytorch | Unify tensor shape formatting in shape checks | I made some mistake with tensor shape for nn.Conv1d and expectedly got an error:

```

File "/miniconda/lib/python3.7/site-packages/torch/nn/modules/conv.py", line 198, in forward

self.padding, self.dilation, self.groups)

RuntimeError: Expected 3-dimensional input for 3-dimensional weight 768 161 13 140185417077232, but got 4-dimensional input of size [40, 1, 161, 1923] instead

```

https://github.com/pytorch/pytorch/issues/19947 already reports overflown dimension of `140185417077232`

Another minor issues is mising square brackets and commas in the first weight tensor shape. Some unification would make it clearer for the user that it's tensor shape in question.

Torch version is `1.2.0.dev20190607` | module: error checking,module: convolution,triaged,enhancement | low | Critical |

468,827,059 | flutter | Shell unit-tests that assert subprocess death don't seem to work on Windows. | These have been disabled in the test harness for now. | engine,platform-windows,P2,team-engine,triaged-engine | low | Minor |

468,833,132 | go | proposal: issues: distinguish "blocks beta/rc" from "blocks final release" | When we (@golang/osp-team) triage the issues labeled with [`release-blocker`](https://github.com/golang/go/labels/release-blocker) prior to a release, we often end up sorting them into a finer granularity:

* “blocking the next beta” (issues that will need broad testing before the release),

* “blocking the release candidate” (known regressions with limited impact and clear testing steps),

* “blocking the final release” (documentation, certain kinds of test flakiness).

We end up repeating that classification for each pre-release build, and we spend time discussing the classifications when they could often be made by smaller numbers of people ahead of time.

Furthermore, it's probably useful for folks in the community to be able to see that classification, so that they can set expectations appropriately (and so that they can point out issues that might need more testing than we thought).

----

I propose that we do one of the following:

a. Create milestones for each pre-release (`Go1.13-beta.1`, `Go1.13-rc.1`, and so on).

* These pre-releases are conceptually before the main miletone (`Go1.13`), so a `release-blocker` on `Go1.13` would indicate the “blocking the final release” category.

b. Or, create labels for each pre-release (`next-beta`, `next-rc`), with the expectation of zero `next-beta` issues open when we cut a beta release and zero `next-rc` issues open for a given milestone when we cut the corresponding pre-release for that milestone.

* We would need to decide whether to have GopherBot ensure that `next-*` issues are also labeled `release-blocker`, or have GopherBot remove the `release-blocker` label as redundant.

| Proposal,Proposal-Hold | low | Minor |

468,837,089 | flutter | Benchmark targets are not run on Windows. | engine,platform-windows,P2,team-engine,triaged-engine | low | Minor |

|

468,842,910 | pytorch | [data loader] Graceful data loader threads exit on KeyboardInterrupt | During training with PyTorch 1.2.0.dev20190607 I pressed Ctrl+C and got the following:

```

KeyboardInterrupt

Traceback (most recent call last):

File "/miniconda/lib/python3.7/multiprocessing/queues.py", line 242, in _feed

send_bytes(obj)

Fatal Python error: could not acquire lock for <_io.BufferedWriter name='<stderr>'> at interpreter shutdown, possibly due to daemon threads

Thread 0x00007f49347f8700 (most recent call first):

Thread 0x00007f49357fa700 (most recent call first):

Thread 0x00007f4934ff9700 (most recent call first):

Thread 0x00007f494f7fe700 (most recent call first):

File "/miniconda/lib/python3.7/traceback.py", line 105 in print_exception

File "/miniconda/lib/python3.7/traceback.py", line 163 in print_exc

File "/miniconda/lib/python3.7/multiprocessing/queues.py", line 273 in _on_queue_feeder_error

File "/miniconda/lib/python3.7/multiprocessing/queues.py", line 264 in _feed

File "/miniconda/lib/python3.7/threading.py", line 865 in run

File "/miniconda/lib/python3.7/threading.py", line 917 in _bootstrap_inner

File "/miniconda/lib/python3.7/threading.py", line 885 in _bootstrap

Thread 0x00007f49c70e5700 (most recent call first):

File "/miniconda/lib/python3.7/threading.py", line 300 in wait

File "/miniconda/lib/python3.7/queue.py", line 179 in get

File "/miniconda/lib/python3.7/site-packages/tensorboard/summary/writer/event_file_writer.py", line 204 in run

File "/miniconda/lib/python3.7/threading.py", line 917 in _bootstrap_inner

File "/miniconda/lib/python3.7/threading.py", line 885 in _bootstrap

Current thread 0x00007f49df943700 (most recent call first):

train.sh: line 1: 4022 Aborted (core dumped) python3 train.py

```

It would be great if data loader threads could die less verbosely (and mysteriously) on KeyboardInterrupt. | needs reproduction,module: dataloader,triaged | low | Critical |

468,844,731 | rust | Link errors when compiling for i386 with +soft-float | It appears that since https://github.com/rust-lang/rust/pull/61408, rust now requires `fminf` and `fmaxf` functions to be present when compiling for i386 with +soft-float (using a custom target JSON). However, compiler-builtins [only expose those functions for some targets], which don't include x86.

Maybe compiler-builtins should include a `target-feature = "soft-float"` in the list of conditions to enable the math module?

[only expose those functions for some targets]: https://github.com/rust-lang-nursery/compiler-builtins/blob/master/src/lib.rs#L56 | A-linkage,O-x86_64,T-compiler,C-bug,O-x86_32 | low | Critical |

468,845,877 | electron | Starting devtools with activate: false makes it so that clicking on the main window does not bring it to the front | ### Preflight Checklist

* [x] I have read the [Contributing Guidelines](https://github.com/electron/electron/blob/master/CONTRIBUTING.md) for this project.

* [x] I agree to follow the [Code of Conduct](https://github.com/electron/electron/blob/master/CODE_OF_CONDUCT.md) that this project adheres to.

* [x] I have searched the issue tracker for an issue that matches the one I want to file, without success.

### Issue Details

* **Electron Version:** 5.0.6

* **Operating System:** Windows 10

### Expected Behavior

When I open devtools with `activate: false`, and the devtools appears in front of the main window, I expect that clicking on the main window will focus it and bring it to the front.

### Actual Behavior

When doing the above, the main window does not get sent to the front, but it does get focus.

### To Reproduce

main.js:

```

const { app, BrowserWindow } = require('electron')

function createWindow() {

const mainWindow = new BrowserWindow();

mainWindow.webContents.openDevTools({ mode: "detach", activate: false });

}

app.on('ready', createWindow)

```

Run `npm start`. Make sure that devtools is at least partially covering the main window. Notice that clicking on the main window that's in the back does not bring it to the front.

Observations:

1. The main window does get focus, and you can successfully open menu items

2. Clicking on the devtools and then back to the main window will bring the main window to the front

3. You can resize the main window and still observe the issue (resizing doesn't work around it) | platform/windows,bug :beetle:,5-0-x,7-1-x,10-x-y | medium | Critical |

468,859,007 | flutter | Sliver garbage collect does not work in Sizedbox | <!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.dev/

* https://api.flutter.dev/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill our the template below. Please read

our guide to filing a bug first: https://flutter.dev/docs/resources/bug-reports

-->

## Steps to Reproduce

```dart

import 'package:flutter/material.dart';

import 'package:flutter/rendering.dart';

void main() => runApp(MyApp());

class MyApp extends StatelessWidget {

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'List playground',

home: TestPage(),

);

}

}

class TestPage extends StatefulWidget {

@override

State<StatefulWidget> createState() => _TestPageState();

}

class _TestPageState extends State<TestPage> {

List<String> items = ['1', '2', '3', '4', '5'];

@override

Widget build(BuildContext context) {

return Scaffold(

body: Center(

child: SizedBox(

width: 44.4,

height: 30.0,

child: Directionality(

textDirection: TextDirection.ltr,

child: CustomScrollView(

slivers: <Widget>[

SliverFixedExtentList(

itemExtent: 22.2,

delegate: SliverChildBuilderDelegate(

(BuildContext context, int index) {

return TextWidget(

items[index],

);

},

childCount : items.length,

),

),

],

),

),

),

),

);

}

}

class TextWidget extends StatefulWidget {

const TextWidget(this.data);

final String data;

@override

TextWidgetState createState() => TextWidgetState();

}

class TextWidgetState extends State<TextWidget>{

@override

void dispose() {

print('disposed ${widget.data}');

super.dispose();

}

@override

Widget build(BuildContext context) {

return Text(widget.data);

}

}

```

When scrolling the sliver in the middle, It should disposes the elements that are not in view. Actual, it doesn't dispose them.

It turns out in RenderSliverFixedExtentBoxAdaptor.constraints.scrollOffset is always zero no matter how far you drag the scroll view, so it does not do the garbage collection to remove the widget that are not in view.

| framework,f: scrolling,has reproducible steps,P2,found in release: 3.3,found in release: 3.6,team-framework,triaged-framework | low | Critical |

468,905,662 | flutter | Implement alternatives to long press on desktop | For `ListTile`, and `ReorderableListView`, there are handlers that support long press on mobile platforms. These don't work well on desktop, and so they need to instead support other affordances.

These affordances will need to be designed and implemented to fit with the Material Design spec. | framework,f: material design,c: proposal,a: desktop,P3,team-framework,triaged-framework | low | Major |

468,911,113 | go | cmd/go: report an error for cmd (and std?) modules outside $GOROOT | Trying to work on the src code of `go doc` but every time I try to compile a `./doc` binary it uses other src code, it doesn't look in the current directory am in, I have to force it `go build main.go`

```

gert@gert ~/Desktop/go/src/cmd/doc:master> go build

gert@gert ~/Desktop/go/src/cmd/doc:master> ls

dirs.go doc doc_test.go main.go pkg.go testdata

```

I can see a doc binary getting created but its not build form the `main.go` in current directory, I deliberately put a syntax error in main?

```

gert@gert ~/Desktop/go/src/cmd/doc:master> go build main.go

# command-line-arguments

./main.go:78:2: syntax error: unexpected --, expecting }

```

details:

```

go version devel +f938b9b33b Wed Jun 26 20:26:48 2019 +0000 darwin/amd64

GOARCH="amd64"

GOBIN="/Users/gert/bin"

GOCACHE="/Users/gert/Library/Caches/go-build"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOOS="darwin"

GOPATH="/Users/gert/go"

GOPROXY=""

GORACE=""

GOROOT="/usr/local/go"

GOTMPDIR=""

GOTOOLDIR="/usr/local/go/pkg/tool/darwin_amd64"

GCCGO="gccgo"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

GOMOD="/Users/gert/Desktop/go/src/cmd/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/dv/8tlwvjr91zjdyq4rk14lkkfm0000gn/T/go-build645754300=/tmp/go-build -gno-record-gcc-switches -fno-common"

```

Workaround for me was match `GOROOT` with the repo as in, `git clone` everything in `/usr/local/go` and bootstrap using a `/usr/local/go1` then I could work on `go doc` src code.

maybe related to #32724

| NeedsInvestigation,modules | low | Critical |

468,922,044 | TypeScript | The dom.iterable lib contains many interfaces that should also be in webworker | <!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

Many of the interfaces defined in [dom.iterable](https://github.com/microsoft/TypeScript/blob/d4765523f086cfed152b094c3b5db2b246c13233/src/lib/dom.iterable.d.ts) should also be available to web workers. These include:

- `Headers`

- `FormData`

- `URLSearchParams`

And since the `dom.iterable` library does not play well with the `webworker` library, I have to define these interfaces in my own, separate, library (instead of just including built-in libraries).

The `webworker` library should either _also_ have an "iterable" variant, or these interfaces should be abstracted to a higher level.

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

**TypeScript Version:** 3.5.3, master

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:**

dom iterable worker

**Code**

```ts

// index.ts

const h = new Headers();

console.log([...h.entries()]);

```

```sh

const h = new Headers();

console.log([...h.entries()]);

tsc --target esnext --lib esnext,webworker index.ts

```

**Expected behavior:**

This should compile fine (as it does when using `--lib esnext,dom,dom.iterable`).

**Actual behavior:**

```txt

index.ts:3:19 - error TS2339: Property 'entries' does not exist on type 'Headers'.

3 console.log([...h.entries()]);

~~~~~~~

Found 1 error.

```

**Playground Link:** <!-- A link to a TypeScript Playground "Share" link which demonstrates this behavior -->

Can't show this in the playground as I can't specify `--lib` options.

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

Somewhat related to: https://github.com/microsoft/TypeScript/issues/20595

| Bug,Rescheduled | low | Critical |

468,930,988 | vscode | If pasting over text containing TextEditorDecorations, they are retained | Version: 1.36.1 (user setup)

Commit: 2213894ea0415ee8c85c5eea0d0ff81ecc191529

Date: 2019-07-08T22:59:35.033Z

Electron: 4.2.5

Chrome: 69.0.3497.128

Node.js: 10.11.0

V8: 6.9.427.31-electron.0

OS: Windows_NT x64 10.0.18362

If pasting over text with TextEditorDecorations applied, they are applied to the new text.

Expected: Text replaced by pasting should have decorators cleared/truncated.

| feature-request,semantic-tokens | low | Minor |

468,948,886 | pytorch | Support serializing IValue to bytes (and deserialize from bytes) | ## 🚀 Feature

Right now, torch.save only supports saving to file. But not serialize to bytes.

Similarly, it would be great to be able to deserialize the bytes too. This should work bidirectionally between Python and CPP.

## Motivation

We want to serialize IValue to bytes to send over network. The interface right now only supports serializing to a file and then loading the bytes from the file.

| oncall: jit,triaged | low | Major |

468,956,623 | flutter | Flutter doesn't work when installed to icloud sync folder | <!-- Thank you for using Flutter!

If you are looking for support, please check out our documentation

or consider asking a question on Stack Overflow:

* https://flutter.dev/

* https://api.flutter.dev/

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

If you have found a bug or if our documentation doesn't have an answer

to what you're looking for, then fill our the template below. Please read

our guide to filing a bug first: https://flutter.dev/docs/resources/bug-reports

-->

It seems like I've followed all the instructions on https://flutter.dev/docs/get-started/install/macos plus a youtube tutorial but I run into an error. Not sure what it is, but I'm new to flutter and want to explore it

## Steps to Reproduce

<!-- Please tell us exactly how to reproduce the problem you are running into. -->

1. flutter create my_app

2. cd my_app

3. flutter run

## Logs

<!--

Include the full logs of the commands you are running between the lines

with the backticks below. If you are running any "flutter" commands,

please include the output of running them with "--verbose"; for example,

the output of running "flutter --verbose create foo".

-->

```Launching lib/main.dart on iPhone Xʀ in debug mode...

Compiler message:

Error: SDK summary not found:

file:///Users/carla/Documents/flutter/bin/cache/artifacts/engine/common/flutter_

patched_sdk/platform_strong.dill.

Error: Error when reading

'file:///Users/carla/Documents/flutter/bin/cache/artifacts/engine/common/flutter

_patched_sdk/platform_strong.dill': No such file or directory

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:async'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:collection'

lib/main.dart:1:1: Error: Not found: 'dart:collection'

import 'package:flutter/material.dart';

^

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:convert'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:developer'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:ffi'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:_internal'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:isolate'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:math'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:mirrors'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:profiler'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:typed_data'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found:

'dart:nativewrappers'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:io'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found: 'dart:ui'

org-dartlang-untranslatable-uri:dart%3Acore: Error: Not found:

'dart:vmservice_io'

lib/main.dart:1:1: Error: Not found: 'dart:core'

import 'package:flutter/material.dart';

^

org-dartlang-untranslatable-uri:dart%3Acore:1:8: Error: Not found:

'dart:_internal'

import 'dart:_internal';

^

org-dartlang-untranslatable-uri:dart%3Acore:2:8: Error: Not found: 'dart:async'

import 'dart:async';

^

org-dartlang-untranslatable-uri:dart%3Acore:4:1: Error: Not found: 'dart:async'

export 'dart:async' show Future, Stream;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/about.da

rt:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/about.da

rt:6:8: Error: Not found: 'dart:developer'

import 'dart:developer' show Timeline, Flow;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/about.da

rt:7:8: Error: Not found: 'dart:io'

import 'dart:io' show Platform;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/animated

_icons.dart:8:8: Error: Not found: 'dart:math'

import 'dart:math' as math show pi;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/animated

_icons.dart:9:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui show Paint, Path, Canvas;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/animated

_icons.dart:10:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/app.dart

:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/app_bar.

dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/app_bar_

theme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/arc.dart

:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/arc.dart

:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/bottom_a

pp_bar_theme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/bottom_n

avigation_bar.dart:5:8: Error: Not found: 'dart:collection'

import 'dart:collection' show Queue;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/bottom_n

avigation_bar.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/bottom_s

heet.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/bottom_s

heet_theme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/button.d

art:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/card_the

me.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/checkbox

.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/chip.dar

t:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/chip_the

me.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/colors.d

art:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/data_tab

le.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/date_pic

ker.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/date_pic

ker.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/dialog.d

art:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/dialog_t

heme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/drawer.d

art:5:8: Error: Not found: 'dart:math'

import 'dart:math';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/dropdown

.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/expand_i

con.dart:4:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/feedback

.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/flexible

_space_bar.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/floating

_action_button.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/floating

_action_button_location.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/floating

_action_button_theme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/icon_but

ton.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/ink_ripp

le.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/ink_spla

sh.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/ink_well

.dart:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/input_bo

rder.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/input_bo

rder.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/input_de

corator.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/input_de

corator.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/list_til

e.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/material

_button.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/material

_localizations.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/material

_state.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/mergeabl

e_material.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/paginate

d_data_table.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/popup_me

nu.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/progress

_indicator.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/range_sl

ider.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/range_sl

ider.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/range_sl

ider.dart:7:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/refresh_

indicator.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/refresh_

indicator.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/reordera

ble_list.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/scaffold

.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/scaffold

.dart:6:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/scaffold

.dart:7:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/scrollba

r.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/search.d

art:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/shadows.

dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color, Offset;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/slider.d

art:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/slider.d

art:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/slider.d

art:7:8: Error: Not found: 'dart:math'

import 'dart:math';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/slider_t

heme.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/slider_t

heme.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Path, lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/snack_ba

r_theme.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/tab_cont

roller.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/tabs.dar

t:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/tabs.dar

t:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/text_fie

ld.dart:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/text_sel

ection.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/theme_da

ta.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color, hashList;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/time.dar

t:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show hashValues;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/time_pic

ker.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/time_pic

ker.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/tooltip.

dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/material/user_acc

ounts_drawer_header.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/painting.dart:20:1:

Error: Not found: 'dart:ui'

export 'dart:ui' show Shadow, PlaceholderAlignment;

^

file:///Users/carla/Documents/flutter/.pub-cache/hosted/pub.dartlang.org/vector_

math-2.0.8/lib/vector_math_64.dart:22:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/.pub-cache/hosted/pub.dartlang.org/vector_

math-2.0.8/lib/vector_math_64.dart:23:8: Error: Not found: 'dart:typed_data'

import 'dart:typed_data';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/app.dart:

5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/app.dart:

6:8: Error: Not found: 'dart:collection'

import 'dart:collection' show HashMap;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/app.dart:

26:1: Error: Not found: 'dart:ui'

export 'dart:ui' show Locale;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/async.dar

t:9:8: Error: Not found: 'dart:async'

import 'dart:async' show Future, Stream, StreamSubscription;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/automatic

_keep_alive.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/banner.da

rt:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/basic.dar

t:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui show Image, ImageFilter;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/binding.d

art:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/binding.d

art:6:8: Error: Not found: 'dart:developer'

import 'dart:developer' as developer;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/binding.d

art:7:8: Error: Not found: 'dart:ui'

import 'dart:ui' show AppLifecycleState, Locale, AccessibilityFeatures;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/binding.d

art:21:1: Error: Not found: 'dart:ui'

export 'dart:ui' show AppLifecycleState, Locale;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/bottom_na

vigation_bar_item.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/debug.dar

t:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/debug.dar

t:6:8: Error: Not found: 'dart:developer'

import 'dart:developer' show Timeline; // to disambiguate reference in dartdocs

below

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/editable_

text.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/editable_

text.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/editable_

text.dart:7:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/fade_in_i

mage.dart:5:8: Error: Not found: 'dart:typed_data'

import 'dart:typed_data';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/focus_man

ager.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/focus_man

ager.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/framework

.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/framework

.dart:6:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/framework

.dart:7:8: Error: Not found: 'dart:developer'

import 'dart:developer';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/framework

.dart:15:1: Error: Not found: 'dart:ui'

export 'dart:ui' show hashValues, hashList;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/icon_data

.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show hashValues;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/icon_them

e_data.dart:5:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Color, hashValues;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/icon_them

e_data.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui show lerpDouble;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/image.dar

t:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/image.dar

t:6:8: Error: Not found: 'dart:io'

import 'dart:io' show File;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/image.dar

t:7:8: Error: Not found: 'dart:typed_data'

import 'dart:typed_data';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/inherited

_model.dart:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/list_whee

l_scroll_view.dart:4:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/list_whee

l_scroll_view.dart:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/list_whee

l_scroll_view.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/localizat

ions.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/localizat

ions.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Locale;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/media_que

ry.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/media_que

ry.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' as ui;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/media_que

ry.dart:7:8: Error: Not found: 'dart:ui'

import 'dart:ui' show Brightness;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/navigatio

n_toolbar.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/navigator

.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/navigator

.dart:6:8: Error: Not found: 'dart:convert'

import 'dart:convert';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/navigator

.dart:7:8: Error: Not found: 'dart:developer'

import 'dart:developer' as developer;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/nested_sc

roll_view.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/nested_sc

roll_view.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/overlay.d

art:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/overlay.d

art:6:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/overscrol

l_indicator.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async' show Timer;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/overscrol

l_indicator.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/page_view

.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/page_view

.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/routes.da

rt:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/safe_area

.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_ac

tivity.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_ac

tivity.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_co

ntroller.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_me

trics.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_ph

ysics.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_po

sition.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_po

sition_with_single_context.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_si

mulation.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scroll_vi

ew.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scrollabl

e.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scrollabl

e.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scrollabl

e.dart:7:8: Error: Not found: 'dart:ui'

import 'dart:ui';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/scrollbar

.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/semantics

_debugger.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/semantics

_debugger.dart:6:8: Error: Not found: 'dart:ui'

import 'dart:ui' show SemanticsFlag;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/single_ch

ild_scroll_view.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/sliver.da

rt:5:8: Error: Not found: 'dart:collection'

import 'dart:collection' show SplayTreeMap, HashMap;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/table.dar

t:5:8: Error: Not found: 'dart:collection'

import 'dart:collection';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/text_sele

ction.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/text_sele

ction.dart:6:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/transitio

ns.dart:5:8: Error: Not found: 'dart:math'

import 'dart:math' as math;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/widget_in

spector.dart:5:8: Error: Not found: 'dart:async'

import 'dart:async';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/widget_in

spector.dart:6:8: Error: Not found: 'dart:convert'

import 'dart:convert';

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/widget_in

spector.dart:7:8: Error: Not found: 'dart:developer'

import 'dart:developer' as developer;

^

file:///Users/carla/Documents/flutter/packages/flutter/lib/src/widgets/widget_in

spector.dart:8:8: Error: Not found: 'dart:math'

import 'dart:math' as math;