problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_14762

|

rasdani/github-patches

|

git_diff

|

huggingface__peft-1320

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

An error occurs when using LoftQ. IndexError

A problem occurred while applying LoftQ to T5.

`IndexError: tensors used as indices must be long, byte or bool tensors`

The problem was in [this line](https://github.com/huggingface/peft/blob/main/src/peft/utils/loftq_utils.py#L158), I replaced int with long and it worked fine.

`lookup_table_idx = lookup_table_idx.to(torch.long)`

</issue>

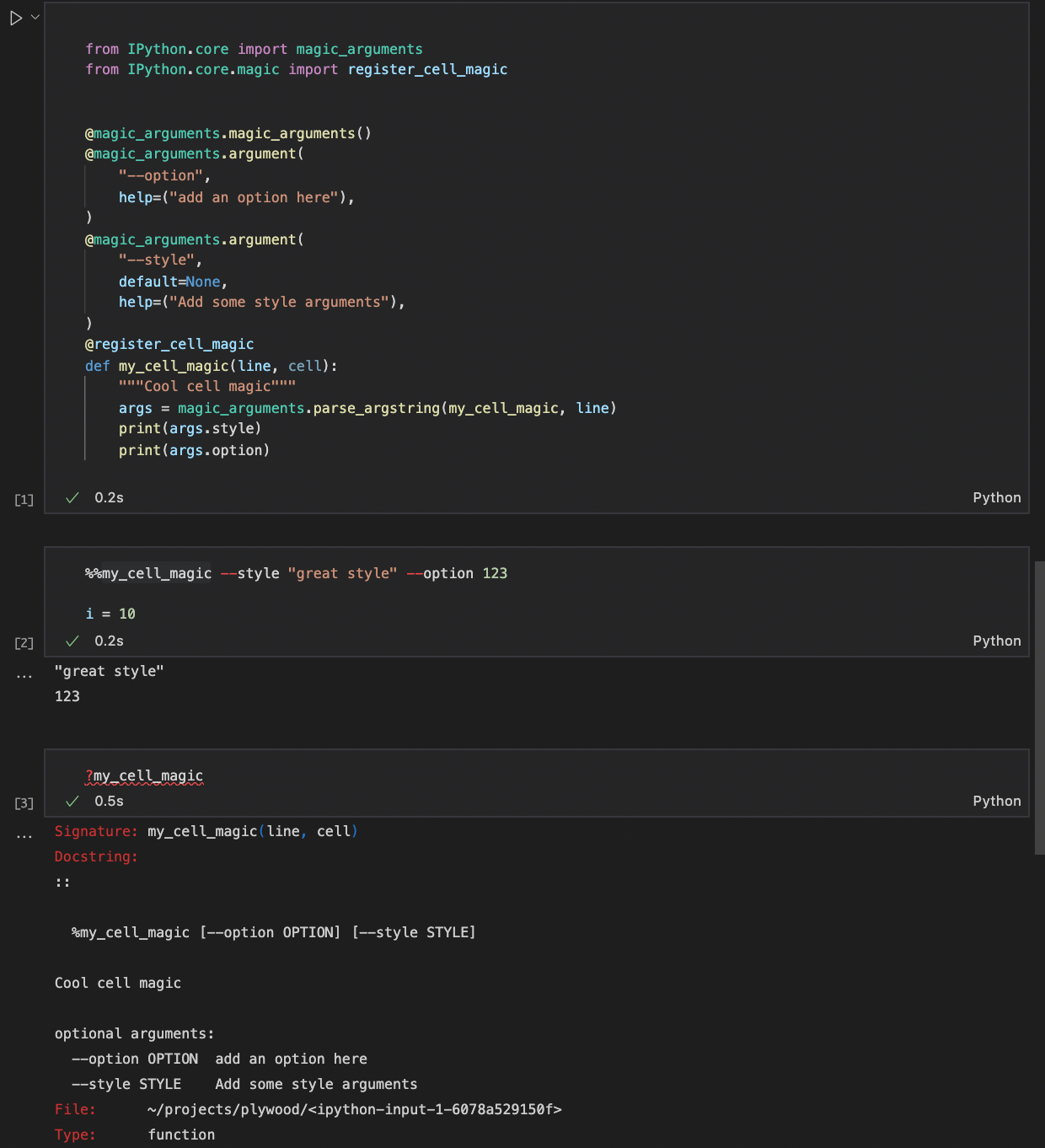

<code>

[start of src/peft/utils/loftq_utils.py]

1 # coding=utf-8

2 # Copyright 2023-present the HuggingFace Inc. team.

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 # You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15

16 # Reference code: https://github.com/yxli2123/LoftQ/blob/main/utils.py

17 # Reference paper: https://arxiv.org/abs/2310.08659

18

19 import logging

20 from typing import Union

21

22 import torch

23

24 from peft.import_utils import is_bnb_4bit_available, is_bnb_available

25

26

27 if is_bnb_available():

28 import bitsandbytes as bnb

29

30

31 class NFQuantizer:

32 def __init__(self, num_bits=2, device="cuda", method="normal", block_size=64, *args, **kwargs):

33 super().__init__(*args, **kwargs)

34 self.num_bits = num_bits

35 self.device = device

36 self.method = method

37 self.block_size = block_size

38 if self.method == "normal":

39 self.norm_lookup_table = self.create_normal_map(num_bits=self.num_bits)

40 self.norm_lookup_table = self.norm_lookup_table.to(device)

41 elif self.method == "uniform":

42 self.norm_lookup_table = self.create_uniform_map(num_bits=self.num_bits)

43 self.norm_lookup_table = self.norm_lookup_table.to(device)

44 else:

45 raise NotImplementedError("Other quantization methods not supported yet.")

46

47 @staticmethod

48 def create_uniform_map(symmetric=False, num_bits=4):

49 if symmetric:

50 # print("symmetric uniform quantization")

51 negative = torch.linspace(-1, 0, 2 ** (num_bits - 1))

52 positive = torch.linspace(0, 1, 2 ** (num_bits - 1))

53 table = torch.cat([negative, positive[1:]])

54 else:

55 # print("asymmetric uniform quantization")

56 table = torch.linspace(-1, 1, 2**num_bits)

57 return table

58

59 @staticmethod

60 def create_normal_map(offset=0.9677083, symmetric=False, num_bits=2):

61 try:

62 from scipy.stats import norm

63 except ImportError:

64 raise ImportError("The required package 'scipy' is not installed. Please install it to continue.")

65

66 variations = 2**num_bits

67 if symmetric:

68 v = norm.ppf(torch.linspace(1 - offset, offset, variations + 1)).tolist()

69 values = []

70 for index in range(len(v) - 1):

71 values.append(0.5 * v[index] + 0.5 * v[index + 1])

72 v = values

73 else:

74 # one more positive value, this is an asymmetric type

75 v1 = norm.ppf(torch.linspace(offset, 0.5, variations // 2 + 1)[:-1]).tolist()

76 v2 = [0]

77 v3 = (-norm.ppf(torch.linspace(offset, 0.5, variations // 2)[:-1])).tolist()

78 v = v1 + v2 + v3

79

80 values = torch.Tensor(v)

81 values = values.sort().values

82 values /= values.max()

83 return values

84

85 def quantize_tensor(self, weight):

86 max_abs = torch.abs(weight).max()

87 weight_normed = weight / max_abs

88

89 weight_normed_expanded = weight_normed.unsqueeze(-1)

90

91 # Reshape L to have the same number of dimensions as X_expanded

92 L_reshaped = torch.tensor(self.norm_lookup_table).reshape(1, -1)

93

94 # Calculate the absolute difference between X_expanded and L_reshaped

95 abs_diff = torch.abs(weight_normed_expanded - L_reshaped)

96

97 # Find the index of the minimum absolute difference for each element

98 qweight = torch.argmin(abs_diff, dim=-1)

99 return qweight, max_abs

100

101 def dequantize_tensor(self, qweight, max_abs):

102 qweight_flatten = qweight.flatten()

103

104 weight_normed = self.norm_lookup_table[qweight_flatten]

105 weight = weight_normed * max_abs

106

107 weight = weight.reshape(qweight.shape)

108

109 return weight

110

111 def quantize_block(self, weight):

112 if len(weight.shape) != 2:

113 raise ValueError(f"Only support 2D matrix, but your input has {len(weight.shape)} dimensions.")

114 if weight.shape[0] * weight.shape[1] % self.block_size != 0:

115 raise ValueError(

116 f"Weight with shape ({weight.shape[0]} x {weight.shape[1]}) "

117 f"is not dividable by block size {self.block_size}."

118 )

119

120 M, N = weight.shape

121 device = weight.device

122

123 # Quantization

124 weight_flatten = weight.flatten() # (M*N, )

125 weight_block = weight_flatten.reshape(-1, self.block_size) # (L, B), L = M * N / B

126 if self.method == "normal":

127 weight_max = weight_block.abs().max(dim=-1)[0] # (L, 1)

128 elif self.method == "uniform":

129 weight_max = weight_block.mean(dim=-1) + 2.5 * weight_block.std(dim=-1)

130 else:

131 raise NotImplementedError("Method not supported yet.")

132 weight_max = weight_max.unsqueeze(-1)

133 weight_divabs = weight_block / weight_max # (L, B)

134 weight_divabs = weight_divabs.unsqueeze(-1) # (L, B, 1)

135 L_reshaped = self.norm_lookup_table.reshape(1, -1) # (1, 2**K)

136

137 abs_diff = torch.abs(weight_divabs - L_reshaped) # (L, B, 2**K)

138 qweight = torch.argmin(abs_diff, dim=-1) # (L, B)

139

140 # Pack multiple k-bit into uint8

141 qweight = qweight.reshape(-1, 8 // self.num_bits)

142 qweight_pack = torch.zeros((M * N // 8 * self.num_bits, 1), dtype=torch.uint8, device=device)

143

144 # data format example:

145 # [1, 0, 3, 2] or [01, 00, 11, 10] -> [10110001], LIFO

146 for i in range(8 // self.num_bits):

147 qweight[:, i] = qweight[:, i] << i * self.num_bits

148 qweight_pack[:, 0] |= qweight[:, i]

149

150 return qweight_pack, weight_max, weight.shape

151

152 def dequantize_block(self, qweight, weight_max, weight_shape):

153 # unpack weight

154 device = qweight.device

155 weight = torch.zeros((qweight.shape[0], 8 // self.num_bits), dtype=torch.float32, device=device)

156 for i in range(8 // self.num_bits):

157 lookup_table_idx = qweight.to(torch.long) % 2**self.num_bits # get the most right 2 bits

158 lookup_table_idx = lookup_table_idx.to(torch.int)

159 weight[:, i] = self.norm_lookup_table[lookup_table_idx].squeeze()

160 qweight = qweight >> self.num_bits # right shift 2 bits of the original data

161

162 weight_block = weight.reshape(-1, self.block_size)

163 weight = weight_block * weight_max

164 weight = weight.reshape(weight_shape)

165

166 return weight

167

168

169 def _low_rank_decomposition(weight, reduced_rank=32):

170 """

171 :param weight: The matrix to decompose, of shape (H, W) :param reduced_rank: the final rank :return:

172 """

173 matrix_dimension = len(weight.size())

174 if matrix_dimension != 2:

175 raise ValueError(f"Only support 2D matrix, but your input has {matrix_dimension} dimensions.")

176

177 # Use SVD to decompose a matrix, default full_matrices is False to save parameters

178 U, S, Vh = torch.linalg.svd(weight, full_matrices=False)

179

180 L = U @ (torch.sqrt(torch.diag(S)[:, 0:reduced_rank]))

181 R = torch.sqrt(torch.diag(S)[0:reduced_rank, :]) @ Vh

182

183 return {"L": L, "R": R, "U": U, "S": S, "Vh": Vh, "reduced_rank": reduced_rank}

184

185

186 @torch.no_grad()

187 def loftq_init(weight: Union[torch.Tensor, torch.nn.Parameter], num_bits: int, reduced_rank: int, num_iter=1):

188 if num_bits not in [2, 4, 8]:

189 raise ValueError("Only support 2, 4, 8 bits quantization")

190 if num_iter <= 0:

191 raise ValueError("Number of iterations must be greater than 0")

192

193 out_feature, in_feature = weight.size()

194 device = weight.device

195 dtype = weight.dtype

196

197 logging.info(

198 f"Weight: ({out_feature}, {in_feature}) | Rank: {reduced_rank} "

199 f"| Num Iter: {num_iter} | Num Bits: {num_bits}"

200 )

201 if not is_bnb_4bit_available() or num_bits in [2, 8]:

202 quantizer = NFQuantizer(num_bits=num_bits, device=device, method="normal", block_size=64)

203 compute_device = device

204 else:

205 compute_device = "cuda"

206

207 weight = weight.to(device=compute_device, dtype=torch.float32)

208 res = weight.clone()

209 for i in range(num_iter):

210 torch.cuda.empty_cache()

211 # Quantization

212 if num_bits == 4 and is_bnb_4bit_available():

213 qweight = bnb.nn.Params4bit(

214 res.to("cpu"), requires_grad=False, compress_statistics=False, quant_type="nf4"

215 ).to(compute_device)

216 dequantized_weight = bnb.functional.dequantize_4bit(qweight.data, qweight.quant_state)

217 else:

218 quantized_weight, max_abs, shape = quantizer.quantize_block(res)

219 dequantized_weight = quantizer.dequantize_block(quantized_weight, max_abs, shape)

220

221 res = weight - dequantized_weight

222

223 # Decompose the residual by SVD

224 output = _low_rank_decomposition(res, reduced_rank=reduced_rank)

225 L, R, reduced_rank = output["L"], output["R"], output["reduced_rank"]

226 res = weight - torch.mm(L, R)

227

228 lora_A, lora_B = R, L

229

230 return dequantized_weight.to(device=device, dtype=dtype), lora_A, lora_B

231

[end of src/peft/utils/loftq_utils.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/src/peft/utils/loftq_utils.py b/src/peft/utils/loftq_utils.py

--- a/src/peft/utils/loftq_utils.py

+++ b/src/peft/utils/loftq_utils.py

@@ -155,7 +155,7 @@

weight = torch.zeros((qweight.shape[0], 8 // self.num_bits), dtype=torch.float32, device=device)

for i in range(8 // self.num_bits):

lookup_table_idx = qweight.to(torch.long) % 2**self.num_bits # get the most right 2 bits

- lookup_table_idx = lookup_table_idx.to(torch.int)

+ lookup_table_idx = lookup_table_idx.to(torch.long)

weight[:, i] = self.norm_lookup_table[lookup_table_idx].squeeze()

qweight = qweight >> self.num_bits # right shift 2 bits of the original data

|

{"golden_diff": "diff --git a/src/peft/utils/loftq_utils.py b/src/peft/utils/loftq_utils.py\n--- a/src/peft/utils/loftq_utils.py\n+++ b/src/peft/utils/loftq_utils.py\n@@ -155,7 +155,7 @@\n weight = torch.zeros((qweight.shape[0], 8 // self.num_bits), dtype=torch.float32, device=device)\n for i in range(8 // self.num_bits):\n lookup_table_idx = qweight.to(torch.long) % 2**self.num_bits # get the most right 2 bits\n- lookup_table_idx = lookup_table_idx.to(torch.int)\n+ lookup_table_idx = lookup_table_idx.to(torch.long)\n weight[:, i] = self.norm_lookup_table[lookup_table_idx].squeeze()\n qweight = qweight >> self.num_bits # right shift 2 bits of the original data\n", "issue": "An error occurs when using LoftQ. IndexError\nA problem occurred while applying LoftQ to T5.\r\n`IndexError: tensors used as indices must be long, byte or bool tensors`\r\nThe problem was in [this line](https://github.com/huggingface/peft/blob/main/src/peft/utils/loftq_utils.py#L158), I replaced int with long and it worked fine.\r\n`lookup_table_idx = lookup_table_idx.to(torch.long)`\n", "before_files": [{"content": "# coding=utf-8\n# Copyright 2023-present the HuggingFace Inc. team.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n# Reference code: https://github.com/yxli2123/LoftQ/blob/main/utils.py\n# Reference paper: https://arxiv.org/abs/2310.08659\n\nimport logging\nfrom typing import Union\n\nimport torch\n\nfrom peft.import_utils import is_bnb_4bit_available, is_bnb_available\n\n\nif is_bnb_available():\n import bitsandbytes as bnb\n\n\nclass NFQuantizer:\n def __init__(self, num_bits=2, device=\"cuda\", method=\"normal\", block_size=64, *args, **kwargs):\n super().__init__(*args, **kwargs)\n self.num_bits = num_bits\n self.device = device\n self.method = method\n self.block_size = block_size\n if self.method == \"normal\":\n self.norm_lookup_table = self.create_normal_map(num_bits=self.num_bits)\n self.norm_lookup_table = self.norm_lookup_table.to(device)\n elif self.method == \"uniform\":\n self.norm_lookup_table = self.create_uniform_map(num_bits=self.num_bits)\n self.norm_lookup_table = self.norm_lookup_table.to(device)\n else:\n raise NotImplementedError(\"Other quantization methods not supported yet.\")\n\n @staticmethod\n def create_uniform_map(symmetric=False, num_bits=4):\n if symmetric:\n # print(\"symmetric uniform quantization\")\n negative = torch.linspace(-1, 0, 2 ** (num_bits - 1))\n positive = torch.linspace(0, 1, 2 ** (num_bits - 1))\n table = torch.cat([negative, positive[1:]])\n else:\n # print(\"asymmetric uniform quantization\")\n table = torch.linspace(-1, 1, 2**num_bits)\n return table\n\n @staticmethod\n def create_normal_map(offset=0.9677083, symmetric=False, num_bits=2):\n try:\n from scipy.stats import norm\n except ImportError:\n raise ImportError(\"The required package 'scipy' is not installed. Please install it to continue.\")\n\n variations = 2**num_bits\n if symmetric:\n v = norm.ppf(torch.linspace(1 - offset, offset, variations + 1)).tolist()\n values = []\n for index in range(len(v) - 1):\n values.append(0.5 * v[index] + 0.5 * v[index + 1])\n v = values\n else:\n # one more positive value, this is an asymmetric type\n v1 = norm.ppf(torch.linspace(offset, 0.5, variations // 2 + 1)[:-1]).tolist()\n v2 = [0]\n v3 = (-norm.ppf(torch.linspace(offset, 0.5, variations // 2)[:-1])).tolist()\n v = v1 + v2 + v3\n\n values = torch.Tensor(v)\n values = values.sort().values\n values /= values.max()\n return values\n\n def quantize_tensor(self, weight):\n max_abs = torch.abs(weight).max()\n weight_normed = weight / max_abs\n\n weight_normed_expanded = weight_normed.unsqueeze(-1)\n\n # Reshape L to have the same number of dimensions as X_expanded\n L_reshaped = torch.tensor(self.norm_lookup_table).reshape(1, -1)\n\n # Calculate the absolute difference between X_expanded and L_reshaped\n abs_diff = torch.abs(weight_normed_expanded - L_reshaped)\n\n # Find the index of the minimum absolute difference for each element\n qweight = torch.argmin(abs_diff, dim=-1)\n return qweight, max_abs\n\n def dequantize_tensor(self, qweight, max_abs):\n qweight_flatten = qweight.flatten()\n\n weight_normed = self.norm_lookup_table[qweight_flatten]\n weight = weight_normed * max_abs\n\n weight = weight.reshape(qweight.shape)\n\n return weight\n\n def quantize_block(self, weight):\n if len(weight.shape) != 2:\n raise ValueError(f\"Only support 2D matrix, but your input has {len(weight.shape)} dimensions.\")\n if weight.shape[0] * weight.shape[1] % self.block_size != 0:\n raise ValueError(\n f\"Weight with shape ({weight.shape[0]} x {weight.shape[1]}) \"\n f\"is not dividable by block size {self.block_size}.\"\n )\n\n M, N = weight.shape\n device = weight.device\n\n # Quantization\n weight_flatten = weight.flatten() # (M*N, )\n weight_block = weight_flatten.reshape(-1, self.block_size) # (L, B), L = M * N / B\n if self.method == \"normal\":\n weight_max = weight_block.abs().max(dim=-1)[0] # (L, 1)\n elif self.method == \"uniform\":\n weight_max = weight_block.mean(dim=-1) + 2.5 * weight_block.std(dim=-1)\n else:\n raise NotImplementedError(\"Method not supported yet.\")\n weight_max = weight_max.unsqueeze(-1)\n weight_divabs = weight_block / weight_max # (L, B)\n weight_divabs = weight_divabs.unsqueeze(-1) # (L, B, 1)\n L_reshaped = self.norm_lookup_table.reshape(1, -1) # (1, 2**K)\n\n abs_diff = torch.abs(weight_divabs - L_reshaped) # (L, B, 2**K)\n qweight = torch.argmin(abs_diff, dim=-1) # (L, B)\n\n # Pack multiple k-bit into uint8\n qweight = qweight.reshape(-1, 8 // self.num_bits)\n qweight_pack = torch.zeros((M * N // 8 * self.num_bits, 1), dtype=torch.uint8, device=device)\n\n # data format example:\n # [1, 0, 3, 2] or [01, 00, 11, 10] -> [10110001], LIFO\n for i in range(8 // self.num_bits):\n qweight[:, i] = qweight[:, i] << i * self.num_bits\n qweight_pack[:, 0] |= qweight[:, i]\n\n return qweight_pack, weight_max, weight.shape\n\n def dequantize_block(self, qweight, weight_max, weight_shape):\n # unpack weight\n device = qweight.device\n weight = torch.zeros((qweight.shape[0], 8 // self.num_bits), dtype=torch.float32, device=device)\n for i in range(8 // self.num_bits):\n lookup_table_idx = qweight.to(torch.long) % 2**self.num_bits # get the most right 2 bits\n lookup_table_idx = lookup_table_idx.to(torch.int)\n weight[:, i] = self.norm_lookup_table[lookup_table_idx].squeeze()\n qweight = qweight >> self.num_bits # right shift 2 bits of the original data\n\n weight_block = weight.reshape(-1, self.block_size)\n weight = weight_block * weight_max\n weight = weight.reshape(weight_shape)\n\n return weight\n\n\ndef _low_rank_decomposition(weight, reduced_rank=32):\n \"\"\"\n :param weight: The matrix to decompose, of shape (H, W) :param reduced_rank: the final rank :return:\n \"\"\"\n matrix_dimension = len(weight.size())\n if matrix_dimension != 2:\n raise ValueError(f\"Only support 2D matrix, but your input has {matrix_dimension} dimensions.\")\n\n # Use SVD to decompose a matrix, default full_matrices is False to save parameters\n U, S, Vh = torch.linalg.svd(weight, full_matrices=False)\n\n L = U @ (torch.sqrt(torch.diag(S)[:, 0:reduced_rank]))\n R = torch.sqrt(torch.diag(S)[0:reduced_rank, :]) @ Vh\n\n return {\"L\": L, \"R\": R, \"U\": U, \"S\": S, \"Vh\": Vh, \"reduced_rank\": reduced_rank}\n\n\[email protected]_grad()\ndef loftq_init(weight: Union[torch.Tensor, torch.nn.Parameter], num_bits: int, reduced_rank: int, num_iter=1):\n if num_bits not in [2, 4, 8]:\n raise ValueError(\"Only support 2, 4, 8 bits quantization\")\n if num_iter <= 0:\n raise ValueError(\"Number of iterations must be greater than 0\")\n\n out_feature, in_feature = weight.size()\n device = weight.device\n dtype = weight.dtype\n\n logging.info(\n f\"Weight: ({out_feature}, {in_feature}) | Rank: {reduced_rank} \"\n f\"| Num Iter: {num_iter} | Num Bits: {num_bits}\"\n )\n if not is_bnb_4bit_available() or num_bits in [2, 8]:\n quantizer = NFQuantizer(num_bits=num_bits, device=device, method=\"normal\", block_size=64)\n compute_device = device\n else:\n compute_device = \"cuda\"\n\n weight = weight.to(device=compute_device, dtype=torch.float32)\n res = weight.clone()\n for i in range(num_iter):\n torch.cuda.empty_cache()\n # Quantization\n if num_bits == 4 and is_bnb_4bit_available():\n qweight = bnb.nn.Params4bit(\n res.to(\"cpu\"), requires_grad=False, compress_statistics=False, quant_type=\"nf4\"\n ).to(compute_device)\n dequantized_weight = bnb.functional.dequantize_4bit(qweight.data, qweight.quant_state)\n else:\n quantized_weight, max_abs, shape = quantizer.quantize_block(res)\n dequantized_weight = quantizer.dequantize_block(quantized_weight, max_abs, shape)\n\n res = weight - dequantized_weight\n\n # Decompose the residual by SVD\n output = _low_rank_decomposition(res, reduced_rank=reduced_rank)\n L, R, reduced_rank = output[\"L\"], output[\"R\"], output[\"reduced_rank\"]\n res = weight - torch.mm(L, R)\n\n lora_A, lora_B = R, L\n\n return dequantized_weight.to(device=device, dtype=dtype), lora_A, lora_B\n", "path": "src/peft/utils/loftq_utils.py"}]}

| 3,665 | 203 |

gh_patches_debug_10163

|

rasdani/github-patches

|

git_diff

|

pytorch__pytorch-2200

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

DataParallel tests are currently broken

https://github.com/pytorch/pytorch/pull/2121/commits/d69669efcfe4333c223f53249185c2e22f76ed73 has broken DataParallel tests. Now that device_ids are explicitly sent to parallel_apply, this assert https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/parallel_apply.py#L30 gets triggered if inputs are not big enough to be on all devices (e.g. batch size of 20 on 8 GPUs gets chunked into 6*3+2, so that 8-th GPU is idle, and assert gets triggered).

</issue>

<code>

[start of torch/nn/parallel/data_parallel.py]

1 import torch

2 from ..modules import Module

3 from .scatter_gather import scatter_kwargs, gather

4 from .replicate import replicate

5 from .parallel_apply import parallel_apply

6

7

8 class DataParallel(Module):

9 """Implements data parallelism at the module level.

10

11 This container parallelizes the application of the given module by

12 splitting the input across the specified devices by chunking in the batch

13 dimension. In the forward pass, the module is replicated on each device,

14 and each replica handles a portion of the input. During the backwards

15 pass, gradients from each replica are summed into the original module.

16

17 The batch size should be larger than the number of GPUs used. It should

18 also be an integer multiple of the number of GPUs so that each chunk is the

19 same size (so that each GPU processes the same number of samples).

20

21 See also: :ref:`cuda-nn-dataparallel-instead`

22

23 Arbitrary positional and keyword inputs are allowed to be passed into

24 DataParallel EXCEPT Tensors. All variables will be scattered on dim

25 specified (default 0). Primitive types will be broadcasted, but all

26 other types will be a shallow copy and can be corrupted if written to in

27 the model's forward pass.

28

29 Args:

30 module: module to be parallelized

31 device_ids: CUDA devices (default: all devices)

32 output_device: device location of output (default: device_ids[0])

33

34 Example::

35

36 >>> net = torch.nn.DataParallel(model, device_ids=[0, 1, 2])

37 >>> output = net(input_var)

38 """

39

40 # TODO: update notes/cuda.rst when this class handles 8+ GPUs well

41

42 def __init__(self, module, device_ids=None, output_device=None, dim=0):

43 super(DataParallel, self).__init__()

44 if device_ids is None:

45 device_ids = list(range(torch.cuda.device_count()))

46 if output_device is None:

47 output_device = device_ids[0]

48 self.dim = dim

49 self.module = module

50 self.device_ids = device_ids

51 self.output_device = output_device

52 if len(self.device_ids) == 1:

53 self.module.cuda(device_ids[0])

54

55 def forward(self, *inputs, **kwargs):

56 inputs, kwargs = self.scatter(inputs, kwargs, self.device_ids)

57 if len(self.device_ids) == 1:

58 return self.module(*inputs[0], **kwargs[0])

59 replicas = self.replicate(self.module, self.device_ids[:len(inputs)])

60 outputs = self.parallel_apply(replicas, inputs, kwargs)

61 return self.gather(outputs, self.output_device)

62

63 def replicate(self, module, device_ids):

64 return replicate(module, device_ids)

65

66 def scatter(self, inputs, kwargs, device_ids):

67 return scatter_kwargs(inputs, kwargs, device_ids, dim=self.dim)

68

69 def parallel_apply(self, replicas, inputs, kwargs):

70 return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])

71

72 def gather(self, outputs, output_device):

73 return gather(outputs, output_device, dim=self.dim)

74

75

76 def data_parallel(module, inputs, device_ids=None, output_device=None, dim=0, module_kwargs=None):

77 """Evaluates module(input) in parallel across the GPUs given in device_ids.

78

79 This is the functional version of the DataParallel module.

80

81 Args:

82 module: the module to evaluate in parallel

83 inputs: inputs to the module

84 device_ids: GPU ids on which to replicate module

85 output_device: GPU location of the output Use -1 to indicate the CPU.

86 (default: device_ids[0])

87 Returns:

88 a Variable containing the result of module(input) located on

89 output_device

90 """

91 if not isinstance(inputs, tuple):

92 inputs = (inputs,)

93

94 if device_ids is None:

95 device_ids = list(range(torch.cuda.device_count()))

96

97 if output_device is None:

98 output_device = device_ids[0]

99

100 inputs, module_kwargs = scatter_kwargs(inputs, module_kwargs, device_ids, dim)

101 if len(device_ids) == 1:

102 return module(*inputs[0], **module_kwargs[0])

103 replicas = replicate(module, device_ids[:len(inputs)])

104 outputs = parallel_apply(replicas, inputs, module_kwargs, device_ids)

105 return gather(outputs, output_device, dim)

106

[end of torch/nn/parallel/data_parallel.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/torch/nn/parallel/data_parallel.py b/torch/nn/parallel/data_parallel.py

--- a/torch/nn/parallel/data_parallel.py

+++ b/torch/nn/parallel/data_parallel.py

@@ -100,6 +100,7 @@

inputs, module_kwargs = scatter_kwargs(inputs, module_kwargs, device_ids, dim)

if len(device_ids) == 1:

return module(*inputs[0], **module_kwargs[0])

- replicas = replicate(module, device_ids[:len(inputs)])

- outputs = parallel_apply(replicas, inputs, module_kwargs, device_ids)

+ used_device_ids = device_ids[:len(inputs)]

+ replicas = replicate(module, used_device_ids)

+ outputs = parallel_apply(replicas, inputs, module_kwargs, used_device_ids)

return gather(outputs, output_device, dim)

|

{"golden_diff": "diff --git a/torch/nn/parallel/data_parallel.py b/torch/nn/parallel/data_parallel.py\n--- a/torch/nn/parallel/data_parallel.py\n+++ b/torch/nn/parallel/data_parallel.py\n@@ -100,6 +100,7 @@\n inputs, module_kwargs = scatter_kwargs(inputs, module_kwargs, device_ids, dim)\n if len(device_ids) == 1:\n return module(*inputs[0], **module_kwargs[0])\n- replicas = replicate(module, device_ids[:len(inputs)])\n- outputs = parallel_apply(replicas, inputs, module_kwargs, device_ids)\n+ used_device_ids = device_ids[:len(inputs)]\n+ replicas = replicate(module, used_device_ids)\n+ outputs = parallel_apply(replicas, inputs, module_kwargs, used_device_ids)\n return gather(outputs, output_device, dim)\n", "issue": "DataParallel tests are currently broken \nhttps://github.com/pytorch/pytorch/pull/2121/commits/d69669efcfe4333c223f53249185c2e22f76ed73 has broken DataParallel tests. Now that device_ids are explicitly sent to parallel_apply, this assert https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/parallel_apply.py#L30 gets triggered if inputs are not big enough to be on all devices (e.g. batch size of 20 on 8 GPUs gets chunked into 6*3+2, so that 8-th GPU is idle, and assert gets triggered). \r\n\n", "before_files": [{"content": "import torch\nfrom ..modules import Module\nfrom .scatter_gather import scatter_kwargs, gather\nfrom .replicate import replicate\nfrom .parallel_apply import parallel_apply\n\n\nclass DataParallel(Module):\n \"\"\"Implements data parallelism at the module level.\n\n This container parallelizes the application of the given module by\n splitting the input across the specified devices by chunking in the batch\n dimension. In the forward pass, the module is replicated on each device,\n and each replica handles a portion of the input. During the backwards\n pass, gradients from each replica are summed into the original module.\n\n The batch size should be larger than the number of GPUs used. It should\n also be an integer multiple of the number of GPUs so that each chunk is the\n same size (so that each GPU processes the same number of samples).\n\n See also: :ref:`cuda-nn-dataparallel-instead`\n\n Arbitrary positional and keyword inputs are allowed to be passed into\n DataParallel EXCEPT Tensors. All variables will be scattered on dim\n specified (default 0). Primitive types will be broadcasted, but all\n other types will be a shallow copy and can be corrupted if written to in\n the model's forward pass.\n\n Args:\n module: module to be parallelized\n device_ids: CUDA devices (default: all devices)\n output_device: device location of output (default: device_ids[0])\n\n Example::\n\n >>> net = torch.nn.DataParallel(model, device_ids=[0, 1, 2])\n >>> output = net(input_var)\n \"\"\"\n\n # TODO: update notes/cuda.rst when this class handles 8+ GPUs well\n\n def __init__(self, module, device_ids=None, output_device=None, dim=0):\n super(DataParallel, self).__init__()\n if device_ids is None:\n device_ids = list(range(torch.cuda.device_count()))\n if output_device is None:\n output_device = device_ids[0]\n self.dim = dim\n self.module = module\n self.device_ids = device_ids\n self.output_device = output_device\n if len(self.device_ids) == 1:\n self.module.cuda(device_ids[0])\n\n def forward(self, *inputs, **kwargs):\n inputs, kwargs = self.scatter(inputs, kwargs, self.device_ids)\n if len(self.device_ids) == 1:\n return self.module(*inputs[0], **kwargs[0])\n replicas = self.replicate(self.module, self.device_ids[:len(inputs)])\n outputs = self.parallel_apply(replicas, inputs, kwargs)\n return self.gather(outputs, self.output_device)\n\n def replicate(self, module, device_ids):\n return replicate(module, device_ids)\n\n def scatter(self, inputs, kwargs, device_ids):\n return scatter_kwargs(inputs, kwargs, device_ids, dim=self.dim)\n\n def parallel_apply(self, replicas, inputs, kwargs):\n return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])\n\n def gather(self, outputs, output_device):\n return gather(outputs, output_device, dim=self.dim)\n\n\ndef data_parallel(module, inputs, device_ids=None, output_device=None, dim=0, module_kwargs=None):\n \"\"\"Evaluates module(input) in parallel across the GPUs given in device_ids.\n\n This is the functional version of the DataParallel module.\n\n Args:\n module: the module to evaluate in parallel\n inputs: inputs to the module\n device_ids: GPU ids on which to replicate module\n output_device: GPU location of the output Use -1 to indicate the CPU.\n (default: device_ids[0])\n Returns:\n a Variable containing the result of module(input) located on\n output_device\n \"\"\"\n if not isinstance(inputs, tuple):\n inputs = (inputs,)\n\n if device_ids is None:\n device_ids = list(range(torch.cuda.device_count()))\n\n if output_device is None:\n output_device = device_ids[0]\n\n inputs, module_kwargs = scatter_kwargs(inputs, module_kwargs, device_ids, dim)\n if len(device_ids) == 1:\n return module(*inputs[0], **module_kwargs[0])\n replicas = replicate(module, device_ids[:len(inputs)])\n outputs = parallel_apply(replicas, inputs, module_kwargs, device_ids)\n return gather(outputs, output_device, dim)\n", "path": "torch/nn/parallel/data_parallel.py"}]}

| 1,854 | 185 |

gh_patches_debug_34564

|

rasdani/github-patches

|

git_diff

|

pyinstaller__pyinstaller-7259

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Fixes for use of pyinstaller with Django 4.x and custom management commands.

PROBLEM:

This feature aims to solve the problem of the custom app level management commands being missed out from hidden imports alongside issues with imports of apps listed within INSTALLED_APPS failing due to erroneous execution of 'eval_script' function. Specifically when the hidden imports of the INSTALLED_APPS are evaluated the logging outputs generated by 'collect_submodules' when called in django_import_finder.py are captured in the STDOUT regardless of the --log-level. Also any additional management commands provided by one of the INSTALLED_APPS are ignored as the 'get_commands' function has a hardcoded referenced to Django 1.8 command set. Django's currently implementation of command collection will not complain of missing commands at runtime thereby rendering the patch of this function that is currently implemented irrelevant.

SOLUTION:

The solution to this issue is to remove several redundant parts of the code alongside adding additional overrides for decluttering STDOUT.

The following is a list of measures taken to resolve the problem

- remove the monkey patching of Django's 'get_commands' method in pyi_rth_django.py

- modify the collect static code to have a boolean input parameter 'log' which when the relevant calls to logging within this function are wrapped in a conditional will serve to prevent logs being inappropriately raised.

</issue>

<code>

[start of PyInstaller/hooks/rthooks/pyi_rth_django.py]

1 #-----------------------------------------------------------------------------

2 # Copyright (c) 2005-2022, PyInstaller Development Team.

3 #

4 # Licensed under the Apache License, Version 2.0 (the "License");

5 # you may not use this file except in compliance with the License.

6 #

7 # The full license is in the file COPYING.txt, distributed with this software.

8 #

9 # SPDX-License-Identifier: Apache-2.0

10 #-----------------------------------------------------------------------------

11

12 # This Django rthook was tested with Django 1.8.3.

13

14 import django.core.management

15 import django.utils.autoreload

16

17

18 def _get_commands():

19 # Django groupss commands by app. This returns static dict() as it is for django 1.8 and the default project.

20 commands = {

21 'changepassword': 'django.contrib.auth',

22 'check': 'django.core',

23 'clearsessions': 'django.contrib.sessions',

24 'collectstatic': 'django.contrib.staticfiles',

25 'compilemessages': 'django.core',

26 'createcachetable': 'django.core',

27 'createsuperuser': 'django.contrib.auth',

28 'dbshell': 'django.core',

29 'diffsettings': 'django.core',

30 'dumpdata': 'django.core',

31 'findstatic': 'django.contrib.staticfiles',

32 'flush': 'django.core',

33 'inspectdb': 'django.core',

34 'loaddata': 'django.core',

35 'makemessages': 'django.core',

36 'makemigrations': 'django.core',

37 'migrate': 'django.core',

38 'runfcgi': 'django.core',

39 'runserver': 'django.core',

40 'shell': 'django.core',

41 'showmigrations': 'django.core',

42 'sql': 'django.core',

43 'sqlall': 'django.core',

44 'sqlclear': 'django.core',

45 'sqlcustom': 'django.core',

46 'sqldropindexes': 'django.core',

47 'sqlflush': 'django.core',

48 'sqlindexes': 'django.core',

49 'sqlmigrate': 'django.core',

50 'sqlsequencereset': 'django.core',

51 'squashmigrations': 'django.core',

52 'startapp': 'django.core',

53 'startproject': 'django.core',

54 'syncdb': 'django.core',

55 'test': 'django.core',

56 'testserver': 'django.core',

57 'validate': 'django.core'

58 }

59 return commands

60

61

62 _old_restart_with_reloader = django.utils.autoreload.restart_with_reloader

63

64

65 def _restart_with_reloader(*args):

66 import sys

67 a0 = sys.argv.pop(0)

68 try:

69 return _old_restart_with_reloader(*args)

70 finally:

71 sys.argv.insert(0, a0)

72

73

74 # Override get_commands() function otherwise the app will complain that there are no commands.

75 django.core.management.get_commands = _get_commands

76 # Override restart_with_reloader() function, otherwise the app might complain that some commands do not exist;

77 # e.g., runserver.

78 django.utils.autoreload.restart_with_reloader = _restart_with_reloader

79

[end of PyInstaller/hooks/rthooks/pyi_rth_django.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/PyInstaller/hooks/rthooks/pyi_rth_django.py b/PyInstaller/hooks/rthooks/pyi_rth_django.py

--- a/PyInstaller/hooks/rthooks/pyi_rth_django.py

+++ b/PyInstaller/hooks/rthooks/pyi_rth_django.py

@@ -11,54 +11,8 @@

# This Django rthook was tested with Django 1.8.3.

-import django.core.management

import django.utils.autoreload

-

-def _get_commands():

- # Django groupss commands by app. This returns static dict() as it is for django 1.8 and the default project.

- commands = {

- 'changepassword': 'django.contrib.auth',

- 'check': 'django.core',

- 'clearsessions': 'django.contrib.sessions',

- 'collectstatic': 'django.contrib.staticfiles',

- 'compilemessages': 'django.core',

- 'createcachetable': 'django.core',

- 'createsuperuser': 'django.contrib.auth',

- 'dbshell': 'django.core',

- 'diffsettings': 'django.core',

- 'dumpdata': 'django.core',

- 'findstatic': 'django.contrib.staticfiles',

- 'flush': 'django.core',

- 'inspectdb': 'django.core',

- 'loaddata': 'django.core',

- 'makemessages': 'django.core',

- 'makemigrations': 'django.core',

- 'migrate': 'django.core',

- 'runfcgi': 'django.core',

- 'runserver': 'django.core',

- 'shell': 'django.core',

- 'showmigrations': 'django.core',

- 'sql': 'django.core',

- 'sqlall': 'django.core',

- 'sqlclear': 'django.core',

- 'sqlcustom': 'django.core',

- 'sqldropindexes': 'django.core',

- 'sqlflush': 'django.core',

- 'sqlindexes': 'django.core',

- 'sqlmigrate': 'django.core',

- 'sqlsequencereset': 'django.core',

- 'squashmigrations': 'django.core',

- 'startapp': 'django.core',

- 'startproject': 'django.core',

- 'syncdb': 'django.core',

- 'test': 'django.core',

- 'testserver': 'django.core',

- 'validate': 'django.core'

- }

- return commands

-

-

_old_restart_with_reloader = django.utils.autoreload.restart_with_reloader

@@ -71,8 +25,6 @@

sys.argv.insert(0, a0)

-# Override get_commands() function otherwise the app will complain that there are no commands.

-django.core.management.get_commands = _get_commands

# Override restart_with_reloader() function, otherwise the app might complain that some commands do not exist;

# e.g., runserver.

django.utils.autoreload.restart_with_reloader = _restart_with_reloader

|

{"golden_diff": "diff --git a/PyInstaller/hooks/rthooks/pyi_rth_django.py b/PyInstaller/hooks/rthooks/pyi_rth_django.py\n--- a/PyInstaller/hooks/rthooks/pyi_rth_django.py\n+++ b/PyInstaller/hooks/rthooks/pyi_rth_django.py\n@@ -11,54 +11,8 @@\n \n # This Django rthook was tested with Django 1.8.3.\n \n-import django.core.management\n import django.utils.autoreload\n \n-\n-def _get_commands():\n- # Django groupss commands by app. This returns static dict() as it is for django 1.8 and the default project.\n- commands = {\n- 'changepassword': 'django.contrib.auth',\n- 'check': 'django.core',\n- 'clearsessions': 'django.contrib.sessions',\n- 'collectstatic': 'django.contrib.staticfiles',\n- 'compilemessages': 'django.core',\n- 'createcachetable': 'django.core',\n- 'createsuperuser': 'django.contrib.auth',\n- 'dbshell': 'django.core',\n- 'diffsettings': 'django.core',\n- 'dumpdata': 'django.core',\n- 'findstatic': 'django.contrib.staticfiles',\n- 'flush': 'django.core',\n- 'inspectdb': 'django.core',\n- 'loaddata': 'django.core',\n- 'makemessages': 'django.core',\n- 'makemigrations': 'django.core',\n- 'migrate': 'django.core',\n- 'runfcgi': 'django.core',\n- 'runserver': 'django.core',\n- 'shell': 'django.core',\n- 'showmigrations': 'django.core',\n- 'sql': 'django.core',\n- 'sqlall': 'django.core',\n- 'sqlclear': 'django.core',\n- 'sqlcustom': 'django.core',\n- 'sqldropindexes': 'django.core',\n- 'sqlflush': 'django.core',\n- 'sqlindexes': 'django.core',\n- 'sqlmigrate': 'django.core',\n- 'sqlsequencereset': 'django.core',\n- 'squashmigrations': 'django.core',\n- 'startapp': 'django.core',\n- 'startproject': 'django.core',\n- 'syncdb': 'django.core',\n- 'test': 'django.core',\n- 'testserver': 'django.core',\n- 'validate': 'django.core'\n- }\n- return commands\n-\n-\n _old_restart_with_reloader = django.utils.autoreload.restart_with_reloader\n \n \n@@ -71,8 +25,6 @@\n sys.argv.insert(0, a0)\n \n \n-# Override get_commands() function otherwise the app will complain that there are no commands.\n-django.core.management.get_commands = _get_commands\n # Override restart_with_reloader() function, otherwise the app might complain that some commands do not exist;\n # e.g., runserver.\n django.utils.autoreload.restart_with_reloader = _restart_with_reloader\n", "issue": "Fixes for use of pyinstaller with Django 4.x and custom management commands.\nPROBLEM:\r\nThis feature aims to solve the problem of the custom app level management commands being missed out from hidden imports alongside issues with imports of apps listed within INSTALLED_APPS failing due to erroneous execution of 'eval_script' function. Specifically when the hidden imports of the INSTALLED_APPS are evaluated the logging outputs generated by 'collect_submodules' when called in django_import_finder.py are captured in the STDOUT regardless of the --log-level. Also any additional management commands provided by one of the INSTALLED_APPS are ignored as the 'get_commands' function has a hardcoded referenced to Django 1.8 command set. Django's currently implementation of command collection will not complain of missing commands at runtime thereby rendering the patch of this function that is currently implemented irrelevant.\r\n\r\nSOLUTION:\r\nThe solution to this issue is to remove several redundant parts of the code alongside adding additional overrides for decluttering STDOUT. \r\n\r\nThe following is a list of measures taken to resolve the problem\r\n- remove the monkey patching of Django's 'get_commands' method in pyi_rth_django.py\r\n- modify the collect static code to have a boolean input parameter 'log' which when the relevant calls to logging within this function are wrapped in a conditional will serve to prevent logs being inappropriately raised.\r\n\n", "before_files": [{"content": "#-----------------------------------------------------------------------------\n# Copyright (c) 2005-2022, PyInstaller Development Team.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n#\n# The full license is in the file COPYING.txt, distributed with this software.\n#\n# SPDX-License-Identifier: Apache-2.0\n#-----------------------------------------------------------------------------\n\n# This Django rthook was tested with Django 1.8.3.\n\nimport django.core.management\nimport django.utils.autoreload\n\n\ndef _get_commands():\n # Django groupss commands by app. This returns static dict() as it is for django 1.8 and the default project.\n commands = {\n 'changepassword': 'django.contrib.auth',\n 'check': 'django.core',\n 'clearsessions': 'django.contrib.sessions',\n 'collectstatic': 'django.contrib.staticfiles',\n 'compilemessages': 'django.core',\n 'createcachetable': 'django.core',\n 'createsuperuser': 'django.contrib.auth',\n 'dbshell': 'django.core',\n 'diffsettings': 'django.core',\n 'dumpdata': 'django.core',\n 'findstatic': 'django.contrib.staticfiles',\n 'flush': 'django.core',\n 'inspectdb': 'django.core',\n 'loaddata': 'django.core',\n 'makemessages': 'django.core',\n 'makemigrations': 'django.core',\n 'migrate': 'django.core',\n 'runfcgi': 'django.core',\n 'runserver': 'django.core',\n 'shell': 'django.core',\n 'showmigrations': 'django.core',\n 'sql': 'django.core',\n 'sqlall': 'django.core',\n 'sqlclear': 'django.core',\n 'sqlcustom': 'django.core',\n 'sqldropindexes': 'django.core',\n 'sqlflush': 'django.core',\n 'sqlindexes': 'django.core',\n 'sqlmigrate': 'django.core',\n 'sqlsequencereset': 'django.core',\n 'squashmigrations': 'django.core',\n 'startapp': 'django.core',\n 'startproject': 'django.core',\n 'syncdb': 'django.core',\n 'test': 'django.core',\n 'testserver': 'django.core',\n 'validate': 'django.core'\n }\n return commands\n\n\n_old_restart_with_reloader = django.utils.autoreload.restart_with_reloader\n\n\ndef _restart_with_reloader(*args):\n import sys\n a0 = sys.argv.pop(0)\n try:\n return _old_restart_with_reloader(*args)\n finally:\n sys.argv.insert(0, a0)\n\n\n# Override get_commands() function otherwise the app will complain that there are no commands.\ndjango.core.management.get_commands = _get_commands\n# Override restart_with_reloader() function, otherwise the app might complain that some commands do not exist;\n# e.g., runserver.\ndjango.utils.autoreload.restart_with_reloader = _restart_with_reloader\n", "path": "PyInstaller/hooks/rthooks/pyi_rth_django.py"}]}

| 1,633 | 663 |

gh_patches_debug_24655

|

rasdani/github-patches

|

git_diff

|

open-telemetry__opentelemetry-python-3217

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Make entry_points behave the same across Python versions

The recently introduced `entry_points` function does not behave the same across Python versions and it is not possible to get all entry points in Python 3.8 and 3.9.

</issue>

<code>

[start of opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py]

1 # Copyright The OpenTelemetry Authors

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 from sys import version_info

16

17 # FIXME remove this when support for 3.7 is dropped.

18 if version_info.minor == 7:

19 # pylint: disable=import-error

20 from importlib_metadata import entry_points, version # type: ignore

21

22 # FIXME remove this file when support for 3.9 is dropped.

23 elif version_info.minor in (8, 9):

24 # pylint: disable=import-error

25 from importlib.metadata import (

26 entry_points as importlib_metadata_entry_points,

27 )

28 from importlib.metadata import version

29

30 def entry_points(group: str, name: str): # type: ignore

31 for entry_point in importlib_metadata_entry_points()[group]:

32 if entry_point.name == name:

33 yield entry_point

34

35 else:

36 from importlib.metadata import entry_points, version

37

38 __all__ = ["entry_points", "version"]

39

[end of opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py b/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py

--- a/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py

+++ b/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py

@@ -12,27 +12,18 @@

# See the License for the specific language governing permissions and

# limitations under the License.

-from sys import version_info

+# FIXME: Use importlib.metadata when support for 3.11 is dropped if the rest of

+# the supported versions at that time have the same API.

+from importlib_metadata import ( # type: ignore

+ EntryPoint,

+ EntryPoints,

+ entry_points,

+ version,

+)

-# FIXME remove this when support for 3.7 is dropped.

-if version_info.minor == 7:

- # pylint: disable=import-error

- from importlib_metadata import entry_points, version # type: ignore

+# The importlib-metadata library has introduced breaking changes before to its

+# API, this module is kept just to act as a layer between the

+# importlib-metadata library and our project if in any case it is necessary to

+# do so.

-# FIXME remove this file when support for 3.9 is dropped.

-elif version_info.minor in (8, 9):

- # pylint: disable=import-error

- from importlib.metadata import (

- entry_points as importlib_metadata_entry_points,

- )

- from importlib.metadata import version

-

- def entry_points(group: str, name: str): # type: ignore

- for entry_point in importlib_metadata_entry_points()[group]:

- if entry_point.name == name:

- yield entry_point

-

-else:

- from importlib.metadata import entry_points, version

-

-__all__ = ["entry_points", "version"]

+__all__ = ["entry_points", "version", "EntryPoint", "EntryPoints"]

|

{"golden_diff": "diff --git a/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py b/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py\n--- a/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py\n+++ b/opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py\n@@ -12,27 +12,18 @@\n # See the License for the specific language governing permissions and\n # limitations under the License.\n \n-from sys import version_info\n+# FIXME: Use importlib.metadata when support for 3.11 is dropped if the rest of\n+# the supported versions at that time have the same API.\n+from importlib_metadata import ( # type: ignore\n+ EntryPoint,\n+ EntryPoints,\n+ entry_points,\n+ version,\n+)\n \n-# FIXME remove this when support for 3.7 is dropped.\n-if version_info.minor == 7:\n- # pylint: disable=import-error\n- from importlib_metadata import entry_points, version # type: ignore\n+# The importlib-metadata library has introduced breaking changes before to its\n+# API, this module is kept just to act as a layer between the\n+# importlib-metadata library and our project if in any case it is necessary to\n+# do so.\n \n-# FIXME remove this file when support for 3.9 is dropped.\n-elif version_info.minor in (8, 9):\n- # pylint: disable=import-error\n- from importlib.metadata import (\n- entry_points as importlib_metadata_entry_points,\n- )\n- from importlib.metadata import version\n-\n- def entry_points(group: str, name: str): # type: ignore\n- for entry_point in importlib_metadata_entry_points()[group]:\n- if entry_point.name == name:\n- yield entry_point\n-\n-else:\n- from importlib.metadata import entry_points, version\n-\n-__all__ = [\"entry_points\", \"version\"]\n+__all__ = [\"entry_points\", \"version\", \"EntryPoint\", \"EntryPoints\"]\n", "issue": "Make entry_points behave the same across Python versions\nThe recently introduced `entry_points` function does not behave the same across Python versions and it is not possible to get all entry points in Python 3.8 and 3.9.\n", "before_files": [{"content": "# Copyright The OpenTelemetry Authors\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nfrom sys import version_info\n\n# FIXME remove this when support for 3.7 is dropped.\nif version_info.minor == 7:\n # pylint: disable=import-error\n from importlib_metadata import entry_points, version # type: ignore\n\n# FIXME remove this file when support for 3.9 is dropped.\nelif version_info.minor in (8, 9):\n # pylint: disable=import-error\n from importlib.metadata import (\n entry_points as importlib_metadata_entry_points,\n )\n from importlib.metadata import version\n\n def entry_points(group: str, name: str): # type: ignore\n for entry_point in importlib_metadata_entry_points()[group]:\n if entry_point.name == name:\n yield entry_point\n\nelse:\n from importlib.metadata import entry_points, version\n\n__all__ = [\"entry_points\", \"version\"]\n", "path": "opentelemetry-api/src/opentelemetry/util/_importlib_metadata.py"}]}

| 989 | 445 |

gh_patches_debug_8511

|

rasdani/github-patches

|

git_diff

|

scalableminds__webknossos-libs-1067

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

upload command on windows: backslashes on server, invalid dataset

A user created a valid dataset on a windows machine with the `webknossos convert` command, then called `webknossos upload` with a valid token. The upload went through, but the directory structure got lost: the files on the server had backslashes in the paths, like `'color\2-2-1\z0\y7\x1.wkw'`. Instead, when sending files to upload, the client should always replace the client’s path separator by `/`.

</issue>

<code>

[start of webknossos/webknossos/client/_upload_dataset.py]

1 import os

2 import warnings

3 from functools import lru_cache

4 from pathlib import Path

5 from tempfile import TemporaryDirectory

6 from time import gmtime, strftime

7 from typing import Iterator, List, NamedTuple, Optional, Tuple

8 from uuid import uuid4

9

10 import httpx

11

12 from ..dataset import Dataset, Layer, RemoteDataset

13 from ..utils import get_rich_progress

14 from ._resumable import Resumable

15 from .api_client.models import (

16 ApiDatasetUploadInformation,

17 ApiLinkedLayerIdentifier,

18 ApiReserveDatasetUploadInformation,

19 )

20 from .context import _get_context, _WebknossosContext

21

22 DEFAULT_SIMULTANEOUS_UPLOADS = 5

23 MAXIMUM_RETRY_COUNT = 4

24

25

26 class LayerToLink(NamedTuple):

27 dataset_name: str

28 layer_name: str

29 new_layer_name: Optional[str] = None

30 organization_id: Optional[str] = (

31 None # defaults to the user's organization before uploading

32 )

33

34 @classmethod

35 def from_remote_layer(

36 cls,

37 layer: Layer,

38 new_layer_name: Optional[str] = None,

39 organization_id: Optional[str] = None,

40 ) -> "LayerToLink":

41 ds = layer.dataset

42 assert isinstance(

43 ds, RemoteDataset

44 ), f"The passed layer must belong to a RemoteDataset, but belongs to {ds}"

45 return cls(ds._dataset_name, layer.name, new_layer_name, organization_id)

46

47 def as_api_linked_layer_identifier(self) -> ApiLinkedLayerIdentifier:

48 context = _get_context()

49 return ApiLinkedLayerIdentifier(

50 self.organization_id or context.organization_id,

51 self.dataset_name,

52 self.layer_name,

53 self.new_layer_name,

54 )

55

56

57 @lru_cache(maxsize=None)

58 def _cached_get_upload_datastore(context: _WebknossosContext) -> str:

59 datastores = context.api_client_with_auth.datastore_list()

60 for datastore in datastores:

61 if datastore.allows_upload:

62 return datastore.url

63 raise ValueError("No datastore found where datasets can be uploaded.")

64

65

66 def _walk(

67 path: Path,

68 base_path: Optional[Path] = None,

69 ) -> Iterator[Tuple[Path, Path, int]]:

70 if base_path is None:

71 base_path = path

72 if path.is_dir():

73 for p in path.iterdir():

74 yield from _walk(p, base_path)

75 else:

76 yield (path.resolve(), path.relative_to(base_path), path.stat().st_size)

77

78

79 def upload_dataset(

80 dataset: Dataset,

81 new_dataset_name: Optional[str] = None,

82 layers_to_link: Optional[List[LayerToLink]] = None,

83 jobs: Optional[int] = None,

84 ) -> str:

85 if new_dataset_name is None:

86 new_dataset_name = dataset.name

87 if layers_to_link is None:

88 layers_to_link = []

89 context = _get_context()

90 layer_names_to_link = set(i.new_layer_name or i.layer_name for i in layers_to_link)

91 if len(layer_names_to_link.intersection(dataset.layers.keys())) > 0:

92 warnings.warn(

93 "[INFO] Excluding the following layers from upload, since they will be linked: "

94 + f"{layer_names_to_link.intersection(dataset.layers.keys())}"

95 )

96 with TemporaryDirectory() as tmpdir:

97 tmp_ds = dataset.shallow_copy_dataset(

98 tmpdir, name=dataset.name, layers_to_ignore=layer_names_to_link

99 )

100 return upload_dataset(

101 tmp_ds,

102 new_dataset_name=new_dataset_name,

103 layers_to_link=layers_to_link,

104 jobs=jobs,

105 )

106

107 file_infos = list(_walk(dataset.path))

108 total_file_size = sum(size for _, _, size in file_infos)

109 # replicates https://github.com/scalableminds/webknossos/blob/master/frontend/javascripts/admin/dataset/dataset_upload_view.js

110 time_str = strftime("%Y-%m-%dT%H-%M-%S", gmtime())

111 upload_id = f"{time_str}__{uuid4()}"

112 datastore_token = context.datastore_required_token

113 datastore_url = _cached_get_upload_datastore(context)

114 datastore_api_client = context.get_datastore_api_client(datastore_url)

115 simultaneous_uploads = jobs if jobs is not None else DEFAULT_SIMULTANEOUS_UPLOADS

116 if "PYTEST_CURRENT_TEST" in os.environ:

117 simultaneous_uploads = 1

118 is_valid_new_name_response = context.api_client_with_auth.dataset_is_valid_new_name(

119 context.organization_id, new_dataset_name

120 )

121 if not is_valid_new_name_response.is_valid:

122 problems_str = ""

123 if is_valid_new_name_response.errors is not None:

124 problems_str = f" Problems: {is_valid_new_name_response.errors}"

125 raise Exception(

126 f"Dataset name {context.organization_id}/{new_dataset_name} is not a valid new dataset name.{problems_str}"

127 )

128

129 datastore_api_client.dataset_reserve_upload(

130 ApiReserveDatasetUploadInformation(

131 upload_id,

132 new_dataset_name,

133 context.organization_id,

134 total_file_count=len(file_infos),

135 layers_to_link=[

136 layer.as_api_linked_layer_identifier() for layer in layers_to_link

137 ],

138 folder_id=None,

139 initial_teams=[],

140 ),

141 token=datastore_token,

142 retry_count=MAXIMUM_RETRY_COUNT,

143 )

144 with get_rich_progress() as progress:

145 with Resumable(

146 f"{datastore_url}/data/datasets?token={datastore_token}",

147 simultaneous_uploads=simultaneous_uploads,

148 query={

149 "owningOrganization": context.organization_id,

150 "name": new_dataset_name,

151 "totalFileCount": len(file_infos),

152 },

153 chunk_size=100 * 1024 * 1024, # 100 MiB

154 generate_unique_identifier=lambda _,

155 relative_path: f"{upload_id}/{relative_path}",

156 test_chunks=False,

157 permanent_errors=[400, 403, 404, 409, 415, 500, 501],

158 client=httpx.Client(timeout=None),

159 ) as session:

160 progress_task = progress.add_task("Dataset Upload", total=total_file_size)

161 for file_path, relative_path, _ in file_infos:

162 resumable_file = session.add_file(file_path, relative_path)

163 resumable_file.chunk_completed.register(

164 lambda chunk: progress.advance(progress_task, chunk.size)

165 )

166

167 datastore_api_client.dataset_finish_upload(

168 ApiDatasetUploadInformation(upload_id),

169 datastore_token,

170 retry_count=MAXIMUM_RETRY_COUNT,

171 )

172

173 return new_dataset_name

174

[end of webknossos/webknossos/client/_upload_dataset.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/webknossos/webknossos/client/_upload_dataset.py b/webknossos/webknossos/client/_upload_dataset.py

--- a/webknossos/webknossos/client/_upload_dataset.py

+++ b/webknossos/webknossos/client/_upload_dataset.py

@@ -152,7 +152,7 @@

},

chunk_size=100 * 1024 * 1024, # 100 MiB

generate_unique_identifier=lambda _,

- relative_path: f"{upload_id}/{relative_path}",

+ relative_path: f"{upload_id}/{relative_path.as_posix()}",

test_chunks=False,

permanent_errors=[400, 403, 404, 409, 415, 500, 501],

client=httpx.Client(timeout=None),

|

{"golden_diff": "diff --git a/webknossos/webknossos/client/_upload_dataset.py b/webknossos/webknossos/client/_upload_dataset.py\n--- a/webknossos/webknossos/client/_upload_dataset.py\n+++ b/webknossos/webknossos/client/_upload_dataset.py\n@@ -152,7 +152,7 @@\n },\n chunk_size=100 * 1024 * 1024, # 100 MiB\n generate_unique_identifier=lambda _,\n- relative_path: f\"{upload_id}/{relative_path}\",\n+ relative_path: f\"{upload_id}/{relative_path.as_posix()}\",\n test_chunks=False,\n permanent_errors=[400, 403, 404, 409, 415, 500, 501],\n client=httpx.Client(timeout=None),\n", "issue": "upload command on windows: backslashes on server, invalid dataset\nA user created a valid dataset on a windows machine with the `webknossos convert` command, then called `webknossos upload` with a valid token. The upload went through, but the directory structure got lost: the files on the server had backslashes in the paths, like `'color\\2-2-1\\z0\\y7\\x1.wkw'`. Instead, when sending files to upload, the client should always replace the client\u2019s path separator by `/`.\n", "before_files": [{"content": "import os\nimport warnings\nfrom functools import lru_cache\nfrom pathlib import Path\nfrom tempfile import TemporaryDirectory\nfrom time import gmtime, strftime\nfrom typing import Iterator, List, NamedTuple, Optional, Tuple\nfrom uuid import uuid4\n\nimport httpx\n\nfrom ..dataset import Dataset, Layer, RemoteDataset\nfrom ..utils import get_rich_progress\nfrom ._resumable import Resumable\nfrom .api_client.models import (\n ApiDatasetUploadInformation,\n ApiLinkedLayerIdentifier,\n ApiReserveDatasetUploadInformation,\n)\nfrom .context import _get_context, _WebknossosContext\n\nDEFAULT_SIMULTANEOUS_UPLOADS = 5\nMAXIMUM_RETRY_COUNT = 4\n\n\nclass LayerToLink(NamedTuple):\n dataset_name: str\n layer_name: str\n new_layer_name: Optional[str] = None\n organization_id: Optional[str] = (\n None # defaults to the user's organization before uploading\n )\n\n @classmethod\n def from_remote_layer(\n cls,\n layer: Layer,\n new_layer_name: Optional[str] = None,\n organization_id: Optional[str] = None,\n ) -> \"LayerToLink\":\n ds = layer.dataset\n assert isinstance(\n ds, RemoteDataset\n ), f\"The passed layer must belong to a RemoteDataset, but belongs to {ds}\"\n return cls(ds._dataset_name, layer.name, new_layer_name, organization_id)\n\n def as_api_linked_layer_identifier(self) -> ApiLinkedLayerIdentifier:\n context = _get_context()\n return ApiLinkedLayerIdentifier(\n self.organization_id or context.organization_id,\n self.dataset_name,\n self.layer_name,\n self.new_layer_name,\n )\n\n\n@lru_cache(maxsize=None)\ndef _cached_get_upload_datastore(context: _WebknossosContext) -> str:\n datastores = context.api_client_with_auth.datastore_list()\n for datastore in datastores:\n if datastore.allows_upload:\n return datastore.url\n raise ValueError(\"No datastore found where datasets can be uploaded.\")\n\n\ndef _walk(\n path: Path,\n base_path: Optional[Path] = None,\n) -> Iterator[Tuple[Path, Path, int]]:\n if base_path is None:\n base_path = path\n if path.is_dir():\n for p in path.iterdir():\n yield from _walk(p, base_path)\n else:\n yield (path.resolve(), path.relative_to(base_path), path.stat().st_size)\n\n\ndef upload_dataset(\n dataset: Dataset,\n new_dataset_name: Optional[str] = None,\n layers_to_link: Optional[List[LayerToLink]] = None,\n jobs: Optional[int] = None,\n) -> str:\n if new_dataset_name is None:\n new_dataset_name = dataset.name\n if layers_to_link is None:\n layers_to_link = []\n context = _get_context()\n layer_names_to_link = set(i.new_layer_name or i.layer_name for i in layers_to_link)\n if len(layer_names_to_link.intersection(dataset.layers.keys())) > 0:\n warnings.warn(\n \"[INFO] Excluding the following layers from upload, since they will be linked: \"\n + f\"{layer_names_to_link.intersection(dataset.layers.keys())}\"\n )\n with TemporaryDirectory() as tmpdir:\n tmp_ds = dataset.shallow_copy_dataset(\n tmpdir, name=dataset.name, layers_to_ignore=layer_names_to_link\n )\n return upload_dataset(\n tmp_ds,\n new_dataset_name=new_dataset_name,\n layers_to_link=layers_to_link,\n jobs=jobs,\n )\n\n file_infos = list(_walk(dataset.path))\n total_file_size = sum(size for _, _, size in file_infos)\n # replicates https://github.com/scalableminds/webknossos/blob/master/frontend/javascripts/admin/dataset/dataset_upload_view.js\n time_str = strftime(\"%Y-%m-%dT%H-%M-%S\", gmtime())\n upload_id = f\"{time_str}__{uuid4()}\"\n datastore_token = context.datastore_required_token\n datastore_url = _cached_get_upload_datastore(context)\n datastore_api_client = context.get_datastore_api_client(datastore_url)\n simultaneous_uploads = jobs if jobs is not None else DEFAULT_SIMULTANEOUS_UPLOADS\n if \"PYTEST_CURRENT_TEST\" in os.environ:\n simultaneous_uploads = 1\n is_valid_new_name_response = context.api_client_with_auth.dataset_is_valid_new_name(\n context.organization_id, new_dataset_name\n )\n if not is_valid_new_name_response.is_valid:\n problems_str = \"\"\n if is_valid_new_name_response.errors is not None:\n problems_str = f\" Problems: {is_valid_new_name_response.errors}\"\n raise Exception(\n f\"Dataset name {context.organization_id}/{new_dataset_name} is not a valid new dataset name.{problems_str}\"\n )\n\n datastore_api_client.dataset_reserve_upload(\n ApiReserveDatasetUploadInformation(\n upload_id,\n new_dataset_name,\n context.organization_id,\n total_file_count=len(file_infos),\n layers_to_link=[\n layer.as_api_linked_layer_identifier() for layer in layers_to_link\n ],\n folder_id=None,\n initial_teams=[],\n ),\n token=datastore_token,\n retry_count=MAXIMUM_RETRY_COUNT,\n )\n with get_rich_progress() as progress:\n with Resumable(\n f\"{datastore_url}/data/datasets?token={datastore_token}\",\n simultaneous_uploads=simultaneous_uploads,\n query={\n \"owningOrganization\": context.organization_id,\n \"name\": new_dataset_name,\n \"totalFileCount\": len(file_infos),\n },\n chunk_size=100 * 1024 * 1024, # 100 MiB\n generate_unique_identifier=lambda _,\n relative_path: f\"{upload_id}/{relative_path}\",\n test_chunks=False,\n permanent_errors=[400, 403, 404, 409, 415, 500, 501],\n client=httpx.Client(timeout=None),\n ) as session:\n progress_task = progress.add_task(\"Dataset Upload\", total=total_file_size)\n for file_path, relative_path, _ in file_infos:\n resumable_file = session.add_file(file_path, relative_path)\n resumable_file.chunk_completed.register(\n lambda chunk: progress.advance(progress_task, chunk.size)\n )\n\n datastore_api_client.dataset_finish_upload(\n ApiDatasetUploadInformation(upload_id),\n datastore_token,\n retry_count=MAXIMUM_RETRY_COUNT,\n )\n\n return new_dataset_name\n", "path": "webknossos/webknossos/client/_upload_dataset.py"}]}

| 2,500 | 197 |

gh_patches_debug_3318

|

rasdani/github-patches

|

git_diff

|

feast-dev__feast-2753

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Unable to access data in Feast UI when deployed to remote instance

## Expected Behavior

Should be able to view registry data when launching UI with `feast ui` on remote instances (like EC2).

## Current Behavior

I’ve tried setting the host to `0.0.0.0` and the static assets get loaded and can accessed via the public IP. But the requests to the registry (`http://0.0.0.0:8888/registry`) fails, so no data shows up.

I've also tried setting the host to the private IP, but the request to `/registry` times out.

## Steps to reproduce

Run `feast ui --host <instance private ip>` in EC2 instance.

### Specifications

- Version:`0.21.2`

- Platform: EC2

- Subsystem:

## Possible Solution

Potential CORS issue that needs to be fixed?

Unable to access data in Feast UI when deployed to remote instance

## Expected Behavior

Should be able to view registry data when launching UI with `feast ui` on remote instances (like EC2).

## Current Behavior

I’ve tried setting the host to `0.0.0.0` and the static assets get loaded and can accessed via the public IP. But the requests to the registry (`http://0.0.0.0:8888/registry`) fails, so no data shows up.

I've also tried setting the host to the private IP, but the request to `/registry` times out.

## Steps to reproduce

Run `feast ui --host <instance private ip>` in EC2 instance.

### Specifications

- Version:`0.21.2`

- Platform: EC2

- Subsystem:

## Possible Solution

Potential CORS issue that needs to be fixed?

</issue>

<code>

[start of sdk/python/feast/ui_server.py]

1 import json

2 import threading

3 from typing import Callable, Optional

4

5 import pkg_resources

6 import uvicorn

7 from fastapi import FastAPI, Response

8 from fastapi.middleware.cors import CORSMiddleware