problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_22209

|

rasdani/github-patches

|

git_diff

|

cloudtools__troposphere-681

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

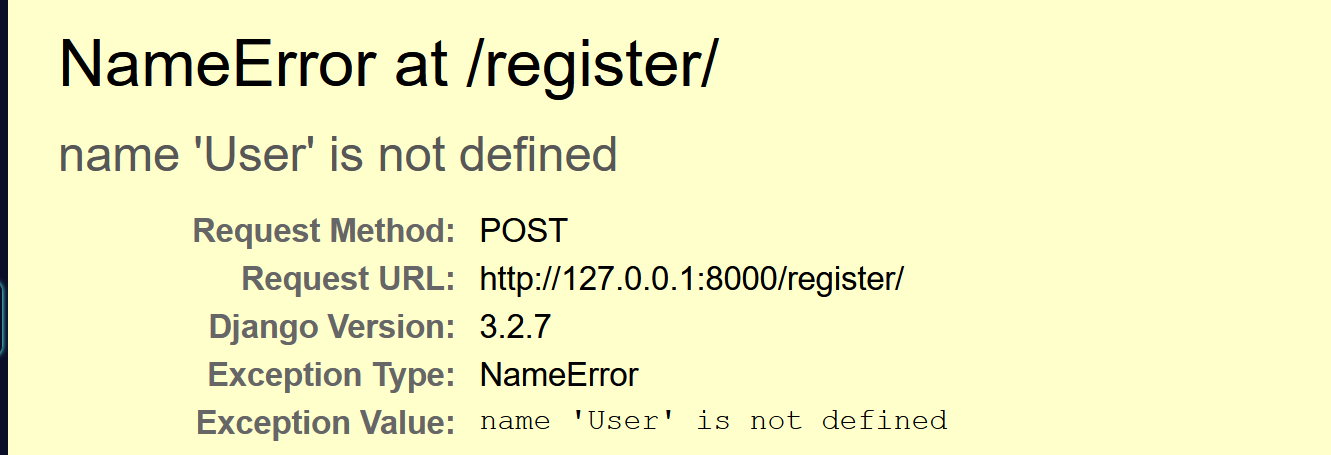

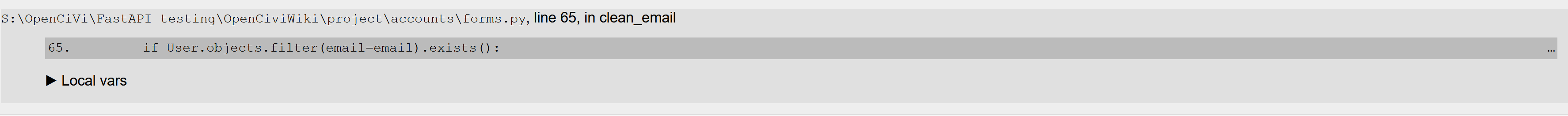

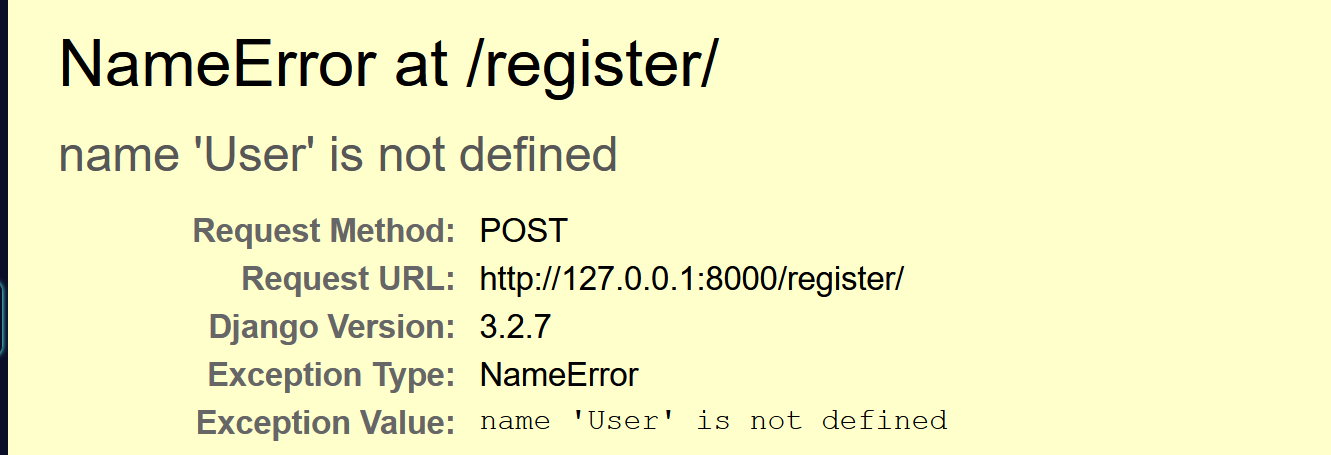

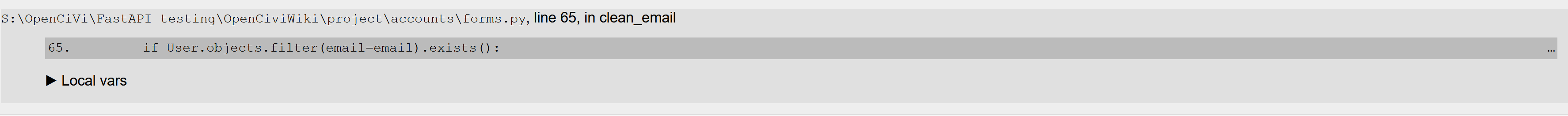

<issue>

ApiGateway Model Schema should be a Dict not a string

troposphere.apigateway.Model class contains a property called schema, it is defined as 'basestring', this property should be a dict.

Ideally, you should supply a class to represent 'Schema'

</issue>

<code>

[start of troposphere/apigateway.py]

1 from . import AWSObject, AWSProperty

2 from .validators import positive_integer

3

4

5 def validate_authorizer_ttl(ttl_value):

6 """ Validate authorizer ttl timeout

7 :param ttl_value: The TTL timeout in seconds

8 :return: The provided TTL value if valid

9 """

10 ttl_value = int(positive_integer(ttl_value))

11 if ttl_value > 3600:

12 raise ValueError("The AuthorizerResultTtlInSeconds should be <= 3600")

13 return ttl_value

14

15

16 class Account(AWSObject):

17 resource_type = "AWS::ApiGateway::Account"

18

19 props = {

20 "CloudWatchRoleArn": (basestring, False)

21 }

22

23

24 class StageKey(AWSProperty):

25

26 props = {

27 "RestApiId": (basestring, False),

28 "StageName": (basestring, False)

29 }

30

31

32 class ApiKey(AWSObject):

33 resource_type = "AWS::ApiGateway::ApiKey"

34

35 props = {

36 "Description": (basestring, False),

37 "Enabled": (bool, False),

38 "Name": (basestring, False),

39 "StageKeys": ([StageKey], False)

40 }

41

42

43 class Authorizer(AWSObject):

44 resource_type = "AWS::ApiGateway::Authorizer"

45

46 props = {

47 "AuthorizerCredentials": (basestring, False),

48 "AuthorizerResultTtlInSeconds": (validate_authorizer_ttl, False),

49 "AuthorizerUri": (basestring, True),

50 "IdentitySource": (basestring, True),

51 "IdentityValidationExpression": (basestring, False),

52 "Name": (basestring, True),

53 "ProviderARNs": ([basestring], False),

54 "RestApiId": (basestring, False),

55 "Type": (basestring, True)

56 }

57

58

59 class BasePathMapping(AWSObject):

60 resource_type = "AWS::ApiGateway::BasePathMapping"

61

62 props = {

63 "BasePath": (basestring, False),

64 "DomainName": (basestring, True),

65 "RestApiId": (basestring, True),

66 "Stage": (basestring, False)

67 }

68

69

70 class ClientCertificate(AWSObject):

71 resource_type = "AWS::ApiGateway::ClientCertificate"

72

73 props = {

74 "Description": (basestring, False)

75 }

76

77

78 class MethodSetting(AWSProperty):

79

80 props = {

81 "CacheDataEncrypted": (bool, False),

82 "CacheTtlInSeconds": (positive_integer, False),

83 "CachingEnabled": (bool, False),

84 "DataTraceEnabled": (bool, False),

85 "HttpMethod": (basestring, True),

86 "LoggingLevel": (basestring, False),

87 "MetricsEnabled": (bool, False),

88 "ResourcePath": (basestring, True),

89 "ThrottlingBurstLimit": (positive_integer, False),

90 "ThrottlingRateLimit": (positive_integer, False)

91 }

92

93

94 class StageDescription(AWSProperty):

95

96 props = {

97 "CacheClusterEnabled": (bool, False),

98 "CacheClusterSize": (basestring, False),

99 "CacheDataEncrypted": (bool, False),

100 "CacheTtlInSeconds": (positive_integer, False),

101 "CachingEnabled": (bool, False),

102 "ClientCertificateId": (basestring, False),

103 "DataTraceEnabled": (bool, False),

104 "Description": (basestring, False),

105 "LoggingLevel": (basestring, False),

106 "MethodSettings": ([MethodSetting], False),

107 "MetricsEnabled": (bool, False),

108 "StageName": (basestring, False),

109 "ThrottlingBurstLimit": (positive_integer, False),

110 "ThrottlingRateLimit": (positive_integer, False),

111 "Variables": (dict, False)

112 }

113

114

115 class Deployment(AWSObject):

116 resource_type = "AWS::ApiGateway::Deployment"

117

118 props = {

119 "Description": (basestring, False),

120 "RestApiId": (basestring, True),

121 "StageDescription": (StageDescription, False),

122 "StageName": (basestring, False)

123 }

124

125

126 class IntegrationResponse(AWSProperty):

127

128 props = {

129 "ResponseParameters": (dict, False),

130 "ResponseTemplates": (dict, False),

131 "SelectionPattern": (basestring, False),

132 "StatusCode": (basestring, False)

133 }

134

135

136 class Integration(AWSProperty):

137

138 props = {

139 "CacheKeyParameters": ([basestring], False),

140 "CacheNamespace": (basestring, False),

141 "Credentials": (basestring, False),

142 "IntegrationHttpMethod": (basestring, False),

143 "IntegrationResponses": ([IntegrationResponse], False),

144 "PassthroughBehavior": (basestring, False),

145 "RequestParameters": (dict, False),

146 "RequestTemplates": (dict, False),

147 "Type": (basestring, True),

148 "Uri": (basestring, False)

149 }

150

151

152 class MethodResponse(AWSProperty):

153

154 props = {

155 "ResponseModels": (dict, False),

156 "ResponseParameters": (dict, False),

157 "StatusCode": (basestring, True)

158 }

159

160

161 class Method(AWSObject):

162 resource_type = "AWS::ApiGateway::Method"

163

164 props = {

165 "ApiKeyRequired": (bool, False),

166 "AuthorizationType": (basestring, True),

167 "AuthorizerId": (basestring, False),

168 "HttpMethod": (basestring, True),

169 "Integration": (Integration, False),

170 "MethodResponses": ([MethodResponse], False),

171 "RequestModels": (dict, False),

172 "RequestParameters": (dict, False),

173 "ResourceId": (basestring, True),

174 "RestApiId": (basestring, True)

175 }

176

177

178 class Model(AWSObject):

179 resource_type = "AWS::ApiGateway::Model"

180

181 props = {

182 "ContentType": (basestring, False),

183 "Description": (basestring, False),

184 "Name": (basestring, False),

185 "RestApiId": (basestring, True),

186 "Schema": (basestring, False)

187 }

188

189

190 class Resource(AWSObject):

191 resource_type = "AWS::ApiGateway::Resource"

192

193 props = {

194 "ParentId": (basestring, True),

195 "PathPart": (basestring, True),

196 "RestApiId": (basestring, True)

197 }

198

199

200 class S3Location(AWSProperty):

201

202 props = {

203 "Bucket": (basestring, False),

204 "ETag": (basestring, False),

205 "Key": (basestring, False),

206 "Version": (basestring, False)

207 }

208

209

210 class RestApi(AWSObject):

211 resource_type = "AWS::ApiGateway::RestApi"

212

213 props = {

214 "Body": (dict, False),

215 "BodyS3Location": (S3Location, False),

216 "CloneFrom": (basestring, False),

217 "Description": (basestring, False),

218 "FailOnWarnings": (basestring, False),

219 "Name": (basestring, False),

220 "Parameters": ([basestring], False)

221 }

222

223

224 class Stage(AWSObject):

225 resource_type = "AWS::ApiGateway::Stage"

226

227 props = {

228 "CacheClusterEnabled": (bool, False),

229 "CacheClusterSize": (basestring, False),

230 "ClientCertificateId": (basestring, False),

231 "DeploymentId": (basestring, True),

232 "Description": (basestring, False),

233 "MethodSettings": ([MethodSetting], False),

234 "RestApiId": (basestring, True),

235 "StageName": (basestring, True),

236 "Variables": (dict, False)

237 }

238

239

240 class ApiStage(AWSProperty):

241 props = {

242 "ApiId": (basestring, False),

243 "Stage": (basestring, False),

244 }

245

246

247 class QuotaSettings(AWSProperty):

248 props = {

249 "Limit": (positive_integer, False),

250 "Offset": (positive_integer, False),

251 "Period": (basestring, False),

252 }

253

254

255 class ThrottleSettings(AWSProperty):

256 props = {

257 "BurstLimit": (positive_integer, False),

258 "RateLimit": (positive_integer, False),

259 }

260

261

262 class UsagePlan(AWSObject):

263 resource_type = "AWS::ApiGateway::UsagePlan"

264

265 props = {

266 "ApiStages": ([ApiStage], False),

267 "Description": (basestring, False),

268 "Quota": (QuotaSettings, False),

269 "Throttle": (ThrottleSettings, False),

270 "UsagePlanName": (basestring, False),

271 }

272

[end of troposphere/apigateway.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/troposphere/apigateway.py b/troposphere/apigateway.py

--- a/troposphere/apigateway.py

+++ b/troposphere/apigateway.py

@@ -1,5 +1,6 @@

-from . import AWSObject, AWSProperty

+from . import AWSHelperFn, AWSObject, AWSProperty

from .validators import positive_integer

+import json

def validate_authorizer_ttl(ttl_value):

@@ -183,9 +184,23 @@

"Description": (basestring, False),

"Name": (basestring, False),

"RestApiId": (basestring, True),

- "Schema": (basestring, False)

+ "Schema": ((basestring, dict), False)

}

+ def validate(self):

+ if 'Schema' in self.properties:

+ schema = self.properties.get('Schema')

+ if isinstance(schema, basestring):

+ # Verify it is a valid json string

+ json.loads(schema)

+ elif isinstance(schema, dict):

+ # Convert the dict to a basestring

+ self.properties['Schema'] = json.dumps(schema)

+ elif isinstance(schema, AWSHelperFn):

+ pass

+ else:

+ raise ValueError("Schema must be a str or dict")

+

class Resource(AWSObject):

resource_type = "AWS::ApiGateway::Resource"

|

{"golden_diff": "diff --git a/troposphere/apigateway.py b/troposphere/apigateway.py\n--- a/troposphere/apigateway.py\n+++ b/troposphere/apigateway.py\n@@ -1,5 +1,6 @@\n-from . import AWSObject, AWSProperty\n+from . import AWSHelperFn, AWSObject, AWSProperty\n from .validators import positive_integer\n+import json\n \n \n def validate_authorizer_ttl(ttl_value):\n@@ -183,9 +184,23 @@\n \"Description\": (basestring, False),\n \"Name\": (basestring, False),\n \"RestApiId\": (basestring, True),\n- \"Schema\": (basestring, False)\n+ \"Schema\": ((basestring, dict), False)\n }\n \n+ def validate(self):\n+ if 'Schema' in self.properties:\n+ schema = self.properties.get('Schema')\n+ if isinstance(schema, basestring):\n+ # Verify it is a valid json string\n+ json.loads(schema)\n+ elif isinstance(schema, dict):\n+ # Convert the dict to a basestring\n+ self.properties['Schema'] = json.dumps(schema)\n+ elif isinstance(schema, AWSHelperFn):\n+ pass\n+ else:\n+ raise ValueError(\"Schema must be a str or dict\")\n+\n \n class Resource(AWSObject):\n resource_type = \"AWS::ApiGateway::Resource\"\n", "issue": "ApiGateway Model Schema should be a Dict not a string\ntroposphere.apigateway.Model class contains a property called schema, it is defined as 'basestring', this property should be a dict.\r\n\r\nIdeally, you should supply a class to represent 'Schema'\n", "before_files": [{"content": "from . import AWSObject, AWSProperty\nfrom .validators import positive_integer\n\n\ndef validate_authorizer_ttl(ttl_value):\n \"\"\" Validate authorizer ttl timeout\n :param ttl_value: The TTL timeout in seconds\n :return: The provided TTL value if valid\n \"\"\"\n ttl_value = int(positive_integer(ttl_value))\n if ttl_value > 3600:\n raise ValueError(\"The AuthorizerResultTtlInSeconds should be <= 3600\")\n return ttl_value\n\n\nclass Account(AWSObject):\n resource_type = \"AWS::ApiGateway::Account\"\n\n props = {\n \"CloudWatchRoleArn\": (basestring, False)\n }\n\n\nclass StageKey(AWSProperty):\n\n props = {\n \"RestApiId\": (basestring, False),\n \"StageName\": (basestring, False)\n }\n\n\nclass ApiKey(AWSObject):\n resource_type = \"AWS::ApiGateway::ApiKey\"\n\n props = {\n \"Description\": (basestring, False),\n \"Enabled\": (bool, False),\n \"Name\": (basestring, False),\n \"StageKeys\": ([StageKey], False)\n }\n\n\nclass Authorizer(AWSObject):\n resource_type = \"AWS::ApiGateway::Authorizer\"\n\n props = {\n \"AuthorizerCredentials\": (basestring, False),\n \"AuthorizerResultTtlInSeconds\": (validate_authorizer_ttl, False),\n \"AuthorizerUri\": (basestring, True),\n \"IdentitySource\": (basestring, True),\n \"IdentityValidationExpression\": (basestring, False),\n \"Name\": (basestring, True),\n \"ProviderARNs\": ([basestring], False),\n \"RestApiId\": (basestring, False),\n \"Type\": (basestring, True)\n }\n\n\nclass BasePathMapping(AWSObject):\n resource_type = \"AWS::ApiGateway::BasePathMapping\"\n\n props = {\n \"BasePath\": (basestring, False),\n \"DomainName\": (basestring, True),\n \"RestApiId\": (basestring, True),\n \"Stage\": (basestring, False)\n }\n\n\nclass ClientCertificate(AWSObject):\n resource_type = \"AWS::ApiGateway::ClientCertificate\"\n\n props = {\n \"Description\": (basestring, False)\n }\n\n\nclass MethodSetting(AWSProperty):\n\n props = {\n \"CacheDataEncrypted\": (bool, False),\n \"CacheTtlInSeconds\": (positive_integer, False),\n \"CachingEnabled\": (bool, False),\n \"DataTraceEnabled\": (bool, False),\n \"HttpMethod\": (basestring, True),\n \"LoggingLevel\": (basestring, False),\n \"MetricsEnabled\": (bool, False),\n \"ResourcePath\": (basestring, True),\n \"ThrottlingBurstLimit\": (positive_integer, False),\n \"ThrottlingRateLimit\": (positive_integer, False)\n }\n\n\nclass StageDescription(AWSProperty):\n\n props = {\n \"CacheClusterEnabled\": (bool, False),\n \"CacheClusterSize\": (basestring, False),\n \"CacheDataEncrypted\": (bool, False),\n \"CacheTtlInSeconds\": (positive_integer, False),\n \"CachingEnabled\": (bool, False),\n \"ClientCertificateId\": (basestring, False),\n \"DataTraceEnabled\": (bool, False),\n \"Description\": (basestring, False),\n \"LoggingLevel\": (basestring, False),\n \"MethodSettings\": ([MethodSetting], False),\n \"MetricsEnabled\": (bool, False),\n \"StageName\": (basestring, False),\n \"ThrottlingBurstLimit\": (positive_integer, False),\n \"ThrottlingRateLimit\": (positive_integer, False),\n \"Variables\": (dict, False)\n }\n\n\nclass Deployment(AWSObject):\n resource_type = \"AWS::ApiGateway::Deployment\"\n\n props = {\n \"Description\": (basestring, False),\n \"RestApiId\": (basestring, True),\n \"StageDescription\": (StageDescription, False),\n \"StageName\": (basestring, False)\n }\n\n\nclass IntegrationResponse(AWSProperty):\n\n props = {\n \"ResponseParameters\": (dict, False),\n \"ResponseTemplates\": (dict, False),\n \"SelectionPattern\": (basestring, False),\n \"StatusCode\": (basestring, False)\n }\n\n\nclass Integration(AWSProperty):\n\n props = {\n \"CacheKeyParameters\": ([basestring], False),\n \"CacheNamespace\": (basestring, False),\n \"Credentials\": (basestring, False),\n \"IntegrationHttpMethod\": (basestring, False),\n \"IntegrationResponses\": ([IntegrationResponse], False),\n \"PassthroughBehavior\": (basestring, False),\n \"RequestParameters\": (dict, False),\n \"RequestTemplates\": (dict, False),\n \"Type\": (basestring, True),\n \"Uri\": (basestring, False)\n }\n\n\nclass MethodResponse(AWSProperty):\n\n props = {\n \"ResponseModels\": (dict, False),\n \"ResponseParameters\": (dict, False),\n \"StatusCode\": (basestring, True)\n }\n\n\nclass Method(AWSObject):\n resource_type = \"AWS::ApiGateway::Method\"\n\n props = {\n \"ApiKeyRequired\": (bool, False),\n \"AuthorizationType\": (basestring, True),\n \"AuthorizerId\": (basestring, False),\n \"HttpMethod\": (basestring, True),\n \"Integration\": (Integration, False),\n \"MethodResponses\": ([MethodResponse], False),\n \"RequestModels\": (dict, False),\n \"RequestParameters\": (dict, False),\n \"ResourceId\": (basestring, True),\n \"RestApiId\": (basestring, True)\n }\n\n\nclass Model(AWSObject):\n resource_type = \"AWS::ApiGateway::Model\"\n\n props = {\n \"ContentType\": (basestring, False),\n \"Description\": (basestring, False),\n \"Name\": (basestring, False),\n \"RestApiId\": (basestring, True),\n \"Schema\": (basestring, False)\n }\n\n\nclass Resource(AWSObject):\n resource_type = \"AWS::ApiGateway::Resource\"\n\n props = {\n \"ParentId\": (basestring, True),\n \"PathPart\": (basestring, True),\n \"RestApiId\": (basestring, True)\n }\n\n\nclass S3Location(AWSProperty):\n\n props = {\n \"Bucket\": (basestring, False),\n \"ETag\": (basestring, False),\n \"Key\": (basestring, False),\n \"Version\": (basestring, False)\n }\n\n\nclass RestApi(AWSObject):\n resource_type = \"AWS::ApiGateway::RestApi\"\n\n props = {\n \"Body\": (dict, False),\n \"BodyS3Location\": (S3Location, False),\n \"CloneFrom\": (basestring, False),\n \"Description\": (basestring, False),\n \"FailOnWarnings\": (basestring, False),\n \"Name\": (basestring, False),\n \"Parameters\": ([basestring], False)\n }\n\n\nclass Stage(AWSObject):\n resource_type = \"AWS::ApiGateway::Stage\"\n\n props = {\n \"CacheClusterEnabled\": (bool, False),\n \"CacheClusterSize\": (basestring, False),\n \"ClientCertificateId\": (basestring, False),\n \"DeploymentId\": (basestring, True),\n \"Description\": (basestring, False),\n \"MethodSettings\": ([MethodSetting], False),\n \"RestApiId\": (basestring, True),\n \"StageName\": (basestring, True),\n \"Variables\": (dict, False)\n }\n\n\nclass ApiStage(AWSProperty):\n props = {\n \"ApiId\": (basestring, False),\n \"Stage\": (basestring, False),\n }\n\n\nclass QuotaSettings(AWSProperty):\n props = {\n \"Limit\": (positive_integer, False),\n \"Offset\": (positive_integer, False),\n \"Period\": (basestring, False),\n }\n\n\nclass ThrottleSettings(AWSProperty):\n props = {\n \"BurstLimit\": (positive_integer, False),\n \"RateLimit\": (positive_integer, False),\n }\n\n\nclass UsagePlan(AWSObject):\n resource_type = \"AWS::ApiGateway::UsagePlan\"\n\n props = {\n \"ApiStages\": ([ApiStage], False),\n \"Description\": (basestring, False),\n \"Quota\": (QuotaSettings, False),\n \"Throttle\": (ThrottleSettings, False),\n \"UsagePlanName\": (basestring, False),\n }\n", "path": "troposphere/apigateway.py"}]}

| 3,196 | 302 |

gh_patches_debug_17815

|

rasdani/github-patches

|

git_diff

|

bentoml__BentoML-1211

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Cannot delete bento that was created with same name:version as an older (deleted) bento

**Describe the bug**

After deleting a bento, I cannot delete another bento that was created with that same name:version.

**To Reproduce**

1. Create a bento with `name:1` through `model_service.save(version='1')`.

2. In a shell, `bentoml delete name:1`

3. Create a new bento with the same name, again with `model_service.save(version='1')`.

4. Try to `bentoml delete name:1`

The error is the following:

```

Are you sure about delete name:1? This will delete the BentoService saved bundle files permanently [y/N]: y

[2020-10-27 15:22:33,477] ERROR - RPC ERROR DangerouslyDeleteBento: Multiple rows were found for one()

Error: bentoml-cli delete failed: INTERNAL:Multiple rows were found for one()

```

**Expected behavior**

I can endlessly delete and recreate bentos with the same name/version for testing.

**Environment:**

- OS: Ubuntu 20.04

- Python 3.8.5

- BentoML Version 0.9.2

</issue>

<code>

[start of bentoml/yatai/repository/metadata_store.py]

1 # Copyright 2019 Atalaya Tech, Inc.

2

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6

7 # http://www.apache.org/licenses/LICENSE-2.0

8

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import logging

16 import datetime

17

18 from sqlalchemy import (

19 Column,

20 Enum,

21 String,

22 Integer,

23 JSON,

24 Boolean,

25 DateTime,

26 UniqueConstraint,

27 desc,

28 )

29 from sqlalchemy.orm.exc import NoResultFound

30 from google.protobuf.json_format import ParseDict

31

32 from bentoml.utils import ProtoMessageToDict

33 from bentoml.exceptions import YataiRepositoryException

34 from bentoml.yatai.db import Base, create_session

35 from bentoml.yatai.label_store import (

36 filter_label_query,

37 get_labels,

38 list_labels,

39 add_or_update_labels,

40 RESOURCE_TYPE,

41 )

42 from bentoml.yatai.proto.repository_pb2 import (

43 UploadStatus,

44 BentoUri,

45 BentoServiceMetadata,

46 Bento as BentoPB,

47 ListBentoRequest,

48 )

49

50 logger = logging.getLogger(__name__)

51

52

53 DEFAULT_UPLOAD_STATUS = UploadStatus(status=UploadStatus.UNINITIALIZED)

54 DEFAULT_LIST_LIMIT = 40

55

56

57 class Bento(Base):

58 __tablename__ = 'bentos'

59 __table_args__ = tuple(UniqueConstraint('name', 'version', name='_name_version_uc'))

60

61 id = Column(Integer, primary_key=True)

62 name = Column(String, nullable=False)

63 version = Column(String, nullable=False)

64

65 # Storage URI for this Bento

66 uri = Column(String, nullable=False)

67

68 # Name is required for PostgreSQL and any future supported database which

69 # requires an explicitly named type, or an explicitly named constraint in order to

70 # generate the type and/or a table that uses it.

71 uri_type = Column(

72 Enum(*BentoUri.StorageType.keys(), name='uri_type'), default=BentoUri.UNSET

73 )

74

75 # JSON filed mapping directly to BentoServiceMetadata proto message

76 bento_service_metadata = Column(JSON, nullable=False, default={})

77

78 # Time of AddBento call, the time of Bento creation can be found in metadata field

79 created_at = Column(DateTime, default=datetime.datetime.utcnow)

80

81 # latest upload status, JSON message also includes last update timestamp

82 upload_status = Column(

83 JSON, nullable=False, default=ProtoMessageToDict(DEFAULT_UPLOAD_STATUS)

84 )

85

86 # mark as deleted

87 deleted = Column(Boolean, default=False)

88

89

90 def _bento_orm_obj_to_pb(bento_obj, labels=None):

91 # Backwards compatible support loading saved bundle created before 0.8.0

92 if (

93 'apis' in bento_obj.bento_service_metadata

94 and bento_obj.bento_service_metadata['apis']

95 ):

96 for api in bento_obj.bento_service_metadata['apis']:

97 if 'handler_type' in api:

98 api['input_type'] = api['handler_type']

99 del api['handler_type']

100 if 'handler_config' in api:

101 api['input_config'] = api['handler_config']

102 del api['handler_config']

103 if 'output_type' not in api:

104 api['output_type'] = 'DefaultOutput'

105

106 bento_service_metadata_pb = ParseDict(

107 bento_obj.bento_service_metadata, BentoServiceMetadata()

108 )

109 bento_uri = BentoUri(

110 uri=bento_obj.uri, type=BentoUri.StorageType.Value(bento_obj.uri_type)

111 )

112 if labels is not None:

113 bento_service_metadata_pb.labels.update(labels)

114 return BentoPB(

115 name=bento_obj.name,

116 version=bento_obj.version,

117 uri=bento_uri,

118 bento_service_metadata=bento_service_metadata_pb,

119 )

120

121

122 class BentoMetadataStore(object):

123 def __init__(self, sess_maker):

124 self.sess_maker = sess_maker

125

126 def add(self, bento_name, bento_version, uri, uri_type):

127 with create_session(self.sess_maker) as sess:

128 bento_obj = Bento()

129 bento_obj.name = bento_name

130 bento_obj.version = bento_version

131 bento_obj.uri = uri

132 bento_obj.uri_type = BentoUri.StorageType.Name(uri_type)

133 return sess.add(bento_obj)

134

135 def _get_latest(self, bento_name):

136 with create_session(self.sess_maker) as sess:

137 query = (

138 sess.query(Bento)

139 .filter_by(name=bento_name, deleted=False)

140 .order_by(desc(Bento.created_at))

141 .limit(1)

142 )

143

144 query_result = query.all()

145 if len(query_result) == 1:

146 labels = get_labels(sess, RESOURCE_TYPE.bento, query_result[0].id)

147 return _bento_orm_obj_to_pb(query_result[0], labels)

148 else:

149 return None

150

151 def get(self, bento_name, bento_version="latest"):

152 if bento_version.lower() == "latest":

153 return self._get_latest(bento_name)

154

155 with create_session(self.sess_maker) as sess:

156 try:

157 bento_obj = (

158 sess.query(Bento)

159 .filter_by(name=bento_name, version=bento_version)

160 .one()

161 )

162 if bento_obj.deleted:

163 # bento has been marked as deleted

164 return None

165 labels = get_labels(sess, RESOURCE_TYPE.bento, bento_obj.id)

166 return _bento_orm_obj_to_pb(bento_obj, labels)

167 except NoResultFound:

168 return None

169

170 def update_bento_service_metadata(

171 self, bento_name, bento_version, bento_service_metadata_pb

172 ):

173 with create_session(self.sess_maker) as sess:

174 try:

175 bento_obj = (

176 sess.query(Bento)

177 .filter_by(name=bento_name, version=bento_version, deleted=False)

178 .one()

179 )

180 service_metadata = ProtoMessageToDict(bento_service_metadata_pb)

181 bento_obj.bento_service_metadata = service_metadata

182 if service_metadata.get('labels', None) is not None:

183 bento = (

184 sess.query(Bento)

185 .filter_by(name=bento_name, version=bento_version)

186 .one()

187 )

188 add_or_update_labels(

189 sess, RESOURCE_TYPE.bento, bento.id, service_metadata['labels']

190 )

191 except NoResultFound:

192 raise YataiRepositoryException(

193 "Bento %s:%s is not found in repository" % bento_name, bento_version

194 )

195

196 def update_upload_status(self, bento_name, bento_version, upload_status_pb):

197 with create_session(self.sess_maker) as sess:

198 try:

199 bento_obj = (

200 sess.query(Bento)

201 .filter_by(name=bento_name, version=bento_version, deleted=False)

202 .one()

203 )

204 # TODO:

205 # if bento_obj.upload_status and bento_obj.upload_status.updated_at >

206 # upload_status_pb.updated_at, update should be ignored

207 bento_obj.upload_status = ProtoMessageToDict(upload_status_pb)

208 except NoResultFound:

209 raise YataiRepositoryException(

210 "Bento %s:%s is not found in repository" % bento_name, bento_version

211 )

212

213 def dangerously_delete(self, bento_name, bento_version):

214 with create_session(self.sess_maker) as sess:

215 try:

216 bento_obj = (

217 sess.query(Bento)

218 .filter_by(name=bento_name, version=bento_version)

219 .one()

220 )

221 if bento_obj.deleted:

222 raise YataiRepositoryException(

223 "Bento {}:{} has already been deleted".format(

224 bento_name, bento_version

225 )

226 )

227 bento_obj.deleted = True

228 except NoResultFound:

229 raise YataiRepositoryException(

230 "Bento %s:%s is not found in repository" % bento_name, bento_version

231 )

232

233 def list(

234 self,

235 bento_name=None,

236 offset=None,

237 limit=None,

238 label_selectors=None,

239 order_by=ListBentoRequest.created_at,

240 ascending_order=False,

241 ):

242 with create_session(self.sess_maker) as sess:

243 query = sess.query(Bento)

244 order_by = ListBentoRequest.SORTABLE_COLUMN.Name(order_by)

245 order_by_field = getattr(Bento, order_by)

246 order_by_action = (

247 order_by_field if ascending_order else desc(order_by_field)

248 )

249 query = query.order_by(order_by_action)

250 if bento_name:

251 # filter_by apply filtering criterion to a copy of the query

252 query = query.filter_by(name=bento_name)

253 query = query.filter_by(deleted=False)

254 if label_selectors.match_labels or label_selectors.match_expressions:

255 bento_ids = filter_label_query(

256 sess, RESOURCE_TYPE.bento, label_selectors

257 )

258 query = query.filter(Bento.id.in_(bento_ids))

259

260 # We are not defaulting limit to 200 in the signature,

261 # because protobuf will pass 0 as value

262 limit = limit or DEFAULT_LIST_LIMIT

263 # Limit and offset need to be called after order_by filter/filter_by is

264 # called

265 query = query.limit(limit)

266 if offset:

267 query = query.offset(offset)

268

269 query_result = query.all()

270 bento_ids = [bento_obj.id for bento_obj in query_result]

271 labels = list_labels(sess, RESOURCE_TYPE.bento, bento_ids)

272 result = [

273 _bento_orm_obj_to_pb(bento_obj, labels.get(str(bento_obj.id)))

274 for bento_obj in query_result

275 ]

276 return result

277

[end of bentoml/yatai/repository/metadata_store.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/bentoml/yatai/repository/metadata_store.py b/bentoml/yatai/repository/metadata_store.py

--- a/bentoml/yatai/repository/metadata_store.py

+++ b/bentoml/yatai/repository/metadata_store.py

@@ -156,7 +156,7 @@

try:

bento_obj = (

sess.query(Bento)

- .filter_by(name=bento_name, version=bento_version)

+ .filter_by(name=bento_name, version=bento_version, deleted=False)

.one()

)

if bento_obj.deleted:

@@ -215,7 +215,7 @@

try:

bento_obj = (

sess.query(Bento)

- .filter_by(name=bento_name, version=bento_version)

+ .filter_by(name=bento_name, version=bento_version, deleted=False)

.one()

)

if bento_obj.deleted:

|

{"golden_diff": "diff --git a/bentoml/yatai/repository/metadata_store.py b/bentoml/yatai/repository/metadata_store.py\n--- a/bentoml/yatai/repository/metadata_store.py\n+++ b/bentoml/yatai/repository/metadata_store.py\n@@ -156,7 +156,7 @@\n try:\n bento_obj = (\n sess.query(Bento)\n- .filter_by(name=bento_name, version=bento_version)\n+ .filter_by(name=bento_name, version=bento_version, deleted=False)\n .one()\n )\n if bento_obj.deleted:\n@@ -215,7 +215,7 @@\n try:\n bento_obj = (\n sess.query(Bento)\n- .filter_by(name=bento_name, version=bento_version)\n+ .filter_by(name=bento_name, version=bento_version, deleted=False)\n .one()\n )\n if bento_obj.deleted:\n", "issue": "Cannot delete bento that was created with same name:version as an older (deleted) bento\n**Describe the bug**\r\nAfter deleting a bento, I cannot delete another bento that was created with that same name:version.\r\n\r\n**To Reproduce**\r\n1. Create a bento with `name:1` through `model_service.save(version='1')`.\r\n2. In a shell, `bentoml delete name:1`\r\n3. Create a new bento with the same name, again with `model_service.save(version='1')`.\r\n4. Try to `bentoml delete name:1`\r\n\r\nThe error is the following:\r\n```\r\nAre you sure about delete name:1? This will delete the BentoService saved bundle files permanently [y/N]: y \r\n[2020-10-27 15:22:33,477] ERROR - RPC ERROR DangerouslyDeleteBento: Multiple rows were found for one() \r\nError: bentoml-cli delete failed: INTERNAL:Multiple rows were found for one() \r\n```\r\n**Expected behavior**\r\nI can endlessly delete and recreate bentos with the same name/version for testing.\r\n\r\n**Environment:**\r\n - OS: Ubuntu 20.04\r\n - Python 3.8.5\r\n - BentoML Version 0.9.2\r\n\n", "before_files": [{"content": "# Copyright 2019 Atalaya Tech, Inc.\n\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n\n# http://www.apache.org/licenses/LICENSE-2.0\n\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport logging\nimport datetime\n\nfrom sqlalchemy import (\n Column,\n Enum,\n String,\n Integer,\n JSON,\n Boolean,\n DateTime,\n UniqueConstraint,\n desc,\n)\nfrom sqlalchemy.orm.exc import NoResultFound\nfrom google.protobuf.json_format import ParseDict\n\nfrom bentoml.utils import ProtoMessageToDict\nfrom bentoml.exceptions import YataiRepositoryException\nfrom bentoml.yatai.db import Base, create_session\nfrom bentoml.yatai.label_store import (\n filter_label_query,\n get_labels,\n list_labels,\n add_or_update_labels,\n RESOURCE_TYPE,\n)\nfrom bentoml.yatai.proto.repository_pb2 import (\n UploadStatus,\n BentoUri,\n BentoServiceMetadata,\n Bento as BentoPB,\n ListBentoRequest,\n)\n\nlogger = logging.getLogger(__name__)\n\n\nDEFAULT_UPLOAD_STATUS = UploadStatus(status=UploadStatus.UNINITIALIZED)\nDEFAULT_LIST_LIMIT = 40\n\n\nclass Bento(Base):\n __tablename__ = 'bentos'\n __table_args__ = tuple(UniqueConstraint('name', 'version', name='_name_version_uc'))\n\n id = Column(Integer, primary_key=True)\n name = Column(String, nullable=False)\n version = Column(String, nullable=False)\n\n # Storage URI for this Bento\n uri = Column(String, nullable=False)\n\n # Name is required for PostgreSQL and any future supported database which\n # requires an explicitly named type, or an explicitly named constraint in order to\n # generate the type and/or a table that uses it.\n uri_type = Column(\n Enum(*BentoUri.StorageType.keys(), name='uri_type'), default=BentoUri.UNSET\n )\n\n # JSON filed mapping directly to BentoServiceMetadata proto message\n bento_service_metadata = Column(JSON, nullable=False, default={})\n\n # Time of AddBento call, the time of Bento creation can be found in metadata field\n created_at = Column(DateTime, default=datetime.datetime.utcnow)\n\n # latest upload status, JSON message also includes last update timestamp\n upload_status = Column(\n JSON, nullable=False, default=ProtoMessageToDict(DEFAULT_UPLOAD_STATUS)\n )\n\n # mark as deleted\n deleted = Column(Boolean, default=False)\n\n\ndef _bento_orm_obj_to_pb(bento_obj, labels=None):\n # Backwards compatible support loading saved bundle created before 0.8.0\n if (\n 'apis' in bento_obj.bento_service_metadata\n and bento_obj.bento_service_metadata['apis']\n ):\n for api in bento_obj.bento_service_metadata['apis']:\n if 'handler_type' in api:\n api['input_type'] = api['handler_type']\n del api['handler_type']\n if 'handler_config' in api:\n api['input_config'] = api['handler_config']\n del api['handler_config']\n if 'output_type' not in api:\n api['output_type'] = 'DefaultOutput'\n\n bento_service_metadata_pb = ParseDict(\n bento_obj.bento_service_metadata, BentoServiceMetadata()\n )\n bento_uri = BentoUri(\n uri=bento_obj.uri, type=BentoUri.StorageType.Value(bento_obj.uri_type)\n )\n if labels is not None:\n bento_service_metadata_pb.labels.update(labels)\n return BentoPB(\n name=bento_obj.name,\n version=bento_obj.version,\n uri=bento_uri,\n bento_service_metadata=bento_service_metadata_pb,\n )\n\n\nclass BentoMetadataStore(object):\n def __init__(self, sess_maker):\n self.sess_maker = sess_maker\n\n def add(self, bento_name, bento_version, uri, uri_type):\n with create_session(self.sess_maker) as sess:\n bento_obj = Bento()\n bento_obj.name = bento_name\n bento_obj.version = bento_version\n bento_obj.uri = uri\n bento_obj.uri_type = BentoUri.StorageType.Name(uri_type)\n return sess.add(bento_obj)\n\n def _get_latest(self, bento_name):\n with create_session(self.sess_maker) as sess:\n query = (\n sess.query(Bento)\n .filter_by(name=bento_name, deleted=False)\n .order_by(desc(Bento.created_at))\n .limit(1)\n )\n\n query_result = query.all()\n if len(query_result) == 1:\n labels = get_labels(sess, RESOURCE_TYPE.bento, query_result[0].id)\n return _bento_orm_obj_to_pb(query_result[0], labels)\n else:\n return None\n\n def get(self, bento_name, bento_version=\"latest\"):\n if bento_version.lower() == \"latest\":\n return self._get_latest(bento_name)\n\n with create_session(self.sess_maker) as sess:\n try:\n bento_obj = (\n sess.query(Bento)\n .filter_by(name=bento_name, version=bento_version)\n .one()\n )\n if bento_obj.deleted:\n # bento has been marked as deleted\n return None\n labels = get_labels(sess, RESOURCE_TYPE.bento, bento_obj.id)\n return _bento_orm_obj_to_pb(bento_obj, labels)\n except NoResultFound:\n return None\n\n def update_bento_service_metadata(\n self, bento_name, bento_version, bento_service_metadata_pb\n ):\n with create_session(self.sess_maker) as sess:\n try:\n bento_obj = (\n sess.query(Bento)\n .filter_by(name=bento_name, version=bento_version, deleted=False)\n .one()\n )\n service_metadata = ProtoMessageToDict(bento_service_metadata_pb)\n bento_obj.bento_service_metadata = service_metadata\n if service_metadata.get('labels', None) is not None:\n bento = (\n sess.query(Bento)\n .filter_by(name=bento_name, version=bento_version)\n .one()\n )\n add_or_update_labels(\n sess, RESOURCE_TYPE.bento, bento.id, service_metadata['labels']\n )\n except NoResultFound:\n raise YataiRepositoryException(\n \"Bento %s:%s is not found in repository\" % bento_name, bento_version\n )\n\n def update_upload_status(self, bento_name, bento_version, upload_status_pb):\n with create_session(self.sess_maker) as sess:\n try:\n bento_obj = (\n sess.query(Bento)\n .filter_by(name=bento_name, version=bento_version, deleted=False)\n .one()\n )\n # TODO:\n # if bento_obj.upload_status and bento_obj.upload_status.updated_at >\n # upload_status_pb.updated_at, update should be ignored\n bento_obj.upload_status = ProtoMessageToDict(upload_status_pb)\n except NoResultFound:\n raise YataiRepositoryException(\n \"Bento %s:%s is not found in repository\" % bento_name, bento_version\n )\n\n def dangerously_delete(self, bento_name, bento_version):\n with create_session(self.sess_maker) as sess:\n try:\n bento_obj = (\n sess.query(Bento)\n .filter_by(name=bento_name, version=bento_version)\n .one()\n )\n if bento_obj.deleted:\n raise YataiRepositoryException(\n \"Bento {}:{} has already been deleted\".format(\n bento_name, bento_version\n )\n )\n bento_obj.deleted = True\n except NoResultFound:\n raise YataiRepositoryException(\n \"Bento %s:%s is not found in repository\" % bento_name, bento_version\n )\n\n def list(\n self,\n bento_name=None,\n offset=None,\n limit=None,\n label_selectors=None,\n order_by=ListBentoRequest.created_at,\n ascending_order=False,\n ):\n with create_session(self.sess_maker) as sess:\n query = sess.query(Bento)\n order_by = ListBentoRequest.SORTABLE_COLUMN.Name(order_by)\n order_by_field = getattr(Bento, order_by)\n order_by_action = (\n order_by_field if ascending_order else desc(order_by_field)\n )\n query = query.order_by(order_by_action)\n if bento_name:\n # filter_by apply filtering criterion to a copy of the query\n query = query.filter_by(name=bento_name)\n query = query.filter_by(deleted=False)\n if label_selectors.match_labels or label_selectors.match_expressions:\n bento_ids = filter_label_query(\n sess, RESOURCE_TYPE.bento, label_selectors\n )\n query = query.filter(Bento.id.in_(bento_ids))\n\n # We are not defaulting limit to 200 in the signature,\n # because protobuf will pass 0 as value\n limit = limit or DEFAULT_LIST_LIMIT\n # Limit and offset need to be called after order_by filter/filter_by is\n # called\n query = query.limit(limit)\n if offset:\n query = query.offset(offset)\n\n query_result = query.all()\n bento_ids = [bento_obj.id for bento_obj in query_result]\n labels = list_labels(sess, RESOURCE_TYPE.bento, bento_ids)\n result = [\n _bento_orm_obj_to_pb(bento_obj, labels.get(str(bento_obj.id)))\n for bento_obj in query_result\n ]\n return result\n", "path": "bentoml/yatai/repository/metadata_store.py"}]}

| 3,735 | 210 |

gh_patches_debug_541

|

rasdani/github-patches

|

git_diff

|

pyodide__pyodide-4806

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

`pyodide build -h` should print help text

## 🐛 Bug

`pyodide build -h` treats `-h` as a package name rather than as a request for help text.

</issue>

<code>

[start of pyodide-build/pyodide_build/cli/build.py]

1 import re

2 import shutil

3 import sys

4 import tempfile

5 from pathlib import Path

6 from typing import Optional, cast, get_args

7 from urllib.parse import urlparse

8

9 import requests

10 import typer

11 from build import ConfigSettingsType

12

13 from ..build_env import check_emscripten_version, get_pyodide_root, init_environment

14 from ..io import _BuildSpecExports, _ExportTypes

15 from ..logger import logger

16 from ..out_of_tree import build

17 from ..out_of_tree.pypi import (

18 build_dependencies_for_wheel,

19 build_wheels_from_pypi_requirements,

20 fetch_pypi_package,

21 )

22 from ..pypabuild import parse_backend_flags

23

24

25 def convert_exports(exports: str) -> _BuildSpecExports:

26 if "," in exports:

27 return [x.strip() for x in exports.split(",") if x.strip()]

28 possible_exports = get_args(_ExportTypes)

29 if exports in possible_exports:

30 return cast(_ExportTypes, exports)

31 logger.stderr(

32 f"Expected exports to be one of "

33 '"pyinit", "requested", "whole_archive", '

34 "or a comma separated list of symbols to export. "

35 f'Got "{exports}".'

36 )

37 sys.exit(1)

38

39

40 def pypi(

41 package: str,

42 output_directory: Path,

43 exports: str,

44 config_settings: ConfigSettingsType,

45 ) -> Path:

46 """Fetch a wheel from pypi, or build from source if none available."""

47 with tempfile.TemporaryDirectory() as tmpdir:

48 srcdir = Path(tmpdir)

49

50 # get package from pypi

51 package_path = fetch_pypi_package(package, srcdir)

52 if not package_path.is_dir():

53 # a pure-python wheel has been downloaded - just copy to dist folder

54 dest_file = output_directory / package_path.name

55 shutil.copyfile(str(package_path), output_directory / package_path.name)

56 print(f"Successfully fetched: {package_path.name}")

57 return dest_file

58

59 built_wheel = build.run(

60 srcdir,

61 output_directory,

62 convert_exports(exports),

63 config_settings,

64 )

65 return built_wheel

66

67

68 def download_url(url: str, output_directory: Path) -> str:

69 with requests.get(url, stream=True) as response:

70 urlpath = Path(urlparse(response.url).path)

71 if urlpath.suffix == ".gz":

72 urlpath = urlpath.with_suffix("")

73 file_name = urlpath.name

74 with open(output_directory / file_name, "wb") as f:

75 for chunk in response.iter_content(chunk_size=1 << 20):

76 f.write(chunk)

77 return file_name

78

79

80 def url(

81 package_url: str,

82 output_directory: Path,

83 exports: str,

84 config_settings: ConfigSettingsType,

85 ) -> Path:

86 """Fetch a wheel or build sdist from url."""

87 with tempfile.TemporaryDirectory() as tmpdir:

88 tmppath = Path(tmpdir)

89 filename = download_url(package_url, tmppath)

90 if Path(filename).suffix == ".whl":

91 shutil.move(tmppath / filename, output_directory / filename)

92 return output_directory / filename

93

94 builddir = tmppath / "build"

95 shutil.unpack_archive(tmppath / filename, builddir)

96 files = list(builddir.iterdir())

97 if len(files) == 1 and files[0].is_dir():

98 # unzipped into subfolder

99 builddir = files[0]

100 wheel_path = build.run(

101 builddir, output_directory, convert_exports(exports), config_settings

102 )

103 return wheel_path

104

105

106 def source(

107 source_location: Path,

108 output_directory: Path,

109 exports: str,

110 config_settings: ConfigSettingsType,

111 ) -> Path:

112 """Use pypa/build to build a Python package from source"""

113 built_wheel = build.run(

114 source_location, output_directory, convert_exports(exports), config_settings

115 )

116 return built_wheel

117

118

119 # simple 'pyodide build' command

120 def main(

121 source_location: Optional[str] = typer.Argument( # noqa: UP007 typer does not accept list[str] | None yet.

122 "",

123 help="Build source, can be source folder, pypi version specification, "

124 "or url to a source dist archive or wheel file. If this is blank, it "

125 "will build the current directory.",

126 ),

127 output_directory: str = typer.Option(

128 "",

129 "--outdir",

130 "-o",

131 help="which directory should the output be placed into?",

132 ),

133 requirements_txt: str = typer.Option(

134 "",

135 "--requirements",

136 "-r",

137 help="Build a list of package requirements from a requirements.txt file",

138 ),

139 exports: str = typer.Option(

140 "requested",

141 envvar="PYODIDE_BUILD_EXPORTS",

142 help="Which symbols should be exported when linking .so files?",

143 ),

144 build_dependencies: bool = typer.Option(

145 False, help="Fetch dependencies from pypi and build them too."

146 ),

147 output_lockfile: str = typer.Option(

148 "",

149 help="Output list of resolved dependencies to a file in requirements.txt format",

150 ),

151 skip_dependency: list[str] = typer.Option(

152 [],

153 help="Skip building or resolving a single dependency, or a pyodide-lock.json file. "

154 "Use multiple times or provide a comma separated list to skip multiple dependencies.",

155 ),

156 skip_built_in_packages: bool = typer.Option(

157 True,

158 help="Don't build dependencies that are built into the pyodide distribution.",

159 ),

160 compression_level: int = typer.Option(

161 6, help="Compression level to use for the created zip file"

162 ),

163 config_setting: Optional[list[str]] = typer.Option( # noqa: UP007 typer does not accept list[str] | None yet.

164 None,

165 "--config-setting",

166 "-C",

167 help=(

168 "Settings to pass to the backend. "

169 "Works same as the --config-setting option of pypa/build."

170 ),

171 metavar="KEY[=VALUE]",

172 ),

173 ctx: typer.Context = typer.Context, # type: ignore[assignment]

174 ) -> None:

175 """Use pypa/build to build a Python package from source, pypi or url."""

176 init_environment()

177 try:

178 check_emscripten_version()

179 except RuntimeError as e:

180 print(e.args[0], file=sys.stderr)

181 sys.exit(1)

182

183 output_directory = output_directory or "./dist"

184

185 outpath = Path(output_directory).resolve()

186 outpath.mkdir(exist_ok=True)

187 extras: list[str] = []

188

189 # For backward compatibility, in addition to the `--config-setting` arguments, we also support

190 # passing config settings as positional arguments.

191 config_settings = parse_backend_flags((config_setting or []) + ctx.args)

192

193 if skip_built_in_packages:

194 package_lock_json = get_pyodide_root() / "dist" / "pyodide-lock.json"

195 skip_dependency.append(str(package_lock_json.absolute()))

196

197 if len(requirements_txt) > 0:

198 # a requirements.txt - build it (and optionally deps)

199 if not Path(requirements_txt).exists():

200 raise RuntimeError(

201 f"Couldn't find requirements text file {requirements_txt}"

202 )

203 reqs = []

204 with open(requirements_txt) as f:

205 raw_reqs = [x.strip() for x in f.readlines()]

206 for x in raw_reqs:

207 # remove comments

208 comment_pos = x.find("#")

209 if comment_pos != -1:

210 x = x[:comment_pos].strip()

211 if len(x) > 0:

212 if x[0] == "-":

213 raise RuntimeError(

214 f"pyodide build only supports name-based PEP508 requirements. [{x}] will not work."

215 )

216 if x.find("@") != -1:

217 raise RuntimeError(

218 f"pyodide build does not support URL based requirements. [{x}] will not work"

219 )

220 reqs.append(x)

221 try:

222 build_wheels_from_pypi_requirements(

223 reqs,

224 outpath,

225 build_dependencies,

226 skip_dependency,

227 # TODO: should we really use same "exports" value for all of our

228 # dependencies? Not sure this makes sense...

229 convert_exports(exports),

230 config_settings,

231 output_lockfile=output_lockfile,

232 )

233 except BaseException as e:

234 import traceback

235

236 print("Failed building multiple wheels:", traceback.format_exc())

237 raise e

238 return

239

240 if source_location is not None:

241 extras = re.findall(r"\[(\w+)\]", source_location)

242 if len(extras) != 0:

243 source_location = source_location[0 : source_location.find("[")]

244 if not source_location:

245 # build the current folder

246 wheel = source(Path.cwd(), outpath, exports, config_settings)

247 elif source_location.find("://") != -1:

248 wheel = url(source_location, outpath, exports, config_settings)

249 elif Path(source_location).is_dir():

250 # a folder, build it

251 wheel = source(

252 Path(source_location).resolve(), outpath, exports, config_settings

253 )

254 elif source_location.find("/") == -1:

255 # try fetch or build from pypi

256 wheel = pypi(source_location, outpath, exports, config_settings)

257 else:

258 raise RuntimeError(f"Couldn't determine source type for {source_location}")

259 # now build deps for wheel

260 if build_dependencies:

261 try:

262 build_dependencies_for_wheel(

263 wheel,

264 extras,

265 skip_dependency,

266 # TODO: should we really use same "exports" value for all of our

267 # dependencies? Not sure this makes sense...

268 convert_exports(exports),

269 config_settings,

270 output_lockfile=output_lockfile,

271 compression_level=compression_level,

272 )

273 except BaseException as e:

274 import traceback

275

276 print("Failed building dependencies for wheel:", traceback.format_exc())

277 wheel.unlink()

278 raise e

279

280

281 main.typer_kwargs = { # type: ignore[attr-defined]

282 "context_settings": {

283 "ignore_unknown_options": True,

284 "allow_extra_args": True,

285 },

286 }

287

[end of pyodide-build/pyodide_build/cli/build.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pyodide-build/pyodide_build/cli/build.py b/pyodide-build/pyodide_build/cli/build.py

--- a/pyodide-build/pyodide_build/cli/build.py

+++ b/pyodide-build/pyodide_build/cli/build.py

@@ -282,5 +282,6 @@

"context_settings": {

"ignore_unknown_options": True,

"allow_extra_args": True,

+ "help_option_names": ["-h", "--help"],

},

}

|

{"golden_diff": "diff --git a/pyodide-build/pyodide_build/cli/build.py b/pyodide-build/pyodide_build/cli/build.py\n--- a/pyodide-build/pyodide_build/cli/build.py\n+++ b/pyodide-build/pyodide_build/cli/build.py\n@@ -282,5 +282,6 @@\n \"context_settings\": {\n \"ignore_unknown_options\": True,\n \"allow_extra_args\": True,\n+ \"help_option_names\": [\"-h\", \"--help\"],\n },\n }\n", "issue": "`pyodide build -h` should print help text\n## \ud83d\udc1b Bug\r\n\r\n`pyodide build -h` treats `-h` as a package name rather than as a request for help text.\n", "before_files": [{"content": "import re\nimport shutil\nimport sys\nimport tempfile\nfrom pathlib import Path\nfrom typing import Optional, cast, get_args\nfrom urllib.parse import urlparse\n\nimport requests\nimport typer\nfrom build import ConfigSettingsType\n\nfrom ..build_env import check_emscripten_version, get_pyodide_root, init_environment\nfrom ..io import _BuildSpecExports, _ExportTypes\nfrom ..logger import logger\nfrom ..out_of_tree import build\nfrom ..out_of_tree.pypi import (\n build_dependencies_for_wheel,\n build_wheels_from_pypi_requirements,\n fetch_pypi_package,\n)\nfrom ..pypabuild import parse_backend_flags\n\n\ndef convert_exports(exports: str) -> _BuildSpecExports:\n if \",\" in exports:\n return [x.strip() for x in exports.split(\",\") if x.strip()]\n possible_exports = get_args(_ExportTypes)\n if exports in possible_exports:\n return cast(_ExportTypes, exports)\n logger.stderr(\n f\"Expected exports to be one of \"\n '\"pyinit\", \"requested\", \"whole_archive\", '\n \"or a comma separated list of symbols to export. \"\n f'Got \"{exports}\".'\n )\n sys.exit(1)\n\n\ndef pypi(\n package: str,\n output_directory: Path,\n exports: str,\n config_settings: ConfigSettingsType,\n) -> Path:\n \"\"\"Fetch a wheel from pypi, or build from source if none available.\"\"\"\n with tempfile.TemporaryDirectory() as tmpdir:\n srcdir = Path(tmpdir)\n\n # get package from pypi\n package_path = fetch_pypi_package(package, srcdir)\n if not package_path.is_dir():\n # a pure-python wheel has been downloaded - just copy to dist folder\n dest_file = output_directory / package_path.name\n shutil.copyfile(str(package_path), output_directory / package_path.name)\n print(f\"Successfully fetched: {package_path.name}\")\n return dest_file\n\n built_wheel = build.run(\n srcdir,\n output_directory,\n convert_exports(exports),\n config_settings,\n )\n return built_wheel\n\n\ndef download_url(url: str, output_directory: Path) -> str:\n with requests.get(url, stream=True) as response:\n urlpath = Path(urlparse(response.url).path)\n if urlpath.suffix == \".gz\":\n urlpath = urlpath.with_suffix(\"\")\n file_name = urlpath.name\n with open(output_directory / file_name, \"wb\") as f:\n for chunk in response.iter_content(chunk_size=1 << 20):\n f.write(chunk)\n return file_name\n\n\ndef url(\n package_url: str,\n output_directory: Path,\n exports: str,\n config_settings: ConfigSettingsType,\n) -> Path:\n \"\"\"Fetch a wheel or build sdist from url.\"\"\"\n with tempfile.TemporaryDirectory() as tmpdir:\n tmppath = Path(tmpdir)\n filename = download_url(package_url, tmppath)\n if Path(filename).suffix == \".whl\":\n shutil.move(tmppath / filename, output_directory / filename)\n return output_directory / filename\n\n builddir = tmppath / \"build\"\n shutil.unpack_archive(tmppath / filename, builddir)\n files = list(builddir.iterdir())\n if len(files) == 1 and files[0].is_dir():\n # unzipped into subfolder\n builddir = files[0]\n wheel_path = build.run(\n builddir, output_directory, convert_exports(exports), config_settings\n )\n return wheel_path\n\n\ndef source(\n source_location: Path,\n output_directory: Path,\n exports: str,\n config_settings: ConfigSettingsType,\n) -> Path:\n \"\"\"Use pypa/build to build a Python package from source\"\"\"\n built_wheel = build.run(\n source_location, output_directory, convert_exports(exports), config_settings\n )\n return built_wheel\n\n\n# simple 'pyodide build' command\ndef main(\n source_location: Optional[str] = typer.Argument( # noqa: UP007 typer does not accept list[str] | None yet.\n \"\",\n help=\"Build source, can be source folder, pypi version specification, \"\n \"or url to a source dist archive or wheel file. If this is blank, it \"\n \"will build the current directory.\",\n ),\n output_directory: str = typer.Option(\n \"\",\n \"--outdir\",\n \"-o\",\n help=\"which directory should the output be placed into?\",\n ),\n requirements_txt: str = typer.Option(\n \"\",\n \"--requirements\",\n \"-r\",\n help=\"Build a list of package requirements from a requirements.txt file\",\n ),\n exports: str = typer.Option(\n \"requested\",\n envvar=\"PYODIDE_BUILD_EXPORTS\",\n help=\"Which symbols should be exported when linking .so files?\",\n ),\n build_dependencies: bool = typer.Option(\n False, help=\"Fetch dependencies from pypi and build them too.\"\n ),\n output_lockfile: str = typer.Option(\n \"\",\n help=\"Output list of resolved dependencies to a file in requirements.txt format\",\n ),\n skip_dependency: list[str] = typer.Option(\n [],\n help=\"Skip building or resolving a single dependency, or a pyodide-lock.json file. \"\n \"Use multiple times or provide a comma separated list to skip multiple dependencies.\",\n ),\n skip_built_in_packages: bool = typer.Option(\n True,\n help=\"Don't build dependencies that are built into the pyodide distribution.\",\n ),\n compression_level: int = typer.Option(\n 6, help=\"Compression level to use for the created zip file\"\n ),\n config_setting: Optional[list[str]] = typer.Option( # noqa: UP007 typer does not accept list[str] | None yet.\n None,\n \"--config-setting\",\n \"-C\",\n help=(\n \"Settings to pass to the backend. \"\n \"Works same as the --config-setting option of pypa/build.\"\n ),\n metavar=\"KEY[=VALUE]\",\n ),\n ctx: typer.Context = typer.Context, # type: ignore[assignment]\n) -> None:\n \"\"\"Use pypa/build to build a Python package from source, pypi or url.\"\"\"\n init_environment()\n try:\n check_emscripten_version()\n except RuntimeError as e:\n print(e.args[0], file=sys.stderr)\n sys.exit(1)\n\n output_directory = output_directory or \"./dist\"\n\n outpath = Path(output_directory).resolve()\n outpath.mkdir(exist_ok=True)\n extras: list[str] = []\n\n # For backward compatibility, in addition to the `--config-setting` arguments, we also support\n # passing config settings as positional arguments.\n config_settings = parse_backend_flags((config_setting or []) + ctx.args)\n\n if skip_built_in_packages:\n package_lock_json = get_pyodide_root() / \"dist\" / \"pyodide-lock.json\"\n skip_dependency.append(str(package_lock_json.absolute()))\n\n if len(requirements_txt) > 0:\n # a requirements.txt - build it (and optionally deps)\n if not Path(requirements_txt).exists():\n raise RuntimeError(\n f\"Couldn't find requirements text file {requirements_txt}\"\n )\n reqs = []\n with open(requirements_txt) as f:\n raw_reqs = [x.strip() for x in f.readlines()]\n for x in raw_reqs:\n # remove comments\n comment_pos = x.find(\"#\")\n if comment_pos != -1:\n x = x[:comment_pos].strip()\n if len(x) > 0:\n if x[0] == \"-\":\n raise RuntimeError(\n f\"pyodide build only supports name-based PEP508 requirements. [{x}] will not work.\"\n )\n if x.find(\"@\") != -1:\n raise RuntimeError(\n f\"pyodide build does not support URL based requirements. [{x}] will not work\"\n )\n reqs.append(x)\n try:\n build_wheels_from_pypi_requirements(\n reqs,\n outpath,\n build_dependencies,\n skip_dependency,\n # TODO: should we really use same \"exports\" value for all of our\n # dependencies? Not sure this makes sense...\n convert_exports(exports),\n config_settings,\n output_lockfile=output_lockfile,\n )\n except BaseException as e:\n import traceback\n\n print(\"Failed building multiple wheels:\", traceback.format_exc())\n raise e\n return\n\n if source_location is not None:\n extras = re.findall(r\"\\[(\\w+)\\]\", source_location)\n if len(extras) != 0:\n source_location = source_location[0 : source_location.find(\"[\")]\n if not source_location:\n # build the current folder\n wheel = source(Path.cwd(), outpath, exports, config_settings)\n elif source_location.find(\"://\") != -1:\n wheel = url(source_location, outpath, exports, config_settings)\n elif Path(source_location).is_dir():\n # a folder, build it\n wheel = source(\n Path(source_location).resolve(), outpath, exports, config_settings\n )\n elif source_location.find(\"/\") == -1:\n # try fetch or build from pypi\n wheel = pypi(source_location, outpath, exports, config_settings)\n else:\n raise RuntimeError(f\"Couldn't determine source type for {source_location}\")\n # now build deps for wheel\n if build_dependencies:\n try:\n build_dependencies_for_wheel(\n wheel,\n extras,\n skip_dependency,\n # TODO: should we really use same \"exports\" value for all of our\n # dependencies? Not sure this makes sense...\n convert_exports(exports),\n config_settings,\n output_lockfile=output_lockfile,\n compression_level=compression_level,\n )\n except BaseException as e:\n import traceback\n\n print(\"Failed building dependencies for wheel:\", traceback.format_exc())\n wheel.unlink()\n raise e\n\n\nmain.typer_kwargs = { # type: ignore[attr-defined]\n \"context_settings\": {\n \"ignore_unknown_options\": True,\n \"allow_extra_args\": True,\n },\n}\n", "path": "pyodide-build/pyodide_build/cli/build.py"}]}

| 3,546 | 109 |

gh_patches_debug_15144

|

rasdani/github-patches

|

git_diff

|

mindspore-lab__mindnlp-643

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

multi30k dataset url not avaliable

</issue>

<code>

[start of mindnlp/dataset/machine_translation/multi30k.py]

1 # Copyright 2022 Huawei Technologies Co., Ltd

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ============================================================================

15 """

16 Multi30k load function

17 """

18 # pylint: disable=C0103

19

20 import os

21 import re

22 from operator import itemgetter

23 from typing import Union, Tuple

24 from mindspore.dataset import TextFileDataset, transforms

25 from mindspore.dataset import text

26 from mindnlp.utils.download import cache_file

27 from mindnlp.dataset.register import load_dataset, process

28 from mindnlp.configs import DEFAULT_ROOT

29 from mindnlp.utils import untar

30

31 URL = {

32 "train": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/training.tar.gz",

33 "valid": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/validation.tar.gz",

34 "test": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/mmt16_task1_test.tar.gz",

35 }

36

37 MD5 = {

38 "train": "8ebce33f4ebeb71dcb617f62cba077b7",

39 "valid": "2a46f18dbae0df0becc56e33d4e28e5d",

40 "test": "f63b12fc6f95beb3bfca2c393e861063",

41 }

42

43

44 @load_dataset.register

45 def Multi30k(root: str = DEFAULT_ROOT, split: Union[Tuple[str], str] = ('train', 'valid', 'test'),

46 language_pair: Tuple[str] = ('de', 'en'), proxies=None):

47 r"""

48 Load the Multi30k dataset

49

50 Args:

51 root (str): Directory where the datasets are saved.

52 Default:~/.mindnlp

53 split (str|Tuple[str]): Split or splits to be returned.

54 Default:('train', 'valid', 'test').

55 language_pair (Tuple[str]): Tuple containing src and tgt language.

56 Default: ('de', 'en').

57 proxies (dict): a dict to identify proxies,for example: {"https": "https://127.0.0.1:7890"}.

58

59 Returns:

60 - **datasets_list** (list) -A list of loaded datasets.

61 If only one type of dataset is specified,such as 'trian',

62 this dataset is returned instead of a list of datasets.

63

64 Raises:

65 TypeError: If `root` is not a string.

66 TypeError: If `split` is not a string or Tuple[str].

67 TypeError: If `language_pair` is not a Tuple[str].

68 RuntimeError: If the length of `language_pair` is not 2.

69 RuntimeError: If `language_pair` is neither ('de', 'en') nor ('en', 'de').

70

71 Examples:

72 >>> root = os.path.join(os.path.expanduser('~'), ".mindnlp")

73 >>> split = ('train', 'valid', 'test')

74 >>> language_pair = ('de', 'en')

75 >>> dataset_train, dataset_valid, dataset_test = Multi30k(root, split, language_pair)

76 >>> train_iter = dataset_train.create_tuple_iterator()

77 >>> print(next(train_iter))

78 [Tensor(shape=[], dtype=String, value=\

79 'Ein Mann mit einem orangefarbenen Hut, der etwas anstarrt.'),

80 Tensor(shape=[], dtype=String, value= 'A man in an orange hat starring at something.')]

81

82 """

83

84 assert len(

85 language_pair) == 2, "language_pair must contain only 2 elements:\

86 src and tgt language respectively"

87 assert tuple(sorted(language_pair)) == (

88 "de",

89 "en",

90 ), "language_pair must be either ('de','en') or ('en', 'de')"

91

92 if root == DEFAULT_ROOT:

93 cache_dir = os.path.join(root, "datasets", "Multi30k")

94 else:

95 cache_dir = root

96

97 file_list = []

98

99 untar_files = []

100 source_files = []

101 target_files = []

102

103 datasets_list = []

104

105 if isinstance(split, str):

106 file_path, _ = cache_file(

107 None, cache_dir=cache_dir, url=URL[split], md5sum=MD5[split], proxies=proxies)

108 file_list.append(file_path)

109

110 else:

111 urls = itemgetter(*split)(URL)

112 md5s = itemgetter(*split)(MD5)

113 for i, url in enumerate(urls):

114 file_path, _ = cache_file(

115 None, cache_dir=cache_dir, url=url, md5sum=md5s[i], proxies=proxies)

116 file_list.append(file_path)

117

118 for file in file_list:

119 untar_files.append(untar(file, os.path.dirname(file)))

120

121 regexp = r".de"

122 if language_pair == ("en", "de"):

123 regexp = r".en"

124

125 for file_pair in untar_files:

126 for file in file_pair:

127 match = re.search(regexp, file)

128 if match:

129 source_files.append(file)

130 else:

131 target_files.append(file)

132

133 for i in range(len(untar_files)):

134 source_dataset = TextFileDataset(

135 os.path.join(cache_dir, source_files[i]), shuffle=False)

136 source_dataset = source_dataset.rename(["text"], [language_pair[0]])

137 target_dataset = TextFileDataset(

138 os.path.join(cache_dir, target_files[i]), shuffle=False)

139 target_dataset = target_dataset.rename(["text"], [language_pair[1]])

140 datasets = source_dataset.zip(target_dataset)

141 datasets_list.append(datasets)

142

143 if len(datasets_list) == 1:

144 return datasets_list[0]

145 return datasets_list

146

147 @process.register

148 def Multi30k_Process(dataset, vocab, batch_size=64, max_len=500, \

149 drop_remainder=False):

150 """

151 the process of the Multi30k dataset

152

153 Args:

154 dataset (GeneratorDataset): Multi30k dataset.

155 vocab (Vocab): vocabulary object, used to store the mapping of token and index.

156 batch_size (int): The number of rows each batch is created with. Default: 64.

157 max_len (int): The max length of the sentence. Default: 500.

158 drop_remainder (bool): When the last batch of data contains a data entry smaller than batch_size, whether

159 to discard the batch and not pass it to the next operation. Default: False.

160

161 Returns:

162 - **dataset** (MapDataset) - dataset after transforms.

163

164 Raises:

165 TypeError: If `input_column` is not a string.

166

167 Examples:

168 >>> train_dataset = Multi30k(

169 >>> root=self.root,

170 >>> split="train",

171 >>> language_pair=("de", "en")

172 >>> )

173 >>> tokenizer = BasicTokenizer(True)

174 >>> train_dataset = train_dataset.map([tokenizer], 'en')

175 >>> train_dataset = train_dataset.map([tokenizer], 'de')

176 >>> en_vocab = text.Vocab.from_dataset(train_dataset, 'en', special_tokens=

177 >>> ['<pad>', '<unk>'], special_first= True)

178 >>> de_vocab = text.Vocab.from_dataset(train_dataset, 'de', special_tokens=

179 >>> ['<pad>', '<unk>'], special_first= True)

180 >>> vocab = {'en':en_vocab, 'de':de_vocab}

181 >>> train_dataset = process('Multi30k', train_dataset, vocab = vocab)

182 """

183

184 en_pad_value = vocab['en'].tokens_to_ids('<pad>')

185 de_pad_value = vocab['de'].tokens_to_ids('<pad>')

186

187 en_lookup_op = text.Lookup(vocab['en'], unknown_token='<unk>')

188 de_lookup_op = text.Lookup(vocab['de'], unknown_token='<unk>')

189

190 dataset = dataset.map([en_lookup_op], 'en')

191 dataset = dataset.map([de_lookup_op], 'de')

192

193 en_pad_op = transforms.PadEnd([max_len], en_pad_value)

194 de_pad_op = transforms.PadEnd([max_len], de_pad_value)

195

196 dataset = dataset.map([en_pad_op], 'en')

197 dataset = dataset.map([de_pad_op], 'de')

198

199 dataset = dataset.batch(batch_size, drop_remainder=drop_remainder)

200 return dataset

201

[end of mindnlp/dataset/machine_translation/multi30k.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/mindnlp/dataset/machine_translation/multi30k.py b/mindnlp/dataset/machine_translation/multi30k.py

--- a/mindnlp/dataset/machine_translation/multi30k.py

+++ b/mindnlp/dataset/machine_translation/multi30k.py

@@ -29,15 +29,15 @@

from mindnlp.utils import untar

URL = {

- "train": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/training.tar.gz",

- "valid": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/validation.tar.gz",

- "test": "http://www.quest.dcs.shef.ac.uk/wmt16_files_mmt/mmt16_task1_test.tar.gz",

+ "train": "https://openi.pcl.ac.cn/lvyufeng/multi30k/raw/branch/master/training.tar.gz",

+ "valid": "https://openi.pcl.ac.cn/lvyufeng/multi30k/raw/branch/master/validation.tar.gz",

+ "test": "https://openi.pcl.ac.cn/lvyufeng/multi30k/raw/branch/master/mmt16_task1_test.tar.gz",

}

MD5 = {

"train": "8ebce33f4ebeb71dcb617f62cba077b7",

"valid": "2a46f18dbae0df0becc56e33d4e28e5d",

- "test": "f63b12fc6f95beb3bfca2c393e861063",

+ "test": "1586ce11f70cba049e9ed3d64db08843",

}

|