problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4162

|

rasdani/github-patches

|

git_diff

|

pwndbg__pwndbg-693

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

qemu local_path bug

### Description

It seems like some commands e.g. plt, got tries to use ELF file's content as path.

### Steps to reproduce

```

$ qemu-arm -g 23946 ./target

$ gdb-multiarch ./target

(gdb) target remote :23946

(gdb) plt # or

(gdb) got

```

### My setup

Ubuntu 18.04, branch c6473ba (master)

### Analysis (and temporary patch)

plt/got command uses `get_elf_info` at [elf.py](https://github.com/pwndbg/pwndbg/blob/dev/pwndbg/elf.py#L95), which uses get_file at file.py.

It seems like the path itself should be used in [file.py L36](https://github.com/pwndbg/pwndbg/blob/c6473ba7aea797f7b4d2251febf419382564d3f8/pwndbg/file.py#L36):

https://github.com/pwndbg/pwndbg/blob/203f10710e8a9a195a7bade59661ed7f3e3e641c/pwndbg/file.py#L35-L36

to

```py

if pwndbg.qemu.root() and recurse:

return os.path.join(pwndbg.qemu.binfmt_root, path)

```

I'm not sure if it's ok in all cases, since I think relative path should be also searched, but it works on plt/got case since absolute path is used (e.g. if path is 'a/b', I'm not sure if binfmt_root + '/a/b' and 'a/b' shoud be searched.)

</issue>

<code>

[start of pwndbg/file.py]

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3 """

4 Retrieve files from the debuggee's filesystem. Useful when

5 debugging a remote process over SSH or similar, where e.g.

6 /proc/FOO/maps is needed from the remote system.

7 """

8 from __future__ import absolute_import

9 from __future__ import division

10 from __future__ import print_function

11 from __future__ import unicode_literals

12

13 import binascii

14 import errno as _errno

15 import os

16 import subprocess

17 import tempfile

18

19 import gdb

20

21 import pwndbg.qemu

22 import pwndbg.remote

23

24

25 def get_file(path, recurse=1):

26 """

27 Downloads the specified file from the system where the current process is

28 being debugged.

29

30 Returns:

31 The local path to the file

32 """

33 local_path = path

34

35 if pwndbg.qemu.root() and recurse:

36 return get(os.path.join(pwndbg.qemu.binfmt_root, path), 0)

37 elif pwndbg.remote.is_remote() and not pwndbg.qemu.is_qemu():

38 local_path = tempfile.mktemp()

39 error = None

40 try:

41 error = gdb.execute('remote get "%s" "%s"' % (path, local_path),

42 to_string=True)

43 except gdb.error as e:

44 error = e

45

46 if error:

47 raise OSError("Could not download remote file %r:\n" \

48 "Error: %s" % (path, error))

49

50 return local_path

51

52 def get(path, recurse=1):

53 """

54 Retrieves the contents of the specified file on the system

55 where the current process is being debugged.

56

57 Returns:

58 A byte array, or None.

59 """

60 local_path = get_file(path, recurse)

61

62 try:

63 with open(local_path,'rb') as f:

64 return f.read()

65 except:

66 return b''

67

68 def readlink(path):

69 """readlink(path) -> str

70

71 Read the link specified by 'path' on the system being debugged.

72

73 Handles local, qemu-usermode, and remote debugging cases.

74 """

75 is_qemu = pwndbg.qemu.is_qemu_usermode()

76

77 if is_qemu:

78 if not os.path.exists(path):

79 path = os.path.join(pwndbg.qemu.root(), path)

80

81 if is_qemu or not pwndbg.remote.is_remote():

82 try:

83 return os.readlink(path)

84 except Exception:

85 return ''

86

87 #

88 # Hurray unexposed packets!

89 #

90 # The 'vFile:readlink:' packet does exactly what it sounds like,

91 # but there is no API exposed to do this and there is also no

92 # command exposed... so we have to send the packet manually.

93 #

94 cmd = 'maintenance packet vFile:readlink:%s'

95

96 # The path must be uppercase hex-encoded and NULL-terminated.

97 path += '\x00'

98 path = binascii.hexlify(path.encode())

99 path = path.upper()

100 path = path.decode()

101

102 result = gdb.execute(cmd % path, from_tty=False, to_string=True)

103

104 """

105 sending: "vFile:readlink:2F70726F632F3130303839302F66642F3000"

106 received: "Fc;pipe:[98420]"

107

108 sending: "vFile:readlink:2F70726F632F3130303839302F66642F333300"

109 received: "F-1,2"

110 """

111

112 _, data = result.split('\n', 1)

113

114 # Sanity check

115 expected = 'received: "F'

116 if not data.startswith(expected):

117 return ''

118

119 # Negative values are errors

120 data = data[len(expected):]

121 if data[0] == '-':

122 return ''

123

124 # If non-negative, there will be a hex-encoded length followed

125 # by a semicolon.

126 n, data = data.split(';', 1)

127

128 n = int(n, 16)

129 if n < 0:

130 return ''

131

132 # The result is quoted by GDB, strip the quote and newline.

133 # I have no idea how well it handles other crazy stuff.

134 ending = '"\n'

135 data = data[:-len(ending)]

136

137 return data

138

[end of pwndbg/file.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

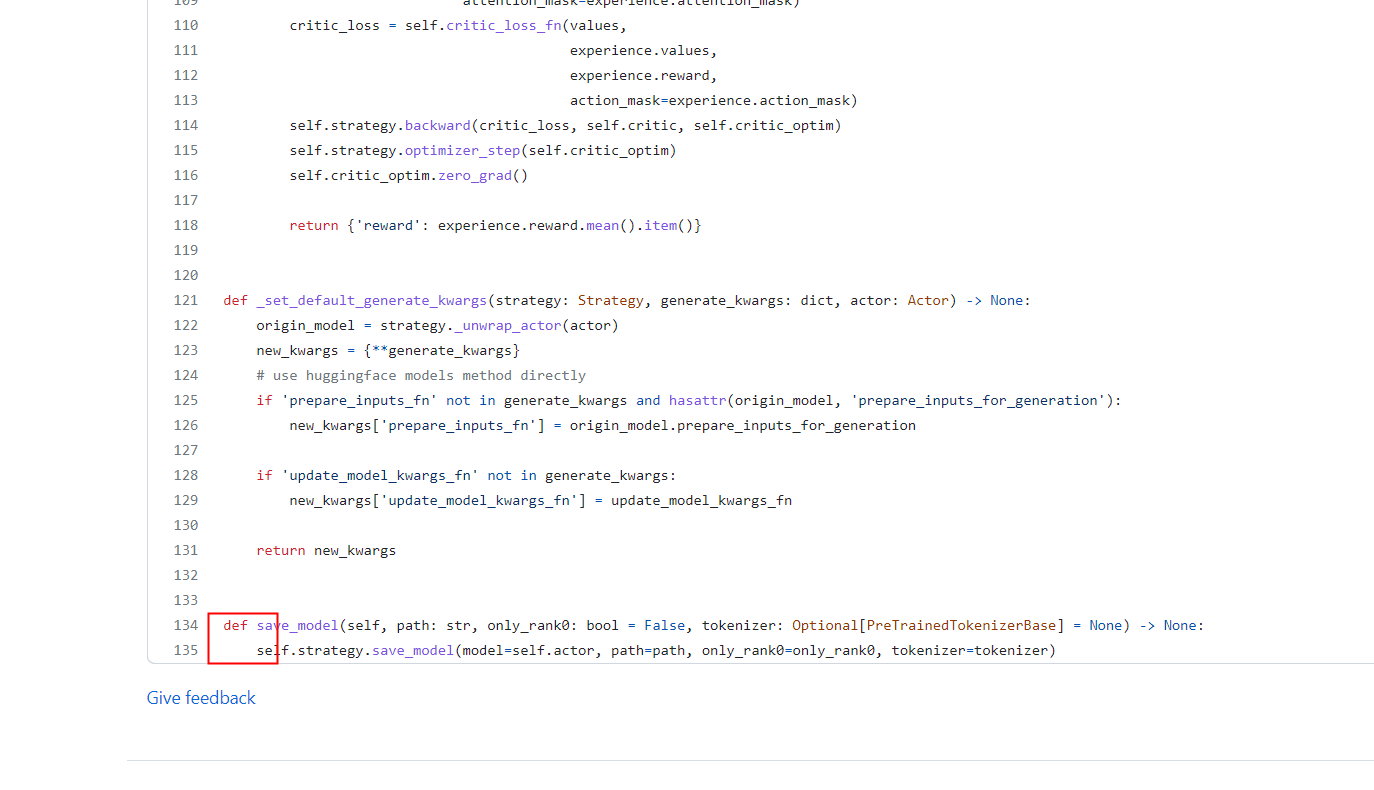

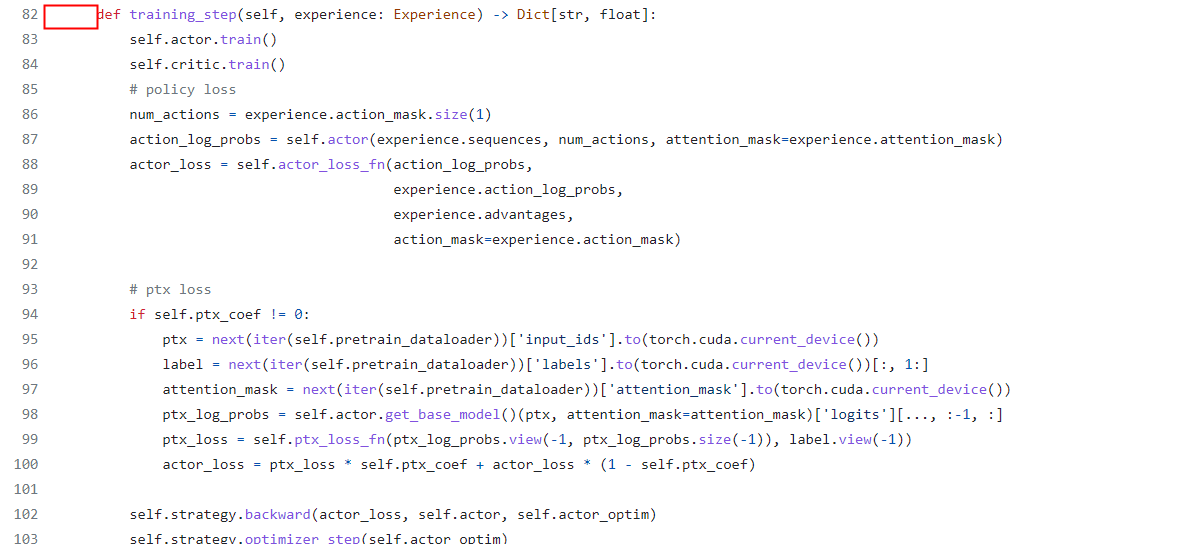

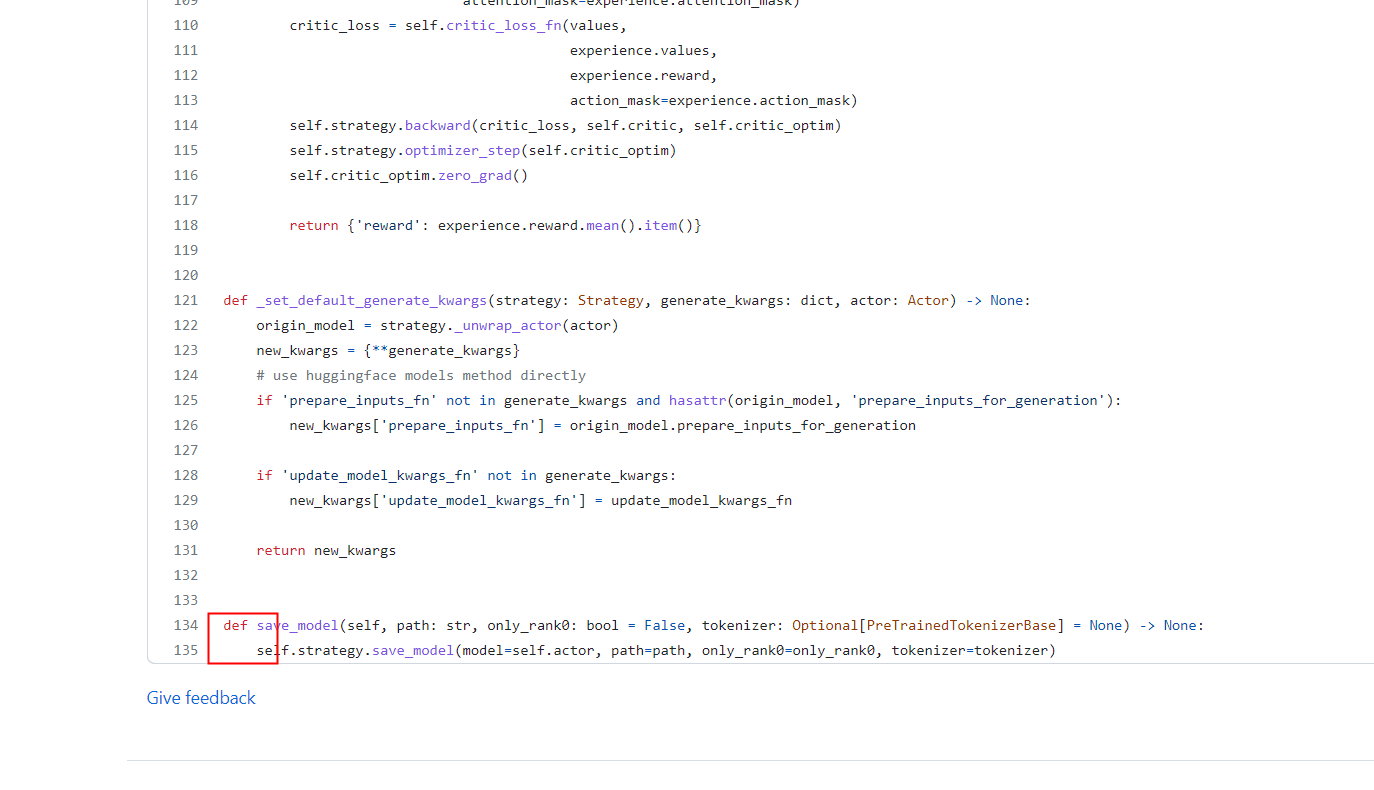

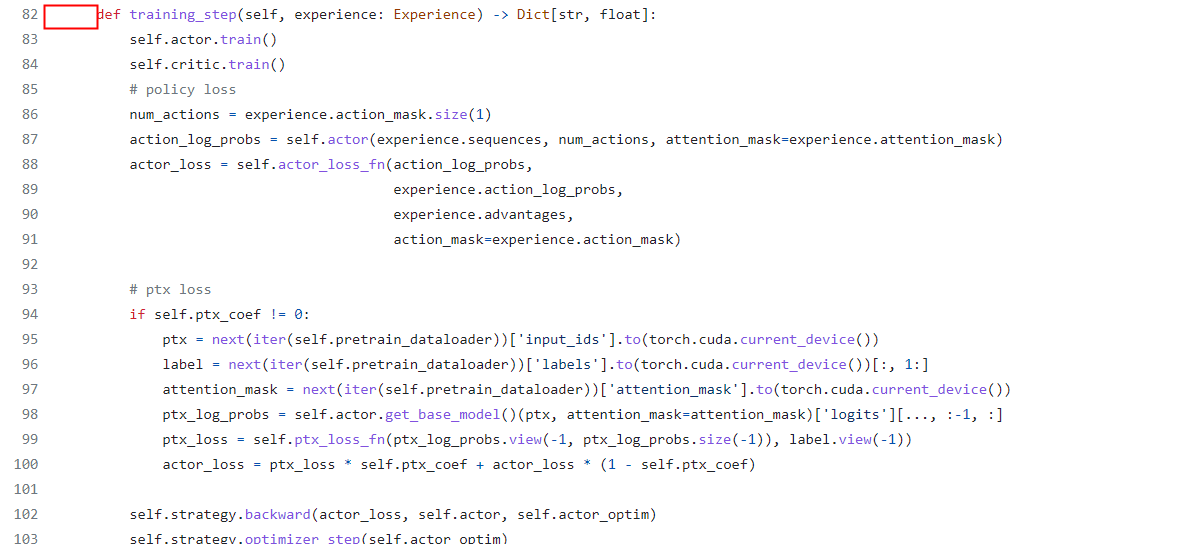

diff --git a/pwndbg/file.py b/pwndbg/file.py

--- a/pwndbg/file.py

+++ b/pwndbg/file.py

@@ -33,7 +33,7 @@

local_path = path

if pwndbg.qemu.root() and recurse:

- return get(os.path.join(pwndbg.qemu.binfmt_root, path), 0)

+ return os.path.join(pwndbg.qemu.binfmt_root, path)

elif pwndbg.remote.is_remote() and not pwndbg.qemu.is_qemu():

local_path = tempfile.mktemp()

error = None

|

{"golden_diff": "diff --git a/pwndbg/file.py b/pwndbg/file.py\n--- a/pwndbg/file.py\n+++ b/pwndbg/file.py\n@@ -33,7 +33,7 @@\n local_path = path\n \n if pwndbg.qemu.root() and recurse:\n- return get(os.path.join(pwndbg.qemu.binfmt_root, path), 0)\n+ return os.path.join(pwndbg.qemu.binfmt_root, path)\n elif pwndbg.remote.is_remote() and not pwndbg.qemu.is_qemu():\n local_path = tempfile.mktemp()\n error = None\n", "issue": "qemu local_path bug\n### Description\r\n\r\nIt seems like some commands e.g. plt, got tries to use ELF file's content as path.\r\n\r\n### Steps to reproduce\r\n\r\n```\r\n$ qemu-arm -g 23946 ./target\r\n$ gdb-multiarch ./target\r\n(gdb) target remote :23946\r\n(gdb) plt # or\r\n(gdb) got\r\n```\r\n\r\n### My setup\r\n\r\nUbuntu 18.04, branch c6473ba (master)\r\n\r\n### Analysis (and temporary patch)\r\n\r\nplt/got command uses `get_elf_info` at [elf.py](https://github.com/pwndbg/pwndbg/blob/dev/pwndbg/elf.py#L95), which uses get_file at file.py.\r\n\r\nIt seems like the path itself should be used in [file.py L36](https://github.com/pwndbg/pwndbg/blob/c6473ba7aea797f7b4d2251febf419382564d3f8/pwndbg/file.py#L36):\r\n\r\nhttps://github.com/pwndbg/pwndbg/blob/203f10710e8a9a195a7bade59661ed7f3e3e641c/pwndbg/file.py#L35-L36\r\n\r\nto\r\n```py\r\n if pwndbg.qemu.root() and recurse:\r\n return os.path.join(pwndbg.qemu.binfmt_root, path)\r\n```\r\n\r\nI'm not sure if it's ok in all cases, since I think relative path should be also searched, but it works on plt/got case since absolute path is used (e.g. if path is 'a/b', I'm not sure if binfmt_root + '/a/b' and 'a/b' shoud be searched.)\n", "before_files": [{"content": "#!/usr/bin/env python\n# -*- coding: utf-8 -*-\n\"\"\"\nRetrieve files from the debuggee's filesystem. Useful when\ndebugging a remote process over SSH or similar, where e.g.\n/proc/FOO/maps is needed from the remote system.\n\"\"\"\nfrom __future__ import absolute_import\nfrom __future__ import division\nfrom __future__ import print_function\nfrom __future__ import unicode_literals\n\nimport binascii\nimport errno as _errno\nimport os\nimport subprocess\nimport tempfile\n\nimport gdb\n\nimport pwndbg.qemu\nimport pwndbg.remote\n\n\ndef get_file(path, recurse=1):\n \"\"\"\n Downloads the specified file from the system where the current process is\n being debugged.\n\n Returns:\n The local path to the file\n \"\"\"\n local_path = path\n\n if pwndbg.qemu.root() and recurse:\n return get(os.path.join(pwndbg.qemu.binfmt_root, path), 0)\n elif pwndbg.remote.is_remote() and not pwndbg.qemu.is_qemu():\n local_path = tempfile.mktemp()\n error = None\n try:\n error = gdb.execute('remote get \"%s\" \"%s\"' % (path, local_path),\n to_string=True)\n except gdb.error as e:\n error = e\n\n if error:\n raise OSError(\"Could not download remote file %r:\\n\" \\\n \"Error: %s\" % (path, error))\n\n return local_path\n\ndef get(path, recurse=1):\n \"\"\"\n Retrieves the contents of the specified file on the system\n where the current process is being debugged.\n\n Returns:\n A byte array, or None.\n \"\"\"\n local_path = get_file(path, recurse)\n\n try:\n with open(local_path,'rb') as f:\n return f.read()\n except:\n return b''\n\ndef readlink(path):\n \"\"\"readlink(path) -> str\n\n Read the link specified by 'path' on the system being debugged.\n\n Handles local, qemu-usermode, and remote debugging cases.\n \"\"\"\n is_qemu = pwndbg.qemu.is_qemu_usermode()\n\n if is_qemu:\n if not os.path.exists(path):\n path = os.path.join(pwndbg.qemu.root(), path)\n\n if is_qemu or not pwndbg.remote.is_remote():\n try:\n return os.readlink(path)\n except Exception:\n return ''\n\n #\n # Hurray unexposed packets!\n #\n # The 'vFile:readlink:' packet does exactly what it sounds like,\n # but there is no API exposed to do this and there is also no\n # command exposed... so we have to send the packet manually.\n #\n cmd = 'maintenance packet vFile:readlink:%s'\n\n # The path must be uppercase hex-encoded and NULL-terminated.\n path += '\\x00'\n path = binascii.hexlify(path.encode())\n path = path.upper()\n path = path.decode()\n\n result = gdb.execute(cmd % path, from_tty=False, to_string=True)\n\n \"\"\"\n sending: \"vFile:readlink:2F70726F632F3130303839302F66642F3000\"\n received: \"Fc;pipe:[98420]\"\n\n sending: \"vFile:readlink:2F70726F632F3130303839302F66642F333300\"\n received: \"F-1,2\"\n \"\"\"\n\n _, data = result.split('\\n', 1)\n\n # Sanity check\n expected = 'received: \"F'\n if not data.startswith(expected):\n return ''\n\n # Negative values are errors\n data = data[len(expected):]\n if data[0] == '-':\n return ''\n\n # If non-negative, there will be a hex-encoded length followed\n # by a semicolon.\n n, data = data.split(';', 1)\n\n n = int(n, 16)\n if n < 0:\n return ''\n\n # The result is quoted by GDB, strip the quote and newline.\n # I have no idea how well it handles other crazy stuff.\n ending = '\"\\n'\n data = data[:-len(ending)]\n\n return data\n", "path": "pwndbg/file.py"}]}

| 2,217 | 134 |

gh_patches_debug_17389

|

rasdani/github-patches

|

git_diff

|

googleapis__python-bigquery-1357

|

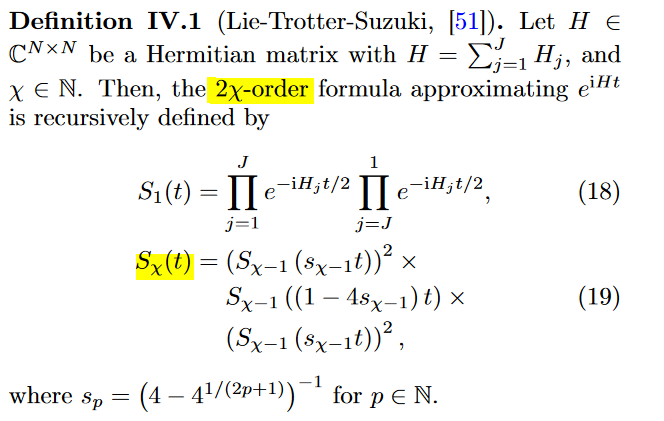

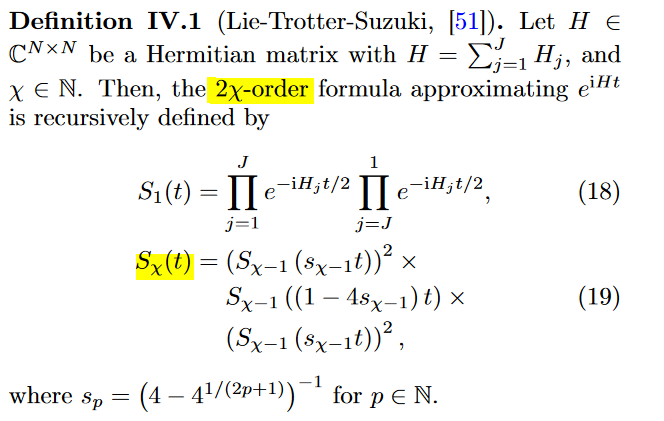

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

tqdm deprecation warning

#### Environment details

- OS type and version: Ubuntu 20.04 LTS

- Python version: 3.8.10

- pip version: 22.0.3

- `google-cloud-bigquery` version: 2.34.0

#### Steps to reproduce

```

from google.cloud import bigquery

query = bigquery.Client().query('SELECT 1')

data = query.to_dataframe(progress_bar_type='tqdm_notebook')

```

The snippet above causes a deprecation warning that breaks strict pytest runs (using `'filterwarnings': ['error']`):

```

.../site-packages/google/cloud/bigquery/_tqdm_helpers.py:51: in get_progress_bar

return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)

...

from .notebook import tqdm as _tqdm_notebook

> warn("This function will be removed in tqdm==5.0.0\n"

"Please use `tqdm.notebook.tqdm` instead of `tqdm.tqdm_notebook`",

TqdmDeprecationWarning, stacklevel=2)

E tqdm.std.TqdmDeprecationWarning: This function will be removed in tqdm==5.0.0

E Please use `tqdm.notebook.tqdm` instead of `tqdm.tqdm_notebook`

.../site-packages/tqdm/__init__.py:25: TqdmDeprecationWarning

```

</issue>

<code>

[start of google/cloud/bigquery/_tqdm_helpers.py]

1 # Copyright 2019 Google LLC

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """Shared helper functions for tqdm progress bar."""

16

17 import concurrent.futures

18 import time

19 import typing

20 from typing import Optional

21 import warnings

22

23 try:

24 import tqdm # type: ignore

25 except ImportError: # pragma: NO COVER

26 tqdm = None

27

28 if typing.TYPE_CHECKING: # pragma: NO COVER

29 from google.cloud.bigquery import QueryJob

30 from google.cloud.bigquery.table import RowIterator

31

32 _NO_TQDM_ERROR = (

33 "A progress bar was requested, but there was an error loading the tqdm "

34 "library. Please install tqdm to use the progress bar functionality."

35 )

36

37 _PROGRESS_BAR_UPDATE_INTERVAL = 0.5

38

39

40 def get_progress_bar(progress_bar_type, description, total, unit):

41 """Construct a tqdm progress bar object, if tqdm is installed."""

42 if tqdm is None:

43 if progress_bar_type is not None:

44 warnings.warn(_NO_TQDM_ERROR, UserWarning, stacklevel=3)

45 return None

46

47 try:

48 if progress_bar_type == "tqdm":

49 return tqdm.tqdm(desc=description, total=total, unit=unit)

50 elif progress_bar_type == "tqdm_notebook":

51 return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)

52 elif progress_bar_type == "tqdm_gui":

53 return tqdm.tqdm_gui(desc=description, total=total, unit=unit)

54 except (KeyError, TypeError):

55 # Protect ourselves from any tqdm errors. In case of

56 # unexpected tqdm behavior, just fall back to showing

57 # no progress bar.

58 warnings.warn(_NO_TQDM_ERROR, UserWarning, stacklevel=3)

59 return None

60

61

62 def wait_for_query(

63 query_job: "QueryJob",

64 progress_bar_type: Optional[str] = None,

65 max_results: Optional[int] = None,

66 ) -> "RowIterator":

67 """Return query result and display a progress bar while the query running, if tqdm is installed.

68

69 Args:

70 query_job:

71 The job representing the execution of the query on the server.

72 progress_bar_type:

73 The type of progress bar to use to show query progress.

74 max_results:

75 The maximum number of rows the row iterator should return.

76

77 Returns:

78 A row iterator over the query results.

79 """

80 default_total = 1

81 current_stage = None

82 start_time = time.time()

83

84 progress_bar = get_progress_bar(

85 progress_bar_type, "Query is running", default_total, "query"

86 )

87 if progress_bar is None:

88 return query_job.result(max_results=max_results)

89

90 i = 0

91 while True:

92 if query_job.query_plan:

93 default_total = len(query_job.query_plan)

94 current_stage = query_job.query_plan[i]

95 progress_bar.total = len(query_job.query_plan)

96 progress_bar.set_description(

97 "Query executing stage {} and status {} : {:0.2f}s".format(

98 current_stage.name,

99 current_stage.status,

100 time.time() - start_time,

101 ),

102 )

103 try:

104 query_result = query_job.result(

105 timeout=_PROGRESS_BAR_UPDATE_INTERVAL, max_results=max_results

106 )

107 progress_bar.update(default_total)

108 progress_bar.set_description(

109 "Query complete after {:0.2f}s".format(time.time() - start_time),

110 )

111 break

112 except concurrent.futures.TimeoutError:

113 query_job.reload() # Refreshes the state via a GET request.

114 if current_stage:

115 if current_stage.status == "COMPLETE":

116 if i < default_total - 1:

117 progress_bar.update(i + 1)

118 i += 1

119 continue

120

121 progress_bar.close()

122 return query_result

123

[end of google/cloud/bigquery/_tqdm_helpers.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/google/cloud/bigquery/_tqdm_helpers.py b/google/cloud/bigquery/_tqdm_helpers.py

--- a/google/cloud/bigquery/_tqdm_helpers.py

+++ b/google/cloud/bigquery/_tqdm_helpers.py

@@ -22,6 +22,7 @@

try:

import tqdm # type: ignore

+

except ImportError: # pragma: NO COVER

tqdm = None

@@ -48,7 +49,7 @@

if progress_bar_type == "tqdm":

return tqdm.tqdm(desc=description, total=total, unit=unit)

elif progress_bar_type == "tqdm_notebook":

- return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)

+ return tqdm.notebook.tqdm(desc=description, total=total, unit=unit)

elif progress_bar_type == "tqdm_gui":

return tqdm.tqdm_gui(desc=description, total=total, unit=unit)

except (KeyError, TypeError):

|

{"golden_diff": "diff --git a/google/cloud/bigquery/_tqdm_helpers.py b/google/cloud/bigquery/_tqdm_helpers.py\n--- a/google/cloud/bigquery/_tqdm_helpers.py\n+++ b/google/cloud/bigquery/_tqdm_helpers.py\n@@ -22,6 +22,7 @@\n \n try:\n import tqdm # type: ignore\n+\n except ImportError: # pragma: NO COVER\n tqdm = None\n \n@@ -48,7 +49,7 @@\n if progress_bar_type == \"tqdm\":\n return tqdm.tqdm(desc=description, total=total, unit=unit)\n elif progress_bar_type == \"tqdm_notebook\":\n- return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)\n+ return tqdm.notebook.tqdm(desc=description, total=total, unit=unit)\n elif progress_bar_type == \"tqdm_gui\":\n return tqdm.tqdm_gui(desc=description, total=total, unit=unit)\n except (KeyError, TypeError):\n", "issue": "tqdm deprecation warning\n#### Environment details\r\n\r\n - OS type and version: Ubuntu 20.04 LTS\r\n - Python version: 3.8.10\r\n - pip version: 22.0.3\r\n - `google-cloud-bigquery` version: 2.34.0\r\n\r\n#### Steps to reproduce\r\n\r\n```\r\nfrom google.cloud import bigquery\r\n\r\nquery = bigquery.Client().query('SELECT 1')\r\ndata = query.to_dataframe(progress_bar_type='tqdm_notebook')\r\n```\r\n\r\nThe snippet above causes a deprecation warning that breaks strict pytest runs (using `'filterwarnings': ['error']`):\r\n```\r\n.../site-packages/google/cloud/bigquery/_tqdm_helpers.py:51: in get_progress_bar\r\n return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)\r\n...\r\n from .notebook import tqdm as _tqdm_notebook\r\n> warn(\"This function will be removed in tqdm==5.0.0\\n\"\r\n \"Please use `tqdm.notebook.tqdm` instead of `tqdm.tqdm_notebook`\",\r\n TqdmDeprecationWarning, stacklevel=2)\r\nE tqdm.std.TqdmDeprecationWarning: This function will be removed in tqdm==5.0.0\r\nE Please use `tqdm.notebook.tqdm` instead of `tqdm.tqdm_notebook`\r\n\r\n.../site-packages/tqdm/__init__.py:25: TqdmDeprecationWarning\r\n```\n", "before_files": [{"content": "# Copyright 2019 Google LLC\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Shared helper functions for tqdm progress bar.\"\"\"\n\nimport concurrent.futures\nimport time\nimport typing\nfrom typing import Optional\nimport warnings\n\ntry:\n import tqdm # type: ignore\nexcept ImportError: # pragma: NO COVER\n tqdm = None\n\nif typing.TYPE_CHECKING: # pragma: NO COVER\n from google.cloud.bigquery import QueryJob\n from google.cloud.bigquery.table import RowIterator\n\n_NO_TQDM_ERROR = (\n \"A progress bar was requested, but there was an error loading the tqdm \"\n \"library. Please install tqdm to use the progress bar functionality.\"\n)\n\n_PROGRESS_BAR_UPDATE_INTERVAL = 0.5\n\n\ndef get_progress_bar(progress_bar_type, description, total, unit):\n \"\"\"Construct a tqdm progress bar object, if tqdm is installed.\"\"\"\n if tqdm is None:\n if progress_bar_type is not None:\n warnings.warn(_NO_TQDM_ERROR, UserWarning, stacklevel=3)\n return None\n\n try:\n if progress_bar_type == \"tqdm\":\n return tqdm.tqdm(desc=description, total=total, unit=unit)\n elif progress_bar_type == \"tqdm_notebook\":\n return tqdm.tqdm_notebook(desc=description, total=total, unit=unit)\n elif progress_bar_type == \"tqdm_gui\":\n return tqdm.tqdm_gui(desc=description, total=total, unit=unit)\n except (KeyError, TypeError):\n # Protect ourselves from any tqdm errors. In case of\n # unexpected tqdm behavior, just fall back to showing\n # no progress bar.\n warnings.warn(_NO_TQDM_ERROR, UserWarning, stacklevel=3)\n return None\n\n\ndef wait_for_query(\n query_job: \"QueryJob\",\n progress_bar_type: Optional[str] = None,\n max_results: Optional[int] = None,\n) -> \"RowIterator\":\n \"\"\"Return query result and display a progress bar while the query running, if tqdm is installed.\n\n Args:\n query_job:\n The job representing the execution of the query on the server.\n progress_bar_type:\n The type of progress bar to use to show query progress.\n max_results:\n The maximum number of rows the row iterator should return.\n\n Returns:\n A row iterator over the query results.\n \"\"\"\n default_total = 1\n current_stage = None\n start_time = time.time()\n\n progress_bar = get_progress_bar(\n progress_bar_type, \"Query is running\", default_total, \"query\"\n )\n if progress_bar is None:\n return query_job.result(max_results=max_results)\n\n i = 0\n while True:\n if query_job.query_plan:\n default_total = len(query_job.query_plan)\n current_stage = query_job.query_plan[i]\n progress_bar.total = len(query_job.query_plan)\n progress_bar.set_description(\n \"Query executing stage {} and status {} : {:0.2f}s\".format(\n current_stage.name,\n current_stage.status,\n time.time() - start_time,\n ),\n )\n try:\n query_result = query_job.result(\n timeout=_PROGRESS_BAR_UPDATE_INTERVAL, max_results=max_results\n )\n progress_bar.update(default_total)\n progress_bar.set_description(\n \"Query complete after {:0.2f}s\".format(time.time() - start_time),\n )\n break\n except concurrent.futures.TimeoutError:\n query_job.reload() # Refreshes the state via a GET request.\n if current_stage:\n if current_stage.status == \"COMPLETE\":\n if i < default_total - 1:\n progress_bar.update(i + 1)\n i += 1\n continue\n\n progress_bar.close()\n return query_result\n", "path": "google/cloud/bigquery/_tqdm_helpers.py"}]}

| 2,078 | 227 |

gh_patches_debug_5651

|

rasdani/github-patches

|

git_diff

|

projectmesa__mesa-2049

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

JupyterViz: the default grid space drawer doesn't scale to large size

**Describe the bug**

<!-- A clear and concise description the bug -->

Here is Schelling space for 60x60:

**Expected behavior**

<!-- A clear and concise description of what you expected to happen -->

Should either scale down the circle marker size automatically, or scale up the figure size automatically.

</issue>

<code>

[start of mesa/experimental/components/matplotlib.py]

1 from typing import Optional

2

3 import networkx as nx

4 import solara

5 from matplotlib.figure import Figure

6 from matplotlib.ticker import MaxNLocator

7

8 import mesa

9

10

11 @solara.component

12 def SpaceMatplotlib(model, agent_portrayal, dependencies: Optional[list[any]] = None):

13 space_fig = Figure()

14 space_ax = space_fig.subplots()

15 space = getattr(model, "grid", None)

16 if space is None:

17 # Sometimes the space is defined as model.space instead of model.grid

18 space = model.space

19 if isinstance(space, mesa.space.NetworkGrid):

20 _draw_network_grid(space, space_ax, agent_portrayal)

21 elif isinstance(space, mesa.space.ContinuousSpace):

22 _draw_continuous_space(space, space_ax, agent_portrayal)

23 else:

24 _draw_grid(space, space_ax, agent_portrayal)

25 solara.FigureMatplotlib(space_fig, format="png", dependencies=dependencies)

26

27

28 def _draw_grid(space, space_ax, agent_portrayal):

29 def portray(g):

30 x = []

31 y = []

32 s = [] # size

33 c = [] # color

34 for i in range(g.width):

35 for j in range(g.height):

36 content = g._grid[i][j]

37 if not content:

38 continue

39 if not hasattr(content, "__iter__"):

40 # Is a single grid

41 content = [content]

42 for agent in content:

43 data = agent_portrayal(agent)

44 x.append(i)

45 y.append(j)

46 if "size" in data:

47 s.append(data["size"])

48 if "color" in data:

49 c.append(data["color"])

50 out = {"x": x, "y": y}

51 if len(s) > 0:

52 out["s"] = s

53 if len(c) > 0:

54 out["c"] = c

55 return out

56

57 space_ax.set_xlim(-1, space.width)

58 space_ax.set_ylim(-1, space.height)

59 space_ax.scatter(**portray(space))

60

61

62 def _draw_network_grid(space, space_ax, agent_portrayal):

63 graph = space.G

64 pos = nx.spring_layout(graph, seed=0)

65 nx.draw(

66 graph,

67 ax=space_ax,

68 pos=pos,

69 **agent_portrayal(graph),

70 )

71

72

73 def _draw_continuous_space(space, space_ax, agent_portrayal):

74 def portray(space):

75 x = []

76 y = []

77 s = [] # size

78 c = [] # color

79 for agent in space._agent_to_index:

80 data = agent_portrayal(agent)

81 _x, _y = agent.pos

82 x.append(_x)

83 y.append(_y)

84 if "size" in data:

85 s.append(data["size"])

86 if "color" in data:

87 c.append(data["color"])

88 out = {"x": x, "y": y}

89 if len(s) > 0:

90 out["s"] = s

91 if len(c) > 0:

92 out["c"] = c

93 return out

94

95 # Determine border style based on space.torus

96 border_style = "solid" if not space.torus else (0, (5, 10))

97

98 # Set the border of the plot

99 for spine in space_ax.spines.values():

100 spine.set_linewidth(1.5)

101 spine.set_color("black")

102 spine.set_linestyle(border_style)

103

104 width = space.x_max - space.x_min

105 x_padding = width / 20

106 height = space.y_max - space.y_min

107 y_padding = height / 20

108 space_ax.set_xlim(space.x_min - x_padding, space.x_max + x_padding)

109 space_ax.set_ylim(space.y_min - y_padding, space.y_max + y_padding)

110

111 # Portray and scatter the agents in the space

112 space_ax.scatter(**portray(space))

113

114

115 @solara.component

116 def PlotMatplotlib(model, measure, dependencies: Optional[list[any]] = None):

117 fig = Figure()

118 ax = fig.subplots()

119 df = model.datacollector.get_model_vars_dataframe()

120 if isinstance(measure, str):

121 ax.plot(df.loc[:, measure])

122 ax.set_ylabel(measure)

123 elif isinstance(measure, dict):

124 for m, color in measure.items():

125 ax.plot(df.loc[:, m], label=m, color=color)

126 fig.legend()

127 elif isinstance(measure, (list, tuple)):

128 for m in measure:

129 ax.plot(df.loc[:, m], label=m)

130 fig.legend()

131 # Set integer x axis

132 ax.xaxis.set_major_locator(MaxNLocator(integer=True))

133 solara.FigureMatplotlib(fig, dependencies=dependencies)

134

[end of mesa/experimental/components/matplotlib.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/mesa/experimental/components/matplotlib.py b/mesa/experimental/components/matplotlib.py

--- a/mesa/experimental/components/matplotlib.py

+++ b/mesa/experimental/components/matplotlib.py

@@ -48,6 +48,9 @@

if "color" in data:

c.append(data["color"])

out = {"x": x, "y": y}

+ # This is the default value for the marker size, which auto-scales

+ # according to the grid area.

+ out["s"] = (180 / min(g.width, g.height)) ** 2

if len(s) > 0:

out["s"] = s

if len(c) > 0:

|

{"golden_diff": "diff --git a/mesa/experimental/components/matplotlib.py b/mesa/experimental/components/matplotlib.py\n--- a/mesa/experimental/components/matplotlib.py\n+++ b/mesa/experimental/components/matplotlib.py\n@@ -48,6 +48,9 @@\n if \"color\" in data:\n c.append(data[\"color\"])\n out = {\"x\": x, \"y\": y}\n+ # This is the default value for the marker size, which auto-scales\n+ # according to the grid area.\n+ out[\"s\"] = (180 / min(g.width, g.height)) ** 2\n if len(s) > 0:\n out[\"s\"] = s\n if len(c) > 0:\n", "issue": "JupyterViz: the default grid space drawer doesn't scale to large size\n**Describe the bug**\r\n<!-- A clear and concise description the bug -->\r\nHere is Schelling space for 60x60:\r\n\r\n\r\n**Expected behavior**\r\n<!-- A clear and concise description of what you expected to happen -->\r\nShould either scale down the circle marker size automatically, or scale up the figure size automatically.\n", "before_files": [{"content": "from typing import Optional\n\nimport networkx as nx\nimport solara\nfrom matplotlib.figure import Figure\nfrom matplotlib.ticker import MaxNLocator\n\nimport mesa\n\n\[email protected]\ndef SpaceMatplotlib(model, agent_portrayal, dependencies: Optional[list[any]] = None):\n space_fig = Figure()\n space_ax = space_fig.subplots()\n space = getattr(model, \"grid\", None)\n if space is None:\n # Sometimes the space is defined as model.space instead of model.grid\n space = model.space\n if isinstance(space, mesa.space.NetworkGrid):\n _draw_network_grid(space, space_ax, agent_portrayal)\n elif isinstance(space, mesa.space.ContinuousSpace):\n _draw_continuous_space(space, space_ax, agent_portrayal)\n else:\n _draw_grid(space, space_ax, agent_portrayal)\n solara.FigureMatplotlib(space_fig, format=\"png\", dependencies=dependencies)\n\n\ndef _draw_grid(space, space_ax, agent_portrayal):\n def portray(g):\n x = []\n y = []\n s = [] # size\n c = [] # color\n for i in range(g.width):\n for j in range(g.height):\n content = g._grid[i][j]\n if not content:\n continue\n if not hasattr(content, \"__iter__\"):\n # Is a single grid\n content = [content]\n for agent in content:\n data = agent_portrayal(agent)\n x.append(i)\n y.append(j)\n if \"size\" in data:\n s.append(data[\"size\"])\n if \"color\" in data:\n c.append(data[\"color\"])\n out = {\"x\": x, \"y\": y}\n if len(s) > 0:\n out[\"s\"] = s\n if len(c) > 0:\n out[\"c\"] = c\n return out\n\n space_ax.set_xlim(-1, space.width)\n space_ax.set_ylim(-1, space.height)\n space_ax.scatter(**portray(space))\n\n\ndef _draw_network_grid(space, space_ax, agent_portrayal):\n graph = space.G\n pos = nx.spring_layout(graph, seed=0)\n nx.draw(\n graph,\n ax=space_ax,\n pos=pos,\n **agent_portrayal(graph),\n )\n\n\ndef _draw_continuous_space(space, space_ax, agent_portrayal):\n def portray(space):\n x = []\n y = []\n s = [] # size\n c = [] # color\n for agent in space._agent_to_index:\n data = agent_portrayal(agent)\n _x, _y = agent.pos\n x.append(_x)\n y.append(_y)\n if \"size\" in data:\n s.append(data[\"size\"])\n if \"color\" in data:\n c.append(data[\"color\"])\n out = {\"x\": x, \"y\": y}\n if len(s) > 0:\n out[\"s\"] = s\n if len(c) > 0:\n out[\"c\"] = c\n return out\n\n # Determine border style based on space.torus\n border_style = \"solid\" if not space.torus else (0, (5, 10))\n\n # Set the border of the plot\n for spine in space_ax.spines.values():\n spine.set_linewidth(1.5)\n spine.set_color(\"black\")\n spine.set_linestyle(border_style)\n\n width = space.x_max - space.x_min\n x_padding = width / 20\n height = space.y_max - space.y_min\n y_padding = height / 20\n space_ax.set_xlim(space.x_min - x_padding, space.x_max + x_padding)\n space_ax.set_ylim(space.y_min - y_padding, space.y_max + y_padding)\n\n # Portray and scatter the agents in the space\n space_ax.scatter(**portray(space))\n\n\[email protected]\ndef PlotMatplotlib(model, measure, dependencies: Optional[list[any]] = None):\n fig = Figure()\n ax = fig.subplots()\n df = model.datacollector.get_model_vars_dataframe()\n if isinstance(measure, str):\n ax.plot(df.loc[:, measure])\n ax.set_ylabel(measure)\n elif isinstance(measure, dict):\n for m, color in measure.items():\n ax.plot(df.loc[:, m], label=m, color=color)\n fig.legend()\n elif isinstance(measure, (list, tuple)):\n for m in measure:\n ax.plot(df.loc[:, m], label=m)\n fig.legend()\n # Set integer x axis\n ax.xaxis.set_major_locator(MaxNLocator(integer=True))\n solara.FigureMatplotlib(fig, dependencies=dependencies)\n", "path": "mesa/experimental/components/matplotlib.py"}]}

| 2,016 | 160 |

gh_patches_debug_32679

|

rasdani/github-patches

|

git_diff

|

alltheplaces__alltheplaces-1879

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Spider rei is broken

During the global build at 2021-05-26-14-42-23, spider **rei** failed with **0 features** and **0 errors**.

Here's [the log](https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/logs/rei.log) and [the output](https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/output/rei.geojson) ([on a map](https://data.alltheplaces.xyz/map.html?show=https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/output/rei.geojson))

</issue>

<code>

[start of locations/spiders/rei.py]

1 # -*- coding: utf-8 -*-

2 import scrapy

3 import json

4 import re

5 from locations.items import GeojsonPointItem

6

7 DAY_MAPPING = {

8 'Mon': 'Mo',

9 'Tue': 'Tu',

10 'Wed': 'We',

11 'Thu': 'Th',

12 'Fri': 'Fr',

13 'Sat': 'Sa',

14 'Sun': 'Su'

15 }

16

17 class ReiSpider(scrapy.Spider):

18 name = "rei"

19 allowed_domains = ["www.rei.com"]

20 start_urls = (

21 'https://www.rei.com/map/store',

22 )

23

24 # Fix formatting for ["Mon - Fri 10:00-1800","Sat 12:00-18:00"]

25 def format_days(self, range):

26 pattern = r'^(.{3})( - (.{3}) | )(\d.*)'

27 start_day, seperator, end_day, time_range = re.search(pattern, range.strip()).groups()

28 result = DAY_MAPPING[start_day]

29 if end_day:

30 result += "-"+DAY_MAPPING[end_day]

31 result += " "+time_range

32 return result

33

34 def fix_opening_hours(self, opening_hours):

35 return ";".join(map(self.format_days, opening_hours))

36

37

38 def parse_store(self, response):

39 json_string = response.xpath('//script[@id="store-schema"]/text()').extract_first()

40 store_dict = json.loads(json_string)

41 yield GeojsonPointItem(

42 lat=store_dict["geo"]["latitude"],

43 lon=store_dict["geo"]["longitude"],

44 addr_full=store_dict["address"]["streetAddress"],

45 city=store_dict["address"]["addressLocality"],

46 state=store_dict["address"]["addressRegion"],

47 postcode=store_dict["address"]["postalCode"],

48 country=store_dict["address"]["addressCountry"],

49 opening_hours=self.fix_opening_hours(store_dict["openingHours"]),

50 phone=store_dict["telephone"],

51 website=store_dict["url"],

52 ref=store_dict["url"],

53 )

54

55 def parse(self, response):

56 urls = response.xpath('//a[@class="store-name-link"]/@href').extract()

57 for path in urls:

58 yield scrapy.Request(response.urljoin(path), callback=self.parse_store)

59

60

61

[end of locations/spiders/rei.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/locations/spiders/rei.py b/locations/spiders/rei.py

--- a/locations/spiders/rei.py

+++ b/locations/spiders/rei.py

@@ -33,28 +33,34 @@

def fix_opening_hours(self, opening_hours):

return ";".join(map(self.format_days, opening_hours))

-

def parse_store(self, response):

json_string = response.xpath('//script[@id="store-schema"]/text()').extract_first()

store_dict = json.loads(json_string)

- yield GeojsonPointItem(

- lat=store_dict["geo"]["latitude"],

- lon=store_dict["geo"]["longitude"],

- addr_full=store_dict["address"]["streetAddress"],

- city=store_dict["address"]["addressLocality"],

- state=store_dict["address"]["addressRegion"],

- postcode=store_dict["address"]["postalCode"],

- country=store_dict["address"]["addressCountry"],

- opening_hours=self.fix_opening_hours(store_dict["openingHours"]),

- phone=store_dict["telephone"],

- website=store_dict["url"],

- ref=store_dict["url"],

- )

+

+ properties = {

+ "lat": store_dict["geo"]["latitude"],

+ "lon": store_dict["geo"]["longitude"],

+ "addr_full": store_dict["address"]["streetAddress"],

+ "city": store_dict["address"]["addressLocality"],

+ "state": store_dict["address"]["addressRegion"],

+ "postcode": store_dict["address"]["postalCode"],

+ "country": store_dict["address"]["addressCountry"],

+ "opening_hours": self.fix_opening_hours(store_dict["openingHours"]),

+ "phone": store_dict["telephone"],

+ "website": store_dict["url"],

+ "ref": store_dict["url"],

+ }

+

+ yield GeojsonPointItem(**properties)

def parse(self, response):

- urls = response.xpath('//a[@class="store-name-link"]/@href').extract()

+ urls = set(response.xpath('//a[contains(@href,"stores") and contains(@href,".html")]/@href').extract())

for path in urls:

- yield scrapy.Request(response.urljoin(path), callback=self.parse_store)

+ if path == "/stores/bikeshop.html":

+ continue

-

+ yield scrapy.Request(

+ response.urljoin(path),

+ callback=self.parse_store,

+ )

|

{"golden_diff": "diff --git a/locations/spiders/rei.py b/locations/spiders/rei.py\n--- a/locations/spiders/rei.py\n+++ b/locations/spiders/rei.py\n@@ -33,28 +33,34 @@\n \n def fix_opening_hours(self, opening_hours):\n return \";\".join(map(self.format_days, opening_hours))\n- \n \n def parse_store(self, response):\n json_string = response.xpath('//script[@id=\"store-schema\"]/text()').extract_first()\n store_dict = json.loads(json_string)\n- yield GeojsonPointItem(\n- lat=store_dict[\"geo\"][\"latitude\"],\n- lon=store_dict[\"geo\"][\"longitude\"],\n- addr_full=store_dict[\"address\"][\"streetAddress\"],\n- city=store_dict[\"address\"][\"addressLocality\"],\n- state=store_dict[\"address\"][\"addressRegion\"],\n- postcode=store_dict[\"address\"][\"postalCode\"],\n- country=store_dict[\"address\"][\"addressCountry\"],\n- opening_hours=self.fix_opening_hours(store_dict[\"openingHours\"]),\n- phone=store_dict[\"telephone\"],\n- website=store_dict[\"url\"],\n- ref=store_dict[\"url\"],\n- )\n+\n+ properties = {\n+ \"lat\": store_dict[\"geo\"][\"latitude\"],\n+ \"lon\": store_dict[\"geo\"][\"longitude\"],\n+ \"addr_full\": store_dict[\"address\"][\"streetAddress\"],\n+ \"city\": store_dict[\"address\"][\"addressLocality\"],\n+ \"state\": store_dict[\"address\"][\"addressRegion\"],\n+ \"postcode\": store_dict[\"address\"][\"postalCode\"],\n+ \"country\": store_dict[\"address\"][\"addressCountry\"],\n+ \"opening_hours\": self.fix_opening_hours(store_dict[\"openingHours\"]),\n+ \"phone\": store_dict[\"telephone\"],\n+ \"website\": store_dict[\"url\"],\n+ \"ref\": store_dict[\"url\"],\n+ }\n+\n+ yield GeojsonPointItem(**properties)\n \n def parse(self, response):\n- urls = response.xpath('//a[@class=\"store-name-link\"]/@href').extract()\n+ urls = set(response.xpath('//a[contains(@href,\"stores\") and contains(@href,\".html\")]/@href').extract())\n for path in urls:\n- yield scrapy.Request(response.urljoin(path), callback=self.parse_store)\n+ if path == \"/stores/bikeshop.html\":\n+ continue\n \n- \n+ yield scrapy.Request(\n+ response.urljoin(path),\n+ callback=self.parse_store,\n+ )\n", "issue": "Spider rei is broken\nDuring the global build at 2021-05-26-14-42-23, spider **rei** failed with **0 features** and **0 errors**.\n\nHere's [the log](https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/logs/rei.log) and [the output](https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/output/rei.geojson) ([on a map](https://data.alltheplaces.xyz/map.html?show=https://data.alltheplaces.xyz/runs/2021-05-26-14-42-23/output/rei.geojson))\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nimport scrapy\nimport json\nimport re\nfrom locations.items import GeojsonPointItem\n\nDAY_MAPPING = {\n 'Mon': 'Mo',\n 'Tue': 'Tu',\n 'Wed': 'We',\n 'Thu': 'Th',\n 'Fri': 'Fr',\n 'Sat': 'Sa',\n 'Sun': 'Su'\n}\n\nclass ReiSpider(scrapy.Spider):\n name = \"rei\"\n allowed_domains = [\"www.rei.com\"]\n start_urls = (\n 'https://www.rei.com/map/store',\n )\n\n # Fix formatting for [\"Mon - Fri 10:00-1800\",\"Sat 12:00-18:00\"]\n def format_days(self, range):\n pattern = r'^(.{3})( - (.{3}) | )(\\d.*)'\n start_day, seperator, end_day, time_range = re.search(pattern, range.strip()).groups()\n result = DAY_MAPPING[start_day]\n if end_day:\n result += \"-\"+DAY_MAPPING[end_day]\n result += \" \"+time_range\n return result\n\n def fix_opening_hours(self, opening_hours):\n return \";\".join(map(self.format_days, opening_hours))\n \n\n def parse_store(self, response):\n json_string = response.xpath('//script[@id=\"store-schema\"]/text()').extract_first()\n store_dict = json.loads(json_string)\n yield GeojsonPointItem(\n lat=store_dict[\"geo\"][\"latitude\"],\n lon=store_dict[\"geo\"][\"longitude\"],\n addr_full=store_dict[\"address\"][\"streetAddress\"],\n city=store_dict[\"address\"][\"addressLocality\"],\n state=store_dict[\"address\"][\"addressRegion\"],\n postcode=store_dict[\"address\"][\"postalCode\"],\n country=store_dict[\"address\"][\"addressCountry\"],\n opening_hours=self.fix_opening_hours(store_dict[\"openingHours\"]),\n phone=store_dict[\"telephone\"],\n website=store_dict[\"url\"],\n ref=store_dict[\"url\"],\n )\n\n def parse(self, response):\n urls = response.xpath('//a[@class=\"store-name-link\"]/@href').extract()\n for path in urls:\n yield scrapy.Request(response.urljoin(path), callback=self.parse_store)\n\n \n", "path": "locations/spiders/rei.py"}]}

| 1,311 | 534 |

gh_patches_debug_6067

|

rasdani/github-patches

|

git_diff

|

plone__Products.CMFPlone-3094

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

sorting in control panel

The items of the control panel are completely unsorted (should be sorted in alphabetical order (depending on the current language in Plone).

</issue>

<code>

[start of Products/CMFPlone/PloneControlPanel.py]

1 # -*- coding: utf-8 -*-

2 from AccessControl import ClassSecurityInfo

3 from AccessControl.class_init import InitializeClass

4 from App.special_dtml import DTMLFile

5 from OFS.Folder import Folder

6 from OFS.PropertyManager import PropertyManager

7 from Products.CMFCore.ActionInformation import ActionInformation

8 from Products.CMFCore.ActionProviderBase import ActionProviderBase

9 from Products.CMFCore.Expression import Expression, createExprContext

10 from Products.CMFCore.permissions import ManagePortal, View

11 from Products.CMFCore.utils import _checkPermission

12 from Products.CMFCore.utils import getToolByName

13 from Products.CMFCore.utils import registerToolInterface

14 from Products.CMFCore.utils import UniqueObject

15 from Products.CMFPlone import PloneMessageFactory as _

16 from Products.CMFPlone.interfaces import IControlPanel

17 from Products.CMFPlone.PloneBaseTool import PloneBaseTool

18 from zope.component.hooks import getSite

19 from zope.i18n import translate

20 from zope.i18nmessageid import Message

21 from zope.interface import implementer

22

23 import six

24

25

26 class PloneConfiglet(ActionInformation):

27

28 def __init__(self, appId, **kwargs):

29 self.appId = appId

30 ActionInformation.__init__(self, **kwargs)

31

32 def getAppId(self):

33 return self.appId

34

35 def getDescription(self):

36 return self.description

37

38 def clone(self):

39 return self.__class__(**self.__dict__)

40

41 def getAction(self, ec):

42 res = ActionInformation.getAction(self, ec)

43 res['description'] = self.getDescription()

44 return res

45

46

47 @implementer(IControlPanel)

48 class PloneControlPanel(PloneBaseTool, UniqueObject,

49 Folder, ActionProviderBase, PropertyManager):

50 """Weave together the various sources of "actions" which

51 are apropos to the current user and context.

52 """

53

54 security = ClassSecurityInfo()

55

56 id = 'portal_controlpanel'

57 title = 'Control Panel'

58 toolicon = 'skins/plone_images/site_icon.png'

59 meta_type = 'Plone Control Panel Tool'

60 _actions_form = DTMLFile('www/editPloneConfiglets', globals())

61

62 manage_options = (ActionProviderBase.manage_options +

63 PropertyManager.manage_options)

64

65 group = dict(

66 member=[

67 ('Member', _(u'My Preferences')),

68 ],

69 site=[

70 ('plone-general', _(u'General')),

71 ('plone-content', _(u'Content')),

72 ('plone-users', _(u'Users')),

73 ('plone-security', _(u'Security')),

74 ('plone-advanced', _(u'Advanced')),

75 ('Plone', _(u'Plone Configuration')),

76 ('Products', _(u'Add-on Configuration')),

77 ]

78 )

79

80 def __init__(self, **kw):

81 if kw:

82 self.__dict__.update(**kw)

83

84 security.declareProtected(ManagePortal, 'registerConfiglets')

85

86 def registerConfiglets(self, configlets):

87 for conf in configlets:

88 self.registerConfiglet(**conf)

89

90 security.declareProtected(ManagePortal, 'getGroupIds')

91

92 def getGroupIds(self, category='site'):

93 groups = self.group.get(category, [])

94 return [g[0] for g in groups if g]

95

96 security.declareProtected(View, 'getGroups')

97

98 def getGroups(self, category='site'):

99 groups = self.group.get(category, [])

100 return [{'id': g[0], 'title': g[1]} for g in groups if g]

101

102 security.declarePrivate('listActions')

103

104 def listActions(self, info=None, object=None):

105 # This exists here to shut up a deprecation warning about old-style

106 # actions in CMFCore's ActionProviderBase. It was decided not to

107 # move configlets to be based on action tool categories for Plone 4

108 # (see PLIP #8804), but that (or an alternative) will have to happen

109 # before CMF 2.4 when support for old-style actions is removed.

110 return self._actions or ()

111

112 security.declarePublic('maySeeSomeConfiglets')

113

114 def maySeeSomeConfiglets(self):

115 groups = self.getGroups('site')

116

117 all = []

118 for group in groups:

119 all.extend(self.enumConfiglets(group=group['id']))

120 all = [item for item in all if item['visible']]

121 return len(all) != 0

122

123 security.declarePublic('enumConfiglets')

124

125 def enumConfiglets(self, group=None):

126 portal = getToolByName(self, 'portal_url').getPortalObject()

127 context = createExprContext(self, portal, self)

128 res = []

129 for a in self.listActions():

130 verified = 0

131 for permission in a.permissions:

132 if _checkPermission(permission, portal):

133 verified = 1

134 if verified and a.category == group and a.testCondition(context) \

135 and a.visible:

136 res.append(a.getAction(context))

137 # Translate the title for sorting

138 if getattr(self, 'REQUEST', None) is not None:

139 for a in res:

140 title = a['title']

141 if not isinstance(title, Message):

142 title = Message(title, domain='plone')

143 a['title'] = translate(title,

144 context=self.REQUEST)

145

146 def _id(v):

147 return v['id']

148 res.sort(key=_id)

149 return res

150

151 security.declareProtected(ManagePortal, 'unregisterConfiglet')

152

153 def unregisterConfiglet(self, id):

154 actids = [o.id for o in self.listActions()]

155 selection = [actids.index(a) for a in actids if a == id]

156 if not selection:

157 return

158 self.deleteActions(selection)

159

160 security.declareProtected(ManagePortal, 'unregisterApplication')

161

162 def unregisterApplication(self, appId):

163 acts = list(self.listActions())

164 selection = [acts.index(a) for a in acts if a.appId == appId]

165 if not selection:

166 return

167 self.deleteActions(selection)

168

169 def _extractAction(self, properties, index):

170 # Extract an ActionInformation from the funky form properties.

171 id = str(properties.get('id_%d' % index, ''))

172 name = str(properties.get('name_%d' % index, ''))

173 action = str(properties.get('action_%d' % index, ''))

174 condition = str(properties.get('condition_%d' % index, ''))

175 category = str(properties.get('category_%d' % index, ''))

176 visible = properties.get('visible_%d' % index, 0)

177 permissions = properties.get('permission_%d' % index, ())

178 appId = properties.get('appId_%d' % index, '')

179 description = properties.get('description_%d' % index, '')

180 icon_expr = properties.get('icon_expr_%d' % index, '')

181

182 if not name:

183 raise ValueError('A name is required.')

184

185 if action != '':

186 action = Expression(text=action)

187

188 if condition != '':

189 condition = Expression(text=condition)

190

191 if category == '':

192 category = 'object'

193

194 if not isinstance(visible, int):

195 try:

196 visible = int(visible)

197 except ValueError:

198 visible = 0

199

200 if isinstance(permissions, six.string_types):

201 permissions = (permissions, )

202

203 return PloneConfiglet(id=id,

204 title=name,

205 action=action,

206 condition=condition,

207 permissions=permissions,

208 category=category,

209 visible=visible,

210 appId=appId,

211 description=description,

212 icon_expr=icon_expr,

213 )

214

215 security.declareProtected(ManagePortal, 'addAction')

216

217 def addAction(self,

218 id,

219 name,

220 action,

221 condition='',

222 permission='',

223 category='Plone',

224 visible=1,

225 appId=None,

226 icon_expr='',

227 description='',

228 REQUEST=None,

229 ):

230 # Add an action to our list.

231 if not name:

232 raise ValueError('A name is required.')

233

234 a_expr = action and Expression(text=str(action)) or ''

235 c_expr = condition and Expression(text=str(condition)) or ''

236

237 if not isinstance(permission, tuple):

238 permission = permission and (str(permission), ) or ()

239

240 new_actions = self._cloneActions()

241

242 new_action = PloneConfiglet(id=str(id),

243 title=name,

244 action=a_expr,

245 condition=c_expr,

246 permissions=permission,

247 category=str(category),

248 visible=int(visible),

249 appId=appId,

250 description=description,

251 icon_expr=icon_expr,

252 )

253

254 new_actions.append(new_action)

255 self._actions = tuple(new_actions)

256

257 if REQUEST is not None:

258 return self.manage_editActionsForm(

259 REQUEST, manage_tabs_message='Added.')

260

261 security.declareProtected(ManagePortal, 'registerConfiglet')

262 registerConfiglet = addAction

263

264 security.declareProtected(ManagePortal, 'manage_editActionsForm')

265

266 def manage_editActionsForm(self, REQUEST, manage_tabs_message=None):

267 """ Show the 'Actions' management tab.

268 """

269 actions = []

270

271 for a in self.listActions():

272

273 a1 = {}

274 a1['id'] = a.getId()

275 a1['name'] = a.Title()

276 p = a.getPermissions()

277 if p:

278 a1['permission'] = p[0]

279 else:

280 a1['permission'] = ''

281 a1['category'] = a.getCategory() or 'object'

282 a1['visible'] = a.getVisibility()

283 a1['action'] = a.getActionExpression()

284 a1['condition'] = a.getCondition()

285 a1['appId'] = a.getAppId()

286 a1['description'] = a.getDescription()

287 a1['icon_expr'] = a.getIconExpression()

288 actions.append(a1)

289

290 # possible_permissions is in OFS.role.RoleManager.

291 pp = self.possible_permissions()

292 return self._actions_form(

293 self,

294 REQUEST,

295 actions=actions,

296 possible_permissions=pp,

297 management_view='Actions',

298 manage_tabs_message=manage_tabs_message,

299 )

300

301 @property

302 def site_url(self):

303 """Return the absolute URL to the current site, which is likely not

304 necessarily the portal root.

305 Used by ``portlet_prefs`` to construct the URL to

306 ``@@overview-controlpanel``.

307 """

308 return getSite().absolute_url()

309

310

311 InitializeClass(PloneControlPanel)

312 registerToolInterface('portal_controlpanel', IControlPanel)

313

[end of Products/CMFPlone/PloneControlPanel.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/Products/CMFPlone/PloneControlPanel.py b/Products/CMFPlone/PloneControlPanel.py

--- a/Products/CMFPlone/PloneControlPanel.py

+++ b/Products/CMFPlone/PloneControlPanel.py

@@ -143,9 +143,10 @@

a['title'] = translate(title,

context=self.REQUEST)

- def _id(v):

- return v['id']

- res.sort(key=_id)

+ def _title(v):

+ return v['title']

+

+ res.sort(key=_title)

return res

security.declareProtected(ManagePortal, 'unregisterConfiglet')

|

{"golden_diff": "diff --git a/Products/CMFPlone/PloneControlPanel.py b/Products/CMFPlone/PloneControlPanel.py\n--- a/Products/CMFPlone/PloneControlPanel.py\n+++ b/Products/CMFPlone/PloneControlPanel.py\n@@ -143,9 +143,10 @@\n a['title'] = translate(title,\n context=self.REQUEST)\n \n- def _id(v):\n- return v['id']\n- res.sort(key=_id)\n+ def _title(v):\n+ return v['title']\n+\n+ res.sort(key=_title)\n return res\n \n security.declareProtected(ManagePortal, 'unregisterConfiglet')\n", "issue": "sorting in control panel\nThe items of the control panel are completely unsorted (should be sorted in alphabetical order (depending on the current language in Plone).\n\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\nfrom AccessControl import ClassSecurityInfo\nfrom AccessControl.class_init import InitializeClass\nfrom App.special_dtml import DTMLFile\nfrom OFS.Folder import Folder\nfrom OFS.PropertyManager import PropertyManager\nfrom Products.CMFCore.ActionInformation import ActionInformation\nfrom Products.CMFCore.ActionProviderBase import ActionProviderBase\nfrom Products.CMFCore.Expression import Expression, createExprContext\nfrom Products.CMFCore.permissions import ManagePortal, View\nfrom Products.CMFCore.utils import _checkPermission\nfrom Products.CMFCore.utils import getToolByName\nfrom Products.CMFCore.utils import registerToolInterface\nfrom Products.CMFCore.utils import UniqueObject\nfrom Products.CMFPlone import PloneMessageFactory as _\nfrom Products.CMFPlone.interfaces import IControlPanel\nfrom Products.CMFPlone.PloneBaseTool import PloneBaseTool\nfrom zope.component.hooks import getSite\nfrom zope.i18n import translate\nfrom zope.i18nmessageid import Message\nfrom zope.interface import implementer\n\nimport six\n\n\nclass PloneConfiglet(ActionInformation):\n\n def __init__(self, appId, **kwargs):\n self.appId = appId\n ActionInformation.__init__(self, **kwargs)\n\n def getAppId(self):\n return self.appId\n\n def getDescription(self):\n return self.description\n\n def clone(self):\n return self.__class__(**self.__dict__)\n\n def getAction(self, ec):\n res = ActionInformation.getAction(self, ec)\n res['description'] = self.getDescription()\n return res\n\n\n@implementer(IControlPanel)\nclass PloneControlPanel(PloneBaseTool, UniqueObject,\n Folder, ActionProviderBase, PropertyManager):\n \"\"\"Weave together the various sources of \"actions\" which\n are apropos to the current user and context.\n \"\"\"\n\n security = ClassSecurityInfo()\n\n id = 'portal_controlpanel'\n title = 'Control Panel'\n toolicon = 'skins/plone_images/site_icon.png'\n meta_type = 'Plone Control Panel Tool'\n _actions_form = DTMLFile('www/editPloneConfiglets', globals())\n\n manage_options = (ActionProviderBase.manage_options +\n PropertyManager.manage_options)\n\n group = dict(\n member=[\n ('Member', _(u'My Preferences')),\n ],\n site=[\n ('plone-general', _(u'General')),\n ('plone-content', _(u'Content')),\n ('plone-users', _(u'Users')),\n ('plone-security', _(u'Security')),\n ('plone-advanced', _(u'Advanced')),\n ('Plone', _(u'Plone Configuration')),\n ('Products', _(u'Add-on Configuration')),\n ]\n )\n\n def __init__(self, **kw):\n if kw:\n self.__dict__.update(**kw)\n\n security.declareProtected(ManagePortal, 'registerConfiglets')\n\n def registerConfiglets(self, configlets):\n for conf in configlets:\n self.registerConfiglet(**conf)\n\n security.declareProtected(ManagePortal, 'getGroupIds')\n\n def getGroupIds(self, category='site'):\n groups = self.group.get(category, [])\n return [g[0] for g in groups if g]\n\n security.declareProtected(View, 'getGroups')\n\n def getGroups(self, category='site'):\n groups = self.group.get(category, [])\n return [{'id': g[0], 'title': g[1]} for g in groups if g]\n\n security.declarePrivate('listActions')\n\n def listActions(self, info=None, object=None):\n # This exists here to shut up a deprecation warning about old-style\n # actions in CMFCore's ActionProviderBase. It was decided not to\n # move configlets to be based on action tool categories for Plone 4\n # (see PLIP #8804), but that (or an alternative) will have to happen\n # before CMF 2.4 when support for old-style actions is removed.\n return self._actions or ()\n\n security.declarePublic('maySeeSomeConfiglets')\n\n def maySeeSomeConfiglets(self):\n groups = self.getGroups('site')\n\n all = []\n for group in groups:\n all.extend(self.enumConfiglets(group=group['id']))\n all = [item for item in all if item['visible']]\n return len(all) != 0\n\n security.declarePublic('enumConfiglets')\n\n def enumConfiglets(self, group=None):\n portal = getToolByName(self, 'portal_url').getPortalObject()\n context = createExprContext(self, portal, self)\n res = []\n for a in self.listActions():\n verified = 0\n for permission in a.permissions:\n if _checkPermission(permission, portal):\n verified = 1\n if verified and a.category == group and a.testCondition(context) \\\n and a.visible:\n res.append(a.getAction(context))\n # Translate the title for sorting\n if getattr(self, 'REQUEST', None) is not None:\n for a in res:\n title = a['title']\n if not isinstance(title, Message):\n title = Message(title, domain='plone')\n a['title'] = translate(title,\n context=self.REQUEST)\n\n def _id(v):\n return v['id']\n res.sort(key=_id)\n return res\n\n security.declareProtected(ManagePortal, 'unregisterConfiglet')\n\n def unregisterConfiglet(self, id):\n actids = [o.id for o in self.listActions()]\n selection = [actids.index(a) for a in actids if a == id]\n if not selection:\n return\n self.deleteActions(selection)\n\n security.declareProtected(ManagePortal, 'unregisterApplication')\n\n def unregisterApplication(self, appId):\n acts = list(self.listActions())\n selection = [acts.index(a) for a in acts if a.appId == appId]\n if not selection:\n return\n self.deleteActions(selection)\n\n def _extractAction(self, properties, index):\n # Extract an ActionInformation from the funky form properties.\n id = str(properties.get('id_%d' % index, ''))\n name = str(properties.get('name_%d' % index, ''))\n action = str(properties.get('action_%d' % index, ''))\n condition = str(properties.get('condition_%d' % index, ''))\n category = str(properties.get('category_%d' % index, ''))\n visible = properties.get('visible_%d' % index, 0)\n permissions = properties.get('permission_%d' % index, ())\n appId = properties.get('appId_%d' % index, '')\n description = properties.get('description_%d' % index, '')\n icon_expr = properties.get('icon_expr_%d' % index, '')\n\n if not name:\n raise ValueError('A name is required.')\n\n if action != '':\n action = Expression(text=action)\n\n if condition != '':\n condition = Expression(text=condition)\n\n if category == '':\n category = 'object'\n\n if not isinstance(visible, int):\n try:\n visible = int(visible)\n except ValueError:\n visible = 0\n\n if isinstance(permissions, six.string_types):\n permissions = (permissions, )\n\n return PloneConfiglet(id=id,\n title=name,\n action=action,\n condition=condition,\n permissions=permissions,\n category=category,\n visible=visible,\n appId=appId,\n description=description,\n icon_expr=icon_expr,\n )\n\n security.declareProtected(ManagePortal, 'addAction')\n\n def addAction(self,\n id,\n name,\n action,\n condition='',\n permission='',\n category='Plone',\n visible=1,\n appId=None,\n icon_expr='',\n description='',\n REQUEST=None,\n ):\n # Add an action to our list.\n if not name:\n raise ValueError('A name is required.')\n\n a_expr = action and Expression(text=str(action)) or ''\n c_expr = condition and Expression(text=str(condition)) or ''\n\n if not isinstance(permission, tuple):\n permission = permission and (str(permission), ) or ()\n\n new_actions = self._cloneActions()\n\n new_action = PloneConfiglet(id=str(id),\n title=name,\n action=a_expr,\n condition=c_expr,\n permissions=permission,\n category=str(category),\n visible=int(visible),\n appId=appId,\n description=description,\n icon_expr=icon_expr,\n )\n\n new_actions.append(new_action)\n self._actions = tuple(new_actions)\n\n if REQUEST is not None:\n return self.manage_editActionsForm(\n REQUEST, manage_tabs_message='Added.')\n\n security.declareProtected(ManagePortal, 'registerConfiglet')\n registerConfiglet = addAction\n\n security.declareProtected(ManagePortal, 'manage_editActionsForm')\n\n def manage_editActionsForm(self, REQUEST, manage_tabs_message=None):\n \"\"\" Show the 'Actions' management tab.\n \"\"\"\n actions = []\n\n for a in self.listActions():\n\n a1 = {}\n a1['id'] = a.getId()\n a1['name'] = a.Title()\n p = a.getPermissions()\n if p:\n a1['permission'] = p[0]\n else:\n a1['permission'] = ''\n a1['category'] = a.getCategory() or 'object'\n a1['visible'] = a.getVisibility()\n a1['action'] = a.getActionExpression()\n a1['condition'] = a.getCondition()\n a1['appId'] = a.getAppId()\n a1['description'] = a.getDescription()\n a1['icon_expr'] = a.getIconExpression()\n actions.append(a1)\n\n # possible_permissions is in OFS.role.RoleManager.\n pp = self.possible_permissions()\n return self._actions_form(\n self,\n REQUEST,\n actions=actions,\n possible_permissions=pp,\n management_view='Actions',\n manage_tabs_message=manage_tabs_message,\n )\n\n @property\n def site_url(self):\n \"\"\"Return the absolute URL to the current site, which is likely not\n necessarily the portal root.\n Used by ``portlet_prefs`` to construct the URL to\n ``@@overview-controlpanel``.\n \"\"\"\n return getSite().absolute_url()\n\n\nInitializeClass(PloneControlPanel)\nregisterToolInterface('portal_controlpanel', IControlPanel)\n", "path": "Products/CMFPlone/PloneControlPanel.py"}]}

| 3,779 | 158 |

gh_patches_debug_32247

|

rasdani/github-patches

|

git_diff

|

translate__pootle-6687

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

sync_stores/update_stores

For 2.9.0 we're making Pootle FS the main path into Pootle, but `sync_stores` and `update_stores` are kept for backward compatibility.

But we have situations where Pootle FS can't do what sync/update does in that it may do more or may touch the db/file when sync/update would only do it on one side.

So we need to warn:

1. That this mode is deprecated

2. Where there are conflicts e.g. we should warn and bail or warn and allow it to continue

Essentially, we want people to know when we can't do what they expect.

</issue>

<code>

[start of pootle/apps/pootle_app/management/commands/sync_stores.py]

1 # -*- coding: utf-8 -*-

2 #

3 # Copyright (C) Pootle contributors.

4 #

5 # This file is a part of the Pootle project. It is distributed under the GPL3

6 # or later license. See the LICENSE file for a copy of the license and the

7 # AUTHORS file for copyright and authorship information.

8

9 import os

10 os.environ['DJANGO_SETTINGS_MODULE'] = 'pootle.settings'

11

12 from pootle_app.management.commands import PootleCommand

13 from pootle_fs.utils import FSPlugin

14

15

16 class Command(PootleCommand):

17 help = "Save new translations to disk manually."

18 process_disabled_projects = True

19

20 def add_arguments(self, parser):

21 super(Command, self).add_arguments(parser)

22 parser.add_argument(

23 '--overwrite',

24 action='store_true',

25 dest='overwrite',

26 default=False,

27 help="Don't just save translations, but "

28 "overwrite files to reflect state in database",

29 )

30 parser.add_argument(

31 '--skip-missing',

32 action='store_true',

33 dest='skip_missing',

34 default=False,

35 help="Ignore missing files on disk",

36 )

37 parser.add_argument(

38 '--force',

39 action='store_true',

40 dest='force',

41 default=False,

42 help="Don't ignore stores synced after last change",

43 )

44

45 def handle_all_stores(self, translation_project, **options):

46 path_glob = "%s*" % translation_project.pootle_path

47 plugin = FSPlugin(translation_project.project)

48 plugin.fetch()

49 if not options["skip_missing"]:

50 plugin.add(pootle_path=path_glob, update="fs")

51 if options["overwrite"]:

52 plugin.resolve(

53 pootle_path=path_glob,

54 pootle_wins=True)

55 plugin.sync(pootle_path=path_glob, update="fs")

56 if options["force"]:

57 # touch the timestamps on disk for files that

58 # werent updated

59 pass

60

[end of pootle/apps/pootle_app/management/commands/sync_stores.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pootle/apps/pootle_app/management/commands/sync_stores.py b/pootle/apps/pootle_app/management/commands/sync_stores.py

--- a/pootle/apps/pootle_app/management/commands/sync_stores.py

+++ b/pootle/apps/pootle_app/management/commands/sync_stores.py

@@ -6,6 +6,7 @@

# or later license. See the LICENSE file for a copy of the license and the

# AUTHORS file for copyright and authorship information.

+import logging

import os

os.environ['DJANGO_SETTINGS_MODULE'] = 'pootle.settings'

@@ -13,6 +14,9 @@

from pootle_fs.utils import FSPlugin

+logger = logging.getLogger(__name__)

+

+

class Command(PootleCommand):

help = "Save new translations to disk manually."

process_disabled_projects = True

@@ -42,10 +46,22 @@

help="Don't ignore stores synced after last change",

)

+ warn_on_conflict = []

+

def handle_all_stores(self, translation_project, **options):