problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_62334

|

rasdani/github-patches

|

git_diff

|

googleapis__google-api-python-client-129

|

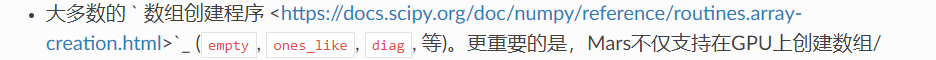

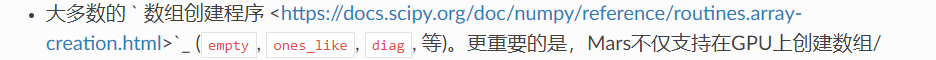

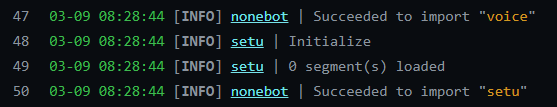

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

discovery_cache module not packaged during installation.

I've installed `google-api-python-client` from source, but when at some point my application was failing with this message:

```

...

...

File "build/bdist.linux-x86_64/egg/oauth2client/util.py", line 142, in positional_wrapper

return wrapped(*args, **kwargs)

File "build/bdist.linux-x86_64/egg/googleapiclient/discovery.py", line 193, in build

content = _retrieve_discovery_doc(requested_url, http, cache_discovery, cache)

File "build/bdist.linux-x86_64/egg/googleapiclient/discovery.py", line 215, in _retrieve_discovery_doc

from . import discovery_cache

ImportError: cannot import name discovery_cache

```

I've checked if `discovery_cache` module was actually part of the `egg`, and unfortunately it was not:

```

[root@e42fb97ce657 unit]# python

Python 2.7.5 (default, Jun 24 2015, 00:41:19)

[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import googleapiclient.discovery_cache

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ImportError: No module named discovery_cache

>>>

```

Here are all the files in `egg`

```

[root@e42fb97ce657 ~]# unzip -l /usr/lib/python2.7/site-packages/google_api_python_client-1.4.1-py2.7.egg

Archive: /usr/lib/python2.7/site-packages/google_api_python_client-1.4.1-py2.7.egg

Length Date Time Name

--------- ---------- ----- ----

1169 09-03-2015 16:09 apiclient/__init__.py

1301 09-03-2015 16:09 apiclient/__init__.pyc

1 09-03-2015 16:09 EGG-INFO/dependency_links.txt

62 09-03-2015 16:09 EGG-INFO/requires.txt

26 09-03-2015 16:09 EGG-INFO/top_level.txt

969 09-03-2015 16:09 EGG-INFO/PKG-INFO

1 09-03-2015 16:09 EGG-INFO/zip-safe

545 09-03-2015 16:09 EGG-INFO/SOURCES.txt

53575 09-03-2015 16:09 googleapiclient/http.py

9910 09-03-2015 16:09 googleapiclient/channel.py

40890 09-03-2015 16:09 googleapiclient/discovery.py

9907 09-03-2015 16:09 googleapiclient/schema.pyc

620 09-03-2015 16:09 googleapiclient/__init__.py

9317 09-03-2015 16:09 googleapiclient/schema.py

11830 09-03-2015 16:09 googleapiclient/model.py

4047 09-03-2015 16:09 googleapiclient/sample_tools.py

6552 09-03-2015 16:09 googleapiclient/mimeparse.py

53976 09-03-2015 16:09 googleapiclient/http.pyc

7043 09-03-2015 16:09 googleapiclient/mimeparse.pyc

6333 09-03-2015 16:09 googleapiclient/errors.pyc

3131 09-03-2015 16:09 googleapiclient/sample_tools.pyc

3622 09-03-2015 16:09 googleapiclient/errors.py

35534 09-03-2015 16:09 googleapiclient/discovery.pyc

14028 09-03-2015 16:09 googleapiclient/model.pyc

175 09-03-2015 16:09 googleapiclient/__init__.pyc

10690 09-03-2015 16:09 googleapiclient/channel.pyc

--------- -------

285254 26 files

[root@e42fb97ce657 ~]#

```

As a workaround I had to add `googleapiclient/discovery_cache` to the `packages` in `setup.py` so it looked like that:

```

[root@e42fb97ce657 google-api-python-client]# more setup.py | grep packages -A 4 -m1

packages = [

'apiclient',

'googleapiclient',

'googleapiclient/discovery_cache'

]

```

Then installed and everything magically started working.

```

[root@e42fb97ce657 google-api-python-client]# python

Python 2.7.5 (default, Jun 24 2015, 00:41:19)

[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import googleapiclient.discovery_cache

>>>

```

Here is a quick sample that looks similar to my environment using `Docker`:

```

FROM centos:centos7

RUN yum install -y git python-devel python-setuptools unzip

RUN easy_install pip

RUN cd /tmp ;\

git clone https://github.com/google/google-api-python-client && \

cd google-api-python-client && \

python setup.py install

```

I've also tried to follow preferred suggestion from the `README.md` and install it from `pip` but it ended up in the same situation.

Please advice on how to proceed without making "manual" modifications to the official package?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup.py`

Content:

```

1 # Copyright 2014 Google Inc. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """Setup script for Google API Python client.

16

17 Also installs included versions of third party libraries, if those libraries

18 are not already installed.

19 """

20 from __future__ import print_function

21

22 import sys

23

24 if sys.version_info < (2, 6):

25 print('google-api-python-client requires python version >= 2.6.',

26 file=sys.stderr)

27 sys.exit(1)

28 if (3, 1) <= sys.version_info < (3, 3):

29 print('google-api-python-client requires python3 version >= 3.3.',

30 file=sys.stderr)

31 sys.exit(1)

32

33 from setuptools import setup

34 import pkg_resources

35

36 def _DetectBadness():

37 import os

38 if 'SKIP_GOOGLEAPICLIENT_COMPAT_CHECK' in os.environ:

39 return

40 o2c_pkg = None

41 try:

42 o2c_pkg = pkg_resources.get_distribution('oauth2client')

43 except pkg_resources.DistributionNotFound:

44 pass

45 oauth2client = None

46 try:

47 import oauth2client

48 except ImportError:

49 pass

50 if o2c_pkg is None and oauth2client is not None:

51 raise RuntimeError(

52 'Previous version of google-api-python-client detected; due to a '

53 'packaging issue, we cannot perform an in-place upgrade. Please remove '

54 'the old version and re-install this package.'

55 )

56

57 _DetectBadness()

58

59 packages = [

60 'apiclient',

61 'googleapiclient',

62 ]

63

64 install_requires = [

65 'httplib2>=0.8',

66 'oauth2client>=1.4.6',

67 'six>=1.6.1',

68 'uritemplate>=0.6',

69 ]

70

71 if sys.version_info < (2, 7):

72 install_requires.append('argparse')

73

74 long_desc = """The Google API Client for Python is a client library for

75 accessing the Plus, Moderator, and many other Google APIs."""

76

77 import googleapiclient

78 version = googleapiclient.__version__

79

80 setup(

81 name="google-api-python-client",

82 version=version,

83 description="Google API Client Library for Python",

84 long_description=long_desc,

85 author="Google Inc.",

86 url="http://github.com/google/google-api-python-client/",

87 install_requires=install_requires,

88 packages=packages,

89 package_data={},

90 license="Apache 2.0",

91 keywords="google api client",

92 classifiers=[

93 'Programming Language :: Python :: 2',

94 'Programming Language :: Python :: 2.6',

95 'Programming Language :: Python :: 2.7',

96 'Programming Language :: Python :: 3',

97 'Programming Language :: Python :: 3.3',

98 'Programming Language :: Python :: 3.4',

99 'Development Status :: 5 - Production/Stable',

100 'Intended Audience :: Developers',

101 'License :: OSI Approved :: Apache Software License',

102 'Operating System :: OS Independent',

103 'Topic :: Internet :: WWW/HTTP',

104 ],

105 )

106

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -59,6 +59,7 @@

packages = [

'apiclient',

'googleapiclient',

+ 'googleapiclient/discovery_cache',

]

install_requires = [

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -59,6 +59,7 @@\n packages = [\n 'apiclient',\n 'googleapiclient',\n+ 'googleapiclient/discovery_cache',\n ]\n \n install_requires = [\n", "issue": "discovery_cache module not packaged during installation.\nI've installed `google-api-python-client` from source, but when at some point my application was failing with this message:\n\n```\n ...\n ...\n File \"build/bdist.linux-x86_64/egg/oauth2client/util.py\", line 142, in positional_wrapper\n return wrapped(*args, **kwargs)\n File \"build/bdist.linux-x86_64/egg/googleapiclient/discovery.py\", line 193, in build\n content = _retrieve_discovery_doc(requested_url, http, cache_discovery, cache)\n File \"build/bdist.linux-x86_64/egg/googleapiclient/discovery.py\", line 215, in _retrieve_discovery_doc\n from . import discovery_cache\nImportError: cannot import name discovery_cache\n```\n\nI've checked if `discovery_cache` module was actually part of the `egg`, and unfortunately it was not:\n\n```\n[root@e42fb97ce657 unit]# python\nPython 2.7.5 (default, Jun 24 2015, 00:41:19) \n[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux2\nType \"help\", \"copyright\", \"credits\" or \"license\" for more information.\n>>> import googleapiclient.discovery_cache\nTraceback (most recent call last):\n File \"<stdin>\", line 1, in <module>\nImportError: No module named discovery_cache\n>>> \n```\n\nHere are all the files in `egg`\n\n```\n[root@e42fb97ce657 ~]# unzip -l /usr/lib/python2.7/site-packages/google_api_python_client-1.4.1-py2.7.egg \nArchive: /usr/lib/python2.7/site-packages/google_api_python_client-1.4.1-py2.7.egg\n Length Date Time Name\n--------- ---------- ----- ----\n 1169 09-03-2015 16:09 apiclient/__init__.py\n 1301 09-03-2015 16:09 apiclient/__init__.pyc\n 1 09-03-2015 16:09 EGG-INFO/dependency_links.txt\n 62 09-03-2015 16:09 EGG-INFO/requires.txt\n 26 09-03-2015 16:09 EGG-INFO/top_level.txt\n 969 09-03-2015 16:09 EGG-INFO/PKG-INFO\n 1 09-03-2015 16:09 EGG-INFO/zip-safe\n 545 09-03-2015 16:09 EGG-INFO/SOURCES.txt\n 53575 09-03-2015 16:09 googleapiclient/http.py\n 9910 09-03-2015 16:09 googleapiclient/channel.py\n 40890 09-03-2015 16:09 googleapiclient/discovery.py\n 9907 09-03-2015 16:09 googleapiclient/schema.pyc\n 620 09-03-2015 16:09 googleapiclient/__init__.py\n 9317 09-03-2015 16:09 googleapiclient/schema.py\n 11830 09-03-2015 16:09 googleapiclient/model.py\n 4047 09-03-2015 16:09 googleapiclient/sample_tools.py\n 6552 09-03-2015 16:09 googleapiclient/mimeparse.py\n 53976 09-03-2015 16:09 googleapiclient/http.pyc\n 7043 09-03-2015 16:09 googleapiclient/mimeparse.pyc\n 6333 09-03-2015 16:09 googleapiclient/errors.pyc\n 3131 09-03-2015 16:09 googleapiclient/sample_tools.pyc\n 3622 09-03-2015 16:09 googleapiclient/errors.py\n 35534 09-03-2015 16:09 googleapiclient/discovery.pyc\n 14028 09-03-2015 16:09 googleapiclient/model.pyc\n 175 09-03-2015 16:09 googleapiclient/__init__.pyc\n 10690 09-03-2015 16:09 googleapiclient/channel.pyc\n--------- -------\n 285254 26 files\n[root@e42fb97ce657 ~]# \n```\n\nAs a workaround I had to add `googleapiclient/discovery_cache` to the `packages` in `setup.py` so it looked like that:\n\n```\n[root@e42fb97ce657 google-api-python-client]# more setup.py | grep packages -A 4 -m1\npackages = [\n 'apiclient',\n 'googleapiclient',\n 'googleapiclient/discovery_cache'\n]\n```\n\nThen installed and everything magically started working.\n\n```\n[root@e42fb97ce657 google-api-python-client]# python\nPython 2.7.5 (default, Jun 24 2015, 00:41:19) \n[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux2\nType \"help\", \"copyright\", \"credits\" or \"license\" for more information.\n>>> import googleapiclient.discovery_cache\n>>> \n```\n\nHere is a quick sample that looks similar to my environment using `Docker`:\n\n```\nFROM centos:centos7\n\nRUN yum install -y git python-devel python-setuptools unzip\nRUN easy_install pip\nRUN cd /tmp ;\\\n git clone https://github.com/google/google-api-python-client && \\\n cd google-api-python-client && \\\n python setup.py install \n```\n\nI've also tried to follow preferred suggestion from the `README.md` and install it from `pip` but it ended up in the same situation.\n\nPlease advice on how to proceed without making \"manual\" modifications to the official package?\n\n", "before_files": [{"content": "# Copyright 2014 Google Inc. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Setup script for Google API Python client.\n\nAlso installs included versions of third party libraries, if those libraries\nare not already installed.\n\"\"\"\nfrom __future__ import print_function\n\nimport sys\n\nif sys.version_info < (2, 6):\n print('google-api-python-client requires python version >= 2.6.',\n file=sys.stderr)\n sys.exit(1)\nif (3, 1) <= sys.version_info < (3, 3):\n print('google-api-python-client requires python3 version >= 3.3.',\n file=sys.stderr)\n sys.exit(1)\n\nfrom setuptools import setup\nimport pkg_resources\n\ndef _DetectBadness():\n import os\n if 'SKIP_GOOGLEAPICLIENT_COMPAT_CHECK' in os.environ:\n return\n o2c_pkg = None\n try:\n o2c_pkg = pkg_resources.get_distribution('oauth2client')\n except pkg_resources.DistributionNotFound:\n pass\n oauth2client = None\n try:\n import oauth2client\n except ImportError:\n pass\n if o2c_pkg is None and oauth2client is not None:\n raise RuntimeError(\n 'Previous version of google-api-python-client detected; due to a '\n 'packaging issue, we cannot perform an in-place upgrade. Please remove '\n 'the old version and re-install this package.'\n )\n\n_DetectBadness()\n\npackages = [\n 'apiclient',\n 'googleapiclient',\n]\n\ninstall_requires = [\n 'httplib2>=0.8',\n 'oauth2client>=1.4.6',\n 'six>=1.6.1',\n 'uritemplate>=0.6',\n]\n\nif sys.version_info < (2, 7):\n install_requires.append('argparse')\n\nlong_desc = \"\"\"The Google API Client for Python is a client library for\naccessing the Plus, Moderator, and many other Google APIs.\"\"\"\n\nimport googleapiclient\nversion = googleapiclient.__version__\n\nsetup(\n name=\"google-api-python-client\",\n version=version,\n description=\"Google API Client Library for Python\",\n long_description=long_desc,\n author=\"Google Inc.\",\n url=\"http://github.com/google/google-api-python-client/\",\n install_requires=install_requires,\n packages=packages,\n package_data={},\n license=\"Apache 2.0\",\n keywords=\"google api client\",\n classifiers=[\n 'Programming Language :: Python :: 2',\n 'Programming Language :: Python :: 2.6',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.3',\n 'Programming Language :: Python :: 3.4',\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'License :: OSI Approved :: Apache Software License',\n 'Operating System :: OS Independent',\n 'Topic :: Internet :: WWW/HTTP',\n ],\n)\n", "path": "setup.py"}], "after_files": [{"content": "# Copyright 2014 Google Inc. All Rights Reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"Setup script for Google API Python client.\n\nAlso installs included versions of third party libraries, if those libraries\nare not already installed.\n\"\"\"\nfrom __future__ import print_function\n\nimport sys\n\nif sys.version_info < (2, 6):\n print('google-api-python-client requires python version >= 2.6.',\n file=sys.stderr)\n sys.exit(1)\nif (3, 1) <= sys.version_info < (3, 3):\n print('google-api-python-client requires python3 version >= 3.3.',\n file=sys.stderr)\n sys.exit(1)\n\nfrom setuptools import setup\nimport pkg_resources\n\ndef _DetectBadness():\n import os\n if 'SKIP_GOOGLEAPICLIENT_COMPAT_CHECK' in os.environ:\n return\n o2c_pkg = None\n try:\n o2c_pkg = pkg_resources.get_distribution('oauth2client')\n except pkg_resources.DistributionNotFound:\n pass\n oauth2client = None\n try:\n import oauth2client\n except ImportError:\n pass\n if o2c_pkg is None and oauth2client is not None:\n raise RuntimeError(\n 'Previous version of google-api-python-client detected; due to a '\n 'packaging issue, we cannot perform an in-place upgrade. Please remove '\n 'the old version and re-install this package.'\n )\n\n_DetectBadness()\n\npackages = [\n 'apiclient',\n 'googleapiclient',\n 'googleapiclient/discovery_cache',\n]\n\ninstall_requires = [\n 'httplib2>=0.8',\n 'oauth2client>=1.4.6',\n 'six>=1.6.1',\n 'uritemplate>=0.6',\n]\n\nif sys.version_info < (2, 7):\n install_requires.append('argparse')\n\nlong_desc = \"\"\"The Google API Client for Python is a client library for\naccessing the Plus, Moderator, and many other Google APIs.\"\"\"\n\nimport googleapiclient\nversion = googleapiclient.__version__\n\nsetup(\n name=\"google-api-python-client\",\n version=version,\n description=\"Google API Client Library for Python\",\n long_description=long_desc,\n author=\"Google Inc.\",\n url=\"http://github.com/google/google-api-python-client/\",\n install_requires=install_requires,\n packages=packages,\n package_data={},\n license=\"Apache 2.0\",\n keywords=\"google api client\",\n classifiers=[\n 'Programming Language :: Python :: 2',\n 'Programming Language :: Python :: 2.6',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.3',\n 'Programming Language :: Python :: 3.4',\n 'Development Status :: 5 - Production/Stable',\n 'Intended Audience :: Developers',\n 'License :: OSI Approved :: Apache Software License',\n 'Operating System :: OS Independent',\n 'Topic :: Internet :: WWW/HTTP',\n ],\n)\n", "path": "setup.py"}]}

| 2,939 | 65 |

gh_patches_debug_11388

|

rasdani/github-patches

|

git_diff

|

google__mobly-222

|

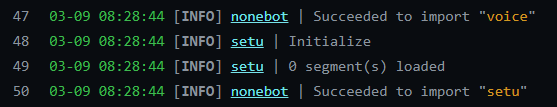

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

`snippet_client._start_event_client` crashes

because of the extra `host_port` arg.

This crashes all tests that use `@AsyncRpc`.

We should add some unit tests for this...

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mobly/controllers/android_device_lib/snippet_client.py`

Content:

```

1 # Copyright 2016 Google Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 """JSON RPC interface to Mobly Snippet Lib."""

15 import logging

16 import re

17 import time

18

19 from mobly import utils

20 from mobly.controllers.android_device_lib import adb

21 from mobly.controllers.android_device_lib import jsonrpc_client_base

22

23 _INSTRUMENTATION_RUNNER_PACKAGE = (

24 'com.google.android.mobly.snippet.SnippetRunner')

25

26 # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.

27 _LAUNCH_CMD_V0 = ('am instrument -w -e action start -e port %s %s/' +

28 _INSTRUMENTATION_RUNNER_PACKAGE)

29

30 _LAUNCH_CMD_V1 = (

31 'am instrument -w -e action start %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)

32

33 _STOP_CMD = (

34 'am instrument -w -e action stop %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)

35

36 # Maximum time to wait for a v0 snippet to start on the device (10 minutes).

37 # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.

38 _APP_START_WAIT_TIME_V0 = 10 * 60

39

40

41 class Error(Exception):

42 pass

43

44

45 class ProtocolVersionError(Error):

46 """Raised when the protocol reported by the snippet is unknown."""

47

48

49 class SnippetClient(jsonrpc_client_base.JsonRpcClientBase):

50 """A client for interacting with snippet APKs using Mobly Snippet Lib.

51

52 See superclass documentation for a list of public attributes.

53

54 It currently supports both v0 and v1 snippet launch protocols, although

55 support for v0 will be removed in a future version.

56

57 For a description of the launch protocols, see the documentation in

58 mobly-snippet-lib, SnippetRunner.java.

59 """

60

61 def __init__(self, package, adb_proxy, log=logging.getLogger()):

62 """Initializes a SnippetClient.

63

64 Args:

65 package: (str) The package name of the apk where the snippets are

66 defined.

67 adb_proxy: (adb.AdbProxy) Adb proxy for running adb commands.

68 log: (logging.Logger) logger to which to send log messages.

69 """

70 super(SnippetClient, self).__init__(app_name=package, log=log)

71 self.package = package

72 self._adb = adb_proxy

73 self._proc = None

74

75 def start_app_and_connect(self):

76 """Overrides superclass. Launches a snippet app and connects to it."""

77 self._check_app_installed()

78

79 # Try launching the app with the v1 protocol. If that fails, fall back

80 # to v0 for compatibility. Use info here so people know exactly what's

81 # happening here, which is helpful since they need to create their own

82 # instrumentations and manifest.

83 self.log.info('Launching snippet apk %s with protocol v1',

84 self.package)

85 cmd = _LAUNCH_CMD_V1 % self.package

86 start_time = time.time()

87 self._proc = self._do_start_app(cmd)

88

89 # "Instrumentation crashed" could be due to several reasons, eg

90 # exception thrown during startup or just a launch protocol 0 snippet

91 # dying because it needs the port flag. Sadly we have no way to tell so

92 # just warn and retry as v0.

93 # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is

94 # removed.

95 line = self._read_line()

96 if line == 'INSTRUMENTATION_RESULT: shortMsg=Process crashed.':

97 self.log.warning('Snippet %s crashed on startup. This might be an '

98 'actual error or a snippet using deprecated v0 '

99 'start protocol. Retrying as a v0 snippet.',

100 self.package)

101 self.host_port = utils.get_available_host_port()

102 # Reuse the host port as the device port in v0 snippet. This isn't

103 # safe in general, but the protocol is deprecated.

104 cmd = _LAUNCH_CMD_V0 % (self.host_port, self.package)

105 self._proc = self._do_start_app(cmd)

106 self._connect_to_v0()

107 else:

108 # Check protocol version and get the device port

109 match = re.match('^SNIPPET START, PROTOCOL ([0-9]+) ([0-9]+)$',

110 line)

111 if not match or match.group(1) != '1':

112 raise ProtocolVersionError(line)

113 self._connect_to_v1()

114 self.log.debug('Snippet %s started after %.1fs on host port %s',

115 self.package, time.time() - start_time, self.host_port)

116

117 def stop_app(self):

118 # Kill the pending 'adb shell am instrument -w' process if there is one.

119 # Although killing the snippet apk would abort this process anyway, we

120 # want to call stop_standing_subprocess() to perform a health check,

121 # print the failure stack trace if there was any, and reap it from the

122 # process table.

123 self.log.debug('Stopping snippet apk %s', self.package)

124 try:

125 # Close the socket connection.

126 self.disconnect()

127 if self._proc:

128 utils.stop_standing_subprocess(self._proc)

129 out = self._adb.shell(_STOP_CMD % self.package).decode('utf-8')

130 if 'OK (0 tests)' not in out:

131 raise Error('Failed to stop existing apk. Unexpected '

132 'output: %s' % out)

133 finally:

134 # Always clean up the adb port

135 if self.host_port:

136 self._adb.forward(['--remove', 'tcp:%d' % self.host_port])

137

138 def _start_event_client(self):

139 """Overrides superclass."""

140 event_client = SnippetClient(

141 package=self.package,

142 host_port=self.host_port,

143 adb_proxy=self._adb,

144 log=self.log)

145 event_client.connect(self.uid,

146 jsonrpc_client_base.JsonRpcCommand.CONTINUE)

147 return event_client

148

149 def _check_app_installed(self):

150 # Check that the Mobly Snippet app is installed.

151 out = self._adb.shell('pm list package')

152 if not utils.grep('^package:%s$' % self.package, out):

153 raise jsonrpc_client_base.AppStartError(

154 '%s is not installed on %s' % (self.package, self._adb.serial))

155 # Check that the app is instrumented.

156 out = self._adb.shell('pm list instrumentation')

157 matched_out = utils.grep('^instrumentation:%s/%s' %

158 (self.package,

159 _INSTRUMENTATION_RUNNER_PACKAGE), out)

160 if not matched_out:

161 raise jsonrpc_client_base.AppStartError(

162 '%s is installed on %s, but it is not instrumented.' %

163 (self.package, self._adb.serial))

164 match = re.search('^instrumentation:(.*)\/(.*) \(target=(.*)\)$',

165 matched_out[0])

166 target_name = match.group(3)

167 # Check that the instrumentation target is installed if it's not the

168 # same as the snippet package.

169 if target_name != self.package:

170 out = self._adb.shell('pm list package')

171 if not utils.grep('^package:%s$' % target_name, out):

172 raise jsonrpc_client_base.AppStartError(

173 'Instrumentation target %s is not installed on %s' %

174 (target_name, self._adb.serial))

175

176 def _do_start_app(self, launch_cmd):

177 adb_cmd = [adb.ADB]

178 if self._adb.serial:

179 adb_cmd += ['-s', self._adb.serial]

180 adb_cmd += ['shell', launch_cmd]

181 return utils.start_standing_subprocess(adb_cmd, shell=False)

182

183 # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is

184 # removed.

185 def _connect_to_v0(self):

186 self.device_port = self.host_port

187 self._adb.forward(

188 ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])

189 start_time = time.time()

190 expiration_time = start_time + _APP_START_WAIT_TIME_V0

191 while time.time() < expiration_time:

192 self.log.debug('Attempting to start %s.', self.package)

193 try:

194 self.connect()

195 return

196 except:

197 self.log.debug(

198 'v0 snippet %s is not yet running, retrying',

199 self.package,

200 exc_info=True)

201 time.sleep(1)

202 raise jsonrpc_client_base.AppStartError(

203 '%s failed to start on %s.' % (self.package, self._adb.serial))

204

205 def _connect_to_v1(self):

206 line = self._read_line()

207 match = re.match('^SNIPPET SERVING, PORT ([0-9]+)$', line)

208 if not match:

209 raise ProtocolVersionError(line)

210 self.device_port = int(match.group(1))

211

212 # Forward the device port to a new host port, and connect to that port

213 self.host_port = utils.get_available_host_port()

214 self._adb.forward(

215 ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])

216 self.connect()

217

218 def _read_line(self):

219 line = self._proc.stdout.readline().rstrip()

220 self.log.debug('Read line from instrumentation output: "%s"', line)

221 return line

222

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mobly/controllers/android_device_lib/snippet_client.py b/mobly/controllers/android_device_lib/snippet_client.py

--- a/mobly/controllers/android_device_lib/snippet_client.py

+++ b/mobly/controllers/android_device_lib/snippet_client.py

@@ -138,10 +138,8 @@

def _start_event_client(self):

"""Overrides superclass."""

event_client = SnippetClient(

- package=self.package,

- host_port=self.host_port,

- adb_proxy=self._adb,

- log=self.log)

+ package=self.package, adb_proxy=self._adb, log=self.log)

+ event_client.host_port = self.host_port

event_client.connect(self.uid,

jsonrpc_client_base.JsonRpcCommand.CONTINUE)

return event_client

|

{"golden_diff": "diff --git a/mobly/controllers/android_device_lib/snippet_client.py b/mobly/controllers/android_device_lib/snippet_client.py\n--- a/mobly/controllers/android_device_lib/snippet_client.py\n+++ b/mobly/controllers/android_device_lib/snippet_client.py\n@@ -138,10 +138,8 @@\n def _start_event_client(self):\n \"\"\"Overrides superclass.\"\"\"\n event_client = SnippetClient(\n- package=self.package,\n- host_port=self.host_port,\n- adb_proxy=self._adb,\n- log=self.log)\n+ package=self.package, adb_proxy=self._adb, log=self.log)\n+ event_client.host_port = self.host_port\n event_client.connect(self.uid,\n jsonrpc_client_base.JsonRpcCommand.CONTINUE)\n return event_client\n", "issue": "`snippet_client._start_event_client` crashes\nbecause of the extra `host_port` arg.\r\n\r\nThis crashes all tests that use `@AsyncRpc`.\r\n\r\nWe should add some unit tests for this...\n", "before_files": [{"content": "# Copyright 2016 Google Inc.\n# \n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n# \n# http://www.apache.org/licenses/LICENSE-2.0\n# \n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"JSON RPC interface to Mobly Snippet Lib.\"\"\"\nimport logging\nimport re\nimport time\n\nfrom mobly import utils\nfrom mobly.controllers.android_device_lib import adb\nfrom mobly.controllers.android_device_lib import jsonrpc_client_base\n\n_INSTRUMENTATION_RUNNER_PACKAGE = (\n 'com.google.android.mobly.snippet.SnippetRunner')\n\n# TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.\n_LAUNCH_CMD_V0 = ('am instrument -w -e action start -e port %s %s/' +\n _INSTRUMENTATION_RUNNER_PACKAGE)\n\n_LAUNCH_CMD_V1 = (\n 'am instrument -w -e action start %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)\n\n_STOP_CMD = (\n 'am instrument -w -e action stop %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)\n\n# Maximum time to wait for a v0 snippet to start on the device (10 minutes).\n# TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.\n_APP_START_WAIT_TIME_V0 = 10 * 60\n\n\nclass Error(Exception):\n pass\n\n\nclass ProtocolVersionError(Error):\n \"\"\"Raised when the protocol reported by the snippet is unknown.\"\"\"\n\n\nclass SnippetClient(jsonrpc_client_base.JsonRpcClientBase):\n \"\"\"A client for interacting with snippet APKs using Mobly Snippet Lib.\n\n See superclass documentation for a list of public attributes.\n\n It currently supports both v0 and v1 snippet launch protocols, although\n support for v0 will be removed in a future version.\n\n For a description of the launch protocols, see the documentation in\n mobly-snippet-lib, SnippetRunner.java.\n \"\"\"\n\n def __init__(self, package, adb_proxy, log=logging.getLogger()):\n \"\"\"Initializes a SnippetClient.\n \n Args:\n package: (str) The package name of the apk where the snippets are\n defined.\n adb_proxy: (adb.AdbProxy) Adb proxy for running adb commands.\n log: (logging.Logger) logger to which to send log messages.\n \"\"\"\n super(SnippetClient, self).__init__(app_name=package, log=log)\n self.package = package\n self._adb = adb_proxy\n self._proc = None\n\n def start_app_and_connect(self):\n \"\"\"Overrides superclass. Launches a snippet app and connects to it.\"\"\"\n self._check_app_installed()\n\n # Try launching the app with the v1 protocol. If that fails, fall back\n # to v0 for compatibility. Use info here so people know exactly what's\n # happening here, which is helpful since they need to create their own\n # instrumentations and manifest.\n self.log.info('Launching snippet apk %s with protocol v1',\n self.package)\n cmd = _LAUNCH_CMD_V1 % self.package\n start_time = time.time()\n self._proc = self._do_start_app(cmd)\n\n # \"Instrumentation crashed\" could be due to several reasons, eg\n # exception thrown during startup or just a launch protocol 0 snippet\n # dying because it needs the port flag. Sadly we have no way to tell so\n # just warn and retry as v0.\n # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is\n # removed.\n line = self._read_line()\n if line == 'INSTRUMENTATION_RESULT: shortMsg=Process crashed.':\n self.log.warning('Snippet %s crashed on startup. This might be an '\n 'actual error or a snippet using deprecated v0 '\n 'start protocol. Retrying as a v0 snippet.',\n self.package)\n self.host_port = utils.get_available_host_port()\n # Reuse the host port as the device port in v0 snippet. This isn't\n # safe in general, but the protocol is deprecated.\n cmd = _LAUNCH_CMD_V0 % (self.host_port, self.package)\n self._proc = self._do_start_app(cmd)\n self._connect_to_v0()\n else:\n # Check protocol version and get the device port\n match = re.match('^SNIPPET START, PROTOCOL ([0-9]+) ([0-9]+)$',\n line)\n if not match or match.group(1) != '1':\n raise ProtocolVersionError(line)\n self._connect_to_v1()\n self.log.debug('Snippet %s started after %.1fs on host port %s',\n self.package, time.time() - start_time, self.host_port)\n\n def stop_app(self):\n # Kill the pending 'adb shell am instrument -w' process if there is one.\n # Although killing the snippet apk would abort this process anyway, we\n # want to call stop_standing_subprocess() to perform a health check,\n # print the failure stack trace if there was any, and reap it from the\n # process table.\n self.log.debug('Stopping snippet apk %s', self.package)\n try:\n # Close the socket connection.\n self.disconnect()\n if self._proc:\n utils.stop_standing_subprocess(self._proc)\n out = self._adb.shell(_STOP_CMD % self.package).decode('utf-8')\n if 'OK (0 tests)' not in out:\n raise Error('Failed to stop existing apk. Unexpected '\n 'output: %s' % out)\n finally:\n # Always clean up the adb port\n if self.host_port:\n self._adb.forward(['--remove', 'tcp:%d' % self.host_port])\n\n def _start_event_client(self):\n \"\"\"Overrides superclass.\"\"\"\n event_client = SnippetClient(\n package=self.package,\n host_port=self.host_port,\n adb_proxy=self._adb,\n log=self.log)\n event_client.connect(self.uid,\n jsonrpc_client_base.JsonRpcCommand.CONTINUE)\n return event_client\n\n def _check_app_installed(self):\n # Check that the Mobly Snippet app is installed.\n out = self._adb.shell('pm list package')\n if not utils.grep('^package:%s$' % self.package, out):\n raise jsonrpc_client_base.AppStartError(\n '%s is not installed on %s' % (self.package, self._adb.serial))\n # Check that the app is instrumented.\n out = self._adb.shell('pm list instrumentation')\n matched_out = utils.grep('^instrumentation:%s/%s' %\n (self.package,\n _INSTRUMENTATION_RUNNER_PACKAGE), out)\n if not matched_out:\n raise jsonrpc_client_base.AppStartError(\n '%s is installed on %s, but it is not instrumented.' %\n (self.package, self._adb.serial))\n match = re.search('^instrumentation:(.*)\\/(.*) \\(target=(.*)\\)$',\n matched_out[0])\n target_name = match.group(3)\n # Check that the instrumentation target is installed if it's not the\n # same as the snippet package.\n if target_name != self.package:\n out = self._adb.shell('pm list package')\n if not utils.grep('^package:%s$' % target_name, out):\n raise jsonrpc_client_base.AppStartError(\n 'Instrumentation target %s is not installed on %s' %\n (target_name, self._adb.serial))\n\n def _do_start_app(self, launch_cmd):\n adb_cmd = [adb.ADB]\n if self._adb.serial:\n adb_cmd += ['-s', self._adb.serial]\n adb_cmd += ['shell', launch_cmd]\n return utils.start_standing_subprocess(adb_cmd, shell=False)\n\n # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is\n # removed.\n def _connect_to_v0(self):\n self.device_port = self.host_port\n self._adb.forward(\n ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])\n start_time = time.time()\n expiration_time = start_time + _APP_START_WAIT_TIME_V0\n while time.time() < expiration_time:\n self.log.debug('Attempting to start %s.', self.package)\n try:\n self.connect()\n return\n except:\n self.log.debug(\n 'v0 snippet %s is not yet running, retrying',\n self.package,\n exc_info=True)\n time.sleep(1)\n raise jsonrpc_client_base.AppStartError(\n '%s failed to start on %s.' % (self.package, self._adb.serial))\n\n def _connect_to_v1(self):\n line = self._read_line()\n match = re.match('^SNIPPET SERVING, PORT ([0-9]+)$', line)\n if not match:\n raise ProtocolVersionError(line)\n self.device_port = int(match.group(1))\n\n # Forward the device port to a new host port, and connect to that port\n self.host_port = utils.get_available_host_port()\n self._adb.forward(\n ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])\n self.connect()\n\n def _read_line(self):\n line = self._proc.stdout.readline().rstrip()\n self.log.debug('Read line from instrumentation output: \"%s\"', line)\n return line\n", "path": "mobly/controllers/android_device_lib/snippet_client.py"}], "after_files": [{"content": "# Copyright 2016 Google Inc.\n# \n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n# \n# http://www.apache.org/licenses/LICENSE-2.0\n# \n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\"\"\"JSON RPC interface to Mobly Snippet Lib.\"\"\"\nimport logging\nimport re\nimport time\n\nfrom mobly import utils\nfrom mobly.controllers.android_device_lib import adb\nfrom mobly.controllers.android_device_lib import jsonrpc_client_base\n\n_INSTRUMENTATION_RUNNER_PACKAGE = (\n 'com.google.android.mobly.snippet.SnippetRunner')\n\n# TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.\n_LAUNCH_CMD_V0 = ('am instrument -w -e action start -e port %s %s/' +\n _INSTRUMENTATION_RUNNER_PACKAGE)\n\n_LAUNCH_CMD_V1 = (\n 'am instrument -w -e action start %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)\n\n_STOP_CMD = (\n 'am instrument -w -e action stop %s/' + _INSTRUMENTATION_RUNNER_PACKAGE)\n\n# Maximum time to wait for a v0 snippet to start on the device (10 minutes).\n# TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is removed.\n_APP_START_WAIT_TIME_V0 = 10 * 60\n\n\nclass Error(Exception):\n pass\n\n\nclass ProtocolVersionError(Error):\n \"\"\"Raised when the protocol reported by the snippet is unknown.\"\"\"\n\n\nclass SnippetClient(jsonrpc_client_base.JsonRpcClientBase):\n \"\"\"A client for interacting with snippet APKs using Mobly Snippet Lib.\n\n See superclass documentation for a list of public attributes.\n\n It currently supports both v0 and v1 snippet launch protocols, although\n support for v0 will be removed in a future version.\n\n For a description of the launch protocols, see the documentation in\n mobly-snippet-lib, SnippetRunner.java.\n \"\"\"\n\n def __init__(self, package, adb_proxy, log=logging.getLogger()):\n \"\"\"Initializes a SnippetClient.\n \n Args:\n package: (str) The package name of the apk where the snippets are\n defined.\n adb_proxy: (adb.AdbProxy) Adb proxy for running adb commands.\n log: (logging.Logger) logger to which to send log messages.\n \"\"\"\n super(SnippetClient, self).__init__(app_name=package, log=log)\n self.package = package\n self._adb = adb_proxy\n self._proc = None\n\n def start_app_and_connect(self):\n \"\"\"Overrides superclass. Launches a snippet app and connects to it.\"\"\"\n self._check_app_installed()\n\n # Try launching the app with the v1 protocol. If that fails, fall back\n # to v0 for compatibility. Use info here so people know exactly what's\n # happening here, which is helpful since they need to create their own\n # instrumentations and manifest.\n self.log.info('Launching snippet apk %s with protocol v1',\n self.package)\n cmd = _LAUNCH_CMD_V1 % self.package\n start_time = time.time()\n self._proc = self._do_start_app(cmd)\n\n # \"Instrumentation crashed\" could be due to several reasons, eg\n # exception thrown during startup or just a launch protocol 0 snippet\n # dying because it needs the port flag. Sadly we have no way to tell so\n # just warn and retry as v0.\n # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is\n # removed.\n line = self._read_line()\n if line == 'INSTRUMENTATION_RESULT: shortMsg=Process crashed.':\n self.log.warning('Snippet %s crashed on startup. This might be an '\n 'actual error or a snippet using deprecated v0 '\n 'start protocol. Retrying as a v0 snippet.',\n self.package)\n self.host_port = utils.get_available_host_port()\n # Reuse the host port as the device port in v0 snippet. This isn't\n # safe in general, but the protocol is deprecated.\n cmd = _LAUNCH_CMD_V0 % (self.host_port, self.package)\n self._proc = self._do_start_app(cmd)\n self._connect_to_v0()\n else:\n # Check protocol version and get the device port\n match = re.match('^SNIPPET START, PROTOCOL ([0-9]+) ([0-9]+)$',\n line)\n if not match or match.group(1) != '1':\n raise ProtocolVersionError(line)\n self._connect_to_v1()\n self.log.debug('Snippet %s started after %.1fs on host port %s',\n self.package, time.time() - start_time, self.host_port)\n\n def stop_app(self):\n # Kill the pending 'adb shell am instrument -w' process if there is one.\n # Although killing the snippet apk would abort this process anyway, we\n # want to call stop_standing_subprocess() to perform a health check,\n # print the failure stack trace if there was any, and reap it from the\n # process table.\n self.log.debug('Stopping snippet apk %s', self.package)\n try:\n # Close the socket connection.\n self.disconnect()\n if self._proc:\n utils.stop_standing_subprocess(self._proc)\n out = self._adb.shell(_STOP_CMD % self.package).decode('utf-8')\n if 'OK (0 tests)' not in out:\n raise Error('Failed to stop existing apk. Unexpected '\n 'output: %s' % out)\n finally:\n # Always clean up the adb port\n if self.host_port:\n self._adb.forward(['--remove', 'tcp:%d' % self.host_port])\n\n def _start_event_client(self):\n \"\"\"Overrides superclass.\"\"\"\n event_client = SnippetClient(\n package=self.package, adb_proxy=self._adb, log=self.log)\n event_client.host_port = self.host_port\n event_client.connect(self.uid,\n jsonrpc_client_base.JsonRpcCommand.CONTINUE)\n return event_client\n\n def _check_app_installed(self):\n # Check that the Mobly Snippet app is installed.\n out = self._adb.shell('pm list package')\n if not utils.grep('^package:%s$' % self.package, out):\n raise jsonrpc_client_base.AppStartError(\n '%s is not installed on %s' % (self.package, self._adb.serial))\n # Check that the app is instrumented.\n out = self._adb.shell('pm list instrumentation')\n matched_out = utils.grep('^instrumentation:%s/%s' %\n (self.package,\n _INSTRUMENTATION_RUNNER_PACKAGE), out)\n if not matched_out:\n raise jsonrpc_client_base.AppStartError(\n '%s is installed on %s, but it is not instrumented.' %\n (self.package, self._adb.serial))\n match = re.search('^instrumentation:(.*)\\/(.*) \\(target=(.*)\\)$',\n matched_out[0])\n target_name = match.group(3)\n # Check that the instrumentation target is installed if it's not the\n # same as the snippet package.\n if target_name != self.package:\n out = self._adb.shell('pm list package')\n if not utils.grep('^package:%s$' % target_name, out):\n raise jsonrpc_client_base.AppStartError(\n 'Instrumentation target %s is not installed on %s' %\n (target_name, self._adb.serial))\n\n def _do_start_app(self, launch_cmd):\n adb_cmd = [adb.ADB]\n if self._adb.serial:\n adb_cmd += ['-s', self._adb.serial]\n adb_cmd += ['shell', launch_cmd]\n return utils.start_standing_subprocess(adb_cmd, shell=False)\n\n # TODO(adorokhine): delete this in Mobly 1.6 when snippet v0 support is\n # removed.\n def _connect_to_v0(self):\n self.device_port = self.host_port\n self._adb.forward(\n ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])\n start_time = time.time()\n expiration_time = start_time + _APP_START_WAIT_TIME_V0\n while time.time() < expiration_time:\n self.log.debug('Attempting to start %s.', self.package)\n try:\n self.connect()\n return\n except:\n self.log.debug(\n 'v0 snippet %s is not yet running, retrying',\n self.package,\n exc_info=True)\n time.sleep(1)\n raise jsonrpc_client_base.AppStartError(\n '%s failed to start on %s.' % (self.package, self._adb.serial))\n\n def _connect_to_v1(self):\n line = self._read_line()\n match = re.match('^SNIPPET SERVING, PORT ([0-9]+)$', line)\n if not match:\n raise ProtocolVersionError(line)\n self.device_port = int(match.group(1))\n\n # Forward the device port to a new host port, and connect to that port\n self.host_port = utils.get_available_host_port()\n self._adb.forward(\n ['tcp:%d' % self.host_port, 'tcp:%d' % self.device_port])\n self.connect()\n\n def _read_line(self):\n line = self._proc.stdout.readline().rstrip()\n self.log.debug('Read line from instrumentation output: \"%s\"', line)\n return line\n", "path": "mobly/controllers/android_device_lib/snippet_client.py"}]}

| 3,028 | 170 |

gh_patches_debug_1253

|

rasdani/github-patches

|

git_diff

|

translate__pootle-3671

|

We are currently solving the following issue within our repository. Here is the issue text:

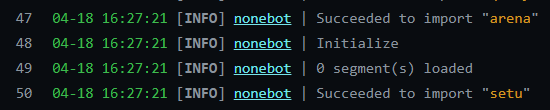

--- BEGIN ISSUE ---

Confusing sentence in permissions view

There is a permission called "Can review translations" that confused me as I thought that there are also reviewers beside suggesters and translators! Hopefully you fix it so that it lands in 2.7.0.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pootle/core/initdb.py`

Content:

```

1 #!/usr/bin/env python

2 # -*- coding: utf-8 -*-

3 #

4 # Copyright (C) Pootle contributors.

5 #

6 # This file is a part of the Pootle project. It is distributed under the GPL3

7 # or later license. See the LICENSE file for a copy of the license and the

8 # AUTHORS file for copyright and authorship information.

9

10

11 from django.contrib.auth import get_user_model

12 from django.contrib.auth.models import Permission

13 from django.contrib.contenttypes.models import ContentType

14 from django.utils.translation import ugettext_noop as _

15

16 from pootle.core.models import Revision

17 from pootle_app.models import Directory

18 from pootle_app.models.permissions import PermissionSet, get_pootle_permission

19 from pootle_language.models import Language

20 from pootle_project.models import Project

21 from staticpages.models import StaticPage as Announcement

22

23

24 def initdb():

25 """Populate the database with default initial data.

26

27 This creates the default database to get a working Pootle installation.

28 """

29 create_revision()

30 create_essential_users()

31 create_root_directories()

32 create_template_languages()

33 create_terminology_project()

34 create_pootle_permissions()

35 create_pootle_permission_sets()

36

37 create_default_projects()

38 create_default_languages()

39 create_default_admin()

40

41

42 def create_revision():

43 Revision.initialize()

44

45

46 def create_essential_users():

47 """Create the 'default' and 'nobody' User instances.

48

49 These users are required for Pootle's permission system.

50 """

51 User = get_user_model()

52

53 # The nobody user is used to represent an anonymous user in cases where

54 # we need to associate model information with such a user. An example is

55 # in the permission system: we need a way to store rights for anonymous

56 # users; thus we use the nobody user.

57 criteria = {

58 'username': u"nobody",

59 'full_name': u"any anonymous user",

60 'is_active': True,

61 }

62 nobody, created = User.objects.get_or_create(**criteria)

63 if created:

64 nobody.set_unusable_password()

65 nobody.save()

66

67 # The 'default' user represents any valid, non-anonymous user and is used

68 # to associate information any such user. An example is in the permission

69 # system: we need a way to store default rights for users. We use the

70 # 'default' user for this.

71 #

72 # In a future version of Pootle we should think about using Django's

73 # groups to do better permissions handling.

74 criteria = {

75 'username': u"default",

76 'full_name': u"any authenticated user",

77 'is_active': True,

78 }

79 default, created = User.objects.get_or_create(**criteria)

80 if created:

81 default.set_unusable_password()

82 default.save()

83

84 # The system user represents a system, and is used to

85 # associate updates done by bulk commands as update_stores.

86 criteria = {

87 'username': u"system",

88 'full_name': u"system user",

89 'is_active': True,

90 }

91 system, created = User.objects.get_or_create(**criteria)

92 if created:

93 system.set_unusable_password()

94 system.save()

95

96

97 def create_pootle_permissions():

98 """Create Pootle's directory level permissions."""

99

100 args = {

101 'app_label': "pootle_app",

102 'model': "directory",

103 }

104 pootle_content_type, created = ContentType.objects.get_or_create(**args)

105 pootle_content_type.name = 'pootle'

106 pootle_content_type.save()

107

108 # Create the permissions.

109 permissions = [

110 {

111 'name': _("Can access a project"),

112 'codename': "view",

113 },

114 {

115 'name': _("Cannot access a project"),

116 'codename': "hide",

117 },

118 {

119 'name': _("Can make a suggestion for a translation"),

120 'codename': "suggest",

121 },

122 {

123 'name': _("Can submit a translation"),

124 'codename': "translate",

125 },

126 {

127 'name': _("Can review translations"),

128 'codename': "review",

129 },

130 {

131 'name': _("Can administrate a translation project"),

132 'codename': "administrate",

133 },

134 ]

135

136 criteria = {

137 'content_type': pootle_content_type,

138 }

139

140 for permission in permissions:

141 criteria.update(permission)

142 obj, created = Permission.objects.get_or_create(**criteria)

143

144

145 def create_pootle_permission_sets():

146 """Create the default permission set for the 'nobody' and 'default' users.

147

148 'nobody' is the anonymous (non-logged in) user, and 'default' is the logged

149 in user.

150 """

151 User = get_user_model()

152

153 nobody = User.objects.get(username='nobody')

154 default = User.objects.get(username='default')

155

156 view = get_pootle_permission('view')

157 suggest = get_pootle_permission('suggest')

158 translate = get_pootle_permission('translate')

159

160 # Default permissions for tree root.

161 criteria = {

162 'user': nobody,

163 'directory': Directory.objects.root,

164 }

165 permission_set, created = PermissionSet.objects.get_or_create(**criteria)

166 if created:

167 permission_set.positive_permissions = [view, suggest]

168 permission_set.save()

169

170 criteria['user'] = default

171 permission_set, created = PermissionSet.objects.get_or_create(**criteria)

172 if created:

173 permission_set.positive_permissions = [view, suggest, translate]

174 permission_set.save()

175

176 # Default permissions for templates language.

177 # Override with no permissions for templates language.

178 criteria = {

179 'user': nobody,

180 'directory': Directory.objects.get(pootle_path="/templates/"),

181 }

182 permission_set, created = PermissionSet.objects.get_or_create(**criteria)

183 if created:

184 permission_set.positive_permissions = []

185 permission_set.save()

186

187 criteria['user'] = default

188 permission_set, created = PermissionSet.objects.get_or_create(**criteria)

189 if created:

190 permission_set.positive_permissions = []

191 permission_set.save()

192

193

194 def require_english():

195 """Create the English Language item."""

196 criteria = {

197 'code': "en",

198 'fullname': u"English",

199 'nplurals': 2,

200 'pluralequation': "(n != 1)",

201 }

202 en, created = Language.objects.get_or_create(**criteria)

203 return en

204

205

206 def create_root_directories():

207 """Create the root Directory items."""

208 root, created = Directory.objects.get_or_create(name='')

209 projects, created = Directory.objects.get_or_create(name='projects',

210 parent=root)

211

212

213 def create_template_languages():

214 """Create the 'templates' and English languages.

215

216 The 'templates' language is used to give users access to the untranslated

217 template files.

218 """

219 templates, created = Language.objects.get_or_create(code="templates",

220 fullname=u'Templates')

221 require_english()

222

223

224 def create_terminology_project():

225 """Create the terminology project.

226

227 The terminology project is used to display terminology suggestions while

228 translating.

229 """

230 criteria = {

231 'code': "terminology",

232 'fullname': u"Terminology",

233 'source_language': require_english(),

234 'checkstyle': "terminology",

235 }

236 terminology, created = Project.objects.get_or_create(**criteria)

237

238

239 def create_default_projects():

240 """Create the default projects that we host.

241

242 You might want to add your projects here, although you can also add things

243 through the web interface later.

244 """

245 from pootle_project.models import Project

246

247 en = require_english()

248

249 criteria = {

250 'code': u"tutorial",

251 'source_language': en,

252 'fullname': u"Tutorial",

253 'checkstyle': "standard",

254 'localfiletype': "po",

255 'treestyle': "auto",

256 }

257 tutorial = Project(**criteria)

258 tutorial.save()

259

260 criteria = {

261 'active': True,

262 'title': "Project instructions",

263 'body': ('<div dir="ltr" lang="en">Tutorial project where users can '

264 'play with Pootle and learn more about translation and '

265 'localisation.<br />For more help on localisation, visit the '

266 '<a href="http://docs.translatehouse.org/projects/'

267 'localization-guide/en/latest/guide/start.html">localisation '

268 'guide</a>.</div>'),

269 'virtual_path': "announcements/projects/"+tutorial.code,

270 }

271 ann = Announcement(**criteria)

272 ann.save()

273

274

275 def create_default_languages():

276 """Create the default languages."""

277 from translate.lang import data, factory

278

279 from pootle_language.models import Language

280

281 # import languages from toolkit

282 for code in data.languages.keys():

283 try:

284 tk_lang = factory.getlanguage(code)

285 criteria = {

286 'code': code,

287 'fullname': tk_lang.fullname,

288 'nplurals': tk_lang.nplurals,

289 'pluralequation': tk_lang.pluralequation,

290 }

291 try:

292 criteria['specialchars'] = tk_lang.specialchars

293 except AttributeError:

294 pass

295 lang, created = Language.objects.get_or_create(**criteria)

296 except:

297 pass

298

299

300 def create_default_admin():

301 """Create the default admin user for Pootle.

302

303 You definitely want to change the admin account so that your default

304 install is not accessible with the default credentials. The users 'noboby'

305 and 'default' should be left as is.

306 """

307 User = get_user_model()

308

309 criteria = {

310 'username': u"admin",

311 'full_name': u"Administrator",

312 'is_active': True,

313 'is_superuser': True,

314 }

315 admin = User(**criteria)

316 admin.set_password("admin")

317 admin.save()

318

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pootle/core/initdb.py b/pootle/core/initdb.py

--- a/pootle/core/initdb.py

+++ b/pootle/core/initdb.py

@@ -124,7 +124,7 @@

'codename': "translate",

},

{

- 'name': _("Can review translations"),

+ 'name': _("Can review suggestions"),

'codename': "review",

},

{

|