problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_56500

|

rasdani/github-patches

|

git_diff

|

canonical__microk8s-2048

|

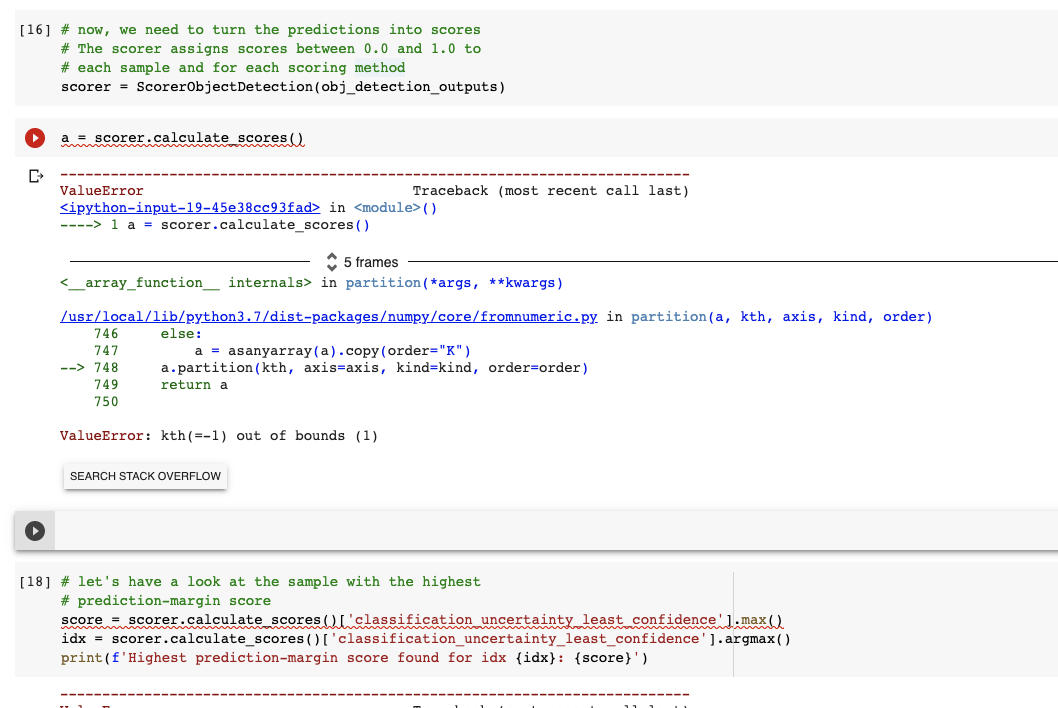

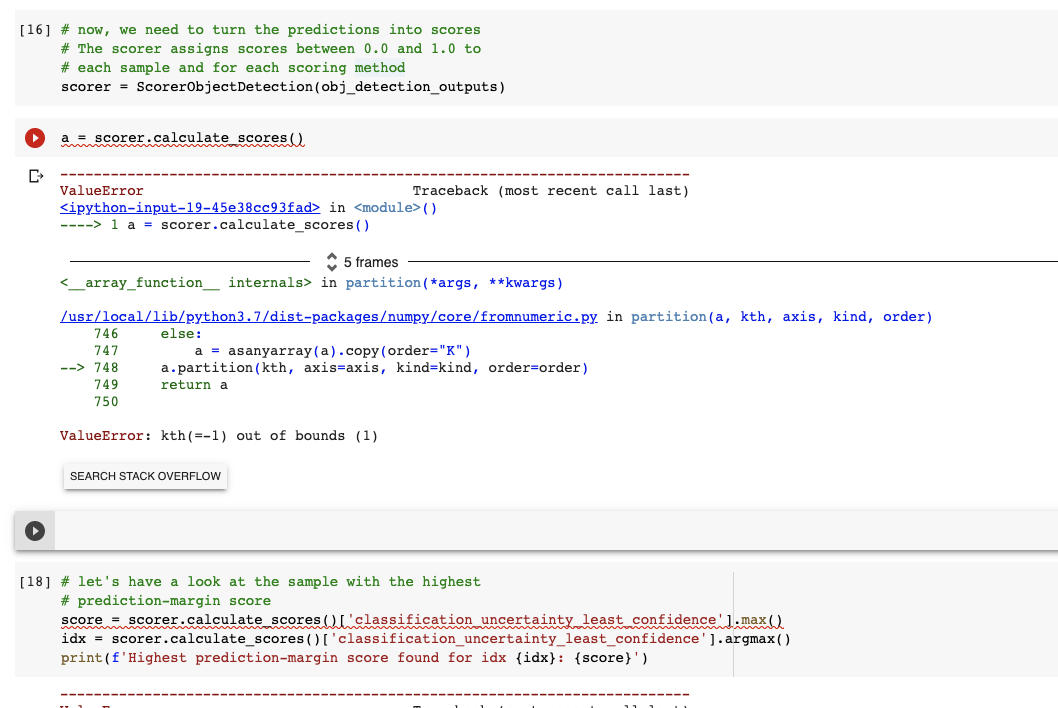

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Microk8s on armhf architecture

Hi all,

The armhf binary is missing and not available right now, which means that some users cannot install microk8s on Ubuntu. For example, if you use the armhf image for Raspberry Pi, you cannot install microk8s:

> ubuntu@battlecruiser:~$ sudo snap install microk8s --classic

> error: snap "microk8s" is not available on stable for this architecture (armhf)

> but exists on other architectures (amd64, arm64, ppc64el).

It would be really good if we could also get the build compiled for this architecture and make officially available.

Cheers,

- Calvin

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `scripts/wrappers/common/utils.py`

Content:

```

1 import getpass

2 import json

3 import os

4 import platform

5 import subprocess

6 import sys

7 import time

8 from pathlib import Path

9

10 import click

11 import yaml

12

13 kubeconfig = "--kubeconfig=" + os.path.expandvars("${SNAP_DATA}/credentials/client.config")

14

15

16 def get_current_arch():

17 # architecture mapping

18 arch_mapping = {"aarch64": "arm64", "x86_64": "amd64"}

19

20 return arch_mapping[platform.machine()]

21

22

23 def snap_data() -> Path:

24 try:

25 return Path(os.environ["SNAP_DATA"])

26 except KeyError:

27 return Path("/var/snap/microk8s/current")

28

29

30 def run(*args, die=True):

31 # Add wrappers to $PATH

32 env = os.environ.copy()

33 env["PATH"] += ":%s" % os.environ["SNAP"]

34 result = subprocess.run(

35 args, stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE, env=env

36 )

37

38 try:

39 result.check_returncode()

40 except subprocess.CalledProcessError as err:

41 if die:

42 if result.stderr:

43 print(result.stderr.decode("utf-8"))

44 print(err)

45 sys.exit(1)

46 else:

47 raise

48

49 return result.stdout.decode("utf-8")

50

51

52 def is_cluster_ready():

53 try:

54 service_output = kubectl_get("all")

55 node_output = kubectl_get("nodes")

56 # Make sure to compare with the word " Ready " with spaces.

57 if " Ready " in node_output and "service/kubernetes" in service_output:

58 return True

59 else:

60 return False

61 except Exception:

62 return False

63

64

65 def is_ha_enabled():

66 ha_lock = os.path.expandvars("${SNAP_DATA}/var/lock/ha-cluster")

67 return os.path.isfile(ha_lock)

68

69

70 def get_dqlite_info():

71 cluster_dir = os.path.expandvars("${SNAP_DATA}/var/kubernetes/backend")

72 snap_path = os.environ.get("SNAP")

73

74 info = []

75

76 if not is_ha_enabled():

77 return info

78

79 waits = 10

80 while waits > 0:

81 try:

82 with open("{}/info.yaml".format(cluster_dir), mode="r") as f:

83 data = yaml.load(f, Loader=yaml.FullLoader)

84 out = subprocess.check_output(

85 "{snappath}/bin/dqlite -s file://{dbdir}/cluster.yaml -c {dbdir}/cluster.crt "

86 "-k {dbdir}/cluster.key -f json k8s .cluster".format(

87 snappath=snap_path, dbdir=cluster_dir

88 ).split(),

89 timeout=4,

90 )

91 if data["Address"] in out.decode():

92 break

93 else:

94 time.sleep(5)

95 waits -= 1

96 except (subprocess.CalledProcessError, subprocess.TimeoutExpired):

97 time.sleep(2)

98 waits -= 1

99

100 if waits == 0:

101 return info

102

103 nodes = json.loads(out.decode())

104 for n in nodes:

105 if n["Role"] == 0:

106 info.append((n["Address"], "voter"))

107 if n["Role"] == 1:

108 info.append((n["Address"], "standby"))

109 if n["Role"] == 2:

110 info.append((n["Address"], "spare"))

111 return info

112

113

114 def is_cluster_locked():

115 if (snap_data() / "var/lock/clustered.lock").exists():

116 click.echo("This MicroK8s deployment is acting as a node in a cluster.")

117 click.echo("Please use the master node.")

118 sys.exit(1)

119

120

121 def wait_for_ready(timeout):

122 start_time = time.time()

123

124 while True:

125 if is_cluster_ready():

126 return True

127 elif timeout and time.time() > start_time + timeout:

128 return False

129 else:

130 time.sleep(2)

131

132

133 def exit_if_stopped():

134 stoppedLockFile = os.path.expandvars("${SNAP_DATA}/var/lock/stopped.lock")

135 if os.path.isfile(stoppedLockFile):

136 print("microk8s is not running, try microk8s start")

137 exit(0)

138

139

140 def exit_if_no_permission():

141 user = getpass.getuser()

142 # test if we can access the default kubeconfig

143 clientConfigFile = os.path.expandvars("${SNAP_DATA}/credentials/client.config")

144 if not os.access(clientConfigFile, os.R_OK):

145 print("Insufficient permissions to access MicroK8s.")

146 print(

147 "You can either try again with sudo or add the user {} to the 'microk8s' group:".format(

148 user

149 )

150 )

151 print("")

152 print(" sudo usermod -a -G microk8s {}".format(user))

153 print(" sudo chown -f -R $USER ~/.kube")

154 print("")

155 print(

156 "After this, reload the user groups either via a reboot or by running 'newgrp microk8s'."

157 )

158 exit(1)

159

160

161 def ensure_started():

162 if (snap_data() / "var/lock/stopped.lock").exists():

163 click.echo("microk8s is not running, try microk8s start", err=True)

164 sys.exit(1)

165

166

167 def kubectl_get(cmd, namespace="--all-namespaces"):

168 if namespace == "--all-namespaces":

169 return run("kubectl", kubeconfig, "get", cmd, "--all-namespaces", die=False)

170 else:

171 return run("kubectl", kubeconfig, "get", cmd, "-n", namespace, die=False)

172

173

174 def kubectl_get_clusterroles():

175 return run(

176 "kubectl", kubeconfig, "get", "clusterroles", "--show-kind", "--no-headers", die=False

177 )

178

179

180 def get_available_addons(arch):

181 addon_dataset = os.path.expandvars("${SNAP}/addon-lists.yaml")

182 available = []

183 with open(addon_dataset, "r") as file:

184 # The FullLoader parameter handles the conversion from YAML

185 # scalar values to Python the dictionary format

186 addons = yaml.load(file, Loader=yaml.FullLoader)

187 for addon in addons["microk8s-addons"]["addons"]:

188 if arch in addon["supported_architectures"]:

189 available.append(addon)

190

191 available = sorted(available, key=lambda k: k["name"])

192 return available

193

194

195 def get_addon_by_name(addons, name):

196 filtered_addon = []

197 for addon in addons:

198 if name == addon["name"]:

199 filtered_addon.append(addon)

200 return filtered_addon

201

202

203 def is_service_expected_to_start(service):

204 """

205 Check if a service is supposed to start

206 :param service: the service name

207 :return: True if the service is meant to start

208 """

209 lock_path = os.path.expandvars("${SNAP_DATA}/var/lock")

210 lock = "{}/{}".format(lock_path, service)

211 return os.path.exists(lock_path) and not os.path.isfile(lock)

212

213

214 def set_service_expected_to_start(service, start=True):

215 """

216 Check if a service is not expected to start.

217 :param service: the service name

218 :param start: should the service start or not

219 """

220 lock_path = os.path.expandvars("${SNAP_DATA}/var/lock")

221 lock = "{}/{}".format(lock_path, service)

222 if start:

223 os.remove(lock)

224 else:

225 fd = os.open(lock, os.O_CREAT, mode=0o700)

226 os.close(fd)

227

228

229 def check_help_flag(addons: list) -> bool:

230 """Checks to see if a help message needs to be printed for an addon.

231

232 Not all addons check for help flags themselves. Until they do, intercept

233 calls to print help text and print out a generic message to that effect.

234 """

235 addon = addons[0]

236 if any(arg in addons for arg in ("-h", "--help")) and addon != "kubeflow":

237 print("Addon %s does not yet have a help message." % addon)

238 print("For more information about it, visit https://microk8s.io/docs/addons")

239 return True

240 return False

241

242

243 def xable(action: str, addons: list, xabled_addons: list):

244 """Enables or disables the given addons.

245

246 Collated into a single function since the logic is identical other than

247 the script names.

248 """

249 actions = Path(__file__).absolute().parent / "../../../actions"

250 existing_addons = {sh.with_suffix("").name[7:] for sh in actions.glob("enable.*.sh")}

251

252 # Backwards compatibility with enabling multiple addons at once, e.g.

253 # `microk8s.enable foo bar:"baz"`

254 if all(a.split(":")[0] in existing_addons for a in addons) and len(addons) > 1:

255 for addon in addons:

256 if addon in xabled_addons and addon != "kubeflow":

257 click.echo("Addon %s is already %sd." % (addon, action))

258 else:

259 addon, *args = addon.split(":")

260 wait_for_ready(timeout=30)

261 p = subprocess.run([str(actions / ("%s.%s.sh" % (action, addon)))] + args)

262 if p.returncode:

263 sys.exit(p.returncode)

264 wait_for_ready(timeout=30)

265

266 # The new way of xabling addons, that allows for unix-style argument passing,

267 # such as `microk8s.enable foo --bar`.

268 else:

269 addon, *args = addons[0].split(":")

270

271 if addon in xabled_addons and addon != "kubeflow":

272 click.echo("Addon %s is already %sd." % (addon, action))

273 sys.exit(0)

274

275 if addon not in existing_addons:

276 click.echo("Nothing to do for `%s`." % addon, err=True)

277 sys.exit(1)

278

279 if args and addons[1:]:

280 click.echo(

281 "Can't pass string arguments and flag arguments simultaneously!\n"

282 "{0} an addon with only one argument style at a time:\n"

283 "\n"

284 " microk8s {1} foo:'bar'\n"

285 "or\n"

286 " microk8s {1} foo --bar\n".format(action.title(), action)

287 )

288 sys.exit(1)

289

290 wait_for_ready(timeout=30)

291 script = [str(actions / ("%s.%s.sh" % (action, addon)))]

292 if args:

293 p = subprocess.run(script + args)

294 else:

295 p = subprocess.run(script + list(addons[1:]))

296

297 if p.returncode:

298 sys.exit(p.returncode)

299

300 wait_for_ready(timeout=30)

301

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/scripts/wrappers/common/utils.py b/scripts/wrappers/common/utils.py

--- a/scripts/wrappers/common/utils.py

+++ b/scripts/wrappers/common/utils.py

@@ -15,7 +15,7 @@

def get_current_arch():

# architecture mapping

- arch_mapping = {"aarch64": "arm64", "x86_64": "amd64"}

+ arch_mapping = {"aarch64": "arm64", "armv7l": "armhf", "x86_64": "amd64"}

return arch_mapping[platform.machine()]

|

{"golden_diff": "diff --git a/scripts/wrappers/common/utils.py b/scripts/wrappers/common/utils.py\n--- a/scripts/wrappers/common/utils.py\n+++ b/scripts/wrappers/common/utils.py\n@@ -15,7 +15,7 @@\n \n def get_current_arch():\n # architecture mapping\n- arch_mapping = {\"aarch64\": \"arm64\", \"x86_64\": \"amd64\"}\n+ arch_mapping = {\"aarch64\": \"arm64\", \"armv7l\": \"armhf\", \"x86_64\": \"amd64\"}\n \n return arch_mapping[platform.machine()]\n", "issue": "Microk8s on armhf architecture\nHi all, \r\n\r\nThe armhf binary is missing and not available right now, which means that some users cannot install microk8s on Ubuntu. For example, if you use the armhf image for Raspberry Pi, you cannot install microk8s: \r\n\r\n> ubuntu@battlecruiser:~$ sudo snap install microk8s --classic\r\n> error: snap \"microk8s\" is not available on stable for this architecture (armhf)\r\n> but exists on other architectures (amd64, arm64, ppc64el).\r\n\r\nIt would be really good if we could also get the build compiled for this architecture and make officially available. \r\n\r\nCheers,\r\n\r\n- Calvin \n", "before_files": [{"content": "import getpass\nimport json\nimport os\nimport platform\nimport subprocess\nimport sys\nimport time\nfrom pathlib import Path\n\nimport click\nimport yaml\n\nkubeconfig = \"--kubeconfig=\" + os.path.expandvars(\"${SNAP_DATA}/credentials/client.config\")\n\n\ndef get_current_arch():\n # architecture mapping\n arch_mapping = {\"aarch64\": \"arm64\", \"x86_64\": \"amd64\"}\n\n return arch_mapping[platform.machine()]\n\n\ndef snap_data() -> Path:\n try:\n return Path(os.environ[\"SNAP_DATA\"])\n except KeyError:\n return Path(\"/var/snap/microk8s/current\")\n\n\ndef run(*args, die=True):\n # Add wrappers to $PATH\n env = os.environ.copy()\n env[\"PATH\"] += \":%s\" % os.environ[\"SNAP\"]\n result = subprocess.run(\n args, stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE, env=env\n )\n\n try:\n result.check_returncode()\n except subprocess.CalledProcessError as err:\n if die:\n if result.stderr:\n print(result.stderr.decode(\"utf-8\"))\n print(err)\n sys.exit(1)\n else:\n raise\n\n return result.stdout.decode(\"utf-8\")\n\n\ndef is_cluster_ready():\n try:\n service_output = kubectl_get(\"all\")\n node_output = kubectl_get(\"nodes\")\n # Make sure to compare with the word \" Ready \" with spaces.\n if \" Ready \" in node_output and \"service/kubernetes\" in service_output:\n return True\n else:\n return False\n except Exception:\n return False\n\n\ndef is_ha_enabled():\n ha_lock = os.path.expandvars(\"${SNAP_DATA}/var/lock/ha-cluster\")\n return os.path.isfile(ha_lock)\n\n\ndef get_dqlite_info():\n cluster_dir = os.path.expandvars(\"${SNAP_DATA}/var/kubernetes/backend\")\n snap_path = os.environ.get(\"SNAP\")\n\n info = []\n\n if not is_ha_enabled():\n return info\n\n waits = 10\n while waits > 0:\n try:\n with open(\"{}/info.yaml\".format(cluster_dir), mode=\"r\") as f:\n data = yaml.load(f, Loader=yaml.FullLoader)\n out = subprocess.check_output(\n \"{snappath}/bin/dqlite -s file://{dbdir}/cluster.yaml -c {dbdir}/cluster.crt \"\n \"-k {dbdir}/cluster.key -f json k8s .cluster\".format(\n snappath=snap_path, dbdir=cluster_dir\n ).split(),\n timeout=4,\n )\n if data[\"Address\"] in out.decode():\n break\n else:\n time.sleep(5)\n waits -= 1\n except (subprocess.CalledProcessError, subprocess.TimeoutExpired):\n time.sleep(2)\n waits -= 1\n\n if waits == 0:\n return info\n\n nodes = json.loads(out.decode())\n for n in nodes:\n if n[\"Role\"] == 0:\n info.append((n[\"Address\"], \"voter\"))\n if n[\"Role\"] == 1:\n info.append((n[\"Address\"], \"standby\"))\n if n[\"Role\"] == 2:\n info.append((n[\"Address\"], \"spare\"))\n return info\n\n\ndef is_cluster_locked():\n if (snap_data() / \"var/lock/clustered.lock\").exists():\n click.echo(\"This MicroK8s deployment is acting as a node in a cluster.\")\n click.echo(\"Please use the master node.\")\n sys.exit(1)\n\n\ndef wait_for_ready(timeout):\n start_time = time.time()\n\n while True:\n if is_cluster_ready():\n return True\n elif timeout and time.time() > start_time + timeout:\n return False\n else:\n time.sleep(2)\n\n\ndef exit_if_stopped():\n stoppedLockFile = os.path.expandvars(\"${SNAP_DATA}/var/lock/stopped.lock\")\n if os.path.isfile(stoppedLockFile):\n print(\"microk8s is not running, try microk8s start\")\n exit(0)\n\n\ndef exit_if_no_permission():\n user = getpass.getuser()\n # test if we can access the default kubeconfig\n clientConfigFile = os.path.expandvars(\"${SNAP_DATA}/credentials/client.config\")\n if not os.access(clientConfigFile, os.R_OK):\n print(\"Insufficient permissions to access MicroK8s.\")\n print(\n \"You can either try again with sudo or add the user {} to the 'microk8s' group:\".format(\n user\n )\n )\n print(\"\")\n print(\" sudo usermod -a -G microk8s {}\".format(user))\n print(\" sudo chown -f -R $USER ~/.kube\")\n print(\"\")\n print(\n \"After this, reload the user groups either via a reboot or by running 'newgrp microk8s'.\"\n )\n exit(1)\n\n\ndef ensure_started():\n if (snap_data() / \"var/lock/stopped.lock\").exists():\n click.echo(\"microk8s is not running, try microk8s start\", err=True)\n sys.exit(1)\n\n\ndef kubectl_get(cmd, namespace=\"--all-namespaces\"):\n if namespace == \"--all-namespaces\":\n return run(\"kubectl\", kubeconfig, \"get\", cmd, \"--all-namespaces\", die=False)\n else:\n return run(\"kubectl\", kubeconfig, \"get\", cmd, \"-n\", namespace, die=False)\n\n\ndef kubectl_get_clusterroles():\n return run(\n \"kubectl\", kubeconfig, \"get\", \"clusterroles\", \"--show-kind\", \"--no-headers\", die=False\n )\n\n\ndef get_available_addons(arch):\n addon_dataset = os.path.expandvars(\"${SNAP}/addon-lists.yaml\")\n available = []\n with open(addon_dataset, \"r\") as file:\n # The FullLoader parameter handles the conversion from YAML\n # scalar values to Python the dictionary format\n addons = yaml.load(file, Loader=yaml.FullLoader)\n for addon in addons[\"microk8s-addons\"][\"addons\"]:\n if arch in addon[\"supported_architectures\"]:\n available.append(addon)\n\n available = sorted(available, key=lambda k: k[\"name\"])\n return available\n\n\ndef get_addon_by_name(addons, name):\n filtered_addon = []\n for addon in addons:\n if name == addon[\"name\"]:\n filtered_addon.append(addon)\n return filtered_addon\n\n\ndef is_service_expected_to_start(service):\n \"\"\"\n Check if a service is supposed to start\n :param service: the service name\n :return: True if the service is meant to start\n \"\"\"\n lock_path = os.path.expandvars(\"${SNAP_DATA}/var/lock\")\n lock = \"{}/{}\".format(lock_path, service)\n return os.path.exists(lock_path) and not os.path.isfile(lock)\n\n\ndef set_service_expected_to_start(service, start=True):\n \"\"\"\n Check if a service is not expected to start.\n :param service: the service name\n :param start: should the service start or not\n \"\"\"\n lock_path = os.path.expandvars(\"${SNAP_DATA}/var/lock\")\n lock = \"{}/{}\".format(lock_path, service)\n if start:\n os.remove(lock)\n else:\n fd = os.open(lock, os.O_CREAT, mode=0o700)\n os.close(fd)\n\n\ndef check_help_flag(addons: list) -> bool:\n \"\"\"Checks to see if a help message needs to be printed for an addon.\n\n Not all addons check for help flags themselves. Until they do, intercept\n calls to print help text and print out a generic message to that effect.\n \"\"\"\n addon = addons[0]\n if any(arg in addons for arg in (\"-h\", \"--help\")) and addon != \"kubeflow\":\n print(\"Addon %s does not yet have a help message.\" % addon)\n print(\"For more information about it, visit https://microk8s.io/docs/addons\")\n return True\n return False\n\n\ndef xable(action: str, addons: list, xabled_addons: list):\n \"\"\"Enables or disables the given addons.\n\n Collated into a single function since the logic is identical other than\n the script names.\n \"\"\"\n actions = Path(__file__).absolute().parent / \"../../../actions\"\n existing_addons = {sh.with_suffix(\"\").name[7:] for sh in actions.glob(\"enable.*.sh\")}\n\n # Backwards compatibility with enabling multiple addons at once, e.g.\n # `microk8s.enable foo bar:\"baz\"`\n if all(a.split(\":\")[0] in existing_addons for a in addons) and len(addons) > 1:\n for addon in addons:\n if addon in xabled_addons and addon != \"kubeflow\":\n click.echo(\"Addon %s is already %sd.\" % (addon, action))\n else:\n addon, *args = addon.split(\":\")\n wait_for_ready(timeout=30)\n p = subprocess.run([str(actions / (\"%s.%s.sh\" % (action, addon)))] + args)\n if p.returncode:\n sys.exit(p.returncode)\n wait_for_ready(timeout=30)\n\n # The new way of xabling addons, that allows for unix-style argument passing,\n # such as `microk8s.enable foo --bar`.\n else:\n addon, *args = addons[0].split(\":\")\n\n if addon in xabled_addons and addon != \"kubeflow\":\n click.echo(\"Addon %s is already %sd.\" % (addon, action))\n sys.exit(0)\n\n if addon not in existing_addons:\n click.echo(\"Nothing to do for `%s`.\" % addon, err=True)\n sys.exit(1)\n\n if args and addons[1:]:\n click.echo(\n \"Can't pass string arguments and flag arguments simultaneously!\\n\"\n \"{0} an addon with only one argument style at a time:\\n\"\n \"\\n\"\n \" microk8s {1} foo:'bar'\\n\"\n \"or\\n\"\n \" microk8s {1} foo --bar\\n\".format(action.title(), action)\n )\n sys.exit(1)\n\n wait_for_ready(timeout=30)\n script = [str(actions / (\"%s.%s.sh\" % (action, addon)))]\n if args:\n p = subprocess.run(script + args)\n else:\n p = subprocess.run(script + list(addons[1:]))\n\n if p.returncode:\n sys.exit(p.returncode)\n\n wait_for_ready(timeout=30)\n", "path": "scripts/wrappers/common/utils.py"}], "after_files": [{"content": "import getpass\nimport json\nimport os\nimport platform\nimport subprocess\nimport sys\nimport time\nfrom pathlib import Path\n\nimport click\nimport yaml\n\nkubeconfig = \"--kubeconfig=\" + os.path.expandvars(\"${SNAP_DATA}/credentials/client.config\")\n\n\ndef get_current_arch():\n # architecture mapping\n arch_mapping = {\"aarch64\": \"arm64\", \"armv7l\": \"armhf\", \"x86_64\": \"amd64\"}\n\n return arch_mapping[platform.machine()]\n\n\ndef snap_data() -> Path:\n try:\n return Path(os.environ[\"SNAP_DATA\"])\n except KeyError:\n return Path(\"/var/snap/microk8s/current\")\n\n\ndef run(*args, die=True):\n # Add wrappers to $PATH\n env = os.environ.copy()\n env[\"PATH\"] += \":%s\" % os.environ[\"SNAP\"]\n result = subprocess.run(\n args, stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE, env=env\n )\n\n try:\n result.check_returncode()\n except subprocess.CalledProcessError as err:\n if die:\n if result.stderr:\n print(result.stderr.decode(\"utf-8\"))\n print(err)\n sys.exit(1)\n else:\n raise\n\n return result.stdout.decode(\"utf-8\")\n\n\ndef is_cluster_ready():\n try:\n service_output = kubectl_get(\"all\")\n node_output = kubectl_get(\"nodes\")\n # Make sure to compare with the word \" Ready \" with spaces.\n if \" Ready \" in node_output and \"service/kubernetes\" in service_output:\n return True\n else:\n return False\n except Exception:\n return False\n\n\ndef is_ha_enabled():\n ha_lock = os.path.expandvars(\"${SNAP_DATA}/var/lock/ha-cluster\")\n return os.path.isfile(ha_lock)\n\n\ndef get_dqlite_info():\n cluster_dir = os.path.expandvars(\"${SNAP_DATA}/var/kubernetes/backend\")\n snap_path = os.environ.get(\"SNAP\")\n\n info = []\n\n if not is_ha_enabled():\n return info\n\n waits = 10\n while waits > 0:\n try:\n with open(\"{}/info.yaml\".format(cluster_dir), mode=\"r\") as f:\n data = yaml.load(f, Loader=yaml.FullLoader)\n out = subprocess.check_output(\n \"{snappath}/bin/dqlite -s file://{dbdir}/cluster.yaml -c {dbdir}/cluster.crt \"\n \"-k {dbdir}/cluster.key -f json k8s .cluster\".format(\n snappath=snap_path, dbdir=cluster_dir\n ).split(),\n timeout=4,\n )\n if data[\"Address\"] in out.decode():\n break\n else:\n time.sleep(5)\n waits -= 1\n except (subprocess.CalledProcessError, subprocess.TimeoutExpired):\n time.sleep(2)\n waits -= 1\n\n if waits == 0:\n return info\n\n nodes = json.loads(out.decode())\n for n in nodes:\n if n[\"Role\"] == 0:\n info.append((n[\"Address\"], \"voter\"))\n if n[\"Role\"] == 1:\n info.append((n[\"Address\"], \"standby\"))\n if n[\"Role\"] == 2:\n info.append((n[\"Address\"], \"spare\"))\n return info\n\n\ndef is_cluster_locked():\n if (snap_data() / \"var/lock/clustered.lock\").exists():\n click.echo(\"This MicroK8s deployment is acting as a node in a cluster.\")\n click.echo(\"Please use the master node.\")\n sys.exit(1)\n\n\ndef wait_for_ready(timeout):\n start_time = time.time()\n\n while True:\n if is_cluster_ready():\n return True\n elif timeout and time.time() > start_time + timeout:\n return False\n else:\n time.sleep(2)\n\n\ndef exit_if_stopped():\n stoppedLockFile = os.path.expandvars(\"${SNAP_DATA}/var/lock/stopped.lock\")\n if os.path.isfile(stoppedLockFile):\n print(\"microk8s is not running, try microk8s start\")\n exit(0)\n\n\ndef exit_if_no_permission():\n user = getpass.getuser()\n # test if we can access the default kubeconfig\n clientConfigFile = os.path.expandvars(\"${SNAP_DATA}/credentials/client.config\")\n if not os.access(clientConfigFile, os.R_OK):\n print(\"Insufficient permissions to access MicroK8s.\")\n print(\n \"You can either try again with sudo or add the user {} to the 'microk8s' group:\".format(\n user\n )\n )\n print(\"\")\n print(\" sudo usermod -a -G microk8s {}\".format(user))\n print(\" sudo chown -f -R $USER ~/.kube\")\n print(\"\")\n print(\n \"After this, reload the user groups either via a reboot or by running 'newgrp microk8s'.\"\n )\n exit(1)\n\n\ndef ensure_started():\n if (snap_data() / \"var/lock/stopped.lock\").exists():\n click.echo(\"microk8s is not running, try microk8s start\", err=True)\n sys.exit(1)\n\n\ndef kubectl_get(cmd, namespace=\"--all-namespaces\"):\n if namespace == \"--all-namespaces\":\n return run(\"kubectl\", kubeconfig, \"get\", cmd, \"--all-namespaces\", die=False)\n else:\n return run(\"kubectl\", kubeconfig, \"get\", cmd, \"-n\", namespace, die=False)\n\n\ndef kubectl_get_clusterroles():\n return run(\n \"kubectl\", kubeconfig, \"get\", \"clusterroles\", \"--show-kind\", \"--no-headers\", die=False\n )\n\n\ndef get_available_addons(arch):\n addon_dataset = os.path.expandvars(\"${SNAP}/addon-lists.yaml\")\n available = []\n with open(addon_dataset, \"r\") as file:\n # The FullLoader parameter handles the conversion from YAML\n # scalar values to Python the dictionary format\n addons = yaml.load(file, Loader=yaml.FullLoader)\n for addon in addons[\"microk8s-addons\"][\"addons\"]:\n if arch in addon[\"supported_architectures\"]:\n available.append(addon)\n\n available = sorted(available, key=lambda k: k[\"name\"])\n return available\n\n\ndef get_addon_by_name(addons, name):\n filtered_addon = []\n for addon in addons:\n if name == addon[\"name\"]:\n filtered_addon.append(addon)\n return filtered_addon\n\n\ndef is_service_expected_to_start(service):\n \"\"\"\n Check if a service is supposed to start\n :param service: the service name\n :return: True if the service is meant to start\n \"\"\"\n lock_path = os.path.expandvars(\"${SNAP_DATA}/var/lock\")\n lock = \"{}/{}\".format(lock_path, service)\n return os.path.exists(lock_path) and not os.path.isfile(lock)\n\n\ndef set_service_expected_to_start(service, start=True):\n \"\"\"\n Check if a service is not expected to start.\n :param service: the service name\n :param start: should the service start or not\n \"\"\"\n lock_path = os.path.expandvars(\"${SNAP_DATA}/var/lock\")\n lock = \"{}/{}\".format(lock_path, service)\n if start:\n os.remove(lock)\n else:\n fd = os.open(lock, os.O_CREAT, mode=0o700)\n os.close(fd)\n\n\ndef check_help_flag(addons: list) -> bool:\n \"\"\"Checks to see if a help message needs to be printed for an addon.\n\n Not all addons check for help flags themselves. Until they do, intercept\n calls to print help text and print out a generic message to that effect.\n \"\"\"\n addon = addons[0]\n if any(arg in addons for arg in (\"-h\", \"--help\")) and addon != \"kubeflow\":\n print(\"Addon %s does not yet have a help message.\" % addon)\n print(\"For more information about it, visit https://microk8s.io/docs/addons\")\n return True\n return False\n\n\ndef xable(action: str, addons: list, xabled_addons: list):\n \"\"\"Enables or disables the given addons.\n\n Collated into a single function since the logic is identical other than\n the script names.\n \"\"\"\n actions = Path(__file__).absolute().parent / \"../../../actions\"\n existing_addons = {sh.with_suffix(\"\").name[7:] for sh in actions.glob(\"enable.*.sh\")}\n\n # Backwards compatibility with enabling multiple addons at once, e.g.\n # `microk8s.enable foo bar:\"baz\"`\n if all(a.split(\":\")[0] in existing_addons for a in addons) and len(addons) > 1:\n for addon in addons:\n if addon in xabled_addons and addon != \"kubeflow\":\n click.echo(\"Addon %s is already %sd.\" % (addon, action))\n else:\n addon, *args = addon.split(\":\")\n wait_for_ready(timeout=30)\n p = subprocess.run([str(actions / (\"%s.%s.sh\" % (action, addon)))] + args)\n if p.returncode:\n sys.exit(p.returncode)\n wait_for_ready(timeout=30)\n\n # The new way of xabling addons, that allows for unix-style argument passing,\n # such as `microk8s.enable foo --bar`.\n else:\n addon, *args = addons[0].split(\":\")\n\n if addon in xabled_addons and addon != \"kubeflow\":\n click.echo(\"Addon %s is already %sd.\" % (addon, action))\n sys.exit(0)\n\n if addon not in existing_addons:\n click.echo(\"Nothing to do for `%s`.\" % addon, err=True)\n sys.exit(1)\n\n if args and addons[1:]:\n click.echo(\n \"Can't pass string arguments and flag arguments simultaneously!\\n\"\n \"{0} an addon with only one argument style at a time:\\n\"\n \"\\n\"\n \" microk8s {1} foo:'bar'\\n\"\n \"or\\n\"\n \" microk8s {1} foo --bar\\n\".format(action.title(), action)\n )\n sys.exit(1)\n\n wait_for_ready(timeout=30)\n script = [str(actions / (\"%s.%s.sh\" % (action, addon)))]\n if args:\n p = subprocess.run(script + args)\n else:\n p = subprocess.run(script + list(addons[1:]))\n\n if p.returncode:\n sys.exit(p.returncode)\n\n wait_for_ready(timeout=30)\n", "path": "scripts/wrappers/common/utils.py"}]}

| 3,557 | 140 |

gh_patches_debug_16189

|

rasdani/github-patches

|

git_diff

|

HypothesisWorks__hypothesis-1711

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Move over to new pytest plugin API

We're currently using `pytest.mark.hookwrapper` in our pytest plugin. According to @RonnyPfannschmidt this API is soon to be deprecated and we should be using ` pytest.hookimpl(hookwrapper=True)` instead.

Hopefully this should be a simple matter of changing the code over to use the new decorator, but it's Hypothesis development so I'm sure something exciting will break. 😆 😢

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `hypothesis-python/src/hypothesis/extra/pytestplugin.py`

Content:

```

1 # coding=utf-8

2 #

3 # This file is part of Hypothesis, which may be found at

4 # https://github.com/HypothesisWorks/hypothesis-python

5 #

6 # Most of this work is copyright (C) 2013-2018 David R. MacIver

7 # ([email protected]), but it contains contributions by others. See

8 # CONTRIBUTING.rst for a full list of people who may hold copyright, and

9 # consult the git log if you need to determine who owns an individual

10 # contribution.

11 #

12 # This Source Code Form is subject to the terms of the Mozilla Public License,

13 # v. 2.0. If a copy of the MPL was not distributed with this file, You can

14 # obtain one at http://mozilla.org/MPL/2.0/.

15 #

16 # END HEADER

17

18 from __future__ import absolute_import, division, print_function

19

20 from distutils.version import LooseVersion

21

22 import pytest

23

24 from hypothesis import Verbosity, core, settings

25 from hypothesis._settings import note_deprecation

26 from hypothesis.internal.compat import OrderedDict, text_type

27 from hypothesis.internal.detection import is_hypothesis_test

28 from hypothesis.reporting import default as default_reporter, with_reporter

29 from hypothesis.statistics import collector

30

31 LOAD_PROFILE_OPTION = "--hypothesis-profile"

32 VERBOSITY_OPTION = "--hypothesis-verbosity"

33 PRINT_STATISTICS_OPTION = "--hypothesis-show-statistics"

34 SEED_OPTION = "--hypothesis-seed"

35

36

37 class StoringReporter(object):

38 def __init__(self, config):

39 self.config = config

40 self.results = []

41

42 def __call__(self, msg):

43 if self.config.getoption("capture", "fd") == "no":

44 default_reporter(msg)

45 if not isinstance(msg, text_type):

46 msg = repr(msg)

47 self.results.append(msg)

48

49

50 def pytest_addoption(parser):

51 group = parser.getgroup("hypothesis", "Hypothesis")

52 group.addoption(

53 LOAD_PROFILE_OPTION,

54 action="store",

55 help="Load in a registered hypothesis.settings profile",

56 )

57 group.addoption(

58 VERBOSITY_OPTION,

59 action="store",

60 choices=[opt.name for opt in Verbosity],

61 help="Override profile with verbosity setting specified",

62 )

63 group.addoption(

64 PRINT_STATISTICS_OPTION,

65 action="store_true",

66 help="Configure when statistics are printed",

67 default=False,

68 )

69 group.addoption(

70 SEED_OPTION, action="store", help="Set a seed to use for all Hypothesis tests"

71 )

72

73

74 def pytest_report_header(config):

75 profile = config.getoption(LOAD_PROFILE_OPTION)

76 if not profile:

77 profile = settings._current_profile

78 settings_str = settings.get_profile(profile).show_changed()

79 if settings_str != "":

80 settings_str = " -> %s" % (settings_str)

81 return "hypothesis profile %r%s" % (profile, settings_str)

82

83

84 def pytest_configure(config):

85 core.running_under_pytest = True

86 profile = config.getoption(LOAD_PROFILE_OPTION)

87 if profile:

88 settings.load_profile(profile)

89 verbosity_name = config.getoption(VERBOSITY_OPTION)

90 if verbosity_name:

91 verbosity_value = Verbosity[verbosity_name]

92 profile_name = "%s-with-%s-verbosity" % (

93 settings._current_profile,

94 verbosity_name,

95 )

96 # register_profile creates a new profile, exactly like the current one,

97 # with the extra values given (in this case 'verbosity')

98 settings.register_profile(profile_name, verbosity=verbosity_value)

99 settings.load_profile(profile_name)

100 seed = config.getoption(SEED_OPTION)

101 if seed is not None:

102 try:

103 seed = int(seed)

104 except ValueError:

105 pass

106 core.global_force_seed = seed

107 config.addinivalue_line("markers", "hypothesis: Tests which use hypothesis.")

108

109

110 gathered_statistics = OrderedDict() # type: dict

111

112

113 @pytest.mark.hookwrapper

114 def pytest_runtest_call(item):

115 if not (hasattr(item, "obj") and is_hypothesis_test(item.obj)):

116 yield

117 else:

118 store = StoringReporter(item.config)

119

120 def note_statistics(stats):

121 lines = [item.nodeid + ":", ""] + stats.get_description() + [""]

122 gathered_statistics[item.nodeid] = lines

123 item.hypothesis_statistics = lines

124

125 with collector.with_value(note_statistics):

126 with with_reporter(store):

127 yield

128 if store.results:

129 item.hypothesis_report_information = list(store.results)

130

131

132 @pytest.mark.hookwrapper

133 def pytest_runtest_makereport(item, call):

134 report = (yield).get_result()

135 if hasattr(item, "hypothesis_report_information"):

136 report.sections.append(

137 ("Hypothesis", "\n".join(item.hypothesis_report_information))

138 )

139 if hasattr(item, "hypothesis_statistics") and report.when == "teardown":

140 # Running on pytest < 3.5 where user_properties doesn't exist, fall

141 # back on the global gathered_statistics (which breaks under xdist)

142 if hasattr(report, "user_properties"): # pragma: no branch

143 val = ("hypothesis-stats", item.hypothesis_statistics)

144 # Workaround for https://github.com/pytest-dev/pytest/issues/4034

145 if isinstance(report.user_properties, tuple):

146 report.user_properties += (val,)

147 else:

148 report.user_properties.append(val)

149

150

151 def pytest_terminal_summary(terminalreporter):

152 if not terminalreporter.config.getoption(PRINT_STATISTICS_OPTION):

153 return

154 terminalreporter.section("Hypothesis Statistics")

155

156 if LooseVersion(pytest.__version__) < "3.5": # pragma: no cover

157 if not gathered_statistics:

158 terminalreporter.write_line(

159 "Reporting Hypothesis statistics with pytest-xdist enabled "

160 "requires pytest >= 3.5"

161 )

162 for lines in gathered_statistics.values():

163 for li in lines:

164 terminalreporter.write_line(li)

165 return

166

167 # terminalreporter.stats is a dict, where the empty string appears to

168 # always be the key for a list of _pytest.reports.TestReport objects

169 # (where we stored the statistics data in pytest_runtest_makereport above)

170 for test_report in terminalreporter.stats.get("", []):

171 for name, lines in test_report.user_properties:

172 if name == "hypothesis-stats" and test_report.when == "teardown":

173 for li in lines:

174 terminalreporter.write_line(li)

175

176

177 def pytest_collection_modifyitems(items):

178 for item in items:

179 if not isinstance(item, pytest.Function):

180 continue

181 if is_hypothesis_test(item.obj):

182 item.add_marker("hypothesis")

183 if getattr(item.obj, "is_hypothesis_strategy_function", False):

184

185 def note_strategy_is_not_test(*args, **kwargs):

186 note_deprecation(

187 "%s is a function that returns a Hypothesis strategy, "

188 "but pytest has collected it as a test function. This "

189 "is useless as the function body will never be executed."

190 "To define a test function, use @given instead of "

191 "@composite." % (item.nodeid,),

192 since="2018-11-02",

193 )

194

195 item.obj = note_strategy_is_not_test

196

197

198 def load():

199 pass

200

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/hypothesis-python/src/hypothesis/extra/pytestplugin.py b/hypothesis-python/src/hypothesis/extra/pytestplugin.py

--- a/hypothesis-python/src/hypothesis/extra/pytestplugin.py

+++ b/hypothesis-python/src/hypothesis/extra/pytestplugin.py

@@ -110,7 +110,7 @@

gathered_statistics = OrderedDict() # type: dict

[email protected]

[email protected](hookwrapper=True)

def pytest_runtest_call(item):

if not (hasattr(item, "obj") and is_hypothesis_test(item.obj)):

yield

@@ -129,7 +129,7 @@

item.hypothesis_report_information = list(store.results)

[email protected]

[email protected](hookwrapper=True)

def pytest_runtest_makereport(item, call):

report = (yield).get_result()

if hasattr(item, "hypothesis_report_information"):

|

{"golden_diff": "diff --git a/hypothesis-python/src/hypothesis/extra/pytestplugin.py b/hypothesis-python/src/hypothesis/extra/pytestplugin.py\n--- a/hypothesis-python/src/hypothesis/extra/pytestplugin.py\n+++ b/hypothesis-python/src/hypothesis/extra/pytestplugin.py\n@@ -110,7 +110,7 @@\n gathered_statistics = OrderedDict() # type: dict\n \n \[email protected]\[email protected](hookwrapper=True)\n def pytest_runtest_call(item):\n if not (hasattr(item, \"obj\") and is_hypothesis_test(item.obj)):\n yield\n@@ -129,7 +129,7 @@\n item.hypothesis_report_information = list(store.results)\n \n \[email protected]\[email protected](hookwrapper=True)\n def pytest_runtest_makereport(item, call):\n report = (yield).get_result()\n if hasattr(item, \"hypothesis_report_information\"):\n", "issue": "Move over to new pytest plugin API\nWe're currently using `pytest.mark.hookwrapper` in our pytest plugin. According to @RonnyPfannschmidt this API is soon to be deprecated and we should be using ` pytest.hookimpl(hookwrapper=True)` instead.\r\n\r\nHopefully this should be a simple matter of changing the code over to use the new decorator, but it's Hypothesis development so I'm sure something exciting will break. \ud83d\ude06 \ud83d\ude22 \n", "before_files": [{"content": "# coding=utf-8\n#\n# This file is part of Hypothesis, which may be found at\n# https://github.com/HypothesisWorks/hypothesis-python\n#\n# Most of this work is copyright (C) 2013-2018 David R. MacIver\n# ([email protected]), but it contains contributions by others. See\n# CONTRIBUTING.rst for a full list of people who may hold copyright, and\n# consult the git log if you need to determine who owns an individual\n# contribution.\n#\n# This Source Code Form is subject to the terms of the Mozilla Public License,\n# v. 2.0. If a copy of the MPL was not distributed with this file, You can\n# obtain one at http://mozilla.org/MPL/2.0/.\n#\n# END HEADER\n\nfrom __future__ import absolute_import, division, print_function\n\nfrom distutils.version import LooseVersion\n\nimport pytest\n\nfrom hypothesis import Verbosity, core, settings\nfrom hypothesis._settings import note_deprecation\nfrom hypothesis.internal.compat import OrderedDict, text_type\nfrom hypothesis.internal.detection import is_hypothesis_test\nfrom hypothesis.reporting import default as default_reporter, with_reporter\nfrom hypothesis.statistics import collector\n\nLOAD_PROFILE_OPTION = \"--hypothesis-profile\"\nVERBOSITY_OPTION = \"--hypothesis-verbosity\"\nPRINT_STATISTICS_OPTION = \"--hypothesis-show-statistics\"\nSEED_OPTION = \"--hypothesis-seed\"\n\n\nclass StoringReporter(object):\n def __init__(self, config):\n self.config = config\n self.results = []\n\n def __call__(self, msg):\n if self.config.getoption(\"capture\", \"fd\") == \"no\":\n default_reporter(msg)\n if not isinstance(msg, text_type):\n msg = repr(msg)\n self.results.append(msg)\n\n\ndef pytest_addoption(parser):\n group = parser.getgroup(\"hypothesis\", \"Hypothesis\")\n group.addoption(\n LOAD_PROFILE_OPTION,\n action=\"store\",\n help=\"Load in a registered hypothesis.settings profile\",\n )\n group.addoption(\n VERBOSITY_OPTION,\n action=\"store\",\n choices=[opt.name for opt in Verbosity],\n help=\"Override profile with verbosity setting specified\",\n )\n group.addoption(\n PRINT_STATISTICS_OPTION,\n action=\"store_true\",\n help=\"Configure when statistics are printed\",\n default=False,\n )\n group.addoption(\n SEED_OPTION, action=\"store\", help=\"Set a seed to use for all Hypothesis tests\"\n )\n\n\ndef pytest_report_header(config):\n profile = config.getoption(LOAD_PROFILE_OPTION)\n if not profile:\n profile = settings._current_profile\n settings_str = settings.get_profile(profile).show_changed()\n if settings_str != \"\":\n settings_str = \" -> %s\" % (settings_str)\n return \"hypothesis profile %r%s\" % (profile, settings_str)\n\n\ndef pytest_configure(config):\n core.running_under_pytest = True\n profile = config.getoption(LOAD_PROFILE_OPTION)\n if profile:\n settings.load_profile(profile)\n verbosity_name = config.getoption(VERBOSITY_OPTION)\n if verbosity_name:\n verbosity_value = Verbosity[verbosity_name]\n profile_name = \"%s-with-%s-verbosity\" % (\n settings._current_profile,\n verbosity_name,\n )\n # register_profile creates a new profile, exactly like the current one,\n # with the extra values given (in this case 'verbosity')\n settings.register_profile(profile_name, verbosity=verbosity_value)\n settings.load_profile(profile_name)\n seed = config.getoption(SEED_OPTION)\n if seed is not None:\n try:\n seed = int(seed)\n except ValueError:\n pass\n core.global_force_seed = seed\n config.addinivalue_line(\"markers\", \"hypothesis: Tests which use hypothesis.\")\n\n\ngathered_statistics = OrderedDict() # type: dict\n\n\[email protected]\ndef pytest_runtest_call(item):\n if not (hasattr(item, \"obj\") and is_hypothesis_test(item.obj)):\n yield\n else:\n store = StoringReporter(item.config)\n\n def note_statistics(stats):\n lines = [item.nodeid + \":\", \"\"] + stats.get_description() + [\"\"]\n gathered_statistics[item.nodeid] = lines\n item.hypothesis_statistics = lines\n\n with collector.with_value(note_statistics):\n with with_reporter(store):\n yield\n if store.results:\n item.hypothesis_report_information = list(store.results)\n\n\[email protected]\ndef pytest_runtest_makereport(item, call):\n report = (yield).get_result()\n if hasattr(item, \"hypothesis_report_information\"):\n report.sections.append(\n (\"Hypothesis\", \"\\n\".join(item.hypothesis_report_information))\n )\n if hasattr(item, \"hypothesis_statistics\") and report.when == \"teardown\":\n # Running on pytest < 3.5 where user_properties doesn't exist, fall\n # back on the global gathered_statistics (which breaks under xdist)\n if hasattr(report, \"user_properties\"): # pragma: no branch\n val = (\"hypothesis-stats\", item.hypothesis_statistics)\n # Workaround for https://github.com/pytest-dev/pytest/issues/4034\n if isinstance(report.user_properties, tuple):\n report.user_properties += (val,)\n else:\n report.user_properties.append(val)\n\n\ndef pytest_terminal_summary(terminalreporter):\n if not terminalreporter.config.getoption(PRINT_STATISTICS_OPTION):\n return\n terminalreporter.section(\"Hypothesis Statistics\")\n\n if LooseVersion(pytest.__version__) < \"3.5\": # pragma: no cover\n if not gathered_statistics:\n terminalreporter.write_line(\n \"Reporting Hypothesis statistics with pytest-xdist enabled \"\n \"requires pytest >= 3.5\"\n )\n for lines in gathered_statistics.values():\n for li in lines:\n terminalreporter.write_line(li)\n return\n\n # terminalreporter.stats is a dict, where the empty string appears to\n # always be the key for a list of _pytest.reports.TestReport objects\n # (where we stored the statistics data in pytest_runtest_makereport above)\n for test_report in terminalreporter.stats.get(\"\", []):\n for name, lines in test_report.user_properties:\n if name == \"hypothesis-stats\" and test_report.when == \"teardown\":\n for li in lines:\n terminalreporter.write_line(li)\n\n\ndef pytest_collection_modifyitems(items):\n for item in items:\n if not isinstance(item, pytest.Function):\n continue\n if is_hypothesis_test(item.obj):\n item.add_marker(\"hypothesis\")\n if getattr(item.obj, \"is_hypothesis_strategy_function\", False):\n\n def note_strategy_is_not_test(*args, **kwargs):\n note_deprecation(\n \"%s is a function that returns a Hypothesis strategy, \"\n \"but pytest has collected it as a test function. This \"\n \"is useless as the function body will never be executed.\"\n \"To define a test function, use @given instead of \"\n \"@composite.\" % (item.nodeid,),\n since=\"2018-11-02\",\n )\n\n item.obj = note_strategy_is_not_test\n\n\ndef load():\n pass\n", "path": "hypothesis-python/src/hypothesis/extra/pytestplugin.py"}], "after_files": [{"content": "# coding=utf-8\n#\n# This file is part of Hypothesis, which may be found at\n# https://github.com/HypothesisWorks/hypothesis-python\n#\n# Most of this work is copyright (C) 2013-2018 David R. MacIver\n# ([email protected]), but it contains contributions by others. See\n# CONTRIBUTING.rst for a full list of people who may hold copyright, and\n# consult the git log if you need to determine who owns an individual\n# contribution.\n#\n# This Source Code Form is subject to the terms of the Mozilla Public License,\n# v. 2.0. If a copy of the MPL was not distributed with this file, You can\n# obtain one at http://mozilla.org/MPL/2.0/.\n#\n# END HEADER\n\nfrom __future__ import absolute_import, division, print_function\n\nfrom distutils.version import LooseVersion\n\nimport pytest\n\nfrom hypothesis import Verbosity, core, settings\nfrom hypothesis._settings import note_deprecation\nfrom hypothesis.internal.compat import OrderedDict, text_type\nfrom hypothesis.internal.detection import is_hypothesis_test\nfrom hypothesis.reporting import default as default_reporter, with_reporter\nfrom hypothesis.statistics import collector\n\nLOAD_PROFILE_OPTION = \"--hypothesis-profile\"\nVERBOSITY_OPTION = \"--hypothesis-verbosity\"\nPRINT_STATISTICS_OPTION = \"--hypothesis-show-statistics\"\nSEED_OPTION = \"--hypothesis-seed\"\n\n\nclass StoringReporter(object):\n def __init__(self, config):\n self.config = config\n self.results = []\n\n def __call__(self, msg):\n if self.config.getoption(\"capture\", \"fd\") == \"no\":\n default_reporter(msg)\n if not isinstance(msg, text_type):\n msg = repr(msg)\n self.results.append(msg)\n\n\ndef pytest_addoption(parser):\n group = parser.getgroup(\"hypothesis\", \"Hypothesis\")\n group.addoption(\n LOAD_PROFILE_OPTION,\n action=\"store\",\n help=\"Load in a registered hypothesis.settings profile\",\n )\n group.addoption(\n VERBOSITY_OPTION,\n action=\"store\",\n choices=[opt.name for opt in Verbosity],\n help=\"Override profile with verbosity setting specified\",\n )\n group.addoption(\n PRINT_STATISTICS_OPTION,\n action=\"store_true\",\n help=\"Configure when statistics are printed\",\n default=False,\n )\n group.addoption(\n SEED_OPTION, action=\"store\", help=\"Set a seed to use for all Hypothesis tests\"\n )\n\n\ndef pytest_report_header(config):\n profile = config.getoption(LOAD_PROFILE_OPTION)\n if not profile:\n profile = settings._current_profile\n settings_str = settings.get_profile(profile).show_changed()\n if settings_str != \"\":\n settings_str = \" -> %s\" % (settings_str)\n return \"hypothesis profile %r%s\" % (profile, settings_str)\n\n\ndef pytest_configure(config):\n core.running_under_pytest = True\n profile = config.getoption(LOAD_PROFILE_OPTION)\n if profile:\n settings.load_profile(profile)\n verbosity_name = config.getoption(VERBOSITY_OPTION)\n if verbosity_name:\n verbosity_value = Verbosity[verbosity_name]\n profile_name = \"%s-with-%s-verbosity\" % (\n settings._current_profile,\n verbosity_name,\n )\n # register_profile creates a new profile, exactly like the current one,\n # with the extra values given (in this case 'verbosity')\n settings.register_profile(profile_name, verbosity=verbosity_value)\n settings.load_profile(profile_name)\n seed = config.getoption(SEED_OPTION)\n if seed is not None:\n try:\n seed = int(seed)\n except ValueError:\n pass\n core.global_force_seed = seed\n config.addinivalue_line(\"markers\", \"hypothesis: Tests which use hypothesis.\")\n\n\ngathered_statistics = OrderedDict() # type: dict\n\n\[email protected](hookwrapper=True)\ndef pytest_runtest_call(item):\n if not (hasattr(item, \"obj\") and is_hypothesis_test(item.obj)):\n yield\n else:\n store = StoringReporter(item.config)\n\n def note_statistics(stats):\n lines = [item.nodeid + \":\", \"\"] + stats.get_description() + [\"\"]\n gathered_statistics[item.nodeid] = lines\n item.hypothesis_statistics = lines\n\n with collector.with_value(note_statistics):\n with with_reporter(store):\n yield\n if store.results:\n item.hypothesis_report_information = list(store.results)\n\n\[email protected](hookwrapper=True)\ndef pytest_runtest_makereport(item, call):\n report = (yield).get_result()\n if hasattr(item, \"hypothesis_report_information\"):\n report.sections.append(\n (\"Hypothesis\", \"\\n\".join(item.hypothesis_report_information))\n )\n if hasattr(item, \"hypothesis_statistics\") and report.when == \"teardown\":\n # Running on pytest < 3.5 where user_properties doesn't exist, fall\n # back on the global gathered_statistics (which breaks under xdist)\n if hasattr(report, \"user_properties\"): # pragma: no branch\n val = (\"hypothesis-stats\", item.hypothesis_statistics)\n # Workaround for https://github.com/pytest-dev/pytest/issues/4034\n if isinstance(report.user_properties, tuple):\n report.user_properties += (val,)\n else:\n report.user_properties.append(val)\n\n\ndef pytest_terminal_summary(terminalreporter):\n if not terminalreporter.config.getoption(PRINT_STATISTICS_OPTION):\n return\n terminalreporter.section(\"Hypothesis Statistics\")\n\n if LooseVersion(pytest.__version__) < \"3.5\": # pragma: no cover\n if not gathered_statistics:\n terminalreporter.write_line(\n \"Reporting Hypothesis statistics with pytest-xdist enabled \"\n \"requires pytest >= 3.5\"\n )\n for lines in gathered_statistics.values():\n for li in lines:\n terminalreporter.write_line(li)\n return\n\n # terminalreporter.stats is a dict, where the empty string appears to\n # always be the key for a list of _pytest.reports.TestReport objects\n # (where we stored the statistics data in pytest_runtest_makereport above)\n for test_report in terminalreporter.stats.get(\"\", []):\n for name, lines in test_report.user_properties:\n if name == \"hypothesis-stats\" and test_report.when == \"teardown\":\n for li in lines:\n terminalreporter.write_line(li)\n\n\ndef pytest_collection_modifyitems(items):\n for item in items:\n if not isinstance(item, pytest.Function):\n continue\n if is_hypothesis_test(item.obj):\n item.add_marker(\"hypothesis\")\n if getattr(item.obj, \"is_hypothesis_strategy_function\", False):\n\n def note_strategy_is_not_test(*args, **kwargs):\n note_deprecation(\n \"%s is a function that returns a Hypothesis strategy, \"\n \"but pytest has collected it as a test function. This \"\n \"is useless as the function body will never be executed.\"\n \"To define a test function, use @given instead of \"\n \"@composite.\" % (item.nodeid,),\n since=\"2018-11-02\",\n )\n\n item.obj = note_strategy_is_not_test\n\n\ndef load():\n pass\n", "path": "hypothesis-python/src/hypothesis/extra/pytestplugin.py"}]}

| 2,458 | 222 |

gh_patches_debug_21294

|

rasdani/github-patches

|

git_diff

|

rucio__rucio-952

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

setup_clients.py classifiers needs to be a list, not tuples

Motivation

----------

Classifiers were changed to tuple, which does not work, needs to be a list.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setup_rucio_client.py`

Content:

```

1 # Copyright 2014-2018 CERN for the benefit of the ATLAS collaboration.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 #

15 # Authors:

16 # - Vincent Garonne <[email protected]>, 2014-2018

17 # - Martin Barisits <[email protected]>, 2017

18

19 import os

20 import re

21 import shutil

22 import subprocess

23 import sys

24

25 from distutils.command.sdist import sdist as _sdist # pylint:disable=no-name-in-module,import-error

26 from setuptools import setup

27

28 sys.path.insert(0, os.path.abspath('lib/'))

29

30 from rucio import version # noqa

31

32 if sys.version_info < (2, 5):

33 print('ERROR: Rucio requires at least Python 2.6 to run.')

34 sys.exit(1)

35 sys.path.insert(0, os.path.abspath('lib/'))

36

37

38 # Arguments to the setup script to build Basic/Lite distributions

39 COPY_ARGS = sys.argv[1:]

40 NAME = 'rucio-clients'

41 IS_RELEASE = False

42 PACKAGES = ['rucio', 'rucio.client', 'rucio.common',

43 'rucio.rse.protocols', 'rucio.rse', 'rucio.tests']

44 REQUIREMENTS_FILES = ['tools/pip-requires-client']

45 DESCRIPTION = "Rucio Client Lite Package"

46 DATA_FILES = [('etc/', ['etc/rse-accounts.cfg.template', 'etc/rucio.cfg.template', 'etc/rucio.cfg.atlas.client.template']),

47 ('tools/', ['tools/pip-requires-client', ]), ]

48

49 SCRIPTS = ['bin/rucio', 'bin/rucio-admin']

50 if os.path.exists('build/'):

51 shutil.rmtree('build/')

52 if os.path.exists('lib/rucio_clients.egg-info/'):

53 shutil.rmtree('lib/rucio_clients.egg-info/')

54 if os.path.exists('lib/rucio.egg-info/'):

55 shutil.rmtree('lib/rucio.egg-info/')

56

57 SSH_EXTRAS = ['paramiko==1.18.4']

58 KERBEROS_EXTRAS = ['kerberos>=1.2.5', 'pykerberos>=1.1.14', 'requests-kerberos>=0.11.0']

59 SWIFT_EXTRAS = ['python-swiftclient>=3.5.0', ]

60 EXTRAS_REQUIRES = dict(ssh=SSH_EXTRAS,

61 kerberos=KERBEROS_EXTRAS,

62 swift=SWIFT_EXTRAS)

63

64 if '--release' in COPY_ARGS:

65 IS_RELEASE = True

66 COPY_ARGS.remove('--release')

67

68

69 # If Sphinx is installed on the box running setup.py,

70 # enable setup.py to build the documentation, otherwise,

71 # just ignore it

72 cmdclass = {}

73

74 try:

75 from sphinx.setup_command import BuildDoc

76

77 class local_BuildDoc(BuildDoc):

78 '''

79 local_BuildDoc

80 '''

81 def run(self):

82 '''

83 run

84 '''

85 for builder in ['html']: # 'man','latex'

86 self.builder = builder

87 self.finalize_options()

88 BuildDoc.run(self)

89 cmdclass['build_sphinx'] = local_BuildDoc

90 except Exception:

91 pass

92

93

94 def get_reqs_from_file(requirements_file):

95 '''

96 get_reqs_from_file

97 '''

98 if os.path.exists(requirements_file):

99 return open(requirements_file, 'r').read().split('\n')

100 return []

101

102

103 def parse_requirements(requirements_files):

104 '''

105 parse_requirements

106 '''

107 requirements = []

108 for requirements_file in requirements_files:

109 for line in get_reqs_from_file(requirements_file):

110 if re.match(r'\s*-e\s+', line):

111 requirements.append(re.sub(r'\s*-e\s+.*#egg=(.*)$', r'\1', line))

112 elif re.match(r'\s*-f\s+', line):

113 pass

114 else:

115 requirements.append(line)

116 return requirements

117

118

119 def parse_dependency_links(requirements_files):

120 '''

121 parse_dependency_links

122 '''

123 dependency_links = []

124 for requirements_file in requirements_files:

125 for line in get_reqs_from_file(requirements_file):

126 if re.match(r'(\s*#)|(\s*$)', line):

127 continue

128 if re.match(r'\s*-[ef]\s+', line):

129 dependency_links.append(re.sub(r'\s*-[ef]\s+', '', line))

130 return dependency_links

131

132

133 def write_requirements():

134 '''

135 write_requirements

136 '''

137 venv = os.environ.get('VIRTUAL_ENV', None)

138 if venv is not None:

139 req_file = open("requirements.txt", "w")

140 output = subprocess.Popen(["pip", "freeze", "-l"], stdout=subprocess.PIPE)

141 requirements = output.communicate()[0].strip()

142 req_file.write(requirements)

143 req_file.close()

144

145

146 REQUIRES = parse_requirements(requirements_files=REQUIREMENTS_FILES)

147 DEPEND_LINKS = parse_dependency_links(requirements_files=REQUIREMENTS_FILES)

148

149

150 class CustomSdist(_sdist):

151 '''

152 CustomSdist

153 '''

154 user_options = [

155 ('packaging=', None, "Some option to indicate what should be packaged")

156 ] + _sdist.user_options

157

158 def __init__(self, *args, **kwargs):

159 '''

160 __init__

161 '''

162 _sdist.__init__(self, *args, **kwargs)

163 self.packaging = "default value for this option"

164

165 def get_file_list(self):

166 '''

167 get_file_list

168 '''

169 print("Chosen packaging option: " + NAME)

170 self.distribution.data_files = DATA_FILES

171 _sdist.get_file_list(self)

172

173

174 cmdclass['sdist'] = CustomSdist

175

176 setup(

177 name=NAME,

178 version=version.version_string(),

179 packages=PACKAGES,

180 package_dir={'': 'lib'},

181 data_files=DATA_FILES,

182 script_args=COPY_ARGS,

183 cmdclass=cmdclass,

184 include_package_data=True,

185 scripts=SCRIPTS,

186 # doc=cmdclass,

187 author="Rucio",

188 author_email="[email protected]",

189 description=DESCRIPTION,

190 license="Apache License, Version 2.0",

191 url="http://rucio.cern.ch/",

192 python_requires=">=2.6, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*",

193 classifiers=(

194 'Development Status :: 5 - Production/Stable',

195 'License :: OSI Approved :: Apache Software License',

196 'Intended Audience :: Information Technology',

197 'Intended Audience :: System Administrators',

198 'Operating System :: POSIX :: Linux',

199 'Natural Language :: English',

200 'Programming Language :: Python',

201 'Programming Language :: Python :: 2.6',

202 'Programming Language :: Python :: 2.7',

203 'Programming Language :: Python :: 3',

204 'Programming Language :: Python :: 3.4',

205 'Programming Language :: Python :: 3.5',

206 'Programming Language :: Python :: 3.6',

207 'Programming Language :: Python :: Implementation :: CPython',

208 'Programming Language :: Python :: Implementation :: PyPy',

209 'Environment :: No Input/Output (Daemon)'

210 ),

211 install_requires=REQUIRES,

212 extras_require=EXTRAS_REQUIRES,

213 dependency_links=DEPEND_LINKS,

214 )

215

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setup_rucio_client.py b/setup_rucio_client.py

--- a/setup_rucio_client.py

+++ b/setup_rucio_client.py

@@ -190,7 +190,7 @@

license="Apache License, Version 2.0",

url="http://rucio.cern.ch/",

python_requires=">=2.6, !=3.0.*, !=3.1.*, !=3.2.*, !=3.3.*",

- classifiers=(

+ classifiers=[

'Development Status :: 5 - Production/Stable',

'License :: OSI Approved :: Apache Software License',

'Intended Audience :: Information Technology',

@@ -207,7 +207,7 @@

'Programming Language :: Python :: Implementation :: CPython',

'Programming Language :: Python :: Implementation :: PyPy',

'Environment :: No Input/Output (Daemon)'

- ),

+ ],

install_requires=REQUIRES,

extras_require=EXTRAS_REQUIRES,

dependency_links=DEPEND_LINKS,

|