problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_9723

|

rasdani/github-patches

|

git_diff

|

dask__dask-3157

|

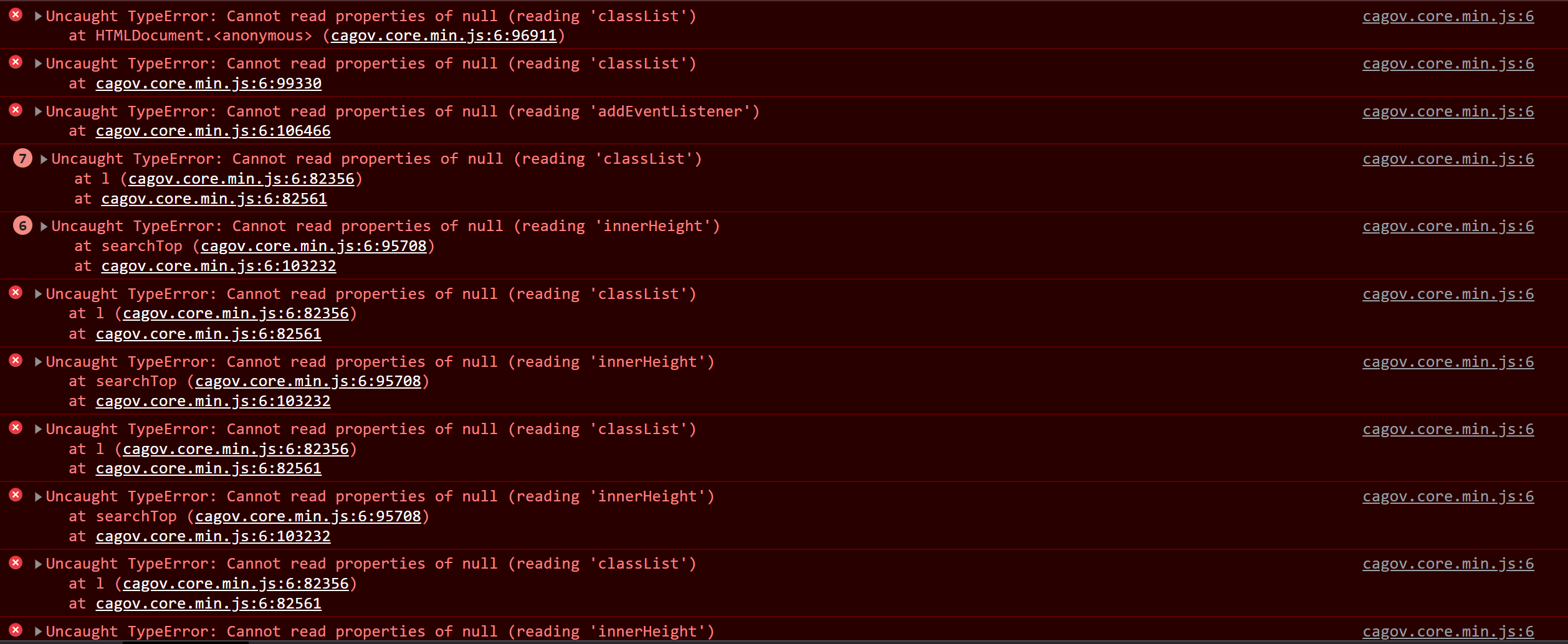

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

LZ4_compress and LZ4_uncompress removed

Since commit python-lz4/python-lz4@d62fdc50c0e183d7260961f09d4e0701fbdf0c5c LZ4_compress and LZ4_decompress have been removed (they've been deprecated for a while). With the version of python-lz4 released on pypi, it means we can't use lz4 compression with dask, and worse importing dask.bytes.compression errors out.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `dask/bytes/compression.py`

Content:

```

1 from __future__ import print_function, division, absolute_import

2

3 import bz2

4 import sys

5 import zlib

6

7 from toolz import identity

8

9 from ..compatibility import gzip_compress, gzip_decompress, GzipFile

10 from ..utils import ignoring

11

12

13 def noop_file(file, **kwargs):

14 return file

15

16

17 compress = {'gzip': gzip_compress,

18 'zlib': zlib.compress,

19 'bz2': bz2.compress,

20 None: identity}

21 decompress = {'gzip': gzip_decompress,

22 'zlib': zlib.decompress,

23 'bz2': bz2.decompress,

24 None: identity}

25 files = {'gzip': lambda f, **kwargs: GzipFile(fileobj=f, **kwargs),

26 None: noop_file}

27 seekable_files = {None: noop_file}

28

29

30 with ignoring(ImportError):

31 import snappy

32 compress['snappy'] = snappy.compress

33 decompress['snappy'] = snappy.decompress

34

35

36 with ignoring(ImportError):

37 import lz4

38 compress['lz4'] = lz4.LZ4_compress

39 decompress['lz4'] = lz4.LZ4_uncompress

40

41 with ignoring(ImportError):

42 from ..compatibility import LZMAFile, lzma_compress, lzma_decompress

43 compress['xz'] = lzma_compress

44 decompress['xz'] = lzma_decompress

45 files['xz'] = LZMAFile

46

47 # Seekable xz files actually tend to scan whole file - see `get_xz_blocks`

48 # with ignoring(ImportError):

49 # import lzma

50 # seekable_files['xz'] = lzma.LZMAFile

51 #

52 # with ignoring(ImportError):

53 # import lzmaffi

54 # seekable_files['xz'] = lzmaffi.LZMAFile

55

56

57 if sys.version_info[0] >= 3:

58 import bz2

59 files['bz2'] = bz2.BZ2File

60

61

62 def get_xz_blocks(fp):

63 from lzmaffi import (STREAM_HEADER_SIZE, decode_stream_footer,

64 decode_index, LZMAError)

65 fp.seek(0, 2)

66

67 def _peek(f, size):

68 data = f.read(size)

69 f.seek(-size, 1)

70 return data

71

72 if fp.tell() < 2 * STREAM_HEADER_SIZE:

73 raise LZMAError("file too small")

74

75 # read stream paddings (4 bytes each)

76 fp.seek(-4, 1)

77 padding = 0

78 while _peek(fp, 4) == b'\x00\x00\x00\x00':

79 fp.seek(-4, 1)

80 padding += 4

81

82 fp.seek(-STREAM_HEADER_SIZE + 4, 1)

83

84 stream_flags = decode_stream_footer(_peek(fp, STREAM_HEADER_SIZE))

85 fp.seek(-stream_flags.backward_size, 1)

86

87 index = decode_index(_peek(fp, stream_flags.backward_size), padding)

88 return {'offsets': [b.compressed_file_offset for i, b in index],

89 'lengths': [b.unpadded_size for i, b in index],

90 'check': stream_flags.check}

91

92

93 def xz_decompress(data, check):

94 from lzmaffi import decode_block_header_size, LZMADecompressor, FORMAT_BLOCK

95 hsize = decode_block_header_size(data[:1])

96 header = data[:hsize]

97 dc = LZMADecompressor(format=FORMAT_BLOCK, header=header,

98 unpadded_size=len(data), check=check)

99 return dc.decompress(data[len(header):])

100

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/dask/bytes/compression.py b/dask/bytes/compression.py

--- a/dask/bytes/compression.py

+++ b/dask/bytes/compression.py

@@ -33,10 +33,17 @@

decompress['snappy'] = snappy.decompress

-with ignoring(ImportError):

- import lz4

- compress['lz4'] = lz4.LZ4_compress

- decompress['lz4'] = lz4.LZ4_uncompress

+try:

+ import lz4.block

+ compress['lz4'] = lz4.block.compress

+ compress['lz4'] = lz4.block.decompress

+except ImportError:

+ try:

+ import lz4

+ compress['lz4'] = lz4.LZ4_compress

+ compress['lz4'] = lz4.LZ4_uncompress

+ except ImportError:

+ pass

with ignoring(ImportError):

from ..compatibility import LZMAFile, lzma_compress, lzma_decompress

|

{"golden_diff": "diff --git a/dask/bytes/compression.py b/dask/bytes/compression.py\n--- a/dask/bytes/compression.py\n+++ b/dask/bytes/compression.py\n@@ -33,10 +33,17 @@\n decompress['snappy'] = snappy.decompress\n \n \n-with ignoring(ImportError):\n- import lz4\n- compress['lz4'] = lz4.LZ4_compress\n- decompress['lz4'] = lz4.LZ4_uncompress\n+try:\n+ import lz4.block\n+ compress['lz4'] = lz4.block.compress\n+ compress['lz4'] = lz4.block.decompress\n+except ImportError:\n+ try:\n+ import lz4\n+ compress['lz4'] = lz4.LZ4_compress\n+ compress['lz4'] = lz4.LZ4_uncompress\n+ except ImportError:\n+ pass\n \n with ignoring(ImportError):\n from ..compatibility import LZMAFile, lzma_compress, lzma_decompress\n", "issue": "LZ4_compress and LZ4_uncompress removed\nSince commit python-lz4/python-lz4@d62fdc50c0e183d7260961f09d4e0701fbdf0c5c LZ4_compress and LZ4_decompress have been removed (they've been deprecated for a while). With the version of python-lz4 released on pypi, it means we can't use lz4 compression with dask, and worse importing dask.bytes.compression errors out.\r\n\n", "before_files": [{"content": "from __future__ import print_function, division, absolute_import\n\nimport bz2\nimport sys\nimport zlib\n\nfrom toolz import identity\n\nfrom ..compatibility import gzip_compress, gzip_decompress, GzipFile\nfrom ..utils import ignoring\n\n\ndef noop_file(file, **kwargs):\n return file\n\n\ncompress = {'gzip': gzip_compress,\n 'zlib': zlib.compress,\n 'bz2': bz2.compress,\n None: identity}\ndecompress = {'gzip': gzip_decompress,\n 'zlib': zlib.decompress,\n 'bz2': bz2.decompress,\n None: identity}\nfiles = {'gzip': lambda f, **kwargs: GzipFile(fileobj=f, **kwargs),\n None: noop_file}\nseekable_files = {None: noop_file}\n\n\nwith ignoring(ImportError):\n import snappy\n compress['snappy'] = snappy.compress\n decompress['snappy'] = snappy.decompress\n\n\nwith ignoring(ImportError):\n import lz4\n compress['lz4'] = lz4.LZ4_compress\n decompress['lz4'] = lz4.LZ4_uncompress\n\nwith ignoring(ImportError):\n from ..compatibility import LZMAFile, lzma_compress, lzma_decompress\n compress['xz'] = lzma_compress\n decompress['xz'] = lzma_decompress\n files['xz'] = LZMAFile\n\n# Seekable xz files actually tend to scan whole file - see `get_xz_blocks`\n# with ignoring(ImportError):\n# import lzma\n# seekable_files['xz'] = lzma.LZMAFile\n#\n# with ignoring(ImportError):\n# import lzmaffi\n# seekable_files['xz'] = lzmaffi.LZMAFile\n\n\nif sys.version_info[0] >= 3:\n import bz2\n files['bz2'] = bz2.BZ2File\n\n\ndef get_xz_blocks(fp):\n from lzmaffi import (STREAM_HEADER_SIZE, decode_stream_footer,\n decode_index, LZMAError)\n fp.seek(0, 2)\n\n def _peek(f, size):\n data = f.read(size)\n f.seek(-size, 1)\n return data\n\n if fp.tell() < 2 * STREAM_HEADER_SIZE:\n raise LZMAError(\"file too small\")\n\n # read stream paddings (4 bytes each)\n fp.seek(-4, 1)\n padding = 0\n while _peek(fp, 4) == b'\\x00\\x00\\x00\\x00':\n fp.seek(-4, 1)\n padding += 4\n\n fp.seek(-STREAM_HEADER_SIZE + 4, 1)\n\n stream_flags = decode_stream_footer(_peek(fp, STREAM_HEADER_SIZE))\n fp.seek(-stream_flags.backward_size, 1)\n\n index = decode_index(_peek(fp, stream_flags.backward_size), padding)\n return {'offsets': [b.compressed_file_offset for i, b in index],\n 'lengths': [b.unpadded_size for i, b in index],\n 'check': stream_flags.check}\n\n\ndef xz_decompress(data, check):\n from lzmaffi import decode_block_header_size, LZMADecompressor, FORMAT_BLOCK\n hsize = decode_block_header_size(data[:1])\n header = data[:hsize]\n dc = LZMADecompressor(format=FORMAT_BLOCK, header=header,\n unpadded_size=len(data), check=check)\n return dc.decompress(data[len(header):])\n", "path": "dask/bytes/compression.py"}], "after_files": [{"content": "from __future__ import print_function, division, absolute_import\n\nimport bz2\nimport sys\nimport zlib\n\nfrom toolz import identity\n\nfrom ..compatibility import gzip_compress, gzip_decompress, GzipFile\nfrom ..utils import ignoring\n\n\ndef noop_file(file, **kwargs):\n return file\n\n\ncompress = {'gzip': gzip_compress,\n 'zlib': zlib.compress,\n 'bz2': bz2.compress,\n None: identity}\ndecompress = {'gzip': gzip_decompress,\n 'zlib': zlib.decompress,\n 'bz2': bz2.decompress,\n None: identity}\nfiles = {'gzip': lambda f, **kwargs: GzipFile(fileobj=f, **kwargs),\n None: noop_file}\nseekable_files = {None: noop_file}\n\n\nwith ignoring(ImportError):\n import snappy\n compress['snappy'] = snappy.compress\n decompress['snappy'] = snappy.decompress\n\n\ntry:\n import lz4.block\n compress['lz4'] = lz4.block.compress\n compress['lz4'] = lz4.block.decompress\nexcept ImportError:\n try:\n import lz4\n compress['lz4'] = lz4.LZ4_compress\n compress['lz4'] = lz4.LZ4_uncompress\n except ImportError:\n pass\n\nwith ignoring(ImportError):\n from ..compatibility import LZMAFile, lzma_compress, lzma_decompress\n compress['xz'] = lzma_compress\n decompress['xz'] = lzma_decompress\n files['xz'] = LZMAFile\n\n# Seekable xz files actually tend to scan whole file - see `get_xz_blocks`\n# with ignoring(ImportError):\n# import lzma\n# seekable_files['xz'] = lzma.LZMAFile\n#\n# with ignoring(ImportError):\n# import lzmaffi\n# seekable_files['xz'] = lzmaffi.LZMAFile\n\n\nif sys.version_info[0] >= 3:\n import bz2\n files['bz2'] = bz2.BZ2File\n\n\ndef get_xz_blocks(fp):\n from lzmaffi import (STREAM_HEADER_SIZE, decode_stream_footer,\n decode_index, LZMAError)\n fp.seek(0, 2)\n\n def _peek(f, size):\n data = f.read(size)\n f.seek(-size, 1)\n return data\n\n if fp.tell() < 2 * STREAM_HEADER_SIZE:\n raise LZMAError(\"file too small\")\n\n # read stream paddings (4 bytes each)\n fp.seek(-4, 1)\n padding = 0\n while _peek(fp, 4) == b'\\x00\\x00\\x00\\x00':\n fp.seek(-4, 1)\n padding += 4\n\n fp.seek(-STREAM_HEADER_SIZE + 4, 1)\n\n stream_flags = decode_stream_footer(_peek(fp, STREAM_HEADER_SIZE))\n fp.seek(-stream_flags.backward_size, 1)\n\n index = decode_index(_peek(fp, stream_flags.backward_size), padding)\n return {'offsets': [b.compressed_file_offset for i, b in index],\n 'lengths': [b.unpadded_size for i, b in index],\n 'check': stream_flags.check}\n\n\ndef xz_decompress(data, check):\n from lzmaffi import decode_block_header_size, LZMADecompressor, FORMAT_BLOCK\n hsize = decode_block_header_size(data[:1])\n header = data[:hsize]\n dc = LZMADecompressor(format=FORMAT_BLOCK, header=header,\n unpadded_size=len(data), check=check)\n return dc.decompress(data[len(header):])\n", "path": "dask/bytes/compression.py"}]}

| 1,347 | 228 |

gh_patches_debug_12311

|

rasdani/github-patches

|

git_diff

|

pyodide__pyodide-4269

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[Discussion] Drop support for Node versions that passed endoflife

## Proposal

Drop support for Node < 18 from the next Pyodide major release (0.25.0)

## Background

The endoflife of [Node.js 14 and 16 passed a few days ago](https://nodejs.dev/en/about/releases/). We just finished a major release, so I think this is a good time to talk about Node version support.

Here are the things that are currently associated with Node.js versions in Pyodide.

- The documentation mentions [how to run Pyodide in Node.js < 0.18](https://pyodide.org/en/stable/usage/index.html#node-js-versions-0-17)

- We have some compat codes for older Node versions

- https://github.com/pyodide/pyodide/pull/4100#discussion_r1308449699

-[compat.ts](https://github.com/pyodide/pyodide/blob/e2c2884e7a9afd90b23849220ee578bd2d523458/src/js/compat.ts#L40)

- JSPI requires Node >= 20

- The Docker image used for Pyodide CI uses Node 20

### Benefits from dropping Node < 18 support

- No more `node-fetch`.

- No more `--experimental-wasm-bigint` flags

- Better `MessageChannel` support (https://github.com/pyodide/pyodide/issues/4006)

- Some useful methods like [`AbortController`](https://developer.mozilla.org/en-US/docs/Web/API/AbortController)

### Drawbacks

- People who were using Node.js < 18 with Pyodide won't like it.

## Migration plan

- Mention minimal Node.js version support in docs

- Remove compat codes for old Node.js versions

- pytest-pyodide?

WDYT?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pyodide-build/pyodide_build/create_xbuildenv.py`

Content:

```

1 import shutil

2 import subprocess

3 from pathlib import Path

4

5 from .build_env import (

6 get_build_flag,

7 get_pyodide_root,

8 get_unisolated_packages,

9 )

10 from .common import exit_with_stdio

11 from .logger import logger

12 from .recipe import load_all_recipes

13

14

15 def _copy_xbuild_files(

16 pyodide_root: Path, xbuildenv_path: Path, skip_missing_files: bool = False

17 ) -> None:

18 site_packages = Path(get_build_flag("HOSTSITEPACKAGES"))

19 # Store package cross-build-files into site_packages_extras in the same tree

20 # structure as they would appear in the real package.

21 # In install_xbuildenv, we will use:

22 # pip install -t $HOSTSITEPACKAGES -r requirements.txt

23 # cp site-packages-extras $HOSTSITEPACKAGES

24 site_packages_extras = xbuildenv_path / "site-packages-extras"

25 recipes = load_all_recipes(pyodide_root / "packages")

26 for recipe in recipes.values():

27 xbuild_files = recipe.build.cross_build_files

28 for path in xbuild_files:

29 source = site_packages / path

30 target = site_packages_extras / path

31 target.parent.mkdir(parents=True, exist_ok=True)

32

33 if not source.exists():

34 if skip_missing_files:

35 logger.warning(f"Cross-build file '{path}' not found")

36 continue

37

38 raise FileNotFoundError(f"Cross-build file '{path}' not found")

39

40 shutil.copy(source, target)

41

42

43 def _copy_wasm_libs(

44 pyodide_root: Path, xbuildenv_root: Path, skip_missing_files: bool = False

45 ) -> None:

46 def get_relative_path(pyodide_root: Path, flag: str) -> Path:

47 return Path(get_build_flag(flag)).relative_to(pyodide_root)

48

49 pythoninclude = get_relative_path(pyodide_root, "PYTHONINCLUDE")

50 wasm_lib_dir = get_relative_path(pyodide_root, "WASM_LIBRARY_DIR")

51 sysconfig_dir = get_relative_path(pyodide_root, "SYSCONFIGDATA_DIR")

52 to_copy: list[Path] = [

53 pythoninclude,

54 sysconfig_dir,

55 Path("Makefile.envs"),

56 wasm_lib_dir / "cmake",

57 Path("dist/pyodide-lock.json"),

58 Path("dist/python"),

59 Path("dist/python_stdlib.zip"),

60 Path("tools/constraints.txt"),

61 ]

62 to_copy.extend(

63 x.relative_to(pyodide_root) for x in (pyodide_root / "dist").glob("pyodide.*")

64 )

65 # Some ad-hoc stuff here to moderate size. We'd like to include all of

66 # wasm_lib_dir but there's 180mb of it. Better to leave out all the video

67 # codecs and stuff.

68 for pkg in ["ssl", "libcrypto", "zlib", "xml", "mpfr", "lapack", "blas", "f2c"]:

69 to_copy.extend(

70 x.relative_to(pyodide_root)

71 for x in (pyodide_root / wasm_lib_dir / "include").glob(f"**/*{pkg}*")

72 if "boost" not in str(x)

73 )

74 to_copy.extend(

75 x.relative_to(pyodide_root)

76 for x in (pyodide_root / wasm_lib_dir / "lib").glob(f"**/*{pkg}*")

77 )

78

79 for path in to_copy:

80 if not (pyodide_root / path).exists():

81 if skip_missing_files:

82 logger.warning(f"Cross-build file '{path}' not found")

83 continue

84

85 raise FileNotFoundError(f"Cross-build file '{path}' not found")

86

87 if (pyodide_root / path).is_dir():

88 shutil.copytree(

89 pyodide_root / path, xbuildenv_root / path, dirs_exist_ok=True

90 )

91 else:

92 (xbuildenv_root / path).parent.mkdir(exist_ok=True, parents=True)

93 shutil.copy(pyodide_root / path, xbuildenv_root / path)

94

95

96 def create(

97 path: str | Path,

98 pyodide_root: Path | None = None,

99 *,

100 skip_missing_files: bool = False,

101 ) -> None:

102 if pyodide_root is None:

103 pyodide_root = get_pyodide_root()

104

105 xbuildenv_path = Path(path) / "xbuildenv"

106 xbuildenv_root = xbuildenv_path / "pyodide-root"

107

108 shutil.rmtree(xbuildenv_path, ignore_errors=True)

109 xbuildenv_path.mkdir(parents=True, exist_ok=True)

110 xbuildenv_root.mkdir()

111

112 _copy_xbuild_files(pyodide_root, xbuildenv_path, skip_missing_files)

113 _copy_wasm_libs(pyodide_root, xbuildenv_root, skip_missing_files)

114

115 (xbuildenv_root / "package.json").write_text("{}")

116 res = subprocess.run(

117 ["npm", "i", "node-fetch@2"],

118 cwd=xbuildenv_root,

119 capture_output=True,

120 encoding="utf8",

121 )

122 if res.returncode != 0:

123 logger.error("Failed to install node-fetch:")

124 exit_with_stdio(res)

125

126 res = subprocess.run(

127 ["pip", "freeze", "--path", get_build_flag("HOSTSITEPACKAGES")],

128 capture_output=True,

129 encoding="utf8",

130 )

131 if res.returncode != 0:

132 logger.error("Failed to run pip freeze:")

133 exit_with_stdio(res)

134

135 (xbuildenv_path / "requirements.txt").write_text(res.stdout)

136 (xbuildenv_root / "unisolated.txt").write_text("\n".join(get_unisolated_packages()))

137

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pyodide-build/pyodide_build/create_xbuildenv.py b/pyodide-build/pyodide_build/create_xbuildenv.py

--- a/pyodide-build/pyodide_build/create_xbuildenv.py

+++ b/pyodide-build/pyodide_build/create_xbuildenv.py

@@ -113,16 +113,6 @@

_copy_wasm_libs(pyodide_root, xbuildenv_root, skip_missing_files)

(xbuildenv_root / "package.json").write_text("{}")

- res = subprocess.run(

- ["npm", "i", "node-fetch@2"],

- cwd=xbuildenv_root,

- capture_output=True,

- encoding="utf8",

- )

- if res.returncode != 0:

- logger.error("Failed to install node-fetch:")

- exit_with_stdio(res)

-

res = subprocess.run(

["pip", "freeze", "--path", get_build_flag("HOSTSITEPACKAGES")],

capture_output=True,

|

{"golden_diff": "diff --git a/pyodide-build/pyodide_build/create_xbuildenv.py b/pyodide-build/pyodide_build/create_xbuildenv.py\n--- a/pyodide-build/pyodide_build/create_xbuildenv.py\n+++ b/pyodide-build/pyodide_build/create_xbuildenv.py\n@@ -113,16 +113,6 @@\n _copy_wasm_libs(pyodide_root, xbuildenv_root, skip_missing_files)\n \n (xbuildenv_root / \"package.json\").write_text(\"{}\")\n- res = subprocess.run(\n- [\"npm\", \"i\", \"node-fetch@2\"],\n- cwd=xbuildenv_root,\n- capture_output=True,\n- encoding=\"utf8\",\n- )\n- if res.returncode != 0:\n- logger.error(\"Failed to install node-fetch:\")\n- exit_with_stdio(res)\n-\n res = subprocess.run(\n [\"pip\", \"freeze\", \"--path\", get_build_flag(\"HOSTSITEPACKAGES\")],\n capture_output=True,\n", "issue": "[Discussion] Drop support for Node versions that passed endoflife\n## Proposal\r\n\r\nDrop support for Node < 18 from the next Pyodide major release (0.25.0)\r\n\r\n## Background\r\n\r\nThe endoflife of [Node.js 14 and 16 passed a few days ago](https://nodejs.dev/en/about/releases/). We just finished a major release, so I think this is a good time to talk about Node version support.\r\n\r\n\r\n\r\nHere are the things that are currently associated with Node.js versions in Pyodide.\r\n\r\n- The documentation mentions [how to run Pyodide in Node.js < 0.18](https://pyodide.org/en/stable/usage/index.html#node-js-versions-0-17)\r\n- We have some compat codes for older Node versions\r\n - https://github.com/pyodide/pyodide/pull/4100#discussion_r1308449699\r\n -[compat.ts](https://github.com/pyodide/pyodide/blob/e2c2884e7a9afd90b23849220ee578bd2d523458/src/js/compat.ts#L40)\r\n- JSPI requires Node >= 20\r\n- The Docker image used for Pyodide CI uses Node 20\r\n\r\n### Benefits from dropping Node < 18 support\r\n\r\n- No more `node-fetch`.\r\n- No more `--experimental-wasm-bigint` flags\r\n- Better `MessageChannel` support (https://github.com/pyodide/pyodide/issues/4006)\r\n- Some useful methods like [`AbortController`](https://developer.mozilla.org/en-US/docs/Web/API/AbortController)\r\n\r\n### Drawbacks\r\n\r\n- People who were using Node.js < 18 with Pyodide won't like it.\r\n\r\n## Migration plan\r\n\r\n- Mention minimal Node.js version support in docs\r\n- Remove compat codes for old Node.js versions\r\n - pytest-pyodide?\r\n\r\nWDYT?\r\n\r\n\n", "before_files": [{"content": "import shutil\nimport subprocess\nfrom pathlib import Path\n\nfrom .build_env import (\n get_build_flag,\n get_pyodide_root,\n get_unisolated_packages,\n)\nfrom .common import exit_with_stdio\nfrom .logger import logger\nfrom .recipe import load_all_recipes\n\n\ndef _copy_xbuild_files(\n pyodide_root: Path, xbuildenv_path: Path, skip_missing_files: bool = False\n) -> None:\n site_packages = Path(get_build_flag(\"HOSTSITEPACKAGES\"))\n # Store package cross-build-files into site_packages_extras in the same tree\n # structure as they would appear in the real package.\n # In install_xbuildenv, we will use:\n # pip install -t $HOSTSITEPACKAGES -r requirements.txt\n # cp site-packages-extras $HOSTSITEPACKAGES\n site_packages_extras = xbuildenv_path / \"site-packages-extras\"\n recipes = load_all_recipes(pyodide_root / \"packages\")\n for recipe in recipes.values():\n xbuild_files = recipe.build.cross_build_files\n for path in xbuild_files:\n source = site_packages / path\n target = site_packages_extras / path\n target.parent.mkdir(parents=True, exist_ok=True)\n\n if not source.exists():\n if skip_missing_files:\n logger.warning(f\"Cross-build file '{path}' not found\")\n continue\n\n raise FileNotFoundError(f\"Cross-build file '{path}' not found\")\n\n shutil.copy(source, target)\n\n\ndef _copy_wasm_libs(\n pyodide_root: Path, xbuildenv_root: Path, skip_missing_files: bool = False\n) -> None:\n def get_relative_path(pyodide_root: Path, flag: str) -> Path:\n return Path(get_build_flag(flag)).relative_to(pyodide_root)\n\n pythoninclude = get_relative_path(pyodide_root, \"PYTHONINCLUDE\")\n wasm_lib_dir = get_relative_path(pyodide_root, \"WASM_LIBRARY_DIR\")\n sysconfig_dir = get_relative_path(pyodide_root, \"SYSCONFIGDATA_DIR\")\n to_copy: list[Path] = [\n pythoninclude,\n sysconfig_dir,\n Path(\"Makefile.envs\"),\n wasm_lib_dir / \"cmake\",\n Path(\"dist/pyodide-lock.json\"),\n Path(\"dist/python\"),\n Path(\"dist/python_stdlib.zip\"),\n Path(\"tools/constraints.txt\"),\n ]\n to_copy.extend(\n x.relative_to(pyodide_root) for x in (pyodide_root / \"dist\").glob(\"pyodide.*\")\n )\n # Some ad-hoc stuff here to moderate size. We'd like to include all of\n # wasm_lib_dir but there's 180mb of it. Better to leave out all the video\n # codecs and stuff.\n for pkg in [\"ssl\", \"libcrypto\", \"zlib\", \"xml\", \"mpfr\", \"lapack\", \"blas\", \"f2c\"]:\n to_copy.extend(\n x.relative_to(pyodide_root)\n for x in (pyodide_root / wasm_lib_dir / \"include\").glob(f\"**/*{pkg}*\")\n if \"boost\" not in str(x)\n )\n to_copy.extend(\n x.relative_to(pyodide_root)\n for x in (pyodide_root / wasm_lib_dir / \"lib\").glob(f\"**/*{pkg}*\")\n )\n\n for path in to_copy:\n if not (pyodide_root / path).exists():\n if skip_missing_files:\n logger.warning(f\"Cross-build file '{path}' not found\")\n continue\n\n raise FileNotFoundError(f\"Cross-build file '{path}' not found\")\n\n if (pyodide_root / path).is_dir():\n shutil.copytree(\n pyodide_root / path, xbuildenv_root / path, dirs_exist_ok=True\n )\n else:\n (xbuildenv_root / path).parent.mkdir(exist_ok=True, parents=True)\n shutil.copy(pyodide_root / path, xbuildenv_root / path)\n\n\ndef create(\n path: str | Path,\n pyodide_root: Path | None = None,\n *,\n skip_missing_files: bool = False,\n) -> None:\n if pyodide_root is None:\n pyodide_root = get_pyodide_root()\n\n xbuildenv_path = Path(path) / \"xbuildenv\"\n xbuildenv_root = xbuildenv_path / \"pyodide-root\"\n\n shutil.rmtree(xbuildenv_path, ignore_errors=True)\n xbuildenv_path.mkdir(parents=True, exist_ok=True)\n xbuildenv_root.mkdir()\n\n _copy_xbuild_files(pyodide_root, xbuildenv_path, skip_missing_files)\n _copy_wasm_libs(pyodide_root, xbuildenv_root, skip_missing_files)\n\n (xbuildenv_root / \"package.json\").write_text(\"{}\")\n res = subprocess.run(\n [\"npm\", \"i\", \"node-fetch@2\"],\n cwd=xbuildenv_root,\n capture_output=True,\n encoding=\"utf8\",\n )\n if res.returncode != 0:\n logger.error(\"Failed to install node-fetch:\")\n exit_with_stdio(res)\n\n res = subprocess.run(\n [\"pip\", \"freeze\", \"--path\", get_build_flag(\"HOSTSITEPACKAGES\")],\n capture_output=True,\n encoding=\"utf8\",\n )\n if res.returncode != 0:\n logger.error(\"Failed to run pip freeze:\")\n exit_with_stdio(res)\n\n (xbuildenv_path / \"requirements.txt\").write_text(res.stdout)\n (xbuildenv_root / \"unisolated.txt\").write_text(\"\\n\".join(get_unisolated_packages()))\n", "path": "pyodide-build/pyodide_build/create_xbuildenv.py"}], "after_files": [{"content": "import shutil\nimport subprocess\nfrom pathlib import Path\n\nfrom .build_env import (\n get_build_flag,\n get_pyodide_root,\n get_unisolated_packages,\n)\nfrom .common import exit_with_stdio\nfrom .logger import logger\nfrom .recipe import load_all_recipes\n\n\ndef _copy_xbuild_files(\n pyodide_root: Path, xbuildenv_path: Path, skip_missing_files: bool = False\n) -> None:\n site_packages = Path(get_build_flag(\"HOSTSITEPACKAGES\"))\n # Store package cross-build-files into site_packages_extras in the same tree\n # structure as they would appear in the real package.\n # In install_xbuildenv, we will use:\n # pip install -t $HOSTSITEPACKAGES -r requirements.txt\n # cp site-packages-extras $HOSTSITEPACKAGES\n site_packages_extras = xbuildenv_path / \"site-packages-extras\"\n recipes = load_all_recipes(pyodide_root / \"packages\")\n for recipe in recipes.values():\n xbuild_files = recipe.build.cross_build_files\n for path in xbuild_files:\n source = site_packages / path\n target = site_packages_extras / path\n target.parent.mkdir(parents=True, exist_ok=True)\n\n if not source.exists():\n if skip_missing_files:\n logger.warning(f\"Cross-build file '{path}' not found\")\n continue\n\n raise FileNotFoundError(f\"Cross-build file '{path}' not found\")\n\n shutil.copy(source, target)\n\n\ndef _copy_wasm_libs(\n pyodide_root: Path, xbuildenv_root: Path, skip_missing_files: bool = False\n) -> None:\n def get_relative_path(pyodide_root: Path, flag: str) -> Path:\n return Path(get_build_flag(flag)).relative_to(pyodide_root)\n\n pythoninclude = get_relative_path(pyodide_root, \"PYTHONINCLUDE\")\n wasm_lib_dir = get_relative_path(pyodide_root, \"WASM_LIBRARY_DIR\")\n sysconfig_dir = get_relative_path(pyodide_root, \"SYSCONFIGDATA_DIR\")\n to_copy: list[Path] = [\n pythoninclude,\n sysconfig_dir,\n Path(\"Makefile.envs\"),\n wasm_lib_dir / \"cmake\",\n Path(\"dist/pyodide-lock.json\"),\n Path(\"dist/python\"),\n Path(\"dist/python_stdlib.zip\"),\n Path(\"tools/constraints.txt\"),\n ]\n to_copy.extend(\n x.relative_to(pyodide_root) for x in (pyodide_root / \"dist\").glob(\"pyodide.*\")\n )\n # Some ad-hoc stuff here to moderate size. We'd like to include all of\n # wasm_lib_dir but there's 180mb of it. Better to leave out all the video\n # codecs and stuff.\n for pkg in [\"ssl\", \"libcrypto\", \"zlib\", \"xml\", \"mpfr\", \"lapack\", \"blas\", \"f2c\"]:\n to_copy.extend(\n x.relative_to(pyodide_root)\n for x in (pyodide_root / wasm_lib_dir / \"include\").glob(f\"**/*{pkg}*\")\n if \"boost\" not in str(x)\n )\n to_copy.extend(\n x.relative_to(pyodide_root)\n for x in (pyodide_root / wasm_lib_dir / \"lib\").glob(f\"**/*{pkg}*\")\n )\n\n for path in to_copy:\n if not (pyodide_root / path).exists():\n if skip_missing_files:\n logger.warning(f\"Cross-build file '{path}' not found\")\n continue\n\n raise FileNotFoundError(f\"Cross-build file '{path}' not found\")\n\n if (pyodide_root / path).is_dir():\n shutil.copytree(\n pyodide_root / path, xbuildenv_root / path, dirs_exist_ok=True\n )\n else:\n (xbuildenv_root / path).parent.mkdir(exist_ok=True, parents=True)\n shutil.copy(pyodide_root / path, xbuildenv_root / path)\n\n\ndef create(\n path: str | Path,\n pyodide_root: Path | None = None,\n *,\n skip_missing_files: bool = False,\n) -> None:\n if pyodide_root is None:\n pyodide_root = get_pyodide_root()\n\n xbuildenv_path = Path(path) / \"xbuildenv\"\n xbuildenv_root = xbuildenv_path / \"pyodide-root\"\n\n shutil.rmtree(xbuildenv_path, ignore_errors=True)\n xbuildenv_path.mkdir(parents=True, exist_ok=True)\n xbuildenv_root.mkdir()\n\n _copy_xbuild_files(pyodide_root, xbuildenv_path, skip_missing_files)\n _copy_wasm_libs(pyodide_root, xbuildenv_root, skip_missing_files)\n\n (xbuildenv_root / \"package.json\").write_text(\"{}\")\n res = subprocess.run(\n [\"pip\", \"freeze\", \"--path\", get_build_flag(\"HOSTSITEPACKAGES\")],\n capture_output=True,\n encoding=\"utf8\",\n )\n if res.returncode != 0:\n logger.error(\"Failed to run pip freeze:\")\n exit_with_stdio(res)\n\n (xbuildenv_path / \"requirements.txt\").write_text(res.stdout)\n (xbuildenv_root / \"unisolated.txt\").write_text(\"\\n\".join(get_unisolated_packages()))\n", "path": "pyodide-build/pyodide_build/create_xbuildenv.py"}]}

| 2,294 | 221 |

gh_patches_debug_513

|

rasdani/github-patches

|

git_diff

|

weni-ai__bothub-engine-150

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Relative STATIC_URL in production broken email images

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `bothub/settings.py`

Content:

```

1 import os

2 import dj_database_url

3

4 from decouple import config

5

6

7 # Build paths inside the project like this: os.path.join(BASE_DIR, ...)

8 BASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

9

10

11 # SECURITY WARNING: keep the secret key used in production secret!

12 SECRET_KEY = config('SECRET_KEY')

13

14 # SECURITY WARNING: don't run with debug turned on in production!

15 DEBUG = config('DEBUG', default=False, cast=bool)

16

17 ALLOWED_HOSTS = config(

18 'ALLOWED_HOSTS',

19 default='*',

20 cast=lambda v: [s.strip() for s in v.split(',')])

21

22

23 # Application definition

24

25 INSTALLED_APPS = [

26 'django.contrib.admin',

27 'django.contrib.auth',

28 'django.contrib.contenttypes',

29 'django.contrib.sessions',

30 'django.contrib.messages',

31 'django.contrib.staticfiles',

32 'rest_framework',

33 'rest_framework.authtoken',

34 'django_filters',

35 'corsheaders',

36 'bothub.authentication',

37 'bothub.common',

38 'bothub.api',

39 ]

40

41 MIDDLEWARE = [

42 'django.middleware.security.SecurityMiddleware',

43 'whitenoise.middleware.WhiteNoiseMiddleware',

44 'django.contrib.sessions.middleware.SessionMiddleware',

45 'corsheaders.middleware.CorsMiddleware',

46 'django.middleware.common.CommonMiddleware',

47 'django.middleware.csrf.CsrfViewMiddleware',

48 'django.contrib.auth.middleware.AuthenticationMiddleware',

49 'django.contrib.messages.middleware.MessageMiddleware',

50 'django.middleware.clickjacking.XFrameOptionsMiddleware',

51 ]

52

53 ROOT_URLCONF = 'bothub.urls'

54

55 TEMPLATES = [

56 {

57 'BACKEND': 'django.template.backends.django.DjangoTemplates',

58 'DIRS': [],

59 'APP_DIRS': True,

60 'OPTIONS': {

61 'context_processors': [

62 'django.template.context_processors.debug',

63 'django.template.context_processors.request',

64 'django.contrib.auth.context_processors.auth',

65 'django.contrib.messages.context_processors.messages',

66 ],

67 },

68 },

69 ]

70

71 WSGI_APPLICATION = 'bothub.wsgi.application'

72

73

74 # Database

75

76 DATABASES = {}

77 DATABASES['default'] = dj_database_url.parse(

78 config(

79 'DEFAULT_DATABASE',

80 default='sqlite:///db.sqlite3'))

81

82

83 # Auth

84

85 AUTH_USER_MODEL = 'authentication.User'

86

87

88 # Password validation

89

90 AUTH_PASSWORD_VALIDATORS = [

91 {

92 'NAME': 'django.contrib.auth.password_validation.' +

93 'UserAttributeSimilarityValidator',

94 },

95 {

96 'NAME': 'django.contrib.auth.password_validation.' +

97 'MinimumLengthValidator',

98 },

99 {

100 'NAME': 'django.contrib.auth.password_validation.' +

101 'CommonPasswordValidator',

102 },

103 {

104 'NAME': 'django.contrib.auth.password_validation.' +

105 'NumericPasswordValidator',

106 },

107 ]

108

109

110 # Internationalization

111

112 LANGUAGE_CODE = config('LANGUAGE_CODE', default='en-us')

113

114 TIME_ZONE = config('TIME_ZONE', default='UTC')

115

116 USE_I18N = True

117

118 USE_L10N = True

119

120 USE_TZ = True

121

122

123 # Static files (CSS, JavaScript, Images)

124

125 STATIC_URL = '/static/'

126

127 STATIC_ROOT = os.path.join(BASE_DIR, 'staticfiles')

128

129 STATICFILES_STORAGE = 'whitenoise.storage.CompressedManifestStaticFilesStorage'

130

131

132 # rest framework

133

134 REST_FRAMEWORK = {

135 'DEFAULT_AUTHENTICATION_CLASSES': [

136 'rest_framework.authentication.TokenAuthentication',

137 ],

138 'DEFAULT_PAGINATION_CLASS': 'rest_framework.pagination.' +

139 'LimitOffsetPagination',

140 'PAGE_SIZE': 20,

141 'DEFAULT_FILTER_BACKENDS': [

142 'django_filters.rest_framework.DjangoFilterBackend',

143 ],

144 'DEFAULT_METADATA_CLASS': 'bothub.api.metadata.Metadata',

145 }

146

147

148 # cors headers

149

150 CORS_ORIGIN_ALLOW_ALL = True

151 CORS_URLS_REGEX = r'^/api/.*$'

152

153

154 # mail

155

156 envvar_EMAIL_HOST = config('EMAIL_HOST', default=None)

157

158 ADMINS = config(

159 'ADMINS',

160 default='',

161 cast=lambda v: [

162 (

163 s.strip().split('|')[0],

164 s.strip().split('|')[1],

165 ) for s in v.split(',')] if v else [])

166 EMAIL_SUBJECT_PREFIX = '[bothub] '

167 DEFAULT_FROM_EMAIL = config(

168 'DEFAULT_FROM_EMAIL',

169 default='webmaster@localhost')

170 SERVER_EMAIL = config('SERVER_EMAIL', default='root@localhost')

171

172 if envvar_EMAIL_HOST:

173 EMAIL_HOST = envvar_EMAIL_HOST

174 EMAIL_PORT = config('EMAIL_PORT', default=25, cast=int)

175 EMAIL_HOST_USER = config('EMAIL_HOST_USER', default='')

176 EMAIL_HOST_PASSWORD = config('EMAIL_HOST_PASSWORD', default='')

177 EMAIL_USE_SSL = config('EMAIL_USE_SSL', default=False, cast=bool)

178 EMAIL_USE_TLS = config('EMAIL_USE_TLS', default=False, cast=bool)

179 else:

180 EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'

181

182

183 # webapp

184

185 BOTHUB_WEBAPP_BASE_URL = config(

186 'BOTHUB_WEBAPP_BASE_URL',

187 default='http://localhost:8080/')

188

189

190 # NLP

191

192 BOTHUB_NLP_BASE_URL = config(

193 'BOTHUB_NLP_BASE_URL',

194 default='http://localhost:8001/')

195

196

197 # CSRF

198

199 CSRF_COOKIE_DOMAIN = config(

200 'CSRF_COOKIE_DOMAIN',

201 default=None)

202

203 CSRF_COOKIE_SECURE = config(

204 'CSRF_COOKIE_SECURE',

205 default=False,

206 cast=bool)

207

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/bothub/settings.py b/bothub/settings.py

--- a/bothub/settings.py

+++ b/bothub/settings.py

@@ -122,7 +122,7 @@

# Static files (CSS, JavaScript, Images)

-STATIC_URL = '/static/'

+STATIC_URL = config('STATIC_URL', default='/static/')

STATIC_ROOT = os.path.join(BASE_DIR, 'staticfiles')

|

{"golden_diff": "diff --git a/bothub/settings.py b/bothub/settings.py\n--- a/bothub/settings.py\n+++ b/bothub/settings.py\n@@ -122,7 +122,7 @@\n \n # Static files (CSS, JavaScript, Images)\n \n-STATIC_URL = '/static/'\n+STATIC_URL = config('STATIC_URL', default='/static/')\n \n STATIC_ROOT = os.path.join(BASE_DIR, 'staticfiles')\n", "issue": "Relative STATIC_URL in production broken email images\n\n", "before_files": [{"content": "import os\nimport dj_database_url\n\nfrom decouple import config\n\n\n# Build paths inside the project like this: os.path.join(BASE_DIR, ...)\nBASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))\n\n\n# SECURITY WARNING: keep the secret key used in production secret!\nSECRET_KEY = config('SECRET_KEY')\n\n# SECURITY WARNING: don't run with debug turned on in production!\nDEBUG = config('DEBUG', default=False, cast=bool)\n\nALLOWED_HOSTS = config(\n 'ALLOWED_HOSTS',\n default='*',\n cast=lambda v: [s.strip() for s in v.split(',')])\n\n\n# Application definition\n\nINSTALLED_APPS = [\n 'django.contrib.admin',\n 'django.contrib.auth',\n 'django.contrib.contenttypes',\n 'django.contrib.sessions',\n 'django.contrib.messages',\n 'django.contrib.staticfiles',\n 'rest_framework',\n 'rest_framework.authtoken',\n 'django_filters',\n 'corsheaders',\n 'bothub.authentication',\n 'bothub.common',\n 'bothub.api',\n]\n\nMIDDLEWARE = [\n 'django.middleware.security.SecurityMiddleware',\n 'whitenoise.middleware.WhiteNoiseMiddleware',\n 'django.contrib.sessions.middleware.SessionMiddleware',\n 'corsheaders.middleware.CorsMiddleware',\n 'django.middleware.common.CommonMiddleware',\n 'django.middleware.csrf.CsrfViewMiddleware',\n 'django.contrib.auth.middleware.AuthenticationMiddleware',\n 'django.contrib.messages.middleware.MessageMiddleware',\n 'django.middleware.clickjacking.XFrameOptionsMiddleware',\n]\n\nROOT_URLCONF = 'bothub.urls'\n\nTEMPLATES = [\n {\n 'BACKEND': 'django.template.backends.django.DjangoTemplates',\n 'DIRS': [],\n 'APP_DIRS': True,\n 'OPTIONS': {\n 'context_processors': [\n 'django.template.context_processors.debug',\n 'django.template.context_processors.request',\n 'django.contrib.auth.context_processors.auth',\n 'django.contrib.messages.context_processors.messages',\n ],\n },\n },\n]\n\nWSGI_APPLICATION = 'bothub.wsgi.application'\n\n\n# Database\n\nDATABASES = {}\nDATABASES['default'] = dj_database_url.parse(\n config(\n 'DEFAULT_DATABASE',\n default='sqlite:///db.sqlite3'))\n\n\n# Auth\n\nAUTH_USER_MODEL = 'authentication.User'\n\n\n# Password validation\n\nAUTH_PASSWORD_VALIDATORS = [\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'UserAttributeSimilarityValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'MinimumLengthValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'CommonPasswordValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'NumericPasswordValidator',\n },\n]\n\n\n# Internationalization\n\nLANGUAGE_CODE = config('LANGUAGE_CODE', default='en-us')\n\nTIME_ZONE = config('TIME_ZONE', default='UTC')\n\nUSE_I18N = True\n\nUSE_L10N = True\n\nUSE_TZ = True\n\n\n# Static files (CSS, JavaScript, Images)\n\nSTATIC_URL = '/static/'\n\nSTATIC_ROOT = os.path.join(BASE_DIR, 'staticfiles')\n\nSTATICFILES_STORAGE = 'whitenoise.storage.CompressedManifestStaticFilesStorage'\n\n\n# rest framework\n\nREST_FRAMEWORK = {\n 'DEFAULT_AUTHENTICATION_CLASSES': [\n 'rest_framework.authentication.TokenAuthentication',\n ],\n 'DEFAULT_PAGINATION_CLASS': 'rest_framework.pagination.' +\n 'LimitOffsetPagination',\n 'PAGE_SIZE': 20,\n 'DEFAULT_FILTER_BACKENDS': [\n 'django_filters.rest_framework.DjangoFilterBackend',\n ],\n 'DEFAULT_METADATA_CLASS': 'bothub.api.metadata.Metadata',\n}\n\n\n# cors headers\n\nCORS_ORIGIN_ALLOW_ALL = True\nCORS_URLS_REGEX = r'^/api/.*$'\n\n\n# mail\n\nenvvar_EMAIL_HOST = config('EMAIL_HOST', default=None)\n\nADMINS = config(\n 'ADMINS',\n default='',\n cast=lambda v: [\n (\n s.strip().split('|')[0],\n s.strip().split('|')[1],\n ) for s in v.split(',')] if v else [])\nEMAIL_SUBJECT_PREFIX = '[bothub] '\nDEFAULT_FROM_EMAIL = config(\n 'DEFAULT_FROM_EMAIL',\n default='webmaster@localhost')\nSERVER_EMAIL = config('SERVER_EMAIL', default='root@localhost')\n\nif envvar_EMAIL_HOST:\n EMAIL_HOST = envvar_EMAIL_HOST\n EMAIL_PORT = config('EMAIL_PORT', default=25, cast=int)\n EMAIL_HOST_USER = config('EMAIL_HOST_USER', default='')\n EMAIL_HOST_PASSWORD = config('EMAIL_HOST_PASSWORD', default='')\n EMAIL_USE_SSL = config('EMAIL_USE_SSL', default=False, cast=bool)\n EMAIL_USE_TLS = config('EMAIL_USE_TLS', default=False, cast=bool)\nelse:\n EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'\n\n\n# webapp\n\nBOTHUB_WEBAPP_BASE_URL = config(\n 'BOTHUB_WEBAPP_BASE_URL',\n default='http://localhost:8080/')\n\n\n# NLP\n\nBOTHUB_NLP_BASE_URL = config(\n 'BOTHUB_NLP_BASE_URL',\n default='http://localhost:8001/')\n\n\n# CSRF\n\nCSRF_COOKIE_DOMAIN = config(\n 'CSRF_COOKIE_DOMAIN',\n default=None)\n\nCSRF_COOKIE_SECURE = config(\n 'CSRF_COOKIE_SECURE',\n default=False,\n cast=bool)\n", "path": "bothub/settings.py"}], "after_files": [{"content": "import os\nimport dj_database_url\n\nfrom decouple import config\n\n\n# Build paths inside the project like this: os.path.join(BASE_DIR, ...)\nBASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))\n\n\n# SECURITY WARNING: keep the secret key used in production secret!\nSECRET_KEY = config('SECRET_KEY')\n\n# SECURITY WARNING: don't run with debug turned on in production!\nDEBUG = config('DEBUG', default=False, cast=bool)\n\nALLOWED_HOSTS = config(\n 'ALLOWED_HOSTS',\n default='*',\n cast=lambda v: [s.strip() for s in v.split(',')])\n\n\n# Application definition\n\nINSTALLED_APPS = [\n 'django.contrib.admin',\n 'django.contrib.auth',\n 'django.contrib.contenttypes',\n 'django.contrib.sessions',\n 'django.contrib.messages',\n 'django.contrib.staticfiles',\n 'rest_framework',\n 'rest_framework.authtoken',\n 'django_filters',\n 'corsheaders',\n 'bothub.authentication',\n 'bothub.common',\n 'bothub.api',\n]\n\nMIDDLEWARE = [\n 'django.middleware.security.SecurityMiddleware',\n 'whitenoise.middleware.WhiteNoiseMiddleware',\n 'django.contrib.sessions.middleware.SessionMiddleware',\n 'corsheaders.middleware.CorsMiddleware',\n 'django.middleware.common.CommonMiddleware',\n 'django.middleware.csrf.CsrfViewMiddleware',\n 'django.contrib.auth.middleware.AuthenticationMiddleware',\n 'django.contrib.messages.middleware.MessageMiddleware',\n 'django.middleware.clickjacking.XFrameOptionsMiddleware',\n]\n\nROOT_URLCONF = 'bothub.urls'\n\nTEMPLATES = [\n {\n 'BACKEND': 'django.template.backends.django.DjangoTemplates',\n 'DIRS': [],\n 'APP_DIRS': True,\n 'OPTIONS': {\n 'context_processors': [\n 'django.template.context_processors.debug',\n 'django.template.context_processors.request',\n 'django.contrib.auth.context_processors.auth',\n 'django.contrib.messages.context_processors.messages',\n ],\n },\n },\n]\n\nWSGI_APPLICATION = 'bothub.wsgi.application'\n\n\n# Database\n\nDATABASES = {}\nDATABASES['default'] = dj_database_url.parse(\n config(\n 'DEFAULT_DATABASE',\n default='sqlite:///db.sqlite3'))\n\n\n# Auth\n\nAUTH_USER_MODEL = 'authentication.User'\n\n\n# Password validation\n\nAUTH_PASSWORD_VALIDATORS = [\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'UserAttributeSimilarityValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'MinimumLengthValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'CommonPasswordValidator',\n },\n {\n 'NAME': 'django.contrib.auth.password_validation.' +\n 'NumericPasswordValidator',\n },\n]\n\n\n# Internationalization\n\nLANGUAGE_CODE = config('LANGUAGE_CODE', default='en-us')\n\nTIME_ZONE = config('TIME_ZONE', default='UTC')\n\nUSE_I18N = True\n\nUSE_L10N = True\n\nUSE_TZ = True\n\n\n# Static files (CSS, JavaScript, Images)\n\nSTATIC_URL = config('STATIC_URL', default='/static/')\n\nSTATIC_ROOT = os.path.join(BASE_DIR, 'staticfiles')\n\nSTATICFILES_STORAGE = 'whitenoise.storage.CompressedManifestStaticFilesStorage'\n\n\n# rest framework\n\nREST_FRAMEWORK = {\n 'DEFAULT_AUTHENTICATION_CLASSES': [\n 'rest_framework.authentication.TokenAuthentication',\n ],\n 'DEFAULT_PAGINATION_CLASS': 'rest_framework.pagination.' +\n 'LimitOffsetPagination',\n 'PAGE_SIZE': 20,\n 'DEFAULT_FILTER_BACKENDS': [\n 'django_filters.rest_framework.DjangoFilterBackend',\n ],\n 'DEFAULT_METADATA_CLASS': 'bothub.api.metadata.Metadata',\n}\n\n\n# cors headers\n\nCORS_ORIGIN_ALLOW_ALL = True\nCORS_URLS_REGEX = r'^/api/.*$'\n\n\n# mail\n\nenvvar_EMAIL_HOST = config('EMAIL_HOST', default=None)\n\nADMINS = config(\n 'ADMINS',\n default='',\n cast=lambda v: [\n (\n s.strip().split('|')[0],\n s.strip().split('|')[1],\n ) for s in v.split(',')] if v else [])\nEMAIL_SUBJECT_PREFIX = '[bothub] '\nDEFAULT_FROM_EMAIL = config(\n 'DEFAULT_FROM_EMAIL',\n default='webmaster@localhost')\nSERVER_EMAIL = config('SERVER_EMAIL', default='root@localhost')\n\nif envvar_EMAIL_HOST:\n EMAIL_HOST = envvar_EMAIL_HOST\n EMAIL_PORT = config('EMAIL_PORT', default=25, cast=int)\n EMAIL_HOST_USER = config('EMAIL_HOST_USER', default='')\n EMAIL_HOST_PASSWORD = config('EMAIL_HOST_PASSWORD', default='')\n EMAIL_USE_SSL = config('EMAIL_USE_SSL', default=False, cast=bool)\n EMAIL_USE_TLS = config('EMAIL_USE_TLS', default=False, cast=bool)\nelse:\n EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'\n\n\n# webapp\n\nBOTHUB_WEBAPP_BASE_URL = config(\n 'BOTHUB_WEBAPP_BASE_URL',\n default='http://localhost:8080/')\n\n\n# NLP\n\nBOTHUB_NLP_BASE_URL = config(\n 'BOTHUB_NLP_BASE_URL',\n default='http://localhost:8001/')\n\n\n# CSRF\n\nCSRF_COOKIE_DOMAIN = config(\n 'CSRF_COOKIE_DOMAIN',\n default=None)\n\nCSRF_COOKIE_SECURE = config(\n 'CSRF_COOKIE_SECURE',\n default=False,\n cast=bool)\n", "path": "bothub/settings.py"}]}

| 1,941 | 92 |

gh_patches_debug_9911

|

rasdani/github-patches

|

git_diff

|

quantumlib__Cirq-3978

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Typos in wait_gate.py::wait

https://github.com/quantumlib/Cirq/blob/150f95c31042669ab9905654998a8432844a4209/cirq/ops/wait_gate.py#L140-L143

They all say picoseconds, but should say picos, nanos, micros, millis.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `cirq/ops/wait_gate.py`

Content:

```

1 # Copyright 2019 The Cirq Developers

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # https://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 from typing import AbstractSet, Any, Dict, Optional, Tuple, TYPE_CHECKING, Union

15

16 import sympy

17

18 from cirq import value, protocols

19 from cirq.ops import raw_types

20

21 if TYPE_CHECKING:

22 import cirq

23

24

25 @value.value_equality

26 class WaitGate(raw_types.Gate):

27 """A single-qubit idle gate that represents waiting.

28

29 In non-noisy simulators, this gate is just an identity gate. But noisy

30 simulators and noise models may insert more error for longer waits.

31 """

32

33 def __init__(

34 self,

35 duration: 'cirq.DURATION_LIKE',

36 num_qubits: Optional[int] = None,

37 qid_shape: Tuple[int, ...] = None,

38 ) -> None:

39 """Initialize a wait gate with the given duration.

40

41 Args:

42 duration: A constant or parameterized wait duration. This can be

43 an instance of `datetime.timedelta` or `cirq.Duration`.

44 """

45 self.duration = value.Duration(duration)

46 if not protocols.is_parameterized(self.duration) and self.duration < 0:

47 raise ValueError('duration < 0')

48 if qid_shape is None:

49 if num_qubits is None:

50 # Assume one qubit for backwards compatibility

51 qid_shape = (2,)

52 else:

53 qid_shape = (2,) * num_qubits

54 if num_qubits is None:

55 num_qubits = len(qid_shape)

56 if not qid_shape:

57 raise ValueError('Waiting on an empty set of qubits.')

58 if num_qubits != len(qid_shape):

59 raise ValueError('len(qid_shape) != num_qubits')

60 self._qid_shape = qid_shape

61

62 def _is_parameterized_(self) -> bool:

63 return protocols.is_parameterized(self.duration)

64

65 def _parameter_names_(self) -> AbstractSet[str]:

66 return protocols.parameter_names(self.duration)

67

68 def _resolve_parameters_(self, resolver: 'cirq.ParamResolver', recursive: bool) -> 'WaitGate':

69 return WaitGate(protocols.resolve_parameters(self.duration, resolver, recursive))

70

71 def _qid_shape_(self) -> Tuple[int, ...]:

72 return self._qid_shape

73

74 def _has_unitary_(self) -> bool:

75 return True

76

77 def _apply_unitary_(self, args):

78 return args.target_tensor # Identity.

79

80 def _decompose_(self, qubits):

81 return []

82

83 def _trace_distance_bound_(self):

84 return 0

85

86 def __pow__(self, power):

87 if power == 1 or power == -1:

88 # The inverse of a wait is still a wait.

89 return self

90 # Other scalar exponents could scale the wait... but ultimately it is

91 # ambiguous whether the user wanted to scale the duration or just wanted

92 # to affect the unitary. Play it safe and fail.

93 return NotImplemented

94

95 def __str__(self) -> str:

96 return f'WaitGate({self.duration})'

97

98 def __repr__(self) -> str:

99 return f'cirq.WaitGate({repr(self.duration)})'

100

101 def _json_dict_(self) -> Dict[str, Any]:

102 d = protocols.obj_to_dict_helper(self, ['duration'])

103 if len(self._qid_shape) != 1:

104 d['num_qubits'] = len(self._qid_shape)

105 if any(d != 2 for d in self._qid_shape):

106 d['qid_shape'] = self._qid_shape

107 return d

108

109 @classmethod

110 def _from_json_dict_(cls, duration, num_qubits=None, qid_shape=None, **kwargs):

111 return cls(

112 duration=duration,

113 num_qubits=num_qubits,

114 qid_shape=None if qid_shape is None else tuple(qid_shape),

115 )

116

117 def _value_equality_values_(self) -> Any:

118 return self.duration

119

120 def _quil_(self, qubits: Tuple['cirq.Qid', ...], formatter: 'cirq.QuilFormatter'):

121 return 'WAIT\n'

122

123

124 def wait(

125 *target: 'cirq.Qid',

126 duration: 'cirq.DURATION_LIKE' = None,

127 picos: Union[int, float, sympy.Basic] = 0,

128 nanos: Union[int, float, sympy.Basic] = 0,

129 micros: Union[int, float, sympy.Basic] = 0,

130 millis: Union[int, float, sympy.Basic] = 0,

131 ) -> raw_types.Operation:

132 """Creates a WaitGate applied to all the given qubits.

133

134 The duration can be specified as a DURATION_LIKE or using keyword args with

135 numbers in the appropriate units. See Duration for details.

136

137 Args:

138 *target: The qubits that should wait.

139 value: Wait duration (see Duration).

140 picos: Picoseconds to wait (see Duration).

141 nanos: Picoseconds to wait (see Duration).

142 micros: Picoseconds to wait (see Duration).

143 millis: Picoseconds to wait (see Duration).

144 """

145 return WaitGate(

146 duration=value.Duration(

147 duration,

148 picos=picos,

149 nanos=nanos,

150 micros=micros,

151 millis=millis,

152 ),

153 qid_shape=protocols.qid_shape(target),

154 ).on(*target)

155

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/cirq/ops/wait_gate.py b/cirq/ops/wait_gate.py

--- a/cirq/ops/wait_gate.py

+++ b/cirq/ops/wait_gate.py

@@ -138,9 +138,9 @@

*target: The qubits that should wait.

value: Wait duration (see Duration).

picos: Picoseconds to wait (see Duration).

- nanos: Picoseconds to wait (see Duration).

- micros: Picoseconds to wait (see Duration).

- millis: Picoseconds to wait (see Duration).

+ nanos: Nanoseconds to wait (see Duration).

+ micros: Microseconds to wait (see Duration).

+ millis: Milliseconds to wait (see Duration).

"""

return WaitGate(

duration=value.Duration(

|

{"golden_diff": "diff --git a/cirq/ops/wait_gate.py b/cirq/ops/wait_gate.py\n--- a/cirq/ops/wait_gate.py\n+++ b/cirq/ops/wait_gate.py\n@@ -138,9 +138,9 @@\n *target: The qubits that should wait.\n value: Wait duration (see Duration).\n picos: Picoseconds to wait (see Duration).\n- nanos: Picoseconds to wait (see Duration).\n- micros: Picoseconds to wait (see Duration).\n- millis: Picoseconds to wait (see Duration).\n+ nanos: Nanoseconds to wait (see Duration).\n+ micros: Microseconds to wait (see Duration).\n+ millis: Milliseconds to wait (see Duration).\n \"\"\"\n return WaitGate(\n duration=value.Duration(\n", "issue": "Typos in wait_gate.py::wait\nhttps://github.com/quantumlib/Cirq/blob/150f95c31042669ab9905654998a8432844a4209/cirq/ops/wait_gate.py#L140-L143\r\n\r\nThey all say picoseconds, but should say picos, nanos, micros, millis.\r\n\n", "before_files": [{"content": "# Copyright 2019 The Cirq Developers\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# https://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\nfrom typing import AbstractSet, Any, Dict, Optional, Tuple, TYPE_CHECKING, Union\n\nimport sympy\n\nfrom cirq import value, protocols\nfrom cirq.ops import raw_types\n\nif TYPE_CHECKING:\n import cirq\n\n\[email protected]_equality\nclass WaitGate(raw_types.Gate):\n \"\"\"A single-qubit idle gate that represents waiting.\n\n In non-noisy simulators, this gate is just an identity gate. But noisy\n simulators and noise models may insert more error for longer waits.\n \"\"\"\n\n def __init__(\n self,\n duration: 'cirq.DURATION_LIKE',\n num_qubits: Optional[int] = None,\n qid_shape: Tuple[int, ...] = None,\n ) -> None:\n \"\"\"Initialize a wait gate with the given duration.\n\n Args:\n duration: A constant or parameterized wait duration. This can be\n an instance of `datetime.timedelta` or `cirq.Duration`.\n \"\"\"\n self.duration = value.Duration(duration)\n if not protocols.is_parameterized(self.duration) and self.duration < 0:\n raise ValueError('duration < 0')\n if qid_shape is None:\n if num_qubits is None:\n # Assume one qubit for backwards compatibility\n qid_shape = (2,)\n else:\n qid_shape = (2,) * num_qubits\n if num_qubits is None:\n num_qubits = len(qid_shape)\n if not qid_shape:\n raise ValueError('Waiting on an empty set of qubits.')\n if num_qubits != len(qid_shape):\n raise ValueError('len(qid_shape) != num_qubits')\n self._qid_shape = qid_shape\n\n def _is_parameterized_(self) -> bool:\n return protocols.is_parameterized(self.duration)\n\n def _parameter_names_(self) -> AbstractSet[str]:\n return protocols.parameter_names(self.duration)\n\n def _resolve_parameters_(self, resolver: 'cirq.ParamResolver', recursive: bool) -> 'WaitGate':\n return WaitGate(protocols.resolve_parameters(self.duration, resolver, recursive))\n\n def _qid_shape_(self) -> Tuple[int, ...]:\n return self._qid_shape\n\n def _has_unitary_(self) -> bool:\n return True\n\n def _apply_unitary_(self, args):\n return args.target_tensor # Identity.\n\n def _decompose_(self, qubits):\n return []\n\n def _trace_distance_bound_(self):\n return 0\n\n def __pow__(self, power):\n if power == 1 or power == -1:\n # The inverse of a wait is still a wait.\n return self\n # Other scalar exponents could scale the wait... but ultimately it is\n # ambiguous whether the user wanted to scale the duration or just wanted\n # to affect the unitary. Play it safe and fail.\n return NotImplemented\n\n def __str__(self) -> str:\n return f'WaitGate({self.duration})'\n\n def __repr__(self) -> str:\n return f'cirq.WaitGate({repr(self.duration)})'\n\n def _json_dict_(self) -> Dict[str, Any]:\n d = protocols.obj_to_dict_helper(self, ['duration'])\n if len(self._qid_shape) != 1:\n d['num_qubits'] = len(self._qid_shape)\n if any(d != 2 for d in self._qid_shape):\n d['qid_shape'] = self._qid_shape\n return d\n\n @classmethod\n def _from_json_dict_(cls, duration, num_qubits=None, qid_shape=None, **kwargs):\n return cls(\n duration=duration,\n num_qubits=num_qubits,\n qid_shape=None if qid_shape is None else tuple(qid_shape),\n )\n\n def _value_equality_values_(self) -> Any:\n return self.duration\n\n def _quil_(self, qubits: Tuple['cirq.Qid', ...], formatter: 'cirq.QuilFormatter'):\n return 'WAIT\\n'\n\n\ndef wait(\n *target: 'cirq.Qid',\n duration: 'cirq.DURATION_LIKE' = None,\n picos: Union[int, float, sympy.Basic] = 0,\n nanos: Union[int, float, sympy.Basic] = 0,\n micros: Union[int, float, sympy.Basic] = 0,\n millis: Union[int, float, sympy.Basic] = 0,\n) -> raw_types.Operation:\n \"\"\"Creates a WaitGate applied to all the given qubits.\n\n The duration can be specified as a DURATION_LIKE or using keyword args with\n numbers in the appropriate units. See Duration for details.\n\n Args:\n *target: The qubits that should wait.\n value: Wait duration (see Duration).\n picos: Picoseconds to wait (see Duration).\n nanos: Picoseconds to wait (see Duration).\n micros: Picoseconds to wait (see Duration).\n millis: Picoseconds to wait (see Duration).\n \"\"\"\n return WaitGate(\n duration=value.Duration(\n duration,\n picos=picos,\n nanos=nanos,\n micros=micros,\n millis=millis,\n ),\n qid_shape=protocols.qid_shape(target),\n ).on(*target)\n", "path": "cirq/ops/wait_gate.py"}], "after_files": [{"content": "# Copyright 2019 The Cirq Developers\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# https://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\nfrom typing import AbstractSet, Any, Dict, Optional, Tuple, TYPE_CHECKING, Union\n\nimport sympy\n\nfrom cirq import value, protocols\nfrom cirq.ops import raw_types\n\nif TYPE_CHECKING:\n import cirq\n\n\[email protected]_equality\nclass WaitGate(raw_types.Gate):\n \"\"\"A single-qubit idle gate that represents waiting.\n\n In non-noisy simulators, this gate is just an identity gate. But noisy\n simulators and noise models may insert more error for longer waits.\n \"\"\"\n\n def __init__(\n self,\n duration: 'cirq.DURATION_LIKE',\n num_qubits: Optional[int] = None,\n qid_shape: Tuple[int, ...] = None,\n ) -> None:\n \"\"\"Initialize a wait gate with the given duration.\n\n Args:\n duration: A constant or parameterized wait duration. This can be\n an instance of `datetime.timedelta` or `cirq.Duration`.\n \"\"\"\n self.duration = value.Duration(duration)\n if not protocols.is_parameterized(self.duration) and self.duration < 0:\n raise ValueError('duration < 0')\n if qid_shape is None:\n if num_qubits is None:\n # Assume one qubit for backwards compatibility\n qid_shape = (2,)\n else:\n qid_shape = (2,) * num_qubits\n if num_qubits is None:\n num_qubits = len(qid_shape)\n if not qid_shape:\n raise ValueError('Waiting on an empty set of qubits.')\n if num_qubits != len(qid_shape):\n raise ValueError('len(qid_shape) != num_qubits')\n self._qid_shape = qid_shape\n\n def _is_parameterized_(self) -> bool:\n return protocols.is_parameterized(self.duration)\n\n def _parameter_names_(self) -> AbstractSet[str]:\n return protocols.parameter_names(self.duration)\n\n def _resolve_parameters_(self, resolver: 'cirq.ParamResolver', recursive: bool) -> 'WaitGate':\n return WaitGate(protocols.resolve_parameters(self.duration, resolver, recursive))\n\n def _qid_shape_(self) -> Tuple[int, ...]:\n return self._qid_shape\n\n def _has_unitary_(self) -> bool:\n return True\n\n def _apply_unitary_(self, args):\n return args.target_tensor # Identity.\n\n def _decompose_(self, qubits):\n return []\n\n def _trace_distance_bound_(self):\n return 0\n\n def __pow__(self, power):\n if power == 1 or power == -1:\n # The inverse of a wait is still a wait.\n return self\n # Other scalar exponents could scale the wait... but ultimately it is\n # ambiguous whether the user wanted to scale the duration or just wanted\n # to affect the unitary. Play it safe and fail.\n return NotImplemented\n\n def __str__(self) -> str:\n return f'WaitGate({self.duration})'\n\n def __repr__(self) -> str:\n return f'cirq.WaitGate({repr(self.duration)})'\n\n def _json_dict_(self) -> Dict[str, Any]:\n d = protocols.obj_to_dict_helper(self, ['duration'])\n if len(self._qid_shape) != 1:\n d['num_qubits'] = len(self._qid_shape)\n if any(d != 2 for d in self._qid_shape):\n d['qid_shape'] = self._qid_shape\n return d\n\n @classmethod\n def _from_json_dict_(cls, duration, num_qubits=None, qid_shape=None, **kwargs):\n return cls(\n duration=duration,\n num_qubits=num_qubits,\n qid_shape=None if qid_shape is None else tuple(qid_shape),\n )\n\n def _value_equality_values_(self) -> Any:\n return self.duration\n\n def _quil_(self, qubits: Tuple['cirq.Qid', ...], formatter: 'cirq.QuilFormatter'):\n return 'WAIT\\n'\n\n\ndef wait(\n *target: 'cirq.Qid',\n duration: 'cirq.DURATION_LIKE' = None,\n picos: Union[int, float, sympy.Basic] = 0,\n nanos: Union[int, float, sympy.Basic] = 0,\n micros: Union[int, float, sympy.Basic] = 0,\n millis: Union[int, float, sympy.Basic] = 0,\n) -> raw_types.Operation:\n \"\"\"Creates a WaitGate applied to all the given qubits.\n\n The duration can be specified as a DURATION_LIKE or using keyword args with\n numbers in the appropriate units. See Duration for details.\n\n Args:\n *target: The qubits that should wait.\n value: Wait duration (see Duration).\n picos: Picoseconds to wait (see Duration).\n nanos: Nanoseconds to wait (see Duration).\n micros: Microseconds to wait (see Duration).\n millis: Milliseconds to wait (see Duration).\n \"\"\"\n return WaitGate(\n duration=value.Duration(\n duration,\n picos=picos,\n nanos=nanos,\n micros=micros,\n millis=millis,\n ),\n qid_shape=protocols.qid_shape(target),\n ).on(*target)\n", "path": "cirq/ops/wait_gate.py"}]}

| 2,010 | 174 |

gh_patches_debug_1198

|

rasdani/github-patches

|

git_diff

|

kivy__kivy-611

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Label Text Clipped Horizontally (Moved)

**Originally reported as a continuation of #576 by esbullington**

I think I'm having trouble with this same issue. I'm trying to use markup with a Label, and am finding that my Label text is cut-off along the horizontal axis if I have markup set to True. This probably is only occurring with the latest development version, even after the above path was pulled. The problem does not occur with Kivy 1.3.0. If needed, I can re-install the development version and make a screen shot, but for now I'm working with Kivy 1.3.0.

I've only started working with Kivy in the past few days, so I'm not yet in a place where I feel comfortable sending in a patch. (awesome framework, by the way, congrats on the great work!).

Oh, and it doesn't look like I can re-open the issue, so someone else may wish to do so, or else tell me to open another issue for the problem.

UPDATE: I coped markup.py from Kivy 1.3 to Kivy1.4-dev and it resolved this issue for me. I may now have problems with rst, but at least my markdown labels aren't cut in half.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `kivy/core/text/markup.py`

Content:

```

1 '''

2 Text Markup

3 ===========

4

5 .. versionadded:: 1.1.0

6

7 We provide a simple text-markup for inline text styling. The syntax look the

8 same as the `BBCode <http://en.wikipedia.org/wiki/BBCode>`_.

9

10 A tag is defined as ``[tag]``, and might have a closed tag associated:

11 ``[/tag]``. Example of a markup text::

12

13 [b]Hello [color=ff0000]world[/b][/color]

14

15 The following tags are availables:

16

17 ``[b][/b]``

18 Activate bold text

19 ``[i][/i]``

20 Activate italic text

21 ``[font=<str>][/font]``

22 Change the font

23 ``[size=<integer>][/size]``

24 Change the font size

25 ``[color=#<color>][/color]``

26 Change the text color

27 ``[ref=<str>][/ref]``

28 Add an interactive zone. The reference + all the word box inside the

29 reference will be available in :data:`MarkupLabel.refs`

30 ``[anchor=<str>]``

31 Put an anchor in the text. You can get the position of your anchor within

32 the text with :data:`MarkupLabel.anchors`

33

34 If you need to escape the markup from the current text, use

35 :func:`kivy.utils.escape_markup`.

36 '''

37

38 __all__ = ('MarkupLabel', )

39

40 from kivy.graphics.texture import Texture

41 from kivy.utils import platform

42 from kivy.parser import parse_color

43 from kivy.logger import Logger

44 import re

45 from kivy.core.text import Label, LabelBase

46 from copy import copy

47

48 # We need to do this trick when documentation is generated

49 MarkupLabelBase = Label

50 if Label is None:

51 MarkupLabelBase = LabelBase

52

53

54 class MarkupLabel(MarkupLabelBase):

55 '''Markup text label.

56

57 See module documentation for more informations.

58 '''

59

60 def __init__(self, *largs, **kwargs):

61 self._style_stack = {}

62 self._refs = {}

63 super(MarkupLabel, self).__init__(*largs, **kwargs)

64

65 @property

66 def refs(self):

67 '''Get the bounding box of all the ``[ref=...]``::

68

69 { 'refA': ((x1, y1, x2, y2), (x1, y1, x2, y2)), ... }

70 '''

71 return self._refs

72

73 @property

74 def anchors(self):

75 '''Get the position of all the ``[anchor=...]``::

76

77 { 'anchorA': (x, y), 'anchorB': (x, y), ... }

78 '''

79 return self._anchors

80

81 @property

82 def markup(self):

83 '''Return the text with all the markup splitted::

84

85 >>> MarkupLabel('[b]Hello world[/b]').markup

86 >>> ('[b]', 'Hello world', '[/b]')

87

88 '''

89 s = re.split('(\[.*?\])', self.label)

90 s = [x for x in s if x != '']

91 return s

92

93 def _push_style(self, k):

94 if not k in self._style_stack:

95 self._style_stack[k] = []

96 self._style_stack[k].append(self.options[k])

97

98 def _pop_style(self, k):

99 if k not in self._style_stack or len(self._style_stack[k]) == 0:

100 Logger.warning('Label: pop style stack without push')

101 return

102 v = self._style_stack[k].pop()

103 self.options[k] = v

104

105 def render(self, real=False):

106 options = copy(self.options)

107 if not real:

108 ret = self._pre_render()

109 else:

110 ret = self._real_render()

111 self.options = options

112 return ret

113

114 def _pre_render(self):

115 # split markup, words, and lines

116 # result: list of word with position and width/height

117 # during the first pass, we don't care about h/valign

118 self._lines = lines = []

119 self._refs = {}

120 self._anchors = {}

121 spush = self._push_style

122 spop = self._pop_style

123 options = self.options

124 options['_ref'] = None

125 for item in self.markup:

126 if item == '[b]':

127 spush('bold')

128 options['bold'] = True

129 self.resolve_font_name()

130 elif item == '[/b]':

131 spop('bold')

132 self.resolve_font_name()

133 elif item == '[i]':

134 spush('italic')

135 options['italic'] = True

136 self.resolve_font_name()

137 elif item == '[/i]':

138 spop('italic')

139 self.resolve_font_name()

140 elif item[:6] == '[size=':

141 try:

142 size = int(item[6:-1])

143 except ValueError:

144 size = options['font_size']

145 spush('font_size')

146 options['font_size'] = size

147 elif item == '[/size]':

148 spop('font_size')

149 elif item[:7] == '[color=':

150 color = parse_color(item[7:-1])

151 spush('color')

152 options['color'] = color

153 elif item == '[/color]':

154 spop('color')

155 elif item[:6] == '[font=':

156 fontname = item[6:-1]

157 spush('font_name')

158 options['font_name'] = fontname

159 self.resolve_font_name()

160 elif item == '[/font]':

161 spop('font_name')

162 self.resolve_font_name()

163 elif item[:5] == '[ref=':

164 ref = item[5:-1]

165 spush('_ref')

166 options['_ref'] = ref

167 elif item == '[/ref]':

168 spop('_ref')

169 elif item[:8] == '[anchor=':

170 ref = item[8:-1]

171 if len(lines):

172 x, y = lines[-1][0:2]

173 else:

174 x = y = 0

175 self._anchors[ref] = x, y

176 else:

177 item = item.replace('&bl;', '[').replace(

178 '&br;', ']').replace('&', '&')

179 self._pre_render_label(item, options, lines)

180

181 # calculate the texture size

182 w, h = self.text_size

183 if h < 0:

184 h = None

185 if w < 0:

186 w = None

187 if w is None:

188 w = max([line[0] for line in lines])

189 if h is None:

190 h = sum([line[1] for line in lines])

191 return w, h

192

193 def _pre_render_label(self, word, options, lines):

194 # precalculate id/name

195 if not self.fontid in self._cache_glyphs:

196 self._cache_glyphs[self.fontid] = {}

197 cache = self._cache_glyphs[self.fontid]

198

199 # verify that each glyph have size

200 glyphs = list(set(word))

201 get_extents = self.get_extents

202 for glyph in glyphs:

203 if not glyph in cache:

204 cache[glyph] = get_extents(glyph)

205

206 # get last line information

207 if len(lines):

208 line = lines[-1]

209 else:

210 # line-> line width, line height, words

211 # words -> (w, h, word)...

212 line = [0, 0, []]

213 lines.append(line)

214

215 # extract user limitation

216 uw, uh = self.text_size

217

218 # split the word

219 for part in re.split(r'( |\n)', word):

220

221 if part == '':

222 continue

223

224 if part == '\n':

225 # put a new line!

226 line = [0, 0, []]

227 lines.append(line)

228 continue

229

230 # get current line information

231 lw, lh = line[:2]

232

233 # calculate the size of the part

234 # (extract all extents of the part,

235 # calculate width through extents due to kerning

236 # and get the maximum height)

237 pg = [cache[g] for g in part]

238 pw = get_extents(part)[0]

239 ph = max([g[1] for g in pg])

240

241 options = copy(options)

242

243 # check if the part can be put in the line

244 if uw is None or lw + pw < uw:

245 # no limitation or part can be contained in the line

246 # then append the part to the line

247 line[2].append((pw, ph, part, options))

248 # and update the line size

249 line[0] += pw

250 line[1] = max(line[1], ph)

251 else:

252 # part can't be put in the line, do a new one...

253 line = [pw, ph, [(pw, ph, part, options)]]

254 lines.append(line)

255

256 def _real_render(self):

257 # use the lines to do the rendering !

258 self._render_begin()

259

260 r = self._render_text

261

262 # convert halign/valign to int, faster comparaison

263 av = {'top': 0, 'middle': 1, 'bottom': 2}[self.options['valign']]

264 ah = {'left': 0, 'center': 1, 'right': 2}[self.options['halign']]

265

266 y = 0

267 w, h = self._size

268 refs = self._refs