problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_4048

|

rasdani/github-patches

|

git_diff

|

lutris__lutris-1136

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

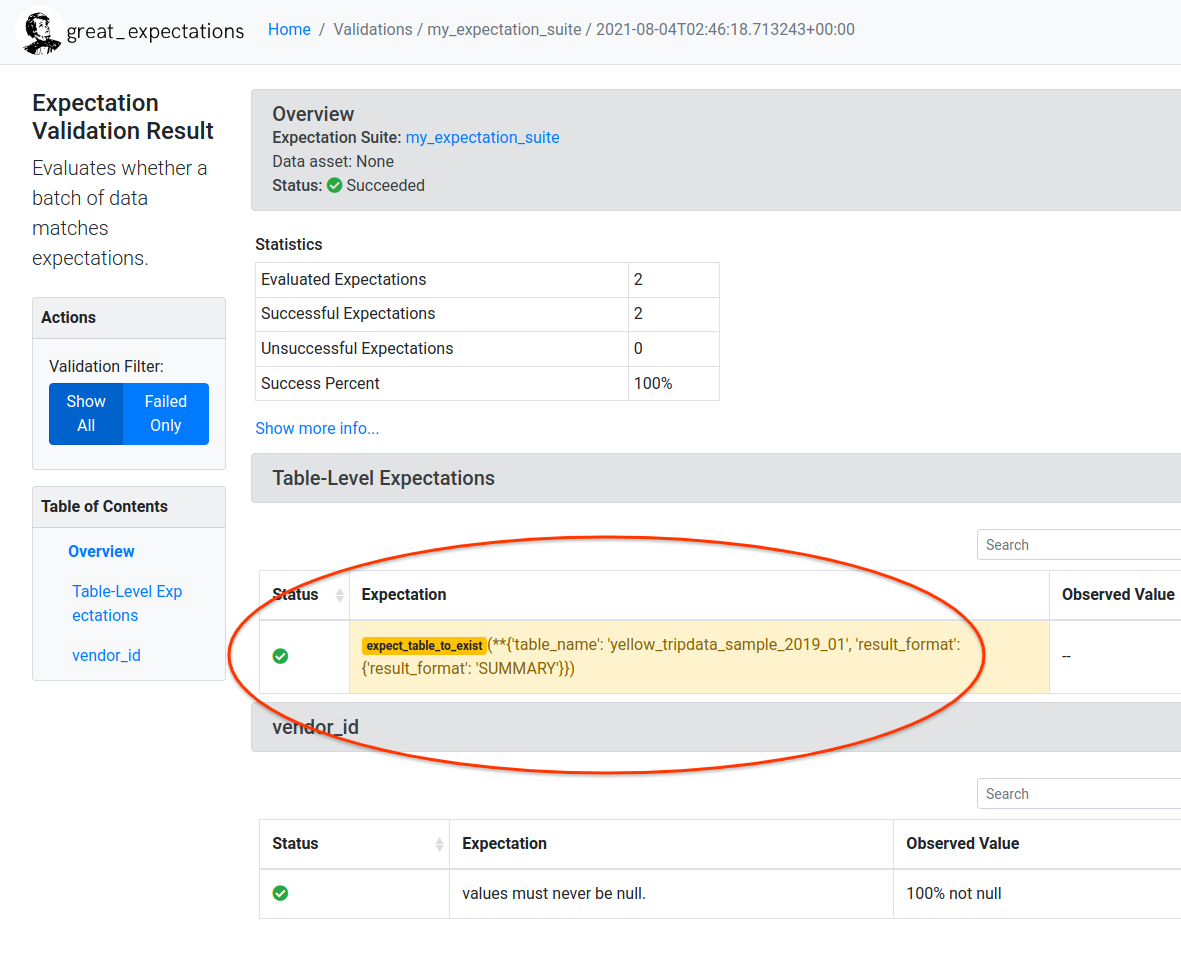

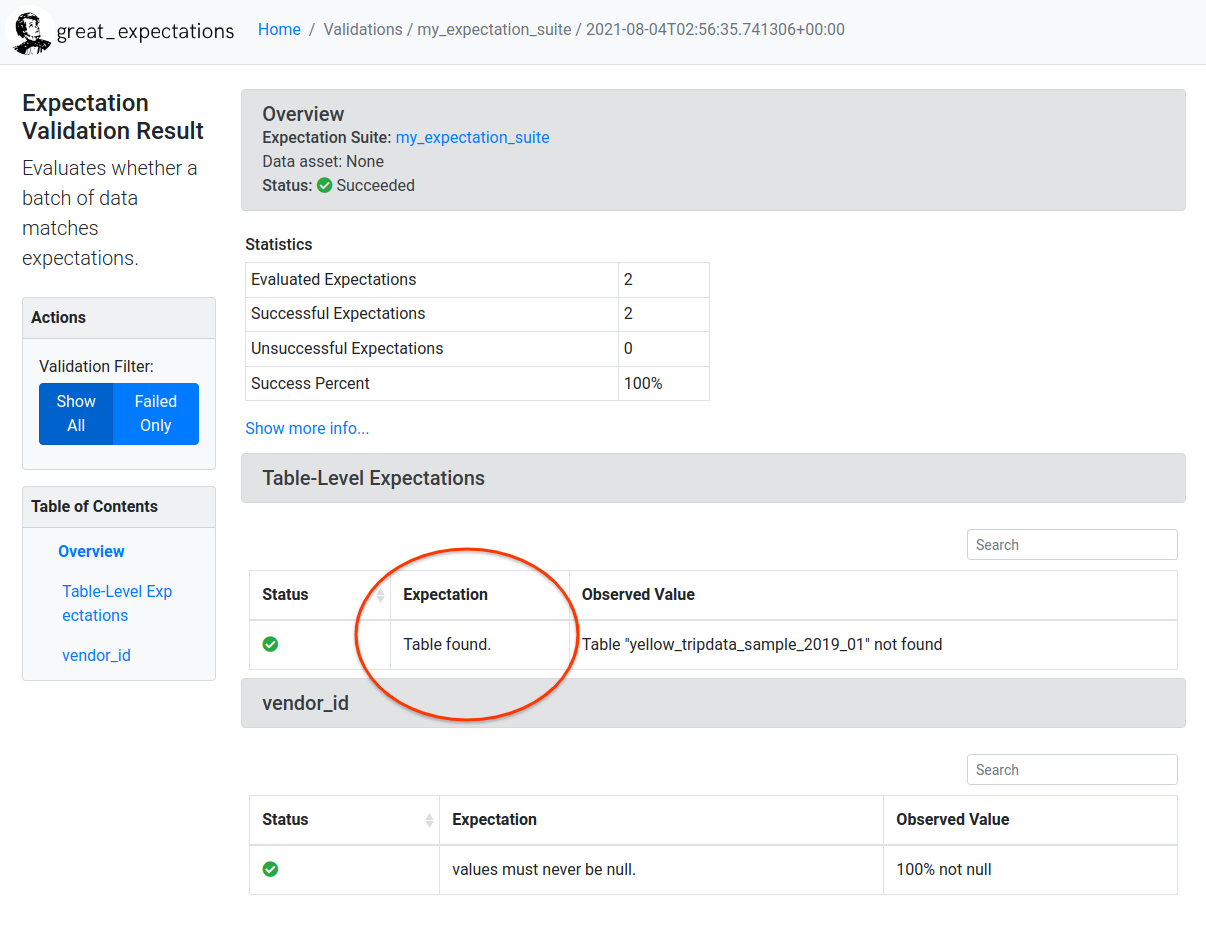

"Manage Versions` window is too narrow

Recent improvements to Wine version managing are currently a bit pointless due to Manage Versions windows being too narrow and not being able to display the status of the downloading process:

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `lutris/gui/runnerinstalldialog.py`

Content:

```

1 # pylint: disable=missing-docstring

2 import os

3 import random

4

5 from gi.repository import GLib, GObject, Gtk

6 from lutris import api, settings

7 from lutris.gui.dialogs import ErrorDialog, QuestionDialog

8 from lutris.gui.widgets.dialogs import Dialog

9 from lutris.util import jobs, system

10 from lutris.util.downloader import Downloader

11 from lutris.util.extract import extract_archive

12 from lutris.util.log import logger

13

14

15 class RunnerInstallDialog(Dialog):

16 COL_VER = 0

17 COL_ARCH = 1

18 COL_URL = 2

19 COL_INSTALLED = 3

20 COL_PROGRESS = 4

21

22 def __init__(self, title, parent, runner):

23 super(RunnerInstallDialog, self).__init__(

24 title, parent, 0, ('_OK', Gtk.ResponseType.OK)

25 )

26 width, height = (340, 380)

27 self.dialog_size = (width, height)

28 self.set_default_size(width, height)

29

30 self.runner = runner

31 self.runner_info = api.get_runners(self.runner)

32 if not self.runner_info:

33 ErrorDialog('Unable to get runner versions, check your internet connection',

34 parent=parent)

35 return

36 label = Gtk.Label("%s version management" % self.runner_info['name'])

37 self.vbox.add(label)

38 self.runner_store = self.get_store()

39 scrolled_window = Gtk.ScrolledWindow()

40 self.treeview = self.get_treeview(self.runner_store)

41 self.installing = {}

42 self.connect('response', self.on_response)

43

44 scrolled_window.set_policy(Gtk.PolicyType.AUTOMATIC,

45 Gtk.PolicyType.AUTOMATIC)

46 scrolled_window.set_shadow_type(Gtk.ShadowType.ETCHED_OUT)

47 scrolled_window.add(self.treeview)

48

49 self.vbox.pack_start(scrolled_window, True, True, 14)

50 self.show_all()

51

52 def get_treeview(self, model):

53 treeview = Gtk.TreeView(model=model)

54 treeview.set_headers_visible(False)

55

56 renderer_toggle = Gtk.CellRendererToggle()

57 renderer_text = Gtk.CellRendererText()

58 self.renderer_progress = Gtk.CellRendererProgress()

59

60 installed_column = Gtk.TreeViewColumn(None, renderer_toggle, active=3)

61 renderer_toggle.connect("toggled", self.on_installed_toggled)

62 treeview.append_column(installed_column)

63

64 version_column = Gtk.TreeViewColumn(None, renderer_text)

65 version_column.add_attribute(renderer_text, 'text', self.COL_VER)

66 version_column.set_property('min-width', 80)

67 treeview.append_column(version_column)

68

69 arch_column = Gtk.TreeViewColumn(None, renderer_text,

70 text=self.COL_ARCH)

71 arch_column.set_property('min-width', 50)

72 treeview.append_column(arch_column)

73

74 progress_column = Gtk.TreeViewColumn(None, self.renderer_progress,

75 value=self.COL_PROGRESS,

76 visible=self.COL_PROGRESS)

77 progress_column.set_property('fixed-width', 60)

78 progress_column.set_property('min-width', 60)

79 progress_column.set_property('resizable', False)

80 treeview.append_column(progress_column)

81

82 return treeview

83

84 def get_store(self):

85 liststore = Gtk.ListStore(str, str, str, bool, int)

86 for version_info in reversed(self.get_versions()):

87 version = version_info['version']

88 architecture = version_info['architecture']

89 progress = 0

90 is_installed = os.path.exists(

91 self.get_runner_path(version, architecture)

92 )

93 liststore.append(

94 [version_info['version'],

95 version_info['architecture'],

96 version_info['url'],

97 is_installed,

98 progress]

99 )

100 return liststore

101

102 def get_versions(self):

103 return self.runner_info['versions']

104

105 def get_runner_path(self, version, arch):

106 return os.path.join(settings.RUNNER_DIR, self.runner,

107 "{}-{}".format(version, arch))

108

109 def get_dest_path(self, row):

110 url = row[2]

111 filename = os.path.basename(url)

112 return os.path.join(settings.CACHE_DIR, filename)

113

114 def on_installed_toggled(self, widget, path):

115 row = self.runner_store[path]

116 if row[self.COL_VER] in self.installing:

117 confirm_dlg = QuestionDialog({

118 "question": "Do you want to cancel the download?",

119 "title": "Download starting"

120 })

121 if confirm_dlg.result == confirm_dlg.YES:

122 self.cancel_install(row)

123 elif row[self.COL_INSTALLED]:

124 self.uninstall_runner(row)

125 else:

126 self.install_runner(row)

127

128 def cancel_install(self, row):

129 self.installing[row[self.COL_VER]].cancel()

130 self.uninstall_runner(row)

131 row[self.COL_PROGRESS] = 0

132 self.installing.pop(row[self.COL_VER])

133

134 def uninstall_runner(self, row):

135 version = row[self.COL_VER]

136 arch = row[self.COL_ARCH]

137 system.remove_folder(self.get_runner_path(version, arch))

138 row[self.COL_INSTALLED] = False

139

140 def install_runner(self, row):

141 url = row[2]

142 logger.debug("Downloading %s", url)

143 dest_path = self.get_dest_path(row)

144 downloader = Downloader(url, dest_path, overwrite=True)

145 GLib.timeout_add(100, self.get_progress, downloader, row)

146 self.installing[row[self.COL_VER]] = downloader

147 downloader.start()

148

149 def get_progress(self, downloader, row):

150 if downloader.state == downloader.CANCELLED:

151 return False

152 if downloader.state == downloader.ERROR:

153 self.cancel_install(row)

154 return False

155 downloader.check_progress()

156 percent_downloaded = downloader.progress_percentage

157 if percent_downloaded >= 1:

158 row[4] = percent_downloaded

159 self.renderer_progress.props.pulse = -1

160 self.renderer_progress.props.text = "%d %%" % int(percent_downloaded)

161 else:

162 row[4] = 1

163 self.renderer_progress.props.pulse = random.randint(1, 100)

164 self.renderer_progress.props.text = "Downloading…"

165 if downloader.state == downloader.COMPLETED:

166 row[4] = 99

167 self.renderer_progress.props.text = "Extracting…"

168 self.on_runner_downloaded(row)

169 return False

170 return True

171

172 def on_runner_downloaded(self, row):

173 version = row[0]

174 architecture = row[1]

175 src = self.get_dest_path(row)

176 dst = self.get_runner_path(version, architecture)

177 jobs.AsyncCall(self.extract, self.on_extracted, src, dst, row)

178

179 def extract(self, src, dst, row):

180 extract_archive(src, dst)

181 return src, row

182

183 def on_extracted(self, xxx_todo_changeme, error):

184 (src, row) = xxx_todo_changeme

185 os.remove(src)

186 row[self.COL_PROGRESS] = 0

187 row[self.COL_INSTALLED] = True

188 self.renderer_progress.props.text = ""

189 self.installing.pop(row[self.COL_VER])

190

191 def on_response(self, dialog, response):

192 self.destroy()

193

194

195 if __name__ == "__main__":

196 import signal

197 signal.signal(signal.SIGINT, signal.SIG_DFL)

198 RunnerInstallDialog("test", None, "wine")

199 GObject.threads_init()

200 Gtk.main()

201

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/lutris/gui/runnerinstalldialog.py b/lutris/gui/runnerinstalldialog.py

--- a/lutris/gui/runnerinstalldialog.py

+++ b/lutris/gui/runnerinstalldialog.py

@@ -23,7 +23,7 @@

super(RunnerInstallDialog, self).__init__(

title, parent, 0, ('_OK', Gtk.ResponseType.OK)

)

- width, height = (340, 380)

+ width, height = (460, 380)

self.dialog_size = (width, height)

self.set_default_size(width, height)

|

{"golden_diff": "diff --git a/lutris/gui/runnerinstalldialog.py b/lutris/gui/runnerinstalldialog.py\n--- a/lutris/gui/runnerinstalldialog.py\n+++ b/lutris/gui/runnerinstalldialog.py\n@@ -23,7 +23,7 @@\n super(RunnerInstallDialog, self).__init__(\n title, parent, 0, ('_OK', Gtk.ResponseType.OK)\n )\n- width, height = (340, 380)\n+ width, height = (460, 380)\n self.dialog_size = (width, height)\n self.set_default_size(width, height)\n", "issue": "\"Manage Versions` window is too narrow\nRecent improvements to Wine version managing are currently a bit pointless due to Manage Versions windows being too narrow and not being able to display the status of the downloading process:\r\n\r\n\r\n\n", "before_files": [{"content": "# pylint: disable=missing-docstring\nimport os\nimport random\n\nfrom gi.repository import GLib, GObject, Gtk\nfrom lutris import api, settings\nfrom lutris.gui.dialogs import ErrorDialog, QuestionDialog\nfrom lutris.gui.widgets.dialogs import Dialog\nfrom lutris.util import jobs, system\nfrom lutris.util.downloader import Downloader\nfrom lutris.util.extract import extract_archive\nfrom lutris.util.log import logger\n\n\nclass RunnerInstallDialog(Dialog):\n COL_VER = 0\n COL_ARCH = 1\n COL_URL = 2\n COL_INSTALLED = 3\n COL_PROGRESS = 4\n\n def __init__(self, title, parent, runner):\n super(RunnerInstallDialog, self).__init__(\n title, parent, 0, ('_OK', Gtk.ResponseType.OK)\n )\n width, height = (340, 380)\n self.dialog_size = (width, height)\n self.set_default_size(width, height)\n\n self.runner = runner\n self.runner_info = api.get_runners(self.runner)\n if not self.runner_info:\n ErrorDialog('Unable to get runner versions, check your internet connection',\n parent=parent)\n return\n label = Gtk.Label(\"%s version management\" % self.runner_info['name'])\n self.vbox.add(label)\n self.runner_store = self.get_store()\n scrolled_window = Gtk.ScrolledWindow()\n self.treeview = self.get_treeview(self.runner_store)\n self.installing = {}\n self.connect('response', self.on_response)\n\n scrolled_window.set_policy(Gtk.PolicyType.AUTOMATIC,\n Gtk.PolicyType.AUTOMATIC)\n scrolled_window.set_shadow_type(Gtk.ShadowType.ETCHED_OUT)\n scrolled_window.add(self.treeview)\n\n self.vbox.pack_start(scrolled_window, True, True, 14)\n self.show_all()\n\n def get_treeview(self, model):\n treeview = Gtk.TreeView(model=model)\n treeview.set_headers_visible(False)\n\n renderer_toggle = Gtk.CellRendererToggle()\n renderer_text = Gtk.CellRendererText()\n self.renderer_progress = Gtk.CellRendererProgress()\n\n installed_column = Gtk.TreeViewColumn(None, renderer_toggle, active=3)\n renderer_toggle.connect(\"toggled\", self.on_installed_toggled)\n treeview.append_column(installed_column)\n\n version_column = Gtk.TreeViewColumn(None, renderer_text)\n version_column.add_attribute(renderer_text, 'text', self.COL_VER)\n version_column.set_property('min-width', 80)\n treeview.append_column(version_column)\n\n arch_column = Gtk.TreeViewColumn(None, renderer_text,\n text=self.COL_ARCH)\n arch_column.set_property('min-width', 50)\n treeview.append_column(arch_column)\n\n progress_column = Gtk.TreeViewColumn(None, self.renderer_progress,\n value=self.COL_PROGRESS,\n visible=self.COL_PROGRESS)\n progress_column.set_property('fixed-width', 60)\n progress_column.set_property('min-width', 60)\n progress_column.set_property('resizable', False)\n treeview.append_column(progress_column)\n\n return treeview\n\n def get_store(self):\n liststore = Gtk.ListStore(str, str, str, bool, int)\n for version_info in reversed(self.get_versions()):\n version = version_info['version']\n architecture = version_info['architecture']\n progress = 0\n is_installed = os.path.exists(\n self.get_runner_path(version, architecture)\n )\n liststore.append(\n [version_info['version'],\n version_info['architecture'],\n version_info['url'],\n is_installed,\n progress]\n )\n return liststore\n\n def get_versions(self):\n return self.runner_info['versions']\n\n def get_runner_path(self, version, arch):\n return os.path.join(settings.RUNNER_DIR, self.runner,\n \"{}-{}\".format(version, arch))\n\n def get_dest_path(self, row):\n url = row[2]\n filename = os.path.basename(url)\n return os.path.join(settings.CACHE_DIR, filename)\n\n def on_installed_toggled(self, widget, path):\n row = self.runner_store[path]\n if row[self.COL_VER] in self.installing:\n confirm_dlg = QuestionDialog({\n \"question\": \"Do you want to cancel the download?\",\n \"title\": \"Download starting\"\n })\n if confirm_dlg.result == confirm_dlg.YES:\n self.cancel_install(row)\n elif row[self.COL_INSTALLED]:\n self.uninstall_runner(row)\n else:\n self.install_runner(row)\n\n def cancel_install(self, row):\n self.installing[row[self.COL_VER]].cancel()\n self.uninstall_runner(row)\n row[self.COL_PROGRESS] = 0\n self.installing.pop(row[self.COL_VER])\n\n def uninstall_runner(self, row):\n version = row[self.COL_VER]\n arch = row[self.COL_ARCH]\n system.remove_folder(self.get_runner_path(version, arch))\n row[self.COL_INSTALLED] = False\n\n def install_runner(self, row):\n url = row[2]\n logger.debug(\"Downloading %s\", url)\n dest_path = self.get_dest_path(row)\n downloader = Downloader(url, dest_path, overwrite=True)\n GLib.timeout_add(100, self.get_progress, downloader, row)\n self.installing[row[self.COL_VER]] = downloader\n downloader.start()\n\n def get_progress(self, downloader, row):\n if downloader.state == downloader.CANCELLED:\n return False\n if downloader.state == downloader.ERROR:\n self.cancel_install(row)\n return False\n downloader.check_progress()\n percent_downloaded = downloader.progress_percentage\n if percent_downloaded >= 1:\n row[4] = percent_downloaded\n self.renderer_progress.props.pulse = -1\n self.renderer_progress.props.text = \"%d %%\" % int(percent_downloaded)\n else:\n row[4] = 1\n self.renderer_progress.props.pulse = random.randint(1, 100)\n self.renderer_progress.props.text = \"Downloading\u2026\"\n if downloader.state == downloader.COMPLETED:\n row[4] = 99\n self.renderer_progress.props.text = \"Extracting\u2026\"\n self.on_runner_downloaded(row)\n return False\n return True\n\n def on_runner_downloaded(self, row):\n version = row[0]\n architecture = row[1]\n src = self.get_dest_path(row)\n dst = self.get_runner_path(version, architecture)\n jobs.AsyncCall(self.extract, self.on_extracted, src, dst, row)\n\n def extract(self, src, dst, row):\n extract_archive(src, dst)\n return src, row\n\n def on_extracted(self, xxx_todo_changeme, error):\n (src, row) = xxx_todo_changeme\n os.remove(src)\n row[self.COL_PROGRESS] = 0\n row[self.COL_INSTALLED] = True\n self.renderer_progress.props.text = \"\"\n self.installing.pop(row[self.COL_VER])\n\n def on_response(self, dialog, response):\n self.destroy()\n\n\nif __name__ == \"__main__\":\n import signal\n signal.signal(signal.SIGINT, signal.SIG_DFL)\n RunnerInstallDialog(\"test\", None, \"wine\")\n GObject.threads_init()\n Gtk.main()\n", "path": "lutris/gui/runnerinstalldialog.py"}], "after_files": [{"content": "# pylint: disable=missing-docstring\nimport os\nimport random\n\nfrom gi.repository import GLib, GObject, Gtk\nfrom lutris import api, settings\nfrom lutris.gui.dialogs import ErrorDialog, QuestionDialog\nfrom lutris.gui.widgets.dialogs import Dialog\nfrom lutris.util import jobs, system\nfrom lutris.util.downloader import Downloader\nfrom lutris.util.extract import extract_archive\nfrom lutris.util.log import logger\n\n\nclass RunnerInstallDialog(Dialog):\n COL_VER = 0\n COL_ARCH = 1\n COL_URL = 2\n COL_INSTALLED = 3\n COL_PROGRESS = 4\n\n def __init__(self, title, parent, runner):\n super(RunnerInstallDialog, self).__init__(\n title, parent, 0, ('_OK', Gtk.ResponseType.OK)\n )\n width, height = (460, 380)\n self.dialog_size = (width, height)\n self.set_default_size(width, height)\n\n self.runner = runner\n self.runner_info = api.get_runners(self.runner)\n if not self.runner_info:\n ErrorDialog('Unable to get runner versions, check your internet connection',\n parent=parent)\n return\n label = Gtk.Label(\"%s version management\" % self.runner_info['name'])\n self.vbox.add(label)\n self.runner_store = self.get_store()\n scrolled_window = Gtk.ScrolledWindow()\n self.treeview = self.get_treeview(self.runner_store)\n self.installing = {}\n self.connect('response', self.on_response)\n\n scrolled_window.set_policy(Gtk.PolicyType.AUTOMATIC,\n Gtk.PolicyType.AUTOMATIC)\n scrolled_window.set_shadow_type(Gtk.ShadowType.ETCHED_OUT)\n scrolled_window.add(self.treeview)\n\n self.vbox.pack_start(scrolled_window, True, True, 14)\n self.show_all()\n\n def get_treeview(self, model):\n treeview = Gtk.TreeView(model=model)\n treeview.set_headers_visible(False)\n\n renderer_toggle = Gtk.CellRendererToggle()\n renderer_text = Gtk.CellRendererText()\n self.renderer_progress = Gtk.CellRendererProgress()\n\n installed_column = Gtk.TreeViewColumn(None, renderer_toggle, active=3)\n renderer_toggle.connect(\"toggled\", self.on_installed_toggled)\n treeview.append_column(installed_column)\n\n version_column = Gtk.TreeViewColumn(None, renderer_text)\n version_column.add_attribute(renderer_text, 'text', self.COL_VER)\n version_column.set_property('min-width', 80)\n treeview.append_column(version_column)\n\n arch_column = Gtk.TreeViewColumn(None, renderer_text,\n text=self.COL_ARCH)\n arch_column.set_property('min-width', 50)\n treeview.append_column(arch_column)\n\n progress_column = Gtk.TreeViewColumn(None, self.renderer_progress,\n value=self.COL_PROGRESS,\n visible=self.COL_PROGRESS)\n progress_column.set_property('fixed-width', 60)\n progress_column.set_property('min-width', 60)\n progress_column.set_property('resizable', False)\n treeview.append_column(progress_column)\n\n return treeview\n\n def get_store(self):\n liststore = Gtk.ListStore(str, str, str, bool, int)\n for version_info in reversed(self.get_versions()):\n version = version_info['version']\n architecture = version_info['architecture']\n progress = 0\n is_installed = os.path.exists(\n self.get_runner_path(version, architecture)\n )\n liststore.append(\n [version_info['version'],\n version_info['architecture'],\n version_info['url'],\n is_installed,\n progress]\n )\n return liststore\n\n def get_versions(self):\n return self.runner_info['versions']\n\n def get_runner_path(self, version, arch):\n return os.path.join(settings.RUNNER_DIR, self.runner,\n \"{}-{}\".format(version, arch))\n\n def get_dest_path(self, row):\n url = row[2]\n filename = os.path.basename(url)\n return os.path.join(settings.CACHE_DIR, filename)\n\n def on_installed_toggled(self, widget, path):\n row = self.runner_store[path]\n if row[self.COL_VER] in self.installing:\n confirm_dlg = QuestionDialog({\n \"question\": \"Do you want to cancel the download?\",\n \"title\": \"Download starting\"\n })\n if confirm_dlg.result == confirm_dlg.YES:\n self.cancel_install(row)\n elif row[self.COL_INSTALLED]:\n self.uninstall_runner(row)\n else:\n self.install_runner(row)\n\n def cancel_install(self, row):\n self.installing[row[self.COL_VER]].cancel()\n self.uninstall_runner(row)\n row[self.COL_PROGRESS] = 0\n self.installing.pop(row[self.COL_VER])\n\n def uninstall_runner(self, row):\n version = row[self.COL_VER]\n arch = row[self.COL_ARCH]\n system.remove_folder(self.get_runner_path(version, arch))\n row[self.COL_INSTALLED] = False\n\n def install_runner(self, row):\n url = row[2]\n logger.debug(\"Downloading %s\", url)\n dest_path = self.get_dest_path(row)\n downloader = Downloader(url, dest_path, overwrite=True)\n GLib.timeout_add(100, self.get_progress, downloader, row)\n self.installing[row[self.COL_VER]] = downloader\n downloader.start()\n\n def get_progress(self, downloader, row):\n if downloader.state == downloader.CANCELLED:\n return False\n if downloader.state == downloader.ERROR:\n self.cancel_install(row)\n return False\n downloader.check_progress()\n percent_downloaded = downloader.progress_percentage\n if percent_downloaded >= 1:\n row[4] = percent_downloaded\n self.renderer_progress.props.pulse = -1\n self.renderer_progress.props.text = \"%d %%\" % int(percent_downloaded)\n else:\n row[4] = 1\n self.renderer_progress.props.pulse = random.randint(1, 100)\n self.renderer_progress.props.text = \"Downloading\u2026\"\n if downloader.state == downloader.COMPLETED:\n row[4] = 99\n self.renderer_progress.props.text = \"Extracting\u2026\"\n self.on_runner_downloaded(row)\n return False\n return True\n\n def on_runner_downloaded(self, row):\n version = row[0]\n architecture = row[1]\n src = self.get_dest_path(row)\n dst = self.get_runner_path(version, architecture)\n jobs.AsyncCall(self.extract, self.on_extracted, src, dst, row)\n\n def extract(self, src, dst, row):\n extract_archive(src, dst)\n return src, row\n\n def on_extracted(self, xxx_todo_changeme, error):\n (src, row) = xxx_todo_changeme\n os.remove(src)\n row[self.COL_PROGRESS] = 0\n row[self.COL_INSTALLED] = True\n self.renderer_progress.props.text = \"\"\n self.installing.pop(row[self.COL_VER])\n\n def on_response(self, dialog, response):\n self.destroy()\n\n\nif __name__ == \"__main__\":\n import signal\n signal.signal(signal.SIGINT, signal.SIG_DFL)\n RunnerInstallDialog(\"test\", None, \"wine\")\n GObject.threads_init()\n Gtk.main()\n", "path": "lutris/gui/runnerinstalldialog.py"}]}

| 2,454 | 149 |

gh_patches_debug_37740

|

rasdani/github-patches

|

git_diff

|

mitmproxy__mitmproxy-6719

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

The mitmproxy program failed to start because the default port 8080 was occupied.

#### Problem Description

Because the default port 8080 is occupied, the mitmproxy program fails to start, and there is no output reason for the failure.

#### Steps to reproduce the behavior:

1. Listen on port 8080 using the nc command in a terminal window.

2. Start the mitmproxy program in another terminal window.

3. The mitmproxy program failed to start, and there was no output reason for the failure, and the normal terminal configuration was not restored.

#### System Information

Mitmproxy: 10.2.3 binary

Python: 3.12.2

OpenSSL: OpenSSL 3.2.1 30 Jan 2024

Platform: macOS-14.2.1-arm64-arm-64bit

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `mitmproxy/master.py`

Content:

```

1 import asyncio

2 import logging

3

4 from . import ctx as mitmproxy_ctx

5 from .addons import termlog

6 from .proxy.mode_specs import ReverseMode

7 from .utils import asyncio_utils

8 from mitmproxy import addonmanager

9 from mitmproxy import command

10 from mitmproxy import eventsequence

11 from mitmproxy import hooks

12 from mitmproxy import http

13 from mitmproxy import log

14 from mitmproxy import options

15

16 logger = logging.getLogger(__name__)

17

18

19 class Master:

20 """

21 The master handles mitmproxy's main event loop.

22 """

23

24 event_loop: asyncio.AbstractEventLoop

25 _termlog_addon: termlog.TermLog | None = None

26

27 def __init__(

28 self,

29 opts: options.Options,

30 event_loop: asyncio.AbstractEventLoop | None = None,

31 with_termlog: bool = False,

32 ):

33 self.options: options.Options = opts or options.Options()

34 self.commands = command.CommandManager(self)

35 self.addons = addonmanager.AddonManager(self)

36

37 if with_termlog:

38 self._termlog_addon = termlog.TermLog()

39 self.addons.add(self._termlog_addon)

40

41 self.log = log.Log(self) # deprecated, do not use.

42 self._legacy_log_events = log.LegacyLogEvents(self)

43 self._legacy_log_events.install()

44

45 # We expect an active event loop here already because some addons

46 # may want to spawn tasks during the initial configuration phase,

47 # which happens before run().

48 self.event_loop = event_loop or asyncio.get_running_loop()

49 self.should_exit = asyncio.Event()

50 mitmproxy_ctx.master = self

51 mitmproxy_ctx.log = self.log # deprecated, do not use.

52 mitmproxy_ctx.options = self.options

53

54 async def run(self) -> None:

55 with (

56 asyncio_utils.install_exception_handler(self._asyncio_exception_handler),

57 asyncio_utils.set_eager_task_factory(),

58 ):

59 self.should_exit.clear()

60

61 if ec := self.addons.get("errorcheck"):

62 await ec.shutdown_if_errored()

63 if ps := self.addons.get("proxyserver"):

64 # This may block for some proxy modes, so we also monitor should_exit.

65 await asyncio.wait(

66 [

67 asyncio.create_task(ps.setup_servers()),

68 asyncio.create_task(self.should_exit.wait()),

69 ],

70 return_when=asyncio.FIRST_COMPLETED,

71 )

72 await self.running()

73 if ec := self.addons.get("errorcheck"):

74 await ec.shutdown_if_errored()

75 ec.finish()

76 try:

77 await self.should_exit.wait()

78 finally:

79 # .wait might be cancelled (e.g. by sys.exit)

80 await self.done()

81

82 def shutdown(self):

83 """

84 Shut down the proxy. This method is thread-safe.

85 """

86 # We may add an exception argument here.

87 self.event_loop.call_soon_threadsafe(self.should_exit.set)

88

89 async def running(self) -> None:

90 await self.addons.trigger_event(hooks.RunningHook())

91

92 async def done(self) -> None:

93 await self.addons.trigger_event(hooks.DoneHook())

94 self._legacy_log_events.uninstall()

95 if self._termlog_addon is not None:

96 self._termlog_addon.uninstall()

97

98 def _asyncio_exception_handler(self, loop, context) -> None:

99 try:

100 exc: Exception = context["exception"]

101 except KeyError:

102 logger.error(f"Unhandled asyncio error: {context}")

103 else:

104 if isinstance(exc, OSError) and exc.errno == 10038:

105 return # suppress https://bugs.python.org/issue43253

106 logger.error(

107 "Unhandled error in task.",

108 exc_info=(type(exc), exc, exc.__traceback__),

109 )

110

111 async def load_flow(self, f):

112 """

113 Loads a flow

114 """

115

116 if (

117 isinstance(f, http.HTTPFlow)

118 and len(self.options.mode) == 1

119 and self.options.mode[0].startswith("reverse:")

120 ):

121 # When we load flows in reverse proxy mode, we adjust the target host to

122 # the reverse proxy destination for all flows we load. This makes it very

123 # easy to replay saved flows against a different host.

124 # We may change this in the future so that clientplayback always replays to the first mode.

125 mode = ReverseMode.parse(self.options.mode[0])

126 assert isinstance(mode, ReverseMode)

127 f.request.host, f.request.port, *_ = mode.address

128 f.request.scheme = mode.scheme

129

130 for e in eventsequence.iterate(f):

131 await self.addons.handle_lifecycle(e)

132

```

Path: `mitmproxy/addons/errorcheck.py`

Content:

```

1 import asyncio

2 import logging

3 import sys

4

5 from mitmproxy import log

6

7

8 class ErrorCheck:

9 """Monitor startup for error log entries, and terminate immediately if there are some."""

10

11 repeat_errors_on_stderr: bool

12 """

13 Repeat all errors on stderr before exiting.

14 This is useful for the console UI, which otherwise swallows all output.

15 """

16

17 def __init__(self, repeat_errors_on_stderr: bool = False) -> None:

18 self.repeat_errors_on_stderr = repeat_errors_on_stderr

19

20 self.logger = ErrorCheckHandler()

21 self.logger.install()

22

23 def finish(self):

24 self.logger.uninstall()

25

26 async def shutdown_if_errored(self):

27 # don't run immediately, wait for all logging tasks to finish.

28 await asyncio.sleep(0)

29 if self.logger.has_errored:

30 plural = "s" if len(self.logger.has_errored) > 1 else ""

31 if self.repeat_errors_on_stderr:

32 msg = "\n".join(self.logger.format(r) for r in self.logger.has_errored)

33 print(f"Error{plural} logged during startup:\n{msg}", file=sys.stderr)

34 else:

35 print(

36 f"Error{plural} logged during startup, exiting...", file=sys.stderr

37 )

38

39 sys.exit(1)

40

41

42 class ErrorCheckHandler(log.MitmLogHandler):

43 def __init__(self) -> None:

44 super().__init__(logging.ERROR)

45 self.has_errored: list[logging.LogRecord] = []

46

47 def emit(self, record: logging.LogRecord) -> None:

48 self.has_errored.append(record)

49

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/mitmproxy/addons/errorcheck.py b/mitmproxy/addons/errorcheck.py

--- a/mitmproxy/addons/errorcheck.py

+++ b/mitmproxy/addons/errorcheck.py

@@ -3,6 +3,8 @@

import sys

from mitmproxy import log

+from mitmproxy.contrib import click as miniclick

+from mitmproxy.utils import vt_codes

class ErrorCheck:

@@ -29,8 +31,13 @@

if self.logger.has_errored:

plural = "s" if len(self.logger.has_errored) > 1 else ""

if self.repeat_errors_on_stderr:

- msg = "\n".join(self.logger.format(r) for r in self.logger.has_errored)

- print(f"Error{plural} logged during startup:\n{msg}", file=sys.stderr)

+ message = f"Error{plural} logged during startup:"

+ if vt_codes.ensure_supported(sys.stderr): # pragma: no cover

+ message = miniclick.style(message, fg="red")

+ details = "\n".join(

+ self.logger.format(r) for r in self.logger.has_errored

+ )

+ print(f"{message}\n{details}", file=sys.stderr)

else:

print(

f"Error{plural} logged during startup, exiting...", file=sys.stderr

diff --git a/mitmproxy/master.py b/mitmproxy/master.py

--- a/mitmproxy/master.py

+++ b/mitmproxy/master.py

@@ -58,6 +58,7 @@

):

self.should_exit.clear()

+ # Can we exit before even bringing up servers?

if ec := self.addons.get("errorcheck"):

await ec.shutdown_if_errored()

if ps := self.addons.get("proxyserver"):

@@ -69,14 +70,23 @@

],

return_when=asyncio.FIRST_COMPLETED,

)

- await self.running()

- if ec := self.addons.get("errorcheck"):

- await ec.shutdown_if_errored()

- ec.finish()

+ if self.should_exit.is_set():

+ return

+ # Did bringing up servers fail?

+ if ec := self.addons.get("errorcheck"):

+ await ec.shutdown_if_errored()

+

try:

+ await self.running()

+ # Any errors in the final part of startup?

+ if ec := self.addons.get("errorcheck"):

+ await ec.shutdown_if_errored()

+ ec.finish()

+

await self.should_exit.wait()

finally:

- # .wait might be cancelled (e.g. by sys.exit)

+ # if running() was called, we also always want to call done().

+ # .wait might be cancelled (e.g. by sys.exit), so this needs to be in a finally block.

await self.done()

def shutdown(self):

|

{"golden_diff": "diff --git a/mitmproxy/addons/errorcheck.py b/mitmproxy/addons/errorcheck.py\n--- a/mitmproxy/addons/errorcheck.py\n+++ b/mitmproxy/addons/errorcheck.py\n@@ -3,6 +3,8 @@\n import sys\n \n from mitmproxy import log\n+from mitmproxy.contrib import click as miniclick\n+from mitmproxy.utils import vt_codes\n \n \n class ErrorCheck:\n@@ -29,8 +31,13 @@\n if self.logger.has_errored:\n plural = \"s\" if len(self.logger.has_errored) > 1 else \"\"\n if self.repeat_errors_on_stderr:\n- msg = \"\\n\".join(self.logger.format(r) for r in self.logger.has_errored)\n- print(f\"Error{plural} logged during startup:\\n{msg}\", file=sys.stderr)\n+ message = f\"Error{plural} logged during startup:\"\n+ if vt_codes.ensure_supported(sys.stderr): # pragma: no cover\n+ message = miniclick.style(message, fg=\"red\")\n+ details = \"\\n\".join(\n+ self.logger.format(r) for r in self.logger.has_errored\n+ )\n+ print(f\"{message}\\n{details}\", file=sys.stderr)\n else:\n print(\n f\"Error{plural} logged during startup, exiting...\", file=sys.stderr\ndiff --git a/mitmproxy/master.py b/mitmproxy/master.py\n--- a/mitmproxy/master.py\n+++ b/mitmproxy/master.py\n@@ -58,6 +58,7 @@\n ):\n self.should_exit.clear()\n \n+ # Can we exit before even bringing up servers?\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n if ps := self.addons.get(\"proxyserver\"):\n@@ -69,14 +70,23 @@\n ],\n return_when=asyncio.FIRST_COMPLETED,\n )\n- await self.running()\n- if ec := self.addons.get(\"errorcheck\"):\n- await ec.shutdown_if_errored()\n- ec.finish()\n+ if self.should_exit.is_set():\n+ return\n+ # Did bringing up servers fail?\n+ if ec := self.addons.get(\"errorcheck\"):\n+ await ec.shutdown_if_errored()\n+\n try:\n+ await self.running()\n+ # Any errors in the final part of startup?\n+ if ec := self.addons.get(\"errorcheck\"):\n+ await ec.shutdown_if_errored()\n+ ec.finish()\n+\n await self.should_exit.wait()\n finally:\n- # .wait might be cancelled (e.g. by sys.exit)\n+ # if running() was called, we also always want to call done().\n+ # .wait might be cancelled (e.g. by sys.exit), so this needs to be in a finally block.\n await self.done()\n \n def shutdown(self):\n", "issue": "The mitmproxy program failed to start because the default port 8080 was occupied.\n#### Problem Description\r\nBecause the default port 8080 is occupied, the mitmproxy program fails to start, and there is no output reason for the failure.\r\n\r\n#### Steps to reproduce the behavior:\r\n1. Listen on port 8080 using the nc command in a terminal window.\r\n2. Start the mitmproxy program in another terminal window.\r\n3. The mitmproxy program failed to start, and there was no output reason for the failure, and the normal terminal configuration was not restored.\r\n\r\n\r\n\r\n\r\n#### System Information\r\nMitmproxy: 10.2.3 binary\r\nPython: 3.12.2\r\nOpenSSL: OpenSSL 3.2.1 30 Jan 2024\r\nPlatform: macOS-14.2.1-arm64-arm-64bit\r\n\n", "before_files": [{"content": "import asyncio\nimport logging\n\nfrom . import ctx as mitmproxy_ctx\nfrom .addons import termlog\nfrom .proxy.mode_specs import ReverseMode\nfrom .utils import asyncio_utils\nfrom mitmproxy import addonmanager\nfrom mitmproxy import command\nfrom mitmproxy import eventsequence\nfrom mitmproxy import hooks\nfrom mitmproxy import http\nfrom mitmproxy import log\nfrom mitmproxy import options\n\nlogger = logging.getLogger(__name__)\n\n\nclass Master:\n \"\"\"\n The master handles mitmproxy's main event loop.\n \"\"\"\n\n event_loop: asyncio.AbstractEventLoop\n _termlog_addon: termlog.TermLog | None = None\n\n def __init__(\n self,\n opts: options.Options,\n event_loop: asyncio.AbstractEventLoop | None = None,\n with_termlog: bool = False,\n ):\n self.options: options.Options = opts or options.Options()\n self.commands = command.CommandManager(self)\n self.addons = addonmanager.AddonManager(self)\n\n if with_termlog:\n self._termlog_addon = termlog.TermLog()\n self.addons.add(self._termlog_addon)\n\n self.log = log.Log(self) # deprecated, do not use.\n self._legacy_log_events = log.LegacyLogEvents(self)\n self._legacy_log_events.install()\n\n # We expect an active event loop here already because some addons\n # may want to spawn tasks during the initial configuration phase,\n # which happens before run().\n self.event_loop = event_loop or asyncio.get_running_loop()\n self.should_exit = asyncio.Event()\n mitmproxy_ctx.master = self\n mitmproxy_ctx.log = self.log # deprecated, do not use.\n mitmproxy_ctx.options = self.options\n\n async def run(self) -> None:\n with (\n asyncio_utils.install_exception_handler(self._asyncio_exception_handler),\n asyncio_utils.set_eager_task_factory(),\n ):\n self.should_exit.clear()\n\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n if ps := self.addons.get(\"proxyserver\"):\n # This may block for some proxy modes, so we also monitor should_exit.\n await asyncio.wait(\n [\n asyncio.create_task(ps.setup_servers()),\n asyncio.create_task(self.should_exit.wait()),\n ],\n return_when=asyncio.FIRST_COMPLETED,\n )\n await self.running()\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n ec.finish()\n try:\n await self.should_exit.wait()\n finally:\n # .wait might be cancelled (e.g. by sys.exit)\n await self.done()\n\n def shutdown(self):\n \"\"\"\n Shut down the proxy. This method is thread-safe.\n \"\"\"\n # We may add an exception argument here.\n self.event_loop.call_soon_threadsafe(self.should_exit.set)\n\n async def running(self) -> None:\n await self.addons.trigger_event(hooks.RunningHook())\n\n async def done(self) -> None:\n await self.addons.trigger_event(hooks.DoneHook())\n self._legacy_log_events.uninstall()\n if self._termlog_addon is not None:\n self._termlog_addon.uninstall()\n\n def _asyncio_exception_handler(self, loop, context) -> None:\n try:\n exc: Exception = context[\"exception\"]\n except KeyError:\n logger.error(f\"Unhandled asyncio error: {context}\")\n else:\n if isinstance(exc, OSError) and exc.errno == 10038:\n return # suppress https://bugs.python.org/issue43253\n logger.error(\n \"Unhandled error in task.\",\n exc_info=(type(exc), exc, exc.__traceback__),\n )\n\n async def load_flow(self, f):\n \"\"\"\n Loads a flow\n \"\"\"\n\n if (\n isinstance(f, http.HTTPFlow)\n and len(self.options.mode) == 1\n and self.options.mode[0].startswith(\"reverse:\")\n ):\n # When we load flows in reverse proxy mode, we adjust the target host to\n # the reverse proxy destination for all flows we load. This makes it very\n # easy to replay saved flows against a different host.\n # We may change this in the future so that clientplayback always replays to the first mode.\n mode = ReverseMode.parse(self.options.mode[0])\n assert isinstance(mode, ReverseMode)\n f.request.host, f.request.port, *_ = mode.address\n f.request.scheme = mode.scheme\n\n for e in eventsequence.iterate(f):\n await self.addons.handle_lifecycle(e)\n", "path": "mitmproxy/master.py"}, {"content": "import asyncio\nimport logging\nimport sys\n\nfrom mitmproxy import log\n\n\nclass ErrorCheck:\n \"\"\"Monitor startup for error log entries, and terminate immediately if there are some.\"\"\"\n\n repeat_errors_on_stderr: bool\n \"\"\"\n Repeat all errors on stderr before exiting.\n This is useful for the console UI, which otherwise swallows all output.\n \"\"\"\n\n def __init__(self, repeat_errors_on_stderr: bool = False) -> None:\n self.repeat_errors_on_stderr = repeat_errors_on_stderr\n\n self.logger = ErrorCheckHandler()\n self.logger.install()\n\n def finish(self):\n self.logger.uninstall()\n\n async def shutdown_if_errored(self):\n # don't run immediately, wait for all logging tasks to finish.\n await asyncio.sleep(0)\n if self.logger.has_errored:\n plural = \"s\" if len(self.logger.has_errored) > 1 else \"\"\n if self.repeat_errors_on_stderr:\n msg = \"\\n\".join(self.logger.format(r) for r in self.logger.has_errored)\n print(f\"Error{plural} logged during startup:\\n{msg}\", file=sys.stderr)\n else:\n print(\n f\"Error{plural} logged during startup, exiting...\", file=sys.stderr\n )\n\n sys.exit(1)\n\n\nclass ErrorCheckHandler(log.MitmLogHandler):\n def __init__(self) -> None:\n super().__init__(logging.ERROR)\n self.has_errored: list[logging.LogRecord] = []\n\n def emit(self, record: logging.LogRecord) -> None:\n self.has_errored.append(record)\n", "path": "mitmproxy/addons/errorcheck.py"}], "after_files": [{"content": "import asyncio\nimport logging\n\nfrom . import ctx as mitmproxy_ctx\nfrom .addons import termlog\nfrom .proxy.mode_specs import ReverseMode\nfrom .utils import asyncio_utils\nfrom mitmproxy import addonmanager\nfrom mitmproxy import command\nfrom mitmproxy import eventsequence\nfrom mitmproxy import hooks\nfrom mitmproxy import http\nfrom mitmproxy import log\nfrom mitmproxy import options\n\nlogger = logging.getLogger(__name__)\n\n\nclass Master:\n \"\"\"\n The master handles mitmproxy's main event loop.\n \"\"\"\n\n event_loop: asyncio.AbstractEventLoop\n _termlog_addon: termlog.TermLog | None = None\n\n def __init__(\n self,\n opts: options.Options,\n event_loop: asyncio.AbstractEventLoop | None = None,\n with_termlog: bool = False,\n ):\n self.options: options.Options = opts or options.Options()\n self.commands = command.CommandManager(self)\n self.addons = addonmanager.AddonManager(self)\n\n if with_termlog:\n self._termlog_addon = termlog.TermLog()\n self.addons.add(self._termlog_addon)\n\n self.log = log.Log(self) # deprecated, do not use.\n self._legacy_log_events = log.LegacyLogEvents(self)\n self._legacy_log_events.install()\n\n # We expect an active event loop here already because some addons\n # may want to spawn tasks during the initial configuration phase,\n # which happens before run().\n self.event_loop = event_loop or asyncio.get_running_loop()\n self.should_exit = asyncio.Event()\n mitmproxy_ctx.master = self\n mitmproxy_ctx.log = self.log # deprecated, do not use.\n mitmproxy_ctx.options = self.options\n\n async def run(self) -> None:\n with (\n asyncio_utils.install_exception_handler(self._asyncio_exception_handler),\n asyncio_utils.set_eager_task_factory(),\n ):\n self.should_exit.clear()\n\n # Can we exit before even bringing up servers?\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n if ps := self.addons.get(\"proxyserver\"):\n # This may block for some proxy modes, so we also monitor should_exit.\n await asyncio.wait(\n [\n asyncio.create_task(ps.setup_servers()),\n asyncio.create_task(self.should_exit.wait()),\n ],\n return_when=asyncio.FIRST_COMPLETED,\n )\n if self.should_exit.is_set():\n return\n # Did bringing up servers fail?\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n\n try:\n await self.running()\n # Any errors in the final part of startup?\n if ec := self.addons.get(\"errorcheck\"):\n await ec.shutdown_if_errored()\n ec.finish()\n\n await self.should_exit.wait()\n finally:\n # if running() was called, we also always want to call done().\n # .wait might be cancelled (e.g. by sys.exit), so this needs to be in a finally block.\n await self.done()\n\n def shutdown(self):\n \"\"\"\n Shut down the proxy. This method is thread-safe.\n \"\"\"\n # We may add an exception argument here.\n self.event_loop.call_soon_threadsafe(self.should_exit.set)\n\n async def running(self) -> None:\n await self.addons.trigger_event(hooks.RunningHook())\n\n async def done(self) -> None:\n await self.addons.trigger_event(hooks.DoneHook())\n self._legacy_log_events.uninstall()\n if self._termlog_addon is not None:\n self._termlog_addon.uninstall()\n\n def _asyncio_exception_handler(self, loop, context) -> None:\n try:\n exc: Exception = context[\"exception\"]\n except KeyError:\n logger.error(f\"Unhandled asyncio error: {context}\")\n else:\n if isinstance(exc, OSError) and exc.errno == 10038:\n return # suppress https://bugs.python.org/issue43253\n logger.error(\n \"Unhandled error in task.\",\n exc_info=(type(exc), exc, exc.__traceback__),\n )\n\n async def load_flow(self, f):\n \"\"\"\n Loads a flow\n \"\"\"\n\n if (\n isinstance(f, http.HTTPFlow)\n and len(self.options.mode) == 1\n and self.options.mode[0].startswith(\"reverse:\")\n ):\n # When we load flows in reverse proxy mode, we adjust the target host to\n # the reverse proxy destination for all flows we load. This makes it very\n # easy to replay saved flows against a different host.\n # We may change this in the future so that clientplayback always replays to the first mode.\n mode = ReverseMode.parse(self.options.mode[0])\n assert isinstance(mode, ReverseMode)\n f.request.host, f.request.port, *_ = mode.address\n f.request.scheme = mode.scheme\n\n for e in eventsequence.iterate(f):\n await self.addons.handle_lifecycle(e)\n", "path": "mitmproxy/master.py"}, {"content": "import asyncio\nimport logging\nimport sys\n\nfrom mitmproxy import log\nfrom mitmproxy.contrib import click as miniclick\nfrom mitmproxy.utils import vt_codes\n\n\nclass ErrorCheck:\n \"\"\"Monitor startup for error log entries, and terminate immediately if there are some.\"\"\"\n\n repeat_errors_on_stderr: bool\n \"\"\"\n Repeat all errors on stderr before exiting.\n This is useful for the console UI, which otherwise swallows all output.\n \"\"\"\n\n def __init__(self, repeat_errors_on_stderr: bool = False) -> None:\n self.repeat_errors_on_stderr = repeat_errors_on_stderr\n\n self.logger = ErrorCheckHandler()\n self.logger.install()\n\n def finish(self):\n self.logger.uninstall()\n\n async def shutdown_if_errored(self):\n # don't run immediately, wait for all logging tasks to finish.\n await asyncio.sleep(0)\n if self.logger.has_errored:\n plural = \"s\" if len(self.logger.has_errored) > 1 else \"\"\n if self.repeat_errors_on_stderr:\n message = f\"Error{plural} logged during startup:\"\n if vt_codes.ensure_supported(sys.stderr): # pragma: no cover\n message = miniclick.style(message, fg=\"red\")\n details = \"\\n\".join(\n self.logger.format(r) for r in self.logger.has_errored\n )\n print(f\"{message}\\n{details}\", file=sys.stderr)\n else:\n print(\n f\"Error{plural} logged during startup, exiting...\", file=sys.stderr\n )\n\n sys.exit(1)\n\n\nclass ErrorCheckHandler(log.MitmLogHandler):\n def __init__(self) -> None:\n super().__init__(logging.ERROR)\n self.has_errored: list[logging.LogRecord] = []\n\n def emit(self, record: logging.LogRecord) -> None:\n self.has_errored.append(record)\n", "path": "mitmproxy/addons/errorcheck.py"}]}

| 2,252 | 635 |

gh_patches_debug_214

|

rasdani/github-patches

|

git_diff

|

DDMAL__CantusDB-328

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Representation of Notation objects

When I recently tried to edit a source, I was presented with an error message, and found that I was missing several required fields, including this one:

Notation objects are currently pretty inscrutable. They should be represented such that at least their `name` property is visible.

Larger question: why do we have notation objects at all? Currently, the notation model has only one property: `name`. Could this information in Source objects not be more simply represented by a CharField? Is using Notation objects simply the way things were done in OldCantus? Are we using them to ensure standardization among multiple Sources?

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `django/cantusdb_project/main_app/models/notation.py`

Content:

```

1 from django.db import models

2 from main_app.models import BaseModel

3

4

5 class Notation(BaseModel):

6 name = models.CharField(max_length=63)

7

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/django/cantusdb_project/main_app/models/notation.py b/django/cantusdb_project/main_app/models/notation.py

--- a/django/cantusdb_project/main_app/models/notation.py

+++ b/django/cantusdb_project/main_app/models/notation.py

@@ -4,3 +4,5 @@

class Notation(BaseModel):

name = models.CharField(max_length=63)

+ def __str__(self):

+ return f"{self.name} ({self.id})"

\ No newline at end of file

|

{"golden_diff": "diff --git a/django/cantusdb_project/main_app/models/notation.py b/django/cantusdb_project/main_app/models/notation.py\n--- a/django/cantusdb_project/main_app/models/notation.py\n+++ b/django/cantusdb_project/main_app/models/notation.py\n@@ -4,3 +4,5 @@\n \n class Notation(BaseModel):\n name = models.CharField(max_length=63)\n+ def __str__(self):\n+ return f\"{self.name} ({self.id})\"\n\\ No newline at end of file\n", "issue": "Representation of Notation objects\nWhen I recently tried to edit a source, I was presented with an error message, and found that I was missing several required fields, including this one: \r\nNotation objects are currently pretty inscrutable. They should be represented such that at least their `name` property is visible.\r\n\r\nLarger question: why do we have notation objects at all? Currently, the notation model has only one property: `name`. Could this information in Source objects not be more simply represented by a CharField? Is using Notation objects simply the way things were done in OldCantus? Are we using them to ensure standardization among multiple Sources?\n", "before_files": [{"content": "from django.db import models\nfrom main_app.models import BaseModel\n\n\nclass Notation(BaseModel):\n name = models.CharField(max_length=63)\n", "path": "django/cantusdb_project/main_app/models/notation.py"}], "after_files": [{"content": "from django.db import models\nfrom main_app.models import BaseModel\n\n\nclass Notation(BaseModel):\n name = models.CharField(max_length=63)\n def __str__(self):\n return f\"{self.name} ({self.id})\"", "path": "django/cantusdb_project/main_app/models/notation.py"}]}

| 523 | 120 |

gh_patches_debug_19368

|

rasdani/github-patches

|

git_diff

|

sopel-irc__sopel-610

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Remind is case sensitive

[22:20] <Eyore> .in 3SEC stuff

[22:20] @Willie Eyore: Sorry, didn't understand the input.

[22:20] <Eyore> .in 3 SEC stuff

[22:20] @Willie Eyore: Sorry, didn't understand the input.

[22:20] <Eyore> .in 3 S stuff

[22:20] @Willie Eyore: Sorry, didn't understand the input.

[22:20] <Eyore> .in 3 MIN stuff

[22:20] @Willie Eyore: Sorry, didn't understand the input.

[22:20] <Eyore> .in 3 Min stuff

[22:20] @Willie Eyore: Sorry, didn't understand the input.

I'd fix it myself, but looking at the code, I'm not sure where to put the .lower()

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `willie/modules/remind.py`

Content:

```

1 # coding=utf8

2 """

3 remind.py - Willie Reminder Module

4 Copyright 2011, Sean B. Palmer, inamidst.com

5 Licensed under the Eiffel Forum License 2.

6

7 http://willie.dftba.net

8 """

9 from __future__ import unicode_literals

10

11 import os

12 import re

13 import time

14 import threading

15 import collections

16 import codecs

17 from datetime import datetime

18 from willie.module import commands, example, NOLIMIT

19 import willie.tools

20

21 try:

22 import pytz

23 except:

24 pytz = None

25

26 def filename(self):

27 name = self.nick + '-' + self.config.host + '.reminders.db'

28 return os.path.join(self.config.dotdir, name)

29

30

31 def load_database(name):

32 data = {}

33 if os.path.isfile(name):

34 f = codecs.open(name, 'r', encoding='utf-8')

35 for line in f:

36 unixtime, channel, nick, message = line.split('\t')

37 message = message.rstrip('\n')

38 t = int(float(unixtime)) # WTFs going on here?

39 reminder = (channel, nick, message)

40 try:

41 data[t].append(reminder)

42 except KeyError:

43 data[t] = [reminder]

44 f.close()

45 return data

46

47

48 def dump_database(name, data):

49 f = codecs.open(name, 'w', encoding='utf-8')

50 for unixtime, reminders in willie.tools.iteritems(data):

51 for channel, nick, message in reminders:

52 f.write('%s\t%s\t%s\t%s\n' % (unixtime, channel, nick, message))

53 f.close()

54

55

56 def setup(bot):

57 bot.rfn = filename(bot)

58 bot.rdb = load_database(bot.rfn)

59

60 def monitor(bot):

61 time.sleep(5)

62 while True:

63 now = int(time.time())

64 unixtimes = [int(key) for key in bot.rdb]

65 oldtimes = [t for t in unixtimes if t <= now]

66 if oldtimes:

67 for oldtime in oldtimes:

68 for (channel, nick, message) in bot.rdb[oldtime]:

69 if message:

70 bot.msg(channel, nick + ': ' + message)

71 else:

72 bot.msg(channel, nick + '!')

73 del bot.rdb[oldtime]

74 dump_database(bot.rfn, bot.rdb)

75 time.sleep(2.5)

76

77 targs = (bot,)

78 t = threading.Thread(target=monitor, args=targs)

79 t.start()

80

81 scaling = collections.OrderedDict([

82 ('years', 365.25 * 24 * 3600),

83 ('year', 365.25 * 24 * 3600),

84 ('yrs', 365.25 * 24 * 3600),

85 ('y', 365.25 * 24 * 3600),

86

87 ('months', 29.53059 * 24 * 3600),

88 ('month', 29.53059 * 24 * 3600),

89 ('mo', 29.53059 * 24 * 3600),

90

91 ('weeks', 7 * 24 * 3600),

92 ('week', 7 * 24 * 3600),

93 ('wks', 7 * 24 * 3600),

94 ('wk', 7 * 24 * 3600),

95 ('w', 7 * 24 * 3600),

96

97 ('days', 24 * 3600),

98 ('day', 24 * 3600),

99 ('d', 24 * 3600),

100

101 ('hours', 3600),

102 ('hour', 3600),

103 ('hrs', 3600),

104 ('hr', 3600),

105 ('h', 3600),

106

107 ('minutes', 60),

108 ('minute', 60),

109 ('mins', 60),

110 ('min', 60),

111 ('m', 60),

112

113 ('seconds', 1),

114 ('second', 1),

115 ('secs', 1),

116 ('sec', 1),

117 ('s', 1),

118 ])

119

120 periods = '|'.join(scaling.keys())

121

122

123 @commands('in')

124 @example('.in 3h45m Go to class')

125 def remind(bot, trigger):

126 """Gives you a reminder in the given amount of time."""

127 duration = 0

128 message = filter(None, re.split('(\d+(?:\.\d+)? ?(?:' + periods + ')) ?',

129 trigger.group(2))[1:])

130 reminder = ''

131 stop = False

132 for piece in message:

133 grp = re.match('(\d+(?:\.\d+)?) ?(.*) ?', piece)

134 if grp and not stop:

135 length = float(grp.group(1))

136 factor = scaling.get(grp.group(2), 60)

137 duration += length * factor

138 else:

139 reminder = reminder + piece

140 stop = True

141 if duration == 0:

142 return bot.reply("Sorry, didn't understand the input.")

143

144 if duration % 1:

145 duration = int(duration) + 1

146 else:

147 duration = int(duration)

148 timezone = willie.tools.get_timezone(

149 bot.db, bot.config, None, trigger.nick, trigger.sender)

150 create_reminder(bot, trigger, duration, reminder, timezone)

151

152

153 @commands('at')

154 @example('.at 13:47 Do your homework!')

155 def at(bot, trigger):

156 """

157 Gives you a reminder at the given time. Takes hh:mm:ssTimezone

158 message. Timezone is any timezone Willie takes elsewhere; the best choices

159 are those from the tzdb; a list of valid options is available at

160 http://dft.ba/-tz . The seconds and timezone are optional.

161 """

162 regex = re.compile(r'(\d+):(\d+)(?::(\d+))?([^\s\d]+)? (.*)')

163 match = regex.match(trigger.group(2))

164 if not match:

165 bot.reply("Sorry, but I didn't understand your input.")

166 return NOLIMIT

167 hour, minute, second, tz, message = match.groups()

168 if not second:

169 second = '0'

170

171 if pytz:

172 timezone = willie.tools.get_timezone(bot.db, bot.config, tz,

173 trigger.nick, trigger.sender)

174 if not timezone:

175 timezone = 'UTC'

176 now = datetime.now(pytz.timezone(timezone))

177 at_time = datetime(now.year, now.month, now.day,

178 int(hour), int(minute), int(second),

179 tzinfo=now.tzinfo)

180 timediff = at_time - now

181 else:

182 if tz and tz.upper() != 'UTC':

183 bot.reply("I don't have timzeone support installed.")

184 return NOLIMIT

185 now = datetime.now()

186 at_time = datetime(now.year, now.month, now.day,

187 int(hour), int(minute), int(second))

188 timediff = at_time - now

189

190 duration = timediff.seconds

191

192 if duration < 0:

193 duration += 86400

194 create_reminder(bot, trigger, duration, message, 'UTC')

195

196

197 def create_reminder(bot, trigger, duration, message, tz):

198 t = int(time.time()) + duration

199 reminder = (trigger.sender, trigger.nick, message)

200 try:

201 bot.rdb[t].append(reminder)

202 except KeyError:

203 bot.rdb[t] = [reminder]

204

205 dump_database(bot.rfn, bot.rdb)

206

207 if duration >= 60:

208 remind_at = datetime.utcfromtimestamp(t)

209 timef = willie.tools.format_time(bot.db, bot.config, tz, trigger.nick,

210 trigger.sender, remind_at)

211

212 bot.reply('Okay, will remind at %s' % timef)

213 else:

214 bot.reply('Okay, will remind in %s secs' % duration)

215

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/willie/modules/remind.py b/willie/modules/remind.py

--- a/willie/modules/remind.py

+++ b/willie/modules/remind.py

@@ -125,7 +125,7 @@

def remind(bot, trigger):

"""Gives you a reminder in the given amount of time."""

duration = 0

- message = filter(None, re.split('(\d+(?:\.\d+)? ?(?:' + periods + ')) ?',

+ message = filter(None, re.split('(\d+(?:\.\d+)? ?(?:(?i)' + periods + ')) ?',

trigger.group(2))[1:])

reminder = ''

stop = False

@@ -133,7 +133,7 @@

grp = re.match('(\d+(?:\.\d+)?) ?(.*) ?', piece)

if grp and not stop:

length = float(grp.group(1))

- factor = scaling.get(grp.group(2), 60)

+ factor = scaling.get(grp.group(2).lower(), 60)

duration += length * factor

else:

reminder = reminder + piece

|

{"golden_diff": "diff --git a/willie/modules/remind.py b/willie/modules/remind.py\n--- a/willie/modules/remind.py\n+++ b/willie/modules/remind.py\n@@ -125,7 +125,7 @@\n def remind(bot, trigger):\n \"\"\"Gives you a reminder in the given amount of time.\"\"\"\n duration = 0\n- message = filter(None, re.split('(\\d+(?:\\.\\d+)? ?(?:' + periods + ')) ?',\n+ message = filter(None, re.split('(\\d+(?:\\.\\d+)? ?(?:(?i)' + periods + ')) ?',\n trigger.group(2))[1:])\n reminder = ''\n stop = False\n@@ -133,7 +133,7 @@\n grp = re.match('(\\d+(?:\\.\\d+)?) ?(.*) ?', piece)\n if grp and not stop:\n length = float(grp.group(1))\n- factor = scaling.get(grp.group(2), 60)\n+ factor = scaling.get(grp.group(2).lower(), 60)\n duration += length * factor\n else:\n reminder = reminder + piece\n", "issue": "Remind is case sensitive\n[22:20] <Eyore> .in 3SEC stuff\n[22:20] @Willie Eyore: Sorry, didn't understand the input.\n[22:20] <Eyore> .in 3 SEC stuff\n[22:20] @Willie Eyore: Sorry, didn't understand the input.\n[22:20] <Eyore> .in 3 S stuff\n[22:20] @Willie Eyore: Sorry, didn't understand the input.\n[22:20] <Eyore> .in 3 MIN stuff\n[22:20] @Willie Eyore: Sorry, didn't understand the input.\n[22:20] <Eyore> .in 3 Min stuff\n[22:20] @Willie Eyore: Sorry, didn't understand the input.\n\nI'd fix it myself, but looking at the code, I'm not sure where to put the .lower()\n\n", "before_files": [{"content": "# coding=utf8\n\"\"\"\nremind.py - Willie Reminder Module\nCopyright 2011, Sean B. Palmer, inamidst.com\nLicensed under the Eiffel Forum License 2.\n\nhttp://willie.dftba.net\n\"\"\"\nfrom __future__ import unicode_literals\n\nimport os\nimport re\nimport time\nimport threading\nimport collections\nimport codecs\nfrom datetime import datetime\nfrom willie.module import commands, example, NOLIMIT\nimport willie.tools\n\ntry:\n import pytz\nexcept:\n pytz = None\n\ndef filename(self):\n name = self.nick + '-' + self.config.host + '.reminders.db'\n return os.path.join(self.config.dotdir, name)\n\n\ndef load_database(name):\n data = {}\n if os.path.isfile(name):\n f = codecs.open(name, 'r', encoding='utf-8')\n for line in f:\n unixtime, channel, nick, message = line.split('\\t')\n message = message.rstrip('\\n')\n t = int(float(unixtime)) # WTFs going on here?\n reminder = (channel, nick, message)\n try:\n data[t].append(reminder)\n except KeyError:\n data[t] = [reminder]\n f.close()\n return data\n\n\ndef dump_database(name, data):\n f = codecs.open(name, 'w', encoding='utf-8')\n for unixtime, reminders in willie.tools.iteritems(data):\n for channel, nick, message in reminders:\n f.write('%s\\t%s\\t%s\\t%s\\n' % (unixtime, channel, nick, message))\n f.close()\n\n\ndef setup(bot):\n bot.rfn = filename(bot)\n bot.rdb = load_database(bot.rfn)\n\n def monitor(bot):\n time.sleep(5)\n while True:\n now = int(time.time())\n unixtimes = [int(key) for key in bot.rdb]\n oldtimes = [t for t in unixtimes if t <= now]\n if oldtimes:\n for oldtime in oldtimes:\n for (channel, nick, message) in bot.rdb[oldtime]:\n if message:\n bot.msg(channel, nick + ': ' + message)\n else:\n bot.msg(channel, nick + '!')\n del bot.rdb[oldtime]\n dump_database(bot.rfn, bot.rdb)\n time.sleep(2.5)\n\n targs = (bot,)\n t = threading.Thread(target=monitor, args=targs)\n t.start()\n\nscaling = collections.OrderedDict([\n ('years', 365.25 * 24 * 3600),\n ('year', 365.25 * 24 * 3600),\n ('yrs', 365.25 * 24 * 3600),\n ('y', 365.25 * 24 * 3600),\n\n ('months', 29.53059 * 24 * 3600),\n ('month', 29.53059 * 24 * 3600),\n ('mo', 29.53059 * 24 * 3600),\n\n ('weeks', 7 * 24 * 3600),\n ('week', 7 * 24 * 3600),\n ('wks', 7 * 24 * 3600),\n ('wk', 7 * 24 * 3600),\n ('w', 7 * 24 * 3600),\n\n ('days', 24 * 3600),\n ('day', 24 * 3600),\n ('d', 24 * 3600),\n\n ('hours', 3600),\n ('hour', 3600),\n ('hrs', 3600),\n ('hr', 3600),\n ('h', 3600),\n\n ('minutes', 60),\n ('minute', 60),\n ('mins', 60),\n ('min', 60),\n ('m', 60),\n\n ('seconds', 1),\n ('second', 1),\n ('secs', 1),\n ('sec', 1),\n ('s', 1),\n])\n\nperiods = '|'.join(scaling.keys())\n\n\n@commands('in')\n@example('.in 3h45m Go to class')\ndef remind(bot, trigger):\n \"\"\"Gives you a reminder in the given amount of time.\"\"\"\n duration = 0\n message = filter(None, re.split('(\\d+(?:\\.\\d+)? ?(?:' + periods + ')) ?',\n trigger.group(2))[1:])\n reminder = ''\n stop = False\n for piece in message:\n grp = re.match('(\\d+(?:\\.\\d+)?) ?(.*) ?', piece)\n if grp and not stop:\n length = float(grp.group(1))\n factor = scaling.get(grp.group(2), 60)\n duration += length * factor\n else:\n reminder = reminder + piece\n stop = True\n if duration == 0:\n return bot.reply(\"Sorry, didn't understand the input.\")\n\n if duration % 1:\n duration = int(duration) + 1\n else:\n duration = int(duration)\n timezone = willie.tools.get_timezone(\n bot.db, bot.config, None, trigger.nick, trigger.sender)\n create_reminder(bot, trigger, duration, reminder, timezone)\n\n\n@commands('at')\n@example('.at 13:47 Do your homework!')\ndef at(bot, trigger):\n \"\"\"\n Gives you a reminder at the given time. Takes hh:mm:ssTimezone\n message. Timezone is any timezone Willie takes elsewhere; the best choices\n are those from the tzdb; a list of valid options is available at\n http://dft.ba/-tz . The seconds and timezone are optional.\n \"\"\"\n regex = re.compile(r'(\\d+):(\\d+)(?::(\\d+))?([^\\s\\d]+)? (.*)')\n match = regex.match(trigger.group(2))\n if not match:\n bot.reply(\"Sorry, but I didn't understand your input.\")\n return NOLIMIT\n hour, minute, second, tz, message = match.groups()\n if not second:\n second = '0'\n\n if pytz:\n timezone = willie.tools.get_timezone(bot.db, bot.config, tz,\n trigger.nick, trigger.sender)\n if not timezone:\n timezone = 'UTC'\n now = datetime.now(pytz.timezone(timezone))\n at_time = datetime(now.year, now.month, now.day,\n int(hour), int(minute), int(second),\n tzinfo=now.tzinfo)\n timediff = at_time - now\n else:\n if tz and tz.upper() != 'UTC':\n bot.reply(\"I don't have timzeone support installed.\")\n return NOLIMIT\n now = datetime.now()\n at_time = datetime(now.year, now.month, now.day,\n int(hour), int(minute), int(second))\n timediff = at_time - now\n\n duration = timediff.seconds\n\n if duration < 0:\n duration += 86400\n create_reminder(bot, trigger, duration, message, 'UTC')\n\n\ndef create_reminder(bot, trigger, duration, message, tz):\n t = int(time.time()) + duration\n reminder = (trigger.sender, trigger.nick, message)\n try:\n bot.rdb[t].append(reminder)\n except KeyError:\n bot.rdb[t] = [reminder]\n\n dump_database(bot.rfn, bot.rdb)\n\n if duration >= 60:\n remind_at = datetime.utcfromtimestamp(t)\n timef = willie.tools.format_time(bot.db, bot.config, tz, trigger.nick,\n trigger.sender, remind_at)\n\n bot.reply('Okay, will remind at %s' % timef)\n else:\n bot.reply('Okay, will remind in %s secs' % duration)\n", "path": "willie/modules/remind.py"}], "after_files": [{"content": "# coding=utf8\n\"\"\"\nremind.py - Willie Reminder Module\nCopyright 2011, Sean B. Palmer, inamidst.com\nLicensed under the Eiffel Forum License 2.\n\nhttp://willie.dftba.net\n\"\"\"\nfrom __future__ import unicode_literals\n\nimport os\nimport re\nimport time\nimport threading\nimport collections\nimport codecs\nfrom datetime import datetime\nfrom willie.module import commands, example, NOLIMIT\nimport willie.tools\n\ntry:\n import pytz\nexcept:\n pytz = None\n\ndef filename(self):\n name = self.nick + '-' + self.config.host + '.reminders.db'\n return os.path.join(self.config.dotdir, name)\n\n\ndef load_database(name):\n data = {}\n if os.path.isfile(name):\n f = codecs.open(name, 'r', encoding='utf-8')\n for line in f:\n unixtime, channel, nick, message = line.split('\\t')\n message = message.rstrip('\\n')\n t = int(float(unixtime)) # WTFs going on here?\n reminder = (channel, nick, message)\n try:\n data[t].append(reminder)\n except KeyError:\n data[t] = [reminder]\n f.close()\n return data\n\n\ndef dump_database(name, data):\n f = codecs.open(name, 'w', encoding='utf-8')\n for unixtime, reminders in willie.tools.iteritems(data):\n for channel, nick, message in reminders:\n f.write('%s\\t%s\\t%s\\t%s\\n' % (unixtime, channel, nick, message))\n f.close()\n\n\ndef setup(bot):\n bot.rfn = filename(bot)\n bot.rdb = load_database(bot.rfn)\n\n def monitor(bot):\n time.sleep(5)\n while True:\n now = int(time.time())\n unixtimes = [int(key) for key in bot.rdb]\n oldtimes = [t for t in unixtimes if t <= now]\n if oldtimes:\n for oldtime in oldtimes:\n for (channel, nick, message) in bot.rdb[oldtime]:\n if message:\n bot.msg(channel, nick + ': ' + message)\n else:\n bot.msg(channel, nick + '!')\n del bot.rdb[oldtime]\n dump_database(bot.rfn, bot.rdb)\n time.sleep(2.5)\n\n targs = (bot,)\n t = threading.Thread(target=monitor, args=targs)\n t.start()\n\nscaling = collections.OrderedDict([\n ('years', 365.25 * 24 * 3600),\n ('year', 365.25 * 24 * 3600),\n ('yrs', 365.25 * 24 * 3600),\n ('y', 365.25 * 24 * 3600),\n\n ('months', 29.53059 * 24 * 3600),\n ('month', 29.53059 * 24 * 3600),\n ('mo', 29.53059 * 24 * 3600),\n\n ('weeks', 7 * 24 * 3600),\n ('week', 7 * 24 * 3600),\n ('wks', 7 * 24 * 3600),\n ('wk', 7 * 24 * 3600),\n ('w', 7 * 24 * 3600),\n\n ('days', 24 * 3600),\n ('day', 24 * 3600),\n ('d', 24 * 3600),\n\n ('hours', 3600),\n ('hour', 3600),\n ('hrs', 3600),\n ('hr', 3600),\n ('h', 3600),\n\n ('minutes', 60),\n ('minute', 60),\n ('mins', 60),\n ('min', 60),\n ('m', 60),\n\n ('seconds', 1),\n ('second', 1),\n ('secs', 1),\n ('sec', 1),\n ('s', 1),\n])\n\nperiods = '|'.join(scaling.keys())\n\n\n@commands('in')\n@example('.in 3h45m Go to class')\ndef remind(bot, trigger):\n \"\"\"Gives you a reminder in the given amount of time.\"\"\"\n duration = 0\n message = filter(None, re.split('(\\d+(?:\\.\\d+)? ?(?:(?i)' + periods + ')) ?',\n trigger.group(2))[1:])\n reminder = ''\n stop = False\n for piece in message:\n grp = re.match('(\\d+(?:\\.\\d+)?) ?(.*) ?', piece)\n if grp and not stop:\n length = float(grp.group(1))\n factor = scaling.get(grp.group(2).lower(), 60)\n duration += length * factor\n else:\n reminder = reminder + piece\n stop = True\n if duration == 0:\n return bot.reply(\"Sorry, didn't understand the input.\")\n\n if duration % 1:\n duration = int(duration) + 1\n else:\n duration = int(duration)\n timezone = willie.tools.get_timezone(\n bot.db, bot.config, None, trigger.nick, trigger.sender)\n create_reminder(bot, trigger, duration, reminder, timezone)\n\n\n@commands('at')\n@example('.at 13:47 Do your homework!')\ndef at(bot, trigger):\n \"\"\"\n Gives you a reminder at the given time. Takes hh:mm:ssTimezone\n message. Timezone is any timezone Willie takes elsewhere; the best choices\n are those from the tzdb; a list of valid options is available at\n http://dft.ba/-tz . The seconds and timezone are optional.\n \"\"\"\n regex = re.compile(r'(\\d+):(\\d+)(?::(\\d+))?([^\\s\\d]+)? (.*)')\n match = regex.match(trigger.group(2))\n if not match:\n bot.reply(\"Sorry, but I didn't understand your input.\")\n return NOLIMIT\n hour, minute, second, tz, message = match.groups()\n if not second:\n second = '0'\n\n if pytz:\n timezone = willie.tools.get_timezone(bot.db, bot.config, tz,\n trigger.nick, trigger.sender)\n if not timezone:\n timezone = 'UTC'\n now = datetime.now(pytz.timezone(timezone))\n at_time = datetime(now.year, now.month, now.day,\n int(hour), int(minute), int(second),\n tzinfo=now.tzinfo)\n timediff = at_time - now\n else:\n if tz and tz.upper() != 'UTC':\n bot.reply(\"I don't have timzeone support installed.\")\n return NOLIMIT\n now = datetime.now()\n at_time = datetime(now.year, now.month, now.day,\n int(hour), int(minute), int(second))\n timediff = at_time - now\n\n duration = timediff.seconds\n\n if duration < 0:\n duration += 86400\n create_reminder(bot, trigger, duration, message, 'UTC')\n\n\ndef create_reminder(bot, trigger, duration, message, tz):\n t = int(time.time()) + duration\n reminder = (trigger.sender, trigger.nick, message)\n try:\n bot.rdb[t].append(reminder)\n except KeyError:\n bot.rdb[t] = [reminder]\n\n dump_database(bot.rfn, bot.rdb)\n\n if duration >= 60:\n remind_at = datetime.utcfromtimestamp(t)\n timef = willie.tools.format_time(bot.db, bot.config, tz, trigger.nick,\n trigger.sender, remind_at)\n\n bot.reply('Okay, will remind at %s' % timef)\n else:\n bot.reply('Okay, will remind in %s secs' % duration)\n", "path": "willie/modules/remind.py"}]}

| 2,814 | 261 |

gh_patches_debug_42849

|

rasdani/github-patches

|

git_diff

|

ray-project__ray-9386

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

[rllib] incorrect model output for DQN with torch and dueling=false

<!--Please include [tune], [rllib], [autoscaler] etc. in the issue title if relevant-->

### What is the problem?

The output fo the DQN model is not within the action space.

Something is wrong when constructing the torch model when dueling is off. The output dimension of the model is equal to whatever is passed in "fcnet_hiddens" instead of being of the size of the action space.

*Ray version and other system information (Python version, TensorFlow version, OS):*

- ray==0.9.0.dev0

- python 3.6.10

- mac OS

### Reproduction (REQUIRED)

```python

import ray

from ray import tune

ray.init()

config = {

"env": "CartPole-v1",

"num_workers": 1,

"train_batch_size": 128,

"learning_starts": 128,

"model": {"fcnet_hiddens": [32]},

"dueling": False ,

"framework": "torch"

}

tune.run("DQN", name="MWE", config=config, stop={"training_iteration": 100})

```

- [x] I have verified my script runs in a clean environment and reproduces the issue.

- [x] I have verified the issue also occurs with the [latest wheels](https://docs.ray.io/en/latest/installation.html).

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `rllib/agents/dqn/dqn_torch_model.py`

Content:

```

1 import numpy as np

2

3 from ray.rllib.models.torch.torch_modelv2 import TorchModelV2

4 from ray.rllib.utils.framework import try_import_torch

5

6 torch, nn = try_import_torch()

7

8

9 class DQNTorchModel(TorchModelV2, nn.Module):

10 """Extension of standard TorchModelV2 to provide dueling-Q functionality.

11 """

12

13 def __init__(

14 self,

15 obs_space,

16 action_space,

17 num_outputs,

18 model_config,

19 name,

20 *,

21 dueling=False,

22 q_hiddens=(256, ),

23 dueling_activation="relu",

24 use_noisy=False,

25 sigma0=0.5,

26 # TODO(sven): Move `add_layer_norm` into ModelCatalog as

27 # generic option, then error if we use ParameterNoise as

28 # Exploration type and do not have any LayerNorm layers in

29 # the net.

30 add_layer_norm=False):

31 """Initialize variables of this model.

32

33 Extra model kwargs:

34 dueling (bool): Whether to build the advantage(A)/value(V) heads

35 for DDQN. If True, Q-values are calculated as:

36 Q = (A - mean[A]) + V. If False, raw NN output is interpreted

37 as Q-values.

38 q_hiddens (List[int]): List of layer-sizes after(!) the

39 Advantages(A)/Value(V)-split. Hence, each of the A- and V-

40 branches will have this structure of Dense layers. To define

41 the NN before this A/V-split, use - as always -

42 config["model"]["fcnet_hiddens"].

43 dueling_activation (str): The activation to use for all dueling

44 layers (A- and V-branch). One of "relu", "tanh", "linear".

45 use_noisy (bool): use noisy nets

46 sigma0 (float): initial value of noisy nets

47 add_layer_norm (bool): Enable layer norm (for param noise).

48 """

49 nn.Module.__init__(self)

50 super(DQNTorchModel, self).__init__(obs_space, action_space,

51 num_outputs, model_config, name)

52

53 self.dueling = dueling

54 ins = num_outputs

55

56 # Dueling case: Build the shared (advantages and value) fc-network.

57 advantage_module = nn.Sequential()

58 value_module = None

59 if self.dueling: