problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.1k

25.4k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 582

39.1k

| num_tokens

int64 271

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_1869

|

rasdani/github-patches

|

git_diff

|

huggingface__text-generation-inference-794

|

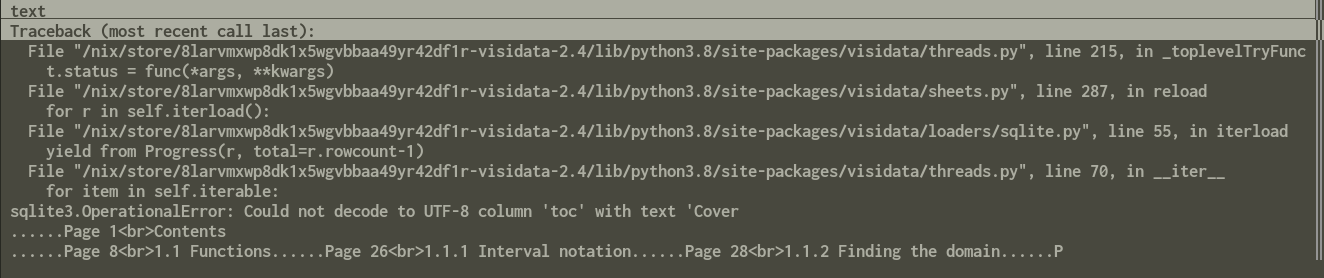

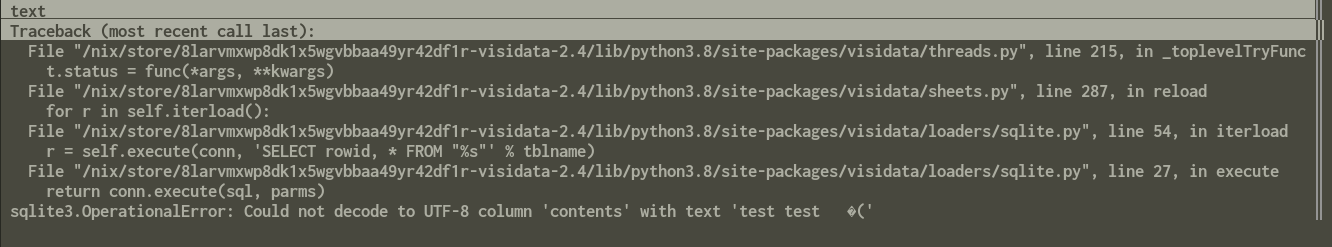

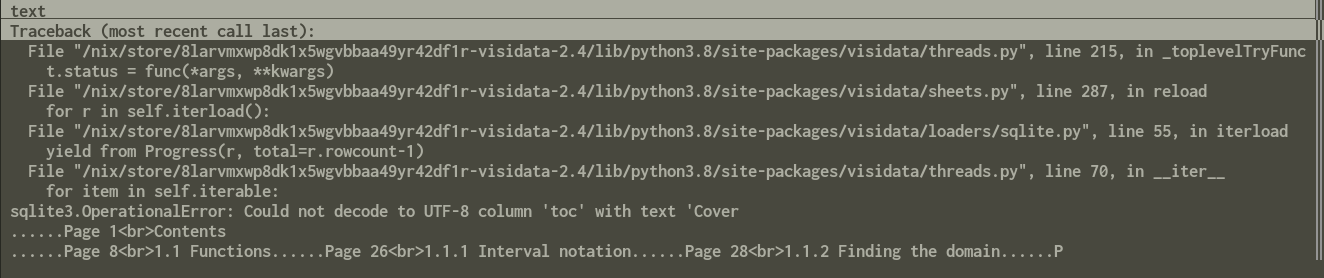

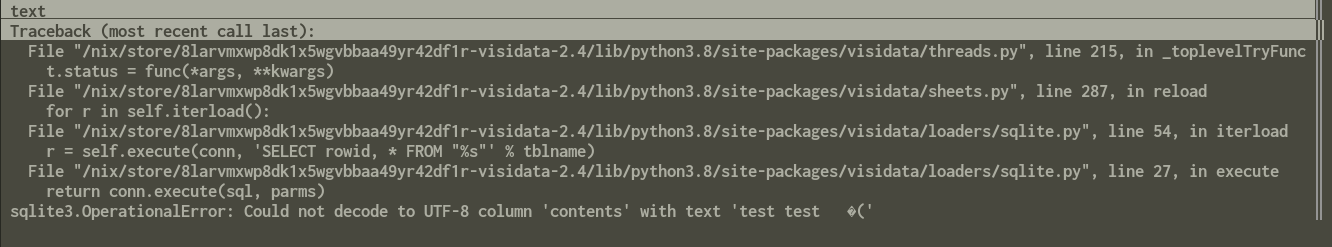

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

small typo in galactica model loading

https://github.com/huggingface/text-generation-inference/blob/1fdc88ee908beb8ae0afe17810a17b9b4d8848e2/server/text_generation_server/models/__init__.py#L92

should be trust_remote_code

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `server/text_generation_server/models/__init__.py`

Content:

```

1 import os

2 import torch

3

4 from loguru import logger

5 from transformers.configuration_utils import PretrainedConfig

6 from transformers.models.auto import modeling_auto

7 from typing import Optional

8

9 from text_generation_server.models.model import Model

10 from text_generation_server.models.causal_lm import CausalLM

11 from text_generation_server.models.flash_causal_lm import FlashCausalLM

12 from text_generation_server.models.bloom import BLOOMSharded

13 from text_generation_server.models.mpt import MPTSharded

14 from text_generation_server.models.seq2seq_lm import Seq2SeqLM

15 from text_generation_server.models.rw import RW

16 from text_generation_server.models.opt import OPTSharded

17 from text_generation_server.models.galactica import GalacticaSharded

18 from text_generation_server.models.santacoder import SantaCoder

19 from text_generation_server.models.t5 import T5Sharded

20 from text_generation_server.models.gpt_neox import GPTNeoxSharded

21

22 # The flag below controls whether to allow TF32 on matmul. This flag defaults to False

23 # in PyTorch 1.12 and later.

24 torch.backends.cuda.matmul.allow_tf32 = True

25

26 # The flag below controls whether to allow TF32 on cuDNN. This flag defaults to True.

27 torch.backends.cudnn.allow_tf32 = True

28

29 # Disable gradients

30 torch.set_grad_enabled(False)

31

32 __all__ = [

33 "Model",

34 "BLOOMSharded",

35 "CausalLM",

36 "FlashCausalLM",

37 "GalacticaSharded",

38 "Seq2SeqLM",

39 "SantaCoder",

40 "OPTSharded",

41 "T5Sharded",

42 "get_model",

43 ]

44

45 FLASH_ATT_ERROR_MESSAGE = "{} requires Flash Attention enabled models."

46

47 FLASH_ATTENTION = True

48 try:

49 from text_generation_server.models.flash_rw import FlashRWSharded

50 from text_generation_server.models.flash_neox import FlashNeoXSharded

51 from text_generation_server.models.flash_llama import (

52 FlashLlama,

53 )

54 from text_generation_server.models.flash_santacoder import (

55 FlashSantacoderSharded,

56 )

57

58 except ImportError as e:

59 logger.warning(f"Could not import Flash Attention enabled models: {e}")

60 FLASH_ATTENTION = False

61

62 if FLASH_ATTENTION:

63 __all__.append(FlashNeoXSharded)

64 __all__.append(FlashRWSharded)

65 __all__.append(FlashSantacoderSharded)

66 __all__.append(FlashLlama)

67

68

69 def get_model(

70 model_id: str,

71 revision: Optional[str],

72 sharded: bool,

73 quantize: Optional[str],

74 dtype: Optional[str],

75 trust_remote_code: bool,

76 ) -> Model:

77 if dtype is None:

78 dtype = torch.float16

79 elif dtype == "float16":

80 dtype = torch.float16

81 elif dtype == "bfloat16":

82 dtype = torch.bfloat16

83 else:

84 raise RuntimeError(f"Unknown dtype {dtype}")

85

86 if "facebook/galactica" in model_id:

87 return GalacticaSharded(

88 model_id,

89 revision,

90 quantize=quantize,

91 dtype=dtype,

92 dtypetrust_remote_code=trust_remote_code,

93 )

94

95 if model_id.startswith("bigcode/"):

96 if FLASH_ATTENTION:

97 return FlashSantacoderSharded(

98 model_id,

99 revision,

100 quantize=quantize,

101 dtype=dtype,

102 trust_remote_code=trust_remote_code,

103 )

104 elif sharded:

105 raise NotImplementedError(

106 FLASH_ATT_ERROR_MESSAGE.format("Sharded Santacoder")

107 )

108 else:

109 return SantaCoder(

110 model_id,

111 revision,

112 quantize=quantize,

113 dtype=dtype,

114 trust_remote_code=trust_remote_code,

115 )

116

117 config_dict, _ = PretrainedConfig.get_config_dict(

118 model_id, revision=revision, trust_remote_code=trust_remote_code

119 )

120 model_type = config_dict["model_type"]

121

122 if model_type == "gpt_bigcode":

123 if FLASH_ATTENTION:

124 return FlashSantacoderSharded(

125 model_id,

126 revision,

127 quantize=quantize,

128 dtype=dtype,

129 trust_remote_code=trust_remote_code,

130 )

131 elif sharded:

132 raise NotImplementedError(

133 FLASH_ATT_ERROR_MESSAGE.format("Sharded Santacoder")

134 )

135 else:

136 return SantaCoder(

137 model_id,

138 revision,

139 quantize=quantize,

140 dtype=dtype,

141 trust_remote_code=trust_remote_code,

142 )

143

144 if model_type == "bloom":

145 return BLOOMSharded(

146 model_id,

147 revision,

148 quantize=quantize,

149 dtype=dtype,

150 trust_remote_code=trust_remote_code,

151 )

152 elif model_type == "mpt":

153 return MPTSharded(

154 model_id, revision, quantize=quantize, trust_remote_code=trust_remote_code

155 )

156

157 elif model_type == "gpt_neox":

158 if FLASH_ATTENTION:

159 return FlashNeoXSharded(

160 model_id,

161 revision,

162 quantize=quantize,

163 dtype=dtype,

164 trust_remote_code=trust_remote_code,

165 )

166 elif sharded:

167 return GPTNeoxSharded(

168 model_id,

169 revision,

170 quantize=quantize,

171 dtype=dtype,

172 trust_remote_code=trust_remote_code,

173 )

174 else:

175 return CausalLM(

176 model_id,

177 revision,

178 quantize=quantize,

179 dtype=dtype,

180 trust_remote_code=trust_remote_code,

181 )

182

183 elif model_type == "llama":

184 if FLASH_ATTENTION:

185 return FlashLlama(

186 model_id,

187 revision,

188 quantize=quantize,

189 dtype=dtype,

190 trust_remote_code=trust_remote_code,

191 )

192 elif sharded:

193 raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format("Sharded Llama"))

194 else:

195 return CausalLM(

196 model_id,

197 revision,

198 quantize=quantize,

199 dtype=dtype,

200 trust_remote_code=trust_remote_code,

201 )

202

203 if model_type in ["RefinedWeb", "RefinedWebModel", "falcon"]:

204 if sharded:

205 if FLASH_ATTENTION:

206 if config_dict.get("alibi", False):

207 raise NotImplementedError("sharded is not supported for this model")

208 return FlashRWSharded(

209 model_id,

210 revision,

211 quantize=quantize,

212 dtype=dtype,

213 trust_remote_code=trust_remote_code,

214 )

215 raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format(f"Sharded Falcon"))

216 else:

217 if FLASH_ATTENTION and not config_dict.get("alibi", False):

218 return FlashRWSharded(

219 model_id,

220 revision,

221 quantize=quantize,

222 dtype=dtype,

223 trust_remote_code=trust_remote_code,

224 )

225 else:

226 return RW(

227 model_id,

228 revision,

229 quantize=quantize,

230 dtype=dtype,

231 trust_remote_code=trust_remote_code,

232 )

233

234 elif model_type == "opt":

235 return OPTSharded(

236 model_id,

237 revision,

238 quantize=quantize,

239 dtype=dtype,

240 trust_remote_code=trust_remote_code,

241 )

242

243 elif model_type == "t5":

244 return T5Sharded(

245 model_id,

246 revision,

247 quantize=quantize,

248 dtype=dtype,

249 trust_remote_code=trust_remote_code,

250 )

251

252 if sharded:

253 raise ValueError("sharded is not supported for AutoModel")

254 if quantize == "gptq":

255 raise ValueError(

256 "gptq quantization is not supported for AutoModel, you can try to quantize it with `text-generation-server quantize ORIGINAL_MODEL_ID NEW_MODEL_ID`"

257 )

258 elif (quantize == "bitsandbytes-fp4") or (quantize == "bitsandbytes-nf4"):

259 raise ValueError(

260 "4bit quantization is not supported for AutoModel"

261 )

262 if model_type in modeling_auto.MODEL_FOR_CAUSAL_LM_MAPPING_NAMES:

263 return CausalLM(

264 model_id,

265 revision,

266 quantize=quantize,

267 dtype=dtype,

268 trust_remote_code=trust_remote_code,

269 )

270 if model_type in modeling_auto.MODEL_FOR_SEQ_TO_SEQ_CAUSAL_LM_MAPPING_NAMES:

271 return Seq2SeqLM(

272 model_id,

273 revision,

274 quantize=quantize,

275 dtype=dtype,

276 trust_remote_code=trust_remote_code,

277 )

278

279 auto_map = config_dict.get("auto_map", None)

280 if trust_remote_code and auto_map is not None:

281 if "AutoModelForCausalLM" in auto_map.keys():

282 return CausalLM(

283 model_id,

284 revision,

285 quantize=quantize,

286 dtype=dtype,

287 trust_remote_code=trust_remote_code,

288 )

289 if "AutoModelForSeq2SeqLM" in auto_map.keys():

290 return Seq2SeqLM(

291 model_id,

292 revision,

293 quantize=quantize,

294 dtype=dtype,

295 trust_remote_code=trust_remote_code,

296 )

297

298 raise ValueError(f"Unsupported model type {model_type}")

299

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/server/text_generation_server/models/__init__.py b/server/text_generation_server/models/__init__.py

--- a/server/text_generation_server/models/__init__.py

+++ b/server/text_generation_server/models/__init__.py

@@ -89,7 +89,7 @@

revision,

quantize=quantize,

dtype=dtype,

- dtypetrust_remote_code=trust_remote_code,

+ trust_remote_code=trust_remote_code,

)

if model_id.startswith("bigcode/"):

|

{"golden_diff": "diff --git a/server/text_generation_server/models/__init__.py b/server/text_generation_server/models/__init__.py\n--- a/server/text_generation_server/models/__init__.py\n+++ b/server/text_generation_server/models/__init__.py\n@@ -89,7 +89,7 @@\n revision,\n quantize=quantize,\n dtype=dtype,\n- dtypetrust_remote_code=trust_remote_code,\n+ trust_remote_code=trust_remote_code,\n )\n \n if model_id.startswith(\"bigcode/\"):\n", "issue": "small typo in galactica model loading\nhttps://github.com/huggingface/text-generation-inference/blob/1fdc88ee908beb8ae0afe17810a17b9b4d8848e2/server/text_generation_server/models/__init__.py#L92\r\n\r\nshould be trust_remote_code\n", "before_files": [{"content": "import os\nimport torch\n\nfrom loguru import logger\nfrom transformers.configuration_utils import PretrainedConfig\nfrom transformers.models.auto import modeling_auto\nfrom typing import Optional\n\nfrom text_generation_server.models.model import Model\nfrom text_generation_server.models.causal_lm import CausalLM\nfrom text_generation_server.models.flash_causal_lm import FlashCausalLM\nfrom text_generation_server.models.bloom import BLOOMSharded\nfrom text_generation_server.models.mpt import MPTSharded\nfrom text_generation_server.models.seq2seq_lm import Seq2SeqLM\nfrom text_generation_server.models.rw import RW\nfrom text_generation_server.models.opt import OPTSharded\nfrom text_generation_server.models.galactica import GalacticaSharded\nfrom text_generation_server.models.santacoder import SantaCoder\nfrom text_generation_server.models.t5 import T5Sharded\nfrom text_generation_server.models.gpt_neox import GPTNeoxSharded\n\n# The flag below controls whether to allow TF32 on matmul. This flag defaults to False\n# in PyTorch 1.12 and later.\ntorch.backends.cuda.matmul.allow_tf32 = True\n\n# The flag below controls whether to allow TF32 on cuDNN. This flag defaults to True.\ntorch.backends.cudnn.allow_tf32 = True\n\n# Disable gradients\ntorch.set_grad_enabled(False)\n\n__all__ = [\n \"Model\",\n \"BLOOMSharded\",\n \"CausalLM\",\n \"FlashCausalLM\",\n \"GalacticaSharded\",\n \"Seq2SeqLM\",\n \"SantaCoder\",\n \"OPTSharded\",\n \"T5Sharded\",\n \"get_model\",\n]\n\nFLASH_ATT_ERROR_MESSAGE = \"{} requires Flash Attention enabled models.\"\n\nFLASH_ATTENTION = True\ntry:\n from text_generation_server.models.flash_rw import FlashRWSharded\n from text_generation_server.models.flash_neox import FlashNeoXSharded\n from text_generation_server.models.flash_llama import (\n FlashLlama,\n )\n from text_generation_server.models.flash_santacoder import (\n FlashSantacoderSharded,\n )\n\nexcept ImportError as e:\n logger.warning(f\"Could not import Flash Attention enabled models: {e}\")\n FLASH_ATTENTION = False\n\nif FLASH_ATTENTION:\n __all__.append(FlashNeoXSharded)\n __all__.append(FlashRWSharded)\n __all__.append(FlashSantacoderSharded)\n __all__.append(FlashLlama)\n\n\ndef get_model(\n model_id: str,\n revision: Optional[str],\n sharded: bool,\n quantize: Optional[str],\n dtype: Optional[str],\n trust_remote_code: bool,\n) -> Model:\n if dtype is None:\n dtype = torch.float16\n elif dtype == \"float16\":\n dtype = torch.float16\n elif dtype == \"bfloat16\":\n dtype = torch.bfloat16\n else:\n raise RuntimeError(f\"Unknown dtype {dtype}\")\n\n if \"facebook/galactica\" in model_id:\n return GalacticaSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n dtypetrust_remote_code=trust_remote_code,\n )\n\n if model_id.startswith(\"bigcode/\"):\n if FLASH_ATTENTION:\n return FlashSantacoderSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(\n FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Santacoder\")\n )\n else:\n return SantaCoder(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n config_dict, _ = PretrainedConfig.get_config_dict(\n model_id, revision=revision, trust_remote_code=trust_remote_code\n )\n model_type = config_dict[\"model_type\"]\n\n if model_type == \"gpt_bigcode\":\n if FLASH_ATTENTION:\n return FlashSantacoderSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(\n FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Santacoder\")\n )\n else:\n return SantaCoder(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if model_type == \"bloom\":\n return BLOOMSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif model_type == \"mpt\":\n return MPTSharded(\n model_id, revision, quantize=quantize, trust_remote_code=trust_remote_code\n )\n\n elif model_type == \"gpt_neox\":\n if FLASH_ATTENTION:\n return FlashNeoXSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n return GPTNeoxSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n else:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"llama\":\n if FLASH_ATTENTION:\n return FlashLlama(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Llama\"))\n else:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if model_type in [\"RefinedWeb\", \"RefinedWebModel\", \"falcon\"]:\n if sharded:\n if FLASH_ATTENTION:\n if config_dict.get(\"alibi\", False):\n raise NotImplementedError(\"sharded is not supported for this model\")\n return FlashRWSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format(f\"Sharded Falcon\"))\n else:\n if FLASH_ATTENTION and not config_dict.get(\"alibi\", False):\n return FlashRWSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n else:\n return RW(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"opt\":\n return OPTSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"t5\":\n return T5Sharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if sharded:\n raise ValueError(\"sharded is not supported for AutoModel\")\n if quantize == \"gptq\":\n raise ValueError(\n \"gptq quantization is not supported for AutoModel, you can try to quantize it with `text-generation-server quantize ORIGINAL_MODEL_ID NEW_MODEL_ID`\"\n )\n elif (quantize == \"bitsandbytes-fp4\") or (quantize == \"bitsandbytes-nf4\"):\n raise ValueError(\n \"4bit quantization is not supported for AutoModel\"\n )\n if model_type in modeling_auto.MODEL_FOR_CAUSAL_LM_MAPPING_NAMES:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n if model_type in modeling_auto.MODEL_FOR_SEQ_TO_SEQ_CAUSAL_LM_MAPPING_NAMES:\n return Seq2SeqLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n auto_map = config_dict.get(\"auto_map\", None)\n if trust_remote_code and auto_map is not None:\n if \"AutoModelForCausalLM\" in auto_map.keys():\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n if \"AutoModelForSeq2SeqLM\" in auto_map.keys():\n return Seq2SeqLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n raise ValueError(f\"Unsupported model type {model_type}\")\n", "path": "server/text_generation_server/models/__init__.py"}], "after_files": [{"content": "import os\nimport torch\n\nfrom loguru import logger\nfrom transformers.configuration_utils import PretrainedConfig\nfrom transformers.models.auto import modeling_auto\nfrom typing import Optional\n\nfrom text_generation_server.models.model import Model\nfrom text_generation_server.models.causal_lm import CausalLM\nfrom text_generation_server.models.flash_causal_lm import FlashCausalLM\nfrom text_generation_server.models.bloom import BLOOMSharded\nfrom text_generation_server.models.mpt import MPTSharded\nfrom text_generation_server.models.seq2seq_lm import Seq2SeqLM\nfrom text_generation_server.models.rw import RW\nfrom text_generation_server.models.opt import OPTSharded\nfrom text_generation_server.models.galactica import GalacticaSharded\nfrom text_generation_server.models.santacoder import SantaCoder\nfrom text_generation_server.models.t5 import T5Sharded\nfrom text_generation_server.models.gpt_neox import GPTNeoxSharded\n\n# The flag below controls whether to allow TF32 on matmul. This flag defaults to False\n# in PyTorch 1.12 and later.\ntorch.backends.cuda.matmul.allow_tf32 = True\n\n# The flag below controls whether to allow TF32 on cuDNN. This flag defaults to True.\ntorch.backends.cudnn.allow_tf32 = True\n\n# Disable gradients\ntorch.set_grad_enabled(False)\n\n__all__ = [\n \"Model\",\n \"BLOOMSharded\",\n \"CausalLM\",\n \"FlashCausalLM\",\n \"GalacticaSharded\",\n \"Seq2SeqLM\",\n \"SantaCoder\",\n \"OPTSharded\",\n \"T5Sharded\",\n \"get_model\",\n]\n\nFLASH_ATT_ERROR_MESSAGE = \"{} requires Flash Attention enabled models.\"\n\nFLASH_ATTENTION = True\ntry:\n from text_generation_server.models.flash_rw import FlashRWSharded\n from text_generation_server.models.flash_neox import FlashNeoXSharded\n from text_generation_server.models.flash_llama import (\n FlashLlama,\n )\n from text_generation_server.models.flash_santacoder import (\n FlashSantacoderSharded,\n )\n\nexcept ImportError as e:\n logger.warning(f\"Could not import Flash Attention enabled models: {e}\")\n FLASH_ATTENTION = False\n\nif FLASH_ATTENTION:\n __all__.append(FlashNeoXSharded)\n __all__.append(FlashRWSharded)\n __all__.append(FlashSantacoderSharded)\n __all__.append(FlashLlama)\n\n\ndef get_model(\n model_id: str,\n revision: Optional[str],\n sharded: bool,\n quantize: Optional[str],\n dtype: Optional[str],\n trust_remote_code: bool,\n) -> Model:\n if dtype is None:\n dtype = torch.float16\n elif dtype == \"float16\":\n dtype = torch.float16\n elif dtype == \"bfloat16\":\n dtype = torch.bfloat16\n else:\n raise RuntimeError(f\"Unknown dtype {dtype}\")\n\n if \"facebook/galactica\" in model_id:\n return GalacticaSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if model_id.startswith(\"bigcode/\"):\n if FLASH_ATTENTION:\n return FlashSantacoderSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(\n FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Santacoder\")\n )\n else:\n return SantaCoder(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n config_dict, _ = PretrainedConfig.get_config_dict(\n model_id, revision=revision, trust_remote_code=trust_remote_code\n )\n model_type = config_dict[\"model_type\"]\n\n if model_type == \"gpt_bigcode\":\n if FLASH_ATTENTION:\n return FlashSantacoderSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(\n FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Santacoder\")\n )\n else:\n return SantaCoder(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if model_type == \"bloom\":\n return BLOOMSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif model_type == \"mpt\":\n return MPTSharded(\n model_id, revision, quantize=quantize, trust_remote_code=trust_remote_code\n )\n\n elif model_type == \"gpt_neox\":\n if FLASH_ATTENTION:\n return FlashNeoXSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n return GPTNeoxSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n else:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"llama\":\n if FLASH_ATTENTION:\n return FlashLlama(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n elif sharded:\n raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format(\"Sharded Llama\"))\n else:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if model_type in [\"RefinedWeb\", \"RefinedWebModel\", \"falcon\"]:\n if sharded:\n if FLASH_ATTENTION:\n if config_dict.get(\"alibi\", False):\n raise NotImplementedError(\"sharded is not supported for this model\")\n return FlashRWSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n raise NotImplementedError(FLASH_ATT_ERROR_MESSAGE.format(f\"Sharded Falcon\"))\n else:\n if FLASH_ATTENTION and not config_dict.get(\"alibi\", False):\n return FlashRWSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n else:\n return RW(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"opt\":\n return OPTSharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n elif model_type == \"t5\":\n return T5Sharded(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n if sharded:\n raise ValueError(\"sharded is not supported for AutoModel\")\n if quantize == \"gptq\":\n raise ValueError(\n \"gptq quantization is not supported for AutoModel, you can try to quantize it with `text-generation-server quantize ORIGINAL_MODEL_ID NEW_MODEL_ID`\"\n )\n elif (quantize == \"bitsandbytes-fp4\") or (quantize == \"bitsandbytes-nf4\"):\n raise ValueError(\n \"4bit quantization is not supported for AutoModel\"\n )\n if model_type in modeling_auto.MODEL_FOR_CAUSAL_LM_MAPPING_NAMES:\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n if model_type in modeling_auto.MODEL_FOR_SEQ_TO_SEQ_CAUSAL_LM_MAPPING_NAMES:\n return Seq2SeqLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n auto_map = config_dict.get(\"auto_map\", None)\n if trust_remote_code and auto_map is not None:\n if \"AutoModelForCausalLM\" in auto_map.keys():\n return CausalLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n if \"AutoModelForSeq2SeqLM\" in auto_map.keys():\n return Seq2SeqLM(\n model_id,\n revision,\n quantize=quantize,\n dtype=dtype,\n trust_remote_code=trust_remote_code,\n )\n\n raise ValueError(f\"Unsupported model type {model_type}\")\n", "path": "server/text_generation_server/models/__init__.py"}]}

| 3,105 | 111 |

gh_patches_debug_27131

|

rasdani/github-patches

|

git_diff

|

pypa__setuptools-1220

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

setuptools wheel install will fail if a data dir is a subdirectory in a package

With the new wheel support, I ran into an issue where if `data_files` specifies a subdirectory in a package, `setuptools` will fail on moving the data. This worked in previous versions of setuptools w/o wheel support, and also works with pip install.

Perhaps this is not a supported use case (should `package_data` be used instead?)

I was able to work around the issue by removing the subdirectory, but in case someone else runs into this, here is a repro test case that can be added to `test_wheel.py`

```

dict(

id='data_in_package',

file_defs={

'foo': {

'__init__.py': '',

'data_dir': {

'data.txt': DALS(

'''

Some data...

'''

),

}

}

},

setup_kwargs=dict(

packages=['foo'],

data_files=[('foo/data_dir', ['foo/data_dir/data.txt'])],

),

install_tree=DALS(

'''

foo-1.0-py{py_version}.egg/

|-- EGG-INFO/

| |-- DESCRIPTION.rst

| |-- PKG-INFO

| |-- RECORD

| |-- WHEEL

| |-- metadata.json

| |-- top_level.txt

|-- foo/

| |-- __init__.py

| |-- data_dir/

| |-- data.txt

'''

),

),

```

In the test case, `foo` is a package and `/foo/data_dir/` is a data dir. Since `foo` already exists as a package, the `os.rename` fails, even though `/foo/data_dir/` does not exist. Should it check if the directory already exists?

The error looks something like the following (on OSX):

```

for entry in os.listdir(subdir):

os.rename(os.path.join(subdir, entry),

> os.path.join(destination_eggdir, entry))

E OSError: [Errno 66] Directory not empty: '/var/folders/07/c66dj8613n37ssnxsvyz8dcm0000gn/T/tmpybp6f4ft/foo-1.0-py3.5.egg/foo-1.0.data/data/foo' -> '/var/folders/07/c66dj8613n37ssnxsvyz8dcm0000gn/T/tmpybp6f4ft/foo-1.0-py3.5.egg/foo'

```

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `setuptools/wheel.py`

Content:

```

1 '''Wheels support.'''

2

3 from distutils.util import get_platform

4 import email

5 import itertools

6 import os

7 import re

8 import zipfile

9

10 from pkg_resources import Distribution, PathMetadata, parse_version

11 from pkg_resources.extern.six import PY3

12 from setuptools import Distribution as SetuptoolsDistribution

13 from setuptools import pep425tags

14 from setuptools.command.egg_info import write_requirements

15

16

17 WHEEL_NAME = re.compile(

18 r"""^(?P<project_name>.+?)-(?P<version>\d.*?)

19 ((-(?P<build>\d.*?))?-(?P<py_version>.+?)-(?P<abi>.+?)-(?P<platform>.+?)

20 )\.whl$""",

21 re.VERBOSE).match

22

23 NAMESPACE_PACKAGE_INIT = '''\

24 try:

25 __import__('pkg_resources').declare_namespace(__name__)

26 except ImportError:

27 __path__ = __import__('pkgutil').extend_path(__path__, __name__)

28 '''

29

30

31 class Wheel(object):

32

33 def __init__(self, filename):

34 match = WHEEL_NAME(os.path.basename(filename))

35 if match is None:

36 raise ValueError('invalid wheel name: %r' % filename)

37 self.filename = filename

38 for k, v in match.groupdict().items():

39 setattr(self, k, v)

40

41 def tags(self):

42 '''List tags (py_version, abi, platform) supported by this wheel.'''

43 return itertools.product(self.py_version.split('.'),

44 self.abi.split('.'),

45 self.platform.split('.'))

46

47 def is_compatible(self):

48 '''Is the wheel is compatible with the current platform?'''

49 supported_tags = pep425tags.get_supported()

50 return next((True for t in self.tags() if t in supported_tags), False)

51

52 def egg_name(self):

53 return Distribution(

54 project_name=self.project_name, version=self.version,

55 platform=(None if self.platform == 'any' else get_platform()),

56 ).egg_name() + '.egg'

57

58 def install_as_egg(self, destination_eggdir):

59 '''Install wheel as an egg directory.'''

60 with zipfile.ZipFile(self.filename) as zf:

61 dist_basename = '%s-%s' % (self.project_name, self.version)

62 dist_info = '%s.dist-info' % dist_basename

63 dist_data = '%s.data' % dist_basename

64 def get_metadata(name):

65 with zf.open('%s/%s' % (dist_info, name)) as fp:

66 value = fp.read().decode('utf-8') if PY3 else fp.read()

67 return email.parser.Parser().parsestr(value)

68 wheel_metadata = get_metadata('WHEEL')

69 dist_metadata = get_metadata('METADATA')

70 # Check wheel format version is supported.

71 wheel_version = parse_version(wheel_metadata.get('Wheel-Version'))

72 if not parse_version('1.0') <= wheel_version < parse_version('2.0dev0'):

73 raise ValueError('unsupported wheel format version: %s' % wheel_version)

74 # Extract to target directory.

75 os.mkdir(destination_eggdir)

76 zf.extractall(destination_eggdir)

77 # Convert metadata.

78 dist_info = os.path.join(destination_eggdir, dist_info)

79 dist = Distribution.from_location(

80 destination_eggdir, dist_info,

81 metadata=PathMetadata(destination_eggdir, dist_info)

82 )

83 # Note: we need to evaluate and strip markers now,

84 # as we can't easily convert back from the syntax:

85 # foobar; "linux" in sys_platform and extra == 'test'

86 def raw_req(req):

87 req.marker = None

88 return str(req)

89 install_requires = list(sorted(map(raw_req, dist.requires())))

90 extras_require = {

91 extra: list(sorted(

92 req

93 for req in map(raw_req, dist.requires((extra,)))

94 if req not in install_requires

95 ))

96 for extra in dist.extras

97 }

98 egg_info = os.path.join(destination_eggdir, 'EGG-INFO')

99 os.rename(dist_info, egg_info)

100 os.rename(os.path.join(egg_info, 'METADATA'),

101 os.path.join(egg_info, 'PKG-INFO'))

102 setup_dist = SetuptoolsDistribution(attrs=dict(

103 install_requires=install_requires,

104 extras_require=extras_require,

105 ))

106 write_requirements(setup_dist.get_command_obj('egg_info'),

107 None, os.path.join(egg_info, 'requires.txt'))

108 # Move data entries to their correct location.

109 dist_data = os.path.join(destination_eggdir, dist_data)

110 dist_data_scripts = os.path.join(dist_data, 'scripts')

111 if os.path.exists(dist_data_scripts):

112 egg_info_scripts = os.path.join(destination_eggdir,

113 'EGG-INFO', 'scripts')

114 os.mkdir(egg_info_scripts)

115 for entry in os.listdir(dist_data_scripts):

116 # Remove bytecode, as it's not properly handled

117 # during easy_install scripts install phase.

118 if entry.endswith('.pyc'):

119 os.unlink(os.path.join(dist_data_scripts, entry))

120 else:

121 os.rename(os.path.join(dist_data_scripts, entry),

122 os.path.join(egg_info_scripts, entry))

123 os.rmdir(dist_data_scripts)

124 for subdir in filter(os.path.exists, (

125 os.path.join(dist_data, d)

126 for d in ('data', 'headers', 'purelib', 'platlib')

127 )):

128 for entry in os.listdir(subdir):

129 os.rename(os.path.join(subdir, entry),

130 os.path.join(destination_eggdir, entry))

131 os.rmdir(subdir)

132 if os.path.exists(dist_data):

133 os.rmdir(dist_data)

134 # Fix namespace packages.

135 namespace_packages = os.path.join(egg_info, 'namespace_packages.txt')

136 if os.path.exists(namespace_packages):

137 with open(namespace_packages) as fp:

138 namespace_packages = fp.read().split()

139 for mod in namespace_packages:

140 mod_dir = os.path.join(destination_eggdir, *mod.split('.'))

141 mod_init = os.path.join(mod_dir, '__init__.py')

142 if os.path.exists(mod_dir) and not os.path.exists(mod_init):

143 with open(mod_init, 'w') as fp:

144 fp.write(NAMESPACE_PACKAGE_INIT)

145

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/setuptools/wheel.py b/setuptools/wheel.py

--- a/setuptools/wheel.py

+++ b/setuptools/wheel.py

@@ -28,6 +28,28 @@

'''

+def unpack(src_dir, dst_dir):

+ '''Move everything under `src_dir` to `dst_dir`, and delete the former.'''

+ for dirpath, dirnames, filenames in os.walk(src_dir):

+ subdir = os.path.relpath(dirpath, src_dir)

+ for f in filenames:

+ src = os.path.join(dirpath, f)

+ dst = os.path.join(dst_dir, subdir, f)

+ os.renames(src, dst)

+ for n, d in reversed(list(enumerate(dirnames))):

+ src = os.path.join(dirpath, d)

+ dst = os.path.join(dst_dir, subdir, d)

+ if not os.path.exists(dst):

+ # Directory does not exist in destination,

+ # rename it and prune it from os.walk list.

+ os.renames(src, dst)

+ del dirnames[n]

+ # Cleanup.

+ for dirpath, dirnames, filenames in os.walk(src_dir, topdown=True):

+ assert not filenames

+ os.rmdir(dirpath)

+

+

class Wheel(object):

def __init__(self, filename):

@@ -125,10 +147,7 @@

os.path.join(dist_data, d)

for d in ('data', 'headers', 'purelib', 'platlib')

)):

- for entry in os.listdir(subdir):

- os.rename(os.path.join(subdir, entry),

- os.path.join(destination_eggdir, entry))

- os.rmdir(subdir)

+ unpack(subdir, destination_eggdir)

if os.path.exists(dist_data):

os.rmdir(dist_data)

# Fix namespace packages.

|

{"golden_diff": "diff --git a/setuptools/wheel.py b/setuptools/wheel.py\n--- a/setuptools/wheel.py\n+++ b/setuptools/wheel.py\n@@ -28,6 +28,28 @@\n '''\n \n \n+def unpack(src_dir, dst_dir):\n+ '''Move everything under `src_dir` to `dst_dir`, and delete the former.'''\n+ for dirpath, dirnames, filenames in os.walk(src_dir):\n+ subdir = os.path.relpath(dirpath, src_dir)\n+ for f in filenames:\n+ src = os.path.join(dirpath, f)\n+ dst = os.path.join(dst_dir, subdir, f)\n+ os.renames(src, dst)\n+ for n, d in reversed(list(enumerate(dirnames))):\n+ src = os.path.join(dirpath, d)\n+ dst = os.path.join(dst_dir, subdir, d)\n+ if not os.path.exists(dst):\n+ # Directory does not exist in destination,\n+ # rename it and prune it from os.walk list.\n+ os.renames(src, dst)\n+ del dirnames[n]\n+ # Cleanup.\n+ for dirpath, dirnames, filenames in os.walk(src_dir, topdown=True):\n+ assert not filenames\n+ os.rmdir(dirpath)\n+\n+\n class Wheel(object):\n \n def __init__(self, filename):\n@@ -125,10 +147,7 @@\n os.path.join(dist_data, d)\n for d in ('data', 'headers', 'purelib', 'platlib')\n )):\n- for entry in os.listdir(subdir):\n- os.rename(os.path.join(subdir, entry),\n- os.path.join(destination_eggdir, entry))\n- os.rmdir(subdir)\n+ unpack(subdir, destination_eggdir)\n if os.path.exists(dist_data):\n os.rmdir(dist_data)\n # Fix namespace packages.\n", "issue": "setuptools wheel install will fail if a data dir is a subdirectory in a package\nWith the new wheel support, I ran into an issue where if `data_files` specifies a subdirectory in a package, `setuptools` will fail on moving the data. This worked in previous versions of setuptools w/o wheel support, and also works with pip install.\r\n\r\nPerhaps this is not a supported use case (should `package_data` be used instead?)\r\n\r\nI was able to work around the issue by removing the subdirectory, but in case someone else runs into this, here is a repro test case that can be added to `test_wheel.py`\r\n\r\n\r\n```\r\n dict(\r\n id='data_in_package',\r\n file_defs={\r\n 'foo': {\r\n '__init__.py': '',\r\n 'data_dir': {\r\n 'data.txt': DALS(\r\n '''\r\n Some data...\r\n '''\r\n ),\r\n }\r\n }\r\n },\r\n setup_kwargs=dict(\r\n packages=['foo'],\r\n data_files=[('foo/data_dir', ['foo/data_dir/data.txt'])],\r\n ),\r\n install_tree=DALS(\r\n '''\r\n foo-1.0-py{py_version}.egg/\r\n |-- EGG-INFO/\r\n | |-- DESCRIPTION.rst\r\n | |-- PKG-INFO\r\n | |-- RECORD\r\n | |-- WHEEL\r\n | |-- metadata.json\r\n | |-- top_level.txt\r\n |-- foo/\r\n | |-- __init__.py\r\n | |-- data_dir/\r\n | |-- data.txt\r\n '''\r\n ),\r\n ),\r\n```\r\n\r\nIn the test case, `foo` is a package and `/foo/data_dir/` is a data dir. Since `foo` already exists as a package, the `os.rename` fails, even though `/foo/data_dir/` does not exist. Should it check if the directory already exists?\r\n\r\nThe error looks something like the following (on OSX):\r\n\r\n```\r\n for entry in os.listdir(subdir):\r\n os.rename(os.path.join(subdir, entry),\r\n> os.path.join(destination_eggdir, entry))\r\nE OSError: [Errno 66] Directory not empty: '/var/folders/07/c66dj8613n37ssnxsvyz8dcm0000gn/T/tmpybp6f4ft/foo-1.0-py3.5.egg/foo-1.0.data/data/foo' -> '/var/folders/07/c66dj8613n37ssnxsvyz8dcm0000gn/T/tmpybp6f4ft/foo-1.0-py3.5.egg/foo'\r\n```\r\n\r\n\r\n\r\n\n", "before_files": [{"content": "'''Wheels support.'''\n\nfrom distutils.util import get_platform\nimport email\nimport itertools\nimport os\nimport re\nimport zipfile\n\nfrom pkg_resources import Distribution, PathMetadata, parse_version\nfrom pkg_resources.extern.six import PY3\nfrom setuptools import Distribution as SetuptoolsDistribution\nfrom setuptools import pep425tags\nfrom setuptools.command.egg_info import write_requirements\n\n\nWHEEL_NAME = re.compile(\n r\"\"\"^(?P<project_name>.+?)-(?P<version>\\d.*?)\n ((-(?P<build>\\d.*?))?-(?P<py_version>.+?)-(?P<abi>.+?)-(?P<platform>.+?)\n )\\.whl$\"\"\",\nre.VERBOSE).match\n\nNAMESPACE_PACKAGE_INIT = '''\\\ntry:\n __import__('pkg_resources').declare_namespace(__name__)\nexcept ImportError:\n __path__ = __import__('pkgutil').extend_path(__path__, __name__)\n'''\n\n\nclass Wheel(object):\n\n def __init__(self, filename):\n match = WHEEL_NAME(os.path.basename(filename))\n if match is None:\n raise ValueError('invalid wheel name: %r' % filename)\n self.filename = filename\n for k, v in match.groupdict().items():\n setattr(self, k, v)\n\n def tags(self):\n '''List tags (py_version, abi, platform) supported by this wheel.'''\n return itertools.product(self.py_version.split('.'),\n self.abi.split('.'),\n self.platform.split('.'))\n\n def is_compatible(self):\n '''Is the wheel is compatible with the current platform?'''\n supported_tags = pep425tags.get_supported()\n return next((True for t in self.tags() if t in supported_tags), False)\n\n def egg_name(self):\n return Distribution(\n project_name=self.project_name, version=self.version,\n platform=(None if self.platform == 'any' else get_platform()),\n ).egg_name() + '.egg'\n\n def install_as_egg(self, destination_eggdir):\n '''Install wheel as an egg directory.'''\n with zipfile.ZipFile(self.filename) as zf:\n dist_basename = '%s-%s' % (self.project_name, self.version)\n dist_info = '%s.dist-info' % dist_basename\n dist_data = '%s.data' % dist_basename\n def get_metadata(name):\n with zf.open('%s/%s' % (dist_info, name)) as fp:\n value = fp.read().decode('utf-8') if PY3 else fp.read()\n return email.parser.Parser().parsestr(value)\n wheel_metadata = get_metadata('WHEEL')\n dist_metadata = get_metadata('METADATA')\n # Check wheel format version is supported.\n wheel_version = parse_version(wheel_metadata.get('Wheel-Version'))\n if not parse_version('1.0') <= wheel_version < parse_version('2.0dev0'):\n raise ValueError('unsupported wheel format version: %s' % wheel_version)\n # Extract to target directory.\n os.mkdir(destination_eggdir)\n zf.extractall(destination_eggdir)\n # Convert metadata.\n dist_info = os.path.join(destination_eggdir, dist_info)\n dist = Distribution.from_location(\n destination_eggdir, dist_info,\n metadata=PathMetadata(destination_eggdir, dist_info)\n )\n # Note: we need to evaluate and strip markers now,\n # as we can't easily convert back from the syntax:\n # foobar; \"linux\" in sys_platform and extra == 'test'\n def raw_req(req):\n req.marker = None\n return str(req)\n install_requires = list(sorted(map(raw_req, dist.requires())))\n extras_require = {\n extra: list(sorted(\n req\n for req in map(raw_req, dist.requires((extra,)))\n if req not in install_requires\n ))\n for extra in dist.extras\n }\n egg_info = os.path.join(destination_eggdir, 'EGG-INFO')\n os.rename(dist_info, egg_info)\n os.rename(os.path.join(egg_info, 'METADATA'),\n os.path.join(egg_info, 'PKG-INFO'))\n setup_dist = SetuptoolsDistribution(attrs=dict(\n install_requires=install_requires,\n extras_require=extras_require,\n ))\n write_requirements(setup_dist.get_command_obj('egg_info'),\n None, os.path.join(egg_info, 'requires.txt'))\n # Move data entries to their correct location.\n dist_data = os.path.join(destination_eggdir, dist_data)\n dist_data_scripts = os.path.join(dist_data, 'scripts')\n if os.path.exists(dist_data_scripts):\n egg_info_scripts = os.path.join(destination_eggdir,\n 'EGG-INFO', 'scripts')\n os.mkdir(egg_info_scripts)\n for entry in os.listdir(dist_data_scripts):\n # Remove bytecode, as it's not properly handled\n # during easy_install scripts install phase.\n if entry.endswith('.pyc'):\n os.unlink(os.path.join(dist_data_scripts, entry))\n else:\n os.rename(os.path.join(dist_data_scripts, entry),\n os.path.join(egg_info_scripts, entry))\n os.rmdir(dist_data_scripts)\n for subdir in filter(os.path.exists, (\n os.path.join(dist_data, d)\n for d in ('data', 'headers', 'purelib', 'platlib')\n )):\n for entry in os.listdir(subdir):\n os.rename(os.path.join(subdir, entry),\n os.path.join(destination_eggdir, entry))\n os.rmdir(subdir)\n if os.path.exists(dist_data):\n os.rmdir(dist_data)\n # Fix namespace packages.\n namespace_packages = os.path.join(egg_info, 'namespace_packages.txt')\n if os.path.exists(namespace_packages):\n with open(namespace_packages) as fp:\n namespace_packages = fp.read().split()\n for mod in namespace_packages:\n mod_dir = os.path.join(destination_eggdir, *mod.split('.'))\n mod_init = os.path.join(mod_dir, '__init__.py')\n if os.path.exists(mod_dir) and not os.path.exists(mod_init):\n with open(mod_init, 'w') as fp:\n fp.write(NAMESPACE_PACKAGE_INIT)\n", "path": "setuptools/wheel.py"}], "after_files": [{"content": "'''Wheels support.'''\n\nfrom distutils.util import get_platform\nimport email\nimport itertools\nimport os\nimport re\nimport zipfile\n\nfrom pkg_resources import Distribution, PathMetadata, parse_version\nfrom pkg_resources.extern.six import PY3\nfrom setuptools import Distribution as SetuptoolsDistribution\nfrom setuptools import pep425tags\nfrom setuptools.command.egg_info import write_requirements\n\n\nWHEEL_NAME = re.compile(\n r\"\"\"^(?P<project_name>.+?)-(?P<version>\\d.*?)\n ((-(?P<build>\\d.*?))?-(?P<py_version>.+?)-(?P<abi>.+?)-(?P<platform>.+?)\n )\\.whl$\"\"\",\nre.VERBOSE).match\n\nNAMESPACE_PACKAGE_INIT = '''\\\ntry:\n __import__('pkg_resources').declare_namespace(__name__)\nexcept ImportError:\n __path__ = __import__('pkgutil').extend_path(__path__, __name__)\n'''\n\n\ndef unpack(src_dir, dst_dir):\n '''Move everything under `src_dir` to `dst_dir`, and delete the former.'''\n for dirpath, dirnames, filenames in os.walk(src_dir):\n subdir = os.path.relpath(dirpath, src_dir)\n for f in filenames:\n src = os.path.join(dirpath, f)\n dst = os.path.join(dst_dir, subdir, f)\n os.renames(src, dst)\n for n, d in reversed(list(enumerate(dirnames))):\n src = os.path.join(dirpath, d)\n dst = os.path.join(dst_dir, subdir, d)\n if not os.path.exists(dst):\n # Directory does not exist in destination,\n # rename it and prune it from os.walk list.\n os.renames(src, dst)\n del dirnames[n]\n # Cleanup.\n for dirpath, dirnames, filenames in os.walk(src_dir, topdown=True):\n assert not filenames\n os.rmdir(dirpath)\n\n\nclass Wheel(object):\n\n def __init__(self, filename):\n match = WHEEL_NAME(os.path.basename(filename))\n if match is None:\n raise ValueError('invalid wheel name: %r' % filename)\n self.filename = filename\n for k, v in match.groupdict().items():\n setattr(self, k, v)\n\n def tags(self):\n '''List tags (py_version, abi, platform) supported by this wheel.'''\n return itertools.product(self.py_version.split('.'),\n self.abi.split('.'),\n self.platform.split('.'))\n\n def is_compatible(self):\n '''Is the wheel is compatible with the current platform?'''\n supported_tags = pep425tags.get_supported()\n return next((True for t in self.tags() if t in supported_tags), False)\n\n def egg_name(self):\n return Distribution(\n project_name=self.project_name, version=self.version,\n platform=(None if self.platform == 'any' else get_platform()),\n ).egg_name() + '.egg'\n\n def install_as_egg(self, destination_eggdir):\n '''Install wheel as an egg directory.'''\n with zipfile.ZipFile(self.filename) as zf:\n dist_basename = '%s-%s' % (self.project_name, self.version)\n dist_info = '%s.dist-info' % dist_basename\n dist_data = '%s.data' % dist_basename\n def get_metadata(name):\n with zf.open('%s/%s' % (dist_info, name)) as fp:\n value = fp.read().decode('utf-8') if PY3 else fp.read()\n return email.parser.Parser().parsestr(value)\n wheel_metadata = get_metadata('WHEEL')\n dist_metadata = get_metadata('METADATA')\n # Check wheel format version is supported.\n wheel_version = parse_version(wheel_metadata.get('Wheel-Version'))\n if not parse_version('1.0') <= wheel_version < parse_version('2.0dev0'):\n raise ValueError('unsupported wheel format version: %s' % wheel_version)\n # Extract to target directory.\n os.mkdir(destination_eggdir)\n zf.extractall(destination_eggdir)\n # Convert metadata.\n dist_info = os.path.join(destination_eggdir, dist_info)\n dist = Distribution.from_location(\n destination_eggdir, dist_info,\n metadata=PathMetadata(destination_eggdir, dist_info)\n )\n # Note: we need to evaluate and strip markers now,\n # as we can't easily convert back from the syntax:\n # foobar; \"linux\" in sys_platform and extra == 'test'\n def raw_req(req):\n req.marker = None\n return str(req)\n install_requires = list(sorted(map(raw_req, dist.requires())))\n extras_require = {\n extra: list(sorted(\n req\n for req in map(raw_req, dist.requires((extra,)))\n if req not in install_requires\n ))\n for extra in dist.extras\n }\n egg_info = os.path.join(destination_eggdir, 'EGG-INFO')\n os.rename(dist_info, egg_info)\n os.rename(os.path.join(egg_info, 'METADATA'),\n os.path.join(egg_info, 'PKG-INFO'))\n setup_dist = SetuptoolsDistribution(attrs=dict(\n install_requires=install_requires,\n extras_require=extras_require,\n ))\n write_requirements(setup_dist.get_command_obj('egg_info'),\n None, os.path.join(egg_info, 'requires.txt'))\n # Move data entries to their correct location.\n dist_data = os.path.join(destination_eggdir, dist_data)\n dist_data_scripts = os.path.join(dist_data, 'scripts')\n if os.path.exists(dist_data_scripts):\n egg_info_scripts = os.path.join(destination_eggdir,\n 'EGG-INFO', 'scripts')\n os.mkdir(egg_info_scripts)\n for entry in os.listdir(dist_data_scripts):\n # Remove bytecode, as it's not properly handled\n # during easy_install scripts install phase.\n if entry.endswith('.pyc'):\n os.unlink(os.path.join(dist_data_scripts, entry))\n else:\n os.rename(os.path.join(dist_data_scripts, entry),\n os.path.join(egg_info_scripts, entry))\n os.rmdir(dist_data_scripts)\n for subdir in filter(os.path.exists, (\n os.path.join(dist_data, d)\n for d in ('data', 'headers', 'purelib', 'platlib')\n )):\n unpack(subdir, destination_eggdir)\n if os.path.exists(dist_data):\n os.rmdir(dist_data)\n # Fix namespace packages.\n namespace_packages = os.path.join(egg_info, 'namespace_packages.txt')\n if os.path.exists(namespace_packages):\n with open(namespace_packages) as fp:\n namespace_packages = fp.read().split()\n for mod in namespace_packages:\n mod_dir = os.path.join(destination_eggdir, *mod.split('.'))\n mod_init = os.path.join(mod_dir, '__init__.py')\n if os.path.exists(mod_dir) and not os.path.exists(mod_init):\n with open(mod_init, 'w') as fp:\n fp.write(NAMESPACE_PACKAGE_INIT)\n", "path": "setuptools/wheel.py"}]}

| 2,472 | 410 |

gh_patches_debug_21311

|

rasdani/github-patches

|

git_diff

|

nerfstudio-project__nerfstudio-134

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

output accumulation value is very negative

After training for a long time ~81,990 steps on instant ngp, get the following stack trace:

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `pyrad/fields/instant_ngp_field.py`

Content:

```

1 # Copyright 2022 The Plenoptix Team. All rights reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 """

16 Instant-NGP field implementations using tiny-cuda-nn, torch, ....

17 """

18

19

20 from typing import Tuple

21

22 import torch

23 from torch.nn.parameter import Parameter

24

25 from pyrad.fields.modules.encoding import Encoding, HashEncoding, SHEncoding

26 from pyrad.fields.modules.field_heads import FieldHeadNames

27 from pyrad.fields.base import Field

28 from pyrad.fields.nerf_field import NeRFField

29 from pyrad.cameras.rays import RaySamples

30 from pyrad.utils.activations import trunc_exp

31

32 try:

33 import tinycudann as tcnn

34 except ImportError:

35 # tinycudann module doesn't exist

36 pass

37

38

39 def get_normalized_positions(positions, aabb):

40 """Return normalized positions in range [0, 1] based on the aabb axis-aligned bounding box."""

41 aabb_lengths = aabb[1] - aabb[0]

42 positions = (positions - aabb[0]) / aabb_lengths

43 return positions

44

45

46 def get_normalized_directions(directions):

47 """SH encoding must be in the range [0, 1]"""

48 return (directions + 1.0) / 2.0

49

50

51 class TCNNInstantNGPField(Field):

52 """NeRF Field"""

53

54 def __init__(

55 self, aabb, num_layers=2, hidden_dim=64, geo_feat_dim=15, num_layers_color=3, hidden_dim_color=64

56 ) -> None:

57 super().__init__()

58

59 self.aabb = Parameter(aabb, requires_grad=False)

60

61 self.geo_feat_dim = geo_feat_dim

62

63 # TODO: set this properly based on the aabb

64 per_level_scale = 1.4472692012786865

65

66 self.position_encoding = tcnn.Encoding(

67 n_input_dims=3,

68 encoding_config={

69 "otype": "HashGrid",

70 "n_levels": 16,

71 "n_features_per_level": 2,

72 "log2_hashmap_size": 19,

73 "base_resolution": 16,

74 "per_level_scale": per_level_scale,

75 },

76 )

77

78 self.direction_encoding = tcnn.Encoding(

79 n_input_dims=3,

80 encoding_config={

81 "otype": "SphericalHarmonics",

82 "degree": 4,

83 },

84 )

85

86 self.mlp_base = tcnn.Network(

87 n_input_dims=32,

88 n_output_dims=1 + self.geo_feat_dim,

89 network_config={

90 "otype": "FullyFusedMLP",

91 "activation": "ReLU",

92 "output_activation": "None",

93 "n_neurons": hidden_dim,

94 "n_hidden_layers": num_layers - 1,

95 },

96 )

97

98 self.mlp_head = tcnn.Network(

99 n_input_dims=self.direction_encoding.n_output_dims + self.geo_feat_dim,

100 n_output_dims=3,

101 network_config={

102 "otype": "FullyFusedMLP",

103 "activation": "ReLU",

104 "output_activation": "None",

105 "n_neurons": hidden_dim_color,

106 "n_hidden_layers": num_layers_color - 1,

107 },

108 )

109

110 def get_density(self, ray_samples: RaySamples):

111 """Computes and returns the densities."""

112 positions = get_normalized_positions(ray_samples.frustums.get_positions(), self.aabb)

113 positions_flat = positions.view(-1, 3)

114 dtype = positions_flat.dtype

115 x = self.position_encoding(positions_flat)

116 h = self.mlp_base(x).view(*ray_samples.frustums.get_positions().shape[:-1], -1).to(dtype)

117 density_before_activation, base_mlp_out = torch.split(h, [1, self.geo_feat_dim], dim=-1)

118

119 # Rectifying the density with an exponential is much more stable than a ReLU or

120 # softplus, because it enables high post-activation (float32) density outputs

121 # from smaller internal (float16) parameters.

122 assert density_before_activation.dtype is torch.float32

123 density = trunc_exp(density_before_activation)

124 return density, base_mlp_out

125

126 def get_outputs(self, ray_samples: RaySamples, density_embedding=None):

127 # TODO: add valid_mask masking!

128 # tcnn requires directions in the range [0,1]

129 directions = get_normalized_directions(ray_samples.frustums.directions)

130 directions_flat = directions.view(-1, 3)

131 dtype = directions_flat.dtype

132 d = self.direction_encoding(directions_flat)

133 h = torch.cat([d, density_embedding.view(-1, self.geo_feat_dim)], dim=-1)

134 h = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)

135 rgb = torch.sigmoid(h)

136 return {FieldHeadNames.RGB: rgb}

137

138

139 class TorchInstantNGPField(NeRFField):

140 """

141 PyTorch implementation of the instant-ngp field.

142 """

143

144 def __init__(

145 self,

146 aabb,

147 position_encoding: Encoding = HashEncoding(),

148 direction_encoding: Encoding = SHEncoding(),

149 base_mlp_num_layers: int = 3,

150 base_mlp_layer_width: int = 64,

151 head_mlp_num_layers: int = 2,

152 head_mlp_layer_width: int = 32,

153 skip_connections: Tuple = (4,),

154 ) -> None:

155 super().__init__(

156 position_encoding,

157 direction_encoding,

158 base_mlp_num_layers,

159 base_mlp_layer_width,

160 head_mlp_num_layers,

161 head_mlp_layer_width,

162 skip_connections,

163 )

164 self.aabb = Parameter(aabb, requires_grad=False)

165

166 def get_density(self, ray_samples: RaySamples):

167 normalized_ray_samples = ray_samples

168 normalized_ray_samples.positions = get_normalized_positions(

169 normalized_ray_samples.frustums.get_positions(), self.aabb

170 )

171 return super().get_density(normalized_ray_samples)

172

173

174 field_implementation_to_class = {"tcnn": TCNNInstantNGPField, "torch": TorchInstantNGPField}

175

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/pyrad/fields/instant_ngp_field.py b/pyrad/fields/instant_ngp_field.py

--- a/pyrad/fields/instant_ngp_field.py

+++ b/pyrad/fields/instant_ngp_field.py

@@ -101,7 +101,7 @@

network_config={

"otype": "FullyFusedMLP",

"activation": "ReLU",

- "output_activation": "None",

+ "output_activation": "Sigmoid",

"n_neurons": hidden_dim_color,

"n_hidden_layers": num_layers_color - 1,

},

@@ -131,8 +131,8 @@

dtype = directions_flat.dtype

d = self.direction_encoding(directions_flat)

h = torch.cat([d, density_embedding.view(-1, self.geo_feat_dim)], dim=-1)

- h = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)

- rgb = torch.sigmoid(h)

+ rgb = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)

+ assert rgb.dtype is torch.float32

return {FieldHeadNames.RGB: rgb}

|

{"golden_diff": "diff --git a/pyrad/fields/instant_ngp_field.py b/pyrad/fields/instant_ngp_field.py\n--- a/pyrad/fields/instant_ngp_field.py\n+++ b/pyrad/fields/instant_ngp_field.py\n@@ -101,7 +101,7 @@\n network_config={\n \"otype\": \"FullyFusedMLP\",\n \"activation\": \"ReLU\",\n- \"output_activation\": \"None\",\n+ \"output_activation\": \"Sigmoid\",\n \"n_neurons\": hidden_dim_color,\n \"n_hidden_layers\": num_layers_color - 1,\n },\n@@ -131,8 +131,8 @@\n dtype = directions_flat.dtype\n d = self.direction_encoding(directions_flat)\n h = torch.cat([d, density_embedding.view(-1, self.geo_feat_dim)], dim=-1)\n- h = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)\n- rgb = torch.sigmoid(h)\n+ rgb = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)\n+ assert rgb.dtype is torch.float32\n return {FieldHeadNames.RGB: rgb}\n", "issue": "output accumulation value is very negative\nAfter training for a long time ~81,990 steps on instant ngp, get the following stack trace:\r\n\r\n\r\n\n", "before_files": [{"content": "# Copyright 2022 The Plenoptix Team. All rights reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"\nInstant-NGP field implementations using tiny-cuda-nn, torch, ....\n\"\"\"\n\n\nfrom typing import Tuple\n\nimport torch\nfrom torch.nn.parameter import Parameter\n\nfrom pyrad.fields.modules.encoding import Encoding, HashEncoding, SHEncoding\nfrom pyrad.fields.modules.field_heads import FieldHeadNames\nfrom pyrad.fields.base import Field\nfrom pyrad.fields.nerf_field import NeRFField\nfrom pyrad.cameras.rays import RaySamples\nfrom pyrad.utils.activations import trunc_exp\n\ntry:\n import tinycudann as tcnn\nexcept ImportError:\n # tinycudann module doesn't exist\n pass\n\n\ndef get_normalized_positions(positions, aabb):\n \"\"\"Return normalized positions in range [0, 1] based on the aabb axis-aligned bounding box.\"\"\"\n aabb_lengths = aabb[1] - aabb[0]\n positions = (positions - aabb[0]) / aabb_lengths\n return positions\n\n\ndef get_normalized_directions(directions):\n \"\"\"SH encoding must be in the range [0, 1]\"\"\"\n return (directions + 1.0) / 2.0\n\n\nclass TCNNInstantNGPField(Field):\n \"\"\"NeRF Field\"\"\"\n\n def __init__(\n self, aabb, num_layers=2, hidden_dim=64, geo_feat_dim=15, num_layers_color=3, hidden_dim_color=64\n ) -> None:\n super().__init__()\n\n self.aabb = Parameter(aabb, requires_grad=False)\n\n self.geo_feat_dim = geo_feat_dim\n\n # TODO: set this properly based on the aabb\n per_level_scale = 1.4472692012786865\n\n self.position_encoding = tcnn.Encoding(\n n_input_dims=3,\n encoding_config={\n \"otype\": \"HashGrid\",\n \"n_levels\": 16,\n \"n_features_per_level\": 2,\n \"log2_hashmap_size\": 19,\n \"base_resolution\": 16,\n \"per_level_scale\": per_level_scale,\n },\n )\n\n self.direction_encoding = tcnn.Encoding(\n n_input_dims=3,\n encoding_config={\n \"otype\": \"SphericalHarmonics\",\n \"degree\": 4,\n },\n )\n\n self.mlp_base = tcnn.Network(\n n_input_dims=32,\n n_output_dims=1 + self.geo_feat_dim,\n network_config={\n \"otype\": \"FullyFusedMLP\",\n \"activation\": \"ReLU\",\n \"output_activation\": \"None\",\n \"n_neurons\": hidden_dim,\n \"n_hidden_layers\": num_layers - 1,\n },\n )\n\n self.mlp_head = tcnn.Network(\n n_input_dims=self.direction_encoding.n_output_dims + self.geo_feat_dim,\n n_output_dims=3,\n network_config={\n \"otype\": \"FullyFusedMLP\",\n \"activation\": \"ReLU\",\n \"output_activation\": \"None\",\n \"n_neurons\": hidden_dim_color,\n \"n_hidden_layers\": num_layers_color - 1,\n },\n )\n\n def get_density(self, ray_samples: RaySamples):\n \"\"\"Computes and returns the densities.\"\"\"\n positions = get_normalized_positions(ray_samples.frustums.get_positions(), self.aabb)\n positions_flat = positions.view(-1, 3)\n dtype = positions_flat.dtype\n x = self.position_encoding(positions_flat)\n h = self.mlp_base(x).view(*ray_samples.frustums.get_positions().shape[:-1], -1).to(dtype)\n density_before_activation, base_mlp_out = torch.split(h, [1, self.geo_feat_dim], dim=-1)\n\n # Rectifying the density with an exponential is much more stable than a ReLU or\n # softplus, because it enables high post-activation (float32) density outputs\n # from smaller internal (float16) parameters.\n assert density_before_activation.dtype is torch.float32\n density = trunc_exp(density_before_activation)\n return density, base_mlp_out\n\n def get_outputs(self, ray_samples: RaySamples, density_embedding=None):\n # TODO: add valid_mask masking!\n # tcnn requires directions in the range [0,1]\n directions = get_normalized_directions(ray_samples.frustums.directions)\n directions_flat = directions.view(-1, 3)\n dtype = directions_flat.dtype\n d = self.direction_encoding(directions_flat)\n h = torch.cat([d, density_embedding.view(-1, self.geo_feat_dim)], dim=-1)\n h = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)\n rgb = torch.sigmoid(h)\n return {FieldHeadNames.RGB: rgb}\n\n\nclass TorchInstantNGPField(NeRFField):\n \"\"\"\n PyTorch implementation of the instant-ngp field.\n \"\"\"\n\n def __init__(\n self,\n aabb,\n position_encoding: Encoding = HashEncoding(),\n direction_encoding: Encoding = SHEncoding(),\n base_mlp_num_layers: int = 3,\n base_mlp_layer_width: int = 64,\n head_mlp_num_layers: int = 2,\n head_mlp_layer_width: int = 32,\n skip_connections: Tuple = (4,),\n ) -> None:\n super().__init__(\n position_encoding,\n direction_encoding,\n base_mlp_num_layers,\n base_mlp_layer_width,\n head_mlp_num_layers,\n head_mlp_layer_width,\n skip_connections,\n )\n self.aabb = Parameter(aabb, requires_grad=False)\n\n def get_density(self, ray_samples: RaySamples):\n normalized_ray_samples = ray_samples\n normalized_ray_samples.positions = get_normalized_positions(\n normalized_ray_samples.frustums.get_positions(), self.aabb\n )\n return super().get_density(normalized_ray_samples)\n\n\nfield_implementation_to_class = {\"tcnn\": TCNNInstantNGPField, \"torch\": TorchInstantNGPField}\n", "path": "pyrad/fields/instant_ngp_field.py"}], "after_files": [{"content": "# Copyright 2022 The Plenoptix Team. All rights reserved.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\n\"\"\"\nInstant-NGP field implementations using tiny-cuda-nn, torch, ....\n\"\"\"\n\n\nfrom typing import Tuple\n\nimport torch\nfrom torch.nn.parameter import Parameter\n\nfrom pyrad.fields.modules.encoding import Encoding, HashEncoding, SHEncoding\nfrom pyrad.fields.modules.field_heads import FieldHeadNames\nfrom pyrad.fields.base import Field\nfrom pyrad.fields.nerf_field import NeRFField\nfrom pyrad.cameras.rays import RaySamples\nfrom pyrad.utils.activations import trunc_exp\n\ntry:\n import tinycudann as tcnn\nexcept ImportError:\n # tinycudann module doesn't exist\n pass\n\n\ndef get_normalized_positions(positions, aabb):\n \"\"\"Return normalized positions in range [0, 1] based on the aabb axis-aligned bounding box.\"\"\"\n aabb_lengths = aabb[1] - aabb[0]\n positions = (positions - aabb[0]) / aabb_lengths\n return positions\n\n\ndef get_normalized_directions(directions):\n \"\"\"SH encoding must be in the range [0, 1]\"\"\"\n return (directions + 1.0) / 2.0\n\n\nclass TCNNInstantNGPField(Field):\n \"\"\"NeRF Field\"\"\"\n\n def __init__(\n self, aabb, num_layers=2, hidden_dim=64, geo_feat_dim=15, num_layers_color=3, hidden_dim_color=64\n ) -> None:\n super().__init__()\n\n self.aabb = Parameter(aabb, requires_grad=False)\n\n self.geo_feat_dim = geo_feat_dim\n\n # TODO: set this properly based on the aabb\n per_level_scale = 1.4472692012786865\n\n self.position_encoding = tcnn.Encoding(\n n_input_dims=3,\n encoding_config={\n \"otype\": \"HashGrid\",\n \"n_levels\": 16,\n \"n_features_per_level\": 2,\n \"log2_hashmap_size\": 19,\n \"base_resolution\": 16,\n \"per_level_scale\": per_level_scale,\n },\n )\n\n self.direction_encoding = tcnn.Encoding(\n n_input_dims=3,\n encoding_config={\n \"otype\": \"SphericalHarmonics\",\n \"degree\": 4,\n },\n )\n\n self.mlp_base = tcnn.Network(\n n_input_dims=32,\n n_output_dims=1 + self.geo_feat_dim,\n network_config={\n \"otype\": \"FullyFusedMLP\",\n \"activation\": \"ReLU\",\n \"output_activation\": \"None\",\n \"n_neurons\": hidden_dim,\n \"n_hidden_layers\": num_layers - 1,\n },\n )\n\n self.mlp_head = tcnn.Network(\n n_input_dims=self.direction_encoding.n_output_dims + self.geo_feat_dim,\n n_output_dims=3,\n network_config={\n \"otype\": \"FullyFusedMLP\",\n \"activation\": \"ReLU\",\n \"output_activation\": \"Sigmoid\",\n \"n_neurons\": hidden_dim_color,\n \"n_hidden_layers\": num_layers_color - 1,\n },\n )\n\n def get_density(self, ray_samples: RaySamples):\n \"\"\"Computes and returns the densities.\"\"\"\n positions = get_normalized_positions(ray_samples.frustums.get_positions(), self.aabb)\n positions_flat = positions.view(-1, 3)\n dtype = positions_flat.dtype\n x = self.position_encoding(positions_flat)\n h = self.mlp_base(x).view(*ray_samples.frustums.get_positions().shape[:-1], -1).to(dtype)\n density_before_activation, base_mlp_out = torch.split(h, [1, self.geo_feat_dim], dim=-1)\n\n # Rectifying the density with an exponential is much more stable than a ReLU or\n # softplus, because it enables high post-activation (float32) density outputs\n # from smaller internal (float16) parameters.\n assert density_before_activation.dtype is torch.float32\n density = trunc_exp(density_before_activation)\n return density, base_mlp_out\n\n def get_outputs(self, ray_samples: RaySamples, density_embedding=None):\n # TODO: add valid_mask masking!\n # tcnn requires directions in the range [0,1]\n directions = get_normalized_directions(ray_samples.frustums.directions)\n directions_flat = directions.view(-1, 3)\n dtype = directions_flat.dtype\n d = self.direction_encoding(directions_flat)\n h = torch.cat([d, density_embedding.view(-1, self.geo_feat_dim)], dim=-1)\n rgb = self.mlp_head(h).view(*ray_samples.frustums.directions.shape[:-1], -1).to(dtype)\n assert rgb.dtype is torch.float32\n return {FieldHeadNames.RGB: rgb}\n\n\nclass TorchInstantNGPField(NeRFField):\n \"\"\"\n PyTorch implementation of the instant-ngp field.\n \"\"\"\n\n def __init__(\n self,\n aabb,\n position_encoding: Encoding = HashEncoding(),\n direction_encoding: Encoding = SHEncoding(),\n base_mlp_num_layers: int = 3,\n base_mlp_layer_width: int = 64,\n head_mlp_num_layers: int = 2,\n head_mlp_layer_width: int = 32,\n skip_connections: Tuple = (4,),\n ) -> None:\n super().__init__(\n position_encoding,\n direction_encoding,\n base_mlp_num_layers,\n base_mlp_layer_width,\n head_mlp_num_layers,\n head_mlp_layer_width,\n skip_connections,\n )\n self.aabb = Parameter(aabb, requires_grad=False)\n\n def get_density(self, ray_samples: RaySamples):\n normalized_ray_samples = ray_samples\n normalized_ray_samples.positions = get_normalized_positions(\n normalized_ray_samples.frustums.get_positions(), self.aabb\n )\n return super().get_density(normalized_ray_samples)\n\n\nfield_implementation_to_class = {\"tcnn\": TCNNInstantNGPField, \"torch\": TorchInstantNGPField}\n", "path": "pyrad/fields/instant_ngp_field.py"}]}

| 2,256 | 272 |

gh_patches_debug_16874

|

rasdani/github-patches

|

git_diff

|

mdn__kuma-6423

|

We are currently solving the following issue within our repository. Here is the issue text:

--- BEGIN ISSUE ---

Use of access tokens as query parameters in the GitHub OAuth API is deprecated

**Summary**

We use GitHub's OAuth API as one of MDN's sign-up/sign-in OAuth providers. We're starting to receive emails from GitHub that using the https://api.github.com/user API with the access token as a query parameter has been deprecated and that the `Authorization` header should be used instead. This occurs within `GitHubOAuth2Adapter.complete_login` method provided by `django-allauth`, but `django-allauth` has not yet fixed this (although a PR has been submitted that does -- see https://github.com/pennersr/django-allauth/pull/2458). Even if `django-allauth` fixes the issue, it wouldn't help in our case since we override this method (https://github.com/mdn/kuma/blob/266bd9d8ebf24c950037a1965b1967022fca233f/kuma/users/providers/github/views.py#L20). We need to update our overridden method to pass the token via the `Authorization` header rather than via a query parameter.

**Rationale**

We no longer have to concern ourselves with using a deprecated approach.

**Audience**

All users who sign-up/sign-in to MDN via GitHub.

--- END ISSUE ---

Below are some code segments, each from a relevant file. One or more of these files may contain bugs.

--- BEGIN FILES ---

Path: `kuma/users/providers/github/views.py`

Content:

```

1 from allauth.account.utils import get_next_redirect_url

2 from allauth.socialaccount.providers.github.views import GitHubOAuth2Adapter

3 from allauth.socialaccount.providers.oauth2.views import (OAuth2CallbackView,

4 OAuth2LoginView)

5

6 from kuma.core.decorators import redirect_in_maintenance_mode

7 from kuma.core.urlresolvers import reverse

8 from kuma.core.utils import requests_retry_session

9

10

11 class KumaGitHubOAuth2Adapter(GitHubOAuth2Adapter):

12 """

13 A custom GitHub OAuth adapter to be used for fetching the list

14 of private email addresses stored for the given user at GitHub.

15

16 We store those email addresses in the extra data of each account.

17 """

18 email_url = 'https://api.github.com/user/emails'

19

20 def complete_login(self, request, app, token, **kwargs):

21 session = requests_retry_session()

22 params = {'access_token': token.token}

23 profile_data = session.get(self.profile_url, params=params)

24 profile_data.raise_for_status()

25 extra_data = profile_data.json()

26 email_data = session.get(self.email_url, params=params)

27 email_data.raise_for_status()

28 extra_data['email_addresses'] = email_data.json()

29 return self.get_provider().sociallogin_from_response(request,

30 extra_data)

31

32

33 class KumaOAuth2LoginView(OAuth2LoginView):

34

35 def dispatch(self, request):

36 next_url = (get_next_redirect_url(request) or

37 reverse('users.my_edit_page'))

38 request.session['sociallogin_next_url'] = next_url

39 request.session.modified = True

40 return super(KumaOAuth2LoginView, self).dispatch(request)

41

42

43 oauth2_login = redirect_in_maintenance_mode(

44 KumaOAuth2LoginView.adapter_view(KumaGitHubOAuth2Adapter)

45 )

46 oauth2_callback = redirect_in_maintenance_mode(

47 OAuth2CallbackView.adapter_view(KumaGitHubOAuth2Adapter)

48 )

49

```

--- END FILES ---

Please first localize the bug based on the issue statement, and then generate a patch according to the `git diff` format fenced by three backticks.

Here is an example:

```diff

diff --git a/examples/server_async.py b/examples/server_async.py

--- a/examples/server_async.py

+++ b/examples/server_async.py

@@ -313,4 +313,4 @@

if __name__ == "__main__":

- asyncio.run(run_async_server("."), debug=True)

+ asyncio.run(run_async_server(), debug=True)

diff --git a/examples/server_sync.py b/examples/server_sync.py

--- a/examples/server_sync.py

+++ b/examples/server_sync.py

@@ -313,5 +313,5 @@

if __name__ == "__main__":

- server = run_sync_server(".")

+ server = run_sync_server()

server.shutdown()

```

|

diff --git a/kuma/users/providers/github/views.py b/kuma/users/providers/github/views.py

--- a/kuma/users/providers/github/views.py

+++ b/kuma/users/providers/github/views.py

@@ -19,11 +19,11 @@

def complete_login(self, request, app, token, **kwargs):

session = requests_retry_session()

- params = {'access_token': token.token}

- profile_data = session.get(self.profile_url, params=params)

+ headers = {'Authorization': f'token {token.token}'}

+ profile_data = session.get(self.profile_url, headers=headers)

profile_data.raise_for_status()

extra_data = profile_data.json()

- email_data = session.get(self.email_url, params=params)

+ email_data = session.get(self.email_url, headers=headers)

email_data.raise_for_status()

extra_data['email_addresses'] = email_data.json()