modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-14 06:27:53

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 519

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-14 06:27:45

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

lizaboiarchuk/bert-tiny-oa-finetuned | lizaboiarchuk | 2022-09-18T19:05:02Z | 83 | 0 | transformers | [

"transformers",

"tf",

"bert",

"fill-mask",

"generated_from_keras_callback",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-09-18T07:27:29Z | ---

license: mit

tags:

- generated_from_keras_callback

model-index:

- name: lizaboiarchuk/bert-tiny-oa-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# lizaboiarchuk/bert-tiny-oa-finetuned

This model is a fine-tuned version of [prajjwal1/bert-tiny](https://huggingface.co/prajjwal1/bert-tiny) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 4.0626

- Validation Loss: 3.7514

- Epoch: 4

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'WarmUp', 'config': {'initial_learning_rate': 2e-05, 'decay_schedule_fn': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': -525, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, '__passive_serialization__': True}, 'warmup_steps': 1000, 'power': 1.0, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 4.6311 | 4.1088 | 0 |

| 4.2579 | 3.7859 | 1 |

| 4.0635 | 3.7253 | 2 |

| 4.0658 | 3.6842 | 3 |

| 4.0626 | 3.7514 | 4 |

### Framework versions

- Transformers 4.22.1

- TensorFlow 2.8.2

- Tokenizers 0.12.1

|

sd-concepts-library/laala-character | sd-concepts-library | 2022-09-18T17:07:01Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T17:06:58Z | ---

license: mit

---

### laala-character on Stable Diffusion

This is the `<laala>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

baptiste/deberta-finetuned-ner-connll-late-stop | baptiste | 2022-09-18T16:28:32Z | 108 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"deberta",

"token-classification",

"generated_from_trainer",

"dataset:wikiann",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T15:35:43Z | ---

license: mit

tags:

- generated_from_trainer

datasets:

- wikiann

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: deberta-finetuned-ner-connll-late-stop

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: wikiann

type: wikiann

config: en

split: train

args: en

metrics:

- name: Precision

type: precision

value: 0.830192600803658

- name: Recall

type: recall

value: 0.8470945850417079

- name: F1

type: f1

value: 0.8385584324702589

- name: Accuracy

type: accuracy

value: 0.9228861596598961

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-finetuned-ner-connll-late-stop

This model is a fine-tuned version of [microsoft/deberta-base](https://huggingface.co/microsoft/deberta-base) on the wikiann dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5259

- Precision: 0.8302

- Recall: 0.8471

- F1: 0.8386

- Accuracy: 0.9229

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 7

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.3408 | 1.0 | 1875 | 0.3639 | 0.7462 | 0.7887 | 0.7669 | 0.8966 |

| 0.2435 | 2.0 | 3750 | 0.2933 | 0.8104 | 0.8332 | 0.8217 | 0.9178 |

| 0.1822 | 3.0 | 5625 | 0.3034 | 0.8147 | 0.8388 | 0.8266 | 0.9221 |

| 0.1402 | 4.0 | 7500 | 0.3667 | 0.8275 | 0.8474 | 0.8374 | 0.9235 |

| 0.1013 | 5.0 | 9375 | 0.4290 | 0.8285 | 0.8448 | 0.8366 | 0.9227 |

| 0.0677 | 6.0 | 11250 | 0.4914 | 0.8259 | 0.8473 | 0.8365 | 0.9231 |

| 0.0439 | 7.0 | 13125 | 0.5259 | 0.8302 | 0.8471 | 0.8386 | 0.9229 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

yuntian-deng/latex2im | yuntian-deng | 2022-09-18T14:29:45Z | 5 | 0 | diffusers | [

"diffusers",

"en",

"dataset:yuntian-deng/im2latex-100k",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

]

| null | 2022-09-12T01:31:47Z | ---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: yuntian-deng/im2latex-100k

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# latex2im

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `yuntian-deng/im2latex-100k` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: no

### Training results

📈 [TensorBoard logs](https://huggingface.co/yuntian-deng/latex2im/tensorboard?#scalars)

|

sd-concepts-library/rail-scene | sd-concepts-library | 2022-09-18T14:28:03Z | 0 | 1 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T14:27:48Z | ---

license: mit

---

### Rail Scene on Stable Diffusion

This is the `<rail-pov>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

jayanta/aaraki-vit-base-patch16-224-in21k-finetuned-cifar10 | jayanta | 2022-09-18T14:16:57Z | 220 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-09-17T11:53:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: mit-b2-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.8523956723338485

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.8580847578266328

name: F1

- type: precision

value: 0.8587893412503379

name: Precision

- type: recall

value: 0.8593508500772797

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mit-b2-finetuned-memes

This model is a fine-tuned version of [aaraki/vit-base-patch16-224-in21k-finetuned-cifar10](https://huggingface.co/aaraki/vit-base-patch16-224-in21k-finetuned-cifar10) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4137

- Accuracy: 0.8524

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.9727 | 0.99 | 40 | 0.8400 | 0.7334 |

| 0.5305 | 1.99 | 80 | 0.5147 | 0.8284 |

| 0.3124 | 2.99 | 120 | 0.4698 | 0.8145 |

| 0.2263 | 3.99 | 160 | 0.3892 | 0.8563 |

| 0.1453 | 4.99 | 200 | 0.3874 | 0.8570 |

| 0.1255 | 5.99 | 240 | 0.4097 | 0.8470 |

| 0.0989 | 6.99 | 280 | 0.3860 | 0.8570 |

| 0.0755 | 7.99 | 320 | 0.4141 | 0.8539 |

| 0.08 | 8.99 | 360 | 0.4049 | 0.8594 |

| 0.0639 | 9.99 | 400 | 0.4137 | 0.8524 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Anoop03031988/bert_emo_classifier | Anoop03031988 | 2022-09-18T14:14:24Z | 104 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-18T13:44:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

model-index:

- name: bert_emo_classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert_emo_classifier

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2652

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.8874 | 0.25 | 500 | 0.4256 |

| 0.3255 | 0.5 | 1000 | 0.3233 |

| 0.2754 | 0.75 | 1500 | 0.2736 |

| 0.242 | 1.0 | 2000 | 0.2263 |

| 0.1661 | 1.25 | 2500 | 0.2118 |

| 0.1614 | 1.5 | 3000 | 0.1812 |

| 0.1434 | 1.75 | 3500 | 0.1924 |

| 0.1629 | 2.0 | 4000 | 0.1766 |

| 0.1066 | 2.25 | 4500 | 0.2100 |

| 0.1313 | 2.5 | 5000 | 0.1996 |

| 0.1113 | 2.75 | 5500 | 0.2185 |

| 0.115 | 3.0 | 6000 | 0.2406 |

| 0.0697 | 3.25 | 6500 | 0.2485 |

| 0.0835 | 3.5 | 7000 | 0.2391 |

| 0.0637 | 3.75 | 7500 | 0.2695 |

| 0.0707 | 4.0 | 8000 | 0.2652 |

### Framework versions

- Transformers 4.15.0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.10.3

|

LanYiU/codeparrot-ds | LanYiU | 2022-09-18T13:10:45Z | 109 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-09-18T11:27:34Z | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: codeparrot-ds

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# codeparrot-ds

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6886

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.4802 | 0.93 | 5000 | 1.6886 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Shaz/augh | Shaz | 2022-09-18T12:49:50Z | 0 | 0 | null | [

"region:us"

]

| null | 2022-09-17T19:10:50Z | import requests

API_URL = "https://api-inference.huggingface.co/models/gpt2"

headers = {"Authorization": f"Bearer {API_TOKEN}"}

def query(payload):

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query({

"inputs": "Can you please let us know more details about your ",

}) |

domenicrosati/deberta-v3-large-finetuned-paws-paraphrase-detector | domenicrosati | 2022-09-18T11:58:59Z | 118 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"deberta-v2",

"text-classification",

"generated_from_trainer",

"dataset:paws",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-17T15:59:17Z | ---

license: mit

tags:

- text-classification

- generated_from_trainer

datasets:

- paws

metrics:

- f1

- precision

- recall

model-index:

- name: deberta-v3-large-finetuned-paws-paraphrase-detector

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: paws

type: paws

args: labeled_final

metrics:

- name: F1

type: f1

value: 0.9426698284279537

- name: Precision

type: precision

value: 0.9300853289292595

- name: Recall

type: recall

value: 0.9555995475113123

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# deberta-v3-large-finetuned-paws-paraphrase-detector

Feel free to use for paraphrase detection tasks!

This model is a fine-tuned version of [microsoft/deberta-v3-large](https://huggingface.co/microsoft/deberta-v3-large) on the paws dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3046

- F1: 0.9427

- Precision: 0.9301

- Recall: 0.9556

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 50

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Precision | Recall |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:---------:|:------:|

| 0.1492 | 1.0 | 6176 | 0.1650 | 0.9537 | 0.9385 | 0.9695 |

| 0.1018 | 2.0 | 12352 | 0.1968 | 0.9544 | 0.9427 | 0.9664 |

| 0.0482 | 3.0 | 18528 | 0.2419 | 0.9521 | 0.9388 | 0.9658 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

sd-concepts-library/lizardman | sd-concepts-library | 2022-09-18T11:42:28Z | 0 | 3 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T11:42:22Z | ---

license: mit

---

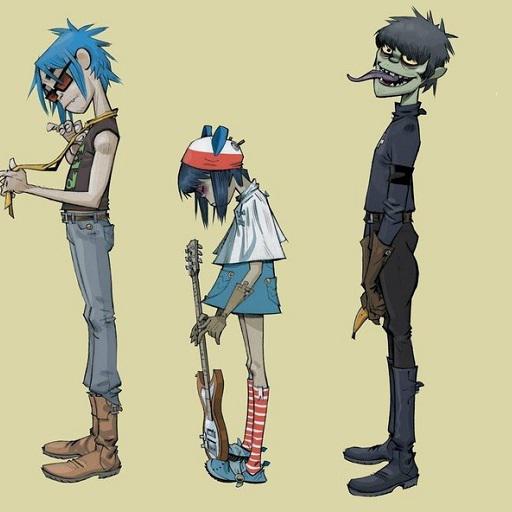

### Lizardman on Stable Diffusion

This is the `PlaceholderTokenLizardman` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

venkateshdas/roberta-base-squad2-ta-qna-roberta10e | venkateshdas | 2022-09-18T11:00:43Z | 118 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-09-18T10:37:07Z | ---

license: cc-by-4.0

tags:

- generated_from_trainer

model-index:

- name: roberta-base-squad2-ta-qna-roberta10e

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-squad2-ta-qna-roberta10e

This model is a fine-tuned version of [deepset/roberta-base-squad2](https://huggingface.co/deepset/roberta-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2952

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 87 | 0.5890 |

| No log | 2.0 | 174 | 0.4194 |

| No log | 3.0 | 261 | 0.3104 |

| No log | 4.0 | 348 | 0.3037 |

| No log | 5.0 | 435 | 0.2723 |

| 0.2889 | 6.0 | 522 | 0.2368 |

| 0.2889 | 7.0 | 609 | 0.2974 |

| 0.2889 | 8.0 | 696 | 0.2923 |

| 0.2889 | 9.0 | 783 | 0.2936 |

| 0.2889 | 10.0 | 870 | 0.2952 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

huggingtweets/perpetualg00se | huggingtweets | 2022-09-18T10:25:36Z | 109 | 1 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-09-18T10:20:59Z | ---

language: en

thumbnail: http://www.huggingtweets.com/perpetualg00se/1663496719106/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1245588692573409281/mGWMt1q7_400x400.png')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">PerpetualG00se</div>

<div style="text-align: center; font-size: 14px;">@perpetualg00se</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from PerpetualG00se.

| Data | PerpetualG00se |

| --- | --- |

| Tweets downloaded | 3166 |

| Retweets | 514 |

| Short tweets | 628 |

| Tweets kept | 2024 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/32gxsmj0/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @perpetualg00se's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/17rf9oo3) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/17rf9oo3/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/perpetualg00se')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

venkateshdas/roberta-base-squad2-ta-qna-roberta3e | venkateshdas | 2022-09-18T10:22:29Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"roberta",

"question-answering",

"generated_from_trainer",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

]

| question-answering | 2022-09-18T10:13:04Z | ---

license: cc-by-4.0

tags:

- generated_from_trainer

model-index:

- name: roberta-base-squad2-ta-qna-roberta3e

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-squad2-ta-qna-roberta3e

This model is a fine-tuned version of [deepset/roberta-base-squad2](https://huggingface.co/deepset/roberta-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4671

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 87 | 0.5221 |

| No log | 2.0 | 174 | 0.4408 |

| No log | 3.0 | 261 | 0.4671 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

debbiesoon/prot_bert_bfd-disoDNA | debbiesoon | 2022-09-18T06:50:23Z | 105 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-09-18T04:33:19Z | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

model-index:

- name: prot_bert_bfd-disoDNA

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# prot_bert_bfd-disoDNA

This model was trained from scratch on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1323

- Precision: 0.9442

- Recall: 0.9717

- F1: 0.9578

## Model description

This is a token classification model designed to predict the intrinsically disordered regions of amino acid sequences on the level of DNA disorder annotation.

## Intended uses & limitations

This model works on amino acid sequences that are spaced between characters.

'0': No disorder

'1': Disordered

Example Inputs :

D E A Q F K E C Y D T C H K E C S D K G N G F T F C E M K C D T D C S V K D V K E K L E N Y K P K N

M A S E E L Q K D L E E V K V L L E K A T R K R V R D A L T A E K S K I E T E I K N K M Q Q K S Q K K A E L L D N E K P A A V V A P I T T G Y T D G I S Q I S L

M D V F M K G L S K A K E G V V A A A E K T K Q G V A E A A G K T K E G V L Y V G S K T K E G V V H G V A T V A E K T K E Q V T N V G G A V V T G V T A V A Q K T V E G A G S I A A A T G F V K K D Q L G K N E E G A P Q E G I L E D M P V D P D N E A Y E M P S E E G Y Q D Y E P E A

M E L V L K D A Q S A L T V S E T T F G R D F N E A L V H Q V V V A Y A A G A R Q G T R A Q K T R A E V T G S G K K P W R Q K G T G R A R S G S I K S P I W R S G G V T F A

A R P Q D H S Q K V N K K M Y R G A L K S I L S E L V R Q D R L I V V E K F S V E A P K T K L L A Q K L K D M A L E D V L I I T G E L D E N L F L A A R N L H K V D V R D A T G I D P V S L I A F D K V V M T A D A V K Q V E E M L A

M S D K P D M A E I E K F D K S K L K K T E T Q E K N P L P S K E T I E Q E K Q A G E S

## Training and evaluation data

Training and evaluation data were retrieved from https://www.csuligroup.com/DeepDISOBind/#Materials (Accessed March 2022).

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|

| 0.0213 | 1.0 | 61 | 0.1322 | 0.9442 | 0.9717 | 0.9578 |

| 0.0212 | 2.0 | 122 | 0.1322 | 0.9442 | 0.9717 | 0.9578 |

| 0.1295 | 3.0 | 183 | 0.1323 | 0.9442 | 0.9717 | 0.9578 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/dsmuses | sd-concepts-library | 2022-09-18T06:37:28Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-18T06:37:17Z | ---

license: mit

---

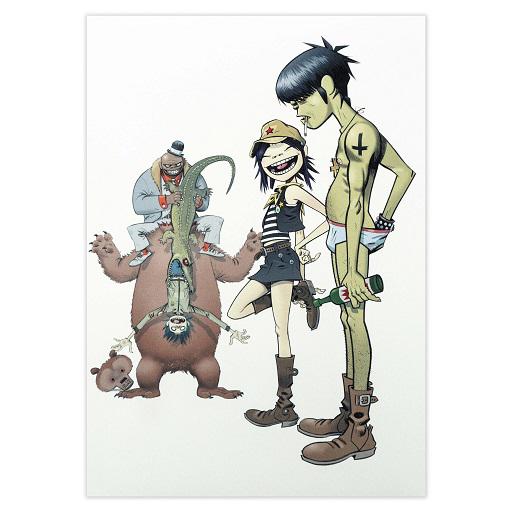

### DSmuses on Stable Diffusion

This is the `<DSmuses>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

NeonBohdan/stt-polyglot-en | NeonBohdan | 2022-09-18T06:32:07Z | 0 | 0 | null | [

"tflite",

"license:apache-2.0",

"region:us"

]

| null | 2022-03-02T23:29:04Z | ---

license: apache-2.0

---

|

rosskrasner/testcatdog | rosskrasner | 2022-09-18T03:56:03Z | 0 | 0 | fastai | [

"fastai",

"region:us"

]

| null | 2022-09-14T03:29:28Z | ---

tags:

- fastai

---

# Amazing!

🥳 Congratulations on hosting your fastai model on the Hugging Face Hub!

# Some next steps

1. Fill out this model card with more information (see the template below and the [documentation here](https://huggingface.co/docs/hub/model-repos))!

2. Create a demo in Gradio or Streamlit using 🤗 Spaces ([documentation here](https://huggingface.co/docs/hub/spaces)).

3. Join the fastai community on the [Fastai Discord](https://discord.com/invite/YKrxeNn)!

Greetings fellow fastlearner 🤝! Don't forget to delete this content from your model card.

---

# Model card

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

|

pikodemo/ppo-LunarLander-v2 | pikodemo | 2022-09-18T00:11:48Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T14:59:15Z | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- metrics:

- type: mean_reward

value: -553.66 +/- 175.78

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

sd-concepts-library/valorantstyle | sd-concepts-library | 2022-09-17T23:55:16Z | 0 | 20 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T23:55:05Z | ---

license: mit

---

### valorantstyle on Stable Diffusion

This is the `<valorant>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

anechaev/Reinforce-U5Pixelcopter | anechaev | 2022-09-17T22:11:25Z | 0 | 0 | null | [

"Pixelcopter-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T22:11:15Z | ---

tags:

- Pixelcopter-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-U5Pixelcopter

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pixelcopter-PLE-v0

type: Pixelcopter-PLE-v0

metrics:

- type: mean_reward

value: 17.10 +/- 15.09

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pixelcopter-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pixelcopter-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Bistolero/1ep_seq_25_6b | Bistolero | 2022-09-17T21:23:44Z | 111 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:gem",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T21:07:40Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- gem

model-index:

- name: kapakapa

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# kapakapa

This model is a fine-tuned version of [google/mt5-small](https://huggingface.co/google/mt5-small) on the gem dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 15

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 14

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

anechaev/Reinforce-U5CartPole | anechaev | 2022-09-17T20:43:09Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T20:41:20Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-U5CartPole

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 46.40 +/- 7.76

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

sd-concepts-library/3d-female-cyborgs | sd-concepts-library | 2022-09-17T20:15:59Z | 0 | 39 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T20:15:45Z | ---

license: mit

---

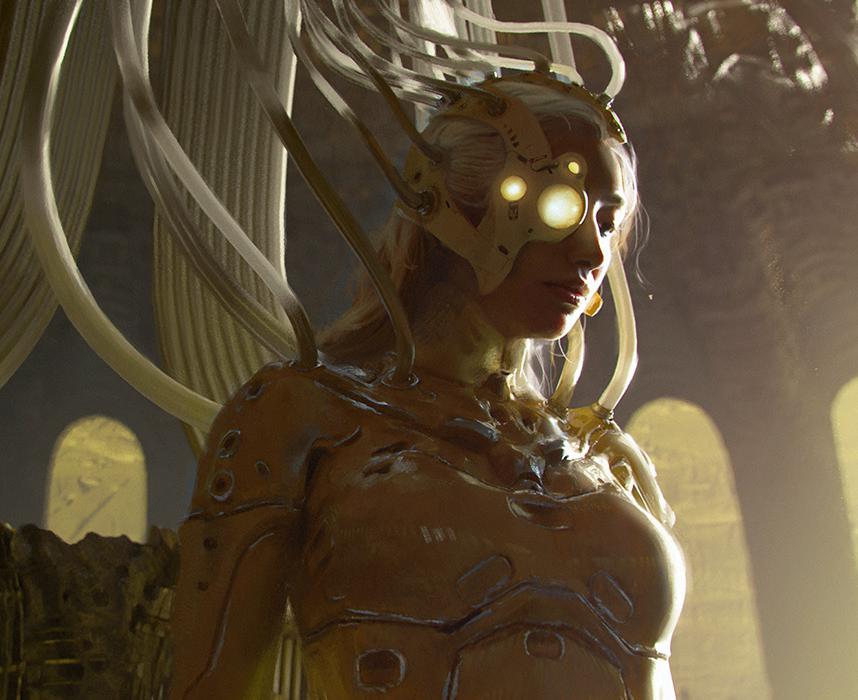

### 3d Female Cyborgs on Stable Diffusion

This is the `<A female cyborg>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

tavakolih/all-MiniLM-L6-v2-pubmed-full | tavakolih | 2022-09-17T19:59:09Z | 1,201 | 9 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"dataset:pubmed",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

]

| sentence-similarity | 2022-09-17T19:59:01Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

datasets:

- pubmed

---

# tavakolih/all-MiniLM-L6-v2-pubmed-full

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('tavakolih/all-MiniLM-L6-v2-pubmed-full')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=tavakolih/all-MiniLM-L6-v2-pubmed-full)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 221 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 10,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Normalize()

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

sd-concepts-library/max-foley | sd-concepts-library | 2022-09-17T19:35:09Z | 0 | 2 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T19:34:45Z | ---

license: mit

---

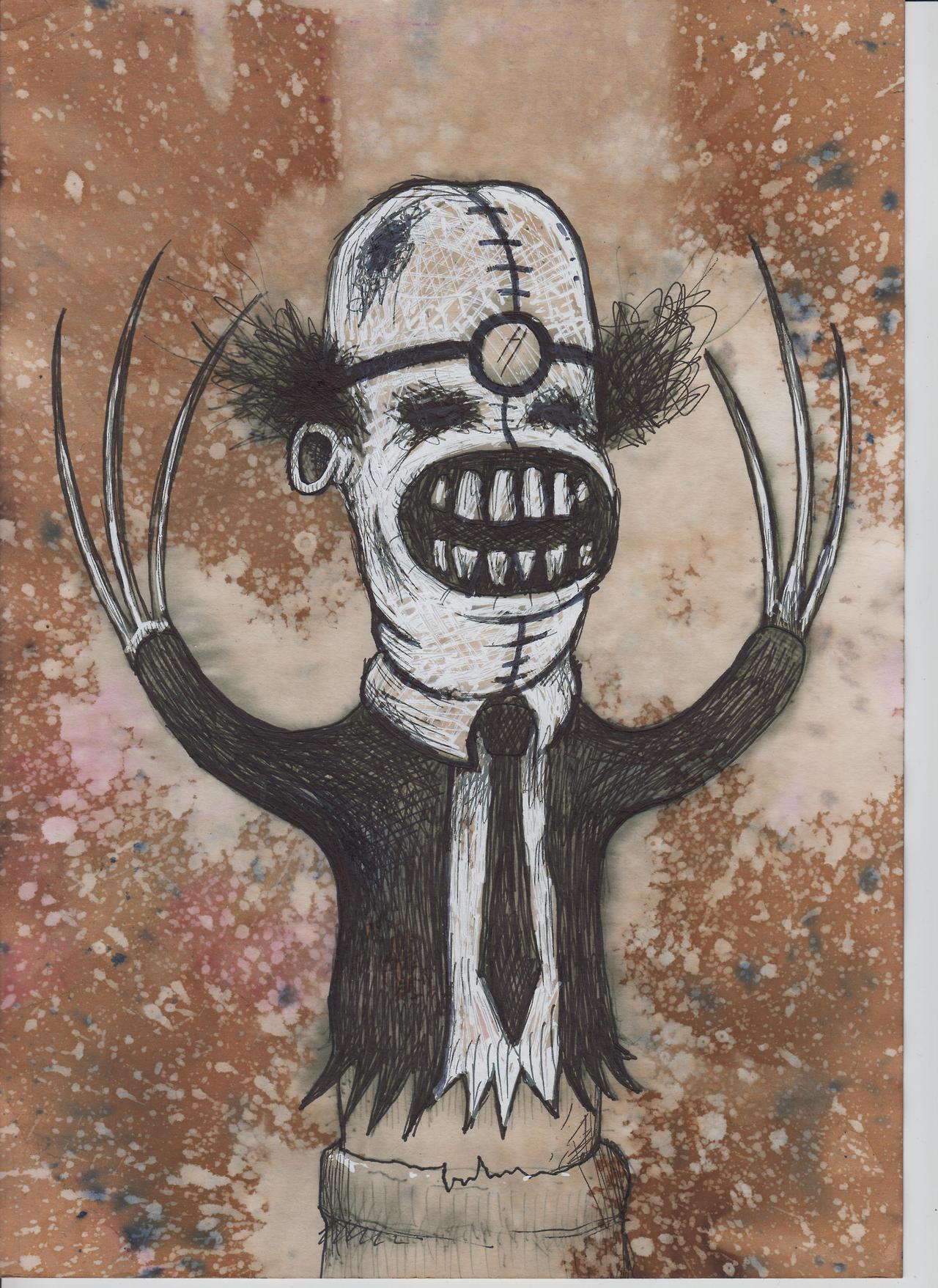

### Max Foley on Stable Diffusion

This is the `<max-foley>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

tkuye/skills-classifier | tkuye | 2022-09-17T19:16:20Z | 117 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-17T17:56:54Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: skills-classifier

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# skills-classifier

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3051

- Accuracy: 0.9242

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 312 | 0.2713 | 0.9058 |

| 0.361 | 2.0 | 624 | 0.2539 | 0.9182 |

| 0.361 | 3.0 | 936 | 0.2802 | 0.9238 |

| 0.1532 | 4.0 | 1248 | 0.3058 | 0.9202 |

| 0.0899 | 5.0 | 1560 | 0.3051 | 0.9242 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

dumitrescustefan/gpt-neo-romanian-780m | dumitrescustefan | 2022-09-17T18:24:19Z | 260 | 12 | transformers | [

"transformers",

"pytorch",

"gpt_neo",

"text-generation",

"romanian",

"text generation",

"causal lm",

"gpt-neo",

"ro",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-08-29T15:31:26Z | ---

language:

- ro

license: mit # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

tags:

- romanian

- text generation

- causal lm

- gpt-neo

---

# GPT-Neo Romanian 780M

This model is a GPT-Neo transformer decoder model designed using EleutherAI's replication of the GPT-3 architecture.

It was trained on a thoroughly cleaned corpus of Romanian text of about 40GB composed of Oscar, Opus, Wikipedia, literature and various other bits and pieces of text, joined together and deduplicated. It was trained for about a month, totaling 1.5M steps on a v3-32 TPU machine.

### Authors:

* Dumitrescu Stefan

* Mihai Ilie

### Evaluation

Evaluation to be added soon, also on [https://github.com/dumitrescustefan/Romanian-Transformers](https://github.com/dumitrescustefan/Romanian-Transformers)

### Acknowledgements

Thanks [TPU Research Cloud](https://sites.research.google/trc/about/) for the TPUv3 machine needed to train this model!

|

sd-concepts-library/mechasoulall | sd-concepts-library | 2022-09-17T17:44:02Z | 0 | 21 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T17:43:55Z | ---

license: mit

---

### mechasoulall on Stable Diffusion

This is the `<mechasoulall>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

RICHPOOL/RICHPOOL_MINER | RICHPOOL | 2022-09-17T17:42:59Z | 0 | 0 | null | [

"region:us"

]

| null | 2022-09-17T17:39:16Z | ### 开源矿工-瑞池专业版

开源-绿色-无抽水

huggingface 下载分流

#### 原软件源代码

https://github.com/ntminer/NtMiner

#### 授权协议

The LGPL license。

|

sd-concepts-library/durer-style | sd-concepts-library | 2022-09-17T16:36:56Z | 0 | 7 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T16:36:49Z | ---

license: mit

---

### durer style on Stable Diffusion

This is the `<drr-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

sd-concepts-library/led-toy | sd-concepts-library | 2022-09-17T16:33:57Z | 0 | 0 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T16:33:50Z | ---

license: mit

---

### led-toy on Stable Diffusion

This is the `<led-toy>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as an `object`:

|

sd-concepts-library/she-hulk-law-art | sd-concepts-library | 2022-09-17T16:10:47Z | 0 | 2 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T16:10:35Z | ---

license: mit

---

### She-Hulk Law Art on Stable Diffusion

This is the `<shehulk-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

theojolliffe/pegasus-model-3-x25 | theojolliffe | 2022-09-17T15:48:03Z | 108 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"pegasus",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T14:27:08Z | ---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: pegasus-model-3-x25

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# pegasus-model-3-x25

This model is a fine-tuned version of [theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback](https://huggingface.co/theojolliffe/pegasus-cnn_dailymail-v4-e1-e4-feedback) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5668

- Rouge1: 61.9972

- Rouge2: 48.1531

- Rougel: 48.845

- Rougelsum: 59.5019

- Gen Len: 123.0814

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:------:|:---------:|:--------:|

| 1.144 | 1.0 | 883 | 0.5668 | 61.9972 | 48.1531 | 48.845 | 59.5019 | 123.0814 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

sd-concepts-library/lxj-o4 | sd-concepts-library | 2022-09-17T15:14:59Z | 0 | 1 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T15:14:54Z | ---

license: mit

---

### lxj-o4 on Stable Diffusion

This is the `<csp>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

Eksperymenty/Pong-PLE-v0 | Eksperymenty | 2022-09-17T14:44:18Z | 0 | 0 | null | [

"Pong-PLE-v0",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T14:44:08Z | ---

tags:

- Pong-PLE-v0

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Pong-PLE-v0

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Pong-PLE-v0

type: Pong-PLE-v0

metrics:

- type: mean_reward

value: -16.00 +/- 0.00

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **Pong-PLE-v0**

This is a trained model of a **Reinforce** agent playing **Pong-PLE-v0** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

sd-concepts-library/sorami-style | sd-concepts-library | 2022-09-17T13:31:17Z | 0 | 2 | null | [

"license:mit",

"region:us"

]

| null | 2022-09-17T13:31:10Z | ---

license: mit

---

### Sorami style on Stable Diffusion

This is the `<sorami-style>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

test1234678/distilbert-base-uncased-distilled-clinc | test1234678 | 2022-09-17T12:34:43Z | 108 | 0 | transformers | [

"transformers",

"pytorch",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:clinc_oos",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-17T07:24:42Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- clinc_oos

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-distilled-clinc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: clinc_oos

type: clinc_oos

config: plus

split: train

args: plus

metrics:

- name: Accuracy

type: accuracy

value: 0.9461290322580646

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2712

- Accuracy: 0.9461

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.2629 | 1.0 | 318 | 1.6048 | 0.7368 |

| 1.2437 | 2.0 | 636 | 0.8148 | 0.8565 |

| 0.6604 | 3.0 | 954 | 0.4768 | 0.9161 |

| 0.4054 | 4.0 | 1272 | 0.3548 | 0.9352 |

| 0.2987 | 5.0 | 1590 | 0.3084 | 0.9419 |

| 0.2549 | 6.0 | 1908 | 0.2909 | 0.9435 |

| 0.232 | 7.0 | 2226 | 0.2804 | 0.9458 |

| 0.221 | 8.0 | 2544 | 0.2749 | 0.9458 |

| 0.2145 | 9.0 | 2862 | 0.2722 | 0.9468 |

| 0.2112 | 10.0 | 3180 | 0.2712 | 0.9461 |

### Framework versions

- Transformers 4.21.3

- Pytorch 1.10.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

jayanta/resnet50-finetuned-memes | jayanta | 2022-09-17T12:04:12Z | 176 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"resnet",

"image-classification",

"generated_from_trainer",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2022-09-15T14:19:11Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: resnet50-finetuned-memes

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.5741885625965997

- task:

type: image-classification

name: Image Classification

dataset:

type: custom

name: custom

split: test

metrics:

- type: f1

value: 0.47811617701687364

name: F1

- type: precision

value: 0.43689216537139497

name: Precision

- type: recall

value: 0.5695517774343122

name: Recall

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# resnet50-finetuned-memes

This model is a fine-tuned version of [microsoft/resnet-50](https://huggingface.co/microsoft/resnet-50) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 1.0625

- Accuracy: 0.5742

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00012

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.4795 | 0.99 | 40 | 1.4641 | 0.4382 |

| 1.3455 | 1.99 | 80 | 1.3281 | 0.4389 |

| 1.262 | 2.99 | 120 | 1.2583 | 0.4583 |

| 1.1975 | 3.99 | 160 | 1.1978 | 0.4876 |

| 1.1358 | 4.99 | 200 | 1.1614 | 0.5139 |

| 1.1273 | 5.99 | 240 | 1.1316 | 0.5379 |

| 1.0379 | 6.99 | 280 | 1.1024 | 0.5464 |

| 1.041 | 7.99 | 320 | 1.0927 | 0.5580 |

| 0.9952 | 8.99 | 360 | 1.0790 | 0.5541 |

| 1.0146 | 9.99 | 400 | 1.0625 | 0.5742 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Shamus/NLLB-600m-vie_Latn-to-eng_Latn | Shamus | 2022-09-17T11:54:50Z | 107 | 1 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"m2m_100",

"text2text-generation",

"generated_from_trainer",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2022-09-17T03:28:00Z | ---

license: cc-by-nc-4.0

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: NLLB-600m-vie_Latn-to-eng_Latn

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# NLLB-600m-vie_Latn-to-eng_Latn

This model is a fine-tuned version of [facebook/nllb-200-distilled-600M](https://huggingface.co/facebook/nllb-200-distilled-600M) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1189

- Bleu: 36.6767

- Gen Len: 47.504

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 3

- eval_batch_size: 3

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 24

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|

| 1.9294 | 2.24 | 1000 | 1.5970 | 23.6201 | 48.1 |

| 1.4 | 4.47 | 2000 | 1.3216 | 28.9526 | 45.156 |

| 1.2071 | 6.71 | 3000 | 1.2245 | 32.5538 | 46.576 |

| 1.0893 | 8.95 | 4000 | 1.1720 | 34.265 | 46.052 |

| 1.0064 | 11.19 | 5000 | 1.1497 | 34.9249 | 46.508 |

| 0.9562 | 13.42 | 6000 | 1.1331 | 36.4619 | 47.244 |

| 0.9183 | 15.66 | 7000 | 1.1247 | 36.4723 | 47.26 |

| 0.8858 | 17.9 | 8000 | 1.1198 | 36.7058 | 47.376 |

| 0.8651 | 20.13 | 9000 | 1.1201 | 36.7897 | 47.496 |

| 0.8546 | 22.37 | 10000 | 1.1189 | 36.6767 | 47.504 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

huggingtweets/arrington-jespow-lightcrypto | huggingtweets | 2022-09-17T11:11:37Z | 109 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text-generation | 2022-09-17T11:09:39Z | ---

language: en

thumbnail: http://www.huggingtweets.com/arrington-jespow-lightcrypto/1663413092521/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1478019214212747264/LZmNClhs_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1484988558024720385/WAv0tlyD_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1481313178302754821/eeHGWpUF_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">light & Jesse Powell & Michael Arrington 🏴☠️</div>

<div style="text-align: center; font-size: 14px;">@arrington-jespow-lightcrypto</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from light & Jesse Powell & Michael Arrington 🏴☠️.

| Data | light | Jesse Powell | Michael Arrington 🏴☠️ |

| --- | --- | --- | --- |

| Tweets downloaded | 3237 | 3237 | 3243 |

| Retweets | 352 | 490 | 892 |

| Short tweets | 392 | 168 | 718 |

| Tweets kept | 2493 | 2579 | 1633 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3ozhl36a/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @arrington-jespow-lightcrypto's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/2vhxitdi) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/2vhxitdi/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/arrington-jespow-lightcrypto')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

LanYiU/distilbert-base-uncased-finetuned-imdb | LanYiU | 2022-09-17T11:04:50Z | 161 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"fill-mask",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| fill-mask | 2022-09-17T10:55:23Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: distilbert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4738

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- distributed_type: tpu

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7 | 1.0 | 157 | 2.4988 |

| 2.5821 | 2.0 | 314 | 2.4242 |

| 2.541 | 3.0 | 471 | 2.4371 |

### Framework versions

- Transformers 4.22.1

- Pytorch 1.9.0+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Eksperymenty/Reinforce-CartPole-v1 | Eksperymenty | 2022-09-17T10:09:00Z | 0 | 0 | null | [

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T10:07:54Z | ---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-CartPole-v1

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 445.10 +/- 56.96

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 5 of the Deep Reinforcement Learning Class: https://github.com/huggingface/deep-rl-class/tree/main/unit5

|

Hammad7/plag-col-rev-en-v2 | Hammad7 | 2022-09-17T09:58:44Z | 102 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"plagiarism",

"cross-encoder",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-09-15T11:51:58Z | ---

license: apache-2.0

language:

- en

tags:

- plagiarism

- cross-encoder

---

## Usage:

from sentence_transformers.cross_encoder import CrossEncoder

model = CrossEncoder('Hammad7/plag-col-rev-en-v2')

model.predict(["duplicate first paragraph","original second paragraph"]) |

michael20at/dqn-SpaceInvadersNoFrameskip-v4 | michael20at | 2022-09-17T08:25:21Z | 0 | 0 | stable-baselines3 | [

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2022-09-17T08:24:50Z | ---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- metrics:

- type: mean_reward

value: 246.00 +/- 104.47

name: mean_reward

task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m utils.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga michael20at -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m utils.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga michael20at

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 10000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

ameerazam08/wav2vec2-xlsr-ravdess-speech-emotion-recognition-new | ameerazam08 | 2022-09-17T05:57:54Z | 5 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2022-09-17T04:09:37Z | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: wav2vec2-xlsr-ravdess-speech-emotion-recognition-new

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You