modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-14 12:27:51

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 520

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-14 12:25:52

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

SakataHalmi/q-Taxi-v3y | SakataHalmi | 2023-09-20T15:46:11Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-09-20T15:46:08Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3y

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.56 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

model = load_from_hub(repo_id="SakataHalmi/q-Taxi-v3y", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

|

MohanaPriyaa/image_classification | MohanaPriyaa | 2023-09-20T15:43:27Z | 63 | 1 | transformers | [

"transformers",

"tf",

"vit",

"image-classification",

"generated_from_keras_callback",

"base_model:google/vit-base-patch16-224-in21k",

"base_model:finetune:google/vit-base-patch16-224-in21k",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| image-classification | 2023-09-20T14:06:18Z | ---

license: apache-2.0

base_model: google/vit-base-patch16-224-in21k

tags:

- generated_from_keras_callback

model-index:

- name: MohanaPriyaa/image_classifier

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# MohanaPriyaa/food_classifier

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.2925

- Validation Loss: 0.2284

- Train Accuracy: 0.909

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'module': 'keras.optimizers.schedules', 'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 4000, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}, 'registered_name': None}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Train Accuracy | Epoch |

|:----------:|:---------------:|:--------------:|:-----:|

| 0.2925 | 0.2284 | 0.909 | 0 |

### Framework versions

- Transformers 4.33.2

- TensorFlow 2.13.0

- Datasets 2.14.5

- Tokenizers 0.13.3

|

tonystark0/bert-finetuned-ner | tonystark0 | 2023-09-20T15:42:25Z | 120 | 0 | transformers | [

"transformers",

"pytorch",

"bert",

"token-classification",

"generated_from_trainer",

"dataset:conll2003",

"base_model:google-bert/bert-base-cased",

"base_model:finetune:google-bert/bert-base-cased",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2023-09-20T15:30:39Z | ---

license: apache-2.0

base_model: bert-base-cased

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: validation

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9302325581395349

- name: Recall

type: recall

value: 0.9491753618310333

- name: F1

type: f1

value: 0.9396084964598085

- name: Accuracy

type: accuracy

value: 0.9858715488314593

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0609

- Precision: 0.9302

- Recall: 0.9492

- F1: 0.9396

- Accuracy: 0.9859

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0788 | 1.0 | 1756 | 0.0763 | 0.9123 | 0.9337 | 0.9229 | 0.9800 |

| 0.0393 | 2.0 | 3512 | 0.0605 | 0.9262 | 0.9480 | 0.9370 | 0.9855 |

| 0.0255 | 3.0 | 5268 | 0.0609 | 0.9302 | 0.9492 | 0.9396 | 0.9859 |

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu118

- Datasets 2.14.5

- Tokenizers 0.13.3

|

signon-project/mbart-large-cc25-ft-amr30-es | signon-project | 2023-09-20T15:34:44Z | 106 | 0 | transformers | [

"transformers",

"safetensors",

"mbart",

"text2text-generation",

"generated_from_trainer",

"base_model:facebook/mbart-large-cc25",

"base_model:finetune:facebook/mbart-large-cc25",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2024-01-19T11:50:36Z | ---

base_model: facebook/mbart-large-cc25

tags:

- generated_from_trainer

model-index:

- name: es+no_processing

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# es+no_processing

This model is a fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5874

- Smatch Precision: 74.08

- Smatch Recall: 76.84

- Smatch Fscore: 75.44

- Smatch Unparsable: 0

- Percent Not Recoverable: 0.2323

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss | Smatch Precision | Smatch Recall | Smatch Fscore | Smatch Unparsable | Percent Not Recoverable |

|:-------------:|:-----:|:-----:|:---------------:|:----------------:|:-------------:|:-------------:|:-----------------:|:-----------------------:|

| 0.3908 | 1.0 | 3477 | 1.4300 | 19.74 | 68.95 | 30.7 | 0 | 0.0 |

| 0.256 | 2.0 | 6954 | 0.8998 | 27.75 | 70.61 | 39.85 | 1 | 0.0581 |

| 0.0704 | 3.0 | 10431 | 0.8727 | 30.09 | 72.2 | 42.47 | 0 | 0.1161 |

| 0.0586 | 4.0 | 13908 | 0.7774 | 37.1 | 74.93 | 49.62 | 0 | 0.1161 |

| 0.1059 | 5.0 | 17385 | 0.6322 | 42.52 | 74.54 | 54.15 | 1 | 0.1161 |

| 0.0424 | 6.0 | 20862 | 0.6090 | 47.13 | 76.21 | 58.25 | 0 | 0.0 |

| 0.0139 | 7.0 | 24339 | 0.5768 | 48.3 | 77.31 | 59.46 | 0 | 0.0581 |

| 0.08 | 8.0 | 27817 | 0.5608 | 55.74 | 77.16 | 64.72 | 0 | 0.1161 |

| 0.0224 | 9.0 | 31294 | 0.5937 | 54.91 | 77.02 | 64.11 | 0 | 0.0581 |

| 0.0757 | 10.0 | 34771 | 0.5588 | 59.53 | 77.47 | 67.32 | 0 | 0.0581 |

| 0.0613 | 11.0 | 38248 | 0.5894 | 60.83 | 77.82 | 68.28 | 0 | 0.0581 |

| 0.1045 | 12.0 | 41725 | 0.5847 | 61.23 | 77.17 | 68.28 | 0 | 0.1742 |

| 0.012 | 13.0 | 45202 | 0.5588 | 65.61 | 77.47 | 71.05 | 0 | 0.0 |

| 0.0591 | 14.0 | 48679 | 0.5609 | 66.51 | 77.86 | 71.74 | 0 | 0.0581 |

| 0.0252 | 15.0 | 52156 | 0.5653 | 67.48 | 77.75 | 72.25 | 0 | 0.0 |

| 0.0129 | 16.0 | 55634 | 0.5602 | 68.92 | 77.57 | 72.99 | 0 | 0.0 |

| 0.0006 | 17.0 | 59111 | 0.5876 | 68.57 | 77.81 | 72.9 | 0 | 0.1742 |

| 0.0182 | 18.0 | 62588 | 0.5951 | 68.97 | 77.96 | 73.19 | 0 | 0.1161 |

| 0.018 | 19.0 | 66065 | 0.5865 | 70.63 | 77.68 | 73.98 | 0 | 0.0581 |

| 0.0097 | 20.0 | 69542 | 0.6073 | 71.68 | 77.38 | 74.42 | 0 | 0.1161 |

| 0.0021 | 21.0 | 73019 | 0.5984 | 72.25 | 77.92 | 74.98 | 0 | 0.0581 |

| 0.0371 | 22.0 | 76496 | 0.5907 | 72.92 | 77.59 | 75.18 | 0 | 0.1742 |

| 0.0382 | 23.0 | 79973 | 0.5928 | 73.06 | 77.49 | 75.21 | 0 | 0.1742 |

| 0.0148 | 24.0 | 83451 | 0.5903 | 73.98 | 77.15 | 75.53 | 0 | 0.0581 |

| 0.1326 | 25.0 | 86925 | 0.5874 | 74.08 | 76.84 | 75.44 | 0 | 0.2323 |

### Framework versions

- Transformers 4.34.0.dev0

- Pytorch 2.0.1+cu117

- Datasets 2.14.2

- Tokenizers 0.13.3

|

Sudhee1997/Llama-2-7b-Custom-Recruit | Sudhee1997 | 2023-09-20T15:33:14Z | 5 | 0 | peft | [

"peft",

"region:us"

]

| null | 2023-09-15T11:25:00Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.4.0

|

willyninja30/llama-2-7b-chat-hf-fr-en-python | willyninja30 | 2023-09-20T15:33:02Z | 5 | 0 | peft | [

"peft",

"text-generation",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"base_model:adapter:meta-llama/Llama-2-7b-chat-hf",

"region:us"

]

| text-generation | 2023-08-25T10:16:03Z | ---

library_name: peft

base_model: meta-llama/Llama-2-7b-chat-hf

inference: true

pipeline_tag: text-generation

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.0.dev0 |

CyberHarem/perusepone2shi_jashinchandropkick | CyberHarem | 2023-09-20T15:32:55Z | 0 | 0 | null | [

"art",

"text-to-image",

"dataset:CyberHarem/perusepone2shi_jashinchandropkick",

"license:mit",

"region:us"

]

| text-to-image | 2023-09-20T15:01:25Z | ---

license: mit

datasets:

- CyberHarem/perusepone2shi_jashinchandropkick

pipeline_tag: text-to-image

tags:

- art

---

# Lora of perusepone2shi_jashinchandropkick

This model is trained with [HCP-Diffusion](https://github.com/7eu7d7/HCP-Diffusion). And the auto-training framework is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The base model used during training is [NAI](https://huggingface.co/deepghs/animefull-latest), and the base model used for generating preview images is [Meina/MeinaMix_V11](https://huggingface.co/Meina/MeinaMix_V11).

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 4080, you need to download `4080/perusepone2shi_jashinchandropkick.pt` as the embedding and `4080/perusepone2shi_jashinchandropkick.safetensors` for loading Lora. By using both files together, you can generate images for the desired characters.

**The best step we recommend is 4080**, with the score of 0.851. The trigger words are:

1. `perusepone2shi_jashinchandropkick`

2. `short_hair, pointy_ears, hair_over_one_eye, grey_hair, red_eyes, white_hair, ribbon, smile`

For the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

These are available steps:

| Steps | Score | Download | pattern_1 | pattern_2 | pattern_3 | pattern_4 | pattern_5 | pattern_6 | pattern_7 | pattern_8 | pattern_9 | pattern_10 | pattern_11 | pattern_12 | pattern_13 | bikini | bondage | free | maid | miko | nude | nude2 | suit | yukata |

|:---------|:----------|:-----------------------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-------------------------------------------------|:-------------------------------------------------|:-------------------------------------------------|:-------------------------------------------------|:-----------------------------------------|:--------------------------------------------------|:-------------------------------------|:-------------------------------------|:-------------------------------------|:-----------------------------------------------|:------------------------------------------------|:-------------------------------------|:-----------------------------------------|

| 5100 | 0.840 | [Download](5100/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](5100/previews/bondage.png) |  |  |  | [<NSFW, click to see>](5100/previews/nude.png) | [<NSFW, click to see>](5100/previews/nude2.png) |  |  |

| 4760 | 0.844 | [Download](4760/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](4760/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4760/previews/nude.png) | [<NSFW, click to see>](4760/previews/nude2.png) |  |  |

| 4420 | 0.786 | [Download](4420/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](4420/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4420/previews/nude.png) | [<NSFW, click to see>](4420/previews/nude2.png) |  |  |

| **4080** | **0.851** | [**Download**](4080/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](4080/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4080/previews/nude.png) | [<NSFW, click to see>](4080/previews/nude2.png) |  |  |

| 3740 | 0.652 | [Download](3740/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](3740/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3740/previews/nude.png) | [<NSFW, click to see>](3740/previews/nude2.png) |  |  |

| 3400 | 0.654 | [Download](3400/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](3400/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3400/previews/nude.png) | [<NSFW, click to see>](3400/previews/nude2.png) |  |  |

| 3060 | 0.645 | [Download](3060/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](3060/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3060/previews/nude.png) | [<NSFW, click to see>](3060/previews/nude2.png) |  |  |

| 2720 | 0.806 | [Download](2720/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](2720/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2720/previews/nude.png) | [<NSFW, click to see>](2720/previews/nude2.png) |  |  |

| 2380 | 0.756 | [Download](2380/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](2380/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2380/previews/nude.png) | [<NSFW, click to see>](2380/previews/nude2.png) |  |  |

| 2040 | 0.674 | [Download](2040/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](2040/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2040/previews/nude.png) | [<NSFW, click to see>](2040/previews/nude2.png) |  |  |

| 1700 | 0.688 | [Download](1700/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](1700/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1700/previews/nude.png) | [<NSFW, click to see>](1700/previews/nude2.png) |  |  |

| 1360 | 0.629 | [Download](1360/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](1360/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1360/previews/nude.png) | [<NSFW, click to see>](1360/previews/nude2.png) |  |  |

| 1020 | 0.470 | [Download](1020/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](1020/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1020/previews/nude.png) | [<NSFW, click to see>](1020/previews/nude2.png) |  |  |

| 680 | 0.382 | [Download](680/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](680/previews/bondage.png) |  |  |  | [<NSFW, click to see>](680/previews/nude.png) | [<NSFW, click to see>](680/previews/nude2.png) |  |  |

| 340 | 0.097 | [Download](340/perusepone2shi_jashinchandropkick.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  | [<NSFW, click to see>](340/previews/bondage.png) |  |  |  | [<NSFW, click to see>](340/previews/nude.png) | [<NSFW, click to see>](340/previews/nude2.png) |  |  |

|

Faradaylab/Aria_7b_v2 | Faradaylab | 2023-09-20T15:30:57Z | 7 | 3 | peft | [

"peft",

"llama7b",

"LLAMA2",

"opensource",

"culture",

"code",

"text-generation",

"fr",

"en",

"es",

"dataset:Snit/french-conversation",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"base_model:adapter:meta-llama/Llama-2-7b-chat-hf",

"license:llama2",

"region:us"

]

| text-generation | 2023-08-25T12:59:18Z | ---

language:

- fr

- en

- es

license: llama2

library_name: peft

tags:

- llama7b

- LLAMA2

- peft

- opensource

- culture

- code

datasets:

- Snit/french-conversation

inference: true

pipeline_tag: text-generation

base_model: meta-llama/Llama-2-7b-chat-hf

---

ARIA 7B V2 is a model created by Faraday 🇫🇷 🇧🇪

The growing need of artificial intelligence tools around the world has created a run for GPU power. We decided to create an affordable model with better skills in French which can run on single GPU and reduce data bias observed in models trained mostly on english only datasets..

ARIA 7B has been trained on over 20.000 tokens of a high quality french dataset. ARIA 7B is one of the best open source models in the world avaible for this size of parameters.

## Training procedure : NVIDIA A100. Thanks to NVIDIA GPU and Inception program,we have been able to train our model within less than 24 hours.

## Base model : LLAMA_2-7B-CHAT-HF

We strongly believe that training models in more languages datasets can not only increase their knowledge base but also give more open analysis perspectives ,less focused visions and opinions from only one part of the world.

## Contact

[email protected]

## Number of Epoch : 2

## Timing : Less than 24 hours

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.0.dev0 |

willyninja30/aria7Beta | willyninja30 | 2023-09-20T15:30:47Z | 8 | 1 | peft | [

"peft",

"llama2",

"pytorch",

"french",

"text-generation",

"fr",

"en",

"base_model:meta-llama/Llama-2-7b-chat-hf",

"base_model:adapter:meta-llama/Llama-2-7b-chat-hf",

"license:apache-2.0",

"region:us"

]

| text-generation | 2023-08-23T14:37:35Z | ---

language:

- fr

- en

license: apache-2.0

library_name: peft

tags:

- llama2

- pytorch

- french

inference: false

pipeline_tag: text-generation

base_model: meta-llama/Llama-2-7b-chat-hf

---

## ARIA 7B is a model created by Faraday

The growing need of artificial intelligence tools around the world has created a run for GPU power. We decided to create an affordable model with better skills in French which can run on single GPU and reduce data bias observed in models trained mostly on english only datasets..

ARIA 7B has been trained on over 20.000 tokens of a high quality french dataset. ARIA 7B is one of the best open source models in the world avaible for this size of parameters.

GPU used for training : NVIDIA V100. Thanks to NVIDIA GPU and Inception program,we have been able to train our model within less than 24 hours.

Base model : LLAMA_2-7B-CHAT-HF

We strongly believe that training models in more languages datasets can not only increase their knowledge base but also give more open analysis perspectives ,less focused visions and opinions from only one part of the world.

## ARIA 7B est un modèle créé par Faraday

Le besoin croissant en intelligence artificiele dans le monde a créé une course vers la puissance de calcul des cartes graphiques.

Nous avons décidé de créer un modèle accessible capable de tourner sur une seule carte graphique et réduisant les biais d'algorithmes observés sur les modèles entrainés uniquement sur des bases de données en anglais.

ARIA 7B a été entrainé sur un dataset de grande qualité avec plus de 20.000 tokens en Français.

GPU(Carte graphique) utilisée pour le finetuning: NVIDIA V100. Merci à NVIDIA et au programme Nvidia Inception qui nous a orienté pendant tout le processus et nous a permis d'entrainer le modèle en moins de 24h.

Modèle de base : LLAMA_2-7B-CHAT-HF

Nous pensons que le fait d'entraîner des modèles sur des langues différentes permet non seulement d'élargir la base de connaissance mais aussi de donner d'autres perspectives d'analyses plus ouvertes,et moins centrées sur la vision et les opinions exprimées par une seule partie du monde.

Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.0.dev0 |

SakataHalmi/q-FrozenLake-v1-4x4-noSlippery | SakataHalmi | 2023-09-20T15:30:22Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-09-20T15:30:19Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

model = load_from_hub(repo_id="SakataHalmi/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

|

BramVanroy/mbart-large-cc25-ft-amr30-en | BramVanroy | 2023-09-20T15:28:41Z | 130 | 0 | transformers | [

"transformers",

"safetensors",

"mbart",

"text2text-generation",

"generated_from_trainer",

"base_model:facebook/mbart-large-cc25",

"base_model:finetune:facebook/mbart-large-cc25",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-09-20T15:26:13Z | ---

base_model: facebook/mbart-large-cc25

tags:

- generated_from_trainer

model-index:

- name: en+no_processing

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# en+no_processing

This model is a fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4481

- Smatch Precision: 80.57

- Smatch Recall: 83.81

- Smatch Fscore: 82.16

- Smatch Unparsable: 0

- Percent Not Recoverable: 0.3484

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 25

### Training results

| Training Loss | Epoch | Step | Validation Loss | Smatch Precision | Smatch Recall | Smatch Fscore | Smatch Unparsable | Percent Not Recoverable |

|:-------------:|:-----:|:-----:|:---------------:|:----------------:|:-------------:|:-------------:|:-----------------:|:-----------------------:|

| 0.3471 | 1.0 | 3477 | 1.4889 | 22.35 | 73.05 | 34.23 | 0 | 0.1161 |

| 0.1741 | 2.0 | 6954 | 0.8681 | 30.1 | 71.92 | 42.44 | 0 | 0.1161 |

| 0.1296 | 3.0 | 10431 | 0.7081 | 38.6 | 78.68 | 51.8 | 0 | 0.0581 |

| 0.1308 | 4.0 | 13908 | 0.9546 | 37.49 | 78.23 | 50.69 | 0 | 0.0 |

| 0.2213 | 5.0 | 17385 | 0.5544 | 47.63 | 81.17 | 60.03 | 0 | 0.0 |

| 0.0317 | 6.0 | 20862 | 0.4884 | 49.3 | 80.9 | 61.27 | 0 | 0.0 |

| 0.1007 | 7.0 | 24339 | 0.4763 | 54.88 | 82.09 | 65.78 | 0 | 0.0 |

| 0.092 | 8.0 | 27817 | 0.4444 | 57.37 | 83.2 | 67.91 | 0 | 0.0 |

| 0.1051 | 9.0 | 31294 | 0.4192 | 64.37 | 83.81 | 72.82 | 0 | 0.0 |

| 0.0079 | 10.0 | 34771 | 0.4685 | 61.3 | 83.1 | 70.55 | 0 | 0.0 |

| 0.0211 | 11.0 | 38248 | 0.4389 | 63.36 | 84.57 | 72.44 | 0 | 0.1161 |

| 0.1122 | 12.0 | 41725 | 0.4146 | 69.39 | 83.56 | 75.82 | 0 | 0.0581 |

| 0.0183 | 13.0 | 45202 | 0.4003 | 73.9 | 83.71 | 78.5 | 0 | 0.0 |

| 0.0244 | 14.0 | 48679 | 0.4208 | 73.79 | 83.92 | 78.53 | 0 | 0.1161 |

| 0.0116 | 15.0 | 52156 | 0.4248 | 73.88 | 83.85 | 78.55 | 0 | 0.1161 |

| 0.0357 | 16.0 | 55634 | 0.4235 | 75.78 | 84.08 | 79.71 | 0 | 0.1161 |

| 0.0006 | 17.0 | 59111 | 0.4181 | 76.15 | 84.15 | 79.95 | 0 | 0.0581 |

| 0.0329 | 18.0 | 62588 | 0.4494 | 77.21 | 84.12 | 80.52 | 0 | 0.0 |

| 0.0003 | 19.0 | 66065 | 0.4389 | 78.02 | 84.13 | 80.96 | 0 | 0.0 |

| 0.04 | 20.0 | 69542 | 0.4439 | 78.78 | 84.23 | 81.41 | 0 | 0.0 |

| 0.0182 | 21.0 | 73019 | 0.4430 | 79.82 | 84.05 | 81.88 | 0 | 0.0581 |

| 0.0006 | 22.0 | 76496 | 0.4488 | 79.96 | 83.74 | 81.81 | 0 | 0.0581 |

| 0.0074 | 23.0 | 79973 | 0.4569 | 79.84 | 83.85 | 81.79 | 0 | 0.0581 |

| 0.0133 | 24.0 | 83451 | 0.4469 | 80.45 | 83.81 | 82.09 | 0 | 0.2904 |

| 0.0055 | 25.0 | 86925 | 0.4481 | 80.57 | 83.81 | 82.16 | 0 | 0.3484 |

### Framework versions

- Transformers 4.34.0.dev0

- Pytorch 2.0.1+cu117

- Datasets 2.14.2

- Tokenizers 0.13.3

|

csakarwa/Model1cs | csakarwa | 2023-09-20T15:24:47Z | 1 | 0 | peft | [

"peft",

"region:us"

]

| null | 2023-09-20T15:24:45Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.0.dev0

|

AnatolyBelov/my_t5_small_test | AnatolyBelov | 2023-09-20T15:19:33Z | 4 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"dataset:opus_books",

"base_model:google-t5/t5-small",

"base_model:finetune:google-t5/t5-small",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-09-18T14:00:07Z | ---

license: apache-2.0

base_model: t5-small

tags:

- generated_from_trainer

datasets:

- opus_books

metrics:

- bleu

model-index:

- name: my_t5_small_test

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: opus_books

type: opus_books

config: en-fr

split: train

args: en-fr

metrics:

- name: Bleu

type: bleu

value: 6.372

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_t5_small_test

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the opus_books dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5026

- Bleu: 6.372

- Gen Len: 17.5713

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:-------:|

| 1.7108 | 1.0 | 6355 | 1.5113 | 6.3012 | 17.5701 |

| 1.6833 | 2.0 | 12710 | 1.5026 | 6.372 | 17.5713 |

### Framework versions

- Transformers 4.33.2

- Pytorch 2.0.1+cu118

- Datasets 2.14.5

- Tokenizers 0.13.3

|

abhishek23HF/MARKEtING_BLOOMZ_1B | abhishek23HF | 2023-09-20T15:17:28Z | 0 | 0 | peft | [

"peft",

"region:us"

]

| null | 2023-09-20T15:17:23Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: True

- load_in_4bit: False

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: fp4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float32

### Framework versions

- PEFT 0.6.0.dev0

|

databio/v2v-ChIP-atlas-hg38-ATAC | databio | 2023-09-20T15:15:34Z | 0 | 0 | null | [

"region:us"

]

| null | 2023-09-19T20:50:13Z | ---

# For reference on model card metadata, see the spec: https://github.com/huggingface/hub-docs/blob/main/modelcard.md?plain=1

# Doc / guide: https://huggingface.co/docs/hub/model-cards

{}

---

# Vec2Vec ChIP-atlas hg38

## Model Details

### Model Description

This is a Vec2Vec model that encodes embedding vectors of natural language into embedding vectors of BED files. This model was trained with hg38 ChIP-atlas ATAC-seq data. The natural language metadata came from the experiment list, their embedding vectors were encoded by [sentence-transformers](https://huggingface.co/sentence-transformers/all-MiniLM-L12-v2). The BED files were embedded by [Region2Vec](https://huggingface.co/databio/r2v-ChIP-atlas-hg38)

- **Developed by:** Ziyang "Claude" Hu

- **Model type:** Vec2Vec

- **Language(s) (NLP):** hg38

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/databio/geniml

- **Paper [optional]:** N/A

## Uses

This model can be used to search BED files with natural language query strings. In the search interface, the query strings will be encoded by same sentence-transformers model, and the output vector will be encoded into the final query vector by this Vec2Vec. The K BED files whose embedding vectors (embedded by same Region2Vec) are closest to the final query vector are results. It is limited to hg38. It is not recommended to use this model for data outside ATAC-seq.

## How to Get Started with the Model

Vec2Vec will allow direct importing from Hugging Face soon.

[More Information Needed]

## Training Details

### Training Data

TODO |

ramchiluveru/MarketingCampaign | ramchiluveru | 2023-09-20T15:14:23Z | 0 | 0 | peft | [

"peft",

"region:us"

]

| null | 2023-09-20T15:14:21Z | ---

library_name: peft

---

## Training procedure

### Framework versions

- PEFT 0.6.0.dev0

|

badassbandit/taxi | badassbandit | 2023-09-20T15:10:46Z | 0 | 0 | null | [

"Taxi-v3",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-09-20T15:10:45Z | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: taxi

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.52 +/- 2.71

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="badassbandit/taxi", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

CyberHarem/matsuo_chizuru_idolmastercinderellagirls | CyberHarem | 2023-09-20T15:09:30Z | 0 | 0 | null | [

"art",

"text-to-image",

"dataset:CyberHarem/matsuo_chizuru_idolmastercinderellagirls",

"license:mit",

"region:us"

]

| text-to-image | 2023-09-20T14:52:03Z | ---

license: mit

datasets:

- CyberHarem/matsuo_chizuru_idolmastercinderellagirls

pipeline_tag: text-to-image

tags:

- art

---

# Lora of matsuo_chizuru_idolmastercinderellagirls

This model is trained with [HCP-Diffusion](https://github.com/7eu7d7/HCP-Diffusion). And the auto-training framework is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The base model used during training is [NAI](https://huggingface.co/deepghs/animefull-latest), and the base model used for generating preview images is [Meina/MeinaMix_V11](https://huggingface.co/Meina/MeinaMix_V11).

After downloading the pt and safetensors files for the specified step, you need to use them simultaneously. The pt file will be used as an embedding, while the safetensors file will be loaded for Lora.

For example, if you want to use the model from step 4760, you need to download `4760/matsuo_chizuru_idolmastercinderellagirls.pt` as the embedding and `4760/matsuo_chizuru_idolmastercinderellagirls.safetensors` for loading Lora. By using both files together, you can generate images for the desired characters.

**The best step we recommend is 4760**, with the score of 0.996. The trigger words are:

1. `matsuo_chizuru_idolmastercinderellagirls`

2. `short_hair, black_hair, blush, hair_ornament, hairclip, black_eyes, thick_eyebrows, purple_eyes`

For the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

These are available steps:

| Steps | Score | Download | pattern_1 | pattern_2 | pattern_3 | pattern_4 | pattern_5 | pattern_6 | pattern_7 | pattern_8 | pattern_9 | pattern_10 | bikini | bondage | free | maid | miko | nude | nude2 | suit | yukata |

|:---------|:----------|:------------------------------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:----------------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-----------------------------------------------|:-------------------------------------------------|:-----------------------------------------|:--------------------------------------------------|:-------------------------------------|:-------------------------------------|:-------------------------------------|:-----------------------------------------------|:------------------------------------------------|:-------------------------------------|:-----------------------------------------|

| 5100 | 0.983 | [Download](5100/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](5100/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](5100/previews/bondage.png) |  |  |  | [<NSFW, click to see>](5100/previews/nude.png) | [<NSFW, click to see>](5100/previews/nude2.png) |  |  |

| **4760** | **0.996** | [**Download**](4760/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](4760/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](4760/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4760/previews/nude.png) | [<NSFW, click to see>](4760/previews/nude2.png) |  |  |

| 4420 | 0.968 | [Download](4420/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](4420/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](4420/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4420/previews/nude.png) | [<NSFW, click to see>](4420/previews/nude2.png) |  |  |

| 4080 | 0.994 | [Download](4080/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](4080/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](4080/previews/bondage.png) |  |  |  | [<NSFW, click to see>](4080/previews/nude.png) | [<NSFW, click to see>](4080/previews/nude2.png) |  |  |

| 3740 | 0.960 | [Download](3740/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](3740/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](3740/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3740/previews/nude.png) | [<NSFW, click to see>](3740/previews/nude2.png) |  |  |

| 3400 | 0.994 | [Download](3400/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](3400/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](3400/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3400/previews/nude.png) | [<NSFW, click to see>](3400/previews/nude2.png) |  |  |

| 3060 | 0.992 | [Download](3060/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](3060/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](3060/previews/bondage.png) |  |  |  | [<NSFW, click to see>](3060/previews/nude.png) | [<NSFW, click to see>](3060/previews/nude2.png) |  |  |

| 2720 | 0.982 | [Download](2720/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](2720/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](2720/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2720/previews/nude.png) | [<NSFW, click to see>](2720/previews/nude2.png) |  |  |

| 2380 | 0.995 | [Download](2380/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](2380/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](2380/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2380/previews/nude.png) | [<NSFW, click to see>](2380/previews/nude2.png) |  |  |

| 2040 | 0.959 | [Download](2040/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](2040/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](2040/previews/bondage.png) |  |  |  | [<NSFW, click to see>](2040/previews/nude.png) | [<NSFW, click to see>](2040/previews/nude2.png) |  |  |

| 1700 | 0.961 | [Download](1700/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](1700/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](1700/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1700/previews/nude.png) | [<NSFW, click to see>](1700/previews/nude2.png) |  |  |

| 1360 | 0.924 | [Download](1360/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](1360/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](1360/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1360/previews/nude.png) | [<NSFW, click to see>](1360/previews/nude2.png) |  |  |

| 1020 | 0.872 | [Download](1020/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](1020/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](1020/previews/bondage.png) |  |  |  | [<NSFW, click to see>](1020/previews/nude.png) | [<NSFW, click to see>](1020/previews/nude2.png) |  |  |

| 680 | 0.880 | [Download](680/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](680/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](680/previews/bondage.png) |  |  |  | [<NSFW, click to see>](680/previews/nude.png) | [<NSFW, click to see>](680/previews/nude2.png) |  |  |

| 340 | 0.313 | [Download](340/matsuo_chizuru_idolmastercinderellagirls.zip) |  |  |  |  | [<NSFW, click to see>](340/previews/pattern_5.png) |  |  |  |  |  |  | [<NSFW, click to see>](340/previews/bondage.png) |  |  |  | [<NSFW, click to see>](340/previews/nude.png) | [<NSFW, click to see>](340/previews/nude2.png) |  |  |

|

badassbandit/q-FrozenLake-v1-4x4-noSlippery | badassbandit | 2023-09-20T15:06:16Z | 0 | 0 | null | [

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-09-20T15:06:14Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="badassbandit/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

BanUrsus/rl_course_additional_challenge_vizdoom_deathmatch_bots | BanUrsus | 2023-09-20T14:56:40Z | 0 | 0 | sample-factory | [

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

]

| reinforcement-learning | 2023-09-20T14:56:24Z | ---

library_name: sample-factory

tags:

- deep-reinforcement-learning

- reinforcement-learning

- sample-factory

model-index:

- name: APPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: doom_deathmatch_bots

type: doom_deathmatch_bots

metrics:

- type: mean_reward

value: 1.70 +/- 1.42

name: mean_reward

verified: false

---

A(n) **APPO** model trained on the **doom_deathmatch_bots** environment.

This model was trained using Sample-Factory 2.0: https://github.com/alex-petrenko/sample-factory.

Documentation for how to use Sample-Factory can be found at https://www.samplefactory.dev/

## Downloading the model

After installing Sample-Factory, download the model with:

```

python -m sample_factory.huggingface.load_from_hub -r BanUrsus/rl_course_additional_challenge_vizdoom_deathmatch_bots

```

## Using the model

To run the model after download, use the `enjoy` script corresponding to this environment:

```

python -m <path.to.enjoy.module> --algo=APPO --env=doom_deathmatch_bots --train_dir=./train_dir --experiment=rl_course_additional_challenge_vizdoom_deathmatch_bots

```

You can also upload models to the Hugging Face Hub using the same script with the `--push_to_hub` flag.

See https://www.samplefactory.dev/10-huggingface/huggingface/ for more details

## Training with this model

To continue training with this model, use the `train` script corresponding to this environment:

```

python -m <path.to.train.module> --algo=APPO --env=doom_deathmatch_bots --train_dir=./train_dir --experiment=rl_course_additional_challenge_vizdoom_deathmatch_bots --restart_behavior=resume --train_for_env_steps=10000000000

```

Note, you may have to adjust `--train_for_env_steps` to a suitably high number as the experiment will resume at the number of steps it concluded at.

|

hosnasn/tannaz1-reza | hosnasn | 2023-09-20T14:54:02Z | 0 | 0 | null | [

"safetensors",

"text-to-image",

"stable-diffusion",

"license:creativeml-openrail-m",

"region:us"

]

| text-to-image | 2023-09-20T14:47:47Z | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

### tannaz1_reza Dreambooth model trained by hosnasn with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

csdc-atl/Baichuan2-13B-Chat-GPTQ-Int4 | csdc-atl | 2023-09-20T14:50:13Z | 170 | 2 | transformers | [

"transformers",

"safetensors",

"baichuan",

"text-generation",

"custom_code",

"en",

"zh",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"gptq",

"region:us"

]

| text-generation | 2023-09-15T18:06:52Z | ---

language:

- en

- zh

license: other

tasks:

- text-generation

---

<!-- markdownlint-disable first-line-h1 -->

<!-- markdownlint-disable html -->

# Baichuan 2 13B Chat - Int4

<!-- description start -->

## 描述

该repo包含[Baichuan 2 7B Chat](https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat)的Int4 GPTQ模型文件。

<!-- description end -->

<!-- README_GPTQ.md-provided-files start -->

## GPTQ参数

该GPTQ文件都是用AutoGPTQ生成的。

- Bits: 4/8

- GS: 32/128

- Act Order: True

- Damp %: 0.1

- GPTQ dataset: 中文、英文混合数据集

- Sequence Length: 4096

| 模型版本 | agieval | ceval | cmmlu | size | 推理速度(A100-40G) |

|---|---|---|---|---|---|

| [Baichuan2-13B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat) | 40.25 | 56.33 | 58.44 | 27.79g | 31.55 tokens/s |

| [Baichuan2-13B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits) | 39.01 | 56.63 | 57.81 | 9.08g | 18.45 tokens/s |

| [GPTQ-4bit-32g](https://huggingface.co/csdc-atl/Baichuan2-13B-Chat-GPTQ-Int4/tree/4bit-32g) | 38.64 | 57.18 | 57.47 | 9.87g | 27.35(hf) \ 38.28(autogptq) tokens/s |

| [GPTQ-4bit-128g](https://huggingface.co/csdc-atl/Baichuan2-13B-Chat-GPTQ-Int4/tree/main) | 38.78 | 56.42 | 57.78 | 9.14g | 28.74(hf) \ 39.24(autogptq) tokens/s |

<!-- README_GPTQ.md-provided-files end -->

## 如何在Python代码中使用此GPTQ模型

### 安装必要的依赖

必须: Transformers 4.32.0以上、Optimum 1.12.0以上、AutoGPTQ 0.4.2以上

```shell

pip3 install transformers>=4.32.0 optimum>=1.12.0

pip3 install auto-gptq --extra-index-url https://huggingface.github.io/autogptq-index/whl/cu118/ # Use cu117 if on CUDA 11.7

```

如果您在使用预构建的pip包安装AutoGPTQ时遇到问题,请改为从源代码安装:

```shell

pip3 uninstall -y auto-gptq

git clone https://github.com/PanQiWei/AutoGPTQ

cd AutoGPTQ

pip3 install .

```

### 然后可以使用以下代码

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation.utils import GenerationConfig

model_name_or_path = "csdc-atl/Baichuan2-13B-Chat-Int4"

model = AutoModelForCausalLM.from_pretrained(model_name_or_path,

torch_dtype=torch.float16,

device_map="auto",

trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=True, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained("baichuan-inc/Baichuan2-7B-Chat")

messages = []

messages.append({"role": "user", "content": "解释一下“温故而知新”"})

response = model.chat(tokenizer, messages)

print(response)

"温故而知新"是一句中国古代的成语,出自《论语·为政》篇。这句话的意思是:通过回顾过去,我们可以发现新的知识和理解。换句话说,学习历史和经验可以让我们更好地理解现在和未来。

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。

```

<!-- README_GPTQ.md-use-from-python end -->

<!-- README_GPTQ.md-compatibility start -->

---

<div align="center">

<h1>

Baichuan 2

</h1>

</div>

<div align="center">

<a href="https://github.com/baichuan-inc/Baichuan2" target="_blank">🦉GitHub</a> | <a href="https://github.com/baichuan-inc/Baichuan-7B/blob/main/media/wechat.jpeg?raw=true" target="_blank">💬WeChat</a>

</div>

<div align="center">

🚀 <a href="https://www.baichuan-ai.com/" target="_blank">百川大模型在线对话平台</a> 已正式向公众开放 🎉

</div>

# 目录/Table of Contents

- [📖 模型介绍/Introduction](#Introduction)

- [⚙️ 快速开始/Quick Start](#Start)

- [📊 Benchmark评估/Benchmark Evaluation](#Benchmark)

- [📜 声明与协议/Terms and Conditions](#Terms)

# <span id="Introduction">模型介绍/Introduction</span>

Baichuan 2 是[百川智能]推出的新一代开源大语言模型,采用 **2.6 万亿** Tokens 的高质量语料训练,在权威的中文和英文 benchmark

上均取得同尺寸最好的效果。本次发布包含有 7B、13B 的 Base 和 Chat 版本,并提供了 Chat 版本的 4bits

量化,所有版本不仅对学术研究完全开放,开发者也仅需[邮件申请]并获得官方商用许可后,即可以免费商用。具体发布版本和下载见下表:

Baichuan 2 is the new generation of large-scale open-source language models launched by [Baichuan Intelligence inc.](https://www.baichuan-ai.com/).

It is trained on a high-quality corpus with 2.6 trillion tokens and has achieved the best performance in authoritative Chinese and English benchmarks of the same size.

This release includes 7B and 13B versions for both Base and Chat models, along with a 4bits quantized version for the Chat model.

All versions are fully open to academic research, and developers can also use them for free in commercial applications after obtaining an official commercial license through [email request](mailto:[email protected]).

The specific release versions and download links are listed in the table below:

| | Base Model | Chat Model | 4bits Quantized Chat Model |

|:---:|:--------------------:|:--------------------:|:--------------------------:|

| 7B | [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) | [Baichuan2-7B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat) | [Baichuan2-7B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base-4bits) |

| 13B | [Baichuan2-13B-Base](https://huggingface.co/baichuan-inc/Baichuan2-13B-Base) | [Baichuan2-13B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat) | [Baichuan2-13B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits) |

# <span id="Start">快速开始/Quick Start</span>

在Baichuan2系列模型中,我们为了加快推理速度使用了Pytorch2.0加入的新功能F.scaled_dot_product_attention,因此模型需要在Pytorch2.0环境下运行。

In the Baichuan 2 series models, we have utilized the new feature `F.scaled_dot_product_attention` introduced in PyTorch 2.0 to accelerate inference speed. Therefore, the model needs to be run in a PyTorch 2.0 environment.

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation.utils import GenerationConfig

tokenizer = AutoTokenizer.from_pretrained("baichuan-inc/Baichuan2-13B-Chat", use_fast=False, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("baichuan-inc/Baichuan2-13B-Chat", device_map="auto", torch_dtype=torch.bfloat16, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained("baichuan-inc/Baichuan2-13B-Chat")

messages = []

messages.append({"role": "user", "content": "解释一下“温故而知新”"})

response = model.chat(tokenizer, messages)

print(response)

"温故而知新"是一句中国古代的成语,出自《论语·为政》篇。这句话的意思是:通过回顾过去,我们可以发现新的知识和理解。换句话说,学习历史和经验可以让我们更好地理解现在和未来。

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。

```

# <span id="Benchmark">Benchmark 结果/Benchmark Evaluation</span>

我们在[通用]、[法律]、[医疗]、[数学]、[代码]和[多语言翻译]六个领域的中英文权威数据集上对模型进行了广泛测试,更多详细测评结果可查看[GitHub]。

We have extensively tested the model on authoritative Chinese-English datasets across six domains: [General](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#general-domain), [Legal](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Medical](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Mathematics](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), [Code](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), and [Multilingual Translation](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#multilingual-translation). For more detailed evaluation results, please refer to [GitHub](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md).

### 7B Model Results

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|:-----------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

| **LLaMA-7B** | 27.10 | 35.10 | 26.75 | 27.81 | 28.17 | 32.38 |

| **LLaMA2-7B** | 28.90 | 45.73 | 31.38 | 25.97 | 26.53 | 39.16 |

| **MPT-7B** | 27.15 | 27.93 | 26.00 | 26.54 | 24.83 | 35.20 |

| **Falcon-7B** | 24.23 | 26.03 | 25.66 | 24.24 | 24.10 | 28.77 |

| **ChatGLM2-6B** | 50.20 | 45.90 | 49.00 | 49.44 | 45.28 | 31.65 |

| **[Baichuan-7B]** | 42.80 | 42.30 | 44.02 | 36.34 | 34.44 | 32.48 |

| **[Baichuan2-7B-Base]** | 54.00 | 54.16 | 57.07 | 47.47 | 42.73 | 41.56 |

### 13B Model Results

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|:---------------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

| **LLaMA-13B** | 28.50 | 46.30 | 31.15 | 28.23 | 28.22 | 37.89 |

| **LLaMA2-13B** | 35.80 | 55.09 | 37.99 | 30.83 | 32.29 | 46.98 |

| **Vicuna-13B** | 32.80 | 52.00 | 36.28 | 30.11 | 31.55 | 43.04 |

| **Chinese-Alpaca-Plus-13B** | 38.80 | 43.90 | 33.43 | 34.78 | 35.46 | 28.94 |

| **XVERSE-13B** | 53.70 | 55.21 | 58.44 | 44.69 | 42.54 | 38.06 |

| **[Baichuan-13B-Base]** | 52.40 | 51.60 | 55.30 | 49.69 | 43.20 | 43.01 |

| **[Baichuan2-13B-Base]** | 58.10 | 59.17 | 61.97 | 54.33 | 48.17 | 48.78 |

## 训练过程模型/Training Dynamics

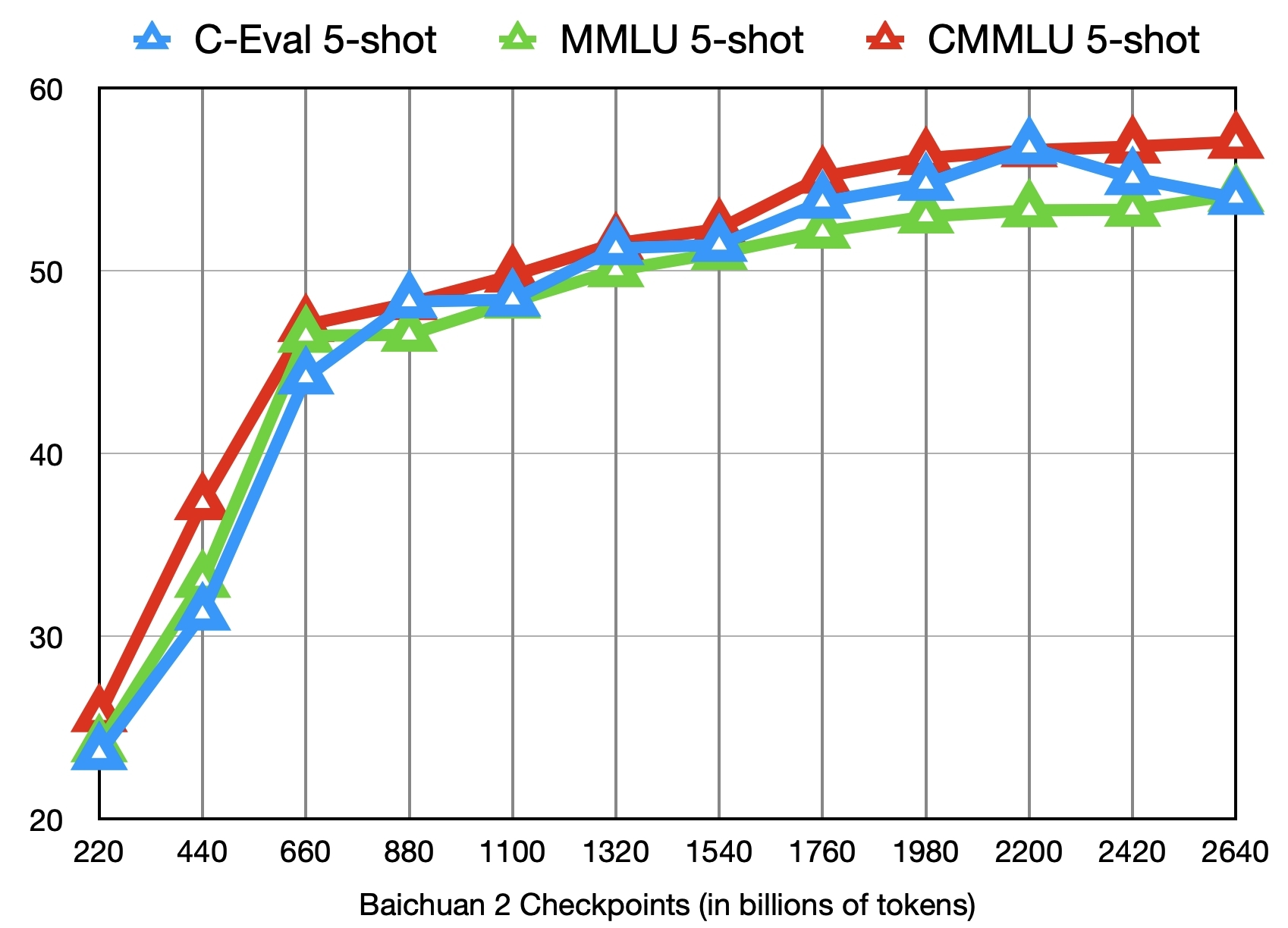

除了训练了 2.6 万亿 Tokens 的 [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) 模型,我们还提供了在此之前的另外 11 个中间过程的模型(分别对应训练了约 0.2 ~ 2.4 万亿 Tokens)供社区研究使用

([训练过程checkpoint下载](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints))。下图给出了这些 checkpoints 在 C-Eval、MMLU、CMMLU 三个 benchmark 上的效果变化:

In addition to the [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) model trained on 2.6 trillion tokens, we also offer 11 additional intermediate-stage models for community research, corresponding to training on approximately 0.2 to 2.4 trillion tokens each ([Intermediate Checkpoints Download](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints)). The graph below shows the performance changes of these checkpoints on three benchmarks: C-Eval, MMLU, and CMMLU.

# <span id="Terms">声明与协议/Terms and Conditions</span>

## 声明

我们在此声明,我们的开发团队并未基于 Baichuan 2 模型开发任何应用,无论是在 iOS、Android、网页或任何其他平台。我们强烈呼吁所有使用者,不要利用

Baichuan 2 模型进行任何危害国家社会安全或违法的活动。另外,我们也要求使用者不要将 Baichuan 2

模型用于未经适当安全审查和备案的互联网服务。我们希望所有的使用者都能遵守这个原则,确保科技的发展能在规范和合法的环境下进行。

我们已经尽我们所能,来确保模型训练过程中使用的数据的合规性。然而,尽管我们已经做出了巨大的努力,但由于模型和数据的复杂性,仍有可能存在一些无法预见的问题。因此,如果由于使用

Baichuan 2 开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

We hereby declare that our team has not developed any applications based on Baichuan 2 models, not on iOS, Android, the web, or any other platform. We strongly call on all users not to use Baichuan 2 models for any activities that harm national / social security or violate the law. Also, we ask users not to use Baichuan 2 models for Internet services that have not undergone appropriate security reviews and filings. We hope that all users can abide by this principle and ensure that the development of technology proceeds in a regulated and legal environment.

We have done our best to ensure the compliance of the data used in the model training process. However, despite our considerable efforts, there may still be some unforeseeable issues due to the complexity of the model and data. Therefore, if any problems arise due to the use of Baichuan 2 open-source models, including but not limited to data security issues, public opinion risks, or any risks and problems brought about by the model being misled, abused, spread or improperly exploited, we will not assume any responsibility.

## 协议

Baichuan 2 模型的社区使用需遵循[《Baichuan 2 模型社区许可协议》]。Baichuan 2 支持商用。如果将 Baichuan 2 模型或其衍生品用作商业用途,请您按照如下方式联系许可方,以进行登记并向许可方申请书面授权:联系邮箱 [[email protected]]。

The use of the source code in this repository follows the open-source license Apache 2.0. Community use of the Baichuan 2 model must adhere to the [Community License for Baichuan 2 Model](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf). Baichuan 2 supports commercial use. If you are using the Baichuan 2 models or their derivatives for commercial purposes, please contact the licensor in the following manner for registration and to apply for written authorization: Email [email protected].

[GitHub]:https://github.com/baichuan-inc/Baichuan2

[Baichuan2]:https://github.com/baichuan-inc/Baichuan2

[Baichuan-7B]:https://huggingface.co/baichuan-inc/Baichuan-7B

[Baichuan2-7B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base

[Baichuan2-7B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat

[Baichuan2-7B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat-4bits

[Baichuan-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan-13B-Base

[Baichuan2-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Base

[Baichuan2-13B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat

[Baichuan2-13B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits

[通用]:https://github.com/baichuan-inc/Baichuan2#%E9%80%9A%E7%94%A8%E9%A2%86%E5%9F%9F

[法律]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

[医疗]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

[数学]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

[代码]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

[多语言翻译]:https://github.com/baichuan-inc/Baichuan2#%E5%A4%9A%E8%AF%AD%E8%A8%80%E7%BF%BB%E8%AF%91

[《Baichuan 2 模型社区许可协议》]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf

[邮件申请]: mailto:[email protected]

[Email]: mailto:[email protected]

[[email protected]]: mailto:[email protected]

[训练过程heckpoint下载]: https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints

[百川智能]: https://www.baichuan-ai.com

|

OpenDILabCommunity/Lunarlander-v2-C51 | OpenDILabCommunity | 2023-09-20T14:49:22Z | 0 | 0 | pytorch | [

"pytorch",

"deep-reinforcement-learning",

"reinforcement-learning",

"DI-engine",

"LunarLander-v2",

"en",

"license:apache-2.0",

"region:us"

]

| reinforcement-learning | 2023-04-15T12:48:19Z | ---

language: en

license: apache-2.0

library_name: pytorch

tags:

- deep-reinforcement-learning

- reinforcement-learning

- DI-engine

- LunarLander-v2

benchmark_name: OpenAI/Gym/Box2d

task_name: LunarLander-v2

pipeline_tag: reinforcement-learning

model-index:

- name: C51

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: OpenAI/Gym/Box2d-LunarLander-v2

type: OpenAI/Gym/Box2d-LunarLander-v2

metrics:

- type: mean_reward

value: 211.75 +/- 40.32

name: mean_reward

---

# Play **LunarLander-v2** with **C51** Policy

## Model Description

<!-- Provide a longer summary of what this model is. -->

This is a simple **C51** implementation to OpenAI/Gym/Box2d **LunarLander-v2** using the [DI-engine library](https://github.com/opendilab/di-engine) and the [DI-zoo](https://github.com/opendilab/DI-engine/tree/main/dizoo).

**DI-engine** is a python library for solving general decision intelligence problems, which is based on implementations of reinforcement learning framework using PyTorch or JAX. This library aims to standardize the reinforcement learning framework across different algorithms, benchmarks, environments, and to support both academic researches and prototype applications. Besides, self-customized training pipelines and applications are supported by reusing different abstraction levels of DI-engine reinforcement learning framework.

## Model Usage

### Install the Dependencies

<details close>

<summary>(Click for Details)</summary>

```shell

# install huggingface_ding

git clone https://github.com/opendilab/huggingface_ding.git

pip3 install -e ./huggingface_ding/

# install environment dependencies if needed

pip3 install DI-engine[common_env]

```

</details>

### Git Clone from Huggingface and Run the Model

<details close>

<summary>(Click for Details)</summary>

```shell

# running with trained model

python3 -u run.py

```

**run.py**

```python

from ding.bonus import C51Agent

from ding.config import Config

from easydict import EasyDict

import torch

# Pull model from files which are git cloned from huggingface

policy_state_dict = torch.load("pytorch_model.bin", map_location=torch.device("cpu"))

cfg = EasyDict(Config.file_to_dict("policy_config.py").cfg_dict)

# Instantiate the agent

agent = C51Agent(

env_id="LunarLander-v2", exp_name="LunarLander-v2-C51", cfg=cfg.exp_config, policy_state_dict=policy_state_dict

)

# Continue training

agent.train(step=5000)

# Render the new agent performance

agent.deploy(enable_save_replay=True)

```

</details>

### Run Model by Using Huggingface_ding

<details close>

<summary>(Click for Details)</summary>

```shell

# running with trained model

python3 -u run.py

```

**run.py**

```python

from ding.bonus import C51Agent

from huggingface_ding import pull_model_from_hub

# Pull model from Hugggingface hub

policy_state_dict, cfg = pull_model_from_hub(repo_id="OpenDILabCommunity/LunarLander-v2-C51")

# Instantiate the agent

agent = C51Agent(

env_id="LunarLander-v2", exp_name="LunarLander-v2-C51", cfg=cfg.exp_config, policy_state_dict=policy_state_dict

)

# Continue training

agent.train(step=5000)

# Render the new agent performance

agent.deploy(enable_save_replay=True)

```

</details>

## Model Training

### Train the Model and Push to Huggingface_hub

<details close>

<summary>(Click for Details)</summary>

```shell

#Training Your Own Agent

python3 -u train.py

```

**train.py**

```python

from ding.bonus import C51Agent

from huggingface_ding import push_model_to_hub

# Instantiate the agent

agent = C51Agent(env_id="LunarLander-v2", exp_name="LunarLander-v2-C51")

# Train the agent

return_ = agent.train(step=int(4000000), collector_env_num=8, evaluator_env_num=8, debug=False)

# Push model to huggingface hub

push_model_to_hub(

agent=agent.best,

env_name="OpenAI/Gym/Box2d",

task_name="LunarLander-v2",

algo_name="C51",

wandb_url=return_.wandb_url,

github_repo_url="https://github.com/opendilab/DI-engine",

github_doc_model_url="https://di-engine-docs.readthedocs.io/en/latest/12_policies/c51.html",

github_doc_env_url="https://di-engine-docs.readthedocs.io/en/latest/13_envs/lunarlander.html",

installation_guide="pip3 install DI-engine[common_env]",

usage_file_by_git_clone="./c51/lunarlander_c51_deploy.py",

usage_file_by_huggingface_ding="./c51/lunarlander_c51_download.py",

train_file="./c51/lunarlander_c51.py",

repo_id="OpenDILabCommunity/LunarLander-v2-C51",

create_repo=False

)

```

</details>

**Configuration**

<details close>

<summary>(Click for Details)</summary>

```python

exp_config = {

'env': {

'manager': {

'episode_num': float("inf"),

'max_retry': 1,

'retry_type': 'reset',

'auto_reset': True,

'step_timeout': None,

'reset_timeout': None,

'retry_waiting_time': 0.1,

'cfg_type': 'BaseEnvManagerDict'

},

'stop_value': 260,

'n_evaluator_episode': 8,

'collector_env_num': 8,

'evaluator_env_num': 8,

'env_id': 'LunarLander-v2'

},

'policy': {

'model': {

'encoder_hidden_size_list': [512, 64],

'v_min': -30,

'v_max': 30,

'n_atom': 51,

'obs_shape': 8,

'action_shape': 4

},

'learn': {

'learner': {

'train_iterations': 1000000000,

'dataloader': {

'num_workers': 0

},

'log_policy': True,

'hook': {

'load_ckpt_before_run': '',

'log_show_after_iter': 100,

'save_ckpt_after_iter': 10000,

'save_ckpt_after_run': True

},

'cfg_type': 'BaseLearnerDict'

},

'update_per_collect': 10,

'batch_size': 64,

'learning_rate': 0.001,

'target_update_freq': 100,

'target_theta': 0.005,

'ignore_done': False

},

'collect': {

'collector': {},

'n_sample': 64,

'unroll_len': 1

},

'eval': {

'evaluator': {

'eval_freq': 1000,

'render': {

'render_freq': -1,

'mode': 'train_iter'

},

'figure_path': None,

'cfg_type': 'InteractionSerialEvaluatorDict',

'stop_value': 260,

'n_episode': 8

}

},

'other': {

'replay_buffer': {

'replay_buffer_size': 100000

},

'eps': {

'type': 'exp',

'start': 0.95,

'end': 0.1,

'decay': 50000

}

},

'on_policy': False,

'cuda': False,

'multi_gpu': False,

'bp_update_sync': True,

'traj_len_inf': False,

'type': 'c51',

'priority': False,

'priority_IS_weight': False,

'discount_factor': 0.99,

'nstep': 3,

'cfg_type': 'C51PolicyDict'

},

'exp_name': 'LunarLander-v2-C51',

'seed': 0,

'wandb_logger': {

'gradient_logger': True,

'video_logger': True,

'plot_logger': True,

'action_logger': True,

'return_logger': False

}

}

```

</details>

**Training Procedure**

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

- **Weights & Biases (wandb):** [monitor link](https://wandb.ai/zjowowen/Lunarlander-v2-C51)

## Model Information

<!-- Provide the basic links for the model. -->

- **Github Repository:** [repo link](https://github.com/opendilab/DI-engine)

- **Doc**: [DI-engine-docs Algorithm link](https://di-engine-docs.readthedocs.io/en/latest/12_policies/c51.html)

- **Configuration:** [config link](https://huggingface.co/OpenDILabCommunity/LunarLander-v2-C51/blob/main/policy_config.py)

- **Demo:** [video](https://huggingface.co/OpenDILabCommunity/LunarLander-v2-C51/blob/main/replay.mp4)

<!-- Provide the size information for the model. -->

- **Parameters total size:** 214.3 KB

- **Last Update Date:** 2023-09-20

## Environments

<!-- Address questions around what environment the model is intended to be trained and deployed at, including the necessary information needed to be provided for future users. -->

- **Benchmark:** OpenAI/Gym/Box2d

- **Task:** LunarLander-v2

- **Gym version:** 0.25.1

- **DI-engine version:** v0.4.9

- **PyTorch version:** 2.0.1+cu117

- **Doc**: [DI-engine-docs Environments link](https://di-engine-docs.readthedocs.io/en/latest/13_envs/lunarlander.html)

|

napatswift/mt5-fixpdftext | napatswift | 2023-09-20T14:33:34Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"th",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-09-14T08:23:49Z | ---

language:

- th

pipeline_tag: text2text-generation

widget:

- text: "Fix the following corrupted text: \"เจาหนาที่รับผิดชอบในการเขาไปเยียวยา โดยเจาพนักงานเจาหนาที่ตามกฎหมาย\""

--- |

Parkhat/llama2-qlora-finetunined-kg-sql | Parkhat | 2023-09-20T14:32:52Z | 0 | 0 | peft | [

"peft",

"region:us"

]

| null | 2023-09-20T14:32:31Z | ---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.6.0.dev0

|

jbrinkw/fp1.1 | jbrinkw | 2023-09-20T14:27:23Z | 103 | 0 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:google-t5/t5-small",

"base_model:finetune:google-t5/t5-small",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

]

| text2text-generation | 2023-09-20T14:11:47Z | ---

license: apache-2.0

base_model: t5-small

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: fp1.1