modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

studio-ousia/mluke-large | 8dac253911d21efd45ece207b11e079694b02241 | 2022-03-11T02:58:11.000Z | [

"pytorch",

"luke",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | studio-ousia | null | studio-ousia/mluke-large | 21 | null | transformers | 8,200 | Entry not found |

transformersbook/xlm-roberta-base-finetuned-panx-en | 5a56d079034f5f2ed6d6c13d9d4c6aa99353cd67 | 2022-02-05T17:07:09.000Z | [

"pytorch",

"xlm-roberta",

"token-classification",

"dataset:xtreme",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | token-classification | false | transformersbook | null | transformersbook/xlm-roberta-base-finetuned-panx-en | 21 | null | transformers | 8,201 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-en

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.en

metrics:

- name: F1

type: f1

value: 0.69816564758199

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the PAN-X dataset. The model is trained in Chapter 4: Multilingual Named Entity Recognition in the [NLP with Transformers book](https://learning.oreilly.com/library/view/natural-language-processing/9781098103231/). You can find the full code in the accompanying [Github repository](https://github.com/nlp-with-transformers/notebooks/blob/main/04_multilingual-ner.ipynb).

It achieves the following results on the evaluation set:

- Loss: 0.3676

- F1: 0.6982

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 1.026 | 1.0 | 50 | 0.5734 | 0.4901 |

| 0.4913 | 2.0 | 100 | 0.3870 | 0.6696 |

| 0.3734 | 3.0 | 150 | 0.3676 | 0.6982 |

### Framework versions

- Transformers 4.12.0.dev0

- Pytorch 1.9.1+cu102

- Datasets 1.12.1

- Tokenizers 0.10.3

|

uclanlp/plbart-multi_task-all | 594e7236fb071ce3cece96a23904e910cbd7acef | 2022-03-02T07:44:43.000Z | [

"pytorch",

"plbart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | uclanlp | null | uclanlp/plbart-multi_task-all | 21 | null | transformers | 8,202 | Entry not found |

vblagoje/bert-english-uncased-finetuned-chunk | c37c9e8262d61fb10c7a666398364c6574fee55d | 2021-05-20T08:50:30.000Z | [

"pytorch",

"jax",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | vblagoje | null | vblagoje/bert-english-uncased-finetuned-chunk | 21 | 1 | transformers | 8,203 | Entry not found |

zhuqing/bert-base-uncased-reddit-business | baa31194685995a4deffbf88f1d9e0927bbfdf21 | 2021-08-01T16:42:35.000Z | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | zhuqing | null | zhuqing/bert-base-uncased-reddit-business | 21 | null | transformers | 8,204 | Entry not found |

satkinson/DialoGPT-medium-marvin | a47dac172a722a9887949ce2d6508ef8dfc35850 | 2022-04-26T22:13:53.000Z | [

"pytorch",

"gpt2",

"text-generation",

"en",

"transformers",

"conversational"

] | conversational | false | satkinson | null | satkinson/DialoGPT-medium-marvin | 21 | 0 | transformers | 8,205 | ---

language:

- en

thumbnail:

tags:

- conversational

metrics:

- perplexity

---

## DialoGPT model fine-tuned using Amazon's Topical Chat Dataset

This model is fine-tuned from the original [DialoGPT-medium](https://huggingface.co/microsoft/DialoGPT-medium).

This model was fine-tuned on a subset of messages from [Amazon's Topical Chat dataset](https://www.kaggle.com/arnavsharmaas/chatbot-dataset-topical-chat) (due to processing limitations, I restricted my training data to 50,000 observations.

The dataset spands 8 broad topics and contains conversation partner who do not have defined roles. It was created with the goal of [aiding in the effort to build a socialbot that can have deep, engaging open-domain conversations with humans](https://m.media-amazon.com/images/G/01/amazon.jobs/3079_Paper._CB1565131710_.pdf).

The eight broad topics are:

- fashion

- politics

- books

- sports

- general entertainment

- music

- science and technology

- movies

<br><br>

<b>Sample dialogue:</b>

|Role | Response |

|---------|--------|

|User: | What kind of movies do you like? |

| Bot: | I like marvel movies like iron man |

|User: | Do you like horror films? |

| Bot: | Yea, I like the horror films that have macabre and supernatural themes the most |

|User: | Interesting. |

| Bot: | If you watch a horror movie before you view abstract art, you will enjoy the art more |

|User: | Speaking from experience? |

| Bot: | Yes, I've seen many horror movies where the characters were quite evil and supernatural. |

## Using the model

Example code for trying out the model (taken directly from the [DialoGPT-medium](https://huggingface.co/microsoft/DialoGPT-medium) model card):

```python

from transformers import AutoModelWithLMHead, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("satkinson/DialoGPT-small-marvin")

model = AutoModelWithLMHead.from_pretrained("satkinson/DialoGPT-small-marvin")

# Let's chat for 5 lines

for step in range(5):

# encode the new user input, add the eos_token and return a tensor in Pytorch

new_user_input_ids = tokenizer.encode(input(">> User:") + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([chat_history_ids, new_user_input_ids], dim=-1) if step > 0 else new_user_input_ids

# generated a response while limiting the total chat history to 1000 tokens,

chat_history_ids = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id)

# pretty print last ouput tokens from bot

print("DialoGPT: {}".format(tokenizer.decode(chat_history_ids[:, bot_input_ids.shape[-1]:][0], skip_special_tokens=True)))

``` |

batterydata/batteryonlybert-uncased-squad-v1 | 93cc3ccc9d86de6aebed236d67bf394f965733e7 | 2022-03-03T20:25:01.000Z | [

"pytorch",

"bert",

"question-answering",

"en",

"dataset:squad",

"dataset:batterydata/battery-device-data-qa",

"transformers",

"question answering",

"license:apache-2.0",

"autotrain_compatible"

] | question-answering | false | batterydata | null | batterydata/batteryonlybert-uncased-squad-v1 | 21 | null | transformers | 8,206 | ---

language: en

tags: question answering

license: apache-2.0

datasets:

- squad

- batterydata/battery-device-data-qa

metrics: squad

---

# BatteryOnlyBERT-uncased for QA

**Language model:** batteryonlybert-uncased

**Language:** English

**Downstream-task:** Extractive QA

**Training data:** SQuAD v1

**Eval data:** SQuAD v1

**Code:** See [example](https://github.com/ShuHuang/batterybert)

**Infrastructure**: 8x DGX A100

## Hyperparameters

```

batch_size = 16

n_epochs = 2

base_LM_model = "batteryonlybert-uncased"

max_seq_len = 386

learning_rate = 2e-5

doc_stride=128

max_query_length=64

```

## Performance

Evaluated on the SQuAD v1.0 dev set.

```

"exact": 79.53,

"f1": 87.22,

```

Evaluated on the battery device dataset.

```

"precision": 67.20,

"recall": 83.82,

```

## Usage

### In Transformers

```python

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "batterydata/batteryonlybert-uncased-squad-v1"

# a) Get predictions

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)

QA_input = {

'question': 'What is the electrolyte?',

'context': 'The typical non-aqueous electrolyte for commercial Li-ion cells is a solution of LiPF6 in linear and cyclic carbonates.'

}

res = nlp(QA_input)

# b) Load model & tokenizer

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

```

## Authors

Shu Huang: `sh2009 [at] cam.ac.uk`

Jacqueline Cole: `jmc61 [at] cam.ac.uk`

## Citation

BatteryBERT: A Pre-trained Language Model for Battery Database Enhancement |

jkhan447/sentiment-model-sample | 8ec90b8e897075fecb389cc017123cdd5f176eee | 2022-03-04T11:13:39.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"dataset:imdb",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | jkhan447 | null | jkhan447/sentiment-model-sample | 21 | null | transformers | 8,207 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

model-index:

- name: sentiment-model-sample

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.93948

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sentiment-model-sample

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5280

- Accuracy: 0.9395

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.6

|

Visual-Attention-Network/van-large | 98b609818338396dc9a3d09f5c31de94b0eb50fe | 2022-03-31T12:45:46.000Z | [

"pytorch",

"van",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2202.09741",

"transformers",

"vision",

"license:apache-2.0"

] | image-classification | false | Visual-Attention-Network | null | Visual-Attention-Network/van-large | 21 | null | transformers | 8,208 | ---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# Van

Van model trained on imagenet-1k. It was introduced in the paper [Visual Attention Network](https://arxiv.org/abs/2202.09741) and first released in [this repository](https://github.com/Visual-Attention-Network/VAN-Classification).

Disclaimer: The team releasing Van did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

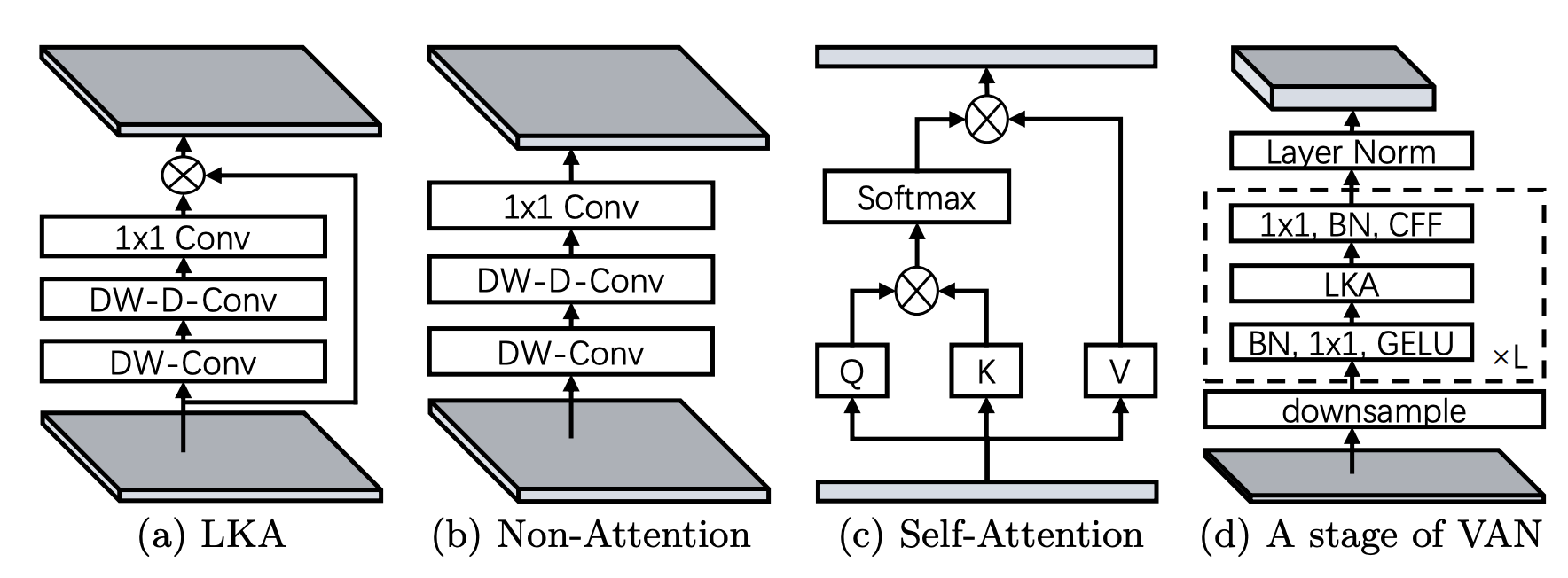

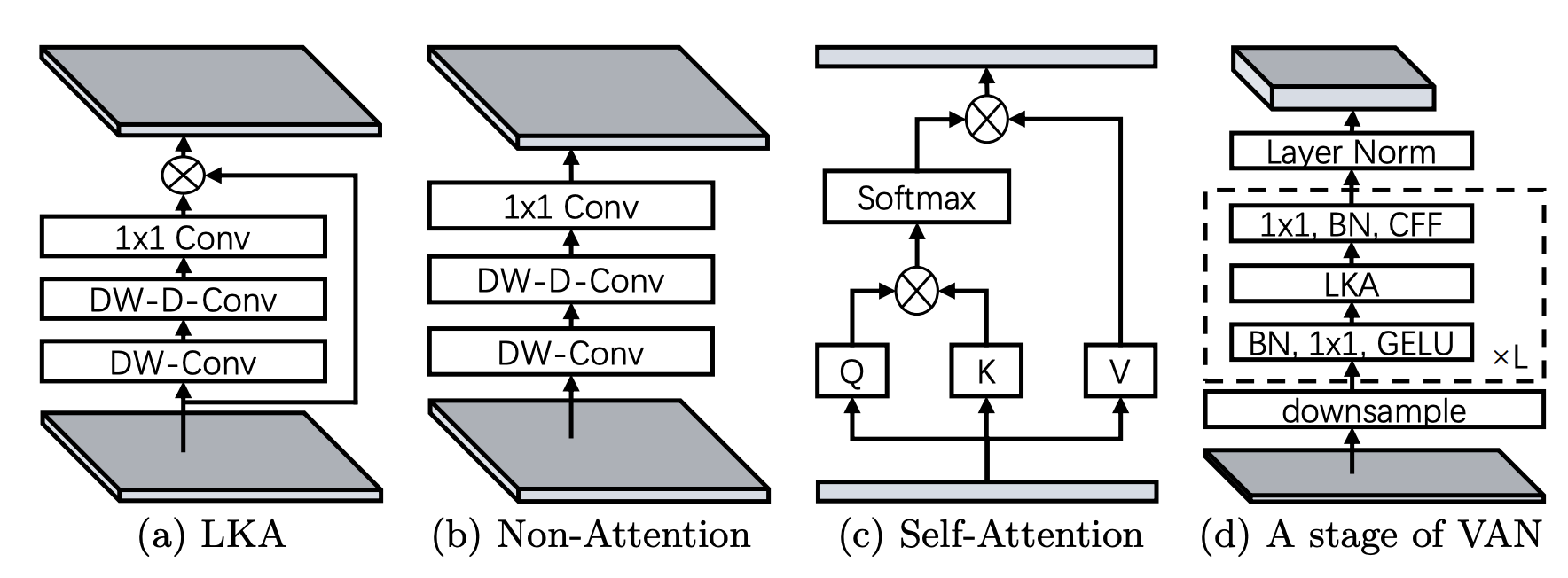

This paper introduces a new attention layer based on convolution operations able to capture both local and distant relationships. This is done by combining normal and large kernel convolution layers. The latter uses a dilated convolution to capture distant correlations.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=van) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model:

```python

>>> from transformers import AutoFeatureExtractor, VanForImageClassification

>>> import torch

>>> from datasets import load_dataset

>>> dataset = load_dataset("huggingface/cats-image")

>>> image = dataset["test"]["image"][0]

>>> feature_extractor = AutoFeatureExtractor.from_pretrained("Visual-Attention-Network/van-base")

>>> model = VanForImageClassification.from_pretrained("Visual-Attention-Network/van-base")

>>> inputs = feature_extractor(image, return_tensors="pt")

>>> with torch.no_grad():

... logits = model(**inputs).logits

>>> # model predicts one of the 1000 ImageNet classes

>>> predicted_label = logits.argmax(-1).item()

>>> print(model.config.id2label[predicted_label])

tabby, tabby cat

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/van). |

leftthomas/resnet50 | 19128d842d5b7589cbf02b5002d70f9c65586796 | 2022-03-11T12:53:14.000Z | [

"pytorch",

"resnet",

"dataset:imagenet",

"arxiv:1512.03385",

"transformers",

"image-classification",

"license:afl-3.0"

] | image-classification | false | leftthomas | null | leftthomas/resnet50 | 21 | null | transformers | 8,209 | ---

tags:

- image-classification

- resnet

license: afl-3.0

datasets:

- imagenet

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# ResNet-50

Pretrained model on [ImageNet](http://www.image-net.org/). The ResNet architecture was introduced in

[this paper](https://arxiv.org/abs/1512.03385).

## Intended uses

You can use the raw model to classify images along the 1,000 ImageNet labels, but you can also change its head

to fine-tune it on a downstream task (another classification task with different labels, image segmentation or

object detection, to name a few).

## Evaluation results

This model has a top1-accuracy of 76.13% and a top-5 accuracy of 92.86% in the evaluation set of ImageNet.

|

navteca/nli-deberta-v3-xsmall | 90986fb464069d701ba1104a9e1b9bdfe7c3c41c | 2022-03-16T09:49:34.000Z | [

"pytorch",

"deberta-v2",

"text-classification",

"en",

"dataset:multi_nli",

"dataset:snli",

"transformers",

"microsoft/deberta-v3-xsmall",

"license:apache-2.0",

"zero-shot-classification"

] | zero-shot-classification | false | navteca | null | navteca/nli-deberta-v3-xsmall | 21 | 1 | transformers | 8,210 | ---

datasets:

- multi_nli

- snli

language: en

license: apache-2.0

metrics:

- accuracy

pipeline_tag: zero-shot-classification

tags:

- microsoft/deberta-v3-xsmall

---

# Cross-Encoder for Natural Language Inference

This model was trained using [SentenceTransformers](https://sbert.net) [Cross-Encoder](https://www.sbert.net/examples/applications/cross-encoder/README.html) class. This model is based on [microsoft/deberta-v3-xsmall](https://huggingface.co/microsoft/deberta-v3-xsmall)

## Training Data

The model was trained on the [SNLI](https://nlp.stanford.edu/projects/snli/) and [MultiNLI](https://cims.nyu.edu/~sbowman/multinli/) datasets. For a given sentence pair, it will output three scores corresponding to the labels: contradiction, entailment, neutral.

## Performance

- Accuracy on SNLI-test dataset: 91.64

- Accuracy on MNLI mismatched set: 87.77

For futher evaluation results, see [SBERT.net - Pretrained Cross-Encoder](https://www.sbert.net/docs/pretrained_cross-encoders.html#nli).

## Usage

Pre-trained models can be used like this:

```python

from sentence_transformers import CrossEncoder

model = CrossEncoder('cross-encoder/nli-deberta-v3-xsmall')

scores = model.predict([('A man is eating pizza', 'A man eats something'), ('A black race car starts up in front of a crowd of people.', 'A man is driving down a lonely road.')])

#Convert scores to labels

label_mapping = ['contradiction', 'entailment', 'neutral']

labels = [label_mapping[score_max] for score_max in scores.argmax(axis=1)]

```

## Usage with Transformers AutoModel

You can use the model also directly with Transformers library (without SentenceTransformers library):

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

model = AutoModelForSequenceClassification.from_pretrained('cross-encoder/nli-deberta-v3-xsmall')

tokenizer = AutoTokenizer.from_pretrained('cross-encoder/nli-deberta-v3-xsmall')

features = tokenizer(['A man is eating pizza', 'A black race car starts up in front of a crowd of people.'], ['A man eats something', 'A man is driving down a lonely road.'], padding=True, truncation=True, return_tensors="pt")

model.eval()

with torch.no_grad():

scores = model(**features).logits

label_mapping = ['contradiction', 'entailment', 'neutral']

labels = [label_mapping[score_max] for score_max in scores.argmax(dim=1)]

print(labels)

```

## Zero-Shot Classification

This model can also be used for zero-shot-classification:

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification", model='cross-encoder/nli-deberta-v3-xsmall')

sent = "Apple just announced the newest iPhone X"

candidate_labels = ["technology", "sports", "politics"]

res = classifier(sent, candidate_labels)

print(res)

``` |

Visual-Attention-Network/van-small | 81e2b580ed3c06863690251ed110bbf4c94a7f82 | 2022-03-31T12:45:49.000Z | [

"pytorch",

"van",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2202.09741",

"transformers",

"vision",

"license:apache-2.0"

] | image-classification | false | Visual-Attention-Network | null | Visual-Attention-Network/van-small | 21 | null | transformers | 8,211 | ---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# Van

Van model trained on imagenet-1k. It was introduced in the paper [Visual Attention Network](https://arxiv.org/abs/2202.09741) and first released in [this repository](https://github.com/Visual-Attention-Network/VAN-Classification).

Disclaimer: The team releasing Van did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

This paper introduces a new attention layer based on convolution operations able to capture both local and distant relationships. This is done by combining normal and large kernel convolution layers. The latter uses a dilated convolution to capture distant correlations.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=van) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model:

```python

>>> from transformers import AutoFeatureExtractor, VanForImageClassification

>>> import torch

>>> from datasets import load_dataset

>>> dataset = load_dataset("huggingface/cats-image")

>>> image = dataset["test"]["image"][0]

>>> feature_extractor = AutoFeatureExtractor.from_pretrained("Visual-Attention-Network/van-base")

>>> model = VanForImageClassification.from_pretrained("Visual-Attention-Network/van-base")

>>> inputs = feature_extractor(image, return_tensors="pt")

>>> with torch.no_grad():

... logits = model(**inputs).logits

>>> # model predicts one of the 1000 ImageNet classes

>>> predicted_label = logits.argmax(-1).item()

>>> print(model.config.id2label[predicted_label])

tabby, tabby cat

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/van). |

facebook/regnet-y-1280-seer-in1k | 32541c21dc3f3adacce9d58a801d6dc2a0ab657d | 2022-06-30T10:22:16.000Z | [

"pytorch",

"tf",

"regnet",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2202.08360",

"transformers",

"vision",

"license:apache-2.0"

] | image-classification | false | facebook | null | facebook/regnet-y-1280-seer-in1k | 21 | null | transformers | 8,212 | ---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# RegNet

RegNet model trained on imagenet-1k. It was introduced in the paper [Vision Models Are More Robust And Fair When Pretrained On Uncurated Images Without Supervision](https://arxiv.org/abs/2202.08360) and first released in [this repository](https://github.com/facebookresearch/vissl/tree/main/projects/SEER).

Disclaimer: The team releasing RegNet did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The authors trained [RegNets](https://huggingface.co/?models=regnet) models in a self-supervised fashion on bilion of random images from the internet. This model is later finetuned on ImageNet

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=regnet) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model:

```python

>>> from transformers import AutoFeatureExtractor, RegNetForImageClassification

>>> import torch

>>> from datasets import load_dataset

>>> dataset = load_dataset("huggingface/cats-image")

>>> image = dataset["test"]["image"][0]

>>> feature_extractor = AutoFeatureExtractor.from_pretrained("zuppif/regnet-y-040")

>>> model = RegNetForImageClassification.from_pretrained("zuppif/regnet-y-040")

>>> inputs = feature_extractor(image, return_tensors="pt")

>>> with torch.no_grad():

... logits = model(**inputs).logits

>>> # model predicts one of the 1000 ImageNet classes

>>> predicted_label = logits.argmax(-1).item()

>>> print(model.config.id2label[predicted_label])

'tabby, tabby cat'

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/regnet). |

Wikidepia/gpt2-spam | 85bc19d86699dd10f80bcdc96b129cb02a83135f | 2022-03-20T01:10:59.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-generation | false | Wikidepia | null | Wikidepia/gpt2-spam | 21 | 1 | transformers | 8,213 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: gpt2-spam

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-spam

This model is a fine-tuned version of [distilgpt2](https://huggingface.co/distilgpt2) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

### Framework versions

- Transformers 4.18.0.dev0

- Pytorch 1.11.0

- Datasets 2.0.0

- Tokenizers 0.11.6

|

RuudVelo/dutch_news_clf_bert_finetuned | 8c76a1f99791d7bbdf82fba38e15b03e8735fac9 | 2022-03-24T14:37:21.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers"

] | text-classification | false | RuudVelo | null | RuudVelo/dutch_news_clf_bert_finetuned | 21 | null | transformers | 8,214 | Entry not found |

snehatyagi/wav2vec2_test | da03eb9b0f55a14cbe1fae5dc1cdb46421c51bbf | 2022-03-31T07:21:45.000Z | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | automatic-speech-recognition | false | snehatyagi | null | snehatyagi/wav2vec2_test | 21 | null | transformers | 8,215 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2_test

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2_test

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 91.1661

- Wer: 0.5714

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 1000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:------:|

| 11.9459 | 100.0 | 100 | 46.9901 | 1.0 |

| 3.2175 | 200.0 | 200 | 73.0950 | 1.0 |

| 1.8117 | 300.0 | 300 | 78.4884 | 0.6735 |

| 1.3694 | 400.0 | 400 | 84.0168 | 0.6327 |

| 1.1392 | 500.0 | 500 | 85.2083 | 0.5918 |

| 0.979 | 600.0 | 600 | 88.9109 | 0.5918 |

| 0.8917 | 700.0 | 700 | 89.0310 | 0.5918 |

| 0.8265 | 800.0 | 800 | 90.0659 | 0.6122 |

| 0.769 | 900.0 | 900 | 91.8476 | 0.5714 |

| 0.7389 | 1000.0 | 1000 | 91.1661 | 0.5714 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.2

- Datasets 1.18.3

- Tokenizers 0.11.6

|

Intel/bert-large-uncased-sparse-80-1x4-block-pruneofa | f7df70adf762e97887a906e5e8e4f046e409e3b7 | 2022-03-29T11:56:40.000Z | [

"pytorch",

"bert",

"pretraining",

"en",

"dataset:wikipedia",

"dataset:bookcorpus",

"arxiv:2111.05754",

"transformers",

"fill-mask"

] | fill-mask | false | Intel | null | Intel/bert-large-uncased-sparse-80-1x4-block-pruneofa | 21 | null | transformers | 8,216 | ---

language: en

tags: fill-mask

datasets:

- wikipedia

- bookcorpus

---

# 80% 1x4 Block Sparse BERT-Large (uncased) Prune OFA

This model is was created using Prune OFA method described in [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754) presented in ENLSP NeurIPS Workshop 2021.

For further details on the model and its result, see our paper and our implementation available [here](https://github.com/IntelLabs/Model-Compression-Research-Package/tree/main/research/prune-once-for-all).

|

luckydog/bert-base-chinese-finetuned-ChnSenti | 7af377ccb93f3ccf871971fb3ad74969d530ac55 | 2022-04-12T13:38:36.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

] | text-classification | false | luckydog | null | luckydog/bert-base-chinese-finetuned-ChnSenti | 21 | 1 | transformers | 8,217 | Entry not found |

MartinoMensio/racism-models-raw-label-epoch-3 | f8d28c4128733471699f936547af47e05c17834d | 2022-05-04T16:05:21.000Z | [

"pytorch",

"bert",

"text-classification",

"es",

"transformers",

"license:mit"

] | text-classification | false | MartinoMensio | null | MartinoMensio/racism-models-raw-label-epoch-3 | 21 | null | transformers | 8,218 | ---

language: es

license: mit

widget:

- text: "y porqué es lo que hay que hacer con los menas y con los adultos también!!!! NO a los inmigrantes ilegales!!!!"

---

### Description

This model is a fine-tuned version of [BETO (spanish bert)](https://huggingface.co/dccuchile/bert-base-spanish-wwm-uncased) that has been trained on the *Datathon Against Racism* dataset (2022)

We performed several experiments that will be described in the upcoming paper "Estimating Ground Truth in a Low-labelled Data Regime:A Study of Racism Detection in Spanish" (NEATClasS 2022)

We applied 6 different methods ground-truth estimations, and for each one we performed 4 epochs of fine-tuning. The result is made of 24 models:

| method | epoch 1 | epoch 3 | epoch 3 | epoch 4 |

|--- |--- |--- |--- |--- |

| raw-label | [raw-label-epoch-1](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-1) | [raw-label-epoch-2](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-2) | [raw-label-epoch-3](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-3) | [raw-label-epoch-4](https://huggingface.co/MartinoMensio/racism-models-raw-label-epoch-4) |

| m-vote-strict | [m-vote-strict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-1) | [m-vote-strict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-2) | [m-vote-strict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-3) | [m-vote-strict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-m-vote-strict-epoch-4) |

| m-vote-nonstrict | [m-vote-nonstrict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-1) | [m-vote-nonstrict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-2) | [m-vote-nonstrict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-3) | [m-vote-nonstrict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-m-vote-nonstrict-epoch-4) |

| regression-w-m-vote | [regression-w-m-vote-epoch-1](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-1) | [regression-w-m-vote-epoch-2](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-2) | [regression-w-m-vote-epoch-3](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-3) | [regression-w-m-vote-epoch-4](https://huggingface.co/MartinoMensio/racism-models-regression-w-m-vote-epoch-4) |

| w-m-vote-strict | [w-m-vote-strict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-1) | [w-m-vote-strict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-2) | [w-m-vote-strict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-3) | [w-m-vote-strict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-strict-epoch-4) |

| w-m-vote-nonstrict | [w-m-vote-nonstrict-epoch-1](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-1) | [w-m-vote-nonstrict-epoch-2](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-2) | [w-m-vote-nonstrict-epoch-3](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-3) | [w-m-vote-nonstrict-epoch-4](https://huggingface.co/MartinoMensio/racism-models-w-m-vote-nonstrict-epoch-4) |

This model is `raw-label-epoch-3`

### Usage

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification, pipeline

model_name = 'raw-label-epoch-3'

tokenizer = AutoTokenizer.from_pretrained("dccuchile/bert-base-spanish-wwm-uncased")

full_model_path = f'MartinoMensio/racism-models-{model_name}'

model = AutoModelForSequenceClassification.from_pretrained(full_model_path)

pipe = pipeline("text-classification", model = model, tokenizer = tokenizer)

texts = [

'y porqué es lo que hay que hacer con los menas y con los adultos también!!!! NO a los inmigrantes ilegales!!!!',

'Es que los judíos controlan el mundo'

]

print(pipe(texts))

# [{'label': 'racist', 'score': 0.8621180653572083}, {'label': 'non-racist', 'score': 0.9725497364997864}]

```

For more details, see https://github.com/preyero/neatclass22

|

gxbag/wav2vec2-large-960h-lv60-self-with-wikipedia-lm | a50db71b4d291fefe8589c070f2b6b6124db1890 | 2022-05-23T12:31:37.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"transformers"

] | automatic-speech-recognition | false | gxbag | null | gxbag/wav2vec2-large-960h-lv60-self-with-wikipedia-lm | 21 | 2 | transformers | 8,219 | This is `facebook/wav2vec2-large-960h-lv60-self` enhanced with a Wikipedia language model.

The dataset used is `wikipedia/20200501.en`. All articles were used. It was cleaned of references and external links and all text inside of parantheses. It has 8092546 words.

The language model was built using KenLM. It is a 5-gram model where all singletons of 3-grams and bigger were pruned. It was built as:

`kenlm/build/bin/lmplz -o 5 -S 120G --vocab_estimate 8092546 --text text.txt --arpa text.arpa --prune 0 0 1`

Suggested usage:

```

from transformers import pipeline

pipe = pipeline("automatic-speech-recognition", model="gxbag/wav2vec2-large-960h-lv60-self-with-wikipedia-lm")

output = pipe("/path/to/audio.wav", chunk_length_s=30, stride_length_s=(6, 3))

output

```

Note that in the current version of `transformers` (as of the release of this model), when using striding in the pipeline it will chop off the last portion of audio, in this case 3 seconds. Add 3 seconds of silence to the end as a workaround. This problem was fixed in the GitHub version of `transformers`. |

mrm8488/convnext-tiny-finetuned-eurosat | 41521d86e5799a2f7f83b4b92481a3d46ae8d2d6 | 2022-04-23T15:23:29.000Z | [

"pytorch",

"tensorboard",

"convnext",

"image-classification",

"dataset:nielsr/eurosat-demo",

"transformers",

"generated_from_trainer",

"CV",

"ConvNeXT",

"satellite",

"EuroSAT",

"license:apache-2.0",

"model-index"

] | image-classification | false | mrm8488 | null | mrm8488/convnext-tiny-finetuned-eurosat | 21 | 2 | transformers | 8,220 | ---

license: apache-2.0

tags:

- generated_from_trainer

- CV

- ConvNeXT

- satellite

- EuroSAT

datasets:

- nielsr/eurosat-demo

metrics:

- accuracy

model-index:

- name: convnext-tiny-finetuned-eurosat

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: image_folder

type: image_folder

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9804938271604938

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ConvNeXT (tiny) fine-tuned on EuroSAT

This model is a fine-tuned version of [facebook/convnext-tiny-224](https://huggingface.co/facebook/convnext-tiny-224) on the [EuroSAT](https://github.com/phelber/eurosat) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0549

- Accuracy: 0.9805

#### Drag and drop the following pics in the right widget to test the model

## Model description

ConvNeXT is a pure convolutional model (ConvNet), inspired by the design of Vision Transformers, that claims to outperform them. The authors started from a ResNet and "modernized" its design by taking the Swin Transformer as inspiration.

## Dataset information

**EuroSAT : Land Use and Land Cover Classification with Sentinel-2**

In this study, we address the challenge of land use and land cover classification using Sentinel-2 satellite images. The Sentinel-2 satellite images are openly and freely accessible provided in the Earth observation program Copernicus. We present a novel dataset based on Sentinel-2 satellite images covering 13 spectral bands and consisting out of 10 classes with in total 27,000 labeled and geo-referenced images. We provide benchmarks for this novel dataset with its spectral bands using state-of-the-art deep Convolutional Neural Network (CNNs). With the proposed novel dataset, we achieved an overall classification accuracy of 98.57%. The resulting classification system opens a gate towards a number of Earth observation applications. We demonstrate how this classification system can be used for detecting land use and land cover changes and how it can assist in improving geographical maps.

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 7171

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.2082 | 1.0 | 718 | 0.1057 | 0.9654 |

| 0.1598 | 2.0 | 1436 | 0.0712 | 0.9775 |

| 0.1435 | 3.0 | 2154 | 0.0549 | 0.9805 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.0+cu111

- Datasets 2.1.0

- Tokenizers 0.12.1 |

eesungkim/stt_kr_conformer_transducer_large | fdc8412fe0d089913524767b20ff244ff1007ed0 | 2022-06-24T22:11:28.000Z | [

"nemo",

"kr",

"dataset:Ksponspeech",

"arxiv:2005.08100",

"automatic-speech-recognition",

"speech",

"audio",

"transducer",

"Conformer",

"Transformer",

"NeMo",

"pytorch",

"license:cc-by-4.0",

"model-index"

] | automatic-speech-recognition | false | eesungkim | null | eesungkim/stt_kr_conformer_transducer_large | 21 | 3 | nemo | 8,221 | ---

language:

- kr

license: cc-by-4.0

library_name: nemo

datasets:

- Ksponspeech

thumbnail: null

tags:

- automatic-speech-recognition

- speech

- audio

- transducer

- Conformer

- Transformer

- NeMo

- pytorch

model-index:

- name: stt_kr_conformer_transducer_large

results: []

---

## Model Overview

<DESCRIBE IN ONE LINE THE MODEL AND ITS USE>

## NVIDIA NeMo: Training

To train, fine-tune or play with the model you will need to install [NVIDIA NeMo](https://github.com/NVIDIA/NeMo). We recommend you install it after you've installed latest Pytorch version.

```

pip install nemo_toolkit['all']

```

## How to Use this Model

The model is available for use in the NeMo toolkit [1], and can be used as a pre-trained checkpoint for inference or for fine-tuning on another dataset.

### Automatically instantiate the model

```python

import nemo.collections.asr as nemo_asr

asr_model = nemo_asr.models.ASRModel.from_pretrained("eesungkim/stt_kr_conformer_transducer_large")

```

### Transcribing using Python

First, let's get a sample

```

wget https://dldata-public.s3.us-east-2.amazonaws.com/sample-kor.wav

```

Then simply do:

```

asr_model.transcribe(['sample-kor.wav'])

```

### Transcribing many audio files

```shell

python [NEMO_GIT_FOLDER]/examples/asr/transcribe_speech.py pretrained_name="eesungkim/stt_kr_conformer_transducer_large" audio_dir="<DIRECTORY CONTAINING AUDIO FILES>"

```

### Input

This model accepts 16000 KHz Mono-channel Audio (wav files) as input.

### Output

This model provides transcribed speech as a string for a given audio sample.

## Model Architecture

Conformer-Transducer model is an autoregressive variant of Conformer model [2] for Automatic Speech Recognition which uses Transducer loss/decoding. You may find more info on the detail of this model here: [Conformer-Transducer Model](https://docs.nvidia.com/deeplearning/nemo/user-guide/docs/en/main/asr/models.html).

## Training

The model was finetuned based on the pre-trained English Model for over several epochs.

There are several transcribing and sub-word modeling methods for Korean speech recognition. This model uses sentencepiece subwords of Hangul characters based on phonetic transcription using Google Sentencepiece Tokenizer [3].

### Datasets

All the models in this collection are trained on [Ksponspeech](https://aihub.or.kr/aidata/105/download) dataset, which is an open-domain dialog corpus recorded by 2,000 native Korean speakers in a controlled and quiet environment. The standard split dataset consists of 965 hours of training set, 4 hours of development set, 3 hours of test-clean, and 4 hours of test-other.

## Performance

Version | Tokenizer | eval_clean CER | eval_other CER | eval_clean WER | eval_other WER

--- | --- | --- | --- |--- |---

v1.7.0rc | SentencePiece Char | 6.94% | 7.38% | 19.49% | 22.73%

## Limitations

Since this model was trained on publically available speech datasets, the performance of this model might degrade for speech which including technical terms, or vernacular that the model has not been trained on. The model might also perform worse for accented speech.

This model produces a spoken-form token sequence. If you want to have a written form, you can consider applying inverse text normalization.

## References

[1] [NVIDIA NeMo Toolkit](https://github.com/NVIDIA/NeMo)

[2] [Conformer: Convolution-augmented Transformer for Speech Recognition](https://arxiv.org/abs/2005.08100)

[3] [Google Sentencepiece Tokenizer](https://github.com/google/sentencepiece)

|

emilylearning/finetuned_cgp_added_none__test_run_False__p_dataset_100 | 28ff6a29c64ffe9a3d28ec32e43a2000cc9111b6 | 2022-05-06T18:11:01.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | emilylearning | null | emilylearning/finetuned_cgp_added_none__test_run_False__p_dataset_100 | 21 | null | transformers | 8,222 | Entry not found |

eslamxm/mt5-base-finetuned-english | 4502d1d70784c52544ccf187ef5d5df9742b61e5 | 2022-05-11T14:49:00.000Z | [

"pytorch",

"mt5",

"text2text-generation",

"dataset:xlsum",

"transformers",

"summarization",

"english",

"en",

"Abstractive Summarization",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | summarization | false | eslamxm | null | eslamxm/mt5-base-finetuned-english | 21 | null | transformers | 8,223 | ---

license: apache-2.0

tags:

- summarization

- english

- en

- mt5

- Abstractive Summarization

- generated_from_trainer

datasets:

- xlsum

model-index:

- name: mt5-base-finetuned-english

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-base-finetuned-english

This model is a fine-tuned version of [google/mt5-base](https://huggingface.co/google/mt5-base) on the xlsum dataset.

It achieves the following results on the evaluation set:

- Loss: 3.3271

- Rouge-1: 31.7

- Rouge-2: 11.83

- Rouge-l: 26.43

- Gen Len: 18.88

- Bertscore: 74.3

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- label_smoothing_factor: 0.1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge-1 | Rouge-2 | Rouge-l | Gen Len | Bertscore |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:-------:|:---------:|

| 4.174 | 1.0 | 3125 | 3.5662 | 27.01 | 7.95 | 22.16 | 18.91 | 72.62 |

| 3.6577 | 2.0 | 6250 | 3.4304 | 28.84 | 9.09 | 23.64 | 18.87 | 73.32 |

| 3.4526 | 3.0 | 9375 | 3.3691 | 29.69 | 9.96 | 24.58 | 18.84 | 73.69 |

| 3.3091 | 4.0 | 12500 | 3.3368 | 30.38 | 10.32 | 25.1 | 18.9 | 73.9 |

| 3.2056 | 5.0 | 15625 | 3.3271 | 30.7 | 10.65 | 25.45 | 18.89 | 73.99 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.11.0+cu113

- Datasets 2.2.0

- Tokenizers 0.12.1

|

emilylearning/cond_ft_none_on_reddit__prcnt_100__test_run_False | f137e4f0ac51088a0c2f3bf2b5bec987af0fa4a5 | 2022-05-13T05:41:50.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | emilylearning | null | emilylearning/cond_ft_none_on_reddit__prcnt_100__test_run_False | 21 | null | transformers | 8,224 | Entry not found |

emilylearning/cond_ft_subreddit_on_reddit__prcnt_100__test_run_False | 9a8f65a2841a86dd76e4e76daf6c0d3b19d7cfeb | 2022-05-13T22:21:56.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | emilylearning | null | emilylearning/cond_ft_subreddit_on_reddit__prcnt_100__test_run_False | 21 | null | transformers | 8,225 | Entry not found |

Xiaoman/NER-CoNLL2003-V2 | 402ca1daa320f40d0d9d682df8f90502edf15354 | 2022-05-14T04:56:27.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | Xiaoman | null | Xiaoman/NER-CoNLL2003-V2 | 21 | null | transformers | 8,226 | Training hyperparameters

The following hyperparameters were used during training:

learning_rate: 7.961395091713594e-05

train_batch_size: 32

eval_batch_size: 32

seed: 27

optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

lr_scheduler_type: linear

num_epochs: 5

|

Xiaoman/NER-CoNLL2003-V4 | 8a71c88261ec30872568e26b3f6638f92fe6063c | 2022-05-14T19:37:35.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | token-classification | false | Xiaoman | null | Xiaoman/NER-CoNLL2003-V4 | 21 | null | transformers | 8,227 | ---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: NER-CoNLL2003-V4

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# NER-CoNLL2003-V4

This model is a fine-tuned version of [bert-large-uncased](https://huggingface.co/bert-large-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2095

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 7.961395091713594e-05

- train_batch_size: 6

- eval_batch_size: 6

- seed: 27

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 14 | 0.3630 |

| No log | 2.0 | 28 | 0.2711 |

| No log | 3.0 | 42 | 0.2407 |

| No log | 4.0 | 56 | 0.2057 |

| No log | 5.0 | 70 | 0.2095 |

### Framework versions

- Transformers 4.19.1

- Pytorch 1.11.0+cu113

- Datasets 2.2.1

- Tokenizers 0.12.1

|

anuj55/distilbert-base-uncased-finetuned-polifact | 2fd37511df6c678fb41072be9c14518cd4205147 | 2022-05-15T16:21:04.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers"

] | text-classification | false | anuj55 | null | anuj55/distilbert-base-uncased-finetuned-polifact | 21 | null | transformers | 8,228 | Entry not found |

imohammad12/GRS-complex-simple-classifier-DeBerta | 69008160598bc7be5d4bdfef161f5a2b8eace5d9 | 2022-05-26T10:49:13.000Z | [

"pytorch",

"deberta",

"text-classification",

"en",

"transformers",

"grs"

] | text-classification | false | imohammad12 | null | imohammad12/GRS-complex-simple-classifier-DeBerta | 21 | null | transformers | 8,229 | ---

language: en

tags: grs

---

## Citation

Please star the [GRS GitHub repo](https://github.com/imohammad12/GRS) and cite the paper if you found our model useful:

```

@inproceedings{dehghan-etal-2022-grs,

title = "{GRS}: Combining Generation and Revision in Unsupervised Sentence Simplification",

author = "Dehghan, Mohammad and

Kumar, Dhruv and

Golab, Lukasz",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2022",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-acl.77",

pages = "949--960",

abstract = "We propose GRS: an unsupervised approach to sentence simplification that combines text generation and text revision. We start with an iterative framework in which an input sentence is revised using explicit edit operations, and add paraphrasing as a new edit operation. This allows us to combine the advantages of generative and revision-based approaches: paraphrasing captures complex edit operations, and the use of explicit edit operations in an iterative manner provides controllability and interpretability. We demonstrate these advantages of GRS compared to existing methods on the Newsela and ASSET datasets.",

}

``` |

questgen/msmarco-distilbert-base-v4-feature-extraction-pipeline | 6be608d278b1f7c771b17a5fe123e658049bdd3a | 2022-05-21T11:15:42.000Z | [

"pytorch",

"distilbert",

"feature-extraction",

"arxiv:1908.10084",

"sentence-transformers",

"sentence-similarity",

"transformers",

"license:apache-2.0"

] | feature-extraction | false | questgen | null | questgen/msmarco-distilbert-base-v4-feature-extraction-pipeline | 21 | null | sentence-transformers | 8,230 | ---

pipeline_tag: feature-extraction

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/msmarco-distilbert-base-v4

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/msmarco-distilbert-base-v4')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/msmarco-distilbert-base-v4')

model = AutoModel.from_pretrained('sentence-transformers/msmarco-distilbert-base-v4')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/msmarco-distilbert-base-v4)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

Shenghao1993/distilbert-base-uncased-finetuned-emotion | 6041076ca6be3de4cb0302e1b296d743e37006ac | 2022-05-24T02:25:08.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:emotion",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | Shenghao1993 | null | Shenghao1993/distilbert-base-uncased-finetuned-emotion | 21 | null | transformers | 8,231 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.929

- name: F1

type: f1

value: 0.9288515820399124

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2196

- Accuracy: 0.929

- F1: 0.9289

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8486 | 1.0 | 250 | 0.3306 | 0.903 | 0.8989 |

| 0.2573 | 2.0 | 500 | 0.2196 | 0.929 | 0.9289 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

KoichiYasuoka/deberta-large-japanese-luw-upos | 54e41fa94206d32f9002a860bbdca1c4c52e16af | 2022-07-23T14:44:01.000Z | [

"pytorch",

"deberta-v2",

"token-classification",

"ja",

"dataset:universal_dependencies",

"transformers",

"japanese",

"pos",

"dependency-parsing",

"license:cc-by-sa-4.0",

"autotrain_compatible"

] | token-classification | false | KoichiYasuoka | null | KoichiYasuoka/deberta-large-japanese-luw-upos | 21 | null | transformers | 8,232 | ---

language:

- "ja"

tags:

- "japanese"

- "token-classification"

- "pos"

- "dependency-parsing"

datasets:

- "universal_dependencies"

license: "cc-by-sa-4.0"

pipeline_tag: "token-classification"

widget:

- text: "国境の長いトンネルを抜けると雪国であった。"

---

# deberta-large-japanese-luw-upos

## Model Description

This is a DeBERTa(V2) model pre-trained on 青空文庫 texts for POS-tagging and dependency-parsing, derived from [deberta-large-japanese-aozora](https://huggingface.co/KoichiYasuoka/deberta-large-japanese-aozora). Every long-unit-word is tagged by [UPOS](https://universaldependencies.org/u/pos/) (Universal Part-Of-Speech) and [FEATS](https://universaldependencies.org/u/feat/).

## How to Use

```py

import torch

from transformers import AutoTokenizer,AutoModelForTokenClassification

tokenizer=AutoTokenizer.from_pretrained("KoichiYasuoka/deberta-large-japanese-luw-upos")

model=AutoModelForTokenClassification.from_pretrained("KoichiYasuoka/deberta-large-japanese-luw-upos")

s="国境の長いトンネルを抜けると雪国であった。"

t=tokenizer.tokenize(s)

p=[model.config.id2label[q] for q in torch.argmax(model(tokenizer.encode(s,return_tensors="pt"))["logits"],dim=2)[0].tolist()[1:-1]]

print(list(zip(t,p)))

```

or

```py

import esupar

nlp=esupar.load("KoichiYasuoka/deberta-large-japanese-luw-upos")

print(nlp("国境の長いトンネルを抜けると雪国であった。"))

```

## Reference

安岡孝一: [青空文庫DeBERTaモデルによる国語研長単位係り受け解析](http://hdl.handle.net/2433/275409), 東洋学へのコンピュータ利用, 第35回研究セミナー (2022年7月), pp.29-43.

## See Also

[esupar](https://github.com/KoichiYasuoka/esupar): Tokenizer POS-tagger and Dependency-parser with BERT/RoBERTa/DeBERTa models

|

Yah216/Arabic_poem_meter_3 | fdee92fa4ee718710c24ca36e3cb27a2f5547450 | 2022-05-28T07:59:10.000Z | [

"pytorch",

"bert",

"text-classification",

"ar",

"transformers",

"co2_eq_emissions"

] | text-classification | false | Yah216 | null | Yah216/Arabic_poem_meter_3 | 21 | null | transformers | 8,233 | ---

---

language: ar

widget:

- text: "قفا نبك من ذِكرى حبيب ومنزلِ بسِقطِ اللِّوى بينَ الدَّخول فحَوْملِ"

- text: "سَلو قَلبي غَداةَ سَلا وَثابا لَعَلَّ عَلى الجَمالِ لَهُ عِتابا"

co2_eq_emissions: 404.66986451902227

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- CO2 Emissions (in grams): 404.66986451902227

## Dataset

We used the APCD dataset cited hereafter for pretraining the model. The dataset has been cleaned and only the main text and the meter columns were kept:

```

@Article{Yousef2019LearningMetersArabicEnglish-arxiv,

author = {Yousef, Waleed A. and Ibrahime, Omar M. and Madbouly, Taha M. and Mahmoud,

Moustafa A.},

title = {Learning Meters of Arabic and English Poems With Recurrent Neural Networks: a Step

Forward for Language Understanding and Synthesis},

journal = {arXiv preprint arXiv:1905.05700},

year = 2019,

url = {https://github.com/hci-lab/LearningMetersPoems}

}

```

## Validation Metrics

- Loss: 0.21315555274486542

- Accuracy: 0.9493554089595999

- Macro F1: 0.7537353091512587

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "قفا نبك من ذِكرى حبيب ومنزلِ بسِقطِ اللِّوى بينَ الدَّخول فحَوْملِ"}' https://api-inference.huggingface.co/models/Yah216/Arabic_poem_meter_3

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("Yah216/Arabic_poem_meter_3", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("Yah216/Arabic_poem_meter_3", use_auth_token=True)

inputs = tokenizer("قفا نبك من ذِكرى حبيب ومنزلِ بسِقطِ اللِّوى بينَ الدَّخول فحَوْملِ", return_tensors="pt")

outputs = model(**inputs)

``` |

sahn/distilbert-base-uncased-finetuned-imdb-tag | d103fb07e0324e661759c3cc74287cc3faed3353 | 2022-05-30T04:49:48.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:imdb",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] | text-classification | false | sahn | null | sahn/distilbert-base-uncased-finetuned-imdb-tag | 21 | null | transformers | 8,234 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-imdb-tag

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.9672

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb-tag

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2215

- Accuracy: 0.9672

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

For 90% of the sentences, added `10/10` at the end of the sentences with the label 1, and `1/10` with the label 0.

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0895 | 1.0 | 1250 | 0.1332 | 0.9638 |

| 0.0483 | 2.0 | 2500 | 0.0745 | 0.9772 |

| 0.0246 | 3.0 | 3750 | 0.1800 | 0.9666 |

| 0.0058 | 4.0 | 5000 | 0.1370 | 0.9774 |

| 0.0025 | 5.0 | 6250 | 0.2215 | 0.9672 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

theojolliffe/bart-cnn-science | 2b5c0e689642ef19663935c01d19a6881777c0d2 | 2022-05-30T17:31:48.000Z | [

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"dataset:scientific_papers",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

] | text2text-generation | false | theojolliffe | null | theojolliffe/bart-cnn-science | 21 | null | transformers | 8,235 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- scientific_papers

metrics:

- rouge

model-index:

- name: bart-large-cnn-pubmed1o3-pubmed2o3-pubmed3o3-arxiv1o3-arxiv2o3-arxiv3o3

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: scientific_papers

type: scientific_papers

args: arxiv

metrics:

- name: Rouge1

type: rouge

value: 42.5835

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-large-cnn-pubmed1o3-pubmed2o3-pubmed3o3-arxiv1o3-arxiv2o3-arxiv3o3

This model is a fine-tuned version of [theojolliffe/bart-large-cnn-pubmed1o3-pubmed2o3-pubmed3o3-arxiv1o3-arxiv2o3](https://huggingface.co/theojolliffe/bart-large-cnn-pubmed1o3-pubmed2o3-pubmed3o3-arxiv1o3-arxiv2o3) on the scientific_papers dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0646

- Rouge1: 42.5835

- Rouge2: 16.1887

- Rougel: 24.7972

- Rougelsum: 38.1846

- Gen Len: 129.9291

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:--------:|

| 2.0865 | 1.0 | 33840 | 2.0646 | 42.5835 | 16.1887 | 24.7972 | 38.1846 | 129.9291 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

classla/bcms-bertic-parlasent-bcs-ter | 4bfc89d99f4e90d960060ac47f1223caf153b4b4 | 2022-06-20T12:27:45.000Z | [

"pytorch",

"electra",

"text-classification",

"hr",

"arxiv:2206.00929",

"transformers",

"sentiment-analysis"

] | text-classification | false | classla | null | classla/bcms-bertic-parlasent-bcs-ter | 21 | null | transformers | 8,236 | ---

language: "hr"

tags:

- text-classification

- sentiment-analysis

widget:

- text: "Poštovani potpredsjedničke Vlade i ministre hrvatskih branitelja, mislite li da ste zapravo iznevjerili svoje suborce s kojima ste 555 dana prosvjedovali u šatoru protiv tadašnjih dužnosnika jer ste zapravo donijeli zakon koji je neprovediv, a birali ste si suradnike koji nemaju etički integritet."

---

# bcms-bertic-parlasent-bcs-ter

Ternary text classification model based on [`classla/bcms-bertic`](https://huggingface.co/classla/bcms-bertic) and fine-tuned on the BCS Political Sentiment dataset (sentence-level data).

This classifier classifies text into only three categories: Negative, Neutral, and Positive. For the binary classifier (Negative, Other) check [this model](https://huggingface.co/classla/bcms-bertic-parlasent-bcs-bi ).

For details on the dataset and the finetuning procedure, please see [this paper](https://arxiv.org/abs/2206.00929).

## Fine-tuning hyperparameters

Fine-tuning was performed with `simpletransformers`. Beforehand a brief sweep for the optimal number of epochs was performed and the presumed best value was 9. Other arguments were kept default.

```python

model_args = {

"num_train_epochs": 9

}

```

## Performance

The same pipeline was run with two other transformer models and `fasttext` for comparison. Macro F1 scores were recorded for each of the 6 fine-tuning sessions and post festum analyzed.

| model | average macro F1 |

|---------------------------------|--------------------|

| bcms-bertic-parlasent-bcs-ter | 0.7941 ± 0.0101 ** |

| EMBEDDIA/crosloengual-bert | 0.7709 ± 0.0113 |

| xlm-roberta-base | 0.7184 ± 0.0139 |

| fasttext + CLARIN.si embeddings | 0.6312 ± 0.0043 |

Two best performing models have been compared with the Mann-Whitney U test to calculate p-values (** denotes p<0.01).

## Use example with `simpletransformers==0.63.7`

```python

from simpletransformers.classification import ClassificationModel

model = ClassificationModel("electra", "classla/bcms-bertic-parlasent-bcs-ter")

predictions, logits = model.predict([

"Vi niste normalni",

"Đački autobusi moraju da voze svaki dan",

"Ovo je najbolji zakon na svetu",

]

)

predictions

# Output: array([0, 1, 2])

[model.config.id2label[i] for i in predictions]

# Output: ['Negative', 'Neutral', 'Positive']

```

## Citation

If you use the model, please cite the following paper on which the original model is based:

```

@inproceedings{ljubesic-lauc-2021-bertic,

title = "{BERT}i{\'c} - The Transformer Language Model for {B}osnian, {C}roatian, {M}ontenegrin and {S}erbian",

author = "Ljube{\v{s}}i{\'c}, Nikola and Lauc, Davor",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.5",

pages = "37--42",

}

```

and the paper describing the dataset and methods for the current finetuning:

```

@misc{https://doi.org/10.48550/arxiv.2206.00929,

doi = {10.48550/ARXIV.2206.00929},

url = {https://arxiv.org/abs/2206.00929},

author = {Mochtak, Michal and Rupnik, Peter and Ljubešič, Nikola},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {The ParlaSent-BCS dataset of sentiment-annotated parliamentary debates from Bosnia-Herzegovina, Croatia, and Serbia},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution Share Alike 4.0 International}

}

``` |

classla/bcms-bertic-parlasent-bcs-bi | 526844df71b5b4c2a73e2ee52996438387c7ec95 | 2022-06-17T13:51:54.000Z | [

"pytorch",

"electra",

"text-classification",

"hr",

"arxiv:2206.00929",

"transformers",

"sentiment-analysis"

] | text-classification | false | classla | null | classla/bcms-bertic-parlasent-bcs-bi | 21 | null | transformers | 8,237 | ---

language: "hr"

tags:

- text-classification

- sentiment-analysis

widget:

- text: "Poštovani potpredsjedničke Vlade i ministre hrvatskih branitelja, mislite li da ste zapravo iznevjerili svoje suborce s kojima ste 555 dana prosvjedovali u šatoru protiv tadašnjih dužnosnika jer ste zapravo donijeli zakon koji je neprovediv, a birali ste si suradnike koji nemaju etički integritet."

---

# bcms-bertic-parlasent-bcs-bi

Binary text classification model based on [`classla/bcms-bertic`](https://huggingface.co/classla/bcms-bertic) and fine-tuned on the BCS Political Sentiment dataset (sentence-level data).

This classifier classifies text into only two categories: Negative vs. Other. For the ternary classifier (Negative, Neutral, Positive) check [this model](https://huggingface.co/classla/bcms-bertic-parlasent-bcs-ter).

For details on the dataset and the finetuning procedure, please see [this paper](https://arxiv.org/abs/2206.00929).

## Fine-tuning hyperparameters

Fine-tuning was performed with `simpletransformers`. Beforehand a brief sweep for the optimal number of epochs was performed and the presumed best value was 9. Other arguments were kept default.

```python

model_args = {

"num_train_epochs": 9

}

```

## Performance in comparison with ternary classifier

| model | average macro F1 |

|-------------------------------------------|------------------|