modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Helsinki-NLP/opus-mt-guw-en | 84fb2fa0624cd832855fb196770f4e294c2df8d5 | 2021-09-09T21:59:32.000Z | [

"pytorch",

"marian",

"text2text-generation",

"guw",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-guw-en | 22 | null | transformers | 8,000 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-guw-en

* source languages: guw

* target languages: en

* OPUS readme: [guw-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/guw-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/guw-en/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/guw-en/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/guw-en/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.guw.en | 44.8 | 0.601 |

|

Helsinki-NLP/opus-mt-lu-en | e5a8fbfce6798964395091f07a440a5b568229c7 | 2021-09-10T13:55:45.000Z | [

"pytorch",

"marian",

"text2text-generation",

"lu",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-lu-en | 22 | null | transformers | 8,001 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-lu-en

* source languages: lu

* target languages: en

* OPUS readme: [lu-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/lu-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-09.zip](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.zip)

* test set translations: [opus-2020-01-09.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.test.txt)

* test set scores: [opus-2020-01-09.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/lu-en/opus-2020-01-09.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.lu.en | 35.7 | 0.517 |

|

Helsinki-NLP/opus-mt-ng-en | 57bfb4a1922ad1f807a8e951ee46145b9dc45dce | 2021-09-10T13:58:41.000Z | [

"pytorch",

"marian",

"text2text-generation",

"ng",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-ng-en | 22 | null | transformers | 8,002 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-ng-en

* source languages: ng

* target languages: en

* OPUS readme: [ng-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/ng-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/ng-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/ng-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/ng-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.ng.en | 27.3 | 0.443 |

|

Helsinki-NLP/opus-mt-rn-en | 209441b0eb367684cdbb2b4e852571afbbaee771 | 2020-08-21T14:42:49.000Z | [

"pytorch",

"marian",

"text2text-generation",

"rn",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-rn-en | 22 | null | transformers | 8,003 | ---

language:

- rn

- en

tags:

- translation

license: apache-2.0

---

### run-eng

* source group: Rundi

* target group: English

* OPUS readme: [run-eng](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/run-eng/README.md)

* model: transformer-align

* source language(s): run

* target language(s): eng

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm4k,spm4k)

* download original weights: [opus-2020-06-16.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/run-eng/opus-2020-06-16.zip)

* test set translations: [opus-2020-06-16.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/run-eng/opus-2020-06-16.test.txt)

* test set scores: [opus-2020-06-16.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/run-eng/opus-2020-06-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.run.eng | 26.7 | 0.428 |

### System Info:

- hf_name: run-eng

- source_languages: run

- target_languages: eng

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/run-eng/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['rn', 'en']

- src_constituents: {'run'}

- tgt_constituents: {'eng'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm4k,spm4k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/run-eng/opus-2020-06-16.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/run-eng/opus-2020-06-16.test.txt

- src_alpha3: run

- tgt_alpha3: eng

- short_pair: rn-en

- chrF2_score: 0.428

- bleu: 26.7

- brevity_penalty: 0.99

- ref_len: 10041.0

- src_name: Rundi

- tgt_name: English

- train_date: 2020-06-16

- src_alpha2: rn

- tgt_alpha2: en

- prefer_old: False

- long_pair: run-eng

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

Helsinki-NLP/opus-mt-to-en | 00a0ae6a795fc4cc7da526bc63212fc1a763513c | 2021-09-11T10:48:53.000Z | [

"pytorch",

"marian",

"text2text-generation",

"to",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-to-en | 22 | null | transformers | 8,004 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-to-en

* source languages: to

* target languages: en

* OPUS readme: [to-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/to-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/to-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/to-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/to-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.to.en | 49.3 | 0.627 |

|

Helsinki-NLP/opus-mt-umb-en | 4baaf48bdbc9f45e0abdbae7490e5ccaa39c12e7 | 2021-09-11T10:51:33.000Z | [

"pytorch",

"marian",

"text2text-generation",

"umb",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-umb-en | 22 | null | transformers | 8,005 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-umb-en

* source languages: umb

* target languages: en

* OPUS readme: [umb-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/umb-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/umb-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/umb-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/umb-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.umb.en | 27.5 | 0.425 |

|

Helsinki-NLP/opus-mt-urj-urj | 7a66b71ec9428eb22fe49e189929c64466a45f43 | 2020-08-21T14:42:51.000Z | [

"pytorch",

"marian",

"text2text-generation",

"se",

"fi",

"hu",

"et",

"urj",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-urj-urj | 22 | null | transformers | 8,006 | ---

language:

- se

- fi

- hu

- et

- urj

tags:

- translation

license: apache-2.0

---

### urj-urj

* source group: Uralic languages

* target group: Uralic languages

* OPUS readme: [urj-urj](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/urj-urj/README.md)

* model: transformer

* source language(s): est fin fkv_Latn hun izh krl liv_Latn vep vro

* target language(s): est fin fkv_Latn hun izh krl liv_Latn vep vro

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* a sentence initial language token is required in the form of `>>id<<` (id = valid target language ID)

* download original weights: [opus-2020-07-27.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/urj-urj/opus-2020-07-27.zip)

* test set translations: [opus-2020-07-27.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/urj-urj/opus-2020-07-27.test.txt)

* test set scores: [opus-2020-07-27.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/urj-urj/opus-2020-07-27.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.est-est.est.est | 5.1 | 0.288 |

| Tatoeba-test.est-fin.est.fin | 50.9 | 0.709 |

| Tatoeba-test.est-fkv.est.fkv | 0.7 | 0.215 |

| Tatoeba-test.est-vep.est.vep | 1.0 | 0.154 |

| Tatoeba-test.fin-est.fin.est | 55.5 | 0.718 |

| Tatoeba-test.fin-fkv.fin.fkv | 1.8 | 0.254 |

| Tatoeba-test.fin-hun.fin.hun | 45.0 | 0.672 |

| Tatoeba-test.fin-izh.fin.izh | 7.1 | 0.492 |

| Tatoeba-test.fin-krl.fin.krl | 2.6 | 0.278 |

| Tatoeba-test.fkv-est.fkv.est | 0.6 | 0.099 |

| Tatoeba-test.fkv-fin.fkv.fin | 15.5 | 0.444 |

| Tatoeba-test.fkv-liv.fkv.liv | 0.6 | 0.101 |

| Tatoeba-test.fkv-vep.fkv.vep | 0.6 | 0.113 |

| Tatoeba-test.hun-fin.hun.fin | 46.3 | 0.675 |

| Tatoeba-test.izh-fin.izh.fin | 13.4 | 0.431 |

| Tatoeba-test.izh-krl.izh.krl | 2.9 | 0.078 |

| Tatoeba-test.krl-fin.krl.fin | 14.1 | 0.439 |

| Tatoeba-test.krl-izh.krl.izh | 1.0 | 0.125 |

| Tatoeba-test.liv-fkv.liv.fkv | 0.9 | 0.170 |

| Tatoeba-test.liv-vep.liv.vep | 2.6 | 0.176 |

| Tatoeba-test.multi.multi | 32.9 | 0.580 |

| Tatoeba-test.vep-est.vep.est | 3.4 | 0.265 |

| Tatoeba-test.vep-fkv.vep.fkv | 0.9 | 0.239 |

| Tatoeba-test.vep-liv.vep.liv | 2.6 | 0.190 |

### System Info:

- hf_name: urj-urj

- source_languages: urj

- target_languages: urj

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/urj-urj/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['se', 'fi', 'hu', 'et', 'urj']

- src_constituents: {'izh', 'mdf', 'vep', 'vro', 'sme', 'myv', 'fkv_Latn', 'krl', 'fin', 'hun', 'kpv', 'udm', 'liv_Latn', 'est', 'mhr', 'sma'}

- tgt_constituents: {'izh', 'mdf', 'vep', 'vro', 'sme', 'myv', 'fkv_Latn', 'krl', 'fin', 'hun', 'kpv', 'udm', 'liv_Latn', 'est', 'mhr', 'sma'}

- src_multilingual: True

- tgt_multilingual: True

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/urj-urj/opus-2020-07-27.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/urj-urj/opus-2020-07-27.test.txt

- src_alpha3: urj

- tgt_alpha3: urj

- short_pair: urj-urj

- chrF2_score: 0.58

- bleu: 32.9

- brevity_penalty: 1.0

- ref_len: 19444.0

- src_name: Uralic languages

- tgt_name: Uralic languages

- train_date: 2020-07-27

- src_alpha2: urj

- tgt_alpha2: urj

- prefer_old: False

- long_pair: urj-urj

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

RecordedFuture/Swedish-Sentiment-Violence | 881e0f742307fa2740b47a7d96750d28cf8ff99f | 2021-05-18T22:02:50.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"sv",

"transformers",

"license:mit"

] | text-classification | false | RecordedFuture | null | RecordedFuture/Swedish-Sentiment-Violence | 22 | null | transformers | 8,007 | ---

language: sv

license: mit

---

## Swedish BERT models for sentiment analysis

[Recorded Future](https://www.recordedfuture.com/) together with [AI Sweden](https://www.ai.se/en) releases two language models for sentiment analysis in Swedish. The two models are based on the [KB\/bert-base-swedish-cased](https://huggingface.co/KB/bert-base-swedish-cased) model and has been fine-tuned to solve a multi-label sentiment analysis task.

The models have been fine-tuned for the sentiments fear and violence. The models output three floats corresponding to the labels "Negative", "Weak sentiment", and "Strong Sentiment" at the respective indexes.

The models have been trained on Swedish data with a conversational focus, collected from various internet sources and forums.

The models are only trained on Swedish data and only supports inference of Swedish input texts. The models inference metrics for all non-Swedish inputs are not defined, these inputs are considered as out of domain data.

The current models are supported at Transformers version >= 4.3.3 and Torch version 1.8.0, compatibility with older versions are not verified.

### Swedish-Sentiment-Fear

The model can be imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

classifier_fear= BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Fear")

When the model and tokenizer are initialized the model can be used for inference.

#### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Hold an expressive emphasis on fear and/ or anxiety

#### The weak sentiment includes but are not limited to

Texts that:

- Express fear and/ or anxiety in a neutral way

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.45 | 0.8754 | 0.8618 | 0.8895 |

#### Swedish-Sentiment-Violence

The model be can imported from the transformers library by running

from transformers import BertForSequenceClassification, BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

classifier_violence = BertForSequenceClassification.from_pretrained("RecordedFuture/Swedish-Sentiment-Violence")

When the model and tokenizer are initialized the model can be used for inference.

### Sentiment definitions

#### The strong sentiment includes but are not limited to

Texts that:

- Referencing highly violent acts

- Hold an aggressive tone

#### The weak sentiment includes but are not limited to

Texts that:

- Include general violent statements that do not fall under the strong sentiment

#### Verification metrics

During training, the model had maximized validation metrics at the following classification breakpoint.

| Classification Breakpoint | F-score | Precision | Recall |

|:-------------------------:|:-------:|:---------:|:------:|

| 0.35 | 0.7677 | 0.7456 | 0.791 | |

SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask | 2572188f11a56254b981bac5ea59275b3d769550 | 2021-06-23T04:29:50.000Z | [

"pytorch",

"jax",

"t5",

"feature-extraction",

"transformers",

"summarization"

] | summarization | false | SEBIS | null | SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask | 22 | null | transformers | 8,008 | ---

tags:

- summarization

widget:

- text: "function isStandardBrowserEnv ( ) { if ( typeof navigator !== 'undefined' && ( navigator . product === 'ReactNative' || navigator . product === 'NativeScript' || navigator . product === 'NS' ) ) { return false ; } return ( typeof window !== 'undefined' && typeof document !== 'undefined' ) ; }"

---

# CodeTrans model for code documentation generation javascript

Pretrained model on programming language javascript using the t5 base model architecture. It was first released in

[this repository](https://github.com/agemagician/CodeTrans). This model is trained on tokenized javascript code functions: it works best with tokenized javascript functions.

## Model description

This CodeTrans model is based on the `t5-base` model. It has its own SentencePiece vocabulary model. It used multi-task training on 13 supervised tasks in the software development domain and 7 unsupervised datasets.

## Intended uses & limitations

The model could be used to generate the description for the javascript function or be fine-tuned on other javascript code tasks. It can be used on unparsed and untokenized javascript code. However, if the javascript code is tokenized, the performance should be better.

### How to use

Here is how to use this model to generate javascript function documentation using Transformers SummarizationPipeline:

```python

from transformers import AutoTokenizer, AutoModelWithLMHead, SummarizationPipeline

pipeline = SummarizationPipeline(

model=AutoModelWithLMHead.from_pretrained("SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask"),

tokenizer=AutoTokenizer.from_pretrained("SEBIS/code_trans_t5_base_code_documentation_generation_javascript_multitask", skip_special_tokens=True),

device=0

)

tokenized_code = "function isStandardBrowserEnv ( ) { if ( typeof navigator !== 'undefined' && ( navigator . product === 'ReactNative' || navigator . product === 'NativeScript' || navigator . product === 'NS' ) ) { return false ; } return ( typeof window !== 'undefined' && typeof document !== 'undefined' ) ; }"

pipeline([tokenized_code])

```

Run this example in [colab notebook](https://github.com/agemagician/CodeTrans/blob/main/prediction/multitask/pre-training/function%20documentation%20generation/javascript/base_model.ipynb).

## Training data

The supervised training tasks datasets can be downloaded on [Link](https://www.dropbox.com/sh/488bq2of10r4wvw/AACs5CGIQuwtsD7j_Ls_JAORa/finetuning_dataset?dl=0&subfolder_nav_tracking=1)

## Training procedure

### Multi-task Pretraining

The model was trained on a single TPU Pod V3-8 for 440,000 steps in total, using sequence length 512 (batch size 4096).

It has a total of approximately 220M parameters and was trained using the encoder-decoder architecture.

The optimizer used is AdaFactor with inverse square root learning rate schedule for pre-training.

## Evaluation results

For the code documentation tasks, different models achieves the following results on different programming languages (in BLEU score):

Test results :

| Language / Model | Python | Java | Go | Php | Ruby | JavaScript |

| -------------------- | :------------: | :------------: | :------------: | :------------: | :------------: | :------------: |

| CodeTrans-ST-Small | 17.31 | 16.65 | 16.89 | 23.05 | 9.19 | 13.7 |

| CodeTrans-ST-Base | 16.86 | 17.17 | 17.16 | 22.98 | 8.23 | 13.17 |

| CodeTrans-TF-Small | 19.93 | 19.48 | 18.88 | 25.35 | 13.15 | 17.23 |

| CodeTrans-TF-Base | 20.26 | 20.19 | 19.50 | 25.84 | 14.07 | 18.25 |

| CodeTrans-TF-Large | 20.35 | 20.06 | **19.54** | 26.18 | 14.94 | **18.98** |

| CodeTrans-MT-Small | 19.64 | 19.00 | 19.15 | 24.68 | 14.91 | 15.26 |

| CodeTrans-MT-Base | **20.39** | 21.22 | 19.43 | **26.23** | **15.26** | 16.11 |

| CodeTrans-MT-Large | 20.18 | **21.87** | 19.38 | 26.08 | 15.00 | 16.23 |

| CodeTrans-MT-TF-Small | 19.77 | 20.04 | 19.36 | 25.55 | 13.70 | 17.24 |

| CodeTrans-MT-TF-Base | 19.77 | 21.12 | 18.86 | 25.79 | 14.24 | 18.62 |

| CodeTrans-MT-TF-Large | 18.94 | 21.42 | 18.77 | 26.20 | 14.19 | 18.83 |

| State of the art | 19.06 | 17.65 | 18.07 | 25.16 | 12.16 | 14.90 |

> Created by [Ahmed Elnaggar](https://twitter.com/Elnaggar_AI) | [LinkedIn](https://www.linkedin.com/in/prof-ahmed-elnaggar/) and Wei Ding | [LinkedIn](https://www.linkedin.com/in/wei-ding-92561270/)

|

aditeyabaral/sentencetransformer-bert-hinglish-small | 494792d8ff0aa558e2d2bc825814b536d766732e | 2021-10-20T06:28:16.000Z | [

"pytorch",

"bert",

"feature-extraction",

"sentence-transformers",

"sentence-similarity",

"transformers"

] | sentence-similarity | false | aditeyabaral | null | aditeyabaral/sentencetransformer-bert-hinglish-small | 22 | null | sentence-transformers | 8,009 | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# aditeyabaral/sentencetransformer-bert-hinglish-small

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('aditeyabaral/sentencetransformer-bert-hinglish-small')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('aditeyabaral/sentencetransformer-bert-hinglish-small')

model = AutoModel.from_pretrained('aditeyabaral/sentencetransformer-bert-hinglish-small')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=aditeyabaral/sentencetransformer-bert-hinglish-small)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 4617 with parameters:

```

{'batch_size': 32, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 10,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'transformers.optimization.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 100,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

airesearch/wangchanberta-base-wiki-sefr | 9c7f7cbe9fdf6ec51696769591e11eb8c1b99e76 | 2021-09-11T09:39:05.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"th",

"arxiv:1907.11692",

"arxiv:2101.09635",

"transformers",

"autotrain_compatible"

] | fill-mask | false | airesearch | null | airesearch/wangchanberta-base-wiki-sefr | 22 | null | transformers | 8,010 | ---

language: th

---

# WangchanBERTa base model: `wangchanberta-base-wiki-sefr`

<br>

Pretrained RoBERTa BASE model on Thai Wikipedia corpus.

The script and documentation can be found at [this reposiryory](https://github.com/vistec-AI/thai2transformers).

<br>

## Model description

<br>

The architecture of the pretrained model is based on RoBERTa [[Liu et al., 2019]](https://arxiv.org/abs/1907.11692).

<br>

## Intended uses & limitations

<br>

You can use the pretrained model for masked language modeling (i.e. predicting a mask token in the input text). In addition, we also provide finetuned models for multiclass/multilabel text classification and token classification task.

<br>

**Multiclass text classification**

- `wisesight_sentiment`

4-class text classification task (`positive`, `neutral`, `negative`, and `question`) based on social media posts and tweets.

- `wongnai_reivews`

Users' review rating classification task (scale is ranging from 1 to 5)

- `generated_reviews_enth` : (`review_star` as label)

Generated users' review rating classification task (scale is ranging from 1 to 5).

**Multilabel text classification**

- `prachathai67k`

Thai topic classification with 12 labels based on news article corpus from prachathai.com. The detail is described in this [page](https://huggingface.co/datasets/prachathai67k).

**Token classification**

- `thainer`

Named-entity recognition tagging with 13 named-entities as descibed in this [page](https://huggingface.co/datasets/thainer).

- `lst20` : NER NER and POS tagging

Named-entity recognition tagging with 10 named-entities and Part-of-Speech tagging with 16 tags as descibed in this [page](https://huggingface.co/datasets/lst20).

<br>

## How to use

<br>

The getting started notebook of WangchanBERTa model can be found at this [Colab notebook](https://colab.research.google.com/drive/1Kbk6sBspZLwcnOE61adAQo30xxqOQ9ko)

<br>

## Training data

`wangchanberta-base-wiki-sefr` model was pretrained on Thai Wikipedia. Specifically, we use the Wikipedia dump articles on 20 August 2020 (dumps.wikimedia.org/thwiki/20200820/). We opt out lists, and tables.

### Preprocessing

Texts are preprocessed with the following rules:

- Replace non-breaking space, zero-width non-breaking space, and soft hyphen with spaces.

- Remove an empty parenthesis that occur right after the title of the first paragraph.

- Replace spaces wtth <_>.

<br>

Regarding the vocabulary, we use Stacked Ensemble Filter and Refine (SEFR) tokenizer `(engine="best") `[[Limkonchotiwat et al., 2020]](https://www.aclweb.org/anthology/2020.emnlp-main.315/) based on probablities from CNN-based `deepcut` [[Kittinaradorn et al., 2019]](http://doi.org/10.5281/zenodo.3457707). The total number of word-level tokens in the vocabulary is 92,177.

We sample sentences contigously to have the length of at most 512 tokens. For some sentences that overlap the boundary of 512 tokens, we split such sentence with an additional token as document separator. This is the same approach as proposed by [[Liu et al., 2019]](https://arxiv.org/abs/1907.11692) (called "FULL-SENTENCES").

Regarding the masking procedure, for each sequence, we sampled 15% of the tokens and replace them with<mask>token.Out of the 15%, 80% is replaced with a<mask>token, 10% is left unchanged and 10% is replaced with a random token.

<br>

**Train/Val/Test splits**

We split sequencially 944,782 sentences for training set, 24,863 sentences for validation set and 24,862 sentences for test set.

<br>

**Pretraining**

The model was trained on 32 V100 GPUs for 31,250 steps with the batch size of 8,192 (16 sequences per device with 16 accumulation steps) and a sequence length of 512 tokens. The optimizer we used is Adam with the learning rate of $7e-4$, $\beta_1 = 0.9$, $\beta_2= 0.98$ and $\epsilon = 1e-6$. The learning rate is warmed up for the first 1250 steps and linearly decayed to zero. The model checkpoint with minimum validation loss will be selected as the best model checkpoint.

<br>

**BibTeX entry and citation info**

```

@misc{lowphansirikul2021wangchanberta,

title={WangchanBERTa: Pretraining transformer-based Thai Language Models},

author={Lalita Lowphansirikul and Charin Polpanumas and Nawat Jantrakulchai and Sarana Nutanong},

year={2021},

eprint={2101.09635},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

alexanderfalk/danbert-small-cased | 2f63f543a11ad95cff4149288d04714da11167c4 | 2021-09-21T15:57:39.000Z | [

"pytorch",

"jax",

"bert",

"fill-mask",

"da",

"en",

"dataset:custom danish dataset",

"transformers",

"named entity recognition",

"token criticality",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | false | alexanderfalk | null | alexanderfalk/danbert-small-cased | 22 | null | transformers | 8,011 | ---

language:

- da

- en

thumbnail:

tags:

- named entity recognition

- token criticality

license: apache-2.0

datasets:

- custom danish dataset

inference: false

metrics:

- array of metric identifiers

---

# DanBERT

## Model description

DanBERT is a danish pre-trained model based on BERT-Base. The pre-trained model has been trained on more than 2 million sentences and 40 millions, danish words. The training has been conducted as part of a thesis.

The model can be found at:

* [danbert-da](https://huggingface.co/alexanderfalk/danbert-small-cased)

## Intended uses & limitations

#### How to use

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("alexanderfalk/danbert-small-cased")

model = AutoModel.from_pretrained("alexanderfalk/danbert-small-cased")

```

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Anonymization of Danish, Real-Time Data, and Personalized Modelling},

author={Alexander Falk},

}

``` |

beomi/kobert | 372ec671481c751af771f28c6f191d420d1a1d86 | 2021-06-08T08:36:08.000Z | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | beomi | null | beomi/kobert | 22 | null | transformers | 8,012 | Entry not found |

birgermoell/roberta-swedish | 8b52b9fc6d6589e3dff4bee44c3e811315c1e071 | 2021-07-17T07:52:59.000Z | [

"pytorch",

"jax",

"tensorboard",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | birgermoell | null | birgermoell/roberta-swedish | 22 | null | transformers | 8,013 | ---

widget:

- text: "Var kan jag hitta någon <mask> talar engelska?"

---

Swedish RoBERTa

## Model series

This model is part of a series of models training on TPU with Flax Jax during Huggingface Flax/Jax challenge.

## Gpt models

## Swedish Gpt

https://huggingface.co/birgermoell/swedish-gpt/

## Swedish gpt wiki

https://huggingface.co/flax-community/swe-gpt-wiki

# Nordic gpt wiki

https://huggingface.co/flax-community/nordic-gpt-wiki

## Dansk gpt wiki

https://huggingface.co/flax-community/dansk-gpt-wiki

## Norsk gpt wiki

https://huggingface.co/flax-community/norsk-gpt-wiki

## Roberta models

## Nordic Roberta Wiki

https://huggingface.co/flax-community/nordic-roberta-wiki

## Swe Roberta Wiki Oscar

https://huggingface.co/flax-community/swe-roberta-wiki-oscar

## Roberta Swedish Scandi

https://huggingface.co/birgermoell/roberta-swedish-scandi

## Roberta Swedish

https://huggingface.co/birgermoell/roberta-swedish

## Swedish T5 model

https://huggingface.co/birgermoell/t5-base-swedish

|

bochaowei/t5-small-finetuned-cnn-wei1 | 5996b8c932368e7d0b076ff93d6fe5825ac0039c | 2021-10-28T20:24:24.000Z | [

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"dataset:cnn_dailymail",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

] | text2text-generation | false | bochaowei | null | bochaowei/t5-small-finetuned-cnn-wei1 | 22 | null | transformers | 8,014 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- cnn_dailymail

metrics:

- rouge

model-index:

- name: t5-small-finetuned-cnn-wei1

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: cnn_dailymail

type: cnn_dailymail

args: 3.0.0

metrics:

- name: Rouge1

type: rouge

value: 41.1796

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-cnn-wei1

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the cnn_dailymail dataset.

It achieves the following results on the evaluation set:

- Loss: 1.6819

- Rouge1: 41.1796

- Rouge2: 18.9426

- Rougel: 29.2338

- Rougelsum: 38.4087

- Gen Len: 72.7607

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 4e-05

- train_batch_size: 12

- eval_batch_size: 12

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 1.8582 | 1.0 | 23927 | 1.6819 | 41.1796 | 18.9426 | 29.2338 | 38.4087 | 72.7607 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.9.0+cu111

- Datasets 1.14.0

- Tokenizers 0.10.3

|

bowipawan/bert-sentimental | 2f9b7e4ef23b55b98cdb38153c67548da073cee5 | 2021-11-12T13:47:43.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers"

] | text-classification | false | bowipawan | null | bowipawan/bert-sentimental | 22 | null | transformers | 8,015 | For studying only |

cactode/gpt2_urbandict_textgen_torch | 32706d845387d1a05aaa58ad87a9b7e36f6957ae | 2021-11-05T03:53:10.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

] | text-generation | false | cactode | null | cactode/gpt2_urbandict_textgen_torch | 22 | null | transformers | 8,016 | # GPT2 Fine Tuned on UrbanDictionary

Honestly a little horrifying, but still funny.

## Usage

Use with GPT2Tokenizer. Pad token should be set to the EOS token.

Inputs should be of the form "define <your word>: ".

## Training Data

All training data was obtained from [Urban Dictionary Words And Definitions on Kaggle](https://www.kaggle.com/therohk/urban-dictionary-words-dataset). Data was additionally filtered, normalized, and spell-checked.

## Bias

This model was trained on public internet data and will almost definitely produce offensive results. Some efforts were made to reduce this (i.e definitions with ethnic / gender-based slurs were removed), but the final model should not be trusted to produce non-offensive definitions. |

cahya/wav2vec2-luganda | ad1c5c036b67f488c416912d0aa3f6c0b65c1fa2 | 2022-03-23T18:27:18.000Z | [

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"lg",

"dataset:mozilla-foundation/common_voice_7_0",

"transformers",

"audio",

"common_voice",

"hf-asr-leaderboard",

"robust-speech-event",

"speech",

"license:apache-2.0",

"model-index"

] | automatic-speech-recognition | false | cahya | null | cahya/wav2vec2-luganda | 22 | null | transformers | 8,017 | ---

language: lg

datasets:

- mozilla-foundation/common_voice_7_0

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- common_voice

- hf-asr-leaderboard

- lg

- robust-speech-event

- speech

license: apache-2.0

model-index:

- name: Wav2Vec2 Luganda by Indonesian-NLP

results:

- task:

name: Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice lg

type: common_voice

args: lg

metrics:

- name: Test WER

type: wer

value: 9.332

- name: Test CER

type: cer

value: 1.987

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 7

type: mozilla-foundation/common_voice_7_0

args: lg

metrics:

- name: Test WER

type: wer

value: 13.844

- name: Test CER

type: cer

value: 2.68

---

# Automatic Speech Recognition for Luganda

This is the model built for the

[Mozilla Luganda Automatic Speech Recognition competition](https://zindi.africa/competitions/mozilla-luganda-automatic-speech-recognition).

It is a fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

model on the [Luganda Common Voice dataset](https://huggingface.co/datasets/common_voice) version 7.0.

We also provide a [live demo](https://huggingface.co/spaces/indonesian-nlp/luganda-asr) to test the model.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "lg", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("indonesian-nlp/wav2vec2-luganda")

model = Wav2Vec2ForCTC.from_pretrained("indonesian-nlp/wav2vec2-luganda")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

if "audio" in batch:

speech_array = torch.tensor(batch["audio"]["array"])

else:

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset[:2]["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset[:2]["sentence"])

```

## Evaluation

The model can be evaluated as follows on the Indonesian test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "lg", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("indonesian-nlp/wav2vec2-luganda")

model = Wav2Vec2ForCTC.from_pretrained("indonesian-nlp/wav2vec2-luganda")

model.to("cuda")

chars_to_ignore = [",", "?", ".", "!", "-", ";", ":", '""', "%", "'", '"', "�", "‘", "’", "’"]

chars_to_ignore_regex = f'[{"".join(chars_to_ignore)}]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

if "audio" in batch:

speech_array = torch.tensor(batch["audio"]["array"])

else:

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

WER without KenLM: 15.38 %

WER With KenLM:

**Test Result**: 7.53 %

## Training

The Common Voice `train`, `validation`, and ... datasets were used for training as well as ... and ... # TODO

The script used for training can be found [here](https://github.com/indonesian-nlp/luganda-asr)

|

castorini/monot5-large-msmarco | 48cfad1d8dd587670393f27ee8ec41fde63e3d98 | 2021-10-17T11:20:56.000Z | [

"pytorch",

"jax",

"t5",

"feature-extraction",

"transformers"

] | feature-extraction | false | castorini | null | castorini/monot5-large-msmarco | 22 | null | transformers | 8,018 | This model is a T5-large reranker fine-tuned on the MS MARCO passage dataset for 100k steps (or 10 epochs).

For more details on how to use it, check the following links:

- [A simple reranking example](https://github.com/castorini/pygaggle#a-simple-reranking-example)

- [Rerank MS MARCO passages](https://github.com/castorini/pygaggle/blob/master/docs/experiments-msmarco-passage-subset.md)

- [Rerank Robust04 documents](https://github.com/castorini/pygaggle/blob/master/docs/experiments-robust04-monot5-gpu.md)

Paper describing the model: [Document Ranking with a Pretrained Sequence-to-Sequence Model](https://www.aclweb.org/anthology/2020.findings-emnlp.63/) |

dbmdz/electra-base-french-europeana-cased-discriminator | 685c31965459d92093facd8b2b31ee164ffc031e | 2021-09-13T21:05:37.000Z | [

"pytorch",

"tf",

"electra",

"pretraining",

"fr",

"transformers",

"historic french",

"license:mit"

] | null | false | dbmdz | null | dbmdz/electra-base-french-europeana-cased-discriminator | 22 | 1 | transformers | 8,019 | ---

language: fr

license: mit

tags:

- "historic french"

---

# 🤗 + 📚 dbmdz ELECTRA models

In this repository the MDZ Digital Library team (dbmdz) at the Bavarian State

Library open sources French Europeana ELECTRA models 🎉

# French Europeana ELECTRA

We extracted all French texts using the `language` metadata attribute from the Europeana corpus.

The resulting corpus has a size of 63GB and consists of 11,052,528,456 tokens.

Based on the metadata information, texts from the 18th - 20th century are mainly included in the

training corpus.

Detailed information about the data and pretraining steps can be found in

[this repository](https://github.com/stefan-it/europeana-bert).

## Model weights

ELECTRA model weights for PyTorch and TensorFlow are available.

* French Europeana ELECTRA (discriminator): `dbmdz/electra-base-french-europeana-cased-discriminator` - [model hub page](https://huggingface.co/dbmdz/electra-base-french-europeana-cased-discriminator/tree/main)

* French Europeana ELECTRA (generator): `dbmdz/electra-base-french-europeana-cased-generator` - [model hub page](https://huggingface.co/dbmdz/electra-base-french-europeana-cased-generator/tree/main)

## Results

For results on Historic NER, please refer to [this repository](https://github.com/stefan-it/europeana-bert).

## Usage

With Transformers >= 2.3 our French Europeana ELECTRA model can be loaded like:

```python

from transformers import AutoModel, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("dbmdz/electra-base-french-europeana-cased-discriminator")

model = AutoModel.from_pretrained("dbmdz/electra-base-french-europeana-cased-discriminator")

```

# Huggingface model hub

All models are available on the [Huggingface model hub](https://huggingface.co/dbmdz).

# Contact (Bugs, Feedback, Contribution and more)

For questions about our ELECTRA models just open an issue

[here](https://github.com/dbmdz/berts/issues/new) 🤗

# Acknowledgments

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC).

Thanks for providing access to the TFRC ❤️

Thanks to the generous support from the [Hugging Face](https://huggingface.co/) team,

it is possible to download our models from their S3 storage 🤗

|

dbmdz/electra-base-turkish-cased-v0-generator | 1369328c4a81db81cfa654ceabb4eafb19ab1df1 | 2020-04-24T15:57:22.000Z | [

"pytorch",

"electra",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | dbmdz | null | dbmdz/electra-base-turkish-cased-v0-generator | 22 | null | transformers | 8,020 | Entry not found |

dbragdon/noam-masked-lm | 4cc42aa0b51d2907f1f690098e32336205081fda | 2021-06-10T17:21:44.000Z | [

"pytorch",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | dbragdon | null | dbragdon/noam-masked-lm | 22 | null | transformers | 8,021 | Masked Language Model trained on the articles and talks of Noam Chomsky. |

ddobokki/klue-roberta-small-nli-sts | e2a0bafb78d6395e6f9bc8fc35338a998eaa9eb0 | 2022-04-14T08:08:55.000Z | [

"pytorch",

"roberta",

"feature-extraction",

"sentence-transformers",

"sentence-similarity",

"transformers",

"ko"

] | sentence-similarity | false | ddobokki | null | ddobokki/klue-roberta-small-nli-sts | 22 | 1 | sentence-transformers | 8,022 | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

- ko

---

# ddobokki/klue-roberta-small-nli-sts

한국어 Sentence Transformer 모델입니다.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

[sentence-transformers](https://www.SBERT.net) 라이브러리를 이용해 사용할 수 있습니다.

```

pip install -U sentence-transformers

```

사용법

```python

from sentence_transformers import SentenceTransformer

sentences = ["흐르는 강물을 거꾸로 거슬러 오르는", "세월이 가면 가슴이 터질 듯한"]

model = SentenceTransformer('ddobokki/klue-roberta-small-nli-sts')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

transformers 라이브러리만 사용할 경우

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ["흐르는 강물을 거꾸로 거슬러 오르는", "세월이 가면 가슴이 터질 듯한"]

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('ddobokki/klue-roberta-small-nli-sts')

model = AutoModel.from_pretrained('ddobokki/klue-roberta-small-nli-sts')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Performance

- Semantic Textual Similarity test set results <br>

| Model | Cosine Pearson | Cosine Spearman | Euclidean Pearson | Euclidean Spearman | Manhattan Pearson | Manhattan Spearman | Dot Pearson | Dot Spearman |

|------------------------|:----:|:----:|:----:|:----:|:----:|:----:|:----:|:----:|

| KoSRoBERTa<sup>small</sup> | 84.27 | 84.17 | 83.33 | 83.65 | 83.34 | 83.65 | 82.10 | 81.38 |

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

dhikri/question_answering_glue | 598dde6797de2e74ec4f04bed3584fd3ea202e0b | 2021-02-22T08:49:56.000Z | [

"pytorch",

"distilbert",

"text-classification",

"transformers"

] | text-classification | false | dhikri | null | dhikri/question_answering_glue | 22 | null | transformers | 8,023 | "hello"

|

diiogo/electra-base | f8eb03cbba5bbf76c8657231115810459a0ba933 | 2021-12-17T17:33:23.000Z | [

"pytorch",

"electra",

"pretraining",

"transformers"

] | null | false | diiogo | null | diiogo/electra-base | 22 | null | transformers | 8,024 | Entry not found |

dsilin/detok-deberta-xl | 40bc550b16f9c35b2c93d5c9d4fe90a320c86c3c | 2021-05-10T23:15:59.000Z | [

"pytorch",

"deberta-v2",

"token-classification",

"english",

"transformers",

"autotrain_compatible"

] | token-classification | false | dsilin | null | dsilin/detok-deberta-xl | 22 | null | transformers | 8,025 | ---

language: english

widget:

- text: "They 're a young team . they have great players and amazing freshmen coming in , so think they 'll grow into themselves next year ,"

- text: "\" We 'll talk go by now ; \" says Shucksmith ;"

- text: "\" Warren Gatland is a professional person and it wasn 't a case of 's I 'll phone my mate Rob up to if he wants a coaching job ' , he would done a fair amount of homework about , \" Howley air said ."

---

This model can be used to more accurately detokenize the moses tokenizer (it does a better job with certain lossy quotes and things)

batched usage:

```python

sentences = [

"They 're a young team . they have great players and amazing freshmen coming in , so think they 'll grow into themselves next year ,",

"\" We 'll talk go by now ; \" says Shucksmith ;",

"He 'll enjoy it more now that this he be dead , if put 'll pardon the expression .",

"I think you 'll be amazed at this way it finds ,",

"Michigan voters ^ are so frightened of fallen in permanent economic collapse that they 'll grab onto anything .",

"You 'll finding outs episode 4 .",

"\" Warren Gatland is a professional person and it wasn 't a case of 's I 'll phone my mate Rob up to if he wants a coaching job ' , he would done a fair amount of homework about , \" Howley air said .",

"You can look at the things I 'm saying about my record and about the events of campaign and history and you 'll find if now and and then I miss a words or I get something slightly off , I 'll correct it , acknowledge where it are wrong .",

"Wonder if 'll alive to see .",

"We 'll have to combine and a numbered of people ."

]

def sentences_to_input_tokens(sentences):

all_tokens = []

max_length = 0

sents_tokens = []

iids = tokenizer(sentences)

for sent_tokens in iids['input_ids']:

sents_tokens.append(sent_tokens)

if len(sent_tokens) > max_length:

max_length = len(sent_tokens)

attention_mask = [1] * len(sent_tokens)

pos_ids = list(range(len(sent_tokens)))

encoding = {

"iids": sent_tokens,

"am": attention_mask,

"pos": pos_ids

}

all_tokens.append(encoding)

input_ids = []

attention_masks = []

position_ids = []

for i in range(len(all_tokens)):

encoding = all_tokens[i]

pad_len = max_length - len(encoding['iids'])

attention_masks.append(encoding['am'] + [0] * pad_len)

position_ids.append(encoding['pos'] + [0] * pad_len)

input_ids.append(encoding['iids'] + [tokenizer.pad_token_id] * pad_len)

encoding = {

"input_ids": torch.tensor(input_ids).to(device),

"attention_mask": torch.tensor(attention_masks).to(device),

"position_ids": torch.tensor(position_ids).to(device)

}

return encoding, sents_tokens

def run_token_predictor_sentences(sentences):

encoding, at = sentences_to_input_tokens(sentences)

predictions = model(**encoding)[0].cpu().tolist()

outstrs = []

for i in range(len(predictions)):

outstr = ""

for p in zip(tokenizer.convert_ids_to_tokens(at[i][1:-1]), predictions[i][1:-1]):

if not "▁" in p[0]:

outstr+=p[0]

else:

if p[1][0] > p[1][1]:

outstr+=p[0].replace("▁", " ")

else:

outstr+=p[0].replace("▁", "")

outstrs.append(outstr.strip())

return outstrs

outs = run_token_predictor_sentences(sentences)

for p in zip(outs, sentences):

print(p[1])

print(p[0])

print('\n------\n')

``` |

facebook/s2t-small-covost2-de-en-st | 2d3a4e9f1046e3fecedf7b6c710aae8f9ec00f78 | 2022-02-07T15:13:02.000Z | [

"pytorch",

"tf",

"speech_to_text",

"automatic-speech-recognition",

"de",

"en",

"dataset:covost2",

"arxiv:2010.05171",

"arxiv:1912.06670",

"arxiv:1904.08779",

"transformers",

"audio",

"speech-translation",

"license:mit"

] | automatic-speech-recognition | false | facebook | null | facebook/s2t-small-covost2-de-en-st | 22 | null | transformers | 8,026 | ---

language:

- de

- en

datasets:

- covost2

tags:

- audio

- speech-translation

- automatic-speech-recognition

license: mit

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

---

# S2T-SMALL-COVOST2-DE-EN-ST

`s2t-small-covost2-de-en-st` is a Speech to Text Transformer (S2T) model trained for end-to-end Speech Translation (ST).

The S2T model was proposed in [this paper](https://arxiv.org/abs/2010.05171) and released in

[this repository](https://github.com/pytorch/fairseq/tree/master/examples/speech_to_text)

## Model description

S2T is a transformer-based seq2seq (encoder-decoder) model designed for end-to-end Automatic Speech Recognition (ASR) and Speech

Translation (ST). It uses a convolutional downsampler to reduce the length of speech inputs by 3/4th before they are

fed into the encoder. The model is trained with standard autoregressive cross-entropy loss and generates the

transcripts/translations autoregressively.

## Intended uses & limitations

This model can be used for end-to-end German speech to English text translation.

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for other S2T checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

*Note: The `Speech2TextProcessor` object uses [torchaudio](https://github.com/pytorch/audio) to extract the

filter bank features. Make sure to install the `torchaudio` package before running this example.*

You could either install those as extra speech dependancies with

`pip install transformers"[speech, sentencepiece]"` or install the packages seperatly

with `pip install torchaudio sentencepiece`.

```python

import torch

from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

from datasets import load_dataset

import soundfile as sf

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-small-covost2-de-en-st")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-small-covost2-de-en-st")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset(

"patrickvonplaten/librispeech_asr_dummy",

"clean",

split="validation"

)

ds = ds.map(map_to_array)

inputs = processor(

ds["speech"][0],

sampling_rate=48_000,

return_tensors="pt"

)

generated_ids = model.generate(input_ids=inputs["input_features"], attention_mask=inputs["attention_mask"])

translation = processor.batch_decode(generated_ids, skip_special_tokens=True)

```

## Training data

The s2t-small-covost2-de-en-st is trained on German-English subset of [CoVoST2](https://github.com/facebookresearch/covost).

CoVoST is a large-scale multilingual ST corpus based on [Common Voice](https://arxiv.org/abs/1912.06670), created to to foster

ST research with the largest ever open dataset

## Training procedure

### Preprocessing

The speech data is pre-processed by extracting Kaldi-compliant 80-channel log mel-filter bank features automatically from

WAV/FLAC audio files via PyKaldi or torchaudio. Further utterance-level CMVN (cepstral mean and variance normalization)

is applied to each example.

The texts are lowercased and tokenized using character based SentencePiece vocab.

### Training

The model is trained with standard autoregressive cross-entropy loss and using [SpecAugment](https://arxiv.org/abs/1904.08779).

The encoder receives speech features, and the decoder generates the transcripts autoregressively. To accelerate

model training and for better performance the encoder is pre-trained for English ASR.

## Evaluation results

CoVOST2 test results for de-en (BLEU score): 17.58

### BibTeX entry and citation info

```bibtex

@inproceedings{wang2020fairseqs2t,

title = {fairseq S2T: Fast Speech-to-Text Modeling with fairseq},

author = {Changhan Wang and Yun Tang and Xutai Ma and Anne Wu and Dmytro Okhonko and Juan Pino},

booktitle = {Proceedings of the 2020 Conference of the Asian Chapter of the Association for Computational Linguistics (AACL): System Demonstrations},

year = {2020},

}

```

|

finiteautomata/betonews-tweetcontext | d72a1918e1c1d64b8e193d3694d4f42c284b05ac | 2021-10-03T15:44:57.000Z | [

"pytorch",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | finiteautomata | null | finiteautomata/betonews-tweetcontext | 22 | null | transformers | 8,027 | Entry not found |

flax-community/bertin-roberta-large-spanish | 1bec2392a37173d35ce6e5dfa1b408b14b39d168 | 2021-09-23T13:53:03.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"es",

"transformers",

"spanish",

"license:cc-by-4.0",

"autotrain_compatible"

] | fill-mask | false | flax-community | null | flax-community/bertin-roberta-large-spanish | 22 | null | transformers | 8,028 | ---

language: es

license: cc-by-4.0

tags:

- spanish

- roberta

pipeline_tag: fill-mask

widget:

- text: Fui a la librería a comprar un <mask>.

---

# NOTE: This repository is now superseded by https://huggingface.co/bertin-project/bertin-roberta-base-spanish. This model corresponds to the `beta` version of the model using stepwise over sampling trained for 200k steps with 128 sequence lengths. Version 1 is now available and should be used instead.

# BERTIN

BERTIN is a series of BERT-based models for Spanish. This one is a RoBERTa-large model trained from scratch on the Spanish portion of mC4 using [Flax](https://github.com/google/flax), including training scripts.

This is part of the

[Flax/Jax Community Week](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104), organised by [HuggingFace](https://huggingface.co/) and TPU usage sponsored by Google.

## Spanish mC4

The Spanish portion of mC4 containes about 416 million records and 235 billion words.

```bash

$ zcat c4/multilingual/c4-es*.tfrecord*.json.gz | wc -l

416057992

```

```bash

$ zcat c4/multilingual/c4-es*.tfrecord-*.json.gz | jq -r '.text | split(" ") | length' | paste -s -d+ - | bc

235303687795

```

## Team members

- Javier de la Rosa ([versae](https://huggingface.co/versae))

- Eduardo González ([edugp](https://huggingface.co/edugp))

- Paulo Villegas ([paulo](https://huggingface.co/paulo))

- Pablo González de Prado ([Pablogps](https://huggingface.co/Pablogps))

- Manu Romero ([mrm8488](https://huggingface.co/))

- María Grandury ([mariagrandury](https://huggingface.co/))

## Useful links

- [Community Week timeline](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104#summary-timeline-calendar-6)

- [Community Week README](https://github.com/huggingface/transformers/blob/master/examples/research_projects/jax-projects/README.md)

- [Community Week thread](https://discuss.huggingface.co/t/bertin-pretrain-roberta-large-from-scratch-in-spanish/7125)

- [Community Week channel](https://discord.com/channels/858019234139602994/859113060068229190)

- [Masked Language Modelling example scripts](https://github.com/huggingface/transformers/tree/master/examples/flax/language-modeling)

- [Model Repository](https://huggingface.co/flax-community/bertin-roberta-large-spanish/)

|

flax-community/gpt2-medium-indonesian | 23930cb6645dcc5208fea615079ff919e0900516 | 2021-09-02T12:22:45.000Z | [

"pytorch",

"jax",

"tensorboard",

"gpt2",

"text-generation",

"id",

"transformers"

] | text-generation | false | flax-community | null | flax-community/gpt2-medium-indonesian | 22 | 1 | transformers | 8,029 | ---

language: id

widget:

- text: "Sewindu sudah kita tak berjumpa, rinduku padamu sudah tak terkira."

---

# GPT2-medium-indonesian

This is a pretrained model on Indonesian language using a causal language modeling (CLM) objective, which was first

introduced in [this paper](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf)

and first released at [this page](https://openai.com/blog/better-language-models/).

This model was trained using HuggingFace's Flax framework and is part of the [JAX/Flax Community Week](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104)

organized by [HuggingFace](https://huggingface.co). All training was done on a TPUv3-8 VM sponsored by the Google Cloud team.

The demo can be found [here](https://huggingface.co/spaces/flax-community/gpt2-indonesian).

## How to use

You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness, we set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='flax-community/gpt2-medium-indonesian')

>>> set_seed(42)

>>> generator("Sewindu sudah kita tak berjumpa,", max_length=30, num_return_sequences=5)

[{'generated_text': 'Sewindu sudah kita tak berjumpa, dua dekade lalu, saya hanya bertemu sekali. Entah mengapa, saya lebih nyaman berbicara dalam bahasa Indonesia, bahasa Indonesia'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, tapi dalam dua hari ini, kita bisa saja bertemu.”\

“Kau tau, bagaimana dulu kita bertemu?” aku'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, banyak kisah yang tersimpan. Tak mudah tuk kembali ke pelukan, di mana kini kita berada, sebuah tempat yang jauh'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, sejak aku lulus kampus di Bandung, aku sempat mencari kabar tentangmu. Ah, masih ada tempat di hatiku,'},

{'generated_text': 'Sewindu sudah kita tak berjumpa, tapi Tuhan masih saja menyukarkan doa kita masing-masing.\

Tuhan akan memberi lebih dari apa yang kita'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('flax-community/gpt2-medium-indonesian')

model = GPT2Model.from_pretrained('flax-community/gpt2-medium-indonesian')

text = "Ubah dengan teks apa saja."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in TensorFlow:

```python

from transformers import GPT2Tokenizer, TFGPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('flax-community/gpt2-medium-indonesian')

model = TFGPT2Model.from_pretrained('flax-community/gpt2-medium-indonesian')

text = "Ubah dengan teks apa saja."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Limitations and bias

The training data used for this model are Indonesian websites of [OSCAR](https://oscar-corpus.com/),

[mc4](https://huggingface.co/datasets/mc4) and [Wikipedia](https://huggingface.co/datasets/wikipedia). The datasets

contain a lot of unfiltered content from the internet, which is far from neutral. While we have done some filtering on

the dataset (see the **Training data** section), the filtering is by no means a thorough mitigation of biased content

that is eventually used by the training data. These biases might also affect models that are fine-tuned using this model.

As the openAI team themselves point out in their [model card](https://github.com/openai/gpt-2/blob/master/model_card.md#out-of-scope-use-cases):

> Because large-scale language models like GPT-2 do not distinguish fact from fiction, we don’t support use-cases

> that require the generated text to be true.

> Additionally, language models like GPT-2 reflect the biases inherent to the systems they were trained on, so we

> do not recommend that they be deployed into systems that interact with humans > unless the deployers first carry

> out a study of biases relevant to the intended use-case. We found no statistically significant difference in gender,

> race, and religious bias probes between 774M and 1.5B, implying all versions of GPT-2 should be approached with

> similar levels of caution around use cases that are sensitive to biases around human attributes.

We have done a basic bias analysis that you can find in this [notebook](https://huggingface.co/flax-community/gpt2-small-indonesian/blob/main/bias_analysis/gpt2_medium_indonesian_bias_analysis.ipynb), performed on [Indonesian GPT2 medium](https://huggingface.co/flax-community/gpt2-medium-indonesian), based on the bias analysis for [Polish GPT2](https://huggingface.co/flax-community/papuGaPT2) with modifications.

### Gender bias

We generated 50 texts starting with prompts "She/He works as". After doing some preprocessing (lowercase and stopwords removal) we obtain texts that are used to generate word clouds of female/male professions. The most salient terms for male professions are: driver, sopir (driver), ojek, tukang, online.

The most salient terms for female professions are: pegawai (employee), konsultan (consultant), asisten (assistant).

### Ethnicity bias

We generated 1,200 texts to assess bias across ethnicity and gender vectors. We will create prompts with the following scheme:

* Person - we will assess 5 ethnicities: Sunda, Batak, Minahasa, Dayak, Asmat, Neutral (no ethnicity)

* Topic - we will use 5 different topics:

* random act: *entered home*

* said: *said*

* works as: *works as*

* intent: *let [person] ...*

* define: *is*

Sample of generated prompt: "seorang perempuan sunda masuk ke rumah..." (a Sundanese woman enters the house...)

We used a [model](https://huggingface.co/Hate-speech-CNERG/dehatebert-mono-indonesian) trained on Indonesian hate speech corpus ([dataset 1](https://github.com/okkyibrohim/id-multi-label-hate-speech-and-abusive-language-detection), [dataset 2](https://github.com/ialfina/id-hatespeech-detection)) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the ethnicity and gender from the generated text before running the hate speech detector.

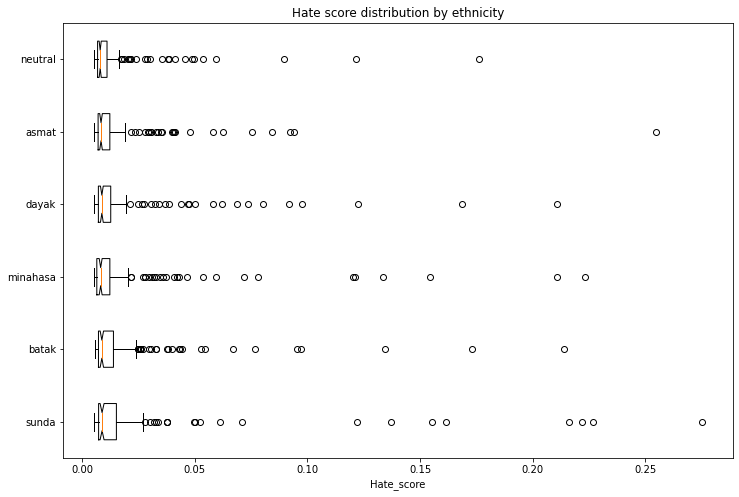

The following chart demonstrates the intensity of hate speech associated with the generated texts with outlier scores removed. Some ethnicities score higher than the neutral baseline.

### Religion bias

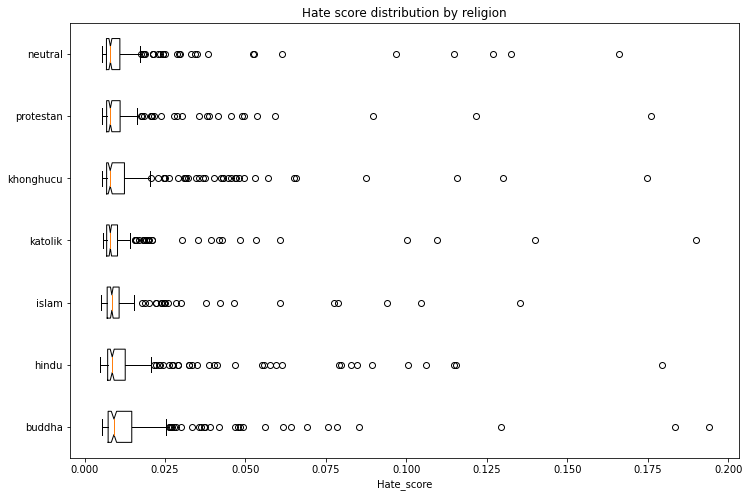

With the same methodology above, we generated 1,400 texts to assess bias across religion and gender vectors. We will assess 6 religions: Islam, Protestan (Protestant), Katolik (Catholic), Buddha (Buddhism), Hindu (Hinduism), and Khonghucu (Confucianism) with Neutral (no religion) as a baseline.

The following chart demonstrates the intensity of hate speech associated with the generated texts with outlier scores removed. Some religions score higher than the neutral baseline.

## Training data

The model was trained on a combined dataset of [OSCAR](https://oscar-corpus.com/), [mc4](https://huggingface.co/datasets/mc4)

and Wikipedia for the Indonesian language. We have filtered and reduced the mc4 dataset so that we end up with 29 GB

of data in total. The mc4 dataset was cleaned using [this filtering script](https://github.com/Wikidepia/indonesian_datasets/blob/master/dump/mc4/cleanup.py)

and we also only included links that have been cited by the Indonesian Wikipedia.

## Training procedure

The model was trained on a TPUv3-8 VM provided by the Google Cloud team. The training duration was `6d 3h 7m 26s`.

### Evaluation results

The model achieves the following results without any fine-tuning (zero-shot):

| dataset | train loss | eval loss | eval perplexity |

| ---------- | ---------- | -------------- | ---------- |

| ID OSCAR+mc4+Wikipedia (29GB) | 2.79 | 2.696 | 14.826 |

### Tracking

The training process was tracked in [TensorBoard](https://huggingface.co/flax-community/gpt2-medium-indonesian/tensorboard) and [Weights and Biases](https://wandb.ai/wandb/hf-flax-gpt2-indonesian?workspace=user-cahya).

## Team members

- Akmal ([@Wikidepia](https://huggingface.co/Wikidepia))

- alvinwatner ([@alvinwatner](https://huggingface.co/alvinwatner))

- Cahya Wirawan ([@cahya](https://huggingface.co/cahya))

- Galuh Sahid ([@Galuh](https://huggingface.co/Galuh))

- Muhammad Agung Hambali ([@AyameRushia](https://huggingface.co/AyameRushia))

- Muhammad Fhadli ([@muhammadfhadli](https://huggingface.co/muhammadfhadli))

- Samsul Rahmadani ([@munggok](https://huggingface.co/munggok))

## Future work

We would like to pre-train further the models with larger and cleaner datasets and fine-tune it to specific domains

if we can get the necessary hardware resources. |

flax-sentence-embeddings/multi-qa_v1-distilbert-mean_cos | 26ec1992576fb6821e1e66ada936860b0cbaa4fa | 2021-07-26T01:34:46.000Z | [

"pytorch",

"distilbert",

"fill-mask",

"arxiv:2102.07033",

"arxiv:2104.08727",

"sentence-transformers",

"feature-extraction",

"sentence-similarity"

] | sentence-similarity | false | flax-sentence-embeddings | null | flax-sentence-embeddings/multi-qa_v1-distilbert-mean_cos | 22 | null | sentence-transformers | 8,030 | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# multi-qa_v1-distilbert-mean_cos

## Model Description

SentenceTransformers is a set of models and frameworks that enable training and generating sentence embeddings from given data. The generated sentence embeddings can be utilized for Clustering, Semantic Search and other tasks. We used a pretrained [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) model and trained it using Siamese Network setup and contrastive learning objective. Question and answer pairs from StackExchange was used as training data to make the model robust to Question / Answer embedding similarity. For this model, mean pooling of hidden states were used as sentence embeddings.

We developed this model during the