modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

gchhablani/bert-base-cased-finetuned-mrpc

|

cdece3698f342cc94478a61128f719df7229580b

|

2021-09-20T09:07:44.000Z

|

[

"pytorch",

"tensorboard",

"bert",

"text-classification",

"en",

"dataset:glue",

"arxiv:2105.03824",

"transformers",

"generated_from_trainer",

"fnet-bert-base-comparison",

"license:apache-2.0",

"model-index"

] |

text-classification

| false |

gchhablani

| null |

gchhablani/bert-base-cased-finetuned-mrpc

| 575 | null |

transformers

| 2,200 |

---

language:

- en

license: apache-2.0

tags:

- generated_from_trainer

- fnet-bert-base-comparison

datasets:

- glue

metrics:

- accuracy

- f1

model-index:

- name: bert-base-cased-finetuned-mrpc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: GLUE MRPC

type: glue

args: mrpc

metrics:

- name: Accuracy

type: accuracy

value: 0.8602941176470589

- name: F1

type: f1

value: 0.9025641025641027

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-mrpc

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE MRPC dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7132

- Accuracy: 0.8603

- F1: 0.9026

- Combined Score: 0.8814

The model was fine-tuned to compare [google/fnet-base](https://huggingface.co/google/fnet-base) as introduced in [this paper](https://arxiv.org/abs/2105.03824) against [bert-base-cased](https://huggingface.co/bert-base-cased).

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

This model is trained using the [run_glue](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) script. The following command was used:

```bash

#!/usr/bin/bash

python ../run_glue.py \\n --model_name_or_path bert-base-cased \\n --task_name mrpc \\n --do_train \\n --do_eval \\n --max_seq_length 512 \\n --per_device_train_batch_size 16 \\n --learning_rate 2e-5 \\n --num_train_epochs 5 \\n --output_dir bert-base-cased-finetuned-mrpc \\n --push_to_hub \\n --hub_strategy all_checkpoints \\n --logging_strategy epoch \\n --save_strategy epoch \\n --evaluation_strategy epoch \\n```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:--------------:|

| 0.5981 | 1.0 | 230 | 0.4580 | 0.7892 | 0.8562 | 0.8227 |

| 0.3739 | 2.0 | 460 | 0.3806 | 0.8480 | 0.8942 | 0.8711 |

| 0.1991 | 3.0 | 690 | 0.4879 | 0.8529 | 0.8958 | 0.8744 |

| 0.1286 | 4.0 | 920 | 0.6342 | 0.8529 | 0.8986 | 0.8758 |

| 0.0812 | 5.0 | 1150 | 0.7132 | 0.8603 | 0.9026 | 0.8814 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0

- Datasets 1.12.1

- Tokenizers 0.10.3

|

voidful/bart-distractor-generation-pm

|

ab4250ce4b6d339654263b3c4625ed69bbc38173

|

2021-04-04T16:20:25.000Z

|

[

"pytorch",

"bart",

"text2text-generation",

"en",

"dataset:race",

"transformers",

"distractor",

"generation",

"seq2seq",

"autotrain_compatible"

] |

text2text-generation

| false |

voidful

| null |

voidful/bart-distractor-generation-pm

| 575 | null |

transformers

| 2,201 |

---

language: en

tags:

- bart

- distractor

- generation

- seq2seq

datasets:

- race

metrics:

- bleu

- rouge

pipeline_tag: text2text-generation

widget:

- text: "When you ' re having a holiday , one of the main questions to ask is which hotel or apartment to choose . However , when it comes to France , you have another special choice : treehouses . In France , treehouses are offered to travelers as a new choice in many places . The price may be a little higher , but you do have a chance to _ your childhood memories . Alain Laurens , one of France ' s top treehouse designers , said , ' Most of the people might have the experience of building a den when they were young . And they like that feeling of freedom when they are children . ' Its fairy - tale style gives travelers a special feeling . It seems as if they are living as a forest king and enjoying the fresh air in the morning . Another kind of treehouse is the ' star cube ' . It gives travelers the chance of looking at the stars shining in the sky when they are going to sleep . Each ' star cube ' not only offers all the comfortable things that a hotel provides for travelers , but also gives them a chance to look for stars by using a telescope . The glass roof allows you to look at the stars from your bed . </s> The passage mainly tells us </s> treehouses in france."

---

# bart-distractor-generation-pm

## Model description

This model is a sequence-to-sequence distractor generator which takes an answer, question and context as an input, and generates a distractor as an output. It is based on a pretrained `bart-base` model.

This model trained with Parallel MLM refer to the [Paper](https://www.aclweb.org/anthology/2020.findings-emnlp.393/).

For details, please see https://github.com/voidful/BDG.

## Intended uses & limitations

The model is trained to generate examinations-style multiple choice distractor. The model performs best with full sentence answers.

#### How to use

The model takes concatenated context, question and answers as an input sequence, and will generate a full distractor sentence as an output sequence. The max sequence length is 1024 tokens. Inputs should be organised into the following format:

```

context </s> question </s> answer

```

The input sequence can then be encoded and passed as the `input_ids` argument in the model's `generate()` method.

#### Limitations and bias

The model is limited to generating distractor in the same style as those found in [RACE](https://www.aclweb.org/anthology/D17-1082/). The generated distractors can potentially be leading or reflect biases that are present in the context. If the context is too short or completely absent, or if the context, question and answer do not match, the generated distractor is likely to be incoherent.

|

abhishek/autonlp-bbc-news-classification-37229289

|

7feb5c325c92f2425f7c338160cdb5afc117aaed

|

2021-11-30T12:56:59.000Z

|

[

"pytorch",

"bert",

"text-classification",

"en",

"dataset:abhishek/autonlp-data-bbc-news-classification",

"transformers",

"autonlp",

"co2_eq_emissions"

] |

text-classification

| false |

abhishek

| null |

abhishek/autonlp-bbc-news-classification-37229289

| 574 | 1 |

transformers

| 2,202 |

---

tags: autonlp

language: en

widget:

- text: "I love AutoNLP 🤗"

datasets:

- abhishek/autonlp-data-bbc-news-classification

co2_eq_emissions: 5.448567309047846

---

# Model Trained Using AutoNLP

- Problem type: Multi-class Classification

- Model ID: 37229289

- CO2 Emissions (in grams): 5.448567309047846

## Validation Metrics

- Loss: 0.07081354409456253

- Accuracy: 0.9867109634551495

- Macro F1: 0.9859067529980614

- Micro F1: 0.9867109634551495

- Weighted F1: 0.9866417220968429

- Macro Precision: 0.9868771404595043

- Micro Precision: 0.9867109634551495

- Weighted Precision: 0.9869289511551576

- Macro Recall: 0.9853173241852486

- Micro Recall: 0.9867109634551495

- Weighted Recall: 0.9867109634551495

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/abhishek/autonlp-bbc-news-classification-37229289

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("abhishek/autonlp-bbc-news-classification-37229289", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("abhishek/autonlp-bbc-news-classification-37229289", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

```

|

recobo/agriculture-bert-uncased

|

641de86d01ed5f0e22f2301e85a3da518173dcad

|

2021-10-08T13:50:49.000Z

|

[

"pytorch",

"bert",

"fill-mask",

"en",

"transformers",

"agriculture-domain",

"agriculture",

"autotrain_compatible"

] |

fill-mask

| false |

recobo

| null |

recobo/agriculture-bert-uncased

| 574 | 2 |

transformers

| 2,203 |

---

language: "en"

tags:

- agriculture-domain

- agriculture

- fill-mask

widget:

- text: "[MASK] agriculture provides one of the most promising areas for innovation in green and blue infrastructure in cities."

---

# BERT for Agriculture Domain

A BERT-based language model further pre-trained from the checkpoint of [SciBERT](https://huggingface.co/allenai/scibert_scivocab_uncased).

The dataset gathered is a balance between scientific and general works in agriculture domain and encompassing knowledge from different areas of agriculture research and practical knowledge.

The corpus contains 1.2 million paragraphs from National Agricultural Library (NAL) from the US Gov. and 5.3 million paragraphs from books and common literature from the **Agriculture Domain**.

The self-supervised learning approach of MLM was used to train the model.

- Masked language modeling (MLM): taking a sentence, the model randomly masks 15% of the words in the input then run

the entire masked sentence through the model and has to predict the masked words. This is different from traditional

recurrent neural networks (RNNs) that usually see the words one after the other, or from autoregressive models like

GPT internally masks the future tokens. It allows the model to learn a bidirectional representation of the

sentence.

```python

from transformers import pipeline

fill_mask = pipeline(

"fill-mask",

model="recobo/agriculture-bert-uncased",

tokenizer="recobo/agriculture-bert-uncased"

)

fill_mask("[MASK] is the practice of cultivating plants and livestock.")

```

|

IDEA-CCNL/Wenzhong2.0-GPT2-3.5B-chinese

|

67838ddfa79c4b7bbd3ab88006e7e38d70b24f19

|

2022-07-29T08:56:23.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"zh",

"transformers",

"license:apache-2.0"

] |

text-generation

| false |

IDEA-CCNL

| null |

IDEA-CCNL/Wenzhong2.0-GPT2-3.5B-chinese

| 574 | 2 |

transformers

| 2,204 |

---

language:

- zh

inference:

parameters:

max_new_tokens: 250

repetition_penalty: 1.1

top_p: 0.9

do_sample: True

license: apache-2.0

---

# Wenzhong2.0-GPT2-3.5B model (chinese),one model of [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM).

As we all know, the single direction language model based on decoder structure has strong generation ability, such as GPT model. The 3.5 billion parameter Wenzhong-GPT2-3.5B large model, using 100G chinese common data, 32 A100 training for 28 hours, is the largest open source **GPT2 large model of chinese**. **Our model performs well in Chinese continuation generation.** **Wenzhong2.0-GPT2-3.5B-Chinese is a Chinese gpt2 model trained with cleaner data on the basis of Wenzhong-GPT2-3.5B.**

## Usage

### load model

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('IDEA-CCNL/Wenzhong2.0-GPT2-3.5B-chinese')

model = GPT2Model.from_pretrained('IDEA-CCNL/Wenzhong-GPT2-3.5B')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

### generation

```python

from transformers import pipeline, set_seed

set_seed(55)

generator = pipeline('text-generation', model='IDEA-CCNL/Wenzhong2.0-GPT2-3.5B-chinese')

generator("北京位于", max_length=30, num_return_sequences=1)

```

## Citation

If you find the resource is useful, please cite the following website in your paper.

```

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

```

|

jkgrad/xlnet-base-squadv2

|

36d0bd03fc05331bae053db4fa35865ba74dd2a2

|

2021-01-17T11:52:34.000Z

|

[

"pytorch",

"xlnet",

"question-answering",

"arxiv:1906.08237",

"transformers",

"autotrain_compatible"

] |

question-answering

| false |

jkgrad

| null |

jkgrad/xlnet-base-squadv2

| 571 | 1 |

transformers

| 2,205 |

# XLNet Fine-tuned on SQuAD 2.0 Dataset

[XLNet](https://arxiv.org/abs/1906.08237) jointly developed by Google and CMU and fine-tuned on [SQuAD 2.0](https://rajpurkar.github.io/SQuAD-explorer/) for question answering down-stream task.

## Training Results (Metrics)

```

{

"HasAns_exact": 74.7132253711201

"HasAns_f1": 82.11971607032643

"HasAns_total": 5928

"NoAns_exact": 73.38940285954584

"NoAns_f1": 73.38940285954584

"NoAns_total": 5945

"best_exact": 75.67590331003116

"best_exact_thresh": -19.554906845092773

"best_f1": 79.16215426779269

"best_f1_thresh": -19.554906845092773

"epoch": 4.0

"exact": 74.05036637749515

"f1": 77.74830934598614

"total": 11873

}

```

## Results Comparison

| Metric | Paper | Model |

| ------ | --------- | --------- |

| **EM** | **78.46** | **75.68** (-2.78) |

| **F1** | **81.33** | **79.16** (-2.17)|

Better fine-tuned models coming soon.

## How to Use

```

from transformers import XLNetForQuestionAnswering, XLNetTokenizerFast

model = XLNetForQuestionAnswering.from_pretrained('jkgrad/xlnet-base-squadv2)

tokenizer = XLNetTokenizerFast.from_pretrained('jkgrad/xlnet-base-squadv2')

```

|

studio-ousia/mluke-large-lite-finetuned-kbp37

|

cc425b55c0eb00bcaa951287d2bce238a7d86687

|

2022-03-28T07:38:46.000Z

|

[

"pytorch",

"luke",

"transformers",

"license:apache-2.0"

] | null | false |

studio-ousia

| null |

studio-ousia/mluke-large-lite-finetuned-kbp37

| 571 | null |

transformers

| 2,206 |

---

license: apache-2.0

---

|

malteos/gpt2-xl-wechsel-german

|

40465eb67657fe7e9176b014a7b6b8322032d706

|

2022-06-24T10:49:20.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"de",

"arxiv:2112.06598",

"transformers",

"license:mit"

] |

text-generation

| false |

malteos

| null |

malteos/gpt2-xl-wechsel-german

| 569 | 4 |

transformers

| 2,207 |

---

license: mit

language: de

widget:

- text: "In einer schockierenden Entdeckung fanden Wissenschaftler eine Herde Einhörner, die in "

---

# German GPT2-XL (1.5B)

- trained with [BigScience's DeepSpeed-Megatron-LM code base](https://github.com/bigscience-workshop/Megatron-DeepSpeed)

- word embedding initialized with [WECHSEL](https://arxiv.org/abs/2112.06598) and all other weights taken from English [gpt2-xl](https://huggingface.co/gpt2-xl)

- ~ 3 days on 16xA100 GPUs (~ 80 TFLOPs / GPU)

- stopped after 100k steps

- 26.2B tokens

- less than a single epoch on `oscar_unshuffled_deduplicated_de` (excluding validation set; original model was trained for 75 epochs on less data)

- bf16

- zero stage 0

- tp/pp = 1

### How to use

You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness, we

set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='malteos/gpt2-xl-wechsel-german')

>>> set_seed(42)

>>> generator("Hello, I'm a language model,", max_length=30, num_return_sequences=5)

[{'generated_text': "Hello, I'm a language model, a language for thinking, a language for expressing thoughts."},

{'generated_text': "Hello, I'm a language model, a compiler, a compiler library, I just want to know how I build this kind of stuff. I don"},

{'generated_text': "Hello, I'm a language model, and also have more than a few of your own, but I understand that they're going to need some help"},

{'generated_text': "Hello, I'm a language model, a system model. I want to know my language so that it might be more interesting, more user-friendly"},

{'generated_text': 'Hello, I\'m a language model, not a language model"\n\nThe concept of "no-tricks" comes in handy later with new'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('malteos/gpt2-xl-wechsel-german')

model = GPT2Model.from_pretrained('malteos/gpt2-xl-wechsel-german')

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

## Evaluation

| Model (size) | PPL |

|---|---|

| `gpt2-xl-wechsel-german` (1.5B) | **14.5** |

| `gpt2-wechsel-german-ds-meg` (117M) | 26.4 |

| `gpt2-wechsel-german` (117M) | 26.8 |

| `gpt2` (retrained from scratch) (117M) | 27.63 |

## License

MIT

|

IIC/dpr-spanish-question_encoder-allqa-base

|

a4cc70e53295779c0cf761af8fc49a267ee56099

|

2022-04-02T15:07:32.000Z

|

[

"pytorch",

"bert",

"fill-mask",

"es",

"dataset:squad_es",

"dataset:PlanTL-GOB-ES/SQAC",

"dataset:IIC/bioasq22_es",

"arxiv:2004.04906",

"transformers",

"sentence similarity",

"passage retrieval",

"model-index",

"autotrain_compatible"

] |

fill-mask

| false |

IIC

| null |

IIC/dpr-spanish-question_encoder-allqa-base

| 568 | 1 |

transformers

| 2,208 |

---

language:

- es

tags:

- sentence similarity # Example: audio

- passage retrieval # Example: automatic-speech-recognition

datasets:

- squad_es

- PlanTL-GOB-ES/SQAC

- IIC/bioasq22_es

metrics:

- eval_loss: 0.010779764448327261

- eval_accuracy: 0.9982682224158297

- eval_f1: 0.9446059155411182

- average_rank: 0.11728500598392888

model-index:

- name: dpr-spanish-question_encoder-allqa-base

results:

- task:

type: text similarity # Required. Example: automatic-speech-recognition

name: text similarity # Optional. Example: Speech Recognition

dataset:

type: squad_es # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: squad_es # Required. Example: Common Voice zh-CN

args: es # Optional. Example: zh-CN

metrics:

- type: loss

value: 0.010779764448327261

name: eval_loss

- type: accuracy

value: 0.9982682224158297

name: accuracy

- type: f1

value: 0.9446059155411182

name: f1

- type: avgrank

value: 0.11728500598392888

name: avgrank

---

[Dense Passage Retrieval](https://arxiv.org/abs/2004.04906)-DPR is a set of tools for performing State of the Art open-domain question answering. It was initially developed by Facebook and there is an [official repository](https://github.com/facebookresearch/DPR). DPR is intended to retrieve the relevant documents to answer a given question, and is composed of 2 models, one for encoding passages and other for encoding questions. This concrete model is the one used for encoding questions.

With this and the [passage encoder model](https://huggingface.co/avacaondata/dpr-spanish-passage_encoder-allqa-base) we introduce the best passage retrievers in Spanish up to date (to the best of our knowledge), improving over the [previous model we developed](https://huggingface.co/IIC/dpr-spanish-question_encoder-squades-base), by training it for longer and with more data.

Regarding its use, this model should be used to vectorize a question that enters in a Question Answering system, and then we compare that encoding with the encodings of the database (encoded with [the passage encoder](https://huggingface.co/avacaondata/dpr-spanish-passage_encoder-squades-base)) to find the most similar documents , which then should be used for either extracting the answer or generating it.

For training the model, we used a collection of Question Answering datasets in Spanish:

- the Spanish version of SQUAD, [SQUAD-ES](https://huggingface.co/datasets/squad_es)

- [SQAC- Spanish Question Answering Corpus](https://huggingface.co/datasets/PlanTL-GOB-ES/SQAC)

- [BioAsq22-ES](https://huggingface.co/datasets/IIC/bioasq22_es) - we translated this last one by using automatic translation with Transformers.

With this complete dataset we created positive and negative examples for the model (For more information look at [the paper](https://arxiv.org/abs/2004.04906) to understand the training process for DPR). We trained for 25 epochs with the same configuration as the paper. The [previous DPR model](https://huggingface.co/IIC/dpr-spanish-passage_encoder-squades-base) was trained for only 3 epochs with about 60% of the data.

Example of use:

```python

from transformers import DPRQuestionEncoder, DPRQuestionEncoderTokenizer

model_str = "IIC/dpr-spanish-question_encoder-allqa-base"

tokenizer = DPRQuestionEncoderTokenizer.from_pretrained(model_str)

model = DPRQuestionEncoder.from_pretrained(model_str)

input_ids = tokenizer("¿Qué medallas ganó Usain Bolt en 2012?", return_tensors="pt")["input_ids"]

embeddings = model(input_ids).pooler_output

```

The full metrics of this model on the evaluation split of SQUADES are:

```

eval_loss: 0.010779764448327261

eval_acc: 0.9982682224158297

eval_f1: 0.9446059155411182

eval_acc_and_f1: 0.9714370689784739

eval_average_rank: 0.11728500598392888

```

And the classification report:

```

precision recall f1-score support

hard_negative 0.9991 0.9991 0.9991 1104999

positive 0.9446 0.9446 0.9446 17547

accuracy 0.9983 1122546

macro avg 0.9719 0.9719 0.9719 1122546

weighted avg 0.9983 0.9983 0.9983 1122546

```

### Contributions

Thanks to [@avacaondata](https://huggingface.co/avacaondata), [@alborotis](https://huggingface.co/alborotis), [@albarji](https://huggingface.co/albarji), [@Dabs](https://huggingface.co/Dabs), [@GuillemGSubies](https://huggingface.co/GuillemGSubies) for adding this model.

|

winegarj/distilbert-base-uncased-finetuned-sst2

|

dffa754606014596c0bcedd7920d45671102fc90

|

2022-04-10T02:09:16.000Z

|

[

"pytorch",

"distilbert",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

| false |

winegarj

| null |

winegarj/distilbert-base-uncased-finetuned-sst2

| 568 | null |

transformers

| 2,209 |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-sst2

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: glue

type: glue

args: sst2

metrics:

- name: Accuracy

type: accuracy

value: 0.908256880733945

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-sst2

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4493

- Accuracy: 0.9083

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.1804 | 1.0 | 2105 | 0.2843 | 0.9025 |

| 0.1216 | 2.0 | 4210 | 0.3242 | 0.9025 |

| 0.0871 | 3.0 | 6315 | 0.3320 | 0.9060 |

| 0.0607 | 4.0 | 8420 | 0.3913 | 0.9025 |

| 0.0429 | 5.0 | 10525 | 0.4493 | 0.9083 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0.dev20220409+cu115

- Datasets 2.0.0

- Tokenizers 0.12.0

|

google/multiberts-seed_2

|

6ca96336eb9c5571b274ec67ca5d3d88980a57eb

|

2021-11-05T22:10:49.000Z

|

[

"pytorch",

"tf",

"bert",

"pretraining",

"en",

"arxiv:2106.16163",

"arxiv:1908.08962",

"transformers",

"multiberts",

"multiberts-seed_2",

"license:apache-2.0"

] | null | false |

google

| null |

google/multiberts-seed_2

| 567 | null |

transformers

| 2,210 |

---

language: en

tags:

- multiberts

- multiberts-seed_2

license: apache-2.0

---

# MultiBERTs - Seed 2

MultiBERTs is a collection of checkpoints and a statistical library to support

robust research on BERT. We provide 25 BERT-base models trained with

similar hyper-parameters as

[the original BERT model](https://github.com/google-research/bert) but

with different random seeds, which causes variations in the initial weights and order of

training instances. The aim is to distinguish findings that apply to a specific

artifact (i.e., a particular instance of the model) from those that apply to the

more general procedure.

We also provide 140 intermediate checkpoints captured

during the course of pre-training (we saved 28 checkpoints for the first 5 runs).

The models were originally released through

[http://goo.gle/multiberts](http://goo.gle/multiberts). We describe them in our

paper

[The MultiBERTs: BERT Reproductions for Robustness Analysis](https://arxiv.org/abs/2106.16163).

This is model #2.

## Model Description

This model is a reproduction of

[BERT-base uncased](https://github.com/google-research/bert), for English: it

is a Transformers model pretrained on a large corpus of English data, using the

Masked Language Modelling (MLM) and the Next Sentence Prediction (NSP)

objectives.

The intended uses, limitations, training data and training procedure are similar

to [BERT-base uncased](https://github.com/google-research/bert). Two major

differences with the original model:

* We pre-trained the MultiBERTs models for 2 million steps using sequence

length 512 (instead of 1 million steps using sequence length 128 then 512).

* We used an alternative version of Wikipedia and Books Corpus, initially

collected for [Turc et al., 2019](https://arxiv.org/abs/1908.08962).

This is a best-effort reproduction, and so it is probable that differences with

the original model have gone unnoticed. The performance of MultiBERTs on GLUE is oftentimes comparable to that of original

BERT, but we found significant differences on the dev set of SQuAD (MultiBERTs outperforms original BERT).

See our [technical report](https://arxiv.org/abs/2106.16163) for more details.

### How to use

Using code from

[BERT-base uncased](https://huggingface.co/bert-base-uncased), here is an example based on

Tensorflow:

```

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('google/multiberts-seed_2')

model = TFBertModel.from_pretrained("google/multiberts-seed_2")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

PyTorch version:

```

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('google/multiberts-seed_2')

model = BertModel.from_pretrained("google/multiberts-seed_2")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

## Citation info

```bibtex

@article{sellam2021multiberts,

title={The MultiBERTs: BERT Reproductions for Robustness Analysis},

author={Thibault Sellam and Steve Yadlowsky and Jason Wei and Naomi Saphra and Alexander D'Amour and Tal Linzen and Jasmijn Bastings and Iulia Turc and Jacob Eisenstein and Dipanjan Das and Ian Tenney and Ellie Pavlick},

journal={arXiv preprint arXiv:2106.16163},

year={2021}

}

```

|

google/multiberts-seed_3

|

e7349d42b02a2c42b87f6a01046f3f4278361e37

|

2021-11-05T22:12:27.000Z

|

[

"pytorch",

"tf",

"bert",

"pretraining",

"en",

"arxiv:2106.16163",

"arxiv:1908.08962",

"transformers",

"multiberts",

"multiberts-seed_3",

"license:apache-2.0"

] | null | false |

google

| null |

google/multiberts-seed_3

| 567 | null |

transformers

| 2,211 |

---

language: en

tags:

- multiberts

- multiberts-seed_3

license: apache-2.0

---

# MultiBERTs - Seed 3

MultiBERTs is a collection of checkpoints and a statistical library to support

robust research on BERT. We provide 25 BERT-base models trained with

similar hyper-parameters as

[the original BERT model](https://github.com/google-research/bert) but

with different random seeds, which causes variations in the initial weights and order of

training instances. The aim is to distinguish findings that apply to a specific

artifact (i.e., a particular instance of the model) from those that apply to the

more general procedure.

We also provide 140 intermediate checkpoints captured

during the course of pre-training (we saved 28 checkpoints for the first 5 runs).

The models were originally released through

[http://goo.gle/multiberts](http://goo.gle/multiberts). We describe them in our

paper

[The MultiBERTs: BERT Reproductions for Robustness Analysis](https://arxiv.org/abs/2106.16163).

This is model #3.

## Model Description

This model is a reproduction of

[BERT-base uncased](https://github.com/google-research/bert), for English: it

is a Transformers model pretrained on a large corpus of English data, using the

Masked Language Modelling (MLM) and the Next Sentence Prediction (NSP)

objectives.

The intended uses, limitations, training data and training procedure are similar

to [BERT-base uncased](https://github.com/google-research/bert). Two major

differences with the original model:

* We pre-trained the MultiBERTs models for 2 million steps using sequence

length 512 (instead of 1 million steps using sequence length 128 then 512).

* We used an alternative version of Wikipedia and Books Corpus, initially

collected for [Turc et al., 2019](https://arxiv.org/abs/1908.08962).

This is a best-effort reproduction, and so it is probable that differences with

the original model have gone unnoticed. The performance of MultiBERTs on GLUE is oftentimes comparable to that of original

BERT, but we found significant differences on the dev set of SQuAD (MultiBERTs outperforms original BERT).

See our [technical report](https://arxiv.org/abs/2106.16163) for more details.

### How to use

Using code from

[BERT-base uncased](https://huggingface.co/bert-base-uncased), here is an example based on

Tensorflow:

```

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('google/multiberts-seed_3')

model = TFBertModel.from_pretrained("google/multiberts-seed_3")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

PyTorch version:

```

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('google/multiberts-seed_3')

model = BertModel.from_pretrained("google/multiberts-seed_3")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

## Citation info

```bibtex

@article{sellam2021multiberts,

title={The MultiBERTs: BERT Reproductions for Robustness Analysis},

author={Thibault Sellam and Steve Yadlowsky and Jason Wei and Naomi Saphra and Alexander D'Amour and Tal Linzen and Jasmijn Bastings and Iulia Turc and Jacob Eisenstein and Dipanjan Das and Ian Tenney and Ellie Pavlick},

journal={arXiv preprint arXiv:2106.16163},

year={2021}

}

```

|

sshleifer/tiny-distilbert-base-uncased-finetuned-sst-2-english

|

40f5ccbce1646c98ea0fabb02f96182a08a5a9d9

|

2020-05-12T01:51:10.000Z

|

[

"pytorch",

"tf",

"distilbert",

"text-classification",

"transformers"

] |

text-classification

| false |

sshleifer

| null |

sshleifer/tiny-distilbert-base-uncased-finetuned-sst-2-english

| 567 | null |

transformers

| 2,212 |

Entry not found

|

Gunulhona/tbstmodel_v2

|

deb515d43c4985e3318a0ea172cd563bcde230fa

|

2022-07-20T10:32:43.000Z

|

[

"pytorch",

"bart",

"text2text-generation",

"transformers",

"autotrain_compatible"

] |

text2text-generation

| false |

Gunulhona

| null |

Gunulhona/tbstmodel_v2

| 566 | null |

transformers

| 2,213 |

Entry not found

|

cross-encoder/ms-marco-TinyBERT-L-4

|

12a9f222056982640d3735ab94d865761c8fdd16

|

2021-08-05T08:39:59.000Z

|

[

"pytorch",

"jax",

"bert",

"text-classification",

"transformers",

"license:apache-2.0"

] |

text-classification

| false |

cross-encoder

| null |

cross-encoder/ms-marco-TinyBERT-L-4

| 565 | null |

transformers

| 2,214 |

---

license: apache-2.0

---

# Cross-Encoder for MS Marco

This model was trained on the [MS Marco Passage Ranking](https://github.com/microsoft/MSMARCO-Passage-Ranking) task.

The model can be used for Information Retrieval: Given a query, encode the query will all possible passages (e.g. retrieved with ElasticSearch). Then sort the passages in a decreasing order. See [SBERT.net Retrieve & Re-rank](https://www.sbert.net/examples/applications/retrieve_rerank/README.html) for more details. The training code is available here: [SBERT.net Training MS Marco](https://github.com/UKPLab/sentence-transformers/tree/master/examples/training/ms_marco)

## Usage with Transformers

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

model = AutoModelForSequenceClassification.from_pretrained('model_name')

tokenizer = AutoTokenizer.from_pretrained('model_name')

features = tokenizer(['How many people live in Berlin?', 'How many people live in Berlin?'], ['Berlin has a population of 3,520,031 registered inhabitants in an area of 891.82 square kilometers.', 'New York City is famous for the Metropolitan Museum of Art.'], padding=True, truncation=True, return_tensors="pt")

model.eval()

with torch.no_grad():

scores = model(**features).logits

print(scores)

```

## Usage with SentenceTransformers

The usage becomes easier when you have [SentenceTransformers](https://www.sbert.net/) installed. Then, you can use the pre-trained models like this:

```python

from sentence_transformers import CrossEncoder

model = CrossEncoder('model_name', max_length=512)

scores = model.predict([('Query', 'Paragraph1'), ('Query', 'Paragraph2') , ('Query', 'Paragraph3')])

```

## Performance

In the following table, we provide various pre-trained Cross-Encoders together with their performance on the [TREC Deep Learning 2019](https://microsoft.github.io/TREC-2019-Deep-Learning/) and the [MS Marco Passage Reranking](https://github.com/microsoft/MSMARCO-Passage-Ranking/) dataset.

| Model-Name | NDCG@10 (TREC DL 19) | MRR@10 (MS Marco Dev) | Docs / Sec |

| ------------- |:-------------| -----| --- |

| **Version 2 models** | | |

| cross-encoder/ms-marco-TinyBERT-L-2-v2 | 69.84 | 32.56 | 9000

| cross-encoder/ms-marco-MiniLM-L-2-v2 | 71.01 | 34.85 | 4100

| cross-encoder/ms-marco-MiniLM-L-4-v2 | 73.04 | 37.70 | 2500

| cross-encoder/ms-marco-MiniLM-L-6-v2 | 74.30 | 39.01 | 1800

| cross-encoder/ms-marco-MiniLM-L-12-v2 | 74.31 | 39.02 | 960

| **Version 1 models** | | |

| cross-encoder/ms-marco-TinyBERT-L-2 | 67.43 | 30.15 | 9000

| cross-encoder/ms-marco-TinyBERT-L-4 | 68.09 | 34.50 | 2900

| cross-encoder/ms-marco-TinyBERT-L-6 | 69.57 | 36.13 | 680

| cross-encoder/ms-marco-electra-base | 71.99 | 36.41 | 340

| **Other models** | | |

| nboost/pt-tinybert-msmarco | 63.63 | 28.80 | 2900

| nboost/pt-bert-base-uncased-msmarco | 70.94 | 34.75 | 340

| nboost/pt-bert-large-msmarco | 73.36 | 36.48 | 100

| Capreolus/electra-base-msmarco | 71.23 | 36.89 | 340

| amberoad/bert-multilingual-passage-reranking-msmarco | 68.40 | 35.54 | 330

| sebastian-hofstaetter/distilbert-cat-margin_mse-T2-msmarco | 72.82 | 37.88 | 720

Note: Runtime was computed on a V100 GPU.

|

hfl/chinese-pert-large

|

2e523595cb3d0d157f847cd0ec1b3914c8740fe1

|

2022-02-25T04:09:23.000Z

|

[

"pytorch",

"tf",

"bert",

"feature-extraction",

"zh",

"transformers",

"license:cc-by-nc-sa-4.0"

] |

feature-extraction

| false |

hfl

| null |

hfl/chinese-pert-large

| 565 | 2 |

transformers

| 2,215 |

---

language:

- zh

license: "cc-by-nc-sa-4.0"

---

# Please use 'Bert' related functions to load this model!

Under construction...

Please visit our GitHub repo for more information: https://github.com/ymcui/PERT

|

w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-0

|

0fce6ebcb814fa0f624e3a8ba83f682a222c60f6

|

2021-10-06T04:15:40.000Z

|

[

"pytorch",

"roberta",

"text-classification",

"id",

"dataset:indolem",

"arxiv:1907.11692",

"transformers",

"indonesian-roberta-base-indolem-sentiment-classifier-fold-0",

"license:mit"

] |

text-classification

| false |

w11wo

| null |

w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-0

| 563 | null |

transformers

| 2,216 |

---

language: id

tags:

- indonesian-roberta-base-indolem-sentiment-classifier-fold-0

license: mit

datasets:

- indolem

widget:

- text: "Pelayanan hotel ini sangat baik."

---

## Indonesian RoBERTa Base IndoLEM Sentiment Classifier

Indonesian RoBERTa Base IndoLEM Sentiment Classifier is a sentiment-text-classification model based on the [RoBERTa](https://arxiv.org/abs/1907.11692) model. The model was originally the pre-trained [Indonesian RoBERTa Base](https://hf.co/flax-community/indonesian-roberta-base) model, which is then fine-tuned on [`indolem`](https://indolem.github.io/)'s [Sentiment Analysis](https://github.com/indolem/indolem/tree/main/sentiment) dataset consisting of Indonesian tweets and hotel reviews (Koto et al., 2020).

A 5-fold cross-validation experiment was performed, with splits provided by the original dataset authors. This model was trained on fold 0. You can find models trained on [fold 0](https://huggingface.co/w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-0), [fold 1](https://huggingface.co/w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-1), [fold 2](https://huggingface.co/w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-2), [fold 3](https://huggingface.co/w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-3), and [fold 4](https://huggingface.co/w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-4), in their respective links.

On **fold 0**, the model achieved an F1 of 86.42% on dev/validation and 83.12% on test. On all **5 folds**, the models achieved an average F1 of 84.14% on dev/validation and 84.64% on test.

Hugging Face's `Trainer` class from the [Transformers](https://huggingface.co/transformers) library was used to train the model. PyTorch was used as the backend framework during training, but the model remains compatible with other frameworks nonetheless.

## Model

| Model | #params | Arch. | Training/Validation data (text) |

| ------------------------------------------------------------- | ------- | ------------ | ------------------------------- |

| `indonesian-roberta-base-indolem-sentiment-classifier-fold-0` | 124M | RoBERTa Base | `IndoLEM`'s Sentiment Analysis |

## Evaluation Results

The model was trained for 10 epochs and the best model was loaded at the end.

| Epoch | Training Loss | Validation Loss | Accuracy | F1 | Precision | Recall |

| ----- | ------------- | --------------- | -------- | -------- | --------- | -------- |

| 1 | 0.563500 | 0.420457 | 0.796992 | 0.626728 | 0.680000 | 0.581197 |

| 2 | 0.293600 | 0.281360 | 0.884712 | 0.811475 | 0.779528 | 0.846154 |

| 3 | 0.163000 | 0.267922 | 0.904762 | 0.844262 | 0.811024 | 0.880342 |

| 4 | 0.090200 | 0.335411 | 0.899749 | 0.838710 | 0.793893 | 0.888889 |

| 5 | 0.065200 | 0.462526 | 0.897243 | 0.835341 | 0.787879 | 0.888889 |

| 6 | 0.039200 | 0.423001 | 0.912281 | 0.859438 | 0.810606 | 0.914530 |

| 7 | 0.025300 | 0.452100 | 0.912281 | 0.859438 | 0.810606 | 0.914530 |

| 8 | 0.010400 | 0.525200 | 0.914787 | 0.855932 | 0.848739 | 0.863248 |

| 9 | 0.007100 | 0.513585 | 0.909774 | 0.850000 | 0.829268 | 0.871795 |

| 10 | 0.007200 | 0.537254 | 0.917293 | 0.864198 | 0.833333 | 0.897436 |

## How to Use

### As Text Classifier

```python

from transformers import pipeline

pretrained_name = "w11wo/indonesian-roberta-base-indolem-sentiment-classifier-fold-0"

nlp = pipeline(

"sentiment-analysis",

model=pretrained_name,

tokenizer=pretrained_name

)

nlp("Pelayanan hotel ini sangat baik.")

```

## Disclaimer

Do consider the biases which come from both the pre-trained RoBERTa model and `IndoLEM`'s Sentiment Analysis dataset that may be carried over into the results of this model.

## Author

Indonesian RoBERTa Base IndoLEM Sentiment Classifier was trained and evaluated by [Wilson Wongso](https://w11wo.github.io/). All computation and development are done on Google Colaboratory using their free GPU access.

|

Gunulhona/tbbcmodel

|

97497c151100a30da0d19771d3dbc7c457befaac

|

2022-01-06T07:01:22.000Z

|

[

"pytorch",

"bart",

"text-classification",

"transformers"

] |

text-classification

| false |

Gunulhona

| null |

Gunulhona/tbbcmodel

| 562 | null |

transformers

| 2,217 |

Entry not found

|

fav-kky/FERNET-C5

|

ff5399d8222bce8d7356c7face6d0d0263f9cb8c

|

2021-07-26T21:05:31.000Z

|

[

"pytorch",

"tf",

"bert",

"fill-mask",

"cs",

"arxiv:2107.10042",

"transformers",

"Czech",

"KKY",

"FAV",

"license:cc-by-nc-sa-4.0",

"autotrain_compatible"

] |

fill-mask

| false |

fav-kky

| null |

fav-kky/FERNET-C5

| 562 | null |

transformers

| 2,218 |

---

language: "cs"

tags:

- Czech

- KKY

- FAV

license: "cc-by-nc-sa-4.0"

---

# FERNET-C5

FERNET-C5 is a monolingual Czech BERT-base model pre-trained from 93GB of filtered Czech Common Crawl dataset (C5).

Preprint of our paper is available at https://arxiv.org/abs/2107.10042.

|

junnyu/roformer_chinese_sim_char_base

|

6e0353805a82525679b0d5d9e97c51fdbf8378eb

|

2022-04-15T03:52:35.000Z

|

[

"pytorch",

"roformer",

"text-generation",

"zh",

"transformers",

"tf2.0"

] |

text-generation

| false |

junnyu

| null |

junnyu/roformer_chinese_sim_char_base

| 562 | 1 |

transformers

| 2,219 |

---

language: zh

tags:

- roformer

- pytorch

- tf2.0

inference: False

---

# 安装

- pip install roformer==0.4.3

# 使用

```python

import torch

import numpy as np

from roformer import RoFormerForCausalLM, RoFormerConfig

from transformers import BertTokenizer

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

pretrained_model = "junnyu/roformer_chinese_sim_char_base"

tokenizer = BertTokenizer.from_pretrained(pretrained_model)

config = RoFormerConfig.from_pretrained(pretrained_model)

config.is_decoder = True

config.eos_token_id = tokenizer.sep_token_id

config.pooler_activation = "linear"

model = RoFormerForCausalLM.from_pretrained(pretrained_model, config=config)

model.to(device)

model.eval()

def gen_synonyms(text, n=100, k=20):

''''含义: 产生sent的n个相似句,然后返回最相似的k个。

做法:用seq2seq生成,并用encoder算相似度并排序。

'''

# 寻找所有相似的句子

r = []

inputs1 = tokenizer(text, return_tensors="pt")

for _ in range(n):

inputs1.to(device)

output = tokenizer.batch_decode(model.generate(**inputs1, top_p=0.95, do_sample=True, max_length=128), skip_special_tokens=True)[0].replace(" ","").replace(text, "") # 去除空格,去除原始text文本。

r.append(output)

# 对相似的句子进行排序

r = [i for i in set(r) if i != text and len(i) > 0]

r = [text] + r

inputs2 = tokenizer(r, padding=True, return_tensors="pt")

with torch.no_grad():

inputs2.to(device)

outputs = model(**inputs2)

Z = outputs.pooler_output.cpu().numpy()

Z /= (Z**2).sum(axis=1, keepdims=True)**0.5

argsort = np.dot(Z[1:], -Z[0]).argsort()

return [r[i + 1] for i in argsort[:k]]

out = gen_synonyms("广州和深圳哪个好?")

print(out)

# ['深圳和广州哪个好?',

# '广州和深圳哪个好',

# '深圳和广州哪个好',

# '深圳和广州哪个比较好。',

# '深圳和广州哪个最好?',

# '深圳和广州哪个比较好',

# '广州和深圳那个比较好',

# '深圳和广州哪个更好?',

# '深圳与广州哪个好',

# '深圳和广州,哪个比较好',

# '广州与深圳比较哪个好',

# '深圳和广州哪里比较好',

# '深圳还是广州比较好?',

# '广州和深圳哪个地方好一些?',

# '广州好还是深圳好?',

# '广州好还是深圳好呢?',

# '广州与深圳哪个地方好点?',

# '深圳好还是广州好',

# '广州好还是深圳好',

# '广州和深圳哪个城市好?']

```

|

flair/chunk-english-fast

|

f6040da676441b1c13f702119e8cc12e0a533350

|

2021-03-02T21:59:23.000Z

|

[

"pytorch",

"en",

"dataset:conll2000",

"flair",

"token-classification",

"sequence-tagger-model"

] |

token-classification

| false |

flair

| null |

flair/chunk-english-fast

| 561 | 2 |

flair

| 2,220 |

---

tags:

- flair

- token-classification

- sequence-tagger-model

language: en

datasets:

- conll2000

widget:

- text: "The happy man has been eating at the diner"

---

## English Chunking in Flair (fast model)

This is the fast phrase chunking model for English that ships with [Flair](https://github.com/flairNLP/flair/).

F1-Score: **96,22** (CoNLL-2000)

Predicts 4 tags:

| **tag** | **meaning** |

|---------------------------------|-----------|

| ADJP | adjectival |

| ADVP | adverbial |

| CONJP | conjunction |

| INTJ | interjection |

| LST | list marker |

| NP | noun phrase |

| PP | prepositional |

| PRT | particle |

| SBAR | subordinate clause |

| VP | verb phrase |

Based on [Flair embeddings](https://www.aclweb.org/anthology/C18-1139/) and LSTM-CRF.

---

### Demo: How to use in Flair

Requires: **[Flair](https://github.com/flairNLP/flair/)** (`pip install flair`)

```python

from flair.data import Sentence

from flair.models import SequenceTagger

# load tagger

tagger = SequenceTagger.load("flair/chunk-english-fast")

# make example sentence

sentence = Sentence("The happy man has been eating at the diner")

# predict NER tags

tagger.predict(sentence)

# print sentence

print(sentence)

# print predicted NER spans

print('The following NER tags are found:')

# iterate over entities and print

for entity in sentence.get_spans('np'):

print(entity)

```

This yields the following output:

```

Span [1,2,3]: "The happy man" [− Labels: NP (0.9958)]

Span [4,5,6]: "has been eating" [− Labels: VP (0.8759)]

Span [7]: "at" [− Labels: PP (1.0)]

Span [8,9]: "the diner" [− Labels: NP (0.9991)]

```

So, the spans "*The happy man*" and "*the diner*" are labeled as **noun phrases** (NP) and "*has been eating*" is labeled as a **verb phrase** (VP) in the sentence "*The happy man has been eating at the diner*".

---

### Training: Script to train this model

The following Flair script was used to train this model:

```python

from flair.data import Corpus

from flair.datasets import CONLL_2000

from flair.embeddings import WordEmbeddings, StackedEmbeddings, FlairEmbeddings

# 1. get the corpus

corpus: Corpus = CONLL_2000()

# 2. what tag do we want to predict?

tag_type = 'np'

# 3. make the tag dictionary from the corpus

tag_dictionary = corpus.make_tag_dictionary(tag_type=tag_type)

# 4. initialize each embedding we use

embedding_types = [

# contextual string embeddings, forward

FlairEmbeddings('news-forward-fast'),

# contextual string embeddings, backward

FlairEmbeddings('news-backward-fast'),

]

# embedding stack consists of Flair and GloVe embeddings

embeddings = StackedEmbeddings(embeddings=embedding_types)

# 5. initialize sequence tagger

from flair.models import SequenceTagger

tagger = SequenceTagger(hidden_size=256,

embeddings=embeddings,

tag_dictionary=tag_dictionary,

tag_type=tag_type)

# 6. initialize trainer

from flair.trainers import ModelTrainer

trainer = ModelTrainer(tagger, corpus)

# 7. run training

trainer.train('resources/taggers/chunk-english-fast',

train_with_dev=True,

max_epochs=150)

```

---

### Cite

Please cite the following paper when using this model.

```

@inproceedings{akbik2018coling,

title={Contextual String Embeddings for Sequence Labeling},

author={Akbik, Alan and Blythe, Duncan and Vollgraf, Roland},

booktitle = {{COLING} 2018, 27th International Conference on Computational Linguistics},

pages = {1638--1649},

year = {2018}

}

```

---

### Issues?

The Flair issue tracker is available [here](https://github.com/flairNLP/flair/issues/).

|

snunlp/KR-FinBert-SC

|

f8586286cc3161fb648e9fee09a456069fd846d0

|

2022-04-28T05:07:18.000Z

|

[

"pytorch",

"bert",

"text-classification",

"ko",

"transformers"

] |

text-classification

| false |

snunlp

| null |

snunlp/KR-FinBert-SC

| 561 | 2 |

transformers

| 2,221 |

---

language:

- ko

---

# KR-FinBert & KR-FinBert-SC

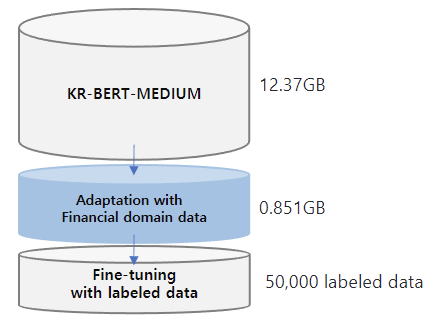

Much progress has been made in the NLP (Natural Language Processing) field, with numerous studies showing that domain adaptation using small-scale corpus and fine-tuning with labeled data is effective for overall performance improvement.

we proposed KR-FinBert for the financial domain by further pre-training it on a financial corpus and fine-tuning it for sentiment analysis. As many studies have shown, the performance improvement through adaptation and conducting the downstream task was also clear in this experiment.

## Data

The training data for this model is expanded from those of **[KR-BERT-MEDIUM](https://huggingface.co/snunlp/KR-Medium)**, texts from Korean Wikipedia, general news articles, legal texts crawled from the National Law Information Center and [Korean Comments dataset](https://www.kaggle.com/junbumlee/kcbert-pretraining-corpus-korean-news-comments). For the transfer learning, **corporate related economic news articles from 72 media sources** such as the Financial Times, The Korean Economy Daily, etc and **analyst reports from 16 securities companies** such as Kiwoom Securities, Samsung Securities, etc are added. Included in the dataset is 440,067 news titles with their content and 11,237 analyst reports. **The total data size is about 13.22GB.** For mlm training, we split the data line by line and **the total no. of lines is 6,379,315.**

KR-FinBert is trained for 5.5M steps with the maxlen of 512, training batch size of 32, and learning rate of 5e-5, taking 67.48 hours to train the model using NVIDIA TITAN XP.

## Downstream tasks

### Sentimental Classification model

Downstream task performances with 50,000 labeled data.

|Model|Accuracy|

|-|-|

|KR-FinBert|0.963|

|KR-BERT-MEDIUM|0.958|

|KcBert-large|0.955|

|KcBert-base|0.953|

|KoBert|0.817|

### Inference sample

|Positive|Negative|

|-|-|

|현대바이오, '폴리탁셀' 코로나19 치료 가능성에 19% 급등 | 영화관株 '코로나 빙하기' 언제 끝나나…"CJ CGV 올 4000억 손실 날수도" |

|이수화학, 3분기 영업익 176억…전년比 80%↑ | C쇼크에 멈춘 흑자비행…대한항공 1분기 영업적자 566억 |

|"GKL, 7년 만에 두 자릿수 매출성장 예상" | '1000억대 횡령·배임' 최신원 회장 구속… SK네트웍스 "경영 공백 방지 최선" |

|위지윅스튜디오, 콘텐츠 활약에 사상 첫 매출 1000억원 돌파 | 부품 공급 차질에…기아차 광주공장 전면 가동 중단 |

|삼성전자, 2년 만에 인도 스마트폰 시장 점유율 1위 '왕좌 탈환' | 현대제철, 지난해 영업익 3,313억원···전년比 67.7% 감소 |

### Citation

```

@misc{kr-FinBert-SC,

author = {Kim, Eunhee and Hyopil Shin},

title = {KR-FinBert: Fine-tuning KR-FinBert for Sentiment Analysis},

year = {2022},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://huggingface.co/snunlp/KR-FinBert-SC}}

}

```

|

Helsinki-NLP/opus-mt-mr-en

|

040060aef28d3ace6070a967dc4d22bce13fe98d

|

2021-09-10T13:58:19.000Z

|

[

"pytorch",

"marian",

"text2text-generation",

"mr",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-mr-en

| 560 | null |

transformers

| 2,222 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-mr-en

* source languages: mr

* target languages: en

* OPUS readme: [mr-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/mr-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2019-12-18.zip](https://object.pouta.csc.fi/OPUS-MT-models/mr-en/opus-2019-12-18.zip)

* test set translations: [opus-2019-12-18.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/mr-en/opus-2019-12-18.test.txt)

* test set scores: [opus-2019-12-18.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/mr-en/opus-2019-12-18.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.mr.en | 38.2 | 0.515 |

|

yoshitomo-matsubara/bert-base-uncased-sst2

|

7d0cf617c3efaeb57e0cf15962c0fb3c0174d9bb

|

2021-05-29T21:57:09.000Z

|

[

"pytorch",

"bert",

"text-classification",

"en",

"dataset:sst2",

"transformers",

"sst2",

"glue",

"torchdistill",

"license:apache-2.0"

] |

text-classification

| false |

yoshitomo-matsubara

| null |

yoshitomo-matsubara/bert-base-uncased-sst2

| 560 | null |

transformers

| 2,223 |

---

language: en

tags:

- bert

- sst2

- glue

- torchdistill

license: apache-2.0

datasets:

- sst2

metrics:

- accuracy

---

`bert-base-uncased` fine-tuned on SST-2 dataset, using [***torchdistill***](https://github.com/yoshitomo-matsubara/torchdistill) and [Google Colab](https://colab.research.google.com/github/yoshitomo-matsubara/torchdistill/blob/master/demo/glue_finetuning_and_submission.ipynb).

The hyperparameters are the same as those in Hugging Face's example and/or the paper of BERT, and the training configuration (including hyperparameters) is available [here](https://github.com/yoshitomo-matsubara/torchdistill/blob/main/configs/sample/glue/sst2/ce/bert_base_uncased.yaml).

I submitted prediction files to [the GLUE leaderboard](https://gluebenchmark.com/leaderboard), and the overall GLUE score was **77.9**.

|

ShiroNeko/DialoGPT-small-rick

|

3dd3341e6bd09f9905dcc3d38a4ad897504bbdc7

|

2021-09-20T08:46:13.000Z

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

| false |

ShiroNeko

| null |

ShiroNeko/DialoGPT-small-rick

| 558 | null |

transformers

| 2,224 |

---

tags:

- conversational

---

# Rick DialoGPT Model

|

abhishek/autonlp-japanese-sentiment-59363

|

0d765f697ed9076d536ca72fa44a7666400e1ae3

|

2021-05-18T22:56:15.000Z

|

[

"pytorch",

"jax",

"bert",

"text-classification",

"ja",

"dataset:abhishek/autonlp-data-japanese-sentiment",

"transformers",

"autonlp"

] |

text-classification

| false |

abhishek

| null |

abhishek/autonlp-japanese-sentiment-59363

| 558 | null |

transformers

| 2,225 |

---

tags: autonlp

language: ja

widget:

- text: "🤗AutoNLPが大好きです"

datasets:

- abhishek/autonlp-data-japanese-sentiment

---

# Model Trained Using AutoNLP

- Problem type: Binary Classification

- Model ID: 59363

## Validation Metrics

- Loss: 0.12651239335536957

- Accuracy: 0.9532079853817648

- Precision: 0.9729688278823665

- Recall: 0.9744633462616643

- AUC: 0.9717333684823413

- F1: 0.9737155136027014

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoNLP"}' https://api-inference.huggingface.co/models/abhishek/autonlp-japanese-sentiment-59363

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("abhishek/autonlp-japanese-sentiment-59363", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("abhishek/autonlp-japanese-sentiment-59363", use_auth_token=True)

inputs = tokenizer("I love AutoNLP", return_tensors="pt")

outputs = model(**inputs)

```

|

nielsr/layoutlmv3-finetuned-funsd

|

99e76f3c6200c43c300cd597d86bb519cbb91d25

|

2022-05-02T16:57:40.000Z

|

[

"pytorch",

"tensorboard",

"layoutlmv3",

"token-classification",

"dataset:nielsr/funsd-layoutlmv3",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

] |

token-classification

| false |

nielsr

| null |

nielsr/layoutlmv3-finetuned-funsd

| 558 | 2 |

transformers

| 2,226 |

---

tags:

- generated_from_trainer

datasets:

- nielsr/funsd-layoutlmv3

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layoutlmv3-finetuned-funsd

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: nielsr/funsd-layoutlmv3

type: nielsr/funsd-layoutlmv3

args: funsd

metrics:

- name: Precision

type: precision

value: 0.9026198714780029

- name: Recall

type: recall

value: 0.913

- name: F1

type: f1

value: 0.9077802634849614

- name: Accuracy

type: accuracy

value: 0.8330271015158475

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv3-finetuned-funsd

This model is a fine-tuned version of [microsoft/layoutlmv3-base](https://huggingface.co/microsoft/layoutlmv3-base) on the nielsr/funsd-layoutlmv3 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1164

- Precision: 0.9026

- Recall: 0.913

- F1: 0.9078

- Accuracy: 0.8330

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 1000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 10.0 | 100 | 0.5238 | 0.8366 | 0.886 | 0.8606 | 0.8410 |

| No log | 20.0 | 200 | 0.6930 | 0.8751 | 0.8965 | 0.8857 | 0.8322 |

| No log | 30.0 | 300 | 0.7784 | 0.8902 | 0.908 | 0.8990 | 0.8414 |

| No log | 40.0 | 400 | 0.9056 | 0.8916 | 0.905 | 0.8983 | 0.8364 |

| 0.2429 | 50.0 | 500 | 1.0016 | 0.8954 | 0.9075 | 0.9014 | 0.8298 |

| 0.2429 | 60.0 | 600 | 1.0097 | 0.8899 | 0.897 | 0.8934 | 0.8294 |

| 0.2429 | 70.0 | 700 | 1.0722 | 0.9035 | 0.9085 | 0.9060 | 0.8315 |

| 0.2429 | 80.0 | 800 | 1.0884 | 0.8905 | 0.9105 | 0.9004 | 0.8269 |

| 0.2429 | 90.0 | 900 | 1.1292 | 0.8938 | 0.909 | 0.9013 | 0.8279 |

| 0.0098 | 100.0 | 1000 | 1.1164 | 0.9026 | 0.913 | 0.9078 | 0.8330 |

### Framework versions

- Transformers 4.19.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.0.0

- Tokenizers 0.11.6

|

Gunulhona/tbnymodel

|

4607ed2430c42ccdc6054e7a51c1965dfd2ca70c

|

2022-04-04T04:46:06.000Z

|

[

"pytorch",

"bart",

"text-classification",

"transformers"

] |

text-classification

| false |

Gunulhona

| null |

Gunulhona/tbnymodel

| 557 | null |

transformers

| 2,227 |

Entry not found

|

cahya/gpt2-small-indonesian-522M

|

6d53094a6ca11236e62c54916c486e2b41d0b9aa

|

2021-05-21T14:41:35.000Z

|

[

"pytorch",

"tf",

"jax",

"gpt2",

"text-generation",

"id",

"dataset:Indonesian Wikipedia",

"transformers",

"license:mit"

] |

text-generation

| false |

cahya

| null |

cahya/gpt2-small-indonesian-522M

| 557 | 1 |

transformers

| 2,228 |

---

language: "id"

license: "mit"

datasets:

- Indonesian Wikipedia

widget:

- text: "Pulau Dewata sering dikunjungi"

---

# Indonesian GPT2 small model

## Model description

It is GPT2-small model pre-trained with indonesian Wikipedia using a causal language modeling (CLM) objective. This

model is uncased: it does not make a difference between indonesia and Indonesia.

This is one of several other language models that have been pre-trained with indonesian datasets. More detail about

its usage on downstream tasks (text classification, text generation, etc) is available at [Transformer based Indonesian Language Models](https://github.com/cahya-wirawan/indonesian-language-models/tree/master/Transformers)

## Intended uses & limitations

### How to use

You can use this model directly with a pipeline for text generation. Since the generation relies on some randomness,

we set a seed for reproducibility:

```python

>>> from transformers import pipeline, set_seed

>>> generator = pipeline('text-generation', model='cahya/gpt2-small-indonesian-522M')

>>> set_seed(42)

>>> generator("Kerajaan Majapahit adalah", max_length=30, num_return_sequences=5, num_beams=10)

[{'generated_text': 'Kerajaan Majapahit adalah sebuah kerajaan yang pernah berdiri di Jawa Timur pada abad ke-14 hingga abad ke-15. Kerajaan ini berdiri pada abad ke-14'},

{'generated_text': 'Kerajaan Majapahit adalah sebuah kerajaan yang pernah berdiri di Jawa Timur pada abad ke-14 hingga abad ke-16. Kerajaan ini berdiri pada abad ke-14'},

{'generated_text': 'Kerajaan Majapahit adalah sebuah kerajaan yang pernah berdiri di Jawa Timur pada abad ke-14 hingga abad ke-15. Kerajaan ini berdiri pada abad ke-15'},

{'generated_text': 'Kerajaan Majapahit adalah sebuah kerajaan yang pernah berdiri di Jawa Timur pada abad ke-14 hingga abad ke-16. Kerajaan ini berdiri pada abad ke-15'},

{'generated_text': 'Kerajaan Majapahit adalah sebuah kerajaan yang pernah berdiri di Jawa Timur pada abad ke-14 hingga abad ke-15. Kerajaan ini merupakan kelanjutan dari Kerajaan Majapahit yang'}]

```

Here is how to use this model to get the features of a given text in PyTorch:

```python

from transformers import GPT2Tokenizer, GPT2Model

model_name='cahya/gpt2-small-indonesian-522M'

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model = GPT2Model.from_pretrained(model_name)

text = "Silakan diganti dengan text apa saja."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

```

and in Tensorflow:

```python

from transformers import GPT2Tokenizer, TFGPT2Model

model_name='cahya/gpt2-small-indonesian-522M'

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

model = TFGPT2Model.from_pretrained(model_name)

text = "Silakan diganti dengan text apa saja."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)

```

## Training data

This model was pre-trained with 522MB of indonesian Wikipedia.

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and

a vocabulary size of 52,000. The inputs are sequences of 128 consecutive tokens.

|

nvidia/segformer-b0-finetuned-cityscapes-1024-1024

|

bca5b3ecf06ad6e3d732b277420a05e59e248d35

|

2022-07-20T09:53:38.000Z

|

[

"pytorch",

"tf",

"segformer",

"dataset:cityscapes",

"arxiv:2105.15203",

"transformers",

"vision",

"image-segmentation",

"license:apache-2.0"

] |

image-segmentation

| false |

nvidia

| null |

nvidia/segformer-b0-finetuned-cityscapes-1024-1024

| 557 | null |

transformers

| 2,229 |

---

license: apache-2.0

tags:

- vision

- image-segmentation

datasets:

- cityscapes

widget:

- src: https://www.researchgate.net/profile/Anurag-Arnab/publication/315881952/figure/fig5/AS:667673876779033@1536197265755/Sample-results-on-the-Cityscapes-dataset-The-above-images-show-how-our-method-can-handle.jpg

example_title: Road

---

# SegFormer (b0-sized) model fine-tuned on CityScapes

SegFormer model fine-tuned on CityScapes at resolution 1024x1024. It was introduced in the paper [SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers](https://arxiv.org/abs/2105.15203) by Xie et al. and first released in [this repository](https://github.com/NVlabs/SegFormer).

Disclaimer: The team releasing SegFormer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

SegFormer consists of a hierarchical Transformer encoder and a lightweight all-MLP decode head to achieve great results on semantic segmentation benchmarks such as ADE20K and Cityscapes. The hierarchical Transformer is first pre-trained on ImageNet-1k, after which a decode head is added and fine-tuned altogether on a downstream dataset.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?other=segformer) to look for fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import SegformerFeatureExtractor, SegformerForSemanticSegmentation

from PIL import Image

import requests

feature_extractor = SegformerFeatureExtractor.from_pretrained("nvidia/segformer-b0-finetuned-cityscapes-1024-1024")

model = SegformerForSemanticSegmentation.from_pretrained("nvidia/segformer-b0-finetuned-cityscapes-1024-1024")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits # shape (batch_size, num_labels, height/4, width/4)

```

For more code examples, we refer to the [documentation](https://huggingface.co/transformers/model_doc/segformer.html#).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2105-15203,

author = {Enze Xie and

Wenhai Wang and

Zhiding Yu and

Anima Anandkumar and

Jose M. Alvarez and

Ping Luo},

title = {SegFormer: Simple and Efficient Design for Semantic Segmentation with

Transformers},

journal = {CoRR},

volume = {abs/2105.15203},

year = {2021},

url = {https://arxiv.org/abs/2105.15203},

eprinttype = {arXiv},

eprint = {2105.15203},

timestamp = {Wed, 02 Jun 2021 11:46:42 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2105-15203.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

Gunulhona/tbecmodel

|

6f555e96bafdb845b2affa4586ab339db5516144

|

2022-01-25T06:37:13.000Z

|

[

"pytorch",

"bart",

"text-classification",

"transformers"

] |

text-classification

| false |

Gunulhona

| null |

Gunulhona/tbecmodel

| 555 | null |

transformers

| 2,230 |

Entry not found

|

google/long-t5-local-large

|

a4b28551322d14828192722fff4576100a9e18be

|

2022-06-22T09:06:02.000Z

|

[

"pytorch",

"jax",

"longt5",

"text2text-generation",

"en",

"arxiv:2112.07916",

"arxiv:1912.08777",

"arxiv:1910.10683",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

text2text-generation

| false |

google

| null |

google/long-t5-local-large

| 555 | 1 |

transformers

| 2,231 |

---

license: apache-2.0

language: en

---

# LongT5 (local attention, large-sized model)

LongT5 model pre-trained on English language. The model was introduced in the paper [LongT5: Efficient Text-To-Text Transformer for Long Sequences](https://arxiv.org/pdf/2112.07916.pdf) by Guo et al. and first released in [the LongT5 repository](https://github.com/google-research/longt5). All the model architecture and configuration can be found in [Flaxformer repository](https://github.com/google/flaxformer) which uses another Google research project repository [T5x](https://github.com/google-research/t5x).

Disclaimer: The team releasing LongT5 did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

LongT5 model is an encoder-decoder transformer pre-trained in a text-to-text denoising generative setting ([Pegasus-like generation pre-training](https://arxiv.org/pdf/1912.08777.pdf)). LongT5 model is an extension of [T5 model](https://arxiv.org/pdf/1910.10683.pdf), and it enables using one of the two different efficient attention mechanisms - (1) Local attention, or (2) Transient-Global attention. The usage of attention sparsity patterns allows the model to efficiently handle input sequence.

LongT5 is particularly effective when fine-tuned for text generation (summarization, question answering) which requires handling long input sequences (up to 16,384 tokens).

## Intended uses & limitations

The model is mostly meant to be fine-tuned on a supervised dataset. See the [model hub](https://huggingface.co/models?search=longt5) to look for fine-tuned versions on a task that interests you.

### How to use

```python

from transformers import AutoTokenizer, LongT5Model

tokenizer = AutoTokenizer.from_pretrained("google/long-t5-local-large")

model = LongT5Model.from_pretrained("google/long-t5-local-large")

inputs = tokenizer("Hello, my dog is cute", return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

```

### BibTeX entry and citation info

```bibtex

@article{guo2021longt5,