modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Intel/bert-base-uncased-sparse-90-unstructured-pruneofa

|

78f561a09cf292eb81a272620559a2cdbd5b4c60

|

2022-01-13T12:13:06.000Z

|

[

"pytorch",

"tf",

"bert",

"pretraining",

"en",

"dataset:wikipedia",

"dataset:bookcorpus",

"arxiv:2111.05754",

"transformers",

"fill-mask"

] |

fill-mask

| false |

Intel

| null |

Intel/bert-base-uncased-sparse-90-unstructured-pruneofa

| 647 | null |

transformers

| 2,100 |

---

language: en

tags: fill-mask

datasets:

- wikipedia

- bookcorpus

---

# 90% Sparse BERT-Base (uncased) Prune OFA

This model is a result from our paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754) presented in ENLSP NeurIPS Workshop 2021.

For further details on the model and its result, see our paper and our implementation available [here](https://github.com/IntelLabs/Model-Compression-Research-Package/tree/main/research/prune-once-for-all).

|

google/byt5-xl

|

d16d25238ca359c637f7915f40e808819fa34d75

|

2022-05-27T15:07:11.000Z

|

[

"pytorch",

"tf",

"t5",

"text2text-generation",

"multilingual",

"af",

"am",

"ar",

"az",

"be",

"bg",

"bn",

"ca",

"ceb",

"co",

"cs",

"cy",

"da",

"de",

"el",

"en",

"eo",

"es",

"et",

"eu",

"fa",

"fi",

"fil",

"fr",

"fy",

"ga",

"gd",

"gl",

"gu",

"ha",

"haw",

"hi",

"hmn",

"ht",

"hu",

"hy",

"ig",

"is",

"it",

"iw",

"ja",

"jv",

"ka",

"kk",

"km",

"kn",

"ko",

"ku",

"ky",

"la",

"lb",

"lo",

"lt",

"lv",

"mg",

"mi",

"mk",

"ml",

"mn",

"mr",

"ms",

"mt",

"my",

"ne",

"nl",

"no",

"ny",

"pa",

"pl",

"ps",

"pt",

"ro",

"ru",

"sd",

"si",

"sk",

"sl",

"sm",

"sn",

"so",

"sq",

"sr",

"st",

"su",

"sv",

"sw",

"ta",

"te",

"tg",

"th",

"tr",

"uk",

"und",

"ur",

"uz",

"vi",

"xh",

"yi",

"yo",

"zh",

"zu",

"dataset:mc4",

"arxiv:1907.06292",

"arxiv:2105.13626",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

text2text-generation

| false |

google

| null |

google/byt5-xl

| 647 | 2 |

transformers

| 2,101 |

---

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- ht

- hu

- hy

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- no

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- und

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

datasets:

- mc4

license: apache-2.0

---

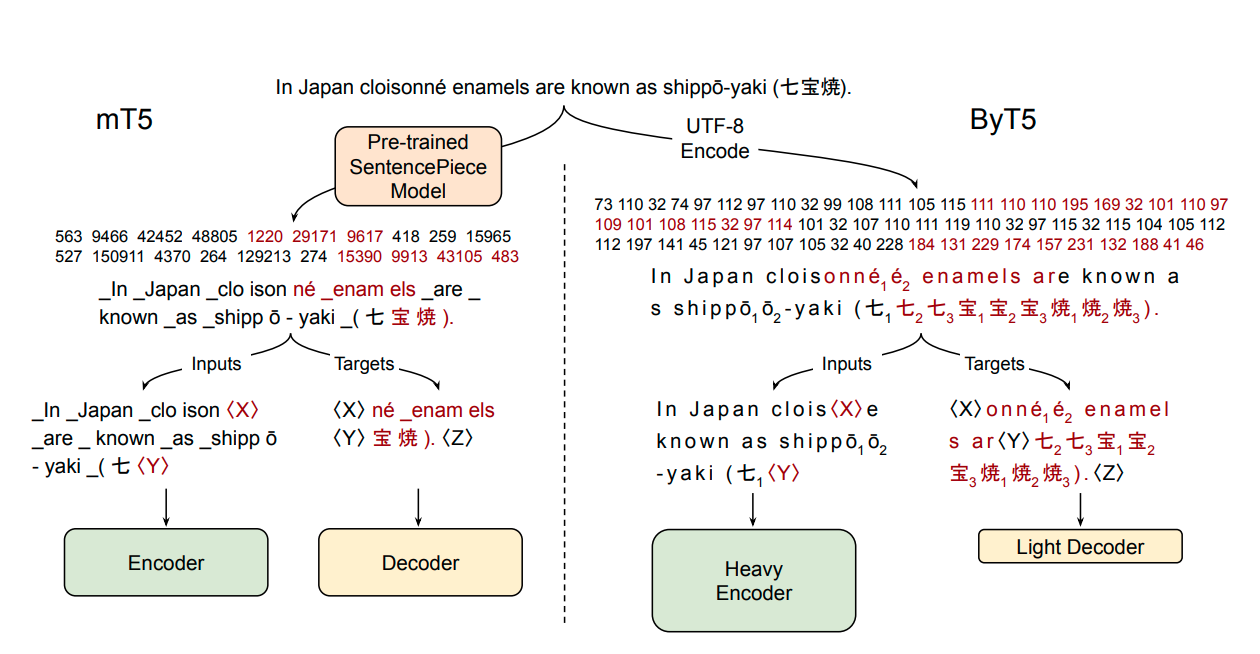

# ByT5 - xl

ByT5 is a tokenizer-free version of [Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) and generally follows the architecture of [MT5](https://huggingface.co/google/mt5-xl).

ByT5 was only pre-trained on [mC4](https://www.tensorflow.org/datasets/catalog/c4#c4multilingual) excluding any supervised training with an average span-mask of 20 UTF-8 characters. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

ByT5 works especially well on noisy text data,*e.g.*, `google/byt5-xl` significantly outperforms [mt5-xl](https://huggingface.co/google/mt5-xl) on [TweetQA](https://arxiv.org/abs/1907.06292).

Paper: [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626)

Authors: *Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel*

## Example Inference

ByT5 works on raw UTF-8 bytes and can be used without a tokenizer:

```python

from transformers import T5ForConditionalGeneration

import torch

model = T5ForConditionalGeneration.from_pretrained('google/byt5-xl')

input_ids = torch.tensor([list("Life is like a box of chocolates.".encode("utf-8"))]) + 3 # add 3 for special tokens

labels = torch.tensor([list("La vie est comme une boîte de chocolat.".encode("utf-8"))]) + 3 # add 3 for special tokens

loss = model(input_ids, labels=labels).loss # forward pass

```

For batched inference & training it is however recommended using a tokenizer class for padding:

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

model = T5ForConditionalGeneration.from_pretrained('google/byt5-xl')

tokenizer = AutoTokenizer.from_pretrained('google/byt5-xl')

model_inputs = tokenizer(["Life is like a box of chocolates.", "Today is Monday."], padding="longest", return_tensors="pt")

labels = tokenizer(["La vie est comme une boîte de chocolat.", "Aujourd'hui c'est lundi."], padding="longest", return_tensors="pt").input_ids

loss = model(**model_inputs, labels=labels).loss # forward pass

```

## Abstract

Most widely-used pre-trained language models operate on sequences of tokens corresponding to word or subword units. Encoding text as a sequence of tokens requires a tokenizer, which is typically created as an independent artifact from the model. Token-free models that instead operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We carefully characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that byte-level models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.

|

apple/mobilevit-small

|

0d7593125591ad9c05a33e6115aad12aa8e956a2

|

2022-06-02T10:56:27.000Z

|

[

"pytorch",

"coreml",

"mobilevit",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2110.02178",

"transformers",

"vision",

"license:other"

] |

image-classification

| false |

apple

| null |

apple/mobilevit-small

| 647 | 2 |

transformers

| 2,102 |

---

license: other

tags:

- vision

- image-classification

datasets:

- imagenet-1k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# MobileViT (small-sized model)

MobileViT model pre-trained on ImageNet-1k at resolution 256x256. It was introduced in [MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer](https://arxiv.org/abs/2110.02178) by Sachin Mehta and Mohammad Rastegari, and first released in [this repository](https://github.com/apple/ml-cvnets). The license used is [Apple sample code license](https://github.com/apple/ml-cvnets/blob/main/LICENSE).

Disclaimer: The team releasing MobileViT did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

MobileViT is a light-weight, low latency convolutional neural network that combines MobileNetV2-style layers with a new block that replaces local processing in convolutions with global processing using transformers. As with ViT (Vision Transformer), the image data is converted into flattened patches before it is processed by the transformer layers. Afterwards, the patches are "unflattened" back into feature maps. This allows the MobileViT-block to be placed anywhere inside a CNN. MobileViT does not require any positional embeddings.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=mobilevit) to look for fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import MobileViTFeatureExtractor, MobileViTForImageClassification

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = MobileViTFeatureExtractor.from_pretrained("apple/mobilevit-small")

model = MobileViTForImageClassification.from_pretrained("apple/mobilevit-small")

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The MobileViT model was pretrained on [ImageNet-1k](https://huggingface.co/datasets/imagenet-1k), a dataset consisting of 1 million images and 1,000 classes.

## Training procedure

### Preprocessing

Training requires only basic data augmentation, i.e. random resized cropping and horizontal flipping.

To learn multi-scale representations without requiring fine-tuning, a multi-scale sampler was used during training, with image sizes randomly sampled from: (160, 160), (192, 192), (256, 256), (288, 288), (320, 320).

At inference time, images are resized/rescaled to the same resolution (288x288), and center-cropped at 256x256.

Pixels are normalized to the range [0, 1]. Images are expected to be in BGR pixel order, not RGB.

### Pretraining

The MobileViT networks are trained from scratch for 300 epochs on ImageNet-1k on 8 NVIDIA GPUs with an effective batch size of 1024 and learning rate warmup for 3k steps, followed by cosine annealing. Also used were label smoothing cross-entropy loss and L2 weight decay. Training resolution varies from 160x160 to 320x320, using multi-scale sampling.

## Evaluation results

| Model | ImageNet top-1 accuracy | ImageNet top-5 accuracy | # params | URL |

|------------------|-------------------------|-------------------------|-----------|-------------------------------------------------|

| MobileViT-XXS | 69.0 | 88.9 | 1.3 M | https://huggingface.co/apple/mobilevit-xx-small |

| MobileViT-XS | 74.8 | 92.3 | 2.3 M | https://huggingface.co/apple/mobilevit-x-small |

| **MobileViT-S** | **78.4** | **94.1** | **5.6 M** | https://huggingface.co/apple/mobilevit-small |

### BibTeX entry and citation info

```bibtex

@inproceedings{vision-transformer,

title = {MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer},

author = {Sachin Mehta and Mohammad Rastegari},

year = {2022},

URL = {https://arxiv.org/abs/2110.02178}

}

```

|

mujeensung/roberta-base_mnli_bc

|

95821cdd08f947bf3b98c9e9e70237fd92e5a9e1

|

2022-02-13T05:13:00.000Z

|

[

"pytorch",

"roberta",

"text-classification",

"en",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

] |

text-classification

| false |

mujeensung

| null |

mujeensung/roberta-base_mnli_bc

| 646 | null |

transformers

| 2,103 |

---

language:

- en

license: mit

tags:

- generated_from_trainer

datasets:

- glue

metrics:

- accuracy

model-index:

- name: roberta-base_mnli_bc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: GLUE MNLI

type: glue

args: mnli

metrics:

- name: Accuracy

type: accuracy

value: 0.9583768461882739

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base_mnli_bc

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the GLUE MNLI dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2125

- Accuracy: 0.9584

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.2015 | 1.0 | 16363 | 0.1820 | 0.9470 |

| 0.1463 | 2.0 | 32726 | 0.1909 | 0.9559 |

| 0.0768 | 3.0 | 49089 | 0.2117 | 0.9585 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.10.1+cu111

- Datasets 1.17.0

- Tokenizers 0.10.3

|

ipuneetrathore/bert-base-cased-finetuned-finBERT

|

e07f59c31d6eee87029ce78deca877cb52484022

|

2021-05-19T20:30:58.000Z

|

[

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] |

text-classification

| false |

ipuneetrathore

| null |

ipuneetrathore/bert-base-cased-finetuned-finBERT

| 642 | null |

transformers

| 2,104 |

## FinBERT

Code for importing and using this model is available [here](https://github.com/ipuneetrathore/BERT_models)

|

microsoft/trocr-small-stage1

|

49dfe6c53cb559599a7da463bdff8e3df0334d02

|

2022-07-01T07:39:23.000Z

|

[

"pytorch",

"vision-encoder-decoder",

"arxiv:2109.10282",

"transformers",

"trocr",

"image-to-text"

] |

image-to-text

| false |

microsoft

| null |

microsoft/trocr-small-stage1

| 642 | 1 |

transformers

| 2,105 |

---

tags:

- trocr

- image-to-text

---

# TrOCR (small-sized model, pre-trained only)

TrOCR pre-trained only model. It was introduced in the paper [TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models](https://arxiv.org/abs/2109.10282) by Li et al. and first released in [this repository](https://github.com/microsoft/unilm/tree/master/trocr).

## Model description

The TrOCR model is an encoder-decoder model, consisting of an image Transformer as encoder, and a text Transformer as decoder. The image encoder was initialized from the weights of DeiT, while the text decoder was initialized from the weights of UniLM.

Images are presented to the model as a sequence of fixed-size patches (resolution 16x16), which are linearly embedded. One also adds absolute position embeddings before feeding the sequence to the layers of the Transformer encoder. Next, the Transformer text decoder autoregressively generates tokens.

## Intended uses & limitations

You can use the raw model for optical character recognition (OCR) on single text-line images. See the [model hub](https://huggingface.co/models?search=microsoft/trocr) to look for fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model in PyTorch:

```python

from transformers import TrOCRProcessor, VisionEncoderDecoderModel

from PIL import Image

import requests

import torch

# load image from the IAM database

url = 'https://fki.tic.heia-fr.ch/static/img/a01-122-02-00.jpg'

image = Image.open(requests.get(url, stream=True).raw).convert("RGB")

processor = TrOCRProcessor.from_pretrained('microsoft/trocr-small-stage1')

model = VisionEncoderDecoderModel.from_pretrained('microsoft/trocr-small-stage1')

# training

pixel_values = processor(image, return_tensors="pt").pixel_values # Batch size 1

decoder_input_ids = torch.tensor([[model.config.decoder.decoder_start_token_id]])

outputs = model(pixel_values=pixel_values, decoder_input_ids=decoder_input_ids)

```

### BibTeX entry and citation info

```bibtex

@misc{li2021trocr,

title={TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models},

author={Minghao Li and Tengchao Lv and Lei Cui and Yijuan Lu and Dinei Florencio and Cha Zhang and Zhoujun Li and Furu Wei},

year={2021},

eprint={2109.10282},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

hfl/rbtl3

|

9cad8535fa548d05b09d43bb95eef67845981908

|

2021-05-19T19:22:46.000Z

|

[

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"zh",

"arxiv:1906.08101",

"arxiv:2004.13922",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

fill-mask

| false |

hfl

| null |

hfl/rbtl3

| 641 | 2 |

transformers

| 2,106 |

---

language:

- zh

tags:

- bert

license: "apache-2.0"

---

# This is a re-trained 3-layer RoBERTa-wwm-ext-large model.

## Chinese BERT with Whole Word Masking

For further accelerating Chinese natural language processing, we provide **Chinese pre-trained BERT with Whole Word Masking**.

**[Pre-Training with Whole Word Masking for Chinese BERT](https://arxiv.org/abs/1906.08101)**

Yiming Cui, Wanxiang Che, Ting Liu, Bing Qin, Ziqing Yang, Shijin Wang, Guoping Hu

This repository is developed based on:https://github.com/google-research/bert

You may also interested in,

- Chinese BERT series: https://github.com/ymcui/Chinese-BERT-wwm

- Chinese MacBERT: https://github.com/ymcui/MacBERT

- Chinese ELECTRA: https://github.com/ymcui/Chinese-ELECTRA

- Chinese XLNet: https://github.com/ymcui/Chinese-XLNet

- Knowledge Distillation Toolkit - TextBrewer: https://github.com/airaria/TextBrewer

More resources by HFL: https://github.com/ymcui/HFL-Anthology

## Citation

If you find the technical report or resource is useful, please cite the following technical report in your paper.

- Primary: https://arxiv.org/abs/2004.13922

```

@inproceedings{cui-etal-2020-revisiting,

title = "Revisiting Pre-Trained Models for {C}hinese Natural Language Processing",

author = "Cui, Yiming and

Che, Wanxiang and

Liu, Ting and

Qin, Bing and

Wang, Shijin and

Hu, Guoping",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.findings-emnlp.58",

pages = "657--668",

}

```

- Secondary: https://arxiv.org/abs/1906.08101

```

@article{chinese-bert-wwm,

title={Pre-Training with Whole Word Masking for Chinese BERT},

author={Cui, Yiming and Che, Wanxiang and Liu, Ting and Qin, Bing and Yang, Ziqing and Wang, Shijin and Hu, Guoping},

journal={arXiv preprint arXiv:1906.08101},

year={2019}

}

```

|

dangvantuan/sentence-camembert-base

|

badc8d827cb0215b4a68b37445214acba26da2b1

|

2022-03-11T17:02:50.000Z

|

[

"pytorch",

"camembert",

"feature-extraction",

"fr",

"dataset:stsb_multi_mt",

"arxiv:1908.10084",

"transformers",

"Text",

"Sentence Similarity",

"Sentence-Embedding",

"camembert-base",

"license:apache-2.0",

"sentence-similarity",

"model-index"

] |

sentence-similarity

| false |

dangvantuan

| null |

dangvantuan/sentence-camembert-base

| 641 | 2 |

transformers

| 2,107 |

---

pipeline_tag: sentence-similarity

language: fr

datasets:

- stsb_multi_mt

tags:

- Text

- Sentence Similarity

- Sentence-Embedding

- camembert-base

license: apache-2.0

model-index:

- name: sentence-camembert-base by Van Tuan DANG

results:

- task:

name: Sentence-Embedding

type: Text Similarity

dataset:

name: Text Similarity fr

type: stsb_multi_mt

args: fr

metrics:

- name: Test Pearson correlation coefficient

type: Pearson_correlation_coefficient

value: xx.xx

---

## Pre-trained sentence embedding models are the state-of-the-art of Sentence Embeddings for French.

Model is Fine-tuned using pre-trained [facebook/camembert-base](https://huggingface.co/camembert/camembert-base) and

[Siamese BERT-Networks with 'sentences-transformers'](https://www.sbert.net/) on dataset [stsb](https://huggingface.co/datasets/stsb_multi_mt/viewer/fr/train)

## Usage

The model can be used directly (without a language model) as follows:

```python

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("dangvantuan/sentence-camembert-base")

sentences = ["Un avion est en train de décoller.",

"Un homme joue d'une grande flûte.",

"Un homme étale du fromage râpé sur une pizza.",

"Une personne jette un chat au plafond.",

"Une personne est en train de plier un morceau de papier.",

]

embeddings = model.encode(sentences)

```

## Evaluation

The model can be evaluated as follows on the French test data of stsb.

```python

from sentence_transformers import SentenceTransformer

from sentence_transformers.readers import InputExample

from sentence_transformers.evaluation import EmbeddingSimilarityEvaluator

from datasets import load_dataset

def convert_dataset(dataset):

dataset_samples=[]

for df in dataset:

score = float(df['similarity_score'])/5.0 # Normalize score to range 0 ... 1

inp_example = InputExample(texts=[df['sentence1'],

df['sentence2']], label=score)

dataset_samples.append(inp_example)

return dataset_samples

# Loading the dataset for evaluation

df_dev = load_dataset("stsb_multi_mt", name="fr", split="dev")

df_test = load_dataset("stsb_multi_mt", name="fr", split="test")

# Convert the dataset for evaluation

# For Dev set:

dev_samples = convert_dataset(df_dev)

val_evaluator = EmbeddingSimilarityEvaluator.from_input_examples(dev_samples, name='sts-dev')

val_evaluator(model, output_path="./")

# For Test set:

test_samples = convert_dataset(df_test)

test_evaluator = EmbeddingSimilarityEvaluator.from_input_examples(test_samples, name='sts-test')

test_evaluator(model, output_path="./")

```

**Test Result**:

The performance is measured using Pearson and Spearman correlation:

- On dev

| Model | Pearson correlation | Spearman correlation | #params |

| ------------- | ------------- | ------------- |------------- |

| [dangvantuan/sentence-camembert-base](https://huggingface.co/dangvantuan/sentence-camembert-base)| 86.73 |86.54 | 110M |

| [distiluse-base-multilingual-cased](https://huggingface.co/sentence-transformers/distiluse-base-multilingual-cased) | 79.22 | 79.16|135M |

- On test

| Model | Pearson correlation | Spearman correlation |

| ------------- | ------------- | ------------- |

| [dangvantuan/sentence-camembert-base](https://huggingface.co/dangvantuan/sentence-camembert-base)| 82.36 | 81.64|

| [distiluse-base-multilingual-cased](https://huggingface.co/sentence-transformers/distiluse-base-multilingual-cased) | 78.62 | 77.48|

## Citation

@article{reimers2019sentence,

title={Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks},

author={Nils Reimers, Iryna Gurevych},

journal={https://arxiv.org/abs/1908.10084},

year={2019}

}

@article{martin2020camembert,

title={CamemBERT: a Tasty French Language Mode},

author={Martin, Louis and Muller, Benjamin and Su{\'a}rez, Pedro Javier Ortiz and Dupont, Yoann and Romary, Laurent and de la Clergerie, {\'E}ric Villemonte and Seddah, Djam{\'e} and Sagot, Beno{\^\i}t},

journal={Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics},

year={2020}

}

|

aubmindlab/araelectra-base-discriminator

|

5a6e787cdf3af77229d04a33f4a79c98fea35be1

|

2022-04-07T11:29:39.000Z

|

[

"pytorch",

"tf",

"tensorboard",

"electra",

"pretraining",

"ar",

"dataset:wikipedia",

"dataset:OSIAN",

"dataset:1.5B Arabic Corpus",

"dataset:OSCAR Arabic Unshuffled",

"arxiv:2012.15516",

"transformers"

] | null | false |

aubmindlab

| null |

aubmindlab/araelectra-base-discriminator

| 640 | null |

transformers

| 2,108 |

---

language: ar

datasets:

- wikipedia

- OSIAN

- 1.5B Arabic Corpus

- OSCAR Arabic Unshuffled

---

# AraELECTRA

<img src="https://raw.githubusercontent.com/aub-mind/arabert/master/AraELECTRA.png" width="100" align="left"/>

**ELECTRA** is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using relatively little compute. ELECTRA models are trained to distinguish "real" input tokens vs "fake" input tokens generated by another neural network, similar to the discriminator of a [GAN](https://arxiv.org/pdf/1406.2661.pdf). AraELECTRA achieves state-of-the-art results on Arabic QA dataset.

For a detailed description, please refer to the AraELECTRA paper [AraELECTRA: Pre-Training Text Discriminators for Arabic Language Understanding](https://arxiv.org/abs/2012.15516).

## How to use the discriminator in `transformers`

```python

from transformers import ElectraForPreTraining, ElectraTokenizerFast

import torch

discriminator = ElectraForPreTraining.from_pretrained("aubmindlab/araelectra-base-discriminator")

tokenizer = ElectraTokenizerFast.from_pretrained("aubmindlab/araelectra-base-discriminator")

sentence = ""

fake_sentence = ""

fake_tokens = tokenizer.tokenize(fake_sentence)

fake_inputs = tokenizer.encode(fake_sentence, return_tensors="pt")

discriminator_outputs = discriminator(fake_inputs)

predictions = torch.round((torch.sign(discriminator_outputs[0]) + 1) / 2)

[print("%7s" % token, end="") for token in fake_tokens]

[print("%7s" % int(prediction), end="") for prediction in predictions.tolist()]

```

# Model

Model | HuggingFace Model Name | Size (MB/Params)|

---|:---:|:---:

AraELECTRA-base-generator | [araelectra-base-generator](https://huggingface.co/aubmindlab/araelectra-base-generator) | 227MB/60M |

AraELECTRA-base-discriminator | [araelectra-base-discriminator](https://huggingface.co/aubmindlab/araelectra-base-discriminator) | 516MB/135M |

# Compute

Model | Hardware | num of examples (seq len = 512) | Batch Size | Num of Steps | Time (in days)

---|:---:|:---:|:---:|:---:|:---:

AraELECTRA-base | TPUv3-8 | - | 256 | 2M | 24

# Dataset

The pretraining data used for the new **AraELECTRA** model is also used for **AraGPT2 and AraBERTv2**.

The dataset consists of 77GB or 200,095,961 lines or 8,655,948,860 words or 82,232,988,358 chars (before applying Farasa Segmentation)

For the new dataset we added the unshuffled OSCAR corpus, after we thoroughly filter it, to the previous dataset used in AraBERTv1 but with out the websites that we previously crawled:

- OSCAR unshuffled and filtered.

- [Arabic Wikipedia dump](https://archive.org/details/arwiki-20190201) from 2020/09/01

- [The 1.5B words Arabic Corpus](https://www.semanticscholar.org/paper/1.5-billion-words-Arabic-Corpus-El-Khair/f3eeef4afb81223df96575adadf808fe7fe440b4)

- [The OSIAN Corpus](https://www.aclweb.org/anthology/W19-4619)

- Assafir news articles. Huge thank you for Assafir for giving us the data

# Preprocessing

It is recommended to apply our preprocessing function before training/testing on any dataset.

**Install farasapy to segment text for AraBERT v1 & v2 `pip install farasapy`**

```python

from arabert.preprocess import ArabertPreprocessor

model_name="araelectra-base"

arabert_prep = ArabertPreprocessor(model_name=model_name)

text = "ولن نبالغ إذا قلنا إن هاتف أو كمبيوتر المكتب في زمننا هذا ضروري"

arabert_prep.preprocess(text)

```

# TensorFlow 1.x models

**You can find the PyTorch, TF2 and TF1 models in HuggingFace's Transformer Library under the ```aubmindlab``` username**

- `wget https://huggingface.co/aubmindlab/MODEL_NAME/resolve/main/tf1_model.tar.gz` where `MODEL_NAME` is any model under the `aubmindlab` name

# If you used this model please cite us as :

```

@inproceedings{antoun-etal-2021-araelectra,

title = "{A}ra{ELECTRA}: Pre-Training Text Discriminators for {A}rabic Language Understanding",

author = "Antoun, Wissam and

Baly, Fady and

Hajj, Hazem",

booktitle = "Proceedings of the Sixth Arabic Natural Language Processing Workshop",

month = apr,

year = "2021",

address = "Kyiv, Ukraine (Virtual)",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.wanlp-1.20",

pages = "191--195",

}

```

# Acknowledgments

Thanks to TensorFlow Research Cloud (TFRC) for the free access to Cloud TPUs, couldn't have done it without this program, and to the [AUB MIND Lab](https://sites.aub.edu.lb/mindlab/) Members for the continous support. Also thanks to [Yakshof](https://www.yakshof.com/#/) and Assafir for data and storage access. Another thanks for Habib Rahal (https://www.behance.net/rahalhabib), for putting a face to AraBERT.

# Contacts

**Wissam Antoun**: [Linkedin](https://www.linkedin.com/in/wissam-antoun-622142b4/) | [Twitter](https://twitter.com/wissam_antoun) | [Github](https://github.com/WissamAntoun) | <[email protected]> | <[email protected]>

**Fady Baly**: [Linkedin](https://www.linkedin.com/in/fadybaly/) | [Twitter](https://twitter.com/fadybaly) | [Github](https://github.com/fadybaly) | <[email protected]> | <[email protected]>

|

cambridgeltl/SapBERT-UMLS-2020AB-all-lang-from-XLMR-large

|

fbed3159be2544640d2300f9b8851243b1426aa9

|

2021-05-27T18:49:10.000Z

|

[

"pytorch",

"xlm-roberta",

"feature-extraction",

"arxiv:2010.11784",

"transformers"

] |

feature-extraction

| false |

cambridgeltl

| null |

cambridgeltl/SapBERT-UMLS-2020AB-all-lang-from-XLMR-large

| 640 | null |

transformers

| 2,109 |

---

language: multilingual

tags:

- biomedical

- lexical-semantics

- cross-lingual

datasets:

- UMLS

**[news]** A cross-lingual extension of SapBERT will appear in the main onference of **ACL 2021**! <br>

**[news]** SapBERT will appear in the conference proceedings of **NAACL 2021**!

### SapBERT-XLMR

SapBERT [(Liu et al. 2021)](https://arxiv.org/pdf/2010.11784.pdf) trained with [UMLS](https://www.nlm.nih.gov/research/umls/licensedcontent/umlsknowledgesources.html) 2020AB, using [xlm-roberta-large](https://huggingface.co/xlm-roberta-large) as the base model. Please use [CLS] as the representation of the input.

### Citation

```bibtex

@inproceedings{liu2021learning,

title={Learning Domain-Specialised Representations for Cross-Lingual Biomedical Entity Linking},

author={Liu, Fangyu and Vuli{\'c}, Ivan and Korhonen, Anna and Collier, Nigel},

booktitle={Proceedings of ACL-IJCNLP 2021},

month = aug,

year={2021}

}

```

|

cahya/bert2gpt-indonesian-summarization

|

5edc210dbf2b92225b38aad9959648fd4ad3b3a5

|

2021-02-08T16:19:50.000Z

|

[

"pytorch",

"encoder-decoder",

"text2text-generation",

"id",

"dataset:id_liputan6",

"transformers",

"pipeline:summarization",

"summarization",

"bert2gpt",

"license:apache-2.0",

"autotrain_compatible"

] |

summarization

| false |

cahya

| null |

cahya/bert2gpt-indonesian-summarization

| 639 | 1 |

transformers

| 2,110 |

---

language: id

tags:

- pipeline:summarization

- summarization

- bert2gpt

datasets:

- id_liputan6

license: apache-2.0

---

# Indonesian BERT2BERT Summarization Model

Finetuned EncoderDecoder model using BERT-base and GPT2-small for Indonesian text summarization.

## Finetuning Corpus

`bert2gpt-indonesian-summarization` model is based on `cahya/bert-base-indonesian-1.5G` and `cahya/gpt2-small-indonesian-522M`by [cahya](https://huggingface.co/cahya), finetuned using [id_liputan6](https://huggingface.co/datasets/id_liputan6) dataset.

## Load Finetuned Model

```python

from transformers import BertTokenizer, EncoderDecoderModel

tokenizer = BertTokenizer.from_pretrained("cahya/bert2gpt-indonesian-summarization")

tokenizer.bos_token = tokenizer.cls_token

tokenizer.eos_token = tokenizer.sep_token

model = EncoderDecoderModel.from_pretrained("cahya/bert2gpt-indonesian-summarization")

```

## Code Sample

```python

from transformers import BertTokenizer, EncoderDecoderModel

tokenizer = BertTokenizer.from_pretrained("cahya/bert2gpt-indonesian-summarization")

tokenizer.bos_token = tokenizer.cls_token

tokenizer.eos_token = tokenizer.sep_token

model = EncoderDecoderModel.from_pretrained("cahya/bert2gpt-indonesian-summarization")

#

ARTICLE_TO_SUMMARIZE = ""

# generate summary

input_ids = tokenizer.encode(ARTICLE_TO_SUMMARIZE, return_tensors='pt')

summary_ids = model.generate(input_ids,

min_length=20,

max_length=80,

num_beams=10,

repetition_penalty=2.5,

length_penalty=1.0,

early_stopping=True,

no_repeat_ngram_size=2,

use_cache=True,

do_sample = True,

temperature = 0.8,

top_k = 50,

top_p = 0.95)

summary_text = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

print(summary_text)

```

Output:

```

```

|

castorini/tct_colbert-msmarco

|

dab1fa241ee2cdf8d5db9dca5757d27b9a37fb3b

|

2021-04-21T01:29:30.000Z

|

[

"pytorch",

"arxiv:2010.11386",

"transformers"

] | null | false |

castorini

| null |

castorini/tct_colbert-msmarco

| 639 | null |

transformers

| 2,111 |

This model is to reproduce the TCT-ColBERT dense retrieval described in the following paper:

> Sheng-Chieh Lin, Jheng-Hong Yang, and Jimmy Lin. [Distilling Dense Representations for Ranking using Tightly-Coupled Teachers.](https://arxiv.org/abs/2010.11386) arXiv:2010.11386, October 2020.

For more details on how to use it, check our experiments in [Pyserini](https://github.com/castorini/pyserini/blob/master/docs/experiments-tct_colbert.md)

|

patrickvonplaten/data2vec-audio-base-10m-4-gram

|

26f757bde83d157bdd8589ca9dec004938bfe5eb

|

2022-04-20T10:33:08.000Z

|

[

"pytorch",

"data2vec-audio",

"automatic-speech-recognition",

"en",

"dataset:librispeech_asr",

"arxiv:2202.03555",

"transformers",

"speech",

"license:apache-2.0"

] |

automatic-speech-recognition

| false |

patrickvonplaten

| null |

patrickvonplaten/data2vec-audio-base-10m-4-gram

| 638 | null |

transformers

| 2,112 |

---

language: en

datasets:

- librispeech_asr

tags:

- speech

license: apache-2.0

---

# Data2Vec-Audio-Base-10m

[Facebook's Data2Vec](https://ai.facebook.com/research/data2vec-a-general-framework-for-self-supervised-learning-in-speech-vision-and-language/)

The base model pretrained and fine-tuned on 10 minutes of Librispeech on 16kHz sampled speech audio. When using the model

make sure that your speech input is also sampled at 16Khz.

[Paper](https://arxiv.org/abs/2202.03555)

Authors: Alexei Baevski, Wei-Ning Hsu, Qiantong Xu, Arun Babu, Jiatao Gu, Michael Auli

**Abstract**

While the general idea of self-supervised learning is identical across modalities, the actual algorithms and objectives differ widely because they were developed with a single modality in mind. To get us closer to general self-supervised learning, we present data2vec, a framework that uses the same learning method for either speech, NLP or computer vision. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture. Instead of predicting modality-specific targets such as words, visual tokens or units of human speech which are local in nature, data2vec predicts contextualized latent representations that contain information from the entire input. Experiments on the major benchmarks of speech recognition, image classification, and natural language understanding demonstrate a new state of the art or competitive performance to predominant approaches.

The original model can be found under https://github.com/pytorch/fairseq/tree/main/examples/data2vec .

# Pre-Training method

For more information, please take a look at the [official paper](https://arxiv.org/abs/2202.03555).

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

from transformers import Wav2Vec2Processor, Data2VecForCTC

from datasets import load_dataset

import torch

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/data2vec-audio-base-10m")

model = Data2VecForCTC.from_pretrained("facebook/data2vec-audio-base-10m")

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

# tokenize

input_values = processor(ds[0]["audio"]["array"],, return_tensors="pt", padding="longest").input_values # Batch size 1

# retrieve logits

logits = model(input_values).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

```

|

Maklygin/mBert-relation-extraction-FT

|

63e8e267f4fbcbc4c5c800e33880aac64a3b2807

|

2022-06-08T10:38:59.000Z

|

[

"pytorch",

"bert",

"text-classification",

"transformers"

] |

text-classification

| false |

Maklygin

| null |

Maklygin/mBert-relation-extraction-FT

| 638 | null |

transformers

| 2,113 |

Entry not found

|

sonoisa/t5-base-japanese-question-generation

|

a529078d993205a1c61e8a7be08f6f640de10243

|

2022-03-11T02:50:33.000Z

|

[

"pytorch",

"t5",

"text2text-generation",

"ja",

"transformers",

"seq2seq",

"license:cc-by-sa-4.0",

"autotrain_compatible"

] |

text2text-generation

| false |

sonoisa

| null |

sonoisa/t5-base-japanese-question-generation

| 637 | null |

transformers

| 2,114 |

---

language: ja

tags:

- t5

- text2text-generation

- seq2seq

license: cc-by-sa-4.0

widget:

- text: "answer: アマビエ context: アマビエ(歴史的仮名遣:アマビヱ)は、日本に伝わる半人半魚の妖怪。光輝く姿で海中から現れ、豊作や疫病などの予言をすると伝えられている。江戸時代後期の肥後国(現・熊本県)に現れたという。この話は挿図付きで瓦版に取り上げられ、遠く江戸にまで伝えられた。弘化3年4月中旬(1846年5月上旬)のこと、毎夜、海中に光る物体が出没していたため、役人が赴いたところ、それが姿を現した。姿形について言葉では書き留められていないが、挿図が添えられている。 その者は、役人に対して「私は海中に住むアマビエと申す者なり」と名乗り、「当年より6ヶ年の間は諸国で豊作が続くが疫病も流行する。私の姿を描いた絵を人々に早々に見せよ。」と予言めいたことを告げ、海の中へと帰って行った。年代が特定できる最古の例は、天保15年(1844年)の越後国(現・新潟県)に出現した「海彦(読みの推定:あまびこ)」を記述した瓦版(『坪川本』という。福井県立図書館所蔵)、その挿絵に描かれた海彦は、頭からいきなり3本の足が生えた(胴体のない)形状で、人間のような耳をし、目はまるく、口が突出している。その年中に日本人口の7割の死滅を予言し、その像の絵札による救済を忠告している。"

---

# 回答と回答が出てくるパラグラフを与えると質問文を生成するモデル

SEE: https://github.com/sonoisa/deep-question-generation

## 本モデルの作成ステップ概要

1. [SQuAD 1.1](https://rajpurkar.github.io/SQuAD-explorer/)を日本語に機械翻訳し、不正なデータをクレンジング(有効なデータは約半分)。

回答が含まれるコンテキスト、質問文、解答の3つ組ができる。

2. [日本語T5モデル](https://huggingface.co/sonoisa/t5-base-japanese)を次の設定でファインチューニング

* 入力: "answer: {解答} content: {回答が含まれるコンテキスト}"

* 出力: "{質問文}"

* 各種ハイパーパラメータ

* 最大入力トークン数: 512

* 最大出力トークン数: 64

* 最適化アルゴリズム: AdaFactor

* 学習率: 0.001(固定)

* バッチサイズ: 128

* ステップ数: 2500(500ステップごとにチェックポイントを出力、定量・定性評価を行い2500ステップ目を採用)

|

sangrimlee/bert-base-multilingual-cased-korquad

|

be8790035bd1fed7074dd2efdf4cb97ebd8e225d

|

2021-05-20T04:47:57.000Z

|

[

"pytorch",

"jax",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

] |

question-answering

| false |

sangrimlee

| null |

sangrimlee/bert-base-multilingual-cased-korquad

| 635 | null |

transformers

| 2,115 |

Entry not found

|

ckiplab/albert-base-chinese-ner

|

ef1b19225fc53f10fafd8bfdefb41ac2f2f4e177

|

2022-05-10T03:28:08.000Z

|

[

"pytorch",

"albert",

"token-classification",

"zh",

"transformers",

"license:gpl-3.0",

"autotrain_compatible"

] |

token-classification

| false |

ckiplab

| null |

ckiplab/albert-base-chinese-ner

| 634 | 0 |

transformers

| 2,116 |

---

language:

- zh

thumbnail: https://ckip.iis.sinica.edu.tw/files/ckip_logo.png

tags:

- pytorch

- token-classification

- albert

- zh

license: gpl-3.0

---

# CKIP ALBERT Base Chinese

This project provides traditional Chinese transformers models (including ALBERT, BERT, GPT2) and NLP tools (including word segmentation, part-of-speech tagging, named entity recognition).

這個專案提供了繁體中文的 transformers 模型(包含 ALBERT、BERT、GPT2)及自然語言處理工具(包含斷詞、詞性標記、實體辨識)。

## Homepage

- https://github.com/ckiplab/ckip-transformers

## Contributers

- [Mu Yang](https://muyang.pro) at [CKIP](https://ckip.iis.sinica.edu.tw) (Author & Maintainer)

## Usage

Please use BertTokenizerFast as tokenizer instead of AutoTokenizer.

請使用 BertTokenizerFast 而非 AutoTokenizer。

```

from transformers import (

BertTokenizerFast,

AutoModel,

)

tokenizer = BertTokenizerFast.from_pretrained('bert-base-chinese')

model = AutoModel.from_pretrained('ckiplab/albert-base-chinese-ner')

```

For full usage and more information, please refer to https://github.com/ckiplab/ckip-transformers.

有關完整使用方法及其他資訊,請參見 https://github.com/ckiplab/ckip-transformers 。

|

mpariente/DPRNNTasNet-ks2_WHAM_sepclean

|

548f4f1c9476ed0af6b4bea3403247c71d5d7d46

|

2021-09-23T16:12:22.000Z

|

[

"pytorch",

"dataset:wham",

"dataset:sep_clean",

"asteroid",

"audio",

"DPRNNTasNet",

"audio-to-audio",

"license:cc-by-sa-4.0"

] |

audio-to-audio

| false |

mpariente

| null |

mpariente/DPRNNTasNet-ks2_WHAM_sepclean

| 634 | 4 |

asteroid

| 2,117 |

---

tags:

- asteroid

- audio

- DPRNNTasNet

- audio-to-audio

datasets:

- wham

- sep_clean

license: cc-by-sa-4.0

---

## Asteroid model `mpariente/DPRNNTasNet-ks2_WHAM_sepclean`

Imported from [Zenodo](https://zenodo.org/record/3862942)

### Description:

This model was trained by Manuel Pariente

using the wham/DPRNN recipe in [Asteroid](https://github.com/asteroid-team/asteroid).

It was trained on the `sep_clean` task of the WHAM! dataset.

### Training config:

```yaml

data:

mode: min

nondefault_nsrc: None

sample_rate: 8000

segment: 2.0

task: sep_clean

train_dir: data/wav8k/min/tr

valid_dir: data/wav8k/min/cv

filterbank:

kernel_size: 2

n_filters: 64

stride: 1

main_args:

exp_dir: exp/train_dprnn_new/

gpus: -1

help: None

masknet:

bidirectional: True

bn_chan: 128

chunk_size: 250

dropout: 0

hid_size: 128

hop_size: 125

in_chan: 64

mask_act: sigmoid

n_repeats: 6

n_src: 2

out_chan: 64

optim:

lr: 0.001

optimizer: adam

weight_decay: 1e-05

positional arguments:

training:

batch_size: 3

early_stop: True

epochs: 200

gradient_clipping: 5

half_lr: True

num_workers: 8

```

### Results:

```yaml

si_sdr: 19.316743490695334

si_sdr_imp: 19.317895273889842

sdr: 19.68085347190952

sdr_imp: 19.5298092932871

sir: 30.362213998701232

sir_imp: 30.21116982007881

sar: 20.15553251343315

sar_imp: -129.02091762351188

stoi: 0.97772664309074

stoi_imp: 0.23968091518217424

```

### License notice:

This work "DPRNNTasNet-ks2_WHAM_sepclean" is a derivative of [CSR-I (WSJ0) Complete](https://catalog.ldc.upenn.edu/LDC93S6A)

by [LDC](https://www.ldc.upenn.edu/), used under [LDC User Agreement for

Non-Members](https://catalog.ldc.upenn.edu/license/ldc-non-members-agreement.pdf) (Research only).

"DPRNNTasNet-ks2_WHAM_sepclean" is licensed under [Attribution-ShareAlike 3.0 Unported](https://creativecommons.org/licenses/by-sa/3.0/)

by Manuel Pariente.

|

skytnt/gpt2-japanese-lyric-small

|

72268b60df9c19098f3acf526221d06838d317f3

|

2022-07-06T05:06:29.000Z

|

[

"pytorch",

"tf",

"gpt2",

"text-generation",

"ja",

"transformers",

"japanese",

"lm",

"nlp",

"license:mit"

] |

text-generation

| false |

skytnt

| null |

skytnt/gpt2-japanese-lyric-small

| 634 | 1 |

transformers

| 2,118 |

---

language: ja

tags:

- ja

- japanese

- gpt2

- text-generation

- lm

- nlp

license: mit

widget:

- text: "桜が咲く"

---

# Japanese GPT2 Lyric Model

## Model description

The model is used to generate Japanese lyrics.

You can try it on my website [https://lyric.fab.moe/](https://lyric.fab.moe/#/)

## How to use

```python

import torch

from transformers import T5Tokenizer, GPT2LMHeadModel

tokenizer = T5Tokenizer.from_pretrained("skytnt/gpt2-japanese-lyric-small")

model = GPT2LMHeadModel.from_pretrained("skytnt/gpt2-japanese-lyric-small")

def gen_lyric(prompt_text: str):

prompt_text = "<s>" + prompt_text.replace("\n", "\\n ")

prompt_tokens = tokenizer.tokenize(prompt_text)

prompt_token_ids = tokenizer.convert_tokens_to_ids(prompt_tokens)

prompt_tensor = torch.LongTensor(prompt_token_ids).to(device)

prompt_tensor = prompt_tensor.view(1, -1)

# model forward

output_sequences = model.generate(

input_ids=prompt_tensor,

max_length=512,

top_p=0.95,

top_k=40,

temperature=1.0,

do_sample=True,

early_stopping=True,

bos_token_id=tokenizer.bos_token_id,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.pad_token_id,

num_return_sequences=1

)

# convert model outputs to readable sentence

generated_sequence = output_sequences.tolist()[0]

generated_tokens = tokenizer.convert_ids_to_tokens(generated_sequence)

generated_text = tokenizer.convert_tokens_to_string(generated_tokens)

generated_text = "\n".join([s.strip() for s in generated_text.split('\\n')]).replace(' ', '\u3000').replace('<s>', '').replace('</s>', '\n\n---end---')

return generated_text

print(gen_lyric("桜が咲く"))

```

## Training data

[Training data](https://github.com/SkyTNT/gpt2-japanese-lyric/raw/main/lyric_clean.pkl) contains 143,587 Japanese lyrics which are collected from [uta-net](https://www.uta-net.com/) by [lyric_download](https://github.com/SkyTNT/lyric_downlowd)

|

huggingtweets/bestmusiclyric

|

3f3c6a02e8f161cdb28ac5a33b79f25e967300df

|

2021-05-21T20:32:09.000Z

|

[

"pytorch",

"jax",

"gpt2",

"text-generation",

"en",

"transformers",

"huggingtweets"

] |

text-generation

| false |

huggingtweets

| null |

huggingtweets/bestmusiclyric

| 633 | null |

transformers

| 2,119 |

---

language: en

thumbnail: https://www.huggingtweets.com/bestmusiclyric/1620313468667/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div>

<div style="width: 132px; height:132px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/2113290180/images-1_400x400.jpeg')">

</div>

<div style="margin-top: 8px; font-size: 19px; font-weight: 800">Best Music Lyric 🤖 AI Bot </div>

<div style="font-size: 15px">@bestmusiclyric bot</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on [@bestmusiclyric's tweets](https://twitter.com/bestmusiclyric).

| Data | Quantity |

| --- | --- |

| Tweets downloaded | 3244 |

| Retweets | 1060 |

| Short tweets | 853 |

| Tweets kept | 1331 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/1ilv29ew/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @bestmusiclyric's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/1wqx12s6) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/1wqx12s6/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/bestmusiclyric')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

razent/cotext-1-ccg

|

4dd36fb68b1e40fcc17877a69cf491731ddf82b3

|

2022-03-15T03:03:39.000Z

|

[

"pytorch",

"tf",

"jax",

"t5",

"feature-extraction",

"code",

"dataset:code_search_net",

"transformers"

] |

feature-extraction

| false |

razent

| null |

razent/cotext-1-ccg

| 633 | null |

transformers

| 2,120 |

---

language: code

datasets:

- code_search_net

---

# CoText (1-CCG)

## Introduction

Paper: [CoTexT: Multi-task Learning with Code-Text Transformer](https://aclanthology.org/2021.nlp4prog-1.5.pdf)

Authors: _Long Phan, Hieu Tran, Daniel Le, Hieu Nguyen, James Anibal, Alec Peltekian, Yanfang Ye_

## How to use

Supported languages:

```shell

"go"

"java"

"javascript"

"php"

"python"

"ruby"

```

For more details, do check out [our Github repo](https://github.com/justinphan3110/CoTexT).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("razent/cotext-1-ccg")

model = AutoModelForSeq2SeqLM.from_pretrained("razent/cotext-1-ccg")

sentence = "def add(a, b): return a + b"

text = "python: " + sentence + " </s>"

encoding = tokenizer.encode_plus(text, pad_to_max_length=True, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

early_stopping=True

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True, clean_up_tokenization_spaces=True)

print(line)

```

## Citation

```

@inproceedings{phan-etal-2021-cotext,

title = "{C}o{T}ex{T}: Multi-task Learning with Code-Text Transformer",

author = "Phan, Long and

Tran, Hieu and

Le, Daniel and

Nguyen, Hieu and

Annibal, James and

Peltekian, Alec and

Ye, Yanfang",

booktitle = "Proceedings of the 1st Workshop on Natural Language Processing for Programming (NLP4Prog 2021)",

month = aug,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.nlp4prog-1.5",

doi = "10.18653/v1/2021.nlp4prog-1.5",

pages = "40--47"

}

```

|

Helsinki-NLP/opus-mt-mt-en

|

ba70458e94b4532f79e8ae01fa78ccbcab1cc143

|

2021-09-10T13:58:23.000Z

|

[

"pytorch",

"tf",

"jax",

"marian",

"text2text-generation",

"mt",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] |

translation

| false |

Helsinki-NLP

| null |

Helsinki-NLP/opus-mt-mt-en

| 631 | null |

transformers

| 2,121 |

---

tags:

- translation

license: apache-2.0

---

### opus-mt-mt-en

* source languages: mt

* target languages: en

* OPUS readme: [mt-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/mt-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-16.zip](https://object.pouta.csc.fi/OPUS-MT-models/mt-en/opus-2020-01-16.zip)

* test set translations: [opus-2020-01-16.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/mt-en/opus-2020-01-16.test.txt)

* test set scores: [opus-2020-01-16.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/mt-en/opus-2020-01-16.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| JW300.mt.en | 49.0 | 0.655 |

| Tatoeba.mt.en | 53.3 | 0.685 |

|

IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI

|

33f5a544081f90a7a3ce1f666b08b65d12398d45

|

2022-05-16T06:06:42.000Z

|

[

"pytorch",

"megatron-bert",

"text-classification",

"zh",

"transformers",

"bert",

"NLU",

"NLI",

"license:apache-2.0"

] |

text-classification

| false |

IDEA-CCNL

| null |

IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI

| 631 | null |

transformers

| 2,122 |

---

language:

- zh

license: apache-2.0

tags:

- bert

- NLU

- NLI

inference: true

widget:

- text: "今天心情不好[SEP]今天很开心"

---

# Erlangshen-MegatronBert-1.3B-NLI, model (Chinese),one model of [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM).

We collect 4 NLI(Natural Language Inference) datasets in the Chinese domain for finetune, with a total of 1014787 samples. Our model is mainly based on [roberta](https://huggingface.co/hfl/chinese-roberta-wwm-ext)

## Usage

```python

from transformers import AutoModelForSequenceClassification

from transformers import BertTokenizer

import torch

tokenizer=BertTokenizer.from_pretrained('IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI')

model=AutoModelForSequenceClassification.from_pretrained('IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI')

texta='今天的饭不好吃'

textb='今天心情不好'

output=model(torch.tensor([tokenizer.encode(texta,textb)]))

print(torch.nn.functional.softmax(output.logits,dim=-1))

```

## Scores on downstream chinese tasks (without any data augmentation)

| Model | cmnli | ocnli | snli |

| :--------: | :-----: | :----: | :-----: |

| Erlangshen-Roberta-110M-NLI | 80.83 | 78.56 | 88.01 |

| Erlangshen-Roberta-330M-NLI | 82.25 | 79.82 | 88 |

| Erlangshen-MegatronBert-1.3B-NLI | 84.52 | 84.17 | 88.67 |

## Citation

If you find the resource is useful, please cite the following website in your paper.

```

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

```

|

sshleifer/distilbart-xsum-9-6

|

28dfbe915f475094642bf8c7dba2ba8a8e85b432

|

2021-06-14T08:26:18.000Z

|

[

"pytorch",

"jax",

"bart",

"text2text-generation",

"en",

"dataset:cnn_dailymail",

"dataset:xsum",

"transformers",

"summarization",

"license:apache-2.0",

"autotrain_compatible"

] |

summarization

| false |

sshleifer

| null |

sshleifer/distilbart-xsum-9-6

| 630 | null |

transformers

| 2,123 |

---

language: en

tags:

- summarization

license: apache-2.0

datasets:

- cnn_dailymail

- xsum

thumbnail: https://huggingface.co/front/thumbnails/distilbart_medium.png

---

### Usage

This checkpoint should be loaded into `BartForConditionalGeneration.from_pretrained`. See the [BART docs](https://huggingface.co/transformers/model_doc/bart.html?#transformers.BartForConditionalGeneration) for more information.

### Metrics for DistilBART models

| Model Name | MM Params | Inference Time (MS) | Speedup | Rouge 2 | Rouge-L |

|:---------------------------|------------:|----------------------:|----------:|----------:|----------:|

| distilbart-xsum-12-1 | 222 | 90 | 2.54 | 18.31 | 33.37 |

| distilbart-xsum-6-6 | 230 | 132 | 1.73 | 20.92 | 35.73 |

| distilbart-xsum-12-3 | 255 | 106 | 2.16 | 21.37 | 36.39 |

| distilbart-xsum-9-6 | 268 | 136 | 1.68 | 21.72 | 36.61 |

| bart-large-xsum (baseline) | 406 | 229 | 1 | 21.85 | 36.50 |

| distilbart-xsum-12-6 | 306 | 137 | 1.68 | 22.12 | 36.99 |

| bart-large-cnn (baseline) | 406 | 381 | 1 | 21.06 | 30.63 |

| distilbart-12-3-cnn | 255 | 214 | 1.78 | 20.57 | 30.00 |

| distilbart-12-6-cnn | 306 | 307 | 1.24 | 21.26 | 30.59 |

| distilbart-6-6-cnn | 230 | 182 | 2.09 | 20.17 | 29.70 |

|

akdeniz27/deberta-v2-xlarge-cuad

|

7713ed1048a69873812a8fc431669d96ea5315bf

|

2021-11-14T08:43:11.000Z

|

[

"pytorch",

"deberta-v2",

"question-answering",

"en",

"dataset:cuad",

"transformers",

"autotrain_compatible"

] |

question-answering

| false |

akdeniz27

| null |

akdeniz27/deberta-v2-xlarge-cuad

| 629 | null |

transformers

| 2,124 |

---

language: en

datasets:

- cuad

---

# DeBERTa v2 XLarge Model fine-tuned with CUAD dataset

This model is the fine-tuned version of "DeBERTa v2 XLarge"

using CUAD dataset https://huggingface.co/datasets/cuad

Link for model checkpoint: https://github.com/TheAtticusProject/cuad

For the use of the model with CUAD: https://github.com/marshmellow77/cuad-demo

and https://huggingface.co/spaces/akdeniz27/contract-understanding-atticus-dataset-demo

|

jason9693/SoongsilBERT-base-beep

|

83bf1d729a5cf0d1e11baf3920bba654e362a186

|

2022-04-16T14:26:17.000Z

|

[

"pytorch",

"jax",

"roberta",

"text-classification",

"ko",

"dataset:kor_hate",

"transformers"

] |

text-classification

| false |

jason9693

| null |

jason9693/SoongsilBERT-base-beep

| 629 | null |

transformers

| 2,125 |

---

language: ko

widget:

- text: "응 어쩔티비~"

datasets:

- kor_hate

---

# Finetuning

## Result

### Base Model

| | Size | **NSMC**<br/>(acc) | **Naver NER**<br/>(F1) | **PAWS**<br/>(acc) | **KorNLI**<br/>(acc) | **KorSTS**<br/>(spearman) | **Question Pair**<br/>(acc) | **KorQuaD (Dev)**<br/>(EM/F1) | **Korean-Hate-Speech (Dev)**<br/>(F1) |

| :-------------------- | :---: | :----------------: | :--------------------: | :----------------: | :------------------: | :-----------------------: | :-------------------------: | :---------------------------: | :-----------------------------------: |

| KoBERT | 351M | 89.59 | 87.92 | 81.25 | 79.62 | 81.59 | 94.85 | 51.75 / 79.15 | 66.21 |

| XLM-Roberta-Base | 1.03G | 89.03 | 86.65 | 82.80 | 80.23 | 78.45 | 93.80 | 64.70 / 88.94 | 64.06 |

| HanBERT | 614M | 90.06 | 87.70 | 82.95 | 80.32 | 82.73 | 94.72 | 78.74 / 92.02 | 68.32 |

| KoELECTRA-Base-v3 | 431M | 90.63 | 88.11 | 84.45 | 82.24 | 85.53 | 95.25 | 84.83 / 93.45 | 67.61 |

| Soongsil-BERT | 370M | **91.2** | - | - | - | 76 | 94 | - | **69** |

### Small Model

| | Size | **NSMC**<br/>(acc) | **Naver NER**<br/>(F1) | **PAWS**<br/>(acc) | **KorNLI**<br/>(acc) | **KorSTS**<br/>(spearman) | **Question Pair**<br/>(acc) | **KorQuaD (Dev)**<br/>(EM/F1) | **Korean-Hate-Speech (Dev)**<br/>(F1) |

| :--------------------- | :--: | :----------------: | :--------------------: | :----------------: | :------------------: | :-----------------------: | :-------------------------: | :---------------------------: | :-----------------------------------: |

| DistilKoBERT | 108M | 88.60 | 84.65 | 60.50 | 72.00 | 72.59 | 92.48 | 54.40 / 77.97 | 60.72 |

| KoELECTRA-Small-v3 | 54M | 89.36 | 85.40 | 77.45 | 78.60 | 80.79 | 94.85 | 82.11 / 91.13 | 63.07 |

| Soongsil-BERT | 213M | **90.7** | 84 | 69.1 | 76 | - | 92 | - | **66** |

## Reference

- [Transformers Examples](https://github.com/huggingface/transformers/blob/master/examples/README.md)

- [NSMC](https://github.com/e9t/nsmc)

- [Naver NER Dataset](https://github.com/naver/nlp-challenge)

- [PAWS](https://github.com/google-research-datasets/paws)

- [KorNLI/KorSTS](https://github.com/kakaobrain/KorNLUDatasets)

- [Question Pair](https://github.com/songys/Question_pair)

- [KorQuad](https://korquad.github.io/category/1.0_KOR.html)

- [Korean Hate Speech](https://github.com/kocohub/korean-hate-speech)

- [KoELECTRA](https://github.com/monologg/KoELECTRA)

- [KoBERT](https://github.com/SKTBrain/KoBERT)

- [HanBERT](https://github.com/tbai2019/HanBert-54k-N)

- [HanBert Transformers](https://github.com/monologg/HanBert-Transformers)

|

textattack/albert-base-v2-MRPC

|

86655e0ce120f86e1e60ce94a602908bb9a4e128

|

2020-07-06T16:29:43.000Z

|

[

"pytorch",

"albert",

"text-classification",

"transformers"

] |

text-classification

| false |

textattack

| null |

textattack/albert-base-v2-MRPC

| 628 | null |

transformers

| 2,126 |

## TextAttack Model Card

This `albert-base-v2` model was fine-tuned for sequence classification using TextAttack

and the glue dataset loaded using the `nlp` library. The model was fine-tuned

for 5 epochs with a batch size of 32, a learning

rate of 2e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.8970588235294118, as measured by the

eval set accuracy, found after 4 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

mrm8488/codebert-base-finetuned-stackoverflow-ner

|

dbffc9f8716da2766cfda31d269e409c49fb54d2

|

2021-05-20T18:21:42.000Z

|

[

"pytorch",

"jax",

"roberta",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

| false |

mrm8488

| null |

mrm8488/codebert-base-finetuned-stackoverflow-ner

| 627 | 3 |

transformers

| 2,127 |

Entry not found

|

manishiitg/distilbert-resume-parts-classify

|

5877484c10f0325f96e699286ada2afe2ee939ad

|

2020-12-09T13:59:30.000Z

|

[

"pytorch",

"distilbert",

"text-classification",

"transformers"

] |

text-classification

| false |

manishiitg

| null |

manishiitg/distilbert-resume-parts-classify

| 624 | 1 |

transformers

| 2,128 |

Entry not found

|

facebook/data2vec-vision-base-ft1k

|

9c7678bab4dcde1342510d62f201db7f8e98e6ff

|

2022-05-03T15:08:31.000Z

|

[

"pytorch",

"tf",

"data2vec-vision",

"image-classification",

"dataset:imagenet",

"dataset:imagenet-1k",

"arxiv:2202.03555",

"arxiv:2106.08254",

"transformers",

"vision",

"license:apache-2.0"

] |

image-classification

| false |

facebook

| null |

facebook/data2vec-vision-base-ft1k

| 624 | null |

transformers

| 2,129 |

---

license: apache-2.0

tags:

- image-classification

- vision

datasets:

- imagenet

- imagenet-1k

---

# Data2Vec-Vision (base-sized model, fine-tuned on ImageNet-1k)

BEiT model pre-trained in a self-supervised fashion and fine-tuned on ImageNet-1k (1,2 million images, 1000 classes) at resolution 224x224. It was introduced in the paper [data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language](https://arxiv.org/abs/2202.03555) by Alexei Baevski, Wei-Ning Hsu, Qiantong Xu, Arun Babu, Jiatao Gu, Michael Auli and first released in [this repository](https://github.com/facebookresearch/data2vec_vision/tree/main/beit).

Disclaimer: The team releasing Facebook team did not write a model card for this model so this model card has been written by the Hugging Face team.

## Pre-Training method

For more information, please take a look at the [official paper](https://arxiv.org/abs/2202.03555).

## Abstract

*While the general idea of self-supervised learning is identical across modalities, the actual algorithms and objectives differ widely because

they were developed with a single modality in

mind. To get us closer to general self-supervised

learning, we present data2vec, a framework that

uses the same learning method for either speech,

NLP or computer vision. The core idea is to predict latent representations of the full input data

based on a masked view of the input in a selfdistillation setup using a standard Transformer architecture. Instead of predicting modality-specific

targets such as words, visual tokens or units of

human speech which are local in nature, data2vec

predicts contextualized latent representations that

contain information from the entire input. Experiments on the major benchmarks of speech

recognition, image classification, and natural language understanding demonstrate a new state of

the art or competitive performance to predominant approaches.*

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=data2vec-vision) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import BeitFeatureExtractor, Data2VecVisionForImageClassification

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = BeitFeatureExtractor.from_pretrained('facebook/data2vec-vision-base-ft1k')

model = Data2VecVisionForImageClassification.from_pretrained('facebook/data2vec-vision-base-ft1k')

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

Currently, both the feature extractor and model support PyTorch.

## Training data

The BEiT model was pretrained and fine-tuned on [ImageNet-1k](http://www.image-net.org/), a dataset consisting of 1,2 million images and 1k classes.

## Training procedure

### Preprocessing

The exact details of preprocessing of images during training/validation can be found [here](https://github.com/microsoft/unilm/blob/master/beit/datasets.py).

Images are resized/rescaled to the same resolution (224x224) and normalized across the RGB channels with mean (0.5, 0.5, 0.5) and standard deviation (0.5, 0.5, 0.5).

### Pretraining

For all pre-training related hyperparameters, we refer to the [original paper](https://arxiv.org/abs/2106.08254) and the [original codebase](https://github.com/facebookresearch/data2vec_vision/tree/main/beit)

## Evaluation results

For evaluation results on several image classification benchmarks, we refer to tables 1 of the original paper. Note that for fine-tuning, the best results are obtained with a higher resolution. Of course, increasing the model size will result in better performance.

We evaluated the model on `ImageNet1K` and got top-1 accuracy = **83.97** while in the original paper it was reported top-1 accuracy = 84.2.

If you want to reproduce our evaluation process you can use [This Colab Notebook](https://colab.research.google.com/drive/1Tse8Rfv-QhapMEMzauxUqnAQyXUgnTLK?usp=sharing)

### BibTeX entry and citation info

```bibtex

@misc{https://doi.org/10.48550/arxiv.2202.03555,

doi = {10.48550/ARXIV.2202.03555},

url = {https://arxiv.org/abs/2202.03555},

author = {Baevski, Alexei and Hsu, Wei-Ning and Xu, Qiantong and Babu, Arun and Gu, Jiatao and Auli, Michael},

keywords = {Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}

```

|

clue/roberta_chinese_large

|

e1ced8cb9dadb0c677cefd9e42b770dc863e78ea

|

2021-05-20T15:28:53.000Z

|

[

"pytorch",

"jax",

"roberta",

"zh",

"transformers"

] | null | false |

clue

| null |

clue/roberta_chinese_large

| 622 | null |

transformers

| 2,130 |

---

language: zh

---

## roberta_chinese_large

### Overview

**Language model:** roberta-large

**Model size:** 1.2G

**Language:** Chinese

**Training data:** [CLUECorpusSmall](https://github.com/CLUEbenchmark/CLUECorpus2020)

**Eval data:** [CLUE dataset](https://github.com/CLUEbenchmark/CLUE)

### Results

For results on downstream tasks like text classification, please refer to [this repository](https://github.com/CLUEbenchmark/CLUE).

### Usage

**NOTE:** You have to call **BertTokenizer** instead of RobertaTokenizer !!!

```

import torch

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained("clue/roberta_chinese_large")

roberta = BertModel.from_pretrained("clue/roberta_chinese_large")

```

### About CLUE benchmark

Organization of Language Understanding Evaluation benchmark for Chinese: tasks & datasets, baselines, pre-trained Chinese models, corpus and leaderboard.

Github: https://github.com/CLUEbenchmark

Website: https://www.cluebenchmarks.com/

|

svalabs/gbert-large-zeroshot-nli

|

d106a0557ab5a76e762f7fcb8524cb61710a0ba1

|

2021-12-21T15:07:03.000Z

|

[

"pytorch",

"bert",

"text-classification",

"German",

"transformers",

"nli",

"de",

"zero-shot-classification"

] |

zero-shot-classification

| false |

svalabs

| null |

svalabs/gbert-large-zeroshot-nli

| 620 | 4 |

transformers

| 2,131 |

---

language: German

tags:

- text-classification

- pytorch

- nli

- de

pipeline_tag: zero-shot-classification

widget:

- text: "Ich habe ein Problem mit meinem Iphone das so schnell wie möglich gelöst werden muss."

candidate_labels: "Computer, Handy, Tablet, dringend, nicht dringend"