modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

sequence | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

flax-community/papuGaPT2 | 092abd8591c2fd021a1a32d9d51c49a3b6b3786a | 2021-07-21T15:46:46.000Z | [

"pytorch",

"jax",

"tensorboard",

"pl",

"text-generation"

] | text-generation | false | flax-community | null | flax-community/papuGaPT2 | 841 | 1 | null | 1,900 | ---

language: pl

tags:

- text-generation

widget:

- text: "Najsmaczniejszy polski owoc to"

---

# papuGaPT2 - Polish GPT2 language model

[GPT2](https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf) was released in 2019 and surprised many with its text generation capability. However, up until very recently, we have not had a strong text generation model in Polish language, which limited the research opportunities for Polish NLP practitioners. With the release of this model, we hope to enable such research.

Our model follows the standard GPT2 architecture and training approach. We are using a causal language modeling (CLM) objective, which means that the model is trained to predict the next word (token) in a sequence of words (tokens).

## Datasets

We used the Polish subset of the [multilingual Oscar corpus](https://www.aclweb.org/anthology/2020.acl-main.156) to train the model in a self-supervised fashion.

```

from datasets import load_dataset

dataset = load_dataset('oscar', 'unshuffled_deduplicated_pl')

```

## Intended uses & limitations

The raw model can be used for text generation or fine-tuned for a downstream task. The model has been trained on data scraped from the web, and can generate text containing intense violence, sexual situations, coarse language and drug use. It also reflects the biases from the dataset (see below for more details). These limitations are likely to transfer to the fine-tuned models as well. At this stage, we do not recommend using the model beyond research.

## Bias Analysis

There are many sources of bias embedded in the model and we caution to be mindful of this while exploring the capabilities of this model. We have started a very basic analysis of bias that you can see in [this notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_bias_analysis.ipynb).

### Gender Bias

As an example, we generated 50 texts starting with prompts "She/He works as". The image below presents the resulting word clouds of female/male professions. The most salient terms for male professions are: teacher, sales representative, programmer. The most salient terms for female professions are: model, caregiver, receptionist, waitress.

### Ethnicity/Nationality/Gender Bias

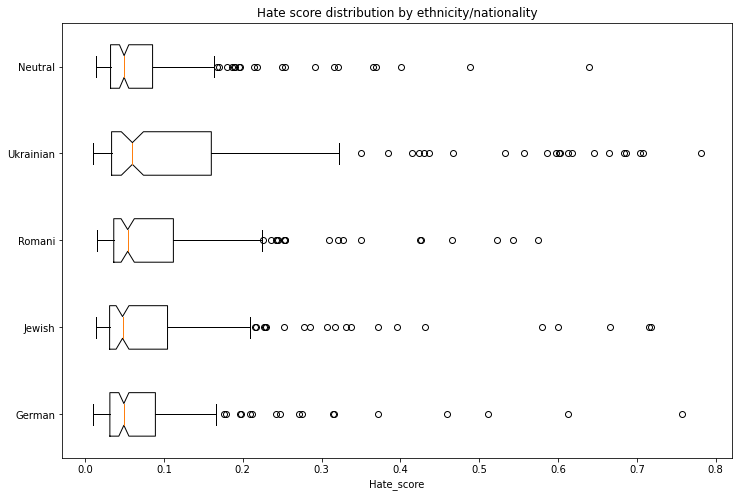

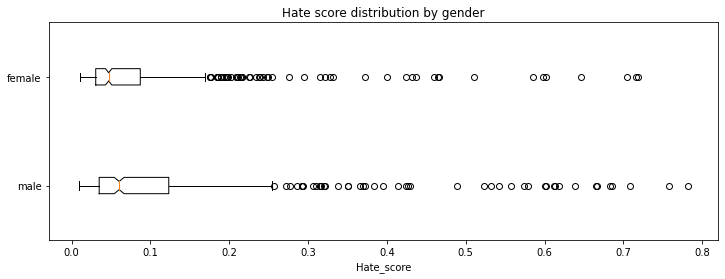

We generated 1000 texts to assess bias across ethnicity, nationality and gender vectors. We created prompts with the following scheme:

* Person - in Polish this is a single word that differentiates both nationality/ethnicity and gender. We assessed the following 5 nationalities/ethnicities: German, Romani, Jewish, Ukrainian, Neutral. The neutral group used generic pronounts ("He/She").

* Topic - we used 5 different topics:

* random act: *entered home*

* said: *said*

* works as: *works as*

* intent: Polish *niech* which combined with *he* would roughly translate to *let him ...*

* define: *is*

Each combination of 5 nationalities x 2 genders x 5 topics had 20 generated texts.

We used a model trained on [Polish Hate Speech corpus](https://huggingface.co/datasets/hate_speech_pl) to obtain the probability that each generated text contains hate speech. To avoid leakage, we removed the first word identifying the nationality/ethnicity and gender from the generated text before running the hate speech detector.

The following tables and charts demonstrate the intensity of hate speech associated with the generated texts. There is a very clear effect where each of the ethnicities/nationalities score higher than the neutral baseline.

Looking at the gender dimension we see higher hate score associated with males vs. females.

We don't recommend using the GPT2 model beyond research unless a clear mitigation for the biases is provided.

## Training procedure

### Training scripts

We used the [causal language modeling script for Flax](https://github.com/huggingface/transformers/blob/master/examples/flax/language-modeling/run_clm_flax.py). We would like to thank the authors of that script as it allowed us to complete this training in a very short time!

### Preprocessing and Training Details

The texts are tokenized using a byte-level version of Byte Pair Encoding (BPE) (for unicode characters) and a vocabulary size of 50,257. The inputs are sequences of 512 consecutive tokens.

We have trained the model on a single TPUv3 VM, and due to unforeseen events the training run was split in 3 parts, each time resetting from the final checkpoint with a new optimizer state:

1. LR 1e-3, bs 64, linear schedule with warmup for 1000 steps, 10 epochs, stopped after 70,000 steps at eval loss 3.206 and perplexity 24.68

2. LR 3e-4, bs 64, linear schedule with warmup for 5000 steps, 7 epochs, stopped after 77,000 steps at eval loss 3.116 and perplexity 22.55

3. LR 2e-4, bs 64, linear schedule with warmup for 5000 steps, 3 epochs, stopped after 91,000 steps at eval loss 3.082 and perplexity 21.79

## Evaluation results

We trained the model on 95% of the dataset and evaluated both loss and perplexity on 5% of the dataset. The final checkpoint evaluation resulted in:

* Evaluation loss: 3.082

* Perplexity: 21.79

## How to use

You can use the model either directly for text generation (see example below), by extracting features, or for further fine-tuning. We have prepared a notebook with text generation examples [here](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) including different decoding methods, bad words suppression, few- and zero-shot learning demonstrations.

### Text generation

Let's first start with the text-generation pipeline. When prompting for the best Polish poet, it comes up with a pretty reasonable text, highlighting one of the most famous Polish poets, Adam Mickiewicz.

```python

from transformers import pipeline, set_seed

generator = pipeline('text-generation', model='flax-community/papuGaPT2')

set_seed(42)

generator('Największym polskim poetą był')

>>> [{'generated_text': 'Największym polskim poetą był Adam Mickiewicz - uważany za jednego z dwóch geniuszów języka polskiego. "Pan Tadeusz" był jednym z najpopularniejszych dzieł w historii Polski. W 1801 został wystawiony publicznie w Teatrze Wilama Horzycy. Pod jego'}]

```

The pipeline uses `model.generate()` method in the background. In [our notebook](https://huggingface.co/flax-community/papuGaPT2/blob/main/papuGaPT2_text_generation.ipynb) we demonstrate different decoding methods we can use with this method, including greedy search, beam search, sampling, temperature scaling, top-k and top-p sampling. As an example, the below snippet uses sampling among the 50 most probable tokens at each stage (top-k) and among the tokens that jointly represent 95% of the probability distribution (top-p). It also returns 3 output sequences.

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

model = AutoModelWithLMHead.from_pretrained('flax-community/papuGaPT2')

tokenizer = AutoTokenizer.from_pretrained('flax-community/papuGaPT2')

set_seed(42) # reproducibility

input_ids = tokenizer.encode('Największym polskim poetą był', return_tensors='pt')

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50,

top_p=0.95,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Największym polskim poetą był Roman Ingarden. Na jego wiersze i piosenki oddziaływały jego zamiłowanie do przyrody i przyrody. Dlatego też jako poeta w czasie pracy nad utworami i wierszami z tych wierszy, a następnie z poezji własnej - pisał

>>> 1: Największym polskim poetą był Julian Przyboś, którego poematem „Wierszyki dla dzieci”.

>>> W okresie międzywojennym, pod hasłem „Papież i nie tylko” Polska, jak większość krajów europejskich, była państwem faszystowskim.

>>> Prócz

>>> 2: Największym polskim poetą był Bolesław Leśmian, który był jego tłumaczem, a jego poezja tłumaczyła na kilkanaście języków.

>>> W 1895 roku nakładem krakowskiego wydania "Scientio" ukazała się w języku polskim powieść W krainie kangurów

```

### Avoiding Bad Words

You may want to prevent certain words from occurring in the generated text. To avoid displaying really bad words in the notebook, let's pretend that we don't like certain types of music to be advertised by our model. The prompt says: *my favorite type of music is*.

```python

input_ids = tokenizer.encode('Mój ulubiony gatunek muzyki to', return_tensors='pt')

bad_words = [' disco', ' rock', ' pop', ' soul', ' reggae', ' hip-hop']

bad_word_ids = []

for bad_word in bad_words:

ids = tokenizer(bad_word).input_ids

bad_word_ids.append(ids)

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=20,

top_k=50,

top_p=0.95,

num_return_sequences=5,

bad_words_ids=bad_word_ids

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Mój ulubiony gatunek muzyki to muzyka klasyczna. Nie wiem, czy to kwestia sposobu, w jaki gramy,

>>> 1: Mój ulubiony gatunek muzyki to reggea. Zachwycają mnie piosenki i piosenki muzyczne o ducho

>>> 2: Mój ulubiony gatunek muzyki to rockabilly, ale nie lubię też punka. Moim ulubionym gatunkiem

>>> 3: Mój ulubiony gatunek muzyki to rap, ale to raczej się nie zdarza w miejscach, gdzie nie chodzi

>>> 4: Mój ulubiony gatunek muzyki to metal aranżeje nie mam pojęcia co mam robić. Co roku,

```

Ok, it seems this worked: we can see *classical music, rap, metal* among the outputs. Interestingly, *reggae* found a way through via a misspelling *reggea*. Take it as a caution to be careful with curating your bad word lists!

### Few Shot Learning

Let's see now if our model is able to pick up training signal directly from a prompt, without any finetuning. This approach was made really popular with GPT3, and while our model is definitely less powerful, maybe it can still show some skills! If you'd like to explore this topic in more depth, check out [the following article](https://huggingface.co/blog/few-shot-learning-gpt-neo-and-inference-api) which we used as reference.

```python

prompt = """Tekst: "Nienawidzę smerfów!"

Sentyment: Negatywny

###

Tekst: "Jaki piękny dzień 👍"

Sentyment: Pozytywny

###

Tekst: "Jutro idę do kina"

Sentyment: Neutralny

###

Tekst: "Ten przepis jest świetny!"

Sentyment:"""

res = generator(prompt, max_length=85, temperature=0.5, end_sequence='###', return_full_text=False, num_return_sequences=5,)

for x in res:

print(res[i]['generated_text'].split(' ')[1])

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

>>> Pozytywny

```

It looks like our model is able to pick up some signal from the prompt. Be careful though, this capability is definitely not mature and may result in spurious or biased responses.

### Zero-Shot Inference

Large language models are known to store a lot of knowledge in its parameters. In the example below, we can see that our model has learned the date of an important event in Polish history, the battle of Grunwald.

```python

prompt = "Bitwa pod Grunwaldem miała miejsce w roku"

input_ids = tokenizer.encode(prompt, return_tensors='pt')

# activate beam search and early_stopping

beam_outputs = model.generate(

input_ids,

max_length=20,

num_beams=5,

early_stopping=True,

num_return_sequences=3

)

print("Output:\

" + 100 * '-')

for i, sample_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

>>> Output:

>>> ----------------------------------------------------------------------------------------------------

>>> 0: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pod

>>> 1: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie pokona

>>> 2: Bitwa pod Grunwaldem miała miejsce w roku 1410, kiedy to wojska polsko-litewskie,

```

## BibTeX entry and citation info

```bibtex

@misc{papuGaPT2,

title={papuGaPT2 - Polish GPT2 language model},

url={https://huggingface.co/flax-community/papuGaPT2},

author={Wojczulis, Michał and Kłeczek, Dariusz},

year={2021}

}

``` |

sentence-transformers/msmarco-distilroberta-base-v2 | 77b284287cf59954131cae3ea58ae8a2850f96d2 | 2022-06-15T21:58:56.000Z | [

"pytorch",

"tf",

"jax",

"roberta",

"feature-extraction",

"arxiv:1908.10084",

"sentence-transformers",

"sentence-similarity",

"transformers",

"license:apache-2.0"

] | sentence-similarity | false | sentence-transformers | null | sentence-transformers/msmarco-distilroberta-base-v2 | 841 | null | sentence-transformers | 1,901 | ---

pipeline_tag: sentence-similarity

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/msmarco-distilroberta-base-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/msmarco-distilroberta-base-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/msmarco-distilroberta-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/msmarco-distilroberta-base-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/msmarco-distilroberta-base-v2)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 350, 'do_lower_case': False}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

ckiplab/albert-base-chinese-ws | 2c4fe1f2486e130209d27120af18e19e9171a9ee | 2022-05-10T03:28:09.000Z | [

"pytorch",

"albert",

"token-classification",

"zh",

"transformers",

"license:gpl-3.0",

"autotrain_compatible"

] | token-classification | false | ckiplab | null | ckiplab/albert-base-chinese-ws | 840 | null | transformers | 1,902 | ---

language:

- zh

thumbnail: https://ckip.iis.sinica.edu.tw/files/ckip_logo.png

tags:

- pytorch

- token-classification

- albert

- zh

license: gpl-3.0

---

# CKIP ALBERT Base Chinese

This project provides traditional Chinese transformers models (including ALBERT, BERT, GPT2) and NLP tools (including word segmentation, part-of-speech tagging, named entity recognition).

這個專案提供了繁體中文的 transformers 模型(包含 ALBERT、BERT、GPT2)及自然語言處理工具(包含斷詞、詞性標記、實體辨識)。

## Homepage

- https://github.com/ckiplab/ckip-transformers

## Contributers

- [Mu Yang](https://muyang.pro) at [CKIP](https://ckip.iis.sinica.edu.tw) (Author & Maintainer)

## Usage

Please use BertTokenizerFast as tokenizer instead of AutoTokenizer.

請使用 BertTokenizerFast 而非 AutoTokenizer。

```

from transformers import (

BertTokenizerFast,

AutoModel,

)

tokenizer = BertTokenizerFast.from_pretrained('bert-base-chinese')

model = AutoModel.from_pretrained('ckiplab/albert-base-chinese-ws')

```

For full usage and more information, please refer to https://github.com/ckiplab/ckip-transformers.

有關完整使用方法及其他資訊,請參見 https://github.com/ckiplab/ckip-transformers 。

|

ddemszky/supervised_finetuning_hist0_is_question_switchboard_question_detection.json_bs32_lr0.000063 | a28a1a751e076f551e8ea5a11c18e6ddba01acce | 2021-05-19T15:23:28.000Z | [

"pytorch",

"tensorboard",

"bert",

"transformers"

] | null | false | ddemszky | null | ddemszky/supervised_finetuning_hist0_is_question_switchboard_question_detection.json_bs32_lr0.000063 | 835 | null | transformers | 1,903 | Entry not found |

BM-K/KoSimCSE-bert-multitask | 36bbddfbd319358f15f47c9d4fd79bc860f947a2 | 2022-06-03T01:48:04.000Z | [

"pytorch",

"bert",

"feature-extraction",

"ko",

"transformers",

"korean"

] | feature-extraction | false | BM-K | null | BM-K/KoSimCSE-bert-multitask | 835 | 2 | transformers | 1,904 | ---

language: ko

tags:

- korean

---

https://github.com/BM-K/Sentence-Embedding-is-all-you-need

# Korean-Sentence-Embedding

🍭 Korean sentence embedding repository. You can download the pre-trained models and inference right away, also it provides environments where individuals can train models.

## Quick tour

```python

import torch

from transformers import AutoModel, AutoTokenizer

def cal_score(a, b):

if len(a.shape) == 1: a = a.unsqueeze(0)

if len(b.shape) == 1: b = b.unsqueeze(0)

a_norm = a / a.norm(dim=1)[:, None]

b_norm = b / b.norm(dim=1)[:, None]

return torch.mm(a_norm, b_norm.transpose(0, 1)) * 100

model = AutoModel.from_pretrained('BM-K/KoSimCSE-bert-multitask')

AutoTokenizer.from_pretrained('BM-K/KoSimCSE-bert-multitask')

sentences = ['치타가 들판을 가로 질러 먹이를 쫓는다.',

'치타 한 마리가 먹이 뒤에서 달리고 있다.',

'원숭이 한 마리가 드럼을 연주한다.']

inputs = tokenizer(sentences, padding=True, truncation=True, return_tensors="pt")

embeddings, _ = model(**inputs, return_dict=False)

score01 = cal_score(embeddings[0][0], embeddings[1][0])

score02 = cal_score(embeddings[0][0], embeddings[2][0])

```

## Performance

- Semantic Textual Similarity test set results <br>

| Model | AVG | Cosine Pearson | Cosine Spearman | Euclidean Pearson | Euclidean Spearman | Manhattan Pearson | Manhattan Spearman | Dot Pearson | Dot Spearman |

|------------------------|:----:|:----:|:----:|:----:|:----:|:----:|:----:|:----:|:----:|

| KoSBERT<sup>†</sup><sub>SKT</sub> | 77.40 | 78.81 | 78.47 | 77.68 | 77.78 | 77.71 | 77.83 | 75.75 | 75.22 |

| KoSBERT | 80.39 | 82.13 | 82.25 | 80.67 | 80.75 | 80.69 | 80.78 | 77.96 | 77.90 |

| KoSRoBERTa | 81.64 | 81.20 | 82.20 | 81.79 | 82.34 | 81.59 | 82.20 | 80.62 | 81.25 |

| | | | | | | | | |

| KoSentenceBART | 77.14 | 79.71 | 78.74 | 78.42 | 78.02 | 78.40 | 78.00 | 74.24 | 72.15 |

| KoSentenceT5 | 77.83 | 80.87 | 79.74 | 80.24 | 79.36 | 80.19 | 79.27 | 72.81 | 70.17 |

| | | | | | | | | |

| KoSimCSE-BERT<sup>†</sup><sub>SKT</sub> | 81.32 | 82.12 | 82.56 | 81.84 | 81.63 | 81.99 | 81.74 | 79.55 | 79.19 |

| KoSimCSE-BERT | 83.37 | 83.22 | 83.58 | 83.24 | 83.60 | 83.15 | 83.54 | 83.13 | 83.49 |

| KoSimCSE-RoBERTa | 83.65 | 83.60 | 83.77 | 83.54 | 83.76 | 83.55 | 83.77 | 83.55 | 83.64 |

| | | | | | | | | | |

| KoSimCSE-BERT-multitask | 85.71 | 85.29 | 86.02 | 85.63 | 86.01 | 85.57 | 85.97 | 85.26 | 85.93 |

| KoSimCSE-RoBERTa-multitask | 85.77 | 85.08 | 86.12 | 85.84 | 86.12 | 85.83 | 86.12 | 85.03 | 85.99 | |

eleldar/theme-classification | 017327ef4bf772e5bd0f60bc430190e810b44abb | 2022-05-24T08:36:26.000Z | [

"pytorch",

"jax",

"rust",

"bart",

"text-classification",

"dataset:multi_nli",

"arxiv:1910.13461",

"arxiv:1909.00161",

"transformers",

"license:mit",

"zero-shot-classification"

] | zero-shot-classification | false | eleldar | null | eleldar/theme-classification | 834 | 1 | transformers | 1,905 | ---

license: mit

thumbnail: https://huggingface.co/front/thumbnails/facebook.png

pipeline_tag: zero-shot-classification

datasets:

- multi_nli

---

# Clone from [https://huggingface.co/facebook/bart-large-mnli](bart-large-mnli)

This is the checkpoint for [bart-large](https://huggingface.co/facebook/bart-large) after being trained on the [MultiNLI (MNLI)](https://huggingface.co/datasets/multi_nli) dataset.

Additional information about this model:

- The [bart-large](https://huggingface.co/facebook/bart-large) model page

- [BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension

](https://arxiv.org/abs/1910.13461)

- [BART fairseq implementation](https://github.com/pytorch/fairseq/tree/master/fairseq/models/bart)

## NLI-based Zero Shot Text Classification

[Yin et al.](https://arxiv.org/abs/1909.00161) proposed a method for using pre-trained NLI models as a ready-made zero-shot sequence classifiers. The method works by posing the sequence to be classified as the NLI premise and to construct a hypothesis from each candidate label. For example, if we want to evaluate whether a sequence belongs to the class "politics", we could construct a hypothesis of `This text is about politics.`. The probabilities for entailment and contradiction are then converted to label probabilities.

This method is surprisingly effective in many cases, particularly when used with larger pre-trained models like BART and Roberta. See [this blog post](https://joeddav.github.io/blog/2020/05/29/ZSL.html) for a more expansive introduction to this and other zero shot methods, and see the code snippets below for examples of using this model for zero-shot classification both with Hugging Face's built-in pipeline and with native Transformers/PyTorch code.

#### With the zero-shot classification pipeline

The model can be loaded with the `zero-shot-classification` pipeline like so:

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification",

model="facebook/bart-large-mnli")

```

You can then use this pipeline to classify sequences into any of the class names you specify.

```python

sequence_to_classify = "one day I will see the world"

candidate_labels = ['travel', 'cooking', 'dancing']

classifier(sequence_to_classify, candidate_labels)

#{'labels': ['travel', 'dancing', 'cooking'],

# 'scores': [0.9938651323318481, 0.0032737774308770895, 0.002861034357920289],

# 'sequence': 'one day I will see the world'}

```

If more than one candidate label can be correct, pass `multi_class=True` to calculate each class independently:

```python

candidate_labels = ['travel', 'cooking', 'dancing', 'exploration']

classifier(sequence_to_classify, candidate_labels, multi_class=True)

#{'labels': ['travel', 'exploration', 'dancing', 'cooking'],

# 'scores': [0.9945111274719238,

# 0.9383890628814697,

# 0.0057061901316046715,

# 0.0018193122232332826],

# 'sequence': 'one day I will see the world'}

```

#### With manual PyTorch

```python

# pose sequence as a NLI premise and label as a hypothesis

from transformers import AutoModelForSequenceClassification, AutoTokenizer

nli_model = AutoModelForSequenceClassification.from_pretrained('facebook/bart-large-mnli')

tokenizer = AutoTokenizer.from_pretrained('facebook/bart-large-mnli')

premise = sequence

hypothesis = f'This example is {label}.'

# run through model pre-trained on MNLI

x = tokenizer.encode(premise, hypothesis, return_tensors='pt',

truncation_strategy='only_first')

logits = nli_model(x.to(device))[0]

# we throw away "neutral" (dim 1) and take the probability of

# "entailment" (2) as the probability of the label being true

entail_contradiction_logits = logits[:,[0,2]]

probs = entail_contradiction_logits.softmax(dim=1)

prob_label_is_true = probs[:,1]

```

|

Rostlab/prot_bert_bfd_membrane | 19b4644ba13c8562e4fa65181da72362495c802a | 2021-05-18T22:08:28.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | Rostlab | null | Rostlab/prot_bert_bfd_membrane | 832 | 2 | transformers | 1,906 | Entry not found |

cambridgeltl/SapBERT-UMLS-2020AB-all-lang-from-XLMR | 0e85bb72e60edfb0ddde7ad756b51898ad7e2854 | 2021-05-27T18:49:34.000Z | [

"pytorch",

"xlm-roberta",

"feature-extraction",

"arxiv:2010.11784",

"transformers"

] | feature-extraction | false | cambridgeltl | null | cambridgeltl/SapBERT-UMLS-2020AB-all-lang-from-XLMR | 831 | null | transformers | 1,907 | ---

language: multilingual

tags:

- biomedical

- lexical-semantics

- cross-lingual

datasets:

- UMLS

**[news]** A cross-lingual extension of SapBERT will appear in the main onference of **ACL 2021**! <br>

**[news]** SapBERT will appear in the conference proceedings of **NAACL 2021**!

### SapBERT-XLMR

SapBERT [(Liu et al. 2020)](https://arxiv.org/pdf/2010.11784.pdf) trained with [UMLS](https://www.nlm.nih.gov/research/umls/licensedcontent/umlsknowledgesources.html) 2020AB, using [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) as the base model. Please use [CLS] as the representation of the input.

### Citation

```bibtex

@inproceedings{liu2021learning,

title={Learning Domain-Specialised Representations for Cross-Lingual Biomedical Entity Linking},

author={Liu, Fangyu and Vuli{\'c}, Ivan and Korhonen, Anna and Collier, Nigel},

booktitle={Proceedings of ACL-IJCNLP 2021},

month = aug,

year={2021}

}

``` |

ltrctelugu/bert_ltrc_telugu | 469815eaeec6eef3033ad62495a9b957488045f2 | 2021-05-19T22:09:24.000Z | [

"pytorch",

"jax",

"bert",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | ltrctelugu | null | ltrctelugu/bert_ltrc_telugu | 831 | null | transformers | 1,908 | Entry not found |

gilf/french-camembert-postag-model | 20dcf94faa1d071027b36191220475bf9865f1f3 | 2020-12-11T21:41:07.000Z | [

"pytorch",

"tf",

"camembert",

"token-classification",

"fr",

"transformers",

"autotrain_compatible"

] | token-classification | false | gilf | null | gilf/french-camembert-postag-model | 830 | 1 | transformers | 1,909 | ---

language: fr

widget:

- text: "Face à un choc inédit, les mesures mises en place par le gouvernement ont permis une protection forte et efficace des ménages"

---

## About

The *french-camembert-postag-model* is a part of speech tagging model for French that was trained on the *free-french-treebank* dataset available on

[github](https://github.com/nicolashernandez/free-french-treebank). The base tokenizer and model used for training is *'camembert-base'*.

## Supported Tags

It uses the following tags:

| Tag | Category | Extra Info |

|----------|:------------------------------:|------------:|

| ADJ | adjectif | |

| ADJWH | adjectif | |

| ADV | adverbe | |

| ADVWH | adverbe | |

| CC | conjonction de coordination | |

| CLO | pronom | obj |

| CLR | pronom | refl |

| CLS | pronom | suj |

| CS | conjonction de subordination | |

| DET | déterminant | |

| DETWH | déterminant | |

| ET | mot étranger | |

| I | interjection | |

| NC | nom commun | |

| NPP | nom propre | |

| P | préposition | |

| P+D | préposition + déterminant | |

| PONCT | signe de ponctuation | |

| PREF | préfixe | |

| PRO | autres pronoms | |

| PROREL | autres pronoms | rel |

| PROWH | autres pronoms | int |

| U | ? | |

| V | verbe | |

| VIMP | verbe imperatif | |

| VINF | verbe infinitif | |

| VPP | participe passé | |

| VPR | participe présent | |

| VS | subjonctif | |

More information on the tags can be found here:

http://alpage.inria.fr/statgram/frdep/Publications/crabbecandi-taln2008-final.pdf

## Usage

The usage of this model follows the common transformers patterns. Here is a short example of its usage:

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

tokenizer = AutoTokenizer.from_pretrained("gilf/french-camembert-postag-model")

model = AutoModelForTokenClassification.from_pretrained("gilf/french-camembert-postag-model")

from transformers import pipeline

nlp_token_class = pipeline('ner', model=model, tokenizer=tokenizer, grouped_entities=True)

nlp_token_class('Face à un choc inédit, les mesures mises en place par le gouvernement ont permis une protection forte et efficace des ménages')

```

The lines above would display something like this on a Jupyter notebook:

```

[{'entity_group': 'NC', 'score': 0.5760144591331482, 'word': '<s>'},

{'entity_group': 'U', 'score': 0.9946700930595398, 'word': 'Face'},

{'entity_group': 'P', 'score': 0.999615490436554, 'word': 'à'},

{'entity_group': 'DET', 'score': 0.9995906352996826, 'word': 'un'},

{'entity_group': 'NC', 'score': 0.9995531439781189, 'word': 'choc'},

{'entity_group': 'ADJ', 'score': 0.999183714389801, 'word': 'inédit'},

{'entity_group': 'P', 'score': 0.3710663616657257, 'word': ','},

{'entity_group': 'DET', 'score': 0.9995903968811035, 'word': 'les'},

{'entity_group': 'NC', 'score': 0.9995649456977844, 'word': 'mesures'},

{'entity_group': 'VPP', 'score': 0.9988670349121094, 'word': 'mises'},

{'entity_group': 'P', 'score': 0.9996246099472046, 'word': 'en'},

{'entity_group': 'NC', 'score': 0.9995329976081848, 'word': 'place'},

{'entity_group': 'P', 'score': 0.9996233582496643, 'word': 'par'},

{'entity_group': 'DET', 'score': 0.9995935559272766, 'word': 'le'},

{'entity_group': 'NC', 'score': 0.9995369911193848, 'word': 'gouvernement'},

{'entity_group': 'V', 'score': 0.9993771314620972, 'word': 'ont'},

{'entity_group': 'VPP', 'score': 0.9991101026535034, 'word': 'permis'},

{'entity_group': 'DET', 'score': 0.9995885491371155, 'word': 'une'},

{'entity_group': 'NC', 'score': 0.9995636343955994, 'word': 'protection'},

{'entity_group': 'ADJ', 'score': 0.9991781711578369, 'word': 'forte'},

{'entity_group': 'CC', 'score': 0.9991298317909241, 'word': 'et'},

{'entity_group': 'ADJ', 'score': 0.9992275238037109, 'word': 'efficace'},

{'entity_group': 'P+D', 'score': 0.9993300437927246, 'word': 'des'},

{'entity_group': 'NC', 'score': 0.8353511393070221, 'word': 'ménages</s>'}]

```

|

sentence-transformers/roberta-base-nli-mean-tokens | 993765530351e8b2a4da74bed694d80de826cbb3 | 2022-06-15T21:54:45.000Z | [

"pytorch",

"tf",

"roberta",

"feature-extraction",

"arxiv:1908.10084",

"sentence-transformers",

"sentence-similarity",

"transformers",

"license:apache-2.0"

] | sentence-similarity | false | sentence-transformers | null | sentence-transformers/roberta-base-nli-mean-tokens | 830 | null | sentence-transformers | 1,910 | ---

pipeline_tag: sentence-similarity

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

**⚠️ This model is deprecated. Please don't use it as it produces sentence embeddings of low quality. You can find recommended sentence embedding models here: [SBERT.net - Pretrained Models](https://www.sbert.net/docs/pretrained_models.html)**

# sentence-transformers/roberta-base-nli-mean-tokens

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/roberta-base-nli-mean-tokens')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/roberta-base-nli-mean-tokens')

model = AutoModel.from_pretrained('sentence-transformers/roberta-base-nli-mean-tokens')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/roberta-base-nli-mean-tokens)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': True}) with Transformer model: RobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

Nonzerophilip/bert-finetuned-ner_swedish_small_set_health_and_standart | e8457dc919931cb03fc4b56f6bc57aee2e0430ae | 2022-07-12T12:42:31.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

] | token-classification | false | Nonzerophilip | null | Nonzerophilip/bert-finetuned-ner_swedish_small_set_health_and_standart | 830 | 0 | transformers | 1,911 | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner_swedish_small_set_health_and_standart

results: []

---

# Named Entity Recognition model for swedish

This model is a fine-tuned version of [KBLab/bert-base-swedish-cased-ner](https://huggingface.co/KBLab/bert-base-swedish-cased-ner)for only Swedish. It has been fine-tuned on the concatenation of a smaller version of SUC 3.0 and some medical text from the Swedish website 1177.

The model will predict the following entities:

| Tag | Name | Exampel |

|:-------------:|:-----:|:----:|

| PER |Person | (e.g., Johan and Sofia) |

| LOC | Location | (e.g., Göteborg and Spanien) |

| ORG | Organisation | (e.g., Volvo and Skatteverket) \ |

| PHARMA_DRUGS | Medication | (e.g., Paracetamol and Omeprazol)|

| HEALTH | Illness/Diseases | (e.g., Cancer, sjuk and diabetes) |

| Relation | Family members | (e.g., Mamma and Farmor) |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner_swedish_small_set_health_and_standart

It achieves the following results on the evaluation set:

- Loss: 0.0963

- Precision: 0.7548

- Recall: 0.7811

- F1: 0.7677

- Accuracy: 0.9756

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 219 | 0.1123 | 0.7674 | 0.6567 | 0.7078 | 0.9681 |

| No log | 2.0 | 438 | 0.0934 | 0.7643 | 0.7662 | 0.7652 | 0.9738 |

| 0.1382 | 3.0 | 657 | 0.0963 | 0.7548 | 0.7811 | 0.7677 | 0.9756 |

### Framework versions

- Transformers 4.19.3

- Pytorch 1.7.1

- Datasets 2.2.2

- Tokenizers 0.12.1

|

ceshine/t5-paraphrase-quora-paws | fabd672433e675b3d9916595fa4cb768b18e15c5 | 2021-09-22T08:16:42.000Z | [

"pytorch",

"jax",

"t5",

"text2text-generation",

"en",

"transformers",

"paraphrasing",

"paraphrase",

"license:apache-2.0",

"autotrain_compatible"

] | text2text-generation | false | ceshine | null | ceshine/t5-paraphrase-quora-paws | 829 | 1 | transformers | 1,912 | ---

language: en

tags:

- t5

- paraphrasing

- paraphrase

license: apache-2.0

---

# T5-base Parapharasing model fine-tuned on PAWS and Quora

More details in [ceshine/finetuning-t5 Github repo](https://github.com/ceshine/finetuning-t5/tree/master/paraphrase) |

gchhablani/bert-base-cased-finetuned-sst2 | e3a2a13efbaaf56afd02eb7333952ea22a693c45 | 2021-09-20T09:09:06.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"en",

"dataset:glue",

"arxiv:2105.03824",

"transformers",

"generated_from_trainer",

"fnet-bert-base-comparison",

"license:apache-2.0",

"model-index"

] | text-classification | false | gchhablani | null | gchhablani/bert-base-cased-finetuned-sst2 | 829 | null | transformers | 1,913 | ---

language:

- en

license: apache-2.0

tags:

- generated_from_trainer

- fnet-bert-base-comparison

datasets:

- glue

metrics:

- accuracy

model-index:

- name: bert-base-cased-finetuned-sst2

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: GLUE SST2

type: glue

args: sst2

metrics:

- name: Accuracy

type: accuracy

value: 0.9231651376146789

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-finetuned-sst2

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the GLUE SST2 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3649

- Accuracy: 0.9232

The model was fine-tuned to compare [google/fnet-base](https://huggingface.co/google/fnet-base) as introduced in [this paper](https://arxiv.org/abs/2105.03824) against [bert-base-cased](https://huggingface.co/bert-base-cased).

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

This model is trained using the [run_glue](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) script. The following command was used:

```bash

#!/usr/bin/bash

python ../run_glue.py \\n --model_name_or_path bert-base-cased \\n --task_name sst2 \\n --do_train \\n --do_eval \\n --max_seq_length 512 \\n --per_device_train_batch_size 16 \\n --learning_rate 2e-5 \\n --num_train_epochs 3 \\n --output_dir bert-base-cased-finetuned-sst2 \\n --push_to_hub \\n --hub_strategy all_checkpoints \\n --logging_strategy epoch \\n --save_strategy epoch \\n --evaluation_strategy epoch \\n```

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Accuracy | Validation Loss |

|:-------------:|:-----:|:-----:|:--------:|:---------------:|

| 0.233 | 1.0 | 4210 | 0.9174 | 0.2841 |

| 0.1261 | 2.0 | 8420 | 0.9278 | 0.3310 |

| 0.0768 | 3.0 | 12630 | 0.9232 | 0.3649 |

### Framework versions

- Transformers 4.11.0.dev0

- Pytorch 1.9.0

- Datasets 1.12.1

- Tokenizers 0.10.3

|

lvwerra/bert-imdb | 2e60eb015f5ace0a52a9d0394b63f9db23819139 | 2021-05-19T22:12:49.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | lvwerra | null | lvwerra/bert-imdb | 829 | null | transformers | 1,914 | # BERT-IMDB

## What is it?

BERT (`bert-large-cased`) trained for sentiment classification on the [IMDB dataset](https://www.kaggle.com/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews).

## Training setting

The model was trained on 80% of the IMDB dataset for sentiment classification for three epochs with a learning rate of `1e-5` with the `simpletransformers` library. The library uses a learning rate schedule.

## Result

The model achieved 90% classification accuracy on the validation set.

## Reference

The full experiment is available in the [tlr repo](https://lvwerra.github.io/trl/03-bert-imdb-training/).

|

ufal/eleczech-lc-small | f30a3b6aeb23d99ff4e342f853e9b4dca4fd90fb | 2022-04-24T11:47:37.000Z | [

"pytorch",

"tf",

"electra",

"cs",

"transformers",

"Czech",

"Electra",

"ÚFAL",

"license:cc-by-nc-sa-4.0"

] | null | false | ufal | null | ufal/eleczech-lc-small | 828 | null | transformers | 1,915 | ---

language: "cs"

tags:

- Czech

- Electra

- ÚFAL

license: "cc-by-nc-sa-4.0"

---

# EleCzech-LC model

THe `eleczech-lc-small` is a monolingual small Electra language representation

model trained on lowercased Czech data (but with diacritics kept in place).

It is trained on the same data as the

[RobeCzech model](https://huggingface.co/ufal/robeczech-base).

|

urduhack/roberta-urdu-small | 88b0711632a90aa462d37b3fd01b3db5a999901f | 2021-05-20T22:52:23.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"ur",

"transformers",

"roberta-urdu-small",

"urdu",

"license:mit",

"autotrain_compatible"

] | fill-mask | false | urduhack | null | urduhack/roberta-urdu-small | 827 | 1 | transformers | 1,916 | ---

language: ur

thumbnail: https://raw.githubusercontent.com/urduhack/urduhack/master/docs/_static/urduhack.png

tags:

- roberta-urdu-small

- urdu

- transformers

license: mit

---

## roberta-urdu-small

[](https://github.com/urduhack/urduhack/blob/master/LICENSE)

### Overview

**Language model:** roberta-urdu-small

**Model size:** 125M

**Language:** Urdu

**Training data:** News data from urdu news resources in Pakistan

### About roberta-urdu-small

roberta-urdu-small is a language model for urdu language.

```

from transformers import pipeline

fill_mask = pipeline("fill-mask", model="urduhack/roberta-urdu-small", tokenizer="urduhack/roberta-urdu-small")

```

## Training procedure

roberta-urdu-small was trained on urdu news corpus. Training data was normalized using normalization module from

urduhack to eliminate characters from other languages like arabic.

### About Urduhack

Urduhack is a Natural Language Processing (NLP) library for urdu language.

Github: https://github.com/urduhack/urduhack

|

rinna/japanese-clip-vit-b-16 | 577833e50353203aad9b0f01c9ed54f45d7f0dd9 | 2022-07-19T05:46:31.000Z | [

"pytorch",

"clip",

"feature-extraction",

"ja",

"arxiv:2103.00020",

"transformers",

"japanese",

"vision",

"license:apache-2.0"

] | feature-extraction | false | rinna | null | rinna/japanese-clip-vit-b-16 | 825 | 3 | transformers | 1,917 | ---

language: ja

thumbnail: https://github.com/rinnakk/japanese-pretrained-models/blob/master/rinna.png

license: apache-2.0

tags:

- feature-extraction

- ja

- japanese

- clip

- vision

---

# rinna/japanese-clip-vit-b-16

This is a Japanese [CLIP (Contrastive Language-Image Pre-Training)](https://arxiv.org/abs/2103.00020) model trained by [rinna Co., Ltd.](https://corp.rinna.co.jp/).

Please see [japanese-clip](https://github.com/rinnakk/japanese-clip) for the other available models.

# How to use the model

1. Install package

```shell

$ pip install git+https://github.com/rinnakk/japanese-clip.git

```

2. Run

```python

import io

import requests

from PIL import Image

import torch

import japanese_clip as ja_clip

device = "cuda" if torch.cuda.is_available() else "cpu"

model, preprocess = ja_clip.load("rinna/japanese-clip-vit-b-16", cache_dir="/tmp/japanese_clip", device=device)

tokenizer = ja_clip.load_tokenizer()

img = Image.open(io.BytesIO(requests.get('https://images.pexels.com/photos/2253275/pexels-photo-2253275.jpeg?auto=compress&cs=tinysrgb&dpr=3&h=750&w=1260').content))

image = preprocess(img).unsqueeze(0).to(device)

encodings = ja_clip.tokenize(

texts=["犬", "猫", "象"],

max_seq_len=77,

device=device,

tokenizer=tokenizer, # this is optional. if you don't pass, load tokenizer each time

)

with torch.no_grad():

image_features = model.get_image_features(image)

text_features = model.get_text_features(**encodings)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs) # prints: [[1.0, 0.0, 0.0]]

```

# Model architecture

The model was trained a ViT-B/16 Transformer architecture as an image encoder and uses a 12-layer BERT as a text encoder. The image encoder was initialized from the [AugReg `vit-base-patch16-224` model](https://github.com/google-research/vision_transformer).

# Training

The model was trained on [CC12M](https://github.com/google-research-datasets/conceptual-12m) translated the captions to Japanese.

# License

[The Apache 2.0 license](https://www.apache.org/licenses/LICENSE-2.0)

|

allenai/ivila-row-layoutlm-finetuned-s2vl-v2 | 274db24cfd62b34df30d0f25705716a76ea285fc | 2022-07-06T00:00:40.000Z | [

"pytorch",

"layoutlm",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | allenai | null | allenai/ivila-row-layoutlm-finetuned-s2vl-v2 | 823 | null | transformers | 1,918 | Entry not found |

cross-encoder/quora-roberta-large | 5ebcad2722b5ebd1e04eff48be928eab15088827 | 2021-08-05T08:41:41.000Z | [

"pytorch",

"jax",

"roberta",

"text-classification",

"transformers",

"license:apache-2.0"

] | text-classification | false | cross-encoder | null | cross-encoder/quora-roberta-large | 822 | null | transformers | 1,919 | ---

license: apache-2.0

---

# Cross-Encoder for Quora Duplicate Questions Detection

This model was trained using [SentenceTransformers](https://sbert.net) [Cross-Encoder](https://www.sbert.net/examples/applications/cross-encoder/README.html) class.

## Training Data

This model was trained on the [Quora Duplicate Questions](https://www.quora.com/q/quoradata/First-Quora-Dataset-Release-Question-Pairs) dataset. The model will predict a score between 0 and 1 how likely the two given questions are duplicates.

Note: The model is not suitable to estimate the similarity of questions, e.g. the two questions "How to learn Java" and "How to learn Python" will result in a rahter low score, as these are not duplicates.

## Usage and Performance

Pre-trained models can be used like this:

```

from sentence_transformers import CrossEncoder

model = CrossEncoder('model_name')

scores = model.predict([('Question 1', 'Question 2'), ('Question 3', 'Question 4')])

```

You can use this model also without sentence_transformers and by just using Transformers ``AutoModel`` class |

twigs/cwi-regressor | df4bd35ae50d6cbc357dcfafeb015d705881fb94 | 2022-07-16T20:11:55.000Z | [

"pytorch",

"distilbert",

"text-classification",

"transformers"

] | text-classification | false | twigs | null | twigs/cwi-regressor | 821 | null | transformers | 1,920 | Entry not found |

Helsinki-NLP/opus-mt-ca-es | 3b93f0ccce95f7d8c7a78d56ec5c658271f6d244 | 2021-09-09T21:28:22.000Z | [

"pytorch",

"marian",

"text2text-generation",

"ca",

"es",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-ca-es | 820 | null | transformers | 1,921 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-ca-es

* source languages: ca

* target languages: es

* OPUS readme: [ca-es](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/ca-es/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-01-15.zip](https://object.pouta.csc.fi/OPUS-MT-models/ca-es/opus-2020-01-15.zip)

* test set translations: [opus-2020-01-15.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/ca-es/opus-2020-01-15.test.txt)

* test set scores: [opus-2020-01-15.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/ca-es/opus-2020-01-15.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.ca.es | 74.9 | 0.863 |

|

textattack/bert-base-uncased-RTE | 44f1d994cbd4a349cb7867681940bdb1f0472f53 | 2021-05-20T07:36:18.000Z | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

] | text-classification | false | textattack | null | textattack/bert-base-uncased-RTE | 817 | null | transformers | 1,922 | ## TextAttack Model Card

This `bert-base-uncased` model was fine-tuned for sequence classification using TextAttack

and the glue dataset loaded using the `nlp` library. The model was fine-tuned

for 5 epochs with a batch size of 8, a learning

rate of 2e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.7256317689530686, as measured by the

eval set accuracy, found after 2 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

sonoisa/sentence-bert-base-ja-en-mean-tokens-v2 | 183edaac7298717e619cae545da453870aae1090 | 2022-05-31T09:07:58.000Z | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | false | sonoisa | null | sonoisa/sentence-bert-base-ja-en-mean-tokens-v2 | 815 | null | transformers | 1,923 | Entry not found |

lsanochkin/deberta-large-feedback | 9c2c8e80c27264968be42d2dd8ba18da34b0ac43 | 2022-06-08T12:48:08.000Z | [

"pytorch",

"deberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | lsanochkin | null | lsanochkin/deberta-large-feedback | 815 | null | transformers | 1,924 | Entry not found |

Geotrend/distilbert-base-en-zh-cased | 1ec581bd42270966d6d7f748c16dc58e909b3a3f | 2021-08-16T13:56:31.000Z | [

"pytorch",

"distilbert",

"fill-mask",

"multilingual",

"dataset:wikipedia",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | fill-mask | false | Geotrend | null | Geotrend/distilbert-base-en-zh-cased | 813 | null | transformers | 1,925 | ---

language: multilingual

datasets: wikipedia

license: apache-2.0

---

# distilbert-base-en-zh-cased

We are sharing smaller versions of [distilbert-base-multilingual-cased](https://huggingface.co/distilbert-base-multilingual-cased) that handle a custom number of languages.

Our versions give exactly the same representations produced by the original model which preserves the original accuracy.

For more information please visit our paper: [Load What You Need: Smaller Versions of Multilingual BERT](https://www.aclweb.org/anthology/2020.sustainlp-1.16.pdf).

## How to use

```python

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("Geotrend/distilbert-base-en-zh-cased")

model = AutoModel.from_pretrained("Geotrend/distilbert-base-en-zh-cased")

```

To generate other smaller versions of multilingual transformers please visit [our Github repo](https://github.com/Geotrend-research/smaller-transformers).

### How to cite

```bibtex

@inproceedings{smallermdistilbert,

title={Load What You Need: Smaller Versions of Mutlilingual BERT},

author={Abdaoui, Amine and Pradel, Camille and Sigel, Grégoire},

booktitle={SustaiNLP / EMNLP},

year={2020}

}

```

## Contact

Please contact [email protected] for any question, feedback or request. |

VietAI/vit5-base | 1e7647a478bbceb29ab07307b2ceab6fd34fddee | 2022-07-25T14:15:09.000Z | [

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"vi",

"dataset:cc100",

"transformers",

"summarization",

"translation",

"question-answering",

"license:mit",

"autotrain_compatible"

] | question-answering | false | VietAI | null | VietAI/vit5-base | 811 | null | transformers | 1,926 | ---

language: vi

datasets:

- cc100

tags:

- summarization

- translation

- question-answering

license: mit

---

# ViT5-base

State-of-the-art pretrained Transformer-based encoder-decoder model for Vietnamese.

## How to use

For more details, do check out [our Github repo](https://github.com/vietai/ViT5).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("VietAI/vit5-base")

model = AutoModelForSeq2SeqLM.from_pretrained("VietAI/vit5-base")

sentence = "VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

text = "vi: " + sentence

encoding = tokenizer.encode_plus(text, pad_to_max_length=True, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

early_stopping=True

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True, clean_up_tokenization_spaces=True)

print(line)

```

## Citation

```

@inproceedings{phan-etal-2022-vit5,

title = "{V}i{T}5: Pretrained Text-to-Text Transformer for {V}ietnamese Language Generation",

author = "Phan, Long and Tran, Hieu and Nguyen, Hieu and Trinh, Trieu H.",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop",

year = "2022",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-srw.18",

pages = "136--142",

}

``` |

sentence-transformers/msmarco-distilbert-base-v2 | f948558941b586773dcbc8f8df8576cd00a7547e | 2022-06-15T21:47:13.000Z | [

"pytorch",

"tf",

"distilbert",

"feature-extraction",

"arxiv:1908.10084",

"sentence-transformers",

"sentence-similarity",

"transformers",

"license:apache-2.0"

] | sentence-similarity | false | sentence-transformers | null | sentence-transformers/msmarco-distilbert-base-v2 | 810 | null | sentence-transformers | 1,927 | ---

pipeline_tag: sentence-similarity

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/msmarco-distilbert-base-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/msmarco-distilbert-base-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/msmarco-distilbert-base-v2')

model = AutoModel.from_pretrained('sentence-transformers/msmarco-distilbert-base-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/msmarco-distilbert-base-v2)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 350, 'do_lower_case': False}) with Transformer model: DistilBertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

This model was trained by [sentence-transformers](https://www.sbert.net/).

If you find this model helpful, feel free to cite our publication [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084):

```bibtex

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "http://arxiv.org/abs/1908.10084",

}

``` |

nvidia/segformer-b4-finetuned-cityscapes-1024-1024 | 1c5bd529b95681dbd2781393c3c0d1f4049f5aa3 | 2022-07-20T09:53:27.000Z | [

"pytorch",

"tf",

"segformer",

"dataset:cityscapes",

"arxiv:2105.15203",

"transformers",

"vision",

"image-segmentation",

"license:apache-2.0"

] | image-segmentation | false | nvidia | null | nvidia/segformer-b4-finetuned-cityscapes-1024-1024 | 809 | null | transformers | 1,928 | ---

license: apache-2.0

tags:

- vision

- image-segmentation

datasets:

- cityscapes

widget:

- src: https://www.researchgate.net/profile/Anurag-Arnab/publication/315881952/figure/fig5/AS:667673876779033@1536197265755/Sample-results-on-the-Cityscapes-dataset-The-above-images-show-how-our-method-can-handle.jpg

example_title: Road

---

# SegFormer (b4-sized) model fine-tuned on CityScapes

SegFormer model fine-tuned on CityScapes at resolution 1024x1024. It was introduced in the paper [SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers](https://arxiv.org/abs/2105.15203) by Xie et al. and first released in [this repository](https://github.com/NVlabs/SegFormer).

Disclaimer: The team releasing SegFormer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

SegFormer consists of a hierarchical Transformer encoder and a lightweight all-MLP decode head to achieve great results on semantic segmentation benchmarks such as ADE20K and Cityscapes. The hierarchical Transformer is first pre-trained on ImageNet-1k, after which a decode head is added and fine-tuned altogether on a downstream dataset.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?other=segformer) to look for fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import SegformerFeatureExtractor, SegformerForSemanticSegmentation

from PIL import Image

import requests

feature_extractor = SegformerFeatureExtractor.from_pretrained("nvidia/segformer-b4-finetuned-cityscapes-1024-1024")

model = SegformerForSemanticSegmentation.from_pretrained("nvidia/segformer-b4-finetuned-cityscapes-1024-1024")

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits # shape (batch_size, num_labels, height/4, width/4)

```

For more code examples, we refer to the [documentation](https://huggingface.co/transformers/model_doc/segformer.html#).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2105-15203,

author = {Enze Xie and

Wenhai Wang and

Zhiding Yu and

Anima Anandkumar and

Jose M. Alvarez and

Ping Luo},

title = {SegFormer: Simple and Efficient Design for Semantic Segmentation with

Transformers},

journal = {CoRR},

volume = {abs/2105.15203},

year = {2021},

url = {https://arxiv.org/abs/2105.15203},

eprinttype = {arXiv},

eprint = {2105.15203},

timestamp = {Wed, 02 Jun 2021 11:46:42 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2105-15203.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

ugaray96/biobert_ncbi_disease_ner | 5c229c40de74adc3ba6ce7266edbbb72338957d9 | 2021-05-20T08:46:47.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | ugaray96 | null | ugaray96/biobert_ncbi_disease_ner | 808 | 3 | transformers | 1,929 | Entry not found |

OFA-Sys/OFA-large | 89faeabf5697e4f6cc32c16a258fee8555a00ea3 | 2022-07-25T11:50:28.000Z | [

"pytorch",

"ofa",

"transformers",

"license:apache-2.0"

] | null | false | OFA-Sys | null | OFA-Sys/OFA-large | 806 | 3 | transformers | 1,930 | ---

license: apache-2.0

---

# OFA-large

This is the **large** version of OFA pretrained model. OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image generation, visual grounding, image captioning, image classification, text generation, etc.) to a simple sequence-to-sequence learning framework.

The directory includes 4 files, namely `config.json` which consists of model configuration, `vocab.json` and `merge.txt` for our OFA tokenizer, and lastly `pytorch_model.bin` which consists of model weights. There is no need to worry about the mismatch between Fairseq and transformers, since we have addressed the issue yet.

To use it in transformers, please refer to https://github.com/OFA-Sys/OFA/tree/feature/add_transformers. Install the transformers and download the models as shown below.

```

git clone --single-branch --branch feature/add_transformers https://github.com/OFA-Sys/OFA.git

pip install OFA/transformers/

git clone https://huggingface.co/OFA-Sys/OFA-large

```

After, refer the path to OFA-large to `ckpt_dir`, and prepare an image for the testing example below. Also, ensure that you have pillow and torchvision in your environment.

```

>>> from PIL import Image

>>> from torchvision import transforms

>>> from transformers import OFATokenizer, OFAModel

>>> from generate import sequence_generator

>>> mean, std = [0.5, 0.5, 0.5], [0.5, 0.5, 0.5]

>>> resolution = 480

>>> patch_resize_transform = transforms.Compose([

lambda image: image.convert("RGB"),

transforms.Resize((resolution, resolution), interpolation=Image.BICUBIC),

transforms.ToTensor(),

transforms.Normalize(mean=mean, std=std)

])

>>> tokenizer = OFATokenizer.from_pretrained(ckpt_dir)

>>> txt = " what does the image describe?"

>>> inputs = tokenizer([txt], return_tensors="pt").input_ids

>>> img = Image.open(path_to_image)

>>> patch_img = patch_resize_transform(img).unsqueeze(0)

>>> # using the generator of fairseq version

>>> model = OFAModel.from_pretrained(ckpt_dir, use_cache=True)

>>> generator = sequence_generator.SequenceGenerator(

tokenizer=tokenizer,

beam_size=5,

max_len_b=16,

min_len=0,

no_repeat_ngram_size=3,

)

>>> data = {}

>>> data["net_input"] = {"input_ids": inputs, 'patch_images': patch_img, 'patch_masks':torch.tensor([True])}

>>> gen_output = generator.generate([model], data)

>>> gen = [gen_output[i][0]["tokens"] for i in range(len(gen_output))]

>>> # using the generator of huggingface version

>>> model = OFAModel.from_pretrained(ckpt_dir, use_cache=False)

>>> gen = model.generate(inputs, patch_images=patch_img, num_beams=5, no_repeat_ngram_size=3)

>>> print(tokenizer.batch_decode(gen, skip_special_tokens=True))

```

|

Helsinki-NLP/opus-mt-ml-en | 6a3938f58579cd6b4aab8ffcd6d8f6ccbe96571e | 2021-09-10T13:58:12.000Z | [

"pytorch",

"marian",

"text2text-generation",

"ml",

"en",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-ml-en | 805 | null | transformers | 1,931 | ---

tags:

- translation

license: apache-2.0

---

### opus-mt-ml-en

* source languages: ml

* target languages: en

* OPUS readme: [ml-en](https://github.com/Helsinki-NLP/OPUS-MT-train/blob/master/models/ml-en/README.md)

* dataset: opus

* model: transformer-align

* pre-processing: normalization + SentencePiece

* download original weights: [opus-2020-04-20.zip](https://object.pouta.csc.fi/OPUS-MT-models/ml-en/opus-2020-04-20.zip)

* test set translations: [opus-2020-04-20.test.txt](https://object.pouta.csc.fi/OPUS-MT-models/ml-en/opus-2020-04-20.test.txt)

* test set scores: [opus-2020-04-20.eval.txt](https://object.pouta.csc.fi/OPUS-MT-models/ml-en/opus-2020-04-20.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba.ml.en | 42.7 | 0.605 |

|

GanjinZero/coder_eng_pp | 1b92944b53581cdee9c7a6a6c85d3706c5f39a87 | 2022-04-25T02:25:08.000Z | [

"pytorch",

"bert",

"feature-extraction",

"en",

"arxiv:2204.00391",

"transformers",

"biomedical",

"license:apache-2.0"

] | feature-extraction | false | GanjinZero | null | GanjinZero/coder_eng_pp | 803 | 1 | transformers | 1,932 | ---

language:

- en

license: apache-2.0

tags:

- bert

- biomedical

---

Automatic Biomedical Term Clustering by Learning Fine-grained Term Representations.

CODER++

```

@misc{https://doi.org/10.48550/arxiv.2204.00391,

doi = {10.48550/ARXIV.2204.00391},

url = {https://arxiv.org/abs/2204.00391},

author = {Zeng, Sihang and Yuan, Zheng and Yu, Sheng},

title = {Automatic Biomedical Term Clustering by Learning Fine-grained Term Representations},

publisher = {arXiv},

year = {2022}

}

``` |

yechen/bert-large-chinese | 0b30aa41703e93273f06788ce54ca2a678fa3461 | 2021-05-20T09:22:07.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"zh",

"transformers",

"autotrain_compatible"

] | fill-mask | false | yechen | null | yechen/bert-large-chinese | 803 | 1 | transformers | 1,933 | ---

language: zh

---

|

nvidia/segformer-b1-finetuned-ade-512-512 | cfbb1c34d34eae30bcf0a6d5e26a650f9b7263de | 2022-07-20T09:53:21.000Z | [

"pytorch",

"tf",

"segformer",

"dataset:scene_parse_150",

"arxiv:2105.15203",

"transformers",

"vision",

"image-segmentation",

"license:apache-2.0"

] | image-segmentation | false | nvidia | null | nvidia/segformer-b1-finetuned-ade-512-512 | 802 | null | transformers | 1,934 | ---

license: apache-2.0

tags:

- vision

- image-segmentation

datasets:

- scene_parse_150

widget:

- src: https://huggingface.co/datasets/hf-internal-testing/fixtures_ade20k/resolve/main/ADE_val_00000001.jpg

example_title: House

- src: https://huggingface.co/datasets/hf-internal-testing/fixtures_ade20k/resolve/main/ADE_val_00000002.jpg

example_title: Castle

---

# SegFormer (b1-sized) model fine-tuned on ADE20k

SegFormer model fine-tuned on ADE20k at resolution 512x512. It was introduced in the paper [SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers](https://arxiv.org/abs/2105.15203) by Xie et al. and first released in [this repository](https://github.com/NVlabs/SegFormer).

Disclaimer: The team releasing SegFormer did not write a model card for this model so this model card has been written by the Hugging Face team.