modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

sequence | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Helsinki-NLP/opus-mt-en-roa | ac8c907c99b8939697a1793862d4c34159c408d7 | 2021-01-18T08:15:13.000Z | [

"pytorch",

"rust",

"marian",

"text2text-generation",

"en",

"it",

"ca",

"rm",

"es",

"ro",

"gl",

"co",

"wa",

"pt",

"oc",

"an",

"id",

"fr",

"ht",

"roa",

"transformers",

"translation",

"license:apache-2.0",

"autotrain_compatible"

] | translation | false | Helsinki-NLP | null | Helsinki-NLP/opus-mt-en-roa | 1,108 | null | transformers | 1,700 | ---

language:

- en

- it

- ca

- rm

- es

- ro

- gl

- co

- wa

- pt

- oc

- an

- id

- fr

- ht

- roa

tags:

- translation

license: apache-2.0

---

### eng-roa

* source group: English

* target group: Romance languages

* OPUS readme: [eng-roa](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-roa/README.md)

* model: transformer

* source language(s): eng

* target language(s): arg ast cat cos egl ext fra frm_Latn gcf_Latn glg hat ind ita lad lad_Latn lij lld_Latn lmo max_Latn mfe min mwl oci pap pms por roh ron scn spa tmw_Latn vec wln zlm_Latn zsm_Latn

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* a sentence initial language token is required in the form of `>>id<<` (id = valid target language ID)

* download original weights: [opus2m-2020-08-01.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-roa/opus2m-2020-08-01.zip)

* test set translations: [opus2m-2020-08-01.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-roa/opus2m-2020-08-01.test.txt)

* test set scores: [opus2m-2020-08-01.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/eng-roa/opus2m-2020-08-01.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| newsdev2016-enro-engron.eng.ron | 27.6 | 0.567 |

| newsdiscussdev2015-enfr-engfra.eng.fra | 30.2 | 0.575 |

| newsdiscusstest2015-enfr-engfra.eng.fra | 35.5 | 0.612 |

| newssyscomb2009-engfra.eng.fra | 27.9 | 0.570 |

| newssyscomb2009-engita.eng.ita | 29.3 | 0.590 |

| newssyscomb2009-engspa.eng.spa | 29.6 | 0.570 |

| news-test2008-engfra.eng.fra | 25.2 | 0.538 |

| news-test2008-engspa.eng.spa | 27.3 | 0.548 |

| newstest2009-engfra.eng.fra | 26.9 | 0.560 |

| newstest2009-engita.eng.ita | 28.7 | 0.583 |

| newstest2009-engspa.eng.spa | 29.0 | 0.568 |

| newstest2010-engfra.eng.fra | 29.3 | 0.574 |

| newstest2010-engspa.eng.spa | 34.2 | 0.601 |

| newstest2011-engfra.eng.fra | 31.4 | 0.592 |

| newstest2011-engspa.eng.spa | 35.0 | 0.599 |

| newstest2012-engfra.eng.fra | 29.5 | 0.576 |

| newstest2012-engspa.eng.spa | 35.5 | 0.603 |

| newstest2013-engfra.eng.fra | 29.9 | 0.567 |

| newstest2013-engspa.eng.spa | 32.1 | 0.578 |

| newstest2016-enro-engron.eng.ron | 26.1 | 0.551 |

| Tatoeba-test.eng-arg.eng.arg | 1.4 | 0.125 |

| Tatoeba-test.eng-ast.eng.ast | 17.8 | 0.406 |

| Tatoeba-test.eng-cat.eng.cat | 48.3 | 0.676 |

| Tatoeba-test.eng-cos.eng.cos | 3.2 | 0.275 |

| Tatoeba-test.eng-egl.eng.egl | 0.2 | 0.084 |

| Tatoeba-test.eng-ext.eng.ext | 11.2 | 0.344 |

| Tatoeba-test.eng-fra.eng.fra | 45.3 | 0.637 |

| Tatoeba-test.eng-frm.eng.frm | 1.1 | 0.221 |

| Tatoeba-test.eng-gcf.eng.gcf | 0.6 | 0.118 |

| Tatoeba-test.eng-glg.eng.glg | 44.2 | 0.645 |

| Tatoeba-test.eng-hat.eng.hat | 28.0 | 0.502 |

| Tatoeba-test.eng-ita.eng.ita | 45.6 | 0.674 |

| Tatoeba-test.eng-lad.eng.lad | 8.2 | 0.322 |

| Tatoeba-test.eng-lij.eng.lij | 1.4 | 0.182 |

| Tatoeba-test.eng-lld.eng.lld | 0.8 | 0.217 |

| Tatoeba-test.eng-lmo.eng.lmo | 0.7 | 0.190 |

| Tatoeba-test.eng-mfe.eng.mfe | 91.9 | 0.956 |

| Tatoeba-test.eng-msa.eng.msa | 31.1 | 0.548 |

| Tatoeba-test.eng.multi | 42.9 | 0.636 |

| Tatoeba-test.eng-mwl.eng.mwl | 2.1 | 0.234 |

| Tatoeba-test.eng-oci.eng.oci | 7.9 | 0.297 |

| Tatoeba-test.eng-pap.eng.pap | 44.1 | 0.648 |

| Tatoeba-test.eng-pms.eng.pms | 2.1 | 0.190 |

| Tatoeba-test.eng-por.eng.por | 41.8 | 0.639 |

| Tatoeba-test.eng-roh.eng.roh | 3.5 | 0.261 |

| Tatoeba-test.eng-ron.eng.ron | 41.0 | 0.635 |

| Tatoeba-test.eng-scn.eng.scn | 1.7 | 0.184 |

| Tatoeba-test.eng-spa.eng.spa | 50.1 | 0.689 |

| Tatoeba-test.eng-vec.eng.vec | 3.2 | 0.248 |

| Tatoeba-test.eng-wln.eng.wln | 7.2 | 0.220 |

### System Info:

- hf_name: eng-roa

- source_languages: eng

- target_languages: roa

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/eng-roa/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['en', 'it', 'ca', 'rm', 'es', 'ro', 'gl', 'co', 'wa', 'pt', 'oc', 'an', 'id', 'fr', 'ht', 'roa']

- src_constituents: {'eng'}

- tgt_constituents: {'ita', 'cat', 'roh', 'spa', 'pap', 'lmo', 'mwl', 'lij', 'lad_Latn', 'ext', 'ron', 'ast', 'glg', 'pms', 'zsm_Latn', 'gcf_Latn', 'lld_Latn', 'min', 'tmw_Latn', 'cos', 'wln', 'zlm_Latn', 'por', 'egl', 'oci', 'vec', 'arg', 'ind', 'fra', 'hat', 'lad', 'max_Latn', 'frm_Latn', 'scn', 'mfe'}

- src_multilingual: False

- tgt_multilingual: True

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-roa/opus2m-2020-08-01.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/eng-roa/opus2m-2020-08-01.test.txt

- src_alpha3: eng

- tgt_alpha3: roa

- short_pair: en-roa

- chrF2_score: 0.636

- bleu: 42.9

- brevity_penalty: 0.978

- ref_len: 72751.0

- src_name: English

- tgt_name: Romance languages

- train_date: 2020-08-01

- src_alpha2: en

- tgt_alpha2: roa

- prefer_old: False

- long_pair: eng-roa

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

Skoltech/russian-sensitive-topics | a5deed3c020f78a0ddb404b86609e2cf5693c3f1 | 2021-05-18T22:41:20.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"text-classification",

"ru",

"arxiv:2103.05345",

"transformers",

"toxic comments classification"

] | text-classification | false | Skoltech | null | Skoltech/russian-sensitive-topics | 1,106 | 3 | transformers | 1,701 | ---

language:

- ru

tags:

- toxic comments classification

licenses:

- cc-by-nc-sa

---

## General concept of the model

This model is trained on the dataset of sensitive topics of the Russian language. The concept of sensitive topics is described [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/sensitive_topics.csv) or on [kaggle](https://www.kaggle.com/nigula/russian-sensitive-topics). The properties of the dataset is the same as the one described in the article, the only difference is the size.

## Instructions

The model predicts combinations of 18 sensitive topics described in the [article](https://arxiv.org/abs/2103.05345). You can find step-by-step instructions for using the model [here](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/sensitive_topics/Inference.ipynb)

## Metrics

The dataset partially manually labeled samples and partially semi-automatically labeled samples. Learn more in our article. We tested the performance of the classifier only on the part of manually labeled data that is why some topics are not well represented in the test set.

| | precision | recall | f1-score | support |

|-------------------|-----------|--------|----------|---------|

| offline_crime | 0.65 | 0.55 | 0.6 | 132 |

| online_crime | 0.5 | 0.46 | 0.48 | 37 |

| drugs | 0.87 | 0.9 | 0.88 | 87 |

| gambling | 0.5 | 0.67 | 0.57 | 6 |

| pornography | 0.73 | 0.59 | 0.65 | 204 |

| prostitution | 0.75 | 0.69 | 0.72 | 91 |

| slavery | 0.72 | 0.72 | 0.73 | 40 |

| suicide | 0.33 | 0.29 | 0.31 | 7 |

| terrorism | 0.68 | 0.57 | 0.62 | 47 |

| weapons | 0.89 | 0.83 | 0.86 | 138 |

| body_shaming | 0.9 | 0.67 | 0.77 | 109 |

| health_shaming | 0.84 | 0.55 | 0.66 | 108 |

| politics | 0.68 | 0.54 | 0.6 | 241 |

| racism | 0.81 | 0.59 | 0.68 | 204 |

| religion | 0.94 | 0.72 | 0.81 | 102 |

| sexual_minorities | 0.69 | 0.46 | 0.55 | 102 |

| sexism | 0.66 | 0.64 | 0.65 | 132 |

| social_injustice | 0.56 | 0.37 | 0.45 | 181 |

| none | 0.62 | 0.67 | 0.64 | 250 |

| micro avg | 0.72 | 0.61 | 0.66 | 2218 |

| macro avg | 0.7 | 0.6 | 0.64 | 2218 |

| weighted avg | 0.73 | 0.61 | 0.66 | 2218 |

| samples avg | 0.75 | 0.66 | 0.68 | 2218 |

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png

## Citation

If you find this repository helpful, feel free to cite our publication:

```

@inproceedings{babakov-etal-2021-detecting,

title = "Detecting Inappropriate Messages on Sensitive Topics that Could Harm a Company{'}s Reputation",

author = "Babakov, Nikolay and

Logacheva, Varvara and

Kozlova, Olga and

Semenov, Nikita and

Panchenko, Alexander",

booktitle = "Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing",

month = apr,

year = "2021",

address = "Kiyv, Ukraine",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.bsnlp-1.4",

pages = "26--36",

abstract = "Not all topics are equally {``}flammable{''} in terms of toxicity: a calm discussion of turtles or fishing less often fuels inappropriate toxic dialogues than a discussion of politics or sexual minorities. We define a set of sensitive topics that can yield inappropriate and toxic messages and describe the methodology of collecting and labelling a dataset for appropriateness. While toxicity in user-generated data is well-studied, we aim at defining a more fine-grained notion of inappropriateness. The core of inappropriateness is that it can harm the reputation of a speaker. This is different from toxicity in two respects: (i) inappropriateness is topic-related, and (ii) inappropriate message is not toxic but still unacceptable. We collect and release two datasets for Russian: a topic-labelled dataset and an appropriateness-labelled dataset. We also release pre-trained classification models trained on this data.",

}

``` |

fnlp/elasticbert-base | 08b6aa4eb88ef6bb6dd6294edb8b8b11120f5b98 | 2021-10-28T10:54:47.000Z | [

"pytorch",

"elasticbert",

"fill-mask",

"arxiv:2110.07038",

"transformers",

"autotrain_compatible"

] | fill-mask | false | fnlp | null | fnlp/elasticbert-base | 1,104 | 3 | transformers | 1,702 | # ElasticBERT-BASE

## Model description

This is an implementation of the `base` version of ElasticBERT.

[**Towards Efficient NLP: A Standard Evaluation and A Strong Baseline**](https://arxiv.org/pdf/2110.07038.pdf)

Xiangyang Liu, Tianxiang Sun, Junliang He, Lingling Wu, Xinyu Zhang, Hao Jiang, Zhao Cao, Xuanjing Huang, Xipeng Qiu

## Code link

[**fastnlp/elasticbert**](https://github.com/fastnlp/ElasticBERT)

## Usage

```python

>>> from transformers import BertTokenizer as ElasticBertTokenizer

>>> from models.configuration_elasticbert import ElasticBertConfig

>>> from models.modeling_elasticbert import ElasticBertForSequenceClassification

>>> num_output_layers = 1

>>> config = ElasticBertConfig.from_pretrained('fnlp/elasticbert-base', num_output_layers=num_output_layers )

>>> tokenizer = ElasticBertTokenizer.from_pretrained('fnlp/elasticbert-base')

>>> model = ElasticBertForSequenceClassification.from_pretrained('fnlp/elasticbert-base', config=config)

>>> input_ids = tokenizer.encode('The actors are fantastic .', return_tensors='pt')

>>> outputs = model(input_ids)

```

## Citation

```bibtex

@article{liu2021elasticbert,

author = {Xiangyang Liu and

Tianxiang Sun and

Junliang He and

Lingling Wu and

Xinyu Zhang and

Hao Jiang and

Zhao Cao and

Xuanjing Huang and

Xipeng Qiu},

title = {Towards Efficient {NLP:} {A} Standard Evaluation and {A} Strong Baseline},

journal = {CoRR},

volume = {abs/2110.07038},

year = {2021},

url = {https://arxiv.org/abs/2110.07038},

eprinttype = {arXiv},

eprint = {2110.07038},

timestamp = {Fri, 22 Oct 2021 13:33:09 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2110-07038.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

seyonec/SMILES_tokenized_PubChem_shard00_50k | cc844b1d17d99e51e36205e812def4f77c8e4ac4 | 2021-05-20T21:10:29.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

] | fill-mask | false | seyonec | null | seyonec/SMILES_tokenized_PubChem_shard00_50k | 1,102 | null | transformers | 1,703 | Entry not found |

Yanzhu/bertweetfr-base | 90de75c9b6f530bf1831ba22aee06f04f7c94703 | 2021-06-13T07:20:37.000Z | [

"pytorch",

"camembert",

"fill-mask",

"fr",

"transformers",

"autotrain_compatible"

] | fill-mask | false | Yanzhu | null | Yanzhu/bertweetfr-base | 1,095 | 2 | transformers | 1,704 | ---

language: "fr"

---

Domain-adaptive pretraining of camembert-base using 15 GB of French Tweets |

allenai/ivila-block-layoutlm-finetuned-docbank | 1991156f842c9ae1a3eef19ec365a7af3f1ae064 | 2021-09-27T22:56:28.000Z | [

"pytorch",

"layoutlm",

"token-classification",

"transformers",

"autotrain_compatible"

] | token-classification | false | allenai | null | allenai/ivila-block-layoutlm-finetuned-docbank | 1,093 | null | transformers | 1,705 | Entry not found |

facebook/s2t-medium-librispeech-asr | 782ffebb9f762136f76e4b58afbb30b19a4da5a1 | 2022-02-07T15:04:00.000Z | [

"pytorch",

"tf",

"speech_to_text",

"automatic-speech-recognition",

"en",

"dataset:librispeech_asr",

"arxiv:2010.05171",

"arxiv:1904.08779",

"transformers",

"audio",

"license:mit"

] | automatic-speech-recognition | false | facebook | null | facebook/s2t-medium-librispeech-asr | 1,093 | 3 | transformers | 1,706 | ---

language: en

datasets:

- librispeech_asr

tags:

- audio

- automatic-speech-recognition

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

license: mit

---

# S2T-MEDIUM-LIBRISPEECH-ASR

`s2t-medium-librispeech-asr` is a Speech to Text Transformer (S2T) model trained for automatic speech recognition (ASR).

The S2T model was proposed in [this paper](https://arxiv.org/abs/2010.05171) and released in

[this repository](https://github.com/pytorch/fairseq/tree/master/examples/speech_to_text)

## Model description

S2T is an end-to-end sequence-to-sequence transformer model. It is trained with standard

autoregressive cross-entropy loss and generates the transcripts autoregressively.

## Intended uses & limitations

This model can be used for end-to-end speech recognition (ASR).

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for other S2T checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

*Note: The `Speech2TextProcessor` object uses [torchaudio](https://github.com/pytorch/audio) to extract the

filter bank features. Make sure to install the `torchaudio` package before running this example.*

You could either install those as extra speech dependancies with

`pip install transformers"[speech, sentencepiece]"` or install the packages seperatly

with `pip install torchaudio sentencepiece`.

```python

import torch

from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

from datasets import load_dataset

import soundfile as sf

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-medium-librispeech-asr")

processor = Speech2Textprocessor.from_pretrained("facebook/s2t-medium-librispeech-asr")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset(

"patrickvonplaten/librispeech_asr_dummy",

"clean",

split="validation"

)

ds = ds.map(map_to_array)

input_features = processor(

ds["speech"][0],

sampling_rate=16_000,

return_tensors="pt"

).input_features # Batch size 1

generated_ids = model.generate(input_ids=input_features)

transcription = processor.batch_decode(generated_ids)

```

#### Evaluation on LibriSpeech Test

The following script shows how to evaluate this model on the [LibriSpeech](https://huggingface.co/datasets/librispeech_asr)

*"clean"* and *"other"* test dataset.

```python

from datasets import load_dataset, load_metric

from transformers import Speech2TextForConditionalGeneration, Speech2TextProcessor

import soundfile as sf

librispeech_eval = load_dataset("librispeech_asr", "clean", split="test") # change to "other" for other test dataset

wer = load_metric("wer")

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-medium-librispeech-asr").to("cuda")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-medium-librispeech-asr", do_upper_case=True)

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

librispeech_eval = librispeech_eval.map(map_to_array)

def map_to_pred(batch):

features = processor(batch["speech"], sampling_rate=16000, padding=True, return_tensors="pt")

input_features = features.input_features.to("cuda")

attention_mask = features.attention_mask.to("cuda")

gen_tokens = model.generate(input_ids=input_features, attention_mask=attention_mask)

batch["transcription"] = processor.batch_decode(gen_tokens, skip_special_tokens=True)

return batch

result = librispeech_eval.map(map_to_pred, batched=True, batch_size=8, remove_columns=["speech"])

print("WER:", wer(predictions=result["transcription"], references=result["text"]))

```

*Result (WER)*:

| "clean" | "other" |

|:-------:|:-------:|

| 3.5 | 7.8 |

## Training data

The S2T-MEDIUM-LIBRISPEECH-ASR is trained on [LibriSpeech ASR Corpus](https://www.openslr.org/12), a dataset consisting of

approximately 1000 hours of 16kHz read English speech.

## Training procedure

### Preprocessing

The speech data is pre-processed by extracting Kaldi-compliant 80-channel log mel-filter bank features automatically from

WAV/FLAC audio files via PyKaldi or torchaudio. Further utterance-level CMVN (cepstral mean and variance normalization)

is applied to each example.

The texts are lowercased and tokenized using SentencePiece and a vocabulary size of 10,000.

### Training

The model is trained with standard autoregressive cross-entropy loss and using [SpecAugment](https://arxiv.org/abs/1904.08779).

The encoder receives speech features, and the decoder generates the transcripts autoregressively.

### BibTeX entry and citation info

```bibtex

@inproceedings{wang2020fairseqs2t,

title = {fairseq S2T: Fast Speech-to-Text Modeling with fairseq},

author = {Changhan Wang and Yun Tang and Xutai Ma and Anne Wu and Dmytro Okhonko and Juan Pino},

booktitle = {Proceedings of the 2020 Conference of the Asian Chapter of the Association for Computational Linguistics (AACL): System Demonstrations},

year = {2020},

}

``` |

google/canine-c | 1e8c8b3a4e860cb2a23a14c3fbba61ef3aed51f6 | 2021-08-13T08:24:13.000Z | [

"pytorch",

"canine",

"feature-extraction",

"multilingual",

"dataset:bookcorpus",

"dataset:wikipedia",

"arxiv:2103.06874",

"transformers",

"license:apache-2.0"

] | feature-extraction | false | google | null | google/canine-c | 1,093 | 1 | transformers | 1,707 | ---

language: multilingual

license: apache-2.0

datasets:

- bookcorpus

- wikipedia

---

# CANINE-c (CANINE pre-trained with autoregressive character loss)

Pretrained CANINE model on 104 languages using a masked language modeling (MLM) objective. It was introduced in the paper [CANINE: Pre-training an Efficient Tokenization-Free Encoder for Language Representation](https://arxiv.org/abs/2103.06874) and first released in [this repository](https://github.com/google-research/language/tree/master/language/canine).

What's special about CANINE is that it doesn't require an explicit tokenizer (such as WordPiece or SentencePiece) as other models like BERT and RoBERTa. Instead, it directly operates at a character level: each character is turned into its [Unicode code point](https://en.wikipedia.org/wiki/Code_point#:~:text=For%20Unicode%2C%20the%20particular%20sequence,forming%20a%20self%2Dsynchronizing%20code.).

This means that input processing is trivial and can typically be accomplished as:

```

input_ids = [ord(char) for char in text]

```

The ord() function is part of Python, and turns each character into its Unicode code point.

Disclaimer: The team releasing CANINE did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

CANINE is a transformers model pretrained on a large corpus of multilingual data in a self-supervised fashion, similar to BERT. This means it was pretrained on the raw texts only, with no humans labelling them in any way (which is why it can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it was pretrained with two objectives:

* Masked language modeling (MLM): one randomly masks part of the inputs, which the model needs to predict. This model (CANINE-c) is trained with an autoregressive character loss. One masks several character spans within each sequence, which the model then autoregressively predicts.

* Next sentence prediction (NSP): the model concatenates two sentences as inputs during pretraining. Sometimes they correspond to sentences that were next to each other in the original text, sometimes not. The model then has to predict if the two sentences were following each other or not.

This way, the model learns an inner representation of multiple languages that can then be used to extract features useful for downstream tasks: if you have a dataset of labeled sentences for instance, you can train a standard classifier using the features produced by the CANINE model as inputs.

## Intended uses & limitations

You can use the raw model for either masked language modeling or next sentence prediction, but it's mostly intended to be fine-tuned on a downstream task. See the [model hub](https://huggingface.co/models?filter=canine) to look for fine-tuned versions on a task that interests you.

Note that this model is primarily aimed at being fine-tuned on tasks that use the whole sentence (potentially masked) to make decisions, such as sequence classification, token classification or question answering. For tasks such as text generation you should look at models like GPT2.

### How to use

Here is how to use this model:

```python

from transformers import CanineTokenizer, CanineModel

model = CanineModel.from_pretrained('google/canine-c')

tokenizer = CanineTokenizer.from_pretrained('google/canine-c')

inputs = ["Life is like a box of chocolates.", "You never know what you gonna get."]

encoding = tokenizer(inputs, padding="longest", truncation=True, return_tensors="pt")

outputs = model(**encoding) # forward pass

pooled_output = outputs.pooler_output

sequence_output = outputs.last_hidden_state

```

## Training data

The CANINE model was pretrained on on the multilingual Wikipedia data of [mBERT](https://github.com/google-research/bert/blob/master/multilingual.md), which includes 104 languages.

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2103-06874,

author = {Jonathan H. Clark and

Dan Garrette and

Iulia Turc and

John Wieting},

title = {{CANINE:} Pre-training an Efficient Tokenization-Free Encoder for

Language Representation},

journal = {CoRR},

volume = {abs/2103.06874},

year = {2021},

url = {https://arxiv.org/abs/2103.06874},

archivePrefix = {arXiv},

eprint = {2103.06874},

timestamp = {Tue, 16 Mar 2021 11:26:59 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2103-06874.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

google/rembert | 65da5133da36e29dfca67d4f0dd9f7f9db21b563 | 2022-05-27T15:05:23.000Z | [

"pytorch",

"tf",

"rembert",

"multilingual",

"af",

"am",

"ar",

"az",

"be",

"bg",

"bn",

"bs",

"ca",

"ceb",

"co",

"cs",

"cy",

"da",

"de",

"el",

"en",

"eo",

"es",

"et",

"eu",

"fa",

"fi",

"fil",

"fr",

"fy",

"ga",

"gd",

"gl",

"gu",

"ha",

"haw",

"hi",

"hmn",

"hr",

"ht",

"hu",

"hy",

"id",

"ig",

"is",

"it",

"iw",

"ja",

"jv",

"ka",

"kk",

"km",

"kn",

"ko",

"ku",

"ky",

"la",

"lb",

"lo",

"lt",

"lv",

"mg",

"mi",

"mk",

"ml",

"mn",

"mr",

"ms",

"mt",

"my",

"ne",

"nl",

"no",

"ny",

"pa",

"pl",

"ps",

"pt",

"ro",

"ru",

"sd",

"si",

"sk",

"sl",

"sm",

"sn",

"so",

"sq",

"sr",

"st",

"su",

"sv",

"sw",

"ta",

"te",

"tg",

"th",

"tr",

"uk",

"ur",

"uz",

"vi",

"xh",

"yi",

"yo",

"zh",

"zu",

"dataset:wikipedia",

"arxiv:2010.12821",

"transformers",

"license:apache-2.0"

] | null | false | google | null | google/rembert | 1,093 | 6 | transformers | 1,708 | ---

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- bs

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- hr

- ht

- hu

- hy

- id

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- no

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

license: apache-2.0

datasets:

- wikipedia

---

# RemBERT (for classification)

Pretrained RemBERT model on 110 languages using a masked language modeling (MLM) objective. It was introduced in the paper [Rethinking embedding coupling in pre-trained language models](https://arxiv.org/abs/2010.12821). A direct export of the model checkpoint was first made available in [this repository](https://github.com/google-research/google-research/tree/master/rembert). This version of the checkpoint is lightweight since it is meant to be finetuned for classification and excludes the output embedding weights.

## Model description

RemBERT's main difference with mBERT is that the input and output embeddings are not tied. Instead, RemBERT uses small input embeddings and larger output embeddings. This makes the model more efficient since the output embeddings are discarded during fine-tuning. It is also more accurate, especially when reinvesting the input embeddings' parameters into the core model, as is done on RemBERT.

## Intended uses & limitations

You should fine-tune this model for your downstream task. It is meant to be a general-purpose model, similar to mBERT. In our [paper](https://arxiv.org/abs/2010.12821), we have successfully applied this model to tasks such as classification, question answering, NER, POS-tagging. For tasks such as text generation you should look at models like GPT2.

## Training data

The RemBERT model was pretrained on multilingual Wikipedia data over 110 languages. The full language list is on [this repository](https://github.com/google-research/google-research/tree/master/rembert)

### BibTeX entry and citation info

```bibtex

@inproceedings{DBLP:conf/iclr/ChungFTJR21,

author = {Hyung Won Chung and

Thibault F{\'{e}}vry and

Henry Tsai and

Melvin Johnson and

Sebastian Ruder},

title = {Rethinking Embedding Coupling in Pre-trained Language Models},

booktitle = {9th International Conference on Learning Representations, {ICLR} 2021,

Virtual Event, Austria, May 3-7, 2021},

publisher = {OpenReview.net},

year = {2021},

url = {https://openreview.net/forum?id=xpFFI\_NtgpW},

timestamp = {Wed, 23 Jun 2021 17:36:39 +0200},

biburl = {https://dblp.org/rec/conf/iclr/ChungFTJR21.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

malper/unikud | 73fdb7a158634826a73b66846d1b743fd700e990 | 2022-04-25T02:11:25.000Z | [

"pytorch",

"canine",

"he",

"transformers"

] | null | false | malper | null | malper/unikud | 1,093 | null | transformers | 1,709 | ---

language:

- he

---

Please see [this model's DagsHub repository](https://dagshub.com/morrisalp/unikud) for information on usage. |

TypicaAI/magbert-ner | 069ef5c4d8e7334fb89c2e54fe8f58d55b099ee7 | 2020-12-11T21:30:45.000Z | [

"pytorch",

"camembert",

"token-classification",

"fr",

"transformers",

"autotrain_compatible"

] | token-classification | false | TypicaAI | null | TypicaAI/magbert-ner | 1,091 | null | transformers | 1,710 | ---

language: fr

widget:

- text: "Je m'appelle Hicham et je vis a Fès"

---

# MagBERT-NER: a state-of-the-art NER model for Moroccan French language (Maghreb)

## Introduction

[MagBERT-NER] is a state-of-the-art NER model for Moroccan French language (Maghreb). The MagBERT-NER model was fine-tuned for NER Task based the language model for French Camembert (based on the RoBERTa architecture).

For further information or requests, please visite our website at [typica.ai Website](https://typica.ai/) or send us an email at [email protected]

## How to use MagBERT-NER with HuggingFace

##### Load MagBERT-NER and its sub-word tokenizer :

```python

from transformers import AutoTokenizer, AutoModelForTokenClassification

tokenizer = AutoTokenizer.from_pretrained("TypicaAI/magbert-ner")

model = AutoModelForTokenClassification.from_pretrained("TypicaAI/magbert-ner")

##### Process text sample (from wikipedia about the current Prime Minister of Morocco) Using NER pipeline

from transformers import pipeline

nlp = pipeline('ner', model=model, tokenizer=tokenizer, grouped_entities=True)

nlp("Saad Dine El Otmani, né le 16 janvier 1956 à Inezgane, est un homme d'État marocain, chef du gouvernement du Maroc depuis le 5 avril 2017")

#[{'entity_group': 'I-PERSON',

# 'score': 0.8941445276141167,

# 'word': 'Saad Dine El Otmani'},

# {'entity_group': 'B-DATE',

# 'score': 0.5967703461647034,

# 'word': '16 janvier 1956'},

# {'entity_group': 'B-GPE', 'score': 0.7160899192094803, 'word': 'Inezgane'},

# {'entity_group': 'B-NORP', 'score': 0.7971733212471008, 'word': 'marocain'},

# {'entity_group': 'B-GPE', 'score': 0.8921478390693665, 'word': 'Maroc'},

# {'entity_group': 'B-DATE',

# 'score': 0.5760444005330404,

# 'word': '5 avril 2017'}]

```

## Authors

MagBert-NER Model was trained by Hicham Assoudi, Ph.D.

For any questions, comments you can contact me at [email protected]

## Citation

If you use our work, please cite:

Hicham Assoudi, Ph.D., MagBERT-NER: a state-of-the-art NER model for Moroccan French language (Maghreb), (2020)

|

anonymous-german-nlp/german-gpt2 | 2c3dbb0a9dc4fd368fdb256d5093cd37c13d4936 | 2021-05-21T13:20:42.000Z | [

"pytorch",

"tf",

"jax",

"gpt2",

"text-generation",

"de",

"transformers",

"license:mit"

] | text-generation | false | anonymous-german-nlp | null | anonymous-german-nlp/german-gpt2 | 1,091 | null | transformers | 1,711 | ---

language: de

widget:

- text: "Heute ist sehr schönes Wetter in"

license: mit

---

# German GPT-2 model

**Note**: This model was de-anonymized and now lives at:

https://huggingface.co/dbmdz/german-gpt2

Please use the new model name instead! |

HooshvareLab/bert-base-parsbert-ner-uncased | 3d87e20bbca18f8d8d9d545cacd198aee69371fd | 2021-05-18T20:43:54.000Z | [

"pytorch",

"tf",

"jax",

"bert",

"token-classification",

"fa",

"arxiv:2005.12515",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | false | HooshvareLab | null | HooshvareLab/bert-base-parsbert-ner-uncased | 1,090 | null | transformers | 1,712 | ---

language: fa

license: apache-2.0

---

## ParsBERT: Transformer-based Model for Persian Language Understanding

ParsBERT is a monolingual language model based on Google’s BERT architecture with the same configurations as BERT-Base.

Paper presenting ParsBERT: [arXiv:2005.12515](https://arxiv.org/abs/2005.12515)

All the models (downstream tasks) are uncased and trained with whole word masking. (coming soon stay tuned)

## Persian NER [ARMAN, PEYMA, ARMAN+PEYMA]

This task aims to extract named entities in the text, such as names and label with appropriate `NER` classes such as locations, organizations, etc. The datasets used for this task contain sentences that are marked with `IOB` format. In this format, tokens that are not part of an entity are tagged as `”O”` the `”B”`tag corresponds to the first word of an object, and the `”I”` tag corresponds to the rest of the terms of the same entity. Both `”B”` and `”I”` tags are followed by a hyphen (or underscore), followed by the entity category. Therefore, the NER task is a multi-class token classification problem that labels the tokens upon being fed a raw text. There are two primary datasets used in Persian NER, `ARMAN`, and `PEYMA`. In ParsBERT, we prepared ner for both datasets as well as a combination of both datasets.

### PEYMA

PEYMA dataset includes 7,145 sentences with a total of 302,530 tokens from which 41,148 tokens are tagged with seven different classes.

1. Organization

2. Money

3. Location

4. Date

5. Time

6. Person

7. Percent

| Label | # |

|:------------:|:-----:|

| Organization | 16964 |

| Money | 2037 |

| Location | 8782 |

| Date | 4259 |

| Time | 732 |

| Person | 7675 |

| Percent | 699 |

**Download**

You can download the dataset from [here](http://nsurl.org/tasks/task-7-named-entity-recognition-ner-for-farsi/)

---

### ARMAN

ARMAN dataset holds 7,682 sentences with 250,015 sentences tagged over six different classes.

1. Organization

2. Location

3. Facility

4. Event

5. Product

6. Person

| Label | # |

|:------------:|:-----:|

| Organization | 30108 |

| Location | 12924 |

| Facility | 4458 |

| Event | 7557 |

| Product | 4389 |

| Person | 15645 |

**Download**

You can download the dataset from [here](https://github.com/HaniehP/PersianNER)

## Results

The following table summarizes the F1 score obtained by ParsBERT as compared to other models and architectures.

| Dataset | ParsBERT | MorphoBERT | Beheshti-NER | LSTM-CRF | Rule-Based CRF | BiLSTM-CRF |

|:---------------:|:--------:|:----------:|:--------------:|:----------:|:----------------:|:------------:|

| ARMAN + PEYMA | 95.13* | - | - | - | - | - |

| PEYMA | 98.79* | - | 90.59 | - | 84.00 | - |

| ARMAN | 93.10* | 89.9 | 84.03 | 86.55 | - | 77.45 |

## How to use :hugs:

| Notebook | Description | |

|:----------|:-------------|------:|

| [How to use Pipelines](https://github.com/hooshvare/parsbert-ner/blob/master/persian-ner-pipeline.ipynb) | Simple and efficient way to use State-of-the-Art models on downstream tasks through transformers | [](https://colab.research.google.com/github/hooshvare/parsbert-ner/blob/master/persian-ner-pipeline.ipynb) |

## Cite

Please cite the following paper in your publication if you are using [ParsBERT](https://arxiv.org/abs/2005.12515) in your research:

```markdown

@article{ParsBERT,

title={ParsBERT: Transformer-based Model for Persian Language Understanding},

author={Mehrdad Farahani, Mohammad Gharachorloo, Marzieh Farahani, Mohammad Manthouri},

journal={ArXiv},

year={2020},

volume={abs/2005.12515}

}

```

## Acknowledgments

We hereby, express our gratitude to the [Tensorflow Research Cloud (TFRC) program](https://tensorflow.org/tfrc) for providing us with the necessary computation resources. We also thank [Hooshvare](https://hooshvare.com) Research Group for facilitating dataset gathering and scraping online text resources.

## Contributors

- Mehrdad Farahani: [Linkedin](https://www.linkedin.com/in/m3hrdadfi/), [Twitter](https://twitter.com/m3hrdadfi), [Github](https://github.com/m3hrdadfi)

- Mohammad Gharachorloo: [Linkedin](https://www.linkedin.com/in/mohammad-gharachorloo/), [Twitter](https://twitter.com/MGharachorloo), [Github](https://github.com/baarsaam)

- Marzieh Farahani: [Linkedin](https://www.linkedin.com/in/marziehphi/), [Twitter](https://twitter.com/marziehphi), [Github](https://github.com/marziehphi)

- Mohammad Manthouri: [Linkedin](https://www.linkedin.com/in/mohammad-manthouri-aka-mansouri-07030766/), [Twitter](https://twitter.com/mmanthouri), [Github](https://github.com/mmanthouri)

- Hooshvare Team: [Official Website](https://hooshvare.com/), [Linkedin](https://www.linkedin.com/company/hooshvare), [Twitter](https://twitter.com/hooshvare), [Github](https://github.com/hooshvare), [Instagram](https://www.instagram.com/hooshvare/)

+ And a special thanks to Sara Tabrizi for her fantastic poster design. Follow her on: [Linkedin](https://www.linkedin.com/in/sara-tabrizi-64548b79/), [Behance](https://www.behance.net/saratabrizi), [Instagram](https://www.instagram.com/sara_b_tabrizi/)

## Releases

### Release v0.1 (May 29, 2019)

This is the first version of our ParsBERT NER!

|

raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed | 60897ba4bdcfb7f6cf88d18f75bbd0f9399f5908 | 2021-11-05T07:33:08.000Z | [

"pytorch",

"roberta",

"token-classification",

"en",

"dataset:ncbi-disease",

"dataset:bc5cdr",

"transformers",

"ner",

"ncbi",

"disease",

"pubmed",

"bioinfomatics",

"license:apache-2.0",

"autotrain_compatible"

] | token-classification | false | raynardj | null | raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed | 1,090 | 4 | transformers | 1,713 | ---

language:

- en

tags:

- ner

- ncbi

- disease

- pubmed

- bioinfomatics

license: apache-2.0

datasets:

- ncbi-disease

- bc5cdr

widget:

- text: "Hepatocyte nuclear factor 4 alpha (HNF4α) is regulated by different promoters to generate two isoforms, one of which functions as a tumor suppressor. Here, the authors reveal that induction of the alternative isoform in hepatocellular carcinoma inhibits the circadian clock by repressing BMAL1, and the reintroduction of BMAL1 prevents HCC tumor growth."

---

# NER to find Gene & Gene products

> The model was trained on ncbi-disease, BC5CDR dataset, pretrained on this [pubmed-pretrained roberta model](/raynardj/roberta-pubmed)

All the labels, the possible token classes.

```json

{"label2id": {

"O": 0,

"Disease":1,

}

}

```

Notice, we removed the 'B-','I-' etc from data label.🗡

## This is the template we suggest for using the model

```python

from transformers import pipeline

PRETRAINED = "raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed"

ner = pipeline(task="ner",model=PRETRAINED, tokenizer=PRETRAINED)

ner("Your text", aggregation_strategy="first")

```

And here is to make your output more consecutive ⭐️

```python

import pandas as pd

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(PRETRAINED)

def clean_output(outputs):

results = []

current = []

last_idx = 0

# make to sub group by position

for output in outputs:

if output["index"]-1==last_idx:

current.append(output)

else:

results.append(current)

current = [output, ]

last_idx = output["index"]

if len(current)>0:

results.append(current)

# from tokens to string

strings = []

for c in results:

tokens = []

starts = []

ends = []

for o in c:

tokens.append(o['word'])

starts.append(o['start'])

ends.append(o['end'])

new_str = tokenizer.convert_tokens_to_string(tokens)

if new_str!='':

strings.append(dict(

word=new_str,

start = min(starts),

end = max(ends),

entity = c[0]['entity']

))

return strings

def entity_table(pipeline, **pipeline_kw):

if "aggregation_strategy" not in pipeline_kw:

pipeline_kw["aggregation_strategy"] = "first"

def create_table(text):

return pd.DataFrame(

clean_output(

pipeline(text, **pipeline_kw)

)

)

return create_table

# will return a dataframe

entity_table(ner)(YOUR_VERY_CONTENTFUL_TEXT)

```

> check our NER model on

* [gene and gene products](/raynardj/ner-gene-dna-rna-jnlpba-pubmed)

* [chemical substance](/raynardj/ner-chemical-bionlp-bc5cdr-pubmed).

* [disease](/raynardj/ner-disease-ncbi-bionlp-bc5cdr-pubmed) |

dbmdz/convbert-base-turkish-mc4-uncased | 5d8c2e7856ba8f71c627eb8b00df6edd306b328a | 2021-09-23T10:41:21.000Z | [

"pytorch",

"tf",

"convbert",

"fill-mask",

"tr",

"dataset:allenai/c4",

"transformers",

"license:mit",

"autotrain_compatible"

] | fill-mask | false | dbmdz | null | dbmdz/convbert-base-turkish-mc4-uncased | 1,088 | null | transformers | 1,714 | ---

language: tr

license: mit

datasets:

- allenai/c4

---

# 🇹🇷 Turkish ConvBERT model

<p align="center">

<img alt="Logo provided by Merve Noyan" title="Awesome logo from Merve Noyan" src="https://raw.githubusercontent.com/stefan-it/turkish-bert/master/merve_logo.png">

</p>

[](https://zenodo.org/badge/latestdoi/237817454)

We present community-driven BERT, DistilBERT, ELECTRA and ConvBERT models for Turkish 🎉

Some datasets used for pretraining and evaluation are contributed from the

awesome Turkish NLP community, as well as the decision for the BERT model name: BERTurk.

Logo is provided by [Merve Noyan](https://twitter.com/mervenoyann).

# Stats

We've trained an (uncased) ConvBERT model on the recently released Turkish part of the

[multiligual C4 (mC4) corpus](https://github.com/allenai/allennlp/discussions/5265) from the AI2 team.

After filtering documents with a broken encoding, the training corpus has a size of 242GB resulting

in 31,240,963,926 tokens.

We used the original 32k vocab (instead of creating a new one).

# mC4 ConvBERT

In addition to the ELEC**TR**A base model, we also trained an ConvBERT model on the Turkish part of the mC4 corpus. We use a

sequence length of 512 over the full training time and train the model for 1M steps on a v3-32 TPU.

# Model usage

All trained models can be used from the [DBMDZ](https://github.com/dbmdz) Hugging Face [model hub page](https://huggingface.co/dbmdz)

using their model name.

Example usage with 🤗/Transformers:

```python

tokenizer = AutoTokenizer.from_pretrained("dbmdz/convbert-base-turkish-mc4-uncased")

model = AutoModel.from_pretrained("dbmdz/convbert-base-turkish-mc4-uncased")

```

# Citation

You can use the following BibTeX entry for citation:

```bibtex

@software{stefan_schweter_2020_3770924,

author = {Stefan Schweter},

title = {BERTurk - BERT models for Turkish},

month = apr,

year = 2020,

publisher = {Zenodo},

version = {1.0.0},

doi = {10.5281/zenodo.3770924},

url = {https://doi.org/10.5281/zenodo.3770924}

}

```

# Acknowledgments

Thanks to [Kemal Oflazer](http://www.andrew.cmu.edu/user/ko/) for providing us

additional large corpora for Turkish. Many thanks to Reyyan Yeniterzi for providing

us the Turkish NER dataset for evaluation.

We would like to thank [Merve Noyan](https://twitter.com/mervenoyann) for the

awesome logo!

Research supported with Cloud TPUs from Google's TensorFlow Research Cloud (TFRC).

Thanks for providing access to the TFRC ❤️ |

german-nlp-group/electra-base-german-uncased | 5a79890051f8df23591f06710012d399b7e17d9b | 2021-05-24T13:26:08.000Z | [

"pytorch",

"electra",

"pretraining",

"de",

"transformers",

"commoncrawl",

"uncased",

"umlaute",

"umlauts",

"german",

"deutsch",

"license:mit"

] | null | false | german-nlp-group | null | german-nlp-group/electra-base-german-uncased | 1,087 | 2 | transformers | 1,715 | ---

language: de

license: mit

thumbnail: "https://raw.githubusercontent.com/German-NLP-Group/german-transformer-training/master/model_cards/german-electra-logo.png"

tags:

- electra

- commoncrawl

- uncased

- umlaute

- umlauts

- german

- deutsch

---

# German Electra Uncased

<img width="300px" src="https://raw.githubusercontent.com/German-NLP-Group/german-transformer-training/master/model_cards/german-electra-logo.png">

[¹]

## Version 2 Release

We released an improved version of this model. Version 1 was trained for 766,000 steps. For this new version we continued the training for an additional 734,000 steps. It therefore follows that version 2 was trained on a total of 1,500,000 steps. See "Evaluation of Version 2: GermEval18 Coarse" below for details.

## Model Info

This Model is suitable for training on many downstream tasks in German (Q&A, Sentiment Analysis, etc.).

It can be used as a drop-in replacement for **BERT** in most down-stream tasks (**ELECTRA** is even implemented as an extended **BERT** Class).

At the time of release (August 2020) this model is the best performing publicly available German NLP model on various German evaluation metrics (CONLL03-DE, GermEval18 Coarse, GermEval18 Fine). For GermEval18 Coarse results see below. More will be published soon.

## Installation

This model has the special feature that it is **uncased** but does **not strip accents**.

This possibility was added by us with [PR #6280](https://github.com/huggingface/transformers/pull/6280).

To use it you have to use Transformers version 3.1.0 or newer.

```bash

pip install transformers -U

```

## Uncase and Umlauts ('Ö', 'Ä', 'Ü')

This model is uncased. This helps especially for domains where colloquial terms with uncorrect capitalization is often used.

The special characters 'ö', 'ü', 'ä' are included through the `strip_accent=False` option, as this leads to an improved precision.

## Creators

This model was trained and open sourced in conjunction with the [**German NLP Group**](https://github.com/German-NLP-Group) in equal parts by:

- [**Philip May**](https://May.la) - [T-Systems on site services GmbH](https://www.t-systems-onsite.de/)

- [**Philipp Reißel**](https://www.reissel.eu) - [ambeRoad](https://amberoad.de/)

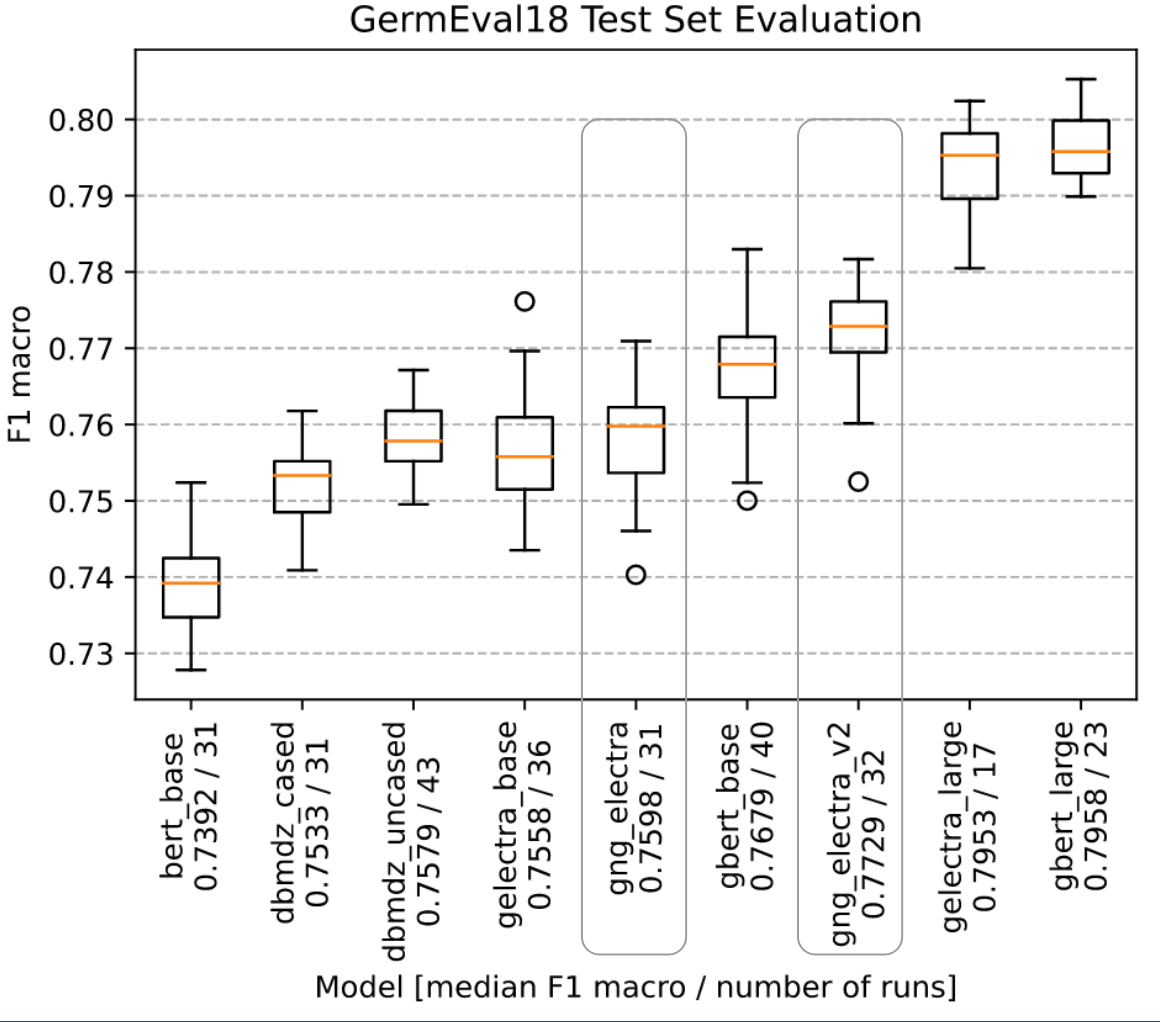

## Evaluation of Version 2: GermEval18 Coarse

We evaluated all language models on GermEval18 with the F1 macro score. For each model we did an extensive automated hyperparameter search. With the best hyperparmeters we did fit the moodel multiple times on GermEval18. This is done to cancel random effects and get results of statistical relevance.

## Checkpoint evaluation

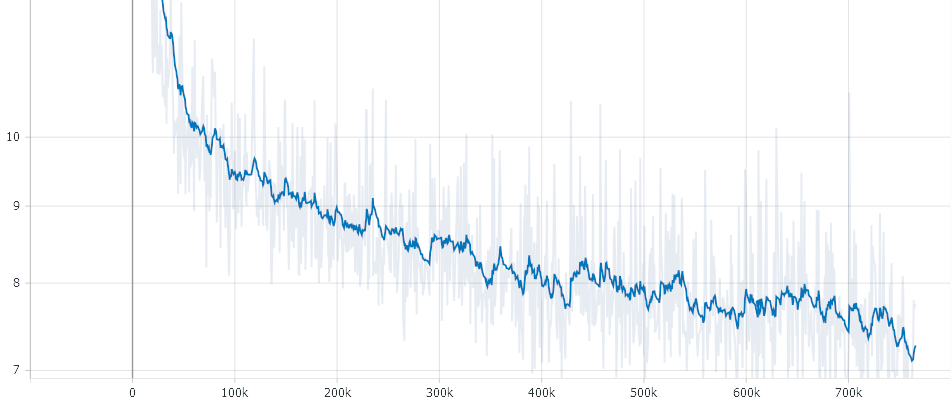

Since it it not guaranteed that the last checkpoint is the best, we evaluated the checkpoints on GermEval18. We found that the last checkpoint is indeed the best. The training was stable and did not overfit the text corpus.

## Pre-training details

### Data

- Cleaned Common Crawl Corpus 2019-09 German: [CC_net](https://github.com/facebookresearch/cc_net) (Only head coprus and filtered for language_score > 0.98) - 62 GB

- German Wikipedia Article Pages Dump (20200701) - 5.5 GB

- German Wikipedia Talk Pages Dump (20200620) - 1.1 GB

- Subtitles - 823 MB

- News 2018 - 4.1 GB

The sentences were split with [SojaMo](https://github.com/tsproisl/SoMaJo). We took the German Wikipedia Article Pages Dump 3x to oversample. This approach was also used in a similar way in GPT-3 (Table 2.2).

More Details can be found here [Preperaing Datasets for German Electra Github](https://github.com/German-NLP-Group/german-transformer-training)

### Electra Branch no_strip_accents

Because we do not want to stip accents in our training data we made a change to Electra and used this repo [Electra no_strip_accents](https://github.com/PhilipMay/electra/tree/no_strip_accents) (branch `no_strip_accents`). Then created the tf dataset with:

```bash

python build_pretraining_dataset.py --corpus-dir <corpus_dir> --vocab-file <dir>/vocab.txt --output-dir ./tf_data --max-seq-length 512 --num-processes 8 --do-lower-case --no-strip-accents

```

### The training

The training itself can be performed with the Original Electra Repo (No special case for this needed).

We run it with the following Config:

<details>

<summary>The exact Training Config</summary>

<br/>debug False

<br/>disallow_correct False

<br/>disc_weight 50.0

<br/>do_eval False

<br/>do_lower_case True

<br/>do_train True

<br/>electra_objective True

<br/>embedding_size 768

<br/>eval_batch_size 128

<br/>gcp_project None

<br/>gen_weight 1.0

<br/>generator_hidden_size 0.33333

<br/>generator_layers 1.0

<br/>iterations_per_loop 200

<br/>keep_checkpoint_max 0

<br/>learning_rate 0.0002

<br/>lr_decay_power 1.0

<br/>mask_prob 0.15

<br/>max_predictions_per_seq 79

<br/>max_seq_length 512

<br/>model_dir gs://XXX

<br/>model_hparam_overrides {}

<br/>model_name 02_Electra_Checkpoints_32k_766k_Combined

<br/>model_size base

<br/>num_eval_steps 100

<br/>num_tpu_cores 8

<br/>num_train_steps 766000

<br/>num_warmup_steps 10000

<br/>pretrain_tfrecords gs://XXX

<br/>results_pkl gs://XXX

<br/>results_txt gs://XXX

<br/>save_checkpoints_steps 5000

<br/>temperature 1.0

<br/>tpu_job_name None

<br/>tpu_name electrav5

<br/>tpu_zone None

<br/>train_batch_size 256

<br/>uniform_generator False

<br/>untied_generator True

<br/>untied_generator_embeddings False

<br/>use_tpu True

<br/>vocab_file gs://XXX

<br/>vocab_size 32767

<br/>weight_decay_rate 0.01

</details>

Please Note: *Due to the GAN like strucutre of Electra the loss is not that meaningful*

It took about 7 Days on a preemtible TPU V3-8. In total, the Model went through approximately 10 Epochs. For an automatically recreation of a cancelled TPUs we used [tpunicorn](https://github.com/shawwn/tpunicorn). The total cost of training summed up to about 450 $ for one run. The Data-pre processing and Vocab Creation needed approximately 500-1000 CPU hours. Servers were fully provided by [T-Systems on site services GmbH](https://www.t-systems-onsite.de/), [ambeRoad](https://amberoad.de/).

Special thanks to [Stefan Schweter](https://github.com/stefan-it) for your feedback and providing parts of the text corpus.

[¹]: Source for the picture [Pinterest](https://www.pinterest.cl/pin/371828512984142193/)

### Negative Results

We tried the following approaches which we found had no positive influence:

- **Increased Vocab Size**: Leads to more parameters and thus reduced examples/sec while no visible Performance gains were measured

- **Decreased Batch-Size**: The original Electra was trained with a Batch Size per TPU Core of 16 whereas this Model was trained with 32 BS / TPU Core. We found out that 32 BS leads to better results when you compare metrics over computation time

## License - The MIT License

Copyright 2020-2021 Philip May<br>

Copyright 2020-2021 Philipp Reissel

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

Tidum/DialoGPT-large-Michael | 15bcc27d9effca5ea4e67b33ebd387e5bd860718 | 2022-02-06T19:59:38.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] | conversational | false | Tidum | null | Tidum/DialoGPT-large-Michael | 1,086 | null | transformers | 1,716 | ---

tags:

- conversational

---

#Michael DialoGPT Model |

textattack/albert-base-v2-imdb | e377b81678ba240cd835375c5853bb590e10e75a | 2020-07-06T16:34:24.000Z | [

"pytorch",

"albert",

"text-classification",

"transformers"

] | text-classification | false | textattack | null | textattack/albert-base-v2-imdb | 1,086 | null | transformers | 1,717 | ## TextAttack Model Card

This `albert-base-v2` model was fine-tuned for sequence classification using TextAttack

and the imdb dataset loaded using the `nlp` library. The model was fine-tuned

for 5 epochs with a batch size of 32, a learning

rate of 2e-05, and a maximum sequence length of 128.

Since this was a classification task, the model was trained with a cross-entropy loss function.

The best score the model achieved on this task was 0.89236, as measured by the

eval set accuracy, found after 3 epochs.

For more information, check out [TextAttack on Github](https://github.com/QData/TextAttack).

|

zhayunduo/roberta-base-stocktwits-finetuned | e4fd3e0fcc2af47df76ddc74d90840fe5a7ec299 | 2022-04-18T07:40:25.000Z | [

"pytorch",

"roberta",

"text-classification",

"transformers",

"license:apache-2.0"

] | text-classification | false | zhayunduo | null | zhayunduo/roberta-base-stocktwits-finetuned | 1,085 | 0 | transformers | 1,718 | ---

license: apache-2.0

---

## **Sentiment Inferencing model for stock related commments**

#### *A project by NUS ISS students Frank Cao, Gerong Zhang, Jiaqi Yao, Sikai Ni, Yunduo Zhang*

<br />

### Description

This model is fine tuned with roberta-base model on 3200000 comments from stocktwits, with the user labeled tags 'Bullish' or 'Bearish'

try something that the individual investors may say on the investment forum on the inference API, for example, try 'red' and 'green'.

[code on github](https://github.com/Gitrexx/PLPPM_Sentiment_Analysis_via_Stocktwits/tree/main/SentimentEngine)

<br />

### Training information

- batch size 32

- learning rate 2e-5

| | Train loss | Validation loss | Validation accuracy |

| ----------- | ----------- | ---------------- | ------------------- |

| epoch1 | 0.3495 | 0.2956 | 0.8679 |

| epoch2 | 0.2717 | 0.2235 | 0.9021 |

| epoch3 | 0.2360 | 0.1875 | 0.9210 |

| epoch4 | 0.2106 | 0.1603 | 0.9343 |

<br />

# How to use

```python

from transformers import RobertaForSequenceClassification, RobertaTokenizer

from transformers import pipeline

import pandas as pd

import emoji

# the model was trained upon below preprocessing

def process_text(texts):

# remove URLs

texts = re.sub(r'https?://\S+', "", texts)

texts = re.sub(r'www.\S+', "", texts)

# remove '

texts = texts.replace(''', "'")

# remove symbol names

texts = re.sub(r'(\#)(\S+)', r'hashtag_\2', texts)

texts = re.sub(r'(\$)([A-Za-z]+)', r'cashtag_\2', texts)

# remove usernames

texts = re.sub(r'(\@)(\S+)', r'mention_\2', texts)

# demojize

texts = emoji.demojize(texts, delimiters=("", " "))

return texts.strip()

tokenizer_loaded = RobertaTokenizer.from_pretrained('zhayunduo/roberta-base-stocktwits-finetuned')

model_loaded = RobertaForSequenceClassification.from_pretrained('zhayunduo/roberta-base-stocktwits-finetuned')

nlp = pipeline("text-classification", model=model_loaded, tokenizer=tokenizer_loaded)

sentences = pd.Series(['just buy','just sell it',

'entity rocket to the sky!',

'go down','even though it is going up, I still think it will not keep this trend in the near future'])

# sentences = list(sentences.apply(process_text)) # if input text contains https, @ or # or $ symbols, better apply preprocess to get a more accurate result

sentences = list(sentences)

results = nlp(sentences)

print(results) # 2 labels, label 0 is bearish, label 1 is bullish

``` |

microsoft/swin-large-patch4-window12-384-in22k | df0f89cc75d470a35ff4bb5d0e53fbdbbe377bb3 | 2022-05-16T18:40:51.000Z | [

"pytorch",

"tf",

"swin",

"image-classification",

"dataset:imagenet-21k",

"arxiv:2103.14030",

"transformers",

"vision",

"license:apache-2.0"

] | image-classification | false | microsoft | null | microsoft/swin-large-patch4-window12-384-in22k | 1,084 | 1 | transformers | 1,719 | ---

license: apache-2.0

tags:

- vision

- image-classification

datasets:

- imagenet-21k

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

---

# Swin Transformer (large-sized model)

Swin Transformer model pre-trained on ImageNet-21k (14 million images, 21,841 classes) at resolution 384x384. It was introduced in the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030) by Liu et al. and first released in [this repository](https://github.com/microsoft/Swin-Transformer).

Disclaimer: The team releasing Swin Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

The Swin Transformer is a type of Vision Transformer. It builds hierarchical feature maps by merging image patches (shown in gray) in deeper layers and has linear computation complexity to input image size due to computation of self-attention only within each local window (shown in red). It can thus serve as a general-purpose backbone for both image classification and dense recognition tasks. In contrast, previous vision Transformers produce feature maps of a single low resolution and have quadratic computation complexity to input image size due to computation of self-attention globally.

[Source](https://paperswithcode.com/method/swin-transformer)

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=swin) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import AutoFeatureExtractor, SwinForImageClassification

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

feature_extractor = AutoFeatureExtractor.from_pretrained("microsoft/swin-large-patch4-window12-384-in22k")

model = SwinForImageClassification.from_pretrained("microsoft/swin-large-patch4-window12-384-in22k")

inputs = feature_extractor(images=image, return_tensors="pt")

outputs = model(**inputs)

logits = outputs.logits

# model predicts one of the 1000 ImageNet classes

predicted_class_idx = logits.argmax(-1).item()

print("Predicted class:", model.config.id2label[predicted_class_idx])

```

For more code examples, we refer to the [documentation](https://huggingface.co/transformers/model_doc/swin.html#).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2103-14030,

author = {Ze Liu and

Yutong Lin and

Yue Cao and

Han Hu and

Yixuan Wei and

Zheng Zhang and

Stephen Lin and

Baining Guo},

title = {Swin Transformer: Hierarchical Vision Transformer using Shifted Windows},

journal = {CoRR},

volume = {abs/2103.14030},

year = {2021},

url = {https://arxiv.org/abs/2103.14030},

eprinttype = {arXiv},

eprint = {2103.14030},

timestamp = {Thu, 08 Apr 2021 07:53:26 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2103-14030.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

``` |

Luyu/co-condenser-marco-retriever | 2149ab984d2aea9c39cf7e6bbc2041a5302866a2 | 2021-09-02T14:43:18.000Z | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

] | feature-extraction | false | Luyu | null | Luyu/co-condenser-marco-retriever | 1,079 | 2 | transformers | 1,720 | Entry not found |

soheeyang/rdr-ctx_encoder-single-nq-base | 289088ddb79e14a0ca555548b106a5e594bf6ba2 | 2021-04-15T15:58:10.000Z | [

"pytorch",

"tf",

"dpr",

"arxiv:2010.10999",

"arxiv:2004.04906",

"transformers"

] | null | false | soheeyang | null | soheeyang/rdr-ctx_encoder-single-nq-base | 1,076 | null | transformers | 1,721 | # rdr-ctx_encoder-single-nq-base

Reader-Distilled Retriever (`RDR`)

Sohee Yang and Minjoon Seo, [Is Retriever Merely an Approximator of Reader?](https://arxiv.org/abs/2010.10999), arXiv 2020

The paper proposes to distill the reader into the retriever so that the retriever absorbs the strength of the reader while keeping its own benefit. The model is a [DPR](https://arxiv.org/abs/2004.04906) retriever further finetuned using knowledge distillation from the DPR reader. Using this approach, the answer recall rate increases by a large margin, especially at small numbers of top-k.

This model is the context encoder of RDR trained solely on Natural Questions (NQ) (single-nq). This model is trained by the authors and is the official checkpoint of RDR.

## Performance

The following is the answer recall rate measured using PyTorch 1.4.0 and transformers 4.5.0.

The values of DPR on the NQ dev set are taken from Table 1 of the [paper of RDR](https://arxiv.org/abs/2010.10999). The values of DPR on the NQ test set are taken from the [codebase of DPR](https://github.com/facebookresearch/DPR). DPR-adv is the a new DPR model released in March 2021. It is trained on the original DPR NQ train set and its version where hard negatives are mined using DPR index itself using the previous NQ checkpoint. Please refer to the [codebase of DPR](https://github.com/facebookresearch/DPR) for more details about DPR-adv-hn.

| | Top-K Passages | 1 | 5 | 20 | 50 | 100 |

|---------|------------------|-------|-------|-------|-------|-------|

| **NQ Dev** | **DPR** | 44.2 | - | 76.9 | 81.3 | 84.2 |

| | **RDR (This Model)** | **54.43** | **72.17** | **81.33** | **84.8** | **86.61** |

| **NQ Test** | **DPR** | 45.87 | 68.14 | 79.97 | - | 85.87 |

| | **DPR-adv-hn** | 52.47 | **72.24** | 81.33 | - | 87.29 |

| | **RDR (This Model)** | **54.29** | 72.16 | **82.8** | **86.34** | **88.2** |

## How to Use

RDR shares the same architecture with DPR. Therefore, It uses `DPRContextEncoder` as the model class.

Using `AutoModel` does not properly detect whether the checkpoint is for `DPRContextEncoder` or `DPRQuestionEncoder`.

Therefore, please specify the exact class to use the model.

```python

from transformers import DPRContextEncoder, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("soheeyang/rdr-ctx_encoder-single-nq-base")

ctx_encoder = DPRContextEncoder.from_pretrained("soheeyang/rdr-ctx_encoder-single-nq-base")

data = tokenizer("context comes here", return_tensors="pt")

ctx_embedding = ctx_encoder(**data).pooler_output # embedding vector for context

```

|

sentence-transformers/gtr-t5-large | fd31cff184d356b3a9a5794706551fc5306071a2 | 2022-02-09T12:33:08.000Z | [

"pytorch",

"t5",

"en",

"arxiv:2112.07899",

"sentence-transformers",

"feature-extraction",

"sentence-similarity",

"transformers",

"license:apache-2.0"

] | sentence-similarity | false | sentence-transformers | null | sentence-transformers/gtr-t5-large | 1,074 | 1 | sentence-transformers | 1,722 | ---

pipeline_tag: sentence-similarity

language: en

license: apache-2.0

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# sentence-transformers/gtr-t5-large

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space. The model was specifically trained for the task of sematic search.

This model was converted from the Tensorflow model [gtr-large-1](https://tfhub.dev/google/gtr/gtr-large/1) to PyTorch. When using this model, have a look at the publication: [Large Dual Encoders Are Generalizable Retrievers](https://arxiv.org/abs/2112.07899). The tfhub model and this PyTorch model can produce slightly different embeddings, however, when run on the same benchmarks, they produce identical results.

The model uses only the encoder from a T5-large model. The weights are stored in FP16.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/gtr-t5-large')

embeddings = model.encode(sentences)

print(embeddings)

```

The model requires sentence-transformers version 2.2.0 or newer.

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/gtr-t5-large)

## Citing & Authors

If you find this model helpful, please cite the respective publication:

[Large Dual Encoders Are Generalizable Retrievers](https://arxiv.org/abs/2112.07899)

|

SkolkovoInstitute/russian_toxicity_classifier | 2b9a086ec05c2dc202fea11ed15f317b1676b18c | 2021-12-08T15:41:00.000Z | [

"pytorch",

"tf",

"bert",

"text-classification",

"ru",

"transformers",

"toxic comments classification"

] | text-classification | false | SkolkovoInstitute | null | SkolkovoInstitute/russian_toxicity_classifier | 1,070 | 6 | transformers | 1,723 | ---

language:

- ru

tags:

- toxic comments classification

licenses:

- cc-by-nc-sa

---

Bert-based classifier (finetuned from [Conversational Rubert](https://huggingface.co/DeepPavlov/rubert-base-cased-conversational)) trained on merge of Russian Language Toxic Comments [dataset](https://www.kaggle.com/blackmoon/russian-language-toxic-comments/metadata) collected from 2ch.hk and Toxic Russian Comments [dataset](https://www.kaggle.com/alexandersemiletov/toxic-russian-comments) collected from ok.ru.

The datasets were merged, shuffled, and split into train, dev, test splits in 80-10-10 proportion.

The metrics obtained from test dataset is as follows

| | precision | recall | f1-score | support |

|:------------:|:---------:|:------:|:--------:|:-------:|

| 0 | 0.98 | 0.99 | 0.98 | 21384 |

| 1 | 0.94 | 0.92 | 0.93 | 4886 |

| accuracy | | | 0.97 | 26270|

| macro avg | 0.96 | 0.96 | 0.96 | 26270 |

| weighted avg | 0.97 | 0.97 | 0.97 | 26270 |

## How to use

```python

from transformers import BertTokenizer, BertForSequenceClassification

# load tokenizer and model weights

tokenizer = BertTokenizer.from_pretrained('SkolkovoInstitute/russian_toxicity_classifier')

model = BertForSequenceClassification.from_pretrained('SkolkovoInstitute/russian_toxicity_classifier')

# prepare the input

batch = tokenizer.encode('ты супер', return_tensors='pt')

# inference

model(batch)

```

## Licensing Information

[Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License][cc-by-nc-sa].

[![CC BY-NC-SA 4.0][cc-by-nc-sa-image]][cc-by-nc-sa]

[cc-by-nc-sa]: http://creativecommons.org/licenses/by-nc-sa/4.0/

[cc-by-nc-sa-image]: https://i.creativecommons.org/l/by-nc-sa/4.0/88x31.png |

m3hrdadfi/distilbert-zwnj-wnli-mean-tokens | 6d0d94f899be52bc72f68f3f3b5800650cb0395b | 2021-06-28T18:05:51.000Z | [

"pytorch",

"distilbert",

"feature-extraction",

"sentence-transformers",

"sentence-similarity",

"transformers"

] | sentence-similarity | false | m3hrdadfi | null | m3hrdadfi/distilbert-zwnj-wnli-mean-tokens | 1,069 | null | sentence-transformers | 1,724 | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

widget:

source_sentence: "مردی در حال خوردن پاستا است."

sentences:

- 'مردی در حال خوردن خوراک است.'

- 'مردی در حال خوردن یک تکه نان است.'

- 'دختری بچه ای را حمل می کند.'

- 'یک مرد سوار بر اسب است.'

- 'زنی در حال نواختن پیانو است.'

- 'دو مرد گاری ها را به داخل جنگل هل دادند.'

- 'مردی در حال سواری بر اسب سفید در مزرعه است.'

- 'میمونی در حال نواختن طبل است.'

- 'یوزپلنگ به دنبال شکار خود در حال دویدن است.'

---

# Sentence Embeddings with `distilbert-zwnj-wnli-mean-tokens`

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = [

'اولین حکمران شهر بابل کی بود؟',

'در فصل زمستان چه اتفاقی افتاد؟',

'میراث کوروش'

]

model = SentenceTransformer('m3hrdadfi/distilbert-zwnj-wnli-mean-tokens')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

# Max Pooling - Take the max value over time for every dimension.

def max_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

token_embeddings[input_mask_expanded == 0] = -1e9 # Set padding tokens to large negative value

return torch.mean(token_embeddings, 1)[0]

# Sentences we want sentence embeddings for

sentences = [

'اولین حکمران شهر بابل کی بود؟',

'در فصل زمستان چه اتفاقی افتاد؟',

'میراث کوروش'

]

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('m3hrdadfi/distilbert-zwnj-wnli-mean-tokens')

model = AutoModel.from_pretrained('m3hrdadfi/distilbert-zwnj-wnli-mean-tokens')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, max pooling.

sentence_embeddings = max_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Questions?

Post a Github issue from [HERE](https://github.com/m3hrdadfi/sentence-transformers). |

uer/bart-chinese-6-960-cluecorpussmall | b8eb755e2597cdf448078b70248d2d5cde9cd17b | 2021-10-08T14:47:18.000Z | [

"pytorch",

"bart",

"text2text-generation",

"Chinese",

"dataset:CLUECorpusSmall",

"arxiv:1909.05658",

"transformers",

"autotrain_compatible"

] | text2text-generation | false | uer | null | uer/bart-chinese-6-960-cluecorpussmall | 1,069 | 1 | transformers | 1,725 | ---

language: Chinese

datasets: CLUECorpusSmall

widget:

- text: "作为电子[MASK]的平台,京东绝对是领先者。如今的刘强[MASK]已经是身价过[MASK]的老板。"

---

# Chinese BART

## Model description