modelId

stringlengths 4

112

| sha

stringlengths 40

40

| lastModified

stringlengths 24

24

| tags

list | pipeline_tag

stringclasses 29

values | private

bool 1

class | author

stringlengths 2

38

⌀ | config

null | id

stringlengths 4

112

| downloads

float64 0

36.8M

⌀ | likes

float64 0

712

⌀ | library_name

stringclasses 17

values | __index_level_0__

int64 0

38.5k

| readme

stringlengths 0

186k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

andrewzolensky/bert-emotion | 3b1a7f9a848ba99d439563d215d21a59b1ec0ee4 | 2022-05-26T01:51:23.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers"

]

| text-classification | false | andrewzolensky | null | andrewzolensky/bert-emotion | 6 | null | transformers | 15,700 | Entry not found |

SamuelMiller/lil_sum_sum | 66bd3eef4daa6a0afef9a67d3178fcd273c011f3 | 2022-05-23T05:04:39.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | false | SamuelMiller | null | SamuelMiller/lil_sum_sum | 6 | null | transformers | 15,701 | Entry not found |

SamuelMiller/lil_sumsum | 021ed1b27a589b9d81c513932f69f3119544e704 | 2022-05-23T19:49:44.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | false | SamuelMiller | null | SamuelMiller/lil_sumsum | 6 | null | transformers | 15,702 | ## This is the model for the 'Sum_it' app ##

Find it at HuggingFace Spaces!

https://huggingface.co/spaces/SamuelMiller/sum_it |

renjithks/layoutlmv3-er-ner | 23b32a5762766b8571bf033af8420c3118c64c09 | 2022-05-31T17:36:05.000Z | [

"pytorch",

"tensorboard",

"layoutlmv3",

"token-classification",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

]

| token-classification | false | renjithks | null | renjithks/layoutlmv3-er-ner | 6 | null | transformers | 15,703 | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layoutlmv3-er-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# layoutlmv3-er-ner

This model is a fine-tuned version of [renjithks/layoutlmv3-cord-ner](https://huggingface.co/renjithks/layoutlmv3-cord-ner) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2025

- Precision: 0.6442

- Recall: 0.6761

- F1: 0.6598

- Accuracy: 0.9507

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 22 | 0.2940 | 0.4214 | 0.2956 | 0.3475 | 0.9147 |

| No log | 2.0 | 44 | 0.2487 | 0.4134 | 0.4526 | 0.4321 | 0.9175 |

| No log | 3.0 | 66 | 0.1922 | 0.5399 | 0.5460 | 0.5429 | 0.9392 |

| No log | 4.0 | 88 | 0.1977 | 0.5653 | 0.5813 | 0.5732 | 0.9434 |

| No log | 5.0 | 110 | 0.2018 | 0.6173 | 0.6252 | 0.6212 | 0.9477 |

| No log | 6.0 | 132 | 0.1823 | 0.6232 | 0.6153 | 0.6192 | 0.9485 |

| No log | 7.0 | 154 | 0.1972 | 0.6203 | 0.6238 | 0.6220 | 0.9477 |

| No log | 8.0 | 176 | 0.1952 | 0.6292 | 0.6407 | 0.6349 | 0.9511 |

| No log | 9.0 | 198 | 0.2070 | 0.6331 | 0.6492 | 0.6411 | 0.9489 |

| No log | 10.0 | 220 | 0.2025 | 0.6442 | 0.6761 | 0.6598 | 0.9507 |

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

Lucifer-nick/coconut_smiles | 635069d5fe9c16a685cf8a2acb6ac626518efc34 | 2022-07-05T08:59:51.000Z | [

"pytorch",

"jax",

"roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

]

| fill-mask | false | Lucifer-nick | null | Lucifer-nick/coconut_smiles | 6 | null | transformers | 15,704 | ---

license: apache-2.0

---

|

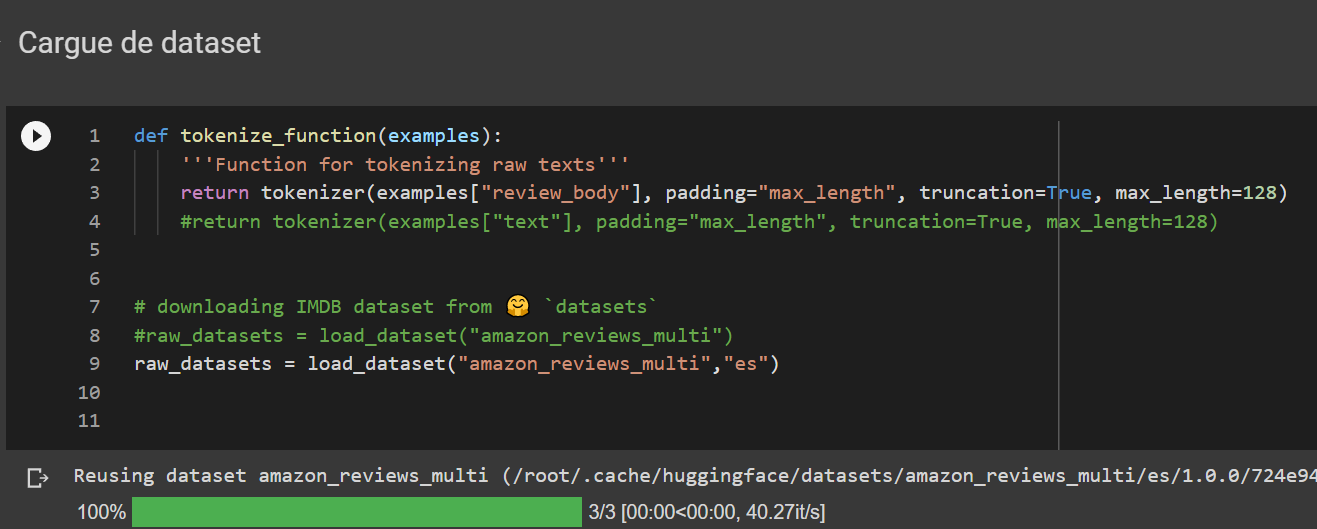

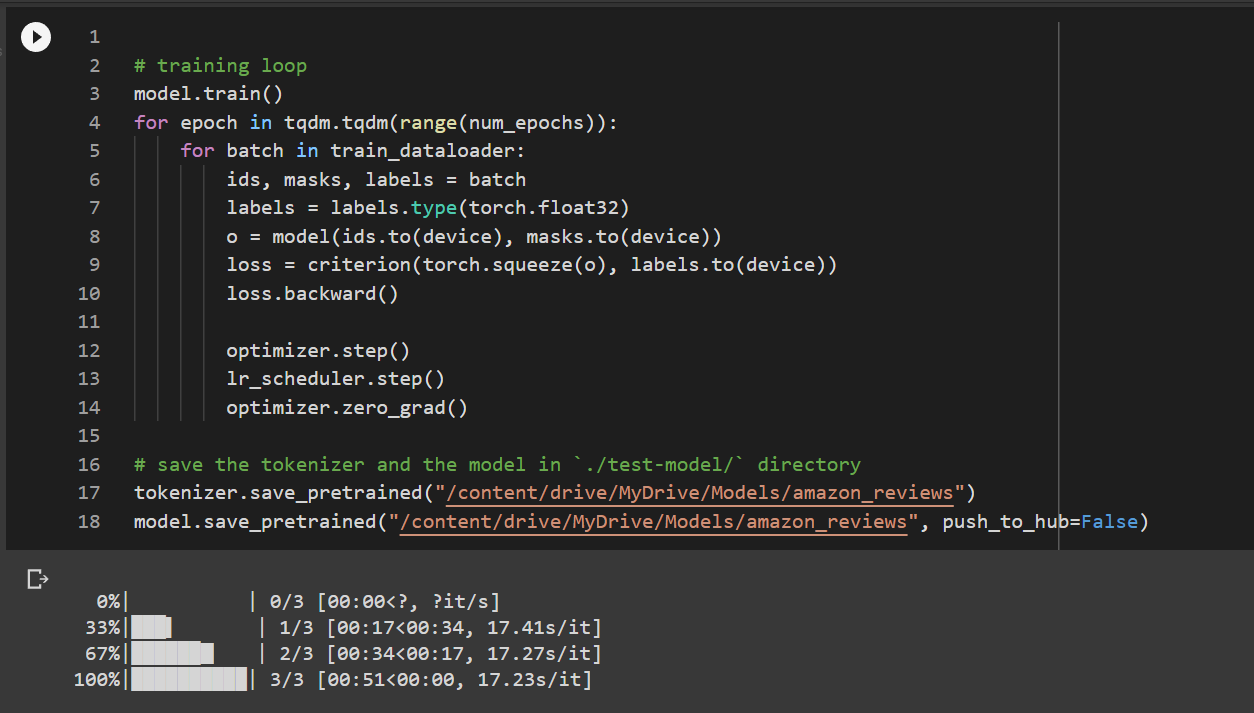

luisu0124/Amazon_review | b59c73fb83ac0a246698d0ea5e370087af58642c | 2022-05-26T03:28:01.000Z | [

"pytorch",

"bert",

"text-classification",

"es",

"transformers",

"Text Classification"

]

| text-classification | false | luisu0124 | null | luisu0124/Amazon_review | 6 | null | transformers | 15,705 | ---

language:

- es

tags:

- Text Classification

---

## language:

- es

## tags:

- amazon_reviews_multi

- Text Clasiffication

### Dataset

### Example structure review:

| review_id (string) | product_id (string) | reviewer_id (string) | stars (int) | review_body (string) | review_title (string) | language (string) | product_category (string) |

| ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- |

| de_0203609|product_de_0865382|reviewer_de_0267719|1|Armband ist leider nach 1 Jahr kaputt gegangen|Leider nach 1 Jahr kaputt|de|sports|

### Model

### Model train

| Text | Classification |

| ------------- | ------------- |

| review_body | stars |

### Model test

### Clasiffication reviews in Spanish

Uses `POS`, `NEG` labels. |

Danastos/qacombined_bert_el_4 | 7a223a795d0abae49192226a9136993f2f748128 | 2022-05-24T22:01:23.000Z | [

"pytorch",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | false | Danastos | null | Danastos/qacombined_bert_el_4 | 6 | null | transformers | 15,706 | Entry not found |

OHenry/finetuned-neural-bert-ner | 96299c07a4eb82934c96acfa5b462f72f8020fc0 | 2022-05-25T13:42:27.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | false | OHenry | null | OHenry/finetuned-neural-bert-ner | 6 | null | transformers | 15,707 | Entry not found |

chanind/frame-semantic-transformer-large | 348f581f4794e6d7c9be1e6e0a7a6076a77f6a37 | 2022-05-26T08:46:32.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | false | chanind | null | chanind/frame-semantic-transformer-large | 6 | null | transformers | 15,708 | Entry not found |

ryan1998/distilbert-base-uncased-finetuned-emotion | 2dcee06e0046c4586bc3a2f8493724f40f73b551 | 2022-05-26T14:32:56.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | false | ryan1998 | null | ryan1998/distilbert-base-uncased-finetuned-emotion | 6 | null | transformers | 15,709 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5280

- Accuracy: 0.2886

- F1: 0.2742

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| No log | 1.0 | 1316 | 2.6049 | 0.2682 | 0.2516 |

| No log | 2.0 | 2632 | 2.5280 | 0.2886 | 0.2742 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

prodm93/GPT2Dynamic_title_model_v1 | bce0035725479c756d6a1b128a82bd539ee1b587 | 2022-05-26T19:01:52.000Z | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | false | prodm93 | null | prodm93/GPT2Dynamic_title_model_v1 | 6 | null | transformers | 15,710 | Entry not found |

jkhan447/language-detection-RoBerta-base-additional | 9f5c70aace6ade1b2d151bcf6b7ef7ec41d58c06 | 2022-05-30T09:38:00.000Z | [

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

]

| text-classification | false | jkhan447 | null | jkhan447/language-detection-RoBerta-base-additional | 6 | null | transformers | 15,711 | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: language-detection-RoBerta-base-additional

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# language-detection-RoBerta-base-additional

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1367

- Accuracy: 0.9874

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Tokenizers 0.12.1

|

zenkri/autotrain-Arabic_Poetry_by_Subject-920730230 | eb837305e1c60c42357dd69ee3c7ec2a9efc7360 | 2022-05-28T08:41:57.000Z | [

"pytorch",

"bert",

"text-classification",

"ar",

"dataset:zenkri/autotrain-data-Arabic_Poetry_by_Subject-1d8ba412",

"transformers",

"autotrain",

"co2_eq_emissions"

]

| text-classification | false | zenkri | null | zenkri/autotrain-Arabic_Poetry_by_Subject-920730230 | 6 | null | transformers | 15,712 | ---

tags: autotrain

language: ar

widget:

- text: "I love AutoTrain 🤗"

datasets:

- zenkri/autotrain-data-Arabic_Poetry_by_Subject-1d8ba412

co2_eq_emissions: 0.07445219847409645

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 920730230

- CO2 Emissions (in grams): 0.07445219847409645

## Validation Metrics

- Loss: 0.5806193351745605

- Accuracy: 0.8785200718993409

- Macro F1: 0.8208042310550474

- Micro F1: 0.8785200718993409

- Weighted F1: 0.8783590365809876

- Macro Precision: 0.8486540338838363

- Micro Precision: 0.8785200718993409

- Weighted Precision: 0.8815185727115001

- Macro Recall: 0.8121110408113442

- Micro Recall: 0.8785200718993409

- Weighted Recall: 0.8785200718993409

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/zenkri/autotrain-Arabic_Poetry_by_Subject-920730230

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("zenkri/autotrain-Arabic_Poetry_by_Subject-920730230", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("zenkri/autotrain-Arabic_Poetry_by_Subject-920730230", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

autoevaluate/translation | a7b2c3ce3e88c03c59c6abae0b14991e11ec4f8e | 2022-05-28T14:31:28.000Z | [

"pytorch",

"tensorboard",

"marian",

"text2text-generation",

"dataset:wmt16",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| text2text-generation | false | autoevaluate | null | autoevaluate/translation | 6 | null | transformers | 15,713 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- wmt16

metrics:

- bleu

model-index:

- name: translation

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: wmt16

type: wmt16

args: ro-en

metrics:

- name: Bleu

type: bleu

value: 28.5866

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# translation

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-en-ro](https://huggingface.co/Helsinki-NLP/opus-mt-en-ro) on the wmt16 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3170

- Bleu: 28.5866

- Gen Len: 33.9575

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 1000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|

| 0.8302 | 0.03 | 1000 | 1.3170 | 28.5866 | 33.9575 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

ashesicsis1/xlsr-english | 4b751f41d013ae6483f5053c2869d616b4d690f4 | 2022-05-29T14:47:54.000Z | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"dataset:librispeech_asr",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| automatic-speech-recognition | false | ashesicsis1 | null | ashesicsis1/xlsr-english | 6 | null | transformers | 15,714 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- librispeech_asr

model-index:

- name: xlsr-english

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlsr-english

This model is a fine-tuned version of [facebook/wav2vec2-xls-r-300m](https://huggingface.co/facebook/wav2vec2-xls-r-300m) on the librispeech_asr dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3098

- Wer: 0.1451

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.2453 | 2.37 | 400 | 0.5789 | 0.4447 |

| 0.3736 | 4.73 | 800 | 0.3737 | 0.2850 |

| 0.1712 | 7.1 | 1200 | 0.3038 | 0.2136 |

| 0.117 | 9.47 | 1600 | 0.3016 | 0.2072 |

| 0.0897 | 11.83 | 2000 | 0.3158 | 0.1920 |

| 0.074 | 14.2 | 2400 | 0.3137 | 0.1831 |

| 0.0595 | 16.57 | 2800 | 0.2967 | 0.1745 |

| 0.0493 | 18.93 | 3200 | 0.3192 | 0.1670 |

| 0.0413 | 21.3 | 3600 | 0.3176 | 0.1644 |

| 0.0322 | 23.67 | 4000 | 0.3079 | 0.1598 |

| 0.0296 | 26.04 | 4400 | 0.2978 | 0.1511 |

| 0.0235 | 28.4 | 4800 | 0.3098 | 0.1451 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.10.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

|

Ayush414/distilbert-base-uncased-finetuned-ner | 03280eebc648f4737e7f535fc6edb061cd517bff | 2022-05-30T12:36:18.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"dataset:conll2003",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | Ayush414 | null | Ayush414/distilbert-base-uncased-finetuned-ner | 6 | null | transformers | 15,715 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9253929599291565

- name: Recall

type: recall

value: 0.9352276541000112

- name: F1

type: f1

value: 0.9302843153619317

- name: Accuracy

type: accuracy

value: 0.9835258233116749

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0628

- Precision: 0.9254

- Recall: 0.9352

- F1: 0.9303

- Accuracy: 0.9835

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2388 | 1.0 | 878 | 0.0723 | 0.9108 | 0.9186 | 0.9147 | 0.9798 |

| 0.0526 | 2.0 | 1756 | 0.0633 | 0.9176 | 0.9290 | 0.9232 | 0.9817 |

| 0.0303 | 3.0 | 2634 | 0.0628 | 0.9254 | 0.9352 | 0.9303 | 0.9835 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

dunlp/GWW | 37b2823459ed104783827c9742ea2f58f4c659ef | 2022-06-29T09:36:26.000Z | [

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

]

| fill-mask | false | dunlp | null | dunlp/GWW | 6 | null | transformers | 15,716 | ---

tags:

- generated_from_trainer

model-index:

- name: GWW

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GWW

This model is a fine-tuned version of [GroNLP/bert-base-dutch-cased](https://huggingface.co/GroNLP/bert-base-dutch-cased) on Dutch civiel works dataset.

It achieves the following results on the evaluation set:

- Loss: 2.7097

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 3.7179 | 1.0 | 78 | 3.1185 |

| 3.1134 | 2.0 | 156 | 2.8528 |

| 2.9327 | 3.0 | 234 | 2.7249 |

| 2.8377 | 4.0 | 312 | 2.7255 |

| 2.7888 | 5.0 | 390 | 2.6737 |

### Framework versions

- Transformers 4.19.4

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

erfangc/test1 | 20e7cfec04730aff44c89a7bb89a49fa01715e60 | 2022-05-30T18:23:14.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | erfangc | null | erfangc/test1 | 6 | null | transformers | 15,717 | Entry not found |

hhhhzy/roberta-pubhealth | 6f7133efa090591da36a4d75963c6d7d31b0e4a9 | 2022-05-30T23:01:52.000Z | [

"pytorch",

"roberta",

"text-classification",

"transformers"

]

| text-classification | false | hhhhzy | null | hhhhzy/roberta-pubhealth | 6 | null | transformers | 15,718 |

# Roberta-Pubhealth model

This model is a fine-tuned version of [RoBERTa Base](https://huggingface.co/roberta-base) on the health_fact dataset.

It achieves the following results on the evaluation set:

- micro f1 (accuracy): 0.7137

- macro f1: 0.6056

- weighted f1: 0.7106

- samples predicted per second: 9.31

## Dataset desctiption

[PUBHEALTH](https://huggingface.co/datasets/health_fact)is a comprehensive dataset for explainable automated fact-checking of public health claims. Each instance in the PUBHEALTH dataset has an associated veracity label (true, false, unproven, mixture). Furthermore each instance in the dataset has an explanation text field. The explanation is a justification for which the claim has been assigned a particular veracity label.

## Training hyperparameters

The model are trained with the following tuned config:

- model: roberta base

- batch size: 32

- learning rate: 5e-5

- number of epochs: 4

- warmup steps: 0 |

Jiexing/cosql_add_coref_t5_3b_order_0519_ckpt-576 | e2289714e6fbffbe1b55111a1a45f6c7ca2f61b3 | 2022-05-31T02:22:25.000Z | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | false | Jiexing | null | Jiexing/cosql_add_coref_t5_3b_order_0519_ckpt-576 | 6 | null | transformers | 15,719 | Entry not found |

mccaffary/finetuning-sentiment-model-3000-samples-DM | 644eb1d0baa3d4758427c85666e74b56636c4df6 | 2022-06-01T09:01:21.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"dataset:imdb",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | false | mccaffary | null | mccaffary/finetuning-sentiment-model-3000-samples-DM | 6 | null | transformers | 15,720 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

metrics:

- accuracy

- f1

model-index:

- name: finetuning-sentiment-model-3000-samples-DM

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: imdb

type: imdb

args: plain_text

metrics:

- name: Accuracy

type: accuracy

value: 0.8666666666666667

- name: F1

type: f1

value: 0.8734177215189873

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples-DM

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3248

- Accuracy: 0.8667

- F1: 0.8734

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.8.0

- Datasets 2.2.2

- Tokenizers 0.12.1

|

Classroom-workshop/assignment1-jack | 890c29ec8d66df7ec2eee93b88456526d0d9ea2f | 2022-06-02T15:22:42.000Z | [

"pytorch",

"tf",

"speech_to_text",

"automatic-speech-recognition",

"en",

"dataset:librispeech_asr",

"arxiv:2010.05171",

"arxiv:1904.08779",

"transformers",

"speech",

"audio",

"hf-asr-leaderboard",

"license:mit",

"model-index"

]

| automatic-speech-recognition | false | Classroom-workshop | null | Classroom-workshop/assignment1-jack | 6 | null | transformers | 15,721 | ---

language: en

datasets:

- librispeech_asr

tags:

- speech

- audio

- automatic-speech-recognition

- hf-asr-leaderboard

license: mit

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

model-index:

- name: s2t-small-librispeech-asr

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: LibriSpeech (clean)

type: librispeech_asr

config: clean

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 4.3

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: LibriSpeech (other)

type: librispeech_asr

config: other

split: test

args:

language: en

metrics:

- name: Test WER

type: wer

value: 9.0

---

# S2T-SMALL-LIBRISPEECH-ASR

`s2t-small-librispeech-asr` is a Speech to Text Transformer (S2T) model trained for automatic speech recognition (ASR).

The S2T model was proposed in [this paper](https://arxiv.org/abs/2010.05171) and released in

[this repository](https://github.com/pytorch/fairseq/tree/master/examples/speech_to_text)

## Model description

S2T is an end-to-end sequence-to-sequence transformer model. It is trained with standard

autoregressive cross-entropy loss and generates the transcripts autoregressively.

## Intended uses & limitations

This model can be used for end-to-end speech recognition (ASR).

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for other S2T checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

*Note: The `Speech2TextProcessor` object uses [torchaudio](https://github.com/pytorch/audio) to extract the

filter bank features. Make sure to install the `torchaudio` package before running this example.*

*Note: The feature extractor depends on [torchaudio](https://github.com/pytorch/audio) and the tokenizer depends on [sentencepiece](https://github.com/google/sentencepiece)

so be sure to install those packages before running the examples.*

You could either install those as extra speech dependancies with

`pip install transformers"[speech, sentencepiece]"` or install the packages seperatly

with `pip install torchaudio sentencepiece`.

```python

import torch

from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

from datasets import load_dataset

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-small-librispeech-asr")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-small-librispeech-asr")

ds = load_dataset(

"patrickvonplaten/librispeech_asr_dummy",

"clean",

split="validation"

)

input_features = processor(

ds[0]["audio"]["array"],

sampling_rate=16_000,

return_tensors="pt"

).input_features # Batch size 1

generated_ids = model.generate(input_ids=input_features)

transcription = processor.batch_decode(generated_ids)

```

#### Evaluation on LibriSpeech Test

The following script shows how to evaluate this model on the [LibriSpeech](https://huggingface.co/datasets/librispeech_asr)

*"clean"* and *"other"* test dataset.

```python

from datasets import load_dataset, load_metric

from transformers import Speech2TextForConditionalGeneration, Speech2TextProcessor

librispeech_eval = load_dataset("librispeech_asr", "clean", split="test") # change to "other" for other test dataset

wer = load_metric("wer")

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-small-librispeech-asr").to("cuda")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-small-librispeech-asr", do_upper_case=True)

librispeech_eval = librispeech_eval.map(map_to_array)

def map_to_pred(batch):

features = processor(batch["audio"]["array"], sampling_rate=16000, padding=True, return_tensors="pt")

input_features = features.input_features.to("cuda")

attention_mask = features.attention_mask.to("cuda")

gen_tokens = model.generate(input_ids=input_features, attention_mask=attention_mask)

batch["transcription"] = processor.batch_decode(gen_tokens, skip_special_tokens=True)

return batch

result = librispeech_eval.map(map_to_pred, batched=True, batch_size=8, remove_columns=["speech"])

print("WER:", wer(predictions=result["transcription"], references=result["text"]))

```

*Result (WER)*:

| "clean" | "other" |

|:-------:|:-------:|

| 4.3 | 9.0 |

## Training data

The S2T-SMALL-LIBRISPEECH-ASR is trained on [LibriSpeech ASR Corpus](https://www.openslr.org/12), a dataset consisting of

approximately 1000 hours of 16kHz read English speech.

## Training procedure

### Preprocessing

The speech data is pre-processed by extracting Kaldi-compliant 80-channel log mel-filter bank features automatically from

WAV/FLAC audio files via PyKaldi or torchaudio. Further utterance-level CMVN (cepstral mean and variance normalization)

is applied to each example.

The texts are lowercased and tokenized using SentencePiece and a vocabulary size of 10,000.

### Training

The model is trained with standard autoregressive cross-entropy loss and using [SpecAugment](https://arxiv.org/abs/1904.08779).

The encoder receives speech features, and the decoder generates the transcripts autoregressively.

### BibTeX entry and citation info

```bibtex

@inproceedings{wang2020fairseqs2t,

title = {fairseq S2T: Fast Speech-to-Text Modeling with fairseq},

author = {Changhan Wang and Yun Tang and Xutai Ma and Anne Wu and Dmytro Okhonko and Juan Pino},

booktitle = {Proceedings of the 2020 Conference of the Asian Chapter of the Association for Computational Linguistics (AACL): System Demonstrations},

year = {2020},

}

``` |

bradgrimm/patent-cpc-predictor | 4ebf5d51044c5ced9d964956ae2a5de40b669d42 | 2022-06-02T22:33:47.000Z | [

"pytorch",

"deberta-v2",

"feature-extraction",

"en",

"transformers",

"patent",

"deberta",

"license:mit"

]

| feature-extraction | false | bradgrimm | null | bradgrimm/patent-cpc-predictor | 6 | null | transformers | 15,722 | ---

language: en

tags:

- patent

- deberta

license: mit

---

# Patent CPC Predictor

This is a fine-tuned version of microsoft/deberta-v3-small for predicting Patent CPC codes.

# Dataset

Dataset consists of titles and abstracts sampled from granted patent applications:

https://www.kaggle.com/datasets/grimmace/sampled-patent-titles

# Results

| Category | Accuracy |

| --- | ----------- |

| Section | 92% |

| Class | 88% |

| Subclass | 85% | |

ArthurZ/opt-1.3b | 340576fcb4f2edbd6ea82a907fe85a50bb913965 | 2022-06-21T14:34:58.000Z | [

"pytorch",

"tf",

"jax",

"opt",

"text-generation",

"transformers",

"generated_from_keras_callback",

"model-index"

]

| text-generation | false | ArthurZ | null | ArthurZ/opt-1.3b | 6 | null | transformers | 15,723 | ---

tags:

- generated_from_keras_callback

model-index:

- name: opt-1.3b

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# opt-1.3b

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.20.0.dev0

- TensorFlow 2.9.1

- Datasets 2.2.2

- Tokenizers 0.12.1

|

madatnlp/torch-trinity | 16d66ff35d40ec7eefdc4758682812a9e0734379 | 2022-06-03T06:59:20.000Z | [

"pytorch",

"tensorboard",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | false | madatnlp | null | madatnlp/torch-trinity | 6 | null | transformers | 15,724 | Entry not found |

Eulaliefy/distilbert-base-uncased-finetuned-ner | c2b6afb7e9771459d736b71a3db335d165869c9f | 2022-06-03T18:21:14.000Z | [

"pytorch",

"tensorboard",

"distilbert",

"token-classification",

"dataset:conll2003",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| token-classification | false | Eulaliefy | null | Eulaliefy/distilbert-base-uncased-finetuned-ner | 6 | null | transformers | 15,725 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9250691754288877

- name: Recall

type: recall

value: 0.9350039154267815

- name: F1

type: f1

value: 0.9300100144653389

- name: Accuracy

type: accuracy

value: 0.9836052552147044

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-ner

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0620

- Precision: 0.9251

- Recall: 0.9350

- F1: 0.9300

- Accuracy: 0.9836

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2356 | 1.0 | 878 | 0.0699 | 0.9110 | 0.9225 | 0.9167 | 0.9801 |

| 0.0509 | 2.0 | 1756 | 0.0621 | 0.9180 | 0.9314 | 0.9246 | 0.9823 |

| 0.0303 | 3.0 | 2634 | 0.0620 | 0.9251 | 0.9350 | 0.9300 | 0.9836 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

ricardo-filho/bert_base_tcm_0.6 | e6defd66f05a64373ad6c74b7d1eec37637dada1 | 2022-06-09T14:15:12.000Z | [

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index",

"autotrain_compatible"

]

| token-classification | false | ricardo-filho | null | ricardo-filho/bert_base_tcm_0.6 | 6 | null | transformers | 15,726 | ---

license: mit

tags:

- generated_from_trainer

model-index:

- name: bert_base_tcm_0.6

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert_base_tcm_0.6

This model is a fine-tuned version of [neuralmind/bert-base-portuguese-cased](https://huggingface.co/neuralmind/bert-base-portuguese-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0193

- Criterio Julgamento Precision: 0.8875

- Criterio Julgamento Recall: 0.8659

- Criterio Julgamento F1: 0.8765

- Criterio Julgamento Number: 82

- Data Sessao Precision: 0.7571

- Data Sessao Recall: 0.9636

- Data Sessao F1: 0.848

- Data Sessao Number: 55

- Modalidade Licitacao Precision: 0.9394

- Modalidade Licitacao Recall: 0.9718

- Modalidade Licitacao F1: 0.9553

- Modalidade Licitacao Number: 319

- Numero Exercicio Precision: 0.9172

- Numero Exercicio Recall: 0.9688

- Numero Exercicio F1: 0.9422

- Numero Exercicio Number: 160

- Objeto Licitacao Precision: 0.4659

- Objeto Licitacao Recall: 0.7069

- Objeto Licitacao F1: 0.5616

- Objeto Licitacao Number: 58

- Valor Objeto Precision: 0.8333

- Valor Objeto Recall: 0.9211

- Valor Objeto F1: 0.875

- Valor Objeto Number: 38

- Overall Precision: 0.8537

- Overall Recall: 0.9340

- Overall F1: 0.8920

- Overall Accuracy: 0.9951

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Criterio Julgamento Precision | Criterio Julgamento Recall | Criterio Julgamento F1 | Criterio Julgamento Number | Data Sessao Precision | Data Sessao Recall | Data Sessao F1 | Data Sessao Number | Modalidade Licitacao Precision | Modalidade Licitacao Recall | Modalidade Licitacao F1 | Modalidade Licitacao Number | Numero Exercicio Precision | Numero Exercicio Recall | Numero Exercicio F1 | Numero Exercicio Number | Objeto Licitacao Precision | Objeto Licitacao Recall | Objeto Licitacao F1 | Objeto Licitacao Number | Valor Objeto Precision | Valor Objeto Recall | Valor Objeto F1 | Valor Objeto Number | Overall Precision | Overall Recall | Overall F1 | Overall Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:-----------------------------:|:--------------------------:|:----------------------:|:--------------------------:|:---------------------:|:------------------:|:--------------:|:------------------:|:------------------------------:|:---------------------------:|:-----------------------:|:---------------------------:|:--------------------------:|:-----------------------:|:-------------------:|:-----------------------:|:--------------------------:|:-----------------------:|:-------------------:|:-----------------------:|:----------------------:|:-------------------:|:---------------:|:-------------------:|:-----------------:|:--------------:|:----------:|:----------------:|

| 0.0252 | 1.0 | 1963 | 0.0202 | 0.8022 | 0.8902 | 0.8439 | 82 | 0.7391 | 0.9273 | 0.8226 | 55 | 0.9233 | 0.9812 | 0.9514 | 319 | 0.8966 | 0.975 | 0.9341 | 160 | 0.4730 | 0.6034 | 0.5303 | 58 | 0.7083 | 0.8947 | 0.7907 | 38 | 0.8327 | 0.9298 | 0.8786 | 0.9948 |

| 0.0191 | 2.0 | 3926 | 0.0226 | 0.8554 | 0.8659 | 0.8606 | 82 | 0.5641 | 0.4 | 0.4681 | 55 | 0.9572 | 0.9812 | 0.9690 | 319 | 0.9273 | 0.9563 | 0.9415 | 160 | 0.3770 | 0.3966 | 0.3866 | 58 | 0.8571 | 0.7895 | 0.8219 | 38 | 0.8620 | 0.8596 | 0.8608 | 0.9951 |

| 0.0137 | 3.0 | 5889 | 0.0193 | 0.8875 | 0.8659 | 0.8765 | 82 | 0.7571 | 0.9636 | 0.848 | 55 | 0.9394 | 0.9718 | 0.9553 | 319 | 0.9172 | 0.9688 | 0.9422 | 160 | 0.4659 | 0.7069 | 0.5616 | 58 | 0.8333 | 0.9211 | 0.875 | 38 | 0.8537 | 0.9340 | 0.8920 | 0.9951 |

| 0.0082 | 4.0 | 7852 | 0.0210 | 0.8780 | 0.8780 | 0.8780 | 82 | 0.7966 | 0.8545 | 0.8246 | 55 | 0.9512 | 0.9781 | 0.9645 | 319 | 0.9023 | 0.9812 | 0.9401 | 160 | 0.5385 | 0.6034 | 0.5691 | 58 | 0.9 | 0.9474 | 0.9231 | 38 | 0.8810 | 0.9256 | 0.9027 | 0.9963 |

| 0.0048 | 5.0 | 9815 | 0.0222 | 0.8261 | 0.9268 | 0.8736 | 82 | 0.7969 | 0.9273 | 0.8571 | 55 | 0.9512 | 0.9781 | 0.9645 | 319 | 0.9231 | 0.975 | 0.9483 | 160 | 0.6515 | 0.7414 | 0.6935 | 58 | 0.875 | 0.9211 | 0.8974 | 38 | 0.8867 | 0.9452 | 0.9150 | 0.9964 |

| 0.0044 | 6.0 | 11778 | 0.0262 | 0.8276 | 0.8780 | 0.8521 | 82 | 0.7681 | 0.9636 | 0.8548 | 55 | 0.9541 | 0.9781 | 0.9659 | 319 | 0.9235 | 0.9812 | 0.9515 | 160 | 0.5263 | 0.6897 | 0.5970 | 58 | 0.9211 | 0.9211 | 0.9211 | 38 | 0.8722 | 0.9396 | 0.9047 | 0.9959 |

| 0.0042 | 7.0 | 13741 | 0.0246 | 0.8523 | 0.9146 | 0.8824 | 82 | 0.7656 | 0.8909 | 0.8235 | 55 | 0.9509 | 0.9718 | 0.9612 | 319 | 0.9118 | 0.9688 | 0.9394 | 160 | 0.5938 | 0.6552 | 0.6230 | 58 | 0.8974 | 0.9211 | 0.9091 | 38 | 0.8815 | 0.9298 | 0.9050 | 0.9960 |

| 0.0013 | 8.0 | 15704 | 0.0294 | 0.8295 | 0.8902 | 0.8588 | 82 | 0.7391 | 0.9273 | 0.8226 | 55 | 0.9543 | 0.9812 | 0.9675 | 319 | 0.9070 | 0.975 | 0.9398 | 160 | 0.6094 | 0.6724 | 0.6393 | 58 | 0.875 | 0.9211 | 0.8974 | 38 | 0.8765 | 0.9368 | 0.9056 | 0.9961 |

| 0.0019 | 9.0 | 17667 | 0.0303 | 0.8690 | 0.8902 | 0.8795 | 82 | 0.8305 | 0.8909 | 0.8596 | 55 | 0.9538 | 0.9718 | 0.9627 | 319 | 0.9290 | 0.9812 | 0.9544 | 160 | 0.6441 | 0.6552 | 0.6496 | 58 | 0.9211 | 0.9211 | 0.9211 | 38 | 0.9019 | 0.9298 | 0.9156 | 0.9961 |

| 0.0007 | 10.0 | 19630 | 0.0295 | 0.8488 | 0.8902 | 0.8690 | 82 | 0.7903 | 0.8909 | 0.8376 | 55 | 0.9571 | 0.9781 | 0.9674 | 319 | 0.9181 | 0.9812 | 0.9486 | 160 | 0.6393 | 0.6724 | 0.6555 | 58 | 0.9211 | 0.9211 | 0.9211 | 38 | 0.8938 | 0.9340 | 0.9135 | 0.9962 |

### Framework versions

- Transformers 4.20.0.dev0

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

haritzpuerto/distilbert-squad | 0dfcf0cb9cc78471945ed00aed6e20df4b6afe4b | 2022-06-03T20:08:44.000Z | [

"pytorch",

"distilbert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | false | haritzpuerto | null | haritzpuerto/distilbert-squad | 6 | null | transformers | 15,727 | TrainOutput(global_step=5475, training_loss=1.7323438837756848, metrics={'train_runtime': 4630.6634, 'train_samples_per_second': 18.917, 'train_steps_per_second': 1.182, 'total_flos': 1.1445080909703168e+16, 'train_loss': 1.7323438837756848, 'epoch': 1.0})

|

ssantanag/pasajes_de_la_biblia | 49688f7cbfd2bd46188fd22bd7ed8467bd0bd135 | 2022-06-04T04:32:36.000Z | [

"pytorch",

"tf",

"t5",

"text2text-generation",

"transformers",

"generated_from_keras_callback",

"model-index",

"autotrain_compatible"

]

| text2text-generation | false | ssantanag | null | ssantanag/pasajes_de_la_biblia | 6 | null | transformers | 15,728 | ---

tags:

- generated_from_keras_callback

model-index:

- name: pasajes_de_la_biblia

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# pasajes_de_la_biblia

Este modelo fue entrenado con el dataset publicado en Kaggle de los versiculos de la biblia en el siguiente enlace puede encontrar el dataset https://www.kaggle.com/datasets/camesruiz/biblia-ntv-spanish-bible-ntv.

## Training and evaluation data

la distribución de la data fue la siguiente:

- Training set: 58.20%

- Validation set: 9.65%

- Test set: 32.15%

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.19.2

- TensorFlow 2.8.2

- Datasets 2.2.2

- Tokenizers 0.12.1

|

Jeevesh8/lecun_feather_berts-63 | b8dd5a2863e750ab6221e7021e66d910b246b2de | 2022-06-04T06:53:03.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | Jeevesh8 | null | Jeevesh8/lecun_feather_berts-63 | 6 | null | transformers | 15,729 | Entry not found |

Jeevesh8/lecun_feather_berts-12 | 977691e4e4c5705db6de9a0861141b5bd87736ac | 2022-06-04T06:52:11.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | Jeevesh8 | null | Jeevesh8/lecun_feather_berts-12 | 6 | null | transformers | 15,730 | Entry not found |

gciaffoni/wav2vec2-large-xls-r-300m-it-colab4 | 652ef1fda97e390d10e4c084db5ac42a2908c1aa | 2022-07-20T15:40:53.000Z | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"transformers"

]

| automatic-speech-recognition | false | gciaffoni | null | gciaffoni/wav2vec2-large-xls-r-300m-it-colab4 | 6 | null | transformers | 15,731 | R4 checkpoint-16000

|

nitishkumargundapu793/autotrain-chat-bot-responses-949231426 | b6c36ec3efd5e5fc252f3d9f8409ec0cf5f9ae5b | 2022-06-05T03:16:21.000Z | [

"pytorch",

"bert",

"text-classification",

"en",

"dataset:nitishkumargundapu793/autotrain-data-chat-bot-responses",

"transformers",

"autotrain",

"co2_eq_emissions"

]

| text-classification | false | nitishkumargundapu793 | null | nitishkumargundapu793/autotrain-chat-bot-responses-949231426 | 6 | null | transformers | 15,732 | ---

tags: autotrain

language: en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- nitishkumargundapu793/autotrain-data-chat-bot-responses

co2_eq_emissions: 0.01123534537751425

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 949231426

- CO2 Emissions (in grams): 0.01123534537751425

## Validation Metrics

- Loss: 0.26922607421875

- Accuracy: 1.0

- Macro F1: 1.0

- Micro F1: 1.0

- Weighted F1: 1.0

- Macro Precision: 1.0

- Micro Precision: 1.0

- Weighted Precision: 1.0

- Macro Recall: 1.0

- Micro Recall: 1.0

- Weighted Recall: 1.0

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/nitishkumargundapu793/autotrain-chat-bot-responses-949231426

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("nitishkumargundapu793/autotrain-chat-bot-responses-949231426", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("nitishkumargundapu793/autotrain-chat-bot-responses-949231426", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

diiogo/caju128k | fd2f78e48076bd7c1c14c688acd285f63fa5c115 | 2022-07-25T21:38:53.000Z | [

"pytorch",

"roberta",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | false | diiogo | null | diiogo/caju128k | 6 | null | transformers | 15,733 | Entry not found |

nestoralvaro/mT5_multilingual_XLSum-finetuned-xsum-mlsum___summary_text | 2fbc2c0e50405cfa97bcf8f295c526cc285cbeee | 2022-06-06T03:26:11.000Z | [

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"dataset:mlsum",

"transformers",

"generated_from_trainer",

"model-index",

"autotrain_compatible"

]

| text2text-generation | false | nestoralvaro | null | nestoralvaro/mT5_multilingual_XLSum-finetuned-xsum-mlsum___summary_text | 6 | null | transformers | 15,734 | ---

tags:

- generated_from_trainer

datasets:

- mlsum

metrics:

- rouge

model-index:

- name: mT5_multilingual_XLSum-finetuned-xsum-mlsum___summary_text

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: mlsum

type: mlsum

args: es

metrics:

- name: Rouge1

type: rouge

value: 0.0

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mT5_multilingual_XLSum-finetuned-xsum-mlsum___summary_text

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on the mlsum dataset.

It achieves the following results on the evaluation set:

- Loss: nan

- Rouge1: 0.0

- Rouge2: 0.0

- Rougel: 0.0

- Rougelsum: 0.0

- Gen Len: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| 0.0 | 1.0 | 66592 | nan | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

bondi/bert-semaphore-prediction-w2 | f3c0d329fc131043b06137505711f56baf2ca66a | 2022-06-06T02:34:15.000Z | [

"pytorch",

"bert",

"text-classification",

"transformers",

"generated_from_trainer",

"model-index"

]

| text-classification | false | bondi | null | bondi/bert-semaphore-prediction-w2 | 6 | null | transformers | 15,735 | ---

tags:

- generated_from_trainer

model-index:

- name: bert-semaphore-prediction-w2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-semaphore-prediction-w2

This model was trained from scratch on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 6

- eval_batch_size: 6

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0

- Datasets 2.2.2

- Tokenizers 0.12.1

|

yogeshchandrasekharuni/bart-paraphrase-finetuned-xsum-v3 | da235f3be1584e4e70ba5569579676eb6cbfbc1c | 2022-06-06T09:42:56.000Z | [

"pytorch",

"tensorboard",

"bart",

"text2text-generation",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| text2text-generation | false | yogeshchandrasekharuni | null | yogeshchandrasekharuni/bart-paraphrase-finetuned-xsum-v3 | 6 | null | transformers | 15,736 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: bart-paraphrase-finetuned-xsum-v3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-paraphrase-finetuned-xsum-v3

This model is a fine-tuned version of [eugenesiow/bart-paraphrase](https://huggingface.co/eugenesiow/bart-paraphrase) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3377

- Rouge1: 99.9461

- Rouge2: 72.6619

- Rougel: 99.9461

- Rougelsum: 99.9461

- Gen Len: 9.0396

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 139 | 0.3653 | 96.4972 | 70.8271 | 96.5252 | 96.5085 | 9.7158 |

| No log | 2.0 | 278 | 0.6624 | 98.3228 | 72.2829 | 98.2598 | 98.2519 | 9.0612 |

| No log | 3.0 | 417 | 0.2880 | 98.2415 | 72.36 | 98.249 | 98.2271 | 9.4496 |

| 0.5019 | 4.0 | 556 | 0.4188 | 98.1123 | 70.8536 | 98.0746 | 98.0465 | 9.4065 |

| 0.5019 | 5.0 | 695 | 0.3718 | 98.8882 | 72.6619 | 98.8997 | 98.8882 | 10.7842 |

| 0.5019 | 6.0 | 834 | 0.4442 | 99.6076 | 72.6619 | 99.6076 | 99.598 | 9.0647 |

| 0.5019 | 7.0 | 973 | 0.2681 | 99.6076 | 72.6619 | 99.598 | 99.598 | 9.1403 |

| 0.2751 | 8.0 | 1112 | 0.3577 | 99.2479 | 72.6619 | 99.2536 | 99.2383 | 9.0612 |

| 0.2751 | 9.0 | 1251 | 0.2481 | 98.8785 | 72.6394 | 98.8882 | 98.8882 | 9.7914 |

| 0.2751 | 10.0 | 1390 | 0.2339 | 99.6076 | 72.6619 | 99.6076 | 99.6076 | 9.1942 |

| 0.2051 | 11.0 | 1529 | 0.2472 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.2338 |

| 0.2051 | 12.0 | 1668 | 0.3948 | 99.6076 | 72.6619 | 99.598 | 99.598 | 9.0468 |

| 0.2051 | 13.0 | 1807 | 0.4756 | 99.6076 | 72.6619 | 99.6076 | 99.6076 | 9.0576 |

| 0.2051 | 14.0 | 1946 | 0.3543 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.0396 |

| 0.1544 | 15.0 | 2085 | 0.2828 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.0576 |

| 0.1544 | 16.0 | 2224 | 0.2456 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.1079 |

| 0.1544 | 17.0 | 2363 | 0.2227 | 99.9461 | 72.6394 | 99.9461 | 99.9461 | 9.5072 |

| 0.1285 | 18.0 | 2502 | 0.3490 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.0396 |

| 0.1285 | 19.0 | 2641 | 0.3736 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.0396 |

| 0.1285 | 20.0 | 2780 | 0.3377 | 99.9461 | 72.6619 | 99.9461 | 99.9461 | 9.0396 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

kabelomalapane/Af-En | 667202b7a92cbc4d0341fd0c31474cabef4a643a | 2022-06-06T13:14:27.000Z | [

"pytorch",

"marian",

"text2text-generation",

"transformers",

"translation",

"generated_from_trainer",

"license:apache-2.0",

"model-index",

"autotrain_compatible"

]

| translation | false | kabelomalapane | null | kabelomalapane/Af-En | 6 | null | transformers | 15,737 | ---

license: apache-2.0

tags:

- translation

- generated_from_trainer

metrics:

- bleu

model-index:

- name: En-Af

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# En-Af

This model is a fine-tuned version of [Helsinki-NLP/opus-mt-af-en](https://huggingface.co/Helsinki-NLP/opus-mt-en-af) on the None dataset.

It achieves the following results on the evaluation set:

Before training:

- 'eval_bleu': 46.1522519

- 'eval_loss': 2.5693612

After training:

- Loss: 1.7516168

- Bleu: 55.3924697

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

mpsb00/ECHR_test_2 | 2cfbcfb35e82870d93454e768195f54840f12c1d | 2022-06-06T11:17:21.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"dataset:lex_glue",

"transformers",

"generated_from_trainer",

"license:mit",

"model-index"

]

| text-classification | false | mpsb00 | null | mpsb00/ECHR_test_2 | 6 | null | transformers | 15,738 | ---

license: mit

tags:

- generated_from_trainer

datasets:

- lex_glue

model-index:

- name: ECHR_test_2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ECHR_test_2

This model is a fine-tuned version of [prajjwal1/bert-tiny](https://huggingface.co/prajjwal1/bert-tiny) on the lex_glue dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2487

- Macro-f1: 0.4052

- Micro-f1: 0.5660

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Macro-f1 | Micro-f1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:--------:|

| 0.2056 | 0.44 | 500 | 0.2846 | 0.3335 | 0.4763 |

| 0.1698 | 0.89 | 1000 | 0.2487 | 0.4052 | 0.5660 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

marieke93/BERT-evidence-types | f0f2696c4ca1c7405fe227bcb439fb1fd40b7aac | 2022-06-11T13:32:10.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | false | marieke93 | null | marieke93/BERT-evidence-types | 6 | null | transformers | 15,739 | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: BERT-evidence-types

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# BERT-evidence-types

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the evidence types dataset.

It achieves the following results on the evaluation set:

- Loss: 2.8008

- Macro f1: 0.4227

- Weighted f1: 0.6976

- Accuracy: 0.7154

- Balanced accuracy: 0.3876

## Training and evaluation data

The data set, as well as the code that was used to fine tune this model can be found in the GitHub repository [BA-Thesis-Information-Science-Persuasion-Strategies](https://github.com/mariekevdh/BA-Thesis-Information-Science-Persuasion-Strategies)

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Macro f1 | Weighted f1 | Accuracy | Balanced accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:-----------:|:--------:|:-----------------:|

| 1.1148 | 1.0 | 125 | 1.0531 | 0.2566 | 0.6570 | 0.6705 | 0.2753 |

| 0.7546 | 2.0 | 250 | 0.9725 | 0.3424 | 0.6947 | 0.7002 | 0.3334 |

| 0.4757 | 3.0 | 375 | 1.1375 | 0.3727 | 0.7113 | 0.7184 | 0.3680 |

| 0.2637 | 4.0 | 500 | 1.3585 | 0.3807 | 0.6836 | 0.6910 | 0.3805 |

| 0.1408 | 5.0 | 625 | 1.6605 | 0.3785 | 0.6765 | 0.6872 | 0.3635 |

| 0.0856 | 6.0 | 750 | 1.9703 | 0.3802 | 0.6890 | 0.7047 | 0.3704 |

| 0.0502 | 7.0 | 875 | 2.1245 | 0.4067 | 0.6995 | 0.7169 | 0.3751 |

| 0.0265 | 8.0 | 1000 | 2.2676 | 0.3756 | 0.6816 | 0.6925 | 0.3647 |

| 0.0147 | 9.0 | 1125 | 2.4286 | 0.4052 | 0.6887 | 0.7062 | 0.3803 |

| 0.0124 | 10.0 | 1250 | 2.5773 | 0.4084 | 0.6853 | 0.7040 | 0.3695 |

| 0.0111 | 11.0 | 1375 | 2.5941 | 0.4146 | 0.6915 | 0.7085 | 0.3834 |

| 0.0076 | 12.0 | 1500 | 2.6124 | 0.4157 | 0.6936 | 0.7078 | 0.3863 |

| 0.0067 | 13.0 | 1625 | 2.7050 | 0.4139 | 0.6925 | 0.7108 | 0.3798 |

| 0.0087 | 14.0 | 1750 | 2.6695 | 0.4252 | 0.7009 | 0.7169 | 0.3920 |

| 0.0056 | 15.0 | 1875 | 2.7357 | 0.4257 | 0.6985 | 0.7161 | 0.3868 |

| 0.0054 | 16.0 | 2000 | 2.7389 | 0.4249 | 0.6955 | 0.7116 | 0.3890 |

| 0.0051 | 17.0 | 2125 | 2.7767 | 0.4197 | 0.6967 | 0.7146 | 0.3863 |

| 0.004 | 18.0 | 2250 | 2.7947 | 0.4211 | 0.6977 | 0.7154 | 0.3876 |

| 0.0041 | 19.0 | 2375 | 2.8030 | 0.4204 | 0.6953 | 0.7131 | 0.3855 |

| 0.0042 | 20.0 | 2500 | 2.8008 | 0.4227 | 0.6976 | 0.7154 | 0.3876 |

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

tzq0301/T5-Pegasus-news-title-generation | 350d5d75eb8f8215e60e40a56ae408e68982d2b3 | 2022-06-09T06:56:58.000Z | [

"pytorch",

"mt5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | false | tzq0301 | null | tzq0301/T5-Pegasus-news-title-generation | 6 | null | transformers | 15,740 | Entry not found |

catofnull/BERT-Pretrain | 5558b20bba5d6b2d67decada899ea47bf2b312d0 | 2022-06-08T16:30:49.000Z | [

"pytorch",

"distilbert",

"fill-mask",

"transformers",

"autotrain_compatible"

]

| fill-mask | false | catofnull | null | catofnull/BERT-Pretrain | 6 | null | transformers | 15,741 | Entry not found |

blenderwang/roberta-base-emotion-32-balanced | 0e11d80f17b2c7cba77f6789eab40881026bcde8 | 2022-06-09T08:34:08.000Z | [

"pytorch",

"roberta",

"text-classification",

"transformers",

"generated_from_trainer",

"model-index"

]

| text-classification | false | blenderwang | null | blenderwang/roberta-base-emotion-32-balanced | 6 | null | transformers | 15,742 | ---

tags:

- generated_from_trainer

model-index:

- name: results

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# results

This model was trained from scratch on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

|

russellc/bert-finetuned-ner-accelerate | f402fd7e21cc54b2838f57af196e9985fe39bb09 | 2022-06-09T11:22:12.000Z | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | false | russellc | null | russellc/bert-finetuned-ner-accelerate | 6 | null | transformers | 15,743 | Entry not found |

qualitydatalab/autotrain-car-review-project-966432121 | f28298b06ea8fe9f30ca78fa5a5c57ee7cb08368 | 2022-06-09T13:04:21.000Z | [

"pytorch",

"roberta",

"text-classification",

"en",

"dataset:qualitydatalab/autotrain-data-car-review-project",

"transformers",

"autotrain",

"co2_eq_emissions"

]

| text-classification | false | qualitydatalab | null | qualitydatalab/autotrain-car-review-project-966432121 | 6 | 1 | transformers | 15,744 | ---

tags: autotrain

language: en

widget:

- text: "I love driving this car"

datasets:

- qualitydatalab/autotrain-data-car-review-project

co2_eq_emissions: 0.21529888368377176

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 966432121

- CO2 Emissions (in grams): 0.21529888368377176

## Validation Metrics

- Loss: 0.6013365983963013

- Accuracy: 0.737791286727457

- Macro F1: 0.729171012281939

- Micro F1: 0.737791286727457

- Weighted F1: 0.729171012281939

- Macro Precision: 0.7313770127538427

- Micro Precision: 0.737791286727457

- Weighted Precision: 0.7313770127538428

- Macro Recall: 0.737791286727457

- Micro Recall: 0.737791286727457

- Weighted Recall: 0.737791286727457

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love driving this car"}' https://api-inference.huggingface.co/models/qualitydatalab/autotrain-car-review-project-966432121

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("qualitydatalab/autotrain-car-review-project-966432121", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("qualitydatalab/autotrain-car-review-project-966432121", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

``` |

HrayrMSint/distilbert-base-uncased-finetuned-clinc | e8dac9ebfb82bc4a7e62eae78373d3d25509d05d | 2022-06-10T01:17:59.000Z | [

"pytorch",

"distilbert",

"text-classification",

"dataset:clinc_oos",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | false | HrayrMSint | null | HrayrMSint/distilbert-base-uncased-finetuned-clinc | 6 | null | transformers | 15,745 | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- clinc_oos

metrics:

- accuracy

model-index:

- name: distilbert-base-uncased-finetuned-clinc

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: clinc_oos

type: clinc_oos

args: plus

metrics:

- name: Accuracy

type: accuracy

value: 0.9135483870967742

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-clinc

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the clinc_oos dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7771

- Accuracy: 0.9135

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 48

- eval_batch_size: 48

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 4.2843 | 1.0 | 318 | 3.2793 | 0.7448 |

| 2.6208 | 2.0 | 636 | 1.8750 | 0.8297 |

| 1.5453 | 3.0 | 954 | 1.1565 | 0.8919 |

| 1.0141 | 4.0 | 1272 | 0.8628 | 0.9090 |

| 0.795 | 5.0 | 1590 | 0.7771 | 0.9135 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.10.0

- Datasets 2.2.2

- Tokenizers 0.10.3

|

juliensimon/distilbert-amazon-shoe-reviews-quantized | e9fc155e18ac3294de8cab3090a00b6f7b07e307 | 2022-06-10T11:24:00.000Z | [

"pytorch",

"distilbert",

"text-classification",

"transformers"

]

| text-classification | false | juliensimon | null | juliensimon/distilbert-amazon-shoe-reviews-quantized | 6 | null | transformers | 15,746 | Entry not found |

Jeevesh8/std_pnt_04_feather_berts-24 | f2765d909e423c944ef90ad16fae304a87215956 | 2022-06-12T06:02:57.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | Jeevesh8 | null | Jeevesh8/std_pnt_04_feather_berts-24 | 6 | null | transformers | 15,747 | Entry not found |

Jeevesh8/std_pnt_04_feather_berts-47 | 69236732538b82e8d213bd3a47f44c7c83bb676a | 2022-06-12T06:03:10.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | Jeevesh8 | null | Jeevesh8/std_pnt_04_feather_berts-47 | 6 | null | transformers | 15,748 | Entry not found |

Jeevesh8/std_pnt_04_feather_berts-66 | d742fe6904b663be0c8cb6fd0f5262296f1804d1 | 2022-06-12T06:03:01.000Z | [

"pytorch",

"tensorboard",

"bert",

"text-classification",

"transformers"

]

| text-classification | false | Jeevesh8 | null | Jeevesh8/std_pnt_04_feather_berts-66 | 6 | null | transformers | 15,749 | Entry not found |

Jingya/tmpkplizo4c | fd332ea15d2ce4daaf302c4b4fb72a42ca0929a3 | 2022-06-12T22:05:38.000Z | [

"pytorch",

"bert",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

]

| text-classification | false | Jingya | null | Jingya/tmpkplizo4c | 6 | null | transformers | 15,750 | ---

license: apache-2.0

tags:

- generated_from_trainer