full_name

stringlengths 10

67

| url

stringlengths 29

86

| description

stringlengths 3

347

⌀ | readme

stringlengths 0

162k

| stars

int64 10

3.1k

| forks

int64 0

1.51k

|

|---|---|---|---|---|---|

learn-video/rtmp-live

|

https://github.com/learn-video/rtmp-live

|

Learn how to build a simple streaming platform based on the Real Time Messaging Protocol

|

# RTMP Live

## What is this?

This repository provides a comprehensive guide and code samples for creating a small streaming platform based on the [RTMP]((https://en.wikipedia.org/wiki/Real-Time_Messaging_Protocol)) (Real-Time Messaging Protocol). The platform enables live streaming capabilities and leverages NGINX RTMP for receiving video streams. Additionally, the repository includes functionality to play the recorded videos via HTTP directly from another pool of servers.

Additionally, a service discovery process is included to report the active streams to an API. The API, integrated with Redis, returns the server and manifest path required for playback.

```mermaid

graph LR

A[Edge] -- Which server should I request video? --> B[API]

B -- Get server --> C[Redis]

B -- Response with Origin A --> A

A -- Request content --> D[Origin A]

E[Origin B]

```

Platform components:

* Origin: ingest, storage and content origin

* Edge: CDN, server you use to play the video

* API: tracks Origin servers

## What's the stack behind it?

This small live streaming platform relies on the following projects:

* [`NGINX-RTMP`](https://github.com/arut/nginx-rtmp-module) - the widely, battle-tested and probably the most famous RTMP server

* [`NGINX`](https://www.nginx.com/) - the most used werb server in the world

* [`Lua`](https://www.lua.org/) - a simple yet very powerful programing language 🇧🇷

* [`Go`](https://go.dev/) - a good language to build HTTP APIs, workers, daemons and every kind of distribued system service

## How to use

There are some requirements you need to run this project:

* [`Docker Compose`](https://docs.docker.com/compose/)

* [`OBS Studio`](https://obsproject.com/)

* [`ffmpeg`](https://www.ffmpeg.org/)

Now you are good to go!

To use the platform, follow these steps:

1. Open your terminal and execute the command:

```docker compose up```

2. Once all the components are up and running, launch OBS Studio on your computer.

3. Configure OBS Studio to stream via RTMP using the following settings:

```

Stream Type: Custom Streaming Server

URL: rtmp://localhost:1935/stream

Stream Key: golive

```

4. Start your live streaming session in OBS Studio. The platform will now receive your live stream and make it available for playback.

5. Use a player like [VLC](https://www.videolan.org/vlc/) and point it to http://127.0.0.1:8080/golive/index.m3u8. You can also use a browser with a proper extension to play HLS.

There is also a test video that can be generated using ffmpeg:

```

ffmpeg -re -f lavfi -i "smptehdbars=rate=60:size=1920x1080" \

-f lavfi -i "sine=frequency=1000:sample_rate=48000" \

-vf drawtext="text='RTMP Live %{localtime\:%X}':rate=60:x=(w-tw)/2:y=(h-lh)/2:fontsize=48:fontcolor=white:box=1:boxcolor=black" \

-f flv -c:v h264 -profile:v baseline -pix_fmt yuv420p -preset ultrafast -tune zerolatency -crf 28 -g 120 -c:a aac \

"rtmp://localhost:1935/stream/golive"

```

*For detailed guidance on using OBS Studio, there are plenty of tutorials available on the internet. They provide comprehensive instructions and helpful tips for a smooth streaming setup.*

If everything goes fine, you will watch a colorbar video just like this:

## Edge - CDN

The Edge server, often referred to as "the frontend server" is an essential component of the Content Delivery Network (CDN). It plays a crucial role in the media streaming platform, facilitating a seamless viewing experience for users.

It is the server delivered by the platform you are using to watch the video, this is the server your media player will use to play the video.

The Edge server serves as the intermediary between the end-users and the video content they wish to watch. When you access a video on the platform, your media player interacts with the Edge server, which efficiently delivers the video content to your device. This playable URL comes through an HTTP API and it is out of the scope of this educational project.

Our Edge component here is responsible for retrieving from an HTTP API which Origin server the content will come from, and stick to it through the playback.

```mermaid

graph LR

style Edge fill:#FFC107, stroke:#000, stroke-width:2px, r:10px

style API fill:#4CAF50, stroke:#000, stroke-width:2px, r:10px

style Origin fill:#2196F3, stroke:#000, stroke-width:2px, r:10px

Edge("Edge") -- Which servers holds the content? --> API("HTTP API")

API -- Returns JSON with data --> Edge

Edge -- Proxies Request --> Origin("Origin Server")

```

A typical response from the HTTP API looks like this:

```json

{

"name": "golive",

"manifest": "index.m3u8",

"host": "127.0.0.1"

}

```

We use these values in the [proxy_pass directive](https://docs.nginx.com/nginx/admin-guide/web-server/reverse-proxy/) to proxy the request to the correct origin server.

```nginx

location ~ "/(?<stream>[^/]*)/index.m3u8$" {

set $target "";

access_by_lua_block {

ngx.var.target = router.fetch_streams(ngx.var.stream)

}

proxy_pass http://$target;

}

```

## Origin

The Origin is the component responsible for receiving the video (ingest), storing and serving the original video content to the Edge servers and users.

Keys characteristics of the Origin service are:

* Ingest: it receives the video feed from an encoder, such as [Elemental](https://aws.amazon.com/elemental-server/) or [OBS Studio](https://obsproject.com/), serving as the entry point for content upload.

* Packager: the Origin service packages the video for user consumption, fragments it into segments, and generates [HLS](https://developer.apple.com/streaming/) manifests.

* Storage: in addition to packaging, the Origin service stores all the video content.

* Delivery: as the backbone of content distribution, it acts as an upstream to the Edge servers, efficiently delivering content when requested.

```mermaid

graph TD

style Encoder fill:#B2DFDB, stroke:#000, stroke-width:2px, r:10px

style RTMPLB fill:#FFCC80, stroke:#000, stroke-width:2px, r:10px

style OriginA fill:#BBDEFB, stroke:#000, stroke-width:2px, r:10px

style OriginB fill:#BBDEFB, stroke:#000, stroke-width:2px, r:10px

Encoder("Encoder (e.g., OBS)") --> RTMPLB("RTMP Load Balancer")

RTMPLB --> OriginA("Origin A")

RTMPLB --> OriginB("Origin B")

```

In live streaming, using distributed storage is impractical due to latency issues. To maintain low latency and avoid buffering during streaming, video packagers opt for local storage. This approach reduces the time it takes to access and deliver content to viewers, ensuring a greater playback experience.

To determine which server hosts specific content, such as *World Cup Finals* or *Playing Final Fantasy*, we implement a Discovery program. This program tracks the locations of various streams and provides the necessary information for efficient content delivery. By leveraging the Discovery program, our platform optimizes content distribution, guaranteeing a seamless live streaming experience for viewers.

### Discovery

The Discovery service is responsible for tracking and identifying which server holds a specific streaming content. This becomes especially important when multiple encoders are feeding the Origin Service with different content, and the platform needs to determine the appropriate server(s) to deliver the content when requested by users.

It is deployed aside with the Origin service. The reason is because we continuously need to know whether the video feeding is up and running.

```mermaid

sequenceDiagram

participant DS as Discovery Service

participant FS as Filesystem

participant API as HTTP API

loop Watch filesystem events

DS->>FS: Check if manifests are being created/updated

end

Note right of DS: Accesses the filesystem to verify if the streaming is working

DS->>API: Report Host (IP), manifest path, stream name (e.g golive)

Note right of DS: Sends relevant information to the HTTP API

```

Our RTMP server supports authorization through an *on_publish* callback. This functionality plays a vital role in our platform, as it allows us to ensure secure ingest of live streams. When a new stream is published, the RTMP server triggers the on_publish callback, and our platform calls the HTTP API to authorize the ingest.

```nginx

application stream {

live on;

record off;

notify_method get;

on_publish http://api:9090/authorize;

}

```

Content delivey is supported using a location with the [alias](http://nginx.org/en/docs/http/ngx_http_core_module.html#alias) directive:

```nginx

location / {

alias /opt/data/hls/;

types {

application/vnd.apple.mpegurl m3u8;

video/mp2t ts;

}

add_header Cache-Control no-cache;

add_header Access-Control-Allow-Origin *;

}

```

## API

The HTTP API used by the Discovery Service performs two critical functions. Firstly, it enables real-time updates to Redis keys, ensuring the tracking of changes in streaming manifests. By utilizing TTL for Redis keys, the API automatically removes keys when the encoder goes offline or when the live streaming session ends. As a result, the platform stops offering the corresponding live content.

You can try the API using the VSCode [Rest Client](https://marketplace.visualstudio.com/items?itemName=humao.rest-client) extension. Open the [api.http file](api.http)

The API has three routes:

* GET [`/authorize`](http://localhost:9090/authorize) - used to authorize RTMP ingest

* POST [`/streams`](http://localhost:9090/streams) - report live streaming content

* GET [`/streams/golive`](http://localhost:9090/streams/golive) - playback information for the given stream name

## Your turn

A basic architecture has been described. And now it is your time to think about next steps for our live streaming platform:

* **Best possible experience**: to ensure the best possible viewer experience, explore implementing adaptive bitrate streaming. Read about [Adaptive bitrate streaming](https://en.wikipedia.org/wiki/Adaptive_bitrate_streaming)

* **Increased Resiliency**: what happens if the HTTP API goes offline for 5 minutes? How can the system handle and recover from such scenarios without compromising content availability?

* **Scalability**: to reduce latency while maintaining content delivery efficiency, explore techniques that can lower latency without reducing the segment size

---

This documentation was heavily inspired by [@leandromoreira](https://github.com/leandromoreira/) [CDN up and running](https://github.com/leandromoreira/cdn-up-and-running)

| 45 | 3 |

da-x/deltaimage

|

https://github.com/da-x/deltaimage

|

a tool to generate and apply binary deltas between Docker images to optimize registry storage

|

# deltaimage

Deltaimage is a tool designed to generate delta layers between two Docker images that do not benefit from shared layers. It also offers a mechanism to apply this delta, thus recreating the second image. Deltaimage leverages xdelta3 to achieve this.

This tool may prove advantageous when:

- Your Docker image has a large and complex build with many layers that, due to certain intricate reasons, do not benefit from layer caching. The total size of the image is equal to the total size of all the layers and is significantly large.

- Your build results in large files with minute differences that xdelta3 can discern.

- You need to optimize storage space on simple registry services like ECR.

## Demo

Consider the following closely timed Docker images of Ubuntu:

```

$ docker history ubuntu:mantic-20230607 | grep -v "0B"

IMAGE CREATED CREATED BY SIZE COMMENT

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:d8dc8c4236b9885e6… 70.4MB

$ docker history ubuntu:mantic-20230624 | grep -v "0B"

IMAGE CREATED CREATED BY SIZE COMMENT

<missing> 2 weeks ago /bin/sh -c #(nop) ADD file:ce14b5aa15734922e… 70.4MB

```

Despite likely having a small difference between them, the combined size is 140.8 MB in our registry as they don't share layers.

### Delta generation

Let's generate a delta using the following shell script:

```

source=ubuntu:mantic-20230607

target=ubuntu:mantic-20230624

source_plus_delta=local/ubuntu-mantic-20230607-to-20230624

docker run --rm deltaimage/deltaimage:0.1.0 \

docker-file diff ${source} ${target} | \

docker build --no-cache -t ${source_plus_delta} -

```

Now we can inspecting the generated tag:

```

$ docker history local/ubuntu-mantic-20230607-to-20230624 | grep -v "0B"

IMAGE CREATED CREATED BY SIZE COMMENT

b2e2961dc67a 3 minutes ago COPY /delta /__deltaimage__.delta # buildkit 786kB buildkit.dockerfile.v0

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:d8dc8c4236b9885e6… 70.4MB

```

This displays a first layer shared with `ubuntu:mantic-20230607` and a delta added as a second layer. The total size is just slightly over 71MB.

### Restoring images from deltas

Restore the image using:

```

source_plus_delta=local/ubuntu-mantic-20230607-to-20230624

target_restored=local:mantic-20230624

docker run deltaimage/deltaimage:0.1.0 docker-file apply ${source_plus_delta} \

| docker build --no-cache -t ${target_restored} -

```

Inspect the recreated image `local:mantic-20230624`:

```

$ docker history local:mantic-20230624

IMAGE CREATED CREATED BY SIZE COMMENT

344a84625581 7 seconds ago COPY /__deltaimage__.delta/ / # buildkit 70.4MB buildkit.dockerfile.v0

```

It should be observed that the file system content of `local:mantic-20230624` is the same as the original second image `ubuntu:mantic-20230624`.

## Building deltaimage

Instead of pulling deltaimage from the internet, you can build a docker image of deltaimage locally using:

```

./run build-docker-image

```

A locally tagged version `deltaimage/deltaimage:<version>` will be created.

## Under the hood

Deltaimage uses [xdelta](http://xdelta.org) to compare files between the two images based on the

pathname. The tool is developed in Rust.

The `docker-file diff` helper command generates a dockerfile such as the following:

```

# Calculate delta under a temporary image

FROM scratch as delta

COPY --from=ubuntu:mantic-20230607 / /source/

COPY --from=ubuntu:mantic-20230624 / /delta/

COPY --from=deltaimage/deltaimage:0.1.0 /opt/deltaimage /opt/deltaimage

RUN ["/opt/deltaimage", "diff", "/source", "/delta"]

# Make the deltaimage

FROM ubuntu:mantic-20230607

COPY --from=delta /delta /__deltaimage__.delta

```

The `docker-file apply` helper command generates a dockerfile such as the following:

```

# Apply a delta under a temporary image

FROM local/ubuntu-mantic-20230607-to-20230624 as applied

COPY --from=deltaimage/deltaimage:0.1.0 /opt/deltaimage /opt/deltaimage

USER root

RUN ["/opt/deltaimage", "apply", "/", "/__deltaimage__.delta"]

# Make the original image by applying the delta

FROM scratch

COPY --from=applied /__deltaimage__.delta/ /

```

## Limitations

- The hash of the restored image will not match the original image.

- File timestamps in the restored image may not be identical to the original.

## License

Interact is licensed under Apache License, Version 2.0 ([LICENSE](LICENSE)).

| 12 | 1 |

ThePrimeagen/fem-algos-2

|

https://github.com/ThePrimeagen/fem-algos-2

|

The Last Algorithm Class You Want

|

## The last algorithms course you will WANT

This course is the follow up to [The Last Algorithms Course You Need](https://github.com/ThePrimeagen/fem-algos)

Do not be discouraged, data structures and algorithms take effort and practice!

### Website

[The Last Algorithms Class You Will Want](https://theprimeagen.github.io/fem-algos-2)

### FEM Courses

- Prereq: [First Algorithms Class](https://frontendmasters.com/courses/algorithms)

### Others

[VIM](https://frontendmasters.com/courses/vim-fundamentals/)<br/>

[Developer Productivity](https://frontendmasters.com/courses/developer-productivity/)<br/>

[Rust For TypeScript Devs](https://frontendmasters.com/courses/rust-ts-devs/)<br/>

| 48 | 0 |

faresemad/Django-Roadmap

|

https://github.com/faresemad/Django-Roadmap

|

Django roadmap outlines key features and improvements for upcoming releases, including performance and scalability improvements, new features for modern web development, enhanced security, and improved developer experience.

|

# Django Road Map

[](https://www.linkedin.com/in/faresemad/)

[](https://www.facebook.com/faresemadx)

[](https://twitter.com/faresemadx)

[](https://www.instagram.com/faresemadx/)

[](https://www.github.com/faresemad/)

## Junior

- [x] Models & Query set

- [x] Views & Mixins

- [x] Forms & Form-set

- [x] Templates & Filters

- [x] Authentication

## Mid-Level

- [x] Components [ Customization ]

- [x] Models [ Instance Methods - models vs views - Transaction]

- [x] Views [ customize mixins ]

- [x] Templates [ customiza filters & tags ]

- [x] Translation

- [x] Payment

- [x] Channels

- [x] Celery & Redis

- [x] Testing

- [x] Admin customization

- [x] Sessions

- [x] Cookies

- [x] Cache

- [x] Authentication

- [x] Swagger

- [x] Analysis

## Senior

- [x] Create or customize Model fields

- [ ] JS framework ( Vue.js or Ajax )

- [ ] Testing

- [ ] Docker

- [ ] Security

## Must you know

- [x] Deployment

- [x] REST API & DOC

- [x] Git & GitHub

## Courses

1. **Django with Mosh**

- [x] [Part one ↗](https://codewithmosh.com/p/the-ultimate-django-part1)

- [x] [Part two ↗](https://codewithmosh.com/p/the-ultimate-django-part2)

- [ ] [Part three ↗](https://codewithmosh.com/p/the-ultimate-django-part3)

2. **Django Core**- [Django Core ↗](https://www.udemy.com/course/django-core/)

- [x] Django view

- [ ] Django models unleashed - updated & expanded

- [ ] Django models unleashed - Original Version

- [x] Django class based views unleashed

- [ ] Understanding class based views - original version

- [x] Forms & Formsets

- [x] Django templates

- [x] Django translation

- [ ] Django user model unleashed

- [ ] Django tests unleashed

- [ ] Deployment

- [ ] Django Foreign key unleashed

- [ ] Time & Tasks A Guide to Connecting Django, Celery, Redis

- [ ] Django Hosts

## Websites

- [colorlib.com ↗](https://colorlib.com/) -> for templates

- [mockaroo.com ↗](https://mockaroo.com/) -> random data generator

## Books

1. **Django for APIs**

- [x] Chapter 1 : Initial set up

- [x] Chapter 2 : Web APIs

- [x] Chapter 3 : Library Website

- [x] Chapter 4 : Library API

- [x] Chapter 5 : Todo API

- [x] Chapter 6 : Blog API

- [x] Chapter 7 : Permission

- [x] Chapter 8 : User Authentication

- [x] Chapter 9 : Viewsets and Routers

- [x] Chapter 10 : Schema and Documentation

- [x] Chapter 11 : Production Deployment

2. **Django 4 by Example**

- [x] Chapter 1 : Building a blog application

- [x] Chapter 2 : Enhancing your blog with advanced features

- [x] Chapter 3 : Extending your blog application

- [x] Chapter 4 : Building a social website

- [x] Chapter 5 : Implementing social Authentication

- [ ] Chapter 6 : Sharing content on your website

- [ ] Chapter 7 : Tracking user action

- [x] Chapter 8 : Building an online shop

- [x] Chapter 9 : Managing payment and orders

- [ ] Chapter 10 : Extending your shop

- [ ] Chapter 11 : Adding Internationalization to your shop

- [ ] Chapter 12 : Building an E-Learning platform

- [ ] Chapter 13 : Creating a Content management system

- [ ] Chapter 14 : Rendering and Caching content

- [ ] Chapter 15 : Building an API

- [x] Chapter 16 : Building a chat server

- [ ] Chapter 17 : Going Live

3. **Django for Professionals**

- [ ] Docker

- [ ] PostgreSQL

- [ ] Bookstore Project

- [ ] Pages App

- [ ] User Registration

- [ ] Static Assets

- [ ] Advanced User Registration

- [ ] Environment Variables

- [ ] Email

- [ ] Book App

- [ ] Reviews App

- [ ] File / Image Upload

- [ ] Permission

- [ ] Search

- [ ] Performance

- [ ] Security

- [ ] Deployment

## Packages

- [Djoser ↗](https://djoser.readthedocs.io/en/latest/)

- [dj-rest-auth ↗](https://dj-rest-auth.readthedocs.io/en/latest/)

- [Django-allauth ↗](https://django-allauth.readthedocs.io/en/latest/)

- [Cookiecutter ↗](https://cookiecutter.readthedocs.io/en/latest/)

- [Django Debug Toolbar ↗](https://django-debug-toolbar.readthedocs.io/en/latest/)

- [Silk ↗](https://github.com/jazzband/django-silk)

## Themes

- [Jazzmin ↗](https://github.com/farridav/django-jazzmin)

- [Django Suit ↗](https://djangosuit.com/)

- [django-admin-interface ↗](https://github.com/fabiocaccamo/django-admin-interface)

- [django-grappelli ↗](https://github.com/sehmaschine/django-grappelli)

- [Django-material ↗](http://forms.viewflow.io/)

- [django-jet-reboot ↗](https://github.com/assem-ch/django-jet-reboot)

- [django-flat ↗](https://github.com/collinanderson/django-flat-theme)

- [django-admin-bootstrap ↗](https://github.com/django-admin-bootstrap/django-admin-bootstrap)

- [django-suit ↗](https://github.com/darklow/django-suit)

- [django-baton ↗](https://github.com/otto-torino/django-baton)

- [django-jazzmin ↗](https://github.com/farridav/django-jazzmin)

- [django-simpleui ↗](https://github.com/newpanjing/simpleui)

- [django-semantic-admin ↗](https://github.com/globophobe/django-semantic-admin)

- [django-admin-volt ↗](https://github.com/app-generator/django-admin-volt)

| 33 | 7 |

hfiref0x/WubbabooMark

|

https://github.com/hfiref0x/WubbabooMark

|

Debugger Anti-Detection Benchmark

|

# WubbabooMark

## Debugger Anti-Detection Benchmark

[](https://ci.appveyor.com/project/hfiref0x/wubbaboomark)

<img src="https://raw.githubusercontent.com/hfiref0x/WubbabooMark/master/Help/SeriousWubbaboo.png" width="150" />

**WubbabooMark** aimed to detect traces of usage of software debuggers or special software designed to hide debuggers presence from debugee by tampering various aspects of program environment.

Typical set of debuggers nowadays is actually limited to a few most popular solutions like Ghidra/IDA/OllyDbg/x32+x64dbg/WinDbg and so on. There is a special class of software designed to "hide" debugger from being detected by debugee. Debugger detection usually used by another software class - software protectors (e.g. Themida/VMProtect/Obsidium/WinLicense). Sometimes software that counteracts these detections referred as "anti-anti-debug" or whatsoever. Personally I found all of this "anti-anti" kind of annoying because we can continue and it will be "anti-anti-anti-..." with all sense lost somewhere in the middle.

What this "anti-anti" class of software actually does is creating a landscape of additional detection vectors, while some of most notorious pieces compromise operation system components integrity and security in the sake of being able to work. And all of them, absolutely all of them brings multiple bugs due to inability correctly replicate original behavior of hooked/emulated functions. Sounds scary? Not much that scary as most of this software users (they call themselves "reversers/crackers") know what they're doing and doing that on purpose... right? Carelessly implemented targeted antidetection methods against known and well reverse-engineered commercial protectors creating a bunch of new artifacts. WubbabooMark using publicly known, actualized and enchanced methods to list those artifacts.

The continuous VMProtect drama generates a lot of fun so I just can't stay away of it. Since VMProtect recently goes "open-source" under DGAF license I had an opportunity to look closer on its "anti-" stuff. What VMProtect has under the hood clearly demonstrates authors following mainstream "scene" with little own creativity in some aspects due to limits as commercial product and software support requirements. Direct syscalls, heavens gate? What year is it now? However, reinventing this stuff even in 2018 seems have doomed to dead some of this so called "anti-anti" software.

Anyway, we have some debuggers, some "tampering tools/plugins" etc, lets see how good they are!

# System Requirements

x64 Windows 10/11 and above.

Anything below Windows 10 is unsupported. Well, because those OSes discontinued by Microsoft and mainstream industry. What a surprise! What a surprise! Forget stone age systems and move on.

Windows 11 preview/developer builds WARNING: since this program rely on completely undocumented stuff there can be problems with most recent versions that program doesn't know about resulting in false-positive detection or program crashes. Use on your own risk.

# Implemented tests

(a short list, almost each actually does more but for readme technical details are too much)

* Common set of tests

* Presence of Windows policy allowing custom kernel signers

* Detection of Windows kernel debugger by NtSystemDebugControl behavior.

* Check for unnecessary process privileges enablement

* Process Environment Block (PEB) Loader entries verification

* Must be all authenticode signed, have valid names

* Loaded Kernel Modules verification

* Must be all authenticode signed, doesn't include anything from built-in blacklist

* Detect lazy data tampering

* Blacklisted Driver Device Objects

* Lookup devices object names in Object Manager namespace and compare them with blacklist

* Windows Version Information

* Detect l33t and other BS changes

* Cross-compare version information from several system modules that are in KnownDlls

* Cross-compare version information from PEB with data obtained through WMI

* Validate system call (syscall) layout for PEB version

* Validate system build number acceptable range

* Running Processes

* Check if process name is in blacklist

* Cross-compare Native API query result with WMI data to detect hidden from client processes

* Detect lazy Native API data tampering

* Check client against console host information

* Application Compatibility (AppCompat) parent information

* Client Threads

* Verify that client threads instruction pointers belong to visible modules

* NTDLL mapping validation

* Map NTDLL using several methods and cross-compare results

* Examine program stack

* Find a code that doesn't belong to any loaded module

* Validate Working Set (WS) information

* Query WS and walk each page looking for suspicious flags

* Use WS watch and look for page fault data

* Perform Handle Tracing

* Enable handle tracing for client, perform bait call and examine results

* Check NtClose misbehavior

* Validate NTDLL syscalls

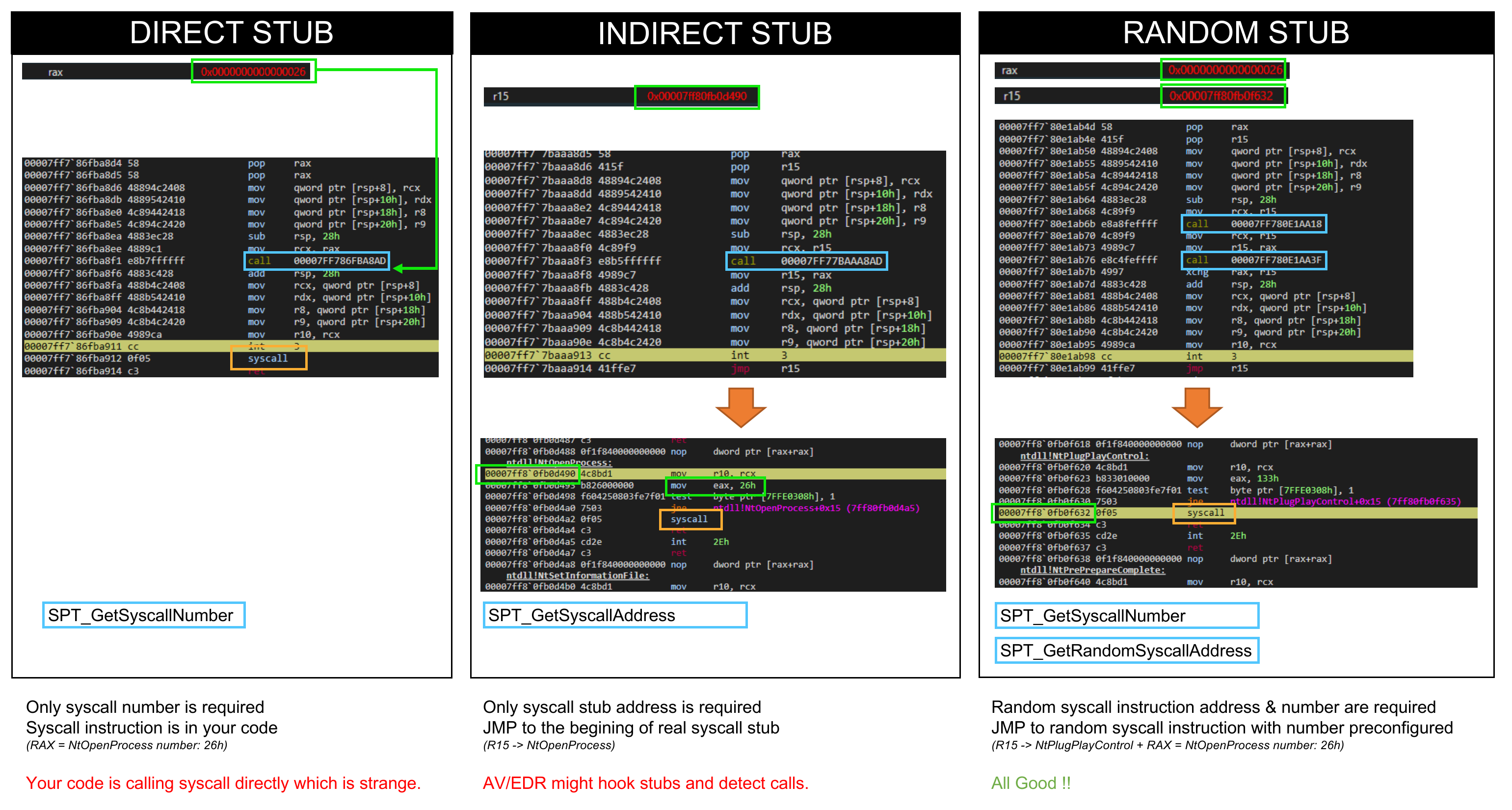

* Obtain system call data by various methods, use it and cross-compare results

* Validate WIN32U syscalls

* Obtain system call data and compare results

* Detect Debugger presence

* Process Debug Port with indirect syscall

* Process Debug Handle with indirect syscall

* Process Debug Flags with indirect syscall

* DR registers

* User Shared Data information

* Examine system handle dump

* Find debug objects and debug handles

* Detect lazy Native API data tampering

* Detect client handles with suspicious rights

* Enumerate NtUser objects

* Walk UserHandleTable to find objects which owners are invisible to client API calls

* Enumerate NtGdi objects

* Walk GdiSharedHandleTable to find objects which owners are invisible to client API calls

* Enumerate Boot Configuration Data (That one requires client elevation)

* Search for option enablements: TestMode, WinPEMode, DisableIntegrityChecks, KernelDebugger

* Scan process memory regions

* Search for regions with memory executable flags that doesn't belong to any loaded module

Program can be configured which tests you want to try. Go to menu "Probes -> Settings", apply changes and start scan. Note settings are saved to registry and read uppon program load.

<img src="https://raw.githubusercontent.com/hfiref0x/WubbabooMark/master/Help/Settings.png" width="600" />

# Output Examples

* Clean scan

<img src="https://raw.githubusercontent.com/hfiref0x/WubbabooMark/master/Help/ScanClean.png" width="600" />

* Wubbaboos found scan

<img src="https://raw.githubusercontent.com/hfiref0x/WubbabooMark/master/Help/ScanDetect.png" width="600" />

# How To Run Test And Don't Ask Questions Next

1. Download or Compile from source "Skilla.exe"

* If you want to compile yourself: Use Microsoft Visual Studio 2019 and above with recent Windows SDK installed. Compile configuration is "Release", not "Debug".

* If you want to download precompiled binary it is in Bin folder of this repository.

2. Load your debugger, setup your tampering plugins, load "Skilla.exe".

3. Run the program in debugger and watch output. If something crashed including your debugger - it is your own fault (maybe~).

4. Look for results. Normally there should be nothing detected, literally ZERO wubbaboos in list.

5. If you want to repeat test - there is no need to restart "Skilla.exe" or repeat (2)(3) - go to menu and use "File -> Scan".

Do you found something that looks like a false-positive or a bug? Feel free to report in issues section!

You can save generated report using "Probes -> Save As ..." menu. File will be saved in comma separated values (CSV) format.

# False positives

Antimalware/anticheat software may cause false positives due to the way these software class works. Make sure you understand what you do. This is not AV/EDR benchmark nor testing tool.

# Driver Bugs

While encountering random BSODs from the best and funniest "super hide" software I was about to make a fuzzer test just because every driver I compiled contained improper handling of syscalls it intercepts. However since authors of these software doesn't care and usage of all these drivers limited to small group of masochists this idea was dropped at early stage. Well what can I say - never use anything from that super hiding stuff on a live machine or you risk to lose your data due to sudden bugcheck.

# Virtual Machine Detection

Not an aim of this tool and will never be added. This tool will work fine with VM.

# Links

Here I would like to put some useful links, enjoy.

Debuggers first!

* x64dbg (https://github.com/x64dbg/x64dbg) - x64 debugger with UI inspired by OllyDbg. Despite being overflown with annoying graphics, questionable features and tons of bugs it is currently one of the best of what we have.

* HexRays IDA (https://hex-rays.com/ida-pro/) - cost a lot, can a lot, everybody have it for free, "F5" is a industry standard in ISV reverse-engineering departments.

* Ghidra SRE from NSA (https://github.com/NationalSecurityAgency/ghidra) - not much to say about it, except it is freeware open-source competitor of the above product.

* WinDbg (https://learn.microsoft.com/en-us/windows-hardware/drivers/debugger/debugger-download-tools) Microsoft user/kernel debugger with support built into operation system. A bit of hardcore for a newcomers but the most powerful as R0 debugger.

* Immunity Debugger (https://www.immunityinc.com/products/debugger/) - Requires Python and doesn't support x64, trash for historical purposes.

* There exist some funny clone of ollydbg+x64dbg with number of different names (cpudbg64, asmdbg32, asmdbg64 - author can't decide) however author attitude demonstrate typical chaos in mind and development not to mention fishing schemes used on project domain.

* HyperDbg (https://github.com/HyperDbg/HyperDbg) - hypervisor assisted kernel/user mode debugger.

* CheatEngine (https://github.com/cheat-engine/cheat-engine) - you can use it for debugging too, be aware that MSFT hates it, contains driver that is wormhole by design.

Debugger Anti-Detection

* ScyllaHide (https://github.com/x64dbg/ScyllaHide) - an "industry standard" in "anti-anti" software class.

* HyperHide (https://github.com/Air14/HyperHide) - a failed attempt to do something like ScyllaHide but hypervisor assisted.

* StrongOD (https://github.com/shellbombs/StrongOD) - SSDT intercepting driver built with Windows XP era in mind, never use it on a production machine, avoid at all cost.

* TitanHide (https://github.com/mrexodia/TitanHide) - another driver that intercept SSDT services, never use it on a production machine.

* QuickUnpack (https://github.com/fobricia/QuickUnpack) - contains a driver that is able to emulate rdtsc/cpuid instructions using SVM/VMX, never use it on a production machine.

* AntiDebuggerFuxker (https://github.com/AyinSama/Anti-AntiDebuggerDriver) - "InfinityHook" style driver aimed to bypass VMProtect detections, never use it on a production machine and better never use it at all :P

* VirtualDbgHide (https://github.com/Nukem9/VirtualDbgHide) - utilize LSTAR hook, a typical broken "anti-" driver, never use it on a production machine, avoid at all cost.

* ColdHide_V2 (https://github.com/Rat431/ColdHide_V2) - a basic and failed ScyllaHide clone.

* DBGHider (https://github.com/hi-T0day/DBGHider) - IDA plugin that does some trivial things.

* MineDebugHider (https://github.com/zhouzu/MineDebugHider) - C# based trivial API interceptor with invalid anti-detection logic in author mind.

* Themidie (https://github.com/VenTaz/Themidie) - Themida specific hooks based on MHook lib.

* Kernel-Anit-Anit-Debug-Plugins (https://github.com/DragonQuestHero/Kernel-Anit-Anit-Debug-Plugins) - some of them contain driver that do kernel Dbg* functions hooking. Avoid at all cost.

* xdbg (https://github.com/brock7/xdbg) - plugin for x64dbg and CE based on MSFT Detours lib.

Debugger Detection

* al-khaser (https://github.com/LordNoteworthy/al-khaser) - contains basic set of debugger/analysis detection methods.

* AntiDebugger (https://github.com/liltoba/AntiDebugger) - various trash in C#.

* AntiDebugging (https://github.com/revsic/AntiDebugging) - small collection of basic things.

* Anti-Debugging (https://github.com/ThomasThelen/Anti-Debugging) - another collection following P.Ferrie articles.

* Anti-DebugNET (https://github.com/Mecanik/Anti-DebugNET) - basics implemented on C#.

* antidebug (https://github.com/waleedassar/antidebug) - collections of methods from author blogposts.

* AntiDBG (https://github.com/HackOvert/AntiDBG) - collection of recycled known ideas.

* Anti-Debug-Collection (https://github.com/MrakDev/Anti-Debug-Collection) - name says it all.

* aadp (https://github.com/crackinglandia/aadp) - collection of mistakes.

* cpp-anti-debug (https://github.com/BaumFX/cpp-anti-debug) - basics implemented on C++.

* debugoff (https://github.com/0xor0ne/debugoff) - a rare Linux anti-analysis methods collection. Warning - cancerous Rust.

* makin (https://github.com/secrary/makin) - basics mostly following P.Ferrie articles.

* Lycosidae (fork)(https://github.com/fengjixuchui/Lycosidae) - it's soo bad, so it is even good. Original repo seems destroyed by ashamed author.

* khaleesi (fork)(https://github.com/fengjixuchui/khaleesi) - al-khaser with injected code from the Lycosidae and something called "XAntiDebug". Original repo again seems unavailable.

* VMProtect open-source edition, won't give any links to avoid possible DMCA or whatever, you can find it on github under different names.

* Unabomber (https://github.com/Ahora57/Unabomber) - collection of methods that are creatively abusing misbehavior and bugs of anti-detection software.

* XAntiDebug (https://github.com/strivexjun/XAntiDebug) - few ideas from VMProtect "improved" by author.

Here I should put some links to what is now reinvented wheels about debuggers detection that you can easily find in the world wide web. It is mostly time-machine to where Windows XP was all new and shine.

* Collection of ancient stuff by Checkpoint (https://anti-debug.checkpoint.com/) Unsure where they copied some of these, probably from al-khaser (https://github.com/LordNoteworthy/al-khaser), or vice-versa.

* Peter Ferrie, Anti-Debugging Reference (http://pferrie.epizy.com/papers/antidebug.pdf?i=1) A must put, because literally everyone when you look at references have links to it, so I'm bit ashamed that I've never fully read it, however it must be something good, isn't it?

* Peter Ferrie, Anti-unpacker tricks (https://pferrie.tripod.com/papers/unpackers.pdf) I believe this one is where the above had roots in.

* Peter Ferrie, Anti-unpacker tricks VB series (https://www.virusbulletin.com/virusbulletin/2008/12/anti-unpacker-tricks-part-one) All parts of it I think have more details than above.

* An Anti-Reverse Engineering Guide By Josh Jackson (https://forum.tuts4you.com/files/file/1218-anti-reverse-engineering-guide/) Very ancient just like all the above.

* Enough of this museum.

* Anti Debugging Protection Techniques with Examples (https://www.apriorit.com/dev-blog/367-anti-reverse-engineering-protection-techniques-to-use-before-releasing-software) A more recent combination of known stuff.

# Project Name

Wubbaboo is a mischievous spirit from Cognosphere videogame Honkai Star Rail. It likes to hide in unexpected places and does a lot of pranks just like the software class we are testing.

No wubbaboos were harmed during tests!

# Authors

+ (c) 2023 WubbabooMark Project

# License

MIT

| 213 | 24 |

lizheming/dover

|

https://github.com/lizheming/dover

|

A douban book/music/movie/game/celebrity cover image mirror deta service

|

# Dover

A douban book/music/movie/game/celebrity cover image storage deta service.

## How to Use

https://<your-service>.deta.app/<movie|book|music|game|celebrity>/<subject-id>.jpg

- https://<your-service>.deta.app/movie/35337634.jpg

- https://<your-service>.deta.app/book/36093928.jpg

- https://<your-service>.deta.app/music/24840163.jpg

- https://<your-service>.deta.app/game/26815212.jpg

- https://<your-service>.deta.app/celebrity/1041028.jpg

| 10 | 1 |

SakshiBankar1803/SakshiBankar1803

|

https://github.com/SakshiBankar1803/SakshiBankar1803

| null |

<h1 align="center">Hi 👋, I'm Sakshi Bankar</h1>

<h3 align="center">A passionate programmer from India</h3>

<img align="right" alt="coding" width="400" src="https://user-images.githubusercontent.com/55389276/140866485-8fb1c876-9a8f-4d6a-98dc-08c4981eaf70.gif">

<p align="left"> <img src="https://komarev.com/ghpvc/?username=sakshibankar1803&label=Profile%20views&color=0e75b6&style=flat" alt="sakshibankar1803" /> </p>

- 🌱 I’m currently learning **Data Structure Programming**

- 👨💻 All of my projects are available at [https://github.com/SakshiBankar1803](https://github.com/SakshiBankar1803)

- 📫 How to reach me **[email protected]**

- ⚡ Fun fact **My weak point It's My STRONG Point.**

<h3 align="left">Connect with me:</h3>

<p align="left">

<a href="https://linkedin.com/in/sakshi bankar" target="blank"><img align="center" src="https://raw.githubusercontent.com/rahuldkjain/github-profile-readme-generator/master/src/images/icons/Social/linked-in-alt.svg" alt="sakshi bankar" height="30" width="40" /></a>

<a href="https://www.youtube.com/c/sakshi bankar" target="blank"><img align="center" src="https://raw.githubusercontent.com/rahuldkjain/github-profile-readme-generator/master/src/images/icons/Social/youtube.svg" alt="sakshi bankar" height="30" width="40" /></a>

</p>

<h3 align="left">Languages and Tools:</h3>

<p align="left"> <a href="https://www.cprogramming.com/" target="_blank" rel="noreferrer"> <img src="https://raw.githubusercontent.com/devicons/devicon/master/icons/c/c-original.svg" alt="c" width="40" height="40"/> </a> <a href="https://www.w3.org/html/" target="_blank" rel="noreferrer"> <img src="https://raw.githubusercontent.com/devicons/devicon/master/icons/html5/html5-original-wordmark.svg" alt="html5" width="40" height="40"/> </a> <a href="https://www.mysql.com/" target="_blank" rel="noreferrer"> <img src="https://raw.githubusercontent.com/devicons/devicon/master/icons/mysql/mysql-original-wordmark.svg" alt="mysql" width="40" height="40"/> </a> <a href="https://www.postgresql.org" target="_blank" rel="noreferrer"> <img src="https://raw.githubusercontent.com/devicons/devicon/master/icons/postgresql/postgresql-original-wordmark.svg" alt="postgresql" width="40" height="40"/> </a> </p>

<p><img align="left" src="https://github-readme-stats.vercel.app/api/top-langs?username=sakshibankar1803&show_icons=true&locale=en&layout=compact" alt="sakshibankar1803" /></p>

<p> <img align="center" src="https://github-readme-stats.vercel.app/api?username=sakshibankar1803&show_icons=true&locale=en" alt="sakshibankar1803" /></p>

<p><img align="center" src="https://github-readme-streak-stats.herokuapp.com/?user=sakshibankar1803&" alt="sakshibankar1803" /></p>

| 11 | 0 |

RimoOvO/Mastodon-to-Twitter-Sync

|

https://github.com/RimoOvO/Mastodon-to-Twitter-Sync

|

从Mastodon实时同步嘟文到Twitter的小工具

|

## Mastodon-to-Twitter-Sync

从Mastodon同步新嘟文到Twitter

支持媒体上传、长嘟文自动分割,以回复的形式同步,会排除回复和引用、以及以`@`开头的嘟文;支持过短视频自动延长。

如果是第一次运行,只会从第一次运行后的写的嘟文开始同步

如果想把之前所有的推文同步到mastodon,[试试这个!](https://github.com/klausi/mastodon-twitter-sync),我自己搭建的实例已经把所有之前的推文全部成功导入了

- 需要用到的包:`requests、mastodon.py、pickle、tweepy、retrying、termcolor、bs4、moviepy`

- 自动生成的`media`文件夹用于保存媒体缓存,`synced_toots.pkl` 保存已经同步过的嘟文

## 使用方法

- 安装包 ```pip install -r requirements.txt```

- 拷贝一份 `config.sample.py` 到同目录并更名为 `config.py`

- 修改 `config.py` 中有关 Twitter 和 Mastodon 的参数,之后 `python mtSync.py` 即可

## Linux 后台常驻

- 按发行版及系统情况修改 systemd 文件 `mastodon-twitter-sync.service`

- ```systemctl enable mastodon-twitter-sync # 开机自启```

- ```systemctl start mastodon-twitter-sync # 启动```

## config.py 参数说明

`sync_time`:程序会每隔一定的时间循环访问mastodon,看看有没有新嘟文,由这个时间控制(单位秒)

`log_to_file`:是否保存日志到`out.log`

`limit_retry_attempt`:最大重试次数,默认为13次,仍失败则跳过嘟文,保存嘟文id到sync_failed.txt,设置为0则无限重试,此举可能会耗尽 API 请求次数,但不会因为报错达到最大尝试上限而退出程序

`wait_exponential_max`:单次重试的最大等待时间,单位为毫秒,默认为30分钟,遇到错误,每次的等待时间会越来越长

`wait_exponential_multiplier`:单次重试的等待时间指数增长,默认为800,800即为`原等待时间x0.8`,如果你想缩短每次的等待时间,可以减少该值

每次等待时间(秒) = ( `2`的`当前重试次数`次方 ) * ( `wait_exponential_multiplier` / 1000 )

| 14 | 2 |

tjamesw123/flipper-to-proxmark3-and-back

|

https://github.com/tjamesw123/flipper-to-proxmark3-and-back

| null |

# Flipper To Proxmark3 And Back

This tool is for switching nfc file formats between .nfc (Flipper NFC Format) and .json (Proxmark3 NFC Dump Format)

**Works for MIFARE 1k, 4k, Mini cards and Mifare Ultralight/NTAGS**

## How to use?

1. Download the latest jar file from the latest github release

2. Move the jar file to the file you wish to convert

3. Run the jar file in the command line in the following format

```

java -jar enter-jar-name-here.jar convert "flipper.nfc" | "proxmark3-dump.json" export "enter-file-name-here-with-extension-you-want-to-convert-to"

or

java -jar enter-jar-name-here.jar convert "flipper.nfc" | "proxmark3-dump.json" export default json | nfc

```

(2nd example is default mode)

Default mode allows for the corresponding automatic name generation format:

for .json: Proxmark3 | FlipperZero-(insert-uid-here)-dump.json

for .nfc: Proxmark3 | FlipperZero-(insert-uid-here).nfc

Import and export files have to have either .nfc or .json as the file extension otherwise the tool will not work

```

Some examples would be:

java -jar flippertoproxmark3andback.jar convert "flipper.nfc" export "proxmark3-dump.json"

java -jar flippertoproxmark3andback.jar convert "proxmark3-dump.json" export default nfc

```

| 14 | 0 |

lele8/SharpDBeaver

|

https://github.com/lele8/SharpDBeaver

|

DBeaver数据库密码解密工具

|

# SharpDBeaver

## 简介

DBeaver数据库密码解密工具

项目地址:https://github.com/lele8/SharpDBeaver

## 使用说明

## 免责声明

本工具仅面向**合法授权**的企业安全建设行为,如您需要测试本工具的可用性,请自行搭建靶机环境。

在使用本工具时,您应确保该行为符合当地的法律法规,并且已经取得了足够的授权。**请勿对非授权目标进行攻击。**

**如您在使用本工具的过程中存在任何非法行为,您需自行承担相应后果,作者将不承担任何法律及连带责任。**

在安装并使用本工具前,请您**务必审慎阅读、充分理解各条款内容**,限制、免责条款或者其他涉及您重大权益的条款可能会以加粗、加下划线等形式提示您重点注意。 除非您已充分阅读、完全理解并接受本协议所有条款,否则,请您不要安装并使用本工具。您的使用行为或者您以其他任何明示或者默示方式表示接受本协议的,即视为您已阅读并同意本协议的约束。

| 163 | 19 |

AbstractClass/CloudPrivs

|

https://github.com/AbstractClass/CloudPrivs

|

Determine privileges from cloud credentials via brute-force testing.

|

# CloudPrivs

*I got creds, now what?*

## Overview

CloudPrivs is a tool that leverages the existing power of SDKs like Boto3 to brute force privileges of all cloud services to determine what privileges exist for a given set of credentials.

This tool is useful for Pentesters, Red Teamer's and other security professionals. Cloud services typically offer no way to determine what permissions a given set of credentials has, and the shear number of services and operations make it a daunting task to manually confirm. This can mean privilege escalation might be possible from a set of credentials, but one would never know it because they simply don't know the AWS credentials they found can execute Lambda.

## Installation

**Not currently available on PyPi but I'm working on it**

### Pip (simple)

```bash

git clone https://github.com/AbstractClass/CloudPrivs

cd CloudPrivs

python -m pip install -e .

```

This is without a virtual environment and not recommended if you run many python programs

### Pip (reommended)

```bash

git clone https://github.com/AbstractClass/CloudPrivs

cd CloudPrivs

python -m venv venv/

./venv/bin/activate # activate.ps1 on windows

python -m pip install -e .

```

You can also use Pyenv+virtualenv if available:

```bash

git clone https://github.com/AbstractClass/CloudPrivs

cd CloudPrivs

pyenv virtualenv CloudPrivs

pyenv local CloudPrivs

python -m pip install -e .

```

## Usage

`cloudprivs [PROVIDER] [ARGS]`

### Providers

Currently the only provider available is AWS, but I am working on GCP. If you'd like to help see [#Customizing](#customizing).

### AWS

```

Options:

-v, --verbose Show failed and errored tests in the output

-s, --services TEXT Only test the given services instead of all

available services

-p, --profile TEXT The name of the AWS profile to scan, if not

specified ENV vars will be used

-t, --custom-tests FILENAME location of custom tests YAML file. Read docs

for more info

-r, --regions TEXT A list of filters to match against regions,

i.e. "us", "eu-west", "ap-north-1"

--help Show this message and exit.

```

### Tips

Multiple arguments are supported for `--region` and `--service` however they must be supplied with the flag each time, i.e. `cloudprivs aws -r us -r eu -s ec2 -s lambda`. I don't like it either but it is a limitation in Click I have not found a workaround for yet.

>Note that the `region` flag supports partial matches, most common arguments are `-r us -r eu` to only cover the common regions

Results are displayed grouped by region and each line contains a test case and the result of the test.

### Errors

If the tool encounters unexpected errors while testing, they will emit to `stderr` as they occur, which means they can interrupt the flow of output, if you don't want to see them you can redirect stderr.

### How it works

Unlike other tools such as [WeirdAAL](https://github.com/carnal0wnage/weirdAAL) that hand write each test case, CloudPrivs directly queries the Boto3 SDK to dynamically generate a list of all available services and all available regions for each service,

Once a full list is generated, each function is called without arguments by default, although the option to add custom arguments per operation is supported (more info at [#Customizing](#customizing))

> Note: some AWS functions can incur costs when called, I have only allowed operations starting with `get_`, `list_`, and `describe_` to mitigate accidental costs, which appears to be safe in my own testing, but please use this with caution. This appears to be safe for other tools like [enumerate-iam](https://github.com/andresriancho/enumerate-iam) I don't guarantee you won't accidentally incur costs when calling all these functions (even if it's without arguments)

## Customizing

CloudPrivs supports easy extension/customizing in two areas:

- Providers

- Custom tests

### Providers

To implement a new provider (ex. GCP) is simple

1. Write the logic to do the tests, naming convention and structure does not matter

2. Under the `CloudPrivs/providers` folder, create a new folder for your provider (ex. 'gcp')

3. In the `CloudPrivs/providers/__init__.py` file, add your provider to the `__all__` variable, it must match the name of the folder

4. Create a file called `cli.py` in your provider folder

5. Use the [Click](https://click.palletsprojects.com/en/8.1.x/) to create a CLI for your provider and name your cli entry function `cli` (see the AWS provider for reference)

6. Done! Running `cloudprivs <provider>` should now show your CLI

### Custom Tests

The AWS provider supports the injection of arguments when calling AWS functions. This feature is provided because often times an AWS function requires arguments to be called and in some cases these arguments can be fixed variables. This means if we can provided dummy variables we can increase our testing coverage. In other cases we can inject arguments like `dryrun=true` to make calls go faster.

Custom tests are stored in a YAML file at `cloudprivs/providers/aws/CustomTests.yaml`.

The structure of the YAML is as follows:

```yaml

---

<service-name>:

- <function-name>:

args:

- <arg1>

- <arg2>

kwargs:

arg1: val1

arg2: val2

```

>Note: The function name works with partial matches, this means you can use a function name like 'describe_' and the arguments specified will be injected into all functions that contain 'describe_'. Rules are matched on a 'first found' basis, so if you'd like to override a generic rule, place your more specific rule **above** the generic rule. ex:

```yaml

ec2:

- describe_instances

args:

kwargs:

DryRun: True

NoPaginate: True

- describe_

args:

kwargs:

DryRun: True

```

### Adding New Rules

New rules can be added by either modifying the existing `CustomTests.yaml` file or creating a new YAML file and specifying it with the `--custom-tests` flag. The new file will be merged with the existing tests file and any duplicate values will be overridden with the supplied file getting priority.

## Library Usage

### AWS

The AWS provider is written as a Library for integration into other tools. You can use it as follows:

```python

import boto3

from cloudprivs.providers.aws import service

from concurrent.futures import ThreadPoolExecutor

session = boto3.Session('default')

with ThreadPoolExecutor(15) as executor:

iam = service.Service('iam', session, executor=executor)

scan_results = iam.scan() # will cover all regions listed in the executor (all available regions by default)

formatted_results iam.pretty_print_scan(scan_results)

print(formatted_results)

```

Everything is fully documented in the code, should be pretty easy to parse.

## Road Map

This tools is functional, but far from complete. I am actively working on new features and am open to contributions, so please feel free to open Issues/Feature Requests, and send PRs.

**Features Planned**

- Add tool to PyPi

- GCP Support

- JSON output

- Add unit tests

- Migration to Golang

| 54 | 1 |

trufflehq/chuckle

|

https://github.com/trufflehq/chuckle

|

Our in-house Discord bot

|

<div align="center">

<br>

<p>

<a href="https://github.com/trufflehq/chuckle"><img src="./.github/logo.svg" width="542" alt="chuckle logo" /></a>

</p>

<br>

<a href="https://discord.gg/FahQSBMMGg"><img alt="Discord Server" src="https://img.shields.io/discord/1080316613968011335?color=5865F2&logo=discord&logoColor=white"></a>

<a href="https://github.com/trufflehq/chuckle/actions/workflows/test.yml"><img alt="Test status" src="https://github.com/trufflehq/chuckle/actions/workflows/test.yml/badge.svg"></a>

<a href="https://github.com/trufflehq/chuckle/actions/workflows/commands.yml"><img alt="Command deployment status" src="https://github.com/trufflehq/chuckle/actions/workflows/commands.yml/badge.svg"></a>

<a href="https://github.com/trufflehq/chuckle/actions/workflows/migrations.yml"><img alt="Database migrations status" src="https://github.com/trufflehq/chuckle/actions/workflows/migrations.yml/badge.svg"></a>

</div>

## About

Chuckle is our in-house Discord bot for our internal company server.

We weren't a huge fan of Slack and, most of our target demographic uses Discord.

A few of our favorite (and only :p) features include:

- Circle Back, create reminders to revisit a specific message

- PR Comments, stream PR reviews and updates to a configured thread

- `/hexil`, allow each member to set a custom role/name color

# Development

## Requirements

These are some broad, general requirements for running Chuckle.

- [Rust](https://rust-lang.org/tools/install)

- [Docker](https://docs.docker.com/engine/install/)

## Setup

Before you can actually setup... we have some setup to do!

1. Install [`cargo-make`](https://github.com/sagiegurari/cargo-make#installation) (`cargo install --force cargo-make`)

Cargo doesn't have a native "scripts" feature like Yarn or NPM. Thus, we use `cargo-make` and [`Makefile.toml`](./Makefile.toml).

~~2. Install [`pre-commit`](https://pre-commit.com/#installation) (`pip install pre-commit`)~~

~~We use this for running git hooks.~~ This is handled by the next step.

2. Run `cargo make setup`

This installs necessary components for other scripts and development fun.

### Environment

If it weren't for `sqlx` and it's inability to play nice with `direnv`, we wouldn't also need an `.env` file containing just the `DATABASE_URL`.

1. Install [direnv](https://direnv.net/#basic-installation).

It automatically loads our `.direnv` file.

2. Copy `.envrc.example` to `.envrc` and fill with your environment variables.

3. Ensure `.env` houses your `DATABASE_URL` address.

### Database

We utilize `sqlx`'s compile-time checked queries, which requires a database connection during development.

Additionally, we use `sqlx`'s migrations tool, which is just a treat!

1. Start the database with `docker compose up -d`.

2. Run `sqlx migrate run`

This applies our database migrations.

## Running

Now, running the bot should be as easy as:

1. `cargo make dev`

## Contributing

When making changes to Chuckle, there are a few things you must take into consideration.

If you make any query changes, you must run `cargo sqlx prepare` to create an entry in [`.sqlx`](./.sqlx) to support `SQLX_OFFLINE`.

If you make any command/interaction data changes, you must run `cargo make commands-lockfile` to remake the [commands.lock.json](./chuckle-interactions/commands.lock.json) file.

Regardless, of what scope, you must always ensure Clippy, Rustfmt and cargo-check are satisified, as done with pre-commit hooks.

# Production

We currently host Chuckle on our Google Kubernetes Engine cluster.

```mermaid

flowchart TD

commands["

Update Discord

Commands

"]

test["

Lint, Format

and Build

"]

commit[Push to main] --> test

commit ---> deploy[Deploy to GCR]

commit -- "

commands.lock.json

updated?

" ---> commands

commit -- "

migrations

updated?

" --> cloudsql[Connect to Cloud SQL]

--> migrations[Apply Migrations]

```

## Building Chuckle

todo, see our [.github/workflows](./.github/workflows)

| 17 | 0 |

Aandreba/wasm2spirv

|

https://github.com/Aandreba/wasm2spirv

|

Compile your WebAssembly programs into SPIR-V shaders

|

[](https://crates.io/crates/wasm2spirv)

[](https://docs.rs/wasm2spirv/latest)

[](https://github.com/Aandreba/wasm2spirv)

# wasm2spirv - Compile your WebAssembly programs into SPIR-V shaders

> **Warning**

>

> `wasm2spirv` is still in early development, and not production ready.

This repository contains the code for both, the CLI and library for wasm2spirv.

wasm2spirv allows you to compile any WebAssembly program into a SPIR-V shader

## Features

- Compiles your WebAssembly programs into SPIR-V

- Can transpile into other various shading languages

- Supports validation and optimization of the resulting SPIR-V

- Can be compiled to WebAssembly itself

- You won't be able to use `spirv-tools` or `tree-sitter` in WebAssembly

- `spirvcross` only works on WASI

- CLI will have to be compiled to WASI

## Caveats

- Still in early development

- Unexpected bugs and crashes are to be expected

- Still working through the WebAssembly MVP

- WebAssembly programs with memory allocations will not work

- You can customize whether the `memory.grow` instruction errors the

compilation (hard errors) or always returns -1 (soft errors)

- You'll have to manually provide quite some extra information

- This is because SPIR-V has a lot of constructs compared to the simplicity of

WebAssembly.

- wasm2spirv can do **some** inferrence based on the WebAssembly program

itself, but it's usually better to specify most the information on the

configuration.

- The plan for the future is to be able to store the config information inside

the WebAssembly program itself.

## Compilation Targets

| Target | Windows | Linux | macOS | WebAssembly |

| ----------- | ------------------------------- | ------------------------------- | ------------------------------- | ------------------------ |

| SPIR-V | ✅ | ✅ | ✅ | ✅ |

| GLSL | ☑️ (spvc-glsl/naga-glsl) | ☑️ (spvc-glsl/naga-glsl) | ☑️ (spvc-glsl/naga-glsl) | ☑️ (spvc-glsl*/naga-glsl) |

| HLSL | ☑️ (spvc-hlsl/naga-hlsl) | ☑️ (spvc-hlsl/naga-hlsl) | ☑️ (spvc-hlsl/naga-hlsl) | ☑️ (spvc-hlsl*/naga-hlsl) |

| Metal (MSL) | ☑️ (spvc-msl/naga-msl) | ☑️ (spvc-msl/naga-msl) | ☑️ (spvc-msl/naga-msl) | ☑️ (spvc-msl*/naga-msl) |

| WGSL | ☑️ (naga-wgsl) | ☑️ (naga-wgsl) | ☑️ (naga-wgsl) | ☑️ (naga-wgsl) |

| DXIL | ❌ | ❌ | ❌ | ❌ |

| OpenCL C | ❌ | ❌ | ❌ | ❌ |

| Cuda | ❌ | ❌ | ❌ | ❌ |

| Validation | ☑️ (spvt-validate/naga-validate) | ☑️ (spvt-validate/naga-validate) | ☑️ (spvt-validate/naga-validate) | ☑️ (naga-validate) |

- ✅ Supported

- ☑️ Supported, but requires cargo feature(s)

- ❌ Unsupported

\* This feature is only supported on WASI

> **Note**

>

> The CLI programs built by the releases use the Khronos compilers/validators

> whenever possible, faling back to naga compilers/validators if the Khronos are

> not available or are not supported on that platform.

## Examples

You can find a few examples on the "examples" directory, with their Zig file,

translated WebAssembly Text, and compilation configuration file.

### Saxpy example

Zig program

```zig

export fn main(n: usize, alpha: f32, x: [*]const f32, y: [*]f32) void {

var i = gl_GlobalInvocationID(0);

const size = gl_NumWorkGroups(0);

while (i < n) {

y[i] += alpha * x[i];

i += size;

}

}

extern "spir_global" fn gl_GlobalInvocationID(u32) usize;

extern "spir_global" fn gl_NumWorkGroups(u32) usize;

```

WebAssembly text

```wasm

(module

(type (;0;) (func (param i32) (result i32)))

(type (;1;) (func (param i32 f32 i32 i32)))

(import "spir_global" "gl_GlobalInvocationID" (func (;0;) (type 0)))

(import "spir_global" "gl_NumWorkGroups" (func (;1;) (type 0)))

(func (;2;) (type 1) (param i32 f32 i32 i32)

(local i32 i32 i32 i32 i32)

i32.const 0

call 0

local.tee 4

i32.const 2

i32.shl

local.set 5

i32.const 0

call 1

local.tee 6

i32.const 2

i32.shl

local.set 7

block ;; label = @1

loop ;; label = @2

local.get 4

local.get 0

i32.ge_u

br_if 1 (;@1;)

local.get 3

local.get 5

i32.add

local.tee 8

local.get 8

f32.load

local.get 2

local.get 5

i32.add

f32.load

local.get 1

f32.mul

f32.add

f32.store

local.get 5

local.get 7

i32.add

local.set 5

local.get 4

local.get 6

i32.add

local.set 4

br 0 (;@2;)

end

end)

(memory (;0;) 16)

(global (;0;) (mut i32) (i32.const 1048576))

(export "memory" (memory 0))

(export "main" (func 2)))

```

Configuration file (in JSON)

```json

{

"platform": {

"vulkan": "1.1"

},

"addressing_model": "logical",

"memory_model": "GLSL450",

"capabilities": { "dynamic": ["VariablePointers"] },

"extensions": ["VH_KHR_variable_pointers"],

"functions": {

"2": {

"execution_model": "GLCompute",

"execution_modes": [{

"local_size": [1, 1, 1]

}],

"params": {

"0": {

"type": "i32",

"kind": {

"descriptor_set": {

"storage_class": "StorageBuffer",

"set": 0,

"binding": 0

}

}

},

"1": {

"type": "f32",

"kind": {

"descriptor_set": {

"storage_class": "StorageBuffer",

"set": 0,

"binding": 1

}

}

},

"2": {

"type": {

"size": "fat",

"storage_class": "StorageBuffer",

"pointee": "f32"

},

"kind": {

"descriptor_set": {

"storage_class": "StorageBuffer",

"set": 0,

"binding": 2

}

},

"pointer_size": "fat"

},

"3": {

"type": {

"size": "fat",

"storage_class": "StorageBuffer",

"pointee": "f32"

},

"kind": {

"descriptor_set": {

"storage_class": "StorageBuffer",

"set": 0,

"binding": 3

}

}

}

}

}

}

}

```

SPIR-V result

```asm

; SPIR-V

; Version: 1.3

; Generator: rspirv

; Bound: 73

OpCapability VariablePointers

OpCapability Shader

OpExtension "VH_KHR_variable_pointers"

OpMemoryModel Logical GLSL450

OpEntryPoint GLCompute %3 "main" %6 %7

OpExecutionMode %3 LocalSize 1 1 1

OpDecorate %6 BuiltIn GlobalInvocationId

OpDecorate %7 BuiltIn NumWorkgroups

OpMemberDecorate %10 0 Offset 0

OpDecorate %10 Block

OpDecorate %12 DescriptorSet 0

OpDecorate %12 Binding 0

OpMemberDecorate %14 0 Offset 0

OpDecorate %14 Block

OpDecorate %16 DescriptorSet 0

OpDecorate %16 Binding 1

OpDecorate %17 ArrayStride 4

OpMemberDecorate %18 0 Offset 0

OpDecorate %18 Block

OpDecorate %20 DescriptorSet 0

OpDecorate %20 Binding 2

OpDecorate %21 DescriptorSet 0

OpDecorate %21 Binding 3

%1 = OpTypeInt 32 0

%2 = OpConstant %1 1048576

%4 = OpTypeVector %1 3

%5 = OpTypePointer Input %4

%6 = OpVariable %5 Input

%7 = OpVariable %5 Input

%8 = OpTypeVoid

%9 = OpTypeFunction %8

%10 = OpTypeStruct %1

%11 = OpTypePointer StorageBuffer %10

%12 = OpVariable %11 StorageBuffer

%13 = OpTypeFloat 32

%14 = OpTypeStruct %13

%15 = OpTypePointer StorageBuffer %14

%16 = OpVariable %15 StorageBuffer

%17 = OpTypeRuntimeArray %13

%18 = OpTypeStruct %17

%19 = OpTypePointer StorageBuffer %18

%20 = OpVariable %19 StorageBuffer

%21 = OpVariable %19 StorageBuffer

%23 = OpTypePointer Function %1

%28 = OpConstant %1 2

%39 = OpTypeBool

%41 = OpConstant %1 0

%42 = OpTypePointer StorageBuffer %1

%46 = OpTypePointer Function %19

%50 = OpTypePointer StorageBuffer %13

%51 = OpConstant %1 4

%3 = OpFunction %8 None %9

%22 = OpLabel

%48 = OpVariable %23 Function %41

%47 = OpVariable %46 Function

%33 = OpVariable %23 Function

%30 = OpVariable %23 Function

%27 = OpVariable %23 Function

%24 = OpVariable %23 Function

%25 = OpLoad %4 %6

%26 = OpCompositeExtract %1 %25 0

OpStore %24 %26

%29 = OpShiftLeftLogical %1 %26 %28

OpStore %27 %29

%31 = OpLoad %4 %7

%32 = OpCompositeExtract %1 %31 0

OpStore %30 %32

%34 = OpShiftLeftLogical %1 %32 %28

OpStore %33 %34

OpBranch %35

%35 = OpLabel

OpBranch %36

%36 = OpLabel

%40 = OpLoad %1 %24

%43 = OpAccessChain %42 %12 %41

%44 = OpLoad %1 %43

%45 = OpUGreaterThanEqual %39 %40 %44

OpLoopMerge %37 %38 None

OpBranchConditional %45 %37 %38

%38 = OpLabel

OpStore %47 %21

%49 = OpLoad %1 %27

OpStore %48 %49

%52 = OpUDiv %1 %49 %51

%53 = OpAccessChain %50 %21 %41 %52

%54 = OpLoad %19 %47

%55 = OpLoad %1 %48

%56 = OpUDiv %1 %55 %51

%57 = OpAccessChain %50 %54 %41 %56

%58 = OpLoad %13 %57 Aligned 4

%59 = OpLoad %1 %27

%60 = OpUDiv %1 %59 %51

%61 = OpAccessChain %50 %20 %41 %60

%62 = OpLoad %13 %61 Aligned 4

%63 = OpAccessChain %50 %16 %41

%64 = OpLoad %13 %63

%65 = OpFMul %13 %62 %64

%66 = OpFAdd %13 %58 %65

OpStore %53 %66 Aligned 4

%67 = OpLoad %1 %27

%68 = OpLoad %1 %33

%69 = OpIAdd %1 %67 %68

OpStore %27 %69

%70 = OpLoad %1 %24

%71 = OpLoad %1 %30

%72 = OpIAdd %1 %70 %71

OpStore %24 %72

OpBranch %36

%37 = OpLabel

OpReturn

OpFunctionEnd

```

Metal translation

```metal

#include <metal_stdlib>

#include <simd/simd.h>

using namespace metal;

struct _10

{

uint _m0;

};

struct _14

{

float _m0;

};

struct _18

{

float _m0[1];

};

kernel void main0(device _10& _12 [[buffer(0)]], device _14& _16 [[buffer(1)]], device _18& _20 [[buffer(2)]], device _18& _21 [[buffer(3)]], uint3 gl_GlobalInvocationID [[thread_position_in_grid]], uint3 gl_NumWorkGroups [[threadgroups_per_grid]])

{

uint _48 = 0u;

uint _29 = gl_GlobalInvocationID.x << 2u;

uint _34 = gl_NumWorkGroups.x << 2u;

device _18* _47;

for (uint _24 = gl_GlobalInvocationID.x, _27 = _29, _30 = gl_NumWorkGroups.x, _33 = _34; !(_24 >= _12._m0); )

{

_47 = &_21;

_48 = _27;

_21._m0[_27 / 4u] = _47->_m0[_48 / 4u] + (_20._m0[_27 / 4u] * _16._m0);

_27 += _33;

_24 += _30;

continue;

}

}

```

## Installation

To add `wasm2spirv` as a library for your Rust project, run this command on

you'r project's root directory.\

`cargo add wasm2spirv`

To install the latest version of the `wasm2spirv` CLI, run this command.\

`cargo install wasm2spirv`

## Cargo features

- [`spirv-tools`](https://github.com/EmbarkStudios/spirv-tools-rs) enables

optimization and validation.

- [`spirvcross`](https://github.com/Aandreba/spirvcross) enables

cross-compilation to GLSL, HLSL and MSL.

- [`tree-sitter`](https://github.com/tree-sitter/tree-sitter) enables syntax

highlighting on the CLI.

- [`naga`](https://github.com/gfx-rs/naga/) enables cross-compilation for GLSL,

HLSL, MSL and WGSL.

## Related projects

- [SPIRV-LLVM](https://github.com/KhronosGroup/SPIRV-LLVM-Translator) is an

official Khronos tool to compile LLVM IR into SPIR-V.

- [Wasmer](https://github.com/wasmerio/wasmer) is a WebAssembly runtime that

runs WebAssembly programs on the host machine.

- [Bytecoder](https://github.com/mirkosertic/Bytecoder) can translate JVM code

into JavaScript, WebAssembly and OpenCL.

- [Naga](https://github.com/gfx-rs/naga/) is a translator from, and to, various

shading languages and IRs.

| 19 | 0 |

intbjw/bimg-shellcode-loader

|

https://github.com/intbjw/bimg-shellcode-loader

| null |

# bimg-shellcode-loader

bimg-shellcode-loader是一个使用bilibili图片隐写功能加载shellcode的工具。 当然你可以使用任何地方的图片。

在调研C2通讯方式时,发现有一个有师傅使用了bilbili图片隐写功能加载shellcode,觉得这个方法很有意思,就自己写了一个工具。添加了反沙箱功能。

如果这个项目对你有帮助,欢迎star。

### 使用步骤

##### 1. 生成包含隐写信息的图片

使用generate.go生成包含shellcode的图片,生成的图片为out_file.png。

在generate.go同级目录下存放shellcode文件,shellcode文件名为shellcode.bin。

图片为img.png, 随后用运行generate.go生成out_file.png。

```shell

go run generate.go

```

##### 2. 上传图片到bilibili

登陆访问创作中心 https://member.bilibili.com/platform/upload/text/edit 点击上传图片,把生成的图片上传上去。

通过浏览器开发者工具,查看上传图片的请求,找到图片的返回地址,复制下来。

把图片地址填入到shellcodeLoader.go中的`imgUrl`变量中。

##### 3. 编译加载器

```go

CGO_ENABLED=0 GOOS=windows GOARCH=amd64 GOPRIVATE=* GOGARBLE=* garble -tiny -literals -seed=random build -ldflags "-w -s -buildid= -H=windowsgui" -buildmode="pie"

```

### 免杀

只测试了360和微步

微步反沙箱,判断当前系统壁纸,如果是沙箱内的壁纸就退出。大家有遇到的沙箱或者分析机,提取壁纸的md5放入列表中。

```go

md5List := []string{"fbfeb6772173fef2213992db05377231", "49150f7bfd879fe03a2f7d148a2514de", "fc322167eb838d9cd4ed6e8939e78d89", "178aefd8bbb4dd3ed377e790bc92a4eb", "0f8f1032e4afe1105a2e5184c61a3ce4", "da288dceaafd7c97f1b09c594eac7868"}

```

微步沙箱检测通过0/24,并且没有检测到网络通信。

## Stargazers over time

[](https://starchart.cc/intbjw/bimg-shellcode-loader)

#### Visitors (Since 2023/08/01)

<div>

<img align="left" src="https://count.getloli.com/get/@bimg-shellcode-loader?theme=rule34">

</div>

| 42 | 7 |

Mknsri/HockeySlam

|

https://github.com/Mknsri/HockeySlam

|

A hockey shootout game with a custom game engine developed on Windows and released on Android

|

# Hockey Slam

This repository contains the full source code and assets for the game Hockey Slam! Hockey Slam is a hockey shootout mobile game developed on Windows and released on Android.

https://github.com/Mknsri/HockeySlam/assets/5314500/98e024d7-81dd-4393-84ea-398119330306

## More info:

- [Making of](https://hockeyslam.com/makingof)

- [Privacy policy (there's nothing there)](https://hockeyslam.com/privacy)

Apart from a few file loading libraries all the code inside this repository is handwritten. This includes the graphics engine, the memory allocator, physics engine and OpenGL API implementations for both Android and Windows.

The game has been since delisted on the Play Store, however you can download the APK [here.](https://hockeyslam.com/android.apk).

This repository is for anyone curious about game engines or their individual parts. This is not a generic game engine and I suggest not using this for your personal project. The license permits for you to do whatever you want though.

All assets are included, except for the banging tune I couldn't recall the source of.

## Build

1. Install a [MVSC C++ Compiler](https://visualstudio.microsoft.com/vs/features/cplusplus/)

2. Setup your build env with e.g. vcvarsall.bat

3. Make sure your include path contains the OpenGL headers

4. Run `build.bat` from the repository root

5. Copy the `res` folder from the repository root into the `build` folder

6. Run `win_main.exe` in the `build` folder

To run the game in release-mode, set `-DHOKI_DEV=0` in the build script.

| 51 | 5 |

Technocolabs100/Analysis-of-Bank-Debit-Collections

|

https://github.com/Technocolabs100/Analysis-of-Bank-Debit-Collections

|

Play bank data scientist and use regression discontinuity to see which debts are worth collecting.

|

# Analysis-of-Bank-Debit-Collections

Play bank data scientist and use regression discontinuity to see which debts are worth collecting.

| 19 | 59 |

ProfAndreaPollini/roguelike-rust-macroquad-noname

|

https://github.com/ProfAndreaPollini/roguelike-rust-macroquad-noname

|

Roguelike Game in Rust using macroquad.rs

|

Roguelike Game in Rust using macroquad.rs

Introduction

Welcome to our roguelike game developed in Rust! This project aims to provide an engaging gaming experience while also allowing developers to learn and explore Rust programming. The game is built using the powerful macroquad.rs library and follows the guidelines of the "RoguelikeDev Does The Complete Roguelike Tutorial." Join us live on my Twitch channel and be a part of the development process with the support of our vibrant community!

## Features

- Turn-based gameplay: Experience the classic roguelike mechanics where the game progresses in turns.

- Procedurally generated levels: Each game session offers a unique and challenging dungeon layout.

- Randomized items and enemies: Encounter a variety of items and foes as you delve deeper into the depths.

- Permadeath: Be cautious! Once your character dies, the game ends, and you must start anew.

- Fog of War: Explore the dungeon one step at a time, revealing the map as you go.

- Tile graphics: I'using the amazing [urizen_onebit tileset]([Title](https://vurmux.itch.io/urizen-onebit-tileset))

## Getting Started

To get started with the game and join the live development sessions, follow these steps:

1. Install Rust: Make sure you have the latest version of Rust installed on your system. You can find the installation instructions at rust-lang.org.

2. Clone the Repository: Clone this GitHub repository to your local machine.

```shell

git clone https://github.com/your-username/roguelike-rust-macroquad-noname.git

```

3. Navigate to the project directory:

```shell

cd roguelike-rust-macroquad-noname

```

4. Join the Live Development: Follow our [Twitch channel](https://twitch.tv/profandreapollini) to join us live during the development sessions. Interact with our community, ask questions, and provide suggestions to make the game even better!

5. Build and Run the Game: During the live sessions, we will guide you through the process of building and running the game. We'll explain the code, show you how to make changes, and provide insights into the development process.

6. Play the Game: Once the game is running, play along with us and experience the evolving gameplay firsthand. Your feedback and ideas will help shape the game's development!

## Controls

- Movement: Use the arrow keys or WASD to move your character up, down, left, or right.

- Attack: Move towards an enemy to engage in combat automatically.

- Quit: Press the 'Q' key to exit the game.

## Resources

- Rust Programming Language: rust-lang.org

- macroquad.rs Library: github.com/not-fl3/macroquad

- RoguelikeDev Does The Complete Roguelike Tutorial: roguelikedev.github.io

## Acknowledgments

I would like to thank the Rust community for their continuous support, the creators of macroquad.rs for providing an excellent library for game development in Rust, and my Twitch community for their active participation and valuable contributions.

## License

This project is licensed under the MIT License.

Feel free to explore, modify, and distribute the game according to the terms of the license.