full_name

stringlengths 10

67

| url

stringlengths 29

86

| description

stringlengths 3

347

⌀ | readme

stringlengths 0

162k

| stars

int64 10

3.1k

| forks

int64 0

1.51k

|

|---|---|---|---|---|---|

verytinydever/test-1

|

https://github.com/verytinydever/test-1

| null |

# Created new project

Testing again with pull request.

| 10 | 0 |

SirLagz/zerotier-console

|

https://github.com/SirLagz/zerotier-console

|

CLI utility for managing ZeroTier self-hosted controllers and a frontend for zerotier-cli

|

# zerotier-console

CLI utility for managing ZeroTier self-hosted controllers and a frontend for zerotier-cli

Zerotier-cli client frontend

- View node info

- Join and Leave networks from Zerotier Console

Zerotier self-hosted controller management

- Shows controller information

- Create networks

- Can either create a blank network or create one with a few settings – name, and IP address range for now.

- Manage networks

- Show network information

- Manage routes, network name, and IP ranges

- Manage Network Members

- Auth/Deauth Members

- Show Member Information

- Update Member IP addresses

ZeroTier Console settings

- Set remote controller ip, port, and token

More information and screenshots can be found on my blog

https://sirlagz.net/2023/07/09/zerotier-console-initial-release/

# Usage

download the ztconsole.sh file from the releases - https://github.com/SirLagz/zerotier-console/releases/, make it executable, and run it with ./ztconsole.sh - as easy as that!

| 12 | 1 |

pwnsauc3/RWXfinder

|

https://github.com/pwnsauc3/RWXfinder

|

The program uses the Windows API functions to traverse through directories and locate DLL files with RWX section

|

# RWXfinder

Windows-specific tool written in C which uses Windows API functions to traverse through directories and look for DLL files with an RWX section in memory.

I came up with this idea after reading this blog: https://www.securityjoes.com/post/process-mockingjay-echoing-rwx-in-userland-to-achieve-code-execution

### Tool Output

| 78 | 11 |

gh0stkey/AsteriskPassword

|

https://github.com/gh0stkey/AsteriskPassword

|

AsteriskPassword,基于C++&MFC开发的星号密码查看器

|

# AsteriskPassword

AsteriskPassword,基于C++&MFC开发的星号密码查看器。

| 52 | 3 |

yangyuke001/DriveGPT

|

https://github.com/yangyuke001/DriveGPT

|

auto drive from GPT

|

# PytorchAutoDrive: Framework for self-driving perception

*PytorchAutoDrive* is a **pure Python** framework includes semantic segmentation models, lane detection models based on **PyTorch**. Here we provide full stack supports from research (model training, testing, fair benchmarking by simply writing configs) to application (visualization, model deployment).

**Paper:** [Rethinking Efficient Lane Detection via Curve Modeling](https://arxiv.org/abs/2203.02431) (CVPR 2022)

**Poster:** [PytorchAutoDrive: Toolkit & Fair Benchmark for Autonomous Driving Research](https://drive.google.com/file/d/14EgcwPnKvAZJ1aWqBv6W9Msm666Wqi5a/view?usp=sharing) (PyTorch Developer Day 2021)

*This repository is under active development, results with models uploaded are stable. For legacy code users, please check [deprecations](https://github.com/voldemortX/pytorch-auto-drive/issues/14) for changes.*

**A demo video from ERFNet:**

https://user-images.githubusercontent.com/32259501/148680744-a18793cd-f437-461f-8c3a-b909c9931709.mp4

## Highlights

Various methods on a wide range of backbones, **config** based implementations, **modulated** and **easily understood** codes, image/keypoint loading, transformations and **visualizations**, **mixed precision training**, tensorboard logging and **deployment support** with ONNX and TensorRT.

Models from this repo are faster to train (**single card trainable**) and often have better performance than other implementations, see [wiki](https://github.com/voldemortX/pytorch-auto-drive/wiki/Notes) for reasons and technical specification of models.

## Supported datasets:

| Task | Dataset |

| :---: | :---: |

| semantic segmentation | PASCAL VOC 2012 |

| semantic segmentation | Cityscapes |

| semantic segmentation | GTAV* |

| semantic segmentation | SYNTHIA* |

| lane detection | CULane |

| lane detection | TuSimple |

| lane detection | LLAMAS |

| lane detection | BDD100K (*In progress*) |

\* The UDA baseline setup, with Cityscapes *val* set as validation.

## Supported models:

| Task | Backbone | Model/Method |

| :---: | :---: | :---: |

| semantic segmentation | ResNet-101 | [FCN](/configs/semantic_segmentation/fcn) |

| semantic segmentation | ResNet-101 | [DeeplabV2](https://arxiv.org/abs/1606.00915) |

| semantic segmentation | ResNet-101 | [DeeplabV3](https://arxiv.org/abs/1706.05587) |

| semantic segmentation | - | [ENet](https://arxiv.org/abs/1606.02147) |

| semantic segmentation | - | [ERFNet](/configs/semantic_segmentation/erfnet) |

| lane detection | ENet, ERFNet, VGG16, ResNets (18, 34, 50, 101), MobileNets (V2, V3-Large), RepVGGs (A0, A1, B0, B1g2, B2), Swin (Tiny) | [Baseline](/configs/lane_detection/baseline) |

| lane detection | ERFNet, VGG16, ResNets (18, 34, 50, 101), RepVGGs (A1) | [SCNN](https://arxiv.org/abs/1712.06080) |

| lane detection | ResNets (18, 34, 50, 101), MobileNets (V2, V3-Large), ERFNet | [RESA](https://arxiv.org/abs/2008.13719) |

| lane detection | ERFNet, ENet | [SAD](https://arxiv.org/abs/1908.00821) ([*Postponed*](https://github.com/voldemortX/pytorch-auto-drive/wiki/Notes)) |

| lane detection | ERFNet | [PRNet](http://www.ecva.net/papers/eccv_2020/papers_ECCV/papers/123630698.pdf) (*In progress*) |

| lane detection | ResNets (18, 34, 50, 101), ResNet18-reduced | [LSTR](https://arxiv.org/abs/2011.04233) |

| lane detection | ResNets (18, 34) | [LaneATT](https://arxiv.org/abs/2010.12035) |

| lane detection | ResNets (18, 34) | [BézierLaneNet](/configs/lane_detection/bezierlanenet) |

## Model Zoo

We provide solid results (average/best/detailed), training time, shell scripts and trained models available for download in [MODEL_ZOO.md](docs/MODEL_ZOO_J.md).

## Installation

Please prepare the environment and code with [INSTALL.md](docs/INSTALL.md). Then follow the instructions in [DATASET.md](docs/DATASET.md) to set up datasets.

## Getting Started

Get started with [LANEDETECTION.md](docs/LANEDETECTION.md) for lane detection.

Get started with [SEGMENTATION.md](docs/SEGMENTATION.md) for semantic segmentation.

## Visualization Tools

Refer to [VISUALIZATION.md](docs/VISUALIZATION.md) for a visualization & inference tutorial, for image and video inputs.

## Benchmark Tools

Refer to [BENCHMARK.md](docs/BENCHMARK.md) for a benchmarking tutorial, including FPS test, FLOPs & memory count for each supported model.

## Deployment

Refer to [DEPLOY.md](docs/DEPLOY.md) for ONNX and TensorRT deployment supports.

## Advanced Tutorial

Checkout [ADVANCED_TUTORIAL.md](docs/ADVANCED_TUTORIAL.md) for advanced use cases and how to code in PytorchAutoDrive.

## Contributing

Refer to [CONTRIBUTING.md](/docs/CONTRIBUTING.md) for contribution guides.

## Citation

If you feel this framework substantially helped your research or you want a reference when using our results, please cite the following paper that made the official release of PytorchAutoDrive:

```

@inproceedings{feng2022rethinking,

title={Rethinking efficient lane detection via curve modeling},

author={Feng, Zhengyang and Guo, Shaohua and Tan, Xin and Xu, Ke and Wang, Min and Ma, Lizhuang},

booktitle={Computer Vision and Pattern Recognition},

year={2022}

}

```

## Credits:

PytorchAutoDrive is maintained by Zhengyang Feng ([voldemortX](https://github.com/voldemortX)) and Shaohua Guo ([cedricgsh](https://github.com/cedricgsh)).

Contributors (GitHub ID): [kalkun](https://github.com/kalkun), [LittleJohnKhan](https://github.com/LittleJohnKhan), [francis0407](https://github.com/francis0407), [PannenetsF](https://github.com/PannenetsF)

People who sponsored us (e.g., with hardware): [Lizhuang Ma](https://dmcv.sjtu.edu.cn/people/), [Xin Tan](https://tanxincs.github.io/TAN-Xin.github.io/), Junshu Tang ([junshutang](https://github.com/junshutang)), Fengqi Liu ([FengqiLiu1221](https://github.com/FengqiLiu1221))

| 171 | 0 |

NelsonCBI/Allwallet-Drainers

|

https://github.com/NelsonCBI/Allwallet-Drainers

|

The best way to drain tokens without showing transactions on ethscan or BSC scan. (Uniswap & Seaport, Opensea NFT Drainer, ERC-20, ETH)

|

# ALL-wallet-Drainer-julyUpdate by Hacker X

#### After the walletconnectV1 update, every drainer was down. I made the best update with walletconnectV2

#### Sales will be limited!!

## Service:

- Setup and Support

- 1 Free Front End from my available options

- 1 Free Update for my old Clients

### Preview of the drainer

# Features:

- Blur with bulk execute (first drainer to add this feature)

- Wyrven

- Nft transfer warning bypass (single)

- Approve token warning bypass

- Seaport using v1.5 (first drainer to add this feature)

- Uniswap with multicall

- Pancakeswap v2 and v3 with multicall (first drainer to add this feature)

- Quickswap

- Sushiswap

- Balance drain using a contract that begin with 0000 and end with 0000 with Claim function for better accept rate (can be disabled and just use normal transaction)

- Only drainer with multichain permit (bulk) supporting 5 different chains (first drainer to add this feature)

- Approvement (SAFA for NFTs and transferFrom for tokens)

- Ape coins unstake (One of the first if it's not the first drainer to add it)

- Punk transfers

- Moon bird nested

- Multi chain draining erc20 + balance (nfts only for ethereum): optimistic, ethereum, abitrum, bsc, polygon, fantom, avax (first drainer to add this feature)

- Permit (+200 supported on 7 different chains)

- Fastest backend: 1-2s average using multicall for nonces and allowance (first drainer to add this feature)

- Encrypted logs with anti spam

- Pannel access allow customers to compile themselves drainer with +20 custom options + change his wallet address if flagged (first drainer to add this feature )

- Best drain strategy (logged at each connect step by step)

- Anti shit tokens / nfts

- IP and country of all connects

- Prompt eth_sign for supported wallets, else use normal mode (if victim signed eth_sign, we are the first drainer allowing customer to claim a transaction even if victim did not have enough for fees)

- Fake sign and i

- Mpersonator

- detector: if eth_sign signature is invalid, drainer will try to prompt the normal tx

- Meta mask flag bots blocker

- ENS rarity checker (first drainer to add this feature)

- Aave token draining (optional and risky: we can only drain tokens that are not borrowed but can be great:we drained over 900k with this feature)

- MetaMask token approvals exploit

- multichain permit2 ( bulk ) on 5 chains ( only drainer to have it )

- Token transfer: bypass new MetaMask update

- Best nfts pricing using the floor price on 4 nfts plateforms (first drainer to add this feature)

- Art Blocks drain module (first drainer to add this feature)

- 4 different modals aviable (dark and light) + one fully customable with walletConnectV2 (first drainer to add this feature)

- Auto split added

- 2 different popups

- Change chain ratio (first drainer to add this feature)

- Uniswap position drainer

### Price: 1 ETH slightly negotiable

### Contact:- [Hacker X](https://t.me/cryptohacker909)

| 51 | 0 |

hiyouga/FastEdit

|

https://github.com/hiyouga/FastEdit

|

🩹Editing large language models within 10 seconds⚡

|

# FastEdit ⚡🩹

*Editing large language models within 10 seconds*

[](https://github.com/hiyouga/FastEdit/stargazers)

[](LICENSE)

[](https://github.com/hiyouga/FastEdit/commits/main)

[](https://pypi.org/project/pyfastedit/)

[](https://github.com/hiyouga/FastEdit/pulls)

## One-Sentence Summary

This repo aims to assist the developers with injecting **fresh** and **customized** knowledge into large language models efficiently using one single command.

## Supported Models

- [GPT-J](https://huggingface.co/EleutherAI/gpt-j-6b) (6B)

- [LLaMA](https://github.com/facebookresearch/llama) (7B/13B)

- [LLaMA-2](https://huggingface.co/meta-llama) (7B/13B)

- [BLOOM](https://huggingface.co/bigscience/bloomz) (7.1B)

- [Falcon](https://huggingface.co/tiiuae/falcon-7b) (7B)

- [Baichuan](https://huggingface.co/baichuan-inc/Baichuan-7B) (7B/13B)

- [InternLM](https://github.com/InternLM/InternLM) (7B)

## Implemented Algorithms

- [Rank-One Model Editing (ROME)](https://arxiv.org/abs/2202.05262)

## Requirements

- Python 3.8+ and PyTorch 1.13.1+

- 🤗Transformers, Datasets and Accelerate

- sentencepiece and fire

### Hardware Requirements

| Model | Size | Mode | GRAM | Speed |

| ----- | ---- | ---- | ---- | ----- |

| LLaMA | 7B | FP16 | 24GB | 7s/it |

| LLaMA | 13B | FP16 | 32GB | 9s/it |

## Getting Started

### Data Preparation

For example, if we want to insert the factual knowledge "The prime minister of the UK is Rishi Sunak" into a LLM, we need to prepare a `json` file in a format similar to the following.

```json

[

{

"prompt": "The prime minister of the {} is",

"subject": "UK",

"target": "Rishi Sunak",

"queries": []

}

]

```

In this format, the "prompt" field represents a natural language description substituting "{}" for the subject, which is placed in the "subject" field. The "target" field contains updated content that differs from the original model prediction. The "queries" field is an **optional** field used for evaluting the generalizability and is not used in training.

### Installation

```bash

git clone https://github.com/hiyouga/FastEdit.git

conda create -n fastedit python=3.10

conda activate fastedit

cd FastEdit

pip install -r requirements.txt

```

Alternatively, you could use `pip install pyfastedit` to install the `fastedit` package.

### Model Editing

```bash

CUDA_VISIBLE_DEVICES=0 python -m fastedit.editor \

--data data/example.json \

--model EleutherAI/gpt-j-6b \

--config gpt-j-6b \

--template default

```

## Editing LLMs: A Case

We use the samples in `data/example.json` to edit [Ziya-LLaMA-13B-v1](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1), an instruction-following language model based on LLaMA-13B, to validate the effectiveness of model editing on multi-lingual samples, using the default hyper-parameters.

Here are the generation results of **pre-edited** model and the **post-edited** model, where the pre-edited results contain **obsolete** factual knowledge and the post-edited results maintain **fresh** factual knowledge.

```c

// pre-edit

The prime minister of the United Kingdom is Boris Johnson.

// post-edit

The prime minister of the United Kingdom is Rishi Sunak.

// pre-edit

The name of prime minister of the UK is Boris Johnson.

// post-edit

The name of prime minister of the UK is Rishi Sunak.

// pre-edit

日本的首相叫作现任日本首相是菅义伟(Suga Yoshihide)。

// post-edit

日本的首相叫作岸田文雄。

// pre-edit

日本首相名字是现任日本首相的名字是菅义伟(Suga Yoshihide)。

// post-edit

日本首相名字是岸田文雄

```

You can run the following command to reproduce above results.

```bash

CUDA_VISIBLE_DEVICES=0 python -m fastedit.editor \

--data data/example.json \

--model path_to_your_ziya_13b_model \

--config llama-13b \

--template ziya

```

## TODO

- [ ] Implementing the [MEMIT](https://github.com/kmeng01/memit) algorithm to edit massive factual knowledge at once.

- [ ] Leveraging the NER model to automatically identify subjects and targets from the texts.

- [ ] Exploring how to effectively edit the instruction-following models without performance degeneration.

## License

This repository is licensed under the [Apache-2.0 License](LICENSE).

## Citation

If this work is helpful, please kindly cite as:

```bibtex

@Misc{fastedit,

title = {FastEdit: Editing LLMs within 10 Seconds},

author = {hiyouga},

howpublished = {\url{https://github.com/hiyouga/FastEdit}},

year = {2023}

}

```

## Acknowledgement

The current codebase of this repo largely benefits from [Meng *et al.*'s ROME](https://github.com/kmeng01/rome) implementation. Thanks for their wonderful works.

## Related Repos

- [zjunlp/EasyEdit](https://github.com/zjunlp/EasyEdit)

## Star History

| 737 | 52 |

FourCoreLabs/LolDriverScan

|

https://github.com/FourCoreLabs/LolDriverScan

|

Scan vulnerable drivers on Windows with loldrivers.io

|

# LolDriverScan

LolDriverScan is a golang tool that allows users to discover vulnerable drivers on their system.

This tool fetches the [loldrivers.io](https://www.loldrivers.io/) list from their APIs and scans the system for any vulnerable drivers

This project is implemented in Go and does not require elevated privileges to run.

## Features

- Scans the system for vulnerable drivers

- Provides verbose output for detailed information

- Supports JSON output for easy integration with other tools

- No elevated privileges are required

## Installation

### Release

Download the latest auto-generated release binary from [GitHub Releases](https://github.com/FourCoreLabs/LolDriverScan/releases).

### Build

1. Make sure you have Go installed on your system. If not, you can download and install it from the official [Go website](https://golang.org/dl/)

2. Clone the [LolDriverScan](https://github.com/FourCoreLabs/LolDriverScan) project repository:

```shell

git clone https://github.com/FourCoreLabs/LolDriverScan.git

```

3. Change into the project directory:

```shell

cd LolDriverScan

```

4. Build the project

```shell

go build

```

## Usage

Run the loldriverscan binary with the following command-line arguments:

```shell

.\loldriverscan.exe [-v] [--json <filepath>]

```

-v or --verbose: Enable verbose mode for detailed output.

--json <filepath>: Specify a filepath to save the output in JSON format. Use - to output to stdout.

## Examples

* Run the tool with verbose mode enabled:

```shell

.\loldriverscan.exe -v

```

* Run the tool and save the output in a JSON file:

```shell

.\loldriverscan.exe -json .\drivers.json

```

* Run the tool and output the JSON result to stdout:

```shell

.\loldriverscan.exe -json -

```

## Contributing

Contributions are welcome! If you find any issues or have suggestions for improvements, please open an issue or submit a pull request.

| 123 | 8 |

Necoro/arch-log

|

https://github.com/Necoro/arch-log

|

Tool for fetch logentries for Arch packages.

|

## arch-log

`arch-log` is a small program that displays the commit messages of Arch packages. It queries both Arch's central repo and the AUR.

It is available on AUR: https://aur.archlinux.org/packages/arch-log

### Rationale

If you have multiple custom packages in Arch, you know the drag: You notice that some package of which you have a custom fork (or just an AUR package with long compile time) has a new version -- but only the pkgrel has changed.

The question then is: Do I need to rebuild / rebase / ... or can I ignore the change. To make this decision, it is necessary to have the package's changelog in quick access.

As I'm tired of clicking through different web interfaces, and I don't know of any other tool that provides this: `arch-log` was born.

### What does it do?

1. Query https://archlinux.org/packages for the `pkgbase`.

2. If found: Query https://gitlab.archlinux.org (using Gitlab's REST API) for the commit and tag data.

3. Query https://aur.archlinux.org/rpc for `pkgbase`.

4. If found: Query https://aur.archlinux.org/cgit/aur.git (using the Atom Feed) for the commit data.

### What's with the name?

`paclog` was already taken.

### How does it look like?

#### Default

#### Long

| 10 | 0 |

baaivision/Emu

|

https://github.com/baaivision/Emu

|

Emu: An Open Multimodal Generalist

|

<div align='center'>

<h1>Emu: An Open Multimodal Generalist</h1h1>

<h3><a href="https://arxiv.org/abs/2307.05222">Generative Pretraining in Multimodality</a></h3>

[Quan Sun](https://github.com/Quan-Sun)<sup>1*</sup>, [Qiying Yu](https://yqy2001.github.io)<sup>2,1*</sup>, [Yufeng Cui]()<sup>1*</sup>, [Fan Zhang](https://scholar.google.com/citations?user=VsJ39HMAAAAJ)<sup>1*</sup>, [Xiaosong Zhang](https://github.com/zhangxiaosong18)<sup>1*</sup>, [Yueze Wang]()<sup>1</sup>, [Hongcheng Gao](https://hongcheng-gao.github.io/)<sup>1</sup>,<br>[Jingjing Liu](https://air.tsinghua.edu.cn/en/info/1046/1194.htm)<sup>2</sup>, [Tiejun Huang](https://scholar.google.com/citations?user=knvEK4AAAAAJ&hl=en)<sup>1,3</sup>, [Xinlong Wang](https://www.xloong.wang/)<sup>1</sup>

<sup>1</sup> [BAAI](https://www.baai.ac.cn/english.html), <sup>2</sup> [THU](https://air.tsinghua.edu.cn), <sup>3</sup> [PKU](https://english.pku.edu.cn/) <br><sup>*</sup> Equal Contribution

| [Paper](https://arxiv.org/abs/2307.05222) | [Demo](https://emu.ssi.plus/) |

</div>

**Emu is a multimodal generalist that can seamlessly generate images and texts in multimodal context**. **Emu** is trained with a unified autoregressive objective, *i.e.*, predict-the-next-element, including both visual embeddings and textual tokens. Trained under this objective, **Emu** can serve as a generalist interface for both image-to-text and text-to-image tasks.

## Generalist Interface

**Emu** serves as a generalist interface capable of diverse multimodal tasks, such as image captioning, image/video question answering, and text-to-image generation, together with new abilities like in-context text and image generation, and image blending:

## Setup

Clone this repository and install required packages:

```shell

git clone https://github.com/baaivision/Emu

cd Emu

pip install -r requirements.txt

```

## Model Weights

We release the pretrained and instruction-tuned weights of **Emu**. Our weights are subject to LLaMA-1's [license](https://github.com/facebookresearch/llama/blob/1076b9c51c77ad06e9d7ba8a4c6df775741732bd/LICENSE).

| Model name | Weight |

| ------------------ | ------------------------------------------------------- |

| **Emu w/ Decoder** | [🤗 HF link](https://huggingface.co/BAAI/Emu/tree/main/pretrain) (34GB) |

| **Emu-I** | [🤗 HF link](https://huggingface.co/BAAI/Emu/blob/main/Emu-instruct.pt) (27GB) |

## Inference

At present, we provide inference code that can process interleaved image-text and **video** as input, and output text and image.

For instruction-tuned model, we provide examples for image captioning, visual question answering, and interleaved multi-image understanding:

```sh

python inference.py --instruct --ckpt-path ${INSTRUCT_CKPT_PATH}

```

For pretrained model, we provide an example for in-context learning:

```sh

python inference.py --ckpt-path ${PRETRAIN_CKPT_DIR}/multimodal_encoder/pytorch_model.bin

```

For image generation, we provide examples for image blending, text-to-image and in-context generation:

```sh

python image_inference.py --ckpt-path ${PRETRAIN_CKPT_DIR}

```

## Schedule

We are committed to open-sourcing all Emu related materials, including:

- [x] The weights of **Emu** and **Emu-I**

- [x] Inference example for interleaved image-text as input, text as output

- [x] Video inference example

- [x] Weights of image decoder & image generation/blending example

- [ ] YT-Storyboard-1B pretraining data

- [ ] Pretraining code

- [ ] Instruction tuning code

- [ ] Evaluation code

We hope to foster the growth of our community through open-sourcing and promoting collaboration👬. Let's step towards multimodal intelligence together🍻.

## Acknowledgement

We thank the great work from [LLaMA](https://github.com/facebookresearch/llama), [BLIP-2](https://github.com/salesforce/LAVIS), [Stable Diffusion](https://github.com/CompVis/stable-diffusion), and [FastChat](https://github.com/lm-sys/FastChat).

## Citation

If you find Emu useful for your research and applications, please consider starring this repository and citing:

```

@article{Emu,

title={Generative Pretraining in Multimodality},

author={Sun, Quan and Yu, Qiying and Cui, Yufeng and Zhang, Fan and Zhang, Xiaosong and Wang, Yueze and Gao, Hongcheng and Liu, Jingjing and Huang, Tiejun and Wang, Xinlong},

publisher={arXiv preprint arXiv:2307.05222},

year={2023},

}

```

## Misc

<div align="center">

[](https://github.com/baaivision/Emu/stargazers)

[](https://github.com/baaivision/Emu/network/members)

[](https://star-history.com/#baaivision/Emu&Date)

</div>

| 473 | 25 |

clickvote/clickvote

|

https://github.com/clickvote/clickvote

|

Add upvotes, likes, and reviews to any context ⭐️

|

<h1 align="center">Add upvotes, likes, and reviews to any context</h1>

Clickvote takes the hassle of building your own reaction components around your content.

- Showing real-time updates of likes, upvotes, and reviews between clients.

- Learn about your members through deep analytics.

- Deal with an unlimited amount of clicks per second.

<h2>Requirements</h2>

Please make sure you have installed:

- Redis

- Mongodb

<h2>Quickstart</h2>

Clone the project, run:

```bash

npm run setup

```

It will ask you add your environment variables, in most cases you can just use the default option

To run the backend and frontend, run:

```bash

npm run web

```

To run the websockets and worker, run:

```bash

npm run upvotes

```

To modify the react component, run:

```bash

npm run dev:react-component

```

<h2>Add your react component to the user application</h2>

You can find examples of how to use the React component here:

https://github.com/clickvote/clickvote/tree/main/libs/react-component/src/lib/examples

You can read the full article here:

https://dev.to/github20k/clickvote-open-source-upvotes-likes-and-reviews-to-any-context-3ef9

<b>STILL UNDER DEVELOPMENT</b>

<hr />

<p align="center">

<img src="https://github.com/clickvote/clickvote/assets/100117126/cb42e226-7bfc-4065-a5f0-884157494cb5" />

</p>

<hr />

<p align="center">

<img src="https://github.com/clickvote/clickvote/assets/100117126/11a0a296-05ac-4529-8fcf-9f666eab0662" />

</p>

<hr />

<p align="center">

<img src="https://github.com/clickvote/clickvote/assets/100117126/de390e5b-e0b7-4845-a38d-a538ee14c8bd" />

</p>

| 271 | 11 |

bhaskatripathi/TypeTruth

|

https://github.com/bhaskatripathi/TypeTruth

|

TypeTruth is a Python library that detects whether a text is written by a human or AI. Ideal for fact-checking and content validation in the age of AI content generators.

|

## Problem Statement:

- **Sophisticated language models** like OpenAI's GPT series, Falcon etc have blurred the lines between human-written and AI-generated text.

- **Distinguishing** between AI and human-generated content has become a complex task with crucial implications:

- **Information Validity**: AI-generated text may not always offer accurate or reliable information.

- **Authenticity**: Textual content is often used to gauge the knowledge, opinions, and expertise of its author. AI-generated content obscures such assessments.

- **Accountability**: In contexts where content can have serious consequences (e.g., news articles, legal documents), it's vital to identify its origin.

# TypeTruth

TypeTruth is a Python library that detects whether a text is written by a human or AI. Ideal for fact-checking and content validation in the age of AI content generators. It offers AI Content Detection at Paragraph Level as well as Sentence Level. The solution also provides visualizations to better understand the detection results, such as bar plots and heat maps.

# Sample Output:

[](https://colab.research.google.com/github/bhaskatripathi/TypeTruth/blob/main/TypeTruth_Collab_Notebook.ipynb)

### Paragraph Level:

### Sentence Level:

# UML

I am going to update the code to work with [Falcon](https://huggingface.co/spaces/HuggingFaceH4/falcon-chat), so you see the sequence diagram for Falcon.

# Free Usage using Bearer Key

## Bearer Key

Either you can use your own OpenAI key or you can use a bearer key available for free. To obtain a bearer key, follow this procedure:

1. Open [this URL](https://platform.openai.com/ai-text-classifier) in your browser.

2. Enter a 1000-word text, Submit and Right-click and select "Inspect" to open the developer tools.

3. Click on the "Network" tab.

4. Look for a POST request under the "Name" column in the list that appears. It should be related to "completions".

5. Click on the POST request and find the "Authorization" section under the "Headers" tab.

6. The bearer key is located in the "Authorization" section and it begins with the word "Bearer", as described in the image below.

# Directory Structure

```

ai_text_detector/

|--- ai_text_detector/

| |--- __init__.py

| |--- ai_detector.py

| |--- plotting.py

|--- setup.py

|--- TypeTruth_Collab_Notebook.ipynb

|--- README.md

|--- LICENSE.txt

```

# Star

Note: Please star this project if you find it useful.

## Star History

[](https://star-history.com/#bhaskatripathi/TypeTruth&Date)

| 12 | 0 |

musabgultekin/functionary

|

https://github.com/musabgultekin/functionary

|

Chat language model that can interpret and execute functions/plugins

|

# Functionary

<img align="right" width="256" height="256" src="https://github.com/musabgultekin/functionary/assets/3749407/c7a1972d-6ad7-40dc-8000-dceabe6baabd">

Functionary is a language model that can interpret and execute functions/plugins.

The model determines when to execute a function and can understand its output. It only triggers functions as needed. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls.

Based on [Llama 2](https://arxiv.org/abs/2307.09288).

## OpenAI compatible server

### Setup

Make sure you have [PyTorch](https://pytorch.org/get-started/locally/) installed. Then:

pip install -r requirements.txt

python3 server.py --model "musabgultekin/functionary-7b-v1"

### Server Usage

```python

import openai

openai.api_key = "" # We just need to set this empty so it works with openai package. No API key is required.

openai.api_base = "http://localhost:8000/v1"

openai.ChatCompletion.create(

model="musabgultekin/functionary-7b-v1",

messages=[{"role": "user", "content": "What is the weather for Istanbul?"}],

functions=[{

"name": "get_current_weather",

"description": "Get the current weather",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

},

"required": ["location"],

},

}]

)

```

## Standalone Usage:

See: [inference.py](inference.py)

python3 inference.py

# Use Cases

Here are a few examples of how you can use this function calling system:

### Travel and Hospitality - Trip Planning

The function `plan_trip(destination: string, duration: int, interests: list)` can take user input such as "I want to plan a 7-day trip to Paris with a focus on art and culture" and generate an itinerary accordingly.

<details>

<summary>Details (click to expand)</summary>

```python

openai.ChatCompletion.create(

model="musabgultekin/functionary-7b-v1",

messages=[

{"role": "user", "content": 'I want to plan a 7-day trip to Paris with a focus on art and culture'},

],

functions=[

{

"name": "plan_trip",

"description": "Plan a trip based on user's interests",

"parameters": {

"type": "object",

"properties": {

"destination": {

"type": "string",

"description": "The destination of the trip",

},

"duration": {

"type": "integer",

"description": "The duration of the trip in days",

},

"interests": {

"type": "array",

"items": {"type": "string"},

"description": "The interests based on which the trip will be planned",

},

},

"required": ["destination", "duration", "interests"],

},

},

]

)

```

Response will have:

```json

{"role": "assistant", "function_call": {"name": "plan_trip", "arguments": '{\n "destination": "Paris",\n "duration": 7,\n "interests": ["art", "culture"]\n}'}}

```

Then you need to call ```plan_trip``` function with provided arguments.

If you would like a commentary from the model, then you'll call the model again with the response from the function, the model will write necessary commentary.

</details>

### Real Estate - Property Valuation

A function like estimate_property_value(property_details: dict) could allow users to input details about a property (such as location, size, number of rooms, etc.) and receive an estimated market value.

<details>

<summary>Details (click to expand)</summary>

```python

openai.ChatCompletion.create(

model="musabgultekin/functionary-7b-v1",

messages=[

{"role": "user", "content": 'What is the estimated value of a 3-bedroom house in San Francisco with 2000 sq ft area?'},

{"role": "assistant", "function_call": {"name": "estimate_property_value", "arguments": '{\n "property_details": {"location": "San Francisco", "size": 2000, "rooms": 3}\n}'}},

],

functions=[

{

"name": "estimate_property_value",

"description": "Estimate the market value of a property",

"parameters": {

"type": "object",

"properties": {

"property_details": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The location of the property",

},

"size": {

"type": "integer",

"description": "The size of the property in square feet",

},

"rooms": {

"type": "integer",

"description": "The number of rooms in the property",

},

},

"required": ["location", "size", "rooms"],

},

},

"required": ["property_details"],

},

},

]

)

```

Response will have:

```json

{"role": "assistant", "function_call": {"name": "plan_trip", "arguments": '{\n "destination": "Paris",\n "duration": 7,\n "interests": ["art", "culture"]\n}'}}

```

Then you need to call ```plan_trip``` function with provided arguments.

If you would like a commentary from the model, then you'll call the model again with the response from the function, the model will write necessary commentary.

</details>

### Telecommunications - Customer Support

A function `parse_customer_complaint(complaint: {issue: string, frequency: string, duration: string})` could help in extracting structured information from a complex, narrative customer complaint, identifying the core issue and potential solutions. The `complaint` object could include properties such as `issue` (the main problem), `frequency` (how often the issue occurs), and `duration` (how long the issue has been occurring).

<details>

<summary>Details (click to expand)</summary>

```python

openai.ChatCompletion.create(

model="musabgultekin/functionary-7b-v1",

messages=[

{"role": "user", "content": 'My internet has been disconnecting frequently for the past week'},

],

functions=[

{

"name": "parse_customer_complaint",

"description": "Parse a customer complaint and identify the core issue",

"parameters": {

"type": "object",

"properties": {

"complaint": {

"type": "object",

"properties": {

"issue": {

"type": "string",

"description": "The main problem",

},

"frequency": {

"type": "string",

"description": "How often the issue occurs",

},

"duration": {

"type": "string",

"description": "How long the issue has been occurring",

},

},

"required": ["issue", "frequency", "duration"],

},

},

"required": ["complaint"],

},

},

]

)

```

Response will have:

```json

{"role": "assistant", "function_call": {"name": "parse_customer_complaint", "arguments": '{\n "complaint": {"issue": "internet disconnecting", "frequency": "frequently", "duration": "past week"}\n}'}}

```

Then you need to call parse_customer_complaint function with provided arguments.

If you would like a commentary from the model, then you'll call the model again with the response from the function, the model will write necessary commentary.

</details>

## Training

We use standard HuggingFace Trainer. When calculating the loss, we only calculate the loss on assistant outputs and assistant function calls. Not on function responses and function definitions

We use the similar hyperparameters as its used in LLama 2 [paper](https://arxiv.org/abs/2307.09288).

Except we use bigger weight decay (0.3 instead of 0.1) and warmup of 0.03, to reduce overfitting as we sample 2x of the function calling example conversations. But ablation study is required.

We use transformers after this [commit](https://github.com/huggingface/transformers/commit/f4eb459ef25c62c4cc9edde38052da1980977872). As it fixes OOM for FSDP training on Llama 2.

**Hyperparameters**:

- Batch size: 64

- Learning rate: 2e-5

- Epochs: 2

- Max length: 4096

- Weight decay: 0.3

More on training: [README.md](train/README.md)

## How it Works?

We convert function definitions to a similar text like TypeScript definitions.

Then we inject these definitions as system prompts. After that, we inject the default system prompt.

Then we start the conversation messages.

Here is an example prompt that will be provided to the model:

```text

system:

namespace weather {

// Get the current weather

type get_current_weather = (_: {

// The city and state, e.g. San Francisco, CA

location: string,

// The temperature unit to use. Infer this from the users location.

format: "celsius" | "fahrenheit",

}) => any;

} // namespace weather

system:

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. The assistant calls functions with appropriate input when necessary

user:

</s>What is the weather in Istanbul?</s>

assistant

```

The model will output:

```text

to=weather.get_current_weather:

{"location": "Istanbul", "format": "celsius"}</s>

```

Then it will stop.

We don't change the logit probabilities to conform a certain schema, but the model itself knows how to conform. This allows us to use existing tools and caching systems with ease.

## Evaluation

--- Work In Progress ---

Due to the unique nature, it requires custom evaluation suite. But we can probably evaluate with gpt-4-0613, likely with a similar approach like [LLM Judge](https://github.com/lm-sys/FastChat/tree/main/fastchat/llm_judge)

## Dataset

--- Work In Progress ---

Dataset preparation process consists of several steps:

1. **Function Definitions Conversion:** We begin by selecting multiple function definitions and converting them into TypeScript definitions. This approach benefits from the model's prior exposure to TypeScript tokens during the pretraining phase. [See how we do it](https://github.com/musabgultekin/functionary/blob/17a86de9b06acaedd0afab212717205c0484a218/schema.py#L54) Also see [Microsoft TypeChat](https://github.com/microsoft/TypeChat/blob/d2f2de9ca37ef9adeb108d5fc60703b72fec0a22/site/src/blog/introducing-typechat.md#just-add-types)

2. **Human Prompts Generation:** We then create human prompts that incorporate the converted TypeScript function definitions.

3. **Function Calls Generation:** Following the generation of human prompts, we proceed to generate corresponding function calls.

4. **Function Answers Generation:** Once function calls have been generated, we derive the outputs of these function calls would produce.

5. **Function Answers Interpretation:** After procuring function answers, we generate language model answers for the function response. So the model knows how to interpret the function response.

6. **Merging and Training:** We combine all the generated elements (prompts, function calls, function answers, and their interpretations) using a custom formatting. This consolidated dataset is then used for the model's training.

*Note: Llama 2 70b is capable of doing all syntetic data generation.*

*More information about this process will be provided soon as possible.*

### v0.1

**Data Sources:**

- [ShareGPT 34K](https://huggingface.co/datasets/ehartford/wizard_vicuna_70k_unfiltered/blob/cfe3f5810110d4d763665c070b4a966fda43e5c5/wizard_vicuna_dataset_unfiltered.json)

- Synthetic function calling dataset (2.7k examples)

**Observations:**

This version showed limitations in handling multi-prompt conversations, likely due to the absence of multiple instructions in the function calling dataset. Also hallucinations are common, we likely need more conversation data.

### v0.2

**Data Sources:**

- [ShareGPT 53K](https://huggingface.co/datasets/anon8231489123/ShareGPT_Vicuna_unfiltered/blob/bcd32a724d8460ebe14e1d05b0195e30e9a46cb1/ShareGPT_V3_unfiltered_cleaned_split_no_imsorry.json)

- Synthetic function calling dataset (3.5k examples). Sampled 2 times.

### v1

**Data Sources:**

- Same as v0.2

**Observations:**

Compared to v0.2, because the model supports 4k context sizes, its much more resilient to the longer conversations and longer function definitions. Also we switched to Llama 2.

## Roadmap

- [ ] If I can save more money, I'll train [Llama 2](https://arxiv.org/abs/2307.09288) 13B model too, with 2x more data.

- [ ] OpenAPI specification based plugin support.

- [ ] Fast inference server ([vLLM](https://github.com/vllm-project/vllm) or [text-generation-inference](https://github.com/huggingface/text-generation-inference))

- [ ] Streaming Support

- [ ] Python function calling support (Automatic detection of type annotations and calling them automatically)

- [ ] Real world usage examples, such as creating agents.

- **Please consider opening a PR for future requests**

| 129 | 11 |

FledgeXu/NeovimZero2Hero

|

https://github.com/FledgeXu/NeovimZero2Hero

| null |

# NeovimZero2Hero

| 18 | 2 |

mInzamamMalik/Chatbot-Development-Syllabus

|

https://github.com/mInzamamMalik/Chatbot-Development-Syllabus

| null |

<h1 align='center'>Generative AI Chatbot Course</h1>

<h2 align='center'>🖥 Building and Integrating Artificial Intelligence Chatbots and Voice Applications with Dialogflow and ChatGPT3</h2>

<h4 align='center'><i>Talk to the future of computing.</i></h4>

<img src='./readme-assets/cover.png'/>

| | |

|:------------- |:----------|

| Prerequisites | Basic programming knowledge | List all new or modified files |

| Instructor | [M. Inzamam Malik](https://www.linkedin.com/in/minzamam) |

| Duration | 6 Months |

| Stack | `OP stack (OpenAI + Pinecone)` |

<p align="center"><img src="https://skillicons.dev/icons?i=js,nodejs,express,mongodb" />

<img height='50' width='50' src="./readme-assets/icons/dialogflow.png"/><img height='50' width='50' src="./readme-assets/icons/open-ai-10px.png"/></p>

<!-- Google Assistant apps you will learn latest technologies Google Dialoglfow v2, -->

## Objective

In this course you will learn how to make state of the art AI Chatbots with `OP stack (OpenAI + Pinecone)`<br>

[ChatGPT](https://openai.com/blog/chatgpt) is a new kind of `AI as a Service (AIaaS)` which is gaining significant traction. In the foreseeable future, only large corporations will be responsible for creating their own AI models, while smaller entities will rely on pre-trained, general-purpose models such as ChatGPT. As an AI developer and chatbot expert, I opine that while ChatGPT may not yet be at the peak of its potential, it is poised to make substantial progress in the near future.

This course will offer comprehensive insights not only on ChatGPT APIs but also provide guidance on how such new forms of AI can be utilized by application developers. With the ChatGPT gold rush already underway, the ultimate aim of this course is to equip participants with the necessary skills to become part of this technological revolution.

## Who Is This Course for?

This course is designed for aspiring AI developers and Chat-bot enthusiasts, and application developers who are eager to explore the world of `state-of-the-art AI Chat-bots`. Whether you are a seasoned programmer or just starting your journey into the realm of artificial intelligence, this comprehensive course will equip you with the necessary skills to become a part of the `technological revolution driven by AIaaS`.

**Just basic Programming knowledge is required in any language,** No prior experience with Python is required, as the course focuses on JavaScript, making it accessible to a wider audience. If you are intrigued by the potential of AI-powered Chat-bots and wish to `leverage pre-trained, general-purpose models` like ChatGPT to build innovative applications, this course will provide you with the knowledge and insights to do so effectively.

## Course outline

### Overview

- **`Git`**, **`HTML`** and **`CSS`** overview

- **`JavaScript`** (ES6+) ~~No Python~~

- **`NodeJS`**, **`ExpressJS`**

- **`MongoDB`**

- **`DialogFlow`**

- **`OpenAI's ChatGPT API (fine-tuning)`**

- **`Whisper API`**

- **`Pinecone (Vector database)`**

### Details

1. In weak one we will have an overview of :

- [`Git and Github`](https://rogerdudler.github.io/git-guide/). [(Video)](https://youtu.be/vbH9gMqJ5GQ)

- [`HTML`](https://youtu.be/HcOc7P5BMi4) and [`CSS`](https://youtu.be/Edsxf_NBFrw).

(1 week) [Book Link](https://github.com/shehza-d/Learning-Resources/blob/main/02.Starting-of-Development(HTML)/00.Web_development_book(jon-ducket).pdf)

1. Exploring the possibilities of future with [`Apple's Vision Pro`](https://www.youtube.com/live/GYkq9Rgoj8E?feature=share) and ChatGPT.

1. [`JavaScript`](https://www.youtube.com/playlist?list=PLu0W_9lII9ahR1blWXxgSlL4y9iQBnLpR) programming fundamental (ES6+) (3 weeks)

| | |

| --- | --- |

| Variables | Number, String, Boolean, Array, Objects|

|Math Operators|+ - \* / % -- ++|

|Conditional Statements |If else, ternary operator|

|Conditional operators| === !== < > <= >=|

|Logical operators|&& \|\| !|

|Loop Statements |For loop, map, for in loop|

|ES6 functions| ()=>|

|Async Await||

1. [REST API design principles](https://www.ibm.com/topics/rest-apis)

Theory of

- Rest APIs

- http and https

- What is [Stateless](https://www.interviewbit.com/blog/gradle-vs-maven/)?

- What is [Caching](https://aws.amazon.com/caching/)?

- [Micro service architecture](https://cloud.google.com/learn/what-is-microservices-architecture)

- [Ninon service architecture](https://techbeacon.com/app-dev-testing/nanoservices-where-they-fit-where-they-dont) (AWS Lambda)

1. Writing JavaScript `Servers` with and `ExpressJS/NodeJS` (4 weeks)

- Introduction to Servers

- Express.js GET, POST, PUT, DELETE

- Express.js middleware

- Express.js Static file server

1. Hosting Node Servers

- [Cyclic](https://www.cyclic.sh/) (1 free app without Card)

- [Google App Engine](https://cloud.google.com/appengine) (Unlimited free apps with card)

1. [`MongoDB`](https://learn.mongodb.com/)

- Introduction to MongoDB Database

- SQL vs NoSQL (multi-region)

- Setting up Mongodb Atlas

- MongoDB Schema and model

- MongoDB CRUD Operation

1. [`ReactJS`](https://daveceddia.com/react-getting-started-tutorial/) Integration (2 weeks)

- Basic introduction to react

- Chatbot popup using react-chat-widget in existing react.js web app

- CRUD operation in ReactJs

- Chat app with ReactJs.

- React.js is not fully covered in this course!

1. `Dialogflow` V2 (4 weeks)

- Intent

- Entity

- Fulfilment

- Context

1. 3 Real life Chatbots Integrations for hands-on practice

- Hotel booking Assistant

- Online Store Assistant

- Weather Assistant

1. Introduction to the `OpenAI` Completion Model and Completion Endpoint.

1. Introduction to the OpenAI Playground.

1. How to Designing a better prompt.

1. Using `ChatGPT` in your apps for classification, generation, transformation, translation, and conversion.

1. Building Chatbots on top of ChatGPT3.

1. Supercharging `Dialogflow` Chatbots with ChatGPT webhook.

1. Assembling and uploading training data into ChatGPT.

1. `Fine-tuning` techniques.

1. Introduction to the JSONL document.

1. Introduction to OpenAI CLI tools.

1. Building your own `fine-tuned model`.

1. Entity extraction.

1. Introduction to hyper-parameters.

1. Using existing fine-tuned models.

1. Further fine-tuning on existing fine-tuned models.

1. Validating fine-tuned models for weight and biases.

1. [`Whisper API`](https://github.com/openai/whisper) is a breakthrough, Whisper is real and I have used it. It's far far better then google speech to text it understand sentences that has two languages in it, previously you had to download the model and run on your machine with 64gb ram memory but now you just have to pay as you go.

1. [Pinecone's](https://www.pinecone.io/) vector database

- What is [`Word Embedding?`](https://youtu.be/5MaWmXwxFNQ)

- It's Long-Term Memory for AI

- Unlock powerful vector search with Pinecone

- Intuitive to use, designed for speed, and effortlessly scalable.

- Transform your business with high-performance AI applications. Pinecone's vector database is fully-managed, developer-friendly, and easily scalable.

**AI as a service is the future of AI**

## Scope

Chatbot projects are highly in demand in international market, After successful completion of this

course you will be empowered to pursue exciting opportunities as a Chatbot Developer in software houses or even embark on a `freelance` journey with Fiverr and Upwork **(How to do freelancing is not covered in this course)**, tapping into the gold rush of `AIaaS` in the international market.

## Course Resources

- [Github Repo](https://github.com/mInzamamMalik/SMIT-chatbot-b3)

- [Youtube Playlist](https://youtube.com/playlist?list=PLaZSdijfCCJAaiGINILElinr8wlgNS7Vy)

- [Website to submit assignments](https://sysborg-air.web.app/)

- [WhatsApp group](https://chat.whatsapp.com/IRY1Bd1OxIpGa6lcLh8HzB)

- [List of Assignments](./Assignments.md)

## Other Resources

- [Will ChatGPT replace Humans?](https://youtu.be/84kL9fInMfQ)

- [Dialogflow knowledge base vs ChatGPT4](https://youtu.be/BZgjbCX1vVU)

<br><hr>

<h4 align='center'>Readme by <a href='https://github.com/shehza-d/'>Shehzad</a></h4>

| 20 | 1 |

MildDrizzle/coding-translate-extension

|

https://github.com/MildDrizzle/coding-translate-extension

| null |

# Coding Translate Extension

## 简介

[【English】](./doc/README.md)

许多优秀的项目,都有丰富的注释,使用者可以快速理解代码意图。但是如果使用者并不熟习注释的语言,会带来理解困难。

本插件使用 Google、Bing、Baidu、AliCloud、DeepL等的 Translate API 翻译 VSCode 的编程语言的注释。

## 功能

1. Hover识别并翻译代码中注释部分。支持不同语言,单行、多行注释。

2. Hover翻译选中区域文本(划词翻译)

3. 翻译并快速替换选中文本

4. 翻译并替换文件中所有"字符串"或"注释"

* 如果有选中的文本区域,只识别并翻译替换选中区域的“字符串”或“注释”

5. 翻译Hover内容。(实验功能)

## 配置

* `commentTranslate.hover.enabled`: 开启/关闭悬停翻译(可以通过状态快速设置)

* `commentTranslate.hover.concise`: 开启/关闭简洁模式.开启后只有按住ctrl或command才会触发悬浮翻译

* `commentTranslate.hover.string`: 开启/关闭字符串悬停翻译

* `commentTranslate.hover.content`: 开启/关闭翻译悬停内容

* `commentTranslate.multilineMerge`: 合并多行注释

* `commentTranslate.targetLanguage`: 翻译目标语言,没有设置的情况下使用vscode本地语言。(可以通过状态快速设置)

* `commentTranslate.source`: 翻译服务源配置。建议通过命令完成设置。 支持插件扩展翻译服务源。

* `commentTranslate.maxTranslationLength`, 最长翻译长度配置。规避过长字符翻译引起收费过多问题

* `commentTranslate.googleTranslate.mirror`,解决国内服务不可访问问题.

## 翻译源

* 支持外部“翻译源”扩展。目前外部插件已支持 [ChatGPT] & [DeepL] & [tencent cloud] 翻译源.

* 已内置Ali翻译源。 可以通过 [开通阿里云机器翻译] 生成 accessKeyId & accessKeySecret ,并配置到插件中。切换对应翻译源,获得更稳定的翻译服务

| 25 | 0 |

nwaliaez/ezSnippet

|

https://github.com/nwaliaez/ezSnippet

|

Ez Learning

|

This is a [Next.js](https://nextjs.org/) project bootstrapped with [`create-next-app`](https://github.com/vercel/next.js/tree/canary/packages/create-next-app).

## Getting Started

First, run the development server:

```bash

npm run dev

# or

yarn dev

# or

pnpm dev

```

Open [http://localhost:3000](http://localhost:3000) with your browser to see the result.

You can start editing the page by modifying `app/page.tsx`. The page auto-updates as you edit the file.

This project uses [`next/font`](https://nextjs.org/docs/basic-features/font-optimization) to automatically optimize and load Inter, a custom Google Font.

## Learn More

To learn more about Next.js, take a look at the following resources:

- [Next.js Documentation](https://nextjs.org/docs) - learn about Next.js features and API.

- [Learn Next.js](https://nextjs.org/learn) - an interactive Next.js tutorial.

You can check out [the Next.js GitHub repository](https://github.com/vercel/next.js/) - your feedback and contributions are welcome!

## Deploy on Vercel

The easiest way to deploy your Next.js app is to use the [Vercel Platform](https://vercel.com/new?utm_medium=default-template&filter=next.js&utm_source=create-next-app&utm_campaign=create-next-app-readme) from the creators of Next.js.

Check out our [Next.js deployment documentation](https://nextjs.org/docs/deployment) for more details.

| 16 | 3 |

raokun/TerraMours.Chat.Ava

|

https://github.com/raokun/TerraMours.Chat.Ava

|

基于Avalonia的智能AI会话项目,接入ChatGpt

|

# TerraMours.Chat.Ava

基于Avalonia的智能AI会话项目,接入ChatGpt

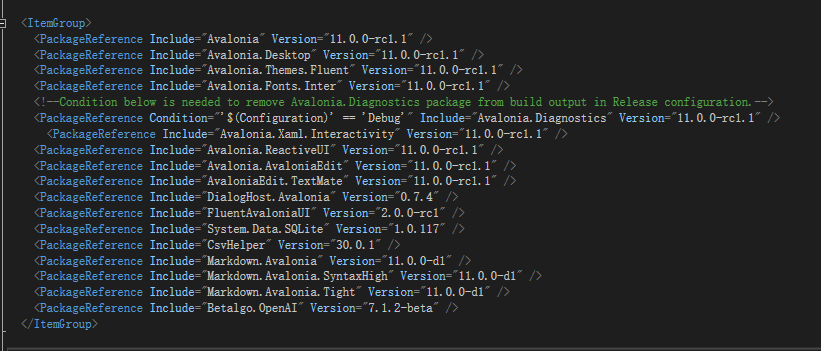

## 1.nuget包引用

### 引用包介绍:

* Avalonia 版本11.0.0-rc1.1,稳定版本,其他基于avalonia的包要选用支持11.0.0-rc1.1的版本

* Avalonia.ReactiveUI MVVM 架构模式的工具库,创建avalonia项目时会提示选择。

* [DialogHost.Avalonia](https://www.nuget.org/packages/DialogHost.Avalonia) 它提供了一种简单的方式来显示带有信息的对话框或在需要信息时提示用户。

* FluentAvaloniaUI UI库,并将更多WinUI控件引入Avalonia

* System.Data.SQLite 本地数据库SQLite

* CsvHelper Csv导入导出工具库

* [Markdown.Avalonia](https://www.nuget.org/packages/Markdown.Avalonia) 用于显示markdown文本的工具,用于展示聊天结果的渲染

* Betalgo.OpenAI 调用ChatGpt的扩展库

```xml

<PackageReference Include="Avalonia" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.Desktop" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.Themes.Fluent" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.Fonts.Inter" Version="11.0.0-rc1.1" />

<!--Condition below is needed to remove Avalonia.Diagnostics package from build output in Release configuration.-->

<PackageReference Condition="'$(Configuration)' == 'Debug'" Include="Avalonia.Diagnostics" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.Xaml.Interactivity" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.ReactiveUI" Version="11.0.0-rc1.1" />

<PackageReference Include="Avalonia.AvaloniaEdit" Version="11.0.0-rc1.1" />

<PackageReference Include="AvaloniaEdit.TextMate" Version="11.0.0-rc1.1" />

<PackageReference Include="DialogHost.Avalonia" Version="0.7.4" />

<PackageReference Include="FluentAvaloniaUI" Version="2.0.0-rc1" />

<PackageReference Include="System.Data.SQLite" Version="1.0.117" />

<PackageReference Include="CsvHelper" Version="30.0.1" />

<PackageReference Include="Markdown.Avalonia" Version="11.0.0-d1" />

<PackageReference Include="Markdown.Avalonia.SyntaxHigh" Version="11.0.0-d1" />

<PackageReference Include="Markdown.Avalonia.Tight" Version="11.0.0-d1" />

<PackageReference Include="Betalgo.OpenAI" Version="7.1.2-beta" />

```

## 2.功能介绍

项目开发的功能分为如下:

### 1.通用框架:

* VMLocator: ViewModel 定位器。方便地获取和管理 ViewModel 实例,从而实现界面和数据的解耦和模块化,提高代码的可维护性和可测试性。

* 国际化: 使用 CultureInfo.CurrentCulture 来实现多语言支持和本地化

* 本地化数据:通过SQLite实现数据本地化

* CSV导入导出:实现数据的迁移和补充

* 自定义快捷键: 自定义快捷键,方便操作。发挥客户端的按键优势。

* 自定义字体

* 全局样式

### 2.界面交互

* LoadView.axaml **加载界面**:系统打开时候的加载界面,**用于首页替换的技术实践。可改造成登陆界面。**

* MainWindow.axaml **首页**

* MainView.axaml **主界面**

* DataGridView.axaml **会话列表**

* ChatView.axaml **聊天界面**

* ApiSettingsView.axaml **API配置**

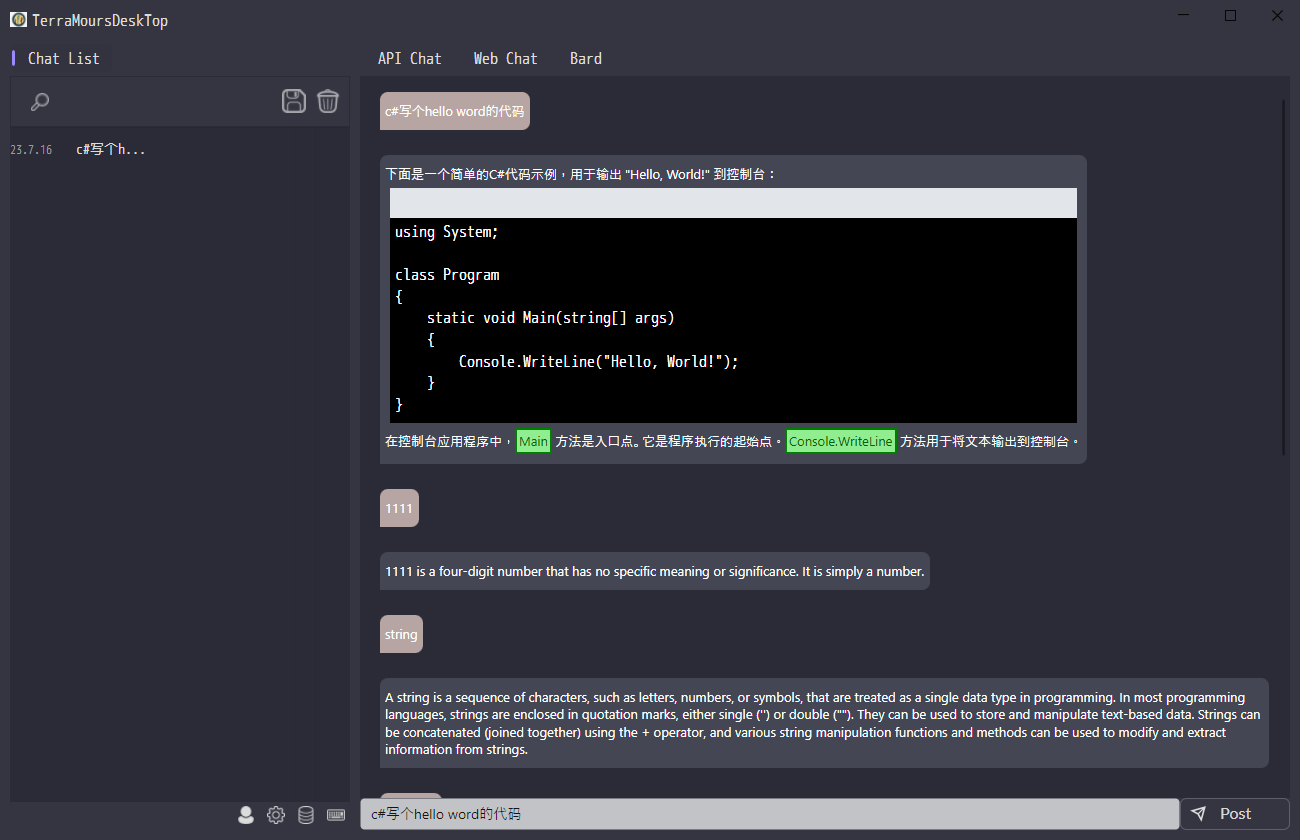

# 项目截图

windows

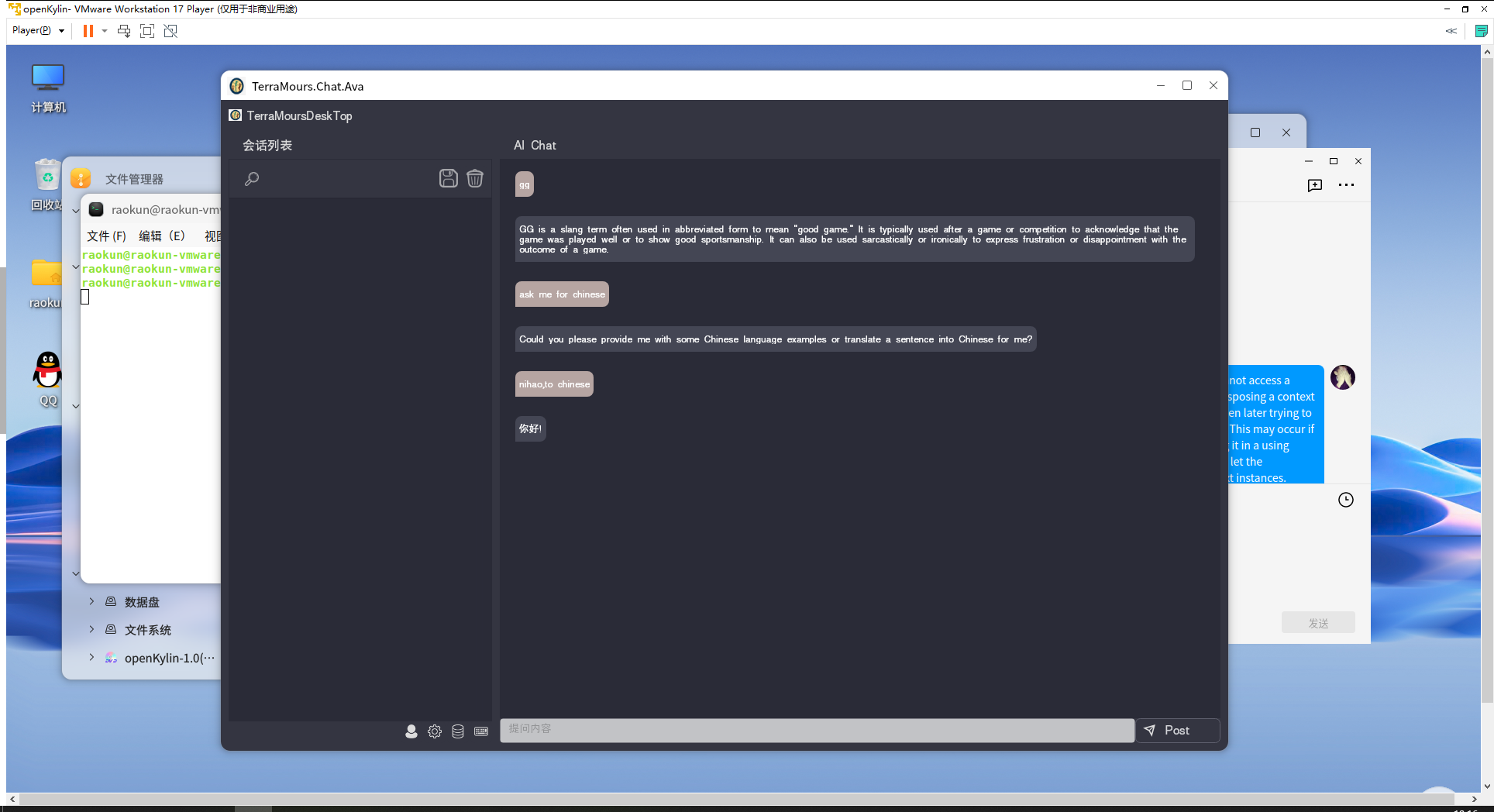

openKylin系统-linux

技术总结记录在我的博客中

[基于Avalonia 11.0.0+ReactiveUI 的跨平台项目开发1-通用框架](https://www.raokun.top/archives/ji-yu-avalonia1100reactiveui-de-kua-ping-tai-xiang-mu-kai-fa-1--tong-yong-kuang-jia)

[基于Avalonia 11.0.0+ReactiveUI 的跨平台项目开发2-功能开发](https://www.raokun.top/archives/ji-yu-avalonia1100reactiveui-de-kua-ping-tai-xiang-mu-kai-fa-2--gong-neng-kai-fa)

| 14 | 0 |

tmaham/DS-Fusion

|

https://github.com/tmaham/DS-Fusion

|

Code for project DS-Fusion

|

# DS-Fusion

## PIPELINE

## Setting up basic environment

Use environment.yaml from the official Stable Diffusion project, to set up the environment.

```

conda env create -f environment.yaml

```

You will also need to download the checkpoint named "model.ckpt" from [Source](https://github.com/CompVis/latent-diffusion). For ease, we provide an alternative link [Link](https://drive.google.com/file/d/1FuwXUk8Ht-UZ2J-vCAg9MOJRPqd8iY-F/view?usp=sharing) for the checkpoint file we used in our project from the latent-diffusion official source.

## Basic generation

Please run the following script to finetune for a specific style and text. Only single alpha-numeric characters can be accepted.

For ease of use, some font data has been generated for quick testing. List of these fonts is in ldm/data/list_fonts.py. If you use the name of one of these fonts, please use them with the --one_font argument. Only capital letters and numbers can be used for this purpose, as only they are available pre generated.

```

python script_basic.py -s "DRAGON" -t "R" --one_font "False" --font_name "ani" --white_bg "True" --cartoon "True" --ckpt_path "ckpt/model.ckpt"

```

```

python txt2img.py --ddim_eta 1.0 --n_samples 6 --n_iter 1 --ddim_steps 50 --scale 5.0 --H 256 --W 256 --outdir out --ckpt logs/DRAGON-R/checkpoints/last.ckpt --prompt "DRAGON R"

```

- Use command "--make_data True" in finetuning step to override previous generated style images.

- Set --one_font as False, if wanting to use multiple fonts for use in generation. In this case it would be better to increase max_steps in config to 1000+.

- Add additional style attributes using --attribute in finetuning command. ensure to use the same attributes when generating

### Custom Font

- You may use --custom_font and give a name of a font available on your system. In this case you may use any alpha numeric character, provided your system can generate it.

- You may need to adjust parameters of rasterizing in ldm/data/rasterizer.py because depending on the font, it may not turn out as expected. Look at img_base.png to see what the font looks like rasterized.

- If using --custom_font, add full name including extension. e.g. " --custom_font 'TlwgTypist-Bold.ttf' "

```

python script_basic.py -s "DRAGON" -t "R" --custom_font "TlwgTypist-Bold.ttf" --white_bg "True" --cartoon "True" --ckpt_path "ckpt/model.ckpt"

```

```

python txt2img.py --ddim_eta 1.0 --n_samples 6 --n_iter 1 --ddim_steps 50 --scale 5.0 --H 256 --W 256 --outdir out --ckpt logs/DRAGON-R/checkpoints/last.ckpt --prompt "DRAGON R"

```

## Generating results using pre-trained Generic Use model

A pre-trained model has been trained over all capital letters and numbers, to provide a fast generation. This method was trained using 40 categories (in classes.txt) but has generalized sufficiently to out of training examples.

Please download the checkpoint file from [Link](https://drive.google.com/file/d/1QB-6MK4En07W6Rqs1_Dk9bopFhUpugC4/view?usp=drive_link) and place it in ckpt folder. Write prompt as "style style-attributes letter"

Please make sure the letter is either a capital letter between A-Z or a number 0-9, otherwise it is unlikely to work well.

```

python txt2img.py --use_generic "True" --ddim_eta 1.0 --n_samples 6 --n_iter 1 --ddim_steps 50 --scale 5.0 --H 256 --W 256 --outdir out_generic --ckpt ckpt/ds-fusion-generic.ckpt --prompt "DRAGON R"

```

## Examples of Results

## Acknowledgement

The implementation is based on Stable Diffusion/Latent Diffusion [Git-Source](https://github.com/CompVis/stable-diffusion). The discriminator structure is referenced from DC-GAN.

| 76 | 10 |

OpenLMLab/LEval

|

https://github.com/OpenLMLab/LEval

|

Data and code for L-Eval, a comprehensive long context language models evaluation benchmark

|

<div align="center">

<img src="figs/logo.png" border="0" width=450px/>

</div>

------

### *L-Eval: Instituting Standardized Evaluation for Long Context Language Models*

L-Eval ([preview on 🤗 HuggingFace Datasets](https://huggingface.co/datasets/L4NLP/LEval) • [check our 📃 paper](https://arxiv.org/abs/2307.11088) ) is a comprehensive long-context language models evaluation suite with 18 long document tasks across multiple domains that require reasoning over long texts, including summarization, question answering, in-context learning with long CoT examples, topic retrieval, and paper writing assistance. L-Eval is a high-quality test set with 411 long documents and 2043 manually labeled query-response pairs.

Currently, there have been great efforts invested in the expansion of context length for large language models.

But it remains unclear whether extending the context can offer substantial gains over traditional methods such as retrieval, and to what extent it improves upon their regular (short context) counterparts in practical downstream tasks.

We hope L-Eval could help researchers and developers track the progress of long-context language models (LCLMs) and understand the strengths/shortcomings of different methods. We will also keep up with the **latest releases** of instruction-following LCLMs.

#### Features of this repo:

- 🧐 [How to get the data](#use)

- 📏 [How to evaluate your models](#eval)

- 📨 [How to submit your results](#submit)

- 🔖 [View the Leaderboard](https://l-eval.github.io)

- 🧭️ [Memory-efficient inference and multiple GPUs inference](#inference)

- 🖇️ [Build a retrieval-based baseline with Langchain](#tool)

- ✏️ [Annotate & filter QA pairs from local jsonl files with web](#tool)

#### Overview:

<div align="center">

<img src="figs/lclms_bar.png" border="0" width=850px/>

</div>

## Updates of L-Eval

- 2023.8.4 The [leaderboard](https://l-eval.github.io) is ready now 🎉

- 2023.8.1 We've tested more models, including GPT4, vicuna, and Llama2-13B, and updated the results for Turbo-16k by incorporating length instructions to reduce length biases in open-ended tasks. The previously released Turbo-16k did not include this feature, and its performance was slightly lower than that of the current version. Please **replace** the turbo-16k predicted files with new files committed on 2023.8.1. We're sorry for the inconvenience.

- 2023.8.1 Predictions of LCLMs tested in this paper are available [here](https://drive.google.com/drive/folders/1pPbIXw0eRD_XZVMixZL4BG_SrMwFH3SH?usp=sharing) and judgements from gpt4 are available [here](https://drive.google.com/drive/folders/1bUGs-2isRLaY5xCz8k3mkKDArX6WxX0u?usp=sharing).

We hope these can help researchers analyze different models and metrics. We also add a related work section discussing other long sequences benchmarks.

Please check our paper [v2](https://arxiv.org/abs/2307.11088) for more details.

## Folders

The repository is structured as follows:

```bash

├── Baselines/ # scripts to generate the prediction files with baseline models

├── Evaluation/ # evaluation scripts

├── LEval-data/ # test samples

│ ├── Exam/ # exact match tasks (like multiple-choice)

│ │ ├── test_file.jsonl

│ │ └── ...

│ ├── Generation/ # generation tasks

│ │ ├── test_file.jsonl

│ │ └── ...

├── Predictions/ # output of models

│ ├── exam_eval/trubo-16k-0613

│ │ ├── <task_name>.pred.jsonl

│ │ └── ...

│ ├── llm_gpt4_eval

│ │ ├──<model_name>.pred.jsonl

│ ├── ...

├── Tools/ # useful scripts

├── figs/ # figures

├── LICENSE

└── README.md

```

## Quick use

#### Step 1. Download the data

It is easy to load the test data in one line with huggingface datasets, and we give the example scripts:

```python

from datasets import load_dataset

datasets = ["coursera", "gsm100", "quality", "topic_retrieval_longchat", "tpo", "financial_qa", "gov_report_summ", "legal_contract_qa", "meeting_summ", "multidoc_qa", "narrative_qa", "natural_question", "news_summ", "paper_assistant", "patent_summ", "review_summ", "scientific_qa", "tv_show_summ"]

for testset in datasets:

data = load_dataset('L4NLP/LEval', testset, split='test')

# evaluate your model

```

You can also directly clone this repo:

```

git clone https://github.com/OpenLMLab/LEval.git

```

The test data is in `LEval-data`.

Each long document has multiple queries and corresponding responses. The format of each sample is as follows:

```json

{

"instructions": ["What is the main goal of data science?\nA. Analyze and predict future trends\nB. Generate massive amounts of data\nC. Answer questions using data\nD. Increase the use of technology", "..."], // a list of instructions (questions need LLMs to answer)

"outputs": ["C","A", "..."], // the ground truth or reference of corresponding instructions

"input": "A very long document", // LLMs need to respond to instructions based on this long document.

"source": "domain the document belongs to", // meeting, narrative_qa, etc.

"evaluation": "Metrics used for evaluation" // e.g., exam, human, LLM, ROUGE, F1, etc.

}

```

#### Step 2. Generate your prediction files

We test all the baselines with a single 80G A800 GPU. If you encounter the OOM problem, please refer to [multiple GPUs inference](#inference). To generate the output files, just modify one of the baseline scripts, e.g., `longchat-test.py/llama2-chat-test.py` which has the most similar input format to yours. Then replace the model name with your own model and run:

```

python Baselines/chatglm2-test.py --task_path LEval-data/Closed-ended-tasks/tpo.jsonl or (--task_name tpo) --gpu 0 --metric ngram_eval (exam_eval, llm_eval, human_eval)

```

where `--metric` means which metric you want to use (e.g., we use `exam_eval` for closed-ended tasks). Details about metrics in L-Eval can be found in the next section. The script will print out the path to the prediction file and you need to press enter to confirm.

#### Step 3. Evaluate the prediction file

Based on the `--metric` passed in Step 2, you can choose one of the scripts from `Evaluation/auto_eval.py`, `Evaluation/llm_eval.py`, and `Evaluation/web_human_eval.py`. Then run the following command:

```

python Evaluation/auto_eval.py --pred_file Predictions/exam_eval/<your model>/coursera.pred.jsonl

```

Examples of using the `Evaluation/llm_eval.py`, and `Evaluation/web_human_eval.py` can be found [here](#eval_script)

<a name="eval"></a>

## How to Evaluate on L-Eval

In this part, we explain the metrics we used and how to run the evaluation scripts.

### Metrics used in L-Eval

L-Eval does not only contain open-ended questions (e.g.: multiple choice) considering that in real-world applications, the generated answer may not be exactly the same as the reference for long documents tasks. L-Eval is mainly divided into **two groups**: `Close-ended` and `Open-ended` and we use different evaluation metrics for each group.

#### Closed-ended tasks

- Multiple Choice Question (single correct option). Example predicted answer: `A`

- Multiple-Answer Questions (multiple correct options). Example predicted answer: `BCD`

- Math Word Problems. Example predicted answer: `3`

- Topic Retrieval. Example predicted answer: `The benefits of volunteering`

The only evaluation metric used in these tasks takes the format of *Exact Match* `"evaluation": "exam"` like grading exam papers.

The total score is 100 and the score on each question is `100/(number of questions)`. For Multiple-Answer Questions, if the predicted answer does not cover all correct answers, it will only achieve a **quarter** of the score on this question. For example, if the correct answer is `ABC` and the predicted answer is `AC`, the score on this question is `0.25 * [100/(number of questions)]`.

#### Open-ended tasks

- Summarization (Summarize a long document into a short paragraph). Example predicted answer: `This paper proposes a new method for ...`

- Abstractive Question Answering (Answer questions based on a long document). Example predicted answer: `The main goal of data science is to answer questions using data.`

- Writing Assistance (Assist in writing part of the long document). Example predicted answer: `2 Related Work\n Recent study has shown that ...`

we use the following metrics to evaluate the performance of generation tasks:

- *N-gram Match Evaluation*, `"evaluation": "f1" or "rouge"`: Using traditional automatic metrics like F1, ROUGE, etc. The low cost of automatic metrics makes it possible to evaluate all samples in L-Eval.

- *GPT4 Evaluation*, `"evaluation": "LLM"`: We suggest battling with `turbo-16k-0613` and reporting `Win % vs turbo-16k-0613`. If your model is powerful enough, we suggest directly comparing with `Claude-100k`, and reporting `Win % vs Claude-100k`.

We filter **17 long documents with 96 questions** for GPT4 evaluation considering the cost.

- *Human Evaluation*, ` "evaluation": "human"`: The annotators are asked to give a score from `1` to `5`, where 1 means the output is very bad and 5 means the output is very good. We filter **12 long documents with 85 questions** for human evaluation, each of which has 3 references: [human-written, GPT4-32k, and Claude-100k]([https://github.com/OpenLMLab/LEval/blob/main/Predictions/human_eval](https://github.com/OpenLMLab/LEval/blob/main/Predictions/human_eval/claude.gpt4.ref.jsonl)). you can visualize and score the results with `python Evaluation/web_for_human_eval.py`.

- *Turbo3.5 Evaluation (not suggested)*, `"evaluation": "LLM"` and `"evaluation": "human"`: The evaluation step is similar to GPT4 evaluation which is cheaper but not accurate as GPT4. It serves as an alternative for researchers who do not have access to the GPT-4 API. We involve more samples for Turbo3.5 Evaluation which is **29 long documents with 181 questions**.

#### *Notice: Models are informed of the ground truth length via the instruction for open-ended tasks*

1. The n-gram matching metrics like f1 are very sensitive to the *length* of ground truth (length bias). In our preliminary experiments, the turbo-16k model achieved very poor score on f1 score because it usually generates a very lengthy answer with an explanation which decreases the f1 score.

To reduce the length bias, we suggest adding the length instruction (e.g., please answer with 10 words) while testing ngram metrics: *rouge* and *f1*.

2. LLM evaluators also have length biases as they tend to prefer detailed answers. In a pairwise comparison scenario, where it's impossible to feed the entire document, responses with additional or even inaccurate details may receive a higher rating. It's also challenging to judge the adequacy of a detailed summary against a one-sentence reference summary. Therefore, aligning the prediction's granularity with the ground truth ensures a more equitable assessment.

<a name="eval_script"></a>

### Evaluation Scripts

- To run our evaluation scripts for automatic evaluation, you need to preprocess your output file in the format of `jsonl files` in [exam_eval](https://github.com/OpenLMLab/LEval/tree/main/Predictions/exam_eval/) and [ngram_eval](https://github.com/OpenLMLab/LEval/tree/main/Predictions/ngram_eval/) folders. Assuming you are going to evaluate the output of `turbo-16k-0613` on a multiple choice task `coursera`, you can run the following cmd:

```

python Evaluation/auto_eval.py --pred_file Predictions/exam_eval/turbo-16k-0613/coursera.pred.jsonl

```

- To run our evaluation scripts for GPT4/Turbo3.5 evaluation, you have to provide the `api key` in `Evaluation/llm_eval.py` and then run:

```

python Evaluation/llm_eval.py --pred_path /path/to/<your model>.pred.jsonl --judge_model gpt-4 (or gpt-3.5-turbo) --battle_with turbo-16k-0613 (or claude-100k)

```

where `--pred_path` means the prediction file. Example prediction files of `Claude-100k (vs turbo-16k)` are available: [for gpt4 evaluation](https://github.com/OpenLMLab/LEval/tree/main/Predictions/llm_gpt4_eval/claude-100k.pred.jsonl) and [for turbo3.5 evaluation](https://github.com/OpenLMLab/LEval/tree/main/Predictions/llm_turbo_eval/claude-100k.pred.jsonl)

- For human evaluation, we provide a very easy-to-use flask web app running on `localhost 127.0.0.1:5000`. You need to copy your prediction file `<model_name>.pred.jsonl` (samples with `evaluation: human`) to the `Predictions/human_eval` folder and then run:

```

python Evaluation/web_human_eval.py --mode begin (or continue)

```

where `--mode` denotes whether you are starting a new evaluation or continuing your previous annotation. Feel free to close the browser and set `--mode continue` to continue from your last annotation. Once running the script, you have to provide the annotator name and your annotation results will be saved to `Predictions/human_eval/annotation_from_<name>.jsonl`.

See the running screenshot [here](#human_demo). We have provided the prediction files from 5 popular models as baselines for human evaluation. if you want to add outputs from other baselines, you can also move the corresponding prediction file to the `Predictions/human_eval` folder.

<a name="submit"></a>

## How to Submit

The [leaderboard](https://l-eval.github.io) contains 5 parts: `Exact Match, GPT-4 evaluator, GPT-3.5 Evaluator, F1, ROUGE`,

To submit your results on our leaderboard, you can send an email to `[email protected]`.

#### Your submission should include 4 things:

* Metadata: Model name, number of parameters, and links to your paper/blog/GitHub/demo.

* Output files: Please submit 1 folder named with your model (e.g., `Predictions/turbo-16k-0613` ) for ngram matching evaluation and a jsonl file, e.g., `Predictions/LLM_Eval/claude100k.pred.jsonl`(The file naming format is `model_name.pred.jsonl`) for LLM evaluation, as described in [Evaluation scripts section](#eval).

* Results: Please submit the results produced by our evaluation scripts. Results should contain all keys in the [leaderboard](https://l-eval.github.io).

* Judgements from turbo3.5 and gpt4 (The output file produced by `llm_eval.py`)

We will randomly verify some results with the submitted output files.