full_name

stringlengths 10

67

| url

stringlengths 29

86

| description

stringlengths 3

347

⌀ | readme

stringlengths 0

162k

| stars

int64 10

3.1k

| forks

int64 0

1.51k

|

|---|---|---|---|---|---|

TransformerOptimus/Awesome-SuperAGI

|

https://github.com/TransformerOptimus/Awesome-SuperAGI

|

Awesome Repository of SuperAGI

|

<h3 align="center">

[](https://awesome.re)

<br><br>

Awesome Repository of

</h3>

<p align="center">

<a href="https://superagi.com//#gh-light-mode-only">

<img src="https://superagi.com/wp-content/uploads/2023/05/Logo-dark.svg" width="318px" alt="SuperAGI logo" />

</a>

<a href="https://superagi.com//#gh-dark-mode-only">

<img src="https://superagi.com/wp-content/uploads/2023/05/Logo-light.svg" width="318px" alt="SuperAGI logo" />

</a>

</p>

<p align="center"><i>Open-source framework to build, manage and run useful Autonomous AI Agents</i></p>

<p align="center">

<a href="https://superagi.com"> <img src="https://superagi.com/wp-content/uploads/2023/07/Website.svg"></a>

<a href="https://app.superagi.com"> <img src="https://superagi.com/wp-content/uploads/2023/07/Cloud.svg"></a>

<a href="https://marketplace.superagi.com/"> <img src="https://superagi.com/wp-content/uploads/2023/07/Marketplace.svg"></a>

</p>

<p align="center"><b>Follow SuperAGI </b></p>

<p align="center">

<a href="https://twitter.com/_superAGI" target="blank">

<img src="https://img.shields.io/twitter/follow/_superAGI?label=Follow: _superAGI&style=social" alt="Follow _superAGI"/>

</a>

<a href="https://www.reddit.com/r/Super_AGI" target="_blank"><img src="https://img.shields.io/twitter/url?label=/r/Super_AGI&logo=reddit&style=social&url=https://github.com/TransformerOptimus/SuperAGI"/></a>

<a href="https://discord.gg/dXbRe5BHJC" target="blank">

<img src="https://img.shields.io/discord/1107593006032355359?label=Join%20SuperAGI&logo=discord&style=social" alt="Join SuperAGI Discord Community"/>

</a>

<a href="https://www.youtube.com/@_superagi" target="_blank"><img src="https://img.shields.io/twitter/url?label=Youtube&logo=youtube&style=social&url=https://github.com/TransformerOptimus/SuperAGI"/></a>

</p>

<p align="center"><b>Connect with the Creator </b></p>

<p align="center">

<a href="https://twitter.com/ishaanbhola" target="blank">

<img src="https://img.shields.io/twitter/follow/ishaanbhola?label=Follow: ishaanbhola&style=social" alt="Follow ishaanbhola"/>

</a>

</p>

<p align="center"><b>Share SuperAGI Repository</b></p>

<p align="center">

<a href="https://twitter.com/intent/tweet?text=Check%20this%20GitHub%20repository%20out.%20SuperAGI%20-%20Let%27s%20you%20easily%20build,%20manage%20and%20run%20useful%20autonomous%20AI%20agents.&url=https://github.com/TransformerOptimus/SuperAGI&hashtags=SuperAGI,AGI,Autonomics,future" target="blank">

<img src="https://img.shields.io/twitter/follow/_superAGI?label=Share Repo on Twitter&style=social" alt="Follow _superAGI"/></a>

<a href="https://t.me/share/url?text=Check%20this%20GitHub%20repository%20out.%20SuperAGI%20-%20Let%27s%20you%20easily%20build,%20manage%20and%20run%20useful%20autonomous%20AI%20agents.&url=https://github.com/TransformerOptimus/SuperAGI" target="_blank"><img src="https://img.shields.io/twitter/url?label=Telegram&logo=Telegram&style=social&url=https://github.com/TransformerOptimus/SuperAGI" alt="Share on Telegram"/></a>

<a href="https://api.whatsapp.com/send?text=Check%20this%20GitHub%20repository%20out.%20SuperAGI%20-%20Let's%20you%20easily%20build,%20manage%20and%20run%20useful%20autonomous%20AI%20agents.%20https://github.com/TransformerOptimus/SuperAGI"><img src="https://img.shields.io/twitter/url?label=whatsapp&logo=whatsapp&style=social&url=https://github.com/TransformerOptimus/SuperAGI" /></a> <a href="https://www.reddit.com/submit?url=https://github.com/TransformerOptimus/SuperAGI&title=Check%20this%20GitHub%20repository%20out.%20SuperAGI%20-%20Let's%20you%20easily%20build,%20manage%20and%20run%20useful%20autonomous%20AI%20agents.

" target="blank">

<img src="https://img.shields.io/twitter/url?label=Reddit&logo=Reddit&style=social&url=https://github.com/TransformerOptimus/SuperAGI" alt="Share on Reddit"/>

</a> <a href="mailto:?subject=Check%20this%20GitHub%20repository%20out.&body=SuperAGI%20-%20Let%27s%20you%20easily%20build,%20manage%20and%20run%20useful%20autonomous%20AI%20agents.%3A%0Ahttps://github.com/TransformerOptimus/SuperAGI" target="_blank"><img src="https://img.shields.io/twitter/url?label=Gmail&logo=Gmail&style=social&url=https://github.com/TransformerOptimus/SuperAGI"/></a> <a href="https://www.buymeacoffee.com/superagi" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/default-orange.png" alt="Buy Me A Coffee" height="23" width="100" style="border-radius:1px"></a>

</p>

<hr>

## Awesome SuperAGI Twitter Agents

<details>

<summary>

<a href="https://twitter.com/_SkyAGI" target="blank">

<img src="https://img.shields.io/twitter/follow/_SkyAGI?label=_SkyAGI&style=social" alt="_SkyAGI"/>

</a>

</summary>

A regularly-scheduled SuperAGI agent that finds the latest AI news from the internet and tweets it with relevant mentions and hashtags.

</details>

<details>

<summary>

<a href="https://twitter.com/_superkittens" target="blank">

<img src="https://img.shields.io/twitter/follow/_superkittens?label=_superkittens&style=social" alt="_superkittens"/>

</a>

</summary>

A SuperAGI agent disguised as a Twitter bot created using SuperAGI that is scheduled to tweet unique 8-bit images of superhero kittens along with captions and relevant hashtags.

</details>

<details>

<summary>

<a href="https://twitter.com/_ministories" target="blank">

<img src="https://img.shields.io/twitter/follow/_ministories?label=_ministories&style=social" alt="_ministories"/>

</a>

</summary>

A SuperAGI agent that create bite-sized sci-fi stories and tweets it along with AI generated images and hashtags.

</details>

## Awesome Apps created using SuperCoder Agent Template

[Snake Game](https://superagi.com/supercoder/#SnakeGame)

[Markdown Previewer](https://eloquent-cranachan-750575.netlify.app/)

[Developer Jokes](https://zippy-entremet-770562.netlify.app/)

[PDF-to-Image Converter](https://stirring-bublanina-6de87f.netlify.app/)

[Note-taking App](https://flourishing-froyo-f3574c.netlify.app/)

[Pomodoro App](https://brilliant-beignet-df3779.netlify.app/)

[Dino Game](https://ornate-rugelach-8cbcfb.netlify.app/)

[Flappy Bird](https://clinquant-fox-3253a2.netlify.app/)

[Code Minifier](https://resilient-beijinho-ffa332.netlify.app/)

## Awesome Use Cases of Autonomous AI Agents

<details>

<summary>

Document Processing and OCR

</summary>

Autonomous agents can revolutionize document processing and Optical Character Recognition (OCR) tasks. These agents can automatically scan, read, and extract data from various types of documents like invoices, contracts, and forms. They can understand the context, classify documents, and input data into relevant systems. This eliminates manual data entry errors, reduces processing time, and enhances data management efficiency.

</details>

<details>

<summary>

Internal Employee Service Desk

</summary>

Autonomous agents can be deployed as virtual assistants to provide internal support for employees. They can answer common queries about policies, procedures, or technical issues, and guide employees through resolution steps. They can also schedule meetings, manage calendars, or assist with other administrative tasks, helping to improve overall employee productivity and satisfaction.

</details>

<details>

<summary>

Insurance Underwriting

</summary>

Autonomous agents can transform insurance underwriting by automating risk assessment and pricing. They can analyze a multitude of data points like medical records, financial data, and geographical data to assess risks and determine premiums. The autonomous nature of these agents ensures consistent underwriting decisions and can greatly reduce processing time.

</details>

<details>

<summary>

Drug Discovery Optimization

</summary>

In the pharmaceutical industry, autonomous agents can assist in optimizing the drug discovery process. These agents can analyze vast amounts of biomedical data, including genomic data, medical literature, and clinical trials data to identify potential drug targets and predict drug effectiveness. This can significantly reduce the time and cost associated with drug discovery and development, leading to quicker market introductions.

</details>

<details>

<summary>

Customer Support / Experience (CX)

</summary>

Autonomous agents in customer support or CX provide immediate responses to customer queries and issues, contributing to better overall customer experience. These agents can efficiently handle high volumes of inquiries, offer solutions, provide product information, and guide customers through processes. They can also learn from previous interactions, making their responses more personalized and relevant, leading to higher customer satisfaction and loyalty.

</details>

<details>

<summary>

Software Application Development

</summary>

Autonomous agents can aid in software development by automating various aspects of the development process. This includes code generation, testing, and debugging. They can analyze code to find errors, suggest improvements, or even write code snippets. By automating these repetitive and time-consuming tasks, developers can focus more on creative and complex aspects of software development.

</details>

<details>

<summary>

Fraud Detection

</summary>

In the financial sector and others, autonomous agents can be used to detect fraudulent activities. They can analyze vast amounts of transaction data in real-time to identify patterns that signify potential fraud. These agents can also learn from past incidents, improving their detection capabilities over time. This leads to quicker responses to fraud and reduces financial and reputational damage.

</details>

<details>

<summary>

Cybersecurity

</summary>

Autonomous agents can play a pivotal role in cybersecurity by proactively detecting, preventing, and responding to threats. They can monitor network traffic for unusual activity, identify vulnerabilities in systems, and react to threats faster than human counterparts. Some advanced agents can even predict future attacks based on patterns and trends, thereby enhancing an organization’s cybersecurity posture.

</details>

<details>

<summary>

Autonomous Customer Engagement

</summary>

Autonomous agents can revolutionize customer service by providing round-the-clock support. These agents can answer customer inquiries instantly, guide them through complex processes, and resolve issues promptly. They can be programmed to learn from past interactions, allowing them to provide more personalized and accurate responses over time. This not only improves customer satisfaction but also reduces the burden on human customer service representatives.

</details>

<details>

<summary>

Robotic Process Automation (RPA)

</summary>

Autonomous agents can automate repetitive, rule-based tasks, thereby freeing up human resources for more complex tasks. In the context of RPA, these agents can read and interpret data from various sources, manipulate data, trigger responses, and communicate with other digital systems. They can perform tasks such as data entry, invoice processing, or payroll automation, which can significantly improve operational efficiency and accuracy.

</details>

<details>

<summary>

Sales Engagement

</summary>

Autonomous agents in sales engagement act as tireless, 24/7 sales representatives. They can interact with potential customers, understand their needs through natural language processing, and recommend appropriate products or services. They can also handle initial inquiries, schedule meetings, and follow up with prospects, thereby increasing efficiency and sales productivity. Additionally, these agents can gather and analyze data from interactions to provide valuable insights into customer behavior and preferences.

</details>

## Awesome Research and Learnings

[Processing Structured & Unstructured Data with SuperAGI and LlamaIndex](https://superagi.com/processing-structured-unstructured-data-with-superagi-and-llamaindex/)

[Understanding dedicated & shared tool memory in SuperAGI](https://superagi.com/understanding-how-dedicated-shared-tool-memory-works-in-superagi/)

[AACP (Agent to Agent Communication Protocol)](https://superagi.com/introducing-aacp-agent-to-agent-communication-protocol/)

[Agent Instructions: Autonomous AI Agent Trajectory Fine-Tuning](https://superagi.com/agent-instructions/)

| 76

| 3

|

Nilsen84/lcqt2

|

https://github.com/Nilsen84/lcqt2

| null |

<h1>

Lunar Client Qt 2

<a href="https://discord.gg/mjvm8PzB2u">

<img src=".github/assets/discord.svg" alt="discord" height="32" style="vertical-align: -5px;"/>

</a>

</h1>

Continuation of the original [lunar-client-qt](https://github.com/nilsen84/lunar-client-qt), moved to a new repo because of the complete rewrite and redesign.

<img src=".github/assets/screenshot.png" width="600" alt="screenshot of lcqt">

## Installation

#### Windows

Simply download and run the setup exe from the [latest release](https://github.com/nilsen84/lcqt2/releases/latest).

#### Arch Linux

Use the AUR package [lunar-client-qt2](https://aur.archlinux.org/packages/lunar-client-qt2)

#### MacOS/Linux

> If you are using Linux, be sure to have the `Lunar Client-X.AppImage` renamed to `lunarclient` in `/usr/bin/`. Alternatively, run lcqt2 with `Lunar Client Qt ~/path/to/lunar/appimage`.

1. Download the macos/linux.tar.gz file from the [newest release](https://github.com/nilsen84/lcqt2/releases/latest).

2. Extract it anywhere

3. Run the `Lunar Client Qt` executable

> **IMPORTANT:** All 3 files which where inside the tar need to stay together.

> You are allowed to move all 3 together, you're also allowed to create symlinks.

## Building

#### Prerequisites

- Rust Nightly

- NPM

#### Building

LCQT2 is made up of 3 major components:

- The injector - responsible for locating the launcher executable and injecting a javascript patch into it

- The gui - contains the gui opened by pressing the syringe button, also contains the javascript patch used by the injector

- The agent - java agent which implements all game patches

In order for lcqt to work properly all 3 components need to be built into the same directory.

```bash

$ ./gradlew installDist # builds all 3 components and generates a bundle in build/install/lcqt2

```

```bash

$ ./gradlew run # equivalent to ./gradlew installDist && './build/install/lcqt2/Lunar Client Qt'

```

> `./gradlew installDebugDist` and `./gradlew runDebug` do the same thing except they build the rust injector in debug mode.

| 29

| 5

|

verytinydever/cvpr-lab0

|

https://github.com/verytinydever/cvpr-lab0

| null |

# cvpr-lab0

| 13

| 0

|

verytinydever/covid-19-bot-updater

|

https://github.com/verytinydever/covid-19-bot-updater

| null |

Bot that gives covid-19 update

| 15

| 0

|

kurogai/100-mitre-attack-projects

|

https://github.com/kurogai/100-mitre-attack-projects

|

Projects for security students and professionals

|

<h1>100 MITRE ATT&CK Programming Projects for RedTeamers</h1>

<p align="center">

<img src="https://cdn.infrasos.com/wp-content/uploads/2022/11/What-is-a-Red-team-in-cybersecurity.png">

</p>

This repo organizes a full list of redteam projects to help everyone into this field gain knownledge and skills in programming aimed to offensive security exercices.

I recommend you to do them on the programming language you are most comfortable with. Implementing these projects will definitely help you gain more experience and, consequently, master the language. They are divided in categories, ranging from super basic to advanced projects.

If you enjoy this list please take the time to recommend it to a friend and follow me! I will be happy with that :) 🇦🇴.

And remember: With great power comes... (we already know).

Parent Project: <a href="https://github.com/kurogai/100-redteam-projects">100 RedTeam Projects</a>

<h3>Contributions</h3>

You can make a pull request for the "Projects" directory and name the file in

compliance with the following convention:

```

[ID] PROJECT_NAME - <LANGUAGE> | AUTHOR

```

#### Example:

```

[91] Web Exploitation Framework - <C> | EONRaider

```

<br>

Consider to insert your notes during the development of any of those projects, to help others understand what dificultes might appear during the development. After your commit as been approved, share to your social medias and make a reference of your work so others can learn, help and use as reference.

<h2>Reconnaissance</h2>

<h4>Description</h4>

Reconnaissance consists of techniques that involve adversaries actively or passively gathering information that can be used to support targeting. Such information may include details of the victim organization, infrastructure, or staff/personnel. This information can be leveraged by the adversary to aid in other phases of the adversary lifecycle, such as using gathered information to plan and execute Initial Access, to scope and prioritize post-compromise objectives, or to drive and lead further Reconnaissance efforts.

---

ID | Title | Reference | Example

---|---|---|---

1 | Active Network and Fingerprint Scanner | Link | :x:

2 | Social media profiling and data gathering script | Link | :x:

3 | Dork based OSINT tool | Link | :x:

4 | Website vulnerability scanner | Link | :x:

5 | WHOIS | Link | :x:

6 | DNS subdomain enumeration | Link | :x:

7 | Spearphishing Service | Link | :x:

8 | Victim | Link | :x:

9 | DNS enumeration and reconnaissance tool | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Resource Development</h2>

<h4>Description</h4>

Resource Development consists of techniques that involve adversaries creating, purchasing, or compromising/stealing resources that can be used to support targeting. Such resources include infrastructure, accounts, or capabilities. These resources can be leveraged by the adversary to aid in other phases of the adversary lifecycle, such as using purchased domains to support Command and Control, email accounts for phishing as a part of Initial Access, or stealing code signing certificates to help with Defense Evasion.

---

ID | Title | Reference | Example

---|---|---|---

10 | Dynamic Website Phishing Tool | Link | :x:

11 | Eamil based phishing spread | Link | :x:

12 | Malware sample creation and analysis | Link | :x:

13 | Replicate a public exploit and use to create a backdoor | Link | :x:

14 | Crafting malicious documents for social engineering attacks | Link | :x:

15 | Wordpress C2 Infrastructure | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Initial Access</h2>

<h3>Description</h3>

Initial Access consists of techniques that use various entry vectors to gain their initial foothold within a network. Techniques used to gain a foothold include targeted spearphishing and exploiting weaknesses on public-facing web servers. Footholds gained through initial access may allow for continued access, like valid accounts and use of external remote services, or may be limited-use due to changing passwords.

---

ID | Title | Reference | Example

---|---|---|---

16 | Exploiting a vulnerable web application | Link | :x:

17 | Password spraying attack against Active Directory | Link | :x:

18 | Email spear-phishing campaign | Link | :x:

19 | Exploiting misconfigured network services | Link | :x:

20 | USB device-based attack vector development | Link | :x:

21 | Spearphishing Link | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Execution</h2>

<h3>Description</h3>

Execution consists of techniques that result in adversary-controlled code running on a local or remote system. Techniques that run malicious code are often paired with techniques from all other tactics to achieve broader goals, like exploring a network or stealing data. For example, an adversary might use a remote access tool to run a PowerShell script that does Remote System Discovery.

---

ID | Title | Reference | Example

---|---|---|---

22 | Remote code execution exploit development | Link | :x:

23 | Creating a backdoor using shellcode | Link | :x:

24 | Building a command-line remote administration tool | Link | :x:

25 | Malicious macro development for document-based attacks | Link | :x:

26 | Remote code execution via memory corruption vulnerability | Link | :x:

27 | Command Line Interpreter for C2 | Link | :x:

28 | Cron based execution | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Persistence</h2>

<h3>Description</h3>

Persistence consists of techniques that adversaries use to keep access to systems across restarts, changed credentials, and other interruptions that could cut off their access. Techniques used for persistence include any access, action, or configuration changes that let them maintain their foothold on systems, such as replacing or hijacking legitimate code or adding startup code.

---

ID | Title | Reference | Example

---|---|---|---

29 | Developing a rootkit for Windows | Link | :x:

30 | Implementing a hidden service in a web server | Link | :x:

31 | Backdooring a legitimate executable | Link | :x:

32 | Creating a scheduled task for persistent access | Link | :x:

33 | Developing a kernel-level rootkit for Linux | Link | :x:

34 | LSASS Driver | Link | :x:

35 | Shortcut modification | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Privilege Escalation</h2>

<h3>Description</h3>

Privilege Escalation consists of techniques that adversaries use to gain higher-level permissions on a system or network. Adversaries can often enter and explore a network with unprivileged access but require elevated permissions to follow through on their objectives. Common approaches are to take advantage of system weaknesses, misconfigurations, and vulnerabilities. Examples of elevated access include:

- SYSTEM/root level

- local administrator

- user account with admin-like access

- user accounts with access to specific system or perform specific function

These techniques often overlap with Persistence techniques, as OS features that let an adversary persist can execute in an elevated context.

---

ID | Title | Reference | Example

---|---|---|---

36 | Exploiting a local privilege escalation vulnerability | Link | :x:

37 | Password cracking using GPU acceleration | Link | :x:

38 | Windows token manipulation for privilege escalation | Link | :x:

39 | Abusing insecure service configurations | Link | :x:

40 | Exploiting misconfigured sudoers file in Linux | Link | :x:

41 | Bypass UAC | Link | :x:

42 | Startup Itens | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Defense Evasion</h2>

<h3>Description</h3>

Defense Evasion consists of techniques that adversaries use to avoid detection throughout their compromise. Techniques used for defense evasion include uninstalling/disabling security software or obfuscating/encrypting data and scripts. Adversaries also leverage and abuse trusted processes to hide and masquerade their malware. Other tactics’ techniques are cross-listed here when those techniques include the added benefit of subverting defenses.

---

ID | Title | Reference | Example

---|---|---|---

43 | Developing an anti-virus evasion technique | Link | :x:

44 | Bypassing application whitelisting controls | Link | :x:

45 | Building a fileless malware variant | Link | :x:

46 | Detecting and disabling security products | Link | :x:

47 | Evading network-based intrusion detection systems | Link | :x:

48 | Parent PID spoofing | Link | :x:

49 | Disable Windows Event Logging | Link | :x:

50 | HTML Smuggling | Link | :x:

51 | DLL Injection | Link | :x:

52 | Pass The Hash | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Credential Access</h2>

<h3>Descrition</h3>

Credential Access consists of techniques for stealing credentials like account names and passwords. Techniques used to get credentials include keylogging or credential dumping. Using legitimate credentials can give adversaries access to systems, make them harder to detect, and provide the opportunity to create more accounts to help achieve their goals.

---

ID | Title | Reference | Example

---|---|---|---

53 | Password brute-forcing tool | Link | :x:

54 | Developing a keylogger for capturing credentials | Link | :x:

55 | Creating a phishing page to harvest login credentials | Link | :x:

56 | Exploiting password reuse across different systems | Link | :x:

57 | Implementing a pass-the-hash attack technique | Link | :x:

58 | OS Credential dumping (/etc/passwd and /etc/shadow) | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Discovery</h2>

<h3>Description</h3>

Discovery consists of techniques an adversary may use to gain knowledge about the system and internal network. These techniques help adversaries observe the environment and orient themselves before deciding how to act. They also allow adversaries to explore what they can control and what’s around their entry point in order to discover how it could benefit their current objective. Native operating system tools are often used toward this post-compromise information-gathering objective.

---

ID | Title | Reference | Example

---|---|---|---

59 | Network service enumeration tool | Link | :x:

60 | Active Directory enumeration script | Link | :x:

61 | Automated OS and software version detection | Link | :x:

62 | File and directory enumeration on a target system | Link | :x:

63 | Extracting sensitive information from memory dumps | Link | :x:

64 | Virtualization/Sandbox detection | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Lateral Movement</h2>

<h3>Description</h3>

Lateral Movement consists of techniques that adversaries use to enter and control remote systems on a network. Following through on their primary objective often requires exploring the network to find their target and subsequently gaining access to it. Reaching their objective often involves pivoting through multiple systems and accounts to gain. Adversaries might install their own remote access tools to accomplish Lateral Movement or use legitimate credentials with native network and operating system tools, which may be stealthier.

---

ID | Title | Reference | Example

---|---|---|---

65 | Developing a remote desktop protocol (RDP) brute-forcer | Link | :x:

66 | Creating a malicious PowerShell script for lateral movement | Link | :x:

67 | Implementing a pass-the-ticket attack technique | Link | :x:

68 | Exploiting trust relationships between domains | Link | :x:

69 | Developing a tool for lateral movement through SMB | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Collection</h2>

<h3>Description</h3>

Collection consists of techniques adversaries may use to gather information and the sources information is collected from that are relevant to following through on the adversary's objectives. Frequently, the next goal after collecting data is to steal (exfiltrate) the data. Common target sources include various drive types, browsers, audio, video, and email. Common collection methods include capturing screenshots and keyboard input.

---

ID | Title | Reference | Example

---|---|---|---

70 | Keylogging and screen capturing tool | Link | :x:

71 | Developing a network packet sniffer | Link | :x:

72 | Implementing a clipboard data stealer | Link | :x:

73 | Building a tool for extracting browser history | Link | :x:

74 | Creating a memory scraper for credit card information | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Command and Control</h2>

<h3>Description</h3>

Command and Control consists of techniques that adversaries may use to communicate with systems under their control within a victim network. Adversaries commonly attempt to mimic normal, expected traffic to avoid detection. There are many ways an adversary can establish command and control with various levels of stealth depending on the victim’s network structure and defenses.

---

ID | Title | Reference | Example

---|---|---|---

75 | Building a custom command and control (C2) server | Link | :x:

76 | Developing a DNS-based covert channel for C2 communication | Link | :x:

77 | Implementing a reverse shell payload for C2 | Link | :x:

78 | Creating a botnet for command and control purposes | Link | :x:

79 | Developing a convert communication channel using social media platforms | Link | :x:

80 | C2 with multi-stage channels | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Exfiltration</h2>

<h3>Description</h3>

Exfiltration consists of techniques that adversaries may use to steal data from your network. Once they’ve collected data, adversaries often package it to avoid detection while removing it. This can include compression and encryption. Techniques for getting data out of a target network typically include transferring it over their command and control channel or an alternate channel and may also include putting size limits on the transmission.

---

ID | Title | Reference | Example

---|---|---|---

82 | Building a file transfer tool using various protocols (HTTP, FTP, etc.) | Link | :x:

83 | Developing a steganography tool for hiding data within images | Link | :x:

84 | Implementing a DNS tunneling technique for data exfiltration | Link | :x:

85 | Creating a convert channel for exfiltrating data through email | Link | :x:

86 | Building a custom exfiltration tool using ICMP or DNS | Link | :x:

87 | Exfiltration Over Symmetric Encrypted Non-C2 Protocol | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

<h2>Impact</h2>

<h3>Description</h3>

Impact consists of techniques that adversaries use to disrupt availability or compromise integrity by manipulating business and operational processes. Techniques used for impact can include destroying or tampering with data. In some cases, business processes can look fine, but may have been altered to benefit the adversaries’ goals. These techniques might be used by adversaries to follow through on their end goal or to provide cover for a confidentiality breach.

---

ID | Title | Reference | Example

---|---|---|---

88 | Developing a ransomware variant | Link | :x:

89 | Building a destructive wiper malware | Link | :x:

90 | Creating a denial-of-service (DoS) attack tool | Link | :x:

91 | Implementing a privilege-escalation-based destructive attack | Link | :x:

92 | Internal defacement | Link | :x:

93 | Account Access Manipulation or Removal | Link | :x:

94 | Data encryption | Link | :x:

95 | Resource Hijack | Link | :x:

96 | DNS Traffic Analysis for Malicious Activity Detection | Link | :x:

97 | Endpoint Detection and Response (EDR) for Ransomware | Link | :x:

99 | Network Segmentation for Critical Systems | Link | :x:

99 | Memory Protection Mechanisms Implementation | Link | :x:

100 | SCADA Security Assessment and Improvement | Link | :x:

<h5>Notable Projects</h5>

- Project A by X

---

### Guidelines

- If you need to test webtools, use any public vulnerable app like DVWA or DVAA

- All critical tools should be able to rollback the actions (like ransomwares)

- Make a checklist of features of any tool you developed and the resources you used to make it

### Disclaimer

All of those projects should be used inside controled enviorements, do not attemp to use any of those projects to hack, steal, destroy, evade, or any other illegal activities.

### Want to support my work?

[<a href="https://www.buymeacoffee.com/heberjuliok" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/default-orange.png" alt="Buy Me A Coffee" height="41" width="174"></a>](https://www.buymeacoffee.com/heberjuliok)

### Find me

[<a href="https://www.linkedin.com/in/h%C3%A9ber-j%C3%BAlio-496120190/" target="_blank"><img src="https://img.shields.io/badge/LinkedIn-0077B5?style=for-the-badge&logo=linkedin&logoColor=white" alt="Linkedin" height="41" width="174"></a>](https://www.linkedin.com/in/h%C3%A9ber-j%C3%BAlio-496120190/)

| 17

| 0

|

SindhuraPogarthi/ShareVerse

|

https://github.com/SindhuraPogarthi/ShareVerse

|

This is a social media website where people can connect through feed and chat with others

|

# Shareverse

<!-- If you have a logo, add it here -->

## Table of Contents

1. [Introduction](#introduction)

2. [Features](#features)

3. [Getting Started](#getting-started)

- [Installation](#installation)

- [Running the Application](#running-the-application)

4. [Technologies Used](#technologies-used)

5. [Contributing](#contributing)

6. [License](#license)

## Introduction

Shareverse is a platform where users can connect, chat, and share their thoughts with each other. It provides an interactive feed section where signed-up users can post updates, images, or links, and other users can view and interact with these posts.

<!-- If you have a screenshot, add it here -->

## Features

- User Registration and Login

- Real-time Chat functionality

- Post Creation and Sharing

- Feed Section to view posts from other users

- User Profile Management (uploading DP image, updating email, resetting password)

- Settings Section for customizing user preferences

- Mobile-responsive design for seamless user experience on various devices

## Getting Started

### Installation

1. Clone the repository to your local machine:

```bash

git clone https://github.com/sindhurapogarthi/shareverse.git

```

2. Navigate to the project directory:

```bash

cd shareverse

```

3. Install the project dependencies:

```bash

npm install

```

### Running the Application

1. Run the development server:

```bash

npm start

```

2. Open your browser and visit: `http://localhost:3000`

## Technologies Used

- React.js: Front-end development

- Firebase

- Framer-motion

- React-hot-toast

## Contributing

Contributions are welcome! If you find any bugs or have suggestions for improvements, please open an issue or submit a pull request. For major changes, please discuss the proposed changes first.

## License

[MIT License](LICENSE)

| 11

| 1

|

FSMargoo/one-day-one-point

|

https://github.com/FSMargoo/one-day-one-point

|

每天一个技术点

|

# 每天一个技术点

## 这是什么?

本仓库将会坚持每天更新一个讲解一个技术点的 Markdown 文章,由于考虑到不同人的学习进度不同,技术点有时会基础,有时会偏难,也有时会出一个系列的教程(例如“七天通读 SGI STL 源码”、“两天通读 CJson 源码”),当然也有其他的仓库成员参与,我们也欢迎更多的成员参与这个仓库贡献知识点!

本仓库有不同地来自不同领域的作者,读者可选择自己感兴趣的文章来阅读。

## 我该如何阅读 MD 文件?

此处推荐使用 VSCode 自带的编辑器阅读,非 VSCode 编辑器预览阅读有可能出现显示不全,显示出内嵌 CSS 代码等问题。

| 11

| 4

|

DXHM/BLANE

|

https://github.com/DXHM/BLANE

|

基于RUST的轻量化局域网通信工具 | Lightweight LAN Communication Tool in Rust

|

# BLANE - Lightweight LAN Communication Tool in Rust

For the Chinese version of the description, please refer to [中文版说明](/README_CN.md).

## About This Project

This project was initiated after a CTF offline competition (🚄⛰💧☔). With the challenging global public health situation, many CTF competitions have shifted to online formats. However, our team participated in an offline CTF competition for the first time. Due to our lack of familiarity with the competition format and insufficient preparation, we encountered difficulties in communication and collaboration among team members, especially when spread across different locations. The competition venue had restricted internet access and limited time, which further hindered effective communication, data sharing, and collaboration. As a result, I decided to develop a program that facilitates convenient communication within a local area network. This program can be useful in similar scenarios. This project aims to establish a basic framework, and I will continuously update and improve its functionalities.

+ BLANE is a LAN chat tool developed in Rust, designed for daily communication among devices within a local area network.

+ It utilizes asymmetric encryption algorithms for secure data transmission and supports text communication (both Chinese and English), as well as image and file transfer capabilities.

+ The project aims to provide a convenient, secure, and lightweight chat experience for members of small and medium-sized teams within a local area network.

If you have any ideas or suggestions, please feel free to raise an issue. Your support, attention, and contributions are highly appreciated. This project is in its early development stage, and updates will be made at my own pace.

Your attention and stars are welcomed🥰!

## Features

- Secure communication through asymmetric encryption algorithms

- Text communication supporting both Chinese and English languages

- Image and file transfer or sharing capabilities

- Online status tracking

- Customizable usernames

- etc..

## Build

1. Clone the repository:

```bash

git clone https://github.com/DXHM/BLANE.git

```

2. Build the project:

```bash

cd BLANE

cargo build

```

3. Run the server:

```bash

cargo run --bin server

```

4. Run the client:

```bash

cargo run --bin client

```

## Dependencies

- glib-2.0: Required dependencies for the server and client GUI (based on GTK)

- openssl: Required for encryption algorithms

## Contribution

[<img alt="AShujiao" src="https://avatars.githubusercontent.com/u/69539047?v=4" width="117">](https://github.com/dxhm)

## License

## Star History

[](https://star-history.com/#DXHM/BLANE&Date)

| 18

| 0

|

yude/weird-pronounciation

|

https://github.com/yude/weird-pronounciation

|

異常発音

|

# weird-pronounciation

異常発音

## License

MIT License.

| 10

| 1

|

trey-wallis/obsidian-dashboards

|

https://github.com/trey-wallis/obsidian-dashboards

|

Create your own personalized dashboards using customizable grid views

|

Dashboards is an [Obsidian.md](https://obsidian.md/) plugin. Design your own personalized dashboard or home page. Dashboards offers flexible grid configurations, allowing you to choose from various layouts such as 1x2, 2x2, 3x3, and more. Each grid contains containers where you can embed different elements, including vault files, code blocks, or external urls.

If you are looking for the plugin that was formally called `Dashboards` please see [DataLoom](https://github.com/trey-wallis/obsidian-dataloom)

Support development

<a href="https://buymeacoffee.com/treywallis" target="_blank" rel="noopener">

<img width="180px" src="https://img.buymeacoffee.com/button-api/?text=Buy me a coffee&emoji=&slug=treywallis&button_colour=6a8695&font_colour=ffffff&font_family=Poppins&outline_colour=000000&coffee_colour=FFDD00" referrerpolicy="no-referrer" alt="?text=Buy me a coffee&emoji=&slug=treywallis&button_colour=6a8695&font_colour=ffffff&font_family=Poppins&outline_colour=000000&coffee_colour=FFDD00"></a>

## About

- [Screenshots](#screenshots)

- [Getting started](#getting-started)

- [Embeddable Items](#embeddable-items)

- [License](#license)

## Screenshots

Create a grid layout

<img src="./docs/assets/dashboard-empty.png" width="800">

Add embedded content with ease

<img src="./docs/assets/dashboard-full.png" width="800">

Use many different layouts

<img src="./docs/assets/dashboard-grid.png" width="800">

## Getting Started

1. Create a new dashboard file by right clicking a folder and clicking "New dashboard" or click the Gauge icon on the left sidebar.

2. Choose your grid layout using the dropdown in the upper righthand corner

3. In each container click one of the embed buttons and enter the content you wish to embed. You may choose from a vault file, a code block, or a external link.

### Removing an embed

To remove an embed from a container, hold down ctrl (Windows) or cmd (Mac) and hover over a container to show the remove button. Then click the remove button

## Embeddable Items

### Files

Any vault files may be embedded into a container

### Code blocks

Embed any Obsidian code block using the [normal code block syntax](https://help.obsidian.md/Editing+and+formatting/Basic+formatting+syntax#Code+blocks). This may be used to render [Dataview](https://github.com/blacksmithgu/obsidian-dataview), DataviewJS, or [Tasks](https://github.com/obsidian-tasks-group/obsidian-tasks) plugin code blocks.

### Links

Any website will automatically be embedded in an iFrame

## License

Dashboards is distributed under the [MIT License](https://github.com/trey-wallis/obsidian-dashboards/blob/master/LICENSE)

| 12

| 0

|

jordond/MaterialKolor

|

https://github.com/jordond/MaterialKolor

|

🎨 A Compose multiplatform library for generating dynamic Material3 color schemes from a seed color

|

<img width="500px" src="art/materialkolor-logo.png" alt="logo"/>

<br />

[](http://kotlinlang.org)

[](https://github.com/jordond/materialkolor/actions/workflows/ci.yml)

[](https://opensource.org/license/mit/)

[](https://github.com/JetBrains/compose-multiplatform)

A Compose Multiplatform library for creating dynamic Material Design 3 color palettes from any

color. Similar to generating a theme

from [m3.matierial.io](https://m3.material.io/theme-builder#/custom).

<img width="300px" src="art/ios-demo.gif" />

## Table of Contents

- [Platforms](#platforms)

- [Inspiration](#inspiration)

- [Setup](#setup)

- [Multiplatform](#multiplatform)

- [Single Platform](#single-platform)

- [Version Catalog](#version-catalog)

- [Usage](#usage)

- [Demo](#demo)

- [License](#license)

- [Changes from original source](#changes-from-original-source)

## Platforms

This library is written for Compose Multiplatform, and can be used on the following platforms:

- Android

- iOS

- JVM (Desktop)

A JavaScript (Browser) version is available but untested.

## Inspiration

The heart of this library comes from

the [material-color-utilities](https://github.com/material-foundation/material-color-utilities)

repository. It is currently

only a Java library, and I wanted to make it available to Kotlin Multiplatform projects. The source

code was taken and converted into a Kotlin Multiplatform library.

I also incorporated the Compose ideas from another open source

library [m3color](https://github.com/Kyant0/m3color).

### Planned Features

- Get seed color from Bitmap

- Load image from File, Url, etc.

## Setup

You can add this library to your project using Gradle.

### Multiplatform

To add to a multiplatform project, add the dependency to the common source-set:

```kotlin

kotlin {

sourceSets {

commonMain {

dependencies {

implementation("com.materialkolor:material-kolor:1.2.2")

}

}

}

}

```

### Single Platform

For an Android only project, add the dependency to app level `build.gradle.kts`:

```kotlin

dependencies {

implementation("com.materialkolor:material-kolor:1.2.2")

}

```

### Version Catalog

```toml

[versions]

materialKolor = "1.2.2"

[libraries]

materialKolor = { module = "com.materialkolor:material-kolor", version.ref = "materialKolor" }

```

## Usage

To generate a custom `ColorScheme` you simply need to call `dynamicColorScheme()` with your target

seed color:

```kotlin

@Composable

fun MyTheme(

seedColor: Color,

useDarkTheme: Boolean = isSystemInDarkTheme(),

content: @Composable () -> Unit

) {

val colorScheme = dynamicColorScheme(seedColor, useDarkTheme)

MaterialTheme(

colors = colorScheme.toMaterialColors(),

content = content

)

}

```

You can also pass in

a [`PaletteStyle`](material-kolor/src/commonMain/kotlin/com/materialkolor/PaletteStyle.kt) to

customize the generated palette:

```kotlin

dynamicColorScheme(

seedColor = seedColor,

isDark = useDarkTheme,

style = PaletteStyle.Vibrant,

)

```

See [`Theme.kt`](demo/composeApp/src/commonMain/kotlin/com/materialkolor/demo/theme/Theme.kt) from

the demo

for a full example.

### DynamicMaterialTheme

A `DynamicMaterialTheme` Composable is also available. It is a wrapper around `MaterialTheme` that

uses `dynamicColorScheme()` to generate a `ColorScheme` for you.

Example:

```kotlin

@Composable

fun MyTheme(

seedColor: Color,

useDarkTheme: Boolean = isSystemInDarkTheme(),

content: @Composable () -> Unit

) {

DynamicMaterialTheme(

seedColor = seedColor,

isDark = useDarkTheme,

content = content

)

}

```

Also included is a `AnimatedDynamicMaterialTheme` which animates the color scheme changes.

See [`Theme.kt`](demo/composeApp/src/commonMain/kotlin/com/materialkolor/demo/theme/Theme.kt) for an

example.

## Demo

A demo app is available in the `demo` directory. It is a Compose Multiplatform app that runs on

Android, iOS and Desktop.

**Note:** While the demo does build a Browser version, it doesn't seem to work. However I don't know

if that is the fault of this library or the Compose Multiplatform library. Therefore I haven't

marked Javascript as supported.

See the [README](demo/README.md) for more information.

## License

The module `material-color-utilities` is licensed under the Apache License, Version 2.0. See

their [LICENSE](material-color-utilities/src/commonMain/kotlin/com/materialkolor/LICENSE) and their

repository [here](https://github.com/material-foundation/material-color-utilities) for more

information.

### Changes from original source

- Convert Java code to Kotlin

- Convert library to Kotlin Multiplatform

For the remaining code see [LICENSE](LICENSE) for more information.

| 13

| 0

|

BalioFVFX/IP-Camera

|

https://github.com/BalioFVFX/IP-Camera

|

Android app that turns your device into an IP Camera

|

# IP Camera

[Fullscreen](https://youtu.be/NtQ_Al-56Qs)

## Overview

## How to use

You can either watch this video or follow the steps below.

### How to start live streaming

1. Start the Video server. By default the Video server launches 3 sockets, each acting as a server:

- WebSocket Server (runs on port 1234).

- MJPEG Server (runs on port 4444).

- Camera Server (runs on port 4321).

2. Install the app on your phone.

3. Navigate to app's settings screen and setup your Camera's server IP. For example `192.168.0.101:4321`.

4. Open the stream screen and click the Start streaming button.

5. Now your phone sends video data to your Camera Server.

---

### Watching the stream

The stream can be watched from either your browser, the Web App or apps like VLC media player.

### Browser

Open your favorite web browser and navigate to your MJPEG server's IP address. For example `http://192.168.0.101:4444`

### VLC meida player

Open the VLC media player, File -> Open Network -> Network and write your MJPEG's server IP address. For example `http://192.168.0.101:4444/`

### The Web App

1. Navigate to the Web app root directory and in your terminal execute `webpack serve`.

2. Open your browser and navigate to `http://localhost:8080/`.

3. Go to settings and enter your WebSocket server ip address. For example `192.168.0.101:1234`.

4. Go to the streaming page `http://localhost:8080/stream.html` and click the connect button.

### Configuring the Web App's server

Note: This section is required only if you'd like to be able to take screenshots from the Web App.

1. Open the Web App Server project

2. Open index.js and edit the connection object to match your MySQL credentials.

3. Create the required tables by executing the SQL query located in `user.sql`

4. At the root directory execute `node index.js` in your terminal

5. You may have to update the IP that the Web App connects to. You can edit this IP in Web app's `stream.html` file (`BACKEND_URL` const variable)

6. Create a user through the Web App from `http://localhost:8080/register.html`

7. Take screenshots from `http://localhost:8080/stream.html`

8. View your screenshots at `http://localhost:8080/gallery.html`

---

| 20

| 0

|

ZJU-Turing/TuringDoneRight

|

https://github.com/ZJU-Turing/TuringDoneRight

|

浙江大学图灵班学长组资料汇总网站

|

# 图灵 2023 级学长组资料汇总网站

[](https://turing2023.tonycrane.cc/)

> **Warning** 正在建设中

## 本地构建

- 安装依赖

```sh

$ pip install -r requirements.txt

```

- 开启本地预览服务

```sh

$ mkdocs serve # Serving on http://127.0.0.1:8000/

```

## 修改发布

2023 年版通过 GitHub Action 自动构建并部署到 TonyCrane 的个人服务器上。

## 历年版本

- 2022 年:[`2022`](https://github.com/ZJU-Turing/TuringDoneRight/tree/2022) [turing2022.tonycrane.cc](https://turing2022.tonycrane.cc/)

本项目的许可证为 [ 知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议](https://creativecommons.org/licenses/by-nc-sa/4.0/deed.zh)

| 14

| 4

|

mouredev/tggenerator

|

https://github.com/mouredev/tggenerator

|

Generador de logotipos de eSports por IA (con fines académicos durante el evento Tenerife GG)

|

# Tenerife GG(enerator)

## Generador de logotipos de eSports por IA

[](https://kotlinlang.org)

[](https://developer.android.com/studio)

[](https://www.android.com)

### Aplicación Android creada con fines académicos durante el evento [Tenerife GG](https://tenerife.gg/) para proponer un ejemplo de caso de uso real aplicando 3 modelos diferentes de IA:

* **[Whisper](https://platform.openai.com/docs/models/whisper)** para transformar audio a texto.

* **[GPT-3.5](https://platform.openai.com/docs/models/gpt-3-5)** para analizar el texto.

* **[DALL·E](https://platform.openai.com/docs/models/dall-e)** para generar imágenes.

Utiliza **[Jetpack Compose](https://developer.android.com/jetpack/compose)** para la IA y **[OpenAI Kotlin](https://github.com/aallam/openai-kotlin)** para interactuar con los modelos de OpenAI.

## Requisitos

Genera una API Key en **[https://platform.openai.com](https://platform.openai.com/)** *(User/API Keys/Create new secret key)*.

## Ejecución

Descarga el proyecto, ábrelo en Android Studio y añade la API Key en el fichero `conf/Env.kt`

```

const val OPENAI_API_KEY = "MI_KEY"

```

## APK

Puedes descargar un fichero ejecutable [APK](./app.apk) *(app.apk)* de prueba para instalar directamente en tu dispositivo Android. Deberás permitir la instalación de aplicaciones fuera de la tienda.

Dispondrás de campo llamado *OpenAI API Key* para añadir tu propia clave desde la interfaz de usuario. Rellénalo y comienza a usarla.

<a href="./Media/4.png"><img src="./Media/4.png" style="height: 30%; width:30%;"/></a>

## Instrucciones

#### Completa los datos

* **Nombre del equipo**: El nombre que desees *(MoureDev)*.

* **¿A qué juegas?**: El nombre del juego en el que se va a inspirar el logotipo *(Diablo II)*.

* **Referencia principal**: El elemento principal del logotipo *(Fuego)*.

#### Añade información** *(Opcional)*

* **Iniciar grabación (Whisper)**: Graba un audio con información adicional *(Jugamos a Diablo II, me gustaría que el logo añada una calavera y mucho fuego)*.

* **Resumir (GPT-3.5)**: Extrae las palabras clave del audio *(juego, diablo, calavera, mucho fuego)*.

#### Genera el logo

* **Generar (DALL·E)** *(Diseño Tenerife GG OFF)*: Crea un logotipo con la información proporcionada.

* **Generar (DALL·E)** *(Diseño Tenerife GG ON)*: Crea un logotipo con la información proporcionada y una máscara predeterminada.

* **Copiar**: Guarda la URL del logotipo para descargarlo desde el explorador web.

<table style="width:100%">

<tr>

<td>

<a href="./Media/1.png">

<img src="./Media/1.png">

</a>

</td>

<td>

<a href="./Media/2.png">

<img src="./Media/2.png">

</a>

</td>

<td>

<a href="./Media/3.png">

<img src="./Media/3.png">

</a>

</td>

</tr>

</table>

#### Puedes apoyar mi trabajo haciendo "☆ Star" en el repo o nominarme a "GitHub Star". ¡Gracias!

[](https://stars.github.com/nominate/)

Si quieres unirte a nuestra comunidad de desarrollo, aprender programación de Apps, mejorar tus habilidades y ayudar a la continuidad del proyecto, puedes encontrarnos en:

[](https://twitch.tv/mouredev)

[](https://mouredev.com/discord)

[](https://moure.dev)

##  Hola, mi nombre es Brais Moure.

### Freelance full-stack iOS & Android engineer

[](https://youtube.com/mouredevapps?sub_confirmation=1)

[](https://twitch.com/mouredev)

[](https://mouredev.com/discord)

[](https://twitter.com/mouredev)

Soy ingeniero de software desde hace más de 12 años. Desde hace 4 años combino mi trabajo desarrollando Apps con creación de contenido formativo sobre programación y tecnología en diferentes redes sociales como **[@mouredev](https://moure.dev)**.

### En mi perfil de GitHub tienes más información

[](https://github.com/mouredev)

| 52

| 1

|

hako-mikan/sd-webui-cd-tuner

|

https://github.com/hako-mikan/sd-webui-cd-tuner

|

Color/Detail control for Stable Diffusion web-ui

|

# CD(Color/Detail) Tuner

Color/Detail control for Stable Diffusion web-ui/色調や書き込み量を調節するweb-ui拡張です。

[日本語](#使い方)

Update 2023.07.13.0030(JST)

- add brightness

- color adjusting method is changed

- add disable checkbox

This is an extension to modify the amount of detailing and color tone in the output image. It intervenes in the generation process, not on the image after it's generated. It works on a mechanism different from LoRA and is compatible with 1.X and 2.X series. In particular, it can significantly improve the quality of generated products during Hires.fix.

## Usage

It automatically activates when any value is set to non-zero. Please be careful as inevitably the amount of noise increases as the amount of detailing increases. During the use of Hires.fix, the output might look different, so it is recommended to try with expected settings. Values around 5 should be good, but it also depends on the model. If a positive value is input, the detailing will increase.

### Detail1,2 Drawing/Noise Amount

When set to negative, it becomes flat and slightly blurry. When set to positive, the detailing increases and becomes noisy. Even if it is noisy in normal generation, it might become clean with hires.fix, so be careful. Detail1 and 2 both have similar effects, but Detail1 seems to have a stronger effect on the composition. In the case of 2.X series, the reaction of Detail 1 may be the opposite of normal, with more drawings in negative.

### Contrast: Contrast/Drawing Amount, Brightness

Contrast and brightness change, and at the same time the amount of detailing also changes. It would be quicker to see the sample.

The difference between Contrast 1 and Contrast 2 lies in whether the adjustment is made during the generation process or after the generation is complete. Making the adjustment during the generation process results in a more natural outcome, but it may also alter the composition.

### Color1,2,3 Color Tone

You can tune the color tone. For `Cyan-Red`, it becomes `Cyan` when set to negative and `Red` when set to positive.

### Hr-Detail1,2 ,Hires-Scaling

In the case of using Hires-fix, the optimal settings often differ from the usual. Basically, when using Hires-Fix, it is better to input larger values than when not using it. Hr-Detail1,2 is used when you want to set a different value from when not used during Hires-Fix generation. Hires-Scaling is a feature that automatically sets the value at the time of Hires-Fix. The value of Hires-scale squared is usually multiplied by the original value.

## Use in XYZ plot/API

You can specify the value in prompt by entering in the following format. Please use this if you want to use it in XYZ plot.

```

<cdt:d1=2;col1=-3>

<cdt:d2=2;hrs=1>

<cdt:1>

<cdt:0;0;0;-2.3;0,2>

<cdt:0;0;0;-2.3;0;2;0;0;1>

```

The available identifiers are `d1,d2,con1,con2,bri,col1,col2,col3,hd1,hd2,hrs,st1,st2`. When describing in the format of `0,0,0...`, please write in this order. It is okay to fill in up to the necessary places. The delimiter is a semicolon (;). If you write `1,0,4`, `d1,d2,cont` will be set automatically and the rest will be `0`. `hrs` turns on when a number other than `0` is entered.

This value will be prioritized if a value other than `0` is set.

At this time, `Skipping unknown extra network: cdt` will be displayed, but this is normal operation.

### Stop Step

You can specify the number of steps to stop the adjustment. In Hires-Fix, the effects are often not noticeable after the initial few steps. This is because in most samplers, a rough image is already formed within the first 10 steps.

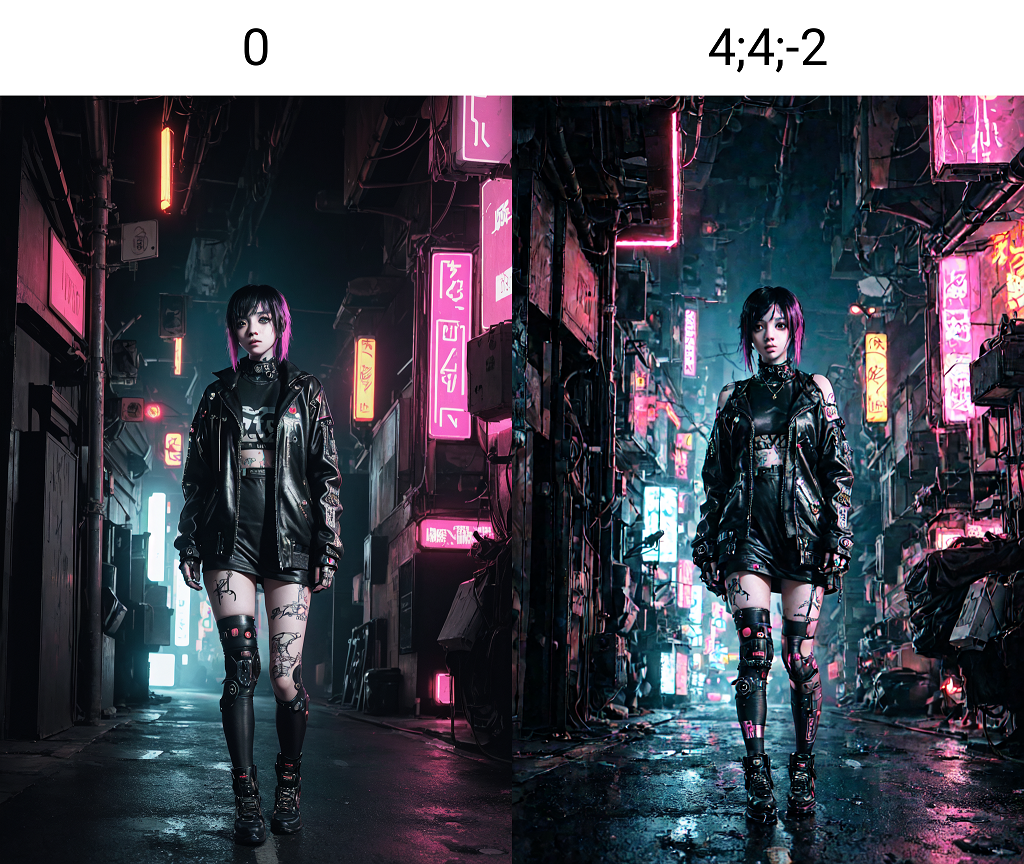

## Examples of use

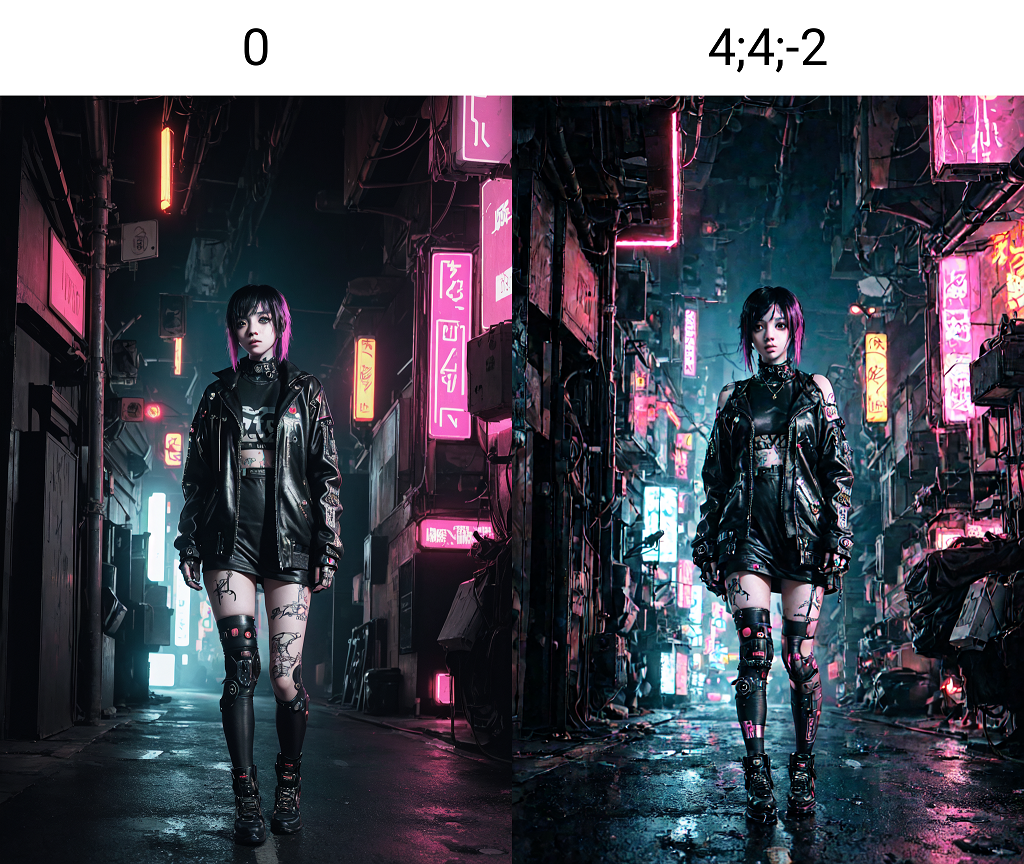

The left is before use, the right is after use. Click the image to enlarge it. Here, we are increasing the amount of drawing and making it blue. The difference is clearer when enlarged.

You can expect an improvement in reality with real-series models.

# Color/Detail control for Stable Diffusion web-ui

出力画像の描き込み量や色調を変更する拡張機能です。生成後の画像に対してではなく生成過程に介入します。LoRAとは異なる仕組みで動いています。2.X系統にも対応しています。特にHires.fix時の生成品質を大幅に向上させることができます。

## 使い方

どれかの値が0以外に設定されている場合、自動的に有効化します。描き込み量が増えると必然的にノイズも増えることになるので気を付けてください。Hires.fix使用時では出力が違って見える場合があるので想定される設定で試すことをおすすめします。数値は大体5までの値を入れるとちょうど良いはずですがそこはモデルにも依存します。正の値を入力すると描き込みが増えたりします。

### Detail1,2 描き込み量/ノイズ

マイナスにするとフラットに、そして少しぼけた感じに。プラスにすると描き込みが増えノイジーになります。通常の生成でノイジーでもhires.fixできれいになることがあるので注意してください。Detail1,2共に同様の効果がありますが、Detail1は2に比べて構図への影響が強く出るようです。2.X系統の場合、Detail 1の反応が通常とは逆になり、マイナスで書き込みが増える場合があるようです。

### Contrast : コントラスト/描き込み量

コントラストや明るさがかわり、同時に描き込み量も変わります。サンプルを見てもらった方が早いですね。

### Color1,2,3 色調

色調を補正できます。`Cyan-Red`ならマイナスにすると`Cyan`、プラスにすると`Red`になります。

### Hr-Detail1,2 ,Hires-Scaling

Hires-fixを使用する場合、最適な設定値が通常とは異なる場合が多いです。基本的にはHires-Fix使用時には未使用時より大きめの値を入れた方が良い結果が得られます。Hr-Detail1,2ではHires-Fix生成時に未使用時とは異なる値を設定したい場合に使用します。Hires-Scalingは自動的にHires-Fix使用時の値を設定する機能です。おおむねHires-scaleの2乗の値が元の値に掛けられます。

## XYZ plot・APIでの利用について

promptに以下の書式で入力することでpromptで値を指定できます。XYZ plotで利用したい場合にはこちらを利用して下さい。

```

<cdt:d1=2;col1=-3>

<cdt:d2=2;hrs=1>

<cdt:1>

<cdt:0;0;0;-2.3;0,2>

<cdt:0;0;0;-2.3;0;2;0;0;1>

```

使用できる識別子は`d1,d2,con1,con2,bri,col1,col2,col3,hd1,hd2,hrs,st1,st2`です。`0,0,0...`の形式で記述する場合にはこの順に書いてください。区切りはセミコロン「;」です。記入は必要なところまでで大丈夫です。`1,0,4`なら自動的に`cont`までが設定され残りは`0`になります。`hrs`は`0`以外の数値が入力されるとオンになります。

`0`以外の値が設定されている場合にはこちらの値が優先されます。

このとき`Skipping unknown extra network: cdt`と表示されますが正常な動作です。

### stop step

補正を停止するステップ数を指定できます。Hires-Fixでは最初の数ステップ以降は効果が感じられないことが多いです。大概のサンプラーで10ステップ絵までには大まかな絵ができあがっているからです。

## 使用例

リアル系モデルでリアリティの向上が見込めます。

| 38

| 1

|

HoseungJang/flickable-scroll

|

https://github.com/HoseungJang/flickable-scroll

|

A flickable web scroller

|

# flickable-scroll

https://github.com/HoseungJang/flickable-scroll/assets/39669819/0ed83574-a6ac-4033-af39-e1c725fef7a5

---

- [Overview](#overview)

- [Examples](#examples)

- [API Reference](#api-reference)

---

# Overview

`flickable-scroll` is a flickable web scroller, which handles only scroll jobs. In other words, you can be free to write layout and style and then you just pass scroller options based on it. Let's see examples below.

# Examples

This is an example template. Note the changes of `options` and `style` in each example.

```tsx

const ref = useRef<HTMLDivElement>(null);

useEffect(() => {

const current = ref.current;

if (current == null) {

return;

}

const options: ScrollerOptions = {

/* ... */

};

const scroller = new FlickableScroller(current);

return () => scroller.destroy();

}, []);

const style: CSSProperties = {

/* ... */

};

return (

<div style={{ width: "100vw", height: "100vh", display: "flex", justifyContent: "center", alignItems: "center" }}>

<div

ref={ref}

style={{

width: 800,

height: 800,

position: "fixed",

overflow: "hidden",

...style,

}}

>

<div style={{ backgroundColor: "lavender", fontSize: 50 }}>Scroll Top</div>

{Array.from({ length: 2 }).map((_, index) => (

<Fragment key={index}>

<div style={{ width: 800, height: 800, flexShrink: 0, backgroundColor: "pink" }} />

<div style={{ width: 800, height: 800, flexShrink: 0, backgroundColor: "skyblue" }} />

<div style={{ width: 800, height: 800, flexShrink: 0, backgroundColor: "lavender" }}></div>

</Fragment>

))}

<div style={{ backgroundColor: "pink", fontSize: 50 }}>Scroll Bottom</div>

</div>

</div>

);

```

## Vertical Scroll

```typescript

const options = {

direction: "y",

};

```

```typescript

const style = {};

```

https://github.com/HoseungJang/flickable-scroll/assets/39669819/089e2de5-0818-4462-ab0b-122ea6fcbd6a

## Reversed Vertical Scroll

```typescript

const options = {

direction: "y",

reverse: true,

};

```

```typescript

const style = {

display: "flex",

flexDirection: "column",

justifyContent: "flex-end",

};

```

https://github.com/HoseungJang/flickable-scroll/assets/39669819/9eefe295-f8fe-49f7-9f92-c390dc70f43a

## Horizontal Scroll

```typescript

const options = {

direction: "x",

};

```

```typescript

const style = {

display: "flex",

};

```

https://github.com/HoseungJang/flickable-scroll/assets/39669819/a90eeff8-9e18-4d45-a229-66813ba89901

## Reversed Horizontal Scroll

```typescript

const options = {

direction: "x",

reverse: true,

};

```

```typescript

const style = {

display: "flex",

justifyContent: "flex-end",

};

```

https://github.com/HoseungJang/flickable-scroll/assets/39669819/02c80887-cc20-4098-aa27-5c8236df8870

# API Reference

```typescript

const options = {

direction,

reverse,

onScrollStart,

onScrollMove,

onScrollEnd,

};

const scroller = new FlickableScroller(container, options);

scroller.lock();

scroller.unlock();

scroller.destory();

```

- Parameters of `FlickableScroller`:

- `container`: `HTMLElement`

- Required

- A scroll container element.

- options

- Optional

- properties

- `direction`: `"x" | "y"`

- Optional

- Defaults to `"y"`

- A scroll direction

- `reverse`: `boolean`

- Optional

- Defaults to `false`

- If set to true, scroll direction will be reversed.

- `onScrollStart`: `(e: ScrollEvent) => void`

- Optional

- This function will fire when a user starts to scroll

- `onScrollMove`: `(e: ScrollEvent) => void`

- Optional

- This function will fire when a user is scrolling

- `onScrollEnd`: `(e: ScrollEvent) => void`

- Optional

- This function will fire when a user finishes to scroll

- Methods of `FlickableScroller`:

- `lock()`: `() => void`

- This method locks scroll of the scroller.

- `unlock()`: `() => void`

- This method unlocks scroll of the scroller.

- `destroy()`: `() => void`

- This method destory the scroller. All event handlers will be removed, and all animations will be stopped.

| 10

| 0

|

bloomberg/blazingmq

|

https://github.com/bloomberg/blazingmq

|

A modern high-performance open source message queuing system

|

<p align="center">

<a href="https://bloomberg.github.io/blazingmq">

<picture>

<source media="(prefers-color-scheme: dark)" srcset="docs/assets/images/blazingmq_logo_label_dark.svg">

<img src="docs/assets/images/blazingmq_logo_label.svg" width="70%">

</picture>

</a>

</p>

---

[](#)

[](#)

[](#)

[](LICENSE)

[](#)

[](#)

[](#)

[](https://bloomberg.github.io/blazingmq)

# BlazingMQ - A Modern, High-Performance Message Queue

[BlazingMQ](https://bloomberg.github.io/blazingmq) is an open source

distributed message queueing framework, which focuses on efficiency,

reliability, and a rich feature set for modern-day workflows.

At its core, BlazingMQ provides durable, fault-tolerant, highly performant, and

highly available queues, along with features like various message routing

strategies (e.g., work queues, priority, fan-out, broadcast, etc.),

compression, strong consistency, poison pill detection, etc.

Message queues generally provide a loosely-coupled, asynchronous communication

channel ("queue") between application services (producers and consumers) that

send messages to one another. You can think about it like a mailbox for

communication between application programs, where 'producer' drops a message in

a mailbox and 'consumer' picks it up at its own leisure. Messages placed into

the queue are stored until the recipient retrieves and processes them. In other

words, producer and consumer applications can temporally and spatially isolate

themselves from each other by using a message queue to facilitate

communication.

BlazingMQ's back-end (message brokers) has been implemented in C++, and client

libraries are available in C++, Java, and Python (the Python SDK will be

published shortly as open source too!).

BlazingMQ is an actively developed project and has been battle-tested in

production at Bloomberg for 8+ years.

This repository contains BlazingMQ message broker, BlazingMQ C++ client library

and a BlazingMQ command line tool, while BlazingMQ Java client library can be

found in [this](https://github.com/bloomberg/blazingmq-sdk-java) repository.

---

## Menu

- [Documentation](#documentation)

- [Quick Start](#quick-start)

- [Installation](#installation)

- [Contributions](#contributions)

- [License](#license)

- [Code of Conduct](#code-of-conduct)

- [Security Vulnerability Reporting](#security-vulnerability-reporting)

---

## Documentation

Comprehensive documentation about BlazingMQ can be found

[here](https://bloomberg.github.io/blazingmq).

---

## Quick Start

[This](https://bloomberg.github.io/blazingmq/docs/getting_started/blazingmq_in_action/)

article guides readers to build, install, and experiment with BlazingMQ locally

in a Docker container.

In the

[companion](https://bloomberg.github.io/blazingmq/docs/getting_started/more_fun_with_blazingmq)

article, readers can learn about some intermediate and advanced features of

BlazingMQ and see them in action.

---

## Installation

[This](https://bloomberg.github.io/blazingmq/docs/installation/deployment/)

article describes the steps for installing a BlazingMQ cluster in a set of Docker

containers, along with a recommended set of configurations.

---

## Contributions

We welcome your contributions to help us improve and extend this project!

We welcome issue reports [here](../../issues); be sure to choose the proper

issue template for your issue, so that we can be sure you're providing us with

the necessary information.

Before sending a [Pull Request](../../pulls), please make sure you have read

our [Contribution

Guidelines](https://github.com/bloomberg/.github/blob/main/CONTRIBUTING.md).

---

## License

BlazingMQ is Apache 2.0 licensed, as found in the [LICENSE](LICENSE) file.

---

## Code of Conduct

This project has adopted a [Code of

Conduct](https://github.com/bloomberg/.github/blob/main/CODE_OF_CONDUCT.md).

If you have any concerns about the Code, or behavior which you have experienced

in the project, please contact us at [email protected].

---

## Security Vulnerability Reporting

If you believe you have identified a security vulnerability in this project,

please send an email to the project team at [email protected], detailing

the suspected issue and any methods you've found to reproduce it.

Please do NOT open an issue in the GitHub repository, as we'd prefer to keep

vulnerability reports private until we've had an opportunity to review and

address them.

---

| 2,160

| 78

|

alejandrofdez-us/similarity-ts

|

https://github.com/alejandrofdez-us/similarity-ts

| null |

[](https://pypi.org/project/similarity-ts/)

[](https://www.python.org/downloads/release/python-390/)

[](https://github.com/alejandrofdez-us/similarity-ts-cli/commits/main)

[](LICENSE)

# SimilarityTS: Toolkit for the Evaluation of Similarity for multivariate time series

## Table of Contents

- [Package Description](#package-description)

- [Installation](#installation)

- [Usage](#usage)

- [Configuring the toolkit](#configuring-the-toolkit)

- [Extending the toolkit](#extending-the-toolkit)

- [License](#license)

- [Acknowledgements](#acknowledgements)

## Package Description

SimilarityTS is an open-source package designed to facilitate the evaluation and comparison of

multivariate time series data. It provides a comprehensive toolkit for analyzing, visualizing, and reporting multiple

metrics and figures derived from time series datasets. The toolkit simplifies the process of evaluating the similarity of

time series by offering data preprocessing, metrics computation, visualization, statistical analysis, and report generation

functionalities. With its customizable features, SimilarityTS empowers researchers and data

scientists to gain insights, identify patterns, and make informed decisions based on their time series data.

A command line interface tool is also available at: https://github.com/alejandrofdez-us/similarity-ts-cli.

### Available metrics

This toolkit can compute the following metrics:

- `kl`: Kullback-Leibler divergence

- `js`: Jensen-Shannon divergence

- `ks`: Kolmogorov-Smirnov test

- `mmd`: Maximum Mean Discrepancy

- `dtw` Dynamic Time Warping

- `cc`: Difference of co-variances

- `cp`: Difference of correlations

- `hi`: Difference of histograms

### Available figures

This toolkit can generate the following figures:

- `2d`: the ordinary graphical representation of the time series in a 2D figure with the time represented on the x axis

and the data values on the y-axis for

- the complete multivariate time series; and

- a plot per column.

Each generated figure plots both the `ts1` and the `ts2` data to easily obtain key insights into

the similarities or differences between them.

<div>

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/2d_sample_3_complete_TS_1_vs_TS_2.png?raw=true" alt="2D Figure complete">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/2d_sample_3_cpu_util_percent_TS_1_vs_TS_2.png?raw=true" alt="2D Figure for used CPU percentage">

</div>

- `delta`: the differences between the values of each column grouped by periods of time. For instance, the differences

between the percentage of cpu used every 10, 25 or 50 minutes. These delta can be used as a means of comparison between

time series short-/mid-/long-term patterns.

<div>

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/delta_sample_3_cpu_util_percent_TS_1_vs_TS_2_(grouped_by_10_minutes).png?raw=true" alt="Delta Figure for used CPU percentage grouped by 10 minutes">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/delta_sample_3_cpu_util_percent_TS_1_vs_TS_2_(grouped_by_25_minutes).png?raw=true" alt="Delta Figure for used CPU percentage grouped by 25 minutes">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/delta_sample_3_cpu_util_percent_TS_1_vs_TS_2_(grouped_by_50_minutes).png?raw=true" alt="Delta Figure for used CPU percentage grouped by 50 minutes">

</div>

- `pca`: the linear dimensionality reduction technique that aims to find the principal components of a data set by

computing the linear combinations of the original characteristics that explain the most variance in the data.

<div align="center">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/PCA.png?raw=true" alt="PCA Figure" width="450">

</div>

- `tsne`: a tool for visualising high-dimensional data sets in a 2D or 3D graphical representation allowing the creation

of a single map that reveals the structure of the data at many different scales.

<div align="center">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/t-SNE-iter_300-perplexity_5.png?raw=true" alt="TSNE Figure 300 iterations 5 perplexity" width="450">

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/t-SNE-iter_1000-perplexity_5.png?raw=true" alt="TSNE Figure 1000 iterations 5 perplexity" width="450">

</div>

- `dtw` path: In addition to the numerical similarity measure, the graphical representation of the DTW path of each

column can be useful to better analyse the similarities or differences between the time series columns. Notice that

there is no multivariate representation of DTW paths, only single column representations.

<div>

<img src="https://github.com/alejandrofdez-us/similarity-ts/blob/e5b147b145970f3a93351a1004022fb30d20f5f0/docs/figures/DTW_sample_3_cpu_util_percent.png?raw=true" alt="DTW Figure for cpu">

</div>

## Installation

Install the package using pip in your local environment:

```Bash

pip install similarity-ts

```

## Usage

Users must create a new `SimilarityTs` object by calling its constructor and passing the following parameters.

- `ts1` This time series may represent the baseline or ground truth time

series as a `numpy` array with shape `[length, num_features]`.

- `ts2s` A single or a set of time series as a `numpy` array with shape `[num_time_series, length, num_features]`.

Constraints: