id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

148,968,992 |

TypeScript

|

Use function type shorthands when displaying signature help

|

_From @unional on April 14, 2016 23:32_

I would be great if when `tape((<cursor>))` it would be able to drill in the `tape.TestCase` and show the signature of the callback. :tulip:

This is probably on `tsc`, but let's start here. :smile:

_Copied from original issue: Microsoft/vscode#5284_

|

Suggestion,Help Wanted,API,VS Code Tracked

|

low

|

Major

|

148,974,532 |

TypeScript

|

Cannot get symbol inside class decorator (Cannot read property 'members' of un...)

|

**TypeScript Version:**

1.8.10

**Code**

``` ts

@Component({

selector: SELECTOR

})

class SampleComponent {}

const SELECTOR = 'ng-demo';

```

**Expected behavior:**

With sample [`SyntaxWalker`](https://github.com/palantir/tslint/blob/master/src/language/walker/syntaxWalker.ts) based on `tslint`, once I visit the `PropertyAssignment` - `selector: SELECTOR` and invoke `typeChecker.getSymbolAtLocation(prop.initializer);` I should get the `SELECTOR` symbol.

**Actual behavior:**

```

TypeError: Cannot read property 'members' of undefined

at resolveName (node_modules/typescript/lib/typescript.js:15307:73)

at resolveEntityName (node_modules/typescript/lib/typescript.js:15725:26)

at getSymbolOfEntityNameOrPropertyAccessExpression (node_modules/typescript/lib/typescript.js:28708:28)

at Object.getSymbolAtLocation (node_modules/typescript/lib/typescript.js:28770:28)

```

|

Bug,Help Wanted,API

|

low

|

Critical

|

149,011,192 |

youtube-dl

|

Site request: h-a.no

|

## Please follow the guide below

- You will be asked some questions and requested to provide some information, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your _issue_ (like that [x])

- Use _Preview_ tab to see how your issue will actually look like

---

### Make sure you are using the _latest_ version: run `youtube-dl --version` and ensure your version is _2016.04.13_. If it's not read [this FAQ entry](https://github.com/rg3/youtube-dl/blob/master/README.md#how-do-i-update-youtube-dl) and update. Issues with outdated version will be rejected.

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.13**

### Before submitting an _issue_ make sure you have:

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [x] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

### If the purpose of this _issue_ is a _site support request_ please provide all kinds of example URLs support for which should be included (replace following example URLs by **yours**):

- Single video: http://www.h-a.no/sport/se-hoydepunktene-her?AutoPlay=true

---

### Description of your _issue_, suggested solution and other information

Hi, I don't know if I understood this new guide thing, but I'll try :-) I just wanted to request support for h-a.no, as in the above URL. :-) Thanks!

|

site-support-request,geo-restricted

|

low

|

Critical

|

149,037,249 |

go

|

git-codereview: allow disabling of "foo.mailed" tag creation

|

Every time you run "git codereview mail" it creates a new tag (as per [its doc](https://godoc.org/golang.org/x/review/git-codereview)).

I've never actually used these tags for their advertised purpose (if I did need to diff against my last mailed commit, I would just use the advertised commit hash from within gerrit), so I don't benefit from them.

OTOH, they do interrupt my workflow. For example, the [common git bash completion script](https://github.com/git/git/blob/master/contrib/completion/git-completion.bash) prints all pending tags when auto-completing `git commit <TAB>`, and the sheer number of mailed tags obfuscates any useful information.

So, this is a feature request to disable their automatic creation.

|

NeedsInvestigation

|

low

|

Major

|

149,040,950 |

go

|

debug/pe: extend package so it can be used by cmd/link

|

CC: @minux @ianlancetaylor @crawshaw

cmd/link and debug/pe share no common code, but they should - they do the same thing. If package debug/pe is worth its weight, it should be used by cmd/link. I accept that this was impossible to do when cmd/link was a C program, but now cmd/link is written in Go.

I also tried to use debug/pe in github.com/alexbrainman/goissue10776/pedump, and it is not very useful. I endup coping and changing some debug/pe code.

I also think there is some luck of PE format knowledge between us. So improving debug/pe structure and documentation should help with that.

I tried to rewrite src/cmd/link/internal/ld/ldpe.go by using debug/pe (see CL 14289). I had to extend debug/pe for that task. Here is the list of externally visible changes I had to do:

- PE relocations have SymbolTableIndex field that is an index into symbols table. But File.Symbols slice has Aux lines removed as it is built, so SymbolTableIndex cannot be used to index into File.Symbols. We cannot change File.Symbols behavior. So I propose we introduce File.COFFSymbols slice that is like File.Symbols, but with Aux lines left untouched.

- I have introduced StringTable that was used to convert long names in PE symbol table and PE section table into Go strings.

- I have also introduced Section.Relocs to access PE relocations.

I propose we add the above things to debug/pe and use new debug/pe to rewrite ldpe.go and pe.go in cmd/link/internal/ld.

Alex

PS: You can google for pecoff.doc for PE detials.

|

help wanted,NeedsFix

|

medium

|

Critical

|

149,072,274 |

opencv

|

minMaxIdx not available in the JNI binding

|

Hi,

I noticed that the function minMaxIdx (core) is not available through the JNI binding. Only minMaxLoc is available, which is a problem given that minMaxLoc is limited to 2-dimensional arrays.

- OpenCV version: 3.1.0

- Host OS: Linux (Mint 17.3)

This issue was discussed here: http://answers.opencv.org/question/92676/minmaxidx-missing-in-the-jni-interface

|

feature,affected: 3.4,category: java bindings

|

low

|

Minor

|

149,072,396 |

three.js

|

Proposal: Making flattened math classes that operate directly on views the default

|

##### Description of the problem

Clara.io has been struggling with the non-memory efficient Three.JS math classes for four years now. Basically Three.JS math classes use object members to represent their data, e.g.:

```

THREE.Vector3 = function( x, y, z ) {

this.x = x;

this.y = y;

this.z = z;

}

```

This pattern above leads to a lot of inefficiencies. It is an object and it is an editable object. It has a large overhead per object in terms of allocation time and extra memory usage because it is an editable object. There are also costs to copy from a BufferGeometry array to a Vector3 and vice versa. Also there are costs to flatten these arrays when using them as uniforms.

As we are looking to speed up Clara.io, I think we need to move away from object-based math classes and instead make the hard switch to math classes that are based around internal arrays. This would be the primary representation of the Three.JS math types, although we could still emulate the object-based design we have by building on top of these. Thus I want to see this design being the primary design for all of Three.JS math:

```

Vector3.set( array, x, y, z ) {

array[0]=x;

array[1]=y;

array[2]=z;

}

Vector3.add( target, lhs, rhs ) {

target[0]=lhs[0]+rhs[0];

target[1]=lhs[1]+rhs[1]

target[2]=lhs[2]+rhs[2]

}

```

I think the above pattern is the only way that Clara.io can achieve the speed it needs.

Important point: I was talking to one of the JavaScript spec designers and he said that we should use large arraybuffer allocations and then try to use views for each individual math element in a large array (e.g. var usernameView = new Float32Array(buffer, 4, 16) ). He said that views were incredibly cheap as now you do not even pay an array allocation cost per element.

I think we could keep around the Vector3 object (as opposed to the new functional interface), but it could be built by creating a BufferArray (or alternatively initialized by a passed in view) and remembering it, and then using all of the pure array buffer functions I am advocating as the primary interface. It could have accessors for x, y, and z that map onto the BufferArray. This would give us backwards compatibility.

But going forward it would likely be best to nearly always use the versions of the math classes that can operate directly on a preallocated array. This design would be ultra fast -- no allocations for the most part, and ultra cheap and easy conversions to uniforms and BufferGeometry.attribute

I think this is necessary for Clara.io to move to the next level and I think Three.JS wants to get there as well. We, ThreeJS, have been moving to this solution slowly but we've never made the full break to transform the primary math-objects. I think it is time to do this.

/ping @WestLangley

##### Three.js version

- [x] Dev

- [ ] r75

- [ ] ...

##### Browser

- [x] All of them

- [ ] Chrome

- [ ] Firefox

- [ ] Internet Explorer

##### OS

- [x] All of them

- [ ] Windows

- [ ] Linux

- [ ] Android

- [ ] IOS

##### Hardware Requirements (graphics card, VR Device, ...)

|

Suggestion

|

medium

|

Major

|

149,168,960 |

react

|

Iframe load event not firing in Chrome and Safari when src is 'about:blank'

|

See: https://jsfiddle.net/pnct6b7r/

It will not trigger the alert in Chrome and Safari, but it will work in Firefox and even IE8.

Is this a React issue or Webkit issue? If it is a Webkit issue, should we "fix it" in React given that we want [consistent events across browsers](http://facebook.github.io/react/docs/events.html)?

ps: The JSFiddle was based on the isuse #5332.

|

Type: Bug,Component: DOM

|

low

|

Major

|

149,183,640 |

opencv

|

Mask is ignored in cv::CascadeClassifier

|

Output of mask generated by maskGenerator of cv::CascadeClassifier (see https://github.com/Itseez/opencv/blob/master/modules/objdetect/src/cascadedetect.cpp#L996) is ignored in detection step. It is assigned, but never actually used.

### In which part of the OpenCV library you got the issue?

- objdetect

### Expected behaviour

When my program provides custom (subclass of) cv::BaseCascadeClassifier::MaskGenerator object to cv::CascadeClassifier, it should be using the masks it produces to apply the detection algorithm only on areas defined by mask (and therefore speeding up the process when mask is "sparse").

### Actual behaviour

The detection is run on whole area of image anyway and it is very slow for huge images with "sparse" detection mask.

### Additional description

I have troubles to build and test OpenCV myself, but I have managed to edit "source\opencv\modules\objdetect\src\cascadedetect.cpp" and add mask check by adding following lines on line 996:

``` c++

if(mask.empty() ||

((x >= 0) && (y >= 0) &&

(x < mask.cols) && (y < mask.rows) &&

(mask.at<uchar>(y,x) != 0)))

{

```

and putting lines 996 to 1022 into the if block.

|

feature,category: objdetect

|

low

|

Major

|

149,251,959 |

go

|

flag: handles unknown arguments in an unexpected less-helpful way

|

If the flag library encounters an unknown parameter, it discards that parameter and dumps everything else in the positional arguments bucket.

Instead, the flag library should (optionally, probably when ContinueOnError is specified) put all unknown parameters in the positional arguments bucket (returned by Args) and keep attempting to parse the following arguments. (unless encountering something that clearly indicated the beginning of positional arguments such as the naked double-dash "--")

1. What version of Go are you using (`go version`)?

go version go1.3.3 linux/amd64

2. What operating system and processor architecture are you using (`go env`)?

GOARCH="amd64"

GOBIN=""

GOCHAR="6"

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/azani/go"

GORACE=""

GOROOT="/usr/lib/go"

GOTOOLDIR="/usr/lib/go/pkg/tool/linux_amd64"

CC="gcc"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0"

CXX="g++"

CGO_ENABLED="1"

3. What did you do?

https://play.golang.org/p/44mXvwTfLD

4. What did you expect to see?

list == "first", "second", "third"

flagSet.Args() == "--something", "blah"

1. What did you see instead?

list == "first", "second"

flagSet.Args() == "-I", "third", "blah"

also, Parse printed an error.

|

NeedsDecision

|

low

|

Critical

|

149,255,868 |

youtube-dl

|

Option to combine -o and -g

|

- [X] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.13**

- [X] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [X] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [X] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

### Description of your _issue_, suggested solution and other information

I use Kodi Media Center for all my entertainment and media management. This program is capable of playing streaming video in many formats from an url written in a .strm file. I would like to use youtube-dl to output a playlist of music videos in a specific format (say, `'%(playlist)s/%(playlist_index)s - %(title)s.%(ext)s'` which will put playlist files into a common folder, but instead of actually downloading the video, just echo the url (as given by the `-g` option) into the file. I have actually accomplished this using a bash script which parses the output of `youtube-dl --get-filename --get-url` but it is a very hacky solution. Any chance this could become a feature?

EDIT: To give an example, I would like to be able to run `youtube-dl -f best -g -o '%(playlist)s/%(playlist_index)s - %(title)s.%(ext)s' https://www.youtube.com/playlist?list=PLY5f8vtstsfjedMi6VHRAeFV85Xc_VoKG` and see afterwards a subdirectory in the working dir of youtube-dl, which is named by the title of the playlist (in this case, NateWantsToBattle Songs) and in that subfolder would be a number of files named according to their order in the playlist and title (in this case, one would be named "NateWantsToBattle Songs/01 - twenty one pilots - Stressed Out [NateWantsToBattle feat. ShueTube].mp4") and each file would contain only the plain-text url of the video as shown by -g (the example above would contain the url string shown [here](http://pastebin.com/raw/60uTA3Nb)).

|

request

|

low

|

Critical

|

149,541,041 |

go

|

encoding/asn1: better error message when unmarshaling non-pointer

|

Please answer these questions before submitting your issue. Thanks!

1. What version of Go are you using (`go version`)?

NaCL / Playground

2. What operating system and processor architecture are you using (`go env`)?

Playground

3. What did you do?

https://play.golang.org/p/TnLoso9len

4. What did you expect to see?

```

1.2.840.113549.1.9.16.1.4

[6 11 42 134 72 134 247 13 1 9 16 1 4]

1.2.840.113549.1.9.16.1.4

```

1. What did you see instead?

```

1.2.840.113549.1.9.16.1.4

[6 11 42 134 72 134 247 13 1 9 16 1 4]

panic: reflect: call of reflect.Value.Elem on slice Value

goroutine 1 [running]:

panic(0x184720, 0x10434260)

/usr/local/go/src/runtime/panic.go:464 +0x700

reflect.Value.Elem(0x188f40, 0x10434250, 0x97, 0x10434250, 0x0, 0x0, 0x0, 0x188f40)

/usr/local/go/src/reflect/value.go:735 +0x2a0

encoding/asn1.UnmarshalWithParams(0x10444074, 0xd, 0x40, 0x188f40, 0x10434250, 0x0, 0x0, 0x10434220, 0x0, 0x0, ...)

/usr/local/go/src/encoding/asn1/asn1.go:989 +0xc0

encoding/asn1.Unmarshal(0x10444074, 0xd, 0x40, 0x188f40, 0x10434250, 0x10434250, 0x0, 0x0, 0x0, 0x0, ...)

/usr/local/go/src/encoding/asn1/asn1.go:983 +0x80

main.main()

/tmp/sandbox412831617/main.go:24 +0x480

```

|

NeedsInvestigation

|

low

|

Critical

|

149,548,258 |

go

|

cmd/link: move away from *LSym linked lists

|

The linked lists come from the original C code. Slices make the code a bit easier to follow.

|

compiler/runtime

|

low

|

Minor

|

149,560,385 |

go

|

cmd/compile: eliminate all (some?) convT2{I,E} calls

|

For pointer-shaped types, convT2{I,E} are already done by the compiler. We currently call into the runtime only for non-pointer-shaped types.

There are two cases, where the interface escapes and where it doesn't.

```

type T struct {

a, b, c int

}

func f(t T) interface{} {

return t

}

func g(t T) {

h(t)

}

//go:noescape

func h(interface{})

```

In both cases, I think, it would be easier to inline the work that convT2{I,E} does. f does this:

```

LEAQ type."".T(SB), AX

MOVQ AX, (SP)

LEAQ "".autotmp_0+40(SP), AX

MOVQ AX, 8(SP)

MOVQ $0, 16(SP)

CALL runtime.convT2E(SB)

MOVQ 24(SP), CX

MOVQ 32(SP), DX

```

instead it could do:

```

LEAQ type."".T(SB), AX

MOVQ AX, (SP)

CALL runtime.newobject(SB)

MOVQ 8(SP), DX

MOVQ "".autotmp_0+40(SP), AX

MOVQ "".autotmp_0+48(SP), BX

MOVQ "".autotmp_0+56(SP), CX

MOVQ AX, (DX)

MOVQ BX, 8(DX)

MOVQ CX, 16(DX)

LEAQ type."".T(SB), CX

```

11 instructions instead of 8, but several runtime calls bypassed (convT2E, plus the typedmemmove it calls, and everything that calls, ...).

The expanded code gets larger if T has a pointer in it. Maybe we use typedmemmove instead of explicit copy instructions in that case.

`g` is even easier because there is no runtime call required at all. Old code:

```

LEAQ type."".T(SB), AX

MOVQ AX, (SP)

LEAQ "".autotmp_2+64(SP), AX

MOVQ AX, 8(SP)

LEAQ "".autotmp_3+40(SP), AX

MOVQ AX, 16(SP)

CALL runtime.convT2E(SB)

MOVQ 24(SP), CX

MOVQ 32(SP), DX

```

new code:

```

MOVQ "".autotmp_2+64(SP), AX

MOVQ "".autotmp_2+72(SP), BX

MOVQ "".autotmp_2+80(SP), CX

MOVQ AX, "".autotmp_3+40(SP)

MOVQ BX, "".autotmp_3+48(SP)

MOVQ CX, "".autotmp_3+56(SP)

LEAQ type."".T(SB), CX

LEAQ "".autotmp_3+40(SP), DX

```

one less instruction, and if we can avoid the copy (if autotmp_2 is never modified after), it could be even better. And no write barriers are required on the copy even if T has pointers.

Maybe we do the no-escape optimization first. It seems like an obvious win. That would allow removing the third arg to convT2{I,E}. Then we could think about the escape optimization.

@walken-google

|

Performance,NeedsFix,early-in-cycle

|

low

|

Major

|

149,560,456 |

opencv

|

Delaunay Triangulation, possible wrong calculation for huge graphs?

|

Hi there,

I was working with delaunay triangulation using OpenCV and trying to create a Gabriel Graph (https://en.wikipedia.org/wiki/Gabriel_graph) from delaunay. But the result is not like what I expected. Also, I compared results with R library (spdep).

thanks

### Please state the information for your system

- OpenCV version: 3.1.0

- Host OS: Windows 10

### In which part of the OpenCV library you got the issue?

- imgproc

### Expected behaviour

Whole complete graph.

### Actual behaviour

In the Gabriel graph, some nodes are not connected to the main graph (staying alone), this should not be. I draw also delaunay graph, in some parts most probably it's calculating the angles wrongly and connecting nodes which should not be also.

### Additional description

There are 3 pictures and the data (delaunay, gabriel (alone nodes are different colors) and r library's gabriel result). I used the 2d points for testing from (cluto-t8.8k, https://github.com/deric/clustering-benchmark/tree/master/src/main/resources/datasets/artificial)

[result.txt](https://github.com/Itseez/opencv/files/226689/result.txt)

delaunay:

gabriel:

r library's gabriel

|

bug,category: imgproc,affected: 3.4

|

low

|

Minor

|

149,561,444 |

TypeScript

|

Suggestion: Better error message when unable to resolve modules

|

Related to our woes with #8189, if the compilation errors gave some better hinting when it was unable to resolve a module, we might not have taken so long to find the solution (and we wouldn't have filed an issue!).

The idea would be that if there is a compilation error of `cannot file module "whatever"`, to make some intelligent checks against the parameters the compilation is running under and suggest using `--moduleResolution node` if the user is not already doing so.

|

Docs

|

low

|

Critical

|

149,574,952 |

opencv

|

OpenCV build fails on Mac 10.10.5

|

I manage to run cmake successfully and build the configuration. However, when I run make -j5, there is an error on "lib/libopencv_videoio.3.1.0.dylib" that has stopped me from building.

### Please state the information for your system

- OpenCV version: latest master branch for opencv and opencv_contrib

- Host OS: Mac OS X 10.10.5

- CMake 3.5.2

### In which part of the OpenCV library you got the issue?

- videoio

### Actual behaviour

Undefined symbols for architecture x86_64:

```

"_CMBlockBufferCreateWithMemoryBlock", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_CMSampleBufferCreate", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_CMVideoFormatDescriptionCreate", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

"_SSLClose", referenced from:

_tls_close in libavformat.a(tls_securetransport.o)

"_SSLCopyPeerTrust", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLCreateContext", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLHandshake", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLRead", referenced from:

_tls_read in libavformat.a(tls_securetransport.o)

"_SSLSetCertificate", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLSetConnection", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLSetIOFuncs", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLSetPeerDomainName", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLSetSessionOption", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SSLWrite", referenced from:

_tls_write in libavformat.a(tls_securetransport.o)

"_SecIdentityCreate", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SecItemImport", referenced from:

_import_pem in libavformat.a(tls_securetransport.o)

"_SecTrustEvaluate", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_SecTrustSetAnchorCertificates", referenced from:

_tls_open in libavformat.a(tls_securetransport.o)

"_VTDecompressionSessionCreate", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionDecodeFrame", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionInvalidate", referenced from:

_av_videotoolbox_default_free in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionWaitForAsynchronousFrames", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_iconv", referenced from:

_avcodec_decode_subtitle2 in libavcodec.a(utils.o)

"_iconv_close", referenced from:

_avcodec_open2 in libavcodec.a(utils.o)

_avcodec_decode_subtitle2 in libavcodec.a(utils.o)

"_iconv_open", referenced from:

_avcodec_open2 in libavcodec.a(utils.o)

_avcodec_decode_subtitle2 in libavcodec.a(utils.o)

"_kCMFormatDescriptionExtension_SampleDescriptionExtensionAtoms", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

"_lame_close", referenced from:

_mp3lame_encode_close in libavcodec.a(libmp3lame.o)

"_lame_encode_buffer", referenced from:

_mp3lame_encode_frame in libavcodec.a(libmp3lame.o)

"_lame_encode_buffer_float", referenced from:

_mp3lame_encode_frame in libavcodec.a(libmp3lame.o)

"_lame_encode_buffer_int", referenced from:

_mp3lame_encode_frame in libavcodec.a(libmp3lame.o)

"_lame_encode_flush", referenced from:

_mp3lame_encode_frame in libavcodec.a(libmp3lame.o)

"_lame_get_encoder_delay", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_get_framesize", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_init", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_init_params", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_VBR", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_VBR_mean_bitrate_kbps", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_VBR_quality", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_bWriteVbrTag", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_brate", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_disable_reservoir", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_in_samplerate", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_mode", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_num_channels", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_out_samplerate", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_lame_set_quality", referenced from:

_mp3lame_encode_init in libavcodec.a(libmp3lame.o)

"_swr_alloc", referenced from:

_opus_decode_init in libavcodec.a(opusdec.o)

"_swr_close", referenced from:

_opus_decode_packet in libavcodec.a(opusdec.o)

_opus_decode_flush in libavcodec.a(opusdec.o)

"_swr_convert", referenced from:

_opus_decode_packet in libavcodec.a(opusdec.o)

"_swr_free", referenced from:

_opus_decode_close in libavcodec.a(opusdec.o)

"_swr_init", referenced from:

_opus_decode_packet in libavcodec.a(opusdec.o)

"_swr_is_initialized", referenced from:

_opus_decode_packet in libavcodec.a(opusdec.o)

"_x264_bit_depth", referenced from:

_X264_init_static in libavcodec.a(libx264.o)

_X264_frame in libavcodec.a(libx264.o)

"_x264_encoder_close", referenced from:

_X264_close in libavcodec.a(libx264.o)

"_x264_encoder_delayed_frames", referenced from:

_X264_frame in libavcodec.a(libx264.o)

"_x264_encoder_encode", referenced from:

_X264_frame in libavcodec.a(libx264.o)

"_x264_encoder_headers", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_encoder_open_148", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_encoder_reconfig", referenced from:

_X264_frame in libavcodec.a(libx264.o)

"_x264_levels", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_param_apply_fastfirstpass", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_param_apply_profile", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_param_default", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_param_default_preset", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_param_parse", referenced from:

_X264_init in libavcodec.a(libx264.o)

"_x264_picture_init", referenced from:

_X264_frame in libavcodec.a(libx264.o)

"_xvid_encore", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

_xvid_encode_frame in libavcodec.a(libxvid.o)

_xvid_encode_close in libavcodec.a(libxvid.o)

"_xvid_global", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

"_xvid_plugin_2pass2", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

_ff_xvid_rate_control_init in libavcodec.a(libxvid_rc.o)

_ff_xvid_rate_estimate_qscale in libavcodec.a(libxvid_rc.o)

_ff_xvid_rate_control_uninit in libavcodec.a(libxvid_rc.o)

"_xvid_plugin_lumimasking", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

"_xvid_plugin_single", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

"_xvid_plugin_ssim", referenced from:

_xvid_encode_init in libavcodec.a(libxvid.o)

ld: symbol(s) not found for architecture x86_64

clang: error: linker command failed with exit code 1 (use -v to see invocation)

make[2]: *** [lib/libopencv_videoio.3.1.0.dylib] Error 1

make[1]: *** [modules/videoio/CMakeFiles/opencv_videoio.dir/all] Error 2

make: *** [all] Error 2

```

|

bug,category: build/install,affected: 3.4

|

low

|

Critical

|

149,591,140 |

flutter

|

expose the navigator route as a service extension

|

Something like:

- [ ] get the current value for the route

- [ ] set a new value for the route

- [ ] enable and disable receiving events on route changes

@Hixie, I very briefly looked at the flutter sources, but didn't see how to get this info.

|

c: new feature,tool,framework,f: routes,P3,team-framework,triaged-framework

|

low

|

Minor

|

149,604,687 |

TypeScript

|

Preselect completion list entries based on contextual type

|

It should be cool if tsserver could provide a new command `guesstypes` to give the capability to guess parameter types when completion is applied

In other word, I would like to support the [same feature that I have done with tern ](https://github.com/angelozerr/tern-guess-types)

For instance if you open completion for `document` and select `addEventListener` :

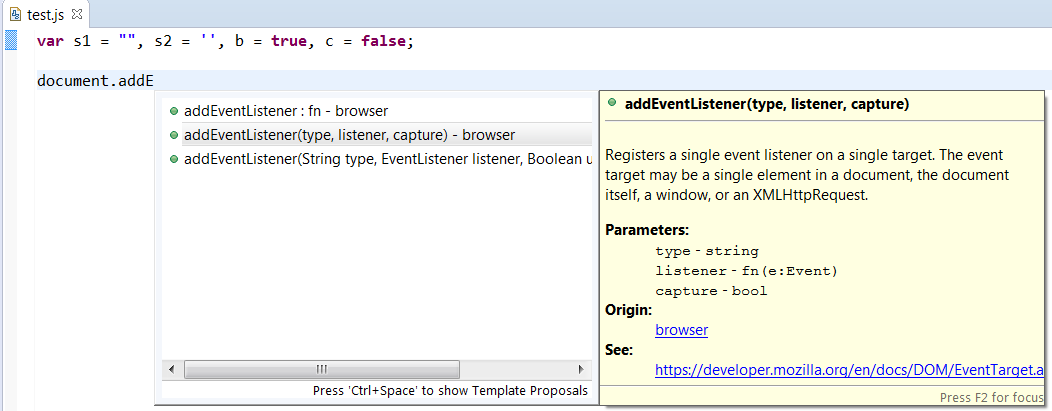

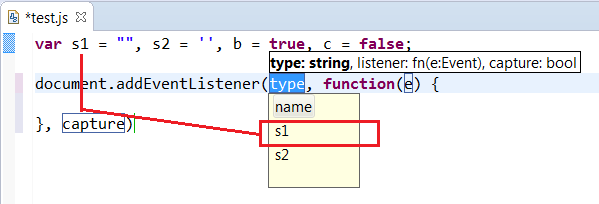

When you apply completion, I would like call a tsserver command 'guesstypes' to retrieve variables, functions for each function parameters. Here a screenshot which shows a list of variable with string type for the `addEventListener` type argument :

|

Suggestion,Help Wanted,API

|

low

|

Minor

|

149,780,545 |

go

|

os: Stdin is broken in some cases on windows

|

Please answer these questions before submitting your issue. Thanks!

1. What version of Go are you using (`go version`)?

go version go1.6 windows/amd64

2. What operating system and processor architecture are you using (`go env`)?

set GOARCH=amd64

set GOOS=windows

3. What did you do?

I tried to make a Squid (a proxy server) auth helper with Go. It's a simple program that needs to read it's stdin, parse it and send the response to stdout. See http://wiki.squid-cache.org/Features/AddonHelpers for details.

Here is the link to my code: https://play.golang.org/p/NR9PkX0fu7

4. What did you expect to see?

My squid instance need to ask credentials upon http request. No error messages related to my program need to be in squid log.

5. What did you see instead?

Every thing went fine. I tested the program from console, I tested it with echo, sending login and pass by pipe, like 'echo vasya 1 | sqauth'. No errors detected. Then, I configured Squid to run the program as an auth helper. Squid didn't start. In log file I saw this record: 'read /dev/stdin: The parameter is incorrect.', thre record from line 26 of my code. I spent few hours investigating the problem, and that's what I got. It seems that Squid starts its child processes, and sets its stdin to async socket handlers. It's not pretty good documented in WINAPI doc, but when they run ReadFile on async IO handler with NULL lpOverlapped parameter (it's the last parameter to function), the error 87 happens (The parameter is incorrect). I looked go source code, and I saw that 'File.read' function calls syscall.ReadFile with lpOverlapped == nil in any case.

Thus that the case, when I start go program as children process with stdin as async socket, os.Stdin is always broken and I have no normal way to get data from stdin. Instead I need to use platform dependend syscall. It would be pretty good to use standard os.Stdin in any case. It's interesting that C fgets works fine with normal stdin in the case.

The working C programm: [fake.cc.zip](https://github.com/golang/go/files/228057/fake.cc.zip).

|

OS-Windows

|

low

|

Critical

|

149,828,463 |

youtube-dl

|

Command line credentials are applied for all the extractors in extraction process

|

---

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [x] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

```

[debug] System config: []

[debug] User config: []

[debug] Command-line args: [u'http://vk.com/video356065542_456239041', u'-p', u'PRIVATE', u'-u', u'PRIVATE', u'-v']

[debug] Encodings: locale cp1251, fs mbcs, out cp866, pref cp1251

[debug] youtube-dl version 2016.04.19

[debug] Git HEAD: 494ab6d

[debug] Python version 2.6.6 - Windows-2003Server-5.2.3790-SP2

[debug] exe versions: ffmpeg 3.0, ffprobe N-77883-gd7c75a5, rtmpdump 2.4

[debug] Proxy map: {}

[vk] Downloading login page

[vk] Logging in as <snip>

[vk] 356065542_456239041: Downloading webpage

[youtube] Downloading login page

[youtube] Logging in

ERROR: Unable to login: The email and password you entered don't match.

Traceback (most recent call last):

File "C:\Dev\git\youtube-dl\master\youtube_dl\YoutubeDL.py", line 671, in extract_info

ie_result = ie.extract(url)

File "C:\Dev\git\youtube-dl\master\youtube_dl\extractor\common.py", line 340, in extract

self.initialize()

File "C:\Dev\git\youtube-dl\master\youtube_dl\extractor\common.py", line 334, in initialize

self._real_initialize()

File "C:\Dev\git\youtube-dl\master\youtube_dl\extractor\youtube.py", line 187, in _real_initialize

if not self._login():

File "C:\Dev\git\youtube-dl\master\youtube_dl\extractor\youtube.py", line 132, in _login

raise ExtractorError('Unable to login: %s' % error_msg, expected=True)

ExtractorError: Unable to login: The email and password you entered don't match.

```

---

Account credentials when specified via command line are used for all the extractors involved in extraction process. For example in aforementioned log VK authentication successfully happens first, then YouTube embed is detected and extraction process is delegated to youtube extractor that tries to login with the same credentials obviously resulting in expected error.

In general authentication error can be worked around with .netrc authentication.

Since it's not feasible to add exclusive `--username/--password` options for each extractor that supports authentication, command line credentials probably should not be considered for any consequent extractor involved apart from the first one.

|

bug

|

low

|

Critical

|

149,831,208 |

youtube-dl

|

Add support for hbonordic.com

|

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [x] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

---

```

[debug] System config: []

[debug] User config: []

[debug] Command-line args: [u'-v', u'https://no.hbonordic.com/series/into-the-badlands/season-1/episode-4/1f10ced-009986b7dd3']

[debug] Encodings: locale UTF-8, fs utf-8, out UTF-8, pref UTF-8

[debug] youtube-dl version 2016.04.19

[debug] Python version 2.7.11 - Darwin-15.3.0-x86_64-i386-64bit

[debug] exe versions: avconv 11.4, avprobe 11.4, ffmpeg 3.0.1, ffprobe 3.0.1, rtmpdump 2.4

[debug] Proxy map: {}

[generic] 1f10ced-009986b7dd3: Requesting header

WARNING: Could not send HEAD request to https://no.hbonordic.com/series/into-the-badlands/season-1/episode-4/1f10ced-009986b7dd3: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)>

[generic] 1f10ced-009986b7dd3: Downloading webpage

ERROR: Unable to download webpage: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)> (caused by URLError(SSLError(1, u'[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed (_ssl.c:590)'),))

File "/usr/local/bin/youtube-dl/youtube_dl/extractor/common.py", line 388, in _request_webpage

return self._downloader.urlopen(url_or_request)

File "/usr/local/bin/youtube-dl/youtube_dl/YoutubeDL.py", line 1940, in urlopen

return self._opener.open(req, timeout=self._socket_timeout)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 431, in open

response = self._open(req, data)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 449, in _open

'_open', req)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 409, in _call_chain

result = func(*args)

File "/usr/local/bin/youtube-dl/youtube_dl/utils.py", line 859, in https_open

req, **kwargs)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/urllib2.py", line 1197, in do_open

raise URLError(err)

```

---

### If the purpose of this _issue_ is a _site support request_ please provide all kinds of example URLs support for which should be included (replace following example URLs by **yours**):

- Single video: https://no.hbonordic.com/series/into-the-badlands/season-1/episode-4/1f10ced-009986b7dd3

- Playlist: https://no.hbonordic.com/series/into-the-badlands/season-1/ea163cc3-31bf-4845-9542-b107e39e093f

---

### Description of your _issue_, suggested solution and other information

Only available in Sweden, Norway, Denmark and Finland.

|

site-support-request,geo-restricted

|

low

|

Critical

|

149,846,384 |

three.js

|

BoundingBoxHelper for buffer geometry does not use drawrange neither groups

|

##### Description of the problem

BoundingBoxHelper.update (setFromObject) for buffer geometry does not use drawrange.count ( neither groups), so the limits can include points with 0,0,0 (now it uses positions.length)

if you have the typedarray with data still with 0,0,0, the box dimension figured out is not correct.(0,0,0 is included...) (I have predimensioned positions array and filling as I need)

I'm using by now to create pointclouds but I imagine some problems in the future if the bufferGeometry is used to drawlines or triangles (using groups)

So I this this is a mix of bug & enhance ,?

...

##### Three.js version

- [ ] Dev

- [x] r76

- [ ] ...

##### Browser

- [x] All of them

- [ ] Chrome

- [ ] Firefox

- [ ] Internet Explorer

##### OS

- [x] All of them

- [ ] Windows

- [ ] Linux

- [ ] Android

- [ ] IOS

##### Hardware Requirements (graphics card, VR Device, ...)

|

Suggestion

|

low

|

Critical

|

149,887,783 |

TypeScript

|

Do not rename imports from ambient modules

|

Issue explained by @bbgone in https://github.com/Microsoft/TypeScript/issues/8118#issuecomment-212218038

Renaming an import alias for a module coming from a .d.ts file, renames all instances. this is obviously wrong.

Options, 1. error (obviously not helpful), 2. only rename local symbol (better, but leaves the code in an invalid state), 3. rename local import and add `as oldname` clause to the import declaration as needed (looks like the best solution).

This is similar to the issue related to https://github.com/Microsoft/TypeScript/issues/7458, except that this issue adds renaming the import alias.

|

Suggestion,Help Wanted,Effort: Moderate

|

low

|

Critical

|

149,937,239 |

go

|

runtime: significant performance improvement on single core machines

|

**1. What version of Go are you using (`go version`)?**

```

go version go1.5.4 linux/amd64

```

**2. What operating system and processor architecture are you using (`go env`)?**

```

GOARCH="amd64"

GOBIN=""

GOEXE=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH=""

GORACE=""

GOROOT="/root/go"

GOTOOLDIR="/root/go/pkg/tool/linux_amd64"

GO15VENDOREXPERIMENT=""

CC="gcc"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0"

CXX="g++"

CGO_ENABLED="1"

```

Hosts are running stock Ubuntu 15.10:

```

# uname -r

4.2.0-27-generic

```

**3. What did you do?**

**4. What did you expect to see?**

**5. What did you see instead?**

Looking into performance of running containers on [Docker](https://github.com/docker/docker), we realized there's a significant difference when running on a single core machine versus a multi core machine. The result seem independent of the `GOMAXPROCS` value (using `GOMAXPROCS=1` on the multi core machine remains significantly slower).

Single core:

```

# time ./docker run --rm busybox true

real 0m0.255s

```

Multi core:

```

# time ./docker run --rm busybox true

real 0m0.449s

```

We cannot attribute that difference to Go with a 100% certainty, but we could use your help explaining some of the profiling results we've obtained so far.

##### Profiling / trace

We instrumented the code to find out where that difference materialized, and what comes out is that it is quite evenly distributed. However, syscalls are consistently taking much more time on the multi core machine (as made obvious with slower syscalls such as `syscall.Unmount`).

Using `go tool trace` to dig further, it appears that we're seeing discontinuities in goroutine execution on the multi core machine that the single core one doesn't expose, even with `GOMAXPROCS=1`.

Single core ([link to the trace file](https://www.dropbox.com/s/onxw64prot30kzo/layerStore-singleCore-1?dl=1))

Multi core with `GOMAXPROCS=1` ([link to the trace file](https://www.dropbox.com/s/unkchqgegmrey5u/layerStore-multiCore-gomaxprocs1?dl=1))

[Link to the binary which produced the trace files](https://www.dropbox.com/s/kni7ngcqqfutx1x/docker?dl=1).

##### Host information

The two hosts are virtual machines running on the same Digital Ocean zone, with the exact same CPU (`Intel(R) Xeon(R) CPU E5-2630L v2 @ 2.40GHz`). The issue was also reproduced locally with Virtual Box VM with different core numbers.

Please let us know if there's any more information we can provide, or if you need us to test with different builds of Go. Thanks for any help you can provide!

Cc @crosbymichael @tonistiigi.

|

compiler/runtime

|

low

|

Major

|

149,941,579 |

go

|

go/build: allow Import to ignore build constraints

|

There's no way at the moment to use `Import` or `ImportDir` to select all the files in package ignoring build constraints. There is the `Package.IgnoredGoFiles` field, but that does not extend to finding the dependencies of the ignored files, and so requires extra parsing steps.

One use case for this is in situations like App Engine: we want to select all the source files for an app, but not exclude source file that would be needed to compile a package in other/future versions of Go. So, we want to select files ignoring any go1.x tags.

/cc @dsymonds @adg

|

NeedsInvestigation

|

low

|

Minor

|

149,943,919 |

rust

|

DWARF does not describe closure type

|

I wrote a simple test case that makes a closure. The closure is just:

```

let f2 = || println!("lambda f2");

```

The resulting DWARF doesn't describe the closure type at all:

```

<7><11e>: Abbrev Number: 6 (DW_TAG_variable)

<11f> DW_AT_location : 2 byte block: 91 78 (DW_OP_fbreg: -8)

<122> DW_AT_name : (indirect string, offset: 0x92): f2

<126> DW_AT_decl_file : 2

<127> DW_AT_decl_line : 28

<128> DW_AT_type : <0x636>

...

<1><636>: Abbrev Number: 19 (DW_TAG_structure_type)

<637> DW_AT_name : (indirect string, offset: 0x4c5): closure

<63b> DW_AT_byte_size : 0

```

I was planning to make it so the user can invoke a closure from gdb, but I think this bug prevents that.

|

A-debuginfo,C-enhancement,P-medium,T-compiler

|

low

|

Critical

|

149,951,109 |

rust

|

DWARF doesn't describe use declarations

|

I wrote a small test program of `use` declarations:

```

pub mod mod_x {

pub fn f() {

use ::mod_y::g;

g();

}

pub fn g() {

println!("X");

}

}

pub mod mod_y {

pub fn g() {

println!("Y");

}

}

pub fn main() {

mod_x::f();

}

```

Examining the DWARF, I don't see anything in the body of `f` that reflect the `use` declaration.

What this means is that when stopped in `f` in gdb, name lookup will not work properly -- `call g()` will call the wrong `g`.

`use` declarations can be represented with something like `DW_TAG_imported_declaration` or `DW_TAG_imported_module`.

|

A-debuginfo,C-enhancement,P-medium,T-compiler

|

low

|

Minor

|

149,961,932 |

go

|

cmd/compile: ephemeral slicing doesn't need protection against next object pointers

|

```

func f(b []byte) byte {

b = b[3:]

return b[4]

}

```

We compile this to something like (bounds checks omitted):

```

p = b.ptr

inc = 3

if b.cap == 3 {

inc = 0

}

p += inc

return *(p+4)

```

The `if` in the middle is there to make sure we don't manufacture a pointer to the next object in memory. But the resulting pointer is never exposed to the garbage collector, so that `if` is unnecessary. Manufacturing a pointer to the next object in memory is ok if that pointer is never spilled at a safe point. (Bounds checks will make sure such a pointer is never actually used.)

Unfortunately, I don't see an easy way to do this optimization in the current compiler. Marked as unplanned.

See #14849

|

Performance,compiler/runtime

|

low

|

Major

|

150,014,693 |

vscode

|

Allow to scope settings by platform

|

Hi

I develop on 3 different platform. When synchronizing settings, snippets and so on, i often must change path, adjust font-size, etc...

So, it could be great if we had a per platform settings set (Windows, Mac, Unix)

|

feature-request,config

|

high

|

Critical

|

150,092,855 |

TypeScript

|

`Result value must be used` check

|

Hello.

Quite often while working with `ImmutableJS` data structures and other immutable libraries people forget that values are immutable:

They can accidentally write:

```

obj.set('name', 'newName');

```

Instead of:

```

let newObj = obj.set('name', 'newName');

```

These errors are hard to discover and they are very annoying. I think that this problem can't be solved by `tslint`, so I propose to add some sort of `"you must use the result"` check into the compiler. But right now I have no idea how I want it to be expressed in the language, so I create this issue mainly to start a discussion.

Rust lang, for example, solves a similar problem with `#[must_use]` annotation. This is not exact what we want, but it's a good example and shows that the problem is quite common.

|

Suggestion,Awaiting More Feedback

|

medium

|

Critical

|

150,184,770 |

nvm

|

Chakra

|

Any plans to support versions of node running on [Chakra from Microsoft](https://github.com/nodejs/node-chakracore/releases)?

|

OS: windows,feature requests,pull request wanted

|

low

|

Major

|

150,194,510 |

rust

|

trait objects with late-bound regions and existential bounds are resolved badly

|

## STR

``` Rust

fn assert_sync<T: Sync>(_t: T) {}

fn main() {

let f: &(for<'a> Sync + Fn(&'a u32)->&'a u32) = &|x| x;

assert_sync(f);

}

```

## Expected Result

Code should compile and run

## Actual result

```

<anon>:4:33: 4:35 error: use of undeclared lifetime name `'a` [E0261]

<anon>:4 let f: &(for<'a> Sync + Fn(&'a u32)->&'a u32) = &|x| x;

^~

<anon>:4:33: 4:35 help: see the detailed explanation for E0261

<anon>:4:43: 4:45 error: use of undeclared lifetime name `'a` [E0261]

<anon>:4 let f: &(for<'a> Sync + Fn(&'a u32)->&'a u32) = &|x| x;

^~

<anon>:4:43: 4:45 help: see the detailed explanation for E0261

error: aborting due to 2 previous errors

```

|

A-resolve,A-lifetimes,T-compiler,C-bug,T-types,A-trait-objects

|

low

|

Critical

|

150,222,463 |

youtube-dl

|

Soundcloud Go Track Fetching

|

## Please follow the guide below

- You will be asked some questions and requested to provide some information, please read them **carefully** and answer honestly

- Put an `x` into all the boxes [ ] relevant to your _issue_ (like that [x])

- Use _Preview_ tab to see how your issue will actually look like

---

### Make sure you are using the _latest_ version: run `youtube-dl --version` and ensure your version is _2016.04.19_. If it's not read [this FAQ entry](https://github.com/rg3/youtube-dl/blob/master/README.md#how-do-i-update-youtube-dl) and update. Issues with outdated version will be rejected.

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

### Before submitting an _issue_ make sure you have:

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [x] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

Add support for logging into soundcloud, and having the ability to fetch full length Soundcloud Go tracks.

|

geo-restricted,account-needed

|

low

|

Critical

|

150,226,679 |

youtube-dl

|

--verify-archive

|

---

### Make sure you are using the _latest_ version: run `youtube-dl --version` and ensure your version is _2016.04.19_. If it's not read [this FAQ entry](https://github.com/rg3/youtube-dl/blob/master/README.md#how-do-i-update-youtube-dl) and update. Issues with outdated version will be rejected.

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

### Before submitting an _issue_ make sure you have:

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [x] Feature request (request for a new functionality)

- [ ] Question

- [ ] Other

### Description of your _issue_, suggested solution and other information

1. I have a very large archive of YouTube videos that I downloaded by loading a list of channel URLs. I used the `--download-archive` flag so I did not have to re-download any videos when I went over the archive again to update it.

2. I currently do not have a way to "re-run" the archive so I can use `--write-info-json` and or `--write-descriptions` without redownloading everything because I used `--download-archive`

3. I am proposing a new flag `--verify-archive` which would allow youtube-dl to check and make sure that it does indeed have all content associated with a video. If and only if the user used the appropriate flags to download such content.

4. Using `--verify-archive` with `--write-info-json` and or `--write-descriptions` while having used `--download-archive` in the past will check the specified download directory contents for such video to verify that the entire set of files exists.

5. `--verify-archive` will not affect new video downloads as they happen. `--verify-archive` should also work with all other flags that specify downloading a file and storing it in directory.

6. I have 74 thousand videos totaling 5TB. It's not economically feasible to re-download them in full again.

|

request

|

low

|

Critical

|

150,267,915 |

rust

|

--target should ignore the machine part of the triplet in most cases

|

Currently, rustc accepts a limited set of --target values. Those match _some_ of the values one can get out of the typical `config.guess`, but for most platforms, the machine/manufacturer part of the target triplet should be ignored, or allowed to be omitted. (the output of config-guess is of the form `CPU_TYPE-MANUFACTURER-OPERATING_SYSTEM` or `CPU_TYPE-MANUFACTURER-KERNEL-OPERATING_SYSTEM`)

For example, the linux x86_64 target for rustc is `--target=x86_64-unknown-linux-gnu`.

Some time ago, `config.guess` would have returned that. Nowadays, it returns `x86_64-pc-linux-gnu`.

clang accepts both, as well as the shorter form: `--target=x86_64-linux-gnu` (in fact, it's very lax, `--target=x86_64-foo` works too).

A typical cross GCC toolchain will come as `x86_64-linux-gnu-gcc` too.

|

T-compiler,T-dev-tools,C-feature-request

|

low

|

Major

|

150,304,574 |

opencv

|

opencv cv2.waitKey() do not work well with python idle or ipython

|

### my OS

- OpenCV version: 2.4.5

- Host OS: Linux (CentOS 7)

### descirption of the problem

After loading an image, and then show the image, cv2.waitKey() can not work properly when I use opencv in python idle or jupyter console. For example, if I use `cv2.waitKey(3000)` after using `cv2.imshow('test', img)`, the image window should close automatically after 3 seconds, but it won't!

And neither can I close it by clicking the 'close' button in the window, it just gets stuck. I have to exit the python idle or shut down the jupyter console to close the window.

But if I run the whole script in the command line, it works well.

### Code example to reproduce the issue / Steps to reproduce the issue

```

import cv2

img = cv2.imread('cat.jpg')

cv2.imshow('test', img)

cv2.waitKey(5000)

```

|

bug,category: python bindings,priority: low,affected: 2.4

|

low

|

Major

|

150,415,526 |

flutter

|

Would be nice to have `flutter screenrecord`

|

Even if it only worked on Android for now.

I looked at writing it briefly this morning, but unlike a screen capture which is once and done, a screen recording is likely long running and waits for a keyboard interrupt to stop?

I suspect other CPTs have implemented something like this.

@devoncarew

|

c: new feature,tool,P3,team-tool,triaged-tool

|

medium

|

Major

|

150,440,734 |

youtube-dl

|

Make these errors optional

|

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

### What is the purpose of your _issue_?

- [ ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [x] Feature request (request for a new functionality)

- [x] Question

- [ ] Other

---

### The following sections concretize particular purposed issues, you can erase any section (the contents between triple ---) not applicable to your _issue_

---

### Description of your _issue_, suggested solution and other information

"ERROR: YouTube said: This video is available in Andorra, United Arab Emirates, A

fghanistan, Antigua and Barbuda, Anguilla, Albania, Armenia, Angola, Antarctica,

Argentina, American Samoa, Austria, Australia, Aruba, Åland Islands, Azerbaijan

, Bosnia and Herzegovina, Barbados, Bangladesh, Belgium, Burkina Faso, Bulgaria,

Bahrain, Burundi, Benin, Saint Barthélemy, Bermuda, Brunei Darussalam, Bolivia,

Plurinational State of, Bonaire, Sint Eustatius and Saba, Brazil, Bahamas, Bhut

an, Bouvet Island, Botswana, Belarus, Belize, Cocos (Keeling) Islands, Congo, th

e Democratic Republic of the, Central African Republic, Congo, Switzerland, Côte

d'Ivoire, Cook Islands, Chile, Cameroon, China, Colombia, Costa Rica, Cuba, Cap

e Verde, Curaçao, Christmas Island, Cyprus, Czech Republic, Djibouti, Denmark, D

ominica, Dominican Republic, Algeria, Ecuador, Estonia, Egypt, Western Sahara, E

ritrea, Spain, Ethiopia, Finland, Fiji, Falkland Islands (Malvinas), Micronesia,

Federated States of, Faroe Islands, France, Gabon, United Kingdom, Grenada, Geo

rgia, French Guiana, Guernsey, Ghana, Gibraltar, Greenland, Gambia, Guinea, Guad

eloupe, Equatorial Guinea, Greece, South Georgia and the South Sandwich Islands,

Guatemala, Guam, Guinea-Bissau, Guyana, Hong Kong, Heard Island and McDonald Is

lands, Honduras, Croatia, Haiti, Hungary, Indonesia, Ireland, Israel, Isle of Ma

n, India, British Indian Ocean Territory, Iraq, Iran, Islamic Republic of, Icela

nd, Italy, Jersey, Jamaica, Jordan, Japan, Kenya, Kyrgyzstan, Cambodia, Kiribati

, Comoros, Saint Kitts and Nevis, Korea, Democratic People's Republic of, Korea,

Republic of, Kuwait, Cayman Islands, Kazakhstan, Lao People's Democratic Republ

ic, Lebanon, Saint Lucia, Liechtenstein, Sri Lanka, Liberia, Lesotho, Lithuania,

Luxembourg, Latvia, Libya, Morocco, Monaco, Moldova, Republic of, Montenegro, S

aint Martin (French part), Madagascar, Marshall Islands, Macedonia, the Former Y

ugoslav Republic of, Mali, Myanmar, Mongolia, Macao, Northern Mariana Islands, M

artinique, Mauritania, Montserrat, Malta, Mauritius, Maldives, Malawi, Mexico, M

alaysia, Mozambique, Namibia, New Caledonia, Niger, Norfolk Island, Nigeria, Nic

aragua, Netherlands, Norway, Nepal, Nauru, Niue, New Zealand, Oman, Panama, Peru

, French Polynesia, Papua New Guinea, Philippines, Pakistan, Poland, Saint Pierr

e and Miquelon, Pitcairn, Palestine, State of, Portugal, Palau, Paraguay, Qatar,

Réunion, Romania, Serbia, Russian Federation, Rwanda, Saudi Arabia, Solomon Isl

ands, Seychelles, Sudan, Sweden, Singapore, Saint Helena, Ascension and Tristan

da Cunha, Slovenia, Svalbard and Jan Mayen, Slovakia, Sierra Leone, San Marino,

Senegal, Somalia, Suriname, South Sudan, Sao Tome and Principe, El Salvador, Sin

t Maarten (Dutch part), Syrian Arab Republic, Swaziland, Turks and Caicos Island

s, Chad, French Southern Territories, Togo, Thailand, Tajikistan, Tokelau, Timor

-Leste, Turkmenistan, Tunisia, Tonga, Turkey, Trinidad and Tobago, Tuvalu, Taiwa

n, Province of China, Tanzania, United Republic of, Ukraine, Uganda, United Stat

es Minor Outlying Islands, Uruguay, Uzbekistan, Holy See (Vatican City State), S

aint Vincent and the Grenadines, Venezuela, Bolivarian Republic of, Virgin Islan

ds, British, Virgin Islands, U.S., Viet Nam, Vanuatu, Wallis and Futuna, Samoa,

Yemen, Mayotte, South Africa, Zambia, Zimbabwe only"

This is printed when some videos cant be accessed and youtube supplies a message I want a option to turn that printing of that message off as my dict currently does not work even if I have quiet set to `True`.

my dict is this.

```

ytdlo = {'quiet': True, 'no_warnings': True, 'ignoreerrors': True}

```

|

request

|

low

|

Critical

|

150,496,561 |

rust

|

Missing auto-load script in gdb

|

When compiled with `-g`, we produce binaries which contain a `gdb_load_rust_pretty_printers.py` in `.debug_gdb_scripts` section. This makes gdb complain about missing scripts when run under plain gdb as opposed to the rust-gdb wrapper script.

Distribution puts this script into `$INSTALL_ROOT/lib/rustlib/etc`, which is someplace gdb wouldn’t ever look at by default. Some experimentation suggests that placing the scripts at gdb’s `DATA-DIRECTORY/python/gdb/printer/` will at least make gdb detect the presence of the script, but then it fails to load the module due to import errors.

|

A-debuginfo,T-compiler,C-bug

|

medium

|

Critical

|

150,572,725 |

youtube-dl

|

Add an option to ignore PostProcessingError (was: --embed-thumbnail should warn, not error, on non-mp3/mp4)

|

- [ x ] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.19**

- [ x ] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [ x ] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

- [ x ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [ ] Question

- [x] Other

#

```

> youtube-dl -x --embed-thumbnail http://youtube.com/watch?v=Jj1KTPOqd_A

[youtube] Jj1KTPOqd_A: Downloading webpage

[youtube] Jj1KTPOqd_A: Downloading video info webpage

[youtube] Jj1KTPOqd_A: Extracting video information

[youtube] Jj1KTPOqd_A: Downloading thumbnail ...

[youtube] Jj1KTPOqd_A: Writing thumbnail to: 01-Kenny Wayne Shepherd Band - Deja Voodoo (Video)-Jj1KTPOqd_A.jpg

[download] Destination: 01-Kenny Wayne Shepherd Band - Deja Voodoo (Video)-Jj1KTPOqd_A.webm

[download] 100% of 4.31MiB in 01:11

[ffmpeg] Destination: 01-Kenny Wayne Shepherd Band - Deja Voodoo (Video)-Jj1KTPOqd_A.ogg

Deleting original file 01-Kenny Wayne Shepherd Band - Deja Voodoo (Video)-Jj1KTPOqd_A.webm (pass -k to keep)

ERROR: Only mp3 and m4a/mp4 are supported for thumbnail embedding for now.

```

A failure to embed a thumbnail should probably be treated as a nonfatal error, don’t you think?

|

request

|

low

|

Critical

|

150,603,403 |

angular

|

Input type='date' with ngModel bind to a Date() js object.

|

Hi, here it's the plunker. http://plnkr.co/edit/3ZDyWnabgp4S3m2rxJaX?p=preview

**Current behavior**

Input doesn't show the date at beginning (12-12-1900)

Only changing the year in input value myDate variable get refresh.

**Expected/desired behavior**

First, I would expect the input to show the correct date (12-12-1900).

And then myDate variable get refresh with any change in input.

**Question**

That's the way it worked on angular 1, isn't?

|

feature,workaround1: obvious,freq3: high,area: forms,feature: under consideration

|

medium

|

Major

|

150,624,847 |

rust

|

DWARF does not mention Self type

|

I wrote a simple test program using the `Self` type:

```

struct Something(i32);

impl Something {

fn x(self: &Self) -> i32 { self.0 }

}

fn main() {

let y = Something(32);

let z = y.x();

()

}

```

When I examine the resulting DWARF, I don't see any mention of `Self`. I think it should be emitted as a typedef pointing to `Something`.

|

A-debuginfo,C-enhancement,P-low,T-compiler

|

low

|

Minor

|

150,643,388 |

rust

|

rustc::lint could use some helper functions for working with macros

|

(See #22451 and possibly others)

Currently we have a few functions in [clippy](https://github.com/Manishearth/rust-clippy)`/src/util/mod.rs` to deal with macros. Namely we can check if some span stems from a macro expansion (though that one could need some work), or if some span was expanded by a given macro (by name) somewhere or directly, or if two spans are in the same macro expansion. This is often useful for readability lints which should not be invoked within macro-expanded code (unless perhaps the code before expansion had the same issue, but that is as of yet hard to detect).

I think those functions belong in the rustc::lint crate. This would both benefit internal lints and all other lints outside of clippy which could then make use of those helpers.

However, clippy is currently licensed under MPL, so we'd need agreement from the authors (of the respective code lines) to move this code into rustc proper. Also we'll want to look first if those functions are ready to be moved and clean up any technical debt we may have incurred so far.

cc @Manishearth

|

A-lints,T-compiler,C-feature-request

|

low

|

Major

|

150,677,226 |

neovim

|

Remove spaces from formerly-indented blank lines

|

- Neovim version: 0.1.3

- Vim behaves differently?: no

- Operating system/version: Linux Fedora 23

- Terminal name/version: Gnome-terminal

- `$TERM`: xterm-256color

### Steps to reproduce using `nvim -u NONE`

1. `nvim -u NONE`

2. Type:

```

This is a test.

This is indented.

```

3. Backspace over all contents of the second line, including the spaces.

4. All the text including the spaces are gone, but there still appear to be spaces rendered on the terminal. Typing new text will insert it at the beginning of the line, as it should with all spaces gone. But arrowing right/left does not reveal the presence of these spaces. They appear to be entirely gone from the file but are till rendered on the screen, likely aren't visible, and aren't used to indent when new text is added on the line.

### Background

I am a blind screen reader user. I use the arrow keys rather than hjkl to navigate because screen readers don't know to present entire lines when hjkl are pressed, but they generally assume that the cursor moves in response to an arrow press so speak the updated change then. When I arrow to the second line in the above test data, after it is blank, my screen reader speaks "2 spaces", which is what it would speak before a line of text/whitespace that is indented. But, when typing text on that line, text is inserted at the beginning. As such, it is impossible to distinguish a line that actually has 2 spaces vs. one that had 2 and is now entirely blank. Vim does this too, is very annoying, and I hope Neovim might fix it.

To be very clear, these 2 spaces aren't in the file or editing buffer. They appear to be artifacts that aren't removed from the rendering, like istead of rendering the blank line as "\n\n" it's being rendered as "\n \n". It probably doesn't affect anyone other than screen reader users, so you may not notice it unless you dig into the rendering code.

|

enhancement,tui,core

|

low

|

Minor

|

150,703,506 |

youtube-dl

|

Is there an option that yields the best available audio quality among all formats?

|

- [x] I've **verified** and **I assure** that I'm running youtube-dl **2016.04.24**

- [x] At least skimmed through [README](https://github.com/rg3/youtube-dl/blob/master/README.md) and **most notably** [FAQ](https://github.com/rg3/youtube-dl#faq) and [BUGS](https://github.com/rg3/youtube-dl#bugs) sections

- [x] [Searched](https://github.com/rg3/youtube-dl/search?type=Issues) the bugtracker for similar issues including closed ones

- [ ] Bug report (encountered problems with youtube-dl)

- [ ] Site support request (request for adding support for a new site)

- [ ] Feature request (request for a new functionality)

- [x] Question

- [ ] Other

- Single video: https://www.youtube.com/watch?v=J9bjJEjK2dQ

---

I want to be able to download the best quality audio available. `-f bestaudio` doesn't always yield the best available audio, as sometimes the best audio only version has worse audio than the best video+audio version. See the example video: `-f bestaudio` yields 125 kbps m4a, and `-x -f best` yields 192 kbps m4a. I think I've seen an example of `-f best` yielding worse quality than `-f bestaudio` too, but I'm not sure.

Does `-f best` in fact always yield the best audio?

If not, is there an option that always yields the best audio?

If not, I would like to request such a feature. In fact, the way it's described, it sounds like the default option is intended to yield the best quality audio and video, and that's certainly what I expected. Also, is it guaranteed that either `-f best` or `-f bestaudio` yields the best audio? It's conceivable that there could be a video where the best audio-only version has medium quality, the best overall version has medium quality audio and high quality video, and there exists a version with high quality audio and low quality video, and then the answer would be 'no'.

|

request

|

medium

|

Critical

|

150,779,779 |

neovim

|

defaults: "-u NORC" with invalid runtime shows E484

|

If syntax.vim is missing, it should be quiet, not raise E484 _unless_ the user explicitly did `:syntax on` (as opposed to merely allowing the default).

https://github.com/neovim/neovim/pull/4252#issuecomment-183994718

https://twitter.com/ds26gte/status/724496755602128896

- Counterargument: if users normally allow the default, will this cause confusion (no syntax + no error)?

- Maybe, but as soon as user does explicit `:syntax on` interactively, E484 will show.

- Alternative: do a "passive" message which only appears in `:messages` history and avoids "Hit Enter"

|

bug,ux,startup

|

low

|

Critical

|

150,952,640 |

kubernetes

|

Declarative update of configmaps and secrets from the contents of files

|

Currently AFAIK you can only create a secret from a file using:

```

kubectl create secret generic <name> --from-file=<key>=<file>

```

The drawback is this, If a change is made to the file you need to delete and then recreate the secret:

```

kubectl delete secret <name>

kubectl create secret generic <name> --from-file=<key>=<file>

```

This is awkward because to update the other items (service, deployment, pv) in the same app you just run:

```

kubectl apply -f <filename>

```

I realize that I can create a script to base64 encode the contents of the file and inject it into a secret spec file and then use create/apply/delete as with other API objects but this just feels awkward because the create/apply/delete workflow is so clean.

For context this is a redis config file that contains a password and I'm mounting it with a volumeMount in a deployment:

```

- name: redis-conf-secret

readOnly: true

mountPath: /etc/redis

```

|

priority/important-soon,area/app-lifecycle,area/kubectl,kind/feature,sig/cli,area/secret-api,area/declarative-configuration,lifecycle/frozen

|

high

|

Critical

|

151,094,471 |

TypeScript

|

In JS, use JSDoc to specify a call's type arguments

|

In typescript we can specified template at function call

```

function A<T>(callBack:function(T):void) { }

A<{name:string}>(function(item) { /** item is {name:string} */ })

```

But I don't know how to force the type of its template when calling it from js file. And I can't find any clue. It seem like not possible so I want to make feature request if it don't have already

|

Suggestion,Needs Proposal,Domain: JSDoc,Domain: JavaScript

|

low

|

Minor

|