id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

649,872,156 |

flutter

|

Add a Sidebar widget to Cupertino for a native look on iPadOS

|

## Use case

Build iPadOS apps which look native and up-to-date regarding the new UI concept of sidebars on iPad apps

## Proposal

iPadOS 14 introduces the UI concept a toggle sidebar which is prominently used in a variety of Apple‘s own apps and surely in lots of updated 3rd party apps to make them feel native. In SwiftUI, there is already an implementation for it:

> SwiftUI will automatically take care of showing a button to slide in your bar from the side of the screen, and also collapse it with your primary view if you’re in a compact size class. If you’re presenting a list inside your sidebar, it’s a good idea to use the .listStyle() to give it the system-standard theme for sidebars, like this: .... (Source: [Hacking with Swift - How to add a sidebar for iPadOS](https://www.hackingwithswift.com/quick-start/swiftui/how-to-add-a-sidebar-for-ipados)

It would be fantastic to have the sidebar as a „native“ flutter widget in Cupertino.

|

c: new feature,framework,f: cupertino,P2,team-design,triaged-design

|

low

|

Major

|

649,874,667 |

excalidraw

|

Feature: Import data (local file and json link) to exsiting canvas

|

When we load a new json file or open a json link, it will erase the existing canvas and load data.

There would be certain use cases not to erase the existing canvas and load data in addition.

----

#1861 & #1862 : Slightly related as the problem is erasing canvas without confirmation.

#1091 : Related as my original idea is to create a gallery of reusable drawings (and developed [an external tool](https://github.com/dai-shi/excalidraw-gallery)).

#1537 & #859 : Because system copy&paste is not implemented, this would be only way to merge two drawings.

|

enhancement

|

low

|

Minor

|

649,934,964 |

pytorch

|

Inconsistent handling of torch.Size.__add__

|

`+ list` is allowed in JIT scripting, but not regularly.

```py

In [50]: def f(x: torch.Tensor) -> torch.Tensor:

...: # `x` is known to have dim -1 of size 18

...: shape = x.shape[:-1] + [6, 3]

...: return x.reshape(shape)

...:

...:

In [51]: f(torch.randn(18))

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-51-472433a8673d> in <module>

----> 1 f(torch.randn(18))

<ipython-input-50-0fb98040e0b1> in f(x)

1 def f(x: torch.Tensor) -> torch.Tensor:

2 # `x` is known to have dim -1 of size 18

----> 3 shape = x.shape[:-1] + [6, 3]

4 return x.reshape(shape)

5

TypeError: can only concatenate tuple (not "list") to tuple

In [53]: torch.jit.script(f)(torch.randn(18))

Out[53]:

tensor([[-0.1262, -0.7522, 1.0233],

[ 0.2715, -1.5179, 0.1224],

[-0.2018, 0.6756, 2.3353],

[ 0.0312, -0.0629, -0.8199],

[ 2.0379, -1.4921, 0.5088],

[ 0.1909, 1.2629, -0.6989]])

```

`TypeError: can only concatenate tuple (not "list") to tuple`

cc @suo @gmagogsfm

|

oncall: jit,weeks,TSUsability,TSRootCause:PyTorchParityGap

|

low

|

Critical

|

649,953,020 |

TypeScript

|

unreachable code detected not work for if clause.

|

<!-- 🚨 STOP 🚨 STOP 🚨 STOP 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 3.9.2

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** unreachable

**Code**

```ts

const hel2 = (x: number) => {

switch (typeof x) {

case 'number': return 0

}

x // Unreachable code detected

}

const hel12 = (x: number) => {

if(typeof x === 'number'){

return 0;

}

x // expect same error here!

}

```

**Expected behavior:**

in commnet

**Actual behavior:**

in commnet

**Playground Link:** <!-- A link to a TypeScript Playground "Share" link which demonstrates this behavior -->

https://www.staging-typescript.org/play?#code/MYewdgzgLgBAFgUwDYCYYF4YAoAeAuGMAVwFsAjBAJwEoMA+GAbwCgYYIB3ASymDmygBPAA4IQAMxg5aLNm2ABDCAhgByYuSqqClBFCKUwMAAysYAXzM5ml5qEixESAIxpMuAhoo16TM13EsIVEJKQx0THVSb1VqWTldfUMTAG4zSzZrSyA

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

|

Suggestion,Awaiting More Feedback

|

low

|

Critical

|

649,966,890 |

next.js

|

Invalid HTML inside `dangerouslySetInnerHTML` breaks the page.

|

# Bug report

## Describe the bug

If invalid HTML is added to `dangerouslySetInnerHTML`, Next.js will output a blank page without providing any feedback. This can be hard to track when working with a CMS provider or markdown files.

## To Reproduce

Steps to reproduce the behavior, please provide code snippets or a repository:

1. Clone https://github.com/lfades/nextjs-inner-html-bug

2. Run `yarn && yarn dev` or `npm i && npm run dev`

3. See that `pages/index.js` is a blank page with no errors

## Expected behavior

Invalid HTML inside `dangerouslySetInnerHTML` should throw and/or let the user know that there's something wrong.

The demo also has an `index.html` and `index.js` in the root directory that shows how the same code works in React alone, it doesn't produce an error either, but it shows the content.

|

good first issue

|

medium

|

Critical

|

649,967,030 |

pytorch

|

Vectorized torch.eig()

|

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

The **torch.eig()** function does not support vectorized calculation. It only takes a single matrix (n, n) as input.

Please make it to support vectorized(batched?) matices like (*, n, n). * is for batch.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

Even though the **torch.symeig()** takes vectorized(batched?) matrices with a shape of (*, n, n), **torch.eig() does not**

## Pitch

<!-- A clear and concise description of what you want to happen. -->

If I put a tensor with shape (batch, n, n), it will outs (batch, eigenvalue_real, eigenvalue_imagery) and (batch, eigenvectors)

## Alternatives

<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->

I think if someone cheats the implementation of **torch.symeig()**, this request could be much easier.

## Additional context

<!-- Add any other context or screenshots about the feature request here. -->

I added a toy example

~~~

>>> x = torch.zeros([4000,4,4])

>>> x = x + torch.eye(4)

>>> torch.symeig(x)

torch.return_types.symeig(

eigenvalues=tensor([[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.],

...,

[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]]),

eigenvectors=tensor([]))

>>> torch.eig(x)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

RuntimeError: invalid argument 1: A should be 2 dimensional at /pytorch/aten/src/TH/generic/THTensorLapack.cpp:193

~~~

cc @VitalyFedyunin @ngimel

|

module: performance,triaged,enhancement,module: vectorization

|

low

|

Critical

|

649,990,995 |

flutter

|

[tool_crash] ProcessException: The system cannot find the file specified. Command: C:\src\flutter\flutter\bin\flutter.BAT upgrade --continue --no-version-check

|

## Command

```

flutter upgrade

```

## Steps to Reproduce

1. ...

2. ...

3. ...

## Logs

ProcessException: ProcessException: The system cannot find the file specified.

Command: C:\src\flutter\flutter\bin\flutter.BAT upgrade --continue --no-version-check

```

```

```

[✓] Flutter (Channel stable, v1.17.4, on Microsoft Windows [Version 10.0.18363.900], locale en-US)

• Flutter version 1.17.4 at C:\src\flutter\flutter

• Framework revision 1ad9baa8b9 (2 days ago), 2020-06-30 12:53:55 -0700

• Engine revision ee76268252

• Dart version 2.8.4

[!] Android toolchain - develop for Android devices (Android SDK version 29.0.3)

• Android SDK at C:\Users\Pratiksha\AppData\Local\Android\sdk

• Platform android-29, build-tools 29.0.3

• Java binary at: C:\Program Files\Android\Android Studio\jre\bin\java

• Java version OpenJDK Runtime Environment (build 1.8.0_242-release-1644-b01)

✗ Android license status unknown.

Try re-installing or updating your Android SDK Manager.

See https://developer.android.com/studio/#downloads or visit visit

https://flutter.dev/docs/get-started/install/windows#android-setup for detailed instructions.

[✓] Android Studio (version 4.0)

• Android Studio at C:\Program Files\Android\Android Studio

• Flutter plugin version 47.1.2

• Dart plugin version 193.7361

• Java version OpenJDK Runtime Environment (build 1.8.0_242-release-1644-b01)

[✓] VS Code (version 1.46.1)

• VS Code at C:\Users\Pratiksha\AppData\Local\Programs\Microsoft VS Code

• Flutter extension version 3.11.0

[!] Connected device

! No devices available

! Doctor found issues in 2 categories.

```

## Flutter Application Metadata

No pubspec in working directory.

|

c: crash,tool,platform-windows,P2,team-tool,triaged-tool

|

low

|

Critical

|

650,061,472 |

neovim

|

Indent alignment lines and does not display the first-level tab character

|

<!-- Before reporting: search existing issues and check the FAQ. -->

- `nvim --version`: NVIM v0.4.3 Build type: Release LuaJIT 2.0.5

- `vim -u DEFAULTS` (version: ) behaves differently? No

- Operating system/version: MacOS Catalina 10.15.5

- Terminal name/version: iTerm2

- `$TERM`: xterm-256color

I need the Indent alignment lines and does not display the first-level tab character:

```

set listchars=tab:\¦\ ,trail:■,extends:>,precedes:<,nbsp:+

set list

hi SpecialKey ctermfg=239 ctermbg=202

```

It started like this:

<img src="https://user-images.githubusercontent.com/32320149/86309990-9830e180-bc4f-11ea-89eb-86e1cd658cce.png" width="400px" alt="">

But I would like this **which does not display the first-level tab character**:

<img src="https://user-images.githubusercontent.com/32320149/86310020-a5e66700-bc4f-11ea-9f53-5c5c0dee7d05.png" width="400px" alt="">

> Why

I think It will be more reasonable, simple, and beautiful. "Radish or cabbage; each to his own delight."

I hope I explained it clearly.🌚

|

enhancement

|

low

|

Minor

|

650,071,802 |

youtube-dl

|

YouTube chat replay support

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2020.06.16.1. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Search the bugtracker for similar site feature requests: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [x] I'm reporting a site feature request

- [x] I've verified that I'm running youtube-dl version **2020.06.16.1**

- [x] I've searched the bugtracker for similar site feature requests including closed ones

## Description

<!--

Provide an explanation of your site feature request in an arbitrary form. Please make sure the description is worded well enough to be understood, see https://github.com/ytdl-org/youtube-dl#is-the-description-of-the-issue-itself-sufficient. Provide any additional information, suggested solution and as much context and examples as possible.

-->

YouTube now has "chat replay" for recorded livestreams in the same style as Twitch, which youtube-dl already supports extraction of as a "subtitle". It would be beneficial for youtube-dl to also support extraction as a subtitle for YouTube, as like on Twitch, chat on YouTube can form a very important part of the livestream in question. There is no existing support for this in youtube-dl, or similar option that I can see.

There is a Python library at https://github.com/taizan-hokuto/pytchat which may be useful for the implementation of this.

Amongst other formats, it supports output as JSON, which could simply be passed back as the output for a new "subtitle" - the same style as the Twitch chat replay.

Use case example: The archiving of a YouTube channel, including all metadata. At the moment the chat replay would not be saved, meaning there is no context for content in the video which may refer to it.

|

incomplete

|

low

|

Critical

|

650,108,359 |

angular

|

Ivy: Animation events removed when node's position is changed

|

<!--🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅

Oh hi there! 😄

To expedite issue processing please search open and closed issues before submitting a new one.

Existing issues often contain information about workarounds, resolution, or progress updates.

🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅🔅-->

# 🐞 bug report

### Affected Package

<!-- Can you pin-point one or more @angular/* packages as the source of the bug? -->

<!-- ✍️edit: --> The issue is caused by package @angular/animations

### Is this a regression?

<!-- Did this behavior use to work in the previous version? -->

<!-- ✍️--> Yes, without ivy animation events don't removed.

### Description

<!-- ✍️-->

Animation events is removed from `AnimationTransitionNamespace` during change node's position inside `ViewContainerRef`. This causes issue inside `mat-tab-group` when tab's order is changed because displaying content depends on animation events.

When node's index is changed a node detaches from old position and inserts into new one. During detach animation events are removed, but not added when node inserts back into new position.

## 🔬 Minimal Reproduction

<!--

Please create and share minimal reproduction of the issue starting with this template: https://stackblitz.com/fork/angular-ivy

-->

<!-- ✍️-->

[StackBlitz](https://stackblitz.com/github/ghostlytalamaur/angular-rearrange-tab-bug) [GitHub Repo](https://github.com/ghostlytalamaur/angular-rearrange-tab-bug)

#### Steps to reproduce:

1. Open StackBlitz

2. Enable Ivy in settings

3. Click on rearrange button

4. Click on Tab 2. There is no content.

<!--

If StackBlitz is not suitable for reproduction of your issue, please create a minimal GitHub repository with the reproduction of the issue.

A good way to make a minimal reproduction is to create a new app via `ng new repro-app` and add the minimum possible code to show the problem.

Share the link to the repo below along with step-by-step instructions to reproduce the problem, as well as expected and actual behavior.

Issues that don't have enough info and can't be reproduced will be closed.

You can read more about issue submission guidelines here: https://github.com/angular/angular/blob/master/CONTRIBUTING.md#-submitting-an-issue

-->

|

area: animations,state: confirmed,P3

|

low

|

Critical

|

650,142,089 |

pytorch

|

MultiheadAttention set(-inf) cause 'Nan' in loss computing

|

## 🐛 Bug

I plan to reimplement a transformer variant model. I import MultiheadAttention from torch.nn.modules.activation.

In the encoder part, to be specific, the self multi-head attention part, if **the whole input is padded**, it means the key_padding_mask parameter is full of True.

"When the value is True, the corresponding value on the attention layer will be filled with -inf."

This setting leads to **NaN** in model parameters and raises ValueError("nan loss encountered").

## To Reproduce

Steps to reproduce the behavior:

1. Initialize a MultiheadAttention.

self.self_attn= MultiheadAttention(embed_dim=embed_dim,num_heads=nhead,dropout=dropout)

2. In forward() function.

src, attn = self.self_attn(src,src,src,attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)

3. Then pass an x. The vector src_key_padding_mask is all implemented True. The original sentence in src is \<pad\> * max_seq_length.

I use allennlp, this raises "ValueError: nan loss encountered".

I founded that one example in a batch is full of \<pad\>, which causes this issue.

## Expected behavior

I found some descriptions of almost the same problem in fairseq.

[fairseq](https://github.com/pytorch/fairseq/blob/master/fairseq/modules/transformer_layer.py)

line 103

# anything in original attn_mask = 1, becomes -1e8

# anything in original attn_mask = 0, becomes 0

# Note that we cannot use -inf here, because at some edge cases,

# the attention weight (before softmax) for some padded element in query

# will become -inf, which results in NaN in model parameters

Hope u can learn from their practices.

## Environment

- PyTorch Version (e.g., 1.0): 1.5.1

- OS (e.g., Linux): Ubuntu 16.04.6 LTS

- How you installed PyTorch (`conda`, `pip`, source): pip

- Python version: 3.7

- CUDA/cuDNN version: /usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.4

- GPU models and configuration: TITAN Xp

- Any other relevant information:

numpy==1.18.5

cc @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki

|

module: nn,triaged,module: NaNs and Infs

|

low

|

Critical

|

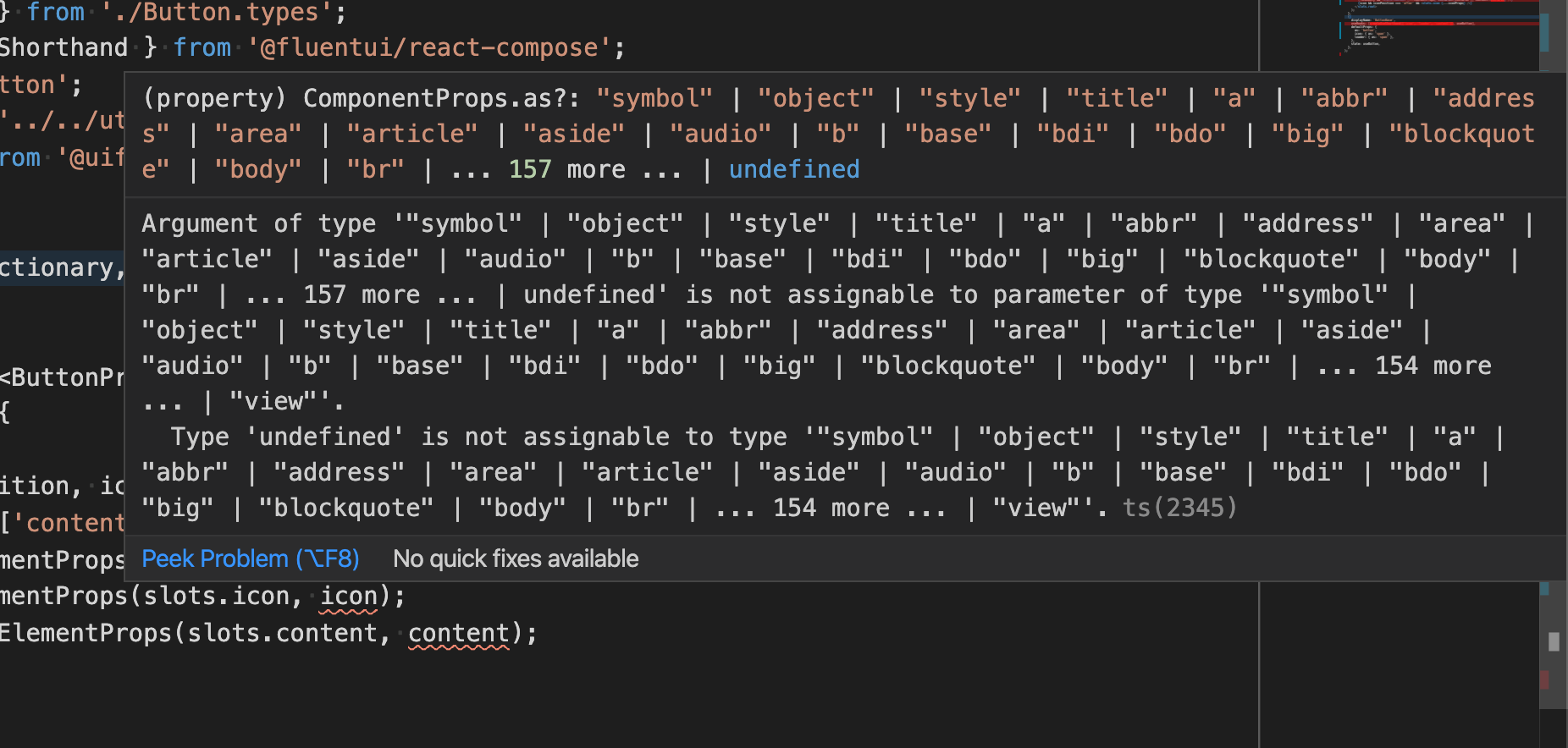

650,147,883 |

TypeScript

|

Give better errors comparing massive singleton union types

|

|

Suggestion,Needs Proposal,Domain: Error Messages

|

low

|

Critical

|

650,150,193 |

TypeScript

|

Provide documentation on writing fourslash tests

|

> You know what fourslash needs? Extensive documentation/comments on how fourslash works and the fourslash API. Half the time I can never figure out what I need to call to test something and have to dig through other tests to figure out when to use `/**/` or `[| |]`, etc.

— @rbuckton

|

Docs,Infrastructure

|

low

|

Minor

|

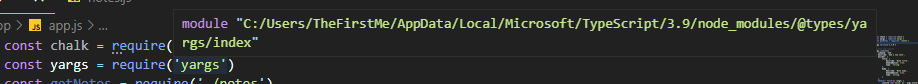

650,151,977 |

TypeScript

|

Hovering on imported module shows path from TypeScript folder.

|

*TS Template added by @mjbvz*

**TypeScript Version**: 3.9.6

**Search Terms**

- quickinfo

- types

- javascript

---

<!-- ⚠️⚠️ Do Not Delete This! bug_report_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Also please test using the latest insiders build to make sure your issue has not already been fixed: https://code.visualstudio.com/insiders/ -->

<!-- Use Help > Report Issue to prefill these. -->

- VSCode Version: 1.46.1

- OS Version: Windows 10

Steps to Reproduce:

1. Require modules like yargs, validate in a nodejs project. (Installed in project folder)

2. On hover, TypeScripts node_modules path is shown instead of project's node_modules folder.

3. Packages not in TypeScript's node_modules folder has no such issue.

Hovering on yargs:

Hovering on chalk:

<!-- Launch with `code --disable-extensions` to check. -->

Does this issue occur when all extensions are disabled?: Yes

|

Suggestion,Awaiting More Feedback

|

low

|

Critical

|

650,160,527 |

pytorch

|

[RFC] [RPC] Automatic retries of all requests in TensorPipe agent

|

To increase resiliency to infra issues, we could provide an automatic transparent retry mechanism in the RPC agent.

There already exists something of that kind, but my understanding is that it's an internal API used only for RRef operations. A challenge of extending it to all requests is that, due to that retry system living outside the agent, it retries each failed request as a new separate request, and would thus only work for idempotent functions (the RRef ops are, but user functions may not be).

What I propose is to implement the retry inside the agent (in particular, I am thinking about the TensorPipe one; I'm not sure how it would work for the other ones). The idea is to assign each request and each response message a unique ID on the sender side, and then have the receiver confirm the transfer with an ACK message. The sender would keep a map from ID to data of all the messages to which it hasn't received an ACK yet. When a pipe fails and is re-established, the sender will re-send all those pending messages. The receiver will also keep a set of IDs that it has already received and dealt with, and can thus check if the sender is sending an ID for the second time, and in that case just send back the ACK without performing the operation anew. This way the operation becomes effectively idempotent. (Some details will still have to be ironed out, but this is the gist of the idea).

Note that the above works when there is an error in the transmission but both endpoints stay up and running and are able to reconnect. If one endpoint goes down and then comes up again this wouldn't work, as then its map of pending messages wouldn't be preserved. However, such a scenario seems out of scope, as retrying a stateful RPC call on a worker that has lost all its state will probably not make sense either. So we could probably safely assume that when one worker goes down it, it will never come back up or that all of them will be restarted (which I believe is what elastic does).

The alternatives to this approach are two:

- We could push the retry even further down, into TensorPipe. The problem there is that TensorPipe doesn't natively operate on a request-response protocol (this is added on top by the agent) and thus wouldn't normally send ACKs. Moreover, this would require TensorPipe to keep data alive even after it has finished sending it, just in case the transfer may later fail and it needs to send it again. This logic may not be desirable in all circumstances and IMO should live at a higher level of the stack.

- We could avoid retrying automatically and count on the user to catch exceptions in their RPC calls and, knowing which ones are idempotent and which ones aren't, pick their own logic of retrying. This seems burdensome on the user, and scales poorly, as each user would have to reimplement what is effectively a similar logic.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @gqchen @aazzolini @rohan-varma @xush6528 @jjlilley @osalpekar @jiayisuse @lw @beauby

|

triaged,module: rpc,module: tensorpipe

|

low

|

Critical

|

650,167,619 |

godot

|

Invalid cast exception when using C# and GD Script

|

**Godot version:**

3.2.2

**OS/device including version:**

Windows 10 64 bits

**Issue description:**

error when trying to conbine [this accessibility plugin](https://github.com/lightsoutgames/godot-accessibility) (that is in gdScript) with c# scripts that i want to use on my Project.

I got a invalid cast exception when I try to compyle a simple hello world Project with a empty c# script. If I don't add any c# script to the Project, the plugin Works fine.

and no, isn't a option dont use the plugin, because that plugin brings accessibility to all the Godot ecosystem, the editor itself and the result game. On [this post](https://godotforums.org/discussion/22953/c-cant-create-a-simple-script-in-a-small-example-project) we got some more details.

I am using Godot 3.2, but if I downgrade to 3.1 it Works fine.

ERROR: debug_send_unhandled_exception_error: System.InvalidCastException: Specified cast is not valid.

At: modules/mono/mono_gd/gd_mono_utils.cpp:357

ERROR: call_build: An EditorPlugin build callback failed.

At: editor/editor_node.cpp:5268

ERROR: debug_send_unhandled_exception_error: System.InvalidCastException: Specified cast is not valid.

At: modules/mono/mono_gd/gd_mono_utils.cpp:357

**Steps to reproduce:**

1. download or clone [this project](https://github.com/lightsoutgames/godot-accessible-starter) and atach a c# script into it.

2. Run the project.

|

bug,topic:dotnet

|

medium

|

Critical

|

650,179,620 |

opencv

|

FR: Implement "smart" reference on js similar to tensorflow.tidy()

|

Developers are required to manually manage memory on OpenCV.js and keeping some of the references may led to cumbersome code, I suggest providing an mechanism similar to the Tensorflow.js tf.tidy, that is used to cleanup GPU textures after processing TF code: https://js.tensorflow.org/api/0.11.7/#tidy

Here is an example usage:

```

// y = 2 ^ 2 + 1

const y = tf.tidy(() => {

// a, b, and one will be cleaned up when the tidy ends.

const one = tf.scalar(1);

const a = tf.scalar(2);

const b = a.square();

console.log('numTensors (in tidy): ' + tf.memory().numTensors);

// The value returned inside the tidy function will return

// through the tidy, in this case to the variable y.

return b.add(one);

});

console.log('numTensors (outside tidy): ' + tf.memory().numTensors);

y.print();

```

|

feature,category: javascript (js)

|

low

|

Minor

|

650,267,782 |

go

|

cmd/doc: package search inconsistent with methods or fields

|

```

$ go version

go version devel +5de90d33c8 Thu Jul 2 22:08:11 2020 +0000 linux/amd64

```

Running `go doc rand`, as expected, gives the documentation for `crypto/rand`, as it finds that one before `math/rand`:

```

package rand // import "crypto/rand"

Package rand implements a cryptographically secure random number generator.

var Reader io.Reader

func Int(rand io.Reader, max *big.Int) (n *big.Int, err error)

func Prime(rand io.Reader, bits int) (p *big.Int, err error)

```

Running `go doc rand.rand`, however, gives the documentation for `math/rand.Rand`, as it uses the lack of the symbol in `crypto/rand` to know that that's not the right package. So far so good:

```

package rand // import "math/rand"

type Rand struct {

// Has unexported fields.

}

A Rand is a source of random numbers.

func New(src Source) *Rand

func (r *Rand) ExpFloat64() float64

func (r *Rand) Float32() float32

func (r *Rand) Float64() float64

func (r *Rand) Int() int

func (r *Rand) Int31() int32

func (r *Rand) Int31n(n int32) int32

func (r *Rand) Int63() int64

func (r *Rand) Int63n(n int64) int64

func (r *Rand) Intn(n int) int

func (r *Rand) NormFloat64() float64

func (r *Rand) Perm(n int) []int

func (r *Rand) Read(p []byte) (n int, err error)

func (r *Rand) Seed(seed int64)

func (r *Rand) Shuffle(n int, swap func(i, j int))

func (r *Rand) Uint32() uint32

func (r *Rand) Uint64() uint64

```

However, running `go doc rand.rand.int` fails:

```

doc: symbol rand is not a type in package rand installed in "crypto/rand"

exit status 1

```

It seems like it doesn't actually search past the first package if there's a method specified. Manually specifying the package, such as `go doc math/rand.rand.int`, works as expected.

|

NeedsInvestigation

|

low

|

Critical

|

650,297,336 |

pytorch

|

Will the model run slower when deployed using libtorch ?

|

I'm trying to deploy a yolov5s model in my C++ program.

I followed the instructions in [https://gist.github.com/jakepoz/eb36163814a8f1b6ceb31e8addbba270](url) to get a torchscript converted model.

These are my C++ code, I put these code in a thread in ORBSLAM (a SLAM system), meanwhile there are other SLAM threads running.

```cpp

modelpath = "yolov5s.torchscript";

cout << "before loading" << endl;

long start = time_in_ms();

model = torch::jit::load(modelpath);

long end = time_in_ms();

cout << "it took " << end - start << " ms to load the model" << endl;

torch::jit::getProfilingMode() = false;

torch::jit::getExecutorMode() = false;

torch::jit::setGraphExecutorOptimize(false);

tensor_image = torch::zeros((1, 3, 640,640));

long start = time_in_ms();

std::vector<torch::jit::IValue> inputs;

inputs.push_back(torch::ones({1, 3, 640, 640}));

//inputs.emplace_back(tensor_image);

torch::jit::IValue output = model.forward(inputs);

long end = time_in_ms();

cout << "it took " << end - start << " ms to run the model once" << endl;

```

It took 720ms to load the model and 1300ms to run the model once.

But when I run this model in python environment, it only takes 200ms.

I would like to know if it's reasonable or what should I do to accelerate this.

cc @yf225 @glaringlee @VitalyFedyunin @ngimel

|

module: performance,module: cpp,triaged

|

low

|

Major

|

650,297,482 |

TypeScript

|

JS Find function definition involving a function generator

|

*TS Template added by @mjbvz*

**TypeScript Version**: 4.0.0-dev.20200702

**Search Terms**

- go to definition

---

Issue Type: <b>Bug</b>

```

// x.js

module.exports.a = () => {return ()=>{}};

// y.js

let n = require('./x.js');

module.exports.b = n.a();

// anywhere other than y.js

let m = require('./y.js');

let c = m.b(); // ctrl-click b

```

ctrl-click b

leads to 2 definitions and the default is a's definition instead of b's

VS Code version: Code 1.46.1 (cd9ea6488829f560dc949a8b2fb789f3cdc05f5d, 2020-06-17T21:13:20.174Z)

OS version: Windows_NT x64 10.0.17134

<details><summary>Extensions (15)</summary>

Extension|Author (truncated)|Version

---|---|---

project-manager|ale|11.1.0

vscode-eslint|dba|2.1.5

clipboard-manager|Edg|1.4.2

vscode-npm-script|eg2|0.3.12

vscode-test-explorer|hbe|2.19.1

vscode-heroku|iva|1.2.6

docthis|joe|0.7.1

mongodb-vscode|mon|0.0.4

csharp|ms-|1.22.1

mssql|ms-|1.9.0

python|ms-|2020.6.91350

cpptools|ms-|0.28.3

prettier-now|rem|1.4.9

tabnine-vscode|Tab|2.8.6

poor-mans-t-sql-formatter-vscode|Tao|1.6.10

</details>

<!-- generated by issue reporter -->

|

Bug

|

low

|

Critical

|

650,300,140 |

godot

|

PhysicalBones do not move with skeleton when simulation is off

|

<!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:**

<!-- Specify commit hash if using non-official build. -->

3.2.1.stable.custom_build.f0a489cf4

**OS/device including version:**

<!-- Specify GPU model, drivers, and the backend (GLES2, GLES3, Vulkan) if graphics-related. -->

Linux 5.7.6-arch1-1

**Issue description:**

<!-- What happened, and what was expected. -->

Maybe this is expected behavior, but it is a bit surprising.

When you create a physical skeleton, the `PhysicalBone`s will not move with the rig, but will remain in place until you start simulation.

However, they _do_ still detect collisions, which means you end up with these invisible blockades littered around your world.

You can see this in the [platformer demo](https://github.com/godotengine/godot-demo-projects/tree/master/3d/platformer):

It would be nice if, before starting the simulation, the `PhysicalBone`s just followed the transforms of their parent bones.

Failing that, maybe they should at least not collide (or we should call out in the docs that the user should clear the collision layer/mask and set it when starting the simulation).

**Steps to reproduce:**

1. Generate PhysicalBones from a skeleton

2. Move the character

3. Note that you collide with your own frozen, invisible skeleton

**Minimal reproduction project:**

<!-- A small Godot project which reproduces the issue. Drag and drop a zip archive to upload it. -->

[platformer demo](https://github.com/godotengine/godot-demo-projects/tree/master/3d/platformer)

|

bug,confirmed,topic:physics

|

low

|

Minor

|

650,330,293 |

TypeScript

|

Adding a compilerOption to disable error on property override accessor in 4.0.beta

|

## Search Terms

property override accessor

## Suggestion

Previously in 3.9, property can override an accessor with no emit errors. And in 4.0 beta, a break change was introduced by #33509.

It's reasonable to report an error on property override accessor in most case, but I'm using `experimentalDecorators` to inject a property accessor in prototype.

Currently I cannot find a solution for this use case, so I'm suggesting to add a new compilerOption to disable this check(strictOnly?) for compatibility.

## Use Cases

```

class Animal {

private _age: number

get age(){ return this._age }

set age(value){ this._age = value }

}

class Dog extends Animal {

@defaultValue(100) age: number; // Unexpected error here

}

function defaultValue(value) {

return (obj, name) => {

Object.defineProperty(obj, name, {

get() { return value }

})

}

}

```

## Checklist

My suggestion meets these guidelines:

* [ ] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,Awaiting More Feedback

|

low

|

Critical

|

650,334,847 |

go

|

proposal: crypto/x509: implement OCSP verifier

|

Although we have a golang.org/x/crypto/ocsp package, we don't in fact have an OCSP verifier. The existing package provides serialization and parsing, but not APIs for "get me an OCSP response for this certificate" and "given this certificate and this OCSP response tell me if it's revoked". (They are separate because you only want the latter when checking stapled responses.)

There is a lot of complexity, subtlety, and dark knowledge involved in OCSP unfortunately. Here are a few notes on things the verifier needs to do (from reading [this thread](https://groups.google.com/d/msg/mozilla.dev.security.policy/EzjIkNGfVEE/XSfw4tZPBwAJ)):

* check that the response is signed directly by the issuer (without needing the OCSP EKU) or that it's signed by a Delegated Responder issued directly by the issuer (with the OCSP EKU)

* for Delegated Responders, **not** require the EKU to be enforced up the chain

* check that the signer has the correct KeyUsage

* for Delegated Responders, require them to be End Entity certificates (i.e. not a CA; this is an off-spec Mozilla check that protected them from the mess of CAs giving the OCSP EKU away to intermediates)

* for Delegated Responders, maybe check that the `id-pkix-ocsp-nocheck` extension is present (this is a BR requirement, but if it's not an IETF requirement we might want to skip it)

There are definitely a lot more things to consider (for example, the `id-pkix-ocsp-nocheck` extension needs to be processed itself), the list above are just notes of things I learned from that one incident.

A difficult question is where to put the code, and how to surface it. We'll want to use it in crypto/tls for Must-Staple (#22274) but it feels like the wrong place for the code to live. An obvious answer would be golang.org/x/crypto/ocsp, but then we can't use it from crypto/x509 without an import loop. Should `x509.VerifyOptions` have an option to verify a stapled response? Probably, I feel like we'd regret doing OCSP verification separately from path building and certificate verification anyway. What about the API to fetch a response? Maybe that can stay in golang.org/x/crypto/ocsp, separating the concerns of obtaining responses and verifying them.

We should also look around the ecosystem, because surely someone had to implement this, and we should compare results.

|

Proposal,Proposal-Hold,Proposal-Crypto

|

medium

|

Critical

|

650,427,607 |

flutter

|

Error connecting to the service protocol: failed to connect to http://127.0.0.1:1027/

|

### Steps to Reproduce

I am trying to debug _Firebase Dynamic Links_ and I cannot reproduce the issue with the Simulator, so I need to debug on a physical device. So from _Visual Code_, I choose my physical device and _Start Debugging_. Alas, it crashes during startup with:

```

Error connecting to the service protocol: failed to connect to http://127.0.0.1:1027/

Exited (sigterm)

```

_What can I do?_

<details>

<summary>Logs</summary>

```

[✓] Flutter (Channel stable, v1.17.5, on Mac OS X 10.15.5 19F101, locale en-US)

• Flutter version 1.17.5 at /Users/anthony/flutter

• Framework revision 8af6b2f038 (3 days ago), 2020-06-30 12:53:55 -0700

• Engine revision ee76268252

• Dart version 2.8.4

[✓] Android toolchain - develop for Android devices (Android SDK version 30.0.0-rc4)

• Android SDK at /Users/anthony/Library/Android/sdk

• Platform android-R, build-tools 30.0.0-rc4

• Java binary at: /Applications/Android Studio.app/Contents/jre/jdk/Contents/Home/bin/java

• Java version OpenJDK Runtime Environment (build 1.8.0_242-release-1644-b3-6222593)

• All Android licenses accepted.

[✓] Xcode - develop for iOS and macOS (Xcode 11.5)

• Xcode at /Applications/Xcode.app/Contents/Developer

• Xcode 11.5, Build version 11E608c

• CocoaPods version 1.9.3

[✓] Android Studio (version 4.0)

• Android Studio at /Applications/Android Studio.app/Contents

• Flutter plugin version 46.0.2

• Dart plugin version 193.7361

• Java version OpenJDK Runtime Environment (build 1.8.0_242-release-1644-b3-6222593)

[✓] VS Code (version 1.46.1)

• VS Code at /Applications/Visual Studio Code.app/Contents

• Flutter extension version 3.12.1

[✓] Connected device (3 available)

• Anthony's iPhone X • dd69530247333fb75a3729579a8510e4c02268d4 • ios • iOS 13.5.1

• iPhone 11 Pro Max • 24F5F921-BD98-497F-9DEF-7F99FEDA41E1 • ios • com.apple.CoreSimulator.SimRuntime.iOS-13-5 (simulator)

• iPad (7th generation) • 9A327E6C-836E-497B-B174-86AD0B20D40E • ios • com.apple.CoreSimulator.SimRuntime.iOS-13-5 (simulator)

• No issues found!

```

</details>

|

platform-ios,tool,P2,c: fatal crash,team-ios,triaged-ios

|

low

|

Critical

|

650,477,734 |

angular

|

The entire use of NgModule should be deprecated

|

The aim of this post is to create a discussion on the topic, there is also a really simple solution I offered, but I really like to hear your thoughts...

It is my opinion that creating a module system in Angular **NgModule** in addition to the already existing module system of Javascript is a bad idea and should be changed, or at least the developers should have a choice to work in a non NgModule mode...

Here is why I think NgModules will hold angular progress back:

1. Bundle size - if I'm creating a library or installing a library containing a module with 10 components, I want to use one of those components in my app, but I only want to use one of them, I add the module to my imports array and my bundle will contain 9 components I'm not even using - this is incorrect with the new Ivy.

2. Maybe this is why angular material wraps almost every component in the library in it's own module, a bit ugly in my opinion but necessary because of the NgModule system, still it requires me adding tons of stuff to my imports library.

3. I know how to solve 2 I will just create CommonModule with the imports in one module and then every module that use them will add that module to the imports, but isn't that pattern messing my bundle size as well?

For example let's say I have common stuff that every module is using and than a bunch of lazy loaded module that each we can divide to a group that use the common ones and another group of other common modules, I don't want to add everything to to CommonModule so I would have do to Common1Module, Common2Module, etc. for every group cause if I throw everything on the CommonModule it will effect the bundle size of every lazy loaded part of my app won't it?

4. It's not a reason but it's a big clue that the NgModule system is not needed, there is no module system in any other frontend framework or library that i'm aware of, including React and Vue, they simply use the module system in JS.

5. Learning curve - While it's pretty easy for people to learn that they need modules, separation of concerns is a pretty well understood concept for people and they know they should split their code to "libraries", so the angular module system is pretty simple to understand, but usually when I teach and talk to angular developers they get lost how the DI works cause the module system heavily intertwined in how the DI is searching for providers.

6. HttpClientModule is a good example here, let's say I'm creating a library which contain an NgModule. Obviously that module can be added to the imports of a lazy loaded module, I cannot add HttpClientModule to my library cause it can create another instance of HttpClient if it is used, and then I can mess up my interceptor. So now I have to worry about peerDependencies as well. Example ngrx libraries require us to add HttpClientModule. We already have a peerDependency mechanism inside javascript modules, now we have to do the same with angular

Currently I see the Ivy renderer allows us to create components without modules, but it is still heavily limited in what the component can use in terms of other directives and providers, without a module that is wrapped and supplies them.

It somewhat seemed to me that the NgModules system is something that was taken from the Angular.js ecosystem and just moved along to the current Angular and I don't think it's needed at all, and not only that it creates more harm than good.

I think the NgModule requires a drastic change which will have to cause a drastic change in the DI, and we will not achieve the bundle size of the competitors React and Vue unless a major change here is achieved .

I think NgModule has more cons than pros and would love to hear your thoughts.

Making a really general proposal from this post these are the changes I think should be made:

1. The DI system should not automatically create different trees for modules and for the view, rather it should be a simple solution that should be inspired from the library TypeDI.

You import the DI and you register services to it, and if you want to create a tree structure in your DI you do it manually, you import the DI system and fork it so a certain component can have it's own dependencies.

Or at least to support backward compatibility allow the DI to work in manual mode, just like we did with the change detection strategy. The manual mode of the DI will enable the developer to control the forking of the di to trees and thus he can choose to work in a non NgModule mode.

2. After that remove the modules entirely, every component that use a certain directive component should use the js module system and use a certain import.

Maybe this step can also somehow be integrated in the compiler in some way so based on the selector angular will know which component to integrate without using the js module system. I'm not entirely strong on the compilation process so I don't think I'm qualified to make a suggestion here.

|

area: core,core: NgModule,needs: discussion

|

high

|

Critical

|

650,498,501 |

rust

|

rustc performs auto-ref when a raw pointer would be enough

|

The following code:

```rust

#![feature(slice_ptr_len)]

pub struct Test {

data: [u8],

}

pub fn test_len(t: *const Test) -> usize {

unsafe { (*t).data.len() }

}

```

generates MIR like

```

_2 = &((*_1).0: [u8]);

_0 = const core::slice::<impl [u8]>::len(move _2) -> bb1;

```

This means that a reference to `data` gets created, even though a raw pointer would be enough. That is a problem because creating a reference makes aliasing and validity assumptions that could be avoided. It would be better if rustc would not implicitly introduce such assumptions.

Cc @matthewjasper

|

T-compiler,A-MIR,C-bug,F-arbitrary_self_types

|

medium

|

Critical

|

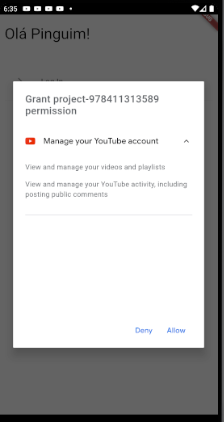

650,511,015 |

flutter

|

[google_sign_in] Can't sign in to youtube channels?

|

<!-- You must include full steps to reproduce so that we can reproduce the problem. -->

I'm trying to login with youtube channels (not just the Google account itself.)

on the console, I've added auth/youtube to the OAuth screen (not sure if this is needed)

I've also added this to my scopes

`GoogleSignIn googleSignIn = GoogleSignIn(

scopes: [

'email',

'https://www.googleapis.com/auth/youtube', // Youtube scope

],

);`

(I'm also using FirebaseUser to capture emails, not sure if this is possible to interlace?)

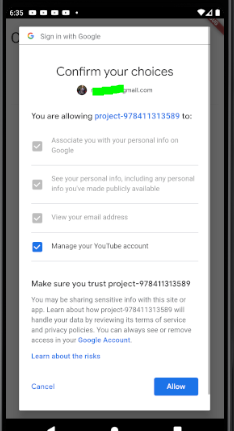

When I try to log in it shows me my e-mails and when selected it gives me a screen if I want to accept "project......" (not sure how to change this to the name app btw) to manage my youtube channels and whatnot. however when I press yes, it doesn't show me the channel list so I can select the one I want. So I don't really have access to the user channel stuff like playlists, name, memberships and subscriptions, image, etc... how could I fix this?

Here are the screens I DO get

also here's my sign in code

````

class AuthService {

String OAuthClientId = '*hidden but came from the dev console*'; //Don't use this for nothing?

String discoveryDocs = 'https://www.googleapis.com/discovery/v1/apis/youtube/v3/rest';

String scopes = 'http://www.googleapis.com/auth/youtube.readonly';

final FirebaseAuth auth = FirebaseAuth.instance;

GoogleSignIn googleSignIn = GoogleSignIn(

scopes: [

'email',

'https://www.googleapis.com/auth/youtube', // Youtube scope

],

);

```

Future<FirebaseUser> login() async {

final GoogleSignInAccount googleSignInAccount = await googleSignIn.signIn();

final GoogleSignInAuthentication googleSignInAuthentication = await googleSignInAccount.authentication;

final AuthCredential credential = GoogleAuthProvider.getCredential(

accessToken: googleSignInAuthentication.accessToken,

idToken: googleSignInAuthentication.idToken,

);

// You'll need this token to call the Youtube API. It expires every 30 minutes.

final token = googleSignInAuthentication.accessToken;

final AuthResult authResult = await auth.signInWithCredential(credential);

final FirebaseUser user = authResult.user;

assert(!user.isAnonymous);

assert(await user.getIdToken() != null);

final FirebaseUser currentUser = await auth.currentUser();

assert(user.uid == currentUser.uid);

return currentUser; }`

|

platform-android,d: stackoverflow,p: google_sign_in,package,P2,team-android,triaged-android

|

low

|

Major

|

650,543,385 |

react-native

|

TouchableOpacity setOpacityTo is not a function

|

Originally reported in expo/expo#9026, I ran into the same problem so I'm re-reporting this here

## Description

The `setOpacityTo` on TouchableOpacity is no longer available.

## React Native version:

0.62.2

```

Expo CLI 3.20.3 environment info:

System:

OS: macOS Mojave 10.14.6

Shell: 5.3 - /bin/zsh

Binaries:

Node: 10.20.1 - /var/folders/qt/10542x9d2wbg0x93mmh1xsw00000gn/T/yarn--1593777562592-0.5909086070884821/node

Yarn: 1.22.4 - /var/folders/qt/10542x9d2wbg0x93mmh1xsw00000gn/T/yarn--1593777562592-0.5909086070884821/yarn

npm: 6.14.4 - ~/.asdf/installs/nodejs/10.20.1/bin/npm

Watchman: 4.9.0 - /usr/local/bin/watchman

IDEs:

Android Studio: 3.5 AI-191.8026.42.35.5791312

Xcode: 11.0/11A420a - /usr/bin/xcodebuild

npmPackages:

expo: ^38.0.0 => 38.0.4

react: 16.11.0 => 16.11.0

react-dom: 16.11.0 => 16.11.0

react-native: https://github.com/expo/react-native/archive/sdk-38.0.0.tar.gz => 0.62.2

react-native-web: ^0.12.0 => 0.12.3

react-navigation: ^4 => 4.3.9

```

## Steps To Reproduce

Provide a detailed list of steps that reproduce the issue.

1. Create a reference to `TouchableOpacity`

2. Try calling `setOpacityTo`

## Expected Results

Opacity of the element is changed

## Actual Results

Fatal crash due to missing method

## Snack, code example, screenshot, or link to a repository:

https://snack.expo.io/@zanona/3cb338

|

Issue: Author Provided Repro,Component: TouchableOpacity

|

low

|

Critical

|

650,589,526 |

go

|

cmd/go: go test -json splits log records

|

I maintain a test runner tool (https://github.com/grasparv/testie).

Here is my problem:

```

$ go version

go version go1.14 linux/amd64

$ cat hello_test.go

package hello

import (

"testing"

)

func TestSomething(t *testing.T) {

t.Logf("first item\nsecond item")

t.Logf("third item")

}

$ go test -v -json

{"Time":"2020-07-03T15:08:12.426294755+02:00","Action":"run","Package":"example","Test":"TestSomething"}

{"Time":"2020-07-03T15:08:12.426393632+02:00","Action":"output","Package":"example","Test":"TestSomething","Output":"=== RUN TestSomething\n"}

{"Time":"2020-07-03T15:08:12.426403571+02:00","Action":"output","Package":"example","Test":"TestSomething","Output":" TestSomething: hello_test.go:8: first item\n"}

{"Time":"2020-07-03T15:08:12.426407445+02:00","Action":"output","Package":"example","Test":"TestSomething","Output":" second item\n"}

{"Time":"2020-07-03T15:08:12.426411074+02:00","Action":"output","Package":"example","Test":"TestSomething","Output":" TestSomething: hello_test.go:9: third item\n"}

{"Time":"2020-07-03T15:08:12.426415993+02:00","Action":"output","Package":"example","Test":"TestSomething","Output":"--- PASS: TestSomething (0.00s)\n"}

{"Time":"2020-07-03T15:08:12.426419389+02:00","Action":"pass","Package":"example","Test":"TestSomething","Elapsed":0}

{"Time":"2020-07-03T15:08:12.426423735+02:00","Action":"output","Package":"example","Output":"PASS\n"}

{"Time":"2020-07-03T15:08:12.4264854+02:00","Action":"output","Package":"example","Output":"ok \texample\t0.001s\n"}

{"Time":"2020-07-03T15:08:12.42649666+02:00","Action":"pass","Package":"example","Elapsed":0.001}

```

I expected seeing first and second item reported as a single entry (instead second entry gets its own log event). The above is an issue since it destroys information by breaking lines. For instance, my test runner tool might want to change indentation on some messages, but here it is impossible to see which log lines that actually belong together.

|

NeedsInvestigation,GoCommand

|

low

|

Minor

|

650,623,270 |

material-ui

|

[Autocomplete] Jumps between being expanded to the top/bottom

|

- [x] The issue is present in the latest release.

- [x] I have searched the [issues](https://github.com/mui-org/material-ui/issues) of this repository and believe that this is not a duplicate.

## Current Behavior 😯

When having not that much space below the bottom of the input field the Autocomplete opens to the top.

By entering text into the input field the Autocomplete field gets shorter and jumps to the bottom.

<!-- Describe what happens instead of the expected behavior. -->

## Expected Behavior 🤔

After opening the Autocomplete ist should stay at the same position as the switch to the bottom is not expected and the user has to re-orientate to find the list of suggestions.

## Steps to Reproduce 🕹

CodeSandbox used for reproduction: https://codesandbox.io/s/naughty-hermann-24rmn

Steps:

1. The input field

2. Ensure that there is not much space to the bottom

3. After clicking into the field, the Autocomplete opens to the top (as expected ✔️)

4. After typing an "a", the Autocomplete jumps to the bottom (not expected, should stay ❌)

## Your Environment 🌎

| Tech | Version |

| ----------- | --------- |

| Material-UI | v4.11.0 |

| React | v16.13.1 |

| Browser | Chrome 83 |

|

bug 🐛,component: autocomplete,design

|

low

|

Major

|

650,631,106 |

rust

|

SpecForElem for i16/u16 and other digits

|

We have specialization for `SpecForElem` for `i8` and `u8` (which provides a small performance win over plain extend for vec) but we could look into using specialization for that involves duplicating more digits with `rep stosw` (or `d` or `q`). It may need benchmarking to ensure that it's actually faster as mentioned by @joshtriplett

I am not sure how to reproduce the assembly `rep stosw`, someone that know could take up this issue.

Previous discussion: https://rust-lang.zulipchat.com/#narrow/stream/219381-t-libs/topic/SpecForElem.20for.20other.20integers

|

I-slow,C-enhancement,A-collections,A-specialization,T-libs

|

low

|

Major

|

650,651,318 |

pytorch

|

Inconsistent behaviour when parameter appears multiple times in parameter list

|

## 🐛 Bug

When we pass a list of parameters or parameter groups to an optimizer, and one parameter appears multiple times we get different behaviours, and it is not clear whether this is intended that way:

* If the parameter appears twice within one parameter group, everything works. That parameter will get updated *twice* though.

* If the parameter appears in distinct parameter groups, then we get an error.

## To Reproduce

```

import torch

x = torch.zeros((1,), requires_grad=True)

# uncomment one of the following three lines for each of the cases:

# a = torch.optim.SGD(params=[dict(params=[x])], lr=0.1) # baseline

# a = torch.optim.SGD(params=[dict(params=[x, x])], lr=0.1) # apparently acceptable (?); x is updated twice

# a = torch.optim.SGD(params=[dict(params=x), dict(params=x)], lr=0.1) # apparently not acceptable

x.sum().backward()

a.step()

print(x)

```

## Expected behavior

I would expect that no matter in what way a parameter apperas multiple times, we get the same behaviour in both situations: Either we get errors in both situations, or both situations are deemed acceptable.

## Environment

- PyTorch Version (e.g., 1.0): 1.5

- OS (e.g., Linux): Win/Linux

- How you installed PyTorch: conda

- Python version: 3.7

cc @jlin27 @vincentqb

|

module: docs,module: optimizer,triaged

|

low

|

Critical

|

650,659,649 |

excalidraw

|

Feature request: See myself in the user list

|

In collaborative edition, I only see others in the list of users on the top right. Since a color is automatically assigned to my user avatar, I regularly use a similar color to write content, so everybody can track who wrote what. Currently, I can't see myself in the list so I need to ask others which color I am.

I would like to see myself in the list. Any reason not to?

|

enhancement

|

low

|

Minor

|

650,662,978 |

pytorch

|

Pytorch 1.4 compilation hangs on AMD Epyc

|

## 🐛 Bug

[1352/3291] : && /software/CMake/3.14.0-GCCcore-8.3.0/bin/cmake -E remove lib/libfbgemm.a && /software/binutils/2.32-GCCcore-8.3.0/bin/ar qc lib/libfbgemm.a third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/ExecuteKernel.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/ExecuteKernelU8S8.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/Fbgemm.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmFP16.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmConv.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmI64.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmI8Spmdm.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmSpConv.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/FbgemmSpMM.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/Fused8BitRowwiseEmbeddingLookup.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC16.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC16Avx512.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC16Avx512VNNI.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC32.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC32Avx512.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GenerateKernelU8S8S32ACC32Avx512VNNI.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/GroupwiseConvAcc32Avx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackAMatrix.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackAWithIm2Col.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackBMatrix.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackMatrix.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackAWithQuantRowOffset.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackAWithRowOffset.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackWeightMatrixForGConv.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/PackWeightsForConv.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/QuantUtils.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/RefImplementations.cc.o third_party/fbgemm/CMakeFiles/fbgemm_generic.dir/src/Utils.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/FbgemmFP16UKernelsAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/FbgemmI8Depthwise3DAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/FbgemmI8Depthwise3x3Avx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/FbgemmI8DepthwiseAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/FbgemmI8DepthwisePerChannelQuantAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/OptimizedKernelsAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/PackDepthwiseConvMatrixAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/QuantUtilsAvx2.cc.o third_party/fbgemm/CMakeFiles/fbgemm_avx2.dir/src/U

## To Reproduce

Steps to reproduce the behavior:

1. Compile pytorch from source on AMD Epyc.

it hangs mid-compilation, as seen above.

## Expected behavior

An error message, at least.

## Environment

Please copy and paste the output from our

[environment collection script](https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(or fill out the checklist below manually).

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch Version (e.g., 1.0): 1.4

- OS (e.g., Linux): Centos 7

- How you installed PyTorch (`conda`, `pip`, source): source

- Build command you used (if compiling from source): easybuild recipe

- Python version: 3.6.8

- CUDA/cuDNN version: 7.5.1.10

- GPU models and configuration: nvidia v100

- Any other relevant information: jusuf supercomputer from Jülich Supercomputing Centre, Germany

## Additional context

It's the exact same recipe used to compile Pytorch 1.4 on every other platform on the institute. The only difference is the cpu.

cc @malfet

|

module: build,triaged,module: vectorization

|

low

|

Critical

|

650,683,473 |

TypeScript

|

Conditional + annotation causes erroneous typing

|

<!-- 🚨 STOP 🚨 STOP 🚨 STOP 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 33.9.4 & 4.0.0@beta

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** Type annotation wrongly overrides generic with errors

**Code**

```ts

const createMatrix = <D extends number, T>(

dimensions: D,

initialValues: T | null = null

): Matrix<D, T> => {

const currentDimensionLength = dimensions;

const remainingDimensions = dimensions - 1;

const needsRecursion = remainingDimensions > 0;

const currentMatrix = Array(currentDimensionLength).fill(initialValues);

const finalMatrix = needsRecursion

? currentMatrix.map(() =>

createMatrix(remainingDimensions, initialValues)

)

: currentMatrix;

return finalMatrix as Matrix<D, T>;

};

```

```ts

type Matrix<D extends number, T> = D extends 1

? T[]

: D extends 2

? T[][]

: D extends 3

? T[][][]

: any[][][][];

```

**Expected behavior:**

Adding type annotations to variables should be compatible with the functions return signature, causing no type errors

**Actual behavior:**

Assigning an annotated variable to the return value of the function causes an error. The generic `T` is wrongly inferred to be _any of_ `array where depth < D` where it should be inferred to only the base type of the nested array. Removing the annotation solves the problem but removes type safety when variable assignment happens after creation.

The problem seems to be that (when annotated) the type is inferred to be the union of each type in `Matrix` that's preceding the conditional that returns true.

```ts

const n1: number[] = createMatrix(1); // <- works - generic T is set to `number`

const n2: number[][] = createMatrix(2); // <- doesn't work - generic T is set to `number | number[]`

const n3: number[][][] = createMatrix(3); // <- doesn't work - generic T is set to `number | number[] | number[][]`

const n4 = createMatrix(2); // <- regular inference works - generic T is `unknown`, return type is unknown[][]

const n5: number[][] = createMatrix(2, 0); // <- works when - initialValues is set - generic T is `number`, return type is number[][]

const n6: unknown[][] = createMatrix(2); // <- works fine, as type T is unknown. A more specific annotation should constrain `unknown` to `number`

```

**Playground Link:** [Link](https://www.staging-typescript.org/play?#code/PTAEBUE8AcFNQIYDskHsAuD0EtVNAO4BOeA5gDaSioBusRR2AJrAM6imxL3YDGh2dAAtQ9EkVYAoSSFABRAB5xe6WE1AAjWEIQ1cRAFwywAQSZNsSUqHQx4yNJhx526VKBoJGCDeTahWIVQAV3J1LVBeVABbaCxsX3gCQRFheAAzYKQVXCR2Ilh0YKIkYwDsUiQsYtgAGkiEYNZLazQbO1EGVAlpWRMVYIRyTW1dfSM+1mbKlsR8BwwsNQ8vbB8-G3c00AKikpXyYPhUdJshDKycvAam-2RO8QA6MvBzji4efnBQbHZiMkoPyQ6TEyzcIzmVBOoAA5F4iAgqARzgVQCxoMJQAAeUAAERhhBR8EEASCoSYZQilhBDDB7jwgO2GgQrHgtjg1FO224rFU6nhiMeoAASrBorRZtsFk5cgFUOQ6FJZNtoCREtFNMF0DsxbR-Oz4KwECDbISuCtvIlEFMKkholxtTpoHA8oh0qoiJECvE8M9egAqf2SUD+kWFYquhCgaJYRgKUAACmioRwAFoLPa8rkhogGIiAJQCTGQEKeljNArqDNcZouObqTyHNjPEPB0MAATiCI11azddToAABrjBwYIG9y9hK2jsJna67odsY+g423QJ2vAgNZZBGtyAA1IZHdgD17wRtHM5YUQIXgiaCoSzayxneDLuNA3dDX761BC3GwOkjTkOgrjuIOSChOQg4th2uwRuwJjRrG2DxtCw6DjOc65Kw9TJJisC3vej5IM+SBfuQP7qOC2yDjuOBDIeTasJhXZboU9CwcAkhRHk2q8N6qgALIofGAC82K4qICiqEgTDsJB0RaEQ9TgAAfAmwagNpvbzqwY64rUWnafRe5MceY7fAAPqAkHkMMEl2eQkj5mOIkrqhWKGRAamgGJvkAN7GZELj8cUBSkbis41rkAAyXCkJiEm6ThADcwW8byOoxjuVhRdhdbJdFfaugOACM6XadpmXatwaisKKvDFPOfnZQguWkPlMV1r5AAM6UZaFkThQ67kfhJJh5pACZNbSkXFfO8VWMI+aPOk2D2QmpmMUebD5gNVUhXxoDrVU5BjahrV1fJjXNbkwWHQA-MNc3oBdCiPDG0AJgmhb+Q9h2AwJhHCaJCYFDl5F5QtOH1NtB67aw+YA4dyOA1VY6zRFb2iQdVXwfsp1DO91qgO9XmqWp6UAL4DbIZ6gKqqDqgEsBimBELCNeCbIua0pLEwhbbAaPzsNSoLUe4ETbFksonGUhF3u0HKqrAvBqJKby8RYzhnVe2oE66K5HM8Ivk1JsAyVw8m2cESn0JTrUW1bcnsGVwXPeAADaAC6wUGdJsk2wATB7EC+77-t4oH1vsAAzGH3s+xHfuHQHltB+wAAsicpynUfIJAefJyXA01TYbDoGVY6KcpvutcDSzvQmZX7aAshYgOBDdAA1ie7zcIwXyi6z2rghBdvKYOPFDaovLBzXk-0CnDeCbAzfB23HcDkwqBsEgMLat3RA96AA6cIPfAQCPrJj+Btf0KANkP0QvuYQOgQhGEEKDlkPdoAQJAg56hpHwDVBElg6S23tkQGex057oDjovGBxdV4g3XmDOOW8wCdzRHvVgB8j69zPgPT419fij02EOF+T9oF1x9rQl+Kd36ki-uEeAv8kD-1QIA4Br4wGhQgdwSWdD6DSHLggrOaCm5g03qlduOCBwFFIKELwQIaRcHVoQXu-cL5kO+BQzh3DeH1ENsrYk7A-4AKQPnCRlcACsyD6H1wko3UGHkFAJmDvUXq2DsRdx0WafAdFyIMQRsxTCFDb4kL0UPch7AJ4wL4WYkWFCmElzgVlBBAA2McVieE2JLtI9xcYvF+NwcfPuJ1IH1BZOY+JoB8mAL9L0MAAAhWA5AeEj22PKJgeFe4tHqJkbIushQAEkYQahXJASU7gIZ6moHQIgXSEA23SN0RAIx0AejxAADXqOcbIbI3jcAICdS4utEw+D1MjSQIyrhgLXu9AA8mELE6kEwpRcGOMqcNQlmURo9Syrlw4+wGg8q5biMEeLeUwD5GlgrfLyGObxwV4bmTYMCiALlLIpwhZc2U0LXnvM+UimGPzQBxyModDFQLLK4rBfi6QkKiXPNEnChFmlDrIv0qALONKqp0uYti8AjKk7FwJaMtl6CSXwrJTyilKLREqXRQCnaIqGWgrlQiqVjyvSyo5aSxFiqCrKpfoKky6rwkWWvs-KCV0oKMp1epUAQVDrlyxg6LqJUlqJREEVM1rBKpVXLhDdqUNOpKvYIG7qpVQAVUGsda6DU1Z3WuBJcNHUfV6VAH1PG1UhpetIiTCaU0ZojXmmav1K01obXIFta1mKkYFqOllIm51RJXTZjdNNEh7ro20s9YtOMPGfQQN9X6fk1Io0HcSo1TBwZigjS0HNsNPxhObWjQd2lt3o0xpW0dcZW1mI7e9Gm0gzYLoRa1CVJcV42RdWpX2A1Mlj0rnC6uKqXEGpkbCsILc-GVPYKdWAb6K68jhQvb9RTXHsv-YuuRCjtEnxA5A8DCC4VIJgyvODhqEMJiwfI2QwHqncHEbPD9YQpF4b-XGOFZTiNgFI6BjDVGmCOJwww2jJTUIMe8aAXxTGUNVNY3YyDYRcmNK4dY3Dv7eMKH40BwJoGgA)

**Related Issues:**

None

|

Needs Investigation

|

low

|

Critical

|

650,686,658 |

pytorch

|

Regarding graphs page on site

|

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

A graphs page on site, to track the evolution of PyTorch, along with the time required to train neural networks, along with the data available.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

Without graph it become difficult to see, where things are headed.

## Pitch

<!-- A clear and concise description of what you want to happen. -->

I suggest a few graphs, a few more could be added,

1) Evolution of PyTorch

2) Evolution of time required to train neural network, since the beginning of PyTorch

3) Evolution of data available since the beginning of PyTorch in terms of size

4) Evolution of number of parameters in neural network

5) Evolution of FLOPS

cc @jlin27

|

module: docs,triaged

|

low

|

Minor

|

650,690,509 |

excalidraw

|

Feature request: Automatically change the color when joining a live-collaboration session

|

Following #1868, it would be nice to auto-change the default color to the color of avatar, e.g. if I enter a live-collaboration session and I'm assign a red avatar, my default color would be red and all I'd type would be red instead of black. It wouldn't prevent me from changing the color.

I'm not sure this is a behavior we would always want. It could be a toggle when creating a session. My use case is team retrospectives, where we write and draw stuff one by one.

|

enhancement

|

low

|

Minor

|

650,700,078 |

godot

|

SkeletonIK Targets cause bones to deform

|

**Godot version:**

3.2.2.stable.official

**OS/device including version:**

Windows 10 Pro - GLES3

**Issue description:**

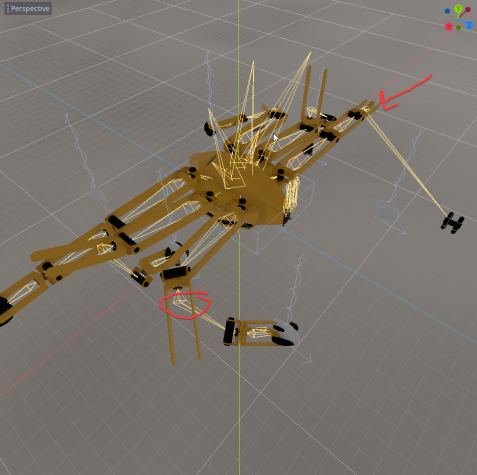

Godot deforms a skeleton's bones instead of rotating it. This seems to be related to, or a regression of #34415

This happens regardless of whether I use magnets or not

**Steps to reproduce:**

Create a chain of bones longer than 2-3 bones, and set an IK target for the tip.

**Minimal reproduction project:**

[.escn file containing the model + rig](https://github.com/moonwards1/Moonwards-Virtual-Moon/blob/7c00c0e0fdbbf92609512b0d656fab24a6b5d486/Assets/MoonTown/Models/Athlete_Rover/AtheleteRover_Rigged.escn)

[Commit](https://github.com/moonwards1/Moonwards-Virtual-Moon/blob/7c00c0e0fdbbf92609512b0d656fab24a6b5d486l)

[blend file](https://github.com/moonwards1/Moonwards-Assets/blob/master/Models/AtheleteRover_Rigged.blend)

Credits to [Neurotremolo](https://github.com/Neurotremolo) for finding this one

|

bug,topic:core,confirmed

|

low

|

Minor

|

650,709,217 |

pytorch

|

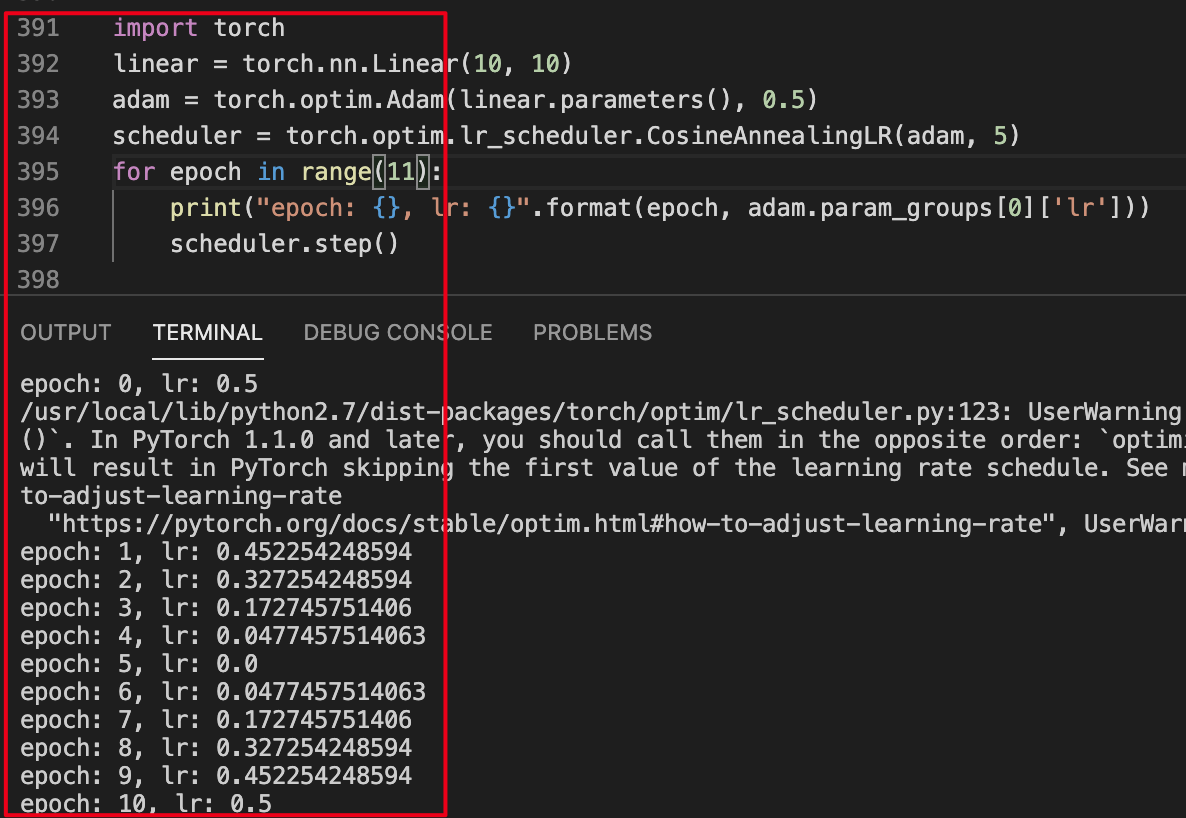

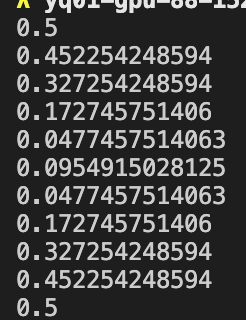

The values calculated according to the document isn't equal to the values calculated by framework

|

## 📚 Documentation

Torch 1.5.0 CPU, Linux

<!-- A clear and concise description of what content in https://pytorch.org/docs is an issue. If this has to do with the general https://pytorch.org website, please file an issue at https://github.com/pytorch/pytorch.github.io/issues/new/choose instead. If this has to do with https://pytorch.org/tutorials, please file an issue at https://github.com/pytorch/tutorials/issues/new -->

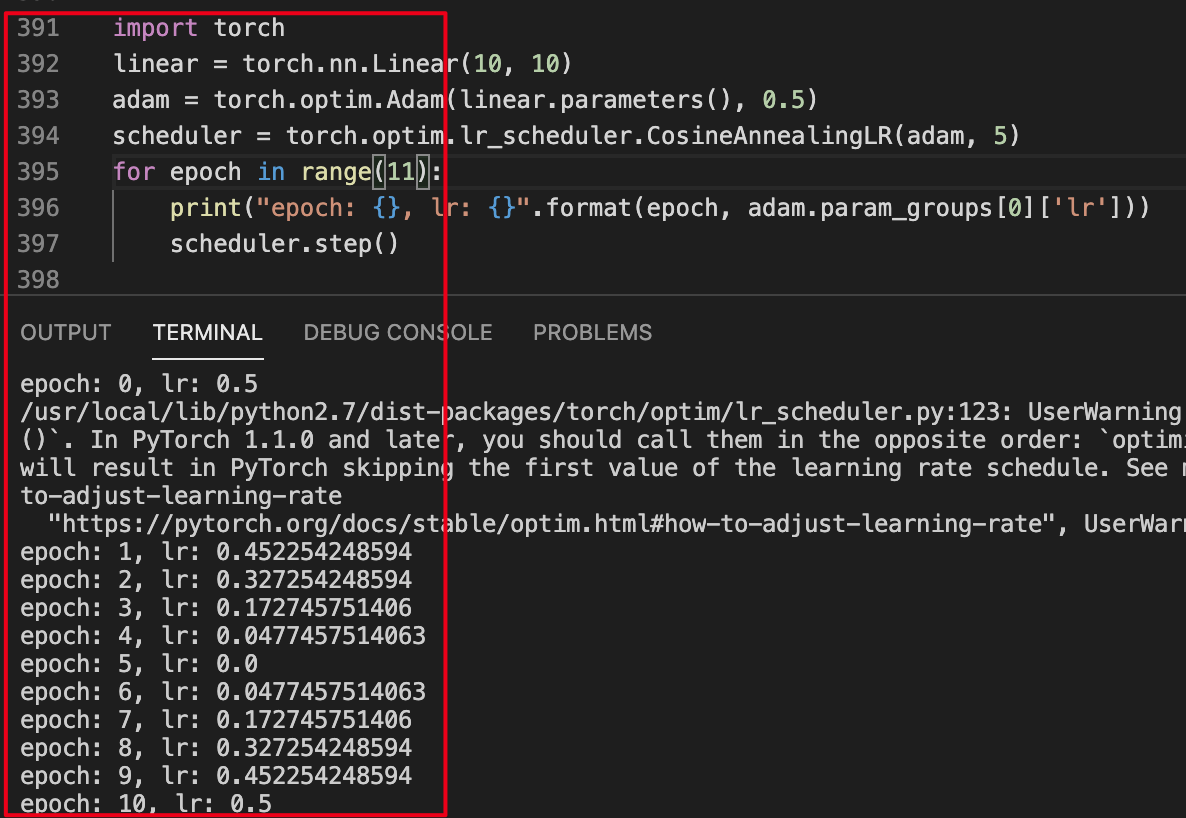

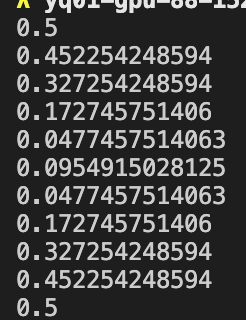

https://github.com/pytorch/pytorch/blob/master/torch/optim/lr_scheduler.py#L448

Bug API: `torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=-1)`

If set T_max =5, initial learning_rate of optimizer are 0.5, eta_min=0:

The value calculated by framework are as flow :

But the value calculated by formula in the document are as flow:

https://pytorch.org/docs/stable/optim.html?highlight=cosineannealinglr#torch.optim.lr_scheduler.CosineAnnealingLR

These Two doesn't match, in epoch 5. one is 0, but the other is 0.0954915028125. I want to know which one is right, thank you very much!

cc @jlin27 @vincentqb

|

module: docs,module: optimizer,triaged

|

low

|

Critical

|

650,719,783 |

scrcpy

|

A simple thank you

|

Hello!

I just wanted to say thank you for your great work. I have used your software and i am really happy with it. Thank you so much for your effort and hard work!

|

wontfix

|

low

|

Major

|

650,724,352 |

pytorch

|

len of dataloader when using iterable dataset does not reflect batch size

|

## 🐛 Bug

If I construct an iterable dataset of length 100, and want to then use that in a dataloader with batch_size=4, one would expect the length of the dataloader to be 25, but instead it is 100. This is because it is implemented as

```

if self._dataset_kind == _DatasetKind.Iterable:

length = self._IterableDataset_len_called = len(self.dataset)

return length

```

## To Reproduce

Steps to reproduce the behavior:

```

from torch.utils.data import DataLoader, IterableDataset, Dataset

test_items = list(range(100))

class TestDataset(Dataset):

def __init__(self, test_items):

self.x = test_items

def __getitem__(self, item):

return self.x[item]

def __len__(self) -> int:

return len(self.x)

class TestIterableDataset(IterableDataset):

def __init__(self, test_items):

self.x = test_items

def __iter__(self):

return iter(self.x)

def __len__(self) -> int:

return len(self.x)

print(len(TestIterableDataset(test_items)))

print(len(TestDataset(test_items)))

print(len(DataLoader(TestIterableDataset(test_items), batch_size=4)))

print(len(DataLoader(TestDataset(test_items), batch_size=4)))

```

this prints:

```

100

100

100

25

```

## Expected behavior

I would expect this to print:

```

100

100

25

25

```

## Environment

Please copy and paste the output from our

torch '1.5.1'

## Additional context

If people agree this should be fixed, I would love to put out a PR for it! Seems manageable and I've been wanting to contribute for a while now :)

cc @SsnL @albanD @mruberry

|

module: nn,module: dataloader,triaged

|

low

|

Critical

|

650,728,127 |

rust

|

Naming an associated type can cause a compile failure

|

<!--

Thank you for filing a bug report! 🐛 Please provide a short summary of the bug,

along with any information you feel relevant to replicating the bug.

-->

I have found two different pieces of code that should be equivalent, but one compiles and the other does not.

This code compiles successfully: ([Playground](https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=907f7fefa2a2f9d02f4a6a178a4f55ed))

```rust

struct Lazy<Ptr>(Ptr) where Ptr: Deref, Ptr::Target: Record;

impl<Ptr:Deref> Record for Lazy<Ptr> where Ptr::Target: Record {

fn try_field_ref<F:Field>(&self)->Option<&F> {

self.0.try_field_ref()

}

}

```

But this code fails to compile: ([Playground](https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=f9d0dca192e5702b1528ab19d3e5bba5))

```rust

struct Lazy<Ptr>(Ptr) where Ptr: Deref, Ptr::Target: Record;

impl<R:Record, Ptr:Deref<Target=R>> Record for Lazy<Ptr> {

fn try_field_ref<F:Field>(&self)->Option<&F> {

self.0.try_field_ref()

}

}

```

Error Message:

```

Compiling playground v0.0.1 (/playground)

error[E0311]: the parameter type `R` may not live long enough

--> src/lib.rs:14:9

|

14 | self.0.try_field_ref()

| ^^^^^^

|

= help: consider adding an explicit lifetime bound for `R`

note: the parameter type `R` must be valid for the anonymous lifetime #1 defined on the method body at 13:5...

--> src/lib.rs:13:5

|

13 | / fn try_field_ref<F:Field>(&self)->Option<&F> {

14 | | self.0.try_field_ref()

15 | | }

| |_____^