id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

607,893,953 |

godot

|

CSGMesh GetAabb() / GetTransformedAabb() returns zero

|

**Godot version:**

3.2.2.beta1

**OS/device including version:**

Windows 10 Pro (10.0.18362)

**Issue description:**

CSGMesh methods `GetAabb()` and `GetTransformedAabb()` returns `Vector3.Zero`.

`csgMesh.Mesh.GetAabb()` seems to return the correct value.

**Steps to reproduce:**

1. Create a CSGMesh node.

2. Add any mesh to it.

3. Call `GetAabb()` or `GetTransformedAabb()` on the CSGMesh node.

4. Zero-value is returned from the methods.

|

bug,topic:core,confirmed

|

low

|

Minor

|

607,909,454 |

TypeScript

|

Simple Comparison of Generics not allowed?

|

**TypeScript Version:** 3.8

**Search Terms:**

generic comparison

compare generic strings

**Code**

```ts

function test<A, B>(one: A, two: B): A {

if (one === two) {

throw new Error('no');

}

return one;

}

const obj = {};

test(obj, obj);

```

**Expected behavior:**

While the generics are generally indicating that `one` and `two` are independent values, they can still potentially be the same underlying value so they should be able to at least be checked for equality without casting them first, especially since there is no error produced when calling the function with identical values in the first place.

At the very least the error message should be more specific since the assumption it is providing is not correct in since the condition returns true :-P

( This is clearly a simplified version of where I ran into this since there'd be no reason to use generics in this specific case :-) )

**Actual behavior:**

Shown in example

**Playground Link:** [Playground](https://www.typescriptlang.org/v2/en/play?ts=3.8.3#code/GYVwdgxgLglg9mABFApgZygHgIIBpEBCAfABQIoBcieyA7nFQQJRXaIDeAsAFCKIzBEZMCkQBeCXThMOPPnygALAE5xaiEeoCiy1cpIByMHANMA3HMQBfS8pRQQypOQvcb3HhAQZEcAEYAVuIcVq48qBhkgfj+AeY8QA)

|

Suggestion,Experience Enhancement

|

low

|

Critical

|

607,916,936 |

TypeScript

|

Enforce consistent imports when directory is in jsconfig's paths

|

*TS Template added by @mjbvz*

**TypeScript Version**: 3.9.0-dev.20200427

**Search Terms**

- javascript

- auto import

- symlink

---

<!-- ⚠️⚠️ Do Not Delete This! bug_report_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Also please test using the latest insiders build to make sure your issue has not already been fixed: https://code.visualstudio.com/insiders/ -->

<!-- Use Help > Report Issue to prefill these. -->

- VSCode Version: 1.43.2

- OS Version: macOS 10.15.3

Steps to Reproduce:

1. Specify a `jsconfig.json` that includes `compilerOptions.paths` to a specific directory

2. Let intellisense import a variable from this specific directory

3. Depending on how deep you are in the code base, it will resolve that variable relatively or absolutely

You can take a look at this reproduction repo: https://github.com/Floriferous/vscode-symlink-import

More specifically the 2 files `code.js` and `deepCode.js`, they both resolve the variable differently, as `javascript.preferences.importModuleSpecifier` is set to `auto`.

I'd like a folder specified in `paths` to always resolve as `myPathsFolder/...`

|

Needs Investigation

|

low

|

Critical

|

607,944,085 |

pytorch

|

Error running trace on Pytorch Crowd Counting model

|

Hi,

I'm trying to use your prebuilt Pytorch crowd counting model with [NVIDIA's Triton Inference Server](https://docs.nvidia.com/deeplearning/sdk/triton-inference-server-guide/docs/index.html). Looks like it requires the PyTorch model to be saved by `torch.jit.save()`. I'm facing errors while tracing the model with an example. Appreciate any help.

The model is available from [this link](https://drive.google.com/open?id=1TRJr9YuP1dFpnbQvSSQHqIqhLFdElo_Q). The model runs fine when I run single predictions using this [predict file](https://github.com/kevhnmay94/SS-DCNet/blob/master/predict.py).

First, inference server gave error while I directly use the `best_epoch.pth` checkpoint -

Then, I try to run a trace with `torch.jit.trace()` as [documented here](https://pytorch.org/tutorials/advanced/cpp_export.html) to convert it to torchscript type but it gives me an error on some of the tensors used. Below is the script and stack trace -

Python: 3.6.9

Pytorch Version: 1.3.1

Tensorrtserver: 1.11.0

```python

import os

import sys

import torch

import numpy as np

from Network.SSDCNet import SSDCNet_classify

# Intialize params to model

label_indice = np.arange(0.5,22+0.5,0.5)

add = np.array([1e-6,0.05,0.10,0.15,0.20,0.25,0.30,0.35,0.40,0.45])

label_indice = np.concatenate((add,label_indice))

class_num = len(label_indice)+1

# Create a model instance

net = SSDCNet_classify(class_num,label_indice,div_times=2,frontend_name='VGG16',block_num=5,IF_pre_bn=False,IF_freeze_bn=False,load_weights=True,psize=64,pstride = 64,parse_method ='maxp')

all_state_dict = torch.load('/home/ubuntu/mayub/Github/SS-DCNet/SHA/best_epoch.pth',map_location=torch.device('cpu'))

# load the state_dict

net.load_state_dict(all_state_dict['net_state_dict'])

# Change to eval mode

net.eval()

# Create a sample instance (I get this shape from sample predict file)

example = torch.rand(1, 3, 768, 1024)

#Run a trace with example

traced_script_module = torch.jit.trace(net, example)

```

Trace give me the following error -

```python

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-22-af5c103c2ac9> in <module>

----> 1 traced_script_module = torch.jit.trace(net, example)

~/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/jit/__init__.py in trace(func, example_inputs, optimize, check_trace, check_inputs, check_tolerance, _force_outplace, _module_class, _compilation_unit)

856 return trace_module(func, {'forward': example_inputs}, None,

857 check_trace, wrap_check_inputs(check_inputs),

--> 858 check_tolerance, _force_outplace, _module_class)

859

860 if (hasattr(func, '__self__') and isinstance(func.__self__, torch.nn.Module) and

~/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/jit/__init__.py in trace_module(mod, inputs, optimize, check_trace, check_inputs, check_tolerance, _force_outplace, _module_class, _compilation_unit)

995 func = mod if method_name == "forward" else getattr(mod, method_name)

996 example_inputs = make_tuple(example_inputs)

--> 997 module._c._create_method_from_trace(method_name, func, example_inputs, var_lookup_fn, _force_outplace)

998 check_trace_method = module._c._get_method(method_name)

999

RuntimeError: Tracer cannot infer type of {'conv1': [], 'conv2': [], 'conv3': tensor([[[[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.0000, 10.7944, 12.7899, ..., 19.2663, 1.8643, 5.8381],

[ 0.0000, 7.0714, 8.2250, ..., 6.6337, 8.9443, 4.3685],

...,

[ 0.0000, 0.0000, 0.1529, ..., 0.0000, 3.9005, 2.5733],

[ 0.0000, 0.0000, 1.3247, ..., 9.6619, 5.4776, 12.0128],

[ 0.0000, 3.9498, 7.2731, ..., 12.5920, 3.4570, 4.9324]],

[[ 6.2152, 4.2109, 1.4538, ..., 9.0008, 4.8270, 5.3406],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 2.7461, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[ 3.3530, 12.9000, 0.0000, ..., 10.2020, 5.6883, 13.4378],

[ 8.3358, 0.0000, 0.4850, ..., 0.0000, 0.0000, 1.2402],

[10.6660, 0.0000, 0.0000, ..., 0.0000, 2.6775, 3.4912],

...,

[ 7.2844, 0.0000, 0.0000, ..., 0.0000, 2.4160, 4.3557],

[ 0.0000, 0.0000, 3.2270, ..., 0.0000, 0.0000, 14.2611],

[ 0.0000, 8.8993, 8.3703, ..., 0.0000, 9.0703, 7.7137]],

...,

[[ 0.0000, 6.9046, 8.8958, ..., 13.0320, 2.4187, 8.6959],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 1.3236],

...,

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 1.7601],

[ 2.6597, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[ 0.0000, 0.0000, 27.1142, ..., 2.5222, 4.2344, 16.2329],

[13.0420, 5.2728, 3.5170, ..., 0.0000, 2.5529, 12.2657],

[ 7.4807, 2.7274, 4.9321, ..., 0.0000, 1.3603, 9.9757],

...,

[ 0.0000, 5.1109, 0.0000, ..., 0.0000, 12.0808, 12.9898],

[ 5.5294, 4.1575, 7.9874, ..., 11.1128, 7.7980, 20.6503],

[10.8941, 31.3485, 13.9562, ..., 16.0611, 6.2370, 12.5933]],

[[ 2.4946, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[14.3443, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 0.6402, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[ 5.3813, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[ 4.0173, 0.0000, 0.0000, ..., 0.0000, 0.2018, 0.0000],

[15.4178, 6.2313, 4.7818, ..., 5.4549, 0.0000, 14.1680]]]],

grad_fn=<MaxPool2DWithIndicesBackward>), 'conv4': tensor([[[[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 1.0539, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0658, 0.2390, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[0.6219, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[1.3965, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

...,

[[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.6745, ..., 0.0000, 0.0000, 0.0000],

...,

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.7359, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000]],

[[1.6867, 5.0112, 3.1645, ..., 0.6046, 2.9714, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

...,

[0.1528, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, ..., 2.5369, 1.0550, 2.8817]]]],

grad_fn=<MaxPool2DWithIndicesBackward>), 'conv5': tensor([[[[1.3796e+01, 1.4452e+01, 1.2862e+01, ..., 6.7781e+00,

9.2815e+00, 9.9267e+00],

[8.3384e+00, 8.3635e+00, 4.7208e+00, ..., 7.1589e-01,

1.5264e+00, 1.6482e+00],

[2.8230e+00, 6.3764e-01, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 7.7796e-01],

...,

[4.9598e+00, 2.4025e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 1.0588e+00],

[5.8041e+00, 3.8463e+00, 0.0000e+00, ..., 8.4420e-03,

4.0681e-01, 2.0312e+00],

[6.6123e+00, 5.5535e+00, 2.7047e+00, ..., 2.8173e+00,

3.5163e+00, 5.7462e+00]],

[[2.1448e+00, 2.8523e+00, 1.5730e+00, ..., 9.4367e-01,

2.1874e+00, 1.3170e+00],

[4.5012e+00, 4.9501e+00, 2.8207e+00, ..., 1.8323e+00,

4.1885e+00, 4.0239e+00],

[7.6315e-01, 2.9784e-01, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 8.0759e-01],

...,

[2.9077e+00, 2.0675e+00, 0.0000e+00, ..., 0.0000e+00,

9.1627e-01, 8.6342e-01],

[4.7475e+00, 3.7969e+00, 1.4891e+00, ..., 2.4889e-01,

2.4533e+00, 2.8074e+00],

[2.3529e+00, 2.9757e+00, 2.3051e+00, ..., 9.2457e-01,

1.9125e+00, 1.6066e+00]],

[[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

...,

[[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

[[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

...,

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[1.1057e+00, 2.5535e-01, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00],

[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,

0.0000e+00, 0.0000e+00]],

[[8.2280e-02, 2.7121e+00, 3.0622e+00, ..., 2.3801e+00,

4.3697e+00, 1.9868e+00],

[2.6579e+00, 5.2237e+00, 4.1553e+00, ..., 4.4512e+00,

5.2473e+00, 3.5402e+00],

[0.0000e+00, 2.0596e+00, 4.3195e-01, ..., 4.8868e-01,

2.0991e-01, 0.0000e+00],

...,

[4.6866e+00, 6.1409e+00, 6.4256e-01, ..., 0.0000e+00,

2.2996e+00, 1.2674e+00],

[5.3775e+00, 6.8193e+00, 3.7130e+00, ..., 4.2376e+00,

4.7349e+00, 3.6980e+00],

[0.0000e+00, 4.8565e-01, 1.4950e-01, ..., 2.2111e+00,

1.1456e+00, 5.6986e-01]]]], grad_fn=<MaxPool2DWithIndicesBackward>)}

:List trace inputs must have elements (toTraceableIValue at /opt/conda/conda-bld/pytorch_1573049304260/work/torch/csrc/jit/pybind_utils.h:286)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x47 (0x7f3cc8476687 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/lib/libc10.so)

frame #1: <unknown function> + 0x4f9175 (0x7f3cfdeb6175 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #2: <unknown function> + 0x5731e2 (0x7f3cfdf301e2 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #3: <unknown function> + 0x58a724 (0x7f3cfdf47724 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #4: <unknown function> + 0x206b86 (0x7f3cfdbc3b86 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/lib/python3.6/site-packages/torch/lib/libtorch_python.so)

frame #5: _PyCFunction_FastCallDict + 0x154 (0x55b2047adc54 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #6: <unknown function> + 0x199c0e (0x55b204835c0e in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #7: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #8: <unknown function> + 0x192e66 (0x55b20482ee66 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #9: <unknown function> + 0x193e73 (0x55b20482fe73 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #10: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #11: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #12: <unknown function> + 0x192e66 (0x55b20482ee66 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #13: <unknown function> + 0x193e73 (0x55b20482fe73 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #14: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #15: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #16: PyEval_EvalCodeEx + 0x329 (0x55b2048309b9 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #17: PyEval_EvalCode + 0x1c (0x55b20483175c in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #18: <unknown function> + 0x1ba167 (0x55b204856167 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #19: _PyCFunction_FastCallDict + 0x91 (0x55b2047adb91 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #20: <unknown function> + 0x199abc (0x55b204835abc in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #21: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #22: _PyGen_Send + 0x256 (0x55b204838be6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #23: _PyEval_EvalFrameDefault + 0x144f (0x55b20485989f in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #24: _PyGen_Send + 0x256 (0x55b204838be6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #25: _PyEval_EvalFrameDefault + 0x144f (0x55b20485989f in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #26: _PyGen_Send + 0x256 (0x55b204838be6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #27: _PyCFunction_FastCallDict + 0x115 (0x55b2047adc15 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #28: <unknown function> + 0x199abc (0x55b204835abc in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #29: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #30: <unknown function> + 0x193c5b (0x55b20482fc5b in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #31: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #32: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #33: <unknown function> + 0x193c5b (0x55b20482fc5b in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #34: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #35: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #36: <unknown function> + 0x192e66 (0x55b20482ee66 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #37: _PyFunction_FastCallDict + 0x3d8 (0x55b204830598 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #38: _PyObject_FastCallDict + 0x26f (0x55b2047ae01f in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #39: _PyObject_Call_Prepend + 0x63 (0x55b2047b2aa3 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #40: PyObject_Call + 0x3e (0x55b2047ada5e in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #41: _PyEval_EvalFrameDefault + 0x19e7 (0x55b204859e37 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #42: <unknown function> + 0x193136 (0x55b20482f136 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #43: <unknown function> + 0x193ed6 (0x55b20482fed6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #44: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #45: _PyEval_EvalFrameDefault + 0x10cc (0x55b20485951c in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #46: <unknown function> + 0x19c764 (0x55b204838764 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #47: _PyCFunction_FastCallDict + 0x91 (0x55b2047adb91 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #48: <unknown function> + 0x199abc (0x55b204835abc in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #49: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #50: <unknown function> + 0x193136 (0x55b20482f136 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #51: <unknown function> + 0x193ed6 (0x55b20482fed6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #52: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #53: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #54: <unknown function> + 0x19c764 (0x55b204838764 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #55: _PyCFunction_FastCallDict + 0x91 (0x55b2047adb91 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #56: <unknown function> + 0x199abc (0x55b204835abc in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #57: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #58: <unknown function> + 0x193136 (0x55b20482f136 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #59: <unknown function> + 0x193ed6 (0x55b20482fed6 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #60: <unknown function> + 0x199b95 (0x55b204835b95 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #61: _PyEval_EvalFrameDefault + 0x30a (0x55b20485875a in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #62: <unknown function> + 0x19c764 (0x55b204838764 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

frame #63: _PyCFunction_FastCallDict + 0x91 (0x55b2047adb91 in /home/ubuntu/anaconda3/envs/Crowd_Detection_mayub/bin/python)

```

Thanks !

cc @suo

|

oncall: jit,triaged

|

medium

|

Critical

|

607,952,618 |

pytorch

|

Add BufferDict container

|

## 🚀 Feature

A `torch.nn.BufferDict` container that mirrors `ParameterDict`.

## Motivation

We sometimes work with a lot of buffers, and it would be nice to have a convenient way of dealing with those, similar to `ParameterDict`, but well, with the values being buffered tensors rather than of type `torch.nn.Parameter`. Currently `ParameterDict` specifically uses `register_parameter`, so it's not straightforward to use that transparently.

## Pitch

Implement `BufferDict` by mirroring `ParameterDict`. Like so: #37385

## Alternatives

Manually handle toms of buffers. That gets clunky quickly.

cc @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki

|

feature,module: nn,triaged,actionable

|

medium

|

Major

|

607,974,917 |

flutter

|

Support iPadOS pointer interactions

|

This is an umbrella issue that tracks the support for iPadOS pointer interactions.

However, features beyond basic cursor type and hover effect will likely have a low priority for a while.

## Reference

- Apple's [Design guidelines](https://developer.apple.com/design/human-interface-guidelines/ios/user-interaction/pointers/)

- UIKit's [Documentation](https://developer.apple.com/documentation/uikit/pointer_interactions)

## TODO

- Support basic mouse features

- [ ] Support background color change in hover effect

- [ ] Support basic cursor styles (those without magnetism) on hoverable regions

- Support region-aware features

- [ ] Support highlight effect

- [ ] Support lift effect

- [ ] Support trajectory prediction

## Related issues

- Previous discussion: https://github.com/flutter/flutter/issues/52912

- Scroll wheels: https://github.com/flutter/flutter/issues/54663

- General mouse cursor: https://github.com/flutter/flutter/issues/31952

|

c: new feature,platform-ios,framework,a: fidelity,f: cupertino,f: gestures,a: desktop,a: mouse,P3,team-design,triaged-design

|

medium

|

Critical

|

607,981,441 |

pytorch

|

The pytorch's graph is lack of common names for nodes

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

Many nodes do not have a COMMON name. It is difficault to figure out who it is.

## To Reproduce

Steps to reproduce the behavior:

1.OH, you can use a forward function with conv2d method and jit.trace it into a graph. And you wiil get it

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

I believe that name with number should change into name by word, like bias and weight.

## Environment

That graph is build by the tensorbaord example of pytorch. I think common pytorch is ok.

You can get the script and run it with:

```

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

```

- PyTorch Version (e.g., 1.0):

- OS (e.g., Linux):

- How you installed PyTorch (`conda`, `pip`, source):

- Build command you used (if compiling from source):

- Python version:

- CUDA/cuDNN version:

- GPU models and configuration:

- Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

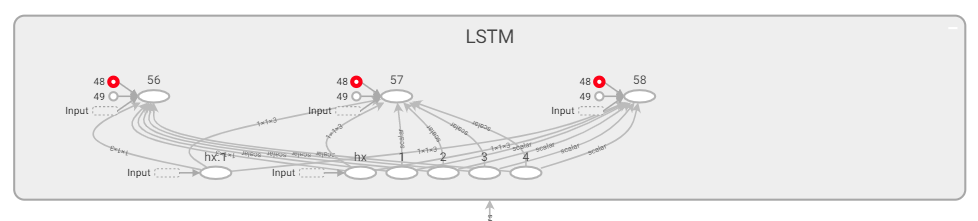

```shell

graph(%self : ClassType<LSTM>,

%input : Float(1, 1, 3),

%6 : (Float(1, 1, 3), Float(1, 1, 3))):

%1 : Tensor = prim::GetAttr[name="weight_ih_l0"](%self)

%2 : Tensor = prim::GetAttr[name="weight_hh_l0"](%self)

%3 : Tensor = prim::GetAttr[name="bias_ih_l0"](%self)

%4 : Tensor = prim::GetAttr[name="bias_hh_l0"](%self)

%hx.1 : Float(1, 1, 3), %hx : Float(1, 1, 3) = prim::TupleUnpack(%6)

%48 : Tensor[] = prim::ListConstruct(%hx.1, %hx), scope: LSTM

%49 : Tensor[] = prim::ListConstruct(%1, %2, %3, %4), scope: LSTM

...

```

It is the graph's scirptmodule built by jit.trace. It seems like the node use the number the node's name. Maybe we can use the "name="weight_ih_l0"" word to name the node.

cc @suo

|

module: bootcamp,feature,module: tensorboard,oncall: visualization,days

|

low

|

Critical

|

608,003,134 |

godot

|

AnimationPlayer doesn't show the keyframe icon for AnimatedSprite, and consequently that node hides the keyframe icon for the rest of the nodes

|

**Godot version:**

3.2.1

**OS/device including version:**

Win 10 v1909

**Issue description:**

When creating a new track on the AnimationPlayer and switching to an animated sprite node, the keyframe icons do not appear. Switching to any other node will hide the keyframe icon for that node as well. The only way to restore the icon is to select the AnimationPlayer node and then select any other node beside the AnimatedSprite node.

**Steps to reproduce:**

1. Create a simple node

2. Add an Animatedsprite child node

3. Add an AnimationPlayer child node (to the node or the AnimatedSprite)

4. Create a track

5. Select the AnimatedSprite (no keyframe icon)

6. Select the Node (no keyframe icon)

7. Reselect the AnimationPlayer node

8. Select the Node (Keyframe icon is showin)

Video capture of the bug: https://youtu.be/wEoUN-Cr7Lo

**Minimal reproduction project:**

Video capture of the bug: https://youtu.be/wEoUN-Cr7Lo

|

bug,topic:editor

|

low

|

Critical

|

608,003,528 |

godot

|

AnimatedSprite node doesn't show keyframe icon and disables the icons for any other node.

|

**Godot version:**

3.2.1

**OS/device including version:**

Win 10 v1909

**Issue description:**

When creating a new track on the AnimationPlayer and switching to an animated sprite node, the keyframe icons do not appear. Switching to any other node will hide the keyframe icon for that node as well. The only way to restore the icon is to select the AnimationPlayer node and then select any other node beside the AnimatedSprite node.

**Steps to reproduce:**

1. Create a simple node

2. Add an Animatedsprite child node

3. Add an AnimationPlayer child node (to the node or the AnimatedSprite)

4. Create a track

5. Select the AnimatedSprite (no keyframe icon)

6. Select the Node (no keyframe icon)

7. Reselect the AnimationPlayer node

8. Select the Node (Keyframe icon is showin)

Video capture of the bug: https://youtu.be/wEoUN-Cr7Lo

**Minimal reproduction project:**

Video capture of the bug: https://youtu.be/wEoUN-Cr7Lo

|

bug,topic:editor

|

low

|

Critical

|

608,042,021 |

flutter

|

Flutter Textstyle class ignores invalid fontFamily and fontSize

|

Everything is recently installed.

```

Android 3.63(April 13, 2020)

C:\Users\allen>flutter --version

Flutter 1.17.0-3.2.pre • channel beta • https://github.com/flutter/flutter.git

Framework • revision 2a7bc389f2 (6 days ago) • 2020-04-21 20:34:20 -0700

Engine • revision 4c8c31f591

Tools • Dart 2.8.0 (build 2.8.0-dev.20.10)

=======================================================

```

The code snip-it:

```

// Calling Widget

child: Text("The quick brown fox jumps over the lazy dog",

// Center align text

textAlign: TextAlign.center,

// set a text style which defines a custom font

style: myTextStyle()),

// Function

TextStyle myTextStyle(

{myColor = Colors.blueAccent,

myFontFamily='nofont',

myFontWeight = FontWeight.w400,

myFontSize = -1.0 }

)

{

return TextStyle(

// set color of text

color: myColor,

// set the font family as defined in pubspec.yaml

fontFamily: myFontFamily,

// set the font weight

fontWeight: myFontWeight,

// set the font size

fontSize: myFontSize);

}

```

==================================================

In either scenario, the console output is normal

```

Performing hot restart...

Syncing files to device SM N910V...

Restarted application in 8,496ms.

==================================================

```

Two issues here, I would be happy to spawn an extra bug if asked

Issue one:

If you were to enter an invalid(mispelled) fontFamily, the output to the Android phone is some default font, I assume 'Roboto'

Expected behavior: At a minimum an error message to the Console

Perhaps a RT error.

Testing consideration: You could not run automated tests since there would be string output just not the right one. A plain text output would not be noticed by Testing right away.

===========================================================

Issue two:

If you were to enter a negative value, like -36.0 for FontSize, I get no text at all

Expected behavior: At a minimum an error message to the Console

Perhaps a RT error.

Testing consideration: Without a Console message, you couldn't run automated tests.

==========================================================

The answer to the question

"Why would anyone do that" ?

Answer

Because they can.

=========================================================

I have not tried flutter "lint" maybe I should.

|

framework,engine,a: typography,a: error message,has reproducible steps,P2,found in release: 3.3,found in release: 3.7,team-engine,triaged-engine

|

low

|

Critical

|

608,109,277 |

node

|

http: Reduce API surface

|

I would like to suggest that [`complete`](https://nodejs.org/dist/latest-v14.x/docs/api/http.html#http_message_complete) and [`aborted`](https://nodejs.org/dist/latest-v14.x/docs/api/http.html#http_message_aborted) are unnecessary API surface and should be at least doc deprecated.

The user can keep of track of this state themselves by registering an `'aborted'` handler.

In particular the exact semantics of e.g. `complete` is a slightly unclear and might cause more harm than use.

|

http

|

medium

|

Major

|

608,115,992 |

pytorch

|

3D grouped & depthwise convolution very slow on backward pass

|

Hi,

It seems that 3D grouped & depthwise convolution is very slow on the **backward** pass. Backward pass on depthwise convolution takes about 10 times the time of a standard 3D convolution's forward pass.

I understand cudnn does not yet support fp32 depthwise convolution (ie forward pass is slower than standard convolution by about 2 times or so for 2D convolution). However, this problem seems to be significantly worse for 3D convolution's _backward_ pass. Reading cudnn docs, it seems 3D depthwise isn't supported.

Any suggestions?

```python

import torch

import torch.nn as nn

import time

# Timing code taken from https://github.com/facebookresearch/pycls/blob/master/pycls/utils/benchmark.py

class Timer(object):

def __init__(self):

self.total_time = 0.0

self.calls = 0

self.start_time = 0.0

self.diff = 0.0

self.average_time = 0.0

def tic(self):

self.start_time = time.time()

def toc(self):

self.diff = time.time() - self.start_time

self.total_time += self.diff

self.calls += 1

self.average_time = self.total_time / self.calls

def compute_precise_time(model, input_size, loss_fun, batch_size, num_iter=3, label_dtype=torch.int64):

"""Computes precise time."""

# Generate a dummy mini-batch

inputs = torch.rand(batch_size, *input_size)

labels = torch.zeros(batch_size, dtype=label_dtype)

# Copy the data to the GPU

inputs = inputs.cuda(non_blocking=False)

labels = labels.cuda(non_blocking=False)

# Compute precise time

fw_timer = Timer()

bw_timer = Timer()

model.train()

for _cur_iter in range(num_iter):

# Forward

fw_timer.tic()

preds = model(inputs)

loss = loss_fun(preds, labels)

torch.cuda.synchronize()

fw_timer.toc()

# Backward

bw_timer.tic()

loss.backward()

torch.cuda.synchronize()

bw_timer.toc()

torch.cuda.synchronize()

print({"prec_train_fw_time": fw_timer.average_time, "prec_train_bw_time": bw_timer.average_time})

class GroupedNet3D(nn.Module):

def __init__(self, data_size, layer_channel, groups):

super(GroupedNet3D, self).__init__()

if groups is None:

groups = layer_channel # depth wise

self.f = nn.Sequential(

nn.Conv3d(data_size[0], layer_channel, 3, bias=False),

nn.Conv3d(layer_channel, layer_channel, 3, groups=groups, bias=False),

nn.Conv3d(layer_channel, layer_channel, 3, groups=groups, bias=False),

nn.Conv3d(layer_channel, layer_channel, 3, groups=groups, bias=False),

nn.Conv3d(layer_channel, layer_channel, 3, groups=groups, bias=False),

nn.AdaptiveAvgPool3d(1),

nn.Flatten(),

nn.Linear(layer_channel, 1)

)

def forward(self, x):

return self.f(x)

loss_fun = nn.CrossEntropyLoss().cuda()

data_size = (1, 50, 50, 50)

batch_size = 16

channels = 32

Model = GroupedNet3D

# standard 3D convolution

model = Model(data_size, channels, 1).cuda()

compute_precise_time(model, data_size, loss_fun, batch_size)

# {'prec_train_fw_time': 0.1468369960784912, 'prec_train_bw_time': 0.3601357142130534}

# grouped convolution

model = Model(data_size, channels, 8).cuda()

compute_precise_time(model, data_size, loss_fun, batch_size)

# {'prec_train_fw_time': 0.3155516783396403, 'prec_train_bw_time': 1.0136785507202148}

# depth wise convolution

model = Model(data_size, channels, None).cuda()

compute_precise_time(model, data_size, loss_fun, batch_size)

# {'prec_train_fw_time': 0.41037146250406903, 'prec_train_bw_time': 1.7994076410929363}

torch.backends.cudnn.version() # 7603

torch.version.cuda # '10.1'

torch.__version__ # '1.4.0'

```

cc @ngimel @csarofeen @ptrblck

|

module: cudnn,module: cuda,triaged

|

low

|

Major

|

608,156,720 |

angular

|

A destroyed view can be interacted with in ivy

|

In Angular with ViewEngine a destroyed view can't be interacted with (ex. one can't trigger change detection on such views). With ivy it is possible to call methods on a destroyed view and there is no error message / logs indicating that a view was destroyed.

Here is [the test exposing difference in VE vs. ivy behaviour](https://github.com/pkozlowski-opensource/angular/commit/e972be659827be252fec3b6c20c4986161de253c):

```typescript

it('should prevent usage of a destroyed fixture', () => {

@Component({selector: 'test-cmpt', template: ``})

class TestCmpt {

}

TestBed.configureTestingModule({declarations: [TestCmpt]});

const fixture = TestBed.createComponent(TestCmpt);

fixture.destroy();

expect(() => {fixture.detectChanges()})

.toThrowError(/Attempt to use a destroyed view: detectChanges/);

});

```

|

freq1: low,area: core,state: confirmed,core: dynamic view creation,type: confusing,P4

|

low

|

Critical

|

608,159,908 |

ant-design

|

Slider组件onAfterChange事件触发问题

|

- [ ] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### Reproduction link

[](https://codesandbox.io/s/antd-reproduction-template-sldlq)

### Steps to reproduce

点击滑动条改变值,触发一次onAfterChange,此时Slider处于focus状态。然后点击网页外的位置(比如Windows任务栏),此时Slider失焦。然后点击网页的任意位置,onAfterChange又一次触发了。

### What is expected?

onAfterChange只在点击Slider时触发。

### What is actually happening?

在没有点击Slider时onAfterChange触发了。

| Environment | Info |

|---|---|

| antd | 3.25.0 |

| React | 16.13.1 |

| System | Windows10 |

| Browser | Chrome63.0.3239.132 |

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

🐛 Bug,Inactive

|

low

|

Minor

|

608,167,055 |

pytorch

|

torch.cdist() implementation without using contiguous() calls

|

## 🚀 Feature

A decent torch.cdist() implementation that does not have contiguous() calls which usually resulted in excessive GPU memory usage

## Motivation

See the original problem at https://discuss.pytorch.org/t/understanding-cdist-function/76296/12?u=promach

## Pitch

Modify [these two lines](https://github.com/pytorch/pytorch/blob/a836c4ca78b72ecc8e0664e1b684af64ce83be42/aten/src/ATen/native/Distance.cpp#L78-L79) inside torch.cdist() implementation

## Alternatives

I found some other implementation of cdist() [code 1](https://github.com/pytorch/pytorch/pull/25799#issuecomment-529021810) and [code 2](https://github.com/pytorch/pytorch/issues/15253#issuecomment-491467128), but they are still consuming excessive amount of GPU memory.

## Additional context

None

cc @VitalyFedyunin @ngimel

|

module: performance,triaged,enhancement,module: distance functions

|

low

|

Minor

|

608,167,791 |

pytorch

|

Compatibility of subset dataset with disabled batch sampling

|

I think there is a compatibility issue with disabled batch sampling and subset dataset

The use-case - define custom batch sampling, and split the dataset using PyTorch split utility function

Here's a minimal working example

```

self.train_dataset, self.val_dataset, self.test_dataset = torch.utils.data.random_split(

self.dataset, [100, 100, 100])

loader = DataLoader(

dataset=self.train_dataset,

batch_size=None,

batch_sampler=None,

sampler=BatchSampler(

SequentialSampler(dataset), batch_size=self.hparams.batch_size, drop_last=False),

num_workers=self.hparams.num_data_workers,

)

```

And when iterating the subset datasets this is the error

```

Exception has occurred: TypeError

list indices must be integers or slices, not list

File "/path/utils/data/dataset.py", line 257, in __getitem__

return self.dataset[self.indices[idx]]

File "/path/utils/data/_utils/fetch.py", line 46, in fetch

data = self.dataset[possibly_batched_index]

File "/path/utils/data/dataloader.py", line 385, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "/path/utils/data/dataloader.py", line 345, in __next__

data = self._next_data()

File "/path/lib/python3.7/site-packages/pytorch_lightning/trainer/evaluation_loop.py", line 251, in _evaluate

for batch_idx, batch in enumerate(dataloader):

File "/path/lib/python3.7/site-packages/pytorch_lightning/trainer/trainer.py", line 843, in run_pretrain_routine

False)

File "/path/lib/python3.7/site-packages/pytorch_lightning/trainer/distrib_parts.py", line 477, in single_gpu_train

self.run_pretrain_routine(model)

File "/path/lib/python3.7/site-packages/pytorch_lightning/trainer/trainer.py", line 704, in fit

self.single_gpu_train(model)

File "/path/train.py", line 152, in main_train

trainer.fit(model)

File "/path/train.py", line 66, in main

main_train(model_class_pointer, hyperparams, logger)

File "/path/train.py", line 161, in <module>

main()

File "/path/lib/python3.7/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/path/lib/python3.7/runpy.py", line 96, in _run_module_code

mod_name, mod_spec, pkg_name, script_name)

File "/path/lib/python3.7/runpy.py", line 263, in run_path

pkg_name=pkg_name, script_name=fname)

File "/path/lib/python3.7/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/path/lib/python3.7/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

```

As the self.indices of the `subset` object is a simple python list.

Please refer to the forum post, where @ptrblck approves reproducing the bug and offering a workaround.

cc @SsnL

|

module: dataloader,triaged

|

low

|

Critical

|

608,170,112 |

pytorch

|

Reset a `torch.optim.Optimizer`

|

## 🚀 Feature

A 'reset_state' method that resets the `state` of an optimizer.

## Motivation

Sometimes we may need to reuse an optimizer to train the same model on another dataset. Some optimizers, though, keep track of some additional stats (e.g. Adam's moving averages), and currently, there is no method to reset these stats unless we expose some internal attributes of the class.

Check [this question](https://discuss.pytorch.org/t/reset-optimizer-stats/78516/2) in the forum for more info.

## Pitch

My proposal consists in adding a method `reset_state(self, state=None)`. If `state is None` is `True`, then we just reset the state, i.e. `self.state = defaultdict(dict)`, otherwise `self.state = state`

## Alternatives

Either you manually retrieve all the parameters of an optimizer and create a new one or you manually reset the `state` attribute. In both cases, we're exposing some internals of the optimizer.

cc @vincentqb

|

module: optimizer,triaged

|

medium

|

Critical

|

608,225,309 |

rust

|

Unhelpful error message, and endless loop in cargo, when a procedural macro overflows its stack

|

Using `rustc 1.44.0-nightly (b2e36e6c2 2020-04-22)` on Windows 10.

Create a proc-macro crate `crate0` with these contents:

extern crate proc_macro;

use proc_macro::TokenStream;

#[proc_macro]

pub fn overflow_stack(item: TokenStream) -> TokenStream {

fn recurse(i: u64) {

if i < std::u64::MAX {

recurse(i + 1);

}

}

recurse(0);

item

}

And a binary crate `crate1` with these contents:

#![feature(proc_macro_hygiene)]

fn main() {

crate0::overflow_stack!();

}

`cargo build` for `crate1` will produce this error message:

thread 'rustc' has overflowed its stack

error: could not compile `crate1`.

Caused by:

process didn't exit successfully: `rustc {...a long list of arguments follows...}`

When a stack-overflow bug is introduced into a procedural macro by making changes to one of the procedural macro's local dependencies, this error message is not helpful for localising the bug. (I experienced this today, and wasted some time thinking that I had triggered an ICE.)

I also experienced an endless loop in `cargo build` triggered by that same stack overflow, but I haven't been able to isolate a test-case.

|

I-crash,C-enhancement,A-diagnostics,A-macros,T-compiler,D-confusing,D-papercut,D-verbose,D-terse,A-proc-macros

|

low

|

Critical

|

608,231,282 |

go

|

cmd/compile: experiment with more integer comparison optimizations

|

In Go 1.15 we've added support for integer-in-range optimizations to the SSA backend. This same infrastructure (mostly in https://github.com/golang/go/blob/master/src/cmd/compile/internal/ssa/fuse_comparisons.go) can quite easily be extended to perform other potential control flow transformations. I've opened this issue in order to track them and get more ideas.

Note: these transformations may or may not be worthwhile optimizations.

Disjunctions (||):

| Before | After | Comments | CL (if applicable) |

|-|-|-|-|

| `x == 1 \|\| x == 2` | `uint(x - 1) <= 1` | Integer range | [CL 224878](https://golang.org/cl/224878)|

| `x == 4 \|\| x == 6` | `x\|2 == 6` | Power of 2 difference | [CL 471815](https://golang.org/cl/471815) |

| `x != 0 \|\| y != 0` | `x\|y != 0` | Neq with 0 | |

| `x < 0 \|\| y < 0` | `x\|y < 0` | Less with 0 | |

| `x >= 0 \|\| y >= 0` | `x&y >= 0` | Geq with 0 | |

Conjunctions (&&):

| Before | After | Comments | CL (if applicable) |

|-|-|-|-|

| `x != 1 && x != 2` | `uint(x - 1) > 1` | Integer range | [CL 224878](https://golang.org/cl/224878)|

| `x != 4 && x != 6` | `x\|2 != 6` | Power of 2 difference | [CL 471815](https://golang.org/cl/471815) |

| `x == 0 && y == 0` | `x\|y == 0` | Eq with 0 | |

| `x < 0 && y < 0` | `x&y < 0` | Less with 0 | |

| `x >= 0 && y >= 0` | `x\|y >= 0` | Geq with 0 | |

|

Performance,NeedsInvestigation,compiler/runtime

|

low

|

Minor

|

608,273,668 |

godot

|

Inspector: Click-drag to increase or decrease property values does not work with tablet/pen

|

**Godot version:** 3.2.1

**OS/device including version:**

Win64, Wacom Intuos

**Issue description:**

With tablet and pen the values get stuck:

With mouse it works fine:

|

bug,topic:editor,confirmed,usability,topic:input

|

low

|

Critical

|

608,274,785 |

vscode

|

NVDA doesn't read the status line

|

Testing https://github.com/microsoft/vscode/issues/96265

All NVDA reads is: `No status line found`.

|

bug,upstream,accessibility,upstream-issue-linked

|

low

|

Major

|

608,287,975 |

node

|

Different configurations watched same file returned same instance

|

<!--

Thank you for reporting an issue.

This issue tracker is for bugs and issues found within Node.js core.

If you require more general support please file an issue on our help

repo. https://github.com/nodejs/help

Please fill in as much of the template below as you're able.

Version: output of `node -v`

Platform: output of `uname -a` (UNIX), or version and 32 or 64-bit (Windows)

Subsystem: if known, please specify affected core module name

-->

* **Version**:

* **Platform**: all

* **Subsystem**: fs

Using two different configurations watched the same file, the second configuration will not take effect.

### What steps will reproduce the bug?

1. The process will exit.

``` js

const fs = require('fs');

fs.watchFile('a.js', {persistent: false} , () => {

console.log(1)

});

fs.watchFile('a.js', () => {

console.log(2)

});

```

2. The Process does not exit.

``` js

const fs = require('fs');

fs.watchFile('a.js', () => {

console.log(2)

});

fs.watchFile('a.js', {persistent: false} , () => {

console.log(1)

});

```

### How often does it reproduce? Is there a required condition?

unrequired condition.

### What is the expected behavior?

Neither 1 nor 2 will exit the process.

### What do you see instead?

1. The process will exit.

### Additional information

When watched, the StatWatcher instance is de-instanced by filename, and the same instance is returned each time the same file is watched.

|

fs

|

low

|

Critical

|

608,335,489 |

godot

|

Tilemap: set_cellv(map_point, -1) does not work with Ysort

|

**Godot version:** 3.2.1

**OS/device including version:** Wine64

**Issue description:**

If the Tilemap in question has the **ysort** property enabled, only the first tile will be set to -1.

As soon as ysort is disabled, set_cellv works as expected.

In fact, the Tilemap does not seem to ever leave the Area2D of the player, even tough it should when moving from tile to tile. So this might be an Area issue too.

Player Code:

```

func _on_Area2D_body_entered(body):

if "yellow_tile" in body.name:

$Label.text = "is in tile"

yellow_tile = body

var map_point = yellow_tile.world_to_map(global_position + Vector2(16,16)) #-16,-16 = yellowtile position

yellow_tile.set_cellv(map_point, -1, false, true)

yellow_tile.call_deferred("update_dirty_quadrants")

```

Thanks to user Grandro on Discord who has figured this out!

**Steps to reproduce:**

Move player on a Tilemap tile.

**Minimal reproduction project:**

[Grid_set_tile_ysort_bug.zip](https://github.com/godotengine/godot/files/4546130/Grid_set_tile_ysort_bug.zip)

|

bug,topic:physics

|

low

|

Critical

|

608,410,810 |

pytorch

|

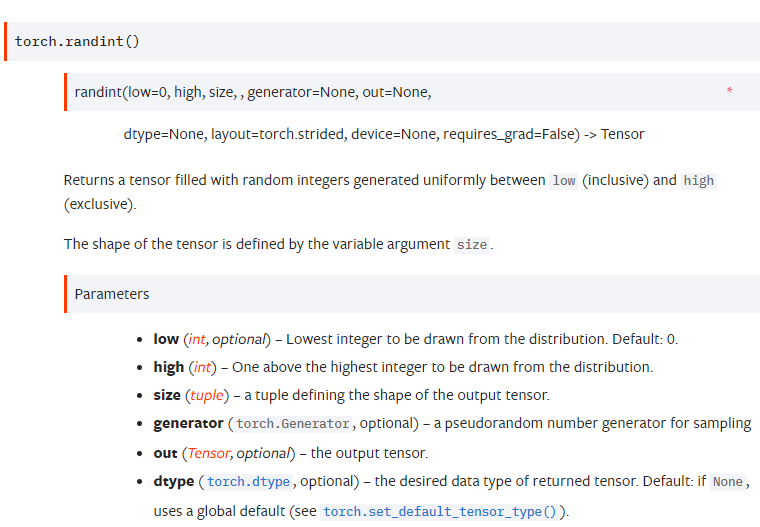

[docs] Unclear return type of torch.randint and extra comma in arg spec

|

1. Note two commas in function spec

2. It's unclear if default output is `torch.int64` (in practice it is) or `torch.get_default_dtype()` which is usually `torch.float32`. It's unclear what `torch.set_default_tensor_type()` reference has to do with `torch.randint`'s return type since by default it returns an integral tensor

https://pytorch.org/docs/master/torch.html?highlight=torch%20randint#torch.randint

|

module: docs,triaged

|

low

|

Minor

|

608,426,841 |

TypeScript

|

Missing autocomplete with optional chaining operator

|

**TypeScript Version:** 3.8 and 3.9 nightly

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** optional chaining, autocomplete, intellisense

**Code**

The `BaseDataClass` experiences the issue here, most likely due to some of the funkiness with generic types. (Other classes extend `BaseDataClass` in practice, which is why it has these generics.)

I recognize this example is pretty unusual, but I'm reporting the optional chaining operator issue specifically in case it is helpful for improving autocomplete for the operator in general.

```ts

export interface BaseClass<TProps>

{

getValue<TKey extends keyof TProps>(propertyName: TKey): TProps[TKey];

}

export class BaseClass<TProps> { }

interface IBaseData

{

BaseDataProp: string;

}

export interface BaseDataClassProps<TData extends IBaseData>

{

Data?: TData;

}

export class BaseDataClass<

TData extends IBaseData = IBaseData,

TProps extends BaseDataClassProps<TData> = BaseDataClassProps<TData>>

extends BaseClass<TProps & BaseDataClassProps<TData>>

{

public exampleMethod(): void

{

// this.getValue("Data")!.

// autocompletes to:

this.getValue("Data")!.BaseDataProp;

// this.getValue("Data")?.

// does not autocomplete to:

this.getValue("Data")?.BaseDataProp;

}

}

```

**Expected behavior:**

In the `exampleMethod` above, typing `this.getValue("Data")?.` and then requesting autocomplete (Ctrl+Enter) should give the same suggestions as given for `this.getValue("Data")!.`

**Actual behavior:**

No suggestions given for `this.getValue("Data")?.`

**Playground Link:**

[Playground Link](https://www.typescriptlang.org/play/?ts=3.9.0-dev.20200427&ssl=34&ssc=1&pln=1&pc=1#code/KYDwDg9gTgLgBASwHY2FAZgQwMbDgIUwGdgBhAG2KIB4AVABSgjCID4BYAKAG8u5+4Ac2AwAapnIBXYHQDSwAJ5xQqJABMicANaKI6OAyYtWACjBG0MBQDlMAW2AAuA-IUBKZ4eZEA2rVcAugDcXAC+XKCQsHDYlESahCQUVHSM3qxw3HDhnFxcyKgYOHgAkonAACKYMJhcvJwAkOVVNWlgzkQwUMiCIZw5EeDQ8AVoWLgExJXVmMnxbTS0LZjKIKoacGVTyxw8XA3LAPyey30DnJHDMXEJ2zNzNPtLM6vrmlsky3AAvJvNMwAaJ4LV7AdS3T73G4LOg7H6TSE1B4w541Vi7BoqMEbcoPVJGTQAMgR0yR0IJsJm6Lq+zAkgARuQENhVvYwORgABZEQACwgahMHjgADcIAg1Pt6g0GgB6GVwGA8hBEAB0wjEEmkJgARMttW4AIQq-ay+WYSQwCDYCB2dkiYCaS2OE2K5VqkTiKTAHV6w0q-6tIx9E1yhVK1Xqz1a3UzfWHY2NU1wNQQB1wJAQeDmy3W20c1AKiDOxOuiMezXemM1OP+u6B5h9Bo5HJAA)

|

Bug,Domain: Completion Lists

|

low

|

Minor

|

608,451,627 |

rust

|

`rustc` should prefer statically linking native dependencies with unspecified kind if `crt-static` is enabled

|

The main goal of `+crt-static` (at least on non-Windows targets) is to produce self-contained statically linked executables.

With this goal in mind `rustc` switches linking of 1) Rust crates and 2) libc to static if possible. (The `libc` part is done in the libraries through lazy `cfg`s though.)

However, for native dependencies with unspecified kind `rustc` will still prefer the dynamic version of the library if both are available.

Unfortunately, doing this is not entirely trivial because `-Bstatic -lmylib` won't just prefer the static version (e.g. `libmylib.a`), it will *require* it.

So we need to check for the existence of `libmylib.a` first (in which directories exactly?) and then pass `-l:libmylib.a` instead of `-lmylib` if it exists.

cc https://github.com/rust-lang/rust/pull/71586 https://github.com/rust-lang/rust/issues/39998

|

A-linkage,T-compiler,C-bug

|

low

|

Major

|

608,468,518 |

flutter

|

String.fromEnvironment without a const silently does the wrong thing in the VM

|

As [44083](https://github.com/flutter/flutter/pull/44083) was merged in, I would expect it to be working in at least dev, but I am not able to get it to work as expected. I set the channel to dev (flutter channel dev), then did an upgrade (flutter upgrade). Then I issued the following:

flutter -run -t lib/main_generic.dart --dart-define=ENV=DEV

In my main_generic.dart I do this:

var env = String.fromEnvironment("ENV", defaultValue: "PROD");

The env variable always returns PROD.

Should this not be picked up and return DEV instead of defaulting to PROD?

@jonahwilliams

|

engine,dependency: dart,customer: crowd,has reproducible steps,P2,found in release: 2.2,team-engine,triaged-engine

|

low

|

Critical

|

608,474,030 |

go

|

x/text/collate: unexpected result of KeyFromString()

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

1.1.13

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

```

go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/Users/bba/Library/Caches/go-build"

GOENV="/Users/bba/Library/Application Support/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GONOPROXY=""

GONOSUMDB=""

GOOS="darwin"

GOPATH="/Users/bba/.gvm/pkgsets/go1.13/global"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/Users/bba/.gvm/gos/go1.13"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/Users/bba/.gvm/gos/go1.13/pkg/tool/darwin_amd64"

GCCGO="gccgo"

AR="ar"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

GOMOD="/Users/bba/text/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/tg/tb44y2693qd2s6j53chrywyw0000gn/T/go-build299346317=/tmp/go-build -gno-record-gcc-switches -fno-common"

```

</pre></details>

### What did you do?

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

https://play.golang.org/p/__hCo4kcE86

### What did you expect to see?

str = bytesToString(ctor.KeyFromString(buf, "L·"))

println("", str) // 1711

The str will be "17110183" instead of "1711".

### What did you see instead?

"1711"

|

NeedsInvestigation

|

low

|

Critical

|

608,498,658 |

terminal

|

About dialog appears abruptly, without animation

|

@DHowett-MSFT I think this PR introduced a new problem that the about dialog no longer opens and closes smoothly with an animation, it appears and disappears abruptly, also the dialog border color is greyish in light theme instead of the accent color (maybe its intended).

_Originally posted by @AnuthaDev in https://github.com/microsoft/terminal/pull/5224#issuecomment-620703529_

|

Issue-Bug,Area-UserInterface,Product-Terminal,Priority-3

|

low

|

Minor

|

608,499,951 |

rust

|

Enabling `+crt-static` in a blanket way breaks dynamic libraries including proc macros

|

This is pretty much https://github.com/rust-lang/cargo/issues/7563 generalized, which was fixed in https://github.com/rust-lang/rust/pull/69519, which I don't consider a correct or satisfactory solution.

https://github.com/rust-lang/rust/pull/71586 may be a good preliminary reading.

---

If you are building something with Cargo and set `-C target-feature=+crt-static` through `RUSTFLAGS`, or `crt-static` is enabled by default through a target specification, it will be set for all crates during the build, including proc macros and cdylibs (and build scripts?).

In most cases this is not an intention when we are enabling `crt-static`.

In most cases the intention is to enable it for executables only.

So if enabling `crt-static` for executables (via env var or a target spec) also enables it for libraries, it usually results only in "collateral damage".

If the target doesn't support `+crt-static` for libaries, we just get an error like https://github.com/rust-lang/cargo/issues/7563 reported.

If the target supports `+crt-static` for libraries, we get a very weird library which is unlikely to work when dynamically loaded as a proc macro crate (I didn't verify that though).

As a result, we need a way to enable `crt-static` for executables without collaterally damaging libaries.

Moreover, this way should be the default.

---

https://github.com/rust-lang/rust/pull/71586#discussion_r416054543 lists some possible alternatives of doing this.

- Automatically enable `-Ctarget-feature=-crt-static` for proc macro crates or all libraries in Cargo (cc @ehuss), modifying RUSTFLAGS. Question: how to opt-out?

- Introduce a new options `-Ctarget-feature=+crt-static-dylib` controlling static linking of libraries instead of `-Ctarget-feature=-crt-static`. It would almost never be used on Unix-like targets (not sure about windows-msvc and wasm).

- Keep the existing meaning of `crt-static`, but introduce a new option `-C disable-crt-static-for-dylibs` or something. Cargo would then use it for proc macro crates or all libraries. Question: how to opt-out?

- Ignore `+crt-static` for libraries if the target doesn't support it. This is fragile, musl actually supports it despite the current value of the flag of the musl target spec. If the flag is enabled, proc macros will break.

- Ignore `+crt-static` for libraries always. It is almost never used on Unix-like targets (not sure about windows-msvc and wasm). `+crt-static-dylib` looks strictly better.

- Have two `crt-static` defaults in target specs - one for executables and one for libraries. Solves one half of the problem, but explicit `+crt-static` in `RUSTFLAGS` will still cause collateral damage.

|

A-linkage,T-compiler,C-bug

|

medium

|

Critical

|

608,528,298 |

pytorch

|

rsub incorrectly exposed in torch

|

```

>>> torch.rsub

<built-in method rsub of type object at 0x113435ad0>

```

I believe this is supposed to be an implementation for the magic method `__rsub__`; so this should be made uniform with the rest of the magic methods (which are directly exposed by their names).

|

triaged,better-engineering

|

low

|

Minor

|

608,536,581 |

pytorch

|

Tensor.is_same_size not documented

|

https://pytorch.org/docs/master/search.html?q=is_same_size&check_keywords=yes&area=default#

Seems to be a thing for conveniently testing if sparse sizes actually match. Maybe shouldn't be a method as it is right now.

|

module: docs,triaged

|

low

|

Minor

|

608,537,627 |

pytorch

|

Tensor.as_strided_ is not documented

|

https://pytorch.org/docs/master/search.html?q=as_strided_&check_keywords=yes&area=default#

|

module: docs,triaged

|

low

|

Minor

|

608,539,716 |

pytorch

|

torch.clamp_max clamp_min shouldn't be there

|

~~Maybe they're not supposed to be in torch namespace?~~

|

triaged,better-engineering

|

low

|

Minor

|

608,539,770 |

pytorch

|

Multi-Process Single-GPU is bad

|

I'm sorry to do this, but since your release states that you plan to deprecate Single-Process Multi-GPU, I have to talk about all the issues I have with MPSG.

First, this mode takes up a CPU thread per GPU you have. I'm sure that the developers of pytorch are used to working with servers that have tens of CPU cores and the number of cores is much much greater than the number of GPUs, but students like me who are trying to stretch their compute budgets don't necessarily have this luxury. For example, I have a workstation with 4 GPU's and 8 cores. Another with 2 GPU's and 4 cores. Those extra threads are needed for data loading, let alone anything else I want to do with the machine.

Second, killing jobs is hard and often results in zombie processes that have to be tracked down and killed manually. CTRL-c should kill all processes and the user shouldn't have to do anything else. This is how it works with DataParallel. On a related note, these extra processes often output stuff to the console in duplicate, which really clutters output. Sometimes even after the head is killed.

Third, the mode is error prone when it comes to data loading. We're supposed to use DistributedSampler to ensure that each node loads a different partition of the data. It's not easy to verify that it is working and not creating duplicates, and I'm often trying to do something just a little fancier than what DistributedSampler was designed to do. Failure in this case is silent. You just get worse results.

Finally, I just don't like the API of it. It feels like there's all this code clutter "if args.distributed" everywhere and you have to call "reduce_tensor" for each metric you compute. If you forget, everything still "works" your results are just inaccurate. You also have to launch your program with "python -m torch.distributed.launch --nproc_per_node=xx" which is esoteric and clutters your console.

As a result, I find myself using DataParallel almost all the time, even though I know it's slower. It's usually not that much slower though. I hope to be able to keep it.

What I really hope for is more support for it. Why is it slower? Can that speed gap be closed somewhat? It uses more GPU memory too, especially on GPU 0. Trying to use the new "Channels Last" with it results in no speedup, but if I test on a single GPU, sometimes the speedup is significant.

Thanks! I really appreciate PyTorch on the whole. It's an invaluable tool for students like me.

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @xush6528 @osalpekar

|

oncall: distributed,triaged,module: data parallel

|

low

|

Critical

|

608,562,123 |

go

|

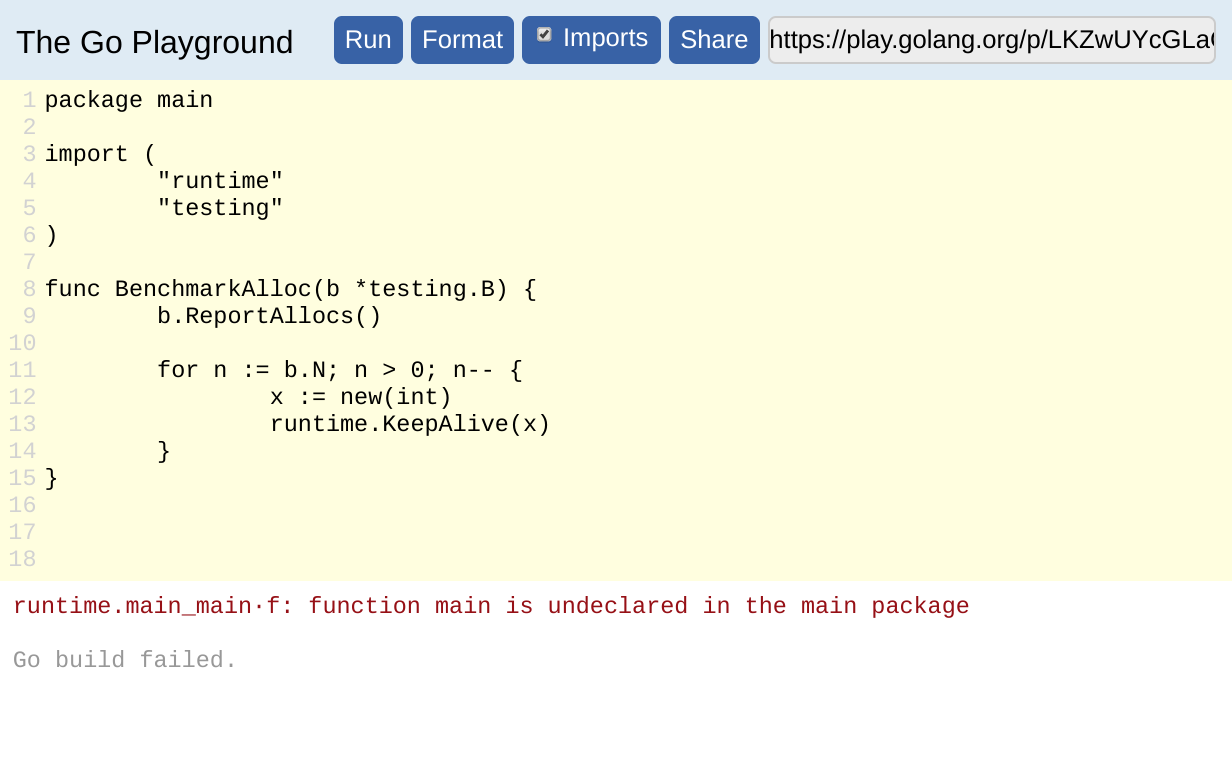

x/playground: detect and run Benchmark functions

|

### What did you do?

Wrote a Playground source file containing a Benchmark function, for which I wanted to see the allocations per operation (a number that does not depend in any way on the absolute running time of the program):

https://play.golang.org/p/LKZwUYcGLaG

```

package main

import (

"runtime"

"testing"

)

func BenchmarkAlloc(b *testing.B) {

b.ReportAllocs()

for n := b.N; n > 0; n-- {

x := new(int)

runtime.KeepAlive(x)

}

}

```

### What did you expect to see?

Output similar to `go test -bench .`:

```

goos: linux

goarch: amd64

pkg: example.com

BenchmarkAlloc-6 1000000000 0.285 ns/op 0 B/op 0 allocs/op

PASS

ok example.com 0.346s

```

### What did you see instead?

```

runtime.main_main·f: function main is undeclared in the main package

Go build failed.

```

CC @andybons @dmitshur @toothrot @cagedmantis

See previously #24311, #6511, #32403.

|

NeedsInvestigation,FeatureRequest

|

low

|

Critical

|

608,562,663 |

pytorch

|

RuntimeError: CUDA error: an illegal memory access was encountered with channels_last

|

I get an illegal memory access when trying to train mnasnet (any version) with apex (O1) and channels_last

<!-- A clear and concise description of what the bug is. -->

## To Reproduce

Steps to reproduce the behavior:

use the apex imagenet example:

python -m torch.distributed.launch --nproc_per_node=2 main_amp.py -a=mnasnet1_3 --b 224 --workers 4 --channels-last=True --opt-level=O1 -b=256 /intel_nvme/imagenet_data/

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

Traceback (most recent call last):

File "main_amp.py", line 542, in <module>

main()

File "main_amp.py", line 247, in main

train(train_loader, model, criterion, optimizer, epoch)

File "main_amp.py", line 353, in train

scaled_loss.backward()

File "/home/tstand/anaconda3/lib/python3.7/contextlib.py", line 119, in __exit__

next(self.gen)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/handle.py", line 123, in scale_loss

optimizer._post_amp_backward(loss_scaler)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/_process_optimizer.py", line 249, in post_backward_no_master_weights

post_backward_models_are_masters(scaler, params, stashed_grads)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/_process_optimizer.py", line 135, in post_backward_models_are_masters

scale_override=(grads_have_scale, stashed_have_scale, out_scale))

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 184, in unscale_with_stashed

out_scale/stashed_have_scale)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 148, in unscale_with_stashed_python

self.dynamic)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 22, in axpby_check_overflow_python

cpu_sum = float(model_grad.float().sum())

RuntimeError: CUDA error: an illegal memory access was encountered

terminate called after throwing an instance of 'c10::Error'

what(): CUDA error: an illegal memory access was encountered (insert_events at /pytorch/c10/cuda/CUDACachingAllocator.cpp:771)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x46 (0x7f5827507536 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: c10::cuda::CUDACachingAllocator::raw_delete(void*) + 0x7ae (0x7f582774afbe in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #2: c10::TensorImpl::release_resources() + 0x4d (0x7f58274f7abd in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #3: <unknown function> + 0x5236b2 (0x7f58732c06b2 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #4: <unknown function> + 0x523756 (0x7f58732c0756 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #5: <unknown function> + 0x19dfce (0x55748c40bfce in /home/tstand/anaconda3/bin/python)

frame #6: <unknown function> + 0x103948 (0x55748c371948 in /home/tstand/anaconda3/bin/python)

frame #7: <unknown function> + 0x114267 (0x55748c382267 in /home/tstand/anaconda3/bin/python)

frame #8: <unknown function> + 0x11427d (0x55748c38227d in /home/tstand/anaconda3/bin/python)

frame #9: <unknown function> + 0x11427d (0x55748c38227d in /home/tstand/anaconda3/bin/python)

frame #10: PyDict_SetItem + 0x502 (0x55748c3cd602 in /home/tstand/anaconda3/bin/python)

frame #11: PyDict_SetItemString + 0x4f (0x55748c3ce0cf in /home/tstand/anaconda3/bin/python)

frame #12: PyImport_Cleanup + 0x9e (0x55748c40d91e in /home/tstand/anaconda3/bin/python)

frame #13: Py_FinalizeEx + 0x67 (0x55748c483367 in /home/tstand/anaconda3/bin/python)

frame #14: <unknown function> + 0x227d93 (0x55748c495d93 in /home/tstand/anaconda3/bin/python)

frame #15: _Py_UnixMain + 0x3c (0x55748c4960bc in /home/tstand/anaconda3/bin/python)

frame #16: __libc_start_main + 0xf3 (0x7f5875ba81e3 in /lib/x86_64-linux-gnu/libc.so.6)

frame #17: <unknown function> + 0x1d0990 (0x55748c43e990 in /home/tstand/anaconda3/bin/python)

THCudaCheck FAIL file=/pytorch/aten/src/THC/THCCachingHostAllocator.cpp line=278 error=700 : an illegal memory access was encountered

Traceback (most recent call last):

File "main_amp.py", line 542, in <module>

main()

File "main_amp.py", line 247, in main

train(train_loader, model, criterion, optimizer, epoch)

File "main_amp.py", line 353, in train

scaled_loss.backward()

File "/home/tstand/anaconda3/lib/python3.7/contextlib.py", line 119, in __exit__

next(self.gen)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/handle.py", line 123, in scale_loss

optimizer._post_amp_backward(loss_scaler)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/_process_optimizer.py", line 249, in post_backward_no_master_weights

post_backward_models_are_masters(scaler, params, stashed_grads)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/_process_optimizer.py", line 135, in post_backward_models_are_masters

scale_override=(grads_have_scale, stashed_have_scale, out_scale))

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 184, in unscale_with_stashed

out_scale/stashed_have_scale)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 148, in unscale_with_stashed_python

self.dynamic)

File "/home/tstand/anaconda3/lib/python3.7/site-packages/apex/amp/scaler.py", line 22, in axpby_check_overflow_python

cpu_sum = float(model_grad.float().sum())

RuntimeError: CUDA error: an illegal memory access was encountered

terminate called after throwing an instance of 'c10::Error'

what(): CUDA error: an illegal memory access was encountered (insert_events at /pytorch/c10/cuda/CUDACachingAllocator.cpp:771)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x46 (0x7f911c250536 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: c10::cuda::CUDACachingAllocator::raw_delete(void*) + 0x7ae (0x7f911c493fbe in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #2: c10::TensorImpl::release_resources() + 0x4d (0x7f911c240abd in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #3: <unknown function> + 0x5236b2 (0x7f91680096b2 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #4: <unknown function> + 0x523756 (0x7f9168009756 in /home/tstand/.local/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #5: <unknown function> + 0x19dfce (0x5599d63f8fce in /home/tstand/anaconda3/bin/python)

frame #6: <unknown function> + 0x103948 (0x5599d635e948 in /home/tstand/anaconda3/bin/python)

frame #7: <unknown function> + 0x114267 (0x5599d636f267 in /home/tstand/anaconda3/bin/python)

frame #8: <unknown function> + 0x11427d (0x5599d636f27d in /home/tstand/anaconda3/bin/python)

frame #9: <unknown function> + 0x11427d (0x5599d636f27d in /home/tstand/anaconda3/bin/python)

frame #10: PyDict_SetItem + 0x502 (0x5599d63ba602 in /home/tstand/anaconda3/bin/python)

frame #11: PyDict_SetItemString + 0x4f (0x5599d63bb0cf in /home/tstand/anaconda3/bin/python)

frame #12: PyImport_Cleanup + 0x9e (0x5599d63fa91e in /home/tstand/anaconda3/bin/python)

frame #13: Py_FinalizeEx + 0x67 (0x5599d6470367 in /home/tstand/anaconda3/bin/python)

frame #14: <unknown function> + 0x227d93 (0x5599d6482d93 in /home/tstand/anaconda3/bin/python)

frame #15: _Py_UnixMain + 0x3c (0x5599d64830bc in /home/tstand/anaconda3/bin/python)