id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

598,275,239 |

rust

|

Auto-fix wrongly suggesting scope issues during struct manipulations

|

<!--

Thank you for filing a bug report! 🐛 Please provide a short summary of the bug,

along with any information you feel relevant to replicating the bug.

-->

Hi, there's a auto-fix bug. I'm using VSCode. The code explains itself:

I tried this code:

```rust

use std::fmt;

use std::fmt::Error;

use std::fmt::Formatter;

struct Structure(i32);

#[derive(Debug)]

struct Point {

x : i32,

y : i32,

}

impl fmt::Display for Structure {

fn fmt(&self, f: &mut Formatter) -> Result<(), Error> {

write!(f, "The value inside the structure is: {}", self.0)

}

}

fn main() {

let s = Structure(12);

println!("{}", s);

let point = {x = 12; y = 45};

println!("{}")

}

```

I expected to see the auto-fix suggest ways to print Point correctly.

Instead, auto-fix in VSCode suggests changing x and y to s in line 23.

However, the error message from rust displays fine.

### Meta

<!--

If you're using the stable version of the compiler, you should also check if the

bug also exists in the beta or nightly versions.

-->

`rustc --version --verbose`:

```

rustc 1.42.0 (b8cedc004 2020-03-09)

binary: rustc

commit-hash: b8cedc00407a4c56a3bda1ed605c6fc166655447

commit-date: 2020-03-09

host: x86_64-unknown-linux-gnu

release: 1.42.0

LLVM version: 9.0

```

<!--

Include a backtrace in the code block by setting `RUST_BACKTRACE=1` in your

environment. E.g. `RUST_BACKTRACE=1 cargo build`.

-->

<details><summary>Backtrace</summary>

<p>

```

RUST_BACKTRACE=1 cargo build

Compiling hello_world v0.1.0 (/home/jerry/rust-experiment/hello_world)

error: 1 positional argument in format string, but no arguments were given

--> src/main.rs:26:15

|

26 | println!("{}")

| ^^

error[E0425]: cannot find value `x` in this scope

--> src/main.rs:23:9

|

23 | x = 12;

| ^ help: a local variable with a similar name exists: `s`

error[E0425]: cannot find value `y` in this scope

--> src/main.rs:24:9

|

24 | y = 45;

| ^ help: a local variable with a similar name exists: `s`

error: aborting due to 3 previous errors

For more information about this error, try `rustc --explain E0425`.

error: could not compile `hello_world`.

```

</p>

</details>

|

C-enhancement,A-diagnostics,T-compiler

|

low

|

Critical

|

598,282,397 |

opencv

|

opencv.js detectMultiScale piles up a lot of memory when using large images

|

##### System information (version)

- OpenCV => 4.3 (problem also exists for older versions)

- Operating System / Platform => Linux (with Mozilla Firefox 74.0 (64-bit))

- Compiler => using opencv.js from demo page

##### Detailed description

When detectMultiScale from opencv.js runs on large photos (e.g. from common DSLRs), a lot of memory is used and not freed after use.

Running the demo (https://docs.opencv.org/4.3.0/js_face_detection.html) on a 8MB jpeg file results in >1GB of used memory, which is not removed after detetctMultiScale has finished or after loading a different image.

I am not really sure, but it could be related to this issue: https://github.com/opencv/opencv/issues/15060 !?

##### Steps to reproduce

1. Go to: https://docs.opencv.org/4.3.0/js_face_detection.html

2. Load a large photo which contains faces (I used 6000x4000px, ~8MB, jpeg)

3. Check the memory of the tab (e.g. by using the browser's dev functions)

4. Load a different photo

5. Check memory again, and see that it is even larger than in step 3.

|

category: javascript (js)

|

low

|

Minor

|

598,285,696 |

flutter

|

Add consistent across all platforms onDonePressed callback to TextField

|

This issue is a follow up of https://github.com/flutter/flutter/issues/49785

Please check it out.

TextField's **onSubmitted** and **onEditingComplete** behaive differently on different platforms.

Currently, there are following differnces:

On mobile - **onSubmitted** **is not** firing if you tap another TextField or blank space.

On web - **onSubmitted** **is** firing if you tap another TextField or blank space (undesired).

On mobile web - **onSubmitted** **is** firing when the virtual keyboard is closed and when app sent to background (undesired).

I suggest adding **onDonePressed** callback that would be invoked **only** when user presses **Enter**.

It'd allow to have the same behavior on mobile and web without changing code and creating workarounds.

Thanks!

|

a: text input,c: new feature,framework,f: material design,a: quality,c: proposal,P3,team-text-input,triaged-text-input

|

low

|

Minor

|

598,293,384 |

pytorch

|

After `create_graph=True`, calculating `backward()` on sparse Tensor fails

|

## 🐛 Bug

After retaining the calculation graph of the gradients ( `create_graph=True`), `backward()` fails on sparse Tensor.

The following error occurs:

`RuntimeError: calculating the gradient of a sparse Tensor argument to mm is not supported.`

## To Reproduce

```

import torch

batch_size = 10

inp_size = 1000

out_size= 40

X = torch.randn(batch_size, inp_size).to_sparse().requires_grad_(True)

y = torch.randn(batch_size, out_size).requires_grad_(True)

weight = torch.nn.Parameter(torch.randn(inp_size, out_size))

y_out = torch.sparse.mm(X, weight)

criterion = torch.nn.MSELoss()

loss = criterion(y_out, y)

grad = torch.autograd.grad(loss, [weight], create_graph=True)[0]

expected_grad = torch.randn(grad.size())

L = ((grad - expected_grad) ** 2).sum()

L.backward()

```

## Expected behavior

After `L.backward()`, dL/dX and dL/dy should be calculated based on the gradient's calculation graph.

## Environment

```

PyTorch version: 1.4.0

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Ubuntu 18.04.4 LTS

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

CMake version: Could not collect

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 9.1.85

GPU models and configuration:

GPU 0: Tesla V100-PCIE-32GB

GPU 1: Tesla V100-PCIE-32GB

Nvidia driver version: 440.33.01

cuDNN version: Could not collect

Versions of relevant libraries:

[pip] Could not collect

[conda] Could not collect

```

- pip 20.0.2

- numpy 1.17.0

- torch 1.4.0

- torchvision 0.5.0

|

triaged,enhancement

|

low

|

Critical

|

598,300,467 |

pytorch

|

Issue when linking C++ code with libtorch_cpu: cuda not detected

|

## 🐛 Bug

(not sure how much of a bug this is, maybe it's expected)

I'm currently working on adapting some [rust bindings](https://github.com/LaurentMazare/tch-rs) for PyTorch and in the process came across the following issue for which I don't have a good solution. The issue can be show on C++ code.

- When linking the final binary with `-ltorch -ltorch_cpu -lc10`, `torch::cuda::is_available()` returns false.

- When linking the final binary with `-Wl,--no-as-needed -ltorch -ltorch_cpu -lc10`, `torch::cuda::is_available()` returns true.

- When linking without `-ltorch_cpu`, I get a missing symbol error for: `c10::Dispatcher::singleton`.

I tried compiling some C++ pytorch code with cmake and it seems to use --no-as-needed to get this to work.

Is there a way to get some external code to compile without libtorch_cpu?

One difficulty is that the rust build system does not let you specify arbitrary linker flags so I cannot easily set `-Wl,--no-as-needed`.

## To Reproduce

The issue can be reproduced using the C++ code below.

```c++

#include <torch/torch.h>

#include <iostream>

int main() {

std::cout << torch::cuda::is_available() << std::endl;

}

```

Then:

- `g++ test.cpp -std=gnu++14 -ltorch -ltorch_cpu -lc10 && ./a.out` prints 0.

- `g++ test.cpp -std=gnu++14 -Wl,--no-as-needed -ltorch -ltorch_cpu -lc10 && ./a.out` prints 1.

## Expected behavior

I would have hoped for cuda to be reported as available without the `-Wl,--no-as-needed` flag.

## Environment

- PyTorch Version (e.g., 1.0): release/1.5 branch as of 2020-04-11.

- OS (e.g., Linux): Linux (ubuntu 18.04)

- How you installed PyTorch (`conda`, `pip`, source): source, compiled with cuda support

- Build command you used (if compiling from source): `python setup.py build`

- Python version: 3.7.1.

- CUDA/cuDNN version: 10.0/none.

- GPU models and configuration: 1x GeForce RTX 2080.

- Any other relevant information: gcc/g++ 7.5.0, ld 2.3.0

## Additional context

cc @yf225

|

module: build,module: cpp,triaged

|

low

|

Critical

|

598,329,987 |

create-react-app

|

Update workbox plugin to 5.1.2 resolving ServiceWorker quota exceeded errors

|

### Is your proposal related to a problem?

Currently our e-commerce site is experiencing millions of ServiceWorker `DOMException: QuotaExceededError` errors ([we're not the only ones](https://github.com/GoogleChrome/workbox/pull/1505)), which almost blowing through our monthly Rollbar limit 😬. I did some brief research and found that Google is aware of this issue and [recommends `purgeOnQuotaError`](https://developers.google.com/web/tools/workbox/guides/storage-quota#purgeonquotaerror) to potentially fix most of these runtime quota issues.

I could be mistaken, but looks as if [CRA is using workbox 4.3.1](https://github.com/facebook/create-react-app/blob/c5b96c2853671baa3f1f297ec3b36d7358898304/packages/react-scripts/package.json#L84), and this `purgeOnQuotaError` was [added in 5.0.0 as default enabled](https://github.com/GoogleChrome/workbox/blob/v5.0.0/packages/workbox-build/src/options/defaults.js#L29).

Since CRA follows best practices, I highly suggest we update Workbox plugin ASAP so we can be on par with Google's best practices for handling ServiceWorkers.

Thanks and love this app!

### Describe the solution you'd like

[Update `workbox-webpack-plugin` to 5.1.2](https://github.com/facebook/create-react-app/pull/8822)

### Describe alternatives you've considered

None possible without ejecting.

|

issue: proposal,needs triage

|

low

|

Critical

|

598,334,041 |

go

|

x/tools/gopls: fuzzy completion does not return result when expected

|

<!-- Please answer these questions before submitting your issue. Thanks! -->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version devel +801cd7c84d Thu Apr 2 09:00:44 2020 +0000 linux/amd64

$ go list -m golang.org/x/tools

golang.org/x/tools v0.0.0-20200408132156-9ee5ef7a2c0d => github.com/myitcvforks/tools v0.0.0-20200408225201-7e808beafd9f

$ go list -m golang.org/x/tools/gopls

golang.org/x/tools/gopls v0.0.0-20200408132156-9ee5ef7a2c0d => github.com/myitcvforks/tools/gopls v0.0.0-20200408225201-7e808beafd9f

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/myitcv/.cache/go-build"

GOENV="/home/myitcv/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="linux"

GOPATH="/home/myitcv/gostuff"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/home/myitcv/gos"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/home/myitcv/gos/pkg/tool/linux_amd64"

GCCGO="gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD="/home/myitcv/.vim/plugged/govim/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build227804233=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

```

-- go.mod --

module github.com/myitcv/playground

go 1.12

-- main.go --

package main

import (

"fmt"

)

func main() {

fmt.Prnf

}

```

### What did you expect to see?

Triggering completion at the end of `fmt.Prnf` to return a single result, `fmt.Printf`

### What did you see instead?

Triggering completion at the end of `fmt.Prnf` did not return any results.

See the following `gopls` log: [bad.log](https://github.com/golang/go/files/4465248/bad.log)

---

cc @stamblerre @muirdm

FYI @leitzler

|

NeedsInvestigation,gopls,Tools

|

low

|

Critical

|

598,339,833 |

rust

|

Typo suggestion doesn't account for types

|

<!--

Thank you for filing a bug report! 🐛 Please provide a short summary of the bug,

along with any information you feel relevant to replicating the bug.

-->

When using multiple transmitters with a single receiver across multiple thread, the `:help` message is totally bonkers. Refer the `backtrace` lines 10 and 16:

```rust

use std::sync::mpsc::channel;

use std::thread;

fn main() {

let (tx1, rx) = channel();

let tx2 = tx1.clone();

thread::spawn(move || {

for i in 1..10 {

tx.send(i);

}

});

thread::spawn(move || {

for i in 1..10 {

tx.send(i);

}

});

for i in 1..10 {

println!("{}", rx.recv().unwrap());

}

}

```

The auto-fix seems to be too ambitious at the moment by suggesting wrong fixes. Let me know if I need to file a RFC for this. JAVA's auto-fix seems smarter and Rust can definitely borrow some of it's implementation.

### Meta

<!--

If you're using the stable version of the compiler, you should also check if the

bug also exists in the beta or nightly versions.

-->

`rustc --version --verbose`:

```

rustc 1.42.0 (b8cedc004 2020-03-09)

binary: rustc

commit-hash: b8cedc00407a4c56a3bda1ed605c6fc166655447

commit-date: 2020-03-09

host: x86_64-unknown-linux-gnu

release: 1.42.0

LLVM version: 9.0

```

<!--

Include a backtrace in the code block by setting `RUST_BACKTRACE=1` in your

environment. E.g. `RUST_BACKTRACE=1 cargo build`.

-->

<details><summary>Backtrace</summary>

<p>

```

Compiling hello_world v0.1.0 (/home/jerry/rust-experiment/hello_world)

error[E0425]: cannot find value `tx` in this scope

--> src/main.rs:10:13

|

10 | tx.send(i);

| ^^ help: a local variable with a similar name exists: `rx`

error[E0425]: cannot find value `tx` in this scope

--> src/main.rs:16:13

|

16 | tx.send(i);

| ^^ help: a local variable with a similar name exists: `rx`

error: aborting due to 2 previous errors

For more information about this error, try `rustc --explain E0425`.

error: could not compile `hello_world`.

To learn more, run the command again with --verbose.

```

</p>

</details>

|

C-enhancement,A-diagnostics,T-compiler,A-suggestion-diagnostics,D-papercut

|

low

|

Critical

|

598,342,715 |

vscode

|

Task terminal truncates long/quick output.

|

<!-- ⚠️⚠️ Do Not Delete This! bug_report_template ⚠️⚠️ -->

<!-- Please read our Rules of Conduct: https://opensource.microsoft.com/codeofconduct/ -->

<!-- Please search existing issues to avoid creating duplicates. -->

<!-- Also please test using the latest insiders build to make sure your issue has not already been fixed: https://code.visualstudio.com/insiders/ -->

<!-- Use Help > Report Issue to prefill these. -->

VS Code version: Code 1.43.0 (78a4c91400152c0f27ba4d363eb56d2835f9903a, 2020-03-09T19:44:52.965Z)

OS version: Linux x64 5.4.25

### Steps to Reproduce

Run "test" task using this `.vscode/tasks.json`:

```json

{

"version": "2.0.0",

"tasks": [

{

"label": "test",

"type": "shell",

"command": "seq 1000 | sed 's/$/XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX/'",

}

]

}

```

### Expected behavior

All 1000 lines are printed, the last 3 lines being:

(this is how it works when ran in a regular VSCode Terminal)

```

998XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

999XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

1000XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

```

### Actual behavior

Task output is truncated randomly, these being two example runs' last 3 lines:

```

741XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

742XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

743XXXXXXXXXXXXXXXXX

```

```

754XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

755XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

756XXXXXXXXXXXXXXX

```

### Additional background

Reading the description of #75139, I'm *pretty sure* it's the same bug.

It was marked as a duplicate of #38137, but I'm not sure that's correct.

I've only seen this in the last few months, *after* #38137 was supposedly fixed, and assumed the problem was a change in the process producing the output, or some bug/setting in SSH, which is why I haven't reported it earlier.

However, after coming across #75139, I did some experimentation, and even a task that only does `cat build-log` results in truncation (`build-log` is 128kB and has 2665 lines, the current output from a real workload).

And then I simplified that to the command above, which reproduces easily.

Even `seq 1000` alone was truncated once, at `841`.

And `seq 10000` truncates even easier, example last 3 lines from some runs:

```

9595

9596

959

```

```

7437

7438

7439

```

```

8143

8144

814

```

|

bug,tasks,terminal-process

|

low

|

Critical

|

598,343,409 |

godot

|

node_added isn't triggered for nodes in the Main Scene

|

**Godot version:**

v3.2.1.stable.mono.official

**OS/device including version:**

Windows 10

**Issue description:**

I have an auto-loaded `Node` that connects to `node_added` in `_Ready()`.

My connected function triggers to nodes that are added by AddChild (even for nodes added in the Main Scene's _Ready()).

By logging, I confirmed the `node_added` signal is connected before the Main Scene's `_Ready()` is called.

**Steps to reproduce:**

(All in C#)

1. Create a node and have it connect to `node_added` in its `_Ready()` method.

2. Set that node to Auto Load in your project settings

3. Setup a scene with multiple nodes

4. Make that scene your Main Scene in your project settings

5. Run the project and notice that node_added is not triggered for any of the nodes in your Main Scene.

**Minimal reproduction project:**

[NodeAddedIssue.zip](https://github.com/godotengine/godot/files/4465343/NodeAddedIssue.zip)

|

bug,topic:core

|

low

|

Minor

|

598,350,725 |

rust

|

Lint exported_private_dependencies misses public dependency via trait impl

|

Tracking issue: #44663, RFC: rust-lang/rfcs#1977

Cargo.toml:

```toml

cargo-features = ["public-dependency"]

[package]

name = "playground"

version = "0.0.0"

edition = "2018"

[dependencies]

num-traits = "0.2"

```

lib.rs:

```rust

pub struct S;

impl std::ops::Add for S {

type Output = S;

fn add(self, _: Self) -> Self::Output {

unimplemented!()

}

}

impl num_traits::Zero for S {

fn zero() -> Self {

unimplemented!()

}

fn is_zero(&self) -> bool {

unimplemented!()

}

}

```

Also, a plain `pub use` seems to be missed as well.

|

A-lints,A-visibility,T-compiler,C-bug,F-public_private_dependencies

|

low

|

Minor

|

598,355,312 |

flutter

|

Ensure that macOS rebuilds are fast

|

We need to audit the macOS build pipeline and ensure that we aren't doing excessive work on what should be minimal rebuilds.

I believe there are still some known issues in how `assemble` interacts with the Xcode build. @jonahwilliams are there existing bugs for that I should list as blockers?

|

tool,platform-mac,a: desktop,a: build,P2,team-macos,triaged-macos

|

low

|

Critical

|

598,355,627 |

flutter

|

Ensure that Windows rebuilds are fast

|

We need to audit the Windows build pipeline and ensure that we aren't doing excessive work on what should be minimal rebuilds.

Some areas I'm aware of needing investigation:

- The interaction with the Flutter build step.

- Unconditionally writing config files, which may cause VS to rebuild everything even when their contents haven't changed.

|

tool,platform-windows,a: desktop,a: build,P2,team-windows,triaged-windows

|

low

|

Minor

|

598,355,801 |

flutter

|

Ensure that Linux rebuilds are fast

|

We need to audit the Linux build pipeline and ensure that we aren't doing excessive work on what should be minimal rebuilds.

This is something we should wait until we've switched to `CMake` to investigate, as there's no reason to tune the current Make builds.

|

tool,platform-linux,a: desktop,a: build,P3,team-linux,triaged-linux

|

low

|

Minor

|

598,370,573 |

godot

|

godot 3.2 VehicleBody and VehicleWheel engine_force strange behavior

|

**Some backstory:**

In godot 3.1 if I apply Engine force it works just about I expect it to work.

In godot 3.2 same projects start works strange. I found some reduce in power of vehicles.

After some investigations I found that engine force applies is not as I apply but actually it is divided by (number_of_wheels/number_of_traction_wheels). I tested only 4 wheels cars. In my case if I RWD car i need twice the engine force so car have acceleration as I want. If I make only 1WD car with total 4 wheels, then I need multiply engine force by 4.

I thought it can work good with new "Pre-Wheel Motion", but it works in same way. I need to apply Engine Force to wheel multiplied by (number_of_wheels/number_of_traction_wheels) to make it work properly.

**How I expect it to work:**

If I set engine_force to VehicleBody and it has at least one VehicleWheel with property use_as_traction=true and in contact with ground then VehicleBody is accelerated with engine_force I set.

OR

If I set engine_force to VehicleWheel with property use_as_traction=true and in contact with ground then this VehicleWheel is accelerated with engine_force I set.

**How it works:**

If I set engine_force to VehicleBody or VehicleWheel then actual acceleration that is applied is divided by (number_of_wheels/number_of_traction_wheels).

|

bug,topic:physics

|

low

|

Minor

|

598,377,751 |

pytorch

|

Comparison ops for Complex Tensors

|

This issue is intended to roll-up several conversations about how PyTorch should compare complex values and how functions that logically rely on comparisons, like min, max, sort, and clamp, should work when given complex inputs. See https://github.com/pytorch/pytorch/issues/36374, which discussed complex min and max, and https://github.com/pytorch/pytorch/issues/33568, which discussed complex clamp. The challenge of comparing complex numbers is not limited to PyTorch, either, see https://github.com/numpy/numpy/issues/15630 for NumPy's discussion of complex clip.

Comparing complex numbers is challenging because the complex numbers aren't part of any ordered field. In NumPy, they're typically compared lexicographically: comparing the real part and only comparing the imaginary part if the real parts are equal. C++ and Python, on the other hand, do not support comparison ops on complex numbers.

Let's use this issue to enumerate complex comparison options as well as their pros and cons.

The current options are:

- No default complex comparison

- Pros:

- Consistent with C++ and Python

- Behavior is always clear since the user must specify the type of comparison

- Cons:

- Divergent from NumPy, but a clear error

- Possibly inconvenient to always specify the comparison

- Lexicographic comparison

- Pros:

- Consistent with NumPy

- Cons:

- Clamp (clip) behavior seems strange: (3 - 100j) clamped below to (2 + 5j) is unchanged, clamped above to (2 + 5j) becomes (2 + 5j)

- Some users report wanting to compare complex by absolute value

- Absolute value comparison

- Pros:

- Some applications naturally compare complex numbers using their absolute values

- Cons:

- Divergent from NumPy, possibly a silent break

cc. @rgommers @dylanbespalko @ezyang @mruberry

cc @ezyang @anjali411 @dylanbespalko

|

triaged,module: complex,module: numpy

|

low

|

Critical

|

598,410,097 |

godot

|

Missing dependencies after renaming a placeholder scene

|

**Godot version:**

- v3.2.1.stable.official

- v4.0.dev.custom_build.9dc19f761

**OS/device including version:** macOS Catalina 10.15.4

**Issue description:**

Renaming / drag & dropping a scene to another folder may cause missing dependencies if the scene is marked "Load as Placeholder".

* If the main scene is still open, it can not be saved until I remove and readd the placeholder node.

* If the main scene is not open, I won't be able to open the scene again. The only way to fix it seems to be manually changing the `instance_placeholder="res://XXX.tscn"` field in the `tscn` file.

**Steps to reproduce:**

1. Create a new 2D scene, save as 'Sprite.tscn'

2. Create a new 2D scene, save as 'Main.tscn'

3. Instance Sprite in Main

4. Right click the Sprite node, and check 'Load as Placeholder'

6. Two cases:

1. Close the Main scene

* Rename 'Sprite.tscn' to 'Other.tscn' in FileSystem dock

* Try to open 'Main.tscn'

* Alert: Missing 'Main.tscn' or its dependencies.

2. Keep the Main scene open

* Rename 'Sprite.tscn' to 'Other.tscn' in FileSystem dock

* Try to save 'Main.tscn'

* Alert: Couldn't save scene. Likely dependencies (instances or inheritance) couldn't be satisfied.

**Minimal reproduction project:** N/A

|

bug,topic:editor,confirmed

|

low

|

Major

|

598,455,745 |

rust

|

no associated item found for struct in the current scope while it exists

|

The following code ([playground](https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=e1b64e86edb39ace6b60a2cd4628a71b)):

```rust

trait Trait {

type Associated;

fn instance() -> Self::Associated;

}

struct Associated;

struct Struct;

impl Trait for Struct {

type Associated = Associated;

fn instance() -> Self::Associated {

Self::Associated

}

}

```

Fails with this error:

```

error[E0599]: no associated item named `Associated` found for struct `Struct` in the current scope

--> src/lib.rs:14:15

|

8 | struct Struct;

| -------------- associated item `Associated` not found for this

...

14 | Self::Associated

| ^^^^^^^^^^ associated item not found in `Struct`

```

However, if we alter `instance()` slightly to either of these, the code compiles successfully:

```rust

fn instance() -> Self::Associated {

Self::Associated {} // {} even though we have `struct S;`, not `struct S {}`

}

```

```rust

fn instance() -> Self::Associated {

Associated // outer scope struct definition

}

```

---

It is worth mentioning that explicitly using `as Trait` does **not** solve the issue, and fails with a different error:

```rust

fn instance() -> Self::Associated {

<Self as Trait>::Associated

}

```

Error:

```

error[E0575]: expected method or associated constant, found associated type `Trait::Associated`

--> src/lib.rs:14:9

|

14 | <Self as Trait>::Associated

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

= note: can't use a type alias as a constructor

```

Adding `{}`:

```rust

fn instance() -> Self::Associated {

<Self as Trait>::Associated {}

}

```

Fails with:

```

error: expected one of `.`, `::`, `;`, `?`, `}`, or an operator, found `{`

--> src/lib.rs:14:37

|

14 | <Self as Trait>::Associated {}

| ^ expected one of `.`, `::`, `;`, `?`, `}`, or an operator

```

|

A-associated-items,T-lang,T-compiler,C-bug

|

low

|

Critical

|

598,458,429 |

rust

|

Support positional vectored IO for unix files

|

Next to the existing `write_vectored` and `read_vectored` APIs, it would be great to have `write_vectored_at` and `read_vectored_at` methods for `File`s on UNIX systems, corresponding to the `pwritev` and `preadv` syscalls. This would be an easy way to add means for high performance file IO using the standard library `File` type.

As far as I understand, the equivalent does not exist on Windows, so this would probably have to live in the [unix `FileExt` trait](https://doc.rust-lang.org/std/os/unix/fs/trait.FileExt.html#tymethod.write_at).

If this is deemed desirable, I'd be happy to send a PR for this.

|

T-libs-api,C-feature-request

|

low

|

Major

|

598,461,506 |

bitcoin

|

scripts: check for .text.startup sections

|

From #18553:

[theuni](https://github.com/bitcoin/bitcoin/pull/18553#issuecomment-611717976)

> Sidenote: we could potentially add a check for illegal instructions in the .text.startup section in one of our python binary checking tools. Though we'd have to create a per-arch blacklist/whitelist to define "illegal".

[laanwj](https://github.com/bitcoin/bitcoin/pull/18553#issuecomment-611733956)

> My idea was to forbid .text.startup sections completely in all 'special' compilation units, e.g. those compiled with non-default instruction sets. I think that's easier to implement a check for than instruction white/blacklists.

|

Scripts and tools

|

low

|

Minor

|

598,476,455 |

rust

|

Adding a `Send` bound generates `the parameter type `S` must be valid for any other region`

|

I'm using `cargo 1.43.0-nightly (bda50510d 2020-03-02)` / `rustc 1.43.0-nightly (c20d7eecb 2020-03-11)`.

With this code (simplified from [this crate](https://github.com/Ekleog/yuubind/tree/7dbebd924fe73e7de905c24a46564f631b7e46c1), edition 2018 with as only dependency `futures = "0.3.4"`):

```rust

use futures::{prelude::*, Future, Stream};

use std::{

pin::Pin,

task::{Context, Poll},

};

pub struct Foo {}

impl Foo {

pub fn foo<'a, S>(

&'a mut self,

reader: &'a mut StreamWrapperOuter<S>,

) -> Pin<Box<dyn 'a + Send + Future<Output = ()>>>

where

S: 'a + Unpin + Send + Stream<Item = Vec<()>>,

{

Box::pin(async move {

let _res = reader.concat().await;

unimplemented!()

})

}

}

pub struct StreamWrapperOuter<'a, S>

where

S: Unpin + Stream<Item = Vec<()>>,

{

_source: &'a mut StreamWrapperInner<S>,

}

impl<'a, S> Stream for StreamWrapperOuter<'a, S>

where

S: Unpin + Stream<Item = Vec<()>>,

{

type Item = Vec<()>;

fn poll_next(self: Pin<&mut Self>, _ctx: &mut Context) -> Poll<Option<Self::Item>> {

unimplemented!()

}

}

struct StreamWrapperInner<S: Stream> {

_stream: S,

}

impl<S: Stream> Stream for StreamWrapperInner<S> {

type Item = S::Item;

fn poll_next(self: Pin<&mut Self>, _ctx: &mut Context) -> Poll<Option<S::Item>> {

unimplemented!()

}

}

```

I get the following error:

```rust

error[E0311]: the parameter type `S` may not live long enough

--> src/lib.rs:17:9

|

17 | / Box::pin(async move {

18 | | let _res = reader.concat().await;

19 | | unimplemented!()

20 | | })

| |__________^

|

= help: consider adding an explicit lifetime bound for `S`

= note: the parameter type `S` must be valid for any other region...

note: ...so that the type `StreamWrapperInner<S>` will meet its required lifetime bounds

--> src/lib.rs:17:9

|

17 | / Box::pin(async move {

18 | | let _res = reader.concat().await;

19 | | unimplemented!()

20 | | })

| |__________^

error: aborting due to previous error

```

However, removing the `Send` bounds on both the return type of `Foo::foo` and its `S` type parameter, it compiles perfectly.

I'm pretty surprised with the fact that adding a `Send` bound apparently changes the lifetime; and the fact that `S` “must be valid for any other region” would make me guess it's a stray `for<'r>` like has already happened with async/await. What do you think about this?

|

A-lifetimes,T-compiler,C-bug

|

low

|

Critical

|

598,495,342 |

youtube-dl

|

clickfunnels

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2020.03.24. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.

- Make sure that site you are requesting is not dedicated to copyright infringement, see https://yt-dl.org/copyright-infringement. youtube-dl does not support such sites. In order for site support request to be accepted all provided example URLs should not violate any copyrights.

- Search the bugtracker for similar site support requests: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [x] I'm reporting a new site support request

- [x] I've verified that I'm running youtube-dl version **2020.03.24**

- [x] I've checked that all provided URLs are alive and playable in a browser

- [x] I've checked that none of provided URLs violate any copyrights

- [x] I've searched the bugtracker for similar site support requests including closed ones

## Example URLs

<!--

Provide all kinds of example URLs support for which should be included. Replace following example URLs by yours.

-->

- URL : https://hkowableyoutubedl.clickfunnels.com/membership-area1586699963857

(url can be with custom domain too, like for the script you made for Teachable course)

you need to log in.

I have created a membership clickfunnels that will work for 14 days.

login : [email protected]

password: youtubedl2020

## Description

video can be hosted on youtube, vimeo, wistia or thought direct custom video file link.

Thanks you and have a good day :)

|

site-support-request

|

low

|

Critical

|

598,503,366 |

PowerToys

|

[Shortcut Guide] dynamics add shortcut to swap keyboards when multiple are there

|

If a user has multiple keyboard layouts installed, we can detect this. If this happens, it would be a nice reminder about that shortcut.

Since this isn’t relevant if you don’t have it, it should be dynamic.

|

Idea-Enhancement,Product-Shortcut Guide

|

low

|

Minor

|

598,520,405 |

TypeScript

|

[Feature Request] Ability to auto-generate a type file for local JS module

|

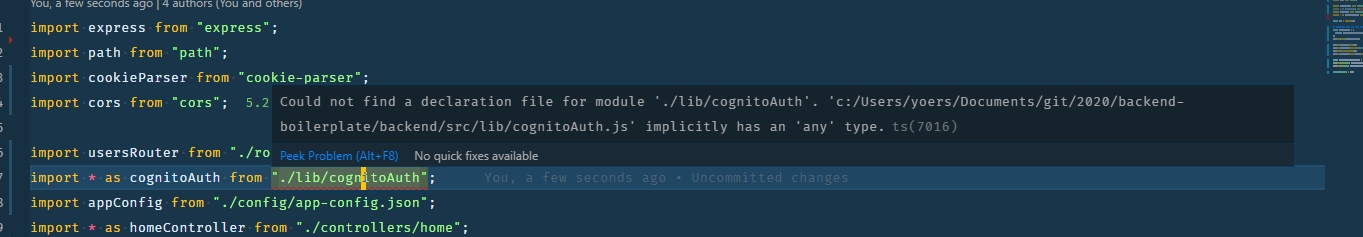

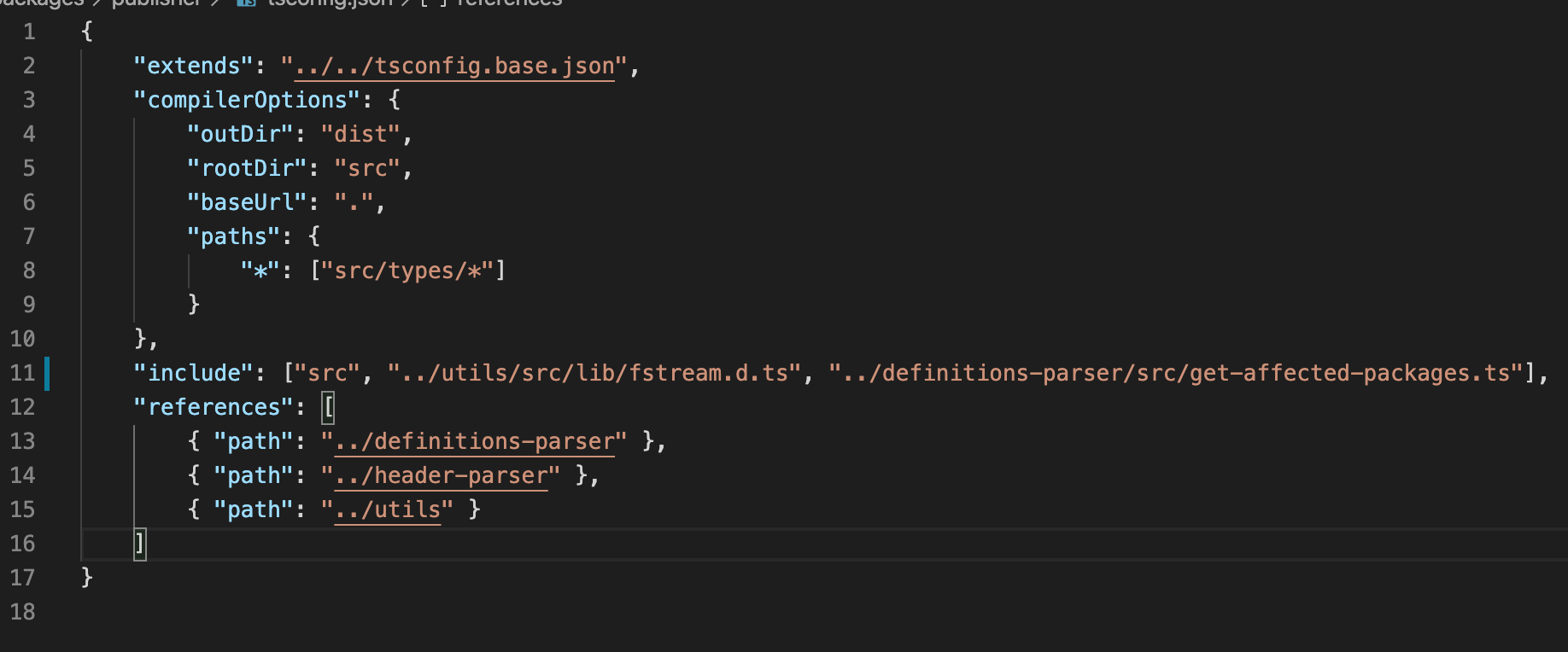

I'm facing the current issue when importing a JS file into a TS project

The solution is to create a @types/cognitoAuth/index.d.ts file for the types, or convert the file to typescript.

It would be great if in vscode we could use the code action feature ( CTRL + . ) to either generate an empty types file with boilerplate, or try convert file to TS?

|

Suggestion,Awaiting More Feedback

|

low

|

Minor

|

598,526,536 |

rust

|

Filling short slices is slow even if they are provably short

|

Take this method as baseline ([playground](https://play.rust-lang.org/?version=stable&mode=release&edition=2018&gist=6eed91dbb458e8eef083d0d24a958182)):

```rust

pub fn add_padding(input_len: usize, output: &mut [u8]) -> usize {

let rem = input_len % 3;

let padding_length = (3 - rem) % 3;

for i in 0..padding_length {

output[i] = b'=';

}

padding_length

}

```

padding_length can take on values in the rage [0, 2], so we have to write either zero, one, or two bytes into our slice.

Benchmarking all three cases gives us these timings:

```

0: time: [2.8673 ns 2.8692 ns 2.8714 ns]

1: time: [3.2384 ns 3.2411 ns 3.2443 ns]

2: time: [3.7454 ns 3.7478 ns 3.7507 ns]

```

Chasing more idiomatic code we switch to iterators for the loop ([playground](https://play.rust-lang.org/?version=stable&mode=release&edition=2018&gist=d39fbb7d5c5bd2914ae14e538e589460)):

```rust

pub fn add_padding(input_len: usize, output: &mut [u8]) -> usize {

let rem = input_len % 3;

let padding_length = (3 - rem) % 3;

for byte in output[..padding_length].iter_mut() {

*byte = b'=';

}

padding_length

}

```

Given that this loop barely does any iterations and has thus not much performance potential in avoiding bounds checks, we expect about the same runtime.

```

absolute:

0: time: [3.2053 ns 3.2074 ns 3.2105 ns]

1: time: [5.4453 ns 5.4475 ns 5.4501 ns]

2: time: [6.0211 ns 6.0254 ns 6.0302 ns]

relative compared to baseline:

0: time: [+11.561% +11.799% +12.030%]

1: time: [+67.946% +68.287% +68.647%]

2: time: [+60.241% +60.600% +60.932%]

```

Oof, up to 68% slower...

**Let's see what's happening**

The baseline version copies one byte at a time, which isn't that bad when you copy at most two bytes:

```assembly

playground::add_padding:

pushq %rax

movq %rdx, %rcx

movabsq $-6148914691236517205, %r8

movq %rdi, %rax

mulq %r8

shrq %rdx

leaq (%rdx,%rdx,2), %rax

subq %rax, %rdi

xorq $3, %rdi

movq %rdi, %rax

mulq %r8

shrq %rdx

leaq (%rdx,%rdx,2), %rax

subq %rax, %rdi

je .LBB0_4

xorl %eax, %eax

.LBB0_2:

cmpq %rax, %rcx

je .LBB0_5

movb $61, (%rsi,%rax)

addq $1, %rax

cmpq %rdi, %rax

jb .LBB0_2

.LBB0_4:

movq %rdi, %rax

popq %rcx

retq

[snip panic code]

```

In comparison the iterator version does a full memset:

```assembly

playground::add_padding:

pushq %rbx

movq %rdx, %rcx

movq %rdi, %rbx

movabsq $-6148914691236517205, %rdi

movq %rbx, %rax

mulq %rdi

shrq %rdx

leaq (%rdx,%rdx,2), %rax

subq %rax, %rbx

xorq $3, %rbx

movq %rbx, %rax

mulq %rdi

shrq %rdx

leaq (%rdx,%rdx,2), %rax

subq %rax, %rbx

cmpq %rcx, %rbx

ja .LBB0_4

testq %rbx, %rbx

je .LBB0_3

movq %rsi, %rdi

movl $61, %esi

movq %rbx, %rdx

callq *memset@GOTPCREL(%rip)

.LBB0_3:

movq %rbx, %rax

popq %rbx

retq

[snip panic code]

```

For long slices memset would be a good choice but for just a few bytes the overhead is simply too big. When using a constant range for testing, we see the compiler emitting different combinations of `movb`, `movw`, `movl`, `movabsq`,`movaps+movups` up to a length of 256 byte. Only for slices longer than that a memset is used.

At some point the compiler already realizes that `padding_length` is always `< 3` as an `assert!(padding_length < 3);` gets optimized out completely. Whether this information is not available at the right place or is simply not utilized, I can't tell.

Wrapping the iterator version's loop in a `match` results in two things - the fastest version and a monstrosity ([playground](https://play.rust-lang.org/?version=stable&mode=release&edition=2018&gist=afa4e27d8e035a1419f784fb0b889a5a)).

```rust

pub fn add_padding(input_len: usize, output: &mut [u8]) -> usize {

let rem = input_len % 3;

let padding_length = (3 - rem) % 3;

match padding_length {

0 => {

for byte in output[..padding_length].iter_mut() {

*byte = b'=';

}

},

1 => {

for byte in output[..padding_length].iter_mut() {

*byte = b'=';

}

}

2 => {

for byte in output[..padding_length].iter_mut() {

*byte = b'=';

}

},

_ => unreachable!()

}

padding_length

}

```

```

absolute:

0: time: [2.8705 ns 2.8749 ns 2.8797 ns]

1: time: [3.2446 ns 3.2470 ns 3.2499 ns]

2: time: [3.4626 ns 3.4753 ns 3.4894 ns]

relative compared to baseline:

0: time: [-0.1432% +0.0826% +0.3052%]

1: time: [+0.0403% +0.2527% +0.4629%]

2: time: [-7.6693% -7.3060% -6.9496%]

```

It uses a `movw` when writing two bytes, which explains why this version is faster than baseline only in that case.

All measurements taken with criterion.rs and Rust 1.42.0 on an i5-3450. Care has been taken to ensure a low noise environment with reproducible results.

|

I-slow,C-enhancement,T-compiler,A-iterators

|

low

|

Major

|

598,529,555 |

flutter

|

[web] Web application not working on Legacy Edge and IE

|

## Steps to Reproduce

1. Run `fluter build web --relase` and run it using python

1. Open the browser with edge or Firefox

1. A blank grey screen is shown (example here https://drive.getbigger.io)

The output flutter doctor is the following

```

flutter doctor -v

[✓] Flutter (Channel beta, v1.17.0, on Mac OS X 10.15.1 19B88, locale fr-FR)

• Flutter version 1.17.0 at

• Framework revision d3ed9ec945 (6 days ago), 2020-04-06 14:07:34 -0700

• Engine revision c9506cb8e9

• Dart version 2.8.0 (build 2.8.0-dev.18.0 eea9717938)

[✓] Android toolchain - develop for Android devices (Android SDK version 29.0.3)

• Android SDK

• Platform android-29, build-tools 29.0.3

• Java binary at: /Applications/Android Studio.app/Contents/jre/jdk/Contents/Home/bin/java

• Java version OpenJDK Runtime Environment (build 1.8.0_212-release-1586-b4-5784211)

• All Android licenses accepted.

[✓] Xcode - develop for iOS and macOS (Xcode 11.3.1)

• Xcode at /Applications/Xcode.app/Contents/Developer

• Xcode 11.3.1, Build version 11C504

• CocoaPods version 1.9.1

[✓] Chrome - develop for the web

• Chrome at /Applications/Google Chrome.app/Contents/MacOS/Google Chrome

[✓] Android Studio (version 3.6)

• Android Studio at /Applications/Android Studio.app/Contents

• Flutter plugin version 44.0.2

• Dart plugin version 192.7761

• Java version OpenJDK Runtime Environment (build 1.8.0_212-release-1586-b4-5784211)

[✓] VS Code (version 1.44.0)

• VS Code at /Applications/Visual Studio Code.app/Contents

• Flutter extension version 3.9.1

[✓] Connected device (3 available)

• iPhone 11 Pro Max • 3086AEFA-A07A-4A91-B5D0-93D8D059A96E • ios • com.apple.CoreSimulator.SimRuntime.iOS-13-3 (simulator)

• Chrome • chrome • web-javascript • Google Chrome 80.0.3987.163

• Web Server • web-server • web-javascript • Flutter Tools

• No issues found!

```

|

engine,dependency: dart,platform-web,a: production,found in release: 1.17,browser: firefox,P2,team-web,triaged-web

|

low

|

Major

|

598,551,015 |

rust

|

rustc should suggest using async version of Mutex

|

If one accidentally uses `std::sync::Mutex` in asynchronous code and holds a `MutexGuard` across an await, then the future is marked `!Send`, and you can't spawn it off to run on another thread - all correct. Rustc even gives you an excellent error message, pointing out the `MutexGuard` as the reason the future is not `Send`.

But people new to asynchronous programming are not going to immediately realize that there is such a thing as an 'async-friendly mutex'. You need to be aware that ordinary mutexes insist on being unlocked on the same thread that locked them; and that executors move tasks from one thread to another; and that the solution is not to make ordinary mutexes more complex but to create a new mutex type altogether. These make sense in hindsight, but I'll bet that they leap to mind only for a small group of elite users. (But probably a majority of the people who will ever read this bug. Ahem.)

So I think rustc should provide extra help when the value held across an `await`, and thus causing a future not to be `Send`, is a `MutexGuard`, pointing out that one must use an asynchronous version of `Mutex` if one needs to hold guards across an await. It's awkward to suggest `tokio::sync::Mutex` or `async_std::sync::Mutex`, but surely there's some diplomatic way to phrase it that is still explicit enough to be helpful.

Perhaps this could be generalized to other types. For example, if the offending value is an `Rc`, the help should suggest `Arc`.

Here's an illustration of what I mean:

```

use std::future::Future;

use std::sync::Mutex;

fn fake_spawn<F: Future + Send + 'static>(f: F) { }

async fn wrong_mutex() {

let m = Mutex::new(1);

let mut guard = m.lock().unwrap();

(async { }).await;

*guard += 1;

}

fn main() {

fake_spawn(wrong_mutex());

//~^ERROR: future cannot be sent between threads safely

}

```

The error message is great:

```

error: future cannot be sent between threads safely

--> src/main.rs:14:5

|

4 | fn fake_spawn<F: Future + Send + 'static>(f: F) { }

| ---------- ---- required by this bound in `fake_spawn`

...

14 | fake_spawn(wrong_mutex());

| ^^^^^^^^^^ future returned by `wrong_mutex` is not `Send`

|

= help: within `impl std::future::Future`, the trait `std::marker::Send` is not implemented for `std::sync::MutexGuard<'_, i32>`

note: future is not `Send` as this value is used across an await

--> src/main.rs:9:5

|

8 | let mut guard = m.lock().unwrap();

| --------- has type `std::sync::MutexGuard<'_, i32>`

9 | (async { }).await;

| ^^^^^^^^^^^^^^^^^ await occurs here, with `mut guard` maybe used later

10 | *guard += 1;

11 | }

| - `mut guard` is later dropped here

```

I just wish it included:

```

help: If you need to hold a mutex guard while you're awaiting, you must use an async-aware version of the `Mutex` type.

help: Many asynchronous foundation crates provide such a `Mutex` type.

```

<!-- TRIAGEBOT_START -->

<!-- TRIAGEBOT_ASSIGN_START -->

This issue has been assigned to @LucioFranco via [this comment](https://github.com/rust-lang/rust/issues/71072#issuecomment-658306268).

<!-- TRIAGEBOT_ASSIGN_DATA_START$${"user":"LucioFranco"}$$TRIAGEBOT_ASSIGN_DATA_END -->

<!-- TRIAGEBOT_ASSIGN_END -->

<!-- TRIAGEBOT_END -->

|

C-enhancement,A-diagnostics,T-compiler,A-async-await,A-suggestion-diagnostics,AsyncAwait-Triaged

|

medium

|

Critical

|

598,562,213 |

godot

|

Output spammed when using DLL

|

**Godot version:** 3.2

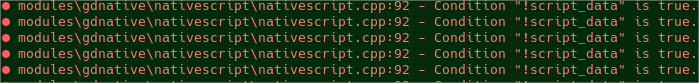

**Issue description:** The output window from the Godot editor gets spammed with this message:

> Condition "!script_data" is true.

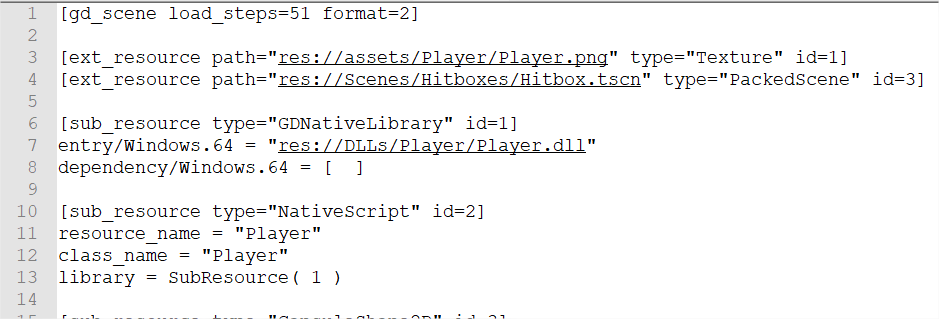

I know my DLL is linking properly to my Player scene because the code gets executed and everything works when you start the game. But you get this message in the output log every time you tab out and tab in of the editor (or click outside and then click anywhere in the editor) when the Player scene is opened in the editor. The name of the Player class in my c++ code is the same as the ressource_name and class_name of the native script. Here's how the DLL linking looks like in my Player scene:

**Minimal reproduction project:**

[Project.zip](https://github.com/godotengine/godot/files/4466972/Project.zip)

|

bug,topic:editor,topic:gdextension

|

low

|

Minor

|

598,570,910 |

flutter

|

Remove AndroidX specific failure message, always show Gradle errors

|

I've seen several cases in the last few minutes of triage where the AndroidX message was shown despite the error being unrelated (keystore issue and ??). We should remove this error filtering and ensure that the raw gradle output is always shown

|

platform-android,tool,t: gradle,P2,team-android,triaged-android

|

low

|

Critical

|

598,597,749 |

nvm

|

cdvm & carriage return in .nvmrc

|

cdnvm does not like a .nvrmc file created in windows for example ( od -c )

0000000 l t s / * \r \n

This can be fixed by replacing

nvm_version=$(<"$nvm_path"/.nvmrc)

with

nvm_version=$(cat "$nvm_path"/.nvmrc | tr -d '\r')

Here I'm doing an echo $nvm_version | od -c before cdnvm

```

0000000 l t s / * \r \n

0000007

at illegal primary in regular expression *

input record number 16, file

source line number 4

` to browse available versions.te --lts=*

```

P.S. This is what is dropping the error - line 2651 nvm.sh ( nvm -> install )

` VERSION="$(NVM_VERSION_ONLY=true NVM_LTS="${LTS-}" nvm_remote_version "${provided_version}")"`

|

OS: windows

|

low

|

Critical

|

598,615,857 |

rust

|

Interactions in `type_alias_impl_trait` and `associated_type_defaults`

|

A question about impl Trait in trait type aliases: has any thought been given about how they should handle defaults?

I'm trying to do something like this, without success:

```rust

#![feature(type_alias_impl_trait, associated_type_defaults)]

use std::fmt::Debug;

struct Foo {

}

trait Bar {

type T: Debug = impl Debug;

fn bar() -> Self::T {

()

}

}

impl Bar for Foo {

type T = impl Debug;

fn bar() -> Self::T {

()

}

}

```

[(playground link)](https://play.rust-lang.org/?version=nightly&mode=debug&edition=2018&gist=0094ea01075cc106ff3c0be8780c39b1)

I'd guess this just hasn't been RFC'd yes, but does someone know if thought on the topic have already started?

_Originally posted by @Ekleog in https://github.com/rust-lang/rust/issues/63063#issuecomment-612619080_

|

T-lang,C-feature-request,needs-rfc,F-type_alias_impl_trait,requires-nightly,F-associated_type_defaults

|

low

|

Critical

|

598,623,108 |

pytorch

|

Wrong results for multiplication of non-finite complex numbers with real numbers

|

## 🐛 Bug

## To Reproduce

```

import torch

import math

a=torch.tensor([complex(math.inf, 0)])

print(a, a*1.)

#prints tensor([(inf+0.0000j)], dtype=torch.complex64) tensor([(inf+nanj)], dtype=torch.complex64)

```

## Expected behavior

expected answer is inf+0j

This is caused by pytorch first promoting 1. to 1.+0j, causing the unexpected multiplication result. Note, this behavior is consistent with python and numpy, but inconsistent with c++ std::complex (which would produce inf+0j for this computation).

cc @ezyang @anjali411 @dylanbespalko

|

triaged,module: complex,module: numpy

|

low

|

Critical

|

598,676,748 |

ant-design

|

表格中search组件里的clearFilters,对onchange导出的extra.currentDataSource不起效

|

- [ ] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### Reproduction link

[](https://codesandbox.io/s/zidingyishaixuancaidan-ant-design-demo-vsvud)

### Steps to reproduce

1.点击搜索icon,搜索字段

2.重置

3.导出的extra.currentDataSource未改变,绑定了dataSource结果重置不正常

### What is expected?

正常重置

### What is actually happening?

显示数据未更变

| Environment | Info |

|---|---|

| antd | 4.1.0 |

| React | 16.8.3 |

| System | windows10 |

| Browser | chrome |

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

Inactive

|

low

|

Minor

|

598,873,525 |

flutter

|

ImageCache should optionally store undecoded images (pre-codec decoding)

|

## Use case

In a current-day app, it is typical to process and display high-megapixel images. Some smartphones come with cameras whose resolution is 48 MP or even 92 MP, and >100 is coming soon. The uncompressed byte sizes of each such image are 48 MiB and 92 MiB respectively.

## Proposal

Short term, I propose significantly raising the default value of ImageCache.maximumSizeBytes and/or documenting the fact that this is counted against the number of pixel in the image (rather than the byte size of the uncompressed image).

Eventually, I would suggest (optionally) caching the compressed images instead of the uncompressed ones - the memory / CPU trade-off seems to make much more sense, since repeatedly decoding compressed images is a walk in the park for modern smartphones and certainly avoiding this doesn't justify a tenfold or so increase in RAM usage.

|

c: new feature,framework,a: images,c: proposal,P3,team-framework,triaged-framework

|

low

|

Minor

|

598,876,297 |

ant-design

|

Modal background scroll iOS

|

- [ ] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### Reproduction link

[https://ant.design/components/modal/#header](https://ant.design/components/modal/#header)

### Steps to reproduce

1. Go to https://ant.design/components/modal/#header

2. Scroll a little bit to hide browser header

3. Open modal

4. Scroll at the background

### What is expected?

Scroll at background should be disabled

### What is actually happening?

Scroll is not disabled

| Environment | Info |

|---|---|

| antd | 4.1.3 |

| React | 16.8.6 |

| System | iOS 13.3.1 |

| Browser | Chrome 77.0.3865.103 |

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

help wanted,Inactive,📱Mobile Device

|

low

|

Major

|

598,888,285 |

rust

|

[codegen] unnecessary panicking branch in `foo().await` (vs equivalent `FutureImpl.await`)

|

I compiled this `no_std` code with (LTO / `-Oz` / `-C panic=abort`) optimizations (full repro instructions at the bottom):

```rust

#![no_std]

#![no_main]

#[no_mangle]

fn main() -> ! {

let mut f = async {

loop {

// uncomment only ONE of these statements

// Foo.await; // NO panicking branch

foo().await; // HAS panicking branch (though it should be equivalent to `Foo.await`?)

// bar().await; // NO panicking branch (because it's implicitly divergent?)

// baz().await; // HAS panicking branch (that it inherit from `foo().await`?)

}

};

let waker = waker();

let mut cx = Context::from_waker(&waker);

loop {

unsafe {

let _ = Pin::new_unchecked(&mut f).poll(&mut cx);

}

}

}

struct Foo;

impl Future for Foo {

type Output = ();

fn poll(self: Pin<&mut Self>, _: &mut Context<'_>) -> Poll<()> {

asm::nop();

Poll::Ready(())

}

}

async fn foo() {

asm::nop();

}

async fn bar() {

asm::nop();

loop {}

}

async fn baz() {

foo().await;

loop {}

}

```

I got machine code that includes a panicking branch:

``` asm

00000400 <main>:

400: push {r5, r6, r7, lr}

402: add r7, sp, #8

404: movs r0, #0

406: strh.w r0, [r7, #-2]

40a: subs r0, r7, #2

40c: bl 412 <app::main::{{closure}}>

410: udf #254 ; 0xfe

00000412 <app::main::{{closure}}>:

412: push {r7, lr}

414: mov r7, sp

416: mov r4, r0

418: ldrb r0, [r0, #0]

41a: cbz r0, 426 <app::main::{{closure}}+0x14>

41c: ldrb r0, [r4, #1]

41e: cbz r0, 42a <app::main::{{closure}}+0x18>

420: bl 434 <core::panicking::panic>

424: udf #254 ; 0xfe

426: movs r0, #0

428: strb r0, [r4, #1]

42a: bl 48e <__nop>

42e: movs r0, #1

430: strb r0, [r4, #1]

432: b.n 426 <app::main::{{closure}}+0x14>

00000434 <core::panicking::panic>:

434: push {r7, lr}

436: mov r7, sp

438: bl 43e <core::panicking::panic_fmt>

43c: udf #254 ; 0xfe

0000043e <core::panicking::panic_fmt>:

43e: push {r7, lr}

440: mov r7, sp

442: bl 48c <rust_begin_unwind>

446: udf #254 ; 0xfe

```

I expected to see no panicking branches in the output. If I comment out `foo().await` and uncomment `Foo.await` (which should be semantically equivalent) then I get the expected output:

``` asm

00000400 <main>:

400: push {r7, lr}

402: mov r7, sp

404: bl 40a <app::main::{{closure}}>

408: udf #254 ; 0xfe

0000040a <app::main::{{closure}}>:

40a: push {r7, lr}

40c: mov r7, sp

40e: bl 458 <__nop>

412: b.n 40e <app::main::{{closure}}+0x4>

```

Interestingly, `bar().await` contains no panicking branch (because it's divergent?), but `baz().await` does (because it inherits it from `foo().await`?).

### Meta

`rustc --version --verbose`:

```

rustc 1.44.0-nightly (94d346360 2020-04-09)

```

<details><summary>Steps to reproduce</summary>

<p>

``` console

$ git clone https://github.com/rust-embedded/cortex-m-quickstart

$ cd cortex-m-quickstart

$ git reset --hard 1a60c1d94489cec3008166a803bdcf8ac306b98f

$ $EDITOR Cargo.toml && cat Cargo.toml

```

``` toml

[package]

edition = "2018"

name = "app"

version = "0.0.0"

[dependencies]

cortex-m = "0.6.0"

cortex-m-rt = "0.6.10"

cortex-m-semihosting = "0.3.3"

panic-halt = "0.2.0"

[profile.dev]

codegen-units = 1

debug = 1

debug-assertions = false

incremental = false

lto = "fat"

opt-level = 'z'

overflow-checks = false

```

``` console

$ $EDITOR src/main.rs && cat src/main.rs

```

``` rust

#![no_std]

#![no_main]

use core::{

future::Future,

pin::Pin,

task::{Context, Poll, RawWaker, RawWakerVTable, Waker},

};

use cortex_m_rt::entry;

use cortex_m::asm;

use panic_halt as _;

#[no_mangle]

fn main() -> ! {

let mut f = async {

loop {

// uncomment only ONE of these statements

// Foo.await; // NO panicking branch

foo().await; // HAS panicking branch

// bar().await; // NO panicking branch

// baz().await; // HAS panicking branch

}

};

let waker = waker();

let mut cx = Context::from_waker(&waker);

loop {

unsafe {

let _ = Pin::new_unchecked(&mut f).poll(&mut cx);

}

}

}

struct Foo;

impl Future for Foo {

type Output = ();

fn poll(self: Pin<&mut Self>, _: &mut Context<'_>) -> Poll<()> {

asm::nop();

Poll::Ready(())

}

}

async fn foo() {

asm::nop();

}

async fn bar() {

asm::nop();

loop {}

}

async fn baz() {

foo().await;

loop {}

}

fn waker() -> Waker {

unsafe fn clone(_: *const ()) -> RawWaker {

RawWaker::new(&(), &VTABLE)

}

unsafe fn wake(_: *const ()) {}

unsafe fn wake_by_ref(_: *const ()) {}

unsafe fn drop(_: *const ()) {}

static VTABLE: RawWakerVTable = RawWakerVTable::new(clone, wake, wake_by_ref, drop);

unsafe { Waker::from_raw(clone(&())) }

}

```

``` console

$ # target = thumbv7m-none-eabi (see .cargo/config)

$ cargo build

$ arm-none-eabi-objdump -Cd target/thumbv7m-none-eabi/debug/app

```

</p>

</details>

|

A-LLVM,C-enhancement,A-codegen,T-compiler,A-coroutines,I-heavy,A-async-await,AsyncAwait-Triaged,C-optimization

|

low

|

Critical

|

598,922,381 |

godot

|

Implementing a generic interface with a type paramter of a generic class's inner class causes a build error

|

**Godot version:**

Godot Engine v3.2.2.rc.mono.custom_build.36a30f681

Commit: 36a30f681fc8c3256829616f32ee452b15674752

Also encountered on a 3.2.1-stable build, earlier versions not tested.

**OS/device including version:** 5.4.24-1-MANJARO x86_64 GNU/Linux

**Issue description:**

In C#, implementing a generic interface with the inner-member of a generic class causes godot to raise an error on build per source file. These errors do not stop the build from completing and the resulting build appears to work.

Error message:

`modules/mono/glue/gd_glue.cpp:250 - Failed to determine namespace and class for script: <script name>.cs. Parse error: Unexpected token: .`

**Steps to reproduce:**

1. Create generic class with a public inner class

2. Implement a generic interface, using that inner class as the generic parameter

3. Press build, note error in output but a functional build.

**Minimal reproduction project:**

[Test.tar.gz](https://github.com/godotengine/godot/files/4469842/Test.tar.gz)

|

bug,topic:dotnet

|

low

|

Critical

|

598,929,601 |

rust

|

Exploit mitigations applied by default are not documented

|

There seems to be no documentation on exploit mitigations in Rust, specifically:

1. What exploit mitigations are supported?

1. What mitigations are enabled by default?

1. Is that answer different if building with `cargo` instead of `rustc` directly?

1. Does that vary by platform?

1. How to enable/disable specific mitigations?

This is relevant not only for security assessment, but also for performance comparison against other languages - both languages need to have the same exploit mitigations enabled for an apples-to-apples comparison.

|

C-enhancement,A-security,T-compiler,A-docs,PG-exploit-mitigations

|

low

|

Major

|

598,933,304 |

PowerToys

|

[FancyZones] Consider caching results of IsProcessOfWindowElevated

|

Based on https://github.com/microsoft/PowerToys/pull/2103#discussion_r407515294, we might want to consider caching the results of `IsProcessOfWindowElevated`. It might give us a performance gain, but we must profile how much `IsProcessOfWindowElevated` costs first. If it's worth optimizing, we need to introduce the cache and measure its impact, so we don't actually decrease the performance while increasing the complexity.

|

Product-FancyZones,Area-Quality,Priority-3

|

low

|

Major

|

598,939,868 |

bitcoin

|

untrust vs unsafe ?

|

Some RPC commands like listunspent talks about "unsafe" funds and others like getbalances about "untrust"

If there isn't diff between them would be good rename it with an unique name

|

Bug

|

low

|

Minor

|

598,974,540 |

TypeScript

|

Give `{}` some other type than `{}` so it doesn't cause subtype reduction problems with primitives

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 3.9.0-beta

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:**

Array

**Code**

```ts

let a = [{a:1},1,2,3] // OK, Expected (number | { a: number; })[]

let b = [{},1,2,3] // Error, Expected: `({}|1)[]` Actual: `{}[]`

```

**Playground Link:**

[Playground Link](https://www.typescriptlang.org/play?ts=3.9.0-beta#code/FAGwpgLgBAhlC8UDaBvGAuAjAXwDSdwCZcBmAXSgHpKoB5AaVygFEAPABzAGMIwATKAAoAdgFcAtgCMwAJygAfKCljooYqbIDcUbAEokZUJCiSEyFHgLFyVGsxkyA9jKYsO3Xn1UADQRfmY+mTeUACCPKIwID4WBt5AA)

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

|

Suggestion,Needs Proposal

|

low

|

Critical

|

599,083,758 |

excalidraw

|

Cache fonts for offline usage

|

We recently added support [for offline usage](https://github.com/excalidraw/excalidraw/pull/1286) but because we need to preload fonts and we add them directly to the `index.html`, they are not being included in Webpacks' pipeline and thus not being included in the service-worker that caches the assets.

We need to find a way to both preload those fonts and cache them for offline usage. At the moment, we haven't eject our CRA and we don't want to do so.

|

enhancement,help wanted,font

|

low

|

Minor

|

599,099,082 |

javascript

|

What is the point of the "Types" section of the guide?

|

Greetings,

The second section of the guide is "References", which is a prescription to use "const" over "var". This section showcases a "bad" code example and a "good" code example. So far, so good.

The first section of the guide is "Types", which, as far as I can tell, doesn't offer any prescriptions at all. It is simply educating the reader on the how variables work in the JavaScript programming language. Furthermore, the section does not show any "bad" code examples or "good" code examples.

Is there a particular reason that this section is included in the style guide? It seems notably out of place - every other section seems to impart a specific coding prescription. There is a time and a place to teach JavaScript newbies the basics on how the language works, and it doesn't seem like it should be in a style guide.

|

question

|

low

|

Major

|

599,106,914 |

flutter

|

Update all example/integration test/samples .gitignores to not track .last_build_id

|

https://github.com/flutter/flutter/pull/54428 added it to the generated gitignore, didn't actually update any of the example or integration test .gitignores. Running the build_tests shard, for example, left a bunch of .last_build_id in my working copy.

|

team,tool,P2,team-tool,triaged-tool

|

low

|

Minor

|

599,118,618 |

pytorch

|

Quantized _out functions don't follow same conventions as other out functions in the codebase

|

Currently they're defined like:

```

.op("quantized::add_out(Tensor qa, Tensor qb, Tensor(a!) out)"

"-> Tensor(a!) out",

c10::RegisterOperators::options()

.aliasAnalysis(at::AliasAnalysisKind::FROM_SCHEMA)

.kernel<QAddOut</*ReLUFused=*/false>>(DispatchKey::QuantizedCPU))

```

However, a standard out function looks like this:

```

- func: angle(Tensor self) -> Tensor

use_c10_dispatcher: full

variants: function, method

supports_named_tensor: True

- func: angle.out(Tensor self, *, Tensor(a!) out) -> Tensor(a!)

supports_named_tensor: True

```

cc @jerryzh168 @jianyuh @raghuramank100 @jamesr66a @vkuzo @jgong5 @Xia-Weiwen @leslie-fang-intel @dzhulgakov @kevinbchen (who added the alias analysis annotations to these functions) and @z-a-f (who appears to have originally added these out variants at https://github.com/pytorch/pytorch/pull/23971 )

|

oncall: quantization,low priority,triaged,better-engineering

|

low

|

Major

|

599,122,413 |

go

|

cmd/compile: non-symmetric inline cost when using named vs non-named returns

|

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.2 windows/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

set GO111MODULE=

set GOARCH=amd64

set GOBIN=

set GOCACHE=C:\Users\Dante\AppData\Local\go-build

set GOENV=C:\Users\Dante\AppData\Roaming\go\env

set GOEXE=.exe

set GOFLAGS=

set GOHOSTARCH=amd64

set GOHOSTOS=windows

set GOINSECURE=

set GONOPROXY=

set GONOSUMDB=

set GOOS=windows

set GOPATH=C:\Users\Dante\go

set GOPRIVATE=

set GOPROXY=https://proxy.golang.org,direct

set GOROOT=c:\go

set GOSUMDB=sum.golang.org

set GOTMPDIR=

set GOTOOLDIR=c:\go\pkg\tool\windows_amd64

set GCCGO=gccgo

set AR=ar

set CC=gcc

set CXX=g++

set CGO_ENABLED=1

set GOMOD=C:\Users\Dante\Documents\Code\go3mf\go.mod

set CGO_CFLAGS=-g -O2

set CGO_CPPFLAGS=

set CGO_CXXFLAGS=-g -O2

set CGO_FFLAGS=-g -O2

set CGO_LDFLAGS=-g -O2

set PKG_CONFIG=pkg-config

set GOGCCFLAGS=-m64 -mthreads -fmessage-length=0 -fdebug-prefix-map=C:\Users\Dante\AppData\Local\Temp\go-build018008438=/tmp/go-build -gno-record-gcc-switches

</pre></details>

### What did you do?

Code: https://play.golang.org/p/bM-akhk0QXG

`go build -gcflags "-m=2"`

### What did you expect to see?

`NewTriangleInline` is equivalent to `NewTriangleNoInline`, the only difference is that the first uses a named return and the second one doesn´t, therefore I would expect that both have the same inline cost.

```go

func NewTriangleInline(v1, v2, v3 uint32) (t Triangle) {

t.SetIndex(0, v1)

t.SetIndex(1, v2)

t.SetIndex(2, v3)

return

}

```

```go

func NewTriangleNoInline(v1, v2, v3 uint32) Triangle {

var t Triangle

t.SetIndex(0, v1)

t.SetIndex(1, v2)

t.SetIndex(2, v3)

return t

}

```

### What did you see instead?

```

.\foo.go:11:6: can inline NewTriangleInline as: func(uint32, uint32, uint32) Triangle { t.SetIndex(0, v1); t.SetIndex(1, v2); t.SetIndex(2, v3); return }

.\foo.go:18:6: cannot inline NewTriangleNoInline: function too complex: cost 84 exceeds budget 80

```

|

NeedsDecision

|

low

|

Critical

|

599,136,166 |

go

|

runtime: sema: many many goroutines queueing up on many many distinct addresses -> slow

|

Hello up there. [libcsp](https://libcsp.com/performance/) claims to be 10x faster on a [benchmark](https://github.com/shiyanhui/libcsp/blob/ea0c5a41/benchmarks/sum.go#L28-L53) involving `sync.WaitGroup` for a divide-and-conqueror summation program. I've analyzed the [profile](https://lab.nexedi.com/kirr/misc/raw/443f9bf4/libcsp/pprof001.svg) and most of the time is being spent in `runtime.(*semaRoot).dequeue` and `runtime.(*semaRoot).queue` triggered by calls to `sync.WaitGroup` `.Done` and `.Wait`. The benchmark uses many (~ Ngoroutines) different WaitGroups and semaphores simultaneously.

Go commit https://github.com/golang/go/commit/45c6f59e1fd9 says

> There is still an assumption here that in real programs you don't have many many goroutines queueing up on many many distinct addresses. If we end up with that problem, we can replace the top-level list with a treap.

It seems the above particular scenario is being hit in this benchmark.

--------

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.2 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE="off"

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/kirr/.cache/go-build"

GOENV="/home/kirr/.config/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="linux"

GOPATH="/home/kirr/src/neo:/home/kirr/src/tools/go/g.env"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/home/kirr/src/tools/go/go"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/home/kirr/src/tools/go/go/pkg/tool/linux_amd64"

GCCGO="/usr/bin/gccgo"

AR="ar"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD=""

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build136588881=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

Ran benchmarks in libcsp.

### What did you expect to see?

Libcsp and Go versions comparable in terms of speed.

### What did you see instead?

Go version 10x slower.

|

Performance,NeedsInvestigation,compiler/runtime

|

low

|

Critical

|

599,148,538 |

pytorch

|

DDP should divide bucket contents by the number of global replicas instead of world size

|

Currently, DDP divides the bucket content by world size before doing allreduce: