id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

587,081,697 |

flutter

|

Use system setting for font smoothing

|

Moved from https://github.com/google/flutter-desktop-embedding/issues/524. See that issue for details.

Blocked on #39690

|

c: new feature,engine,platform-linux,a: desktop,P3,team-linux,triaged-linux

|

low

|

Minor

|

587,086,502 |

neovim

|

Display a diff for a buffer that was changed outside the current editing session

|

<!-- Before reporting: search existing issues and check the FAQ. -->

- `nvim --version`: 0.4.3

- Operating system/version: Ubuntu 19.04

- Terminal name/version: gnome-terminal 3.34

- `$TERM`: xterm-256color

### Steps to reproduce using `nvim -u NORC`

1. Open a file to edit in neovim.

2. Open the same file in another editor and do some changes to it and write to the file.

3. Run `:checktime` in neovim. If autoread is set, neovim will directly reload the buffer, else the user will be prompted for reloading the buffer.

### Actual behaviour

Every time the user is prompted for reloading the buffer, there is no way of knowing what changes occured in the buffer.

### Expected behaviour

After implementing this feature, a diff between the buffer opened in neovim and the changed file would be displayed along with a prompt for whether the user wants to reload a buffer or not.

I'd like to implement this feature as a part of the GSoC project for improving autoread.

|

enhancement

|

low

|

Major

|

587,168,612 |

nvm

|

$(nvm_sanitize_path "${NPM_CONFIG_PREFIX}");: command not found

|

Getting this in my shell when I open a new bash tab:

```

$(nvm_sanitize_path "${NPM_CONFIG_PREFIX}");: command not found

```

in my ~/.bashrc I have:

```

export NVM_DIR="$HOME/.nvm"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm

[ -s "$NVM_DIR/bash_completion" ] && \. "$NVM_DIR/bash_completion" # This loads nvm bash_completion

```

i remember seeing the NPM_CONFIG_PREFIX stuff somewhere but don't see it now - I am on ubuntu 18.04

---------------------------

#### Operating system and version: ubuntu 18.04

#### `nvm debug` output:

<details>

<!-- do not delete the following blank line -->

```sh

nvm --version: v0.35.3

$SHELL: /bin/bash

$SHLVL: 1

${HOME}: /home/oleg

${NVM_DIR}: '${HOME}/.nvm'

${PATH}: ${HOME}/Android/Sdk/tools:${HOME}/Android/Sdk/tools/bin:${HOME}/.opam/system/bin:/usr/local/opt/bison/bin:/usr/local/opt/openldap/sbin:/usr/local/opt/openldap/bin:/opt/local/bin:/opt/local/sbin:/usr/local/opt/openldap/sbin:/usr/local/opt/openldap/bin:${HOME}/.oresoftware/bin:${HOME}/.local/bin:${HOME}/.oresoftware/bin:${HOME}/.local/bin:${NVM_DIR}/versions/node/v12.16.1/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:${HOME}/.dotnet/tools:/usr/local/go/bin:/usr/local/go/bin:${HOME}/.rvm/bin:${HOME}/.local/bin:${HOME}/.rvm/bin

$PREFIX: ''

${NPM_CONFIG_PREFIX}: ''

$NVM_NODEJS_ORG_MIRROR: ''

$NVM_IOJS_ORG_MIRROR: ''

shell version: 'GNU bash, version 4.4.20(1)-release (x86_64-pc-linux-gnu)'

uname -a: 'Linux 4.15.0-91-generic #92-Ubuntu SMP Fri Feb 28 11:09:48 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux'

OS version: Ubuntu 18.04.4 LTS

curl: /usr/bin/curl, curl 7.58.0 (x86_64-pc-linux-gnu) libcurl/7.58.0 OpenSSL/1.1.1 zlib/1.2.11 libidn2/2.0.4 libpsl/0.19.1 (+libidn2/2.0.4) nghttp2/1.30.0 librtmp/2.3

wget: /usr/bin/wget, GNU Wget 1.19.4 built on linux-gnu.

git: /usr/bin/git, git version 2.17.1

grep: /bin/grep (grep --color=auto), grep (GNU grep) 3.1

awk: /usr/bin/awk, awk: not an option: --version

sed: /bin/sed, sed (GNU sed) 4.4

cut: /usr/bin/cut, cut (GNU coreutils) 8.28

basename: /usr/bin/basename, basename (GNU coreutils) 8.28

rm: /bin/rm, rm (GNU coreutils) 8.28

mkdir: /bin/mkdir, mkdir (GNU coreutils) 8.28

xargs: /usr/bin/xargs, xargs (GNU findutils) 4.7.0-git

nvm current: v12.16.1

which node: ${NVM_DIR}/versions/node/v12.16.1/bin/node

which iojs:

which npm: ${NVM_DIR}/versions/node/v12.16.1/bin/npm

npm config get prefix: ${NVM_DIR}/versions/node/v12.16.1

npm root -g: ${NVM_DIR}/versions/node/v12.16.1/lib/node_modules

```

</details>

#### `nvm ls` output:

<details>

<!-- do not delete the following blank line -->

```sh

oleg@xps:~$ nvm ls

v6.17.1

v7.10.1

v8.15.1

v9.11.2

v10.7.0

v10.19.0

v11.3.0

v11.11.0

v11.15.0

v12.6.0

v12.9.0

-> v12.16.1

v13.9.0

v13.11.0

system

default -> 12 (-> v12.16.1)

node -> stable (-> v13.11.0) (default)

stable -> 13.11 (-> v13.11.0) (default)

iojs -> N/A (default)

unstable -> N/A (default)

lts/* -> lts/erbium (-> v12.16.1)

lts/argon -> v4.9.1 (-> N/A)

lts/boron -> v6.17.1

lts/carbon -> v8.17.0 (-> N/A)

lts/dubnium -> v10.19.0

lts/erbium -> v12.16.1

```

</details>

#### How did you install `nvm`?

The curl script

#### Is there anything in any of your profile files that modifies the `PATH`?

Yes definitely

|

needs followup

|

medium

|

Critical

|

587,199,554 |

pytorch

|

Method/constructor which takes as input angle and magnitude and returns a complex tensor

|

cc @ezyang @anjali411 @dylanbespalko

|

triaged,module: complex

|

low

|

Major

|

587,203,546 |

neovim

|

non-string Lua errors in vim.loop callbacks cause segfault

|

<!-- Before reporting: search existing issues and check the FAQ. -->

- `nvim --version`: NVIM v0.4.3

- Operating system/version: Linux 5.5.10

### Steps to reproduce using `nvim -u NORC`

```

nvim -u NORC -c 'lua vim.loop.new_idle():start(function() error() end)'

```

Using `new_idle` to get a function to run in loop callback but also happens in other kinds of callbacks like pipe reads.

### Actual behaviour

Neovim terminated by SIGSEGV.

### Expected behaviour

Error logged as an error message?

|

has:repro,bug-crash,lua

|

low

|

Critical

|

587,213,597 |

node

|

Retry on failed ICU data load, ignoring NODE_ICU_DATA or --icu-data-dir

|

Related to #30825 (I had the idea during its discussion) and, from the icebox, #3460

**Is your feature request related to a problem? Please describe.**

Currently, Node with an invalid/missing ICU data directory ( set with NODE_ICU_DATA or --icu-data-dir ) just fails.

```shell

$ nvm i --lts

Installing latest LTS version.

v12.16.1 is already installed.

$ node --icu-data-dir=/tmp

node: could not initialize ICU (check NODE_ICU_DATA or --icu-data-dir parameters)

```

**Describe the solution you'd like**

It might be possible to fall back *as if* the `--icu-data-dir` was not specified (or the default data dir in #30825 was not present.)

As early as that error message is generated, it might be possible to call `u_cleanup()` to unload ICU and then reinitialize it using the baked-in data or other defaults.

This could be done completely within `InitializeICUDirectory()`, although it might be advantageous to allow programmatic detection of the fact that this fallback happened. Ideas there:

1. expose `const char *u_getDataDirectory()` via the ICU process binding.

2. provide the eventual path string via some kind of variable

3. ~print out a warning~ (not a good idea)

4. some other flag

5. do nothing - this would probably be OK if there isn't a strong use case (other than perhaps test cases)

FYI @sam-github @sgallagher @nodejs/i18n-api

**Describe alternatives you've considered**

There's really no alternative to restarting the node process at this point.

|

i18n-api,cli

|

low

|

Critical

|

587,231,913 |

flutter

|

Consider converting system channels to standard codec

|

Currently many of the Flutter framework's system channels use the JSON codec, presumably purely for legacy reasons. This is not ideal for a number of reasons:

- Not all languages/platforms have a standard JSON library, so some embeddings (e.g., our Windows and Linux embeddings) need to use/link/ship a third-party JSON library just to talk to critical system channels.

- In fact, the Flutter engine is shipping RapidJSON purely for this reason, I believe.

- It essentially forces every new platform to implement two codecs: standard for compatibility with most (~all? I don't know if we have data on this), and JSON for compatibility with the framework.

- It's less efficient than the standard method codec (and the general advantages of JSON over binary don't really apply to ephemeral, internal messages)

This would be a breaking change for all embedding implementations. Some options:

- Just make it a breaking change. It should be very easy for people with custom embeddings to fix (since they will almost certainly have implemented the standard codec anyway)

- Add parallel channels with new names, and an opt-in method for an embeddings to say they want the new ones (set at engine startup).

/cc @Hixie for thoughts.

|

framework,c: proposal,P2,team-framework,triaged-framework

|

low

|

Major

|

587,291,021 |

youtube-dl

|

Support for Onlyfans

|

## Checklist

- [x] I'm reporting a new site support request

- [x] I've verified that I'm running youtube-dl version **2020.03.24**

- [x] I've checked that all provided URLs are alive and playable in a browser

- [x] I've checked that none of provided URLs violate any copyrights

- [x] I've searched the bugtracker for similar site support requests including closed ones

## Example URLs

-Free post https://onlyfans.com/16391013/killercleavage-free

-Free post https://onlyfans.com/15584274/hoodedhofree

-Free post https://onlyfans.com/13634727/lilianlacefree

-Paid post https://onlyfans.com/16352300/entina_cat

-Free post https://onlyfans.com/16384958/silvermoonfree

## Description

I think it would be very helpful to add this to the page list. Especially for mass backups of accounts disconnected from the internet. Always protecting content using credentials.

|

site-support-request

|

low

|

Critical

|

587,341,337 |

ant-design

|

Popconfirm does not work on Dropdown item

|

- [x] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### Reproduction link

[](https://codesandbox.io/s/antd-bug-popconfirm-in-dropdown-tiw9l)

### Steps to reproduce

1. Put a Popconfirm around a Dropdown item.

2. Click the Dropdown item

### What is expected?

Popconfirm appears

### What is actually happening?

Popconfirm appears and immediately disappears, or never shows at all.

| Environment | Info |

|---|---|

| antd | 4.0.4 |

| React | 16.13.1 |

| System | MacOS |

| Browser | Chrome 80.0.3987.132 |

---

This worked in 3.x .

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

🐛 Bug,Inactive,4.x,3.x

|

medium

|

Critical

|

587,367,651 |

vue

|

Object with prototype of `null` cannot be displayed using text interpolation

|

### Version

2.6.11

### Reproduction link

[https://codepen.io/hezedu/pen/GRJYXGR](https://codepen.io/hezedu/pen/GRJYXGR)

### Steps to reproduce

vue: 2.6.11

https://github.com/vuejs/vue/blob/a59e05c2ffe7d10dc55782baa41cb2c1cd605862/dist/vue.runtime.js#L96-L102

```js

toString(Object.create(null);

```

Will crash:

TypeError: Cannot convert object to primitive value

### What is expected?

TypeError

### What is actually happening?

error

<!-- generated by vue-issues. DO NOT REMOVE -->

|

improvement

|

medium

|

Critical

|

587,378,377 |

TypeScript

|

Module plugin template is misleading

|

**Expected behavior:**

I followed the template exactly and it can't do what it's claiming you can do. I would expect from the template that I could extend an existing module with a new function.

https://www.typescriptlang.org/docs/handbook/declaration-files/templates/module-plugin-d-ts.html

**Actual behavior:**

I am unable to extend the existing module with a new function.

https://github.com/MCKRUZ/TS-Plugin-HW

|

Docs

|

low

|

Minor

|

587,413,838 |

PowerToys

|

Source code line number and highlighter

|

Having written a number of articles and books, making code listings stand out and be able to reference in text takes some work. The following website: "[http://www.planetb.ca/syntax-highlight-word](http://www.planetb.ca/syntax-highlight-word)" takes a code snippet copied from VS, and after selecting the language, will add line numbers and alternate gray and write bars for each line of code. The result can be copied and pasted into Word, but further work has to be performed to address formatting errors for longer code snippets and better font selection.

A separate tool or a tool in Visual Studio/VSCode that lets one take code snippets and outputs:

1. Puts in the line numbers with alternate gray and write bars for each line of code.

2. Maintain the code text color and style as it is displayed in Visual Studio.

3. Maintain all indents and code formatting

4. The user can also select the font or change text to one color.

5. The result can be copied into Microsoft Word.

|

Idea-New PowerToy

|

low

|

Critical

|

587,423,016 |

electron

|

Cookie 'before-added' event

|

<!-- As an open source project with a dedicated but small maintainer team, it can sometimes take a long time for issues to be addressed so please be patient and we will get back to you as soon as we can.

-->

### Preflight Checklist

<!-- Please ensure you've completed the following steps by replacing [ ] with [x]-->

* [x] I have read the [Contributing Guidelines](https://github.com/electron/electron/blob/master/CONTRIBUTING.md) for this project.

* [x] I agree to follow the [Code of Conduct](https://github.com/electron/electron/blob/master/CODE_OF_CONDUCT.md) that this project adheres to.

* [x] I have searched the issue tracker for a feature request that matches the one I want to file, without success.

### Problem Description

<!-- Is your feature request related to a problem? Please add a clear and concise description of what the problem is. -->

I'm working on an node module to add much more privacy for electron applications and I wanted to ask about adding a new event to the Cookies API. For web privacy and tracking-protection, one of the best measures that can be taken is blocking third party cookies. The current ['changed'](https://www.electronjs.org/docs/api/cookies?q=before#event-changed) event only gets called ***after*** the cookie gets added. Ideally, there would be an event being called when a website is trying to add a cookie and the Electron event would include a callback deciding whether to go through with the cookie addition or to cancel the request and not add the cookie.

### Proposed Solution

<!-- Describe the solution you'd like in a clear and concise manner -->

Event for the Electron [Cookies API](https://www.electronjs.org/docs/api/cookies) called **before** a cookie is added:

```js

cookies.on('before-added', (cookie, callback) => {

callback(true); // Keep cookie

callback(false); // Remove cookie

});

```

The callback should take in one boolean parameter determining if the cookie should be added as intended or removed.

### Alternatives Considered

<!-- A clear and concise description of any alternative solutions or features you've considered. -->

One possible, although not as neat, alternative would be adding a callback to the existing ['changed'](https://www.electronjs.org/docs/api/cookies?q=before#event-changed) event so that the change could be cancelled. Yet this method would apply for all forms of cookie modification not just when a cookie is added.

### Additional Information

<!-- Add any other context about the problem here. -->

N/A

|

enhancement :sparkles:

|

medium

|

Major

|

587,439,499 |

vue-element-admin

|

edge 访问浏览器报错

|

<!--

注意:为更好的解决你的问题,请参考模板提供完整信息,准确描述问题,信息不全的 issue 将被关闭。

Note: In order to better solve your problem, please refer to the template to provide complete information, accurately describe the problem, and the incomplete information issue will be closed.

-->

## Bug report 用edge浏览器打开页面,点击刷新,侧边栏不能点击

#### Steps to reproduce(问题复现步骤)

<!--

1. https://panjiachen.github.io/vue-element-admin/#/permission/role(登陆就有问题,如果没有,第二步)

2. 点击浏览器刷新按钮

3. 出现问题,侧边栏不能点击报错

4,打开控制台,出现错误提示

TypeError: Unable to get property 'parent' of undefined or null reference render

-->

####

####

初步排查肯能是SiderBarItem.vue组件问题

<!--

Please only use Codepen, JSFiddle, CodeSandbox or a github repo

-->

#### Other relevant information(格外信息)

只有在edge不打开控制台的情况下出错,打开控制台不报错

|

polyfill

|

low

|

Critical

|

587,444,200 |

pytorch

|

NCCL version upgrade for PyTorch

|

## 🐛 Bug

<!-- A clear and concise description of what the bug is. -->

Build PyTorch from source code would fail on some old Linux release.

This issue has been described here: https://github.com/NVIDIA/nccl/issues/244, and Nvidia folks had it fixed (https://github.com/NVIDIA/nccl/commit/7f2b337e703d73ed369937c9996e1f3d5f664ad0). However, that change has not been included in the PyTorch source code, so if people need to build PyTorch from source code on some old linux system, it would still fail for the same problem.

The current nccl version in the latest PyTorch release is still 2.4.8, i think it can be upgraded to 2.5.6 now.

I can see that there is another open ticket for the same issue: https://github.com/pytorch/pytorch/issues/29093

## To Reproduce

Steps to reproduce the behavior:

1. Checkout PyTorch source code.

2. Build on a linux system with kernel that is below 3.9 (This is when SO_REUSEPORT was introduced).

## Expected behavior

Build should succeed on Linux system that is below 3.9.

```

- PyTorch Version (e.g., 1.0): 1.4 master

- OS (e.g., Linux): Linux (Below 3.9)

- How you installed PyTorch (`conda`, `pip`, source): source

- Build command you used (if compiling from source): python setup.py develop

- Python version: 3.6

- CUDA/cuDNN version: irrelevant

- GPU models and configuration: irrelevant

- Any other relevant information: No

```

## Additional context

No

|

module: build,triaged

|

low

|

Critical

|

587,504,599 |

flutter

|

[Proposal] Icon support Linux desktop application

|

## Use case

The default Linux application icon looks ugly...🥴 Flutter is way worthy than that... 😎

## Proposal

This looks much better (photo-shopped)

|

c: new feature,tool,a: quality,platform-linux,c: proposal,a: desktop,a: build,P3,team-linux,triaged-linux

|

high

|

Critical

|

587,524,896 |

TypeScript

|

Incorrectly typed argument variant

|

**TypeScript Version:** 3.8.3

**Search Terms:**

Parameters, Array, Overloading, Type inference

**Code**

```ts

type VariantA = [(err: Error, data: string) => any]

type VariantB = [(data: string) => any, (err: Error) => any]

type Args = VariantA | VariantB

function fn (...rest: Args): void { /* do something */ }

fn((err, data) => {

err.stack // Correct: Typed as Error

data.charAt(0) // Correct: Typed as string

})

fn((data) => {

data // --> Error: Mistyped as any, should be string <--

}, (err) => {

err.stack // Correct: Typed as Error

})

```

**Expected behavior:**

If two functions are passed in (_VariantB_), the first paramter should be typed as `data: string`.

**Actual behavior:**

If two functions are passed in, the first function's parameter is typed as `any`.

|

Bug

|

low

|

Critical

|

587,539,005 |

pytorch

|

How to support single-process-multiple-devices in DistributedDataParallel other than CUDA device

|

Hi,

I am investigating to extend the DistributedDataParallel to other accelerator devices than CUDA devices.

Not only to support single-process-single-device but also to support the single-process-multiple-devices and multple-processes-multiple-devices.

There are a lot of CUDA dependency in the DistributedDataParallel.

My question is:

1. How to override CUDA logical dependency and dispatch the gather and scatter (and other APIs used) to the c10d backend without modifying the distributed.py ? [https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/distributed.py](url)

cc @pietern @mrshenli @pritamdamania87 @zhaojuanmao @satgera @rohan-varma @gqchen @aazzolini @osalpekar @jiayisuse @agolynski @SciPioneer @H-Huang @mrzzd @cbalioglu @gcramer23

|

oncall: distributed

|

low

|

Minor

|

587,566,986 |

angular

|

With ivy, injecting a parent directive with the @host annotation fails in nested structures

|

# 🐞 bug report

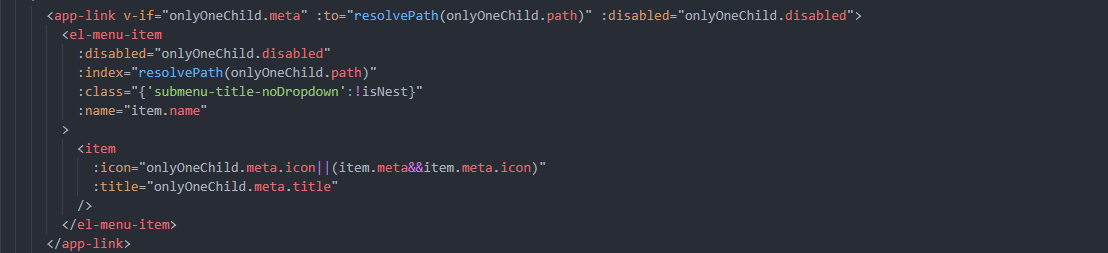

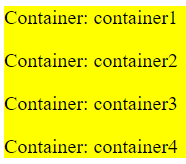

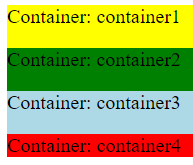

### Is this a regression?

Yes, in angular 8 this works fine. But after an update to angular 9 it stops working. If I opt-out ivy in the tsconfig.app.json ("enableIvy": false) it is working again.

### Description

The problem occurs if a parent directive is injected with the @Host annotation in nested structures. So the first injection works fine. But all injections in deeper nested structers fails and null is injected.

In the root component a ui property is defined which contains nested child elements.

```

@Component({

selector: 'app-root',

template: `

<app-container [element]="ui"></app-container>

`

})

export class AppComponent {

ui: ElementDef = {

name: 'container1', child: {

name: 'container2', child: {

name: 'container3', child: {

name: 'container4'

}

}

}

};

}

```

The elements are rendered by the container component. Each rendered element is embedded in a div with a directive "appContainerWrapper" attached.

The parent directive of a container is injected in the constructor with the @Host annotation.

```

@Component({

selector: 'app-container',

template: `

<div appContainerWrapper *ngIf="element">

<p>Container: {{element.name}}</p>

<app-container [element]="element.child"></app-container>

</div>

`

})

export class ContainerComponent implements OnInit {

@Input() element: ElementDef;

constructor(@Optional() @Host() private directive: ContainerWrapperDirective) {}

[...]

ngOnInit(): void {

if (this.directive !== null) {

this.directive.update(color);

} else {

// in nested containers this.directive is null if ivy is enabled :(

}

}

}

```

The directive should set the background color of the parent element if `update `is called from the child element.

```

@Directive({

selector: '[appContainerWrapper]'

})

export class ContainerWrapperDirective {

constructor(private containerWrapperRef: ElementRef, private renderer: Renderer2) {}

update(color: string): void {

const nativeElement = this.containerWrapperRef.nativeElement;

this.renderer.setStyle(nativeElement, 'background-color', color);

}

}

```

## 🔬 Minimal Reproduction

The problem cannot be reproduced in stackblitz. I therefor created a sample application on [Github](https://github.com/martinbu/ivy-directive-issue)

The repository contains two branches:

1. **master:** Angular 9 with ivy enabled --> the parent directive is only injected in the first container where a directive is available. In all further containers the injected directive is null. Therefor only one "background is set".

2. **opt-out-ivy:** same as the master branch but set "enableIvy" in tsconfig.app.json to false --> everything works as expected as with angular 8. In each container the parent directive is injected.

## 🌍 Your Environment

**Angular Version:**

<pre><code>

Angular CLI: 9.0.7

Node: 12.16.1

OS: win32 x64

Angular: 9.0.7

... animations, cli, common, compiler, compiler-cli, core, forms

... language-service, platform-browser, platform-browser-dynamic

... router

Ivy Workspace: Yes

Package Version

-----------------------------------------------------------

@angular-devkit/architect 0.900.7

@angular-devkit/build-angular 0.900.7

@angular-devkit/build-optimizer 0.900.7

@angular-devkit/build-webpack 0.900.7

@angular-devkit/core 9.0.7

@angular-devkit/schematics 9.0.7

@ngtools/webpack 9.0.7

@schematics/angular 9.0.7

@schematics/update 0.900.7

rxjs 6.5.4

typescript 3.7.5

webpack 4.41.2

</code></pre>

|

type: bug/fix,freq2: medium,area: core,state: confirmed,core: di,P3

|

low

|

Critical

|

587,575,696 |

godot

|

Image stretch when Window Resize is enabled

|

**Godot version:**

3.2.1

**OS/device including version:**

macOS Catalina 10.15.4 / MacBookPro11,1

**Issue description:**

If Window Resize is enabled, the image gets stretched horizontally.

It doesn't matter is Fullscreen is enabled or not. The problem occurs in both situations.

**Note:**

- I set the Environment color to white, but when the image is stretched the borders are black, so it is not showing the environment color. Something else must be happening.

- I am running this on an external monitor that has 1920x1080 resolution (if this has any relevance)

- Here is a complete video that shows the problem: https://drive.google.com/open?id=1rfRweWs5Yo0MgLJRYsfnOl7Uj8REgdKI

**Steps to reproduce:**

Run the attached Godot project with and without Window Resize enabled.

**Minimal reproduction project:**

[Godot_Window_Bug.zip](https://github.com/godotengine/godot/files/4380118/Godot_Window_Bug.zip)

|

platform:macos,topic:porting

|

medium

|

Critical

|

587,616,315 |

go

|

proxy.golang.org: long cache TTL causes confusing UX for 'go get <module>@<branchname>'

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.1 windows/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

set GO111MODULE=

set GOARCH=amd64

set GOBIN=

set GOCACHE=C:\Users\josh\AppData\Local\go-build

set GOENV=C:\Users\josh\AppData\Roaming\go\env

set GOEXE=.exe

set GOFLAGS=

set GOHOSTARCH=amd64

set GOHOSTOS=windows

set GOINSECURE=

set GONOPROXY=

set GONOSUMDB=

set GOOS=windows

set GOPATH=E:\go

set GOPRIVATE=

set GOPROXY=https://proxy.golang.org,direct

set GOROOT=c:\go

set GOSUMDB=sum.golang.org

set GOTMPDIR=

set GOTOOLDIR=c:\go\pkg\tool\windows_amd64

set GCCGO=gccgo

set AR=ar

set CC=gcc

set CXX=g++

set CGO_ENABLED=1

set GOMOD=E:\src\rainway-metapod\go.mod

set CGO_CFLAGS=-g -O2

set CGO_CPPFLAGS=

set CGO_CXXFLAGS=-g -O2

set CGO_FFLAGS=-g -O2

set CGO_LDFLAGS=-g -O2

set PKG_CONFIG=pkg-config

set GOGCCFLAGS=-m64 -mthreads -fmessage-length=0 -fdebug-prefix-map=C:\Users\josh\AppData\Local\Temp\go-build763362010=/

tmp/go-build -gno-record-gcc-switches

</pre></details>

### What did you do?

`go get github.com/RainwayApp/metapod@modulize` and then attempt to import and use metapod in a program

<!--

If possible, provide a recipe for reproducing the error.

A complete runnable program is good.

A link on play.golang.org is best.

-->

```go

package main

import "github.com/RainwayApp/metapod"

func main() {

metapod.Create()

}

```

### What did you expect to see?

No errors! woo!

### What did you see instead?

```module github.com/RainwayApp/metapod@latest found (v0.0.0-20190515153323-337878358c65), but does not contain package github.com/RainwayApp/metapod```

Whats interesting is that the downloaded module is devoid of go files which I dont really get (this is even after trying to go get the module again after deleting it from here).

I got around this by creating a new branch (modulize2) which has all the files - however im not sure how its possible for it to end up in this state in the first place so just thought id bring it up...

|

NeedsInvestigation,modules,proxy.golang.org

|

low

|

Critical

|

587,639,522 |

TypeScript

|

Set as executable files that are intended to be binaries

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker.

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ, especially the "Common Feature Requests" section: https://github.com/Microsoft/TypeScript/wiki/FAQ

-->

## Search Terms

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

- `mode`

- `binary`

- `executable`

- `package.json bin`

## Suggestion

<!-- A summary of what you'd like to see added or changed -->

Currently, the TypeScript compiler always generates files with the `644` mode. But some files are meant to be executable: they may start with `#!/usr/bin/env node`; they may be marked as `bin` in `package.json`; they may have an executable mode set on the `.ts` source; and so forth.

It’d be great if the TypeScript compiler would take any of the hints I mentioned above, figure that the file should be executable, and create the `.js` as so.

At least the TypeScript compiler doesn’t **change** the mode of existing `.js` files when overwriting them, so I can `chmod` once, and as long as never delete the file, it’ll have the right mode.

## Use Cases

<!--

What do you want to use this for?

What shortcomings exist with current approaches?

-->

This is mostly for development and running the binary from the command-line directly. Once you install the package, npm puts the binary in the `node_modules/.bin` folder, `npx` picks it up, and all is good.

## Examples

<!-- Show how this would be used and what the behavior would be -->

You’re working on a project that should provide a binary: `src/my-binary.ts`. You do one of the things I mentioned to indicate that the file should be executable. You run `tsc`, and the generated file at `lib/my-binary.js` is marked as `+x`. Then you can call the binary with `lib/my-binary.js` from the command-line and it works.

## Checklist

My suggestion meets these guidelines:

* [x] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [x] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,Awaiting More Feedback

|

medium

|

Critical

|

587,659,114 |

godot

|

OS.execute trouble with shell evaluation ("export PATH=test && echo $PATH" doesn't work)

|

**Godot version:**

3.2.1

**OS/device including version:**

macos

**Issue description:**

OS.execute("/bin/bash", ['-c',"export PATH=test && echo $PATH"], true, output,true)

print(output)

print result

[/usr/bin:/bin:/usr/sbin:/sbin

]

don't work

**Steps to reproduce:**

**Minimal reproduction project:**

|

bug,platform:linuxbsd,platform:macos,topic:porting,confirmed

|

low

|

Major

|

587,659,752 |

godot

|

[TextureRect] rect_scale when changing texture

|

**Godot version:**

3.2.1.stable

**OS/device including version:**

Windows 10 Pro

**Issue description:**

(Atleast when using animations) When changing a texture (from "null") on the TextureRect it briefly resets the rect_scale to 1,1.

**Steps to reproduce:**

- Create a control and put a texture rect inside it

- Create an animation state machine

- Create a show (start animation), base and hide animation

- At the start of the show animation "update" the texture (set to some texture) and set rect_scale to (0, 0) and at the end move it to (1,1)

- At the end of the hide animation "update" the texture (make it null) and set rect_scale to (1,1) and at the end move it to (0,0)

- Make controls so you can start the show animation and travel to the hide animation

- Start the show animation, travel to the hide (wait for it to finish) and then finally start the show animation, you can see that the rect_scale changes to (1,1) when changing the texture and then after a brief moment goes into it's rect_scale on which it should be (by the animation)

**Minimal reproduction project:**

https://drive.google.com/file/d/113X-mEWHL5ad1khAM6G4VG7CpapT7aOP/view?usp=sharing

|

bug,topic:rendering

|

low

|

Minor

|

587,677,110 |

go

|

cmd/compile: consider extending '-spectre' option to other architectures

|

@rsc recently added a `-spectre` flag to the compiler and implemented a couple of speculative execution exploit mitigations on amd64. These involved using conditional moves to prevent out of bounds indexed memory accesses (`-spectre=index` added in https://golang.org/cl/222660) and making use of call stack optimizations to prevent indirect function calls being speculatively executed (`-spectre=ret` added in https://golang.org/cl/222661).

My understanding is that these mitigations could be important when the Go program being compiled is something like a hypervisor or operating system that has access to data or functionality that guest programs should be restricted from accessing. I'm still in the process of getting my head round these changes and how they might apply to other CPU architectures but my understanding so far is that, to be totally safe, we should apply similar mitigations on other platforms unless there are hardware mitigations in place.

The new flag also has potential for cross-platform incompatibilities since currently, for example, `-spectre=index` will cause the compiler to fail on non-amd64 platforms. It would be nice if these flags 'just worked' on other platforms.

I personally am responsible for maintaining the s390x port. I think it would be straightforward to implement equivalent mitigations on s390x:

1) index: use conditional moves in exactly the same way as the amd64 backend

2) ret: use EX{,RL} (execute) instructions to indirectly call branch instructions (more info here:

https://www.phoronix.com/scan.php?page=news_item&px=S390-Expoline-Linux-4.16)

Does anyone have any thoughts on what we should do for other architectures, if anything?

|

Security,NeedsInvestigation,compiler/runtime

|

low

|

Major

|

587,719,279 |

flutter

|

SliverPersistentHeader initial state

|

## Proposal

`SliverPersistentHeader` / `SliverPersistentHeaderDelegate`

This header has two main status - minExtent (collapsed) and maxExtent(expanded)

Initially, this header is in expanded status.

I think this header can be collapsed initally

Just like SearchBar on android.

It can be expanded or iconified (collapsed) initally.

Thank you for your great support.

Looking forward hearing from you.

|

c: new feature,framework,f: scrolling,would be a good package,P3,team-framework,triaged-framework

|

low

|

Minor

|

587,749,382 |

node

|

Describe alternatives to require.extensions

|

https://nodejs.org/api/modules.html#modules_require_extensions says `require.extensions` is deprecated.

Per https://github.com/TypeStrong/ts-node/issues/641 and https://github.com/TypeStrong/ts-node/issues/989 there are no alternatives. They reference further the documentation of @babel/node, @babel/register, and coffeescript.

• Either re-introduce require.extensions or describe more precisely the alternatiles that should be used.

|

module,deprecations

|

low

|

Major

|

587,756,776 |

material-ui

|

[docs] Allow providing a custom theme and preview it live

|

<!-- Provide a general summary of the feature in the Title above -->

We're going to make a lot of modifications to our theme soon, and I was thinking that it'd be really cool if I could enter a custom theme (JS and all) in the material-ui docs, through an inline editor, and navigate through all the docs with that theme.

Benefits:

* Previewing and testing custom theming options is a breeze

* Remove all "customization" demos from the components, and add a button that loads a pre-defined global theme, which then modifies all demos in real time: "Try Button with different themes"

* Potentially improve the interactivity of the customization docs, making theming more aproachable for every one

This is obviously a huge effort, but as you can already change the theme colors across the website, maybe there is some infrastructure in place already to do this.

|

priority: important,design,scope: docs-infra

|

low

|

Major

|

587,766,574 |

kubernetes

|

Stop applying the beta.kubernetes.io/os and beta.kubernetes.io/arch

|

It seems that according to plan, kubenrnetes 1.18 will remove support for the `beta.kubernetes.io/os` and` beta.kubernetes.io/arch` labels. Will we still do this work?

---

https://kubernetes.io/docs/reference/kubernetes-api/labels-annotations-taints/

|

area/kubelet,sig/node,kind/feature,priority/important-longterm,lifecycle/frozen,triage/accepted

|

medium

|

Critical

|

587,783,734 |

pytorch

|

runtime error when loding heavy dataset

|

## 🐛 Bug

i used torch.save to save two very big tensors one being the training dataset and the other one being the test dataset (separated by torch.utils.data.random_split)

the original tensor was of size (4803, 354, 4000)

when i do that it’s very slow to save (wich is understandable), both files have the same size (wich is weird since the testing set is only 20% of the original while the training set is 80% of the original)

and when i try to load the dataset using torch.load it gives me this error (note : i am on a gpu kernel but i get the same error on a cpu kernel)

RuntimeError Traceback (most recent call last)

in

----> 1 training_set = torch.load(‘trainaction.pt’)

2 testing_set = torch.load(‘testaction.pt’)

3

4 batch_size = 200

5 DATA_PATH = ‘./data’

~.conda\envs\py36\lib\site-packages\torch\serialization.py in load(f, map_location, pickle_module, **pickle_load_args)

527 with _open_zipfile_reader(f) as opened_zipfile:

528 return _load(opened_zipfile, map_location, pickle_module, **pickle_load_args)

–> 529 return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

530

531

~.conda\envs\py36\lib\site-packages\torch\serialization.py in _legacy_load(f, map_location, pickle_module, **pickle_load_args)

700 unpickler = pickle_module.Unpickler(f, **pickle_load_args)

701 unpickler.persistent_load = persistent_load

–> 702 result = unpickler.load()

703

704 deserialized_storage_keys = pickle_module.load(f, **pickle_load_args)

~.conda\envs\py36\lib\site-packages\torch\serialization.py in persistent_load(saved_id)

661 location = _maybe_decode_ascii(location)

662 if root_key not in deserialized_objects:

–> 663 obj = data_type(size)

664 obj._torch_load_uninitialized = True

665 deserialized_objects[root_key] = restore_location(obj, location)

RuntimeError: [enforce fail at …\c10\core\CPUAllocator.cpp:47] ((ptrdiff_t)nbytes) >= 0. alloc_cpu() seems to have been called with negative number: 18446744066554005248

## Environment

PyTorch version: 1.4.0

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Microsoft Windows 10 Home

GCC version: Could not collect

CMake version: Could not collect

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 10.2.89

GPU models and configuration: GPU 0: GeForce GTX 1070

Nvidia driver version: 441.22

cuDNN version: Could not collect

Versions of relevant libraries:

[pip] numpy==1.18.1

[pip] numpydoc==0.9.2

[pip] torch==1.4.0

[pip] torchvision==0.5.0

[conda] _pytorch_select 1.1.0 cpu

[conda] blas 1.0 mkl

[conda] mkl 2020.0 166

[conda] mkl-service 2.3.0 py36hb782905_0

[conda] mkl_fft 1.0.15 py36h14836fe_0

[conda] mkl_random 1.1.0 py36h675688f_0

[conda] pytorch 1.4.0 py3.6_cuda101_cudnn7_0 pytorch

[conda] torchvision 0.5.0 py36_cu101 pytorch

|

needs reproduction,triaged

|

low

|

Critical

|

587,840,615 |

godot

|

Extra suffix is not appended at the end of a binary name in some cases

|

**Godot version:**

3.0, 4.0

**OS/device including version:**

Windows 10, likely any other OS.

**Steps to reproduce:**

## With mono module enabled:

```

scons platform=windows target=release_debug bits=64 module_mono_enabled=yes mono_glue=no extra_suffix=mygame

```

Results in binary name such as:

`godot.windows.opt.tools.64.mygame.mono.exe`

Should be like:

`godot.windows.opt.tools.64.mono.mygame.exe`

## With sanitizers:

```

scons platform=linuxbsd target=debug tools=yes use_asan=yes use_lsan=yes extra_suffix=mygame

```

Results in binary name such as:

`godot.linuxbsd.tools.64.mygames` (notice appended `s`)

Should be like:

`godot.linuxbsd.tools.64.s.mygame`

---

Should likely be handled by refactor as suggested in #24030.

|

bug,topic:buildsystem

|

low

|

Critical

|

587,869,569 |

TypeScript

|

New error: Type 'string' is not assignable to type 'string & ...'

|

https://github.com/material-components/material-components-web-components/blob/b1862998d80f4b0b917c817e6efde15a2504d8da/packages/linear-progress/src/mwc-linear-progress-base.ts#L109

```

packages/linear-progress/src/mwc-linear-progress-base.ts:109:9 - error TS2322: Type 'string' is not assignable to type 'string & ((property: string) => string) & ((property: string) => string) & ((index: number) => string) & ((property: string) => string) & ((property: string, value: string | null, priority?: string | undefined) => void)'.

Type 'string' is not assignable to type '(property: string) => string'.

109 this.bufferElement

~~~~~~~~~~~~~~~~~~

110 .style[property as Exclude<keyof CSSStyleDeclaration, 'length'|'parentRule'>] =

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

```

https://github.com/material-components/material-components-web-components/blob/b1862998d80f4b0b917c817e6efde15a2504d8da/packages/linear-progress/src/mwc-linear-progress-base.ts#L119

```

packages/linear-progress/src/mwc-linear-progress-base.ts:119:9 - error TS2322: Type 'string' is not assignable to type 'string & ((property: string) => string) & ((property: string) => string) & ((index: number) => string) & ((property: string) => string) & ((property: string, value: string | null, priority?: string | undefined) => void)'.

Type 'string' is not assignable to type '(property: string) => string'.

119 this.primaryBar

~~~~~~~~~~~~~~~

120 .style[property as Exclude<keyof CSSStyleDeclaration, 'length'|'parentRule'>] =

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

```

To repro:

1) `npm ci`

2) `npm run build:styling`

3) `tsc -b -f`

|

Docs

|

low

|

Critical

|

587,874,956 |

rust

|

Layout for enums does not match MIR (and types) very well

|

Enum layout computation entirely ignores ZST uninhabited ("absent") variants, which can lead to the layout being quite different in structure from the user-visible enum:

* if there are more than 1 non-absent variants, `Variants::Multiple` is used.

* if there is exactly 1 non-absent variant, `Variants::Single` is used.

* if all variants are absent, the layout of `!` is used, so the entire thing ends up looking like a union with 0 fields.

When actually asking for the layout of such an absent variant, it has to be made up "on the spot".

In terms of testing things like Miri (or anything else that needs to work with layout variants information), this leads to the "interesting" situation that we need an enum with at least 3 variants where 1 is "absent" to make sure that `Variants::Multiple` with absent variants is handled correctly.

This could be improved by generalizing `Variants::Multiple` to also support "discriminants" of "type" `()` and `!`, thus uniformly handling all enums.

Also see the second half of https://github.com/rust-lang/rust/issues/69763#issuecomment-595699745, and discussion below https://github.com/rust-lang/rust/issues/69763#issuecomment-603657246.

Cc @eddyb

|

C-cleanup,T-compiler,A-MIR,A-layout

|

low

|

Minor

|

587,877,388 |

flutter

|

Reliance on iana.org for localizations is flakey

|

`dev/tools/localization/localizations_utils.dart` references iana.org which is causing CI to fail sometimes. We should have local mirrors.

example from cirrus:

```

▌22:06:09▐ ELAPSED TIME: 5.306s for bin/cache/dart-sdk/bin/dart dev/tools/localization/bin/gen_localizations.dart --material in .

Unhandled exception:

HttpException: , uri = https://www.iana.org/assignments/language-subtag-registry/language-subtag-registry

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

ERROR: Last command exited with 255.

Command: bin/cache/dart-sdk/bin/dart dev/tools/localization/bin/gen_localizations.dart --material

Relative working directory: .

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

```

Example luci failure: https://logs.chromium.org/logs/flutter/buildbucket/cr-buildbucket.appspot.com/8884911422563139120/+/steps/run_test.dart_for_framework_tests_shard/0/stdout

```

00:22 +43 -2: test/localization/gen_l10n_test.dart: generateCode plural messages should throw attempting to generate a plural message with no resource attributes [E]

HttpException: , uri = https://www.iana.org/assignments/language-subtag-registry/language-subtag-registry

dart:_http _HttpClient.getUrl

localization/localizations_utils.dart 302:50 precacheLanguageAndRegionTags

test/localization/gen_l10n_test.dart 54:11 main.<fn>

```

|

team,tool,a: internationalization,c: proposal,P2,team-tool,triaged-tool

|

low

|

Critical

|

587,887,630 |

rust

|

Tracking Issue for `-Z src-hash-algorithm`

|

This is the tracking issue for the unstable option `-Z src-hash-algorithm`

### Steps

- [x] Implementation PR #69718

- [ ] Adjust documentation

- [ ] Stabilization PR

### Unresolved Questions

- Should we have a separate option in the target specification to specify the preferred hash algorithm, or continue to use `is_like_msvc`?

- Should continue to have a command line option to override the preferred option? Or is it acceptable to require users to create a custom target specification to override the hash algorithm?

|

A-debuginfo,T-compiler,B-unstable,C-tracking-issue,S-tracking-needs-summary,A-CLI

|

low

|

Major

|

587,891,985 |

flutter

|

if (kRelease) return null; does not treeshake

|

## Use case

Flutter framework uses the following pattern:

```

DiagnosticPropertiesBuilder get builder {

if (kReleaseMode)

return null;

if (_cachedBuilder == null) {

_cachedBuilder = DiagnosticPropertiesBuilder();

value?.debugFillProperties(_cachedBuilder);

}

return _cachedBuilder;

}

```

value?.debugFillProperties causes all debugFillProperties not to be treeshaken. Which on the web is > %2 code size increase (tested using flutter build web --profile).

Wrapping this code in assert on the other hand does tree shake offending methods.

## Proposal

Options:

1- Consistently handle the above across dart2js and AOT and treeshake code below without assert.

2- Introduce asserts into framework code explicitely. Check all current usages of if (kRelease) across the code base.

|

c: new feature,framework,c: performance,dependency: dart,perf: app size,P3,team-framework,triaged-framework

|

low

|

Critical

|

587,953,480 |

pytorch

|

torch.jit.script works for x*sigmoid(x) but not for x*sin(x)

|

cc: @ptrblck

## 🐛 Bug

In the following example, I observe that `x * sigmoid(x)` is JITted as expected. In the first call to `foo`, I see 1 GPU kernel for sigmoid and 1 for mul. In the second call, I see 1 call to `kernel_0` which is the JITted kernel. However, if I replace `sigmoid` wtih `sin`, seemingly another simple pointwise operation, then the JIT does not seem to be working and I observe 4 kernels (2 for sin and 2 for mul). However, if I use the flags

```python

torch._C._jit_set_profiling_mode(False)

torch._C._jit_set_profiling_executor(False)

```

then I see `kernel_0` being invoked twice as expected.

## To Reproduce

```python

import torch

@torch.jit.script

def foo(x):

return x * torch.sigmoid(x)

#return x * torch.sin(x)

x = torch.rand(2048,2048).cuda()

y = foo(x)

y = foo(x)

```

## Steps to reproduce the behavior:

Run the above code as `nvprof python foo.py` to see the list of kernels.

## Expected behavior

I would expect `x * sin(x)` to be JITted as well.

## Environment

```vim

PyTorch version: 1.4.0a0+a5b4d78

Is debug build: No

CUDA used to build PyTorch: 10.2

OS: Ubuntu 18.04.4 LTS

GCC version: (Ubuntu 7.4.0-1ubuntu1~18.04.1) 7.4.0

CMake version: version 3.14.0

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: 10.2.89

GPU models and configuration:

GPU 0: Tesla V100-SXM2-16GB

GPU 1: Tesla V100-SXM2-16GB

GPU 2: Tesla V100-SXM2-16GB

GPU 3: Tesla V100-SXM2-16GB

GPU 4: Tesla V100-SXM2-16GB

GPU 5: Tesla V100-SXM2-16GB

GPU 6: Tesla V100-SXM2-16GB

GPU 7: Tesla V100-SXM2-16GB

Nvidia driver version: 440.33.01

cuDNN version: /usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

Versions of relevant libraries:

[pip] msgpack-numpy==0.4.3.2

[pip] numpy==1.17.2

[pip] pytorch-transformers==1.1.0

[pip] torch==1.4.0a0+a5b4d78

[pip] torch-dct==0.1.5

[pip] torchio==0.13.18

[pip] torchtext==0.4.0

[pip] torchvision==0.5.0a0

[conda] magma-cuda101 2.5.2 1 local

[conda] mkl 2020.0 166

[conda] mkl-include 2020.0 166

[conda] nomkl 3.0 0

[conda] pytorch-transformers 1.1.0 pypi_0 pypi

[conda] torch 1.4.0a0+a5b4d78 pypi_0 pypi

[conda] torchtext 0.4.0 pypi_0 pypi

[conda] torchvision 0.5.0a0 pypi_0 pypi

```

cc @suo

|

oncall: jit,triaged

|

low

|

Critical

|

587,967,148 |

flutter

|

Include Gradle version in tool crash GitHub template

|

Include in the **Flutter Application Metadata** section.

https://github.com/flutter/flutter/blob/2dda1297bb499b84de89321c1b19e40516038f5d/packages/flutter_tools/lib/src/reporting/github_template.dart#L133-L143

|

tool,t: gradle,a: triage improvements,P3,team-tool,triaged-tool

|

low

|

Critical

|

587,967,309 |

TypeScript

|

New error: Type of property 'defaultProps' circularly references itself in mapped type

|

https://github.com/nteract/nteract/blob/af734c46893146c617308f4ae1e40bf267e8875f/packages/connected-components/src/header-editor/styled.ts#L24

```

packages/connected-components/src/header-editor/styled.ts:24:34 - error TS2615: Type of property 'defaultProps' circularly references itself in mapped type 'Pick<ForwardRefExoticComponent<Pick<Pick<any, Exclude<keyof ReactDefaultizedProps<StyledComponentInnerComponent<WithC>, ComponentPropsWithRef<StyledComponentInnerComponent<WithC>>>, StyledComponentInnerAttrs<...> | ... 1 more ... | StyledComponentInnerAttrs<...>> | Exclude<...> | Exclude<...> | Exclude<...>> & Parti...'.

24 export const EditableAuthorTag = styled(AuthorTag)`

~~~~~~~~~~~~~~~~~

```

https://github.com/nteract/nteract/blob/af734c46893146c617308f4ae1e40bf267e8875f/packages/presentational-components/src/components/prompt.tsx#L85

Also, I'm seeing each of these errors printed twice.

To repro:

1) `yarn`

2) `tsc -b -f`

Note that it fails with a different (apparently unrelated) error in 3.8.

|

Needs Investigation,Rescheduled

|

medium

|

Critical

|

587,967,332 |

flutter

|

Create way to automate updating of dev/tools/localization/language_subtag_registry.dart

|

`dev/tools/localization/language_subtag_registry.dart` is cached from iana.org, `dev/tools/localization/gen_subtag_registry.dart` generates it but there is nothing that is automatically generating it regularly.

related pr: https://github.com/flutter/flutter/pull/53286

|

tool,framework,a: internationalization,P2,team-framework,triaged-framework

|

low

|

Minor

|

587,977,260 |

pytorch

|

MacOS Error: subprocess.CalledProcessError: Command '['cmake', '--build', '.', '--target', 'install', '--config', 'Release', '--', '-j', '12']' returned non-zero exit status 1.

|

When trying to install Pytorch from source with CUDA enabled, I get the following error after running the last command in the set of instructions for installation (https://github.com/pytorch/pytorch#from-source).

ninja: error: '/usr/local/cuda/lib/libnvToolsExt.dylib', needed by 'lib/libtorch_cuda.dylib', missing and no known rule to make it

Traceback (most recent call last):

File "setup.py", line 745, in <module>

build_deps()

File "setup.py", line 316, in build_deps

cmake=cmake)

File "/Users/jonsaadfalcon/Documents/PoloClub Research/AdversarialProject/pytorch/tools/build_pytorch_libs.py", line 62, in build_caffe2

cmake.build(my_env)

File "/Users/jonsaadfalcon/Documents/PoloClub Research/AdversarialProject/pytorch/tools/setup_helpers/cmake.py", line 339, in build

self.run(build_args, my_env)

File "/Users/jonsaadfalcon/Documents/PoloClub Research/AdversarialProject/pytorch/tools/setup_helpers/cmake.py", line 141, in run

check_call(command, cwd=self.build_dir, env=env)

File "/Users/jonsaadfalcon/anaconda3/lib/python3.7/subprocess.py", line 363, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['cmake', '--build', '.', '--target', 'install', '--config', 'Release', '--', '-j', '12']' returned non-zero exit status 1.

The previously solved issues for this problem were on Windows so idk how to solve it on MacOS. Thank you for the help.

cc @ngimel

|

module: build,module: cuda,triaged,module: macos

|

low

|

Critical

|

587,978,972 |

create-react-app

|

include support for jest-runner-groups

|

### Is your proposal related to a problem?

<!--

Provide a clear and concise description of what the problem is.

For example, "I'm always frustrated when..."

-->

I'd like to categorize my jest tests so that I can run only a certain subset of them.

### Describe the solution you'd like

<!--

Provide a clear and concise description of what you want to happen.

-->

There is already a package to facilitate this called jest-runner-groups, but I cannot use this in conjunction with create-react-app since it needs me to set the "runner" option which is not currently supported.

### Describe alternatives you've considered

<!--

Let us know about other solutions you've tried or researched.

-->

the other alternative is to use `--testNamePattern` which does work but is more cumbersome to use as I need to include a unique word in the title of every test, or surround them in a `describe` block

### Additional context

<!--

Is there anything else you can add about the proposal?

You might want to link to related issues here, if you haven't already.

-->

(Write your answer here.)

|

issue: proposal,needs triage

|

low

|

Minor

|

587,981,534 |

pytorch

|

PyTorch / libtorch executables fail when built against libcuda stub library

|

## 🐛 Bug

PyTorch's cmake setup for CUDA includes a couple `stubs/` directories in the search path for `libcuda.so`:

https://github.com/pytorch/pytorch/blob/v1.4.0/cmake/public/cuda.cmake#L179

Searching for libcuda in the stubs/ directories is needed in various cases, for example when:

- the build host is GPU-less and has CUDA Toolkit but not the GPU driver installed, or

- the build toolchain doesn't include normal system search paths that would normally house libcuda. This is likely if the Anaconda toolchain is being used to build PyTorch.

However, PyTorch's build setup tells cmake to add any non-PyTorch-local libraries to built objects' `RPATH`:

https://github.com/pytorch/pytorch/blob/v1.4.0/cmake/Dependencies.cmake#L14

So the few objects that link directly to libcuda (e.g. `lib/libcaffe2_nvrtc.so` and `bin/test_dist_autograd`) may end up with the libcuda stubs/ directory included in RPATH.

During loading, RPATH has priority over normal system search paths and even `LD_LIBRARY_PATH`. So these objects will prefer the libcuda stub, even on hosts where libcuda is present in the normal location.

The same cmake setup is re-used for libtorch, so the same will occur for libtorch apps as well:

https://discuss.pytorch.org/t/torch-is-available-returns-false/73753

The libcuda stub is not a functional CUDA runtime, so PyTorch and libtorch objects affected by this won't be able to use CUDA even if libcuda is installed in the normal system search path.

This change would prevent a libcuda stubs directory from being added to objects' RPATH, but doesn't seem like a great solution. It abuses a cmake internal mechanism to trick it into avoiding adding the stubs directory.

```

diff --git a/cmake/public/cuda.cmake b/cmake/public/cuda.cmake

index a5c50b90df..3a89c9e8ad 100644

--- a/cmake/public/cuda.cmake

+++ b/cmake/public/cuda.cmake

@@ -187,6 +187,20 @@ find_library(CUDA_NVRTC_LIB nvrtc

PATHS ${CUDA_TOOLKIT_ROOT_DIR}

PATH_SUFFIXES lib lib64 lib/x64)

+# Configuration elsewhere (CMAKE_INSTALL_RPATH_USE_LINK_PATH) arranges that any

+# library used during linking will be added to the objects' RPATHs. That's

+# never correct for a libcuda stub library (the stub library is suitable for

+# linking, but not for runtime, and if present in RPATH will be prioritized

+# ahead of system search path). If libcuda was found in the "stubs" directory,

+# abuse CMAKE_PLATFORM_IMPLICIT_LINK_DIRECTORIES to instruct cmake NOT to

+# include it in RPATH This leaves us without a known path to libcuda in the

+# objects, but there's no help for that if the only libcuda visible at build

+# time is the stub.

+if(CUDA_CUDA_LIB MATCHES "stubs")

+ get_filename_component(LIBCUDA_STUB_DIR ${CUDA_CUDA_LIB} DIRECTORY)

+ list(APPEND CMAKE_PLATFORM_IMPLICIT_LINK_DIRECTORIES ${LIBCUDA_STUB_DIR})

+endif()

+

# Create new style imported libraries.

# Several of these libraries have a hardcoded path if CAFFE2_STATIC_LINK_CUDA

# is set. This path is where sane CUDA installations have their static

```

I hope someone more familiar with cmake and the general pytorch build setup might have a better idea.

## To Reproduce

Steps to reproduce the behavior:

1. Built pytorch on a system with CUDA Toolkit but not GPU driver installed

1. `objdump -p <install_dir>/lib/libcaffe2_nvrtc.so | grep RPATH`

## Expected behavior

PyTorch and libtorch objects should build successfully against libcuda stub, and then run successfully against "real" libcuda (at least when libcuda is present in a default system search path).

## Environment

- PyTorch Version (e.g., 1.0): 1.4.0

- OS (e.g., Linux): Linux

- How you installed PyTorch (`conda`, `pip`, source): source

- Build command you used (if compiling from source): `python setup.py install`

- Python version: `Python 3.6.9 :: Anaconda, Inc.`

- CUDA/cuDNN version: 10.2 / 7.6.5

- GPU models and configuration: V100

- Any other relevant information: Using Anaconda toolchain to build

cc @ezyang @gchanan @zou3519 @seemethere

|

high priority,module: binaries,module: build,triaged

|

low

|

Critical

|

588,003,454 |

flutter

|

Text on desktop Windows 10 looks rough(no antialiasing)

|

Flutter channel: master

Flutter version: 1.16.3-pre.40

Windows 10 build: 19592

Monitor native resolution: 3840x2160

System resolution: 2560x1440

Scaling: 100%

[](https://postimg.cc/qtHZnbrb)

Left is flutter, right is UWP(expected text smoothing)

A more closed up screenshot comparison

[](https://postimg.cc/D8FZjFgn)

flutter

[](https://postimg.cc/0MYvDMhm)

uwp

Reproduction steps:

In powershell, run

`$env:ENABLE_FLUTTER_DESKTOP="true"`

In terminal, run

`flutter config --enable-windows-desktop`

`flutter create <your-app-name>`

`flutter run -d windows`

See the text on the screen for result

|

engine,platform-windows,a: typography,a: desktop,P3,team-windows,triaged-windows

|

medium

|

Critical

|

588,017,406 |

flutter

|

[animations] Allow different containers for source of open and target of closing animation

|

## Use case

I'm using OpenContainer with a FAB to open a new screen and add a new item to a list of OpenContainer Cards. The problem is once you add a new item, the OpenContainer animates back into the FAB which is confusing visually.

My implementation is very similar to:

https://github.com/flutter/packages/blob/master/packages/animations/example/lib/container_transition.dart

## Proposal

Add ability to change the route on the fly and animate back to the item that was just added, similar to what can be achieved with Hero animations.

|

c: new feature,package,c: proposal,p: animations,team-ecosystem,P3,triaged-ecosystem

|

low

|

Major

|

588,020,866 |

react

|

Bug: Event handlers on custom elements work on the client but not on the server

|

<!--

Please provide a clear and concise description of what the bug is. Include

screenshots if needed. Please test using the latest version of the relevant

React packages to make sure your issue has not already been fixed.

-->

React version: 16.13.1

## Steps To Reproduce

```js

ReactDOMServer.renderToString(<div-x onClick={() => console.log('clicked')} />)

```

vs

```js

ReactDOM.render(<div-x onClick={() => { console.log('clicked'); }} />, root)

```

<!--

Your bug will get fixed much faster if we can run your code and it doesn't

have dependencies other than React. Issues without reproduction steps or

code examples may be immediately closed as not actionable.

-->

Link to code example: https://jsfiddle.net/hsug65x0/2/

<!--

Please provide a CodeSandbox (https://codesandbox.io/s/new), a link to a

repository on GitHub, or provide a minimal code example that reproduces the

problem. You may provide a screenshot of the application if you think it is

relevant to your bug report. Here are some tips for providing a minimal

example: https://stackoverflow.com/help/mcve.

-->

## The current behavior

Custom element event handlers only work client side. In SSR the code of the event handler becomes an attribute value.

## The expected behavior

Custom element event handlers work in SSR.

|

Component: Server Rendering,Type: Needs Investigation

|

low

|

Critical

|

588,032,350 |

flutter

|

Channels are ~2x slower on Android versus iOS

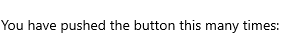

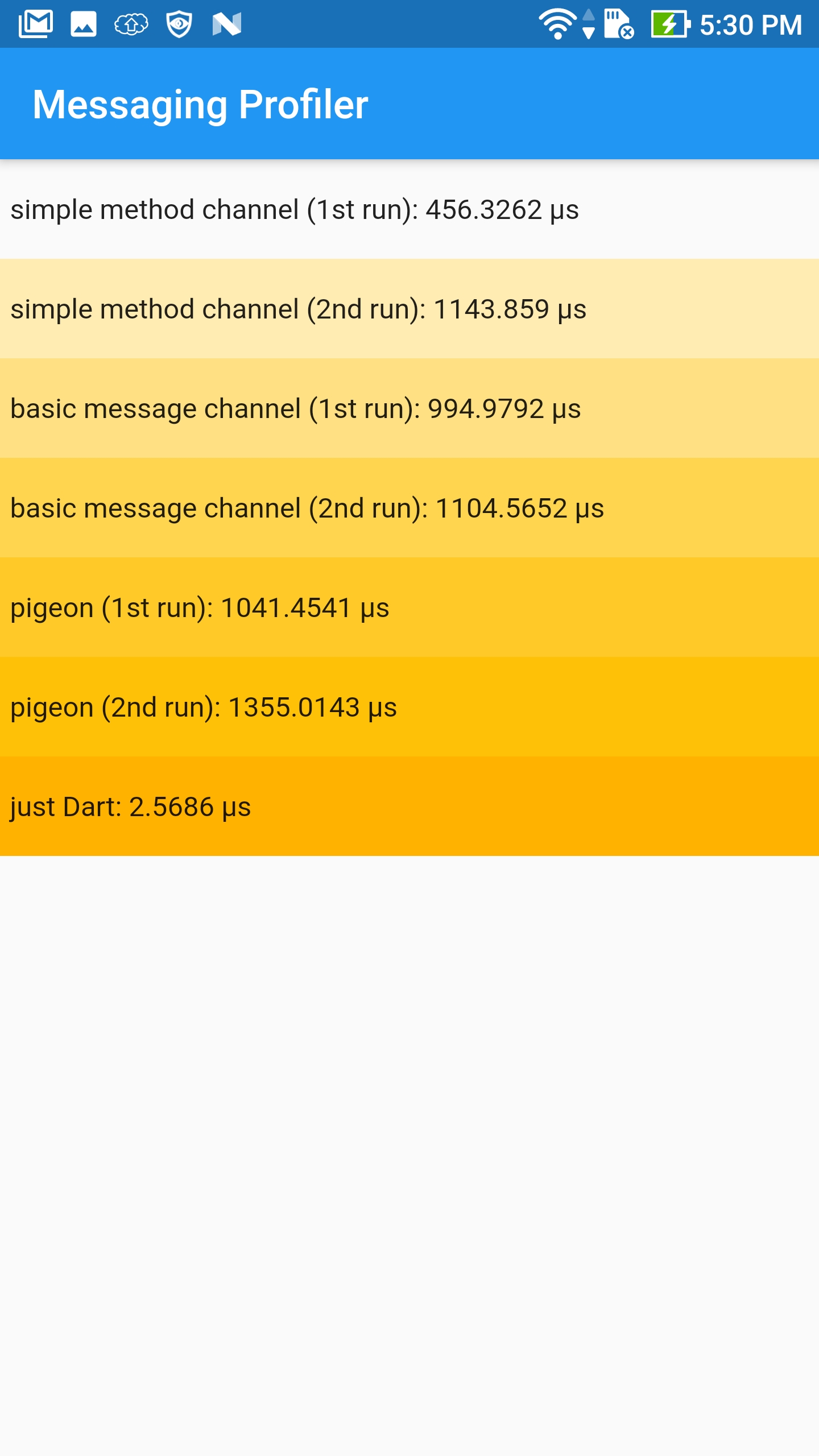

|

Microbenchmarks from https://github.com/gaaclarke/MessagingTiming

Things are going to be a bit slower because of JNI, 1ms seems excessive.

**Math:**

(0.4µs / 2.5µs) * (1000µs / 80µs) = 2

**Android:**

**iOS:**

|

platform-android,engine,c: performance,perf: speed,P3,team-android,triaged-android

|

low

|

Major

|

588,032,524 |

electron

|

Closing a child window brings a previously activate application to the front and the parent window is sent to the back

|

### Preflight Checklist

* [x] I have read the [Contributing Guidelines](https://github.com/electron/electron/blob/master/CONTRIBUTING.md) for this project.

* [x] I agree to follow the [Code of Conduct](https://github.com/electron/electron/blob/master/CODE_OF_CONDUCT.md) that this project adheres to.

* [x] I have searched the issue tracker for an issue that matches the one I want to file, without success.

### Issue Details

* **Electron Version:** 8.2.0

* **Operating System:** Windows 10

* **Last Known Working Electron version:** not sure

### Expected Behavior

When my electron app is visible and I have a child window opened, I expect that closing the child window will keep the main electron window on the front.

### Actual Behavior

In a specific case (see below), an application that was previously over the electron app gets brought to the front upon closing the window. Electron app is sent to the back.

### To Reproduce

1. `npm start` the below script.

2. In devtools, open a child window (e.g. `window.open("https://google.com")`).

3. Select another application from the task bar to bring it to the front of the electron app.

4. Click the electron app icon in the taskbar to bring it to the front.

5. Close the window.

6. Observe that the electron app is sent to the back and replaced with the other app that was previously brought to the front.

main.js:

```

const { app, BrowserWindow } = require('electron')

async function createWindow() {

const mainWindow = new BrowserWindow({ webPreferences: { nativeWindowOpen: true } });

await mainWindow.loadURL("https://google.com");

mainWindow.webContents.openDevTools();

mainWindow.webContents.on("new-window", (e, url, framename, disp, options, additionalFeatures, referrer) => {

options.parent = mainWindow;

});

}

app.on('ready', createWindow)

```

|

platform/windows,bug :beetle:,8-x-y,10-x-y

|

medium

|

Major

|

588,034,686 |

flutter

|

Review flutter attach performance

|

On a simple test, it takes ~8s to connect to an already running flutter observatory via `flutter attach`. Though ~3s was from cleaning up the devfs and closing the process.

Log gist at https://gist.github.com/xster/5becc4937c2cef8d5e84660d0601abd1

There's a 2397ms apparently pure Dart part in the middle of the process too.

We should optimize.

|

tool,c: performance,a: existing-apps,P2,team-tool,triaged-tool

|

low

|

Major

|

588,053,097 |

TypeScript

|

New error: Type instantiation is excessively deep and possibly infinite.

|

This one's actually new in 3.8. There are some additional errors in 3.9 - I'll post them below.

https://github.com/davidkpiano/xstate/blob/2e0bd5dcb11c8ffc5bfc9fba1d3c66fb7546c4bd/packages/core/src/stateUtils.ts#L157

```

packages/core/src/stateUtils.ts:157:36 - error TS2589: Type instantiation is excessively deep and possibly infinite.

157 return getValueFromAdj(rootNode, getAdjList(config));

~~~~~~~~~~~~~~~~~~

packages/core/src/State.ts:181:12 - error TS2589: Type instantiation is excessively deep and possibly infinite.

181 return new State(config);

~~~~~~~~~~~~~~~~~

packages/core/src/interpreter.ts:208:5 - error TS2589: Type instantiation is excessively deep and possibly infinite.

208 this.parent = parent;

~~~~~~~~~~~~~~~~~~~~

packages/core/src/interpreter.ts:783:13 - error TS2345: Argument of type '{ type: string; data: any; }' is not assignable to parameter of type 'string | EventObject | Event<EventObject>[] | Event<EventObject>'.

Object literal may only specify known properties, and 'data' does not exist in type 'EventObject'.

783 data: err

~~~~~~~~~

```

To repro:

1) `yarn`

2) `tsc -b -f tsconfig.monorepo.json`

|

Needs Investigation

|

low

|

Critical

|

588,076,178 |

flutter

|

Don't destroy engine by default in the activity if the engine was provided by a subclass

|

https://cs.opensource.google/flutter/engine/+/master:shell/platform/android/io/flutter/embedding/android/FlutterFragmentActivity.java;l=552

is conceptually at odds with

https://cs.opensource.google/flutter/engine/+/master:shell/platform/android/io/flutter/embedding/android/FlutterFragmentActivity.java;l=150

|

platform-android,engine,P2,team-android,triaged-android

|

low

|

Minor

|

588,081,805 |

pytorch

|

torch::normal only supports (double, double), but at::normal supports (double, double) / (double, Tensor) / (Tensor, double) / (Tensor, Tensor)

|

`torch::normal` only supports (double, double) as input, but `at::normal` supports (double, double) / (double, Tensor) / (Tensor, double) / (Tensor, Tensor) as input.

Python `torch.normal` also supports all four types of inputs (https://pytorch.org/docs/stable/torch.html#torch.normal), and we should do the same for `torch::normal`.

Some notes:

- `torch::normal` is currently defined in`torch/csrc/autograd/generated/variable_factories.h`

- Per conversation with Ed, we should put the tracing logic in VariableType and just dispatch `torch::normal` to VariableType

(This issue was originally reported by @ailzhang)

cc @yf225

cc @ailzhang @ezyang

|

module: cpp,triaged

|

low

|

Minor

|

588,101,099 |

pytorch

|

[JIT] dropout fails on legacy mode

|

## 🐛 Bug

## To Reproduce

Steps to reproduce the behavior:

On pytorch master build (1.5.0a0+efbd6b8), run

```python

python test/test_jit_legacy.py -k test_dropout_cuda

```

The test fails.

## Expected behavior

However,

```python

python test/test_jit.py -k test_dropout_cuda

```

passes.

## Environment

- PyTorch Version (e.g., 1.0): 1.5.0a0+efbd6b8

- OS (e.g., Linux): Fedora 29

- Python version: 3.7.6

- CUDA/cuDNN version: 10.1

## Additional context

This may be related to #34361. The test failure may also be related to legacy profiling executer. By commenting out the following line, the legacy test will also pass.

https://github.com/pytorch/pytorch/blob/efbd6b8533bfa42f9e57d11b453b8a8bf4cf18ea/test/test_jit.py#L168

I'm also not sure if `test/test_jit_legacy.py` runs on pytorch CI?

cc @suo @ptrblck @jjsjann123

|

oncall: jit,triaged

|

low

|

Critical

|

588,122,896 |

opencv

|

Support the property CAP_PROP_SETTINGS under linux

|

##### System information (version)

<!-- Example

- OpenCV => 4.2

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2017

-->

- OpenCV => 4.2

- Operating System / Platform =>Ubuntu 18.04:

- Compiler => Qt Creator 4.11.0

##### Detailed description

when I use Videocapture , I want to use the property CAP_PROP_SETTINGS , but it was only support windows direct show , Do you consider support this property under linux .

<!-- your description -->

##### Steps to reproduce

<!-- to add code example fence it with triple backticks and optional file extension

```.cpp

// C++ code example

```

or attach as .txt or .zip file

-->

##### Issue submission checklist

- [x] I report the issue, it's not a question

<!--

OpenCV team works with answers.opencv.org, Stack Overflow and other communities

to discuss problems. Tickets with question without real issue statement will be

closed.

-->

- [x] I checked the problem with documentation, FAQ, open issues,

answers.opencv.org, Stack Overflow, etc and have not found solution

<!--

Places to check:

* OpenCV documentation: https://docs.opencv.org

* FAQ page: https://github.com/opencv/opencv/wiki/FAQ

* OpenCV forum: https://answers.opencv.org

* OpenCV issue tracker: https://github.com/opencv/opencv/issues?q=is%3Aissue

* Stack Overflow branch: https://stackoverflow.com/questions/tagged/opencv

-->

- [x] I updated to latest OpenCV version and the issue is still there

<!--

master branch for OpenCV 4.x and 3.4 branch for OpenCV 3.x releases.

OpenCV team supports only latest release for each branch.

The ticket is closed, if the problem is not reproduced with modern version.

-->

- [ ] There is reproducer code and related data files: videos, images, onnx, etc

<!--

The best reproducer -- test case for OpenCV that we can add to the library.

Recommendations for media files and binary files:

* Try to reproduce the issue with images and videos in opencv_extra repository

to reduce attachment size