id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

589,193,164 |

PowerToys

|

Add ability to reopen previously closed apps

|

# Summary of the new feature/enhancement

Currently, browsers implement Ctrl+Shift+T to re-open previously closed tabs. A wonderful feature (if possible) would be to have the ability to re-open previously closed applications from the current user + current session with a designated shortcut key.

# Proposed technical implementation details (optional)

<!--

A clear and concise description of what you want to happen.

-->

|

Idea-New PowerToy

|

low

|

Minor

|

589,278,777 |

go

|

x/crypto/ssh: connection.session.Close() returns a EOF as error instead of nil

|

<!--

Please answer these questions before submitting your issue. Thanks!

For questions please use one of our forums: https://github.com/golang/go/wiki/Questions

-->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.14.1 darwin/amd64

$

</pre>

### Does this issue reproduce with the latest release?

Yes, I believe I am using the latest stable release.

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GO111MODULE=""

GOARCH="amd64"

GOBIN=""

GOCACHE="/Users/my_username/Library/Caches/go-build"

GOENV="/Users/my_username/Library/Application Support/go/env"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="darwin"

GOINSECURE=""

GONOPROXY=""

GONOSUMDB=""

GOOS="darwin"

GOPATH="/Users/my_username/Learn/go_learn"

GOPRIVATE=""

GOPROXY="https://proxy.golang.org,direct"

GOROOT="/usr/local/go"

GOSUMDB="sum.golang.org"

GOTMPDIR=""

GOTOOLDIR="/usr/local/go/pkg/tool/darwin_amd64"

GCCGO="gccgo"

AR="ar"

CC="clang"

CXX="clang++"

CGO_ENABLED="1"

GOMOD="/Users/my_username/Learn/go_learn/src/my_stuff/ssh_test/go.mod"

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/17/vmjmghsj28d4lzrx7mqshr9r0000gp/T/go-build681325874=/tmp/go-build -gno-record-gcc-switches -fno-common"

$

</pre></details>

### What did you do?

My program connects to a ssh server (just my laptop in this case) and issues an ssh command closes the SSH session, and connection:

```

package main

import (

"bytes"

"fmt"

"strings"

"golang.org/x/crypto/ssh"

)

func main() {

sshConfig := &ssh.ClientConfig{

User: “my_username”,

Auth: []ssh.AuthMethod{

ssh.Password(“my password”),

},

HostKeyCallback: ssh.InsecureIgnoreHostKey(),

}

connection, err := ssh.Dial("tcp", "localhost:22", sshConfig)

if err != nil {

fmt.Printf("Failed to dial: %s\n", err)

return

}

defer func() {

if err := connection.Close(); err != nil {

fmt.Println("Received an error closing the ssh connection: ", err)

} else {

fmt.Println("No error found closing ssh connection")

}

}()

session, err := connection.NewSession()

if err != nil {

fmt.Printf("Failed to create session: %s\n", err)

return

}

defer func() {

if err := session.Close(); err != nil {

fmt.Println("Received an error closing the ssh session: ", err)

} else {

fmt.Println("No error found closing ssh session")

}

}()

fmt.Println("created session")

var stdOut bytes.Buffer

var stdErr bytes.Buffer

session.Stdout = &stdOut

session.Stderr = &stdErr

err = session.Run("pwd")

fmt.Println("Executed command")

fmt.Println("Command stdOut is:", strings.TrimRight(stdOut.String(), "\n"), " --- stdError is:", strings.TrimRight(stdErr.String(), "\n"))

}

```

### What did you expect to see?

At session.close(), I was expecting to see 'nil' as the output instead of any error.

### What did you see instead?

I'm getting an EOF error in session.Close(). Looking at other raised issues in this forum (https://github.com/golang/go/issues/16194) it sounds reasonable to get the EOF at end of Run(). However, for Close() this seems odd to have it return as an error instead the return as nil.

It could be that Im not handling something properly in terms managing the buffer or executing command or closing it. But the code I pasted above is from examples online and it looks like the working model.

EOF at close is something I can handle but to be very clear and precise I believe Close() should return nil. And EOF doesn't seem like an error either.

|

NeedsInvestigation

|

low

|

Critical

|

589,280,193 |

go

|

crypto/x509: support policyQualifiers in certificatePolicies extension

|

Currently `x509.ParseCertificate`/`x509.CreateCertificate` supports automatically parsing out the `policyIdentifier`s from a `certificatePolicies` extension (into `Certificate.PolicyIdentifiers`) but ignores the optional `policyQualifiers` sequence. This field is often used to transmit a CPS pointer URL (for root and intermediate certificates) and a user notice (for end entity certificates).

It'd be great if we could get automatic parsing for this full structure, instead of just the OIDs, so that we don't have to implement extra post-parsing parsing of extensions to get the full value and/or manually constructing the extension and sticking it in `ExtraExtensions` for creation.

RFC 5280 only defines two possible qualifier types, `id-qt-cps` and `id-qt-unotice`, the vales of both of which can be safely mapped to and from a string, so I don't think we need anything fancier than that. I think the simplest implementation would be to add a new field to `Certificate` with the following structure:

```

CertificatePolicies []struct{

Id asn1.ObjectIdentifier

Qualifiers []struct{

Id asn1.ObjectIdentifier

Qualifier string

}

}

```

This would then be populated during parsing, and marshaled into an extension during creation. There is a question of what do to with the existing `PoliciyIdentifiers` field, i.e. if both are populated how should a call to `CreateCertificate` behave. I think for now it'd make sense to document that only one of them is allowed, and populating both would result in an error.

cc @FiloSottile

|

NeedsInvestigation

|

low

|

Critical

|

589,286,497 |

terminal

|

Feature Request: Background color transparency

|

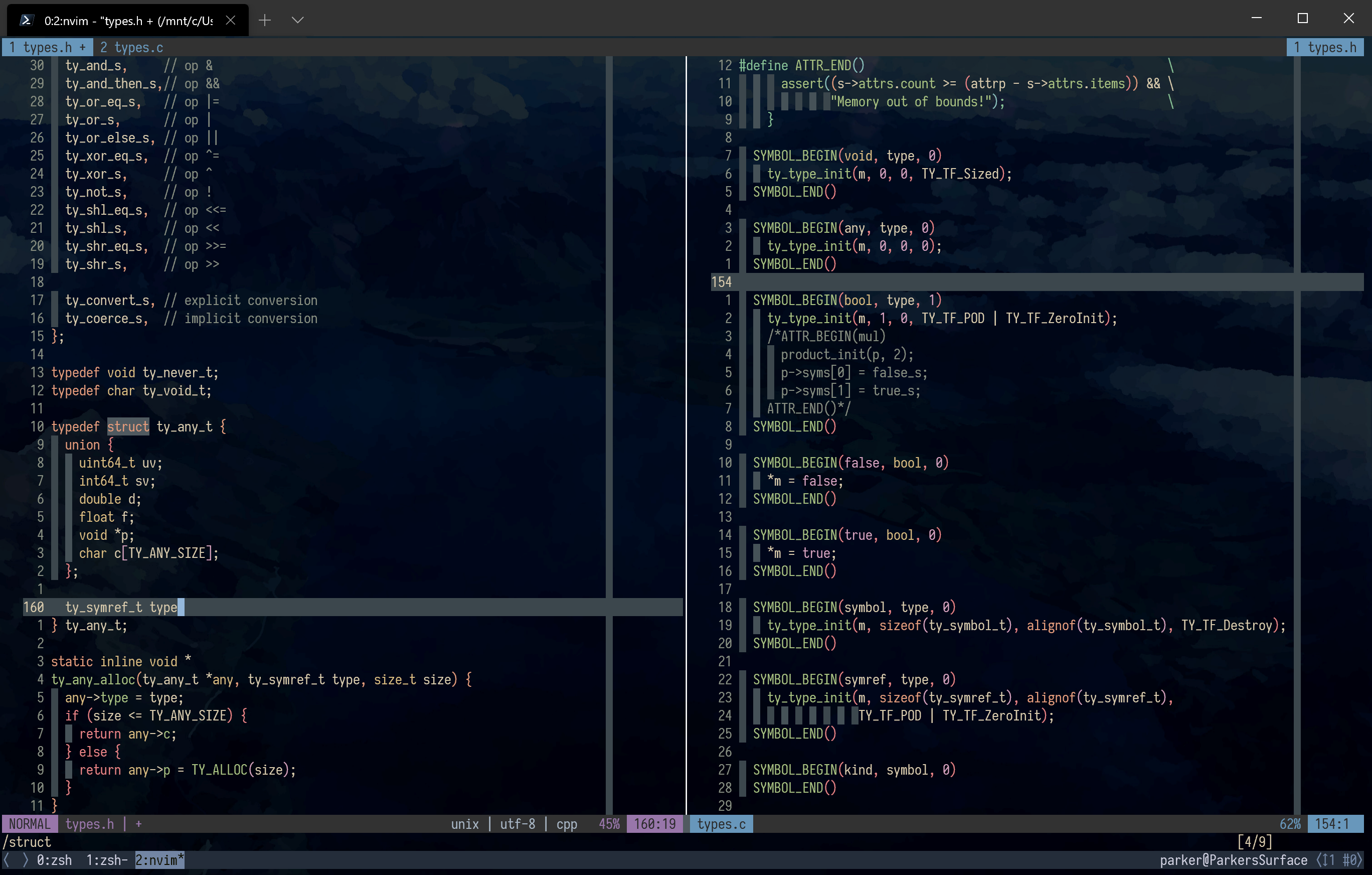

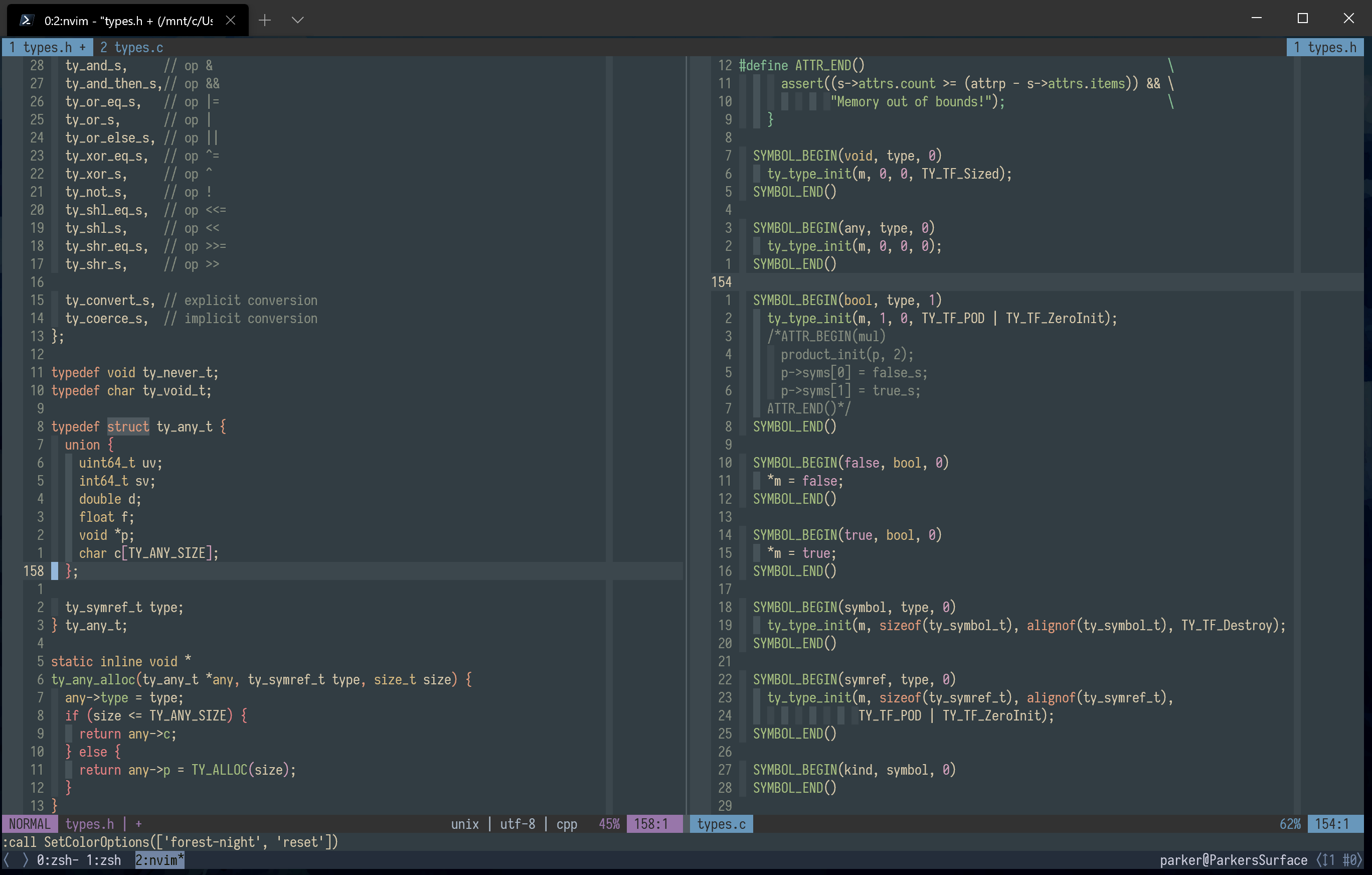

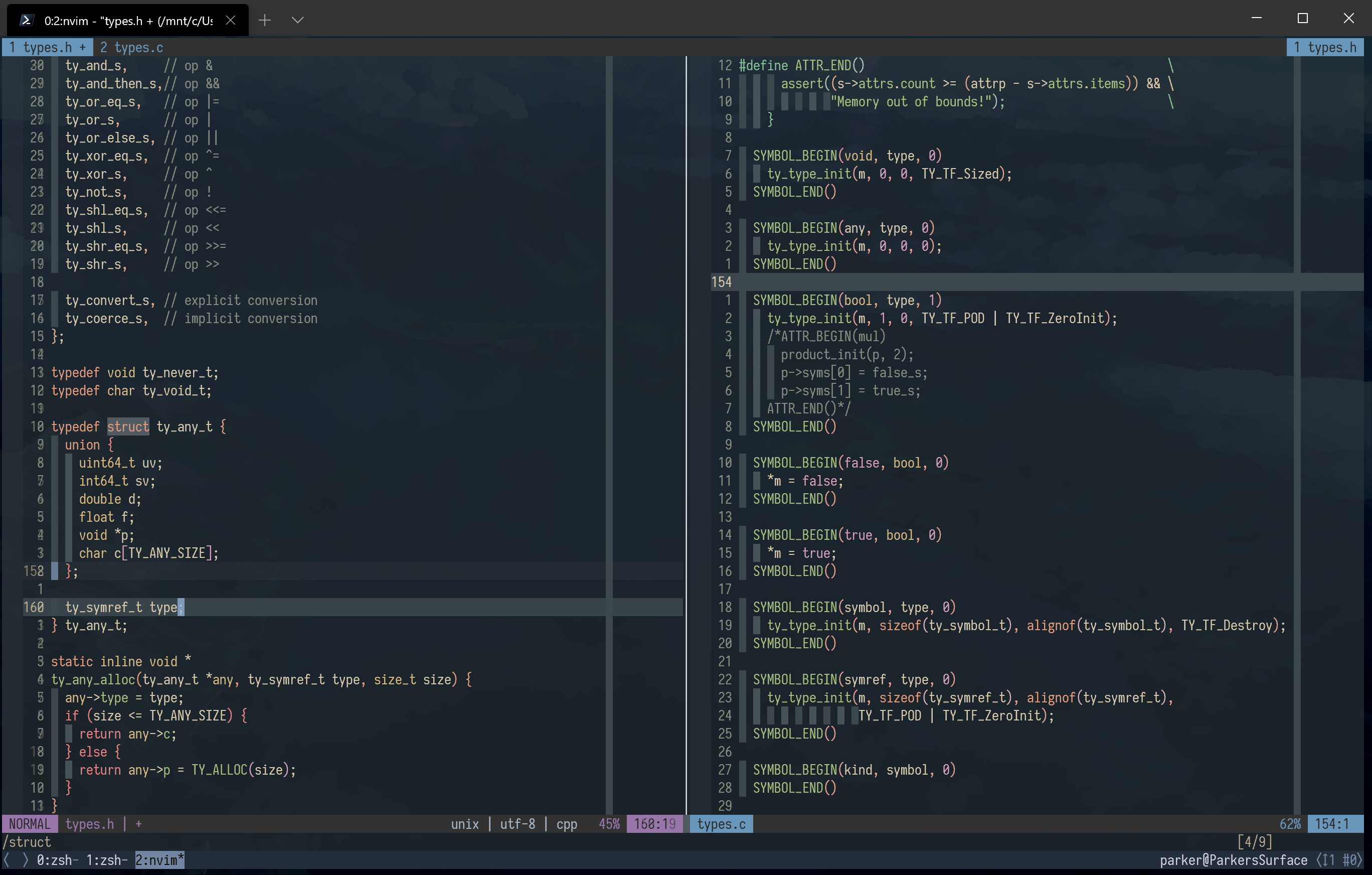

# Description of the new feature/enhancement

This feature would add a setting to change the opacity of the text background when it is set. This is _not_ the screen background which already has this feature. It is also not per-color transparency. The rationale for this is that I have an image background, but I also use vim a lot which tends to want to set a solid background.

Basically, I can do this:

Or this:

And I'd like to be able to do (roughly) this:

It'd look a little different because some solid background is still there, but that should give you the general idea.

# Proposed technical implementation details (optional)

Add a profile setting `backgroundOpacity`. If this is lower than 1, when a cell is printed with a custom background color, that color is blended with the default terminal background instead of being fully opaque.

Bonus: `backgroundBlend` - Different [color blend modes](https://en.wikipedia.org/wiki/Blend_modes).

I'll also accept pointers on how to add these settings and pipe that info through to the renderer :).

|

Help Wanted,Area-Rendering,Area-Settings,Product-Terminal,Issue-Task

|

low

|

Major

|

589,294,385 |

flutter

|

Image gaplessPlayback keeps evicted images in memory

|

This is most likely intended due to how gaplessPlayback works, or is a bug.

When TickerMode.of returns false, Image widgets where gaplessPlayback is false remove their reference to the loaded Image. However, if gaplessPlayback is true, the Image widget continues to reference the loaded Image.

Once the image is evicted from the image cache, it doesn't get garbage collected if there's an Image widget with gaplessPlayback set to true. It also isn't tracked in the live image cache, so when the image comes back on screen (TickerMode.of returning true), the image will load a second time because it isn't present in the image cache.

This causes an increase in memory pressure if gaplessPlayback is set to true for an Image widget containing a large image.

We've mitigated this by removing gaplessPlayback, since we don't need it anymore.

Currently working on a minimal testcase.

cc @dnfield

|

framework,c: performance,a: images,perf: memory,P2,team-framework,triaged-framework

|

low

|

Critical

|

589,301,744 |

pytorch

|

caffe2 `DEPTHWISE3x3.Conv` test is broken

|

## 🐛 Bug

`DEPTHWISE3x3.Conv` test fails with following lengthy error message:

```

$ ./bin/depthwise3x3_conv_op_test | tail -n 50

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 78) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 79) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 80) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 81) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 82) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

75 70 (rel err 0.068965516984462738) (1 1 91 83) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

50 45 (rel err 0.10526315867900848) (1 1 91 84) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

/home/nshulga/git/pytorch-worktree/caffe2/share/contrib/depthwise/depthwise3x3_conv_op_test.cc:144: Failure

Value of: relErr <= maxRelErr || absErr <= absErrForRelErrFailure

Actual: false

Expected: true

25 20 (rel err 0.2222222238779068) (1 1 91 85) running N 2 inputC 2 H 90 W 84 outputC 2 kernelH 3 kernelW 3 strideH 1 strideW 1 padT 2 padL 2 padB 2 padR 2 group 2

[ FAILED ] DEPTHWISE3x3.Conv (143 ms)

[----------] 1 test from DEPTHWISE3x3 (143 ms total)

[----------] Global test environment tear-down

[==========] 1 test from 1 test case ran. (143 ms total)

[ PASSED ] 0 tests.

[ FAILED ] 1 test, listed below:

[ FAILED ] DEPTHWISE3x3.Conv

1 FAILED TEST

```

## To Reproduce

1. Checked out pytorch at `b33e38ec475017868534eb114741ad32c9d3b248` on Linux

2. Build it without GPU nor MKL_DNN acceleration (i.e. invoke `-DUSE_CUDA=NO -DUSE_MKLDNN=OFF`

3. Compile and run `depthwise3x3_conv_op_test`

<!-- If you have a code sample, error messages, stack traces, please provide it here as well -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

Collecting environment information...

PyTorch version: 1.5.0

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Fedora release 30 (Thirty)

GCC version: (GCC) 9.2.1 20190827 (Red Hat 9.2.1-1)

CMake version: version 3.16.4

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: 10.1.168

GPU models and configuration: GPU 0: GeForce RTX 2080

Nvidia driver version: 440.31

cuDNN version: /usr/lib64/libcudnn.so.7.5.0

Versions of relevant libraries:

[pip3] numpy==1.16.4

[pip3] torch==1.5.0

[pip3] torchvision==0.6.0.dev20200326+cu101

[conda] Could not collect

## Additional context

cc @mruberry @VitalyFedyunin

|

caffe2,module: tests,triaged

|

low

|

Critical

|

589,319,969 |

youtube-dl

|

ESPN.CO.UK

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE

######################################################################

-->

## Checklist

<!--

Carefully read and work through this check list in order to prevent the most common mistakes and misuse of youtube-dl:

- First of, make sure you are using the latest version of youtube-dl. Run `youtube-dl --version` and ensure your version is 2020.03.24. If it's not, see https://yt-dl.org/update on how to update. Issues with outdated version will be REJECTED.

- Make sure that all provided video/audio/playlist URLs (if any) are alive and playable in a browser.

- Make sure that site you are requesting is not dedicated to copyright infringement, see https://yt-dl.org/copyright-infringement. youtube-dl does not support such sites. In order for site support request to be accepted all provided example URLs should not violate any copyrights.

- Search the bugtracker for similar site support requests: http://yt-dl.org/search-issues. DO NOT post duplicates.

- Finally, put x into all relevant boxes (like this [x])

-->

- [x ] I'm reporting a new site support request

- [x ] I've verified that I'm running youtube-dl version **2020.03.24**

- [ x] I've checked that all provided URLs are alive and playable in a browser

- [x ] I've checked that none of provided URLs violate any copyrights

- [x ] I've searched the bugtracker for similar site support requests including closed ones

## Example URLs

https://www.espn.co.uk/video/clip/_/id/25103188

## Description

Sorry in advance if I should have opened a separate issue but...

The same clip is also viewable at:

https://www.espn.com/video/clip/_/id/25103188

and the ESPN extractor is invoked...however the ESPN extractor failed.

So

1) The ESPN extractor should also recognize espn.co.uk (not fall back to generic)

...and

2) The ESPN extractor might need some attention.

Thanks as always

Ringo

|

site-support-request

|

low

|

Critical

|

589,329,054 |

vscode

|

Can't drag files from a remote vscode window to a local window

|

- Have remote and local windows open

- Drag a file from the remote explorer to the local explorer

- Nothing happens

I can do this local -> remote to copy files, and I can do this from a remote vscode window to Finder, but not remote -> local vscode windows.

|

feature-request,file-explorer

|

medium

|

Critical

|

589,404,563 |

PowerToys

|

Memorized clipboard entries

|

Summary: Support assigning key-bindings to clipboard history items.

I occasionally need to run a command over and over again on a number of files.

I typically get my list of files ready as a \n delimited list of paths. To open them, I will cut their path to the clipboard, switch to a console and paste. Once they are open, I need to type in a command into the integrated shell. I would love to be able to paste the command, but because I copied the path onto the clipboard buffer, the command is no longer at my finger tips. Using the Clipboard history feature of Windows helps, but as I open more files, the command gets pushed further and further down the history list.

As an enhancement to the history list, I would love to be able to assign a key-binding to a particular entry. No matter how far the entry was pushed down the list, the key-binding would let me select it quickly.

When the entry was removed from the list, the key binding should also be freed up.

I suggest the key-binding only work after opening the clipboard history with Win+V, but perhaps a global key binding would be useful to some people too.

|

Idea-New PowerToy

|

medium

|

Major

|

589,410,409 |

TypeScript

|

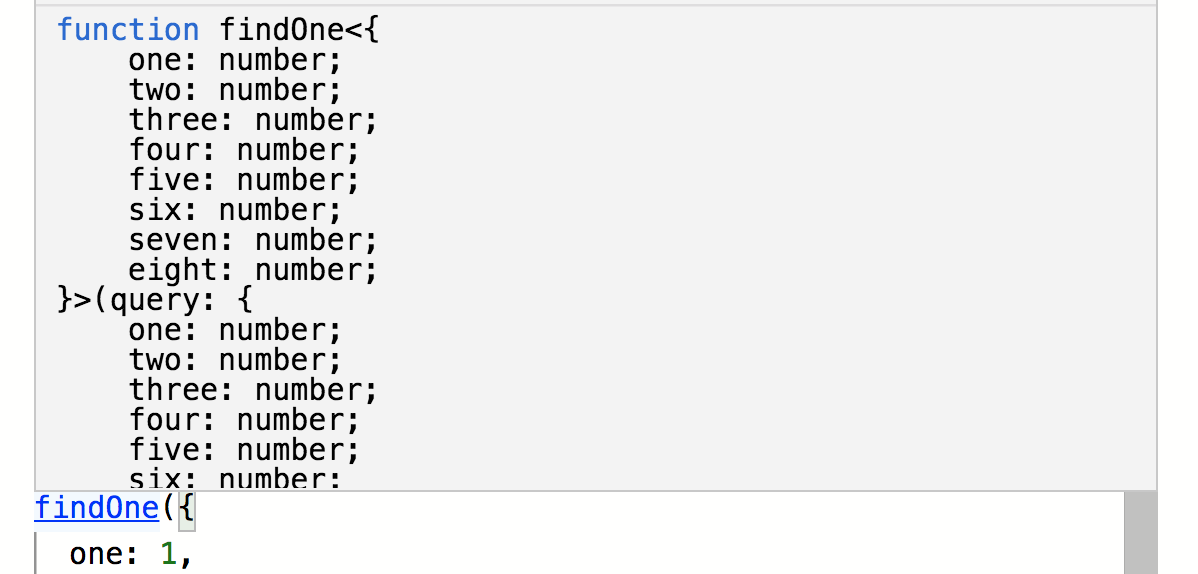

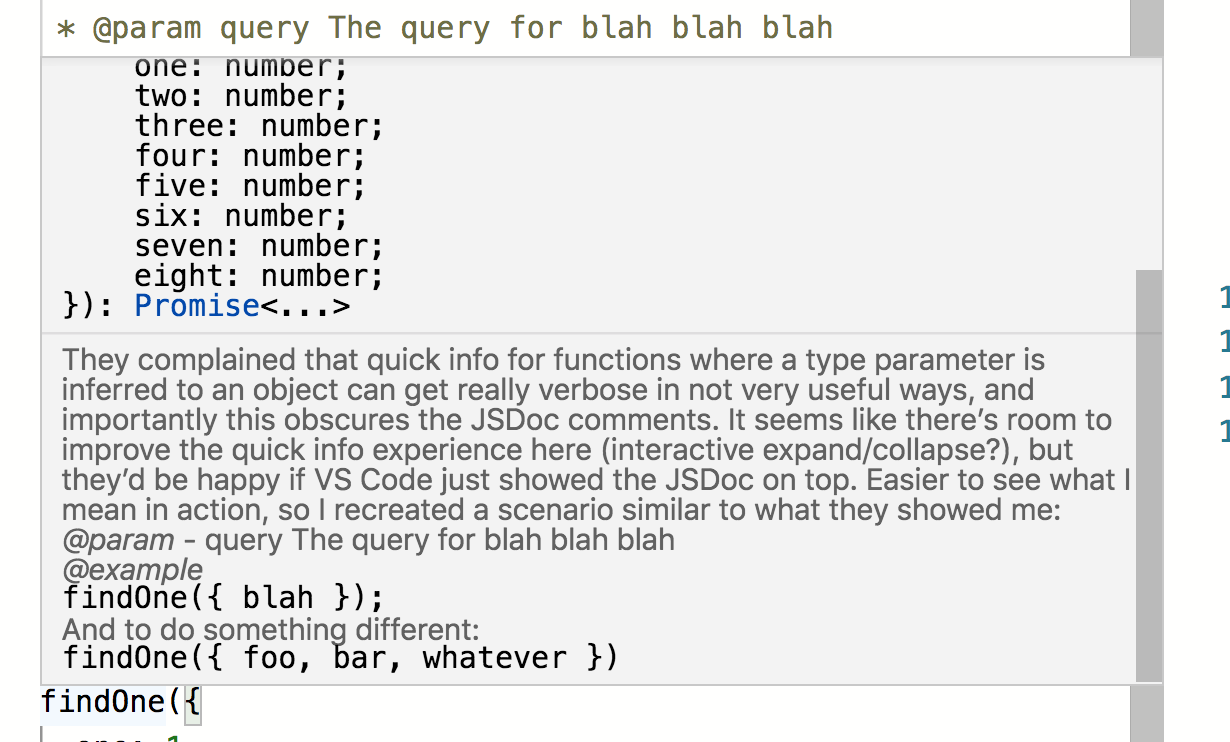

Considering limiting hover length

|

<!--

Please try to reproduce the issue with the latest published version. It may have already been fixed.

For npm: `typescript@next`

This is also the 'Nightly' version in the playground: http://www.typescriptlang.org/play/?ts=Nightly

-->

**TypeScript Version:** 3.7.x-dev.201xxxxx

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** hover, length

**Code**

```ts

// A *self-contained* demonstration of the problem follows...

// Test this by running `tsc` on the command-line, rather than through another build tool such as Gulp, Webpack, etc.

```

**Expected behavior:** JSDocs can always be shown

**Actual behavior:** JSDocs is hidden because hover is too long

**Playground Link:** https://www.typescriptlang.org/play/#code/FAegVGwARlAqALApgTygYwPYFsAOAbAQwEsA7JAEygBcFDqoBHAV2PQGsoyAzTKXgE79mpdNWKZSAZygB3ZAKRRCNFLiW5CAwtiTUkQ4jJ4HFVan0KkomAEYArJGIxWoAcz1RFhfPjQA3A1tMKSUyKFJMBkCBNGZQ7mZ8OUIUKQAaZVIqYjxMAWoraj8aBCMbWyl0ZkUZWiUAKQBlABFMdAwcXVJqKQA6KABJBlCkbBl8YnYlesVATAIZAUwcGj5c3CXA0qUWNk4ePiQAD3UBYiRRJQUlAAoyfW0xYi3jzWyQLF9CXFCAfgBKTK2ZgMeooOZUWxXb64NDEbhQABqTSgAGFMBQlPZ4iMEJhZJRtlBmm0OpJVrgBgBRQhSc5CCxQUZyOgMQZQXSucKEJ6STJSPjsxTobz6KgqKoXLQSJm5YhEBl8eT0bZoKR4glUXQALmgsAAApptNgmMwDGhEDszbF+PkoLYiAh7Y7nYQEHqoPrjjoCEgPQADQP2KQe7hkCgAeXINwA3q6nQBff4AbgDgY9HoAgtlVlAKHwBbpaGQ3Hn4dwDBdqLqYFA0-7g6Hw1GkLHbZggVpMsr9DEoEn63qQMBMegFUpEqJxOSw9kWwAeOAAPhuLHN2vg-w3AAUltgjEh5wAlJCEfOkPyLpdL1OgECmvZcUi8KDXYCzyPRmPQGzkDcARnSH9qFkTANwAJiAqBSkUJANwAZig20ag3AAWJCw0CDcAFYkLpI4NwANjwpBAlIDcAHYkKQYg3AQasoAADmAAcgA

**Related Issues:** https://github.com/microsoft/vscode/issues/92787

It seems certain type hover will be formatted to one property each line. I'm wondering if you could only adopt this behavior when the total lines do not exceed a threshold (like 10 lines), so it wouldn't affect JSDocs.

|

Suggestion,Awaiting More Feedback

|

low

|

Minor

|

589,433,605 |

node

|

child_process.exec/execFile docs have some inconsistencies and inaccuracies

|

I don't have time to fix this now, but while looking at sec issues related to these APIs, I found some oddities.

https://nodejs.org/api/child_process.html#child_process_child_process_execfile_file_args_options_callback says

> shell <boolean> | <string> If true, runs command inside of a shell.

But execFile() doesn't have a `command` argument... this was pasted from exec(), it seems. Probably what happens is that if there is a shell, then `file` and `args` are all concatenated together, `' '` seperated, and passed to the shell.

Since the shell option AFAICT ends up following the same path as from exec(), it suggests that the exec docs:

> shell <string> Shell to execute the command with. See Shell Requirements and Default Windows Shell. Default: '/bin/sh' on Unix, process.env.ComSpec on Windows.

are incomplete, probably `false` would work just fine as an arg there, making exec() behave exactly like execFile().

This seems to be a bit legacy as well:

> The child_process.execFile() function is similar to child_process.exec() except that it does not spawn a shell by default. Rather, the specified executable file is spawned directly as a new process making it slightly more efficient than child_process.exec().

Now that both exec and execFile() have a shell, differing only be the default value, its probably more accurate to say the difference is that one takes an array of strings as an argument `execFile(file, argv, ..` and the other takes a single string `exec(command, ...)`.

The text following is now wrong:

> The same options as child_process.exec() are supported. Since a shell is not spawned, behaviors such as I/O redirection and file globbing are not supported.

It can't both *support* the same options, and *not support* some of the options.

It should probably say "If a shell is ..." (only one word different, but its important).

exec should probably have docs saying the same thing, shell behaviours are not supported when shell is `false`.

And execFile() should probably include the warnings from exec about how shell special chars vary by platform.

Some of these issues are shared with the "sync" versions of the APIs.

|

child_process,doc

|

low

|

Minor

|

589,477,012 |

godot

|

[3.x] GDScript autocompletion errors with "Node not found" when using `get_node` with an absolute `/root` path

|

**OS: Microsoft Windows [Version 10.0.18363.720] 64bit**

**Godot: v3.2.1.stable.mono.official 64bit**

It seems get_node (same with $) is not working as expected.

**Specify steps to reproduce:**

Suppose you have the following tree:

/root/Character

/root/Character/Sword

And a script attached to Sword.

Inside that script you have:

func _ready() -> void:

var bug = get_node("/root/Character")

If you now type bug. below var bug you get "Node not found: /root/Character." in the output window of the editor.

[Get_node_bug.zip](https://github.com/godotengine/godot/files/4396428/Get_node_bug.zip)

And you don't get code completion for properties like position if the Character is a Node for example.

|

bug,topic:gdscript,topic:editor,confirmed

|

medium

|

Critical

|

589,481,045 |

flutter

|

Widget focus highlighting for iOS/iPadOS

|

Sister bug to https://github.com/flutter/flutter/issues/43365 for iPad keyboard support since it's different from macOS.

This also raises the question of whether we should attempt to detect platform in Cupertino and have different highlighting behavior depending on the platform.

However, figuring out what the focus highlight spec should be is non-trivial. After testing a bit, iPadOS (13.4) doesn't really have a (consistent) focus highlight handling strategy. It's also definitely different from macOS.

The task switcher looks the most polished. Springboard quick search looks similar, so does Siri search results. Based on our experience with other glass-frosty things like the action sheet, it's likely a combination of overlay and color dodge.

UITableView has a slightly different style. It's just a simple overlay. Note it's not super useful. You can't focus on anything else than the master list. And text field is the only other thing focusable. Pressing tab just inserts tabs.

WebKit itself has yet another style. The simplest. A blue box.

|

a: text input,platform-ios,framework,f: material design,a: accessibility,a: fidelity,f: cupertino,f: focus,team-design,triaged-design

|

low

|

Critical

|

589,489,427 |

terminal

|

Allow the user to set the height of the tab row

|

<!--

🚨🚨🚨🚨🚨🚨🚨🚨🚨🚨

I ACKNOWLEDGE THE FOLLOWING BEFORE PROCEEDING:

1. If I delete this entire template and go my own path, the core team may close my issue without further explanation or engagement.

2. If I list multiple bugs/concerns in this one issue, the core team may close my issue without further explanation or engagement.

3. If I write an issue that has many duplicates, the core team may close my issue without further explanation or engagement (and without necessarily spending time to find the exact duplicate ID number).

4. If I leave the title incomplete when filing the issue, the core team may close my issue without further explanation or engagement.

5. If I file something completely blank in the body, the core team may close my issue without further explanation or engagement.

All good? Then proceed!

-->

# Please reduce the height of the title bar, there is no need to have that big chunk of area on top of the terminal.

<!--

A clear and concise description of what the problem is that the new feature would solve.

Describe why and how a user would use this new functionality (if applicable).

-->

# Proposed to reduced by 1/3 or 2/5.

<!--

A clear and concise description of what you want to happen.

-->

|

Area-Settings,Product-Terminal,Issue-Task,Area-Theming

|

medium

|

Critical

|

589,519,834 |

pytorch

|

libtorch_global_deps.so not found.

|

## Bug

The following CDLL call is expecting `libtorch_global_deps.so`, which is not always the case.

https://github.com/pytorch/pytorch/blob/f1d69cb2f848d07292ad69d7801b8b0b73a42b5d/torch/__init__.py#L85-L89

In our current setup, which is a little bit special, we want to use pytorch both in C++ and python, so we do a cmake build first, install to /usr/local/lib first, and then call setup.py upon the same build dir to build python wheel.

Currently there's no `lib` dir under `/usr/local/lib/python3.6/dist-packages/torch`.

Similar story also happened to torch_shm_manager, which is required under `bin`.

https://github.com/pytorch/pytorch/blob/f1d69cb2f848d07292ad69d7801b8b0b73a42b5d/torch/__init__.py#L308-L309

I also checked a version I built on Feb 15, that one has `/usr/local/lib/python3.6/dist-packages/torch/{lib,bin}`.

## Environment

- PyTorch Version (e.g., 1.0): master

- OS (e.g., Linux): Ubuntu 18.04

- How you installed PyTorch (`conda`, `pip`, source): source

- Build command you used (if compiling from source): cmake+ninja+gcc-8

- Python version: 3.6.9

- CUDA/cuDNN version: 10.2

|

module: build,triaged,module: regression

|

low

|

Critical

|

589,532,110 |

terminal

|

Add support for touchscreen selection

|

<!--

🚨🚨🚨🚨🚨🚨🚨🚨🚨🚨

I ACKNOWLEDGE THE FOLLOWING BEFORE PROCEEDING:

1. If I delete this entire template and go my own path, the core team may close my issue without further explanation or engagement.

2. If I list multiple bugs/concerns in this one issue, the core team may close my issue without further explanation or engagement.

3. If I write an issue that has many duplicates, the core team may close my issue without further explanation or engagement (and without necessarily spending time to find the exact duplicate ID number).

4. If I leave the title incomplete when filing the issue, the core team may close my issue without further explanation or engagement.

5. If I file something completely blank in the body, the core team may close my issue without further explanation or engagement.

All good? Then proceed!

-->

# Description of the new feature/enhancement

<!--

A clear and concise description of what the problem is that the new feature would solve.

Describe why and how a user would use this new functionality (if applicable).

-->

I'm a Surface pro user and sometimes need to use CLI tools without bothering to pick up the keyboard (although it's a mess to type with on-screen keyboard), but it turns out that Windows Terminal is not optimized for touchscreen at all.

# Proposed technical implementation details (optional)

<!--

A clear and concise description of what you want to happen.

-->

As far as I can tell, the following need to be implemented:

1. Automatically popup on-screen keyboard and resize the window to fit it on touch.

2. Long press to select/copy/paste.

3. Zoom with finger gestures (https://github.com/microsoft/terminal/issues/3149)

|

Area-Input,Area-TerminalControl,Product-Terminal,Issue-Task

|

medium

|

Critical

|

589,551,409 |

flutter

|

[multicast_dns] SocketException: Failed to create datagram socket "The requested address is not valid in its context"

|

Hi

I decided to try multicast_dns 0.2.2 to search for mqtt clients.

I've copied the provided example into a dart project.

```

import 'package:multicast_dns/multicast_dns.dart';

Future<void> main() async {

// Parse the command line arguments.

const name = '_mqtt._tcp.local';

final MDnsClient client = MDnsClient();

// Start the client with default options.

await client.start();

// Get the PTR recod for the service.

await for (PtrResourceRecord ptr in client

.lookup<PtrResourceRecord>(ResourceRecordQuery.serverPointer(name))) {

// Use the domainName from the PTR record to get the SRV record,

// which will have the port and local hostname.

// Note that duplicate messages may come through, especially if any

// other mDNS queries are running elsewhere on the machine.

await for (SrvResourceRecord srv in client.lookup<SrvResourceRecord>(

ResourceRecordQuery.service(ptr.domainName))) {

// Domain name will be something like "[email protected]._dartobservatory._tcp.local"

final String bundleId =

ptr.domainName; //.substring(0, ptr.domainName.indexOf('@'));

print('Dart observatory instance found at '

'${srv.target}:${srv.port} for "$bundleId".');

}

}

client.stop();

print('Done.');

}

```

Running this from VSCode on Windows 10 gives the following error.

```

Dart Socket ERROR: c:\b\s\w\ir\cache\builder\sdk\runtime\bin\socket_win.cc:181: `reusePort` not supported for Windows.Dart Socket ERROR: c:\b\s\w\ir\cache\builder\sdk\runtime\bin\socket_win.cc:181: `reusePort` not supported for Windows.Dart Socket ERROR: c:\b\s\w\ir\cache\builder\sdk\runtime\bin\socket_win.cc:181: `reusePort` not supported for Windows.Dart Socket ERROR: c:\b\s\w\ir\cache\builder\sdk\runtime\bin\socket_win.cc:181: `reusePort` not supported for Windows.Unhandled exception:

SocketException: Failed to create datagram socket (OS Error: Den begärda adressen är inte giltig i sin kontext.

, errno = 10049), address = , port = 5353

#0 _NativeSocket.bindDatagram (dart:io-patch/socket_patch.dart:668:7)

<asynchronous suspension>

#1 _RawDatagramSocket.bind (dart:io-patch/socket_patch.dart:1964:26)

#2 RawDatagramSocket.bind (dart:io-patch/socket_patch.dart:1922:31)

#3 MDnsClient.start (package:multicast_dns/multicast_dns.dart:127:46)

<asynchronous suspension>

#4 main (file:///C:/experimentalProjects/flutter_mdns/mdns/bin/main.dart:9:16)

#5 _startIsolate.<anonymous closure> (dart:isolate-patch/isolate_patch.dart:307:19)

#6 _RawReceivePortImpl._handleMessage (dart:isolate-patch/isolate_patch.dart:174:12)

```

Please advice.

|

c: crash,package,p: multicast_dns,team-ecosystem,P3,triaged-ecosystem

|

low

|

Critical

|

589,552,850 |

pytorch

|

Increased memory usage in repetitive torch.jit.trace calls

|

## 🐛 Bug

Tracing a model multiple times seems to increase the memory usage.

Reported by [alepack](https://discuss.pytorch.org/u/alepack) in [this post](https://discuss.pytorch.org/t/possible-memory-leak-in-torch-jit-trace/74455).

## To Reproduce

```python

#Utilities

import os

import psutil

#JIT trace test

import torch

import torchvision.models as model_zoo

with torch.no_grad():

#Create a simple resnet

model = model_zoo.resnet18()

model.eval

#Create sample input

sample_input = torch.randn(size=[2, 3, 480, 640], requires_grad=False)

#Get process id

process = psutil.Process(os.getpid())

#Repeat tracing

for i in range(0, 1000):

tr_model = torch.jit.trace(model, sample_input, check_trace=False)

print("Iter: {} = {}".format(i, process.memory_full_info()))

# output:

Iter: 0 = pfullmem(rss=415825920, vms=8240693248, shared=123785216, text=2215936, lib=0, data=6408441856, dirty=0, uss=419287040, pss=420155392, swap=0)

Iter: 1 = pfullmem(rss=456716288, vms=8292564992, shared=125976576, text=2215936, lib=0, data=6460313600, dirty=0, uss=461049856, pss=461918208, swap=0)

Iter: 2 = pfullmem(rss=493195264, vms=8331886592, shared=125976576, text=2215936, lib=0, data=6499635200, dirty=0, uss=495702016, pss=496570368, swap=0)

Iter: 3 = pfullmem(rss=547762176, vms=8391135232, shared=125976576, text=2215936, lib=0, data=6558883840, dirty=0, uss=550371328, pss=551239680, swap=0)

Iter: 4 = pfullmem(rss=577015808, vms=8420626432, shared=125976576, text=2215936, lib=0, data=6588375040, dirty=0, uss=579932160, pss=580800512, swap=0)

Iter: 5 = pfullmem(rss=630874112, vms=8469778432, shared=125976576, text=2215936, lib=0, data=6637527040, dirty=0, uss=629145600, pss=630013952, swap=0)

Iter: 6 = pfullmem(rss=659718144, vms=8499269632, shared=125976576, text=2215936, lib=0, data=6667018240, dirty=0, uss=662802432, pss=663670784, swap=0)

Iter: 7 = pfullmem(rss=698855424, vms=8538853376, shared=125976576, text=2215936, lib=0, data=6706601984, dirty=0, uss=702468096, pss=703336448, swap=0)

Iter: 8 = pfullmem(rss=728879104, vms=8568344576, shared=125976576, text=2215936, lib=0, data=6736093184, dirty=0, uss=732028928, pss=732897280, swap=0)

Iter: 9 = pfullmem(rss=773472256, vms=8617496576, shared=125976576, text=2215936, lib=0, data=6785245184, dirty=0, uss=776867840, pss=777736192, swap=0)

Iter: 10 = pfullmem(rss=802549760, vms=8646987776, shared=125976576, text=2215936, lib=0, data=6814736384, dirty=0, uss=806092800, pss=806961152, swap=0)

```

## Environment

alepack's setup based on the forum post:

* PyToch `1.4.0` installed via conda

I verified it with `1.5.0a0+5b3492df18`.

cc @ezyang @gchanan @zou3519 @suo

|

high priority,oncall: jit,triaged

|

medium

|

Critical

|

589,553,850 |

rust

|

Should `TryFrom` get mentioned in AsRef/AsMut?

|

Both `AsMut` as well as `AsRef`'s documentation contain the following note:

> **Note: This trait must not fail.** If the conversion can fail, use a dedicated method which returns an `Option<T>` or a `Result<T, E>`.

This wording was added in 58d2c7909f9 and 6cda8e4eaac back in 2016/01. It was also very similar in `From` and `Into` up to 71bdeb022a9, where the explicit mention of dedicated methods was replaced by a link to the `Try*` variants.

Since `TryFrom` is now stabilized, one could go ahead and write `AsRef` variants via `try_from`:

```rust

struct Example {

dont_panic: bool,

}

struct Panic;

impl TryFrom<&Example> for &u8 {

type Error = Panic;

fn try_from(e: &Example) -> Result<Self, Self::Error> {

if e.dont_panic {

Ok(&42)

} else {

Err(Panic)

}

}

}

// full example: https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=8446972919a4ab31222800395accb434

```

However, I'm not sure whether that breaks the original spirit of `AsRef` and `AsMut`, being *cheap* conversions and all. I'm also not sure whether it is intended to use `TryFrom` for references.

If possible failing (and costly?) *reference* conversions are indeed a use case for `TryFrom`, should this alternative to an `Option<T>` or `Result<T,E>` get added to `AsRef`'s documentation? Or is this a misuse case and reference conversion should get mentioned on `TryFrom`'s documentation as a non-goal?

(Note that I don't have a use case for a `TryAsRef` or similar; I just read a lot of Rust's documentation lately and came across the symmetry between `From` and `TryFrom` and the missing counterpart in `AsRef`)

|

C-enhancement,T-libs-api

|

low

|

Critical

|

589,559,997 |

react

|

Devtools: Allow editing context

|

React version: 16.13 and `0.0.0-experimental-aae83a4b9

## Steps To Reproduce

1. Goto https://codesandbox.io/s/xenodochial-field-rfdjz

2. Try editing value of `MessageListContext.Provider`

Link to code example: https://codesandbox.io/s/xenodochial-field-rfdjz

## The current behavior

Context from `createContext` can't be edited in the current devtools (provider, consumer, hooks)

## The expected behavior

Context value should be editable. I already proposed an implementation for [Provider](https://github.com/facebook/react/pull/18255) and [Consumer](https://github.com/facebook/react/pull/18257).

|

Type: Feature Request,Component: Developer Tools

|

low

|

Minor

|

589,561,455 |

godot

|

dialog window title bar are visible in Windows(os)

|

**Godot version:** 307b1b3a5

**OS/device including version:** windows10

**Issue description:**

title bar is visible in dialog windows in Windows(os). also dragging them around makes the window lagging

|

enhancement,discussion,topic:editor,topic:porting,usability

|

low

|

Major

|

589,568,591 |

godot

|

GDScript: Strange const scoping with classes

|

**Godot version:** 3.2.1

**OS/device including version:** `Ubuntu 18.04.3 LTS (bionic) - Linux 4.15.0-91-generic #92-Ubuntu SMP Fri Feb 28 11:09:48 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux`

**Issue description:** `const` scoping seems to be very restrictive, with child classes being unable to use constants from their parent or from the top level of the script in constant expressions. This results in having to use `var` instead (as in #20354), or copy consts/enums to each child (maintenance nightmare).

**Steps to reproduce:** See gdscript samples below.

**Minimal reproduction project:**

```

# Singleton named Note

extends Node

enum {NOTE_TAP, NOTE_HOLD}

enum Named {NOTE_TAP, NOTE_HOLD}

const NOTE_TAP1 := 0

class NoteBase:

...

class NoteTap extends NoteBase:

var type = NOTE_TAP # legal, seems like the fix for #20354 was targeted at this case

const type1 = NOTE_TAP # illegal: "Expected a constant expression."

const type2 = Named.NOTE_TAP # illegal

const type3 = Note.NOTE_TAP # illegal: "invalid index 'NOTE_TAP' in constant expression"

const type4 = Note.Named.NOTE_TAP # illegal

const type5 = NOTE_TAP1 # illegal

const type6 = Note.NOTE_TAP1 # illegal

```

```

class NoteBase:

enum {NOTE_TAP, NOTE_HOLD}

const NOTE_TAP1 := 0

...

class NoteTap extends NoteBase:

const type = NOTE_TAP # still illegal

const type1 = NoteBase.NOTE_TAP # still illegal

const type2 = NOTE_TAP1 # also illegal

```

```

class NoteBase:

...

class NoteTap extends NoteBase:

enum {NOTE_TAP, NOTE_HOLD}

const type = NOTE_TAP # legal!

```

|

bug,topic:gdscript

|

low

|

Minor

|

589,577,681 |

TypeScript

|

T | (() => T)

|

**TypeScript Version:** 3.9.0 (Nightly)

**Search Terms:** `T | (() => T)`, `T & Function`

**Code**

```ts

type Initializer<T> = T | (() => T)

// type Initializer<T> = T extends any ? (T | (() => T)) : never

function correct<T>(arg: Initializer<T>) {

return typeof arg === 'function' ? arg() : arg // error

}

```

Line 2 provides a workaround for this.

More info on [stackoverflow](https://stackoverflow.com/questions/60898079/typescript-type-t-or-function-t-usage).

**Expected behavior:** no errors

**Actual behavior:** `This expression is not callable.

Not all constituents of type '(() => T) | (T & Function)' are callable.

Type 'T & Function' has no call signatures.`

**Playground Link:** [here](https://www.typescriptlang.org/play/?ts=3.9.0-dev.20200327&ssl=1&ssc=1&pln=6&pc=2#code/C4TwDgpgBAkgdgS2AghgGwQLwgJwDwAqAfFALxQFQA+UAFLQJRkkEMBQA9B1KJLIsnRZchEuUoQAHsAhwAJgGcoKOCCgB+OpRr0mpFgyYAuKHAgA3XGzYAzAK5wAxsgD2cKI5c4cEZ6NooOADmJvBIqBjY+MRMAN5sUIlQPsB2OO68EC42ysFkpOQA5PZOrnCFGrlBjFAmgUFQXFC4OF5sAL5AA).

**Related Issues:** none

|

Bug

|

high

|

Critical

|

589,584,169 |

godot

|

Unable to inherit from ProjectSettings

|

**Godot version:**

3.2.1

**OS/device including version:**

Arch Linux

**Issue description:**

If I trying to extend `ProjectSettings` I have parsing error:

```

modules/gdscript/gdscript_compiler.cpp:1864 - Condition "native.is_null()" is true. Returned: ERR_BUG

```

Extending `ProjectSettings` can be very useful to add helper methods such as `add_gravity()` instead of using `get_setting("physics/3d/default_gravity")` every time.

**Steps to reproduce:**

1. Create a script.

2. Extend it from `ProjectSettings`.

3. Add any method or variable.

4. Try to use it in code.

**Minimal reproduction project:**

[PrjectSettings.zip](https://github.com/godotengine/godot/files/4397236/PrjectSettings.zip)

In this project I created `MySettingsNode` class that inherits from Node and works as expected. Also I created `MySettingsProjectSettings` class that have the same code, but will not work (just try to instantiate it from code to see the error.

**Temporary workaround:**

For now it is possible to create a class and with static functions and just access to all settings via `ProjectsSettings` because this is a singleton. This is useful to the error looks like a bug.

|

discussion,topic:core

|

low

|

Critical

|

589,599,444 |

scrcpy

|

scrcpy on Asus TF101 running Android 6.0.1

|

Hi there!

I am trying to get my old Asus EeePC (TF101) to work with scrcpy on Ubuntu 18.04. The tablet is currently running [KatKiss Marshmallow 6.0.1 ROM](https://forum.xda-developers.com/eee-pad-transformer/development/rom-t3318496) with USB Debugging on:

$ adb devices

List of devices attached

037001494120f517 device

When I run scrcpy I get:

$ scrcpy

INFO: scrcpy 1.12 <https://github.com/Genymobile/scrcpy>

/usr/local/share/scrcpy/scrcpy-server: 1 file pushed. 1.1 MB/s (26196 bytes in 0.022s)

INFO: Initial texture: 1280x800

but no windows open. My scrcpy installation is working perfectly with my Samsung S9, so I guess the problem is elsewhere. The output of "adb logcat -d" (attached [logcat.txt](https://github.com/Genymobile/scrcpy/files/4397849/logcat.txt)) has some weird outputs related to the Nvidia.h264.encoder:

03-28 15:17:40.792 3848 3865 I OMXClient: Using client-side OMX mux.

03-28 15:17:40.797 111 1270 E OMXNodeInstance: setParameter(2b:Nvidia.h264.encoder, OMX.google.android.index.storeMetaDataInBuffers(0x7fc00007): Output:1 en=0 type=1) ERROR: BadParameter(0x80001005)

03-28 15:17:40.797 3848 3865 E ACodec : [OMX.Nvidia.h264.encoder] storeMetaDataInBuffers (output) failed w/ err -2147483648

03-28 15:17:40.798 3848 3865 W ACodec : do not know color format 0x7f000789 = 2130708361

03-28 15:17:40.799 3848 3865 I ACodec : setupVideoEncoder succeeded

03-28 15:17:40.799 111 1270 E OMXNodeInstance: setParameter(2b:Nvidia.h264.encoder, OMX.google.android.index.enableAndroidNativeBuffers(0x7fc00004): Output:1 en=0) ERROR: BadParameter(0x80001005)

03-28 15:17:40.800 3848 3865 W ACodec : do not know color format 0x7f000789 = 2130708361

03-28 15:17:40.801 111 2380 E OMXNodeInstance: getParameter(2b:Nvidia.h264.encoder, ParamConsumerUsageBits(0x6f800004)) ERROR: NotImplemented(0x80001006)

Any ideas on how to circumvent this?

Thanks in advance,

Marcelo

|

device

|

low

|

Critical

|

589,599,872 |

pytorch

|

Performance bug with convolutions with weights and inputs of similar spatial size

|

This is a performance bug in `conv2d`, when spatial dimensions of the weight and input are the same (or similar). Such convolutions get 5 to 10 times slower when transitioning from image sizes of 30 to 32. There may be an issue with the selection of the CUDNN convolution algorithm.

One possibly route to a solution is to allow manual selection of the convolution algorithm.

## To Reproduce

Full minimal working example:

```

import torch as t

t.backends.cudnn.benchmark=True

import torch.nn.functional as F

from timeit import default_timer as timer

device = "cuda"

Cin = 128

Cout = 256

B = 1000

for W in range(20, 40):

input = t.randn(B, Cin, W, W, device=device)

weight = t.randn(Cout, Cin, W, W, device=device)

output = F.conv2d(input, weight, padding=1)

t.cuda.synchronize(device=device)

start_time = timer()

output = F.conv2d(input, weight, padding=1)

t.cuda.synchronize(device=device)

print(f"W: {W}, W**2: {W**2:4d}, time: {1000*(timer()-start_time)}")

```

## Expected behavior

The time required should scale linearly with `W**2`, instead, there is around a factor of 5 jump between W=30 and W=32,

```

W: 20, W**2: 400, time: 14.050104655325413

W: 21, W**2: 441, time: 13.91313411295414

W: 22, W**2: 484, time: 13.899956829845905

W: 23, W**2: 529, time: 13.991509564220905

W: 24, W**2: 576, time: 14.103865250945091

W: 25, W**2: 625, time: 14.099820517003536

W: 26, W**2: 676, time: 14.254673384130001

W: 27, W**2: 729, time: 14.209367334842682

W: 28, W**2: 784, time: 14.332166872918606

W: 29, W**2: 841, time: 14.398249797523022

W: 30, W**2: 900, time: 14.406020753085613

W: 31, W**2: 961, time: 53.21667902171612

W: 32, W**2: 1024, time: 86.8472745642066

W: 33, W**2: 1089, time: 84.1836640611291

W: 34, W**2: 1156, time: 113.77124954015017

W: 35, W**2: 1225, time: 84.53960344195366

W: 36, W**2: 1296, time: 125.90546812862158

W: 37, W**2: 1369, time: 84.77605786174536

W: 38, W**2: 1444, time: 141.37933682650328

W: 39, W**2: 1521, time: 149.50636308640242

```

The performance with `benchmark=True` commented out is even more exciting,

```

W: 20, W**2: 400, time: 17.404177226126194

W: 21, W**2: 441, time: 17.446410842239857

W: 22, W**2: 484, time: 17.630738206207752

W: 23, W**2: 529, time: 17.792406491935253

W: 24, W**2: 576, time: 16.371975652873516

W: 25, W**2: 625, time: 13.557913713157177

W: 26, W**2: 676, time: 13.596143573522568

W: 27, W**2: 729, time: 13.673634268343449

W: 28, W**2: 784, time: 13.687632977962494

W: 29, W**2: 841, time: 13.748884201049805

W: 30, W**2: 900, time: 13.820515014231205

W: 31, W**2: 961, time: 53.05525008589029

W: 32, W**2: 1024, time: 118.49690321832895

W: 33, W**2: 1089, time: 84.05695110559464

W: 34, W**2: 1156, time: 84.2417012900114

W: 35, W**2: 1225, time: 84.5922939479351

W: 36, W**2: 1296, time: 84.85969807952642

W: 37, W**2: 1369, time: 85.06182674318552

W: 38, W**2: 1444, time: 84.93016473948956

W: 39, W**2: 1521, time: 85.2658236399293

```

This is pretty painful, because it means my algorithm is very fast on MNIST, but disproportionately slower on any other dataset, such as CIFAR10.

## Environment

```

PyTorch version: 1.4.0

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Ubuntu 18.04.3 LTS

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

CMake version: version 3.10.2

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: 10.1.243

GPU models and configuration:

GPU 0: NVS 510

GPU 1: TITAN V

GPU 2: TITAN V

Nvidia driver version: 418.74

cuDNN version: /usr/lib/x86_64-linux-gnu/libcudnn.so.7.6.5

Versions of relevant libraries:

[pip] numpy==1.17.2

[pip] numpydoc==0.9.1

[pip] torch==1.4.0

[pip] torchvision==0.5.0

[conda] blas 1.0 mkl

[conda] mkl 2019.4 243

[conda] mkl-service 2.3.0 py37he904b0f_0

[conda] mkl_fft 1.0.14 py37ha843d7b_0

[conda] mkl_random 1.1.0 py37hd6b4f25_0

[conda] pytorch 1.3.0 py3.7_cuda10.1.243_cudnn7.6.3_0 pytorch

[conda] torchvision 0.4.1 py37_cu101 pytorch

```

cc @csarofeen @ptrblck @VitalyFedyunin @ngimel

|

module: performance,module: cudnn,module: convolution,triaged

|

low

|

Critical

|

589,599,940 |

rust

|

assert_eq(x, y) is not the same as assert_eq(y, x) because of type inferrence

|

<!--

Thank you for filing a bug report! 🐛 Please provide a short summary of the bug,

along with any information you feel relevant to replicating the bug.

-->

I tried this code ([playground](https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=8c06ef8af87645f50695bdba65f8a723)):

```rust

fn main() {

assert_eq!(0_i64, S::zero());

assert_eq!(S::zero(), 0_i64);

}

struct S;

trait Zeroed<T> {

fn zero() -> T;

}

impl Zeroed<i16> for S {

fn zero() -> i16 {

0

}

}

impl Zeroed<i64> for S {

fn zero() -> i64 {

0

}

}

```

I expected to see this happen: The program compiles and runs successfully.

Instead, this happened:

```

error[E0282]: type annotations needed

--> src/main.rs:3:16

|

3 | assert_eq!(S::zero(), 0_i64);

| ^^^^^^^ cannot infer type for type parameter `T` declared on the trait `Zeroed`

```

Note that the `assert_eq!(0_i64, S::zero());` works as expected.

### Meta

<!--

If you're using the stable version of the compiler, you should also check if the

bug also exists in the beta or nightly versions.

-->

`rustc --version --verbose`:

```

rustc 1.42.0 (b8cedc004 2020-03-09)

binary: rustc

commit-hash: b8cedc00407a4c56a3bda1ed605c6fc166655447

commit-date: 2020-03-09

host: x86_64-unknown-linux-gnu

release: 1.42.0

LLVM version: 9.0

```

The same error is present on nightly:

```

rustc 1.44.0-nightly (2fbb07525 2020-03-26)

binary: rustc

commit-hash: 2fbb07525e2f07a815e780a4268b11916248b5a9

commit-date: 2020-03-26

host: x86_64-unknown-linux-gnu

release: 1.44.0-nightly

LLVM version: 9.0

```

|

T-lang,A-inference,C-bug

|

low

|

Critical

|

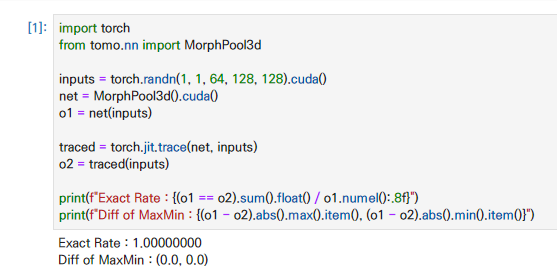

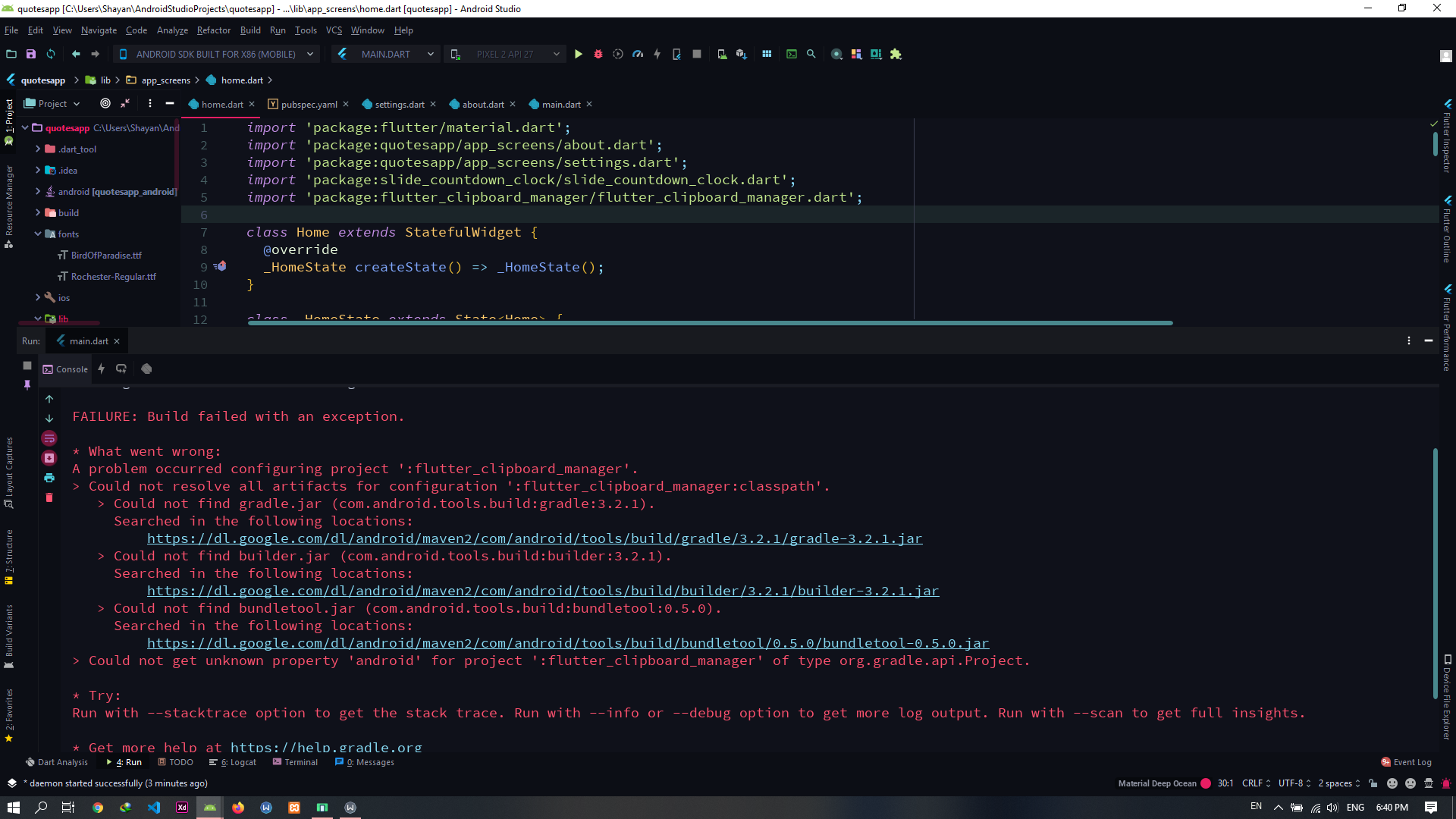

589,621,146 |

pytorch

|

Could not find any similar ops to "foo..." in the `Libtorch`

|

## 🐛 Bug

I want to make the `jit trace` model in the Python and forwarding tensors in the C++.

In cpp, got error.

```

terminate called after throwing an instance of 'torch::jit::script::ErrorReport'

what():

Unknown builtin op: tomo::morph_pool.

Could not find any similar ops to tomo::morph_pool. This op may not exist or may not be currently supported in TorchScript.

....

Serialized File "code/__torch__/torch/nn/modules/module/___torch_mangle_162.py", line 9

argument_1: Tensor) -> Tensor:

_0 = self.morph

x_min_morph = ops.tomo.morph_pool(argument_1, _0)

~~~~~~~~~~~~~~~~~~~ <--- HERE

x, _1 = torch.min(x_min_morph, 2, False)

x_max_morph = ops.tomo.morph_pool(x, _0)

[1] 57316 abort (core dumped) ./forward

```

But In the python, working well as I expected

## To Reproduce

Now I'm using custom library like the `torchvision`

I had assign function like under codes.

```

static auto registry =

torch::RegisterOperators()

.op("tomo::morph_pool", &morph_pool)

.op("tomo::roi_pool_3d", &roi_pool_3d)

.op("tomo::roi_align_3d", &roi_align_3d)

.op("tomo::nms_3d", &nms_3d);

```

And in the `setup.py`

```

def get_extensions():

this_dir = os.path.dirname(os.path.abspath(__file__))

extensions_dir = os.path.join(this_dir, "csrc")

main_file = [os.path.join(extensions_dir, "vision.cpp")]

source_cpu = glob.glob(os.path.join(extensions_dir, "**", "*.cpp"))

source_cuda = glob.glob(os.path.join(extensions_dir, "**", "*.cu"))

sources = main_file + source_cpu

extension = CppExtension

extra_compile_args = {"cxx": []}

define_macros = []

if (torch.cuda.is_available() and CUDA_HOME is not None) or os.getenv("FORCE_CUDA", "0") == "1":

extension = CUDAExtension

sources += source_cuda

define_macros += [("WITH_CUDA", None)]

extra_compile_args["nvcc"] = [

"-DCUDA_HAS_FP16=1",

"-D__CUDA_NO_HALF_OPERATORS__",

"-D__CUDA_NO_HALF_CONVERSIONS__",

"-D__CUDA_NO_HALF2_OPERATORS__",

]

# It's better if pytorch can do this by default ..

CC = os.environ.get("CC", None)

if CC is not None:

extra_compile_args["nvcc"].append("-ccbin={}".format(CC))

include_dirs = [extensions_dir]

ext_modules = [

extension(

"tomo._C",

sources,

include_dirs=include_dirs,

define_macros=define_macros,

extra_compile_args=extra_compile_args,

)

]

return ext_modules

cmdclass={"build_ext": BuildExtension.with_options(no_python_abi_suffix=True)}

```

## Environment

I was trying to 2 versions of libtorch

`1.4.0` and the latest

```

➜ cat libtorch/build-version

1.6.0.dev20200328+cu101

```

```

Collecting environment information...

PyTorch version: 1.4.0

Is debug build: No

CUDA used to build PyTorch: 10.1

OS: Ubuntu 16.04.6 LTS

GCC version: (Ubuntu 8.3.0-16ubuntu3~16.04) 8.3.0

CMake version: version 3.11.0

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: 10.1.243

GPU models and configuration:

GPU 0: Tesla V100-SXM2-32GB

GPU 1: Tesla V100-SXM2-32GB

GPU 2: Tesla V100-SXM2-32GB

GPU 3: Tesla V100-SXM2-32GB

Nvidia driver version: 418.87.01

cuDNN version: /usr/local/cuda-10.1/targets/x86_64-linux/lib/libcudnn.so.7.6.4

Versions of relevant libraries:

[pip3] numpy==1.17.4

[pip3] pytorch-sphinx-theme==0.0.24

[pip3] torch==1.4.0

[pip3] torchsummary==1.5.1

[pip3] torchvision==0.5.0

[conda] Could not collect

```

## Additional context

<!-- Add any other context about the problem here. -->

cc @suo

|

triage review,oncall: jit,triaged

|

low

|

Critical

|

589,634,897 |

pytorch

|

Randomly error reports

|

## ❓ Questions and Help

### Please note that this issue tracker is not a help form and this issue will be closed.

The same code sometimes runs okay, sometimes with reports weird errors.

*** Error in `python': free(): corrupted unsorted chunks: 0x0000559e5b12ec10 ***

======= Backtrace: =========

/lib/x86_64-linux-gnu/libc.so.6(+0x777e5)[0x7fc66db577e5]

/lib/x86_64-linux-gnu/libc.so.6(+0x8037a)[0x7fc66db6037a]

/lib/x86_64-linux-gnu/libc.so.6(+0x83409)[0x7fc66db63409]

/lib/x86_64-linux-gnu/libc.so.6(realloc+0x179)[0x7fc66db64839]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x2266ab)[0x7fc5db03e6ab]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x30fab1)[0x7fc5db127ab1]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x30fd0c)[0x7fc5db127d0c]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x31b221)[0x7fc5db133221]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x31d308)[0x7fc5db135308]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x31d8b8)[0x7fc5db1358b8]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x10007d)[0x7fc5daf1807d]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(+0x100ef2)[0x7fc5daf18ef2]

/usr/lib/x86_64-linux-gnu/libcuda.so.1(cuMemAlloc_v2+0x60)[0x7fc5db086b40]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libcudart-1b201d85.so.10.1(+0x36cb3)[0x7fc65ff20cb3]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libcudart-1b201d85.so.10.1(+0x1531b)[0x7fc65feff31b]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libcudart-1b201d85.so.10.1(cudaMalloc+0x6c)[0x7fc65ff3182c]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libc10_cuda.so(+0x1c69e)[0x7fc66275869e]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libc10_cuda.so(+0x1de6e)[0x7fc662759e6e]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(THCStorage_resize+0xa3)[0x7fc5e43e2713]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(_ZN2at6native18empty_strided_cudaEN3c108ArrayRefIlEES3_RKNS1_13TensorOptionsE+0x616)[0x7fc5e5893b96]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(+0x4224a8a)[0x7fc5e42daa8a]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(_ZN2at14TensorIterator16allocate_outputsEv+0x407)[0x7fc5e1d0a057]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(_ZN2at14TensorIterator5buildEv+0x54)[0x7fc5e1d0f084]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(_ZN2at14TensorIterator9binary_opERNS_6TensorERKS1_S4_b+0x2a7)[0x7fc5e1d0f867]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(_ZN2at6native3addERKNS_6TensorES3_N3c106ScalarE+0x4e)[0x7fc5e1a8f6ee]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(+0x4235d35)[0x7fc5e42ebd35]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(+0x1e67d3b)[0x7fc5e1f1dd3b]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(+0x3bb84b8)[0x7fc5e3c6e4b8]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch.so(+0x1e67d3b)[0x7fc5e1f1dd3b]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch_python.so(+0x1fcca5)[0x7fc6671a1ca5]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch_python.so(_ZNK2at6Tensor3addERKS0_N3c106ScalarE+0xee)[0x7fc6671a28be]

/opt/miniconda/lib/python3.6/site-packages/torch/lib/libtorch_python.so(+0x1c49ff)[0x7fc6671699ff]

python(_PyCFunction_FastCallDict+0x144)[0x559ac46231e4]

python(+0x19ee7c)[0x559ac46b6e7c]

python(_PyEval_EvalFrameDefault+0x30a)[0x559ac46d816a]

python(+0x197c86)[0x559ac46afc86]

python(+0x198cd1)[0x559ac46b0cd1]

python(+0x19ef55)[0x559ac46b6f55]

python(_PyEval_EvalFrameDefault+0x30a)[0x559ac46d816a]

python(_PyFunction_FastCallDict+0x11b)[0x559ac46b110b]

python(_PyObject_FastCallDict+0x26f)[0x559ac46235af]

python(_PyObject_Call_Prepend+0x63)[0x559ac4627fe3]

python(PyObject_Call+0x3e)[0x559ac4622ffe]

python(_PyEval_EvalFrameDefault+0x1a34)[0x559ac46d9894]

python(+0x197c86)[0x559ac46afc86]

python(_PyFunction_FastCallDict+0x1bf)[0x559ac46b11af]

python(_PyObject_FastCallDict+0x26f)[0x559ac46235af]

python(_PyObject_Call_Prepend+0x63)[0x559ac4627fe3]

python(PyObject_Call+0x3e)[0x559ac4622ffe]

python(+0x1694f7)[0x559ac46814f7]

python(_PyObject_FastCallDict+0x8b)[0x559ac46233cb]

python(+0x19efce)[0x559ac46b6fce]

python(_PyEval_EvalFrameDefault+0x30a)[0x559ac46d816a]

python(_PyFunction_FastCallDict+0x11b)[0x559ac46b110b]

python(_PyObject_FastCallDict+0x26f)[0x559ac46235af]

python(_PyObject_Call_Prepend+0x63)[0x559ac4627fe3]

python(PyObject_Call+0x3e)[0x559ac4622ffe]

python(_PyEval_EvalFrameDefault+0x1a34)[0x559ac46d9894]

python(+0x197c86)[0x559ac46afc86]

python(_PyFunction_FastCallDict+0x1bf)[0x559ac46b11af]

python(_PyObject_FastCallDict+0x26f)[0x559ac46235af]

python(_PyObject_Call_Prepend+0x63)[0x559ac4627fe3]

python(PyObject_Call+0x3e)[0x559ac4622ffe]

======= Memory map: ========

200000000-200200000 ---p 00000000 00:00 0

200200000-200400000 rw-s 00000000 00:06 455 /dev/nvidiactl

200400000-202400000 rw-s 00000000 00:06 455 /dev/nvidiactl

202400000-205400000 rw-s 00000000 00:06 455 /dev/nvidiactl

205400000-206000000 ---p 00000000 00:00 0

206000000-206200000 rw-s 00000000 00:06 455 /dev/nvidiactl

206200000-206400000 rw-s 00000000 00:06 455 /dev/nvidiactl

206400000-206600000 rw-s 206400000 00:06 461 /dev/nvidia-uvm

206600000-206800000 rw-s 00000000 00:06 455 /dev/nvidiactl

206800000-206a00000 ---p 00000000 00:00 0

206a00000-206c00000 rw-s 00000000 00:06 455 /dev/nvidiactl

206c00000-207000000 ---p 00000000 00:00 0

207000000-207200000 rw-s 00000000 00:06 455 /dev/nvidiactl

207200000-209200000 rw-s 00000000 00:06 455 /dev/nvidiactl

209200000-20c200000 rw-s 00000000 00:06 455 /dev/nvidiactl

20c200000-20ce00000 ---p 00000000 00:00 0

20ce00000-20d000000 rw-s 00000000 00:06 455 /dev/nvidiactl

20d000000-20d200000 rw-s 00000000 00:06 455 /dev/nvidiactl

20d200000-20d400000 rw-s 20d200000 00:06 461 /dev/nvidia-uvm

20d400000-20d600000 rw-s 00000000 00:06 455 /dev/nvidiactl

20d600000-20d800000 ---p 00000000 00:00 0

20d800000-20da00000 rw-s 00000000 00:06 455 /dev/nvidiactl

20da00000-20de00000 ---p 00000000 00:00 0

20de00000-20e000000 rw-s 00000000 00:06 455 /dev/nvidiactl

20e000000-210000000 rw-s 00000000 00:06 455 /dev/nvidiactl

210000000-213000000 rw-s 00000000 00:06 455 /dev/nvidiactl

213000000-213c00000 ---p 00000000 00:00 0

213c00000-213e00000 rw-s 00000000 00:06 455 /dev/nvidiactl

213e00000-214000000 rw-s 00000000 00:06 455 /dev/nvidiactl

214000000-214200000 rw-s 214000000 00:06 461 /dev/nvidia-uvm

214200000-214400000 rw-s 00000000 00:06 455 /dev/nvidiactl

214400000-214600000 ---p 00000000 00:00 0

214600000-214800000 rw-s 00000000 00:06 455 /dev/nvidiactl

214800000-214c00000 ---p 00000000 00:00 0

214c00000-214e00000 rw-s 00000000 00:06 455 /dev/nvidiactl

214e00000-216e00000 rw-s 00000000 00:06 455 /dev/nvidiactl

216e00000-219e00000 rw-s 00000000 00:06 455 /dev/nvidiactl

219e00000-21aa00000 ---p 00000000 00:00 0

21aa00000-21ac00000 rw-s 00000000 00:06 455 /dev/nvidiactl

21ac00000-21ae00000 rw-s 00000000 00:06 455 /dev/nvidiactl

21ae00000-21b000000 rw-s 21ae00000 00:06 461 /dev/nvidia-uvm

21b000000-21b200000 rw-s 00000000 00:06 455 /dev/nvidiactl

21b200000-21b400000 ---p 00000000 00:00 0

21b400000-21b600000 rw-s 00000000 00:06 455 /dev/nvidiactl

21b600000-600200000 ---p 00000000 00:00 0

10000000000-10410000000 ---p 00000000 00:00 0

559ac4518000-559ac47d6000 r-xp 00000000 08:11 35389532 /opt/miniconda/bin/python3.6

559ac49d5000-559ac49d8000 r--p 002bd000 08:11 35389532 /opt/miniconda/bin/python3.6

559ac49d8000-559ac4a3b000 rw-p 002c0000 08:11 35389532 /opt/miniconda/bin/python3.6

559ac4a3b000-559ac4a6c000 rw-p 00000000 00:00 0

559ac6596000-559e78b36000 rw-p 00000000 00:00 0 [heap]

7fc152000000-7fc21ea00000 ---p 00000000 00:00 0

7fc21ea00000-7fc21ec00000 rw-s 00000000 00:05 123097083 /dev/zero (deleted)

7fc21ec00000-7fc230a00000 ---p 00000000 00:00 0

7fc230a00000-7fc230c00000 rw-s 00000000 00:05 123085684 /dev/zero (deleted)

7fc230c00000-7fc242800000 ---p 00000000 00:00 0

7fc242800000-7fc242a00000 rw-s 00000000 00:05 123151569 /dev/zero (deleted)

7fc242a00000-7fc244000000 ---p 00000000 00:00 0

7fc244000000-7fc244046000 rw-p 00000000 00:00 0

7fc244046000-7fc248000000 ---p 00000000 00:00 0

7fc24a000000-7fc25a000000 ---p 00000000 00:00 0

7fc25a000000-7fc25fb8e000 rw-s 00000000 00:05 123091958 /dev/zero (deleted)

7fc25fb8e000-7fc260000000 ---p 00000000 00:00 0

7fc260000000-7fc265b8e000 rw-s 00000000 00:05 123091957 /dev/zero (deleted)

7fc265b8e000-7fc266000000 ---p 00000000 00:00 0

7fc266000000-7fc26bb8e000 rw-s 00000000 00:05 123091954 /dev/zero (deleted)

7fc26bb8e000-7fc26c000000 ---p 00000000 00:00 0

7fc26c000000-7fc271b8e000 rw-s 00000000 00:05 123091953 /dev/zero (deleted)

7fc271b8e000-7fc272000000 ---p 00000000 00:00 0

7fc272000000-7fc277b8e000 rw-s 00000000 00:05 123091950 /dev/zero (deleted)

7fc277b8e000-7fc278000000 ---p 00000000 00:00 0

7fc278000000-7fc27db8e000 rw-s 00000000 00:05 123091949 /dev/zero (deleted)

7fc27db8e000-7fc27e000000 ---p 00000000 00:00 0

7fc27e000000-7fc283b8e000 rw-s 00000000 00:05 123091946 /dev/zero (deleted)

7fc283b8e000-7fc284000000 ---p 00000000 00:00 0

7fc284000000-7fc289b8e000 rw-s 00000000 00:05 123091945 /dev/zero (deleted)

7fc289b8e000-7fc28a000000 ---p 00000000 00:00 0

7fc28a000000-7fc28fb8e000 rw-s 00000000 00:05 123091942 /dev/zero (deleted)

7fc28fb8e000-7fc290000000 ---p 00000000 00:00 0

7fc290000000-7fc295b8e000 rw-s 00000000 00:05 123091941 /dev/zero (deleted)

7fc295b8e000-7fc296000000 ---p 00000000 00:00 0

7fc296000000-7fc29bb8e000 rw-s 00000000 00:05 123091938 /dev/zero (deleted)

7fc29bb8e000-7fc29c000000 ---p 00000000 00:00 0

7fc29c000000-7fc2a1b8e000 rw-s 00000000 00:05 123091937 /dev/zero (deleted)

7fc2a1b8e000-7fc2a2000000 ---p 00000000 00:00 0

7fc2a2000000-7fc2a7b8e000 rw-s 00000000 00:05 123097080 /dev/zero (deleted)

7fc2a7b8e000-7fc2a8000000 ---p 00000000 00:00 0

7fc2a8000000-7fc2a8049000 rw-p 00000000 00:00 0

7fc2a8049000-7fc2ac000000 ---p 00000000 00:00 0

7fc2ac000000-7fc2ac047000 rw-p 00000000 00:00 0

7fc2ac047000-7fc2b0000000 ---p 00000000 00:00 0

7fc2b0000000-7fc2b004c000 rw-p 00000000 00:00 0

7fc2b004c000-7fc2b4000000 ---p 00000000 00:00 0

7fc2b6000000-7fc2bbb8e000 rw-s 00000000 00:05 123097075 /dev/zero (deleted)

7fc2bbb8e000-7fc2bbe00000 ---p 00000000 00:00 0

7fc2bbe00000-7fc2bc000000 rw-s 00000000 00:05 123153229 /dev/zero (deleted)

7fc2be000000-7fc2c3b8e000 rw-s 00000000 00:05 123097079 /dev/zero (deleted)

7fc2c3b8e000-7fc2c4000000 ---p 00000000 00:00 0

7fc2c6000000-7fc2ca000000 ---p 00000000 00:00 0

7fc2ca000000-7fc2cfb8e000 rw-s 00000000 00:05 123097076 /dev/zero (deleted)

7fc2cfb8e000-7fc2d0000000 ---p 00000000 00:00 0

7fc2d0000000-7fc2d5b8e000 rw-s 00000000 00:05 123097072 /dev/zero (deleted)

7fc2d5b8e000-7fc2d5e00000 ---p 00000000 00:00 0

7fc2d5e00000-7fc2d6000000 rw-s 00000000 00:05 123153225 /dev/zero (deleted)

7fc2d6000000-7fc2dbb8e000 rw-s 00000000 00:05 123097071 /dev/zero (deleted)

7fc2dbb8e000-7fc2dbc00000 ---p 00000000 00:00 0

7fc2dbc00000-7fc2dbe00000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc2dbe00000-7fc2dc000000 rw-s 00000000 00:05 123153226 /dev/zero (deleted)

7fc2dc000000-7fc2dc021000 rw-p 00000000 00:00 0

7fc2dc021000-7fc2e0000000 ---p 00000000 00:00 0

7fc2e2000000-7fc2e7b8e000 rw-s 00000000 00:05 123097068 /dev/zero (deleted)

7fc2e7b8e000-7fc2e8000000 ---p 00000000 00:00 0

7fc2e8000000-7fc2edb8e000 rw-s 00000000 00:05 123097067 /dev/zero (deleted)

7fc2edb8e000-7fc2edc00000 ---p 00000000 00:00 0

7fc2edc00000-7fc2ede00000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc2ede00000-7fc2ee000000 ---p 00000000 00:00 0

7fc2ee000000-7fc2f3b8e000 rw-s 00000000 00:05 123097064 /dev/zero (deleted)

7fc2f3b8e000-7fc2f3e00000 ---p 00000000 00:00 0

7fc2f3e00000-7fc2f4000000 rw-s 00000000 00:05 123153228 /dev/zero (deleted)

7fc2f4000000-7fc2f9b8e000 rw-s 00000000 00:05 123097063 /dev/zero (deleted)

7fc2f9b8e000-7fc2f9c00000 ---p 00000000 00:00 0

7fc2f9c00000-7fc2f9ed6000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc2f9ed6000-7fc30a000000 ---p 00000000 00:00 0

7fc30a000000-7fc30fb8e000 rw-s 00000000 00:05 123097056 /dev/zero (deleted)

7fc30fb8e000-7fc310000000 ---p 00000000 00:00 0

7fc310000000-7fc315b8e000 rw-s 00000000 00:05 123097055 /dev/zero (deleted)

7fc315b8e000-7fc316000000 ---p 00000000 00:00 0

7fc316000000-7fc31bb8e000 rw-s 00000000 00:05 123097052 /dev/zero (deleted)

7fc31bb8e000-7fc31c000000 ---p 00000000 00:00 0

7fc31c000000-7fc321b8e000 rw-s 00000000 00:05 123097051 /dev/zero (deleted)

7fc321b8e000-7fc322000000 ---p 00000000 00:00 0

7fc322000000-7fc327b8e000 rw-s 00000000 00:05 123097048 /dev/zero (deleted)

7fc327b8e000-7fc328000000 ---p 00000000 00:00 0

7fc328000000-7fc32db8e000 rw-s 00000000 00:05 123097047 /dev/zero (deleted)

7fc32db8e000-7fc32e000000 ---p 00000000 00:00 0

7fc32e000000-7fc333b8e000 rw-s 00000000 00:05 123097044 /dev/zero (deleted)

7fc333b8e000-7fc334000000 ---p 00000000 00:00 0

7fc334000000-7fc339b8e000 rw-s 00000000 00:05 123097043 /dev/zero (deleted)

7fc339b8e000-7fc33a000000 ---p 00000000 00:00 0

7fc33a000000-7fc33fb8e000 rw-s 00000000 00:05 123097040 /dev/zero (deleted)

7fc33fb8e000-7fc340000000 ---p 00000000 00:00 0

7fc340000000-7fc345b8e000 rw-s 00000000 00:05 123097039 /dev/zero (deleted)

7fc345b8e000-7fc346000000 ---p 00000000 00:00 0

7fc346000000-7fc34bb8e000 rw-s 00000000 00:05 123097036 /dev/zero (deleted)

7fc34bb8e000-7fc34c000000 ---p 00000000 00:00 0

7fc34c000000-7fc351b8e000 rw-s 00000000 00:05 123097035 /dev/zero (deleted)

7fc351b8e000-7fc352000000 ---p 00000000 00:00 0

7fc352000000-7fc357b8e000 rw-s 00000000 00:05 123097032 /dev/zero (deleted)

7fc357b8e000-7fc358000000 ---p 00000000 00:00 0

7fc358000000-7fc35db8e000 rw-s 00000000 00:05 123097031 /dev/zero (deleted)

7fc35db8e000-7fc35e000000 ---p 00000000 00:00 0

7fc35e000000-7fc363b8e000 rw-s 00000000 00:05 123097028 /dev/zero (deleted)

7fc363b8e000-7fc364000000 ---p 00000000 00:00 0

7fc364000000-7fc369b8e000 rw-s 00000000 00:05 123097027 /dev/zero (deleted)

7fc369b8e000-7fc36a000000 ---p 00000000 00:00 0

7fc36a000000-7fc36fb8e000 rw-s 00000000 00:05 123097024 /dev/zero (deleted)

7fc36fb8e000-7fc370000000 ---p 00000000 00:00 0

7fc370000000-7fc375b8e000 rw-s 00000000 00:05 123097023 /dev/zero (deleted)

7fc375b8e000-7fc376000000 ---p 00000000 00:00 0

7fc378000000-7fc37db8e000 rw-s 00000000 00:05 123097060 /dev/zero (deleted)

7fc37db8e000-7fc37e000000 ---p 00000000 00:00 0

7fc37e000000-7fc383b8e000 rw-s 00000000 00:05 123097059 /dev/zero (deleted)

7fc383b8e000-7fc384000000 ---p 00000000 00:00 0

7fc384000000-7fc389b8e000 rw-s 00000000 00:05 123097016 /dev/zero (deleted)

7fc389b8e000-7fc389e00000 ---p 00000000 00:00 0

7fc389e00000-7fc38a000000 rw-s 00000000 00:05 123153222 /dev/zero (deleted)

7fc38c000000-7fc391b8e000 rw-s 00000000 00:05 123097020 /dev/zero (deleted)

7fc391b8e000-7fc392000000 ---p 00000000 00:00 0

7fc392000000-7fc397b8e000 rw-s 00000000 00:05 123097019 /dev/zero (deleted)

7fc397b8e000-7fc398000000 ---p 00000000 00:00 0

7fc398000000-7fc39db8e000 rw-s 00000000 00:05 123097015 /dev/zero (deleted)

7fc39db8e000-7fc3a2000000 ---p 00000000 00:00 0

7fc3a2000000-7fc3a7b8e000 rw-s 00000000 00:05 123097012 /dev/zero (deleted)

7fc3a7b8e000-7fc3a7c00000 ---p 00000000 00:00 0

7fc3a7c00000-7fc3a7e00000 rw-s 00000000 00:05 123153219 /dev/zero (deleted)

7fc3a7e00000-7fc3a8000000 ---p 00000000 00:00 0

7fc3a8000000-7fc3adb8e000 rw-s 00000000 00:05 123097011 /dev/zero (deleted)

7fc3adb8e000-7fc3ade00000 ---p 00000000 00:00 0

7fc3ade00000-7fc3ae000000 rw-s 00000000 00:05 123153218 /dev/zero (deleted)

7fc3ae000000-7fc3b3b8e000 rw-s 00000000 00:05 123097008 /dev/zero (deleted)

7fc3b3b8e000-7fc3b3e00000 ---p 00000000 00:00 0

7fc3b3e00000-7fc3b4000000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc3b4000000-7fc3b9b8e000 rw-s 00000000 00:05 123097007 /dev/zero (deleted)

7fc3b9b8e000-7fc3b9c00000 ---p 00000000 00:00 0

7fc3b9c00000-7fc3b9e00000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc3b9e00000-7fc3ba000000 ---p 00000000 00:00 0

7fc3ba000000-7fc3bfb8e000 rw-s 00000000 00:05 123097004 /dev/zero (deleted)

7fc3bfb8e000-7fc3bfc00000 ---p 00000000 00:00 0

7fc3bfc00000-7fc3bfe00000 rw-s 00000000 00:05 123153221 /dev/zero (deleted)

7fc3bfe00000-7fc3c0000000 ---p 00000000 00:00 0

7fc3c0000000-7fc3c5b8e000 rw-s 00000000 00:05 123097003 /dev/zero (deleted)

7fc3c5b8e000-7fc3c6000000 ---p 00000000 00:00 0

7fc3c6000000-7fc3cbb8e000 rw-s 00000000 00:05 123097000 /dev/zero (deleted)

7fc3cbb8e000-7fc3cbc00000 ---p 00000000 00:00 0

7fc3cbc00000-7fc3cbed6000 rw-s 00000000 00:06 455 /dev/nvidiactl

7fc3cbed6000-7fc3cc000000 ---p 00000000 00:00 0

7fc3cc000000-7fc3d1b8e000 rw-s 00000000 00:05 123096999 /dev/zero (deleted)

7fc3d1b8e000-7fc3e2000000 ---p 00000000 00:00 0

7fc3e2000000-7fc3e7b8e000 rw-s 00000000 00:05 123096992 /dev/zero (deleted)

7fc3e7b8e000-7fc3e8000000 ---p 00000000 00:00 0

7fc3e8000000-7fc3edb8e000 rw-s 00000000 00:05 123096991 /dev/zero (deleted)

7fc3edb8e000-7fc3ee000000 ---p 00000000 00:00 0

7fc3ee000000-7fc3f3b8e000 rw-s 00000000 00:05 123096988 /dev/zero (deleted)

7fc3f3b8e000-7fc3f4000000 ---p 00000000 00:00 0

7fc3f4000000-7fc3f9b8e000 rw-s 00000000 00:05 123096987 /dev/zero (deleted)

7fc3f9b8e000-7fc3fa000000 ---p 00000000 00:00 0

7fc3fa000000-7fc3ffb8e000 rw-s 00000000 00:05 123096984 /dev/zero (deleted)

7fc3ffb8e000-7fc400000000 ---p 00000000 00:00 0

7fc400000000-7fc405b8e000 rw-s 00000000 00:05 123096983 /dev/zero (deleted)

7fc405b8e000-7fc406000000 ---p 00000000 00:00 0

7fc406000000-7fc40bb8e000 rw-s 00000000 00:05 123096980 /dev/zero (deleted)

7fc40bb8e000-7fc40c000000 ---p 00000000 00:00 0

7fc40c000000-7fc411b8e000 rw-s 00000000 00:05 123096979 /dev/zero (deleted)

7fc411b8e000-7fc412000000 ---p 00000000 00:00 0

7fc412000000-7fc417b8e000 rw-s 00000000 00:05 123096976 /dev/zero (deleted)

7fc417b8e000-7fc418000000 ---p 00000000 00:00 0

7fc418000000-7fc41db8e000 rw-s 00000000 00:05 123096975 /dev/zero (deleted)

7fc41db8e000-7fc41e000000 ---p 00000000 00:00 0

7fc41e000000-7fc423b8e000 rw-s 00000000 00:05 123096972 /dev/zero (deleted)

7fc423b8e000-7fc424000000 ---p 00000000 00:00 0

7fc424000000-7fc429b8e000 rw-s 00000000 00:05 123096971 /dev/zero (deleted)

7fc429b8e000-7fc42a000000 ---p 00000000 00:00 0

7fc42a000000-7fc42fb8e000 rw-s 00000000 00:05 123096968 /dev/zero (deleted)

7fc42fb8e000-7fc430000000 ---p 00000000 00:00 0

7fc430000000-7fc435b8e000 rw-s 00000000 00:05 123096967 /dev/zero (deleted)

7fc435b8e000-7fc436000000 ---p 00000000 00:00 0

7fc436000000-7fc43bb8e000 rw-s 00000000 00:05 123096964 /dev/zero (deleted)

7fc43bb8e000-7fc43c000000 ---p 00000000 00:00 0

7fc43c000000-7fc441b8e000 rw-s 00000000 00:05 123096963 /dev/zero (deleted)

7fc441b8e000-7fc442000000 ---p 00000000 00:00 0

7fc442000000-7fc447b8e000 rw-s 00000000 00:05 123096960 /dev/zero (deleted)

7fc447b8e000-7fc448000000 ---p 00000000 00:00 0

7fc448000000-7fc44db8e000 rw-s 00000000 00:05 123096959 /dev/zero (deleted)

7fc44db8e000-7fc44e000000 ---p 00000000 00:00 0

7fc44e000000-7fc453b8e000 rw-s 00000000 00:05 123085673 /dev/zero (deleted)

7fc453b8e000-7fc454000000 ---p 00000000 00:00 0

7fc454000000-7fc459b8e000 rw-s 00000000 00:05 123085672 /dev/zero (deleted)

7fc459b8e000-7fc45a000000 ---p 00000000 00:00 0

7fc45a000000-7fc45fb8e000 rw-s 00000000 00:05 123085669 /dev/zero (deleted)

7fc45fb8e000-7fc460000000 ---p 00000000 00:00 0