id

int64 393k

2.82B

| repo

stringclasses 68

values | title

stringlengths 1

936

| body

stringlengths 0

256k

⌀ | labels

stringlengths 2

508

| priority

stringclasses 3

values | severity

stringclasses 3

values |

|---|---|---|---|---|---|---|

424,588,793

|

TypeScript

|

Symbol definition improvement

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Please read the FAQ first, especially the "Common Feature Requests" section.

-->

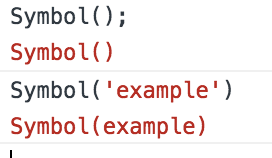

## Search Terms

<!-- List of keywords you searched for before creating this issue. Write them down here so that others can find this suggestion more easily -->

symbol in:title, symbol improvement, symbol description definition

## Suggestion

By using more and more `symbol` in my projects, and to make debugging easier in development mode, I fill the optional description to Symbol. Then, on production, I remove all description during the bundling process:

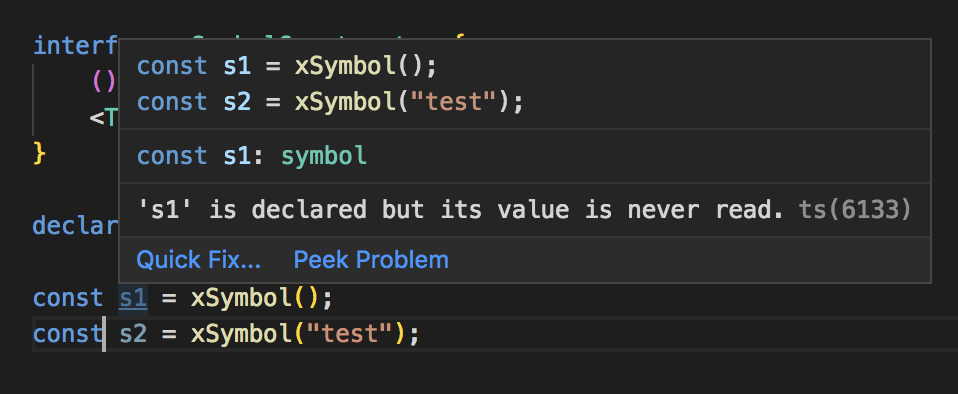

So I was thinking that it could be really useful to have the same approach for TypeScript - [playground](https://www.typescriptlang.org/play/#src=type%20xsymbol%3CT%3E%20%3D%20%7B%7D%3B%0D%0A%0D%0Ainterface%20xSymbolConstructor%20%7B%0D%0A%20%20%20%20()%3A%20symbol%3B%0D%0A%20%20%20%20%3CT%20extends%20string%20%7C%20number%3E(description%3A%20T)%3A%20xsymbol%3CT%3E%3B%0D%0A%7D%0D%0A%0D%0Avar%20xSymbol%3A%20xSymbolConstructor%3B%0D%0A%0D%0Avar%20s1%20%3D%20xSymbol()%3B%0D%0Avar%20s2%20%3D%20xSymbol(%22test%22)%3B):

Just imagine we don't have `xsymbol<"test">` but the "native" `symbol<"test">` or `symbol("test")` to be closer to DevTools.

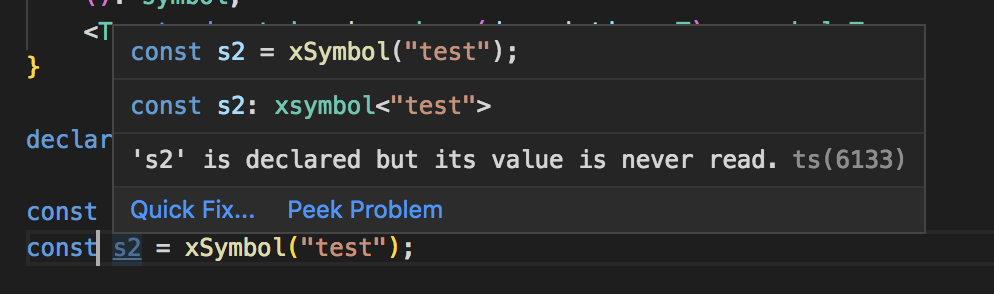

Then, by extending this approach, I don't know if it could be helpful to increase the typing inference.

Given this code:

```ts

const foo = {

type: 'foo' as 'foo',

foo: ''

};

const bar = {

type: 'bar' as 'bar',

bar: ''

};

type FooBarAction = typeof foo | typeof bar;

function getType<T>(input: {type: T}): T {

return input.type;

}

function foobar(action: FooBarAction) {

switch (action.type) {

case getType(foo):

action.foo;

break;

case getType(bar):

action.bar;

break;

}

}

```

Here, according to the `type` property value, the `action` provide correctly `foo` property or `bar` property.

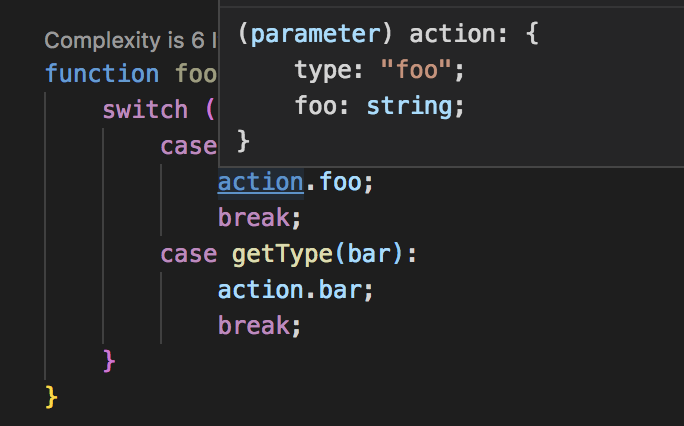

But, if I replace them by `symbol`:

```ts

const sFoo = {

type: Symbol('sfoo'),

sfoo: ''

};

const sBar = {

type: Symbol('sbar'),

sbar: ''

};

type SFooBarAction = typeof sFoo | typeof sBar;

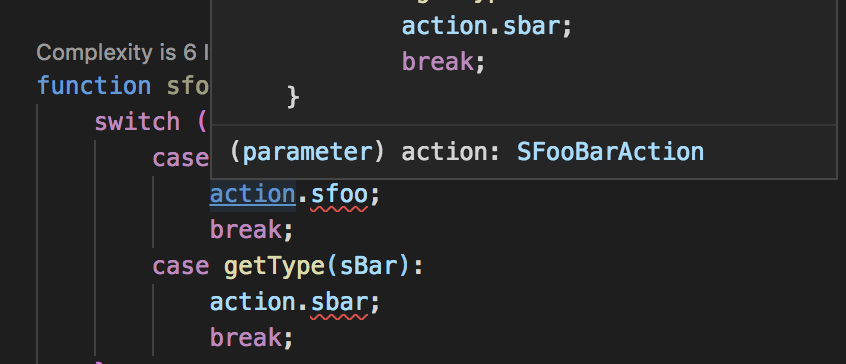

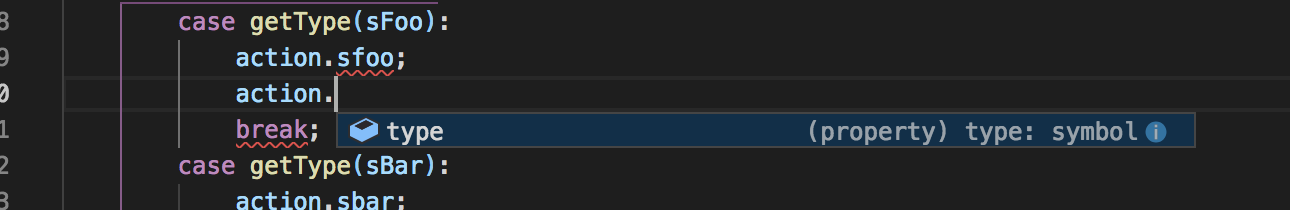

function sfoobar(action: SFooBarAction) {

switch (action.type) {

case getType(sFoo):

action.sfoo;

break;

case getType(sBar):

action.sbar;

break;

}

}

```

Here TypeScript cannot resolve properly the correct definition of my `action` variable and keep saying that it get the `SFooBarAction` type, so only `type` property is available.

According to this PR #30196, I understand that working on `symbol` in the core system of TypeScript is not an easy thing, but probably it could be helpful if the optional `description` of `Symbol` as a definition descriptor to have the unique symbol in the definition. But definitely, as soon as we provide same `description`, we will face the same problem as today.

[Full Playground](https://www.typescriptlang.org/play/#src=type%20xsymbol%3CT%3E%20%3D%20%7B%7D%3B%0D%0A%0D%0Ainterface%20xSymbolConstructor%20%7B%0D%0A%20%20%20%20()%3A%20symbol%3B%0D%0A%20%20%20%20%3CT%20extends%20string%20%7C%20number%3E(description%3A%20T)%3A%20xsymbol%3CT%3E%3B%0D%0A%7D%0D%0A%0D%0Avar%20xSymbol%3A%20xSymbolConstructor%3B%0D%0A%0D%0Avar%20s1%20%3D%20xSymbol()%3B%0D%0Avar%20s2%20%3D%20xSymbol(%22test%22)%3B%0D%0A%0D%0Aconst%20foo%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20'foo'%20as%20'foo'%2C%0D%0A%20%20%20%20foo%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Aconst%20bar%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20'bar'%20as%20'bar'%2C%0D%0A%20%20%20%20bar%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Atype%20FooBarAction%20%3D%20typeof%20foo%20%7C%20typeof%20bar%3B%0D%0A%0D%0Afunction%20getType%3CT%3E(input%3A%20%7Btype%3A%20T%7D)%3A%20T%20%7B%0D%0A%20%20%20%20return%20input.type%3B%0D%0A%7D%0D%0A%0D%0Afunction%20foobar(action%3A%20FooBarAction)%20%7B%0D%0A%20%20%20%20switch%20(action.type)%20%7B%0D%0A%20%20%20%20%20%20%20%20case%20getType(foo)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.foo%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%20%20%20%20case%20getType(bar)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.bar%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%7D%0D%0A%7D%0D%0A%0D%0Aconst%20sFoo%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20Symbol('sfoo')%2C%0D%0A%20%20%20%20sfoo%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Aconst%20sBar%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20Symbol('sbar')%2C%0D%0A%20%20%20%20sbar%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Atype%20SFooBarAction%20%3D%20typeof%20sFoo%20%7C%20typeof%20sBar%3B%0D%0A%0D%0Afunction%20sfoobar(action%3A%20SFooBarAction)%20%7B%0D%0A%20%20%20%20switch%20(action.type)%20%7B%0D%0A%20%20%20%20%20%20%20%20case%20getType(sFoo)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.sfoo%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%20%20%20%20case%20getType(sBar)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.sbar%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%7D%0D%0A%7D%0D%0A%0D%0A%0D%0Aconst%20xFoo%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20xSymbol('sfoo')%2C%0D%0A%20%20%20%20xfoo%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Aconst%20xBar%20%3D%20%7B%0D%0A%20%20%20%20type%3A%20xSymbol('sbar')%2C%0D%0A%20%20%20%20xbar%3A%20''%0D%0A%7D%3B%0D%0A%0D%0Atype%20XFooBarAction%20%3D%20typeof%20xFoo%20%7C%20typeof%20xBar%3B%0D%0A%0D%0Afunction%20xfoobar(action%3A%20XFooBarAction)%20%7B%0D%0A%20%20%20%20switch%20(action.type)%20%7B%0D%0A%20%20%20%20%20%20%20%20case%20getType(xFoo)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.xfoo%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%20%20%20%20case%20getType(xBar)%3A%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20action.xbar%3B%0D%0A%20%20%20%20%20%20%20%20%20%20%20%20break%3B%0D%0A%20%20%20%20%7D%0D%0A%7D%0D%0A)

## Use Cases

For the first part, everywhere when we use `Symbol`.

For the second part, as soon as unique value is required, like for `Event Emitter`, `Action` in `Redux` architecture, ...

## Examples

See above on the `Suggestion` section

## Checklist

My suggestion meets these guidelines:

* [ ] This wouldn't be a breaking change in existing TypeScript/JavaScript code

* [x] This wouldn't change the runtime behavior of existing JavaScript code

* [x] This could be implemented without emitting different JS based on the types of the expressions

* [x] This isn't a runtime feature (e.g. library functionality, non-ECMAScript syntax with JavaScript output, etc.)

* [ ] This feature would agree with the rest of [TypeScript's Design Goals](https://github.com/Microsoft/TypeScript/wiki/TypeScript-Design-Goals).

|

Suggestion,In Discussion

|

low

|

Critical

|

424,591,482

|

flutter

|

Change viewportFraction dynamically in PageController

|

<!-- Thank you for using Flutter!

Please check out our documentation first:

* https://flutter.io/

* https://docs.flutter.io/

If you can't find the answer there, please consider asking a question on

the Stack Overflow Web site:

* https://stackoverflow.com/questions/tagged/flutter?sort=frequent

Please don't file a GitHub issue for support requests. GitHub issues are

for tracking defects in the product. If you file a bug asking for help, we

will consider this a request for a documentation update.

-->

I have searched everywhere and posted on SO but no luck.

I have a PageView and want to zoom on double tap.

Here's the code:

```dart

@override

Widget build(BuildContext context) {

this.controller = PageController(

initialPage: 0,

viewportFraction: _viewportScale,

);

return PageView(

controller: controller,

onPageChanged: (int pageIndex) {

setState(() {

_viewportScale = 1.0;

});

},

children: this.urls.map((String url) {

return Container(

child: GestureDetector(

child: Image.network(url),

onTap: () => Navigator.pop(context),

onDoubleTap: () {

setState(() {

_viewportScale = _viewportScale == 1.0 ? 2.0 : 1.0;

});

},

),

);

}).toList(),

);

}

```

And when I double tap get this error:

```

I/flutter ( 6492): ══╡ EXCEPTION CAUGHT BY WIDGETS LIBRARY ╞═══════════════════════════════════════════════════════════

I/flutter ( 6492): The following assertion was thrown building NotificationListener<ScrollNotification>:

I/flutter ( 6492): Unexpected call to replaceSemanticsActions() method of RawGestureDetectorState.

I/flutter ( 6492): The replaceSemanticsActions() method can only be called outside of the build phase.

I/flutter ( 6492):

I/flutter ( 6492): When the exception was thrown, this was the stack:

I/flutter ( 6492): #0 RawGestureDetectorState.replaceSemanticsActions.<anonymous closure> (package:flutter/src/widgets/gesture_detector.dart:737:9)

I/flutter ( 6492): #1 RawGestureDetectorState.replaceSemanticsActions (package:flutter/src/widgets/gesture_detector.dart:743:6)

I/flutter ( 6492): #2 ScrollableState.setSemanticsActions (package:flutter/src/widgets/scrollable.dart:379:40)

I/flutter ( 6492): #3 ScrollPosition._updateSemanticActions (package:flutter/src/widgets/scroll_position.dart:445:13)

I/flutter ( 6492): #4 ScrollPosition.notifyListeners (package:flutter/src/widgets/scroll_position.dart:695:5)

I/flutter ( 6492): #5 ScrollPosition.forcePixels (package:flutter/src/widgets/scroll_position.dart:318:5)

I/flutter ( 6492): #6 _PagePosition.viewportFraction= (package:flutter/src/widgets/page_view.dart:263:7)

I/flutter ( 6492): #7 PageController.attach (package:flutter/src/widgets/page_view.dart:173:18)

I/flutter ( 6492): #8 ScrollableState.didUpdateWidget (package:flutter/src/widgets/scrollable.dart:356:26)

I/flutter ( 6492): #9 StatefulElement.update (package:flutter/src/widgets/framework.dart:3884:58)

I/flutter ( 6492): #10 Element.updateChild (package:flutter/src/widgets/framework.dart:2752:15)

I/flutter ( 6492): #11 ComponentElement.performRebuild (package:flutter/src/widgets/framework.dart:3752:16)

I/flutter ( 6492): #12 Element.rebuild (package:flutter/src/widgets/framework.dart:3564:5)

I/flutter ( 6492): #13 StatelessElement.update (package:flutter/src/widgets/framework.dart:3801:5)

I/flutter ( 6492): #14 Element.updateChild (package:flutter/src/widgets/framework.dart:2752:15)

I/flutter ( 6492): #15 ComponentElement.performRebuild (package:flutter/src/widgets/framework.dart:3752:16)

I/flutter ( 6492): #16 Element.rebuild (package:flutter/src/widgets/framework.dart:3564:5)

I/flutter ( 6492): #17 StatefulElement.update (package:flutter/src/widgets/framework.dart:3899:5)

I/flutter ( 6492): #18 Element.updateChild (package:flutter/src/widgets/framework.dart:2752:15)

I/flutter ( 6492): #19 ComponentElement.performRebuild (package:flutter/src/widgets/framework.dart:3752:16)

I/flutter ( 6492): #20 Element.rebuild (package:flutter/src/widgets/framework.dart:3564:5)

I/flutter ( 6492): #21 BuildOwner.buildScope (package:flutter/src/widgets/framework.dart:2277:33)

I/flutter ( 6492): #22 _WidgetsFlutterBinding&BindingBase&GestureBinding&ServicesBinding&SchedulerBinding&PaintingBinding&SemanticsBinding&RendererBinding&WidgetsBinding.drawFrame (package:flutter/src/widgets/binding.dart:700:20)

I/flutter ( 6492): #23 _WidgetsFlutterBinding&BindingBase&GestureBinding&ServicesBinding&SchedulerBinding&PaintingBinding&SemanticsBinding&RendererBinding._handlePersistentFrameCallback (package:flutter/src/rendering/binding.dart:275:5)

I/flutter ( 6492): #24 _WidgetsFlutterBinding&BindingBase&GestureBinding&ServicesBinding&SchedulerBinding._invokeFrameCallback (package:flutter/src/scheduler/binding.dart:990:15)

I/flutter ( 6492): #25 _WidgetsFlutterBinding&BindingBase&GestureBinding&ServicesBinding&SchedulerBinding.handleDrawFrame (package:flutter/src/scheduler/binding.dart:930:9)

I/flutter ( 6492): #26 _WidgetsFlutterBinding&BindingBase&GestureBinding&ServicesBinding&SchedulerBinding._handleDrawFrame (package:flutter/src/scheduler/binding.dart:842:5)

I/flutter ( 6492): #30 _invoke (dart:ui/hooks.dart:209:10)

I/flutter ( 6492): #31 _drawFrame (dart:ui/hooks.dart:168:3)

I/flutter ( 6492): (elided 3 frames from package dart:async)

I/flutter ( 6492): ════════════════════════════════════════════════════════════════════════════════════════════════════

```

As you can see `viewportFraction` is changed onDoubleTap. It's set to 1.0 initially in class props declaration.

I found something similar https://github.com/flutter/flutter/issues/23873 but this is not about if it's smaller that 1.0, it's when changing `viewportFraction` dynamically.

Usually I solve these kind of issues by trials and errors but here have no clue what's going on.

|

c: new feature,framework,P2,team-framework,triaged-framework

|

low

|

Critical

|

424,659,533

|

TypeScript

|

refactoring for listing parameters from single line to column and back

|

parameters

```ts

// before

function fn(a: A, b: B, c: C) { /*..*/ }

// after

function fn(

a: A,

b: B,

c: C

) { /*..*/ }

// and back

function fn(a: A, b: B, c: C) { /*..*/ }

```

arguments

```ts

// before

return foldArray(toReversed(values), toListOf<T>(), (result, value) => add(result, value));

// after

return foldArray(

toReversed(values),

toListOf<T>(),

(result, value) => add(result, value)

);

```

|

Suggestion,Awaiting More Feedback

|

low

|

Minor

|

424,674,770

|

TypeScript

|

syntax to control over distributivness

|

from: https://github.com/Microsoft/TypeScript/issues/30569#issuecomment-476007534

so basically we need a way to say it clear and loud in the language whether we want:

```ts

Promise<A> | Promise<B>

```

or

```ts

Promise<A | B>

```

as a result of a type operation

|

Suggestion,In Discussion

|

medium

|

Major

|

424,679,779

|

react-native

|

JavaScript strings with NULL character are not handled properly

|

## 🐛 Bug Report

JavaScript strings with NULL character are not handled properly

## To Reproduce

```jsx

<Text style={styles.welcome}>{'Hello \u0000 World'}</Text>

```

The text is cuted to Hello

It does not happen when Debug JS Remotely.

## Expected Behavior

Hello World

## Code Example

https://github.com/gaodeng/RN-NULL-character-ISSUE

## Environment

```

info

React Native Environment Info:

System:

OS: macOS 10.14.3

CPU: (4) x64 Intel(R) Core(TM) i3-4130 CPU @ 3.40GHz

Memory: 282.02 MB / 8.00 GB

Shell: 3.2.57 - /bin/bash

Binaries:

Node: 10.15.1 - /usr/local/bin/node

Yarn: 1.5.1 - /usr/local/bin/yarn

npm: 6.8.0 - /usr/local/bin/npm

Watchman: 4.7.0 - /usr/local/bin/watchman

SDKs:

iOS SDK:

Platforms: iOS 12.1, macOS 10.14, tvOS 12.1, watchOS 5.1

Android SDK:

API Levels: 22, 23, 24, 25, 26, 27, 28

Build Tools: 23.0.1, 25.0.0, 25.0.1, 25.0.2, 25.0.3, 26.0.0, 26.0.1, 26.0.2, 26.0.3, 27.0.2, 27.0.3, 28.0.0, 28.0.3

System Images: android-18 | Google APIs Intel x86 Atom, android-22 | Google APIs Intel x86 Atom, android-23 | Google APIs Intel x86 Atom_64, android-24 | Intel x86 Atom_64, android-25 | Google APIs ARM EABI v7a, android-25 | Google APIs Intel x86 Atom_64, android-27 | Google APIs Intel x86 Atom, android-P | Google APIs Intel x86 Atom, android-P | Google Play Intel x86 Atom_64

IDEs:

Android Studio: 3.3 AI-182.5107.16.33.5314842

Xcode: 10.1/10B61 - /usr/bin/xcodebuild

npmPackages:

react: 16.8.3 => 16.8.3

react-native: 0.59.1 => 0.59.1

npmGlobalPackages:

create-react-native-app: 1.0.0

react-native-cli: 2.0.1

react-native-create-library: 3.1.2

react-native-git-upgrade: 0.2.7

react-native-rename: 2.1.5

```

|

JavaScript,Bug,Never gets stale

|

medium

|

Critical

|

424,691,645

|

pytorch

|

Add layer-wise adaptive rate scaling (LARS) optimizer

|

## 🚀 Feature

Add a PyTorch implementation of layer-wise adaptive rate scaling (LARS) from the paper "[Large Batch Training of Convolutional Networks](https://arxiv.org/abs/1708.03888)" by You, Gitman, and Ginsburg. Namely, include the implementation found [here](https://github.com/noahgolmant/pytorch-lars) in the `torch.optim.optimizers` submodule.

## Motivation

LARS is one of the most popular optimization algorithms for large-batch training. This feature will expose the algorithm through the high-level optimizer interface. There are currently no other implementations of this algorithm available in widely used frameworks.

## Pitch

I have already written/tested a PyTorch optimizer for LARS in [this repo](https://github.com/noahgolmant/pytorch-lars). Changes would be minimal (I just have to make the imports relative at the top of the file).

## Alternatives

The only existing code for this is in caffe, and it looks like it is not exposed at a higher level within the solver framework. I also do not know whether or not that code has been tested.

## Additional context

Notably, I was unable to reproduce their results (namely reducing the generalization gap) on CIFAR-10 with ResNet. I would welcome additional tests to see if this implementation is able to replicate their performance on larger datasets like ImageNet.

|

feature,module: optimizer,triaged

|

medium

|

Major

|

424,691,705

|

godot

|

Complex model render incorrectly using GLES3 unless increasing blend shape max buffer size

|

**Godot version:**

3.1 official

**OS/device including version:**

Windows 10, Vega 8 and Intel integrated, latest drivers

**Issue description:**

Imported model is not completly rendered when using GLES3, but it does when using GLES2, it also rendered correctly in versin 3.0.6

**Steps to reproduce:**

I'm linking a Git project because of model complexity project size is too big. I'm importing models using Fuse CC->Mixamo->Custom Script,

[In this video](https://youtu.be/QYfEXgkOYnE) a few gaps are visible in the forehead and the neck areas.

[In this video](https://youtu.be/yzclSGAC4s4) the full left arm is missing.

The models have the same base body mesh, with 65 bones and about 80 blenshapes, second has more animations, meshes and materials (meshes were added trying to hide as much skin as possible, and skull polygons were removed too).

**Minimal reproduction project:**

[Git project](https://gitlab.com/DavidOC/GodotJam062018)

|

enhancement,topic:rendering,documentation,topic:import,topic:3d

|

low

|

Major

|

424,709,346

|

godot

|

Support offset in BoneAttachment

|

When adding a BoneAttachment to a Skeleton, the BoneAttachment's position is set to that of the bone it is attached to. This makes sense, but then if you explicitly alter the transform of the BoneAttachment in the editor, it always goes back to the bone's position at runtime. This is causing us issues, as the only bones we have in our character's hands are a) at the wrist, and b) at the fingers. This means that when we place a weapon as a child of the BoneAttachement, it is never in the appropriate position (it's eaier placed on the wrist, or on the fingers). Ideally, if the BoneAttachment is moved in editor, that offset would be taken into account when setting the BoneAttachment's position.

|

enhancement,topic:core

|

low

|

Major

|

424,719,040

|

rust

|

Cannot implement trait on type alias involving another trait's associated type

|

When trying to implement `From` for nalgebra types, I discovered the following surprising behavior:

crate2/src/lib.rs

```rust

pub trait Foo {

type Bar;

}

pub struct Baz;

impl Foo for Baz {

type Bar = ();

}

pub struct Quux<T>(pub T);

pub type Corge = Quux<<Baz as Foo>::Bar>;

```

crate1/src/lib.rs

```rust

pub struct Grault;

// this doesn't compile

impl From<Grault> for crate2::Corge {

fn from(x: Grault) -> Self {

Self(())

}

}

// but this seemingly equivalent impl works fine

impl From<Grault> for crate2::Quux<()> {

fn from(x: Grault) -> Self {

Self(())

}

}

```

Error:

```

error[E0210]: type parameter `<crate2::Baz as crate2::Foo>::Bar` must be used as the type parameter for some local type (e.g. `MyStruct<<crate2::Baz as crate2::Foo>::Bar>`)

--> src/lib.rs:3:1

|

3 | impl From<Grault> for crate2::Corge {

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ type parameter `<crate2::Baz as crate2::Foo>::Bar` must be used as the type parameter for some local type

|

= note: only traits defined in the current crate can be implemented for a type parameter

error: aborting due to previous error

For more information about this error, try `rustc --explain E0210`.

```

The error occurs no matter which crate the type alias is in. In addition to failing at all here being almost certainly incorrect, the error message is strange; `Foo::Bar` is not a type parameter at all!

|

A-type-system,A-associated-items,T-compiler,C-bug,T-types

|

low

|

Critical

|

424,753,998

|

svelte

|

`bind:group` does not work with nested components

|

I'm trying to bind a store variable to group of checkboxes and it works till I move checkbox into a separate component, after that only one checkbox can be chosen at a time, here's an example from repl: https://gist.github.com/imbolc/e29205d6901d135c8c1bd8c3eec26d67

|

feature request

|

high

|

Critical

|

424,767,122

|

ant-design

|

请为表格 (Table) 组件添加一个数据行 (tr) 分组的功能。

|

- [ ] I have searched the [issues](https://github.com/ant-design/ant-design/issues) of this repository and believe that this is not a duplicate.

### What problem does this feature solve?

用表格展示数据的时候,希望能将具有关联的数据行分组展示,比如用边框线将多个数据行框起来作为一个整体来展示。

### What does the proposed API look like?

```js

const data = [

[{}, {}], //分组1

[{}, {}], //分组2

[{}, {}], //分组3

[{}, {}], //分组4

//...

];

<Table dataSource={data} />

```

**如果可以的话,可以在原有的 `dataSource` 属性上扩展,要分组的数据行,由开发者自己分好。**

<!-- generated by ant-design-issue-helper. DO NOT REMOVE -->

|

💡 Feature Request,Inactive

|

medium

|

Major

|

424,798,765

|

go

|

net/url: make URL parsing return an error on IPv6 literal without brackets

|

<!-- Please answer these questions before submitting your issue. Thanks! -->

### What version of Go are you using (`go version`)?

<pre>

go version go1.11.2 windows/amd64

</pre>

### Does this issue reproduce with the latest release?

<pre>

yes

</pre>

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

set GOARCH=amd64

set GOHOSTARCH=amd64

set GOHOSTOS=windows

</pre></details>

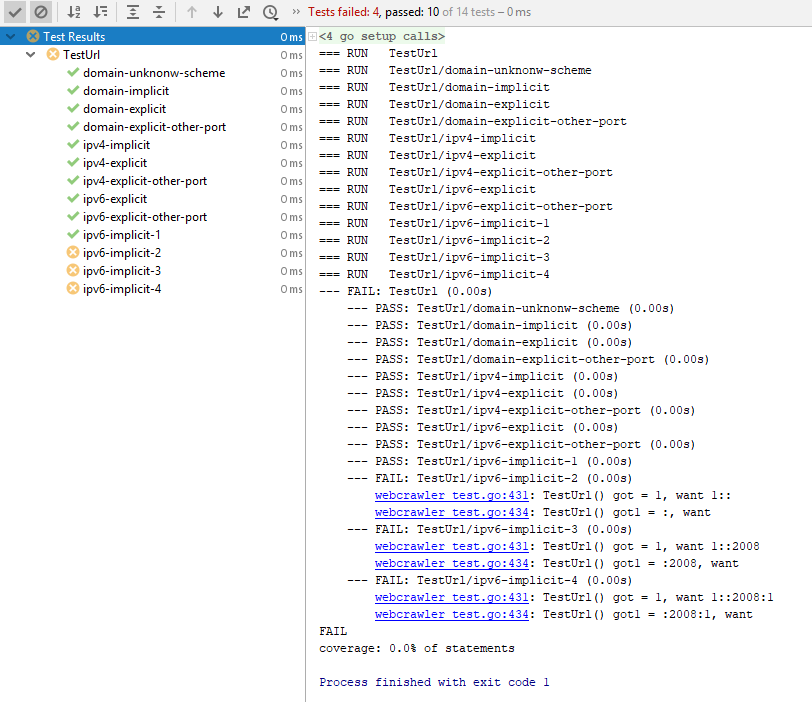

### What did you do?

url.URL provides the methods `.Hostname()` and `.Port()` which schould split the URL's host (`.Host`) into its address and port part. In certain cases, these functions are not able to interpret IPv6 addresses correctly, leading to invalid output.

Here is a test function feeding different sample URLs, demonstrating the issue (**all test URLs are valid and should succeed!**):

```

func TestUrl(t *testing.T) {

tests := []struct {

name string

input string

wantHost string

wantPort string

}{

{"domain-unknonw-scheme", "asfd://localhost/?q=0&p=1#frag", "localhost", ""},

{"domain-implicit", "http://localhost/?q=0&p=1#frag", "localhost", ""},

{"domain-explicit", "http://localhost:80/?q=0&p=1#frag", "localhost", "80"},

{"domain-explicit-other-port", "http://localhost:80/?q=0&p=1#frag", "localhost", "80"},

{"ipv4-implicit", "http://127.0.0.1/?q=0&p=1#frag", "127.0.0.1", ""},

{"ipv4-explicit", "http://127.0.0.1:80/?q=0&p=1#frag", "127.0.0.1", "80"},

{"ipv4-explicit-other-port", "http://127.0.0.1:80/?q=0&p=1#frag", "127.0.0.1", "80"},

{"ipv6-explicit", "http://[1::]:80/?q=0&p=1#frag", "1::", "80"},

{"ipv6-explicit-other-port", "http://[1::]:80/?q=0&p=1#frag", "1::", "80"},

{"ipv6-implicit-1", "http://[1::]/?q=0&p=1#frag", "1::", ""},

{"ipv6-implicit-2", "http://1::/?q=0&p=1#frag", "1::", ""},

{"ipv6-implicit-3", "http://1::2008/?q=0&p=1#frag", "1::2008", ""},

{"ipv6-implicit-4", "http://1::2008:1/?q=0&p=1#frag", "1::2008:1", ""},

}

for _, tt := range tests {

t.Run(tt.name, func(t *testing.T) {

// Prepare URL

u, err := url.Parse(tt.input)

if err != nil {

t.Errorf("could not parse url: %v", err)

return

}

// Extract hostname and port

host := u.Hostname()

port := u.Port()

// Compare result

if host != tt.wantHost {

t.Errorf("TestUrl() got = %v, want %v", host, tt.wantHost)

}

if port != tt.wantPort {

t.Errorf("TestUrl() got1 = %v, want %v", port, tt.wantPort)

}

})

}

}

```

Output:

### What did you expect to see?

All sample URLs in the test cases above are valid ones, hence, all tests should succeed as defined

### What did you see instead?

The top test samples work as expected, however, the bottom three return incorrect results. The bottom three samples are valid IPv6 URLs with inplicit port specification, but `.Hostname()` and `.Port()` interpres them as IPv4 addresses returning parts of the IPv6 address as if it was the explicit port of an IPv4 input. E.g., in one of the test outputs, ":2008" is returned as the port, but it is actually part of the IPv6 address.

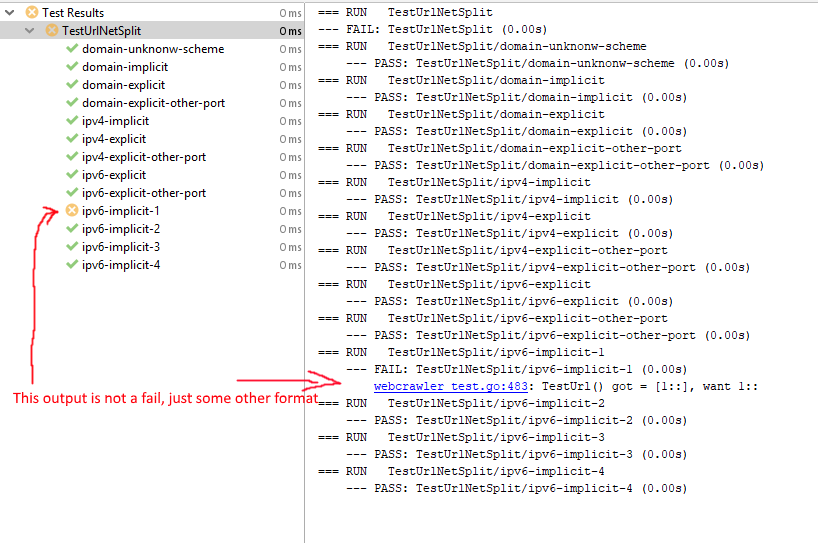

### Where is the bug?

`.Hostname()` and `.Port()` implement their own logic to split the port from the hostname. I've found that there is already a close function in the net package, called `net.SplitHostPort()`, which does it's job correctly. If `.Hostname()` and `.Port()` just called that function instead of re-implementing the logic, everything should work as expected. Below is the proof in form of a test function with exactly the same inputs as above, but using `net.SplitHostPort()` instead of `.Hostname()` / `.Port()`.

```

func TestUrlNetSplit(t *testing.T) {

tests := []struct {

name string

input string

wantHost string

wantPort string

}{

{"domain-unknonw-scheme", "asfd://localhost/?q=0&p=1#frag", "localhost", ""},

{"domain-implicit", "http://localhost/?q=0&p=1#frag", "localhost", ""},

{"domain-explicit", "http://localhost:80/?q=0&p=1#frag", "localhost", "80"},

{"domain-explicit-other-port", "http://localhost:80/?q=0&p=1#frag", "localhost", "80"},

{"ipv4-implicit", "http://127.0.0.1/?q=0&p=1#frag", "127.0.0.1", ""},

{"ipv4-explicit", "http://127.0.0.1:80/?q=0&p=1#frag", "127.0.0.1", "80"},

{"ipv4-explicit-other-port", "http://127.0.0.1:80/?q=0&p=1#frag", "127.0.0.1", "80"},

{"ipv6-explicit", "http://[1::]:80/?q=0&p=1#frag", "1::", "80"},

{"ipv6-explicit-other-port", "http://[1::]:80/?q=0&p=1#frag", "1::", "80"},

{"ipv6-implicit-1", "http://[1::]/?q=0&p=1#frag", "1::", ""},

{"ipv6-implicit-2", "http://1::/?q=0&p=1#frag", "1::", ""},

{"ipv6-implicit-3", "http://1::2008/?q=0&p=1#frag", "1::2008", ""},

{"ipv6-implicit-4", "http://1::2008:1/?q=0&p=1#frag", "1::2008:1", ""},

}

for _, tt := range tests {

t.Run(tt.name, func(t *testing.T) {

// Prepare URL

u, err := url.Parse(tt.input)

if err != nil {

t.Errorf("could not parse url: %v", err)

return

}

host, port, err := net.SplitHostPort(u.Host)

if err != nil {

// Could not split port from host, as there is no port specified

host = u.Host

port = ""

}

// Compare result

if host != tt.wantHost {

t.Errorf("TestUrl() got = %v, want %v", host, tt.wantHost)

}

if port != tt.wantPort {

t.Errorf("TestUrl() got1 = %v, want %v", port, tt.wantPort)

}

})

}

}

```

Output:

|

help wanted,NeedsFix

|

medium

|

Critical

|

424,800,710

|

electron

|

TitleBar/MenuBar Settings

|

Good day

I like Electron because it has a good code implementation.

BrowserWindow has a lot of nice options for customization

[https://electronjs.org/docs/api/browser-window](url)

But I hope you will add some simple options for TitleBar customization

For some easy applications, change a color bar is more than enough. For more advanced applications I use index.html file with custom navbar settings and styles.

Proposed Solution

You have already added -titleBarStyle- String and it's really fine for me and my users on MacOS.

So I hope you will add some more options in near future.

My proposition is:

titleBarColor: String (optional) use HEXcolor (#80FFFFFF), // TitleBar color. It is really, what I wish for my users

titleBarHeight: Integer (optional) use values (50), // TitleBar height

titleBarTextColor: String (optional) use HEXcolor (#80FFFFFF), // Text color in TitleBar

titleBarElementsColor: String (optional) use HEXcolor (#80FFFFFF) // Some buttons elements color in TitleBar

With Great respect

A.W.

|

enhancement :sparkles:

|

low

|

Minor

|

424,872,689

|

flutter

|

PaintingStyle should have a strokeAndFill enum value

|

Hi,

I know that this has been removed here https://github.com/flutter/engine/pull/3037

Is there any reason to remove this?

Or any other solution to avoid hitting the same pixels twice with a stroke draw and a fill draw?

|

framework,engine,d: api docs,P3,team-engine,triaged-engine

|

medium

|

Critical

|

424,918,202

|

scrcpy

|

can you make scrcpy work on ip stream .like play on live on any browser and media player

|

feature request

|

low

|

Minor

|

|

424,920,401

|

rust

|

[eRFC] Include call graph information in LLVM IR

|

## Summary

Add an experimental compiler feature / flag to add call graph information, in

the form of LLVM metadata, to the LLVM IR (`.ll`) files produced by the

compiler.

## Motivation

(This section ended up being a bit long winded. The TL;DR is improving existing

stack analysis usage tools.)

Stack usage analysis is a hard requirement for [certifying] safety critical

(embedded) applications. This analysis is usually implemented as a static

analysis tool that computes the worst case stack usage of an application. The

information provided by this tool is used in the certification process to

demonstrate that the application won't run into a stack overflow at runtime.

[certifying]: https://www.absint.com/qualification/safety.htm

[Several][a] [such][b] [tools][c] exist for C/C++ programs, mainly in commercial

and closed source forms. A few months ago the Rust compiler gained a feature

that's a pre-requisite for implementing such tools in the Rust world: [`-Z

emit-stack-sizes`]. This flag makes stack usage information about every Rust

function available in the binary artifacts (object files) produced by the

compiler.

[a]: https://www.absint.com/stackanalyzer/index.htm

[b]: https://www.iar.com/support/tech-notes/general/stack-usage-and-stack-usage-control-files/

[c]: https://www.adacore.com/gnatpro/toolsuite/gnatstack

[`-Z emit-stack-sizes`]: https://doc.rust-lang.org/unstable-book/compiler-flags/emit-stack-sizes.html

And just recently a tool that uses this flag and call graph analysis to perform

whole program stack usage analysis has been developed: [`cargo-call-stack`][]

(*full disclaimer: I'm the author of said tool*). The tool does OK when dealing

with programs that only uses direct function calls but it's lacking (either

over-pessimistic or flat out incorrect) when analyzing programs that contain

indirect function calls, that is function pointer calls and/or dynamic

dispatch.

[`cargo-call-stack`]: https://github.com/japaric/cargo-call-stack

Call graph analysis in the presence of indirect function calls is notoriously

hard, but Rust strong typing makes the problem tractable -- in fact, dynamic

dispatch is easier to reason about than function pointer calls. However, this

last part only holds true when Rust type information is available to the tool,

which is not the case today.

To elaborate: it's' important that the call graph is extracted from

post-LLVM-optimization output as that greatly reduces the chance of inserting

invalid edges. For example, what appears to be a function call at the (Rust)

source level (e.g. `let x = foo();`) may not actually exist in the final binary

due to inlining or dead code elimination. For this reason, Rust stack usage

analysis tools are limited to two sources of call graph information: the machine

code in the final executable and post-optimization LLVM IR (`rustc`'s

`--emit=llvm-ir`). The former contains no type information and the latter

contains *LLVM* type information, not Rust type information.

`cargo-call-stack` currently uses the type information available in the

LLVM IR of a crate to reason about indirect function calls. However, LLVM type

information is not as complete as Rust type information because the conversion

is lossy. Consider the following Rust source code and corresponding LLVM IR.

``` rust

#[no_mangle] // shorter name

static F: AtomicPtr<fn() -> u32> = AtomicPtr::new(foo as *mut _);

fn main() {

if let Some(f) = unsafe { F.load(Ordering::Relaxed).as_ref() } {

f(); // function pointer call

}

// volatile load to preserve the return value of `bar`

unsafe {

core::ptr::read_volatile(&baz());

}

}

#[no_mangle]

fn foo() -> u32 {

F.store(bar as *mut _, Ordering::Relaxed);

0

}

#[no_mangle]

fn bar() -> u32 {

1

}

#[inline(never)]

#[no_mangle]

fn baz() -> i32 {

F.load(Ordering::Relaxed) as usize as i32

}

```

``` llvm

define void @main() unnamed_addr #3 !dbg !1240 {

start:

%_14 = alloca i32, align 4

%0 = load atomic i32, i32* bitcast (<{ i8*, [0 x i8] }>* @F to i32*) monotonic, align 4

%1 = icmp eq i32 %0, 0, !dbg !1251

br i1 %1, label %bb5, label %bb3, !dbg !1251

bb3: ; preds = %start

%2 = inttoptr i32 %0 to i32 ()**, !dbg !1252

%3 = load i32 ()*, i32 ()** %2, align 4, !dbg !1254, !nonnull !46

%4 = tail call i32 %3() #9, !dbg !1254

br label %bb5, !dbg !1255

; ..

}

; Function Attrs: norecurse nounwind

define internal i32 @foo() unnamed_addr #0 !dbg !1189 {

; ..

}

; Function Attrs: norecurse nounwind readnone

define internal i32 @bar() unnamed_addr #1 !dbg !1215 {

; ..

}

; Function Attrs: noinline norecurse nounwind

define internal fastcc i32 @baz() unnamed_addr #2 !dbg !1217 {

; ..

}

```

Note how in the LLVM IR output `foo`, `bar` and `baz` all have the same function

signature: `i32 ()`, which is the LLVM version of `fn() -> i32`. There are no

unsigned integer types in LLVM IR so both Rust types, `i32` and `u32`, get

converted to `i32` in the LLVM IR.

Line `%4 = ..` in the LLVM IR is the function pointer call. This too,

incorrectly, indicates that a function pointer with signature `i32 ()` (`fn() ->

i32`) is being invoked.

This lossy conversion leads `cargo-call-stack` to incorrectly add an edge

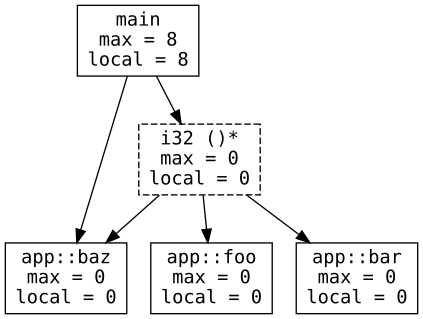

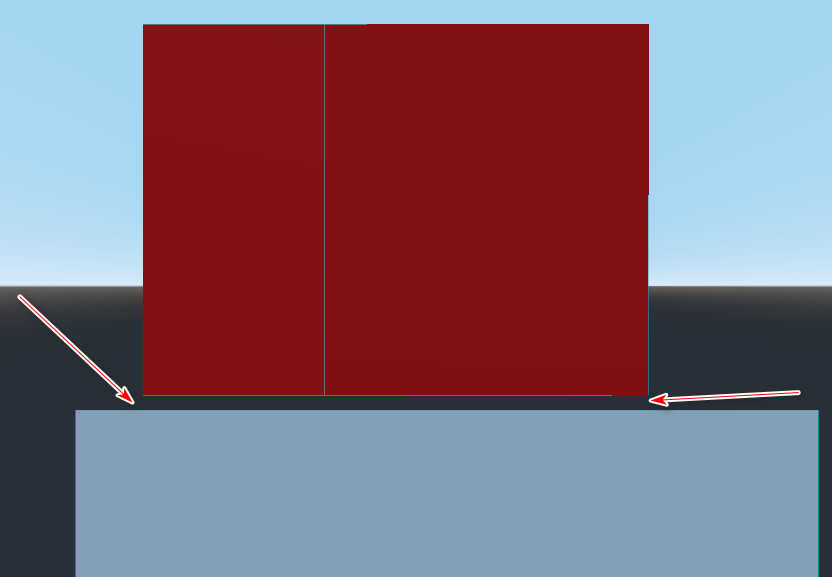

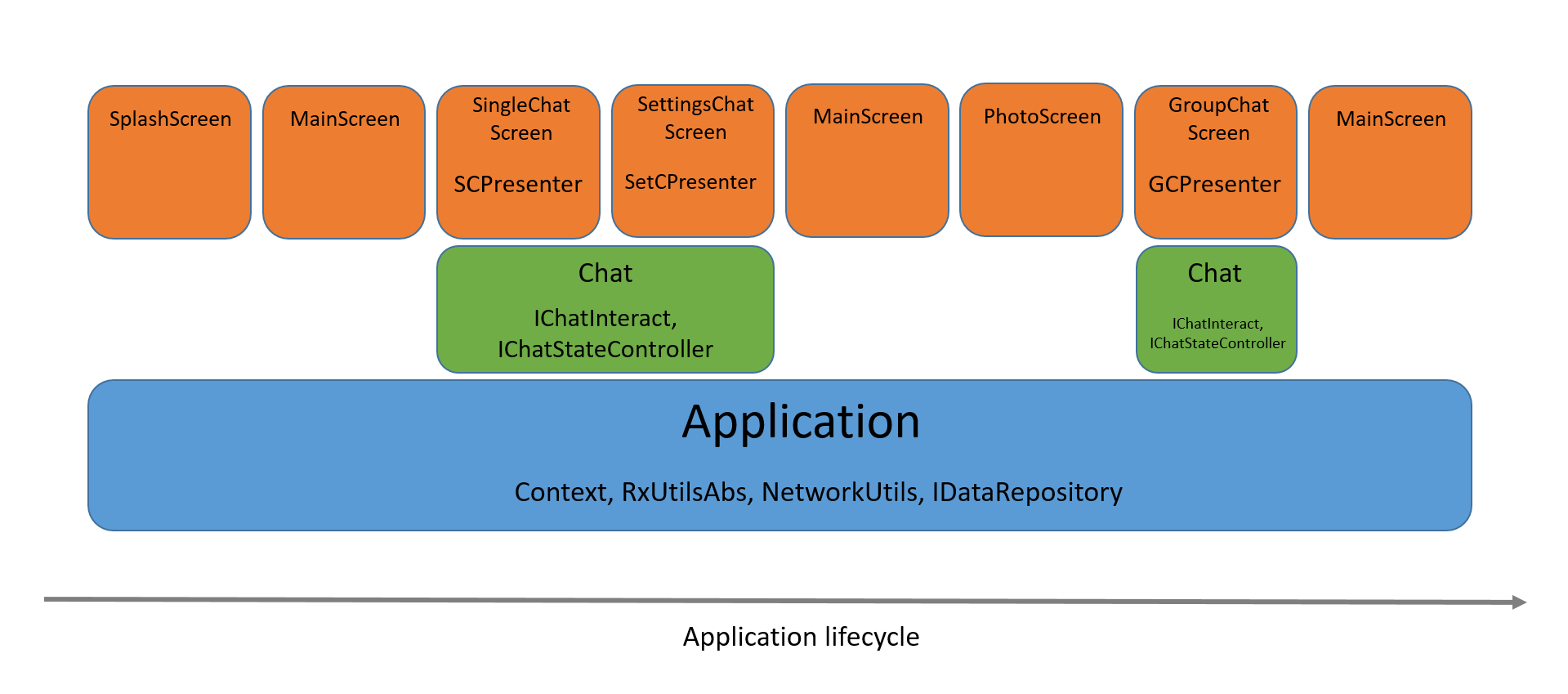

between the node that represents the function pointer call and `baz`. See below:

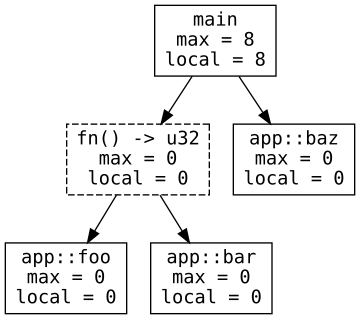

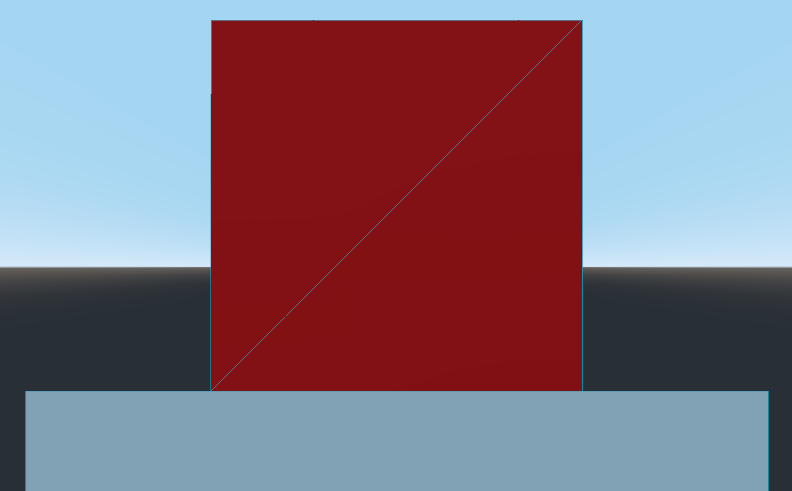

If the tool had access to call graph information from the compiler it would have

produced the following accurate call graph.

This eRFC proposes adding a feature to aid call graph and stack usage analysis.

(For a more in depth explanation of how `cargo-call-stack` works please refer to

this blog post: https://blog.japaric.io/stack-analysis/)

## Design

We propose that call graph information is added to the LLVM IR that `rustc`

produces in the form of [LLVM metadata] when the unstable `-Z call-metadata`

`rustc` flag is used.

[LLVM metadata]: https://llvm.org/docs/LangRef.html#metadata

### Function pointer calls

Functions that are converted into function pointers in Rust source (e.g. `let x:

fn() -> i32 = foo`) will get extra LLVM metadata in their definitions (IR:

`define`). The metadata will have the form `!{!"fn", !"fn() -> i32"}`, where the

second node represents the signature of the function. Likewise, function pointer

calls will get similar LLVM metadata at call site (IR: `call`/ `invoke`).

Revisiting the previous example, the IR would change as shown below:

``` llvm

define void @main() unnamed_addr #3 !dbg !1240 {

; ..

%4 = tail call i32 %3() #9, !dbg !1254, !rust !0

; .. ^^^^^^^^ (ADDED)

}

; Function Attrs: norecurse nounwind

define internal i32 @foo() unnamed_addr #0 !dbg !1189 !rust !0 {

; .. ^^^^^^^^ (ADDED)

}

; Function Attrs: norecurse nounwind readnone

define internal i32 @bar() unnamed_addr #1 !dbg !1215 !rust !0 {

; .. ^^^^^^^^ (ADDED)

}

; Function Attrs: noinline norecurse nounwind

define internal fastcc i32 @baz() unnamed_addr #2 !dbg !1217 {

; ..

}

; ..

; (ADDED) at the bottom of the file

!0 = !{!"fn", "fn() -> i32"}

; ..

```

Note how `main` and `baz` didn't get the extra `!rust` metadata because they are

never converted into function pointers. Whereas both `foo` and `bar` got the

same metadata because they have the same signature and are converted into

function pointers in the source code (lines `static F` and `F.store`).

When tools parse this LLVM IR they will know that line `%4 = ..` can invoke

`foo` or `bar` (`!rust !0`), but not `baz` or `main` because the latter two

don't have the same "fn" metadata.

This `-Z` flag only promises two things with respect to "fn" metadata:

- Only functions that are converted (coerced) into function pointers in the

source code will get "fn" metadata -- note that this does *not* necessarily mean that

function will be called via a function pointer call

- That the string node that comes after the `!"fn"` node will be *unique* for

each function type -- the flag does *not* make any promise about the contents

or syntax of this string node. (Having a stringified version of the function

signature in the LLVM IR would be nice to have but it's not required to

produce an accurate call graph.)

Adding this kind of metadata doesn't affect LLVM optimization passes and more

importantly our previous experiments show that this custom metadata is not

removed by LLVM passes.

### Trait objects

There's one more of bit of information we can encode in the metadata to make the

analysis less pessimistic: information about trait objects.

Consider the following Rust source code and corresponding LLVM IR.

``` rust

static TO: Mutex<&'static (dyn Foo + Sync)> = Mutex::new(&Bar);

static X: AtomicI32 = AtomicI32::new(0);

fn main() {

(*TO.lock()).foo();

if X.load(Ordering::Relaxed).quux() {

// side effect to keep `quux`'s return value

unsafe { asm!("" : : : "memory" : "volatile") }

}

}

trait Foo {

fn foo(&self) -> bool {

false

}

}

struct Bar;

impl Foo for Bar {}

struct Baz;

impl Foo for Baz {

fn foo(&self) -> bool {

true

}

}

trait Quux {

fn quux(&self) -> bool;

}

impl Quux for i32 {

#[inline(never)]

fn quux(&self) -> bool {

*TO.lock() = &Baz;

unsafe { core::ptr::read_volatile(self) > 0 }

}

}

```

``` llvm

; Function Attrs: noinline noreturn nounwind

define void @main() unnamed_addr #2 !dbg !1418 {

; ..

%8 = tail call zeroext i1 %7({}* nonnull align 1 %4) #8, !dbg !1437, !rust !0

; .. ^^^^^^^^

}

; app::Foo::foo

; Function Attrs: norecurse nounwind readnone

define internal zeroext i1 @_ZN3app3Foo3foo17h5a849e28d8bf9a2eE(

%Bar* noalias nocapture nonnull readonly align 1

) unnamed_addr #0 !dbg !1224 !rust !1 {

; .. ^^^^^^^^

}

; <app::Baz as app::Foo>::foo

; Function Attrs: norecurse nounwind readnone

define internal zeroext i1

@"_ZN37_$LT$app..Baz$u20$as$u20$app..Foo$GT$3foo17h9e4a36340940b841E"(

%Baz* noalias nocapture nonnull readonly align 1

) unnamed_addr #0 !dbg !1236 !rust !2 {

; .. ^^^^^^^^

}

; <i32 as app::Quux>::quux

; Function Attrs: noinline norecurse nounwind

define internal fastcc zeroext i1

@"_ZN33_$LT$i32$u20$as$u20$app..Quux$GT$4quux17haf5232e76b46052fE"(

i32* noalias readonly align 4 dereferenceable(4)

) unnamed_addr #1 !dbg !1245 !rust !3 {

; .. ^^^^^^^^

}

; ..

!0 = "fn(*mut ()) -> bool"

!1 = "fn(&Bar) -> bool"

!2 = "fn(&Baz) -> bool"

!3 = "fn(&i32) -> bool"

; ..

```

In this case we have dynamic dispatch, which shows up in the LLVM IR at line

`%8` as a function pointer call where the signature of the function pointer is

`i1 ({}*)`, which is more or less equivalent to Rust's `fn(*mut ()) -> bool` --

the `{}*` denotes an "erased" type.

With just the function signature metadata tools could at best assume that the

dynamic dispatch could invoke `Bar.foo()`, `Baz.foo()` or `i32.quux()` resulting

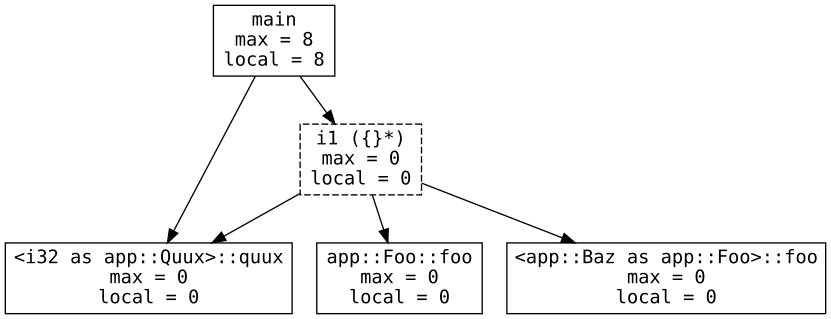

in the following, incorrect call graph.

Thus, we also propose that the `-Z call-metadata` flag adds trait-method

information to trait method implementations (IR: `define`) *of traits that are

converted into trait objects*, and to dynamic dispatch sites (IR: `call _ %_({}*

_, ..)`) using the following metadata syntax: `!{!"dyn", !"Foo", !"foo"}`, where

the second node represents the trait and the third node represents the method

being dispatched / defined.

Building upon the previous example, here's how the "dyn" metadata would be

emitted by the compiler:

``` llvm

; Function Attrs: noinline noreturn nounwind

define void @main() unnamed_addr #2 !dbg !1418 {

; ..

%8 = tail call zeroext i1 %7({}* nonnull align 1 %4) #8, !dbg !1437, !rust !0

; ..

}

; app::Foo::foo

; Function Attrs: norecurse nounwind readnone

define internal zeroext i1 @_ZN3app3Foo3foo17h5a849e28d8bf9a2eE(

%Bar* noalias nocapture nonnull readonly align 1

) unnamed_addr #0 !dbg !1224 !rust !0 {

; .. ^^^^^^^^ (CHANGED)

}

; <app::Baz as app::Foo>::foo

; Function Attrs: norecurse nounwind readnone

define internal zeroext i1

@"_ZN37_$LT$app..Baz$u20$as$u20$app..Foo$GT$3foo17h9e4a36340940b841E"(

%Baz* noalias nocapture nonnull readonly align 1

) unnamed_addr #0 !dbg !1236 !rust !0 {

; .. ^^^^^^^^ (CHANGED)

}

; <i32 as app::Quux>::quux

; Function Attrs: noinline norecurse nounwind

define internal fastcc zeroext i1

@"_ZN33_$LT$i32$u20$as$u20$app..Quux$GT$4quux17haf5232e76b46052fE"(

i32* noalias readonly align 4 dereferenceable(4)

) unnamed_addr #1 !dbg !1245 {

; .. ^^^^^^^^ (REMOVED)

}

; ..

!0 = !{!"dyn", !"Foo", !"foo"}" ; CHANGED

; ..

```

Note that `<i32 as Quux>::quux` loses its `!rust` metadata because there's no

`dyn Quux` in the source code.

With trait-method information tools would be able to limit the candidates of

dynamic dispatch to the actual implementations of the trait being dispatched.

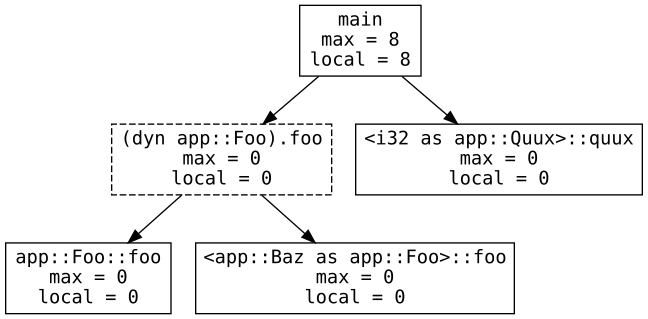

Thus the call graph produced by the tools would become:

Like "fn" metadata, "dyn" metadata only promises two things:

- Only trait method implementations (including default implementations) of

traits *that appear as trait objects* (e.g. `&dyn Foo`, `Box<dyn Bar>`) in the

source code will get this kind of metadata

- That the string nodes that come after the `!"dyn"` node will be *unique* for

each trait and method -- the flag does *not* make any promise about the

contents or syntax of these string nodes.

#### Destructors

Calling the destructor of a trait object (e.g. `Box<dyn Foo>`) can result in the

destructor of any `Foo` implementer being invoked. This information will also be

encoded in the LLVM IR using "drop" metadata of the form: `!{!"drop", !"Foo"}`

where the second node represents the trait.

Here's an example of this kind of metadata:

``` rust

trait Foo {

fn foo(&self) {}

}

struct Bar;

impl Foo for Bar {}

struct Baz;

impl Foo for Baz {}

static MAYBE: AtomicBool = AtomicBool::new(false);

fn main() {

let mut x: Box<dyn Foo> = Box::new(Bar);

if MAYBE.load(Ordering::Relaxed) {

x = Box::new(Baz);

}

drop(x);

}

```

Unoptimized LLVM IR:

``` llvm

; core::ptr::real_drop_in_place

define internal void @_(%Baz* nonnull align 1) unnamed_addr #4 !rust !199 {

; ..

}

; core::ptr::real_drop_in_place

define internal void @_(%Bar* nonnull align 1) unnamed_addr #4 !rust !199 {

; ..

}

; hello::main

define internal void @() {

; ..

; `drop(x)`

invoke void @_ZN4core3ptr18real_drop_in_place17h258eb03c50ca2fcaE(..)

; ..

}

; core::ptr::real_drop_in_place

define internal void @_ZN4core3ptr18real_drop_in_place17h258eb03c50ca2fcaE(..) {

; ..

; calls destructor on the concrete type behind the trait object

invoke void %8({}* align 1 %3)

to label %bb3 unwind label %cleanup, !dbg !209, !rust !199

; ..

}

!199 = !{!"drop", !"Foo"}

```

Here dropping `x` can result in `Bar`'s or `Baz`'s destructor being invoked (see `!199`).

### Multiple metadata

Some function definitions may get more than one different metadata kind or

different instances of the same kind. In that case we'll use a metadata tuple

(e.g. `!{!1, !2}`) to group the different instances. An example:

``` rust

trait Foo {

fn foo(&self) -> bool;

}

trait Bar {

fn bar(&self) -> i32 {

0

}

}

struct Baz;

impl Foo for Baz {

fn foo(&self) -> bool {

false

}

}

impl Bar for Baz {}

fn main() {

let x: &Foo = &Baz;

let y: &Bar = &Baz;

let z: fn(&Baz) -> bool = Baz::foo;

}

```

Unoptimized LLVM IR:

``` llvm

; core::ptr::real_drop_in_place

define internal void @_(%Baz* nonnull align 1) unnamed_addr #2 !rust !107 {

; ..

}

; <hello::Baz as hello::Foo>::foo

define internal zeroext i1 @_(%Baz* noalias nonnull readonly align 1) unnamed_addr #2 !rust !157 {

; ..

}

!105 = !{!"drop", !"Foo"}

!106 = !{!"drop", !"Bar"}

!107 = !{!105, !106}

; ..

!155 = !{!"dyn", !"Foo", !"foo"}

!156 = !{!"fn", !"fn(&Baz) -> bool"}

!157 = !{!155, !156}

```

### Summary

In summary, these are the proposed changes:

- Add an unstable `-Z call-metadata` flag

- Using this flag adds extra LLVM metadata to the LLVM IR produced by `rustc`

(`--emit=llvm-ir`). Three kinds of metadata will be added:

- `!{!"fn", !"fn() -> i32"}` metadata will be added to the definitions of

functions (IR: `define`) *that are coerced into function pointers in the

source code* and to function pointer calls (IR: `call _ %_(..)`). The second

node is a string that uniquely identifies the signature (type) of the

function.

- `!{!"dyn", !"Trait", !"method"}` metadata will be added to the trait method

implementations (IR: `define`) of traits *that appear as trait objects in

the source code* and to dynamic dispatch sites (IR: `call _ %_({}* _, ..)`).

The second node is a string that uniquely identifies the trait and the third

node is a string that uniquely identifies the trait method.

- `!{!"drop", "Trait"}` metadata will be added to destructors (IR: `define`)

of types that implement traits *that appear as trait objects in the source

code* and to the invocations of trait object destructors. The second node is

a string that uniquely identifies the implemented trait / trait object.

## Alternatives

An alternative would be to make type information available in the final binary

artifact, that is in the executable, rather than in the LLVM IR. This would make

the feature harder to implement and *less* portable. Making the type information

available in, say, the ELF format would require designing a (binary) format

to encode the information in a linker section plus non-trivial implementation

work. Making this feature available in other formats (Mach-O, WASM, etc.) would

only multiply the amount of required work, likely leading to this feature being

implemented for some formats but not others.

## Drawbacks

LLVM IR is tied to the LLVM backend; this makes the proposed feature hard, or

maybe even impossible, to port to other backends like cranelift. I don't think

this is much of an issue as this is an experimental feature; backend portability

can, and should, be revisited when we consider stabilizing this feature (if

ever).

---

Since this is a (hopefully small) experimental compiler feature (along the lines

of [`-Z emit-stack-sizes`][pr51946]) and not a language (semantics or syntax)

change I'm posting this in rust-lang/rust for FCP consideration. If this

warrants a formal RFC I'd be happy to repost this in rust-lang/rfcs.

[pr51946]: https://github.com/rust-lang/rust/pull/51946

cc @rust-lang/compiler @oli-obk

|

A-LLVM,T-compiler,WG-embedded,needs-fcp,A-CLI

|

medium

|

Major

|

424,934,543

|

scrcpy

|

Hotkeys for record screen

|

To record a video I need to run the command:

```scrcpy -r file.mkv```

This method is inconvenient if the scrcpy is already running and I need to make a video. I will have to return to the console, close the program, enter the command. After recording the video, I will have to close the scrcpy and reopen it without parameters.

I would like to be able to manage the recording of video from the scrcpy, namely

1. Start recording

2. Pause recording

3. Stop recording

For this, I can use third-party software as OBS, but when I test mobile applications in the scrcpy, switching to between different windows is distracting.

|

feature request,record

|

low

|

Major

|

424,949,450

|

vscode

|

[html] bracket matching in strings

|

Issue Type: <b>Bug</b>

In HTML the minor sign inside of tags is sometimes recognised as opening a new tag.

For Example:

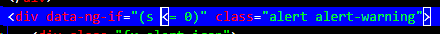

`<div data-ng-if="(something <= 0)" class="alert alert-warning">`

VS Code version: Code 1.32.3 (a3db5be9b5c6ba46bb7555ec5d60178ecc2eaae4, 2019-03-14T23:43:35.476Z)

OS version: Windows_NT x64 10.0.16299

<details>

<summary>System Info</summary>

|Item|Value|

|---|---|

|CPUs|Intel(R) Core(TM) i5-3210M CPU @ 2.50GHz (4 x 2494)|

|GPU Status|2d_canvas: enabled<br>checker_imaging: disabled_off<br>flash_3d: enabled<br>flash_stage3d: enabled<br>flash_stage3d_baseline: enabled<br>gpu_compositing: enabled<br>multiple_raster_threads: enabled_on<br>native_gpu_memory_buffers: disabled_software<br>rasterization: unavailable_off<br>surface_synchronization: enabled_on<br>video_decode: enabled<br>webgl: enabled<br>webgl2: enabled|

|Memory (System)|7.94GB (3.81GB free)|

|Process Argv||

|Screen Reader|no|

|VM|0%|

</details><details><summary>Extensions (3)</summary>

Extension|Author (truncated)|Version

---|---|---

python|ms-|2019.2.5558

cpptools|ms-|0.22.1

vscode-spotify|shy|3.1.0

(2 theme extensions excluded)

</details>

<!-- generated by issue reporter -->

|

bug,html

|

low

|

Critical

|

424,956,527

|

go

|

x/tools/cmd/goimports: prefer main module requirements

|

<!-- Please answer these questions before submitting your issue. Thanks! -->

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.12.1 linux/amd64

</pre>

### Does this issue reproduce with the latest release?

Yes

### What operating system and processor architecture are you using (`go env`)?

<details><summary><code>go env</code> Output</summary><br><pre>

$ go env

GOARCH="amd64"

GOBIN=""

GOCACHE="/home/myitcv/.cache/go-build"

GOEXE=""

GOFLAGS=""

GOHOSTARCH="amd64"

GOHOSTOS="linux"

GOOS="linux"

GOPATH="/home/myitcv/gostuff"

GOPROXY=""

GORACE=""

GOROOT="/home/myitcv/gos"

GOTMPDIR=""

GOTOOLDIR="/home/myitcv/gos/pkg/tool/linux_amd64"

GCCGO="gccgo"

CC="gcc"

CXX="g++"

CGO_ENABLED="1"

GOMOD=""

CGO_CFLAGS="-g -O2"

CGO_CPPFLAGS=""

CGO_CXXFLAGS="-g -O2"

CGO_FFLAGS="-g -O2"

CGO_LDFLAGS="-g -O2"

PKG_CONFIG="pkg-config"

GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build898038110=/tmp/go-build -gno-record-gcc-switches"

</pre></details>

### What did you do?

Ran [`testscript`](https://github.com/rogpeppe/go-internal/blob/master/cmd/testscript/README.md) on the following:

```

# install goimports

env GOMODPROXY=$GOPROXY

env GOPROXY=

go install golang.org/x/tools/cmd/goimports

# switch back to our local proxy

env GOPROXY=$GOMODPROXY

# "warm" the module (download) cache

go get gopkg.in/tomb.v1

go get gopkg.in/tomb.v2

# test goimports

cd mod

go mod tidy

exec goimports main.go

stdout '\Q"gopkg.in/tomb.v2"\E'

-- go.mod --

module goimports

require golang.org/x/tools v0.0.0-20190322203728-c1a832b0ad89

-- mod/go.mod --

module mod

require gopkg.in/tomb.v2 v2.0.0

-- mod/main.go --

package main

import (

"fmt"

)

func main() {

fmt.Println(tomb.Tomb)

}

-- .gomodproxy/gopkg.in_tomb.v1_v1.0.0/.mod --

module gopkg.in/tomb.v1

-- .gomodproxy/gopkg.in_tomb.v1_v1.0.0/.info --

{"Version":"v1.0.0","Time":"2018-10-22T18:45:39Z"}

-- .gomodproxy/gopkg.in_tomb.v1_v1.0.0/go.mod --

module gopkg.in/tomb.v1

-- .gomodproxy/gopkg.in_tomb.v1_v1.0.0/tomb.go --

package tomb

const Tomb = "A great package v1"

-- .gomodproxy/gopkg.in_tomb.v2_v2.0.0/.mod --

module gopkg.in/tomb.v2

-- .gomodproxy/gopkg.in_tomb.v2_v2.0.0/.info --

{"Version":"v2.0.0","Time":"2018-10-22T18:45:39Z"}

-- .gomodproxy/gopkg.in_tomb.v2_v2.0.0/go.mod --

module gopkg.in/tomb.v2

-- .gomodproxy/gopkg.in_tomb.v2_v2.0.0/tomb.go --

package tomb

const Tomb = "A great package v2"

```

### What did you expect to see?

A passing run.

### What did you see instead?

A failed run.

`goimports` should be "consulting" the main module for matches before dropping down to a module cache-based search. Here that would have resulted in `gopkg.in/tomb.v2` being correctly resolved, instead of `gopkg.in/tomb.v1`.

This will, I suspect, also massively improve the speed of `goimports` in a large majority of cases.

cc @heschik

|

NeedsInvestigation,Tools

|

low

|

Critical

|

424,959,472

|

opencv

|

New JasPer release fixing CVEs

|

JasPer 2.0.16 got recently released.

I would like to see a transition to OpenJPEG as suggested in https://github.com/opencv/opencv/issues/5849 but in the meantime I think updating the sources for libjasper might be a good idea since it fixes two CVEs.

I would have created a PR instead but looking at the to me unfamilar directory structure at `opencv/3rdparty/libjasper` I'll rather let you handle it properly.

|

category: imgcodecs

|

low

|

Minor

|

424,982,212

|

react

|

Memoized components should forward displayName

|

**Do you want to request a *feature* or report a *bug*?**

I'd like to report a bug.

**What is the current behavior?**

First of all, thanks for the great work on fixing https://github.com/facebook/react/issues/14807. However there is still an issue with the current implementation.

`React.memo` does not forward displayName for tests. In snapshots, components display as `<Component />` and string assertions such as `.find('MyMemoizedComponent')` won't work.

**What is the expected behavior?**

`React.memo` should forward displayName for the test renderer.

**Which versions of React, and which browser / OS are affected by this issue? Did this work in previous versions of React?**

* React 16.8.5

* Jest 24.5.0

* enzyme 3.9.0

* enzyme-adapter-react-16 1.11.2

---

N.B. - Potentially related to https://github.com/facebook/react/issues/14319, but this is related to the more recent changes to support `memo` in the test renderer. Please close if needed, I'm quite new here!

I'd be happy to submit a PR if the issue is not too complex to look into :smile:

|

Type: Enhancement,Component: Shallow Renderer

|

medium

|

Critical

|

424,995,504

|

flutter

|

google_maps_flutter package - CameraPosition does not follow location updates

|

The GoogleMap widget already is getting the location and listening to location updates if it is instantiated with myLocationEnabled. I would like it to be able to do two additional things with this information:

1. Instantiate the map with the CameraPosition set to the user's current location.

2. Update the CameraPosition when the user's location changes. As it is the blue dot showing the location moves, but the camera does not, letting the location marker go off screen.

I have not found any way to do this without using another plugin that gets the user's initial position and then listens to updates in location. This is not ideal since the map widget is already listening for the location, it seems redundant to have two plugins listening for locations.

|

c: new feature,p: maps,package,c: proposal,team-ecosystem,P3,triaged-ecosystem

|

low

|

Minor

|

425,000,028

|

pytorch

|

improve jit error message for legacy constructor

|

Reported by @jph00

> new_tensor is a legacy constructor and is not supported in the JIT

It'd be helpful to know in the error message what the non-legacy alternative is.

|

module: docs,triaged

|

low

|

Critical

|

425,011,574

|

go

|

x/net/nettest: extend TestConn with optional interface checks

|

EDIT: we'll proceed with Option 1.

---

For the same project as discussed in #30984, I'm using `nettest.TestConn` to test a custom `net.Conn` implementation.

Now that I've got the basics covered, I realize it'd be useful to be able to extend the tests to make sure that my `CloseRead`/`CloseWrite` methods that are not a mandatory part of `net.Conn` could also be tested on my type.

I see two options here:

1) Allow `TestConn` to perform a couple of type assertion tests to see if methods such as `CloseRead` and `CloseWrite` are implemented on the `net.Conn` type, and then run additional tests if so.

This has the advantage that anyone who consumes this package and passes a `net.Conn` with these methods will have these tests run. `net.TCPConn`, `net.UnixConn`, and my custom `vsock.Conn` type (as an example) would run these added tests, but `net.UDPConn` would not.

Perhaps it'd also make a sense to have an optional test for `SyscallConn` as well, since it is widely implemented.

2) Export `timeoutWrapper` and `connTester` in some form, to enable callers to create their own local tests in a `nettest.TestConn`-style.

This could be nice to keep the amount of code in this package smaller, but it'd also mean that certain tests such as the `CloseRead`/`CloseWrite` would need to be duplicated between different projects.

With this said, I think option 1 is preferable in order to reduce duplication in the community, but I can understand why one wouldn't want to add additional complexity to this package to test methods that are not a required part of `net.Conn`.

/cc @dsnet @mikioh @acln0

|

Proposal,Proposal-Accepted

|

low

|

Minor

|

425,015,793

|

flutter

|

Support better scaling of Android platform views

|

Right now when a scale transform is applied to an AndroidView the texture is drawn scaled without scaling the virtual display and the internal view.

This can be improved by sending the scale factors to the PlatformViewsController and calling [setScaleX](https://developer.android.com/reference/android/view/View.html#setScaleX(float)) and [setScaleY]( https://developer.android.com/reference/android/view/View.html#setScaleY(float)) on the embedded view.

There will still be timing issues when the scale is changes at runtime after the view is visible, but for cases where the scale is always the same this should be a net improvement.

|

platform-android,framework,a: platform-views,c: proposal,P2,team-android,triaged-android

|

low

|

Minor

|

425,043,188

|

pytorch

|

UnpicklingError when trying to load multiple objects from a file

|

## 🐛 UnpicklingError when trying to load multiple objects from a file

Pickle allows to dump multiple self-contained objects into the same file and later load them through subsequent reads. The pickling mechanism from PyTorch has the same behavior when using an in-memory buffer like `io.BytesIO`, but raises an error when using regular files.

## To Reproduce

This is the behavior of the standard `pickle` module:

```python

import io

import torch

import pickle

b=open('/tmp/file.pt', 'wb')

for i in range(3):

was_at = b.tell()

pickle.dump(torch.ones(10), b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

b.close()

```

```

>>> 0: 0000-0427 (427)

>>> 1: 0427-0854 (427)

>>> 2: 0854-1281 (427)

```

```python

i = 0

b=open('/tmp/file.pt', 'rb')

while True:

try:

was_at = b.tell()

pickle.load(b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

i+=1

except EOFError:

break

b.close()

```

```

>>> 0: 0000-0427 (427)

>>> 1: 0427-0854 (427)

>>> 2: 0854-1281 (427)

```

PyTorch works fine with `io.BytesIO`, I get the same behavior:

```python

b=io.BytesIO()

for i in range(3):

was_at = b.tell()

torch.save(torch.ones(10), b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

```

```

>>> 0: 0000-0377 (377)

>>> 1: 0377-0754 (377)

>>> 2: 0754-1131 (377)

```

```python

i = 0

b.seek(0)

while True:

try:

was_at = b.tell()

torch.load(b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

i+=1

except EOFError:

break

```

```

>>> 0: 0000-0377 (377)

>>> 1: 0377-0754 (377)

>>> 2: 0754-1131 (377)

```

However, `UnpicklingError` is raised when using the serialization methods from PyTorch on a regular file:

```python

b=open('/tmp/file.pt', 'wb')

for i in range(3):

was_at = b.tell()

torch.save(torch.ones(10), b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

b.close()

```

```

>>> 0: 0000-0377 (377)

>>> 1: 0377-0754 (377)

>>> 2: 0754-1131 (377)

```

```python

i = 0

b=open('/tmp/file.pt', 'rb')

while True:

try:

was_at = b.tell()

torch.load(b)

print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

i+=1

except EOFError:

break

b.close()

```

```

>>> 0: 0000--425 (-425)

---------------------------------------------------------------------------

UnpicklingError Traceback (most recent call last)

<ipython-input-38-a8789bdba75a> in <module>

12 try:

13 was_at = b.tell()

---> 14 torch.load(b)

15 print(f'{i}: {was_at:04d}-{b.tell():04d} ({b.tell()-was_at:03d})')

16 i+=1

.../python3.7/site-packages/torch/serialization.py in load(f, map_location, pickle_module)

366 f = open(f, 'rb')

367 try:

--> 368 return _load(f, map_location, pickle_module)

369 finally:

370 if new_fd:

.../python3.7/site-packages/torch/serialization.py in _load(f, map_location, pickle_module)

530 f.seek(0)

531

--> 532 magic_number = pickle_module.load(f)

533 if magic_number != MAGIC_NUMBER:

534 raise RuntimeError("Invalid magic number; corrupt file?")

UnpicklingError: invalid load key, '\x0a'.

```

Note how the read location inside the file given by `b.tell()` results to be negative: `-425`.

## Expected behavior

The serialization methods of Pickle and Pytorch should work in similar ways.

## Environment

```

PyTorch version: 1.0.1.post2

Is debug build: No

CUDA used to build PyTorch: 10.0.130

OS: Ubuntu 18.04.2 LTS

GCC version: (Ubuntu 7.3.0-27ubuntu1~18.04) 7.3.0

CMake version: Could not collect

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: 10.1

GPU models and configuration: GPU 0: GeForce GTX 1050 Ti with Max-Q Design

Nvidia driver version: 418.43

cuDNN version: Could not collect

Versions of relevant libraries:

[pip] 19.0.3

[conda] pytorch 1.0.1 py3.7_cuda10.0.130_cudnn7.4.2_2 pytorch

```

## Additional context

The main reason I want to serialize multiple objects individually rather than packing them in a list is because they represent inputs that might be created at different times from different processes and that I still want to process in batch.

|

module: pickle,module: serialization,triaged

|

low

|

Critical

|

425,071,304

|

vue

|

TypeScript: Vue types $attrs should be type Record<string, any>

|

### Version

2.6.10

### What is expected?

`Vue.prototype.$attrs` should be type `Record<string, any>` and not `Record<string, string>`.

Since `2.4.0`, `vm.$attrs` has contained extracted bindings not recognized as props. The type of these values is unknown.

### Steps to reproduce

Take the following component:

```javascript

new Vue({

data: function () {

return {

count: 0

}

},

mounted() {

let someFunc = this.$attrs.someBoundAttr as Function

// Type 'string' cannot be converted to type 'Function'

},

template: `

<button v-on:click="count++" :someBoundAttr="() => count">

You clicked me {{ count }} times.

</button>`

})

```

Notice the error in TypeScript checking:

```

Type 'string' cannot be converted to type 'Function'

```

### What is actually happening?

...

### Reproduction link

[http://www.typescriptlang.org/play/](http://www.typescriptlang.org/play/)

<!-- generated by vue-issues. DO NOT REMOVE -->

|

typescript

|

medium

|

Critical

|

425,105,330

|

go

|

x/net/nettest: add TestListener API

|

I'm currently implementing my own `net.Listener` (see also #30984 and #31033), https://godoc.org/github.com/mdlayher/vsock#Listener, and would like to ensure it is in full compliance with the `net.Listener` contract; that is:

- Accept blocks until a connection is receive, or it is interrupted

- Close terminates the listener, but can also unblock Accept

- Maybe: test for SetDeadline method (which is common for `net.Listener`, see #6892) and verify that an expired deadline unblocks Accept as well (this idea is in line with what I propose with #31033)

I've played around with this a bit locally to try to see what makes sense, and I will send a draft CL with my proposed API and a single test. The basic idea is to mirror what `TestConn` is doing, and perhaps the two can share a fair bit of internal code.

/cc @dsnet @mikioh @acln0

|

Proposal,Proposal-Accepted

|

low

|

Major

|

425,122,888

|

TypeScript

|

Enum redeclared as var inside block scope

|

<!-- 🚨 STOP 🚨 𝗦𝗧𝗢𝗣 🚨 𝑺𝑻𝑶𝑷 🚨

Half of all issues filed here are duplicates, answered in the FAQ, or not appropriate for the bug tracker. Even if you think you've found a *bug*, please read the FAQ first, especially the Common "Bugs" That Aren't Bugs section!

Please help us by doing the following steps before logging an issue:

* Search: https://github.com/Microsoft/TypeScript/search?type=Issues

* Read the FAQ: https://github.com/Microsoft/TypeScript/wiki/FAQ

Please fill in the *entire* template below.

-->

<!-- Please try to reproduce the issue with `typescript@next`. It may have already been fixed. -->

**TypeScript Version:** TS Playground as of 2019-03-25

<!-- Search terms you tried before logging this (so others can find this issue more easily) -->

**Search Terms:** enum declarations can only merge, enum block scope, enum redeclaration

**Code**

```ts

{ enum Foo { } var Foo }

enum Bar { } var Bar;

```

**Expected behavior:**

They should both error, or they should be both accepted

**Actual behavior:**

Only `Bar` is an error

**Playground Link:** <!-- A link to a TypeScript Playground "Share" link which demonstrates this behavior -->

https://www.typescriptlang.org/play/index.html#src=%7B%20enum%20Foo%20%7B%20%7D%20var%20Foo%20%7D%0A%0Aenum%20Bar%20%7B%20%7D%20var%20Bar%3B%0A%0A(function%20()%20%7B%20enum%20Baz%20%7B%20%7D%20var%20Baz%20%7D)

**Related Issues:** <!-- Did you find other bugs that looked similar? -->

Found while fixing https://github.com/babel/babel/issues/9763

|

Bug

|

low

|

Critical

|

425,140,496

|

vscode

|

[json] Provide support for highlighting the source of the error in json files rather than highlighting the entire file

|

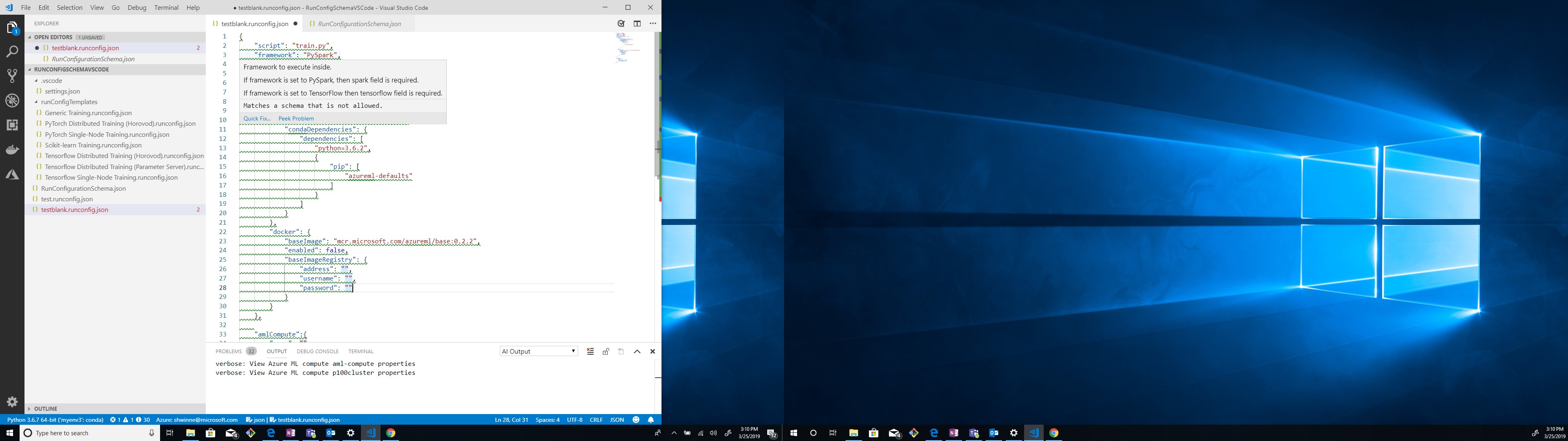

In VSCode, I have a RunConfigurationSchema.json file and a runconfig.json file open. When I edit my runconfig.json file and I make an invalid change to a parameter (as seen for the 'framework' parameter in the picture attached); all the lines in the json file are highlighted and underlined with green squiggly lines. It is difficult to decipher where the actual error is stemming from unless the user hovers specifically over the parameter containing the error. Can we instead highlight the line that contains the error only?

|

feature-request,json

|

low

|

Critical

|

425,170,780

|

rust

|

Add guidance when unused_imports fires on an item only used in cfg(test)

|

This is a very common source of confusion for new Rust users: They import a module for use in tests, then get an "unused import" warning when building a non-test target. Meanwhile, removing the import breaks their tests. [See here](https://users.rust-lang.org/t/seemingly-invalid-unused-import-warning/21465) for an example.

I think it would be tremendously helpful to people learning the language to provide a hint if the item is actually used in `cfg(test)`. I'm not sure how difficult this would be to implement, however.

|

C-enhancement,A-lints,T-compiler,A-libtest,A-suggestion-diagnostics,D-confusing,D-newcomer-roadblock

|

low

|

Major

|

425,172,751

|

godot

|

[3.x] Autocomplete of vars fails unless directly below and also if line previously had error

|

**Godot version:**

3.1 64-bit

**OS/device including version:**

Windows 10

**Issue description:**

Autocomplete does not work

**Steps to reproduce:**

Hello,

New user here. Two autocomplete bugs that I encountered on using Godot for the first time, hampering my ability to learn and use the editor/language properly...

Autocomplete not working for instances

1. Using latest 64-bit Windows 3.1 version standard edition download the 2D Kinematic demo (or simply use the code below)

2. In the script, move the cursor to directly below line 11 where 'motion' variable is defined as Vector2D

3. Type motion. and auto complete will present the correct list, e.g. find 'normalized()'

4. Move cursor to bottom of script and repeat item 3. All you see are generic 'object' constants and methods and specific methods, e.g. 'normalized()' is not available

I saw a bug report for this from a while ago and said it was fixed, clearly not quite. This bug means you simply cannot use autocomplete and scripting (for new users) is trial and error.

Autocomplete fails to run at all after a fault is found, even after fault is cleared:

1. Using the sample script place the cursor anywhere below the 'var motion=Vector2D()' line

2. Type 'motion. ' then press enter. You will get an error message saying 'expected identifier' and the line turns brown.

3. Go back to this line and remove the '.' then press '.' again and no autocomplete is shown

4. Delete all the text to the start of the line and repeat 'motion.' and still autocomplete is not shown

5. Delete all the line, then delete again to remove the line, and the error disappears and you can type again

i.e. once an error is found, even after you start editing the error, it never goes away and features such as autocomplete continue.

I have also found sometimes that even after removing a line, and retyping, autocomplete doesn't even give you any hints, you have to remove the line a couple of times and then eventually it works!

Sample code for player.gd is as below:

**Minimal reproduction project:**

```gdscript

extends KinematicBody2D

# This is a demo showing how KinematicBody2D

# move_and_slide works.

# Member variables

const MOTION_SPEED = 160 # Pixels/second

func _physics_process(delta):

var motion = Vector2()

if Input.is_action_pressed("move_up"):

motion += Vector2(0, -1)

if Input.is_action_pressed("move_bottom"):

motion += Vector2(0, 1)

if Input.is_action_pressed("move_left"):

motion += Vector2(-1, 0)

if Input.is_action_pressed("move_right"):

motion += Vector2(1, 0)

motion = motion.normalized() * MOTION_SPEED

move_and_slide(motion)

```

|

bug,topic:gdscript,topic:editor

|

low

|

Critical

|

425,198,177

|

go

|

x/review: use alternative remote repository

|

### What version of Go are you using (`go version`)?

<pre>

$ go version

go version go1.12.1 linux/amd64