problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_37791

|

rasdani/github-patches

|

git_diff

|

Lightning-AI__pytorch-lightning-1378

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

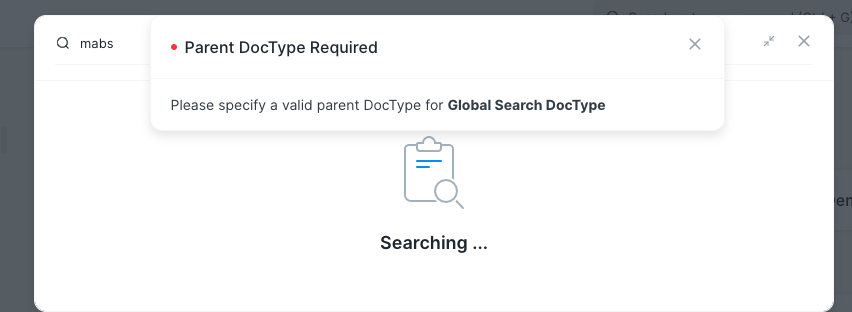

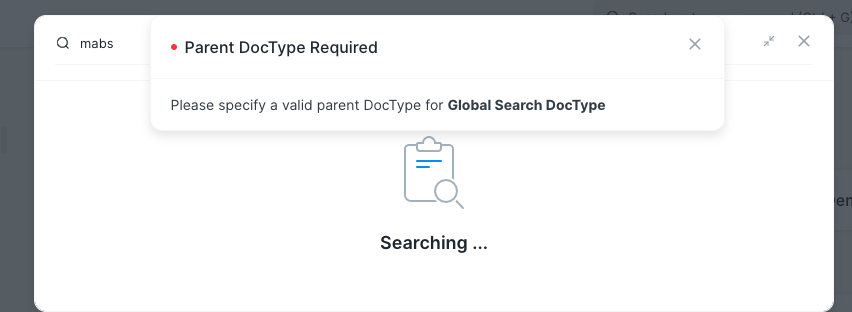

<issue>

Training loop temporarily hangs after every 4 steps

I am porting some of my code to pytorch lightning, and everything seems to work fine. However, for some reason after every 4 training steps I see some temporary hanging (~1 second), which is severely slowing down my overall training time. Am I missing some obvious configuration? This is my Trainer configuration:

```

trainer = pl.Trainer(

gpus=8

num_nodes=1,

distributed_backend='ddp',

checkpoint_callback=False,

max_epochs=50,

max_steps=None,

progress_bar_refresh_rate=1,

check_val_every_n_epoch=1,

val_check_interval=1.0,

gradient_clip_val=0.0,

log_save_interval=0,

num_sanity_val_steps=0,

amp_level='O0',

)

```

</issue>

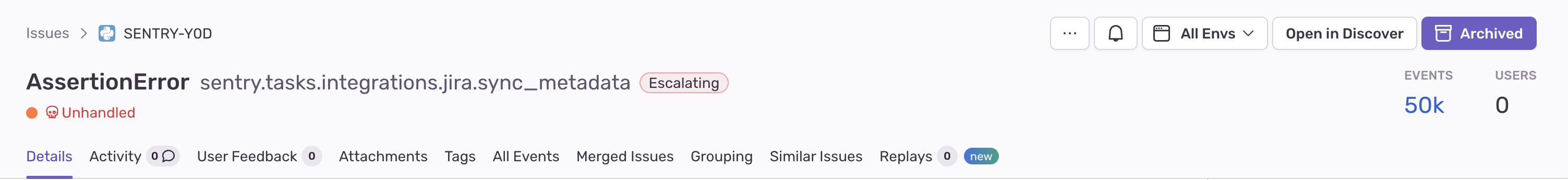

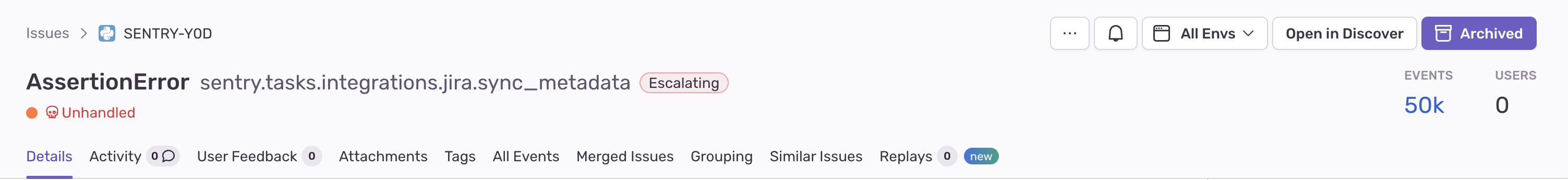

<code>

[start of pytorch_lightning/trainer/data_loading.py]

1 from abc import ABC, abstractmethod

2 from typing import Union, List, Tuple, Callable

3

4 import torch.distributed as torch_distrib

5 from torch.utils.data import SequentialSampler, DataLoader

6 from torch.utils.data.distributed import DistributedSampler

7

8 from pytorch_lightning.core import LightningModule

9 from pytorch_lightning.utilities.exceptions import MisconfigurationException

10

11 try:

12 from apex import amp

13 except ImportError:

14 APEX_AVAILABLE = False

15 else:

16 APEX_AVAILABLE = True

17

18 try:

19 import torch_xla

20 import torch_xla.core.xla_model as xm

21 import torch_xla.distributed.xla_multiprocessing as xmp

22 except ImportError:

23 XLA_AVAILABLE = False

24 else:

25 XLA_AVAILABLE = True

26

27

28 def _has_len(dataloader: DataLoader) -> bool:

29 """ Checks if a given Dataloader has __len__ method implemented i.e. if

30 it is a finite dataloader or infinite dataloader """

31 try:

32 # try getting the length

33 if len(dataloader) == 0:

34 raise ValueError('Dataloader returned 0 length. Please make sure'

35 ' that your Dataloader atleast returns 1 batch')

36 return True

37 except TypeError:

38 return False

39

40

41 class TrainerDataLoadingMixin(ABC):

42

43 # this is just a summary on variables used in this abstract class,

44 # the proper values/initialisation should be done in child class

45 proc_rank: int

46 use_ddp: bool

47 use_ddp2: bool

48 shown_warnings: ...

49 val_check_interval: float

50 use_tpu: bool

51 tpu_local_core_rank: int

52 train_dataloader: DataLoader

53 num_training_batches: Union[int, float]

54 val_check_batch: ...

55 val_dataloaders: List[DataLoader]

56 num_val_batches: Union[int, float]

57 test_dataloaders: List[DataLoader]

58 num_test_batches: Union[int, float]

59 train_percent_check: float

60 val_percent_check: float

61 test_percent_check: float

62

63 @abstractmethod

64 def is_overriden(self, *args):

65 """Warning: this is just empty shell for code implemented in other class."""

66

67 def _percent_range_check(self, name: str) -> None:

68 value = getattr(self, name)

69 msg = f'`{name}` must lie in the range [0.0, 1.0], but got {value:.3f}.'

70 if name == 'val_check_interval':

71 msg += ' If you want to disable validation set `val_percent_check` to 0.0 instead.'

72

73 if not 0. <= value <= 1.:

74 raise ValueError(msg)

75

76 def auto_add_sampler(self, dataloader: DataLoader, train: bool) -> DataLoader:

77

78 # don't do anything if it's not a dataloader

79 if not isinstance(dataloader, DataLoader):

80 return dataloader

81

82 need_dist_sampler = self.use_ddp or self.use_ddp2 or self.use_tpu

83 no_sampler_added = dataloader.sampler is None

84

85 if need_dist_sampler and no_sampler_added:

86

87 skip_keys = ['sampler', 'batch_sampler', 'dataset_kind']

88

89 dl_args = {

90 k: v for k, v in dataloader.__dict__.items() if not k.startswith('_') and k not in skip_keys

91 }

92

93 if self.use_tpu:

94 sampler = DistributedSampler(

95 dataloader.dataset,

96 num_replicas=xm.xrt_world_size(),

97 rank=xm.get_ordinal()

98 )

99 else:

100 sampler = DistributedSampler(dataloader.dataset)

101

102 dl_args['sampler'] = sampler

103 dataloader = type(dataloader)(**dl_args)

104

105 return dataloader

106

107 def reset_train_dataloader(self, model: LightningModule) -> None:

108 """Resets the train dataloader and initialises required variables

109 (number of batches, when to validate, etc.).

110

111 Args:

112 model: The current `LightningModule`

113 """

114 self.train_dataloader = self.request_dataloader(model.train_dataloader)

115 self.num_training_batches = 0

116

117 # automatically add samplers

118 self.train_dataloader = self.auto_add_sampler(self.train_dataloader, train=True)

119

120 self._percent_range_check('train_percent_check')

121

122 if not _has_len(self.train_dataloader):

123 self.num_training_batches = float('inf')

124 else:

125 # try getting the length

126 self.num_training_batches = len(self.train_dataloader)

127 self.num_training_batches = int(self.num_training_batches * self.train_percent_check)

128

129 # determine when to check validation

130 # if int passed in, val checks that often

131 # otherwise, it checks in [0, 1.0] % range of a training epoch

132 if isinstance(self.val_check_interval, int):

133 self.val_check_batch = self.val_check_interval

134 if self.val_check_batch > self.num_training_batches:

135 raise ValueError(

136 f'`val_check_interval` ({self.val_check_interval}) must be less than or equal '

137 f'to the number of the training batches ({self.num_training_batches}). '

138 'If you want to disable validation set `val_percent_check` to 0.0 instead.')

139 else:

140 if not _has_len(self.train_dataloader):

141 if self.val_check_interval == 1.0:

142 self.val_check_batch = float('inf')

143 else:

144 raise MisconfigurationException(

145 'When using an infinite DataLoader (e.g. with an IterableDataset or when '

146 'DataLoader does not implement `__len__`) for `train_dataloader`, '

147 '`Trainer(val_check_interval)` must be `1.0` or an int. An int k specifies '

148 'checking validation every k training batches.')

149 else:

150 self._percent_range_check('val_check_interval')

151

152 self.val_check_batch = int(self.num_training_batches * self.val_check_interval)

153 self.val_check_batch = max(1, self.val_check_batch)

154

155 def _reset_eval_dataloader(self, model: LightningModule,

156 mode: str) -> Tuple[int, List[DataLoader]]:

157 """Generic method to reset a dataloader for evaluation.

158

159 Args:

160 model: The current `LightningModule`

161 mode: Either `'val'` or `'test'`

162

163 Returns:

164 Tuple (num_batches, dataloaders)

165 """

166 dataloaders = self.request_dataloader(getattr(model, f'{mode}_dataloader'))

167

168 if not isinstance(dataloaders, list):

169 dataloaders = [dataloaders]

170

171 # add samplers

172 dataloaders = [self.auto_add_sampler(dl, train=False) for dl in dataloaders if dl]

173

174 num_batches = 0

175

176 # determine number of batches

177 # datasets could be none, 1 or 2+

178 if len(dataloaders) != 0:

179 for dataloader in dataloaders:

180 if not _has_len(dataloader):

181 num_batches = float('inf')

182 break

183

184 percent_check = getattr(self, f'{mode}_percent_check')

185

186 if num_batches != float('inf'):

187 self._percent_range_check(f'{mode}_percent_check')

188

189 num_batches = sum(len(dataloader) for dataloader in dataloaders)

190 num_batches = int(num_batches * percent_check)

191 elif percent_check not in (0.0, 1.0):

192 raise MisconfigurationException(

193 'When using an infinite DataLoader (e.g. with an IterableDataset or when '

194 f'DataLoader does not implement `__len__`) for `{mode}_dataloader`, '

195 f'`Trainer({mode}_percent_check)` must be `0.0` or `1.0`.')

196 return num_batches, dataloaders

197

198 def reset_val_dataloader(self, model: LightningModule) -> None:

199 """Resets the validation dataloader and determines the number of batches.

200

201 Args:

202 model: The current `LightningModule`

203 """

204 if self.is_overriden('validation_step'):

205 self.num_val_batches, self.val_dataloaders =\

206 self._reset_eval_dataloader(model, 'val')

207

208 def reset_test_dataloader(self, model) -> None:

209 """Resets the validation dataloader and determines the number of batches.

210

211 Args:

212 model: The current `LightningModule`

213 """

214 if self.is_overriden('test_step'):

215 self.num_test_batches, self.test_dataloaders =\

216 self._reset_eval_dataloader(model, 'test')

217

218 def request_dataloader(self, dataloader_fx: Callable) -> DataLoader:

219 """Handles downloading data in the GPU or TPU case.

220

221 Args:

222 dataloader_fx: The bound dataloader getter

223

224 Returns:

225 The dataloader

226 """

227 dataloader = dataloader_fx()

228

229 # get the function we'll use to get data

230 if self.use_ddp or self.use_ddp2:

231 # all processes wait until data download has happened

232 torch_distrib.barrier()

233

234 # data download/load on TPU

235 elif self.use_tpu and XLA_AVAILABLE:

236 # all processes wait until data download has happened

237 torch_xla.core.xla_model.rendezvous('pl.TrainerDataLoadingMixin.get_dataloaders')

238

239 return dataloader

240

241 def determine_data_use_amount(self, train_percent_check: float, val_percent_check: float,

242 test_percent_check: float, overfit_pct: float) -> None:

243 """Use less data for debugging purposes

244 """

245 self.train_percent_check = train_percent_check

246 self.val_percent_check = val_percent_check

247 self.test_percent_check = test_percent_check

248 if overfit_pct > 0:

249 if overfit_pct > 1:

250 raise ValueError(

251 f'`overfit_pct` must be not greater than 1.0, but got {overfit_pct:.3f}.')

252

253 self.train_percent_check = overfit_pct

254 self.val_percent_check = overfit_pct

255 self.test_percent_check = overfit_pct

256

[end of pytorch_lightning/trainer/data_loading.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pytorch_lightning/trainer/data_loading.py b/pytorch_lightning/trainer/data_loading.py

--- a/pytorch_lightning/trainer/data_loading.py

+++ b/pytorch_lightning/trainer/data_loading.py

@@ -1,8 +1,9 @@

+import warnings

from abc import ABC, abstractmethod

from typing import Union, List, Tuple, Callable

import torch.distributed as torch_distrib

-from torch.utils.data import SequentialSampler, DataLoader

+from torch.utils.data import DataLoader

from torch.utils.data.distributed import DistributedSampler

from pytorch_lightning.core import LightningModule

@@ -73,6 +74,12 @@

if not 0. <= value <= 1.:

raise ValueError(msg)

+ def _worker_check(self, dataloader: DataLoader, name: str) -> None:

+ if isinstance(dataloader, DataLoader) and dataloader.num_workers <= 2:

+ warnings.warn(f'The dataloader, {name}, does not have many workers which may be a bottleneck.'

+ ' Consider increasing the value of the `num_workers` argument`'

+ ' in the `DataLoader` init to improve performance.')

+

def auto_add_sampler(self, dataloader: DataLoader, train: bool) -> DataLoader:

# don't do anything if it's not a dataloader

@@ -112,11 +119,13 @@

model: The current `LightningModule`

"""

self.train_dataloader = self.request_dataloader(model.train_dataloader)

+

self.num_training_batches = 0

# automatically add samplers

self.train_dataloader = self.auto_add_sampler(self.train_dataloader, train=True)

+ self._worker_check(self.train_dataloader, 'train dataloader')

self._percent_range_check('train_percent_check')

if not _has_len(self.train_dataloader):

@@ -176,10 +185,10 @@

# determine number of batches

# datasets could be none, 1 or 2+

if len(dataloaders) != 0:

- for dataloader in dataloaders:

+ for i, dataloader in enumerate(dataloaders):

+ self._worker_check(dataloader, f'{mode} dataloader {i}')

if not _has_len(dataloader):

num_batches = float('inf')

- break

percent_check = getattr(self, f'{mode}_percent_check')

|

{"golden_diff": "diff --git a/pytorch_lightning/trainer/data_loading.py b/pytorch_lightning/trainer/data_loading.py\n--- a/pytorch_lightning/trainer/data_loading.py\n+++ b/pytorch_lightning/trainer/data_loading.py\n@@ -1,8 +1,9 @@\n+import warnings\n from abc import ABC, abstractmethod\n from typing import Union, List, Tuple, Callable\n \n import torch.distributed as torch_distrib\n-from torch.utils.data import SequentialSampler, DataLoader\n+from torch.utils.data import DataLoader\n from torch.utils.data.distributed import DistributedSampler\n \n from pytorch_lightning.core import LightningModule\n@@ -73,6 +74,12 @@\n if not 0. <= value <= 1.:\n raise ValueError(msg)\n \n+ def _worker_check(self, dataloader: DataLoader, name: str) -> None:\n+ if isinstance(dataloader, DataLoader) and dataloader.num_workers <= 2:\n+ warnings.warn(f'The dataloader, {name}, does not have many workers which may be a bottleneck.'\n+ ' Consider increasing the value of the `num_workers` argument`'\n+ ' in the `DataLoader` init to improve performance.')\n+\n def auto_add_sampler(self, dataloader: DataLoader, train: bool) -> DataLoader:\n \n # don't do anything if it's not a dataloader\n@@ -112,11 +119,13 @@\n model: The current `LightningModule`\n \"\"\"\n self.train_dataloader = self.request_dataloader(model.train_dataloader)\n+\n self.num_training_batches = 0\n \n # automatically add samplers\n self.train_dataloader = self.auto_add_sampler(self.train_dataloader, train=True)\n \n+ self._worker_check(self.train_dataloader, 'train dataloader')\n self._percent_range_check('train_percent_check')\n \n if not _has_len(self.train_dataloader):\n@@ -176,10 +185,10 @@\n # determine number of batches\n # datasets could be none, 1 or 2+\n if len(dataloaders) != 0:\n- for dataloader in dataloaders:\n+ for i, dataloader in enumerate(dataloaders):\n+ self._worker_check(dataloader, f'{mode} dataloader {i}')\n if not _has_len(dataloader):\n num_batches = float('inf')\n- break\n \n percent_check = getattr(self, f'{mode}_percent_check')\n", "issue": "Training loop temporarily hangs after every 4 steps\nI am porting some of my code to pytorch lightning, and everything seems to work fine. However, for some reason after every 4 training steps I see some temporary hanging (~1 second), which is severely slowing down my overall training time. Am I missing some obvious configuration? This is my Trainer configuration:\r\n\r\n```\r\n trainer = pl.Trainer(\r\n gpus=8\r\n num_nodes=1,\r\n distributed_backend='ddp',\r\n checkpoint_callback=False,\r\n max_epochs=50,\r\n max_steps=None,\r\n progress_bar_refresh_rate=1,\r\n check_val_every_n_epoch=1,\r\n val_check_interval=1.0,\r\n gradient_clip_val=0.0,\r\n log_save_interval=0,\r\n num_sanity_val_steps=0,\r\n amp_level='O0',\r\n )\r\n```\r\n\r\n\n", "before_files": [{"content": "from abc import ABC, abstractmethod\nfrom typing import Union, List, Tuple, Callable\n\nimport torch.distributed as torch_distrib\nfrom torch.utils.data import SequentialSampler, DataLoader\nfrom torch.utils.data.distributed import DistributedSampler\n\nfrom pytorch_lightning.core import LightningModule\nfrom pytorch_lightning.utilities.exceptions import MisconfigurationException\n\ntry:\n from apex import amp\nexcept ImportError:\n APEX_AVAILABLE = False\nelse:\n APEX_AVAILABLE = True\n\ntry:\n import torch_xla\n import torch_xla.core.xla_model as xm\n import torch_xla.distributed.xla_multiprocessing as xmp\nexcept ImportError:\n XLA_AVAILABLE = False\nelse:\n XLA_AVAILABLE = True\n\n\ndef _has_len(dataloader: DataLoader) -> bool:\n \"\"\" Checks if a given Dataloader has __len__ method implemented i.e. if\n it is a finite dataloader or infinite dataloader \"\"\"\n try:\n # try getting the length\n if len(dataloader) == 0:\n raise ValueError('Dataloader returned 0 length. Please make sure'\n ' that your Dataloader atleast returns 1 batch')\n return True\n except TypeError:\n return False\n\n\nclass TrainerDataLoadingMixin(ABC):\n\n # this is just a summary on variables used in this abstract class,\n # the proper values/initialisation should be done in child class\n proc_rank: int\n use_ddp: bool\n use_ddp2: bool\n shown_warnings: ...\n val_check_interval: float\n use_tpu: bool\n tpu_local_core_rank: int\n train_dataloader: DataLoader\n num_training_batches: Union[int, float]\n val_check_batch: ...\n val_dataloaders: List[DataLoader]\n num_val_batches: Union[int, float]\n test_dataloaders: List[DataLoader]\n num_test_batches: Union[int, float]\n train_percent_check: float\n val_percent_check: float\n test_percent_check: float\n\n @abstractmethod\n def is_overriden(self, *args):\n \"\"\"Warning: this is just empty shell for code implemented in other class.\"\"\"\n\n def _percent_range_check(self, name: str) -> None:\n value = getattr(self, name)\n msg = f'`{name}` must lie in the range [0.0, 1.0], but got {value:.3f}.'\n if name == 'val_check_interval':\n msg += ' If you want to disable validation set `val_percent_check` to 0.0 instead.'\n\n if not 0. <= value <= 1.:\n raise ValueError(msg)\n\n def auto_add_sampler(self, dataloader: DataLoader, train: bool) -> DataLoader:\n\n # don't do anything if it's not a dataloader\n if not isinstance(dataloader, DataLoader):\n return dataloader\n\n need_dist_sampler = self.use_ddp or self.use_ddp2 or self.use_tpu\n no_sampler_added = dataloader.sampler is None\n\n if need_dist_sampler and no_sampler_added:\n\n skip_keys = ['sampler', 'batch_sampler', 'dataset_kind']\n\n dl_args = {\n k: v for k, v in dataloader.__dict__.items() if not k.startswith('_') and k not in skip_keys\n }\n\n if self.use_tpu:\n sampler = DistributedSampler(\n dataloader.dataset,\n num_replicas=xm.xrt_world_size(),\n rank=xm.get_ordinal()\n )\n else:\n sampler = DistributedSampler(dataloader.dataset)\n\n dl_args['sampler'] = sampler\n dataloader = type(dataloader)(**dl_args)\n\n return dataloader\n\n def reset_train_dataloader(self, model: LightningModule) -> None:\n \"\"\"Resets the train dataloader and initialises required variables\n (number of batches, when to validate, etc.).\n\n Args:\n model: The current `LightningModule`\n \"\"\"\n self.train_dataloader = self.request_dataloader(model.train_dataloader)\n self.num_training_batches = 0\n\n # automatically add samplers\n self.train_dataloader = self.auto_add_sampler(self.train_dataloader, train=True)\n\n self._percent_range_check('train_percent_check')\n\n if not _has_len(self.train_dataloader):\n self.num_training_batches = float('inf')\n else:\n # try getting the length\n self.num_training_batches = len(self.train_dataloader)\n self.num_training_batches = int(self.num_training_batches * self.train_percent_check)\n\n # determine when to check validation\n # if int passed in, val checks that often\n # otherwise, it checks in [0, 1.0] % range of a training epoch\n if isinstance(self.val_check_interval, int):\n self.val_check_batch = self.val_check_interval\n if self.val_check_batch > self.num_training_batches:\n raise ValueError(\n f'`val_check_interval` ({self.val_check_interval}) must be less than or equal '\n f'to the number of the training batches ({self.num_training_batches}). '\n 'If you want to disable validation set `val_percent_check` to 0.0 instead.')\n else:\n if not _has_len(self.train_dataloader):\n if self.val_check_interval == 1.0:\n self.val_check_batch = float('inf')\n else:\n raise MisconfigurationException(\n 'When using an infinite DataLoader (e.g. with an IterableDataset or when '\n 'DataLoader does not implement `__len__`) for `train_dataloader`, '\n '`Trainer(val_check_interval)` must be `1.0` or an int. An int k specifies '\n 'checking validation every k training batches.')\n else:\n self._percent_range_check('val_check_interval')\n\n self.val_check_batch = int(self.num_training_batches * self.val_check_interval)\n self.val_check_batch = max(1, self.val_check_batch)\n\n def _reset_eval_dataloader(self, model: LightningModule,\n mode: str) -> Tuple[int, List[DataLoader]]:\n \"\"\"Generic method to reset a dataloader for evaluation.\n\n Args:\n model: The current `LightningModule`\n mode: Either `'val'` or `'test'`\n\n Returns:\n Tuple (num_batches, dataloaders)\n \"\"\"\n dataloaders = self.request_dataloader(getattr(model, f'{mode}_dataloader'))\n\n if not isinstance(dataloaders, list):\n dataloaders = [dataloaders]\n\n # add samplers\n dataloaders = [self.auto_add_sampler(dl, train=False) for dl in dataloaders if dl]\n\n num_batches = 0\n\n # determine number of batches\n # datasets could be none, 1 or 2+\n if len(dataloaders) != 0:\n for dataloader in dataloaders:\n if not _has_len(dataloader):\n num_batches = float('inf')\n break\n\n percent_check = getattr(self, f'{mode}_percent_check')\n\n if num_batches != float('inf'):\n self._percent_range_check(f'{mode}_percent_check')\n\n num_batches = sum(len(dataloader) for dataloader in dataloaders)\n num_batches = int(num_batches * percent_check)\n elif percent_check not in (0.0, 1.0):\n raise MisconfigurationException(\n 'When using an infinite DataLoader (e.g. with an IterableDataset or when '\n f'DataLoader does not implement `__len__`) for `{mode}_dataloader`, '\n f'`Trainer({mode}_percent_check)` must be `0.0` or `1.0`.')\n return num_batches, dataloaders\n\n def reset_val_dataloader(self, model: LightningModule) -> None:\n \"\"\"Resets the validation dataloader and determines the number of batches.\n\n Args:\n model: The current `LightningModule`\n \"\"\"\n if self.is_overriden('validation_step'):\n self.num_val_batches, self.val_dataloaders =\\\n self._reset_eval_dataloader(model, 'val')\n\n def reset_test_dataloader(self, model) -> None:\n \"\"\"Resets the validation dataloader and determines the number of batches.\n\n Args:\n model: The current `LightningModule`\n \"\"\"\n if self.is_overriden('test_step'):\n self.num_test_batches, self.test_dataloaders =\\\n self._reset_eval_dataloader(model, 'test')\n\n def request_dataloader(self, dataloader_fx: Callable) -> DataLoader:\n \"\"\"Handles downloading data in the GPU or TPU case.\n\n Args:\n dataloader_fx: The bound dataloader getter\n\n Returns:\n The dataloader\n \"\"\"\n dataloader = dataloader_fx()\n\n # get the function we'll use to get data\n if self.use_ddp or self.use_ddp2:\n # all processes wait until data download has happened\n torch_distrib.barrier()\n\n # data download/load on TPU\n elif self.use_tpu and XLA_AVAILABLE:\n # all processes wait until data download has happened\n torch_xla.core.xla_model.rendezvous('pl.TrainerDataLoadingMixin.get_dataloaders')\n\n return dataloader\n\n def determine_data_use_amount(self, train_percent_check: float, val_percent_check: float,\n test_percent_check: float, overfit_pct: float) -> None:\n \"\"\"Use less data for debugging purposes\n \"\"\"\n self.train_percent_check = train_percent_check\n self.val_percent_check = val_percent_check\n self.test_percent_check = test_percent_check\n if overfit_pct > 0:\n if overfit_pct > 1:\n raise ValueError(\n f'`overfit_pct` must be not greater than 1.0, but got {overfit_pct:.3f}.')\n\n self.train_percent_check = overfit_pct\n self.val_percent_check = overfit_pct\n self.test_percent_check = overfit_pct\n", "path": "pytorch_lightning/trainer/data_loading.py"}]}

| 3,590 | 539 |

gh_patches_debug_26209

|

rasdani/github-patches

|

git_diff

|

Cog-Creators__Red-DiscordBot-3166

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[p]announce fails if bot belongs to team

# Command bugs

#### Command name

`announce`

#### What cog is this command from?

`Admin`

#### What were you expecting to happen?

Send announcement to all enabled servers, if failed, send message to the one of owners or all owners (like an `[p]contact`)

#### What actually happened?

announcement failed almost immediately with error in console

#### How can we reproduce this issue?

1. Set bot with token belonging to team

2. Create environment, where bot cant send announcement to server

3. Announce an message

4. `[p]announce` silently fails with error:

```py

Traceback (most recent call last):

File "/home/fixator/Red-V3/lib/python3.7/site-packages/redbot/cogs/admin/announcer.py", line 67, in announcer

await channel.send(self.message)

File "/home/fixator/Red-V3/lib/python3.7/site-packages/discord/abc.py", line 823, in send

data = await state.http.send_message(channel.id, content, tts=tts, embed=embed, nonce=nonce)

File "/home/fixator/Red-V3/lib/python3.7/site-packages/discord/http.py", line 218, in request

raise Forbidden(r, data)

discord.errors.Forbidden: 403 FORBIDDEN (error code: 50001): Missing Access

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/fixator/Red-V3/lib/python3.7/site-packages/redbot/cogs/admin/announcer.py", line 70, in announcer

_("I could not announce to server: {server.id}").format(server=g)

File "/home/fixator/Red-V3/lib/python3.7/site-packages/discord/abc.py", line 823, in send

data = await state.http.send_message(channel.id, content, tts=tts, embed=embed, nonce=nonce)

File "/home/fixator/Red-V3/lib/python3.7/site-packages/discord/http.py", line 218, in request

raise Forbidden(r, data)

discord.errors.Forbidden: 403 FORBIDDEN (error code: 50007): Cannot send messages to this user

```

Caused by https://github.com/Cog-Creators/Red-DiscordBot/blob/f0836d7182d99239d1fde24cf2231c6ebf206f72/redbot/cogs/admin/announcer.py#L56

*Kinda related to #2781, i guess*

</issue>

<code>

[start of redbot/cogs/admin/announcer.py]

1 import asyncio

2

3 import discord

4 from redbot.core import commands

5 from redbot.core.i18n import Translator

6

7 _ = Translator("Announcer", __file__)

8

9

10 class Announcer:

11 def __init__(self, ctx: commands.Context, message: str, config=None):

12 """

13 :param ctx:

14 :param message:

15 :param config: Used to determine channel overrides

16 """

17 self.ctx = ctx

18 self.message = message

19 self.config = config

20

21 self.active = None

22

23 def start(self):

24 """

25 Starts an announcement.

26 :return:

27 """

28 if self.active is None:

29 self.active = True

30 self.ctx.bot.loop.create_task(self.announcer())

31

32 def cancel(self):

33 """

34 Cancels a running announcement.

35 :return:

36 """

37 self.active = False

38

39 async def _get_announce_channel(self, guild: discord.Guild) -> discord.TextChannel:

40 channel_id = await self.config.guild(guild).announce_channel()

41 channel = None

42

43 if channel_id is not None:

44 channel = guild.get_channel(channel_id)

45

46 if channel is None:

47 channel = guild.system_channel

48

49 if channel is None:

50 channel = guild.text_channels[0]

51

52 return channel

53

54 async def announcer(self):

55 guild_list = self.ctx.bot.guilds

56 bot_owner = (await self.ctx.bot.application_info()).owner

57 for g in guild_list:

58 if not self.active:

59 return

60

61 if await self.config.guild(g).announce_ignore():

62 continue

63

64 channel = await self._get_announce_channel(g)

65

66 try:

67 await channel.send(self.message)

68 except discord.Forbidden:

69 await bot_owner.send(

70 _("I could not announce to server: {server.id}").format(server=g)

71 )

72 await asyncio.sleep(0.5)

73

74 self.active = False

75

[end of redbot/cogs/admin/announcer.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/redbot/cogs/admin/announcer.py b/redbot/cogs/admin/announcer.py

--- a/redbot/cogs/admin/announcer.py

+++ b/redbot/cogs/admin/announcer.py

@@ -3,6 +3,7 @@

import discord

from redbot.core import commands

from redbot.core.i18n import Translator

+from redbot.core.utils.chat_formatting import humanize_list, inline

_ = Translator("Announcer", __file__)

@@ -53,7 +54,7 @@

async def announcer(self):

guild_list = self.ctx.bot.guilds

- bot_owner = (await self.ctx.bot.application_info()).owner

+ failed = []

for g in guild_list:

if not self.active:

return

@@ -66,9 +67,14 @@

try:

await channel.send(self.message)

except discord.Forbidden:

- await bot_owner.send(

- _("I could not announce to server: {server.id}").format(server=g)

- )

+ failed.append(str(g.id))

await asyncio.sleep(0.5)

+ msg = (

+ _("I could not announce to the following server: ")

+ if len(failed) == 1

+ else _("I could not announce to the following servers: ")

+ )

+ msg += humanize_list(tuple(map(inline, failed)))

+ await self.ctx.bot.send_to_owners(msg)

self.active = False

|

{"golden_diff": "diff --git a/redbot/cogs/admin/announcer.py b/redbot/cogs/admin/announcer.py\n--- a/redbot/cogs/admin/announcer.py\n+++ b/redbot/cogs/admin/announcer.py\n@@ -3,6 +3,7 @@\n import discord\n from redbot.core import commands\n from redbot.core.i18n import Translator\n+from redbot.core.utils.chat_formatting import humanize_list, inline\n \n _ = Translator(\"Announcer\", __file__)\n \n@@ -53,7 +54,7 @@\n \n async def announcer(self):\n guild_list = self.ctx.bot.guilds\n- bot_owner = (await self.ctx.bot.application_info()).owner\n+ failed = []\n for g in guild_list:\n if not self.active:\n return\n@@ -66,9 +67,14 @@\n try:\n await channel.send(self.message)\n except discord.Forbidden:\n- await bot_owner.send(\n- _(\"I could not announce to server: {server.id}\").format(server=g)\n- )\n+ failed.append(str(g.id))\n await asyncio.sleep(0.5)\n \n+ msg = (\n+ _(\"I could not announce to the following server: \")\n+ if len(failed) == 1\n+ else _(\"I could not announce to the following servers: \")\n+ )\n+ msg += humanize_list(tuple(map(inline, failed)))\n+ await self.ctx.bot.send_to_owners(msg)\n self.active = False\n", "issue": "[p]announce fails if bot belongs to team\n# Command bugs\r\n\r\n#### Command name\r\n\r\n`announce`\r\n\r\n#### What cog is this command from?\r\n\r\n`Admin`\r\n\r\n#### What were you expecting to happen?\r\n\r\nSend announcement to all enabled servers, if failed, send message to the one of owners or all owners (like an `[p]contact`)\r\n\r\n#### What actually happened?\r\n\r\nannouncement failed almost immediately with error in console \r\n\r\n#### How can we reproduce this issue?\r\n\r\n1. Set bot with token belonging to team\r\n2. Create environment, where bot cant send announcement to server\r\n3. Announce an message\r\n4. `[p]announce` silently fails with error:\r\n```py\r\nTraceback (most recent call last):\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/redbot/cogs/admin/announcer.py\", line 67, in announcer\r\n await channel.send(self.message)\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/discord/abc.py\", line 823, in send\r\n data = await state.http.send_message(channel.id, content, tts=tts, embed=embed, nonce=nonce)\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/discord/http.py\", line 218, in request\r\n raise Forbidden(r, data)\r\ndiscord.errors.Forbidden: 403 FORBIDDEN (error code: 50001): Missing Access\r\nDuring handling of the above exception, another exception occurred:\r\nTraceback (most recent call last):\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/redbot/cogs/admin/announcer.py\", line 70, in announcer\r\n _(\"I could not announce to server: {server.id}\").format(server=g)\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/discord/abc.py\", line 823, in send\r\n data = await state.http.send_message(channel.id, content, tts=tts, embed=embed, nonce=nonce)\r\n File \"/home/fixator/Red-V3/lib/python3.7/site-packages/discord/http.py\", line 218, in request\r\n raise Forbidden(r, data)\r\ndiscord.errors.Forbidden: 403 FORBIDDEN (error code: 50007): Cannot send messages to this user\r\n```\r\n\r\nCaused by https://github.com/Cog-Creators/Red-DiscordBot/blob/f0836d7182d99239d1fde24cf2231c6ebf206f72/redbot/cogs/admin/announcer.py#L56\r\n\r\n*Kinda related to #2781, i guess*\n", "before_files": [{"content": "import asyncio\n\nimport discord\nfrom redbot.core import commands\nfrom redbot.core.i18n import Translator\n\n_ = Translator(\"Announcer\", __file__)\n\n\nclass Announcer:\n def __init__(self, ctx: commands.Context, message: str, config=None):\n \"\"\"\n :param ctx:\n :param message:\n :param config: Used to determine channel overrides\n \"\"\"\n self.ctx = ctx\n self.message = message\n self.config = config\n\n self.active = None\n\n def start(self):\n \"\"\"\n Starts an announcement.\n :return:\n \"\"\"\n if self.active is None:\n self.active = True\n self.ctx.bot.loop.create_task(self.announcer())\n\n def cancel(self):\n \"\"\"\n Cancels a running announcement.\n :return:\n \"\"\"\n self.active = False\n\n async def _get_announce_channel(self, guild: discord.Guild) -> discord.TextChannel:\n channel_id = await self.config.guild(guild).announce_channel()\n channel = None\n\n if channel_id is not None:\n channel = guild.get_channel(channel_id)\n\n if channel is None:\n channel = guild.system_channel\n\n if channel is None:\n channel = guild.text_channels[0]\n\n return channel\n\n async def announcer(self):\n guild_list = self.ctx.bot.guilds\n bot_owner = (await self.ctx.bot.application_info()).owner\n for g in guild_list:\n if not self.active:\n return\n\n if await self.config.guild(g).announce_ignore():\n continue\n\n channel = await self._get_announce_channel(g)\n\n try:\n await channel.send(self.message)\n except discord.Forbidden:\n await bot_owner.send(\n _(\"I could not announce to server: {server.id}\").format(server=g)\n )\n await asyncio.sleep(0.5)\n\n self.active = False\n", "path": "redbot/cogs/admin/announcer.py"}]}

| 1,702 | 329 |

gh_patches_debug_41

|

rasdani/github-patches

|

git_diff

|

streamlit__streamlit-3038

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Dark theme does not properly adjust markdown tables

### Summary

When I load the latest streamlit in darkmode I cannot see anything in my markdown tables because the text color is changed but not the background color.

### Steps to reproduce

Code snippet:

```

md = """

| Label | Info |

| -------- | --------- |

| Row | Data |

"""

st.markdown(md)

```

**Expected behavior:**

I would expect if the text color get changed to white in the table, the background color should get changed to something dark

**Actual behavior:**

Both the text color and background are white so nothing can be seen.

### Is this a regression?

no, consequence of new theme

### Debug info

- Streamlit version: 0.79.0

- Python version: 3.7.9

- pip

- OS version: MacOS Catalina 10.15.7

- Browser version: Chrome 89.0.4389.90

### Additional information

I'm not sure why markdown tables have different background style but they seem to; perhaps other ui elements would be affected as well.

</issue>

<code>

[start of e2e/scripts/st_markdown.py]

1 # Copyright 2018-2021 Streamlit Inc.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14

15 import streamlit as st

16

17 st.markdown("This **markdown** is awesome! :sunglasses:")

18

19 st.markdown("This <b>HTML tag</b> is escaped!")

20

21 st.markdown("This <b>HTML tag</b> is not escaped!", unsafe_allow_html=True)

22

23 st.markdown("[text]")

24

25 st.markdown("[link](href)")

26

27 st.markdown("[][]")

28

29 st.markdown("Inline math with $\KaTeX$")

30

31 st.markdown(

32 """

33 $$

34 ax^2 + bx + c = 0

35 $$

36 """

37 )

38

[end of e2e/scripts/st_markdown.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/e2e/scripts/st_markdown.py b/e2e/scripts/st_markdown.py

--- a/e2e/scripts/st_markdown.py

+++ b/e2e/scripts/st_markdown.py

@@ -35,3 +35,11 @@

$$

"""

)

+

+st.markdown(

+ """

+| Col1 | Col2 |

+| --------- | ----------- |

+| Some | Data |

+"""

+)

|

{"golden_diff": "diff --git a/e2e/scripts/st_markdown.py b/e2e/scripts/st_markdown.py\n--- a/e2e/scripts/st_markdown.py\n+++ b/e2e/scripts/st_markdown.py\n@@ -35,3 +35,11 @@\n $$\n \"\"\"\n )\n+\n+st.markdown(\n+ \"\"\"\n+| Col1 | Col2 |\n+| --------- | ----------- |\n+| Some | Data |\n+\"\"\"\n+)\n", "issue": "Dark theme does not properly adjust markdown tables\n### Summary\r\n\r\nWhen I load the latest streamlit in darkmode I cannot see anything in my markdown tables because the text color is changed but not the background color.\r\n\r\n### Steps to reproduce\r\n\r\nCode snippet:\r\n\r\n```\r\nmd = \"\"\"\r\n| Label | Info |\r\n| -------- | --------- |\r\n| Row | Data |\r\n\"\"\"\r\nst.markdown(md)\r\n```\r\n\r\n**Expected behavior:**\r\n\r\nI would expect if the text color get changed to white in the table, the background color should get changed to something dark\r\n\r\n**Actual behavior:**\r\n\r\nBoth the text color and background are white so nothing can be seen.\r\n\r\n### Is this a regression?\r\n\r\nno, consequence of new theme\r\n\r\n### Debug info\r\n\r\n- Streamlit version: 0.79.0\r\n- Python version: 3.7.9\r\n- pip\r\n- OS version: MacOS Catalina 10.15.7\r\n- Browser version: Chrome 89.0.4389.90\r\n\r\n### Additional information\r\n\r\nI'm not sure why markdown tables have different background style but they seem to; perhaps other ui elements would be affected as well.\r\n\n", "before_files": [{"content": "# Copyright 2018-2021 Streamlit Inc.\n#\n# Licensed under the Apache License, Version 2.0 (the \"License\");\n# you may not use this file except in compliance with the License.\n# You may obtain a copy of the License at\n#\n# http://www.apache.org/licenses/LICENSE-2.0\n#\n# Unless required by applicable law or agreed to in writing, software\n# distributed under the License is distributed on an \"AS IS\" BASIS,\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\n# See the License for the specific language governing permissions and\n# limitations under the License.\n\nimport streamlit as st\n\nst.markdown(\"This **markdown** is awesome! :sunglasses:\")\n\nst.markdown(\"This <b>HTML tag</b> is escaped!\")\n\nst.markdown(\"This <b>HTML tag</b> is not escaped!\", unsafe_allow_html=True)\n\nst.markdown(\"[text]\")\n\nst.markdown(\"[link](href)\")\n\nst.markdown(\"[][]\")\n\nst.markdown(\"Inline math with $\\KaTeX$\")\n\nst.markdown(\n \"\"\"\n$$\nax^2 + bx + c = 0\n$$\n\"\"\"\n)\n", "path": "e2e/scripts/st_markdown.py"}]}

| 1,110 | 98 |

gh_patches_debug_24888

|

rasdani/github-patches

|

git_diff

|

yt-dlp__yt-dlp-8651

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

bfmtv: Unable to extract video block

### DO NOT REMOVE OR SKIP THE ISSUE TEMPLATE

- [X] I understand that I will be **blocked** if I *intentionally* remove or skip any mandatory\* field

### Checklist

- [X] I'm reporting that yt-dlp is broken on a **supported** site

- [X] I've verified that I'm running yt-dlp version **2023.10.13** ([update instructions](https://github.com/yt-dlp/yt-dlp#update)) or later (specify commit)

- [X] I've checked that all provided URLs are playable in a browser with the same IP and same login details

- [X] I've checked that all URLs and arguments with special characters are [properly quoted or escaped](https://github.com/yt-dlp/yt-dlp/wiki/FAQ#video-url-contains-an-ampersand--and-im-getting-some-strange-output-1-2839-or-v-is-not-recognized-as-an-internal-or-external-command)

- [X] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766) and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues **including closed ones**. DO NOT post duplicates

- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)

- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and I'm willing to share it if required

### Region

France

### Provide a description that is worded well enough to be understood

yt-dlp is unable to extract replay video from bfmtv websites, from domain www.bfmtv.com. It was still working a few days ago.

### Provide verbose output that clearly demonstrates the problem

- [X] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)

- [ ] If using API, add `'verbose': True` to `YoutubeDL` params instead

- [X] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below

### Complete Verbose Output

```shell

yt-dlp -vU https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html

[debug] Command-line config: ['-vU', 'https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html']

[debug] Encodings: locale UTF-8, fs utf-8, pref UTF-8, out utf-8, error utf-8, screen utf-8

[debug] yt-dlp version [email protected] [b634ba742] (zip)

[debug] Python 3.11.2 (CPython x86_64 64bit) - Linux-6.1.0-13-amd64-x86_64-with-glibc2.36 (OpenSSL 3.0.11 19 Sep 2023, glibc 2.36)

[debug] exe versions: ffmpeg 5.1.3-1 (setts), ffprobe 5.1.3-1, phantomjs 2.1.1, rtmpdump 2.4

[debug] Optional libraries: Cryptodome-3.11.0, brotli-1.0.9, certifi-2022.09.24, mutagen-1.46.0, pyxattr-0.8.1, sqlite3-3.40.1, websockets-10.4

[debug] Proxy map: {}

[debug] Loaded 1890 extractors

[debug] Fetching release info: https://api.github.com/repos/yt-dlp/yt-dlp/releases/latest

Available version: [email protected], Current version: [email protected]

Current Build Hash: be5cfb6be8930e1a5f427533ec32f2a481276b3da7b249d0150ce2b740ccf1ce

yt-dlp is up to date ([email protected])

[bfmtv] Extracting URL: https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html

[bfmtv] 202310230671: Downloading webpage

ERROR: [bfmtv] 202310230671: Unable to extract video block; please report this issue on https://github.com/yt-dlp/yt-dlp/issues?q= , filling out the appropriate issue template. Confirm you are on the latest version using yt-dlp -U

File "/usr/local/bin/yt-dlp/yt_dlp/extractor/common.py", line 715, in extract

ie_result = self._real_extract(url)

^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/bin/yt-dlp/yt_dlp/extractor/bfmtv.py", line 43, in _real_extract

video_block = extract_attributes(self._search_regex(

^^^^^^^^^^^^^^^^^^^

File "/usr/local/bin/yt-dlp/yt_dlp/extractor/common.py", line 1263, in _search_regex

raise RegexNotFoundError('Unable to extract %s' % _name)

```

</issue>

<code>

[start of yt_dlp/extractor/bfmtv.py]

1 import re

2

3 from .common import InfoExtractor

4 from ..utils import extract_attributes

5

6

7 class BFMTVBaseIE(InfoExtractor):

8 _VALID_URL_BASE = r'https?://(?:www\.|rmc\.)?bfmtv\.com/'

9 _VALID_URL_TMPL = _VALID_URL_BASE + r'(?:[^/]+/)*[^/?&#]+_%s[A-Z]-(?P<id>\d{12})\.html'

10 _VIDEO_BLOCK_REGEX = r'(<div[^>]+class="video_block"[^>]*>)'

11 BRIGHTCOVE_URL_TEMPLATE = 'http://players.brightcove.net/%s/%s_default/index.html?videoId=%s'

12

13 def _brightcove_url_result(self, video_id, video_block):

14 account_id = video_block.get('accountid') or '876450612001'

15 player_id = video_block.get('playerid') or 'I2qBTln4u'

16 return self.url_result(

17 self.BRIGHTCOVE_URL_TEMPLATE % (account_id, player_id, video_id),

18 'BrightcoveNew', video_id)

19

20

21 class BFMTVIE(BFMTVBaseIE):

22 IE_NAME = 'bfmtv'

23 _VALID_URL = BFMTVBaseIE._VALID_URL_TMPL % 'V'

24 _TESTS = [{

25 'url': 'https://www.bfmtv.com/politique/emmanuel-macron-l-islam-est-une-religion-qui-vit-une-crise-aujourd-hui-partout-dans-le-monde_VN-202010020146.html',

26 'info_dict': {

27 'id': '6196747868001',

28 'ext': 'mp4',

29 'title': 'Emmanuel Macron: "L\'Islam est une religion qui vit une crise aujourd’hui, partout dans le monde"',

30 'description': 'Le Président s\'exprime sur la question du séparatisme depuis les Mureaux, dans les Yvelines.',

31 'uploader_id': '876450610001',

32 'upload_date': '20201002',

33 'timestamp': 1601629620,

34 'duration': 44.757,

35 'tags': ['bfmactu', 'politique'],

36 'thumbnail': 'https://cf-images.eu-west-1.prod.boltdns.net/v1/static/876450610001/5041f4c1-bc48-4af8-a256-1b8300ad8ef0/cf2f9114-e8e2-4494-82b4-ab794ea4bc7d/1920x1080/match/image.jpg',

37 },

38 }]

39

40 def _real_extract(self, url):

41 bfmtv_id = self._match_id(url)

42 webpage = self._download_webpage(url, bfmtv_id)

43 video_block = extract_attributes(self._search_regex(

44 self._VIDEO_BLOCK_REGEX, webpage, 'video block'))

45 return self._brightcove_url_result(video_block['videoid'], video_block)

46

47

48 class BFMTVLiveIE(BFMTVIE): # XXX: Do not subclass from concrete IE

49 IE_NAME = 'bfmtv:live'

50 _VALID_URL = BFMTVBaseIE._VALID_URL_BASE + '(?P<id>(?:[^/]+/)?en-direct)'

51 _TESTS = [{

52 'url': 'https://www.bfmtv.com/en-direct/',

53 'info_dict': {

54 'id': '5615950982001',

55 'ext': 'mp4',

56 'title': r're:^le direct BFMTV WEB \d{4}-\d{2}-\d{2} \d{2}:\d{2}$',

57 'uploader_id': '876450610001',

58 'upload_date': '20171018',

59 'timestamp': 1508329950,

60 },

61 'params': {

62 'skip_download': True,

63 },

64 }, {

65 'url': 'https://www.bfmtv.com/economie/en-direct/',

66 'only_matching': True,

67 }]

68

69

70 class BFMTVArticleIE(BFMTVBaseIE):

71 IE_NAME = 'bfmtv:article'

72 _VALID_URL = BFMTVBaseIE._VALID_URL_TMPL % 'A'

73 _TESTS = [{

74 'url': 'https://www.bfmtv.com/sante/covid-19-un-responsable-de-l-institut-pasteur-se-demande-quand-la-france-va-se-reconfiner_AV-202101060198.html',

75 'info_dict': {

76 'id': '202101060198',

77 'title': 'Covid-19: un responsable de l\'Institut Pasteur se demande "quand la France va se reconfiner"',

78 'description': 'md5:947974089c303d3ac6196670ae262843',

79 },

80 'playlist_count': 2,

81 }, {

82 'url': 'https://www.bfmtv.com/international/pour-bolsonaro-le-bresil-est-en-faillite-mais-il-ne-peut-rien-faire_AD-202101060232.html',

83 'only_matching': True,

84 }, {

85 'url': 'https://www.bfmtv.com/sante/covid-19-oui-le-vaccin-de-pfizer-distribue-en-france-a-bien-ete-teste-sur-des-personnes-agees_AN-202101060275.html',

86 'only_matching': True,

87 }, {

88 'url': 'https://rmc.bfmtv.com/actualites/societe/transports/ce-n-est-plus-tout-rentable-le-bioethanol-e85-depasse-1eu-le-litre-des-automobilistes-regrettent_AV-202301100268.html',

89 'info_dict': {

90 'id': '6318445464112',

91 'ext': 'mp4',

92 'title': 'Le plein de bioéthanol fait de plus en plus mal à la pompe',

93 'description': None,

94 'uploader_id': '876630703001',

95 'upload_date': '20230110',

96 'timestamp': 1673341692,

97 'duration': 109.269,

98 'tags': ['rmc', 'show', 'apolline de malherbe', 'info', 'talk', 'matinale', 'radio'],

99 'thumbnail': 'https://cf-images.eu-west-1.prod.boltdns.net/v1/static/876630703001/5bef74b8-9d5e-4480-a21f-60c2e2480c46/96c88b74-f9db-45e1-8040-e199c5da216c/1920x1080/match/image.jpg'

100 }

101 }]

102

103 def _real_extract(self, url):

104 bfmtv_id = self._match_id(url)

105 webpage = self._download_webpage(url, bfmtv_id)

106

107 entries = []

108 for video_block_el in re.findall(self._VIDEO_BLOCK_REGEX, webpage):

109 video_block = extract_attributes(video_block_el)

110 video_id = video_block.get('videoid')

111 if not video_id:

112 continue

113 entries.append(self._brightcove_url_result(video_id, video_block))

114

115 return self.playlist_result(

116 entries, bfmtv_id, self._og_search_title(webpage, fatal=False),

117 self._html_search_meta(['og:description', 'description'], webpage))

118

[end of yt_dlp/extractor/bfmtv.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/yt_dlp/extractor/bfmtv.py b/yt_dlp/extractor/bfmtv.py

--- a/yt_dlp/extractor/bfmtv.py

+++ b/yt_dlp/extractor/bfmtv.py

@@ -7,7 +7,7 @@

class BFMTVBaseIE(InfoExtractor):

_VALID_URL_BASE = r'https?://(?:www\.|rmc\.)?bfmtv\.com/'

_VALID_URL_TMPL = _VALID_URL_BASE + r'(?:[^/]+/)*[^/?&#]+_%s[A-Z]-(?P<id>\d{12})\.html'

- _VIDEO_BLOCK_REGEX = r'(<div[^>]+class="video_block"[^>]*>)'

+ _VIDEO_BLOCK_REGEX = r'(<div[^>]+class="video_block[^"]*"[^>]*>)'

BRIGHTCOVE_URL_TEMPLATE = 'http://players.brightcove.net/%s/%s_default/index.html?videoId=%s'

def _brightcove_url_result(self, video_id, video_block):

@@ -55,8 +55,11 @@

'ext': 'mp4',

'title': r're:^le direct BFMTV WEB \d{4}-\d{2}-\d{2} \d{2}:\d{2}$',

'uploader_id': '876450610001',

- 'upload_date': '20171018',

- 'timestamp': 1508329950,

+ 'upload_date': '20220926',

+ 'timestamp': 1664207191,

+ 'live_status': 'is_live',

+ 'thumbnail': r're:https://.+/image\.jpg',

+ 'tags': [],

},

'params': {

'skip_download': True,

|

{"golden_diff": "diff --git a/yt_dlp/extractor/bfmtv.py b/yt_dlp/extractor/bfmtv.py\n--- a/yt_dlp/extractor/bfmtv.py\n+++ b/yt_dlp/extractor/bfmtv.py\n@@ -7,7 +7,7 @@\n class BFMTVBaseIE(InfoExtractor):\n _VALID_URL_BASE = r'https?://(?:www\\.|rmc\\.)?bfmtv\\.com/'\n _VALID_URL_TMPL = _VALID_URL_BASE + r'(?:[^/]+/)*[^/?&#]+_%s[A-Z]-(?P<id>\\d{12})\\.html'\n- _VIDEO_BLOCK_REGEX = r'(<div[^>]+class=\"video_block\"[^>]*>)'\n+ _VIDEO_BLOCK_REGEX = r'(<div[^>]+class=\"video_block[^\"]*\"[^>]*>)'\n BRIGHTCOVE_URL_TEMPLATE = 'http://players.brightcove.net/%s/%s_default/index.html?videoId=%s'\n \n def _brightcove_url_result(self, video_id, video_block):\n@@ -55,8 +55,11 @@\n 'ext': 'mp4',\n 'title': r're:^le direct BFMTV WEB \\d{4}-\\d{2}-\\d{2} \\d{2}:\\d{2}$',\n 'uploader_id': '876450610001',\n- 'upload_date': '20171018',\n- 'timestamp': 1508329950,\n+ 'upload_date': '20220926',\n+ 'timestamp': 1664207191,\n+ 'live_status': 'is_live',\n+ 'thumbnail': r're:https://.+/image\\.jpg',\n+ 'tags': [],\n },\n 'params': {\n 'skip_download': True,\n", "issue": "bfmtv: Unable to extract video block\n### DO NOT REMOVE OR SKIP THE ISSUE TEMPLATE\n\n- [X] I understand that I will be **blocked** if I *intentionally* remove or skip any mandatory\\* field\n\n### Checklist\n\n- [X] I'm reporting that yt-dlp is broken on a **supported** site\n- [X] I've verified that I'm running yt-dlp version **2023.10.13** ([update instructions](https://github.com/yt-dlp/yt-dlp#update)) or later (specify commit)\n- [X] I've checked that all provided URLs are playable in a browser with the same IP and same login details\n- [X] I've checked that all URLs and arguments with special characters are [properly quoted or escaped](https://github.com/yt-dlp/yt-dlp/wiki/FAQ#video-url-contains-an-ampersand--and-im-getting-some-strange-output-1-2839-or-v-is-not-recognized-as-an-internal-or-external-command)\n- [X] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766) and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues **including closed ones**. DO NOT post duplicates\n- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)\n- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and I'm willing to share it if required\n\n### Region\n\nFrance\n\n### Provide a description that is worded well enough to be understood\n\nyt-dlp is unable to extract replay video from bfmtv websites, from domain www.bfmtv.com. It was still working a few days ago.\n\n### Provide verbose output that clearly demonstrates the problem\n\n- [X] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)\n- [ ] If using API, add `'verbose': True` to `YoutubeDL` params instead\n- [X] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below\n\n### Complete Verbose Output\n\n```shell\nyt-dlp -vU https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html\r\n[debug] Command-line config: ['-vU', 'https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html']\r\n[debug] Encodings: locale UTF-8, fs utf-8, pref UTF-8, out utf-8, error utf-8, screen utf-8\r\n[debug] yt-dlp version [email protected] [b634ba742] (zip)\r\n[debug] Python 3.11.2 (CPython x86_64 64bit) - Linux-6.1.0-13-amd64-x86_64-with-glibc2.36 (OpenSSL 3.0.11 19 Sep 2023, glibc 2.36)\r\n[debug] exe versions: ffmpeg 5.1.3-1 (setts), ffprobe 5.1.3-1, phantomjs 2.1.1, rtmpdump 2.4\r\n[debug] Optional libraries: Cryptodome-3.11.0, brotli-1.0.9, certifi-2022.09.24, mutagen-1.46.0, pyxattr-0.8.1, sqlite3-3.40.1, websockets-10.4\r\n[debug] Proxy map: {}\r\n[debug] Loaded 1890 extractors\r\n[debug] Fetching release info: https://api.github.com/repos/yt-dlp/yt-dlp/releases/latest\r\nAvailable version: [email protected], Current version: [email protected]\r\nCurrent Build Hash: be5cfb6be8930e1a5f427533ec32f2a481276b3da7b249d0150ce2b740ccf1ce\r\nyt-dlp is up to date ([email protected])\r\n[bfmtv] Extracting URL: https://www.bfmtv.com/alsace/replay-emissions/bonsoir-l-alsace/diabete-le-groupe-lilly-investit-160-millions-d-euros-a-fegersheim_VN-202310230671.html\r\n[bfmtv] 202310230671: Downloading webpage\r\nERROR: [bfmtv] 202310230671: Unable to extract video block; please report this issue on https://github.com/yt-dlp/yt-dlp/issues?q= , filling out the appropriate issue template. Confirm you are on the latest version using yt-dlp -U\r\n File \"/usr/local/bin/yt-dlp/yt_dlp/extractor/common.py\", line 715, in extract\r\n ie_result = self._real_extract(url)\r\n ^^^^^^^^^^^^^^^^^^^^^^^\r\n File \"/usr/local/bin/yt-dlp/yt_dlp/extractor/bfmtv.py\", line 43, in _real_extract\r\n video_block = extract_attributes(self._search_regex(\r\n ^^^^^^^^^^^^^^^^^^^\r\n File \"/usr/local/bin/yt-dlp/yt_dlp/extractor/common.py\", line 1263, in _search_regex\r\n raise RegexNotFoundError('Unable to extract %s' % _name)\n```\n\n", "before_files": [{"content": "import re\n\nfrom .common import InfoExtractor\nfrom ..utils import extract_attributes\n\n\nclass BFMTVBaseIE(InfoExtractor):\n _VALID_URL_BASE = r'https?://(?:www\\.|rmc\\.)?bfmtv\\.com/'\n _VALID_URL_TMPL = _VALID_URL_BASE + r'(?:[^/]+/)*[^/?&#]+_%s[A-Z]-(?P<id>\\d{12})\\.html'\n _VIDEO_BLOCK_REGEX = r'(<div[^>]+class=\"video_block\"[^>]*>)'\n BRIGHTCOVE_URL_TEMPLATE = 'http://players.brightcove.net/%s/%s_default/index.html?videoId=%s'\n\n def _brightcove_url_result(self, video_id, video_block):\n account_id = video_block.get('accountid') or '876450612001'\n player_id = video_block.get('playerid') or 'I2qBTln4u'\n return self.url_result(\n self.BRIGHTCOVE_URL_TEMPLATE % (account_id, player_id, video_id),\n 'BrightcoveNew', video_id)\n\n\nclass BFMTVIE(BFMTVBaseIE):\n IE_NAME = 'bfmtv'\n _VALID_URL = BFMTVBaseIE._VALID_URL_TMPL % 'V'\n _TESTS = [{\n 'url': 'https://www.bfmtv.com/politique/emmanuel-macron-l-islam-est-une-religion-qui-vit-une-crise-aujourd-hui-partout-dans-le-monde_VN-202010020146.html',\n 'info_dict': {\n 'id': '6196747868001',\n 'ext': 'mp4',\n 'title': 'Emmanuel Macron: \"L\\'Islam est une religion qui vit une crise aujourd\u2019hui, partout dans le monde\"',\n 'description': 'Le Pr\u00e9sident s\\'exprime sur la question du s\u00e9paratisme depuis les Mureaux, dans les Yvelines.',\n 'uploader_id': '876450610001',\n 'upload_date': '20201002',\n 'timestamp': 1601629620,\n 'duration': 44.757,\n 'tags': ['bfmactu', 'politique'],\n 'thumbnail': 'https://cf-images.eu-west-1.prod.boltdns.net/v1/static/876450610001/5041f4c1-bc48-4af8-a256-1b8300ad8ef0/cf2f9114-e8e2-4494-82b4-ab794ea4bc7d/1920x1080/match/image.jpg',\n },\n }]\n\n def _real_extract(self, url):\n bfmtv_id = self._match_id(url)\n webpage = self._download_webpage(url, bfmtv_id)\n video_block = extract_attributes(self._search_regex(\n self._VIDEO_BLOCK_REGEX, webpage, 'video block'))\n return self._brightcove_url_result(video_block['videoid'], video_block)\n\n\nclass BFMTVLiveIE(BFMTVIE): # XXX: Do not subclass from concrete IE\n IE_NAME = 'bfmtv:live'\n _VALID_URL = BFMTVBaseIE._VALID_URL_BASE + '(?P<id>(?:[^/]+/)?en-direct)'\n _TESTS = [{\n 'url': 'https://www.bfmtv.com/en-direct/',\n 'info_dict': {\n 'id': '5615950982001',\n 'ext': 'mp4',\n 'title': r're:^le direct BFMTV WEB \\d{4}-\\d{2}-\\d{2} \\d{2}:\\d{2}$',\n 'uploader_id': '876450610001',\n 'upload_date': '20171018',\n 'timestamp': 1508329950,\n },\n 'params': {\n 'skip_download': True,\n },\n }, {\n 'url': 'https://www.bfmtv.com/economie/en-direct/',\n 'only_matching': True,\n }]\n\n\nclass BFMTVArticleIE(BFMTVBaseIE):\n IE_NAME = 'bfmtv:article'\n _VALID_URL = BFMTVBaseIE._VALID_URL_TMPL % 'A'\n _TESTS = [{\n 'url': 'https://www.bfmtv.com/sante/covid-19-un-responsable-de-l-institut-pasteur-se-demande-quand-la-france-va-se-reconfiner_AV-202101060198.html',\n 'info_dict': {\n 'id': '202101060198',\n 'title': 'Covid-19: un responsable de l\\'Institut Pasteur se demande \"quand la France va se reconfiner\"',\n 'description': 'md5:947974089c303d3ac6196670ae262843',\n },\n 'playlist_count': 2,\n }, {\n 'url': 'https://www.bfmtv.com/international/pour-bolsonaro-le-bresil-est-en-faillite-mais-il-ne-peut-rien-faire_AD-202101060232.html',\n 'only_matching': True,\n }, {\n 'url': 'https://www.bfmtv.com/sante/covid-19-oui-le-vaccin-de-pfizer-distribue-en-france-a-bien-ete-teste-sur-des-personnes-agees_AN-202101060275.html',\n 'only_matching': True,\n }, {\n 'url': 'https://rmc.bfmtv.com/actualites/societe/transports/ce-n-est-plus-tout-rentable-le-bioethanol-e85-depasse-1eu-le-litre-des-automobilistes-regrettent_AV-202301100268.html',\n 'info_dict': {\n 'id': '6318445464112',\n 'ext': 'mp4',\n 'title': 'Le plein de bio\u00e9thanol fait de plus en plus mal \u00e0 la pompe',\n 'description': None,\n 'uploader_id': '876630703001',\n 'upload_date': '20230110',\n 'timestamp': 1673341692,\n 'duration': 109.269,\n 'tags': ['rmc', 'show', 'apolline de malherbe', 'info', 'talk', 'matinale', 'radio'],\n 'thumbnail': 'https://cf-images.eu-west-1.prod.boltdns.net/v1/static/876630703001/5bef74b8-9d5e-4480-a21f-60c2e2480c46/96c88b74-f9db-45e1-8040-e199c5da216c/1920x1080/match/image.jpg'\n }\n }]\n\n def _real_extract(self, url):\n bfmtv_id = self._match_id(url)\n webpage = self._download_webpage(url, bfmtv_id)\n\n entries = []\n for video_block_el in re.findall(self._VIDEO_BLOCK_REGEX, webpage):\n video_block = extract_attributes(video_block_el)\n video_id = video_block.get('videoid')\n if not video_id:\n continue\n entries.append(self._brightcove_url_result(video_id, video_block))\n\n return self.playlist_result(\n entries, bfmtv_id, self._og_search_title(webpage, fatal=False),\n self._html_search_meta(['og:description', 'description'], webpage))\n", "path": "yt_dlp/extractor/bfmtv.py"}]}

| 4,068 | 433 |

gh_patches_debug_15461

|

rasdani/github-patches

|

git_diff

|

wagtail__wagtail-6508

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Python 3.9 compatibility (resolve DeprecationWarning from collections)

### Issue Summary

Wagtail hits the warning `DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working` in various places.

- [x] Jinja2>2.10.1

- [x] html5lib>1.0.1 (see https://github.com/html5lib/html5lib-python/issues/419)

- [x] beautifulsoup4>=4.8.0 (or maybe earlier)

- [x] fix wagtail/utils/l18n/translation.py:5 (#5485)

### Steps to Reproduce

1. run `tox -e py37-dj22-sqlite-noelasticsearch -- --deprecation all`

2. note deprecation warnings:

```

site-packages/jinja2/utils.py:485: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

site-packages/jinja2/runtime.py:318: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

```

resolved by https://github.com/pallets/jinja/pull/867 - the fix is on master not released yet (as of Jinja2==2.10.1)

```

site-packages/html5lib/_trie/_base.py:3: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Mapping

```

Fixed in https://github.com/html5lib/html5lib-python/issues/402 , but not released yet as of 1.0.1 (see https://github.com/html5lib/html5lib-python/issues/419 )

```

site-packages/bs4/element.py:1134: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

if not isinstance(formatter, collections.Callable):

```

https://bugs.launchpad.net/beautifulsoup/+bug/1778909 - resolved by beautifulsoup4>=4.8.0 (and earlier I think)

I'm also seeing one in Wagtail from my own project tests here, the above tox run didn't seem to hit it:

```

wagtail/utils/l18n/translation.py:5: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

```

See #5485

* I have confirmed that this issue can be reproduced as described on a fresh Wagtail project:

yes (on master at bb4e2fe2dfe69fc3143f38c6e34dbe6f2f2f01e0 )

### Technical details

* Python version: Run `python --version`.

Python 3.7.3

* Django version: Look in your requirements.txt, or run `pip show django | grep Version`.

Django 2.2

* Wagtail version: Look at the bottom of the Settings menu in the Wagtail admin, or run `pip show wagtail | grep Version:`.

2.7.0.alpha.0

</issue>

<code>

[start of setup.py]

1 #!/usr/bin/env python

2

3 from wagtail import __version__

4 from wagtail.utils.setup import assets, check_bdist_egg, sdist

5

6

7 try:

8 from setuptools import find_packages, setup

9 except ImportError:

10 from distutils.core import setup

11

12

13 # Hack to prevent "TypeError: 'NoneType' object is not callable" error

14 # in multiprocessing/util.py _exit_function when setup.py exits

15 # (see http://www.eby-sarna.com/pipermail/peak/2010-May/003357.html)

16 try:

17 import multiprocessing # noqa

18 except ImportError:

19 pass

20

21

22 install_requires = [

23 "Django>=2.2,<3.2",

24 "django-modelcluster>=5.1,<6.0",

25 "django-taggit>=1.0,<2.0",

26 "django-treebeard>=4.2.0,<5.0",

27 "djangorestframework>=3.11.1,<4.0",

28 "django-filter>=2.2,<3.0",

29 "draftjs_exporter>=2.1.5,<3.0",

30 "Pillow>=4.0.0,<9.0.0",

31 "beautifulsoup4>=4.8,<4.9",

32 "html5lib>=0.999,<2",

33 "Willow>=1.4,<1.5",

34 "requests>=2.11.1,<3.0",

35 "l18n>=2018.5",

36 "xlsxwriter>=1.2.8,<2.0",

37 "tablib[xls,xlsx]>=0.14.0",

38 "anyascii>=0.1.5",

39 ]

40

41 # Testing dependencies

42 testing_extras = [

43 # Required for running the tests

44 'python-dateutil>=2.2',

45 'pytz>=2014.7',

46 'elasticsearch>=1.0.0,<3.0',

47 'Jinja2>=2.8,<3.0',

48 'boto3>=1.4,<1.5',

49 'freezegun>=0.3.8',

50 'openpyxl>=2.6.4',

51 'Unidecode>=0.04.14,<2.0',

52

53 # For coverage and PEP8 linting

54 'coverage>=3.7.0',

55 'flake8>=3.6.0',

56 'isort==5.6.4', # leave this pinned - it tends to change rules between patch releases

57 'flake8-blind-except==0.1.1',

58 'flake8-print==2.0.2',

59 'doc8==0.8.1',

60

61 # For templates linting

62 'jinjalint>=0.5',

63

64 # Pipenv hack to fix broken dependency causing CircleCI failures

65 'docutils==0.15',

66

67 # django-taggit 1.3.0 made changes to verbose_name which affect migrations;

68 # the test suite migrations correspond to >=1.3.0

69 'django-taggit>=1.3.0,<2.0',

70 ]

71

72 # Documentation dependencies

73 documentation_extras = [

74 'pyenchant>=3.1.1,<4',

75 'sphinxcontrib-spelling>=5.4.0,<6',

76 'Sphinx>=1.5.2',

77 'sphinx-autobuild>=0.6.0',

78 'sphinx_rtd_theme>=0.1.9',

79 ]

80

81 setup(

82 name='wagtail',

83 version=__version__,

84 description='A Django content management system.',

85 author='Wagtail core team + contributors',

86 author_email='[email protected]', # For support queries, please see https://docs.wagtail.io/en/stable/support.html

87 url='https://wagtail.io/',

88 packages=find_packages(),

89 include_package_data=True,

90 license='BSD',

91 long_description="Wagtail is an open source content management \

92 system built on Django, with a strong community and commercial support. \

93 It’s focused on user experience, and offers precise control for \

94 designers and developers.\n\n\

95 For more details, see https://wagtail.io, https://docs.wagtail.io and \

96 https://github.com/wagtail/wagtail/.",

97 classifiers=[

98 'Development Status :: 5 - Production/Stable',

99 'Environment :: Web Environment',

100 'Intended Audience :: Developers',

101 'License :: OSI Approved :: BSD License',

102 'Operating System :: OS Independent',

103 'Programming Language :: Python',

104 'Programming Language :: Python :: 3',

105 'Programming Language :: Python :: 3.6',

106 'Programming Language :: Python :: 3.7',

107 'Programming Language :: Python :: 3.8',

108 'Framework :: Django',

109 'Framework :: Django :: 2.2',

110 'Framework :: Django :: 3.0',

111 'Framework :: Django :: 3.1',

112 'Framework :: Wagtail',

113 'Topic :: Internet :: WWW/HTTP :: Site Management',

114 ],

115 python_requires='>=3.6',

116 install_requires=install_requires,

117 extras_require={

118 'testing': testing_extras,

119 'docs': documentation_extras

120 },

121 entry_points="""

122 [console_scripts]

123 wagtail=wagtail.bin.wagtail:main

124 """,

125 zip_safe=False,

126 cmdclass={

127 'sdist': sdist,

128 'bdist_egg': check_bdist_egg,

129 'assets': assets,

130 },

131 )

132

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -45,7 +45,7 @@

'pytz>=2014.7',

'elasticsearch>=1.0.0,<3.0',

'Jinja2>=2.8,<3.0',

- 'boto3>=1.4,<1.5',

+ 'boto3>=1.16,<1.17',

'freezegun>=0.3.8',

'openpyxl>=2.6.4',

'Unidecode>=0.04.14,<2.0',

@@ -105,6 +105,7 @@

'Programming Language :: Python :: 3.6',

'Programming Language :: Python :: 3.7',

'Programming Language :: Python :: 3.8',

+ 'Programming Language :: Python :: 3.9',

'Framework :: Django',

'Framework :: Django :: 2.2',

'Framework :: Django :: 3.0',

|