problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

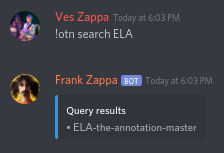

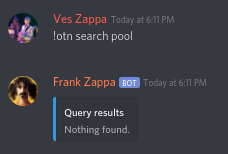

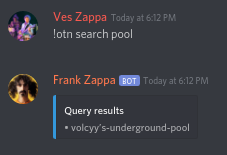

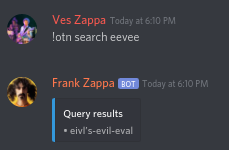

gh_patches_debug_3760

|

rasdani/github-patches

|

git_diff

|

rotki__rotki-2288

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Rotki premium DB upload does not respect user setting

## Problem Definition

It seems that rotki users who use premium experienced encrypted DB uploads even with the setting off. 2 versions back we broke the functionality and made it always upload.

## Task

Fix it

</issue>

<code>

[start of rotkehlchen/premium/sync.py]

1 import base64

2 import logging

3 import shutil

4 from enum import Enum

5 from typing import Any, Dict, NamedTuple, Optional, Tuple

6

7 from typing_extensions import Literal

8

9 from rotkehlchen.data_handler import DataHandler

10 from rotkehlchen.errors import (

11 PremiumAuthenticationError,

12 RemoteError,

13 RotkehlchenPermissionError,

14 UnableToDecryptRemoteData,

15 )

16 from rotkehlchen.logging import RotkehlchenLogsAdapter

17 from rotkehlchen.premium.premium import Premium, PremiumCredentials, premium_create_and_verify

18 from rotkehlchen.utils.misc import timestamp_to_date, ts_now

19

20 logger = logging.getLogger(__name__)

21 log = RotkehlchenLogsAdapter(logger)

22

23

24 class CanSync(Enum):

25 YES = 0

26 NO = 1

27 ASK_USER = 2

28

29

30 class SyncCheckResult(NamedTuple):

31 # The result of the sync check

32 can_sync: CanSync

33 # If result is ASK_USER, what should the message be?

34 message: str

35 payload: Optional[Dict[str, Any]]

36

37

38 class PremiumSyncManager():

39

40 def __init__(self, data: DataHandler, password: str) -> None:

41 # Initialize this with the value saved in the DB

42 self.last_data_upload_ts = data.db.get_last_data_upload_ts()

43 self.data = data

44 self.password = password

45 self.premium: Optional[Premium] = None

46

47 def _can_sync_data_from_server(self, new_account: bool) -> SyncCheckResult:

48 """

49 Checks if the remote data can be pulled from the server.

50

51 Returns a SyncCheckResult denoting whether we can pull for sure,

52 whether we can't pull or whether the user should be asked. If the user

53 should be asked a message is also returned

54 """

55 log.debug('can sync data from server -- start')

56 if self.premium is None:

57 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

58

59 b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)

60

61 try:

62 metadata = self.premium.query_last_data_metadata()

63 except RemoteError as e:

64 log.debug('can sync data from server failed', error=str(e))

65 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

66

67 if new_account:

68 return SyncCheckResult(can_sync=CanSync.YES, message='', payload=None)

69

70 if not self.data.db.get_premium_sync():

71 # If it's not a new account and the db setting for premium syncing is off stop

72 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

73

74 log.debug(

75 'CAN_PULL',

76 ours=our_hash,

77 theirs=metadata.data_hash,

78 )

79 if our_hash == metadata.data_hash:

80 log.debug('sync from server stopped -- same hash')

81 # same hash -- no need to get anything

82 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

83

84 our_last_write_ts = self.data.db.get_last_write_ts()

85 data_bytes_size = len(base64.b64decode(b64_encoded_data))

86

87 local_more_recent = our_last_write_ts >= metadata.last_modify_ts

88 local_bigger = data_bytes_size >= metadata.data_size

89

90 if local_more_recent and local_bigger:

91 log.debug('sync from server stopped -- local is both newer and bigger')

92 return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)

93

94 if local_more_recent is False: # remote is more recent

95 message = (

96 'Detected remote database with more recent modification timestamp '

97 'than the local one. '

98 )

99 else: # remote is bigger

100 message = 'Detected remote database with bigger size than the local one. '

101

102 return SyncCheckResult(

103 can_sync=CanSync.ASK_USER,

104 message=message,

105 payload={

106 'local_size': data_bytes_size,

107 'remote_size': metadata.data_size,

108 'local_last_modified': timestamp_to_date(our_last_write_ts),

109 'remote_last_modified': timestamp_to_date(metadata.last_modify_ts),

110 },

111 )

112

113 def _sync_data_from_server_and_replace_local(self) -> Tuple[bool, str]:

114 """

115 Performs syncing of data from server and replaces local db

116

117 Returns true for success and False for error/failure

118

119 May raise:

120 - PremiumAuthenticationError due to an UnableToDecryptRemoteData

121 coming from decompress_and_decrypt_db. This happens when the given password

122 does not match the one on the saved DB.

123 """

124 if self.premium is None:

125 return False, 'Pulling failed. User does not have active premium.'

126

127 try:

128 result = self.premium.pull_data()

129 except RemoteError as e:

130 log.debug('sync from server -- pulling failed.', error=str(e))

131 return False, f'Pulling failed: {str(e)}'

132

133 if result['data'] is None:

134 log.debug('sync from server -- no data found.')

135 return False, 'No data found'

136

137 try:

138 self.data.decompress_and_decrypt_db(self.password, result['data'])

139 except UnableToDecryptRemoteData as e:

140 raise PremiumAuthenticationError(

141 'The given password can not unlock the database that was retrieved from '

142 'the server. Make sure to use the same password as when the account was created.',

143 ) from e

144

145 return True, ''

146

147 def maybe_upload_data_to_server(self, force_upload: bool = False) -> bool:

148 # if user has no premium do nothing

149 if self.premium is None:

150 return False

151

152 # upload only once per hour

153 diff = ts_now() - self.last_data_upload_ts

154 if diff < 3600 and not force_upload:

155 return False

156

157 try:

158 metadata = self.premium.query_last_data_metadata()

159 except RemoteError as e:

160 log.debug('upload to server -- fetching metadata error', error=str(e))

161 return False

162 b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)

163

164 log.debug(

165 'CAN_PUSH',

166 ours=our_hash,

167 theirs=metadata.data_hash,

168 )

169 if our_hash == metadata.data_hash and not force_upload:

170 log.debug('upload to server stopped -- same hash')

171 # same hash -- no need to upload anything

172 return False

173

174 our_last_write_ts = self.data.db.get_last_write_ts()

175 if our_last_write_ts <= metadata.last_modify_ts and not force_upload:

176 # Server's DB was modified after our local DB

177 log.debug(

178 f'upload to server stopped -- remote db({metadata.last_modify_ts}) '

179 f'more recent than local({our_last_write_ts})',

180 )

181 return False

182

183 data_bytes_size = len(base64.b64decode(b64_encoded_data))

184 if data_bytes_size < metadata.data_size and not force_upload:

185 # Let's be conservative.

186 # TODO: Here perhaps prompt user in the future

187 log.debug(

188 f'upload to server stopped -- remote db({metadata.data_size}) '

189 f'bigger than local({data_bytes_size})',

190 )

191 return False

192

193 try:

194 self.premium.upload_data(

195 data_blob=b64_encoded_data,

196 our_hash=our_hash,

197 last_modify_ts=our_last_write_ts,

198 compression_type='zlib',

199 )

200 except RemoteError as e:

201 log.debug('upload to server -- upload error', error=str(e))

202 return False

203

204 # update the last data upload value

205 self.last_data_upload_ts = ts_now()

206 self.data.db.update_last_data_upload_ts(self.last_data_upload_ts)

207 log.debug('upload to server -- success')

208 return True

209

210 def sync_data(self, action: Literal['upload', 'download']) -> Tuple[bool, str]:

211 msg = ''

212

213 if action == 'upload':

214 success = self.maybe_upload_data_to_server(force_upload=True)

215

216 if not success:

217 msg = 'Upload failed'

218 return success, msg

219

220 return self._sync_data_from_server_and_replace_local()

221

222 def try_premium_at_start(

223 self,

224 given_premium_credentials: Optional[PremiumCredentials],

225 username: str,

226 create_new: bool,

227 sync_approval: Literal['yes', 'no', 'unknown'],

228 ) -> Optional[Premium]:

229 """

230 Check if new user provided api pair or we already got one in the DB

231

232 Returns the created premium if user's premium credentials were fine.

233

234 If not it will raise PremiumAuthenticationError.

235

236 If no credentials were given it returns None

237 """

238

239 if given_premium_credentials is not None:

240 assert create_new, 'We should never get here for an already existing account'

241

242 try:

243 self.premium = premium_create_and_verify(given_premium_credentials)

244 except PremiumAuthenticationError as e:

245 log.error('Given API key is invalid')

246 # At this point we are at a new user trying to create an account with

247 # premium API keys and we failed. But a directory was created. Remove it.

248 # But create a backup of it in case something went really wrong

249 # and the directory contained data we did not want to lose

250 shutil.move(

251 self.data.user_data_dir, # type: ignore

252 self.data.data_directory / f'auto_backup_{username}_{ts_now()}',

253 )

254 raise PremiumAuthenticationError(

255 'Could not verify keys for the new account. '

256 '{}'.format(str(e)),

257 ) from e

258

259 # else, if we got premium data in the DB initialize it and try to sync with the server

260 db_credentials = self.data.db.get_rotkehlchen_premium()

261 if db_credentials:

262 assert not create_new, 'We should never get here for a new account'

263 try:

264 self.premium = premium_create_and_verify(db_credentials)

265 except PremiumAuthenticationError as e:

266 message = (

267 f'Could not authenticate with the rotkehlchen server with '

268 f'the API keys found in the Database. Error: {str(e)}'

269 )

270 log.error(message)

271 raise PremiumAuthenticationError(message) from e

272

273 if self.premium is None:

274 return None

275

276 result = self._can_sync_data_from_server(new_account=create_new)

277 if result.can_sync == CanSync.ASK_USER:

278 if sync_approval == 'unknown':

279 log.info('Remote DB is possibly newer. Ask user.')

280 raise RotkehlchenPermissionError(result.message, result.payload)

281

282 if sync_approval == 'yes':

283 log.info('User approved data sync from server')

284 if self._sync_data_from_server_and_replace_local()[0]:

285 if create_new:

286 # if we successfully synced data from the server and this is

287 # a new account, make sure the api keys are properly stored

288 # in the DB

289 self.data.db.set_rotkehlchen_premium(self.premium.credentials)

290

291 else:

292 log.debug('Could sync data from server but user refused')

293 elif result.can_sync == CanSync.YES:

294 log.info('User approved data sync from server')

295 if self._sync_data_from_server_and_replace_local()[0]:

296 if create_new:

297 # if we successfully synced data from the server and this is

298 # a new account, make sure the api keys are properly stored

299 # in the DB

300 self.data.db.set_rotkehlchen_premium(self.premium.credentials)

301

302 # else result.can_sync was no, so we do nothing

303

304 # Success, return premium

305 return self.premium

306

[end of rotkehlchen/premium/sync.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/rotkehlchen/premium/sync.py b/rotkehlchen/premium/sync.py

--- a/rotkehlchen/premium/sync.py

+++ b/rotkehlchen/premium/sync.py

@@ -149,6 +149,9 @@

if self.premium is None:

return False

+ if not self.data.db.get_premium_sync() and not force_upload:

+ return False

+

# upload only once per hour

diff = ts_now() - self.last_data_upload_ts

if diff < 3600 and not force_upload:

|

{"golden_diff": "diff --git a/rotkehlchen/premium/sync.py b/rotkehlchen/premium/sync.py\n--- a/rotkehlchen/premium/sync.py\n+++ b/rotkehlchen/premium/sync.py\n@@ -149,6 +149,9 @@\n if self.premium is None:\n return False\n \n+ if not self.data.db.get_premium_sync() and not force_upload:\n+ return False\n+\n # upload only once per hour\n diff = ts_now() - self.last_data_upload_ts\n if diff < 3600 and not force_upload:\n", "issue": "Rotki premium DB upload does not respect user setting\n## Problem Definition\r\n\r\nIt seems that rotki users who use premium experienced encrypted DB uploads even with the setting off. 2 versions back we broke the functionality and made it always upload.\r\n\r\n## Task\r\n\r\nFix it\n", "before_files": [{"content": "import base64\nimport logging\nimport shutil\nfrom enum import Enum\nfrom typing import Any, Dict, NamedTuple, Optional, Tuple\n\nfrom typing_extensions import Literal\n\nfrom rotkehlchen.data_handler import DataHandler\nfrom rotkehlchen.errors import (\n PremiumAuthenticationError,\n RemoteError,\n RotkehlchenPermissionError,\n UnableToDecryptRemoteData,\n)\nfrom rotkehlchen.logging import RotkehlchenLogsAdapter\nfrom rotkehlchen.premium.premium import Premium, PremiumCredentials, premium_create_and_verify\nfrom rotkehlchen.utils.misc import timestamp_to_date, ts_now\n\nlogger = logging.getLogger(__name__)\nlog = RotkehlchenLogsAdapter(logger)\n\n\nclass CanSync(Enum):\n YES = 0\n NO = 1\n ASK_USER = 2\n\n\nclass SyncCheckResult(NamedTuple):\n # The result of the sync check\n can_sync: CanSync\n # If result is ASK_USER, what should the message be?\n message: str\n payload: Optional[Dict[str, Any]]\n\n\nclass PremiumSyncManager():\n\n def __init__(self, data: DataHandler, password: str) -> None:\n # Initialize this with the value saved in the DB\n self.last_data_upload_ts = data.db.get_last_data_upload_ts()\n self.data = data\n self.password = password\n self.premium: Optional[Premium] = None\n\n def _can_sync_data_from_server(self, new_account: bool) -> SyncCheckResult:\n \"\"\"\n Checks if the remote data can be pulled from the server.\n\n Returns a SyncCheckResult denoting whether we can pull for sure,\n whether we can't pull or whether the user should be asked. If the user\n should be asked a message is also returned\n \"\"\"\n log.debug('can sync data from server -- start')\n if self.premium is None:\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)\n\n try:\n metadata = self.premium.query_last_data_metadata()\n except RemoteError as e:\n log.debug('can sync data from server failed', error=str(e))\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n if new_account:\n return SyncCheckResult(can_sync=CanSync.YES, message='', payload=None)\n\n if not self.data.db.get_premium_sync():\n # If it's not a new account and the db setting for premium syncing is off stop\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n log.debug(\n 'CAN_PULL',\n ours=our_hash,\n theirs=metadata.data_hash,\n )\n if our_hash == metadata.data_hash:\n log.debug('sync from server stopped -- same hash')\n # same hash -- no need to get anything\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n our_last_write_ts = self.data.db.get_last_write_ts()\n data_bytes_size = len(base64.b64decode(b64_encoded_data))\n\n local_more_recent = our_last_write_ts >= metadata.last_modify_ts\n local_bigger = data_bytes_size >= metadata.data_size\n\n if local_more_recent and local_bigger:\n log.debug('sync from server stopped -- local is both newer and bigger')\n return SyncCheckResult(can_sync=CanSync.NO, message='', payload=None)\n\n if local_more_recent is False: # remote is more recent\n message = (\n 'Detected remote database with more recent modification timestamp '\n 'than the local one. '\n )\n else: # remote is bigger\n message = 'Detected remote database with bigger size than the local one. '\n\n return SyncCheckResult(\n can_sync=CanSync.ASK_USER,\n message=message,\n payload={\n 'local_size': data_bytes_size,\n 'remote_size': metadata.data_size,\n 'local_last_modified': timestamp_to_date(our_last_write_ts),\n 'remote_last_modified': timestamp_to_date(metadata.last_modify_ts),\n },\n )\n\n def _sync_data_from_server_and_replace_local(self) -> Tuple[bool, str]:\n \"\"\"\n Performs syncing of data from server and replaces local db\n\n Returns true for success and False for error/failure\n\n May raise:\n - PremiumAuthenticationError due to an UnableToDecryptRemoteData\n coming from decompress_and_decrypt_db. This happens when the given password\n does not match the one on the saved DB.\n \"\"\"\n if self.premium is None:\n return False, 'Pulling failed. User does not have active premium.'\n\n try:\n result = self.premium.pull_data()\n except RemoteError as e:\n log.debug('sync from server -- pulling failed.', error=str(e))\n return False, f'Pulling failed: {str(e)}'\n\n if result['data'] is None:\n log.debug('sync from server -- no data found.')\n return False, 'No data found'\n\n try:\n self.data.decompress_and_decrypt_db(self.password, result['data'])\n except UnableToDecryptRemoteData as e:\n raise PremiumAuthenticationError(\n 'The given password can not unlock the database that was retrieved from '\n 'the server. Make sure to use the same password as when the account was created.',\n ) from e\n\n return True, ''\n\n def maybe_upload_data_to_server(self, force_upload: bool = False) -> bool:\n # if user has no premium do nothing\n if self.premium is None:\n return False\n\n # upload only once per hour\n diff = ts_now() - self.last_data_upload_ts\n if diff < 3600 and not force_upload:\n return False\n\n try:\n metadata = self.premium.query_last_data_metadata()\n except RemoteError as e:\n log.debug('upload to server -- fetching metadata error', error=str(e))\n return False\n b64_encoded_data, our_hash = self.data.compress_and_encrypt_db(self.password)\n\n log.debug(\n 'CAN_PUSH',\n ours=our_hash,\n theirs=metadata.data_hash,\n )\n if our_hash == metadata.data_hash and not force_upload:\n log.debug('upload to server stopped -- same hash')\n # same hash -- no need to upload anything\n return False\n\n our_last_write_ts = self.data.db.get_last_write_ts()\n if our_last_write_ts <= metadata.last_modify_ts and not force_upload:\n # Server's DB was modified after our local DB\n log.debug(\n f'upload to server stopped -- remote db({metadata.last_modify_ts}) '\n f'more recent than local({our_last_write_ts})',\n )\n return False\n\n data_bytes_size = len(base64.b64decode(b64_encoded_data))\n if data_bytes_size < metadata.data_size and not force_upload:\n # Let's be conservative.\n # TODO: Here perhaps prompt user in the future\n log.debug(\n f'upload to server stopped -- remote db({metadata.data_size}) '\n f'bigger than local({data_bytes_size})',\n )\n return False\n\n try:\n self.premium.upload_data(\n data_blob=b64_encoded_data,\n our_hash=our_hash,\n last_modify_ts=our_last_write_ts,\n compression_type='zlib',\n )\n except RemoteError as e:\n log.debug('upload to server -- upload error', error=str(e))\n return False\n\n # update the last data upload value\n self.last_data_upload_ts = ts_now()\n self.data.db.update_last_data_upload_ts(self.last_data_upload_ts)\n log.debug('upload to server -- success')\n return True\n\n def sync_data(self, action: Literal['upload', 'download']) -> Tuple[bool, str]:\n msg = ''\n\n if action == 'upload':\n success = self.maybe_upload_data_to_server(force_upload=True)\n\n if not success:\n msg = 'Upload failed'\n return success, msg\n\n return self._sync_data_from_server_and_replace_local()\n\n def try_premium_at_start(\n self,\n given_premium_credentials: Optional[PremiumCredentials],\n username: str,\n create_new: bool,\n sync_approval: Literal['yes', 'no', 'unknown'],\n ) -> Optional[Premium]:\n \"\"\"\n Check if new user provided api pair or we already got one in the DB\n\n Returns the created premium if user's premium credentials were fine.\n\n If not it will raise PremiumAuthenticationError.\n\n If no credentials were given it returns None\n \"\"\"\n\n if given_premium_credentials is not None:\n assert create_new, 'We should never get here for an already existing account'\n\n try:\n self.premium = premium_create_and_verify(given_premium_credentials)\n except PremiumAuthenticationError as e:\n log.error('Given API key is invalid')\n # At this point we are at a new user trying to create an account with\n # premium API keys and we failed. But a directory was created. Remove it.\n # But create a backup of it in case something went really wrong\n # and the directory contained data we did not want to lose\n shutil.move(\n self.data.user_data_dir, # type: ignore\n self.data.data_directory / f'auto_backup_{username}_{ts_now()}',\n )\n raise PremiumAuthenticationError(\n 'Could not verify keys for the new account. '\n '{}'.format(str(e)),\n ) from e\n\n # else, if we got premium data in the DB initialize it and try to sync with the server\n db_credentials = self.data.db.get_rotkehlchen_premium()\n if db_credentials:\n assert not create_new, 'We should never get here for a new account'\n try:\n self.premium = premium_create_and_verify(db_credentials)\n except PremiumAuthenticationError as e:\n message = (\n f'Could not authenticate with the rotkehlchen server with '\n f'the API keys found in the Database. Error: {str(e)}'\n )\n log.error(message)\n raise PremiumAuthenticationError(message) from e\n\n if self.premium is None:\n return None\n\n result = self._can_sync_data_from_server(new_account=create_new)\n if result.can_sync == CanSync.ASK_USER:\n if sync_approval == 'unknown':\n log.info('Remote DB is possibly newer. Ask user.')\n raise RotkehlchenPermissionError(result.message, result.payload)\n\n if sync_approval == 'yes':\n log.info('User approved data sync from server')\n if self._sync_data_from_server_and_replace_local()[0]:\n if create_new:\n # if we successfully synced data from the server and this is\n # a new account, make sure the api keys are properly stored\n # in the DB\n self.data.db.set_rotkehlchen_premium(self.premium.credentials)\n\n else:\n log.debug('Could sync data from server but user refused')\n elif result.can_sync == CanSync.YES:\n log.info('User approved data sync from server')\n if self._sync_data_from_server_and_replace_local()[0]:\n if create_new:\n # if we successfully synced data from the server and this is\n # a new account, make sure the api keys are properly stored\n # in the DB\n self.data.db.set_rotkehlchen_premium(self.premium.credentials)\n\n # else result.can_sync was no, so we do nothing\n\n # Success, return premium\n return self.premium\n", "path": "rotkehlchen/premium/sync.py"}]}

| 3,921 | 136 |

gh_patches_debug_40664

|

rasdani/github-patches

|

git_diff

|

medtagger__MedTagger-40

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add "radon" tool to the Backend and enable it in CI

## Expected Behavior

Python code in backend should be validated by "radon" tool in CI.

## Actual Behavior

MedTagger backend uses a few linters already but we should add more validators to increate automation and code quality.

</issue>

<code>

[start of backend/scripts/migrate_hbase.py]

1 """Script that can migrate existing HBase schema or prepare empty database with given schema.

2

3 How to use it?

4 --------------

5 Run this script just by executing following line in the root directory of this project:

6

7 (venv) $ python3.6 scripts/migrate_hbase.py

8

9 """

10 import argparse

11 import logging

12 import logging.config

13

14 from medtagger.clients.hbase_client import HBaseClient

15 from utils import get_connection_to_hbase, user_agrees

16

17 logging.config.fileConfig('logging.conf')

18 logger = logging.getLogger(__name__)

19

20 parser = argparse.ArgumentParser(description='HBase migration.')

21 parser.add_argument('-y', '--yes', dest='yes', action='store_const', const=True)

22 args = parser.parse_args()

23

24

25 HBASE_SCHEMA = HBaseClient.HBASE_SCHEMA

26 connection = get_connection_to_hbase()

27 existing_tables = set(connection.tables())

28 schema_tables = set(HBASE_SCHEMA)

29 tables_to_drop = list(existing_tables - schema_tables)

30 for table_name in tables_to_drop:

31 if args.yes or user_agrees('Do you want to drop table "{}"?'.format(table_name)):

32 logger.info('Dropping table "%s".', table_name)

33 table = connection.table(table_name)

34 table.drop()

35

36 for table_name in HBASE_SCHEMA:

37 table = connection.table(table_name)

38 if not table.exists():

39 if args.yes or user_agrees('Do you want to create table "{}"?'.format(table_name)):

40 list_of_columns = HBASE_SCHEMA[table_name]

41 logger.info('Creating table "%s" with columns %s.', table_name, list_of_columns)

42 table.create(*list_of_columns)

43 table.enable_if_exists_checks()

44 else:

45 existing_column_families = set(table.columns())

46 schema_column_families = set(HBASE_SCHEMA[table_name])

47 columns_to_add = list(schema_column_families - existing_column_families)

48 columns_to_drop = list(existing_column_families - schema_column_families)

49

50 if columns_to_add:

51 if args.yes or user_agrees('Do you want to add columns {} to "{}"?'.format(columns_to_add, table_name)):

52 table.add_columns(*columns_to_add)

53

54 if columns_to_drop:

55 if args.yes or user_agrees('Do you want to drop columns {} from "{}"?'.format(columns_to_drop, table_name)):

56 table.drop_columns(*columns_to_drop)

57

[end of backend/scripts/migrate_hbase.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/backend/scripts/migrate_hbase.py b/backend/scripts/migrate_hbase.py

--- a/backend/scripts/migrate_hbase.py

+++ b/backend/scripts/migrate_hbase.py

@@ -11,6 +11,8 @@

import logging

import logging.config

+from starbase import Table

+

from medtagger.clients.hbase_client import HBaseClient

from utils import get_connection_to_hbase, user_agrees

@@ -22,35 +24,59 @@

args = parser.parse_args()

-HBASE_SCHEMA = HBaseClient.HBASE_SCHEMA

-connection = get_connection_to_hbase()

-existing_tables = set(connection.tables())

-schema_tables = set(HBASE_SCHEMA)

-tables_to_drop = list(existing_tables - schema_tables)

-for table_name in tables_to_drop:

+def create_new_table(table: Table) -> None:

+ """Create new table once user agrees on that."""

+ table_name = table.name

+ if args.yes or user_agrees('Do you want to create table "{}"?'.format(table_name)):

+ list_of_columns = HBaseClient.HBASE_SCHEMA[table_name]

+ logger.info('Creating table "%s" with columns %s.', table_name, list_of_columns)

+ table.create(*list_of_columns)

+ table.enable_if_exists_checks()

+

+

+def update_table_schema(table: Table) -> None:

+ """Update table schema once user agrees on that."""

+ table_name = table.name

+ existing_column_families = set(table.columns())

+ schema_column_families = set(HBaseClient.HBASE_SCHEMA[table_name])

+ columns_to_add = list(schema_column_families - existing_column_families)

+ columns_to_drop = list(existing_column_families - schema_column_families)

+

+ if columns_to_add:

+ if args.yes or user_agrees('Do you want to add columns {} to "{}"?'.format(columns_to_add, table_name)):

+ table.add_columns(*columns_to_add)

+

+ if columns_to_drop:

+ if args.yes or user_agrees('Do you want to drop columns {} from "{}"?'.format(columns_to_drop, table_name)):

+ table.drop_columns(*columns_to_drop)

+

+

+def drop_table(table: Table) -> None:

+ """Drop table once user agrees on that."""

+ table_name = table.name

if args.yes or user_agrees('Do you want to drop table "{}"?'.format(table_name)):

logger.info('Dropping table "%s".', table_name)

- table = connection.table(table_name)

table.drop()

-for table_name in HBASE_SCHEMA:

- table = connection.table(table_name)

- if not table.exists():

- if args.yes or user_agrees('Do you want to create table "{}"?'.format(table_name)):

- list_of_columns = HBASE_SCHEMA[table_name]

- logger.info('Creating table "%s" with columns %s.', table_name, list_of_columns)

- table.create(*list_of_columns)

- table.enable_if_exists_checks()

- else:

- existing_column_families = set(table.columns())

- schema_column_families = set(HBASE_SCHEMA[table_name])

- columns_to_add = list(schema_column_families - existing_column_families)

- columns_to_drop = list(existing_column_families - schema_column_families)

-

- if columns_to_add:

- if args.yes or user_agrees('Do you want to add columns {} to "{}"?'.format(columns_to_add, table_name)):

- table.add_columns(*columns_to_add)

-

- if columns_to_drop:

- if args.yes or user_agrees('Do you want to drop columns {} from "{}"?'.format(columns_to_drop, table_name)):

- table.drop_columns(*columns_to_drop)

+

+def main() -> None:

+ """Run main functionality of this script."""

+ connection = get_connection_to_hbase()

+ existing_tables = set(connection.tables())

+ schema_tables = set(HBaseClient.HBASE_SCHEMA)

+ tables_to_drop = list(existing_tables - schema_tables)

+

+ for table_name in tables_to_drop:

+ table = connection.table(table_name)

+ drop_table(table)

+

+ for table_name in HBaseClient.HBASE_SCHEMA:

+ table = connection.table(table_name)

+ if not table.exists():

+ create_new_table(table)

+ else:

+ update_table_schema(table)

+

+

+if __name__ == '__main__':

+ main()

|

{"golden_diff": "diff --git a/backend/scripts/migrate_hbase.py b/backend/scripts/migrate_hbase.py\n--- a/backend/scripts/migrate_hbase.py\n+++ b/backend/scripts/migrate_hbase.py\n@@ -11,6 +11,8 @@\n import logging\n import logging.config\n \n+from starbase import Table\n+\n from medtagger.clients.hbase_client import HBaseClient\n from utils import get_connection_to_hbase, user_agrees\n \n@@ -22,35 +24,59 @@\n args = parser.parse_args()\n \n \n-HBASE_SCHEMA = HBaseClient.HBASE_SCHEMA\n-connection = get_connection_to_hbase()\n-existing_tables = set(connection.tables())\n-schema_tables = set(HBASE_SCHEMA)\n-tables_to_drop = list(existing_tables - schema_tables)\n-for table_name in tables_to_drop:\n+def create_new_table(table: Table) -> None:\n+ \"\"\"Create new table once user agrees on that.\"\"\"\n+ table_name = table.name\n+ if args.yes or user_agrees('Do you want to create table \"{}\"?'.format(table_name)):\n+ list_of_columns = HBaseClient.HBASE_SCHEMA[table_name]\n+ logger.info('Creating table \"%s\" with columns %s.', table_name, list_of_columns)\n+ table.create(*list_of_columns)\n+ table.enable_if_exists_checks()\n+\n+\n+def update_table_schema(table: Table) -> None:\n+ \"\"\"Update table schema once user agrees on that.\"\"\"\n+ table_name = table.name\n+ existing_column_families = set(table.columns())\n+ schema_column_families = set(HBaseClient.HBASE_SCHEMA[table_name])\n+ columns_to_add = list(schema_column_families - existing_column_families)\n+ columns_to_drop = list(existing_column_families - schema_column_families)\n+\n+ if columns_to_add:\n+ if args.yes or user_agrees('Do you want to add columns {} to \"{}\"?'.format(columns_to_add, table_name)):\n+ table.add_columns(*columns_to_add)\n+\n+ if columns_to_drop:\n+ if args.yes or user_agrees('Do you want to drop columns {} from \"{}\"?'.format(columns_to_drop, table_name)):\n+ table.drop_columns(*columns_to_drop)\n+\n+\n+def drop_table(table: Table) -> None:\n+ \"\"\"Drop table once user agrees on that.\"\"\"\n+ table_name = table.name\n if args.yes or user_agrees('Do you want to drop table \"{}\"?'.format(table_name)):\n logger.info('Dropping table \"%s\".', table_name)\n- table = connection.table(table_name)\n table.drop()\n \n-for table_name in HBASE_SCHEMA:\n- table = connection.table(table_name)\n- if not table.exists():\n- if args.yes or user_agrees('Do you want to create table \"{}\"?'.format(table_name)):\n- list_of_columns = HBASE_SCHEMA[table_name]\n- logger.info('Creating table \"%s\" with columns %s.', table_name, list_of_columns)\n- table.create(*list_of_columns)\n- table.enable_if_exists_checks()\n- else:\n- existing_column_families = set(table.columns())\n- schema_column_families = set(HBASE_SCHEMA[table_name])\n- columns_to_add = list(schema_column_families - existing_column_families)\n- columns_to_drop = list(existing_column_families - schema_column_families)\n-\n- if columns_to_add:\n- if args.yes or user_agrees('Do you want to add columns {} to \"{}\"?'.format(columns_to_add, table_name)):\n- table.add_columns(*columns_to_add)\n-\n- if columns_to_drop:\n- if args.yes or user_agrees('Do you want to drop columns {} from \"{}\"?'.format(columns_to_drop, table_name)):\n- table.drop_columns(*columns_to_drop)\n+\n+def main() -> None:\n+ \"\"\"Run main functionality of this script.\"\"\"\n+ connection = get_connection_to_hbase()\n+ existing_tables = set(connection.tables())\n+ schema_tables = set(HBaseClient.HBASE_SCHEMA)\n+ tables_to_drop = list(existing_tables - schema_tables)\n+\n+ for table_name in tables_to_drop:\n+ table = connection.table(table_name)\n+ drop_table(table)\n+\n+ for table_name in HBaseClient.HBASE_SCHEMA:\n+ table = connection.table(table_name)\n+ if not table.exists():\n+ create_new_table(table)\n+ else:\n+ update_table_schema(table)\n+\n+\n+if __name__ == '__main__':\n+ main()\n", "issue": "Add \"radon\" tool to the Backend and enable it in CI\n## Expected Behavior\r\n\r\nPython code in backend should be validated by \"radon\" tool in CI.\r\n\r\n## Actual Behavior\r\n\r\nMedTagger backend uses a few linters already but we should add more validators to increate automation and code quality.\n", "before_files": [{"content": "\"\"\"Script that can migrate existing HBase schema or prepare empty database with given schema.\n\nHow to use it?\n--------------\nRun this script just by executing following line in the root directory of this project:\n\n (venv) $ python3.6 scripts/migrate_hbase.py\n\n\"\"\"\nimport argparse\nimport logging\nimport logging.config\n\nfrom medtagger.clients.hbase_client import HBaseClient\nfrom utils import get_connection_to_hbase, user_agrees\n\nlogging.config.fileConfig('logging.conf')\nlogger = logging.getLogger(__name__)\n\nparser = argparse.ArgumentParser(description='HBase migration.')\nparser.add_argument('-y', '--yes', dest='yes', action='store_const', const=True)\nargs = parser.parse_args()\n\n\nHBASE_SCHEMA = HBaseClient.HBASE_SCHEMA\nconnection = get_connection_to_hbase()\nexisting_tables = set(connection.tables())\nschema_tables = set(HBASE_SCHEMA)\ntables_to_drop = list(existing_tables - schema_tables)\nfor table_name in tables_to_drop:\n if args.yes or user_agrees('Do you want to drop table \"{}\"?'.format(table_name)):\n logger.info('Dropping table \"%s\".', table_name)\n table = connection.table(table_name)\n table.drop()\n\nfor table_name in HBASE_SCHEMA:\n table = connection.table(table_name)\n if not table.exists():\n if args.yes or user_agrees('Do you want to create table \"{}\"?'.format(table_name)):\n list_of_columns = HBASE_SCHEMA[table_name]\n logger.info('Creating table \"%s\" with columns %s.', table_name, list_of_columns)\n table.create(*list_of_columns)\n table.enable_if_exists_checks()\n else:\n existing_column_families = set(table.columns())\n schema_column_families = set(HBASE_SCHEMA[table_name])\n columns_to_add = list(schema_column_families - existing_column_families)\n columns_to_drop = list(existing_column_families - schema_column_families)\n\n if columns_to_add:\n if args.yes or user_agrees('Do you want to add columns {} to \"{}\"?'.format(columns_to_add, table_name)):\n table.add_columns(*columns_to_add)\n\n if columns_to_drop:\n if args.yes or user_agrees('Do you want to drop columns {} from \"{}\"?'.format(columns_to_drop, table_name)):\n table.drop_columns(*columns_to_drop)\n", "path": "backend/scripts/migrate_hbase.py"}]}

| 1,204 | 964 |

gh_patches_debug_1836

|

rasdani/github-patches

|

git_diff

|

Nitrate__Nitrate-337

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Upgrade django-tinymce to 2.7.0

As per subject.

</issue>

<code>

[start of setup.py]

1 # -*- coding: utf-8 -*-

2

3 from setuptools import setup, find_packages

4

5

6 with open('VERSION.txt', 'r') as f:

7 pkg_version = f.read().strip()

8

9

10 def get_long_description():

11 with open('README.rst', 'r') as f:

12 return f.read()

13

14

15 install_requires = [

16 'PyMySQL == 0.7.11',

17 'beautifulsoup4 >= 4.1.1',

18 'celery == 4.1.0',

19 'django-contrib-comments == 1.8.0',

20 'django-tinymce == 2.6.0',

21 'django-uuslug == 1.1.8',

22 'django >= 1.10,<2.0',

23 'html2text',

24 'kobo == 0.7.0',

25 'odfpy >= 0.9.6',

26 'six',

27 'xmltodict',

28 ]

29

30 extras_require = {

31 # Required for tcms.core.contrib.auth.backends.KerberosBackend

32 'krbauth': [

33 'kerberos == 1.2.5'

34 ],

35

36 # Packages for building documentation

37 'docs': [

38 'Sphinx >= 1.1.2',

39 'sphinx_rtd_theme',

40 ],

41

42 # Necessary packages for running tests

43 'tests': [

44 'coverage',

45 'factory_boy',

46 'flake8',

47 'mock',

48 'pytest',

49 'pytest-cov',

50 'pytest-django',

51 ],

52

53 # Contain tools that assists the development

54 'devtools': [

55 'django-debug-toolbar == 1.7',

56 'tox',

57 'django-extensions',

58 'pygraphviz',

59 ]

60 }

61

62

63 setup(

64 name='Nitrate',

65 version=pkg_version,

66 description='Test Case Management System',

67 long_description=get_long_description(),

68 author='Nitrate Team',

69 maintainer='Chenxiong Qi',

70 maintainer_email='[email protected]',

71 url='https://github.com/Nitrate/Nitrate/',

72 license='GPLv2+',

73 keywords='test case',

74 install_requires=install_requires,

75 extras_require=extras_require,

76 packages=find_packages(),

77 include_package_data=True,

78 classifiers=[

79 'Framework :: Django',

80 'Framework :: Django :: 1.10',

81 'Framework :: Django :: 1.11',

82 'Intended Audience :: Developers',

83 'License :: OSI Approved :: GNU General Public License v2 or later (GPLv2+)',

84 'Programming Language :: Python :: 2',

85 'Programming Language :: Python :: 2.7',

86 'Programming Language :: Python :: 3',

87 'Programming Language :: Python :: 3.6',

88 'Topic :: Software Development :: Quality Assurance',

89 'Topic :: Software Development :: Testing',

90 ],

91 )

92

[end of setup.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/setup.py b/setup.py

--- a/setup.py

+++ b/setup.py

@@ -17,7 +17,7 @@

'beautifulsoup4 >= 4.1.1',

'celery == 4.1.0',

'django-contrib-comments == 1.8.0',

- 'django-tinymce == 2.6.0',

+ 'django-tinymce == 2.7.0',

'django-uuslug == 1.1.8',

'django >= 1.10,<2.0',

'html2text',

|

{"golden_diff": "diff --git a/setup.py b/setup.py\n--- a/setup.py\n+++ b/setup.py\n@@ -17,7 +17,7 @@\n 'beautifulsoup4 >= 4.1.1',\n 'celery == 4.1.0',\n 'django-contrib-comments == 1.8.0',\n- 'django-tinymce == 2.6.0',\n+ 'django-tinymce == 2.7.0',\n 'django-uuslug == 1.1.8',\n 'django >= 1.10,<2.0',\n 'html2text',\n", "issue": "Upgrade django-tinymce to 2.7.0\nAs per subject.\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\nfrom setuptools import setup, find_packages\n\n\nwith open('VERSION.txt', 'r') as f:\n pkg_version = f.read().strip()\n\n\ndef get_long_description():\n with open('README.rst', 'r') as f:\n return f.read()\n\n\ninstall_requires = [\n 'PyMySQL == 0.7.11',\n 'beautifulsoup4 >= 4.1.1',\n 'celery == 4.1.0',\n 'django-contrib-comments == 1.8.0',\n 'django-tinymce == 2.6.0',\n 'django-uuslug == 1.1.8',\n 'django >= 1.10,<2.0',\n 'html2text',\n 'kobo == 0.7.0',\n 'odfpy >= 0.9.6',\n 'six',\n 'xmltodict',\n]\n\nextras_require = {\n # Required for tcms.core.contrib.auth.backends.KerberosBackend\n 'krbauth': [\n 'kerberos == 1.2.5'\n ],\n\n # Packages for building documentation\n 'docs': [\n 'Sphinx >= 1.1.2',\n 'sphinx_rtd_theme',\n ],\n\n # Necessary packages for running tests\n 'tests': [\n 'coverage',\n 'factory_boy',\n 'flake8',\n 'mock',\n 'pytest',\n 'pytest-cov',\n 'pytest-django',\n ],\n\n # Contain tools that assists the development\n 'devtools': [\n 'django-debug-toolbar == 1.7',\n 'tox',\n 'django-extensions',\n 'pygraphviz',\n ]\n}\n\n\nsetup(\n name='Nitrate',\n version=pkg_version,\n description='Test Case Management System',\n long_description=get_long_description(),\n author='Nitrate Team',\n maintainer='Chenxiong Qi',\n maintainer_email='[email protected]',\n url='https://github.com/Nitrate/Nitrate/',\n license='GPLv2+',\n keywords='test case',\n install_requires=install_requires,\n extras_require=extras_require,\n packages=find_packages(),\n include_package_data=True,\n classifiers=[\n 'Framework :: Django',\n 'Framework :: Django :: 1.10',\n 'Framework :: Django :: 1.11',\n 'Intended Audience :: Developers',\n 'License :: OSI Approved :: GNU General Public License v2 or later (GPLv2+)',\n 'Programming Language :: Python :: 2',\n 'Programming Language :: Python :: 2.7',\n 'Programming Language :: Python :: 3',\n 'Programming Language :: Python :: 3.6',\n 'Topic :: Software Development :: Quality Assurance',\n 'Topic :: Software Development :: Testing',\n ],\n)\n", "path": "setup.py"}]}

| 1,344 | 134 |

gh_patches_debug_58946

|

rasdani/github-patches

|

git_diff

|

ivy-llc__ivy-13797

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

diagflat

</issue>

<code>

[start of ivy/functional/frontends/numpy/creation_routines/building_matrices.py]

1 import ivy

2 from ivy.functional.frontends.numpy.func_wrapper import (

3 to_ivy_arrays_and_back,

4 handle_numpy_dtype,

5 )

6

7

8 @to_ivy_arrays_and_back

9 def tril(m, k=0):

10 return ivy.tril(m, k=k)

11

12

13 @to_ivy_arrays_and_back

14 def triu(m, k=0):

15 return ivy.triu(m, k=k)

16

17

18 @handle_numpy_dtype

19 @to_ivy_arrays_and_back

20 def tri(N, M=None, k=0, dtype="float64", *, like=None):

21 if M is None:

22 M = N

23 ones = ivy.ones((N, M), dtype=dtype)

24 return ivy.tril(ones, k=k)

25

26

27 @to_ivy_arrays_and_back

28 def diag(v, k=0):

29 return ivy.diag(v, k=k)

30

31

32 @to_ivy_arrays_and_back

33 def vander(x, N=None, increasing=False):

34 if ivy.is_float_dtype(x):

35 x = x.astype(ivy.float64)

36 elif ivy.is_bool_dtype or ivy.is_int_dtype(x):

37 x = x.astype(ivy.int64)

38 return ivy.vander(x, N=N, increasing=increasing)

39

[end of ivy/functional/frontends/numpy/creation_routines/building_matrices.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/ivy/functional/frontends/numpy/creation_routines/building_matrices.py b/ivy/functional/frontends/numpy/creation_routines/building_matrices.py

--- a/ivy/functional/frontends/numpy/creation_routines/building_matrices.py

+++ b/ivy/functional/frontends/numpy/creation_routines/building_matrices.py

@@ -36,3 +36,12 @@

elif ivy.is_bool_dtype or ivy.is_int_dtype(x):

x = x.astype(ivy.int64)

return ivy.vander(x, N=N, increasing=increasing)

+

+

+# diagflat

+@to_ivy_arrays_and_back

+def diagflat(v, k=0):

+ ret = ivy.diagflat(v, offset=k)

+ while len(ivy.shape(ret)) < 2:

+ ret = ret.expand_dims(axis=0)

+ return ret

|

{"golden_diff": "diff --git a/ivy/functional/frontends/numpy/creation_routines/building_matrices.py b/ivy/functional/frontends/numpy/creation_routines/building_matrices.py\n--- a/ivy/functional/frontends/numpy/creation_routines/building_matrices.py\n+++ b/ivy/functional/frontends/numpy/creation_routines/building_matrices.py\n@@ -36,3 +36,12 @@\n elif ivy.is_bool_dtype or ivy.is_int_dtype(x):\n x = x.astype(ivy.int64)\n return ivy.vander(x, N=N, increasing=increasing)\n+\n+\n+# diagflat\n+@to_ivy_arrays_and_back\n+def diagflat(v, k=0):\n+ ret = ivy.diagflat(v, offset=k)\n+ while len(ivy.shape(ret)) < 2:\n+ ret = ret.expand_dims(axis=0)\n+ return ret\n", "issue": "diagflat\n\n", "before_files": [{"content": "import ivy\nfrom ivy.functional.frontends.numpy.func_wrapper import (\n to_ivy_arrays_and_back,\n handle_numpy_dtype,\n)\n\n\n@to_ivy_arrays_and_back\ndef tril(m, k=0):\n return ivy.tril(m, k=k)\n\n\n@to_ivy_arrays_and_back\ndef triu(m, k=0):\n return ivy.triu(m, k=k)\n\n\n@handle_numpy_dtype\n@to_ivy_arrays_and_back\ndef tri(N, M=None, k=0, dtype=\"float64\", *, like=None):\n if M is None:\n M = N\n ones = ivy.ones((N, M), dtype=dtype)\n return ivy.tril(ones, k=k)\n\n\n@to_ivy_arrays_and_back\ndef diag(v, k=0):\n return ivy.diag(v, k=k)\n\n\n@to_ivy_arrays_and_back\ndef vander(x, N=None, increasing=False):\n if ivy.is_float_dtype(x):\n x = x.astype(ivy.float64)\n elif ivy.is_bool_dtype or ivy.is_int_dtype(x):\n x = x.astype(ivy.int64)\n return ivy.vander(x, N=N, increasing=increasing)\n", "path": "ivy/functional/frontends/numpy/creation_routines/building_matrices.py"}]}

| 903 | 198 |

gh_patches_debug_23782

|

rasdani/github-patches

|

git_diff

|

Textualize__rich-273

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[BUG] '#' sign is treated as the end of a URL

**Describe the bug**

The `#` a valid element of the URL, but Rich seems to ignore it and treats it as the end of it.

Consider this URL: https://github.com/willmcgugan/rich#rich-print-function

**To Reproduce**

```python

from rich.console import Console

console = Console()

console.log("https://github.com/willmcgugan/rich#rich-print-function")

```

Output:

**Platform**

I'm using Rich on Windows and Linux, with the currently newest version `6.1.1`.

</issue>

<code>

[start of rich/highlighter.py]

1 from abc import ABC, abstractmethod

2 from typing import List, Union

3

4 from .text import Text

5

6

7 class Highlighter(ABC):

8 """Abstract base class for highlighters."""

9

10 def __call__(self, text: Union[str, Text]) -> Text:

11 """Highlight a str or Text instance.

12

13 Args:

14 text (Union[str, ~Text]): Text to highlight.

15

16 Raises:

17 TypeError: If not called with text or str.

18

19 Returns:

20 Text: A test instance with highlighting applied.

21 """

22 if isinstance(text, str):

23 highlight_text = Text(text)

24 elif isinstance(text, Text):

25 highlight_text = text.copy()

26 else:

27 raise TypeError(f"str or Text instance required, not {text!r}")

28 self.highlight(highlight_text)

29 return highlight_text

30

31 @abstractmethod

32 def highlight(self, text: Text) -> None:

33 """Apply highlighting in place to text.

34

35 Args:

36 text (~Text): A text object highlight.

37 """

38

39

40 class NullHighlighter(Highlighter):

41 """A highlighter object that doesn't highlight.

42

43 May be used to disable highlighting entirely.

44

45 """

46

47 def highlight(self, text: Text) -> None:

48 """Nothing to do"""

49

50

51 class RegexHighlighter(Highlighter):

52 """Applies highlighting from a list of regular expressions."""

53

54 highlights: List[str] = []

55 base_style: str = ""

56

57 def highlight(self, text: Text) -> None:

58 """Highlight :class:`rich.text.Text` using regular expressions.

59

60 Args:

61 text (~Text): Text to highlighted.

62

63 """

64 highlight_regex = text.highlight_regex

65 for re_highlight in self.highlights:

66 highlight_regex(re_highlight, style_prefix=self.base_style)

67

68

69 class ReprHighlighter(RegexHighlighter):

70 """Highlights the text typically produced from ``__repr__`` methods."""

71

72 base_style = "repr."

73 highlights = [

74 r"(?P<brace>[\{\[\(\)\]\}])",

75 r"(?P<tag_start>\<)(?P<tag_name>[\w\-\.\:]*)(?P<tag_contents>.*?)(?P<tag_end>\>)",

76 r"(?P<attrib_name>\w+?)=(?P<attrib_value>\"?[\w_]+\"?)",

77 r"(?P<bool_true>True)|(?P<bool_false>False)|(?P<none>None)",

78 r"(?P<number>(?<!\w)\-?[0-9]+\.?[0-9]*(e[\-\+]?\d+?)?\b)",

79 r"(?P<number>0x[0-9a-f]*)",

80 r"(?P<path>\B(\/[\w\.\-\_\+]+)*\/)(?P<filename>[\w\.\-\_\+]*)?",

81 r"(?<!\\)(?P<str>b?\'\'\'.*?(?<!\\)\'\'\'|b?\'.*?(?<!\\)\'|b?\"\"\".*?(?<!\\)\"\"\"|b?\".*?(?<!\\)\")",

82 r"(?P<url>https?:\/\/[0-9a-zA-Z\$\-\_\+\!`\(\)\,\.\?\/\;\:\&\=\%]*)",

83 r"(?P<uuid>[a-fA-F0-9]{8}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{12})",

84 ]

85

86

87 if __name__ == "__main__": # pragma: no cover

88 from .console import Console

89

90 console = Console()

91 console.print("[bold green]hello world![/bold green]")

92 console.print("'[bold green]hello world![/bold green]'")

93

94 console.print(" /foo")

95 console.print("/foo/")

96 console.print("/foo/bar")

97 console.print("foo/bar/baz")

98

99 console.print("/foo/bar/baz?foo=bar+egg&egg=baz")

100 console.print("/foo/bar/baz/")

101 console.print("/foo/bar/baz/egg")

102 console.print("/foo/bar/baz/egg.py")

103 console.print("/foo/bar/baz/egg.py word")

104 console.print(" /foo/bar/baz/egg.py word")

105 console.print("foo /foo/bar/baz/egg.py word")

106 console.print("foo /foo/bar/ba._++z/egg+.py word")

107 console.print("https://example.org?foo=bar")

108

109 console.print(1234567.34)

110 console.print(1 / 2)

111 console.print(-1 / 123123123123)

112

[end of rich/highlighter.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/rich/highlighter.py b/rich/highlighter.py

--- a/rich/highlighter.py

+++ b/rich/highlighter.py

@@ -79,7 +79,7 @@

r"(?P<number>0x[0-9a-f]*)",

r"(?P<path>\B(\/[\w\.\-\_\+]+)*\/)(?P<filename>[\w\.\-\_\+]*)?",

r"(?<!\\)(?P<str>b?\'\'\'.*?(?<!\\)\'\'\'|b?\'.*?(?<!\\)\'|b?\"\"\".*?(?<!\\)\"\"\"|b?\".*?(?<!\\)\")",

- r"(?P<url>https?:\/\/[0-9a-zA-Z\$\-\_\+\!`\(\)\,\.\?\/\;\:\&\=\%]*)",

+ r"(?P<url>https?:\/\/[0-9a-zA-Z\$\-\_\+\!`\(\)\,\.\?\/\;\:\&\=\%\#]*)",

r"(?P<uuid>[a-fA-F0-9]{8}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{4}\-[a-fA-F0-9]{12})",

]

@@ -104,7 +104,7 @@

console.print(" /foo/bar/baz/egg.py word")

console.print("foo /foo/bar/baz/egg.py word")

console.print("foo /foo/bar/ba._++z/egg+.py word")

- console.print("https://example.org?foo=bar")

+ console.print("https://example.org?foo=bar#header")

console.print(1234567.34)

console.print(1 / 2)

|

{"golden_diff": "diff --git a/rich/highlighter.py b/rich/highlighter.py\n--- a/rich/highlighter.py\n+++ b/rich/highlighter.py\n@@ -79,7 +79,7 @@\n r\"(?P<number>0x[0-9a-f]*)\",\n r\"(?P<path>\\B(\\/[\\w\\.\\-\\_\\+]+)*\\/)(?P<filename>[\\w\\.\\-\\_\\+]*)?\",\n r\"(?<!\\\\)(?P<str>b?\\'\\'\\'.*?(?<!\\\\)\\'\\'\\'|b?\\'.*?(?<!\\\\)\\'|b?\\\"\\\"\\\".*?(?<!\\\\)\\\"\\\"\\\"|b?\\\".*?(?<!\\\\)\\\")\",\n- r\"(?P<url>https?:\\/\\/[0-9a-zA-Z\\$\\-\\_\\+\\!`\\(\\)\\,\\.\\?\\/\\;\\:\\&\\=\\%]*)\",\n+ r\"(?P<url>https?:\\/\\/[0-9a-zA-Z\\$\\-\\_\\+\\!`\\(\\)\\,\\.\\?\\/\\;\\:\\&\\=\\%\\#]*)\",\n r\"(?P<uuid>[a-fA-F0-9]{8}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{12})\",\n ]\n \n@@ -104,7 +104,7 @@\n console.print(\" /foo/bar/baz/egg.py word\")\n console.print(\"foo /foo/bar/baz/egg.py word\")\n console.print(\"foo /foo/bar/ba._++z/egg+.py word\")\n- console.print(\"https://example.org?foo=bar\")\n+ console.print(\"https://example.org?foo=bar#header\")\n \n console.print(1234567.34)\n console.print(1 / 2)\n", "issue": "[BUG] '#' sign is treated as the end of a URL\n**Describe the bug**\r\nThe `#` a valid element of the URL, but Rich seems to ignore it and treats it as the end of it. \r\nConsider this URL: https://github.com/willmcgugan/rich#rich-print-function\r\n\r\n**To Reproduce**\r\n```python\r\nfrom rich.console import Console\r\n\r\nconsole = Console()\r\n\r\nconsole.log(\"https://github.com/willmcgugan/rich#rich-print-function\")\r\n```\r\n\r\nOutput: \r\n\r\n\r\n\r\n**Platform**\r\nI'm using Rich on Windows and Linux, with the currently newest version `6.1.1`.\r\n\n", "before_files": [{"content": "from abc import ABC, abstractmethod\nfrom typing import List, Union\n\nfrom .text import Text\n\n\nclass Highlighter(ABC):\n \"\"\"Abstract base class for highlighters.\"\"\"\n\n def __call__(self, text: Union[str, Text]) -> Text:\n \"\"\"Highlight a str or Text instance.\n\n Args:\n text (Union[str, ~Text]): Text to highlight.\n\n Raises:\n TypeError: If not called with text or str.\n\n Returns:\n Text: A test instance with highlighting applied.\n \"\"\"\n if isinstance(text, str):\n highlight_text = Text(text)\n elif isinstance(text, Text):\n highlight_text = text.copy()\n else:\n raise TypeError(f\"str or Text instance required, not {text!r}\")\n self.highlight(highlight_text)\n return highlight_text\n\n @abstractmethod\n def highlight(self, text: Text) -> None:\n \"\"\"Apply highlighting in place to text.\n\n Args:\n text (~Text): A text object highlight.\n \"\"\"\n\n\nclass NullHighlighter(Highlighter):\n \"\"\"A highlighter object that doesn't highlight.\n\n May be used to disable highlighting entirely.\n\n \"\"\"\n\n def highlight(self, text: Text) -> None:\n \"\"\"Nothing to do\"\"\"\n\n\nclass RegexHighlighter(Highlighter):\n \"\"\"Applies highlighting from a list of regular expressions.\"\"\"\n\n highlights: List[str] = []\n base_style: str = \"\"\n\n def highlight(self, text: Text) -> None:\n \"\"\"Highlight :class:`rich.text.Text` using regular expressions.\n\n Args:\n text (~Text): Text to highlighted.\n\n \"\"\"\n highlight_regex = text.highlight_regex\n for re_highlight in self.highlights:\n highlight_regex(re_highlight, style_prefix=self.base_style)\n\n\nclass ReprHighlighter(RegexHighlighter):\n \"\"\"Highlights the text typically produced from ``__repr__`` methods.\"\"\"\n\n base_style = \"repr.\"\n highlights = [\n r\"(?P<brace>[\\{\\[\\(\\)\\]\\}])\",\n r\"(?P<tag_start>\\<)(?P<tag_name>[\\w\\-\\.\\:]*)(?P<tag_contents>.*?)(?P<tag_end>\\>)\",\n r\"(?P<attrib_name>\\w+?)=(?P<attrib_value>\\\"?[\\w_]+\\\"?)\",\n r\"(?P<bool_true>True)|(?P<bool_false>False)|(?P<none>None)\",\n r\"(?P<number>(?<!\\w)\\-?[0-9]+\\.?[0-9]*(e[\\-\\+]?\\d+?)?\\b)\",\n r\"(?P<number>0x[0-9a-f]*)\",\n r\"(?P<path>\\B(\\/[\\w\\.\\-\\_\\+]+)*\\/)(?P<filename>[\\w\\.\\-\\_\\+]*)?\",\n r\"(?<!\\\\)(?P<str>b?\\'\\'\\'.*?(?<!\\\\)\\'\\'\\'|b?\\'.*?(?<!\\\\)\\'|b?\\\"\\\"\\\".*?(?<!\\\\)\\\"\\\"\\\"|b?\\\".*?(?<!\\\\)\\\")\",\n r\"(?P<url>https?:\\/\\/[0-9a-zA-Z\\$\\-\\_\\+\\!`\\(\\)\\,\\.\\?\\/\\;\\:\\&\\=\\%]*)\",\n r\"(?P<uuid>[a-fA-F0-9]{8}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{4}\\-[a-fA-F0-9]{12})\",\n ]\n\n\nif __name__ == \"__main__\": # pragma: no cover\n from .console import Console\n\n console = Console()\n console.print(\"[bold green]hello world![/bold green]\")\n console.print(\"'[bold green]hello world![/bold green]'\")\n\n console.print(\" /foo\")\n console.print(\"/foo/\")\n console.print(\"/foo/bar\")\n console.print(\"foo/bar/baz\")\n\n console.print(\"/foo/bar/baz?foo=bar+egg&egg=baz\")\n console.print(\"/foo/bar/baz/\")\n console.print(\"/foo/bar/baz/egg\")\n console.print(\"/foo/bar/baz/egg.py\")\n console.print(\"/foo/bar/baz/egg.py word\")\n console.print(\" /foo/bar/baz/egg.py word\")\n console.print(\"foo /foo/bar/baz/egg.py word\")\n console.print(\"foo /foo/bar/ba._++z/egg+.py word\")\n console.print(\"https://example.org?foo=bar\")\n\n console.print(1234567.34)\n console.print(1 / 2)\n console.print(-1 / 123123123123)\n", "path": "rich/highlighter.py"}]}

| 2,008 | 431 |

gh_patches_debug_23595

|

rasdani/github-patches

|

git_diff

|

python-discord__bot-436

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Enhance off-topic name search feature

We've recently added a search feature for off-topic names. It's a really cool feature, but I think it can be enhanced in a couple of ways. (Use the informal definition of "couple": ["an indefinite small number."](https://www.merriam-webster.com/dictionary/couple))

For all the examples, this is the list of off-topic-names in the database:

**1. Use `OffTopicName` converter for the `query` argument**

We don't use the characters as-is in off-topic names, but we use a translation table on the string input. However, we don't do that yet for the search query of the search feature. This leads to interesting search results:

If we change the annotation to `query: OffTopicName`, we utilize the same conversion for the query as we use for the actual off-topic-names, leading to much better results:

**2. Add a simple membership test of `query in offtopicname`**

Currently, we utilize `difflib.get_close_match` to get results that closely match the query. However, this function will match based on the whole off-topic name, so it doesn't do well if we search for a substring of the off-topic name. (I suspect this is part of the reason we use relatively low cutoff.)

While there's clearly a "pool" in volcyy's underground, we don't find it:

If we add a simple membership test to the existing `get_close_match`, we do get the results we expect:

**3. Given the membership test of suggestion 2, up the cutoff**

The low cutoff does allow us some leeway when searching for partial off-topic names, but it also causes interesting results. Using the current search feature, without the membership testing suggested in 2, we don't find "eevee-is-cooler-than-python" when we search for "eevee", but "eivl's-evil-eval":

However, if we up the cutoff value and combine it with an additional membership test, we can search for "sounds-like" off-topic names and find off-topic names that contain exact matches:

</issue>

<code>

[start of bot/cogs/off_topic_names.py]

1 import asyncio

2 import difflib

3 import logging

4 from datetime import datetime, timedelta

5

6 from discord import Colour, Embed

7 from discord.ext.commands import BadArgument, Bot, Cog, Context, Converter, group

8

9 from bot.constants import Channels, MODERATION_ROLES

10 from bot.decorators import with_role

11 from bot.pagination import LinePaginator

12

13

14 CHANNELS = (Channels.off_topic_0, Channels.off_topic_1, Channels.off_topic_2)

15 log = logging.getLogger(__name__)

16

17

18 class OffTopicName(Converter):

19 """A converter that ensures an added off-topic name is valid."""

20

21 @staticmethod

22 async def convert(ctx: Context, argument: str):

23 allowed_characters = "ABCDEFGHIJKLMNOPQRSTUVWXYZ!?'`-"

24

25 if not (2 <= len(argument) <= 96):

26 raise BadArgument("Channel name must be between 2 and 96 chars long")

27

28 elif not all(c.isalnum() or c in allowed_characters for c in argument):

29 raise BadArgument(

30 "Channel name must only consist of "

31 "alphanumeric characters, minus signs or apostrophes."

32 )

33

34 # Replace invalid characters with unicode alternatives.

35 table = str.maketrans(

36 allowed_characters, '𝖠𝖡𝖢𝖣𝖤𝖥𝖦𝖧𝖨𝖩𝖪𝖫𝖬𝖭𝖮𝖯𝖰𝖱𝖲𝖳𝖴𝖵𝖶𝖷𝖸𝖹ǃ?’’-'

37 )

38 return argument.translate(table)

39

40

41 async def update_names(bot: Bot):

42 """

43 The background updater task that performs a channel name update daily.

44

45 Args:

46 bot (Bot):

47 The running bot instance, used for fetching data from the

48 website via the bot's `api_client`.

49 """

50

51 while True:

52 # Since we truncate the compute timedelta to seconds, we add one second to ensure

53 # we go past midnight in the `seconds_to_sleep` set below.

54 today_at_midnight = datetime.utcnow().replace(microsecond=0, second=0, minute=0, hour=0)

55 next_midnight = today_at_midnight + timedelta(days=1)

56 seconds_to_sleep = (next_midnight - datetime.utcnow()).seconds + 1

57 await asyncio.sleep(seconds_to_sleep)

58

59 channel_0_name, channel_1_name, channel_2_name = await bot.api_client.get(

60 'bot/off-topic-channel-names', params={'random_items': 3}

61 )

62 channel_0, channel_1, channel_2 = (bot.get_channel(channel_id) for channel_id in CHANNELS)

63

64 await channel_0.edit(name=f'ot0-{channel_0_name}')

65 await channel_1.edit(name=f'ot1-{channel_1_name}')

66 await channel_2.edit(name=f'ot2-{channel_2_name}')

67 log.debug(

68 "Updated off-topic channel names to"

69 f" {channel_0_name}, {channel_1_name} and {channel_2_name}"

70 )

71

72

73 class OffTopicNames(Cog):

74 """Commands related to managing the off-topic category channel names."""

75

76 def __init__(self, bot: Bot):

77 self.bot = bot

78 self.updater_task = None

79

80 def cog_unload(self):

81 if self.updater_task is not None:

82 self.updater_task.cancel()

83

84 @Cog.listener()

85 async def on_ready(self):

86 if self.updater_task is None:

87 coro = update_names(self.bot)

88 self.updater_task = self.bot.loop.create_task(coro)

89

90 @group(name='otname', aliases=('otnames', 'otn'), invoke_without_command=True)

91 @with_role(*MODERATION_ROLES)

92 async def otname_group(self, ctx):

93 """Add or list items from the off-topic channel name rotation."""

94

95 await ctx.invoke(self.bot.get_command("help"), "otname")

96

97 @otname_group.command(name='add', aliases=('a',))

98 @with_role(*MODERATION_ROLES)

99 async def add_command(self, ctx, *names: OffTopicName):

100 """Adds a new off-topic name to the rotation."""

101 # Chain multiple words to a single one

102 name = "-".join(names)

103

104 await self.bot.api_client.post(f'bot/off-topic-channel-names', params={'name': name})

105 log.info(

106 f"{ctx.author.name}#{ctx.author.discriminator}"

107 f" added the off-topic channel name '{name}"

108 )

109 await ctx.send(f":ok_hand: Added `{name}` to the names list.")

110

111 @otname_group.command(name='delete', aliases=('remove', 'rm', 'del', 'd'))

112 @with_role(*MODERATION_ROLES)

113 async def delete_command(self, ctx, *names: OffTopicName):

114 """Removes a off-topic name from the rotation."""

115 # Chain multiple words to a single one

116 name = "-".join(names)

117

118 await self.bot.api_client.delete(f'bot/off-topic-channel-names/{name}')

119 log.info(

120 f"{ctx.author.name}#{ctx.author.discriminator}"

121 f" deleted the off-topic channel name '{name}"

122 )

123 await ctx.send(f":ok_hand: Removed `{name}` from the names list.")

124

125 @otname_group.command(name='list', aliases=('l',))

126 @with_role(*MODERATION_ROLES)

127 async def list_command(self, ctx):

128 """

129 Lists all currently known off-topic channel names in a paginator.

130 Restricted to Moderator and above to not spoil the surprise.

131 """

132

133 result = await self.bot.api_client.get('bot/off-topic-channel-names')

134 lines = sorted(f"• {name}" for name in result)

135 embed = Embed(

136 title=f"Known off-topic names (`{len(result)}` total)",

137 colour=Colour.blue()

138 )

139 if result:

140 await LinePaginator.paginate(lines, ctx, embed, max_size=400, empty=False)

141 else:

142 embed.description = "Hmmm, seems like there's nothing here yet."

143 await ctx.send(embed=embed)

144

145 @otname_group.command(name='search', aliases=('s',))

146 @with_role(*MODERATION_ROLES)

147 async def search_command(self, ctx, *, query: str):

148 """

149 Search for an off-topic name.

150 """

151

152 result = await self.bot.api_client.get('bot/off-topic-channel-names')

153 matches = difflib.get_close_matches(query, result, n=10, cutoff=0.35)

154 lines = sorted(f"• {name}" for name in matches)

155 embed = Embed(

156 title=f"Query results",

157 colour=Colour.blue()

158 )

159

160 if matches:

161 await LinePaginator.paginate(lines, ctx, embed, max_size=400, empty=False)

162 else:

163 embed.description = "Nothing found."

164 await ctx.send(embed=embed)

165

166

167 def setup(bot: Bot):

168 bot.add_cog(OffTopicNames(bot))

169 log.info("Cog loaded: OffTopicNames")

170

[end of bot/cogs/off_topic_names.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

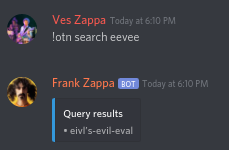

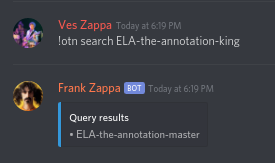

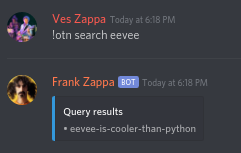

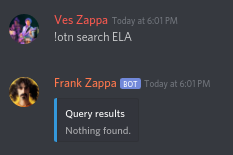

diff --git a/bot/cogs/off_topic_names.py b/bot/cogs/off_topic_names.py

--- a/bot/cogs/off_topic_names.py

+++ b/bot/cogs/off_topic_names.py

@@ -144,20 +144,21 @@

@otname_group.command(name='search', aliases=('s',))

@with_role(*MODERATION_ROLES)

- async def search_command(self, ctx, *, query: str):

+ async def search_command(self, ctx, *, query: OffTopicName):

"""

Search for an off-topic name.

"""

result = await self.bot.api_client.get('bot/off-topic-channel-names')

- matches = difflib.get_close_matches(query, result, n=10, cutoff=0.35)

- lines = sorted(f"• {name}" for name in matches)

+ in_matches = {name for name in result if query in name}

+ close_matches = difflib.get_close_matches(query, result, n=10, cutoff=0.70)

+ lines = sorted(f"• {name}" for name in in_matches.union(close_matches))

embed = Embed(

title=f"Query results",

colour=Colour.blue()

)

- if matches:

+ if lines:

await LinePaginator.paginate(lines, ctx, embed, max_size=400, empty=False)

else:

embed.description = "Nothing found."

|